User login

FDA effort focuses on approving more generic drugs

Pharmaceutical companies may be in for a more difficult time hiding behind a Risk Evaluation and Mitigation Strategy (REMS) as a way to prevent generic competition from entering the market.

The Food and Drug Administration issued two draft guidance documents on May 31 aimed at spurring on generic competition, items that are part of a broader White House strategy targeting the high price of prescription drugs.

“We have seen REMS requirements exploited in two ways,” FDA Commissioner Scott Gottlieb, MD, said in a statement. “One occurs at the front end of the drug development process, when generic drugs are being developed. The other occurs at the back end of the process, after necessary testing has been completed, when a generic drug seeks approval and market entry.”

On the front end, manufacturers use REMS to restrict the sale of drugs to keep them out of the hands of generic firms, which typically need about 5,000 doses to conduct bioequivalence and bioavailability studies, Dr. Gottlieb noted. The back-end obstacle, which happens after a generic manufacturer files application for generic approval, is what the two guidance documents address.

The first draft guidance document, “Development of a Shared System REMS,” outlines principles and recommendations for brand and generic manufacturers to develop a single REMS program that covers both products, which Dr. Gottlieb said will “enable timelier market entry for products that are part of these REMS.”

The second draft guidance document, “Waivers of the Single Shared System REMS Requirement,” describes the two circumstances under which the generic manufacturer can waive the single, shared REMS requirements:

- If the burden of forming a single, shared systems outweighs the benefits.

- If an aspect of the REMS is covered by a patent or is a trade secret and the generic company was unable to obtain a license for use.

“We believe that by making the process for developing a shared system REMS more efficient, we’ll discourage brand drug makers from using REMS as a way to block generic entry and help end some of the tactics that can delay access,” Dr. Gottlieb said. “Our safety programs shouldn’t be leveraged as a way to forestall generic entry after lawful IP has lapsed on a brand drug.”

“Today’s FDA guidance is a step in the right direction toward our common goal of lowering out-of-control drug prices,” the Campaign for Sustainable Rx Pricing said in a statement “When generic and biosimilar competition is thwarted by these abusive tactics, brand-name manufacturers, who alone control the price of their drugs, keep those prices artificially high. The problem is the price, and more competition is a proven solution.”

Comments on each of the draft guidance documents are due July 31 at www.regulations.gov.

Pharmaceutical companies may be in for a more difficult time hiding behind a Risk Evaluation and Mitigation Strategy (REMS) as a way to prevent generic competition from entering the market.

The Food and Drug Administration issued two draft guidance documents on May 31 aimed at spurring on generic competition, items that are part of a broader White House strategy targeting the high price of prescription drugs.

“We have seen REMS requirements exploited in two ways,” FDA Commissioner Scott Gottlieb, MD, said in a statement. “One occurs at the front end of the drug development process, when generic drugs are being developed. The other occurs at the back end of the process, after necessary testing has been completed, when a generic drug seeks approval and market entry.”

On the front end, manufacturers use REMS to restrict the sale of drugs to keep them out of the hands of generic firms, which typically need about 5,000 doses to conduct bioequivalence and bioavailability studies, Dr. Gottlieb noted. The back-end obstacle, which happens after a generic manufacturer files application for generic approval, is what the two guidance documents address.

The first draft guidance document, “Development of a Shared System REMS,” outlines principles and recommendations for brand and generic manufacturers to develop a single REMS program that covers both products, which Dr. Gottlieb said will “enable timelier market entry for products that are part of these REMS.”

The second draft guidance document, “Waivers of the Single Shared System REMS Requirement,” describes the two circumstances under which the generic manufacturer can waive the single, shared REMS requirements:

- If the burden of forming a single, shared systems outweighs the benefits.

- If an aspect of the REMS is covered by a patent or is a trade secret and the generic company was unable to obtain a license for use.

“We believe that by making the process for developing a shared system REMS more efficient, we’ll discourage brand drug makers from using REMS as a way to block generic entry and help end some of the tactics that can delay access,” Dr. Gottlieb said. “Our safety programs shouldn’t be leveraged as a way to forestall generic entry after lawful IP has lapsed on a brand drug.”

“Today’s FDA guidance is a step in the right direction toward our common goal of lowering out-of-control drug prices,” the Campaign for Sustainable Rx Pricing said in a statement “When generic and biosimilar competition is thwarted by these abusive tactics, brand-name manufacturers, who alone control the price of their drugs, keep those prices artificially high. The problem is the price, and more competition is a proven solution.”

Comments on each of the draft guidance documents are due July 31 at www.regulations.gov.

Pharmaceutical companies may be in for a more difficult time hiding behind a Risk Evaluation and Mitigation Strategy (REMS) as a way to prevent generic competition from entering the market.

The Food and Drug Administration issued two draft guidance documents on May 31 aimed at spurring on generic competition, items that are part of a broader White House strategy targeting the high price of prescription drugs.

“We have seen REMS requirements exploited in two ways,” FDA Commissioner Scott Gottlieb, MD, said in a statement. “One occurs at the front end of the drug development process, when generic drugs are being developed. The other occurs at the back end of the process, after necessary testing has been completed, when a generic drug seeks approval and market entry.”

On the front end, manufacturers use REMS to restrict the sale of drugs to keep them out of the hands of generic firms, which typically need about 5,000 doses to conduct bioequivalence and bioavailability studies, Dr. Gottlieb noted. The back-end obstacle, which happens after a generic manufacturer files application for generic approval, is what the two guidance documents address.

The first draft guidance document, “Development of a Shared System REMS,” outlines principles and recommendations for brand and generic manufacturers to develop a single REMS program that covers both products, which Dr. Gottlieb said will “enable timelier market entry for products that are part of these REMS.”

The second draft guidance document, “Waivers of the Single Shared System REMS Requirement,” describes the two circumstances under which the generic manufacturer can waive the single, shared REMS requirements:

- If the burden of forming a single, shared systems outweighs the benefits.

- If an aspect of the REMS is covered by a patent or is a trade secret and the generic company was unable to obtain a license for use.

“We believe that by making the process for developing a shared system REMS more efficient, we’ll discourage brand drug makers from using REMS as a way to block generic entry and help end some of the tactics that can delay access,” Dr. Gottlieb said. “Our safety programs shouldn’t be leveraged as a way to forestall generic entry after lawful IP has lapsed on a brand drug.”

“Today’s FDA guidance is a step in the right direction toward our common goal of lowering out-of-control drug prices,” the Campaign for Sustainable Rx Pricing said in a statement “When generic and biosimilar competition is thwarted by these abusive tactics, brand-name manufacturers, who alone control the price of their drugs, keep those prices artificially high. The problem is the price, and more competition is a proven solution.”

Comments on each of the draft guidance documents are due July 31 at www.regulations.gov.

Chemo-free regimen appears viable in previously untreated FL

CHICAGO – Lenalidomide plus rituximab (R2) had comparable efficacy versus standard chemoimmunotherapy in patients with previously untreated follicular lymphoma, according to results from a phase 3 trial.

RELEVANCE is the first randomized, phase 3 trial to examine a chemotherapy-free regimen in this setting.

Response and progression-free survival (PFS) results were similar for patients who received R2 followed by rituximab maintenance and patients assigned to chemotherapy plus rituximab and rituximab maintenance, in study results presented at the annual meeting of the American Society of Clinical Oncology.

“These results show that lenalidomide plus rituximab, which is a novel immunomodulatory approach, is a potential first-line option for patients with follicular lymphoma that require treatment,” said investigator Nathan H. Fowler, MD, of the University of Texas MD Anderson Cancer Center, Houston.

But since the study was designed as a superiority trial, rather than a noninferiority trial, and it failed to meet its primary endpoint of superior complete remission (CR) or CR unconfirmed (CRu) at 120 weeks, said Bruce D. Cheson, MD, head of hematology at Georgetown University, Washington.

R2 had a similar PFS overall and in all major patient subgroups, similar overall survival, less nonhematologic toxicity aside from rash, less neutropenia, and fewer infections despite increased use of growth factors in the chemoimmunotherapy arm, Dr. Cheson said in a presentation commenting on the results. “Therefore, I agree with Dr. Fowler’s conclusion that R2 can be considered as an option for the front-line therapy of patients with follicular lymphoma,” Dr. Cheson said.

The RELEVANCE study included 1,030 patients (median age, 59 years) with previously untreated, advanced follicular lymphoma requiring treatment. They were randomized 1:1 to either lenalidomide plus rituximab followed by rituximab maintenance, or R-chemotherapy followed by rituximab maintenance.

For patients randomly assigned to R-chemotherapy, physicians could choose among three standard regimens: rituximab plus bendamustine (R-B), rituximab plus cyclophosphamide, doxorubicin, vincristine, and prednisone (R-CHOP), or rituximab plus cyclophosphamide, vincristine, and prednisone (R-CVP).

There was no statistical difference between treatment approaches in CR/CRu at 120 weeks, which was 48% in the R2 arm and 53% in the R-chemotherapy arm (P = 0.13). Best CR/CRu also was not statistically different between arms (59% and 67%, respectively), as was best overall response rate (84% and 89%). The 3-year duration of response was 77% in the R2 arm and 74% for R-chemotherapy.

With 37.9 months median follow-up, progression-free survival was “nearly identical” between the two groups, Dr. Fowler said, at 77% for R2 and 78% for R-chemotherapy (P = 0.48). The 3-year overall survival was 94% in both the R2 and R-chemotherapy arms, though survival data are still immature, Dr. Fowler noted.

Grade 3/4 neutropenia was more common in the R-chemotherapy arm, resulting in higher rates of febrile neutropenia, according to Dr. Fowler, who also noted that rash and cutaneous reactions were more common with R2. About 70% of patients in each arm were able to tolerate treatment, and reasons for discontinuation were “fairly similar” between arms, Dr. Fowler added.

Second primary malignancies occurred in 7% of patients in the R2 arm and 10% of the R-chemotherapy arm.

The study was sponsored was Celgene and the Lymphoma Academic Research Organisation. Dr. Fowler reported disclosures related to Abbvie, Celgene, Janssen, Merck, and Roche.

SOURCE: Fowler NH et al. ASCO 2018, Abstract 7500.

CHICAGO – Lenalidomide plus rituximab (R2) had comparable efficacy versus standard chemoimmunotherapy in patients with previously untreated follicular lymphoma, according to results from a phase 3 trial.

RELEVANCE is the first randomized, phase 3 trial to examine a chemotherapy-free regimen in this setting.

Response and progression-free survival (PFS) results were similar for patients who received R2 followed by rituximab maintenance and patients assigned to chemotherapy plus rituximab and rituximab maintenance, in study results presented at the annual meeting of the American Society of Clinical Oncology.

“These results show that lenalidomide plus rituximab, which is a novel immunomodulatory approach, is a potential first-line option for patients with follicular lymphoma that require treatment,” said investigator Nathan H. Fowler, MD, of the University of Texas MD Anderson Cancer Center, Houston.

But since the study was designed as a superiority trial, rather than a noninferiority trial, and it failed to meet its primary endpoint of superior complete remission (CR) or CR unconfirmed (CRu) at 120 weeks, said Bruce D. Cheson, MD, head of hematology at Georgetown University, Washington.

R2 had a similar PFS overall and in all major patient subgroups, similar overall survival, less nonhematologic toxicity aside from rash, less neutropenia, and fewer infections despite increased use of growth factors in the chemoimmunotherapy arm, Dr. Cheson said in a presentation commenting on the results. “Therefore, I agree with Dr. Fowler’s conclusion that R2 can be considered as an option for the front-line therapy of patients with follicular lymphoma,” Dr. Cheson said.

The RELEVANCE study included 1,030 patients (median age, 59 years) with previously untreated, advanced follicular lymphoma requiring treatment. They were randomized 1:1 to either lenalidomide plus rituximab followed by rituximab maintenance, or R-chemotherapy followed by rituximab maintenance.

For patients randomly assigned to R-chemotherapy, physicians could choose among three standard regimens: rituximab plus bendamustine (R-B), rituximab plus cyclophosphamide, doxorubicin, vincristine, and prednisone (R-CHOP), or rituximab plus cyclophosphamide, vincristine, and prednisone (R-CVP).

There was no statistical difference between treatment approaches in CR/CRu at 120 weeks, which was 48% in the R2 arm and 53% in the R-chemotherapy arm (P = 0.13). Best CR/CRu also was not statistically different between arms (59% and 67%, respectively), as was best overall response rate (84% and 89%). The 3-year duration of response was 77% in the R2 arm and 74% for R-chemotherapy.

With 37.9 months median follow-up, progression-free survival was “nearly identical” between the two groups, Dr. Fowler said, at 77% for R2 and 78% for R-chemotherapy (P = 0.48). The 3-year overall survival was 94% in both the R2 and R-chemotherapy arms, though survival data are still immature, Dr. Fowler noted.

Grade 3/4 neutropenia was more common in the R-chemotherapy arm, resulting in higher rates of febrile neutropenia, according to Dr. Fowler, who also noted that rash and cutaneous reactions were more common with R2. About 70% of patients in each arm were able to tolerate treatment, and reasons for discontinuation were “fairly similar” between arms, Dr. Fowler added.

Second primary malignancies occurred in 7% of patients in the R2 arm and 10% of the R-chemotherapy arm.

The study was sponsored was Celgene and the Lymphoma Academic Research Organisation. Dr. Fowler reported disclosures related to Abbvie, Celgene, Janssen, Merck, and Roche.

SOURCE: Fowler NH et al. ASCO 2018, Abstract 7500.

CHICAGO – Lenalidomide plus rituximab (R2) had comparable efficacy versus standard chemoimmunotherapy in patients with previously untreated follicular lymphoma, according to results from a phase 3 trial.

RELEVANCE is the first randomized, phase 3 trial to examine a chemotherapy-free regimen in this setting.

Response and progression-free survival (PFS) results were similar for patients who received R2 followed by rituximab maintenance and patients assigned to chemotherapy plus rituximab and rituximab maintenance, in study results presented at the annual meeting of the American Society of Clinical Oncology.

“These results show that lenalidomide plus rituximab, which is a novel immunomodulatory approach, is a potential first-line option for patients with follicular lymphoma that require treatment,” said investigator Nathan H. Fowler, MD, of the University of Texas MD Anderson Cancer Center, Houston.

But since the study was designed as a superiority trial, rather than a noninferiority trial, and it failed to meet its primary endpoint of superior complete remission (CR) or CR unconfirmed (CRu) at 120 weeks, said Bruce D. Cheson, MD, head of hematology at Georgetown University, Washington.

R2 had a similar PFS overall and in all major patient subgroups, similar overall survival, less nonhematologic toxicity aside from rash, less neutropenia, and fewer infections despite increased use of growth factors in the chemoimmunotherapy arm, Dr. Cheson said in a presentation commenting on the results. “Therefore, I agree with Dr. Fowler’s conclusion that R2 can be considered as an option for the front-line therapy of patients with follicular lymphoma,” Dr. Cheson said.

The RELEVANCE study included 1,030 patients (median age, 59 years) with previously untreated, advanced follicular lymphoma requiring treatment. They were randomized 1:1 to either lenalidomide plus rituximab followed by rituximab maintenance, or R-chemotherapy followed by rituximab maintenance.

For patients randomly assigned to R-chemotherapy, physicians could choose among three standard regimens: rituximab plus bendamustine (R-B), rituximab plus cyclophosphamide, doxorubicin, vincristine, and prednisone (R-CHOP), or rituximab plus cyclophosphamide, vincristine, and prednisone (R-CVP).

There was no statistical difference between treatment approaches in CR/CRu at 120 weeks, which was 48% in the R2 arm and 53% in the R-chemotherapy arm (P = 0.13). Best CR/CRu also was not statistically different between arms (59% and 67%, respectively), as was best overall response rate (84% and 89%). The 3-year duration of response was 77% in the R2 arm and 74% for R-chemotherapy.

With 37.9 months median follow-up, progression-free survival was “nearly identical” between the two groups, Dr. Fowler said, at 77% for R2 and 78% for R-chemotherapy (P = 0.48). The 3-year overall survival was 94% in both the R2 and R-chemotherapy arms, though survival data are still immature, Dr. Fowler noted.

Grade 3/4 neutropenia was more common in the R-chemotherapy arm, resulting in higher rates of febrile neutropenia, according to Dr. Fowler, who also noted that rash and cutaneous reactions were more common with R2. About 70% of patients in each arm were able to tolerate treatment, and reasons for discontinuation were “fairly similar” between arms, Dr. Fowler added.

Second primary malignancies occurred in 7% of patients in the R2 arm and 10% of the R-chemotherapy arm.

The study was sponsored was Celgene and the Lymphoma Academic Research Organisation. Dr. Fowler reported disclosures related to Abbvie, Celgene, Janssen, Merck, and Roche.

SOURCE: Fowler NH et al. ASCO 2018, Abstract 7500.

REPORTING FROM ASCO 2018

Key clinical point: in patients with previously untreated follicular lymphoma.

Major finding: With 37.9 months’ median follow-up, progression-free survival was “nearly identical” between the two groups, at 77% for R2 and 78% for rituximab chemotherapy (P = 0.48).

Study details: RELEVANCE, a phase 3, randomized clinical trial including 1,030 patients with previously untreated, advanced follicular lymphoma requiring treatment.

Disclosures: The study was sponsored was Celgene and the Lymphoma Academic Research Organisation. Dr. Fowler reported disclosures related to AbbVie, Celgene, Janssen, Merck, and Roche.

Source: Fowler NH et al. ASCO 2018, Abstract 7500.

Prodige 7: No survival benefit with HIPEC for advanced colorectal cancer

CHICAGO – , according to findings from the randomized phase 3 UNICANCER Prodige 7 trial.

At a median follow up of 63.8 months, median overall survival – the primary endpoint of the study – was “completely comparable” at 41.7 and 41.2 months, respectively, in 133 patients randomized to receive HIPEC with oxaliplatin after cytoreductive surgery and 132 randomized to the cytoreductive surgery–only arm, François Quenet, MD, reported during a press briefing at the annual meeting of the American Society of Clinical Oncology.

The postoperative mortality rate was 1.5% at 30 days in both groups, he said, noting that no difference was seen between the groups in the rate of side effects during the first 30 days after surgery.

“However, we did find a difference between the two arms concerning late, severe complications within 60 days,” said, explaining that the 60-day complication rate was nearly double in the HIPEC group vs. the no-HIPEC group (24.1% vs. 13.6%).

Patients in the trial had stage IV colorectal cancer with isolated peritoneal carcinomatosis and a median age of 60 years. They were enrolled and randomized at 17 centers in France between February 2008 and January 2014.

The survival rate of the surgery-alone group was unexpectedly high, Dr. Quenet said, adding that all colorectal cancer patients with an isolated peritoneal carcinomatosis should therefore be considered for surgery.

The use of HIPEC with cytoreductive surgery was introduced about 15 years ago and has become an accepted treatment option – and in some centers, a standard of care; the combination has been considered an effective treatment for peritoneal carcinomatosis, a metastatic tumor of the peritoneum that occurs in about 20% of colorectal cancer patients. The role of HIPEC in the success of the approach, however, has been unclear.

The current findings suggest that cytoreductive surgery alone is as effective as surgery with HIPEC, which “does not influence the survival result,” in most patients, Dr. Quenet said, noting that about 15% of patients were cured.

Additional study is needed to determine if there are certain subsets of patients who might benefit from HIPEC, he added, explaining that a subgroup analysis in the current study suggested that those with a midrange amount of disease in the abdominal cavity (peritoneal cancer index of 11-15) might experience some benefit with HIPEC, but the numbers were too small to be conclusive.

More research also is needed to determine if chemotherapy agents other than the oxaliplatin used with HIPEC in this study might be more effective, he said.

Prodige 7 was funded by UNICANCER. Dr. Quenet has received honoraria from Sanofi/Aventis, Ethicon, and Gamida Cell, as well as travel/accommodations/expenses from Sanofi, Novartis, and Ethicon.

SOURCE: Quenet F et al. ASCO 2018, Abstract LBA3503.

CHICAGO – , according to findings from the randomized phase 3 UNICANCER Prodige 7 trial.

At a median follow up of 63.8 months, median overall survival – the primary endpoint of the study – was “completely comparable” at 41.7 and 41.2 months, respectively, in 133 patients randomized to receive HIPEC with oxaliplatin after cytoreductive surgery and 132 randomized to the cytoreductive surgery–only arm, François Quenet, MD, reported during a press briefing at the annual meeting of the American Society of Clinical Oncology.

The postoperative mortality rate was 1.5% at 30 days in both groups, he said, noting that no difference was seen between the groups in the rate of side effects during the first 30 days after surgery.

“However, we did find a difference between the two arms concerning late, severe complications within 60 days,” said, explaining that the 60-day complication rate was nearly double in the HIPEC group vs. the no-HIPEC group (24.1% vs. 13.6%).

Patients in the trial had stage IV colorectal cancer with isolated peritoneal carcinomatosis and a median age of 60 years. They were enrolled and randomized at 17 centers in France between February 2008 and January 2014.

The survival rate of the surgery-alone group was unexpectedly high, Dr. Quenet said, adding that all colorectal cancer patients with an isolated peritoneal carcinomatosis should therefore be considered for surgery.

The use of HIPEC with cytoreductive surgery was introduced about 15 years ago and has become an accepted treatment option – and in some centers, a standard of care; the combination has been considered an effective treatment for peritoneal carcinomatosis, a metastatic tumor of the peritoneum that occurs in about 20% of colorectal cancer patients. The role of HIPEC in the success of the approach, however, has been unclear.

The current findings suggest that cytoreductive surgery alone is as effective as surgery with HIPEC, which “does not influence the survival result,” in most patients, Dr. Quenet said, noting that about 15% of patients were cured.

Additional study is needed to determine if there are certain subsets of patients who might benefit from HIPEC, he added, explaining that a subgroup analysis in the current study suggested that those with a midrange amount of disease in the abdominal cavity (peritoneal cancer index of 11-15) might experience some benefit with HIPEC, but the numbers were too small to be conclusive.

More research also is needed to determine if chemotherapy agents other than the oxaliplatin used with HIPEC in this study might be more effective, he said.

Prodige 7 was funded by UNICANCER. Dr. Quenet has received honoraria from Sanofi/Aventis, Ethicon, and Gamida Cell, as well as travel/accommodations/expenses from Sanofi, Novartis, and Ethicon.

SOURCE: Quenet F et al. ASCO 2018, Abstract LBA3503.

CHICAGO – , according to findings from the randomized phase 3 UNICANCER Prodige 7 trial.

At a median follow up of 63.8 months, median overall survival – the primary endpoint of the study – was “completely comparable” at 41.7 and 41.2 months, respectively, in 133 patients randomized to receive HIPEC with oxaliplatin after cytoreductive surgery and 132 randomized to the cytoreductive surgery–only arm, François Quenet, MD, reported during a press briefing at the annual meeting of the American Society of Clinical Oncology.

The postoperative mortality rate was 1.5% at 30 days in both groups, he said, noting that no difference was seen between the groups in the rate of side effects during the first 30 days after surgery.

“However, we did find a difference between the two arms concerning late, severe complications within 60 days,” said, explaining that the 60-day complication rate was nearly double in the HIPEC group vs. the no-HIPEC group (24.1% vs. 13.6%).

Patients in the trial had stage IV colorectal cancer with isolated peritoneal carcinomatosis and a median age of 60 years. They were enrolled and randomized at 17 centers in France between February 2008 and January 2014.

The survival rate of the surgery-alone group was unexpectedly high, Dr. Quenet said, adding that all colorectal cancer patients with an isolated peritoneal carcinomatosis should therefore be considered for surgery.

The use of HIPEC with cytoreductive surgery was introduced about 15 years ago and has become an accepted treatment option – and in some centers, a standard of care; the combination has been considered an effective treatment for peritoneal carcinomatosis, a metastatic tumor of the peritoneum that occurs in about 20% of colorectal cancer patients. The role of HIPEC in the success of the approach, however, has been unclear.

The current findings suggest that cytoreductive surgery alone is as effective as surgery with HIPEC, which “does not influence the survival result,” in most patients, Dr. Quenet said, noting that about 15% of patients were cured.

Additional study is needed to determine if there are certain subsets of patients who might benefit from HIPEC, he added, explaining that a subgroup analysis in the current study suggested that those with a midrange amount of disease in the abdominal cavity (peritoneal cancer index of 11-15) might experience some benefit with HIPEC, but the numbers were too small to be conclusive.

More research also is needed to determine if chemotherapy agents other than the oxaliplatin used with HIPEC in this study might be more effective, he said.

Prodige 7 was funded by UNICANCER. Dr. Quenet has received honoraria from Sanofi/Aventis, Ethicon, and Gamida Cell, as well as travel/accommodations/expenses from Sanofi, Novartis, and Ethicon.

SOURCE: Quenet F et al. ASCO 2018, Abstract LBA3503.

REPORTING FROM ASCO 2018

Key clinical point: HIPEC with oxaliplatin after surgery offers no survival benefit in patients with advanced colorectal cancer.

Major finding: Median overall survival was comparable at 41.7 and 41.2 months, respectively, in the HIPEC and no-HIPEC groups.

Study details: The randomized phase 3 Prodige 7 trial of 265 patients.

Disclosures: Prodige 7 was funded by UNICANCER. Dr. Quenet has received honoraria from Sanofi/Aventis, Ethicon, and Gamida Cell, as well as travel/accommodations/expenses from Sanofi, Novartis, and Ethicon.

Source: Quenet F et al. ASCO 2018, LBA3503.

Tiny Tot, Big Lesion

About six months ago, the parents of this 1-year-old boy first noticed the lesion on his shoulder. It started as a pinpoint papule but has grown to its current size—at which point, it caught their full attention. Although there are no associated symptoms, the parents request referral to dermatology to clear up the matter.

The child is reportedly healthy in all other respects, maintaining weight as expected, and normally active and reactive to verbal and visual stimuli.

EXAMINATION

A distinctive orangish brown, ovoid, 8 x 4–mm nodule is located on the child’s right superior shoulder. The lesion has a smooth, soft surface, and there is no tenderness on palpation. No additional lesions are seen elsewhere.

Eye examination reveals normal and symmetrical red reflexes.

What is the diagnosis?

DISCUSSION

Juvenile xanthogranuloma (JXG) is a rare, benign variant of non-Langerhans cell histiocytosis. This patient’s lesion is typical, but JXG can vary in appearance; some patients present with darker or larger lesions—or multiple lesions.

JXGs are essentially granulomatous tumors that, on histologic examination, display multinucleated giant cells called Touton giant cells. These macrophage-derived foam cells are seen in lesions with high lipid content.

JXG tends to favor the neck, face, and trunk but can appear around or (rarely) inside the eye, typically unilaterally in the iris. Benign in all other respects, ocular JXG lesions can cause spontaneous hyphema, glaucoma, or blindness; they must therefore be dealt with by a specialist. Fortunately, only about 10% of patients display ocular involvement.

JXGs can be confused with compound nevi, warts, or Spitz tumors. Therefore, biopsy is often necessary to establish the diagnosis.

TAKE-HOME LEARNING POINTS

- Juvenile xanthogranuloma (JXG) is a rare non-Langerhans cell tumor usually seen on the neck, face, or trunk of children younger than 2.

- The orangish brown, soft appearance of this patient’s papule was typical.

- Although atypical JXG lesions may require shave biopsy to confirm the diagnosis, they typically resolve on their own without treatment.

- When JXG lesions appear in the eye (most commonly in the iris), there is potential for serious complications, including heterochromia, glaucoma, spontaneous hyphema, or even blindness.

About six months ago, the parents of this 1-year-old boy first noticed the lesion on his shoulder. It started as a pinpoint papule but has grown to its current size—at which point, it caught their full attention. Although there are no associated symptoms, the parents request referral to dermatology to clear up the matter.

The child is reportedly healthy in all other respects, maintaining weight as expected, and normally active and reactive to verbal and visual stimuli.

EXAMINATION

A distinctive orangish brown, ovoid, 8 x 4–mm nodule is located on the child’s right superior shoulder. The lesion has a smooth, soft surface, and there is no tenderness on palpation. No additional lesions are seen elsewhere.

Eye examination reveals normal and symmetrical red reflexes.

What is the diagnosis?

DISCUSSION

Juvenile xanthogranuloma (JXG) is a rare, benign variant of non-Langerhans cell histiocytosis. This patient’s lesion is typical, but JXG can vary in appearance; some patients present with darker or larger lesions—or multiple lesions.

JXGs are essentially granulomatous tumors that, on histologic examination, display multinucleated giant cells called Touton giant cells. These macrophage-derived foam cells are seen in lesions with high lipid content.

JXG tends to favor the neck, face, and trunk but can appear around or (rarely) inside the eye, typically unilaterally in the iris. Benign in all other respects, ocular JXG lesions can cause spontaneous hyphema, glaucoma, or blindness; they must therefore be dealt with by a specialist. Fortunately, only about 10% of patients display ocular involvement.

JXGs can be confused with compound nevi, warts, or Spitz tumors. Therefore, biopsy is often necessary to establish the diagnosis.

TAKE-HOME LEARNING POINTS

- Juvenile xanthogranuloma (JXG) is a rare non-Langerhans cell tumor usually seen on the neck, face, or trunk of children younger than 2.

- The orangish brown, soft appearance of this patient’s papule was typical.

- Although atypical JXG lesions may require shave biopsy to confirm the diagnosis, they typically resolve on their own without treatment.

- When JXG lesions appear in the eye (most commonly in the iris), there is potential for serious complications, including heterochromia, glaucoma, spontaneous hyphema, or even blindness.

About six months ago, the parents of this 1-year-old boy first noticed the lesion on his shoulder. It started as a pinpoint papule but has grown to its current size—at which point, it caught their full attention. Although there are no associated symptoms, the parents request referral to dermatology to clear up the matter.

The child is reportedly healthy in all other respects, maintaining weight as expected, and normally active and reactive to verbal and visual stimuli.

EXAMINATION

A distinctive orangish brown, ovoid, 8 x 4–mm nodule is located on the child’s right superior shoulder. The lesion has a smooth, soft surface, and there is no tenderness on palpation. No additional lesions are seen elsewhere.

Eye examination reveals normal and symmetrical red reflexes.

What is the diagnosis?

DISCUSSION

Juvenile xanthogranuloma (JXG) is a rare, benign variant of non-Langerhans cell histiocytosis. This patient’s lesion is typical, but JXG can vary in appearance; some patients present with darker or larger lesions—or multiple lesions.

JXGs are essentially granulomatous tumors that, on histologic examination, display multinucleated giant cells called Touton giant cells. These macrophage-derived foam cells are seen in lesions with high lipid content.

JXG tends to favor the neck, face, and trunk but can appear around or (rarely) inside the eye, typically unilaterally in the iris. Benign in all other respects, ocular JXG lesions can cause spontaneous hyphema, glaucoma, or blindness; they must therefore be dealt with by a specialist. Fortunately, only about 10% of patients display ocular involvement.

JXGs can be confused with compound nevi, warts, or Spitz tumors. Therefore, biopsy is often necessary to establish the diagnosis.

TAKE-HOME LEARNING POINTS

- Juvenile xanthogranuloma (JXG) is a rare non-Langerhans cell tumor usually seen on the neck, face, or trunk of children younger than 2.

- The orangish brown, soft appearance of this patient’s papule was typical.

- Although atypical JXG lesions may require shave biopsy to confirm the diagnosis, they typically resolve on their own without treatment.

- When JXG lesions appear in the eye (most commonly in the iris), there is potential for serious complications, including heterochromia, glaucoma, spontaneous hyphema, or even blindness.

Youth tobacco use shows ‘promising declines’

according to the Centers for Disease Control and Prevention.

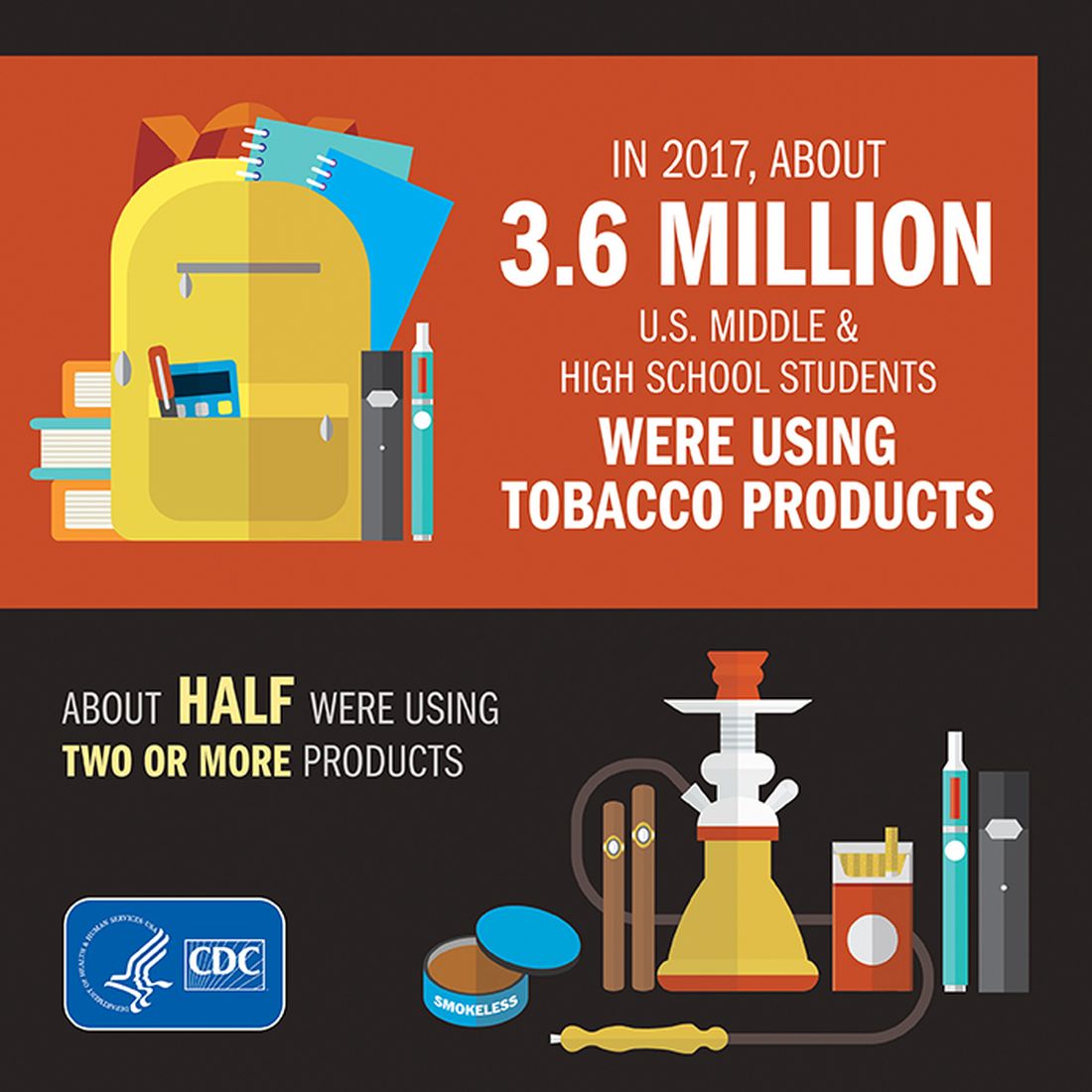

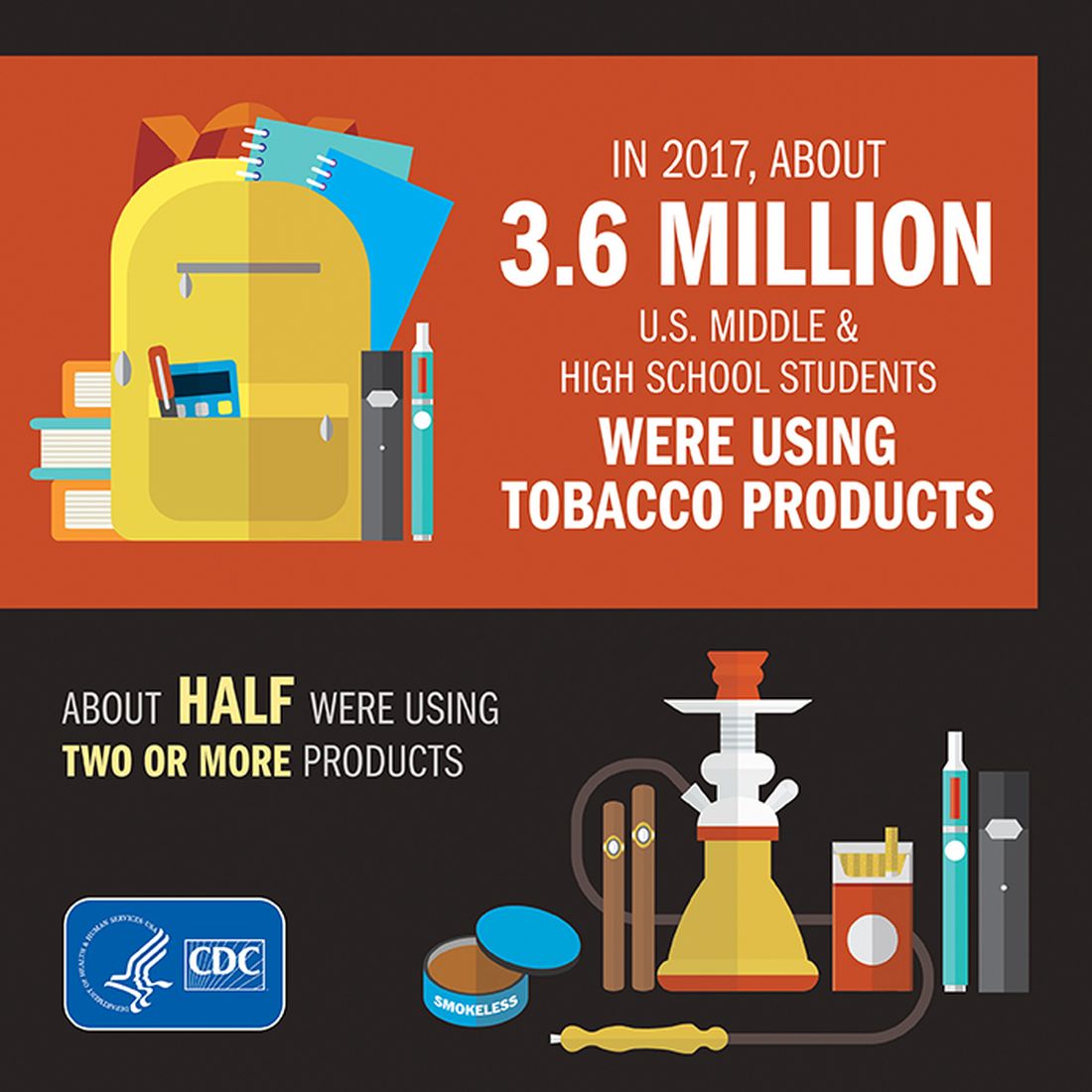

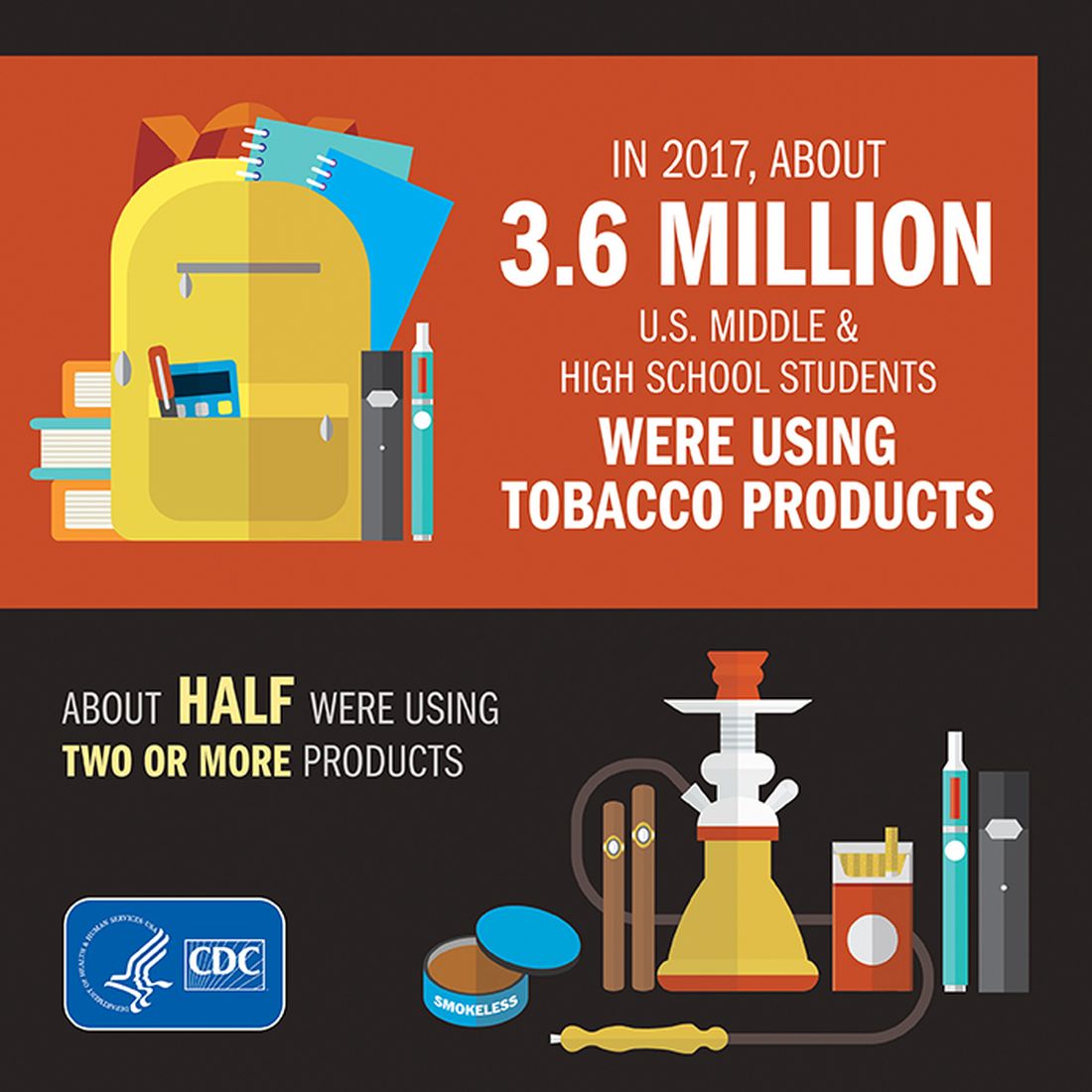

The prevalence of current tobacco use – defined as use on 1 or more days in the past 30 days – among high schoolers fell from 24.2% in 2011 to 19.6% in 2017, and middle school use decreased from 7.5% to 5.6% over that same time. That means the number of youth tobacco users went from almost 4.6 million in 2011 to slightly more than 3.6 million in 2017, Teresa W. Wang, PhD, and her associates said in the Morbidity and Mortality Weekly Report.

Almost half (47%) of the high school students who used tobacco in 2017 used two or more products, as did two out of five (42%) middle schoolers. That year, black high school students were less likely to use any tobacco product (14.2%) than were whites (22.7%) and Hispanics (16.7%). E-cigarettes were the most popular form of tobacco among white and Hispanic high schoolers, while cigars were the most commonly used form among blacks, they reported based on data from the National Youth Tobacco Surveys, which had sample sizes of 18,766 in 2011 and 17,872 in 2017.

“Despite promising declines in tobacco use, far too many young people continue to use tobacco products, including e-cigarettes,” CDC Director Robert R. Redfield, MD, said in a written statement accompanying the report. “Comprehensive, sustained strategies can help prevent and reduce tobacco use and protect our nation’s youth from this preventable health risk.”

In a separate statement, FDA Commissioner Scott Gottlieb, MD, said, “We are working hard to develop a pathway to put products like e-cigarettes through an appropriate series of regulatory gates to properly evaluate them as an alternative for adults who still want to get access to satisfying levels of nicotine, without all the risks associated with lighting tobacco on fire. And we will continue to encourage the development of potentially less harmful forms of nicotine delivery for currently addicted adult smokers. … But these public health opportunities are put at risk if all we do is hook another generation of kids on nicotine and tobacco products through alternatives like e-cigarettes.”

SOURCE: Wang TW et al. MMWR. 2018;67(22):629-33.

according to the Centers for Disease Control and Prevention.

The prevalence of current tobacco use – defined as use on 1 or more days in the past 30 days – among high schoolers fell from 24.2% in 2011 to 19.6% in 2017, and middle school use decreased from 7.5% to 5.6% over that same time. That means the number of youth tobacco users went from almost 4.6 million in 2011 to slightly more than 3.6 million in 2017, Teresa W. Wang, PhD, and her associates said in the Morbidity and Mortality Weekly Report.

Almost half (47%) of the high school students who used tobacco in 2017 used two or more products, as did two out of five (42%) middle schoolers. That year, black high school students were less likely to use any tobacco product (14.2%) than were whites (22.7%) and Hispanics (16.7%). E-cigarettes were the most popular form of tobacco among white and Hispanic high schoolers, while cigars were the most commonly used form among blacks, they reported based on data from the National Youth Tobacco Surveys, which had sample sizes of 18,766 in 2011 and 17,872 in 2017.

“Despite promising declines in tobacco use, far too many young people continue to use tobacco products, including e-cigarettes,” CDC Director Robert R. Redfield, MD, said in a written statement accompanying the report. “Comprehensive, sustained strategies can help prevent and reduce tobacco use and protect our nation’s youth from this preventable health risk.”

In a separate statement, FDA Commissioner Scott Gottlieb, MD, said, “We are working hard to develop a pathway to put products like e-cigarettes through an appropriate series of regulatory gates to properly evaluate them as an alternative for adults who still want to get access to satisfying levels of nicotine, without all the risks associated with lighting tobacco on fire. And we will continue to encourage the development of potentially less harmful forms of nicotine delivery for currently addicted adult smokers. … But these public health opportunities are put at risk if all we do is hook another generation of kids on nicotine and tobacco products through alternatives like e-cigarettes.”

SOURCE: Wang TW et al. MMWR. 2018;67(22):629-33.

according to the Centers for Disease Control and Prevention.

The prevalence of current tobacco use – defined as use on 1 or more days in the past 30 days – among high schoolers fell from 24.2% in 2011 to 19.6% in 2017, and middle school use decreased from 7.5% to 5.6% over that same time. That means the number of youth tobacco users went from almost 4.6 million in 2011 to slightly more than 3.6 million in 2017, Teresa W. Wang, PhD, and her associates said in the Morbidity and Mortality Weekly Report.

Almost half (47%) of the high school students who used tobacco in 2017 used two or more products, as did two out of five (42%) middle schoolers. That year, black high school students were less likely to use any tobacco product (14.2%) than were whites (22.7%) and Hispanics (16.7%). E-cigarettes were the most popular form of tobacco among white and Hispanic high schoolers, while cigars were the most commonly used form among blacks, they reported based on data from the National Youth Tobacco Surveys, which had sample sizes of 18,766 in 2011 and 17,872 in 2017.

“Despite promising declines in tobacco use, far too many young people continue to use tobacco products, including e-cigarettes,” CDC Director Robert R. Redfield, MD, said in a written statement accompanying the report. “Comprehensive, sustained strategies can help prevent and reduce tobacco use and protect our nation’s youth from this preventable health risk.”

In a separate statement, FDA Commissioner Scott Gottlieb, MD, said, “We are working hard to develop a pathway to put products like e-cigarettes through an appropriate series of regulatory gates to properly evaluate them as an alternative for adults who still want to get access to satisfying levels of nicotine, without all the risks associated with lighting tobacco on fire. And we will continue to encourage the development of potentially less harmful forms of nicotine delivery for currently addicted adult smokers. … But these public health opportunities are put at risk if all we do is hook another generation of kids on nicotine and tobacco products through alternatives like e-cigarettes.”

SOURCE: Wang TW et al. MMWR. 2018;67(22):629-33.

FROM MMWR

HHS to allow insurers’ workaround on 2019 prices

Federal officials will not block insurance companies from again using a workaround to cushion a steep rise in health premiums caused by President Donald Trump’s cancellation of a program established under the Affordable Care Act, Health and Human Services Secretary Alex Azar announced June 6.

The technique – called “silver loading” because it pushed price increases onto the silver-level plans in the ACA marketplaces – was used by many states for 2018 policies. But federal officials had hinted they might bar the practice next year.

At a hearing June 6 before the House Education and Workforce Committee, Mr. Azar said stopping this practice “would require regulations, which simply couldn’t be done in time for the 2019 plan period.”

States moved to silver loading after the president in October cut off federal reimbursement for so-called cost-sharing reduction subsidies that the ACA guaranteed to insurance companies. Those payments offset the cost of discounts that insurers are required by the law to provide to some low-income people to help cover their deductibles and other out-of-pocket costs.

States scrambled to let insurers raise rates so they would stay in the market. And many let them use this technique to recoup the lost funding by adding to the premium costs of midlevel silver plans in the health exchanges.

Because the formula for federal premium subsidies offered to people who purchase through the marketplaces is based on the prices of those silver plans, as those premiums rose so did the subsidies to help people afford them. That meant the federal government ended up paying much of the increase in prices.

At the committee hearing June 6, under questioning from Rep. Joe Courtney (D-Conn.), Mr. Azar declined to say if the department was considering a future ban.

“It’s not an easy question,” Mr. Azar said.

The fact that the federal government ended up effectively making the payments aggravated many Republicans, and there have been rumors over the past several months that HHS might require the premium increases to be applied across all plans, boosting costs for all buyers in the individual market.

Seema Verma, the administrator of the Centers for Medicare & Medicaid Services, told reporters in April that the department was examining the possibility.

Apparently that will not happen, at least not for plan year 2019.

Kaiser Health News is a nonprofit national health policy news service. It is an editorially independent program of the Henry J. Kaiser Family Foundation that is not affiliated with Kaiser Permanente.

Federal officials will not block insurance companies from again using a workaround to cushion a steep rise in health premiums caused by President Donald Trump’s cancellation of a program established under the Affordable Care Act, Health and Human Services Secretary Alex Azar announced June 6.

The technique – called “silver loading” because it pushed price increases onto the silver-level plans in the ACA marketplaces – was used by many states for 2018 policies. But federal officials had hinted they might bar the practice next year.

At a hearing June 6 before the House Education and Workforce Committee, Mr. Azar said stopping this practice “would require regulations, which simply couldn’t be done in time for the 2019 plan period.”

States moved to silver loading after the president in October cut off federal reimbursement for so-called cost-sharing reduction subsidies that the ACA guaranteed to insurance companies. Those payments offset the cost of discounts that insurers are required by the law to provide to some low-income people to help cover their deductibles and other out-of-pocket costs.

States scrambled to let insurers raise rates so they would stay in the market. And many let them use this technique to recoup the lost funding by adding to the premium costs of midlevel silver plans in the health exchanges.

Because the formula for federal premium subsidies offered to people who purchase through the marketplaces is based on the prices of those silver plans, as those premiums rose so did the subsidies to help people afford them. That meant the federal government ended up paying much of the increase in prices.

At the committee hearing June 6, under questioning from Rep. Joe Courtney (D-Conn.), Mr. Azar declined to say if the department was considering a future ban.

“It’s not an easy question,” Mr. Azar said.

The fact that the federal government ended up effectively making the payments aggravated many Republicans, and there have been rumors over the past several months that HHS might require the premium increases to be applied across all plans, boosting costs for all buyers in the individual market.

Seema Verma, the administrator of the Centers for Medicare & Medicaid Services, told reporters in April that the department was examining the possibility.

Apparently that will not happen, at least not for plan year 2019.

Kaiser Health News is a nonprofit national health policy news service. It is an editorially independent program of the Henry J. Kaiser Family Foundation that is not affiliated with Kaiser Permanente.

Federal officials will not block insurance companies from again using a workaround to cushion a steep rise in health premiums caused by President Donald Trump’s cancellation of a program established under the Affordable Care Act, Health and Human Services Secretary Alex Azar announced June 6.

The technique – called “silver loading” because it pushed price increases onto the silver-level plans in the ACA marketplaces – was used by many states for 2018 policies. But federal officials had hinted they might bar the practice next year.

At a hearing June 6 before the House Education and Workforce Committee, Mr. Azar said stopping this practice “would require regulations, which simply couldn’t be done in time for the 2019 plan period.”

States moved to silver loading after the president in October cut off federal reimbursement for so-called cost-sharing reduction subsidies that the ACA guaranteed to insurance companies. Those payments offset the cost of discounts that insurers are required by the law to provide to some low-income people to help cover their deductibles and other out-of-pocket costs.

States scrambled to let insurers raise rates so they would stay in the market. And many let them use this technique to recoup the lost funding by adding to the premium costs of midlevel silver plans in the health exchanges.

Because the formula for federal premium subsidies offered to people who purchase through the marketplaces is based on the prices of those silver plans, as those premiums rose so did the subsidies to help people afford them. That meant the federal government ended up paying much of the increase in prices.

At the committee hearing June 6, under questioning from Rep. Joe Courtney (D-Conn.), Mr. Azar declined to say if the department was considering a future ban.

“It’s not an easy question,” Mr. Azar said.

The fact that the federal government ended up effectively making the payments aggravated many Republicans, and there have been rumors over the past several months that HHS might require the premium increases to be applied across all plans, boosting costs for all buyers in the individual market.

Seema Verma, the administrator of the Centers for Medicare & Medicaid Services, told reporters in April that the department was examining the possibility.

Apparently that will not happen, at least not for plan year 2019.

Kaiser Health News is a nonprofit national health policy news service. It is an editorially independent program of the Henry J. Kaiser Family Foundation that is not affiliated with Kaiser Permanente.

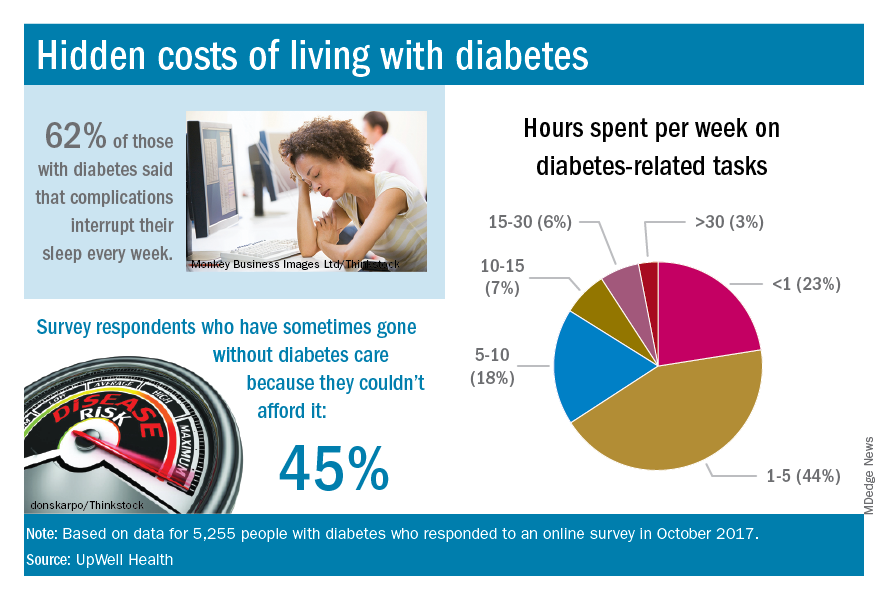

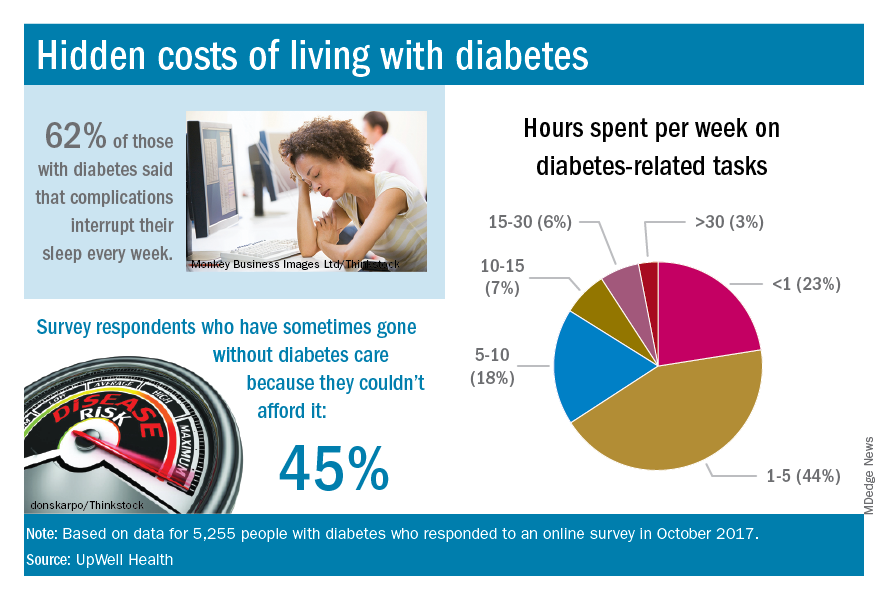

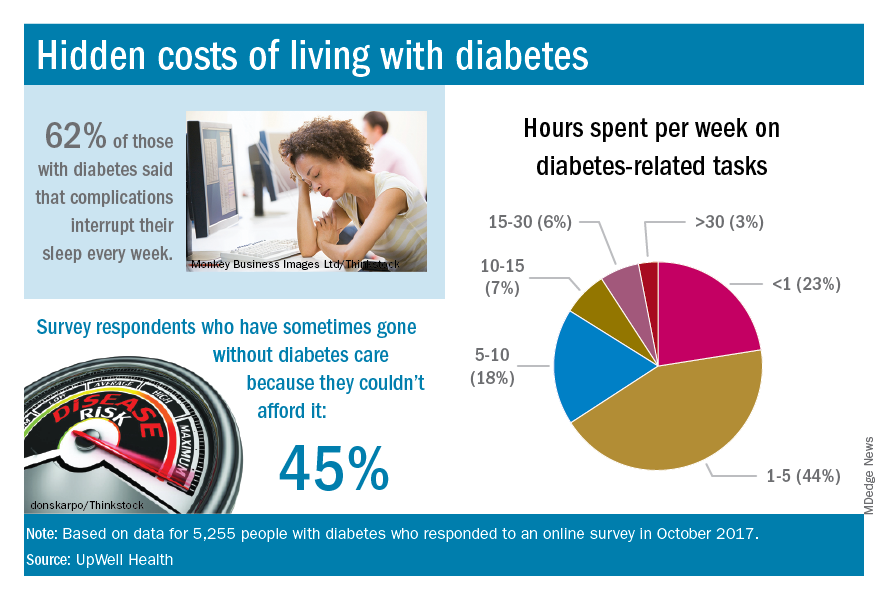

Diabetes places burden on patients

according to a new survey by prescription manager UpWell Health.

The time spent on activities that come with diabetes management – blood glucose monitoring, diet planning, medical appointments – can add up to several hours a week. Among the 5,255 respondents to the online survey, 18% said that such tasks took up 5-10 hours each week, 7% said it was 10-15 hours, and 9% said they spent 15 or more hours a week on diabetes-related tasks, UpWell reported.

Since medical expenses aren’t always fully covered by insurance, 43% of respondents paid up to $1,000 a year out of pocket to treat diabetes complications, 16% paid $1,000 to $2,000 a year, and 4% paid more than $5,000. Five percent also paid over $5,000 a year out of pocket for diabetes care from a physician and 45% said that they had sometimes gone without diabetes care because they couldn’t afford it, the survey data showed.

“Most people with diabetes are able to manage it successfully and live active, satisfying lives. But doing so requires constant planning, vigilance, and care. They eagerly seek trustworthy resources to help them reduce the burden of living with diabetes,” UpWell said.

according to a new survey by prescription manager UpWell Health.

The time spent on activities that come with diabetes management – blood glucose monitoring, diet planning, medical appointments – can add up to several hours a week. Among the 5,255 respondents to the online survey, 18% said that such tasks took up 5-10 hours each week, 7% said it was 10-15 hours, and 9% said they spent 15 or more hours a week on diabetes-related tasks, UpWell reported.

Since medical expenses aren’t always fully covered by insurance, 43% of respondents paid up to $1,000 a year out of pocket to treat diabetes complications, 16% paid $1,000 to $2,000 a year, and 4% paid more than $5,000. Five percent also paid over $5,000 a year out of pocket for diabetes care from a physician and 45% said that they had sometimes gone without diabetes care because they couldn’t afford it, the survey data showed.

“Most people with diabetes are able to manage it successfully and live active, satisfying lives. But doing so requires constant planning, vigilance, and care. They eagerly seek trustworthy resources to help them reduce the burden of living with diabetes,” UpWell said.

according to a new survey by prescription manager UpWell Health.

The time spent on activities that come with diabetes management – blood glucose monitoring, diet planning, medical appointments – can add up to several hours a week. Among the 5,255 respondents to the online survey, 18% said that such tasks took up 5-10 hours each week, 7% said it was 10-15 hours, and 9% said they spent 15 or more hours a week on diabetes-related tasks, UpWell reported.

Since medical expenses aren’t always fully covered by insurance, 43% of respondents paid up to $1,000 a year out of pocket to treat diabetes complications, 16% paid $1,000 to $2,000 a year, and 4% paid more than $5,000. Five percent also paid over $5,000 a year out of pocket for diabetes care from a physician and 45% said that they had sometimes gone without diabetes care because they couldn’t afford it, the survey data showed.

“Most people with diabetes are able to manage it successfully and live active, satisfying lives. But doing so requires constant planning, vigilance, and care. They eagerly seek trustworthy resources to help them reduce the burden of living with diabetes,” UpWell said.

A U.S. model for Italian hospitals?

In the United States, family physicians (general practitioners) used to manage their patients in the hospital, either as the primary care doctor or in consultation with specialists. Only since the 1990s has a new kind of physician gained widespread acceptance: the hospitalist (“specialist of inpatient care”).1

In Italy the process has not been the same. In our health care system, primary care physicians have always transferred the responsibility of hospital care to an inpatient team. Actually, our hospital-based doctors dedicate their whole working time to inpatient care, and general practitioners are not expected to go to the hospital. The patients were (and are) admitted to one ward or another according to their main clinical problem.

Little by little, a huge number of organ specialty and subspecialty wards have filled Italian hospitals. In this context, the internal medicine specialty was unable to occupy its characteristic role, so that, a few years ago, the medical community wondered if the specialty should have continued to exist.

Anyway, as a result of hyperspecialization, we have many different specialists in inpatient care who are not specialists in global inpatient care.

Nowadays, in our country we are faced with a dramatic epidemiologic change. The Italian population is aging and the majority of patients have not only one clinical problem but multiple comorbidities. When these patients reach the emergency department, it is not easy to identify the main clinical problem and assign him/her to an organ specialty unit. And when he or she eventually arrives there, a considerable number of consultants is frequently required. The vision of organ specialists is not holistic, and they are more prone to maximizing their tools than rationalizing them. So, at present, our traditional hospital model has been generating care fragmentation, overproduction of diagnoses, overprescription of drugs, and increasing costs.

It is obvious that a new model is necessary for the future, and we look with great interest at the American hospitalist model.

We need a new hospital-based clinician who has wide-ranging competencies, and is able to define priorities and appropriateness of care when a patient requires multiple specialists’ interventions; one who is autonomous in performing basic procedures and expert in perioperative medicine; prompt to communicate with primary care doctors at the time of admission and discharge; and prepared to work in managed-care organizations.

We wonder: Are Italian hospital-based internists – the only specialists in global inpatient care – suited to this role?

We think so. However, current Italian training in internal medicine is focused mainly on scientific bases of diseases, pathophysiological, and clinical aspects. Concepts such as complexity or the management of patients with comorbidities are quite difficult to teach to medical school students and therefore often neglected. As a result, internal medicine physicians require a prolonged practical training.

Inspired by the Core Competencies in Hospital Medicine published by the Society of Hospital Medicine, this year in Genoa (the birthplace of Christopher Columbus) we started a 2-year second-level University Master course, called “Hospitalist: Managing complexity in Internal Medicine inpatients” for 35 internal medicine specialists. It is the fruit of collaboration between the main association of Italian hospital-based internists (Federation of Associations of Hospital Doctors on Internal Medicine, or FADOI) and the University of Genoa’s Department of Internal Medicine, Academy of Health Management, and the Center of Simulation and Advanced Training.

In Italy, this is the first concrete initiative to train, and better define, this new type of physician expert in the management of inpatients.

According to SHM’s definition of a hospitalist, we think that the activities of this new physician should also include teaching and research related to hospital medicine. And as Dr. Steven Pantilat wrote, “patient safety, leadership, palliative care and quality improvement are the issues that pertain to all hospitalists.”2

Theoretically, the development of the hospitalist model should be easier in Italy when compared to the United States. Dr. Robert Wachter and Dr. Lee Goldman wrote in 1996 about the objections to the hospitalist model of American primary care physicians (“to preserve continuity”) and specialists (“fewer consultations, lower income”), but in Italy family doctors do not usually follow their patients in the hospital, and specialists have no incentive for in-hospital consultations.3 Moreover, patients with comorbidities, or pathologies on the border between medicine and surgery (e.g. cholecystitis, bowel obstruction, polytrauma, etc.), are already often assigned to internal medicine, and in the smallest hospitals, the internist is most of the time the only specialist doctor continually present.

Nevertheless, the Italian hospitalist model will be a challenge. We know we have to deal with organ specialists, but we strongly believe that this is the most appropriate and the most sustainable model for the future of the Italian hospitals. Our wish is not to become the “bosses” of the hospital, but to ensure global, coordinated, and respectful care to present and future patients.

Published outcomes studies demonstrate that the U.S. hospitalist model has led to consistent and pronounced cost saving with no loss in quality.4 In the United States, the hospitalist field has grown from a few hundred physicians to more than 50,000,5 making it the fastest growing physician specialty in medical history.

Why should the same not occur in Italy?

References

1. Baudendistel TE, Watcher RM. The evolution of the hospitalist movement in USA. Clin Med JRCPL. 2002;2:327-30.

2. Pantilat S. What is a Hospitalist? The Hospitalist 2006 February;2006(2).

3. Wachter RM, Goldman Lee. The emerging role of “Hospitalists” in the American Health Care System. N Engl J Med. 1996;335:514-7.

4. White HL, Glazier RH. Do hospitalist physicians improve the quality of inpatient care delivery? A systematic review of process, efficiency and outcome measures. BMC Medicine. 2011;9:58:1-22. http://www.biomedcentral.com/1741-7015/9/58.

5. Wachter RM, Goldman L. Zero to 50,000 – The 20th Anniversary of the Hospitalist. N Engl J Med. 2016;375:1009-11.

Valerio Verdiani, MD, director of internal medicine, Grosseto, Italy. Francesco Orlandini, MD, internal medicine, health administrator, ASL4 Liguria, Chiavari (GE), Italy. Micaela La Regina, MD, internal medicine, risk management and clinical governance, ASL5 Liguria, La Spezia, Italy. Giovanni Murialdo, MD, department of internal medicine and medical specialty, University of Genoa (Italy). Andrea Fontanella, MD, director of medicine department, president of the Federation of Associations of Hospital Doctors on Internal Medicine (FADOI), Naples, Italy. Mauro Silingardi, MD, director of internal medicine, director of training and refresher of FADOI, Bologna, Italy.

In the United States, family physicians (general practitioners) used to manage their patients in the hospital, either as the primary care doctor or in consultation with specialists. Only since the 1990s has a new kind of physician gained widespread acceptance: the hospitalist (“specialist of inpatient care”).1

In Italy the process has not been the same. In our health care system, primary care physicians have always transferred the responsibility of hospital care to an inpatient team. Actually, our hospital-based doctors dedicate their whole working time to inpatient care, and general practitioners are not expected to go to the hospital. The patients were (and are) admitted to one ward or another according to their main clinical problem.

Little by little, a huge number of organ specialty and subspecialty wards have filled Italian hospitals. In this context, the internal medicine specialty was unable to occupy its characteristic role, so that, a few years ago, the medical community wondered if the specialty should have continued to exist.

Anyway, as a result of hyperspecialization, we have many different specialists in inpatient care who are not specialists in global inpatient care.

Nowadays, in our country we are faced with a dramatic epidemiologic change. The Italian population is aging and the majority of patients have not only one clinical problem but multiple comorbidities. When these patients reach the emergency department, it is not easy to identify the main clinical problem and assign him/her to an organ specialty unit. And when he or she eventually arrives there, a considerable number of consultants is frequently required. The vision of organ specialists is not holistic, and they are more prone to maximizing their tools than rationalizing them. So, at present, our traditional hospital model has been generating care fragmentation, overproduction of diagnoses, overprescription of drugs, and increasing costs.

It is obvious that a new model is necessary for the future, and we look with great interest at the American hospitalist model.

We need a new hospital-based clinician who has wide-ranging competencies, and is able to define priorities and appropriateness of care when a patient requires multiple specialists’ interventions; one who is autonomous in performing basic procedures and expert in perioperative medicine; prompt to communicate with primary care doctors at the time of admission and discharge; and prepared to work in managed-care organizations.

We wonder: Are Italian hospital-based internists – the only specialists in global inpatient care – suited to this role?

We think so. However, current Italian training in internal medicine is focused mainly on scientific bases of diseases, pathophysiological, and clinical aspects. Concepts such as complexity or the management of patients with comorbidities are quite difficult to teach to medical school students and therefore often neglected. As a result, internal medicine physicians require a prolonged practical training.

Inspired by the Core Competencies in Hospital Medicine published by the Society of Hospital Medicine, this year in Genoa (the birthplace of Christopher Columbus) we started a 2-year second-level University Master course, called “Hospitalist: Managing complexity in Internal Medicine inpatients” for 35 internal medicine specialists. It is the fruit of collaboration between the main association of Italian hospital-based internists (Federation of Associations of Hospital Doctors on Internal Medicine, or FADOI) and the University of Genoa’s Department of Internal Medicine, Academy of Health Management, and the Center of Simulation and Advanced Training.

In Italy, this is the first concrete initiative to train, and better define, this new type of physician expert in the management of inpatients.

According to SHM’s definition of a hospitalist, we think that the activities of this new physician should also include teaching and research related to hospital medicine. And as Dr. Steven Pantilat wrote, “patient safety, leadership, palliative care and quality improvement are the issues that pertain to all hospitalists.”2

Theoretically, the development of the hospitalist model should be easier in Italy when compared to the United States. Dr. Robert Wachter and Dr. Lee Goldman wrote in 1996 about the objections to the hospitalist model of American primary care physicians (“to preserve continuity”) and specialists (“fewer consultations, lower income”), but in Italy family doctors do not usually follow their patients in the hospital, and specialists have no incentive for in-hospital consultations.3 Moreover, patients with comorbidities, or pathologies on the border between medicine and surgery (e.g. cholecystitis, bowel obstruction, polytrauma, etc.), are already often assigned to internal medicine, and in the smallest hospitals, the internist is most of the time the only specialist doctor continually present.

Nevertheless, the Italian hospitalist model will be a challenge. We know we have to deal with organ specialists, but we strongly believe that this is the most appropriate and the most sustainable model for the future of the Italian hospitals. Our wish is not to become the “bosses” of the hospital, but to ensure global, coordinated, and respectful care to present and future patients.

Published outcomes studies demonstrate that the U.S. hospitalist model has led to consistent and pronounced cost saving with no loss in quality.4 In the United States, the hospitalist field has grown from a few hundred physicians to more than 50,000,5 making it the fastest growing physician specialty in medical history.

Why should the same not occur in Italy?

References

1. Baudendistel TE, Watcher RM. The evolution of the hospitalist movement in USA. Clin Med JRCPL. 2002;2:327-30.

2. Pantilat S. What is a Hospitalist? The Hospitalist 2006 February;2006(2).

3. Wachter RM, Goldman Lee. The emerging role of “Hospitalists” in the American Health Care System. N Engl J Med. 1996;335:514-7.

4. White HL, Glazier RH. Do hospitalist physicians improve the quality of inpatient care delivery? A systematic review of process, efficiency and outcome measures. BMC Medicine. 2011;9:58:1-22. http://www.biomedcentral.com/1741-7015/9/58.

5. Wachter RM, Goldman L. Zero to 50,000 – The 20th Anniversary of the Hospitalist. N Engl J Med. 2016;375:1009-11.

Valerio Verdiani, MD, director of internal medicine, Grosseto, Italy. Francesco Orlandini, MD, internal medicine, health administrator, ASL4 Liguria, Chiavari (GE), Italy. Micaela La Regina, MD, internal medicine, risk management and clinical governance, ASL5 Liguria, La Spezia, Italy. Giovanni Murialdo, MD, department of internal medicine and medical specialty, University of Genoa (Italy). Andrea Fontanella, MD, director of medicine department, president of the Federation of Associations of Hospital Doctors on Internal Medicine (FADOI), Naples, Italy. Mauro Silingardi, MD, director of internal medicine, director of training and refresher of FADOI, Bologna, Italy.

In the United States, family physicians (general practitioners) used to manage their patients in the hospital, either as the primary care doctor or in consultation with specialists. Only since the 1990s has a new kind of physician gained widespread acceptance: the hospitalist (“specialist of inpatient care”).1

In Italy the process has not been the same. In our health care system, primary care physicians have always transferred the responsibility of hospital care to an inpatient team. Actually, our hospital-based doctors dedicate their whole working time to inpatient care, and general practitioners are not expected to go to the hospital. The patients were (and are) admitted to one ward or another according to their main clinical problem.

Little by little, a huge number of organ specialty and subspecialty wards have filled Italian hospitals. In this context, the internal medicine specialty was unable to occupy its characteristic role, so that, a few years ago, the medical community wondered if the specialty should have continued to exist.

Anyway, as a result of hyperspecialization, we have many different specialists in inpatient care who are not specialists in global inpatient care.

Nowadays, in our country we are faced with a dramatic epidemiologic change. The Italian population is aging and the majority of patients have not only one clinical problem but multiple comorbidities. When these patients reach the emergency department, it is not easy to identify the main clinical problem and assign him/her to an organ specialty unit. And when he or she eventually arrives there, a considerable number of consultants is frequently required. The vision of organ specialists is not holistic, and they are more prone to maximizing their tools than rationalizing them. So, at present, our traditional hospital model has been generating care fragmentation, overproduction of diagnoses, overprescription of drugs, and increasing costs.

It is obvious that a new model is necessary for the future, and we look with great interest at the American hospitalist model.

We need a new hospital-based clinician who has wide-ranging competencies, and is able to define priorities and appropriateness of care when a patient requires multiple specialists’ interventions; one who is autonomous in performing basic procedures and expert in perioperative medicine; prompt to communicate with primary care doctors at the time of admission and discharge; and prepared to work in managed-care organizations.

We wonder: Are Italian hospital-based internists – the only specialists in global inpatient care – suited to this role?

We think so. However, current Italian training in internal medicine is focused mainly on scientific bases of diseases, pathophysiological, and clinical aspects. Concepts such as complexity or the management of patients with comorbidities are quite difficult to teach to medical school students and therefore often neglected. As a result, internal medicine physicians require a prolonged practical training.

Inspired by the Core Competencies in Hospital Medicine published by the Society of Hospital Medicine, this year in Genoa (the birthplace of Christopher Columbus) we started a 2-year second-level University Master course, called “Hospitalist: Managing complexity in Internal Medicine inpatients” for 35 internal medicine specialists. It is the fruit of collaboration between the main association of Italian hospital-based internists (Federation of Associations of Hospital Doctors on Internal Medicine, or FADOI) and the University of Genoa’s Department of Internal Medicine, Academy of Health Management, and the Center of Simulation and Advanced Training.

In Italy, this is the first concrete initiative to train, and better define, this new type of physician expert in the management of inpatients.

According to SHM’s definition of a hospitalist, we think that the activities of this new physician should also include teaching and research related to hospital medicine. And as Dr. Steven Pantilat wrote, “patient safety, leadership, palliative care and quality improvement are the issues that pertain to all hospitalists.”2

Theoretically, the development of the hospitalist model should be easier in Italy when compared to the United States. Dr. Robert Wachter and Dr. Lee Goldman wrote in 1996 about the objections to the hospitalist model of American primary care physicians (“to preserve continuity”) and specialists (“fewer consultations, lower income”), but in Italy family doctors do not usually follow their patients in the hospital, and specialists have no incentive for in-hospital consultations.3 Moreover, patients with comorbidities, or pathologies on the border between medicine and surgery (e.g. cholecystitis, bowel obstruction, polytrauma, etc.), are already often assigned to internal medicine, and in the smallest hospitals, the internist is most of the time the only specialist doctor continually present.

Nevertheless, the Italian hospitalist model will be a challenge. We know we have to deal with organ specialists, but we strongly believe that this is the most appropriate and the most sustainable model for the future of the Italian hospitals. Our wish is not to become the “bosses” of the hospital, but to ensure global, coordinated, and respectful care to present and future patients.

Published outcomes studies demonstrate that the U.S. hospitalist model has led to consistent and pronounced cost saving with no loss in quality.4 In the United States, the hospitalist field has grown from a few hundred physicians to more than 50,000,5 making it the fastest growing physician specialty in medical history.

Why should the same not occur in Italy?

References

1. Baudendistel TE, Watcher RM. The evolution of the hospitalist movement in USA. Clin Med JRCPL. 2002;2:327-30.

2. Pantilat S. What is a Hospitalist? The Hospitalist 2006 February;2006(2).

3. Wachter RM, Goldman Lee. The emerging role of “Hospitalists” in the American Health Care System. N Engl J Med. 1996;335:514-7.

4. White HL, Glazier RH. Do hospitalist physicians improve the quality of inpatient care delivery? A systematic review of process, efficiency and outcome measures. BMC Medicine. 2011;9:58:1-22. http://www.biomedcentral.com/1741-7015/9/58.

5. Wachter RM, Goldman L. Zero to 50,000 – The 20th Anniversary of the Hospitalist. N Engl J Med. 2016;375:1009-11.

Valerio Verdiani, MD, director of internal medicine, Grosseto, Italy. Francesco Orlandini, MD, internal medicine, health administrator, ASL4 Liguria, Chiavari (GE), Italy. Micaela La Regina, MD, internal medicine, risk management and clinical governance, ASL5 Liguria, La Spezia, Italy. Giovanni Murialdo, MD, department of internal medicine and medical specialty, University of Genoa (Italy). Andrea Fontanella, MD, director of medicine department, president of the Federation of Associations of Hospital Doctors on Internal Medicine (FADOI), Naples, Italy. Mauro Silingardi, MD, director of internal medicine, director of training and refresher of FADOI, Bologna, Italy.

ASH urges lawmakers to keep opioids accessible

The American Society of Hematology (ASH) has released a new policy statement in favor of safeguarding access to opioids for hematology patients with chronic, severe pain as policymakers consider restrictions on opioid prescribing.

The statement is a recognition from ASH officials of the large number of opioid overdose deaths that involve prescription medication, and an acknowledgment that hematologists need to be advocates for their patients, said Joseph Alvarnas, MD, of City of Hope, Duarte, Calif.

The scope of the opioid problem is significant and worsening. More than 200,000 people in the United States died from overdoses related to prescription opioids. And in 2016, about 46 people were dying every day from prescription opioid overdoses, according to the Centers for Disease Control and Prevention.

In October 2017, President Trump declared that the opioid crisis was a nationwide “public health emergency” and regulators with the Centers for Medicare % Medicaid Services have already put in place restrictions on opioid dosing through the Medicare Part D program.

In a rule finalized in April 2018, the CMS placed restrictions on the dosage of opioids available for chronic opioid users and limited the days’ supply for first-time opioid users. For chronic users, the CMS set a 90-morphine-milligram-equivalent (MME) per day limit that triggers pharmacist consultation with the prescriber. The agency instructed health plans to implement an “opioid care coordination edit” that would be triggered at 90 MME per day across all opioid prescriptions and would require pharmacists to contact prescribers to override for a higher dosage.

The entire exchange must be documented. The CMS instructed health plans in the Medicare Part D program to implement a “hard safety edit” that limits opioid prescription fills to no more than a 7-day supply for opioid-naive patients being treated for acute pain. The changes are set to take effect in January 2019.

But Diane E. Meier, MD, director of the Center to Advance Palliative Care in New York, said the most current data suggest the risk of addiction and substance use disorder among medically-ill patients taking opioids is less than 10%. “That means that 90% of patients with a serious medical illness can safely take opioids for the relief of pain that is causing functional disorder,” she said.

Policymakers should not conflate the use and prescription of opioids with cases of misuse and abuse, Dr. Alvarnas said. Some patients will require a higher dose of opioids depending on their age or number of pain episodes, or because of their body’s physiological response.