User login

Hospitalist movers and shakers – September 2019

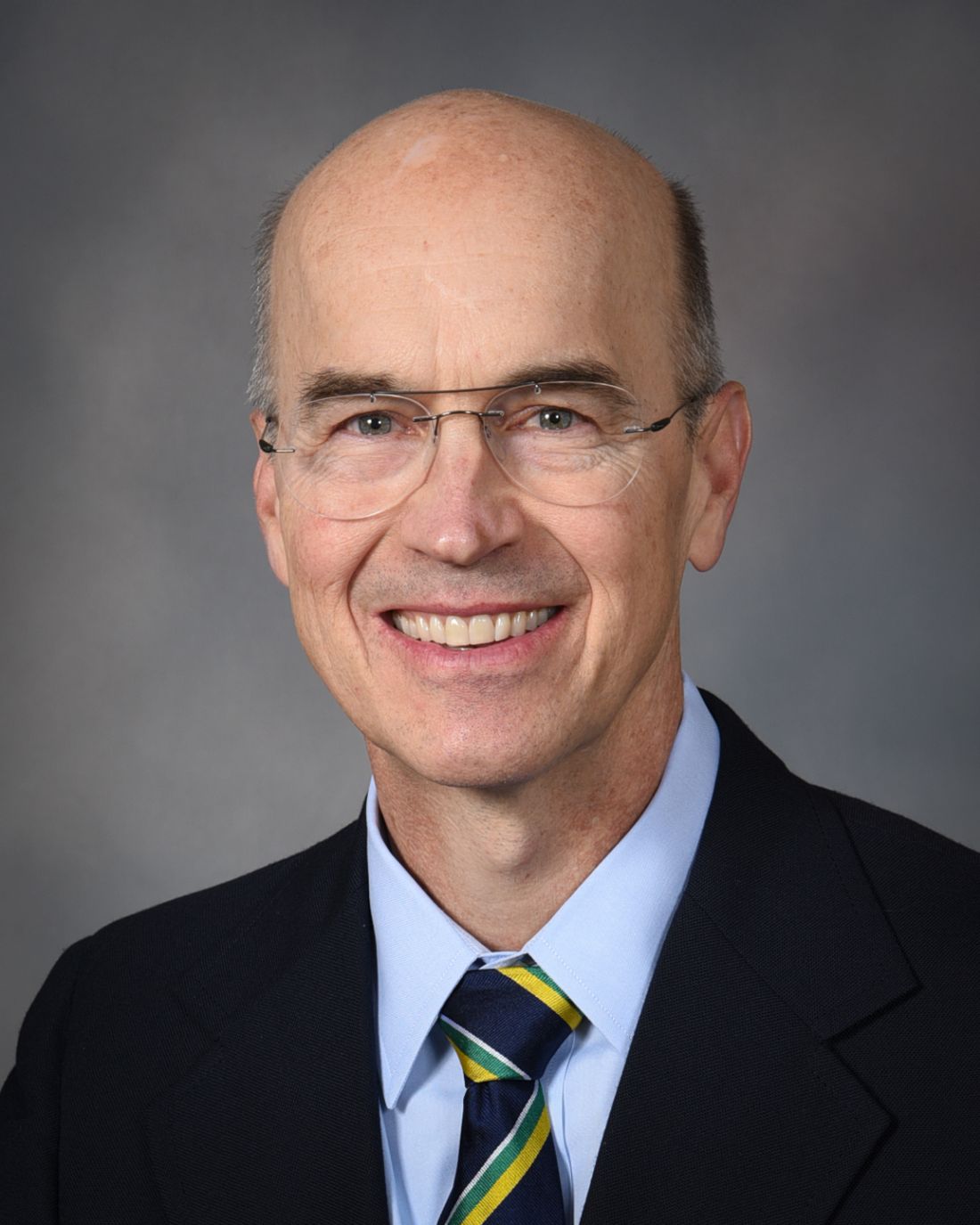

Mark Williams, MD, MHM, FACP, recently was appointed chief quality and transformation officer for the University of Kentucky’s UK HealthCare (Lexington). Dr. Williams, a tenured professor in the division of hospital medicine at the UK College of Medicine, will serve as chair of UK HealthCare’s Executive Quality Committee. Dr. Williams will lead integration of quality improvement, safety, and quality reporting with data analytics.

Dr. Williams established the first hospitalist program at a public hospital (Grady Memorial Hospital) and academic hospitalist programs at Emory University, Northwestern University, and UK HealthCare. An inaugural member of SHM, he is a past president, was the founding editor-in-chief of the Journal of Hospital Medicine and led SHM’s Project BOOST.

Also at UK HealthCare, Romil Chadha, MD, MPH, SFHM, FACP, has been named interim chief of the division of hospital medicine and medical director of Physician Information Technology Services. Previously, he was associate chief of the division of hospital medicine, and he also serves as medical director of telemetry.

Dr. Chadha is the founder of the Kentucky chapter of SHM, where he is the immediate past president. He is also the codirector of the Heartland Hospital Medicine Conference.

Amit Vashist, MD, MBA, CPE, FHM, FACP, FAPA, has been named chief clinical officer at Ballad Health, a 21-hospital health system in Northeast Tennessee, Southwest Virginia, Northwest North Carolina, and Southeast Kentucky.

In his new role, he will focus on clinical quality, value-based initiatives to improve quality while reducing cost of care, performance improvement, oversight of the clinical delivery of care and will be the liaison to the Ballad Health Clinical Council. Dr. Vashist is a member of The Hospitalist’s editorial advisory board.

Nagendra Gupta, MD, FACP, CPE, has been appointed to the American Board of Internal Medicine’s Internal Medicine Specialty Board. ABIM Specialty Boards are responsible for the broad definition of the discipline across Certification and Maintenance of Certification (MOC). Specialty Board members work with physicians and medical societies to develop Certification and MOC credentials to recognize physicians for their specialized knowledge and commitment to staying current in their field.

Dr. Gupta is a full-time practicing hospitalist with Apogee Physicians and currently serves as the director of the hospitalist program at Texas Health Arlington (Tex.) Memorial Hospital. He also serves as vice president for SHM’s North Central Texas Chapter.

T. Steen Trawick Jr., MD, was named the CEO of Christus Shreveport-Bossier Health System in Shreveport, La., in August 2019.

Dr. Trawick has worked for Christus as a pediatric hospitalist since 2005 and most recently has served concurrently as associate chief medical officer for Sound Physicians. Through Sound Physicians, Dr. Trawick oversees the hospitalist and emergency medical programs for Christus and other hospitals – 14 in total – in Texas and Louisiana. He has worked in that role for the past 6 years.

Scott Shepherd, DO, FACP, has been selected chief medical officer of the health data enrichment and integration technology company Verinovum in Tulsa, Okla. Dr. Shepherd is the medical director for hospitalist medicine and a practicing hospitalist with St. John Health System in Tulsa, and also medical director of the Center for Health Systems Innovation at his alma mater, Oklahoma State University in Stillwater.

Amanda Logue, MD, has been elevated to chief medical officer at Lafayette (La.) General Hospital. Dr. Logue assumed her role in May 2019, which includes the title of senior vice president.

Dr. Logue has worked at Lafayette General since 2009. A hospitalist/internist, her duties at the facility have included department chair of medicine, physician champion for electronic medical record implementation, medical director of the hospitalist program, and most recently chief medical information officer.

Rina Bansal, MD, MBA, recently was appointed full-time president of Inova Alexandria (Va.) Hospital, taking the reins officially after serving as acting president since November 2018. Dr. Bansal has been at Inova since 2008, when she started as a hospitalist at Inova Fairfax (Va.).

Dr. Bansal created and led Inova’s Clinical Nurse Services Hospitalist program through its department of neurosciences and has done stints as Inova Fairfax’s associate chief medical officer, medical director of Inova Telemedicine, and chief medical officer at Inova Alexandria.

James Napoli, MD, has been named chief medical officer for Blue Cross and Blue Shield of Arizona (BCBSAZ). He has manned the CMO position in an interim role since March, taking those duties on top of his role as BCBSAZ’s enterprise medical director for health care ventures and innovation.

Dr. Napoli came to BCBSAZ in 2013 after more than a decade at Abrazo Arrowhead Campus (Glendale, Ariz.) At Abrazo, he was director of hospitalist services and vice-chief of staff, on top of his efforts as a practicing hospital medicine clinician.

Dr. Napoli was previously medical director at OptumHealth, working specifically in the medical management and quality improvement areas for the health management solutions organization’s Medicare Advantage clients.

Mercy Hospital Fort Smith (Ark.) has partnered with the Ob Hospitalist Group (Greenville, S.C.) to launch an obstetric hospitalist program. OB hospitalists deliver babies when a patient’s physician cannot be present, provide emergency care, and provide support to high-risk pregnancy patients, among other duties within the hospital.

The partnership has allowed Mercy Fort Smith to create a dedicated, four-room obstetric emergency department in its Mercy Childbirth Center. Eight OB hospitalists have been hired and will provide care 24 hours a day, 7 days a week.

Mark Williams, MD, MHM, FACP, recently was appointed chief quality and transformation officer for the University of Kentucky’s UK HealthCare (Lexington). Dr. Williams, a tenured professor in the division of hospital medicine at the UK College of Medicine, will serve as chair of UK HealthCare’s Executive Quality Committee. Dr. Williams will lead integration of quality improvement, safety, and quality reporting with data analytics.

Dr. Williams established the first hospitalist program at a public hospital (Grady Memorial Hospital) and academic hospitalist programs at Emory University, Northwestern University, and UK HealthCare. An inaugural member of SHM, he is a past president, was the founding editor-in-chief of the Journal of Hospital Medicine and led SHM’s Project BOOST.

Also at UK HealthCare, Romil Chadha, MD, MPH, SFHM, FACP, has been named interim chief of the division of hospital medicine and medical director of Physician Information Technology Services. Previously, he was associate chief of the division of hospital medicine, and he also serves as medical director of telemetry.

Dr. Chadha is the founder of the Kentucky chapter of SHM, where he is the immediate past president. He is also the codirector of the Heartland Hospital Medicine Conference.

Amit Vashist, MD, MBA, CPE, FHM, FACP, FAPA, has been named chief clinical officer at Ballad Health, a 21-hospital health system in Northeast Tennessee, Southwest Virginia, Northwest North Carolina, and Southeast Kentucky.

In his new role, he will focus on clinical quality, value-based initiatives to improve quality while reducing cost of care, performance improvement, oversight of the clinical delivery of care and will be the liaison to the Ballad Health Clinical Council. Dr. Vashist is a member of The Hospitalist’s editorial advisory board.

Nagendra Gupta, MD, FACP, CPE, has been appointed to the American Board of Internal Medicine’s Internal Medicine Specialty Board. ABIM Specialty Boards are responsible for the broad definition of the discipline across Certification and Maintenance of Certification (MOC). Specialty Board members work with physicians and medical societies to develop Certification and MOC credentials to recognize physicians for their specialized knowledge and commitment to staying current in their field.

Dr. Gupta is a full-time practicing hospitalist with Apogee Physicians and currently serves as the director of the hospitalist program at Texas Health Arlington (Tex.) Memorial Hospital. He also serves as vice president for SHM’s North Central Texas Chapter.

T. Steen Trawick Jr., MD, was named the CEO of Christus Shreveport-Bossier Health System in Shreveport, La., in August 2019.

Dr. Trawick has worked for Christus as a pediatric hospitalist since 2005 and most recently has served concurrently as associate chief medical officer for Sound Physicians. Through Sound Physicians, Dr. Trawick oversees the hospitalist and emergency medical programs for Christus and other hospitals – 14 in total – in Texas and Louisiana. He has worked in that role for the past 6 years.

Scott Shepherd, DO, FACP, has been selected chief medical officer of the health data enrichment and integration technology company Verinovum in Tulsa, Okla. Dr. Shepherd is the medical director for hospitalist medicine and a practicing hospitalist with St. John Health System in Tulsa, and also medical director of the Center for Health Systems Innovation at his alma mater, Oklahoma State University in Stillwater.

Amanda Logue, MD, has been elevated to chief medical officer at Lafayette (La.) General Hospital. Dr. Logue assumed her role in May 2019, which includes the title of senior vice president.

Dr. Logue has worked at Lafayette General since 2009. A hospitalist/internist, her duties at the facility have included department chair of medicine, physician champion for electronic medical record implementation, medical director of the hospitalist program, and most recently chief medical information officer.

Rina Bansal, MD, MBA, recently was appointed full-time president of Inova Alexandria (Va.) Hospital, taking the reins officially after serving as acting president since November 2018. Dr. Bansal has been at Inova since 2008, when she started as a hospitalist at Inova Fairfax (Va.).

Dr. Bansal created and led Inova’s Clinical Nurse Services Hospitalist program through its department of neurosciences and has done stints as Inova Fairfax’s associate chief medical officer, medical director of Inova Telemedicine, and chief medical officer at Inova Alexandria.

James Napoli, MD, has been named chief medical officer for Blue Cross and Blue Shield of Arizona (BCBSAZ). He has manned the CMO position in an interim role since March, taking those duties on top of his role as BCBSAZ’s enterprise medical director for health care ventures and innovation.

Dr. Napoli came to BCBSAZ in 2013 after more than a decade at Abrazo Arrowhead Campus (Glendale, Ariz.) At Abrazo, he was director of hospitalist services and vice-chief of staff, on top of his efforts as a practicing hospital medicine clinician.

Dr. Napoli was previously medical director at OptumHealth, working specifically in the medical management and quality improvement areas for the health management solutions organization’s Medicare Advantage clients.

Mercy Hospital Fort Smith (Ark.) has partnered with the Ob Hospitalist Group (Greenville, S.C.) to launch an obstetric hospitalist program. OB hospitalists deliver babies when a patient’s physician cannot be present, provide emergency care, and provide support to high-risk pregnancy patients, among other duties within the hospital.

The partnership has allowed Mercy Fort Smith to create a dedicated, four-room obstetric emergency department in its Mercy Childbirth Center. Eight OB hospitalists have been hired and will provide care 24 hours a day, 7 days a week.

Mark Williams, MD, MHM, FACP, recently was appointed chief quality and transformation officer for the University of Kentucky’s UK HealthCare (Lexington). Dr. Williams, a tenured professor in the division of hospital medicine at the UK College of Medicine, will serve as chair of UK HealthCare’s Executive Quality Committee. Dr. Williams will lead integration of quality improvement, safety, and quality reporting with data analytics.

Dr. Williams established the first hospitalist program at a public hospital (Grady Memorial Hospital) and academic hospitalist programs at Emory University, Northwestern University, and UK HealthCare. An inaugural member of SHM, he is a past president, was the founding editor-in-chief of the Journal of Hospital Medicine and led SHM’s Project BOOST.

Also at UK HealthCare, Romil Chadha, MD, MPH, SFHM, FACP, has been named interim chief of the division of hospital medicine and medical director of Physician Information Technology Services. Previously, he was associate chief of the division of hospital medicine, and he also serves as medical director of telemetry.

Dr. Chadha is the founder of the Kentucky chapter of SHM, where he is the immediate past president. He is also the codirector of the Heartland Hospital Medicine Conference.

Amit Vashist, MD, MBA, CPE, FHM, FACP, FAPA, has been named chief clinical officer at Ballad Health, a 21-hospital health system in Northeast Tennessee, Southwest Virginia, Northwest North Carolina, and Southeast Kentucky.

In his new role, he will focus on clinical quality, value-based initiatives to improve quality while reducing cost of care, performance improvement, oversight of the clinical delivery of care and will be the liaison to the Ballad Health Clinical Council. Dr. Vashist is a member of The Hospitalist’s editorial advisory board.

Nagendra Gupta, MD, FACP, CPE, has been appointed to the American Board of Internal Medicine’s Internal Medicine Specialty Board. ABIM Specialty Boards are responsible for the broad definition of the discipline across Certification and Maintenance of Certification (MOC). Specialty Board members work with physicians and medical societies to develop Certification and MOC credentials to recognize physicians for their specialized knowledge and commitment to staying current in their field.

Dr. Gupta is a full-time practicing hospitalist with Apogee Physicians and currently serves as the director of the hospitalist program at Texas Health Arlington (Tex.) Memorial Hospital. He also serves as vice president for SHM’s North Central Texas Chapter.

T. Steen Trawick Jr., MD, was named the CEO of Christus Shreveport-Bossier Health System in Shreveport, La., in August 2019.

Dr. Trawick has worked for Christus as a pediatric hospitalist since 2005 and most recently has served concurrently as associate chief medical officer for Sound Physicians. Through Sound Physicians, Dr. Trawick oversees the hospitalist and emergency medical programs for Christus and other hospitals – 14 in total – in Texas and Louisiana. He has worked in that role for the past 6 years.

Scott Shepherd, DO, FACP, has been selected chief medical officer of the health data enrichment and integration technology company Verinovum in Tulsa, Okla. Dr. Shepherd is the medical director for hospitalist medicine and a practicing hospitalist with St. John Health System in Tulsa, and also medical director of the Center for Health Systems Innovation at his alma mater, Oklahoma State University in Stillwater.

Amanda Logue, MD, has been elevated to chief medical officer at Lafayette (La.) General Hospital. Dr. Logue assumed her role in May 2019, which includes the title of senior vice president.

Dr. Logue has worked at Lafayette General since 2009. A hospitalist/internist, her duties at the facility have included department chair of medicine, physician champion for electronic medical record implementation, medical director of the hospitalist program, and most recently chief medical information officer.

Rina Bansal, MD, MBA, recently was appointed full-time president of Inova Alexandria (Va.) Hospital, taking the reins officially after serving as acting president since November 2018. Dr. Bansal has been at Inova since 2008, when she started as a hospitalist at Inova Fairfax (Va.).

Dr. Bansal created and led Inova’s Clinical Nurse Services Hospitalist program through its department of neurosciences and has done stints as Inova Fairfax’s associate chief medical officer, medical director of Inova Telemedicine, and chief medical officer at Inova Alexandria.

James Napoli, MD, has been named chief medical officer for Blue Cross and Blue Shield of Arizona (BCBSAZ). He has manned the CMO position in an interim role since March, taking those duties on top of his role as BCBSAZ’s enterprise medical director for health care ventures and innovation.

Dr. Napoli came to BCBSAZ in 2013 after more than a decade at Abrazo Arrowhead Campus (Glendale, Ariz.) At Abrazo, he was director of hospitalist services and vice-chief of staff, on top of his efforts as a practicing hospital medicine clinician.

Dr. Napoli was previously medical director at OptumHealth, working specifically in the medical management and quality improvement areas for the health management solutions organization’s Medicare Advantage clients.

Mercy Hospital Fort Smith (Ark.) has partnered with the Ob Hospitalist Group (Greenville, S.C.) to launch an obstetric hospitalist program. OB hospitalists deliver babies when a patient’s physician cannot be present, provide emergency care, and provide support to high-risk pregnancy patients, among other duties within the hospital.

The partnership has allowed Mercy Fort Smith to create a dedicated, four-room obstetric emergency department in its Mercy Childbirth Center. Eight OB hospitalists have been hired and will provide care 24 hours a day, 7 days a week.

Legislative Conference 2019

Unreasonable step therapy and prior authorizations, overly expensive generic drugs, opposition to a 5-year Medicare pay freeze, surprise medical billing, and the risks and benefits of sunscreen use for the prevention of skin cancer.

These were just some of the hot button issues that 156 of my fellow We also were joined by 49 patients and practice administrators for this terrific meeting filled with classes and speakers. Members of Congress joined us at various sessions and social occasions with good food, fine wine, and great conversations. Radio personality and CNN host Michael Smerconish gave a very funny speech at dinner and had comments about civility in politics. The comradery was excellent and the intellectual food for thought was extraordinary – right up the alley of dermatologists who are also political junkies.

Most congressional representatives are not well versed in health care topics. As a result, we spent a good deal of time on education – speaking to junior staffers who are expected to keep the members of Congress updated on what they need to know about medical and, specifically, dermatologic issues. As many refer to Washington as “the swamp,” you can consider the House members to be the “big gators,” and the staffers the ”little gators.” Taking that analogy further, lobbying (or educating) then logically becomes “gator wrestling.”

Most of the time we met with little gators, although this year we also met with 84 big gators. We took turns telling them true stories based on our patients’ problems with abuses within our health care system. I think these stories are effective. This is your representative government in action!

On the last day of the conference, we made personal calls by state on the hill offices. Some groups, like Ohio, were nine strong! Everyone gets to speak. This year’s meeting was attended by a total of 31 dermatology residents, and the residents from Ohio State and Cleveland Clinic who participated in our state meetings were terrific. In all, we covered 228 offices – 157 Congressional and 71 Senate.

Rep. John Joyce, MD, FAAD (R-PA-13) was on hand and is the first dermatologist elected for a full term to Congress. Anyone at the meeting could have spent all the time they wanted with him discussing our issues. He is most knowledgeable and a great asset for our specialty. Dr. Joyce is recognized as a dermatologist by his fellow representatives, and even by Speaker Nancy Pelosi and President Trump. For 30 years, Dr. Joyce has been a proud member of the American Academy of Dermatology, as is his wife Dr. Alice Joyce, who also is a dermatologist and continues to run their practice, Altoona Dermatology Associates.

Dr. Joyce is a true asset to dermatology. As an individual, I advocate supporting his campaign financially if you get the chance, beyond just what SkinPAC can give him.

In sum, the AADA Legislative Conference is a lot of productive fun. You get to network with all the leaders of the AAD, as well as many of the leaders of the United States. If you donate $5,000 to SkinPAC, they will pay your way to the conference. If you contribute $1,000, you get to go to the high-donor dinner (The same goes for a $50 donation from residents, no typo!). What’s not to like about that? Most dermatologists like a good party. Next year’s meeting is Sept. 13-15, 2020. See you there!

Dr. Coldiron is in private practice but maintains a clinical assistant professorship at the University of Cincinnati. He cares for patients, teaches medical students and residents, and has several active clinical research projects. Dr. Coldiron is the author of more than 80 scientific letters, papers, and several book chapters, and he speaks frequently on a variety of topics. He is a past president of the American Academy of Dermatology. Write to him at [email protected].

Unreasonable step therapy and prior authorizations, overly expensive generic drugs, opposition to a 5-year Medicare pay freeze, surprise medical billing, and the risks and benefits of sunscreen use for the prevention of skin cancer.

These were just some of the hot button issues that 156 of my fellow We also were joined by 49 patients and practice administrators for this terrific meeting filled with classes and speakers. Members of Congress joined us at various sessions and social occasions with good food, fine wine, and great conversations. Radio personality and CNN host Michael Smerconish gave a very funny speech at dinner and had comments about civility in politics. The comradery was excellent and the intellectual food for thought was extraordinary – right up the alley of dermatologists who are also political junkies.

Most congressional representatives are not well versed in health care topics. As a result, we spent a good deal of time on education – speaking to junior staffers who are expected to keep the members of Congress updated on what they need to know about medical and, specifically, dermatologic issues. As many refer to Washington as “the swamp,” you can consider the House members to be the “big gators,” and the staffers the ”little gators.” Taking that analogy further, lobbying (or educating) then logically becomes “gator wrestling.”

Most of the time we met with little gators, although this year we also met with 84 big gators. We took turns telling them true stories based on our patients’ problems with abuses within our health care system. I think these stories are effective. This is your representative government in action!

On the last day of the conference, we made personal calls by state on the hill offices. Some groups, like Ohio, were nine strong! Everyone gets to speak. This year’s meeting was attended by a total of 31 dermatology residents, and the residents from Ohio State and Cleveland Clinic who participated in our state meetings were terrific. In all, we covered 228 offices – 157 Congressional and 71 Senate.

Rep. John Joyce, MD, FAAD (R-PA-13) was on hand and is the first dermatologist elected for a full term to Congress. Anyone at the meeting could have spent all the time they wanted with him discussing our issues. He is most knowledgeable and a great asset for our specialty. Dr. Joyce is recognized as a dermatologist by his fellow representatives, and even by Speaker Nancy Pelosi and President Trump. For 30 years, Dr. Joyce has been a proud member of the American Academy of Dermatology, as is his wife Dr. Alice Joyce, who also is a dermatologist and continues to run their practice, Altoona Dermatology Associates.

Dr. Joyce is a true asset to dermatology. As an individual, I advocate supporting his campaign financially if you get the chance, beyond just what SkinPAC can give him.

In sum, the AADA Legislative Conference is a lot of productive fun. You get to network with all the leaders of the AAD, as well as many of the leaders of the United States. If you donate $5,000 to SkinPAC, they will pay your way to the conference. If you contribute $1,000, you get to go to the high-donor dinner (The same goes for a $50 donation from residents, no typo!). What’s not to like about that? Most dermatologists like a good party. Next year’s meeting is Sept. 13-15, 2020. See you there!

Dr. Coldiron is in private practice but maintains a clinical assistant professorship at the University of Cincinnati. He cares for patients, teaches medical students and residents, and has several active clinical research projects. Dr. Coldiron is the author of more than 80 scientific letters, papers, and several book chapters, and he speaks frequently on a variety of topics. He is a past president of the American Academy of Dermatology. Write to him at [email protected].

Unreasonable step therapy and prior authorizations, overly expensive generic drugs, opposition to a 5-year Medicare pay freeze, surprise medical billing, and the risks and benefits of sunscreen use for the prevention of skin cancer.

These were just some of the hot button issues that 156 of my fellow We also were joined by 49 patients and practice administrators for this terrific meeting filled with classes and speakers. Members of Congress joined us at various sessions and social occasions with good food, fine wine, and great conversations. Radio personality and CNN host Michael Smerconish gave a very funny speech at dinner and had comments about civility in politics. The comradery was excellent and the intellectual food for thought was extraordinary – right up the alley of dermatologists who are also political junkies.

Most congressional representatives are not well versed in health care topics. As a result, we spent a good deal of time on education – speaking to junior staffers who are expected to keep the members of Congress updated on what they need to know about medical and, specifically, dermatologic issues. As many refer to Washington as “the swamp,” you can consider the House members to be the “big gators,” and the staffers the ”little gators.” Taking that analogy further, lobbying (or educating) then logically becomes “gator wrestling.”

Most of the time we met with little gators, although this year we also met with 84 big gators. We took turns telling them true stories based on our patients’ problems with abuses within our health care system. I think these stories are effective. This is your representative government in action!

On the last day of the conference, we made personal calls by state on the hill offices. Some groups, like Ohio, were nine strong! Everyone gets to speak. This year’s meeting was attended by a total of 31 dermatology residents, and the residents from Ohio State and Cleveland Clinic who participated in our state meetings were terrific. In all, we covered 228 offices – 157 Congressional and 71 Senate.

Rep. John Joyce, MD, FAAD (R-PA-13) was on hand and is the first dermatologist elected for a full term to Congress. Anyone at the meeting could have spent all the time they wanted with him discussing our issues. He is most knowledgeable and a great asset for our specialty. Dr. Joyce is recognized as a dermatologist by his fellow representatives, and even by Speaker Nancy Pelosi and President Trump. For 30 years, Dr. Joyce has been a proud member of the American Academy of Dermatology, as is his wife Dr. Alice Joyce, who also is a dermatologist and continues to run their practice, Altoona Dermatology Associates.

Dr. Joyce is a true asset to dermatology. As an individual, I advocate supporting his campaign financially if you get the chance, beyond just what SkinPAC can give him.

In sum, the AADA Legislative Conference is a lot of productive fun. You get to network with all the leaders of the AAD, as well as many of the leaders of the United States. If you donate $5,000 to SkinPAC, they will pay your way to the conference. If you contribute $1,000, you get to go to the high-donor dinner (The same goes for a $50 donation from residents, no typo!). What’s not to like about that? Most dermatologists like a good party. Next year’s meeting is Sept. 13-15, 2020. See you there!

Dr. Coldiron is in private practice but maintains a clinical assistant professorship at the University of Cincinnati. He cares for patients, teaches medical students and residents, and has several active clinical research projects. Dr. Coldiron is the author of more than 80 scientific letters, papers, and several book chapters, and he speaks frequently on a variety of topics. He is a past president of the American Academy of Dermatology. Write to him at [email protected].

Dr. Barbara J. Howard will receive the 2019 C. Anderson Aldrich Award

The award is given by the AAP Section on Developmental and Behavioral Pediatrics to “recognize physicians who have made outstanding contributions to the field of child development,” according to the AAP. Previous recipients of the award include pediatricians such as Benjamin M. Spock, MD, and T. Berry Brazelton, MD, as well as psychoanalyst Anna Freud and child psychologist Erik H. Erickson.

Dr. Howard is a developmental-behavioral pediatrician who is an assistant professor of pediatrics at Johns Hopkins University, Baltimore, where she codirected a fellowship program to train developmental and behavioral pediatricians. She is a creator of CHADIS, an innovative online system that provides previsit questionnaires that allows physicians “to collect patient-generated data that can be used to support clinical and shared decisions, track data, and create quality improvement reports,” according to the CHADIS website. She has given free monthly case conferences through a federal grant for 30 years, initially in person and more recently through a national webcast. Over the last 2 decades, Dr. Howard has written about practical approaches to developmental and behavioral problems children experience for this newspaper in her Behavioral Consult column.

Michael S. Jellinek, MD, professor emeritus of psychiatry and pediatrics at the Harvard Medical School, Boston, said in an interview, “Barbara’s dedication to the emotional health of children has made an enormous difference. In addition to her clinical care and writing, her development of CHADIS has helped pediatricians recognize and treat thousands upon thousands of children. She is most deserving of this high honor.”

The award is given by the AAP Section on Developmental and Behavioral Pediatrics to “recognize physicians who have made outstanding contributions to the field of child development,” according to the AAP. Previous recipients of the award include pediatricians such as Benjamin M. Spock, MD, and T. Berry Brazelton, MD, as well as psychoanalyst Anna Freud and child psychologist Erik H. Erickson.

Dr. Howard is a developmental-behavioral pediatrician who is an assistant professor of pediatrics at Johns Hopkins University, Baltimore, where she codirected a fellowship program to train developmental and behavioral pediatricians. She is a creator of CHADIS, an innovative online system that provides previsit questionnaires that allows physicians “to collect patient-generated data that can be used to support clinical and shared decisions, track data, and create quality improvement reports,” according to the CHADIS website. She has given free monthly case conferences through a federal grant for 30 years, initially in person and more recently through a national webcast. Over the last 2 decades, Dr. Howard has written about practical approaches to developmental and behavioral problems children experience for this newspaper in her Behavioral Consult column.

Michael S. Jellinek, MD, professor emeritus of psychiatry and pediatrics at the Harvard Medical School, Boston, said in an interview, “Barbara’s dedication to the emotional health of children has made an enormous difference. In addition to her clinical care and writing, her development of CHADIS has helped pediatricians recognize and treat thousands upon thousands of children. She is most deserving of this high honor.”

The award is given by the AAP Section on Developmental and Behavioral Pediatrics to “recognize physicians who have made outstanding contributions to the field of child development,” according to the AAP. Previous recipients of the award include pediatricians such as Benjamin M. Spock, MD, and T. Berry Brazelton, MD, as well as psychoanalyst Anna Freud and child psychologist Erik H. Erickson.

Dr. Howard is a developmental-behavioral pediatrician who is an assistant professor of pediatrics at Johns Hopkins University, Baltimore, where she codirected a fellowship program to train developmental and behavioral pediatricians. She is a creator of CHADIS, an innovative online system that provides previsit questionnaires that allows physicians “to collect patient-generated data that can be used to support clinical and shared decisions, track data, and create quality improvement reports,” according to the CHADIS website. She has given free monthly case conferences through a federal grant for 30 years, initially in person and more recently through a national webcast. Over the last 2 decades, Dr. Howard has written about practical approaches to developmental and behavioral problems children experience for this newspaper in her Behavioral Consult column.

Michael S. Jellinek, MD, professor emeritus of psychiatry and pediatrics at the Harvard Medical School, Boston, said in an interview, “Barbara’s dedication to the emotional health of children has made an enormous difference. In addition to her clinical care and writing, her development of CHADIS has helped pediatricians recognize and treat thousands upon thousands of children. She is most deserving of this high honor.”

Financial Education for Health Care Providers

Health care provider (HCP) well-being has become a central topic as health care agencies increasingly recognize that stress leads to turnover and reduced efficacy.1 Financial health of HCPs is one aspect of overall well-being that has received little attention. We all work at the US Department of Veterans Affairs (VA) as psychologists and believe that there is a need to attend to financial literacy within the health care professions, a call that also has been made by physicians.2 For instance, a frequently mentioned aspect of financial literacy involves learning to effectively manage student loan debt. Another less often discussed facet is the need to save money for retirement early in one’s career to reap the benefits of compound interest: This is a particular concern for HCPs who were in graduate/medical school when they would have optimally started saving for retirement. Delaying retirement savings can have significant financial consequences, which can have a negative effect on well-being.

A few years ago, we started teaching advanced psychology trainees about financial well-being and were startled at the students’ lack of knowledge. For example, many students did not understand basic financial concepts, including the difference between a pension and a 401k/403b system of retirement savings—a knowledge gap that the authors speculate persists throughout some professionals’ careers. Research suggests that lack of knowledge in an area feels aversive and may result in procrastination or an inability to move toward a goal.3,4 Yet, postponing saving is problematic as it attenuates the effect of compound interest, thus making it difficult to accrue wealth.5 To address the lack of financial training among psychologists, the authors designed a seminar to provide retirement/financial-planning information to early career psychologists. This information fits the concept of “just in time” education: Disseminating knowledge when it is most likely to be useful, put into practice, and thus retained.6

Methods

In consultation with human resources officials at the VA, a 90-minute seminar was created to educate psychologists about saving for retirement. The seminar was recorded so that psychologists who were not able to attend in-person could view it at a later date. The seminar mainly covered systems of retirement (especially the VAspecific Thrift Savings Plan [TSP]), basic concepts of investing, ways of determining how much to save for retirement, and tax advantages of increased saving. It also provided simple retirement planning rules of thumb, as such heuristics have been shown to lead to greater behavior change than more unsystematic approaches.7 Key points included:

- Psychologists should try to approximately replace their current salary during retirement;

- There is no option to borrow money for retirement; the only sources of income for the retiree are social security, a possible pension, and any money saved;

- Psychologists and many other HCPs were in school during their prime saving years and tend to have lower salaries than that of other professional groups with similar amounts of education, so they should save aggressively earlier in their career;

- Early career psychologists should ensure that money saved for retirement is invested in relatively “aggressive” options, such as stock index funds (vs bond funds); and

- The tax benefits of allocating more income toward retirement savings in a tax-deferred savings plan such as the TSP can make it seem cheaper to invest, which can make it more attractive to immediately increase one’s savings.

As with any other savings plan, there are no guarantees or one-size-fits-all solutions, and finance professionals typically advise diversifying retirement savings (eg, stocks, bonds, real estate), to include both TSP and non-TSP plans in the case of VA employees.

To assess the usefulness of this seminar, the authors conducted a process improvement case study. The institutional review board of the Milwaukee VA Medical Center (VAMC) determined the study to be exempt as it was considered process improvement rather than research. Two assessment measures were created: a 5-item, anonymous measure of attendee satisfaction was administered immediately following the seminar, which assessed the extent to which presenters were engaging, material was presented clearly, presenters effectively used examples and illustrations, presenters effectively used slides/visual aids, and objectives were met (5-point Likert scale from “Needs major improvement” to “Excellent”).

Second, an internally developed anonymous pre- and postseminar survey was administered to assess changes in retirement- related knowledge, attitudes, and behaviors (3 months before the seminar [8 questions] and 2 months after [9 questions]). The survey assessed knowledge of retirement benefits (eg, difference between Roth and traditional retirement savings plans), general investment actions (eg, investing in TSP, investing in the TSP G fund, and investing sufficiently to earn the full employer match), and postseminar actions taken (eg, logging on to tsp.gov, increasing TSP contribution). Participants’ responses were anonymous, so the authors compared average behavior before and after the seminar rather than comparing individuals’ pre- and postseminar comments.

Results

About one-third (n = 28) of the Milwaukee VAMC psychologists attended, viewed, or presented/designed the seminar. Of the 12 participants who attended the seminar in person, all rated the presentation as excellent in each domain, with the exception of 1 participant (good). Anecdotally, participants approached presenters immediately after the presentation and up to 2 years later to indicate that the presentation was a useful retirement planning resource. A total of 27 psychologists completed the preseminar survey. Sixteen psychologists completed the postseminar survey and indicated that they attended/viewed the retirement seminar. Participants’ perceived knowledge of retirement benefits was assessed with response options, including nonexistent, vague, good, and sophisticated.

There was a significant change from preto postseminar, such that psychologists at postseminar felt that they had a better understanding of their retirement benefit options (Mann-Whitney U = 65.5, n1 = 27, n2 = 16, P < .01). The modal response preseminar was “vague” (67%) and postseminar was “good” (88%). There also were changes that were meaningful though not statistically significant: The percentage who had moved their money from the default, low-yield fund increased from 70% at preseminar to 88% at postseminar (Fisher exact test, 1-sided, P = .31). Also, fewer people reported on the postseminar survey that they were not sure whether they were invested in a Roth individual retirement account (IRA) or traditional TSP, indicating a trend toward significantly increased knowledge of their investments (Fisher exact test, 1-sided, P = .076).

Most important at follow-up, several behavior changes were reported. Most people (56%) had logged on to the TSP website to check on their account. A substantial number (26%) increased their contribution amount, and 6% moved money from the default fund. Overall, every respondent at follow-up confirmed having taken at least 1 of the actions assessed by the survey.

Conclusion

Based on the authors’ experience and research into financial education among HCPs, it is recommended that psychologists and other disciplines offer opportunities for retirement education at all levels of training. Financial education is likely to be most helpful if it is tailored toward a specific discipline, workplace, and time frame (eg, early career physicians may need more information about loan repayment and may need to invest in more aggressive retirement funds).8 Although many employers provide access to general financial education from outside companies, information provided by informed members of one’s field may be particularly helpful (eg, our seminar was curated for a psychology audience).

We found that the process of creating such a seminar was not burdensome and was educational for presenters as well as attendees. Further, it need not be intimidating to accumulate information to share; especially for those health care providers who have not made financial well-being a priority, learning and deploying a few targeted strategies can lead to increased peace of mind about retirement savings. Overall, we encourage a focus on financial literacy for all health care professions, including physicians who often may graduate with greater debts. Emphasizing early and aggressive financial literacy as an important aspect of provider well-being may help to produce healthier, wealthier, and overall better health care providers.2

Acknowledgments

This manuscript is partially the result of work supported with resources and the use of facilities at the Clement J. Zablocki VAMC, Milwaukee, Wisconsin. We thank Milwaukee VA retirement specialist, Vicki Heckman, for her invaluable advice in the preparation of these materials and the Psychology Advancement Workgroup at the Milwaukee VAMC for providing the impetus and support for this project.

1. Zhang Y, Feng X. The relationship between job satisfaction, burnout, and turnover intention among physicians from urban state-owned medical institutions in Hubei, China: a cross-sectional study. BMC Health Serv Res. 2011;11(1):235.

2. Chandrakantan A. Why is there no financial literacy 101 for doctors? https://opmed.doximity.com/an-open -call-to-residency-training-programs-and-trainees-to -facilitate-financial-literacy-bb762e585ed8. Published August 21, 2017. Accessed August 22, 2019.

3. Iyengar SS, Huberman G, Jiang W. How much choice is too much: determinants of individual contributions in 401K retirement plans. In: Mitchell OS, Utkus S, eds. Pension Design and Structure: New Lessons From Behavioral Finance. Oxford: Oxford University Press; 2004:83-95.

4. Parker AM, de Bruin WB, Yoong J, Willis R. Inappropriate confidence and retirement planning: four studies with a national sample. J Behav Decis Mak. 2012;25(4):382-389.

5. Lusardi A, Mitchell OS. Baby boomer retirement security: the roles of planning, financial literacy, and housing wealth. J Monet Econ. 2007;54(1):205-224.

6. Chub C. It’s time to teach financial literacy to young doctors. https://www.cnbc.com/2016/12/08/teaching -financial-literacy-to-young-doctors.html. Published December 8, 2016. Accessed August 22, 2019.

7. Binswanger J, Carman KG. How real people make longterm decisions: the case of retirement preparation. J Econ Behav Org. 2012;81(1):39-60.

8. Knoll MA. The role of behavioral economics and behavioral decision making in Americans’ retirement savings decisions. Soc Secur Bull. 2010;70(4):1-23.

Health care provider (HCP) well-being has become a central topic as health care agencies increasingly recognize that stress leads to turnover and reduced efficacy.1 Financial health of HCPs is one aspect of overall well-being that has received little attention. We all work at the US Department of Veterans Affairs (VA) as psychologists and believe that there is a need to attend to financial literacy within the health care professions, a call that also has been made by physicians.2 For instance, a frequently mentioned aspect of financial literacy involves learning to effectively manage student loan debt. Another less often discussed facet is the need to save money for retirement early in one’s career to reap the benefits of compound interest: This is a particular concern for HCPs who were in graduate/medical school when they would have optimally started saving for retirement. Delaying retirement savings can have significant financial consequences, which can have a negative effect on well-being.

A few years ago, we started teaching advanced psychology trainees about financial well-being and were startled at the students’ lack of knowledge. For example, many students did not understand basic financial concepts, including the difference between a pension and a 401k/403b system of retirement savings—a knowledge gap that the authors speculate persists throughout some professionals’ careers. Research suggests that lack of knowledge in an area feels aversive and may result in procrastination or an inability to move toward a goal.3,4 Yet, postponing saving is problematic as it attenuates the effect of compound interest, thus making it difficult to accrue wealth.5 To address the lack of financial training among psychologists, the authors designed a seminar to provide retirement/financial-planning information to early career psychologists. This information fits the concept of “just in time” education: Disseminating knowledge when it is most likely to be useful, put into practice, and thus retained.6

Methods

In consultation with human resources officials at the VA, a 90-minute seminar was created to educate psychologists about saving for retirement. The seminar was recorded so that psychologists who were not able to attend in-person could view it at a later date. The seminar mainly covered systems of retirement (especially the VAspecific Thrift Savings Plan [TSP]), basic concepts of investing, ways of determining how much to save for retirement, and tax advantages of increased saving. It also provided simple retirement planning rules of thumb, as such heuristics have been shown to lead to greater behavior change than more unsystematic approaches.7 Key points included:

- Psychologists should try to approximately replace their current salary during retirement;

- There is no option to borrow money for retirement; the only sources of income for the retiree are social security, a possible pension, and any money saved;

- Psychologists and many other HCPs were in school during their prime saving years and tend to have lower salaries than that of other professional groups with similar amounts of education, so they should save aggressively earlier in their career;

- Early career psychologists should ensure that money saved for retirement is invested in relatively “aggressive” options, such as stock index funds (vs bond funds); and

- The tax benefits of allocating more income toward retirement savings in a tax-deferred savings plan such as the TSP can make it seem cheaper to invest, which can make it more attractive to immediately increase one’s savings.

As with any other savings plan, there are no guarantees or one-size-fits-all solutions, and finance professionals typically advise diversifying retirement savings (eg, stocks, bonds, real estate), to include both TSP and non-TSP plans in the case of VA employees.

To assess the usefulness of this seminar, the authors conducted a process improvement case study. The institutional review board of the Milwaukee VA Medical Center (VAMC) determined the study to be exempt as it was considered process improvement rather than research. Two assessment measures were created: a 5-item, anonymous measure of attendee satisfaction was administered immediately following the seminar, which assessed the extent to which presenters were engaging, material was presented clearly, presenters effectively used examples and illustrations, presenters effectively used slides/visual aids, and objectives were met (5-point Likert scale from “Needs major improvement” to “Excellent”).

Second, an internally developed anonymous pre- and postseminar survey was administered to assess changes in retirement- related knowledge, attitudes, and behaviors (3 months before the seminar [8 questions] and 2 months after [9 questions]). The survey assessed knowledge of retirement benefits (eg, difference between Roth and traditional retirement savings plans), general investment actions (eg, investing in TSP, investing in the TSP G fund, and investing sufficiently to earn the full employer match), and postseminar actions taken (eg, logging on to tsp.gov, increasing TSP contribution). Participants’ responses were anonymous, so the authors compared average behavior before and after the seminar rather than comparing individuals’ pre- and postseminar comments.

Results

About one-third (n = 28) of the Milwaukee VAMC psychologists attended, viewed, or presented/designed the seminar. Of the 12 participants who attended the seminar in person, all rated the presentation as excellent in each domain, with the exception of 1 participant (good). Anecdotally, participants approached presenters immediately after the presentation and up to 2 years later to indicate that the presentation was a useful retirement planning resource. A total of 27 psychologists completed the preseminar survey. Sixteen psychologists completed the postseminar survey and indicated that they attended/viewed the retirement seminar. Participants’ perceived knowledge of retirement benefits was assessed with response options, including nonexistent, vague, good, and sophisticated.

There was a significant change from preto postseminar, such that psychologists at postseminar felt that they had a better understanding of their retirement benefit options (Mann-Whitney U = 65.5, n1 = 27, n2 = 16, P < .01). The modal response preseminar was “vague” (67%) and postseminar was “good” (88%). There also were changes that were meaningful though not statistically significant: The percentage who had moved their money from the default, low-yield fund increased from 70% at preseminar to 88% at postseminar (Fisher exact test, 1-sided, P = .31). Also, fewer people reported on the postseminar survey that they were not sure whether they were invested in a Roth individual retirement account (IRA) or traditional TSP, indicating a trend toward significantly increased knowledge of their investments (Fisher exact test, 1-sided, P = .076).

Most important at follow-up, several behavior changes were reported. Most people (56%) had logged on to the TSP website to check on their account. A substantial number (26%) increased their contribution amount, and 6% moved money from the default fund. Overall, every respondent at follow-up confirmed having taken at least 1 of the actions assessed by the survey.

Conclusion

Based on the authors’ experience and research into financial education among HCPs, it is recommended that psychologists and other disciplines offer opportunities for retirement education at all levels of training. Financial education is likely to be most helpful if it is tailored toward a specific discipline, workplace, and time frame (eg, early career physicians may need more information about loan repayment and may need to invest in more aggressive retirement funds).8 Although many employers provide access to general financial education from outside companies, information provided by informed members of one’s field may be particularly helpful (eg, our seminar was curated for a psychology audience).

We found that the process of creating such a seminar was not burdensome and was educational for presenters as well as attendees. Further, it need not be intimidating to accumulate information to share; especially for those health care providers who have not made financial well-being a priority, learning and deploying a few targeted strategies can lead to increased peace of mind about retirement savings. Overall, we encourage a focus on financial literacy for all health care professions, including physicians who often may graduate with greater debts. Emphasizing early and aggressive financial literacy as an important aspect of provider well-being may help to produce healthier, wealthier, and overall better health care providers.2

Acknowledgments

This manuscript is partially the result of work supported with resources and the use of facilities at the Clement J. Zablocki VAMC, Milwaukee, Wisconsin. We thank Milwaukee VA retirement specialist, Vicki Heckman, for her invaluable advice in the preparation of these materials and the Psychology Advancement Workgroup at the Milwaukee VAMC for providing the impetus and support for this project.

Health care provider (HCP) well-being has become a central topic as health care agencies increasingly recognize that stress leads to turnover and reduced efficacy.1 Financial health of HCPs is one aspect of overall well-being that has received little attention. We all work at the US Department of Veterans Affairs (VA) as psychologists and believe that there is a need to attend to financial literacy within the health care professions, a call that also has been made by physicians.2 For instance, a frequently mentioned aspect of financial literacy involves learning to effectively manage student loan debt. Another less often discussed facet is the need to save money for retirement early in one’s career to reap the benefits of compound interest: This is a particular concern for HCPs who were in graduate/medical school when they would have optimally started saving for retirement. Delaying retirement savings can have significant financial consequences, which can have a negative effect on well-being.

A few years ago, we started teaching advanced psychology trainees about financial well-being and were startled at the students’ lack of knowledge. For example, many students did not understand basic financial concepts, including the difference between a pension and a 401k/403b system of retirement savings—a knowledge gap that the authors speculate persists throughout some professionals’ careers. Research suggests that lack of knowledge in an area feels aversive and may result in procrastination or an inability to move toward a goal.3,4 Yet, postponing saving is problematic as it attenuates the effect of compound interest, thus making it difficult to accrue wealth.5 To address the lack of financial training among psychologists, the authors designed a seminar to provide retirement/financial-planning information to early career psychologists. This information fits the concept of “just in time” education: Disseminating knowledge when it is most likely to be useful, put into practice, and thus retained.6

Methods

In consultation with human resources officials at the VA, a 90-minute seminar was created to educate psychologists about saving for retirement. The seminar was recorded so that psychologists who were not able to attend in-person could view it at a later date. The seminar mainly covered systems of retirement (especially the VAspecific Thrift Savings Plan [TSP]), basic concepts of investing, ways of determining how much to save for retirement, and tax advantages of increased saving. It also provided simple retirement planning rules of thumb, as such heuristics have been shown to lead to greater behavior change than more unsystematic approaches.7 Key points included:

- Psychologists should try to approximately replace their current salary during retirement;

- There is no option to borrow money for retirement; the only sources of income for the retiree are social security, a possible pension, and any money saved;

- Psychologists and many other HCPs were in school during their prime saving years and tend to have lower salaries than that of other professional groups with similar amounts of education, so they should save aggressively earlier in their career;

- Early career psychologists should ensure that money saved for retirement is invested in relatively “aggressive” options, such as stock index funds (vs bond funds); and

- The tax benefits of allocating more income toward retirement savings in a tax-deferred savings plan such as the TSP can make it seem cheaper to invest, which can make it more attractive to immediately increase one’s savings.

As with any other savings plan, there are no guarantees or one-size-fits-all solutions, and finance professionals typically advise diversifying retirement savings (eg, stocks, bonds, real estate), to include both TSP and non-TSP plans in the case of VA employees.

To assess the usefulness of this seminar, the authors conducted a process improvement case study. The institutional review board of the Milwaukee VA Medical Center (VAMC) determined the study to be exempt as it was considered process improvement rather than research. Two assessment measures were created: a 5-item, anonymous measure of attendee satisfaction was administered immediately following the seminar, which assessed the extent to which presenters were engaging, material was presented clearly, presenters effectively used examples and illustrations, presenters effectively used slides/visual aids, and objectives were met (5-point Likert scale from “Needs major improvement” to “Excellent”).

Second, an internally developed anonymous pre- and postseminar survey was administered to assess changes in retirement- related knowledge, attitudes, and behaviors (3 months before the seminar [8 questions] and 2 months after [9 questions]). The survey assessed knowledge of retirement benefits (eg, difference between Roth and traditional retirement savings plans), general investment actions (eg, investing in TSP, investing in the TSP G fund, and investing sufficiently to earn the full employer match), and postseminar actions taken (eg, logging on to tsp.gov, increasing TSP contribution). Participants’ responses were anonymous, so the authors compared average behavior before and after the seminar rather than comparing individuals’ pre- and postseminar comments.

Results

About one-third (n = 28) of the Milwaukee VAMC psychologists attended, viewed, or presented/designed the seminar. Of the 12 participants who attended the seminar in person, all rated the presentation as excellent in each domain, with the exception of 1 participant (good). Anecdotally, participants approached presenters immediately after the presentation and up to 2 years later to indicate that the presentation was a useful retirement planning resource. A total of 27 psychologists completed the preseminar survey. Sixteen psychologists completed the postseminar survey and indicated that they attended/viewed the retirement seminar. Participants’ perceived knowledge of retirement benefits was assessed with response options, including nonexistent, vague, good, and sophisticated.

There was a significant change from preto postseminar, such that psychologists at postseminar felt that they had a better understanding of their retirement benefit options (Mann-Whitney U = 65.5, n1 = 27, n2 = 16, P < .01). The modal response preseminar was “vague” (67%) and postseminar was “good” (88%). There also were changes that were meaningful though not statistically significant: The percentage who had moved their money from the default, low-yield fund increased from 70% at preseminar to 88% at postseminar (Fisher exact test, 1-sided, P = .31). Also, fewer people reported on the postseminar survey that they were not sure whether they were invested in a Roth individual retirement account (IRA) or traditional TSP, indicating a trend toward significantly increased knowledge of their investments (Fisher exact test, 1-sided, P = .076).

Most important at follow-up, several behavior changes were reported. Most people (56%) had logged on to the TSP website to check on their account. A substantial number (26%) increased their contribution amount, and 6% moved money from the default fund. Overall, every respondent at follow-up confirmed having taken at least 1 of the actions assessed by the survey.

Conclusion

Based on the authors’ experience and research into financial education among HCPs, it is recommended that psychologists and other disciplines offer opportunities for retirement education at all levels of training. Financial education is likely to be most helpful if it is tailored toward a specific discipline, workplace, and time frame (eg, early career physicians may need more information about loan repayment and may need to invest in more aggressive retirement funds).8 Although many employers provide access to general financial education from outside companies, information provided by informed members of one’s field may be particularly helpful (eg, our seminar was curated for a psychology audience).

We found that the process of creating such a seminar was not burdensome and was educational for presenters as well as attendees. Further, it need not be intimidating to accumulate information to share; especially for those health care providers who have not made financial well-being a priority, learning and deploying a few targeted strategies can lead to increased peace of mind about retirement savings. Overall, we encourage a focus on financial literacy for all health care professions, including physicians who often may graduate with greater debts. Emphasizing early and aggressive financial literacy as an important aspect of provider well-being may help to produce healthier, wealthier, and overall better health care providers.2

Acknowledgments

This manuscript is partially the result of work supported with resources and the use of facilities at the Clement J. Zablocki VAMC, Milwaukee, Wisconsin. We thank Milwaukee VA retirement specialist, Vicki Heckman, for her invaluable advice in the preparation of these materials and the Psychology Advancement Workgroup at the Milwaukee VAMC for providing the impetus and support for this project.

1. Zhang Y, Feng X. The relationship between job satisfaction, burnout, and turnover intention among physicians from urban state-owned medical institutions in Hubei, China: a cross-sectional study. BMC Health Serv Res. 2011;11(1):235.

2. Chandrakantan A. Why is there no financial literacy 101 for doctors? https://opmed.doximity.com/an-open -call-to-residency-training-programs-and-trainees-to -facilitate-financial-literacy-bb762e585ed8. Published August 21, 2017. Accessed August 22, 2019.

3. Iyengar SS, Huberman G, Jiang W. How much choice is too much: determinants of individual contributions in 401K retirement plans. In: Mitchell OS, Utkus S, eds. Pension Design and Structure: New Lessons From Behavioral Finance. Oxford: Oxford University Press; 2004:83-95.

4. Parker AM, de Bruin WB, Yoong J, Willis R. Inappropriate confidence and retirement planning: four studies with a national sample. J Behav Decis Mak. 2012;25(4):382-389.

5. Lusardi A, Mitchell OS. Baby boomer retirement security: the roles of planning, financial literacy, and housing wealth. J Monet Econ. 2007;54(1):205-224.

6. Chub C. It’s time to teach financial literacy to young doctors. https://www.cnbc.com/2016/12/08/teaching -financial-literacy-to-young-doctors.html. Published December 8, 2016. Accessed August 22, 2019.

7. Binswanger J, Carman KG. How real people make longterm decisions: the case of retirement preparation. J Econ Behav Org. 2012;81(1):39-60.

8. Knoll MA. The role of behavioral economics and behavioral decision making in Americans’ retirement savings decisions. Soc Secur Bull. 2010;70(4):1-23.

1. Zhang Y, Feng X. The relationship between job satisfaction, burnout, and turnover intention among physicians from urban state-owned medical institutions in Hubei, China: a cross-sectional study. BMC Health Serv Res. 2011;11(1):235.

2. Chandrakantan A. Why is there no financial literacy 101 for doctors? https://opmed.doximity.com/an-open -call-to-residency-training-programs-and-trainees-to -facilitate-financial-literacy-bb762e585ed8. Published August 21, 2017. Accessed August 22, 2019.

3. Iyengar SS, Huberman G, Jiang W. How much choice is too much: determinants of individual contributions in 401K retirement plans. In: Mitchell OS, Utkus S, eds. Pension Design and Structure: New Lessons From Behavioral Finance. Oxford: Oxford University Press; 2004:83-95.

4. Parker AM, de Bruin WB, Yoong J, Willis R. Inappropriate confidence and retirement planning: four studies with a national sample. J Behav Decis Mak. 2012;25(4):382-389.

5. Lusardi A, Mitchell OS. Baby boomer retirement security: the roles of planning, financial literacy, and housing wealth. J Monet Econ. 2007;54(1):205-224.

6. Chub C. It’s time to teach financial literacy to young doctors. https://www.cnbc.com/2016/12/08/teaching -financial-literacy-to-young-doctors.html. Published December 8, 2016. Accessed August 22, 2019.

7. Binswanger J, Carman KG. How real people make longterm decisions: the case of retirement preparation. J Econ Behav Org. 2012;81(1):39-60.

8. Knoll MA. The role of behavioral economics and behavioral decision making in Americans’ retirement savings decisions. Soc Secur Bull. 2010;70(4):1-23.

Talking to overweight children

You are seeing a 9-year-old for her annual health maintenance visit. A quick look at her growth chart easily confirms your first impression that she is obese. How are you going to address the weight that you know, and she probably suspects, is going to make her vulnerable to a myriad of health problems as she gets older?

If she has been your patient since she was in preschool, this is certainly not the first time that her growth chart has been concerning. When did you first start discussing her weight with her parents? What words did you use? What strategies have you suggested? What referrals have you made? Maybe you have already given up and decided to not even “go there” at this visit because your experience with overweight patients has been so disappointing.

In her op ed in the New York Times, Dr. Perri Klass reconsiders these kinds of questions as she reviews an article in the journal Childhood Obesity (“Let’s Not Just Dismiss the Weight Watchers Kurbo App,” by Michelle I. Cardel, PhD, MS, RD, and Elsie M. Taveras, MD, MPH, August 2019) written by a nutrition scientist and a pediatrician who are concerned about a new weight loss app for children recently released by Weight Watchers. (The Checkup, “Helping Children Learn to Eat Well,” The New York Times, Aug. 26, 2019). Although the authors of the journal article question some of the science behind the app, their primary concerns are that the app is aimed at children without a way to guarantee parental involvement, and in their opinion the app also places too much emphasis on weight loss.

Their concerns go right to the heart of what troubles me about managing obesity in children. How should I talk to a child about her weight? What words can I choose without shaming? Maybe I shouldn’t be talking to her at all. When a child is 18 months old, we don’t talk to her about her growth chart. Not because she couldn’t understand, but because the solution rests not with her but with her parents.

Does that point come when we have given up on the parents’ ability to create and maintain an environment that discourages obesity? Is that the point when we begin asking the child to unlearn a complex set of behaviors that have been enabled or at least tolerated and poorly modeled at home?

When we begin to talk to a child about his weight do we begin by telling him that he may not have been a contributor to the problem when it began but from now on he needs to be a major player in its management? Of course we don’t share that reality with an 8-year-old, but sometime during his struggle to manage his weight he will connect the dots.

If you are beginning to suspect that I have built my pediatric career around a scaffolding of parent blaming and shaming you are wrong. I know that there are children who have inherited a suite of genes that make them vulnerable to obesity. And I know that too many children grow up in environments in which their parents are powerless to control the family diet for economic reasons. But I am sure that like me you mutter to yourself and your colleagues about the number of patients you are seeing each day whose growth charts are a clear reflection of less than optimal parenting.

Does all of this mean we throw in the towel and stop trying to help overweight children after they turn 6 years old? Of course not. But, it does mean we must redouble our efforts to help parents manage their children’s diets and activity levels in those first critical preschool years.

Dr. Wilkoff practiced primary care pediatrics in Brunswick, Maine for nearly 40 years. He has authored several books on behavioral pediatrics, including “How to Say No to Your Toddler.” Email him at [email protected].

You are seeing a 9-year-old for her annual health maintenance visit. A quick look at her growth chart easily confirms your first impression that she is obese. How are you going to address the weight that you know, and she probably suspects, is going to make her vulnerable to a myriad of health problems as she gets older?

If she has been your patient since she was in preschool, this is certainly not the first time that her growth chart has been concerning. When did you first start discussing her weight with her parents? What words did you use? What strategies have you suggested? What referrals have you made? Maybe you have already given up and decided to not even “go there” at this visit because your experience with overweight patients has been so disappointing.

In her op ed in the New York Times, Dr. Perri Klass reconsiders these kinds of questions as she reviews an article in the journal Childhood Obesity (“Let’s Not Just Dismiss the Weight Watchers Kurbo App,” by Michelle I. Cardel, PhD, MS, RD, and Elsie M. Taveras, MD, MPH, August 2019) written by a nutrition scientist and a pediatrician who are concerned about a new weight loss app for children recently released by Weight Watchers. (The Checkup, “Helping Children Learn to Eat Well,” The New York Times, Aug. 26, 2019). Although the authors of the journal article question some of the science behind the app, their primary concerns are that the app is aimed at children without a way to guarantee parental involvement, and in their opinion the app also places too much emphasis on weight loss.

Their concerns go right to the heart of what troubles me about managing obesity in children. How should I talk to a child about her weight? What words can I choose without shaming? Maybe I shouldn’t be talking to her at all. When a child is 18 months old, we don’t talk to her about her growth chart. Not because she couldn’t understand, but because the solution rests not with her but with her parents.

Does that point come when we have given up on the parents’ ability to create and maintain an environment that discourages obesity? Is that the point when we begin asking the child to unlearn a complex set of behaviors that have been enabled or at least tolerated and poorly modeled at home?

When we begin to talk to a child about his weight do we begin by telling him that he may not have been a contributor to the problem when it began but from now on he needs to be a major player in its management? Of course we don’t share that reality with an 8-year-old, but sometime during his struggle to manage his weight he will connect the dots.

If you are beginning to suspect that I have built my pediatric career around a scaffolding of parent blaming and shaming you are wrong. I know that there are children who have inherited a suite of genes that make them vulnerable to obesity. And I know that too many children grow up in environments in which their parents are powerless to control the family diet for economic reasons. But I am sure that like me you mutter to yourself and your colleagues about the number of patients you are seeing each day whose growth charts are a clear reflection of less than optimal parenting.

Does all of this mean we throw in the towel and stop trying to help overweight children after they turn 6 years old? Of course not. But, it does mean we must redouble our efforts to help parents manage their children’s diets and activity levels in those first critical preschool years.

Dr. Wilkoff practiced primary care pediatrics in Brunswick, Maine for nearly 40 years. He has authored several books on behavioral pediatrics, including “How to Say No to Your Toddler.” Email him at [email protected].

You are seeing a 9-year-old for her annual health maintenance visit. A quick look at her growth chart easily confirms your first impression that she is obese. How are you going to address the weight that you know, and she probably suspects, is going to make her vulnerable to a myriad of health problems as she gets older?

If she has been your patient since she was in preschool, this is certainly not the first time that her growth chart has been concerning. When did you first start discussing her weight with her parents? What words did you use? What strategies have you suggested? What referrals have you made? Maybe you have already given up and decided to not even “go there” at this visit because your experience with overweight patients has been so disappointing.

In her op ed in the New York Times, Dr. Perri Klass reconsiders these kinds of questions as she reviews an article in the journal Childhood Obesity (“Let’s Not Just Dismiss the Weight Watchers Kurbo App,” by Michelle I. Cardel, PhD, MS, RD, and Elsie M. Taveras, MD, MPH, August 2019) written by a nutrition scientist and a pediatrician who are concerned about a new weight loss app for children recently released by Weight Watchers. (The Checkup, “Helping Children Learn to Eat Well,” The New York Times, Aug. 26, 2019). Although the authors of the journal article question some of the science behind the app, their primary concerns are that the app is aimed at children without a way to guarantee parental involvement, and in their opinion the app also places too much emphasis on weight loss.

Their concerns go right to the heart of what troubles me about managing obesity in children. How should I talk to a child about her weight? What words can I choose without shaming? Maybe I shouldn’t be talking to her at all. When a child is 18 months old, we don’t talk to her about her growth chart. Not because she couldn’t understand, but because the solution rests not with her but with her parents.

Does that point come when we have given up on the parents’ ability to create and maintain an environment that discourages obesity? Is that the point when we begin asking the child to unlearn a complex set of behaviors that have been enabled or at least tolerated and poorly modeled at home?

When we begin to talk to a child about his weight do we begin by telling him that he may not have been a contributor to the problem when it began but from now on he needs to be a major player in its management? Of course we don’t share that reality with an 8-year-old, but sometime during his struggle to manage his weight he will connect the dots.

If you are beginning to suspect that I have built my pediatric career around a scaffolding of parent blaming and shaming you are wrong. I know that there are children who have inherited a suite of genes that make them vulnerable to obesity. And I know that too many children grow up in environments in which their parents are powerless to control the family diet for economic reasons. But I am sure that like me you mutter to yourself and your colleagues about the number of patients you are seeing each day whose growth charts are a clear reflection of less than optimal parenting.

Does all of this mean we throw in the towel and stop trying to help overweight children after they turn 6 years old? Of course not. But, it does mean we must redouble our efforts to help parents manage their children’s diets and activity levels in those first critical preschool years.

Dr. Wilkoff practiced primary care pediatrics in Brunswick, Maine for nearly 40 years. He has authored several books on behavioral pediatrics, including “How to Say No to Your Toddler.” Email him at [email protected].

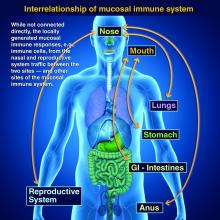

ID Blog: The story of syphilis, part II

From epidemic to endemic curse

Evolution is an amazing thing, and its more fascinating aspects are never more apparent than in the endless genetic dance between host and pathogen. And certainly, our fascination with the dance is not merely an intellectual exercise. The evolution of disease is perhaps one of the starkest examples of human misery writ large across the pages of recorded history.