User login

Remember that preeclampsia has a ‘fourth trimester’

NEW ORLEANS – according to Natalie Bello, MD, a cardiologist and assistant professor of medicine at Columbia University in New York.

In medical school, “they told me that you deliver the placenta, and the preeclampsia goes away. Not the case. Postpartum preeclampsia is a real thing. We are seeing a lot of it at our sites, which have a lot of underserved women who hadn’t had great prenatal care” elsewhere, she said.

Headache and visual changes in association with hypertension during what’s been dubbed “the fourth trimester” raise suspicions. Women can progress rapidly to eclampsia and HELLP syndrome (hemolysis, elevated liver enzymes, low platelet count), but sometimes providers don’t recognize what’s going on because they don’t know women have recently given birth. “We can do better; we should be doing better. Please always ask women if they’ve delivered recently,” Dr. Bello said at the joint scientific sessions of the American Heart Association Council on Hypertension, AHA Council on Kidney in Cardiovascular Disease, and American Society of Hypertension.

Hypertension should resolve within 6 weeks of delivery, and blood pressure should be back to baseline by 3 months. To make sure that happens, BP should be checked within a few days of birth and regularly thereafter. It can be tough to get busy and tired new moms back into the office, but “they’ll do whatever it takes” to get their baby to a pediatric appointment, so maybe having pediatricians involved in checking blood pressure would help, she said.

The cutoff point for hypertension in pregnancy is 140/90 mm Hg, and it’s considered severe when values hit 160/110 mm Hg or higher. Evidence is strong for treating severe hypertension to reduce strokes, placental abruptions, and other problems, but the data for treating nonsevere hypertension are less clear, Dr. Bello explained.

The Chronic Hypertension and Pregnancy (CHAP) trial is expected to fill the evidence gap in a few years; women are being randomized to start treatment at either 140/90 mm Hg or 160/105 mm Hg. Meanwhile, the American College of Obstetricians and Gynecologists recently suggested that treatment of nonsevere hypertension might be appropriate in the setting of comorbidities and renal dysfunction (Obstet Gynecol. 2019 Jan;133[1]:e26-e50).

Dr. Bello prefers treating with extended-release calcium channel blocker nifedipine over the beta-blocker labetalol. “We think it is a little more effective,” and the once daily dosing, instead of two or three times a day, helps with compliance. Thiazide diuretics and hydralazine are also in her arsenal, but hydralazine shouldn’t be used in isolation because of its reflex tachycardia risk. The old standby, the antiadrenergic methyldopa, has fallen out of favor because of depression and other concerns. Angiotensin-converting enzyme inhibitors, angiotensin receptor blockers, renin inhibitors, and mineralocorticoid receptor antagonists shouldn’t be used in pregnancy, she said.

Intravenous labetalol and short-acting oral nifedipine are the mainstays for urgent, severe hypertension, along with high-dose intravenous magnesium, especially for seizure control. IV hydralazine or nitroglycerin are other options, the latter particularly for pulmonary edema. “Be careful of synergistic hypotension with magnesium and nifedipine,” Dr. Bello said.

Dr. Bello didn’t have any industry disclosures.

NEW ORLEANS – according to Natalie Bello, MD, a cardiologist and assistant professor of medicine at Columbia University in New York.

In medical school, “they told me that you deliver the placenta, and the preeclampsia goes away. Not the case. Postpartum preeclampsia is a real thing. We are seeing a lot of it at our sites, which have a lot of underserved women who hadn’t had great prenatal care” elsewhere, she said.

Headache and visual changes in association with hypertension during what’s been dubbed “the fourth trimester” raise suspicions. Women can progress rapidly to eclampsia and HELLP syndrome (hemolysis, elevated liver enzymes, low platelet count), but sometimes providers don’t recognize what’s going on because they don’t know women have recently given birth. “We can do better; we should be doing better. Please always ask women if they’ve delivered recently,” Dr. Bello said at the joint scientific sessions of the American Heart Association Council on Hypertension, AHA Council on Kidney in Cardiovascular Disease, and American Society of Hypertension.

Hypertension should resolve within 6 weeks of delivery, and blood pressure should be back to baseline by 3 months. To make sure that happens, BP should be checked within a few days of birth and regularly thereafter. It can be tough to get busy and tired new moms back into the office, but “they’ll do whatever it takes” to get their baby to a pediatric appointment, so maybe having pediatricians involved in checking blood pressure would help, she said.

The cutoff point for hypertension in pregnancy is 140/90 mm Hg, and it’s considered severe when values hit 160/110 mm Hg or higher. Evidence is strong for treating severe hypertension to reduce strokes, placental abruptions, and other problems, but the data for treating nonsevere hypertension are less clear, Dr. Bello explained.

The Chronic Hypertension and Pregnancy (CHAP) trial is expected to fill the evidence gap in a few years; women are being randomized to start treatment at either 140/90 mm Hg or 160/105 mm Hg. Meanwhile, the American College of Obstetricians and Gynecologists recently suggested that treatment of nonsevere hypertension might be appropriate in the setting of comorbidities and renal dysfunction (Obstet Gynecol. 2019 Jan;133[1]:e26-e50).

Dr. Bello prefers treating with extended-release calcium channel blocker nifedipine over the beta-blocker labetalol. “We think it is a little more effective,” and the once daily dosing, instead of two or three times a day, helps with compliance. Thiazide diuretics and hydralazine are also in her arsenal, but hydralazine shouldn’t be used in isolation because of its reflex tachycardia risk. The old standby, the antiadrenergic methyldopa, has fallen out of favor because of depression and other concerns. Angiotensin-converting enzyme inhibitors, angiotensin receptor blockers, renin inhibitors, and mineralocorticoid receptor antagonists shouldn’t be used in pregnancy, she said.

Intravenous labetalol and short-acting oral nifedipine are the mainstays for urgent, severe hypertension, along with high-dose intravenous magnesium, especially for seizure control. IV hydralazine or nitroglycerin are other options, the latter particularly for pulmonary edema. “Be careful of synergistic hypotension with magnesium and nifedipine,” Dr. Bello said.

Dr. Bello didn’t have any industry disclosures.

NEW ORLEANS – according to Natalie Bello, MD, a cardiologist and assistant professor of medicine at Columbia University in New York.

In medical school, “they told me that you deliver the placenta, and the preeclampsia goes away. Not the case. Postpartum preeclampsia is a real thing. We are seeing a lot of it at our sites, which have a lot of underserved women who hadn’t had great prenatal care” elsewhere, she said.

Headache and visual changes in association with hypertension during what’s been dubbed “the fourth trimester” raise suspicions. Women can progress rapidly to eclampsia and HELLP syndrome (hemolysis, elevated liver enzymes, low platelet count), but sometimes providers don’t recognize what’s going on because they don’t know women have recently given birth. “We can do better; we should be doing better. Please always ask women if they’ve delivered recently,” Dr. Bello said at the joint scientific sessions of the American Heart Association Council on Hypertension, AHA Council on Kidney in Cardiovascular Disease, and American Society of Hypertension.

Hypertension should resolve within 6 weeks of delivery, and blood pressure should be back to baseline by 3 months. To make sure that happens, BP should be checked within a few days of birth and regularly thereafter. It can be tough to get busy and tired new moms back into the office, but “they’ll do whatever it takes” to get their baby to a pediatric appointment, so maybe having pediatricians involved in checking blood pressure would help, she said.

The cutoff point for hypertension in pregnancy is 140/90 mm Hg, and it’s considered severe when values hit 160/110 mm Hg or higher. Evidence is strong for treating severe hypertension to reduce strokes, placental abruptions, and other problems, but the data for treating nonsevere hypertension are less clear, Dr. Bello explained.

The Chronic Hypertension and Pregnancy (CHAP) trial is expected to fill the evidence gap in a few years; women are being randomized to start treatment at either 140/90 mm Hg or 160/105 mm Hg. Meanwhile, the American College of Obstetricians and Gynecologists recently suggested that treatment of nonsevere hypertension might be appropriate in the setting of comorbidities and renal dysfunction (Obstet Gynecol. 2019 Jan;133[1]:e26-e50).

Dr. Bello prefers treating with extended-release calcium channel blocker nifedipine over the beta-blocker labetalol. “We think it is a little more effective,” and the once daily dosing, instead of two or three times a day, helps with compliance. Thiazide diuretics and hydralazine are also in her arsenal, but hydralazine shouldn’t be used in isolation because of its reflex tachycardia risk. The old standby, the antiadrenergic methyldopa, has fallen out of favor because of depression and other concerns. Angiotensin-converting enzyme inhibitors, angiotensin receptor blockers, renin inhibitors, and mineralocorticoid receptor antagonists shouldn’t be used in pregnancy, she said.

Intravenous labetalol and short-acting oral nifedipine are the mainstays for urgent, severe hypertension, along with high-dose intravenous magnesium, especially for seizure control. IV hydralazine or nitroglycerin are other options, the latter particularly for pulmonary edema. “Be careful of synergistic hypotension with magnesium and nifedipine,” Dr. Bello said.

Dr. Bello didn’t have any industry disclosures.

EXPERT ANALYSIS FROM JOINT HYPERTENSION 2019

‘Fast MRI’ may be option in TBI screening for children

“Fast MRI,” which allows scans to be taken quickly without sedation, is a “reasonable alternative” to screen certain younger children for traumatic brain injury, a new study found.

The fast MRI option has “the potential to eliminate ionizing radiation exposure for thousands of children each year,” the study authors wrote in Pediatrics. “The ability to complete imaging in about 6 minutes, without the need for anesthesia or sedation, suggests that fast MRI is appropriate even in acute settings, where patient throughput is a priority.”

Daniel M. Lindberg, MD, of the University of Colorado at Denver, Aurora, and associates wrote that children make between 600,000 and 1.6 million ED visits in the United States each year for evaluation of possible traumatic brain injury (TBI). While the incidence of clinically significant injury from TBI is low, 20%-70% of these children are exposed to potentially dangerous radiation as they undergo CT.

The new study focuses on fast MRI. Unlike traditional MRI, it doesn’t require children to remain motionless – typically with the help of sedation – to be scanned.

The researchers performed fast MRI in 223 children aged younger than 6 years (median age, 12.6 months; interquartile range, 4.7-32.6) who sought emergency care at a level 1 pediatric trauma center from 2015 to 2018. They had all had CT scans performed.

CT identified TBI in 111 (50%) of the subjects, while fast MRI identified it in 103 (sensitivity, 92.8%; 95% confidence interval, 86.3-96.8). Fast MRI missed six participants with isolated skull fractures and two with subarachnoid hemorrhage; CT missed five participants with subdural hematomas, parenchymal contusions, and subarachnoid hemorrhage.

While the researchers hoped for a higher sensitivity level, they wrote that “we feel that the benefit of avoiding radiation exposure outweighs the concern for missed injury.”

In a commentary, Brett Burstein, MDCM, PhD, MPH, and Christine Saint-Martin, MDCM, MSc, of Montreal Children’s Hospital and McGill University Health Center, also in Montreal, wrote that the study is “well conducted.”

However, they noted that “the reported feasibility reflects a highly selected cohort of stable patients in whom fast MRI is already likely to succeed. Feasibility results in a more generalizable population of head-injured children cannot be extrapolated.”

And, they added, “fast MRI was unavailable for 65 of 299 consenting, eligible patients because of lack of overnight staffing. Although not included among the outcome definitions of imaging time, this would be an important ‘feasibility’ consideration in most centers.”

Dr. Burstein and Dr. Saint-Martin wrote that “centers migrating toward this modality for neuroimaging children with head injuries should still use clinical judgment and highly sensitive, validated clinical decision rules when determining the need for any neuroimaging for head-injured children.”

The study was funded by the Colorado Traumatic Brain Injury Trust Fund (MindSource) and the Colorado Clinical and Translational Sciences Institute. The study and commentary authors reported no relevant financial disclosures.

SOURCES: Lindberg DM et al. Pediatrics. 2019 Sep 18. doi: 10.1542/peds.2019-0419; Burstein B, Saint-Martin C. Pediatrics. 2019 Sep 18. doi: 10.1542/peds.2019-2387.

“Fast MRI,” which allows scans to be taken quickly without sedation, is a “reasonable alternative” to screen certain younger children for traumatic brain injury, a new study found.

The fast MRI option has “the potential to eliminate ionizing radiation exposure for thousands of children each year,” the study authors wrote in Pediatrics. “The ability to complete imaging in about 6 minutes, without the need for anesthesia or sedation, suggests that fast MRI is appropriate even in acute settings, where patient throughput is a priority.”

Daniel M. Lindberg, MD, of the University of Colorado at Denver, Aurora, and associates wrote that children make between 600,000 and 1.6 million ED visits in the United States each year for evaluation of possible traumatic brain injury (TBI). While the incidence of clinically significant injury from TBI is low, 20%-70% of these children are exposed to potentially dangerous radiation as they undergo CT.

The new study focuses on fast MRI. Unlike traditional MRI, it doesn’t require children to remain motionless – typically with the help of sedation – to be scanned.

The researchers performed fast MRI in 223 children aged younger than 6 years (median age, 12.6 months; interquartile range, 4.7-32.6) who sought emergency care at a level 1 pediatric trauma center from 2015 to 2018. They had all had CT scans performed.

CT identified TBI in 111 (50%) of the subjects, while fast MRI identified it in 103 (sensitivity, 92.8%; 95% confidence interval, 86.3-96.8). Fast MRI missed six participants with isolated skull fractures and two with subarachnoid hemorrhage; CT missed five participants with subdural hematomas, parenchymal contusions, and subarachnoid hemorrhage.

While the researchers hoped for a higher sensitivity level, they wrote that “we feel that the benefit of avoiding radiation exposure outweighs the concern for missed injury.”

In a commentary, Brett Burstein, MDCM, PhD, MPH, and Christine Saint-Martin, MDCM, MSc, of Montreal Children’s Hospital and McGill University Health Center, also in Montreal, wrote that the study is “well conducted.”

However, they noted that “the reported feasibility reflects a highly selected cohort of stable patients in whom fast MRI is already likely to succeed. Feasibility results in a more generalizable population of head-injured children cannot be extrapolated.”

And, they added, “fast MRI was unavailable for 65 of 299 consenting, eligible patients because of lack of overnight staffing. Although not included among the outcome definitions of imaging time, this would be an important ‘feasibility’ consideration in most centers.”

Dr. Burstein and Dr. Saint-Martin wrote that “centers migrating toward this modality for neuroimaging children with head injuries should still use clinical judgment and highly sensitive, validated clinical decision rules when determining the need for any neuroimaging for head-injured children.”

The study was funded by the Colorado Traumatic Brain Injury Trust Fund (MindSource) and the Colorado Clinical and Translational Sciences Institute. The study and commentary authors reported no relevant financial disclosures.

SOURCES: Lindberg DM et al. Pediatrics. 2019 Sep 18. doi: 10.1542/peds.2019-0419; Burstein B, Saint-Martin C. Pediatrics. 2019 Sep 18. doi: 10.1542/peds.2019-2387.

“Fast MRI,” which allows scans to be taken quickly without sedation, is a “reasonable alternative” to screen certain younger children for traumatic brain injury, a new study found.

The fast MRI option has “the potential to eliminate ionizing radiation exposure for thousands of children each year,” the study authors wrote in Pediatrics. “The ability to complete imaging in about 6 minutes, without the need for anesthesia or sedation, suggests that fast MRI is appropriate even in acute settings, where patient throughput is a priority.”

Daniel M. Lindberg, MD, of the University of Colorado at Denver, Aurora, and associates wrote that children make between 600,000 and 1.6 million ED visits in the United States each year for evaluation of possible traumatic brain injury (TBI). While the incidence of clinically significant injury from TBI is low, 20%-70% of these children are exposed to potentially dangerous radiation as they undergo CT.

The new study focuses on fast MRI. Unlike traditional MRI, it doesn’t require children to remain motionless – typically with the help of sedation – to be scanned.

The researchers performed fast MRI in 223 children aged younger than 6 years (median age, 12.6 months; interquartile range, 4.7-32.6) who sought emergency care at a level 1 pediatric trauma center from 2015 to 2018. They had all had CT scans performed.

CT identified TBI in 111 (50%) of the subjects, while fast MRI identified it in 103 (sensitivity, 92.8%; 95% confidence interval, 86.3-96.8). Fast MRI missed six participants with isolated skull fractures and two with subarachnoid hemorrhage; CT missed five participants with subdural hematomas, parenchymal contusions, and subarachnoid hemorrhage.

While the researchers hoped for a higher sensitivity level, they wrote that “we feel that the benefit of avoiding radiation exposure outweighs the concern for missed injury.”

In a commentary, Brett Burstein, MDCM, PhD, MPH, and Christine Saint-Martin, MDCM, MSc, of Montreal Children’s Hospital and McGill University Health Center, also in Montreal, wrote that the study is “well conducted.”

However, they noted that “the reported feasibility reflects a highly selected cohort of stable patients in whom fast MRI is already likely to succeed. Feasibility results in a more generalizable population of head-injured children cannot be extrapolated.”

And, they added, “fast MRI was unavailable for 65 of 299 consenting, eligible patients because of lack of overnight staffing. Although not included among the outcome definitions of imaging time, this would be an important ‘feasibility’ consideration in most centers.”

Dr. Burstein and Dr. Saint-Martin wrote that “centers migrating toward this modality for neuroimaging children with head injuries should still use clinical judgment and highly sensitive, validated clinical decision rules when determining the need for any neuroimaging for head-injured children.”

The study was funded by the Colorado Traumatic Brain Injury Trust Fund (MindSource) and the Colorado Clinical and Translational Sciences Institute. The study and commentary authors reported no relevant financial disclosures.

SOURCES: Lindberg DM et al. Pediatrics. 2019 Sep 18. doi: 10.1542/peds.2019-0419; Burstein B, Saint-Martin C. Pediatrics. 2019 Sep 18. doi: 10.1542/peds.2019-2387.

FROM PEDIATRICS

Subchorionic hematomas not associated with adverse pregnancy outcomes

Subchorionic hematomas in the first trimester were not associated with adverse pregnancy outcomes after 20 weeks’ gestation in singleton pregnancies, according to Mackenzie N. Naert of the Icahn School of Medicine at Mount Sinai, New York, and associates.

The investigators conducted a retrospective study, published in Obstetrics & Gynecology, of all women who presented for prenatal care before 14 weeks’ gestation at a single maternal-fetal medicine practice between January 2015 and December 2017. Of the 2,172 women with singleton pregnancies included in the analysis, 389 (18%) had a subchorionic hematoma.

Women with subchorionic hematomas were more likely to have their first ultrasound at an earlier gestational age (8 5/7 weeks vs. 9 6/7 weeks; P less than .001) and to have vaginal bleeding at the time of the ultrasound exam (32% vs. 8%; P less than .001). No other differences in baseline characteristics were observed, and after univariable analysis, subchorionic hematoma was not associated with any of the measured adverse outcomes, such as preterm birth, low birth weight, preeclampsia, gestational hypertension, placental disruption, intrauterine fetal death, cesarean section, blood transfusion, or antepartum admission.

In a regression analysis that included subchorionic hematoma, vaginal bleeding, and gestational age at ultrasound examination, vaginal bleeding had an independent association with preterm birth at less than 37 weeks’ gestation (adjusted odds ratio, 1.8; 95% confidence interval, 1.2-2.6) and birth weight less than the 10th percentile (aOR, 1.8; 95% CI, 1.2-2.6). No independent association was found for subchorionic hematoma.

“Most subchorionic hematomas present during the first trimester resolved by the second trimester,” the investigators wrote. “Therefore, women diagnosed with a first-trimester subchorionic hematoma should be reassured that their rate of adverse pregnancy outcomes at more than 20 weeks of gestation is not affected by the presence of the subchorionic hematoma. Additionally, we have previously shown that first-trimester subchorionic hematoma is not associated with pregnancy loss at less than 20 weeks of gestation.”

The authors reported no conflicts of interest.

SOURCE: Naert MN et al. Obstet Gynecol. 2019 Sep 10. doi: 10.1097/AOG.0000000000003487.

Subchorionic hematomas in the first trimester were not associated with adverse pregnancy outcomes after 20 weeks’ gestation in singleton pregnancies, according to Mackenzie N. Naert of the Icahn School of Medicine at Mount Sinai, New York, and associates.

The investigators conducted a retrospective study, published in Obstetrics & Gynecology, of all women who presented for prenatal care before 14 weeks’ gestation at a single maternal-fetal medicine practice between January 2015 and December 2017. Of the 2,172 women with singleton pregnancies included in the analysis, 389 (18%) had a subchorionic hematoma.

Women with subchorionic hematomas were more likely to have their first ultrasound at an earlier gestational age (8 5/7 weeks vs. 9 6/7 weeks; P less than .001) and to have vaginal bleeding at the time of the ultrasound exam (32% vs. 8%; P less than .001). No other differences in baseline characteristics were observed, and after univariable analysis, subchorionic hematoma was not associated with any of the measured adverse outcomes, such as preterm birth, low birth weight, preeclampsia, gestational hypertension, placental disruption, intrauterine fetal death, cesarean section, blood transfusion, or antepartum admission.

In a regression analysis that included subchorionic hematoma, vaginal bleeding, and gestational age at ultrasound examination, vaginal bleeding had an independent association with preterm birth at less than 37 weeks’ gestation (adjusted odds ratio, 1.8; 95% confidence interval, 1.2-2.6) and birth weight less than the 10th percentile (aOR, 1.8; 95% CI, 1.2-2.6). No independent association was found for subchorionic hematoma.

“Most subchorionic hematomas present during the first trimester resolved by the second trimester,” the investigators wrote. “Therefore, women diagnosed with a first-trimester subchorionic hematoma should be reassured that their rate of adverse pregnancy outcomes at more than 20 weeks of gestation is not affected by the presence of the subchorionic hematoma. Additionally, we have previously shown that first-trimester subchorionic hematoma is not associated with pregnancy loss at less than 20 weeks of gestation.”

The authors reported no conflicts of interest.

SOURCE: Naert MN et al. Obstet Gynecol. 2019 Sep 10. doi: 10.1097/AOG.0000000000003487.

Subchorionic hematomas in the first trimester were not associated with adverse pregnancy outcomes after 20 weeks’ gestation in singleton pregnancies, according to Mackenzie N. Naert of the Icahn School of Medicine at Mount Sinai, New York, and associates.

The investigators conducted a retrospective study, published in Obstetrics & Gynecology, of all women who presented for prenatal care before 14 weeks’ gestation at a single maternal-fetal medicine practice between January 2015 and December 2017. Of the 2,172 women with singleton pregnancies included in the analysis, 389 (18%) had a subchorionic hematoma.

Women with subchorionic hematomas were more likely to have their first ultrasound at an earlier gestational age (8 5/7 weeks vs. 9 6/7 weeks; P less than .001) and to have vaginal bleeding at the time of the ultrasound exam (32% vs. 8%; P less than .001). No other differences in baseline characteristics were observed, and after univariable analysis, subchorionic hematoma was not associated with any of the measured adverse outcomes, such as preterm birth, low birth weight, preeclampsia, gestational hypertension, placental disruption, intrauterine fetal death, cesarean section, blood transfusion, or antepartum admission.

In a regression analysis that included subchorionic hematoma, vaginal bleeding, and gestational age at ultrasound examination, vaginal bleeding had an independent association with preterm birth at less than 37 weeks’ gestation (adjusted odds ratio, 1.8; 95% confidence interval, 1.2-2.6) and birth weight less than the 10th percentile (aOR, 1.8; 95% CI, 1.2-2.6). No independent association was found for subchorionic hematoma.

“Most subchorionic hematomas present during the first trimester resolved by the second trimester,” the investigators wrote. “Therefore, women diagnosed with a first-trimester subchorionic hematoma should be reassured that their rate of adverse pregnancy outcomes at more than 20 weeks of gestation is not affected by the presence of the subchorionic hematoma. Additionally, we have previously shown that first-trimester subchorionic hematoma is not associated with pregnancy loss at less than 20 weeks of gestation.”

The authors reported no conflicts of interest.

SOURCE: Naert MN et al. Obstet Gynecol. 2019 Sep 10. doi: 10.1097/AOG.0000000000003487.

FROM OBSTETRICS AND GYNECOLOGY

Indwelling endoscopic biliary stents reduced risk of recurrent strictures in chronic pancreatitis

Sundeep Lakhtakia, MD, and colleagues reported in Gastrointestinal Endoscopy.

Patients with severe disease at baseline were more than twice as likely to develop a postprocedural stricture (odds ratio, 2.4). Longer baseline stricture length was less predictive, but it was still significantly associated with increased risk (OR, 1.2), according to Dr. Lakhtakia of the Asian Institute of Gastroenterology, Hyderabad, India, and coauthors.

The results indicate that indwelling biliary stenting is a reasonable and beneficial procedure for many of these patients, wrote Dr. Lakhtakia and coauthors.

“The major message to be taken from this study is that in patients with chronic [symptomatic] pancreatitis ... associated with benign biliary strictures, the single placement of a fully covered self-expanding metal stent for an intended indwell of 10-12 months allows more than 60% to remain free of symptoms up to 5 years later without additional intervention.”

The prospective nonrandomized study comprised 118 patients with chronic symptomatic pancreatitis and benign biliary strictures. All received a stent with removal scheduled for 10-12 months later. Patients were followed for 5 years. The primary endpoints were stricture resolution and freedom from recurrence at the end of follow-up.

Patients were a mean of 52 years old; most (83%) were male. At baseline, the mean total bilirubin was 1.4 mg/dL, and the mean alkaline phosphate level was 338.7 IU/L. Mean stricture length was 23.7 mm, but varied from 7.2 to 40 mm. Severe disease was present in 70%.

Among the cohort, five cases (4.2%) were considered treatment failures, with four lost to follow-up and one treated surgically for chronic pancreatitis progression. Another five experienced a spontaneous complete distal stent migration. The rest of the cohort (108) had their scheduled stent removal. At that time, 95 of the 118 experienced successful stent removal, without serious adverse events or the need for immediate replacement.

At 5 years, patients were reassessed, with the primary follow-up endpoint of stricture resolution. Secondary endpoints were time to stricture recurrence and/or changes in liver function tests. Overall, 79.7% (94) of the overall cohort showed stricture resolution at 5 years.

Among the 108 who had a successful removal, a longer time of stent indwell was associated with a decreased chance of recurrent placement. Among those with the longer indwell (median, 344 days), the risk reduction was 34% (OR, 0.66). Of the 94 patients with stricture resolution at stent removal, 77.4% remained stent free at 5 years.

At the end of follow-up, 56 patients had symptomatic data available. Most (53) had not experienced symptoms of biliary obstruction and/or cholestasis. The other three had been symptom free at 48 months but had incomplete or missing 5-year data.

By 5 years, 19 patients needed a new stent. Of these, 13 had symptoms of biliary obstruction.

About 23% of stented patients had a stent-related serious adverse event. These included cholangitis (9.3%), abdominal pain (5%), pancreatitis (3.4%), cholecystitis (2%), and cholestasis (1.7%).

About 80% of the 19 patients who had a stricture recurrence experienced a serious adverse event in the month before recurrent stent placement. The most common were cholangitis, cholestasis, abdominal pain, and cholelithiasis.

In a univariate analysis, recurrence risk was significantly associated with severe baseline disease and longer stricture length. The associations remained significant in the multivariate model.

“Strikingly, patients with initial stricture resolution at [stent] removal ... were very likely to have long-term stricture resolution” the authors noted.

Dr. Lakhtakia had no financial disclosures.

SOURCE: Lakhtakia S et al. Gastrointest Endosc. 2019. doi: 10.1016/j.gie.2019.08.037.

Sundeep Lakhtakia, MD, and colleagues reported in Gastrointestinal Endoscopy.

Patients with severe disease at baseline were more than twice as likely to develop a postprocedural stricture (odds ratio, 2.4). Longer baseline stricture length was less predictive, but it was still significantly associated with increased risk (OR, 1.2), according to Dr. Lakhtakia of the Asian Institute of Gastroenterology, Hyderabad, India, and coauthors.

The results indicate that indwelling biliary stenting is a reasonable and beneficial procedure for many of these patients, wrote Dr. Lakhtakia and coauthors.

“The major message to be taken from this study is that in patients with chronic [symptomatic] pancreatitis ... associated with benign biliary strictures, the single placement of a fully covered self-expanding metal stent for an intended indwell of 10-12 months allows more than 60% to remain free of symptoms up to 5 years later without additional intervention.”

The prospective nonrandomized study comprised 118 patients with chronic symptomatic pancreatitis and benign biliary strictures. All received a stent with removal scheduled for 10-12 months later. Patients were followed for 5 years. The primary endpoints were stricture resolution and freedom from recurrence at the end of follow-up.

Patients were a mean of 52 years old; most (83%) were male. At baseline, the mean total bilirubin was 1.4 mg/dL, and the mean alkaline phosphate level was 338.7 IU/L. Mean stricture length was 23.7 mm, but varied from 7.2 to 40 mm. Severe disease was present in 70%.

Among the cohort, five cases (4.2%) were considered treatment failures, with four lost to follow-up and one treated surgically for chronic pancreatitis progression. Another five experienced a spontaneous complete distal stent migration. The rest of the cohort (108) had their scheduled stent removal. At that time, 95 of the 118 experienced successful stent removal, without serious adverse events or the need for immediate replacement.

At 5 years, patients were reassessed, with the primary follow-up endpoint of stricture resolution. Secondary endpoints were time to stricture recurrence and/or changes in liver function tests. Overall, 79.7% (94) of the overall cohort showed stricture resolution at 5 years.

Among the 108 who had a successful removal, a longer time of stent indwell was associated with a decreased chance of recurrent placement. Among those with the longer indwell (median, 344 days), the risk reduction was 34% (OR, 0.66). Of the 94 patients with stricture resolution at stent removal, 77.4% remained stent free at 5 years.

At the end of follow-up, 56 patients had symptomatic data available. Most (53) had not experienced symptoms of biliary obstruction and/or cholestasis. The other three had been symptom free at 48 months but had incomplete or missing 5-year data.

By 5 years, 19 patients needed a new stent. Of these, 13 had symptoms of biliary obstruction.

About 23% of stented patients had a stent-related serious adverse event. These included cholangitis (9.3%), abdominal pain (5%), pancreatitis (3.4%), cholecystitis (2%), and cholestasis (1.7%).

About 80% of the 19 patients who had a stricture recurrence experienced a serious adverse event in the month before recurrent stent placement. The most common were cholangitis, cholestasis, abdominal pain, and cholelithiasis.

In a univariate analysis, recurrence risk was significantly associated with severe baseline disease and longer stricture length. The associations remained significant in the multivariate model.

“Strikingly, patients with initial stricture resolution at [stent] removal ... were very likely to have long-term stricture resolution” the authors noted.

Dr. Lakhtakia had no financial disclosures.

SOURCE: Lakhtakia S et al. Gastrointest Endosc. 2019. doi: 10.1016/j.gie.2019.08.037.

Sundeep Lakhtakia, MD, and colleagues reported in Gastrointestinal Endoscopy.

Patients with severe disease at baseline were more than twice as likely to develop a postprocedural stricture (odds ratio, 2.4). Longer baseline stricture length was less predictive, but it was still significantly associated with increased risk (OR, 1.2), according to Dr. Lakhtakia of the Asian Institute of Gastroenterology, Hyderabad, India, and coauthors.

The results indicate that indwelling biliary stenting is a reasonable and beneficial procedure for many of these patients, wrote Dr. Lakhtakia and coauthors.

“The major message to be taken from this study is that in patients with chronic [symptomatic] pancreatitis ... associated with benign biliary strictures, the single placement of a fully covered self-expanding metal stent for an intended indwell of 10-12 months allows more than 60% to remain free of symptoms up to 5 years later without additional intervention.”

The prospective nonrandomized study comprised 118 patients with chronic symptomatic pancreatitis and benign biliary strictures. All received a stent with removal scheduled for 10-12 months later. Patients were followed for 5 years. The primary endpoints were stricture resolution and freedom from recurrence at the end of follow-up.

Patients were a mean of 52 years old; most (83%) were male. At baseline, the mean total bilirubin was 1.4 mg/dL, and the mean alkaline phosphate level was 338.7 IU/L. Mean stricture length was 23.7 mm, but varied from 7.2 to 40 mm. Severe disease was present in 70%.

Among the cohort, five cases (4.2%) were considered treatment failures, with four lost to follow-up and one treated surgically for chronic pancreatitis progression. Another five experienced a spontaneous complete distal stent migration. The rest of the cohort (108) had their scheduled stent removal. At that time, 95 of the 118 experienced successful stent removal, without serious adverse events or the need for immediate replacement.

At 5 years, patients were reassessed, with the primary follow-up endpoint of stricture resolution. Secondary endpoints were time to stricture recurrence and/or changes in liver function tests. Overall, 79.7% (94) of the overall cohort showed stricture resolution at 5 years.

Among the 108 who had a successful removal, a longer time of stent indwell was associated with a decreased chance of recurrent placement. Among those with the longer indwell (median, 344 days), the risk reduction was 34% (OR, 0.66). Of the 94 patients with stricture resolution at stent removal, 77.4% remained stent free at 5 years.

At the end of follow-up, 56 patients had symptomatic data available. Most (53) had not experienced symptoms of biliary obstruction and/or cholestasis. The other three had been symptom free at 48 months but had incomplete or missing 5-year data.

By 5 years, 19 patients needed a new stent. Of these, 13 had symptoms of biliary obstruction.

About 23% of stented patients had a stent-related serious adverse event. These included cholangitis (9.3%), abdominal pain (5%), pancreatitis (3.4%), cholecystitis (2%), and cholestasis (1.7%).

About 80% of the 19 patients who had a stricture recurrence experienced a serious adverse event in the month before recurrent stent placement. The most common were cholangitis, cholestasis, abdominal pain, and cholelithiasis.

In a univariate analysis, recurrence risk was significantly associated with severe baseline disease and longer stricture length. The associations remained significant in the multivariate model.

“Strikingly, patients with initial stricture resolution at [stent] removal ... were very likely to have long-term stricture resolution” the authors noted.

Dr. Lakhtakia had no financial disclosures.

SOURCE: Lakhtakia S et al. Gastrointest Endosc. 2019. doi: 10.1016/j.gie.2019.08.037.

FROM GASTROINTESTINAL ENDOSCOPY

Genetic analysis highlights value of germline variants in MDS, AML

Germline DDX41 mutations were found to be relatively prevalent and showed favorable outcomes in a cohort of patients with myelodysplastic syndromes or acute myeloid leukemia, according to a genetic analysis.

The results demonstrate that systematic genetic testing for DDX41 mutations may aid in clinical decision making for adults with myelodysplastic syndromes (MDS) or acute myeloid leukemia (AML).

“We screened a large, unselected cohort of adult patients diagnosed with MDS/AML to analyze the biological and clinical features of DDX41-related myeloid malignancies,” wrote Marie Sébert, MD, PhD, of Hôpital Saint Louis in Paris and colleagues. The results were published in Blood.

The researchers used next-generation sequencing to analyze blood and bone marrow samples from 1,385 patients with MDS or AML to detect DDX41 mutations. A variant allele frequency of greater than 0.4 was regarded as indicative of a germline origin, and only specific variants (minor allele frequency of less than 0.01) were included.

The team analyzed various parameters relevant to DDX41-related myeloid disorders, including patient demographics, karyotyping, and treatment response rates, in addition to the prevalence of DDX41-related malignancies.

A total of 28 distinct germline DDX41 variants were detected among 43 unrelated patients. The researchers classified 21 of the variants as causal, with the rest being of unknown significance.

“We focused on the 33 patients having causal variants, representing 2.4% of our cohort,” they wrote.

The majority of patients with DDX41-related MDS/AML were male, with a median age of 69 years. Few patients (27%) had a family history of blood malignancies, while the majority of patients (85%) had a normal karyotype.

With respect to treatment, most high-risk patients received either azacitidine or intensive chemotherapy, with overall response rates of 73% and 100%, respectively. The median overall survival was 5.2 years.

There are currently no consensus recommendations on genetic counseling and follow-up of asymptomatic carriers and more studies are needed to refine clinical management and genetic counseling, the researchers wrote. “However, in our experience, DDX41-mutated patients frequently presented mild cytopenias years before overt hematological myeloid malignancy, suggesting that watchful surveillance would allow the detection of disease evolution.”

No funding sources were reported, and the authors reported having no conflicts of interest.

SOURCE: Sébert M et al. Blood. 2019 Sep 4. doi: 10.1182/blood.2019000909.

Germline DDX41 mutations were found to be relatively prevalent and showed favorable outcomes in a cohort of patients with myelodysplastic syndromes or acute myeloid leukemia, according to a genetic analysis.

The results demonstrate that systematic genetic testing for DDX41 mutations may aid in clinical decision making for adults with myelodysplastic syndromes (MDS) or acute myeloid leukemia (AML).

“We screened a large, unselected cohort of adult patients diagnosed with MDS/AML to analyze the biological and clinical features of DDX41-related myeloid malignancies,” wrote Marie Sébert, MD, PhD, of Hôpital Saint Louis in Paris and colleagues. The results were published in Blood.

The researchers used next-generation sequencing to analyze blood and bone marrow samples from 1,385 patients with MDS or AML to detect DDX41 mutations. A variant allele frequency of greater than 0.4 was regarded as indicative of a germline origin, and only specific variants (minor allele frequency of less than 0.01) were included.

The team analyzed various parameters relevant to DDX41-related myeloid disorders, including patient demographics, karyotyping, and treatment response rates, in addition to the prevalence of DDX41-related malignancies.

A total of 28 distinct germline DDX41 variants were detected among 43 unrelated patients. The researchers classified 21 of the variants as causal, with the rest being of unknown significance.

“We focused on the 33 patients having causal variants, representing 2.4% of our cohort,” they wrote.

The majority of patients with DDX41-related MDS/AML were male, with a median age of 69 years. Few patients (27%) had a family history of blood malignancies, while the majority of patients (85%) had a normal karyotype.

With respect to treatment, most high-risk patients received either azacitidine or intensive chemotherapy, with overall response rates of 73% and 100%, respectively. The median overall survival was 5.2 years.

There are currently no consensus recommendations on genetic counseling and follow-up of asymptomatic carriers and more studies are needed to refine clinical management and genetic counseling, the researchers wrote. “However, in our experience, DDX41-mutated patients frequently presented mild cytopenias years before overt hematological myeloid malignancy, suggesting that watchful surveillance would allow the detection of disease evolution.”

No funding sources were reported, and the authors reported having no conflicts of interest.

SOURCE: Sébert M et al. Blood. 2019 Sep 4. doi: 10.1182/blood.2019000909.

Germline DDX41 mutations were found to be relatively prevalent and showed favorable outcomes in a cohort of patients with myelodysplastic syndromes or acute myeloid leukemia, according to a genetic analysis.

The results demonstrate that systematic genetic testing for DDX41 mutations may aid in clinical decision making for adults with myelodysplastic syndromes (MDS) or acute myeloid leukemia (AML).

“We screened a large, unselected cohort of adult patients diagnosed with MDS/AML to analyze the biological and clinical features of DDX41-related myeloid malignancies,” wrote Marie Sébert, MD, PhD, of Hôpital Saint Louis in Paris and colleagues. The results were published in Blood.

The researchers used next-generation sequencing to analyze blood and bone marrow samples from 1,385 patients with MDS or AML to detect DDX41 mutations. A variant allele frequency of greater than 0.4 was regarded as indicative of a germline origin, and only specific variants (minor allele frequency of less than 0.01) were included.

The team analyzed various parameters relevant to DDX41-related myeloid disorders, including patient demographics, karyotyping, and treatment response rates, in addition to the prevalence of DDX41-related malignancies.

A total of 28 distinct germline DDX41 variants were detected among 43 unrelated patients. The researchers classified 21 of the variants as causal, with the rest being of unknown significance.

“We focused on the 33 patients having causal variants, representing 2.4% of our cohort,” they wrote.

The majority of patients with DDX41-related MDS/AML were male, with a median age of 69 years. Few patients (27%) had a family history of blood malignancies, while the majority of patients (85%) had a normal karyotype.

With respect to treatment, most high-risk patients received either azacitidine or intensive chemotherapy, with overall response rates of 73% and 100%, respectively. The median overall survival was 5.2 years.

There are currently no consensus recommendations on genetic counseling and follow-up of asymptomatic carriers and more studies are needed to refine clinical management and genetic counseling, the researchers wrote. “However, in our experience, DDX41-mutated patients frequently presented mild cytopenias years before overt hematological myeloid malignancy, suggesting that watchful surveillance would allow the detection of disease evolution.”

No funding sources were reported, and the authors reported having no conflicts of interest.

SOURCE: Sébert M et al. Blood. 2019 Sep 4. doi: 10.1182/blood.2019000909.

FROM BLOOD

Juvenile dermatomyositis derails growth and pubertal development

Children with juvenile dermatomyositis showed significant growth failure and pubertal delay, based on data from a longitudinal cohort study.

“Both the inflammatory activity of this severe chronic rheumatic disease and the well-known side effects of corticosteroid treatment may interfere with normal growth and pubertal development of children,” wrote Ellen Nordal, MD, of the University Hospital of Northern Norway, Tromsø, and colleagues.

The goal in treating juvenile dermatomyositis (JDM) is to achieve inactive disease and prevent permanent damage, but long-term data on growth and puberty in JDM patients are limited, they wrote.

In a study published in Arthritis Care & Research, the investigators reviewed data from 196 children and followed them for 2 years. The patients were part of the Paediatric Rheumatology International Trials Organisation (PRINTO) observational cohort study.

Overall, the researchers identified growth failure, height deflection, and/or delayed puberty in 94 children (48%) at the last study visit.

Growth failure was present at baseline in 17% of girls and 10% of boys. Over the 2-year study period, height deflection increased to 25% of girls and 31% of boys, but this change was not significant. Height deflection was defined as a change in the height z score of less than –0.25 per year from baseline. However, body mass index increased significantly from baseline during the study.

Catch-up growth had occurred by the final study visit in some patients, based on parent-adjusted z scores over time. Girls with a disease duration of 12 months or more showed no catch-up growth at 2 years and had significantly lower parent-adjusted height z scores.

In addition, the researchers observed a delay in the onset of puberty (including pubertal tempo and menarche) in approximately 36% of both boys and girls. However, neither growth failure nor height deflection was significantly associated with delayed puberty in either sex.

“In follow-up, clinicians should therefore be aware of both the pubertal development and the growth of the child, assess the milestones of development, and ensure that the children reach as much as possible of their genetic potential,” the researchers wrote.

The study participants were younger than 18 years at study enrollment, and all were in an active disease phase, defined as needing to start or receive a major dose increase of corticosteroids and/or immunosuppressants. Patients were assessed at baseline, at 6 months and/or at 12 months, and during a final visit at approximately 26 months. During the study, approximately half of the participants (50.5%) received methotrexate, 30 (15.3%) received cyclosporine A, 10 (5.1%) received cyclophosphamide, and 27 (13.8%) received intravenous immunoglobulin.

The study findings were limited by several factors, including the short follow-up period for assessing pubertal development and the inability to analyze any impact of corticosteroid use prior to the study, the researchers noted. However, “the overall frequency of growth failure was not significantly higher at the final study visit 2 years after baseline, indicating that the very high doses of corticosteroid treatment given during the study period is reasonably well tolerated with regards to growth,” they wrote. But monitoring remains essential, especially for children with previous growth failure or with disease onset early in pubertal development.

The study was supported by the European Union, Helse Nord Research grants, and by IRCCS Istituto Giannina Gaslini. Five authors of the study reported financial relationships with pharmaceutical companies.

SOURCE: Nordal E et al. Arthritis Care Res. 2019 Sep 10. doi: 10.1002/acr.24065.

Children with juvenile dermatomyositis showed significant growth failure and pubertal delay, based on data from a longitudinal cohort study.

“Both the inflammatory activity of this severe chronic rheumatic disease and the well-known side effects of corticosteroid treatment may interfere with normal growth and pubertal development of children,” wrote Ellen Nordal, MD, of the University Hospital of Northern Norway, Tromsø, and colleagues.

The goal in treating juvenile dermatomyositis (JDM) is to achieve inactive disease and prevent permanent damage, but long-term data on growth and puberty in JDM patients are limited, they wrote.

In a study published in Arthritis Care & Research, the investigators reviewed data from 196 children and followed them for 2 years. The patients were part of the Paediatric Rheumatology International Trials Organisation (PRINTO) observational cohort study.

Overall, the researchers identified growth failure, height deflection, and/or delayed puberty in 94 children (48%) at the last study visit.

Growth failure was present at baseline in 17% of girls and 10% of boys. Over the 2-year study period, height deflection increased to 25% of girls and 31% of boys, but this change was not significant. Height deflection was defined as a change in the height z score of less than –0.25 per year from baseline. However, body mass index increased significantly from baseline during the study.

Catch-up growth had occurred by the final study visit in some patients, based on parent-adjusted z scores over time. Girls with a disease duration of 12 months or more showed no catch-up growth at 2 years and had significantly lower parent-adjusted height z scores.

In addition, the researchers observed a delay in the onset of puberty (including pubertal tempo and menarche) in approximately 36% of both boys and girls. However, neither growth failure nor height deflection was significantly associated with delayed puberty in either sex.

“In follow-up, clinicians should therefore be aware of both the pubertal development and the growth of the child, assess the milestones of development, and ensure that the children reach as much as possible of their genetic potential,” the researchers wrote.

The study participants were younger than 18 years at study enrollment, and all were in an active disease phase, defined as needing to start or receive a major dose increase of corticosteroids and/or immunosuppressants. Patients were assessed at baseline, at 6 months and/or at 12 months, and during a final visit at approximately 26 months. During the study, approximately half of the participants (50.5%) received methotrexate, 30 (15.3%) received cyclosporine A, 10 (5.1%) received cyclophosphamide, and 27 (13.8%) received intravenous immunoglobulin.

The study findings were limited by several factors, including the short follow-up period for assessing pubertal development and the inability to analyze any impact of corticosteroid use prior to the study, the researchers noted. However, “the overall frequency of growth failure was not significantly higher at the final study visit 2 years after baseline, indicating that the very high doses of corticosteroid treatment given during the study period is reasonably well tolerated with regards to growth,” they wrote. But monitoring remains essential, especially for children with previous growth failure or with disease onset early in pubertal development.

The study was supported by the European Union, Helse Nord Research grants, and by IRCCS Istituto Giannina Gaslini. Five authors of the study reported financial relationships with pharmaceutical companies.

SOURCE: Nordal E et al. Arthritis Care Res. 2019 Sep 10. doi: 10.1002/acr.24065.

Children with juvenile dermatomyositis showed significant growth failure and pubertal delay, based on data from a longitudinal cohort study.

“Both the inflammatory activity of this severe chronic rheumatic disease and the well-known side effects of corticosteroid treatment may interfere with normal growth and pubertal development of children,” wrote Ellen Nordal, MD, of the University Hospital of Northern Norway, Tromsø, and colleagues.

The goal in treating juvenile dermatomyositis (JDM) is to achieve inactive disease and prevent permanent damage, but long-term data on growth and puberty in JDM patients are limited, they wrote.

In a study published in Arthritis Care & Research, the investigators reviewed data from 196 children and followed them for 2 years. The patients were part of the Paediatric Rheumatology International Trials Organisation (PRINTO) observational cohort study.

Overall, the researchers identified growth failure, height deflection, and/or delayed puberty in 94 children (48%) at the last study visit.

Growth failure was present at baseline in 17% of girls and 10% of boys. Over the 2-year study period, height deflection increased to 25% of girls and 31% of boys, but this change was not significant. Height deflection was defined as a change in the height z score of less than –0.25 per year from baseline. However, body mass index increased significantly from baseline during the study.

Catch-up growth had occurred by the final study visit in some patients, based on parent-adjusted z scores over time. Girls with a disease duration of 12 months or more showed no catch-up growth at 2 years and had significantly lower parent-adjusted height z scores.

In addition, the researchers observed a delay in the onset of puberty (including pubertal tempo and menarche) in approximately 36% of both boys and girls. However, neither growth failure nor height deflection was significantly associated with delayed puberty in either sex.

“In follow-up, clinicians should therefore be aware of both the pubertal development and the growth of the child, assess the milestones of development, and ensure that the children reach as much as possible of their genetic potential,” the researchers wrote.

The study participants were younger than 18 years at study enrollment, and all were in an active disease phase, defined as needing to start or receive a major dose increase of corticosteroids and/or immunosuppressants. Patients were assessed at baseline, at 6 months and/or at 12 months, and during a final visit at approximately 26 months. During the study, approximately half of the participants (50.5%) received methotrexate, 30 (15.3%) received cyclosporine A, 10 (5.1%) received cyclophosphamide, and 27 (13.8%) received intravenous immunoglobulin.

The study findings were limited by several factors, including the short follow-up period for assessing pubertal development and the inability to analyze any impact of corticosteroid use prior to the study, the researchers noted. However, “the overall frequency of growth failure was not significantly higher at the final study visit 2 years after baseline, indicating that the very high doses of corticosteroid treatment given during the study period is reasonably well tolerated with regards to growth,” they wrote. But monitoring remains essential, especially for children with previous growth failure or with disease onset early in pubertal development.

The study was supported by the European Union, Helse Nord Research grants, and by IRCCS Istituto Giannina Gaslini. Five authors of the study reported financial relationships with pharmaceutical companies.

SOURCE: Nordal E et al. Arthritis Care Res. 2019 Sep 10. doi: 10.1002/acr.24065.

FROM ARTHRITIS CARE & RESEARCH

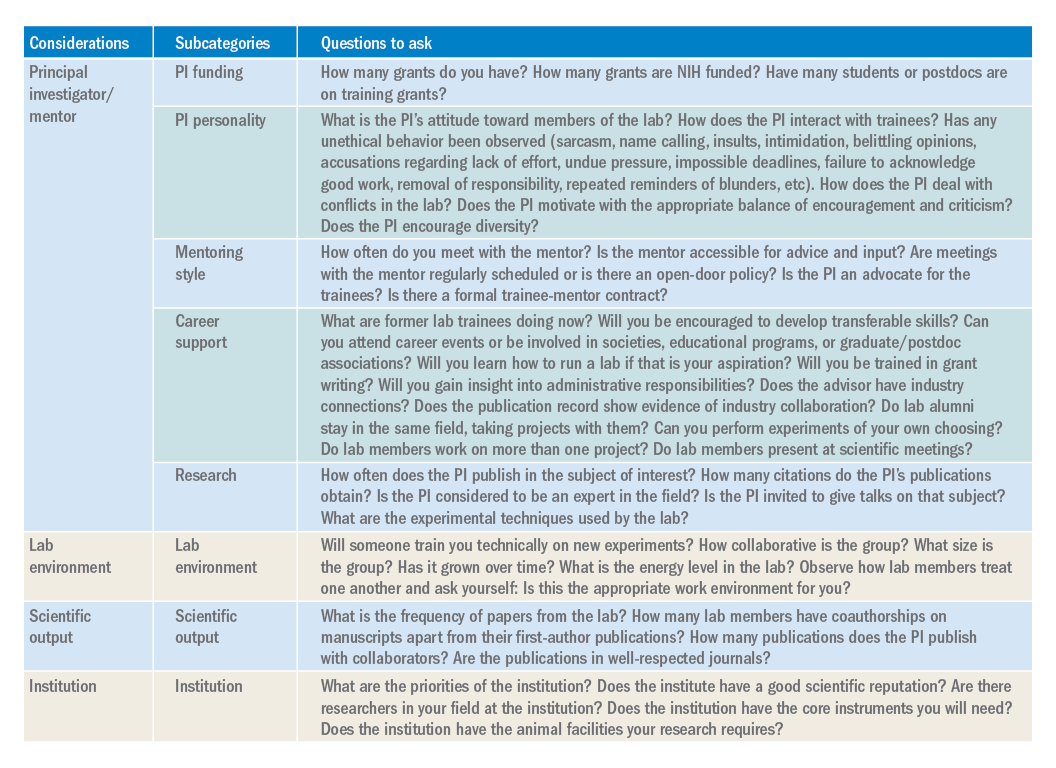

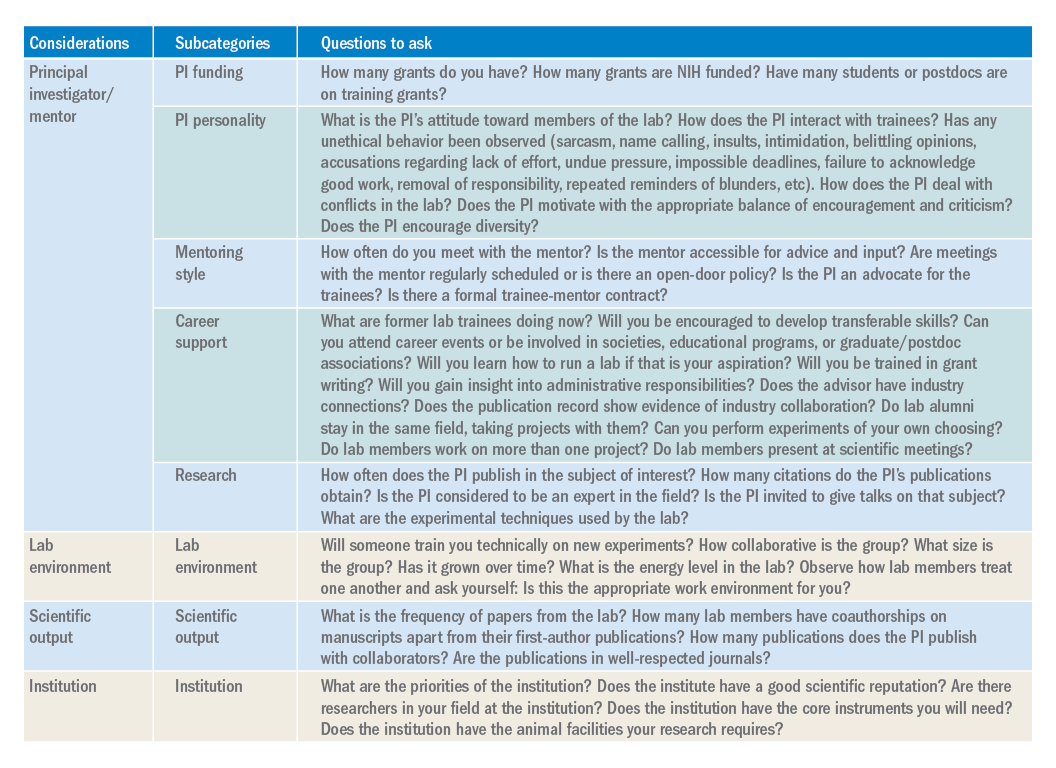

Not all labs are created equal: What to look for when searching for the right lab

Introduction

As researchers, we spend a substantial period of our careers as trainees in a lab. As graduate students or postdoctoral fellows, selecting the “right” lab has the potential to make a huge difference for your future career and your mental health. Although much of the decision is based on instinct, there is still some logic and reason that can be applied to ensure the choice is a good fit.

PI/mentor

PI funding. The history of funding and the funding of your specific project are important factors to consider. No matter how significant the scientific question, not having adequate funding can be paralyzing. For both graduate students and postdoctoral fellows, it is in your best interest to proactively inquire about the funding status of the lab. This can be accomplished using online reporters (such as NIH Reporter or Grantome) and asking the PI. It is equally important to identify which grant application/fellowship will fund your stipend and ensure that the funding is secure for 4-6 years.

PI personality. For better or for worse, the PI largely sets the tone for the lab. The personality of the PI can be a key determining factor in finding the right “fit” for a trainee. Ask your peers and current and past lab members about working with the PI. As you speak to lab members and alumni, don’t disregard warning signs of unethical behavior, bullying, or harassment. Even with the rise of movements such as the #MeTooSTEM, academic misconduct is hard to correct. It is far better to avoid these labs. If available, examine the PI’s social networks (LinkedIn, Twitter, Facebook, etc.) or read previously published interviews and look for the PI’s attitude toward its lab members.

Mentoring style. The NIH emphasizes mentorship in numerous funding mechanisms; thus, it isn’t surprising that selecting an appropriate mentor is paramount for a successful training experience. A lot of this decision requires information about you as a trainee. Reflect on what type of scientific mentor will be the best fit for your needs and honestly evaluate your own communication style, expectations, and final career goals. Ask questions of the PI and lab members to identify the mentoring style. Find a mentor who takes his or her responsibility to train you seriously and who genuinely cares about the well-being of the lab personnel. Good mentors advocate for their trainees inside and outside of the lab. A mentor’s vocal support of talented trainees can help propel them toward their career goals.

Career support. One of the most important questions you need to consider is whether your mentor can help you accomplish your career goals. Be prepared to have an honest conversation with the PI about your goals. The majority of PhD scientists will pursue careers outside of academia, so choose a mentor who supports diverse career paths. A mentor who is invested in your success will work with you to finish papers in a timely manner, encourage you to apply for awards and grants, aid in the identification of fruitful collaborations, help develop new skills, and expand your professional network. A good mentor will also ensure that you have freedom in your project to pursue your own scientific interests, and ultimately allow you to carve out a project for you to take with you when your training is complete.

Research. The research done in your lab will also factor into your decision. Especially if you are a postdoctoral fellow, this will be the field/subject/niche in which you will likely establish your career and expertise. If there is a field you want to pursue, identify mentors who have a publication record in that subject. Examine the PIs for publications, citations, and overall contributions to the field. If the PI in question is young, you may not find many citations of papers. However, you can identify the quality of the papers, the lab’s experimental techniques, and how often the PI is invited to give talks on that subject.

Lab environment

The composition of a lab can play a huge role in your overall productivity and mental well-being. Your coworkers will be the ones you interact with on a day-to-day basis. Your lab mates can be a huge asset in terms of mastering new techniques, collaborating on a project, or receiving helpful feedback. Thus, it is to your benefit to get along with your lab mates and to choose a lab that has people who you want to work with or don’t mind working with. The reality of postdoctoral training is that you will spend a large amount of time working in the lab, so choosing a lab that has an environment that fits your personality and desired workload and schedule is important. Considering the valuable contribution of lab members toward your research progression, you should give considerable weight to this factor when choosing a lab.

Scientific output

Although labs with high-impact publications are attractive, it is more important to consider the frequency with which a lab comes out with papers. Search Pubmed to obtain the complete publication roster of the PI. This output will give you an idea about the consistency and regularity of publications and overall lab productivity. When querying the lab’s publication list, check if the lab members have coauthorships on manuscripts apart from their first-author ones. It is desirable to be a coauthor on other manuscripts, as this will help increase your publication record. In addition, look for collaborators of the PI. These collaborations might benefit you by broadening your skill set and experience. Be intentional about reviewing the papers from the lab over the last 5 years. If you want to pursue academic research, you will want to be in a lab that publishes frequently in well-respected journals.

Institution

The institutional environment should also influence your decision. Top universities can attract some of the best researchers. Moreover, funding agencies examine the “Environment and Institutional Commitment” as one of the criteria for awarding grants. When choosing an institution, consider its priorities and ensure that it aligns with your priorities (i.e., research, undergraduate education, etc.). Consider the number of PIs conducting research in your field. These other labs may benefit your career development through collaborations, scientific discussions, letters of recommendation, career support, and feedback.

Conclusion

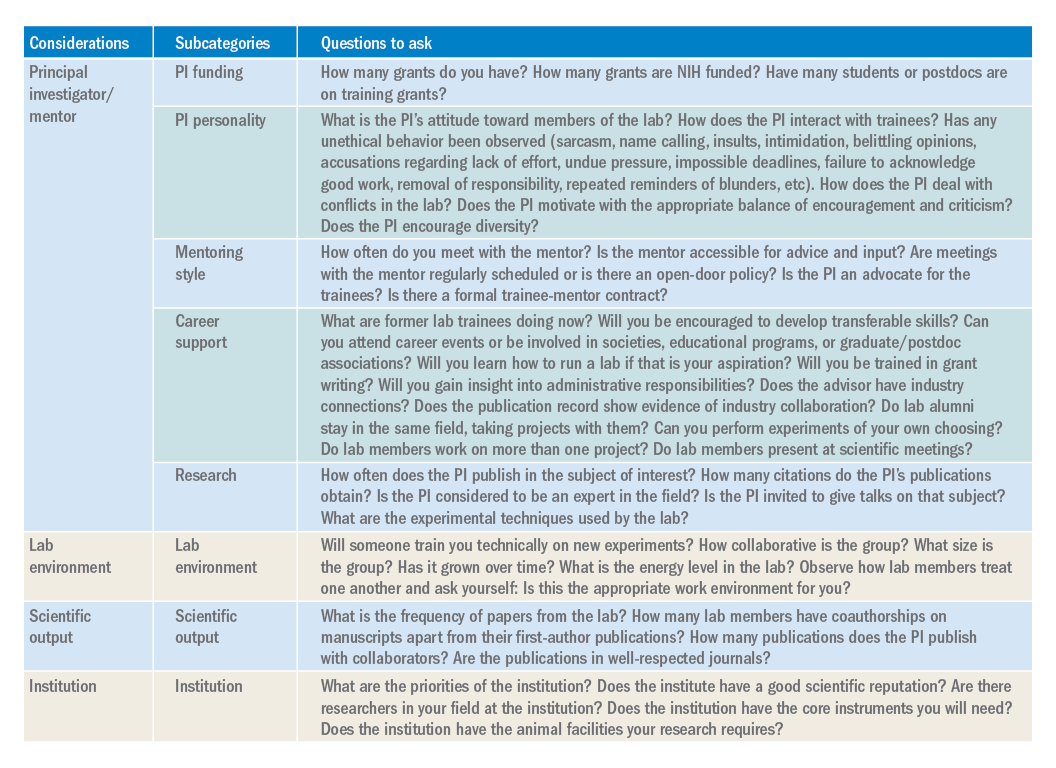

While there are certain key criteria that should be prioritized when choosing a lab, the path to finding (and joining) the right lab will vary from individual to individual. Gather data, ask questions, research what you can online (lab website, publication records, LinkedIn, ResearchGate, or Twitter), and be ready for the interview with a long list of written questions for the PI and lab members. One of the best ways to begin looking for a new lab is to ask your current mentor, committee members, peers, and others in your professional network for their suggestions. Table 1 has questions to keep in mind as you are searching for a lab. You will spend much of your time in the lab of your choosing, so choose wisely.

Dr. Engevik is an instructor in pathology and immunology, Baylor College of Medicine, Texas Children’s Hospital, Houston.

Introduction

As researchers, we spend a substantial period of our careers as trainees in a lab. As graduate students or postdoctoral fellows, selecting the “right” lab has the potential to make a huge difference for your future career and your mental health. Although much of the decision is based on instinct, there is still some logic and reason that can be applied to ensure the choice is a good fit.

PI/mentor

PI funding. The history of funding and the funding of your specific project are important factors to consider. No matter how significant the scientific question, not having adequate funding can be paralyzing. For both graduate students and postdoctoral fellows, it is in your best interest to proactively inquire about the funding status of the lab. This can be accomplished using online reporters (such as NIH Reporter or Grantome) and asking the PI. It is equally important to identify which grant application/fellowship will fund your stipend and ensure that the funding is secure for 4-6 years.

PI personality. For better or for worse, the PI largely sets the tone for the lab. The personality of the PI can be a key determining factor in finding the right “fit” for a trainee. Ask your peers and current and past lab members about working with the PI. As you speak to lab members and alumni, don’t disregard warning signs of unethical behavior, bullying, or harassment. Even with the rise of movements such as the #MeTooSTEM, academic misconduct is hard to correct. It is far better to avoid these labs. If available, examine the PI’s social networks (LinkedIn, Twitter, Facebook, etc.) or read previously published interviews and look for the PI’s attitude toward its lab members.

Mentoring style. The NIH emphasizes mentorship in numerous funding mechanisms; thus, it isn’t surprising that selecting an appropriate mentor is paramount for a successful training experience. A lot of this decision requires information about you as a trainee. Reflect on what type of scientific mentor will be the best fit for your needs and honestly evaluate your own communication style, expectations, and final career goals. Ask questions of the PI and lab members to identify the mentoring style. Find a mentor who takes his or her responsibility to train you seriously and who genuinely cares about the well-being of the lab personnel. Good mentors advocate for their trainees inside and outside of the lab. A mentor’s vocal support of talented trainees can help propel them toward their career goals.

Career support. One of the most important questions you need to consider is whether your mentor can help you accomplish your career goals. Be prepared to have an honest conversation with the PI about your goals. The majority of PhD scientists will pursue careers outside of academia, so choose a mentor who supports diverse career paths. A mentor who is invested in your success will work with you to finish papers in a timely manner, encourage you to apply for awards and grants, aid in the identification of fruitful collaborations, help develop new skills, and expand your professional network. A good mentor will also ensure that you have freedom in your project to pursue your own scientific interests, and ultimately allow you to carve out a project for you to take with you when your training is complete.

Research. The research done in your lab will also factor into your decision. Especially if you are a postdoctoral fellow, this will be the field/subject/niche in which you will likely establish your career and expertise. If there is a field you want to pursue, identify mentors who have a publication record in that subject. Examine the PIs for publications, citations, and overall contributions to the field. If the PI in question is young, you may not find many citations of papers. However, you can identify the quality of the papers, the lab’s experimental techniques, and how often the PI is invited to give talks on that subject.

Lab environment

The composition of a lab can play a huge role in your overall productivity and mental well-being. Your coworkers will be the ones you interact with on a day-to-day basis. Your lab mates can be a huge asset in terms of mastering new techniques, collaborating on a project, or receiving helpful feedback. Thus, it is to your benefit to get along with your lab mates and to choose a lab that has people who you want to work with or don’t mind working with. The reality of postdoctoral training is that you will spend a large amount of time working in the lab, so choosing a lab that has an environment that fits your personality and desired workload and schedule is important. Considering the valuable contribution of lab members toward your research progression, you should give considerable weight to this factor when choosing a lab.

Scientific output

Although labs with high-impact publications are attractive, it is more important to consider the frequency with which a lab comes out with papers. Search Pubmed to obtain the complete publication roster of the PI. This output will give you an idea about the consistency and regularity of publications and overall lab productivity. When querying the lab’s publication list, check if the lab members have coauthorships on manuscripts apart from their first-author ones. It is desirable to be a coauthor on other manuscripts, as this will help increase your publication record. In addition, look for collaborators of the PI. These collaborations might benefit you by broadening your skill set and experience. Be intentional about reviewing the papers from the lab over the last 5 years. If you want to pursue academic research, you will want to be in a lab that publishes frequently in well-respected journals.

Institution

The institutional environment should also influence your decision. Top universities can attract some of the best researchers. Moreover, funding agencies examine the “Environment and Institutional Commitment” as one of the criteria for awarding grants. When choosing an institution, consider its priorities and ensure that it aligns with your priorities (i.e., research, undergraduate education, etc.). Consider the number of PIs conducting research in your field. These other labs may benefit your career development through collaborations, scientific discussions, letters of recommendation, career support, and feedback.

Conclusion

While there are certain key criteria that should be prioritized when choosing a lab, the path to finding (and joining) the right lab will vary from individual to individual. Gather data, ask questions, research what you can online (lab website, publication records, LinkedIn, ResearchGate, or Twitter), and be ready for the interview with a long list of written questions for the PI and lab members. One of the best ways to begin looking for a new lab is to ask your current mentor, committee members, peers, and others in your professional network for their suggestions. Table 1 has questions to keep in mind as you are searching for a lab. You will spend much of your time in the lab of your choosing, so choose wisely.

Dr. Engevik is an instructor in pathology and immunology, Baylor College of Medicine, Texas Children’s Hospital, Houston.

Introduction

As researchers, we spend a substantial period of our careers as trainees in a lab. As graduate students or postdoctoral fellows, selecting the “right” lab has the potential to make a huge difference for your future career and your mental health. Although much of the decision is based on instinct, there is still some logic and reason that can be applied to ensure the choice is a good fit.

PI/mentor

PI funding. The history of funding and the funding of your specific project are important factors to consider. No matter how significant the scientific question, not having adequate funding can be paralyzing. For both graduate students and postdoctoral fellows, it is in your best interest to proactively inquire about the funding status of the lab. This can be accomplished using online reporters (such as NIH Reporter or Grantome) and asking the PI. It is equally important to identify which grant application/fellowship will fund your stipend and ensure that the funding is secure for 4-6 years.

PI personality. For better or for worse, the PI largely sets the tone for the lab. The personality of the PI can be a key determining factor in finding the right “fit” for a trainee. Ask your peers and current and past lab members about working with the PI. As you speak to lab members and alumni, don’t disregard warning signs of unethical behavior, bullying, or harassment. Even with the rise of movements such as the #MeTooSTEM, academic misconduct is hard to correct. It is far better to avoid these labs. If available, examine the PI’s social networks (LinkedIn, Twitter, Facebook, etc.) or read previously published interviews and look for the PI’s attitude toward its lab members.

Mentoring style. The NIH emphasizes mentorship in numerous funding mechanisms; thus, it isn’t surprising that selecting an appropriate mentor is paramount for a successful training experience. A lot of this decision requires information about you as a trainee. Reflect on what type of scientific mentor will be the best fit for your needs and honestly evaluate your own communication style, expectations, and final career goals. Ask questions of the PI and lab members to identify the mentoring style. Find a mentor who takes his or her responsibility to train you seriously and who genuinely cares about the well-being of the lab personnel. Good mentors advocate for their trainees inside and outside of the lab. A mentor’s vocal support of talented trainees can help propel them toward their career goals.

Career support. One of the most important questions you need to consider is whether your mentor can help you accomplish your career goals. Be prepared to have an honest conversation with the PI about your goals. The majority of PhD scientists will pursue careers outside of academia, so choose a mentor who supports diverse career paths. A mentor who is invested in your success will work with you to finish papers in a timely manner, encourage you to apply for awards and grants, aid in the identification of fruitful collaborations, help develop new skills, and expand your professional network. A good mentor will also ensure that you have freedom in your project to pursue your own scientific interests, and ultimately allow you to carve out a project for you to take with you when your training is complete.

Research. The research done in your lab will also factor into your decision. Especially if you are a postdoctoral fellow, this will be the field/subject/niche in which you will likely establish your career and expertise. If there is a field you want to pursue, identify mentors who have a publication record in that subject. Examine the PIs for publications, citations, and overall contributions to the field. If the PI in question is young, you may not find many citations of papers. However, you can identify the quality of the papers, the lab’s experimental techniques, and how often the PI is invited to give talks on that subject.

Lab environment

The composition of a lab can play a huge role in your overall productivity and mental well-being. Your coworkers will be the ones you interact with on a day-to-day basis. Your lab mates can be a huge asset in terms of mastering new techniques, collaborating on a project, or receiving helpful feedback. Thus, it is to your benefit to get along with your lab mates and to choose a lab that has people who you want to work with or don’t mind working with. The reality of postdoctoral training is that you will spend a large amount of time working in the lab, so choosing a lab that has an environment that fits your personality and desired workload and schedule is important. Considering the valuable contribution of lab members toward your research progression, you should give considerable weight to this factor when choosing a lab.

Scientific output

Although labs with high-impact publications are attractive, it is more important to consider the frequency with which a lab comes out with papers. Search Pubmed to obtain the complete publication roster of the PI. This output will give you an idea about the consistency and regularity of publications and overall lab productivity. When querying the lab’s publication list, check if the lab members have coauthorships on manuscripts apart from their first-author ones. It is desirable to be a coauthor on other manuscripts, as this will help increase your publication record. In addition, look for collaborators of the PI. These collaborations might benefit you by broadening your skill set and experience. Be intentional about reviewing the papers from the lab over the last 5 years. If you want to pursue academic research, you will want to be in a lab that publishes frequently in well-respected journals.

Institution