User login

HIV-positive patients may be at greater risk of atherosclerosis

HIV-infected individuals without any traditional cardiovascular disease risk factors still show signs of vascular thickening, compared with HIV-negative controls, researchers reported in a British study.

A team led by Dr. Kathleen A.M. Rose of the cardiovascular biomedical research unit at London’s Royal Brompton Hospital performed carotid cardiovascular magnetic resonance imaging on 33 HIV-infected patients and 35 HIV-negative controls, with both groups being at low cardiovascular risk. The study showed HIV infection was associated with a significantly greater ratio of carotid wall to outer wall thickness (36.7% vs. 32.5%, P less than .0001) – an indicator of carotid intima-media thickening that has been shown in HIV-negative populations to be predictive of future cardiovascular events.

Women with HIV had an even greater increase in carotid intima-media thickening, compared with men with HIV, according to the study results (JAIDS. 2015 Nov 16. doi: 10.1097/QAI.0000000000000900)

While there were no significant differences between the cases and controls in total carotid lumen volume, carotid artery volume, and carotid wall volume, total wall volume was higher in HIV-infected individuals, and there was a nonsignificant decrease in carotid artery distensibility in the HIV-infected group.

“Although traditional cardiovascular risk factors are highly prevalent and accepted to play a role in HIV-associated cardiovascular disease, the role of long-term cART [combination antiretroviral therapy] and HIV infection itself remains controversial,” wrote Dr. Rose and her coauthors.

The researchers cited earlier studies linking the antiretroviral agents indinavir, abacavir, and lopinavir with increased cardiovascular risk, although they pointed out there was conflicting evidence of a link with type of antiretroviral therapy.

The HIV-infected participants were all stable on combination antiretroviral therapy for a median duration of 7 years (2-21 years), and all had a plasma HIV-1 RNA viral load below 50 copies/mL.

Years of antiretroviral therapy and use of nonnucleoside reverse transcriptase inhibitors or protease inhibitors did not significantly impact carotid intima-media thickness, although patients taking abacavir – which is associated with increased cardiovascular risk – had a lower wall/outer wall ratio than those on zidovudine.

“This result may also reflect a channeling bias whereby clinicians only use abacavir in subjects they consider to have very low cardiovascular risk,” the authors wrote.

Other parameters such as age, ethnicity, CD4 cell count, nadir CD4 cell count, and years since HIV diagnosis did not impact atherosclerosis in HIV-positive subjects.

“Although our study has not followed up patients or controls longitudinally, the diverging lines between the groups with increasing age suggests that HIV infection and/or its treatment may be associated with progression of vascular wall thickening beyond that normally seen with age,” the authors reported.

“As increasing C-IMT [carotid intima-media thickness] has been found to be independently predictive of future stroke and myocardial infarction in HIV-uninfected populations, the findings of this study suggest that the rate of vascular events is likely to remain elevated in HIV-patients despite aggressive treatment of cardiovascular risk factors, highlighting the need for improved patient and health care provider education to detect and manage aggressively early signs of cardiovascular disease.”

The study was supported by the National Institute of Health Research cardiovascular biomedical research unit at Royal Brompton and Harefield NHS Foundation Trust, and Imperial College London. Three authors declared honoraria, grants, sponsorship, and consultancies from the pharmaceutical industry.

HIV-infected individuals without any traditional cardiovascular disease risk factors still show signs of vascular thickening, compared with HIV-negative controls, researchers reported in a British study.

A team led by Dr. Kathleen A.M. Rose of the cardiovascular biomedical research unit at London’s Royal Brompton Hospital performed carotid cardiovascular magnetic resonance imaging on 33 HIV-infected patients and 35 HIV-negative controls, with both groups being at low cardiovascular risk. The study showed HIV infection was associated with a significantly greater ratio of carotid wall to outer wall thickness (36.7% vs. 32.5%, P less than .0001) – an indicator of carotid intima-media thickening that has been shown in HIV-negative populations to be predictive of future cardiovascular events.

Women with HIV had an even greater increase in carotid intima-media thickening, compared with men with HIV, according to the study results (JAIDS. 2015 Nov 16. doi: 10.1097/QAI.0000000000000900)

While there were no significant differences between the cases and controls in total carotid lumen volume, carotid artery volume, and carotid wall volume, total wall volume was higher in HIV-infected individuals, and there was a nonsignificant decrease in carotid artery distensibility in the HIV-infected group.

“Although traditional cardiovascular risk factors are highly prevalent and accepted to play a role in HIV-associated cardiovascular disease, the role of long-term cART [combination antiretroviral therapy] and HIV infection itself remains controversial,” wrote Dr. Rose and her coauthors.

The researchers cited earlier studies linking the antiretroviral agents indinavir, abacavir, and lopinavir with increased cardiovascular risk, although they pointed out there was conflicting evidence of a link with type of antiretroviral therapy.

The HIV-infected participants were all stable on combination antiretroviral therapy for a median duration of 7 years (2-21 years), and all had a plasma HIV-1 RNA viral load below 50 copies/mL.

Years of antiretroviral therapy and use of nonnucleoside reverse transcriptase inhibitors or protease inhibitors did not significantly impact carotid intima-media thickness, although patients taking abacavir – which is associated with increased cardiovascular risk – had a lower wall/outer wall ratio than those on zidovudine.

“This result may also reflect a channeling bias whereby clinicians only use abacavir in subjects they consider to have very low cardiovascular risk,” the authors wrote.

Other parameters such as age, ethnicity, CD4 cell count, nadir CD4 cell count, and years since HIV diagnosis did not impact atherosclerosis in HIV-positive subjects.

“Although our study has not followed up patients or controls longitudinally, the diverging lines between the groups with increasing age suggests that HIV infection and/or its treatment may be associated with progression of vascular wall thickening beyond that normally seen with age,” the authors reported.

“As increasing C-IMT [carotid intima-media thickness] has been found to be independently predictive of future stroke and myocardial infarction in HIV-uninfected populations, the findings of this study suggest that the rate of vascular events is likely to remain elevated in HIV-patients despite aggressive treatment of cardiovascular risk factors, highlighting the need for improved patient and health care provider education to detect and manage aggressively early signs of cardiovascular disease.”

The study was supported by the National Institute of Health Research cardiovascular biomedical research unit at Royal Brompton and Harefield NHS Foundation Trust, and Imperial College London. Three authors declared honoraria, grants, sponsorship, and consultancies from the pharmaceutical industry.

HIV-infected individuals without any traditional cardiovascular disease risk factors still show signs of vascular thickening, compared with HIV-negative controls, researchers reported in a British study.

A team led by Dr. Kathleen A.M. Rose of the cardiovascular biomedical research unit at London’s Royal Brompton Hospital performed carotid cardiovascular magnetic resonance imaging on 33 HIV-infected patients and 35 HIV-negative controls, with both groups being at low cardiovascular risk. The study showed HIV infection was associated with a significantly greater ratio of carotid wall to outer wall thickness (36.7% vs. 32.5%, P less than .0001) – an indicator of carotid intima-media thickening that has been shown in HIV-negative populations to be predictive of future cardiovascular events.

Women with HIV had an even greater increase in carotid intima-media thickening, compared with men with HIV, according to the study results (JAIDS. 2015 Nov 16. doi: 10.1097/QAI.0000000000000900)

While there were no significant differences between the cases and controls in total carotid lumen volume, carotid artery volume, and carotid wall volume, total wall volume was higher in HIV-infected individuals, and there was a nonsignificant decrease in carotid artery distensibility in the HIV-infected group.

“Although traditional cardiovascular risk factors are highly prevalent and accepted to play a role in HIV-associated cardiovascular disease, the role of long-term cART [combination antiretroviral therapy] and HIV infection itself remains controversial,” wrote Dr. Rose and her coauthors.

The researchers cited earlier studies linking the antiretroviral agents indinavir, abacavir, and lopinavir with increased cardiovascular risk, although they pointed out there was conflicting evidence of a link with type of antiretroviral therapy.

The HIV-infected participants were all stable on combination antiretroviral therapy for a median duration of 7 years (2-21 years), and all had a plasma HIV-1 RNA viral load below 50 copies/mL.

Years of antiretroviral therapy and use of nonnucleoside reverse transcriptase inhibitors or protease inhibitors did not significantly impact carotid intima-media thickness, although patients taking abacavir – which is associated with increased cardiovascular risk – had a lower wall/outer wall ratio than those on zidovudine.

“This result may also reflect a channeling bias whereby clinicians only use abacavir in subjects they consider to have very low cardiovascular risk,” the authors wrote.

Other parameters such as age, ethnicity, CD4 cell count, nadir CD4 cell count, and years since HIV diagnosis did not impact atherosclerosis in HIV-positive subjects.

“Although our study has not followed up patients or controls longitudinally, the diverging lines between the groups with increasing age suggests that HIV infection and/or its treatment may be associated with progression of vascular wall thickening beyond that normally seen with age,” the authors reported.

“As increasing C-IMT [carotid intima-media thickness] has been found to be independently predictive of future stroke and myocardial infarction in HIV-uninfected populations, the findings of this study suggest that the rate of vascular events is likely to remain elevated in HIV-patients despite aggressive treatment of cardiovascular risk factors, highlighting the need for improved patient and health care provider education to detect and manage aggressively early signs of cardiovascular disease.”

The study was supported by the National Institute of Health Research cardiovascular biomedical research unit at Royal Brompton and Harefield NHS Foundation Trust, and Imperial College London. Three authors declared honoraria, grants, sponsorship, and consultancies from the pharmaceutical industry.

Key clinical point: HIV infection and/or treatment are associated with significantly greater vascular thickening, even in the absence of other cardiovascular risk factors.

Major finding: HIV-infected patients had a significantly greater ratio of carotid wall to outer wall thickness, compared with HIV-negative controls.

Data source: An observational study of 33 HIV-infected patients and 35 HIV-negative controls.

Disclosures: The study was supported by the National Institute of Health Research cardiovascular biomedical research unit at Royal Brompton and Harefield NHS Foundation Trust, and Imperial College London. Three authors declared honoraria, grants, sponsorship, and consultancies from the pharmaceutical industry.

Resolutions

For many, the making and breaking of New Year’s resolutions has become a humorless cliché. Still, the beginning of a new year is as good a time as any for reflection and inspiration; and if you restrict your fix-it list to a few realistic promises that can actually be kept, resolution time does not have to be such an exercise in futility.

I can’t presume to know what needs improving in your practice, much less your life; but I do know the office issues I get the most questions about. Perhaps the following examples will provide inspiration for assembling a realistic list of your own:

1. Review your October, November, and December claim payments. That is, all payments since ICD-10 launched. Overall, the transition has been surprisingly smooth; the Centers for Medicare & Medicaid Services says the claim denial rate has not increased significantly, and very few rejections are due to incorrect diagnosis coding. But each private payer has its own procedure, so make sure that none of your payers has dropped the ball. The most common problem so far seems to be inconsistent handling of “Z” codes, such as skin cancer screening (Z12.83), so pay particular attention to those.

2. Do a HIPAA risk assessment. The new HIPAA rules have been in effect for more than a year. Is your office up to speed? Review every procedure that involves confidential information; make sure there are no violations. Penalties for carelessness are a lot stiffer now.

3. Encrypt your mobile devices. This is really a subset of No. 2. The biggest HIPAA vulnerability in many practices is laptops and tablets carrying confidential patient information; losing one could be a disaster. Encryption software is cheap and readily available, and a lost or stolen mobile device will probably not be treated as a HIPAA breach if it is properly encrypted.

4. Reduce your accounts receivable by keeping a credit card number on file for each patient, and charging patient-owed balances as they come in. A series of my past columns in the archive at www.edermatologynews.com explains exactly how to do this. Every hotel in the world does it; you should too.

5. Clear your “horizontal file cabinet.” That’s the mess on your desk, all the paperwork you never seem to get to (probably because you’re tweeting or answering e-mail). Set aside an hour or two and get it all done. You’ll find some interesting stuff in there. Then, for every piece of paper that arrives on your desk from now on, follow the DDD Rule: Do it, Delegate it, or Destroy it. Don’t start a new mess.

6. Keep a closer eye on your office finances. Most physicians delegate the bookkeeping, and that’s fine. But ignoring the financial side creates an atmosphere that facilitates embezzlement. Set aside a couple of hours each month to review the books personally. And make sure your employees know you’re doing it.

7. Make sure your long range financial planning is on track. This is another task physicians tend to “set and forget,” but the Great Recession was an eye opener for many of us. Once a year, sit down with your accountant and planner and make sure your investments are well diversified and all other aspects of your finances – budgets, credit ratings, insurance coverage, tax situations, college savings, estate plans, retirement accounts – are in the best shape possible. January is a good time.

8. Back up your data. Now is also an excellent time to verify that the information on your office and personal computers is being backed up – locally and online – on a regular schedule. Don’t wait until something crashes.

9. Take more vacations. Remember Eastern’s First Law: Your last words will NOT be, “I wish I had spent more time in the office.” This is the year to start spending more time enjoying your life, your friends and family, and the world. As John Lennon said, “Life is what happens to you while you’re busy making other plans.”

10. Look at yourself. A private practice lives or dies on the personalities of its physicians, and your staff copies your personality and style. Take a hard, honest look at yourself. Identify your negative personality traits and work to eliminate them. If you have any difficulty finding habits that need changing … ask your spouse. He or she will be happy to explain them in excruciating detail.

Dr. Eastern practices dermatology and dermatologic surgery in Belleville, N.J. He is the author of numerous articles and textbook chapters, and is a longtime monthly columnist for Dermatology News. Write to him at [email protected].

For many, the making and breaking of New Year’s resolutions has become a humorless cliché. Still, the beginning of a new year is as good a time as any for reflection and inspiration; and if you restrict your fix-it list to a few realistic promises that can actually be kept, resolution time does not have to be such an exercise in futility.

I can’t presume to know what needs improving in your practice, much less your life; but I do know the office issues I get the most questions about. Perhaps the following examples will provide inspiration for assembling a realistic list of your own:

1. Review your October, November, and December claim payments. That is, all payments since ICD-10 launched. Overall, the transition has been surprisingly smooth; the Centers for Medicare & Medicaid Services says the claim denial rate has not increased significantly, and very few rejections are due to incorrect diagnosis coding. But each private payer has its own procedure, so make sure that none of your payers has dropped the ball. The most common problem so far seems to be inconsistent handling of “Z” codes, such as skin cancer screening (Z12.83), so pay particular attention to those.

2. Do a HIPAA risk assessment. The new HIPAA rules have been in effect for more than a year. Is your office up to speed? Review every procedure that involves confidential information; make sure there are no violations. Penalties for carelessness are a lot stiffer now.

3. Encrypt your mobile devices. This is really a subset of No. 2. The biggest HIPAA vulnerability in many practices is laptops and tablets carrying confidential patient information; losing one could be a disaster. Encryption software is cheap and readily available, and a lost or stolen mobile device will probably not be treated as a HIPAA breach if it is properly encrypted.

4. Reduce your accounts receivable by keeping a credit card number on file for each patient, and charging patient-owed balances as they come in. A series of my past columns in the archive at www.edermatologynews.com explains exactly how to do this. Every hotel in the world does it; you should too.

5. Clear your “horizontal file cabinet.” That’s the mess on your desk, all the paperwork you never seem to get to (probably because you’re tweeting or answering e-mail). Set aside an hour or two and get it all done. You’ll find some interesting stuff in there. Then, for every piece of paper that arrives on your desk from now on, follow the DDD Rule: Do it, Delegate it, or Destroy it. Don’t start a new mess.

6. Keep a closer eye on your office finances. Most physicians delegate the bookkeeping, and that’s fine. But ignoring the financial side creates an atmosphere that facilitates embezzlement. Set aside a couple of hours each month to review the books personally. And make sure your employees know you’re doing it.

7. Make sure your long range financial planning is on track. This is another task physicians tend to “set and forget,” but the Great Recession was an eye opener for many of us. Once a year, sit down with your accountant and planner and make sure your investments are well diversified and all other aspects of your finances – budgets, credit ratings, insurance coverage, tax situations, college savings, estate plans, retirement accounts – are in the best shape possible. January is a good time.

8. Back up your data. Now is also an excellent time to verify that the information on your office and personal computers is being backed up – locally and online – on a regular schedule. Don’t wait until something crashes.

9. Take more vacations. Remember Eastern’s First Law: Your last words will NOT be, “I wish I had spent more time in the office.” This is the year to start spending more time enjoying your life, your friends and family, and the world. As John Lennon said, “Life is what happens to you while you’re busy making other plans.”

10. Look at yourself. A private practice lives or dies on the personalities of its physicians, and your staff copies your personality and style. Take a hard, honest look at yourself. Identify your negative personality traits and work to eliminate them. If you have any difficulty finding habits that need changing … ask your spouse. He or she will be happy to explain them in excruciating detail.

Dr. Eastern practices dermatology and dermatologic surgery in Belleville, N.J. He is the author of numerous articles and textbook chapters, and is a longtime monthly columnist for Dermatology News. Write to him at [email protected].

For many, the making and breaking of New Year’s resolutions has become a humorless cliché. Still, the beginning of a new year is as good a time as any for reflection and inspiration; and if you restrict your fix-it list to a few realistic promises that can actually be kept, resolution time does not have to be such an exercise in futility.

I can’t presume to know what needs improving in your practice, much less your life; but I do know the office issues I get the most questions about. Perhaps the following examples will provide inspiration for assembling a realistic list of your own:

1. Review your October, November, and December claim payments. That is, all payments since ICD-10 launched. Overall, the transition has been surprisingly smooth; the Centers for Medicare & Medicaid Services says the claim denial rate has not increased significantly, and very few rejections are due to incorrect diagnosis coding. But each private payer has its own procedure, so make sure that none of your payers has dropped the ball. The most common problem so far seems to be inconsistent handling of “Z” codes, such as skin cancer screening (Z12.83), so pay particular attention to those.

2. Do a HIPAA risk assessment. The new HIPAA rules have been in effect for more than a year. Is your office up to speed? Review every procedure that involves confidential information; make sure there are no violations. Penalties for carelessness are a lot stiffer now.

3. Encrypt your mobile devices. This is really a subset of No. 2. The biggest HIPAA vulnerability in many practices is laptops and tablets carrying confidential patient information; losing one could be a disaster. Encryption software is cheap and readily available, and a lost or stolen mobile device will probably not be treated as a HIPAA breach if it is properly encrypted.

4. Reduce your accounts receivable by keeping a credit card number on file for each patient, and charging patient-owed balances as they come in. A series of my past columns in the archive at www.edermatologynews.com explains exactly how to do this. Every hotel in the world does it; you should too.

5. Clear your “horizontal file cabinet.” That’s the mess on your desk, all the paperwork you never seem to get to (probably because you’re tweeting or answering e-mail). Set aside an hour or two and get it all done. You’ll find some interesting stuff in there. Then, for every piece of paper that arrives on your desk from now on, follow the DDD Rule: Do it, Delegate it, or Destroy it. Don’t start a new mess.

6. Keep a closer eye on your office finances. Most physicians delegate the bookkeeping, and that’s fine. But ignoring the financial side creates an atmosphere that facilitates embezzlement. Set aside a couple of hours each month to review the books personally. And make sure your employees know you’re doing it.

7. Make sure your long range financial planning is on track. This is another task physicians tend to “set and forget,” but the Great Recession was an eye opener for many of us. Once a year, sit down with your accountant and planner and make sure your investments are well diversified and all other aspects of your finances – budgets, credit ratings, insurance coverage, tax situations, college savings, estate plans, retirement accounts – are in the best shape possible. January is a good time.

8. Back up your data. Now is also an excellent time to verify that the information on your office and personal computers is being backed up – locally and online – on a regular schedule. Don’t wait until something crashes.

9. Take more vacations. Remember Eastern’s First Law: Your last words will NOT be, “I wish I had spent more time in the office.” This is the year to start spending more time enjoying your life, your friends and family, and the world. As John Lennon said, “Life is what happens to you while you’re busy making other plans.”

10. Look at yourself. A private practice lives or dies on the personalities of its physicians, and your staff copies your personality and style. Take a hard, honest look at yourself. Identify your negative personality traits and work to eliminate them. If you have any difficulty finding habits that need changing … ask your spouse. He or she will be happy to explain them in excruciating detail.

Dr. Eastern practices dermatology and dermatologic surgery in Belleville, N.J. He is the author of numerous articles and textbook chapters, and is a longtime monthly columnist for Dermatology News. Write to him at [email protected].

Chemo quadruples risk for myeloid cancers

ORLANDO – Patients who undergo cytotoxic chemotherapy, even in the modern era, are at increased risk for developing myeloid neoplasms, based on data from a cohort of nearly 750,000 adults who were initially treated with chemotherapy and survived at least 1 year after diagnosis.

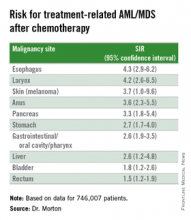

In the cohort, the standardized incidence ratio (SIR) for treatment-related acute myeloid leukemia (tAML) or myelodysplastic syndrome (MDS) was four times greater than would be expected in the general population, reported Lindsay M. Morton, Ph.D., of the division of cancer epidemiology and genetics at the National Cancer Institute in Bethesda, Md.

“We now demonstrate that there are elevated risks for treatment-related leukemia after treatment for a broad spectrum of first primary malignancies that are generally consistent with what we know about changing treatment practices,” she said at the American Society of Hematology annual meeting.

“The number of cancer survivors in the United States has increased dramatically, to reach nearly 14 million individuals today, and in just the next few years the number is expected to reach more than 18 million people, which means that the long-term health of this population is of great clinical importance as well as public health importance,” Dr. Morton emphasized.

The link between cytotoxic chemotherapy and leukemia risk has been known since the 1960s, with certain classes of agents carrying higher risks than others, including platinum-containing compounds, alkylating agents (for example, cyclophosphamide, melphalan, chlorambucil), topoisomerase II inhibitors (doxorubicin, daunorubicin, epirubicin, etc.), and antimetabolites (5-fluorauracil, capecitabine, gemcitabine, et al.).

Treatment-related leukemias are associated with higher doses of these agents, and the trend in contemporary medicine is to use more of these agents upfront for the treatment of primary malignancies. Yet estimates of the risk of tAML, MDS, or other malignancies have been hard to come by because of the relative rarity of cases and the sporadic reports in the literature, Dr. Morton said.

The investigators previously reported that risk for tAML and other myeloid neoplasms changed over time, and showed that since the 1990s there was an uptick in risk for patients treated with chemotherapy for cancers of bone, joints, and endometrium, and since 2000 for patients treated with chemotherapy for cancers of the esophagus, cervix and prostate.

For example, risks for tAML were higher in the 1970s for patients with ovarian cancer treated with melphalan, a highly leukemogenic agent, but dropped somewhat with the switch to platinum-based agents. Similarly, women with breast cancer had a comparatively high risk with the use of melphalan, a decline in risk with the introduction of cyclophosphamide, and then an increase with the addition of anthracyclines and dose-dense regimens.

Risk update

To get a better idea of the magnitude of risk in the modern era, Dr. Morton and colleagues sifted through Surveillance, Epidemiology, and End Results (SEER) data to identify a cohort of 746,007 adults who were initially treated with chemotherapy and survived for at least 1 year following a diagnosis with a first primary malignancy from 2000 through 2012. They calculated SIRs based on variables that included age, race, sex, malignancy type and treatment period.

They looked at four categories of myeloid neoplasms as defined by World Health Organization criteria: AML/MDS, chronic myeloid leukemia, myeloproliferative neoplasms (MPN) negative for BCR-ABL (Philadelphia-negative), and chronic myelomonocytic leukemia (CMML).

They found that 2,071 patients developed treatment-related AML/MDS, translating into a fourfold incidence compared with the general population (SIR 4.1, 95% confidence interval [CI] 3.9-4.2), 106 were diagnosed with CMML

They also identified novel risk for tAML/MDS after chemotherapy by malignancy (see table).

The investigators found that breast cancer, non-Hodgkin lymphoma, and lung cancer were most commonly associated with tAML/MDS (SIRs 4.1, 7.3, and 4.1, respectively, all significant).

In addition, although the overall numbers of cases were small, the investigators noted “strikingly elevated” risks for cancers of bone (SIR 35.1, CI. 16.9-64.6). testes (15.6, CI, 9.2-24.6), and soft tissue (12.6, CI=7.7-19.4),

Risk for tAML/MD was more modestly elevated for cancers of the brain, ovaries, endometrium, cervix, and prostate, and for Hodgkin lymphoma, chronic lymphocytic leukemia, and multiple myeloma.

Adding radiotherapy to chemotherapy for cancers of the breast, lung, and stomach cancers showed a trend toward heightened tAML/MDS risk, but this was not significant.

An elevated risk for CMML was also seen after chemotherapy for lung cancer (SIR 2.5, CI, 1.3-4.4), breast cancer (1.8, CI, 1.3-2.5), and non-Hodgkin lymphoma (2.1, CI, 1.2-3.4). There was elevated risk for CMML following chemotherapy for breast cancer (3.0, CI. 1.7-5.0) and non-Hodgkin lymphoma (4.2, CI, 2.4-6.9).

There were no increased risks for other myeloproliferative neoplasms after chemotherapy for any first primary cancer, however.

“This reminds us that with new uses of standard agents and introduction of new agents, it’s critical to carefully weigh the risks and benefits of systemic therapy,” Dr. Morton said.

The investigators plan to quantify risks associated with specific drugs and doses, she added.

The study was supported by the National Cancer Institute. Dr. Morton reported no relevant conflicts of interest to disclose.

ORLANDO – Patients who undergo cytotoxic chemotherapy, even in the modern era, are at increased risk for developing myeloid neoplasms, based on data from a cohort of nearly 750,000 adults who were initially treated with chemotherapy and survived at least 1 year after diagnosis.

In the cohort, the standardized incidence ratio (SIR) for treatment-related acute myeloid leukemia (tAML) or myelodysplastic syndrome (MDS) was four times greater than would be expected in the general population, reported Lindsay M. Morton, Ph.D., of the division of cancer epidemiology and genetics at the National Cancer Institute in Bethesda, Md.

“We now demonstrate that there are elevated risks for treatment-related leukemia after treatment for a broad spectrum of first primary malignancies that are generally consistent with what we know about changing treatment practices,” she said at the American Society of Hematology annual meeting.

“The number of cancer survivors in the United States has increased dramatically, to reach nearly 14 million individuals today, and in just the next few years the number is expected to reach more than 18 million people, which means that the long-term health of this population is of great clinical importance as well as public health importance,” Dr. Morton emphasized.

The link between cytotoxic chemotherapy and leukemia risk has been known since the 1960s, with certain classes of agents carrying higher risks than others, including platinum-containing compounds, alkylating agents (for example, cyclophosphamide, melphalan, chlorambucil), topoisomerase II inhibitors (doxorubicin, daunorubicin, epirubicin, etc.), and antimetabolites (5-fluorauracil, capecitabine, gemcitabine, et al.).

Treatment-related leukemias are associated with higher doses of these agents, and the trend in contemporary medicine is to use more of these agents upfront for the treatment of primary malignancies. Yet estimates of the risk of tAML, MDS, or other malignancies have been hard to come by because of the relative rarity of cases and the sporadic reports in the literature, Dr. Morton said.

The investigators previously reported that risk for tAML and other myeloid neoplasms changed over time, and showed that since the 1990s there was an uptick in risk for patients treated with chemotherapy for cancers of bone, joints, and endometrium, and since 2000 for patients treated with chemotherapy for cancers of the esophagus, cervix and prostate.

For example, risks for tAML were higher in the 1970s for patients with ovarian cancer treated with melphalan, a highly leukemogenic agent, but dropped somewhat with the switch to platinum-based agents. Similarly, women with breast cancer had a comparatively high risk with the use of melphalan, a decline in risk with the introduction of cyclophosphamide, and then an increase with the addition of anthracyclines and dose-dense regimens.

Risk update

To get a better idea of the magnitude of risk in the modern era, Dr. Morton and colleagues sifted through Surveillance, Epidemiology, and End Results (SEER) data to identify a cohort of 746,007 adults who were initially treated with chemotherapy and survived for at least 1 year following a diagnosis with a first primary malignancy from 2000 through 2012. They calculated SIRs based on variables that included age, race, sex, malignancy type and treatment period.

They looked at four categories of myeloid neoplasms as defined by World Health Organization criteria: AML/MDS, chronic myeloid leukemia, myeloproliferative neoplasms (MPN) negative for BCR-ABL (Philadelphia-negative), and chronic myelomonocytic leukemia (CMML).

They found that 2,071 patients developed treatment-related AML/MDS, translating into a fourfold incidence compared with the general population (SIR 4.1, 95% confidence interval [CI] 3.9-4.2), 106 were diagnosed with CMML

They also identified novel risk for tAML/MDS after chemotherapy by malignancy (see table).

The investigators found that breast cancer, non-Hodgkin lymphoma, and lung cancer were most commonly associated with tAML/MDS (SIRs 4.1, 7.3, and 4.1, respectively, all significant).

In addition, although the overall numbers of cases were small, the investigators noted “strikingly elevated” risks for cancers of bone (SIR 35.1, CI. 16.9-64.6). testes (15.6, CI, 9.2-24.6), and soft tissue (12.6, CI=7.7-19.4),

Risk for tAML/MD was more modestly elevated for cancers of the brain, ovaries, endometrium, cervix, and prostate, and for Hodgkin lymphoma, chronic lymphocytic leukemia, and multiple myeloma.

Adding radiotherapy to chemotherapy for cancers of the breast, lung, and stomach cancers showed a trend toward heightened tAML/MDS risk, but this was not significant.

An elevated risk for CMML was also seen after chemotherapy for lung cancer (SIR 2.5, CI, 1.3-4.4), breast cancer (1.8, CI, 1.3-2.5), and non-Hodgkin lymphoma (2.1, CI, 1.2-3.4). There was elevated risk for CMML following chemotherapy for breast cancer (3.0, CI. 1.7-5.0) and non-Hodgkin lymphoma (4.2, CI, 2.4-6.9).

There were no increased risks for other myeloproliferative neoplasms after chemotherapy for any first primary cancer, however.

“This reminds us that with new uses of standard agents and introduction of new agents, it’s critical to carefully weigh the risks and benefits of systemic therapy,” Dr. Morton said.

The investigators plan to quantify risks associated with specific drugs and doses, she added.

The study was supported by the National Cancer Institute. Dr. Morton reported no relevant conflicts of interest to disclose.

ORLANDO – Patients who undergo cytotoxic chemotherapy, even in the modern era, are at increased risk for developing myeloid neoplasms, based on data from a cohort of nearly 750,000 adults who were initially treated with chemotherapy and survived at least 1 year after diagnosis.

In the cohort, the standardized incidence ratio (SIR) for treatment-related acute myeloid leukemia (tAML) or myelodysplastic syndrome (MDS) was four times greater than would be expected in the general population, reported Lindsay M. Morton, Ph.D., of the division of cancer epidemiology and genetics at the National Cancer Institute in Bethesda, Md.

“We now demonstrate that there are elevated risks for treatment-related leukemia after treatment for a broad spectrum of first primary malignancies that are generally consistent with what we know about changing treatment practices,” she said at the American Society of Hematology annual meeting.

“The number of cancer survivors in the United States has increased dramatically, to reach nearly 14 million individuals today, and in just the next few years the number is expected to reach more than 18 million people, which means that the long-term health of this population is of great clinical importance as well as public health importance,” Dr. Morton emphasized.

The link between cytotoxic chemotherapy and leukemia risk has been known since the 1960s, with certain classes of agents carrying higher risks than others, including platinum-containing compounds, alkylating agents (for example, cyclophosphamide, melphalan, chlorambucil), topoisomerase II inhibitors (doxorubicin, daunorubicin, epirubicin, etc.), and antimetabolites (5-fluorauracil, capecitabine, gemcitabine, et al.).

Treatment-related leukemias are associated with higher doses of these agents, and the trend in contemporary medicine is to use more of these agents upfront for the treatment of primary malignancies. Yet estimates of the risk of tAML, MDS, or other malignancies have been hard to come by because of the relative rarity of cases and the sporadic reports in the literature, Dr. Morton said.

The investigators previously reported that risk for tAML and other myeloid neoplasms changed over time, and showed that since the 1990s there was an uptick in risk for patients treated with chemotherapy for cancers of bone, joints, and endometrium, and since 2000 for patients treated with chemotherapy for cancers of the esophagus, cervix and prostate.

For example, risks for tAML were higher in the 1970s for patients with ovarian cancer treated with melphalan, a highly leukemogenic agent, but dropped somewhat with the switch to platinum-based agents. Similarly, women with breast cancer had a comparatively high risk with the use of melphalan, a decline in risk with the introduction of cyclophosphamide, and then an increase with the addition of anthracyclines and dose-dense regimens.

Risk update

To get a better idea of the magnitude of risk in the modern era, Dr. Morton and colleagues sifted through Surveillance, Epidemiology, and End Results (SEER) data to identify a cohort of 746,007 adults who were initially treated with chemotherapy and survived for at least 1 year following a diagnosis with a first primary malignancy from 2000 through 2012. They calculated SIRs based on variables that included age, race, sex, malignancy type and treatment period.

They looked at four categories of myeloid neoplasms as defined by World Health Organization criteria: AML/MDS, chronic myeloid leukemia, myeloproliferative neoplasms (MPN) negative for BCR-ABL (Philadelphia-negative), and chronic myelomonocytic leukemia (CMML).

They found that 2,071 patients developed treatment-related AML/MDS, translating into a fourfold incidence compared with the general population (SIR 4.1, 95% confidence interval [CI] 3.9-4.2), 106 were diagnosed with CMML

They also identified novel risk for tAML/MDS after chemotherapy by malignancy (see table).

The investigators found that breast cancer, non-Hodgkin lymphoma, and lung cancer were most commonly associated with tAML/MDS (SIRs 4.1, 7.3, and 4.1, respectively, all significant).

In addition, although the overall numbers of cases were small, the investigators noted “strikingly elevated” risks for cancers of bone (SIR 35.1, CI. 16.9-64.6). testes (15.6, CI, 9.2-24.6), and soft tissue (12.6, CI=7.7-19.4),

Risk for tAML/MD was more modestly elevated for cancers of the brain, ovaries, endometrium, cervix, and prostate, and for Hodgkin lymphoma, chronic lymphocytic leukemia, and multiple myeloma.

Adding radiotherapy to chemotherapy for cancers of the breast, lung, and stomach cancers showed a trend toward heightened tAML/MDS risk, but this was not significant.

An elevated risk for CMML was also seen after chemotherapy for lung cancer (SIR 2.5, CI, 1.3-4.4), breast cancer (1.8, CI, 1.3-2.5), and non-Hodgkin lymphoma (2.1, CI, 1.2-3.4). There was elevated risk for CMML following chemotherapy for breast cancer (3.0, CI. 1.7-5.0) and non-Hodgkin lymphoma (4.2, CI, 2.4-6.9).

There were no increased risks for other myeloproliferative neoplasms after chemotherapy for any first primary cancer, however.

“This reminds us that with new uses of standard agents and introduction of new agents, it’s critical to carefully weigh the risks and benefits of systemic therapy,” Dr. Morton said.

The investigators plan to quantify risks associated with specific drugs and doses, she added.

The study was supported by the National Cancer Institute. Dr. Morton reported no relevant conflicts of interest to disclose.

AT ASH 2015

Key clinical point: This study quantifies the risks for treatment-related myeloid cancers after chemotherapy.

Major finding: Chemotherapy is associated with a fourfold risk for treatment-related AML/MDS, compared with the general population.

Data source: Retrospective review of data on 746,007 adults treated with chemotherapy for a first primary malignancy.

Disclosures: The National Cancer Institute supported the study. Dr. Morton reported having no conflicts of interest to disclose.

More complete cytogenetic responses at 12 months with radotinib than imatinib

Radotinib was associated with significantly higher complete cytogenetic responses and major molecular responses than imatinib was at a minimum 12 months of follow-up in a randomized, open-label, phase III clinical trialof patients with newly diagnosed chronic myeloid leukemia-chronic phase (CML-CP).

Radotinib, an investigational BCR-ABL1 tyrosine kinase inhibitor developed by IL-YANG Pharmaceuticals, is approved in Korea for the treatment of CML-CP in patients who have failed prior TKIs.

Dr. Jae-Yong Kwak of Chonbuk National University Medical School and Hospital, Jeonju, South Korea, and his colleagues randomized 241 patients to either radotinib 300 mg twice daily (n = 79), radotinib 400 mg twice daily (n = 81), or imatinib 400 mg once daily (n = 81). All three study groups were balanced in regard to baseline age, gender, race, and Sokal risk score.

At a minimum follow-up of 12 months, the proportions of patients receiving a study drug were 86% (69/79) in the radotinib 300 mg twice-daily group, 72% (58/81) in the radotinib 400 mg twice-daily group, and 82% (66/81) in the imatinib 400 mg once-daily group.

The rates of major molecular response at 12 months were significantly higher in patients receiving radotinib 300 mg b.i.d. (52%, P = .0044) and radotinib 400 mg b.i.d. (46%, P = .0342), compared with imatinib (30%), Dr. Kwak reported at the annual meeting of the American Society of Hematology in Orlando.

Among responders, the median times to major molecular response were shorter on radotinib 300 mg b.i.d. (5.7 months) and radotinib 400 mg b.i.d. (5.6 months) than on imatinib (8.2 months). The MR4.5 rates by 12 months were also higher for both radotinib 300 mg b.i.d. (15%) and 400 mg b.i.d. (14%), compared with imatinib (9%). The complete cytogenetic response rates by 12 months were 91% for radotinib 300 mg b.i.d. (P = .0120), compared with imatinib (77%). None of the patients in the study had progressed to accelerated phase or blast crisis at 12 months.

Drug discontinuation due to adverse events (AEs) or laboratory abnormalities occurred in 9% of patients on radotinib 300 mg b.i.d., 20% on radotinib 400 mg b.i.d., and 6% on imatinib.

The major side effects included grade 3/4 thrombocytopenia in 16% of patients receiving radotinib 300 mg b.i.d., 14% on radotinib 400 mg b.i.d., and 20% receiving imatinib. Grade 3/4 neutropenia occurred in 19%, 24%, and 30% for radotinib 300 mg b.i.d., 400 mg b.i.d., and imatinib, respectively.

Overall, grade 3/4 nonlaboratory AEs were uncommon in all groups. The most common nonlaboratory AEs in the radotinib groups were skin rash (about 33% in both), nausea/vomiting (about 23% in both), headache (19% and 31%), and pruritus (19% and 30%). In the imatinib group, the most common adverse events were edema (35%), myalgia (28%), nausea/vomiting (27%), and skin rash (22%).

Dr. Kwak had no relevant disclosures. Some of his colleagues received research funding from IL-YANG Pharmaceutical Co. and Alexion Pharmaceuticals.

On Twitter @maryjodales

Radotinib was associated with significantly higher complete cytogenetic responses and major molecular responses than imatinib was at a minimum 12 months of follow-up in a randomized, open-label, phase III clinical trialof patients with newly diagnosed chronic myeloid leukemia-chronic phase (CML-CP).

Radotinib, an investigational BCR-ABL1 tyrosine kinase inhibitor developed by IL-YANG Pharmaceuticals, is approved in Korea for the treatment of CML-CP in patients who have failed prior TKIs.

Dr. Jae-Yong Kwak of Chonbuk National University Medical School and Hospital, Jeonju, South Korea, and his colleagues randomized 241 patients to either radotinib 300 mg twice daily (n = 79), radotinib 400 mg twice daily (n = 81), or imatinib 400 mg once daily (n = 81). All three study groups were balanced in regard to baseline age, gender, race, and Sokal risk score.

At a minimum follow-up of 12 months, the proportions of patients receiving a study drug were 86% (69/79) in the radotinib 300 mg twice-daily group, 72% (58/81) in the radotinib 400 mg twice-daily group, and 82% (66/81) in the imatinib 400 mg once-daily group.

The rates of major molecular response at 12 months were significantly higher in patients receiving radotinib 300 mg b.i.d. (52%, P = .0044) and radotinib 400 mg b.i.d. (46%, P = .0342), compared with imatinib (30%), Dr. Kwak reported at the annual meeting of the American Society of Hematology in Orlando.

Among responders, the median times to major molecular response were shorter on radotinib 300 mg b.i.d. (5.7 months) and radotinib 400 mg b.i.d. (5.6 months) than on imatinib (8.2 months). The MR4.5 rates by 12 months were also higher for both radotinib 300 mg b.i.d. (15%) and 400 mg b.i.d. (14%), compared with imatinib (9%). The complete cytogenetic response rates by 12 months were 91% for radotinib 300 mg b.i.d. (P = .0120), compared with imatinib (77%). None of the patients in the study had progressed to accelerated phase or blast crisis at 12 months.

Drug discontinuation due to adverse events (AEs) or laboratory abnormalities occurred in 9% of patients on radotinib 300 mg b.i.d., 20% on radotinib 400 mg b.i.d., and 6% on imatinib.

The major side effects included grade 3/4 thrombocytopenia in 16% of patients receiving radotinib 300 mg b.i.d., 14% on radotinib 400 mg b.i.d., and 20% receiving imatinib. Grade 3/4 neutropenia occurred in 19%, 24%, and 30% for radotinib 300 mg b.i.d., 400 mg b.i.d., and imatinib, respectively.

Overall, grade 3/4 nonlaboratory AEs were uncommon in all groups. The most common nonlaboratory AEs in the radotinib groups were skin rash (about 33% in both), nausea/vomiting (about 23% in both), headache (19% and 31%), and pruritus (19% and 30%). In the imatinib group, the most common adverse events were edema (35%), myalgia (28%), nausea/vomiting (27%), and skin rash (22%).

Dr. Kwak had no relevant disclosures. Some of his colleagues received research funding from IL-YANG Pharmaceutical Co. and Alexion Pharmaceuticals.

On Twitter @maryjodales

Radotinib was associated with significantly higher complete cytogenetic responses and major molecular responses than imatinib was at a minimum 12 months of follow-up in a randomized, open-label, phase III clinical trialof patients with newly diagnosed chronic myeloid leukemia-chronic phase (CML-CP).

Radotinib, an investigational BCR-ABL1 tyrosine kinase inhibitor developed by IL-YANG Pharmaceuticals, is approved in Korea for the treatment of CML-CP in patients who have failed prior TKIs.

Dr. Jae-Yong Kwak of Chonbuk National University Medical School and Hospital, Jeonju, South Korea, and his colleagues randomized 241 patients to either radotinib 300 mg twice daily (n = 79), radotinib 400 mg twice daily (n = 81), or imatinib 400 mg once daily (n = 81). All three study groups were balanced in regard to baseline age, gender, race, and Sokal risk score.

At a minimum follow-up of 12 months, the proportions of patients receiving a study drug were 86% (69/79) in the radotinib 300 mg twice-daily group, 72% (58/81) in the radotinib 400 mg twice-daily group, and 82% (66/81) in the imatinib 400 mg once-daily group.

The rates of major molecular response at 12 months were significantly higher in patients receiving radotinib 300 mg b.i.d. (52%, P = .0044) and radotinib 400 mg b.i.d. (46%, P = .0342), compared with imatinib (30%), Dr. Kwak reported at the annual meeting of the American Society of Hematology in Orlando.

Among responders, the median times to major molecular response were shorter on radotinib 300 mg b.i.d. (5.7 months) and radotinib 400 mg b.i.d. (5.6 months) than on imatinib (8.2 months). The MR4.5 rates by 12 months were also higher for both radotinib 300 mg b.i.d. (15%) and 400 mg b.i.d. (14%), compared with imatinib (9%). The complete cytogenetic response rates by 12 months were 91% for radotinib 300 mg b.i.d. (P = .0120), compared with imatinib (77%). None of the patients in the study had progressed to accelerated phase or blast crisis at 12 months.

Drug discontinuation due to adverse events (AEs) or laboratory abnormalities occurred in 9% of patients on radotinib 300 mg b.i.d., 20% on radotinib 400 mg b.i.d., and 6% on imatinib.

The major side effects included grade 3/4 thrombocytopenia in 16% of patients receiving radotinib 300 mg b.i.d., 14% on radotinib 400 mg b.i.d., and 20% receiving imatinib. Grade 3/4 neutropenia occurred in 19%, 24%, and 30% for radotinib 300 mg b.i.d., 400 mg b.i.d., and imatinib, respectively.

Overall, grade 3/4 nonlaboratory AEs were uncommon in all groups. The most common nonlaboratory AEs in the radotinib groups were skin rash (about 33% in both), nausea/vomiting (about 23% in both), headache (19% and 31%), and pruritus (19% and 30%). In the imatinib group, the most common adverse events were edema (35%), myalgia (28%), nausea/vomiting (27%), and skin rash (22%).

Dr. Kwak had no relevant disclosures. Some of his colleagues received research funding from IL-YANG Pharmaceutical Co. and Alexion Pharmaceuticals.

On Twitter @maryjodales

FROM ASH 2015

Key clinical point: Radotinib was associated with significantly higher complete cytogenetic responses and major molecular responses than was imatinib at a minimum 12 months of follow-up.

Major finding: By 12 months, the rates of major molecular response were significantly higher in patients receiving radotinib 300 mg b.i.d. (52%, P = .0044) and radotinib 400 mg b.i.d. (46%, P = .0342), compared with imatinib 400 mg/day (30%).

Data source: Randomized, open-label, phase III clinical trial involving 241 patients.

Disclosures: Dr. Kwak had no relevant disclosures. Some of his colleagues received research funding from IL-YANG Pharmaceutical Co. and Alexion Pharmaceuticals.

Nilotinib safe, effective as first-line therapy for CML-CP patients age 65 and older

ORLANDO – Age did not affect molecular response or the incidence of adverse reactions to nilotinib among patients with chronic myeloid leukemia in chronic phase (CML-CP), based on results from a subanalysis of the ENEST1st study.

The analysis of the ENEST1st study, reported by Dr. Francis J. Giles, compared outcomes for 1,089 newly diagnosed CML-CP patients, 19% were aged 65 years or older and 81% were younger than age 65 years. All patients had typical transcripts and were treated for 3 months or less with nilotinib 300 mg twice daily in the open-label study.

For those 65 years and older, Sokal risk scores were low in 4.5%, intermediate in 61.2%, and high in 23.4%, with missing data for 10.9%. For younger patients, Sokal risk scores were low in 42.1%, intermediate in 32%, and high in 16.9%, with missing data for 9.

At 18 months, there was an overall 38.4% rate (95% CI, 35.5%-41.3%) of MR4 grade molecular response, which was defined as BCR-ABL level of 0.01% or less on the International Scale or undetectable BCR-ABL in cDNA with at least 10,000 ABL transcripts.

The MR4 rate at 18 months did not significantly vary by age. For patients under age 65, the cumulative incidence of MR4 by 18 months was 48.8% (95% CI, 45.4% - 52.1%); among patients aged 65 and older, the incidence of MR4 was 48.3% (95% CI, 41.4% - 55.2%). The MR4.5 rate by 18 months was 32.5% in younger patients and 28.4% in older patients, reported Dr. Giles of the Institute for Drug Development, Cancer Therapy and Research Center, at the University of Texas Health Science Center at San Antonio, and his colleagues.

Based on Sokal score, the MR4 rate by 18 months in younger patients was 53.6% (low), 45.2% (intermediate), and 35.4% (high), respectively. For older patients, the MR4 rate by 18 months based on Sokal score was 44.4% (low), 49.6% (intermediate), and 44.7% (high).

Six patients (0.6%) progressed to accelerated phase/blast crisis (AP/BC) on study; 13 patients (1.2%) died by 24 months. The most common adverse events were rash (21.4%), pruritus (16.5%), and headache (15.2%).

Novartis is the sponsor of the ENEST1st study. Dr. Giles consults for and receives honoraria and research funding from Novartis.

On Twitter @maryjodales

ORLANDO – Age did not affect molecular response or the incidence of adverse reactions to nilotinib among patients with chronic myeloid leukemia in chronic phase (CML-CP), based on results from a subanalysis of the ENEST1st study.

The analysis of the ENEST1st study, reported by Dr. Francis J. Giles, compared outcomes for 1,089 newly diagnosed CML-CP patients, 19% were aged 65 years or older and 81% were younger than age 65 years. All patients had typical transcripts and were treated for 3 months or less with nilotinib 300 mg twice daily in the open-label study.

For those 65 years and older, Sokal risk scores were low in 4.5%, intermediate in 61.2%, and high in 23.4%, with missing data for 10.9%. For younger patients, Sokal risk scores were low in 42.1%, intermediate in 32%, and high in 16.9%, with missing data for 9.

At 18 months, there was an overall 38.4% rate (95% CI, 35.5%-41.3%) of MR4 grade molecular response, which was defined as BCR-ABL level of 0.01% or less on the International Scale or undetectable BCR-ABL in cDNA with at least 10,000 ABL transcripts.

The MR4 rate at 18 months did not significantly vary by age. For patients under age 65, the cumulative incidence of MR4 by 18 months was 48.8% (95% CI, 45.4% - 52.1%); among patients aged 65 and older, the incidence of MR4 was 48.3% (95% CI, 41.4% - 55.2%). The MR4.5 rate by 18 months was 32.5% in younger patients and 28.4% in older patients, reported Dr. Giles of the Institute for Drug Development, Cancer Therapy and Research Center, at the University of Texas Health Science Center at San Antonio, and his colleagues.

Based on Sokal score, the MR4 rate by 18 months in younger patients was 53.6% (low), 45.2% (intermediate), and 35.4% (high), respectively. For older patients, the MR4 rate by 18 months based on Sokal score was 44.4% (low), 49.6% (intermediate), and 44.7% (high).

Six patients (0.6%) progressed to accelerated phase/blast crisis (AP/BC) on study; 13 patients (1.2%) died by 24 months. The most common adverse events were rash (21.4%), pruritus (16.5%), and headache (15.2%).

Novartis is the sponsor of the ENEST1st study. Dr. Giles consults for and receives honoraria and research funding from Novartis.

On Twitter @maryjodales

ORLANDO – Age did not affect molecular response or the incidence of adverse reactions to nilotinib among patients with chronic myeloid leukemia in chronic phase (CML-CP), based on results from a subanalysis of the ENEST1st study.

The analysis of the ENEST1st study, reported by Dr. Francis J. Giles, compared outcomes for 1,089 newly diagnosed CML-CP patients, 19% were aged 65 years or older and 81% were younger than age 65 years. All patients had typical transcripts and were treated for 3 months or less with nilotinib 300 mg twice daily in the open-label study.

For those 65 years and older, Sokal risk scores were low in 4.5%, intermediate in 61.2%, and high in 23.4%, with missing data for 10.9%. For younger patients, Sokal risk scores were low in 42.1%, intermediate in 32%, and high in 16.9%, with missing data for 9.

At 18 months, there was an overall 38.4% rate (95% CI, 35.5%-41.3%) of MR4 grade molecular response, which was defined as BCR-ABL level of 0.01% or less on the International Scale or undetectable BCR-ABL in cDNA with at least 10,000 ABL transcripts.

The MR4 rate at 18 months did not significantly vary by age. For patients under age 65, the cumulative incidence of MR4 by 18 months was 48.8% (95% CI, 45.4% - 52.1%); among patients aged 65 and older, the incidence of MR4 was 48.3% (95% CI, 41.4% - 55.2%). The MR4.5 rate by 18 months was 32.5% in younger patients and 28.4% in older patients, reported Dr. Giles of the Institute for Drug Development, Cancer Therapy and Research Center, at the University of Texas Health Science Center at San Antonio, and his colleagues.

Based on Sokal score, the MR4 rate by 18 months in younger patients was 53.6% (low), 45.2% (intermediate), and 35.4% (high), respectively. For older patients, the MR4 rate by 18 months based on Sokal score was 44.4% (low), 49.6% (intermediate), and 44.7% (high).

Six patients (0.6%) progressed to accelerated phase/blast crisis (AP/BC) on study; 13 patients (1.2%) died by 24 months. The most common adverse events were rash (21.4%), pruritus (16.5%), and headache (15.2%).

Novartis is the sponsor of the ENEST1st study. Dr. Giles consults for and receives honoraria and research funding from Novartis.

On Twitter @maryjodales

FROM ASH 2015

Key clinical point: Age did not affect molecular response or the incidence of adverse reactions to nilotinib among patients with CML-CP.

Major finding: For patients younger than age 65 years, the cumulative incidence of MR4 by 18 months was 48.8% (95% CI, 45.4%-52.1%); among patients aged 65 years and older, the incidence of MR4 was 48.3% (95% CI, 41.4%-55.2%).

Data source: The analysis of the ENEST1st study compared outcomes for 1,089 newly diagnosed CML-CP patients.

Disclosures: Novartis is the sponsor of the ENEST1st study. Dr. Giles consults for and receives honoraria and research funding from Novartis.

Chagas disease: Neither foreign nor untreatable

Chagas disease is a vector-borne parasitic disease, endemic to the Americas, that remains as little recognized by U.S. patients and practitioners as the obscure winged insects that transmit it.

Transmission occurs when triatomine bugs, commonly called “kissing bugs,” pierce the skin to feed and leave behind parasite-infected feces that can enter the bloodstream; pregnant women can also transmit Chagas to their newborns.

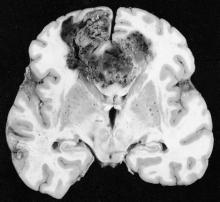

About a third of patients infected with Trypanosoma cruzi, the protozoan parasite that causes Chagas, will develop cardiac abnormalities such as cardiomyopathy, arrhythmias, and heart failure – often decades after becoming infected. In the United States, where blood banks began screening for Chagas in 2007, patients without symptoms are likely to learn they are positive only after donating blood.

Conventional wisdom has long maintained that Chagas is limited to Central and South America. But immigration from Chagas-endemic countries, such as El Salvador, Mexico, and Bolivia, means more people are living with the disease in the United States.

“One percent of the Latin American immigrant population we screen [in Los Angeles] has Chagas,” said Dr. Sheba K. Meymandi, cardiologist and director of Center of Excellence for Chagas Disease at Olive View–UCLA Medical Center in Los Angeles, who also works with the city’s health department to detect Chagas. “That’s huge.”

Meanwhile, blood banks are discovering more cases among people without ties to Latin America, and species of kissing bugs native to the southern United States are increasingly recognized as a non-negligible source of Chagas transmission. Of 39 Chagas cases reported to Texas health authorities in 2013 and 2014, 12 were thought to be locally acquired.

Dr. Heather Yun, an infectious disease specialist at the San Antonio Military Medical Center, said risk factors for local transmission are not well established, but “we think people who are living in poverty in substandard housing, people who spend a lot of time outdoors, especially at night, and people involved with direct blood contact with wild game in Southern parts of the United States” may be at higher risk.

A U.S. disease

Evidence is amassing quickly that Chagas is a U.S. disease. But U.S. clinicians still lag in their knowledge of it, say physicians treating Chagas cases. “In medical school we get a 2-hour lecture on it, and it’s always been presented as an exotic disease and one you don’t treat,” Dr. Meymandi said.

The persistent perception of Chagas as a foreign disease means clinicians are inclined to dismiss positive results from a blood screening, particularly from someone who is not from Latin America. Yet cardiologists, ID practitioners, obstetricians, and primary care physicians all need to be aware that cases do occur in the United States and are potentially treatable.

Dr. Laila Woc-Colburn, an infectious disease and tropical medicine specialist at Baylor College of Medicine in Houston, said many people with Chagas never make it to an infectious disease specialist or cardiologist for a work-up. “When you test positive on serology [after a blood donation], you get a letter recommending you consult your physician. Most will go to their primary care doctors, who might say ‘this isn’t a disease in the United States.’ In Houston, that is often the case.”

Dr. Meymandi, who has treated hundreds of patients with Chagas with and without cardiac involvement, said any physician with a potential Chagas case must act. “If you get someone that’s positive, it’s your duty as a physician to confirm the positivity with CDC,” she said.

Dr. Yun concurred. “The most important message is, do something,” she said. “Don’t just assume it’s a false positive.”

Diagnosis is not simple and requires testing beyond the initial ELISA assay used in blood-bank screening. Confirmatory tests must be carried out in coordination with the Centers for Disease Control and Prevention. Also, with no agents approved by the Food and Drug Administration to treat Chagas, treatment is available only through the CDC’s investigational drugs protocol. Both drugs used in Chagas, benznidazole and nifurtimox, come with serious adverse effects that must be closely monitored.

“It’s time consuming, filling out the forms, getting the consent, tracking and sending back lab results to CDC in order to get drugs – it’s not like you can just write a prescription,” Dr. Meymandi said. But, “if you don’t know how to treat the patient or don’t have time, find someone like me,” she noted, adding that she is available to counsel any physician daunted by a potential Chagas case.

Treatment options

No formal clinical algorithm exists for Chagas, but Dr. Meymandi, Dr. Yun, and Dr. Woc-Colburn all pointed to a 2007 JAMA article, which describes diagnosis and treatment protocols, as an important reference for clinicians to start with. It’s “the best approximation of a clinical guideline we have,” Dr. Yun said (JAMA. 2007;298[18]:2171-81. doi:10.1001/jama.298.18.2171).

Dr. Meymandi, who has treated more Chagas patients than has any other U.S. clinician, said that treatment has changed somewhat since the JAMA article was published. In 2007, she said, nifurtimox was the main drug available through CDC, while benznidazole, which is somewhat better tolerated and has shorter treatment duration, has since become the first-line agent.

“We’ve lowered the dose of benznidazole, maxing out at 400 mg/day to decrease the toxicity,” she said. Also, treatment is now being extended to some patients aged 60 years and older.

The decision to treat or not treat, clinicians say, depends on the patient’s age, disease progression, comorbidities and potential serious drug interactions, and willingness to tolerate side effects that, with nifurtimox especially, can include skin sloughing, rash, and psychological and neurologic symptoms including depression and peripheral neuropathy.

“If you don’t have side effects, you’re not taking the drugs,” Dr. Meymandi said. Dr. Woc-Colburn noted that polypharmacy was a major consideration when treating older adults for Chagas. “If I have a patient who has diabetes, obesity, [and] end-stage renal disease, it’s not going to be ideal to give [benznidazole].”

Recent, highly anticipated results from BENEFIT, a large randomized trial (n = 2,854) showed that benznidazole reduced parasite load but was not helpful in halting cardiac damage at 5 years’ follow-up in patients with established Chagas cardiomyopathy (N Engl J Med. 2015 Oct;373:1295-306. doi:10.1056/NEJMoa1507574).

Dr. Meymandi, whose earlier research established that Chagas cardiomyopathy carries significantly higher morbidity and mortality than does non–Chagas cardiomyopathy (Circulation. 2012;126:A18171), said that the BENEFIT results underscore the need for physicians to be bullish in their approach to treating Chagas soon after diagnosis.

“It doesn’t matter if they’re symptomatic or asymptomatic. You can’t wait till they progress to treat. If you wait for the progression of disease you’ve lost the battle. You can’t wait and follow conservatively until you see the complications, because once those complications have started the parasitic load is too high for you to have an impact,” she said.

Dr. Yun said that given the toxicity of current treatment, she hoped to see more studies show clearer evidence of clinical benefit, “either reductions in mortality or reductions in end organ disease.” Most studies “have focused on clearance of parasite, which is important, but it’s not as important decreasing the risk of death or cardiomyopathy or heart failure.”

Rick Tarleton, Ph.D., a biologist the University of Georgia, in Athens, who has worked on Chagas for more than 30 years, said that because Chagas pathology is directly tied to parasite load – and not, as people have suggested in the past, an autoimmune reaction resulting from parasite exposure – drug treatment may prove to be worthwhile even in patients with significant cardiac involvement.

“You get rid of the parasite, you get rid of the progression of the disease,” Dr. Tarleton said. Even the findings from the BENEFIT trial, he said, did not lead him to conclude that treatment in people with established cardiac disease was futile.

“If you’re treating people who are already chronically infected and showing symptoms, the question is not have you reversed the damage, it’s have you stopped accumulating damage,” he noted. “And a 5-year follow-up is probably not long enough to know whether you’ve stopped accumulating.”

“We have drugs, they’re not great, they do have side effects, they don’t always work,” Dr. Tarleton said. “But they’re better than nothing. And they ought to be more widely used.”

Dr. Meymandi said that current supplies of benznidazole at CDC are low and that a dozen patients at her clinic are awaiting treatment. Meanwhile, access may soon be complicated further by the announcement, this month, that KaloBios Pharmaceuticals had bought the rights to seek FDA approval of benznidazole and market it in the United States.

The same company’s CEO came under fire in recent months for acquiring rights to an inexpensive drug to treat toxoplasmosis in AIDS patients, then announcing a price increase from $13.50 to $750 a pill.

“Everyone’s really concerned,” Dr. Meymandi said, “because Chagas is a disease of the poor.”

Chagas disease is a vector-borne parasitic disease, endemic to the Americas, that remains as little recognized by U.S. patients and practitioners as the obscure winged insects that transmit it.

Transmission occurs when triatomine bugs, commonly called “kissing bugs,” pierce the skin to feed and leave behind parasite-infected feces that can enter the bloodstream; pregnant women can also transmit Chagas to their newborns.

About a third of patients infected with Trypanosoma cruzi, the protozoan parasite that causes Chagas, will develop cardiac abnormalities such as cardiomyopathy, arrhythmias, and heart failure – often decades after becoming infected. In the United States, where blood banks began screening for Chagas in 2007, patients without symptoms are likely to learn they are positive only after donating blood.

Conventional wisdom has long maintained that Chagas is limited to Central and South America. But immigration from Chagas-endemic countries, such as El Salvador, Mexico, and Bolivia, means more people are living with the disease in the United States.

“One percent of the Latin American immigrant population we screen [in Los Angeles] has Chagas,” said Dr. Sheba K. Meymandi, cardiologist and director of Center of Excellence for Chagas Disease at Olive View–UCLA Medical Center in Los Angeles, who also works with the city’s health department to detect Chagas. “That’s huge.”

Meanwhile, blood banks are discovering more cases among people without ties to Latin America, and species of kissing bugs native to the southern United States are increasingly recognized as a non-negligible source of Chagas transmission. Of 39 Chagas cases reported to Texas health authorities in 2013 and 2014, 12 were thought to be locally acquired.

Dr. Heather Yun, an infectious disease specialist at the San Antonio Military Medical Center, said risk factors for local transmission are not well established, but “we think people who are living in poverty in substandard housing, people who spend a lot of time outdoors, especially at night, and people involved with direct blood contact with wild game in Southern parts of the United States” may be at higher risk.

A U.S. disease

Evidence is amassing quickly that Chagas is a U.S. disease. But U.S. clinicians still lag in their knowledge of it, say physicians treating Chagas cases. “In medical school we get a 2-hour lecture on it, and it’s always been presented as an exotic disease and one you don’t treat,” Dr. Meymandi said.

The persistent perception of Chagas as a foreign disease means clinicians are inclined to dismiss positive results from a blood screening, particularly from someone who is not from Latin America. Yet cardiologists, ID practitioners, obstetricians, and primary care physicians all need to be aware that cases do occur in the United States and are potentially treatable.

Dr. Laila Woc-Colburn, an infectious disease and tropical medicine specialist at Baylor College of Medicine in Houston, said many people with Chagas never make it to an infectious disease specialist or cardiologist for a work-up. “When you test positive on serology [after a blood donation], you get a letter recommending you consult your physician. Most will go to their primary care doctors, who might say ‘this isn’t a disease in the United States.’ In Houston, that is often the case.”

Dr. Meymandi, who has treated hundreds of patients with Chagas with and without cardiac involvement, said any physician with a potential Chagas case must act. “If you get someone that’s positive, it’s your duty as a physician to confirm the positivity with CDC,” she said.

Dr. Yun concurred. “The most important message is, do something,” she said. “Don’t just assume it’s a false positive.”

Diagnosis is not simple and requires testing beyond the initial ELISA assay used in blood-bank screening. Confirmatory tests must be carried out in coordination with the Centers for Disease Control and Prevention. Also, with no agents approved by the Food and Drug Administration to treat Chagas, treatment is available only through the CDC’s investigational drugs protocol. Both drugs used in Chagas, benznidazole and nifurtimox, come with serious adverse effects that must be closely monitored.

“It’s time consuming, filling out the forms, getting the consent, tracking and sending back lab results to CDC in order to get drugs – it’s not like you can just write a prescription,” Dr. Meymandi said. But, “if you don’t know how to treat the patient or don’t have time, find someone like me,” she noted, adding that she is available to counsel any physician daunted by a potential Chagas case.

Treatment options

No formal clinical algorithm exists for Chagas, but Dr. Meymandi, Dr. Yun, and Dr. Woc-Colburn all pointed to a 2007 JAMA article, which describes diagnosis and treatment protocols, as an important reference for clinicians to start with. It’s “the best approximation of a clinical guideline we have,” Dr. Yun said (JAMA. 2007;298[18]:2171-81. doi:10.1001/jama.298.18.2171).

Dr. Meymandi, who has treated more Chagas patients than has any other U.S. clinician, said that treatment has changed somewhat since the JAMA article was published. In 2007, she said, nifurtimox was the main drug available through CDC, while benznidazole, which is somewhat better tolerated and has shorter treatment duration, has since become the first-line agent.

“We’ve lowered the dose of benznidazole, maxing out at 400 mg/day to decrease the toxicity,” she said. Also, treatment is now being extended to some patients aged 60 years and older.

The decision to treat or not treat, clinicians say, depends on the patient’s age, disease progression, comorbidities and potential serious drug interactions, and willingness to tolerate side effects that, with nifurtimox especially, can include skin sloughing, rash, and psychological and neurologic symptoms including depression and peripheral neuropathy.

“If you don’t have side effects, you’re not taking the drugs,” Dr. Meymandi said. Dr. Woc-Colburn noted that polypharmacy was a major consideration when treating older adults for Chagas. “If I have a patient who has diabetes, obesity, [and] end-stage renal disease, it’s not going to be ideal to give [benznidazole].”

Recent, highly anticipated results from BENEFIT, a large randomized trial (n = 2,854) showed that benznidazole reduced parasite load but was not helpful in halting cardiac damage at 5 years’ follow-up in patients with established Chagas cardiomyopathy (N Engl J Med. 2015 Oct;373:1295-306. doi:10.1056/NEJMoa1507574).

Dr. Meymandi, whose earlier research established that Chagas cardiomyopathy carries significantly higher morbidity and mortality than does non–Chagas cardiomyopathy (Circulation. 2012;126:A18171), said that the BENEFIT results underscore the need for physicians to be bullish in their approach to treating Chagas soon after diagnosis.

“It doesn’t matter if they’re symptomatic or asymptomatic. You can’t wait till they progress to treat. If you wait for the progression of disease you’ve lost the battle. You can’t wait and follow conservatively until you see the complications, because once those complications have started the parasitic load is too high for you to have an impact,” she said.

Dr. Yun said that given the toxicity of current treatment, she hoped to see more studies show clearer evidence of clinical benefit, “either reductions in mortality or reductions in end organ disease.” Most studies “have focused on clearance of parasite, which is important, but it’s not as important decreasing the risk of death or cardiomyopathy or heart failure.”

Rick Tarleton, Ph.D., a biologist the University of Georgia, in Athens, who has worked on Chagas for more than 30 years, said that because Chagas pathology is directly tied to parasite load – and not, as people have suggested in the past, an autoimmune reaction resulting from parasite exposure – drug treatment may prove to be worthwhile even in patients with significant cardiac involvement.

“You get rid of the parasite, you get rid of the progression of the disease,” Dr. Tarleton said. Even the findings from the BENEFIT trial, he said, did not lead him to conclude that treatment in people with established cardiac disease was futile.

“If you’re treating people who are already chronically infected and showing symptoms, the question is not have you reversed the damage, it’s have you stopped accumulating damage,” he noted. “And a 5-year follow-up is probably not long enough to know whether you’ve stopped accumulating.”