User login

Is Posthospital Syndrome a Result of Hospitalization-Induced Allostatic Overload?

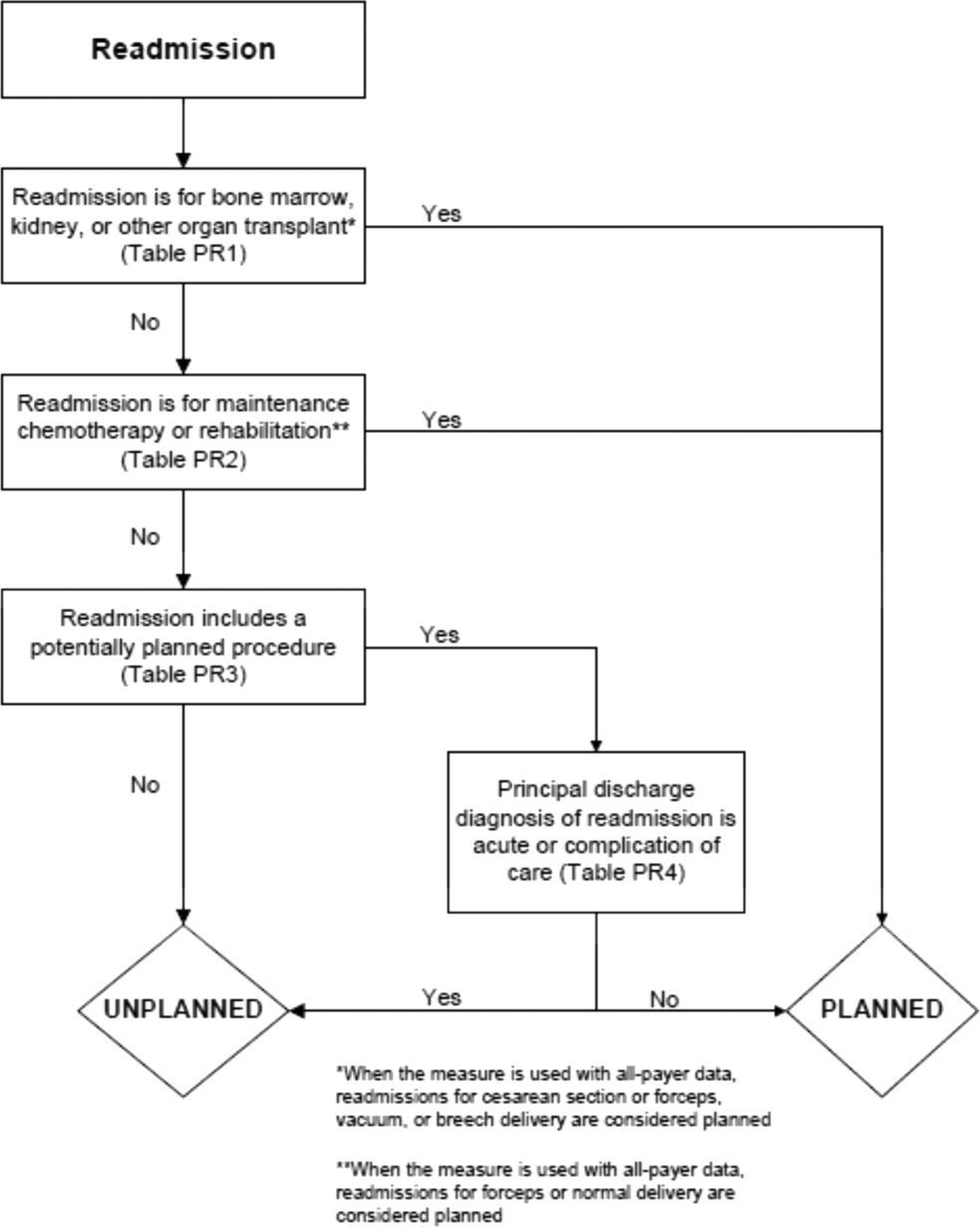

After discharge from the hospital, patients have a significantly elevated risk for adverse events, including emergency department use, hospital readmission, and death. More than 1 in 3 patients discharged from the hospital require acute care in the month after hospital discharge, and more than 1 in 6 require readmission, with readmission diagnoses frequently differing from those of the preceding hospitalization.1-4 This heightened susceptibility to adverse events persists beyond 30 days but levels off by 7 weeks after discharge, suggesting that the period of increased risk is transient and dynamic.5

The term posthospital syndrome (PHS) describes this period of vulnerability to major adverse events following hospitalization.6 In addition to increased risk for readmission and mortality, patients in this period often show evidence of generalized dysfunction with new cognitive impairment, mobility disability, or functional decline.7-12 To date, the etiology of this vulnerability is neither well understood nor effectively addressed by transitional care interventions.13

One hypothesis to explain PHS is that stressors associated with the experience of hospitalization contribute to transient multisystem dysfunction that induces susceptibility to a broad range of medical maladies. These stressors include frequent sleep disruption, noxious sounds, painful stimuli, mobility restrictions, and poor nutrition.12 The stress hypothesis as a cause of PHS is therefore based, in large part, on evidence about allostasis and the deleterious effects of allostatic overload.

Allostasis defines a system functioning within normal stress-response parameters to promote adaptation and survival.14 In allostasis, the hypothalamic-pituitary-adrenal (HPA) axis and the sympathetic and parasympathetic branches of the autonomic nervous system (ANS) exist in homeostatic balance and respond to environmental stimuli within a range of healthy physiologic parameters. The hallmark of a system in allostasis is the ability to rapidly activate, then successfully deactivate, a stress response once the stressor (ie, threat) has resolved.14,15 To promote survival and potentiate “fight or flight” mechanisms, an appropriate stress response necessarily impacts multiple physiologic systems that result in hemodynamic augmentation and gluconeogenesis to support the anticipated action of large muscle groups, heightened vigilance and memory capabilities to improve rapid decision-making, and enhancement of innate and adaptive immune capabilities to prepare for wound repair and infection defense.14-16 The stress response is subsequently terminated by negative feedback mechanisms of glucocorticoids as well as a shift of the ANS from sympathetic to parasympathetic tone.17,18

Extended or repetitive stress exposure, however, leads to dysregulation of allostatic mechanisms responsible for stress adaptation and hinders an efficient and effective stress response. After extended stress exposure, baseline (ie, resting) HPA activity resets, causing a disruption of normal diurnal cortisol rhythm and an increase in total cortisol concentration. Moreover, in response to stress, HPA and ANS system excitation becomes impaired, and negative feedback properties are undermined.14,15 This maladaptive state, known as allostatic overload, disrupts the finely tuned mechanisms that are the foundation of mind-body balance and yields pathophysiologic consequences to multiple organ systems. Downstream ramifications of allostatic overload include cognitive deterioration, cardiovascular and immune system dysfunction, and functional decline.14,15,19

Although a stress response is an expected and necessary aspect of acute illness that promotes survival, the central thesis of this work is that additional environmental and social stressors inherent in hospitalization may unnecessarily compound stress and increase the risk of HPA axis dysfunction, allostatic overload, and subsequent multisystem dysfunction, predisposing individuals to adverse outcomes after hospital discharge. Based on data from both human subjects and animal models, we present a possible pathophysiologic mechanism for the postdischarge vulnerability of PHS, encourage critical contemplation of traditional hospitalization, and suggest interventions that might improve outcomes.

POSTHOSPITAL SYNDROME

Posthospital syndrome (PHS) describes a transient period of vulnerability after hospitalization during which patients are at elevated risk for adverse events from a broad range of conditions. In support of this characterization, epidemiologic data have demonstrated high rates of adverse outcomes following hospitalization. For example, data have shown that more than 1 in 6 older adults is readmitted to the hospital within 30 days of discharge.20 Death is also common in this first month, during which rates of postdischarge mortality may exceed initial inpatient mortality.21,22 Elevated vulnerability after hospitalization is not restricted to older adults, as readmission risk among younger patients 18 to 64 years of age may be even higher for selected conditions, such as heart failure.3,23

Vulnerability after hospitalization is broad. In patients over age 65 initially admitted for heart failure or acute myocardial infarction, only 35% and 10% of readmissions are for recurrent heart failure or reinfarction, respectively.1 Nearly half of readmissions are for noncardiovascular causes.1 Similarly, following hospitalization for pneumonia, more than 60 percent of readmissions are for nonpulmonary etiologies. Moreover, the risk for all these causes of readmission is much higher than baseline risk, indicating an extended period of lack of resilience to many types of illness.24 These patterns of broad susceptibility also extend to younger adults hospitalized with common medical conditions.3

Accumulating evidence suggests that hospitalized patients face functional decline, debility, and risk for adverse events despite resolution of the presenting illness, implying perhaps that the hospital environment itself is hazardous to patients’ health. In 1993, Creditor hypothesized that the “hazards of hospitalization,” including enforced bed-rest, sensory deprivation, social isolation, and malnutrition lead to a “cascade of dependency” in which a collection of small insults to multiple organ systems precipitates loss of function and debility despite cure or resolution of presenting illness.12 Covinsky (2011) later defined hospitalization-associated disability as an iatrogenic hospital-related “disorder” characterized by new impairments in abilities to perform basic activities of daily living such as bathing, feeding, toileting, dressing, transferring, and walking at the time of hospital discharge.11 Others have described a postintensive-care syndrome (PICS),25 characterized by cognitive, psychiatric, and physical impairments acquired during hospitalization for critical illness that persist postdischarge and increase the long-term risk for adverse outcomes, including elevated mortality rates,26,27 readmission rates,28 and physical disabilities.29 Similar to the “hazards of hospitalization,” PICS is thought to be related to common experiences of ICU stays, including mobility restriction, sensory deprivation, sleep disruption, sedation, malnutrition, and polypharmacy.30-33

Taken together, these data suggest that adverse health consequences attributable to hospitalization extend across the spectrum of age, presenting disease severity, and hospital treatment location. As detailed below, the PHS hypothesis is rooted in a mechanistic understanding of the role of exogenous stressors in producing physiologic dysregulation and subsequent adverse health effects across multiple organ systems.

Nature of Stress in the Hospital

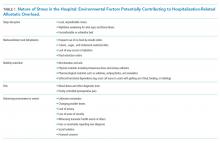

Compounding the stress of acute illness, hospitalized patients are routinely and repetitively exposed to a wide variety of environmental stressors that may have downstream adverse consequences (Table 1). In the absence of overt clinical manifestations of harm, the possible subclinical physiologic dysfunction generated by the following stress exposures may increase patients’ susceptibility to the manifestations of PHS.

Sleep Disruption

Sleep disruptions trigger potent stress responses,34,35 yet they are common occurrences during hospitalization. In surveys, about half of patients report poor sleep quality during hospitalization that persists for many months after discharge.36 In a simulated hospital setting, test subjects exposed to typical hospital sounds (paging system, machine alarms, etc.) experienced significant sleep-wake cycle abnormalities.37 Although no work has yet focused specifically on the physiologic consequences of sleep disruption and stress in hospitalized patients, in healthy humans, mild sleep disruption has clear effects on allostasis by disrupting HPA activity, raising cortisol levels, diminishing parasympathetic tone, and impairing cognitive performance.18,34,35,38,39

Malnourishment

Malnourishment in hospitalized patients is common, with one-fifth of hospitalized patients receiving nothing per mouth or clear liquid diets for more than 3 continuous days,40 and one-fifth of hospitalized elderly patients receiving less than half of their calculated nutrition requirements.41 Although the relationship between food restriction, cortisol levels, and postdischarge outcomes has not been fully explored, in healthy humans, meal anticipation, meal withdrawal (withholding an expected meal), and self-reported dietary restraint are known to generate stress responses.42,43 Furthermore, malnourishment during hospitalization is associated with increased 90-day and 1-year mortality after discharge,44 adding malnourishment to the list of plausible components of hospital-related stress.

Mobility Restriction

Physical activity counterbalances stress responses and minimizes downstream consequences of allostatic load,15 yet mobility limitations via physical and chemical restraints are common in hospitalized patients, particularly among the elderly.45-47 Many patients are tethered to devices that make ambulation hazardous, such as urinary catheters and infusion pumps. Even without physical or chemical restraints or a limited mobility order, patients may be hesitant to leave the room so as not to miss transport to a diagnostic study or an unscheduled physician’s visit. Indeed, mobility limitations of hospitalized patients increase the risk for adverse events after discharge, while interventions designed to encourage mobility are associated with improved postdischarge outcomes.47,48

Other Stressors

Other hospital-related aversive stimuli are less commonly quantified, but clearly exist. According to surveys of hospitalized patients, sources of emotional stress include social isolation; loss of autonomy and privacy; fear of serious illness; lack of control over activities of daily living; lack of clear communication between treatment team and patients; and death of a patient roommate.49,50 Furthermore, consider the physical discomfort and emotional distress of patients with urinary incontinence awaiting assistance for a diaper or bedding change or the pain of repetitive blood draws or other invasive testing. Although individualized, the subjective discomfort and emotional distress associated with these experiences undoubtedly contribute to the stress of hospitalization.

IMPACT OF ALLOSTATIC OVERLOAD ON PHYSIOLOGIC FUNCTION

Animal Models of Stress

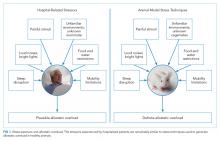

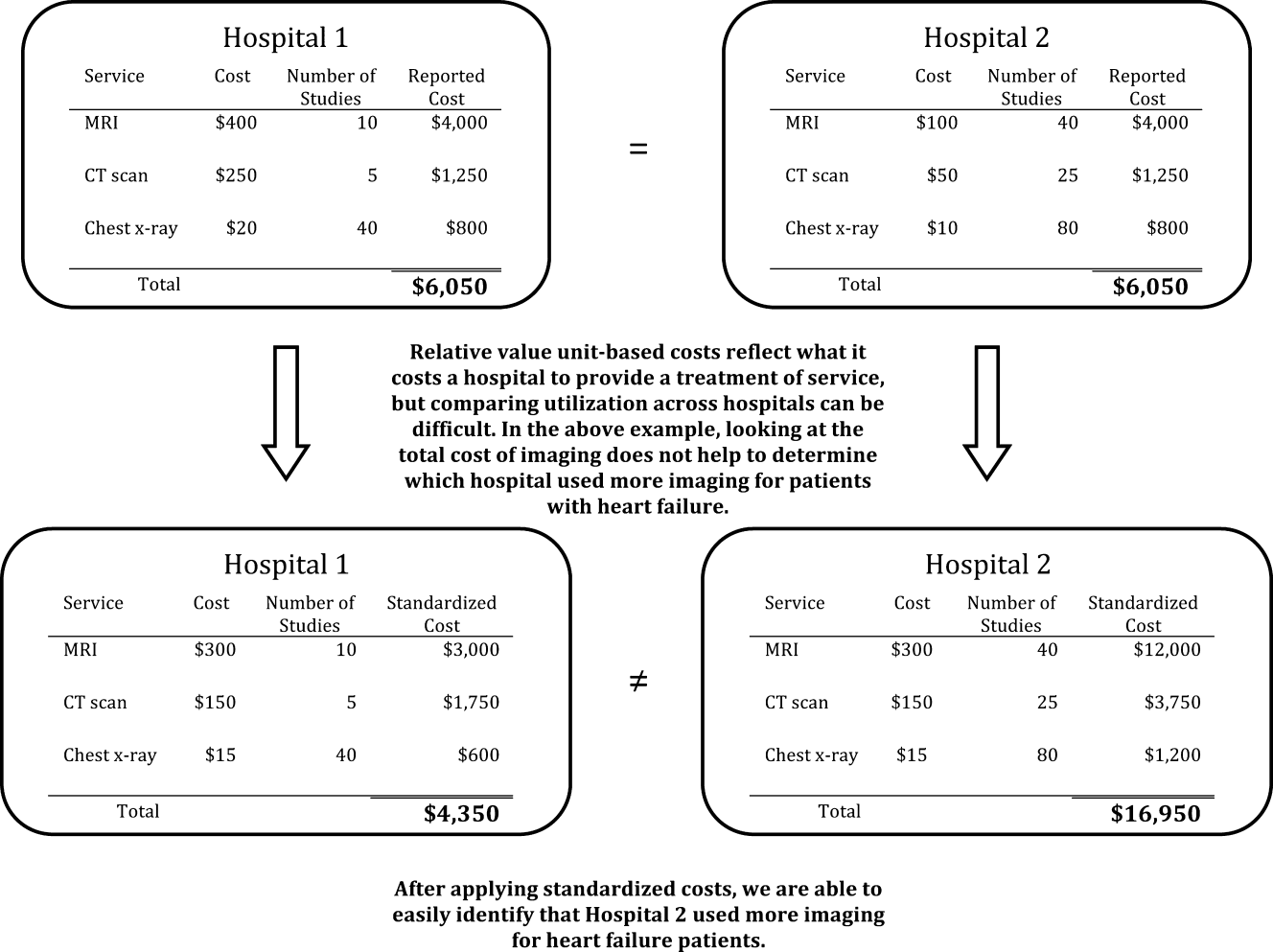

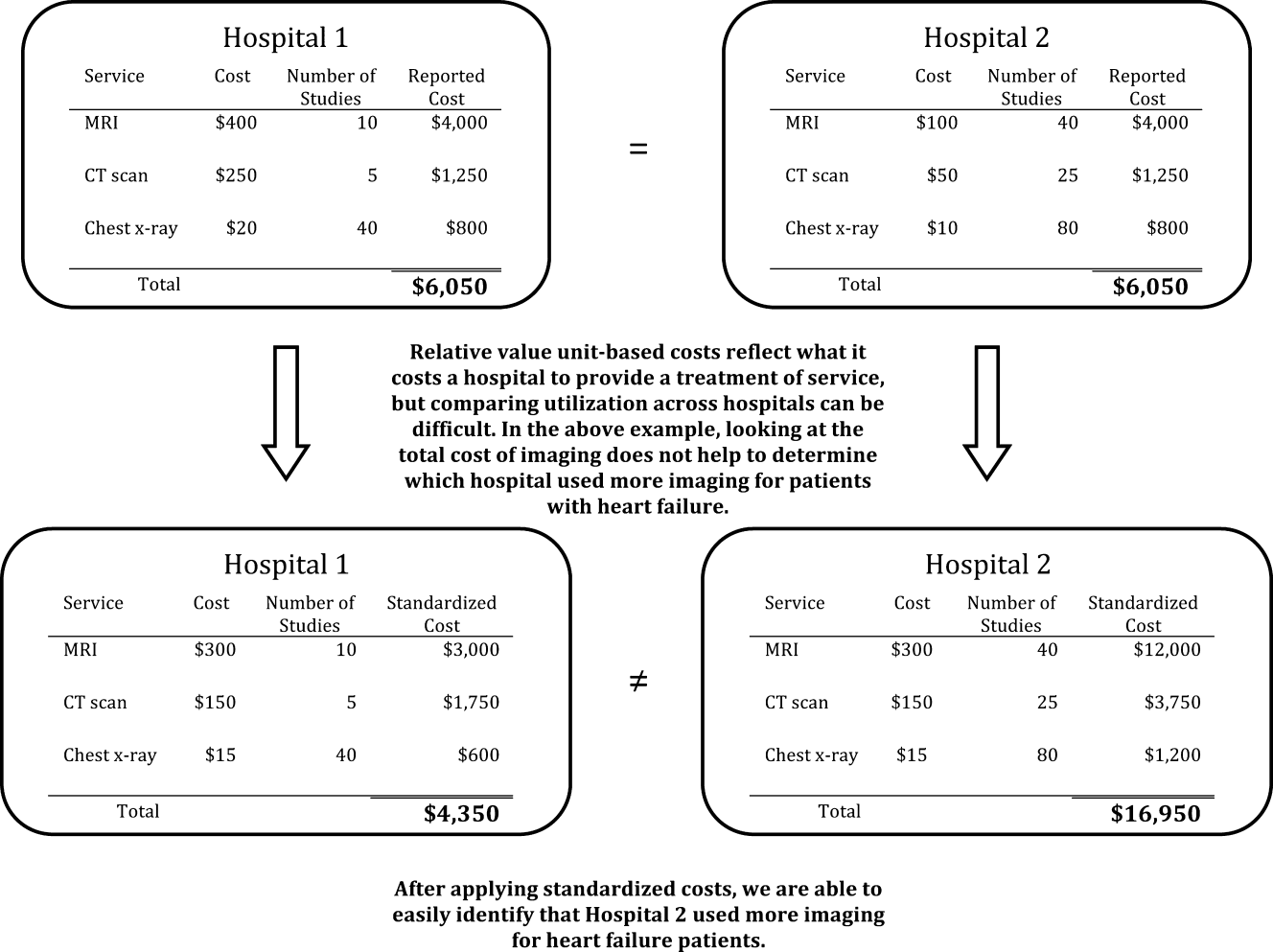

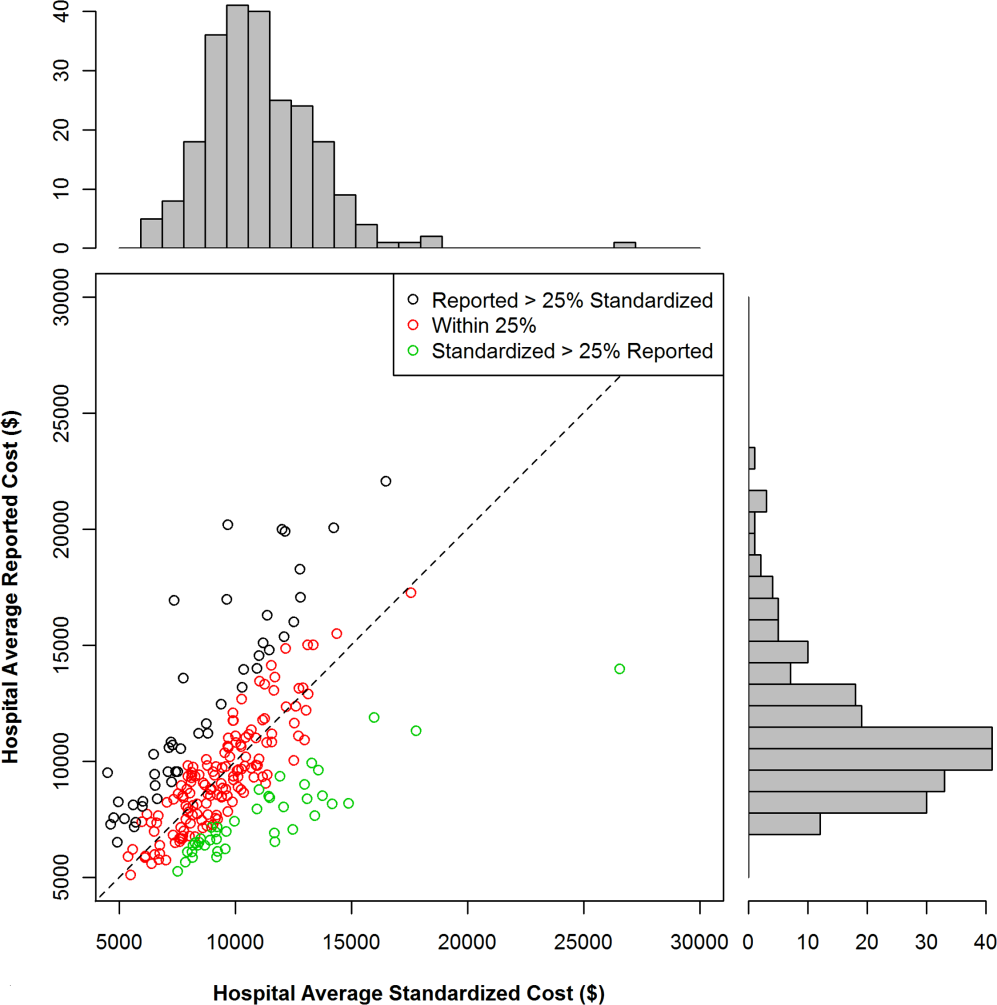

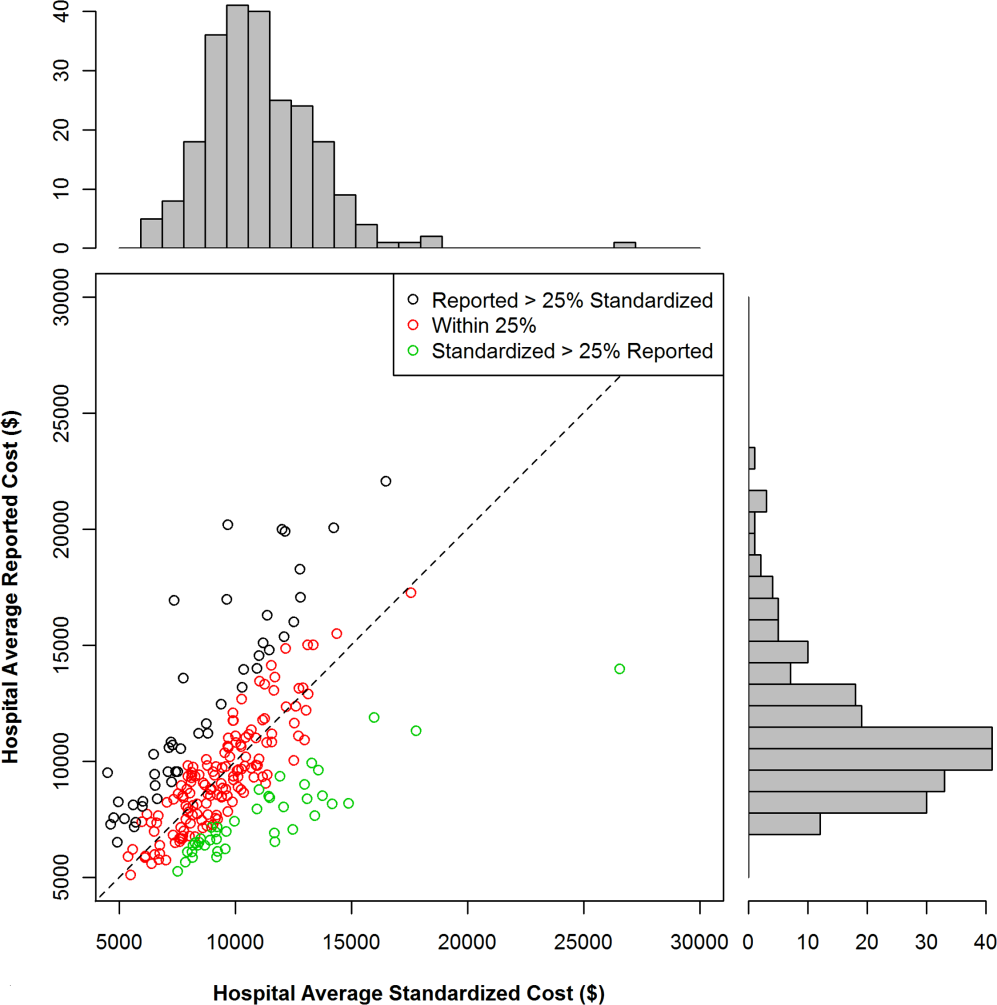

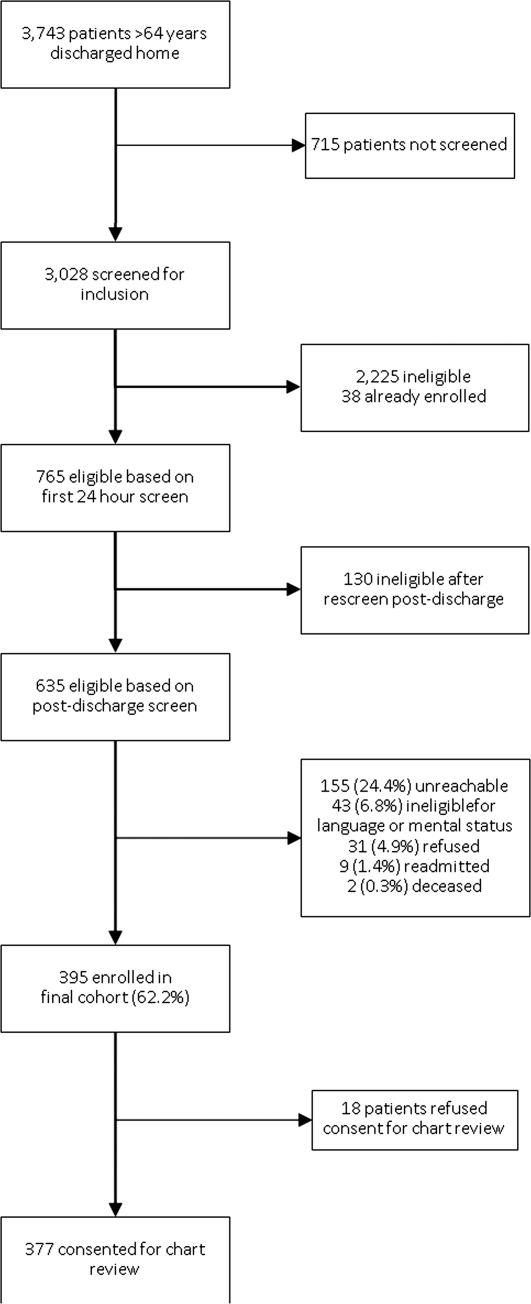

Laboratory techniques reminiscent of the numerous environmental stressors associated with hospitalization have been used to reliably trigger allostatic overload in healthy young animals.51 These techniques include sequential exposure to aversive stimuli, including food and water deprivation, continuous overnight illumination, paired housing with known and unknown cagemates, mobility restriction, soiled cage conditions, and continuous noise. All of these techniques have been shown to cause HPA axis and ANS dysfunction, allostatic overload, and subsequent stress-mediated consequences to multiple organ systems.19,52-54 Given the remarkable similarity of these protocols to common experiences during hospitalization, animal models of stress may be useful in understanding the spectrum of maladaptive consequences experienced by patients within the hospital (Figure 1).

These animal models of stress have resulted in a number of instructive findings. For example, in rodents, extended stress exposure induces structural and functional remodeling of neuronal networks that precipitate learning and memory, working memory, and attention impairments.55-57 These exposures also result in cardiovascular abnormalities, including dyslipidemia, progressive atherosclerosis,58,59 and enhanced inflammatory cytokine expression,60 all of which increase both atherosclerotic burden and susceptibility to plaque rupture, leading to elevated risk for major cardiovascular adverse events. Moreover, these extended stress exposures in animals increase susceptibility to both bacterial and viral infections and increase their severity.16,61 This outcome appears to be driven by a stress-induced elevation of glucocorticoid levels, decreased leukocyte proliferation, altered leukocyte trafficking, and a transition to a proinflammatory cytokine environment.16, 61 Allostatic overload has also been shown to contribute to metabolic dysregulation involving insulin resistance, persistence of hyperglycemia, dyslipidemia, catabolism of lean muscle, and visceral adipose tissue deposition.62-64 In addition to cardiovascular, immune, and metabolic consequences of allostatic overload, the spectrum of physiologic dysfunction in animal models is broad and includes mood disorder symptoms,65 intestinal barrier abnormalities,66 airway reactivity exacerbation,67 and enhanced tumor growth.68

Although the majority of this research highlights the multisystem effects of variable stress exposure in healthy animals, preliminary evidence suggests that aged or diseased animals subjected to additional stressors display a heightened inflammatory cytokine response that contributes to exaggerated sickness behavior and greater and prolonged cognitive deficits.69 Future studies exploring the consequences of extended stress exposure in animals with existing disease or debility may therefore more closely simulate the experience of hospitalized patients and perhaps further our understanding of PHS.

Hospitalized Patients

While no intervention studies have examined the effects of potential hospital stressors on the development of allostatic overload, there is evidence from small studies that dysregulated stress responses during hospitalization are associated with adverse events. For example, high serum cortisol, catecholamine, and proinflammatory cytokine levels during hospitalization have individually been associated with the development of cognitive dysfunction,70-72 increased risk of cardiovascular events such as myocardial infarction and stroke in the year following discharge,73-76 and the development of wound infections after discharge.77 Moreover, elevated plasma glucose during admission for myocardial infarction in patients with or without diabetes has been associated with greater in-hospital and 1-year mortality,78 with a similar relationship seen between elevated plasma glucose and survival after admission for stroke79 and pneumonia.80 Furthermore, in addition to atherothrombosis, stress may contribute to the risk for venous thromboembolism,81 resulting in readmissions for deep vein thrombosis or pulmonary embolism posthospitalization. Although potentially surrogate markers of illness acuity, a handful of studies have shown that these stress biomarkers are actually only weakly correlated with,82 or independent of,72,76 disease severity. As discussed in detail below, future studies utilizing a summative measure of multisystem physiologic dysfunction as opposed to individual biomarkers may more accurately reflect the cumulative stress effects of hospitalization and subsequent risk for adverse events.

Additional Considerations

Elderly patients, in particular, may have heightened susceptibility to the consequences of allostatic overload due to common geriatric issues such as multimorbidity and frailty. Patients with chronic diseases display both baseline HPA axis abnormalities as well as dysregulated stress responses and may therefore be more vulnerable to hospitalization-related stress. For example, when subjected to psychosocial stress, patients with chronic conditions such as diabetes, heart failure, or atherosclerosis demonstrate elevated cortisol levels, increased circulating markers of inflammation, as well as prolonged hemodynamic recovery after stress resolution compared with normal controls.83-85 Additionally, frailty may affect an individual’s susceptibility to exogenous stress. Indeed, frailty identified on hospital admission increases the risk for adverse outcomes during hospitalization and postdischarge.86 Although the specific etiology of this relationship is unclear, persons with frailty are known to have elevated levels of cortisol and other inflammatory markers,87,88 which may contribute to adverse outcomes in the face of additional stressors.

IMPLICATIONS AND NEXT STEPS

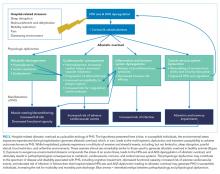

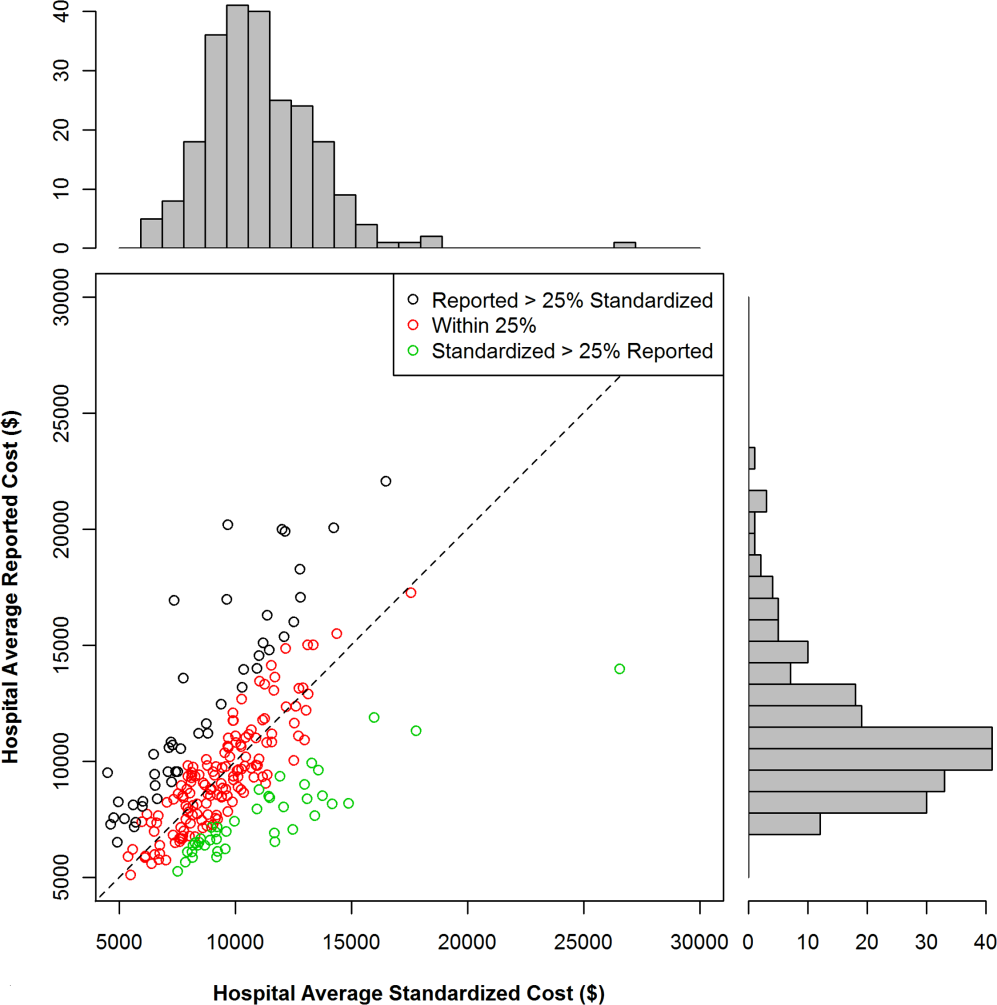

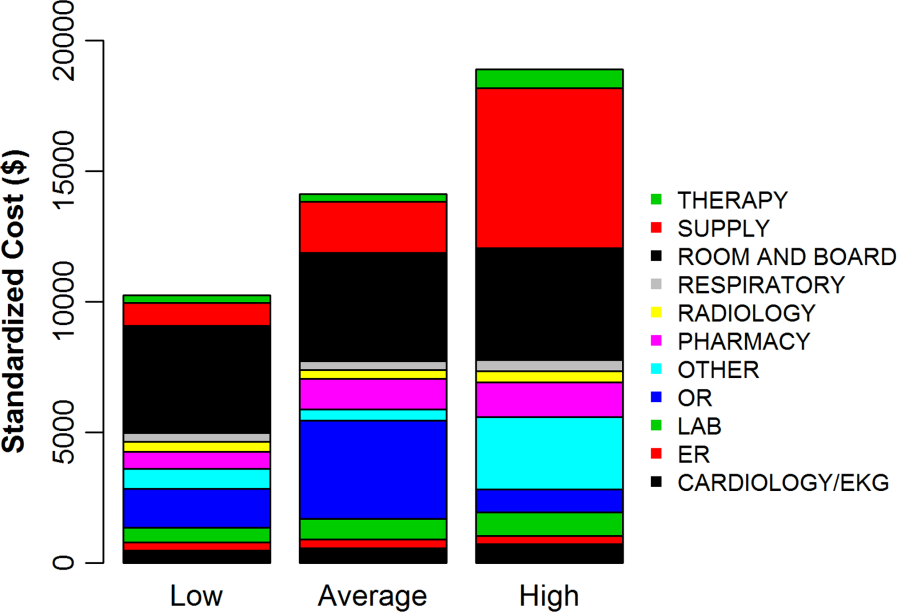

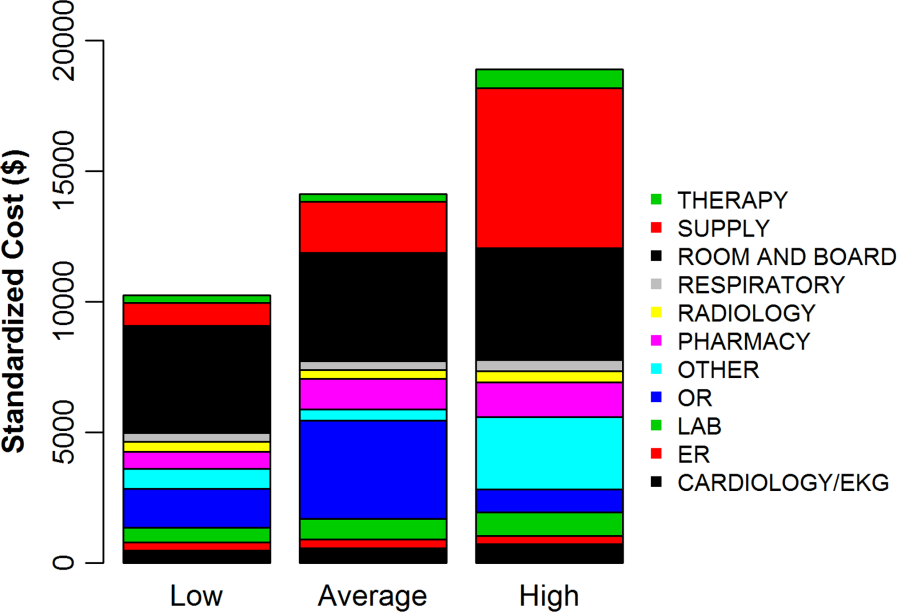

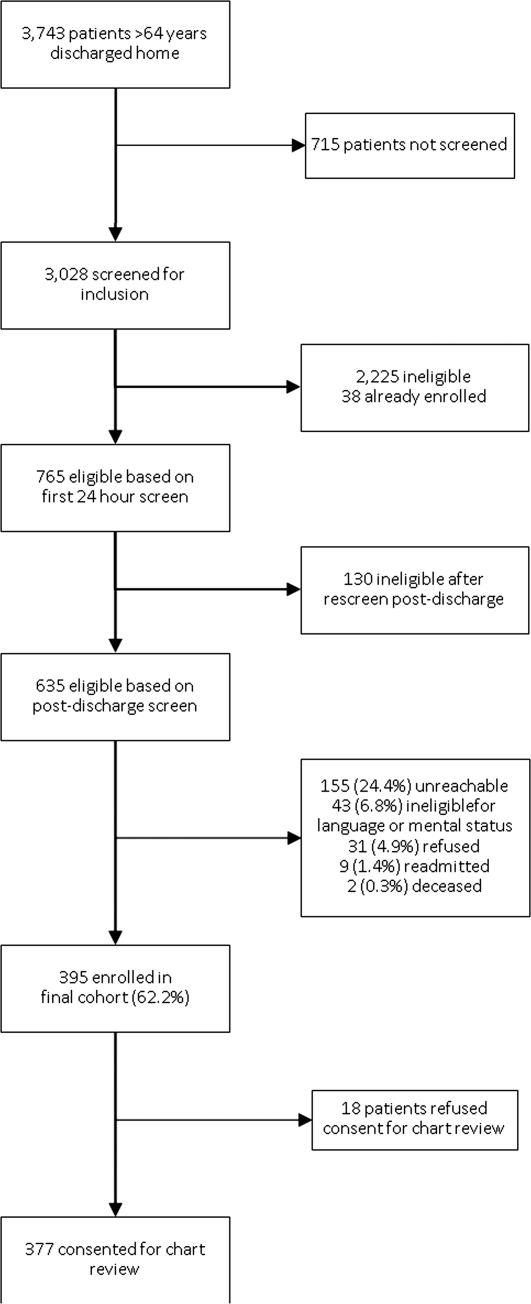

A large body of evidence stretching from bench to bedside suggests that environmental stressors associated with hospitalization are toxic. Understanding PHS within the context of hospital-induced allostatic overload presents a unifying theory for the interrelated multisystem dysfunction and increased susceptibility to adverse events that patients experience after discharge (Figure 2). Furthermore, it defines a potential pathophysiological mechanism for the cognitive impairment, elevated cardiovascular risk, immune system dysfunction, metabolic derangements, and functional decline associated with PHS. Additionally, this theory highlights environmental interventions to limit PHS development and suggests mechanisms to promote stress resilience. Although it is difficult to disentangle the consequences of the endogenous stress triggered by an acute illness from the exogenous stressors related to hospitalization, it is likely that the 2 simultaneous exposures compound risk for stress system dysregulation and allostatic overload. Moreover, hospitalized patients with preexisting HPA axis dysfunction at baseline from chronic disease or advancing age may be even more susceptible to these adverse outcomes. If this hypothesis is true, a reduction in PHS would require mitigation of the modifiable environmental stressors encountered by patients during hospitalization. Directed efforts to diminish ambient noise, limit nighttime disruptions, thoughtfully plan procedures, consider ongoing nutritional status, and promote opportunities for patients to exert some control over their environment may diminish the burden of extrinsic stressors encountered by all patients in the hospital and improve outcomes after discharge.

Hospitals are increasingly recognizing the importance of improving patients’ experience of hospitalization by reducing exposure to potential toxicities. For example, many hospitals are now attempting to reduce sleep disturbances and sleep latency through reduced nighttime noise and light levels, fewer nighttime interruptions for vital signs checks and medication administration, and commonsensical interventions like massages, herbal teas, and warm milk prior to bedtime.89 Likewise, intensive care units are targeting environmental and physical stressors with a multifaceted approach to decrease sedative use, promote healthy sleep cycles, and encourage exercise and ambulation even in those patients who are mechanically ventilated.30 Another promising development has been the increase of Hospital at Home programs. In these programs, patients who meet the criteria for inpatient admission are instead comprehensively managed at home for their acute illness through a multidisciplinary effort between physicians, nurses, social workers, physical therapists, and others. Patients hospitalized at home report higher levels of satisfaction and have modest functional gains, improved health-related quality of life, and decreased risk of mortality at 6 months compared with hospitalized patients.90,91 With some admitting diagnoses (eg, heart failure), hospitalization at home may be associated with decreased readmission risk.92 Although not yet investigated on a physiologic level, perhaps the benefits of hospital at home are partially due to the dramatic difference in exposure to environmental stressors.

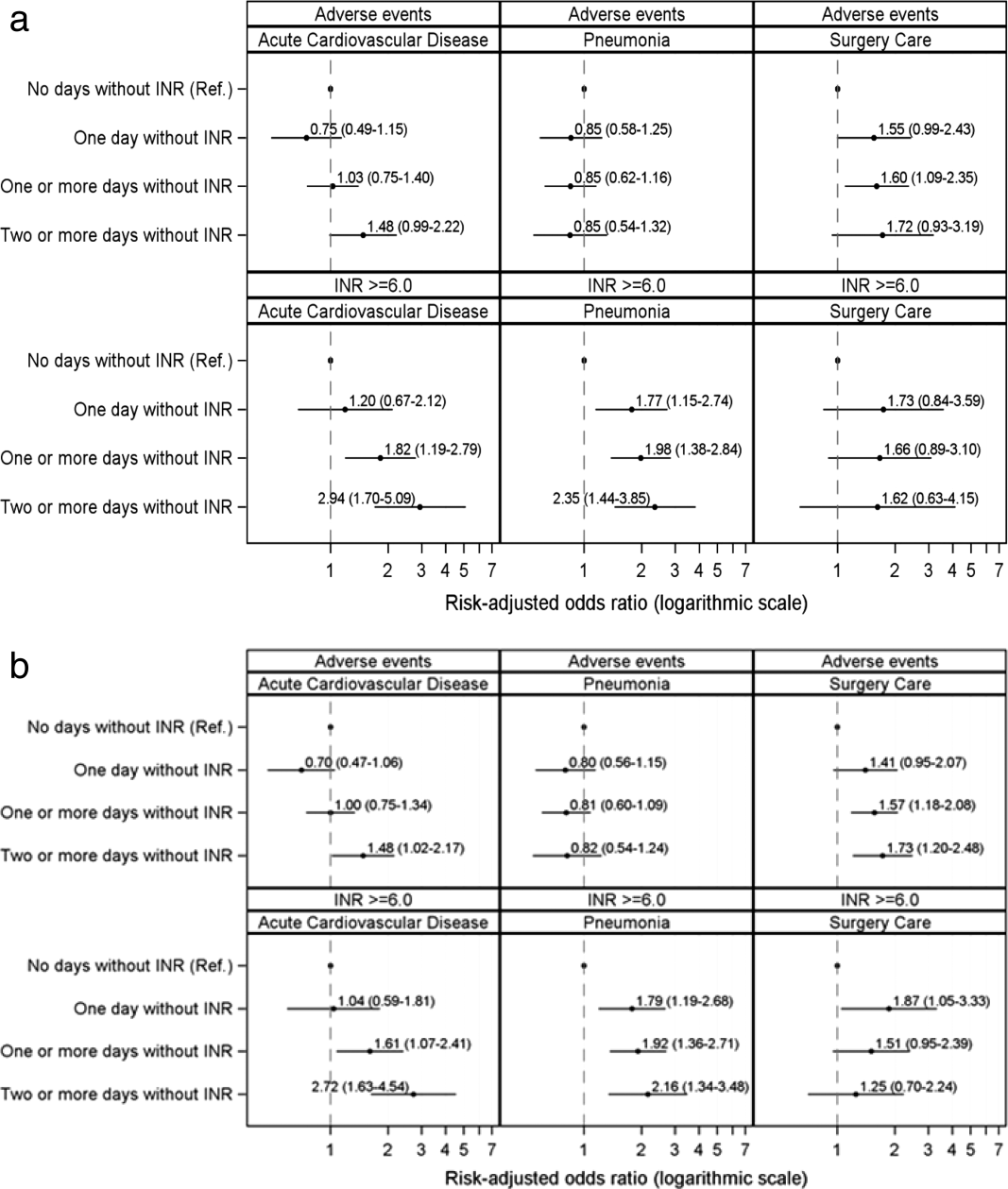

A tool that quantifies hospital-associated stress may help health providers appreciate the experience of patients and better target interventions to aspects of their structure and process that contribute to allostatic overload. Importantly, allostatic overload cannot be identified by one biomarker of stress but instead requires evidence of dysregulation across inflammatory, neuroendocrine, hormonal, and cardiometabolic systems. Future studies to address the burden of stress faced by hospitalized patients should consider a summative measure of multisystem dysregulation as opposed to isolated assessments of individual biomarkers. Allostatic load has previously been operationalized as the summation of a variety of hemodynamic, hormonal, and metabolic factors, including blood pressure, lipid profile, glycosylated hemoglobin, cortisol, catecholamine levels, and inflammatory markers.93 To develop a hospital-associated allostatic load index, models should ideally be adjusted for acute illness severity, patient-reported stress, and capacity for stress resilience. This tool could then be used to quantify hospitalization-related allostatic load and identify those at greatest risk for adverse events after discharge, as well as measure the effectiveness of strategic environmental interventions (Table 2). A natural first experiment may be a comparison of the allostatic load of hospitalized patients versus those hospitalized at home.

The risk of adverse outcomes after discharge is likely a function of the vulnerability of the patient and the degree to which the patient’s healthcare team and social support network mitigates this vulnerability. That is, there is a risk that a person struggles in the postdischarge period and, in many circumstances, a strong healthcare team and social network can identify health problems early and prevent them from progressing to the point that they require hospitalization.13,94-96 There are also hospital occurrences, outside of allostatic load, that can lead to complications that lengthen the stay, weaken the patient, and directly contribute to subsequent vulnerability.94,97 Our contention is that the allostatic load of hospitalization, which may also vary by patient depending on the circumstances of hospitalization, is just one contributor, albeit potentially an important one, to vulnerability to medical problems after discharge.

In conclusion, a plausible etiology of PHS is the maladaptive mind-body consequences of common stressors during hospitalization that compound the stress of acute illness and produce allostatic overload. This stress-induced dysfunction potentially contributes to a spectrum of generalized disease susceptibility and risk of adverse outcomes after discharge. Focused efforts to diminish patient exposure to hospital-related stressors during and after hospitalization might diminish the presence or severity of PHS. Viewing PHS from this perspective enables the development of hypothesis-driven risk-prediction models, encourages critical contemplation of traditional hospitalization, and suggests that targeted environmental interventions may significantly reduce adverse outcomes.

1. Dharmarajan K, Hsieh AF, Lin Z, et al. Diagnoses and timing of 30-day readmissions after hospitalization for heart failure, acute myocardial infarction, or pneumonia. JAMA. 2013;309(4):355-363. http://dx.doi.org/10.1001/jama.2012.216476.

2. Jencks SF, Williams MV, Coleman EA. Rehospitalizations among patients in the Medicare fee-for-service program. N Engl J Med. 2009;360(14):1418-1428. http://dx.doi.org/10.1056/NEJMsa0803563.

3. Ranasinghe I, Wang Y, Dharmarajan K, Hsieh AF, Bernheim SM, Krumholz HM. Readmissions after hospitalization for heart failure, acute myocardial infarction, or pneumonia among young and middle-aged adults: a retrospective observational cohort study. PLoS Med. 2014;11(9):e1001737. http://dx.doi.org/10.1371/journal.pmed.1001737.

4. Vashi AA, Fox JP, Carr BG, et al. Use of hospital-based acute care among patients recently discharged from the hospital. JAMA. 2013;309(4):364-371. http://dx.doi.org/10.1001/jama.2012.216219.

5. Dharmarajan K, Hsieh AF, Kulkarni VT, et al. Trajectories of risk after hospitalization for heart failure, acute myocardial infarction, or pneumonia: retrospective cohort study. BMJ. 2015;350:h411. http://dx.doi.org/10.1136/bmj.h411.

6. Krumholz HM. Post-hospital syndrome--an acquired, transient condition of generalized risk. N Engl J Med. 2013;368(2):100-102. http://dx.doi.org/10.1056/NEJMp1212324.

7. Ferrante LE, Pisani MA, Murphy TE, Gahbauer EA, Leo-Summers LS, Gill TM. Functional trajectories among older persons before and after critical illness. JAMA Intern Med. 2015;175(4):523-529. http://dx.doi.org/10.1001/jamainternmed.2014.7889.

8. Gill TM, Allore HG, Holford TR, Guo Z. Hospitalization, restricted activity, and the development of disability among older persons. JAMA. 2004;292(17):2115-2124. http://dx.doi.org/10.1001/jama.292.17.2115.

9. Inouye SK, Zhang Y, Han L, Leo-Summers L, Jones R, Marcantonio E. Recoverable cognitive dysfunction at hospital admission in older persons during acute illness. J Gen Intern Med. 2006;21(12):1276-1281. http://dx.doi.org/10.1111/j.1525-1497.2006.00613.x.

10. Lindquist LA, Go L, Fleisher J, Jain N, Baker D. Improvements in cognition following hospital discharge of community dwelling seniors. J Gen Intern Med. 2011;26(7):765-770. http://dx.doi.org/10.1007/s11606-011-1681-1.

11. Covinsky KE, Pierluissi E, Johnston CB. Hospitalization-associated disability: “She was probably able to ambulate, but I’m not sure.” JAMA. 2011;306(16):1782-1793. http://dx.doi.org/10.1001/jama.2011.1556.

12. Creditor MC. Hazards of hospitalization of the elderly. Ann Intern Med. 1993;118(3):219-223. http://dx.doi.org/10.7326/0003-4819-118-3-199302010-00011.

13. Dharmarajan K, Krumholz HM. Strategies to reduce 30-day readmissions in older patients hospitalized with heart failure and acute myocardial infarction. Curr Geriatr Rep. 2014;3(4):306-315. http://dx.doi.org/10.1007/s13670-014-0103-8.

14. McEwen BS. Protective and damaging effects of stress mediators. N Engl J Med. 1998;338(3):171-179. http://dx.doi.org/10.1056/NEJM199801153380307.

15. McEwen BS, Gianaros PJ. Stress- and allostasis-induced brain plasticity. Annu Rev Med. 2011;62:431-445. http://dx.doi.org/10.1146/annurev-med-052209-100430.

16. Dhabhar FS. Enhancing versus suppressive effects of stress on immune function: implications for immunoprotection and immunopathology. Neuroimmunomodulation. 2009;16(5):300-317. http://dx.doi.org/10.1159/000216188.

17. Thayer JF, Sternberg E. Beyond heart rate variability: vagal regulation of allostatic systems. Ann N Y Acad Sci. 2006;1088:361-372. http://dx.doi.org/10.1196/annals.1366.014.

18. Jacobson L, Akana SF, Cascio CS, Shinsako J, Dallman MF. Circadian variations in plasma corticosterone permit normal termination of adrenocorticotropin responses to stress. Endocrinology. 1988;122(4):1343-1348. http://dx.doi.org/10.1210/endo-122-4-1343.

19. McEwen BS. Physiology and neurobiology of stress and adaptation: central role of the brain. Physiol Rev. 2007;87(3):873-904. http://dx.doi.org/10.1152/physrev.00041.2006.

20. Medicare Hospital Quality Chartbook 2014: Performance Report on Outcome Measures. Prepared by Yale New Haven Health Services Corporation Center for Outcomes Research and Evaluation for Centers for Medicare & Medicaid Services. https://www.cms.gov/medicare/quality-initiatives-patient-assessment-instruments/hospitalqualityinits/downloads/medicare-hospital-quality-chartbook-2014.pdf. Accessed February 26, 2018.

21. Bueno H, Ross JS, Wang Y, et al. Trends in length of stay and short-term outcomes among Medicare patients hospitalized for heart failure, 1993-2006. JAMA. 2010;303(21):2141-2147. http://dx.doi.org/10.1001/jama.2010.748.

22. Drye EE, Normand SL, Wang Y, et al. Comparison of hospital risk-standardized mortality rates calculated by using in-hospital and 30-day models: an observational study with implications for hospital profiling. Ann Intern Med. 2012;156(1 Pt 1):19-26. http://dx.doi.org/10.7326/0003-4819-156-1-201201030-00004.

23. Dharmarajan K, Hsieh A, Dreyer RP, Welsh J, Qin L, Krumholz HM. Relationship between age and trajectories of rehospitalization risk in older adults. J Am Geriatr Soc. 2017;65(2):421-426. http://dx.doi.org/10.1111/jgs.14583.

24. Krumholz HM, Hsieh A, Dreyer RP, Welsh J, Desai NR, Dharmarajan K. Trajectories of risk for specific readmission diagnoses after hospitalization for heart failure, acute myocardial infarction, or pneumonia. PLoS One. 2016;11(10):e0160492. http://dx.doi.org/10.1371/journal.pone.0160492.

25. Needham DM, Davidson J, Cohen H, et al. Improving long-term outcomes after discharge from intensive care unit: report from a stakeholders’ conference. Crit Care Med. 2012;40(2):502-509. http://dx.doi.org/10.1097/CCM.0b013e318232da75.

26. Brinkman S, de Jonge E, Abu-Hanna A, Arbous MS, de Lange DW, de Keizer NF. Mortality after hospital discharge in ICU patients. Crit Care Med. 2013;41(5):1229-1236. http://dx.doi.org/10.1097/CCM.0b013e31827ca4e1.

27. Steenbergen S, Rijkenberg S, Adonis T, Kroeze G, van Stijn I, Endeman H. Long-term treated intensive care patients outcomes: the one-year mortality rate, quality of life, health care use and long-term complications as reported by general practitioners. BMC Anesthesiol. 2015;15:142. http://dx.doi.org/10.1186/s12871-015-0121-x.

28. Hill AD, Fowler RA, Pinto R, Herridge MS, Cuthbertson BH, Scales DC. Long-term outcomes and healthcare utilization following critical illness--a population-based study. Crit Care. 2016;20:76. http://dx.doi.org/10.1186/s13054-016-1248-y.

29. Jackson JC, Pandharipande PP, Girard TD, et al. Depression, post-traumatic stress disorder, and functional disability in survivors of critical illness in the BRAIN-ICU study: a longitudinal cohort study. Lancet Respir Med. 2014;2(5):369-379. http://dx.doi.org/10.1016/S2213-2600(14)70051-7.

30. Balas MC, Vasilevskis EE, Olsen KM, et al. Effectiveness and safety of the awakening and breathing coordination, delirium monitoring/management, and early exercise/mobility bundle. Crit Care Med. 2014;42(5):1024-1036. http://dx.doi.org/10.1097/CCM.0000000000000129.

31. Kress JP, Hall JB. ICU-acquired weakness and recovery from critical illness. N Engl J Med. 2014;370(17):1626-1635. http://dx.doi.org/10.1056/NEJMra1209390.

32. Mendez-Tellez PA, Needham DM. Early physical rehabilitation in the ICU and ventilator liberation. Respir Care. 2012;57(10):1663-1669. http://dx.doi.org/10.4187/respcare.01931.

33. Schweickert WD, Hall J. ICU-acquired weakness. Chest. 2007;131(5):1541-1549. http://dx.doi.org/10.1378/chest.06-2065.

34. Balbo M, Leproult R, Van Cauter E. Impact of sleep and its disturbances on hypothalamo-pituitary-adrenal axis activity. Int J Endocrinol. 2010;2010:759234. http://dx.doi.org/10.1155/2010/759234.

35. Spiegel K, Leproult R, Van Cauter E. Impact of sleep debt on metabolic and endocrine function. Lancet. 1999;354(9188):1435-1439. http://dx.doi.org/10.1016/S0140-6736(99)01376-8.

36. Orwelius L, Nordlund A, Nordlund P, Edell-Gustafsson U, Sjoberg F. Prevalence of sleep disturbances and long-term reduced health-related quality of life after critical care: a prospective multicenter cohort study. Crit Care. 2008;12(4):R97. http://dx.doi.org/10.1186/cc6973.

37. Buxton OM, Ellenbogen JM, Wang W, et al. Sleep disruption due to hospital noises: a prospective evaluation. Ann Intern Med. 2012;157(3):170-179. http://dx.doi.org/10.7326/0003-4819-157-3-201208070-00472.

38. Sunbul M, Kanar BG, Durmus E, Kivrak T, Sari I. Acute sleep deprivation is associated with increased arterial stiffness in healthy young adults. Sleep Breath. 2014;18(1):215-220. http://dx.doi.org/10.1007/s11325-013-0873-9.

39. Van Dongen HP, Maislin G, Mullington JM, Dinges DF. The cumulative cost of additional wakefulness: dose-response effects on neurobehavioral functions and sleep physiology from chronic sleep restriction and total sleep deprivation. Sleep. 2003;26(2):117-126. http://dx.doi.org/10.1093/sleep/26.2.117.

40. Franklin GA, McClave SA, Hurt RT, et al. Physician-delivered malnutrition: why do patients receive nothing by mouth or a clear liquid diet in a university hospital setting? JPEN J Parenter Enteral Nutr. 2011;35(3):337-342. http://dx.doi.org/10.1177/0148607110374060.

41. Sullivan DH, Sun S, Walls RC. Protein-energy undernutrition among elderly hospitalized patients: a prospective study. JAMA. 1999;281(21):2013-2019. http://dx.doi.org/10.1001/jama.281.21.2013.

42. Anderson DA, Shapiro JR, Lundgren JD, Spataro LE, Frye CA. Self-reported dietary restraint is associated with elevated levels of salivary cortisol. Appetite. 2002;38(1):13-17. http://dx.doi.org/10.1006/appe.2001.0459.

43. Ott V, Friedrich M, Prilop S, et al. Food anticipation and subsequent food withdrawal increase serum cortisol in healthy men. Physiol Behav. 2011;103(5):594-599. http://dx.doi.org/10.1016/j.physbeh.2011.04.020.

44. Covinsky KE, Martin GE, Beyth RJ, Justice AC, Sehgal AR, Landefeld CS. The relationship between clinical assessments of nutritional status and adverse outcomes in older hospitalized medical patients. J Am Geriatr Soc. 1999;47(5):532-538. http://dx.doi.org/10.1111/j.1532-5415.1999.tb02566.x.

45. Lazarus BA, Murphy JB, Coletta EM, McQuade WH, Culpepper L. The provision of physical activity to hospitalized elderly patients. Arch Intern Med. 1991;151(12):2452-2456.

46. Minnick AF, Mion LC, Johnson ME, Catrambone C, Leipzig R. Prevalence and variation of physical restraint use in acute care settings in the US. J Nurs Scholarsh. 2007;39(1):30-37. http://dx.doi.org/10.1111/j.1547-5069.2007.00140.x.

47. Zisberg A, Shadmi E, Sinoff G, Gur-Yaish N, Srulovici E, Admi H. Low mobility during hospitalization and functional decline in older adults. J Am Geriatr Soc. 2011;59(2):266-273. http://dx.doi.org/10.1111/j.1532-5415.2010.03276.x.

48. Landefeld CS, Palmer RM, Kresevic DM, Fortinsky RH, Kowal J. A randomized trial of care in a hospital medical unit especially designed to improve the functional outcomes of acutely ill older patients. N Engl J Med. 1995;332(20):1338-1344. http://dx.doi.org/10.1056/NEJM199505183322006.

49. Douglas CH, Douglas MR. Patient-friendly hospital environments: exploring the patients’ perspective. Health Expectations: an international journal of public participation in health care and health policy. 2004;7(1):61-73. http://dx.doi.org/10.1046/j.1369-6513.2003.00251.x.

50. Volicer BJ. Hospital stress and patient reports of pain and physical status. Journal Human Stress. 1978;4(2):28-37. http://dx.doi.org/10.1080/0097840X.1978.9934984.

51. Willner P, Towell A, Sampson D, Sophokleous S, Muscat R. Reduction of sucrose preference by chronic unpredictable mild stress, and its restoration by a tricyclic antidepressant. Psychopharmacology (Berl). 1987;93(3):358-364. http://dx.doi.org/10.1007/BF00187257.

52. Grippo AJ, Francis J, Beltz TG, Felder RB, Johnson AK. Neuroendocrine and cytokine profile of chronic mild stress-induced anhedonia. Physiol Behav. 2005;84(5):697-706. http://dx.doi.org/10.1016/j.physbeh.2005.02.011.

53. Krishnan V, Nestler EJ. Animal models of depression: molecular perspectives. Curr Top Behav Neurosci. 2011;7:121-147. http://dx.doi.org/10.1007/7854_2010_108.

54. Magarinos AM, McEwen BS. Stress-induced atrophy of apical dendrites of hippocampal CA3c neurons: comparison of stressors. Neuroscience. 1995;69(1):83-88. http://dx.doi.org/10.1016/0306-4522(95)00256-I.

55. McEwen BS. Plasticity of the hippocampus: adaptation to chronic stress and allostatic load. Ann N Y Acad Sci. 2001;933:265-277. http://dx.doi.org/10.1111/j.1749-6632.2001.tb05830.x.

56. McEwen BS. The brain on stress: toward an integrative approach to brain, body, and behavior. Perspect Psychol Sci. 2013;8(6):673-675. http://dx.doi.org/10.1177/1745691613506907.

57. McEwen BS, Morrison JH. The brain on stress: vulnerability and plasticity of the prefrontal cortex over the life course. Neuron. 2013;79(1):16-29. http://dx.doi.org/10.1016/j.neuron.2013.06.028.

58. Dutta P, Courties G, Wei Y, et al. Myocardial infarction accelerates atherosclerosis. Nature. 2012;487(7407):325-329. http://dx.doi.org/10.1038/nature11260.

59. Lu XT, Liu YF, Zhang L, et al. Unpredictable chronic mild stress promotes atherosclerosis in high cholesterol-fed rabbits. Psychosom Med. 2012;74(6):604-611. http://dx.doi.org/10.1097/PSY.0b013e31825d0b71.

60. Heidt T, Sager HB, Courties G, et al. Chronic variable stress activates hematopoietic stem cells. Nat Med. 2014;20(7):754-758. http://dx.doi.org/10.1038/nm.3589.

61. Sheridan JF, Feng NG, Bonneau RH, Allen CM, Huneycutt BS, Glaser R. Restraint stress differentially affects anti-viral cellular and humoral immune responses in mice. J Neuroimmunol. 1991;31(3):245-255. http://dx.doi.org/10.1016/0165-5728(91)90046-A.

62. Kyrou I, Tsigos C. Stress hormones: physiological stress and regulation of metabolism. Curr Opin Pharmacol. 2009;9(6):787-793. http://dx.doi.org/10.1016/j.coph.2009.08.007.

63. Rosmond R. Role of stress in the pathogenesis of the metabolic syndrome. Psychoneuroendocrinology. 2005;30(1):1-10. http://dx.doi.org/10.1016/j.psyneuen.2004.05.007.

64. Tamashiro KL, Sakai RR, Shively CA, Karatsoreos IN, Reagan LP. Chronic stress, metabolism, and metabolic syndrome. Stress. 2011;14(5):468-474. http://dx.doi.org/10.3109/10253890.2011.606341.

65. McEwen BS. Mood disorders and allostatic load. Biol Psychiatry. 2003;54(3):200-207. http://dx.doi.org/10.1016/S0006-3223(03)00177-X.

66. Zareie M, Johnson-Henry K, Jury J, et al. Probiotics prevent bacterial translocation and improve intestinal barrier function in rats following chronic psychological stress. Gut. 2006;55(11):1553-1560. http://dx.doi.org/10.1136/gut.2005.080739.

67. Joachim RA, Quarcoo D, Arck PC, Herz U, Renz H, Klapp BF. Stress enhances airway reactivity and airway inflammation in an animal model of allergic bronchial asthma. Psychosom Med. 2003;65(5):811-815. http://dx.doi.org/10.1097/01.PSY.0000088582.50468.A3.

68. Thaker PH, Han LY, Kamat AA, et al. Chronic stress promotes tumor growth and angiogenesis in a mouse model of ovarian carcinoma. Nat Med. 2006;12(8):939-944. http://dx.doi.org/10.1038/nm1447.

69. Schreuder L, Eggen BJ, Biber K, Schoemaker RG, Laman JD, de Rooij SE. Pathophysiological and behavioral effects of systemic inflammation in aged and diseased rodents with relevance to delirium: A systematic review. Brain Behav Immun. 2017;62:362-381. http://dx.doi.org/10.1016/j.bbi.2017.01.010.

70. Mu DL, Li LH, Wang DX, et al. High postoperative serum cortisol level is associated with increased risk of cognitive dysfunction early after coronary artery bypass graft surgery: a prospective cohort study. PLoS One. 2013;8(10):e77637. http://dx.doi.org/10.1371/journal.pone.0077637.

71. Mu DL, Wang DX, Li LH, et al. High serum cortisol level is associated with increased risk of delirium after coronary artery bypass graft surgery: a prospective cohort study. Crit Care. 2010;14(6):R238. http://dx.doi.org/10.1186/cc9393.

72. Nguyen DN, Huyghens L, Zhang H, Schiettecatte J, Smitz J, Vincent JL. Cortisol is an associated-risk factor of brain dysfunction in patients with severe sepsis and septic shock. Biomed Res Int. 2014;2014:712742. http://dx.doi.org/10.1155/2014/712742.

73. Elkind MS, Carty CL, O’Meara ES, et al. Hospitalization for infection and risk of acute ischemic stroke: the Cardiovascular Health Study. Stroke. 2011;42(7):1851-1856. http://dx.doi.org/10.1161/STROKEAHA.110.608588.

74. Feibel JH, Hardy PM, Campbell RG, Goldstein MN, Joynt RJ. Prognostic value of the stress response following stroke. JAMA. 1977;238(13):1374-1376.

75. Jutla SK, Yuyun MF, Quinn PA, Ng LL. Plasma cortisol and prognosis of patients with acute myocardial infarction. J Cardiovasc Med (Hagerstown). 2014;15(1):33-41. http://dx.doi.org/10.2459/JCM.0b013e328364100b.

76. Yende S, D’Angelo G, Kellum JA, et al. Inflammatory markers at hospital discharge predict subsequent mortality after pneumonia and sepsis. Am J Respir Crit Care Med. 2008;177(11):1242-1247. http://dx.doi.org/10.1164/rccm.200712-1777OC.

77. Gouin JP, Kiecolt-Glaser JK. The impact of psychological stress on wound healing: methods and mechanisms. Immunol Allergy Clin North Am. 2011;31(1):81-93. http://dx.doi.org/10.1016/j.iac.2010.09.010.

78. Capes SE, Hunt D, Malmberg K, Gerstein HC. Stress hyperglycaemia and increased risk of death after myocardial infarction in patients with and without diabetes: a systematic overview. Lancet. 2000;355(9206):773-778. http://dx.doi.org/10.1016/S0140-6736(99)08415-9.

79. O’Neill PA, Davies I, Fullerton KJ, Bennett D. Stress hormone and blood glucose response following acute stroke in the elderly. Stroke. 1991;22(7):842-847. http://dx.doi.org/10.1161/01.STR.22.7.842.

80. Waterer GW, Kessler LA, Wunderink RG. Medium-term survival after hospitalization with community-acquired pneumonia. Am J Respir Crit Care Med. 2004;169(8):910-914. http://dx.doi.org/10.1164/rccm.200310-1448OC.

81. Rosengren A, Freden M, Hansson PO, Wilhelmsen L, Wedel H, Eriksson H. Psychosocial factors and venous thromboembolism: a long-term follow-up study of Swedish men. J Thrombosis Haemostasis. 2008;6(4):558-564. http://dx.doi.org/10.1111/j.1538-7836.2007.02857.x.

82. Oswald GA, Smith CC, Betteridge DJ, Yudkin JS. Determinants and importance of stress hyperglycaemia in non-diabetic patients with myocardial infarction. BMJ. 1986;293(6552):917-922. http://dx.doi.org/10.1136/bmj.293.6552.917.

83. Middlekauff HR, Nguyen AH, Negrao CE, et al. Impact of acute mental stress on sympathetic nerve activity and regional blood flow in advanced heart failure: implications for ‘triggering’ adverse cardiac events. Circulation. 1997;96(6):1835-1842. http://dx.doi.org/10.1161/01.CIR.96.6.1835.

84. Nijm J, Jonasson L. Inflammation and cortisol response in coronary artery disease. Ann Med. 2009;41(3):224-233. http://dx.doi.org/10.1080/07853890802508934.

85. Steptoe A, Hackett RA, Lazzarino AI, et al. Disruption of multisystem responses to stress in type 2 diabetes: investigating the dynamics of allostatic load. Proc Natl Acad Sci U S A. 2014;111(44):15693-15698. http://dx.doi.org/10.1073/pnas.1410401111.

86. Sepehri A, Beggs T, Hassan A, et al. The impact of frailty on outcomes after cardiac surgery: a systematic review. J Thorac Cardiovasc Surg. 2014;148(6):3110-3117. http://dx.doi.org/10.1016/j.jtcvs.2014.07.087.

87. Johar H, Emeny RT, Bidlingmaier M, et al. Blunted diurnal cortisol pattern is associated with frailty: a cross-sectional study of 745 participants aged 65 to 90 years. J Clin Endocrinol Metab. 2014;99(3):E464-468. http://dx.doi.org/10.1210/jc.2013-3079.

88. Yao X, Li H, Leng SX. Inflammation and immune system alterations in frailty. Clin Geriatr Med. 2011;27(1):79-87. http://dx.doi.org/10.1016/j.cger.2010.08.002.

89. Hospital Elder Life Program (HELP) for Prevention of Delirium. 2017; http://www.hospitalelderlifeprogram.org/. Accessed February 16, 2018.

90. Shepperd S, Doll H, Angus RM, et al. Admission avoidance hospital at home. Cochrane Database of System Rev. 2008;(4):CD007491. http://dx.doi.org/10.1002/14651858.CD007491.pub2’

91. Leff B, Burton L, Mader SL, et al. Comparison of functional outcomes associated with hospital at home care and traditional acute hospital care. J Am Geriatrics Soc. 2009;57(2):273-278. http://dx.doi.org/10.1111/j.1532-5415.2008.02103.x.

92. Qaddoura A, Yazdan-Ashoori P, Kabali C, et al. Efficacy of hospital at home in patients with heart failure: a systematic review and meta-analysis. PloS One. 2015;10(6):e0129282. http://dx.doi.org/10.1371/journal.pone.0129282.

93. Seeman T, Gruenewald T, Karlamangla A, et al. Modeling multisystem biological risk in young adults: The Coronary Artery Risk Development in Young Adults Study. Am J Hum Biol. 2010;22(4):463-472. http://dx.doi.org/10.1002/ajhb.21018.

94. Auerbach AD, Kripalani S, Vasilevskis EE, et al. Preventability and causes of readmissions in a national cohort of general medicine patients. JAMA Intern Med. 2016;176(4):484-493. http://dx.doi.org/10.1001/jamainternmed.2015.7863.

95. Hansen LO, Young RS, Hinami K, Leung A, Williams MV. Interventions to reduce 30-day rehospitalization: a systematic review. Ann Intern Med. 2011;155(8):520-528. http://dx.doi.org/10.7326/0003-4819-155-8-201110180-00008.

96. Takahashi PY, Naessens JM, Peterson SM, et al. Short-term and long-term effectiveness of a post-hospital care transitions program in an older, medically complex population. Healthcare. 2016;4(1):30-35. http://dx.doi.org/10.1016/j.hjdsi.2015.06.006.

97. Dharmarajan K, Swami S, Gou RY, Jones RN, Inouye SK. Pathway from delirium to death: potential in-hospital mediators of excess mortality. J Am Geriatr Soc. 2017;65(5):1026-1033. http://dx.doi.org/10.1111/jgs.14743.

97. Dharmarajan K, Swami S, Gou RY, Jones RN, Inouye SK. Pathway from delirium to death: potential in-hospital mediators of excess mortality. J Am Geriatr Soc. 2017;65(5):1026-1033. http://dx.doi.org/10.1111/jgs.14743.

After discharge from the hospital, patients have a significantly elevated risk for adverse events, including emergency department use, hospital readmission, and death. More than 1 in 3 patients discharged from the hospital require acute care in the month after hospital discharge, and more than 1 in 6 require readmission, with readmission diagnoses frequently differing from those of the preceding hospitalization.1-4 This heightened susceptibility to adverse events persists beyond 30 days but levels off by 7 weeks after discharge, suggesting that the period of increased risk is transient and dynamic.5

The term posthospital syndrome (PHS) describes this period of vulnerability to major adverse events following hospitalization.6 In addition to increased risk for readmission and mortality, patients in this period often show evidence of generalized dysfunction with new cognitive impairment, mobility disability, or functional decline.7-12 To date, the etiology of this vulnerability is neither well understood nor effectively addressed by transitional care interventions.13

One hypothesis to explain PHS is that stressors associated with the experience of hospitalization contribute to transient multisystem dysfunction that induces susceptibility to a broad range of medical maladies. These stressors include frequent sleep disruption, noxious sounds, painful stimuli, mobility restrictions, and poor nutrition.12 The stress hypothesis as a cause of PHS is therefore based, in large part, on evidence about allostasis and the deleterious effects of allostatic overload.

Allostasis defines a system functioning within normal stress-response parameters to promote adaptation and survival.14 In allostasis, the hypothalamic-pituitary-adrenal (HPA) axis and the sympathetic and parasympathetic branches of the autonomic nervous system (ANS) exist in homeostatic balance and respond to environmental stimuli within a range of healthy physiologic parameters. The hallmark of a system in allostasis is the ability to rapidly activate, then successfully deactivate, a stress response once the stressor (ie, threat) has resolved.14,15 To promote survival and potentiate “fight or flight” mechanisms, an appropriate stress response necessarily impacts multiple physiologic systems that result in hemodynamic augmentation and gluconeogenesis to support the anticipated action of large muscle groups, heightened vigilance and memory capabilities to improve rapid decision-making, and enhancement of innate and adaptive immune capabilities to prepare for wound repair and infection defense.14-16 The stress response is subsequently terminated by negative feedback mechanisms of glucocorticoids as well as a shift of the ANS from sympathetic to parasympathetic tone.17,18

Extended or repetitive stress exposure, however, leads to dysregulation of allostatic mechanisms responsible for stress adaptation and hinders an efficient and effective stress response. After extended stress exposure, baseline (ie, resting) HPA activity resets, causing a disruption of normal diurnal cortisol rhythm and an increase in total cortisol concentration. Moreover, in response to stress, HPA and ANS system excitation becomes impaired, and negative feedback properties are undermined.14,15 This maladaptive state, known as allostatic overload, disrupts the finely tuned mechanisms that are the foundation of mind-body balance and yields pathophysiologic consequences to multiple organ systems. Downstream ramifications of allostatic overload include cognitive deterioration, cardiovascular and immune system dysfunction, and functional decline.14,15,19

Although a stress response is an expected and necessary aspect of acute illness that promotes survival, the central thesis of this work is that additional environmental and social stressors inherent in hospitalization may unnecessarily compound stress and increase the risk of HPA axis dysfunction, allostatic overload, and subsequent multisystem dysfunction, predisposing individuals to adverse outcomes after hospital discharge. Based on data from both human subjects and animal models, we present a possible pathophysiologic mechanism for the postdischarge vulnerability of PHS, encourage critical contemplation of traditional hospitalization, and suggest interventions that might improve outcomes.

POSTHOSPITAL SYNDROME

Posthospital syndrome (PHS) describes a transient period of vulnerability after hospitalization during which patients are at elevated risk for adverse events from a broad range of conditions. In support of this characterization, epidemiologic data have demonstrated high rates of adverse outcomes following hospitalization. For example, data have shown that more than 1 in 6 older adults is readmitted to the hospital within 30 days of discharge.20 Death is also common in this first month, during which rates of postdischarge mortality may exceed initial inpatient mortality.21,22 Elevated vulnerability after hospitalization is not restricted to older adults, as readmission risk among younger patients 18 to 64 years of age may be even higher for selected conditions, such as heart failure.3,23

Vulnerability after hospitalization is broad. In patients over age 65 initially admitted for heart failure or acute myocardial infarction, only 35% and 10% of readmissions are for recurrent heart failure or reinfarction, respectively.1 Nearly half of readmissions are for noncardiovascular causes.1 Similarly, following hospitalization for pneumonia, more than 60 percent of readmissions are for nonpulmonary etiologies. Moreover, the risk for all these causes of readmission is much higher than baseline risk, indicating an extended period of lack of resilience to many types of illness.24 These patterns of broad susceptibility also extend to younger adults hospitalized with common medical conditions.3

Accumulating evidence suggests that hospitalized patients face functional decline, debility, and risk for adverse events despite resolution of the presenting illness, implying perhaps that the hospital environment itself is hazardous to patients’ health. In 1993, Creditor hypothesized that the “hazards of hospitalization,” including enforced bed-rest, sensory deprivation, social isolation, and malnutrition lead to a “cascade of dependency” in which a collection of small insults to multiple organ systems precipitates loss of function and debility despite cure or resolution of presenting illness.12 Covinsky (2011) later defined hospitalization-associated disability as an iatrogenic hospital-related “disorder” characterized by new impairments in abilities to perform basic activities of daily living such as bathing, feeding, toileting, dressing, transferring, and walking at the time of hospital discharge.11 Others have described a postintensive-care syndrome (PICS),25 characterized by cognitive, psychiatric, and physical impairments acquired during hospitalization for critical illness that persist postdischarge and increase the long-term risk for adverse outcomes, including elevated mortality rates,26,27 readmission rates,28 and physical disabilities.29 Similar to the “hazards of hospitalization,” PICS is thought to be related to common experiences of ICU stays, including mobility restriction, sensory deprivation, sleep disruption, sedation, malnutrition, and polypharmacy.30-33

Taken together, these data suggest that adverse health consequences attributable to hospitalization extend across the spectrum of age, presenting disease severity, and hospital treatment location. As detailed below, the PHS hypothesis is rooted in a mechanistic understanding of the role of exogenous stressors in producing physiologic dysregulation and subsequent adverse health effects across multiple organ systems.

Nature of Stress in the Hospital

Compounding the stress of acute illness, hospitalized patients are routinely and repetitively exposed to a wide variety of environmental stressors that may have downstream adverse consequences (Table 1). In the absence of overt clinical manifestations of harm, the possible subclinical physiologic dysfunction generated by the following stress exposures may increase patients’ susceptibility to the manifestations of PHS.

Sleep Disruption

Sleep disruptions trigger potent stress responses,34,35 yet they are common occurrences during hospitalization. In surveys, about half of patients report poor sleep quality during hospitalization that persists for many months after discharge.36 In a simulated hospital setting, test subjects exposed to typical hospital sounds (paging system, machine alarms, etc.) experienced significant sleep-wake cycle abnormalities.37 Although no work has yet focused specifically on the physiologic consequences of sleep disruption and stress in hospitalized patients, in healthy humans, mild sleep disruption has clear effects on allostasis by disrupting HPA activity, raising cortisol levels, diminishing parasympathetic tone, and impairing cognitive performance.18,34,35,38,39

Malnourishment

Malnourishment in hospitalized patients is common, with one-fifth of hospitalized patients receiving nothing per mouth or clear liquid diets for more than 3 continuous days,40 and one-fifth of hospitalized elderly patients receiving less than half of their calculated nutrition requirements.41 Although the relationship between food restriction, cortisol levels, and postdischarge outcomes has not been fully explored, in healthy humans, meal anticipation, meal withdrawal (withholding an expected meal), and self-reported dietary restraint are known to generate stress responses.42,43 Furthermore, malnourishment during hospitalization is associated with increased 90-day and 1-year mortality after discharge,44 adding malnourishment to the list of plausible components of hospital-related stress.

Mobility Restriction

Physical activity counterbalances stress responses and minimizes downstream consequences of allostatic load,15 yet mobility limitations via physical and chemical restraints are common in hospitalized patients, particularly among the elderly.45-47 Many patients are tethered to devices that make ambulation hazardous, such as urinary catheters and infusion pumps. Even without physical or chemical restraints or a limited mobility order, patients may be hesitant to leave the room so as not to miss transport to a diagnostic study or an unscheduled physician’s visit. Indeed, mobility limitations of hospitalized patients increase the risk for adverse events after discharge, while interventions designed to encourage mobility are associated with improved postdischarge outcomes.47,48

Other Stressors

Other hospital-related aversive stimuli are less commonly quantified, but clearly exist. According to surveys of hospitalized patients, sources of emotional stress include social isolation; loss of autonomy and privacy; fear of serious illness; lack of control over activities of daily living; lack of clear communication between treatment team and patients; and death of a patient roommate.49,50 Furthermore, consider the physical discomfort and emotional distress of patients with urinary incontinence awaiting assistance for a diaper or bedding change or the pain of repetitive blood draws or other invasive testing. Although individualized, the subjective discomfort and emotional distress associated with these experiences undoubtedly contribute to the stress of hospitalization.

IMPACT OF ALLOSTATIC OVERLOAD ON PHYSIOLOGIC FUNCTION

Animal Models of Stress

Laboratory techniques reminiscent of the numerous environmental stressors associated with hospitalization have been used to reliably trigger allostatic overload in healthy young animals.51 These techniques include sequential exposure to aversive stimuli, including food and water deprivation, continuous overnight illumination, paired housing with known and unknown cagemates, mobility restriction, soiled cage conditions, and continuous noise. All of these techniques have been shown to cause HPA axis and ANS dysfunction, allostatic overload, and subsequent stress-mediated consequences to multiple organ systems.19,52-54 Given the remarkable similarity of these protocols to common experiences during hospitalization, animal models of stress may be useful in understanding the spectrum of maladaptive consequences experienced by patients within the hospital (Figure 1).

These animal models of stress have resulted in a number of instructive findings. For example, in rodents, extended stress exposure induces structural and functional remodeling of neuronal networks that precipitate learning and memory, working memory, and attention impairments.55-57 These exposures also result in cardiovascular abnormalities, including dyslipidemia, progressive atherosclerosis,58,59 and enhanced inflammatory cytokine expression,60 all of which increase both atherosclerotic burden and susceptibility to plaque rupture, leading to elevated risk for major cardiovascular adverse events. Moreover, these extended stress exposures in animals increase susceptibility to both bacterial and viral infections and increase their severity.16,61 This outcome appears to be driven by a stress-induced elevation of glucocorticoid levels, decreased leukocyte proliferation, altered leukocyte trafficking, and a transition to a proinflammatory cytokine environment.16, 61 Allostatic overload has also been shown to contribute to metabolic dysregulation involving insulin resistance, persistence of hyperglycemia, dyslipidemia, catabolism of lean muscle, and visceral adipose tissue deposition.62-64 In addition to cardiovascular, immune, and metabolic consequences of allostatic overload, the spectrum of physiologic dysfunction in animal models is broad and includes mood disorder symptoms,65 intestinal barrier abnormalities,66 airway reactivity exacerbation,67 and enhanced tumor growth.68

Although the majority of this research highlights the multisystem effects of variable stress exposure in healthy animals, preliminary evidence suggests that aged or diseased animals subjected to additional stressors display a heightened inflammatory cytokine response that contributes to exaggerated sickness behavior and greater and prolonged cognitive deficits.69 Future studies exploring the consequences of extended stress exposure in animals with existing disease or debility may therefore more closely simulate the experience of hospitalized patients and perhaps further our understanding of PHS.

Hospitalized Patients

While no intervention studies have examined the effects of potential hospital stressors on the development of allostatic overload, there is evidence from small studies that dysregulated stress responses during hospitalization are associated with adverse events. For example, high serum cortisol, catecholamine, and proinflammatory cytokine levels during hospitalization have individually been associated with the development of cognitive dysfunction,70-72 increased risk of cardiovascular events such as myocardial infarction and stroke in the year following discharge,73-76 and the development of wound infections after discharge.77 Moreover, elevated plasma glucose during admission for myocardial infarction in patients with or without diabetes has been associated with greater in-hospital and 1-year mortality,78 with a similar relationship seen between elevated plasma glucose and survival after admission for stroke79 and pneumonia.80 Furthermore, in addition to atherothrombosis, stress may contribute to the risk for venous thromboembolism,81 resulting in readmissions for deep vein thrombosis or pulmonary embolism posthospitalization. Although potentially surrogate markers of illness acuity, a handful of studies have shown that these stress biomarkers are actually only weakly correlated with,82 or independent of,72,76 disease severity. As discussed in detail below, future studies utilizing a summative measure of multisystem physiologic dysfunction as opposed to individual biomarkers may more accurately reflect the cumulative stress effects of hospitalization and subsequent risk for adverse events.

Additional Considerations

Elderly patients, in particular, may have heightened susceptibility to the consequences of allostatic overload due to common geriatric issues such as multimorbidity and frailty. Patients with chronic diseases display both baseline HPA axis abnormalities as well as dysregulated stress responses and may therefore be more vulnerable to hospitalization-related stress. For example, when subjected to psychosocial stress, patients with chronic conditions such as diabetes, heart failure, or atherosclerosis demonstrate elevated cortisol levels, increased circulating markers of inflammation, as well as prolonged hemodynamic recovery after stress resolution compared with normal controls.83-85 Additionally, frailty may affect an individual’s susceptibility to exogenous stress. Indeed, frailty identified on hospital admission increases the risk for adverse outcomes during hospitalization and postdischarge.86 Although the specific etiology of this relationship is unclear, persons with frailty are known to have elevated levels of cortisol and other inflammatory markers,87,88 which may contribute to adverse outcomes in the face of additional stressors.

IMPLICATIONS AND NEXT STEPS

A large body of evidence stretching from bench to bedside suggests that environmental stressors associated with hospitalization are toxic. Understanding PHS within the context of hospital-induced allostatic overload presents a unifying theory for the interrelated multisystem dysfunction and increased susceptibility to adverse events that patients experience after discharge (Figure 2). Furthermore, it defines a potential pathophysiological mechanism for the cognitive impairment, elevated cardiovascular risk, immune system dysfunction, metabolic derangements, and functional decline associated with PHS. Additionally, this theory highlights environmental interventions to limit PHS development and suggests mechanisms to promote stress resilience. Although it is difficult to disentangle the consequences of the endogenous stress triggered by an acute illness from the exogenous stressors related to hospitalization, it is likely that the 2 simultaneous exposures compound risk for stress system dysregulation and allostatic overload. Moreover, hospitalized patients with preexisting HPA axis dysfunction at baseline from chronic disease or advancing age may be even more susceptible to these adverse outcomes. If this hypothesis is true, a reduction in PHS would require mitigation of the modifiable environmental stressors encountered by patients during hospitalization. Directed efforts to diminish ambient noise, limit nighttime disruptions, thoughtfully plan procedures, consider ongoing nutritional status, and promote opportunities for patients to exert some control over their environment may diminish the burden of extrinsic stressors encountered by all patients in the hospital and improve outcomes after discharge.

Hospitals are increasingly recognizing the importance of improving patients’ experience of hospitalization by reducing exposure to potential toxicities. For example, many hospitals are now attempting to reduce sleep disturbances and sleep latency through reduced nighttime noise and light levels, fewer nighttime interruptions for vital signs checks and medication administration, and commonsensical interventions like massages, herbal teas, and warm milk prior to bedtime.89 Likewise, intensive care units are targeting environmental and physical stressors with a multifaceted approach to decrease sedative use, promote healthy sleep cycles, and encourage exercise and ambulation even in those patients who are mechanically ventilated.30 Another promising development has been the increase of Hospital at Home programs. In these programs, patients who meet the criteria for inpatient admission are instead comprehensively managed at home for their acute illness through a multidisciplinary effort between physicians, nurses, social workers, physical therapists, and others. Patients hospitalized at home report higher levels of satisfaction and have modest functional gains, improved health-related quality of life, and decreased risk of mortality at 6 months compared with hospitalized patients.90,91 With some admitting diagnoses (eg, heart failure), hospitalization at home may be associated with decreased readmission risk.92 Although not yet investigated on a physiologic level, perhaps the benefits of hospital at home are partially due to the dramatic difference in exposure to environmental stressors.

A tool that quantifies hospital-associated stress may help health providers appreciate the experience of patients and better target interventions to aspects of their structure and process that contribute to allostatic overload. Importantly, allostatic overload cannot be identified by one biomarker of stress but instead requires evidence of dysregulation across inflammatory, neuroendocrine, hormonal, and cardiometabolic systems. Future studies to address the burden of stress faced by hospitalized patients should consider a summative measure of multisystem dysregulation as opposed to isolated assessments of individual biomarkers. Allostatic load has previously been operationalized as the summation of a variety of hemodynamic, hormonal, and metabolic factors, including blood pressure, lipid profile, glycosylated hemoglobin, cortisol, catecholamine levels, and inflammatory markers.93 To develop a hospital-associated allostatic load index, models should ideally be adjusted for acute illness severity, patient-reported stress, and capacity for stress resilience. This tool could then be used to quantify hospitalization-related allostatic load and identify those at greatest risk for adverse events after discharge, as well as measure the effectiveness of strategic environmental interventions (Table 2). A natural first experiment may be a comparison of the allostatic load of hospitalized patients versus those hospitalized at home.

The risk of adverse outcomes after discharge is likely a function of the vulnerability of the patient and the degree to which the patient’s healthcare team and social support network mitigates this vulnerability. That is, there is a risk that a person struggles in the postdischarge period and, in many circumstances, a strong healthcare team and social network can identify health problems early and prevent them from progressing to the point that they require hospitalization.13,94-96 There are also hospital occurrences, outside of allostatic load, that can lead to complications that lengthen the stay, weaken the patient, and directly contribute to subsequent vulnerability.94,97 Our contention is that the allostatic load of hospitalization, which may also vary by patient depending on the circumstances of hospitalization, is just one contributor, albeit potentially an important one, to vulnerability to medical problems after discharge.

In conclusion, a plausible etiology of PHS is the maladaptive mind-body consequences of common stressors during hospitalization that compound the stress of acute illness and produce allostatic overload. This stress-induced dysfunction potentially contributes to a spectrum of generalized disease susceptibility and risk of adverse outcomes after discharge. Focused efforts to diminish patient exposure to hospital-related stressors during and after hospitalization might diminish the presence or severity of PHS. Viewing PHS from this perspective enables the development of hypothesis-driven risk-prediction models, encourages critical contemplation of traditional hospitalization, and suggests that targeted environmental interventions may significantly reduce adverse outcomes.

After discharge from the hospital, patients have a significantly elevated risk for adverse events, including emergency department use, hospital readmission, and death. More than 1 in 3 patients discharged from the hospital require acute care in the month after hospital discharge, and more than 1 in 6 require readmission, with readmission diagnoses frequently differing from those of the preceding hospitalization.1-4 This heightened susceptibility to adverse events persists beyond 30 days but levels off by 7 weeks after discharge, suggesting that the period of increased risk is transient and dynamic.5

The term posthospital syndrome (PHS) describes this period of vulnerability to major adverse events following hospitalization.6 In addition to increased risk for readmission and mortality, patients in this period often show evidence of generalized dysfunction with new cognitive impairment, mobility disability, or functional decline.7-12 To date, the etiology of this vulnerability is neither well understood nor effectively addressed by transitional care interventions.13

One hypothesis to explain PHS is that stressors associated with the experience of hospitalization contribute to transient multisystem dysfunction that induces susceptibility to a broad range of medical maladies. These stressors include frequent sleep disruption, noxious sounds, painful stimuli, mobility restrictions, and poor nutrition.12 The stress hypothesis as a cause of PHS is therefore based, in large part, on evidence about allostasis and the deleterious effects of allostatic overload.

Allostasis defines a system functioning within normal stress-response parameters to promote adaptation and survival.14 In allostasis, the hypothalamic-pituitary-adrenal (HPA) axis and the sympathetic and parasympathetic branches of the autonomic nervous system (ANS) exist in homeostatic balance and respond to environmental stimuli within a range of healthy physiologic parameters. The hallmark of a system in allostasis is the ability to rapidly activate, then successfully deactivate, a stress response once the stressor (ie, threat) has resolved.14,15 To promote survival and potentiate “fight or flight” mechanisms, an appropriate stress response necessarily impacts multiple physiologic systems that result in hemodynamic augmentation and gluconeogenesis to support the anticipated action of large muscle groups, heightened vigilance and memory capabilities to improve rapid decision-making, and enhancement of innate and adaptive immune capabilities to prepare for wound repair and infection defense.14-16 The stress response is subsequently terminated by negative feedback mechanisms of glucocorticoids as well as a shift of the ANS from sympathetic to parasympathetic tone.17,18

Extended or repetitive stress exposure, however, leads to dysregulation of allostatic mechanisms responsible for stress adaptation and hinders an efficient and effective stress response. After extended stress exposure, baseline (ie, resting) HPA activity resets, causing a disruption of normal diurnal cortisol rhythm and an increase in total cortisol concentration. Moreover, in response to stress, HPA and ANS system excitation becomes impaired, and negative feedback properties are undermined.14,15 This maladaptive state, known as allostatic overload, disrupts the finely tuned mechanisms that are the foundation of mind-body balance and yields pathophysiologic consequences to multiple organ systems. Downstream ramifications of allostatic overload include cognitive deterioration, cardiovascular and immune system dysfunction, and functional decline.14,15,19

Although a stress response is an expected and necessary aspect of acute illness that promotes survival, the central thesis of this work is that additional environmental and social stressors inherent in hospitalization may unnecessarily compound stress and increase the risk of HPA axis dysfunction, allostatic overload, and subsequent multisystem dysfunction, predisposing individuals to adverse outcomes after hospital discharge. Based on data from both human subjects and animal models, we present a possible pathophysiologic mechanism for the postdischarge vulnerability of PHS, encourage critical contemplation of traditional hospitalization, and suggest interventions that might improve outcomes.

POSTHOSPITAL SYNDROME

Posthospital syndrome (PHS) describes a transient period of vulnerability after hospitalization during which patients are at elevated risk for adverse events from a broad range of conditions. In support of this characterization, epidemiologic data have demonstrated high rates of adverse outcomes following hospitalization. For example, data have shown that more than 1 in 6 older adults is readmitted to the hospital within 30 days of discharge.20 Death is also common in this first month, during which rates of postdischarge mortality may exceed initial inpatient mortality.21,22 Elevated vulnerability after hospitalization is not restricted to older adults, as readmission risk among younger patients 18 to 64 years of age may be even higher for selected conditions, such as heart failure.3,23

Vulnerability after hospitalization is broad. In patients over age 65 initially admitted for heart failure or acute myocardial infarction, only 35% and 10% of readmissions are for recurrent heart failure or reinfarction, respectively.1 Nearly half of readmissions are for noncardiovascular causes.1 Similarly, following hospitalization for pneumonia, more than 60 percent of readmissions are for nonpulmonary etiologies. Moreover, the risk for all these causes of readmission is much higher than baseline risk, indicating an extended period of lack of resilience to many types of illness.24 These patterns of broad susceptibility also extend to younger adults hospitalized with common medical conditions.3

Accumulating evidence suggests that hospitalized patients face functional decline, debility, and risk for adverse events despite resolution of the presenting illness, implying perhaps that the hospital environment itself is hazardous to patients’ health. In 1993, Creditor hypothesized that the “hazards of hospitalization,” including enforced bed-rest, sensory deprivation, social isolation, and malnutrition lead to a “cascade of dependency” in which a collection of small insults to multiple organ systems precipitates loss of function and debility despite cure or resolution of presenting illness.12 Covinsky (2011) later defined hospitalization-associated disability as an iatrogenic hospital-related “disorder” characterized by new impairments in abilities to perform basic activities of daily living such as bathing, feeding, toileting, dressing, transferring, and walking at the time of hospital discharge.11 Others have described a postintensive-care syndrome (PICS),25 characterized by cognitive, psychiatric, and physical impairments acquired during hospitalization for critical illness that persist postdischarge and increase the long-term risk for adverse outcomes, including elevated mortality rates,26,27 readmission rates,28 and physical disabilities.29 Similar to the “hazards of hospitalization,” PICS is thought to be related to common experiences of ICU stays, including mobility restriction, sensory deprivation, sleep disruption, sedation, malnutrition, and polypharmacy.30-33

Taken together, these data suggest that adverse health consequences attributable to hospitalization extend across the spectrum of age, presenting disease severity, and hospital treatment location. As detailed below, the PHS hypothesis is rooted in a mechanistic understanding of the role of exogenous stressors in producing physiologic dysregulation and subsequent adverse health effects across multiple organ systems.

Nature of Stress in the Hospital

Compounding the stress of acute illness, hospitalized patients are routinely and repetitively exposed to a wide variety of environmental stressors that may have downstream adverse consequences (Table 1). In the absence of overt clinical manifestations of harm, the possible subclinical physiologic dysfunction generated by the following stress exposures may increase patients’ susceptibility to the manifestations of PHS.

Sleep Disruption

Sleep disruptions trigger potent stress responses,34,35 yet they are common occurrences during hospitalization. In surveys, about half of patients report poor sleep quality during hospitalization that persists for many months after discharge.36 In a simulated hospital setting, test subjects exposed to typical hospital sounds (paging system, machine alarms, etc.) experienced significant sleep-wake cycle abnormalities.37 Although no work has yet focused specifically on the physiologic consequences of sleep disruption and stress in hospitalized patients, in healthy humans, mild sleep disruption has clear effects on allostasis by disrupting HPA activity, raising cortisol levels, diminishing parasympathetic tone, and impairing cognitive performance.18,34,35,38,39

Malnourishment