User login

Warfarin‐Associated Adverse Events

Warfarin is 1 of the most common causes of adverse drug events, with hospitalized patients being particularly at risk compared to outpatients.[1] Despite the availability of new oral anticoagulants (NOACs), physicians commonly prescribe warfarin to hospitalized patients,[2] likely in part due to the greater difficulty in reversing NOACs compared to warfarin. Furthermore, uptake of the NOACs is likely to be slow in resource‐poor countries due to the lower cost of warfarin.[3] However, the narrow therapeutic index, frequent drug‐drug interactions, and patient variability in metabolism of warfarin makes management challenging.[4] Thus, warfarin remains a significant cause of adverse events in hospitalized patients, occurring in approximately 3% to 8% of exposed patients, depending on underlying condition.[2, 5]

An elevated international normalized ratio (INR) is a strong predictor of drug‐associated adverse events (patient harm). In a study employing 21 different electronic triggers to identify potential adverse events, an elevated INR had the highest yield for events associated with harm (96% of INRs >5.0 associated with harm).[6] Although pharmacist‐managed inpatient anticoagulation services have been shown to improve warfarin management,[7, 8] there are evidence gaps regarding the causes of warfarin‐related adverse events and practice changes that could decrease their frequency. Although overanticoagulation is a well‐known risk factor for warfarin‐related adverse events,[9, 10] there are few evidence‐based warfarin monitoring and dosing recommendations for hospitalized patients.[10] For example, the 2012 American College of Chest Physicians Antithrombotic Guidelines[11] provide a weak recommendation on initial dosing of warfarin, but no recommendations on how frequently to monitor the INR, or appropriate dosing responses to INR levels. Although many hospitals employ protocols that suggest daily INR monitoring until stable, there are no evidence‐based guidelines to support this practice.[12] Conversely, there are reports of flags to order an INR level that are not activated unless greater than 2[13] or 3 days[14] pass since the prior INR. Protocols from some major academic medical centers suggest that after a therapeutic INR is reached, INR levels can be measured intermittently, as infrequently as twice a week.[15, 16]

The 2015 Joint Commission anticoagulant‐focused National Patient Safety Goal[17] (initially issued in 2008) mandates the assessment of baseline coagulation status before starting warfarin, and warfarin dosing based on a current INR; however, current is not defined. Neither the extent to which the mandate for assessing baseline coagulation status is adhered to nor the relationship between this process of care and patient outcomes is known. The importance of adverse drug events associated with anticoagulants, included warfarin, was also recently highlighted in the 2014 federal National Action Plan for Adverse Drug Event Prevention. In this document, the prevention of adverse drug events associated with anticoagulants was 1 of the 3 areas selected for special national attention and action.[18]

The Medicare Patient Safety Monitoring System (MPSMS) is a national chart abstraction‐based system that includes 21 in‐hospital adverse event measures, including warfarin‐associated adverse drug events.[2] Because of the importance of warfarin‐associated bleeding in hospitalized patients, we analyzed MPSMS data to determine what factors related to INR monitoring practices place patients at risk for these events. We were particularly interested in determining if we could detect potentially modifiable predictors of overanticoagulation and warfarin‐associated adverse events.

METHODS

Study Sample

We combined 2009 to 2013 MPSMS all payer data from the Centers for Medicare & Medicaid Services Hospital Inpatient Quality Reporting program for 4 common medical conditions: (1) acute myocardial infarction, (2) heart failure, (3) pneumonia, and (4) major surgery (as defined by the national Surgical Care Improvement Project).[19] To increase the sample size for cardiac patients, we combined myocardial infarction patients and heart failure patients into 1 group: acute cardiovascular disease. Patients under 18 years of age are excluded from the MPSMS sample, and we excluded patients whose INR never exceeded 1.5 after the initiation of warfarin therapy.

Patient Characteristics

Patient characteristics included demographics (age, sex, race [white, black, and other race]) and comorbidities. Comorbidities abstracted from medical records included: histories at the time of hospital admission of heart failure, obesity, coronary artery disease, renal disease, cerebrovascular disease, chronic obstructive pulmonary disease, cancer, diabetes, and smoking. The use of anticoagulants other than warfarin was also captured.

INRs

The INR measurement period for each patient started from the initial date of warfarin administration and ended on the date the maximum INR occurred. If a patient had more than 1 INR value on any day, the higher INR value was selected. A day without an INR measurement was defined as no INR value documented for a calendar day within the INR measurement period, starting on the third day of warfarin and ending on the day of the maximum INR level.

Outcomes

The study was performed to assess the association between the number of days on which a patient did not have an INR measured while receiving warfarin and the occurrence of (1) an INR 6.0[20, 21] (intermediate outcome) and (2) a warfarin‐associated adverse event. A description of the MPSMS measure of warfarin‐associated adverse events has been previously published.[2] Warfarin‐associated adverse events must have occurred within 48 hours of predefined triggers: an INR 4.0, cessation of warfarin therapy, administration of vitamin K or fresh frozen plasma, or transfusion of packed red blood cells other than in the setting of a surgical procedure. Warfarin‐associated adverse events were divided into minor and major events for this analysis. Minor events were defined as bleeding, drop in hematocrit of 3 points (occurring more than 48 hours after admission and not associated with surgery), or development of a hematoma. Major events were death, intracranial bleeding, or cardiac arrest. A patient who had both a major and a minor event was considered as having had a major event.

To assess the relationship between a rapidly rising INR and a subsequent INR 5.0 or 6.0, we determined the increase in INR between the measurement done 2 days prior to the maximum INR and 1 day prior to the maximum INR. This analysis was performed only on patients whose INR was 2.0 and 3.5 on the day prior to the maximum INR. In doing so, we sought to determine if the INR rise could predict the occurrence of a subsequent severely elevated INR in patients whose INR was within or near the therapeutic range.

Statistical Analysis

We conducted bivariate analysis to quantify the associations between lapses in measurement of the INR and subsequent warfarin‐associated adverse events, using the Mantel‐Haenszel 2 test for categorical variables. We fitted a generalized linear model with a logit link function to estimate the association of days on which an INR was not measured and the occurrence of the composite adverse event measure or the occurrence of an INR 6.0, adjusting for baseline patient characteristics, the number of days on warfarin, and receipt of heparin and low‐molecular‐weight heparin (LMWH). To account for potential imbalances in baseline patient characteristics and warfarin use prior to admission, we conducted a second analysis using the stabilized inverse probability weights approach. Specifically, we weighted each patient by the patient's inverse propensity scores of having only 1 day, at least 1 day, and at least 2 days without an INR measurement while receiving warfarin.[22, 23, 24, 25] To obtain the propensity scores, we fitted 3 logistic models with all variables included in the above primary mixed models except receipt of LMWH, heparin, and the number of days on warfarin as predictors, but 3 different outcomes, 1 day without an INR measurement, 1 or more days without an INR measurement, and 2 or more days without an INR measurement. Analyses were conducted using SAS version 9.2 (SAS Institute Inc., Cary, NC). All statistical testing was 2‐sided, at a significance level of 0.05. The institutional review board at Solutions IRB (Little Rock, AR) determined that the requirement for informed consent could be waived based on the nature of the study.

RESULTS

There were 130,828 patients included in the 2009 to 2013 MPSMS sample, of whom 19,445 (14.9%) received warfarin during their hospital stay and had at least 1 INR measurement. Among these patients, 5228 (26.9%) had no INR level above 1.5 and were excluded from further analysis, leaving 14,217 included patients. Of these patients, 1055 (7.4%) developed a warfarin‐associated adverse event. Table 1 demonstrates the baseline demographics and comorbidities of the included patients.

| Characteristics | Acute Cardiovascular Disease, No. (%), N = 6,394 | Pneumonia, No. (%), N = 3,668 | Major Surgery, No. (%), N = 4,155 | All, No. (%), N = 14,217 |

|---|---|---|---|---|

| ||||

| Age, mean [SD] | 75.3 [12.4] | 74.5 [13.3] | 69.4 [11.8] | 73.4 [12.7] |

| Sex, female | 3,175 (49.7) | 1,741 (47.5) | 2,639 (63.5) | 7,555 (53.1) |

| Race | ||||

| White | 5,388 (84.3) | 3,268 (89.1) | 3,760 (90.5) | 12,416 (87.3) |

| Other | 1,006 (15.7) | 400 (10.9) | 395 (9.5) | 1,801 (12.7) |

| Comorbidities | ||||

| Cancer | 1,186 (18.6) | 939 (25.6) | 708 (17.0) | 2,833 (19.9) |

| Diabetes | 3,043 (47.6) | 1,536 (41.9) | 1,080 (26.0) | 5,659 (39.8) |

| Obesity | 1,938 (30.3) | 896 (24.4) | 1,260 (30.3) | 4,094 (28.8) |

| Cerebrovascular disease | 1,664 (26.0) | 910 (24.8) | 498 (12.0) | 3,072 (21.6) |

| Heart failure/pulmonary edema | 5,882 (92.0) | 2,052 (55.9) | 607 (14.6) | 8,541 (60.1) |

| Chronic obstructive pulmonary disease | 2,636 (41.2) | 1,929 (52.6) | 672 (16.2) | 5,237 (36.8) |

| Smoking | 895 (14.0) | 662 (18.1) | 623 (15.0) | 2,180 (15.3) |

| Corticosteroids | 490 (7.7) | 568 (15.5) | 147 (3.5) | 1,205 (8.5) |

| Coronary artery disease | 4,628 (72.4) | 1,875 (51.1) | 1,228 (29.6) | 7,731 (54.4) |

| Renal disease | 3,000 (46.9) | 1,320 (36.0) | 565 (13.6) | 4,885 (34.4) |

| Warfarin prior to arrival | 5,074 (79.4) | 3,020 (82.3) | 898 (21.6) | 8,992 (63.3) |

| Heparin given during hospitalization | 850 (13.3) | 282 (7.7) | 314 (7.6) | 1,446 (10.7) |

| LMWH given during hospitalization | 1,591 (24.9) | 1,070 (29.2) | 1,431 (34.4) | 4,092 (28.8) |

Warfarin was started on hospital day 1 for 6825 (48.0%) of 14,217 patients. Among these patients, 6539 (95.8%) had an INR measured within 1 calendar day. We were unable to determine how many patients who started warfarin later in their hospital stay had a baseline INR, as we did not capture INRs performed prior to the day that warfarin was initiated.

Supporting Table 1 in the online version of this article demonstrates the association between an INR 6.0 and the occurrence of warfarin‐associated adverse events. A maximum INR 6.0 occurred in 469 (3.3%) of the patients included in the study, and among those patients, 133 (28.4%) experienced a warfarin‐associated adverse event compared to 922 (6.7%) adverse events in the 13,748 patients who did not develop an INR 6.0 (P < 0.001).

Among 8529 patients who received warfarin for at least 3 days, beginning on the third day of warfarin, 1549 patients (18.2%) did not have INR measured at least once each day that they received warfarin. Table 2 demonstrates that patients who had 2 or more days on which the INR was not measured had higher rates of INR 6.0 than patients for whom the INR was measured daily. A similar association was seen for warfarin‐associated adverse events (Table 2).

| No. of Patients, No. (%), N = 8,529 | Patients With INR on All Days, No. (%), N = 6,980 | Patients With 1 Day Without an INR, No. (%), N = 968 | Patients With 2 or More Days Without an INR, No. (%), N = 581 | P Value | |

|---|---|---|---|---|---|

| |||||

| Maximum INR | <0.01* | ||||

| 1.515.99 | 8,183 | 6,748 (96.7) | 911 (94.1) | 524 (90.2) | |

| 6.0 | 346 | 232 (3.3) | 57 (5.9) | 57 (9.8) | |

| Warfarin‐associated adverse events | <0.01* | ||||

| No adverse events | 7,689 (90.2) | 6,331 (90.7) | 872 (90.1) | 486 (83.6) | |

| Minor adverse events | 792 (9.3) | 617 (8.8) | 86 (8.9) | 89 (15.3) | |

| Major adverse events | 48 (0.6) | 32 (0.5) | 10 (1.0) | 6 (1.0) | |

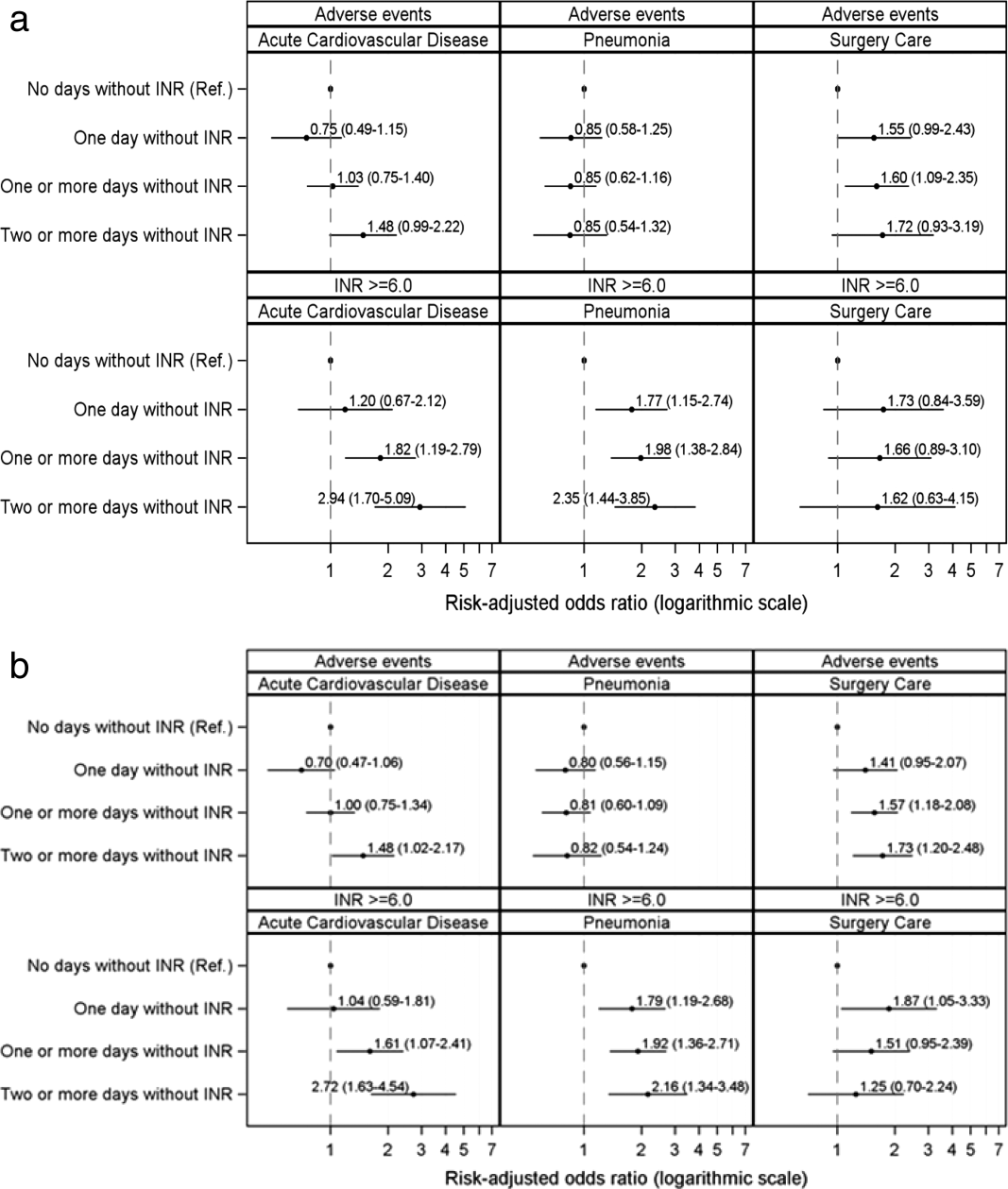

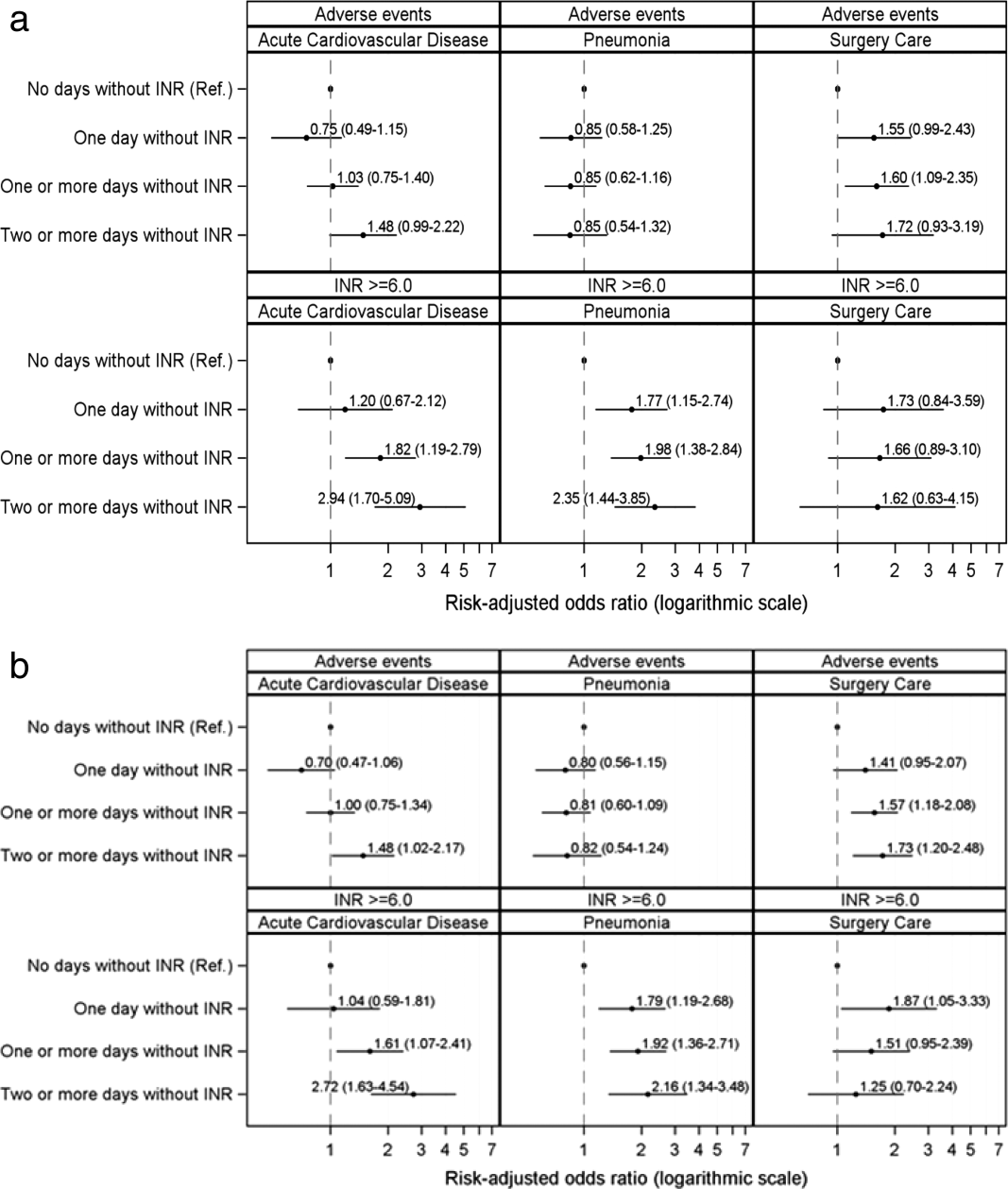

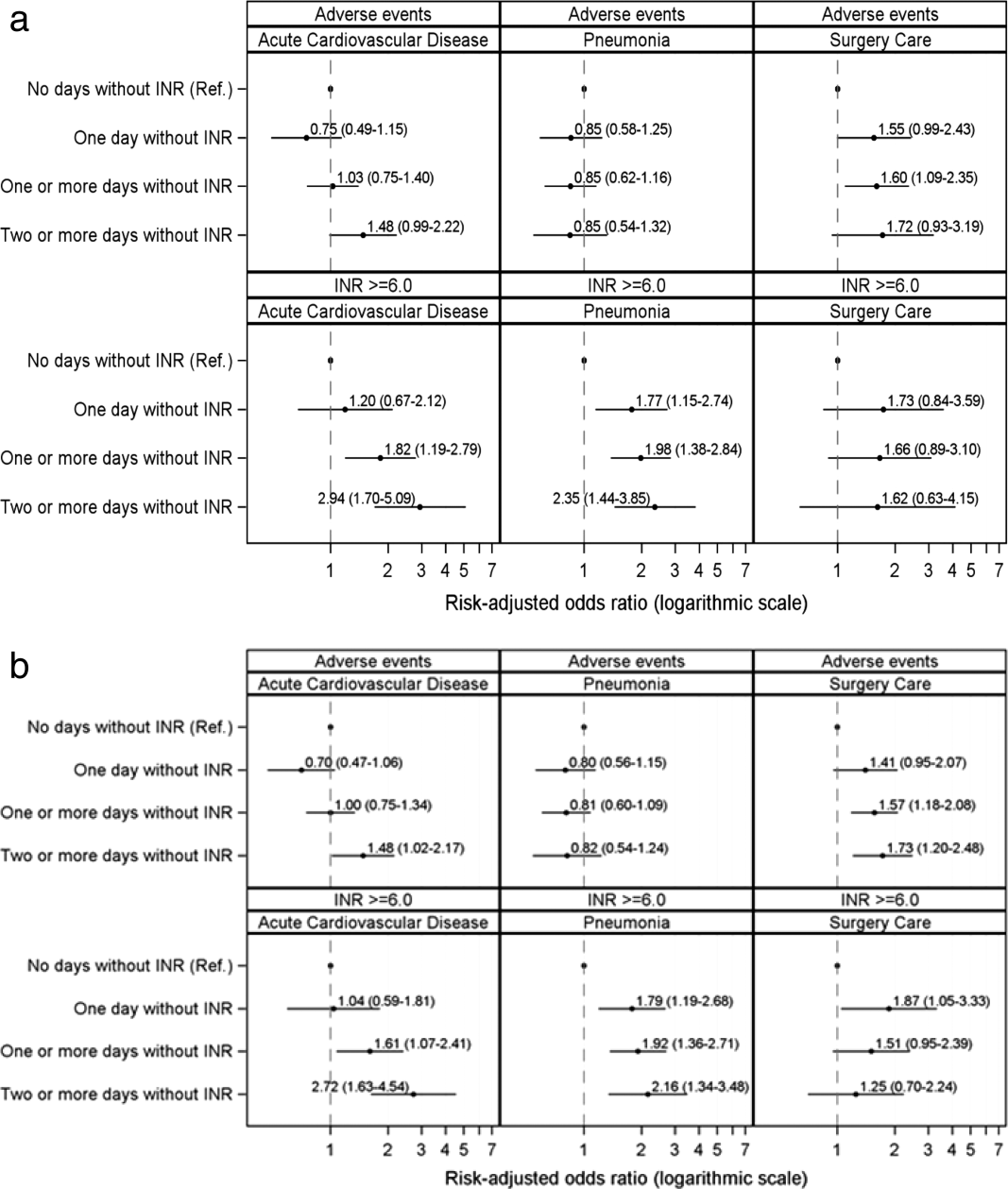

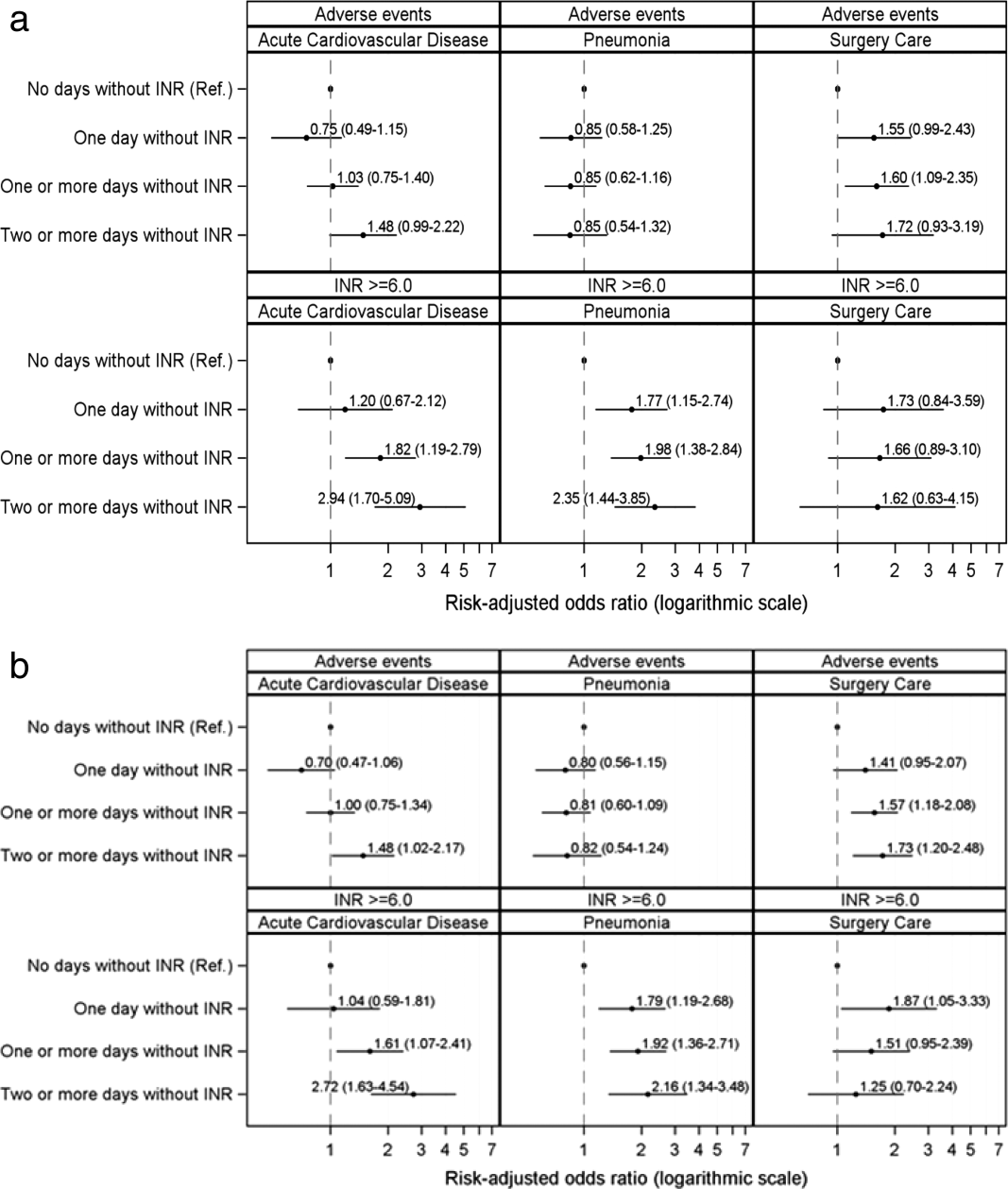

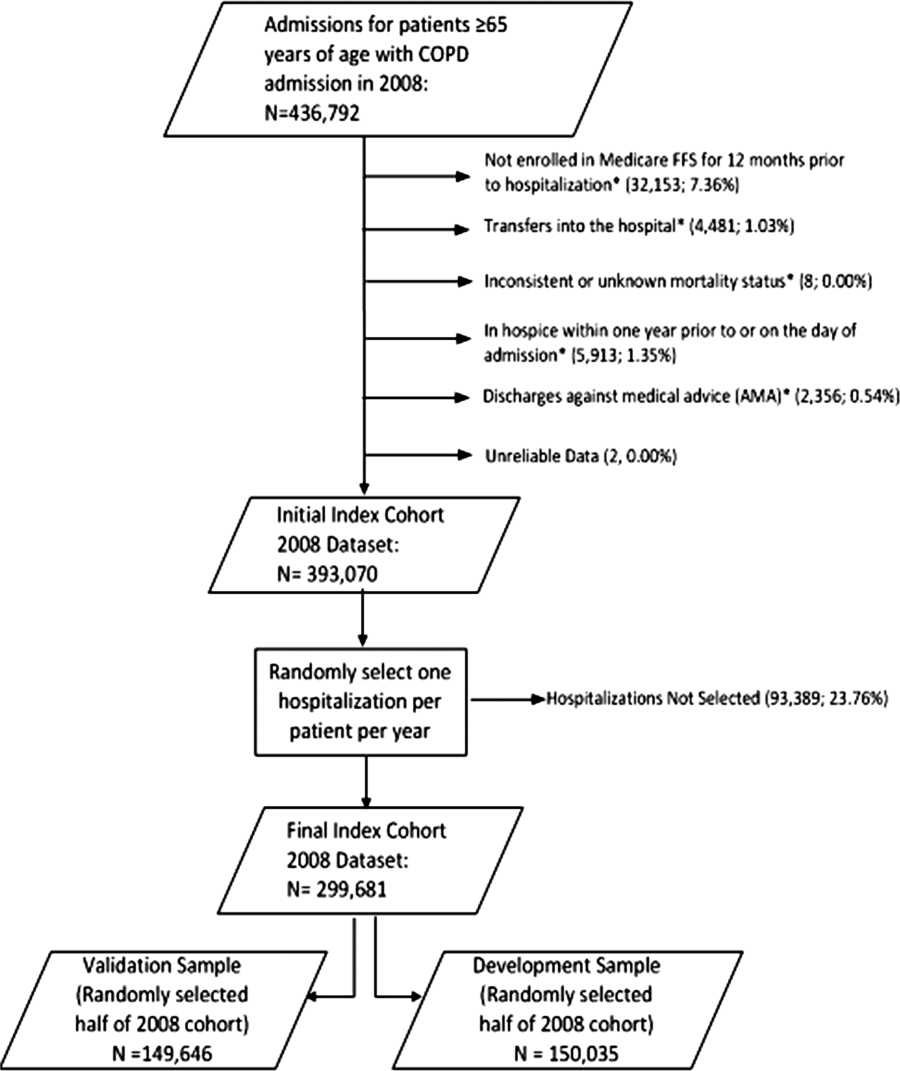

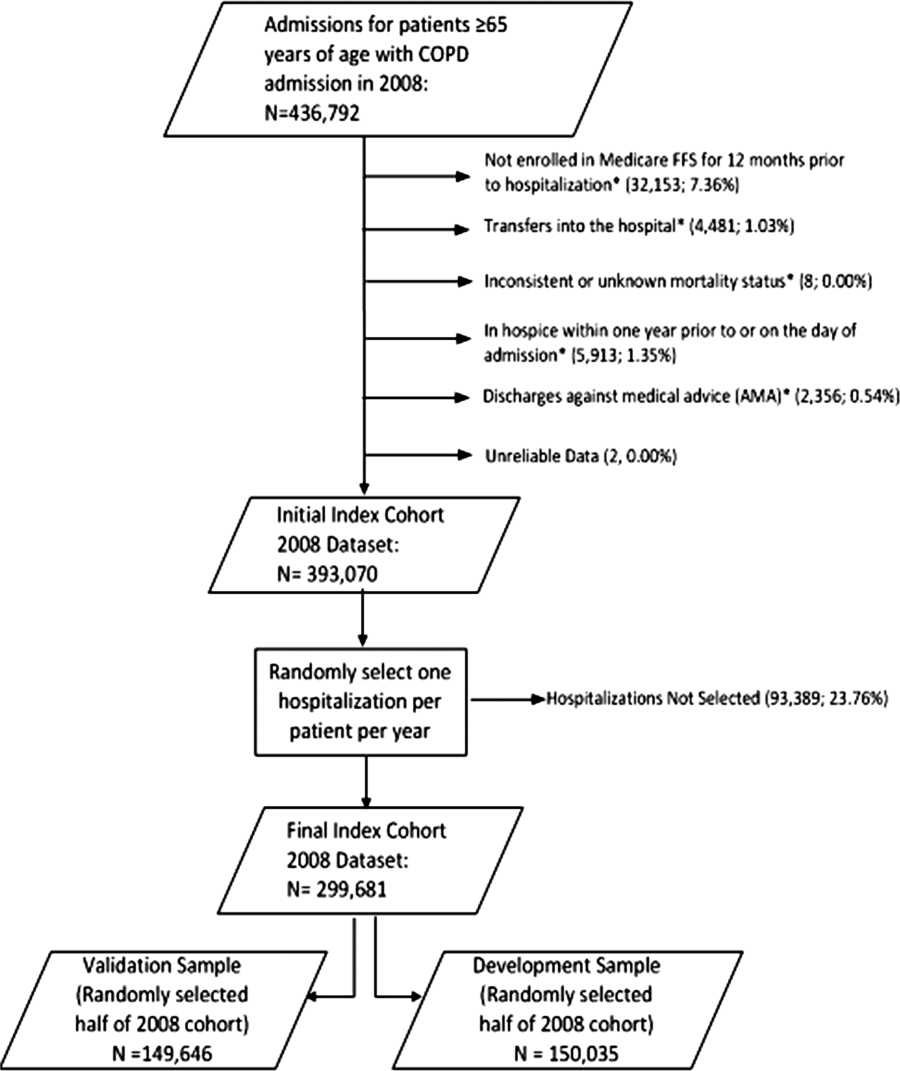

Figure 1A demonstrates the association between the number of days without an INR measurement and the subsequent development of an INR 6.0 or a warfarin‐associated adverse event, adjusted for baseline patient characteristics, receipt of heparin and LMWH, and number of days on warfarin. Patients with 1 or more days without an INR measurement had higher risk‐adjusted ORs of a subsequent INR 6.0, although the difference was not statistically significant for surgical patients. The analysis results based on inverse propensity scoring are seen in Figure 1B. Cardiac and surgical patients with 2 or more days without an INR measurement were at higher risk of having a warfarin‐associated adverse event, whereas cardiac and pneumonia patients with 1 or more days without an INR measurement were at higher risk of developing an INR 6.0.

Supporting Table 2 in the online version of this article demonstrates the relationship between patient characteristics and the occurrence of an INR 6.0 or a warfarin‐related adverse event. The only characteristic that was associated with either of these outcomes for all 3 patient conditions was renal disease, which was positively associated with a warfarin‐associated adverse event. Warfarin use prior to arrival was associated with lower risks of both an INR 6.0 and a warfarin‐associated adverse event, except for among surgical patients. Supporting Table 3 in the online version of this article demonstrates the differences in patient characteristics between patients who had daily INR measurement and those who had at least 1 day without an INR measurement.

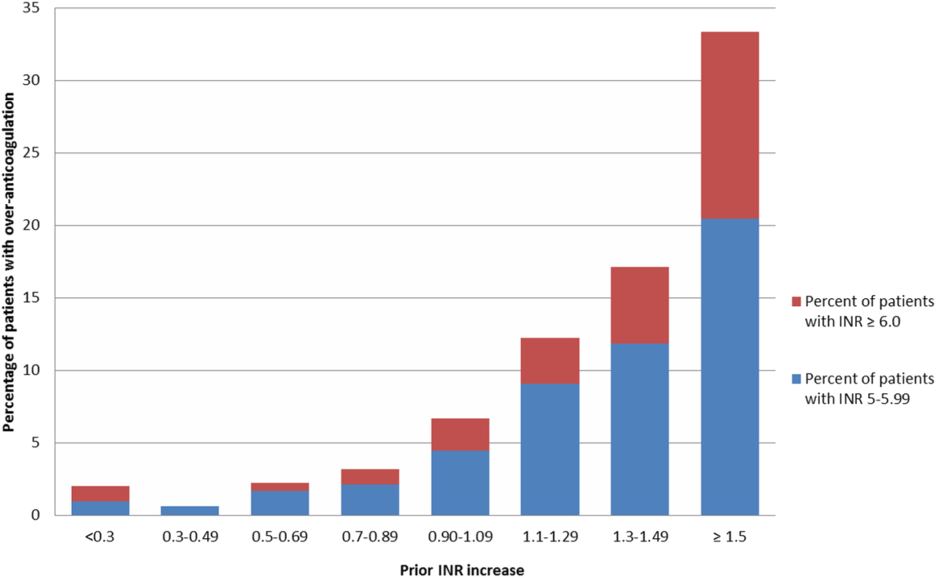

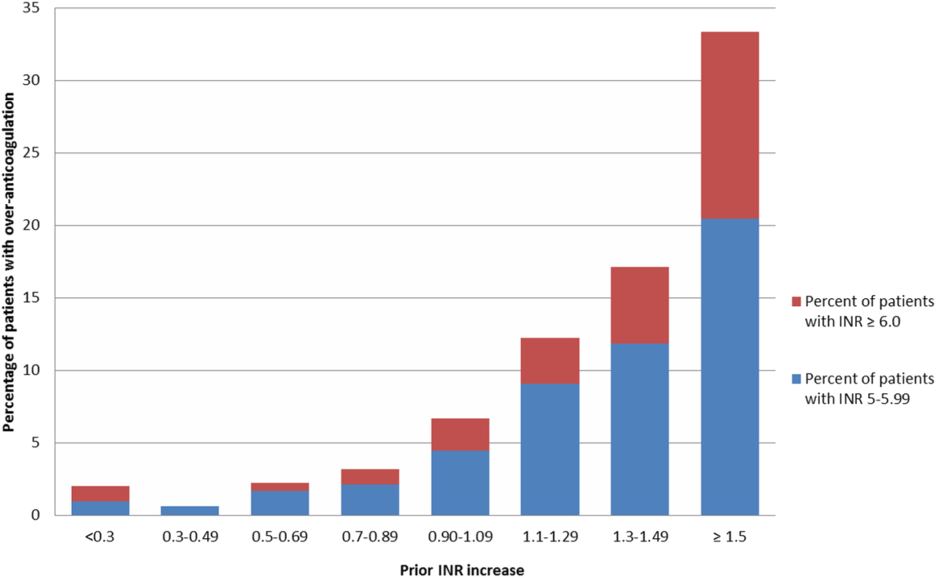

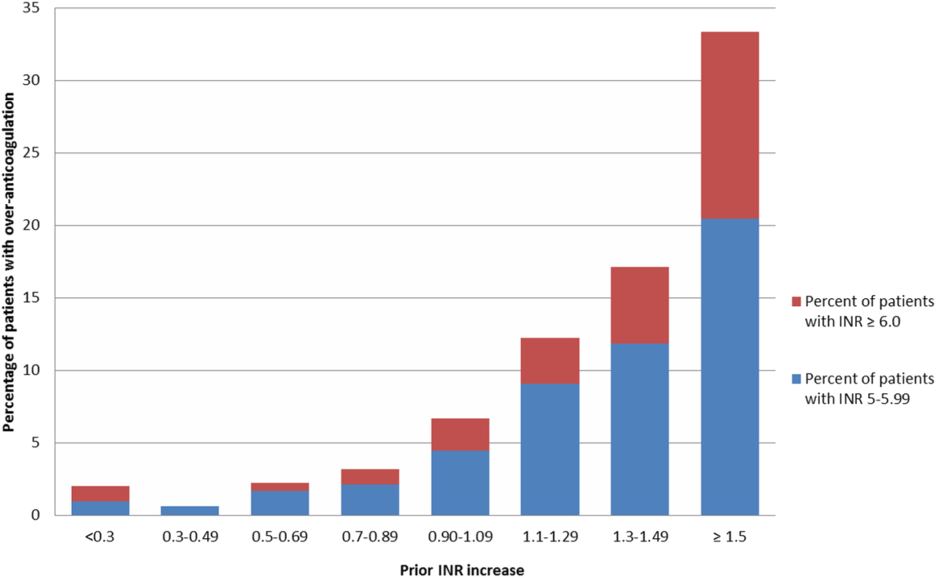

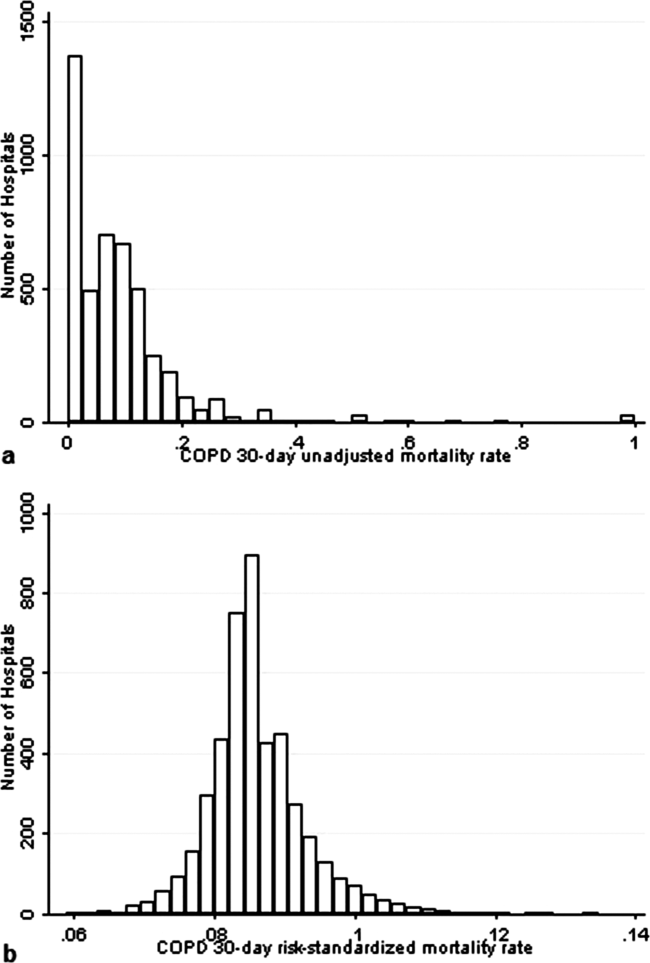

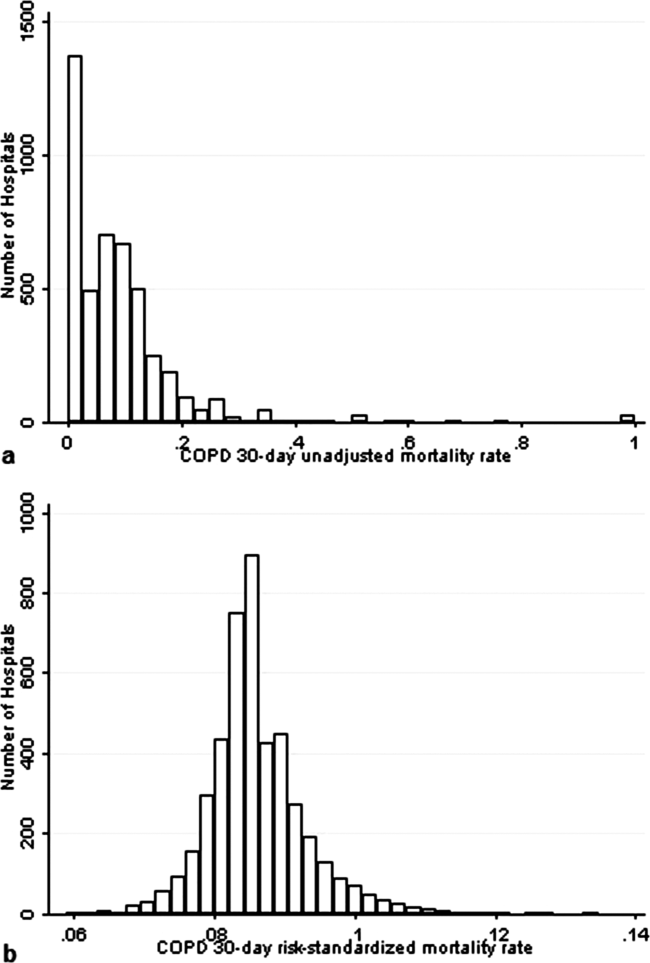

Figure 2 illustrates the relationship of the maximum INR to the prior 1‐day change in INR in 4963 patients whose INR on the day prior to the maximum INR was 2.0 to 3.5. When the increase in INR was <0.9, the risk of the next day's INR being 6.0 was 0.7%, and if the increase was 0.9, the risk was 5.2%. The risk of developing an INR 5.0 was 1.9% if the preceding day's INR increase was <0.9 and 15.3% if the prior day's INR rise was 0.9. Overall, 51% of INRs 6.0 and 55% of INRs 5.0 were immediately preceded by an INR increase of 0.9. The positive likelihood ratio (LR) for a 0.9 rise in INR predicting an INR of 6.0 was 4.2, and the positive LR was 4.9 for predicting an INR 5.0.

There was no decline in the frequency of warfarin use among the patients in the MPSMS sample during the study period (16.7% in 2009 and 17.3% in 2013).

DISCUSSION

We studied warfarin‐associated adverse events in a nationally representative study of patients who received warfarin while in an acute care hospital for a primary diagnosis of cardiac disease, pneumonia, or major surgery. Several findings resulted from our analysis. First, warfarin is still commonly prescribed to hospitalized patients and remains a frequent cause of adverse events; 7.4% of the 2009 to 2013 MPSMS population who received warfarin and had at least 1 INR >1.5 developed a warfarin‐associated adverse event.

Over 95% of patients who received warfarin on the day of hospital admission had an INR performed within 1 day. This is similar to the results from a 2006 single center study in which 95% of patients had an INR measured prior to their first dose of warfarin.[10] Since 2008, The Joint Commission's National Patient Safety Goal has required the assessment of coagulation status before starting warfarin.[17] The high level of adherence to this standard suggests that further attention to this process of care is unlikely to significantly improve patient safety.

We also found that the lack of daily INR measurements was associated with an increased risk of an INR 6.0 and warfarin‐associated adverse events in some patient populations. There is limited evidence addressing the appropriate frequency of INR measurement in hospitalized patients receiving warfarin. The Joint Commission National Patient Safety Goal requires use of a current INR to adjust this therapy, but provides no specifics.[17] Although some experts believe that INRs should be monitored daily in hospitalized patients, this does not appear to be uniformly accepted. In some reports, 2[13] or 3[14] consecutive days without the performance of an INR was required to activate a reminder. Protocols from some major teaching hospitals specify intermittent monitoring once the INR is therapeutic.[15, 16] Because our results suggest that lapses in INR measurement lead to overanticoagulation and warfarin‐related adverse events, it may be appropriate to measure INRs daily in most hospitalized patients receiving warfarin. This would be consistent with the many known causes of INR instability in patients admitted to the hospital, including drug‐drug interactions, hepatic dysfunction, and changes in volume of distribution, such that truly stable hospitalized patients are likely rare. Indeed, hospital admission is a well‐known predictor of instability of warfarin effect. [9] Although our results suggest that daily INR measurement is associated with a lower rate of overanticoagulation, future studies might better define lower risk patients for whom daily INR measurement would not be necessary.

A prior INR increase 0.9 in 1 day was associated with an increased risk of subsequent overanticoagulation. Although a rapidly rising INR is known to predict overanticoagulation[10, 14] we could find no evidence as to what specific rate of rise confers this risk. Our results suggest that use of a warfarin dosing protocol that considers both the absolute value of the INR and the rate of rise could reduce warfarin‐related adverse events.

There are important limitations of our study. We did not abstract warfarin dosages, which precluded study of the appropriateness of both initial warfarin dosing and adjustment of the warfarin dose based on INR results. MPSMS does not reliably capture antiplatelet agents or other agents that result in drug‐drug interactions with warfarin, such as antibiotics, so this factor could theoretically have confounded our results. Antibiotic use seems unlikely to be a major confounder, because patients with acute cardiovascular disease demonstrated a similar relationship between INR measurement and an INR 6.0 to that seen with pneumonia and surgical patients, despite the latter patients likely having greater antibiotics exposure. Furthermore, MPSMS does not capture indices of severity of illness, so other unmeasured confounders could have influenced our results. Although we have data for patients admitted to the hospital for only 4 conditions, these are conditions that represent approximately 22% of hospital admissions in the United States.[2] Strengths of our study include the nationally representative and randomly selected cases and use of data that were obtained from chart abstraction as opposed to administrative data. Through the use of centralized data abstraction, we avoided the potential bias introduced when hospitals self‐report adverse events.

In summary, in a national sample of patients admitted to the hospital for 4 common conditions, warfarin‐associated adverse events were detected in 7.4% of patients who received warfarin. Lack of daily INR measurement was associated with an increased risk of overanticoagulation and warfarin‐associated adverse events in certain patient populations. A 1‐day increase in the INR of 0.9 predicted subsequent overanticoagulation. These results provide actionable opportunities to improve safety in some hospitalized patients receiving warfarin.

Acknowledgements

The authors express their appreciation to Dan Budnitz, MD, MPH, for his advice regarding study design and his review and comments on a draft of this manuscript.

Disclosures: This work was supported by contract HHSA290201200003C from the Agency for Healthcare Research and Quality, United States Department of Health and Human Services, Rockville, Maryland. Qualidigm was the contractor. The authors assume full responsibility for the accuracy and completeness of the ideas. Dr. Metersky has worked on various quality improvement and patient safety projects with Qualidigm, Centers for Medicare & Medicaid Services, and the Agency for Healthcare Research and Quality. His employer has received remuneration for this work. Dr. Krumholz works under contract with the Centers for Medicare & Medicaid Services to develop and maintain performance measures. Dr. Krumholz is the chair of a cardiac scientific advisory board for UnitedHealth and the recipient of a research grant from Medtronic, Inc. through Yale University. The other authors report no conflicts of interest.

- , , , , , . Delivery of optimized inpatient anticoagulation therapy: consensus statement from the anticoagulation forum. Ann Pharmacother. 2013;47:714–724.

- , , , et al. National trends in patient safety for four common conditions, 2005–2011. N Engl J Med. 2014;370:341–351.

- , . Update on antithrombotic therapy: new anticoagulants. Circulation. 2010;121:1523–1532

- , , , . The pharmacogenetics of coumarin therapy. Pharmacogenomics. 2005;6:503–513.

- , , . Adverse drug events among hospitalized Medicare patients: epidemiology and national estimates from a new approach to surveillance. Jt Comm J Qual Patient Saf. 2010;36:12–21.

- , , , et al. Active surveillance using electronic triggers to detect adverse events in hospitalized patients. Qual Saf Health Care. 2006;15:184–190.

- , , , et al. Inpatient warfarin management: pharmacist management using a detailed dosing protocol. J Thromb Thrombolysis. 2012;33:178–184.

- , , , , . Efficacy and safety of a pharmacist‐managed inpatient anticoagulation service for warfarin initiation and titration. J Clin Pharm Ther. 2011;36:585–591.

- , , , et al. Bleeding complications of oral anticoagulant treatment: an inception‐cohort, prospective collaborative study (ISCOAT). Italian Study on Complications of Oral Anticoagulant Therapy. Lancet. 1996;348:423–428.

- , , , , , . Oral anticoagulation in the hospital: analysis of patients at risk. J Thromb Thrombolysis. 2011;31:22–26.

- , , , et al. Evidence‐based management of anticoagulant therapy: Antithrombotic Therapy and Prevention of Thrombosis, 9th ed: American College of Chest Physicians Evidence‐Based Clinical Practice Guidelines. Chest. 2012;141:e152S–e184S.

- Agency for Healthcare Research and Quality. National Guideline Clearinghouse. Available at: http://www.guideline.gov. Accessed April 30, 2015.

- , . Reduction in anticoagulation‐related adverse drug events using a trigger‐based methodology. Jt Comm J Qual Patient Saf. 2005;31:313–318.

- , , . Use of specific indicators to detect warfarin‐related adverse events. Am J Health Syst Pharm. 2005;62:1683–1688.

- University of Wisconsin Health. Warfarin management– adult–inpatient clinical practice guideline. Available at: http://www.uwhealth.org/files/uwhealth/docs/pdf3/Inpatient_Warfarin_Guideline.pdf. Accessed April 30, 2015

- Anticoagulation Guidelines ‐ LSU Health Shreveport. Available at: http://myhsc.lsuhscshreveport.edu/pharmacy/PT%20Policies/Anticoagulation_Safety.pdf. Accessed November 29, 2015.

- The Joint Commission. National patient safety goals effective January 1, 2015. Available at: http://www.jointcommission.org/assets/1/6/2015_NPSG_HAP.pdf. Accessed November 29, 2015.

- U.S. Department of Health and Human Services. Office of Disease Prevention and Health Promotion. Available at: http://health.gov/hcq/pdfs/ade-action-plan-508c.pdf. Accessed November 29, 2015.

- The Joint Commission. Surgical care improvement project. Available at: http://www.jointcommission.org/surgical_care_improvement_project. Accessed May 5, 2015.

- , , , et al. Optimization of inpatient warfarin therapy: Impact of daily consultation by a pharmacist‐managed anticoagulation service. Ann Pharmacother. 2000;34:567–572.

- , , . Effects of requiring a baseline International Normalized Ratio for inpatients treated with warfarin. Am J Health Syst Pharm. 2010;67:17–22.

- , . Weighting regressions by propensity scores. Eval Rev. 2008;32:392–409.

- . An introduction to propensity score methods for reducing the effects of confounding in observational studies. Multivar Behav Res. 2011;46:399–424.

- . Propensity score methods for bias reduction in the comparison of a treatment to a non‐randomized control group. Stat Med. 1998;17:2265–2281.

- , . The central role of the propensity score in observational studies for causal effects. Biometrika. 1983;70:41–55.

Warfarin is 1 of the most common causes of adverse drug events, with hospitalized patients being particularly at risk compared to outpatients.[1] Despite the availability of new oral anticoagulants (NOACs), physicians commonly prescribe warfarin to hospitalized patients,[2] likely in part due to the greater difficulty in reversing NOACs compared to warfarin. Furthermore, uptake of the NOACs is likely to be slow in resource‐poor countries due to the lower cost of warfarin.[3] However, the narrow therapeutic index, frequent drug‐drug interactions, and patient variability in metabolism of warfarin makes management challenging.[4] Thus, warfarin remains a significant cause of adverse events in hospitalized patients, occurring in approximately 3% to 8% of exposed patients, depending on underlying condition.[2, 5]

An elevated international normalized ratio (INR) is a strong predictor of drug‐associated adverse events (patient harm). In a study employing 21 different electronic triggers to identify potential adverse events, an elevated INR had the highest yield for events associated with harm (96% of INRs >5.0 associated with harm).[6] Although pharmacist‐managed inpatient anticoagulation services have been shown to improve warfarin management,[7, 8] there are evidence gaps regarding the causes of warfarin‐related adverse events and practice changes that could decrease their frequency. Although overanticoagulation is a well‐known risk factor for warfarin‐related adverse events,[9, 10] there are few evidence‐based warfarin monitoring and dosing recommendations for hospitalized patients.[10] For example, the 2012 American College of Chest Physicians Antithrombotic Guidelines[11] provide a weak recommendation on initial dosing of warfarin, but no recommendations on how frequently to monitor the INR, or appropriate dosing responses to INR levels. Although many hospitals employ protocols that suggest daily INR monitoring until stable, there are no evidence‐based guidelines to support this practice.[12] Conversely, there are reports of flags to order an INR level that are not activated unless greater than 2[13] or 3 days[14] pass since the prior INR. Protocols from some major academic medical centers suggest that after a therapeutic INR is reached, INR levels can be measured intermittently, as infrequently as twice a week.[15, 16]

The 2015 Joint Commission anticoagulant‐focused National Patient Safety Goal[17] (initially issued in 2008) mandates the assessment of baseline coagulation status before starting warfarin, and warfarin dosing based on a current INR; however, current is not defined. Neither the extent to which the mandate for assessing baseline coagulation status is adhered to nor the relationship between this process of care and patient outcomes is known. The importance of adverse drug events associated with anticoagulants, included warfarin, was also recently highlighted in the 2014 federal National Action Plan for Adverse Drug Event Prevention. In this document, the prevention of adverse drug events associated with anticoagulants was 1 of the 3 areas selected for special national attention and action.[18]

The Medicare Patient Safety Monitoring System (MPSMS) is a national chart abstraction‐based system that includes 21 in‐hospital adverse event measures, including warfarin‐associated adverse drug events.[2] Because of the importance of warfarin‐associated bleeding in hospitalized patients, we analyzed MPSMS data to determine what factors related to INR monitoring practices place patients at risk for these events. We were particularly interested in determining if we could detect potentially modifiable predictors of overanticoagulation and warfarin‐associated adverse events.

METHODS

Study Sample

We combined 2009 to 2013 MPSMS all payer data from the Centers for Medicare & Medicaid Services Hospital Inpatient Quality Reporting program for 4 common medical conditions: (1) acute myocardial infarction, (2) heart failure, (3) pneumonia, and (4) major surgery (as defined by the national Surgical Care Improvement Project).[19] To increase the sample size for cardiac patients, we combined myocardial infarction patients and heart failure patients into 1 group: acute cardiovascular disease. Patients under 18 years of age are excluded from the MPSMS sample, and we excluded patients whose INR never exceeded 1.5 after the initiation of warfarin therapy.

Patient Characteristics

Patient characteristics included demographics (age, sex, race [white, black, and other race]) and comorbidities. Comorbidities abstracted from medical records included: histories at the time of hospital admission of heart failure, obesity, coronary artery disease, renal disease, cerebrovascular disease, chronic obstructive pulmonary disease, cancer, diabetes, and smoking. The use of anticoagulants other than warfarin was also captured.

INRs

The INR measurement period for each patient started from the initial date of warfarin administration and ended on the date the maximum INR occurred. If a patient had more than 1 INR value on any day, the higher INR value was selected. A day without an INR measurement was defined as no INR value documented for a calendar day within the INR measurement period, starting on the third day of warfarin and ending on the day of the maximum INR level.

Outcomes

The study was performed to assess the association between the number of days on which a patient did not have an INR measured while receiving warfarin and the occurrence of (1) an INR 6.0[20, 21] (intermediate outcome) and (2) a warfarin‐associated adverse event. A description of the MPSMS measure of warfarin‐associated adverse events has been previously published.[2] Warfarin‐associated adverse events must have occurred within 48 hours of predefined triggers: an INR 4.0, cessation of warfarin therapy, administration of vitamin K or fresh frozen plasma, or transfusion of packed red blood cells other than in the setting of a surgical procedure. Warfarin‐associated adverse events were divided into minor and major events for this analysis. Minor events were defined as bleeding, drop in hematocrit of 3 points (occurring more than 48 hours after admission and not associated with surgery), or development of a hematoma. Major events were death, intracranial bleeding, or cardiac arrest. A patient who had both a major and a minor event was considered as having had a major event.

To assess the relationship between a rapidly rising INR and a subsequent INR 5.0 or 6.0, we determined the increase in INR between the measurement done 2 days prior to the maximum INR and 1 day prior to the maximum INR. This analysis was performed only on patients whose INR was 2.0 and 3.5 on the day prior to the maximum INR. In doing so, we sought to determine if the INR rise could predict the occurrence of a subsequent severely elevated INR in patients whose INR was within or near the therapeutic range.

Statistical Analysis

We conducted bivariate analysis to quantify the associations between lapses in measurement of the INR and subsequent warfarin‐associated adverse events, using the Mantel‐Haenszel 2 test for categorical variables. We fitted a generalized linear model with a logit link function to estimate the association of days on which an INR was not measured and the occurrence of the composite adverse event measure or the occurrence of an INR 6.0, adjusting for baseline patient characteristics, the number of days on warfarin, and receipt of heparin and low‐molecular‐weight heparin (LMWH). To account for potential imbalances in baseline patient characteristics and warfarin use prior to admission, we conducted a second analysis using the stabilized inverse probability weights approach. Specifically, we weighted each patient by the patient's inverse propensity scores of having only 1 day, at least 1 day, and at least 2 days without an INR measurement while receiving warfarin.[22, 23, 24, 25] To obtain the propensity scores, we fitted 3 logistic models with all variables included in the above primary mixed models except receipt of LMWH, heparin, and the number of days on warfarin as predictors, but 3 different outcomes, 1 day without an INR measurement, 1 or more days without an INR measurement, and 2 or more days without an INR measurement. Analyses were conducted using SAS version 9.2 (SAS Institute Inc., Cary, NC). All statistical testing was 2‐sided, at a significance level of 0.05. The institutional review board at Solutions IRB (Little Rock, AR) determined that the requirement for informed consent could be waived based on the nature of the study.

RESULTS

There were 130,828 patients included in the 2009 to 2013 MPSMS sample, of whom 19,445 (14.9%) received warfarin during their hospital stay and had at least 1 INR measurement. Among these patients, 5228 (26.9%) had no INR level above 1.5 and were excluded from further analysis, leaving 14,217 included patients. Of these patients, 1055 (7.4%) developed a warfarin‐associated adverse event. Table 1 demonstrates the baseline demographics and comorbidities of the included patients.

| Characteristics | Acute Cardiovascular Disease, No. (%), N = 6,394 | Pneumonia, No. (%), N = 3,668 | Major Surgery, No. (%), N = 4,155 | All, No. (%), N = 14,217 |

|---|---|---|---|---|

| ||||

| Age, mean [SD] | 75.3 [12.4] | 74.5 [13.3] | 69.4 [11.8] | 73.4 [12.7] |

| Sex, female | 3,175 (49.7) | 1,741 (47.5) | 2,639 (63.5) | 7,555 (53.1) |

| Race | ||||

| White | 5,388 (84.3) | 3,268 (89.1) | 3,760 (90.5) | 12,416 (87.3) |

| Other | 1,006 (15.7) | 400 (10.9) | 395 (9.5) | 1,801 (12.7) |

| Comorbidities | ||||

| Cancer | 1,186 (18.6) | 939 (25.6) | 708 (17.0) | 2,833 (19.9) |

| Diabetes | 3,043 (47.6) | 1,536 (41.9) | 1,080 (26.0) | 5,659 (39.8) |

| Obesity | 1,938 (30.3) | 896 (24.4) | 1,260 (30.3) | 4,094 (28.8) |

| Cerebrovascular disease | 1,664 (26.0) | 910 (24.8) | 498 (12.0) | 3,072 (21.6) |

| Heart failure/pulmonary edema | 5,882 (92.0) | 2,052 (55.9) | 607 (14.6) | 8,541 (60.1) |

| Chronic obstructive pulmonary disease | 2,636 (41.2) | 1,929 (52.6) | 672 (16.2) | 5,237 (36.8) |

| Smoking | 895 (14.0) | 662 (18.1) | 623 (15.0) | 2,180 (15.3) |

| Corticosteroids | 490 (7.7) | 568 (15.5) | 147 (3.5) | 1,205 (8.5) |

| Coronary artery disease | 4,628 (72.4) | 1,875 (51.1) | 1,228 (29.6) | 7,731 (54.4) |

| Renal disease | 3,000 (46.9) | 1,320 (36.0) | 565 (13.6) | 4,885 (34.4) |

| Warfarin prior to arrival | 5,074 (79.4) | 3,020 (82.3) | 898 (21.6) | 8,992 (63.3) |

| Heparin given during hospitalization | 850 (13.3) | 282 (7.7) | 314 (7.6) | 1,446 (10.7) |

| LMWH given during hospitalization | 1,591 (24.9) | 1,070 (29.2) | 1,431 (34.4) | 4,092 (28.8) |

Warfarin was started on hospital day 1 for 6825 (48.0%) of 14,217 patients. Among these patients, 6539 (95.8%) had an INR measured within 1 calendar day. We were unable to determine how many patients who started warfarin later in their hospital stay had a baseline INR, as we did not capture INRs performed prior to the day that warfarin was initiated.

Supporting Table 1 in the online version of this article demonstrates the association between an INR 6.0 and the occurrence of warfarin‐associated adverse events. A maximum INR 6.0 occurred in 469 (3.3%) of the patients included in the study, and among those patients, 133 (28.4%) experienced a warfarin‐associated adverse event compared to 922 (6.7%) adverse events in the 13,748 patients who did not develop an INR 6.0 (P < 0.001).

Among 8529 patients who received warfarin for at least 3 days, beginning on the third day of warfarin, 1549 patients (18.2%) did not have INR measured at least once each day that they received warfarin. Table 2 demonstrates that patients who had 2 or more days on which the INR was not measured had higher rates of INR 6.0 than patients for whom the INR was measured daily. A similar association was seen for warfarin‐associated adverse events (Table 2).

| No. of Patients, No. (%), N = 8,529 | Patients With INR on All Days, No. (%), N = 6,980 | Patients With 1 Day Without an INR, No. (%), N = 968 | Patients With 2 or More Days Without an INR, No. (%), N = 581 | P Value | |

|---|---|---|---|---|---|

| |||||

| Maximum INR | <0.01* | ||||

| 1.515.99 | 8,183 | 6,748 (96.7) | 911 (94.1) | 524 (90.2) | |

| 6.0 | 346 | 232 (3.3) | 57 (5.9) | 57 (9.8) | |

| Warfarin‐associated adverse events | <0.01* | ||||

| No adverse events | 7,689 (90.2) | 6,331 (90.7) | 872 (90.1) | 486 (83.6) | |

| Minor adverse events | 792 (9.3) | 617 (8.8) | 86 (8.9) | 89 (15.3) | |

| Major adverse events | 48 (0.6) | 32 (0.5) | 10 (1.0) | 6 (1.0) | |

Figure 1A demonstrates the association between the number of days without an INR measurement and the subsequent development of an INR 6.0 or a warfarin‐associated adverse event, adjusted for baseline patient characteristics, receipt of heparin and LMWH, and number of days on warfarin. Patients with 1 or more days without an INR measurement had higher risk‐adjusted ORs of a subsequent INR 6.0, although the difference was not statistically significant for surgical patients. The analysis results based on inverse propensity scoring are seen in Figure 1B. Cardiac and surgical patients with 2 or more days without an INR measurement were at higher risk of having a warfarin‐associated adverse event, whereas cardiac and pneumonia patients with 1 or more days without an INR measurement were at higher risk of developing an INR 6.0.

Supporting Table 2 in the online version of this article demonstrates the relationship between patient characteristics and the occurrence of an INR 6.0 or a warfarin‐related adverse event. The only characteristic that was associated with either of these outcomes for all 3 patient conditions was renal disease, which was positively associated with a warfarin‐associated adverse event. Warfarin use prior to arrival was associated with lower risks of both an INR 6.0 and a warfarin‐associated adverse event, except for among surgical patients. Supporting Table 3 in the online version of this article demonstrates the differences in patient characteristics between patients who had daily INR measurement and those who had at least 1 day without an INR measurement.

Figure 2 illustrates the relationship of the maximum INR to the prior 1‐day change in INR in 4963 patients whose INR on the day prior to the maximum INR was 2.0 to 3.5. When the increase in INR was <0.9, the risk of the next day's INR being 6.0 was 0.7%, and if the increase was 0.9, the risk was 5.2%. The risk of developing an INR 5.0 was 1.9% if the preceding day's INR increase was <0.9 and 15.3% if the prior day's INR rise was 0.9. Overall, 51% of INRs 6.0 and 55% of INRs 5.0 were immediately preceded by an INR increase of 0.9. The positive likelihood ratio (LR) for a 0.9 rise in INR predicting an INR of 6.0 was 4.2, and the positive LR was 4.9 for predicting an INR 5.0.

There was no decline in the frequency of warfarin use among the patients in the MPSMS sample during the study period (16.7% in 2009 and 17.3% in 2013).

DISCUSSION

We studied warfarin‐associated adverse events in a nationally representative study of patients who received warfarin while in an acute care hospital for a primary diagnosis of cardiac disease, pneumonia, or major surgery. Several findings resulted from our analysis. First, warfarin is still commonly prescribed to hospitalized patients and remains a frequent cause of adverse events; 7.4% of the 2009 to 2013 MPSMS population who received warfarin and had at least 1 INR >1.5 developed a warfarin‐associated adverse event.

Over 95% of patients who received warfarin on the day of hospital admission had an INR performed within 1 day. This is similar to the results from a 2006 single center study in which 95% of patients had an INR measured prior to their first dose of warfarin.[10] Since 2008, The Joint Commission's National Patient Safety Goal has required the assessment of coagulation status before starting warfarin.[17] The high level of adherence to this standard suggests that further attention to this process of care is unlikely to significantly improve patient safety.

We also found that the lack of daily INR measurements was associated with an increased risk of an INR 6.0 and warfarin‐associated adverse events in some patient populations. There is limited evidence addressing the appropriate frequency of INR measurement in hospitalized patients receiving warfarin. The Joint Commission National Patient Safety Goal requires use of a current INR to adjust this therapy, but provides no specifics.[17] Although some experts believe that INRs should be monitored daily in hospitalized patients, this does not appear to be uniformly accepted. In some reports, 2[13] or 3[14] consecutive days without the performance of an INR was required to activate a reminder. Protocols from some major teaching hospitals specify intermittent monitoring once the INR is therapeutic.[15, 16] Because our results suggest that lapses in INR measurement lead to overanticoagulation and warfarin‐related adverse events, it may be appropriate to measure INRs daily in most hospitalized patients receiving warfarin. This would be consistent with the many known causes of INR instability in patients admitted to the hospital, including drug‐drug interactions, hepatic dysfunction, and changes in volume of distribution, such that truly stable hospitalized patients are likely rare. Indeed, hospital admission is a well‐known predictor of instability of warfarin effect. [9] Although our results suggest that daily INR measurement is associated with a lower rate of overanticoagulation, future studies might better define lower risk patients for whom daily INR measurement would not be necessary.

A prior INR increase 0.9 in 1 day was associated with an increased risk of subsequent overanticoagulation. Although a rapidly rising INR is known to predict overanticoagulation[10, 14] we could find no evidence as to what specific rate of rise confers this risk. Our results suggest that use of a warfarin dosing protocol that considers both the absolute value of the INR and the rate of rise could reduce warfarin‐related adverse events.

There are important limitations of our study. We did not abstract warfarin dosages, which precluded study of the appropriateness of both initial warfarin dosing and adjustment of the warfarin dose based on INR results. MPSMS does not reliably capture antiplatelet agents or other agents that result in drug‐drug interactions with warfarin, such as antibiotics, so this factor could theoretically have confounded our results. Antibiotic use seems unlikely to be a major confounder, because patients with acute cardiovascular disease demonstrated a similar relationship between INR measurement and an INR 6.0 to that seen with pneumonia and surgical patients, despite the latter patients likely having greater antibiotics exposure. Furthermore, MPSMS does not capture indices of severity of illness, so other unmeasured confounders could have influenced our results. Although we have data for patients admitted to the hospital for only 4 conditions, these are conditions that represent approximately 22% of hospital admissions in the United States.[2] Strengths of our study include the nationally representative and randomly selected cases and use of data that were obtained from chart abstraction as opposed to administrative data. Through the use of centralized data abstraction, we avoided the potential bias introduced when hospitals self‐report adverse events.

In summary, in a national sample of patients admitted to the hospital for 4 common conditions, warfarin‐associated adverse events were detected in 7.4% of patients who received warfarin. Lack of daily INR measurement was associated with an increased risk of overanticoagulation and warfarin‐associated adverse events in certain patient populations. A 1‐day increase in the INR of 0.9 predicted subsequent overanticoagulation. These results provide actionable opportunities to improve safety in some hospitalized patients receiving warfarin.

Acknowledgements

The authors express their appreciation to Dan Budnitz, MD, MPH, for his advice regarding study design and his review and comments on a draft of this manuscript.

Disclosures: This work was supported by contract HHSA290201200003C from the Agency for Healthcare Research and Quality, United States Department of Health and Human Services, Rockville, Maryland. Qualidigm was the contractor. The authors assume full responsibility for the accuracy and completeness of the ideas. Dr. Metersky has worked on various quality improvement and patient safety projects with Qualidigm, Centers for Medicare & Medicaid Services, and the Agency for Healthcare Research and Quality. His employer has received remuneration for this work. Dr. Krumholz works under contract with the Centers for Medicare & Medicaid Services to develop and maintain performance measures. Dr. Krumholz is the chair of a cardiac scientific advisory board for UnitedHealth and the recipient of a research grant from Medtronic, Inc. through Yale University. The other authors report no conflicts of interest.

Warfarin is 1 of the most common causes of adverse drug events, with hospitalized patients being particularly at risk compared to outpatients.[1] Despite the availability of new oral anticoagulants (NOACs), physicians commonly prescribe warfarin to hospitalized patients,[2] likely in part due to the greater difficulty in reversing NOACs compared to warfarin. Furthermore, uptake of the NOACs is likely to be slow in resource‐poor countries due to the lower cost of warfarin.[3] However, the narrow therapeutic index, frequent drug‐drug interactions, and patient variability in metabolism of warfarin makes management challenging.[4] Thus, warfarin remains a significant cause of adverse events in hospitalized patients, occurring in approximately 3% to 8% of exposed patients, depending on underlying condition.[2, 5]

An elevated international normalized ratio (INR) is a strong predictor of drug‐associated adverse events (patient harm). In a study employing 21 different electronic triggers to identify potential adverse events, an elevated INR had the highest yield for events associated with harm (96% of INRs >5.0 associated with harm).[6] Although pharmacist‐managed inpatient anticoagulation services have been shown to improve warfarin management,[7, 8] there are evidence gaps regarding the causes of warfarin‐related adverse events and practice changes that could decrease their frequency. Although overanticoagulation is a well‐known risk factor for warfarin‐related adverse events,[9, 10] there are few evidence‐based warfarin monitoring and dosing recommendations for hospitalized patients.[10] For example, the 2012 American College of Chest Physicians Antithrombotic Guidelines[11] provide a weak recommendation on initial dosing of warfarin, but no recommendations on how frequently to monitor the INR, or appropriate dosing responses to INR levels. Although many hospitals employ protocols that suggest daily INR monitoring until stable, there are no evidence‐based guidelines to support this practice.[12] Conversely, there are reports of flags to order an INR level that are not activated unless greater than 2[13] or 3 days[14] pass since the prior INR. Protocols from some major academic medical centers suggest that after a therapeutic INR is reached, INR levels can be measured intermittently, as infrequently as twice a week.[15, 16]

The 2015 Joint Commission anticoagulant‐focused National Patient Safety Goal[17] (initially issued in 2008) mandates the assessment of baseline coagulation status before starting warfarin, and warfarin dosing based on a current INR; however, current is not defined. Neither the extent to which the mandate for assessing baseline coagulation status is adhered to nor the relationship between this process of care and patient outcomes is known. The importance of adverse drug events associated with anticoagulants, included warfarin, was also recently highlighted in the 2014 federal National Action Plan for Adverse Drug Event Prevention. In this document, the prevention of adverse drug events associated with anticoagulants was 1 of the 3 areas selected for special national attention and action.[18]

The Medicare Patient Safety Monitoring System (MPSMS) is a national chart abstraction‐based system that includes 21 in‐hospital adverse event measures, including warfarin‐associated adverse drug events.[2] Because of the importance of warfarin‐associated bleeding in hospitalized patients, we analyzed MPSMS data to determine what factors related to INR monitoring practices place patients at risk for these events. We were particularly interested in determining if we could detect potentially modifiable predictors of overanticoagulation and warfarin‐associated adverse events.

METHODS

Study Sample

We combined 2009 to 2013 MPSMS all payer data from the Centers for Medicare & Medicaid Services Hospital Inpatient Quality Reporting program for 4 common medical conditions: (1) acute myocardial infarction, (2) heart failure, (3) pneumonia, and (4) major surgery (as defined by the national Surgical Care Improvement Project).[19] To increase the sample size for cardiac patients, we combined myocardial infarction patients and heart failure patients into 1 group: acute cardiovascular disease. Patients under 18 years of age are excluded from the MPSMS sample, and we excluded patients whose INR never exceeded 1.5 after the initiation of warfarin therapy.

Patient Characteristics

Patient characteristics included demographics (age, sex, race [white, black, and other race]) and comorbidities. Comorbidities abstracted from medical records included: histories at the time of hospital admission of heart failure, obesity, coronary artery disease, renal disease, cerebrovascular disease, chronic obstructive pulmonary disease, cancer, diabetes, and smoking. The use of anticoagulants other than warfarin was also captured.

INRs

The INR measurement period for each patient started from the initial date of warfarin administration and ended on the date the maximum INR occurred. If a patient had more than 1 INR value on any day, the higher INR value was selected. A day without an INR measurement was defined as no INR value documented for a calendar day within the INR measurement period, starting on the third day of warfarin and ending on the day of the maximum INR level.

Outcomes

The study was performed to assess the association between the number of days on which a patient did not have an INR measured while receiving warfarin and the occurrence of (1) an INR 6.0[20, 21] (intermediate outcome) and (2) a warfarin‐associated adverse event. A description of the MPSMS measure of warfarin‐associated adverse events has been previously published.[2] Warfarin‐associated adverse events must have occurred within 48 hours of predefined triggers: an INR 4.0, cessation of warfarin therapy, administration of vitamin K or fresh frozen plasma, or transfusion of packed red blood cells other than in the setting of a surgical procedure. Warfarin‐associated adverse events were divided into minor and major events for this analysis. Minor events were defined as bleeding, drop in hematocrit of 3 points (occurring more than 48 hours after admission and not associated with surgery), or development of a hematoma. Major events were death, intracranial bleeding, or cardiac arrest. A patient who had both a major and a minor event was considered as having had a major event.

To assess the relationship between a rapidly rising INR and a subsequent INR 5.0 or 6.0, we determined the increase in INR between the measurement done 2 days prior to the maximum INR and 1 day prior to the maximum INR. This analysis was performed only on patients whose INR was 2.0 and 3.5 on the day prior to the maximum INR. In doing so, we sought to determine if the INR rise could predict the occurrence of a subsequent severely elevated INR in patients whose INR was within or near the therapeutic range.

Statistical Analysis

We conducted bivariate analysis to quantify the associations between lapses in measurement of the INR and subsequent warfarin‐associated adverse events, using the Mantel‐Haenszel 2 test for categorical variables. We fitted a generalized linear model with a logit link function to estimate the association of days on which an INR was not measured and the occurrence of the composite adverse event measure or the occurrence of an INR 6.0, adjusting for baseline patient characteristics, the number of days on warfarin, and receipt of heparin and low‐molecular‐weight heparin (LMWH). To account for potential imbalances in baseline patient characteristics and warfarin use prior to admission, we conducted a second analysis using the stabilized inverse probability weights approach. Specifically, we weighted each patient by the patient's inverse propensity scores of having only 1 day, at least 1 day, and at least 2 days without an INR measurement while receiving warfarin.[22, 23, 24, 25] To obtain the propensity scores, we fitted 3 logistic models with all variables included in the above primary mixed models except receipt of LMWH, heparin, and the number of days on warfarin as predictors, but 3 different outcomes, 1 day without an INR measurement, 1 or more days without an INR measurement, and 2 or more days without an INR measurement. Analyses were conducted using SAS version 9.2 (SAS Institute Inc., Cary, NC). All statistical testing was 2‐sided, at a significance level of 0.05. The institutional review board at Solutions IRB (Little Rock, AR) determined that the requirement for informed consent could be waived based on the nature of the study.

RESULTS

There were 130,828 patients included in the 2009 to 2013 MPSMS sample, of whom 19,445 (14.9%) received warfarin during their hospital stay and had at least 1 INR measurement. Among these patients, 5228 (26.9%) had no INR level above 1.5 and were excluded from further analysis, leaving 14,217 included patients. Of these patients, 1055 (7.4%) developed a warfarin‐associated adverse event. Table 1 demonstrates the baseline demographics and comorbidities of the included patients.

| Characteristics | Acute Cardiovascular Disease, No. (%), N = 6,394 | Pneumonia, No. (%), N = 3,668 | Major Surgery, No. (%), N = 4,155 | All, No. (%), N = 14,217 |

|---|---|---|---|---|

| ||||

| Age, mean [SD] | 75.3 [12.4] | 74.5 [13.3] | 69.4 [11.8] | 73.4 [12.7] |

| Sex, female | 3,175 (49.7) | 1,741 (47.5) | 2,639 (63.5) | 7,555 (53.1) |

| Race | ||||

| White | 5,388 (84.3) | 3,268 (89.1) | 3,760 (90.5) | 12,416 (87.3) |

| Other | 1,006 (15.7) | 400 (10.9) | 395 (9.5) | 1,801 (12.7) |

| Comorbidities | ||||

| Cancer | 1,186 (18.6) | 939 (25.6) | 708 (17.0) | 2,833 (19.9) |

| Diabetes | 3,043 (47.6) | 1,536 (41.9) | 1,080 (26.0) | 5,659 (39.8) |

| Obesity | 1,938 (30.3) | 896 (24.4) | 1,260 (30.3) | 4,094 (28.8) |

| Cerebrovascular disease | 1,664 (26.0) | 910 (24.8) | 498 (12.0) | 3,072 (21.6) |

| Heart failure/pulmonary edema | 5,882 (92.0) | 2,052 (55.9) | 607 (14.6) | 8,541 (60.1) |

| Chronic obstructive pulmonary disease | 2,636 (41.2) | 1,929 (52.6) | 672 (16.2) | 5,237 (36.8) |

| Smoking | 895 (14.0) | 662 (18.1) | 623 (15.0) | 2,180 (15.3) |

| Corticosteroids | 490 (7.7) | 568 (15.5) | 147 (3.5) | 1,205 (8.5) |

| Coronary artery disease | 4,628 (72.4) | 1,875 (51.1) | 1,228 (29.6) | 7,731 (54.4) |

| Renal disease | 3,000 (46.9) | 1,320 (36.0) | 565 (13.6) | 4,885 (34.4) |

| Warfarin prior to arrival | 5,074 (79.4) | 3,020 (82.3) | 898 (21.6) | 8,992 (63.3) |

| Heparin given during hospitalization | 850 (13.3) | 282 (7.7) | 314 (7.6) | 1,446 (10.7) |

| LMWH given during hospitalization | 1,591 (24.9) | 1,070 (29.2) | 1,431 (34.4) | 4,092 (28.8) |

Warfarin was started on hospital day 1 for 6825 (48.0%) of 14,217 patients. Among these patients, 6539 (95.8%) had an INR measured within 1 calendar day. We were unable to determine how many patients who started warfarin later in their hospital stay had a baseline INR, as we did not capture INRs performed prior to the day that warfarin was initiated.

Supporting Table 1 in the online version of this article demonstrates the association between an INR 6.0 and the occurrence of warfarin‐associated adverse events. A maximum INR 6.0 occurred in 469 (3.3%) of the patients included in the study, and among those patients, 133 (28.4%) experienced a warfarin‐associated adverse event compared to 922 (6.7%) adverse events in the 13,748 patients who did not develop an INR 6.0 (P < 0.001).

Among 8529 patients who received warfarin for at least 3 days, beginning on the third day of warfarin, 1549 patients (18.2%) did not have INR measured at least once each day that they received warfarin. Table 2 demonstrates that patients who had 2 or more days on which the INR was not measured had higher rates of INR 6.0 than patients for whom the INR was measured daily. A similar association was seen for warfarin‐associated adverse events (Table 2).

| No. of Patients, No. (%), N = 8,529 | Patients With INR on All Days, No. (%), N = 6,980 | Patients With 1 Day Without an INR, No. (%), N = 968 | Patients With 2 or More Days Without an INR, No. (%), N = 581 | P Value | |

|---|---|---|---|---|---|

| |||||

| Maximum INR | <0.01* | ||||

| 1.515.99 | 8,183 | 6,748 (96.7) | 911 (94.1) | 524 (90.2) | |

| 6.0 | 346 | 232 (3.3) | 57 (5.9) | 57 (9.8) | |

| Warfarin‐associated adverse events | <0.01* | ||||

| No adverse events | 7,689 (90.2) | 6,331 (90.7) | 872 (90.1) | 486 (83.6) | |

| Minor adverse events | 792 (9.3) | 617 (8.8) | 86 (8.9) | 89 (15.3) | |

| Major adverse events | 48 (0.6) | 32 (0.5) | 10 (1.0) | 6 (1.0) | |

Figure 1A demonstrates the association between the number of days without an INR measurement and the subsequent development of an INR 6.0 or a warfarin‐associated adverse event, adjusted for baseline patient characteristics, receipt of heparin and LMWH, and number of days on warfarin. Patients with 1 or more days without an INR measurement had higher risk‐adjusted ORs of a subsequent INR 6.0, although the difference was not statistically significant for surgical patients. The analysis results based on inverse propensity scoring are seen in Figure 1B. Cardiac and surgical patients with 2 or more days without an INR measurement were at higher risk of having a warfarin‐associated adverse event, whereas cardiac and pneumonia patients with 1 or more days without an INR measurement were at higher risk of developing an INR 6.0.

Supporting Table 2 in the online version of this article demonstrates the relationship between patient characteristics and the occurrence of an INR 6.0 or a warfarin‐related adverse event. The only characteristic that was associated with either of these outcomes for all 3 patient conditions was renal disease, which was positively associated with a warfarin‐associated adverse event. Warfarin use prior to arrival was associated with lower risks of both an INR 6.0 and a warfarin‐associated adverse event, except for among surgical patients. Supporting Table 3 in the online version of this article demonstrates the differences in patient characteristics between patients who had daily INR measurement and those who had at least 1 day without an INR measurement.

Figure 2 illustrates the relationship of the maximum INR to the prior 1‐day change in INR in 4963 patients whose INR on the day prior to the maximum INR was 2.0 to 3.5. When the increase in INR was <0.9, the risk of the next day's INR being 6.0 was 0.7%, and if the increase was 0.9, the risk was 5.2%. The risk of developing an INR 5.0 was 1.9% if the preceding day's INR increase was <0.9 and 15.3% if the prior day's INR rise was 0.9. Overall, 51% of INRs 6.0 and 55% of INRs 5.0 were immediately preceded by an INR increase of 0.9. The positive likelihood ratio (LR) for a 0.9 rise in INR predicting an INR of 6.0 was 4.2, and the positive LR was 4.9 for predicting an INR 5.0.

There was no decline in the frequency of warfarin use among the patients in the MPSMS sample during the study period (16.7% in 2009 and 17.3% in 2013).

DISCUSSION

We studied warfarin‐associated adverse events in a nationally representative study of patients who received warfarin while in an acute care hospital for a primary diagnosis of cardiac disease, pneumonia, or major surgery. Several findings resulted from our analysis. First, warfarin is still commonly prescribed to hospitalized patients and remains a frequent cause of adverse events; 7.4% of the 2009 to 2013 MPSMS population who received warfarin and had at least 1 INR >1.5 developed a warfarin‐associated adverse event.

Over 95% of patients who received warfarin on the day of hospital admission had an INR performed within 1 day. This is similar to the results from a 2006 single center study in which 95% of patients had an INR measured prior to their first dose of warfarin.[10] Since 2008, The Joint Commission's National Patient Safety Goal has required the assessment of coagulation status before starting warfarin.[17] The high level of adherence to this standard suggests that further attention to this process of care is unlikely to significantly improve patient safety.

We also found that the lack of daily INR measurements was associated with an increased risk of an INR 6.0 and warfarin‐associated adverse events in some patient populations. There is limited evidence addressing the appropriate frequency of INR measurement in hospitalized patients receiving warfarin. The Joint Commission National Patient Safety Goal requires use of a current INR to adjust this therapy, but provides no specifics.[17] Although some experts believe that INRs should be monitored daily in hospitalized patients, this does not appear to be uniformly accepted. In some reports, 2[13] or 3[14] consecutive days without the performance of an INR was required to activate a reminder. Protocols from some major teaching hospitals specify intermittent monitoring once the INR is therapeutic.[15, 16] Because our results suggest that lapses in INR measurement lead to overanticoagulation and warfarin‐related adverse events, it may be appropriate to measure INRs daily in most hospitalized patients receiving warfarin. This would be consistent with the many known causes of INR instability in patients admitted to the hospital, including drug‐drug interactions, hepatic dysfunction, and changes in volume of distribution, such that truly stable hospitalized patients are likely rare. Indeed, hospital admission is a well‐known predictor of instability of warfarin effect. [9] Although our results suggest that daily INR measurement is associated with a lower rate of overanticoagulation, future studies might better define lower risk patients for whom daily INR measurement would not be necessary.

A prior INR increase 0.9 in 1 day was associated with an increased risk of subsequent overanticoagulation. Although a rapidly rising INR is known to predict overanticoagulation[10, 14] we could find no evidence as to what specific rate of rise confers this risk. Our results suggest that use of a warfarin dosing protocol that considers both the absolute value of the INR and the rate of rise could reduce warfarin‐related adverse events.

There are important limitations of our study. We did not abstract warfarin dosages, which precluded study of the appropriateness of both initial warfarin dosing and adjustment of the warfarin dose based on INR results. MPSMS does not reliably capture antiplatelet agents or other agents that result in drug‐drug interactions with warfarin, such as antibiotics, so this factor could theoretically have confounded our results. Antibiotic use seems unlikely to be a major confounder, because patients with acute cardiovascular disease demonstrated a similar relationship between INR measurement and an INR 6.0 to that seen with pneumonia and surgical patients, despite the latter patients likely having greater antibiotics exposure. Furthermore, MPSMS does not capture indices of severity of illness, so other unmeasured confounders could have influenced our results. Although we have data for patients admitted to the hospital for only 4 conditions, these are conditions that represent approximately 22% of hospital admissions in the United States.[2] Strengths of our study include the nationally representative and randomly selected cases and use of data that were obtained from chart abstraction as opposed to administrative data. Through the use of centralized data abstraction, we avoided the potential bias introduced when hospitals self‐report adverse events.

In summary, in a national sample of patients admitted to the hospital for 4 common conditions, warfarin‐associated adverse events were detected in 7.4% of patients who received warfarin. Lack of daily INR measurement was associated with an increased risk of overanticoagulation and warfarin‐associated adverse events in certain patient populations. A 1‐day increase in the INR of 0.9 predicted subsequent overanticoagulation. These results provide actionable opportunities to improve safety in some hospitalized patients receiving warfarin.

Acknowledgements

The authors express their appreciation to Dan Budnitz, MD, MPH, for his advice regarding study design and his review and comments on a draft of this manuscript.

Disclosures: This work was supported by contract HHSA290201200003C from the Agency for Healthcare Research and Quality, United States Department of Health and Human Services, Rockville, Maryland. Qualidigm was the contractor. The authors assume full responsibility for the accuracy and completeness of the ideas. Dr. Metersky has worked on various quality improvement and patient safety projects with Qualidigm, Centers for Medicare & Medicaid Services, and the Agency for Healthcare Research and Quality. His employer has received remuneration for this work. Dr. Krumholz works under contract with the Centers for Medicare & Medicaid Services to develop and maintain performance measures. Dr. Krumholz is the chair of a cardiac scientific advisory board for UnitedHealth and the recipient of a research grant from Medtronic, Inc. through Yale University. The other authors report no conflicts of interest.

- , , , , , . Delivery of optimized inpatient anticoagulation therapy: consensus statement from the anticoagulation forum. Ann Pharmacother. 2013;47:714–724.

- , , , et al. National trends in patient safety for four common conditions, 2005–2011. N Engl J Med. 2014;370:341–351.

- , . Update on antithrombotic therapy: new anticoagulants. Circulation. 2010;121:1523–1532

- , , , . The pharmacogenetics of coumarin therapy. Pharmacogenomics. 2005;6:503–513.

- , , . Adverse drug events among hospitalized Medicare patients: epidemiology and national estimates from a new approach to surveillance. Jt Comm J Qual Patient Saf. 2010;36:12–21.

- , , , et al. Active surveillance using electronic triggers to detect adverse events in hospitalized patients. Qual Saf Health Care. 2006;15:184–190.

- , , , et al. Inpatient warfarin management: pharmacist management using a detailed dosing protocol. J Thromb Thrombolysis. 2012;33:178–184.

- , , , , . Efficacy and safety of a pharmacist‐managed inpatient anticoagulation service for warfarin initiation and titration. J Clin Pharm Ther. 2011;36:585–591.

- , , , et al. Bleeding complications of oral anticoagulant treatment: an inception‐cohort, prospective collaborative study (ISCOAT). Italian Study on Complications of Oral Anticoagulant Therapy. Lancet. 1996;348:423–428.

- , , , , , . Oral anticoagulation in the hospital: analysis of patients at risk. J Thromb Thrombolysis. 2011;31:22–26.

- , , , et al. Evidence‐based management of anticoagulant therapy: Antithrombotic Therapy and Prevention of Thrombosis, 9th ed: American College of Chest Physicians Evidence‐Based Clinical Practice Guidelines. Chest. 2012;141:e152S–e184S.

- Agency for Healthcare Research and Quality. National Guideline Clearinghouse. Available at: http://www.guideline.gov. Accessed April 30, 2015.

- , . Reduction in anticoagulation‐related adverse drug events using a trigger‐based methodology. Jt Comm J Qual Patient Saf. 2005;31:313–318.

- , , . Use of specific indicators to detect warfarin‐related adverse events. Am J Health Syst Pharm. 2005;62:1683–1688.

- University of Wisconsin Health. Warfarin management– adult–inpatient clinical practice guideline. Available at: http://www.uwhealth.org/files/uwhealth/docs/pdf3/Inpatient_Warfarin_Guideline.pdf. Accessed April 30, 2015

- Anticoagulation Guidelines ‐ LSU Health Shreveport. Available at: http://myhsc.lsuhscshreveport.edu/pharmacy/PT%20Policies/Anticoagulation_Safety.pdf. Accessed November 29, 2015.

- The Joint Commission. National patient safety goals effective January 1, 2015. Available at: http://www.jointcommission.org/assets/1/6/2015_NPSG_HAP.pdf. Accessed November 29, 2015.

- U.S. Department of Health and Human Services. Office of Disease Prevention and Health Promotion. Available at: http://health.gov/hcq/pdfs/ade-action-plan-508c.pdf. Accessed November 29, 2015.

- The Joint Commission. Surgical care improvement project. Available at: http://www.jointcommission.org/surgical_care_improvement_project. Accessed May 5, 2015.

- , , , et al. Optimization of inpatient warfarin therapy: Impact of daily consultation by a pharmacist‐managed anticoagulation service. Ann Pharmacother. 2000;34:567–572.

- , , . Effects of requiring a baseline International Normalized Ratio for inpatients treated with warfarin. Am J Health Syst Pharm. 2010;67:17–22.

- , . Weighting regressions by propensity scores. Eval Rev. 2008;32:392–409.

- . An introduction to propensity score methods for reducing the effects of confounding in observational studies. Multivar Behav Res. 2011;46:399–424.

- . Propensity score methods for bias reduction in the comparison of a treatment to a non‐randomized control group. Stat Med. 1998;17:2265–2281.

- , . The central role of the propensity score in observational studies for causal effects. Biometrika. 1983;70:41–55.

- , , , , , . Delivery of optimized inpatient anticoagulation therapy: consensus statement from the anticoagulation forum. Ann Pharmacother. 2013;47:714–724.

- , , , et al. National trends in patient safety for four common conditions, 2005–2011. N Engl J Med. 2014;370:341–351.

- , . Update on antithrombotic therapy: new anticoagulants. Circulation. 2010;121:1523–1532

- , , , . The pharmacogenetics of coumarin therapy. Pharmacogenomics. 2005;6:503–513.

- , , . Adverse drug events among hospitalized Medicare patients: epidemiology and national estimates from a new approach to surveillance. Jt Comm J Qual Patient Saf. 2010;36:12–21.

- , , , et al. Active surveillance using electronic triggers to detect adverse events in hospitalized patients. Qual Saf Health Care. 2006;15:184–190.

- , , , et al. Inpatient warfarin management: pharmacist management using a detailed dosing protocol. J Thromb Thrombolysis. 2012;33:178–184.

- , , , , . Efficacy and safety of a pharmacist‐managed inpatient anticoagulation service for warfarin initiation and titration. J Clin Pharm Ther. 2011;36:585–591.

- , , , et al. Bleeding complications of oral anticoagulant treatment: an inception‐cohort, prospective collaborative study (ISCOAT). Italian Study on Complications of Oral Anticoagulant Therapy. Lancet. 1996;348:423–428.

- , , , , , . Oral anticoagulation in the hospital: analysis of patients at risk. J Thromb Thrombolysis. 2011;31:22–26.

- , , , et al. Evidence‐based management of anticoagulant therapy: Antithrombotic Therapy and Prevention of Thrombosis, 9th ed: American College of Chest Physicians Evidence‐Based Clinical Practice Guidelines. Chest. 2012;141:e152S–e184S.

- Agency for Healthcare Research and Quality. National Guideline Clearinghouse. Available at: http://www.guideline.gov. Accessed April 30, 2015.

- , . Reduction in anticoagulation‐related adverse drug events using a trigger‐based methodology. Jt Comm J Qual Patient Saf. 2005;31:313–318.

- , , . Use of specific indicators to detect warfarin‐related adverse events. Am J Health Syst Pharm. 2005;62:1683–1688.

- University of Wisconsin Health. Warfarin management– adult–inpatient clinical practice guideline. Available at: http://www.uwhealth.org/files/uwhealth/docs/pdf3/Inpatient_Warfarin_Guideline.pdf. Accessed April 30, 2015

- Anticoagulation Guidelines ‐ LSU Health Shreveport. Available at: http://myhsc.lsuhscshreveport.edu/pharmacy/PT%20Policies/Anticoagulation_Safety.pdf. Accessed November 29, 2015.

- The Joint Commission. National patient safety goals effective January 1, 2015. Available at: http://www.jointcommission.org/assets/1/6/2015_NPSG_HAP.pdf. Accessed November 29, 2015.

- U.S. Department of Health and Human Services. Office of Disease Prevention and Health Promotion. Available at: http://health.gov/hcq/pdfs/ade-action-plan-508c.pdf. Accessed November 29, 2015.

- The Joint Commission. Surgical care improvement project. Available at: http://www.jointcommission.org/surgical_care_improvement_project. Accessed May 5, 2015.

- , , , et al. Optimization of inpatient warfarin therapy: Impact of daily consultation by a pharmacist‐managed anticoagulation service. Ann Pharmacother. 2000;34:567–572.

- , , . Effects of requiring a baseline International Normalized Ratio for inpatients treated with warfarin. Am J Health Syst Pharm. 2010;67:17–22.

- , . Weighting regressions by propensity scores. Eval Rev. 2008;32:392–409.

- . An introduction to propensity score methods for reducing the effects of confounding in observational studies. Multivar Behav Res. 2011;46:399–424.

- . Propensity score methods for bias reduction in the comparison of a treatment to a non‐randomized control group. Stat Med. 1998;17:2265–2281.

- , . The central role of the propensity score in observational studies for causal effects. Biometrika. 1983;70:41–55.

© 2015 Society of Hospital Medicine

Treatment and Outcomes of SPFD

Over the past decade, the use of chest computed tomography scans with pulmonary angiography (CTPA) for diagnosis of pulmonary embolism (PE) has soared due to the ease of acquisition, the desire for the additional information that CT scanning may provide, and heightened sensitivity to medical liability.[1, 2, 3, 4, 5, 6] In parallel with this shift, the incidence of PE has nearly doubled, despite no recorded increase in the pretest probability of the disease, increasing from 62 per 100,000 to 112 per 100,000 during the period of 1993 to 2006.6 One major explanation for this increase is that the improvement in CTPA resolution has enabled radiologists to identify more small peripheral (ie, segmental and subsegmental) filling defects. When confronted with the finding of a small peripheral filling defect on CTPA, clinicians often face a management quandary. Case series and retrospective series on outcomes of these patients do not support treatment, but they are limited by having small numbers of patients; the largest examined 93 patients and provided no insight into the treatment decision.[7] Uncertainty exists, furthermore, about the pathologic meaning of small peripheral filling defects.[8] Clinicians must weigh these arguments and the risk of anticoagulation against concerns about the consequences of untreated pulmonary thromboemboli. More information is needed, therefore, on the outcomes of patients with peripheral filling defects, and on variables impacting the treatment decision, in order to help clinicians manage these patients.[9]

In this study, we analyzed cases of patients with a single peripheral filling defect (SPFD). We choose to look at patients with a SPFD because they represent the starkest decision‐making treatment dilemma and are not infrequent. We assessed the 90‐day mortality and rate of postdischarge venous thromboembolism (VTE) of treated and untreated patients and identified characteristics of treated and untreated patients with a SPFD. We wished to determine the incidence of SPFD among patients evaluated with CTPA and to determine how often the defect is called a PE by the radiologist. We also aimed to determine what role secondary studies play in helping to clarify the diagnosis and management of SPFD and to identify other factors that may influence the decision to treat patients with this finding.

METHODS

Site

This retrospective cohort study was conducted at a community hospital in Norwalk, CT. The hospital is a 328‐bed, not‐for‐profit, acute‐care community teaching hospital that serves a population of 250,000 in lower Fairfield County, Connecticut, and is affiliated with the Yale School of Medicine.

Subjects