User login

Examining How the Body Responds to Ebola

“Unprecedented detail” was observed about how a patient’s clinical condition changes in response to Ebola virus disease and treatment. That’s what NIH researchers who analyzed daily gene activation found in a 26-day study of 1 patient.

The patient, who was admitted to the NIH Clinical Center on day 7 of illness, received intensive supportive care, including fluids and electrolytes, but did not receive any experimental Ebola drugs. The researchers took blood samples daily to measure the rise and decline of virus replication and to track the timing, intensity, and duration of expression of numerous immune system genes. They correlated changes in gene expression with subsequent alterations in the patient’s clinical condition, such as development and resolution of blood-clotting dysfunction.

The researchers pinpointed “key transition points” in the response to infection, NIH says. For example, they observed a marked decline in antiviral responses that correlated with clearance of virus from white blood cells. The researchers also found that most host responses shifted rapidly from activating genes involved in cell damage and inflammation toward those linked to promotion of cellular and organ repair—a “pivot” that came before the patient began showing signs of clinical improvement.

Although the study centered on only 1 patient, the researchers say it may help inform the development of treatments designed to boost or accelerate host factors that counter the virus and promote healing.

“Unprecedented detail” was observed about how a patient’s clinical condition changes in response to Ebola virus disease and treatment. That’s what NIH researchers who analyzed daily gene activation found in a 26-day study of 1 patient.

The patient, who was admitted to the NIH Clinical Center on day 7 of illness, received intensive supportive care, including fluids and electrolytes, but did not receive any experimental Ebola drugs. The researchers took blood samples daily to measure the rise and decline of virus replication and to track the timing, intensity, and duration of expression of numerous immune system genes. They correlated changes in gene expression with subsequent alterations in the patient’s clinical condition, such as development and resolution of blood-clotting dysfunction.

The researchers pinpointed “key transition points” in the response to infection, NIH says. For example, they observed a marked decline in antiviral responses that correlated with clearance of virus from white blood cells. The researchers also found that most host responses shifted rapidly from activating genes involved in cell damage and inflammation toward those linked to promotion of cellular and organ repair—a “pivot” that came before the patient began showing signs of clinical improvement.

Although the study centered on only 1 patient, the researchers say it may help inform the development of treatments designed to boost or accelerate host factors that counter the virus and promote healing.

“Unprecedented detail” was observed about how a patient’s clinical condition changes in response to Ebola virus disease and treatment. That’s what NIH researchers who analyzed daily gene activation found in a 26-day study of 1 patient.

The patient, who was admitted to the NIH Clinical Center on day 7 of illness, received intensive supportive care, including fluids and electrolytes, but did not receive any experimental Ebola drugs. The researchers took blood samples daily to measure the rise and decline of virus replication and to track the timing, intensity, and duration of expression of numerous immune system genes. They correlated changes in gene expression with subsequent alterations in the patient’s clinical condition, such as development and resolution of blood-clotting dysfunction.

The researchers pinpointed “key transition points” in the response to infection, NIH says. For example, they observed a marked decline in antiviral responses that correlated with clearance of virus from white blood cells. The researchers also found that most host responses shifted rapidly from activating genes involved in cell damage and inflammation toward those linked to promotion of cellular and organ repair—a “pivot” that came before the patient began showing signs of clinical improvement.

Although the study centered on only 1 patient, the researchers say it may help inform the development of treatments designed to boost or accelerate host factors that counter the virus and promote healing.

A Rare Case Among Rare Cancer Cases

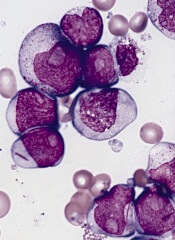

Cardiac tumors are rare and usually benign. An exception is the primary cardiac schwannoma. Only 17 cases have been reported, and most of those were malignant, say a team of doctors from McMaster University and Hamilton General Hospital, both in Ontario, Canada. Schwannomas, composed of Schwann cells, are tumors of nerve sheaths commonly found in cranial and peripheral nerves. The clinicians’ account of an “interesting case” of a patient with a benign schwannoma was unusual—and the fact that it was in the heart makes it “exceedingly rare.”

Their patient, a 47-year-old woman who had been treated for ovarian cancer, had no symptoms of a cardiac schwannoma. During the workup for the ovarian cancer, the clinicians discovered a large mass between the right atrium and right ventricle. Because she was asymptomatic, her physicians decided to closely monitor the tumor’s growth.

The chemotherapy for ovarian cancer did not reduce the cardiac schwannoma. As the disease progressed and the tumor grew, the patient developed symptoms, including sharp chest pain, shortness of breath, and palpitations.

Related: Rare Cancer Gets Timely Right Treatment

The prognosis of benign cardiac tumor depends on resectability, the clinicians say. After complete resection the prognosis is excellent, and adjuvant therapy is not needed. If surgery is not indicated, chemotherapy is an option. The patient chose to have surgery to remove the tumor. During the operation, the surgeons found that the blood supply of the tumor came from a branch of the right coronary artery and the posterior descending artery (PDA).

The surgery, which included resection, ligation of the PDA , and a saphenous vein graft for a coronary bypass to the distal PDA, was successful. A definite diagnosis of cardiac tumors can be completed only after histologic examination of samples taken at autopsy or surgical resection, the clinicians note. The gross and microscopic evidence confirmed diagnosis of benign schwannoma of the heart.

Related: In Rare Case Colorectal Cancer Causes Thrombus

Source:

Koujanian S, Pawlowicz B, Landry D, Alexopoulou I, Nair V. Hum Pathol. 2017;(8):24-26

Cardiac tumors are rare and usually benign. An exception is the primary cardiac schwannoma. Only 17 cases have been reported, and most of those were malignant, say a team of doctors from McMaster University and Hamilton General Hospital, both in Ontario, Canada. Schwannomas, composed of Schwann cells, are tumors of nerve sheaths commonly found in cranial and peripheral nerves. The clinicians’ account of an “interesting case” of a patient with a benign schwannoma was unusual—and the fact that it was in the heart makes it “exceedingly rare.”

Their patient, a 47-year-old woman who had been treated for ovarian cancer, had no symptoms of a cardiac schwannoma. During the workup for the ovarian cancer, the clinicians discovered a large mass between the right atrium and right ventricle. Because she was asymptomatic, her physicians decided to closely monitor the tumor’s growth.

The chemotherapy for ovarian cancer did not reduce the cardiac schwannoma. As the disease progressed and the tumor grew, the patient developed symptoms, including sharp chest pain, shortness of breath, and palpitations.

Related: Rare Cancer Gets Timely Right Treatment

The prognosis of benign cardiac tumor depends on resectability, the clinicians say. After complete resection the prognosis is excellent, and adjuvant therapy is not needed. If surgery is not indicated, chemotherapy is an option. The patient chose to have surgery to remove the tumor. During the operation, the surgeons found that the blood supply of the tumor came from a branch of the right coronary artery and the posterior descending artery (PDA).

The surgery, which included resection, ligation of the PDA , and a saphenous vein graft for a coronary bypass to the distal PDA, was successful. A definite diagnosis of cardiac tumors can be completed only after histologic examination of samples taken at autopsy or surgical resection, the clinicians note. The gross and microscopic evidence confirmed diagnosis of benign schwannoma of the heart.

Related: In Rare Case Colorectal Cancer Causes Thrombus

Source:

Koujanian S, Pawlowicz B, Landry D, Alexopoulou I, Nair V. Hum Pathol. 2017;(8):24-26

Cardiac tumors are rare and usually benign. An exception is the primary cardiac schwannoma. Only 17 cases have been reported, and most of those were malignant, say a team of doctors from McMaster University and Hamilton General Hospital, both in Ontario, Canada. Schwannomas, composed of Schwann cells, are tumors of nerve sheaths commonly found in cranial and peripheral nerves. The clinicians’ account of an “interesting case” of a patient with a benign schwannoma was unusual—and the fact that it was in the heart makes it “exceedingly rare.”

Their patient, a 47-year-old woman who had been treated for ovarian cancer, had no symptoms of a cardiac schwannoma. During the workup for the ovarian cancer, the clinicians discovered a large mass between the right atrium and right ventricle. Because she was asymptomatic, her physicians decided to closely monitor the tumor’s growth.

The chemotherapy for ovarian cancer did not reduce the cardiac schwannoma. As the disease progressed and the tumor grew, the patient developed symptoms, including sharp chest pain, shortness of breath, and palpitations.

Related: Rare Cancer Gets Timely Right Treatment

The prognosis of benign cardiac tumor depends on resectability, the clinicians say. After complete resection the prognosis is excellent, and adjuvant therapy is not needed. If surgery is not indicated, chemotherapy is an option. The patient chose to have surgery to remove the tumor. During the operation, the surgeons found that the blood supply of the tumor came from a branch of the right coronary artery and the posterior descending artery (PDA).

The surgery, which included resection, ligation of the PDA , and a saphenous vein graft for a coronary bypass to the distal PDA, was successful. A definite diagnosis of cardiac tumors can be completed only after histologic examination of samples taken at autopsy or surgical resection, the clinicians note. The gross and microscopic evidence confirmed diagnosis of benign schwannoma of the heart.

Related: In Rare Case Colorectal Cancer Causes Thrombus

Source:

Koujanian S, Pawlowicz B, Landry D, Alexopoulou I, Nair V. Hum Pathol. 2017;(8):24-26

Group creates ‘authentic’ HSCs from endothelial cells

Researchers say they have found a way to convert adult mouse endothelial cells into “authentic” hematopoietic stem cells (HSCs).

The team says these HSCs have a transcriptome and long-term self-renewal capacity that are similar to those of adult HSCs that are produced naturally.

In addition, the lab-generated HSCs were capable of engraftment and multi-lineage reconstitution in mice.

“This is a game-changing breakthrough that brings us closer not only to treat blood disorders, but also to deciphering the complex biology of stem-cell self-renewal machinery,” said Shahin Rafii, MD, of Weill Cornell Medicine in New York, New York.

“This is exciting because it provides us with a path towards generating clinically useful quantities of normal stem cells for transplantation that may help us cure patients with genetic and acquired blood diseases,” added Joseph Scandura, MD, PhD, also of Weill Cornell Medicine.

Drs Scandura and Rafii and their colleagues described this research in Nature.

The researchers took vascular endothelial cells from adult mice and induced expression of the transcription-factor-encoding genes Fosb, Gfi1, Runx1, and Spi1.

The cells were grown and multiplied in co-culture with an engineered vascular niche.

This produced HSCs that were transplanted into irradiated mice.

The researchers said these HSCs were capable of long-term engraftment and hematopoietic reconstitution of myelopoiesis and both innate and adaptive immune function.

In addition, the HSCs were endowed with the same genetic attributes as normal adult HSCs.

If this method of generating HSCs in the lab can be scaled up and applied to humans, it could have wide-ranging clinical implications, according to the researchers.

“It might allow us to provide healthy stem cells to patients who need bone marrow donors but have no genetic match,” Dr Scandura said. “It could lead to new ways to cure leukemia and may help us correct genetic defects that cause blood diseases like sickle cell anemia.”

“More importantly, our vascular niche stem cell expansion model may be employed to clone the key unknown growth factors produced by this niche that are essential for self-perpetuation of stem cells,” Dr Rafii said. “Identification of those factors could be important for unraveling the secrets of stem cells’ longevity and translating the potential of stem cell therapy to the clinical setting.” ![]()

Researchers say they have found a way to convert adult mouse endothelial cells into “authentic” hematopoietic stem cells (HSCs).

The team says these HSCs have a transcriptome and long-term self-renewal capacity that are similar to those of adult HSCs that are produced naturally.

In addition, the lab-generated HSCs were capable of engraftment and multi-lineage reconstitution in mice.

“This is a game-changing breakthrough that brings us closer not only to treat blood disorders, but also to deciphering the complex biology of stem-cell self-renewal machinery,” said Shahin Rafii, MD, of Weill Cornell Medicine in New York, New York.

“This is exciting because it provides us with a path towards generating clinically useful quantities of normal stem cells for transplantation that may help us cure patients with genetic and acquired blood diseases,” added Joseph Scandura, MD, PhD, also of Weill Cornell Medicine.

Drs Scandura and Rafii and their colleagues described this research in Nature.

The researchers took vascular endothelial cells from adult mice and induced expression of the transcription-factor-encoding genes Fosb, Gfi1, Runx1, and Spi1.

The cells were grown and multiplied in co-culture with an engineered vascular niche.

This produced HSCs that were transplanted into irradiated mice.

The researchers said these HSCs were capable of long-term engraftment and hematopoietic reconstitution of myelopoiesis and both innate and adaptive immune function.

In addition, the HSCs were endowed with the same genetic attributes as normal adult HSCs.

If this method of generating HSCs in the lab can be scaled up and applied to humans, it could have wide-ranging clinical implications, according to the researchers.

“It might allow us to provide healthy stem cells to patients who need bone marrow donors but have no genetic match,” Dr Scandura said. “It could lead to new ways to cure leukemia and may help us correct genetic defects that cause blood diseases like sickle cell anemia.”

“More importantly, our vascular niche stem cell expansion model may be employed to clone the key unknown growth factors produced by this niche that are essential for self-perpetuation of stem cells,” Dr Rafii said. “Identification of those factors could be important for unraveling the secrets of stem cells’ longevity and translating the potential of stem cell therapy to the clinical setting.” ![]()

Researchers say they have found a way to convert adult mouse endothelial cells into “authentic” hematopoietic stem cells (HSCs).

The team says these HSCs have a transcriptome and long-term self-renewal capacity that are similar to those of adult HSCs that are produced naturally.

In addition, the lab-generated HSCs were capable of engraftment and multi-lineage reconstitution in mice.

“This is a game-changing breakthrough that brings us closer not only to treat blood disorders, but also to deciphering the complex biology of stem-cell self-renewal machinery,” said Shahin Rafii, MD, of Weill Cornell Medicine in New York, New York.

“This is exciting because it provides us with a path towards generating clinically useful quantities of normal stem cells for transplantation that may help us cure patients with genetic and acquired blood diseases,” added Joseph Scandura, MD, PhD, also of Weill Cornell Medicine.

Drs Scandura and Rafii and their colleagues described this research in Nature.

The researchers took vascular endothelial cells from adult mice and induced expression of the transcription-factor-encoding genes Fosb, Gfi1, Runx1, and Spi1.

The cells were grown and multiplied in co-culture with an engineered vascular niche.

This produced HSCs that were transplanted into irradiated mice.

The researchers said these HSCs were capable of long-term engraftment and hematopoietic reconstitution of myelopoiesis and both innate and adaptive immune function.

In addition, the HSCs were endowed with the same genetic attributes as normal adult HSCs.

If this method of generating HSCs in the lab can be scaled up and applied to humans, it could have wide-ranging clinical implications, according to the researchers.

“It might allow us to provide healthy stem cells to patients who need bone marrow donors but have no genetic match,” Dr Scandura said. “It could lead to new ways to cure leukemia and may help us correct genetic defects that cause blood diseases like sickle cell anemia.”

“More importantly, our vascular niche stem cell expansion model may be employed to clone the key unknown growth factors produced by this niche that are essential for self-perpetuation of stem cells,” Dr Rafii said. “Identification of those factors could be important for unraveling the secrets of stem cells’ longevity and translating the potential of stem cell therapy to the clinical setting.” ![]()

EC grants drug orphan designation for CTCL

The European Commission (EC) has granted orphan designation to MRG-106 for the treatment of cutaneous T-cell lymphoma (CTCL).

MRG-106 is a locked nucleic acid-modified oligonucleotide inhibitor of miR-155-5p.

miRagen Therapeutics, Inc., the company developing MRG-106, is currently testing the drug in a phase 1 trial of CTCL patients.

Early results from this trial were presented at the 2016 ASH Annual Meeting.

Researchers presented results in 6 patients with stage I-III mycosis fungoides.

The patients received 4 or 5 intratumoral injections of MRG-106 (at 75 mg) over 2 weeks. Four patients received saline injections in a second lesion on the same schedule.

There were 3 adverse events related to MRG-106—pain during injection, burning sensation during injection, and tingling at the injection site.

Adverse events considered possibly related to MRG-106 were pruritus, erythema, skin inflammation, sore on hand, nausea, decrease in white blood cells, neutropenia, and prolonged partial thromboplastin time.

One patient was taken off the trial due to rapid disease progression. The other 5 patients completed the dosing period.

All 5 patients had a reduction in the baseline Composite Assessment of Index Lesion Severity score in MRG-106-treated and saline-treated lesions.

The average maximal reduction was 55% (range, 33% to 77%) in MRG-106-treated lesions and 39% (range, 13% to 75%) in saline-treated lesions.

About orphan designation

Orphan designation provides regulatory and financial incentives for companies to develop and market therapies that treat life-threatening or chronically debilitating conditions affecting no more than 5 in 10,000 people in the European Union, and where no satisfactory treatment is available.

Orphan designation provides a 10-year period of marketing exclusivity if the drug receives regulatory approval.

The designation also provides incentives for companies seeking protocol assistance from the European Medicines Agency during the product development phase and direct access to the centralized authorization procedure.

The European Medicines Agency adopts an opinion on the granting of orphan drug designation, and that opinion is submitted to the EC for a final decision. The EC typically makes a decision within 30 days of that submission. ![]()

The European Commission (EC) has granted orphan designation to MRG-106 for the treatment of cutaneous T-cell lymphoma (CTCL).

MRG-106 is a locked nucleic acid-modified oligonucleotide inhibitor of miR-155-5p.

miRagen Therapeutics, Inc., the company developing MRG-106, is currently testing the drug in a phase 1 trial of CTCL patients.

Early results from this trial were presented at the 2016 ASH Annual Meeting.

Researchers presented results in 6 patients with stage I-III mycosis fungoides.

The patients received 4 or 5 intratumoral injections of MRG-106 (at 75 mg) over 2 weeks. Four patients received saline injections in a second lesion on the same schedule.

There were 3 adverse events related to MRG-106—pain during injection, burning sensation during injection, and tingling at the injection site.

Adverse events considered possibly related to MRG-106 were pruritus, erythema, skin inflammation, sore on hand, nausea, decrease in white blood cells, neutropenia, and prolonged partial thromboplastin time.

One patient was taken off the trial due to rapid disease progression. The other 5 patients completed the dosing period.

All 5 patients had a reduction in the baseline Composite Assessment of Index Lesion Severity score in MRG-106-treated and saline-treated lesions.

The average maximal reduction was 55% (range, 33% to 77%) in MRG-106-treated lesions and 39% (range, 13% to 75%) in saline-treated lesions.

About orphan designation

Orphan designation provides regulatory and financial incentives for companies to develop and market therapies that treat life-threatening or chronically debilitating conditions affecting no more than 5 in 10,000 people in the European Union, and where no satisfactory treatment is available.

Orphan designation provides a 10-year period of marketing exclusivity if the drug receives regulatory approval.

The designation also provides incentives for companies seeking protocol assistance from the European Medicines Agency during the product development phase and direct access to the centralized authorization procedure.

The European Medicines Agency adopts an opinion on the granting of orphan drug designation, and that opinion is submitted to the EC for a final decision. The EC typically makes a decision within 30 days of that submission. ![]()

The European Commission (EC) has granted orphan designation to MRG-106 for the treatment of cutaneous T-cell lymphoma (CTCL).

MRG-106 is a locked nucleic acid-modified oligonucleotide inhibitor of miR-155-5p.

miRagen Therapeutics, Inc., the company developing MRG-106, is currently testing the drug in a phase 1 trial of CTCL patients.

Early results from this trial were presented at the 2016 ASH Annual Meeting.

Researchers presented results in 6 patients with stage I-III mycosis fungoides.

The patients received 4 or 5 intratumoral injections of MRG-106 (at 75 mg) over 2 weeks. Four patients received saline injections in a second lesion on the same schedule.

There were 3 adverse events related to MRG-106—pain during injection, burning sensation during injection, and tingling at the injection site.

Adverse events considered possibly related to MRG-106 were pruritus, erythema, skin inflammation, sore on hand, nausea, decrease in white blood cells, neutropenia, and prolonged partial thromboplastin time.

One patient was taken off the trial due to rapid disease progression. The other 5 patients completed the dosing period.

All 5 patients had a reduction in the baseline Composite Assessment of Index Lesion Severity score in MRG-106-treated and saline-treated lesions.

The average maximal reduction was 55% (range, 33% to 77%) in MRG-106-treated lesions and 39% (range, 13% to 75%) in saline-treated lesions.

About orphan designation

Orphan designation provides regulatory and financial incentives for companies to develop and market therapies that treat life-threatening or chronically debilitating conditions affecting no more than 5 in 10,000 people in the European Union, and where no satisfactory treatment is available.

Orphan designation provides a 10-year period of marketing exclusivity if the drug receives regulatory approval.

The designation also provides incentives for companies seeking protocol assistance from the European Medicines Agency during the product development phase and direct access to the centralized authorization procedure.

The European Medicines Agency adopts an opinion on the granting of orphan drug designation, and that opinion is submitted to the EC for a final decision. The EC typically makes a decision within 30 days of that submission. ![]()

Assay available to screen donated blood for Zika

Blood banks in countries that accept the CE mark can now use the Procleix Zika Virus Assay to screen blood donations for the presence of Zika virus.

CE marking means a product conforms to relevant legislation for sale in the European economic area.

The Procleix Zika Virus Assay, which was developed by Hologic, Inc. and Grifols, is designed to run on the Procleix Panther System, an automated, nucleic acid technology (NAT) blood screening platform.

NAT enables detection of infectious agents in blood and plasma donations.

The Procleix Panther system automates all aspects of NAT-based blood screening on a single, integrated platform.

The system has received regulatory approvals in countries around the world, and it is in development for the US market.

In the US, the Procleix Zika Virus Assay is being used under an investigational new drug protocol in response to the US Food and Drug Administration’s recommendation to screen all US blood donations for Zika virus.

“The CE marking of the Procleix Zika virus assay is a further step in our mission to support safer blood donations, the result of our passion for innovation and the role we play as market leaders in transfusion medicine,” said Grifols Diagnostic Division President Carsten Schroeder.

About Zika virus

Zika is a mosquito-borne virus that was first identified in rhesus monkeys in Uganda in 1947 and in humans in 1952. Outbreaks of Zika virus have been recorded in Africa, the Americas, Asia, and the Pacific.

Zika virus is transmitted to humans primarily through the bite of an infected mosquito from the Aedes genus, mainly Aedes aegypti, in tropical regions.

Sexual transmission of Zika virus is also possible, and reports have suggested Zika can be transmitted via transfusion of blood products. Other modes of transmission are being investigated as well.

In total, 64 countries and territories have reported transmission of Zika virus since January 1, 2007. ![]()

Blood banks in countries that accept the CE mark can now use the Procleix Zika Virus Assay to screen blood donations for the presence of Zika virus.

CE marking means a product conforms to relevant legislation for sale in the European economic area.

The Procleix Zika Virus Assay, which was developed by Hologic, Inc. and Grifols, is designed to run on the Procleix Panther System, an automated, nucleic acid technology (NAT) blood screening platform.

NAT enables detection of infectious agents in blood and plasma donations.

The Procleix Panther system automates all aspects of NAT-based blood screening on a single, integrated platform.

The system has received regulatory approvals in countries around the world, and it is in development for the US market.

In the US, the Procleix Zika Virus Assay is being used under an investigational new drug protocol in response to the US Food and Drug Administration’s recommendation to screen all US blood donations for Zika virus.

“The CE marking of the Procleix Zika virus assay is a further step in our mission to support safer blood donations, the result of our passion for innovation and the role we play as market leaders in transfusion medicine,” said Grifols Diagnostic Division President Carsten Schroeder.

About Zika virus

Zika is a mosquito-borne virus that was first identified in rhesus monkeys in Uganda in 1947 and in humans in 1952. Outbreaks of Zika virus have been recorded in Africa, the Americas, Asia, and the Pacific.

Zika virus is transmitted to humans primarily through the bite of an infected mosquito from the Aedes genus, mainly Aedes aegypti, in tropical regions.

Sexual transmission of Zika virus is also possible, and reports have suggested Zika can be transmitted via transfusion of blood products. Other modes of transmission are being investigated as well.

In total, 64 countries and territories have reported transmission of Zika virus since January 1, 2007. ![]()

Blood banks in countries that accept the CE mark can now use the Procleix Zika Virus Assay to screen blood donations for the presence of Zika virus.

CE marking means a product conforms to relevant legislation for sale in the European economic area.

The Procleix Zika Virus Assay, which was developed by Hologic, Inc. and Grifols, is designed to run on the Procleix Panther System, an automated, nucleic acid technology (NAT) blood screening platform.

NAT enables detection of infectious agents in blood and plasma donations.

The Procleix Panther system automates all aspects of NAT-based blood screening on a single, integrated platform.

The system has received regulatory approvals in countries around the world, and it is in development for the US market.

In the US, the Procleix Zika Virus Assay is being used under an investigational new drug protocol in response to the US Food and Drug Administration’s recommendation to screen all US blood donations for Zika virus.

“The CE marking of the Procleix Zika virus assay is a further step in our mission to support safer blood donations, the result of our passion for innovation and the role we play as market leaders in transfusion medicine,” said Grifols Diagnostic Division President Carsten Schroeder.

About Zika virus

Zika is a mosquito-borne virus that was first identified in rhesus monkeys in Uganda in 1947 and in humans in 1952. Outbreaks of Zika virus have been recorded in Africa, the Americas, Asia, and the Pacific.

Zika virus is transmitted to humans primarily through the bite of an infected mosquito from the Aedes genus, mainly Aedes aegypti, in tropical regions.

Sexual transmission of Zika virus is also possible, and reports have suggested Zika can be transmitted via transfusion of blood products. Other modes of transmission are being investigated as well.

In total, 64 countries and territories have reported transmission of Zika virus since January 1, 2007. ![]()

EMA recommends orphan designation for AML drug

The European Medicines Agency (EMA) has recommended orphan designation for Actimab-A, a product intended to treat patients with newly diagnosed acute myeloid leukemia (AML) who are over the age of 60 and are ineligible for standard induction therapy.

Actimab-A targets CD33, a protein expressed on the surface of AML cells, via the monoclonal antibody, HuM195, which carries the cytotoxic radioisotope actinium-225 to the AML cells.

Actinium Pharmaceuticals, Inc., the company developing Actimab-A, is testing the drug in a phase 2 trial.

Results from a phase 1 trial of the drug were presented at the 2016 ASH Annual Meeting.

At that time, researchers reported results in 18 patients who had been newly diagnosed with AML and were age 60 and older. Their median age was 77 (range, 68-87).

The patients received Actimab-A in combination with low-dose cytarabine. Actimab-A was given at 0.5 μCi/kg/fraction (n=3), 1 μCi/kg/fraction (n=6), 1.5 μCi/kg/fraction (n=3), or 2 μCi/kg/fraction (n=6).

Two patients experienced dose-limiting toxicities. Both had grade 4 thrombocytopenia with marrow aplasia for more than 6 weeks after therapy. One patient was in the 1 µCi/kg/fraction cohort, and the other was in the 2 µCi/kg/fraction cohort.

The maximum-tolerated dose was not reached, but 2 µCi/kg/fraction was chosen as the phase 2 dose.

Grade 3/4 toxicities included neutropenia (n=5), thrombocytopenia (n=9), febrile neutropenia (n=6), pneumonia (n=5), other infections (n=3), atrial fibrillation/syncope (n=1), transient creatinine increase (n=1), generalized fatigue (n=1), hypokalemia (n=1), mucositis (n=1), and rectal hemorrhage (n=1).

Twenty-eight percent of patients (5/18) had objective responses to treatment. Two patients achieved a complete response (CR), 1 had a CR with incomplete platelet recovery, and 2 had a CR with incomplete marrow recovery.

The median duration of response was 9.1 months (range, 4.1-16.9).

About orphan designation

Orphan designation provides regulatory and financial incentives for companies to develop and market therapies that treat life-threatening or chronically debilitating conditions affecting no more than 5 in 10,000 people in the European Union, and where no satisfactory treatment is available.

Orphan designation provides a 10-year period of marketing exclusivity if the drug receives regulatory approval. The designation also provides incentives for companies seeking protocol assistance from the EMA during the product development phase and direct access to the centralized authorization procedure.

The EMA adopts an opinion on the granting of orphan drug designation, and that opinion is submitted to the European Commission for a final decision. The European Commission typically makes a decision within 30 days. ![]()

The European Medicines Agency (EMA) has recommended orphan designation for Actimab-A, a product intended to treat patients with newly diagnosed acute myeloid leukemia (AML) who are over the age of 60 and are ineligible for standard induction therapy.

Actimab-A targets CD33, a protein expressed on the surface of AML cells, via the monoclonal antibody, HuM195, which carries the cytotoxic radioisotope actinium-225 to the AML cells.

Actinium Pharmaceuticals, Inc., the company developing Actimab-A, is testing the drug in a phase 2 trial.

Results from a phase 1 trial of the drug were presented at the 2016 ASH Annual Meeting.

At that time, researchers reported results in 18 patients who had been newly diagnosed with AML and were age 60 and older. Their median age was 77 (range, 68-87).

The patients received Actimab-A in combination with low-dose cytarabine. Actimab-A was given at 0.5 μCi/kg/fraction (n=3), 1 μCi/kg/fraction (n=6), 1.5 μCi/kg/fraction (n=3), or 2 μCi/kg/fraction (n=6).

Two patients experienced dose-limiting toxicities. Both had grade 4 thrombocytopenia with marrow aplasia for more than 6 weeks after therapy. One patient was in the 1 µCi/kg/fraction cohort, and the other was in the 2 µCi/kg/fraction cohort.

The maximum-tolerated dose was not reached, but 2 µCi/kg/fraction was chosen as the phase 2 dose.

Grade 3/4 toxicities included neutropenia (n=5), thrombocytopenia (n=9), febrile neutropenia (n=6), pneumonia (n=5), other infections (n=3), atrial fibrillation/syncope (n=1), transient creatinine increase (n=1), generalized fatigue (n=1), hypokalemia (n=1), mucositis (n=1), and rectal hemorrhage (n=1).

Twenty-eight percent of patients (5/18) had objective responses to treatment. Two patients achieved a complete response (CR), 1 had a CR with incomplete platelet recovery, and 2 had a CR with incomplete marrow recovery.

The median duration of response was 9.1 months (range, 4.1-16.9).

About orphan designation

Orphan designation provides regulatory and financial incentives for companies to develop and market therapies that treat life-threatening or chronically debilitating conditions affecting no more than 5 in 10,000 people in the European Union, and where no satisfactory treatment is available.

Orphan designation provides a 10-year period of marketing exclusivity if the drug receives regulatory approval. The designation also provides incentives for companies seeking protocol assistance from the EMA during the product development phase and direct access to the centralized authorization procedure.

The EMA adopts an opinion on the granting of orphan drug designation, and that opinion is submitted to the European Commission for a final decision. The European Commission typically makes a decision within 30 days. ![]()

The European Medicines Agency (EMA) has recommended orphan designation for Actimab-A, a product intended to treat patients with newly diagnosed acute myeloid leukemia (AML) who are over the age of 60 and are ineligible for standard induction therapy.

Actimab-A targets CD33, a protein expressed on the surface of AML cells, via the monoclonal antibody, HuM195, which carries the cytotoxic radioisotope actinium-225 to the AML cells.

Actinium Pharmaceuticals, Inc., the company developing Actimab-A, is testing the drug in a phase 2 trial.

Results from a phase 1 trial of the drug were presented at the 2016 ASH Annual Meeting.

At that time, researchers reported results in 18 patients who had been newly diagnosed with AML and were age 60 and older. Their median age was 77 (range, 68-87).

The patients received Actimab-A in combination with low-dose cytarabine. Actimab-A was given at 0.5 μCi/kg/fraction (n=3), 1 μCi/kg/fraction (n=6), 1.5 μCi/kg/fraction (n=3), or 2 μCi/kg/fraction (n=6).

Two patients experienced dose-limiting toxicities. Both had grade 4 thrombocytopenia with marrow aplasia for more than 6 weeks after therapy. One patient was in the 1 µCi/kg/fraction cohort, and the other was in the 2 µCi/kg/fraction cohort.

The maximum-tolerated dose was not reached, but 2 µCi/kg/fraction was chosen as the phase 2 dose.

Grade 3/4 toxicities included neutropenia (n=5), thrombocytopenia (n=9), febrile neutropenia (n=6), pneumonia (n=5), other infections (n=3), atrial fibrillation/syncope (n=1), transient creatinine increase (n=1), generalized fatigue (n=1), hypokalemia (n=1), mucositis (n=1), and rectal hemorrhage (n=1).

Twenty-eight percent of patients (5/18) had objective responses to treatment. Two patients achieved a complete response (CR), 1 had a CR with incomplete platelet recovery, and 2 had a CR with incomplete marrow recovery.

The median duration of response was 9.1 months (range, 4.1-16.9).

About orphan designation

Orphan designation provides regulatory and financial incentives for companies to develop and market therapies that treat life-threatening or chronically debilitating conditions affecting no more than 5 in 10,000 people in the European Union, and where no satisfactory treatment is available.

Orphan designation provides a 10-year period of marketing exclusivity if the drug receives regulatory approval. The designation also provides incentives for companies seeking protocol assistance from the EMA during the product development phase and direct access to the centralized authorization procedure.

The EMA adopts an opinion on the granting of orphan drug designation, and that opinion is submitted to the European Commission for a final decision. The European Commission typically makes a decision within 30 days. ![]()

Annular lesions on abdomen

The FP concluded that this was a case of nummular eczema, after exploring other elements of the differential diagnosis, which included psoriasis, tinea corporis, and contact dermatitis. The FP looked at the patient's nails and scalp and saw no other signs of psoriasis. He then performed a potassium hydroxide (KOH) preparation and found no evidence of hyphae or fungal elements. (See a video on how to perform a KOH preparation here: http://www.mdedge.com/jfponline/article/100603/dermatology/koh-preparation.) He ruled out contact dermatitis because the history did not support it, and this wouldn’t have been a common location for it.

Nummular eczema is a type of eczema characterized by circular or oval-shaped scaling plaques with well-defined borders. (“Nummus” is Latin for “coin.”) Nummular eczema produces multiple lesions that are most commonly found on the dorsa of the hands, arms, and legs.

In this case, the FP offered to do a biopsy to confirm the clinical diagnosis, but noted that treatment could be started empirically and the biopsy could be reserved for the next visit only if the treatment was not successful. The patient preferred to start with topical treatment and hold off on the biopsy.

The FP prescribed 0.1% triamcinolone ointment to be applied twice daily. The FP knew that this was a mid-potency steroid and a high-potency steroid might work more rapidly and effectively, but the patient's insurance had a large deductible and the patient was going to have to pay for the medication out-of-pocket. Generic 0.1% triamcinolone is far less expensive than any of the high-potency steroids, including generic clobetasol.

Fortunately, at the one-month follow-up, the patient had improved by 95% and the only remaining problem was the hyperpigmentation, which was secondary to the inflammation. The FP suggested that the patient continue using the triamcinolone until there was a full resolution of the erythema, scaling, and itching. He explained that the post-inflammatory hyperpigmentation might take months (to a year) to resolve, and in some cases never resolves. The patient was not worried about this issue and was happy that his symptoms had improved.

Photos and text for Photo Rounds Friday courtesy of Richard P. Usatine, MD. This case was adapted from: Wah Y, Usatine R. Eczema. In: Usatine R, Smith M, Mayeaux EJ, et al, eds. Color Atlas of Family Medicine. 2nd ed. New York, NY: McGraw-Hill; 2013.

To learn more about the Color Atlas of Family Medicine, see: www.amazon.com/Color-Family-Medicine-Richard-Usatine/dp/0071769641/

You can now get the second edition of the Color Atlas of Family Medicine as an app by clicking on this link: usatinemedia.com

The FP concluded that this was a case of nummular eczema, after exploring other elements of the differential diagnosis, which included psoriasis, tinea corporis, and contact dermatitis. The FP looked at the patient's nails and scalp and saw no other signs of psoriasis. He then performed a potassium hydroxide (KOH) preparation and found no evidence of hyphae or fungal elements. (See a video on how to perform a KOH preparation here: http://www.mdedge.com/jfponline/article/100603/dermatology/koh-preparation.) He ruled out contact dermatitis because the history did not support it, and this wouldn’t have been a common location for it.

Nummular eczema is a type of eczema characterized by circular or oval-shaped scaling plaques with well-defined borders. (“Nummus” is Latin for “coin.”) Nummular eczema produces multiple lesions that are most commonly found on the dorsa of the hands, arms, and legs.

In this case, the FP offered to do a biopsy to confirm the clinical diagnosis, but noted that treatment could be started empirically and the biopsy could be reserved for the next visit only if the treatment was not successful. The patient preferred to start with topical treatment and hold off on the biopsy.

The FP prescribed 0.1% triamcinolone ointment to be applied twice daily. The FP knew that this was a mid-potency steroid and a high-potency steroid might work more rapidly and effectively, but the patient's insurance had a large deductible and the patient was going to have to pay for the medication out-of-pocket. Generic 0.1% triamcinolone is far less expensive than any of the high-potency steroids, including generic clobetasol.

Fortunately, at the one-month follow-up, the patient had improved by 95% and the only remaining problem was the hyperpigmentation, which was secondary to the inflammation. The FP suggested that the patient continue using the triamcinolone until there was a full resolution of the erythema, scaling, and itching. He explained that the post-inflammatory hyperpigmentation might take months (to a year) to resolve, and in some cases never resolves. The patient was not worried about this issue and was happy that his symptoms had improved.

Photos and text for Photo Rounds Friday courtesy of Richard P. Usatine, MD. This case was adapted from: Wah Y, Usatine R. Eczema. In: Usatine R, Smith M, Mayeaux EJ, et al, eds. Color Atlas of Family Medicine. 2nd ed. New York, NY: McGraw-Hill; 2013.

To learn more about the Color Atlas of Family Medicine, see: www.amazon.com/Color-Family-Medicine-Richard-Usatine/dp/0071769641/

You can now get the second edition of the Color Atlas of Family Medicine as an app by clicking on this link: usatinemedia.com

The FP concluded that this was a case of nummular eczema, after exploring other elements of the differential diagnosis, which included psoriasis, tinea corporis, and contact dermatitis. The FP looked at the patient's nails and scalp and saw no other signs of psoriasis. He then performed a potassium hydroxide (KOH) preparation and found no evidence of hyphae or fungal elements. (See a video on how to perform a KOH preparation here: http://www.mdedge.com/jfponline/article/100603/dermatology/koh-preparation.) He ruled out contact dermatitis because the history did not support it, and this wouldn’t have been a common location for it.

Nummular eczema is a type of eczema characterized by circular or oval-shaped scaling plaques with well-defined borders. (“Nummus” is Latin for “coin.”) Nummular eczema produces multiple lesions that are most commonly found on the dorsa of the hands, arms, and legs.

In this case, the FP offered to do a biopsy to confirm the clinical diagnosis, but noted that treatment could be started empirically and the biopsy could be reserved for the next visit only if the treatment was not successful. The patient preferred to start with topical treatment and hold off on the biopsy.

The FP prescribed 0.1% triamcinolone ointment to be applied twice daily. The FP knew that this was a mid-potency steroid and a high-potency steroid might work more rapidly and effectively, but the patient's insurance had a large deductible and the patient was going to have to pay for the medication out-of-pocket. Generic 0.1% triamcinolone is far less expensive than any of the high-potency steroids, including generic clobetasol.

Fortunately, at the one-month follow-up, the patient had improved by 95% and the only remaining problem was the hyperpigmentation, which was secondary to the inflammation. The FP suggested that the patient continue using the triamcinolone until there was a full resolution of the erythema, scaling, and itching. He explained that the post-inflammatory hyperpigmentation might take months (to a year) to resolve, and in some cases never resolves. The patient was not worried about this issue and was happy that his symptoms had improved.

Photos and text for Photo Rounds Friday courtesy of Richard P. Usatine, MD. This case was adapted from: Wah Y, Usatine R. Eczema. In: Usatine R, Smith M, Mayeaux EJ, et al, eds. Color Atlas of Family Medicine. 2nd ed. New York, NY: McGraw-Hill; 2013.

To learn more about the Color Atlas of Family Medicine, see: www.amazon.com/Color-Family-Medicine-Richard-Usatine/dp/0071769641/

You can now get the second edition of the Color Atlas of Family Medicine as an app by clicking on this link: usatinemedia.com

Mupirocin plus chlorhexidine halved Mohs surgical-site infections

SYDNEY – All patients undergoing Mohs surgery should be treated with intranasal mupirocin and a chlorhexidine body wash for 5 days before surgery, without any requirement for a nasal swab positive for Staphylococcus aureus, according to Dr. Harvey Smith.

He presented data from a randomized, controlled trial investigating the prevention of surgical-site infection in 1,002 patients undergoing Mohs surgery who had a negative nasal swab result for S. aureus. Patients were randomized to intranasal mupirocin ointment twice daily and chlorhexidine body wash daily for the 5 days before surgery, or no intervention, said Dr. Smith, a dermatologist in group practice in Perth, Australia.

The results add to earlier studies by the same group. The first study – Staph 1 – showed that swab-positive nasal carriage of S. aureus was a greater risk factor for surgical-site infections in Mohs surgery than the Wright criteria, and that decolonization with intranasal mupirocin and chlorhexidine body wash for a few days before surgery reduced the risk of infection in these patients from 12% to 4%.

The second previous study – Staph 2 – showed that using mupirocin and chlorhexidine before surgery was actually superior to the recommended treatment of stat oral cephalexin in reducing the risk of surgical-site infection.

“So, our third paper has been wondering what to do about the silent majority: These are the two-thirds of patients on whom we operate who have a negative swab for S. aureus,” Dr. Harvey said.

A negative nasal swab was not significant, he said, because skin microbiome studies had already demonstrated that humans carry S. aureus in several places, particularly the feet and buttocks.

“What we’re basically saying is we don’t think you need to swab people, because they’ve got it somewhere,” Dr. Harvey said in an interview. “We don’t think risk stratification is useful anymore, because we’ve shown it’s a benefit to everybody.”

The strategy of treating all patients with mupirocin and chlorhexidine, regardless of nasal carriage, rather than using the broad-spectrum cephalexin, fits with the World Health Organization’s global action plan on antimicrobial resistance, Dr. Harvey explained.

While there had been cases of mupirocin resistance in the past, Dr. Harvey said these had been seen in places where the drug had previously been available over the counter, such as New Zealand. However, there was no evidence of resistance developing for such a short course of use as employed in this setting, he said.

An audience member asked about whether there were any side effects from the mupirocin or chlorhexidine. Dr. Harvey said the main potential adverse event from the treatment was the risk of chlorhexidine toxicity to the cornea. However, he said that patients were told not to get the wash near their eyes.

Apart from one or two patients with eczema who could not tolerate the full 5 days of the chlorhexidine, Dr. Harvey said they had now treated more than 4,000 patients with no other side effects observed.

The study was supported by the Australasian College of Dermatologists. No conflicts of interest were declared.

SYDNEY – All patients undergoing Mohs surgery should be treated with intranasal mupirocin and a chlorhexidine body wash for 5 days before surgery, without any requirement for a nasal swab positive for Staphylococcus aureus, according to Dr. Harvey Smith.

He presented data from a randomized, controlled trial investigating the prevention of surgical-site infection in 1,002 patients undergoing Mohs surgery who had a negative nasal swab result for S. aureus. Patients were randomized to intranasal mupirocin ointment twice daily and chlorhexidine body wash daily for the 5 days before surgery, or no intervention, said Dr. Smith, a dermatologist in group practice in Perth, Australia.

The results add to earlier studies by the same group. The first study – Staph 1 – showed that swab-positive nasal carriage of S. aureus was a greater risk factor for surgical-site infections in Mohs surgery than the Wright criteria, and that decolonization with intranasal mupirocin and chlorhexidine body wash for a few days before surgery reduced the risk of infection in these patients from 12% to 4%.

The second previous study – Staph 2 – showed that using mupirocin and chlorhexidine before surgery was actually superior to the recommended treatment of stat oral cephalexin in reducing the risk of surgical-site infection.

“So, our third paper has been wondering what to do about the silent majority: These are the two-thirds of patients on whom we operate who have a negative swab for S. aureus,” Dr. Harvey said.

A negative nasal swab was not significant, he said, because skin microbiome studies had already demonstrated that humans carry S. aureus in several places, particularly the feet and buttocks.

“What we’re basically saying is we don’t think you need to swab people, because they’ve got it somewhere,” Dr. Harvey said in an interview. “We don’t think risk stratification is useful anymore, because we’ve shown it’s a benefit to everybody.”

The strategy of treating all patients with mupirocin and chlorhexidine, regardless of nasal carriage, rather than using the broad-spectrum cephalexin, fits with the World Health Organization’s global action plan on antimicrobial resistance, Dr. Harvey explained.

While there had been cases of mupirocin resistance in the past, Dr. Harvey said these had been seen in places where the drug had previously been available over the counter, such as New Zealand. However, there was no evidence of resistance developing for such a short course of use as employed in this setting, he said.

An audience member asked about whether there were any side effects from the mupirocin or chlorhexidine. Dr. Harvey said the main potential adverse event from the treatment was the risk of chlorhexidine toxicity to the cornea. However, he said that patients were told not to get the wash near their eyes.

Apart from one or two patients with eczema who could not tolerate the full 5 days of the chlorhexidine, Dr. Harvey said they had now treated more than 4,000 patients with no other side effects observed.

The study was supported by the Australasian College of Dermatologists. No conflicts of interest were declared.

SYDNEY – All patients undergoing Mohs surgery should be treated with intranasal mupirocin and a chlorhexidine body wash for 5 days before surgery, without any requirement for a nasal swab positive for Staphylococcus aureus, according to Dr. Harvey Smith.

He presented data from a randomized, controlled trial investigating the prevention of surgical-site infection in 1,002 patients undergoing Mohs surgery who had a negative nasal swab result for S. aureus. Patients were randomized to intranasal mupirocin ointment twice daily and chlorhexidine body wash daily for the 5 days before surgery, or no intervention, said Dr. Smith, a dermatologist in group practice in Perth, Australia.

The results add to earlier studies by the same group. The first study – Staph 1 – showed that swab-positive nasal carriage of S. aureus was a greater risk factor for surgical-site infections in Mohs surgery than the Wright criteria, and that decolonization with intranasal mupirocin and chlorhexidine body wash for a few days before surgery reduced the risk of infection in these patients from 12% to 4%.

The second previous study – Staph 2 – showed that using mupirocin and chlorhexidine before surgery was actually superior to the recommended treatment of stat oral cephalexin in reducing the risk of surgical-site infection.

“So, our third paper has been wondering what to do about the silent majority: These are the two-thirds of patients on whom we operate who have a negative swab for S. aureus,” Dr. Harvey said.

A negative nasal swab was not significant, he said, because skin microbiome studies had already demonstrated that humans carry S. aureus in several places, particularly the feet and buttocks.

“What we’re basically saying is we don’t think you need to swab people, because they’ve got it somewhere,” Dr. Harvey said in an interview. “We don’t think risk stratification is useful anymore, because we’ve shown it’s a benefit to everybody.”

The strategy of treating all patients with mupirocin and chlorhexidine, regardless of nasal carriage, rather than using the broad-spectrum cephalexin, fits with the World Health Organization’s global action plan on antimicrobial resistance, Dr. Harvey explained.

While there had been cases of mupirocin resistance in the past, Dr. Harvey said these had been seen in places where the drug had previously been available over the counter, such as New Zealand. However, there was no evidence of resistance developing for such a short course of use as employed in this setting, he said.

An audience member asked about whether there were any side effects from the mupirocin or chlorhexidine. Dr. Harvey said the main potential adverse event from the treatment was the risk of chlorhexidine toxicity to the cornea. However, he said that patients were told not to get the wash near their eyes.

Apart from one or two patients with eczema who could not tolerate the full 5 days of the chlorhexidine, Dr. Harvey said they had now treated more than 4,000 patients with no other side effects observed.

The study was supported by the Australasian College of Dermatologists. No conflicts of interest were declared.

Key clinical point: Treat all patients undergoing Mohs surgery with intranasal mupirocin and a chlorhexidine body wash for 5 days before surgery, without the need for a nasal swab for Staphylococcus aureus.

Major finding: Treating patients undergoing Mohs surgery with intranasal mupirocin and a chlorhexidine body wash for 5 days before surgery halved the risk of surgical-site infections, even if the patients did not have a positive nasal swab for S. aureus.

Data source: A randomized, controlled trial in 1,002 patients with a negative nasal swab for S. aureus undergoing Mohs surgery.

Disclosures: The study was partly supported by the Australasian College of Dermatologists. No conflicts of interest were declared.

New DES hailed for smallest coronary vessels

Paris – The first multicenter, prospective trial of a drug-eluting stent designed specifically to treat lesions in coronary vessels less than 2.25 mm in diameter showed excellent outcomes, with a 1-year target lesion failure rate of 5% for the Resolute Onyx 2.0 mm diameter zotarolimus-eluting stent.

This result in the pivotal trial easily surpassed the prespecified performance goal of a 19% target lesion failure rate, Matthew J. Price, MD, reported at the annual congress of the European Association of Percutaneous Cardiovascular Interventions.

Hemodynamically significant lesions in such small vessels are “not uncommon, particularly in diabetic patients,” Dr. Price said in an interview. Indeed, 47% of patients in the clinical trial had diabetes.

At present, the only ways to treat coronary disease in arteries having a reference vessel diameter less than 2.25 mm are off-label placement of an oversized stent, with its attendant risk of complications; standard balloon angioplasty, which entails a particularly high restenosis rate in this setting; or medical management, the cardiologist noted.

He presented a multicenter, prospective, open-label, single-arm trial of 101 patients with documented ischemia-producing obstructions in coronary arteries having a reference vessel diameter less than 2.25 mm, a lesion length less than 27 mm, and evidence of ischemia attributable to the lesion, typically via fractional flow reserve. The mean diameter by quantitative coronary angiography was 1.91 mm.

The primary endpoint was the rate of target lesion failure at 12 months, a composite comprising cardiac death, target vessel MI, or clinically driven target lesion revascularization. This endpoint occurred in 5% of patients. There was a 3% target vessel MI rate and a 2% target lesion revascularization rate. There were no cardiac deaths.

“Importantly, the stent thrombosis rate in these patients with extremely small vessels was zero,” the cardiologist emphasized.

The mean angiographic in-stent late lumen loss at 13 months was 0.26 mm, which Dr. Price characterized as “quite good.” The in-segment binary angiographic restenosis rate was 20%.

“That’s slightly higher than you would expect to see in vessels with larger reference diameters. I think that’s because of the lack of headroom. You have a very small vessel, and, even with a very small stent, even a small amount of late loss will give you a larger percent diameter restenosis over time,” he explained.

The 19% target lesion failure rate selected as a performance goal in the trial was set somewhat arbitrarily. It wasn’t possible to randomize patients to a comparator arm because there are no approved stents for vessels less than 2.25 mm in diameter. The 19% figure was arrived at in discussion with the Food and Drug Administration on the basis of similarity to the performance goal used in clinical trials to gain approval of 2.25-mm, drug-eluting stents. Because the Onyx 2.0-mm-diameter trial was developed in collaboration with the FDA and the stent aced its primary endpoint and showed excellent clinical outcomes, Dr. Price anticipates the device will readily gain regulatory approval. In April 2017, the FDA approved the Resolute Onyx in sizes of 2.25- to 5.0-mm diameter.

The study met with an enthusiastic reception.

“That was terrific. It’s clearly an incredibly important unmet clinical need,” commented session cochair David R. Holmes Jr., MD, of the Mayo Clinic in Rochester, Minn.

Assuming the stent is approved, how should interventionalists put it into practice? he asked.

Dr. Price replied that, first, it’s important to step back and ask if percutaneous coronary intervention of a particular lesion in a very small coronary artery is clinically indicated. The stent itself is readily manipulatable. It is a thin-strut device constructed of a single strand of a cobalt alloy with enhanced radiopacity.

Investigators in the trial used the standard approach to dual antiplatelet therapy – at least 6 months, with 12 months preferable.

The 20% in-segment binary restenosis rate at 13 months provides a clear message for interventionalists, he continued. “What this tells me is that, while this is a very good stent, we can’t forget to treat the patient aggressively with medical therapy to stop the progression of prediabetes, diabetes, and small vessel disease in addition to treating obstructive lesions with a small stent.”

Asked if the lack of headroom in these extra-small arteries warrants liberal use of intraprocedural imaging to make sure the stent is perfectly apposed, Dr. Price replied that he doesn’t think so. He noted that intravascular ultrasound and optical coherence tomography were seldom used in the trial, yet the results were reassuringly excellent.

The study results were published simultaneously with Dr. Price’s presentation (JACC Cardiovasc Interv. 2017 May 17. doi: 10.1016/j.jcin.2017.05.004). The trial was sponsored by Medtronic. Dr. Price reported serving as a consultant and paid speaker on behalf of that company, as well as AstraZeneca, Boston Scientific, St. Jude Medical, and The Medicines Company.

Paris – The first multicenter, prospective trial of a drug-eluting stent designed specifically to treat lesions in coronary vessels less than 2.25 mm in diameter showed excellent outcomes, with a 1-year target lesion failure rate of 5% for the Resolute Onyx 2.0 mm diameter zotarolimus-eluting stent.

This result in the pivotal trial easily surpassed the prespecified performance goal of a 19% target lesion failure rate, Matthew J. Price, MD, reported at the annual congress of the European Association of Percutaneous Cardiovascular Interventions.

Hemodynamically significant lesions in such small vessels are “not uncommon, particularly in diabetic patients,” Dr. Price said in an interview. Indeed, 47% of patients in the clinical trial had diabetes.

At present, the only ways to treat coronary disease in arteries having a reference vessel diameter less than 2.25 mm are off-label placement of an oversized stent, with its attendant risk of complications; standard balloon angioplasty, which entails a particularly high restenosis rate in this setting; or medical management, the cardiologist noted.

He presented a multicenter, prospective, open-label, single-arm trial of 101 patients with documented ischemia-producing obstructions in coronary arteries having a reference vessel diameter less than 2.25 mm, a lesion length less than 27 mm, and evidence of ischemia attributable to the lesion, typically via fractional flow reserve. The mean diameter by quantitative coronary angiography was 1.91 mm.

The primary endpoint was the rate of target lesion failure at 12 months, a composite comprising cardiac death, target vessel MI, or clinically driven target lesion revascularization. This endpoint occurred in 5% of patients. There was a 3% target vessel MI rate and a 2% target lesion revascularization rate. There were no cardiac deaths.

“Importantly, the stent thrombosis rate in these patients with extremely small vessels was zero,” the cardiologist emphasized.

The mean angiographic in-stent late lumen loss at 13 months was 0.26 mm, which Dr. Price characterized as “quite good.” The in-segment binary angiographic restenosis rate was 20%.

“That’s slightly higher than you would expect to see in vessels with larger reference diameters. I think that’s because of the lack of headroom. You have a very small vessel, and, even with a very small stent, even a small amount of late loss will give you a larger percent diameter restenosis over time,” he explained.

The 19% target lesion failure rate selected as a performance goal in the trial was set somewhat arbitrarily. It wasn’t possible to randomize patients to a comparator arm because there are no approved stents for vessels less than 2.25 mm in diameter. The 19% figure was arrived at in discussion with the Food and Drug Administration on the basis of similarity to the performance goal used in clinical trials to gain approval of 2.25-mm, drug-eluting stents. Because the Onyx 2.0-mm-diameter trial was developed in collaboration with the FDA and the stent aced its primary endpoint and showed excellent clinical outcomes, Dr. Price anticipates the device will readily gain regulatory approval. In April 2017, the FDA approved the Resolute Onyx in sizes of 2.25- to 5.0-mm diameter.

The study met with an enthusiastic reception.

“That was terrific. It’s clearly an incredibly important unmet clinical need,” commented session cochair David R. Holmes Jr., MD, of the Mayo Clinic in Rochester, Minn.

Assuming the stent is approved, how should interventionalists put it into practice? he asked.

Dr. Price replied that, first, it’s important to step back and ask if percutaneous coronary intervention of a particular lesion in a very small coronary artery is clinically indicated. The stent itself is readily manipulatable. It is a thin-strut device constructed of a single strand of a cobalt alloy with enhanced radiopacity.

Investigators in the trial used the standard approach to dual antiplatelet therapy – at least 6 months, with 12 months preferable.

The 20% in-segment binary restenosis rate at 13 months provides a clear message for interventionalists, he continued. “What this tells me is that, while this is a very good stent, we can’t forget to treat the patient aggressively with medical therapy to stop the progression of prediabetes, diabetes, and small vessel disease in addition to treating obstructive lesions with a small stent.”

Asked if the lack of headroom in these extra-small arteries warrants liberal use of intraprocedural imaging to make sure the stent is perfectly apposed, Dr. Price replied that he doesn’t think so. He noted that intravascular ultrasound and optical coherence tomography were seldom used in the trial, yet the results were reassuringly excellent.

The study results were published simultaneously with Dr. Price’s presentation (JACC Cardiovasc Interv. 2017 May 17. doi: 10.1016/j.jcin.2017.05.004). The trial was sponsored by Medtronic. Dr. Price reported serving as a consultant and paid speaker on behalf of that company, as well as AstraZeneca, Boston Scientific, St. Jude Medical, and The Medicines Company.

Paris – The first multicenter, prospective trial of a drug-eluting stent designed specifically to treat lesions in coronary vessels less than 2.25 mm in diameter showed excellent outcomes, with a 1-year target lesion failure rate of 5% for the Resolute Onyx 2.0 mm diameter zotarolimus-eluting stent.

This result in the pivotal trial easily surpassed the prespecified performance goal of a 19% target lesion failure rate, Matthew J. Price, MD, reported at the annual congress of the European Association of Percutaneous Cardiovascular Interventions.

Hemodynamically significant lesions in such small vessels are “not uncommon, particularly in diabetic patients,” Dr. Price said in an interview. Indeed, 47% of patients in the clinical trial had diabetes.

At present, the only ways to treat coronary disease in arteries having a reference vessel diameter less than 2.25 mm are off-label placement of an oversized stent, with its attendant risk of complications; standard balloon angioplasty, which entails a particularly high restenosis rate in this setting; or medical management, the cardiologist noted.

He presented a multicenter, prospective, open-label, single-arm trial of 101 patients with documented ischemia-producing obstructions in coronary arteries having a reference vessel diameter less than 2.25 mm, a lesion length less than 27 mm, and evidence of ischemia attributable to the lesion, typically via fractional flow reserve. The mean diameter by quantitative coronary angiography was 1.91 mm.

The primary endpoint was the rate of target lesion failure at 12 months, a composite comprising cardiac death, target vessel MI, or clinically driven target lesion revascularization. This endpoint occurred in 5% of patients. There was a 3% target vessel MI rate and a 2% target lesion revascularization rate. There were no cardiac deaths.

“Importantly, the stent thrombosis rate in these patients with extremely small vessels was zero,” the cardiologist emphasized.

The mean angiographic in-stent late lumen loss at 13 months was 0.26 mm, which Dr. Price characterized as “quite good.” The in-segment binary angiographic restenosis rate was 20%.

“That’s slightly higher than you would expect to see in vessels with larger reference diameters. I think that’s because of the lack of headroom. You have a very small vessel, and, even with a very small stent, even a small amount of late loss will give you a larger percent diameter restenosis over time,” he explained.

The 19% target lesion failure rate selected as a performance goal in the trial was set somewhat arbitrarily. It wasn’t possible to randomize patients to a comparator arm because there are no approved stents for vessels less than 2.25 mm in diameter. The 19% figure was arrived at in discussion with the Food and Drug Administration on the basis of similarity to the performance goal used in clinical trials to gain approval of 2.25-mm, drug-eluting stents. Because the Onyx 2.0-mm-diameter trial was developed in collaboration with the FDA and the stent aced its primary endpoint and showed excellent clinical outcomes, Dr. Price anticipates the device will readily gain regulatory approval. In April 2017, the FDA approved the Resolute Onyx in sizes of 2.25- to 5.0-mm diameter.

The study met with an enthusiastic reception.

“That was terrific. It’s clearly an incredibly important unmet clinical need,” commented session cochair David R. Holmes Jr., MD, of the Mayo Clinic in Rochester, Minn.

Assuming the stent is approved, how should interventionalists put it into practice? he asked.

Dr. Price replied that, first, it’s important to step back and ask if percutaneous coronary intervention of a particular lesion in a very small coronary artery is clinically indicated. The stent itself is readily manipulatable. It is a thin-strut device constructed of a single strand of a cobalt alloy with enhanced radiopacity.

Investigators in the trial used the standard approach to dual antiplatelet therapy – at least 6 months, with 12 months preferable.

The 20% in-segment binary restenosis rate at 13 months provides a clear message for interventionalists, he continued. “What this tells me is that, while this is a very good stent, we can’t forget to treat the patient aggressively with medical therapy to stop the progression of prediabetes, diabetes, and small vessel disease in addition to treating obstructive lesions with a small stent.”

Asked if the lack of headroom in these extra-small arteries warrants liberal use of intraprocedural imaging to make sure the stent is perfectly apposed, Dr. Price replied that he doesn’t think so. He noted that intravascular ultrasound and optical coherence tomography were seldom used in the trial, yet the results were reassuringly excellent.

The study results were published simultaneously with Dr. Price’s presentation (JACC Cardiovasc Interv. 2017 May 17. doi: 10.1016/j.jcin.2017.05.004). The trial was sponsored by Medtronic. Dr. Price reported serving as a consultant and paid speaker on behalf of that company, as well as AstraZeneca, Boston Scientific, St. Jude Medical, and The Medicines Company.

AT EUROPCR

Key clinical point:

Major finding: At 12 months’ follow-up, the key outcomes were a 3% rate of target vessel MI, a 2% rate of clinically driven target lesion revascularization, no stent thrombosis, and no cardiac deaths.

Data source: A prospective, multicenter, open-label trial in 101 patients who underwent percutaneous coronary intervention for coronary lesions with a reference vessel diameter of less than 2.25 mm.

Disclosures: The trial was sponsored by Medtronic. Dr. Price reported serving as a consultant to and paid speaker on behalf of that company as well as AstraZeneca, Boston Scientific, St. Jude Medical, and The Medicines Company.

Fighting in a passive manner active against Clostridium difficile

Infections resulting from Clostridium difficile are a major clinical challenge. In hematology and oncology, the widespread use of broad-spectrum antibiotics is essential for patients with profound neutropenia and infectious complications, which are a high-risk factor for C. difficile enteritis.

C. difficile enteritis occurs in 5%-20% of cancer patients.1 With standard of care antibiotics, oral metronidazole or oral vancomycin, high C. difficile cure rates are possible, but up to 25% of these infections recur. Recently, oral fidaxomicin was approved for treatment of C. difficile enteritis and was associated with high cure rates and, more importantly, with significantly lower recurrence rates.2

Bezlotoxumab, a fully humanized monoclonal antibody against C. difficile toxin B, has been shown by x-ray crystallography to neutralize toxin B by blocking its ability to bind to host cells.7 Most recently, this new therapeutic approach was investigated in humans.8

Wilcox et al. used pooled data of 2655 adults treated in two double-blind, randomized, placebo-controlled phase III clinical trials (MODIFY I and MODIFY II) for primary or recurrent C. difficile enteritis. This industry-sponsored trial was conducted at 322 sites in 30 countries.

In one treatment group, patients received a single infusion of bezlotoxumab (781 patients) or placebo (773 patients) and one of the three oral standard-of-care C. difficile antibiotics. Importantly, the primary end point of this trial was recurrent infection within 12 weeks. About 28% of the patients in both the bezlotoxumab group and the placebo group previously had at least one episode of C. difficile enteritis. About 20% of the patients in both groups were immunocompromised.

Pooled data showed that recurrent infection was significantly lower (P less than 0.001) in the bezlotoxumab group (17%), compared with the placebo group (27%). The difference in recurrence rate (25% vs. 41%) was even more pronounced in patients with one or more episodes of recurrent C. difficile enteritis in the past 6 months. Furthermore, a benefit for bezlotoxumab was seen in immunocompromised patients, whose recurrence rates were 15% with bezlotoxumab, vs. 28% with placebo. After the 12 weeks of follow-up, the absolute difference in the Kaplan-Meier rates of recurrent infection was 13% (absolute rate, 21% in bezlotoxumab group vs. 34% in placebo group; P less than 0.001).

The results indicate that bezlotoxumab, which was approved in 2016 by the U.S. Food and Drug Administration, might improve the outcome of patients with C. difficile enteritis. However, bezlotoxumab is not a “magic bullet.” The number needed to treat to prevent one episode of C. difficile enteritis is 10.

It is conceivable that bezlotoxumab may find its role in high-risk patients – those older than 65 years or patients with recurrent C. difficile enteritis – since the number needed to treat is only 6 in these subgroups.8

This new agent could be an important treatment option for our high-risk patients in hematology. However, more studies concerning costs and real-life efficacy are needed.

The new approach of passive immunization for prevention of recurrent C. difficile enteritis shows the importance and the role of toxin B – not only the bacterium per se – in pathogenesis and virulence of C. difficile. This could mean that we have to renew our view on the role of antibiotics against C. difficile. However, in contrast, bezlotoxumab does not affect the efficacy of standard of care antibiotics since the initial cure rates were 80% for both the antibody and the placebo groups.8 Toxin B levels are not detectable in stool samples between days 4 and 10 of standard of care antibiotic treatment. Afterward, however, they increase again.9 Most of the patients had received bezlotoxumab 3 or more days after they began standard-of-care antibiotic treatment – in the time period when toxin B is undetectable in stool – which underlines the importance of toxin B in the pathogenesis of recurrent C. difficile enteritis.8