User login

Friends Don't Let Friends Ignore Skin Problems

This 58-year-old woman was unaware there was a problem with her neck skin until friends took a picture and showed it to her. She was surprised and distressed, thinking the changes were new and therefore representative of serious disease.

She denies having any associated symptoms but does admit to a great deal of sun exposure over the years. Her history is significant for a basal cell carcinoma, removed from her chest many years ago. She also has a history of smoking and early COPD.

EXAMINATION

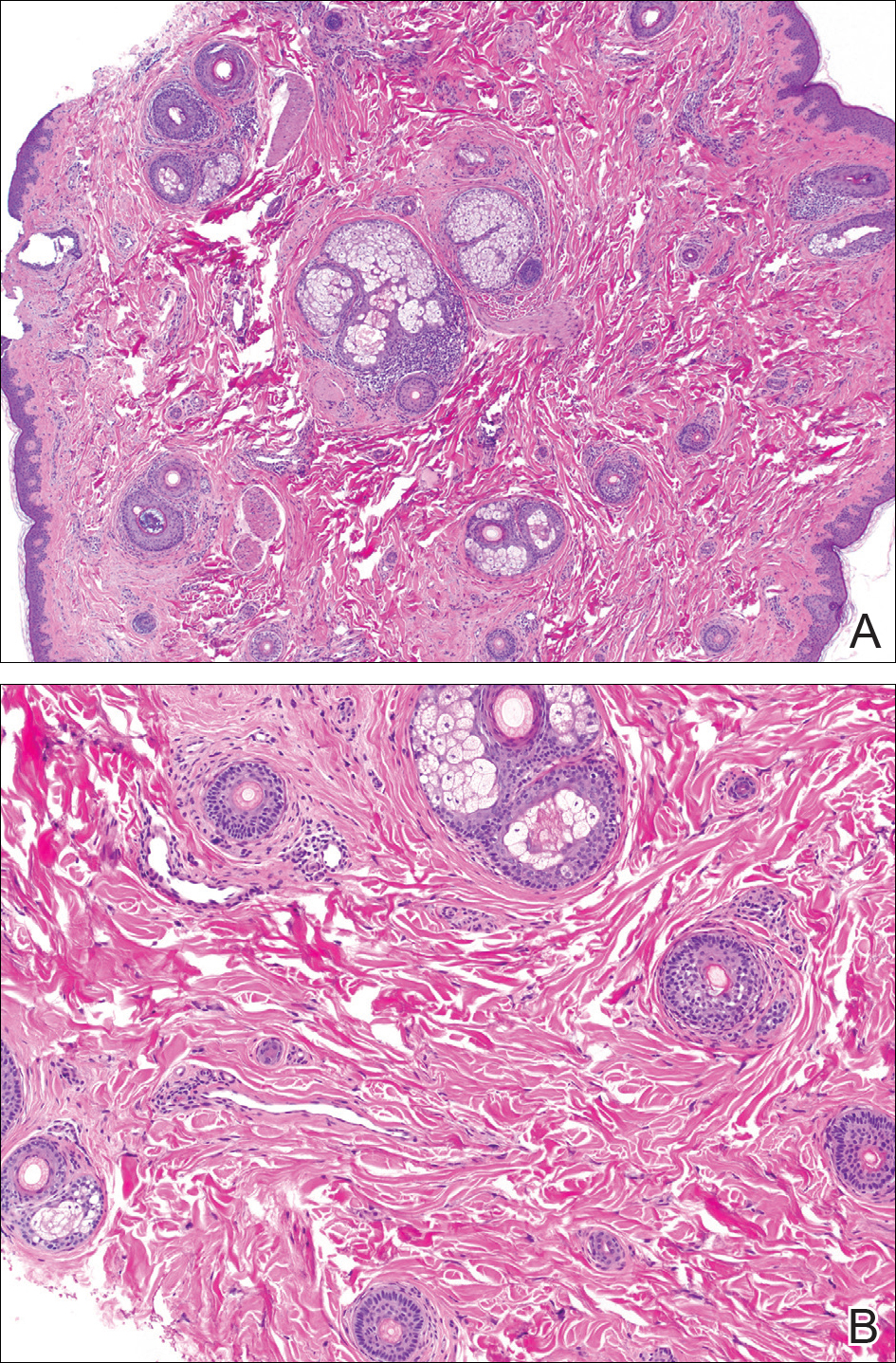

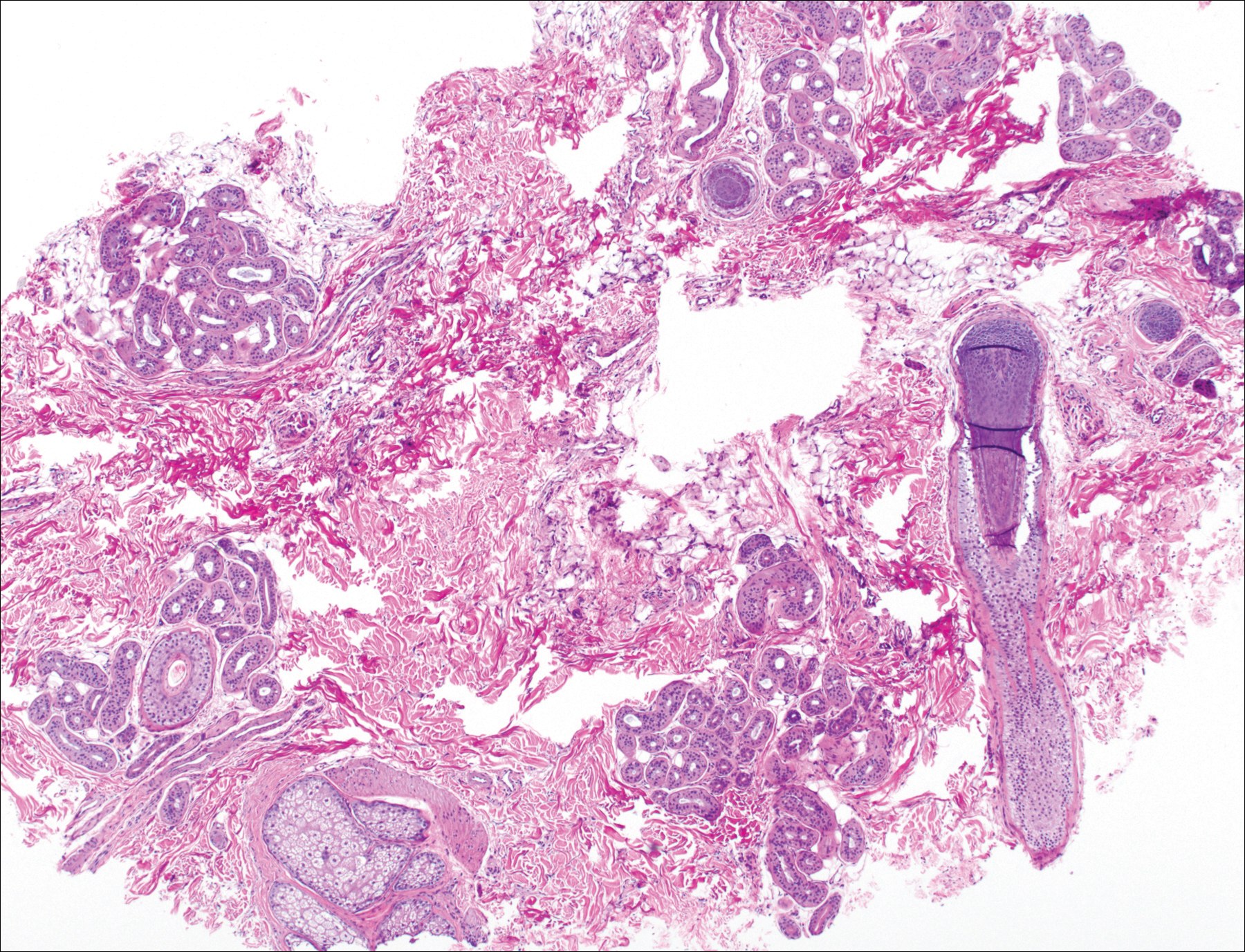

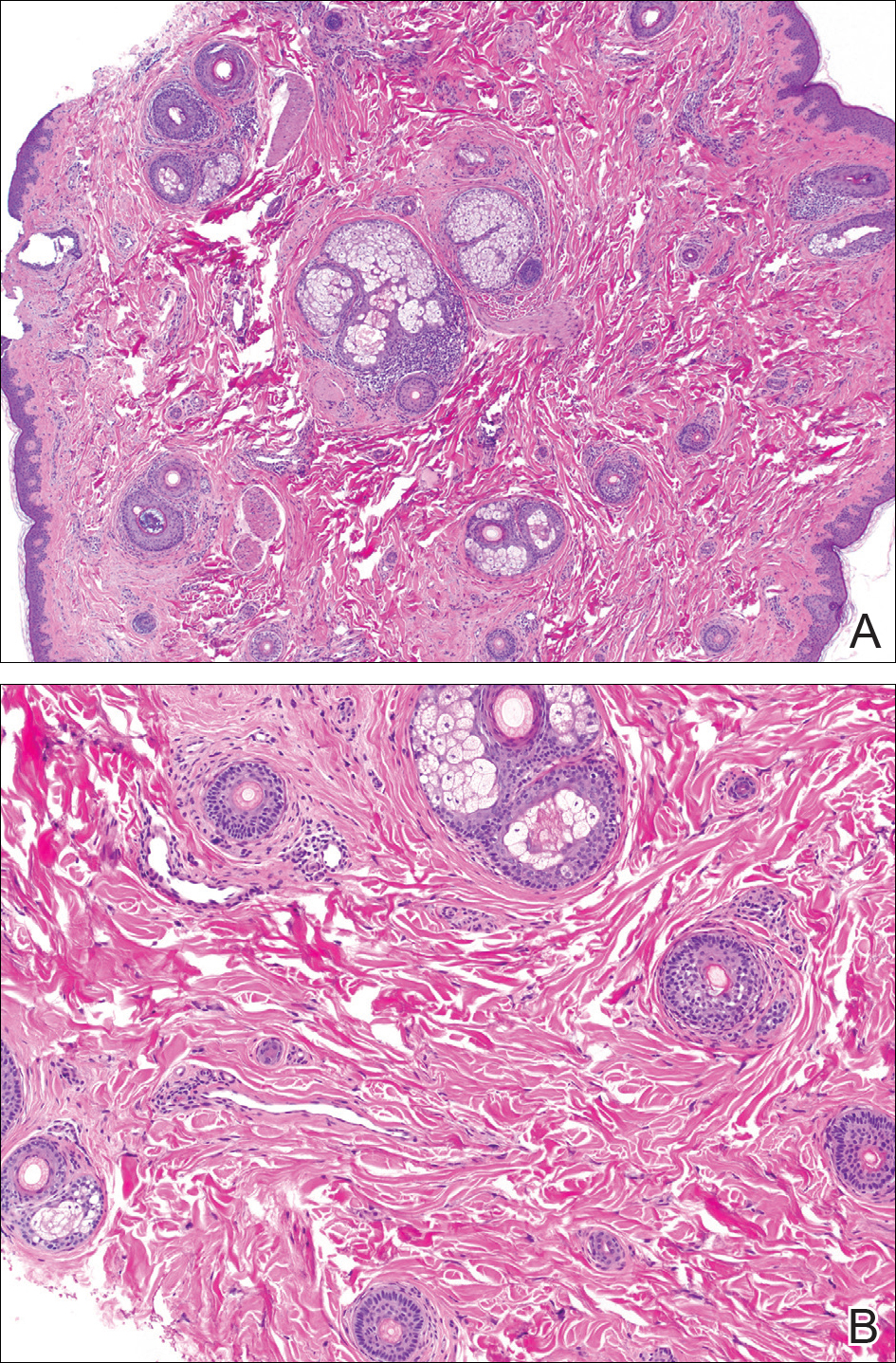

A solid sheet of fine, blanchable telangiectasias spreads across the patient’s upper anterior neck, extending down onto her chest. It spares the skin directly under her chin, leaving an unaffected white oval area.

Elsewhere, the patient has a great deal of dermatoheliosis superimposed on her type II skin, including solar lentigines, weathering, and focal solar elastosis.

What is the diagnosis?

DISCUSSION

This particular pattern of mottled hyper- and hypopigmented skin is a result of overexposure to UV light. The name for this common problem—seen far more commonly in women than in men—is poikiloderma of Civatte (PC). This case is typical in that the changes manifested and progressed so slowly that the patient didn’t notice.

PC can manifest with combinations of red, brown, and yellow discoloration around the neck. In this case, the dominant color was red. The oval area of spared skin was created by the shade of the patient’s chin.

Similar changes can be seen with other conditions, such as poikiloderma vasculare atrophicans, a manifestation of small plaque parapsoriasis. However, this typically affects areas below the waist and does not have areas of sparing.

Treatment has been attempted with lasers and peels, yielding mixed success. Because of her condition’s benignancy, this patient did not opt for treatment.

TAKE-HOME LEARNING POINTS

- Poikiloderma of Civatte (PC) is a permanent skin change caused by overexposure to the sun or another UV source; it is more common in women than men.

- PC manifests with mottled hyper- or hypopigmented patches of skin on the anterior neck and upper chest, which develop gradually over the course of decades. Many patients also have sheets of telangiectasias covering the affected area.

- A distinct area of sparing (usually oval) is typically seen on the upper anterior neck, due to the chin’s shading of this area.

- Laser treatment has been somewhat successful in lightening the affected skin.

This 58-year-old woman was unaware there was a problem with her neck skin until friends took a picture and showed it to her. She was surprised and distressed, thinking the changes were new and therefore representative of serious disease.

She denies having any associated symptoms but does admit to a great deal of sun exposure over the years. Her history is significant for a basal cell carcinoma, removed from her chest many years ago. She also has a history of smoking and early COPD.

EXAMINATION

A solid sheet of fine, blanchable telangiectasias spreads across the patient’s upper anterior neck, extending down onto her chest. It spares the skin directly under her chin, leaving an unaffected white oval area.

Elsewhere, the patient has a great deal of dermatoheliosis superimposed on her type II skin, including solar lentigines, weathering, and focal solar elastosis.

What is the diagnosis?

DISCUSSION

This particular pattern of mottled hyper- and hypopigmented skin is a result of overexposure to UV light. The name for this common problem—seen far more commonly in women than in men—is poikiloderma of Civatte (PC). This case is typical in that the changes manifested and progressed so slowly that the patient didn’t notice.

PC can manifest with combinations of red, brown, and yellow discoloration around the neck. In this case, the dominant color was red. The oval area of spared skin was created by the shade of the patient’s chin.

Similar changes can be seen with other conditions, such as poikiloderma vasculare atrophicans, a manifestation of small plaque parapsoriasis. However, this typically affects areas below the waist and does not have areas of sparing.

Treatment has been attempted with lasers and peels, yielding mixed success. Because of her condition’s benignancy, this patient did not opt for treatment.

TAKE-HOME LEARNING POINTS

- Poikiloderma of Civatte (PC) is a permanent skin change caused by overexposure to the sun or another UV source; it is more common in women than men.

- PC manifests with mottled hyper- or hypopigmented patches of skin on the anterior neck and upper chest, which develop gradually over the course of decades. Many patients also have sheets of telangiectasias covering the affected area.

- A distinct area of sparing (usually oval) is typically seen on the upper anterior neck, due to the chin’s shading of this area.

- Laser treatment has been somewhat successful in lightening the affected skin.

This 58-year-old woman was unaware there was a problem with her neck skin until friends took a picture and showed it to her. She was surprised and distressed, thinking the changes were new and therefore representative of serious disease.

She denies having any associated symptoms but does admit to a great deal of sun exposure over the years. Her history is significant for a basal cell carcinoma, removed from her chest many years ago. She also has a history of smoking and early COPD.

EXAMINATION

A solid sheet of fine, blanchable telangiectasias spreads across the patient’s upper anterior neck, extending down onto her chest. It spares the skin directly under her chin, leaving an unaffected white oval area.

Elsewhere, the patient has a great deal of dermatoheliosis superimposed on her type II skin, including solar lentigines, weathering, and focal solar elastosis.

What is the diagnosis?

DISCUSSION

This particular pattern of mottled hyper- and hypopigmented skin is a result of overexposure to UV light. The name for this common problem—seen far more commonly in women than in men—is poikiloderma of Civatte (PC). This case is typical in that the changes manifested and progressed so slowly that the patient didn’t notice.

PC can manifest with combinations of red, brown, and yellow discoloration around the neck. In this case, the dominant color was red. The oval area of spared skin was created by the shade of the patient’s chin.

Similar changes can be seen with other conditions, such as poikiloderma vasculare atrophicans, a manifestation of small plaque parapsoriasis. However, this typically affects areas below the waist and does not have areas of sparing.

Treatment has been attempted with lasers and peels, yielding mixed success. Because of her condition’s benignancy, this patient did not opt for treatment.

TAKE-HOME LEARNING POINTS

- Poikiloderma of Civatte (PC) is a permanent skin change caused by overexposure to the sun or another UV source; it is more common in women than men.

- PC manifests with mottled hyper- or hypopigmented patches of skin on the anterior neck and upper chest, which develop gradually over the course of decades. Many patients also have sheets of telangiectasias covering the affected area.

- A distinct area of sparing (usually oval) is typically seen on the upper anterior neck, due to the chin’s shading of this area.

- Laser treatment has been somewhat successful in lightening the affected skin.

Blinatumomab granted full approval to treat rel/ref BCP-ALL

The US Food and Drug Administration (FDA) has approved the supplemental biologics license application (sBLA) for blinatumomab (Blincyto®).

The aim of the sBLA was to expand the indication for blinatumomab to include all patients with relapsed or refractory B-cell precursor acute lymphoblastic leukemia (BCP-ALL) and to convert blinatumomab’s accelerated approval to a full approval.

Blinatumomab is a bispecific, CD19-directed, CD3 T-cell engager (BiTE®) antibody construct that binds to CD19 expressed on the surface of cells of B-lineage origin and CD3 expressed on the surface of T cells.

In 2014, the FDA granted blinatumomab accelerated approval to treat adults with Philadelphia chromosome-negative (Ph-) relapsed or refractory BCP-ALL.

In 2016, the FDA granted the therapy accelerated approval for pediatric patients with Ph- relapsed/refractory BCP-ALL.

Now, the FDA has granted blinatumomab full approval for pediatric and adult patients with Ph- or Ph+ relapsed/refractory BCP-ALL.

The FDA also recently approved the sBLA for blinatumomab to be infused over 7 days with preservative, adding to the previously approved administration options for infusion over 24 and 48 hours (preservative-free).

The blinatumomab intravenous bag for a 7-day infusion contains Bacteriostatic 0.9% Sodium Chloride, USP (containing 0.9% benzyl alcohol), which permits continuous intravenous infusion of blinatumomab at 28 mcg/day or 15 mcg/m²/day for a total of 7 days.

The 7-day infusion is not recommended for patients weighing less than 22 kg due to the risk of serious and sometimes fatal adverse events associated with benzyl alcohol in pediatric patients. See the full prescribing information for details.

The prescribing information for blinatumomab includes a boxed warning detailing the risk of cytokine release syndrome and neurologic toxicities. Blinatumomab is also under a Risk Evaluation and Mitigation Strategy program in the US intended to inform healthcare providers about these risks.

Blinatumomab is marketed by Amgen.

Trial results

With this sBLA, Amgen sought to make blinatumomab available as a treatment for patients with Ph+ relapsed/refractory BCP-ALL (as well as Ph-).

To this end, the application included data from the ALCANTARA study, which were published in the Journal of Clinical Oncology.

In this trial, researchers evaluated blinatumomab in adults with Ph+ relapsed/refractory BCP-ALL who had failed treatment with at least 1 tyrosine kinase inhibitor.

Thirty-six percent of patients achieved a complete response or complete response with partial hematologic recovery within the first 2 cycles of blinatumomab treatment. Of these patients, 88% were minimal residual disease-negative.

The most frequent adverse events (AEs) in this trial were pyrexia (58%), neurologic events (47%), febrile neutropenia (40%), and headache (31%). Three patients had grade 1/2 cytokine release syndrome, and 3 patients had grade 3 neurologic AEs.

The sBLA also included overall survival (OS) data from the phase 3 TOWER trial, which was intended to support the conversion of blinatumomab’s accelerated approval to a full approval.

Results from the TOWER trial were published in NEJM.

In this study, researchers compared blinatumomab to standard of care (SOC) chemotherapy (4 different regimens) in adults with Ph- relapsed/refractory BCP-ALL.

Blinatumomab produced higher response rates and nearly doubled OS compared to SOC. The median OS was 7.7 months in the blinatumomab arm and 4 months in the SOC arm. The hazard ratio for death was 0.71 (P=0.012).

The incidence of grade 3 or higher AEs was higher in the SOC arm, but the incidence of serious AEs was higher in the blinatumomab arm. ![]()

The US Food and Drug Administration (FDA) has approved the supplemental biologics license application (sBLA) for blinatumomab (Blincyto®).

The aim of the sBLA was to expand the indication for blinatumomab to include all patients with relapsed or refractory B-cell precursor acute lymphoblastic leukemia (BCP-ALL) and to convert blinatumomab’s accelerated approval to a full approval.

Blinatumomab is a bispecific, CD19-directed, CD3 T-cell engager (BiTE®) antibody construct that binds to CD19 expressed on the surface of cells of B-lineage origin and CD3 expressed on the surface of T cells.

In 2014, the FDA granted blinatumomab accelerated approval to treat adults with Philadelphia chromosome-negative (Ph-) relapsed or refractory BCP-ALL.

In 2016, the FDA granted the therapy accelerated approval for pediatric patients with Ph- relapsed/refractory BCP-ALL.

Now, the FDA has granted blinatumomab full approval for pediatric and adult patients with Ph- or Ph+ relapsed/refractory BCP-ALL.

The FDA also recently approved the sBLA for blinatumomab to be infused over 7 days with preservative, adding to the previously approved administration options for infusion over 24 and 48 hours (preservative-free).

The blinatumomab intravenous bag for a 7-day infusion contains Bacteriostatic 0.9% Sodium Chloride, USP (containing 0.9% benzyl alcohol), which permits continuous intravenous infusion of blinatumomab at 28 mcg/day or 15 mcg/m²/day for a total of 7 days.

The 7-day infusion is not recommended for patients weighing less than 22 kg due to the risk of serious and sometimes fatal adverse events associated with benzyl alcohol in pediatric patients. See the full prescribing information for details.

The prescribing information for blinatumomab includes a boxed warning detailing the risk of cytokine release syndrome and neurologic toxicities. Blinatumomab is also under a Risk Evaluation and Mitigation Strategy program in the US intended to inform healthcare providers about these risks.

Blinatumomab is marketed by Amgen.

Trial results

With this sBLA, Amgen sought to make blinatumomab available as a treatment for patients with Ph+ relapsed/refractory BCP-ALL (as well as Ph-).

To this end, the application included data from the ALCANTARA study, which were published in the Journal of Clinical Oncology.

In this trial, researchers evaluated blinatumomab in adults with Ph+ relapsed/refractory BCP-ALL who had failed treatment with at least 1 tyrosine kinase inhibitor.

Thirty-six percent of patients achieved a complete response or complete response with partial hematologic recovery within the first 2 cycles of blinatumomab treatment. Of these patients, 88% were minimal residual disease-negative.

The most frequent adverse events (AEs) in this trial were pyrexia (58%), neurologic events (47%), febrile neutropenia (40%), and headache (31%). Three patients had grade 1/2 cytokine release syndrome, and 3 patients had grade 3 neurologic AEs.

The sBLA also included overall survival (OS) data from the phase 3 TOWER trial, which was intended to support the conversion of blinatumomab’s accelerated approval to a full approval.

Results from the TOWER trial were published in NEJM.

In this study, researchers compared blinatumomab to standard of care (SOC) chemotherapy (4 different regimens) in adults with Ph- relapsed/refractory BCP-ALL.

Blinatumomab produced higher response rates and nearly doubled OS compared to SOC. The median OS was 7.7 months in the blinatumomab arm and 4 months in the SOC arm. The hazard ratio for death was 0.71 (P=0.012).

The incidence of grade 3 or higher AEs was higher in the SOC arm, but the incidence of serious AEs was higher in the blinatumomab arm. ![]()

The US Food and Drug Administration (FDA) has approved the supplemental biologics license application (sBLA) for blinatumomab (Blincyto®).

The aim of the sBLA was to expand the indication for blinatumomab to include all patients with relapsed or refractory B-cell precursor acute lymphoblastic leukemia (BCP-ALL) and to convert blinatumomab’s accelerated approval to a full approval.

Blinatumomab is a bispecific, CD19-directed, CD3 T-cell engager (BiTE®) antibody construct that binds to CD19 expressed on the surface of cells of B-lineage origin and CD3 expressed on the surface of T cells.

In 2014, the FDA granted blinatumomab accelerated approval to treat adults with Philadelphia chromosome-negative (Ph-) relapsed or refractory BCP-ALL.

In 2016, the FDA granted the therapy accelerated approval for pediatric patients with Ph- relapsed/refractory BCP-ALL.

Now, the FDA has granted blinatumomab full approval for pediatric and adult patients with Ph- or Ph+ relapsed/refractory BCP-ALL.

The FDA also recently approved the sBLA for blinatumomab to be infused over 7 days with preservative, adding to the previously approved administration options for infusion over 24 and 48 hours (preservative-free).

The blinatumomab intravenous bag for a 7-day infusion contains Bacteriostatic 0.9% Sodium Chloride, USP (containing 0.9% benzyl alcohol), which permits continuous intravenous infusion of blinatumomab at 28 mcg/day or 15 mcg/m²/day for a total of 7 days.

The 7-day infusion is not recommended for patients weighing less than 22 kg due to the risk of serious and sometimes fatal adverse events associated with benzyl alcohol in pediatric patients. See the full prescribing information for details.

The prescribing information for blinatumomab includes a boxed warning detailing the risk of cytokine release syndrome and neurologic toxicities. Blinatumomab is also under a Risk Evaluation and Mitigation Strategy program in the US intended to inform healthcare providers about these risks.

Blinatumomab is marketed by Amgen.

Trial results

With this sBLA, Amgen sought to make blinatumomab available as a treatment for patients with Ph+ relapsed/refractory BCP-ALL (as well as Ph-).

To this end, the application included data from the ALCANTARA study, which were published in the Journal of Clinical Oncology.

In this trial, researchers evaluated blinatumomab in adults with Ph+ relapsed/refractory BCP-ALL who had failed treatment with at least 1 tyrosine kinase inhibitor.

Thirty-six percent of patients achieved a complete response or complete response with partial hematologic recovery within the first 2 cycles of blinatumomab treatment. Of these patients, 88% were minimal residual disease-negative.

The most frequent adverse events (AEs) in this trial were pyrexia (58%), neurologic events (47%), febrile neutropenia (40%), and headache (31%). Three patients had grade 1/2 cytokine release syndrome, and 3 patients had grade 3 neurologic AEs.

The sBLA also included overall survival (OS) data from the phase 3 TOWER trial, which was intended to support the conversion of blinatumomab’s accelerated approval to a full approval.

Results from the TOWER trial were published in NEJM.

In this study, researchers compared blinatumomab to standard of care (SOC) chemotherapy (4 different regimens) in adults with Ph- relapsed/refractory BCP-ALL.

Blinatumomab produced higher response rates and nearly doubled OS compared to SOC. The median OS was 7.7 months in the blinatumomab arm and 4 months in the SOC arm. The hazard ratio for death was 0.71 (P=0.012).

The incidence of grade 3 or higher AEs was higher in the SOC arm, but the incidence of serious AEs was higher in the blinatumomab arm. ![]()

ODAC recommends approval of CTL019 in rel/ref ALL

The US Food and Drug Administration’s (FDA) Oncologic Drugs Advisory Committee (ODAC) has unanimously recommended approval for the chimeric antigen receptor (CAR) T-cell therapy CTL019 (tisagenlecleucel).

The committee voted 10 to 0 in favor of approving CTL019 for the treatment of pediatric and young adult patients (ages 3 to 25) with relapsed or refractory B-cell acute lymphoblastic leukemia (ALL).

The FDA will consider this vote as it reviews the biologics license application (BLA) for CTL019, but the agency is not obligated to follow the ODAC’s recommendation.

The BLA for CTL019 is supported by results from 3 trials.

This includes a pilot study, which was presented at the 2015 ASH Annual Meeting; the phase 2 ENSIGN trial, which was presented at the 2016 ASH Annual Meeting; and the phase 2 ELIANA study, which was recently presented at the 22nd Congress of the European Hematology Association (EHA).

ELIANA enrolled 88 patients with relapsed/refractory B-cell ALL, and 68 of them received CTL019.

Nine patients did not receive CTL019 due to death or adverse events, 7 patients were affected by manufacturing failures, and 4 patients were pending infusion at last follow-up.

Most of the infused patients (n=65) received lymphodepleting chemotherapy prior to CTL019 (single dose). The median dose was 3.0 × 106 (range, 0.2-5.4 × 106) transduced CTL019 cells/kg.

Sixty-three patients were evaluable for efficacy.

The overall response rate—complete response (CR) plus CR with incomplete hematologic recovery (CRi)—was 83% (52/63). All patients with CR/CRis were minimal residual disease-negative in the bone marrow.

Sixty-eight patients were evaluated for safety.

Serious adverse events occurred in 69% of patients. These included life-threatening cytokine release syndrome (CRS) and hemophagocytic lymphohistiocytosis, neurological events that occurred with CRS or after CRS was resolved, coagulopathies with CRS, and life-threatening infections.

Seventy-eight percent of patients had CRS—21% with grade 3 and 27% with grade 4 CRS. There were no deaths from CRS.

Forty-four percent of patients had neurological toxicities—15% grade 3 or higher. These included encephalopathy, delirium, hallucinations, somnolence, cognitive disorder, seizure, depressed level of consciousness, mental status changes, dysphagia, muscular weakness, and dysarthria.

Severe infectious complications occurred in 26% of patients, and 3 patients died of such complications.

Eleven patients died after receiving CTL019—7 due to ALL, 1 from cerebral hemorrhage, 1 from encephalitis, 1 from a respiratory tract infection, and 1 from systemic mycosis. ![]()

The US Food and Drug Administration’s (FDA) Oncologic Drugs Advisory Committee (ODAC) has unanimously recommended approval for the chimeric antigen receptor (CAR) T-cell therapy CTL019 (tisagenlecleucel).

The committee voted 10 to 0 in favor of approving CTL019 for the treatment of pediatric and young adult patients (ages 3 to 25) with relapsed or refractory B-cell acute lymphoblastic leukemia (ALL).

The FDA will consider this vote as it reviews the biologics license application (BLA) for CTL019, but the agency is not obligated to follow the ODAC’s recommendation.

The BLA for CTL019 is supported by results from 3 trials.

This includes a pilot study, which was presented at the 2015 ASH Annual Meeting; the phase 2 ENSIGN trial, which was presented at the 2016 ASH Annual Meeting; and the phase 2 ELIANA study, which was recently presented at the 22nd Congress of the European Hematology Association (EHA).

ELIANA enrolled 88 patients with relapsed/refractory B-cell ALL, and 68 of them received CTL019.

Nine patients did not receive CTL019 due to death or adverse events, 7 patients were affected by manufacturing failures, and 4 patients were pending infusion at last follow-up.

Most of the infused patients (n=65) received lymphodepleting chemotherapy prior to CTL019 (single dose). The median dose was 3.0 × 106 (range, 0.2-5.4 × 106) transduced CTL019 cells/kg.

Sixty-three patients were evaluable for efficacy.

The overall response rate—complete response (CR) plus CR with incomplete hematologic recovery (CRi)—was 83% (52/63). All patients with CR/CRis were minimal residual disease-negative in the bone marrow.

Sixty-eight patients were evaluated for safety.

Serious adverse events occurred in 69% of patients. These included life-threatening cytokine release syndrome (CRS) and hemophagocytic lymphohistiocytosis, neurological events that occurred with CRS or after CRS was resolved, coagulopathies with CRS, and life-threatening infections.

Seventy-eight percent of patients had CRS—21% with grade 3 and 27% with grade 4 CRS. There were no deaths from CRS.

Forty-four percent of patients had neurological toxicities—15% grade 3 or higher. These included encephalopathy, delirium, hallucinations, somnolence, cognitive disorder, seizure, depressed level of consciousness, mental status changes, dysphagia, muscular weakness, and dysarthria.

Severe infectious complications occurred in 26% of patients, and 3 patients died of such complications.

Eleven patients died after receiving CTL019—7 due to ALL, 1 from cerebral hemorrhage, 1 from encephalitis, 1 from a respiratory tract infection, and 1 from systemic mycosis. ![]()

The US Food and Drug Administration’s (FDA) Oncologic Drugs Advisory Committee (ODAC) has unanimously recommended approval for the chimeric antigen receptor (CAR) T-cell therapy CTL019 (tisagenlecleucel).

The committee voted 10 to 0 in favor of approving CTL019 for the treatment of pediatric and young adult patients (ages 3 to 25) with relapsed or refractory B-cell acute lymphoblastic leukemia (ALL).

The FDA will consider this vote as it reviews the biologics license application (BLA) for CTL019, but the agency is not obligated to follow the ODAC’s recommendation.

The BLA for CTL019 is supported by results from 3 trials.

This includes a pilot study, which was presented at the 2015 ASH Annual Meeting; the phase 2 ENSIGN trial, which was presented at the 2016 ASH Annual Meeting; and the phase 2 ELIANA study, which was recently presented at the 22nd Congress of the European Hematology Association (EHA).

ELIANA enrolled 88 patients with relapsed/refractory B-cell ALL, and 68 of them received CTL019.

Nine patients did not receive CTL019 due to death or adverse events, 7 patients were affected by manufacturing failures, and 4 patients were pending infusion at last follow-up.

Most of the infused patients (n=65) received lymphodepleting chemotherapy prior to CTL019 (single dose). The median dose was 3.0 × 106 (range, 0.2-5.4 × 106) transduced CTL019 cells/kg.

Sixty-three patients were evaluable for efficacy.

The overall response rate—complete response (CR) plus CR with incomplete hematologic recovery (CRi)—was 83% (52/63). All patients with CR/CRis were minimal residual disease-negative in the bone marrow.

Sixty-eight patients were evaluated for safety.

Serious adverse events occurred in 69% of patients. These included life-threatening cytokine release syndrome (CRS) and hemophagocytic lymphohistiocytosis, neurological events that occurred with CRS or after CRS was resolved, coagulopathies with CRS, and life-threatening infections.

Seventy-eight percent of patients had CRS—21% with grade 3 and 27% with grade 4 CRS. There were no deaths from CRS.

Forty-four percent of patients had neurological toxicities—15% grade 3 or higher. These included encephalopathy, delirium, hallucinations, somnolence, cognitive disorder, seizure, depressed level of consciousness, mental status changes, dysphagia, muscular weakness, and dysarthria.

Severe infectious complications occurred in 26% of patients, and 3 patients died of such complications.

Eleven patients died after receiving CTL019—7 due to ALL, 1 from cerebral hemorrhage, 1 from encephalitis, 1 from a respiratory tract infection, and 1 from systemic mycosis. ![]()

Benefits of gemtuzumab ozogamicin outweigh risks, ODAC says

The US Food and Drug Administration’s (FDA) Oncologic Drug Advisory Committee (ODAC) has announced a positive opinion of gemtuzumab ozogamicin (GO, Mylotarg), a drug that was withdrawn from the US market in 2010.

In a vote of 6 to 1, the ODAC concluded that trial results suggest a favorable risk-benefit profile for low-dose GO given in combination with standard chemotherapy to patients with newly diagnosed, CD33-positive acute myeloid leukemia (AML).

The ODAC’s role is to provide recommendations to the FDA. The FDA is expected to make a decision on the biologics license application (BLA) for GO by September 2017.

With this BLA, Pfizer is seeking approval for GO in 2 indications.

One is for GO in combination with standard chemotherapy (daunorubicin and cytarabine) for the treatment of previously untreated, de novo, CD33-positive AML.

The other is for GO monotherapy for CD33-positive AML patients in first relapse who are 60 years of age or older and who are not considered candidates for other cytotoxic chemotherapy.

GO is an investigational antibody-drug conjugate that consists of the cytotoxic agent calicheamicin attached to a monoclonal antibody targeting CD33.

GO was originally approved under the FDA’s accelerated approval program in 2000 for use as a single agent in patients with CD33-positive AML who had experienced their first relapse and were 60 years of age or older.

In 2010, Pfizer voluntarily withdrew GO from the US market due to the results of a confirmatory phase 3 trial, SWOG S0106.

This trial showed there was no clinical benefit for patients who received GO plus daunorubicin and cytarabine over patients who received only daunorubicin and cytarabine.

In addition, the rate of fatal, treatment-related toxicity was significantly higher in the GO arm of the study.

However, results of subsequent trials suggested that a lower dose of GO was safer.

The current BLA for GO includes data from such a study, known as ALFA-0701.

The ODAC voted that results from ALFA-0701 demonstrated a favorable risk-benefit profile for GO when the drug was given at 3 mg/m² on days 1, 4, and 7 in combination with daunorubicin and cytarabine.

The BLA for GO also includes Pfizer-sponsored studies from the original new drug application for GO and a meta-analysis of patients in 5 randomized, phase 3 studies (including ALFA-0701). These studies span 10 years of research and include more than 4300 patients. ![]()

The US Food and Drug Administration’s (FDA) Oncologic Drug Advisory Committee (ODAC) has announced a positive opinion of gemtuzumab ozogamicin (GO, Mylotarg), a drug that was withdrawn from the US market in 2010.

In a vote of 6 to 1, the ODAC concluded that trial results suggest a favorable risk-benefit profile for low-dose GO given in combination with standard chemotherapy to patients with newly diagnosed, CD33-positive acute myeloid leukemia (AML).

The ODAC’s role is to provide recommendations to the FDA. The FDA is expected to make a decision on the biologics license application (BLA) for GO by September 2017.

With this BLA, Pfizer is seeking approval for GO in 2 indications.

One is for GO in combination with standard chemotherapy (daunorubicin and cytarabine) for the treatment of previously untreated, de novo, CD33-positive AML.

The other is for GO monotherapy for CD33-positive AML patients in first relapse who are 60 years of age or older and who are not considered candidates for other cytotoxic chemotherapy.

GO is an investigational antibody-drug conjugate that consists of the cytotoxic agent calicheamicin attached to a monoclonal antibody targeting CD33.

GO was originally approved under the FDA’s accelerated approval program in 2000 for use as a single agent in patients with CD33-positive AML who had experienced their first relapse and were 60 years of age or older.

In 2010, Pfizer voluntarily withdrew GO from the US market due to the results of a confirmatory phase 3 trial, SWOG S0106.

This trial showed there was no clinical benefit for patients who received GO plus daunorubicin and cytarabine over patients who received only daunorubicin and cytarabine.

In addition, the rate of fatal, treatment-related toxicity was significantly higher in the GO arm of the study.

However, results of subsequent trials suggested that a lower dose of GO was safer.

The current BLA for GO includes data from such a study, known as ALFA-0701.

The ODAC voted that results from ALFA-0701 demonstrated a favorable risk-benefit profile for GO when the drug was given at 3 mg/m² on days 1, 4, and 7 in combination with daunorubicin and cytarabine.

The BLA for GO also includes Pfizer-sponsored studies from the original new drug application for GO and a meta-analysis of patients in 5 randomized, phase 3 studies (including ALFA-0701). These studies span 10 years of research and include more than 4300 patients. ![]()

The US Food and Drug Administration’s (FDA) Oncologic Drug Advisory Committee (ODAC) has announced a positive opinion of gemtuzumab ozogamicin (GO, Mylotarg), a drug that was withdrawn from the US market in 2010.

In a vote of 6 to 1, the ODAC concluded that trial results suggest a favorable risk-benefit profile for low-dose GO given in combination with standard chemotherapy to patients with newly diagnosed, CD33-positive acute myeloid leukemia (AML).

The ODAC’s role is to provide recommendations to the FDA. The FDA is expected to make a decision on the biologics license application (BLA) for GO by September 2017.

With this BLA, Pfizer is seeking approval for GO in 2 indications.

One is for GO in combination with standard chemotherapy (daunorubicin and cytarabine) for the treatment of previously untreated, de novo, CD33-positive AML.

The other is for GO monotherapy for CD33-positive AML patients in first relapse who are 60 years of age or older and who are not considered candidates for other cytotoxic chemotherapy.

GO is an investigational antibody-drug conjugate that consists of the cytotoxic agent calicheamicin attached to a monoclonal antibody targeting CD33.

GO was originally approved under the FDA’s accelerated approval program in 2000 for use as a single agent in patients with CD33-positive AML who had experienced their first relapse and were 60 years of age or older.

In 2010, Pfizer voluntarily withdrew GO from the US market due to the results of a confirmatory phase 3 trial, SWOG S0106.

This trial showed there was no clinical benefit for patients who received GO plus daunorubicin and cytarabine over patients who received only daunorubicin and cytarabine.

In addition, the rate of fatal, treatment-related toxicity was significantly higher in the GO arm of the study.

However, results of subsequent trials suggested that a lower dose of GO was safer.

The current BLA for GO includes data from such a study, known as ALFA-0701.

The ODAC voted that results from ALFA-0701 demonstrated a favorable risk-benefit profile for GO when the drug was given at 3 mg/m² on days 1, 4, and 7 in combination with daunorubicin and cytarabine.

The BLA for GO also includes Pfizer-sponsored studies from the original new drug application for GO and a meta-analysis of patients in 5 randomized, phase 3 studies (including ALFA-0701). These studies span 10 years of research and include more than 4300 patients. ![]()

Brown patch on infant

The FP diagnosed urticaria pigmentosa. If urticaria pigmentosa is suspected, it is helpful to stroke the lesion with a fingernail or the wooden end of a cotton-tipped applicator. This induces erythema and a wheal that is confined to the stroked site within the lesion—a positive Darier sign.

Urticaria pigmentosa is a form of mast cell activation syndrome (MCAS), in which there are too many mast cells in the skin. More serious forms of MCAS in adults include systemic mastocytosis and cutaneous mastocytosis. Urticaria pigmentosa is a self-limiting problem in young children that rarely causes sufficient symptoms to warrant treatment.

In this case, the FP assured the mother that this was not dangerous and would go away over time on its own. The FP wrote down the name of the condition for the mother and explained to her that if she noticed any changes or new symptoms, she should return for further evaluation and treatment. The mother was satisfied with this explanation.

Photos and text for Photo Rounds Friday courtesy of Richard P. Usatine, MD. This case was adapted from: Usatine R. Urticaria and angioedema. In: Usatine R, Smith M, Mayeaux EJ, et al, eds. Color Atlas of Family Medicine. 2nd ed. New York, NY: McGraw-Hill; 2013: 863-870.

To learn more about the Color Atlas of Family Medicine, see: www.amazon.com/Color-Family-Medicine-Richard-Usatine/dp/0071769641/

You can now get the second edition of the Color Atlas of Family Medicine as an app by clicking on this link: usatinemedia.com

The FP diagnosed urticaria pigmentosa. If urticaria pigmentosa is suspected, it is helpful to stroke the lesion with a fingernail or the wooden end of a cotton-tipped applicator. This induces erythema and a wheal that is confined to the stroked site within the lesion—a positive Darier sign.

Urticaria pigmentosa is a form of mast cell activation syndrome (MCAS), in which there are too many mast cells in the skin. More serious forms of MCAS in adults include systemic mastocytosis and cutaneous mastocytosis. Urticaria pigmentosa is a self-limiting problem in young children that rarely causes sufficient symptoms to warrant treatment.

In this case, the FP assured the mother that this was not dangerous and would go away over time on its own. The FP wrote down the name of the condition for the mother and explained to her that if she noticed any changes or new symptoms, she should return for further evaluation and treatment. The mother was satisfied with this explanation.

Photos and text for Photo Rounds Friday courtesy of Richard P. Usatine, MD. This case was adapted from: Usatine R. Urticaria and angioedema. In: Usatine R, Smith M, Mayeaux EJ, et al, eds. Color Atlas of Family Medicine. 2nd ed. New York, NY: McGraw-Hill; 2013: 863-870.

To learn more about the Color Atlas of Family Medicine, see: www.amazon.com/Color-Family-Medicine-Richard-Usatine/dp/0071769641/

You can now get the second edition of the Color Atlas of Family Medicine as an app by clicking on this link: usatinemedia.com

The FP diagnosed urticaria pigmentosa. If urticaria pigmentosa is suspected, it is helpful to stroke the lesion with a fingernail or the wooden end of a cotton-tipped applicator. This induces erythema and a wheal that is confined to the stroked site within the lesion—a positive Darier sign.

Urticaria pigmentosa is a form of mast cell activation syndrome (MCAS), in which there are too many mast cells in the skin. More serious forms of MCAS in adults include systemic mastocytosis and cutaneous mastocytosis. Urticaria pigmentosa is a self-limiting problem in young children that rarely causes sufficient symptoms to warrant treatment.

In this case, the FP assured the mother that this was not dangerous and would go away over time on its own. The FP wrote down the name of the condition for the mother and explained to her that if she noticed any changes or new symptoms, she should return for further evaluation and treatment. The mother was satisfied with this explanation.

Photos and text for Photo Rounds Friday courtesy of Richard P. Usatine, MD. This case was adapted from: Usatine R. Urticaria and angioedema. In: Usatine R, Smith M, Mayeaux EJ, et al, eds. Color Atlas of Family Medicine. 2nd ed. New York, NY: McGraw-Hill; 2013: 863-870.

To learn more about the Color Atlas of Family Medicine, see: www.amazon.com/Color-Family-Medicine-Richard-Usatine/dp/0071769641/

You can now get the second edition of the Color Atlas of Family Medicine as an app by clicking on this link: usatinemedia.com

All FDA panel members go thumbs up for CTL019 in relapsed/refractory childhood ALL

The answer to the billion dollar question – Does the chimeric antigen receptor T-cell (CAR T) construct CTL019 (tisagenlecleucel-T) have a favorable risk-benefit profile for the treatment of children and young adults with relapsed/refractory B-cell precursor acute lymphoblastic leukemia? – was a unanimous “yes” at a July 12 meeting of the Food and Drug Administration’s Oncologic Drugs Advisory Committee.

“This is the most exciting thing I have seen in my lifetime, and probably since the introduction of ‘multiagent total cancer care,’ as it was called then, for treatment of childhood leukemia,” remarked Timothy P. Cripe, MD, PhD, from Nationwide Children’s Hospital in Columbus, Ohio, and a temporary voting member of the ODAC.

Catherine M. Bollard, MD, MBChB, from the Children’s National Medical Center in Washington, also a temporary ODAC member, said that she voted “yes” because “this is a very poor-risk patient population, this is an unmet need in the pediatric population, and as you saw in the data [presented to ODAC] today, the clinical responses are remarkable. I think Novartis [the maker of CTL019] has done a great job putting together a plan for mitigating risk going forward.”

CTL019 was shown in a pivotal phase 2 clinical trial to induce an overall remission rate of 83% in children and young adults with relapsed/refractory ALL for whom at least two prior lines of therapy had failed. Based on these results, the FDA accepted a biologics license application for the agent from Novartis.

At the meeting, panel members initially seemed favorably disposed toward recommending approval but heard concerns from FDA scientists about the potential for severe or fatal adverse events such as the cytokine release syndrome (CRS); the possible generation of replication competent retrovirus (RCR); and the potential for secondary malignancies from insertional mutagenesis, the incorporation of portions of the lentiviral vector into the patient’s genome.

In his opening remarks, Wilson W. Bryan, MD, from the FDA’s Office of Tissue and Advanced Therapies and Center for Biologics Evaluation and Drug Research, commented that “the clinical development of tisagenlecleucel suggests that this is a life-saving product.”

He went on, however, to frame the FDA’s concerns: “Clinical trials are not always a good predictor of the effectiveness and safety of a marketed product,” he said. “In particular, we are concerned that the same benefit and safety seen in clinical trials may not carry over to routine clinical use.”

The purpose of the hearing was to focus on manufacturing issues related to product quality, including replicability of the product for commercial use and safety issues such as prevention of CRS and neurotoxicities.

“We are also concerned about the hypothetical risk of secondary malignancies. Therefore, we are asking for the committee’s recommendations regarding the nature and duration of follow-up of patients who would receive this product,” Dr. Bryan said.

“CTL019 is a living drug, which demonstrates activity after a single infusion,” said Samit Hirawat, MD, head of oncology global development for Novartis.

But the nature of CTL019 as a living drug also means that it is subject to variations in the ability of autologous T cells harvested via leukapheresis to be infected with the lentiviral vector and expanded into a population of CAR T cells large enough to have therapeutic value, said Xiaobin Victor Lu, PhD, a chemistry, manufacturing, and controls reviewer for the FDA.

Mitigation plan

Novartis’ proposed plan includes specific, long-term steps for mitigating the risk of CRS and neurologic events, such as cerebral edema, the latter of which caused the FDA to call for a clinical hold of the phase 2 ROCKET trial for a different CAR T-cell construct.

Among the proposed elements of the mitigation plan are a 15-year minimum pharmacovigilance program and long-term safety follow-up for adverse events related to the therapy, efficacy, immunogenicity, transgene persistence of CD19 CAR, and the incidence of second malignancies possibly related to insertional mutagenesis.

Novartis also will train treatment center staff on processes for cell collection, cryopreservation, transport, chain of identity, safety management, and logistics for handling the CAR T-cell product. The company proposes to provide on-site training of personnel on CRS and neurotoxicity risk and management, as well as to offer information to patients and caregivers about the signs and symptoms of adverse events of concern.

Dr. Cripe expressed his concerns that Novartis’ proposal to initially limit the mitigation plan to 30 or 35 treatment sites would create problems of access and economic disparities among patients, and could cause inequities among treatment centers even with the same city.

David Lebwohl, MD, head of the CAR T global program for Novartis, said that the planned number of sites for the mitigation program would be expanded after 6 to 12 months if the CAR T construct receives final approval and clinical implementation goes well.

There was nearly unanimous agreement among the panel members that the planned 15-year follow-up and other mitigation measures would be adequate for detecting serious short- and long-term consequences of CAR T-cell therapy.

Patient/advocate perspective

In the public comment section of the proceedings, panel members were urged to vote in favor of CTL019 by parents of children with ALL, including Don McMahon, whose son Connor received the therapy after multiple relapses, and Tom Whitehead, father of Emily Whitehead, the first patient to receive CAR T cells for ALL.

Both children are alive and doing well.

CTL019 is produced by Novartis.

The unanimous recommendation by the Food and Drug Administration's Oncologic Drugs Advisory Committee means that the FDA is likely to approve CTL019 (tisagenlecleucel-T), and that approval may come quickly, possibly before the end of 2017. This approval was based on compelling data showing that 83% of children and young adults with refractory or relapsed acute lymphocytic leukemia (ALL) achieved remission with this therapy. This is exciting news for ALL patients as well as for the cell and gene therapy community. What remains to be determined are the labelling for CTL019, the cost of the therapy, and whether all patients who might benefit from this therapy will have the coverage to be able to access it.

While the response rates in patients treated in the trials presented to the FDA are very encouraging there are also concerns with the risks for cytokine release syndrome and neurotoxicity which can affect up to half of treated patients. As a result, Novartis, the manufacturer of CTL019, has proposed an extensive mitigation strategy and education process for the cell therapy centers that will offer the therapy. Initially, this is likely to be limited to around 30 centers that will be geographically distributed throughout the United States with gradual roll out to more centers as there is more experience with the use of CTL019.

Another issue for the centers is going to be operationalizing a new paradigm where CTL019 will be offered as a standard of care rather than in the context of a research study. Most of the initial pediatric centers will likely provide CTL019, within their transplant infrastructure since procurement, initial processing and infusion of cells will utilize their cell processing and collection facilities. The Foundation for Accreditation of Cell Therapy (FACT) has also anticipated this approval by publishing new standards for Immune Effectors earlier this year to promote quality practice in immune effector cell administration.

One other question is whether CTL019 will be transplant enabling or transplant replacing. While the initial response rates are very high and there are some well publicized patients who remain in remission over 5 years after CTL019 without other therapy, other responders proceeded to transplant and there is also a significant relapse rate. It is therefore an open question whether treating physicians will be happy to watch patients who attain remission after this therapy or whether they will still recommend transplant because there is not yet enough follow up on this product to know what the long-term cure rate is going to be.

Another CAR T-cell product is scheduled to come before an FDA advisory committee in October. The indication for KTE-C19 (axicabtagene ciloleucel) from Kite is for relapsed/refractory diffuse large B-cell lymphoma, a much bigger indication with a potentially much larger number of patients. The response rates for KTE-C19 in DLBCL (and indeed for CTL019 in DLBCL) are not as high as those for CTL019 in ALL and follow-up time is shorter, so it is not yet clear how many patients will have sustained long term responses. Nevertheless the response rate in patients who have failed all other therapies is high enough that this product will also likely be approved.

Helen Heslop, MD, is the Dan L. Duncan Chair and Professor of Medicine and Pediatrics at Baylor College of Medicine, Houston. She also is the Director of the Center for Cell and Gene Therapy at Baylor College of Medicine, Houston Methodist Hospital and Texas Children's Hospital. Dr. Heslop is a member of the editorial advisory board of Hematology News.

The unanimous recommendation by the Food and Drug Administration's Oncologic Drugs Advisory Committee means that the FDA is likely to approve CTL019 (tisagenlecleucel-T), and that approval may come quickly, possibly before the end of 2017. This approval was based on compelling data showing that 83% of children and young adults with refractory or relapsed acute lymphocytic leukemia (ALL) achieved remission with this therapy. This is exciting news for ALL patients as well as for the cell and gene therapy community. What remains to be determined are the labelling for CTL019, the cost of the therapy, and whether all patients who might benefit from this therapy will have the coverage to be able to access it.

While the response rates in patients treated in the trials presented to the FDA are very encouraging there are also concerns with the risks for cytokine release syndrome and neurotoxicity which can affect up to half of treated patients. As a result, Novartis, the manufacturer of CTL019, has proposed an extensive mitigation strategy and education process for the cell therapy centers that will offer the therapy. Initially, this is likely to be limited to around 30 centers that will be geographically distributed throughout the United States with gradual roll out to more centers as there is more experience with the use of CTL019.

Another issue for the centers is going to be operationalizing a new paradigm where CTL019 will be offered as a standard of care rather than in the context of a research study. Most of the initial pediatric centers will likely provide CTL019, within their transplant infrastructure since procurement, initial processing and infusion of cells will utilize their cell processing and collection facilities. The Foundation for Accreditation of Cell Therapy (FACT) has also anticipated this approval by publishing new standards for Immune Effectors earlier this year to promote quality practice in immune effector cell administration.

One other question is whether CTL019 will be transplant enabling or transplant replacing. While the initial response rates are very high and there are some well publicized patients who remain in remission over 5 years after CTL019 without other therapy, other responders proceeded to transplant and there is also a significant relapse rate. It is therefore an open question whether treating physicians will be happy to watch patients who attain remission after this therapy or whether they will still recommend transplant because there is not yet enough follow up on this product to know what the long-term cure rate is going to be.

Another CAR T-cell product is scheduled to come before an FDA advisory committee in October. The indication for KTE-C19 (axicabtagene ciloleucel) from Kite is for relapsed/refractory diffuse large B-cell lymphoma, a much bigger indication with a potentially much larger number of patients. The response rates for KTE-C19 in DLBCL (and indeed for CTL019 in DLBCL) are not as high as those for CTL019 in ALL and follow-up time is shorter, so it is not yet clear how many patients will have sustained long term responses. Nevertheless the response rate in patients who have failed all other therapies is high enough that this product will also likely be approved.

Helen Heslop, MD, is the Dan L. Duncan Chair and Professor of Medicine and Pediatrics at Baylor College of Medicine, Houston. She also is the Director of the Center for Cell and Gene Therapy at Baylor College of Medicine, Houston Methodist Hospital and Texas Children's Hospital. Dr. Heslop is a member of the editorial advisory board of Hematology News.

The unanimous recommendation by the Food and Drug Administration's Oncologic Drugs Advisory Committee means that the FDA is likely to approve CTL019 (tisagenlecleucel-T), and that approval may come quickly, possibly before the end of 2017. This approval was based on compelling data showing that 83% of children and young adults with refractory or relapsed acute lymphocytic leukemia (ALL) achieved remission with this therapy. This is exciting news for ALL patients as well as for the cell and gene therapy community. What remains to be determined are the labelling for CTL019, the cost of the therapy, and whether all patients who might benefit from this therapy will have the coverage to be able to access it.

While the response rates in patients treated in the trials presented to the FDA are very encouraging there are also concerns with the risks for cytokine release syndrome and neurotoxicity which can affect up to half of treated patients. As a result, Novartis, the manufacturer of CTL019, has proposed an extensive mitigation strategy and education process for the cell therapy centers that will offer the therapy. Initially, this is likely to be limited to around 30 centers that will be geographically distributed throughout the United States with gradual roll out to more centers as there is more experience with the use of CTL019.

Another issue for the centers is going to be operationalizing a new paradigm where CTL019 will be offered as a standard of care rather than in the context of a research study. Most of the initial pediatric centers will likely provide CTL019, within their transplant infrastructure since procurement, initial processing and infusion of cells will utilize their cell processing and collection facilities. The Foundation for Accreditation of Cell Therapy (FACT) has also anticipated this approval by publishing new standards for Immune Effectors earlier this year to promote quality practice in immune effector cell administration.

One other question is whether CTL019 will be transplant enabling or transplant replacing. While the initial response rates are very high and there are some well publicized patients who remain in remission over 5 years after CTL019 without other therapy, other responders proceeded to transplant and there is also a significant relapse rate. It is therefore an open question whether treating physicians will be happy to watch patients who attain remission after this therapy or whether they will still recommend transplant because there is not yet enough follow up on this product to know what the long-term cure rate is going to be.

Another CAR T-cell product is scheduled to come before an FDA advisory committee in October. The indication for KTE-C19 (axicabtagene ciloleucel) from Kite is for relapsed/refractory diffuse large B-cell lymphoma, a much bigger indication with a potentially much larger number of patients. The response rates for KTE-C19 in DLBCL (and indeed for CTL019 in DLBCL) are not as high as those for CTL019 in ALL and follow-up time is shorter, so it is not yet clear how many patients will have sustained long term responses. Nevertheless the response rate in patients who have failed all other therapies is high enough that this product will also likely be approved.

Helen Heslop, MD, is the Dan L. Duncan Chair and Professor of Medicine and Pediatrics at Baylor College of Medicine, Houston. She also is the Director of the Center for Cell and Gene Therapy at Baylor College of Medicine, Houston Methodist Hospital and Texas Children's Hospital. Dr. Heslop is a member of the editorial advisory board of Hematology News.

The answer to the billion dollar question – Does the chimeric antigen receptor T-cell (CAR T) construct CTL019 (tisagenlecleucel-T) have a favorable risk-benefit profile for the treatment of children and young adults with relapsed/refractory B-cell precursor acute lymphoblastic leukemia? – was a unanimous “yes” at a July 12 meeting of the Food and Drug Administration’s Oncologic Drugs Advisory Committee.

“This is the most exciting thing I have seen in my lifetime, and probably since the introduction of ‘multiagent total cancer care,’ as it was called then, for treatment of childhood leukemia,” remarked Timothy P. Cripe, MD, PhD, from Nationwide Children’s Hospital in Columbus, Ohio, and a temporary voting member of the ODAC.

Catherine M. Bollard, MD, MBChB, from the Children’s National Medical Center in Washington, also a temporary ODAC member, said that she voted “yes” because “this is a very poor-risk patient population, this is an unmet need in the pediatric population, and as you saw in the data [presented to ODAC] today, the clinical responses are remarkable. I think Novartis [the maker of CTL019] has done a great job putting together a plan for mitigating risk going forward.”

CTL019 was shown in a pivotal phase 2 clinical trial to induce an overall remission rate of 83% in children and young adults with relapsed/refractory ALL for whom at least two prior lines of therapy had failed. Based on these results, the FDA accepted a biologics license application for the agent from Novartis.

At the meeting, panel members initially seemed favorably disposed toward recommending approval but heard concerns from FDA scientists about the potential for severe or fatal adverse events such as the cytokine release syndrome (CRS); the possible generation of replication competent retrovirus (RCR); and the potential for secondary malignancies from insertional mutagenesis, the incorporation of portions of the lentiviral vector into the patient’s genome.

In his opening remarks, Wilson W. Bryan, MD, from the FDA’s Office of Tissue and Advanced Therapies and Center for Biologics Evaluation and Drug Research, commented that “the clinical development of tisagenlecleucel suggests that this is a life-saving product.”

He went on, however, to frame the FDA’s concerns: “Clinical trials are not always a good predictor of the effectiveness and safety of a marketed product,” he said. “In particular, we are concerned that the same benefit and safety seen in clinical trials may not carry over to routine clinical use.”

The purpose of the hearing was to focus on manufacturing issues related to product quality, including replicability of the product for commercial use and safety issues such as prevention of CRS and neurotoxicities.

“We are also concerned about the hypothetical risk of secondary malignancies. Therefore, we are asking for the committee’s recommendations regarding the nature and duration of follow-up of patients who would receive this product,” Dr. Bryan said.

“CTL019 is a living drug, which demonstrates activity after a single infusion,” said Samit Hirawat, MD, head of oncology global development for Novartis.

But the nature of CTL019 as a living drug also means that it is subject to variations in the ability of autologous T cells harvested via leukapheresis to be infected with the lentiviral vector and expanded into a population of CAR T cells large enough to have therapeutic value, said Xiaobin Victor Lu, PhD, a chemistry, manufacturing, and controls reviewer for the FDA.

Mitigation plan

Novartis’ proposed plan includes specific, long-term steps for mitigating the risk of CRS and neurologic events, such as cerebral edema, the latter of which caused the FDA to call for a clinical hold of the phase 2 ROCKET trial for a different CAR T-cell construct.

Among the proposed elements of the mitigation plan are a 15-year minimum pharmacovigilance program and long-term safety follow-up for adverse events related to the therapy, efficacy, immunogenicity, transgene persistence of CD19 CAR, and the incidence of second malignancies possibly related to insertional mutagenesis.

Novartis also will train treatment center staff on processes for cell collection, cryopreservation, transport, chain of identity, safety management, and logistics for handling the CAR T-cell product. The company proposes to provide on-site training of personnel on CRS and neurotoxicity risk and management, as well as to offer information to patients and caregivers about the signs and symptoms of adverse events of concern.

Dr. Cripe expressed his concerns that Novartis’ proposal to initially limit the mitigation plan to 30 or 35 treatment sites would create problems of access and economic disparities among patients, and could cause inequities among treatment centers even with the same city.

David Lebwohl, MD, head of the CAR T global program for Novartis, said that the planned number of sites for the mitigation program would be expanded after 6 to 12 months if the CAR T construct receives final approval and clinical implementation goes well.

There was nearly unanimous agreement among the panel members that the planned 15-year follow-up and other mitigation measures would be adequate for detecting serious short- and long-term consequences of CAR T-cell therapy.

Patient/advocate perspective

In the public comment section of the proceedings, panel members were urged to vote in favor of CTL019 by parents of children with ALL, including Don McMahon, whose son Connor received the therapy after multiple relapses, and Tom Whitehead, father of Emily Whitehead, the first patient to receive CAR T cells for ALL.

Both children are alive and doing well.

CTL019 is produced by Novartis.

The answer to the billion dollar question – Does the chimeric antigen receptor T-cell (CAR T) construct CTL019 (tisagenlecleucel-T) have a favorable risk-benefit profile for the treatment of children and young adults with relapsed/refractory B-cell precursor acute lymphoblastic leukemia? – was a unanimous “yes” at a July 12 meeting of the Food and Drug Administration’s Oncologic Drugs Advisory Committee.

“This is the most exciting thing I have seen in my lifetime, and probably since the introduction of ‘multiagent total cancer care,’ as it was called then, for treatment of childhood leukemia,” remarked Timothy P. Cripe, MD, PhD, from Nationwide Children’s Hospital in Columbus, Ohio, and a temporary voting member of the ODAC.

Catherine M. Bollard, MD, MBChB, from the Children’s National Medical Center in Washington, also a temporary ODAC member, said that she voted “yes” because “this is a very poor-risk patient population, this is an unmet need in the pediatric population, and as you saw in the data [presented to ODAC] today, the clinical responses are remarkable. I think Novartis [the maker of CTL019] has done a great job putting together a plan for mitigating risk going forward.”

CTL019 was shown in a pivotal phase 2 clinical trial to induce an overall remission rate of 83% in children and young adults with relapsed/refractory ALL for whom at least two prior lines of therapy had failed. Based on these results, the FDA accepted a biologics license application for the agent from Novartis.

At the meeting, panel members initially seemed favorably disposed toward recommending approval but heard concerns from FDA scientists about the potential for severe or fatal adverse events such as the cytokine release syndrome (CRS); the possible generation of replication competent retrovirus (RCR); and the potential for secondary malignancies from insertional mutagenesis, the incorporation of portions of the lentiviral vector into the patient’s genome.

In his opening remarks, Wilson W. Bryan, MD, from the FDA’s Office of Tissue and Advanced Therapies and Center for Biologics Evaluation and Drug Research, commented that “the clinical development of tisagenlecleucel suggests that this is a life-saving product.”

He went on, however, to frame the FDA’s concerns: “Clinical trials are not always a good predictor of the effectiveness and safety of a marketed product,” he said. “In particular, we are concerned that the same benefit and safety seen in clinical trials may not carry over to routine clinical use.”

The purpose of the hearing was to focus on manufacturing issues related to product quality, including replicability of the product for commercial use and safety issues such as prevention of CRS and neurotoxicities.

“We are also concerned about the hypothetical risk of secondary malignancies. Therefore, we are asking for the committee’s recommendations regarding the nature and duration of follow-up of patients who would receive this product,” Dr. Bryan said.

“CTL019 is a living drug, which demonstrates activity after a single infusion,” said Samit Hirawat, MD, head of oncology global development for Novartis.

But the nature of CTL019 as a living drug also means that it is subject to variations in the ability of autologous T cells harvested via leukapheresis to be infected with the lentiviral vector and expanded into a population of CAR T cells large enough to have therapeutic value, said Xiaobin Victor Lu, PhD, a chemistry, manufacturing, and controls reviewer for the FDA.

Mitigation plan

Novartis’ proposed plan includes specific, long-term steps for mitigating the risk of CRS and neurologic events, such as cerebral edema, the latter of which caused the FDA to call for a clinical hold of the phase 2 ROCKET trial for a different CAR T-cell construct.

Among the proposed elements of the mitigation plan are a 15-year minimum pharmacovigilance program and long-term safety follow-up for adverse events related to the therapy, efficacy, immunogenicity, transgene persistence of CD19 CAR, and the incidence of second malignancies possibly related to insertional mutagenesis.

Novartis also will train treatment center staff on processes for cell collection, cryopreservation, transport, chain of identity, safety management, and logistics for handling the CAR T-cell product. The company proposes to provide on-site training of personnel on CRS and neurotoxicity risk and management, as well as to offer information to patients and caregivers about the signs and symptoms of adverse events of concern.

Dr. Cripe expressed his concerns that Novartis’ proposal to initially limit the mitigation plan to 30 or 35 treatment sites would create problems of access and economic disparities among patients, and could cause inequities among treatment centers even with the same city.

David Lebwohl, MD, head of the CAR T global program for Novartis, said that the planned number of sites for the mitigation program would be expanded after 6 to 12 months if the CAR T construct receives final approval and clinical implementation goes well.

There was nearly unanimous agreement among the panel members that the planned 15-year follow-up and other mitigation measures would be adequate for detecting serious short- and long-term consequences of CAR T-cell therapy.

Patient/advocate perspective

In the public comment section of the proceedings, panel members were urged to vote in favor of CTL019 by parents of children with ALL, including Don McMahon, whose son Connor received the therapy after multiple relapses, and Tom Whitehead, father of Emily Whitehead, the first patient to receive CAR T cells for ALL.

Both children are alive and doing well.

CTL019 is produced by Novartis.

What works and what doesn’t in treating binge eating

SAN FRANCISCO – For patients who meet criteria for binge eating and who express a desire to lose weight, topiramate or lisdexamfetamine are effective treatment options, according to Judith Walsh, MD.

“Binge eating is the most common eating disorder in the United States,” Dr. Walsh said at the UCSF Annual Advances in Internal Medicine meeting. “The lifetime prevalence is 3.5% in women, compared with 2% in men, and 5%-30% of affected women are obese.”

According to the Diagnostic and Statistical Manual of Mental Disorders, Fifth Edition (DSM-5), the condition is characterized by binge eating episodes during which patients consume larger amounts of food than most people would under similar circumstances. “They feel a lack control over eating, [the notion that] ‘I’m eating and I can’t stop,’” said Dr. Walsh of the UCSF division of internal medicine and the Women’s Center for Excellence. Affected patients engage in the behavior of binge eating more than once per week over a period of at least 3 months, and the episodes typically last fewer than 2 hours.

While therapist-led cognitive-behavioral therapy (CBT) is generally considered to be the mainstay of treatment for binge eating disorder, guidelines from the American Psychiatric Association and the National Institute for Health and Care Excellence are conflicting, she said.

A recent meta-analysis funded by the Agency for Healthcare Research and Quality set out to summarize the benefits and harms of psychological and pharmacologic therapies for adults with binge eating disorder (Ann Intern Med. 2016 Sep 20;165[6]:409-20). The researchers evaluated 34 published trials with a low to medium risk of bias, including 25 placebo-controlled trials and 9 waitlist-controlled psychological trials. The majority of trial participants (77%) were women, their mean body mass index was 28.8 kg/m2, and their treatment duration ranged from 6 weeks to 6 months.

The review found that second-generation antidepressants such as citalopram, escitalopram, fluoxetine, fluvoxamine, paroxetine, and sertraline improved abstinence from binge eating (relative risk, 1.67) and depression symptoms (RR, 1.97), but resulted in no weight change. Therapist-led CBT was found to strongly improve abstinence from binge eating (RR, 4.95), but it resulted in no change in depressive symptoms or in weight.

Use of lisdexamfetamine was associated with improved abstinence from binge eating (RR, 2.61) and weight loss of 5.2%-6.3%, while use of topiramate was found in two trials to have a moderate benefit on abstinence from binge eating (by 58%-64% in one trial and by 29%-30% in the other), and weight loss of about 4.9 kg.

“Harms of treatment are generally not reported in the psychological studies, nor in 20 of the 25 pharmacologic studies included in the analysis,” said Dr. Walsh, who was not involved with review. The three trials of lisdexamfetamine found an increased risk of sympathetic nervous system arousal (RR, 4.28), insomnia (RR, 2.8), and GI upset (RR, 2.71).

“So, in adults with binge eating disorder, the primary therapy to increase abstinence is cognitive-behavioral therapy, topiramate, lisdexamfetamine, and second-generation antidepressants,” Dr. Walsh concluded. If reducing weight is a priority, the best available treatment options are topiramate and lisdexamfetamine, she said.

Dr. Walsh reported having no financial disclosures.

SAN FRANCISCO – For patients who meet criteria for binge eating and who express a desire to lose weight, topiramate or lisdexamfetamine are effective treatment options, according to Judith Walsh, MD.

“Binge eating is the most common eating disorder in the United States,” Dr. Walsh said at the UCSF Annual Advances in Internal Medicine meeting. “The lifetime prevalence is 3.5% in women, compared with 2% in men, and 5%-30% of affected women are obese.”

According to the Diagnostic and Statistical Manual of Mental Disorders, Fifth Edition (DSM-5), the condition is characterized by binge eating episodes during which patients consume larger amounts of food than most people would under similar circumstances. “They feel a lack control over eating, [the notion that] ‘I’m eating and I can’t stop,’” said Dr. Walsh of the UCSF division of internal medicine and the Women’s Center for Excellence. Affected patients engage in the behavior of binge eating more than once per week over a period of at least 3 months, and the episodes typically last fewer than 2 hours.

While therapist-led cognitive-behavioral therapy (CBT) is generally considered to be the mainstay of treatment for binge eating disorder, guidelines from the American Psychiatric Association and the National Institute for Health and Care Excellence are conflicting, she said.

A recent meta-analysis funded by the Agency for Healthcare Research and Quality set out to summarize the benefits and harms of psychological and pharmacologic therapies for adults with binge eating disorder (Ann Intern Med. 2016 Sep 20;165[6]:409-20). The researchers evaluated 34 published trials with a low to medium risk of bias, including 25 placebo-controlled trials and 9 waitlist-controlled psychological trials. The majority of trial participants (77%) were women, their mean body mass index was 28.8 kg/m2, and their treatment duration ranged from 6 weeks to 6 months.

The review found that second-generation antidepressants such as citalopram, escitalopram, fluoxetine, fluvoxamine, paroxetine, and sertraline improved abstinence from binge eating (relative risk, 1.67) and depression symptoms (RR, 1.97), but resulted in no weight change. Therapist-led CBT was found to strongly improve abstinence from binge eating (RR, 4.95), but it resulted in no change in depressive symptoms or in weight.

Use of lisdexamfetamine was associated with improved abstinence from binge eating (RR, 2.61) and weight loss of 5.2%-6.3%, while use of topiramate was found in two trials to have a moderate benefit on abstinence from binge eating (by 58%-64% in one trial and by 29%-30% in the other), and weight loss of about 4.9 kg.

“Harms of treatment are generally not reported in the psychological studies, nor in 20 of the 25 pharmacologic studies included in the analysis,” said Dr. Walsh, who was not involved with review. The three trials of lisdexamfetamine found an increased risk of sympathetic nervous system arousal (RR, 4.28), insomnia (RR, 2.8), and GI upset (RR, 2.71).

“So, in adults with binge eating disorder, the primary therapy to increase abstinence is cognitive-behavioral therapy, topiramate, lisdexamfetamine, and second-generation antidepressants,” Dr. Walsh concluded. If reducing weight is a priority, the best available treatment options are topiramate and lisdexamfetamine, she said.

Dr. Walsh reported having no financial disclosures.

SAN FRANCISCO – For patients who meet criteria for binge eating and who express a desire to lose weight, topiramate or lisdexamfetamine are effective treatment options, according to Judith Walsh, MD.

“Binge eating is the most common eating disorder in the United States,” Dr. Walsh said at the UCSF Annual Advances in Internal Medicine meeting. “The lifetime prevalence is 3.5% in women, compared with 2% in men, and 5%-30% of affected women are obese.”

According to the Diagnostic and Statistical Manual of Mental Disorders, Fifth Edition (DSM-5), the condition is characterized by binge eating episodes during which patients consume larger amounts of food than most people would under similar circumstances. “They feel a lack control over eating, [the notion that] ‘I’m eating and I can’t stop,’” said Dr. Walsh of the UCSF division of internal medicine and the Women’s Center for Excellence. Affected patients engage in the behavior of binge eating more than once per week over a period of at least 3 months, and the episodes typically last fewer than 2 hours.

While therapist-led cognitive-behavioral therapy (CBT) is generally considered to be the mainstay of treatment for binge eating disorder, guidelines from the American Psychiatric Association and the National Institute for Health and Care Excellence are conflicting, she said.

A recent meta-analysis funded by the Agency for Healthcare Research and Quality set out to summarize the benefits and harms of psychological and pharmacologic therapies for adults with binge eating disorder (Ann Intern Med. 2016 Sep 20;165[6]:409-20). The researchers evaluated 34 published trials with a low to medium risk of bias, including 25 placebo-controlled trials and 9 waitlist-controlled psychological trials. The majority of trial participants (77%) were women, their mean body mass index was 28.8 kg/m2, and their treatment duration ranged from 6 weeks to 6 months.

The review found that second-generation antidepressants such as citalopram, escitalopram, fluoxetine, fluvoxamine, paroxetine, and sertraline improved abstinence from binge eating (relative risk, 1.67) and depression symptoms (RR, 1.97), but resulted in no weight change. Therapist-led CBT was found to strongly improve abstinence from binge eating (RR, 4.95), but it resulted in no change in depressive symptoms or in weight.

Use of lisdexamfetamine was associated with improved abstinence from binge eating (RR, 2.61) and weight loss of 5.2%-6.3%, while use of topiramate was found in two trials to have a moderate benefit on abstinence from binge eating (by 58%-64% in one trial and by 29%-30% in the other), and weight loss of about 4.9 kg.

“Harms of treatment are generally not reported in the psychological studies, nor in 20 of the 25 pharmacologic studies included in the analysis,” said Dr. Walsh, who was not involved with review. The three trials of lisdexamfetamine found an increased risk of sympathetic nervous system arousal (RR, 4.28), insomnia (RR, 2.8), and GI upset (RR, 2.71).

“So, in adults with binge eating disorder, the primary therapy to increase abstinence is cognitive-behavioral therapy, topiramate, lisdexamfetamine, and second-generation antidepressants,” Dr. Walsh concluded. If reducing weight is a priority, the best available treatment options are topiramate and lisdexamfetamine, she said.

Dr. Walsh reported having no financial disclosures.

EXPERT ANALYSIS AT THE ANNUAL ADVANCES IN INTERNAL MEDICINE

Improving diet over time reduces mortality

Improving diet over time consistently reduces all-cause and cardiovascular mortality, according to an analysis of two large U.S. databases.

Researchers examined the association between dietary change over a 12-year period (1986-1998) and subsequent mortality during a further 12 years of follow-up (1998-2010), using data for 47,994 women participating in the Nurses’ Health Study and 25,745 men participating in the Health Professionals Follow-Up Study. They calculated dietary quality using three different methods: the Alternate Healthy Eating Index-2010 score, the Alternate Mediterranean Diet score, and the Dietary Approaches to Stop Hypertension (DASH) diet score.

Through numerous analyses of the data, they found a consistent inverse relationship between dietary quality and mortality. Overall, a 20-percentile increase in dietary quality over time was associated with an 8%-17% reduction in all-cause mortality, regardless of which scoring method was used. In contrast, a similar decline in dietary quality was associated with a 6%-12% increase in mortality.