User login

Opiate use tied to hepatitis C risk in youth

SAN FRANCISCO – A new study indicates young adults with opioid use disorder are seldom screened for hepatitis C virus infections; yet 11% of the subjects with opioid use disorder who were tested had been exposed to hepatitis C, and 6.8% had evidence of chronic hepatitis C infection.

Overall, 2.5% (6,812 subjects) of all subjects received hepatitis C testing and 122 (1.8%) tested positive. Based on health records, 23,345 had an ICD-9 code for any illicit drug use and 8.9% of those (2,090) were tested for HCV infection. Of the 933 subjects with an ICD-9 code for opioid use disorder, 35% were tested for HCV.

The results suggest that a group at significant risk of hepatitis C – those with opioid use disorder – is being overlooked in public health efforts to control the disease.

Clinicians may presume, “Oh, you just take opioids orally, you don’t inject drugs,” but oral opiate users can progress to intravenous drug use, Donna Futterman, MD, director of clinical pediatrics, Montefiore Medical Center, and professor of clinical pediatrics at Albert Einstein College of Medicine, both in New York, said during the press conference at the annual scientific meeting on infectious diseases.

Guidelines call for testing for hepatitis C only in individuals with known injected drug use, among other risk factors, but the research suggests that this significantly underestimates the population of teenagers and young adults who are at risk. Many who take opiates go on to use injectable drugs.

Another surprise finding in the study was that only 10.6% of those tested for hepatitis C had also been screened for human immunodeficiency virus (HIV).

The reasons for the low frequency of screening are likely complex, including lack of time, discomfort between the physician and patient, and concerns over privacy and stigma, according to Dr. Epstein, who emphasized the importance of communication to overcome such barriers.

“As a pediatrician, I try to be as open as possible with patients and let them know that anything they tell me is confidential. I start out discussing less private issues, things that are easier to talk about,” Dr. Epstein said.

But the results of the study also suggest that preconceived notions may be holding clinicians back from testing. “How can you test for hepatitis C and not think HIV?” Dr. Futterman said. “What is that differentiator in providers’ heads that makes them focus on one thing and not the other?”

SAN FRANCISCO – A new study indicates young adults with opioid use disorder are seldom screened for hepatitis C virus infections; yet 11% of the subjects with opioid use disorder who were tested had been exposed to hepatitis C, and 6.8% had evidence of chronic hepatitis C infection.

Overall, 2.5% (6,812 subjects) of all subjects received hepatitis C testing and 122 (1.8%) tested positive. Based on health records, 23,345 had an ICD-9 code for any illicit drug use and 8.9% of those (2,090) were tested for HCV infection. Of the 933 subjects with an ICD-9 code for opioid use disorder, 35% were tested for HCV.

The results suggest that a group at significant risk of hepatitis C – those with opioid use disorder – is being overlooked in public health efforts to control the disease.

Clinicians may presume, “Oh, you just take opioids orally, you don’t inject drugs,” but oral opiate users can progress to intravenous drug use, Donna Futterman, MD, director of clinical pediatrics, Montefiore Medical Center, and professor of clinical pediatrics at Albert Einstein College of Medicine, both in New York, said during the press conference at the annual scientific meeting on infectious diseases.

Guidelines call for testing for hepatitis C only in individuals with known injected drug use, among other risk factors, but the research suggests that this significantly underestimates the population of teenagers and young adults who are at risk. Many who take opiates go on to use injectable drugs.

Another surprise finding in the study was that only 10.6% of those tested for hepatitis C had also been screened for human immunodeficiency virus (HIV).

The reasons for the low frequency of screening are likely complex, including lack of time, discomfort between the physician and patient, and concerns over privacy and stigma, according to Dr. Epstein, who emphasized the importance of communication to overcome such barriers.

“As a pediatrician, I try to be as open as possible with patients and let them know that anything they tell me is confidential. I start out discussing less private issues, things that are easier to talk about,” Dr. Epstein said.

But the results of the study also suggest that preconceived notions may be holding clinicians back from testing. “How can you test for hepatitis C and not think HIV?” Dr. Futterman said. “What is that differentiator in providers’ heads that makes them focus on one thing and not the other?”

SAN FRANCISCO – A new study indicates young adults with opioid use disorder are seldom screened for hepatitis C virus infections; yet 11% of the subjects with opioid use disorder who were tested had been exposed to hepatitis C, and 6.8% had evidence of chronic hepatitis C infection.

Overall, 2.5% (6,812 subjects) of all subjects received hepatitis C testing and 122 (1.8%) tested positive. Based on health records, 23,345 had an ICD-9 code for any illicit drug use and 8.9% of those (2,090) were tested for HCV infection. Of the 933 subjects with an ICD-9 code for opioid use disorder, 35% were tested for HCV.

The results suggest that a group at significant risk of hepatitis C – those with opioid use disorder – is being overlooked in public health efforts to control the disease.

Clinicians may presume, “Oh, you just take opioids orally, you don’t inject drugs,” but oral opiate users can progress to intravenous drug use, Donna Futterman, MD, director of clinical pediatrics, Montefiore Medical Center, and professor of clinical pediatrics at Albert Einstein College of Medicine, both in New York, said during the press conference at the annual scientific meeting on infectious diseases.

Guidelines call for testing for hepatitis C only in individuals with known injected drug use, among other risk factors, but the research suggests that this significantly underestimates the population of teenagers and young adults who are at risk. Many who take opiates go on to use injectable drugs.

Another surprise finding in the study was that only 10.6% of those tested for hepatitis C had also been screened for human immunodeficiency virus (HIV).

The reasons for the low frequency of screening are likely complex, including lack of time, discomfort between the physician and patient, and concerns over privacy and stigma, according to Dr. Epstein, who emphasized the importance of communication to overcome such barriers.

“As a pediatrician, I try to be as open as possible with patients and let them know that anything they tell me is confidential. I start out discussing less private issues, things that are easier to talk about,” Dr. Epstein said.

But the results of the study also suggest that preconceived notions may be holding clinicians back from testing. “How can you test for hepatitis C and not think HIV?” Dr. Futterman said. “What is that differentiator in providers’ heads that makes them focus on one thing and not the other?”

REPORTING FROM ID WEEK 2018

Key clinical point: By focusing solely on injectable drug users, clinicians may miss many others who are at risk for hepatitis C infection.

Major finding: Among those with opiate use disorder, 11% tested positive for hepatitis C.

Study details: Survey of 269,124 teenagers and young adults visiting U.S. Federally Qualified Health Centers.

Disclosures: Dr. Epstein and Dr. Futterman have reported no conflicts of interest.

Sunscreens: Misleading labels, poor performance, and hype about their risks

MONTEREY, CALIF. – Heads up! “Natural” mineral-based sunscreens don’t provide the protection of their rivals. Patients may get burned by scary hype about the supposed dangers of sunscreen. And sunscreen spray is great for the scalp of people whose hair is thinning.

In a presentation on sunscreens at the annual Coastal Dermatology Symposium, Vincent DeLeo, MD, of the University of Southern California, Los Angeles, offered the following tips on sunscreen and more.

Here’s a roundup of his pearls:

Sunscreens are getting better and are faring poorly, too.

Dr. DeLeo said. A 2013 comparison of sunscreens in 1997 and 2009 found that, among available sunscreens, the percentage of those with low SPF (under 15) fell from 27% to 6% during that time. (The Food and Drug Administration declared in 2011 that manufacturers must tell consumers that low SPF and/or non–broad spectrum sunscreens protect only against sunburn, not against skin cancer or skin aging.) The study also found that the percentage of sunscreens with UVA-1 (such as avobenzone or zinc oxide) filters grew from 5% to 70% (Photochem Photobiol Sci. 2013 Jan;12[1]:197-202).

But the label of sunscreens may not always be accurate. Earlier this year, Consumer Reports wrote that 36 of 73 sunscreens tested failed to correctly list their SPF protection level; 23 sunscreens missed their listed SPF levels by more than half. “Natural” or “mineral-only” sunscreens, which rely on such blockers as zinc oxide or titanium dioxide, performed the worst. Some patients prefer to use these sunscreens because they aren’t chemical based, and “may want to have a more natural sunscreen,” Dr. DeLeo said. “But they should be aware the sunscreens don’t always live up to the SPF level on the label.”

Beware of warnings about sunscreens.

Reports have warned Americans about supposed risks of sunscreen use such as low vitamin D levels from the lack of sun exposure, the exposure to titanium dioxide and zinc oxide nanoparticles, and the exposure to retinyl palmitate in sunscreen. Hawaiian officials, meanwhile, are banning some types of sunscreen chemicals in order to protect coral reefs.

Typical use of sunscreen will not dangerously lower vitamin D levels, Dr. DeLeo said, but people who use it every day may want to be cautious. He dismissed the concerns about nanoparticles and retinyl palmitate.

Dr. DeLeo said two sunscreen risks are real; sunscreens can trigger irritation, at a rate as high as 20%, and, rarely, allergic reactions, as well.

American sunscreens don’t stack up worldwide.

Simplicity often is a virtue. But, Dr. DeLeo said, it’s not helpful when it comes to the components of American sunscreens.

U.S. regulations only allow 16 ingredients in sunscreen while several more are allowed in Europe, he said. According to him, this helps explain why European sunscreens do a better job. European sunscreens “are much more absorbent, much better at absorbing radiation than the U.S. sunscreens,” he said. “It’s because we don’t have the same products as they have in Europe.”

The good news, he said, is that the FDA is considering expanding the number of ingredients allowed in sunscreen. The Sunscreen Innovation Act of 2014, a law passed by Congress, allows the FDA to use efficacy and safety data from Europe without requiring manufacturers to launch new, multimillion dollar tests, he said.

That’s good news for companies that want to improve U.S. sunscreens by selling a wider variety of types. “Sooner or later,” he said, “we will probably get these.”

Sunscreen sprays are tops at scalp protection.

Sunscreen sprays shouldn’t be applied to the face in children, Dr. DeLeo said, but they’re great for solo people because they facilitate protecting the back when there isn’t someone around to help them apply topical sunscreen.

How much spray should people use? A lot, he said. He added that sunscreen sprays are especially useful for the scalps of people with thinning hair.

Dr. DeLeo disclosed consulting work for Estée Lauder.

The meeting is jointly presented by the University of Louisville and Global Academy for Medical Education. This publication and Global Academy for Medical Education are both owned by Frontline Medical Communications.

MONTEREY, CALIF. – Heads up! “Natural” mineral-based sunscreens don’t provide the protection of their rivals. Patients may get burned by scary hype about the supposed dangers of sunscreen. And sunscreen spray is great for the scalp of people whose hair is thinning.

In a presentation on sunscreens at the annual Coastal Dermatology Symposium, Vincent DeLeo, MD, of the University of Southern California, Los Angeles, offered the following tips on sunscreen and more.

Here’s a roundup of his pearls:

Sunscreens are getting better and are faring poorly, too.

Dr. DeLeo said. A 2013 comparison of sunscreens in 1997 and 2009 found that, among available sunscreens, the percentage of those with low SPF (under 15) fell from 27% to 6% during that time. (The Food and Drug Administration declared in 2011 that manufacturers must tell consumers that low SPF and/or non–broad spectrum sunscreens protect only against sunburn, not against skin cancer or skin aging.) The study also found that the percentage of sunscreens with UVA-1 (such as avobenzone or zinc oxide) filters grew from 5% to 70% (Photochem Photobiol Sci. 2013 Jan;12[1]:197-202).

But the label of sunscreens may not always be accurate. Earlier this year, Consumer Reports wrote that 36 of 73 sunscreens tested failed to correctly list their SPF protection level; 23 sunscreens missed their listed SPF levels by more than half. “Natural” or “mineral-only” sunscreens, which rely on such blockers as zinc oxide or titanium dioxide, performed the worst. Some patients prefer to use these sunscreens because they aren’t chemical based, and “may want to have a more natural sunscreen,” Dr. DeLeo said. “But they should be aware the sunscreens don’t always live up to the SPF level on the label.”

Beware of warnings about sunscreens.

Reports have warned Americans about supposed risks of sunscreen use such as low vitamin D levels from the lack of sun exposure, the exposure to titanium dioxide and zinc oxide nanoparticles, and the exposure to retinyl palmitate in sunscreen. Hawaiian officials, meanwhile, are banning some types of sunscreen chemicals in order to protect coral reefs.

Typical use of sunscreen will not dangerously lower vitamin D levels, Dr. DeLeo said, but people who use it every day may want to be cautious. He dismissed the concerns about nanoparticles and retinyl palmitate.

Dr. DeLeo said two sunscreen risks are real; sunscreens can trigger irritation, at a rate as high as 20%, and, rarely, allergic reactions, as well.

American sunscreens don’t stack up worldwide.

Simplicity often is a virtue. But, Dr. DeLeo said, it’s not helpful when it comes to the components of American sunscreens.

U.S. regulations only allow 16 ingredients in sunscreen while several more are allowed in Europe, he said. According to him, this helps explain why European sunscreens do a better job. European sunscreens “are much more absorbent, much better at absorbing radiation than the U.S. sunscreens,” he said. “It’s because we don’t have the same products as they have in Europe.”

The good news, he said, is that the FDA is considering expanding the number of ingredients allowed in sunscreen. The Sunscreen Innovation Act of 2014, a law passed by Congress, allows the FDA to use efficacy and safety data from Europe without requiring manufacturers to launch new, multimillion dollar tests, he said.

That’s good news for companies that want to improve U.S. sunscreens by selling a wider variety of types. “Sooner or later,” he said, “we will probably get these.”

Sunscreen sprays are tops at scalp protection.

Sunscreen sprays shouldn’t be applied to the face in children, Dr. DeLeo said, but they’re great for solo people because they facilitate protecting the back when there isn’t someone around to help them apply topical sunscreen.

How much spray should people use? A lot, he said. He added that sunscreen sprays are especially useful for the scalps of people with thinning hair.

Dr. DeLeo disclosed consulting work for Estée Lauder.

The meeting is jointly presented by the University of Louisville and Global Academy for Medical Education. This publication and Global Academy for Medical Education are both owned by Frontline Medical Communications.

MONTEREY, CALIF. – Heads up! “Natural” mineral-based sunscreens don’t provide the protection of their rivals. Patients may get burned by scary hype about the supposed dangers of sunscreen. And sunscreen spray is great for the scalp of people whose hair is thinning.

In a presentation on sunscreens at the annual Coastal Dermatology Symposium, Vincent DeLeo, MD, of the University of Southern California, Los Angeles, offered the following tips on sunscreen and more.

Here’s a roundup of his pearls:

Sunscreens are getting better and are faring poorly, too.

Dr. DeLeo said. A 2013 comparison of sunscreens in 1997 and 2009 found that, among available sunscreens, the percentage of those with low SPF (under 15) fell from 27% to 6% during that time. (The Food and Drug Administration declared in 2011 that manufacturers must tell consumers that low SPF and/or non–broad spectrum sunscreens protect only against sunburn, not against skin cancer or skin aging.) The study also found that the percentage of sunscreens with UVA-1 (such as avobenzone or zinc oxide) filters grew from 5% to 70% (Photochem Photobiol Sci. 2013 Jan;12[1]:197-202).

But the label of sunscreens may not always be accurate. Earlier this year, Consumer Reports wrote that 36 of 73 sunscreens tested failed to correctly list their SPF protection level; 23 sunscreens missed their listed SPF levels by more than half. “Natural” or “mineral-only” sunscreens, which rely on such blockers as zinc oxide or titanium dioxide, performed the worst. Some patients prefer to use these sunscreens because they aren’t chemical based, and “may want to have a more natural sunscreen,” Dr. DeLeo said. “But they should be aware the sunscreens don’t always live up to the SPF level on the label.”

Beware of warnings about sunscreens.

Reports have warned Americans about supposed risks of sunscreen use such as low vitamin D levels from the lack of sun exposure, the exposure to titanium dioxide and zinc oxide nanoparticles, and the exposure to retinyl palmitate in sunscreen. Hawaiian officials, meanwhile, are banning some types of sunscreen chemicals in order to protect coral reefs.

Typical use of sunscreen will not dangerously lower vitamin D levels, Dr. DeLeo said, but people who use it every day may want to be cautious. He dismissed the concerns about nanoparticles and retinyl palmitate.

Dr. DeLeo said two sunscreen risks are real; sunscreens can trigger irritation, at a rate as high as 20%, and, rarely, allergic reactions, as well.

American sunscreens don’t stack up worldwide.

Simplicity often is a virtue. But, Dr. DeLeo said, it’s not helpful when it comes to the components of American sunscreens.

U.S. regulations only allow 16 ingredients in sunscreen while several more are allowed in Europe, he said. According to him, this helps explain why European sunscreens do a better job. European sunscreens “are much more absorbent, much better at absorbing radiation than the U.S. sunscreens,” he said. “It’s because we don’t have the same products as they have in Europe.”

The good news, he said, is that the FDA is considering expanding the number of ingredients allowed in sunscreen. The Sunscreen Innovation Act of 2014, a law passed by Congress, allows the FDA to use efficacy and safety data from Europe without requiring manufacturers to launch new, multimillion dollar tests, he said.

That’s good news for companies that want to improve U.S. sunscreens by selling a wider variety of types. “Sooner or later,” he said, “we will probably get these.”

Sunscreen sprays are tops at scalp protection.

Sunscreen sprays shouldn’t be applied to the face in children, Dr. DeLeo said, but they’re great for solo people because they facilitate protecting the back when there isn’t someone around to help them apply topical sunscreen.

How much spray should people use? A lot, he said. He added that sunscreen sprays are especially useful for the scalps of people with thinning hair.

Dr. DeLeo disclosed consulting work for Estée Lauder.

The meeting is jointly presented by the University of Louisville and Global Academy for Medical Education. This publication and Global Academy for Medical Education are both owned by Frontline Medical Communications.

REPORTING FROM THE COASTAL DERMATOLOGY SYMPOSIUM

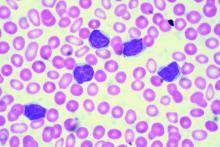

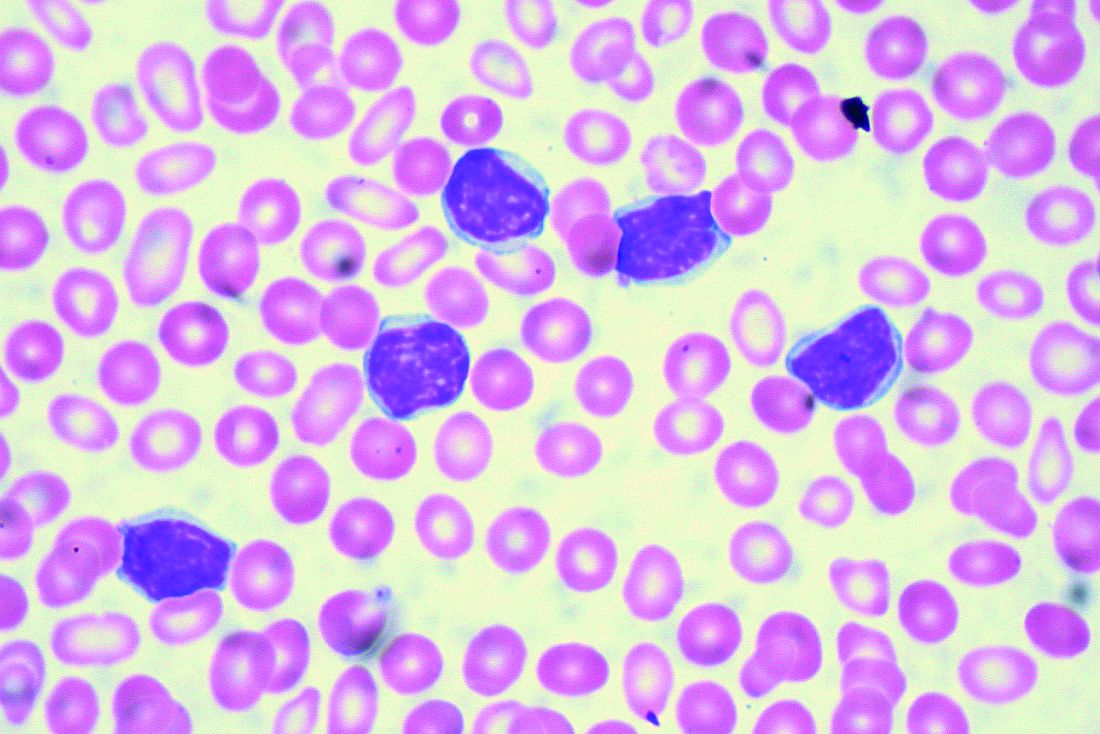

Some mutation testing can be useful at CLL diagnosis

CHICAGO – A number of mutation tests – including immunoglobulin heavy chain gene (IgVH), fluorescence in situ hybridization (FISH), and TP53 – provide useful prognostic information at the time of chronic lymphocytic leukemia (CLL) diagnosis, according to Paul M. Barr, MD.

“It’s understood that IgVH mutation status is certainly prognostic,” Dr. Barr, associate professor of hematology/oncology at the University of Rochester (N.Y.), said during a presentation at the American Society of Hematology Meeting on Hematologic Malignancies.

The B-cell receptor of the CLL cells uses IgVH genes that may or may not have undergone somatic mutations, with unmutated being defined as 98% or more sequence homology to germline.

“This is indicative of stronger signaling through the B-cell receptor and, as we all know, predicts for an inferior prognosis,” he explained, citing a study that demonstrated superior survival rates with mutated IgVH genes (Blood. 1999;94[6]:1840-7).

“It’s also well understood and accepted that we should perform a FISH panel; we should look for interphase cytogenetics based on FISH in our patients,” Dr. Barr said. “Having said that, we, as medical oncologists, do not do a very good job of using this testing appropriately. Patterns of care studies have suggested that a significant number of patients don’t get FISH testing at diagnosis or before first-line therapy.”

In fact, a typical interphase FISH panel identifies cytogenetic lesions, including del(17p), del(11q), del(13q), and trisomy 12 in more than 80% of CLL cases, with del(13q) being the most common.

Another marker that can be assessed in CLL patients and has maintained prognostic value across multiple analyses is serum beta-2 microglobulin, Dr. Barr noted.

TP53 sequencing is valuable as well and has been associated with outcomes similar to those seen in patients with del(17p), he said, citing data from a study that found similarly poor outcomes with TP53 mutations or deletions and del(17p), even when minor subclones are identified using next-generation sequencing (Blood. 2014;123:2139-47).

“One of the primary reasons for this is that the two aberrations go together. Most often, if you have del(17p) you’re also going to find a TP53 mutation, but in about 30% of patients or so, only one allele is affected, so this is why it’s still important to test for TP53 mutations when you’re looking for a 17p deletion,” he said.

Numerous other recurrent mutations in CLL have been associated with poor overall survival and/or progression-free survival, including SF3B1, ATM, NOTCH1, POT1, BIRC3, and NFKBIE.

“The gut instinct is that maybe we should start testing for all of these mutations now, but I would caution everybody that we still need further validation before we can apply these to the majority of patients,” Dr. Barr said. “We still don’t know exactly what to do with all of these mutations – when and how often we should test for them, if the novel agents are truly better – so while, again, they can predict for inferior outcomes, I would say these are not yet standard of care to be tested in all patients.”

It is likely, though, that new prognostic systems will evolve as more is learned about how to use these molecular aberrations. Attempts are already being made to incorporate novel mutations into a prognostic system. Dr. Barr pointed to a report that looked at the integration of mutations and cytogenetic lesions to improve the accuracy of survival prediction in CLL (Blood. 2013;121:1403-12).

“But this still requires prospective testing, especially in patients getting the novel agents,” he said.

Conventional karyotyping also has potential, though a limited role in this setting, he said, noting that it can be reliably performed with stimulation of CLL cells.

“We also know additional aberrations are prognostic and that a complex karyotype predicts for a very poor outcome,” he said. The International Workshop on CLL (iwCLL) guidelines, which were recently updated for the first time in a decade, state that further validation is needed.

“I think it’s potentially useful in a very young patient you are considering taking to transplant, but again, I agree with the stance that this is not something that should be performed in every patient across the board,” he said.

The tests currently recommended by iwCLL before CLL treatment include IgVH mutation status; FISH for del(13q), del(11q), del(17p), and trisomy 12 in peripheral blood lymphocytes; and TP53.

“Some folks... don’t check a lot of these markers at diagnosis, but wait for patients to require therapy, and that’s a reasonable way to practice,” Dr. Barr said, noting, however, that he prefers knowing patients’ risk up front – especially for those patients he will see just once before they are “managed closer to home for the majority of their course.

“But if you [wait], then knowing what to repeat later is important,” he added. Namely, the FISH and TP53 tests are worth repeating as patients can acquire additional molecular aberrations over time.

Dr. Barr reported serving as a consultant for Pharmacyclics, AbbVie, Celgene, Gilead Sciences, Infinity Pharmaceuticals, Novartis, and Seattle Genetics. He also reported receiving research funding from Pharmacyclics and AbbVie.

CHICAGO – A number of mutation tests – including immunoglobulin heavy chain gene (IgVH), fluorescence in situ hybridization (FISH), and TP53 – provide useful prognostic information at the time of chronic lymphocytic leukemia (CLL) diagnosis, according to Paul M. Barr, MD.

“It’s understood that IgVH mutation status is certainly prognostic,” Dr. Barr, associate professor of hematology/oncology at the University of Rochester (N.Y.), said during a presentation at the American Society of Hematology Meeting on Hematologic Malignancies.

The B-cell receptor of the CLL cells uses IgVH genes that may or may not have undergone somatic mutations, with unmutated being defined as 98% or more sequence homology to germline.

“This is indicative of stronger signaling through the B-cell receptor and, as we all know, predicts for an inferior prognosis,” he explained, citing a study that demonstrated superior survival rates with mutated IgVH genes (Blood. 1999;94[6]:1840-7).

“It’s also well understood and accepted that we should perform a FISH panel; we should look for interphase cytogenetics based on FISH in our patients,” Dr. Barr said. “Having said that, we, as medical oncologists, do not do a very good job of using this testing appropriately. Patterns of care studies have suggested that a significant number of patients don’t get FISH testing at diagnosis or before first-line therapy.”

In fact, a typical interphase FISH panel identifies cytogenetic lesions, including del(17p), del(11q), del(13q), and trisomy 12 in more than 80% of CLL cases, with del(13q) being the most common.

Another marker that can be assessed in CLL patients and has maintained prognostic value across multiple analyses is serum beta-2 microglobulin, Dr. Barr noted.

TP53 sequencing is valuable as well and has been associated with outcomes similar to those seen in patients with del(17p), he said, citing data from a study that found similarly poor outcomes with TP53 mutations or deletions and del(17p), even when minor subclones are identified using next-generation sequencing (Blood. 2014;123:2139-47).

“One of the primary reasons for this is that the two aberrations go together. Most often, if you have del(17p) you’re also going to find a TP53 mutation, but in about 30% of patients or so, only one allele is affected, so this is why it’s still important to test for TP53 mutations when you’re looking for a 17p deletion,” he said.

Numerous other recurrent mutations in CLL have been associated with poor overall survival and/or progression-free survival, including SF3B1, ATM, NOTCH1, POT1, BIRC3, and NFKBIE.

“The gut instinct is that maybe we should start testing for all of these mutations now, but I would caution everybody that we still need further validation before we can apply these to the majority of patients,” Dr. Barr said. “We still don’t know exactly what to do with all of these mutations – when and how often we should test for them, if the novel agents are truly better – so while, again, they can predict for inferior outcomes, I would say these are not yet standard of care to be tested in all patients.”

It is likely, though, that new prognostic systems will evolve as more is learned about how to use these molecular aberrations. Attempts are already being made to incorporate novel mutations into a prognostic system. Dr. Barr pointed to a report that looked at the integration of mutations and cytogenetic lesions to improve the accuracy of survival prediction in CLL (Blood. 2013;121:1403-12).

“But this still requires prospective testing, especially in patients getting the novel agents,” he said.

Conventional karyotyping also has potential, though a limited role in this setting, he said, noting that it can be reliably performed with stimulation of CLL cells.

“We also know additional aberrations are prognostic and that a complex karyotype predicts for a very poor outcome,” he said. The International Workshop on CLL (iwCLL) guidelines, which were recently updated for the first time in a decade, state that further validation is needed.

“I think it’s potentially useful in a very young patient you are considering taking to transplant, but again, I agree with the stance that this is not something that should be performed in every patient across the board,” he said.

The tests currently recommended by iwCLL before CLL treatment include IgVH mutation status; FISH for del(13q), del(11q), del(17p), and trisomy 12 in peripheral blood lymphocytes; and TP53.

“Some folks... don’t check a lot of these markers at diagnosis, but wait for patients to require therapy, and that’s a reasonable way to practice,” Dr. Barr said, noting, however, that he prefers knowing patients’ risk up front – especially for those patients he will see just once before they are “managed closer to home for the majority of their course.

“But if you [wait], then knowing what to repeat later is important,” he added. Namely, the FISH and TP53 tests are worth repeating as patients can acquire additional molecular aberrations over time.

Dr. Barr reported serving as a consultant for Pharmacyclics, AbbVie, Celgene, Gilead Sciences, Infinity Pharmaceuticals, Novartis, and Seattle Genetics. He also reported receiving research funding from Pharmacyclics and AbbVie.

CHICAGO – A number of mutation tests – including immunoglobulin heavy chain gene (IgVH), fluorescence in situ hybridization (FISH), and TP53 – provide useful prognostic information at the time of chronic lymphocytic leukemia (CLL) diagnosis, according to Paul M. Barr, MD.

“It’s understood that IgVH mutation status is certainly prognostic,” Dr. Barr, associate professor of hematology/oncology at the University of Rochester (N.Y.), said during a presentation at the American Society of Hematology Meeting on Hematologic Malignancies.

The B-cell receptor of the CLL cells uses IgVH genes that may or may not have undergone somatic mutations, with unmutated being defined as 98% or more sequence homology to germline.

“This is indicative of stronger signaling through the B-cell receptor and, as we all know, predicts for an inferior prognosis,” he explained, citing a study that demonstrated superior survival rates with mutated IgVH genes (Blood. 1999;94[6]:1840-7).

“It’s also well understood and accepted that we should perform a FISH panel; we should look for interphase cytogenetics based on FISH in our patients,” Dr. Barr said. “Having said that, we, as medical oncologists, do not do a very good job of using this testing appropriately. Patterns of care studies have suggested that a significant number of patients don’t get FISH testing at diagnosis or before first-line therapy.”

In fact, a typical interphase FISH panel identifies cytogenetic lesions, including del(17p), del(11q), del(13q), and trisomy 12 in more than 80% of CLL cases, with del(13q) being the most common.

Another marker that can be assessed in CLL patients and has maintained prognostic value across multiple analyses is serum beta-2 microglobulin, Dr. Barr noted.

TP53 sequencing is valuable as well and has been associated with outcomes similar to those seen in patients with del(17p), he said, citing data from a study that found similarly poor outcomes with TP53 mutations or deletions and del(17p), even when minor subclones are identified using next-generation sequencing (Blood. 2014;123:2139-47).

“One of the primary reasons for this is that the two aberrations go together. Most often, if you have del(17p) you’re also going to find a TP53 mutation, but in about 30% of patients or so, only one allele is affected, so this is why it’s still important to test for TP53 mutations when you’re looking for a 17p deletion,” he said.

Numerous other recurrent mutations in CLL have been associated with poor overall survival and/or progression-free survival, including SF3B1, ATM, NOTCH1, POT1, BIRC3, and NFKBIE.

“The gut instinct is that maybe we should start testing for all of these mutations now, but I would caution everybody that we still need further validation before we can apply these to the majority of patients,” Dr. Barr said. “We still don’t know exactly what to do with all of these mutations – when and how often we should test for them, if the novel agents are truly better – so while, again, they can predict for inferior outcomes, I would say these are not yet standard of care to be tested in all patients.”

It is likely, though, that new prognostic systems will evolve as more is learned about how to use these molecular aberrations. Attempts are already being made to incorporate novel mutations into a prognostic system. Dr. Barr pointed to a report that looked at the integration of mutations and cytogenetic lesions to improve the accuracy of survival prediction in CLL (Blood. 2013;121:1403-12).

“But this still requires prospective testing, especially in patients getting the novel agents,” he said.

Conventional karyotyping also has potential, though a limited role in this setting, he said, noting that it can be reliably performed with stimulation of CLL cells.

“We also know additional aberrations are prognostic and that a complex karyotype predicts for a very poor outcome,” he said. The International Workshop on CLL (iwCLL) guidelines, which were recently updated for the first time in a decade, state that further validation is needed.

“I think it’s potentially useful in a very young patient you are considering taking to transplant, but again, I agree with the stance that this is not something that should be performed in every patient across the board,” he said.

The tests currently recommended by iwCLL before CLL treatment include IgVH mutation status; FISH for del(13q), del(11q), del(17p), and trisomy 12 in peripheral blood lymphocytes; and TP53.

“Some folks... don’t check a lot of these markers at diagnosis, but wait for patients to require therapy, and that’s a reasonable way to practice,” Dr. Barr said, noting, however, that he prefers knowing patients’ risk up front – especially for those patients he will see just once before they are “managed closer to home for the majority of their course.

“But if you [wait], then knowing what to repeat later is important,” he added. Namely, the FISH and TP53 tests are worth repeating as patients can acquire additional molecular aberrations over time.

Dr. Barr reported serving as a consultant for Pharmacyclics, AbbVie, Celgene, Gilead Sciences, Infinity Pharmaceuticals, Novartis, and Seattle Genetics. He also reported receiving research funding from Pharmacyclics and AbbVie.

EXPERT ANALYSIS FROM MHM 2018

MELD sodium score tied to better transplant outcomes

Factoring hyponatremic status into liver graft allocations led to significant reductions in wait-list mortality, researchers reported in the November issue of Gastroenterology.

Hyponatremic patients with low MELD scores benefited significantly from allocation based on the end-stage liver disease–sodium (MELD-Na) score, while its survival benefit was less evident among patients with higher scores, said Shunji Nagai, MD, PhD, of Henry Ford Hospital, Detroit, and his associates. “Therefore, liver allocation rules such as Share 15 and Share 35 need to be revised to fulfill the Final Rule under the MELD-Na based allocation,” they wrote.

The Share 35 rule offers liver grafts locally and regionally to wait-listed patients with MELD-Na scores of at least 35. Under the Share 15 rule, livers are offered regionally or nationally before considering local candidates with MELD scores under 15. The traditional MELD scoring system excluded hyponatremia, which has since been found to independently predict death from cirrhosis. Therefore, in January 2016, a modified MELD-Na score was implemented for patients with traditional MELD scores of at least 12. The MELD-Na score assigns patients between 1 and 11 additional points, and patients with low MELD scores and severe hyponatremia receive the most points. To assess the impact of this change, Dr. Nagai and his associates compared wait-list and posttransplantation outcomes during the pre and post–MELD-Na eras and the survival benefit of liver transplantation during the MELD-Na period. The study included all adults wait-listed for livers from June 2013, when Share 35 was implemented, through September 2017.

Mortality within 90 days on the wait list fell significantly during the MELD-Na era (hazard ratio, 0.74; P less than .001). Transplantation conferred a “definitive” survival benefit when MELD-Na scores were 21-23 (HR versus wait list, 0.34; P less than .001). During the traditional MELD period, the equivalent cutoff was 15-17 (HR, 0.36; P less than .001). “As such, the current rules for liver allocation may be suboptimal under the MELD-Na–based allocation and the criteria for Share 15 may need to be revisited,” the researchers wrote. They recommended raising the cutoff to 21.

The study also confirmed mild hyponatremia (130-134 mmol/L), moderate hyponatremia (125-129 mmol/L), and severe hyponatremia (less than 125 mmol/L) as independent predictors of wait-list mortality during the traditional MELD era. Hazard ratios were 1.4, 1.8, and 1.7, respectively (all P less than .001). The implementation of MELD-Na significantly weakened these associations, with HRs of 1.1 (P = .3), 1.3 (P = .02), and 1.4 (P = .04), respectively).

The probability of transplantation also rose significantly during the MELD-Na era (HR, 1.2; P less than .001), possibly because of the opioid epidemic, the researchers said. Although greater availability of liver grafts might have improved wait-list outcomes, all score categories would have shown a positive impact if this was the only reason, they added. Instead, MELD-Na most benefited patients with lower scores.

Finally, posttransplantation outcomes worsened during the MELD-Na era, perhaps because of transplant population aging. However, the survival benefit of transplant shifted to higher score ranges during the MELD-Na era even after the researchers controlled for this effect. “According to this analysis,” they wrote, “the survival benefit of liver transplant was definitive in patients with score category of 21-23, which could further validate our proposal to revise Share 15 rule to ‘Share 21.’ ”

The investigators reported having no external funding sources or conflicts of interest.

SOURCE: Nagai S et al. Gastroenterology. 2018 Jul 26. doi: 10.1053/j.gastro.2018.07.025.

Factoring hyponatremic status into liver graft allocations led to significant reductions in wait-list mortality, researchers reported in the November issue of Gastroenterology.

Hyponatremic patients with low MELD scores benefited significantly from allocation based on the end-stage liver disease–sodium (MELD-Na) score, while its survival benefit was less evident among patients with higher scores, said Shunji Nagai, MD, PhD, of Henry Ford Hospital, Detroit, and his associates. “Therefore, liver allocation rules such as Share 15 and Share 35 need to be revised to fulfill the Final Rule under the MELD-Na based allocation,” they wrote.

The Share 35 rule offers liver grafts locally and regionally to wait-listed patients with MELD-Na scores of at least 35. Under the Share 15 rule, livers are offered regionally or nationally before considering local candidates with MELD scores under 15. The traditional MELD scoring system excluded hyponatremia, which has since been found to independently predict death from cirrhosis. Therefore, in January 2016, a modified MELD-Na score was implemented for patients with traditional MELD scores of at least 12. The MELD-Na score assigns patients between 1 and 11 additional points, and patients with low MELD scores and severe hyponatremia receive the most points. To assess the impact of this change, Dr. Nagai and his associates compared wait-list and posttransplantation outcomes during the pre and post–MELD-Na eras and the survival benefit of liver transplantation during the MELD-Na period. The study included all adults wait-listed for livers from June 2013, when Share 35 was implemented, through September 2017.

Mortality within 90 days on the wait list fell significantly during the MELD-Na era (hazard ratio, 0.74; P less than .001). Transplantation conferred a “definitive” survival benefit when MELD-Na scores were 21-23 (HR versus wait list, 0.34; P less than .001). During the traditional MELD period, the equivalent cutoff was 15-17 (HR, 0.36; P less than .001). “As such, the current rules for liver allocation may be suboptimal under the MELD-Na–based allocation and the criteria for Share 15 may need to be revisited,” the researchers wrote. They recommended raising the cutoff to 21.

The study also confirmed mild hyponatremia (130-134 mmol/L), moderate hyponatremia (125-129 mmol/L), and severe hyponatremia (less than 125 mmol/L) as independent predictors of wait-list mortality during the traditional MELD era. Hazard ratios were 1.4, 1.8, and 1.7, respectively (all P less than .001). The implementation of MELD-Na significantly weakened these associations, with HRs of 1.1 (P = .3), 1.3 (P = .02), and 1.4 (P = .04), respectively).

The probability of transplantation also rose significantly during the MELD-Na era (HR, 1.2; P less than .001), possibly because of the opioid epidemic, the researchers said. Although greater availability of liver grafts might have improved wait-list outcomes, all score categories would have shown a positive impact if this was the only reason, they added. Instead, MELD-Na most benefited patients with lower scores.

Finally, posttransplantation outcomes worsened during the MELD-Na era, perhaps because of transplant population aging. However, the survival benefit of transplant shifted to higher score ranges during the MELD-Na era even after the researchers controlled for this effect. “According to this analysis,” they wrote, “the survival benefit of liver transplant was definitive in patients with score category of 21-23, which could further validate our proposal to revise Share 15 rule to ‘Share 21.’ ”

The investigators reported having no external funding sources or conflicts of interest.

SOURCE: Nagai S et al. Gastroenterology. 2018 Jul 26. doi: 10.1053/j.gastro.2018.07.025.

Factoring hyponatremic status into liver graft allocations led to significant reductions in wait-list mortality, researchers reported in the November issue of Gastroenterology.

Hyponatremic patients with low MELD scores benefited significantly from allocation based on the end-stage liver disease–sodium (MELD-Na) score, while its survival benefit was less evident among patients with higher scores, said Shunji Nagai, MD, PhD, of Henry Ford Hospital, Detroit, and his associates. “Therefore, liver allocation rules such as Share 15 and Share 35 need to be revised to fulfill the Final Rule under the MELD-Na based allocation,” they wrote.

The Share 35 rule offers liver grafts locally and regionally to wait-listed patients with MELD-Na scores of at least 35. Under the Share 15 rule, livers are offered regionally or nationally before considering local candidates with MELD scores under 15. The traditional MELD scoring system excluded hyponatremia, which has since been found to independently predict death from cirrhosis. Therefore, in January 2016, a modified MELD-Na score was implemented for patients with traditional MELD scores of at least 12. The MELD-Na score assigns patients between 1 and 11 additional points, and patients with low MELD scores and severe hyponatremia receive the most points. To assess the impact of this change, Dr. Nagai and his associates compared wait-list and posttransplantation outcomes during the pre and post–MELD-Na eras and the survival benefit of liver transplantation during the MELD-Na period. The study included all adults wait-listed for livers from June 2013, when Share 35 was implemented, through September 2017.

Mortality within 90 days on the wait list fell significantly during the MELD-Na era (hazard ratio, 0.74; P less than .001). Transplantation conferred a “definitive” survival benefit when MELD-Na scores were 21-23 (HR versus wait list, 0.34; P less than .001). During the traditional MELD period, the equivalent cutoff was 15-17 (HR, 0.36; P less than .001). “As such, the current rules for liver allocation may be suboptimal under the MELD-Na–based allocation and the criteria for Share 15 may need to be revisited,” the researchers wrote. They recommended raising the cutoff to 21.

The study also confirmed mild hyponatremia (130-134 mmol/L), moderate hyponatremia (125-129 mmol/L), and severe hyponatremia (less than 125 mmol/L) as independent predictors of wait-list mortality during the traditional MELD era. Hazard ratios were 1.4, 1.8, and 1.7, respectively (all P less than .001). The implementation of MELD-Na significantly weakened these associations, with HRs of 1.1 (P = .3), 1.3 (P = .02), and 1.4 (P = .04), respectively).

The probability of transplantation also rose significantly during the MELD-Na era (HR, 1.2; P less than .001), possibly because of the opioid epidemic, the researchers said. Although greater availability of liver grafts might have improved wait-list outcomes, all score categories would have shown a positive impact if this was the only reason, they added. Instead, MELD-Na most benefited patients with lower scores.

Finally, posttransplantation outcomes worsened during the MELD-Na era, perhaps because of transplant population aging. However, the survival benefit of transplant shifted to higher score ranges during the MELD-Na era even after the researchers controlled for this effect. “According to this analysis,” they wrote, “the survival benefit of liver transplant was definitive in patients with score category of 21-23, which could further validate our proposal to revise Share 15 rule to ‘Share 21.’ ”

The investigators reported having no external funding sources or conflicts of interest.

SOURCE: Nagai S et al. Gastroenterology. 2018 Jul 26. doi: 10.1053/j.gastro.2018.07.025.

FROM GASTROENTEROLOGY

Key clinical point: The implementation of the MELD sodium (MELD-Na) score for liver allocation was associated with significantly improved outcomes for wait-listed patients.

Major finding: During the MELD-Na era, mortality within 90 days on the liver wait list dropped significantly (HR, 0.74; P less than .001) while the probability of transplant rose significantly (HR, 1.2; P less than .001).

Study details: Comparison of 18,850 adult transplant candidates during the traditional MELD era versus 14,512 candidates during the MELD-Na era.

Disclosures: The investigators had no external funding sources or conflicts of interest.

Source: Nagai S et al. Gastroenterology. 2018 Jul 26. doi: 10.1053/j.gastro.2018.07.025.

FDA expands CoolSculpting indication to submandibular area

FDA clearance of the expanded indication was based on results of a 22-week study in which patients achieved a mean 33% reduction in fat layer thickness after two treatments. In addition, 85% of patients reported satisfaction with their treatment in that and two other studies. The data were provided in Allergan’s press release announcing the clearance for the cryolipolysis device.

Adverse events associated with the CoolSculpting treatment include temporary redness, swelling, blanching, bruising, firmness, tingling, stinging, tenderness, cramping, aching, itching, and skin sensitivity. People with cryoglobulinemia, cold agglutinin disease, or paroxysmal cold hemoglobinuria should not receive CoolSculpting treatments, according to the company.

The FDA also expanded the CoolSculpting indication to people with a body mass index up to 46.2 kg/m2 when treating the submental and submandibular areas.

FDA clearance of the expanded indication was based on results of a 22-week study in which patients achieved a mean 33% reduction in fat layer thickness after two treatments. In addition, 85% of patients reported satisfaction with their treatment in that and two other studies. The data were provided in Allergan’s press release announcing the clearance for the cryolipolysis device.

Adverse events associated with the CoolSculpting treatment include temporary redness, swelling, blanching, bruising, firmness, tingling, stinging, tenderness, cramping, aching, itching, and skin sensitivity. People with cryoglobulinemia, cold agglutinin disease, or paroxysmal cold hemoglobinuria should not receive CoolSculpting treatments, according to the company.

The FDA also expanded the CoolSculpting indication to people with a body mass index up to 46.2 kg/m2 when treating the submental and submandibular areas.

FDA clearance of the expanded indication was based on results of a 22-week study in which patients achieved a mean 33% reduction in fat layer thickness after two treatments. In addition, 85% of patients reported satisfaction with their treatment in that and two other studies. The data were provided in Allergan’s press release announcing the clearance for the cryolipolysis device.

Adverse events associated with the CoolSculpting treatment include temporary redness, swelling, blanching, bruising, firmness, tingling, stinging, tenderness, cramping, aching, itching, and skin sensitivity. People with cryoglobulinemia, cold agglutinin disease, or paroxysmal cold hemoglobinuria should not receive CoolSculpting treatments, according to the company.

The FDA also expanded the CoolSculpting indication to people with a body mass index up to 46.2 kg/m2 when treating the submental and submandibular areas.

Frontline rituximab shows long-term success in indolent lymphoma

Advanced indolent lymphoma patients can be treated with a rituximab-containing regimen as first-line therapy and, in some cases, skip chemotherapy altogether, a study with 10 years of follow-up data suggests.

After a median of 10.6 years’ follow-up, almost three-quarters of patients (73%) in the study were alive, and 36% never required chemotherapy.

“This [overall survival] is at least as good as that observed in modern immunochemotherapy trials,” Sandra Lockmer, MD, of Karolinska University Hospital in Stockholm and her colleagues reported in the Journal of Clinical Oncology.

The study included 321 patients who were previously untreated and had been enrolled in two randomized clinical trials performed by the Nordic Lymphoma Group. The trials randomized patients to receive either rituximab monotherapy or rituximab combined with interferon alfa-2a. Neither trial used up-front chemotherapy.

Patients included in the follow-up analysis had follicular lymphoma, marginal zone lymphoma, small lymphocytic lymphoma, or indolent lymphoma not otherwise specified.

The overall survival rate at 10 years after trial assignment was 75% and 66% after 15 years. Similarly, the lymphoma-specific survival rate was 81% at 10 years after trial assignment and 77% at 15 years, the researchers reported.

Overall, 117 patients did not require treatment with chemotherapy, but 24 patients were further treated with antibodies and/or radiation. Of the 93 patients who received no additional therapies after frontline treatment, 9 patients died from causes unrelated to their lymphoma.

Among the 237 patients who failed initial treatment, the median time to treatment failure was 1.5 years.

In terms of transformation to aggressive lymphoma, the rate was 2.4%/person-year overall. The cumulative risk of transformation was 20% at 10 years after trial assignment and 24% at 15 years.

The study was funded in part by the Stockholm County Council and by the Nordic Lymphoma Group. The trials analyzed in the study were supported by Roche. Dr. Lockmer reported having no financial disclosures. Her coauthors reported relationships with Novartis, Gilead, Roche, and Takeda, among others.

[email protected]

SOURCE: Lockmer S et al. J Clin Oncol. 2018 Oct 4:JCO1800262. doi: 10.1200/JCO.18.00262.

Advanced indolent lymphoma patients can be treated with a rituximab-containing regimen as first-line therapy and, in some cases, skip chemotherapy altogether, a study with 10 years of follow-up data suggests.

After a median of 10.6 years’ follow-up, almost three-quarters of patients (73%) in the study were alive, and 36% never required chemotherapy.

“This [overall survival] is at least as good as that observed in modern immunochemotherapy trials,” Sandra Lockmer, MD, of Karolinska University Hospital in Stockholm and her colleagues reported in the Journal of Clinical Oncology.

The study included 321 patients who were previously untreated and had been enrolled in two randomized clinical trials performed by the Nordic Lymphoma Group. The trials randomized patients to receive either rituximab monotherapy or rituximab combined with interferon alfa-2a. Neither trial used up-front chemotherapy.

Patients included in the follow-up analysis had follicular lymphoma, marginal zone lymphoma, small lymphocytic lymphoma, or indolent lymphoma not otherwise specified.

The overall survival rate at 10 years after trial assignment was 75% and 66% after 15 years. Similarly, the lymphoma-specific survival rate was 81% at 10 years after trial assignment and 77% at 15 years, the researchers reported.

Overall, 117 patients did not require treatment with chemotherapy, but 24 patients were further treated with antibodies and/or radiation. Of the 93 patients who received no additional therapies after frontline treatment, 9 patients died from causes unrelated to their lymphoma.

Among the 237 patients who failed initial treatment, the median time to treatment failure was 1.5 years.

In terms of transformation to aggressive lymphoma, the rate was 2.4%/person-year overall. The cumulative risk of transformation was 20% at 10 years after trial assignment and 24% at 15 years.

The study was funded in part by the Stockholm County Council and by the Nordic Lymphoma Group. The trials analyzed in the study were supported by Roche. Dr. Lockmer reported having no financial disclosures. Her coauthors reported relationships with Novartis, Gilead, Roche, and Takeda, among others.

[email protected]

SOURCE: Lockmer S et al. J Clin Oncol. 2018 Oct 4:JCO1800262. doi: 10.1200/JCO.18.00262.

Advanced indolent lymphoma patients can be treated with a rituximab-containing regimen as first-line therapy and, in some cases, skip chemotherapy altogether, a study with 10 years of follow-up data suggests.

After a median of 10.6 years’ follow-up, almost three-quarters of patients (73%) in the study were alive, and 36% never required chemotherapy.

“This [overall survival] is at least as good as that observed in modern immunochemotherapy trials,” Sandra Lockmer, MD, of Karolinska University Hospital in Stockholm and her colleagues reported in the Journal of Clinical Oncology.

The study included 321 patients who were previously untreated and had been enrolled in two randomized clinical trials performed by the Nordic Lymphoma Group. The trials randomized patients to receive either rituximab monotherapy or rituximab combined with interferon alfa-2a. Neither trial used up-front chemotherapy.

Patients included in the follow-up analysis had follicular lymphoma, marginal zone lymphoma, small lymphocytic lymphoma, or indolent lymphoma not otherwise specified.

The overall survival rate at 10 years after trial assignment was 75% and 66% after 15 years. Similarly, the lymphoma-specific survival rate was 81% at 10 years after trial assignment and 77% at 15 years, the researchers reported.

Overall, 117 patients did not require treatment with chemotherapy, but 24 patients were further treated with antibodies and/or radiation. Of the 93 patients who received no additional therapies after frontline treatment, 9 patients died from causes unrelated to their lymphoma.

Among the 237 patients who failed initial treatment, the median time to treatment failure was 1.5 years.

In terms of transformation to aggressive lymphoma, the rate was 2.4%/person-year overall. The cumulative risk of transformation was 20% at 10 years after trial assignment and 24% at 15 years.

The study was funded in part by the Stockholm County Council and by the Nordic Lymphoma Group. The trials analyzed in the study were supported by Roche. Dr. Lockmer reported having no financial disclosures. Her coauthors reported relationships with Novartis, Gilead, Roche, and Takeda, among others.

[email protected]

SOURCE: Lockmer S et al. J Clin Oncol. 2018 Oct 4:JCO1800262. doi: 10.1200/JCO.18.00262.

FROM THE JOURNAL OF CLINICAL ONCOLOGY

Key clinical point:

Major finding: After a median of 10.6 years’ follow up, 73% of patients were alive, and 36% did not require chemotherapy.

Study details: Ten-year follow-up data from two trials on 321 previously untreated patients who had follicular lymphoma, marginal zone lymphoma, small lymphocytic lymphoma, or indolent lymphoma not otherwise specified.

Disclosures: The study was funded in part by the Stockholm County Council and by the Nordic Lymphoma Group. The trials analyzed in the study were supported by Roche. Dr. Lockmer reported having no financial disclosures. Her coauthors reported relationships with Novartis, Gilead, Roche, and Takeda, among others.

Source: Lockmer S et al. J Clin Oncol. 2018 Oct 4:JCO1800262. doi: 10.1200/JCO.18.00262.

Studies reveal pregnancy trends in American women with MS

New evidence provides estimates of the pregnancy rates for American women with multiple sclerosis (MS), their complication rates, and the rates of relapse and disease-modifying drug treatment during different phases before and after pregnancy.

The two new studies, conducted by Maria K. Houtchens, MD, of Brigham and Women’s Hospital and Harvard Medical School, Boston, and her colleagues involved retrospective mining of U.S. commercial health plan data in the IQVIA Real-World Data Adjudicated Claims–U.S. database between Jan. 1, 2006, and June 30, 2015.

The mean age of pregnant women in the nine annual cohorts during that period was just over 32 years for those with MS and just over 29 years for those without. The percentage of women without MS who had a pregnancy-related claim in the database declined from 8.83% in 2006 to 7.75% in 2014 after adjusting for age, region, payer, and Charlson Comorbidity Index score, whereas the percentage increased in women with MS during the same period, from 7.91% to 9.47%. The investigators matched 2,115 women with MS and 2,115 without MS who had live births for a variety of variables and found that women with MS had higher rates of premature labor (31.4% vs. 27.4%; P = .005), infection in pregnancy (13.3% vs. 10.9%; P = .016), maternal cardiovascular disease (3.0% vs. 1.9%; P = .028), anemia or acquired coagulation disorder (2.5% vs. 1.3%; P = .007), neurologic complications in pregnancy (1.6% vs. 0.6%; P = .005), and sexually transmitted diseases in pregnancy (0.4% vs. 0%; P = .045). During labor and delivery, women with MS who had a live birth more often had a claim for acquired damage to the fetus (27.8% vs. 23.5%; P = .002) and congenital fetal malformations (13.2% vs. 10.3%; P = .004) than did women without MS.

In the second study, Dr. Houtchens and two coauthors from the first study of the database reported on a set of 2,158 women who had a live birth during the study period and had 1 year of continuous insurance eligibility before and after pregnancy. The odds for having an MS relapse declined during pregnancy (odds ratio, 0.623; 95% confidence interval, 0.521-0.744), rose during the 6-week postpartum puerperium (OR, 1.710; 95% CI, 1.358-2.152), and leveled off during the last three postpartum quarters to remain at a higher level than before pregnancy (OR, 1.216; 95% CI, 1.052-1.406). Disease-modifying drug treatment followed the same pattern with 20% using it before pregnancy, dropping to about 2% in the second trimester, and peaking in about a quarter of all patients 9-12 months post partum.

SOURCES: Houtchens MK et al. Neurology. 2018 Sep 28. doi: 10.1212/WNL.0000000000006382; Houtchens MK et al. Neurology. 2018 Sep 28. doi: 10.1212/WNL.0000000000006384.

New evidence provides estimates of the pregnancy rates for American women with multiple sclerosis (MS), their complication rates, and the rates of relapse and disease-modifying drug treatment during different phases before and after pregnancy.

The two new studies, conducted by Maria K. Houtchens, MD, of Brigham and Women’s Hospital and Harvard Medical School, Boston, and her colleagues involved retrospective mining of U.S. commercial health plan data in the IQVIA Real-World Data Adjudicated Claims–U.S. database between Jan. 1, 2006, and June 30, 2015.

The mean age of pregnant women in the nine annual cohorts during that period was just over 32 years for those with MS and just over 29 years for those without. The percentage of women without MS who had a pregnancy-related claim in the database declined from 8.83% in 2006 to 7.75% in 2014 after adjusting for age, region, payer, and Charlson Comorbidity Index score, whereas the percentage increased in women with MS during the same period, from 7.91% to 9.47%. The investigators matched 2,115 women with MS and 2,115 without MS who had live births for a variety of variables and found that women with MS had higher rates of premature labor (31.4% vs. 27.4%; P = .005), infection in pregnancy (13.3% vs. 10.9%; P = .016), maternal cardiovascular disease (3.0% vs. 1.9%; P = .028), anemia or acquired coagulation disorder (2.5% vs. 1.3%; P = .007), neurologic complications in pregnancy (1.6% vs. 0.6%; P = .005), and sexually transmitted diseases in pregnancy (0.4% vs. 0%; P = .045). During labor and delivery, women with MS who had a live birth more often had a claim for acquired damage to the fetus (27.8% vs. 23.5%; P = .002) and congenital fetal malformations (13.2% vs. 10.3%; P = .004) than did women without MS.

In the second study, Dr. Houtchens and two coauthors from the first study of the database reported on a set of 2,158 women who had a live birth during the study period and had 1 year of continuous insurance eligibility before and after pregnancy. The odds for having an MS relapse declined during pregnancy (odds ratio, 0.623; 95% confidence interval, 0.521-0.744), rose during the 6-week postpartum puerperium (OR, 1.710; 95% CI, 1.358-2.152), and leveled off during the last three postpartum quarters to remain at a higher level than before pregnancy (OR, 1.216; 95% CI, 1.052-1.406). Disease-modifying drug treatment followed the same pattern with 20% using it before pregnancy, dropping to about 2% in the second trimester, and peaking in about a quarter of all patients 9-12 months post partum.

SOURCES: Houtchens MK et al. Neurology. 2018 Sep 28. doi: 10.1212/WNL.0000000000006382; Houtchens MK et al. Neurology. 2018 Sep 28. doi: 10.1212/WNL.0000000000006384.

New evidence provides estimates of the pregnancy rates for American women with multiple sclerosis (MS), their complication rates, and the rates of relapse and disease-modifying drug treatment during different phases before and after pregnancy.

The two new studies, conducted by Maria K. Houtchens, MD, of Brigham and Women’s Hospital and Harvard Medical School, Boston, and her colleagues involved retrospective mining of U.S. commercial health plan data in the IQVIA Real-World Data Adjudicated Claims–U.S. database between Jan. 1, 2006, and June 30, 2015.

The mean age of pregnant women in the nine annual cohorts during that period was just over 32 years for those with MS and just over 29 years for those without. The percentage of women without MS who had a pregnancy-related claim in the database declined from 8.83% in 2006 to 7.75% in 2014 after adjusting for age, region, payer, and Charlson Comorbidity Index score, whereas the percentage increased in women with MS during the same period, from 7.91% to 9.47%. The investigators matched 2,115 women with MS and 2,115 without MS who had live births for a variety of variables and found that women with MS had higher rates of premature labor (31.4% vs. 27.4%; P = .005), infection in pregnancy (13.3% vs. 10.9%; P = .016), maternal cardiovascular disease (3.0% vs. 1.9%; P = .028), anemia or acquired coagulation disorder (2.5% vs. 1.3%; P = .007), neurologic complications in pregnancy (1.6% vs. 0.6%; P = .005), and sexually transmitted diseases in pregnancy (0.4% vs. 0%; P = .045). During labor and delivery, women with MS who had a live birth more often had a claim for acquired damage to the fetus (27.8% vs. 23.5%; P = .002) and congenital fetal malformations (13.2% vs. 10.3%; P = .004) than did women without MS.

In the second study, Dr. Houtchens and two coauthors from the first study of the database reported on a set of 2,158 women who had a live birth during the study period and had 1 year of continuous insurance eligibility before and after pregnancy. The odds for having an MS relapse declined during pregnancy (odds ratio, 0.623; 95% confidence interval, 0.521-0.744), rose during the 6-week postpartum puerperium (OR, 1.710; 95% CI, 1.358-2.152), and leveled off during the last three postpartum quarters to remain at a higher level than before pregnancy (OR, 1.216; 95% CI, 1.052-1.406). Disease-modifying drug treatment followed the same pattern with 20% using it before pregnancy, dropping to about 2% in the second trimester, and peaking in about a quarter of all patients 9-12 months post partum.

SOURCES: Houtchens MK et al. Neurology. 2018 Sep 28. doi: 10.1212/WNL.0000000000006382; Houtchens MK et al. Neurology. 2018 Sep 28. doi: 10.1212/WNL.0000000000006384.

FROM NEUROLOGY

Consider ART for younger endometriosis patients

but non-ART infertility treatment is less likely to succeed in the endometriosis population, according to data from approximately 1,800 women with infertility.

Moderate to severe endometriosis had a negative impact on the outcome of ART, but “the efficacy of non-ART treatment in patients with endometriosis remains elusive” wrote Wataru Isono of the University of Tokyo, and colleagues.

In a study published in the Journal of Obstetrics & Gynaecology Research, the investigators sought to determine the impact of endometriosis severity on the effectiveness of non-ART treatment. They calculated the cumulative live birth rates for women treated with ART and non-ART.

Overall, 49% of the 894 ART patients and 22% of the 1,358 non-ART patients gave birth after treatment. The birth rate remained more than twice as high among ART patients across all age groups, but declined among ART patients starting at 35 years of age and declined sharply as patients reached 40 years of age.

“The most important aspect of this study was that we determined the limitations of non-ART and sought to identify optimal management methods, as non-ART can be a more cost-effective treatment than ART in certain circumstances,” the researchers noted.

They then focused on 288 patients with advanced endometriosis, defined as stage III or IV according to the revised American Society for Reproductive Medicine score. Notably, the presence of moderate to severe endometriosis had a significant effect on the outcomes of non-ART patients in their 30s, but ART was effective in this age group in a multivariate analysis. The cumulative live birth rate in advanced endometriosis patients was significantly lower than in those without the condition who underwent non-ART treatment (10% vs. 25%). However, cumulative live birth rates after ART were not significantly different among advanced endometriosis patients, compared with patients without advanced endometriosis (45% vs. 51%).

The study was limited by several factors including the inability to analyze the varied types of infertility treatment, Dr. Isono and associates noted. However, “our results may provide infertility patients with accurate information regarding their expected probabilities of achieving a live birth and may help them select the optimal treatment based on their classification according to various risk factors,” they said.

The researchers had no financial conflicts to disclose. The study was supported by the Japan Society for the Promotion of Sciences; Grants-in-Aid for Scientific Research from the Ministry of Education, Science, and Culture; the Japan Agency for Medical Research and Development; and the Ministry of Health, Labor and Welfare.

SOURCE: Isono W et al. J Obstet Gynaecol Res. 2018. doi: 10.1111/jog.13826.

but non-ART infertility treatment is less likely to succeed in the endometriosis population, according to data from approximately 1,800 women with infertility.

Moderate to severe endometriosis had a negative impact on the outcome of ART, but “the efficacy of non-ART treatment in patients with endometriosis remains elusive” wrote Wataru Isono of the University of Tokyo, and colleagues.

In a study published in the Journal of Obstetrics & Gynaecology Research, the investigators sought to determine the impact of endometriosis severity on the effectiveness of non-ART treatment. They calculated the cumulative live birth rates for women treated with ART and non-ART.

Overall, 49% of the 894 ART patients and 22% of the 1,358 non-ART patients gave birth after treatment. The birth rate remained more than twice as high among ART patients across all age groups, but declined among ART patients starting at 35 years of age and declined sharply as patients reached 40 years of age.

“The most important aspect of this study was that we determined the limitations of non-ART and sought to identify optimal management methods, as non-ART can be a more cost-effective treatment than ART in certain circumstances,” the researchers noted.

They then focused on 288 patients with advanced endometriosis, defined as stage III or IV according to the revised American Society for Reproductive Medicine score. Notably, the presence of moderate to severe endometriosis had a significant effect on the outcomes of non-ART patients in their 30s, but ART was effective in this age group in a multivariate analysis. The cumulative live birth rate in advanced endometriosis patients was significantly lower than in those without the condition who underwent non-ART treatment (10% vs. 25%). However, cumulative live birth rates after ART were not significantly different among advanced endometriosis patients, compared with patients without advanced endometriosis (45% vs. 51%).

The study was limited by several factors including the inability to analyze the varied types of infertility treatment, Dr. Isono and associates noted. However, “our results may provide infertility patients with accurate information regarding their expected probabilities of achieving a live birth and may help them select the optimal treatment based on their classification according to various risk factors,” they said.

The researchers had no financial conflicts to disclose. The study was supported by the Japan Society for the Promotion of Sciences; Grants-in-Aid for Scientific Research from the Ministry of Education, Science, and Culture; the Japan Agency for Medical Research and Development; and the Ministry of Health, Labor and Welfare.

SOURCE: Isono W et al. J Obstet Gynaecol Res. 2018. doi: 10.1111/jog.13826.

but non-ART infertility treatment is less likely to succeed in the endometriosis population, according to data from approximately 1,800 women with infertility.

Moderate to severe endometriosis had a negative impact on the outcome of ART, but “the efficacy of non-ART treatment in patients with endometriosis remains elusive” wrote Wataru Isono of the University of Tokyo, and colleagues.

In a study published in the Journal of Obstetrics & Gynaecology Research, the investigators sought to determine the impact of endometriosis severity on the effectiveness of non-ART treatment. They calculated the cumulative live birth rates for women treated with ART and non-ART.

Overall, 49% of the 894 ART patients and 22% of the 1,358 non-ART patients gave birth after treatment. The birth rate remained more than twice as high among ART patients across all age groups, but declined among ART patients starting at 35 years of age and declined sharply as patients reached 40 years of age.

“The most important aspect of this study was that we determined the limitations of non-ART and sought to identify optimal management methods, as non-ART can be a more cost-effective treatment than ART in certain circumstances,” the researchers noted.

They then focused on 288 patients with advanced endometriosis, defined as stage III or IV according to the revised American Society for Reproductive Medicine score. Notably, the presence of moderate to severe endometriosis had a significant effect on the outcomes of non-ART patients in their 30s, but ART was effective in this age group in a multivariate analysis. The cumulative live birth rate in advanced endometriosis patients was significantly lower than in those without the condition who underwent non-ART treatment (10% vs. 25%). However, cumulative live birth rates after ART were not significantly different among advanced endometriosis patients, compared with patients without advanced endometriosis (45% vs. 51%).

The study was limited by several factors including the inability to analyze the varied types of infertility treatment, Dr. Isono and associates noted. However, “our results may provide infertility patients with accurate information regarding their expected probabilities of achieving a live birth and may help them select the optimal treatment based on their classification according to various risk factors,” they said.

The researchers had no financial conflicts to disclose. The study was supported by the Japan Society for the Promotion of Sciences; Grants-in-Aid for Scientific Research from the Ministry of Education, Science, and Culture; the Japan Agency for Medical Research and Development; and the Ministry of Health, Labor and Welfare.

SOURCE: Isono W et al. J Obstet Gynaecol Res. 2018. doi: 10.1111/jog.13826.

FROM THE JOURNAL OF OBSTETRICS AND GYNAECOLOGY RESEARCH

Key clinical point: ART was more effective than was non-ART against infertility in women with endometriosis, especially among younger women.

Major finding: The cumulative live birth rate in advanced endometriosis patients was significantly lower than in those without the condition who underwent non-ART treatment (10% vs. 25%).

Study details: A retrospective study of 1,864 infertile women in Japan, including 288 with advanced endometriosis.

Disclosures: The researchers had no financial conflicts to disclose. The study was supported by the Japan Society for the Promotion of Sciences; Grants-in-Aid for Scientific Research from the Ministry of Education, Science, and Culture; the Japan Agency for Medical Research and Development; and the Ministry of Health, Labor and Welfare.

Source: Isono W et al. J Obstet Gynaecol Res. 2018. doi: 10.1111/jog.13826.

Allergen of the year may be nearer than you think

MONTEREY, CALIF. – It’s only found in 2%-3% of allergy cases. So Because, a dermatologist told colleagues, it’s so common.

“If you’re allergic to it, it’s tough to stay away from it,” said Joseph F. Fowler Jr., MD, clinical professor of dermatology at the University of Louisville (Ky.) in a presentation about contact dermatitis at the annual Coastal Dermatology Symposium.

Indeed, the synthetic compound PG is found in skin care products and cosmetics, coated pills, topical medications such as corticosteroids, foods (including bread, food coloring, and such flavorings as vanilla extracts). “It’s in every topical acne product I know of,” and is even in brake fluid and so-called nontoxic antifreeze, he said. (Propylene glycol shouldn’t be confused with the poisonous toxin ethylene glycol, which also is found in antifreeze.)

Patients can be tested for allergy to PG, Dr. Fowler pointed out, but it’s important to understand that it can trigger an irritation reaction that can be mistaken for an allergic reaction.