User login

Lobar vs. sublobar resection in stage 1 lung cancer

Thoracic Oncology & Chest Imaging Network

Pleural Disease Section

Lobectomy with intrathoracic nodal dissection remains the standard of care for early stage (tumor size ≤ 3.0 cm) peripheral non–small cell lung cancer (NSCLC). This practice is primarily influenced by data from the mid-1990s associating limited resection (segmentectomy or wedge resection) with increased recurrence rate and mortality compared with lobectomy (Ginsberg et al. Ann Thorac Surg. 1995;60:615). Recent advances in video and robot-assisted thoracic surgery, as well as the implementation of lung cancer screening, improvement in minimally invasive diagnostic modalities, and neoadjuvant therapies have driven the medical community to revisit the role of sublobar lung resection.

Two newly published large randomized control multicenter multinational trials (Saji et al. Lancet. 2022;399:1670 and Altorki et al. N Engl J Med. 2023;388:489) have challenged our well-established practices. They compared overall and disease-free survival sublobar to lobar resection of early stage NSCLC (tumor size ≤ 2.0 cm and negative intraoperative nodal disease) and demonstrated noninferiority of sublobar resection with respect to overall survival and disease-free survival. While the sublobar resection in the Saji et al. trial consisted strictly of segmentectomy, the majority of sublobar resections in the Altorki et al. trial were wedge resections. Interestingly, both trials chose lower cut-offs for tumor size (≤ 2.0 cm) compared with the Ginsberg trial (≤ 3.0 cm), which could arguably have accounted for this difference in outcomes.

Christopher Yurosko, DO – Section Fellow-in-Training

Melissa Rosas, MD – Section Member-at-Large

Labib Debiane, MD - Section Member-at-Large

Thoracic Oncology & Chest Imaging Network

Pleural Disease Section

Lobectomy with intrathoracic nodal dissection remains the standard of care for early stage (tumor size ≤ 3.0 cm) peripheral non–small cell lung cancer (NSCLC). This practice is primarily influenced by data from the mid-1990s associating limited resection (segmentectomy or wedge resection) with increased recurrence rate and mortality compared with lobectomy (Ginsberg et al. Ann Thorac Surg. 1995;60:615). Recent advances in video and robot-assisted thoracic surgery, as well as the implementation of lung cancer screening, improvement in minimally invasive diagnostic modalities, and neoadjuvant therapies have driven the medical community to revisit the role of sublobar lung resection.

Two newly published large randomized control multicenter multinational trials (Saji et al. Lancet. 2022;399:1670 and Altorki et al. N Engl J Med. 2023;388:489) have challenged our well-established practices. They compared overall and disease-free survival sublobar to lobar resection of early stage NSCLC (tumor size ≤ 2.0 cm and negative intraoperative nodal disease) and demonstrated noninferiority of sublobar resection with respect to overall survival and disease-free survival. While the sublobar resection in the Saji et al. trial consisted strictly of segmentectomy, the majority of sublobar resections in the Altorki et al. trial were wedge resections. Interestingly, both trials chose lower cut-offs for tumor size (≤ 2.0 cm) compared with the Ginsberg trial (≤ 3.0 cm), which could arguably have accounted for this difference in outcomes.

Christopher Yurosko, DO – Section Fellow-in-Training

Melissa Rosas, MD – Section Member-at-Large

Labib Debiane, MD - Section Member-at-Large

Thoracic Oncology & Chest Imaging Network

Pleural Disease Section

Lobectomy with intrathoracic nodal dissection remains the standard of care for early stage (tumor size ≤ 3.0 cm) peripheral non–small cell lung cancer (NSCLC). This practice is primarily influenced by data from the mid-1990s associating limited resection (segmentectomy or wedge resection) with increased recurrence rate and mortality compared with lobectomy (Ginsberg et al. Ann Thorac Surg. 1995;60:615). Recent advances in video and robot-assisted thoracic surgery, as well as the implementation of lung cancer screening, improvement in minimally invasive diagnostic modalities, and neoadjuvant therapies have driven the medical community to revisit the role of sublobar lung resection.

Two newly published large randomized control multicenter multinational trials (Saji et al. Lancet. 2022;399:1670 and Altorki et al. N Engl J Med. 2023;388:489) have challenged our well-established practices. They compared overall and disease-free survival sublobar to lobar resection of early stage NSCLC (tumor size ≤ 2.0 cm and negative intraoperative nodal disease) and demonstrated noninferiority of sublobar resection with respect to overall survival and disease-free survival. While the sublobar resection in the Saji et al. trial consisted strictly of segmentectomy, the majority of sublobar resections in the Altorki et al. trial were wedge resections. Interestingly, both trials chose lower cut-offs for tumor size (≤ 2.0 cm) compared with the Ginsberg trial (≤ 3.0 cm), which could arguably have accounted for this difference in outcomes.

Christopher Yurosko, DO – Section Fellow-in-Training

Melissa Rosas, MD – Section Member-at-Large

Labib Debiane, MD - Section Member-at-Large

Applications of ChatGPT and Large Language Models in Medicine and Health Care: Benefits and Pitfalls

The development of [artificial intelligence] is as fundamental as the creation of the microprocessor, the personal computer, the Internet, and the mobile phone. It will change the way people work, learn, travel, get health care, and communicate with each other.

Bill Gates 1

As the world emerges from the pandemic and the health care system faces new challenges, technology has become an increasingly important tool for health care professionals (HCPs). One such technology is the large language model (LLM), which has the potential to revolutionize the health care industry. ChatGPT, a popular LLM developed by OpenAI, has gained particular attention in the medical community for its ability to pass the United States Medical Licensing Exam.2 This article will explore the benefits and potential pitfalls of using LLMs like ChatGPT in medicine and health care.

Benefits

HCP burnout is a serious issue that can lead to lower productivity, increased medical errors, and decreased patient satisfaction.3 LLMs can alleviate some administrative burdens on HCPs, allowing them to focus on patient care. By assisting with billing, coding, insurance claims, and organizing schedules, LLMs like ChatGPT can free up time for HCPs to focus on what they do best: providing quality patient care.4 ChatGPT also can assist with diagnoses by providing accurate and reliable information based on a vast amount of clinical data. By learning the relationships between different medical conditions, symptoms, and treatment options, ChatGPT can provide an appropriate differential diagnosis (Figure 1).

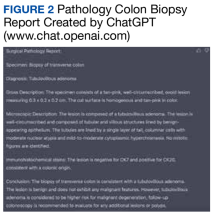

Imaging medical specialists like radiologists, pathologists, dermatologists, and others can benefit from combining computer vision diagnostics with ChatGPT report creation abilities to streamline the diagnostic workflow and improve diagnostic accuracy (Figure 2).

Although using ChatGPT and other LLMs in mental health care has potential benefits, it is essential to note that they are not a substitute for human interaction and personalized care. While ChatGPT can remember information from previous conversations, it cannot provide the same level of personalized, high-quality care that a professional therapist or HCP can. However, by augmenting the work of HCPs, ChatGPT and other LLMs have the potential to make mental health care more accessible and efficient. In addition to providing effective screening in underserved areas, ChatGPT technology may improve the competence of physician assistants and nurse practitioners in delivering mental health care. With the increased incidence of mental health problems in veterans, the pertinence of a ChatGPT-like feature will only increase with time.9

ChatGPT can also be integrated into health care organizations’ websites and mobile apps, providing patients instant access to medical information, self-care advice, symptom checkers, scheduling appointments, and arranging transportation. These features can reduce the burden on health care staff and help patients stay informed and motivated to take an active role in their health. Additionally, health care organizations can use ChatGPT to engage patients by providing reminders for medication renewals and assistance with self-care.4,6,10,11

The potential of artificial intelligence (AI) in the field of medical education and research is immense. According to a study by Gilson and colleagues, ChatGPT has shown promising results as a medical education tool.12 ChatGPT can simulate clinical scenarios, provide real-time feedback, and improve diagnostic skills. It also offers new interactive and personalized learning opportunities for medical students and HCPs.13 ChatGPT can help researchers by streamlining the process of data analysis. It can also administer surveys or questionnaires, facilitate data collection on preferences and experiences, and help in writing scientific publications.14 Nevertheless, to fully unlock the potential of these AI models, additional models that perform checks for factual accuracy, plagiarism, and copyright infringement must be developed.15,16

AI Bill of Rights

In order to protect the American public, the White House Office of Science and Technology Policy (OSTP) has released a blueprint for an AI Bill of Rights that emphasizes 5 principles to protect the public from the harmful effects of AI models, including safe and effective systems; algorithmic discrimination protection; data privacy; notice and explanation; and human alternatives, considerations, and fallback (Figure 3).17

One of the biggest challenges with LLMs like ChatGPT is the prevalence of inaccurate information or so-called hallucinations.16 These inaccuracies stem from the inability of LLMs to distinguish between real and fake information. To prevent hallucinations, researchers have proposed several methods, including training models on more diverse data, using adversarial training methods, and human-in-the-loop approaches.21 In addition, medicine-specific models like GatorTron, medPaLM, and Almanac were developed, increasing the accuracy of factual results.22-24 Unfortunately, only the GatorTron model is available to the public through the NVIDIA developers’ program.25

Despite these shortcomings, the future of LLMs in health care is promising. Although these models will not replace HCPs, they can help reduce the unnecessary burden on them, prevent burnout, and enable HCPs and patients spend more time together. Establishing an official hospital AI oversight governing body that would promote best practices could ensure the trustworthy implementation of these new technologies.26

Conclusions

The use of ChatGPT and other LLMs in health care has the potential to revolutionize the industry. By assisting HCPs with administrative tasks, improving the accuracy and reliability of diagnoses, and engaging patients, ChatGPT can help health care organizations provide better care to their patients. While LLMs are not a substitute for human interaction and personalized care, they can augment the work of HCPs, making health care more accessible and efficient. As the health care industry continues to evolve, it will be exciting to see how ChatGPT and other LLMs are used to improve patient outcomes and quality of care. In addition, AI technologies like ChatGPT offer enormous potential in medical education and research. To ensure that the benefits outweigh the risks, developing trustworthy AI health care products and establishing oversight governing bodies to ensure their implementation is essential. By doing so, we can help HCPs focus on what matters most, providing high-quality care to patients.

Acknowledgments

This material is the result of work supported by resources and the use of facilities at the James A. Haley Veterans’ Hospital.

1. Bill Gates. The age of AI has begun. March 21, 2023. Accessed May 10, 2023. https://www.gatesnotes.com/the-age-of-ai-has-begun

2. Kung TH, Cheatham M, Medenilla A, et al. Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLOS Digit Health. 2023;2(2):e0000198. Published 2023 Feb 9. doi:10.1371/journal.pdig.0000198

3. Shanafelt TD, West CP, Sinsky C, et al. Changes in burnout and satisfaction with work-life integration in physicians and the general US working population between 2011 and 2020. Mayo Clin Proc. 2022;97(3):491-506. doi:10.1016/j.mayocp.2021.11.021

4. Goodman RS, Patrinely JR Jr, Osterman T, Wheless L, Johnson DB. On the cusp: considering the impact of artificial intelligence language models in healthcare. Med. 2023;4(3):139-140. doi:10.1016/j.medj.2023.02.008

5. Will ChatGPT transform healthcare? Nat Med. 2023;29(3):505-506. doi:10.1038/s41591-023-02289-5

6. Hopkins AM, Logan JM, Kichenadasse G, Sorich MJ. Artificial intelligence chatbots will revolutionize how cancer patients access information: ChatGPT represents a paradigm-shift. JNCI Cancer Spectr. 2023;7(2):pkad010. doi:10.1093/jncics/pkad010

7. Babar Z, van Laarhoven T, Zanzotto FM, Marchiori E. Evaluating diagnostic content of AI-generated radiology reports of chest X-rays. Artif Intell Med. 2021;116:102075. doi:10.1016/j.artmed.2021.102075

8. Lecler A, Duron L, Soyer P. Revolutionizing radiology with GPT-based models: current applications, future possibilities and limitations of ChatGPT. Diagn Interv Imaging. 2023;S2211-5684(23)00027-X. doi:10.1016/j.diii.2023.02.003

9. Germain JM. Is ChatGPT smart enough to practice mental health therapy? March 23, 2023. Accessed May 11, 2023. https://www.technewsworld.com/story/is-chatgpt-smart-enough-to-practice-mental-health-therapy-178064.html

10. Cascella M, Montomoli J, Bellini V, Bignami E. Evaluating the feasibility of ChatGPT in healthcare: an analysis of multiple clinical and research scenarios. J Med Syst. 2023;47(1):33. Published 2023 Mar 4. doi:10.1007/s10916-023-01925-4

11. Jungwirth D, Haluza D. Artificial intelligence and public health: an exploratory study. Int J Environ Res Public Health. 2023;20(5):4541. Published 2023 Mar 3. doi:10.3390/ijerph20054541

12. Gilson A, Safranek CW, Huang T, et al. How does ChatGPT perform on the United States Medical Licensing Examination? The implications of large language models for medical education and knowledge assessment. JMIR Med Educ. 2023;9:e45312. Published 2023 Feb 8. doi:10.2196/45312

13. Eysenbach G. The role of ChatGPT, generative language models, and artificial intelligence in medical education: a conversation with ChatGPT and a call for papers. JMIR Med Educ. 2023;9:e46885. Published 2023 Mar 6. doi:10.2196/46885

14. Macdonald C, Adeloye D, Sheikh A, Rudan I. Can ChatGPT draft a research article? An example of population-level vaccine effectiveness analysis. J Glob Health. 2023;13:01003. Published 2023 Feb 17. doi:10.7189/jogh.13.01003

15. Masters K. Ethical use of artificial intelligence in health professions education: AMEE Guide No.158. Med Teach. 2023;1-11. doi:10.1080/0142159X.2023.2186203

16. Smith CS. Hallucinations could blunt ChatGPT’s success. IEEE Spectrum. March 13, 2023. Accessed May 11, 2023. https://spectrum.ieee.org/ai-hallucination

17. Executive Office of the President, Office of Science and Technology Policy. Blueprint for an AI Bill of Rights. Accessed May 11, 2023. https://www.whitehouse.gov/ostp/ai-bill-of-rights

18. Executive office of the President. Executive Order 13960: promoting the use of trustworthy artificial intelligence in the federal government. Fed Regist. 2020;89(236):78939-78943.

19. US Department of Commerce, National institute of Standards and Technology. Artificial Intelligence Risk Management Framework (AI RMF 1.0). Published January 2023. doi:10.6028/NIST.AI.100-1

20. Microsoft. Azure Cognitive Search—Cloud Search Service. Accessed May 11, 2023. https://azure.microsoft.com/en-us/products/search

21. Aiyappa R, An J, Kwak H, Ahn YY. Can we trust the evaluation on ChatGPT? March 22, 2023. Accessed May 11, 2023. https://arxiv.org/abs/2303.12767v1

22. Yang X, Chen A, Pournejatian N, et al. GatorTron: a large clinical language model to unlock patient information from unstructured electronic health records. Updated December 16, 2022. Accessed May 11, 2023. https://arxiv.org/abs/2203.03540v3

23. Singhal K, Azizi S, Tu T, et al. Large language models encode clinical knowledge. December 26, 2022. Accessed May 11, 2023. https://arxiv.org/abs/2212.13138v1

24. Zakka C, Chaurasia A, Shad R, Hiesinger W. Almanac: knowledge-grounded language models for clinical medicine. March 1, 2023. Accessed May 11, 2023. https://arxiv.org/abs/2303.01229v1

25. NVIDIA. GatorTron-OG. Accessed May 11, 2023. https://catalog.ngc.nvidia.com/orgs/nvidia/teams/clara/models/gatortron_og

26. Borkowski AA, Jakey CE, Thomas LB, Viswanadhan N, Mastorides SM. Establishing a hospital artificial intelligence committee to improve patient care. Fed Pract. 2022;39(8):334-336. doi:10.12788/fp.0299

The development of [artificial intelligence] is as fundamental as the creation of the microprocessor, the personal computer, the Internet, and the mobile phone. It will change the way people work, learn, travel, get health care, and communicate with each other.

Bill Gates 1

As the world emerges from the pandemic and the health care system faces new challenges, technology has become an increasingly important tool for health care professionals (HCPs). One such technology is the large language model (LLM), which has the potential to revolutionize the health care industry. ChatGPT, a popular LLM developed by OpenAI, has gained particular attention in the medical community for its ability to pass the United States Medical Licensing Exam.2 This article will explore the benefits and potential pitfalls of using LLMs like ChatGPT in medicine and health care.

Benefits

HCP burnout is a serious issue that can lead to lower productivity, increased medical errors, and decreased patient satisfaction.3 LLMs can alleviate some administrative burdens on HCPs, allowing them to focus on patient care. By assisting with billing, coding, insurance claims, and organizing schedules, LLMs like ChatGPT can free up time for HCPs to focus on what they do best: providing quality patient care.4 ChatGPT also can assist with diagnoses by providing accurate and reliable information based on a vast amount of clinical data. By learning the relationships between different medical conditions, symptoms, and treatment options, ChatGPT can provide an appropriate differential diagnosis (Figure 1).

Imaging medical specialists like radiologists, pathologists, dermatologists, and others can benefit from combining computer vision diagnostics with ChatGPT report creation abilities to streamline the diagnostic workflow and improve diagnostic accuracy (Figure 2).

Although using ChatGPT and other LLMs in mental health care has potential benefits, it is essential to note that they are not a substitute for human interaction and personalized care. While ChatGPT can remember information from previous conversations, it cannot provide the same level of personalized, high-quality care that a professional therapist or HCP can. However, by augmenting the work of HCPs, ChatGPT and other LLMs have the potential to make mental health care more accessible and efficient. In addition to providing effective screening in underserved areas, ChatGPT technology may improve the competence of physician assistants and nurse practitioners in delivering mental health care. With the increased incidence of mental health problems in veterans, the pertinence of a ChatGPT-like feature will only increase with time.9

ChatGPT can also be integrated into health care organizations’ websites and mobile apps, providing patients instant access to medical information, self-care advice, symptom checkers, scheduling appointments, and arranging transportation. These features can reduce the burden on health care staff and help patients stay informed and motivated to take an active role in their health. Additionally, health care organizations can use ChatGPT to engage patients by providing reminders for medication renewals and assistance with self-care.4,6,10,11

The potential of artificial intelligence (AI) in the field of medical education and research is immense. According to a study by Gilson and colleagues, ChatGPT has shown promising results as a medical education tool.12 ChatGPT can simulate clinical scenarios, provide real-time feedback, and improve diagnostic skills. It also offers new interactive and personalized learning opportunities for medical students and HCPs.13 ChatGPT can help researchers by streamlining the process of data analysis. It can also administer surveys or questionnaires, facilitate data collection on preferences and experiences, and help in writing scientific publications.14 Nevertheless, to fully unlock the potential of these AI models, additional models that perform checks for factual accuracy, plagiarism, and copyright infringement must be developed.15,16

AI Bill of Rights

In order to protect the American public, the White House Office of Science and Technology Policy (OSTP) has released a blueprint for an AI Bill of Rights that emphasizes 5 principles to protect the public from the harmful effects of AI models, including safe and effective systems; algorithmic discrimination protection; data privacy; notice and explanation; and human alternatives, considerations, and fallback (Figure 3).17

One of the biggest challenges with LLMs like ChatGPT is the prevalence of inaccurate information or so-called hallucinations.16 These inaccuracies stem from the inability of LLMs to distinguish between real and fake information. To prevent hallucinations, researchers have proposed several methods, including training models on more diverse data, using adversarial training methods, and human-in-the-loop approaches.21 In addition, medicine-specific models like GatorTron, medPaLM, and Almanac were developed, increasing the accuracy of factual results.22-24 Unfortunately, only the GatorTron model is available to the public through the NVIDIA developers’ program.25

Despite these shortcomings, the future of LLMs in health care is promising. Although these models will not replace HCPs, they can help reduce the unnecessary burden on them, prevent burnout, and enable HCPs and patients spend more time together. Establishing an official hospital AI oversight governing body that would promote best practices could ensure the trustworthy implementation of these new technologies.26

Conclusions

The use of ChatGPT and other LLMs in health care has the potential to revolutionize the industry. By assisting HCPs with administrative tasks, improving the accuracy and reliability of diagnoses, and engaging patients, ChatGPT can help health care organizations provide better care to their patients. While LLMs are not a substitute for human interaction and personalized care, they can augment the work of HCPs, making health care more accessible and efficient. As the health care industry continues to evolve, it will be exciting to see how ChatGPT and other LLMs are used to improve patient outcomes and quality of care. In addition, AI technologies like ChatGPT offer enormous potential in medical education and research. To ensure that the benefits outweigh the risks, developing trustworthy AI health care products and establishing oversight governing bodies to ensure their implementation is essential. By doing so, we can help HCPs focus on what matters most, providing high-quality care to patients.

Acknowledgments

This material is the result of work supported by resources and the use of facilities at the James A. Haley Veterans’ Hospital.

The development of [artificial intelligence] is as fundamental as the creation of the microprocessor, the personal computer, the Internet, and the mobile phone. It will change the way people work, learn, travel, get health care, and communicate with each other.

Bill Gates 1

As the world emerges from the pandemic and the health care system faces new challenges, technology has become an increasingly important tool for health care professionals (HCPs). One such technology is the large language model (LLM), which has the potential to revolutionize the health care industry. ChatGPT, a popular LLM developed by OpenAI, has gained particular attention in the medical community for its ability to pass the United States Medical Licensing Exam.2 This article will explore the benefits and potential pitfalls of using LLMs like ChatGPT in medicine and health care.

Benefits

HCP burnout is a serious issue that can lead to lower productivity, increased medical errors, and decreased patient satisfaction.3 LLMs can alleviate some administrative burdens on HCPs, allowing them to focus on patient care. By assisting with billing, coding, insurance claims, and organizing schedules, LLMs like ChatGPT can free up time for HCPs to focus on what they do best: providing quality patient care.4 ChatGPT also can assist with diagnoses by providing accurate and reliable information based on a vast amount of clinical data. By learning the relationships between different medical conditions, symptoms, and treatment options, ChatGPT can provide an appropriate differential diagnosis (Figure 1).

Imaging medical specialists like radiologists, pathologists, dermatologists, and others can benefit from combining computer vision diagnostics with ChatGPT report creation abilities to streamline the diagnostic workflow and improve diagnostic accuracy (Figure 2).

Although using ChatGPT and other LLMs in mental health care has potential benefits, it is essential to note that they are not a substitute for human interaction and personalized care. While ChatGPT can remember information from previous conversations, it cannot provide the same level of personalized, high-quality care that a professional therapist or HCP can. However, by augmenting the work of HCPs, ChatGPT and other LLMs have the potential to make mental health care more accessible and efficient. In addition to providing effective screening in underserved areas, ChatGPT technology may improve the competence of physician assistants and nurse practitioners in delivering mental health care. With the increased incidence of mental health problems in veterans, the pertinence of a ChatGPT-like feature will only increase with time.9

ChatGPT can also be integrated into health care organizations’ websites and mobile apps, providing patients instant access to medical information, self-care advice, symptom checkers, scheduling appointments, and arranging transportation. These features can reduce the burden on health care staff and help patients stay informed and motivated to take an active role in their health. Additionally, health care organizations can use ChatGPT to engage patients by providing reminders for medication renewals and assistance with self-care.4,6,10,11

The potential of artificial intelligence (AI) in the field of medical education and research is immense. According to a study by Gilson and colleagues, ChatGPT has shown promising results as a medical education tool.12 ChatGPT can simulate clinical scenarios, provide real-time feedback, and improve diagnostic skills. It also offers new interactive and personalized learning opportunities for medical students and HCPs.13 ChatGPT can help researchers by streamlining the process of data analysis. It can also administer surveys or questionnaires, facilitate data collection on preferences and experiences, and help in writing scientific publications.14 Nevertheless, to fully unlock the potential of these AI models, additional models that perform checks for factual accuracy, plagiarism, and copyright infringement must be developed.15,16

AI Bill of Rights

In order to protect the American public, the White House Office of Science and Technology Policy (OSTP) has released a blueprint for an AI Bill of Rights that emphasizes 5 principles to protect the public from the harmful effects of AI models, including safe and effective systems; algorithmic discrimination protection; data privacy; notice and explanation; and human alternatives, considerations, and fallback (Figure 3).17

One of the biggest challenges with LLMs like ChatGPT is the prevalence of inaccurate information or so-called hallucinations.16 These inaccuracies stem from the inability of LLMs to distinguish between real and fake information. To prevent hallucinations, researchers have proposed several methods, including training models on more diverse data, using adversarial training methods, and human-in-the-loop approaches.21 In addition, medicine-specific models like GatorTron, medPaLM, and Almanac were developed, increasing the accuracy of factual results.22-24 Unfortunately, only the GatorTron model is available to the public through the NVIDIA developers’ program.25

Despite these shortcomings, the future of LLMs in health care is promising. Although these models will not replace HCPs, they can help reduce the unnecessary burden on them, prevent burnout, and enable HCPs and patients spend more time together. Establishing an official hospital AI oversight governing body that would promote best practices could ensure the trustworthy implementation of these new technologies.26

Conclusions

The use of ChatGPT and other LLMs in health care has the potential to revolutionize the industry. By assisting HCPs with administrative tasks, improving the accuracy and reliability of diagnoses, and engaging patients, ChatGPT can help health care organizations provide better care to their patients. While LLMs are not a substitute for human interaction and personalized care, they can augment the work of HCPs, making health care more accessible and efficient. As the health care industry continues to evolve, it will be exciting to see how ChatGPT and other LLMs are used to improve patient outcomes and quality of care. In addition, AI technologies like ChatGPT offer enormous potential in medical education and research. To ensure that the benefits outweigh the risks, developing trustworthy AI health care products and establishing oversight governing bodies to ensure their implementation is essential. By doing so, we can help HCPs focus on what matters most, providing high-quality care to patients.

Acknowledgments

This material is the result of work supported by resources and the use of facilities at the James A. Haley Veterans’ Hospital.

1. Bill Gates. The age of AI has begun. March 21, 2023. Accessed May 10, 2023. https://www.gatesnotes.com/the-age-of-ai-has-begun

2. Kung TH, Cheatham M, Medenilla A, et al. Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLOS Digit Health. 2023;2(2):e0000198. Published 2023 Feb 9. doi:10.1371/journal.pdig.0000198

3. Shanafelt TD, West CP, Sinsky C, et al. Changes in burnout and satisfaction with work-life integration in physicians and the general US working population between 2011 and 2020. Mayo Clin Proc. 2022;97(3):491-506. doi:10.1016/j.mayocp.2021.11.021

4. Goodman RS, Patrinely JR Jr, Osterman T, Wheless L, Johnson DB. On the cusp: considering the impact of artificial intelligence language models in healthcare. Med. 2023;4(3):139-140. doi:10.1016/j.medj.2023.02.008

5. Will ChatGPT transform healthcare? Nat Med. 2023;29(3):505-506. doi:10.1038/s41591-023-02289-5

6. Hopkins AM, Logan JM, Kichenadasse G, Sorich MJ. Artificial intelligence chatbots will revolutionize how cancer patients access information: ChatGPT represents a paradigm-shift. JNCI Cancer Spectr. 2023;7(2):pkad010. doi:10.1093/jncics/pkad010

7. Babar Z, van Laarhoven T, Zanzotto FM, Marchiori E. Evaluating diagnostic content of AI-generated radiology reports of chest X-rays. Artif Intell Med. 2021;116:102075. doi:10.1016/j.artmed.2021.102075

8. Lecler A, Duron L, Soyer P. Revolutionizing radiology with GPT-based models: current applications, future possibilities and limitations of ChatGPT. Diagn Interv Imaging. 2023;S2211-5684(23)00027-X. doi:10.1016/j.diii.2023.02.003

9. Germain JM. Is ChatGPT smart enough to practice mental health therapy? March 23, 2023. Accessed May 11, 2023. https://www.technewsworld.com/story/is-chatgpt-smart-enough-to-practice-mental-health-therapy-178064.html

10. Cascella M, Montomoli J, Bellini V, Bignami E. Evaluating the feasibility of ChatGPT in healthcare: an analysis of multiple clinical and research scenarios. J Med Syst. 2023;47(1):33. Published 2023 Mar 4. doi:10.1007/s10916-023-01925-4

11. Jungwirth D, Haluza D. Artificial intelligence and public health: an exploratory study. Int J Environ Res Public Health. 2023;20(5):4541. Published 2023 Mar 3. doi:10.3390/ijerph20054541

12. Gilson A, Safranek CW, Huang T, et al. How does ChatGPT perform on the United States Medical Licensing Examination? The implications of large language models for medical education and knowledge assessment. JMIR Med Educ. 2023;9:e45312. Published 2023 Feb 8. doi:10.2196/45312

13. Eysenbach G. The role of ChatGPT, generative language models, and artificial intelligence in medical education: a conversation with ChatGPT and a call for papers. JMIR Med Educ. 2023;9:e46885. Published 2023 Mar 6. doi:10.2196/46885

14. Macdonald C, Adeloye D, Sheikh A, Rudan I. Can ChatGPT draft a research article? An example of population-level vaccine effectiveness analysis. J Glob Health. 2023;13:01003. Published 2023 Feb 17. doi:10.7189/jogh.13.01003

15. Masters K. Ethical use of artificial intelligence in health professions education: AMEE Guide No.158. Med Teach. 2023;1-11. doi:10.1080/0142159X.2023.2186203

16. Smith CS. Hallucinations could blunt ChatGPT’s success. IEEE Spectrum. March 13, 2023. Accessed May 11, 2023. https://spectrum.ieee.org/ai-hallucination

17. Executive Office of the President, Office of Science and Technology Policy. Blueprint for an AI Bill of Rights. Accessed May 11, 2023. https://www.whitehouse.gov/ostp/ai-bill-of-rights

18. Executive office of the President. Executive Order 13960: promoting the use of trustworthy artificial intelligence in the federal government. Fed Regist. 2020;89(236):78939-78943.

19. US Department of Commerce, National institute of Standards and Technology. Artificial Intelligence Risk Management Framework (AI RMF 1.0). Published January 2023. doi:10.6028/NIST.AI.100-1

20. Microsoft. Azure Cognitive Search—Cloud Search Service. Accessed May 11, 2023. https://azure.microsoft.com/en-us/products/search

21. Aiyappa R, An J, Kwak H, Ahn YY. Can we trust the evaluation on ChatGPT? March 22, 2023. Accessed May 11, 2023. https://arxiv.org/abs/2303.12767v1

22. Yang X, Chen A, Pournejatian N, et al. GatorTron: a large clinical language model to unlock patient information from unstructured electronic health records. Updated December 16, 2022. Accessed May 11, 2023. https://arxiv.org/abs/2203.03540v3

23. Singhal K, Azizi S, Tu T, et al. Large language models encode clinical knowledge. December 26, 2022. Accessed May 11, 2023. https://arxiv.org/abs/2212.13138v1

24. Zakka C, Chaurasia A, Shad R, Hiesinger W. Almanac: knowledge-grounded language models for clinical medicine. March 1, 2023. Accessed May 11, 2023. https://arxiv.org/abs/2303.01229v1

25. NVIDIA. GatorTron-OG. Accessed May 11, 2023. https://catalog.ngc.nvidia.com/orgs/nvidia/teams/clara/models/gatortron_og

26. Borkowski AA, Jakey CE, Thomas LB, Viswanadhan N, Mastorides SM. Establishing a hospital artificial intelligence committee to improve patient care. Fed Pract. 2022;39(8):334-336. doi:10.12788/fp.0299

1. Bill Gates. The age of AI has begun. March 21, 2023. Accessed May 10, 2023. https://www.gatesnotes.com/the-age-of-ai-has-begun

2. Kung TH, Cheatham M, Medenilla A, et al. Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLOS Digit Health. 2023;2(2):e0000198. Published 2023 Feb 9. doi:10.1371/journal.pdig.0000198

3. Shanafelt TD, West CP, Sinsky C, et al. Changes in burnout and satisfaction with work-life integration in physicians and the general US working population between 2011 and 2020. Mayo Clin Proc. 2022;97(3):491-506. doi:10.1016/j.mayocp.2021.11.021

4. Goodman RS, Patrinely JR Jr, Osterman T, Wheless L, Johnson DB. On the cusp: considering the impact of artificial intelligence language models in healthcare. Med. 2023;4(3):139-140. doi:10.1016/j.medj.2023.02.008

5. Will ChatGPT transform healthcare? Nat Med. 2023;29(3):505-506. doi:10.1038/s41591-023-02289-5

6. Hopkins AM, Logan JM, Kichenadasse G, Sorich MJ. Artificial intelligence chatbots will revolutionize how cancer patients access information: ChatGPT represents a paradigm-shift. JNCI Cancer Spectr. 2023;7(2):pkad010. doi:10.1093/jncics/pkad010

7. Babar Z, van Laarhoven T, Zanzotto FM, Marchiori E. Evaluating diagnostic content of AI-generated radiology reports of chest X-rays. Artif Intell Med. 2021;116:102075. doi:10.1016/j.artmed.2021.102075

8. Lecler A, Duron L, Soyer P. Revolutionizing radiology with GPT-based models: current applications, future possibilities and limitations of ChatGPT. Diagn Interv Imaging. 2023;S2211-5684(23)00027-X. doi:10.1016/j.diii.2023.02.003

9. Germain JM. Is ChatGPT smart enough to practice mental health therapy? March 23, 2023. Accessed May 11, 2023. https://www.technewsworld.com/story/is-chatgpt-smart-enough-to-practice-mental-health-therapy-178064.html

10. Cascella M, Montomoli J, Bellini V, Bignami E. Evaluating the feasibility of ChatGPT in healthcare: an analysis of multiple clinical and research scenarios. J Med Syst. 2023;47(1):33. Published 2023 Mar 4. doi:10.1007/s10916-023-01925-4

11. Jungwirth D, Haluza D. Artificial intelligence and public health: an exploratory study. Int J Environ Res Public Health. 2023;20(5):4541. Published 2023 Mar 3. doi:10.3390/ijerph20054541

12. Gilson A, Safranek CW, Huang T, et al. How does ChatGPT perform on the United States Medical Licensing Examination? The implications of large language models for medical education and knowledge assessment. JMIR Med Educ. 2023;9:e45312. Published 2023 Feb 8. doi:10.2196/45312

13. Eysenbach G. The role of ChatGPT, generative language models, and artificial intelligence in medical education: a conversation with ChatGPT and a call for papers. JMIR Med Educ. 2023;9:e46885. Published 2023 Mar 6. doi:10.2196/46885

14. Macdonald C, Adeloye D, Sheikh A, Rudan I. Can ChatGPT draft a research article? An example of population-level vaccine effectiveness analysis. J Glob Health. 2023;13:01003. Published 2023 Feb 17. doi:10.7189/jogh.13.01003

15. Masters K. Ethical use of artificial intelligence in health professions education: AMEE Guide No.158. Med Teach. 2023;1-11. doi:10.1080/0142159X.2023.2186203

16. Smith CS. Hallucinations could blunt ChatGPT’s success. IEEE Spectrum. March 13, 2023. Accessed May 11, 2023. https://spectrum.ieee.org/ai-hallucination

17. Executive Office of the President, Office of Science and Technology Policy. Blueprint for an AI Bill of Rights. Accessed May 11, 2023. https://www.whitehouse.gov/ostp/ai-bill-of-rights

18. Executive office of the President. Executive Order 13960: promoting the use of trustworthy artificial intelligence in the federal government. Fed Regist. 2020;89(236):78939-78943.

19. US Department of Commerce, National institute of Standards and Technology. Artificial Intelligence Risk Management Framework (AI RMF 1.0). Published January 2023. doi:10.6028/NIST.AI.100-1

20. Microsoft. Azure Cognitive Search—Cloud Search Service. Accessed May 11, 2023. https://azure.microsoft.com/en-us/products/search

21. Aiyappa R, An J, Kwak H, Ahn YY. Can we trust the evaluation on ChatGPT? March 22, 2023. Accessed May 11, 2023. https://arxiv.org/abs/2303.12767v1

22. Yang X, Chen A, Pournejatian N, et al. GatorTron: a large clinical language model to unlock patient information from unstructured electronic health records. Updated December 16, 2022. Accessed May 11, 2023. https://arxiv.org/abs/2203.03540v3

23. Singhal K, Azizi S, Tu T, et al. Large language models encode clinical knowledge. December 26, 2022. Accessed May 11, 2023. https://arxiv.org/abs/2212.13138v1

24. Zakka C, Chaurasia A, Shad R, Hiesinger W. Almanac: knowledge-grounded language models for clinical medicine. March 1, 2023. Accessed May 11, 2023. https://arxiv.org/abs/2303.01229v1

25. NVIDIA. GatorTron-OG. Accessed May 11, 2023. https://catalog.ngc.nvidia.com/orgs/nvidia/teams/clara/models/gatortron_og

26. Borkowski AA, Jakey CE, Thomas LB, Viswanadhan N, Mastorides SM. Establishing a hospital artificial intelligence committee to improve patient care. Fed Pract. 2022;39(8):334-336. doi:10.12788/fp.0299

WOW! You spend that much time on the EHR?

Unlike many of you, maybe even most of you, I can recall when my office records were handwritten, some would say scribbled, on pieces of paper. They were decipherable by a select few. Some veteran assistants never mastered the skill. Pages were sometimes lavishly illustrated with drawings of body parts, often because I couldn’t remember or spell the correct anatomic term. When I needed to send a referring letter to another provider I typed it myself because dictating never quite suited my personality.

When I joined a small primary care group, the computer-savvy lead physician and a programmer developed our own homegrown EHR. It relied on scanning documents, as so many of us still generated handwritten notes. Even the most vociferous Luddites among us loved the system from day 2.

However, for a variety of reasons, some defensible some just plain bad, our beloved system needed to be replaced after 7 years. We then invested in an off-the-shelf EHR system that promised more capabilities. We were told there would be a learning curve but the plateau would come quickly and we would enjoy our new electronic assistant.

You’ve lived the rest of the story. The learning curve was steep and long and the plateau was a time gobbler. I was probably the most efficient provider in the group, and after 6 months I was leaving the office an hour later than I had been and was seeing the same number of patients. Most of my coworkers were staying and/or working on the computer at home for an extra 2 hours. This change could be easily documented by speaking with our spouses and children. I understand from my colleagues who have stayed in the business that over the ensuing decade and a half since my first experience with the EHR, its insatiable appetite for a clinician’s time has not abated.

The authors of a recent article in Annals of Family Medicine offer up some advice on how this tragic situation might be brought under control. First, the investigators point out that the phenomenon of after-hours EHR work, sometimes referred to as WOW (work outside of work), has not gone unnoticed by health system administrators and vendors who develop and sell the EHRs. However, analyzing the voluminous data necessary is not any easy task and for the most part has resulted in metrics that cannot be easily applied over a variety of practice scenarios. Many health care organizations, even large ones, have simply given up and rely on the WOW data and recommendations provided by the vendors, obviously lending the situation a faint odor of conflict of interest.

The bottom line is that . It would seem to me just asking the spouses and significant others of the clinicians would be sufficient. But, authors of the paper have more specific recommendations. First, they suggest that time working on the computer outside of scheduled time with patients should be separated from any other calculation of EHR usage. They encourage vendors and time-management researchers to develop standardized and validated methods for measuring active EHR use. And, finally they recommend that all EHR work done outside of time scheduled with patients be attributed to WOW. They feel that clearly labeling it work outside of work offers health care organizations a better chance of developing policies that will address the scourge of burnout.

This, unfortunately, is another tragic example of how clinicians have lost control of our work environments. The fact that 20 years have passed and there is still no standardized method for determining how much time we spend on the computer is more evidence we need to raise our voices.

Dr. Wilkoff practiced primary care pediatrics in Brunswick, Maine, for nearly 40 years. He has authored several books on behavioral pediatrics, including “How to Say No to Your Toddler.” Other than a Littman stethoscope he accepted as a first-year medical student in 1966, Dr. Wilkoff reports having nothing to disclose. Email him at [email protected].

Unlike many of you, maybe even most of you, I can recall when my office records were handwritten, some would say scribbled, on pieces of paper. They were decipherable by a select few. Some veteran assistants never mastered the skill. Pages were sometimes lavishly illustrated with drawings of body parts, often because I couldn’t remember or spell the correct anatomic term. When I needed to send a referring letter to another provider I typed it myself because dictating never quite suited my personality.

When I joined a small primary care group, the computer-savvy lead physician and a programmer developed our own homegrown EHR. It relied on scanning documents, as so many of us still generated handwritten notes. Even the most vociferous Luddites among us loved the system from day 2.

However, for a variety of reasons, some defensible some just plain bad, our beloved system needed to be replaced after 7 years. We then invested in an off-the-shelf EHR system that promised more capabilities. We were told there would be a learning curve but the plateau would come quickly and we would enjoy our new electronic assistant.

You’ve lived the rest of the story. The learning curve was steep and long and the plateau was a time gobbler. I was probably the most efficient provider in the group, and after 6 months I was leaving the office an hour later than I had been and was seeing the same number of patients. Most of my coworkers were staying and/or working on the computer at home for an extra 2 hours. This change could be easily documented by speaking with our spouses and children. I understand from my colleagues who have stayed in the business that over the ensuing decade and a half since my first experience with the EHR, its insatiable appetite for a clinician’s time has not abated.

The authors of a recent article in Annals of Family Medicine offer up some advice on how this tragic situation might be brought under control. First, the investigators point out that the phenomenon of after-hours EHR work, sometimes referred to as WOW (work outside of work), has not gone unnoticed by health system administrators and vendors who develop and sell the EHRs. However, analyzing the voluminous data necessary is not any easy task and for the most part has resulted in metrics that cannot be easily applied over a variety of practice scenarios. Many health care organizations, even large ones, have simply given up and rely on the WOW data and recommendations provided by the vendors, obviously lending the situation a faint odor of conflict of interest.

The bottom line is that . It would seem to me just asking the spouses and significant others of the clinicians would be sufficient. But, authors of the paper have more specific recommendations. First, they suggest that time working on the computer outside of scheduled time with patients should be separated from any other calculation of EHR usage. They encourage vendors and time-management researchers to develop standardized and validated methods for measuring active EHR use. And, finally they recommend that all EHR work done outside of time scheduled with patients be attributed to WOW. They feel that clearly labeling it work outside of work offers health care organizations a better chance of developing policies that will address the scourge of burnout.

This, unfortunately, is another tragic example of how clinicians have lost control of our work environments. The fact that 20 years have passed and there is still no standardized method for determining how much time we spend on the computer is more evidence we need to raise our voices.

Dr. Wilkoff practiced primary care pediatrics in Brunswick, Maine, for nearly 40 years. He has authored several books on behavioral pediatrics, including “How to Say No to Your Toddler.” Other than a Littman stethoscope he accepted as a first-year medical student in 1966, Dr. Wilkoff reports having nothing to disclose. Email him at [email protected].

Unlike many of you, maybe even most of you, I can recall when my office records were handwritten, some would say scribbled, on pieces of paper. They were decipherable by a select few. Some veteran assistants never mastered the skill. Pages were sometimes lavishly illustrated with drawings of body parts, often because I couldn’t remember or spell the correct anatomic term. When I needed to send a referring letter to another provider I typed it myself because dictating never quite suited my personality.

When I joined a small primary care group, the computer-savvy lead physician and a programmer developed our own homegrown EHR. It relied on scanning documents, as so many of us still generated handwritten notes. Even the most vociferous Luddites among us loved the system from day 2.

However, for a variety of reasons, some defensible some just plain bad, our beloved system needed to be replaced after 7 years. We then invested in an off-the-shelf EHR system that promised more capabilities. We were told there would be a learning curve but the plateau would come quickly and we would enjoy our new electronic assistant.

You’ve lived the rest of the story. The learning curve was steep and long and the plateau was a time gobbler. I was probably the most efficient provider in the group, and after 6 months I was leaving the office an hour later than I had been and was seeing the same number of patients. Most of my coworkers were staying and/or working on the computer at home for an extra 2 hours. This change could be easily documented by speaking with our spouses and children. I understand from my colleagues who have stayed in the business that over the ensuing decade and a half since my first experience with the EHR, its insatiable appetite for a clinician’s time has not abated.

The authors of a recent article in Annals of Family Medicine offer up some advice on how this tragic situation might be brought under control. First, the investigators point out that the phenomenon of after-hours EHR work, sometimes referred to as WOW (work outside of work), has not gone unnoticed by health system administrators and vendors who develop and sell the EHRs. However, analyzing the voluminous data necessary is not any easy task and for the most part has resulted in metrics that cannot be easily applied over a variety of practice scenarios. Many health care organizations, even large ones, have simply given up and rely on the WOW data and recommendations provided by the vendors, obviously lending the situation a faint odor of conflict of interest.

The bottom line is that . It would seem to me just asking the spouses and significant others of the clinicians would be sufficient. But, authors of the paper have more specific recommendations. First, they suggest that time working on the computer outside of scheduled time with patients should be separated from any other calculation of EHR usage. They encourage vendors and time-management researchers to develop standardized and validated methods for measuring active EHR use. And, finally they recommend that all EHR work done outside of time scheduled with patients be attributed to WOW. They feel that clearly labeling it work outside of work offers health care organizations a better chance of developing policies that will address the scourge of burnout.

This, unfortunately, is another tragic example of how clinicians have lost control of our work environments. The fact that 20 years have passed and there is still no standardized method for determining how much time we spend on the computer is more evidence we need to raise our voices.

Dr. Wilkoff practiced primary care pediatrics in Brunswick, Maine, for nearly 40 years. He has authored several books on behavioral pediatrics, including “How to Say No to Your Toddler.” Other than a Littman stethoscope he accepted as a first-year medical student in 1966, Dr. Wilkoff reports having nothing to disclose. Email him at [email protected].

Could semaglutide treat addiction as well as obesity?

As demand for semaglutide for weight loss grew following approval of Wegovy by the U.S. Food and Drug Administration in 2021, anecdotal reports of unexpected potential added benefits also began to surface.

Some patients taking these drugs for type 2 diabetes or weight loss also lost interest in addictive and compulsive behaviors such as drinking alcohol, smoking, shopping, nail biting, and skin picking, as reported in articles in the New York Times and The Atlantic, among others.

There is also some preliminary research to support these observations.

This news organization invited three experts to weigh in.

Recent and upcoming studies

The senior author of a recent randomized controlled trial of 127 patients with alcohol use disorder (AUD), Anders Fink-Jensen, MD, said: “I hope that GLP-1 analogs in the future can be used against AUD, but before that can happen, several GLP-1 trials [are needed to] prove an effect on alcohol intake.”

His study involved patients who received exenatide (Byetta, Bydureon, AstraZeneca), the first-generation GLP-1 agonist approved for type 2 diabetes, over 26 weeks, but treatment did not reduce the number of heavy drinking days (the primary outcome), compared with placebo.

However, in post hoc, exploratory analyses, heavy drinking days and total alcohol intake were significantly reduced in the subgroup of patients with AUD and obesity (body mass index > 30 kg/m2).

The participants were also shown pictures of alcohol or neutral subjects while they underwent functional magnetic resonance imaging. Those who had received exenatide, compared with placebo, had significantly less activation of brain reward centers when shown the pictures of alcohol.

“Something is happening in the brain and activation of the reward center is hampered by the GLP-1 compound,” Dr. Fink-Jensen, a clinical psychologist at the Psychiatric Centre Copenhagen, remarked in an email.

“If patients with AUD already fulfill the criteria for semaglutide (or other GLP-1 analogs) by having type 2 diabetes and/or a BMI over 30 kg/m2, they can of course use the compound right now,” he noted.

His team is also beginning a study in patients with AUD and a BMI ≥ 30 kg/m2 to investigate the effects on alcohol intake of semaglutide up to 2.4 mg weekly, the maximum dose currently approved for obesity in the United States.

“Based on the potency of exenatide and semaglutide,” Dr. Fink-Jensen said, “we expect that semaglutide will cause a stronger reduction in alcohol intake” than exenatide.

Animal studies have also shown that GLP-1 agonists suppress alcohol-induced reward, alcohol intake, motivation to consume alcohol, alcohol seeking, and relapse drinking of alcohol, Elisabet Jerlhag Holm, PhD, noted.

Interestingly, these agents also suppress the reward, intake, and motivation to consume other addictive drugs like cocaine, amphetamine, nicotine, and some opioids, Jerlhag Holm, professor, department of pharmacology, University of Gothenburg, Sweden, noted in an email.

In a recently published preclinical study, her group provides evidence to help explain anecdotal reports from patients with obesity treated with semaglutide who claim they also reduced their alcohol intake. In the study, semaglutide both reduced alcohol intake (and relapse-like drinking) and decreased body weight of rats of both sexes.

“Future research should explore the possibility of semaglutide decreasing alcohol intake in patients with AUD, particularly those who are overweight,” said Prof. Holm.

“AUD is a heterogenous disorder, and one medication is most likely not helpful for all AUD patients,” she added. “Therefore, an arsenal of different medications is beneficial when treating AUD.”

Janice J. Hwang, MD, MHS, echoed these thoughts: “Anecdotally, there are a lot of reports from patients (and in the news) that this class of medication [GLP-1 agonists] impacts cravings and could impact addictive behaviors.”

“I would say, overall, the jury is still out,” as to whether anecdotal reports of GLP-1 agonists curbing addictions will be borne out in randomized controlled trials.

“I think it is much too early to tell” whether these drugs might be approved for treating addictions without more solid clinical trial data, noted Dr. Hwang, who is an associate professor of medicine and chief, division of endocrinology and metabolism, at the University of North Carolina at Chapel Hill.

Meanwhile, another research group at the University of North Carolina at Chapel Hill, led by psychiatrist Christian Hendershot, PhD, is conducting a clinical trial in 48 participants with AUD who are also smokers.

They aim to determine if patients who receive semaglutide at escalating doses (0.25 mg to 1.0 mg per week via subcutaneous injection) over 9 weeks will consume less alcohol (the primary outcome) and smoke less (a secondary outcome) than those who receive a sham placebo injection. Results are expected in October 2023.

Dr. Fink-Jensen has received an unrestricted research grant from Novo Nordisk to investigate the effects of GLP-1 receptor stimulation on weight gain and metabolic disturbances in patients with schizophrenia treated with an antipsychotic.

A version of this article first appeared on Medscape.com.

As demand for semaglutide for weight loss grew following approval of Wegovy by the U.S. Food and Drug Administration in 2021, anecdotal reports of unexpected potential added benefits also began to surface.

Some patients taking these drugs for type 2 diabetes or weight loss also lost interest in addictive and compulsive behaviors such as drinking alcohol, smoking, shopping, nail biting, and skin picking, as reported in articles in the New York Times and The Atlantic, among others.

There is also some preliminary research to support these observations.

This news organization invited three experts to weigh in.

Recent and upcoming studies

The senior author of a recent randomized controlled trial of 127 patients with alcohol use disorder (AUD), Anders Fink-Jensen, MD, said: “I hope that GLP-1 analogs in the future can be used against AUD, but before that can happen, several GLP-1 trials [are needed to] prove an effect on alcohol intake.”

His study involved patients who received exenatide (Byetta, Bydureon, AstraZeneca), the first-generation GLP-1 agonist approved for type 2 diabetes, over 26 weeks, but treatment did not reduce the number of heavy drinking days (the primary outcome), compared with placebo.

However, in post hoc, exploratory analyses, heavy drinking days and total alcohol intake were significantly reduced in the subgroup of patients with AUD and obesity (body mass index > 30 kg/m2).

The participants were also shown pictures of alcohol or neutral subjects while they underwent functional magnetic resonance imaging. Those who had received exenatide, compared with placebo, had significantly less activation of brain reward centers when shown the pictures of alcohol.

“Something is happening in the brain and activation of the reward center is hampered by the GLP-1 compound,” Dr. Fink-Jensen, a clinical psychologist at the Psychiatric Centre Copenhagen, remarked in an email.

“If patients with AUD already fulfill the criteria for semaglutide (or other GLP-1 analogs) by having type 2 diabetes and/or a BMI over 30 kg/m2, they can of course use the compound right now,” he noted.

His team is also beginning a study in patients with AUD and a BMI ≥ 30 kg/m2 to investigate the effects on alcohol intake of semaglutide up to 2.4 mg weekly, the maximum dose currently approved for obesity in the United States.

“Based on the potency of exenatide and semaglutide,” Dr. Fink-Jensen said, “we expect that semaglutide will cause a stronger reduction in alcohol intake” than exenatide.

Animal studies have also shown that GLP-1 agonists suppress alcohol-induced reward, alcohol intake, motivation to consume alcohol, alcohol seeking, and relapse drinking of alcohol, Elisabet Jerlhag Holm, PhD, noted.

Interestingly, these agents also suppress the reward, intake, and motivation to consume other addictive drugs like cocaine, amphetamine, nicotine, and some opioids, Jerlhag Holm, professor, department of pharmacology, University of Gothenburg, Sweden, noted in an email.

In a recently published preclinical study, her group provides evidence to help explain anecdotal reports from patients with obesity treated with semaglutide who claim they also reduced their alcohol intake. In the study, semaglutide both reduced alcohol intake (and relapse-like drinking) and decreased body weight of rats of both sexes.

“Future research should explore the possibility of semaglutide decreasing alcohol intake in patients with AUD, particularly those who are overweight,” said Prof. Holm.

“AUD is a heterogenous disorder, and one medication is most likely not helpful for all AUD patients,” she added. “Therefore, an arsenal of different medications is beneficial when treating AUD.”

Janice J. Hwang, MD, MHS, echoed these thoughts: “Anecdotally, there are a lot of reports from patients (and in the news) that this class of medication [GLP-1 agonists] impacts cravings and could impact addictive behaviors.”

“I would say, overall, the jury is still out,” as to whether anecdotal reports of GLP-1 agonists curbing addictions will be borne out in randomized controlled trials.

“I think it is much too early to tell” whether these drugs might be approved for treating addictions without more solid clinical trial data, noted Dr. Hwang, who is an associate professor of medicine and chief, division of endocrinology and metabolism, at the University of North Carolina at Chapel Hill.

Meanwhile, another research group at the University of North Carolina at Chapel Hill, led by psychiatrist Christian Hendershot, PhD, is conducting a clinical trial in 48 participants with AUD who are also smokers.

They aim to determine if patients who receive semaglutide at escalating doses (0.25 mg to 1.0 mg per week via subcutaneous injection) over 9 weeks will consume less alcohol (the primary outcome) and smoke less (a secondary outcome) than those who receive a sham placebo injection. Results are expected in October 2023.

Dr. Fink-Jensen has received an unrestricted research grant from Novo Nordisk to investigate the effects of GLP-1 receptor stimulation on weight gain and metabolic disturbances in patients with schizophrenia treated with an antipsychotic.

A version of this article first appeared on Medscape.com.

As demand for semaglutide for weight loss grew following approval of Wegovy by the U.S. Food and Drug Administration in 2021, anecdotal reports of unexpected potential added benefits also began to surface.

Some patients taking these drugs for type 2 diabetes or weight loss also lost interest in addictive and compulsive behaviors such as drinking alcohol, smoking, shopping, nail biting, and skin picking, as reported in articles in the New York Times and The Atlantic, among others.

There is also some preliminary research to support these observations.

This news organization invited three experts to weigh in.

Recent and upcoming studies

The senior author of a recent randomized controlled trial of 127 patients with alcohol use disorder (AUD), Anders Fink-Jensen, MD, said: “I hope that GLP-1 analogs in the future can be used against AUD, but before that can happen, several GLP-1 trials [are needed to] prove an effect on alcohol intake.”

His study involved patients who received exenatide (Byetta, Bydureon, AstraZeneca), the first-generation GLP-1 agonist approved for type 2 diabetes, over 26 weeks, but treatment did not reduce the number of heavy drinking days (the primary outcome), compared with placebo.

However, in post hoc, exploratory analyses, heavy drinking days and total alcohol intake were significantly reduced in the subgroup of patients with AUD and obesity (body mass index > 30 kg/m2).

The participants were also shown pictures of alcohol or neutral subjects while they underwent functional magnetic resonance imaging. Those who had received exenatide, compared with placebo, had significantly less activation of brain reward centers when shown the pictures of alcohol.

“Something is happening in the brain and activation of the reward center is hampered by the GLP-1 compound,” Dr. Fink-Jensen, a clinical psychologist at the Psychiatric Centre Copenhagen, remarked in an email.

“If patients with AUD already fulfill the criteria for semaglutide (or other GLP-1 analogs) by having type 2 diabetes and/or a BMI over 30 kg/m2, they can of course use the compound right now,” he noted.

His team is also beginning a study in patients with AUD and a BMI ≥ 30 kg/m2 to investigate the effects on alcohol intake of semaglutide up to 2.4 mg weekly, the maximum dose currently approved for obesity in the United States.

“Based on the potency of exenatide and semaglutide,” Dr. Fink-Jensen said, “we expect that semaglutide will cause a stronger reduction in alcohol intake” than exenatide.

Animal studies have also shown that GLP-1 agonists suppress alcohol-induced reward, alcohol intake, motivation to consume alcohol, alcohol seeking, and relapse drinking of alcohol, Elisabet Jerlhag Holm, PhD, noted.

Interestingly, these agents also suppress the reward, intake, and motivation to consume other addictive drugs like cocaine, amphetamine, nicotine, and some opioids, Jerlhag Holm, professor, department of pharmacology, University of Gothenburg, Sweden, noted in an email.

In a recently published preclinical study, her group provides evidence to help explain anecdotal reports from patients with obesity treated with semaglutide who claim they also reduced their alcohol intake. In the study, semaglutide both reduced alcohol intake (and relapse-like drinking) and decreased body weight of rats of both sexes.

“Future research should explore the possibility of semaglutide decreasing alcohol intake in patients with AUD, particularly those who are overweight,” said Prof. Holm.

“AUD is a heterogenous disorder, and one medication is most likely not helpful for all AUD patients,” she added. “Therefore, an arsenal of different medications is beneficial when treating AUD.”

Janice J. Hwang, MD, MHS, echoed these thoughts: “Anecdotally, there are a lot of reports from patients (and in the news) that this class of medication [GLP-1 agonists] impacts cravings and could impact addictive behaviors.”

“I would say, overall, the jury is still out,” as to whether anecdotal reports of GLP-1 agonists curbing addictions will be borne out in randomized controlled trials.

“I think it is much too early to tell” whether these drugs might be approved for treating addictions without more solid clinical trial data, noted Dr. Hwang, who is an associate professor of medicine and chief, division of endocrinology and metabolism, at the University of North Carolina at Chapel Hill.

Meanwhile, another research group at the University of North Carolina at Chapel Hill, led by psychiatrist Christian Hendershot, PhD, is conducting a clinical trial in 48 participants with AUD who are also smokers.

They aim to determine if patients who receive semaglutide at escalating doses (0.25 mg to 1.0 mg per week via subcutaneous injection) over 9 weeks will consume less alcohol (the primary outcome) and smoke less (a secondary outcome) than those who receive a sham placebo injection. Results are expected in October 2023.

Dr. Fink-Jensen has received an unrestricted research grant from Novo Nordisk to investigate the effects of GLP-1 receptor stimulation on weight gain and metabolic disturbances in patients with schizophrenia treated with an antipsychotic.

A version of this article first appeared on Medscape.com.

Daily multivitamins boost memory in older adults: A randomized trial

This transcript has been edited for clarity.

This is Dr. JoAnn Manson, professor of medicine at Harvard Medical School and Brigham and Women’s Hospital. , known as COSMOS (Cocoa Supplement and Multivitamins Outcome Study). This is the second COSMOS trial to show a benefit of multivitamins on memory and cognition. This trial involved a collaboration between Brigham and Columbia University and was published in the American Journal of Clinical Nutrition. I’d like to acknowledge that I am a coauthor of this study, together with Dr. Howard Sesso, who co-leads the main COSMOS trial with me.

Preserving memory and cognitive function is of critical importance to older adults. Nutritional interventions play an important role because we know the brain requires several nutrients for optimal health, and deficiencies in one or more of these nutrients may accelerate cognitive decline. Some of the micronutrients that are known to be important for brain health include vitamin B12, thiamin, other B vitamins, lutein, magnesium, and zinc, among others.

The current trial included 3,500 participants aged 60 or older, looking at performance on a web-based memory test. The multivitamin group did significantly better than the placebo group on memory tests and word recall, a finding that was estimated as the equivalent of slowing age-related memory loss by about 3 years. The benefit was first seen at 1 year and was sustained across the 3 years of the trial.

Intriguingly, in both COSMOS and COSMOS-Web, and the earlier COSMOS-Mind study, which was done in collaboration with Wake Forest, the participants with a history of cardiovascular disease showed the greatest benefits from multivitamins, perhaps due to lower nutrient status. But the basis for this finding needs to be explored further.

A few important caveats need to be emphasized. First, multivitamins and other dietary supplements will never be a substitute for a healthy diet and healthy lifestyle and should not distract from those goals. But multivitamins may have a role as a complementary strategy. Another caveat is that the randomized trials tested recommended dietary allowances and not megadoses of these micronutrients. In fact, randomized trials of high doses of isolated micronutrients have not clearly shown cognitive benefits, and this suggests that more is not necessarily better and may be worse. High doses also may be associated with toxicity, or they may interfere with absorption or bioavailability of other nutrients.

In COSMOS, over the average 3.6 years of follow-up and in the earlier Physicians’ Health Study II, over 1 year of supplementation, multivitamins were found to be safe without any clear risks or safety concerns. A further caveat is that although Centrum Silver was tested in this trial, we would not expect that this is a brand-specific benefit, and other high-quality multivitamin brands would be expected to confer similar benefits. Of course, it’s important to check bottles for quality-control documentation such as the seals of the U.S. Pharmacopeia, National Science Foundation, ConsumerLab.com, and other auditors.

Overall, the finding that a daily multivitamin improved memory and slowed cognitive decline in two separate COSMOS randomized trials is exciting, suggesting that multivitamin supplementation holds promise as a safe, accessible, and affordable approach to protecting cognitive health in older adults. Further research will be needed to understand who is most likely to benefit and the biological mechanisms involved. Expert committees will have to look at the research and decide whether any changes in guidelines are indicated in the future.

Dr. Manson is Professor of Medicine and the Michael and Lee Bell Professor of Women’s Health, Harvard Medical School and director of the Division of Preventive Medicine, Brigham and Women’s Hospital, both in Boston. She reported receiving funding/donations from Mars Symbioscience.

A version of this article first appeared on Medscape.com.

This transcript has been edited for clarity.

This is Dr. JoAnn Manson, professor of medicine at Harvard Medical School and Brigham and Women’s Hospital. , known as COSMOS (Cocoa Supplement and Multivitamins Outcome Study). This is the second COSMOS trial to show a benefit of multivitamins on memory and cognition. This trial involved a collaboration between Brigham and Columbia University and was published in the American Journal of Clinical Nutrition. I’d like to acknowledge that I am a coauthor of this study, together with Dr. Howard Sesso, who co-leads the main COSMOS trial with me.

Preserving memory and cognitive function is of critical importance to older adults. Nutritional interventions play an important role because we know the brain requires several nutrients for optimal health, and deficiencies in one or more of these nutrients may accelerate cognitive decline. Some of the micronutrients that are known to be important for brain health include vitamin B12, thiamin, other B vitamins, lutein, magnesium, and zinc, among others.

The current trial included 3,500 participants aged 60 or older, looking at performance on a web-based memory test. The multivitamin group did significantly better than the placebo group on memory tests and word recall, a finding that was estimated as the equivalent of slowing age-related memory loss by about 3 years. The benefit was first seen at 1 year and was sustained across the 3 years of the trial.

Intriguingly, in both COSMOS and COSMOS-Web, and the earlier COSMOS-Mind study, which was done in collaboration with Wake Forest, the participants with a history of cardiovascular disease showed the greatest benefits from multivitamins, perhaps due to lower nutrient status. But the basis for this finding needs to be explored further.

A few important caveats need to be emphasized. First, multivitamins and other dietary supplements will never be a substitute for a healthy diet and healthy lifestyle and should not distract from those goals. But multivitamins may have a role as a complementary strategy. Another caveat is that the randomized trials tested recommended dietary allowances and not megadoses of these micronutrients. In fact, randomized trials of high doses of isolated micronutrients have not clearly shown cognitive benefits, and this suggests that more is not necessarily better and may be worse. High doses also may be associated with toxicity, or they may interfere with absorption or bioavailability of other nutrients.

In COSMOS, over the average 3.6 years of follow-up and in the earlier Physicians’ Health Study II, over 1 year of supplementation, multivitamins were found to be safe without any clear risks or safety concerns. A further caveat is that although Centrum Silver was tested in this trial, we would not expect that this is a brand-specific benefit, and other high-quality multivitamin brands would be expected to confer similar benefits. Of course, it’s important to check bottles for quality-control documentation such as the seals of the U.S. Pharmacopeia, National Science Foundation, ConsumerLab.com, and other auditors.

Overall, the finding that a daily multivitamin improved memory and slowed cognitive decline in two separate COSMOS randomized trials is exciting, suggesting that multivitamin supplementation holds promise as a safe, accessible, and affordable approach to protecting cognitive health in older adults. Further research will be needed to understand who is most likely to benefit and the biological mechanisms involved. Expert committees will have to look at the research and decide whether any changes in guidelines are indicated in the future.

Dr. Manson is Professor of Medicine and the Michael and Lee Bell Professor of Women’s Health, Harvard Medical School and director of the Division of Preventive Medicine, Brigham and Women’s Hospital, both in Boston. She reported receiving funding/donations from Mars Symbioscience.

A version of this article first appeared on Medscape.com.

This transcript has been edited for clarity.

This is Dr. JoAnn Manson, professor of medicine at Harvard Medical School and Brigham and Women’s Hospital. , known as COSMOS (Cocoa Supplement and Multivitamins Outcome Study). This is the second COSMOS trial to show a benefit of multivitamins on memory and cognition. This trial involved a collaboration between Brigham and Columbia University and was published in the American Journal of Clinical Nutrition. I’d like to acknowledge that I am a coauthor of this study, together with Dr. Howard Sesso, who co-leads the main COSMOS trial with me.

Preserving memory and cognitive function is of critical importance to older adults. Nutritional interventions play an important role because we know the brain requires several nutrients for optimal health, and deficiencies in one or more of these nutrients may accelerate cognitive decline. Some of the micronutrients that are known to be important for brain health include vitamin B12, thiamin, other B vitamins, lutein, magnesium, and zinc, among others.

The current trial included 3,500 participants aged 60 or older, looking at performance on a web-based memory test. The multivitamin group did significantly better than the placebo group on memory tests and word recall, a finding that was estimated as the equivalent of slowing age-related memory loss by about 3 years. The benefit was first seen at 1 year and was sustained across the 3 years of the trial.