User login

Salpingectomy after vaginal hysterectomy: Technique, tips, and pearls

In this article, I describe my technique for a vaginal approach to right salpingectomy with ovarian preservation, as well as right salpingo-oophorectomy, in a patient lacking a left tube and ovary. This technique is fully illustrated on a cadaver in the Web-based master course in vaginal hysterectomy produced by the AAGL and co-sponsored by the American College of Obstetricians and Gynecologists and the Society of Gynecologic Surgeons. That course is available online at https://www.aagl.org/vaghystwebinar.

For a detailed description of vaginal hysterectomy technique, see the article entitled “Vaginal hysterectomy using basic instrumentation,” by Barbara S. Levy, MD, which appeared in the October 2015 issue of OBG Management. Next month, in the December 2015 issue of the journal, I will detail my strategies for managing complications associated with vaginal hysterectomy, salpingectomy, and salpingo-oophorectomy.

Right salpingectomy

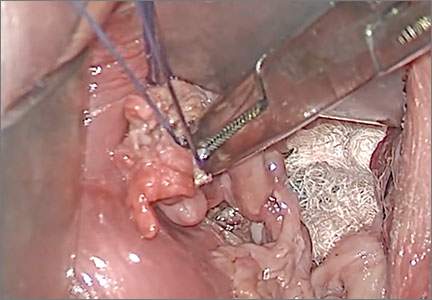

FIGURE 1 Locate the tube |

The fallopian tube will almost always be found on top of the ovary. |

FIGURE 2 Isolate the tube

|

| Grasp the tube and bring it to the midline. |

Start with light traction

Begin by placing an instrument on the round ligament, tube, and uterine-ovarian pedicle, exerting light traction. Note that the tube will always be found on top of the ovary

(FIGURE 1). Take care during placement of packing material to avoid sweeping the fimbriae of the tube up and out of the surgical field. You may need to play with the packing a bit until you are able to deliver the tube.

Once you identify the tube, isolate it by bringing it down to the midline (FIGURE 2). One thing to note if you’re accustomed to performing bilateral salpingo-oophorectomy: The gonadal pedicle is fairly substantive and can sustain a bit of tugging. However, if you’re performing salpingectomy with ovarian preservation, you need to be much more careful in your handling of the tube because the mesosalpinx is extremely delicate.

After you bring the tube to the midline, grasp it using a Heaney or Shallcross clamp. You could use energy to take this pedicle or clamp and tie it.

Make sure that the packing material is out of the way and that you have most of the tube nicely isolated. Don’t take the tube too far up in the surgical field because, if you lose it, it can be hard to control the bleeding. Ensure that you have grasped the fimbriated end of the tube.

In some cases you can leave a portion of the tube right next to the round ligament (FIGURE 3). You can go back and take that portion later, if you desire. But when it comes to the potential for the fallopian tube to generate carcinoma, most of the concern involves the mid to distal end of the tube rather than the cornual portion.

Once the Shallcross clamp has a good purchase on the pedicle, bring the suture around the clamp and then pass it under the tube so that you encircle the mesosalpinx pedicle (FIGURE 4). It is extremely important during salpingectomy to tie this suture down gently but tightly. In the process, have your assistant flash the Shallcross clamp open when you tie the suture. Otherwise, the suture will tend to tear through the mesosalpinx. Be very careful in your handling of the specimen at this point. Next, cut right along the edge of the clamp to remove the tube.

FIGURE 3 Focus on the distal tube

| FIGURE 4 Clamp and tie the pedicle

| |

| The cornual portion of the tube (proximal to the round ligament) can be left behind, if desired. The propensity for cancer centers on the distal end of the tube. | Bring the suture around the clamp and then pass it under the tube so that you encircle the mesosalpinx pedicle. |

If you prefer, you can stick-tie the remaining portion again, but usually one tie will suffice because there is such a small pedicle there. The distal portion of the pedicle eventually will necrose close to the tie. The next step is ensuring hemostasis.

On occasion, if you lose the pedicle high in the surgical field, you can try to oversew it. A 2-0 Vicryl suture may be used to place a figure-eight stitch to control bleeding around the mesosalpinx. Alternatively, an energy device may be used for hemostasis. Rarely, if you encounter bleeding that does not respond to the previous suggestions, you may need to remove the ovary to control bleeding if the tissue tears.

Transvaginal technique for salpingo-oophorectomy

Once the hysterectomy is completed, grasp the round ligament, tube, and uterine-ovarian pedicle, placing slight tension on the pedicle, and free the right round ligament to ease isolation of the gonadal vessels. Using electrocautery, carefully transect the round ligament. It is critical when isolating the round ligament to transect only the ligament and not to get deep into the underlying tissue or bleeding will ensue. If you “hug” just the round ligament, you will open into the broad ligament and easily be able to isolate the gonadal pedicle.

Once the pedicle is nicely isolated, readjust your retractors or lighting to improve visualization. Now the gonadal vessels can be isolated up high much more easily (FIGURE 1).

Next, use a Heaney clamp to grab the pedicle, making sure that the ovary is medial to the clamp (FIGURE 2).

|

| |

| FIGURE 1: ISOLATE THE GONADAL VESSELS Once optimal visualization is achieved, the gonadal vessels can be isolated easily. | FIGURE 2: KEEP THE OVARY MEDIAL TO THE CLAMP Use a Heaney clamp to grab the pedicle, keeping the ovary medial to the clamp. |

In this setting, there are a number of techniques you can use to complete the salpingo-oophorectomy. I tend to doubly ligate the pedicle. To begin, cut the tagging suture to get it out of the way. Then place a free tie lateral to the clamp, bringing it down and underneath to fully encircle the pedicle. Ligate the pedicle then cut the free tie. Follow by cutting the pedicle beside the Heaney clamp and removing the specimen. Stick-tie the remaining pedicle.

Locate the free tie, which is easily identified. Place your needle between that free tie and the clamp so that you do not pierce the vessels proximal to the tie with that needle. Then doubly ligate the pedicle.

Check for hemostasis and, once confirmed, cut the pedicle tie. Because this patient does not have a left tube and ovary, the procedure is now completed.

Conclusion

The tubes are usually readily accessible for removal at the time of vaginal hysterectomy. There is evolving evidence that the tube may play a role in malignancy of the female genital tract. Thus, removal may be preventive. In addition, if there are paratubal cysts or hydrosalpinx from prior tubal ligation, it makes sense to remove the tube. There is little evidence to suggest that removal of the tubes accelerates the menopausal transition due to compromise of the blood supply to the ovaries.

You must be very gentle when handling and removing just the tubes. The mesosalpinx is delicate and easily torn or traumatized. A careful and deliberate approach is warranted.

Bilateral salpingectomy: Key take-aways

Locate the tube. The fallopian tube always lies on top of the ovary and should be found there. On occasion, the abdominal packing used to move the bowel out of the pelvis will “hide” the tube; readjusting this packing often solves the problem.

Be gentle with the mesosalpinx as it is very delicate and can easily avulse. It is very important to “flash the clamp” (open the clamp and then close it) as you free-tie the mesosalpinx to avoid cutting through the delicate pedicle.

Remove as much tube as possible. The fimbriae end of the tube usually is free and easy to identify. Try to remove as much of the tube as possible. Often, a bit of the proximal tube is left in the utero-ovarian pedicle tie.

Clean up. You will often find peritubal cysts or “tubal clips” from a sterilization procedure. I recommend that you remove any of these you encounter to avoid problems down the road. Often, these cysts and clip-like devices are removed as part of the specimen.

Dry up. Always confirm hemostasis before concluding the procedure. If there is bleeding, be sure to assess the mesosalpinx. Occasionally, the pedicle can be torn higher up, near the gonadal vessels. Investigate this region if bleeding seems to be an issue.

Transvaginal salpingo-oophorectomy: Key take-aways

Perfect a technique. There are many approaches to transvaginal removal of the adnexae; pick one and perfect it. The better you are, the fewer complications you will have. Recognize that a different approach (use of a stapler or energy sealing device, for example) may prove useful in some settings. Be surgically versatile and recognize situations that might call for something other than your usual approach.

Optimize visualization. The tubes and ovaries are usually very accessible vaginally. Use an abdominal pack to move the bowel out of the pelvis. Adequate retraction and use of a lighted retractor or suction irrigator will facilitate exposure.

Ligate the gonadal vessels. Retraction of the tube and ovary complex medially away from the pelvic sidewall will allow you to place a clamp (or stapler or energy device) lateral to secure the gonadal vessels and ensure complete removal of the adnexae.

Release the round ligament. Although this step is usually not required, it will allow you to isolate the adnexae more precisely, especially when dealing with an adnexal mass transvaginally. It is critical that you “hug” the round ligament and refrain from penetrating deeply into the underlying tissues, or bleeding will occur. Once the round ligament is released, the tube and ovary are isolated on the gonadal pedicle and can be completely excised with this technique.

Manage bleeding. If suturing, I prefer to doubly ligate the gonadal vessels. Once I clamp the pedicle, I “free-tie” the gonadal vessels with an initial suture. This suture secures the vascular pedicle and prevents retraction. The adnexae can then be removed, followed by placement of a “stick-tie” to re-ligate the pedicle. Although this vascular pedicle is more robust than the mesosalpinx, it, too, can be avulsed, so it is important to proceed with caution. I recommend having a long clamp (uterine packing forceps or MD Anderson clamp) available in your instrument pan to facilitate specific isolation of the gonadal vessels along the pelvic sidewall in the event avulsion does occur.

Share your thoughts! Send your Letter to the Editor to [email protected]. Please include your name and the city and state in which you practice.

In this article, I describe my technique for a vaginal approach to right salpingectomy with ovarian preservation, as well as right salpingo-oophorectomy, in a patient lacking a left tube and ovary. This technique is fully illustrated on a cadaver in the Web-based master course in vaginal hysterectomy produced by the AAGL and co-sponsored by the American College of Obstetricians and Gynecologists and the Society of Gynecologic Surgeons. That course is available online at https://www.aagl.org/vaghystwebinar.

For a detailed description of vaginal hysterectomy technique, see the article entitled “Vaginal hysterectomy using basic instrumentation,” by Barbara S. Levy, MD, which appeared in the October 2015 issue of OBG Management. Next month, in the December 2015 issue of the journal, I will detail my strategies for managing complications associated with vaginal hysterectomy, salpingectomy, and salpingo-oophorectomy.

Right salpingectomy

FIGURE 1 Locate the tube |

The fallopian tube will almost always be found on top of the ovary. |

FIGURE 2 Isolate the tube

|

| Grasp the tube and bring it to the midline. |

Start with light traction

Begin by placing an instrument on the round ligament, tube, and uterine-ovarian pedicle, exerting light traction. Note that the tube will always be found on top of the ovary

(FIGURE 1). Take care during placement of packing material to avoid sweeping the fimbriae of the tube up and out of the surgical field. You may need to play with the packing a bit until you are able to deliver the tube.

Once you identify the tube, isolate it by bringing it down to the midline (FIGURE 2). One thing to note if you’re accustomed to performing bilateral salpingo-oophorectomy: The gonadal pedicle is fairly substantive and can sustain a bit of tugging. However, if you’re performing salpingectomy with ovarian preservation, you need to be much more careful in your handling of the tube because the mesosalpinx is extremely delicate.

After you bring the tube to the midline, grasp it using a Heaney or Shallcross clamp. You could use energy to take this pedicle or clamp and tie it.

Make sure that the packing material is out of the way and that you have most of the tube nicely isolated. Don’t take the tube too far up in the surgical field because, if you lose it, it can be hard to control the bleeding. Ensure that you have grasped the fimbriated end of the tube.

In some cases you can leave a portion of the tube right next to the round ligament (FIGURE 3). You can go back and take that portion later, if you desire. But when it comes to the potential for the fallopian tube to generate carcinoma, most of the concern involves the mid to distal end of the tube rather than the cornual portion.

Once the Shallcross clamp has a good purchase on the pedicle, bring the suture around the clamp and then pass it under the tube so that you encircle the mesosalpinx pedicle (FIGURE 4). It is extremely important during salpingectomy to tie this suture down gently but tightly. In the process, have your assistant flash the Shallcross clamp open when you tie the suture. Otherwise, the suture will tend to tear through the mesosalpinx. Be very careful in your handling of the specimen at this point. Next, cut right along the edge of the clamp to remove the tube.

FIGURE 3 Focus on the distal tube

| FIGURE 4 Clamp and tie the pedicle

| |

| The cornual portion of the tube (proximal to the round ligament) can be left behind, if desired. The propensity for cancer centers on the distal end of the tube. | Bring the suture around the clamp and then pass it under the tube so that you encircle the mesosalpinx pedicle. |

If you prefer, you can stick-tie the remaining portion again, but usually one tie will suffice because there is such a small pedicle there. The distal portion of the pedicle eventually will necrose close to the tie. The next step is ensuring hemostasis.

On occasion, if you lose the pedicle high in the surgical field, you can try to oversew it. A 2-0 Vicryl suture may be used to place a figure-eight stitch to control bleeding around the mesosalpinx. Alternatively, an energy device may be used for hemostasis. Rarely, if you encounter bleeding that does not respond to the previous suggestions, you may need to remove the ovary to control bleeding if the tissue tears.

Transvaginal technique for salpingo-oophorectomy

Once the hysterectomy is completed, grasp the round ligament, tube, and uterine-ovarian pedicle, placing slight tension on the pedicle, and free the right round ligament to ease isolation of the gonadal vessels. Using electrocautery, carefully transect the round ligament. It is critical when isolating the round ligament to transect only the ligament and not to get deep into the underlying tissue or bleeding will ensue. If you “hug” just the round ligament, you will open into the broad ligament and easily be able to isolate the gonadal pedicle.

Once the pedicle is nicely isolated, readjust your retractors or lighting to improve visualization. Now the gonadal vessels can be isolated up high much more easily (FIGURE 1).

Next, use a Heaney clamp to grab the pedicle, making sure that the ovary is medial to the clamp (FIGURE 2).

|

| |

| FIGURE 1: ISOLATE THE GONADAL VESSELS Once optimal visualization is achieved, the gonadal vessels can be isolated easily. | FIGURE 2: KEEP THE OVARY MEDIAL TO THE CLAMP Use a Heaney clamp to grab the pedicle, keeping the ovary medial to the clamp. |

In this setting, there are a number of techniques you can use to complete the salpingo-oophorectomy. I tend to doubly ligate the pedicle. To begin, cut the tagging suture to get it out of the way. Then place a free tie lateral to the clamp, bringing it down and underneath to fully encircle the pedicle. Ligate the pedicle then cut the free tie. Follow by cutting the pedicle beside the Heaney clamp and removing the specimen. Stick-tie the remaining pedicle.

Locate the free tie, which is easily identified. Place your needle between that free tie and the clamp so that you do not pierce the vessels proximal to the tie with that needle. Then doubly ligate the pedicle.

Check for hemostasis and, once confirmed, cut the pedicle tie. Because this patient does not have a left tube and ovary, the procedure is now completed.

Conclusion

The tubes are usually readily accessible for removal at the time of vaginal hysterectomy. There is evolving evidence that the tube may play a role in malignancy of the female genital tract. Thus, removal may be preventive. In addition, if there are paratubal cysts or hydrosalpinx from prior tubal ligation, it makes sense to remove the tube. There is little evidence to suggest that removal of the tubes accelerates the menopausal transition due to compromise of the blood supply to the ovaries.

You must be very gentle when handling and removing just the tubes. The mesosalpinx is delicate and easily torn or traumatized. A careful and deliberate approach is warranted.

Bilateral salpingectomy: Key take-aways

Locate the tube. The fallopian tube always lies on top of the ovary and should be found there. On occasion, the abdominal packing used to move the bowel out of the pelvis will “hide” the tube; readjusting this packing often solves the problem.

Be gentle with the mesosalpinx as it is very delicate and can easily avulse. It is very important to “flash the clamp” (open the clamp and then close it) as you free-tie the mesosalpinx to avoid cutting through the delicate pedicle.

Remove as much tube as possible. The fimbriae end of the tube usually is free and easy to identify. Try to remove as much of the tube as possible. Often, a bit of the proximal tube is left in the utero-ovarian pedicle tie.

Clean up. You will often find peritubal cysts or “tubal clips” from a sterilization procedure. I recommend that you remove any of these you encounter to avoid problems down the road. Often, these cysts and clip-like devices are removed as part of the specimen.

Dry up. Always confirm hemostasis before concluding the procedure. If there is bleeding, be sure to assess the mesosalpinx. Occasionally, the pedicle can be torn higher up, near the gonadal vessels. Investigate this region if bleeding seems to be an issue.

Transvaginal salpingo-oophorectomy: Key take-aways

Perfect a technique. There are many approaches to transvaginal removal of the adnexae; pick one and perfect it. The better you are, the fewer complications you will have. Recognize that a different approach (use of a stapler or energy sealing device, for example) may prove useful in some settings. Be surgically versatile and recognize situations that might call for something other than your usual approach.

Optimize visualization. The tubes and ovaries are usually very accessible vaginally. Use an abdominal pack to move the bowel out of the pelvis. Adequate retraction and use of a lighted retractor or suction irrigator will facilitate exposure.

Ligate the gonadal vessels. Retraction of the tube and ovary complex medially away from the pelvic sidewall will allow you to place a clamp (or stapler or energy device) lateral to secure the gonadal vessels and ensure complete removal of the adnexae.

Release the round ligament. Although this step is usually not required, it will allow you to isolate the adnexae more precisely, especially when dealing with an adnexal mass transvaginally. It is critical that you “hug” the round ligament and refrain from penetrating deeply into the underlying tissues, or bleeding will occur. Once the round ligament is released, the tube and ovary are isolated on the gonadal pedicle and can be completely excised with this technique.

Manage bleeding. If suturing, I prefer to doubly ligate the gonadal vessels. Once I clamp the pedicle, I “free-tie” the gonadal vessels with an initial suture. This suture secures the vascular pedicle and prevents retraction. The adnexae can then be removed, followed by placement of a “stick-tie” to re-ligate the pedicle. Although this vascular pedicle is more robust than the mesosalpinx, it, too, can be avulsed, so it is important to proceed with caution. I recommend having a long clamp (uterine packing forceps or MD Anderson clamp) available in your instrument pan to facilitate specific isolation of the gonadal vessels along the pelvic sidewall in the event avulsion does occur.

Share your thoughts! Send your Letter to the Editor to [email protected]. Please include your name and the city and state in which you practice.

In this article, I describe my technique for a vaginal approach to right salpingectomy with ovarian preservation, as well as right salpingo-oophorectomy, in a patient lacking a left tube and ovary. This technique is fully illustrated on a cadaver in the Web-based master course in vaginal hysterectomy produced by the AAGL and co-sponsored by the American College of Obstetricians and Gynecologists and the Society of Gynecologic Surgeons. That course is available online at https://www.aagl.org/vaghystwebinar.

For a detailed description of vaginal hysterectomy technique, see the article entitled “Vaginal hysterectomy using basic instrumentation,” by Barbara S. Levy, MD, which appeared in the October 2015 issue of OBG Management. Next month, in the December 2015 issue of the journal, I will detail my strategies for managing complications associated with vaginal hysterectomy, salpingectomy, and salpingo-oophorectomy.

Right salpingectomy

FIGURE 1 Locate the tube |

The fallopian tube will almost always be found on top of the ovary. |

FIGURE 2 Isolate the tube

|

| Grasp the tube and bring it to the midline. |

Start with light traction

Begin by placing an instrument on the round ligament, tube, and uterine-ovarian pedicle, exerting light traction. Note that the tube will always be found on top of the ovary

(FIGURE 1). Take care during placement of packing material to avoid sweeping the fimbriae of the tube up and out of the surgical field. You may need to play with the packing a bit until you are able to deliver the tube.

Once you identify the tube, isolate it by bringing it down to the midline (FIGURE 2). One thing to note if you’re accustomed to performing bilateral salpingo-oophorectomy: The gonadal pedicle is fairly substantive and can sustain a bit of tugging. However, if you’re performing salpingectomy with ovarian preservation, you need to be much more careful in your handling of the tube because the mesosalpinx is extremely delicate.

After you bring the tube to the midline, grasp it using a Heaney or Shallcross clamp. You could use energy to take this pedicle or clamp and tie it.

Make sure that the packing material is out of the way and that you have most of the tube nicely isolated. Don’t take the tube too far up in the surgical field because, if you lose it, it can be hard to control the bleeding. Ensure that you have grasped the fimbriated end of the tube.

In some cases you can leave a portion of the tube right next to the round ligament (FIGURE 3). You can go back and take that portion later, if you desire. But when it comes to the potential for the fallopian tube to generate carcinoma, most of the concern involves the mid to distal end of the tube rather than the cornual portion.

Once the Shallcross clamp has a good purchase on the pedicle, bring the suture around the clamp and then pass it under the tube so that you encircle the mesosalpinx pedicle (FIGURE 4). It is extremely important during salpingectomy to tie this suture down gently but tightly. In the process, have your assistant flash the Shallcross clamp open when you tie the suture. Otherwise, the suture will tend to tear through the mesosalpinx. Be very careful in your handling of the specimen at this point. Next, cut right along the edge of the clamp to remove the tube.

FIGURE 3 Focus on the distal tube

| FIGURE 4 Clamp and tie the pedicle

| |

| The cornual portion of the tube (proximal to the round ligament) can be left behind, if desired. The propensity for cancer centers on the distal end of the tube. | Bring the suture around the clamp and then pass it under the tube so that you encircle the mesosalpinx pedicle. |

If you prefer, you can stick-tie the remaining portion again, but usually one tie will suffice because there is such a small pedicle there. The distal portion of the pedicle eventually will necrose close to the tie. The next step is ensuring hemostasis.

On occasion, if you lose the pedicle high in the surgical field, you can try to oversew it. A 2-0 Vicryl suture may be used to place a figure-eight stitch to control bleeding around the mesosalpinx. Alternatively, an energy device may be used for hemostasis. Rarely, if you encounter bleeding that does not respond to the previous suggestions, you may need to remove the ovary to control bleeding if the tissue tears.

Transvaginal technique for salpingo-oophorectomy

Once the hysterectomy is completed, grasp the round ligament, tube, and uterine-ovarian pedicle, placing slight tension on the pedicle, and free the right round ligament to ease isolation of the gonadal vessels. Using electrocautery, carefully transect the round ligament. It is critical when isolating the round ligament to transect only the ligament and not to get deep into the underlying tissue or bleeding will ensue. If you “hug” just the round ligament, you will open into the broad ligament and easily be able to isolate the gonadal pedicle.

Once the pedicle is nicely isolated, readjust your retractors or lighting to improve visualization. Now the gonadal vessels can be isolated up high much more easily (FIGURE 1).

Next, use a Heaney clamp to grab the pedicle, making sure that the ovary is medial to the clamp (FIGURE 2).

|

| |

| FIGURE 1: ISOLATE THE GONADAL VESSELS Once optimal visualization is achieved, the gonadal vessels can be isolated easily. | FIGURE 2: KEEP THE OVARY MEDIAL TO THE CLAMP Use a Heaney clamp to grab the pedicle, keeping the ovary medial to the clamp. |

In this setting, there are a number of techniques you can use to complete the salpingo-oophorectomy. I tend to doubly ligate the pedicle. To begin, cut the tagging suture to get it out of the way. Then place a free tie lateral to the clamp, bringing it down and underneath to fully encircle the pedicle. Ligate the pedicle then cut the free tie. Follow by cutting the pedicle beside the Heaney clamp and removing the specimen. Stick-tie the remaining pedicle.

Locate the free tie, which is easily identified. Place your needle between that free tie and the clamp so that you do not pierce the vessels proximal to the tie with that needle. Then doubly ligate the pedicle.

Check for hemostasis and, once confirmed, cut the pedicle tie. Because this patient does not have a left tube and ovary, the procedure is now completed.

Conclusion

The tubes are usually readily accessible for removal at the time of vaginal hysterectomy. There is evolving evidence that the tube may play a role in malignancy of the female genital tract. Thus, removal may be preventive. In addition, if there are paratubal cysts or hydrosalpinx from prior tubal ligation, it makes sense to remove the tube. There is little evidence to suggest that removal of the tubes accelerates the menopausal transition due to compromise of the blood supply to the ovaries.

You must be very gentle when handling and removing just the tubes. The mesosalpinx is delicate and easily torn or traumatized. A careful and deliberate approach is warranted.

Bilateral salpingectomy: Key take-aways

Locate the tube. The fallopian tube always lies on top of the ovary and should be found there. On occasion, the abdominal packing used to move the bowel out of the pelvis will “hide” the tube; readjusting this packing often solves the problem.

Be gentle with the mesosalpinx as it is very delicate and can easily avulse. It is very important to “flash the clamp” (open the clamp and then close it) as you free-tie the mesosalpinx to avoid cutting through the delicate pedicle.

Remove as much tube as possible. The fimbriae end of the tube usually is free and easy to identify. Try to remove as much of the tube as possible. Often, a bit of the proximal tube is left in the utero-ovarian pedicle tie.

Clean up. You will often find peritubal cysts or “tubal clips” from a sterilization procedure. I recommend that you remove any of these you encounter to avoid problems down the road. Often, these cysts and clip-like devices are removed as part of the specimen.

Dry up. Always confirm hemostasis before concluding the procedure. If there is bleeding, be sure to assess the mesosalpinx. Occasionally, the pedicle can be torn higher up, near the gonadal vessels. Investigate this region if bleeding seems to be an issue.

Transvaginal salpingo-oophorectomy: Key take-aways

Perfect a technique. There are many approaches to transvaginal removal of the adnexae; pick one and perfect it. The better you are, the fewer complications you will have. Recognize that a different approach (use of a stapler or energy sealing device, for example) may prove useful in some settings. Be surgically versatile and recognize situations that might call for something other than your usual approach.

Optimize visualization. The tubes and ovaries are usually very accessible vaginally. Use an abdominal pack to move the bowel out of the pelvis. Adequate retraction and use of a lighted retractor or suction irrigator will facilitate exposure.

Ligate the gonadal vessels. Retraction of the tube and ovary complex medially away from the pelvic sidewall will allow you to place a clamp (or stapler or energy device) lateral to secure the gonadal vessels and ensure complete removal of the adnexae.

Release the round ligament. Although this step is usually not required, it will allow you to isolate the adnexae more precisely, especially when dealing with an adnexal mass transvaginally. It is critical that you “hug” the round ligament and refrain from penetrating deeply into the underlying tissues, or bleeding will occur. Once the round ligament is released, the tube and ovary are isolated on the gonadal pedicle and can be completely excised with this technique.

Manage bleeding. If suturing, I prefer to doubly ligate the gonadal vessels. Once I clamp the pedicle, I “free-tie” the gonadal vessels with an initial suture. This suture secures the vascular pedicle and prevents retraction. The adnexae can then be removed, followed by placement of a “stick-tie” to re-ligate the pedicle. Although this vascular pedicle is more robust than the mesosalpinx, it, too, can be avulsed, so it is important to proceed with caution. I recommend having a long clamp (uterine packing forceps or MD Anderson clamp) available in your instrument pan to facilitate specific isolation of the gonadal vessels along the pelvic sidewall in the event avulsion does occur.

Share your thoughts! Send your Letter to the Editor to [email protected]. Please include your name and the city and state in which you practice.

In this Article

- Technique for salpingo-oophorectomy

- Salpingectomy: Key take-aways

- Pointers for salpingo- oophorectomy

What you need to know (and do) to prescribe the new drug flibanserin

It was a long road to approval by the US Food and Drug Administration (FDA), but flibanserin (Addyi) got the nod on August 18, 2015. Its New Drug Application (NDA) originally was filed October 27, 2009. The drug launched October 17, 2015.

Although there has been a lot of fanfare about approval of this drug, most of the coverage has focused on its status as the “first female Viagra”—a less than accurate depiction. For a more realistic and practical assessment of the drug, OBG Management turned to Michael Krychman, MD, executive director of the Southern California Center for Sexual Health and Survivorship Medicine in Newport Beach, to determine the types of information clinicians need to know to begin prescribing flibanserin. This article highlights 11 questions (and answers) to help you get started.

1. How did the FDA arrive

at its approval?

In 2012, the agency determined that female sexual dysfunction was one of 20 disease areas that warranted focused attention. In October 2014, as part of its intensified look at female sexual dysfunction, the FDA convened a 2-day meeting “to advance our understanding,” reports Andrea Fischer, FDA press officer.

“During the first day of the meeting, the FDA solicited patients’ perspectives on their condition and its impact on daily life. While this meeting did not focus on flibanserin, it provided an opportunity for the FDA to hear directly from patients about the impact of their condition,” Ms. Fischer says. During the second day of the meeting, the FDA “discussed scientific issues and challenges with experts in sexual medicine.”

As a result, by the time of the FDA’s June 4, 2015 Advisory Committee meeting on the flibanserin NDA, FDA physician-scientists were well versed in many nuances of female sexual function. That meeting included an open public hearing “that provided an opportunity for members of the public, including patients, to provide input specifically on the flibanserin application,” Ms. Fischer notes.

Nuances of the deliberations

“The FDA’s regulatory decision making on any drug product is a science-based process that carefully weighs each drug in terms of its risks and benefits to the patient population for which the drug would be indicated,” says Ms. Fischer.

The challenge in the case of flibanserin was determining whether the drug provides “clinically meaningful” improvements in sexual activity and desire.

“For many conditions and diseases, what constitutes ‘clinically meaningful’ is well known and accepted,” Ms. Fischer notes, “such as when something is cured or a severe symptom that is life-altering resolves completely. For others, this is not the case. For example, a condition that has a wide range of degree of severity can offer challenges in assessing what constitutes a clinically meaningful treatment effect. Ascertaining this requires a comprehensive knowledge of the disease, affected patient population, management strategies and the drug in question, as well as an ability to look at the clinical trial data taking this all into account.”

“In clinical trials, an important method for assessing the impact of a treatment on a patient’s symptoms, mental state, or functional status is through direct self-report using well developed and thoughtfully integrated patient-reported outcome (PRO) assessments,” Ms. Fischer says. “PROs can provide valuable information on the patient perspective when determining whether benefits outweigh risks, and they also are used to support medical product labeling claims, which are a key source of information for both health care providers and patients. PROs have been and continue to be a high priority as part of FDA’s commitment to advance patient-focused drug development, and we fully expect this to continue. The clinical trials in the flibanserin NDA all utilized PRO assessments.”

Those assessments found that patients taking flibanserin had a significant increase in “sexually satisfying events.” Three 24-week randomized controlled trials explored this endpoint for flibanserin (studies 1–3).

As for improvements in desire, the first 2 trials utilized an e-diary to assess this aspect of sexual function, while the 3rd trial utilized the Female Sexual Function Index (FSFI).

Although the e-diary reflected no statistically significant improvement in desire in the first 2 trials, the FSFI did find significant improvement in the 3rd trial. In addition, when the FSFI was considered across all 3 trials, results in the desire domain were consistent. (The FSFI was used as a secondary tool in the first 2 trials.)

In addition, sexual distress, as measured by the Female Sexual Distress Scale (FSDS), was decreased in the trials with use of flibanserin, notes Dr. Krychman. The Advisory Committee determined that these findings were sufficient to demonstrate clinically meaningful improvements with use of the drug.

Although the drug was approved by the FDA, the agency was sufficiently concerned about some of its potential risks (see questions 4 and 5) that it implemented rigorous mitigation strategies (see question 7). Additional investigations were requested by the agency, including drug-drug interaction, alcohol challenge, and driving studies.

2. What are the indications?

Flibanserin is intended for use in premenopausal women who have acquired, generalized hypoactive sexual desire disorder (HSDD). That diagnosis no longer is included in the 5th edition of the Diagnostic and

Statistical Manual of Mental Disorders but is described in drug package labeling as “low sexual desire that causes marked distress or interpersonal difficulty and is not due to:

- a coexisting medical or psychiatric condition,

- problems within the relationship, or

- the effects of a medication or other drug substance.”1

- the effects of a medication or other drug substance.”

Although the drug has been tested in both premenopausal and postmenopausal women, it was approved for use only in premenopausal women. Also note inclusion of the term “acquired” before the diagnosis of HSDD, indicating that the drug is inappropriate for women who have never experienced a period of normal sexual desire.

3. How is HSDD diagnosed?

One of the best screening tools is the

Decreased Sexual Desire Screener, says

Dr. Krychman. It is available at http://obgynalliance.com/files/fsd/DSDS_Pocketcard.pdf. This tool is a validated instrument to help clinicians identify what HSDD is and is not.

4. Does the drug carry

any warnings?

Yes, it carries a black box warning about the risks of hypotension and syncope:

- when alcohol is consumed by users of the drug. (Alcohol use is contraindicated.)

- when the drug is taken in conjunction with moderate or strong CYP3A4 inhibitors or by patients with hepatic impairment. (The drug is contraindicated in both circumstances.) See question 9 for a list of drugs that are CYP3A4 inhibitors.

5. Are there any other risks worth noting?

The medication can increase the risks of hypotension and syncope even without concomitant use of alcohol. For example, in clinical trials, hypotension was reported in 0.2% of flibanserin-treated women versus less than 0.1% of placebo users. And syncope was reported in 0.4% of flibanserin users versus 0.2% of placebo-treated patients. Flibanserin is prescribed as a once-daily medication that is to be taken at bedtime; the risks of hypotension and syncope are increased if flibanserin is taken during waking hours.

The risk of adverse effects when flibanserin is taken with alcohol is highlighted by one case reported in package labeling: A 54-year-old postmenopausal woman died after taking flibanserin (100 mg daily at bedtime) for 14 days. This patient had a history of hypertension and hypercholesterolemia and consumed a baseline amount of 1 to 3 alcoholic beverages daily. She died of acute alcohol intoxication, with a blood alcohol concentration of 0.289 g/dL.1 Whether this patient’s death was related to flibanserin use is unknown.1

It is interesting to note that, in the studies of flibanserin leading up to the drug’s approval, alcohol use was not an exclusion, says Dr. Krychman. “Approximately 58% of women were self-described as mild to moderate drinkers. The clinical program was extremely large—more than 11,000 women were studied.”

Flibanserin is currently not approved for use in postmenopausal women, and concomitant alcohol consumption is contraindicated.

6. What is the dose?

The recommended dose is one tablet of

100 mg daily. The drug is to be taken at

bedtime to reduce the risks of hypotension, syncope, accidental injury, and central nervous system (CNS) depression, which can occur even in the absence of alcohol.

7. Are there any requirements for clinicians who want to prescribe the drug?

Yes. Because of the risks of hypotension, syncope, and CNS depression, the drug is subject to Risk Evaluation and Mitigation Strategies (REMS), as determined by the FDA. To prescribe the drug, providers must:

- review its prescribing information

- review the Provider and Pharmacy Training Program

- complete and submit the Knowledge Assessment Form

- enroll in REMS by completing and submitting the Prescriber Enrollment Form.

Before giving a patient her initial prescription, the provider must counsel her about the risks of hypotension and syncope and the interaction with alcohol using the Patient-Provider Agreement Form. The provider must then complete that form, provide a designated portion of it to the patient, and retain the remainder for the patient’s file.

For more information and to download the relevant forms, visit https://www.addyirems.com.

8. What are the most common

adverse reactions to the drug?

According to package labeling, the most common adverse reactions, with an incidence greater than 2%, are dizziness, somnolence, nausea, fatigue, insomnia, and dry mouth.

Less common reactions include anxiety, constipation, abdominal pain, rash, sedation, and vertigo.

In studies of the drug, appendicitis was reported among 0.2% of flibanserin-treated patients, compared with no reports of appendicitis among placebo-treated patients. The FDA has requested additional investigation of the association, if any, between flibanserin and appendicitis.

9. What drug interactions are notable?

As stated earlier, the concomitant use of flibanserin with alcohol or a moderate or strong CYP3A4 inhibitor can result in severe hypotension and syncope. Flibanserin also should not be prescribed for patients who use other CNS depressants such as diphenhydramine, opioids, benzodiazepines, and hypnotic agents.

Some examples of strong CYP3A4 inhibitors are ketoconazole, itraconazole, posaconazole, clarithromycin, nefazodone, ritonavir, saquinavir, nelfinavir, indinavir, boceprevir, telaprevir, telithromycin, and conivaptan.

Moderate CYP3A4 inhibitors include amprenavir, atazanavir, ciprofloxacin, diltiazem, erythromycin, fluconazole, fosamprenavir, verapamil, and grapefruit juice.

In addition, the concomitant use of flibanserin with multiple weak CYP3A4 inhibitors—which include herbal supplements such as ginkgo and resveratrol and nonprescription drugs such as cimetidine—also may increase the risks of hypotension and syncope.

The concomitant use of flibanserin with digoxin increases the digoxin concentration and may lead to toxicity.

10. Is the drug safe in pregnancy

and lactation?

There are currently no data on the use of flibanserin in human pregnancy. In animals, fetal toxicity occurred only in the presence of significant maternal toxicity. Adverse effects included decreased fetal weight, structural anomalies, and increases in fetal loss when exposure exceeded 15 times the recommended human dosage.

As for the advisability of using flibanserin during lactation, it is unknown whether the drug is excreted in human milk, whether it might have adverse effects in the breastfed infant, or whether it affects milk production. Package labeling states: “Because of the potential for serious adverse reactions, including sedation in a breastfed infant, breastfeeding is not recommended during treatment with [flibanserin].”1

11. When should the drug

be discontinued?

If there is no improvement in sexual desire after an 8-week trial of flibanserin, the drug should be

discontinued.

Share your thoughts! Send your Letter to the Editor to [email protected]. Please include your name and the city and state in which you practice.

Reference

- Addyi [package insert]. Raleigh, NC: Sprout Pharmaceuticals; 2015.

It was a long road to approval by the US Food and Drug Administration (FDA), but flibanserin (Addyi) got the nod on August 18, 2015. Its New Drug Application (NDA) originally was filed October 27, 2009. The drug launched October 17, 2015.

Although there has been a lot of fanfare about approval of this drug, most of the coverage has focused on its status as the “first female Viagra”—a less than accurate depiction. For a more realistic and practical assessment of the drug, OBG Management turned to Michael Krychman, MD, executive director of the Southern California Center for Sexual Health and Survivorship Medicine in Newport Beach, to determine the types of information clinicians need to know to begin prescribing flibanserin. This article highlights 11 questions (and answers) to help you get started.

1. How did the FDA arrive

at its approval?

In 2012, the agency determined that female sexual dysfunction was one of 20 disease areas that warranted focused attention. In October 2014, as part of its intensified look at female sexual dysfunction, the FDA convened a 2-day meeting “to advance our understanding,” reports Andrea Fischer, FDA press officer.

“During the first day of the meeting, the FDA solicited patients’ perspectives on their condition and its impact on daily life. While this meeting did not focus on flibanserin, it provided an opportunity for the FDA to hear directly from patients about the impact of their condition,” Ms. Fischer says. During the second day of the meeting, the FDA “discussed scientific issues and challenges with experts in sexual medicine.”

As a result, by the time of the FDA’s June 4, 2015 Advisory Committee meeting on the flibanserin NDA, FDA physician-scientists were well versed in many nuances of female sexual function. That meeting included an open public hearing “that provided an opportunity for members of the public, including patients, to provide input specifically on the flibanserin application,” Ms. Fischer notes.

Nuances of the deliberations

“The FDA’s regulatory decision making on any drug product is a science-based process that carefully weighs each drug in terms of its risks and benefits to the patient population for which the drug would be indicated,” says Ms. Fischer.

The challenge in the case of flibanserin was determining whether the drug provides “clinically meaningful” improvements in sexual activity and desire.

“For many conditions and diseases, what constitutes ‘clinically meaningful’ is well known and accepted,” Ms. Fischer notes, “such as when something is cured or a severe symptom that is life-altering resolves completely. For others, this is not the case. For example, a condition that has a wide range of degree of severity can offer challenges in assessing what constitutes a clinically meaningful treatment effect. Ascertaining this requires a comprehensive knowledge of the disease, affected patient population, management strategies and the drug in question, as well as an ability to look at the clinical trial data taking this all into account.”

“In clinical trials, an important method for assessing the impact of a treatment on a patient’s symptoms, mental state, or functional status is through direct self-report using well developed and thoughtfully integrated patient-reported outcome (PRO) assessments,” Ms. Fischer says. “PROs can provide valuable information on the patient perspective when determining whether benefits outweigh risks, and they also are used to support medical product labeling claims, which are a key source of information for both health care providers and patients. PROs have been and continue to be a high priority as part of FDA’s commitment to advance patient-focused drug development, and we fully expect this to continue. The clinical trials in the flibanserin NDA all utilized PRO assessments.”

Those assessments found that patients taking flibanserin had a significant increase in “sexually satisfying events.” Three 24-week randomized controlled trials explored this endpoint for flibanserin (studies 1–3).

As for improvements in desire, the first 2 trials utilized an e-diary to assess this aspect of sexual function, while the 3rd trial utilized the Female Sexual Function Index (FSFI).

Although the e-diary reflected no statistically significant improvement in desire in the first 2 trials, the FSFI did find significant improvement in the 3rd trial. In addition, when the FSFI was considered across all 3 trials, results in the desire domain were consistent. (The FSFI was used as a secondary tool in the first 2 trials.)

In addition, sexual distress, as measured by the Female Sexual Distress Scale (FSDS), was decreased in the trials with use of flibanserin, notes Dr. Krychman. The Advisory Committee determined that these findings were sufficient to demonstrate clinically meaningful improvements with use of the drug.

Although the drug was approved by the FDA, the agency was sufficiently concerned about some of its potential risks (see questions 4 and 5) that it implemented rigorous mitigation strategies (see question 7). Additional investigations were requested by the agency, including drug-drug interaction, alcohol challenge, and driving studies.

2. What are the indications?

Flibanserin is intended for use in premenopausal women who have acquired, generalized hypoactive sexual desire disorder (HSDD). That diagnosis no longer is included in the 5th edition of the Diagnostic and

Statistical Manual of Mental Disorders but is described in drug package labeling as “low sexual desire that causes marked distress or interpersonal difficulty and is not due to:

- a coexisting medical or psychiatric condition,

- problems within the relationship, or

- the effects of a medication or other drug substance.”1

- the effects of a medication or other drug substance.”

Although the drug has been tested in both premenopausal and postmenopausal women, it was approved for use only in premenopausal women. Also note inclusion of the term “acquired” before the diagnosis of HSDD, indicating that the drug is inappropriate for women who have never experienced a period of normal sexual desire.

3. How is HSDD diagnosed?

One of the best screening tools is the

Decreased Sexual Desire Screener, says

Dr. Krychman. It is available at http://obgynalliance.com/files/fsd/DSDS_Pocketcard.pdf. This tool is a validated instrument to help clinicians identify what HSDD is and is not.

4. Does the drug carry

any warnings?

Yes, it carries a black box warning about the risks of hypotension and syncope:

- when alcohol is consumed by users of the drug. (Alcohol use is contraindicated.)

- when the drug is taken in conjunction with moderate or strong CYP3A4 inhibitors or by patients with hepatic impairment. (The drug is contraindicated in both circumstances.) See question 9 for a list of drugs that are CYP3A4 inhibitors.

5. Are there any other risks worth noting?

The medication can increase the risks of hypotension and syncope even without concomitant use of alcohol. For example, in clinical trials, hypotension was reported in 0.2% of flibanserin-treated women versus less than 0.1% of placebo users. And syncope was reported in 0.4% of flibanserin users versus 0.2% of placebo-treated patients. Flibanserin is prescribed as a once-daily medication that is to be taken at bedtime; the risks of hypotension and syncope are increased if flibanserin is taken during waking hours.

The risk of adverse effects when flibanserin is taken with alcohol is highlighted by one case reported in package labeling: A 54-year-old postmenopausal woman died after taking flibanserin (100 mg daily at bedtime) for 14 days. This patient had a history of hypertension and hypercholesterolemia and consumed a baseline amount of 1 to 3 alcoholic beverages daily. She died of acute alcohol intoxication, with a blood alcohol concentration of 0.289 g/dL.1 Whether this patient’s death was related to flibanserin use is unknown.1

It is interesting to note that, in the studies of flibanserin leading up to the drug’s approval, alcohol use was not an exclusion, says Dr. Krychman. “Approximately 58% of women were self-described as mild to moderate drinkers. The clinical program was extremely large—more than 11,000 women were studied.”

Flibanserin is currently not approved for use in postmenopausal women, and concomitant alcohol consumption is contraindicated.

6. What is the dose?

The recommended dose is one tablet of

100 mg daily. The drug is to be taken at

bedtime to reduce the risks of hypotension, syncope, accidental injury, and central nervous system (CNS) depression, which can occur even in the absence of alcohol.

7. Are there any requirements for clinicians who want to prescribe the drug?

Yes. Because of the risks of hypotension, syncope, and CNS depression, the drug is subject to Risk Evaluation and Mitigation Strategies (REMS), as determined by the FDA. To prescribe the drug, providers must:

- review its prescribing information

- review the Provider and Pharmacy Training Program

- complete and submit the Knowledge Assessment Form

- enroll in REMS by completing and submitting the Prescriber Enrollment Form.

Before giving a patient her initial prescription, the provider must counsel her about the risks of hypotension and syncope and the interaction with alcohol using the Patient-Provider Agreement Form. The provider must then complete that form, provide a designated portion of it to the patient, and retain the remainder for the patient’s file.

For more information and to download the relevant forms, visit https://www.addyirems.com.

8. What are the most common

adverse reactions to the drug?

According to package labeling, the most common adverse reactions, with an incidence greater than 2%, are dizziness, somnolence, nausea, fatigue, insomnia, and dry mouth.

Less common reactions include anxiety, constipation, abdominal pain, rash, sedation, and vertigo.

In studies of the drug, appendicitis was reported among 0.2% of flibanserin-treated patients, compared with no reports of appendicitis among placebo-treated patients. The FDA has requested additional investigation of the association, if any, between flibanserin and appendicitis.

9. What drug interactions are notable?

As stated earlier, the concomitant use of flibanserin with alcohol or a moderate or strong CYP3A4 inhibitor can result in severe hypotension and syncope. Flibanserin also should not be prescribed for patients who use other CNS depressants such as diphenhydramine, opioids, benzodiazepines, and hypnotic agents.

Some examples of strong CYP3A4 inhibitors are ketoconazole, itraconazole, posaconazole, clarithromycin, nefazodone, ritonavir, saquinavir, nelfinavir, indinavir, boceprevir, telaprevir, telithromycin, and conivaptan.

Moderate CYP3A4 inhibitors include amprenavir, atazanavir, ciprofloxacin, diltiazem, erythromycin, fluconazole, fosamprenavir, verapamil, and grapefruit juice.

In addition, the concomitant use of flibanserin with multiple weak CYP3A4 inhibitors—which include herbal supplements such as ginkgo and resveratrol and nonprescription drugs such as cimetidine—also may increase the risks of hypotension and syncope.

The concomitant use of flibanserin with digoxin increases the digoxin concentration and may lead to toxicity.

10. Is the drug safe in pregnancy

and lactation?

There are currently no data on the use of flibanserin in human pregnancy. In animals, fetal toxicity occurred only in the presence of significant maternal toxicity. Adverse effects included decreased fetal weight, structural anomalies, and increases in fetal loss when exposure exceeded 15 times the recommended human dosage.

As for the advisability of using flibanserin during lactation, it is unknown whether the drug is excreted in human milk, whether it might have adverse effects in the breastfed infant, or whether it affects milk production. Package labeling states: “Because of the potential for serious adverse reactions, including sedation in a breastfed infant, breastfeeding is not recommended during treatment with [flibanserin].”1

11. When should the drug

be discontinued?

If there is no improvement in sexual desire after an 8-week trial of flibanserin, the drug should be

discontinued.

Share your thoughts! Send your Letter to the Editor to [email protected]. Please include your name and the city and state in which you practice.

It was a long road to approval by the US Food and Drug Administration (FDA), but flibanserin (Addyi) got the nod on August 18, 2015. Its New Drug Application (NDA) originally was filed October 27, 2009. The drug launched October 17, 2015.

Although there has been a lot of fanfare about approval of this drug, most of the coverage has focused on its status as the “first female Viagra”—a less than accurate depiction. For a more realistic and practical assessment of the drug, OBG Management turned to Michael Krychman, MD, executive director of the Southern California Center for Sexual Health and Survivorship Medicine in Newport Beach, to determine the types of information clinicians need to know to begin prescribing flibanserin. This article highlights 11 questions (and answers) to help you get started.

1. How did the FDA arrive

at its approval?

In 2012, the agency determined that female sexual dysfunction was one of 20 disease areas that warranted focused attention. In October 2014, as part of its intensified look at female sexual dysfunction, the FDA convened a 2-day meeting “to advance our understanding,” reports Andrea Fischer, FDA press officer.

“During the first day of the meeting, the FDA solicited patients’ perspectives on their condition and its impact on daily life. While this meeting did not focus on flibanserin, it provided an opportunity for the FDA to hear directly from patients about the impact of their condition,” Ms. Fischer says. During the second day of the meeting, the FDA “discussed scientific issues and challenges with experts in sexual medicine.”

As a result, by the time of the FDA’s June 4, 2015 Advisory Committee meeting on the flibanserin NDA, FDA physician-scientists were well versed in many nuances of female sexual function. That meeting included an open public hearing “that provided an opportunity for members of the public, including patients, to provide input specifically on the flibanserin application,” Ms. Fischer notes.

Nuances of the deliberations

“The FDA’s regulatory decision making on any drug product is a science-based process that carefully weighs each drug in terms of its risks and benefits to the patient population for which the drug would be indicated,” says Ms. Fischer.

The challenge in the case of flibanserin was determining whether the drug provides “clinically meaningful” improvements in sexual activity and desire.

“For many conditions and diseases, what constitutes ‘clinically meaningful’ is well known and accepted,” Ms. Fischer notes, “such as when something is cured or a severe symptom that is life-altering resolves completely. For others, this is not the case. For example, a condition that has a wide range of degree of severity can offer challenges in assessing what constitutes a clinically meaningful treatment effect. Ascertaining this requires a comprehensive knowledge of the disease, affected patient population, management strategies and the drug in question, as well as an ability to look at the clinical trial data taking this all into account.”

“In clinical trials, an important method for assessing the impact of a treatment on a patient’s symptoms, mental state, or functional status is through direct self-report using well developed and thoughtfully integrated patient-reported outcome (PRO) assessments,” Ms. Fischer says. “PROs can provide valuable information on the patient perspective when determining whether benefits outweigh risks, and they also are used to support medical product labeling claims, which are a key source of information for both health care providers and patients. PROs have been and continue to be a high priority as part of FDA’s commitment to advance patient-focused drug development, and we fully expect this to continue. The clinical trials in the flibanserin NDA all utilized PRO assessments.”

Those assessments found that patients taking flibanserin had a significant increase in “sexually satisfying events.” Three 24-week randomized controlled trials explored this endpoint for flibanserin (studies 1–3).

As for improvements in desire, the first 2 trials utilized an e-diary to assess this aspect of sexual function, while the 3rd trial utilized the Female Sexual Function Index (FSFI).

Although the e-diary reflected no statistically significant improvement in desire in the first 2 trials, the FSFI did find significant improvement in the 3rd trial. In addition, when the FSFI was considered across all 3 trials, results in the desire domain were consistent. (The FSFI was used as a secondary tool in the first 2 trials.)

In addition, sexual distress, as measured by the Female Sexual Distress Scale (FSDS), was decreased in the trials with use of flibanserin, notes Dr. Krychman. The Advisory Committee determined that these findings were sufficient to demonstrate clinically meaningful improvements with use of the drug.

Although the drug was approved by the FDA, the agency was sufficiently concerned about some of its potential risks (see questions 4 and 5) that it implemented rigorous mitigation strategies (see question 7). Additional investigations were requested by the agency, including drug-drug interaction, alcohol challenge, and driving studies.

2. What are the indications?

Flibanserin is intended for use in premenopausal women who have acquired, generalized hypoactive sexual desire disorder (HSDD). That diagnosis no longer is included in the 5th edition of the Diagnostic and

Statistical Manual of Mental Disorders but is described in drug package labeling as “low sexual desire that causes marked distress or interpersonal difficulty and is not due to:

- a coexisting medical or psychiatric condition,

- problems within the relationship, or

- the effects of a medication or other drug substance.”1

- the effects of a medication or other drug substance.”

Although the drug has been tested in both premenopausal and postmenopausal women, it was approved for use only in premenopausal women. Also note inclusion of the term “acquired” before the diagnosis of HSDD, indicating that the drug is inappropriate for women who have never experienced a period of normal sexual desire.

3. How is HSDD diagnosed?

One of the best screening tools is the

Decreased Sexual Desire Screener, says

Dr. Krychman. It is available at http://obgynalliance.com/files/fsd/DSDS_Pocketcard.pdf. This tool is a validated instrument to help clinicians identify what HSDD is and is not.

4. Does the drug carry

any warnings?

Yes, it carries a black box warning about the risks of hypotension and syncope:

- when alcohol is consumed by users of the drug. (Alcohol use is contraindicated.)

- when the drug is taken in conjunction with moderate or strong CYP3A4 inhibitors or by patients with hepatic impairment. (The drug is contraindicated in both circumstances.) See question 9 for a list of drugs that are CYP3A4 inhibitors.

5. Are there any other risks worth noting?

The medication can increase the risks of hypotension and syncope even without concomitant use of alcohol. For example, in clinical trials, hypotension was reported in 0.2% of flibanserin-treated women versus less than 0.1% of placebo users. And syncope was reported in 0.4% of flibanserin users versus 0.2% of placebo-treated patients. Flibanserin is prescribed as a once-daily medication that is to be taken at bedtime; the risks of hypotension and syncope are increased if flibanserin is taken during waking hours.

The risk of adverse effects when flibanserin is taken with alcohol is highlighted by one case reported in package labeling: A 54-year-old postmenopausal woman died after taking flibanserin (100 mg daily at bedtime) for 14 days. This patient had a history of hypertension and hypercholesterolemia and consumed a baseline amount of 1 to 3 alcoholic beverages daily. She died of acute alcohol intoxication, with a blood alcohol concentration of 0.289 g/dL.1 Whether this patient’s death was related to flibanserin use is unknown.1

It is interesting to note that, in the studies of flibanserin leading up to the drug’s approval, alcohol use was not an exclusion, says Dr. Krychman. “Approximately 58% of women were self-described as mild to moderate drinkers. The clinical program was extremely large—more than 11,000 women were studied.”

Flibanserin is currently not approved for use in postmenopausal women, and concomitant alcohol consumption is contraindicated.

6. What is the dose?

The recommended dose is one tablet of

100 mg daily. The drug is to be taken at

bedtime to reduce the risks of hypotension, syncope, accidental injury, and central nervous system (CNS) depression, which can occur even in the absence of alcohol.

7. Are there any requirements for clinicians who want to prescribe the drug?

Yes. Because of the risks of hypotension, syncope, and CNS depression, the drug is subject to Risk Evaluation and Mitigation Strategies (REMS), as determined by the FDA. To prescribe the drug, providers must:

- review its prescribing information

- review the Provider and Pharmacy Training Program

- complete and submit the Knowledge Assessment Form

- enroll in REMS by completing and submitting the Prescriber Enrollment Form.

Before giving a patient her initial prescription, the provider must counsel her about the risks of hypotension and syncope and the interaction with alcohol using the Patient-Provider Agreement Form. The provider must then complete that form, provide a designated portion of it to the patient, and retain the remainder for the patient’s file.

For more information and to download the relevant forms, visit https://www.addyirems.com.

8. What are the most common

adverse reactions to the drug?

According to package labeling, the most common adverse reactions, with an incidence greater than 2%, are dizziness, somnolence, nausea, fatigue, insomnia, and dry mouth.

Less common reactions include anxiety, constipation, abdominal pain, rash, sedation, and vertigo.

In studies of the drug, appendicitis was reported among 0.2% of flibanserin-treated patients, compared with no reports of appendicitis among placebo-treated patients. The FDA has requested additional investigation of the association, if any, between flibanserin and appendicitis.

9. What drug interactions are notable?

As stated earlier, the concomitant use of flibanserin with alcohol or a moderate or strong CYP3A4 inhibitor can result in severe hypotension and syncope. Flibanserin also should not be prescribed for patients who use other CNS depressants such as diphenhydramine, opioids, benzodiazepines, and hypnotic agents.

Some examples of strong CYP3A4 inhibitors are ketoconazole, itraconazole, posaconazole, clarithromycin, nefazodone, ritonavir, saquinavir, nelfinavir, indinavir, boceprevir, telaprevir, telithromycin, and conivaptan.

Moderate CYP3A4 inhibitors include amprenavir, atazanavir, ciprofloxacin, diltiazem, erythromycin, fluconazole, fosamprenavir, verapamil, and grapefruit juice.

In addition, the concomitant use of flibanserin with multiple weak CYP3A4 inhibitors—which include herbal supplements such as ginkgo and resveratrol and nonprescription drugs such as cimetidine—also may increase the risks of hypotension and syncope.

The concomitant use of flibanserin with digoxin increases the digoxin concentration and may lead to toxicity.

10. Is the drug safe in pregnancy

and lactation?

There are currently no data on the use of flibanserin in human pregnancy. In animals, fetal toxicity occurred only in the presence of significant maternal toxicity. Adverse effects included decreased fetal weight, structural anomalies, and increases in fetal loss when exposure exceeded 15 times the recommended human dosage.

As for the advisability of using flibanserin during lactation, it is unknown whether the drug is excreted in human milk, whether it might have adverse effects in the breastfed infant, or whether it affects milk production. Package labeling states: “Because of the potential for serious adverse reactions, including sedation in a breastfed infant, breastfeeding is not recommended during treatment with [flibanserin].”1

11. When should the drug

be discontinued?

If there is no improvement in sexual desire after an 8-week trial of flibanserin, the drug should be

discontinued.

Share your thoughts! Send your Letter to the Editor to [email protected]. Please include your name and the city and state in which you practice.

Reference

- Addyi [package insert]. Raleigh, NC: Sprout Pharmaceuticals; 2015.

Reference

- Addyi [package insert]. Raleigh, NC: Sprout Pharmaceuticals; 2015.

In this Article

- How is HSDD diagnosed?

- What are clinicians required to do?

- Is the drug safe in pregnancy?

Signs of chorioamnionitis ignored? $3.5M settlement

Signs of chorioamnionitis ignored? $3.5M settlement

At 31 weeks’ gestation, a mother at risk for preterm labor was admitted to the hospital for 2 days. Examination and test results showed evidence of infection. She was given antenatal corticosteroids for fetal lung development in case of premature delivery. At discharge, bed rest was ordered and she complied. At 32 weeks’ gestation, she returned to the hospital with worsening symptoms, was prescribed antibiotics to treat a urinary tract infection, and was discharged. She went to the hospital a third time at almost 33 weeks’ gestation, experiencing contractions and leaking fluid. She was admitted with a plan to deliver the baby if any signs or symptoms of intra-amniotic infection (clinical chorioamnionitis) were present. Four days later, a cesarean delivery was ordered due to fetal tachycardia and decreased fetal heart rate. Imaging results performed in the neonatal intensive care unit showed that the baby received a brain injury. The child has physical and mental impairments including cerebral palsy, cortical blindness, and epilepsy.

Parents’ claim Hospital health care providers failed to communicate with each other or to obtain records from prior admissions, although the mother told them that she had been to the hospital twice within the past 2 weeks. Medical records from all 3 admissions showed clear signs and symptoms of a vaginal/cervical infection that had progressed to clinical chorioamnionitis 2 days before delivery. Examination of the placenta by a pathologist confirmed that the infection had spread to the umbilical cord, injuring the child.

Defendant’s defense The standard of care was met. There was no indication that an earlier delivery was needed.

Verdict A $3.5 million Michigan settlement was reached by the hospital during the trial.

Surgical approach questioned

A woman went to her ObGyn for tubal ligation and ventral hernia repair. The patient was concerned about infection and scarring. She agreed to a laparoscopic procedure, knowing that the procedure might have to be altered to laparotomy.

Patient’s claim The patient consented to laparoscopic surgery. However, surgery did not begin as laparoscopy but as an open procedure. The patient has a 6-inch scar on her abdomen. She accused both the ObGyn and the hospital of lack of informed consent for laparotomy.

Defendant’s defense The hospital claimed that their nurses’ role was to read the consent form signed by the patient in the ObGyn’s office. The ObGyn claimed that the patient signed a general consent form that permitted him to do what was reasonable. He had determined after surgery began that a laparoscopic procedure would have been more dangerous.

Verdict A $150,000 Louisiana verdict was returned against the ObGyn; the hospital was acquitted.

Macrosomic baby and mother both injured during delivery

Delivery of a mother’s fourth child was managed by a hospital-employed family physician (FP). Shoulder dystocia was encountered, and the FP made a 4th-degree extension of the episiotomy. The baby weighed 10 lb 14 oz at birth. The mother has fecal and urinary incontinence and pain as a result of the large episiotomy. The child has a right-sided brachial plexus injury.

Parents’ claim Failure to perform cesarean delivery caused injury to the mother and child. The FP should have recognized from the mother’s history of delivering 3 macrosomic babies and the progress of this pregnancy, that the baby was large.

Defendant’s defense The case was settled during trial.

Verdict A $1.5 million Minnesota settlement was reached that included $1.2 million for the child and $300,000 for the mother.

Surgical table folds during hysterectomy: $5.3M verdict

While a woman was undergoing a hysterectomy, the surgical table she was lying on folded up into a “U” position, causing the inserted speculum to tear the patient from vagina to rectum. The fall also caused a back injury usually attributed to falls from great distances. The patient has permanent pain, recurring diarrhea, and depression as a result of the injuries.

Patient’s claim The injuries occurred because of the defendants’ failure to read, understand, and follow the warning labels on the surgical table.

Defendant’s defense The case was settled before trial.

Verdict A $5.3 million settlement was reached with the hospital.

Signs of chorioamnionitis ignored? $3.5M settlement

At 31 weeks’ gestation, a mother at risk for preterm labor was admitted to the hospital for 2 days. Examination and test results showed evidence of infection. She was given antenatal corticosteroids for fetal lung development in case of premature delivery. At discharge, bed rest was ordered and she complied. At 32 weeks’ gestation, she returned to the hospital with worsening symptoms, was prescribed antibiotics to treat a urinary tract infection, and was discharged. She went to the hospital a third time at almost 33 weeks’ gestation, experiencing contractions and leaking fluid. She was admitted with a plan to deliver the baby if any signs or symptoms of intra-amniotic infection (clinical chorioamnionitis) were present. Four days later, a cesarean delivery was ordered due to fetal tachycardia and decreased fetal heart rate. Imaging results performed in the neonatal intensive care unit showed that the baby received a brain injury. The child has physical and mental impairments including cerebral palsy, cortical blindness, and epilepsy.

Parents’ claim Hospital health care providers failed to communicate with each other or to obtain records from prior admissions, although the mother told them that she had been to the hospital twice within the past 2 weeks. Medical records from all 3 admissions showed clear signs and symptoms of a vaginal/cervical infection that had progressed to clinical chorioamnionitis 2 days before delivery. Examination of the placenta by a pathologist confirmed that the infection had spread to the umbilical cord, injuring the child.

Defendant’s defense The standard of care was met. There was no indication that an earlier delivery was needed.

Verdict A $3.5 million Michigan settlement was reached by the hospital during the trial.

Surgical approach questioned

A woman went to her ObGyn for tubal ligation and ventral hernia repair. The patient was concerned about infection and scarring. She agreed to a laparoscopic procedure, knowing that the procedure might have to be altered to laparotomy.

Patient’s claim The patient consented to laparoscopic surgery. However, surgery did not begin as laparoscopy but as an open procedure. The patient has a 6-inch scar on her abdomen. She accused both the ObGyn and the hospital of lack of informed consent for laparotomy.

Defendant’s defense The hospital claimed that their nurses’ role was to read the consent form signed by the patient in the ObGyn’s office. The ObGyn claimed that the patient signed a general consent form that permitted him to do what was reasonable. He had determined after surgery began that a laparoscopic procedure would have been more dangerous.

Verdict A $150,000 Louisiana verdict was returned against the ObGyn; the hospital was acquitted.

Macrosomic baby and mother both injured during delivery

Delivery of a mother’s fourth child was managed by a hospital-employed family physician (FP). Shoulder dystocia was encountered, and the FP made a 4th-degree extension of the episiotomy. The baby weighed 10 lb 14 oz at birth. The mother has fecal and urinary incontinence and pain as a result of the large episiotomy. The child has a right-sided brachial plexus injury.

Parents’ claim Failure to perform cesarean delivery caused injury to the mother and child. The FP should have recognized from the mother’s history of delivering 3 macrosomic babies and the progress of this pregnancy, that the baby was large.

Defendant’s defense The case was settled during trial.

Verdict A $1.5 million Minnesota settlement was reached that included $1.2 million for the child and $300,000 for the mother.

Surgical table folds during hysterectomy: $5.3M verdict