User login

CPR Prior to Defibrillation for VF/VT CPA

Cardiopulmonary arrest (CPA) is a major contributor to overall mortality in both the in‐ and out‐of‐hospital setting.[1, 2, 3] Despite advances in the field of resuscitation science, mortality from CPA remains high.[1, 4] Unlike the out‐of‐hospital environment, inpatient CPA is unique, as trained healthcare providers are the primary responders with a range of expertise available throughout the duration of arrest.

There are inherent opportunities of in‐hospital cardiac arrest that exist, such as the opportunity for near immediate arrest detection, rapid initiation of high‐quality chest compressions, and early defibrillation if indicated. Given the association between improved rates of successful defibrillation and high‐quality chest compressions, the 2005 American Heart Association (AHA) updates changed the recommended guideline ventricular fibrillation/ventricular tachycardia (VF/VT) defibrillation sequence from 3 stacked shocks to a single shock followed by 2 minutes of chest compressions between defibrillation attempts.[5, 6] However, the recommendations were directed primarily at cases of out‐of‐hospital VF/VT CPA, and it currently remains unclear as to whether this strategy offers any advantage to patients who suffer an in‐hospital VF/VT arrest.[7]

Despite the aforementioned findings regarding the benefit of high‐quality chest compressions, there is a paucity of evidence in the medical literature to support whether delivering a period of chest compressions before defibrillation attempt, including initial shock and shock sequence, translate to improved outcomes. With the exception of the statement recommending early defibrillation in case of in‐hospital arrest, there are no formal AHA consensus recommendations.[5, 8, 9] Here we document our experience using the approach of expedited stacked defibrillation shocks in persons experiencing monitored in‐hospital VF/VT arrest.

METHODS

Design

This was a retrospective study of observational data from our in‐hospital resuscitation database. Waiver of informed consent was granted by our institutional investigational review board.

Setting

This study was performed in the University of California San Diego Healthcare System, which includes 2 urban academic hospitals, with a combined total of approximately 500 beds. A designated team is activated in response to code blue requests and includes: code registered nurse (RN), code doctor of medicine (MD), airway MD, respiratory therapist, pharmacist, house nursing supervisor, primary RN, and unit charge RN. Crash carts with defibrillators (ZOLL R and E series; ZOLL Medical Corp., Chelmsford, MA) are located on each inpatient unit. Defibrillator features include real‐time cardiopulmonary resuscitation (CPR) feedback, filtered electrocardiography (ECG), and continuous waveform capnography.

Resuscitation training is provided for all hospital providers as part of the novel Advanced Resuscitation Training (ART) program, which was initiated in 2007.[10] Critical care nurses and physicians receive annual training, whereas noncritical care personnel undergo biennial training. The curriculum is adaptable to institutional treatment algorithms, equipment, and code response. Content is adaptive based on provider type, unit, and opportunities for improvement as revealed by performance improvement data. Resuscitation treatment algorithms are reviewed annually by the Critical Care Committee and Code Blue Subcommittee as part of the ART program, with modifications incorporated into the institutional policies and procedures.

Subjects

All admitted patients with continuous cardiac monitoring who suffered VF/VT arrest between July 2005 and June 2013 were included in this analysis. Patients with active do not attempt resuscitation orders were excluded. Patients were identified from our institutional resuscitation database, into which all in‐hospital cardiopulmonary arrest data are entered. We did not have data on individual patient comorbidity or severity of illness. Overall patient acuity over the course of the study was monitored hospital wide through case‐mix index (CMI). The index is based upon the allocation of hospital resources used to treat a diagnosis‐related group of patients and has previously been used as a surrogate for patient acuity.[11, 12, 13] The code RN who performed the resuscitation is responsible for entering data into a protected performance improvement database. Telecommunications records and the unit log are cross‐referenced to assure complete capture.

Protocols

Specific protocol similarities and differences among the 3 study periods are presented in Table 1.

| Protocol Variable | Stack Shock Period (20052008) | Initial Chest Compression Period (20082011) | Modified Stack Shock Period (20112013) |

|---|---|---|---|

| |||

| Defibrillator type | Medtronic/Physio Control LifePak 12 | Zoll E Series | Zoll E Series |

| Joule increment with defibrillation | 200J‐300J‐360J, manual escalation | 120J‐150J‐200J, manual escalation | 120J‐150J‐200J, automatic escalation |

| Distinction between monitored and unmonitored in‐hospital cardiopulmonary arrest | No | Yes | Yes |

| Chest compressions prior to initial defibrillation | No | Yes | No* |

| Initial defibrillation strategy | 3 expedited stacked shocks with a brief pause between each single defibrillation attempt to confirm sustained VF/VT | 2 minutes of chest compressions prior to initial and in between attempts | 3 expedited stacked shocks with a brief pause between each single defibrillation attempt to confirm sustained VF/VT* |

| Chest compression to ventilation ratio | 15:1 | Continuous chest compressions with ventilation at ratio 10:1 | Continuous chest compressions with ventilation at ratio 10:1 |

| Vasopressors | Epinephrine 1 mg IV/IO every 35 minutes. | Epinephrine 1 mg IV/IO or vasopressin 40 units IV/IO every 35 minutes | Epinephrine 1 mg IV/IO or vasopressin 40 units IV/IO every 35 minutes. |

Stacked Shock Period (20052008)

Historically, our institutional cardiopulmonary arrest protocols advocated early defibrillation with administration of 3 stacked shocks with a brief pause between each single defibrillation attempt to confirm sustained VF/VT before initiating/resuming chest compressions.

Initial Chest Compression Period (20082011)

In 2008 the protocol was modified to reflect recommendations to perform a 2‐minute period of chest compressions prior to each defibrillation, including the initial attempt.

Modified Stacked Shack Period (20112013)

Finally, in 2011 the protocol was modified again, and defibrillators were configured to allow automatic advancement of defibrillation energy (120J‐150J‐200J). The defibrillation protocol included the following elements.

For an unmonitored arrest, chest compressions and ventilations should be initiated upon recognition of cardiopulmonary arrest. If VF/VT was identified upon placement of defibrillator pads, immediate counter shock was performed and chest compressions resumed immediately for a period of 2 minutes before considering a repeat defibrillation attempt. A dose of epinephrine (1 mg intravenous [IV]/emntraosseous [IO]) or vasopressin (40 units IV/IO) was administered as close to the reinitiation of chest compressions as possible. Defibrillation attempts proceeded with a single shock at a time, each preceded by 2 minutes of chest compressions.

For a monitored arrest, defibrillation attempts were expedited. Chest compressions without ventilations were initiated only until defibrillator pads were placed. Defibrillation attempts were initiated as soon as possible, with at least 3 or more successive shocks administered for persistent VF/VT (stacked shocks). Compressions were performed between shocks if they did not interfere with rhythm analysis. Compressions resumed following the initial series of stacked shocks with persistent CPA, regardless of rhythm, and pressors administered (epinephrine 1 mg IV or vasopressin 40 units IV). Persistent VF/VT received defibrillation attempts every 2 minutes following the initial series of stacked shocks, with compressions performed continuously between attempts. Persistent VF/VT should trigger emergent cardiology consultation for possible emergent percutaneous intervention.

Analysis

The primary outcome measure was defined as survival to hospital discharge at baseline and following each protocol change. 2 was used to compare the 3 time periods, with P < 0.05 defined as statistically significant. Specific group comparisons were made with Bonferroni correction, with P < 0.017 defined as statistically significant. Secondary outcome measures included return of spontaneous circulation (ROSC) and number of shocks required. Demographic and clinical data were also presented for each of the 3 study periods.

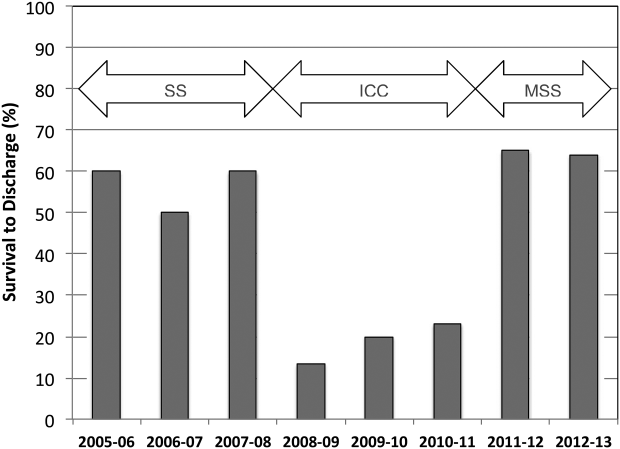

RESULTS

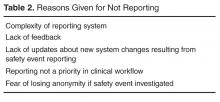

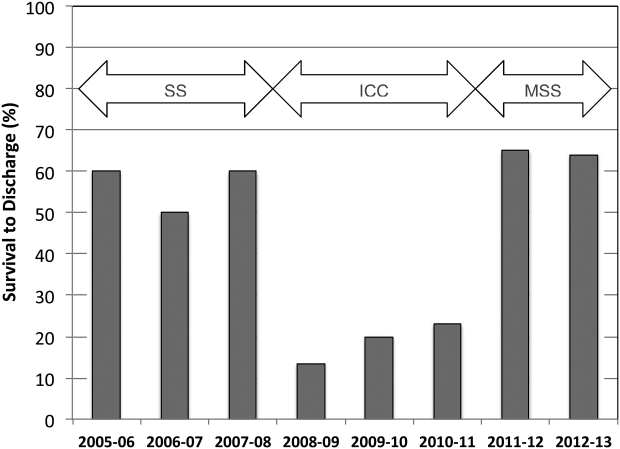

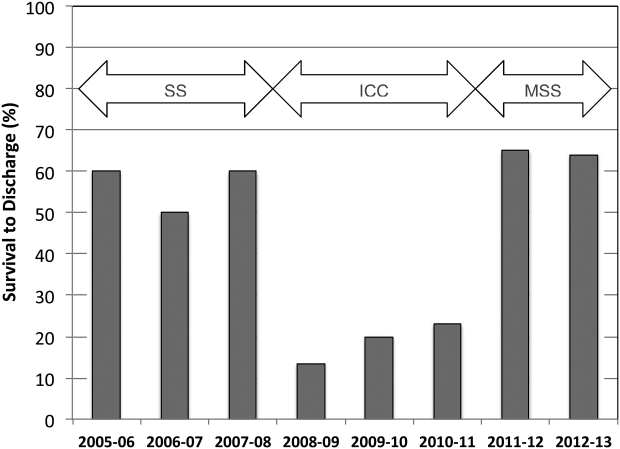

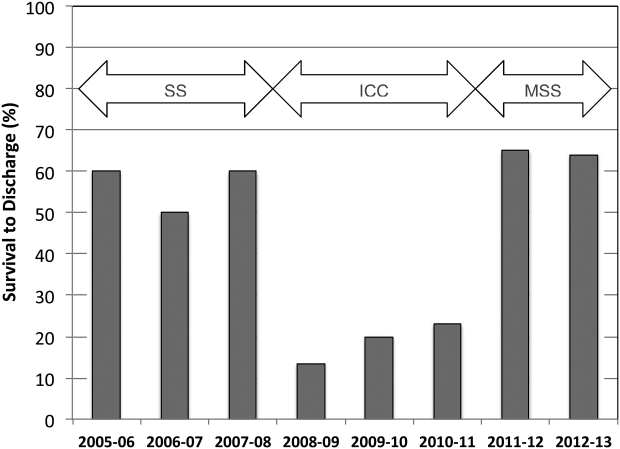

A total of 661 cardiopulmonary arrests of all rhythms were identified during the entire study period. Primary VF/VT arrests was identified in 106 patients (16%). Of these, 102 (96%) were being monitored with continuous ECG at the time of arrest. Demographic and clinical information for the entire study cohort are displayed in Table 2. There were no differences in age, gender, time of arrest, and location of arrest between study periods (all P > 0.05). The incidence of VF/VT arrest did not vary significantly between the study periods (P = 0.16). There were no differences in mean number of defibrillation attempts per arrest; however, there was a significant improvement in the rate of perfusing rhythm after initial set of defibrillation attempts and overall ROSC favoring stacked shocks (all P < 0.05, Table 2). Survival‐to‐hospital discharge for all VF/VT arrest victims decreased, then increased significantly from the stacked shock period to initial chest compression period to modified stacked shock period (58%, 18%, 71%, respectively, P < 0.01, Figure 1). After Bonferroni correction, specific group differences were significant between the stacked shock and initial chest compression groups (P < 0.01) and modified stacked shocks and initial chest compression groups (P < 0.01, Table 2). Finally, the incidence of bystander CPR appeared to be significantly greater in the modified stacked shock period following implementation of our resuscitation program (Table 2). Overall hospital CMI for fiscal years 2005/2006 through 2012/2013 were significantly different (1.47 vs 1.71, P < 0.0001).

| Parameter | Stacked Shocks (n = 31) | Initial Chest Compressions (n = 33) | Modified Stack Shocks (n = 42) |

|---|---|---|---|

| |||

| Age (y) | 54.3 | 64.3 | 59.8 |

| Male gender (%) | 16 (52) | 21 (64) | 21 (50) |

| VF/PVT arrest incidence (per 1,000 admissions) | 0.49 | 0.70 | |

| Arrest 7 am5 pm (%) | 15 (48) | 17 (52) | 21 (50) |

| Non‐ICU location (%) | 13 (42) | 15 (45) | 17 (40) |

| CPR prior to code team arrival (%) | 22 (71)* | 31 (94) | 42 (100) |

| Perfusing rhythm after initial set of defibrillation attempts (%) | 37 | 33 | 70 |

| Mean defibrillation attempts (no.) | 1.3 | 1.8 | 1.5 |

| ROSC (%) | 76 | 56 | 90 |

| Survival‐to‐hospital discharge (%) | 18 (58) | 6 (18) | 30 (71) |

| Case‐mix index (average coefficient by period) | 1.51 | 1.60 | 1.69∥ |

DISCUSSION

The specific focus of this observation was to report on defibrillation strategies that have previously only been reported in an out‐of‐hospital setting. There is no current consensus regarding chest compressions for a predetermined amount of time prior to defibrillation in an inpatient setting. Here we present data suggesting improved outcomes using an approach that expedited defibrillation and included a defibrillation strategy of stacked shocks (stacked shock and modified stack shock, respectively) in monitored inpatient VF/VT arrest.

Early out‐of‐hospital studies initially demonstrated a significant survival benefit for patients who received 1.5 to 3 minutes of chest compressions preceding defibrillation with reported arrest downtimes of 4 to 5 minutes prior to emergency medical services arrival.[14, 15] However, in more recent randomized controlled trials, outcome was not improved when chest compressions were performed prior to defibrillation attempt.[16, 17] Our findings suggest that there is no one size fits all approach to chest compression and defibrillation strategy. Instead, we suggest that factors including whether the arrest occurred while monitored or not aid with decision making and timing of defibrillation.

Our findings favoring expedited defibrillation and stacked shocks in witnessed arrest are consistent with the 3‐phase model of cardiac arrest proposed by Weisfeldt and Becker suggesting that defibrillation success is related to the energy status of the heart.[18] In this model, the first 4 minutes of VF arrest (electrical phase) are characterized by a high‐energy state with higher adenosine triphosphate (ATP)/adenosine monophosphate (AMP) ratios that are associated with increased likelihood for ROSC after defibrillation attempt.[19] Further, VF appears to deplete ATP/AMP ratios after about 4 minutes, at which point the likelihood of defibrillation success is substantially diminished.[18] Between 4 and 10 minutes (circulatory phase), energy stores in the myocardium are severely depleted. However, there is evidence to suggest that high‐quality chest compressions and high chest compression fractionparticularly in conjunction with epinephrinecan replenish ATP stores and increase the likelihood of defibrillation success.[6, 20] Beyond 10 minutes (metabolic phase), survival rates are abysmal, with no therapy yet identified producing clinical utility.

The secondary analyses reveal several interesting trends. We anticipated a higher number of defibrillation attempts during phase II due to a lower likelihood of conversion with a CPR‐first approach. Instead, the number of shocks was similar across all 3 periods. Our findings are consistent with previous reports of a low single or first shock probability of successful defibrillation. However, recent reports document that approximately 80% of patients who ultimately survive to discharge are successfully defibrillated within the first 3 shocks.[21, 22, 23]

It appears that the likelihood of conversion to a perfusing rhythm is higher with expedited, stacked shocks. This underscores the importance of identifying an optimal approach to the treatment of VF/VT, as the initial series of defibrillation attempts may determine outcomes. There also appeared to be an increase in the incidence of VF/VT during the modified stack shock period, although this was not statistically significant. The modified stack shock period correlated temporally with the expansion of our institution's cardiovascular service and the opening of a dedicated inpatient facility, which likely influenced our mixture of inpatients.

These data should be interpreted with consideration of study limitations. Primarily, we did not attempt to determine arrest times prior to initial defibrillation attempts, which is likely an important variable. However, we limited our population studied only to individuals experiencing VF/VT arrest that was witnessed by hospital care staff or occurred while on cardiac monitor. We are confident that these selective criteria resulted in expedited identification and response times well within the electrical phase. We did not evaluate differences or changes in individual patient‐level severity of illness that may have potentially confounded outcome analysis. The effect of individual level in severity of illness and comorbidity are not known. Instead, we used CMI coefficients to explore hospital wide changes in patient acuity during the study period. We noticed an increasing case‐mix coefficient value suggesting higher patient acuity, which would predict increased mortality rather than the decrease noted between the initial chest compression and modified stacked shock periods (Table 2). In addition, we did not integrate CPR process variables, such as depth, rate, recoil, chest compression fraction, and per‐shock pauses, into this analysis. Our previous studies indicated that high‐quality CPR may account for a significant amount of improvement in outcomes following our novel resuscitation program implementation in 2007.[10, 24] Since the program's inception, we have reported continuous improvement in overall in‐hospital mortality that was sustained throughout the duration of the study period despite the significant changes reported in the 3 periods with monitored VF/VT arrest.[10] The use of medications prior to initial defibrillation attempts was not recorded. We have recently reported that during the same period of data collection, there were no significant changes in the use of epinephrine; however, there was a significant increase in the use of vasopressin.[10] It is unclear whether the increased use of vasopressin contributed to the current outcomes. However, given our cohort of witnessed in‐hospital cardiac arrests with an initial shockable rhythm, we anticipate the use of vasopressors as unlikely prior to defibrillation attempt.

Additional important limitations and potential confounding factors in this study were the use of 2 different types of defibrillators, differing escalating energy strategies, and differing defibrillator waveforms. Recent evidence supports biphasic waveforms as more effective than monophasic waveforms.[25, 26, 27] Comparison of defibrillator brand and waveform superiority is out the scope of this study; however, it is interesting to note similar high rates of survival in the stacked shock and modified stack shock phases despite use of different defibrillator brands and waveforms during those respective phases. Regarding escalating energy of defibrillation countershocks, the most recent 2010 AHA guidelines have no position on the superiority of either manual or automatic escalation.[7] However, we noted similar high rates of survival in the stacked shock and modified stack shock periods despite use of differing escalating strategies. Finally, we used survival‐to‐hospital discharge as our main outcome measure rather than neurological status. However, prior studies from our institution suggest that most VF/VT survivors have good neurological outcomes, which are influenced heavily by preadmission functional status.[24]

CONCLUSIONS

Our data suggest that in cases of monitored VF/VT arrest, expeditious defibrillation with use of stacked shocks is associated with a higher rate of ROSC and survival to hospital discharge

Disclosure: Nothing to report.

- , , , et al. Strategies for improving survival after in‐hospital cardiac arrest in the United States: 2013 consensus recommendations: a consensus statement from the American Heart Association. Circulation. 2013;127:1538–1563.

- , , , et al. Survival from in‐hospital cardiac arrest during nights and weekends. JAMA. 2008;299:785–792.

- , , , et al. Heart disease and stroke statistics—2008 update: a report from the American Heart Association Statistics Committee and Stroke Statistics Subcommittee. Circulation. 2008;117:e25–e146.

- , , , . Predictors of survival from out‐of‐hospital cardiac arrest: a systematic review and meta‐analysis. Circ Cardiovasc Qual Outcomes. 2010;3:63–81.

- , , , et al. Quality of cardiopulmonary resuscitation during in‐hospital cardiac arrest. JAMA. 2005;293:305–310.

- , , , et al. Chest compression fraction determines survival in patients with out‐of‐hospital ventricular fibrillation. Circulation. 2009;120:1241–1247.

- , , , et al. Part 6: Defibrillation: 2010 International Consensus on Cardiopulmonary Resuscitation and Emergency Cardiovascular Care Science With Treatment Recommendations. Circulation. 2010;122:S325–S337.

- , , , et al. Part 1: executive summary: 2010 American Heart Association Guidelines for Cardiopulmonary Resuscitation and Emergency Cardiovascular Care. Circulation. 2010;122:S640—S656.

- , , , et al. Part 8: adult advanced cardiovascular life support: 2010 American Heart Association Guidelines for Cardiopulmonary Resuscitation and Emergency Cardiovascular Care. Circulation. 2010;122:S729–S767.

- , , , et al. A performance improvement‐based resuscitation programme reduces arrest incidence and increases survival from in‐hospital cardiac arrest. Resuscitation. 2015;92:63–69.

- . The evolution of case‐mix measurement using DRGs: past, present and future. Stud Health Technol Inform. 1994;14:75–83.

- , , , et al. Variability in case‐mix adjusted in‐hospital cardiac arrest rates. Med Care. 2012;50:124–130.

- , , , , . Impact of socioeconomic adjustment on physicians' relative cost of care. Med Care. 2013;51:454–460.

- , , , et al. Influence of cardiopulmonary resuscitation prior to defibrillation in patients with out‐of‐hospital ventricular fibrillation. JAMA. 1999;281:1182–1188.

- , , , et al. Delaying defibrillation to give basic cardiopulmonary resuscitation to patients with out‐of‐hospital ventricular fibrillation: a randomized trial. JAMA. 2003;289:1389–1395.

- , , , et al. Defibrillation or cardiopulmonary resuscitation first for patients with out‐of‐hospital cardiac arrests found by paramedics to be in ventricular fibrillation? A randomised control trial. Resuscitation. 2008;79:424–431.

- , , , . CPR before defibrillation in out‐of‐hospital cardiac arrest: a randomized trial. Emerg Med Australas. 2005;17:39–45.

- , . Resuscitation after cardiac arrest: a 3‐phase time‐sensitive model. JAMA. 2002;288:3035–3038.

- , , , , . Association of intramyocardial high energy phosphate concentrations with quantitative measures of the ventricular fibrillation electrocardiogram waveform. Resuscitation. 2009;80:946–950.

- , , , et al. Ventricular fibrillation median frequency may not be useful for monitoring during cardiac arrest treated with endothelin‐1 or epinephrine. Anesth Analg. 2004;99:1787–1793, table of contents.

- , , , , . “Probability of successful defibrillation” as a monitor during CPR in out‐of‐hospital cardiac arrested patients. Resuscitation. 2015;48:245–254.

- , , , . Shockable rhythms and defibrillation during in‐hospital pediatric cardiac arrest. Resuscitation. 2014;85:387–391.

- , , , . Beyond the pre‐shock pause: the effect of prehospital defibrillation mode on CPR interruptions and return of spontaneous circulation. Resuscitation. 2013;84:575–579.

- , , . Implementing a “resuscitation bundle” decreases incidence and improves outcomes in inpatient cardiopulmonary arrest. Circulation 2009;120(18 Suppl):S1441.

- , , , et al. Multicenter, randomized, controlled trial of 150‐J biphasic shocks compared with 200‐ to 360‐J monophasic shocks in the resuscitation of out‐of‐hospital cardiac arrest victims. Optimized Response to Cardiac Arrest (ORCA) Investigators. Circulation. 2000;102:1780–1787.

- , , , , . A prospective, randomised and blinded comparison of first shock success of monophasic and biphasic waveforms in out‐of‐hospital cardiac arrest. Resuscitation. 2003;58:17–24.

- , , , et al. Out‐of‐hospital cardiac arrest rectilinear biphasic to monophasic damped sine defibrillation waveforms with advanced life support intervention trial (ORBIT). Resuscitation. 2005;66:149–157.

Cardiopulmonary arrest (CPA) is a major contributor to overall mortality in both the in‐ and out‐of‐hospital setting.[1, 2, 3] Despite advances in the field of resuscitation science, mortality from CPA remains high.[1, 4] Unlike the out‐of‐hospital environment, inpatient CPA is unique, as trained healthcare providers are the primary responders with a range of expertise available throughout the duration of arrest.

There are inherent opportunities of in‐hospital cardiac arrest that exist, such as the opportunity for near immediate arrest detection, rapid initiation of high‐quality chest compressions, and early defibrillation if indicated. Given the association between improved rates of successful defibrillation and high‐quality chest compressions, the 2005 American Heart Association (AHA) updates changed the recommended guideline ventricular fibrillation/ventricular tachycardia (VF/VT) defibrillation sequence from 3 stacked shocks to a single shock followed by 2 minutes of chest compressions between defibrillation attempts.[5, 6] However, the recommendations were directed primarily at cases of out‐of‐hospital VF/VT CPA, and it currently remains unclear as to whether this strategy offers any advantage to patients who suffer an in‐hospital VF/VT arrest.[7]

Despite the aforementioned findings regarding the benefit of high‐quality chest compressions, there is a paucity of evidence in the medical literature to support whether delivering a period of chest compressions before defibrillation attempt, including initial shock and shock sequence, translate to improved outcomes. With the exception of the statement recommending early defibrillation in case of in‐hospital arrest, there are no formal AHA consensus recommendations.[5, 8, 9] Here we document our experience using the approach of expedited stacked defibrillation shocks in persons experiencing monitored in‐hospital VF/VT arrest.

METHODS

Design

This was a retrospective study of observational data from our in‐hospital resuscitation database. Waiver of informed consent was granted by our institutional investigational review board.

Setting

This study was performed in the University of California San Diego Healthcare System, which includes 2 urban academic hospitals, with a combined total of approximately 500 beds. A designated team is activated in response to code blue requests and includes: code registered nurse (RN), code doctor of medicine (MD), airway MD, respiratory therapist, pharmacist, house nursing supervisor, primary RN, and unit charge RN. Crash carts with defibrillators (ZOLL R and E series; ZOLL Medical Corp., Chelmsford, MA) are located on each inpatient unit. Defibrillator features include real‐time cardiopulmonary resuscitation (CPR) feedback, filtered electrocardiography (ECG), and continuous waveform capnography.

Resuscitation training is provided for all hospital providers as part of the novel Advanced Resuscitation Training (ART) program, which was initiated in 2007.[10] Critical care nurses and physicians receive annual training, whereas noncritical care personnel undergo biennial training. The curriculum is adaptable to institutional treatment algorithms, equipment, and code response. Content is adaptive based on provider type, unit, and opportunities for improvement as revealed by performance improvement data. Resuscitation treatment algorithms are reviewed annually by the Critical Care Committee and Code Blue Subcommittee as part of the ART program, with modifications incorporated into the institutional policies and procedures.

Subjects

All admitted patients with continuous cardiac monitoring who suffered VF/VT arrest between July 2005 and June 2013 were included in this analysis. Patients with active do not attempt resuscitation orders were excluded. Patients were identified from our institutional resuscitation database, into which all in‐hospital cardiopulmonary arrest data are entered. We did not have data on individual patient comorbidity or severity of illness. Overall patient acuity over the course of the study was monitored hospital wide through case‐mix index (CMI). The index is based upon the allocation of hospital resources used to treat a diagnosis‐related group of patients and has previously been used as a surrogate for patient acuity.[11, 12, 13] The code RN who performed the resuscitation is responsible for entering data into a protected performance improvement database. Telecommunications records and the unit log are cross‐referenced to assure complete capture.

Protocols

Specific protocol similarities and differences among the 3 study periods are presented in Table 1.

| Protocol Variable | Stack Shock Period (20052008) | Initial Chest Compression Period (20082011) | Modified Stack Shock Period (20112013) |

|---|---|---|---|

| |||

| Defibrillator type | Medtronic/Physio Control LifePak 12 | Zoll E Series | Zoll E Series |

| Joule increment with defibrillation | 200J‐300J‐360J, manual escalation | 120J‐150J‐200J, manual escalation | 120J‐150J‐200J, automatic escalation |

| Distinction between monitored and unmonitored in‐hospital cardiopulmonary arrest | No | Yes | Yes |

| Chest compressions prior to initial defibrillation | No | Yes | No* |

| Initial defibrillation strategy | 3 expedited stacked shocks with a brief pause between each single defibrillation attempt to confirm sustained VF/VT | 2 minutes of chest compressions prior to initial and in between attempts | 3 expedited stacked shocks with a brief pause between each single defibrillation attempt to confirm sustained VF/VT* |

| Chest compression to ventilation ratio | 15:1 | Continuous chest compressions with ventilation at ratio 10:1 | Continuous chest compressions with ventilation at ratio 10:1 |

| Vasopressors | Epinephrine 1 mg IV/IO every 35 minutes. | Epinephrine 1 mg IV/IO or vasopressin 40 units IV/IO every 35 minutes | Epinephrine 1 mg IV/IO or vasopressin 40 units IV/IO every 35 minutes. |

Stacked Shock Period (20052008)

Historically, our institutional cardiopulmonary arrest protocols advocated early defibrillation with administration of 3 stacked shocks with a brief pause between each single defibrillation attempt to confirm sustained VF/VT before initiating/resuming chest compressions.

Initial Chest Compression Period (20082011)

In 2008 the protocol was modified to reflect recommendations to perform a 2‐minute period of chest compressions prior to each defibrillation, including the initial attempt.

Modified Stacked Shack Period (20112013)

Finally, in 2011 the protocol was modified again, and defibrillators were configured to allow automatic advancement of defibrillation energy (120J‐150J‐200J). The defibrillation protocol included the following elements.

For an unmonitored arrest, chest compressions and ventilations should be initiated upon recognition of cardiopulmonary arrest. If VF/VT was identified upon placement of defibrillator pads, immediate counter shock was performed and chest compressions resumed immediately for a period of 2 minutes before considering a repeat defibrillation attempt. A dose of epinephrine (1 mg intravenous [IV]/emntraosseous [IO]) or vasopressin (40 units IV/IO) was administered as close to the reinitiation of chest compressions as possible. Defibrillation attempts proceeded with a single shock at a time, each preceded by 2 minutes of chest compressions.

For a monitored arrest, defibrillation attempts were expedited. Chest compressions without ventilations were initiated only until defibrillator pads were placed. Defibrillation attempts were initiated as soon as possible, with at least 3 or more successive shocks administered for persistent VF/VT (stacked shocks). Compressions were performed between shocks if they did not interfere with rhythm analysis. Compressions resumed following the initial series of stacked shocks with persistent CPA, regardless of rhythm, and pressors administered (epinephrine 1 mg IV or vasopressin 40 units IV). Persistent VF/VT received defibrillation attempts every 2 minutes following the initial series of stacked shocks, with compressions performed continuously between attempts. Persistent VF/VT should trigger emergent cardiology consultation for possible emergent percutaneous intervention.

Analysis

The primary outcome measure was defined as survival to hospital discharge at baseline and following each protocol change. 2 was used to compare the 3 time periods, with P < 0.05 defined as statistically significant. Specific group comparisons were made with Bonferroni correction, with P < 0.017 defined as statistically significant. Secondary outcome measures included return of spontaneous circulation (ROSC) and number of shocks required. Demographic and clinical data were also presented for each of the 3 study periods.

RESULTS

A total of 661 cardiopulmonary arrests of all rhythms were identified during the entire study period. Primary VF/VT arrests was identified in 106 patients (16%). Of these, 102 (96%) were being monitored with continuous ECG at the time of arrest. Demographic and clinical information for the entire study cohort are displayed in Table 2. There were no differences in age, gender, time of arrest, and location of arrest between study periods (all P > 0.05). The incidence of VF/VT arrest did not vary significantly between the study periods (P = 0.16). There were no differences in mean number of defibrillation attempts per arrest; however, there was a significant improvement in the rate of perfusing rhythm after initial set of defibrillation attempts and overall ROSC favoring stacked shocks (all P < 0.05, Table 2). Survival‐to‐hospital discharge for all VF/VT arrest victims decreased, then increased significantly from the stacked shock period to initial chest compression period to modified stacked shock period (58%, 18%, 71%, respectively, P < 0.01, Figure 1). After Bonferroni correction, specific group differences were significant between the stacked shock and initial chest compression groups (P < 0.01) and modified stacked shocks and initial chest compression groups (P < 0.01, Table 2). Finally, the incidence of bystander CPR appeared to be significantly greater in the modified stacked shock period following implementation of our resuscitation program (Table 2). Overall hospital CMI for fiscal years 2005/2006 through 2012/2013 were significantly different (1.47 vs 1.71, P < 0.0001).

| Parameter | Stacked Shocks (n = 31) | Initial Chest Compressions (n = 33) | Modified Stack Shocks (n = 42) |

|---|---|---|---|

| |||

| Age (y) | 54.3 | 64.3 | 59.8 |

| Male gender (%) | 16 (52) | 21 (64) | 21 (50) |

| VF/PVT arrest incidence (per 1,000 admissions) | 0.49 | 0.70 | |

| Arrest 7 am5 pm (%) | 15 (48) | 17 (52) | 21 (50) |

| Non‐ICU location (%) | 13 (42) | 15 (45) | 17 (40) |

| CPR prior to code team arrival (%) | 22 (71)* | 31 (94) | 42 (100) |

| Perfusing rhythm after initial set of defibrillation attempts (%) | 37 | 33 | 70 |

| Mean defibrillation attempts (no.) | 1.3 | 1.8 | 1.5 |

| ROSC (%) | 76 | 56 | 90 |

| Survival‐to‐hospital discharge (%) | 18 (58) | 6 (18) | 30 (71) |

| Case‐mix index (average coefficient by period) | 1.51 | 1.60 | 1.69∥ |

DISCUSSION

The specific focus of this observation was to report on defibrillation strategies that have previously only been reported in an out‐of‐hospital setting. There is no current consensus regarding chest compressions for a predetermined amount of time prior to defibrillation in an inpatient setting. Here we present data suggesting improved outcomes using an approach that expedited defibrillation and included a defibrillation strategy of stacked shocks (stacked shock and modified stack shock, respectively) in monitored inpatient VF/VT arrest.

Early out‐of‐hospital studies initially demonstrated a significant survival benefit for patients who received 1.5 to 3 minutes of chest compressions preceding defibrillation with reported arrest downtimes of 4 to 5 minutes prior to emergency medical services arrival.[14, 15] However, in more recent randomized controlled trials, outcome was not improved when chest compressions were performed prior to defibrillation attempt.[16, 17] Our findings suggest that there is no one size fits all approach to chest compression and defibrillation strategy. Instead, we suggest that factors including whether the arrest occurred while monitored or not aid with decision making and timing of defibrillation.

Our findings favoring expedited defibrillation and stacked shocks in witnessed arrest are consistent with the 3‐phase model of cardiac arrest proposed by Weisfeldt and Becker suggesting that defibrillation success is related to the energy status of the heart.[18] In this model, the first 4 minutes of VF arrest (electrical phase) are characterized by a high‐energy state with higher adenosine triphosphate (ATP)/adenosine monophosphate (AMP) ratios that are associated with increased likelihood for ROSC after defibrillation attempt.[19] Further, VF appears to deplete ATP/AMP ratios after about 4 minutes, at which point the likelihood of defibrillation success is substantially diminished.[18] Between 4 and 10 minutes (circulatory phase), energy stores in the myocardium are severely depleted. However, there is evidence to suggest that high‐quality chest compressions and high chest compression fractionparticularly in conjunction with epinephrinecan replenish ATP stores and increase the likelihood of defibrillation success.[6, 20] Beyond 10 minutes (metabolic phase), survival rates are abysmal, with no therapy yet identified producing clinical utility.

The secondary analyses reveal several interesting trends. We anticipated a higher number of defibrillation attempts during phase II due to a lower likelihood of conversion with a CPR‐first approach. Instead, the number of shocks was similar across all 3 periods. Our findings are consistent with previous reports of a low single or first shock probability of successful defibrillation. However, recent reports document that approximately 80% of patients who ultimately survive to discharge are successfully defibrillated within the first 3 shocks.[21, 22, 23]

It appears that the likelihood of conversion to a perfusing rhythm is higher with expedited, stacked shocks. This underscores the importance of identifying an optimal approach to the treatment of VF/VT, as the initial series of defibrillation attempts may determine outcomes. There also appeared to be an increase in the incidence of VF/VT during the modified stack shock period, although this was not statistically significant. The modified stack shock period correlated temporally with the expansion of our institution's cardiovascular service and the opening of a dedicated inpatient facility, which likely influenced our mixture of inpatients.

These data should be interpreted with consideration of study limitations. Primarily, we did not attempt to determine arrest times prior to initial defibrillation attempts, which is likely an important variable. However, we limited our population studied only to individuals experiencing VF/VT arrest that was witnessed by hospital care staff or occurred while on cardiac monitor. We are confident that these selective criteria resulted in expedited identification and response times well within the electrical phase. We did not evaluate differences or changes in individual patient‐level severity of illness that may have potentially confounded outcome analysis. The effect of individual level in severity of illness and comorbidity are not known. Instead, we used CMI coefficients to explore hospital wide changes in patient acuity during the study period. We noticed an increasing case‐mix coefficient value suggesting higher patient acuity, which would predict increased mortality rather than the decrease noted between the initial chest compression and modified stacked shock periods (Table 2). In addition, we did not integrate CPR process variables, such as depth, rate, recoil, chest compression fraction, and per‐shock pauses, into this analysis. Our previous studies indicated that high‐quality CPR may account for a significant amount of improvement in outcomes following our novel resuscitation program implementation in 2007.[10, 24] Since the program's inception, we have reported continuous improvement in overall in‐hospital mortality that was sustained throughout the duration of the study period despite the significant changes reported in the 3 periods with monitored VF/VT arrest.[10] The use of medications prior to initial defibrillation attempts was not recorded. We have recently reported that during the same period of data collection, there were no significant changes in the use of epinephrine; however, there was a significant increase in the use of vasopressin.[10] It is unclear whether the increased use of vasopressin contributed to the current outcomes. However, given our cohort of witnessed in‐hospital cardiac arrests with an initial shockable rhythm, we anticipate the use of vasopressors as unlikely prior to defibrillation attempt.

Additional important limitations and potential confounding factors in this study were the use of 2 different types of defibrillators, differing escalating energy strategies, and differing defibrillator waveforms. Recent evidence supports biphasic waveforms as more effective than monophasic waveforms.[25, 26, 27] Comparison of defibrillator brand and waveform superiority is out the scope of this study; however, it is interesting to note similar high rates of survival in the stacked shock and modified stack shock phases despite use of different defibrillator brands and waveforms during those respective phases. Regarding escalating energy of defibrillation countershocks, the most recent 2010 AHA guidelines have no position on the superiority of either manual or automatic escalation.[7] However, we noted similar high rates of survival in the stacked shock and modified stack shock periods despite use of differing escalating strategies. Finally, we used survival‐to‐hospital discharge as our main outcome measure rather than neurological status. However, prior studies from our institution suggest that most VF/VT survivors have good neurological outcomes, which are influenced heavily by preadmission functional status.[24]

CONCLUSIONS

Our data suggest that in cases of monitored VF/VT arrest, expeditious defibrillation with use of stacked shocks is associated with a higher rate of ROSC and survival to hospital discharge

Disclosure: Nothing to report.

Cardiopulmonary arrest (CPA) is a major contributor to overall mortality in both the in‐ and out‐of‐hospital setting.[1, 2, 3] Despite advances in the field of resuscitation science, mortality from CPA remains high.[1, 4] Unlike the out‐of‐hospital environment, inpatient CPA is unique, as trained healthcare providers are the primary responders with a range of expertise available throughout the duration of arrest.

There are inherent opportunities of in‐hospital cardiac arrest that exist, such as the opportunity for near immediate arrest detection, rapid initiation of high‐quality chest compressions, and early defibrillation if indicated. Given the association between improved rates of successful defibrillation and high‐quality chest compressions, the 2005 American Heart Association (AHA) updates changed the recommended guideline ventricular fibrillation/ventricular tachycardia (VF/VT) defibrillation sequence from 3 stacked shocks to a single shock followed by 2 minutes of chest compressions between defibrillation attempts.[5, 6] However, the recommendations were directed primarily at cases of out‐of‐hospital VF/VT CPA, and it currently remains unclear as to whether this strategy offers any advantage to patients who suffer an in‐hospital VF/VT arrest.[7]

Despite the aforementioned findings regarding the benefit of high‐quality chest compressions, there is a paucity of evidence in the medical literature to support whether delivering a period of chest compressions before defibrillation attempt, including initial shock and shock sequence, translate to improved outcomes. With the exception of the statement recommending early defibrillation in case of in‐hospital arrest, there are no formal AHA consensus recommendations.[5, 8, 9] Here we document our experience using the approach of expedited stacked defibrillation shocks in persons experiencing monitored in‐hospital VF/VT arrest.

METHODS

Design

This was a retrospective study of observational data from our in‐hospital resuscitation database. Waiver of informed consent was granted by our institutional investigational review board.

Setting

This study was performed in the University of California San Diego Healthcare System, which includes 2 urban academic hospitals, with a combined total of approximately 500 beds. A designated team is activated in response to code blue requests and includes: code registered nurse (RN), code doctor of medicine (MD), airway MD, respiratory therapist, pharmacist, house nursing supervisor, primary RN, and unit charge RN. Crash carts with defibrillators (ZOLL R and E series; ZOLL Medical Corp., Chelmsford, MA) are located on each inpatient unit. Defibrillator features include real‐time cardiopulmonary resuscitation (CPR) feedback, filtered electrocardiography (ECG), and continuous waveform capnography.

Resuscitation training is provided for all hospital providers as part of the novel Advanced Resuscitation Training (ART) program, which was initiated in 2007.[10] Critical care nurses and physicians receive annual training, whereas noncritical care personnel undergo biennial training. The curriculum is adaptable to institutional treatment algorithms, equipment, and code response. Content is adaptive based on provider type, unit, and opportunities for improvement as revealed by performance improvement data. Resuscitation treatment algorithms are reviewed annually by the Critical Care Committee and Code Blue Subcommittee as part of the ART program, with modifications incorporated into the institutional policies and procedures.

Subjects

All admitted patients with continuous cardiac monitoring who suffered VF/VT arrest between July 2005 and June 2013 were included in this analysis. Patients with active do not attempt resuscitation orders were excluded. Patients were identified from our institutional resuscitation database, into which all in‐hospital cardiopulmonary arrest data are entered. We did not have data on individual patient comorbidity or severity of illness. Overall patient acuity over the course of the study was monitored hospital wide through case‐mix index (CMI). The index is based upon the allocation of hospital resources used to treat a diagnosis‐related group of patients and has previously been used as a surrogate for patient acuity.[11, 12, 13] The code RN who performed the resuscitation is responsible for entering data into a protected performance improvement database. Telecommunications records and the unit log are cross‐referenced to assure complete capture.

Protocols

Specific protocol similarities and differences among the 3 study periods are presented in Table 1.

| Protocol Variable | Stack Shock Period (20052008) | Initial Chest Compression Period (20082011) | Modified Stack Shock Period (20112013) |

|---|---|---|---|

| |||

| Defibrillator type | Medtronic/Physio Control LifePak 12 | Zoll E Series | Zoll E Series |

| Joule increment with defibrillation | 200J‐300J‐360J, manual escalation | 120J‐150J‐200J, manual escalation | 120J‐150J‐200J, automatic escalation |

| Distinction between monitored and unmonitored in‐hospital cardiopulmonary arrest | No | Yes | Yes |

| Chest compressions prior to initial defibrillation | No | Yes | No* |

| Initial defibrillation strategy | 3 expedited stacked shocks with a brief pause between each single defibrillation attempt to confirm sustained VF/VT | 2 minutes of chest compressions prior to initial and in between attempts | 3 expedited stacked shocks with a brief pause between each single defibrillation attempt to confirm sustained VF/VT* |

| Chest compression to ventilation ratio | 15:1 | Continuous chest compressions with ventilation at ratio 10:1 | Continuous chest compressions with ventilation at ratio 10:1 |

| Vasopressors | Epinephrine 1 mg IV/IO every 35 minutes. | Epinephrine 1 mg IV/IO or vasopressin 40 units IV/IO every 35 minutes | Epinephrine 1 mg IV/IO or vasopressin 40 units IV/IO every 35 minutes. |

Stacked Shock Period (20052008)

Historically, our institutional cardiopulmonary arrest protocols advocated early defibrillation with administration of 3 stacked shocks with a brief pause between each single defibrillation attempt to confirm sustained VF/VT before initiating/resuming chest compressions.

Initial Chest Compression Period (20082011)

In 2008 the protocol was modified to reflect recommendations to perform a 2‐minute period of chest compressions prior to each defibrillation, including the initial attempt.

Modified Stacked Shack Period (20112013)

Finally, in 2011 the protocol was modified again, and defibrillators were configured to allow automatic advancement of defibrillation energy (120J‐150J‐200J). The defibrillation protocol included the following elements.

For an unmonitored arrest, chest compressions and ventilations should be initiated upon recognition of cardiopulmonary arrest. If VF/VT was identified upon placement of defibrillator pads, immediate counter shock was performed and chest compressions resumed immediately for a period of 2 minutes before considering a repeat defibrillation attempt. A dose of epinephrine (1 mg intravenous [IV]/emntraosseous [IO]) or vasopressin (40 units IV/IO) was administered as close to the reinitiation of chest compressions as possible. Defibrillation attempts proceeded with a single shock at a time, each preceded by 2 minutes of chest compressions.

For a monitored arrest, defibrillation attempts were expedited. Chest compressions without ventilations were initiated only until defibrillator pads were placed. Defibrillation attempts were initiated as soon as possible, with at least 3 or more successive shocks administered for persistent VF/VT (stacked shocks). Compressions were performed between shocks if they did not interfere with rhythm analysis. Compressions resumed following the initial series of stacked shocks with persistent CPA, regardless of rhythm, and pressors administered (epinephrine 1 mg IV or vasopressin 40 units IV). Persistent VF/VT received defibrillation attempts every 2 minutes following the initial series of stacked shocks, with compressions performed continuously between attempts. Persistent VF/VT should trigger emergent cardiology consultation for possible emergent percutaneous intervention.

Analysis

The primary outcome measure was defined as survival to hospital discharge at baseline and following each protocol change. 2 was used to compare the 3 time periods, with P < 0.05 defined as statistically significant. Specific group comparisons were made with Bonferroni correction, with P < 0.017 defined as statistically significant. Secondary outcome measures included return of spontaneous circulation (ROSC) and number of shocks required. Demographic and clinical data were also presented for each of the 3 study periods.

RESULTS

A total of 661 cardiopulmonary arrests of all rhythms were identified during the entire study period. Primary VF/VT arrests was identified in 106 patients (16%). Of these, 102 (96%) were being monitored with continuous ECG at the time of arrest. Demographic and clinical information for the entire study cohort are displayed in Table 2. There were no differences in age, gender, time of arrest, and location of arrest between study periods (all P > 0.05). The incidence of VF/VT arrest did not vary significantly between the study periods (P = 0.16). There were no differences in mean number of defibrillation attempts per arrest; however, there was a significant improvement in the rate of perfusing rhythm after initial set of defibrillation attempts and overall ROSC favoring stacked shocks (all P < 0.05, Table 2). Survival‐to‐hospital discharge for all VF/VT arrest victims decreased, then increased significantly from the stacked shock period to initial chest compression period to modified stacked shock period (58%, 18%, 71%, respectively, P < 0.01, Figure 1). After Bonferroni correction, specific group differences were significant between the stacked shock and initial chest compression groups (P < 0.01) and modified stacked shocks and initial chest compression groups (P < 0.01, Table 2). Finally, the incidence of bystander CPR appeared to be significantly greater in the modified stacked shock period following implementation of our resuscitation program (Table 2). Overall hospital CMI for fiscal years 2005/2006 through 2012/2013 were significantly different (1.47 vs 1.71, P < 0.0001).

| Parameter | Stacked Shocks (n = 31) | Initial Chest Compressions (n = 33) | Modified Stack Shocks (n = 42) |

|---|---|---|---|

| |||

| Age (y) | 54.3 | 64.3 | 59.8 |

| Male gender (%) | 16 (52) | 21 (64) | 21 (50) |

| VF/PVT arrest incidence (per 1,000 admissions) | 0.49 | 0.70 | |

| Arrest 7 am5 pm (%) | 15 (48) | 17 (52) | 21 (50) |

| Non‐ICU location (%) | 13 (42) | 15 (45) | 17 (40) |

| CPR prior to code team arrival (%) | 22 (71)* | 31 (94) | 42 (100) |

| Perfusing rhythm after initial set of defibrillation attempts (%) | 37 | 33 | 70 |

| Mean defibrillation attempts (no.) | 1.3 | 1.8 | 1.5 |

| ROSC (%) | 76 | 56 | 90 |

| Survival‐to‐hospital discharge (%) | 18 (58) | 6 (18) | 30 (71) |

| Case‐mix index (average coefficient by period) | 1.51 | 1.60 | 1.69∥ |

DISCUSSION

The specific focus of this observation was to report on defibrillation strategies that have previously only been reported in an out‐of‐hospital setting. There is no current consensus regarding chest compressions for a predetermined amount of time prior to defibrillation in an inpatient setting. Here we present data suggesting improved outcomes using an approach that expedited defibrillation and included a defibrillation strategy of stacked shocks (stacked shock and modified stack shock, respectively) in monitored inpatient VF/VT arrest.

Early out‐of‐hospital studies initially demonstrated a significant survival benefit for patients who received 1.5 to 3 minutes of chest compressions preceding defibrillation with reported arrest downtimes of 4 to 5 minutes prior to emergency medical services arrival.[14, 15] However, in more recent randomized controlled trials, outcome was not improved when chest compressions were performed prior to defibrillation attempt.[16, 17] Our findings suggest that there is no one size fits all approach to chest compression and defibrillation strategy. Instead, we suggest that factors including whether the arrest occurred while monitored or not aid with decision making and timing of defibrillation.

Our findings favoring expedited defibrillation and stacked shocks in witnessed arrest are consistent with the 3‐phase model of cardiac arrest proposed by Weisfeldt and Becker suggesting that defibrillation success is related to the energy status of the heart.[18] In this model, the first 4 minutes of VF arrest (electrical phase) are characterized by a high‐energy state with higher adenosine triphosphate (ATP)/adenosine monophosphate (AMP) ratios that are associated with increased likelihood for ROSC after defibrillation attempt.[19] Further, VF appears to deplete ATP/AMP ratios after about 4 minutes, at which point the likelihood of defibrillation success is substantially diminished.[18] Between 4 and 10 minutes (circulatory phase), energy stores in the myocardium are severely depleted. However, there is evidence to suggest that high‐quality chest compressions and high chest compression fractionparticularly in conjunction with epinephrinecan replenish ATP stores and increase the likelihood of defibrillation success.[6, 20] Beyond 10 minutes (metabolic phase), survival rates are abysmal, with no therapy yet identified producing clinical utility.

The secondary analyses reveal several interesting trends. We anticipated a higher number of defibrillation attempts during phase II due to a lower likelihood of conversion with a CPR‐first approach. Instead, the number of shocks was similar across all 3 periods. Our findings are consistent with previous reports of a low single or first shock probability of successful defibrillation. However, recent reports document that approximately 80% of patients who ultimately survive to discharge are successfully defibrillated within the first 3 shocks.[21, 22, 23]

It appears that the likelihood of conversion to a perfusing rhythm is higher with expedited, stacked shocks. This underscores the importance of identifying an optimal approach to the treatment of VF/VT, as the initial series of defibrillation attempts may determine outcomes. There also appeared to be an increase in the incidence of VF/VT during the modified stack shock period, although this was not statistically significant. The modified stack shock period correlated temporally with the expansion of our institution's cardiovascular service and the opening of a dedicated inpatient facility, which likely influenced our mixture of inpatients.

These data should be interpreted with consideration of study limitations. Primarily, we did not attempt to determine arrest times prior to initial defibrillation attempts, which is likely an important variable. However, we limited our population studied only to individuals experiencing VF/VT arrest that was witnessed by hospital care staff or occurred while on cardiac monitor. We are confident that these selective criteria resulted in expedited identification and response times well within the electrical phase. We did not evaluate differences or changes in individual patient‐level severity of illness that may have potentially confounded outcome analysis. The effect of individual level in severity of illness and comorbidity are not known. Instead, we used CMI coefficients to explore hospital wide changes in patient acuity during the study period. We noticed an increasing case‐mix coefficient value suggesting higher patient acuity, which would predict increased mortality rather than the decrease noted between the initial chest compression and modified stacked shock periods (Table 2). In addition, we did not integrate CPR process variables, such as depth, rate, recoil, chest compression fraction, and per‐shock pauses, into this analysis. Our previous studies indicated that high‐quality CPR may account for a significant amount of improvement in outcomes following our novel resuscitation program implementation in 2007.[10, 24] Since the program's inception, we have reported continuous improvement in overall in‐hospital mortality that was sustained throughout the duration of the study period despite the significant changes reported in the 3 periods with monitored VF/VT arrest.[10] The use of medications prior to initial defibrillation attempts was not recorded. We have recently reported that during the same period of data collection, there were no significant changes in the use of epinephrine; however, there was a significant increase in the use of vasopressin.[10] It is unclear whether the increased use of vasopressin contributed to the current outcomes. However, given our cohort of witnessed in‐hospital cardiac arrests with an initial shockable rhythm, we anticipate the use of vasopressors as unlikely prior to defibrillation attempt.

Additional important limitations and potential confounding factors in this study were the use of 2 different types of defibrillators, differing escalating energy strategies, and differing defibrillator waveforms. Recent evidence supports biphasic waveforms as more effective than monophasic waveforms.[25, 26, 27] Comparison of defibrillator brand and waveform superiority is out the scope of this study; however, it is interesting to note similar high rates of survival in the stacked shock and modified stack shock phases despite use of different defibrillator brands and waveforms during those respective phases. Regarding escalating energy of defibrillation countershocks, the most recent 2010 AHA guidelines have no position on the superiority of either manual or automatic escalation.[7] However, we noted similar high rates of survival in the stacked shock and modified stack shock periods despite use of differing escalating strategies. Finally, we used survival‐to‐hospital discharge as our main outcome measure rather than neurological status. However, prior studies from our institution suggest that most VF/VT survivors have good neurological outcomes, which are influenced heavily by preadmission functional status.[24]

CONCLUSIONS

Our data suggest that in cases of monitored VF/VT arrest, expeditious defibrillation with use of stacked shocks is associated with a higher rate of ROSC and survival to hospital discharge

Disclosure: Nothing to report.

- , , , et al. Strategies for improving survival after in‐hospital cardiac arrest in the United States: 2013 consensus recommendations: a consensus statement from the American Heart Association. Circulation. 2013;127:1538–1563.

- , , , et al. Survival from in‐hospital cardiac arrest during nights and weekends. JAMA. 2008;299:785–792.

- , , , et al. Heart disease and stroke statistics—2008 update: a report from the American Heart Association Statistics Committee and Stroke Statistics Subcommittee. Circulation. 2008;117:e25–e146.

- , , , . Predictors of survival from out‐of‐hospital cardiac arrest: a systematic review and meta‐analysis. Circ Cardiovasc Qual Outcomes. 2010;3:63–81.

- , , , et al. Quality of cardiopulmonary resuscitation during in‐hospital cardiac arrest. JAMA. 2005;293:305–310.

- , , , et al. Chest compression fraction determines survival in patients with out‐of‐hospital ventricular fibrillation. Circulation. 2009;120:1241–1247.

- , , , et al. Part 6: Defibrillation: 2010 International Consensus on Cardiopulmonary Resuscitation and Emergency Cardiovascular Care Science With Treatment Recommendations. Circulation. 2010;122:S325–S337.

- , , , et al. Part 1: executive summary: 2010 American Heart Association Guidelines for Cardiopulmonary Resuscitation and Emergency Cardiovascular Care. Circulation. 2010;122:S640—S656.

- , , , et al. Part 8: adult advanced cardiovascular life support: 2010 American Heart Association Guidelines for Cardiopulmonary Resuscitation and Emergency Cardiovascular Care. Circulation. 2010;122:S729–S767.

- , , , et al. A performance improvement‐based resuscitation programme reduces arrest incidence and increases survival from in‐hospital cardiac arrest. Resuscitation. 2015;92:63–69.

- . The evolution of case‐mix measurement using DRGs: past, present and future. Stud Health Technol Inform. 1994;14:75–83.

- , , , et al. Variability in case‐mix adjusted in‐hospital cardiac arrest rates. Med Care. 2012;50:124–130.

- , , , , . Impact of socioeconomic adjustment on physicians' relative cost of care. Med Care. 2013;51:454–460.

- , , , et al. Influence of cardiopulmonary resuscitation prior to defibrillation in patients with out‐of‐hospital ventricular fibrillation. JAMA. 1999;281:1182–1188.

- , , , et al. Delaying defibrillation to give basic cardiopulmonary resuscitation to patients with out‐of‐hospital ventricular fibrillation: a randomized trial. JAMA. 2003;289:1389–1395.

- , , , et al. Defibrillation or cardiopulmonary resuscitation first for patients with out‐of‐hospital cardiac arrests found by paramedics to be in ventricular fibrillation? A randomised control trial. Resuscitation. 2008;79:424–431.

- , , , . CPR before defibrillation in out‐of‐hospital cardiac arrest: a randomized trial. Emerg Med Australas. 2005;17:39–45.

- , . Resuscitation after cardiac arrest: a 3‐phase time‐sensitive model. JAMA. 2002;288:3035–3038.

- , , , , . Association of intramyocardial high energy phosphate concentrations with quantitative measures of the ventricular fibrillation electrocardiogram waveform. Resuscitation. 2009;80:946–950.

- , , , et al. Ventricular fibrillation median frequency may not be useful for monitoring during cardiac arrest treated with endothelin‐1 or epinephrine. Anesth Analg. 2004;99:1787–1793, table of contents.

- , , , , . “Probability of successful defibrillation” as a monitor during CPR in out‐of‐hospital cardiac arrested patients. Resuscitation. 2015;48:245–254.

- , , , . Shockable rhythms and defibrillation during in‐hospital pediatric cardiac arrest. Resuscitation. 2014;85:387–391.

- , , , . Beyond the pre‐shock pause: the effect of prehospital defibrillation mode on CPR interruptions and return of spontaneous circulation. Resuscitation. 2013;84:575–579.

- , , . Implementing a “resuscitation bundle” decreases incidence and improves outcomes in inpatient cardiopulmonary arrest. Circulation 2009;120(18 Suppl):S1441.

- , , , et al. Multicenter, randomized, controlled trial of 150‐J biphasic shocks compared with 200‐ to 360‐J monophasic shocks in the resuscitation of out‐of‐hospital cardiac arrest victims. Optimized Response to Cardiac Arrest (ORCA) Investigators. Circulation. 2000;102:1780–1787.

- , , , , . A prospective, randomised and blinded comparison of first shock success of monophasic and biphasic waveforms in out‐of‐hospital cardiac arrest. Resuscitation. 2003;58:17–24.

- , , , et al. Out‐of‐hospital cardiac arrest rectilinear biphasic to monophasic damped sine defibrillation waveforms with advanced life support intervention trial (ORBIT). Resuscitation. 2005;66:149–157.

- , , , et al. Strategies for improving survival after in‐hospital cardiac arrest in the United States: 2013 consensus recommendations: a consensus statement from the American Heart Association. Circulation. 2013;127:1538–1563.

- , , , et al. Survival from in‐hospital cardiac arrest during nights and weekends. JAMA. 2008;299:785–792.

- , , , et al. Heart disease and stroke statistics—2008 update: a report from the American Heart Association Statistics Committee and Stroke Statistics Subcommittee. Circulation. 2008;117:e25–e146.

- , , , . Predictors of survival from out‐of‐hospital cardiac arrest: a systematic review and meta‐analysis. Circ Cardiovasc Qual Outcomes. 2010;3:63–81.

- , , , et al. Quality of cardiopulmonary resuscitation during in‐hospital cardiac arrest. JAMA. 2005;293:305–310.

- , , , et al. Chest compression fraction determines survival in patients with out‐of‐hospital ventricular fibrillation. Circulation. 2009;120:1241–1247.

- , , , et al. Part 6: Defibrillation: 2010 International Consensus on Cardiopulmonary Resuscitation and Emergency Cardiovascular Care Science With Treatment Recommendations. Circulation. 2010;122:S325–S337.

- , , , et al. Part 1: executive summary: 2010 American Heart Association Guidelines for Cardiopulmonary Resuscitation and Emergency Cardiovascular Care. Circulation. 2010;122:S640—S656.

- , , , et al. Part 8: adult advanced cardiovascular life support: 2010 American Heart Association Guidelines for Cardiopulmonary Resuscitation and Emergency Cardiovascular Care. Circulation. 2010;122:S729–S767.

- , , , et al. A performance improvement‐based resuscitation programme reduces arrest incidence and increases survival from in‐hospital cardiac arrest. Resuscitation. 2015;92:63–69.

- . The evolution of case‐mix measurement using DRGs: past, present and future. Stud Health Technol Inform. 1994;14:75–83.

- , , , et al. Variability in case‐mix adjusted in‐hospital cardiac arrest rates. Med Care. 2012;50:124–130.

- , , , , . Impact of socioeconomic adjustment on physicians' relative cost of care. Med Care. 2013;51:454–460.

- , , , et al. Influence of cardiopulmonary resuscitation prior to defibrillation in patients with out‐of‐hospital ventricular fibrillation. JAMA. 1999;281:1182–1188.

- , , , et al. Delaying defibrillation to give basic cardiopulmonary resuscitation to patients with out‐of‐hospital ventricular fibrillation: a randomized trial. JAMA. 2003;289:1389–1395.

- , , , et al. Defibrillation or cardiopulmonary resuscitation first for patients with out‐of‐hospital cardiac arrests found by paramedics to be in ventricular fibrillation? A randomised control trial. Resuscitation. 2008;79:424–431.

- , , , . CPR before defibrillation in out‐of‐hospital cardiac arrest: a randomized trial. Emerg Med Australas. 2005;17:39–45.

- , . Resuscitation after cardiac arrest: a 3‐phase time‐sensitive model. JAMA. 2002;288:3035–3038.

- , , , , . Association of intramyocardial high energy phosphate concentrations with quantitative measures of the ventricular fibrillation electrocardiogram waveform. Resuscitation. 2009;80:946–950.

- , , , et al. Ventricular fibrillation median frequency may not be useful for monitoring during cardiac arrest treated with endothelin‐1 or epinephrine. Anesth Analg. 2004;99:1787–1793, table of contents.

- , , , , . “Probability of successful defibrillation” as a monitor during CPR in out‐of‐hospital cardiac arrested patients. Resuscitation. 2015;48:245–254.

- , , , . Shockable rhythms and defibrillation during in‐hospital pediatric cardiac arrest. Resuscitation. 2014;85:387–391.

- , , , . Beyond the pre‐shock pause: the effect of prehospital defibrillation mode on CPR interruptions and return of spontaneous circulation. Resuscitation. 2013;84:575–579.

- , , . Implementing a “resuscitation bundle” decreases incidence and improves outcomes in inpatient cardiopulmonary arrest. Circulation 2009;120(18 Suppl):S1441.

- , , , et al. Multicenter, randomized, controlled trial of 150‐J biphasic shocks compared with 200‐ to 360‐J monophasic shocks in the resuscitation of out‐of‐hospital cardiac arrest victims. Optimized Response to Cardiac Arrest (ORCA) Investigators. Circulation. 2000;102:1780–1787.

- , , , , . A prospective, randomised and blinded comparison of first shock success of monophasic and biphasic waveforms in out‐of‐hospital cardiac arrest. Resuscitation. 2003;58:17–24.

- , , , et al. Out‐of‐hospital cardiac arrest rectilinear biphasic to monophasic damped sine defibrillation waveforms with advanced life support intervention trial (ORBIT). Resuscitation. 2005;66:149–157.

© 2015 Society of Hospital Medicine

Encouraging Use of the MyFitnessPal App Does Not Lead to Weight Loss in Primary Care Patients

Study Overview

Objective. To evaluate the effectiveness and impact of using MyFitnessPal, a free, popular smartphone application (“app”), for weight loss.

Study design. 2-arm randomized controlled trial.

Setting and participants. Participants were recruited from 2 primary care clinics in the University of California, Los Angeles heath system. The inclusion criteria for the study were ≥ 18 years of age, body mass index (BMI) ≥ 25 kg/m2, an interest in losing weight, and ownership of a smartphone. The exclusion criteria included pregnancy, hemodialysis, life expectancy less than 6 months, lack of interest in weight loss, and current use of a smartphone app for weight loss. Out of 633 individuals assessed, 212 were eligible for the study. Participants were block randomized by BMI 25–30 kg/m2 and BMI > 30 kg/m2 to either usual primary care (n = 107) or usual primary care plus the app (n = 105).

Intervention. MyFitnessPal (MFP) was selected for this study based on previous focus groups with overweight primary care patients. MFP is a calorie-counting app that incorporates evidence-based and theory-based approaches to weight loss. Users can enter their current weight, goal weight, and goal rate of weight loss, which allows the app to generate the user’s daily, individualized calorie goal. MFP users also input daily weight, food intake, and physical activity, which produce certain outputs, including calorie counts, weight trends, and nutritional summaries based on food consumed.

Participants in the intervention arm received help from research assistants in downloading MFP onto their smartphones and received a phone call 1 week after enrollment to assist with any technical issues with the app. Those in the control group were told to choose any preferred activity for weight loss. Both groups received usual care from their primary care provider, with an additional two follow-up visits at 3 and 6 months. At the 3-month follow-up visit, all participants received a nutrition educational handout from www.myplate.gov.

Main outcomes measures. The main outcome measure was weight change at 6 months. Blood pressure, weight, systolic blood pressure (SPB), and 3 self-reported behavioral mediators of weight loss (exercise, diet, and self-efficacy in weight loss) were measured and collected for all participants at baseline and at 3 and 6 months. This study also gathered data from the MyFitnessPal company to measure frequency of app usage. At the 6-month follow-up visit, research assistants asked participants in the intervention arm about their experience using MFP, while those in the control group were asked if they had used MFP in the past 6 months to assess contamination. The authors used a linear mixed effects model (PROC MIXED) in SAS data processing software to investigate the differences in weight change, SBP change, and change in behavioral survey items between the 2 groups while controlling for clinic site. In addition, they performed 2 sensitivity analyses to evaluate the impact of possible informative dropout (income, education, diet experience, treatment group, and baseline value), and the effect of excluding one control group outlier.

Results. The majority of participants were female (73%) with a mean age of 43.4 years (SD = 14.3). The mean BMI was 33.4 kg/m2 (SD = 7.09), and 48% of the participants identified themselves as non-Hispanic white. At the 3-month visit, 26% and 21% of the participants from the intervention and control arms were lost to follow-up. Additionally, at 6 months, 32% and 19% of intervention and control group participants were lost to follow-up.

There was no significant difference in weight change between the two groups at 3 months (control, + 0.54 lb; intervention, –0.06 lb; P = 0.53) or at 6 months (control, +0.6 lb; intervention, –0.07 lb; P = 0.63); between group difference at 3 months was –0.6 lb (95% confidence interval [CI], –2.5 to 1.3 lb; P = 0.53) and at 6 months was –0.67 lb (CI, –3.3 to 2.1 lb; P = 0.63). The sensitivity analysis based on possible missing data also suggested the same outcome with between group difference at 6 months at 0.08 lb (CI, –3.04 to 3.20 lb; P = 0.96). The difference in systolic blood pressure was not significant between the groups.

Participants in the intervention arm used a personal calorie goal more often than those in the control group, with a mean between group difference at 3 months of 1.9 days per week (CI, 1.0 to 2.8; P < 0.001) and a mean between group difference at 6 months of 2.0 days per week (CI, 1.1 to 2.9; P < 0.001). The results also showed that the use of calorie goal feature was significant at 3 months (P < 0.001) and at 6 months (P < 0.001). At the 3-month visit, the authors found that individuals in the intervention group reported decreased self-efficacy in achieving their weight loss goals when compared to their counterparts (–0.85 on a 10-point scale; CI, –1.6 to –0.1; P = 0.026), but self-efficacy was insignificant at the 6-month follow-up. Additionally, the results suggested no difference in self-reported behaviors regarding diet, exercise, and self-efficacy in weight loss between the groups.

The mean number of logins was 61 during the course of the study, and the median total logins was 19. Interestingly, the data showed that there was a rapid decline of logins after enrollment for most participants in the intervention arm. There were 94 users who logged in to the app during the first month and 34 who logged in during the last month of the study. Out of 107 participants from the control group, 14 used MFP during the trial.

Despite a sharp decline in usage, MFP users in the intervention group were satisfied with the app: 79% were somewhat to completely satisfied, 92% would recommend it to a friend, and 80% planned to continue using MFP after the study. The study indicated that there were several aspects that the users liked about MFP including ease of use (100%), feedback on progress (88%), and 48% indicated that it was fun to use. Fewer participants appreciated features such as the reminder feature (42%) and social networking feature (13%). A common theme the authors found in MFP users was increased awareness of food choices, and more caution about food choices. Of those that stopped using MFP, some comments regarding MFP included that it was tedious (84%) and not easy to use (24%).

Conclusion. While most participants were satisfied with MFP, encouraging use of the app did not lead to more weight loss in primary care patients compared to usual care. There was decreased engagement with MFP over time.

Commentary

Despite efforts by the federal government to address the obesity epidemic in the United States, there is still a high prevalence of obesity among children and adults. Approximately 17% of youth and 35% of adults in the United States have a BMI in the obese range (> 30 kg/m2) [1]. In addition to a high association between obesity and chronic diseases such as type 2 diabetes mellitus, hypertension, and hypercholesterolemia[2], the obesity epidemic carries a staggering financial burden. The annual cost of obesity in the U.S. was estimated to be $147 billion in 2008, and the medical costs for each person with obesity was $1429 higher than those with normal weight [3,4]. Therefore, finding cost-effective and easily accessible methods to manage obesity is imperative. The Pew Research Center found that 64% of adults in the U.S. own a smartphone, and 62% of those have used their phone to look up health information[5]. Thus, smartphone applications that deliver weight management information and strategies may be a cost-effective and feasible means to reach a large population.

This study assessed the impact of MyFitnessPal, a free and widely popular mobile application, as an approach to reduce weight among patients in a primary care setting. The authors compared weight change between patients who received usual care from primary care providers (PCPs) and those who used MFP in addition to their usual care. They found no significant difference in weight loss between the two groups at 3 and 6 months during the trial. Despite this negative finding, this study makes important contributions to the e-health literature and highlights important considerations for similar studies.