User login

Modafinil improves cognitive impairment in remitted depression

VIENNA – Modafinil shows potential for the treatment of episodic and working memory dysfunction in patients with remitted depression, Muzaffer Kaser, MD, reported at the annual congress of the European College of Neuropsychopharmacology.

He presented a randomized, double-blind, placebo-controlled proof-of-concept study in which 60 patients with remitted depression undertook a battery of cognitive tests before and 2 hours after either a single 200-mg dose of modafinil or placebo.

“For episodic memory we saw a medium-to-large effect size for modafinil’s effects over placebo, and a medium effect size for working memory. So for administration of a single dose we think these results are quite promising,” said Dr. Kaser, a psychiatrist and PhD candidate at the University of Cambridge, England.

Longer-term studies of modafinil are planned in light of the major unmet need for treatments to address cognitive dysfunction in depression, he added in an interview. Currently, there are none. Yet it is now recognized that cognitive dysfunction in the domains of memory, attention, and planning are a core feature of depression, and they tend to persist after mood symptoms have recovered.

“Because of the cognitive dysfunction, people have difficulty getting back to work at the same pre-illness level, which creates more stress and increases the potential for future relapse. So cognitive dysfunction in depression should be a target for treatment,” the psychiatrist said.

Modafinil’s well-established safety profile makes it an attractive candidate, he continued. In placebo-controlled studies of the drug as augmentation therapy for depression, modafinil’s side effects were at the placebo level.

In this proof-of-concept study, the payoff with a single dose of modafinil was confined to the domain of memory, where the effects were most evident at the most difficult stages of the tests. Most impressively, the modafinil group made half as many errors on the episodic memory test. However, the drug did not improve performance on tests of planning accuracy or sustained attention.

Study participants had a mean of 3.2 prior depressive episodes and were in their current remission for 8.2 months.

The tests were drawn from the Cambridge Neuropsychological Test Automated Battery. They included the Paired Associates Learning measure of episodic memory, Spatial Working Memory, the Stockings of Cambridge measure of planning and executive function, and the Rapid Visual Information Processing measure of sustained attention.

The study was funded by the U.K. Medical Research Council and the Wellcome Trust. Dr. Kaser reported having no financial conflicts of interest.

Correction, 12/15/16: An earlier version of this article misstated Dr. Kaser's name in the photo caption.

VIENNA – Modafinil shows potential for the treatment of episodic and working memory dysfunction in patients with remitted depression, Muzaffer Kaser, MD, reported at the annual congress of the European College of Neuropsychopharmacology.

He presented a randomized, double-blind, placebo-controlled proof-of-concept study in which 60 patients with remitted depression undertook a battery of cognitive tests before and 2 hours after either a single 200-mg dose of modafinil or placebo.

“For episodic memory we saw a medium-to-large effect size for modafinil’s effects over placebo, and a medium effect size for working memory. So for administration of a single dose we think these results are quite promising,” said Dr. Kaser, a psychiatrist and PhD candidate at the University of Cambridge, England.

Longer-term studies of modafinil are planned in light of the major unmet need for treatments to address cognitive dysfunction in depression, he added in an interview. Currently, there are none. Yet it is now recognized that cognitive dysfunction in the domains of memory, attention, and planning are a core feature of depression, and they tend to persist after mood symptoms have recovered.

“Because of the cognitive dysfunction, people have difficulty getting back to work at the same pre-illness level, which creates more stress and increases the potential for future relapse. So cognitive dysfunction in depression should be a target for treatment,” the psychiatrist said.

Modafinil’s well-established safety profile makes it an attractive candidate, he continued. In placebo-controlled studies of the drug as augmentation therapy for depression, modafinil’s side effects were at the placebo level.

In this proof-of-concept study, the payoff with a single dose of modafinil was confined to the domain of memory, where the effects were most evident at the most difficult stages of the tests. Most impressively, the modafinil group made half as many errors on the episodic memory test. However, the drug did not improve performance on tests of planning accuracy or sustained attention.

Study participants had a mean of 3.2 prior depressive episodes and were in their current remission for 8.2 months.

The tests were drawn from the Cambridge Neuropsychological Test Automated Battery. They included the Paired Associates Learning measure of episodic memory, Spatial Working Memory, the Stockings of Cambridge measure of planning and executive function, and the Rapid Visual Information Processing measure of sustained attention.

The study was funded by the U.K. Medical Research Council and the Wellcome Trust. Dr. Kaser reported having no financial conflicts of interest.

Correction, 12/15/16: An earlier version of this article misstated Dr. Kaser's name in the photo caption.

VIENNA – Modafinil shows potential for the treatment of episodic and working memory dysfunction in patients with remitted depression, Muzaffer Kaser, MD, reported at the annual congress of the European College of Neuropsychopharmacology.

He presented a randomized, double-blind, placebo-controlled proof-of-concept study in which 60 patients with remitted depression undertook a battery of cognitive tests before and 2 hours after either a single 200-mg dose of modafinil or placebo.

“For episodic memory we saw a medium-to-large effect size for modafinil’s effects over placebo, and a medium effect size for working memory. So for administration of a single dose we think these results are quite promising,” said Dr. Kaser, a psychiatrist and PhD candidate at the University of Cambridge, England.

Longer-term studies of modafinil are planned in light of the major unmet need for treatments to address cognitive dysfunction in depression, he added in an interview. Currently, there are none. Yet it is now recognized that cognitive dysfunction in the domains of memory, attention, and planning are a core feature of depression, and they tend to persist after mood symptoms have recovered.

“Because of the cognitive dysfunction, people have difficulty getting back to work at the same pre-illness level, which creates more stress and increases the potential for future relapse. So cognitive dysfunction in depression should be a target for treatment,” the psychiatrist said.

Modafinil’s well-established safety profile makes it an attractive candidate, he continued. In placebo-controlled studies of the drug as augmentation therapy for depression, modafinil’s side effects were at the placebo level.

In this proof-of-concept study, the payoff with a single dose of modafinil was confined to the domain of memory, where the effects were most evident at the most difficult stages of the tests. Most impressively, the modafinil group made half as many errors on the episodic memory test. However, the drug did not improve performance on tests of planning accuracy or sustained attention.

Study participants had a mean of 3.2 prior depressive episodes and were in their current remission for 8.2 months.

The tests were drawn from the Cambridge Neuropsychological Test Automated Battery. They included the Paired Associates Learning measure of episodic memory, Spatial Working Memory, the Stockings of Cambridge measure of planning and executive function, and the Rapid Visual Information Processing measure of sustained attention.

The study was funded by the U.K. Medical Research Council and the Wellcome Trust. Dr. Kaser reported having no financial conflicts of interest.

Correction, 12/15/16: An earlier version of this article misstated Dr. Kaser's name in the photo caption.

Key clinical point:

Major finding: Patients with remitted depression made half as many errors on a well-established measure of episodic memory 2 hours after taking a single dose of modafinil, compared with placebo.

Data source: This double-blind, randomized, placebo-controlled study included 60 adults with remitted depression who underwent cognitive testing before and 2 hours after taking a single 200-mg dose of modafinil or placebo.

Disclosures: The study was funded by the UK Medical Research Council and the Wellcome Trust. The presenter reported having no financial conflicts of interest.

Talking to military service members and veterans

The following opinions are my own and not those of the Veterans Health Administration.

A recurring question at many of the talks I give on posttraumatic stress and related topics is: “How can civilian providers relate to military service members and veterans?”

There are now online and in-person courses on this topic. The Center for Deployment Psychology in Bethesda, Md., and Massachusetts General Hospital in Boston are two of the better sources for these. Here, I would like to offer my condensed version.

Frequently, they are brought in by a spouse or girlfriend who says some version of: “If you do not get help, I am getting a divorce.”

So start with neutral subjects and their strengths.

I usually ask first where they live and who lives with them (which usually tells you a lot about socioeconomic status and relationships).

Then I ask about military history. Their branch of services, when they were/are in the military, and their job (known as MOS, military occupational specialty in the Army), and what rank they were when they left the service.

The answers reveal a lot. The Air Force and part of the Navy have more technical occupations. Marines and some Army specialties are “ground pounders,” often serving in the infantry, who were heavily exposed to combat from the wars in Afghanistan and Iraq.

What conflicts the veterans were in, if any, are very important. Those who have served in Afghanistan (Operation Enduring Freedom) and Iraq (Operation Iraqi Freedom, Operation New Dawn, etc.) are usually very proud of their military service. However, those veterans may be internally conflicted, usually over friends who were wounded or who died, while they survived.

This latter subject often touches on the theme of moral injury, feelings of shame or guilt that they have returned home, while others have not.

Service members may not want to talk about these issues, as they reawaken those feelings. They often are reluctant to describe traumatic combat events to “someone who has not been there.” So, in my current VA practice, I touch very lightly on these issues, especially in the first meeting.

Usually, the service member or veteran is relieved about not having to talk about having “their friend’s head blown off.” I do say, “We can return to these events when and if you are ready.”

Service members may be reluctant to start medication, especially if they are worried about sexual dysfunction or addiction. They also may be avoidant of traditional trauma therapies, because revisiting the traumas is too painful. Be patient with their reluctance.

So again, focus on strengths. “What did you do in the military that you are most proud of?”

A very practical approach about other issues, such as housing and employment, will go a long way.

Many veterans, unfortunately, have blown through relationships, perhaps because of their posttraumatic stress disorder and related depression and substance abuse. They may be housed, couch surfing, or sleeping in their car. Another practical question is, “Where are you sleeping tonight?” (The VA, by the way, is very good at getting housing for homeless veterans.)

I have often said: “A good job is the best intervention for better mental health.” It adds structure, a paycheck, and a purpose for life.

Finally, I also talk about integrative or complementary medicine. Yoga, meditation, canine or equine therapy, and acupuncture are some of the alternatives that may allow them to modulate their hyperarousal and reconnect with their loved ones.

So, this is the quick version of “how to talk to veterans.” Delve into other resources as needed.

Dr. Ritchie is a forensic psychiatrist with expertise in military and veterans’ issues. She retired from the U.S. Army in 2010 after serving for 24 years and holding many leadership positions, including chief of psychiatry. Currently, Dr. Ritchie is chief of mental health for the community-based outpatient clinics at the Washington, D.C., VA Medical Center. She also serves as professor of psychiatry at the Uniformed Services University of the Health Sciences in Bethesda, Md., and at Georgetown University and Howard University, both in Washington. Her recent books include “Forensic and Ethical Issues in Military Behavioral Health” (Department of the Army, 2015) and “Posttramatic Stress Disorder and Related Diseases in Combat Veterans (Springer, 2015). Dr. Ritchie’s forthcoming books include “Intimacy Post-Injury: Combat Trauma and Sexual Health (Oxford University Press, 2016) and “Psychiatrists in Combat: Mental Health Clinicians’ Experiences in the War Zone” (Springer, 2017 ed.)

The following opinions are my own and not those of the Veterans Health Administration.

A recurring question at many of the talks I give on posttraumatic stress and related topics is: “How can civilian providers relate to military service members and veterans?”

There are now online and in-person courses on this topic. The Center for Deployment Psychology in Bethesda, Md., and Massachusetts General Hospital in Boston are two of the better sources for these. Here, I would like to offer my condensed version.

Frequently, they are brought in by a spouse or girlfriend who says some version of: “If you do not get help, I am getting a divorce.”

So start with neutral subjects and their strengths.

I usually ask first where they live and who lives with them (which usually tells you a lot about socioeconomic status and relationships).

Then I ask about military history. Their branch of services, when they were/are in the military, and their job (known as MOS, military occupational specialty in the Army), and what rank they were when they left the service.

The answers reveal a lot. The Air Force and part of the Navy have more technical occupations. Marines and some Army specialties are “ground pounders,” often serving in the infantry, who were heavily exposed to combat from the wars in Afghanistan and Iraq.

What conflicts the veterans were in, if any, are very important. Those who have served in Afghanistan (Operation Enduring Freedom) and Iraq (Operation Iraqi Freedom, Operation New Dawn, etc.) are usually very proud of their military service. However, those veterans may be internally conflicted, usually over friends who were wounded or who died, while they survived.

This latter subject often touches on the theme of moral injury, feelings of shame or guilt that they have returned home, while others have not.

Service members may not want to talk about these issues, as they reawaken those feelings. They often are reluctant to describe traumatic combat events to “someone who has not been there.” So, in my current VA practice, I touch very lightly on these issues, especially in the first meeting.

Usually, the service member or veteran is relieved about not having to talk about having “their friend’s head blown off.” I do say, “We can return to these events when and if you are ready.”

Service members may be reluctant to start medication, especially if they are worried about sexual dysfunction or addiction. They also may be avoidant of traditional trauma therapies, because revisiting the traumas is too painful. Be patient with their reluctance.

So again, focus on strengths. “What did you do in the military that you are most proud of?”

A very practical approach about other issues, such as housing and employment, will go a long way.

Many veterans, unfortunately, have blown through relationships, perhaps because of their posttraumatic stress disorder and related depression and substance abuse. They may be housed, couch surfing, or sleeping in their car. Another practical question is, “Where are you sleeping tonight?” (The VA, by the way, is very good at getting housing for homeless veterans.)

I have often said: “A good job is the best intervention for better mental health.” It adds structure, a paycheck, and a purpose for life.

Finally, I also talk about integrative or complementary medicine. Yoga, meditation, canine or equine therapy, and acupuncture are some of the alternatives that may allow them to modulate their hyperarousal and reconnect with their loved ones.

So, this is the quick version of “how to talk to veterans.” Delve into other resources as needed.

Dr. Ritchie is a forensic psychiatrist with expertise in military and veterans’ issues. She retired from the U.S. Army in 2010 after serving for 24 years and holding many leadership positions, including chief of psychiatry. Currently, Dr. Ritchie is chief of mental health for the community-based outpatient clinics at the Washington, D.C., VA Medical Center. She also serves as professor of psychiatry at the Uniformed Services University of the Health Sciences in Bethesda, Md., and at Georgetown University and Howard University, both in Washington. Her recent books include “Forensic and Ethical Issues in Military Behavioral Health” (Department of the Army, 2015) and “Posttramatic Stress Disorder and Related Diseases in Combat Veterans (Springer, 2015). Dr. Ritchie’s forthcoming books include “Intimacy Post-Injury: Combat Trauma and Sexual Health (Oxford University Press, 2016) and “Psychiatrists in Combat: Mental Health Clinicians’ Experiences in the War Zone” (Springer, 2017 ed.)

The following opinions are my own and not those of the Veterans Health Administration.

A recurring question at many of the talks I give on posttraumatic stress and related topics is: “How can civilian providers relate to military service members and veterans?”

There are now online and in-person courses on this topic. The Center for Deployment Psychology in Bethesda, Md., and Massachusetts General Hospital in Boston are two of the better sources for these. Here, I would like to offer my condensed version.

Frequently, they are brought in by a spouse or girlfriend who says some version of: “If you do not get help, I am getting a divorce.”

So start with neutral subjects and their strengths.

I usually ask first where they live and who lives with them (which usually tells you a lot about socioeconomic status and relationships).

Then I ask about military history. Their branch of services, when they were/are in the military, and their job (known as MOS, military occupational specialty in the Army), and what rank they were when they left the service.

The answers reveal a lot. The Air Force and part of the Navy have more technical occupations. Marines and some Army specialties are “ground pounders,” often serving in the infantry, who were heavily exposed to combat from the wars in Afghanistan and Iraq.

What conflicts the veterans were in, if any, are very important. Those who have served in Afghanistan (Operation Enduring Freedom) and Iraq (Operation Iraqi Freedom, Operation New Dawn, etc.) are usually very proud of their military service. However, those veterans may be internally conflicted, usually over friends who were wounded or who died, while they survived.

This latter subject often touches on the theme of moral injury, feelings of shame or guilt that they have returned home, while others have not.

Service members may not want to talk about these issues, as they reawaken those feelings. They often are reluctant to describe traumatic combat events to “someone who has not been there.” So, in my current VA practice, I touch very lightly on these issues, especially in the first meeting.

Usually, the service member or veteran is relieved about not having to talk about having “their friend’s head blown off.” I do say, “We can return to these events when and if you are ready.”

Service members may be reluctant to start medication, especially if they are worried about sexual dysfunction or addiction. They also may be avoidant of traditional trauma therapies, because revisiting the traumas is too painful. Be patient with their reluctance.

So again, focus on strengths. “What did you do in the military that you are most proud of?”

A very practical approach about other issues, such as housing and employment, will go a long way.

Many veterans, unfortunately, have blown through relationships, perhaps because of their posttraumatic stress disorder and related depression and substance abuse. They may be housed, couch surfing, or sleeping in their car. Another practical question is, “Where are you sleeping tonight?” (The VA, by the way, is very good at getting housing for homeless veterans.)

I have often said: “A good job is the best intervention for better mental health.” It adds structure, a paycheck, and a purpose for life.

Finally, I also talk about integrative or complementary medicine. Yoga, meditation, canine or equine therapy, and acupuncture are some of the alternatives that may allow them to modulate their hyperarousal and reconnect with their loved ones.

So, this is the quick version of “how to talk to veterans.” Delve into other resources as needed.

Dr. Ritchie is a forensic psychiatrist with expertise in military and veterans’ issues. She retired from the U.S. Army in 2010 after serving for 24 years and holding many leadership positions, including chief of psychiatry. Currently, Dr. Ritchie is chief of mental health for the community-based outpatient clinics at the Washington, D.C., VA Medical Center. She also serves as professor of psychiatry at the Uniformed Services University of the Health Sciences in Bethesda, Md., and at Georgetown University and Howard University, both in Washington. Her recent books include “Forensic and Ethical Issues in Military Behavioral Health” (Department of the Army, 2015) and “Posttramatic Stress Disorder and Related Diseases in Combat Veterans (Springer, 2015). Dr. Ritchie’s forthcoming books include “Intimacy Post-Injury: Combat Trauma and Sexual Health (Oxford University Press, 2016) and “Psychiatrists in Combat: Mental Health Clinicians’ Experiences in the War Zone” (Springer, 2017 ed.)

SVS Recommends Coverage for Supervised Exercise Therapy for PAD

SVS and colleagues from ACC, ACR, AHA, SCAI, SIR, SVM and VIVA recently submitted a letter to the Centers for Medicare & Medicaid Services (CMS) supporting a proposal to grant coverage for Supervised Exercise Therapy (SET) for symptomatic peripheral artery disease (PAD). Additionally, on behalf of its 5,600 members, SVS submitted a separate letter to CMS strongly supporting this coverage, which could potentially help the 8-12 million Americans affected by PAD.

CMS is considering the AHA’s recommendation to expand coverage and asked the public for comment on this proposal. A proposed decision memo is expected in March 2017.

SVS and colleagues from ACC, ACR, AHA, SCAI, SIR, SVM and VIVA recently submitted a letter to the Centers for Medicare & Medicaid Services (CMS) supporting a proposal to grant coverage for Supervised Exercise Therapy (SET) for symptomatic peripheral artery disease (PAD). Additionally, on behalf of its 5,600 members, SVS submitted a separate letter to CMS strongly supporting this coverage, which could potentially help the 8-12 million Americans affected by PAD.

CMS is considering the AHA’s recommendation to expand coverage and asked the public for comment on this proposal. A proposed decision memo is expected in March 2017.

SVS and colleagues from ACC, ACR, AHA, SCAI, SIR, SVM and VIVA recently submitted a letter to the Centers for Medicare & Medicaid Services (CMS) supporting a proposal to grant coverage for Supervised Exercise Therapy (SET) for symptomatic peripheral artery disease (PAD). Additionally, on behalf of its 5,600 members, SVS submitted a separate letter to CMS strongly supporting this coverage, which could potentially help the 8-12 million Americans affected by PAD.

CMS is considering the AHA’s recommendation to expand coverage and asked the public for comment on this proposal. A proposed decision memo is expected in March 2017.

SVS Offers Digital Pocket Guidelines

The SVS now offers its most recent practice guidelines as digital pocket cards through Guideline Central. These quick reference guideline tools contain everything you need to know from the full text guidelines, only in a more condensed and user-friendly format.

Members are eligible for free access to the SVS digital pocket guidelines by following the link below. Printed pocket cards are also available for purchase (discounted for SVS members). Non-members must purchase the guidelines individually, starting at $8.99.

Click here for info.

The SVS now offers its most recent practice guidelines as digital pocket cards through Guideline Central. These quick reference guideline tools contain everything you need to know from the full text guidelines, only in a more condensed and user-friendly format.

Members are eligible for free access to the SVS digital pocket guidelines by following the link below. Printed pocket cards are also available for purchase (discounted for SVS members). Non-members must purchase the guidelines individually, starting at $8.99.

Click here for info.

The SVS now offers its most recent practice guidelines as digital pocket cards through Guideline Central. These quick reference guideline tools contain everything you need to know from the full text guidelines, only in a more condensed and user-friendly format.

Members are eligible for free access to the SVS digital pocket guidelines by following the link below. Printed pocket cards are also available for purchase (discounted for SVS members). Non-members must purchase the guidelines individually, starting at $8.99.

Click here for info.

How to write a manuscript for publication

Writing should be fun! While some may view writing as painful (i.e., something you rather put off until all your household work, taxes, and even changing the litter boxes are done), writing can and will become more enjoyable the more you do it. Over the years that I have been writing with students, residents, and faculty, I have found that writing the discussion section of a manuscript remains the most daunting aspect of writing a paper and the No. 1 reason people put off writing. Thus, I have developed a strategy that distills this process into a very simple task. When followed, manuscript writing won’t seem so intimidating.

I like to consider the writing process in three phases: preparing to write, writing, and then revising. Let’s address each one of these.

Preparing to write

In preparing to write, it is important to know what audience you want to reach when selecting a journal. I recommend that you peruse the table of contents of the journals you have in mind to determine if that journal is publishing papers similar to yours. You also may want to consider the impact factor of the journal, as the journals you publish in can have an effect on your promotion and tenure process. Once you have decided upon a journal, retrieve the Instructions for Authors (IFA). This section will contain very important information about how the journal would like for you to format the manuscript. Follow these instructions!

If you do not follow these instructions, the journal may reject your manuscript without ever sending it out for review (that is, the managing editor will reject it). Think of it this way, if an editor takes the time to develop the IFA, you’d better believe that the requirements are important to that editor.

Once you have decided upon the journal and read the IFA, it is time to make an OUTLINE. Yes – I said it – an outline. So often we skip this simple task that we were taught in grade school. For a manuscript, the outline I start with includes the figures and/or tables. Your figures and/or tables should tell the story. If they don’t tell a cohesive story, something is wrong. I like to draw out story board on 8.5” x 11” plain paper. Each sheet of paper represents one figure (or table), and I literally draw out each panel. Then I spread the pieces of paper out on a desk to see if they tell the story I want. Once the story is determined, the writing begins.

Writing

The main structure of a manuscript is simple: introduction, methods, results, and discussion. The introduction section should tell the reader why you did the study. The methods section should tell the reader how you did the study. The results section should tell the reader what you found when you did the study. The discussion section should tell the reader what it all means. The introduction should spark the readers interest and provide them with enough information to understand why you conducted the study. It should consist of two to three paragraphs. The first paragraph should state the problem. The second paragraph should state what is known and unknown about the problem. The third paragraph should logically follow with your aim and hypothesis. All manuscripts can and should have a hypothesis.

The methods section should be presented in a straightforward and logical manner and include enough information for others to reproduce the experiments. The information should be presented with subheadings for each different section. For example, a clinical manuscript may have the follow subheadings: study design, study population, primary outcome, secondary outcomes, and statistical analysis.

The results section should also be presented in a logical manner, with subheadings that make sense. Be sure to refer to the IFA on the type of subheadings to use (descriptive versus simple, etc.) and remember to tell a story. The story can be told with data of most to least important, in chronological order, in vitro to in vivo, etc. The main point is to tell a good story that the reader will want to read! Be sure to cite your figures and table, but don’t duplicate the information in the figures and tables.

A nice trick is to present the data in each paragraph, and end with a sentence summarizing the results. Remember, data are the facts obtained from the experiments while results are statements that interpret the data.

The discussion section should be seen as a straightforward section to write instead of an intimidating discourse. The discussion section is where you tell the reader what the data might mean, how else the data could be interpreted, if other studies had similar or dissimilar results, the limitations of the study, and what should be done next. I propose that all discussion sections can be written in five paragraphs.

Paragraph 1 should summarize the findings with respect to the hypothesis. Paragraphs 2 and 3 should compare and contrast your data with published literature. Paragraph 4 should address limitations of the study. Paragraph 5 should conclude what it all means and what should happen next. If you start by outlining these five paragraphs, the discussion section becomes simple to write.

Revising

The most important aspect of writing a manuscript is revising. The importance of this is often overlooked. We all make mistakes in writing. The more you reread and revise your own work, the better it gets. Aim for writing simple sentences that are easy for your reader to read. Choose words carefully and precisely. Write well-designed sentences and structured paragraphs. The Internet has many short online tutorials to remind one how to do this. Use abbreviations sparingly and avoid wordiness. Avoid writing flaws, especially with the subject and verb. For example, “Controls were performed” should read “Control experiments were performed.”

In summary, writing should be an enjoyable process in which one can communicate exciting ideas to others. In this short article, I have presented a few tips and tricks on how to write and revise a manuscript. For a more in-depth resource, I refer the reader to “How to write a paper,” published in 2013 (ANZ J Surg. Jan;83[1-2]:90-2).

Dr. Kibbe is the Zack D. Owens Professor and Chair, department of surgery, the University of North Carolina at Chapel Hill.

Writing should be fun! While some may view writing as painful (i.e., something you rather put off until all your household work, taxes, and even changing the litter boxes are done), writing can and will become more enjoyable the more you do it. Over the years that I have been writing with students, residents, and faculty, I have found that writing the discussion section of a manuscript remains the most daunting aspect of writing a paper and the No. 1 reason people put off writing. Thus, I have developed a strategy that distills this process into a very simple task. When followed, manuscript writing won’t seem so intimidating.

I like to consider the writing process in three phases: preparing to write, writing, and then revising. Let’s address each one of these.

Preparing to write

In preparing to write, it is important to know what audience you want to reach when selecting a journal. I recommend that you peruse the table of contents of the journals you have in mind to determine if that journal is publishing papers similar to yours. You also may want to consider the impact factor of the journal, as the journals you publish in can have an effect on your promotion and tenure process. Once you have decided upon a journal, retrieve the Instructions for Authors (IFA). This section will contain very important information about how the journal would like for you to format the manuscript. Follow these instructions!

If you do not follow these instructions, the journal may reject your manuscript without ever sending it out for review (that is, the managing editor will reject it). Think of it this way, if an editor takes the time to develop the IFA, you’d better believe that the requirements are important to that editor.

Once you have decided upon the journal and read the IFA, it is time to make an OUTLINE. Yes – I said it – an outline. So often we skip this simple task that we were taught in grade school. For a manuscript, the outline I start with includes the figures and/or tables. Your figures and/or tables should tell the story. If they don’t tell a cohesive story, something is wrong. I like to draw out story board on 8.5” x 11” plain paper. Each sheet of paper represents one figure (or table), and I literally draw out each panel. Then I spread the pieces of paper out on a desk to see if they tell the story I want. Once the story is determined, the writing begins.

Writing

The main structure of a manuscript is simple: introduction, methods, results, and discussion. The introduction section should tell the reader why you did the study. The methods section should tell the reader how you did the study. The results section should tell the reader what you found when you did the study. The discussion section should tell the reader what it all means. The introduction should spark the readers interest and provide them with enough information to understand why you conducted the study. It should consist of two to three paragraphs. The first paragraph should state the problem. The second paragraph should state what is known and unknown about the problem. The third paragraph should logically follow with your aim and hypothesis. All manuscripts can and should have a hypothesis.

The methods section should be presented in a straightforward and logical manner and include enough information for others to reproduce the experiments. The information should be presented with subheadings for each different section. For example, a clinical manuscript may have the follow subheadings: study design, study population, primary outcome, secondary outcomes, and statistical analysis.

The results section should also be presented in a logical manner, with subheadings that make sense. Be sure to refer to the IFA on the type of subheadings to use (descriptive versus simple, etc.) and remember to tell a story. The story can be told with data of most to least important, in chronological order, in vitro to in vivo, etc. The main point is to tell a good story that the reader will want to read! Be sure to cite your figures and table, but don’t duplicate the information in the figures and tables.

A nice trick is to present the data in each paragraph, and end with a sentence summarizing the results. Remember, data are the facts obtained from the experiments while results are statements that interpret the data.

The discussion section should be seen as a straightforward section to write instead of an intimidating discourse. The discussion section is where you tell the reader what the data might mean, how else the data could be interpreted, if other studies had similar or dissimilar results, the limitations of the study, and what should be done next. I propose that all discussion sections can be written in five paragraphs.

Paragraph 1 should summarize the findings with respect to the hypothesis. Paragraphs 2 and 3 should compare and contrast your data with published literature. Paragraph 4 should address limitations of the study. Paragraph 5 should conclude what it all means and what should happen next. If you start by outlining these five paragraphs, the discussion section becomes simple to write.

Revising

The most important aspect of writing a manuscript is revising. The importance of this is often overlooked. We all make mistakes in writing. The more you reread and revise your own work, the better it gets. Aim for writing simple sentences that are easy for your reader to read. Choose words carefully and precisely. Write well-designed sentences and structured paragraphs. The Internet has many short online tutorials to remind one how to do this. Use abbreviations sparingly and avoid wordiness. Avoid writing flaws, especially with the subject and verb. For example, “Controls were performed” should read “Control experiments were performed.”

In summary, writing should be an enjoyable process in which one can communicate exciting ideas to others. In this short article, I have presented a few tips and tricks on how to write and revise a manuscript. For a more in-depth resource, I refer the reader to “How to write a paper,” published in 2013 (ANZ J Surg. Jan;83[1-2]:90-2).

Dr. Kibbe is the Zack D. Owens Professor and Chair, department of surgery, the University of North Carolina at Chapel Hill.

Writing should be fun! While some may view writing as painful (i.e., something you rather put off until all your household work, taxes, and even changing the litter boxes are done), writing can and will become more enjoyable the more you do it. Over the years that I have been writing with students, residents, and faculty, I have found that writing the discussion section of a manuscript remains the most daunting aspect of writing a paper and the No. 1 reason people put off writing. Thus, I have developed a strategy that distills this process into a very simple task. When followed, manuscript writing won’t seem so intimidating.

I like to consider the writing process in three phases: preparing to write, writing, and then revising. Let’s address each one of these.

Preparing to write

In preparing to write, it is important to know what audience you want to reach when selecting a journal. I recommend that you peruse the table of contents of the journals you have in mind to determine if that journal is publishing papers similar to yours. You also may want to consider the impact factor of the journal, as the journals you publish in can have an effect on your promotion and tenure process. Once you have decided upon a journal, retrieve the Instructions for Authors (IFA). This section will contain very important information about how the journal would like for you to format the manuscript. Follow these instructions!

If you do not follow these instructions, the journal may reject your manuscript without ever sending it out for review (that is, the managing editor will reject it). Think of it this way, if an editor takes the time to develop the IFA, you’d better believe that the requirements are important to that editor.

Once you have decided upon the journal and read the IFA, it is time to make an OUTLINE. Yes – I said it – an outline. So often we skip this simple task that we were taught in grade school. For a manuscript, the outline I start with includes the figures and/or tables. Your figures and/or tables should tell the story. If they don’t tell a cohesive story, something is wrong. I like to draw out story board on 8.5” x 11” plain paper. Each sheet of paper represents one figure (or table), and I literally draw out each panel. Then I spread the pieces of paper out on a desk to see if they tell the story I want. Once the story is determined, the writing begins.

Writing

The main structure of a manuscript is simple: introduction, methods, results, and discussion. The introduction section should tell the reader why you did the study. The methods section should tell the reader how you did the study. The results section should tell the reader what you found when you did the study. The discussion section should tell the reader what it all means. The introduction should spark the readers interest and provide them with enough information to understand why you conducted the study. It should consist of two to three paragraphs. The first paragraph should state the problem. The second paragraph should state what is known and unknown about the problem. The third paragraph should logically follow with your aim and hypothesis. All manuscripts can and should have a hypothesis.

The methods section should be presented in a straightforward and logical manner and include enough information for others to reproduce the experiments. The information should be presented with subheadings for each different section. For example, a clinical manuscript may have the follow subheadings: study design, study population, primary outcome, secondary outcomes, and statistical analysis.

The results section should also be presented in a logical manner, with subheadings that make sense. Be sure to refer to the IFA on the type of subheadings to use (descriptive versus simple, etc.) and remember to tell a story. The story can be told with data of most to least important, in chronological order, in vitro to in vivo, etc. The main point is to tell a good story that the reader will want to read! Be sure to cite your figures and table, but don’t duplicate the information in the figures and tables.

A nice trick is to present the data in each paragraph, and end with a sentence summarizing the results. Remember, data are the facts obtained from the experiments while results are statements that interpret the data.

The discussion section should be seen as a straightforward section to write instead of an intimidating discourse. The discussion section is where you tell the reader what the data might mean, how else the data could be interpreted, if other studies had similar or dissimilar results, the limitations of the study, and what should be done next. I propose that all discussion sections can be written in five paragraphs.

Paragraph 1 should summarize the findings with respect to the hypothesis. Paragraphs 2 and 3 should compare and contrast your data with published literature. Paragraph 4 should address limitations of the study. Paragraph 5 should conclude what it all means and what should happen next. If you start by outlining these five paragraphs, the discussion section becomes simple to write.

Revising

The most important aspect of writing a manuscript is revising. The importance of this is often overlooked. We all make mistakes in writing. The more you reread and revise your own work, the better it gets. Aim for writing simple sentences that are easy for your reader to read. Choose words carefully and precisely. Write well-designed sentences and structured paragraphs. The Internet has many short online tutorials to remind one how to do this. Use abbreviations sparingly and avoid wordiness. Avoid writing flaws, especially with the subject and verb. For example, “Controls were performed” should read “Control experiments were performed.”

In summary, writing should be an enjoyable process in which one can communicate exciting ideas to others. In this short article, I have presented a few tips and tricks on how to write and revise a manuscript. For a more in-depth resource, I refer the reader to “How to write a paper,” published in 2013 (ANZ J Surg. Jan;83[1-2]:90-2).

Dr. Kibbe is the Zack D. Owens Professor and Chair, department of surgery, the University of North Carolina at Chapel Hill.

Age of blood did not affect mortality in transfused patients

In-hospital mortality did not vary for patients who received transfusions of blood that had been stored for 2 weeks and for patients who got blood that had been stored for 4 weeks, based on results from 20,858 hospitalized patients in the randomized, controlled INFORM (Informing Fresh versus Old Red Cell Management) trial conducted at six hospitals in four countries.

While previous trials have concluded that the storage time of blood did not affect patient mortality, those studies largely included high-risk patients and were not statistically powered to detect small mortality differences, Nancy M. Heddle, professor of medicine and director of the McMaster (University) transfusion research program, Hamilton, Ont., and colleagues reported in an article published online in the New England Journal of Medicine (doi: 10.1056/NEJMoa1609014). Standard practice is to transfuse with the oldest available blood, which can be stored up to 42 days.

Their study included general hospitalized patients who required a red cell transfusion. From April 2012 through October 2015, patients were randomly assigned in a 1:2 ratio patients to receive blood that had been stored for the shortest duration (mean duration 13 days, 6,936 patients) or the longest duration (mean duration 23.6 days, 13,922 patients).

Only patients with type A or O blood were included in the study’s primary analysis, because of the difficulty of achieving a difference of at least 10 days in the mean duration of blood storage with other blood types.

There were 634 deaths (9.1% mortality) among patients in the short-term blood storage group and 1,213 deaths (8.7% mortality) in the long-term blood storage group. The difference was not statistically significant. Similar results were seen when the analysis was expanded to include all 24,736 patients with any blood type; the mortality rates were 9.1% and 8.8%, respectively.

An additional analysis found similar results in three prespecified high-risk subgroups – patients undergoing cardiovascular surgery, those admitted to intensive care, and those with cancer.

INFORM, Current Controlled Trials number ISRCTN08118744, was funded by the Canadian Institutes of Health Research, Canadian Blood Services, and Health Canada. Ms. Heddle had no relevant financial disclosures.

[email protected]

On Twitter @maryjodales

The results of the INFORM trial should end the debate regarding whether short-term or long-term storage of blood is advantageous. However, questions remain about whether red cells transfused during the last allowed week of storage (35-42 days) pose more risk. Observational studies continue to raise concerns about the use of the oldest blood.

The INFORM trial, with its large numbers of patients, should permit researchers to analyze enough data to address this remaining issue. The transfusion medicine community needs to know whether the storage period should be reduced to less than 35 and whether new preservative solutions should be sought.

Aaron A.R. Tobian, MD, PhD, and Paul M. Ness, MD, are with the division of transfusion medicine, department of pathology, Johns Hopkins University, Baltimore. They had no relevant financial conflicts of interest and made their remarks in an editorial (10.1056/NEJMe1612444) that accompanied the published study.

The results of the INFORM trial should end the debate regarding whether short-term or long-term storage of blood is advantageous. However, questions remain about whether red cells transfused during the last allowed week of storage (35-42 days) pose more risk. Observational studies continue to raise concerns about the use of the oldest blood.

The INFORM trial, with its large numbers of patients, should permit researchers to analyze enough data to address this remaining issue. The transfusion medicine community needs to know whether the storage period should be reduced to less than 35 and whether new preservative solutions should be sought.

Aaron A.R. Tobian, MD, PhD, and Paul M. Ness, MD, are with the division of transfusion medicine, department of pathology, Johns Hopkins University, Baltimore. They had no relevant financial conflicts of interest and made their remarks in an editorial (10.1056/NEJMe1612444) that accompanied the published study.

The results of the INFORM trial should end the debate regarding whether short-term or long-term storage of blood is advantageous. However, questions remain about whether red cells transfused during the last allowed week of storage (35-42 days) pose more risk. Observational studies continue to raise concerns about the use of the oldest blood.

The INFORM trial, with its large numbers of patients, should permit researchers to analyze enough data to address this remaining issue. The transfusion medicine community needs to know whether the storage period should be reduced to less than 35 and whether new preservative solutions should be sought.

Aaron A.R. Tobian, MD, PhD, and Paul M. Ness, MD, are with the division of transfusion medicine, department of pathology, Johns Hopkins University, Baltimore. They had no relevant financial conflicts of interest and made their remarks in an editorial (10.1056/NEJMe1612444) that accompanied the published study.

In-hospital mortality did not vary for patients who received transfusions of blood that had been stored for 2 weeks and for patients who got blood that had been stored for 4 weeks, based on results from 20,858 hospitalized patients in the randomized, controlled INFORM (Informing Fresh versus Old Red Cell Management) trial conducted at six hospitals in four countries.

While previous trials have concluded that the storage time of blood did not affect patient mortality, those studies largely included high-risk patients and were not statistically powered to detect small mortality differences, Nancy M. Heddle, professor of medicine and director of the McMaster (University) transfusion research program, Hamilton, Ont., and colleagues reported in an article published online in the New England Journal of Medicine (doi: 10.1056/NEJMoa1609014). Standard practice is to transfuse with the oldest available blood, which can be stored up to 42 days.

Their study included general hospitalized patients who required a red cell transfusion. From April 2012 through October 2015, patients were randomly assigned in a 1:2 ratio patients to receive blood that had been stored for the shortest duration (mean duration 13 days, 6,936 patients) or the longest duration (mean duration 23.6 days, 13,922 patients).

Only patients with type A or O blood were included in the study’s primary analysis, because of the difficulty of achieving a difference of at least 10 days in the mean duration of blood storage with other blood types.

There were 634 deaths (9.1% mortality) among patients in the short-term blood storage group and 1,213 deaths (8.7% mortality) in the long-term blood storage group. The difference was not statistically significant. Similar results were seen when the analysis was expanded to include all 24,736 patients with any blood type; the mortality rates were 9.1% and 8.8%, respectively.

An additional analysis found similar results in three prespecified high-risk subgroups – patients undergoing cardiovascular surgery, those admitted to intensive care, and those with cancer.

INFORM, Current Controlled Trials number ISRCTN08118744, was funded by the Canadian Institutes of Health Research, Canadian Blood Services, and Health Canada. Ms. Heddle had no relevant financial disclosures.

[email protected]

On Twitter @maryjodales

In-hospital mortality did not vary for patients who received transfusions of blood that had been stored for 2 weeks and for patients who got blood that had been stored for 4 weeks, based on results from 20,858 hospitalized patients in the randomized, controlled INFORM (Informing Fresh versus Old Red Cell Management) trial conducted at six hospitals in four countries.

While previous trials have concluded that the storage time of blood did not affect patient mortality, those studies largely included high-risk patients and were not statistically powered to detect small mortality differences, Nancy M. Heddle, professor of medicine and director of the McMaster (University) transfusion research program, Hamilton, Ont., and colleagues reported in an article published online in the New England Journal of Medicine (doi: 10.1056/NEJMoa1609014). Standard practice is to transfuse with the oldest available blood, which can be stored up to 42 days.

Their study included general hospitalized patients who required a red cell transfusion. From April 2012 through October 2015, patients were randomly assigned in a 1:2 ratio patients to receive blood that had been stored for the shortest duration (mean duration 13 days, 6,936 patients) or the longest duration (mean duration 23.6 days, 13,922 patients).

Only patients with type A or O blood were included in the study’s primary analysis, because of the difficulty of achieving a difference of at least 10 days in the mean duration of blood storage with other blood types.

There were 634 deaths (9.1% mortality) among patients in the short-term blood storage group and 1,213 deaths (8.7% mortality) in the long-term blood storage group. The difference was not statistically significant. Similar results were seen when the analysis was expanded to include all 24,736 patients with any blood type; the mortality rates were 9.1% and 8.8%, respectively.

An additional analysis found similar results in three prespecified high-risk subgroups – patients undergoing cardiovascular surgery, those admitted to intensive care, and those with cancer.

INFORM, Current Controlled Trials number ISRCTN08118744, was funded by the Canadian Institutes of Health Research, Canadian Blood Services, and Health Canada. Ms. Heddle had no relevant financial disclosures.

[email protected]

On Twitter @maryjodales

FROM THE NEW ENGLAND JOURNAL OF MEDICINE

Key clinical point:

Major finding: There were 634 deaths (9.1% mortality) among patients in the short-term blood storage group and 1,213 deaths (8.7% mortality) in the long-term blood storage group.

Data source: The randomized, controlled INFORM (Informing Fresh versus Old Red Cell Management) trial.

Disclosures: INFORM, Current Controlled Trials number ISRCTN08118744, was funded by the Canadian Institutes of Health Research, Canadian Blood Services, and Health Canada. Ms. Heddle had no relevant financial disclosures.

Sepsis mortality linked to concentration of critical care fellowships

LOS ANGELES – Compared with other parts of the United States, survival rates for sepsis were highest in the Northeast and in metropolitan areas in the Western regions of the United States, which mirrors the concentration of critical care fellowship programs, results from a descriptive analysis found.

“There must be consideration to redistribute the critical care work force based on the spread of the malady that they are trained to deal with,” lead study author Aditya Shah, MD, said in an interview in advance of the annual meeting of the American College of Chest Physicians. “This could be linked to better reimbursements in the underserved areas.”

Dr. Shah has conducted similar projects in patient populations with HIV and hepatitis, but to his knowledge, this is the first such analysis using NCHS data. “What is unique about this is that we can make real time presentations to see how the work force and the pathology is evolving with regards to an epidemiological stand point with real time data, which can be easily accessed,” he explained. “Depending on what we see, interventions and redistributions could be made with regards to better distributing providers based on where they are needed the most.”

Of 150 critical care fellowship programs identified in the analysis, the majority were concentrated in the Northeast and metropolitan areas in the Western regions of the United States, which parallel similar patterns noted in other specialties. Survival rates for sepsis were also higher in these locations. Dr. Shah said that the findings support previous studies, which indicated that physicians often tend to practice in geographic areas close to their training sites. However, the fact that such variation existed in mortality from sepsis – one of the most common diagnoses in the medical and surgical intensive care units – surprised him. “You would have thought that there would be a work force to deal with this malady,” he said.

He acknowledged certain limitations of the study, including the fact that the NCHS data do not enable researchers to break down mortality from particular causes of sepsis. “Also, the most current data will always lag behind as it is entered retrospectively and needs time to be uploaded online,” he said. “I am still in search of a more real-time database. However, that would require much more intensive time, money, and resources.”

Dr. Shah reported having no financial disclosures.

LOS ANGELES – Compared with other parts of the United States, survival rates for sepsis were highest in the Northeast and in metropolitan areas in the Western regions of the United States, which mirrors the concentration of critical care fellowship programs, results from a descriptive analysis found.

“There must be consideration to redistribute the critical care work force based on the spread of the malady that they are trained to deal with,” lead study author Aditya Shah, MD, said in an interview in advance of the annual meeting of the American College of Chest Physicians. “This could be linked to better reimbursements in the underserved areas.”

Dr. Shah has conducted similar projects in patient populations with HIV and hepatitis, but to his knowledge, this is the first such analysis using NCHS data. “What is unique about this is that we can make real time presentations to see how the work force and the pathology is evolving with regards to an epidemiological stand point with real time data, which can be easily accessed,” he explained. “Depending on what we see, interventions and redistributions could be made with regards to better distributing providers based on where they are needed the most.”

Of 150 critical care fellowship programs identified in the analysis, the majority were concentrated in the Northeast and metropolitan areas in the Western regions of the United States, which parallel similar patterns noted in other specialties. Survival rates for sepsis were also higher in these locations. Dr. Shah said that the findings support previous studies, which indicated that physicians often tend to practice in geographic areas close to their training sites. However, the fact that such variation existed in mortality from sepsis – one of the most common diagnoses in the medical and surgical intensive care units – surprised him. “You would have thought that there would be a work force to deal with this malady,” he said.

He acknowledged certain limitations of the study, including the fact that the NCHS data do not enable researchers to break down mortality from particular causes of sepsis. “Also, the most current data will always lag behind as it is entered retrospectively and needs time to be uploaded online,” he said. “I am still in search of a more real-time database. However, that would require much more intensive time, money, and resources.”

Dr. Shah reported having no financial disclosures.

LOS ANGELES – Compared with other parts of the United States, survival rates for sepsis were highest in the Northeast and in metropolitan areas in the Western regions of the United States, which mirrors the concentration of critical care fellowship programs, results from a descriptive analysis found.

“There must be consideration to redistribute the critical care work force based on the spread of the malady that they are trained to deal with,” lead study author Aditya Shah, MD, said in an interview in advance of the annual meeting of the American College of Chest Physicians. “This could be linked to better reimbursements in the underserved areas.”

Dr. Shah has conducted similar projects in patient populations with HIV and hepatitis, but to his knowledge, this is the first such analysis using NCHS data. “What is unique about this is that we can make real time presentations to see how the work force and the pathology is evolving with regards to an epidemiological stand point with real time data, which can be easily accessed,” he explained. “Depending on what we see, interventions and redistributions could be made with regards to better distributing providers based on where they are needed the most.”

Of 150 critical care fellowship programs identified in the analysis, the majority were concentrated in the Northeast and metropolitan areas in the Western regions of the United States, which parallel similar patterns noted in other specialties. Survival rates for sepsis were also higher in these locations. Dr. Shah said that the findings support previous studies, which indicated that physicians often tend to practice in geographic areas close to their training sites. However, the fact that such variation existed in mortality from sepsis – one of the most common diagnoses in the medical and surgical intensive care units – surprised him. “You would have thought that there would be a work force to deal with this malady,” he said.

He acknowledged certain limitations of the study, including the fact that the NCHS data do not enable researchers to break down mortality from particular causes of sepsis. “Also, the most current data will always lag behind as it is entered retrospectively and needs time to be uploaded online,” he said. “I am still in search of a more real-time database. However, that would require much more intensive time, money, and resources.”

Dr. Shah reported having no financial disclosures.

AT CHEST 2016

Key clinical point:

Major finding: Higher survival rates for sepsis were more concentrated in the Northeast and metropolitan areas in the Western regions of the United States, compared with other areas of the country.

Data source: A descriptive analysis that evaluated sepsis mortality data linked to 150 critical care fellowship programs in the United States.

Disclosures: Dr. Shah reported having no financial disclosures.

Resveratrol may reduce androgen levels in PCOS

The naturally occurring polyphenol resveratrol may have beneficial hormonal effects in women with polycystic ovary syndrome (PCOS), according to the results of a new study.

Previous in vitro research by the lead author, Beata Banaszewska, MD, from the division of infertility and reproductive endocrinology, in the department of gynecology, obstetrics, and gynecological oncology, Poznan University of Medical Sciences, Poznan, Poland, and her colleagues, suggested that resveratrol may inhibit cell growth and reduce androgen production in rat theca-interstitial cells, which are implicated in excessive androgen production in PCOS in humans.

They conducted a placebo-controlled trial, randomizing 34 women with PCOS to 1,500 mg oral resveratrol or placebo daily, with clinical, endocrine and metabolic assessments performed at baseline and three months after initiating treatment. The mean age of the women was 27 years; their mean BMI was 27.1 to 27.6 kg.m2.

At three months, serum total testosterone, the primary outcome, had declined significantly by 23.1% in the resveratrol group (P = .01), but increased by a non-significant 2.9% in the placebo group. The reduction in serum testosterone was even greater among individuals with a lower body mass index (J Clin Endocrinol Metab. 101: 3575–81, 2016. October 18. doi: 10.1210/jc.2016-1858).

Similarly, levels of dehydroepiandrosterone sulfate declined by a significant 22.2% in the resveratrol group (P = .01), but increased by a non-significant 10.5% in the placebo group, suggesting an effect on ovarian as well as adrenal androgen production.

“The magnitude of improvement of hyperandrogenemia observed in response to resveratrol is comparable to or greater than that found in response to OC [oral contraceptive] pills or metformin, with the exception of preparations containing cypretorone acetate, which are not available in the United States,” the authors wrote. They cited another study showing a 19% reduction in testosterone either with 12 months of treatment with the oral contraceptive pill or with metformin.

They also noted that while reductions in testosterone with metformin occur gradually over 3 to 6 months, their study showed a marked reduction in just three months.

Researchers also saw a significant 31.8% decline in fasting insulin (P = .007) and a 66.3% increase (P =.04) in the Insulin Sensitivity Index among patients treated with resveratrol.

Resveratrol was not associated with significant effects on BMI, ovarian volume, gonadotropins, lipid profile, or markers of inflammation and endothelial function. The women on placebo did show a significant reduction in ovarian volume, and increases in total and high-density lipoprotein cholesterol levels, prompting the authors to suggest that resveratrol may have prevented an increase in cholesterol that would otherwise have occurred.

Other than two patients on resveratrol reporting transient numbness, no other adverse events were noted.

While resveratrol, which is found in grapes, nuts and berries, is known to have anti-inflammatory, antioxidant, and cardioprotective properties, the authors said this was the first clinical study examining its effects in PCOS.

“Although identification of the mechanisms of action of resveratrol is not possible in this clinical trial, several possible mechanisms may be considered,” including a reduction of growth of theca cells, “and the improvement of insulin sensitivity with consequent reduction of insulin levels,” they wrote.

“Furthermore, given that insulin is known to stimulate androgen production in both ovarian and adrenal tissues, it is likely that the resveratrol-induced reduction of insulin observed in the present study may have contributed to a decrease of androgen levels,” they added.

RevGenetics provided the resveratrol for the study. The study was supported by the authors’ own institutions, and no conflicts of interest were declared.

The naturally occurring polyphenol resveratrol may have beneficial hormonal effects in women with polycystic ovary syndrome (PCOS), according to the results of a new study.

Previous in vitro research by the lead author, Beata Banaszewska, MD, from the division of infertility and reproductive endocrinology, in the department of gynecology, obstetrics, and gynecological oncology, Poznan University of Medical Sciences, Poznan, Poland, and her colleagues, suggested that resveratrol may inhibit cell growth and reduce androgen production in rat theca-interstitial cells, which are implicated in excessive androgen production in PCOS in humans.

They conducted a placebo-controlled trial, randomizing 34 women with PCOS to 1,500 mg oral resveratrol or placebo daily, with clinical, endocrine and metabolic assessments performed at baseline and three months after initiating treatment. The mean age of the women was 27 years; their mean BMI was 27.1 to 27.6 kg.m2.

At three months, serum total testosterone, the primary outcome, had declined significantly by 23.1% in the resveratrol group (P = .01), but increased by a non-significant 2.9% in the placebo group. The reduction in serum testosterone was even greater among individuals with a lower body mass index (J Clin Endocrinol Metab. 101: 3575–81, 2016. October 18. doi: 10.1210/jc.2016-1858).

Similarly, levels of dehydroepiandrosterone sulfate declined by a significant 22.2% in the resveratrol group (P = .01), but increased by a non-significant 10.5% in the placebo group, suggesting an effect on ovarian as well as adrenal androgen production.

“The magnitude of improvement of hyperandrogenemia observed in response to resveratrol is comparable to or greater than that found in response to OC [oral contraceptive] pills or metformin, with the exception of preparations containing cypretorone acetate, which are not available in the United States,” the authors wrote. They cited another study showing a 19% reduction in testosterone either with 12 months of treatment with the oral contraceptive pill or with metformin.

They also noted that while reductions in testosterone with metformin occur gradually over 3 to 6 months, their study showed a marked reduction in just three months.

Researchers also saw a significant 31.8% decline in fasting insulin (P = .007) and a 66.3% increase (P =.04) in the Insulin Sensitivity Index among patients treated with resveratrol.

Resveratrol was not associated with significant effects on BMI, ovarian volume, gonadotropins, lipid profile, or markers of inflammation and endothelial function. The women on placebo did show a significant reduction in ovarian volume, and increases in total and high-density lipoprotein cholesterol levels, prompting the authors to suggest that resveratrol may have prevented an increase in cholesterol that would otherwise have occurred.

Other than two patients on resveratrol reporting transient numbness, no other adverse events were noted.

While resveratrol, which is found in grapes, nuts and berries, is known to have anti-inflammatory, antioxidant, and cardioprotective properties, the authors said this was the first clinical study examining its effects in PCOS.

“Although identification of the mechanisms of action of resveratrol is not possible in this clinical trial, several possible mechanisms may be considered,” including a reduction of growth of theca cells, “and the improvement of insulin sensitivity with consequent reduction of insulin levels,” they wrote.

“Furthermore, given that insulin is known to stimulate androgen production in both ovarian and adrenal tissues, it is likely that the resveratrol-induced reduction of insulin observed in the present study may have contributed to a decrease of androgen levels,” they added.

RevGenetics provided the resveratrol for the study. The study was supported by the authors’ own institutions, and no conflicts of interest were declared.

The naturally occurring polyphenol resveratrol may have beneficial hormonal effects in women with polycystic ovary syndrome (PCOS), according to the results of a new study.

Previous in vitro research by the lead author, Beata Banaszewska, MD, from the division of infertility and reproductive endocrinology, in the department of gynecology, obstetrics, and gynecological oncology, Poznan University of Medical Sciences, Poznan, Poland, and her colleagues, suggested that resveratrol may inhibit cell growth and reduce androgen production in rat theca-interstitial cells, which are implicated in excessive androgen production in PCOS in humans.

They conducted a placebo-controlled trial, randomizing 34 women with PCOS to 1,500 mg oral resveratrol or placebo daily, with clinical, endocrine and metabolic assessments performed at baseline and three months after initiating treatment. The mean age of the women was 27 years; their mean BMI was 27.1 to 27.6 kg.m2.

At three months, serum total testosterone, the primary outcome, had declined significantly by 23.1% in the resveratrol group (P = .01), but increased by a non-significant 2.9% in the placebo group. The reduction in serum testosterone was even greater among individuals with a lower body mass index (J Clin Endocrinol Metab. 101: 3575–81, 2016. October 18. doi: 10.1210/jc.2016-1858).

Similarly, levels of dehydroepiandrosterone sulfate declined by a significant 22.2% in the resveratrol group (P = .01), but increased by a non-significant 10.5% in the placebo group, suggesting an effect on ovarian as well as adrenal androgen production.

“The magnitude of improvement of hyperandrogenemia observed in response to resveratrol is comparable to or greater than that found in response to OC [oral contraceptive] pills or metformin, with the exception of preparations containing cypretorone acetate, which are not available in the United States,” the authors wrote. They cited another study showing a 19% reduction in testosterone either with 12 months of treatment with the oral contraceptive pill or with metformin.

They also noted that while reductions in testosterone with metformin occur gradually over 3 to 6 months, their study showed a marked reduction in just three months.

Researchers also saw a significant 31.8% decline in fasting insulin (P = .007) and a 66.3% increase (P =.04) in the Insulin Sensitivity Index among patients treated with resveratrol.

Resveratrol was not associated with significant effects on BMI, ovarian volume, gonadotropins, lipid profile, or markers of inflammation and endothelial function. The women on placebo did show a significant reduction in ovarian volume, and increases in total and high-density lipoprotein cholesterol levels, prompting the authors to suggest that resveratrol may have prevented an increase in cholesterol that would otherwise have occurred.

Other than two patients on resveratrol reporting transient numbness, no other adverse events were noted.

While resveratrol, which is found in grapes, nuts and berries, is known to have anti-inflammatory, antioxidant, and cardioprotective properties, the authors said this was the first clinical study examining its effects in PCOS.

“Although identification of the mechanisms of action of resveratrol is not possible in this clinical trial, several possible mechanisms may be considered,” including a reduction of growth of theca cells, “and the improvement of insulin sensitivity with consequent reduction of insulin levels,” they wrote.

“Furthermore, given that insulin is known to stimulate androgen production in both ovarian and adrenal tissues, it is likely that the resveratrol-induced reduction of insulin observed in the present study may have contributed to a decrease of androgen levels,” they added.

RevGenetics provided the resveratrol for the study. The study was supported by the authors’ own institutions, and no conflicts of interest were declared.

FROM THE JOURNAL OF CLINICAL ENDOCRINOLOGY & METABOLISM

Key clinical point: The naturally occurring polyphenol resveratrol may reduce androgen and DHEAS levels in women with polycystic ovary syndrome.

Major finding: Treatment with resveratrol reduced total testosterone by 23.1% and dehydroepiandrosterone sulfate by 22.2%, while these levels increased in the placebo group.

Data source: A randomized placebo controlled trial in 34 women with polycystic ovary syndrome, randomizing women to 1,500 mg of oral resveratrol daily, or placebo.

Disclosures: The study was supported by the authors’ own institutions, and no conflicts of interest were declared. RevGenetics provided the resveratrol for the study.

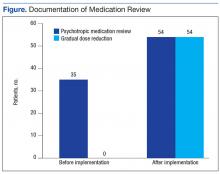

An Electronic Template to Improve Psychotropic Medication Review and Gradual Dose-Reduction Documentation

One in 5 Americans are taking at least 1 psychotropic medication.1 Elderly dementia patients in extended care facilities are the most likely population to be prescribed psychotropic medication: 87% of these patients are on at least 1 psychotropic medication, 66% on at least 2, 36% on at least 3, and 11% on 4 or more.2 Psychotropic medications alleviate the symptoms of mental illness, such as depression, anxiety, and psychosis. Unfortunately, these medications often have adverse effects (AEs), including, but not limited to, excessive sedation, cardiac abnormalities, and tardive dyskinesia.

In 1987, Congress passed the Nursing Home Reform Act (NHRA) as part of the Omnibus Budget Reconciliation Act. The NHRA mandated that residents must remain free of “physical or chemical restraints imposed for the purpose of discipline or convenience.”3

In 1991, in order to meet the NHRA requirements, the Centers for Medicare and Medicaid Services (CMS) issued a guideline that nursing homes should use antipsychotic drug therapy only to treat a specific condition as diagnosed and documented in the clinical record. In 2006, CMS published guidance on the reduction of psychotropic medication usage. These CMS guidelines are the community-accepted standards for prescribing psychotropic medications, and accrediting bodies expect compliance with the guidelines. The guidelines also recommend that antipsychotics should be prescribed at the lowest possible dose, used for the shortest period, and continually undergo gradual dose reduction (GDR).4 To ensure these standards are met, a review of the use of psychotropic medications should be performed regularly.

The purpose of this project was to improve documentation of GDR and review of psychotropic medication based on CMS guidelines in the community living centers (CLCs) at the Carl Vinson Veterans Affairs Medical Center (CVVAMC) in Dublin, Georgia.

Background