User login

Clinical benefit persists for some with mRCC after stopping immune checkpoint blockade

ORLANDO – Some people with advanced kidney cancer who respond to immune checkpoint inhibitor therapy and subsequently stop because of immune-related adverse events may continue to see a clinical benefit for 6 months or longer, a retrospective, multicenter study reveals.

In fact, investigators labeled 42% of 19 patients with metastatic renal cell carcinoma (mRCC) “durable responders” to checkpoint inhibitor blockade. “What we’ve demonstrated is that, despite patients stopping their treatment, there is a subset who continue to have disease in check and controlled despite [their] not being on any therapy,” Rana R. McKay, MD, of the University of California, San Diego, said.

“Our subset was small, only 19 patients, so the next step is to validate our findings in larger study, and actually conduct a prospective trial to assess if discontinuation of therapy is worthwhile to investigate in this population,” Dr. McKay said during a press briefing prior to the Genitourinary Cancers Symposium sponsored by the American Society of Clinical Oncology, ASTRO, and the Society of Urologic Oncology.

PD-1/PDL-1 inhibitors demonstrate efficacy against an expanding list of malignancies, Dr. McKay said. The current standard is to administer these agents on a continuous basis until cancer progression or toxicity occurs. However, the study raises the possibility of intentionally discontinuing their use in some patients in the future, primarily because PD-1/PD-L1 inhibitors are associated with a wide range of side effects. Most concerning are immune-related adverse events “which are thought to be due to immune system activation,” she said.

“These drugs work to reinvigorate the immune response, and one of the unintended consequences is … they may also elicit an autoimmune response against one or more organs in the body,” said Sumanta Pal, MD, of City of Hope Medical Center in Duarte, Calif. and moderator of the press briefing.

“There was a wide spectrum of adverse events affecting different organ systems,” Dr. McKay said, “including pneumonitis, myositis, nephritis, hepatitis, pericarditis, and myocarditis, just to name a few.” A total of 84% of patients required steroids to treat immune-related adverse events, 11% needed additional immunosuppressant agents to treat their symptoms, and 53% have ongoing toxicity.

“If a patient has immune-related side effects, the impact can be serious,” Dr. Pal said. “This [study] certainly supports the premise that those individuals who experience immune related side effects could have a tangible benefit from the drug nonetheless.”

Median patient age was 68 years, 74% were male and 26% had aggressive disease. In the durable responders group, the median time on treatment was 11 months and median time off treatment was 20 months. In contrast, the patients whose cancer worsened immediately after therapy cessation were treated a median 4 months and were off treatment a median of only 2 months. A total of 63% received either PD-1 or PD-L1 monotherapy and the remainder received one of these inhibitors in combination with other systemic therapies.

Prospective studies are warranted to determine approaches to customize immunotherapy based on response, Dr. McKay said. A phase II study is planned to assess optimized management of nivolumab therapy based on response in patients with mRCC, she added

ORLANDO – Some people with advanced kidney cancer who respond to immune checkpoint inhibitor therapy and subsequently stop because of immune-related adverse events may continue to see a clinical benefit for 6 months or longer, a retrospective, multicenter study reveals.

In fact, investigators labeled 42% of 19 patients with metastatic renal cell carcinoma (mRCC) “durable responders” to checkpoint inhibitor blockade. “What we’ve demonstrated is that, despite patients stopping their treatment, there is a subset who continue to have disease in check and controlled despite [their] not being on any therapy,” Rana R. McKay, MD, of the University of California, San Diego, said.

“Our subset was small, only 19 patients, so the next step is to validate our findings in larger study, and actually conduct a prospective trial to assess if discontinuation of therapy is worthwhile to investigate in this population,” Dr. McKay said during a press briefing prior to the Genitourinary Cancers Symposium sponsored by the American Society of Clinical Oncology, ASTRO, and the Society of Urologic Oncology.

PD-1/PDL-1 inhibitors demonstrate efficacy against an expanding list of malignancies, Dr. McKay said. The current standard is to administer these agents on a continuous basis until cancer progression or toxicity occurs. However, the study raises the possibility of intentionally discontinuing their use in some patients in the future, primarily because PD-1/PD-L1 inhibitors are associated with a wide range of side effects. Most concerning are immune-related adverse events “which are thought to be due to immune system activation,” she said.

“These drugs work to reinvigorate the immune response, and one of the unintended consequences is … they may also elicit an autoimmune response against one or more organs in the body,” said Sumanta Pal, MD, of City of Hope Medical Center in Duarte, Calif. and moderator of the press briefing.

“There was a wide spectrum of adverse events affecting different organ systems,” Dr. McKay said, “including pneumonitis, myositis, nephritis, hepatitis, pericarditis, and myocarditis, just to name a few.” A total of 84% of patients required steroids to treat immune-related adverse events, 11% needed additional immunosuppressant agents to treat their symptoms, and 53% have ongoing toxicity.

“If a patient has immune-related side effects, the impact can be serious,” Dr. Pal said. “This [study] certainly supports the premise that those individuals who experience immune related side effects could have a tangible benefit from the drug nonetheless.”

Median patient age was 68 years, 74% were male and 26% had aggressive disease. In the durable responders group, the median time on treatment was 11 months and median time off treatment was 20 months. In contrast, the patients whose cancer worsened immediately after therapy cessation were treated a median 4 months and were off treatment a median of only 2 months. A total of 63% received either PD-1 or PD-L1 monotherapy and the remainder received one of these inhibitors in combination with other systemic therapies.

Prospective studies are warranted to determine approaches to customize immunotherapy based on response, Dr. McKay said. A phase II study is planned to assess optimized management of nivolumab therapy based on response in patients with mRCC, she added

ORLANDO – Some people with advanced kidney cancer who respond to immune checkpoint inhibitor therapy and subsequently stop because of immune-related adverse events may continue to see a clinical benefit for 6 months or longer, a retrospective, multicenter study reveals.

In fact, investigators labeled 42% of 19 patients with metastatic renal cell carcinoma (mRCC) “durable responders” to checkpoint inhibitor blockade. “What we’ve demonstrated is that, despite patients stopping their treatment, there is a subset who continue to have disease in check and controlled despite [their] not being on any therapy,” Rana R. McKay, MD, of the University of California, San Diego, said.

“Our subset was small, only 19 patients, so the next step is to validate our findings in larger study, and actually conduct a prospective trial to assess if discontinuation of therapy is worthwhile to investigate in this population,” Dr. McKay said during a press briefing prior to the Genitourinary Cancers Symposium sponsored by the American Society of Clinical Oncology, ASTRO, and the Society of Urologic Oncology.

PD-1/PDL-1 inhibitors demonstrate efficacy against an expanding list of malignancies, Dr. McKay said. The current standard is to administer these agents on a continuous basis until cancer progression or toxicity occurs. However, the study raises the possibility of intentionally discontinuing their use in some patients in the future, primarily because PD-1/PD-L1 inhibitors are associated with a wide range of side effects. Most concerning are immune-related adverse events “which are thought to be due to immune system activation,” she said.

“These drugs work to reinvigorate the immune response, and one of the unintended consequences is … they may also elicit an autoimmune response against one or more organs in the body,” said Sumanta Pal, MD, of City of Hope Medical Center in Duarte, Calif. and moderator of the press briefing.

“There was a wide spectrum of adverse events affecting different organ systems,” Dr. McKay said, “including pneumonitis, myositis, nephritis, hepatitis, pericarditis, and myocarditis, just to name a few.” A total of 84% of patients required steroids to treat immune-related adverse events, 11% needed additional immunosuppressant agents to treat their symptoms, and 53% have ongoing toxicity.

“If a patient has immune-related side effects, the impact can be serious,” Dr. Pal said. “This [study] certainly supports the premise that those individuals who experience immune related side effects could have a tangible benefit from the drug nonetheless.”

Median patient age was 68 years, 74% were male and 26% had aggressive disease. In the durable responders group, the median time on treatment was 11 months and median time off treatment was 20 months. In contrast, the patients whose cancer worsened immediately after therapy cessation were treated a median 4 months and were off treatment a median of only 2 months. A total of 63% received either PD-1 or PD-L1 monotherapy and the remainder received one of these inhibitors in combination with other systemic therapies.

Prospective studies are warranted to determine approaches to customize immunotherapy based on response, Dr. McKay said. A phase II study is planned to assess optimized management of nivolumab therapy based on response in patients with mRCC, she added

Key clinical point: A subset of patients with metastatic renal cell carcinoma see a durable benefit after stopping therapy with immune checkpoint inhibitors due to immune related adverse events.

Major finding: Just over 40% of patients experienced a durable response to therapy of 6 months or longer after stopping therapy with an immune checkpoint inhibitor.

Data source: Retrospective study of 19 patients conducted at five academic medical centers.

Disclosures: The Dana-Farber/Harvard Cancer Center Kidney SPORE, and the Trust Family, Michael Brigham, and Loker Pin funded this study. Rana R. McKay, MD, receives institutional research funding from Pfizer and Bayer.

CMS proposal seeks to stabilize individual insurance market

Proposed regulations from the Centers for Medicare & Medicaid Services aim to provide short-term stabilization to the individual and small group insurance markets under the Affordable Care Act.

The proposal issued Feb. 15 would make changes to special enrollment periods, open enrollment, guaranteed availability, network adequacy rules, essential community providers, and actuarial value requirements. It also changes the timeline for when insurers would need to get their qualified health plan certification. It represents a first step toward fulfilling President Trump’s Inauguration Day executive order to “minimize the unwarranted economic and regulatory burdens of the [ACA], and prepare to afford the states more flexibility and control to create a more free and open health care market.”

However, the proposed rule, if finalized as is, may not have any dramatic effect on the decision by insurers to serve the individual and small group markets.

“A plan that was going to stay is probably going to stay and be a little bit happier about these regs and a plan that was going to decide to leave, like Humana, would have left anyway,” Caroline Pearson, senior vice president at Avalere Health said in an interview. “I don’t know if it is going to materially change plan participation.”

The proposed rule would shorten open enrollment for plans purchased in the ACA marketplace. Currently, plans can be purchased from Nov. 1 to Jan. 31; the proposal would move the deadline up to Dec. 15.

“We anticipate this change could improve the risk pool because it would reduce opportunities for adverse selection by those who learn they will need services in late December and January; and will encourage healthier individuals who might have previously enrolled in partial year coverage after Dec. 15th to instead enroll in coverage for the full year,” according to the proposed rule.

CMS also proposes to tighten special enrollment by requiring preverification of special enrollment period status for all people applicants. Currently, only 50% of those seeking coverage through special enrollment are verified. The agency also is proposing to limit the plan choices available to individuals who are enrolling via a special enrollment period as a way of minimizing adverse selection.

Another proposal aimed at keeping people covered is one that allows insurers to collect unpaid premiums in the prior coverage year before enrolling a patient in the next year’s plan with the same insurer.

CMS noted in the rule that a recent survey “concluded that approximately 21% of consumers stopped premium payments in 2015. Approximately 87% of those individuals repurchased plans in 2016, while 49% of these consumers purchased the same plan they had previously stopped payment on.”

On the network adequacy front, CMS is shifting the conduct of network adequacy reviews to states, or to an accrediting entity recognized by the Department of Health & Human Services in the case of states that do not have sufficient resources to conduct adequacy reviews. Further, the proposal reduces the minimum percentage of essential community providers (those who serve predominantly low-income and medically underserved populations) in a network to 20% from the 30% instituted in 2015.

CMS said in the proposal that if these rules are finalized, it will issue separate guidance on changes to the timeline for plans to submit their bids for 2018.

Avalere’s Ms. Pearson said that she sees these proposed changes merely as a stopgap measure.

“This reg is intended to stand up the exchange markets and keep them functional while the ACA replacement plan is approved and implemented,” she said. “This is meant to prevent there from being a total loss of coverage before the ACA replacement can be put into effect.”

She added that while insurers will welcome the changes, consumers and patient advocates could push back on the proposal, particularly the actuarial flexibility that could result in smaller networks and shrinking benefits.

Indeed, America’s Health Insurance Plans offered its support of the regulation. “We commend the Administration for proposing these regulatory actions as Congress considers other critical actions necessary to help stabilize and improve the individual market for 2018,” AHIP President and CEO Marilyn Tavenner said in a statement.

The proposed changes were released online Feb. 15 and are scheduled for publication in the Federal Register on Feb. 17. Comments on the proposed changes are due to CMS by March 7.

Proposed regulations from the Centers for Medicare & Medicaid Services aim to provide short-term stabilization to the individual and small group insurance markets under the Affordable Care Act.

The proposal issued Feb. 15 would make changes to special enrollment periods, open enrollment, guaranteed availability, network adequacy rules, essential community providers, and actuarial value requirements. It also changes the timeline for when insurers would need to get their qualified health plan certification. It represents a first step toward fulfilling President Trump’s Inauguration Day executive order to “minimize the unwarranted economic and regulatory burdens of the [ACA], and prepare to afford the states more flexibility and control to create a more free and open health care market.”

However, the proposed rule, if finalized as is, may not have any dramatic effect on the decision by insurers to serve the individual and small group markets.

“A plan that was going to stay is probably going to stay and be a little bit happier about these regs and a plan that was going to decide to leave, like Humana, would have left anyway,” Caroline Pearson, senior vice president at Avalere Health said in an interview. “I don’t know if it is going to materially change plan participation.”

The proposed rule would shorten open enrollment for plans purchased in the ACA marketplace. Currently, plans can be purchased from Nov. 1 to Jan. 31; the proposal would move the deadline up to Dec. 15.

“We anticipate this change could improve the risk pool because it would reduce opportunities for adverse selection by those who learn they will need services in late December and January; and will encourage healthier individuals who might have previously enrolled in partial year coverage after Dec. 15th to instead enroll in coverage for the full year,” according to the proposed rule.

CMS also proposes to tighten special enrollment by requiring preverification of special enrollment period status for all people applicants. Currently, only 50% of those seeking coverage through special enrollment are verified. The agency also is proposing to limit the plan choices available to individuals who are enrolling via a special enrollment period as a way of minimizing adverse selection.

Another proposal aimed at keeping people covered is one that allows insurers to collect unpaid premiums in the prior coverage year before enrolling a patient in the next year’s plan with the same insurer.

CMS noted in the rule that a recent survey “concluded that approximately 21% of consumers stopped premium payments in 2015. Approximately 87% of those individuals repurchased plans in 2016, while 49% of these consumers purchased the same plan they had previously stopped payment on.”

On the network adequacy front, CMS is shifting the conduct of network adequacy reviews to states, or to an accrediting entity recognized by the Department of Health & Human Services in the case of states that do not have sufficient resources to conduct adequacy reviews. Further, the proposal reduces the minimum percentage of essential community providers (those who serve predominantly low-income and medically underserved populations) in a network to 20% from the 30% instituted in 2015.

CMS said in the proposal that if these rules are finalized, it will issue separate guidance on changes to the timeline for plans to submit their bids for 2018.

Avalere’s Ms. Pearson said that she sees these proposed changes merely as a stopgap measure.

“This reg is intended to stand up the exchange markets and keep them functional while the ACA replacement plan is approved and implemented,” she said. “This is meant to prevent there from being a total loss of coverage before the ACA replacement can be put into effect.”

She added that while insurers will welcome the changes, consumers and patient advocates could push back on the proposal, particularly the actuarial flexibility that could result in smaller networks and shrinking benefits.

Indeed, America’s Health Insurance Plans offered its support of the regulation. “We commend the Administration for proposing these regulatory actions as Congress considers other critical actions necessary to help stabilize and improve the individual market for 2018,” AHIP President and CEO Marilyn Tavenner said in a statement.

The proposed changes were released online Feb. 15 and are scheduled for publication in the Federal Register on Feb. 17. Comments on the proposed changes are due to CMS by March 7.

Proposed regulations from the Centers for Medicare & Medicaid Services aim to provide short-term stabilization to the individual and small group insurance markets under the Affordable Care Act.

The proposal issued Feb. 15 would make changes to special enrollment periods, open enrollment, guaranteed availability, network adequacy rules, essential community providers, and actuarial value requirements. It also changes the timeline for when insurers would need to get their qualified health plan certification. It represents a first step toward fulfilling President Trump’s Inauguration Day executive order to “minimize the unwarranted economic and regulatory burdens of the [ACA], and prepare to afford the states more flexibility and control to create a more free and open health care market.”

However, the proposed rule, if finalized as is, may not have any dramatic effect on the decision by insurers to serve the individual and small group markets.

“A plan that was going to stay is probably going to stay and be a little bit happier about these regs and a plan that was going to decide to leave, like Humana, would have left anyway,” Caroline Pearson, senior vice president at Avalere Health said in an interview. “I don’t know if it is going to materially change plan participation.”

The proposed rule would shorten open enrollment for plans purchased in the ACA marketplace. Currently, plans can be purchased from Nov. 1 to Jan. 31; the proposal would move the deadline up to Dec. 15.

“We anticipate this change could improve the risk pool because it would reduce opportunities for adverse selection by those who learn they will need services in late December and January; and will encourage healthier individuals who might have previously enrolled in partial year coverage after Dec. 15th to instead enroll in coverage for the full year,” according to the proposed rule.

CMS also proposes to tighten special enrollment by requiring preverification of special enrollment period status for all people applicants. Currently, only 50% of those seeking coverage through special enrollment are verified. The agency also is proposing to limit the plan choices available to individuals who are enrolling via a special enrollment period as a way of minimizing adverse selection.

Another proposal aimed at keeping people covered is one that allows insurers to collect unpaid premiums in the prior coverage year before enrolling a patient in the next year’s plan with the same insurer.

CMS noted in the rule that a recent survey “concluded that approximately 21% of consumers stopped premium payments in 2015. Approximately 87% of those individuals repurchased plans in 2016, while 49% of these consumers purchased the same plan they had previously stopped payment on.”

On the network adequacy front, CMS is shifting the conduct of network adequacy reviews to states, or to an accrediting entity recognized by the Department of Health & Human Services in the case of states that do not have sufficient resources to conduct adequacy reviews. Further, the proposal reduces the minimum percentage of essential community providers (those who serve predominantly low-income and medically underserved populations) in a network to 20% from the 30% instituted in 2015.

CMS said in the proposal that if these rules are finalized, it will issue separate guidance on changes to the timeline for plans to submit their bids for 2018.

Avalere’s Ms. Pearson said that she sees these proposed changes merely as a stopgap measure.

“This reg is intended to stand up the exchange markets and keep them functional while the ACA replacement plan is approved and implemented,” she said. “This is meant to prevent there from being a total loss of coverage before the ACA replacement can be put into effect.”

She added that while insurers will welcome the changes, consumers and patient advocates could push back on the proposal, particularly the actuarial flexibility that could result in smaller networks and shrinking benefits.

Indeed, America’s Health Insurance Plans offered its support of the regulation. “We commend the Administration for proposing these regulatory actions as Congress considers other critical actions necessary to help stabilize and improve the individual market for 2018,” AHIP President and CEO Marilyn Tavenner said in a statement.

The proposed changes were released online Feb. 15 and are scheduled for publication in the Federal Register on Feb. 17. Comments on the proposed changes are due to CMS by March 7.

Opinions vary considerably on withdrawing drugs in clinically inactive JIA

A wide range of attitudes and practices for the process of withdrawing medications in pediatric patients with clinically inactive juvenile idiopathic arthritis (JIA) exist among clinician members of the Childhood Arthritis and Rheumatology Research Alliance (CARRA), according to findings from an anonymous survey.

The cross-sectional, electronic survey found that respondents varied in the amount of time they thought was necessary to spend in clinically inactive disease before beginning withdrawal of medications and in the amount of time to spend during tapering or stopping medications, for both methotrexate and biologics.

To better understand how clinicians care for patients with clinically inactive disease, the investigators emailed the survey to 388 clinician members of the CARRA in the United States and Canada over a 4-week period during November-December 2015 (J Rheumatol. 2017 Feb 1. doi: 10.3899/jrheum.161078).

The survey, which the investigators thought to be “the first comprehensive evaluation of influential factors and approaches for the clinical management of children with clinically inactive JIA,” did not include questions about systemic JIA, inflammatory bowel disease, psoriasis, and uveitis in order “to simplify responses and encourage participation, because in practice, the manifestations and outcomes of these diseases could substantially influence treatment decisions for children with JIA.”

They received complete responses from 124 of the 132 clinicians who responded to the survey email. Of the 121 respondents who reported taking clinical care of patients with JIA, 87% were physicians, and the same number reported taking care of pediatric patients only. About three-quarters spent half of their professional time in clinical care, and about half had more than 10 years of post-training clinical experience.

When deciding about withdrawing JIA medications, more than one-half of respondents said that the time that a patient spent in clinically inactive disease and a history of drug toxicity are very important factors. Most participants ranked those two factors most highly and most often among their top five factors for decision making.

Respondents also commonly ranked these factors as important:

- JIA duration before attaining clinically inactive disease.

- Patient/family preferences.

- Presence of JIA-related damage.

- JIA category.

The factors that consistently appeared in responses fit into three clusters that included JIA features and time spent in clinically inactive disease (JIA category and total disease duration), JIA severity and resistance to treatment (disease duration before clinically inactive disease, number of drugs needed to attain inactivity, joint damage, and a history of sacroiliac or temporomandibular disease), and the patient’s experience (drug toxicity and family preference).

The respondents indicated that they would be least likely to stop medications for children with rheumatoid factor (RF)–positive polyarthritis (85%), which is “consistent with prior studies showing that RF-positive polyarthritis is associated with higher rates of flares than other JIA categories,” the investigators wrote. However, respondents said they would be most likely to stop medications for children with persistent oligoarthritis (87%) “even though rates of flares in this category appear similar to other JIA types. This method may reflect a belief that flares in children with persistent oligoarticular JIA will be less severe and easier to control.”

When patients met all criteria for clinically inactive disease for a “sufficient amount of time” and families were interested in stopping medications, some factors continued to make respondents reluctant to withdraw medications. These factors were most often a history of erosions (81%), asymptomatic joint abnormalities on ultrasound or MRI (72%), and failure of multiple prior disease-modifying antirheumatic drugs or biologics to control disease (64%). The definition of clinically inactive disease is a composite of no active arthritis, uveitis, or systemic JIA symptoms; the best possible clinical global assessment; inflammatory markers normal or elevated for reasons other than JIA; and no more than 15 minutes of joint stiffness.

A little over half of respondents said they would wait until clinically inactive disease had lasted 12 months before considering stopping or tapering methotrexate or biologic monotherapy, but a substantial minority said they would wait for only 6 months for methotrexate (31%) or biologic monotherapy (23%). A smaller number would wait for 18 months for methotrexate (13%) or biologics (18%), and another 3%-5% said they could not give a time frame.

The strategies varied for how actual withdrawing of medications occurred. Most methotrexate monotherapy withdrawals involved tapering over 2-6 months, one-third over longer periods, and the fewest reported tapering for less than 2 months (7%) or immediate withdrawal (17%).

Withdrawal of biologics was generally said to occur more gradually than with methotrexate, with one-third of respondents citing over 2-6 months, a quarter more slowly, and another 29% in less than 2 months or immediately. Some wrote that they preferred spacing out the interval between doses, but none decreased the dose. When children took combination therapy with methotrexate plus a biologic, 63% said that they began tapering or stopping methotrexate first, but a quarter said that the order was strongly context dependent, and the most commonly cited reason for deciding was history of toxicity or intolerance.

Imaging played a role in less than half of the decisions to withdraw medications, with it being used often by 9% and sometimes by 36%. And while it’s assumed that patients and family consideration played an important role in decision making, only 25% of respondents reported using specific patient-reported outcomes in deciding to withdraw medications.

The study was funded by grants from Rutgers Biomedical and Health Sciences and the National Institute of Arthritis and Musculoskeletal and Skin Diseases.

A wide range of attitudes and practices for the process of withdrawing medications in pediatric patients with clinically inactive juvenile idiopathic arthritis (JIA) exist among clinician members of the Childhood Arthritis and Rheumatology Research Alliance (CARRA), according to findings from an anonymous survey.

The cross-sectional, electronic survey found that respondents varied in the amount of time they thought was necessary to spend in clinically inactive disease before beginning withdrawal of medications and in the amount of time to spend during tapering or stopping medications, for both methotrexate and biologics.

To better understand how clinicians care for patients with clinically inactive disease, the investigators emailed the survey to 388 clinician members of the CARRA in the United States and Canada over a 4-week period during November-December 2015 (J Rheumatol. 2017 Feb 1. doi: 10.3899/jrheum.161078).

The survey, which the investigators thought to be “the first comprehensive evaluation of influential factors and approaches for the clinical management of children with clinically inactive JIA,” did not include questions about systemic JIA, inflammatory bowel disease, psoriasis, and uveitis in order “to simplify responses and encourage participation, because in practice, the manifestations and outcomes of these diseases could substantially influence treatment decisions for children with JIA.”

They received complete responses from 124 of the 132 clinicians who responded to the survey email. Of the 121 respondents who reported taking clinical care of patients with JIA, 87% were physicians, and the same number reported taking care of pediatric patients only. About three-quarters spent half of their professional time in clinical care, and about half had more than 10 years of post-training clinical experience.

When deciding about withdrawing JIA medications, more than one-half of respondents said that the time that a patient spent in clinically inactive disease and a history of drug toxicity are very important factors. Most participants ranked those two factors most highly and most often among their top five factors for decision making.

Respondents also commonly ranked these factors as important:

- JIA duration before attaining clinically inactive disease.

- Patient/family preferences.

- Presence of JIA-related damage.

- JIA category.

The factors that consistently appeared in responses fit into three clusters that included JIA features and time spent in clinically inactive disease (JIA category and total disease duration), JIA severity and resistance to treatment (disease duration before clinically inactive disease, number of drugs needed to attain inactivity, joint damage, and a history of sacroiliac or temporomandibular disease), and the patient’s experience (drug toxicity and family preference).

The respondents indicated that they would be least likely to stop medications for children with rheumatoid factor (RF)–positive polyarthritis (85%), which is “consistent with prior studies showing that RF-positive polyarthritis is associated with higher rates of flares than other JIA categories,” the investigators wrote. However, respondents said they would be most likely to stop medications for children with persistent oligoarthritis (87%) “even though rates of flares in this category appear similar to other JIA types. This method may reflect a belief that flares in children with persistent oligoarticular JIA will be less severe and easier to control.”

When patients met all criteria for clinically inactive disease for a “sufficient amount of time” and families were interested in stopping medications, some factors continued to make respondents reluctant to withdraw medications. These factors were most often a history of erosions (81%), asymptomatic joint abnormalities on ultrasound or MRI (72%), and failure of multiple prior disease-modifying antirheumatic drugs or biologics to control disease (64%). The definition of clinically inactive disease is a composite of no active arthritis, uveitis, or systemic JIA symptoms; the best possible clinical global assessment; inflammatory markers normal or elevated for reasons other than JIA; and no more than 15 minutes of joint stiffness.

A little over half of respondents said they would wait until clinically inactive disease had lasted 12 months before considering stopping or tapering methotrexate or biologic monotherapy, but a substantial minority said they would wait for only 6 months for methotrexate (31%) or biologic monotherapy (23%). A smaller number would wait for 18 months for methotrexate (13%) or biologics (18%), and another 3%-5% said they could not give a time frame.

The strategies varied for how actual withdrawing of medications occurred. Most methotrexate monotherapy withdrawals involved tapering over 2-6 months, one-third over longer periods, and the fewest reported tapering for less than 2 months (7%) or immediate withdrawal (17%).

Withdrawal of biologics was generally said to occur more gradually than with methotrexate, with one-third of respondents citing over 2-6 months, a quarter more slowly, and another 29% in less than 2 months or immediately. Some wrote that they preferred spacing out the interval between doses, but none decreased the dose. When children took combination therapy with methotrexate plus a biologic, 63% said that they began tapering or stopping methotrexate first, but a quarter said that the order was strongly context dependent, and the most commonly cited reason for deciding was history of toxicity or intolerance.

Imaging played a role in less than half of the decisions to withdraw medications, with it being used often by 9% and sometimes by 36%. And while it’s assumed that patients and family consideration played an important role in decision making, only 25% of respondents reported using specific patient-reported outcomes in deciding to withdraw medications.

The study was funded by grants from Rutgers Biomedical and Health Sciences and the National Institute of Arthritis and Musculoskeletal and Skin Diseases.

A wide range of attitudes and practices for the process of withdrawing medications in pediatric patients with clinically inactive juvenile idiopathic arthritis (JIA) exist among clinician members of the Childhood Arthritis and Rheumatology Research Alliance (CARRA), according to findings from an anonymous survey.

The cross-sectional, electronic survey found that respondents varied in the amount of time they thought was necessary to spend in clinically inactive disease before beginning withdrawal of medications and in the amount of time to spend during tapering or stopping medications, for both methotrexate and biologics.

To better understand how clinicians care for patients with clinically inactive disease, the investigators emailed the survey to 388 clinician members of the CARRA in the United States and Canada over a 4-week period during November-December 2015 (J Rheumatol. 2017 Feb 1. doi: 10.3899/jrheum.161078).

The survey, which the investigators thought to be “the first comprehensive evaluation of influential factors and approaches for the clinical management of children with clinically inactive JIA,” did not include questions about systemic JIA, inflammatory bowel disease, psoriasis, and uveitis in order “to simplify responses and encourage participation, because in practice, the manifestations and outcomes of these diseases could substantially influence treatment decisions for children with JIA.”

They received complete responses from 124 of the 132 clinicians who responded to the survey email. Of the 121 respondents who reported taking clinical care of patients with JIA, 87% were physicians, and the same number reported taking care of pediatric patients only. About three-quarters spent half of their professional time in clinical care, and about half had more than 10 years of post-training clinical experience.

When deciding about withdrawing JIA medications, more than one-half of respondents said that the time that a patient spent in clinically inactive disease and a history of drug toxicity are very important factors. Most participants ranked those two factors most highly and most often among their top five factors for decision making.

Respondents also commonly ranked these factors as important:

- JIA duration before attaining clinically inactive disease.

- Patient/family preferences.

- Presence of JIA-related damage.

- JIA category.

The factors that consistently appeared in responses fit into three clusters that included JIA features and time spent in clinically inactive disease (JIA category and total disease duration), JIA severity and resistance to treatment (disease duration before clinically inactive disease, number of drugs needed to attain inactivity, joint damage, and a history of sacroiliac or temporomandibular disease), and the patient’s experience (drug toxicity and family preference).

The respondents indicated that they would be least likely to stop medications for children with rheumatoid factor (RF)–positive polyarthritis (85%), which is “consistent with prior studies showing that RF-positive polyarthritis is associated with higher rates of flares than other JIA categories,” the investigators wrote. However, respondents said they would be most likely to stop medications for children with persistent oligoarthritis (87%) “even though rates of flares in this category appear similar to other JIA types. This method may reflect a belief that flares in children with persistent oligoarticular JIA will be less severe and easier to control.”

When patients met all criteria for clinically inactive disease for a “sufficient amount of time” and families were interested in stopping medications, some factors continued to make respondents reluctant to withdraw medications. These factors were most often a history of erosions (81%), asymptomatic joint abnormalities on ultrasound or MRI (72%), and failure of multiple prior disease-modifying antirheumatic drugs or biologics to control disease (64%). The definition of clinically inactive disease is a composite of no active arthritis, uveitis, or systemic JIA symptoms; the best possible clinical global assessment; inflammatory markers normal or elevated for reasons other than JIA; and no more than 15 minutes of joint stiffness.

A little over half of respondents said they would wait until clinically inactive disease had lasted 12 months before considering stopping or tapering methotrexate or biologic monotherapy, but a substantial minority said they would wait for only 6 months for methotrexate (31%) or biologic monotherapy (23%). A smaller number would wait for 18 months for methotrexate (13%) or biologics (18%), and another 3%-5% said they could not give a time frame.

The strategies varied for how actual withdrawing of medications occurred. Most methotrexate monotherapy withdrawals involved tapering over 2-6 months, one-third over longer periods, and the fewest reported tapering for less than 2 months (7%) or immediate withdrawal (17%).

Withdrawal of biologics was generally said to occur more gradually than with methotrexate, with one-third of respondents citing over 2-6 months, a quarter more slowly, and another 29% in less than 2 months or immediately. Some wrote that they preferred spacing out the interval between doses, but none decreased the dose. When children took combination therapy with methotrexate plus a biologic, 63% said that they began tapering or stopping methotrexate first, but a quarter said that the order was strongly context dependent, and the most commonly cited reason for deciding was history of toxicity or intolerance.

Imaging played a role in less than half of the decisions to withdraw medications, with it being used often by 9% and sometimes by 36%. And while it’s assumed that patients and family consideration played an important role in decision making, only 25% of respondents reported using specific patient-reported outcomes in deciding to withdraw medications.

The study was funded by grants from Rutgers Biomedical and Health Sciences and the National Institute of Arthritis and Musculoskeletal and Skin Diseases.

Key clinical point:

Major finding: A little over half of respondents said they would wait until clinically inactive disease had lasted 12 months before considering stopping or tapering methotrexate or biologic monotherapy.

Data source: A survey of 121 of 388 CARRA members involved in clinical care of JIA patients.

Disclosures: The study was funded by grants from Rutgers Biomedical and Health Sciences and the National Institute of Arthritis and Musculoskeletal and Skin Diseases.

Bundled payment for gastrointestinal hemorrhage

The Medicare Access and Chips Reauthorization Act (MACRA) is now law; it passed with bipartisan, virtually unanimous support in both chambers of Congress. MACRA replaced the Sustainable Growth Rate formula for physician reimbursement and replaced it with a pathway to value-based payment. This law will alter our practices more than the Affordable Care Act and to an extent not seen since the passage of the original Medicare Act. Practices that continue to hang on to our traditional colonoscopy-based fee-for-service reimbursement model will increasingly be marginalized (or discounted) by Medicare, commercial payers, and regional health systems. To thrive in the coming decade, innovative practices will move toward alternative payment models. Many practices have risk-linked bundled payments for colonoscopy, but this step is only for the interim. Long-term success will come to practices that understand the implications of episode payments, specialty medical homes, and total cost of care. Do not wait for the finances to magically appear – start now to build infrastructure. In this month’s article, Dr. Mehta provides a detailed description of how a practice might construct a bundled payment for a common inpatient disorder. No one is paying for this yet, but it will come. Now is not the time to be a “WIMP” (Gastroenterology. 2016;150:295-9).

John I. Allen, MD, MBA, AGAF

Editor in Chief

In January 2016, the Centers for Medicare & Medicaid Services (CMS) launched the Comprehensive Care for Joint Replacement (CJR) model. This payment model aims to improve the value of care provided to Medicare beneficiaries for hip and knee replacement surgery during the inpatient stay and 90-day period after discharge by holding hospitals accountable for cost and quality.1 It includes hospitals in 67 geographic areas across the United States and marks the first time that a postacute bundled payment model is mandatory for traditional Medicare patients. Although this may not seem to be relevant for gastroenterology, it marks an important signal by CMS that there will likely be more episode-payment models in the future.

Gastroenterologists have not been primary drivers or participants in these models, but gastrointestinal hemorrhage is included as 1 of the 48 clinical conditions for the postacute bundled payment program. In addition, CMS recently announced that clinical episode-based payment for GI hemorrhage will be included in hospital inpatient quality reporting (IQR) for fiscal year 2019.4 This is an opportunity for the field of gastroenterology to take a leadership role in an alternate payment model as it has for colonoscopy bundled payment,5 but it requires an understanding of the history of postacute bundled payments and the opportunities for and challenges to applying this model to GI hemorrhage. In this article, I will describe insights from our health system’s experience in evaluating different postacute bundled payment programs and participating in a GI bundled payment program.

Inpatient and postacute bundled payments

A bundled payment refers to a situation in which hospitals and physicians are incentivized to coordinate care for an episode of care across the continuum and eliminate unnecessary spending. In 1983, Medicare initiated a type of bundled payment for Part A spending on inpatient hospital care by creating prospective payment that is based on diagnosis-related groups (DRGs). This was a response to the rising cost of inpatient care resulting from retrospective payment that is based on hospital charges. Because hospitals would get paid the same amount for similar conditions, it resulted in shortened length of stay and reduction in the rise of inpatient costs, along with no measurable impact on quality of care.6 This was followed by prospective payment for outpatient hospital fees and skilled nursing facility (SNF) care as a result of the Balanced Budget Act of 1997. Medicare built on this by bundling physician and hospital fees through demonstration projects in coronary artery bypass graft surgery from 1991 to 1996 and orthopedic and cardiovascular surgery from 2009 to 2012, both resulting in reduced costs and no measurable impact on quality.

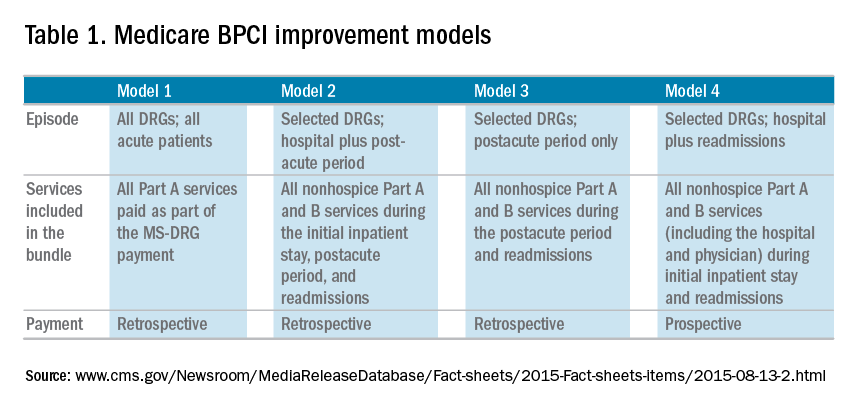

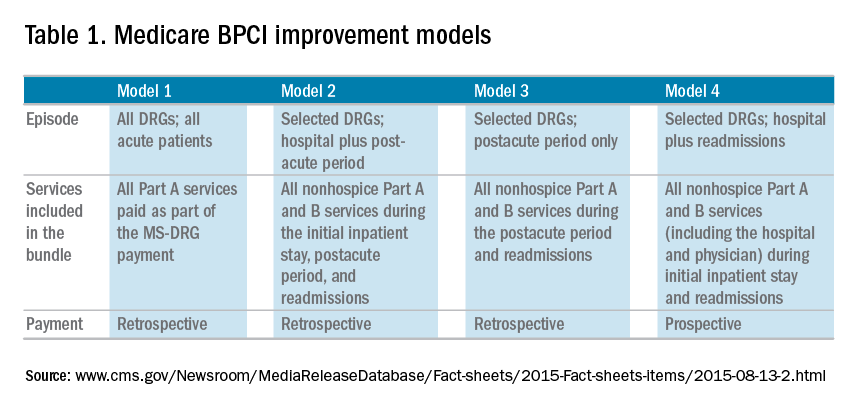

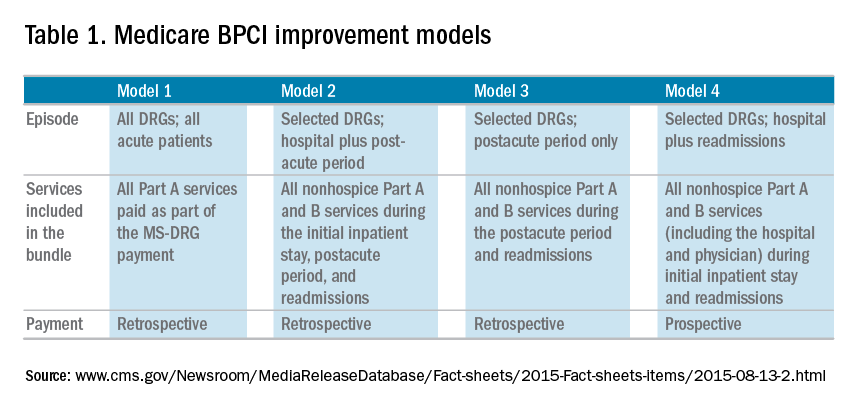

The Bundled Payment for Care Improvement (BPCI) program built on these results in 2013 by expanding to include Part A and B services rendered up to 90 days after discharge, and as of January 2016, it includes 1,574 participants across the country. On a voluntary basis, hospitals, physician groups, and postacute providers and conveners were able to participate in 1 of 4 bundled payment models that were anchored on an inpatient for any of 48 clinical conditions that were based on MS-DRG (Table 1).

• Model 1 defined the episode as the inpatient hospital stay and bundled the facility and physician fees, similar to prior demonstration projects.

• Model 2 is a retrospective bundled payment for Part A and B services in the inpatient hospital stay and up to 90 days after discharge.

• Model 3 is a retrospective model that starts after hospital discharge and includes up to 90 days. (Models 1-3 maintain the current payment structure and retrospectively compare the actual reimbursement with target values that are based on historical data for that hospital with a 2%-3% payment reduction.)

• Model 4 makes a single, prospectively determined global payment to a hospital that encompasses all services during the hospital stay.

Opportunities in inpatient and postacute bundled payments

Participation in bundled payments requires a new set of analytic and organizational capabilities.

• The first step is to identify the patient population on the basis of inclusion and exclusion criteria and to measure the current cost of care through external claims data and internal hospital data. This includes payments for hospital inpatient services, physician fees, postacute care, readmissions, other Part B services, and home health services. The biggest opportunity for postacute bundles is shifting site of service from postacute care to lower-cost settings and reducing readmission rates.

• Subsequently, they need to identify areas of opportunity to reduce expenditure, while also demonstrating consistent or improved quality and outcomes.

• On the basis of this, the team can identify variation in care within the cohort and in comparison with benchmarks across the country.

• After identifying areas of opportunity, the team needs to develop strategies to improve value such as care pathways, information technology tools, care coordination, and remote services.

Of the 48 clinical conditions in BPCI, 4 could be described as related to GI: esophagitis, gastroenteritis, and other digestive disorders (Medicare Severity–Diagnosis Related Group [MS-DRG] 391, 392); gastrointestinal hemorrhage (MS-DRG 377, 378, 379); gastrointestinal obstruction (MS-DRG 388, 389, 390); and major bowel procedure (MS-DRG 329, 330, 331). After evaluating the GI bundles, it was apparent that these were created for billing purposes and were not clinically intuitive, which is why our institution immediately excluded the broad category of esophagitis, gastroenteritis, and other digestive disorders. GI obstruction and major bowel surgery relate to the care of gastroenterologists, but surgeons are typically primary drivers of care for these patients. Thus, we believed that GI hemorrhage was most appropriate because gastroenterologists drive care for this condition, and there is substantial evidence about established guidelines and pathways during this episode.

Bundled payment for gastrointestinal hemorrhage

We built a multidisciplinary team of physicians, data analysts, clinical documentation specialists, and care managers to start developing a plan for improving the value of care in this population. This included data about readmissions and site of postacute care for this population, which were supplemented by chart review of financial outliers and readmissions. We quickly learned about some of the challenges to medical bundles and the GI hemorrhage bundle in particular. It is difficult to identify these patients early in the hospital stay because inclusion is based on a billing code. Many of these patients also have cardiovascular disease, cancer, or cirrhosis, which makes it hard to identify which patients will end up with primary GI hemorrhage coding until after the patient is discharged. They are also on many different inpatient services; in our hospital, there were at least 12 different admitting services. In addition, almost one-third of the patients actually had an admission before this hospitalization, often for different clinical conditions.

Most importantly, it was very challenging to develop protocols to improve the value of care in this population. Most of the patients had many comorbid conditions, so a GI hemorrhage pathway alone would not be sufficient to alter care. The two main areas of opportunity for cost savings in postacute bundled payments are postacute site of service and readmissions, both of which are hard to change for medical GI patients. For medical patients, they have many comorbidities before admission, so postacute site of service is typically driven by which site they were admitted from. This is different from surgical patients who are in SNF or rehabilitation facilities for limited time frames, and there may be more discretion to shift to lower cost settings. In addition, readmissions have not been studied much in GI hemorrhage, so it is not clear how to improve them. On the basis of these factors and the limited sample size for this condition, our health system opted to stop taking financial risk for this population.

Future opportunities for gastroenterology

However, the latest CMS Inpatient Prospective Payment System rule describes the implementation of a new quality metric for hospital IQR called the Gastrointestinal Hemorrhage Clinical Episode-Based Payment. This would hold hospitals accountable for the cost of care for GI hemorrhage admissions plus the 90 days after discharge, similar to model 2 of BPCI. This announcement, as well as the launch of mandatory orthopedic bundles, demonstrates that hospital reimbursement is shifting toward an expansion of bundled payments to include the postacute time frame. This is manifested in postacute bundles, episode-based payment, and readmission penalties. This reignited our GI hemorrhage episode team’s efforts, but with a broader purpose.

Gastroenterologists can take a leadership role in responding to episode-based payments as a way for us to demonstrate value in our collaboration with hospitals, health systems, and payers. The focus on cardiovascular disease as part of readmission penalties and core measures has allowed our cardiology colleagues to partner closely with service lines, learn about episode-based care, and garner resources to build and lead disease and episode teams. Because patients do not fit into the different clinical areas in mutually exclusive categories, we will need to collaborate with other specialties to care for the overlap with other conditions. Many heart failure and myocardial infarction patients will get readmitted for GI hemorrhage, and many GI hemorrhage patients will have concomitant cardiovascular disease or cancer. This suggests that future strategies need to integrate efforts of service lines and that there is greater opportunity for gastroenterologists than just the GI bundles.

Gastroenterologists should also participate in a proactive way. Any new payment mechanism will have some flaws in implementation, so it is more important to do what is right from a clinical standpoint rather than focusing too much on the specific billing code or payment model. These models are evolving, and we have an opportunity to have impact on future implementation. This starts with identifying and including patients from a clinical perspective rather than focusing on specific insurance types that participate in bundled payments. Some examples to improve the value of care in GI hemorrhage include creating evidence-based care pathways that span the episode of care, structured documentation after endoscopy for risk stratification, integrating pathways into the workflow of providers through the electronic health record, and increased coordination between specialties across the continuum of care. Other diagnoses that might be included in future bundles include cirrhosis, bowel obstruction, and inflammatory bowel disease. We can also learn from successful efforts in other clinical specialties that have identified variations in care and implemented a multi-modal strategy to improving care and measuring impact.

References

1. Mechanic, R.E. Mandatory Medicare bundled payment: Is it ready for prime time? N Engl J Med. 2015;373[14]:1291-3.

2. U.S. Department of Health and Human Services. Better, smarter, healthier: In historic announcement, HHS sets clear goals and timeline for shifting Medicare reimbursements from volume to value. January 26, 2015. Available from: http://www.hhs.gov/about/news/2015/01/26/better-smarter-healthier-in-historic-announcement-hhs-sets-clear-goals-and-timeline-for-shifting-medicare-reimbursements-from-volume-to-value.html. Accessed June 28, 2016.

3. Patel, K., Presser, E., George, M., et al. Shifting away from fee-for-service: Alternative approaches to payment in gastroenterology. Clin Gastroenterol Hepatol. 2016;14[4]:497-506.

4. Medicare FY 2016 IPPS final rule. Available from: https://www.cms.gov/Medicare/Medicare-Fee-for-Service-Payment/AcuteInpatientPPS/FY2016-IPPS-Final-Rule-Home-Page.html. Accessed June 28, 2016.

5. Ketover, S.R. Bundled payment for colonoscopy. Clin Gastroenterol Hepatol. 2013;11[5]:454-7.

6. Coulam, R.F., Gaumer, G.L. Medicare’s prospective payment system: a critical appraisal. Health Care Financ Rev Annu Suppl. 1991:45-77.

Dr. Mehta is in the division of gastroenterology, Perelman School of Medicine, University of Pennsylvania, and Penn Medicine Center for Health Care Innovation, University of Pennsylvania, Philadelphia. The author discloses no conflicts of interest.

The Medicare Access and Chips Reauthorization Act (MACRA) is now law; it passed with bipartisan, virtually unanimous support in both chambers of Congress. MACRA replaced the Sustainable Growth Rate formula for physician reimbursement and replaced it with a pathway to value-based payment. This law will alter our practices more than the Affordable Care Act and to an extent not seen since the passage of the original Medicare Act. Practices that continue to hang on to our traditional colonoscopy-based fee-for-service reimbursement model will increasingly be marginalized (or discounted) by Medicare, commercial payers, and regional health systems. To thrive in the coming decade, innovative practices will move toward alternative payment models. Many practices have risk-linked bundled payments for colonoscopy, but this step is only for the interim. Long-term success will come to practices that understand the implications of episode payments, specialty medical homes, and total cost of care. Do not wait for the finances to magically appear – start now to build infrastructure. In this month’s article, Dr. Mehta provides a detailed description of how a practice might construct a bundled payment for a common inpatient disorder. No one is paying for this yet, but it will come. Now is not the time to be a “WIMP” (Gastroenterology. 2016;150:295-9).

John I. Allen, MD, MBA, AGAF

Editor in Chief

In January 2016, the Centers for Medicare & Medicaid Services (CMS) launched the Comprehensive Care for Joint Replacement (CJR) model. This payment model aims to improve the value of care provided to Medicare beneficiaries for hip and knee replacement surgery during the inpatient stay and 90-day period after discharge by holding hospitals accountable for cost and quality.1 It includes hospitals in 67 geographic areas across the United States and marks the first time that a postacute bundled payment model is mandatory for traditional Medicare patients. Although this may not seem to be relevant for gastroenterology, it marks an important signal by CMS that there will likely be more episode-payment models in the future.

Gastroenterologists have not been primary drivers or participants in these models, but gastrointestinal hemorrhage is included as 1 of the 48 clinical conditions for the postacute bundled payment program. In addition, CMS recently announced that clinical episode-based payment for GI hemorrhage will be included in hospital inpatient quality reporting (IQR) for fiscal year 2019.4 This is an opportunity for the field of gastroenterology to take a leadership role in an alternate payment model as it has for colonoscopy bundled payment,5 but it requires an understanding of the history of postacute bundled payments and the opportunities for and challenges to applying this model to GI hemorrhage. In this article, I will describe insights from our health system’s experience in evaluating different postacute bundled payment programs and participating in a GI bundled payment program.

Inpatient and postacute bundled payments

A bundled payment refers to a situation in which hospitals and physicians are incentivized to coordinate care for an episode of care across the continuum and eliminate unnecessary spending. In 1983, Medicare initiated a type of bundled payment for Part A spending on inpatient hospital care by creating prospective payment that is based on diagnosis-related groups (DRGs). This was a response to the rising cost of inpatient care resulting from retrospective payment that is based on hospital charges. Because hospitals would get paid the same amount for similar conditions, it resulted in shortened length of stay and reduction in the rise of inpatient costs, along with no measurable impact on quality of care.6 This was followed by prospective payment for outpatient hospital fees and skilled nursing facility (SNF) care as a result of the Balanced Budget Act of 1997. Medicare built on this by bundling physician and hospital fees through demonstration projects in coronary artery bypass graft surgery from 1991 to 1996 and orthopedic and cardiovascular surgery from 2009 to 2012, both resulting in reduced costs and no measurable impact on quality.

The Bundled Payment for Care Improvement (BPCI) program built on these results in 2013 by expanding to include Part A and B services rendered up to 90 days after discharge, and as of January 2016, it includes 1,574 participants across the country. On a voluntary basis, hospitals, physician groups, and postacute providers and conveners were able to participate in 1 of 4 bundled payment models that were anchored on an inpatient for any of 48 clinical conditions that were based on MS-DRG (Table 1).

• Model 1 defined the episode as the inpatient hospital stay and bundled the facility and physician fees, similar to prior demonstration projects.

• Model 2 is a retrospective bundled payment for Part A and B services in the inpatient hospital stay and up to 90 days after discharge.

• Model 3 is a retrospective model that starts after hospital discharge and includes up to 90 days. (Models 1-3 maintain the current payment structure and retrospectively compare the actual reimbursement with target values that are based on historical data for that hospital with a 2%-3% payment reduction.)

• Model 4 makes a single, prospectively determined global payment to a hospital that encompasses all services during the hospital stay.

Opportunities in inpatient and postacute bundled payments

Participation in bundled payments requires a new set of analytic and organizational capabilities.

• The first step is to identify the patient population on the basis of inclusion and exclusion criteria and to measure the current cost of care through external claims data and internal hospital data. This includes payments for hospital inpatient services, physician fees, postacute care, readmissions, other Part B services, and home health services. The biggest opportunity for postacute bundles is shifting site of service from postacute care to lower-cost settings and reducing readmission rates.

• Subsequently, they need to identify areas of opportunity to reduce expenditure, while also demonstrating consistent or improved quality and outcomes.

• On the basis of this, the team can identify variation in care within the cohort and in comparison with benchmarks across the country.

• After identifying areas of opportunity, the team needs to develop strategies to improve value such as care pathways, information technology tools, care coordination, and remote services.

Of the 48 clinical conditions in BPCI, 4 could be described as related to GI: esophagitis, gastroenteritis, and other digestive disorders (Medicare Severity–Diagnosis Related Group [MS-DRG] 391, 392); gastrointestinal hemorrhage (MS-DRG 377, 378, 379); gastrointestinal obstruction (MS-DRG 388, 389, 390); and major bowel procedure (MS-DRG 329, 330, 331). After evaluating the GI bundles, it was apparent that these were created for billing purposes and were not clinically intuitive, which is why our institution immediately excluded the broad category of esophagitis, gastroenteritis, and other digestive disorders. GI obstruction and major bowel surgery relate to the care of gastroenterologists, but surgeons are typically primary drivers of care for these patients. Thus, we believed that GI hemorrhage was most appropriate because gastroenterologists drive care for this condition, and there is substantial evidence about established guidelines and pathways during this episode.

Bundled payment for gastrointestinal hemorrhage

We built a multidisciplinary team of physicians, data analysts, clinical documentation specialists, and care managers to start developing a plan for improving the value of care in this population. This included data about readmissions and site of postacute care for this population, which were supplemented by chart review of financial outliers and readmissions. We quickly learned about some of the challenges to medical bundles and the GI hemorrhage bundle in particular. It is difficult to identify these patients early in the hospital stay because inclusion is based on a billing code. Many of these patients also have cardiovascular disease, cancer, or cirrhosis, which makes it hard to identify which patients will end up with primary GI hemorrhage coding until after the patient is discharged. They are also on many different inpatient services; in our hospital, there were at least 12 different admitting services. In addition, almost one-third of the patients actually had an admission before this hospitalization, often for different clinical conditions.

Most importantly, it was very challenging to develop protocols to improve the value of care in this population. Most of the patients had many comorbid conditions, so a GI hemorrhage pathway alone would not be sufficient to alter care. The two main areas of opportunity for cost savings in postacute bundled payments are postacute site of service and readmissions, both of which are hard to change for medical GI patients. For medical patients, they have many comorbidities before admission, so postacute site of service is typically driven by which site they were admitted from. This is different from surgical patients who are in SNF or rehabilitation facilities for limited time frames, and there may be more discretion to shift to lower cost settings. In addition, readmissions have not been studied much in GI hemorrhage, so it is not clear how to improve them. On the basis of these factors and the limited sample size for this condition, our health system opted to stop taking financial risk for this population.

Future opportunities for gastroenterology

However, the latest CMS Inpatient Prospective Payment System rule describes the implementation of a new quality metric for hospital IQR called the Gastrointestinal Hemorrhage Clinical Episode-Based Payment. This would hold hospitals accountable for the cost of care for GI hemorrhage admissions plus the 90 days after discharge, similar to model 2 of BPCI. This announcement, as well as the launch of mandatory orthopedic bundles, demonstrates that hospital reimbursement is shifting toward an expansion of bundled payments to include the postacute time frame. This is manifested in postacute bundles, episode-based payment, and readmission penalties. This reignited our GI hemorrhage episode team’s efforts, but with a broader purpose.

Gastroenterologists can take a leadership role in responding to episode-based payments as a way for us to demonstrate value in our collaboration with hospitals, health systems, and payers. The focus on cardiovascular disease as part of readmission penalties and core measures has allowed our cardiology colleagues to partner closely with service lines, learn about episode-based care, and garner resources to build and lead disease and episode teams. Because patients do not fit into the different clinical areas in mutually exclusive categories, we will need to collaborate with other specialties to care for the overlap with other conditions. Many heart failure and myocardial infarction patients will get readmitted for GI hemorrhage, and many GI hemorrhage patients will have concomitant cardiovascular disease or cancer. This suggests that future strategies need to integrate efforts of service lines and that there is greater opportunity for gastroenterologists than just the GI bundles.

Gastroenterologists should also participate in a proactive way. Any new payment mechanism will have some flaws in implementation, so it is more important to do what is right from a clinical standpoint rather than focusing too much on the specific billing code or payment model. These models are evolving, and we have an opportunity to have impact on future implementation. This starts with identifying and including patients from a clinical perspective rather than focusing on specific insurance types that participate in bundled payments. Some examples to improve the value of care in GI hemorrhage include creating evidence-based care pathways that span the episode of care, structured documentation after endoscopy for risk stratification, integrating pathways into the workflow of providers through the electronic health record, and increased coordination between specialties across the continuum of care. Other diagnoses that might be included in future bundles include cirrhosis, bowel obstruction, and inflammatory bowel disease. We can also learn from successful efforts in other clinical specialties that have identified variations in care and implemented a multi-modal strategy to improving care and measuring impact.

References

1. Mechanic, R.E. Mandatory Medicare bundled payment: Is it ready for prime time? N Engl J Med. 2015;373[14]:1291-3.

2. U.S. Department of Health and Human Services. Better, smarter, healthier: In historic announcement, HHS sets clear goals and timeline for shifting Medicare reimbursements from volume to value. January 26, 2015. Available from: http://www.hhs.gov/about/news/2015/01/26/better-smarter-healthier-in-historic-announcement-hhs-sets-clear-goals-and-timeline-for-shifting-medicare-reimbursements-from-volume-to-value.html. Accessed June 28, 2016.

3. Patel, K., Presser, E., George, M., et al. Shifting away from fee-for-service: Alternative approaches to payment in gastroenterology. Clin Gastroenterol Hepatol. 2016;14[4]:497-506.

4. Medicare FY 2016 IPPS final rule. Available from: https://www.cms.gov/Medicare/Medicare-Fee-for-Service-Payment/AcuteInpatientPPS/FY2016-IPPS-Final-Rule-Home-Page.html. Accessed June 28, 2016.

5. Ketover, S.R. Bundled payment for colonoscopy. Clin Gastroenterol Hepatol. 2013;11[5]:454-7.

6. Coulam, R.F., Gaumer, G.L. Medicare’s prospective payment system: a critical appraisal. Health Care Financ Rev Annu Suppl. 1991:45-77.

Dr. Mehta is in the division of gastroenterology, Perelman School of Medicine, University of Pennsylvania, and Penn Medicine Center for Health Care Innovation, University of Pennsylvania, Philadelphia. The author discloses no conflicts of interest.

The Medicare Access and Chips Reauthorization Act (MACRA) is now law; it passed with bipartisan, virtually unanimous support in both chambers of Congress. MACRA replaced the Sustainable Growth Rate formula for physician reimbursement and replaced it with a pathway to value-based payment. This law will alter our practices more than the Affordable Care Act and to an extent not seen since the passage of the original Medicare Act. Practices that continue to hang on to our traditional colonoscopy-based fee-for-service reimbursement model will increasingly be marginalized (or discounted) by Medicare, commercial payers, and regional health systems. To thrive in the coming decade, innovative practices will move toward alternative payment models. Many practices have risk-linked bundled payments for colonoscopy, but this step is only for the interim. Long-term success will come to practices that understand the implications of episode payments, specialty medical homes, and total cost of care. Do not wait for the finances to magically appear – start now to build infrastructure. In this month’s article, Dr. Mehta provides a detailed description of how a practice might construct a bundled payment for a common inpatient disorder. No one is paying for this yet, but it will come. Now is not the time to be a “WIMP” (Gastroenterology. 2016;150:295-9).

John I. Allen, MD, MBA, AGAF

Editor in Chief

In January 2016, the Centers for Medicare & Medicaid Services (CMS) launched the Comprehensive Care for Joint Replacement (CJR) model. This payment model aims to improve the value of care provided to Medicare beneficiaries for hip and knee replacement surgery during the inpatient stay and 90-day period after discharge by holding hospitals accountable for cost and quality.1 It includes hospitals in 67 geographic areas across the United States and marks the first time that a postacute bundled payment model is mandatory for traditional Medicare patients. Although this may not seem to be relevant for gastroenterology, it marks an important signal by CMS that there will likely be more episode-payment models in the future.

Gastroenterologists have not been primary drivers or participants in these models, but gastrointestinal hemorrhage is included as 1 of the 48 clinical conditions for the postacute bundled payment program. In addition, CMS recently announced that clinical episode-based payment for GI hemorrhage will be included in hospital inpatient quality reporting (IQR) for fiscal year 2019.4 This is an opportunity for the field of gastroenterology to take a leadership role in an alternate payment model as it has for colonoscopy bundled payment,5 but it requires an understanding of the history of postacute bundled payments and the opportunities for and challenges to applying this model to GI hemorrhage. In this article, I will describe insights from our health system’s experience in evaluating different postacute bundled payment programs and participating in a GI bundled payment program.

Inpatient and postacute bundled payments

A bundled payment refers to a situation in which hospitals and physicians are incentivized to coordinate care for an episode of care across the continuum and eliminate unnecessary spending. In 1983, Medicare initiated a type of bundled payment for Part A spending on inpatient hospital care by creating prospective payment that is based on diagnosis-related groups (DRGs). This was a response to the rising cost of inpatient care resulting from retrospective payment that is based on hospital charges. Because hospitals would get paid the same amount for similar conditions, it resulted in shortened length of stay and reduction in the rise of inpatient costs, along with no measurable impact on quality of care.6 This was followed by prospective payment for outpatient hospital fees and skilled nursing facility (SNF) care as a result of the Balanced Budget Act of 1997. Medicare built on this by bundling physician and hospital fees through demonstration projects in coronary artery bypass graft surgery from 1991 to 1996 and orthopedic and cardiovascular surgery from 2009 to 2012, both resulting in reduced costs and no measurable impact on quality.

The Bundled Payment for Care Improvement (BPCI) program built on these results in 2013 by expanding to include Part A and B services rendered up to 90 days after discharge, and as of January 2016, it includes 1,574 participants across the country. On a voluntary basis, hospitals, physician groups, and postacute providers and conveners were able to participate in 1 of 4 bundled payment models that were anchored on an inpatient for any of 48 clinical conditions that were based on MS-DRG (Table 1).

• Model 1 defined the episode as the inpatient hospital stay and bundled the facility and physician fees, similar to prior demonstration projects.

• Model 2 is a retrospective bundled payment for Part A and B services in the inpatient hospital stay and up to 90 days after discharge.

• Model 3 is a retrospective model that starts after hospital discharge and includes up to 90 days. (Models 1-3 maintain the current payment structure and retrospectively compare the actual reimbursement with target values that are based on historical data for that hospital with a 2%-3% payment reduction.)

• Model 4 makes a single, prospectively determined global payment to a hospital that encompasses all services during the hospital stay.

Opportunities in inpatient and postacute bundled payments

Participation in bundled payments requires a new set of analytic and organizational capabilities.

• The first step is to identify the patient population on the basis of inclusion and exclusion criteria and to measure the current cost of care through external claims data and internal hospital data. This includes payments for hospital inpatient services, physician fees, postacute care, readmissions, other Part B services, and home health services. The biggest opportunity for postacute bundles is shifting site of service from postacute care to lower-cost settings and reducing readmission rates.

• Subsequently, they need to identify areas of opportunity to reduce expenditure, while also demonstrating consistent or improved quality and outcomes.

• On the basis of this, the team can identify variation in care within the cohort and in comparison with benchmarks across the country.

• After identifying areas of opportunity, the team needs to develop strategies to improve value such as care pathways, information technology tools, care coordination, and remote services.