User login

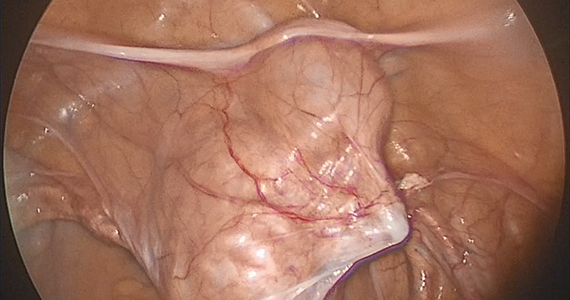

Novel method to demarcate bladder dissection during posthysterectomy sacrocolpopexy

Additional videos from SGS are available here, including these recent offerings:

• Instructional video for fourth-degree obstetric laceration repair using modified beef tongue model

• The art of manipulation: Simplifying hysterectomy by preparing the learner

• Vaginal and bilateral thigh removal of a transobturator sling

Additional videos from SGS are available here, including these recent offerings:

• Instructional video for fourth-degree obstetric laceration repair using modified beef tongue model

• The art of manipulation: Simplifying hysterectomy by preparing the learner

• Vaginal and bilateral thigh removal of a transobturator sling

Additional videos from SGS are available here, including these recent offerings:

• Instructional video for fourth-degree obstetric laceration repair using modified beef tongue model

• The art of manipulation: Simplifying hysterectomy by preparing the learner

• Vaginal and bilateral thigh removal of a transobturator sling

In memoriam: Dr. Henry T. Lynch

Henry T. Lynch, MD, an eminent researcher and trailblazer in the field of hereditary cancers, died June 2, 2019, at age 91.

Born in 1928, Dr. Lynch joined the military at age 16, becoming a gunner in the U.S. Navy. After a stint as a professional boxer, he obtained his high school equivalent, then attended the University of Oklahoma as an undergraduate. After receiving his master’s degree from the University of Denver, he earned a PhD in human genetics from the University of Texas, Austin, and his medical degree from the University of Texas, Galveston.

In 1967, Dr. Lynch accepted a position at Creighton University, Omaha, Neb., where he would spend the rest of his career. He was the founder and director of the Hereditary Cancer Center at Creighton, served as chair of the institution’s Department of Preventive Medicine and Public Health, and was named the inaugural holder of the Charles F. and Mary C. Heider Endowed Chair in Cancer Research.

It was at Creighton that Dr. Lynch began his work on genetic causes of and familial susceptibility to certain cancers. Dr. Lynch created a hereditary cancer registry, and definitively identified several genetic cancer syndromes that persist through multiple familial generations.

Dr. Lynch is credited with identifying a strain of hereditary nonpolyposis colon cancer that was named after him – Lynch syndrome. He is also credited with the discovery of hereditary breast-ovarian cancer syndrome, which would eventually lead to the discovery of the BRCA gene.

Find the full remembrance on the ASCO website.

Henry T. Lynch, MD, an eminent researcher and trailblazer in the field of hereditary cancers, died June 2, 2019, at age 91.

Born in 1928, Dr. Lynch joined the military at age 16, becoming a gunner in the U.S. Navy. After a stint as a professional boxer, he obtained his high school equivalent, then attended the University of Oklahoma as an undergraduate. After receiving his master’s degree from the University of Denver, he earned a PhD in human genetics from the University of Texas, Austin, and his medical degree from the University of Texas, Galveston.

In 1967, Dr. Lynch accepted a position at Creighton University, Omaha, Neb., where he would spend the rest of his career. He was the founder and director of the Hereditary Cancer Center at Creighton, served as chair of the institution’s Department of Preventive Medicine and Public Health, and was named the inaugural holder of the Charles F. and Mary C. Heider Endowed Chair in Cancer Research.

It was at Creighton that Dr. Lynch began his work on genetic causes of and familial susceptibility to certain cancers. Dr. Lynch created a hereditary cancer registry, and definitively identified several genetic cancer syndromes that persist through multiple familial generations.

Dr. Lynch is credited with identifying a strain of hereditary nonpolyposis colon cancer that was named after him – Lynch syndrome. He is also credited with the discovery of hereditary breast-ovarian cancer syndrome, which would eventually lead to the discovery of the BRCA gene.

Find the full remembrance on the ASCO website.

Henry T. Lynch, MD, an eminent researcher and trailblazer in the field of hereditary cancers, died June 2, 2019, at age 91.

Born in 1928, Dr. Lynch joined the military at age 16, becoming a gunner in the U.S. Navy. After a stint as a professional boxer, he obtained his high school equivalent, then attended the University of Oklahoma as an undergraduate. After receiving his master’s degree from the University of Denver, he earned a PhD in human genetics from the University of Texas, Austin, and his medical degree from the University of Texas, Galveston.

In 1967, Dr. Lynch accepted a position at Creighton University, Omaha, Neb., where he would spend the rest of his career. He was the founder and director of the Hereditary Cancer Center at Creighton, served as chair of the institution’s Department of Preventive Medicine and Public Health, and was named the inaugural holder of the Charles F. and Mary C. Heider Endowed Chair in Cancer Research.

It was at Creighton that Dr. Lynch began his work on genetic causes of and familial susceptibility to certain cancers. Dr. Lynch created a hereditary cancer registry, and definitively identified several genetic cancer syndromes that persist through multiple familial generations.

Dr. Lynch is credited with identifying a strain of hereditary nonpolyposis colon cancer that was named after him – Lynch syndrome. He is also credited with the discovery of hereditary breast-ovarian cancer syndrome, which would eventually lead to the discovery of the BRCA gene.

Find the full remembrance on the ASCO website.

Pembrolizumab improves 5-year OS in advanced NSCLC

CHICAGO – New data suggest pembrolizumab can increase 5-year overall survival (OS) for patients with advanced non–small cell lung cancer (NSCLC).

In the phase 1b KEYNOTE-001 trial, the 5-year OS rate was 23.2% in treatment-naive patients and 15.5% in previously treated patients. This is in comparison to the 5.5% average 5-year OS rate observed in NSCLC patients who receive standard chemotherapy (Noone AM et al. SEER Cancer Statistics Review, 1975-2015).

“In total, the data confirm that pembrolizumab has the potential to improve long-term outcomes for both treatment-naive and previously treated patients with advanced non–small cell lung cancer,” said Edward B. Garon, MD, of the University of California, Los Angeles.

Dr. Garon and colleagues presented these results in a poster at the annual meeting of the American Society for Clinical Oncology, and the data were simultaneously published in the Journal of Clinical Oncology.

KEYNOTE-001 (NCT01295827) enrolled 550 patients with advanced NSCLC who had received no prior therapy (n = 101) or at least one prior line of therapy (n = 449). Initially, patients received pembrolizumab at varying doses depending on body weight, but the protocol was changed to a single dose of pembrolizumab at 200 mg every 3 weeks.

At a median follow-up of 60.6 months, 100 patients were still alive. Sixty patients had received at least 2 years of pembrolizumab, 14 of whom were treatment-naive at baseline, and 46 of whom were previously treated at baseline.

Five-year OS rates were best among patients who had high PD-L1 expression, which was defined as 50% or greater.

Among treatment-naive patients, the 5-year OS rate was 29.6% in PD-L1–high patients and 15.7% in PD-L1–low patients (expression of 1% to 49%). The median OS was 35.4 months and 19.5 months, respectively.

Among previously treated patients, the 5-year OS rate was 25.0% in PD-L1–high patients, 12.6% in patients with PD-L1 expression of 1%-49%, and 3.5% in patients with PD-L1 expression less than 1%. The median OS was 15.4 months, 8.5 months, and 8.6 months, respectively.

Among patients who received at least 2 years of pembrolizumab, the 5-year OS rate was 78.6% in the treatment-naive group and 75.8% in the previously treated group. The objective response rate was 86% and 91%, respectively. The rate of ongoing response at the data cutoff was 58% and 71%, respectively.

“The safety data did not show any unanticipated late toxicity, which I consider encouraging,” Dr. Garon noted.

He said rates of immune-mediated adverse events were similar at 3 years and 5 years of follow-up. At 5 years, 17% of patients (n = 92) had experienced an immune-related adverse event, the most common of which were hypothyroidism (9%), pneumonitis (5%), and hyperthyroidism (2%).

Dr. Garon disclosed relationships with AstraZeneca, Bristol-Myers Squibb, Dracen, Dynavax, Genentech, Iovance Biotherapeutics, Lilly, Merck, Mirati Therapeutics, Neon Therapeutics, and Novartis. KEYNOTE-001 was sponsored by Merck Sharp & Dohme Corp.

SOURCES: Garon E. et al. ASCO 2019, Abstract LBA9015; J Clin Oncol. 2019 June 2. doi: 10.1200/JCO.19.00934

CHICAGO – New data suggest pembrolizumab can increase 5-year overall survival (OS) for patients with advanced non–small cell lung cancer (NSCLC).

In the phase 1b KEYNOTE-001 trial, the 5-year OS rate was 23.2% in treatment-naive patients and 15.5% in previously treated patients. This is in comparison to the 5.5% average 5-year OS rate observed in NSCLC patients who receive standard chemotherapy (Noone AM et al. SEER Cancer Statistics Review, 1975-2015).

“In total, the data confirm that pembrolizumab has the potential to improve long-term outcomes for both treatment-naive and previously treated patients with advanced non–small cell lung cancer,” said Edward B. Garon, MD, of the University of California, Los Angeles.

Dr. Garon and colleagues presented these results in a poster at the annual meeting of the American Society for Clinical Oncology, and the data were simultaneously published in the Journal of Clinical Oncology.

KEYNOTE-001 (NCT01295827) enrolled 550 patients with advanced NSCLC who had received no prior therapy (n = 101) or at least one prior line of therapy (n = 449). Initially, patients received pembrolizumab at varying doses depending on body weight, but the protocol was changed to a single dose of pembrolizumab at 200 mg every 3 weeks.

At a median follow-up of 60.6 months, 100 patients were still alive. Sixty patients had received at least 2 years of pembrolizumab, 14 of whom were treatment-naive at baseline, and 46 of whom were previously treated at baseline.

Five-year OS rates were best among patients who had high PD-L1 expression, which was defined as 50% or greater.

Among treatment-naive patients, the 5-year OS rate was 29.6% in PD-L1–high patients and 15.7% in PD-L1–low patients (expression of 1% to 49%). The median OS was 35.4 months and 19.5 months, respectively.

Among previously treated patients, the 5-year OS rate was 25.0% in PD-L1–high patients, 12.6% in patients with PD-L1 expression of 1%-49%, and 3.5% in patients with PD-L1 expression less than 1%. The median OS was 15.4 months, 8.5 months, and 8.6 months, respectively.

Among patients who received at least 2 years of pembrolizumab, the 5-year OS rate was 78.6% in the treatment-naive group and 75.8% in the previously treated group. The objective response rate was 86% and 91%, respectively. The rate of ongoing response at the data cutoff was 58% and 71%, respectively.

“The safety data did not show any unanticipated late toxicity, which I consider encouraging,” Dr. Garon noted.

He said rates of immune-mediated adverse events were similar at 3 years and 5 years of follow-up. At 5 years, 17% of patients (n = 92) had experienced an immune-related adverse event, the most common of which were hypothyroidism (9%), pneumonitis (5%), and hyperthyroidism (2%).

Dr. Garon disclosed relationships with AstraZeneca, Bristol-Myers Squibb, Dracen, Dynavax, Genentech, Iovance Biotherapeutics, Lilly, Merck, Mirati Therapeutics, Neon Therapeutics, and Novartis. KEYNOTE-001 was sponsored by Merck Sharp & Dohme Corp.

SOURCES: Garon E. et al. ASCO 2019, Abstract LBA9015; J Clin Oncol. 2019 June 2. doi: 10.1200/JCO.19.00934

CHICAGO – New data suggest pembrolizumab can increase 5-year overall survival (OS) for patients with advanced non–small cell lung cancer (NSCLC).

In the phase 1b KEYNOTE-001 trial, the 5-year OS rate was 23.2% in treatment-naive patients and 15.5% in previously treated patients. This is in comparison to the 5.5% average 5-year OS rate observed in NSCLC patients who receive standard chemotherapy (Noone AM et al. SEER Cancer Statistics Review, 1975-2015).

“In total, the data confirm that pembrolizumab has the potential to improve long-term outcomes for both treatment-naive and previously treated patients with advanced non–small cell lung cancer,” said Edward B. Garon, MD, of the University of California, Los Angeles.

Dr. Garon and colleagues presented these results in a poster at the annual meeting of the American Society for Clinical Oncology, and the data were simultaneously published in the Journal of Clinical Oncology.

KEYNOTE-001 (NCT01295827) enrolled 550 patients with advanced NSCLC who had received no prior therapy (n = 101) or at least one prior line of therapy (n = 449). Initially, patients received pembrolizumab at varying doses depending on body weight, but the protocol was changed to a single dose of pembrolizumab at 200 mg every 3 weeks.

At a median follow-up of 60.6 months, 100 patients were still alive. Sixty patients had received at least 2 years of pembrolizumab, 14 of whom were treatment-naive at baseline, and 46 of whom were previously treated at baseline.

Five-year OS rates were best among patients who had high PD-L1 expression, which was defined as 50% or greater.

Among treatment-naive patients, the 5-year OS rate was 29.6% in PD-L1–high patients and 15.7% in PD-L1–low patients (expression of 1% to 49%). The median OS was 35.4 months and 19.5 months, respectively.

Among previously treated patients, the 5-year OS rate was 25.0% in PD-L1–high patients, 12.6% in patients with PD-L1 expression of 1%-49%, and 3.5% in patients with PD-L1 expression less than 1%. The median OS was 15.4 months, 8.5 months, and 8.6 months, respectively.

Among patients who received at least 2 years of pembrolizumab, the 5-year OS rate was 78.6% in the treatment-naive group and 75.8% in the previously treated group. The objective response rate was 86% and 91%, respectively. The rate of ongoing response at the data cutoff was 58% and 71%, respectively.

“The safety data did not show any unanticipated late toxicity, which I consider encouraging,” Dr. Garon noted.

He said rates of immune-mediated adverse events were similar at 3 years and 5 years of follow-up. At 5 years, 17% of patients (n = 92) had experienced an immune-related adverse event, the most common of which were hypothyroidism (9%), pneumonitis (5%), and hyperthyroidism (2%).

Dr. Garon disclosed relationships with AstraZeneca, Bristol-Myers Squibb, Dracen, Dynavax, Genentech, Iovance Biotherapeutics, Lilly, Merck, Mirati Therapeutics, Neon Therapeutics, and Novartis. KEYNOTE-001 was sponsored by Merck Sharp & Dohme Corp.

SOURCES: Garon E. et al. ASCO 2019, Abstract LBA9015; J Clin Oncol. 2019 June 2. doi: 10.1200/JCO.19.00934

REPORTING FROM ASCO 2019

Second-line chemo sets benchmark in biliary tract cancers

CHICAGO – Oxaliplatin/5-fluorouracil is the new benchmark for previously treated advanced biliary tract cancers, though more research is needed to improve outcomes, an investigator said at the annual meeting of the American Society of Clinical Oncology.

Modified FOLFOX (mFOLFOX) added to active symptom control reduced risk of death by 31% versus active symptom control alone in patients who had progressed following standard cisplatin/gemcitabine chemotherapy, according to Angela Lamarca, MD, PhD, of the University of Manchester, England, who presented results in an oral presentation at the meeting.

While the chemotherapy did improve survival versus no chemotherapy, the overall benefit was modest and the toxicity was moderate, William P. Harris, MD, said in a discussion of the study results.

“At the moment, based on this study, I think that FOLFOX is the benchmark moving forward in the second-line setting, but certainly clinical trials are reasonable in this space,” said Dr. Harris, who is with the University of Washington and Fred Hutchinson Cancer Research Center, Seattle.

Novel therapies seem particularly “appealing” in biliary tract cancers, he said, based on results so far from studies that include inhibitors targeting PD-1, FGFR1-3, and IDH1, among others.

The present study, known as ABC-06, was the first-ever prospective phase 3 trial to evaluate the benefit of additional chemotherapy after cisplatin/gemcitabine in patients biliary tract cancers, Dr. Lamarca said.

While cisplatin plus gemcitabine is established as first-line therapy for advanced biliary tract cancers, the role of second-line chemotherapy has been unclear, she said in her presentation.

In the trial initiated by Dr. Lamarca and colleagues, 162 patients were randomized to active symptom control alone, or active symptom control plus modified FOLFOX chemotherapy given every 14 days for up to 12 cycles.

The rate of grade 3-4 adverse events was 59% in the modified FOLFOX arm versus 40% in the active symptom control arm. There were three chemotherapy-related deaths, due respectively to renal failure, febrile neutropenia, and acute kidney injury, according to the report.

Grade 3-4 fatigue, infections, and decreased neutrophil count were more common in the chemotherapy arm, while anorexia and thromboembolic events were more often seen in the group of patients receiving active symptom control alone, Dr. Lamarca said.

The study’s primary endpoint of overall survival was met, with a hazard ratio of 0.69 (95% CI, 0.50-0.97; P = .031) favoring the chemotherapy arm after adjusting for platinum sensitivity, albumin, and stage.

The difference in median overall survival was “modest,” Dr. Lamarca said, at 6.2 months in the modified FOLFOX arm versus a higher-than-expected 5.3 months in the active symptom control arm (investigators had anticipated a median survival of 4 months).

However, “clinically meaningful” increases were seen with FOLFOX in both the 6-month overall survival rate, at 50.6% versus 35.5%, and the 12-month overall survival rate, at 25.9% versus 11.4% she said in her presentation.

“Modified FOLFOX chemotherapy combined with active symptom control should therefore become the standard of care in the second-line setting for patients with advanced biliary tract cancers,” she said.

Dr. Lamarca reported disclosures related to Eisai, Nutricia, Ipsen, Merck, Novartis, Pfizer, Abbott Nutrition, Advanced Accelerator Applications, Bayer, Celgene, Delcath Systems, Mylan, NanoString Technologies, and Sirtex Medical.

SOURCE: Lamarca A et al. ASCO 2019, Abstract 4003.

CHICAGO – Oxaliplatin/5-fluorouracil is the new benchmark for previously treated advanced biliary tract cancers, though more research is needed to improve outcomes, an investigator said at the annual meeting of the American Society of Clinical Oncology.

Modified FOLFOX (mFOLFOX) added to active symptom control reduced risk of death by 31% versus active symptom control alone in patients who had progressed following standard cisplatin/gemcitabine chemotherapy, according to Angela Lamarca, MD, PhD, of the University of Manchester, England, who presented results in an oral presentation at the meeting.

While the chemotherapy did improve survival versus no chemotherapy, the overall benefit was modest and the toxicity was moderate, William P. Harris, MD, said in a discussion of the study results.

“At the moment, based on this study, I think that FOLFOX is the benchmark moving forward in the second-line setting, but certainly clinical trials are reasonable in this space,” said Dr. Harris, who is with the University of Washington and Fred Hutchinson Cancer Research Center, Seattle.

Novel therapies seem particularly “appealing” in biliary tract cancers, he said, based on results so far from studies that include inhibitors targeting PD-1, FGFR1-3, and IDH1, among others.

The present study, known as ABC-06, was the first-ever prospective phase 3 trial to evaluate the benefit of additional chemotherapy after cisplatin/gemcitabine in patients biliary tract cancers, Dr. Lamarca said.

While cisplatin plus gemcitabine is established as first-line therapy for advanced biliary tract cancers, the role of second-line chemotherapy has been unclear, she said in her presentation.

In the trial initiated by Dr. Lamarca and colleagues, 162 patients were randomized to active symptom control alone, or active symptom control plus modified FOLFOX chemotherapy given every 14 days for up to 12 cycles.

The rate of grade 3-4 adverse events was 59% in the modified FOLFOX arm versus 40% in the active symptom control arm. There were three chemotherapy-related deaths, due respectively to renal failure, febrile neutropenia, and acute kidney injury, according to the report.

Grade 3-4 fatigue, infections, and decreased neutrophil count were more common in the chemotherapy arm, while anorexia and thromboembolic events were more often seen in the group of patients receiving active symptom control alone, Dr. Lamarca said.

The study’s primary endpoint of overall survival was met, with a hazard ratio of 0.69 (95% CI, 0.50-0.97; P = .031) favoring the chemotherapy arm after adjusting for platinum sensitivity, albumin, and stage.

The difference in median overall survival was “modest,” Dr. Lamarca said, at 6.2 months in the modified FOLFOX arm versus a higher-than-expected 5.3 months in the active symptom control arm (investigators had anticipated a median survival of 4 months).

However, “clinically meaningful” increases were seen with FOLFOX in both the 6-month overall survival rate, at 50.6% versus 35.5%, and the 12-month overall survival rate, at 25.9% versus 11.4% she said in her presentation.

“Modified FOLFOX chemotherapy combined with active symptom control should therefore become the standard of care in the second-line setting for patients with advanced biliary tract cancers,” she said.

Dr. Lamarca reported disclosures related to Eisai, Nutricia, Ipsen, Merck, Novartis, Pfizer, Abbott Nutrition, Advanced Accelerator Applications, Bayer, Celgene, Delcath Systems, Mylan, NanoString Technologies, and Sirtex Medical.

SOURCE: Lamarca A et al. ASCO 2019, Abstract 4003.

CHICAGO – Oxaliplatin/5-fluorouracil is the new benchmark for previously treated advanced biliary tract cancers, though more research is needed to improve outcomes, an investigator said at the annual meeting of the American Society of Clinical Oncology.

Modified FOLFOX (mFOLFOX) added to active symptom control reduced risk of death by 31% versus active symptom control alone in patients who had progressed following standard cisplatin/gemcitabine chemotherapy, according to Angela Lamarca, MD, PhD, of the University of Manchester, England, who presented results in an oral presentation at the meeting.

While the chemotherapy did improve survival versus no chemotherapy, the overall benefit was modest and the toxicity was moderate, William P. Harris, MD, said in a discussion of the study results.

“At the moment, based on this study, I think that FOLFOX is the benchmark moving forward in the second-line setting, but certainly clinical trials are reasonable in this space,” said Dr. Harris, who is with the University of Washington and Fred Hutchinson Cancer Research Center, Seattle.

Novel therapies seem particularly “appealing” in biliary tract cancers, he said, based on results so far from studies that include inhibitors targeting PD-1, FGFR1-3, and IDH1, among others.

The present study, known as ABC-06, was the first-ever prospective phase 3 trial to evaluate the benefit of additional chemotherapy after cisplatin/gemcitabine in patients biliary tract cancers, Dr. Lamarca said.

While cisplatin plus gemcitabine is established as first-line therapy for advanced biliary tract cancers, the role of second-line chemotherapy has been unclear, she said in her presentation.

In the trial initiated by Dr. Lamarca and colleagues, 162 patients were randomized to active symptom control alone, or active symptom control plus modified FOLFOX chemotherapy given every 14 days for up to 12 cycles.

The rate of grade 3-4 adverse events was 59% in the modified FOLFOX arm versus 40% in the active symptom control arm. There were three chemotherapy-related deaths, due respectively to renal failure, febrile neutropenia, and acute kidney injury, according to the report.

Grade 3-4 fatigue, infections, and decreased neutrophil count were more common in the chemotherapy arm, while anorexia and thromboembolic events were more often seen in the group of patients receiving active symptom control alone, Dr. Lamarca said.

The study’s primary endpoint of overall survival was met, with a hazard ratio of 0.69 (95% CI, 0.50-0.97; P = .031) favoring the chemotherapy arm after adjusting for platinum sensitivity, albumin, and stage.

The difference in median overall survival was “modest,” Dr. Lamarca said, at 6.2 months in the modified FOLFOX arm versus a higher-than-expected 5.3 months in the active symptom control arm (investigators had anticipated a median survival of 4 months).

However, “clinically meaningful” increases were seen with FOLFOX in both the 6-month overall survival rate, at 50.6% versus 35.5%, and the 12-month overall survival rate, at 25.9% versus 11.4% she said in her presentation.

“Modified FOLFOX chemotherapy combined with active symptom control should therefore become the standard of care in the second-line setting for patients with advanced biliary tract cancers,” she said.

Dr. Lamarca reported disclosures related to Eisai, Nutricia, Ipsen, Merck, Novartis, Pfizer, Abbott Nutrition, Advanced Accelerator Applications, Bayer, Celgene, Delcath Systems, Mylan, NanoString Technologies, and Sirtex Medical.

SOURCE: Lamarca A et al. ASCO 2019, Abstract 4003.

REPORTING FROM ASCO 2019

Following the path of leadership

VA Hospitalist Dr. Matthew Tuck

For Matthew Tuck, MD, MEd, FACP, associate section chief for hospital medicine at the Veterans Affairs Medical Center (VAMC) in Washington, leadership is something that hospitalists can and should be learning at every opportunity.

Some of the best insights about effective leadership, teamwork, and process improvement come from the business world and have been slower to infiltrate into hospital settings and hospitalist groups, he says. But Dr. Tuck has tried to take advantage of numerous opportunities for leadership development in his own career.

He has been a hospitalist since 2010 and is part of a group of 13 physicians, all of whom carry clinical, teaching, and research responsibilities while pursuing a variety of education, quality improvement, and performance improvement topics.

“My chair has been generous about giving me time to do teaching and research and to pursue opportunities for career development,” he said. The Washington VAMC works with four affiliate medical schools in the area, and its six daily hospital medicine services are all 100% teaching services with assigned residents and interns.

Dr. Tuck divides his professional time roughly one-third each between clinical – seeing patients 5 months a year on a consultative or inpatient basis with resident teams; administrative in a variety of roles; and research. He has academic appointments at the George Washington University (GWU) School of Medicine and at the Uniformed Services University of Health Sciences in Bethesda, Md. He developed the coursework for teaching evidence-based medicine to first- and second-year medical students at GWU.

He is also part of a large research consortium with five sites and $7.5 million in funding over 5 years from NIH’s National Institute on Minority Health and Health Disparities to study how genetic information from African American patients can predict their response to cardiovascular medications. He serves as the study’s site Principal Investigator at the VAMC.

Opportunities to advance his leadership skills have included the VA’s Aspiring Leaders Program and Leadership Development Mentoring Program, which teach leadership skills on topical subjects such as teaching, communications skills, and finance. The Master Teacher Leadership Development Program for medical faculty at GWU, where he attended medical school and did his internship and residency, offers six intensive, classroom-based 8-week courses over a 1-year period. They cover various topical subjects with faculty from the business world teaching principles of leadership. The program includes a mentoring action plan for participants and leads to a graduate certificate in leadership development from GWU’s Graduate School of Education and Human Development at the end of the year’s studies.

Dr. Tuck credits completing this kind of coursework for his current position of leadership in the VA and he tries to share what he has learned with the medical students he teaches.

“When I was starting out as a physician, I never received training in how to lead a team. I found myself trying to get everything done for my patients while teaching my learners, and I really struggled for the first couple of years to manage these competing demands on my time,” he said.

Now, on the first day of a new clinical rotation, he meets one-on-one with his residents to set out goals and expectations. “I say: ‘This is how I want rounds to be run. What are your expectations?’ That way we make sure we’re collaborating as a team. I don’t know that medical school prepares you for this kind of teamwork. Unless you bring a background in business, you can really struggle.”

Interest in hospital medicine

“Throughout our medical training we do a variety of rotations and clerkships. I found myself falling in love with all of them – surgery, psychiatry, obstetrics, and gynecology,” Dr. Tuck explained, as he reflected on how he ended up in hospital medicine. “As someone who was interested in all of these different fields of medicine, I considered myself a true medical generalist. And in hospitalized patients, who struggle with all of the different issues that bring them to the hospital, I saw a compilation of all my experiences in residency training combined in one setting.”

Hospital medicine was a relatively young field at that time, with few academic hospitalists, he said. “But I had good mentors who encouraged me to pursue my educational, research, and administrative interests. My affinity for the VA was also largely due to my training. We worked in multiple settings – academic, community-based, National Institutes of Health, and at the VA.”

Dr. Tuck said that, of all the settings in which he practiced, he felt the VA truly trained him best to be a doctor. “The experience made me feel like a holistic practitioner,” he said. “The system allowed me to take the best care of my patients, since I didn’t have to worry about whether I could make needed referrals to specialists. Very early in my internship year we were seeing very sick patients with multiple comorbidities, but it was easy to get a social worker or case manager involved, compared to other settings, which can be more difficult to navigate.”

While the VA is a “great health system,” Dr. Tuck said, the challenge is learning how to work with its bureaucracy. “If you don’t know how the system works, it can seem to get in your way.” But overall, he said, the VA functions well and compares favorably with private sector hospitals and health systems. That was also the conclusion of a recent study in the Journal of General Internal Medicine, which compared the quality of outpatient and inpatient care in VA and non-VA settings using recent performance measure data.1 The authors concluded that the VA system performed similarly or better than non-VA health care on most nationally recognized measures of inpatient and outpatient care quality, although there is wide variation between VA facilities.

Working with the team

Another major interest for Dr. Tuck is team-based learning, which also grew out of his GWU leadership certificate course work on teaching teams and team development. He is working on a draft paper for publication with coauthor Patrick Rendon, MD, associate program director for the University of New Mexico’s internal medicine residency program, building on the group development stage theory – “Forming/Storming/Norming/Performing” – developed by Tuckman and Jenson.2

The theory offers 12 tips for optimizing inpatient ward team performance, such as getting the learners to buy in at an early stage of a project. “Everyone I talk to about our research is eager to learn how to apply these principles. I don’t think we’re unique at this center. We’re constantly rotating learners through the program. If you apply these principles, you can get learners to be more efficient starting from the first day,” he said.

The current inpatient team model at the Washington VAMC involves a broadly representative team from nursing, case management, social work, the business office, medical coding, utilization management, and administration that convenes every morning to discuss patient navigation and difficult discharges. “Everyone sits around a big table, and the six hospital medicine teams rotate through every fifteen minutes to review their patients’ admitting diagnoses, barriers to discharge and plans of care.”

At the patient’s bedside, a Focused Interdisciplinary Team (FIT) model, which Dr. Tuck helped to implement, incorporates a four-step process with clearly defined roles for the attending, nurse, pharmacist, and case manager or social worker. “Since implementation, our data show overall reductions in lengths of stay,” he said.

Dr. Tuck urges other hospitalists to pursue opportunities available to them to develop their leadership skills. “Look to your professional societies such as the Society of General Internal Medicine (SGIM) or SHM.” For example, SGIM’s Academic Hospitalist Commission, which he cochairs, provides a voice on the national stage for academic hospitalists and cosponsors with SHM an annual Academic Hospitalist Academy to support career development for junior academic hospitalists as educational leaders. Since 2016, its Distinguished Professor of Hospital Medicine recognizes a professor of hospital medicine to give a plenary address at the SGIM national meeting.

SGIM’s SCHOLAR Project, a subgroup of its Academic Hospitalist Commission, has worked to identify features of successful academic hospitalist programs, with the results published in the Journal of Hospital Medicine.3

“We learned that what sets successful programs apart is their leadership – as well as protected time for scholarly pursuits,” he said. “We’re all leaders in this field, whether we view ourselves that way or not.”

References

1. Price RA et al. Comparing quality of care in Veterans Affairs and Non–Veterans Affairs settings. J Gen Intern Med. 2018 Oct;33(10):1631-38.

2. Tuckman B, Jensen M. Stages of small group development revisited. Group and Organizational Studies. 1977;2:419-427.

3. Seymann GB et al. Features of successful academic hospitalist programs: Insights from the SCHOLAR (Successful hospitalists in academics and research) project. J Hosp Med. 2016 Oct;11(10):708-13.

VA Hospitalist Dr. Matthew Tuck

VA Hospitalist Dr. Matthew Tuck

For Matthew Tuck, MD, MEd, FACP, associate section chief for hospital medicine at the Veterans Affairs Medical Center (VAMC) in Washington, leadership is something that hospitalists can and should be learning at every opportunity.

Some of the best insights about effective leadership, teamwork, and process improvement come from the business world and have been slower to infiltrate into hospital settings and hospitalist groups, he says. But Dr. Tuck has tried to take advantage of numerous opportunities for leadership development in his own career.

He has been a hospitalist since 2010 and is part of a group of 13 physicians, all of whom carry clinical, teaching, and research responsibilities while pursuing a variety of education, quality improvement, and performance improvement topics.

“My chair has been generous about giving me time to do teaching and research and to pursue opportunities for career development,” he said. The Washington VAMC works with four affiliate medical schools in the area, and its six daily hospital medicine services are all 100% teaching services with assigned residents and interns.

Dr. Tuck divides his professional time roughly one-third each between clinical – seeing patients 5 months a year on a consultative or inpatient basis with resident teams; administrative in a variety of roles; and research. He has academic appointments at the George Washington University (GWU) School of Medicine and at the Uniformed Services University of Health Sciences in Bethesda, Md. He developed the coursework for teaching evidence-based medicine to first- and second-year medical students at GWU.

He is also part of a large research consortium with five sites and $7.5 million in funding over 5 years from NIH’s National Institute on Minority Health and Health Disparities to study how genetic information from African American patients can predict their response to cardiovascular medications. He serves as the study’s site Principal Investigator at the VAMC.

Opportunities to advance his leadership skills have included the VA’s Aspiring Leaders Program and Leadership Development Mentoring Program, which teach leadership skills on topical subjects such as teaching, communications skills, and finance. The Master Teacher Leadership Development Program for medical faculty at GWU, where he attended medical school and did his internship and residency, offers six intensive, classroom-based 8-week courses over a 1-year period. They cover various topical subjects with faculty from the business world teaching principles of leadership. The program includes a mentoring action plan for participants and leads to a graduate certificate in leadership development from GWU’s Graduate School of Education and Human Development at the end of the year’s studies.

Dr. Tuck credits completing this kind of coursework for his current position of leadership in the VA and he tries to share what he has learned with the medical students he teaches.

“When I was starting out as a physician, I never received training in how to lead a team. I found myself trying to get everything done for my patients while teaching my learners, and I really struggled for the first couple of years to manage these competing demands on my time,” he said.

Now, on the first day of a new clinical rotation, he meets one-on-one with his residents to set out goals and expectations. “I say: ‘This is how I want rounds to be run. What are your expectations?’ That way we make sure we’re collaborating as a team. I don’t know that medical school prepares you for this kind of teamwork. Unless you bring a background in business, you can really struggle.”

Interest in hospital medicine

“Throughout our medical training we do a variety of rotations and clerkships. I found myself falling in love with all of them – surgery, psychiatry, obstetrics, and gynecology,” Dr. Tuck explained, as he reflected on how he ended up in hospital medicine. “As someone who was interested in all of these different fields of medicine, I considered myself a true medical generalist. And in hospitalized patients, who struggle with all of the different issues that bring them to the hospital, I saw a compilation of all my experiences in residency training combined in one setting.”

Hospital medicine was a relatively young field at that time, with few academic hospitalists, he said. “But I had good mentors who encouraged me to pursue my educational, research, and administrative interests. My affinity for the VA was also largely due to my training. We worked in multiple settings – academic, community-based, National Institutes of Health, and at the VA.”

Dr. Tuck said that, of all the settings in which he practiced, he felt the VA truly trained him best to be a doctor. “The experience made me feel like a holistic practitioner,” he said. “The system allowed me to take the best care of my patients, since I didn’t have to worry about whether I could make needed referrals to specialists. Very early in my internship year we were seeing very sick patients with multiple comorbidities, but it was easy to get a social worker or case manager involved, compared to other settings, which can be more difficult to navigate.”

While the VA is a “great health system,” Dr. Tuck said, the challenge is learning how to work with its bureaucracy. “If you don’t know how the system works, it can seem to get in your way.” But overall, he said, the VA functions well and compares favorably with private sector hospitals and health systems. That was also the conclusion of a recent study in the Journal of General Internal Medicine, which compared the quality of outpatient and inpatient care in VA and non-VA settings using recent performance measure data.1 The authors concluded that the VA system performed similarly or better than non-VA health care on most nationally recognized measures of inpatient and outpatient care quality, although there is wide variation between VA facilities.

Working with the team

Another major interest for Dr. Tuck is team-based learning, which also grew out of his GWU leadership certificate course work on teaching teams and team development. He is working on a draft paper for publication with coauthor Patrick Rendon, MD, associate program director for the University of New Mexico’s internal medicine residency program, building on the group development stage theory – “Forming/Storming/Norming/Performing” – developed by Tuckman and Jenson.2

The theory offers 12 tips for optimizing inpatient ward team performance, such as getting the learners to buy in at an early stage of a project. “Everyone I talk to about our research is eager to learn how to apply these principles. I don’t think we’re unique at this center. We’re constantly rotating learners through the program. If you apply these principles, you can get learners to be more efficient starting from the first day,” he said.

The current inpatient team model at the Washington VAMC involves a broadly representative team from nursing, case management, social work, the business office, medical coding, utilization management, and administration that convenes every morning to discuss patient navigation and difficult discharges. “Everyone sits around a big table, and the six hospital medicine teams rotate through every fifteen minutes to review their patients’ admitting diagnoses, barriers to discharge and plans of care.”

At the patient’s bedside, a Focused Interdisciplinary Team (FIT) model, which Dr. Tuck helped to implement, incorporates a four-step process with clearly defined roles for the attending, nurse, pharmacist, and case manager or social worker. “Since implementation, our data show overall reductions in lengths of stay,” he said.

Dr. Tuck urges other hospitalists to pursue opportunities available to them to develop their leadership skills. “Look to your professional societies such as the Society of General Internal Medicine (SGIM) or SHM.” For example, SGIM’s Academic Hospitalist Commission, which he cochairs, provides a voice on the national stage for academic hospitalists and cosponsors with SHM an annual Academic Hospitalist Academy to support career development for junior academic hospitalists as educational leaders. Since 2016, its Distinguished Professor of Hospital Medicine recognizes a professor of hospital medicine to give a plenary address at the SGIM national meeting.

SGIM’s SCHOLAR Project, a subgroup of its Academic Hospitalist Commission, has worked to identify features of successful academic hospitalist programs, with the results published in the Journal of Hospital Medicine.3

“We learned that what sets successful programs apart is their leadership – as well as protected time for scholarly pursuits,” he said. “We’re all leaders in this field, whether we view ourselves that way or not.”

References

1. Price RA et al. Comparing quality of care in Veterans Affairs and Non–Veterans Affairs settings. J Gen Intern Med. 2018 Oct;33(10):1631-38.

2. Tuckman B, Jensen M. Stages of small group development revisited. Group and Organizational Studies. 1977;2:419-427.

3. Seymann GB et al. Features of successful academic hospitalist programs: Insights from the SCHOLAR (Successful hospitalists in academics and research) project. J Hosp Med. 2016 Oct;11(10):708-13.

For Matthew Tuck, MD, MEd, FACP, associate section chief for hospital medicine at the Veterans Affairs Medical Center (VAMC) in Washington, leadership is something that hospitalists can and should be learning at every opportunity.

Some of the best insights about effective leadership, teamwork, and process improvement come from the business world and have been slower to infiltrate into hospital settings and hospitalist groups, he says. But Dr. Tuck has tried to take advantage of numerous opportunities for leadership development in his own career.

He has been a hospitalist since 2010 and is part of a group of 13 physicians, all of whom carry clinical, teaching, and research responsibilities while pursuing a variety of education, quality improvement, and performance improvement topics.

“My chair has been generous about giving me time to do teaching and research and to pursue opportunities for career development,” he said. The Washington VAMC works with four affiliate medical schools in the area, and its six daily hospital medicine services are all 100% teaching services with assigned residents and interns.

Dr. Tuck divides his professional time roughly one-third each between clinical – seeing patients 5 months a year on a consultative or inpatient basis with resident teams; administrative in a variety of roles; and research. He has academic appointments at the George Washington University (GWU) School of Medicine and at the Uniformed Services University of Health Sciences in Bethesda, Md. He developed the coursework for teaching evidence-based medicine to first- and second-year medical students at GWU.

He is also part of a large research consortium with five sites and $7.5 million in funding over 5 years from NIH’s National Institute on Minority Health and Health Disparities to study how genetic information from African American patients can predict their response to cardiovascular medications. He serves as the study’s site Principal Investigator at the VAMC.

Opportunities to advance his leadership skills have included the VA’s Aspiring Leaders Program and Leadership Development Mentoring Program, which teach leadership skills on topical subjects such as teaching, communications skills, and finance. The Master Teacher Leadership Development Program for medical faculty at GWU, where he attended medical school and did his internship and residency, offers six intensive, classroom-based 8-week courses over a 1-year period. They cover various topical subjects with faculty from the business world teaching principles of leadership. The program includes a mentoring action plan for participants and leads to a graduate certificate in leadership development from GWU’s Graduate School of Education and Human Development at the end of the year’s studies.

Dr. Tuck credits completing this kind of coursework for his current position of leadership in the VA and he tries to share what he has learned with the medical students he teaches.

“When I was starting out as a physician, I never received training in how to lead a team. I found myself trying to get everything done for my patients while teaching my learners, and I really struggled for the first couple of years to manage these competing demands on my time,” he said.

Now, on the first day of a new clinical rotation, he meets one-on-one with his residents to set out goals and expectations. “I say: ‘This is how I want rounds to be run. What are your expectations?’ That way we make sure we’re collaborating as a team. I don’t know that medical school prepares you for this kind of teamwork. Unless you bring a background in business, you can really struggle.”

Interest in hospital medicine

“Throughout our medical training we do a variety of rotations and clerkships. I found myself falling in love with all of them – surgery, psychiatry, obstetrics, and gynecology,” Dr. Tuck explained, as he reflected on how he ended up in hospital medicine. “As someone who was interested in all of these different fields of medicine, I considered myself a true medical generalist. And in hospitalized patients, who struggle with all of the different issues that bring them to the hospital, I saw a compilation of all my experiences in residency training combined in one setting.”

Hospital medicine was a relatively young field at that time, with few academic hospitalists, he said. “But I had good mentors who encouraged me to pursue my educational, research, and administrative interests. My affinity for the VA was also largely due to my training. We worked in multiple settings – academic, community-based, National Institutes of Health, and at the VA.”

Dr. Tuck said that, of all the settings in which he practiced, he felt the VA truly trained him best to be a doctor. “The experience made me feel like a holistic practitioner,” he said. “The system allowed me to take the best care of my patients, since I didn’t have to worry about whether I could make needed referrals to specialists. Very early in my internship year we were seeing very sick patients with multiple comorbidities, but it was easy to get a social worker or case manager involved, compared to other settings, which can be more difficult to navigate.”

While the VA is a “great health system,” Dr. Tuck said, the challenge is learning how to work with its bureaucracy. “If you don’t know how the system works, it can seem to get in your way.” But overall, he said, the VA functions well and compares favorably with private sector hospitals and health systems. That was also the conclusion of a recent study in the Journal of General Internal Medicine, which compared the quality of outpatient and inpatient care in VA and non-VA settings using recent performance measure data.1 The authors concluded that the VA system performed similarly or better than non-VA health care on most nationally recognized measures of inpatient and outpatient care quality, although there is wide variation between VA facilities.

Working with the team

Another major interest for Dr. Tuck is team-based learning, which also grew out of his GWU leadership certificate course work on teaching teams and team development. He is working on a draft paper for publication with coauthor Patrick Rendon, MD, associate program director for the University of New Mexico’s internal medicine residency program, building on the group development stage theory – “Forming/Storming/Norming/Performing” – developed by Tuckman and Jenson.2

The theory offers 12 tips for optimizing inpatient ward team performance, such as getting the learners to buy in at an early stage of a project. “Everyone I talk to about our research is eager to learn how to apply these principles. I don’t think we’re unique at this center. We’re constantly rotating learners through the program. If you apply these principles, you can get learners to be more efficient starting from the first day,” he said.

The current inpatient team model at the Washington VAMC involves a broadly representative team from nursing, case management, social work, the business office, medical coding, utilization management, and administration that convenes every morning to discuss patient navigation and difficult discharges. “Everyone sits around a big table, and the six hospital medicine teams rotate through every fifteen minutes to review their patients’ admitting diagnoses, barriers to discharge and plans of care.”

At the patient’s bedside, a Focused Interdisciplinary Team (FIT) model, which Dr. Tuck helped to implement, incorporates a four-step process with clearly defined roles for the attending, nurse, pharmacist, and case manager or social worker. “Since implementation, our data show overall reductions in lengths of stay,” he said.

Dr. Tuck urges other hospitalists to pursue opportunities available to them to develop their leadership skills. “Look to your professional societies such as the Society of General Internal Medicine (SGIM) or SHM.” For example, SGIM’s Academic Hospitalist Commission, which he cochairs, provides a voice on the national stage for academic hospitalists and cosponsors with SHM an annual Academic Hospitalist Academy to support career development for junior academic hospitalists as educational leaders. Since 2016, its Distinguished Professor of Hospital Medicine recognizes a professor of hospital medicine to give a plenary address at the SGIM national meeting.

SGIM’s SCHOLAR Project, a subgroup of its Academic Hospitalist Commission, has worked to identify features of successful academic hospitalist programs, with the results published in the Journal of Hospital Medicine.3

“We learned that what sets successful programs apart is their leadership – as well as protected time for scholarly pursuits,” he said. “We’re all leaders in this field, whether we view ourselves that way or not.”

References

1. Price RA et al. Comparing quality of care in Veterans Affairs and Non–Veterans Affairs settings. J Gen Intern Med. 2018 Oct;33(10):1631-38.

2. Tuckman B, Jensen M. Stages of small group development revisited. Group and Organizational Studies. 1977;2:419-427.

3. Seymann GB et al. Features of successful academic hospitalist programs: Insights from the SCHOLAR (Successful hospitalists in academics and research) project. J Hosp Med. 2016 Oct;11(10):708-13.

Pediatric MS often goes untreated in the year after diagnosis

SEATTLE – Females may be more likely than males to receive DMT during this time, said Chinmay Deshpande, PhD, at the annual meeting of the Consortium of Multiple Sclerosis Centers.

Pediatric onset of MS occurs in 3-5% of patients with the disease, and the median age of pediatric onset is 15 years. This population tends to have a high relapse rate and may develop disability at a younger age, said Dr. Deshpande, associate director of health economics and outcomes research at Novartis. “There have been very few studies done on this population, especially in the clinical trial setting. ... Physicians face considerable uncertainty in how to treat these patients.”

Observational data

To assess the proportion of patients with pediatric MS who receive DMT in the year after diagnosis, Dr. Deshpande and colleagues analyzed retrospective observational data from the Truven Health Marketscan Commercial and Encounters administrative claims databases. They studied patients who received an MS diagnosis between Jan. 1, 2010, and Dec. 31, 2016. In addition, they examined which DMTs were used as first-line therapies, whether prescribing patterns changed between 2010 and 2017, and time to treatment discontinuation or switch.

The databases included data from more than 182,000 patients with two or more claims of MS diagnosis. After including only patients age 17 years or younger at the index diagnosis date who had insurance during the 6 months prior to the index date and 12 months after the index date and who did not use DMT during the 6 months prior to the index date, 288 patients remained in the analysis. Patients had an average age of about 14 years.

The primary outcome was the proportion of patients who started DMT in the year after MS diagnosis. “The proportion of untreated patients within their first year of diagnosis was around 65%,” said Dr. Deshpande. On average, treated patients were slightly older than untreated patients (15.0 years vs. 13.3 years). Among treated patients, 75% were female, and 25% were male. Overall, however, 61% were female and 39% were male. The difference in treatment by gender was surprising and the reason for it is not understood, Dr. Deshpande said. One possibility is that the difference relates to earlier maturation in females, but that is only a hypothesis, he said.

Glatiramer acetate and interferon beta-1a were first-line DMTs for 48% and 30.6% of the treated patients, respectively. Dimethyl fumarate (7.1%), natalizumab (5.1%), fingolimod (4.1%), interferon beta-1b (4.1%), and peginterferon beta-1a (1%) also were used as first-line therapy.

Twenty percent of patients who received DMT switched to another medication during the follow-up period. The median time of switching was within 6 months of starting first-line therapy. Most patients who discontinued DMT – that is, they did not have any DMT for 60 days after stopping their first DMT – did so within 10 months of diagnosis.

Use of newer medications

Overall, the use of glatiramer acetate and interferons has decreased over time, and while the use of newer DMTs has increased, the trend is not consistent. “With the growing uptake of newer oral and infusible DMTs over the recent years, there is a need to increase treatment awareness in the pediatric MS population and to inform currently approved treatment options to the prescribers,” Dr. Deshpande and colleagues said.

The claims database is generalizable and nationally representative, but it does not include clinical or MRI data. “It’s hard to understand the reasoning why they discontinued or why they are switching,” Dr. Deshpande said. In addition, the sample size was relatively small, and the results should be interpreted accordingly, he said.

Novartis funded the study, and Dr. Deshpande and a coauthor are employees of Novartis. Other coauthors reported consulting fees from Novartis, as well as consulting fees and grant funding from other pharmaceutical companies.

SOURCE: Greenberg B et al. CMSC 2019, Abstract DXM02.

SEATTLE – Females may be more likely than males to receive DMT during this time, said Chinmay Deshpande, PhD, at the annual meeting of the Consortium of Multiple Sclerosis Centers.

Pediatric onset of MS occurs in 3-5% of patients with the disease, and the median age of pediatric onset is 15 years. This population tends to have a high relapse rate and may develop disability at a younger age, said Dr. Deshpande, associate director of health economics and outcomes research at Novartis. “There have been very few studies done on this population, especially in the clinical trial setting. ... Physicians face considerable uncertainty in how to treat these patients.”

Observational data

To assess the proportion of patients with pediatric MS who receive DMT in the year after diagnosis, Dr. Deshpande and colleagues analyzed retrospective observational data from the Truven Health Marketscan Commercial and Encounters administrative claims databases. They studied patients who received an MS diagnosis between Jan. 1, 2010, and Dec. 31, 2016. In addition, they examined which DMTs were used as first-line therapies, whether prescribing patterns changed between 2010 and 2017, and time to treatment discontinuation or switch.

The databases included data from more than 182,000 patients with two or more claims of MS diagnosis. After including only patients age 17 years or younger at the index diagnosis date who had insurance during the 6 months prior to the index date and 12 months after the index date and who did not use DMT during the 6 months prior to the index date, 288 patients remained in the analysis. Patients had an average age of about 14 years.

The primary outcome was the proportion of patients who started DMT in the year after MS diagnosis. “The proportion of untreated patients within their first year of diagnosis was around 65%,” said Dr. Deshpande. On average, treated patients were slightly older than untreated patients (15.0 years vs. 13.3 years). Among treated patients, 75% were female, and 25% were male. Overall, however, 61% were female and 39% were male. The difference in treatment by gender was surprising and the reason for it is not understood, Dr. Deshpande said. One possibility is that the difference relates to earlier maturation in females, but that is only a hypothesis, he said.

Glatiramer acetate and interferon beta-1a were first-line DMTs for 48% and 30.6% of the treated patients, respectively. Dimethyl fumarate (7.1%), natalizumab (5.1%), fingolimod (4.1%), interferon beta-1b (4.1%), and peginterferon beta-1a (1%) also were used as first-line therapy.

Twenty percent of patients who received DMT switched to another medication during the follow-up period. The median time of switching was within 6 months of starting first-line therapy. Most patients who discontinued DMT – that is, they did not have any DMT for 60 days after stopping their first DMT – did so within 10 months of diagnosis.

Use of newer medications

Overall, the use of glatiramer acetate and interferons has decreased over time, and while the use of newer DMTs has increased, the trend is not consistent. “With the growing uptake of newer oral and infusible DMTs over the recent years, there is a need to increase treatment awareness in the pediatric MS population and to inform currently approved treatment options to the prescribers,” Dr. Deshpande and colleagues said.

The claims database is generalizable and nationally representative, but it does not include clinical or MRI data. “It’s hard to understand the reasoning why they discontinued or why they are switching,” Dr. Deshpande said. In addition, the sample size was relatively small, and the results should be interpreted accordingly, he said.

Novartis funded the study, and Dr. Deshpande and a coauthor are employees of Novartis. Other coauthors reported consulting fees from Novartis, as well as consulting fees and grant funding from other pharmaceutical companies.

SOURCE: Greenberg B et al. CMSC 2019, Abstract DXM02.

SEATTLE – Females may be more likely than males to receive DMT during this time, said Chinmay Deshpande, PhD, at the annual meeting of the Consortium of Multiple Sclerosis Centers.

Pediatric onset of MS occurs in 3-5% of patients with the disease, and the median age of pediatric onset is 15 years. This population tends to have a high relapse rate and may develop disability at a younger age, said Dr. Deshpande, associate director of health economics and outcomes research at Novartis. “There have been very few studies done on this population, especially in the clinical trial setting. ... Physicians face considerable uncertainty in how to treat these patients.”

Observational data

To assess the proportion of patients with pediatric MS who receive DMT in the year after diagnosis, Dr. Deshpande and colleagues analyzed retrospective observational data from the Truven Health Marketscan Commercial and Encounters administrative claims databases. They studied patients who received an MS diagnosis between Jan. 1, 2010, and Dec. 31, 2016. In addition, they examined which DMTs were used as first-line therapies, whether prescribing patterns changed between 2010 and 2017, and time to treatment discontinuation or switch.

The databases included data from more than 182,000 patients with two or more claims of MS diagnosis. After including only patients age 17 years or younger at the index diagnosis date who had insurance during the 6 months prior to the index date and 12 months after the index date and who did not use DMT during the 6 months prior to the index date, 288 patients remained in the analysis. Patients had an average age of about 14 years.

The primary outcome was the proportion of patients who started DMT in the year after MS diagnosis. “The proportion of untreated patients within their first year of diagnosis was around 65%,” said Dr. Deshpande. On average, treated patients were slightly older than untreated patients (15.0 years vs. 13.3 years). Among treated patients, 75% were female, and 25% were male. Overall, however, 61% were female and 39% were male. The difference in treatment by gender was surprising and the reason for it is not understood, Dr. Deshpande said. One possibility is that the difference relates to earlier maturation in females, but that is only a hypothesis, he said.

Glatiramer acetate and interferon beta-1a were first-line DMTs for 48% and 30.6% of the treated patients, respectively. Dimethyl fumarate (7.1%), natalizumab (5.1%), fingolimod (4.1%), interferon beta-1b (4.1%), and peginterferon beta-1a (1%) also were used as first-line therapy.

Twenty percent of patients who received DMT switched to another medication during the follow-up period. The median time of switching was within 6 months of starting first-line therapy. Most patients who discontinued DMT – that is, they did not have any DMT for 60 days after stopping their first DMT – did so within 10 months of diagnosis.

Use of newer medications

Overall, the use of glatiramer acetate and interferons has decreased over time, and while the use of newer DMTs has increased, the trend is not consistent. “With the growing uptake of newer oral and infusible DMTs over the recent years, there is a need to increase treatment awareness in the pediatric MS population and to inform currently approved treatment options to the prescribers,” Dr. Deshpande and colleagues said.

The claims database is generalizable and nationally representative, but it does not include clinical or MRI data. “It’s hard to understand the reasoning why they discontinued or why they are switching,” Dr. Deshpande said. In addition, the sample size was relatively small, and the results should be interpreted accordingly, he said.

Novartis funded the study, and Dr. Deshpande and a coauthor are employees of Novartis. Other coauthors reported consulting fees from Novartis, as well as consulting fees and grant funding from other pharmaceutical companies.

SOURCE: Greenberg B et al. CMSC 2019, Abstract DXM02.

REPORTING FROM CMSC 2019

Treatment for hepatitis C reduces risk of Parkinson’s disease

, according to a cohort study published online June 5 in JAMA Neurology. The results provide evidence that hepatitis C virus is a risk factor for Parkinson’s disease.

In the past several years, epidemiologic studies have suggested an association between hepatitis C virus infection and Parkinson’s disease. A study published in 2017, however, found no association between the two. In addition, these investigations did not consider antiviral therapy as a potential modifying factor.

Wey-Yil Lin, MD, a neurologist at Landseed International Hospital in Taoyuan, Taiwan, and colleagues examined claims data from the Taiwan National Health Insurance Research Database to identify the risk of incident Parkinson’s disease in patients with hepatitis C virus infection who received antiviral treatment, compared with those who did not receive treatment.

The investigators selected all patients with a new diagnosis of hepatitis C virus infection with or without hepatitis from January 1, 2003, to December 31, 2013. They excluded patients who were aged 20 years or younger; had Parkinson’s disease, dementia, or stroke; or had had major hepatic diseases on the index date. To ensure that treated patients had had an effective course of therapy, the researchers excluded patients who were lost to follow-up within 6 months of the index date, received antiviral therapy for fewer than 16 weeks, or developed Parkinson’s disease within 6 months of the index date.

The primary outcome was incident Parkinson’s disease. Dr. Lin and colleagues excluded participants with a diagnosis of stroke and dementia before the index date to reduce the possibility of enrolling participants with secondary and atypical parkinsonism.

To minimize the potential selection bias to which observational studies are subject, the investigators performed propensity score matching with sex, age, comorbidities, and medication as covariates. This method was intended to create treated and untreated cohorts with comparable characteristics.

Dr. Lin and colleagues included 188,152 patients in their analysis. After matching, each group included 39,936 participants. In the group that received antiviral treatment, 45.0% of participants were female, and mean age was 52.8 years. In the untreated group, 44.4% of participants were female, and mean age was 52.5 years.

The incidence density of Parkinson’s disease per 1,000 person-years was 1.00 in the treated group and 1.39 in the untreated group. The difference in risk of Parkinson’s disease between the treated and untreated groups was statistically significant at year 5 of follow-up (hazard ratio [HR], 0.75) and at the end of the cohort (HR, 0.71). The risk did not differ significantly at year 1 and year 3, however. A subgroup analysis found a greater benefit of antiviral therapy among patients who concurrently used dihydropyridine calcium channel blockers.

“To our knowledge, this is the first cohort study to investigate the association between antiviral therapy and risk of Parkinson’s disease in patients with chronic hepatitis C viral infection,” said Dr. Lin and colleagues. Although it is possible that interferon-based antiviral therapy directly protected against the development of Parkinson’s disease, the short time of exposure to the antiviral agent “makes protecting against Parkinson’s disease development in 5 years less likely,” they added.

Among the study limitations that the authors acknowledged was the lack of data about hepatic function profile, serum virologic response, viral genotype, and hepatitis C virus RNA-level. The database that the investigators used also lacked data about behavioral factors (e.g., smoking status, coffee consumption, and alcohol consumption) that may have affected the incidence of Parkinson’s disease in the cohort. Investigations with longer follow-up periods will be needed to provide clearer information, they concluded.

The authors reported no conflicts of interest. The study was funded by grants from Chang Gung Medical Research Fund and from Chang Gung Memorial Hospital.

SOURCE: Lin W-Y et al. JAMA Neurol. 2019 Jun 5. doi: 10.1001/jamaneurol.2019.1368.

The findings of Lin et al. suggest a potentially modifiable hepatologic risk factor for Parkinson’s disease, Adolfo Ramirez-Zamora, MD, associate professor of neurology; Christopher W. Hess, MD, assistant professor of neurology; and David R. Nelson, MD, senior vice president for health affairs, all at the University of Florida in Gainesville, wrote in an accompanying editorial. Hepatitis C virus infection might enter the brain through the microvasculature and might induce microglial and macrophage-related inflammatory changes (JAMA Neurol. 2019 June 5. doi: 10.1001/jamaneurol.2019.1377).

Lin et al. estimated high diagnostic accuracy for Parkinson’s disease in their study. Nevertheless, clinical, neuroimaging, and pathological confirmation was unavailable, which is a limitation of their investigation, said Dr. Ramirez-Zamora and colleagues. “The diagnosis of Parkinson’s disease in early stages can be challenging, as other related conditions can mimic Parkinson’s disease, including cirrhosis-related parkinsonism. Moreover, using record-linkage systems excludes patients who did not seek medical advice or those who were misdiagnosed by symptoms alone, which may also underestimate the prevalence of Parkinson’s disease. Using population-based studies would be a more accurate method.”

Because interferon, which was the antiviral therapy used in this study, greatly affects the immune system and has a modest rate of eradicating viral hepatitis C infection, future research should examine the association between Parkinson’s disease and patients who cleared the virus, as well as patients who did not, said Dr. Ramirez-Zamora and colleagues. Such research could shed light on potential mechanisms of treatment response. Lin et al. did not examine the newer direct-acting antiviral therapies for hepatitis C virus infection, which cure more than 90% of patients. Nor did they analyze other well established lifestyle and demographic risk factors for developing the disease. In addition, “the authors could not generalize the results to those aged 75 years or older because of the substantially smaller number of patients in this age group,” said Dr. Ramirez-Zamora and colleagues.

Still, “identification of potentially treatable Parkinson’s disease risk factors presents a unique opportunity for treatment. Additional studies with detailed viral analysis and exposure are needed, including in other geographic and ethnic distributions,” they concluded.

The findings of Lin et al. suggest a potentially modifiable hepatologic risk factor for Parkinson’s disease, Adolfo Ramirez-Zamora, MD, associate professor of neurology; Christopher W. Hess, MD, assistant professor of neurology; and David R. Nelson, MD, senior vice president for health affairs, all at the University of Florida in Gainesville, wrote in an accompanying editorial. Hepatitis C virus infection might enter the brain through the microvasculature and might induce microglial and macrophage-related inflammatory changes (JAMA Neurol. 2019 June 5. doi: 10.1001/jamaneurol.2019.1377).

Lin et al. estimated high diagnostic accuracy for Parkinson’s disease in their study. Nevertheless, clinical, neuroimaging, and pathological confirmation was unavailable, which is a limitation of their investigation, said Dr. Ramirez-Zamora and colleagues. “The diagnosis of Parkinson’s disease in early stages can be challenging, as other related conditions can mimic Parkinson’s disease, including cirrhosis-related parkinsonism. Moreover, using record-linkage systems excludes patients who did not seek medical advice or those who were misdiagnosed by symptoms alone, which may also underestimate the prevalence of Parkinson’s disease. Using population-based studies would be a more accurate method.”

Because interferon, which was the antiviral therapy used in this study, greatly affects the immune system and has a modest rate of eradicating viral hepatitis C infection, future research should examine the association between Parkinson’s disease and patients who cleared the virus, as well as patients who did not, said Dr. Ramirez-Zamora and colleagues. Such research could shed light on potential mechanisms of treatment response. Lin et al. did not examine the newer direct-acting antiviral therapies for hepatitis C virus infection, which cure more than 90% of patients. Nor did they analyze other well established lifestyle and demographic risk factors for developing the disease. In addition, “the authors could not generalize the results to those aged 75 years or older because of the substantially smaller number of patients in this age group,” said Dr. Ramirez-Zamora and colleagues.

Still, “identification of potentially treatable Parkinson’s disease risk factors presents a unique opportunity for treatment. Additional studies with detailed viral analysis and exposure are needed, including in other geographic and ethnic distributions,” they concluded.

The findings of Lin et al. suggest a potentially modifiable hepatologic risk factor for Parkinson’s disease, Adolfo Ramirez-Zamora, MD, associate professor of neurology; Christopher W. Hess, MD, assistant professor of neurology; and David R. Nelson, MD, senior vice president for health affairs, all at the University of Florida in Gainesville, wrote in an accompanying editorial. Hepatitis C virus infection might enter the brain through the microvasculature and might induce microglial and macrophage-related inflammatory changes (JAMA Neurol. 2019 June 5. doi: 10.1001/jamaneurol.2019.1377).

Lin et al. estimated high diagnostic accuracy for Parkinson’s disease in their study. Nevertheless, clinical, neuroimaging, and pathological confirmation was unavailable, which is a limitation of their investigation, said Dr. Ramirez-Zamora and colleagues. “The diagnosis of Parkinson’s disease in early stages can be challenging, as other related conditions can mimic Parkinson’s disease, including cirrhosis-related parkinsonism. Moreover, using record-linkage systems excludes patients who did not seek medical advice or those who were misdiagnosed by symptoms alone, which may also underestimate the prevalence of Parkinson’s disease. Using population-based studies would be a more accurate method.”

Because interferon, which was the antiviral therapy used in this study, greatly affects the immune system and has a modest rate of eradicating viral hepatitis C infection, future research should examine the association between Parkinson’s disease and patients who cleared the virus, as well as patients who did not, said Dr. Ramirez-Zamora and colleagues. Such research could shed light on potential mechanisms of treatment response. Lin et al. did not examine the newer direct-acting antiviral therapies for hepatitis C virus infection, which cure more than 90% of patients. Nor did they analyze other well established lifestyle and demographic risk factors for developing the disease. In addition, “the authors could not generalize the results to those aged 75 years or older because of the substantially smaller number of patients in this age group,” said Dr. Ramirez-Zamora and colleagues.

Still, “identification of potentially treatable Parkinson’s disease risk factors presents a unique opportunity for treatment. Additional studies with detailed viral analysis and exposure are needed, including in other geographic and ethnic distributions,” they concluded.

, according to a cohort study published online June 5 in JAMA Neurology. The results provide evidence that hepatitis C virus is a risk factor for Parkinson’s disease.

In the past several years, epidemiologic studies have suggested an association between hepatitis C virus infection and Parkinson’s disease. A study published in 2017, however, found no association between the two. In addition, these investigations did not consider antiviral therapy as a potential modifying factor.