User login

FDA aims to improve review of generic drugs

The US Food and Drug Administration (FDA) has announced that it is taking new steps to facilitate efficient review of generic drugs.

The agency has released 2 documents intended to help streamline and improve the submission and review of generic drug applications, or Abbreviated New Drug Applications (ANDAs).

The first document is a draft guidance for industry, “Good ANDA Submission Practices,” which highlights common, recurring deficiencies the FDA sees in generic drug applications that may lead to a delay in their approval.

The FDA’s goal in releasing this document is to reduce the number of review cycles for ANDAs by helping applicants avoid these deficiencies.

The second document is a Manual of Policies and Procedures (MAPP), “Good ANDA Assessment Practices,” which outlines ANDA assessment practices for FDA staff.

This document formalizes a more streamlined generic review process, including the introduction of new templates designed to make each cycle of the review process more efficient and complete.

Releasing these new documents is part of the FDA’s Drug Competition Action Plan, which has 3 main goals:

- To reduce actions by branded pharmaceutical companies that can delay generic drug entry into the marketplace

- To resolve scientific and regulatory obstacles that can make it difficult to win approval of generic versions of certain complex drugs

- To improve the efficiency and predictability of the FDA’s generic review process to reduce the time it takes to get a generic drug approved and lessen the number of review cycles needed for generic applications.

The new MAPP and draft guidance documents for ANDAs are intended to help the FDA meet the third goal of the Drug Competition Action Plan.

The FDA is hoping to address the second goal of the plan later this year, building upon its initiatives to accelerate review and approval of complex generics.

The agency is also planning to develop guidance documents during the first quarter of 2018 that will address 3 issues related to the first goal of the plan—potential abuses of the citizen petition process, companies that restrict access to testing samples of branded drugs, and abuses of the single, shared system REMS (risk evaluation and mitigation strategy) negotiation process. ![]()

The US Food and Drug Administration (FDA) has announced that it is taking new steps to facilitate efficient review of generic drugs.

The agency has released 2 documents intended to help streamline and improve the submission and review of generic drug applications, or Abbreviated New Drug Applications (ANDAs).

The first document is a draft guidance for industry, “Good ANDA Submission Practices,” which highlights common, recurring deficiencies the FDA sees in generic drug applications that may lead to a delay in their approval.

The FDA’s goal in releasing this document is to reduce the number of review cycles for ANDAs by helping applicants avoid these deficiencies.

The second document is a Manual of Policies and Procedures (MAPP), “Good ANDA Assessment Practices,” which outlines ANDA assessment practices for FDA staff.

This document formalizes a more streamlined generic review process, including the introduction of new templates designed to make each cycle of the review process more efficient and complete.

Releasing these new documents is part of the FDA’s Drug Competition Action Plan, which has 3 main goals:

- To reduce actions by branded pharmaceutical companies that can delay generic drug entry into the marketplace

- To resolve scientific and regulatory obstacles that can make it difficult to win approval of generic versions of certain complex drugs

- To improve the efficiency and predictability of the FDA’s generic review process to reduce the time it takes to get a generic drug approved and lessen the number of review cycles needed for generic applications.

The new MAPP and draft guidance documents for ANDAs are intended to help the FDA meet the third goal of the Drug Competition Action Plan.

The FDA is hoping to address the second goal of the plan later this year, building upon its initiatives to accelerate review and approval of complex generics.

The agency is also planning to develop guidance documents during the first quarter of 2018 that will address 3 issues related to the first goal of the plan—potential abuses of the citizen petition process, companies that restrict access to testing samples of branded drugs, and abuses of the single, shared system REMS (risk evaluation and mitigation strategy) negotiation process. ![]()

The US Food and Drug Administration (FDA) has announced that it is taking new steps to facilitate efficient review of generic drugs.

The agency has released 2 documents intended to help streamline and improve the submission and review of generic drug applications, or Abbreviated New Drug Applications (ANDAs).

The first document is a draft guidance for industry, “Good ANDA Submission Practices,” which highlights common, recurring deficiencies the FDA sees in generic drug applications that may lead to a delay in their approval.

The FDA’s goal in releasing this document is to reduce the number of review cycles for ANDAs by helping applicants avoid these deficiencies.

The second document is a Manual of Policies and Procedures (MAPP), “Good ANDA Assessment Practices,” which outlines ANDA assessment practices for FDA staff.

This document formalizes a more streamlined generic review process, including the introduction of new templates designed to make each cycle of the review process more efficient and complete.

Releasing these new documents is part of the FDA’s Drug Competition Action Plan, which has 3 main goals:

- To reduce actions by branded pharmaceutical companies that can delay generic drug entry into the marketplace

- To resolve scientific and regulatory obstacles that can make it difficult to win approval of generic versions of certain complex drugs

- To improve the efficiency and predictability of the FDA’s generic review process to reduce the time it takes to get a generic drug approved and lessen the number of review cycles needed for generic applications.

The new MAPP and draft guidance documents for ANDAs are intended to help the FDA meet the third goal of the Drug Competition Action Plan.

The FDA is hoping to address the second goal of the plan later this year, building upon its initiatives to accelerate review and approval of complex generics.

The agency is also planning to develop guidance documents during the first quarter of 2018 that will address 3 issues related to the first goal of the plan—potential abuses of the citizen petition process, companies that restrict access to testing samples of branded drugs, and abuses of the single, shared system REMS (risk evaluation and mitigation strategy) negotiation process. ![]()

Stressed for Success

As I write this column, the holiday season has just begun, and its incumbent demands lie ahead. By the time this article reaches you, we will have outlasted the season and its associated stress. But it’s not just the holiday baking, gift-wrapping, and decorating that overwhelms us—we face enormous professional stress during this time of year, with its emphasis on home, family, good health, and harmony.

Stress is simply a part of human nature. And despite its bad rap, not all stress is problematic; it’s what motivates people to prepare or perform. Routine, “normal” stress that is temporary or short-lived can actually be beneficial. When placed in danger, the body prepares to either face the threat or flee to safety. During these times, your pulse quickens, you breathe faster, your muscles tense, your brain uses more oxygen and increases activity—all functions that aid in survival.1

But not every situation we encounter necessitates an increase in endorphin levels and blood pressure. Tell that to our stress levels, which are often persistently elevated! Chronic stress can cause the self-protective responses your body activates when threatened to suppress immune, digestive, sleep, and reproductive systems, leading them to cease normal functioning over time.1 This “bad” stress—or distress—can contribute to health problems such as hypertension, cardiovascular disease, obesity, and diabetes.

Stress can elicit a variety of responses: behavioral, psychologic/emotional, physical, cognitive, and social.2 For many, consumption (of tobacco, alcohol, drugs, sugar, fat, or caffeine) is a coping mechanism. While many people look to food for comfort and stress relief, research suggests it may have undesired effects. Eating a high-fat meal when under stress can slow your metabolism and result in significant weight gain.3 Stress can also influence whether people undereat or overeat and affect neurohormonal activity—which leads to increased production of cortisol, which leads to weight gain (particularly in women).4 Let’s be honest: Gaining weight seldom lowers someone’s stress level.

Everyone has different triggers that cause their stress levels to spike, but the workplace has been found to top the list. Within the “work” category, commonly reported stressors include

- Heavy workload or too much responsibility

- Long hours

- Poor management, unclear expectations, or having no say in decision-making.5

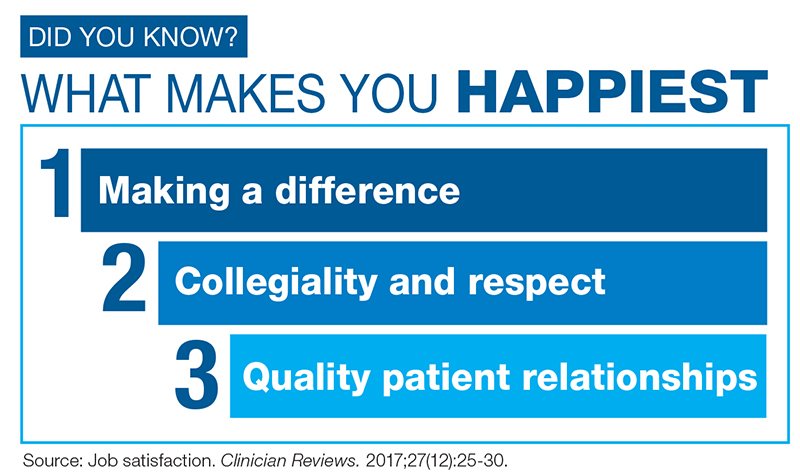

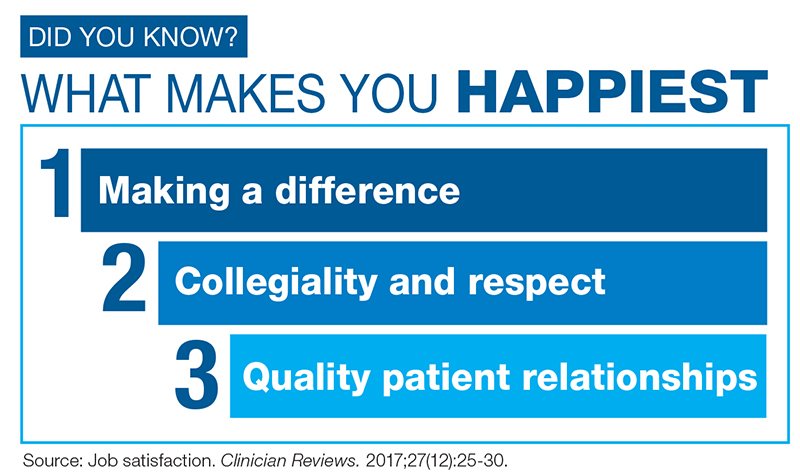

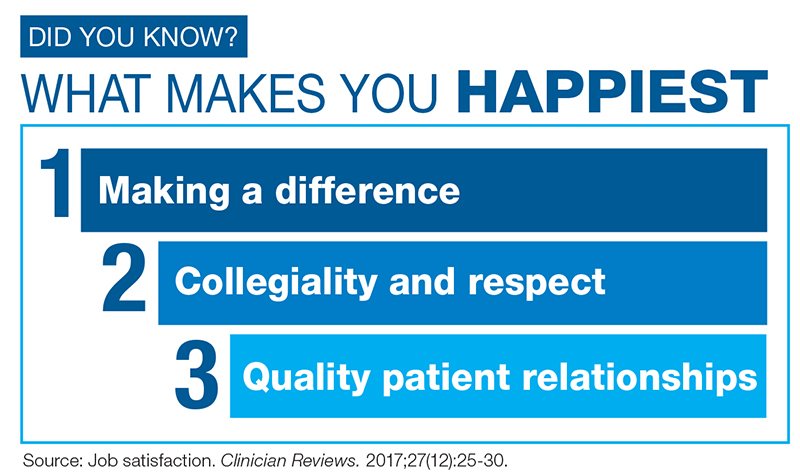

For health care providers, day-to-day stress is a chronic problem; responses to the Clinician Reviews annual job satisfaction survey have demonstrated that.6,7 Many of our readers report ongoing issues related to scheduling, work/life balance, compensation, and working conditions. That tension has a direct negative effect, not only on us, but on our families and our patients as well. A missed soccer game or a holiday on call are obvious stressors—but our inability to help patients achieve optimal health is a source of distress that we may not recognize the ramifications of. How often has a clinician felt caught in what feels like an unattainable quest?

Mitigating this workplace stress is the challenge. Changing jobs is another high-stress event, so up and quitting is probably not the answer. Identifying the problem is the first essential step.

If workload is the issue, focus on setting realistic goals for your day. Goal-setting provides purposeful direction and helps you feel in control. There will undeniably be days in which your plan must be completely abandoned. When this happens, don’t fret—reassess! Decide what must get done and what can wait. If possible, avoid scheduling patient appointments that will put you into overload. Learn to say “no” without feeling as though you are not a team player. And when you feel swamped, put a positive spin on the day by noting what you have accomplished, rather than what you have been unable to achieve.

If you find that your voice is but a whisper in the decision-making process, look for ways to become more involved. How can you provide direction on issues relating to organizational structure and clinical efficiency? Don’t suppress your feelings or concerns about the work environment. Pulling up a chair at the management table is crucial to improving the workplace and reducing stress for everyone (including the management!). Discuss key frustration points. Clear documentation of the issues that impede patient satisfaction (eg, long wait times) will aid in your dialogue.

Literature has identified common professional frustrations related to base pay rates, on-call pay, overtime pay, individual productivity compensation, and general incentive payments, which are further supported by our job satisfaction surveys.6-8 Knowing what’s included in the typical compensation packages in your region can reduce not only your own stress, but your employer’s as well. While this may seem a futile exercise, the investment in evaluating your own value, and the value your employer places on you, is well worth the return.

Previous experience dictates our ability to handle stress. If you have confidence in yourself, your contribution to your patients, and your ability to influence events and persevere through challenges, you are better equipped to handle the stress. If you can put the stressors in perspective by knowing the time frame of the stress, how long it will last, and what to expect, it will be easier to cope with the situation.

While trying to write this column, I was initially so stressed that I could barely compose a sentence! I knew that the stress of meeting my editorial deadline was “good” stress, though, so I kept taking short walks, and (as you can read) I got through it. Whether you turn to exercise or music or (as one friend does!) closet purging—managing your stress is key to maintaining good health.

[polldaddy:9906029]

1. National Institute of Mental Health. 5 things you should know about stress. www.nimh.nih.gov/health/publications/stress/index.shtml. Accessed December 4, 2017.

2. New York State Office of Mental Health. Common stress reactions: a self-assessment. www.omh.ny.gov/omhweb/disaster_resources/pandemic_influenza/doctors_nurses/Common_Stress_Reactions.html. Accessed December 7, 2017.

3. Smith M. Stress, high fat, and your metabolism [video]. WebMD. www.webmd.com/balance/stress-management/video/stress-high-fat-and-your-metabolism. Accessed December 7, 2017.

4. Slachta A. Stressed women more likely to develop obesity. Cardiovascular Business. November 15, 2017. www.cardiovascularbusiness.com/topics/lipid-metabolic/stressed-women-more-likely-develop-obesity-study-finds. Accessed December 7, 2017.

5. WebMD. Causes of stress. www.webmd.com/balance/guide/causes-of-stress#1. Accessed December 7, 2017.

6. Job satisfaction. Clinician Reviews. 2017;27(12):25-30.

7. Beyond salary: are you happy with your work? Clinician Reviews. 2016;26(12):23-26.

8. O’Hare S, Young AF. The advancing role of advanced practice clinicians: compensation, development, & leadership trends (2016). HealthLeaders Media. www.healthleadersmedia.com/whitepaper/advancing-role-advanced-practice-clinicians-compensation-development-leadership-trends. Accessed December 7, 2017.

As I write this column, the holiday season has just begun, and its incumbent demands lie ahead. By the time this article reaches you, we will have outlasted the season and its associated stress. But it’s not just the holiday baking, gift-wrapping, and decorating that overwhelms us—we face enormous professional stress during this time of year, with its emphasis on home, family, good health, and harmony.

Stress is simply a part of human nature. And despite its bad rap, not all stress is problematic; it’s what motivates people to prepare or perform. Routine, “normal” stress that is temporary or short-lived can actually be beneficial. When placed in danger, the body prepares to either face the threat or flee to safety. During these times, your pulse quickens, you breathe faster, your muscles tense, your brain uses more oxygen and increases activity—all functions that aid in survival.1

But not every situation we encounter necessitates an increase in endorphin levels and blood pressure. Tell that to our stress levels, which are often persistently elevated! Chronic stress can cause the self-protective responses your body activates when threatened to suppress immune, digestive, sleep, and reproductive systems, leading them to cease normal functioning over time.1 This “bad” stress—or distress—can contribute to health problems such as hypertension, cardiovascular disease, obesity, and diabetes.

Stress can elicit a variety of responses: behavioral, psychologic/emotional, physical, cognitive, and social.2 For many, consumption (of tobacco, alcohol, drugs, sugar, fat, or caffeine) is a coping mechanism. While many people look to food for comfort and stress relief, research suggests it may have undesired effects. Eating a high-fat meal when under stress can slow your metabolism and result in significant weight gain.3 Stress can also influence whether people undereat or overeat and affect neurohormonal activity—which leads to increased production of cortisol, which leads to weight gain (particularly in women).4 Let’s be honest: Gaining weight seldom lowers someone’s stress level.

Everyone has different triggers that cause their stress levels to spike, but the workplace has been found to top the list. Within the “work” category, commonly reported stressors include

- Heavy workload or too much responsibility

- Long hours

- Poor management, unclear expectations, or having no say in decision-making.5

For health care providers, day-to-day stress is a chronic problem; responses to the Clinician Reviews annual job satisfaction survey have demonstrated that.6,7 Many of our readers report ongoing issues related to scheduling, work/life balance, compensation, and working conditions. That tension has a direct negative effect, not only on us, but on our families and our patients as well. A missed soccer game or a holiday on call are obvious stressors—but our inability to help patients achieve optimal health is a source of distress that we may not recognize the ramifications of. How often has a clinician felt caught in what feels like an unattainable quest?

Mitigating this workplace stress is the challenge. Changing jobs is another high-stress event, so up and quitting is probably not the answer. Identifying the problem is the first essential step.

If workload is the issue, focus on setting realistic goals for your day. Goal-setting provides purposeful direction and helps you feel in control. There will undeniably be days in which your plan must be completely abandoned. When this happens, don’t fret—reassess! Decide what must get done and what can wait. If possible, avoid scheduling patient appointments that will put you into overload. Learn to say “no” without feeling as though you are not a team player. And when you feel swamped, put a positive spin on the day by noting what you have accomplished, rather than what you have been unable to achieve.

If you find that your voice is but a whisper in the decision-making process, look for ways to become more involved. How can you provide direction on issues relating to organizational structure and clinical efficiency? Don’t suppress your feelings or concerns about the work environment. Pulling up a chair at the management table is crucial to improving the workplace and reducing stress for everyone (including the management!). Discuss key frustration points. Clear documentation of the issues that impede patient satisfaction (eg, long wait times) will aid in your dialogue.

Literature has identified common professional frustrations related to base pay rates, on-call pay, overtime pay, individual productivity compensation, and general incentive payments, which are further supported by our job satisfaction surveys.6-8 Knowing what’s included in the typical compensation packages in your region can reduce not only your own stress, but your employer’s as well. While this may seem a futile exercise, the investment in evaluating your own value, and the value your employer places on you, is well worth the return.

Previous experience dictates our ability to handle stress. If you have confidence in yourself, your contribution to your patients, and your ability to influence events and persevere through challenges, you are better equipped to handle the stress. If you can put the stressors in perspective by knowing the time frame of the stress, how long it will last, and what to expect, it will be easier to cope with the situation.

While trying to write this column, I was initially so stressed that I could barely compose a sentence! I knew that the stress of meeting my editorial deadline was “good” stress, though, so I kept taking short walks, and (as you can read) I got through it. Whether you turn to exercise or music or (as one friend does!) closet purging—managing your stress is key to maintaining good health.

[polldaddy:9906029]

As I write this column, the holiday season has just begun, and its incumbent demands lie ahead. By the time this article reaches you, we will have outlasted the season and its associated stress. But it’s not just the holiday baking, gift-wrapping, and decorating that overwhelms us—we face enormous professional stress during this time of year, with its emphasis on home, family, good health, and harmony.

Stress is simply a part of human nature. And despite its bad rap, not all stress is problematic; it’s what motivates people to prepare or perform. Routine, “normal” stress that is temporary or short-lived can actually be beneficial. When placed in danger, the body prepares to either face the threat or flee to safety. During these times, your pulse quickens, you breathe faster, your muscles tense, your brain uses more oxygen and increases activity—all functions that aid in survival.1

But not every situation we encounter necessitates an increase in endorphin levels and blood pressure. Tell that to our stress levels, which are often persistently elevated! Chronic stress can cause the self-protective responses your body activates when threatened to suppress immune, digestive, sleep, and reproductive systems, leading them to cease normal functioning over time.1 This “bad” stress—or distress—can contribute to health problems such as hypertension, cardiovascular disease, obesity, and diabetes.

Stress can elicit a variety of responses: behavioral, psychologic/emotional, physical, cognitive, and social.2 For many, consumption (of tobacco, alcohol, drugs, sugar, fat, or caffeine) is a coping mechanism. While many people look to food for comfort and stress relief, research suggests it may have undesired effects. Eating a high-fat meal when under stress can slow your metabolism and result in significant weight gain.3 Stress can also influence whether people undereat or overeat and affect neurohormonal activity—which leads to increased production of cortisol, which leads to weight gain (particularly in women).4 Let’s be honest: Gaining weight seldom lowers someone’s stress level.

Everyone has different triggers that cause their stress levels to spike, but the workplace has been found to top the list. Within the “work” category, commonly reported stressors include

- Heavy workload or too much responsibility

- Long hours

- Poor management, unclear expectations, or having no say in decision-making.5

For health care providers, day-to-day stress is a chronic problem; responses to the Clinician Reviews annual job satisfaction survey have demonstrated that.6,7 Many of our readers report ongoing issues related to scheduling, work/life balance, compensation, and working conditions. That tension has a direct negative effect, not only on us, but on our families and our patients as well. A missed soccer game or a holiday on call are obvious stressors—but our inability to help patients achieve optimal health is a source of distress that we may not recognize the ramifications of. How often has a clinician felt caught in what feels like an unattainable quest?

Mitigating this workplace stress is the challenge. Changing jobs is another high-stress event, so up and quitting is probably not the answer. Identifying the problem is the first essential step.

If workload is the issue, focus on setting realistic goals for your day. Goal-setting provides purposeful direction and helps you feel in control. There will undeniably be days in which your plan must be completely abandoned. When this happens, don’t fret—reassess! Decide what must get done and what can wait. If possible, avoid scheduling patient appointments that will put you into overload. Learn to say “no” without feeling as though you are not a team player. And when you feel swamped, put a positive spin on the day by noting what you have accomplished, rather than what you have been unable to achieve.

If you find that your voice is but a whisper in the decision-making process, look for ways to become more involved. How can you provide direction on issues relating to organizational structure and clinical efficiency? Don’t suppress your feelings or concerns about the work environment. Pulling up a chair at the management table is crucial to improving the workplace and reducing stress for everyone (including the management!). Discuss key frustration points. Clear documentation of the issues that impede patient satisfaction (eg, long wait times) will aid in your dialogue.

Literature has identified common professional frustrations related to base pay rates, on-call pay, overtime pay, individual productivity compensation, and general incentive payments, which are further supported by our job satisfaction surveys.6-8 Knowing what’s included in the typical compensation packages in your region can reduce not only your own stress, but your employer’s as well. While this may seem a futile exercise, the investment in evaluating your own value, and the value your employer places on you, is well worth the return.

Previous experience dictates our ability to handle stress. If you have confidence in yourself, your contribution to your patients, and your ability to influence events and persevere through challenges, you are better equipped to handle the stress. If you can put the stressors in perspective by knowing the time frame of the stress, how long it will last, and what to expect, it will be easier to cope with the situation.

While trying to write this column, I was initially so stressed that I could barely compose a sentence! I knew that the stress of meeting my editorial deadline was “good” stress, though, so I kept taking short walks, and (as you can read) I got through it. Whether you turn to exercise or music or (as one friend does!) closet purging—managing your stress is key to maintaining good health.

[polldaddy:9906029]

1. National Institute of Mental Health. 5 things you should know about stress. www.nimh.nih.gov/health/publications/stress/index.shtml. Accessed December 4, 2017.

2. New York State Office of Mental Health. Common stress reactions: a self-assessment. www.omh.ny.gov/omhweb/disaster_resources/pandemic_influenza/doctors_nurses/Common_Stress_Reactions.html. Accessed December 7, 2017.

3. Smith M. Stress, high fat, and your metabolism [video]. WebMD. www.webmd.com/balance/stress-management/video/stress-high-fat-and-your-metabolism. Accessed December 7, 2017.

4. Slachta A. Stressed women more likely to develop obesity. Cardiovascular Business. November 15, 2017. www.cardiovascularbusiness.com/topics/lipid-metabolic/stressed-women-more-likely-develop-obesity-study-finds. Accessed December 7, 2017.

5. WebMD. Causes of stress. www.webmd.com/balance/guide/causes-of-stress#1. Accessed December 7, 2017.

6. Job satisfaction. Clinician Reviews. 2017;27(12):25-30.

7. Beyond salary: are you happy with your work? Clinician Reviews. 2016;26(12):23-26.

8. O’Hare S, Young AF. The advancing role of advanced practice clinicians: compensation, development, & leadership trends (2016). HealthLeaders Media. www.healthleadersmedia.com/whitepaper/advancing-role-advanced-practice-clinicians-compensation-development-leadership-trends. Accessed December 7, 2017.

1. National Institute of Mental Health. 5 things you should know about stress. www.nimh.nih.gov/health/publications/stress/index.shtml. Accessed December 4, 2017.

2. New York State Office of Mental Health. Common stress reactions: a self-assessment. www.omh.ny.gov/omhweb/disaster_resources/pandemic_influenza/doctors_nurses/Common_Stress_Reactions.html. Accessed December 7, 2017.

3. Smith M. Stress, high fat, and your metabolism [video]. WebMD. www.webmd.com/balance/stress-management/video/stress-high-fat-and-your-metabolism. Accessed December 7, 2017.

4. Slachta A. Stressed women more likely to develop obesity. Cardiovascular Business. November 15, 2017. www.cardiovascularbusiness.com/topics/lipid-metabolic/stressed-women-more-likely-develop-obesity-study-finds. Accessed December 7, 2017.

5. WebMD. Causes of stress. www.webmd.com/balance/guide/causes-of-stress#1. Accessed December 7, 2017.

6. Job satisfaction. Clinician Reviews. 2017;27(12):25-30.

7. Beyond salary: are you happy with your work? Clinician Reviews. 2016;26(12):23-26.

8. O’Hare S, Young AF. The advancing role of advanced practice clinicians: compensation, development, & leadership trends (2016). HealthLeaders Media. www.healthleadersmedia.com/whitepaper/advancing-role-advanced-practice-clinicians-compensation-development-leadership-trends. Accessed December 7, 2017.

Model validates use of HCV+ livers for transplant

As the evidence supporting the idea of transplanting livers infected with hepatitis C into patients who do not have the disease continues to mount, a multi-institutional team of researchers has developed a mathematical model that shows when hepatitis C–positive-to-negative transplant may improve survival for patients who might otherwise die awaiting a disease-free liver.

In a report published in the journal Hepatology (doi: 10.1002/hep.29723), the researchers noted how direct-acting antivirals (DAAs) have changed the calculus of hepatitis C (HCV) status in liver transplant by reducing the number of HCV-positive patients on the wait list and providing treatment for HCV-negative patients who receive HCV-positive livers. “It is important that further research in this area continues, as we expect that the supply of HCV-positive organs may continue to increase in light of the growing opioid epidemic,” said lead author Jagpreet Chhatwal, PhD, of Massachusetts General Hospital Institute for Technology Assessment in Boston.

Dr. Chhatwal and coauthors claimed their study provides some of the first empirical data for transplanting livers from patients with HCV into patients who do not have the disease.

The researchers performed their analysis using a Markov-based mathematical model known as Simulation of Liver Transplant Candidates (SIM-LT). The model had been validated in previous studies that Dr. Chhatwal and some coauthors had published (Hepatology. 2017;65:777-88; Clin Gastroenterol Hepatol 2018;16:115-22). Dr. Chhatwal and coauthors revised the SIM-LT model to simulate a virtual trial of HCV-negative patients on the liver transplant waiting list to compare outcomes in patients willing to accept any liver to those willing to accept only HCV-negative livers.

The patients willing to receive HCV-positive livers were given 12 weeks of DAA therapy preemptively and had a higher risk of graft failure. The model incorporated data from published studies using the United Network for Organ Sharing (UNOS) and used reported outcomes of the Organ Procurement and Transplantation Network to validate the findings.

The study showed that the clinical benefits of an HCV-negative patient receiving an HCV-positive liver depend on the patient’s Model for End-Stage Liver Disease (MELD) score. Using the measured change in life-years, the researchers found that patients with a MELD score below 20 actually witnessed reduction in life-years when accepting any liver, but that the benefits of accepting any liver started to accrue at MELD score 20. The benefit topped out at MELD 28, with 0.172 life years gained, but even sustained at 0.06 life years gained at MELD 40.

The effectiveness of using HCV-positive livers may also depend on region. UNOS Region 1 – essentially New England minus western Vermont – has the highest rate of HCV-positive organs, and a patient there with MELD 28 would gain 0.36 life-years by accepting any liver regardless of HCV status. However, Region 7 – the Dakotas and upper Midwest plus Illinois – has the lowest HCV-positive organ rate, and a MELD 28 patient there would gain only 0.1 life-year accepting any liver.

“Transplanting HCV-positive livers into HCV-negative patients receiving preemptive DAA therapy could be a viable option for improving patient survival on the LT waiting list, especially in UNOS regions with high HCV-positive donor organ rates,” said Dr. Chhatwal and coauthors. They concluded that their analysis could help direct future clinical trials evaluating the effectiveness of DAA therapy in liver transplant by recognizing patients who could benefit most from accepting HCV-positive donor organs.

The study authors reported having no financial disclosures. The study was supported by grants from the American Cancer Society, Health Resources and Services Administration, National Institutes of Health, National Science Foundation, and Massachusetts General Hospital Research Scholars Program. Coauthor Fasiha Kanwal, MD, received support from the Veterans Administration Health Services, Research & Development Center for Innovations in Quality, Effectiveness and Safety and Public Health Service.

SOURCE: Chhatwal J et al. Hepatology. doi:10.1002/hep.29723.

As the evidence supporting the idea of transplanting livers infected with hepatitis C into patients who do not have the disease continues to mount, a multi-institutional team of researchers has developed a mathematical model that shows when hepatitis C–positive-to-negative transplant may improve survival for patients who might otherwise die awaiting a disease-free liver.

In a report published in the journal Hepatology (doi: 10.1002/hep.29723), the researchers noted how direct-acting antivirals (DAAs) have changed the calculus of hepatitis C (HCV) status in liver transplant by reducing the number of HCV-positive patients on the wait list and providing treatment for HCV-negative patients who receive HCV-positive livers. “It is important that further research in this area continues, as we expect that the supply of HCV-positive organs may continue to increase in light of the growing opioid epidemic,” said lead author Jagpreet Chhatwal, PhD, of Massachusetts General Hospital Institute for Technology Assessment in Boston.

Dr. Chhatwal and coauthors claimed their study provides some of the first empirical data for transplanting livers from patients with HCV into patients who do not have the disease.

The researchers performed their analysis using a Markov-based mathematical model known as Simulation of Liver Transplant Candidates (SIM-LT). The model had been validated in previous studies that Dr. Chhatwal and some coauthors had published (Hepatology. 2017;65:777-88; Clin Gastroenterol Hepatol 2018;16:115-22). Dr. Chhatwal and coauthors revised the SIM-LT model to simulate a virtual trial of HCV-negative patients on the liver transplant waiting list to compare outcomes in patients willing to accept any liver to those willing to accept only HCV-negative livers.

The patients willing to receive HCV-positive livers were given 12 weeks of DAA therapy preemptively and had a higher risk of graft failure. The model incorporated data from published studies using the United Network for Organ Sharing (UNOS) and used reported outcomes of the Organ Procurement and Transplantation Network to validate the findings.

The study showed that the clinical benefits of an HCV-negative patient receiving an HCV-positive liver depend on the patient’s Model for End-Stage Liver Disease (MELD) score. Using the measured change in life-years, the researchers found that patients with a MELD score below 20 actually witnessed reduction in life-years when accepting any liver, but that the benefits of accepting any liver started to accrue at MELD score 20. The benefit topped out at MELD 28, with 0.172 life years gained, but even sustained at 0.06 life years gained at MELD 40.

The effectiveness of using HCV-positive livers may also depend on region. UNOS Region 1 – essentially New England minus western Vermont – has the highest rate of HCV-positive organs, and a patient there with MELD 28 would gain 0.36 life-years by accepting any liver regardless of HCV status. However, Region 7 – the Dakotas and upper Midwest plus Illinois – has the lowest HCV-positive organ rate, and a MELD 28 patient there would gain only 0.1 life-year accepting any liver.

“Transplanting HCV-positive livers into HCV-negative patients receiving preemptive DAA therapy could be a viable option for improving patient survival on the LT waiting list, especially in UNOS regions with high HCV-positive donor organ rates,” said Dr. Chhatwal and coauthors. They concluded that their analysis could help direct future clinical trials evaluating the effectiveness of DAA therapy in liver transplant by recognizing patients who could benefit most from accepting HCV-positive donor organs.

The study authors reported having no financial disclosures. The study was supported by grants from the American Cancer Society, Health Resources and Services Administration, National Institutes of Health, National Science Foundation, and Massachusetts General Hospital Research Scholars Program. Coauthor Fasiha Kanwal, MD, received support from the Veterans Administration Health Services, Research & Development Center for Innovations in Quality, Effectiveness and Safety and Public Health Service.

SOURCE: Chhatwal J et al. Hepatology. doi:10.1002/hep.29723.

As the evidence supporting the idea of transplanting livers infected with hepatitis C into patients who do not have the disease continues to mount, a multi-institutional team of researchers has developed a mathematical model that shows when hepatitis C–positive-to-negative transplant may improve survival for patients who might otherwise die awaiting a disease-free liver.

In a report published in the journal Hepatology (doi: 10.1002/hep.29723), the researchers noted how direct-acting antivirals (DAAs) have changed the calculus of hepatitis C (HCV) status in liver transplant by reducing the number of HCV-positive patients on the wait list and providing treatment for HCV-negative patients who receive HCV-positive livers. “It is important that further research in this area continues, as we expect that the supply of HCV-positive organs may continue to increase in light of the growing opioid epidemic,” said lead author Jagpreet Chhatwal, PhD, of Massachusetts General Hospital Institute for Technology Assessment in Boston.

Dr. Chhatwal and coauthors claimed their study provides some of the first empirical data for transplanting livers from patients with HCV into patients who do not have the disease.

The researchers performed their analysis using a Markov-based mathematical model known as Simulation of Liver Transplant Candidates (SIM-LT). The model had been validated in previous studies that Dr. Chhatwal and some coauthors had published (Hepatology. 2017;65:777-88; Clin Gastroenterol Hepatol 2018;16:115-22). Dr. Chhatwal and coauthors revised the SIM-LT model to simulate a virtual trial of HCV-negative patients on the liver transplant waiting list to compare outcomes in patients willing to accept any liver to those willing to accept only HCV-negative livers.

The patients willing to receive HCV-positive livers were given 12 weeks of DAA therapy preemptively and had a higher risk of graft failure. The model incorporated data from published studies using the United Network for Organ Sharing (UNOS) and used reported outcomes of the Organ Procurement and Transplantation Network to validate the findings.

The study showed that the clinical benefits of an HCV-negative patient receiving an HCV-positive liver depend on the patient’s Model for End-Stage Liver Disease (MELD) score. Using the measured change in life-years, the researchers found that patients with a MELD score below 20 actually witnessed reduction in life-years when accepting any liver, but that the benefits of accepting any liver started to accrue at MELD score 20. The benefit topped out at MELD 28, with 0.172 life years gained, but even sustained at 0.06 life years gained at MELD 40.

The effectiveness of using HCV-positive livers may also depend on region. UNOS Region 1 – essentially New England minus western Vermont – has the highest rate of HCV-positive organs, and a patient there with MELD 28 would gain 0.36 life-years by accepting any liver regardless of HCV status. However, Region 7 – the Dakotas and upper Midwest plus Illinois – has the lowest HCV-positive organ rate, and a MELD 28 patient there would gain only 0.1 life-year accepting any liver.

“Transplanting HCV-positive livers into HCV-negative patients receiving preemptive DAA therapy could be a viable option for improving patient survival on the LT waiting list, especially in UNOS regions with high HCV-positive donor organ rates,” said Dr. Chhatwal and coauthors. They concluded that their analysis could help direct future clinical trials evaluating the effectiveness of DAA therapy in liver transplant by recognizing patients who could benefit most from accepting HCV-positive donor organs.

The study authors reported having no financial disclosures. The study was supported by grants from the American Cancer Society, Health Resources and Services Administration, National Institutes of Health, National Science Foundation, and Massachusetts General Hospital Research Scholars Program. Coauthor Fasiha Kanwal, MD, received support from the Veterans Administration Health Services, Research & Development Center for Innovations in Quality, Effectiveness and Safety and Public Health Service.

SOURCE: Chhatwal J et al. Hepatology. doi:10.1002/hep.29723.

FROM HEPATOLOGY

Key clinical point: Making hepatitis C virus–positive livers available to HCV-negative patients awaiting liver transplant could improve survival of patients on the liver transplant waiting list.

Major finding: Patients with a Model for End-Stage Liver Disease score of 28 willing to receive any liver gained 0.172 life-years.

Data source: Simulated trial using Markov-based mathematical model and data from published studies and the United Network for Organ Sharing.

Disclosures: Dr. Chhatwal and coauthors reported having no financial disclosures. The study was supported by grants from the American Cancer Society, Health Resources and Services Administration, National Institutes of Health, National Science Foundation, and Massachusetts General Hospital Research Scholars Program. Coauthor Fasiha Kanwal, MD, received support from the Veterans Administration Health Services, Research & Development Center for Innovations in Quality, Effectiveness and Safety and Public Health Service.

Source: Chhatwal J et al. Hepatology. doi:10.1002/hep.29723.

Rheumatology News Best of 2017: Top News Highlights

Best of 2017: Top News Highlights is a supplement to Rheumatology News that presents some of the top stories published in the newspaper in 2017.

The ideas and opinions expressed in Best of 2017: Top News Highlights do not necessarily reflect those of the Publisher. Frontline Medical Communications Inc. will not assume responsibility for damages, loss, or claims of any kind arising from or related to the information contained in this publication, including any claims related to the products, drugs, or services mentioned herein.

Best of 2017: Top News Highlights is a supplement to Rheumatology News that presents some of the top stories published in the newspaper in 2017.

The ideas and opinions expressed in Best of 2017: Top News Highlights do not necessarily reflect those of the Publisher. Frontline Medical Communications Inc. will not assume responsibility for damages, loss, or claims of any kind arising from or related to the information contained in this publication, including any claims related to the products, drugs, or services mentioned herein.

Best of 2017: Top News Highlights is a supplement to Rheumatology News that presents some of the top stories published in the newspaper in 2017.

The ideas and opinions expressed in Best of 2017: Top News Highlights do not necessarily reflect those of the Publisher. Frontline Medical Communications Inc. will not assume responsibility for damages, loss, or claims of any kind arising from or related to the information contained in this publication, including any claims related to the products, drugs, or services mentioned herein.

FDA grants breakthrough therapy designation for severe aplastic anemia drug

The Food and Drug Administration has granted breakthrough therapy designation to eltrombopag (Promacta) for use in combination with standard immunosuppressive therapy as a first-line treatment for patients with severe aplastic anemia (SAA).

Eltrombopag already is approved as a second-line therapy in patients with refractory SAA and is approved for adults and children with refractory chronic immune thrombocytopenia (ITP).

“Promacta is a promising medicine that, if approved for first-line use in severe aplastic anemia, may redefine the standard of care for patients with this rare and serious bone marrow condition,” Samit Hirawat, MD, head of Novartis Oncology Global Drug Development, said in a statement.

The Food and Drug Administration has granted breakthrough therapy designation to eltrombopag (Promacta) for use in combination with standard immunosuppressive therapy as a first-line treatment for patients with severe aplastic anemia (SAA).

Eltrombopag already is approved as a second-line therapy in patients with refractory SAA and is approved for adults and children with refractory chronic immune thrombocytopenia (ITP).

“Promacta is a promising medicine that, if approved for first-line use in severe aplastic anemia, may redefine the standard of care for patients with this rare and serious bone marrow condition,” Samit Hirawat, MD, head of Novartis Oncology Global Drug Development, said in a statement.

The Food and Drug Administration has granted breakthrough therapy designation to eltrombopag (Promacta) for use in combination with standard immunosuppressive therapy as a first-line treatment for patients with severe aplastic anemia (SAA).

Eltrombopag already is approved as a second-line therapy in patients with refractory SAA and is approved for adults and children with refractory chronic immune thrombocytopenia (ITP).

“Promacta is a promising medicine that, if approved for first-line use in severe aplastic anemia, may redefine the standard of care for patients with this rare and serious bone marrow condition,” Samit Hirawat, MD, head of Novartis Oncology Global Drug Development, said in a statement.

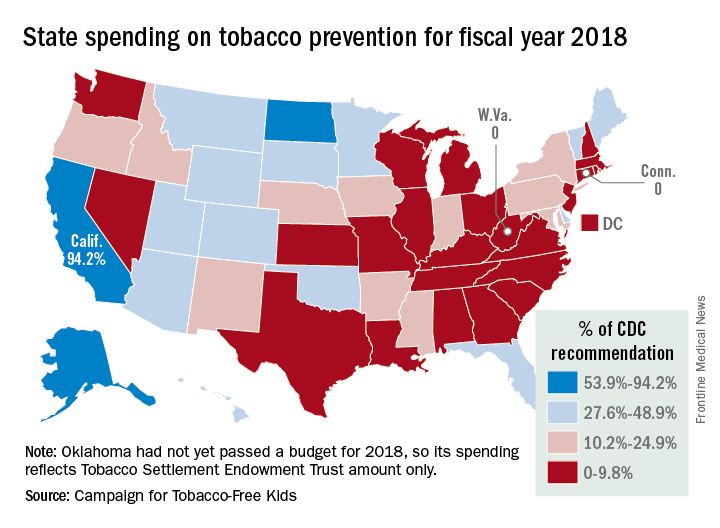

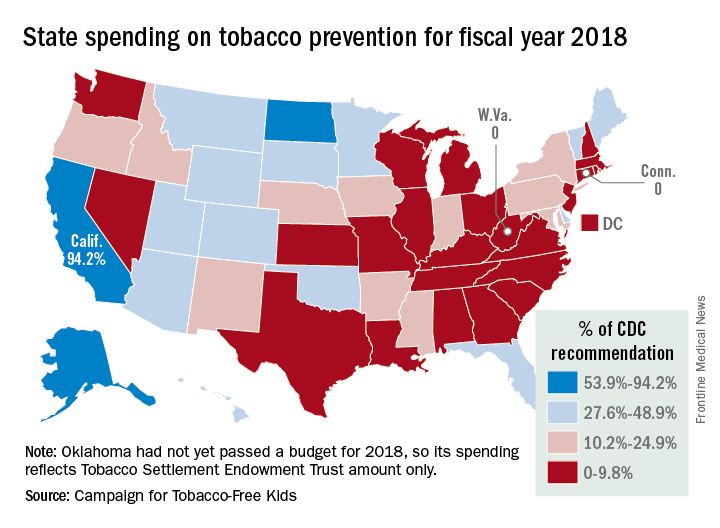

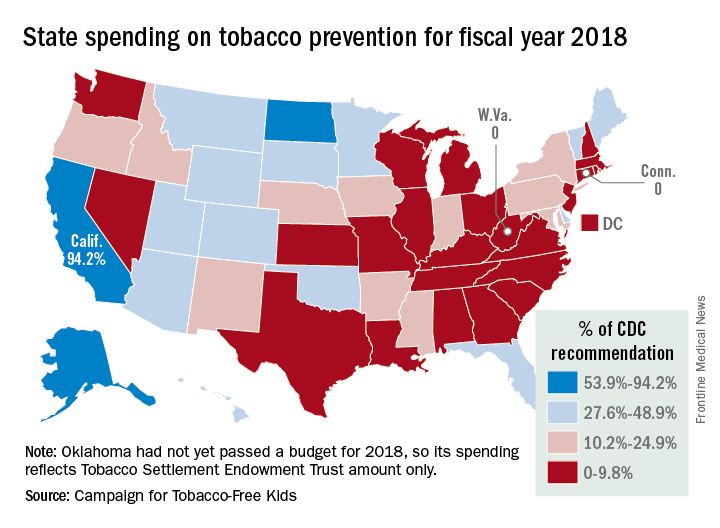

California tops state tobacco prevention spending

California will spend almost as much money on tobacco prevention and smoking cessation as the other states combined in 2018, putting it closest to the spending level recommended for each state by the Centers for Disease Control and Prevention, according to a report on the effects of the 1998 tobacco settlement.

The Golden State has budgeted almost $328 million for tobacco prevention and cessation this year, which amounts to just over 45% of all states’ total spending of $722 million and 94% of the CDC’s recommendation of $348 million. Alaska is the only state close to that in terms of the CDC-recommended level, reaching 93% of its spending target of $10.2 million. In third place for recommended spending is North Dakota, which has budgeted $5.3 million for 2018, or 54% of its CDC target, the report said.

“Broken Promises to Our Children: A State-by-State Look at the 1998 Tobacco Settlement 19 Years Later” was released by the Campaign for Tobacco-Free Kids, American Cancer Society Cancer Action Network, American Heart Association, American Lung Association, Robert Wood Johnson Foundation, Americans for Nonsmokers’ Rights, and Truth Initiative.

As for actual spending, Florida is second behind California with almost $69 million – 35% of its CDC-recommended level – budgeted for tobacco prevention and smoking cessation in 2018, and New York is third at just over $39 million, which is 19.4% of the CDC recommendation. Two states – Connecticut and West Virginia – will spend no money on such programs this year, the report noted.

The CDC has said that all states combined should be spending $3.3 billion for the year on prevention and cessation efforts, which is about 4.5 times higher than actual budgeted spending. The report also pointed out that the $722 million the states will spend this year amounts to just 2.6% of the $27.5 billion they will collect from the 1998 tobacco settlement and tobacco taxes. By comparison, the report cited data from the Federal Trade Commission showing that the tobacco companies spent $8.9 billion on marketing in 2015.

California will spend almost as much money on tobacco prevention and smoking cessation as the other states combined in 2018, putting it closest to the spending level recommended for each state by the Centers for Disease Control and Prevention, according to a report on the effects of the 1998 tobacco settlement.

The Golden State has budgeted almost $328 million for tobacco prevention and cessation this year, which amounts to just over 45% of all states’ total spending of $722 million and 94% of the CDC’s recommendation of $348 million. Alaska is the only state close to that in terms of the CDC-recommended level, reaching 93% of its spending target of $10.2 million. In third place for recommended spending is North Dakota, which has budgeted $5.3 million for 2018, or 54% of its CDC target, the report said.

“Broken Promises to Our Children: A State-by-State Look at the 1998 Tobacco Settlement 19 Years Later” was released by the Campaign for Tobacco-Free Kids, American Cancer Society Cancer Action Network, American Heart Association, American Lung Association, Robert Wood Johnson Foundation, Americans for Nonsmokers’ Rights, and Truth Initiative.

As for actual spending, Florida is second behind California with almost $69 million – 35% of its CDC-recommended level – budgeted for tobacco prevention and smoking cessation in 2018, and New York is third at just over $39 million, which is 19.4% of the CDC recommendation. Two states – Connecticut and West Virginia – will spend no money on such programs this year, the report noted.

The CDC has said that all states combined should be spending $3.3 billion for the year on prevention and cessation efforts, which is about 4.5 times higher than actual budgeted spending. The report also pointed out that the $722 million the states will spend this year amounts to just 2.6% of the $27.5 billion they will collect from the 1998 tobacco settlement and tobacco taxes. By comparison, the report cited data from the Federal Trade Commission showing that the tobacco companies spent $8.9 billion on marketing in 2015.

California will spend almost as much money on tobacco prevention and smoking cessation as the other states combined in 2018, putting it closest to the spending level recommended for each state by the Centers for Disease Control and Prevention, according to a report on the effects of the 1998 tobacco settlement.

The Golden State has budgeted almost $328 million for tobacco prevention and cessation this year, which amounts to just over 45% of all states’ total spending of $722 million and 94% of the CDC’s recommendation of $348 million. Alaska is the only state close to that in terms of the CDC-recommended level, reaching 93% of its spending target of $10.2 million. In third place for recommended spending is North Dakota, which has budgeted $5.3 million for 2018, or 54% of its CDC target, the report said.

“Broken Promises to Our Children: A State-by-State Look at the 1998 Tobacco Settlement 19 Years Later” was released by the Campaign for Tobacco-Free Kids, American Cancer Society Cancer Action Network, American Heart Association, American Lung Association, Robert Wood Johnson Foundation, Americans for Nonsmokers’ Rights, and Truth Initiative.

As for actual spending, Florida is second behind California with almost $69 million – 35% of its CDC-recommended level – budgeted for tobacco prevention and smoking cessation in 2018, and New York is third at just over $39 million, which is 19.4% of the CDC recommendation. Two states – Connecticut and West Virginia – will spend no money on such programs this year, the report noted.

The CDC has said that all states combined should be spending $3.3 billion for the year on prevention and cessation efforts, which is about 4.5 times higher than actual budgeted spending. The report also pointed out that the $722 million the states will spend this year amounts to just 2.6% of the $27.5 billion they will collect from the 1998 tobacco settlement and tobacco taxes. By comparison, the report cited data from the Federal Trade Commission showing that the tobacco companies spent $8.9 billion on marketing in 2015.

Government insurance linked with lower likelihood of radiation therapy for limited-stage small cell lung cancer

Researchers looked at utilization rates and factors associated with chemotherapy and radiation therapy delivery for limited-stage small cell lung cancer cases from 2004 through 2013 in the National Cancer Database.

Researchers suggest that programs such as 340B and the Medicaid Drug Discount Program allow for improved access to chemotherapy.

“However, these programs provide no financial assistance for radiation therapy delivered to this high-risk population, which may partially explain why patients with government insurance were significantly less likely to receive radiation therapy,” Dr. Pezzi and his colleagues noted.

“Our findings suggest the need for targeted access improvement to radiation therapy for this population,” they added.

SOURCE: Todd Pezzi, MD, et al. JAMA Oncol. doi: 10.1001/jamaoncol.2017.4504.

Researchers looked at utilization rates and factors associated with chemotherapy and radiation therapy delivery for limited-stage small cell lung cancer cases from 2004 through 2013 in the National Cancer Database.

Researchers suggest that programs such as 340B and the Medicaid Drug Discount Program allow for improved access to chemotherapy.

“However, these programs provide no financial assistance for radiation therapy delivered to this high-risk population, which may partially explain why patients with government insurance were significantly less likely to receive radiation therapy,” Dr. Pezzi and his colleagues noted.

“Our findings suggest the need for targeted access improvement to radiation therapy for this population,” they added.

SOURCE: Todd Pezzi, MD, et al. JAMA Oncol. doi: 10.1001/jamaoncol.2017.4504.

Researchers looked at utilization rates and factors associated with chemotherapy and radiation therapy delivery for limited-stage small cell lung cancer cases from 2004 through 2013 in the National Cancer Database.

Researchers suggest that programs such as 340B and the Medicaid Drug Discount Program allow for improved access to chemotherapy.

“However, these programs provide no financial assistance for radiation therapy delivered to this high-risk population, which may partially explain why patients with government insurance were significantly less likely to receive radiation therapy,” Dr. Pezzi and his colleagues noted.

“Our findings suggest the need for targeted access improvement to radiation therapy for this population,” they added.

SOURCE: Todd Pezzi, MD, et al. JAMA Oncol. doi: 10.1001/jamaoncol.2017.4504.

FROM JAMA ONCOLOGY

A mismatched haplotype may improve outcomes in second BMT

Undergoing a second allogeneic bone or marrow transplant (BMT) is a feasible strategy for patients who have relapsed after their first transplantation, according to findings reported in Biology of Blood and Marrow Transplantation.

Specifically, there may be an advantage to selecting a donor with a new mismatched haplotype after a failed HLA-matched allograft. Among patients who had a second graft transplanted that did not harbor a new mismatched haplotype, median survival was 552 days (95% CI, 376-2,950+). But median survival was not reached in the cohort whose allograft contained a new mismatched haplotype (hazard ratio, 0.36; 95% confidence interval, 0.14-0.9; P = .02).

“In the case of a failed HLA-matched transplantation, when haplotype loss would not be favored through selective pressure and thus would not provide a mechanism of relapse, switching to a different haploidentical donor may be beneficial by increasing major histocompatibility mismatch,” Philip H. Imus, MD, of the Sidney Kimmel Comprehensive Cancer Center at Johns Hopkins, Baltimore, and colleagues wrote.

For this retrospective review, the researchers examined 40 consecutive patients who underwent a second BMT for a relapsed hematologic malignancy at a single institution between 2005 and 2014. In all, 21 patients had their first allograft from a haploidentical donor, with 14 of these patients receiving a second haploidentical transplant (8 from a donor sharing the same shared haplotype as the first donor and 6 from a second donor sharing the other haplotype).

The other 19 patients received their first allograft from a fully matched donor, with 14 patients receiving a haploidentical allograft at the second transplantation and 5 receiving a second matched allograft. Overall, a total of 20 patients had a second allograft with a new mismatched haplotype.

The median overall survival for the six patients who were retransplantated with an HLA-haploidentical donor sharing the different haplotype from the first haploidentical donor had not been reached, compared with 502 days (95% CI, 317-2,950+) for the eight patients with a second haplo allograft that shared the same haplotype (HR, 0.37; 95% CI, 0.08 to 1.7; P = .16).

The median event free survival was also superior among those retransplanted with a new mismatched haplotype (not reached vs. 401 days; HR, .50; 95% CI, .22-1.14, P = .09).

A higher risk of mortality was observed in patients who had relapsed within 6 months of their first transplantation (HR, 2.49; 95% CI, 1.0-6.2; P = .07), as well as in those who had progressive or refractory disease prior to their second alloBMT (HR, 2.56; 95% CI, 1.03-6.25; P = .06).

“For patients who relapse more than 6 months after a BMT and who achieve remission with salvage therapy, results are very encouraging,” the researchers wrote. “Although a randomized trial to study selecting donors with a new mismatched haplotype may be impractical, larger numbers should provide additional information on the effectiveness of such an approach.”

The researchers reported having no financial disclosures.

SOURCE: Imus P et al. Biol Blood Marrow Transplant. 2017;23:1887-94.

Undergoing a second allogeneic bone or marrow transplant (BMT) is a feasible strategy for patients who have relapsed after their first transplantation, according to findings reported in Biology of Blood and Marrow Transplantation.

Specifically, there may be an advantage to selecting a donor with a new mismatched haplotype after a failed HLA-matched allograft. Among patients who had a second graft transplanted that did not harbor a new mismatched haplotype, median survival was 552 days (95% CI, 376-2,950+). But median survival was not reached in the cohort whose allograft contained a new mismatched haplotype (hazard ratio, 0.36; 95% confidence interval, 0.14-0.9; P = .02).

“In the case of a failed HLA-matched transplantation, when haplotype loss would not be favored through selective pressure and thus would not provide a mechanism of relapse, switching to a different haploidentical donor may be beneficial by increasing major histocompatibility mismatch,” Philip H. Imus, MD, of the Sidney Kimmel Comprehensive Cancer Center at Johns Hopkins, Baltimore, and colleagues wrote.

For this retrospective review, the researchers examined 40 consecutive patients who underwent a second BMT for a relapsed hematologic malignancy at a single institution between 2005 and 2014. In all, 21 patients had their first allograft from a haploidentical donor, with 14 of these patients receiving a second haploidentical transplant (8 from a donor sharing the same shared haplotype as the first donor and 6 from a second donor sharing the other haplotype).

The other 19 patients received their first allograft from a fully matched donor, with 14 patients receiving a haploidentical allograft at the second transplantation and 5 receiving a second matched allograft. Overall, a total of 20 patients had a second allograft with a new mismatched haplotype.

The median overall survival for the six patients who were retransplantated with an HLA-haploidentical donor sharing the different haplotype from the first haploidentical donor had not been reached, compared with 502 days (95% CI, 317-2,950+) for the eight patients with a second haplo allograft that shared the same haplotype (HR, 0.37; 95% CI, 0.08 to 1.7; P = .16).

The median event free survival was also superior among those retransplanted with a new mismatched haplotype (not reached vs. 401 days; HR, .50; 95% CI, .22-1.14, P = .09).

A higher risk of mortality was observed in patients who had relapsed within 6 months of their first transplantation (HR, 2.49; 95% CI, 1.0-6.2; P = .07), as well as in those who had progressive or refractory disease prior to their second alloBMT (HR, 2.56; 95% CI, 1.03-6.25; P = .06).

“For patients who relapse more than 6 months after a BMT and who achieve remission with salvage therapy, results are very encouraging,” the researchers wrote. “Although a randomized trial to study selecting donors with a new mismatched haplotype may be impractical, larger numbers should provide additional information on the effectiveness of such an approach.”

The researchers reported having no financial disclosures.

SOURCE: Imus P et al. Biol Blood Marrow Transplant. 2017;23:1887-94.

Undergoing a second allogeneic bone or marrow transplant (BMT) is a feasible strategy for patients who have relapsed after their first transplantation, according to findings reported in Biology of Blood and Marrow Transplantation.

Specifically, there may be an advantage to selecting a donor with a new mismatched haplotype after a failed HLA-matched allograft. Among patients who had a second graft transplanted that did not harbor a new mismatched haplotype, median survival was 552 days (95% CI, 376-2,950+). But median survival was not reached in the cohort whose allograft contained a new mismatched haplotype (hazard ratio, 0.36; 95% confidence interval, 0.14-0.9; P = .02).

“In the case of a failed HLA-matched transplantation, when haplotype loss would not be favored through selective pressure and thus would not provide a mechanism of relapse, switching to a different haploidentical donor may be beneficial by increasing major histocompatibility mismatch,” Philip H. Imus, MD, of the Sidney Kimmel Comprehensive Cancer Center at Johns Hopkins, Baltimore, and colleagues wrote.

For this retrospective review, the researchers examined 40 consecutive patients who underwent a second BMT for a relapsed hematologic malignancy at a single institution between 2005 and 2014. In all, 21 patients had their first allograft from a haploidentical donor, with 14 of these patients receiving a second haploidentical transplant (8 from a donor sharing the same shared haplotype as the first donor and 6 from a second donor sharing the other haplotype).

The other 19 patients received their first allograft from a fully matched donor, with 14 patients receiving a haploidentical allograft at the second transplantation and 5 receiving a second matched allograft. Overall, a total of 20 patients had a second allograft with a new mismatched haplotype.

The median overall survival for the six patients who were retransplantated with an HLA-haploidentical donor sharing the different haplotype from the first haploidentical donor had not been reached, compared with 502 days (95% CI, 317-2,950+) for the eight patients with a second haplo allograft that shared the same haplotype (HR, 0.37; 95% CI, 0.08 to 1.7; P = .16).

The median event free survival was also superior among those retransplanted with a new mismatched haplotype (not reached vs. 401 days; HR, .50; 95% CI, .22-1.14, P = .09).

A higher risk of mortality was observed in patients who had relapsed within 6 months of their first transplantation (HR, 2.49; 95% CI, 1.0-6.2; P = .07), as well as in those who had progressive or refractory disease prior to their second alloBMT (HR, 2.56; 95% CI, 1.03-6.25; P = .06).

“For patients who relapse more than 6 months after a BMT and who achieve remission with salvage therapy, results are very encouraging,” the researchers wrote. “Although a randomized trial to study selecting donors with a new mismatched haplotype may be impractical, larger numbers should provide additional information on the effectiveness of such an approach.”

The researchers reported having no financial disclosures.

SOURCE: Imus P et al. Biol Blood Marrow Transplant. 2017;23:1887-94.

FROM BIOLOGY OF BLOOD AND MARROW TRANSPLANTATION

Key clinical point:

Major finding: Median survival was 552 days (95% CI, 376-2,950+) for those without a new mismatched haplotype vs. not being reached for patients with a new mismatched haplotype on second transplant (HR, 0.36; 95% CI, 0.14-0.9; P = .02).

Study details: Retrospective review in a single institution that included 40 patients who underwent a second BMT between 2005 and 2014.

Disclosures: The researchers reported having no financial disclosures.

Source: Imus P et al. Biol Blood Marrow Transplant. 2017;23:1887-94.

Acne evaluations using photos with digital tool comparable to in-person exams

Dermatologist evaluation of photographs of acne taken by patients using a telemedicine and mobile data collection tool produced similar Investigator’s Global Assessment (IGA) findings and lesion counts, compared with traditional in-person examinations, in a pilot study sponsored by the tool’s manufacturer.

, the Network Oriented Research Assistant (NORA), Hannah Singer, a medical student at Columbia University, New York, and her coinvestigators concluded. The study was published online Dec. 20 in JAMA Dermatology.

To determine the effectiveness of the tool, investigators compared in-person evaluations of acne patients with digital evaluations in 60 patients in one dermatology practice. The “intraclass correlation coefficient (ICC)” was used to compare the in-person exam and the digital photo assessments of the IGA score and acne lesion counts; ICC scores ranged from 0 to 1, with 0 indicating no agreement and 1 indicating perfect agreement.

Study participants, who were aged 12-54 years (mean age 23 years) were trained to use NORA on an iPhone 6 and were instructed to take five photographs of different facial regions: the forehead, chin, right cheek, left cheek, and the whole face. The evaluation of patient-photographed acne and the in-person evaluation were separated by 1 week.

The ICC for IGA scores was 0.75, and the ICC for total lesion count was 0.81. Inflammatory lesion count and noninflammatory lesion count both had an ICC score of 0.72, while cyst count had the highest ICC score of 0.82. The ICC results demonstrated “strong agreement” between assessment scores from in-person examinations and the patient-taken digital photos, the authors wrote.

The study had several limitations, including the small patient sample size and limited geographic area, and although there was a 1- to 2-week gap between the digital and in-person evaluations, there may have been recall bias because the same physicians conducted both evaluations, they added.

Future studies should collect data from multiple centers, with a longer follow-up, and cost analysis and patient-reported measures, the researchers wrote.

The NORA technology platform is currently being evaluated in studies of patients with diagnoses including atopic dermatitis, pemphigus vulgaris, and liver disease. “Use of the NORA platform in telemedicine-based clinical trials will allow for increased access, a broader diversity of patients, and improved adherence among participants in trials for acne vulgaris and other therapeutic areas,” the researchers noted.

The study was sponsored by Science 37, a mobile technology and clinical trial company that developed NORA, the software used in this study. Other than Ms. Singer, who had no disclosures, the remaining 10 authors are Science 37 employees and have stock options in the company.

SOURCE: M. Singer, JAMA Dermatol 2017 Dec 20; doi:10.1001/jamadermatol.2017.5141.

Dermatologist evaluation of photographs of acne taken by patients using a telemedicine and mobile data collection tool produced similar Investigator’s Global Assessment (IGA) findings and lesion counts, compared with traditional in-person examinations, in a pilot study sponsored by the tool’s manufacturer.

, the Network Oriented Research Assistant (NORA), Hannah Singer, a medical student at Columbia University, New York, and her coinvestigators concluded. The study was published online Dec. 20 in JAMA Dermatology.

To determine the effectiveness of the tool, investigators compared in-person evaluations of acne patients with digital evaluations in 60 patients in one dermatology practice. The “intraclass correlation coefficient (ICC)” was used to compare the in-person exam and the digital photo assessments of the IGA score and acne lesion counts; ICC scores ranged from 0 to 1, with 0 indicating no agreement and 1 indicating perfect agreement.

Study participants, who were aged 12-54 years (mean age 23 years) were trained to use NORA on an iPhone 6 and were instructed to take five photographs of different facial regions: the forehead, chin, right cheek, left cheek, and the whole face. The evaluation of patient-photographed acne and the in-person evaluation were separated by 1 week.

The ICC for IGA scores was 0.75, and the ICC for total lesion count was 0.81. Inflammatory lesion count and noninflammatory lesion count both had an ICC score of 0.72, while cyst count had the highest ICC score of 0.82. The ICC results demonstrated “strong agreement” between assessment scores from in-person examinations and the patient-taken digital photos, the authors wrote.

The study had several limitations, including the small patient sample size and limited geographic area, and although there was a 1- to 2-week gap between the digital and in-person evaluations, there may have been recall bias because the same physicians conducted both evaluations, they added.

Future studies should collect data from multiple centers, with a longer follow-up, and cost analysis and patient-reported measures, the researchers wrote.

The NORA technology platform is currently being evaluated in studies of patients with diagnoses including atopic dermatitis, pemphigus vulgaris, and liver disease. “Use of the NORA platform in telemedicine-based clinical trials will allow for increased access, a broader diversity of patients, and improved adherence among participants in trials for acne vulgaris and other therapeutic areas,” the researchers noted.

The study was sponsored by Science 37, a mobile technology and clinical trial company that developed NORA, the software used in this study. Other than Ms. Singer, who had no disclosures, the remaining 10 authors are Science 37 employees and have stock options in the company.

SOURCE: M. Singer, JAMA Dermatol 2017 Dec 20; doi:10.1001/jamadermatol.2017.5141.

Dermatologist evaluation of photographs of acne taken by patients using a telemedicine and mobile data collection tool produced similar Investigator’s Global Assessment (IGA) findings and lesion counts, compared with traditional in-person examinations, in a pilot study sponsored by the tool’s manufacturer.

, the Network Oriented Research Assistant (NORA), Hannah Singer, a medical student at Columbia University, New York, and her coinvestigators concluded. The study was published online Dec. 20 in JAMA Dermatology.

To determine the effectiveness of the tool, investigators compared in-person evaluations of acne patients with digital evaluations in 60 patients in one dermatology practice. The “intraclass correlation coefficient (ICC)” was used to compare the in-person exam and the digital photo assessments of the IGA score and acne lesion counts; ICC scores ranged from 0 to 1, with 0 indicating no agreement and 1 indicating perfect agreement.

Study participants, who were aged 12-54 years (mean age 23 years) were trained to use NORA on an iPhone 6 and were instructed to take five photographs of different facial regions: the forehead, chin, right cheek, left cheek, and the whole face. The evaluation of patient-photographed acne and the in-person evaluation were separated by 1 week.

The ICC for IGA scores was 0.75, and the ICC for total lesion count was 0.81. Inflammatory lesion count and noninflammatory lesion count both had an ICC score of 0.72, while cyst count had the highest ICC score of 0.82. The ICC results demonstrated “strong agreement” between assessment scores from in-person examinations and the patient-taken digital photos, the authors wrote.

The study had several limitations, including the small patient sample size and limited geographic area, and although there was a 1- to 2-week gap between the digital and in-person evaluations, there may have been recall bias because the same physicians conducted both evaluations, they added.

Future studies should collect data from multiple centers, with a longer follow-up, and cost analysis and patient-reported measures, the researchers wrote.

The NORA technology platform is currently being evaluated in studies of patients with diagnoses including atopic dermatitis, pemphigus vulgaris, and liver disease. “Use of the NORA platform in telemedicine-based clinical trials will allow for increased access, a broader diversity of patients, and improved adherence among participants in trials for acne vulgaris and other therapeutic areas,” the researchers noted.

The study was sponsored by Science 37, a mobile technology and clinical trial company that developed NORA, the software used in this study. Other than Ms. Singer, who had no disclosures, the remaining 10 authors are Science 37 employees and have stock options in the company.

SOURCE: M. Singer, JAMA Dermatol 2017 Dec 20; doi:10.1001/jamadermatol.2017.5141.

FROM JAMA DERMATOLOGY

Key clinical point: In-person evaluations and digital evaluations of self-photographed acne produced comparable results.

Major finding: There was “strong agreement” in the Investigator’s Global Assessment scores and acne lesion counts between assessments of digital photos and in-person exams.

Study details: A pilot study of 60 acne patients at a dermatology office compared assessments with digital photos and in-person exams.

Disclosures: The study was sponsored by Science 37, a mobile technology and clinical trial company that developed NORA, the software used in this study. Other than the lead author, who had no disclosures, the 10 remaining authors are Science 37 employees and have stock options in the company.

Source: M Singer, JAMA Dermatol. 2017 Dec 20; doi: 10.1001/jamadermatol.2017.5141.

Study: Test carcinoembryonic antigen in colon cancer after surgery

A new study challenges the practice of measuring carcinoembryonic antigen (CEA) in patients with colon cancer prior to surgery, as is currently recommended by some guidelines. Researchers found that the levels do have predictive value about risk of recurrence when tested after surgery, but they challenge its use before.

“Consistent with the literature, our data show that postoperative CEA is more informative than preoperative CEA,” the study authors wrote. “Emphasis should be placed on postoperative CEA, and in the setting of modern high-quality imaging, we question the utility of measuring preoperative CEA.”

The study, which was published online in JAMA Oncology, noted that testing for CEA – a colorectal cancer tumor marker – had more value prior to the current era of imaging because it could indicate the need to widen a search for metastases.

“In the era of high-quality computed tomography (CT), the utility of measuring preoperative CEA is less obvious because an elevated preoperative CEA with a normal CT scan does not preclude surgery with curative intent,” wrote Tsuyoshi Konishi, MD, of Memorial Sloan-Kettering Cancer Center in New York and his coauthors.

CEA levels can return to normal after colon cancer surgery – but not always. According to the study, the National Comprehensive Cancer Network, the American Society of Clinical Oncology, and the European Group on Tumor Markers recommend its measurement prior to surgery in patients with nonmetastatic cancer.

For the new study, the authors sought to understand the predictive value of various CEA levels both before and after surgery.

The researchers retrospectively tracked 1,027 patients at a single center with stage I-III colon cancer (50.4% male; median age, 64 years). (Another 221 patients had been removed because of exclusion criteria, such as recent malignancy treatment and preoperative chemotherapy or radiation treatment.)

Nearly 70% of the 1,027 patients had normal preoperative CEA levels, and the rest had elevated levels.

Of the 312 patients with elevated preoperative CEA levels, 113 were lost to follow-up postoperative CEA tests. In the remaining patients, CEA levels returned to normal in 71%.

Patients were followed for a median of 38 months; 10.3% had recurrences, and 4.6% died. The 3-year recurrence-free survival rate was 88.4%.

Researchers found that those with normal preoperative CEA levels were more likely to reach recurrence-free survival at 3 years (89.7%) than were those who had elevated preoperative CEA levels (82.3%; P = .05). The higher level wasn’t much different than the recurrence-free survival level in those whose CEA levels had normalized after the operation (87.9%; P = .86).

Those with elevated CEA levels after surgery had a lower likelihood of reaching recurrence-free survival at 3 years (74.5%) than did those who had normal postoperative CEA levels (89.4%).

The study also linked abnormal postoperative CEA levels to shorter recurrence-free survival (hazard ratio, 2.0; 95% confidence interval, 1.1-3.5). Normalized postoperative CEA levels, however, were not linked to shorter recurrence-free survival (HR, 0.77; 95% CI, 0.45-1.30).

The researchers noted that they didn’t control for factors such as smoking (which increases CEA levels) and disease like gastritis and diabetes, which can produce misleading CEA levels. “Rather, this study is pragmatic,” the researchers wrote, “and represents what is likely to be seen in real-world colorectal cancer care.”