User login

Extramedullary plasmacytoma of the thyroid, refractory to radiation therapy and treated with bortezomib

Plasma cell neoplasms involving tissues other than the bone marrow are known as extramedullary plasmacytoma (EMP).1 EMPs mostly involve the head and neck region.2 Solitary EMP involving only the thyroid gland is very rare.3,4 Because of the limited knowledge about this condition and its rarity, its management can be challenging and is often extrapolated from plasma cell myeloma.5,6 In general, surgery or radiation are considered as front-line therapy.3,5 EMPs usually respond well to radiotherapy with almost complete remission. No definite guidelines outlining the treatment of radio-resistant EMP of the thyroid have yet been published. Data supporting the use of chemotherapy is particularly limited.4,7,8

Here, we describe the case of a 53-year-old woman with a long history of thyroiditis who presented with rapidly worsening symptomatic thyroid enlargement. She was diagnosed with EMP of the thyroid gland that was not amenable to surgery and was refractory to radiotherapy but responded to adjuvant chemotherapy with bortezomib. This report highlights 2 unique aspects of this condition: it focuses on a rare case of EMP and, as far as we know, it reports for the first time on EMP that was resistant to radiotherapy. It also highlights the need for guidelines for the treatment of EMPs.

Case presentation and summary

A 53-year-old woman presented to the emergency department with complaints of difficulty swallowing, hoarseness, and neck pain during the previous 1 month. She had a known history of Hashimoto’s thyroiditis, and an ultrasound scan of her neck 6 years previously had demonstrated diffuse thyromegaly without discrete nodules. On presentation, the patient’s vitals were stable, and a neck examination revealed a firm and enlarged thyroid without any cervical adenopathy. Laboratory investigations revealed a normal complete blood count and comprehensive metabolic panel. She had an elevated thyroid-stimulating hormone level of 13.40 mIU/L (reference range, 0.47-4.68 mIU/L) and normal thyroxine level of 4.5 pmol/L (reference range, 4.5-12.0 pmol/L). A computerized tomography (CT) scan of the neck revealed an enlarged thyroid gland (right lobe length, 10.3 cm; isthmus, 2 cm; left lobe, 8 cm) with a focal area of increased echogenicity in the midpole of the left lobe measuring 9.5 mm × 5.5 mm. The patient was discharged to home with pain medications, and urgent follow-up with an otolaryngologist was arranged. A flexible laryngoscopy was done in the otolaryngology clinic, which revealed retropharyngeal bulging that correlated with the thyromegaly evident on the CT scan.

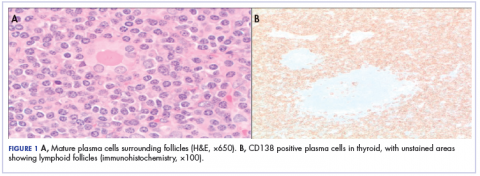

Because of the patient’s significant symptoms, we decided to proceed with surgery with a clinical diagnosis of likely thyroiditis. A left subtotal thyroidectomy with extension to the superior mediastinum was performed, but a right thyroidectomy could not be done safely. On gross examination, a well-capsulated left lobe with a tan-white, lobulated, soft cut surface was seen. Microscopic examination revealed replacement of thyroid parenchyma with sheets of mature-appearing plasma cells with eccentric round nuclei, abundant eosinophilic cytoplasm without atypia, and few scattered thyroid follicles with lymphoepithelial lesions (Figure 1A). Immunohistochemistry confirmed plasma cells with expression of CD138 (Figure 1B).

Fluorescence in situ hybridization (FISH) showed that the neoplastic plasma cells contained monotypic kappa immunoglobulin light chain messenger RNA. Clonal immunoglobulin gene rearrangement was detected on polymerase chain reaction. A diagnosis of plasmacytoma of the thyroid gland in a background of thyroiditis was made on the basis of the aforementioned observations.

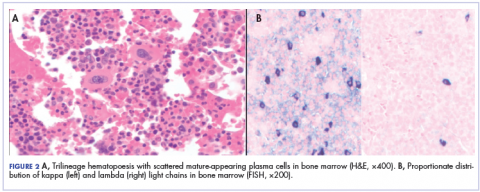

After that diagnosis, we performed an extensive work-up for plasma cell myeloma. Bone marrow biopsy showed normal maturing trilineage hematopoiesis with scattered mature-appearing plasma cells Figure 2A. Flow cytometry showed a rare (0.2%) population of polytypic plasma cells and was confirmed by CD138 immunohistochemistry. FISH showed proportionate distribution (2-5:1) of kappa and lambda light chains in plasma cells (Figure 2B).

Serum protein electrophoresis showed normal levels of serum proteins with no M spike. Serum total protein was 7.9 g/dL, albumin 5.0 g/dL, α1-globulin 0.3 g/dL, α2-globulin 0.8 g/dL, β-globulin 0.7 g/dL, and γ-globulin 1.6 g/dL, with an albumin–globulin ratio of 1.47. Calcium and β2-microglobulin were also in the normal ranges. Serum-free kappa light chain was found to be elevated (20.9 mg/L; reference range, 3.3-19.4 mg/L). The immunoglobulin G level was also elevated at 3,104 mg/dL (reference range, 700-1,600 mg/dL).

A positron-emission tomographic (PET) scan done 1 month after the surgery showed no other sites of disease except the thyroid. No lytic bone lesions were present. The patient was treated with 50.4 Gy of radiation by external beam radiotherapy to the thyroid in 28 fractions as definitive therapy. Despite treatment with surgery and radiation, she continued to have pain around the neck, and a repeat PET scan 3 months after completion of radiation showed persistent uptake in the thyroid. Because of her refractoriness to radiotherapy, she was started on systemic therapy with a weekly regimen of bortezomib and dexamethasone for 9 cycles. Her symptoms began to resolve, and a repeat PET scan done after completion of chemotherapy showed no evidence of uptake, suggesting adequate response to chemotherapy, and chemotherapy was therefore stopped. The patient was scheduled a regular follow-up in 3 months. Because of continued local symptoms in this follow-up period, the decision was made to perform surgical gland removal, and she underwent completion of

Discussion

Plasma cells are well-differentiated B-lymphocytes that secrete antibodies and provide protective immunity to the human body.9 Plasma cell neoplasms are clonal proliferation of plasma cells, producing monoclonal immunoglobulins. They are of the following different types: plasma cell myeloma, monoclonal gammopathy of unknown significance, immunoglobulin deposition disease, POEMS (polyneuropathy, organomegaly, endocrinopathy, monoclonal protein, skin changes) syndrome, and plasmacytomas, which are divided into 2 types – solitary plasmacytoma of the bone, and extramedullary plasmacytoma (EMP).10 EMP is a rare condition and encompasses 3% to 5% of all plasma cell neoplasms, depending on the study.1,2,5 It is more common in men than in women (2.6:1, respectively), with equal incidence among black and white patients. Median age at diagnosis is 62 years, and it is more common among those aged 40 to 70 years.2,11 The most common sites of occurrence are the respiratory tract, the mouth, and the pharynx, but other sites such as the eyes, brain, skin, and lymph nodes may also be involved.2

EMP involving the thyroid gland is a very rare occurrence, but plasma cell myeloma has been shown to secondarily involve the thyroid.4 Similar to our report, EMP of the thyroid in the setting of thyroiditis has been reported by other authors.3,4 The incidence of EMP occurring in the thyroid varies according to different authors. Wiltshaw found 7 cases involving the thyroid out of 272 cases of EMP.1 Galieni and colleagues reported only 1 case that involved the thyroid out of 46 described cases of EMP.12

El- Siemińska and colleagues showed that levels of interleukin (IL)-6 are elevated in thyroiditis.13 IL-6 promotes monoclonal as well as polyclonal proliferation of plasma cells. Kovalchuk and colleagues showed an increase in EMP in IL-6 transgenic mice, suggesting a pathophysiologic explanation.14

The diagnostic requirements of EMP include the following: histology showing monoclonal plasma cell infiltration in tissue; bone marrow biopsy with normal plasma cell aspirate and biopsy (plasma cells, <5%); no lytic lesions on skeletal survey; no anemia, renal impairment, or hypercalcemia; and absent or low serum M protein.12

Our case fulfilled those criteria.

The treatment options for EMP include surgery, radiotherapy, or a combined approach including both. Usually, EMPs are very sensitive to radiotherapy, and complete remission can be achieved by radiotherapy alone in 80% to 100% of cases.6,11,15 Surgery is considered if the tumor is diffuse or is causing symptoms secondary to pressure on surrounding structures. A combined approach is recommended in cases with incomplete surgical margin or lymph node involvement.5,6

There is limited evidence about and experience with the use of chemotherapy in the treatment of EMP. It has been recommended that chemotherapy be considered in patients with refractory or relapsed disease using the same regimen used in plasma cell myeloma.5 Katodritou and colleagues have reported using bortezomib and dexamethasone without surgery in a solitary gastric plasmacytoma to avoid the toxicity of gastrointestinal irradiation.7 Wei and colleagues treated a patient with EMP in the pancreas with bortezomib and achieved a near-complete remission.8 To our knowledge, there is no documented literature about the treatment of EMP of the thyroid with chemotherapy. In our patient, despite the treatment with surgery and radiation, there was persistent uptake on the PET scan, so we treated her with bortezomib and dexamethasone. Because there is an 11% to 30% risk of progression to multiple myeloma, long-term follow-up is recommended in EMP.11

Conclusions

Solitary EMP of the thyroid gland is a rare condition. Plasma cell myeloma must be ruled out to make a diagnosis. Data on the incidence of EMP and its clinicopathological features are sparse, and literature describing proper guidelines on treatment is limited. It can be treated with radiotherapy, surgery, or a combined approach. There is limited data on the role of chemotherapy; our case adds to the available literature on using myeloma-based therapy in refractory disease and, to our knowledge, is the only case report using this in the literature on cases of EMP of the thyroid. Regular follow-up, even after the disease is in remission, is necessary because of the high risk of progression to plasma cell myeloma.

1. Wiltshaw E. The natural history of extramedullary plasmacytoma and its relation to solitary myeloma of bone and myelomatosis. Medicine (Baltimore). 1976;55(3):217-238.

2. Dores GM, Landgren O, McGlynn KA, Curtis RE, Linet MS, Devesa SS. Plasmacytoma of bone, extramedullary plasmacytoma, and multiple myeloma: incidence and survival in the United States, 1992-2004. Br J Haematol. 2009;144(1):86-94.

3. Kovacs CS, Mant MJ, Nguyen GK, Ginsberg J. Plasma cell lesions of the thyroid: report of a case of solitary plasmacytoma and a review of the literature. Thyroid. 1994;4(1):65-71.

4. Avila A, Villalpando A, Montoya G, Luna MA. Clinical features and differential diagnoses of solitary extramedullary plasmacytoma of the thyroid: a case report. Ann Diagn Pathol. 2009;13(2):119-123.

5. Hughes M, Soutar R, Lucraft H, Owen R, Bird J. Guidelines on the diagnosis and management of solitary plasmacytoma of bone, extramedullary plasmacytoma and multiple solitary plasmacytomas: 2009 update. London, United Kingdom: British Committee for Standards in Haematology; 2009.

6. Weber DM. Solitary bone and extramedullary plasmacytoma. Hematology Am Soc Hematol Educ Program. 2005;373-376.

7. Katodritou E, Kartsios C, Gastari V, et al. Successful treatment of extramedullary gastric plasmacytoma with the combination of bortezomib and dexamethasone: first reported case. Leuk Res. 2008;32(2):339-341.

8. Wei JY, Tong HY, Zhu WF, et al. Bortezomib in treatment of extramedullary plasmacytoma of the pancreas. Hepatobiliary Pancreat Dis Int. 2009;8(3):329-331.

9. Roth K, Oehme L, Zehentmeier S, Zhang Y, Niesner R, Hauser AE. Tracking plasma cell differentiation and survival. Cytometry A. 2014;85(1):15-24.

10. Swerdlow SH, Campo E, Harris NL, et al. WHO classification of tumours of haematopoietic and lymphoid tissues. 4th ed. Lyon, France: International Agency for Research on Cancer; 2008.

11. Alexiou C, Kau RJ, Dietzfelbinger H, et al. Extramedullary plasmacytoma: tumor occurrence and therapeutic concepts. Cancer. 1999;85(11):2305-2314.

12. Galieni P, Cavo M, Pulsoni A, et al. Clinical outcome of extramedullary plasmacytoma. Haematologica. 2000;85(1):47-51.

13. Siemińska L, Wojciechowska C, Kos-Kudła B, et al. Serum concentrations of leptin, adiponectin, and interleukin-6 in postmenopausal women with Hashimoto’s thyroiditis. Endokrynol Pol. 2010;61(1):112-116.

14. Kovalchuk AL, Kim JS, Park SS, et al. IL-6 transgenic mouse model for extraosseous plasmacytoma. Proc Natl Acad Sci US

15. Chao MW, Gibbs P, Wirth A, Quong G, Guiney MJ, Liew KH. Radiotherapy in the management of solitary extramedullary plasmacytoma. Intern Med J. 2005;35(4):211-215.

Plasma cell neoplasms involving tissues other than the bone marrow are known as extramedullary plasmacytoma (EMP).1 EMPs mostly involve the head and neck region.2 Solitary EMP involving only the thyroid gland is very rare.3,4 Because of the limited knowledge about this condition and its rarity, its management can be challenging and is often extrapolated from plasma cell myeloma.5,6 In general, surgery or radiation are considered as front-line therapy.3,5 EMPs usually respond well to radiotherapy with almost complete remission. No definite guidelines outlining the treatment of radio-resistant EMP of the thyroid have yet been published. Data supporting the use of chemotherapy is particularly limited.4,7,8

Here, we describe the case of a 53-year-old woman with a long history of thyroiditis who presented with rapidly worsening symptomatic thyroid enlargement. She was diagnosed with EMP of the thyroid gland that was not amenable to surgery and was refractory to radiotherapy but responded to adjuvant chemotherapy with bortezomib. This report highlights 2 unique aspects of this condition: it focuses on a rare case of EMP and, as far as we know, it reports for the first time on EMP that was resistant to radiotherapy. It also highlights the need for guidelines for the treatment of EMPs.

Case presentation and summary

A 53-year-old woman presented to the emergency department with complaints of difficulty swallowing, hoarseness, and neck pain during the previous 1 month. She had a known history of Hashimoto’s thyroiditis, and an ultrasound scan of her neck 6 years previously had demonstrated diffuse thyromegaly without discrete nodules. On presentation, the patient’s vitals were stable, and a neck examination revealed a firm and enlarged thyroid without any cervical adenopathy. Laboratory investigations revealed a normal complete blood count and comprehensive metabolic panel. She had an elevated thyroid-stimulating hormone level of 13.40 mIU/L (reference range, 0.47-4.68 mIU/L) and normal thyroxine level of 4.5 pmol/L (reference range, 4.5-12.0 pmol/L). A computerized tomography (CT) scan of the neck revealed an enlarged thyroid gland (right lobe length, 10.3 cm; isthmus, 2 cm; left lobe, 8 cm) with a focal area of increased echogenicity in the midpole of the left lobe measuring 9.5 mm × 5.5 mm. The patient was discharged to home with pain medications, and urgent follow-up with an otolaryngologist was arranged. A flexible laryngoscopy was done in the otolaryngology clinic, which revealed retropharyngeal bulging that correlated with the thyromegaly evident on the CT scan.

Because of the patient’s significant symptoms, we decided to proceed with surgery with a clinical diagnosis of likely thyroiditis. A left subtotal thyroidectomy with extension to the superior mediastinum was performed, but a right thyroidectomy could not be done safely. On gross examination, a well-capsulated left lobe with a tan-white, lobulated, soft cut surface was seen. Microscopic examination revealed replacement of thyroid parenchyma with sheets of mature-appearing plasma cells with eccentric round nuclei, abundant eosinophilic cytoplasm without atypia, and few scattered thyroid follicles with lymphoepithelial lesions (Figure 1A). Immunohistochemistry confirmed plasma cells with expression of CD138 (Figure 1B).

Fluorescence in situ hybridization (FISH) showed that the neoplastic plasma cells contained monotypic kappa immunoglobulin light chain messenger RNA. Clonal immunoglobulin gene rearrangement was detected on polymerase chain reaction. A diagnosis of plasmacytoma of the thyroid gland in a background of thyroiditis was made on the basis of the aforementioned observations.

After that diagnosis, we performed an extensive work-up for plasma cell myeloma. Bone marrow biopsy showed normal maturing trilineage hematopoiesis with scattered mature-appearing plasma cells Figure 2A. Flow cytometry showed a rare (0.2%) population of polytypic plasma cells and was confirmed by CD138 immunohistochemistry. FISH showed proportionate distribution (2-5:1) of kappa and lambda light chains in plasma cells (Figure 2B).

Serum protein electrophoresis showed normal levels of serum proteins with no M spike. Serum total protein was 7.9 g/dL, albumin 5.0 g/dL, α1-globulin 0.3 g/dL, α2-globulin 0.8 g/dL, β-globulin 0.7 g/dL, and γ-globulin 1.6 g/dL, with an albumin–globulin ratio of 1.47. Calcium and β2-microglobulin were also in the normal ranges. Serum-free kappa light chain was found to be elevated (20.9 mg/L; reference range, 3.3-19.4 mg/L). The immunoglobulin G level was also elevated at 3,104 mg/dL (reference range, 700-1,600 mg/dL).

A positron-emission tomographic (PET) scan done 1 month after the surgery showed no other sites of disease except the thyroid. No lytic bone lesions were present. The patient was treated with 50.4 Gy of radiation by external beam radiotherapy to the thyroid in 28 fractions as definitive therapy. Despite treatment with surgery and radiation, she continued to have pain around the neck, and a repeat PET scan 3 months after completion of radiation showed persistent uptake in the thyroid. Because of her refractoriness to radiotherapy, she was started on systemic therapy with a weekly regimen of bortezomib and dexamethasone for 9 cycles. Her symptoms began to resolve, and a repeat PET scan done after completion of chemotherapy showed no evidence of uptake, suggesting adequate response to chemotherapy, and chemotherapy was therefore stopped. The patient was scheduled a regular follow-up in 3 months. Because of continued local symptoms in this follow-up period, the decision was made to perform surgical gland removal, and she underwent completion of

Discussion

Plasma cells are well-differentiated B-lymphocytes that secrete antibodies and provide protective immunity to the human body.9 Plasma cell neoplasms are clonal proliferation of plasma cells, producing monoclonal immunoglobulins. They are of the following different types: plasma cell myeloma, monoclonal gammopathy of unknown significance, immunoglobulin deposition disease, POEMS (polyneuropathy, organomegaly, endocrinopathy, monoclonal protein, skin changes) syndrome, and plasmacytomas, which are divided into 2 types – solitary plasmacytoma of the bone, and extramedullary plasmacytoma (EMP).10 EMP is a rare condition and encompasses 3% to 5% of all plasma cell neoplasms, depending on the study.1,2,5 It is more common in men than in women (2.6:1, respectively), with equal incidence among black and white patients. Median age at diagnosis is 62 years, and it is more common among those aged 40 to 70 years.2,11 The most common sites of occurrence are the respiratory tract, the mouth, and the pharynx, but other sites such as the eyes, brain, skin, and lymph nodes may also be involved.2

EMP involving the thyroid gland is a very rare occurrence, but plasma cell myeloma has been shown to secondarily involve the thyroid.4 Similar to our report, EMP of the thyroid in the setting of thyroiditis has been reported by other authors.3,4 The incidence of EMP occurring in the thyroid varies according to different authors. Wiltshaw found 7 cases involving the thyroid out of 272 cases of EMP.1 Galieni and colleagues reported only 1 case that involved the thyroid out of 46 described cases of EMP.12

El- Siemińska and colleagues showed that levels of interleukin (IL)-6 are elevated in thyroiditis.13 IL-6 promotes monoclonal as well as polyclonal proliferation of plasma cells. Kovalchuk and colleagues showed an increase in EMP in IL-6 transgenic mice, suggesting a pathophysiologic explanation.14

The diagnostic requirements of EMP include the following: histology showing monoclonal plasma cell infiltration in tissue; bone marrow biopsy with normal plasma cell aspirate and biopsy (plasma cells, <5%); no lytic lesions on skeletal survey; no anemia, renal impairment, or hypercalcemia; and absent or low serum M protein.12

Our case fulfilled those criteria.

The treatment options for EMP include surgery, radiotherapy, or a combined approach including both. Usually, EMPs are very sensitive to radiotherapy, and complete remission can be achieved by radiotherapy alone in 80% to 100% of cases.6,11,15 Surgery is considered if the tumor is diffuse or is causing symptoms secondary to pressure on surrounding structures. A combined approach is recommended in cases with incomplete surgical margin or lymph node involvement.5,6

There is limited evidence about and experience with the use of chemotherapy in the treatment of EMP. It has been recommended that chemotherapy be considered in patients with refractory or relapsed disease using the same regimen used in plasma cell myeloma.5 Katodritou and colleagues have reported using bortezomib and dexamethasone without surgery in a solitary gastric plasmacytoma to avoid the toxicity of gastrointestinal irradiation.7 Wei and colleagues treated a patient with EMP in the pancreas with bortezomib and achieved a near-complete remission.8 To our knowledge, there is no documented literature about the treatment of EMP of the thyroid with chemotherapy. In our patient, despite the treatment with surgery and radiation, there was persistent uptake on the PET scan, so we treated her with bortezomib and dexamethasone. Because there is an 11% to 30% risk of progression to multiple myeloma, long-term follow-up is recommended in EMP.11

Conclusions

Solitary EMP of the thyroid gland is a rare condition. Plasma cell myeloma must be ruled out to make a diagnosis. Data on the incidence of EMP and its clinicopathological features are sparse, and literature describing proper guidelines on treatment is limited. It can be treated with radiotherapy, surgery, or a combined approach. There is limited data on the role of chemotherapy; our case adds to the available literature on using myeloma-based therapy in refractory disease and, to our knowledge, is the only case report using this in the literature on cases of EMP of the thyroid. Regular follow-up, even after the disease is in remission, is necessary because of the high risk of progression to plasma cell myeloma.

Plasma cell neoplasms involving tissues other than the bone marrow are known as extramedullary plasmacytoma (EMP).1 EMPs mostly involve the head and neck region.2 Solitary EMP involving only the thyroid gland is very rare.3,4 Because of the limited knowledge about this condition and its rarity, its management can be challenging and is often extrapolated from plasma cell myeloma.5,6 In general, surgery or radiation are considered as front-line therapy.3,5 EMPs usually respond well to radiotherapy with almost complete remission. No definite guidelines outlining the treatment of radio-resistant EMP of the thyroid have yet been published. Data supporting the use of chemotherapy is particularly limited.4,7,8

Here, we describe the case of a 53-year-old woman with a long history of thyroiditis who presented with rapidly worsening symptomatic thyroid enlargement. She was diagnosed with EMP of the thyroid gland that was not amenable to surgery and was refractory to radiotherapy but responded to adjuvant chemotherapy with bortezomib. This report highlights 2 unique aspects of this condition: it focuses on a rare case of EMP and, as far as we know, it reports for the first time on EMP that was resistant to radiotherapy. It also highlights the need for guidelines for the treatment of EMPs.

Case presentation and summary

A 53-year-old woman presented to the emergency department with complaints of difficulty swallowing, hoarseness, and neck pain during the previous 1 month. She had a known history of Hashimoto’s thyroiditis, and an ultrasound scan of her neck 6 years previously had demonstrated diffuse thyromegaly without discrete nodules. On presentation, the patient’s vitals were stable, and a neck examination revealed a firm and enlarged thyroid without any cervical adenopathy. Laboratory investigations revealed a normal complete blood count and comprehensive metabolic panel. She had an elevated thyroid-stimulating hormone level of 13.40 mIU/L (reference range, 0.47-4.68 mIU/L) and normal thyroxine level of 4.5 pmol/L (reference range, 4.5-12.0 pmol/L). A computerized tomography (CT) scan of the neck revealed an enlarged thyroid gland (right lobe length, 10.3 cm; isthmus, 2 cm; left lobe, 8 cm) with a focal area of increased echogenicity in the midpole of the left lobe measuring 9.5 mm × 5.5 mm. The patient was discharged to home with pain medications, and urgent follow-up with an otolaryngologist was arranged. A flexible laryngoscopy was done in the otolaryngology clinic, which revealed retropharyngeal bulging that correlated with the thyromegaly evident on the CT scan.

Because of the patient’s significant symptoms, we decided to proceed with surgery with a clinical diagnosis of likely thyroiditis. A left subtotal thyroidectomy with extension to the superior mediastinum was performed, but a right thyroidectomy could not be done safely. On gross examination, a well-capsulated left lobe with a tan-white, lobulated, soft cut surface was seen. Microscopic examination revealed replacement of thyroid parenchyma with sheets of mature-appearing plasma cells with eccentric round nuclei, abundant eosinophilic cytoplasm without atypia, and few scattered thyroid follicles with lymphoepithelial lesions (Figure 1A). Immunohistochemistry confirmed plasma cells with expression of CD138 (Figure 1B).

Fluorescence in situ hybridization (FISH) showed that the neoplastic plasma cells contained monotypic kappa immunoglobulin light chain messenger RNA. Clonal immunoglobulin gene rearrangement was detected on polymerase chain reaction. A diagnosis of plasmacytoma of the thyroid gland in a background of thyroiditis was made on the basis of the aforementioned observations.

After that diagnosis, we performed an extensive work-up for plasma cell myeloma. Bone marrow biopsy showed normal maturing trilineage hematopoiesis with scattered mature-appearing plasma cells Figure 2A. Flow cytometry showed a rare (0.2%) population of polytypic plasma cells and was confirmed by CD138 immunohistochemistry. FISH showed proportionate distribution (2-5:1) of kappa and lambda light chains in plasma cells (Figure 2B).

Serum protein electrophoresis showed normal levels of serum proteins with no M spike. Serum total protein was 7.9 g/dL, albumin 5.0 g/dL, α1-globulin 0.3 g/dL, α2-globulin 0.8 g/dL, β-globulin 0.7 g/dL, and γ-globulin 1.6 g/dL, with an albumin–globulin ratio of 1.47. Calcium and β2-microglobulin were also in the normal ranges. Serum-free kappa light chain was found to be elevated (20.9 mg/L; reference range, 3.3-19.4 mg/L). The immunoglobulin G level was also elevated at 3,104 mg/dL (reference range, 700-1,600 mg/dL).

A positron-emission tomographic (PET) scan done 1 month after the surgery showed no other sites of disease except the thyroid. No lytic bone lesions were present. The patient was treated with 50.4 Gy of radiation by external beam radiotherapy to the thyroid in 28 fractions as definitive therapy. Despite treatment with surgery and radiation, she continued to have pain around the neck, and a repeat PET scan 3 months after completion of radiation showed persistent uptake in the thyroid. Because of her refractoriness to radiotherapy, she was started on systemic therapy with a weekly regimen of bortezomib and dexamethasone for 9 cycles. Her symptoms began to resolve, and a repeat PET scan done after completion of chemotherapy showed no evidence of uptake, suggesting adequate response to chemotherapy, and chemotherapy was therefore stopped. The patient was scheduled a regular follow-up in 3 months. Because of continued local symptoms in this follow-up period, the decision was made to perform surgical gland removal, and she underwent completion of

Discussion

Plasma cells are well-differentiated B-lymphocytes that secrete antibodies and provide protective immunity to the human body.9 Plasma cell neoplasms are clonal proliferation of plasma cells, producing monoclonal immunoglobulins. They are of the following different types: plasma cell myeloma, monoclonal gammopathy of unknown significance, immunoglobulin deposition disease, POEMS (polyneuropathy, organomegaly, endocrinopathy, monoclonal protein, skin changes) syndrome, and plasmacytomas, which are divided into 2 types – solitary plasmacytoma of the bone, and extramedullary plasmacytoma (EMP).10 EMP is a rare condition and encompasses 3% to 5% of all plasma cell neoplasms, depending on the study.1,2,5 It is more common in men than in women (2.6:1, respectively), with equal incidence among black and white patients. Median age at diagnosis is 62 years, and it is more common among those aged 40 to 70 years.2,11 The most common sites of occurrence are the respiratory tract, the mouth, and the pharynx, but other sites such as the eyes, brain, skin, and lymph nodes may also be involved.2

EMP involving the thyroid gland is a very rare occurrence, but plasma cell myeloma has been shown to secondarily involve the thyroid.4 Similar to our report, EMP of the thyroid in the setting of thyroiditis has been reported by other authors.3,4 The incidence of EMP occurring in the thyroid varies according to different authors. Wiltshaw found 7 cases involving the thyroid out of 272 cases of EMP.1 Galieni and colleagues reported only 1 case that involved the thyroid out of 46 described cases of EMP.12

El- Siemińska and colleagues showed that levels of interleukin (IL)-6 are elevated in thyroiditis.13 IL-6 promotes monoclonal as well as polyclonal proliferation of plasma cells. Kovalchuk and colleagues showed an increase in EMP in IL-6 transgenic mice, suggesting a pathophysiologic explanation.14

The diagnostic requirements of EMP include the following: histology showing monoclonal plasma cell infiltration in tissue; bone marrow biopsy with normal plasma cell aspirate and biopsy (plasma cells, <5%); no lytic lesions on skeletal survey; no anemia, renal impairment, or hypercalcemia; and absent or low serum M protein.12

Our case fulfilled those criteria.

The treatment options for EMP include surgery, radiotherapy, or a combined approach including both. Usually, EMPs are very sensitive to radiotherapy, and complete remission can be achieved by radiotherapy alone in 80% to 100% of cases.6,11,15 Surgery is considered if the tumor is diffuse or is causing symptoms secondary to pressure on surrounding structures. A combined approach is recommended in cases with incomplete surgical margin or lymph node involvement.5,6

There is limited evidence about and experience with the use of chemotherapy in the treatment of EMP. It has been recommended that chemotherapy be considered in patients with refractory or relapsed disease using the same regimen used in plasma cell myeloma.5 Katodritou and colleagues have reported using bortezomib and dexamethasone without surgery in a solitary gastric plasmacytoma to avoid the toxicity of gastrointestinal irradiation.7 Wei and colleagues treated a patient with EMP in the pancreas with bortezomib and achieved a near-complete remission.8 To our knowledge, there is no documented literature about the treatment of EMP of the thyroid with chemotherapy. In our patient, despite the treatment with surgery and radiation, there was persistent uptake on the PET scan, so we treated her with bortezomib and dexamethasone. Because there is an 11% to 30% risk of progression to multiple myeloma, long-term follow-up is recommended in EMP.11

Conclusions

Solitary EMP of the thyroid gland is a rare condition. Plasma cell myeloma must be ruled out to make a diagnosis. Data on the incidence of EMP and its clinicopathological features are sparse, and literature describing proper guidelines on treatment is limited. It can be treated with radiotherapy, surgery, or a combined approach. There is limited data on the role of chemotherapy; our case adds to the available literature on using myeloma-based therapy in refractory disease and, to our knowledge, is the only case report using this in the literature on cases of EMP of the thyroid. Regular follow-up, even after the disease is in remission, is necessary because of the high risk of progression to plasma cell myeloma.

1. Wiltshaw E. The natural history of extramedullary plasmacytoma and its relation to solitary myeloma of bone and myelomatosis. Medicine (Baltimore). 1976;55(3):217-238.

2. Dores GM, Landgren O, McGlynn KA, Curtis RE, Linet MS, Devesa SS. Plasmacytoma of bone, extramedullary plasmacytoma, and multiple myeloma: incidence and survival in the United States, 1992-2004. Br J Haematol. 2009;144(1):86-94.

3. Kovacs CS, Mant MJ, Nguyen GK, Ginsberg J. Plasma cell lesions of the thyroid: report of a case of solitary plasmacytoma and a review of the literature. Thyroid. 1994;4(1):65-71.

4. Avila A, Villalpando A, Montoya G, Luna MA. Clinical features and differential diagnoses of solitary extramedullary plasmacytoma of the thyroid: a case report. Ann Diagn Pathol. 2009;13(2):119-123.

5. Hughes M, Soutar R, Lucraft H, Owen R, Bird J. Guidelines on the diagnosis and management of solitary plasmacytoma of bone, extramedullary plasmacytoma and multiple solitary plasmacytomas: 2009 update. London, United Kingdom: British Committee for Standards in Haematology; 2009.

6. Weber DM. Solitary bone and extramedullary plasmacytoma. Hematology Am Soc Hematol Educ Program. 2005;373-376.

7. Katodritou E, Kartsios C, Gastari V, et al. Successful treatment of extramedullary gastric plasmacytoma with the combination of bortezomib and dexamethasone: first reported case. Leuk Res. 2008;32(2):339-341.

8. Wei JY, Tong HY, Zhu WF, et al. Bortezomib in treatment of extramedullary plasmacytoma of the pancreas. Hepatobiliary Pancreat Dis Int. 2009;8(3):329-331.

9. Roth K, Oehme L, Zehentmeier S, Zhang Y, Niesner R, Hauser AE. Tracking plasma cell differentiation and survival. Cytometry A. 2014;85(1):15-24.

10. Swerdlow SH, Campo E, Harris NL, et al. WHO classification of tumours of haematopoietic and lymphoid tissues. 4th ed. Lyon, France: International Agency for Research on Cancer; 2008.

11. Alexiou C, Kau RJ, Dietzfelbinger H, et al. Extramedullary plasmacytoma: tumor occurrence and therapeutic concepts. Cancer. 1999;85(11):2305-2314.

12. Galieni P, Cavo M, Pulsoni A, et al. Clinical outcome of extramedullary plasmacytoma. Haematologica. 2000;85(1):47-51.

13. Siemińska L, Wojciechowska C, Kos-Kudła B, et al. Serum concentrations of leptin, adiponectin, and interleukin-6 in postmenopausal women with Hashimoto’s thyroiditis. Endokrynol Pol. 2010;61(1):112-116.

14. Kovalchuk AL, Kim JS, Park SS, et al. IL-6 transgenic mouse model for extraosseous plasmacytoma. Proc Natl Acad Sci US

15. Chao MW, Gibbs P, Wirth A, Quong G, Guiney MJ, Liew KH. Radiotherapy in the management of solitary extramedullary plasmacytoma. Intern Med J. 2005;35(4):211-215.

1. Wiltshaw E. The natural history of extramedullary plasmacytoma and its relation to solitary myeloma of bone and myelomatosis. Medicine (Baltimore). 1976;55(3):217-238.

2. Dores GM, Landgren O, McGlynn KA, Curtis RE, Linet MS, Devesa SS. Plasmacytoma of bone, extramedullary plasmacytoma, and multiple myeloma: incidence and survival in the United States, 1992-2004. Br J Haematol. 2009;144(1):86-94.

3. Kovacs CS, Mant MJ, Nguyen GK, Ginsberg J. Plasma cell lesions of the thyroid: report of a case of solitary plasmacytoma and a review of the literature. Thyroid. 1994;4(1):65-71.

4. Avila A, Villalpando A, Montoya G, Luna MA. Clinical features and differential diagnoses of solitary extramedullary plasmacytoma of the thyroid: a case report. Ann Diagn Pathol. 2009;13(2):119-123.

5. Hughes M, Soutar R, Lucraft H, Owen R, Bird J. Guidelines on the diagnosis and management of solitary plasmacytoma of bone, extramedullary plasmacytoma and multiple solitary plasmacytomas: 2009 update. London, United Kingdom: British Committee for Standards in Haematology; 2009.

6. Weber DM. Solitary bone and extramedullary plasmacytoma. Hematology Am Soc Hematol Educ Program. 2005;373-376.

7. Katodritou E, Kartsios C, Gastari V, et al. Successful treatment of extramedullary gastric plasmacytoma with the combination of bortezomib and dexamethasone: first reported case. Leuk Res. 2008;32(2):339-341.

8. Wei JY, Tong HY, Zhu WF, et al. Bortezomib in treatment of extramedullary plasmacytoma of the pancreas. Hepatobiliary Pancreat Dis Int. 2009;8(3):329-331.

9. Roth K, Oehme L, Zehentmeier S, Zhang Y, Niesner R, Hauser AE. Tracking plasma cell differentiation and survival. Cytometry A. 2014;85(1):15-24.

10. Swerdlow SH, Campo E, Harris NL, et al. WHO classification of tumours of haematopoietic and lymphoid tissues. 4th ed. Lyon, France: International Agency for Research on Cancer; 2008.

11. Alexiou C, Kau RJ, Dietzfelbinger H, et al. Extramedullary plasmacytoma: tumor occurrence and therapeutic concepts. Cancer. 1999;85(11):2305-2314.

12. Galieni P, Cavo M, Pulsoni A, et al. Clinical outcome of extramedullary plasmacytoma. Haematologica. 2000;85(1):47-51.

13. Siemińska L, Wojciechowska C, Kos-Kudła B, et al. Serum concentrations of leptin, adiponectin, and interleukin-6 in postmenopausal women with Hashimoto’s thyroiditis. Endokrynol Pol. 2010;61(1):112-116.

14. Kovalchuk AL, Kim JS, Park SS, et al. IL-6 transgenic mouse model for extraosseous plasmacytoma. Proc Natl Acad Sci US

15. Chao MW, Gibbs P, Wirth A, Quong G, Guiney MJ, Liew KH. Radiotherapy in the management of solitary extramedullary plasmacytoma. Intern Med J. 2005;35(4):211-215.

Salivary ductal adenocarcinoma with complete response to androgen blockade

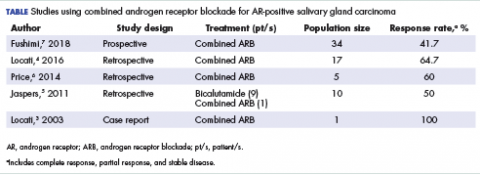

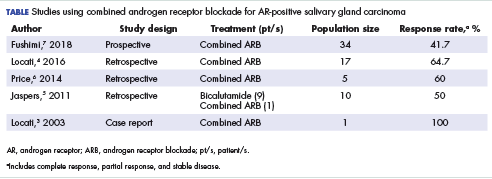

Salivary ductal adenocarcinomas make up about 9% of malignant salivary gland tumors and occur mostly in men older than 50 years, with a peak incidence in the sixth and seventh decades. It is the most aggressive of salivary gland tumors and is histologically similar to high-grade, invasive ductal carcinoma of the breast. In all, 65% of patients will die of the disease, and most will experience skin ulceration and nerve palsy.1 With such an aggressive clinical picture, the temptation for many oncologists and patients is to use aggressive cytotoxic chemotherapies. Considering the lack of large trials exploring treatment options in this less-common subtype of salivary gland carcinoma, practice guidelines also recommend the use of aggressive chemotherapies. Unlike other types of malignant cancers of the salivary glands, 70% to 90% of ductal adenocarcinomas express the androgen receptor (AR) by immunohistochemistry.2 There are reported cases of androgen deprivation therapy (ADT) as a successful treatment for salivary ductal adenocarcinomas that express the AR (Table).In 2003, Locati and colleagues reported the case of a man with salivary ductal adenocarcinomas who had a complete response with ADT.3 In 2016, the same group of authors published a retrospective analysis of 17 patients with recurrent or metastatic AR-positive salivary gland cancers who were treated with ADT and reported a 64.7% overall response rate among the patients.4 A 10-patient case series in the Netherlands demonstrated a 50% response rate to ADT plus bicalutamide, including a palliative effect in the form of pain relief.5 A retrospective analysis by Price and colleagues of 5 patients with AR-positive metastatic salivary duct adenocarcinoma showed a 60% response rate to a combination of leuprolide and bicalutamide.6

Case presentation and summary

A 91-year-old man was diagnosed with salivary ductal adenocarcinoma of the left parotid gland in September 2013 and underwent left parotidectomy and lymph node dissection, which revealed AJCC stage IVA (pT2 pN3 M0) disease. The following year, in December 2014, he had an enlarging left neck mass that was pathologically confirmed to be recurrent disease, and he underwent left level V neck dissection in February 2015. Five months after surgery, in July 2015, he presented with left neck fullness and new skin nodules, and the results of a biopsy confirmed recurrent disease. Given his relatively asymptomatic state and advanced age, the oncology care team decided to follow the patient without any pharmacologic therapy.

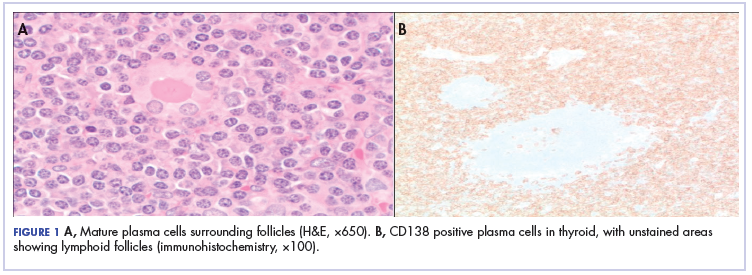

The patient felt relatively well for 11 months but slowly developed increasing pain in the left neck in June 2016. The skin nodules also began to spread inferiorly from his left neck to his upper chest with the development of open sores that wept serous fluid with scab formation (Figure 1). He and his wife lived independently and managed all their own instrumental activities of daily living (IADL). Eventually, the pain in his neck became so severe that it began to interfere with his ability to drive. He declined radiation therapy because of side effects and transportation issues, but he desired something to alleviate the burden of the disease. During a multidisciplinary cancer conference, the staff pathologist and oncologist discussed AR immunohistochemistry to assist with management. In June 2016, the patient’s tumor was found to have AR immunostaining (nuclear pattern) in 100% of cells, and he was treated with combined androgen blockade, consisting of monthly 3.6 mg goserelin injections and daily bicalutamide 50 mg orally.

Within a week, the patient noticed that the skin lesions stopped weeping fluid. Within 2 weeks, the pain had begun to resolve. At his formal follow-up visit 11 weeks after starting treatment, he was not taking any pain medications and reported no pain. In addition, his visually apparent disease had almost completely resolved (Figure 2). He was fully able to manage his own IADL and reported a marked increase in satisfaction with the quality of his life.

Discussion

The oncology care team clearly defined the goal of care for this patient as palliative and conveyed as such to the patient. The team considered the risks and side effects of cytotoxic chemotherapy agents to be contrary to the patient’s stated primary goal of independence. We selected the combined androgen blockade because it has a low toxicity rate and thus met the primary goals of therapy.

The European Organization for Research and Treatment of Cancer is presently conducting a trial in which cytotoxic chemotherapy is being compared with ADT in AR-positive salivary duct tumors. Findings from a recent prospective, phase-2 trial conducted in Japan suggested that combined AR blockade has similar efficacy and less toxicity than conventional cytotoxic chemotherapy for recurrent and/or metastatic and unresectable locally advanced AR-positive salivary gland carcinoma.7 As more data become available from other studies, it is possible that practice guidelines will be revised to recommend this treatment approach for these cancers.

1. Eveson JW, Thompson LDR. Malignant neoplasms of the salivary glands. In: Thompson LDR, ed. Head and neck pathology. 2nd ed. Philadelphia, PA: Elsevier Inc; 2013:304-305.

2. Luk PP, Weston JD, Yu B, et al. Salivary duct carcinoma: clinicopathologic features, morphologic spectrum, and somatic mutations. Head Neck. 2016;38(suppl 1):E1838-E1847.

3. Locati LD, Quattrone P, Bossi P, Marchianò AV, Cantù G, Licitra L. A complete remission with androgen-deprivation therapy in a recurrent androgen receptor-expressing adenocarcinoma of the parotid gland. Ann Oncol. 2003;14(8):1327-1328.

4. Locati LD, Perrone F, Cortelazzi B, et al. Clinical activity of androgen deprivation therapy in patients with metastatic/relapsed androgen receptor-positive salivary gland cancers. Head Neck. 2016;38(5):724-731.

5. Jaspers HC, Verbist BM, Schoffelen R, et al. Androgen receptor-positive salivary duct carcinoma: a disease entity with promising new treatment options. J Clin Oncol. 2011;29(16):e473-e476.

6. Price KAR, Okuno SH, Molina JR, Garcia JJ. Treatment of metastatic salivary duct carcinoma with combined androgen blockade (CAB) with leuprolide acetate and bicalutamide. Int J Radiat Oncol Biol Phys. 2014;88(2):521-522.

7. Fushimi C, Tada Y, Takahashi H, et al. A prospective phase II study of combined androgen blockade in patients with androgen receptor-positive metastatic or locally advanced unresectable salivary gland carcinoma. Ann Oncol. 2018;29(4):979-984.

Salivary ductal adenocarcinomas make up about 9% of malignant salivary gland tumors and occur mostly in men older than 50 years, with a peak incidence in the sixth and seventh decades. It is the most aggressive of salivary gland tumors and is histologically similar to high-grade, invasive ductal carcinoma of the breast. In all, 65% of patients will die of the disease, and most will experience skin ulceration and nerve palsy.1 With such an aggressive clinical picture, the temptation for many oncologists and patients is to use aggressive cytotoxic chemotherapies. Considering the lack of large trials exploring treatment options in this less-common subtype of salivary gland carcinoma, practice guidelines also recommend the use of aggressive chemotherapies. Unlike other types of malignant cancers of the salivary glands, 70% to 90% of ductal adenocarcinomas express the androgen receptor (AR) by immunohistochemistry.2 There are reported cases of androgen deprivation therapy (ADT) as a successful treatment for salivary ductal adenocarcinomas that express the AR (Table).In 2003, Locati and colleagues reported the case of a man with salivary ductal adenocarcinomas who had a complete response with ADT.3 In 2016, the same group of authors published a retrospective analysis of 17 patients with recurrent or metastatic AR-positive salivary gland cancers who were treated with ADT and reported a 64.7% overall response rate among the patients.4 A 10-patient case series in the Netherlands demonstrated a 50% response rate to ADT plus bicalutamide, including a palliative effect in the form of pain relief.5 A retrospective analysis by Price and colleagues of 5 patients with AR-positive metastatic salivary duct adenocarcinoma showed a 60% response rate to a combination of leuprolide and bicalutamide.6

Case presentation and summary

A 91-year-old man was diagnosed with salivary ductal adenocarcinoma of the left parotid gland in September 2013 and underwent left parotidectomy and lymph node dissection, which revealed AJCC stage IVA (pT2 pN3 M0) disease. The following year, in December 2014, he had an enlarging left neck mass that was pathologically confirmed to be recurrent disease, and he underwent left level V neck dissection in February 2015. Five months after surgery, in July 2015, he presented with left neck fullness and new skin nodules, and the results of a biopsy confirmed recurrent disease. Given his relatively asymptomatic state and advanced age, the oncology care team decided to follow the patient without any pharmacologic therapy.

The patient felt relatively well for 11 months but slowly developed increasing pain in the left neck in June 2016. The skin nodules also began to spread inferiorly from his left neck to his upper chest with the development of open sores that wept serous fluid with scab formation (Figure 1). He and his wife lived independently and managed all their own instrumental activities of daily living (IADL). Eventually, the pain in his neck became so severe that it began to interfere with his ability to drive. He declined radiation therapy because of side effects and transportation issues, but he desired something to alleviate the burden of the disease. During a multidisciplinary cancer conference, the staff pathologist and oncologist discussed AR immunohistochemistry to assist with management. In June 2016, the patient’s tumor was found to have AR immunostaining (nuclear pattern) in 100% of cells, and he was treated with combined androgen blockade, consisting of monthly 3.6 mg goserelin injections and daily bicalutamide 50 mg orally.

Within a week, the patient noticed that the skin lesions stopped weeping fluid. Within 2 weeks, the pain had begun to resolve. At his formal follow-up visit 11 weeks after starting treatment, he was not taking any pain medications and reported no pain. In addition, his visually apparent disease had almost completely resolved (Figure 2). He was fully able to manage his own IADL and reported a marked increase in satisfaction with the quality of his life.

Discussion

The oncology care team clearly defined the goal of care for this patient as palliative and conveyed as such to the patient. The team considered the risks and side effects of cytotoxic chemotherapy agents to be contrary to the patient’s stated primary goal of independence. We selected the combined androgen blockade because it has a low toxicity rate and thus met the primary goals of therapy.

The European Organization for Research and Treatment of Cancer is presently conducting a trial in which cytotoxic chemotherapy is being compared with ADT in AR-positive salivary duct tumors. Findings from a recent prospective, phase-2 trial conducted in Japan suggested that combined AR blockade has similar efficacy and less toxicity than conventional cytotoxic chemotherapy for recurrent and/or metastatic and unresectable locally advanced AR-positive salivary gland carcinoma.7 As more data become available from other studies, it is possible that practice guidelines will be revised to recommend this treatment approach for these cancers.

Salivary ductal adenocarcinomas make up about 9% of malignant salivary gland tumors and occur mostly in men older than 50 years, with a peak incidence in the sixth and seventh decades. It is the most aggressive of salivary gland tumors and is histologically similar to high-grade, invasive ductal carcinoma of the breast. In all, 65% of patients will die of the disease, and most will experience skin ulceration and nerve palsy.1 With such an aggressive clinical picture, the temptation for many oncologists and patients is to use aggressive cytotoxic chemotherapies. Considering the lack of large trials exploring treatment options in this less-common subtype of salivary gland carcinoma, practice guidelines also recommend the use of aggressive chemotherapies. Unlike other types of malignant cancers of the salivary glands, 70% to 90% of ductal adenocarcinomas express the androgen receptor (AR) by immunohistochemistry.2 There are reported cases of androgen deprivation therapy (ADT) as a successful treatment for salivary ductal adenocarcinomas that express the AR (Table).In 2003, Locati and colleagues reported the case of a man with salivary ductal adenocarcinomas who had a complete response with ADT.3 In 2016, the same group of authors published a retrospective analysis of 17 patients with recurrent or metastatic AR-positive salivary gland cancers who were treated with ADT and reported a 64.7% overall response rate among the patients.4 A 10-patient case series in the Netherlands demonstrated a 50% response rate to ADT plus bicalutamide, including a palliative effect in the form of pain relief.5 A retrospective analysis by Price and colleagues of 5 patients with AR-positive metastatic salivary duct adenocarcinoma showed a 60% response rate to a combination of leuprolide and bicalutamide.6

Case presentation and summary

A 91-year-old man was diagnosed with salivary ductal adenocarcinoma of the left parotid gland in September 2013 and underwent left parotidectomy and lymph node dissection, which revealed AJCC stage IVA (pT2 pN3 M0) disease. The following year, in December 2014, he had an enlarging left neck mass that was pathologically confirmed to be recurrent disease, and he underwent left level V neck dissection in February 2015. Five months after surgery, in July 2015, he presented with left neck fullness and new skin nodules, and the results of a biopsy confirmed recurrent disease. Given his relatively asymptomatic state and advanced age, the oncology care team decided to follow the patient without any pharmacologic therapy.

The patient felt relatively well for 11 months but slowly developed increasing pain in the left neck in June 2016. The skin nodules also began to spread inferiorly from his left neck to his upper chest with the development of open sores that wept serous fluid with scab formation (Figure 1). He and his wife lived independently and managed all their own instrumental activities of daily living (IADL). Eventually, the pain in his neck became so severe that it began to interfere with his ability to drive. He declined radiation therapy because of side effects and transportation issues, but he desired something to alleviate the burden of the disease. During a multidisciplinary cancer conference, the staff pathologist and oncologist discussed AR immunohistochemistry to assist with management. In June 2016, the patient’s tumor was found to have AR immunostaining (nuclear pattern) in 100% of cells, and he was treated with combined androgen blockade, consisting of monthly 3.6 mg goserelin injections and daily bicalutamide 50 mg orally.

Within a week, the patient noticed that the skin lesions stopped weeping fluid. Within 2 weeks, the pain had begun to resolve. At his formal follow-up visit 11 weeks after starting treatment, he was not taking any pain medications and reported no pain. In addition, his visually apparent disease had almost completely resolved (Figure 2). He was fully able to manage his own IADL and reported a marked increase in satisfaction with the quality of his life.

Discussion

The oncology care team clearly defined the goal of care for this patient as palliative and conveyed as such to the patient. The team considered the risks and side effects of cytotoxic chemotherapy agents to be contrary to the patient’s stated primary goal of independence. We selected the combined androgen blockade because it has a low toxicity rate and thus met the primary goals of therapy.

The European Organization for Research and Treatment of Cancer is presently conducting a trial in which cytotoxic chemotherapy is being compared with ADT in AR-positive salivary duct tumors. Findings from a recent prospective, phase-2 trial conducted in Japan suggested that combined AR blockade has similar efficacy and less toxicity than conventional cytotoxic chemotherapy for recurrent and/or metastatic and unresectable locally advanced AR-positive salivary gland carcinoma.7 As more data become available from other studies, it is possible that practice guidelines will be revised to recommend this treatment approach for these cancers.

1. Eveson JW, Thompson LDR. Malignant neoplasms of the salivary glands. In: Thompson LDR, ed. Head and neck pathology. 2nd ed. Philadelphia, PA: Elsevier Inc; 2013:304-305.

2. Luk PP, Weston JD, Yu B, et al. Salivary duct carcinoma: clinicopathologic features, morphologic spectrum, and somatic mutations. Head Neck. 2016;38(suppl 1):E1838-E1847.

3. Locati LD, Quattrone P, Bossi P, Marchianò AV, Cantù G, Licitra L. A complete remission with androgen-deprivation therapy in a recurrent androgen receptor-expressing adenocarcinoma of the parotid gland. Ann Oncol. 2003;14(8):1327-1328.

4. Locati LD, Perrone F, Cortelazzi B, et al. Clinical activity of androgen deprivation therapy in patients with metastatic/relapsed androgen receptor-positive salivary gland cancers. Head Neck. 2016;38(5):724-731.

5. Jaspers HC, Verbist BM, Schoffelen R, et al. Androgen receptor-positive salivary duct carcinoma: a disease entity with promising new treatment options. J Clin Oncol. 2011;29(16):e473-e476.

6. Price KAR, Okuno SH, Molina JR, Garcia JJ. Treatment of metastatic salivary duct carcinoma with combined androgen blockade (CAB) with leuprolide acetate and bicalutamide. Int J Radiat Oncol Biol Phys. 2014;88(2):521-522.

7. Fushimi C, Tada Y, Takahashi H, et al. A prospective phase II study of combined androgen blockade in patients with androgen receptor-positive metastatic or locally advanced unresectable salivary gland carcinoma. Ann Oncol. 2018;29(4):979-984.

1. Eveson JW, Thompson LDR. Malignant neoplasms of the salivary glands. In: Thompson LDR, ed. Head and neck pathology. 2nd ed. Philadelphia, PA: Elsevier Inc; 2013:304-305.

2. Luk PP, Weston JD, Yu B, et al. Salivary duct carcinoma: clinicopathologic features, morphologic spectrum, and somatic mutations. Head Neck. 2016;38(suppl 1):E1838-E1847.

3. Locati LD, Quattrone P, Bossi P, Marchianò AV, Cantù G, Licitra L. A complete remission with androgen-deprivation therapy in a recurrent androgen receptor-expressing adenocarcinoma of the parotid gland. Ann Oncol. 2003;14(8):1327-1328.

4. Locati LD, Perrone F, Cortelazzi B, et al. Clinical activity of androgen deprivation therapy in patients with metastatic/relapsed androgen receptor-positive salivary gland cancers. Head Neck. 2016;38(5):724-731.

5. Jaspers HC, Verbist BM, Schoffelen R, et al. Androgen receptor-positive salivary duct carcinoma: a disease entity with promising new treatment options. J Clin Oncol. 2011;29(16):e473-e476.

6. Price KAR, Okuno SH, Molina JR, Garcia JJ. Treatment of metastatic salivary duct carcinoma with combined androgen blockade (CAB) with leuprolide acetate and bicalutamide. Int J Radiat Oncol Biol Phys. 2014;88(2):521-522.

7. Fushimi C, Tada Y, Takahashi H, et al. A prospective phase II study of combined androgen blockade in patients with androgen receptor-positive metastatic or locally advanced unresectable salivary gland carcinoma. Ann Oncol. 2018;29(4):979-984.

Characteristics of urgent palliative cancer care consultations encountered by radiation oncologists

Palliative radiation therapy (PRT) plays a major role in the management of incurable cancers. Study findings have demonstrated the efficacy of using PRT in treating tumor-related bone pain,1 brain metastases and related symptoms,2 thoracic disease-causing hemoptysis or obstruction,3 gastrointestinal involvement causing bleeding and/or obstruction,4 and genitourinary and/or gynecologic involvement causing bleeding.5,6

PRT accounts for between 30% and 50% of courses of radiotherapy delivered.3 These courses of RT typically require urgent evaluation since patients are seen because of new and/or progressive symptoms that give cause for concern. The urgency of presentation requires radiation oncologists and the departments receiving these patients to be equipped to manage these cases efficiently and effectively. Furthermore, the types of cases seen, including PRT indications and related symptoms requiring management, inform the training of radiation oncology physicians as well as nursing and other clinical staff. Finally, characterizing the types of urgent PRT cases that are seen can also guide research and quality improvement endeavors for advanced cancer care in radiation oncology settings.

There is currently a paucity of data characterizing the types and frequencies of urgent PRT indications in patients who present to radiation oncology departments, as well as a lack of data detailing the related symptoms radiation oncology clinicians are managing. The aim of this study was to characterize the types and frequencies of urgent PRT consultations and the related symptoms that radiation oncologists are managing as part of patient care.

Methods

Based on national palliative care practice and national oncology care practice guidelines,7,8 we designed a survey to categorize the cancer-related palliative care issues seen by radiation oncologists. Physical symptoms, psychosocial issues, cultural consideration, spiritual needs, care coordination, advanced-care planning, goals of care, and ethical and legal issues comprised the 8 palliative care domains that we evaluated. A survey was developed and critically evaluated by 3 investigators (MK, VL, TB). Each palliative care domain was ranked by clinicians by its relevance (5-point Likert scale [range, 1-5]: 1, Not Relevant, to 5, Extremely Relevant) to the patient’s care point in radiation oncology. In addition, 31 palliative care subissues related to the primary domains were identified by clinicians based on their presence (Yes, No, Not Assessed). Clinicians were also asked whether the consulted patient’s metastatic cancer diagnosis was established (longer than 1 month) or new (within the last 1 month). In addition, clinicians noted whether the patient was returning to active oncologic care (eg, chemotherapy) or to no further anticancer therapies (eg, hospice care) after RT consultation and intervention (if deemed necessary).

The survey’s face and content validity, ease of completion, and time of completion was assessed by a panel of 7 clinicians with expertise in medical oncology, radiation oncology, palliative care, and/or survey construction. The survey was then sent in a sequential manner to 1 member of the panel at a time after incorporating each panel member’s initial comments. After each panel member’s review, the survey was edited until 2 consecutive panel members had no further suggestions for improvement.

After receiving approval from the institutional review boards of participating radiation oncology centers, we electronically surveyed radiation oncology clinicians who were conducting PRT consultations. From May 19 to September 26, 2014, all consultations were evaluated prospectively for consideration of PRT performed by a dedicated PRT service at 3 centers (a large academic cancer center and 2 participating clinicians at affiliated regional hospitals). The consultations for patients aged 18 years or older with incurable, metastatic cancers were considered eligible. The consulting clinician was e-mailed a survey for completion within 5 business days immediately after each PRT consult. Three reminders to complete the survey were sent during the 5–business-day interval. Over the entire study period, 162 consecutive patients were identified, resulting in 162 surveys being sent to 15 radiation oncology clinicians, including nurse practitioners, resident physicians, and attending physicians. Each clinician received a $25 gift card for participating, regardless of the number of surveys completed. In total, 140 of the 162 surveys were returned, resulting in a response rate of 86%.

The investigators then collected patient demographics (age, gender, race, marital status) and disease characteristics (primary cancer type, Eastern Cooperative Oncology Group Performance Status, reasons for urgent RT consult, physical symptoms requiring management at presentation, patient’s place in illness trajectory, and RT recommendation) pertaining to each completed survey from the electronic medical record. Urgent consultations were defined as any patients who needed to be seen on the same day or within a few days of the consult request.

The descriptive statistics of all these data were calculated in terms of frequencies and percentage of categorical variables. Chi-squared statistics, Fisher exact test, and nonparametric rank sum tests were applied to various categories to determine any statistically significant differences between groups.

Results

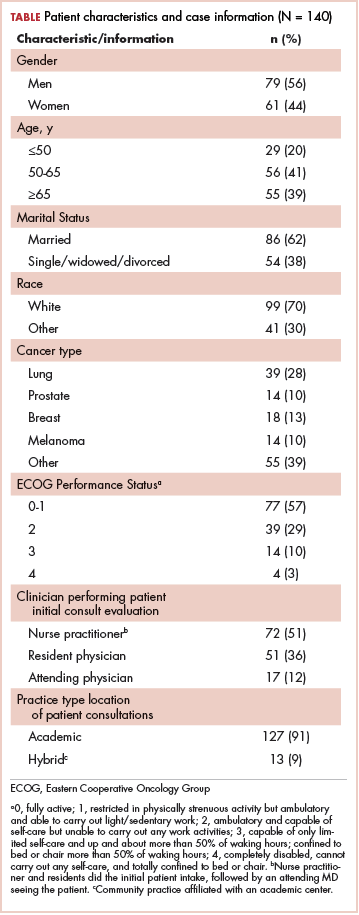

In total, 162 patients were seen in consultation for PRT during the 19-week enrollment period, or an average of 8.7 consults a week. Of that total, surveys for 140 patients were returned (Table).

The median patient age was 63 years (range, 29-89 years). A sizeable minority (20%) was 50 years or younger. The most common cancer diagnosis was lung cancer (28%), followed by breast (13%) and prostate (10%) cancers, melanoma (10%), and sarcoma (7%). Other diagnoses accounted for the remaining 32%.

Timing of PRT consult in cancer trajectory

The points in the advanced cancer illness trajectory at which patients were seen for PRT evaluation are shown in Figure 1. Most patients (63%) were seen for a PRT evaluation at the time of an established diagnosis (>1 month after diagnosis of metastatic cancer) and were continuing to further cancer therapies. An additional 19% of patients with an established diagnosis proceeded to hospice or end-of-life care after the PRT evaluation. A notable minority of patients (18%) were seen for a PRT evaluation at the time of a new diagnosis (<1 month of diagnosis of metastatic cancer), and of those, 17% went on to receive anticancer therapy after the PRT evaluation and 1% proceeded to hospice or end-of-life care.

Characteristics of PRT consults and symptoms at presentation

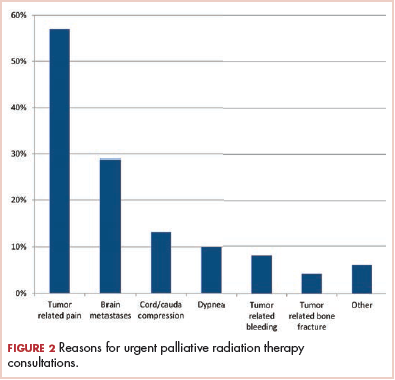

The primary reasons for urgent consultation for PRT are shown in Figure 2. Cancer-related pain (57%), brain metastases (29%), and malignant spinal cord or cauda equina compression (13%) were the predominant reasons for consults. Notable minorities were seen for tumor-related dyspnea (10%), bleeding (8%), and bone fractures (4%).

PRT recommendations and targets

Recommendations regarding PRT are shown in Figure 4A. Of the total 140 patients, 18 (13%) were not recommended for RT. Of the 122 patients for whom PRT was recommended, 11 (9%) received RT at more than 1 site.

Discussion and conclusions

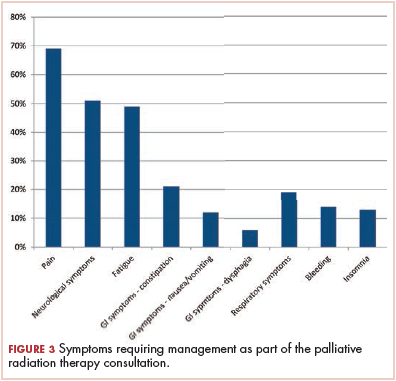

The present study provides a descriptive overview of urgent metastatic cancer patient presentations to radiation oncology clinicians through a comprehensive evaluation of 140 consults for PRT. The most common reasons for urgent evaluation were cancer-related pain (57%), but brain metastases (29%), spinal cord compression (13%), and respiratory symptoms (10%) were also common. Other less-common indications included cancer-related dysphagia, bleeding, and poststabilization management of bone fractures. The most common symptoms requiring management by radiation oncology clinicians were pain (69%), neurologic symptoms (51%), and fatigue (49%). The study also provides a comprehensive characterization of the timeframe of PRT consultation and the treatment recommendations in this cohort. Though most PRT consults occurred at the time of an established metastatic cancer diagnosis and before further anticancer therapies, sizeable minorities occurred at the time of a new diagnosis of metastatic cancer (18%) and before comfort-focused, end-of-life care and no further anticancer therapies (20%). Most patients (87%) were recommended PRT, and of those recommended RT, 11% received RT to more than 1 site. The most common PRT sites were to bone (46%), followed by brain (29%), nonlung soft-tissue sites (17%), and lung (8%). This comprehensive description of the day-to-day urgent, advanced cancer care issues seen and managed in radiation oncology practice can help guide PRT clinical structures, education, research, and quality improvement measures in clinical practice.

Our study provides an insight into urgent symptoms encountered by radiation oncology practitioners during their routine practice. Cancer-related pain remains the most common symptom requiring management. Given the frequency with which pain management is needed among PRT patients, this study highlights the need for radiation oncologists to be well trained in symptom management, particularly as the pain response to RT can often take several days. However, studies suggest that cancer-related pain is not frequently managed by radiation oncologists.9 For example, findings from an Italian study showed that the involvement of radiation oncologists in cancer pain management remains minimal compared with other medical professionals; during the treatment course, only half of the radiation oncologists implemented specific treatment for breakthrough pain.10 A nationwide survey in the United States implicated a number of barriers to adequate pain management, including poor assessment by the physician, reluctance in prescribing opioid analgesics, perceived excessive regulation, and patient reluctance to report pain.11 Notably, in a survey of the Radiation Therapy Oncology Group study physicians, 83% believed cancer patients with pain were undermedicated, and 40% reported that pain relief in their own practice setting was suboptimal. Furthermore, in the treatment plan, adjuvants and prophylactic side effect management were frequently not used properly.12 Education of radiation oncologists in pain assessment and management is key to overcome these barriers and to ensure adequate pain management and quality of life for patients in radiation oncology.

The next most common reason for which patients presented for palliative radiation oncology consultation was for central nervous system (CNS) metastatic disease, including brain metastases and spinal cord compression. Correspondingly, the next most common issue requiring management was neurologic symptoms. Management of CNS disease is becoming increasingly complex, and it benefits from multidisciplinary evaluation to guide optimal and personalized care for each patient, including medical oncology, radiation oncology, neurosurgery and/or orthopedic spine surgery, and palliative medicine. Treatment options include supportive care or corticosteroids alone, surgical resection, whole-brain RT, and/or radiosurgery or stereotactic RT alone. These treatment options are considered on the basis of global patient factors, such as prognosis, together with metastatic-site–specific factors, such as site-related symptoms and the number of metastatic diseases or the burden of the disease.13 For example, the use of the diagnosis-specific Graded Prognostic Assessment index to predict life expectancy can help tailor management of brain metastases based on performance status, age, number of brain metastases, extracranial metastases, and cancer type. Highlighting the complexity of this common PRT presentation, Tsao and colleagues showed that there was a lack of uniform agreement among radiation oncologists for common management issues in patients with brain metastatic disease.14

For metastatic spinal cord or cauda equina compression and the associated neurologic symptoms, initiation of immediate corticosteroids and implementation of local therapy within 24 hours of presentation is paramount,15 highlighting the need for rapid, comprehensive care decision-making for these patients. Treatment options that must be weighed include the potential benefit of upfront decompressive surgery, as supported by a randomized controlled trial by Patchell and colleagues16 for patients who are surgical candidates with true cord or cauda compression and have at least 1 neurologic symptom, a prognosis of ≥3 months, paraplegia of no longer than 48 hours, and no previous RT to the site or brain metastases. Compared with the RT alone, patients receiving surgery before RT had improved ambulatory status and overall survival. Hence, neurosurgical or orthopedic consultation should be standard in the evaluation of metastatic spinal cord or cauda equina compression patients. However, patients frequently do not meet these criteria, and corticosteroids and RT alone are considered. In addition to playing a role in surgical decision-making, prognosis also has a key role in decision-making about the RT fractionation. Short-course RT (8 Gy × 1) is as effectual as longer-course regimens (3 Gy × 10) in terms of motor function.17,18 However, more dose-intense or longer-course regimens, such as 3 Gy × 10, have been shown to have more durability beyond about 6 months and are therefore considered for intermediate to good prognosis.18 The common urgent presentation of CNS metastatic disease to radiation oncology clinics together with the complexity of management and urgency of care decision-making point to the need for dedicated structures of care for these patients in radiation oncology settings. For example, dedicated PRT programs, such as the Rapid Response Radiotherapy Program in Toronto and the Supportive and Palliative Radiation Oncology service in Boston, have demonstrated improved quality of care for patients being urgently evaluated for PRT.19

Following management of pain and neurologic symptoms, clinicians were faced with managing fatigue in nearly half of the patients (49%). The prevalence of fatigue among cancer patients and its impact on quality of life20 highlight the need for this key symptom to be addressed throughout the continuum of cancer care. National Comprehensive Cancer Network guidelines provide a comprehensive framework for addressing cancer-related fatigue.7 However, cancer-related fatigue is a largely underreported, underestimated, and thus undertreated problem.20 In a nationwide survey of members of the American Society for Radiation Oncology, radiation oncologists reported being significantly less confident in managing fatigue compared with managing other common symptoms.21 Furthermore, in a national survey of radiation oncology trainees, 67% of respondents indicated that they were not at all minimally or somewhat confident in their ability to manage fatigue symptoms. The frequency of this symptom together with the demonstrated need for improved education in fatigue management point to a need for radiation oncology palliative educational structures to include dedicated emphasis on managing fatigue in addition to other commonly encountered symptoms, such as pain.

Patients evaluated for PRT are seen across the trajectory of their metastatic cancer diagnosis. In our study, patients presented at all stages in their advanced cancers. These include patients seen at the time of initial diagnosis of cancer as well as those seen near the end of life when end-of-life care planning was underway. The broad spectrum of timing of PRT care underscores that radiation oncologists must be prepared to handle generalist palliative care issues encountered throughout the trajectory of advanced cancer care and hence need comprehensive education in generalist palliative care competencies. These include symptom management, end-of-life care coordination, and communication or goals-of-care discussions. Notably, a recent national survey of radiation oncology residents indicated that most residents, 77% on average, perceived their educational training as suboptimal across domains of generalist palliative care competencies needed in oncology practice.22 Furthermore, a majority (81%) desired greater palliative care education within training.

The most common sites treated in this study were bone, brain, and lung sites. These data provide guidance to both education and research initiatives aiming to advance PRT curriculum and care structures within departments. For example, a same-day simulation and radiation treatment program developed at Princess Margaret Hospital Palliative Radiation Oncology Program (Ontario, Canada) aids in providing streamlined care for patients with bone metastases, the most common presentation for PRT.23 Furthermore, education and research in the application of PRT techniques to bone, brain, and thoracic disease cover the majority of PRT presentations. It is notable, however, that 17% were other soft tissue body sites.

Limitations

There are a few limitations to this study. First, this is a survey-based study conducted at a single academic center within an urban setting and surrounding community regions, which affects its generalizability. Second, this study presents perspectives of radiation oncology practitioners evaluating patients and does not directly reflect patient perceptions or report of symptoms. Third, the data provided by this study are solely descriptive in nature. However, this can guide hypothesis-driven research regarding the evaluation and management of urgent palliative care issues encountered by radiation oncology clinicians and suggest educational objectives to address the needs of these patients.

Conclusions

Radiation oncologists are involved throughout the trajectory of care for advanced cancer patients. Furthermore, they manage a variety of urgent oncologic issues, most commonly metastases causing pain, brain metastases, and spinal cord or cauda equina compression. Radiation oncologists also manage many cancer-related symptoms, mostly pain, neurologic symptoms, fatigue, and gastrointestinal symptoms. These findings point toward the need for palliative care to be well integrated into radiation oncology training curricula and the need for dedicated care structures that enable rapid and multidisciplinary palliative oncology care within radiation oncology departments.

1. Chow E, Harris K, Fan G, Tsao M, Sze WM. Palliative radiotherapy trials for bone metastases: a systematic review. J Clin Oncol. 2007;25(11):1423-1436.

2. van Oorschot B, Rades D, Schulze W, Beckmann G, Feyer P. Palliative radiotherapy--new approaches. Semin Oncol. 2011;38(3):443-449.

3. Simone CB II, Jones JA. Palliative care for patients with locally advanced and metastatic non-small cell lung cancer. Ann Palliat Med. 2013;2(4):178-188.

4. Cihoric N, Crowe S, Eychmüller S, Aebersold DM, Ghadjar P. Clinically significant bleeding in incurable cancer patients: effectiveness of hemostatic radiotherapy. Radiat Oncol. 2012;7:132.

5. Duchesne GM, Bolger JJ, Griffiths GO, et al. A randomized trial of hypofractionated schedules of palliative radiotherapy in the management of bladder carcinoma: results of medical research council trial BA09. Int J Radiat Oncol Biol Phys. 2000;47(2):379-388.

6. Onsrud M, Hagen B, Strickert T. 10-Gy single-fraction pelvic irradiation for palliation and life prolongation in patients with cancer of the cervix and corpus uteri. Gynecol Oncol. 2001;82(1):167-171.

7. NCCN Guidelines(R) Updates. J Natl Compr Canc Netw. 2013;11(9):xxxii-xxxvi.

8. Colby WH, Dahlin C, Lantos J, Carney J, Christopher M. The National Consensus Project for Quality Palliative Care Clinical Practice Guidelines Domain 8: ethical and legal aspects of care. HEC Forum. 2010;22(2):117-131.

9. Stockler MR, Wilcken NR. Why is management of cancer pain still a problem? J Clin Oncol. 2012;30(16):1907-1908.

10. Caravatta L, Ramella S, Melano A, et al. Breakthrough pain management in patients undergoing radiotherapy: a national survey on behalf of the Palliative and Supportive Care Study Group. Tumori. 2015;101(6):603-608.