User login

With site-neutral payments, the devil is in the details

Physician groups are pushing back against a proposal to implement site-neutral payments, despite the fact that they generally support the concept of it.

In the proposed update to the Hospital Outpatient Prospective Payment System (OPPS) for 2019, the Centers for Medicare & Medicaid Services introduced a physician fee schedule–equivalent payment for clinic visit services when provided at an off-campus, provider-based department that is paid under the OPPS.

The American Medical Association said in a letter to the CMS that, while it “generally supports site-neutral payments, we do not believe that it is possible to sustain a high-quality health care system if site neutrality is defined as shrinking all payments to the lowest amount paid in any setting.” The AMA said that the current proposed rule is “complex, confusing, and is not truly site neutral because the policies do not apply equally to all hospital outpatient clinics,” adding that a contributor to the differential between private practice and hospital outpatient departments stems from physicians being underpaid in the physician fee schedule.

The American Academy of Family Physicians stated in a letter to Seema Verma, current administrator of the CMS, that while it supports the idea of site-neutral payments, “we note that the payment methodology for 2019 will not assure equal payments for the same service, regardless of site of service.” The AAFP noted that the goal of curbing hospital acquisition of independent physician practices may not come to fruition and that “hospitals may still be incentivized to buy physician practices based on the mix of services they provide and bill them as PBDs [provider-based departments] at Medicare rates higher than would have been paid had the practice not been bought by the hospital.”

The American College of Cardiology offered support for site-neutral payments and, while it did not come out against the CMS’ proposal, it did offer a series of recommendations to consider, including determining that payments reflect “the resources required to provide patient care in each setting” and that “payment differences across sites should be related to documented differences in the resources needed to ensure patient access and high-quality care.”

The American Academy of Dermatology Association voiced its support for the proposal to the agency.

In a letter signed by the American College of Gastroenterology, the American Gastroenterological Association, and the American Society for Gastrointestinal Endoscopy, they wrote that reimbursement for services provided in ambulatory surgical centers and hospital outpatient departments “should be the same. Our societies support payment rates appropriate for each site of service and using appropriate policy and payment levers that result in patients receiving care in the most cost-efficient site of service.”

Physician groups are pushing back against a proposal to implement site-neutral payments, despite the fact that they generally support the concept of it.

In the proposed update to the Hospital Outpatient Prospective Payment System (OPPS) for 2019, the Centers for Medicare & Medicaid Services introduced a physician fee schedule–equivalent payment for clinic visit services when provided at an off-campus, provider-based department that is paid under the OPPS.

The American Medical Association said in a letter to the CMS that, while it “generally supports site-neutral payments, we do not believe that it is possible to sustain a high-quality health care system if site neutrality is defined as shrinking all payments to the lowest amount paid in any setting.” The AMA said that the current proposed rule is “complex, confusing, and is not truly site neutral because the policies do not apply equally to all hospital outpatient clinics,” adding that a contributor to the differential between private practice and hospital outpatient departments stems from physicians being underpaid in the physician fee schedule.

The American Academy of Family Physicians stated in a letter to Seema Verma, current administrator of the CMS, that while it supports the idea of site-neutral payments, “we note that the payment methodology for 2019 will not assure equal payments for the same service, regardless of site of service.” The AAFP noted that the goal of curbing hospital acquisition of independent physician practices may not come to fruition and that “hospitals may still be incentivized to buy physician practices based on the mix of services they provide and bill them as PBDs [provider-based departments] at Medicare rates higher than would have been paid had the practice not been bought by the hospital.”

The American College of Cardiology offered support for site-neutral payments and, while it did not come out against the CMS’ proposal, it did offer a series of recommendations to consider, including determining that payments reflect “the resources required to provide patient care in each setting” and that “payment differences across sites should be related to documented differences in the resources needed to ensure patient access and high-quality care.”

The American Academy of Dermatology Association voiced its support for the proposal to the agency.

In a letter signed by the American College of Gastroenterology, the American Gastroenterological Association, and the American Society for Gastrointestinal Endoscopy, they wrote that reimbursement for services provided in ambulatory surgical centers and hospital outpatient departments “should be the same. Our societies support payment rates appropriate for each site of service and using appropriate policy and payment levers that result in patients receiving care in the most cost-efficient site of service.”

Physician groups are pushing back against a proposal to implement site-neutral payments, despite the fact that they generally support the concept of it.

In the proposed update to the Hospital Outpatient Prospective Payment System (OPPS) for 2019, the Centers for Medicare & Medicaid Services introduced a physician fee schedule–equivalent payment for clinic visit services when provided at an off-campus, provider-based department that is paid under the OPPS.

The American Medical Association said in a letter to the CMS that, while it “generally supports site-neutral payments, we do not believe that it is possible to sustain a high-quality health care system if site neutrality is defined as shrinking all payments to the lowest amount paid in any setting.” The AMA said that the current proposed rule is “complex, confusing, and is not truly site neutral because the policies do not apply equally to all hospital outpatient clinics,” adding that a contributor to the differential between private practice and hospital outpatient departments stems from physicians being underpaid in the physician fee schedule.

The American Academy of Family Physicians stated in a letter to Seema Verma, current administrator of the CMS, that while it supports the idea of site-neutral payments, “we note that the payment methodology for 2019 will not assure equal payments for the same service, regardless of site of service.” The AAFP noted that the goal of curbing hospital acquisition of independent physician practices may not come to fruition and that “hospitals may still be incentivized to buy physician practices based on the mix of services they provide and bill them as PBDs [provider-based departments] at Medicare rates higher than would have been paid had the practice not been bought by the hospital.”

The American College of Cardiology offered support for site-neutral payments and, while it did not come out against the CMS’ proposal, it did offer a series of recommendations to consider, including determining that payments reflect “the resources required to provide patient care in each setting” and that “payment differences across sites should be related to documented differences in the resources needed to ensure patient access and high-quality care.”

The American Academy of Dermatology Association voiced its support for the proposal to the agency.

In a letter signed by the American College of Gastroenterology, the American Gastroenterological Association, and the American Society for Gastrointestinal Endoscopy, they wrote that reimbursement for services provided in ambulatory surgical centers and hospital outpatient departments “should be the same. Our societies support payment rates appropriate for each site of service and using appropriate policy and payment levers that result in patients receiving care in the most cost-efficient site of service.”

Genes more important than food in hyperuricemia

Also today, time reveals a benefit of CABG over PCI for left main artery disease, lower-limb atherosclerosis predicts long-term mortality in patients with PAD, and TV ads could be required to display drug prices.

Subscribe here:

Amazon Alexa

Apple Podcasts

Google Podcasts

Spotify

Also today, time reveals a benefit of CABG over PCI for left main artery disease, lower-limb atherosclerosis predicts long-term mortality in patients with PAD, and TV ads could be required to display drug prices.

Subscribe here:

Amazon Alexa

Apple Podcasts

Google Podcasts

Spotify

Also today, time reveals a benefit of CABG over PCI for left main artery disease, lower-limb atherosclerosis predicts long-term mortality in patients with PAD, and TV ads could be required to display drug prices.

Subscribe here:

Amazon Alexa

Apple Podcasts

Google Podcasts

Spotify

Anti-inflammatory Drug Could Help Prevent MS Brain Tissue Loss

Findings from a recent study of ibudilast, an anti-inflammatory drug, “provide a glimmer of hope” for people with progressive multiple sclerosis, according to the National Institute of Neurological Disorders and Stroke researchers.

Ibudilast is a phosphodiesterase inhibitor with bronchodilator, vasodilator, and neuroprotective effects, mainly used in the treatment of asthma and stroke.

In the placebo-controlled study, 255 participants were assigned to take up to 10 capsules of ibudilast or placebo per day for 96 weeks. Every 6 months, they had magnetic resonance imaging (MRI) brain scans. The researchers observed a difference in brain shrinkage of about 2.5 mL of brain tissue per year between the 2 groups. (The human brain has a volume of about 1,350 mL.) It is unknown whether the difference had an effect on symptoms or loss of function.

Reported adverse events were similar in both groups. The most common with ibudilast were gastrointestinal, headaches, and depression.

Findings from a recent study of ibudilast, an anti-inflammatory drug, “provide a glimmer of hope” for people with progressive multiple sclerosis, according to the National Institute of Neurological Disorders and Stroke researchers.

Ibudilast is a phosphodiesterase inhibitor with bronchodilator, vasodilator, and neuroprotective effects, mainly used in the treatment of asthma and stroke.

In the placebo-controlled study, 255 participants were assigned to take up to 10 capsules of ibudilast or placebo per day for 96 weeks. Every 6 months, they had magnetic resonance imaging (MRI) brain scans. The researchers observed a difference in brain shrinkage of about 2.5 mL of brain tissue per year between the 2 groups. (The human brain has a volume of about 1,350 mL.) It is unknown whether the difference had an effect on symptoms or loss of function.

Reported adverse events were similar in both groups. The most common with ibudilast were gastrointestinal, headaches, and depression.

Findings from a recent study of ibudilast, an anti-inflammatory drug, “provide a glimmer of hope” for people with progressive multiple sclerosis, according to the National Institute of Neurological Disorders and Stroke researchers.

Ibudilast is a phosphodiesterase inhibitor with bronchodilator, vasodilator, and neuroprotective effects, mainly used in the treatment of asthma and stroke.

In the placebo-controlled study, 255 participants were assigned to take up to 10 capsules of ibudilast or placebo per day for 96 weeks. Every 6 months, they had magnetic resonance imaging (MRI) brain scans. The researchers observed a difference in brain shrinkage of about 2.5 mL of brain tissue per year between the 2 groups. (The human brain has a volume of about 1,350 mL.) It is unknown whether the difference had an effect on symptoms or loss of function.

Reported adverse events were similar in both groups. The most common with ibudilast were gastrointestinal, headaches, and depression.

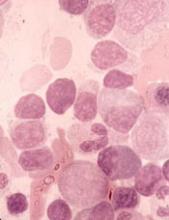

Dataset could reveal better therapies for AML

Researchers have released a dataset detailing the molecular makeup of tumor cells from more than 500 patients with acute myeloid leukemia (AML).

The team discovered mutations not previously observed in AML and found associations between mutations and responses to certain therapies.

For instance, AML cases with FLT3, NPM1, and DNMT3A mutations proved sensitive to the BTK inhibitor ibrutinib.

The researchers described their findings in Nature.

The team also made their dataset available via Vizome, an online data viewer. Other researchers can use Vizome to find out which targeted therapies might be most effective against specific subsets of AML cells.

“People can get online, search our database, and very quickly get answers to ‘Is this a good drug?’ or ‘Is there a patient population my drug can work in?’” said study author Brian Druker, MD, of Oregon Health & Science University (OHSU) in Portland, Oregon.

Newly identified mutations

For this study, part of the Beat AML initiative, Dr. Druker and his colleagues performed whole-exome and RNA sequencing on 672 samples from 562 AML patients.

The team identified mutations in 11 genes that were called in 1% or more of patients in this dataset but had not been observed in previous AML sequencing studies. The genes were:

- CUB and Sushi multiple domains 2 (CSMD2)

- NAC alpha domain containing (NACAD)

- Teneurin transmembrane protein 2 (TENM2)

- Aggrecan (ACAN)

- ADAM metallopeptidase with thrombospondin type 1 motif 7 (ADAMTS7)

- Immunoglobulin-like and fibronectin type III domain containing 1 (IGFN1)

- Neurobeachin-like 2 (NBEAL2)

- Poly(U) binding splicing factor 60 (PUF60)

- Zinc-finger protein 687 (ZNF687)

- Cadherin EGF LAG sevenpass G-type receptor 2 (CELSR2)

- Glutamate ionotropic receptor NMDA type subunit 2B (GRIN2B).

Testing therapies

The researchers also assessed how AML cells from 409 of the patient samples responded to each of 122 targeted therapies.

The team found that mutations in TP53, ASXL1, NRAS, and KRAS caused “a broad pattern of drug resistance.”

However, cases with TP53 mutations were sensitive to elesclomol (a drug that targets cancer cell metabolism), cases with ASXL1 mutations were sensitive to the HDAC inhibitor panobinostat, and cases with KRAS/NRAS mutations were sensitive to MAPK inhibitors (with NRAS-mutated cases demonstrating greater sensitivity).

The researchers also found that IDH2 mutations “conferred sensitivity to a broad spectrum of drugs,” but IDH1 mutations were associated with resistance to most drugs.

As previously mentioned, the researchers found a significant association between mutations in FLT3, NPM1, and DNMT3A and sensitivity to ibrutinib. However, the team found that cases with DNMT3A mutations alone or mutations in DNMT3A and FLT3 were not significantly different from cases with wild-type genes.

On the other hand, cases with FLT3-ITD alone or any combination with a mutation in NPM1 (including mutations in all three genes) were significantly more sensitive to ibrutinib than cases with wild-type genes.

Cases with FLT3-ITD and mutations in NPM1 were sensitive to another kinase inhibitor, entospletinib, as well.

The researchers also found that mutations in both BCOR and RUNX1 correlated with increased sensitivity to four JAK inhibitors—momelotinib, ruxolitinib, tofacitinib, and JAK inhibitor I.

However, cases with BCOR mutations alone or mutations in BCOR and DNMT3A or SRSF2 showed no difference in sensitivity to the JAK inhibitors from cases with wild-type genes.

Next steps

“We’re just starting to scratch the surface of what we can do when we analyze the data,” Dr. Druker said. “The real power comes when you start to integrate all that data. You can analyze what drug worked and why it worked.”

In fact, the researchers are already developing and initiating clinical trials to test hypotheses generated by this study.

“You can start to sense some momentum building with new, better therapeutics for AML patients, and, hopefully, this dataset will help fuel that momentum even further,” said study author Jeff Tyner, PhD, of the OHSU School of Medicine.

“We want to parlay this information into clinical trials as much as we can, and we also want the broader community to use this dataset to accelerate their own work.”

Funding for the current study was provided by grants from The Leukemia & Lymphoma Society, the National Cancer Institute, the National Library of Medicine, and other groups.

Researchers have released a dataset detailing the molecular makeup of tumor cells from more than 500 patients with acute myeloid leukemia (AML).

The team discovered mutations not previously observed in AML and found associations between mutations and responses to certain therapies.

For instance, AML cases with FLT3, NPM1, and DNMT3A mutations proved sensitive to the BTK inhibitor ibrutinib.

The researchers described their findings in Nature.

The team also made their dataset available via Vizome, an online data viewer. Other researchers can use Vizome to find out which targeted therapies might be most effective against specific subsets of AML cells.

“People can get online, search our database, and very quickly get answers to ‘Is this a good drug?’ or ‘Is there a patient population my drug can work in?’” said study author Brian Druker, MD, of Oregon Health & Science University (OHSU) in Portland, Oregon.

Newly identified mutations

For this study, part of the Beat AML initiative, Dr. Druker and his colleagues performed whole-exome and RNA sequencing on 672 samples from 562 AML patients.

The team identified mutations in 11 genes that were called in 1% or more of patients in this dataset but had not been observed in previous AML sequencing studies. The genes were:

- CUB and Sushi multiple domains 2 (CSMD2)

- NAC alpha domain containing (NACAD)

- Teneurin transmembrane protein 2 (TENM2)

- Aggrecan (ACAN)

- ADAM metallopeptidase with thrombospondin type 1 motif 7 (ADAMTS7)

- Immunoglobulin-like and fibronectin type III domain containing 1 (IGFN1)

- Neurobeachin-like 2 (NBEAL2)

- Poly(U) binding splicing factor 60 (PUF60)

- Zinc-finger protein 687 (ZNF687)

- Cadherin EGF LAG sevenpass G-type receptor 2 (CELSR2)

- Glutamate ionotropic receptor NMDA type subunit 2B (GRIN2B).

Testing therapies

The researchers also assessed how AML cells from 409 of the patient samples responded to each of 122 targeted therapies.

The team found that mutations in TP53, ASXL1, NRAS, and KRAS caused “a broad pattern of drug resistance.”

However, cases with TP53 mutations were sensitive to elesclomol (a drug that targets cancer cell metabolism), cases with ASXL1 mutations were sensitive to the HDAC inhibitor panobinostat, and cases with KRAS/NRAS mutations were sensitive to MAPK inhibitors (with NRAS-mutated cases demonstrating greater sensitivity).

The researchers also found that IDH2 mutations “conferred sensitivity to a broad spectrum of drugs,” but IDH1 mutations were associated with resistance to most drugs.

As previously mentioned, the researchers found a significant association between mutations in FLT3, NPM1, and DNMT3A and sensitivity to ibrutinib. However, the team found that cases with DNMT3A mutations alone or mutations in DNMT3A and FLT3 were not significantly different from cases with wild-type genes.

On the other hand, cases with FLT3-ITD alone or any combination with a mutation in NPM1 (including mutations in all three genes) were significantly more sensitive to ibrutinib than cases with wild-type genes.

Cases with FLT3-ITD and mutations in NPM1 were sensitive to another kinase inhibitor, entospletinib, as well.

The researchers also found that mutations in both BCOR and RUNX1 correlated with increased sensitivity to four JAK inhibitors—momelotinib, ruxolitinib, tofacitinib, and JAK inhibitor I.

However, cases with BCOR mutations alone or mutations in BCOR and DNMT3A or SRSF2 showed no difference in sensitivity to the JAK inhibitors from cases with wild-type genes.

Next steps

“We’re just starting to scratch the surface of what we can do when we analyze the data,” Dr. Druker said. “The real power comes when you start to integrate all that data. You can analyze what drug worked and why it worked.”

In fact, the researchers are already developing and initiating clinical trials to test hypotheses generated by this study.

“You can start to sense some momentum building with new, better therapeutics for AML patients, and, hopefully, this dataset will help fuel that momentum even further,” said study author Jeff Tyner, PhD, of the OHSU School of Medicine.

“We want to parlay this information into clinical trials as much as we can, and we also want the broader community to use this dataset to accelerate their own work.”

Funding for the current study was provided by grants from The Leukemia & Lymphoma Society, the National Cancer Institute, the National Library of Medicine, and other groups.

Researchers have released a dataset detailing the molecular makeup of tumor cells from more than 500 patients with acute myeloid leukemia (AML).

The team discovered mutations not previously observed in AML and found associations between mutations and responses to certain therapies.

For instance, AML cases with FLT3, NPM1, and DNMT3A mutations proved sensitive to the BTK inhibitor ibrutinib.

The researchers described their findings in Nature.

The team also made their dataset available via Vizome, an online data viewer. Other researchers can use Vizome to find out which targeted therapies might be most effective against specific subsets of AML cells.

“People can get online, search our database, and very quickly get answers to ‘Is this a good drug?’ or ‘Is there a patient population my drug can work in?’” said study author Brian Druker, MD, of Oregon Health & Science University (OHSU) in Portland, Oregon.

Newly identified mutations

For this study, part of the Beat AML initiative, Dr. Druker and his colleagues performed whole-exome and RNA sequencing on 672 samples from 562 AML patients.

The team identified mutations in 11 genes that were called in 1% or more of patients in this dataset but had not been observed in previous AML sequencing studies. The genes were:

- CUB and Sushi multiple domains 2 (CSMD2)

- NAC alpha domain containing (NACAD)

- Teneurin transmembrane protein 2 (TENM2)

- Aggrecan (ACAN)

- ADAM metallopeptidase with thrombospondin type 1 motif 7 (ADAMTS7)

- Immunoglobulin-like and fibronectin type III domain containing 1 (IGFN1)

- Neurobeachin-like 2 (NBEAL2)

- Poly(U) binding splicing factor 60 (PUF60)

- Zinc-finger protein 687 (ZNF687)

- Cadherin EGF LAG sevenpass G-type receptor 2 (CELSR2)

- Glutamate ionotropic receptor NMDA type subunit 2B (GRIN2B).

Testing therapies

The researchers also assessed how AML cells from 409 of the patient samples responded to each of 122 targeted therapies.

The team found that mutations in TP53, ASXL1, NRAS, and KRAS caused “a broad pattern of drug resistance.”

However, cases with TP53 mutations were sensitive to elesclomol (a drug that targets cancer cell metabolism), cases with ASXL1 mutations were sensitive to the HDAC inhibitor panobinostat, and cases with KRAS/NRAS mutations were sensitive to MAPK inhibitors (with NRAS-mutated cases demonstrating greater sensitivity).

The researchers also found that IDH2 mutations “conferred sensitivity to a broad spectrum of drugs,” but IDH1 mutations were associated with resistance to most drugs.

As previously mentioned, the researchers found a significant association between mutations in FLT3, NPM1, and DNMT3A and sensitivity to ibrutinib. However, the team found that cases with DNMT3A mutations alone or mutations in DNMT3A and FLT3 were not significantly different from cases with wild-type genes.

On the other hand, cases with FLT3-ITD alone or any combination with a mutation in NPM1 (including mutations in all three genes) were significantly more sensitive to ibrutinib than cases with wild-type genes.

Cases with FLT3-ITD and mutations in NPM1 were sensitive to another kinase inhibitor, entospletinib, as well.

The researchers also found that mutations in both BCOR and RUNX1 correlated with increased sensitivity to four JAK inhibitors—momelotinib, ruxolitinib, tofacitinib, and JAK inhibitor I.

However, cases with BCOR mutations alone or mutations in BCOR and DNMT3A or SRSF2 showed no difference in sensitivity to the JAK inhibitors from cases with wild-type genes.

Next steps

“We’re just starting to scratch the surface of what we can do when we analyze the data,” Dr. Druker said. “The real power comes when you start to integrate all that data. You can analyze what drug worked and why it worked.”

In fact, the researchers are already developing and initiating clinical trials to test hypotheses generated by this study.

“You can start to sense some momentum building with new, better therapeutics for AML patients, and, hopefully, this dataset will help fuel that momentum even further,” said study author Jeff Tyner, PhD, of the OHSU School of Medicine.

“We want to parlay this information into clinical trials as much as we can, and we also want the broader community to use this dataset to accelerate their own work.”

Funding for the current study was provided by grants from The Leukemia & Lymphoma Society, the National Cancer Institute, the National Library of Medicine, and other groups.

BTK inhibitor shows early promise for WM

NEW YORK—The BTK inhibitor zanubrutinib has demonstrated “robust activity” and “good tolerability” in patients with Waldenström’s macroglobulinemia (WM), according to an investigator.

In a phase 1 trial, zanubrutinib produced an overall response rate (ORR) of 92%, and the estimated 12-month progression-free survival (PFS) rate was 89%.

Most adverse events (AEs) in this trial were grade 1 or 2 in severity, although the incidence of serious AEs was 42%.

Constantine Tam, MD, of the Peter MacCallum Cancer Center in Victoria, Australia, presented these results at the 10th International Workshop on Waldenström’s Macroglobulinemia.

The trial is sponsored by BeiGene, Ltd., the company developing zanubrutinib.

The trial (NCT02343120) includes patients with WM and other B-cell malignancies. As of July 24, 2018, 77 patients with treatment-naïve or relapsed/refractory WM had been enrolled.

Seventy-three patients were evaluable for efficacy in this analysis, and the median follow-up time was 22.5 months (range, 4.1-43.9).

At the time of the data cutoff, 62 patients remained on study treatment. Four patients (3%) discontinued treatment due to disease progression, and one patient remains on treatment post-progression.

Efficacy

The median time to response was 85 days (range, 55-749).

The ORR was 92% (67/73), and the major response rate (MRR) was 82%. Forty-one percent of patients achieved a very good partial response (VGPR), defined as a greater than 90% reduction in baseline immunoglobulin M (IgM) levels and improvement of extramedullary disease by computed tomography.

The median IgM decreased from 32.7 g/L (range, 5.3-91.9) at baseline to 8.2 g/L (range, 0.3-57.8). The median hemoglobin increased from 8.85 g/dL (range, 6.3-9.8) to 13.4 g/dL (range, 7.7-17.0) among 32 patients with hemoglobin less than 10 g/dL at baseline.

MYD88 genotype was known in 63 patients. In the subset known to have the MYD88L265P mutation (n=54), the ORR was 94%, the MRR was 89%, and the VGPR rate was 46%.

In the nine patients known to have wild-type MYD88 (a genotype that, historically, has had sub-optimal response to BTK inhibition), the ORR was 89%, the MRR was 67%, and the VGPR rate was 22%.

The 12-month PFS was estimated to be 89%, and the median PFS had not been reached.

Safety

The most frequent AEs of any attribution were petechiae/purpura/contusion (43%), upper respiratory tract infection (42%), cough (17%), diarrhea (17%), constipation (16%), back pain (16%), and headache (16%).

Grade 3-4 AEs of any attribution reported in three or more patients included neutropenia (9%), anemia (7%), hypertension (5%), basal cell carcinoma (5%), renal and urinary disorders (4%), and pneumonia (4%).

Serious AEs were seen in 32 patients (42%). Events in five patients (7%) were considered possibly related to zanubrutinib treatment—febrile neutropenia, colitis, atrial fibrillation, hemothorax, and pneumonia.

Nine patients (12%) discontinued study treatment due to AEs, but all of these events were considered unrelated to treatment. The AEs (n=1 for each) included abdominal sepsis (fatal), gastric adenocarcinoma (fatal), septic shoulder, worsening bronchiectasis, scedosporium infection, prostate adenocarcinoma, metastatic neuroendocrine carcinoma, acute myeloid leukemia, and breast cancer.

Atrial fibrillation/flutter occurred in four patients (5%), and major hemorrhage was observed in two patients (3%).

“We are encouraged that additional data on zanubrutinib in patients with WM confirms the initially reported experience, with consistent demonstration of robust activity and good tolerability,” Dr. Tam said.

“We are hopeful that zanubrutinib, if approved, could potentially provide an important new treatment option to patients with WM and other hematologic malignancies.”

Dr. Tam reported financial relationships with BeiGene and other companies.

NEW YORK—The BTK inhibitor zanubrutinib has demonstrated “robust activity” and “good tolerability” in patients with Waldenström’s macroglobulinemia (WM), according to an investigator.

In a phase 1 trial, zanubrutinib produced an overall response rate (ORR) of 92%, and the estimated 12-month progression-free survival (PFS) rate was 89%.

Most adverse events (AEs) in this trial were grade 1 or 2 in severity, although the incidence of serious AEs was 42%.

Constantine Tam, MD, of the Peter MacCallum Cancer Center in Victoria, Australia, presented these results at the 10th International Workshop on Waldenström’s Macroglobulinemia.

The trial is sponsored by BeiGene, Ltd., the company developing zanubrutinib.

The trial (NCT02343120) includes patients with WM and other B-cell malignancies. As of July 24, 2018, 77 patients with treatment-naïve or relapsed/refractory WM had been enrolled.

Seventy-three patients were evaluable for efficacy in this analysis, and the median follow-up time was 22.5 months (range, 4.1-43.9).

At the time of the data cutoff, 62 patients remained on study treatment. Four patients (3%) discontinued treatment due to disease progression, and one patient remains on treatment post-progression.

Efficacy

The median time to response was 85 days (range, 55-749).

The ORR was 92% (67/73), and the major response rate (MRR) was 82%. Forty-one percent of patients achieved a very good partial response (VGPR), defined as a greater than 90% reduction in baseline immunoglobulin M (IgM) levels and improvement of extramedullary disease by computed tomography.

The median IgM decreased from 32.7 g/L (range, 5.3-91.9) at baseline to 8.2 g/L (range, 0.3-57.8). The median hemoglobin increased from 8.85 g/dL (range, 6.3-9.8) to 13.4 g/dL (range, 7.7-17.0) among 32 patients with hemoglobin less than 10 g/dL at baseline.

MYD88 genotype was known in 63 patients. In the subset known to have the MYD88L265P mutation (n=54), the ORR was 94%, the MRR was 89%, and the VGPR rate was 46%.

In the nine patients known to have wild-type MYD88 (a genotype that, historically, has had sub-optimal response to BTK inhibition), the ORR was 89%, the MRR was 67%, and the VGPR rate was 22%.

The 12-month PFS was estimated to be 89%, and the median PFS had not been reached.

Safety

The most frequent AEs of any attribution were petechiae/purpura/contusion (43%), upper respiratory tract infection (42%), cough (17%), diarrhea (17%), constipation (16%), back pain (16%), and headache (16%).

Grade 3-4 AEs of any attribution reported in three or more patients included neutropenia (9%), anemia (7%), hypertension (5%), basal cell carcinoma (5%), renal and urinary disorders (4%), and pneumonia (4%).

Serious AEs were seen in 32 patients (42%). Events in five patients (7%) were considered possibly related to zanubrutinib treatment—febrile neutropenia, colitis, atrial fibrillation, hemothorax, and pneumonia.

Nine patients (12%) discontinued study treatment due to AEs, but all of these events were considered unrelated to treatment. The AEs (n=1 for each) included abdominal sepsis (fatal), gastric adenocarcinoma (fatal), septic shoulder, worsening bronchiectasis, scedosporium infection, prostate adenocarcinoma, metastatic neuroendocrine carcinoma, acute myeloid leukemia, and breast cancer.

Atrial fibrillation/flutter occurred in four patients (5%), and major hemorrhage was observed in two patients (3%).

“We are encouraged that additional data on zanubrutinib in patients with WM confirms the initially reported experience, with consistent demonstration of robust activity and good tolerability,” Dr. Tam said.

“We are hopeful that zanubrutinib, if approved, could potentially provide an important new treatment option to patients with WM and other hematologic malignancies.”

Dr. Tam reported financial relationships with BeiGene and other companies.

NEW YORK—The BTK inhibitor zanubrutinib has demonstrated “robust activity” and “good tolerability” in patients with Waldenström’s macroglobulinemia (WM), according to an investigator.

In a phase 1 trial, zanubrutinib produced an overall response rate (ORR) of 92%, and the estimated 12-month progression-free survival (PFS) rate was 89%.

Most adverse events (AEs) in this trial were grade 1 or 2 in severity, although the incidence of serious AEs was 42%.

Constantine Tam, MD, of the Peter MacCallum Cancer Center in Victoria, Australia, presented these results at the 10th International Workshop on Waldenström’s Macroglobulinemia.

The trial is sponsored by BeiGene, Ltd., the company developing zanubrutinib.

The trial (NCT02343120) includes patients with WM and other B-cell malignancies. As of July 24, 2018, 77 patients with treatment-naïve or relapsed/refractory WM had been enrolled.

Seventy-three patients were evaluable for efficacy in this analysis, and the median follow-up time was 22.5 months (range, 4.1-43.9).

At the time of the data cutoff, 62 patients remained on study treatment. Four patients (3%) discontinued treatment due to disease progression, and one patient remains on treatment post-progression.

Efficacy

The median time to response was 85 days (range, 55-749).

The ORR was 92% (67/73), and the major response rate (MRR) was 82%. Forty-one percent of patients achieved a very good partial response (VGPR), defined as a greater than 90% reduction in baseline immunoglobulin M (IgM) levels and improvement of extramedullary disease by computed tomography.

The median IgM decreased from 32.7 g/L (range, 5.3-91.9) at baseline to 8.2 g/L (range, 0.3-57.8). The median hemoglobin increased from 8.85 g/dL (range, 6.3-9.8) to 13.4 g/dL (range, 7.7-17.0) among 32 patients with hemoglobin less than 10 g/dL at baseline.

MYD88 genotype was known in 63 patients. In the subset known to have the MYD88L265P mutation (n=54), the ORR was 94%, the MRR was 89%, and the VGPR rate was 46%.

In the nine patients known to have wild-type MYD88 (a genotype that, historically, has had sub-optimal response to BTK inhibition), the ORR was 89%, the MRR was 67%, and the VGPR rate was 22%.

The 12-month PFS was estimated to be 89%, and the median PFS had not been reached.

Safety

The most frequent AEs of any attribution were petechiae/purpura/contusion (43%), upper respiratory tract infection (42%), cough (17%), diarrhea (17%), constipation (16%), back pain (16%), and headache (16%).

Grade 3-4 AEs of any attribution reported in three or more patients included neutropenia (9%), anemia (7%), hypertension (5%), basal cell carcinoma (5%), renal and urinary disorders (4%), and pneumonia (4%).

Serious AEs were seen in 32 patients (42%). Events in five patients (7%) were considered possibly related to zanubrutinib treatment—febrile neutropenia, colitis, atrial fibrillation, hemothorax, and pneumonia.

Nine patients (12%) discontinued study treatment due to AEs, but all of these events were considered unrelated to treatment. The AEs (n=1 for each) included abdominal sepsis (fatal), gastric adenocarcinoma (fatal), septic shoulder, worsening bronchiectasis, scedosporium infection, prostate adenocarcinoma, metastatic neuroendocrine carcinoma, acute myeloid leukemia, and breast cancer.

Atrial fibrillation/flutter occurred in four patients (5%), and major hemorrhage was observed in two patients (3%).

“We are encouraged that additional data on zanubrutinib in patients with WM confirms the initially reported experience, with consistent demonstration of robust activity and good tolerability,” Dr. Tam said.

“We are hopeful that zanubrutinib, if approved, could potentially provide an important new treatment option to patients with WM and other hematologic malignancies.”

Dr. Tam reported financial relationships with BeiGene and other companies.

sNDA gets priority review for CLL/SLL

The U.S. Food and Drug Administration (FDA) has accepted for priority review a supplemental new drug application (sNDA) for ibrutinib (Imbruvica®).

With this sNDA, Pharmacyclics LLC (an AbbVie company) and Janssen Biotech, Inc., are seeking approval for ibrutinib in combination with obinutuzumab (Gazyva®) in previously untreated adults with chronic lymphocytic leukemia (CLL) or small lymphocytic lymphoma (SLL).

The FDA grants priority review to applications for products that may provide significant improvements in the treatment, diagnosis, or prevention of serious conditions.

The agency intends to take action on a priority review application within 6 months of receiving it rather than the standard 10 months.

Ibrutinib is already FDA-approved as monotherapy for adults with CLL/SLL (previously treated or untreated), with and without 17p deletion. Ibrutinib is also approved in combination with bendamustine and rituximab for adults with previously treated CLL/SLL.

Obinutuzumab is FDA-approved for use in combination with chlorambucil to treat previously untreated CLL.

The sNDA for ibrutinib in combination with obinutuzumab is based on results from the phase 3 iLLUMINATE trial (NCT02264574).

The trial is a comparison of ibrutinib plus obinutuzumab and chlorambucil plus obinutuzumab in patients with previously untreated CLL/SLL.

In May, AbbVie announced that the trial’s primary endpoint was met. Specifically, ibrutinib plus obinutuzumab was associated with significantly longer progression-free survival than chlorambucil plus obinutuzumab.

Data from the trial have not been released. Pharmacyclics and Janssen said they plan to present the data in a future publication or at a medical congress.

The U.S. Food and Drug Administration (FDA) has accepted for priority review a supplemental new drug application (sNDA) for ibrutinib (Imbruvica®).

With this sNDA, Pharmacyclics LLC (an AbbVie company) and Janssen Biotech, Inc., are seeking approval for ibrutinib in combination with obinutuzumab (Gazyva®) in previously untreated adults with chronic lymphocytic leukemia (CLL) or small lymphocytic lymphoma (SLL).

The FDA grants priority review to applications for products that may provide significant improvements in the treatment, diagnosis, or prevention of serious conditions.

The agency intends to take action on a priority review application within 6 months of receiving it rather than the standard 10 months.

Ibrutinib is already FDA-approved as monotherapy for adults with CLL/SLL (previously treated or untreated), with and without 17p deletion. Ibrutinib is also approved in combination with bendamustine and rituximab for adults with previously treated CLL/SLL.

Obinutuzumab is FDA-approved for use in combination with chlorambucil to treat previously untreated CLL.

The sNDA for ibrutinib in combination with obinutuzumab is based on results from the phase 3 iLLUMINATE trial (NCT02264574).

The trial is a comparison of ibrutinib plus obinutuzumab and chlorambucil plus obinutuzumab in patients with previously untreated CLL/SLL.

In May, AbbVie announced that the trial’s primary endpoint was met. Specifically, ibrutinib plus obinutuzumab was associated with significantly longer progression-free survival than chlorambucil plus obinutuzumab.

Data from the trial have not been released. Pharmacyclics and Janssen said they plan to present the data in a future publication or at a medical congress.

The U.S. Food and Drug Administration (FDA) has accepted for priority review a supplemental new drug application (sNDA) for ibrutinib (Imbruvica®).

With this sNDA, Pharmacyclics LLC (an AbbVie company) and Janssen Biotech, Inc., are seeking approval for ibrutinib in combination with obinutuzumab (Gazyva®) in previously untreated adults with chronic lymphocytic leukemia (CLL) or small lymphocytic lymphoma (SLL).

The FDA grants priority review to applications for products that may provide significant improvements in the treatment, diagnosis, or prevention of serious conditions.

The agency intends to take action on a priority review application within 6 months of receiving it rather than the standard 10 months.

Ibrutinib is already FDA-approved as monotherapy for adults with CLL/SLL (previously treated or untreated), with and without 17p deletion. Ibrutinib is also approved in combination with bendamustine and rituximab for adults with previously treated CLL/SLL.

Obinutuzumab is FDA-approved for use in combination with chlorambucil to treat previously untreated CLL.

The sNDA for ibrutinib in combination with obinutuzumab is based on results from the phase 3 iLLUMINATE trial (NCT02264574).

The trial is a comparison of ibrutinib plus obinutuzumab and chlorambucil plus obinutuzumab in patients with previously untreated CLL/SLL.

In May, AbbVie announced that the trial’s primary endpoint was met. Specifically, ibrutinib plus obinutuzumab was associated with significantly longer progression-free survival than chlorambucil plus obinutuzumab.

Data from the trial have not been released. Pharmacyclics and Janssen said they plan to present the data in a future publication or at a medical congress.

Growth on forehead

The FP was concerned about a possible melanoma due to the dark pigmentation and the positive “ABDCE criteria” of melanoma. The FP used his dermatoscope to determine whether this was a melanoma or a pigmented basal cell carcinoma (BCC).

The multiple leaf-like structures and blue-gray ovoid nests seen with dermoscopy suggested that this was a pigmented BCC. (The ulceration could be seen in either melanoma or BCC.) The FP told the patient that this was most certainly a skin cancer and she needed a biopsy that day. The patient consented and anesthesia was obtained with 1% lidocaine and epinephrine. The physician used a DermaBlade to perform a deep shave (saucerization) under the pigmentation. (See the Watch & Learn video on “Shave biopsy.”)

The pathology confirmed pigmented BCC. The physician recommended an elliptical excision and scheduled it for the following week.

Photos and text for Photo Rounds Friday courtesy of Richard P. Usatine, MD. This case was adapted from: Karnes J, Usatine R. Basal cell carcinoma. In: Usatine R, Smith M, Mayeaux EJ, et al. Color Atlas of Family Medicine. 2nd ed. New York, NY: McGraw-Hill; 2013:989-998.

To learn more about the Color Atlas of Family Medicine, see: www.amazon.com/Color-Family-Medicine-Richard-Usatine/dp/0071769641/.

You can now get the second edition of the Color Atlas of Family Medicine as an app by clicking on this link: usatinemedia.com.

The FP was concerned about a possible melanoma due to the dark pigmentation and the positive “ABDCE criteria” of melanoma. The FP used his dermatoscope to determine whether this was a melanoma or a pigmented basal cell carcinoma (BCC).

The multiple leaf-like structures and blue-gray ovoid nests seen with dermoscopy suggested that this was a pigmented BCC. (The ulceration could be seen in either melanoma or BCC.) The FP told the patient that this was most certainly a skin cancer and she needed a biopsy that day. The patient consented and anesthesia was obtained with 1% lidocaine and epinephrine. The physician used a DermaBlade to perform a deep shave (saucerization) under the pigmentation. (See the Watch & Learn video on “Shave biopsy.”)

The pathology confirmed pigmented BCC. The physician recommended an elliptical excision and scheduled it for the following week.

Photos and text for Photo Rounds Friday courtesy of Richard P. Usatine, MD. This case was adapted from: Karnes J, Usatine R. Basal cell carcinoma. In: Usatine R, Smith M, Mayeaux EJ, et al. Color Atlas of Family Medicine. 2nd ed. New York, NY: McGraw-Hill; 2013:989-998.

To learn more about the Color Atlas of Family Medicine, see: www.amazon.com/Color-Family-Medicine-Richard-Usatine/dp/0071769641/.

You can now get the second edition of the Color Atlas of Family Medicine as an app by clicking on this link: usatinemedia.com.

The FP was concerned about a possible melanoma due to the dark pigmentation and the positive “ABDCE criteria” of melanoma. The FP used his dermatoscope to determine whether this was a melanoma or a pigmented basal cell carcinoma (BCC).

The multiple leaf-like structures and blue-gray ovoid nests seen with dermoscopy suggested that this was a pigmented BCC. (The ulceration could be seen in either melanoma or BCC.) The FP told the patient that this was most certainly a skin cancer and she needed a biopsy that day. The patient consented and anesthesia was obtained with 1% lidocaine and epinephrine. The physician used a DermaBlade to perform a deep shave (saucerization) under the pigmentation. (See the Watch & Learn video on “Shave biopsy.”)

The pathology confirmed pigmented BCC. The physician recommended an elliptical excision and scheduled it for the following week.

Photos and text for Photo Rounds Friday courtesy of Richard P. Usatine, MD. This case was adapted from: Karnes J, Usatine R. Basal cell carcinoma. In: Usatine R, Smith M, Mayeaux EJ, et al. Color Atlas of Family Medicine. 2nd ed. New York, NY: McGraw-Hill; 2013:989-998.

To learn more about the Color Atlas of Family Medicine, see: www.amazon.com/Color-Family-Medicine-Richard-Usatine/dp/0071769641/.

You can now get the second edition of the Color Atlas of Family Medicine as an app by clicking on this link: usatinemedia.com.

Inflammatory arthritis a common rheumatic adverse event with ICIs

Inflammatory arthritis (IA) is the most common rheumatic adverse event seen in people on immune checkpoint inhibitor (ICI) therapy and can largely be managed by rheumatologists without the need to halt cancer treatment, according to Michael D. Richter, MD, of the Mayo School of Graduate Medical Education, Rochester, Minn., and his colleagues.

The investigators’ observations of patients seen at the Mayo Clinic over a 7-year period suggested that “IA should not be seen as a contraindication to continuing or restarting ICIs, ” although continuing ICI therapy concurrently with high-dose steroids is “generally not recommended.” Previous estimates of rheumatic adverse events with ICIs had ranged from 1% to 10%, and management had largely been based on the experience of experts and findings from retrospective studies, they noted.

“This study aims to add granularity to the existing literature by describing the prevalence, clinical characteristics, and treatment outcomes of a large, single-institution cohort of patients with rheumatic immune-related adverse events,” Dr. Richter and his associates wrote in an article published in Arthritis & Rheumatology.

The analysis included 1,293 patients who had received treatment with any ICI at the Mayo Clinic between January 2011 and March 2018. Patients who had received treatment at other centers but were seen at the Mayo clinic for their rheumatic adverse event were also included.

Overall, 61 patients experienced a rheumatic immune-related adverse event (Rh-irAE) related to checkpoint inhibitor treatment; 43 were from the Mayo cohort and 18 were from other centers. The average age of the patients was 62.6 years, and almost half (49%) were female.

New-onset inflammatory arthritis was the most common Rh-irAE reported (n = 34), with the majority of patients presenting with polyarticular symptoms (n = 22; 65%).

Most of these patients were managed by a rheumatologist and were treated with systemic glucocorticoids (n = 26; 76%) for an average duration of 18 weeks. Five of the patients also required disease-modifying antirheumatic drugs and eight needed NSAIDS or intra-articular steroids.

The investigators noted that almost half of the patients (47%) experienced a complete resolution of symptoms during the study period, although few achieved this while they were still on the cancer treatment.

According to the investigators, the clinical characteristics of IA in their cohort support the existing literature: “Time of symptom onset is generally 4-8 weeks after starting ICI therapy. … The majority of patients require long courses of systemic glucocorticoids. And very few achieve complete symptom resolution while continuing ICI therapy,” they wrote.

The database analysis also revealed that a further 10 patients developed myopathy, with all patients presenting with myalgias and weaknesses. Five had bulbar myopathy, and four patients were diagnosed with concomitant myocarditis. All of these patients were treated with glucocorticoids for an average treatment duration of 15 weeks.

Complete resolution of the myopathy was observed in 70%, but two patients died from complications related to myocarditis and bulbar myopathy.

Other rheumatic events included four cases of vasculitis and four cases of polymyalgia rheumatica and six cases of diffuse systemic sclerosis or sicca syndromes.

“In lieu of controlled prospective studies, this cohort may serve as a guide for rheumatologists managing and counseling patients with Rh-IrAEs,” the investigators concluded.

“It remains unclear why some patients develop Rh-irAEs, and so far no genomic risk factors have been identified, though the varied clinical phenotypes of Rh-irAEs certainly suggest multiple underlying immunopathogenic mechanisms. Further prospective studies that distinguish between subsets of Rh-irAEs are necessary to better understand their pathophysiology and improve clinical care,” they added.

They noted that a limitation of the study was its reliance on retrospective data and clinician diagnoses.

None of the authors declared any financial or other conflicts of interest.

SOURCE: Richter MD et al. Arthritis Rheumatol. 2018 Oct 3. doi: 10.1002/art.40745.

Inflammatory arthritis (IA) is the most common rheumatic adverse event seen in people on immune checkpoint inhibitor (ICI) therapy and can largely be managed by rheumatologists without the need to halt cancer treatment, according to Michael D. Richter, MD, of the Mayo School of Graduate Medical Education, Rochester, Minn., and his colleagues.

The investigators’ observations of patients seen at the Mayo Clinic over a 7-year period suggested that “IA should not be seen as a contraindication to continuing or restarting ICIs, ” although continuing ICI therapy concurrently with high-dose steroids is “generally not recommended.” Previous estimates of rheumatic adverse events with ICIs had ranged from 1% to 10%, and management had largely been based on the experience of experts and findings from retrospective studies, they noted.

“This study aims to add granularity to the existing literature by describing the prevalence, clinical characteristics, and treatment outcomes of a large, single-institution cohort of patients with rheumatic immune-related adverse events,” Dr. Richter and his associates wrote in an article published in Arthritis & Rheumatology.

The analysis included 1,293 patients who had received treatment with any ICI at the Mayo Clinic between January 2011 and March 2018. Patients who had received treatment at other centers but were seen at the Mayo clinic for their rheumatic adverse event were also included.

Overall, 61 patients experienced a rheumatic immune-related adverse event (Rh-irAE) related to checkpoint inhibitor treatment; 43 were from the Mayo cohort and 18 were from other centers. The average age of the patients was 62.6 years, and almost half (49%) were female.

New-onset inflammatory arthritis was the most common Rh-irAE reported (n = 34), with the majority of patients presenting with polyarticular symptoms (n = 22; 65%).

Most of these patients were managed by a rheumatologist and were treated with systemic glucocorticoids (n = 26; 76%) for an average duration of 18 weeks. Five of the patients also required disease-modifying antirheumatic drugs and eight needed NSAIDS or intra-articular steroids.

The investigators noted that almost half of the patients (47%) experienced a complete resolution of symptoms during the study period, although few achieved this while they were still on the cancer treatment.

According to the investigators, the clinical characteristics of IA in their cohort support the existing literature: “Time of symptom onset is generally 4-8 weeks after starting ICI therapy. … The majority of patients require long courses of systemic glucocorticoids. And very few achieve complete symptom resolution while continuing ICI therapy,” they wrote.

The database analysis also revealed that a further 10 patients developed myopathy, with all patients presenting with myalgias and weaknesses. Five had bulbar myopathy, and four patients were diagnosed with concomitant myocarditis. All of these patients were treated with glucocorticoids for an average treatment duration of 15 weeks.

Complete resolution of the myopathy was observed in 70%, but two patients died from complications related to myocarditis and bulbar myopathy.

Other rheumatic events included four cases of vasculitis and four cases of polymyalgia rheumatica and six cases of diffuse systemic sclerosis or sicca syndromes.

“In lieu of controlled prospective studies, this cohort may serve as a guide for rheumatologists managing and counseling patients with Rh-IrAEs,” the investigators concluded.

“It remains unclear why some patients develop Rh-irAEs, and so far no genomic risk factors have been identified, though the varied clinical phenotypes of Rh-irAEs certainly suggest multiple underlying immunopathogenic mechanisms. Further prospective studies that distinguish between subsets of Rh-irAEs are necessary to better understand their pathophysiology and improve clinical care,” they added.

They noted that a limitation of the study was its reliance on retrospective data and clinician diagnoses.

None of the authors declared any financial or other conflicts of interest.

SOURCE: Richter MD et al. Arthritis Rheumatol. 2018 Oct 3. doi: 10.1002/art.40745.

Inflammatory arthritis (IA) is the most common rheumatic adverse event seen in people on immune checkpoint inhibitor (ICI) therapy and can largely be managed by rheumatologists without the need to halt cancer treatment, according to Michael D. Richter, MD, of the Mayo School of Graduate Medical Education, Rochester, Minn., and his colleagues.

The investigators’ observations of patients seen at the Mayo Clinic over a 7-year period suggested that “IA should not be seen as a contraindication to continuing or restarting ICIs, ” although continuing ICI therapy concurrently with high-dose steroids is “generally not recommended.” Previous estimates of rheumatic adverse events with ICIs had ranged from 1% to 10%, and management had largely been based on the experience of experts and findings from retrospective studies, they noted.

“This study aims to add granularity to the existing literature by describing the prevalence, clinical characteristics, and treatment outcomes of a large, single-institution cohort of patients with rheumatic immune-related adverse events,” Dr. Richter and his associates wrote in an article published in Arthritis & Rheumatology.

The analysis included 1,293 patients who had received treatment with any ICI at the Mayo Clinic between January 2011 and March 2018. Patients who had received treatment at other centers but were seen at the Mayo clinic for their rheumatic adverse event were also included.

Overall, 61 patients experienced a rheumatic immune-related adverse event (Rh-irAE) related to checkpoint inhibitor treatment; 43 were from the Mayo cohort and 18 were from other centers. The average age of the patients was 62.6 years, and almost half (49%) were female.

New-onset inflammatory arthritis was the most common Rh-irAE reported (n = 34), with the majority of patients presenting with polyarticular symptoms (n = 22; 65%).

Most of these patients were managed by a rheumatologist and were treated with systemic glucocorticoids (n = 26; 76%) for an average duration of 18 weeks. Five of the patients also required disease-modifying antirheumatic drugs and eight needed NSAIDS or intra-articular steroids.

The investigators noted that almost half of the patients (47%) experienced a complete resolution of symptoms during the study period, although few achieved this while they were still on the cancer treatment.

According to the investigators, the clinical characteristics of IA in their cohort support the existing literature: “Time of symptom onset is generally 4-8 weeks after starting ICI therapy. … The majority of patients require long courses of systemic glucocorticoids. And very few achieve complete symptom resolution while continuing ICI therapy,” they wrote.

The database analysis also revealed that a further 10 patients developed myopathy, with all patients presenting with myalgias and weaknesses. Five had bulbar myopathy, and four patients were diagnosed with concomitant myocarditis. All of these patients were treated with glucocorticoids for an average treatment duration of 15 weeks.

Complete resolution of the myopathy was observed in 70%, but two patients died from complications related to myocarditis and bulbar myopathy.

Other rheumatic events included four cases of vasculitis and four cases of polymyalgia rheumatica and six cases of diffuse systemic sclerosis or sicca syndromes.

“In lieu of controlled prospective studies, this cohort may serve as a guide for rheumatologists managing and counseling patients with Rh-IrAEs,” the investigators concluded.

“It remains unclear why some patients develop Rh-irAEs, and so far no genomic risk factors have been identified, though the varied clinical phenotypes of Rh-irAEs certainly suggest multiple underlying immunopathogenic mechanisms. Further prospective studies that distinguish between subsets of Rh-irAEs are necessary to better understand their pathophysiology and improve clinical care,” they added.

They noted that a limitation of the study was its reliance on retrospective data and clinician diagnoses.

None of the authors declared any financial or other conflicts of interest.

SOURCE: Richter MD et al. Arthritis Rheumatol. 2018 Oct 3. doi: 10.1002/art.40745.

FROM ARTHRITIS & RHEUMATOLOGY

Key clinical point: Inflammatory arthritis (IA) is the most common form of rheumatic immune-related adverse event (Rh-irAE) related to ICI treatment. The majority of patients with an Rh-irAE (90% in the current cohort) remained on ICI treatment, which suggests that IA should not be seen as a contraindication to continuing or restarting ICI treatment.

Major finding: Among a cohort of 1,293 patients and 18 patients who received ICI therapy at other centers, 61 cases of Rh-irAE were identified. Over half (n = 34) of these patients presented with IA.

Study details: A retrospective analysis of a database of all patients who received any ICI at the Mayo Clinic between January 2011 and March 2018.

Disclosures: None of the authors declared any financial or other conflicts of interest.

Source: Richter MD et al. Arthritis Rheumatol. 2018 Oct 3. doi: 10.1002/art.40745.

High-Dose Biotin for Progressive MS: Real-World Experience

Benefit was seen in primary and secondary progressive MS.

BERLIN—MD1003, a high-dose pharmaceutical grade biotin, is effective in the treatment of patients with progressive multiple sclerosis (MS), according to a report presented at ECTRIMS 2018. “This real-world study supports the growing body of evidence that MD1003 is an effective and safe treatment for progressive MS,” said lead author Jonathan Ciron, MD, of the Department of Neurology, CHU Toulouse, France, and colleagues.

In the 2016 MS-SPI study, MD1003 treatment was shown to be effective and well tolerated in patients with progressive MS. Notably, MD1003 reversed MS-related disease disability in 13% of patients with progressive MS. Based on these findings, MD1003 is currently being prescribed to patients with progressive MS in France under an expanded access program.

In the present study, Dr. Ciron and colleagues sought to determine the benefits, in terms of effectiveness and safety, of MD1003 in patients with primary progressive MS (PPMS) or secondary progressive MS (SPMS) in a clinical center.

MD1003 300 mg/day (100 mg tid) was prescribed to patients with PPMS or SPMS receiving care at a single center in France (CHU Toulouse) starting in January 2016. The following measures of effectiveness and safety were administered: Expanded Disability Status Scale (EDSS), timed 25-foot walk (T25W), nine-hole peg test (9-HPT), number of relapses, and gadolinium-enhancing lesions on T1-weighted images.

As of May 2018, a total of 220 patients received MD1003. The research team presented the results of the first 91 patients to complete one year of follow-up. At baseline, mean age was 59.5, 61.5% were female, 70.3% had SPMS, mean EDSS was 5.9, mean T25W was 50.7 seconds, 9-HPT in the dominant hand was 35.1 seconds, and the mean number of previous relapses was 5.1. After one year of treatment with MD1003, 19 (23%; n = 83 with data) patients experienced improvement in EDSS and 15 (23%; n = 66 with data) patients experienced 20% or greater improvement in T25W. Active disease, a clinically-defined relapse, or a gadolinium-enhancing T1 lesion was observed in nine (11%; n = 79 with data) patients. MD1003 was also well tolerated, the researchers noted.

Benefit was seen in primary and secondary progressive MS.

Benefit was seen in primary and secondary progressive MS.

BERLIN—MD1003, a high-dose pharmaceutical grade biotin, is effective in the treatment of patients with progressive multiple sclerosis (MS), according to a report presented at ECTRIMS 2018. “This real-world study supports the growing body of evidence that MD1003 is an effective and safe treatment for progressive MS,” said lead author Jonathan Ciron, MD, of the Department of Neurology, CHU Toulouse, France, and colleagues.

In the 2016 MS-SPI study, MD1003 treatment was shown to be effective and well tolerated in patients with progressive MS. Notably, MD1003 reversed MS-related disease disability in 13% of patients with progressive MS. Based on these findings, MD1003 is currently being prescribed to patients with progressive MS in France under an expanded access program.

In the present study, Dr. Ciron and colleagues sought to determine the benefits, in terms of effectiveness and safety, of MD1003 in patients with primary progressive MS (PPMS) or secondary progressive MS (SPMS) in a clinical center.

MD1003 300 mg/day (100 mg tid) was prescribed to patients with PPMS or SPMS receiving care at a single center in France (CHU Toulouse) starting in January 2016. The following measures of effectiveness and safety were administered: Expanded Disability Status Scale (EDSS), timed 25-foot walk (T25W), nine-hole peg test (9-HPT), number of relapses, and gadolinium-enhancing lesions on T1-weighted images.

As of May 2018, a total of 220 patients received MD1003. The research team presented the results of the first 91 patients to complete one year of follow-up. At baseline, mean age was 59.5, 61.5% were female, 70.3% had SPMS, mean EDSS was 5.9, mean T25W was 50.7 seconds, 9-HPT in the dominant hand was 35.1 seconds, and the mean number of previous relapses was 5.1. After one year of treatment with MD1003, 19 (23%; n = 83 with data) patients experienced improvement in EDSS and 15 (23%; n = 66 with data) patients experienced 20% or greater improvement in T25W. Active disease, a clinically-defined relapse, or a gadolinium-enhancing T1 lesion was observed in nine (11%; n = 79 with data) patients. MD1003 was also well tolerated, the researchers noted.

BERLIN—MD1003, a high-dose pharmaceutical grade biotin, is effective in the treatment of patients with progressive multiple sclerosis (MS), according to a report presented at ECTRIMS 2018. “This real-world study supports the growing body of evidence that MD1003 is an effective and safe treatment for progressive MS,” said lead author Jonathan Ciron, MD, of the Department of Neurology, CHU Toulouse, France, and colleagues.

In the 2016 MS-SPI study, MD1003 treatment was shown to be effective and well tolerated in patients with progressive MS. Notably, MD1003 reversed MS-related disease disability in 13% of patients with progressive MS. Based on these findings, MD1003 is currently being prescribed to patients with progressive MS in France under an expanded access program.

In the present study, Dr. Ciron and colleagues sought to determine the benefits, in terms of effectiveness and safety, of MD1003 in patients with primary progressive MS (PPMS) or secondary progressive MS (SPMS) in a clinical center.

MD1003 300 mg/day (100 mg tid) was prescribed to patients with PPMS or SPMS receiving care at a single center in France (CHU Toulouse) starting in January 2016. The following measures of effectiveness and safety were administered: Expanded Disability Status Scale (EDSS), timed 25-foot walk (T25W), nine-hole peg test (9-HPT), number of relapses, and gadolinium-enhancing lesions on T1-weighted images.

As of May 2018, a total of 220 patients received MD1003. The research team presented the results of the first 91 patients to complete one year of follow-up. At baseline, mean age was 59.5, 61.5% were female, 70.3% had SPMS, mean EDSS was 5.9, mean T25W was 50.7 seconds, 9-HPT in the dominant hand was 35.1 seconds, and the mean number of previous relapses was 5.1. After one year of treatment with MD1003, 19 (23%; n = 83 with data) patients experienced improvement in EDSS and 15 (23%; n = 66 with data) patients experienced 20% or greater improvement in T25W. Active disease, a clinically-defined relapse, or a gadolinium-enhancing T1 lesion was observed in nine (11%; n = 79 with data) patients. MD1003 was also well tolerated, the researchers noted.

Kymriah appears cost effective in analysis

The high price of chimeric antigen receptor (CAR) T-cell therapy for pediatric leukemia may prove cost effective if long-term survival benefits are realized, researchers reported.

A cost-effectiveness analysis of the CAR T-cell therapy tisagenlecleucel suggests that the $475,000 price tag is in alignment with the lifetime benefits of the treatment. The findings were published in JAMA Pediatrics.

Tisagenlecleucel – marketed as Kymriah – is a one-dose treatment for relapsed or refractory pediatric B-cell acute lymphoblastic leukemia (ALL) and the first CAR T-cell therapy approved by the Food and Drug Administration.

In this cost-effectiveness analysis, researchers used a decision analytic model that extrapolated the evidence from clinical trials over a patient’s lifetime to assess life-years gained, quality-adjusted life-years (QALYs) gained, and incremental costs per life-year and QALY gained. The comparator was the chemoimmunotherapeutic agent clofarabine.

While tisagenlecleucel has a list price of $475,000, researchers discounted the price by 3% and added several additional costs, such as hospital administration, pretreatment, and potential adverse events, to get to a total discounted cost of about $667,000. They estimated that 42.6% of patients were considered to be long-term survivors with tisagenlecleucel, 10.34 life-years would be gained, and 9.28 QALYs would be gained.

In comparison, clofarabine had a total discounted cost of approximately $337,000 (including an initial discounted price of $164,000 plus additional treatment and administrative costs), 10.8% of patients were long-term survivors, 2.43 life-years were gained, and 2.10 QALYs were gained in the model.

Overall, the mean incremental cost-effectiveness ratio was about $46,000 per QALY gained in this base-case model.

In analyses of different scenarios, such as a deeper discount, a different treatment start, or a different calculation of future treatment costs, the cost-effectiveness ratio varied from $37,000 to $78,000 per QALY gained.

“We acknowledge that considerable uncertainty remains around the long-term benefit of tisagenlecleucel owing to limited available evidence; however, with current evidence and assumptions, tisagenlecleucel meets commonly cited value thresholds over a patient lifetime horizon, assuming payment for treatment acquisition for responders at 1 month,” wrote Melanie D. Whittington, PhD, from the University of Colorado at Denver, Aurora, and her colleagues.

The authors noted that the clinical trial evidence for tisagenlecleucel came from single-arm trials, which made selection of a comparator challenging. Clofarabine was chosen because it had the most similar baseline population characteristics, but they acknowledged that blinatumomab was also frequently used as a treatment for these patients.

“We suspect that tisagenlecleucel would remain cost effective, compared with blinatumomab,” they wrote. “A study conducted by other researchers found the incremental cost-effectiveness ratio of tisagenlecleucel versus blinatumomab was similar to the incremental cost-effectiveness ratio of tisagenlecleucel versus clofarabine [i.e., $3,000 more per QALY].”

The authors suggested that uncertainties in the evidence should be considered as payers are negotiating coverage and payment for tisagenlecleucel.

“Novel payment models consistent with the present evidence may reduce the risk and uncertainty in long-term value and be more closely aligned with ensuring high-value care,” they wrote. “Financing cures in the United States is challenging, owing to the high up-front price, rapid uptake, and uncertainty in long-term outcomes; however, innovative payment models are an opportunity to address some of these challenges and to promote patient access to novel and promising therapies.”

The study was funded by the Institute for Clinical and Economic Review, which receives some funding from the pharmaceutical industry. Four authors are employees of the Institute for Clinical and Economic Review.

SOURCE: Whittington MD et al. JAMA Pediatr. 2018 Oct 8. doi: 10.1001/jamapediatrics.2018.2530.

The high price of chimeric antigen receptor (CAR) T-cell therapy for pediatric leukemia may prove cost effective if long-term survival benefits are realized, researchers reported.

A cost-effectiveness analysis of the CAR T-cell therapy tisagenlecleucel suggests that the $475,000 price tag is in alignment with the lifetime benefits of the treatment. The findings were published in JAMA Pediatrics.

Tisagenlecleucel – marketed as Kymriah – is a one-dose treatment for relapsed or refractory pediatric B-cell acute lymphoblastic leukemia (ALL) and the first CAR T-cell therapy approved by the Food and Drug Administration.

In this cost-effectiveness analysis, researchers used a decision analytic model that extrapolated the evidence from clinical trials over a patient’s lifetime to assess life-years gained, quality-adjusted life-years (QALYs) gained, and incremental costs per life-year and QALY gained. The comparator was the chemoimmunotherapeutic agent clofarabine.

While tisagenlecleucel has a list price of $475,000, researchers discounted the price by 3% and added several additional costs, such as hospital administration, pretreatment, and potential adverse events, to get to a total discounted cost of about $667,000. They estimated that 42.6% of patients were considered to be long-term survivors with tisagenlecleucel, 10.34 life-years would be gained, and 9.28 QALYs would be gained.

In comparison, clofarabine had a total discounted cost of approximately $337,000 (including an initial discounted price of $164,000 plus additional treatment and administrative costs), 10.8% of patients were long-term survivors, 2.43 life-years were gained, and 2.10 QALYs were gained in the model.

Overall, the mean incremental cost-effectiveness ratio was about $46,000 per QALY gained in this base-case model.

In analyses of different scenarios, such as a deeper discount, a different treatment start, or a different calculation of future treatment costs, the cost-effectiveness ratio varied from $37,000 to $78,000 per QALY gained.

“We acknowledge that considerable uncertainty remains around the long-term benefit of tisagenlecleucel owing to limited available evidence; however, with current evidence and assumptions, tisagenlecleucel meets commonly cited value thresholds over a patient lifetime horizon, assuming payment for treatment acquisition for responders at 1 month,” wrote Melanie D. Whittington, PhD, from the University of Colorado at Denver, Aurora, and her colleagues.

The authors noted that the clinical trial evidence for tisagenlecleucel came from single-arm trials, which made selection of a comparator challenging. Clofarabine was chosen because it had the most similar baseline population characteristics, but they acknowledged that blinatumomab was also frequently used as a treatment for these patients.

“We suspect that tisagenlecleucel would remain cost effective, compared with blinatumomab,” they wrote. “A study conducted by other researchers found the incremental cost-effectiveness ratio of tisagenlecleucel versus blinatumomab was similar to the incremental cost-effectiveness ratio of tisagenlecleucel versus clofarabine [i.e., $3,000 more per QALY].”

The authors suggested that uncertainties in the evidence should be considered as payers are negotiating coverage and payment for tisagenlecleucel.

“Novel payment models consistent with the present evidence may reduce the risk and uncertainty in long-term value and be more closely aligned with ensuring high-value care,” they wrote. “Financing cures in the United States is challenging, owing to the high up-front price, rapid uptake, and uncertainty in long-term outcomes; however, innovative payment models are an opportunity to address some of these challenges and to promote patient access to novel and promising therapies.”

The study was funded by the Institute for Clinical and Economic Review, which receives some funding from the pharmaceutical industry. Four authors are employees of the Institute for Clinical and Economic Review.

SOURCE: Whittington MD et al. JAMA Pediatr. 2018 Oct 8. doi: 10.1001/jamapediatrics.2018.2530.

The high price of chimeric antigen receptor (CAR) T-cell therapy for pediatric leukemia may prove cost effective if long-term survival benefits are realized, researchers reported.

A cost-effectiveness analysis of the CAR T-cell therapy tisagenlecleucel suggests that the $475,000 price tag is in alignment with the lifetime benefits of the treatment. The findings were published in JAMA Pediatrics.

Tisagenlecleucel – marketed as Kymriah – is a one-dose treatment for relapsed or refractory pediatric B-cell acute lymphoblastic leukemia (ALL) and the first CAR T-cell therapy approved by the Food and Drug Administration.

In this cost-effectiveness analysis, researchers used a decision analytic model that extrapolated the evidence from clinical trials over a patient’s lifetime to assess life-years gained, quality-adjusted life-years (QALYs) gained, and incremental costs per life-year and QALY gained. The comparator was the chemoimmunotherapeutic agent clofarabine.

While tisagenlecleucel has a list price of $475,000, researchers discounted the price by 3% and added several additional costs, such as hospital administration, pretreatment, and potential adverse events, to get to a total discounted cost of about $667,000. They estimated that 42.6% of patients were considered to be long-term survivors with tisagenlecleucel, 10.34 life-years would be gained, and 9.28 QALYs would be gained.

In comparison, clofarabine had a total discounted cost of approximately $337,000 (including an initial discounted price of $164,000 plus additional treatment and administrative costs), 10.8% of patients were long-term survivors, 2.43 life-years were gained, and 2.10 QALYs were gained in the model.

Overall, the mean incremental cost-effectiveness ratio was about $46,000 per QALY gained in this base-case model.

In analyses of different scenarios, such as a deeper discount, a different treatment start, or a different calculation of future treatment costs, the cost-effectiveness ratio varied from $37,000 to $78,000 per QALY gained.