User login

On Second Thought: The Truth About Beta-Blockers

This transcript has been edited for clarity.

Giving patients a beta-blocker after a myocardial infarction is standard of care. It’s in the guidelines. It’s one of the performance measures used by the American College of Cardiology (ACC) and the American Heart Association (AHA). If you aren’t putting your post–acute coronary syndrome (ACS) patients on a beta-blocker, the ACC and the AHA both think you suck.

They are very disappointed in you, just like your mother was when you told her you didn’t want to become a surgeon because you don’t like waking up early, your hands shake when you get nervous, it’s not your fault, there’s nothing you can do about it, so just leave me alone!

The data on beta-blockers are decades old. In the time before stents, statins, angiotensin-converting enzyme inhibitors, and dual antiplatelet therapy, when patients either died or got better on their own, beta-blockers showed major benefits. Studies like the Norwegian Multicenter Study Group, the BHAT trial, and the ISIS-1 trial proved the benefits of beta blockade. These studies date back to the 1980s, when you could call a study ISIS without controversy.

It was a simpler time, when all you had to worry about was the Cold War, apartheid, and the global AIDS pandemic. It was a time when doctors smoked in their offices, and patients had bigger infarcts that caused large scars and systolic dysfunction. That world is no longer our world, except for the war, the global pandemic, and the out-of-control gas prices.

The reality is that, before troponins, we probably missed most small heart attacks. Now, most infarcts are small, and most patients walk away from their heart attacks with essentially normal hearts. Do beta-blockers still matter? If you’re a fan of Cochrane reviews, the answer is yes.

In 2021, Cochrane published a review of beta-blockers in patients without heart failure after myocardial infarction (MI). The authors of that analysis concluded, after the usual caveats about heterogeneity, potential bias, and the whims of a random universe, that, yes, beta-blockers do reduce mortality. The risk ratio for max all-cause mortality was 0.81.

What does that mean practically? The absolute risk was reduced from 10.9% to 8.7%, a 2.2–percentage point absolute decrease and about a 20% relative drop. A little math gives us a third number: 46. That’s the number needed to treat. If you think about how many patients you admit during a typical week of critical care unit with an MI, a number needed to treat of 46 is a pretty good trade-off for a fairly inexpensive medication with fairly minimal side effects.

Of course, these are the same people who claim that masks don’t stop the spread of COVID-19. Sure, were they the only people who thought that handwashing was the best way to stop a respiratory virus? No. We all believed that fantasy for far longer than we should have. Not everybody can bat a thousand, if by batting a thousand, you mean reflecting on how your words will impact on a broader population primed to believe misinformation because of the increasingly toxic social media environment and worsening politicization and radicalization of our politics.

By the way, if any of you want to come to Canada, you can stay with me. Things are incrementally better here. In this day and age, incrementally better is the best we can hope for.

Here’s the wrinkle with the Cochrane beta-blocker review: Many of the studies took place before early revascularization became the norm and before our current armamentarium of drugs became standard of care.

Back in the day, bed rest and the power of positive thinking were the mainstays of cardiac treatment. Also, many of these studies mixed together ST-segment MI (STEMI) and non-STEMI patients, so you’re obviously going to see more benefits in STEMI patients who are at higher risk. Some of them used intravenous (IV) beta-blockers right away, whereas some were looking only at oral beta-blockers started days after the infarct.

We don’t use IV beta-blockers that much anymore because of the risk for shock.

Also, some studies had short-term follow-up where the benefits were less pronounced, and some studies used doses and types of beta-blockers rarely used today. Some of the studies had a mix of coronary and heart failure patients, which muddies the water because the heart failure patients would clearly benefit from being on a beta-blocker.

Basically, the data are not definitive because they are old and don’t reflect our current standard of care. The data contain a heterogeneous mix of patients that aren’t really relevant to the question that we’re asking. The question we’re asking is, should you put all your post-MI patients on a beta-blocker routinely, even if they don’t have heart failure?

The REDUCE-AMI trial is the first of a few trials testing, or to be more accurate, retesting, whether beta-blockers are useful after an MI. BETAMI, REBOOT, DANBLOCK— you’ll be hearing these names in the next few years, either because the studies get published or because they’re the Twitter handles of people harassing you online. Either/or. (By the way, I’ll be cold in my grave before I call it X.)

For now, REDUCE-AMI is the first across the finish line, and at least in cardiology, finishing first is a good thing. This study enrolled patients with ACS, both STEMI and non-STEMI, with a post-MI ejection fraction ≥ 50%, and the result was nothing. The risk ratio for all-cause mortality was 0.94 and was not statistically significant.

In absolute terms, that’s a reduction from 4.1% to 3.9%, or a 0.2–percentage point decrease; this translates into a number needed to treat of 500, which is 10 times higher than what the Cochrane review found. That’s if you assume that there is, in fact, a small benefit amidst all the statistical noise, which there probably isn’t.

Now, studies like this can never rule out small effects, either positive or negative, so maybe there is a small benefit from using beta-blockers. If it’s there, it’s really small. Do beta-blockers work? Well, yes, obviously, for heart failure and atrial fibrillation — which, let’s face it, are not exactly rare and often coexist in patients with heart disease. They probably aren’t that great as blood pressure pills, but that’s a story for another day and another video.

Yes, beta-blockers are useful pills, and they are standard of care, just maybe not for post-MI patients with normal ejection fractions because they probably don’t really need them. They worked in the pre-stent, pre-aspirin, pre-anything era.

That’s not our world anymore. Things change. It’s not the 1980s. That’s why I don’t have a mullet, and that’s why you need to update your kitchen.

Dr. Labos, a cardiologist at Kirkland Medical Center, Montreal, Quebec, Canada, has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

This transcript has been edited for clarity.

Giving patients a beta-blocker after a myocardial infarction is standard of care. It’s in the guidelines. It’s one of the performance measures used by the American College of Cardiology (ACC) and the American Heart Association (AHA). If you aren’t putting your post–acute coronary syndrome (ACS) patients on a beta-blocker, the ACC and the AHA both think you suck.

They are very disappointed in you, just like your mother was when you told her you didn’t want to become a surgeon because you don’t like waking up early, your hands shake when you get nervous, it’s not your fault, there’s nothing you can do about it, so just leave me alone!

The data on beta-blockers are decades old. In the time before stents, statins, angiotensin-converting enzyme inhibitors, and dual antiplatelet therapy, when patients either died or got better on their own, beta-blockers showed major benefits. Studies like the Norwegian Multicenter Study Group, the BHAT trial, and the ISIS-1 trial proved the benefits of beta blockade. These studies date back to the 1980s, when you could call a study ISIS without controversy.

It was a simpler time, when all you had to worry about was the Cold War, apartheid, and the global AIDS pandemic. It was a time when doctors smoked in their offices, and patients had bigger infarcts that caused large scars and systolic dysfunction. That world is no longer our world, except for the war, the global pandemic, and the out-of-control gas prices.

The reality is that, before troponins, we probably missed most small heart attacks. Now, most infarcts are small, and most patients walk away from their heart attacks with essentially normal hearts. Do beta-blockers still matter? If you’re a fan of Cochrane reviews, the answer is yes.

In 2021, Cochrane published a review of beta-blockers in patients without heart failure after myocardial infarction (MI). The authors of that analysis concluded, after the usual caveats about heterogeneity, potential bias, and the whims of a random universe, that, yes, beta-blockers do reduce mortality. The risk ratio for max all-cause mortality was 0.81.

What does that mean practically? The absolute risk was reduced from 10.9% to 8.7%, a 2.2–percentage point absolute decrease and about a 20% relative drop. A little math gives us a third number: 46. That’s the number needed to treat. If you think about how many patients you admit during a typical week of critical care unit with an MI, a number needed to treat of 46 is a pretty good trade-off for a fairly inexpensive medication with fairly minimal side effects.

Of course, these are the same people who claim that masks don’t stop the spread of COVID-19. Sure, were they the only people who thought that handwashing was the best way to stop a respiratory virus? No. We all believed that fantasy for far longer than we should have. Not everybody can bat a thousand, if by batting a thousand, you mean reflecting on how your words will impact on a broader population primed to believe misinformation because of the increasingly toxic social media environment and worsening politicization and radicalization of our politics.

By the way, if any of you want to come to Canada, you can stay with me. Things are incrementally better here. In this day and age, incrementally better is the best we can hope for.

Here’s the wrinkle with the Cochrane beta-blocker review: Many of the studies took place before early revascularization became the norm and before our current armamentarium of drugs became standard of care.

Back in the day, bed rest and the power of positive thinking were the mainstays of cardiac treatment. Also, many of these studies mixed together ST-segment MI (STEMI) and non-STEMI patients, so you’re obviously going to see more benefits in STEMI patients who are at higher risk. Some of them used intravenous (IV) beta-blockers right away, whereas some were looking only at oral beta-blockers started days after the infarct.

We don’t use IV beta-blockers that much anymore because of the risk for shock.

Also, some studies had short-term follow-up where the benefits were less pronounced, and some studies used doses and types of beta-blockers rarely used today. Some of the studies had a mix of coronary and heart failure patients, which muddies the water because the heart failure patients would clearly benefit from being on a beta-blocker.

Basically, the data are not definitive because they are old and don’t reflect our current standard of care. The data contain a heterogeneous mix of patients that aren’t really relevant to the question that we’re asking. The question we’re asking is, should you put all your post-MI patients on a beta-blocker routinely, even if they don’t have heart failure?

The REDUCE-AMI trial is the first of a few trials testing, or to be more accurate, retesting, whether beta-blockers are useful after an MI. BETAMI, REBOOT, DANBLOCK— you’ll be hearing these names in the next few years, either because the studies get published or because they’re the Twitter handles of people harassing you online. Either/or. (By the way, I’ll be cold in my grave before I call it X.)

For now, REDUCE-AMI is the first across the finish line, and at least in cardiology, finishing first is a good thing. This study enrolled patients with ACS, both STEMI and non-STEMI, with a post-MI ejection fraction ≥ 50%, and the result was nothing. The risk ratio for all-cause mortality was 0.94 and was not statistically significant.

In absolute terms, that’s a reduction from 4.1% to 3.9%, or a 0.2–percentage point decrease; this translates into a number needed to treat of 500, which is 10 times higher than what the Cochrane review found. That’s if you assume that there is, in fact, a small benefit amidst all the statistical noise, which there probably isn’t.

Now, studies like this can never rule out small effects, either positive or negative, so maybe there is a small benefit from using beta-blockers. If it’s there, it’s really small. Do beta-blockers work? Well, yes, obviously, for heart failure and atrial fibrillation — which, let’s face it, are not exactly rare and often coexist in patients with heart disease. They probably aren’t that great as blood pressure pills, but that’s a story for another day and another video.

Yes, beta-blockers are useful pills, and they are standard of care, just maybe not for post-MI patients with normal ejection fractions because they probably don’t really need them. They worked in the pre-stent, pre-aspirin, pre-anything era.

That’s not our world anymore. Things change. It’s not the 1980s. That’s why I don’t have a mullet, and that’s why you need to update your kitchen.

Dr. Labos, a cardiologist at Kirkland Medical Center, Montreal, Quebec, Canada, has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

This transcript has been edited for clarity.

Giving patients a beta-blocker after a myocardial infarction is standard of care. It’s in the guidelines. It’s one of the performance measures used by the American College of Cardiology (ACC) and the American Heart Association (AHA). If you aren’t putting your post–acute coronary syndrome (ACS) patients on a beta-blocker, the ACC and the AHA both think you suck.

They are very disappointed in you, just like your mother was when you told her you didn’t want to become a surgeon because you don’t like waking up early, your hands shake when you get nervous, it’s not your fault, there’s nothing you can do about it, so just leave me alone!

The data on beta-blockers are decades old. In the time before stents, statins, angiotensin-converting enzyme inhibitors, and dual antiplatelet therapy, when patients either died or got better on their own, beta-blockers showed major benefits. Studies like the Norwegian Multicenter Study Group, the BHAT trial, and the ISIS-1 trial proved the benefits of beta blockade. These studies date back to the 1980s, when you could call a study ISIS without controversy.

It was a simpler time, when all you had to worry about was the Cold War, apartheid, and the global AIDS pandemic. It was a time when doctors smoked in their offices, and patients had bigger infarcts that caused large scars and systolic dysfunction. That world is no longer our world, except for the war, the global pandemic, and the out-of-control gas prices.

The reality is that, before troponins, we probably missed most small heart attacks. Now, most infarcts are small, and most patients walk away from their heart attacks with essentially normal hearts. Do beta-blockers still matter? If you’re a fan of Cochrane reviews, the answer is yes.

In 2021, Cochrane published a review of beta-blockers in patients without heart failure after myocardial infarction (MI). The authors of that analysis concluded, after the usual caveats about heterogeneity, potential bias, and the whims of a random universe, that, yes, beta-blockers do reduce mortality. The risk ratio for max all-cause mortality was 0.81.

What does that mean practically? The absolute risk was reduced from 10.9% to 8.7%, a 2.2–percentage point absolute decrease and about a 20% relative drop. A little math gives us a third number: 46. That’s the number needed to treat. If you think about how many patients you admit during a typical week of critical care unit with an MI, a number needed to treat of 46 is a pretty good trade-off for a fairly inexpensive medication with fairly minimal side effects.

Of course, these are the same people who claim that masks don’t stop the spread of COVID-19. Sure, were they the only people who thought that handwashing was the best way to stop a respiratory virus? No. We all believed that fantasy for far longer than we should have. Not everybody can bat a thousand, if by batting a thousand, you mean reflecting on how your words will impact on a broader population primed to believe misinformation because of the increasingly toxic social media environment and worsening politicization and radicalization of our politics.

By the way, if any of you want to come to Canada, you can stay with me. Things are incrementally better here. In this day and age, incrementally better is the best we can hope for.

Here’s the wrinkle with the Cochrane beta-blocker review: Many of the studies took place before early revascularization became the norm and before our current armamentarium of drugs became standard of care.

Back in the day, bed rest and the power of positive thinking were the mainstays of cardiac treatment. Also, many of these studies mixed together ST-segment MI (STEMI) and non-STEMI patients, so you’re obviously going to see more benefits in STEMI patients who are at higher risk. Some of them used intravenous (IV) beta-blockers right away, whereas some were looking only at oral beta-blockers started days after the infarct.

We don’t use IV beta-blockers that much anymore because of the risk for shock.

Also, some studies had short-term follow-up where the benefits were less pronounced, and some studies used doses and types of beta-blockers rarely used today. Some of the studies had a mix of coronary and heart failure patients, which muddies the water because the heart failure patients would clearly benefit from being on a beta-blocker.

Basically, the data are not definitive because they are old and don’t reflect our current standard of care. The data contain a heterogeneous mix of patients that aren’t really relevant to the question that we’re asking. The question we’re asking is, should you put all your post-MI patients on a beta-blocker routinely, even if they don’t have heart failure?

The REDUCE-AMI trial is the first of a few trials testing, or to be more accurate, retesting, whether beta-blockers are useful after an MI. BETAMI, REBOOT, DANBLOCK— you’ll be hearing these names in the next few years, either because the studies get published or because they’re the Twitter handles of people harassing you online. Either/or. (By the way, I’ll be cold in my grave before I call it X.)

For now, REDUCE-AMI is the first across the finish line, and at least in cardiology, finishing first is a good thing. This study enrolled patients with ACS, both STEMI and non-STEMI, with a post-MI ejection fraction ≥ 50%, and the result was nothing. The risk ratio for all-cause mortality was 0.94 and was not statistically significant.

In absolute terms, that’s a reduction from 4.1% to 3.9%, or a 0.2–percentage point decrease; this translates into a number needed to treat of 500, which is 10 times higher than what the Cochrane review found. That’s if you assume that there is, in fact, a small benefit amidst all the statistical noise, which there probably isn’t.

Now, studies like this can never rule out small effects, either positive or negative, so maybe there is a small benefit from using beta-blockers. If it’s there, it’s really small. Do beta-blockers work? Well, yes, obviously, for heart failure and atrial fibrillation — which, let’s face it, are not exactly rare and often coexist in patients with heart disease. They probably aren’t that great as blood pressure pills, but that’s a story for another day and another video.

Yes, beta-blockers are useful pills, and they are standard of care, just maybe not for post-MI patients with normal ejection fractions because they probably don’t really need them. They worked in the pre-stent, pre-aspirin, pre-anything era.

That’s not our world anymore. Things change. It’s not the 1980s. That’s why I don’t have a mullet, and that’s why you need to update your kitchen.

Dr. Labos, a cardiologist at Kirkland Medical Center, Montreal, Quebec, Canada, has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

Navigating Election Anxiety: How Worry Affects the Brain

Once again, America is deeply divided before a national election, with people on each side convinced of the horrors that will be visited upon us if the other side wins.

’Tis the season — and regrettably, not to be jolly but to be worried.

As a neuroscientist, I am especially aware of the deleterious mental and physical impact of chronic worry on our citizenry. That’s because worry is not “all in your head.” We modern humans live in a world of worry which appears to be progressively growing.

Flight or Fight

Worry stems from the brain’s rather remarkable ability to foresee and reflexively respond to threat. Our “fight or flight” brain machinery probably arose in our vertebrate ancestors more than 300 million years ago. The fact that we have machinery akin to that possessed by lizards or tigers or shrews is testimony to its crucial contribution to our species’ survival.

As the phrase “fight or flight” suggests, a brain that senses trouble immediately biases certain body and brain functions. As it shifts into a higher-alert mode, it increases the energy supplies in our blood and supports other changes that facilitate faster and stronger reactions, while it shuts down less essential processes which do not contribute to hiding, fighting, or running like hell.

This hyperreactive response is initiated in the amygdala in the anterior brain, which identifies “what’s happening” as immediately or potentially threatening. The now-activated amygdala generates a response in the hypothalamus that provokes an immediate increase of adrenaline and cortisol in the body, and cortisol and noradrenaline in the brain. Both sharply speed up our physical and neurologic reactivity. In the brain, that is achieved by increasing the level of excitability of neurons across the forebrain. Depending on the perceived level of threat, an excitable brain will be just a little or a lot more “on alert,” just a little or a lot faster to respond, and just a little or a lot better at remembering the specific “warning” events that trigger this lizard-brain response.

Alas, this machinery was designed to be engaged every so often when a potentially dangerous surprise arises in life. When the worry and stress are persistent, the brain experiences a kind of neurologic “burn-out” of its fight versus flight machinery.

Dangers of Nonstop Anxiety and Stress

A consistently stressed-out brain turns down its production and release of noradrenaline, and the brain becomes less attentive, less engaged. This sets the brain on the path to an anxiety (and then a depressive) disorder, and, in the longer term, to cognitive losses in memory and executive control systems, and to emotional distortions that can lead to substance abuse or other addictions.

Our political distress is but one source of persistent worry and stress. Worry is a modern plague. The head counts of individuals seeking psychiatric or psychological health are at an all-time high in the United States. Near-universal low-level stressors, such as 2 years of COVID, insecurities about the changing demands of our professional and private lives, and a deeply divided body politic are unequivocally affecting American brain health.

The brain also collaborates in our body’s response to stress. Its regulation of hormonal responses and its autonomic nervous system’s mediated responses contribute to elevated blood sugar levels, to craving high-sugar foods, to elevated blood pressure, and to weaker immune responses. This all contributes to higher risks for cardiovascular and other dietary- and immune system–related disease. And ultimately, to shorter lifespans.

Strategies to Address Neurologic Changes Arising From Chronic Stress

There are many things you can try to bring your worry back to a manageable (and even productive) level.

- Engage in a “reset” strategy several times a day to bring your amygdala and locus coeruleus back under control. It takes a minute (or five) of calm, positive meditation to take your brain to a happy, optimistic place. Or use a mindfulness exercise to quiet down that overactive amygdala.

- Talk to people. Keeping your worries to yourself can compound them. Hashing through your concerns with a family member, friend, professional coach, or therapist can help put them in perspective and may allow you to come up with strategies to identify and neurologically respond to your sources of stress.

- Exercise, both physically and mentally. Do what works for you, whether it’s a run, a long walk, pumping iron, playing racquetball — anything that promotes physical release. Exercise your brain too. Engage in a project or activity that is mentally demanding. Personally, I like to garden and do online brain exercises. There’s nothing quite like yanking out weeds or hitting a new personal best at a cognitive exercise for me to notch a sense of accomplishment to counterbalance the unresolved issues driving my worry.

- Accept the uncertainty. Life is full of uncertainty. To paraphrase from Yale theologian Reinhold Niebuhr’s “Serenity Prayer”: Have the serenity to accept what you cannot help, the courage to change what you can, and the wisdom to recognize one from the other.

And, please, be assured that you’ll make it through this election season.

Dr. Merzenich, professor emeritus, Department of Neuroscience, University of California San Francisco, disclosed ties with Posit Science. He is often credited with discovering lifelong plasticity, with being the first to harness plasticity for human benefit (in his co-invention of the cochlear implant), and for pioneering the field of plasticity-based computerized brain exercise. He is a Kavli Laureate in Neuroscience, and he has been honored by each of the US National Academies of Sciences, Engineering, and Medicine. He may be most widely known for a series of specials on the brain on public television. His current focus is BrainHQ, a brain exercise app.

A version of this article appeared on Medscape.com.

Once again, America is deeply divided before a national election, with people on each side convinced of the horrors that will be visited upon us if the other side wins.

’Tis the season — and regrettably, not to be jolly but to be worried.

As a neuroscientist, I am especially aware of the deleterious mental and physical impact of chronic worry on our citizenry. That’s because worry is not “all in your head.” We modern humans live in a world of worry which appears to be progressively growing.

Flight or Fight

Worry stems from the brain’s rather remarkable ability to foresee and reflexively respond to threat. Our “fight or flight” brain machinery probably arose in our vertebrate ancestors more than 300 million years ago. The fact that we have machinery akin to that possessed by lizards or tigers or shrews is testimony to its crucial contribution to our species’ survival.

As the phrase “fight or flight” suggests, a brain that senses trouble immediately biases certain body and brain functions. As it shifts into a higher-alert mode, it increases the energy supplies in our blood and supports other changes that facilitate faster and stronger reactions, while it shuts down less essential processes which do not contribute to hiding, fighting, or running like hell.

This hyperreactive response is initiated in the amygdala in the anterior brain, which identifies “what’s happening” as immediately or potentially threatening. The now-activated amygdala generates a response in the hypothalamus that provokes an immediate increase of adrenaline and cortisol in the body, and cortisol and noradrenaline in the brain. Both sharply speed up our physical and neurologic reactivity. In the brain, that is achieved by increasing the level of excitability of neurons across the forebrain. Depending on the perceived level of threat, an excitable brain will be just a little or a lot more “on alert,” just a little or a lot faster to respond, and just a little or a lot better at remembering the specific “warning” events that trigger this lizard-brain response.

Alas, this machinery was designed to be engaged every so often when a potentially dangerous surprise arises in life. When the worry and stress are persistent, the brain experiences a kind of neurologic “burn-out” of its fight versus flight machinery.

Dangers of Nonstop Anxiety and Stress

A consistently stressed-out brain turns down its production and release of noradrenaline, and the brain becomes less attentive, less engaged. This sets the brain on the path to an anxiety (and then a depressive) disorder, and, in the longer term, to cognitive losses in memory and executive control systems, and to emotional distortions that can lead to substance abuse or other addictions.

Our political distress is but one source of persistent worry and stress. Worry is a modern plague. The head counts of individuals seeking psychiatric or psychological health are at an all-time high in the United States. Near-universal low-level stressors, such as 2 years of COVID, insecurities about the changing demands of our professional and private lives, and a deeply divided body politic are unequivocally affecting American brain health.

The brain also collaborates in our body’s response to stress. Its regulation of hormonal responses and its autonomic nervous system’s mediated responses contribute to elevated blood sugar levels, to craving high-sugar foods, to elevated blood pressure, and to weaker immune responses. This all contributes to higher risks for cardiovascular and other dietary- and immune system–related disease. And ultimately, to shorter lifespans.

Strategies to Address Neurologic Changes Arising From Chronic Stress

There are many things you can try to bring your worry back to a manageable (and even productive) level.

- Engage in a “reset” strategy several times a day to bring your amygdala and locus coeruleus back under control. It takes a minute (or five) of calm, positive meditation to take your brain to a happy, optimistic place. Or use a mindfulness exercise to quiet down that overactive amygdala.

- Talk to people. Keeping your worries to yourself can compound them. Hashing through your concerns with a family member, friend, professional coach, or therapist can help put them in perspective and may allow you to come up with strategies to identify and neurologically respond to your sources of stress.

- Exercise, both physically and mentally. Do what works for you, whether it’s a run, a long walk, pumping iron, playing racquetball — anything that promotes physical release. Exercise your brain too. Engage in a project or activity that is mentally demanding. Personally, I like to garden and do online brain exercises. There’s nothing quite like yanking out weeds or hitting a new personal best at a cognitive exercise for me to notch a sense of accomplishment to counterbalance the unresolved issues driving my worry.

- Accept the uncertainty. Life is full of uncertainty. To paraphrase from Yale theologian Reinhold Niebuhr’s “Serenity Prayer”: Have the serenity to accept what you cannot help, the courage to change what you can, and the wisdom to recognize one from the other.

And, please, be assured that you’ll make it through this election season.

Dr. Merzenich, professor emeritus, Department of Neuroscience, University of California San Francisco, disclosed ties with Posit Science. He is often credited with discovering lifelong plasticity, with being the first to harness plasticity for human benefit (in his co-invention of the cochlear implant), and for pioneering the field of plasticity-based computerized brain exercise. He is a Kavli Laureate in Neuroscience, and he has been honored by each of the US National Academies of Sciences, Engineering, and Medicine. He may be most widely known for a series of specials on the brain on public television. His current focus is BrainHQ, a brain exercise app.

A version of this article appeared on Medscape.com.

Once again, America is deeply divided before a national election, with people on each side convinced of the horrors that will be visited upon us if the other side wins.

’Tis the season — and regrettably, not to be jolly but to be worried.

As a neuroscientist, I am especially aware of the deleterious mental and physical impact of chronic worry on our citizenry. That’s because worry is not “all in your head.” We modern humans live in a world of worry which appears to be progressively growing.

Flight or Fight

Worry stems from the brain’s rather remarkable ability to foresee and reflexively respond to threat. Our “fight or flight” brain machinery probably arose in our vertebrate ancestors more than 300 million years ago. The fact that we have machinery akin to that possessed by lizards or tigers or shrews is testimony to its crucial contribution to our species’ survival.

As the phrase “fight or flight” suggests, a brain that senses trouble immediately biases certain body and brain functions. As it shifts into a higher-alert mode, it increases the energy supplies in our blood and supports other changes that facilitate faster and stronger reactions, while it shuts down less essential processes which do not contribute to hiding, fighting, or running like hell.

This hyperreactive response is initiated in the amygdala in the anterior brain, which identifies “what’s happening” as immediately or potentially threatening. The now-activated amygdala generates a response in the hypothalamus that provokes an immediate increase of adrenaline and cortisol in the body, and cortisol and noradrenaline in the brain. Both sharply speed up our physical and neurologic reactivity. In the brain, that is achieved by increasing the level of excitability of neurons across the forebrain. Depending on the perceived level of threat, an excitable brain will be just a little or a lot more “on alert,” just a little or a lot faster to respond, and just a little or a lot better at remembering the specific “warning” events that trigger this lizard-brain response.

Alas, this machinery was designed to be engaged every so often when a potentially dangerous surprise arises in life. When the worry and stress are persistent, the brain experiences a kind of neurologic “burn-out” of its fight versus flight machinery.

Dangers of Nonstop Anxiety and Stress

A consistently stressed-out brain turns down its production and release of noradrenaline, and the brain becomes less attentive, less engaged. This sets the brain on the path to an anxiety (and then a depressive) disorder, and, in the longer term, to cognitive losses in memory and executive control systems, and to emotional distortions that can lead to substance abuse or other addictions.

Our political distress is but one source of persistent worry and stress. Worry is a modern plague. The head counts of individuals seeking psychiatric or psychological health are at an all-time high in the United States. Near-universal low-level stressors, such as 2 years of COVID, insecurities about the changing demands of our professional and private lives, and a deeply divided body politic are unequivocally affecting American brain health.

The brain also collaborates in our body’s response to stress. Its regulation of hormonal responses and its autonomic nervous system’s mediated responses contribute to elevated blood sugar levels, to craving high-sugar foods, to elevated blood pressure, and to weaker immune responses. This all contributes to higher risks for cardiovascular and other dietary- and immune system–related disease. And ultimately, to shorter lifespans.

Strategies to Address Neurologic Changes Arising From Chronic Stress

There are many things you can try to bring your worry back to a manageable (and even productive) level.

- Engage in a “reset” strategy several times a day to bring your amygdala and locus coeruleus back under control. It takes a minute (or five) of calm, positive meditation to take your brain to a happy, optimistic place. Or use a mindfulness exercise to quiet down that overactive amygdala.

- Talk to people. Keeping your worries to yourself can compound them. Hashing through your concerns with a family member, friend, professional coach, or therapist can help put them in perspective and may allow you to come up with strategies to identify and neurologically respond to your sources of stress.

- Exercise, both physically and mentally. Do what works for you, whether it’s a run, a long walk, pumping iron, playing racquetball — anything that promotes physical release. Exercise your brain too. Engage in a project or activity that is mentally demanding. Personally, I like to garden and do online brain exercises. There’s nothing quite like yanking out weeds or hitting a new personal best at a cognitive exercise for me to notch a sense of accomplishment to counterbalance the unresolved issues driving my worry.

- Accept the uncertainty. Life is full of uncertainty. To paraphrase from Yale theologian Reinhold Niebuhr’s “Serenity Prayer”: Have the serenity to accept what you cannot help, the courage to change what you can, and the wisdom to recognize one from the other.

And, please, be assured that you’ll make it through this election season.

Dr. Merzenich, professor emeritus, Department of Neuroscience, University of California San Francisco, disclosed ties with Posit Science. He is often credited with discovering lifelong plasticity, with being the first to harness plasticity for human benefit (in his co-invention of the cochlear implant), and for pioneering the field of plasticity-based computerized brain exercise. He is a Kavli Laureate in Neuroscience, and he has been honored by each of the US National Academies of Sciences, Engineering, and Medicine. He may be most widely known for a series of specials on the brain on public television. His current focus is BrainHQ, a brain exercise app.

A version of this article appeared on Medscape.com.

Customized Dermal Curette: An Alternative and Effective Shaving Tool in Nail Surgery

Practice Gap

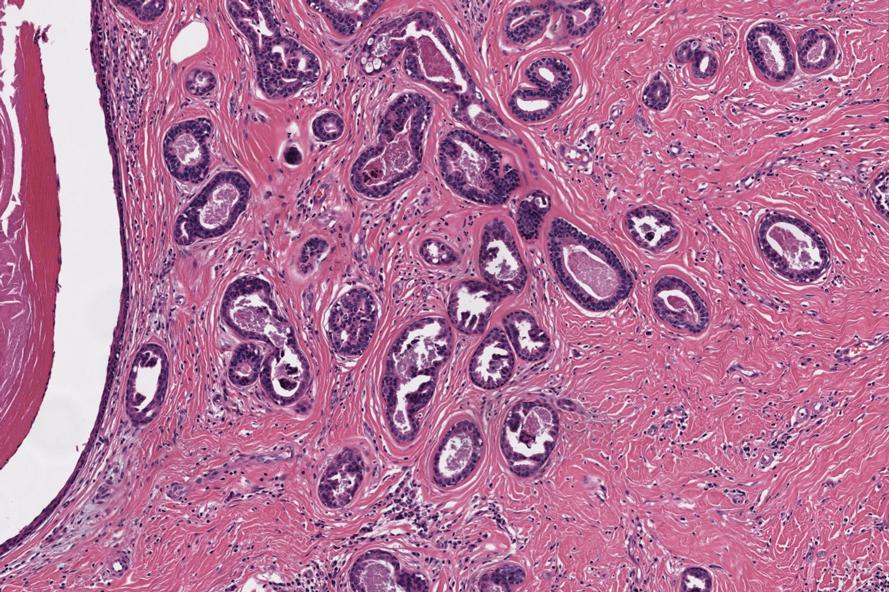

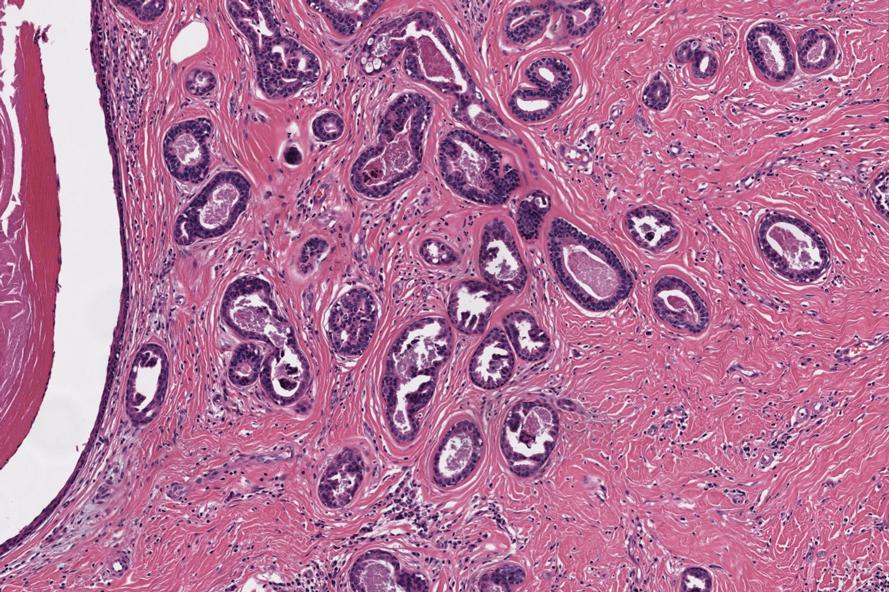

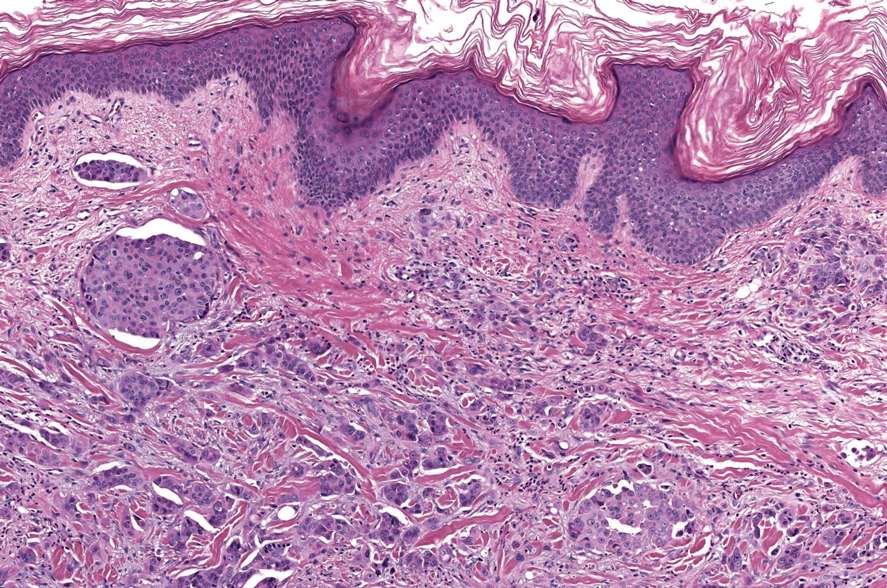

Longitudinal melanonychia (LM) is characterized by the presence of a dark brown, longitudinal, pigmented band on the nail unit, often caused by melanocytic activation or melanocytic hyperplasia in the nail matrix. Distinguishing between benign and early malignant LM is crucial due to their similar clinical presentations.1 Hence, surgical excision of the pigmented nail matrix followed by histopathologic examination is a common procedure aimed at managing LM and reducing the risk for delayed diagnosis of subungual melanoma.

Tangential matrix excision combined with the nail window technique has emerged as a common and favored surgical strategy for managing LM.2 This method is highly valued for its ability to minimize the risk for severe permanent nail dystrophy and effectively reduce postsurgical pigmentation recurrence.

The procedure begins with the creation of a matrix window along the lateral edge of the pigmented band followed by 1 lateral incision carefully made on each side of the nail fold. This meticulous approach allows for the complete exposure of the pigmented lesion. Subsequently, the nail fold is separated from the dorsal surface of the nail plate to facilitate access to the pigmented nail matrix. Finally, the target pigmented area is excised using a scalpel.

Despite the recognized efficacy of this procedure, challenges do arise, particularly when the width of the pigmented matrix lesion is narrow. Holding the scalpel horizontally to ensure precise excision can prove to be demanding, leading to difficulty achieving complete lesion removal and obtaining the desired cosmetic outcomes. As such, there is a clear need to explore alternative tools that can effectively address these challenges while ensuring optimal surgical outcomes for patients with LM. We propose the use of the customized dermal curette.

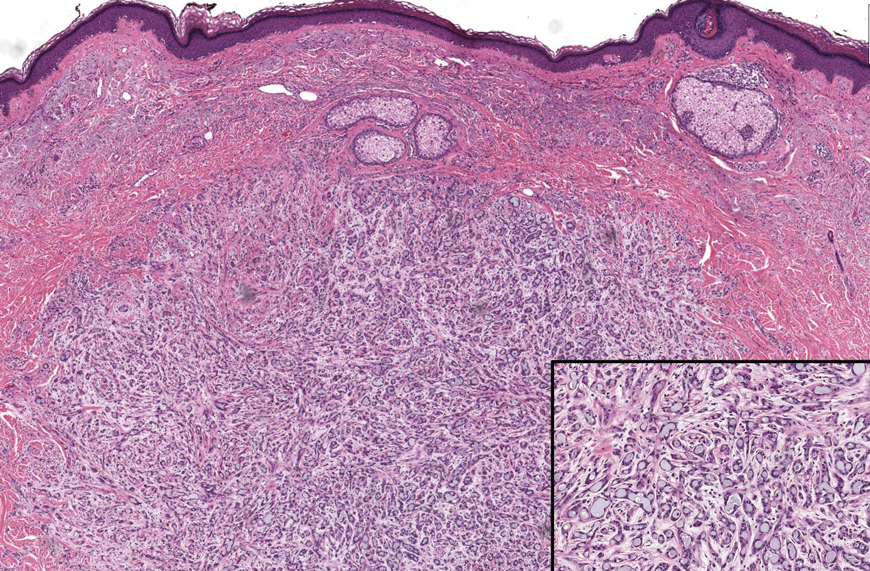

The Technique

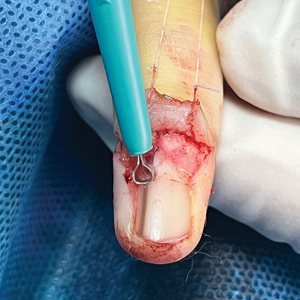

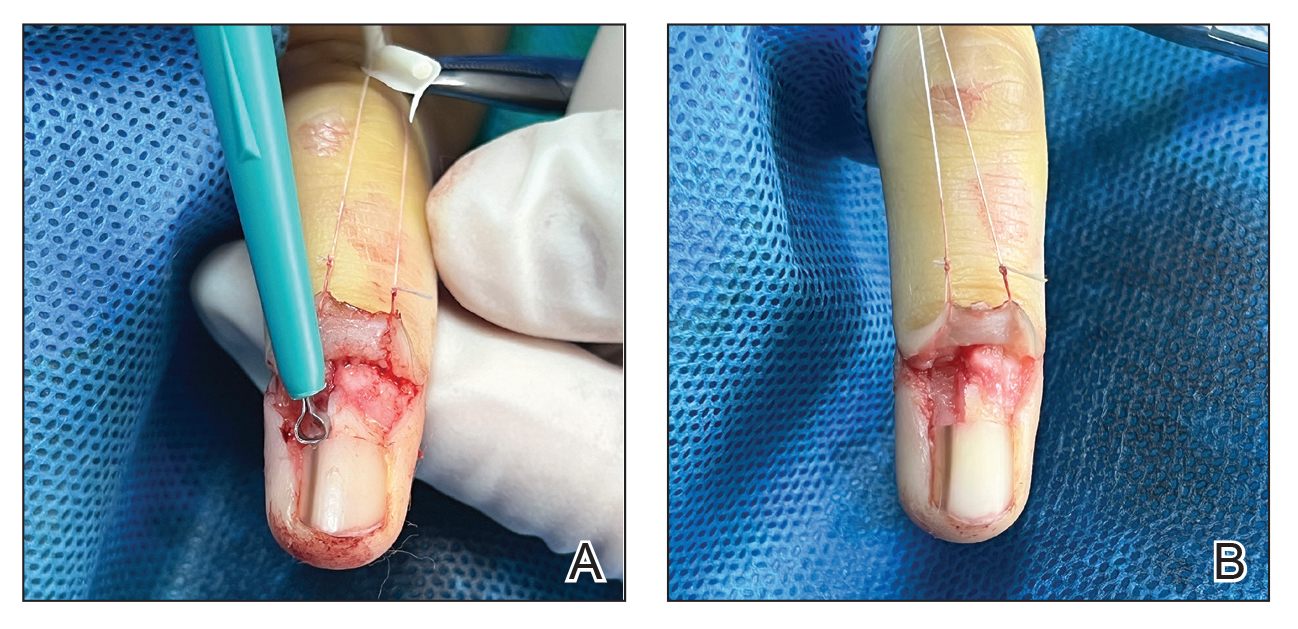

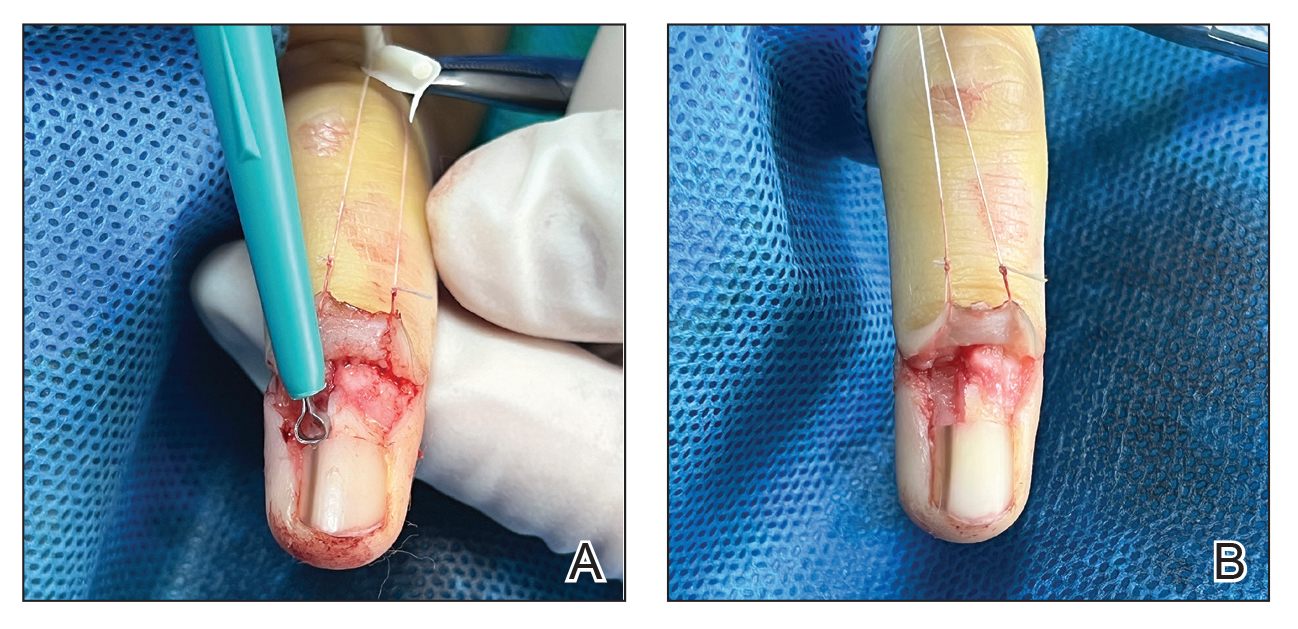

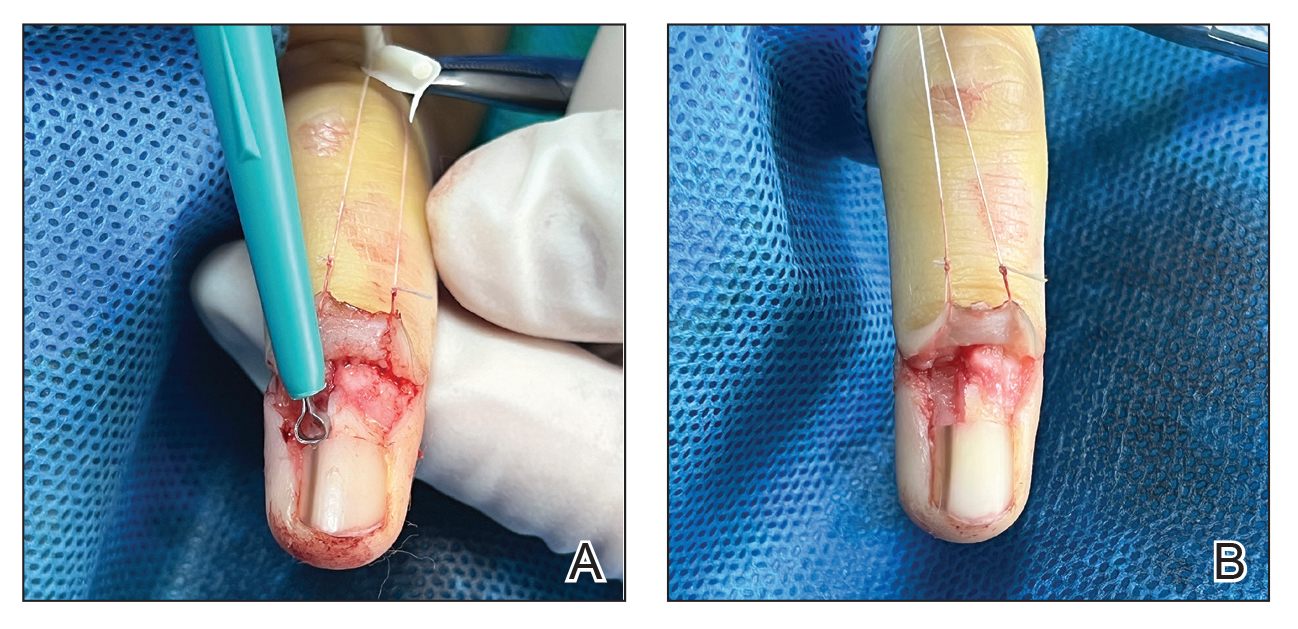

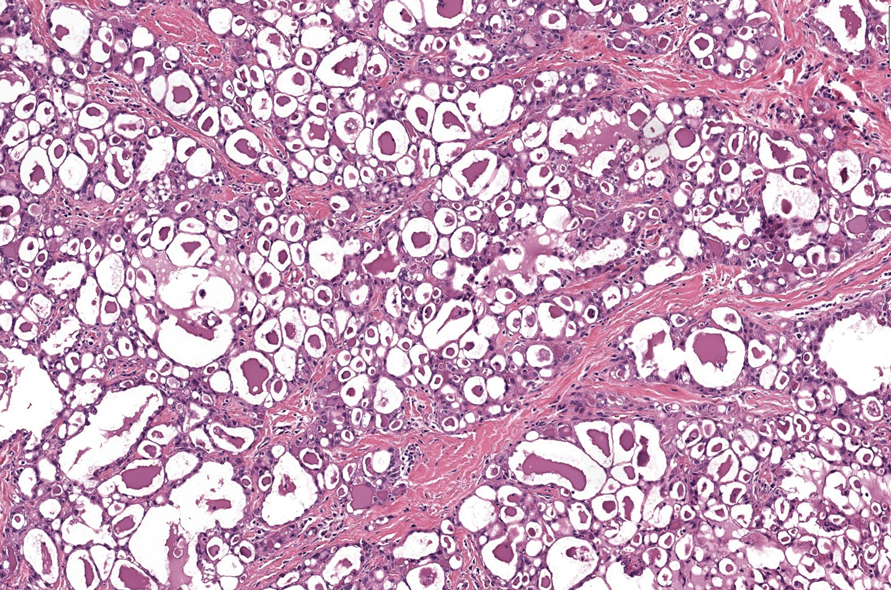

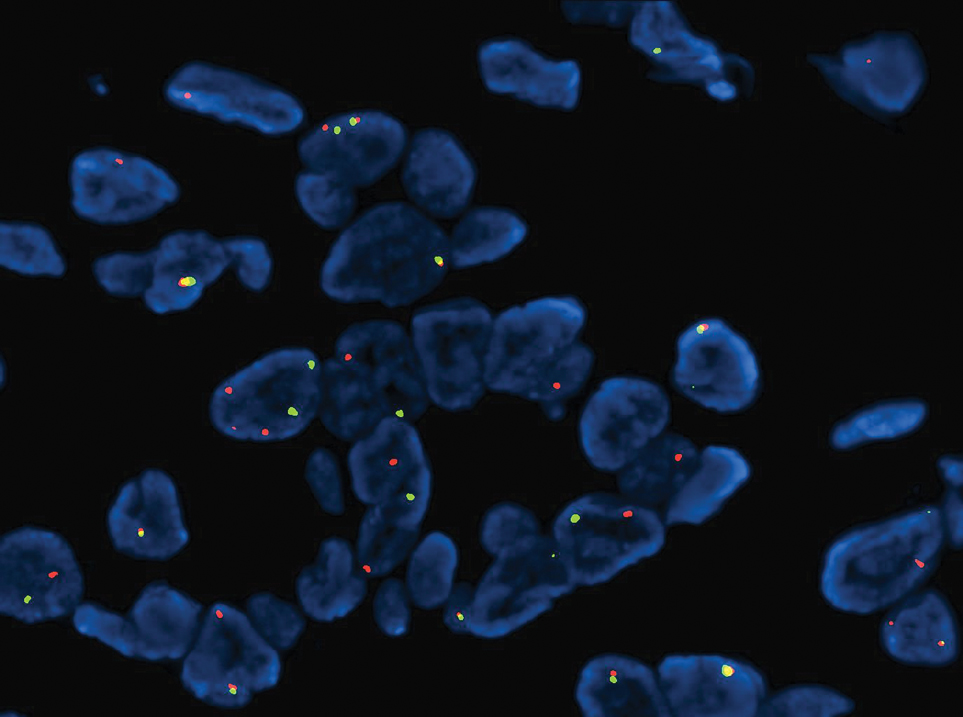

An improved curette tool is a practical solution for complete removal of the pigmented nail matrix. This enhanced instrument is crafted from a sterile disposable dermal curette with its top flattened using a needle holder(Figure 1). Termed the customized dermal curette, this device is a simple yet accurate tool for the precise excision of pigmented lesions within the nail matrix. Importantly, it offers versatility by accommodating different widths of pigmented lesions through the availability of various sizes of dermal curettes (Figure 2).

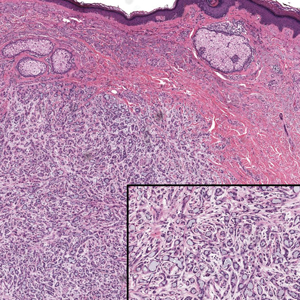

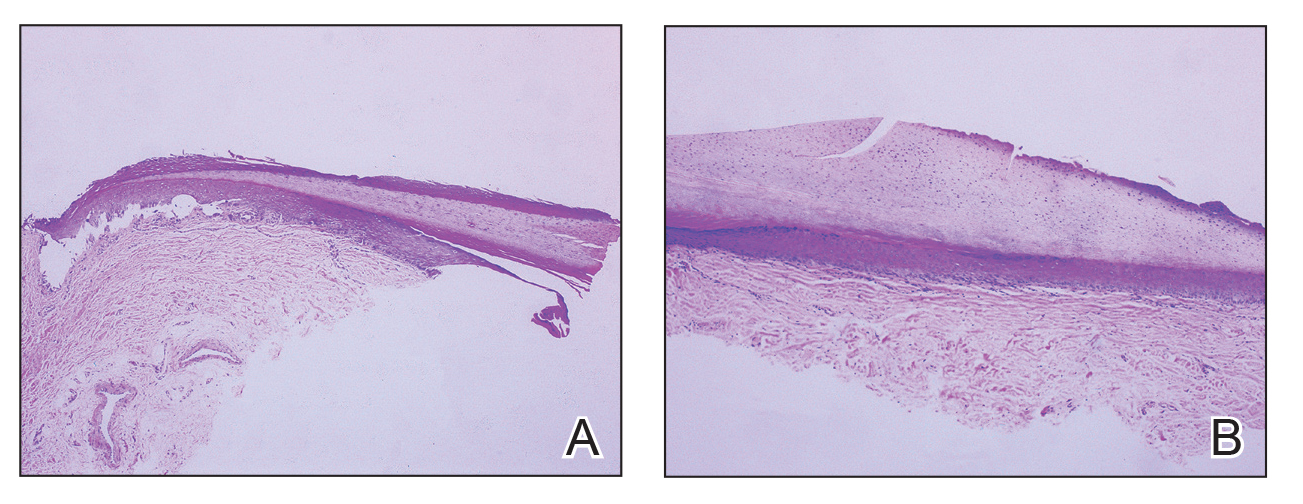

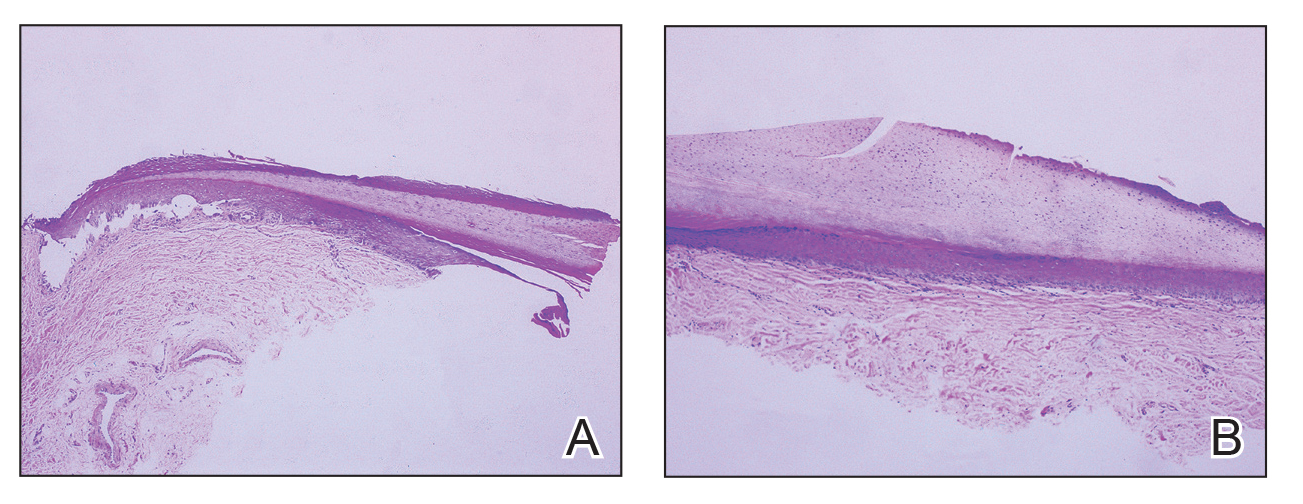

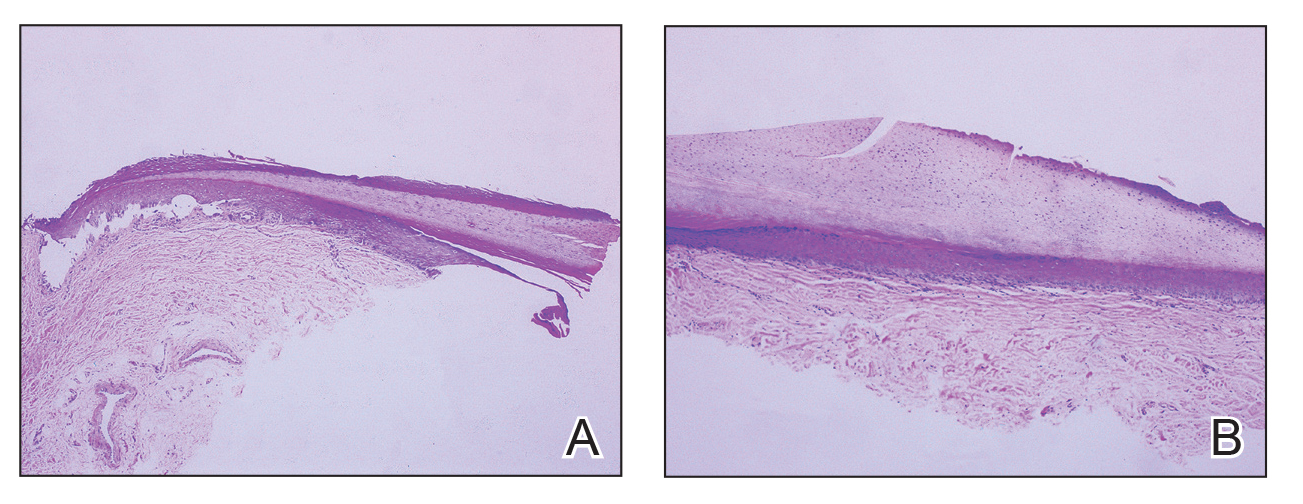

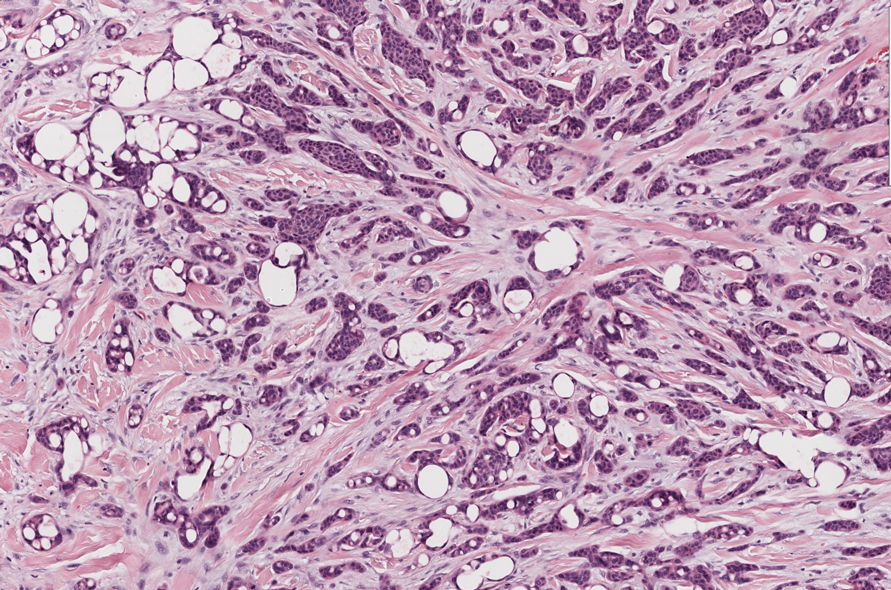

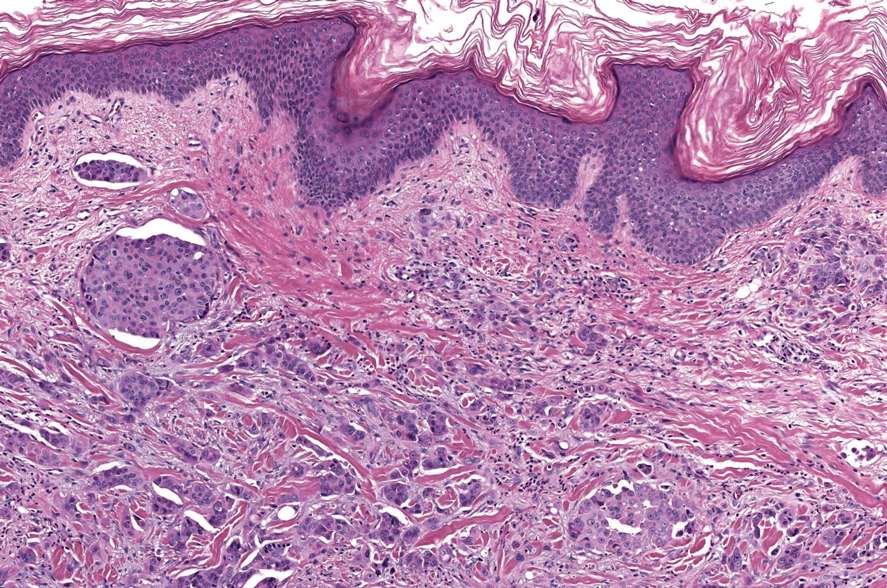

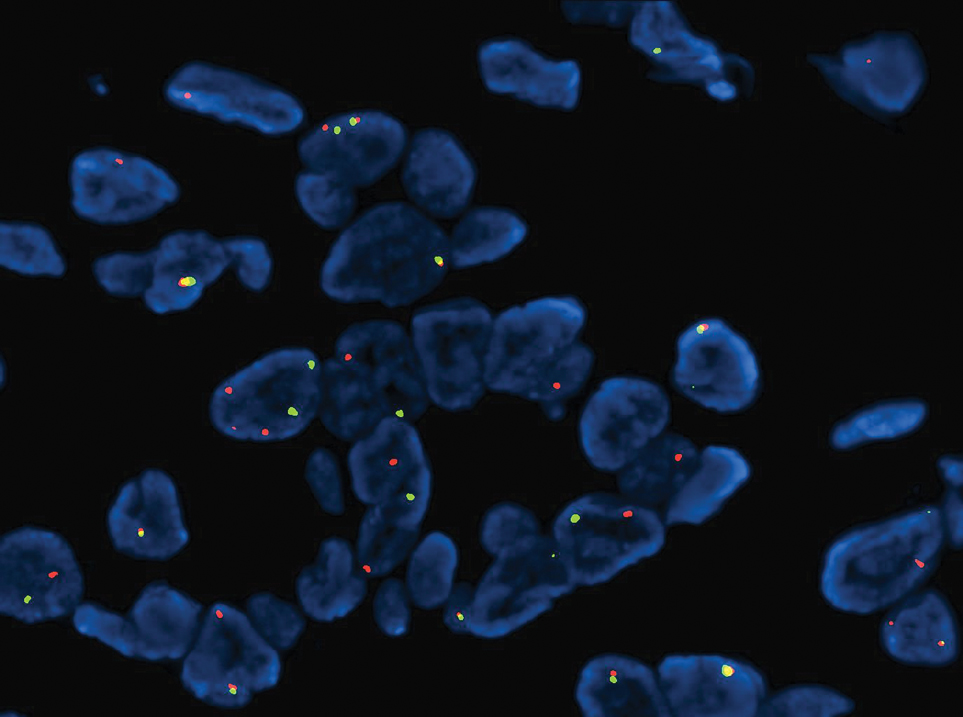

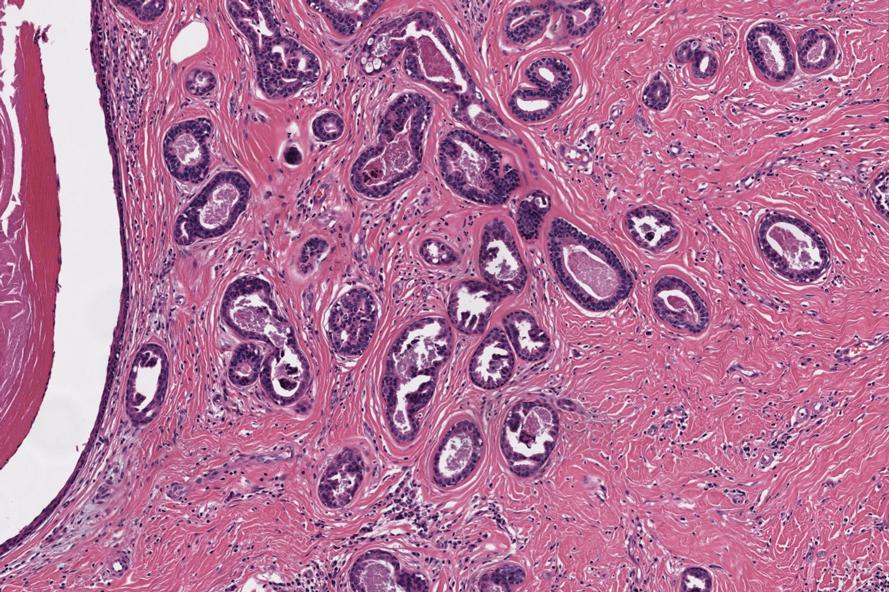

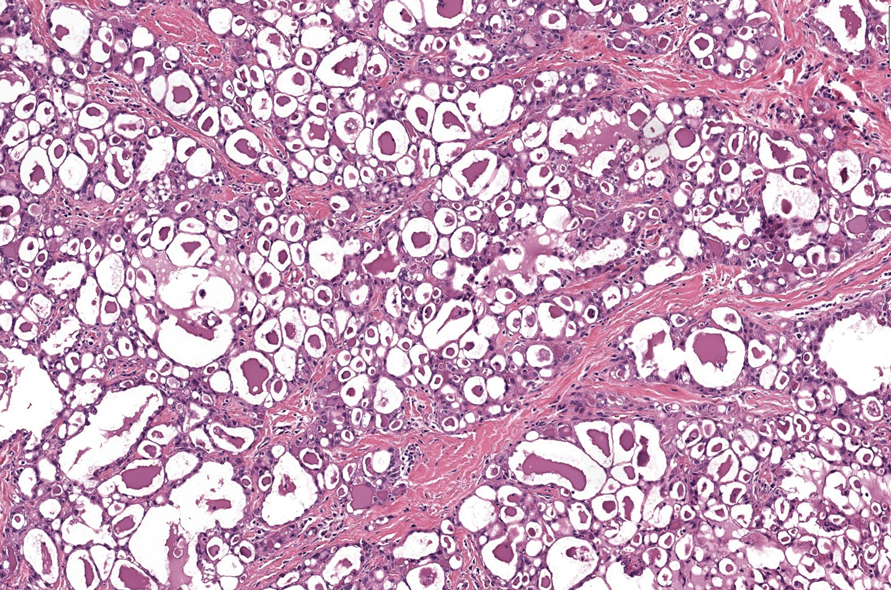

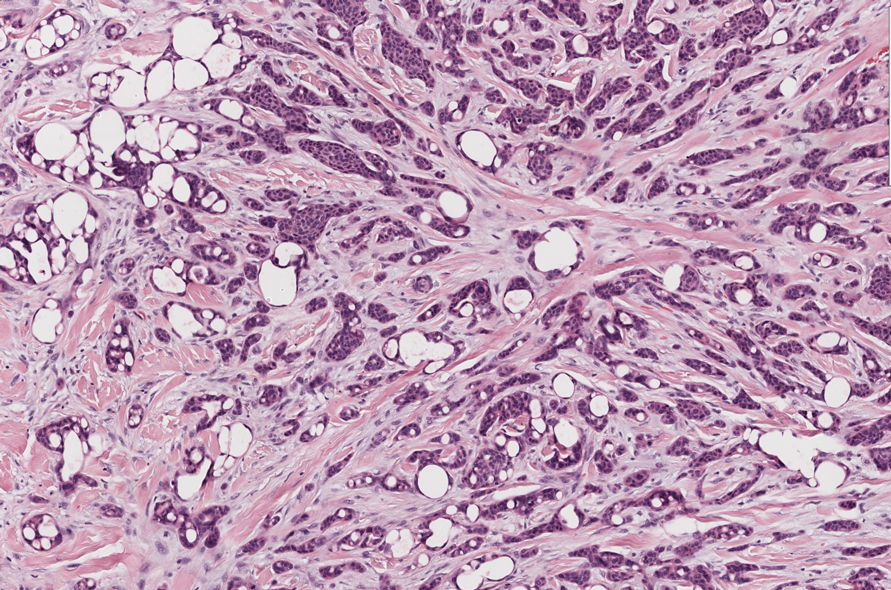

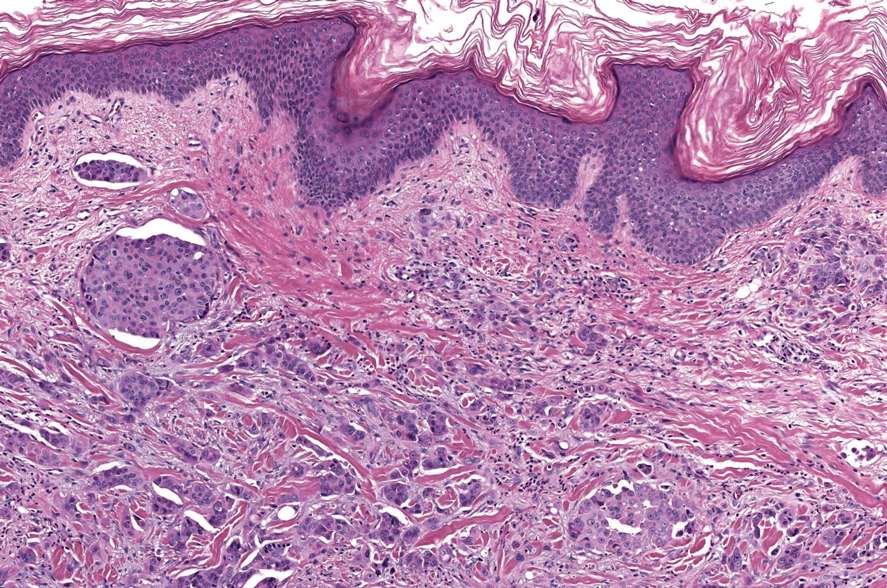

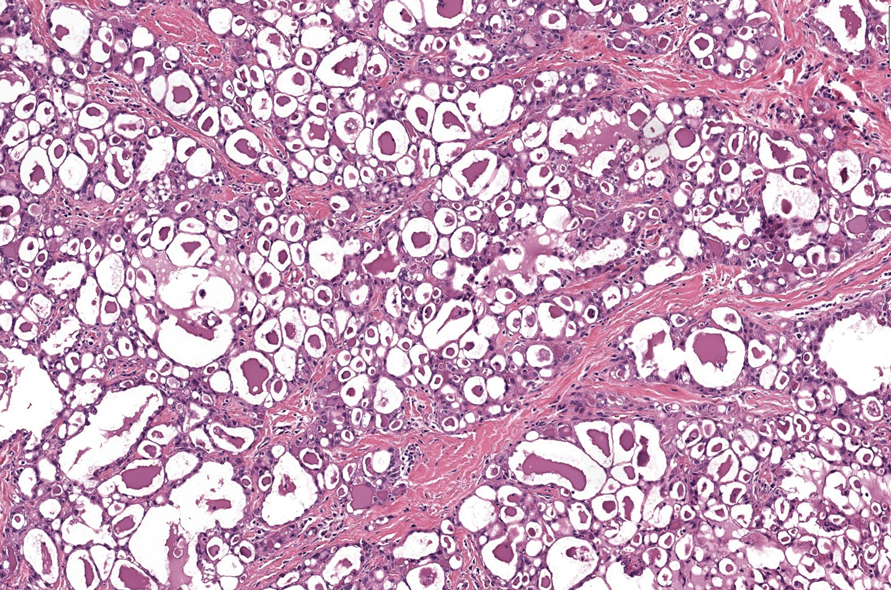

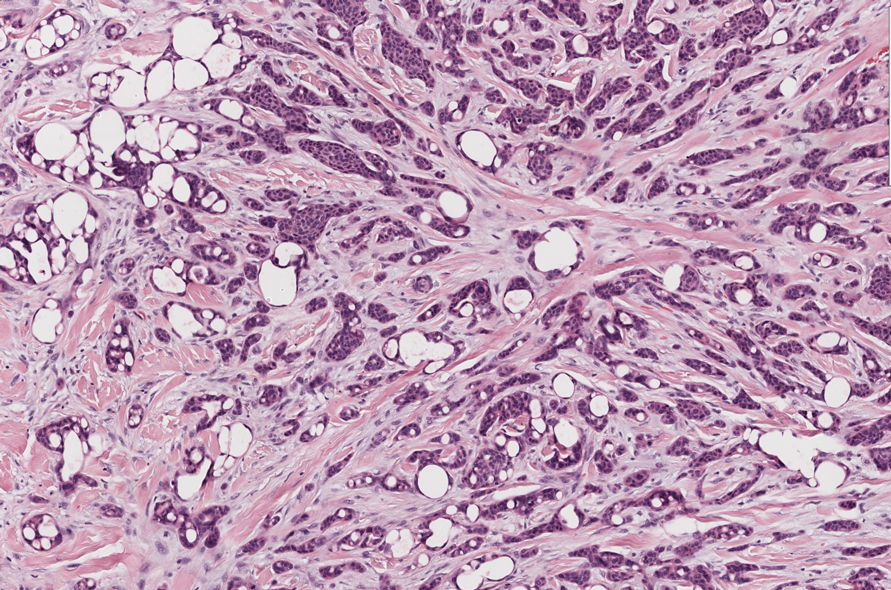

Histopathologically, we have found that the scalpel technique may lead to variable tissue removal, resulting in differences in tissue thickness, fragility, and completeness (Figure 3A). Conversely, the customized dermal curette consistently provides more accurate tissue excision, resulting in uniform tissue thickness and integrity (Figure 3B).

Practice Implications

Compared to the traditional scalpel, this modified tool offers distinct advantages. Specifically, the customized dermal curette provides enhanced maneuverability and control during the procedure, thereby improving the overall efficacy of the excision process. It also offers a more accurate approach to completely remove pigmented bands, which reduces the risk for postoperative recurrence. The simplicity, affordability, and ease of operation associated with customized dermal curettes holds promise as an effective alternative for tissue shaving, especially in cases involving narrow pigmented matrix lesions, thereby addressing a notable practice gap and enhancing patient care.

- Tan WC, Wang DY, Seghers AC, et al. Should we biopsy melanonychia striata in Asian children? a retrospective observational study. Pediatr Dermatol. 2019;36:864-868. doi:10.1111/pde.13934

- Zhou Y, Chen W, Liu ZR, et al. Modified shave surgery combined with nail window technique for the treatment of longitudinal melanonychia: evaluation of the method on a series of 67 cases. J Am Acad Dermatol. 2019;81:717-722. doi:10.1016/j.jaad.2019.03.065

Practice Gap

Longitudinal melanonychia (LM) is characterized by the presence of a dark brown, longitudinal, pigmented band on the nail unit, often caused by melanocytic activation or melanocytic hyperplasia in the nail matrix. Distinguishing between benign and early malignant LM is crucial due to their similar clinical presentations.1 Hence, surgical excision of the pigmented nail matrix followed by histopathologic examination is a common procedure aimed at managing LM and reducing the risk for delayed diagnosis of subungual melanoma.

Tangential matrix excision combined with the nail window technique has emerged as a common and favored surgical strategy for managing LM.2 This method is highly valued for its ability to minimize the risk for severe permanent nail dystrophy and effectively reduce postsurgical pigmentation recurrence.

The procedure begins with the creation of a matrix window along the lateral edge of the pigmented band followed by 1 lateral incision carefully made on each side of the nail fold. This meticulous approach allows for the complete exposure of the pigmented lesion. Subsequently, the nail fold is separated from the dorsal surface of the nail plate to facilitate access to the pigmented nail matrix. Finally, the target pigmented area is excised using a scalpel.

Despite the recognized efficacy of this procedure, challenges do arise, particularly when the width of the pigmented matrix lesion is narrow. Holding the scalpel horizontally to ensure precise excision can prove to be demanding, leading to difficulty achieving complete lesion removal and obtaining the desired cosmetic outcomes. As such, there is a clear need to explore alternative tools that can effectively address these challenges while ensuring optimal surgical outcomes for patients with LM. We propose the use of the customized dermal curette.

The Technique

An improved curette tool is a practical solution for complete removal of the pigmented nail matrix. This enhanced instrument is crafted from a sterile disposable dermal curette with its top flattened using a needle holder(Figure 1). Termed the customized dermal curette, this device is a simple yet accurate tool for the precise excision of pigmented lesions within the nail matrix. Importantly, it offers versatility by accommodating different widths of pigmented lesions through the availability of various sizes of dermal curettes (Figure 2).

Histopathologically, we have found that the scalpel technique may lead to variable tissue removal, resulting in differences in tissue thickness, fragility, and completeness (Figure 3A). Conversely, the customized dermal curette consistently provides more accurate tissue excision, resulting in uniform tissue thickness and integrity (Figure 3B).

Practice Implications

Compared to the traditional scalpel, this modified tool offers distinct advantages. Specifically, the customized dermal curette provides enhanced maneuverability and control during the procedure, thereby improving the overall efficacy of the excision process. It also offers a more accurate approach to completely remove pigmented bands, which reduces the risk for postoperative recurrence. The simplicity, affordability, and ease of operation associated with customized dermal curettes holds promise as an effective alternative for tissue shaving, especially in cases involving narrow pigmented matrix lesions, thereby addressing a notable practice gap and enhancing patient care.

Practice Gap

Longitudinal melanonychia (LM) is characterized by the presence of a dark brown, longitudinal, pigmented band on the nail unit, often caused by melanocytic activation or melanocytic hyperplasia in the nail matrix. Distinguishing between benign and early malignant LM is crucial due to their similar clinical presentations.1 Hence, surgical excision of the pigmented nail matrix followed by histopathologic examination is a common procedure aimed at managing LM and reducing the risk for delayed diagnosis of subungual melanoma.

Tangential matrix excision combined with the nail window technique has emerged as a common and favored surgical strategy for managing LM.2 This method is highly valued for its ability to minimize the risk for severe permanent nail dystrophy and effectively reduce postsurgical pigmentation recurrence.

The procedure begins with the creation of a matrix window along the lateral edge of the pigmented band followed by 1 lateral incision carefully made on each side of the nail fold. This meticulous approach allows for the complete exposure of the pigmented lesion. Subsequently, the nail fold is separated from the dorsal surface of the nail plate to facilitate access to the pigmented nail matrix. Finally, the target pigmented area is excised using a scalpel.

Despite the recognized efficacy of this procedure, challenges do arise, particularly when the width of the pigmented matrix lesion is narrow. Holding the scalpel horizontally to ensure precise excision can prove to be demanding, leading to difficulty achieving complete lesion removal and obtaining the desired cosmetic outcomes. As such, there is a clear need to explore alternative tools that can effectively address these challenges while ensuring optimal surgical outcomes for patients with LM. We propose the use of the customized dermal curette.

The Technique

An improved curette tool is a practical solution for complete removal of the pigmented nail matrix. This enhanced instrument is crafted from a sterile disposable dermal curette with its top flattened using a needle holder(Figure 1). Termed the customized dermal curette, this device is a simple yet accurate tool for the precise excision of pigmented lesions within the nail matrix. Importantly, it offers versatility by accommodating different widths of pigmented lesions through the availability of various sizes of dermal curettes (Figure 2).

Histopathologically, we have found that the scalpel technique may lead to variable tissue removal, resulting in differences in tissue thickness, fragility, and completeness (Figure 3A). Conversely, the customized dermal curette consistently provides more accurate tissue excision, resulting in uniform tissue thickness and integrity (Figure 3B).

Practice Implications

Compared to the traditional scalpel, this modified tool offers distinct advantages. Specifically, the customized dermal curette provides enhanced maneuverability and control during the procedure, thereby improving the overall efficacy of the excision process. It also offers a more accurate approach to completely remove pigmented bands, which reduces the risk for postoperative recurrence. The simplicity, affordability, and ease of operation associated with customized dermal curettes holds promise as an effective alternative for tissue shaving, especially in cases involving narrow pigmented matrix lesions, thereby addressing a notable practice gap and enhancing patient care.

- Tan WC, Wang DY, Seghers AC, et al. Should we biopsy melanonychia striata in Asian children? a retrospective observational study. Pediatr Dermatol. 2019;36:864-868. doi:10.1111/pde.13934

- Zhou Y, Chen W, Liu ZR, et al. Modified shave surgery combined with nail window technique for the treatment of longitudinal melanonychia: evaluation of the method on a series of 67 cases. J Am Acad Dermatol. 2019;81:717-722. doi:10.1016/j.jaad.2019.03.065

- Tan WC, Wang DY, Seghers AC, et al. Should we biopsy melanonychia striata in Asian children? a retrospective observational study. Pediatr Dermatol. 2019;36:864-868. doi:10.1111/pde.13934

- Zhou Y, Chen W, Liu ZR, et al. Modified shave surgery combined with nail window technique for the treatment of longitudinal melanonychia: evaluation of the method on a series of 67 cases. J Am Acad Dermatol. 2019;81:717-722. doi:10.1016/j.jaad.2019.03.065

FDA Approves Lymphir for R/R Cutaneous T-Cell Lymphoma

The immunotherapy is a reformulation of denileukin diftitox (Ontak), initially approved in 1999 for certain patients with persistent or recurrent cutaneous T-cell lymphoma. In 2014, the original formulation was voluntarily withdrawn from the US market. Citius acquired rights to market a reformulated product outside of Asia in 2021.

This is the first indication for Lymphir, which targets interleukin-2 receptors on malignant T cells.

This approval marks “a significant milestone” for patients with cutaneous T-cell lymphoma, a rare cancer, company CEO Leonard Mazur said in a press release announcing the approval. “The introduction of Lymphir, with its potential to rapidly reduce skin disease and control symptomatic itching without cumulative toxicity, is expected to expand the [cutaneous T-cell lymphoma] treatment landscape and grow the overall market, currently estimated to be $300-$400 million.”

Approval was based on the single-arm, open-label 302 study in 69 patients who had a median of four prior anticancer therapies. Patients received 9 mcg/kg daily from day 1 to day 5 of 21-day cycles until disease progression or unacceptable toxicity.

The objective response rate was 36.2%, including complete responses in 8.7% of patients. Responses lasted 6 months or longer in 52% of patients. Over 80% of subjects had a decrease in skin tumor burden, and almost a third had clinically significant improvements in pruritus.

Adverse events occurring in 20% or more of patients include increased transaminases, decreased albumin, decreased hemoglobin, nausea, edema, fatigue, musculoskeletal pain, rash, chills, constipation, pyrexia, and capillary leak syndrome.

Labeling carries a boxed warning of capillary leak syndrome. Other warnings include visual impairment, infusion reactions, hepatotoxicity, and embryo-fetal toxicity. Citius is under a postmarketing requirement to characterize the risk for visual impairment.

The company expects to launch the agent within 5 months.

A version of this article first appeared on Medscape.com.

The immunotherapy is a reformulation of denileukin diftitox (Ontak), initially approved in 1999 for certain patients with persistent or recurrent cutaneous T-cell lymphoma. In 2014, the original formulation was voluntarily withdrawn from the US market. Citius acquired rights to market a reformulated product outside of Asia in 2021.

This is the first indication for Lymphir, which targets interleukin-2 receptors on malignant T cells.

This approval marks “a significant milestone” for patients with cutaneous T-cell lymphoma, a rare cancer, company CEO Leonard Mazur said in a press release announcing the approval. “The introduction of Lymphir, with its potential to rapidly reduce skin disease and control symptomatic itching without cumulative toxicity, is expected to expand the [cutaneous T-cell lymphoma] treatment landscape and grow the overall market, currently estimated to be $300-$400 million.”

Approval was based on the single-arm, open-label 302 study in 69 patients who had a median of four prior anticancer therapies. Patients received 9 mcg/kg daily from day 1 to day 5 of 21-day cycles until disease progression or unacceptable toxicity.

The objective response rate was 36.2%, including complete responses in 8.7% of patients. Responses lasted 6 months or longer in 52% of patients. Over 80% of subjects had a decrease in skin tumor burden, and almost a third had clinically significant improvements in pruritus.

Adverse events occurring in 20% or more of patients include increased transaminases, decreased albumin, decreased hemoglobin, nausea, edema, fatigue, musculoskeletal pain, rash, chills, constipation, pyrexia, and capillary leak syndrome.

Labeling carries a boxed warning of capillary leak syndrome. Other warnings include visual impairment, infusion reactions, hepatotoxicity, and embryo-fetal toxicity. Citius is under a postmarketing requirement to characterize the risk for visual impairment.

The company expects to launch the agent within 5 months.

A version of this article first appeared on Medscape.com.

The immunotherapy is a reformulation of denileukin diftitox (Ontak), initially approved in 1999 for certain patients with persistent or recurrent cutaneous T-cell lymphoma. In 2014, the original formulation was voluntarily withdrawn from the US market. Citius acquired rights to market a reformulated product outside of Asia in 2021.

This is the first indication for Lymphir, which targets interleukin-2 receptors on malignant T cells.

This approval marks “a significant milestone” for patients with cutaneous T-cell lymphoma, a rare cancer, company CEO Leonard Mazur said in a press release announcing the approval. “The introduction of Lymphir, with its potential to rapidly reduce skin disease and control symptomatic itching without cumulative toxicity, is expected to expand the [cutaneous T-cell lymphoma] treatment landscape and grow the overall market, currently estimated to be $300-$400 million.”

Approval was based on the single-arm, open-label 302 study in 69 patients who had a median of four prior anticancer therapies. Patients received 9 mcg/kg daily from day 1 to day 5 of 21-day cycles until disease progression or unacceptable toxicity.

The objective response rate was 36.2%, including complete responses in 8.7% of patients. Responses lasted 6 months or longer in 52% of patients. Over 80% of subjects had a decrease in skin tumor burden, and almost a third had clinically significant improvements in pruritus.

Adverse events occurring in 20% or more of patients include increased transaminases, decreased albumin, decreased hemoglobin, nausea, edema, fatigue, musculoskeletal pain, rash, chills, constipation, pyrexia, and capillary leak syndrome.

Labeling carries a boxed warning of capillary leak syndrome. Other warnings include visual impairment, infusion reactions, hepatotoxicity, and embryo-fetal toxicity. Citius is under a postmarketing requirement to characterize the risk for visual impairment.

The company expects to launch the agent within 5 months.

A version of this article first appeared on Medscape.com.

Thyroid Hormone Balance Crucial for Liver Fat Reduction

TOPLINE:

Greater availability of peripheral tri-iodothyronine (T3), indicated by higher concentrations of free T3, T3, and T3/thyroxine (T4) ratio, is associated with increased liver fat content at baseline and a greater liver fat reduction following a dietary intervention known to reduce liver fat.

METHODOLOGY:

- Systemic hypothyroidism and subclinical hypothyroidism are proposed as independent risk factors for steatotic liver disease, but there are conflicting results in euthyroid individuals with normal thyroid function.

- Researchers investigated the association between thyroid function and intrahepatic lipids in 332 euthyroid individuals aged 50-80 years who reported limited alcohol consumption and had at least one condition for unhealthy aging (eg, cardiovascular disease).

- The analysis drew on a sub-cohort from the NutriAct trial, in which participants were randomly assigned to either an intervention group (diet rich in unsaturated fatty acids, plant protein, and fiber) or a control group (following the German Nutrition Society recommendations).

- The relationship between changes in intrahepatic lipid content and thyroid hormone parameters was evaluated in 243 individuals with data available at 12 months.

TAKEAWAY:

- Higher levels of free T3 and T3/T4 ratio were associated with increased liver fat content at baseline (P = .03 and P = .01, respectively).

- After 12 months, both the intervention and control groups showed reductions in liver fat content, along with similar reductions in free T3, total T3, T3/T4 ratio, and free T3/free T4 ratio (all P < .01).

- Thyroid stimulating hormone, T4, and free T4 levels remained stable in either group during the intervention.

- Participants who maintained higher T3 levels during the dietary intervention experienced a greater reduction in liver fat content over 12 months (Rho = −0.133; P = .039).

IN PRACTICE:

“A higher peripheral concentration of active THs [thyroid hormones] might reflect a compensatory mechanism in subjects with mildly increased IHL [intrahepatic lipid] content and early stages of MASLD [metabolic dysfunction–associated steatotic liver disease],” the authors wrote.

SOURCE:

The study was led by Miriam Sommer-Ballarini, Charité–Universitätsmedizin Berlin, Berlin, Germany. It was published online in the European Journal of Endocrinology.

LIMITATIONS:

Participants younger than 50 years of age and with severe hepatic disease, severe substance abuse, or active cancer were excluded, which may limit the generalizability of the findings. Because the study cohort had only mildly elevated median intrahepatic lipid content at baseline, it may not be suited to address the advanced stages of metabolic dysfunction–associated steatotic liver disease. The study’s findings are based on a specific dietary intervention, which may not be applicable to other dietary patterns or populations.

DISCLOSURES:

The Deutsche Forschungsgemeinschaft and German Federal Ministry for Education and Research funded this study. Some authors declared receiving funding, serving as consultants, or being employed by relevant private companies.

This article was created using several editorial tools, including AI, as part of the process. Human editors reviewed this content before publication. A version of this article first appeared on Medscape.com.

TOPLINE:

Greater availability of peripheral tri-iodothyronine (T3), indicated by higher concentrations of free T3, T3, and T3/thyroxine (T4) ratio, is associated with increased liver fat content at baseline and a greater liver fat reduction following a dietary intervention known to reduce liver fat.

METHODOLOGY:

- Systemic hypothyroidism and subclinical hypothyroidism are proposed as independent risk factors for steatotic liver disease, but there are conflicting results in euthyroid individuals with normal thyroid function.

- Researchers investigated the association between thyroid function and intrahepatic lipids in 332 euthyroid individuals aged 50-80 years who reported limited alcohol consumption and had at least one condition for unhealthy aging (eg, cardiovascular disease).

- The analysis drew on a sub-cohort from the NutriAct trial, in which participants were randomly assigned to either an intervention group (diet rich in unsaturated fatty acids, plant protein, and fiber) or a control group (following the German Nutrition Society recommendations).

- The relationship between changes in intrahepatic lipid content and thyroid hormone parameters was evaluated in 243 individuals with data available at 12 months.

TAKEAWAY:

- Higher levels of free T3 and T3/T4 ratio were associated with increased liver fat content at baseline (P = .03 and P = .01, respectively).

- After 12 months, both the intervention and control groups showed reductions in liver fat content, along with similar reductions in free T3, total T3, T3/T4 ratio, and free T3/free T4 ratio (all P < .01).

- Thyroid stimulating hormone, T4, and free T4 levels remained stable in either group during the intervention.

- Participants who maintained higher T3 levels during the dietary intervention experienced a greater reduction in liver fat content over 12 months (Rho = −0.133; P = .039).

IN PRACTICE:

“A higher peripheral concentration of active THs [thyroid hormones] might reflect a compensatory mechanism in subjects with mildly increased IHL [intrahepatic lipid] content and early stages of MASLD [metabolic dysfunction–associated steatotic liver disease],” the authors wrote.

SOURCE:

The study was led by Miriam Sommer-Ballarini, Charité–Universitätsmedizin Berlin, Berlin, Germany. It was published online in the European Journal of Endocrinology.

LIMITATIONS:

Participants younger than 50 years of age and with severe hepatic disease, severe substance abuse, or active cancer were excluded, which may limit the generalizability of the findings. Because the study cohort had only mildly elevated median intrahepatic lipid content at baseline, it may not be suited to address the advanced stages of metabolic dysfunction–associated steatotic liver disease. The study’s findings are based on a specific dietary intervention, which may not be applicable to other dietary patterns or populations.

DISCLOSURES:

The Deutsche Forschungsgemeinschaft and German Federal Ministry for Education and Research funded this study. Some authors declared receiving funding, serving as consultants, or being employed by relevant private companies.

This article was created using several editorial tools, including AI, as part of the process. Human editors reviewed this content before publication. A version of this article first appeared on Medscape.com.

TOPLINE:

Greater availability of peripheral tri-iodothyronine (T3), indicated by higher concentrations of free T3, T3, and T3/thyroxine (T4) ratio, is associated with increased liver fat content at baseline and a greater liver fat reduction following a dietary intervention known to reduce liver fat.

METHODOLOGY:

- Systemic hypothyroidism and subclinical hypothyroidism are proposed as independent risk factors for steatotic liver disease, but there are conflicting results in euthyroid individuals with normal thyroid function.

- Researchers investigated the association between thyroid function and intrahepatic lipids in 332 euthyroid individuals aged 50-80 years who reported limited alcohol consumption and had at least one condition for unhealthy aging (eg, cardiovascular disease).

- The analysis drew on a sub-cohort from the NutriAct trial, in which participants were randomly assigned to either an intervention group (diet rich in unsaturated fatty acids, plant protein, and fiber) or a control group (following the German Nutrition Society recommendations).

- The relationship between changes in intrahepatic lipid content and thyroid hormone parameters was evaluated in 243 individuals with data available at 12 months.

TAKEAWAY:

- Higher levels of free T3 and T3/T4 ratio were associated with increased liver fat content at baseline (P = .03 and P = .01, respectively).

- After 12 months, both the intervention and control groups showed reductions in liver fat content, along with similar reductions in free T3, total T3, T3/T4 ratio, and free T3/free T4 ratio (all P < .01).

- Thyroid stimulating hormone, T4, and free T4 levels remained stable in either group during the intervention.

- Participants who maintained higher T3 levels during the dietary intervention experienced a greater reduction in liver fat content over 12 months (Rho = −0.133; P = .039).

IN PRACTICE:

“A higher peripheral concentration of active THs [thyroid hormones] might reflect a compensatory mechanism in subjects with mildly increased IHL [intrahepatic lipid] content and early stages of MASLD [metabolic dysfunction–associated steatotic liver disease],” the authors wrote.

SOURCE:

The study was led by Miriam Sommer-Ballarini, Charité–Universitätsmedizin Berlin, Berlin, Germany. It was published online in the European Journal of Endocrinology.

LIMITATIONS:

Participants younger than 50 years of age and with severe hepatic disease, severe substance abuse, or active cancer were excluded, which may limit the generalizability of the findings. Because the study cohort had only mildly elevated median intrahepatic lipid content at baseline, it may not be suited to address the advanced stages of metabolic dysfunction–associated steatotic liver disease. The study’s findings are based on a specific dietary intervention, which may not be applicable to other dietary patterns or populations.

DISCLOSURES:

The Deutsche Forschungsgemeinschaft and German Federal Ministry for Education and Research funded this study. Some authors declared receiving funding, serving as consultants, or being employed by relevant private companies.

This article was created using several editorial tools, including AI, as part of the process. Human editors reviewed this content before publication. A version of this article first appeared on Medscape.com.

AHS White Paper Guides Treatment of Posttraumatic Headache in Youth

The guidance document, the first of its kind, covers risk factors for prolonged recovery, along with pharmacologic and nonpharmacologic management strategies, and supports an emphasis on multidisciplinary care, lead author Carlyn Patterson Gentile, MD, PhD, attending physician in the Division of Neurology at Children’s Hospital of Philadelphia in Pennsylvania, and colleagues reported.

“There are no guidelines to inform the management of posttraumatic headache in youth, but multiple studies have been conducted over the past 2 decades,” the authors wrote in Headache. “This white paper aims to provide a thorough review of the current literature, identify gaps in knowledge, and provide a road map for [posttraumatic headache] management in youth based on available evidence and expert opinion.”

Clarity for an Underrecognized Issue

According to Russell Lonser, MD, professor and chair of neurological surgery at Ohio State University, Columbus, the white paper is important because it offers concrete guidance for health care providers who may be less familiar with posttraumatic headache in youth.

“It brings together all of the previous literature ... in a very well-written way,” Dr. Lonser said in an interview. “More than anything, it could reassure [providers] that they shouldn’t be hunting down potentially magical cures, and reassure them in symptomatic management.”

Meeryo C. Choe, MD, associate clinical professor of pediatric neurology at UCLA Health in Calabasas, California, said the paper also helps shine a light on what may be a more common condition than the public suspects.

“While the media focuses on the effects of concussion in professional sports athletes, the biggest population of athletes is in our youth population,” Dr. Choe said in a written comment. “Almost 25 million children participate in sports throughout the country, and yet we lack guidelines on how to treat posttraumatic headache which can often develop into persistent postconcussive symptoms.”

This white paper, she noted, builds on Dr. Gentile’s 2021 systematic review, introduces new management recommendations, and aligns with the latest consensus statement from the Concussion in Sport Group.

Risk Factors

The white paper first emphasizes the importance of early identification of youth at high risk for prolonged recovery from posttraumatic headache. Risk factors include female sex, adolescent age, a high number of acute symptoms following the initial injury, and social determinants of health.

“I agree that it is important to identify these patients early to improve the recovery trajectory,” Dr. Choe said.

Identifying these individuals quickly allows for timely intervention with both pharmacologic and nonpharmacologic therapies, Dr. Gentile and colleagues noted, potentially mitigating persistent symptoms. Clinicians are encouraged to perform thorough initial assessments to identify these risk factors and initiate early, personalized management plans.

Initial Management of Acute Posttraumatic Headache

For the initial management of acute posttraumatic headache, the white paper recommends a scheduled dosing regimen of simple analgesics. Ibuprofen at a dosage of 10 mg/kg every 6-8 hours (up to a maximum of 600 mg per dose) combined with acetaminophen has shown the best evidence for efficacy. Provided the patient is clinically stable, this regimen should be initiated within 48 hours of the injury and maintained with scheduled dosing for 3-10 days.

If effective, these medications can subsequently be used on an as-needed basis. Careful usage of analgesics is crucial, the white paper cautions, as overadministration can lead to medication-overuse headaches, complicating the recovery process.

Secondary Treatment Options

In cases where first-line oral medications are ineffective, the AHS white paper outlines several secondary treatment options. These include acute intravenous therapies such as ketorolac, dopamine receptor antagonists, and intravenous fluids. Nerve blocks and oral corticosteroid bridges may also be considered.

The white paper stresses the importance of individualized treatment plans that consider the specific needs and responses of each patient, noting that the evidence supporting these approaches is primarily derived from retrospective studies and case reports.

“Patient preferences should be factored in,” said Sean Rose, MD, pediatric neurologist and codirector of the Complex Concussion Clinic at Nationwide Children’s Hospital, Columbus, Ohio.

Supplements and Preventive Measures

For adolescents and young adults at high risk of prolonged posttraumatic headache, the white paper suggests the use of riboflavin and magnesium supplements. Small randomized clinical trials suggest that these supplements may aid in speeding recovery when administered for 1-2 weeks within 48 hours of injury.

If significant headache persists after 2 weeks, a regimen of riboflavin 400 mg daily and magnesium 400-500 mg nightly can be trialed for 6-8 weeks, in line with recommendations for migraine prevention. Additionally, melatonin at a dose of 3-5 mg nightly for an 8-week course may be considered for patients experiencing comorbid sleep disturbances.

Targeted Preventative Therapy

The white paper emphasizes the importance of targeting preventative therapy to the primary headache phenotype.

For instance, patients presenting with a migraine phenotype, or those with a personal or family history of migraines, may be most likely to respond to medications proven effective in migraine prevention, such as amitriptyline, topiramate, and propranolol.

“Most research evidence [for treating posttraumatic headache in youth] is still based on the treatment of migraine,” Dr. Rose pointed out in a written comment.

Dr. Gentile and colleagues recommend initiating preventive therapies 4-6 weeks post injury if headaches are not improving, occur more than 1-2 days per week, or significantly impact daily functioning.

Specialist Referrals and Physical Activity

Referral to a headache specialist is advised for patients who do not respond to first-line acute and preventive therapies. Specialists can offer advanced diagnostic and therapeutic options, the authors noted, ensuring a comprehensive approach to managing posttraumatic headache.

The white paper also recommends noncontact, sub–symptom threshold aerobic physical activity and activities of daily living after an initial 24-48 hour period of symptom-limited cognitive and physical rest. Engaging in these activities may promote faster recovery and help patients gradually return to their normal routines.

“This has been a shift in the concussion treatment approach over the last decade, and is one of the most important interventions we can recommend as physicians,” Dr. Choe noted. “This is where pediatricians and emergency department physicians seeing children acutely can really make a difference in the recovery trajectory for a child after a concussion. ‘Cocoon therapy’ has been proven not only to not work, but be detrimental to recovery.”

Nonpharmacologic Interventions

Based on clinical assessment, nonpharmacologic interventions may also be considered, according to the white paper. These interventions include cervico-vestibular therapy, which addresses neck and balance issues, and cognitive-behavioral therapy, which helps manage the psychological aspects of chronic headache. Dr. Gentile and colleagues highlighted the potential benefits of a collaborative care model that incorporates these nonpharmacologic interventions alongside pharmacologic treatments, providing a holistic approach to posttraumatic headache management.

“Persisting headaches after concussion are often driven by multiple factors,” Dr. Rose said. “Multidisciplinary concussion clinics can offer multiple treatment approaches such as behavioral, physical therapy, exercise, and medication options.”

Unmet Needs

The white paper concludes by calling for high-quality prospective cohort studies and placebo-controlled, randomized, controlled trials to further advance the understanding and treatment of posttraumatic headache in children.

Dr. Lonser, Dr. Choe, and Dr. Rose all agreed.

“More focused treatment trials are needed to gauge efficacy in children with headache after concussion,” Dr. Rose said.

Specifically, Dr. Gentile and colleagues underscored the need to standardize data collection via common elements, which could improve the ability to compare results across studies and develop more effective treatments. In addition, research into the underlying pathophysiology of posttraumatic headache is crucial for identifying new therapeutic targets and clinical and biological markers that can personalize patient care.

They also stressed the importance of exploring the impact of health disparities and social determinants on posttraumatic headache outcomes, aiming to develop interventions that are equitable and accessible to all patient populations.The white paper was approved by the AHS, and supported by the National Institutes of Health/National Institute of Neurological Disorders and Stroke K23 NS124986. The authors disclosed relationships with Eli Lilly, Pfizer, Amgen, and others. The interviewees disclosed no conflicts of interest.

The guidance document, the first of its kind, covers risk factors for prolonged recovery, along with pharmacologic and nonpharmacologic management strategies, and supports an emphasis on multidisciplinary care, lead author Carlyn Patterson Gentile, MD, PhD, attending physician in the Division of Neurology at Children’s Hospital of Philadelphia in Pennsylvania, and colleagues reported.

“There are no guidelines to inform the management of posttraumatic headache in youth, but multiple studies have been conducted over the past 2 decades,” the authors wrote in Headache. “This white paper aims to provide a thorough review of the current literature, identify gaps in knowledge, and provide a road map for [posttraumatic headache] management in youth based on available evidence and expert opinion.”

Clarity for an Underrecognized Issue

According to Russell Lonser, MD, professor and chair of neurological surgery at Ohio State University, Columbus, the white paper is important because it offers concrete guidance for health care providers who may be less familiar with posttraumatic headache in youth.

“It brings together all of the previous literature ... in a very well-written way,” Dr. Lonser said in an interview. “More than anything, it could reassure [providers] that they shouldn’t be hunting down potentially magical cures, and reassure them in symptomatic management.”

Meeryo C. Choe, MD, associate clinical professor of pediatric neurology at UCLA Health in Calabasas, California, said the paper also helps shine a light on what may be a more common condition than the public suspects.

“While the media focuses on the effects of concussion in professional sports athletes, the biggest population of athletes is in our youth population,” Dr. Choe said in a written comment. “Almost 25 million children participate in sports throughout the country, and yet we lack guidelines on how to treat posttraumatic headache which can often develop into persistent postconcussive symptoms.”

This white paper, she noted, builds on Dr. Gentile’s 2021 systematic review, introduces new management recommendations, and aligns with the latest consensus statement from the Concussion in Sport Group.

Risk Factors