User login

Patients at risk for Barrett’s esophagus rarely screened

Adults with chronic gastroesophageal reflux disease (GERD) are at increased risk for Barrett’s esophagus (BE) – the precursor to esophageal adenocarcinoma (EAC) – but most don’t undergo recommended screening, new research shows.

Jennifer Kolb, MD, with the University of California, Los Angeles, and colleagues surveyed 472 adults with chronic GERD who qualify for BE screening and had a recent visit with their primary care provider.

In this diverse population of people at risk for BE and EAC, only 13% had ever been advised to undergo endoscopy to screen for BE and only 5% actually had a prior screening, the study notes.

“These results make it clear that screening is rarely done,” Dr. Kolb told this news organization.

The results of the survey are published online in the American Journal of Gastroenterology.

Concern high, understanding low

Esophageal cancer and BE have increased among middle-aged adults over roughly the past 5 years, and this increase is not because of better or more frequent screening, as reported previously by this news organization.

In fact, the majority of patients who develop EAC do not have a prior diagnosis of BE, which highlights the failure of current BE screening practices, Dr. Kolb and colleagues point out.

Professional gastroenterology society guidelines recommend screening for BE using upper endoscopy for at-risk individuals, which includes those with chronic GERD, along with other risk factors such as age older than 50 years, male sex, white race, smoking, obesity, and family history of BE or EAC.

In the current survey, most individuals said early detection of BE/EAC is important and leads to better outcomes, but most had poor overall knowledge of risk factors and indications for screening.

Only about two-thirds of respondents correctly identified BE risk factors, and only about 20% believed BE screening was necessary with GERD, the researchers note.

Roughly two-thirds of individuals wanted to prioritize BE screening and felt that getting an upper endoscopy would ease their concern.

Yet, 40% had no prior esophagogastroduodenoscopy. These individuals were less knowledgeable about BE/EAC risk and screening recommendations and identified more barriers to completing endoscopy.

“While minorities were most concerned about developing Barrett’s esophagus and cancer, they reported more barriers to screening compared to White participants,” Dr. Kolb said.

Addressing knowledge gaps

The primary care clinician is often the first line for patients with symptomatic acid reflux and the gateway for preventive cancer screening.

Yet, research has shown that primary care clinicians often have trouble identifying who should be screened for BE, and competing clinical issues make it challenging to implement BE screening.

“As gastroenterologists, we must partner with our primary care colleagues to help increase awareness of this lethal disease and improve recognition of risk factors so that eligible patients can be identified and referred for screening,” Dr. Kolb said.

Reached for comment, Seth Gross, MD, clinical chief of the division of gastroenterology and hepatology at New York University Langone Health, said the results “shed light on the fact that patients with GERD don’t have the knowledge of when they should get medical attention and possibly endoscopy.”

“We may need to do a better job of educating our colleagues and patients to know when to seek specialists to potentially get an endoscopy,” Dr. Gross said.

About 90% of esophageal cancers are diagnosed outside of surveillance programs, noted Prasad G. Iyer, MD, a gastroenterologist at Mayo Clinic in Rochester, Minn.

“Patients didn’t even know that they had Barrett’s [esophagus], so they were never under surveillance. They only come to attention after they have trouble with food sticking, and they can’t swallow solid food,” said Dr. Iyer, who wasn’t involved in the survey.

“Unfortunately, there are just so many cancers and so many issues that primary care providers have to deal with that I think this may not be getting the attention it deserves,” he said.

Access to endoscopy is also likely a barrier, Dr. Iyer noted.

“The waiting list may be several months, and I think providers may focus on other things,” he said.

Less-invasive screening options

Fear of endoscopy may be another issue.

In their survey, Dr. Kolb and colleagues found that 20% of respondents reported fear of discomfort with endoscopy as a barrier to completing screening.

But less-invasive screening options are increasingly available or in development.

This includes Cytosponge, a swallowable capsule containing a compressed sponge attached to a string. When withdrawn, the sponge contains esophageal cytology samples that can be used to identify biomarkers for BE.

In a guideline released last spring, the American College of Gastroenterology endorsed Cytosponge as a nonendoscopic BE screening modality, as published in the American Journal of Gastroenterology.

“The strength of the recommendation is conditional, but it’s the first time where [the ACG] is saying that this may be an option for people,” Dr. Gross said.

This research was funded by the American College of Gastroenterology. Dr. Kolb, Dr. Gross, and Dr. Iyer report no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Adults with chronic gastroesophageal reflux disease (GERD) are at increased risk for Barrett’s esophagus (BE) – the precursor to esophageal adenocarcinoma (EAC) – but most don’t undergo recommended screening, new research shows.

Jennifer Kolb, MD, with the University of California, Los Angeles, and colleagues surveyed 472 adults with chronic GERD who qualify for BE screening and had a recent visit with their primary care provider.

In this diverse population of people at risk for BE and EAC, only 13% had ever been advised to undergo endoscopy to screen for BE and only 5% actually had a prior screening, the study notes.

“These results make it clear that screening is rarely done,” Dr. Kolb told this news organization.

The results of the survey are published online in the American Journal of Gastroenterology.

Concern high, understanding low

Esophageal cancer and BE have increased among middle-aged adults over roughly the past 5 years, and this increase is not because of better or more frequent screening, as reported previously by this news organization.

In fact, the majority of patients who develop EAC do not have a prior diagnosis of BE, which highlights the failure of current BE screening practices, Dr. Kolb and colleagues point out.

Professional gastroenterology society guidelines recommend screening for BE using upper endoscopy for at-risk individuals, which includes those with chronic GERD, along with other risk factors such as age older than 50 years, male sex, white race, smoking, obesity, and family history of BE or EAC.

In the current survey, most individuals said early detection of BE/EAC is important and leads to better outcomes, but most had poor overall knowledge of risk factors and indications for screening.

Only about two-thirds of respondents correctly identified BE risk factors, and only about 20% believed BE screening was necessary with GERD, the researchers note.

Roughly two-thirds of individuals wanted to prioritize BE screening and felt that getting an upper endoscopy would ease their concern.

Yet, 40% had no prior esophagogastroduodenoscopy. These individuals were less knowledgeable about BE/EAC risk and screening recommendations and identified more barriers to completing endoscopy.

“While minorities were most concerned about developing Barrett’s esophagus and cancer, they reported more barriers to screening compared to White participants,” Dr. Kolb said.

Addressing knowledge gaps

The primary care clinician is often the first line for patients with symptomatic acid reflux and the gateway for preventive cancer screening.

Yet, research has shown that primary care clinicians often have trouble identifying who should be screened for BE, and competing clinical issues make it challenging to implement BE screening.

“As gastroenterologists, we must partner with our primary care colleagues to help increase awareness of this lethal disease and improve recognition of risk factors so that eligible patients can be identified and referred for screening,” Dr. Kolb said.

Reached for comment, Seth Gross, MD, clinical chief of the division of gastroenterology and hepatology at New York University Langone Health, said the results “shed light on the fact that patients with GERD don’t have the knowledge of when they should get medical attention and possibly endoscopy.”

“We may need to do a better job of educating our colleagues and patients to know when to seek specialists to potentially get an endoscopy,” Dr. Gross said.

About 90% of esophageal cancers are diagnosed outside of surveillance programs, noted Prasad G. Iyer, MD, a gastroenterologist at Mayo Clinic in Rochester, Minn.

“Patients didn’t even know that they had Barrett’s [esophagus], so they were never under surveillance. They only come to attention after they have trouble with food sticking, and they can’t swallow solid food,” said Dr. Iyer, who wasn’t involved in the survey.

“Unfortunately, there are just so many cancers and so many issues that primary care providers have to deal with that I think this may not be getting the attention it deserves,” he said.

Access to endoscopy is also likely a barrier, Dr. Iyer noted.

“The waiting list may be several months, and I think providers may focus on other things,” he said.

Less-invasive screening options

Fear of endoscopy may be another issue.

In their survey, Dr. Kolb and colleagues found that 20% of respondents reported fear of discomfort with endoscopy as a barrier to completing screening.

But less-invasive screening options are increasingly available or in development.

This includes Cytosponge, a swallowable capsule containing a compressed sponge attached to a string. When withdrawn, the sponge contains esophageal cytology samples that can be used to identify biomarkers for BE.

In a guideline released last spring, the American College of Gastroenterology endorsed Cytosponge as a nonendoscopic BE screening modality, as published in the American Journal of Gastroenterology.

“The strength of the recommendation is conditional, but it’s the first time where [the ACG] is saying that this may be an option for people,” Dr. Gross said.

This research was funded by the American College of Gastroenterology. Dr. Kolb, Dr. Gross, and Dr. Iyer report no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Adults with chronic gastroesophageal reflux disease (GERD) are at increased risk for Barrett’s esophagus (BE) – the precursor to esophageal adenocarcinoma (EAC) – but most don’t undergo recommended screening, new research shows.

Jennifer Kolb, MD, with the University of California, Los Angeles, and colleagues surveyed 472 adults with chronic GERD who qualify for BE screening and had a recent visit with their primary care provider.

In this diverse population of people at risk for BE and EAC, only 13% had ever been advised to undergo endoscopy to screen for BE and only 5% actually had a prior screening, the study notes.

“These results make it clear that screening is rarely done,” Dr. Kolb told this news organization.

The results of the survey are published online in the American Journal of Gastroenterology.

Concern high, understanding low

Esophageal cancer and BE have increased among middle-aged adults over roughly the past 5 years, and this increase is not because of better or more frequent screening, as reported previously by this news organization.

In fact, the majority of patients who develop EAC do not have a prior diagnosis of BE, which highlights the failure of current BE screening practices, Dr. Kolb and colleagues point out.

Professional gastroenterology society guidelines recommend screening for BE using upper endoscopy for at-risk individuals, which includes those with chronic GERD, along with other risk factors such as age older than 50 years, male sex, white race, smoking, obesity, and family history of BE or EAC.

In the current survey, most individuals said early detection of BE/EAC is important and leads to better outcomes, but most had poor overall knowledge of risk factors and indications for screening.

Only about two-thirds of respondents correctly identified BE risk factors, and only about 20% believed BE screening was necessary with GERD, the researchers note.

Roughly two-thirds of individuals wanted to prioritize BE screening and felt that getting an upper endoscopy would ease their concern.

Yet, 40% had no prior esophagogastroduodenoscopy. These individuals were less knowledgeable about BE/EAC risk and screening recommendations and identified more barriers to completing endoscopy.

“While minorities were most concerned about developing Barrett’s esophagus and cancer, they reported more barriers to screening compared to White participants,” Dr. Kolb said.

Addressing knowledge gaps

The primary care clinician is often the first line for patients with symptomatic acid reflux and the gateway for preventive cancer screening.

Yet, research has shown that primary care clinicians often have trouble identifying who should be screened for BE, and competing clinical issues make it challenging to implement BE screening.

“As gastroenterologists, we must partner with our primary care colleagues to help increase awareness of this lethal disease and improve recognition of risk factors so that eligible patients can be identified and referred for screening,” Dr. Kolb said.

Reached for comment, Seth Gross, MD, clinical chief of the division of gastroenterology and hepatology at New York University Langone Health, said the results “shed light on the fact that patients with GERD don’t have the knowledge of when they should get medical attention and possibly endoscopy.”

“We may need to do a better job of educating our colleagues and patients to know when to seek specialists to potentially get an endoscopy,” Dr. Gross said.

About 90% of esophageal cancers are diagnosed outside of surveillance programs, noted Prasad G. Iyer, MD, a gastroenterologist at Mayo Clinic in Rochester, Minn.

“Patients didn’t even know that they had Barrett’s [esophagus], so they were never under surveillance. They only come to attention after they have trouble with food sticking, and they can’t swallow solid food,” said Dr. Iyer, who wasn’t involved in the survey.

“Unfortunately, there are just so many cancers and so many issues that primary care providers have to deal with that I think this may not be getting the attention it deserves,” he said.

Access to endoscopy is also likely a barrier, Dr. Iyer noted.

“The waiting list may be several months, and I think providers may focus on other things,” he said.

Less-invasive screening options

Fear of endoscopy may be another issue.

In their survey, Dr. Kolb and colleagues found that 20% of respondents reported fear of discomfort with endoscopy as a barrier to completing screening.

But less-invasive screening options are increasingly available or in development.

This includes Cytosponge, a swallowable capsule containing a compressed sponge attached to a string. When withdrawn, the sponge contains esophageal cytology samples that can be used to identify biomarkers for BE.

In a guideline released last spring, the American College of Gastroenterology endorsed Cytosponge as a nonendoscopic BE screening modality, as published in the American Journal of Gastroenterology.

“The strength of the recommendation is conditional, but it’s the first time where [the ACG] is saying that this may be an option for people,” Dr. Gross said.

This research was funded by the American College of Gastroenterology. Dr. Kolb, Dr. Gross, and Dr. Iyer report no relevant financial relationships.

A version of this article first appeared on Medscape.com.

FROM AMERICAN JOURNAL OF GASTROENTEROLOGY

FDA rejects bulevirtide for hepatitis D

The U.S. Food and Drug Administration (FDA) has declined to approve bulevirtide, Gilead Sciences’ drug for the treatment of hepatitis delta virus (HDV) infection and compensated liver disease.

In a complete response letter, the FDA voiced concerns over the production and delivery of bulevirtide, an investigational, first-in-class HDV entry-inhibitor that received conditional approved in Europe in 2020.

The FDA did not request new studies to evaluate the safety and efficacy of bulevirtide.

As reported previously by this news organization, data from an ongoing phase 3 trial showed that after 48 weeks of treatment, almost half of those treated with bulevirtide achieved the combined primary endpoint of reduced or undetectable HDV RNA levels and normalized alanine aminotransferase levels.

Chronic HDV infection is the most severe form of viral hepatitis. It is associated with a poor prognosis and high mortality rates.

There are currently no approved treatments for HDV in the United States. Bulevirtide was granted breakthrough therapy and orphan drug designations by the FDA.

Merdad Parsey, MD, PhD, chief medical officer, Gilead Sciences, wrote in a news release that the company looks forward to “continuing our active discussions with FDA so that we may bring bulevirtide to people living with HDV in the U.S. as soon as possible.”

This is the second manufacturing-related complete response letter Gilead has received in the past 8 months. In March, the FDA rejected the long-acting HIV drug lenacapavir. The drug was sanctioned in Europe and the United Kingdom in September.

A version of this article first appeared on Medscape.com.

The U.S. Food and Drug Administration (FDA) has declined to approve bulevirtide, Gilead Sciences’ drug for the treatment of hepatitis delta virus (HDV) infection and compensated liver disease.

In a complete response letter, the FDA voiced concerns over the production and delivery of bulevirtide, an investigational, first-in-class HDV entry-inhibitor that received conditional approved in Europe in 2020.

The FDA did not request new studies to evaluate the safety and efficacy of bulevirtide.

As reported previously by this news organization, data from an ongoing phase 3 trial showed that after 48 weeks of treatment, almost half of those treated with bulevirtide achieved the combined primary endpoint of reduced or undetectable HDV RNA levels and normalized alanine aminotransferase levels.

Chronic HDV infection is the most severe form of viral hepatitis. It is associated with a poor prognosis and high mortality rates.

There are currently no approved treatments for HDV in the United States. Bulevirtide was granted breakthrough therapy and orphan drug designations by the FDA.

Merdad Parsey, MD, PhD, chief medical officer, Gilead Sciences, wrote in a news release that the company looks forward to “continuing our active discussions with FDA so that we may bring bulevirtide to people living with HDV in the U.S. as soon as possible.”

This is the second manufacturing-related complete response letter Gilead has received in the past 8 months. In March, the FDA rejected the long-acting HIV drug lenacapavir. The drug was sanctioned in Europe and the United Kingdom in September.

A version of this article first appeared on Medscape.com.

The U.S. Food and Drug Administration (FDA) has declined to approve bulevirtide, Gilead Sciences’ drug for the treatment of hepatitis delta virus (HDV) infection and compensated liver disease.

In a complete response letter, the FDA voiced concerns over the production and delivery of bulevirtide, an investigational, first-in-class HDV entry-inhibitor that received conditional approved in Europe in 2020.

The FDA did not request new studies to evaluate the safety and efficacy of bulevirtide.

As reported previously by this news organization, data from an ongoing phase 3 trial showed that after 48 weeks of treatment, almost half of those treated with bulevirtide achieved the combined primary endpoint of reduced or undetectable HDV RNA levels and normalized alanine aminotransferase levels.

Chronic HDV infection is the most severe form of viral hepatitis. It is associated with a poor prognosis and high mortality rates.

There are currently no approved treatments for HDV in the United States. Bulevirtide was granted breakthrough therapy and orphan drug designations by the FDA.

Merdad Parsey, MD, PhD, chief medical officer, Gilead Sciences, wrote in a news release that the company looks forward to “continuing our active discussions with FDA so that we may bring bulevirtide to people living with HDV in the U.S. as soon as possible.”

This is the second manufacturing-related complete response letter Gilead has received in the past 8 months. In March, the FDA rejected the long-acting HIV drug lenacapavir. The drug was sanctioned in Europe and the United Kingdom in September.

A version of this article first appeared on Medscape.com.

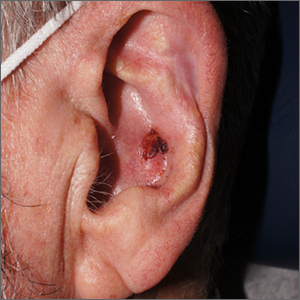

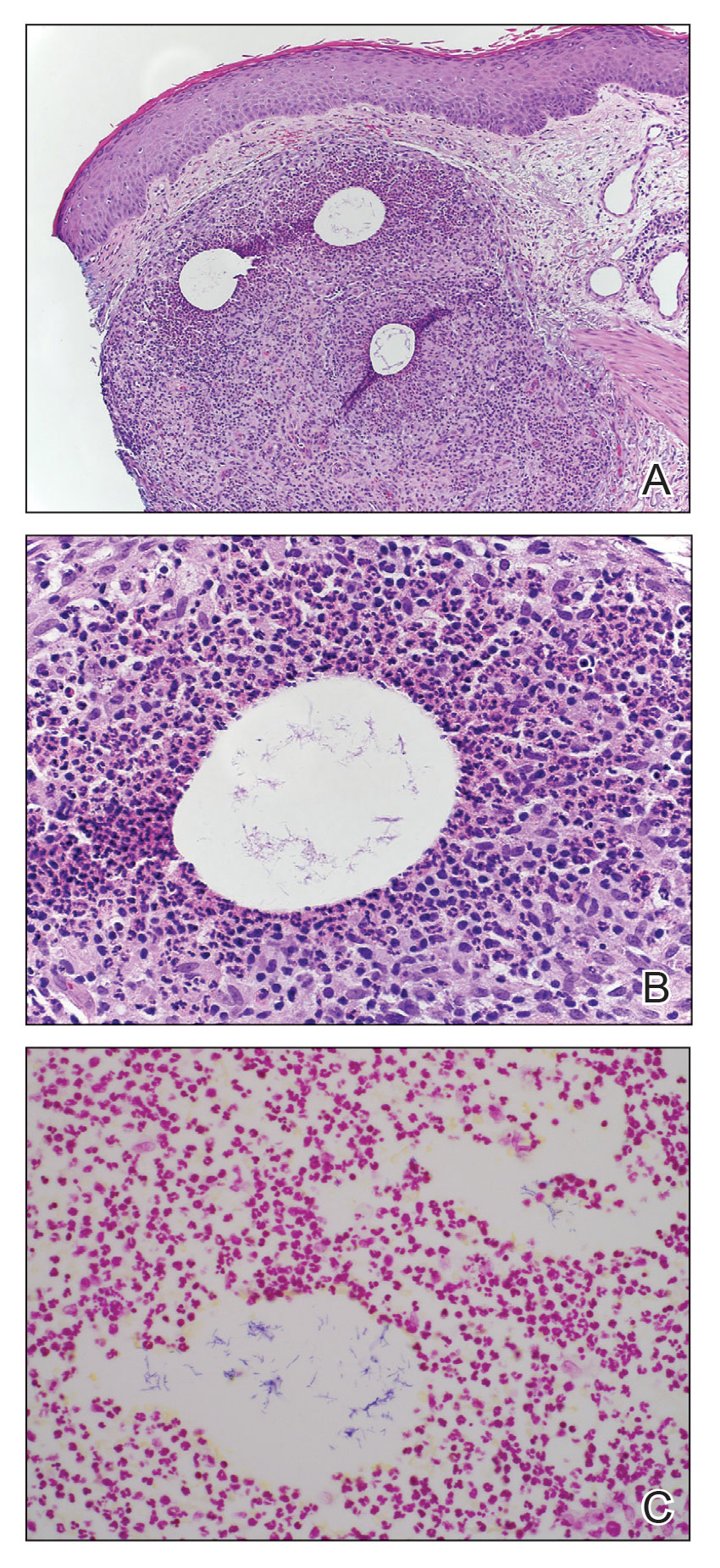

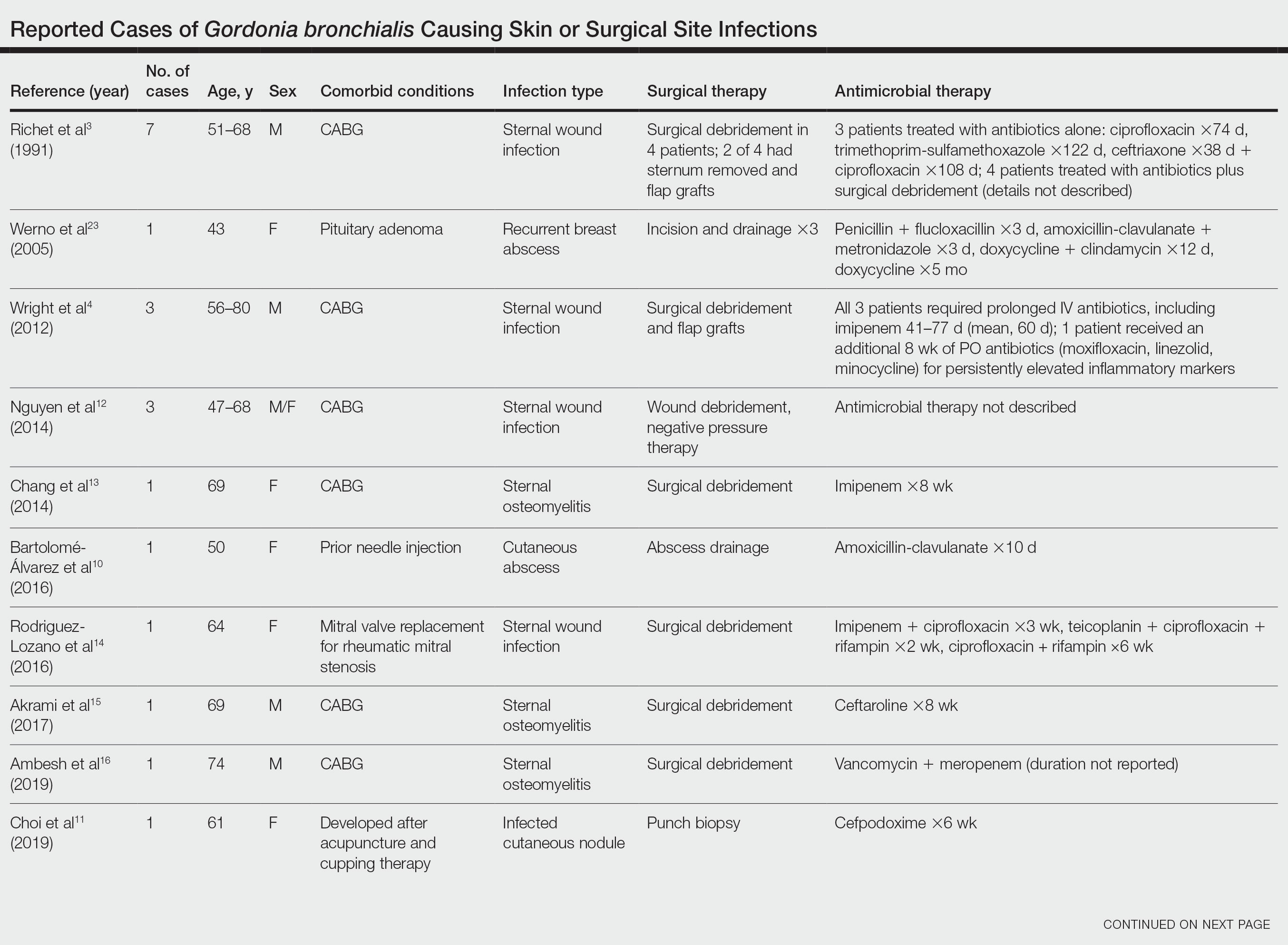

Crusty ear

The physician used a curette to perform a shave biopsy; pathology results indicated this was a poorly differentiated squamous cell carcinoma (SCC). Cutaneous SCC is the second most common skin cancer in the United States (after basal cell carcinoma) and increases in frequency with age and cumulative sun damage. It is the most common skin cancer in patients who are Black.

SCC is frequently found on the head and neck, including the ear, but is less commonly found within the conchal bowl (as seen here). Often, SCC manifests as a rough plaque or dome-shaped papule in a sun damaged location, but it may occasionally manifest as an ulcer. While most patients are cured with outpatient surgery, an estimated 8000 patients will develop nodal metastasis and 3000 patients will die from the disease in the United States annually.1 Chronically immunosuppressed patients, such as organ transplant recipients, are at high risk.

This patient underwent Mohs microsurgery (MMS) and clear margins were achieved after 2 stages. The resulting defect was repaired with a full-thickness graft from the postauricular fold. MMS is an excellent technique for keratinocyte carcinomas (SCC and basal cell carcinomas) of the head and neck, recurrent skin cancers on the trunk and extremities, high-risk cancer subtypes, and tumors with indistinct clinical borders. Follow-up for patients with SCCs includes full skin exams every 6 months for 2 years.

The American Academy of Dermatology offers a complimentary Mohs Surgery Appropriate Use Criteria App that assists in determining when Mohs surgery is appropriate, based on multiple tumor characteristics.

Photos and text for Photo Rounds Friday courtesy of Jonathan Karnes, MD (copyright retained). Dr. Karnes is the medical director of MDFMR Dermatology Services, Augusta, ME.

1. Waldman A, Schmults C. Cutaneous squamous cell carcinoma. Hematol Oncol Clin North Am. 2019;33:1-12. doi:10.1016/j.hoc.2018.08.001

The physician used a curette to perform a shave biopsy; pathology results indicated this was a poorly differentiated squamous cell carcinoma (SCC). Cutaneous SCC is the second most common skin cancer in the United States (after basal cell carcinoma) and increases in frequency with age and cumulative sun damage. It is the most common skin cancer in patients who are Black.

SCC is frequently found on the head and neck, including the ear, but is less commonly found within the conchal bowl (as seen here). Often, SCC manifests as a rough plaque or dome-shaped papule in a sun damaged location, but it may occasionally manifest as an ulcer. While most patients are cured with outpatient surgery, an estimated 8000 patients will develop nodal metastasis and 3000 patients will die from the disease in the United States annually.1 Chronically immunosuppressed patients, such as organ transplant recipients, are at high risk.

This patient underwent Mohs microsurgery (MMS) and clear margins were achieved after 2 stages. The resulting defect was repaired with a full-thickness graft from the postauricular fold. MMS is an excellent technique for keratinocyte carcinomas (SCC and basal cell carcinomas) of the head and neck, recurrent skin cancers on the trunk and extremities, high-risk cancer subtypes, and tumors with indistinct clinical borders. Follow-up for patients with SCCs includes full skin exams every 6 months for 2 years.

The American Academy of Dermatology offers a complimentary Mohs Surgery Appropriate Use Criteria App that assists in determining when Mohs surgery is appropriate, based on multiple tumor characteristics.

Photos and text for Photo Rounds Friday courtesy of Jonathan Karnes, MD (copyright retained). Dr. Karnes is the medical director of MDFMR Dermatology Services, Augusta, ME.

The physician used a curette to perform a shave biopsy; pathology results indicated this was a poorly differentiated squamous cell carcinoma (SCC). Cutaneous SCC is the second most common skin cancer in the United States (after basal cell carcinoma) and increases in frequency with age and cumulative sun damage. It is the most common skin cancer in patients who are Black.

SCC is frequently found on the head and neck, including the ear, but is less commonly found within the conchal bowl (as seen here). Often, SCC manifests as a rough plaque or dome-shaped papule in a sun damaged location, but it may occasionally manifest as an ulcer. While most patients are cured with outpatient surgery, an estimated 8000 patients will develop nodal metastasis and 3000 patients will die from the disease in the United States annually.1 Chronically immunosuppressed patients, such as organ transplant recipients, are at high risk.

This patient underwent Mohs microsurgery (MMS) and clear margins were achieved after 2 stages. The resulting defect was repaired with a full-thickness graft from the postauricular fold. MMS is an excellent technique for keratinocyte carcinomas (SCC and basal cell carcinomas) of the head and neck, recurrent skin cancers on the trunk and extremities, high-risk cancer subtypes, and tumors with indistinct clinical borders. Follow-up for patients with SCCs includes full skin exams every 6 months for 2 years.

The American Academy of Dermatology offers a complimentary Mohs Surgery Appropriate Use Criteria App that assists in determining when Mohs surgery is appropriate, based on multiple tumor characteristics.

Photos and text for Photo Rounds Friday courtesy of Jonathan Karnes, MD (copyright retained). Dr. Karnes is the medical director of MDFMR Dermatology Services, Augusta, ME.

1. Waldman A, Schmults C. Cutaneous squamous cell carcinoma. Hematol Oncol Clin North Am. 2019;33:1-12. doi:10.1016/j.hoc.2018.08.001

1. Waldman A, Schmults C. Cutaneous squamous cell carcinoma. Hematol Oncol Clin North Am. 2019;33:1-12. doi:10.1016/j.hoc.2018.08.001

Major depression treatments boost brain connectivity

VIENNA – , new research suggests.

In a “repeat” MRI study, adult participants with MDD had significantly lower brain connectivity compared with their healthy peers at baseline – but showed significant improvement at the 6-week follow-up. These improvements were associated with decreases in symptom severity, independent of whether they received electroconvulsive therapy (ECT) or other treatment modalities.

“This means that the brain structure of patients with serious clinical depression is not as fixed as we thought, and we can improve brain structure within a short time frame [of] around 6 weeks,” lead author Jonathan Repple, MD, now professor of predictive psychiatry at the University of Frankfurt, Germany, said in a release.

“This gives hope to patients who believe nothing can change and they have to live with a disease forever because it is ‘set in stone’ in their brain,” he added.

The findings were presented at the 35th European College of Neuropsychopharmacology (ECNP) Congress.

‘Easily understandable picture’

Dr. Repple said in an interview that the investigators “were surprised to see how plastic” the brain could be.

“I’ve done a lot of imaging studies in the past where we looked at differences in depression vs. healthy controls, and then maybe had tiny effects. But we’ve never seen such a clear and easily understandable picture, where we see a deficit at the beginning and then a significant increase in whatever biomarker we were looking at, that even correlated with how successful the treatment was,” he said.

Dr. Repple noted that “this is the thing everyone is looking for when we’re talking about a biomarker: That we see this exact pattern” – and it is why they are so excited about the results.

However, he cautioned that the study included a “small sample” and the results need to be independently replicated.

“If this can be replicated, this might be a very good target for future intervention studies,” Dr. Repple said.

The investigators noted that altered brain structural connectivity has been implicated before in the pathophysiology of MDD.

However, it is not clear whether these changes are stable over time and indicate a biological predisposition, or are markers of current disease severity and can be altered by effective treatment.

To investigate further, the researchers used gray matter T1-weighted MRI to define nodes in the brain and diffusion-weighted imaging (DWI)-based tractography to determine connections between the nodes, to create a structural connectome or white matter network.

They performed assessments at baseline and at 6 weeks’ follow-up in 123 participants diagnosed with current MDD and receiving inpatient treatment, and 55 participants who acted as the healthy controls group.

Among the patients with MDD, 56 were treated with ECT and 67 received other antidepressant care, including psychological therapy or medications. Some patients had received all three treatment modalities.

Significant interactions

Results showed a significant interaction by group and time between the baseline and 6-week follow-up assessments (P < .05).

This was partly driven by the MDD group having a significantly lower connectivity strength at baseline than the healthy controls group (P < .05).

It was also partly driven by patients showing a significant improvement in connectivity strength between the baseline and follow-up assessments (P < .05), a pattern that was not seen in the nonpatients.

This increase in connectivity strength was associated with a significant decrease in depression symptom severity (P < .05). This was independent of the treatment modality, indicating that it was not linked to the use of ECT.

Dr. Repple acknowledged the relatively short follow-up period of the study, and added that he is not aware of longitudinal studies of the structural connectome with a longer follow-up.

He pointed out that the structural connectivity of the brain decreases with age, but there have been no studies that have assessed patients with depression and “measured the same person again after 2, 4, 6, or 8 years.”

Dr. Repple reported that the investigators will be following up with their participants, “so hopefully in a few years we’ll have more information on that.

“One thing I also need to stress is that, when we’re looking at the MRI brain scans, we see an increase in connectivity strength, but we really can’t say what the molecular mechanisms behind it are,” he said. “This is a black box for us.”

Several unanswered questions

Commenting in the release, Eric Ruhe, MD, PhD, Radboud University Medical Center, Nijmegen, the Netherlands, said this was a “very interesting and difficult study to perform.”

However, Dr. Ruhe, who was not involved in the research, told this news organization that it is “very difficult to connect the lack of brain connectivity to the patient symptomatology because there is a huge gap between them.”

The problem is that, despite “lots of evidence” that they are effective, “we currently don’t know how antidepressant therapies work” in terms of their underlying mechanisms of action, he said.

“We think that these types of therapies all modulate the plasticity of the brain,” said Dr. Ruhe. “What this study showed is there are changes that you can detect even in 6 weeks,” although they may have been observed even sooner with a shorter follow-up.

He noted that big questions are whether the change is specific to the treatment given, and “can you modulate different brain network dysfunctions with different treatments?”

Moreover, he wondered if a brain scan could indicate which type of treatment should be used. “This is, of course, very new and very challenging, and we don’t know yet, but we should be pursuing this,” Dr. Ruhe said.

Another question is whether or not the brain connectivity changes shown in the study represent a persistent change – “and whether this is a persistent change that is associated with a consistent and persistent relief of depression.

“Again, this is something that needs to be followed up,” said Dr. Ruhe.

No funding was declared. The study authors and Dr. Ruhe report no relevant financial relationships.

A version of this article first appeared on Medscape.com.

VIENNA – , new research suggests.

In a “repeat” MRI study, adult participants with MDD had significantly lower brain connectivity compared with their healthy peers at baseline – but showed significant improvement at the 6-week follow-up. These improvements were associated with decreases in symptom severity, independent of whether they received electroconvulsive therapy (ECT) or other treatment modalities.

“This means that the brain structure of patients with serious clinical depression is not as fixed as we thought, and we can improve brain structure within a short time frame [of] around 6 weeks,” lead author Jonathan Repple, MD, now professor of predictive psychiatry at the University of Frankfurt, Germany, said in a release.

“This gives hope to patients who believe nothing can change and they have to live with a disease forever because it is ‘set in stone’ in their brain,” he added.

The findings were presented at the 35th European College of Neuropsychopharmacology (ECNP) Congress.

‘Easily understandable picture’

Dr. Repple said in an interview that the investigators “were surprised to see how plastic” the brain could be.

“I’ve done a lot of imaging studies in the past where we looked at differences in depression vs. healthy controls, and then maybe had tiny effects. But we’ve never seen such a clear and easily understandable picture, where we see a deficit at the beginning and then a significant increase in whatever biomarker we were looking at, that even correlated with how successful the treatment was,” he said.

Dr. Repple noted that “this is the thing everyone is looking for when we’re talking about a biomarker: That we see this exact pattern” – and it is why they are so excited about the results.

However, he cautioned that the study included a “small sample” and the results need to be independently replicated.

“If this can be replicated, this might be a very good target for future intervention studies,” Dr. Repple said.

The investigators noted that altered brain structural connectivity has been implicated before in the pathophysiology of MDD.

However, it is not clear whether these changes are stable over time and indicate a biological predisposition, or are markers of current disease severity and can be altered by effective treatment.

To investigate further, the researchers used gray matter T1-weighted MRI to define nodes in the brain and diffusion-weighted imaging (DWI)-based tractography to determine connections between the nodes, to create a structural connectome or white matter network.

They performed assessments at baseline and at 6 weeks’ follow-up in 123 participants diagnosed with current MDD and receiving inpatient treatment, and 55 participants who acted as the healthy controls group.

Among the patients with MDD, 56 were treated with ECT and 67 received other antidepressant care, including psychological therapy or medications. Some patients had received all three treatment modalities.

Significant interactions

Results showed a significant interaction by group and time between the baseline and 6-week follow-up assessments (P < .05).

This was partly driven by the MDD group having a significantly lower connectivity strength at baseline than the healthy controls group (P < .05).

It was also partly driven by patients showing a significant improvement in connectivity strength between the baseline and follow-up assessments (P < .05), a pattern that was not seen in the nonpatients.

This increase in connectivity strength was associated with a significant decrease in depression symptom severity (P < .05). This was independent of the treatment modality, indicating that it was not linked to the use of ECT.

Dr. Repple acknowledged the relatively short follow-up period of the study, and added that he is not aware of longitudinal studies of the structural connectome with a longer follow-up.

He pointed out that the structural connectivity of the brain decreases with age, but there have been no studies that have assessed patients with depression and “measured the same person again after 2, 4, 6, or 8 years.”

Dr. Repple reported that the investigators will be following up with their participants, “so hopefully in a few years we’ll have more information on that.

“One thing I also need to stress is that, when we’re looking at the MRI brain scans, we see an increase in connectivity strength, but we really can’t say what the molecular mechanisms behind it are,” he said. “This is a black box for us.”

Several unanswered questions

Commenting in the release, Eric Ruhe, MD, PhD, Radboud University Medical Center, Nijmegen, the Netherlands, said this was a “very interesting and difficult study to perform.”

However, Dr. Ruhe, who was not involved in the research, told this news organization that it is “very difficult to connect the lack of brain connectivity to the patient symptomatology because there is a huge gap between them.”

The problem is that, despite “lots of evidence” that they are effective, “we currently don’t know how antidepressant therapies work” in terms of their underlying mechanisms of action, he said.

“We think that these types of therapies all modulate the plasticity of the brain,” said Dr. Ruhe. “What this study showed is there are changes that you can detect even in 6 weeks,” although they may have been observed even sooner with a shorter follow-up.

He noted that big questions are whether the change is specific to the treatment given, and “can you modulate different brain network dysfunctions with different treatments?”

Moreover, he wondered if a brain scan could indicate which type of treatment should be used. “This is, of course, very new and very challenging, and we don’t know yet, but we should be pursuing this,” Dr. Ruhe said.

Another question is whether or not the brain connectivity changes shown in the study represent a persistent change – “and whether this is a persistent change that is associated with a consistent and persistent relief of depression.

“Again, this is something that needs to be followed up,” said Dr. Ruhe.

No funding was declared. The study authors and Dr. Ruhe report no relevant financial relationships.

A version of this article first appeared on Medscape.com.

VIENNA – , new research suggests.

In a “repeat” MRI study, adult participants with MDD had significantly lower brain connectivity compared with their healthy peers at baseline – but showed significant improvement at the 6-week follow-up. These improvements were associated with decreases in symptom severity, independent of whether they received electroconvulsive therapy (ECT) or other treatment modalities.

“This means that the brain structure of patients with serious clinical depression is not as fixed as we thought, and we can improve brain structure within a short time frame [of] around 6 weeks,” lead author Jonathan Repple, MD, now professor of predictive psychiatry at the University of Frankfurt, Germany, said in a release.

“This gives hope to patients who believe nothing can change and they have to live with a disease forever because it is ‘set in stone’ in their brain,” he added.

The findings were presented at the 35th European College of Neuropsychopharmacology (ECNP) Congress.

‘Easily understandable picture’

Dr. Repple said in an interview that the investigators “were surprised to see how plastic” the brain could be.

“I’ve done a lot of imaging studies in the past where we looked at differences in depression vs. healthy controls, and then maybe had tiny effects. But we’ve never seen such a clear and easily understandable picture, where we see a deficit at the beginning and then a significant increase in whatever biomarker we were looking at, that even correlated with how successful the treatment was,” he said.

Dr. Repple noted that “this is the thing everyone is looking for when we’re talking about a biomarker: That we see this exact pattern” – and it is why they are so excited about the results.

However, he cautioned that the study included a “small sample” and the results need to be independently replicated.

“If this can be replicated, this might be a very good target for future intervention studies,” Dr. Repple said.

The investigators noted that altered brain structural connectivity has been implicated before in the pathophysiology of MDD.

However, it is not clear whether these changes are stable over time and indicate a biological predisposition, or are markers of current disease severity and can be altered by effective treatment.

To investigate further, the researchers used gray matter T1-weighted MRI to define nodes in the brain and diffusion-weighted imaging (DWI)-based tractography to determine connections between the nodes, to create a structural connectome or white matter network.

They performed assessments at baseline and at 6 weeks’ follow-up in 123 participants diagnosed with current MDD and receiving inpatient treatment, and 55 participants who acted as the healthy controls group.

Among the patients with MDD, 56 were treated with ECT and 67 received other antidepressant care, including psychological therapy or medications. Some patients had received all three treatment modalities.

Significant interactions

Results showed a significant interaction by group and time between the baseline and 6-week follow-up assessments (P < .05).

This was partly driven by the MDD group having a significantly lower connectivity strength at baseline than the healthy controls group (P < .05).

It was also partly driven by patients showing a significant improvement in connectivity strength between the baseline and follow-up assessments (P < .05), a pattern that was not seen in the nonpatients.

This increase in connectivity strength was associated with a significant decrease in depression symptom severity (P < .05). This was independent of the treatment modality, indicating that it was not linked to the use of ECT.

Dr. Repple acknowledged the relatively short follow-up period of the study, and added that he is not aware of longitudinal studies of the structural connectome with a longer follow-up.

He pointed out that the structural connectivity of the brain decreases with age, but there have been no studies that have assessed patients with depression and “measured the same person again after 2, 4, 6, or 8 years.”

Dr. Repple reported that the investigators will be following up with their participants, “so hopefully in a few years we’ll have more information on that.

“One thing I also need to stress is that, when we’re looking at the MRI brain scans, we see an increase in connectivity strength, but we really can’t say what the molecular mechanisms behind it are,” he said. “This is a black box for us.”

Several unanswered questions

Commenting in the release, Eric Ruhe, MD, PhD, Radboud University Medical Center, Nijmegen, the Netherlands, said this was a “very interesting and difficult study to perform.”

However, Dr. Ruhe, who was not involved in the research, told this news organization that it is “very difficult to connect the lack of brain connectivity to the patient symptomatology because there is a huge gap between them.”

The problem is that, despite “lots of evidence” that they are effective, “we currently don’t know how antidepressant therapies work” in terms of their underlying mechanisms of action, he said.

“We think that these types of therapies all modulate the plasticity of the brain,” said Dr. Ruhe. “What this study showed is there are changes that you can detect even in 6 weeks,” although they may have been observed even sooner with a shorter follow-up.

He noted that big questions are whether the change is specific to the treatment given, and “can you modulate different brain network dysfunctions with different treatments?”

Moreover, he wondered if a brain scan could indicate which type of treatment should be used. “This is, of course, very new and very challenging, and we don’t know yet, but we should be pursuing this,” Dr. Ruhe said.

Another question is whether or not the brain connectivity changes shown in the study represent a persistent change – “and whether this is a persistent change that is associated with a consistent and persistent relief of depression.

“Again, this is something that needs to be followed up,” said Dr. Ruhe.

No funding was declared. The study authors and Dr. Ruhe report no relevant financial relationships.

A version of this article first appeared on Medscape.com.

AT ECNP 2022

Studies provide compelling momentum for mucosal origins hypothesis of rheumatoid arthritis

A newly discovered strain of bacteria could play a role in the development of rheumatoid arthritis, according to findings recently published in Science Translational Medicine.

Mice colonized with a strain of Subdoligranulum bacteria in their gut – a strain previously unidentified but now named Subdoligranulum didolesgii – developed joint swelling and inflammation as well as antibodies and T-cell responses similar to what is seen in RA, researchers reported.

“This was the first time that anyone has observed arthritis developing in a mouse that was not otherwise immunologically stimulated with an adjuvant of some kind, or genetically manipulated,” said Kristine Kuhn, MD, PhD, associate professor of rheumatology at the University of Colorado at Denver, Aurora, who led a team of researchers that also included investigators from Stanford (Calif.) University and Benaroya Research Institute in Seattle.

The findings offer the latest evidence – and perhaps the most compelling evidence – for the mucosal origins hypothesis, the idea that rheumatoid arthritis can start with an immune response somewhere in the mucosa because of environmental interactions, and then becomes systemic, resulting in symptoms in the joints. Anti-citrullinated protein antibodies (ACPA), hallmarks of RA, have been found at mucosal surfaces in the periodontium and the lungs, and there have been reports of them in the intestine and cervicovaginal mucosa as well.

The latest findings that implicate the new bacterium build on previous findings in which people at risk of RA, but without symptoms yet, had an expansion of B cells producing immunoglobulin A (IgA), an antibody found in the mucosa. A closer look at these B cells, using variable region sequencing, found that they arose from a family that includes both IgA and IgG members. Because IgG antibodies are systemic, this suggested a kind of evolution from an IgA-based, mucosal immune response to one that is systemic and could target the joints.

Researchers mixed monoclonal antibodies from these B cells with a pool of bacteria from the stool of a broad population of people, and then pulled out the bacteria bound by these antibodies, and sequenced them. They found that the antibodies had bound almost exclusively to Ruminococcaceae and Lachnospiraceae.

They then cultured the stool of an individual at risk of developing RA and ended up with five isolates within Ruminococcaceae – “all of which belonged to the Subdoligranulum genus,” Dr. Kuhn said. When they sequenced these, they found that they had a new strain, which was named by Meagan Chriswell, an MD-PhD candidate and member of the Cherokee Nation of Oklahoma, who chose a term based on the Cherokee word for rheumatism.

Researchers at Benaroya then mixed this strain with T cells of people with RA, and those of controls, and only the T cells of those with RA were stimulated by the bacterium, they found.

“When intestines of germ-free mice were colonized with the strain, we found that they were getting arthritis,” Dr. Kuhn said. Photos of the joints show a striking contrast between the swollen joints of the mice given Subdoligranulum didolesgii and those injected with Prevotella copri, another strain suspected of having a link to RA, as well as with another Subdoligranulum strain and a sterile media. Dr. Kuhn noted that the P. copri strain did not come from an RA-affected individual.

“We thought that our results closed the loop nicely to show that these immune responses truly were toward the Subdoligranulum, and also stimulating arthritis,” she said.

The researchers then assessed the prevalence of the strain in people at risk for RA or with RA, and in controls. They found it in 17% of those with or at risk for RA but didn’t see it at all in the healthy control population.

Dr. Kuhn and her research team, she said, are now looking at the prevalence of the strain in a larger population and doing more investigating into the link with RA.

“Does it really associate with the development of immune responses and the development of rheumatoid arthritis?” she said.

Potential etiologic role of P. copri

Another paper, published in Arthritis & Rheumatology by some of the same investigators a week before the study describing the Subdoligranulum findings, tried to ascertain the point at which individuals might develop antibodies to P. copri, which for about a decade has been suspected of having a link to the development of RA.

They found that those with early RA had higher median values of IgG anti–P. copri (Pc) antibodies, compared with matched controls. People with established RA also had higher values of IgA anti-Pc antibodies. Those with ACPA, but not rheumatoid factor (RF), showed a trend toward higher IgG anti-Pc antibodies. Those who were ACPA-positive and RF-positive had significantly increased levels of IgA anti-Pc antibodies and a trend toward higher levels of IgG anti-Pc antibodies, compared with matched controls.

The findings, according to the researchers, “support a potential etiologic role for this microorganism in both RA preclinical evolution and the subsequent pathogenesis of synovitis.”

Dr. Kuhn and others in the field say it’s likely that many microbes play a role in the development of RA, and that the P. copri findings only add evidence of that relationship.

“Maybe the bacteria are involved at different parts of the pathway, and maybe they’re involved in triggering different parts of the immune responses,” she said. “Those are all to be determined.”

Dan Littman, MD, PhD, professor of rheumatology at New York University, who wrote a commentary reflecting on the findings of the Subdoligranulum study, said the results are “another piece of data” adding to the evidence base for the mucosal origins hypothesis.

“It’s by no means proven that this is the way pathogenesis in RA can occur, but it’s certainly a very solid study,” said Dr. Littman, who with colleagues published findings in 2013 linking P. copri to RA. “What makes it most compelling is that they seem to be able to show some evidence of causality in the mouse model.”

Before the findings could lead to therapy, he said, more evidence is needed to show that there is a causal link, and on the mechanism at work, such as whether this is something that occurs at the outset of disease or is something that “fuels the disease” by continually activating immune cells contributing to RA.

“If it’s only something that’s involved in the initiation of the disease, you need to catch it very early,” he said. “But if it’s something that continues to provide fuel for the disease, you may be able to catch it later and still be effective. Those are really critical items.”

Eventually, if these questions are answered, bacteriophages could be developed to snuff out problematic strains, or the regulatory response could be targeted to prevent the activation of the B cells that give rise to autoimmunity, he suggested.

“There are multiple steps to get to a therapeutic here, and I think we’re still a long ways from that,” he said. Still, he said, “I think it’s an important paper because it will encourage more people to look at this mechanism more closely and determine whether it really is representative of what happens in a lot of RA patients.”

The study in Science Translational Medicine was supported by grants from the National Institutes of Health, a Pfizer ASPIRE grant, and a grant from the Rheumatology Research Foundation. The Arthritis & Rheumatology study was supported in part by grants from the National Institutes of Health; the American College of Rheumatology Innovative Grant Program; the Ounsworth-Fitzgerald Foundation; Mathers Foundation; English, Bonter, Mitchell Foundation; Littauer Foundation; Lillian B. Davey Foundation; and the Eshe Fund. None of the researchers in either study had relevant financial disclosures. Dr. Littman is scientific cofounder and member of the scientific advisory board of Vedanta Biosciences, which studies microbiota therapeutics.

A newly discovered strain of bacteria could play a role in the development of rheumatoid arthritis, according to findings recently published in Science Translational Medicine.

Mice colonized with a strain of Subdoligranulum bacteria in their gut – a strain previously unidentified but now named Subdoligranulum didolesgii – developed joint swelling and inflammation as well as antibodies and T-cell responses similar to what is seen in RA, researchers reported.

“This was the first time that anyone has observed arthritis developing in a mouse that was not otherwise immunologically stimulated with an adjuvant of some kind, or genetically manipulated,” said Kristine Kuhn, MD, PhD, associate professor of rheumatology at the University of Colorado at Denver, Aurora, who led a team of researchers that also included investigators from Stanford (Calif.) University and Benaroya Research Institute in Seattle.

The findings offer the latest evidence – and perhaps the most compelling evidence – for the mucosal origins hypothesis, the idea that rheumatoid arthritis can start with an immune response somewhere in the mucosa because of environmental interactions, and then becomes systemic, resulting in symptoms in the joints. Anti-citrullinated protein antibodies (ACPA), hallmarks of RA, have been found at mucosal surfaces in the periodontium and the lungs, and there have been reports of them in the intestine and cervicovaginal mucosa as well.

The latest findings that implicate the new bacterium build on previous findings in which people at risk of RA, but without symptoms yet, had an expansion of B cells producing immunoglobulin A (IgA), an antibody found in the mucosa. A closer look at these B cells, using variable region sequencing, found that they arose from a family that includes both IgA and IgG members. Because IgG antibodies are systemic, this suggested a kind of evolution from an IgA-based, mucosal immune response to one that is systemic and could target the joints.

Researchers mixed monoclonal antibodies from these B cells with a pool of bacteria from the stool of a broad population of people, and then pulled out the bacteria bound by these antibodies, and sequenced them. They found that the antibodies had bound almost exclusively to Ruminococcaceae and Lachnospiraceae.

They then cultured the stool of an individual at risk of developing RA and ended up with five isolates within Ruminococcaceae – “all of which belonged to the Subdoligranulum genus,” Dr. Kuhn said. When they sequenced these, they found that they had a new strain, which was named by Meagan Chriswell, an MD-PhD candidate and member of the Cherokee Nation of Oklahoma, who chose a term based on the Cherokee word for rheumatism.

Researchers at Benaroya then mixed this strain with T cells of people with RA, and those of controls, and only the T cells of those with RA were stimulated by the bacterium, they found.

“When intestines of germ-free mice were colonized with the strain, we found that they were getting arthritis,” Dr. Kuhn said. Photos of the joints show a striking contrast between the swollen joints of the mice given Subdoligranulum didolesgii and those injected with Prevotella copri, another strain suspected of having a link to RA, as well as with another Subdoligranulum strain and a sterile media. Dr. Kuhn noted that the P. copri strain did not come from an RA-affected individual.

“We thought that our results closed the loop nicely to show that these immune responses truly were toward the Subdoligranulum, and also stimulating arthritis,” she said.

The researchers then assessed the prevalence of the strain in people at risk for RA or with RA, and in controls. They found it in 17% of those with or at risk for RA but didn’t see it at all in the healthy control population.

Dr. Kuhn and her research team, she said, are now looking at the prevalence of the strain in a larger population and doing more investigating into the link with RA.

“Does it really associate with the development of immune responses and the development of rheumatoid arthritis?” she said.

Potential etiologic role of P. copri

Another paper, published in Arthritis & Rheumatology by some of the same investigators a week before the study describing the Subdoligranulum findings, tried to ascertain the point at which individuals might develop antibodies to P. copri, which for about a decade has been suspected of having a link to the development of RA.

They found that those with early RA had higher median values of IgG anti–P. copri (Pc) antibodies, compared with matched controls. People with established RA also had higher values of IgA anti-Pc antibodies. Those with ACPA, but not rheumatoid factor (RF), showed a trend toward higher IgG anti-Pc antibodies. Those who were ACPA-positive and RF-positive had significantly increased levels of IgA anti-Pc antibodies and a trend toward higher levels of IgG anti-Pc antibodies, compared with matched controls.

The findings, according to the researchers, “support a potential etiologic role for this microorganism in both RA preclinical evolution and the subsequent pathogenesis of synovitis.”

Dr. Kuhn and others in the field say it’s likely that many microbes play a role in the development of RA, and that the P. copri findings only add evidence of that relationship.

“Maybe the bacteria are involved at different parts of the pathway, and maybe they’re involved in triggering different parts of the immune responses,” she said. “Those are all to be determined.”

Dan Littman, MD, PhD, professor of rheumatology at New York University, who wrote a commentary reflecting on the findings of the Subdoligranulum study, said the results are “another piece of data” adding to the evidence base for the mucosal origins hypothesis.

“It’s by no means proven that this is the way pathogenesis in RA can occur, but it’s certainly a very solid study,” said Dr. Littman, who with colleagues published findings in 2013 linking P. copri to RA. “What makes it most compelling is that they seem to be able to show some evidence of causality in the mouse model.”

Before the findings could lead to therapy, he said, more evidence is needed to show that there is a causal link, and on the mechanism at work, such as whether this is something that occurs at the outset of disease or is something that “fuels the disease” by continually activating immune cells contributing to RA.

“If it’s only something that’s involved in the initiation of the disease, you need to catch it very early,” he said. “But if it’s something that continues to provide fuel for the disease, you may be able to catch it later and still be effective. Those are really critical items.”

Eventually, if these questions are answered, bacteriophages could be developed to snuff out problematic strains, or the regulatory response could be targeted to prevent the activation of the B cells that give rise to autoimmunity, he suggested.

“There are multiple steps to get to a therapeutic here, and I think we’re still a long ways from that,” he said. Still, he said, “I think it’s an important paper because it will encourage more people to look at this mechanism more closely and determine whether it really is representative of what happens in a lot of RA patients.”

The study in Science Translational Medicine was supported by grants from the National Institutes of Health, a Pfizer ASPIRE grant, and a grant from the Rheumatology Research Foundation. The Arthritis & Rheumatology study was supported in part by grants from the National Institutes of Health; the American College of Rheumatology Innovative Grant Program; the Ounsworth-Fitzgerald Foundation; Mathers Foundation; English, Bonter, Mitchell Foundation; Littauer Foundation; Lillian B. Davey Foundation; and the Eshe Fund. None of the researchers in either study had relevant financial disclosures. Dr. Littman is scientific cofounder and member of the scientific advisory board of Vedanta Biosciences, which studies microbiota therapeutics.

A newly discovered strain of bacteria could play a role in the development of rheumatoid arthritis, according to findings recently published in Science Translational Medicine.

Mice colonized with a strain of Subdoligranulum bacteria in their gut – a strain previously unidentified but now named Subdoligranulum didolesgii – developed joint swelling and inflammation as well as antibodies and T-cell responses similar to what is seen in RA, researchers reported.

“This was the first time that anyone has observed arthritis developing in a mouse that was not otherwise immunologically stimulated with an adjuvant of some kind, or genetically manipulated,” said Kristine Kuhn, MD, PhD, associate professor of rheumatology at the University of Colorado at Denver, Aurora, who led a team of researchers that also included investigators from Stanford (Calif.) University and Benaroya Research Institute in Seattle.

The findings offer the latest evidence – and perhaps the most compelling evidence – for the mucosal origins hypothesis, the idea that rheumatoid arthritis can start with an immune response somewhere in the mucosa because of environmental interactions, and then becomes systemic, resulting in symptoms in the joints. Anti-citrullinated protein antibodies (ACPA), hallmarks of RA, have been found at mucosal surfaces in the periodontium and the lungs, and there have been reports of them in the intestine and cervicovaginal mucosa as well.

The latest findings that implicate the new bacterium build on previous findings in which people at risk of RA, but without symptoms yet, had an expansion of B cells producing immunoglobulin A (IgA), an antibody found in the mucosa. A closer look at these B cells, using variable region sequencing, found that they arose from a family that includes both IgA and IgG members. Because IgG antibodies are systemic, this suggested a kind of evolution from an IgA-based, mucosal immune response to one that is systemic and could target the joints.

Researchers mixed monoclonal antibodies from these B cells with a pool of bacteria from the stool of a broad population of people, and then pulled out the bacteria bound by these antibodies, and sequenced them. They found that the antibodies had bound almost exclusively to Ruminococcaceae and Lachnospiraceae.

They then cultured the stool of an individual at risk of developing RA and ended up with five isolates within Ruminococcaceae – “all of which belonged to the Subdoligranulum genus,” Dr. Kuhn said. When they sequenced these, they found that they had a new strain, which was named by Meagan Chriswell, an MD-PhD candidate and member of the Cherokee Nation of Oklahoma, who chose a term based on the Cherokee word for rheumatism.

Researchers at Benaroya then mixed this strain with T cells of people with RA, and those of controls, and only the T cells of those with RA were stimulated by the bacterium, they found.

“When intestines of germ-free mice were colonized with the strain, we found that they were getting arthritis,” Dr. Kuhn said. Photos of the joints show a striking contrast between the swollen joints of the mice given Subdoligranulum didolesgii and those injected with Prevotella copri, another strain suspected of having a link to RA, as well as with another Subdoligranulum strain and a sterile media. Dr. Kuhn noted that the P. copri strain did not come from an RA-affected individual.

“We thought that our results closed the loop nicely to show that these immune responses truly were toward the Subdoligranulum, and also stimulating arthritis,” she said.

The researchers then assessed the prevalence of the strain in people at risk for RA or with RA, and in controls. They found it in 17% of those with or at risk for RA but didn’t see it at all in the healthy control population.

Dr. Kuhn and her research team, she said, are now looking at the prevalence of the strain in a larger population and doing more investigating into the link with RA.

“Does it really associate with the development of immune responses and the development of rheumatoid arthritis?” she said.

Potential etiologic role of P. copri

Another paper, published in Arthritis & Rheumatology by some of the same investigators a week before the study describing the Subdoligranulum findings, tried to ascertain the point at which individuals might develop antibodies to P. copri, which for about a decade has been suspected of having a link to the development of RA.

They found that those with early RA had higher median values of IgG anti–P. copri (Pc) antibodies, compared with matched controls. People with established RA also had higher values of IgA anti-Pc antibodies. Those with ACPA, but not rheumatoid factor (RF), showed a trend toward higher IgG anti-Pc antibodies. Those who were ACPA-positive and RF-positive had significantly increased levels of IgA anti-Pc antibodies and a trend toward higher levels of IgG anti-Pc antibodies, compared with matched controls.

The findings, according to the researchers, “support a potential etiologic role for this microorganism in both RA preclinical evolution and the subsequent pathogenesis of synovitis.”

Dr. Kuhn and others in the field say it’s likely that many microbes play a role in the development of RA, and that the P. copri findings only add evidence of that relationship.

“Maybe the bacteria are involved at different parts of the pathway, and maybe they’re involved in triggering different parts of the immune responses,” she said. “Those are all to be determined.”

Dan Littman, MD, PhD, professor of rheumatology at New York University, who wrote a commentary reflecting on the findings of the Subdoligranulum study, said the results are “another piece of data” adding to the evidence base for the mucosal origins hypothesis.

“It’s by no means proven that this is the way pathogenesis in RA can occur, but it’s certainly a very solid study,” said Dr. Littman, who with colleagues published findings in 2013 linking P. copri to RA. “What makes it most compelling is that they seem to be able to show some evidence of causality in the mouse model.”

Before the findings could lead to therapy, he said, more evidence is needed to show that there is a causal link, and on the mechanism at work, such as whether this is something that occurs at the outset of disease or is something that “fuels the disease” by continually activating immune cells contributing to RA.

“If it’s only something that’s involved in the initiation of the disease, you need to catch it very early,” he said. “But if it’s something that continues to provide fuel for the disease, you may be able to catch it later and still be effective. Those are really critical items.”

Eventually, if these questions are answered, bacteriophages could be developed to snuff out problematic strains, or the regulatory response could be targeted to prevent the activation of the B cells that give rise to autoimmunity, he suggested.

“There are multiple steps to get to a therapeutic here, and I think we’re still a long ways from that,” he said. Still, he said, “I think it’s an important paper because it will encourage more people to look at this mechanism more closely and determine whether it really is representative of what happens in a lot of RA patients.”

The study in Science Translational Medicine was supported by grants from the National Institutes of Health, a Pfizer ASPIRE grant, and a grant from the Rheumatology Research Foundation. The Arthritis & Rheumatology study was supported in part by grants from the National Institutes of Health; the American College of Rheumatology Innovative Grant Program; the Ounsworth-Fitzgerald Foundation; Mathers Foundation; English, Bonter, Mitchell Foundation; Littauer Foundation; Lillian B. Davey Foundation; and the Eshe Fund. None of the researchers in either study had relevant financial disclosures. Dr. Littman is scientific cofounder and member of the scientific advisory board of Vedanta Biosciences, which studies microbiota therapeutics.

FROM SCIENCE TRANSLATIONAL MEDICINE AND ARTHRITIS & RHEUMATOLOGY

Locked-in syndrome malpractice case ends with $75 million verdict against docs

The patient, Jonathan Buckelew, was taken to North Fulton Regional Hospital in Roswell, Ga., where imaging revealed that he had suffered a brain stem stroke.

The patient’s attorney, Laura Shamp, alleged in the legal complaint that a series of miscommunications and negligence by multiple providers delayed the diagnosis and treatment of the stroke until the next day, which led to catastrophic brain damage for the patient, who developed locked-in syndrome. The rare neurologic syndrome causes complete paralysis except for the muscles that control eye movements.

“Mr. Buckelew has expended millions of dollars for medical expenses for his care and he will need 24 hour a day care for the rest of his life,” his lawyer said in court documents.

Both physicians’ attorneys denied the claims and said their clients met the standard of care.

The jury attributed 60% fault to ED physician Matthew Womack, MD, and 40% to radiologist James Waldschmidt, MD.

Ms. Shamp alleged that Dr. Womack failed to inform the consulting neurologist of the chiropractic neck adjustment – a known stroke risk factor – and did not adequately communicate the results from CT angiography and lumbar puncture.

In addition, she said Dr. Womack failed to rule out a vertebral artery dissection and that Dr. Waldschmidt did not “[appreciate] an indisputable acute or subacute vertebral-basilar artery occlusion.”

Further allegations were levied against several other members of the patient’s care team, including the neurologist, a critical care physician, a physician assistant, and intensive care unit nurses, but they were not found liable by the jury.

The chiropractor, Michael Axt, DC, was named in the original complaint, but court documents filed earlier in 2022 requested that he be dismissed from the lawsuit, stating that he and the patient had reached an amicable resolution.

“This is a very large verdict,” James B. Edwards, JD, a medical malpractice attorney based in Texas who was not involved in the case, said in an interview. The diagnosis of locked-in syndrome likely contributed to the substantial monetary award.

“The more sympathetic the plaintiff and the situation, the greater risk of a verdict [against] the defendants,” Mr. Edwards said. “Cases that elicit significant and sometimes decisive sympathy include locked-in syndrome, permanent vegetative state, injury to the sexual or reproductive organs, burns, and blindness.”

The effectiveness and safety of chiropractic adjustments often come under fire. Although postmanipulation injuries are not common, they can have near-fatal consequences when they do occur.

In August, a healthy 28-year-old college student experienced four artery dissections after a chiropractic visit for low-back pain. She subsequently had a stroke and went into cardiac arrest. The patient survived but remains paralyzed.

Mr. Buckelew’s legal team said in a statement that his injuries would have been completely avoided had “the slew of health care providers ... acted according to the standard of care, caught and treated his stroke earlier, and communicated more effectively.”

On the day of the chiropractic adjustment, Ms. Shamp said Mr. Axt had documented that Mr. Buckelew’s primary complaints – neck pain, a headache, and bouts of blurred vision and ringing in the ears – began after exercise and had continued for several days.

In his closing statement, Dr. Womack’s attorney said the “chiropractor is solely responsible” for the patient’s injuries because he performed a manipulation despite the patient having a 2-week history of headaches.

Very few medical malpractice verdicts are appealed, though the sizable award in this suit may increase the likelihood that the defense will do so, Mr. Edwards said.

A version of this article first appeared on Medscape.com.

The patient, Jonathan Buckelew, was taken to North Fulton Regional Hospital in Roswell, Ga., where imaging revealed that he had suffered a brain stem stroke.

The patient’s attorney, Laura Shamp, alleged in the legal complaint that a series of miscommunications and negligence by multiple providers delayed the diagnosis and treatment of the stroke until the next day, which led to catastrophic brain damage for the patient, who developed locked-in syndrome. The rare neurologic syndrome causes complete paralysis except for the muscles that control eye movements.

“Mr. Buckelew has expended millions of dollars for medical expenses for his care and he will need 24 hour a day care for the rest of his life,” his lawyer said in court documents.

Both physicians’ attorneys denied the claims and said their clients met the standard of care.

The jury attributed 60% fault to ED physician Matthew Womack, MD, and 40% to radiologist James Waldschmidt, MD.

Ms. Shamp alleged that Dr. Womack failed to inform the consulting neurologist of the chiropractic neck adjustment – a known stroke risk factor – and did not adequately communicate the results from CT angiography and lumbar puncture.

In addition, she said Dr. Womack failed to rule out a vertebral artery dissection and that Dr. Waldschmidt did not “[appreciate] an indisputable acute or subacute vertebral-basilar artery occlusion.”

Further allegations were levied against several other members of the patient’s care team, including the neurologist, a critical care physician, a physician assistant, and intensive care unit nurses, but they were not found liable by the jury.

The chiropractor, Michael Axt, DC, was named in the original complaint, but court documents filed earlier in 2022 requested that he be dismissed from the lawsuit, stating that he and the patient had reached an amicable resolution.

“This is a very large verdict,” James B. Edwards, JD, a medical malpractice attorney based in Texas who was not involved in the case, said in an interview. The diagnosis of locked-in syndrome likely contributed to the substantial monetary award.

“The more sympathetic the plaintiff and the situation, the greater risk of a verdict [against] the defendants,” Mr. Edwards said. “Cases that elicit significant and sometimes decisive sympathy include locked-in syndrome, permanent vegetative state, injury to the sexual or reproductive organs, burns, and blindness.”

The effectiveness and safety of chiropractic adjustments often come under fire. Although postmanipulation injuries are not common, they can have near-fatal consequences when they do occur.

In August, a healthy 28-year-old college student experienced four artery dissections after a chiropractic visit for low-back pain. She subsequently had a stroke and went into cardiac arrest. The patient survived but remains paralyzed.

Mr. Buckelew’s legal team said in a statement that his injuries would have been completely avoided had “the slew of health care providers ... acted according to the standard of care, caught and treated his stroke earlier, and communicated more effectively.”

On the day of the chiropractic adjustment, Ms. Shamp said Mr. Axt had documented that Mr. Buckelew’s primary complaints – neck pain, a headache, and bouts of blurred vision and ringing in the ears – began after exercise and had continued for several days.

In his closing statement, Dr. Womack’s attorney said the “chiropractor is solely responsible” for the patient’s injuries because he performed a manipulation despite the patient having a 2-week history of headaches.

Very few medical malpractice verdicts are appealed, though the sizable award in this suit may increase the likelihood that the defense will do so, Mr. Edwards said.

A version of this article first appeared on Medscape.com.

The patient, Jonathan Buckelew, was taken to North Fulton Regional Hospital in Roswell, Ga., where imaging revealed that he had suffered a brain stem stroke.