User login

Risk of recurrence outweighs risk of contralateral breast cancer for DCIS patients

LAS VEGAS – The risk of ipsilateral breast tumor recurrence was greater than the risk of contralateral breast cancer at both 5 and 10 years following diagnosis of ductal carcinoma in situ (DCIS), investigators report at a press conference in advance of the annual meeting of the American Society of Breast Surgeons.

“A rapidly growing number of women are choosing double mastectomies for DCIS, perhaps because they misperceive their risk of future cancer. Our research provides important data for treatment decision-making,” said Megan Miller, MD, of Memorial Sloan Kettering Cancer Center. “It suggests patients and their doctors should focus on risk factors and appropriate therapy for the diseased breast, not the opposite breast, and that ipsilateral DCIS should not prompt a bilateral mastectomy.”

The investigators also found that CBC did not correlate with age, family history, and initial DCIS characteristics, though these factors did correlate with the risk of IBTR.

Dr. Miller and her colleagues found radiation had no impact on risk of CBC (4.9% vs. 6.3%; P = .1), though it significantly reduced the risk for IBTR (10.3% vs. 19.3%; P less than .0001).

More research is needed on risk factors for patients with a preinvasive condition, Dr. Miller said.

“Most studies examining the benefits of bilateral mastectomy focus on invasive cancer,” she said. “This study is unique in providing hard data for women with preinvasive disease. For these patients, examining risk factors for recurrence and the benefits of radiation and endocrine therapy to treat the existing cancer are important.”

[email protected]

On Twitter @eaztweets

LAS VEGAS – The risk of ipsilateral breast tumor recurrence was greater than the risk of contralateral breast cancer at both 5 and 10 years following diagnosis of ductal carcinoma in situ (DCIS), investigators report at a press conference in advance of the annual meeting of the American Society of Breast Surgeons.

“A rapidly growing number of women are choosing double mastectomies for DCIS, perhaps because they misperceive their risk of future cancer. Our research provides important data for treatment decision-making,” said Megan Miller, MD, of Memorial Sloan Kettering Cancer Center. “It suggests patients and their doctors should focus on risk factors and appropriate therapy for the diseased breast, not the opposite breast, and that ipsilateral DCIS should not prompt a bilateral mastectomy.”

The investigators also found that CBC did not correlate with age, family history, and initial DCIS characteristics, though these factors did correlate with the risk of IBTR.

Dr. Miller and her colleagues found radiation had no impact on risk of CBC (4.9% vs. 6.3%; P = .1), though it significantly reduced the risk for IBTR (10.3% vs. 19.3%; P less than .0001).

More research is needed on risk factors for patients with a preinvasive condition, Dr. Miller said.

“Most studies examining the benefits of bilateral mastectomy focus on invasive cancer,” she said. “This study is unique in providing hard data for women with preinvasive disease. For these patients, examining risk factors for recurrence and the benefits of radiation and endocrine therapy to treat the existing cancer are important.”

[email protected]

On Twitter @eaztweets

LAS VEGAS – The risk of ipsilateral breast tumor recurrence was greater than the risk of contralateral breast cancer at both 5 and 10 years following diagnosis of ductal carcinoma in situ (DCIS), investigators report at a press conference in advance of the annual meeting of the American Society of Breast Surgeons.

“A rapidly growing number of women are choosing double mastectomies for DCIS, perhaps because they misperceive their risk of future cancer. Our research provides important data for treatment decision-making,” said Megan Miller, MD, of Memorial Sloan Kettering Cancer Center. “It suggests patients and their doctors should focus on risk factors and appropriate therapy for the diseased breast, not the opposite breast, and that ipsilateral DCIS should not prompt a bilateral mastectomy.”

The investigators also found that CBC did not correlate with age, family history, and initial DCIS characteristics, though these factors did correlate with the risk of IBTR.

Dr. Miller and her colleagues found radiation had no impact on risk of CBC (4.9% vs. 6.3%; P = .1), though it significantly reduced the risk for IBTR (10.3% vs. 19.3%; P less than .0001).

More research is needed on risk factors for patients with a preinvasive condition, Dr. Miller said.

“Most studies examining the benefits of bilateral mastectomy focus on invasive cancer,” she said. “This study is unique in providing hard data for women with preinvasive disease. For these patients, examining risk factors for recurrence and the benefits of radiation and endocrine therapy to treat the existing cancer are important.”

[email protected]

On Twitter @eaztweets

FROM ASBS 2017

Key clinical point:

Major finding: Among 2,759 patients, incidence rate of contralateral breast cancer was 2.8% and 5.6% over 5 and 10 years, respectively, while risk of ipsilateral breast tumor recurrence was 7.8% and 14.3% over 5 and 10 years, respectively.

Data source: Study of 2,759 DCIS patients who underwent breast conserving surgery between 1978-2011, who were followed for a median of 6.8 years.

Disclosures: The investigators reported no relevant disclosures.

The EHR Report: Communication, social media, and legal vulnerability

Social media is now a part of everyday life. From Twitter, with its 140 character limit, to Facebook to Linkedin, there is a world of possibilities for communicating with friends, family, colleagues, and others online. Communication is good, but electronic media is a minefield for medical professionals who do not think carefully before they post.

The stories in the news about health care professionals who have posted obviously inflammatory material online, perhaps in a fit of rage, and have had their careers impacted or ended are just the tip of the iceberg. HIPAA violations have received a good deal of attention, with a well-known example being the doctor who was accused of posting a selfie with Joan Rivers, who was unconscious on the operating table. These examples, however, represent obvious violations of HIPAA and are infractions that most physicians would readily identify. Other examples may not be as obvious.

We know of one case where a nurse on the staff of a physicians’ office posted on Facebook that work was grueling that day because he felt under the weather with suspected flu. This may seem, at first, to be an innocuous communication. And that’s all it was, until, the son of an immunosuppressed man who had an appointment at that doctor’s office was flabbergasted to hear from a mutual friend that one of the nurses in the office was at work despite having the flu. He demanded to speak with the office manager and made sure that his father was not seen by that nurse. It may seem like an unlikely coincidence, but, in medical-legal circles, unlikely events occur all the time.

Many people who use social media will check in or post when they are out with friends or colleagues blowing off steam. For example, you might post something on social media about a holiday party you are attending. But, consider what happens if, at work the next day, something goes wrong, your care is called into question, and a lawsuit ensues. Your post may be innocent, but it now falls into the hands of the patient’s attorney. When you are having your deposition taken, the lawyer pulls your social media post out of a stack of papers and grills you about where you were, what you were doing, how late you stayed out, whether you were drinking, how much, and so on. Maybe you explain to him that you were only at the holiday party for an hour and did not have a single drink. That attorney, however, is not required to take your word for it and can ask you who you were with. All of a sudden, your friends and colleagues are being served with subpoenas for their depositions and being examined about what you did that night. Possibly, the lawyer is sending a subpoena for your credit card receipt and the restaurant’s billing records to determine what you ordered that night.

You should never rely on the false assumption that even “private” messages sent directly to other people will truly remain private. One of us was recently involved in a case where this worked to our advantage. A 30-year-old woman claimed that her family doctor never recommended that she see a gastroenterologist. A friend of the patient testified in a deposition that the two of them had discussed her medical care in private messages on Facebook. After the court ordered that the patient turn over her private Facebook messages, we learned that she told her friend that the doctor had indeed made the recommendation for her to see that specialist.

This cautionary tale doesn’t just apply to social media. Keep in mind that, if you are involved in litigation, attorneys can subpoena the records from your cellular phone provider. All cell phone text message are archived by the cellular provider and can be retrieved under subpoena. You may innocently be blowing off steam to a spouse or friend about a difficult patient or bad outcome but later have those text messages used against you in litigation.

The various social media platforms can be great tools for all kinds of professionals to share interesting information and further their professional development. However, everybody, especially the medical professional, needs to think before they post or send a message. We must also remember that, once information is out in cyberspace, it remains there and can never be truly erased. In other words, you can never unring the proverbial bell. It is important to think about the potential impact of that communication before posting and electronically communicating. Only communicate something that you would be comfortable defending in court.

Dr. Skolnik is professor of family and community medicine at Jefferson Medical College, Philadelphia, and associate director of the family medicine residency program at Abington (Pa.) Jefferson Health. Mr. Shear is an associate attorney in the health care department at Marshall Dennehey Warner Coleman & Goggin in Pittsburgh. He represents physicians, medical professionals, and hospitals in medical malpractice actions.

Social media is now a part of everyday life. From Twitter, with its 140 character limit, to Facebook to Linkedin, there is a world of possibilities for communicating with friends, family, colleagues, and others online. Communication is good, but electronic media is a minefield for medical professionals who do not think carefully before they post.

The stories in the news about health care professionals who have posted obviously inflammatory material online, perhaps in a fit of rage, and have had their careers impacted or ended are just the tip of the iceberg. HIPAA violations have received a good deal of attention, with a well-known example being the doctor who was accused of posting a selfie with Joan Rivers, who was unconscious on the operating table. These examples, however, represent obvious violations of HIPAA and are infractions that most physicians would readily identify. Other examples may not be as obvious.

We know of one case where a nurse on the staff of a physicians’ office posted on Facebook that work was grueling that day because he felt under the weather with suspected flu. This may seem, at first, to be an innocuous communication. And that’s all it was, until, the son of an immunosuppressed man who had an appointment at that doctor’s office was flabbergasted to hear from a mutual friend that one of the nurses in the office was at work despite having the flu. He demanded to speak with the office manager and made sure that his father was not seen by that nurse. It may seem like an unlikely coincidence, but, in medical-legal circles, unlikely events occur all the time.

Many people who use social media will check in or post when they are out with friends or colleagues blowing off steam. For example, you might post something on social media about a holiday party you are attending. But, consider what happens if, at work the next day, something goes wrong, your care is called into question, and a lawsuit ensues. Your post may be innocent, but it now falls into the hands of the patient’s attorney. When you are having your deposition taken, the lawyer pulls your social media post out of a stack of papers and grills you about where you were, what you were doing, how late you stayed out, whether you were drinking, how much, and so on. Maybe you explain to him that you were only at the holiday party for an hour and did not have a single drink. That attorney, however, is not required to take your word for it and can ask you who you were with. All of a sudden, your friends and colleagues are being served with subpoenas for their depositions and being examined about what you did that night. Possibly, the lawyer is sending a subpoena for your credit card receipt and the restaurant’s billing records to determine what you ordered that night.

You should never rely on the false assumption that even “private” messages sent directly to other people will truly remain private. One of us was recently involved in a case where this worked to our advantage. A 30-year-old woman claimed that her family doctor never recommended that she see a gastroenterologist. A friend of the patient testified in a deposition that the two of them had discussed her medical care in private messages on Facebook. After the court ordered that the patient turn over her private Facebook messages, we learned that she told her friend that the doctor had indeed made the recommendation for her to see that specialist.

This cautionary tale doesn’t just apply to social media. Keep in mind that, if you are involved in litigation, attorneys can subpoena the records from your cellular phone provider. All cell phone text message are archived by the cellular provider and can be retrieved under subpoena. You may innocently be blowing off steam to a spouse or friend about a difficult patient or bad outcome but later have those text messages used against you in litigation.

The various social media platforms can be great tools for all kinds of professionals to share interesting information and further their professional development. However, everybody, especially the medical professional, needs to think before they post or send a message. We must also remember that, once information is out in cyberspace, it remains there and can never be truly erased. In other words, you can never unring the proverbial bell. It is important to think about the potential impact of that communication before posting and electronically communicating. Only communicate something that you would be comfortable defending in court.

Dr. Skolnik is professor of family and community medicine at Jefferson Medical College, Philadelphia, and associate director of the family medicine residency program at Abington (Pa.) Jefferson Health. Mr. Shear is an associate attorney in the health care department at Marshall Dennehey Warner Coleman & Goggin in Pittsburgh. He represents physicians, medical professionals, and hospitals in medical malpractice actions.

Social media is now a part of everyday life. From Twitter, with its 140 character limit, to Facebook to Linkedin, there is a world of possibilities for communicating with friends, family, colleagues, and others online. Communication is good, but electronic media is a minefield for medical professionals who do not think carefully before they post.

The stories in the news about health care professionals who have posted obviously inflammatory material online, perhaps in a fit of rage, and have had their careers impacted or ended are just the tip of the iceberg. HIPAA violations have received a good deal of attention, with a well-known example being the doctor who was accused of posting a selfie with Joan Rivers, who was unconscious on the operating table. These examples, however, represent obvious violations of HIPAA and are infractions that most physicians would readily identify. Other examples may not be as obvious.

We know of one case where a nurse on the staff of a physicians’ office posted on Facebook that work was grueling that day because he felt under the weather with suspected flu. This may seem, at first, to be an innocuous communication. And that’s all it was, until, the son of an immunosuppressed man who had an appointment at that doctor’s office was flabbergasted to hear from a mutual friend that one of the nurses in the office was at work despite having the flu. He demanded to speak with the office manager and made sure that his father was not seen by that nurse. It may seem like an unlikely coincidence, but, in medical-legal circles, unlikely events occur all the time.

Many people who use social media will check in or post when they are out with friends or colleagues blowing off steam. For example, you might post something on social media about a holiday party you are attending. But, consider what happens if, at work the next day, something goes wrong, your care is called into question, and a lawsuit ensues. Your post may be innocent, but it now falls into the hands of the patient’s attorney. When you are having your deposition taken, the lawyer pulls your social media post out of a stack of papers and grills you about where you were, what you were doing, how late you stayed out, whether you were drinking, how much, and so on. Maybe you explain to him that you were only at the holiday party for an hour and did not have a single drink. That attorney, however, is not required to take your word for it and can ask you who you were with. All of a sudden, your friends and colleagues are being served with subpoenas for their depositions and being examined about what you did that night. Possibly, the lawyer is sending a subpoena for your credit card receipt and the restaurant’s billing records to determine what you ordered that night.

You should never rely on the false assumption that even “private” messages sent directly to other people will truly remain private. One of us was recently involved in a case where this worked to our advantage. A 30-year-old woman claimed that her family doctor never recommended that she see a gastroenterologist. A friend of the patient testified in a deposition that the two of them had discussed her medical care in private messages on Facebook. After the court ordered that the patient turn over her private Facebook messages, we learned that she told her friend that the doctor had indeed made the recommendation for her to see that specialist.

This cautionary tale doesn’t just apply to social media. Keep in mind that, if you are involved in litigation, attorneys can subpoena the records from your cellular phone provider. All cell phone text message are archived by the cellular provider and can be retrieved under subpoena. You may innocently be blowing off steam to a spouse or friend about a difficult patient or bad outcome but later have those text messages used against you in litigation.

The various social media platforms can be great tools for all kinds of professionals to share interesting information and further their professional development. However, everybody, especially the medical professional, needs to think before they post or send a message. We must also remember that, once information is out in cyberspace, it remains there and can never be truly erased. In other words, you can never unring the proverbial bell. It is important to think about the potential impact of that communication before posting and electronically communicating. Only communicate something that you would be comfortable defending in court.

Dr. Skolnik is professor of family and community medicine at Jefferson Medical College, Philadelphia, and associate director of the family medicine residency program at Abington (Pa.) Jefferson Health. Mr. Shear is an associate attorney in the health care department at Marshall Dennehey Warner Coleman & Goggin in Pittsburgh. He represents physicians, medical professionals, and hospitals in medical malpractice actions.

Small study: Vitamin D repletion may decrease insulin resistance

WASHINGTON – Normalizing vitamin D levels correlated with lower insulin resistance and decreased adipose fibrosis in obese patients, according to a study presented at the Eastern regional meeting of the American Federation for Medical Research.

Approximately 86 million U.S. patients have prediabetes, according to Diabetes Report Card 2014, the most recent estimates from the Centers for Disease Control and Prevention. Vitamin D therapy may be able to help lower that number and prevent diabetes in some patients, Jee Young You, MD, a research fellow at Albert Einstein College of Medicine, New York, said at the meeting.

“When there’s increased adiposity, there is reduction of the blood flow which will further lead to inflammation, macrophage infiltration, and fibrosis, which all together leads to insulin resistance,” Dr. You said. “It is shown that there are vitamin D receptors present on adipocytes, so we hypothesize repleting vitamin D will help in reducing this inflammation.”

In a double blind study, Dr. You and her colleagues randomized 11 obese patients, with an average body mass index of 34 kg/m2, insulin resistance, and vitamin D deficiency to vitamin D repletion therapy. Eight similar patients served as controls. The average age was 43 years.

Patients in the test group were placed on a step schedule for vitamin D supplementation. For 3 months, they received 40,000 IU of vitamin D3 weekly in an effort to reach a target 25-hydroxyvitamin D level of greater than 30 ng/ml. Patients then received another 3 months of the same supplementation with an aim to reach a target level of greater than 50 ng/ml.

“We wanted to see if there was a dose dependent effect for vitamin D in patients,” Dr. You said.

Endogenous glucose production decreased by 24% (P = .04) after normalization of vitamin D levels. Patients who received placebo saw an increase in endogenous glucose, pointing to lower hepatic insulin sensitivity, Dr. You said.

When the vitamin D receptors are activated, they inhibit the profibrotic pathways like TGFb-1, Dr. You explained, decreasing fibrosis.

The researchers also found a decrease in profibrotic gene expression in TGFb-1, HiF-1, MMP7, and Collagen I, V, and VI.

However, while testing for reduction in profibrotic gene expression in whole fat, the investigators found that there was no additional improvement after the first round of vitamin D therapy, leading them to assert that raising vitamin D levels above the normal range does not give any additional benefit.

[email protected]

On Twitter @eaztweets

WASHINGTON – Normalizing vitamin D levels correlated with lower insulin resistance and decreased adipose fibrosis in obese patients, according to a study presented at the Eastern regional meeting of the American Federation for Medical Research.

Approximately 86 million U.S. patients have prediabetes, according to Diabetes Report Card 2014, the most recent estimates from the Centers for Disease Control and Prevention. Vitamin D therapy may be able to help lower that number and prevent diabetes in some patients, Jee Young You, MD, a research fellow at Albert Einstein College of Medicine, New York, said at the meeting.

“When there’s increased adiposity, there is reduction of the blood flow which will further lead to inflammation, macrophage infiltration, and fibrosis, which all together leads to insulin resistance,” Dr. You said. “It is shown that there are vitamin D receptors present on adipocytes, so we hypothesize repleting vitamin D will help in reducing this inflammation.”

In a double blind study, Dr. You and her colleagues randomized 11 obese patients, with an average body mass index of 34 kg/m2, insulin resistance, and vitamin D deficiency to vitamin D repletion therapy. Eight similar patients served as controls. The average age was 43 years.

Patients in the test group were placed on a step schedule for vitamin D supplementation. For 3 months, they received 40,000 IU of vitamin D3 weekly in an effort to reach a target 25-hydroxyvitamin D level of greater than 30 ng/ml. Patients then received another 3 months of the same supplementation with an aim to reach a target level of greater than 50 ng/ml.

“We wanted to see if there was a dose dependent effect for vitamin D in patients,” Dr. You said.

Endogenous glucose production decreased by 24% (P = .04) after normalization of vitamin D levels. Patients who received placebo saw an increase in endogenous glucose, pointing to lower hepatic insulin sensitivity, Dr. You said.

When the vitamin D receptors are activated, they inhibit the profibrotic pathways like TGFb-1, Dr. You explained, decreasing fibrosis.

The researchers also found a decrease in profibrotic gene expression in TGFb-1, HiF-1, MMP7, and Collagen I, V, and VI.

However, while testing for reduction in profibrotic gene expression in whole fat, the investigators found that there was no additional improvement after the first round of vitamin D therapy, leading them to assert that raising vitamin D levels above the normal range does not give any additional benefit.

[email protected]

On Twitter @eaztweets

WASHINGTON – Normalizing vitamin D levels correlated with lower insulin resistance and decreased adipose fibrosis in obese patients, according to a study presented at the Eastern regional meeting of the American Federation for Medical Research.

Approximately 86 million U.S. patients have prediabetes, according to Diabetes Report Card 2014, the most recent estimates from the Centers for Disease Control and Prevention. Vitamin D therapy may be able to help lower that number and prevent diabetes in some patients, Jee Young You, MD, a research fellow at Albert Einstein College of Medicine, New York, said at the meeting.

“When there’s increased adiposity, there is reduction of the blood flow which will further lead to inflammation, macrophage infiltration, and fibrosis, which all together leads to insulin resistance,” Dr. You said. “It is shown that there are vitamin D receptors present on adipocytes, so we hypothesize repleting vitamin D will help in reducing this inflammation.”

In a double blind study, Dr. You and her colleagues randomized 11 obese patients, with an average body mass index of 34 kg/m2, insulin resistance, and vitamin D deficiency to vitamin D repletion therapy. Eight similar patients served as controls. The average age was 43 years.

Patients in the test group were placed on a step schedule for vitamin D supplementation. For 3 months, they received 40,000 IU of vitamin D3 weekly in an effort to reach a target 25-hydroxyvitamin D level of greater than 30 ng/ml. Patients then received another 3 months of the same supplementation with an aim to reach a target level of greater than 50 ng/ml.

“We wanted to see if there was a dose dependent effect for vitamin D in patients,” Dr. You said.

Endogenous glucose production decreased by 24% (P = .04) after normalization of vitamin D levels. Patients who received placebo saw an increase in endogenous glucose, pointing to lower hepatic insulin sensitivity, Dr. You said.

When the vitamin D receptors are activated, they inhibit the profibrotic pathways like TGFb-1, Dr. You explained, decreasing fibrosis.

The researchers also found a decrease in profibrotic gene expression in TGFb-1, HiF-1, MMP7, and Collagen I, V, and VI.

However, while testing for reduction in profibrotic gene expression in whole fat, the investigators found that there was no additional improvement after the first round of vitamin D therapy, leading them to assert that raising vitamin D levels above the normal range does not give any additional benefit.

[email protected]

On Twitter @eaztweets

AT THE AFMR EASTERN REGIONAL MEETING

Key clinical point:

Major finding: Of the 19 patients studied, expression of profibrotic genes TGFb-1, HiF-1, MMP7, and Collagen I, V, and VI in those given vitamin D therapy decreased 0.81, 0.72, 0.62,. 0.56, 0.56, and 0.43 times, respectively (P less than .05).

Data source: Randomized, double blind, placebo-controlled study of 19 obese, insulin resistant, vitamin D deficient patients.

Disclosures: The investigators reported no relevant conflicts of interest.

Modifying CAR-T with IL-15 improved activity in glioma models

MONTREAL – Adding an immunostimulatory cytokine to chimeric antigen receptor–T cells (CAR-T) improved the adaptive immunotherapy’s activity against aggressive pediatric brain malignancies both in vitro and in animal models, an investigator reported.

CAR-T cells engineered to express interleukin-15 (IL-15), an inducer of T-cell proliferation and survival, were associated with significantly longer progression-free survival (PFS) and overall survival in mouse models of glioma, compared with regular CAR-T cells, said Giedre Krenciute, PhD, of Baylor College of Medicine in Houston.

The improved T-cell persistence, however, still resulted in the eventual loss of both targeted and nontargeted tumor-associated antigens and tumor recurrence, Dr. Krenciute noted.

The results suggest that T-cell persistence is critical for antitumor activity, and that it will be necessary to perform antigen profiling of recurrent tumors in order to develop follow-on therapies targeting multiple tumor-associated antigens, she said.

Her team had previously reported on the development of a CAR-T cell directed specifically against the IL-13 receptor alpha-2, which is expressed at high frequency in diffuse intrinsic pontine glioma and glioblastoma tumors but not in normal brain tissues.

In preclinical models, the construct had potent antiglioblastoma activity. However, the T-cells had only limited persistence, and tumors positive for IL-13 receptor alpha-2 recurred.

To see whether they could improve on T-cell persistence, they took their CARs back to the shop and modified them with a retroviral vector to express IL-15 transgenes. They then tested the altered cells in vitro using standard assays and found that the addition of IL-15 did not change the T-cell phenotype or affect the cells’ cytotoxicity.

The addition of IL-15 significantly improved the persistence of the T cells when they were injected into the tumors of mice with human glioblastoma xenografts (P less than .05), and this persistence translated into a near-doubling of PFS, compared with regular CAR-T cells (media, 84 days vs. 49 days; P = .008), as well as improved overall survival (P = .02).

Of 10 mice that received the IL-15–expressing CAR-Ts, 4 were free of glioma through at least 80 days of follow-up.

Of five mice with recurring gliomas, three had down-regulated expression of the IL-13 receptor alpha-2 target, indicating immune escape, and all recurring tumors had reduced expression of the human epidermal growth factor receptor-2 antigen, which is associated with gliomas.

The investigators are currently test-driving a new CD20-targeted CAR, transduced to express IL-15, and have seen good expansion and persistence of the T cells for up to 15 days in culture, with in vivo tests in the planning stage, Dr. Krenciute said.

The work is supported by the National Institutes of Health, Alex’s Lemonade Stand Foundation, the James S. McDonnell Foundation, and the American Brain Tumor Association. The laboratory, the Center for Cell and Gene Therapy at Baylor, has or had research collaborations with Celgene, Bluebird Bio, and Tessa Therapeutics, and investigators at the center hold or have applied for patents in T-cell and gene-modified T-cell therapies for cancer.

MONTREAL – Adding an immunostimulatory cytokine to chimeric antigen receptor–T cells (CAR-T) improved the adaptive immunotherapy’s activity against aggressive pediatric brain malignancies both in vitro and in animal models, an investigator reported.

CAR-T cells engineered to express interleukin-15 (IL-15), an inducer of T-cell proliferation and survival, were associated with significantly longer progression-free survival (PFS) and overall survival in mouse models of glioma, compared with regular CAR-T cells, said Giedre Krenciute, PhD, of Baylor College of Medicine in Houston.

The improved T-cell persistence, however, still resulted in the eventual loss of both targeted and nontargeted tumor-associated antigens and tumor recurrence, Dr. Krenciute noted.

The results suggest that T-cell persistence is critical for antitumor activity, and that it will be necessary to perform antigen profiling of recurrent tumors in order to develop follow-on therapies targeting multiple tumor-associated antigens, she said.

Her team had previously reported on the development of a CAR-T cell directed specifically against the IL-13 receptor alpha-2, which is expressed at high frequency in diffuse intrinsic pontine glioma and glioblastoma tumors but not in normal brain tissues.

In preclinical models, the construct had potent antiglioblastoma activity. However, the T-cells had only limited persistence, and tumors positive for IL-13 receptor alpha-2 recurred.

To see whether they could improve on T-cell persistence, they took their CARs back to the shop and modified them with a retroviral vector to express IL-15 transgenes. They then tested the altered cells in vitro using standard assays and found that the addition of IL-15 did not change the T-cell phenotype or affect the cells’ cytotoxicity.

The addition of IL-15 significantly improved the persistence of the T cells when they were injected into the tumors of mice with human glioblastoma xenografts (P less than .05), and this persistence translated into a near-doubling of PFS, compared with regular CAR-T cells (media, 84 days vs. 49 days; P = .008), as well as improved overall survival (P = .02).

Of 10 mice that received the IL-15–expressing CAR-Ts, 4 were free of glioma through at least 80 days of follow-up.

Of five mice with recurring gliomas, three had down-regulated expression of the IL-13 receptor alpha-2 target, indicating immune escape, and all recurring tumors had reduced expression of the human epidermal growth factor receptor-2 antigen, which is associated with gliomas.

The investigators are currently test-driving a new CD20-targeted CAR, transduced to express IL-15, and have seen good expansion and persistence of the T cells for up to 15 days in culture, with in vivo tests in the planning stage, Dr. Krenciute said.

The work is supported by the National Institutes of Health, Alex’s Lemonade Stand Foundation, the James S. McDonnell Foundation, and the American Brain Tumor Association. The laboratory, the Center for Cell and Gene Therapy at Baylor, has or had research collaborations with Celgene, Bluebird Bio, and Tessa Therapeutics, and investigators at the center hold or have applied for patents in T-cell and gene-modified T-cell therapies for cancer.

MONTREAL – Adding an immunostimulatory cytokine to chimeric antigen receptor–T cells (CAR-T) improved the adaptive immunotherapy’s activity against aggressive pediatric brain malignancies both in vitro and in animal models, an investigator reported.

CAR-T cells engineered to express interleukin-15 (IL-15), an inducer of T-cell proliferation and survival, were associated with significantly longer progression-free survival (PFS) and overall survival in mouse models of glioma, compared with regular CAR-T cells, said Giedre Krenciute, PhD, of Baylor College of Medicine in Houston.

The improved T-cell persistence, however, still resulted in the eventual loss of both targeted and nontargeted tumor-associated antigens and tumor recurrence, Dr. Krenciute noted.

The results suggest that T-cell persistence is critical for antitumor activity, and that it will be necessary to perform antigen profiling of recurrent tumors in order to develop follow-on therapies targeting multiple tumor-associated antigens, she said.

Her team had previously reported on the development of a CAR-T cell directed specifically against the IL-13 receptor alpha-2, which is expressed at high frequency in diffuse intrinsic pontine glioma and glioblastoma tumors but not in normal brain tissues.

In preclinical models, the construct had potent antiglioblastoma activity. However, the T-cells had only limited persistence, and tumors positive for IL-13 receptor alpha-2 recurred.

To see whether they could improve on T-cell persistence, they took their CARs back to the shop and modified them with a retroviral vector to express IL-15 transgenes. They then tested the altered cells in vitro using standard assays and found that the addition of IL-15 did not change the T-cell phenotype or affect the cells’ cytotoxicity.

The addition of IL-15 significantly improved the persistence of the T cells when they were injected into the tumors of mice with human glioblastoma xenografts (P less than .05), and this persistence translated into a near-doubling of PFS, compared with regular CAR-T cells (media, 84 days vs. 49 days; P = .008), as well as improved overall survival (P = .02).

Of 10 mice that received the IL-15–expressing CAR-Ts, 4 were free of glioma through at least 80 days of follow-up.

Of five mice with recurring gliomas, three had down-regulated expression of the IL-13 receptor alpha-2 target, indicating immune escape, and all recurring tumors had reduced expression of the human epidermal growth factor receptor-2 antigen, which is associated with gliomas.

The investigators are currently test-driving a new CD20-targeted CAR, transduced to express IL-15, and have seen good expansion and persistence of the T cells for up to 15 days in culture, with in vivo tests in the planning stage, Dr. Krenciute said.

The work is supported by the National Institutes of Health, Alex’s Lemonade Stand Foundation, the James S. McDonnell Foundation, and the American Brain Tumor Association. The laboratory, the Center for Cell and Gene Therapy at Baylor, has or had research collaborations with Celgene, Bluebird Bio, and Tessa Therapeutics, and investigators at the center hold or have applied for patents in T-cell and gene-modified T-cell therapies for cancer.

FROM ASPHO

Key clinical point: CAR-T cells, modified to express IL-15, have improved activity against aggressive pediatric gliomas.

Major finding: IL-15 expressed T cells were associated with improved progression-free and overall survival in animal models of glioblastoma.

Data source: In vitro and in vivo experiments of CAR-T cells modified to improve T-cell persistence and clinical efficacy.

Disclosures: The work is supported by the National Institutes of Health, Alex’s Lemonade Stand Foundation, the James S. McDonnell Foundation, and the American Brain Tumor Association. The laboratory, the Center for Cell and Gene Therapy at Baylor, has or had research collaborations with Celgene, Bluebird Bio, and Tessa Therapeutics, and investigators at the center hold or have applied for patents in T-cell and gene-modified T-cell therapies for cancer.

Medicaid acceptance at 33% for dermatologists

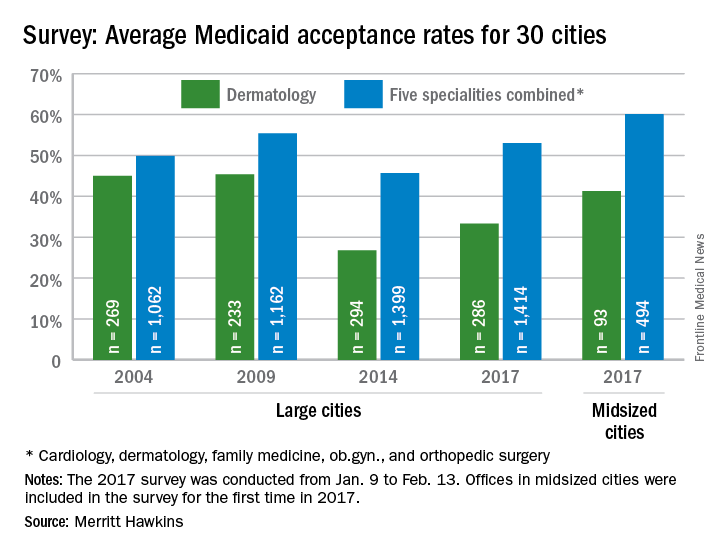

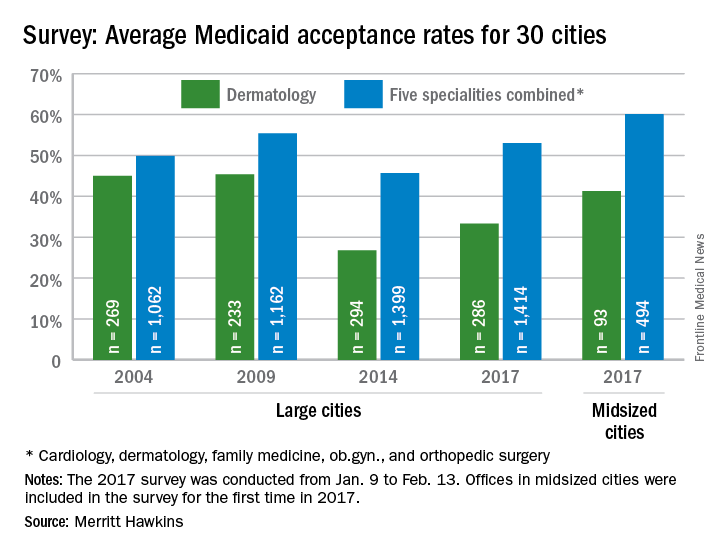

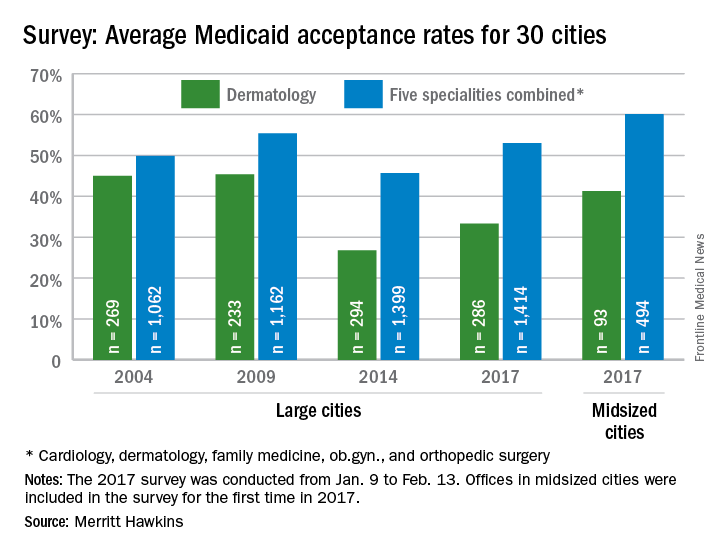

Medicaid acceptance was just over 33% among dermatologists in the 2017 edition of an ongoing survey conducted in 15 large cities by physician recruitment firm Merritt Hawkins.

That was up from 27% in the previous survey, conducted in 2014, but lower than the average of 41% for dermatologists in 15 midsized cities that were included for the first time in 2017, the company reported.

Investigators called 286 randomly selected dermatologists in the large cities and 93 dermatologists in the midsized cities in January and February. It was the fourth such survey the company has conducted since 2004.

The survey also included four other specialties – cardiology, family medicine, ob.gyn., and orthopedic surgery. The Medicaid acceptance rate for all 1,414 physicians in all five specialties in the 15 large cities was 53%, and the average rate for all specialties in the midsized cities was 60% for the 494 offices surveyed. Cardiology had the highest rates by specialty and dermatology the lowest in both the large and midsized cities, the company said.

Medicaid acceptance was just over 33% among dermatologists in the 2017 edition of an ongoing survey conducted in 15 large cities by physician recruitment firm Merritt Hawkins.

That was up from 27% in the previous survey, conducted in 2014, but lower than the average of 41% for dermatologists in 15 midsized cities that were included for the first time in 2017, the company reported.

Investigators called 286 randomly selected dermatologists in the large cities and 93 dermatologists in the midsized cities in January and February. It was the fourth such survey the company has conducted since 2004.

The survey also included four other specialties – cardiology, family medicine, ob.gyn., and orthopedic surgery. The Medicaid acceptance rate for all 1,414 physicians in all five specialties in the 15 large cities was 53%, and the average rate for all specialties in the midsized cities was 60% for the 494 offices surveyed. Cardiology had the highest rates by specialty and dermatology the lowest in both the large and midsized cities, the company said.

Medicaid acceptance was just over 33% among dermatologists in the 2017 edition of an ongoing survey conducted in 15 large cities by physician recruitment firm Merritt Hawkins.

That was up from 27% in the previous survey, conducted in 2014, but lower than the average of 41% for dermatologists in 15 midsized cities that were included for the first time in 2017, the company reported.

Investigators called 286 randomly selected dermatologists in the large cities and 93 dermatologists in the midsized cities in January and February. It was the fourth such survey the company has conducted since 2004.

The survey also included four other specialties – cardiology, family medicine, ob.gyn., and orthopedic surgery. The Medicaid acceptance rate for all 1,414 physicians in all five specialties in the 15 large cities was 53%, and the average rate for all specialties in the midsized cities was 60% for the 494 offices surveyed. Cardiology had the highest rates by specialty and dermatology the lowest in both the large and midsized cities, the company said.

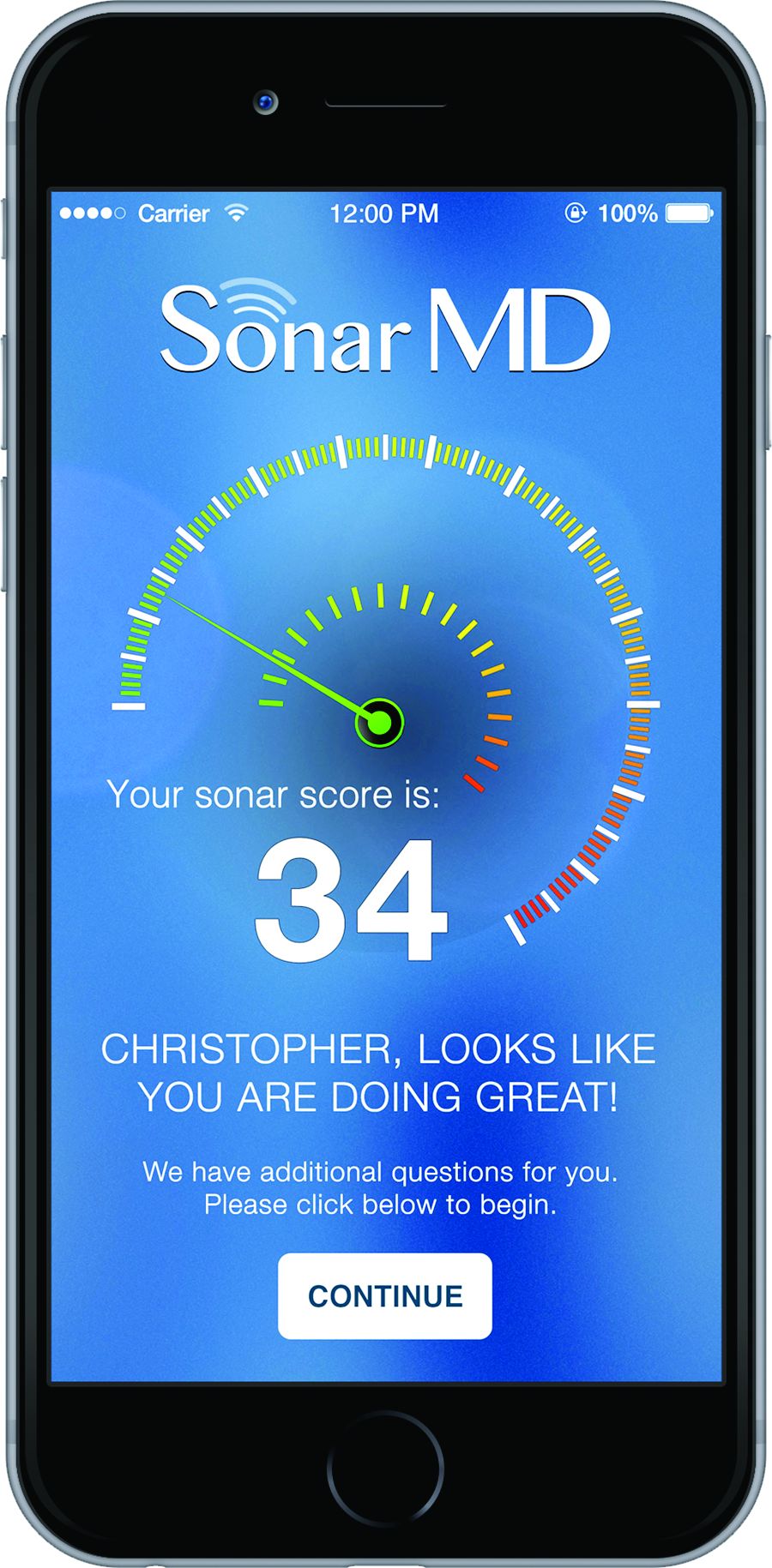

Project Sonar brings gastroenterologists one step closer to a specialty-specific APM

Gastroenterologists wanting to participate in the advanced alternative payment model track of the new Quality Payment Program developed under the Medicare Access and CHIP Reauthorization Act (MACRA) may soon have an option as early as 2018.

Project Sonar, a program that helps gastroenterologists and their patients help manage inflammatory bowel diseases such as Crohn’s disease and ulcerative colitis was the first physician-focused gastroenterology-specific payment model to receive a recommendation from the Physician-Focused Payment Model Technical Advisory Committee (PTAC). The proposal was supported by public comments from AGA and the American Medical Association. It now heads to the U.S. Department of Health & Human Services for final approval.

“The intensive medical home Blue Cross initiated with us because of the success of the pilot pays us a supplemental management fee for every patient that is enrolled,” Lawrence Kosinski, MD, MBA, managing partner at IGG, and a Practice Councillor for the AGA Institute Governing Board, said in an interview. “That management fee is adjusted based on how much savings we produce for the payer. It ultimately winds up being a shared savings program based on our ability to control the cost of care, but we get a management fee upfront in order to make it happen.”

And getting those savings was simply a matter of getting patients engaged in their care. The way Project Sonar works is patients are “pinged” once a month to report on their symptoms. They generally receive a text alert with a link to a secure website where they answer five questions that are derived from the Crohn’s Disease Activity Index. The first one asks how many bowel movements per day over the last 7 days. The second one asks for a rating of abdominal pain. The third one is a rating of the patient’s general state of well-being. Then they are asked whether they are taking drugs for diarrhea. Finally, there are some check boxes for whether they have any eye symptoms or skin rashes or joint pains.

The answers are then weighted and a score is given both to the patient and the doctor. Doctors are alerted to which patients might require more focused attention over those who have their symptoms well managed.

But what is making this program work is that IGG has been able to get an 80% sustained response rate from patients using the program.

“The entire savings that we are generating is coming solely from the patients who participate in the platform,” Dr. Kosinski said.

From an analysis of BCBS of Illinois’ claims data, Dr. Kosinski and his colleagues determined that “ it costs about $24,000 a year to take care of a Crohn’s patient, and over half the money that is spent is spent for inpatient care, complications, bad things happening to these patients.”

He continued: “The difference in annual costs between a pinger and a nonpinger is $6,300. That is a 25% drop in the cost of care.” He added that Project Sonar is leading to a 10% savings in the overall cost of care for these patients when total costs are factored in.

One surprise aspect that Dr. Kosinski found was that patients needed no additional incentive to respond to their pings.

“We had thought we were going to have to get into some gameification and we actually ran a few lotteries to reward people,” he said. “We haven’t had to do it. The patients have participated because I think they feel like they are getting excellent care this way, and I’m very impressed by the fact that the patients have not had to be stimulated to do it.”

At the end of the day, it’s all about improving patients’ lives.

“Patients are doing better,” he noted. “They are staying out of the hospital. They are staying out of the operating room. They are staying at work. They are staying with their families. We have to realize, we look at these as patients with diseases. These are human beings that just happen to have a disease, and they have a life, they have jobs, family, responsibility, and if our Sonar system is allowing them to have a more normal life, there is a lot of traction there.”

Dr. Kosinski is also an Associate Editor for GI & Hepatology News.

Gastroenterologists wanting to participate in the advanced alternative payment model track of the new Quality Payment Program developed under the Medicare Access and CHIP Reauthorization Act (MACRA) may soon have an option as early as 2018.

Project Sonar, a program that helps gastroenterologists and their patients help manage inflammatory bowel diseases such as Crohn’s disease and ulcerative colitis was the first physician-focused gastroenterology-specific payment model to receive a recommendation from the Physician-Focused Payment Model Technical Advisory Committee (PTAC). The proposal was supported by public comments from AGA and the American Medical Association. It now heads to the U.S. Department of Health & Human Services for final approval.

“The intensive medical home Blue Cross initiated with us because of the success of the pilot pays us a supplemental management fee for every patient that is enrolled,” Lawrence Kosinski, MD, MBA, managing partner at IGG, and a Practice Councillor for the AGA Institute Governing Board, said in an interview. “That management fee is adjusted based on how much savings we produce for the payer. It ultimately winds up being a shared savings program based on our ability to control the cost of care, but we get a management fee upfront in order to make it happen.”

And getting those savings was simply a matter of getting patients engaged in their care. The way Project Sonar works is patients are “pinged” once a month to report on their symptoms. They generally receive a text alert with a link to a secure website where they answer five questions that are derived from the Crohn’s Disease Activity Index. The first one asks how many bowel movements per day over the last 7 days. The second one asks for a rating of abdominal pain. The third one is a rating of the patient’s general state of well-being. Then they are asked whether they are taking drugs for diarrhea. Finally, there are some check boxes for whether they have any eye symptoms or skin rashes or joint pains.

The answers are then weighted and a score is given both to the patient and the doctor. Doctors are alerted to which patients might require more focused attention over those who have their symptoms well managed.

But what is making this program work is that IGG has been able to get an 80% sustained response rate from patients using the program.

“The entire savings that we are generating is coming solely from the patients who participate in the platform,” Dr. Kosinski said.

From an analysis of BCBS of Illinois’ claims data, Dr. Kosinski and his colleagues determined that “ it costs about $24,000 a year to take care of a Crohn’s patient, and over half the money that is spent is spent for inpatient care, complications, bad things happening to these patients.”

He continued: “The difference in annual costs between a pinger and a nonpinger is $6,300. That is a 25% drop in the cost of care.” He added that Project Sonar is leading to a 10% savings in the overall cost of care for these patients when total costs are factored in.

One surprise aspect that Dr. Kosinski found was that patients needed no additional incentive to respond to their pings.

“We had thought we were going to have to get into some gameification and we actually ran a few lotteries to reward people,” he said. “We haven’t had to do it. The patients have participated because I think they feel like they are getting excellent care this way, and I’m very impressed by the fact that the patients have not had to be stimulated to do it.”

At the end of the day, it’s all about improving patients’ lives.

“Patients are doing better,” he noted. “They are staying out of the hospital. They are staying out of the operating room. They are staying at work. They are staying with their families. We have to realize, we look at these as patients with diseases. These are human beings that just happen to have a disease, and they have a life, they have jobs, family, responsibility, and if our Sonar system is allowing them to have a more normal life, there is a lot of traction there.”

Dr. Kosinski is also an Associate Editor for GI & Hepatology News.

Gastroenterologists wanting to participate in the advanced alternative payment model track of the new Quality Payment Program developed under the Medicare Access and CHIP Reauthorization Act (MACRA) may soon have an option as early as 2018.

Project Sonar, a program that helps gastroenterologists and their patients help manage inflammatory bowel diseases such as Crohn’s disease and ulcerative colitis was the first physician-focused gastroenterology-specific payment model to receive a recommendation from the Physician-Focused Payment Model Technical Advisory Committee (PTAC). The proposal was supported by public comments from AGA and the American Medical Association. It now heads to the U.S. Department of Health & Human Services for final approval.

“The intensive medical home Blue Cross initiated with us because of the success of the pilot pays us a supplemental management fee for every patient that is enrolled,” Lawrence Kosinski, MD, MBA, managing partner at IGG, and a Practice Councillor for the AGA Institute Governing Board, said in an interview. “That management fee is adjusted based on how much savings we produce for the payer. It ultimately winds up being a shared savings program based on our ability to control the cost of care, but we get a management fee upfront in order to make it happen.”

And getting those savings was simply a matter of getting patients engaged in their care. The way Project Sonar works is patients are “pinged” once a month to report on their symptoms. They generally receive a text alert with a link to a secure website where they answer five questions that are derived from the Crohn’s Disease Activity Index. The first one asks how many bowel movements per day over the last 7 days. The second one asks for a rating of abdominal pain. The third one is a rating of the patient’s general state of well-being. Then they are asked whether they are taking drugs for diarrhea. Finally, there are some check boxes for whether they have any eye symptoms or skin rashes or joint pains.

The answers are then weighted and a score is given both to the patient and the doctor. Doctors are alerted to which patients might require more focused attention over those who have their symptoms well managed.

But what is making this program work is that IGG has been able to get an 80% sustained response rate from patients using the program.

“The entire savings that we are generating is coming solely from the patients who participate in the platform,” Dr. Kosinski said.

From an analysis of BCBS of Illinois’ claims data, Dr. Kosinski and his colleagues determined that “ it costs about $24,000 a year to take care of a Crohn’s patient, and over half the money that is spent is spent for inpatient care, complications, bad things happening to these patients.”

He continued: “The difference in annual costs between a pinger and a nonpinger is $6,300. That is a 25% drop in the cost of care.” He added that Project Sonar is leading to a 10% savings in the overall cost of care for these patients when total costs are factored in.

One surprise aspect that Dr. Kosinski found was that patients needed no additional incentive to respond to their pings.

“We had thought we were going to have to get into some gameification and we actually ran a few lotteries to reward people,” he said. “We haven’t had to do it. The patients have participated because I think they feel like they are getting excellent care this way, and I’m very impressed by the fact that the patients have not had to be stimulated to do it.”

At the end of the day, it’s all about improving patients’ lives.

“Patients are doing better,” he noted. “They are staying out of the hospital. They are staying out of the operating room. They are staying at work. They are staying with their families. We have to realize, we look at these as patients with diseases. These are human beings that just happen to have a disease, and they have a life, they have jobs, family, responsibility, and if our Sonar system is allowing them to have a more normal life, there is a lot of traction there.”

Dr. Kosinski is also an Associate Editor for GI & Hepatology News.

Shark Tank returns: Which GI innovations will make it to market?

BOSTON – A group of aspiring innovators appeared before a panel of experts at the 2017 AGA Tech Summit Shark Tank event to make the case for how their novel device or technology could help advance the field of gastroenterology.

“The conversation that occurs here is really the start of other conversations we hope will continue out of this room and into the future to help everyone move their device or concept forward,” Michael L. Kochman, MD, AGAF, chair of the AGA Center for GI Innovation and Technology (CGIT), which sponsors the summit, told the assembled audience of potential investors, regulatory officials, and other innovators. Dr. Kochman also served as one of the “sharks” who sought to ask fundamental questions and identify potential gaps in each business plan in an ultimate attempt to make the product better and market ready. He was joined by V. Raman Muthusamy, MD, CGIT’s incoming chair, and director of interventional endoscopy and general GI endoscopy at the University of California, Los Angeles, where he is also clinical professor of medicine in the division of digestive diseases. The panel also included Jeffrey M. Marks, MD, director of surgical endoscopy at University Hospitals Cleveland Medical Center, and Dennis McWilliams, president and chief commercial officer of Apollo Endosurgery, Inc.

Analyzing RNA biomarkers in stool samples

First to face the sharks was Erica Barnell, an MD/PhD candidate at the Washington University School of Medicine in St. Louis and the chief science officer of Geneoscopy, LLC. She and her collaborators have created a series of patent-pending methods to isolate and preserve neoplasia-associated RNA biomarkers in stool samples. The technology is expected to improve screening for colorectal cancer, which is the second leading cause of cancer-related deaths in the United States, with more than 50,000 deaths annually. The high mortality rate of colorectal cancer is due, in part, to flaws in existing methodologies to screen for the disease and because of a high rate of patient noncompliance with screening guidelines, according to Ms. Barnell.

Clinical data presented by Ms. Barnell showed that their predictive algorithms in a test population of 65 persons had 81% sensitivity and 59% specificity for detecting adenomas of all sizes. Ms. Barnell acknowledged that the specificity rate was not impressive, so she and her colleagues plan to add a fecal immunochemical test in their next round of clinical trial testing.

Among the feedback Ms. Barnell received was Dr. Kochman’s suggestion that geneoscopy would benefit from having strong head-to-head data for their novel screening procedure vs. current procedures to present to the U.S. Food and Drug Administration, which Ms. Barnell said she and her colleagues were already taking into account.

Reducing risk of repetitive motion injuries

Prosper Abitbol, MD, a gastroenterologist in private practice in Boca Raton, Fla., seeks to limit the potential for harm to the clinician who performs hundreds – or more – of colorectal screening procedures annually. The EndoFeed™ device Dr. Abitbol and his team brought to the Shark Tank panel practically eliminates the need for repetitive, coordinated wrist and thumb movements when performing a colonoscopy.

The EndoFeed™ drive mechanism moves the instruments through the channel of the endoscope’s insertion tube, making it easy to complete the necessary insertion and extraction motions in biopsies without injury from repetitive motion.

Dr. Abitbol and his team also presented the EndoReVu™, which he said increases polyp detection during screening through the use of a mirror that is inserted through the instrument channel of the scope, and then provides reflective images with existing endoscope optics. The mirror is flexible to ensure there is no damage to surrounding tissue. Both devices presented by Dr. Abitbol are single use and disposable.

He concluded his presentation to the panel by saying, “I spend most of my day in the endoscopy suite every day, and I know what I need to do a better job ... I see [what] the reactions of other doctors and nurses are to this product, and I have no doubt these two products will play a major role in the endoscopy suite.”

However, while Dr. Abitbol was commended for considering the problem of operator fatigue in colonoscopies – something at least one audience member said was too often overlooked – the panel unanimously agreed that for Dr. Abitbol to move his products forward he would need to conduct some rigorous studies to show conclusive data that they improve levels of fatigue.

Identifying, classifying small polyps during a procedure

A vexing problem for clinicians is what to do after detecting a colonic polyp, since the majority of those found are not precancerous. Now, Rizkullah “Ray” Dogum, an MS/MBA candidate, and his colleagues are seeking to market a technology that reads the information gathered by elastic scattering spectroscopy to determine whether a sample is dysplastic, essentially creating the first in vivo definitive polyp classification method.

“Using elastic scattering spectroscopy technology, polyps with negligible malignant potential are detected and classified during the procedure, circumventing the routine track to pathology labs,” Mr. Dogum explained in an interview, noting that this adds up to hundreds of millions of dollars savings per year. An important fact, he said, since as our population ages it requires more screening. “As much as $1 billion could be saved annually if diminutive polyps could simply be diagnosed, resected, and discarded in lieu of histopathological processing,” Mr. Dogum said.

Currently being tested in several clinical trials that so far are yielding what Mr. Dogum says are “promising” results, especially when compared with chromoendoscopy and narrow-band imaging, the patented technology uses a white light that is transmitted through fiber optic cables that are integrated into both the snare and forceps tools; the back-reflection is detected by a computer, making polyp classification possible.

An advantage in practice for using the technology is that it does not require any significant alteration in the current standard practice for colonoscopy and does not disrupt the standard flow of work, meaning there is less training required, according to Mr. Dogum.

An audience member who identified himself as a regulator with the FDA told Mr. Dogum that over the years the agency has “struggled” with how to classify imaging technologies, because they are tools, but do not actually change practice, noting that whether to resect and discard is a clinical decision made at the physician’s discretion, not one determined by a device. He expressed his skepticism that the product would qualify as a Class II device but did suggest that it might qualify as a low-risk, de novo product, something that Mr. Dogum said in an interview following the presentation that he and his collaborators would be interested in pursuing.

Preventing endoscopic retrograde infections

Mining Big Data for algorithms that can detect endoscopic infection clusters is what Susan Hutfless, PhD, an epidemiologist and assistant professor in the division of gastroenterology and hepatology at Johns Hopkins University in Baltimore, told the shark panel she and her collaborators want to do using Medicare and Medicaid claims.

“Using Medicare and Medicaid billing data, our technology identifies endoscopic errors in a succinct and quantitative way,” Dr. Hutfless said in an interview. She explained that this benefits device manufacturers, health care facilities, and insurers. Included on the as-yet unnamed software program’s dashboard are a range of metrics that allows continuous monitoring of care, risk factors, and sources of infection, all to prevent serious outbreaks and the proliferation of illness.

Dr. Hutfless and her team came upon the idea after a string of deadly endoscope-related infectious outbreaks were linked to closed-channel duodenoscopes.

“We think that our technology helps move the field forward, because it provides a solution to contain endoscopically transmitted infections without re-engineering the endoscope,” Dr. Hutfless said. “For instance, there was an outbreak despite the use of a redesigned scope. Our technology is able to identify gaps in the care pathway that include the endoscope itself as well as the possible human factors that may be playing a role.”

Another plus, she told the panel, is that the product would not require FDA approval.

Despite data Dr. Hutfless said she and her collaborators will be presenting later this year at the annual Digestive Disease Week(R) that show a tight correlation between relevant claims data and data collected by the FDA during the infection outbreaks, the panel concluded that the stronger marketing potential for this product would be as a monitoring system, not a predictive one, used in combination with an engineering solution.

Simplifying LED incorporation in scopes

LED is only the fourth lighting technology ever invented, according to Thomas V. Root, president and CEO of Acera Inc. In an interview, Mr. Root said that while LEDs are “overtaking all major lighting markets,” medical device manufacturers have been slow to catch on.

“Acera moves the industry ahead with its elliptical optic that significantly improves the collection efficiency and distribution of the LED light. This optic, provided in a modular assembly, simplifies incorporation of LEDs in the practice of scope design and manufacture,” he said.

By eliminating the light guide tether, the use of LED increases mobility and the potential for low-cost or even single-use devices, Mr. Root said. “The result is that engineers are liberated to reimagine how endoscopes are designed.”

Because half of fires that occur in the endoscopy setting come from malfunctioning light sources, one of the sharks suggested that since the Acera product does not use the infrared wavelengths in its light, and its electrical cord remains cool to the touch while in use, Mr. Root and his colleagues should highlight that as a unique safety feature in their marketing materials.

[email protected]

On Twitter @whitneymcknight

BOSTON – A group of aspiring innovators appeared before a panel of experts at the 2017 AGA Tech Summit Shark Tank event to make the case for how their novel device or technology could help advance the field of gastroenterology.

“The conversation that occurs here is really the start of other conversations we hope will continue out of this room and into the future to help everyone move their device or concept forward,” Michael L. Kochman, MD, AGAF, chair of the AGA Center for GI Innovation and Technology (CGIT), which sponsors the summit, told the assembled audience of potential investors, regulatory officials, and other innovators. Dr. Kochman also served as one of the “sharks” who sought to ask fundamental questions and identify potential gaps in each business plan in an ultimate attempt to make the product better and market ready. He was joined by V. Raman Muthusamy, MD, CGIT’s incoming chair, and director of interventional endoscopy and general GI endoscopy at the University of California, Los Angeles, where he is also clinical professor of medicine in the division of digestive diseases. The panel also included Jeffrey M. Marks, MD, director of surgical endoscopy at University Hospitals Cleveland Medical Center, and Dennis McWilliams, president and chief commercial officer of Apollo Endosurgery, Inc.

Analyzing RNA biomarkers in stool samples

First to face the sharks was Erica Barnell, an MD/PhD candidate at the Washington University School of Medicine in St. Louis and the chief science officer of Geneoscopy, LLC. She and her collaborators have created a series of patent-pending methods to isolate and preserve neoplasia-associated RNA biomarkers in stool samples. The technology is expected to improve screening for colorectal cancer, which is the second leading cause of cancer-related deaths in the United States, with more than 50,000 deaths annually. The high mortality rate of colorectal cancer is due, in part, to flaws in existing methodologies to screen for the disease and because of a high rate of patient noncompliance with screening guidelines, according to Ms. Barnell.

Clinical data presented by Ms. Barnell showed that their predictive algorithms in a test population of 65 persons had 81% sensitivity and 59% specificity for detecting adenomas of all sizes. Ms. Barnell acknowledged that the specificity rate was not impressive, so she and her colleagues plan to add a fecal immunochemical test in their next round of clinical trial testing.

Among the feedback Ms. Barnell received was Dr. Kochman’s suggestion that geneoscopy would benefit from having strong head-to-head data for their novel screening procedure vs. current procedures to present to the U.S. Food and Drug Administration, which Ms. Barnell said she and her colleagues were already taking into account.

Reducing risk of repetitive motion injuries

Prosper Abitbol, MD, a gastroenterologist in private practice in Boca Raton, Fla., seeks to limit the potential for harm to the clinician who performs hundreds – or more – of colorectal screening procedures annually. The EndoFeed™ device Dr. Abitbol and his team brought to the Shark Tank panel practically eliminates the need for repetitive, coordinated wrist and thumb movements when performing a colonoscopy.

The EndoFeed™ drive mechanism moves the instruments through the channel of the endoscope’s insertion tube, making it easy to complete the necessary insertion and extraction motions in biopsies without injury from repetitive motion.

Dr. Abitbol and his team also presented the EndoReVu™, which he said increases polyp detection during screening through the use of a mirror that is inserted through the instrument channel of the scope, and then provides reflective images with existing endoscope optics. The mirror is flexible to ensure there is no damage to surrounding tissue. Both devices presented by Dr. Abitbol are single use and disposable.

He concluded his presentation to the panel by saying, “I spend most of my day in the endoscopy suite every day, and I know what I need to do a better job ... I see [what] the reactions of other doctors and nurses are to this product, and I have no doubt these two products will play a major role in the endoscopy suite.”

However, while Dr. Abitbol was commended for considering the problem of operator fatigue in colonoscopies – something at least one audience member said was too often overlooked – the panel unanimously agreed that for Dr. Abitbol to move his products forward he would need to conduct some rigorous studies to show conclusive data that they improve levels of fatigue.

Identifying, classifying small polyps during a procedure

A vexing problem for clinicians is what to do after detecting a colonic polyp, since the majority of those found are not precancerous. Now, Rizkullah “Ray” Dogum, an MS/MBA candidate, and his colleagues are seeking to market a technology that reads the information gathered by elastic scattering spectroscopy to determine whether a sample is dysplastic, essentially creating the first in vivo definitive polyp classification method.

“Using elastic scattering spectroscopy technology, polyps with negligible malignant potential are detected and classified during the procedure, circumventing the routine track to pathology labs,” Mr. Dogum explained in an interview, noting that this adds up to hundreds of millions of dollars savings per year. An important fact, he said, since as our population ages it requires more screening. “As much as $1 billion could be saved annually if diminutive polyps could simply be diagnosed, resected, and discarded in lieu of histopathological processing,” Mr. Dogum said.

Currently being tested in several clinical trials that so far are yielding what Mr. Dogum says are “promising” results, especially when compared with chromoendoscopy and narrow-band imaging, the patented technology uses a white light that is transmitted through fiber optic cables that are integrated into both the snare and forceps tools; the back-reflection is detected by a computer, making polyp classification possible.

An advantage in practice for using the technology is that it does not require any significant alteration in the current standard practice for colonoscopy and does not disrupt the standard flow of work, meaning there is less training required, according to Mr. Dogum.

An audience member who identified himself as a regulator with the FDA told Mr. Dogum that over the years the agency has “struggled” with how to classify imaging technologies, because they are tools, but do not actually change practice, noting that whether to resect and discard is a clinical decision made at the physician’s discretion, not one determined by a device. He expressed his skepticism that the product would qualify as a Class II device but did suggest that it might qualify as a low-risk, de novo product, something that Mr. Dogum said in an interview following the presentation that he and his collaborators would be interested in pursuing.

Preventing endoscopic retrograde infections

Mining Big Data for algorithms that can detect endoscopic infection clusters is what Susan Hutfless, PhD, an epidemiologist and assistant professor in the division of gastroenterology and hepatology at Johns Hopkins University in Baltimore, told the shark panel she and her collaborators want to do using Medicare and Medicaid claims.

“Using Medicare and Medicaid billing data, our technology identifies endoscopic errors in a succinct and quantitative way,” Dr. Hutfless said in an interview. She explained that this benefits device manufacturers, health care facilities, and insurers. Included on the as-yet unnamed software program’s dashboard are a range of metrics that allows continuous monitoring of care, risk factors, and sources of infection, all to prevent serious outbreaks and the proliferation of illness.

Dr. Hutfless and her team came upon the idea after a string of deadly endoscope-related infectious outbreaks were linked to closed-channel duodenoscopes.

“We think that our technology helps move the field forward, because it provides a solution to contain endoscopically transmitted infections without re-engineering the endoscope,” Dr. Hutfless said. “For instance, there was an outbreak despite the use of a redesigned scope. Our technology is able to identify gaps in the care pathway that include the endoscope itself as well as the possible human factors that may be playing a role.”

Another plus, she told the panel, is that the product would not require FDA approval.

Despite data Dr. Hutfless said she and her collaborators will be presenting later this year at the annual Digestive Disease Week(R) that show a tight correlation between relevant claims data and data collected by the FDA during the infection outbreaks, the panel concluded that the stronger marketing potential for this product would be as a monitoring system, not a predictive one, used in combination with an engineering solution.

Simplifying LED incorporation in scopes

LED is only the fourth lighting technology ever invented, according to Thomas V. Root, president and CEO of Acera Inc. In an interview, Mr. Root said that while LEDs are “overtaking all major lighting markets,” medical device manufacturers have been slow to catch on.

“Acera moves the industry ahead with its elliptical optic that significantly improves the collection efficiency and distribution of the LED light. This optic, provided in a modular assembly, simplifies incorporation of LEDs in the practice of scope design and manufacture,” he said.

By eliminating the light guide tether, the use of LED increases mobility and the potential for low-cost or even single-use devices, Mr. Root said. “The result is that engineers are liberated to reimagine how endoscopes are designed.”

Because half of fires that occur in the endoscopy setting come from malfunctioning light sources, one of the sharks suggested that since the Acera product does not use the infrared wavelengths in its light, and its electrical cord remains cool to the touch while in use, Mr. Root and his colleagues should highlight that as a unique safety feature in their marketing materials.

[email protected]

On Twitter @whitneymcknight

BOSTON – A group of aspiring innovators appeared before a panel of experts at the 2017 AGA Tech Summit Shark Tank event to make the case for how their novel device or technology could help advance the field of gastroenterology.

“The conversation that occurs here is really the start of other conversations we hope will continue out of this room and into the future to help everyone move their device or concept forward,” Michael L. Kochman, MD, AGAF, chair of the AGA Center for GI Innovation and Technology (CGIT), which sponsors the summit, told the assembled audience of potential investors, regulatory officials, and other innovators. Dr. Kochman also served as one of the “sharks” who sought to ask fundamental questions and identify potential gaps in each business plan in an ultimate attempt to make the product better and market ready. He was joined by V. Raman Muthusamy, MD, CGIT’s incoming chair, and director of interventional endoscopy and general GI endoscopy at the University of California, Los Angeles, where he is also clinical professor of medicine in the division of digestive diseases. The panel also included Jeffrey M. Marks, MD, director of surgical endoscopy at University Hospitals Cleveland Medical Center, and Dennis McWilliams, president and chief commercial officer of Apollo Endosurgery, Inc.

Analyzing RNA biomarkers in stool samples

First to face the sharks was Erica Barnell, an MD/PhD candidate at the Washington University School of Medicine in St. Louis and the chief science officer of Geneoscopy, LLC. She and her collaborators have created a series of patent-pending methods to isolate and preserve neoplasia-associated RNA biomarkers in stool samples. The technology is expected to improve screening for colorectal cancer, which is the second leading cause of cancer-related deaths in the United States, with more than 50,000 deaths annually. The high mortality rate of colorectal cancer is due, in part, to flaws in existing methodologies to screen for the disease and because of a high rate of patient noncompliance with screening guidelines, according to Ms. Barnell.

Clinical data presented by Ms. Barnell showed that their predictive algorithms in a test population of 65 persons had 81% sensitivity and 59% specificity for detecting adenomas of all sizes. Ms. Barnell acknowledged that the specificity rate was not impressive, so she and her colleagues plan to add a fecal immunochemical test in their next round of clinical trial testing.

Among the feedback Ms. Barnell received was Dr. Kochman’s suggestion that geneoscopy would benefit from having strong head-to-head data for their novel screening procedure vs. current procedures to present to the U.S. Food and Drug Administration, which Ms. Barnell said she and her colleagues were already taking into account.

Reducing risk of repetitive motion injuries