User login

EVATAR model: Endocrinology on a chip

into how female reproductive organs communicate with each other and the liver via the endocrine system.

The project was first funded in 2012 by a National Institutes of Health grant that aimed to spur development of experimental hardware, and now researchers at Northwestern University, Chicago, headed by Teresa K. Woodruff, PhD, have bioengineered a 3-D model of the female reproductive tract and the liver using living cells and tissues. The resulting model is small enough to fit in the palm of your hand.

“Cells in aggregate organs are in constant communication with each other and the conversation is provided by the endocrine system. This is a completely new era in biology helping us illuminate disease systems we could not otherwise, such as polycystic ovary disease [PCOS],” said Dr. Woodruff, who is the Thomas J. Watkins Memorial professor of obstetrics and gynecology, the vice chair of research for the department of obstetrics and gynecology, and the chief of the division of reproductive science and medicine at Northwestern University.

Until the development of EVATAR, there was no good animal or other model of female reproductive diseases, such as cancer, PCOS, and infertility.

The EVATAR 3-D system is composed of plastic cubes, each representing the target organs of the female reproductive tract. The tissue lining each cube is assembled from multiple cell lineages to create individual follicles that enclose and support oocytes, oviductal/fallopian tubes, uterine myometrium and endometrium, the cervix, and the vagina. The cubes are then connected with tiny tubes, so that the fluid representing human blood and the hormones contained therein can flow between each of the cube compartments.

The EVATAR also contains cells from the liver. Using this system, researchers can study the effects of drugs on the female reproductive system and examine the drug metabolites in the liver.

Variations on EVATAR currently in development include 3-D models of the male reproductive system, dubbed “Guy in a Cube,” she quipped.

into how female reproductive organs communicate with each other and the liver via the endocrine system.

The project was first funded in 2012 by a National Institutes of Health grant that aimed to spur development of experimental hardware, and now researchers at Northwestern University, Chicago, headed by Teresa K. Woodruff, PhD, have bioengineered a 3-D model of the female reproductive tract and the liver using living cells and tissues. The resulting model is small enough to fit in the palm of your hand.

“Cells in aggregate organs are in constant communication with each other and the conversation is provided by the endocrine system. This is a completely new era in biology helping us illuminate disease systems we could not otherwise, such as polycystic ovary disease [PCOS],” said Dr. Woodruff, who is the Thomas J. Watkins Memorial professor of obstetrics and gynecology, the vice chair of research for the department of obstetrics and gynecology, and the chief of the division of reproductive science and medicine at Northwestern University.

Until the development of EVATAR, there was no good animal or other model of female reproductive diseases, such as cancer, PCOS, and infertility.

The EVATAR 3-D system is composed of plastic cubes, each representing the target organs of the female reproductive tract. The tissue lining each cube is assembled from multiple cell lineages to create individual follicles that enclose and support oocytes, oviductal/fallopian tubes, uterine myometrium and endometrium, the cervix, and the vagina. The cubes are then connected with tiny tubes, so that the fluid representing human blood and the hormones contained therein can flow between each of the cube compartments.

The EVATAR also contains cells from the liver. Using this system, researchers can study the effects of drugs on the female reproductive system and examine the drug metabolites in the liver.

Variations on EVATAR currently in development include 3-D models of the male reproductive system, dubbed “Guy in a Cube,” she quipped.

into how female reproductive organs communicate with each other and the liver via the endocrine system.

The project was first funded in 2012 by a National Institutes of Health grant that aimed to spur development of experimental hardware, and now researchers at Northwestern University, Chicago, headed by Teresa K. Woodruff, PhD, have bioengineered a 3-D model of the female reproductive tract and the liver using living cells and tissues. The resulting model is small enough to fit in the palm of your hand.

“Cells in aggregate organs are in constant communication with each other and the conversation is provided by the endocrine system. This is a completely new era in biology helping us illuminate disease systems we could not otherwise, such as polycystic ovary disease [PCOS],” said Dr. Woodruff, who is the Thomas J. Watkins Memorial professor of obstetrics and gynecology, the vice chair of research for the department of obstetrics and gynecology, and the chief of the division of reproductive science and medicine at Northwestern University.

Until the development of EVATAR, there was no good animal or other model of female reproductive diseases, such as cancer, PCOS, and infertility.

The EVATAR 3-D system is composed of plastic cubes, each representing the target organs of the female reproductive tract. The tissue lining each cube is assembled from multiple cell lineages to create individual follicles that enclose and support oocytes, oviductal/fallopian tubes, uterine myometrium and endometrium, the cervix, and the vagina. The cubes are then connected with tiny tubes, so that the fluid representing human blood and the hormones contained therein can flow between each of the cube compartments.

The EVATAR also contains cells from the liver. Using this system, researchers can study the effects of drugs on the female reproductive system and examine the drug metabolites in the liver.

Variations on EVATAR currently in development include 3-D models of the male reproductive system, dubbed “Guy in a Cube,” she quipped.

Get Ready for CHEST 2018 in San Antonio

Have you been thinking about how great of a time you had at CHEST 2017? Or, perhaps you weren’t able to make it to CHEST 2017 and are looking forward to attending CHEST 2018? Well, we’d be happy to have you attend the annual meeting in sunny San Antonio, Texas, this fall. CHEST 2018 will occur earlier this year, from October 6-10, and we’ve got a few ways you can get involved leading up to the meeting.

CHEST 2018 Moderators

If you do not have original research to share, but believe you are qualified to moderate sessions, we have an opportunity for you! Moderating will take place on-site in San Antonio, and moderators will be recognized in the CHEST 2018 program and will receive a reduced registration rate to the meeting. See chestmeeting.chestnet.org.

CHEST Challenge 2018

Are you a US-based CHEST fellow-in-training? Compete with other programs across the country in CHEST Challenge 2018 for honor and prizes! The first round of the competition this year will consist of two parts; in addition to the traditional online quiz, there will be a number of social media challenges. The aggregate score for both of these components will be used to identify the top three-scoring teams. These top three teams will then be invited to send three fellows each to the CHEST Challenge Championship, a Jeopardy-style game show that takes place live during the CHEST Annual Meeting. http://www.chestnet.org/Hidden-Pages/CHEST-Challenge-US

CHEST Foundation Grants

We have had many talented and passionate people win our CHEST Foundation grants in research and community service. Each year, the CHEST Foundation offers grants to worthy research candidates, generous community service volunteers, and distinguished scholars in a field of expertise. Nearly 800 recipients worldwide have received more than $10 million in support and recognition of outstanding contributions to chest medicine.

How are you helping to champion lung health? The CHEST Foundation is accepting grant applications February 1 through April 9, 2018, in the following areas:

• CHEST Foundation Research Grant in Lung Cancer – $50,000 - $100,000 2-year grant*

• CHEST Foundation Research Grant in Asthma – $15,000 - $30,000 1-year grant*

• CHEST Foundation Research Grant in Pulmonary Arterial Hypertension – $25,000 - $50,000 1-year grant*

• CHEST Foundation and the Alpha-1 Foundation Research Grant in Alpha-1 Antitrypsin Deficiency – $25,000 - $50,000 1-year grant*

• CHEST Foundation Research Grant in Pulmonary Fibrosis – $25,000 - $50,000 1-year grant*

• CHEST Foundation Research Grant in Chronic Obstructive Pulmonary Disease – $30,000 1-year grant (multiple recipients selected)

• CHEST Foundation Research Grant in Venous Thromboembolism – $15,000 - $30,000 1-year grant*

• CHEST Foundation Research Grant in Nontuberculous Mycobacteria Disease – $25,000 - $50,000 1-year grant*

• CHEST Foundation Research Grant in Women’s Lung Health – $10,000 1-year grant

• CHEST Foundation Research Grant in Cystic Fibrosis – $30,000 1-year grant

• The Eli Lilly and Company Distinguished Scholar in Critical Care Medicine – $150,000 over 3 years

• CHEST Foundation Community Service Grant Honoring D. Robert McCaffree, MD, Master FCCP – $2,500- $15,000 1-year grant*

*Amount contingent on funding.

Learn more on how to apply now at chestfoundation.org/apply.

Things Happening in AprilDon’t forget to look out for CHEST 2018 registration, opening April 5. And, if you missed the first round of abstract submissions, submissions for late-breaking abstracts will open April 30. Stay updated on all things CHEST 2018 at chestmeeting.chestnet.org.

Have you been thinking about how great of a time you had at CHEST 2017? Or, perhaps you weren’t able to make it to CHEST 2017 and are looking forward to attending CHEST 2018? Well, we’d be happy to have you attend the annual meeting in sunny San Antonio, Texas, this fall. CHEST 2018 will occur earlier this year, from October 6-10, and we’ve got a few ways you can get involved leading up to the meeting.

CHEST 2018 Moderators

If you do not have original research to share, but believe you are qualified to moderate sessions, we have an opportunity for you! Moderating will take place on-site in San Antonio, and moderators will be recognized in the CHEST 2018 program and will receive a reduced registration rate to the meeting. See chestmeeting.chestnet.org.

CHEST Challenge 2018

Are you a US-based CHEST fellow-in-training? Compete with other programs across the country in CHEST Challenge 2018 for honor and prizes! The first round of the competition this year will consist of two parts; in addition to the traditional online quiz, there will be a number of social media challenges. The aggregate score for both of these components will be used to identify the top three-scoring teams. These top three teams will then be invited to send three fellows each to the CHEST Challenge Championship, a Jeopardy-style game show that takes place live during the CHEST Annual Meeting. http://www.chestnet.org/Hidden-Pages/CHEST-Challenge-US

CHEST Foundation Grants

We have had many talented and passionate people win our CHEST Foundation grants in research and community service. Each year, the CHEST Foundation offers grants to worthy research candidates, generous community service volunteers, and distinguished scholars in a field of expertise. Nearly 800 recipients worldwide have received more than $10 million in support and recognition of outstanding contributions to chest medicine.

How are you helping to champion lung health? The CHEST Foundation is accepting grant applications February 1 through April 9, 2018, in the following areas:

• CHEST Foundation Research Grant in Lung Cancer – $50,000 - $100,000 2-year grant*

• CHEST Foundation Research Grant in Asthma – $15,000 - $30,000 1-year grant*

• CHEST Foundation Research Grant in Pulmonary Arterial Hypertension – $25,000 - $50,000 1-year grant*

• CHEST Foundation and the Alpha-1 Foundation Research Grant in Alpha-1 Antitrypsin Deficiency – $25,000 - $50,000 1-year grant*

• CHEST Foundation Research Grant in Pulmonary Fibrosis – $25,000 - $50,000 1-year grant*

• CHEST Foundation Research Grant in Chronic Obstructive Pulmonary Disease – $30,000 1-year grant (multiple recipients selected)

• CHEST Foundation Research Grant in Venous Thromboembolism – $15,000 - $30,000 1-year grant*

• CHEST Foundation Research Grant in Nontuberculous Mycobacteria Disease – $25,000 - $50,000 1-year grant*

• CHEST Foundation Research Grant in Women’s Lung Health – $10,000 1-year grant

• CHEST Foundation Research Grant in Cystic Fibrosis – $30,000 1-year grant

• The Eli Lilly and Company Distinguished Scholar in Critical Care Medicine – $150,000 over 3 years

• CHEST Foundation Community Service Grant Honoring D. Robert McCaffree, MD, Master FCCP – $2,500- $15,000 1-year grant*

*Amount contingent on funding.

Learn more on how to apply now at chestfoundation.org/apply.

Things Happening in AprilDon’t forget to look out for CHEST 2018 registration, opening April 5. And, if you missed the first round of abstract submissions, submissions for late-breaking abstracts will open April 30. Stay updated on all things CHEST 2018 at chestmeeting.chestnet.org.

Have you been thinking about how great of a time you had at CHEST 2017? Or, perhaps you weren’t able to make it to CHEST 2017 and are looking forward to attending CHEST 2018? Well, we’d be happy to have you attend the annual meeting in sunny San Antonio, Texas, this fall. CHEST 2018 will occur earlier this year, from October 6-10, and we’ve got a few ways you can get involved leading up to the meeting.

CHEST 2018 Moderators

If you do not have original research to share, but believe you are qualified to moderate sessions, we have an opportunity for you! Moderating will take place on-site in San Antonio, and moderators will be recognized in the CHEST 2018 program and will receive a reduced registration rate to the meeting. See chestmeeting.chestnet.org.

CHEST Challenge 2018

Are you a US-based CHEST fellow-in-training? Compete with other programs across the country in CHEST Challenge 2018 for honor and prizes! The first round of the competition this year will consist of two parts; in addition to the traditional online quiz, there will be a number of social media challenges. The aggregate score for both of these components will be used to identify the top three-scoring teams. These top three teams will then be invited to send three fellows each to the CHEST Challenge Championship, a Jeopardy-style game show that takes place live during the CHEST Annual Meeting. http://www.chestnet.org/Hidden-Pages/CHEST-Challenge-US

CHEST Foundation Grants

We have had many talented and passionate people win our CHEST Foundation grants in research and community service. Each year, the CHEST Foundation offers grants to worthy research candidates, generous community service volunteers, and distinguished scholars in a field of expertise. Nearly 800 recipients worldwide have received more than $10 million in support and recognition of outstanding contributions to chest medicine.

How are you helping to champion lung health? The CHEST Foundation is accepting grant applications February 1 through April 9, 2018, in the following areas:

• CHEST Foundation Research Grant in Lung Cancer – $50,000 - $100,000 2-year grant*

• CHEST Foundation Research Grant in Asthma – $15,000 - $30,000 1-year grant*

• CHEST Foundation Research Grant in Pulmonary Arterial Hypertension – $25,000 - $50,000 1-year grant*

• CHEST Foundation and the Alpha-1 Foundation Research Grant in Alpha-1 Antitrypsin Deficiency – $25,000 - $50,000 1-year grant*

• CHEST Foundation Research Grant in Pulmonary Fibrosis – $25,000 - $50,000 1-year grant*

• CHEST Foundation Research Grant in Chronic Obstructive Pulmonary Disease – $30,000 1-year grant (multiple recipients selected)

• CHEST Foundation Research Grant in Venous Thromboembolism – $15,000 - $30,000 1-year grant*

• CHEST Foundation Research Grant in Nontuberculous Mycobacteria Disease – $25,000 - $50,000 1-year grant*

• CHEST Foundation Research Grant in Women’s Lung Health – $10,000 1-year grant

• CHEST Foundation Research Grant in Cystic Fibrosis – $30,000 1-year grant

• The Eli Lilly and Company Distinguished Scholar in Critical Care Medicine – $150,000 over 3 years

• CHEST Foundation Community Service Grant Honoring D. Robert McCaffree, MD, Master FCCP – $2,500- $15,000 1-year grant*

*Amount contingent on funding.

Learn more on how to apply now at chestfoundation.org/apply.

Things Happening in AprilDon’t forget to look out for CHEST 2018 registration, opening April 5. And, if you missed the first round of abstract submissions, submissions for late-breaking abstracts will open April 30. Stay updated on all things CHEST 2018 at chestmeeting.chestnet.org.

NAMDRC Update Collaboration: Now More Than Ever

Ongoing hospital mergers, acquisitions, and closings demonstrate that reimbursement maximization and cost reduction are the twin sisters of health-care system reform. This focus is not going to change in the foreseeable future, as most experts now view “health system reform” as “health financing reform.”

Reports document that more than 50% of acute care hospitals in the United States experienced negative operating margins for the federal fiscal year ending September 30, 2017. Equally alarming is the increasing number of organizations either reducing or eliminating the roles of medical directors for clinical departments. Hospital and health system executives are increasingly engaging external consultants to find ways to decrease operating costs, with the caveat of maintaining or improving quality, safety, and patient satisfaction and engagement.

Given these cost reduction pressures, what can respiratory therapy medical directors and administrative directors do to ensure that quality and safety are ensured?

We believe that quality and safety can be maintained and improved in this bottom-line-focused environment if we collaborate with stakeholders and communicate the value of respiratory care services. Following are some examples of how to reinvigorate this collaboration. The list is far from complete, but we believe it is a good starting point for making a significant difference.

Science

We recognize that much of our practice is based on levels of evidence, and we must use this evidence as a basis for our services.

In talking and working with RT administrative directors across the country, we continue to see non-value-added “treatments” being provided, such as incentive spirometry and aerosolized acetylcysteine. Not only is this a waste of resources, but, because of it, our clinical RTs are not providing therapy.

One of the best opportunities to decrease cost is to eliminate waste. These services must be eliminated. For those patients who require secretion clearance/lung expansion, we can provide evidence-based services such as oscillating positive expiratory pressure.

Protocols

Respiratory care protocols have been around for decades, but surveys indicate that only half of all RT departments utilize them. Under the guidance of NAMDRC, the AARC has been educating RTs to transition from “treatments” to evidence-based protocols. Various barriers remain, and our challenge remains to implement proven care plans in every department.

Quality Assurance

The health-care industry made the transition from “Quality Control” to “Quality Assurance” several decades ago. However, many RT administrative directors lack the knowledge and/or resources necessary to create a comprehensive QA program, much less participate in clinical research. We suggest creating a standardized model to be adopted by RT departments across the country that would measure and communicate the value of respiratory care services.

Productivity/Staffing

An area where consultants and executives often focus their cost-saving efforts is staffing. Given that 50% to 60% of operating costs are personnel, this is to be expected.

Many organizations, however, are using the wrong metrics—such as procedures, CPT codes , and billables—to project staffing FTEs. Physicians and RTs understand that these metrics are not useful and must convince consultants and executives of this. The AARC Uniform Reporting Manual, which is currently being updated, is the best guide for determining appropriate staffing.

Education

Another common step in cost control has been the significant reduction or total elimination of education budgets.

During the past 5 years, RT leaders attending the AARC Summer Forum have been polled regarding whether they received financial assistance to attend the Management Section program. Sadly, the number attending on their own dime far surpasses those receiving financial assistance.

Additionally, the RT profession is witnessing more department-based education, which, in some cases, is not education at all, but marketing, cleverly packaged in the form of CEUs.

We fully understand these changes and recognize why they have occurred. However, we suggest the need to work together to differentiate marketing from education and ensure that clinical staff receive what is needed to ensure quality care.

It is vital for us to educate our physician leaders and pulmonary and critical care fellows on the science of respiratory care. There is a significant knowledge gap, and we have a great opportunity to improve the training of fellows. It is difficult to attract active medical directors if they don’t understand the science. We believe NAMDRC can play an important role by addressing these knowledge deficits.

Ongoing hospital mergers, acquisitions, and closings demonstrate that reimbursement maximization and cost reduction are the twin sisters of health-care system reform. This focus is not going to change in the foreseeable future, as most experts now view “health system reform” as “health financing reform.”

Reports document that more than 50% of acute care hospitals in the United States experienced negative operating margins for the federal fiscal year ending September 30, 2017. Equally alarming is the increasing number of organizations either reducing or eliminating the roles of medical directors for clinical departments. Hospital and health system executives are increasingly engaging external consultants to find ways to decrease operating costs, with the caveat of maintaining or improving quality, safety, and patient satisfaction and engagement.

Given these cost reduction pressures, what can respiratory therapy medical directors and administrative directors do to ensure that quality and safety are ensured?

We believe that quality and safety can be maintained and improved in this bottom-line-focused environment if we collaborate with stakeholders and communicate the value of respiratory care services. Following are some examples of how to reinvigorate this collaboration. The list is far from complete, but we believe it is a good starting point for making a significant difference.

Science

We recognize that much of our practice is based on levels of evidence, and we must use this evidence as a basis for our services.

In talking and working with RT administrative directors across the country, we continue to see non-value-added “treatments” being provided, such as incentive spirometry and aerosolized acetylcysteine. Not only is this a waste of resources, but, because of it, our clinical RTs are not providing therapy.

One of the best opportunities to decrease cost is to eliminate waste. These services must be eliminated. For those patients who require secretion clearance/lung expansion, we can provide evidence-based services such as oscillating positive expiratory pressure.

Protocols

Respiratory care protocols have been around for decades, but surveys indicate that only half of all RT departments utilize them. Under the guidance of NAMDRC, the AARC has been educating RTs to transition from “treatments” to evidence-based protocols. Various barriers remain, and our challenge remains to implement proven care plans in every department.

Quality Assurance

The health-care industry made the transition from “Quality Control” to “Quality Assurance” several decades ago. However, many RT administrative directors lack the knowledge and/or resources necessary to create a comprehensive QA program, much less participate in clinical research. We suggest creating a standardized model to be adopted by RT departments across the country that would measure and communicate the value of respiratory care services.

Productivity/Staffing

An area where consultants and executives often focus their cost-saving efforts is staffing. Given that 50% to 60% of operating costs are personnel, this is to be expected.

Many organizations, however, are using the wrong metrics—such as procedures, CPT codes , and billables—to project staffing FTEs. Physicians and RTs understand that these metrics are not useful and must convince consultants and executives of this. The AARC Uniform Reporting Manual, which is currently being updated, is the best guide for determining appropriate staffing.

Education

Another common step in cost control has been the significant reduction or total elimination of education budgets.

During the past 5 years, RT leaders attending the AARC Summer Forum have been polled regarding whether they received financial assistance to attend the Management Section program. Sadly, the number attending on their own dime far surpasses those receiving financial assistance.

Additionally, the RT profession is witnessing more department-based education, which, in some cases, is not education at all, but marketing, cleverly packaged in the form of CEUs.

We fully understand these changes and recognize why they have occurred. However, we suggest the need to work together to differentiate marketing from education and ensure that clinical staff receive what is needed to ensure quality care.

It is vital for us to educate our physician leaders and pulmonary and critical care fellows on the science of respiratory care. There is a significant knowledge gap, and we have a great opportunity to improve the training of fellows. It is difficult to attract active medical directors if they don’t understand the science. We believe NAMDRC can play an important role by addressing these knowledge deficits.

Ongoing hospital mergers, acquisitions, and closings demonstrate that reimbursement maximization and cost reduction are the twin sisters of health-care system reform. This focus is not going to change in the foreseeable future, as most experts now view “health system reform” as “health financing reform.”

Reports document that more than 50% of acute care hospitals in the United States experienced negative operating margins for the federal fiscal year ending September 30, 2017. Equally alarming is the increasing number of organizations either reducing or eliminating the roles of medical directors for clinical departments. Hospital and health system executives are increasingly engaging external consultants to find ways to decrease operating costs, with the caveat of maintaining or improving quality, safety, and patient satisfaction and engagement.

Given these cost reduction pressures, what can respiratory therapy medical directors and administrative directors do to ensure that quality and safety are ensured?

We believe that quality and safety can be maintained and improved in this bottom-line-focused environment if we collaborate with stakeholders and communicate the value of respiratory care services. Following are some examples of how to reinvigorate this collaboration. The list is far from complete, but we believe it is a good starting point for making a significant difference.

Science

We recognize that much of our practice is based on levels of evidence, and we must use this evidence as a basis for our services.

In talking and working with RT administrative directors across the country, we continue to see non-value-added “treatments” being provided, such as incentive spirometry and aerosolized acetylcysteine. Not only is this a waste of resources, but, because of it, our clinical RTs are not providing therapy.

One of the best opportunities to decrease cost is to eliminate waste. These services must be eliminated. For those patients who require secretion clearance/lung expansion, we can provide evidence-based services such as oscillating positive expiratory pressure.

Protocols

Respiratory care protocols have been around for decades, but surveys indicate that only half of all RT departments utilize them. Under the guidance of NAMDRC, the AARC has been educating RTs to transition from “treatments” to evidence-based protocols. Various barriers remain, and our challenge remains to implement proven care plans in every department.

Quality Assurance

The health-care industry made the transition from “Quality Control” to “Quality Assurance” several decades ago. However, many RT administrative directors lack the knowledge and/or resources necessary to create a comprehensive QA program, much less participate in clinical research. We suggest creating a standardized model to be adopted by RT departments across the country that would measure and communicate the value of respiratory care services.

Productivity/Staffing

An area where consultants and executives often focus their cost-saving efforts is staffing. Given that 50% to 60% of operating costs are personnel, this is to be expected.

Many organizations, however, are using the wrong metrics—such as procedures, CPT codes , and billables—to project staffing FTEs. Physicians and RTs understand that these metrics are not useful and must convince consultants and executives of this. The AARC Uniform Reporting Manual, which is currently being updated, is the best guide for determining appropriate staffing.

Education

Another common step in cost control has been the significant reduction or total elimination of education budgets.

During the past 5 years, RT leaders attending the AARC Summer Forum have been polled regarding whether they received financial assistance to attend the Management Section program. Sadly, the number attending on their own dime far surpasses those receiving financial assistance.

Additionally, the RT profession is witnessing more department-based education, which, in some cases, is not education at all, but marketing, cleverly packaged in the form of CEUs.

We fully understand these changes and recognize why they have occurred. However, we suggest the need to work together to differentiate marketing from education and ensure that clinical staff receive what is needed to ensure quality care.

It is vital for us to educate our physician leaders and pulmonary and critical care fellows on the science of respiratory care. There is a significant knowledge gap, and we have a great opportunity to improve the training of fellows. It is difficult to attract active medical directors if they don’t understand the science. We believe NAMDRC can play an important role by addressing these knowledge deficits.

Office staff cohesiveness remains important

Recently, a lawyer and I were on the phone about a case, and he mentioned how lucky he was to have had the same staff over the course of a 25-year career, and he was afraid they’d retire before he did.

I feel the same way. My medical assistant has been with me since day one 18 years ago, my secretary since 2004. I hope they keep putting up with me until I hang up my reflex hammer.

It’s impossible to put a great team together just by talent alone. The chemistry and ability to work together are as critical as talent, if not more so, and are far more intangible and unpredictable.

I’ve been lucky that way. The three of us are cohesive. We try to keep some degree of fun in our work routine as each day goes on. My secretary’s 2-year-old daughter, who comes with her every day, adds to the family atmosphere that many patients notice. It’s not uncommon to be asked if the group of us are related. (We’re not.)

Back when I started out, it was with a large group that saw its people as replaceable, and treated them as such. As a result there was a high turnover rate of medical assistants and front office and clerical staff. This led to problems with work-flow and patient care, as there was always someone new being trained.

I can’t imagine having a better team than I have now, and will share this advice with anyone starting a practice: When you get the right people, count yourself lucky and do what you have to do to keep them. They’re worth it.

It’s a lot nicer to work with friends than strangers. It makes the drudgery of the job more interesting, the low points better, and the highs fun.

I wouldn’t have it any other way.

Dr. Block has a solo neurology practice in Scottsdale, Ariz.

Recently, a lawyer and I were on the phone about a case, and he mentioned how lucky he was to have had the same staff over the course of a 25-year career, and he was afraid they’d retire before he did.

I feel the same way. My medical assistant has been with me since day one 18 years ago, my secretary since 2004. I hope they keep putting up with me until I hang up my reflex hammer.

It’s impossible to put a great team together just by talent alone. The chemistry and ability to work together are as critical as talent, if not more so, and are far more intangible and unpredictable.

I’ve been lucky that way. The three of us are cohesive. We try to keep some degree of fun in our work routine as each day goes on. My secretary’s 2-year-old daughter, who comes with her every day, adds to the family atmosphere that many patients notice. It’s not uncommon to be asked if the group of us are related. (We’re not.)

Back when I started out, it was with a large group that saw its people as replaceable, and treated them as such. As a result there was a high turnover rate of medical assistants and front office and clerical staff. This led to problems with work-flow and patient care, as there was always someone new being trained.

I can’t imagine having a better team than I have now, and will share this advice with anyone starting a practice: When you get the right people, count yourself lucky and do what you have to do to keep them. They’re worth it.

It’s a lot nicer to work with friends than strangers. It makes the drudgery of the job more interesting, the low points better, and the highs fun.

I wouldn’t have it any other way.

Dr. Block has a solo neurology practice in Scottsdale, Ariz.

Recently, a lawyer and I were on the phone about a case, and he mentioned how lucky he was to have had the same staff over the course of a 25-year career, and he was afraid they’d retire before he did.

I feel the same way. My medical assistant has been with me since day one 18 years ago, my secretary since 2004. I hope they keep putting up with me until I hang up my reflex hammer.

It’s impossible to put a great team together just by talent alone. The chemistry and ability to work together are as critical as talent, if not more so, and are far more intangible and unpredictable.

I’ve been lucky that way. The three of us are cohesive. We try to keep some degree of fun in our work routine as each day goes on. My secretary’s 2-year-old daughter, who comes with her every day, adds to the family atmosphere that many patients notice. It’s not uncommon to be asked if the group of us are related. (We’re not.)

Back when I started out, it was with a large group that saw its people as replaceable, and treated them as such. As a result there was a high turnover rate of medical assistants and front office and clerical staff. This led to problems with work-flow and patient care, as there was always someone new being trained.

I can’t imagine having a better team than I have now, and will share this advice with anyone starting a practice: When you get the right people, count yourself lucky and do what you have to do to keep them. They’re worth it.

It’s a lot nicer to work with friends than strangers. It makes the drudgery of the job more interesting, the low points better, and the highs fun.

I wouldn’t have it any other way.

Dr. Block has a solo neurology practice in Scottsdale, Ariz.

Radial access PCI best for acute coronary syndrome patients

WASHINGTON – Radial access should be the preferred approach to percutaneous interventions in acute coronary syndromes in the United States as it is now in Europe, according to an investigator whose data from a randomized trial substudy was the topic of a late-breaker presentation at CRT 2018 sponsored by the Cardiovascular Research Institute at Washington Hospital Center..

It was not just these data, but “the totality of the evidence supports radial access as the first choice in PCI [percutaneous intervention] for acute coronary events,” asserted Elmir Omerovic, MD, professor of cardiology at Sahlgrenska University Hospital, Gothenburg, Sweden.

The data for the prespecified substudy was drawn from the VALIDATE-SWEDEHEART trial, published 6 months ago (N Engl J Med 2017;377:1132-42). In that trial, which randomized patients with ACS undergoing PCI to heparin or bivalirudin, there was no difference between anticoagulation strategies for the primary composite endpoint of death from any cause, myocardial infarction or bleeding from any cause.

Of the 6,006 randomized patients in that study, of which about half had an ST-elevation MI and half had a non–ST-elevation MI event, 5,424 (90%) underwent PCI by the radial route and the remainder by the femoral route. The goal of the prespecified subgroup analysis presented by Dr. Omerovic was to evaluate whether choice of access site had an impact on the primary endpoint or its components.

There was a 34% relative risk reduction (P less than .001) in the primary composite endpoint at the end of 180 days of follow-up “after adjusting basically for all of the important differences that might affect risk,” Dr. Omerovic reported. The long list included type of acute coronary syndrome, age, hypertension, diabetes, and history of vascular events or prior procedures.

Radial access was also linked with a 59% lower risk of mortality and a 43% lower risk of major bleeding (both P less than .001) when compared with femoral access, according to Dr. Omerovic. He attributed most of the difference in bleeding to bleeding events at the access site.

Baseline differences between the two groups indicated that patients undergoing PCI with femoral access were a higher-risk group. Femoral patients had higher rates of diabetes, hyperlipidemia, and hypertension (all P less than .001). They were also older, more likely to be in Killip class II or above, and more likely to have history of prior MI, prior stroke, and prior coronary artery bypass grafting (also all P less than .001).

Dr. Omerovic maintained that proper adjustment was made for these confounders. He also defended his conclusion that radial access should be preferred over femoral access by stressing that the data regarding this question, including those from other studies and from registries, are uniformly consistent. “They all go in the same direction,” he said.

Dr. Omerovic claimed that the key message is that “there should be more radial access performed in the United States.” The 90% radial access in this study is reflective of current practice in Sweden and most other European countries, according to Dr. Omerovic. In contrast, the U.S. rate is closer to 60% by some estimates.

“I would always be concerned that if you are performing radial access 90% of the time, you are going to lose your femoral skills,” Dr. Grines said. Like other U.S. interventionalists who commented during an animated discussion, she insisted that a prospective randomized trial, not a subgroup analysis, is needed before declaring radial access preferred.

Dr. Omerovic reports financial relationships with AstraZeneca, Bayer, and Boston Scientific.

SOURCE: Omerovic E. CRT 2018.

WASHINGTON – Radial access should be the preferred approach to percutaneous interventions in acute coronary syndromes in the United States as it is now in Europe, according to an investigator whose data from a randomized trial substudy was the topic of a late-breaker presentation at CRT 2018 sponsored by the Cardiovascular Research Institute at Washington Hospital Center..

It was not just these data, but “the totality of the evidence supports radial access as the first choice in PCI [percutaneous intervention] for acute coronary events,” asserted Elmir Omerovic, MD, professor of cardiology at Sahlgrenska University Hospital, Gothenburg, Sweden.

The data for the prespecified substudy was drawn from the VALIDATE-SWEDEHEART trial, published 6 months ago (N Engl J Med 2017;377:1132-42). In that trial, which randomized patients with ACS undergoing PCI to heparin or bivalirudin, there was no difference between anticoagulation strategies for the primary composite endpoint of death from any cause, myocardial infarction or bleeding from any cause.

Of the 6,006 randomized patients in that study, of which about half had an ST-elevation MI and half had a non–ST-elevation MI event, 5,424 (90%) underwent PCI by the radial route and the remainder by the femoral route. The goal of the prespecified subgroup analysis presented by Dr. Omerovic was to evaluate whether choice of access site had an impact on the primary endpoint or its components.

There was a 34% relative risk reduction (P less than .001) in the primary composite endpoint at the end of 180 days of follow-up “after adjusting basically for all of the important differences that might affect risk,” Dr. Omerovic reported. The long list included type of acute coronary syndrome, age, hypertension, diabetes, and history of vascular events or prior procedures.

Radial access was also linked with a 59% lower risk of mortality and a 43% lower risk of major bleeding (both P less than .001) when compared with femoral access, according to Dr. Omerovic. He attributed most of the difference in bleeding to bleeding events at the access site.

Baseline differences between the two groups indicated that patients undergoing PCI with femoral access were a higher-risk group. Femoral patients had higher rates of diabetes, hyperlipidemia, and hypertension (all P less than .001). They were also older, more likely to be in Killip class II or above, and more likely to have history of prior MI, prior stroke, and prior coronary artery bypass grafting (also all P less than .001).

Dr. Omerovic maintained that proper adjustment was made for these confounders. He also defended his conclusion that radial access should be preferred over femoral access by stressing that the data regarding this question, including those from other studies and from registries, are uniformly consistent. “They all go in the same direction,” he said.

Dr. Omerovic claimed that the key message is that “there should be more radial access performed in the United States.” The 90% radial access in this study is reflective of current practice in Sweden and most other European countries, according to Dr. Omerovic. In contrast, the U.S. rate is closer to 60% by some estimates.

“I would always be concerned that if you are performing radial access 90% of the time, you are going to lose your femoral skills,” Dr. Grines said. Like other U.S. interventionalists who commented during an animated discussion, she insisted that a prospective randomized trial, not a subgroup analysis, is needed before declaring radial access preferred.

Dr. Omerovic reports financial relationships with AstraZeneca, Bayer, and Boston Scientific.

SOURCE: Omerovic E. CRT 2018.

WASHINGTON – Radial access should be the preferred approach to percutaneous interventions in acute coronary syndromes in the United States as it is now in Europe, according to an investigator whose data from a randomized trial substudy was the topic of a late-breaker presentation at CRT 2018 sponsored by the Cardiovascular Research Institute at Washington Hospital Center..

It was not just these data, but “the totality of the evidence supports radial access as the first choice in PCI [percutaneous intervention] for acute coronary events,” asserted Elmir Omerovic, MD, professor of cardiology at Sahlgrenska University Hospital, Gothenburg, Sweden.

The data for the prespecified substudy was drawn from the VALIDATE-SWEDEHEART trial, published 6 months ago (N Engl J Med 2017;377:1132-42). In that trial, which randomized patients with ACS undergoing PCI to heparin or bivalirudin, there was no difference between anticoagulation strategies for the primary composite endpoint of death from any cause, myocardial infarction or bleeding from any cause.

Of the 6,006 randomized patients in that study, of which about half had an ST-elevation MI and half had a non–ST-elevation MI event, 5,424 (90%) underwent PCI by the radial route and the remainder by the femoral route. The goal of the prespecified subgroup analysis presented by Dr. Omerovic was to evaluate whether choice of access site had an impact on the primary endpoint or its components.

There was a 34% relative risk reduction (P less than .001) in the primary composite endpoint at the end of 180 days of follow-up “after adjusting basically for all of the important differences that might affect risk,” Dr. Omerovic reported. The long list included type of acute coronary syndrome, age, hypertension, diabetes, and history of vascular events or prior procedures.

Radial access was also linked with a 59% lower risk of mortality and a 43% lower risk of major bleeding (both P less than .001) when compared with femoral access, according to Dr. Omerovic. He attributed most of the difference in bleeding to bleeding events at the access site.

Baseline differences between the two groups indicated that patients undergoing PCI with femoral access were a higher-risk group. Femoral patients had higher rates of diabetes, hyperlipidemia, and hypertension (all P less than .001). They were also older, more likely to be in Killip class II or above, and more likely to have history of prior MI, prior stroke, and prior coronary artery bypass grafting (also all P less than .001).

Dr. Omerovic maintained that proper adjustment was made for these confounders. He also defended his conclusion that radial access should be preferred over femoral access by stressing that the data regarding this question, including those from other studies and from registries, are uniformly consistent. “They all go in the same direction,” he said.

Dr. Omerovic claimed that the key message is that “there should be more radial access performed in the United States.” The 90% radial access in this study is reflective of current practice in Sweden and most other European countries, according to Dr. Omerovic. In contrast, the U.S. rate is closer to 60% by some estimates.

“I would always be concerned that if you are performing radial access 90% of the time, you are going to lose your femoral skills,” Dr. Grines said. Like other U.S. interventionalists who commented during an animated discussion, she insisted that a prospective randomized trial, not a subgroup analysis, is needed before declaring radial access preferred.

Dr. Omerovic reports financial relationships with AstraZeneca, Bayer, and Boston Scientific.

SOURCE: Omerovic E. CRT 2018.

REPORTING FROM THE 2018 CRT MEETING

Key clinical point:

Major finding: At 180 days, the risk of major cardiac events was 34% lower for PCI performed with radial than with femoral access after risk adjustment.

Data source: Prespecified subgroup analysis of a randomized trial.

Disclosures: Dr. Omerovic reports financial relationships with AstraZeneca, Bayer, and Boston Scientific.

Source: Omerovic E. CRT 2018.

Ultrasound study supports deep Koebner mechanism in PsA pathology

An ultrasonographic study describing the greater presence of thicker digital accessory pulleys in patients with psoriatic arthritis (PsA) than in those with only psoriatic skin disease, rheumatoid arthritis, or no pathology has lent more evidence for a “deep Koebner” response mechanism in the development of joint disease in people with psoriasis.

“The thicker pulleys in RA compared with HCs [healthy controls] might point towards a nonspecific effect of a chronic autoimmune tenosynovitis on the adjacent pulleys,” Ilaria Tinazzi, PhD, of the Sacro Cuore-Don Calabria Hospital, Verona, Italy, and her colleagues wrote in Annals of the Rheumatic Diseases. “However, the greater magnitude of pulley thickening in PsA, especially in the setting of dactylitis, suggests an intrinsic pathology in the pulley contributing to PsA-related tenosynovitis.”

Dr. Tinazzi and her research team found that the pulleys in patients with PsA were thicker in every digit than they were in patients with RA and healthy controls, but pulley thickness also differed for each group of patients when compared with one another. Patients with RA had thicker pulleys than did healthy controls, particularly in the A1 pulley, but showed no differences in the A2 and A4 pulleys of the second and fourth digit. Even patients with only psoriasis had thicker pulleys than did the healthy controls. Patients with PsA exhibited thicker A1 and A4 pulleys, compared with patients with only psoriasis, while A2 pulleys displayed no statistical difference. Among PsA patients, 165 of 243 (68%) pulleys were thickened, whereas only 41 of 243 (17%) were thickened among RA patients (P less than .001). A multiple-regression analysis revealed that only PsA was associated with the number of thickened pulleys (odds ratio, 4.8; 95% confidence interval, 3.3-6.3; P = .001).

The size of the study population and the method for defining pulley thickness limited the study. Measurements were taken only in three digits of the dominant hand of each patient. Among PsA patients, only those with hand involvement participated in the study, which may limit the translation of the results to all PsA patients. Additionally, whether pulley changes trigger PsA tenosynovitis or whether it is a reactive process needs additional investigation.

The results of the study suggest that accessory pulleys are subjected to high physical stress and that they exhibit deep Koebner effects and thicken in response, Dr. Tinazzi and her associates wrote.

“Indeed, these findings suggest that such a mechanism is possible given the frequency of thickening of these structures in PsA” wrote Dr. Tinazzi and her associates. “It will be important to show that such changes are present in early PsA where their presence would support the idea of early biomechanical alterations in tendon function that could contribute to dactylitic tenosynovitis.”

This study received no external funding. Several of the researchers have received grants or honoraria from pharmaceutical companies.

SOURCE: Tinazzi I et al. Ann Rheum Dis. 2018 Mar 6. doi: 10.1136/annrheumdis-2017-212681.

An ultrasonographic study describing the greater presence of thicker digital accessory pulleys in patients with psoriatic arthritis (PsA) than in those with only psoriatic skin disease, rheumatoid arthritis, or no pathology has lent more evidence for a “deep Koebner” response mechanism in the development of joint disease in people with psoriasis.

“The thicker pulleys in RA compared with HCs [healthy controls] might point towards a nonspecific effect of a chronic autoimmune tenosynovitis on the adjacent pulleys,” Ilaria Tinazzi, PhD, of the Sacro Cuore-Don Calabria Hospital, Verona, Italy, and her colleagues wrote in Annals of the Rheumatic Diseases. “However, the greater magnitude of pulley thickening in PsA, especially in the setting of dactylitis, suggests an intrinsic pathology in the pulley contributing to PsA-related tenosynovitis.”

Dr. Tinazzi and her research team found that the pulleys in patients with PsA were thicker in every digit than they were in patients with RA and healthy controls, but pulley thickness also differed for each group of patients when compared with one another. Patients with RA had thicker pulleys than did healthy controls, particularly in the A1 pulley, but showed no differences in the A2 and A4 pulleys of the second and fourth digit. Even patients with only psoriasis had thicker pulleys than did the healthy controls. Patients with PsA exhibited thicker A1 and A4 pulleys, compared with patients with only psoriasis, while A2 pulleys displayed no statistical difference. Among PsA patients, 165 of 243 (68%) pulleys were thickened, whereas only 41 of 243 (17%) were thickened among RA patients (P less than .001). A multiple-regression analysis revealed that only PsA was associated with the number of thickened pulleys (odds ratio, 4.8; 95% confidence interval, 3.3-6.3; P = .001).

The size of the study population and the method for defining pulley thickness limited the study. Measurements were taken only in three digits of the dominant hand of each patient. Among PsA patients, only those with hand involvement participated in the study, which may limit the translation of the results to all PsA patients. Additionally, whether pulley changes trigger PsA tenosynovitis or whether it is a reactive process needs additional investigation.

The results of the study suggest that accessory pulleys are subjected to high physical stress and that they exhibit deep Koebner effects and thicken in response, Dr. Tinazzi and her associates wrote.

“Indeed, these findings suggest that such a mechanism is possible given the frequency of thickening of these structures in PsA” wrote Dr. Tinazzi and her associates. “It will be important to show that such changes are present in early PsA where their presence would support the idea of early biomechanical alterations in tendon function that could contribute to dactylitic tenosynovitis.”

This study received no external funding. Several of the researchers have received grants or honoraria from pharmaceutical companies.

SOURCE: Tinazzi I et al. Ann Rheum Dis. 2018 Mar 6. doi: 10.1136/annrheumdis-2017-212681.

An ultrasonographic study describing the greater presence of thicker digital accessory pulleys in patients with psoriatic arthritis (PsA) than in those with only psoriatic skin disease, rheumatoid arthritis, or no pathology has lent more evidence for a “deep Koebner” response mechanism in the development of joint disease in people with psoriasis.

“The thicker pulleys in RA compared with HCs [healthy controls] might point towards a nonspecific effect of a chronic autoimmune tenosynovitis on the adjacent pulleys,” Ilaria Tinazzi, PhD, of the Sacro Cuore-Don Calabria Hospital, Verona, Italy, and her colleagues wrote in Annals of the Rheumatic Diseases. “However, the greater magnitude of pulley thickening in PsA, especially in the setting of dactylitis, suggests an intrinsic pathology in the pulley contributing to PsA-related tenosynovitis.”

Dr. Tinazzi and her research team found that the pulleys in patients with PsA were thicker in every digit than they were in patients with RA and healthy controls, but pulley thickness also differed for each group of patients when compared with one another. Patients with RA had thicker pulleys than did healthy controls, particularly in the A1 pulley, but showed no differences in the A2 and A4 pulleys of the second and fourth digit. Even patients with only psoriasis had thicker pulleys than did the healthy controls. Patients with PsA exhibited thicker A1 and A4 pulleys, compared with patients with only psoriasis, while A2 pulleys displayed no statistical difference. Among PsA patients, 165 of 243 (68%) pulleys were thickened, whereas only 41 of 243 (17%) were thickened among RA patients (P less than .001). A multiple-regression analysis revealed that only PsA was associated with the number of thickened pulleys (odds ratio, 4.8; 95% confidence interval, 3.3-6.3; P = .001).

The size of the study population and the method for defining pulley thickness limited the study. Measurements were taken only in three digits of the dominant hand of each patient. Among PsA patients, only those with hand involvement participated in the study, which may limit the translation of the results to all PsA patients. Additionally, whether pulley changes trigger PsA tenosynovitis or whether it is a reactive process needs additional investigation.

The results of the study suggest that accessory pulleys are subjected to high physical stress and that they exhibit deep Koebner effects and thicken in response, Dr. Tinazzi and her associates wrote.

“Indeed, these findings suggest that such a mechanism is possible given the frequency of thickening of these structures in PsA” wrote Dr. Tinazzi and her associates. “It will be important to show that such changes are present in early PsA where their presence would support the idea of early biomechanical alterations in tendon function that could contribute to dactylitic tenosynovitis.”

This study received no external funding. Several of the researchers have received grants or honoraria from pharmaceutical companies.

SOURCE: Tinazzi I et al. Ann Rheum Dis. 2018 Mar 6. doi: 10.1136/annrheumdis-2017-212681.

FROM ANNALS OF THE RHEUMATIC DISEASES

Key clinical point: Patients with psoriatic arthritis have thicker accessory pulleys in their digits than do those with rheumatoid arthritis, psoriasis only, or no inflammatory rheumatologic disease.

Major finding: More accessory pulleys were thickened in the PsA group than in the rheumatoid arthritis group (68% vs. 17%; P less than .001).

Study details: An ultrasonographic study of digital pulley thickness in 96 patients with PsA, RA, psoriasis only, or no inflammatory rheumatologic disease.

Disclosures: This study received no external funding. Several of the researchers have received grants or honoraria from pharmaceutical companies.

Source: Tinazzi I et al. Ann Rheum Dis. 2018 Mar 6. doi: 10.1136/annrheumdis-2017-212681.

Turning Up the Heat on ICU Burnout

The work of critical care clinicians can create a perfect storm for emotional exhaustion, depersonalization, and reduced self-efficacy – widely known as burnout. Burnout is occurring in record numbers among physicians in general – more than twice as frequently as for non-health-care workers – and intensivists top the chart. Clinicians from all specialties in medicine today experience the frustrations of workplace chaos and loss of control, displacement of meaningful work with menial work, and ever increasing documentation requirements and electronic health record challenges – all contributing to burnout. Intensivists and other ICU professionals, such as advanced practice providers and nurses, however, experience the added challenge of working in a highly stressful environment characterized by fast-paced high-stakes decision making, long and irregular hours, and end-of-life scenarios often clouded by moral distress. These and other drivers contribute to high rates of burnout.

Being burned out takes its toll on health-care workers, contributing to psychological and physical manifestations, alcohol or substance abuse, posttraumatic stress disorder, and even suicidal ideation. Additionally, burnout carries important negative consequences for the organization and directly to the patient, including higher rates of employee turnover, lower quality of work, more medical errors, and reduced patient satisfaction. Unfortunately, burnout rates continue to rise with alarming speed.

Fortunately, there is increasing attention paid to the magnitude and potential impact of burnout, compelling important organizations to highlight the problem and assist clinicians in combating burnout and its consequences. For example, the National Academy of Medicine (NAM) has convened an Action Collaborative on Clinician Well-Being and Resilience and invited more than 100 organizations to publish their statement of commitment to improve clinician well-being and reduce clinician burnout (https://nam.edu/initiatives/clinician-resilience-and-well-being/). The American Medical Association (AMA) has developed modules and tools to assist clinicians and administrators in taking important steps to prevent burnout (https://www.stepsforward.org/modules/physician-burnout).

CHEST has been an active participant in addressing burnout in ICU professionals, including in an important partnership with the American Association of Critical-Care Nurses (AACN), the American Thoracic Society (ATS), and the Society of Critical Care Medicine (SCCM) - the Critical Care Societies Collaborative (CCSC). The CCSC, whose members include greater than 150,000 critical care professionals in the United States, has established a principle goal of mitigating ICU burnout (#StopICUBurnout). One of the first CCSC efforts was to publish a white paper simultaneously in all four journals of the CCSC professional societies that provides the rationale and direction for a “call for action” to tackle ICU burnout (Moss M, Good VS, Gozal D, Kleinpell R, Sessler CN. Burnout syndrome in critical care health care professionals: A call for action. Chest. 2016;150[1]:17). Recently, the CCSC sponsored a National Summit on the Prevention and Management of Burnout in the ICU (http://ccsconline.org/optimizing-the-workforce/burnout). Fifty-five invited participants brought wide ranging expertise and substantial enthusiasm to the task of deconstructing ICU burnout and identifying knowledge gaps and future directions. Areas of focused discussion included factors influencing burnout, identifying individuals with burnout, the value of organizational and individual interventions to prevent and manage burnout, and translation of these discussions into a research agenda. CHEST and the CCSC are committed to the goals of enhancing clinician well-being and eliminating burnout in the ICU.

The work of critical care clinicians can create a perfect storm for emotional exhaustion, depersonalization, and reduced self-efficacy – widely known as burnout. Burnout is occurring in record numbers among physicians in general – more than twice as frequently as for non-health-care workers – and intensivists top the chart. Clinicians from all specialties in medicine today experience the frustrations of workplace chaos and loss of control, displacement of meaningful work with menial work, and ever increasing documentation requirements and electronic health record challenges – all contributing to burnout. Intensivists and other ICU professionals, such as advanced practice providers and nurses, however, experience the added challenge of working in a highly stressful environment characterized by fast-paced high-stakes decision making, long and irregular hours, and end-of-life scenarios often clouded by moral distress. These and other drivers contribute to high rates of burnout.

Being burned out takes its toll on health-care workers, contributing to psychological and physical manifestations, alcohol or substance abuse, posttraumatic stress disorder, and even suicidal ideation. Additionally, burnout carries important negative consequences for the organization and directly to the patient, including higher rates of employee turnover, lower quality of work, more medical errors, and reduced patient satisfaction. Unfortunately, burnout rates continue to rise with alarming speed.

Fortunately, there is increasing attention paid to the magnitude and potential impact of burnout, compelling important organizations to highlight the problem and assist clinicians in combating burnout and its consequences. For example, the National Academy of Medicine (NAM) has convened an Action Collaborative on Clinician Well-Being and Resilience and invited more than 100 organizations to publish their statement of commitment to improve clinician well-being and reduce clinician burnout (https://nam.edu/initiatives/clinician-resilience-and-well-being/). The American Medical Association (AMA) has developed modules and tools to assist clinicians and administrators in taking important steps to prevent burnout (https://www.stepsforward.org/modules/physician-burnout).

CHEST has been an active participant in addressing burnout in ICU professionals, including in an important partnership with the American Association of Critical-Care Nurses (AACN), the American Thoracic Society (ATS), and the Society of Critical Care Medicine (SCCM) - the Critical Care Societies Collaborative (CCSC). The CCSC, whose members include greater than 150,000 critical care professionals in the United States, has established a principle goal of mitigating ICU burnout (#StopICUBurnout). One of the first CCSC efforts was to publish a white paper simultaneously in all four journals of the CCSC professional societies that provides the rationale and direction for a “call for action” to tackle ICU burnout (Moss M, Good VS, Gozal D, Kleinpell R, Sessler CN. Burnout syndrome in critical care health care professionals: A call for action. Chest. 2016;150[1]:17). Recently, the CCSC sponsored a National Summit on the Prevention and Management of Burnout in the ICU (http://ccsconline.org/optimizing-the-workforce/burnout). Fifty-five invited participants brought wide ranging expertise and substantial enthusiasm to the task of deconstructing ICU burnout and identifying knowledge gaps and future directions. Areas of focused discussion included factors influencing burnout, identifying individuals with burnout, the value of organizational and individual interventions to prevent and manage burnout, and translation of these discussions into a research agenda. CHEST and the CCSC are committed to the goals of enhancing clinician well-being and eliminating burnout in the ICU.

The work of critical care clinicians can create a perfect storm for emotional exhaustion, depersonalization, and reduced self-efficacy – widely known as burnout. Burnout is occurring in record numbers among physicians in general – more than twice as frequently as for non-health-care workers – and intensivists top the chart. Clinicians from all specialties in medicine today experience the frustrations of workplace chaos and loss of control, displacement of meaningful work with menial work, and ever increasing documentation requirements and electronic health record challenges – all contributing to burnout. Intensivists and other ICU professionals, such as advanced practice providers and nurses, however, experience the added challenge of working in a highly stressful environment characterized by fast-paced high-stakes decision making, long and irregular hours, and end-of-life scenarios often clouded by moral distress. These and other drivers contribute to high rates of burnout.

Being burned out takes its toll on health-care workers, contributing to psychological and physical manifestations, alcohol or substance abuse, posttraumatic stress disorder, and even suicidal ideation. Additionally, burnout carries important negative consequences for the organization and directly to the patient, including higher rates of employee turnover, lower quality of work, more medical errors, and reduced patient satisfaction. Unfortunately, burnout rates continue to rise with alarming speed.

Fortunately, there is increasing attention paid to the magnitude and potential impact of burnout, compelling important organizations to highlight the problem and assist clinicians in combating burnout and its consequences. For example, the National Academy of Medicine (NAM) has convened an Action Collaborative on Clinician Well-Being and Resilience and invited more than 100 organizations to publish their statement of commitment to improve clinician well-being and reduce clinician burnout (https://nam.edu/initiatives/clinician-resilience-and-well-being/). The American Medical Association (AMA) has developed modules and tools to assist clinicians and administrators in taking important steps to prevent burnout (https://www.stepsforward.org/modules/physician-burnout).

CHEST has been an active participant in addressing burnout in ICU professionals, including in an important partnership with the American Association of Critical-Care Nurses (AACN), the American Thoracic Society (ATS), and the Society of Critical Care Medicine (SCCM) - the Critical Care Societies Collaborative (CCSC). The CCSC, whose members include greater than 150,000 critical care professionals in the United States, has established a principle goal of mitigating ICU burnout (#StopICUBurnout). One of the first CCSC efforts was to publish a white paper simultaneously in all four journals of the CCSC professional societies that provides the rationale and direction for a “call for action” to tackle ICU burnout (Moss M, Good VS, Gozal D, Kleinpell R, Sessler CN. Burnout syndrome in critical care health care professionals: A call for action. Chest. 2016;150[1]:17). Recently, the CCSC sponsored a National Summit on the Prevention and Management of Burnout in the ICU (http://ccsconline.org/optimizing-the-workforce/burnout). Fifty-five invited participants brought wide ranging expertise and substantial enthusiasm to the task of deconstructing ICU burnout and identifying knowledge gaps and future directions. Areas of focused discussion included factors influencing burnout, identifying individuals with burnout, the value of organizational and individual interventions to prevent and manage burnout, and translation of these discussions into a research agenda. CHEST and the CCSC are committed to the goals of enhancing clinician well-being and eliminating burnout in the ICU.

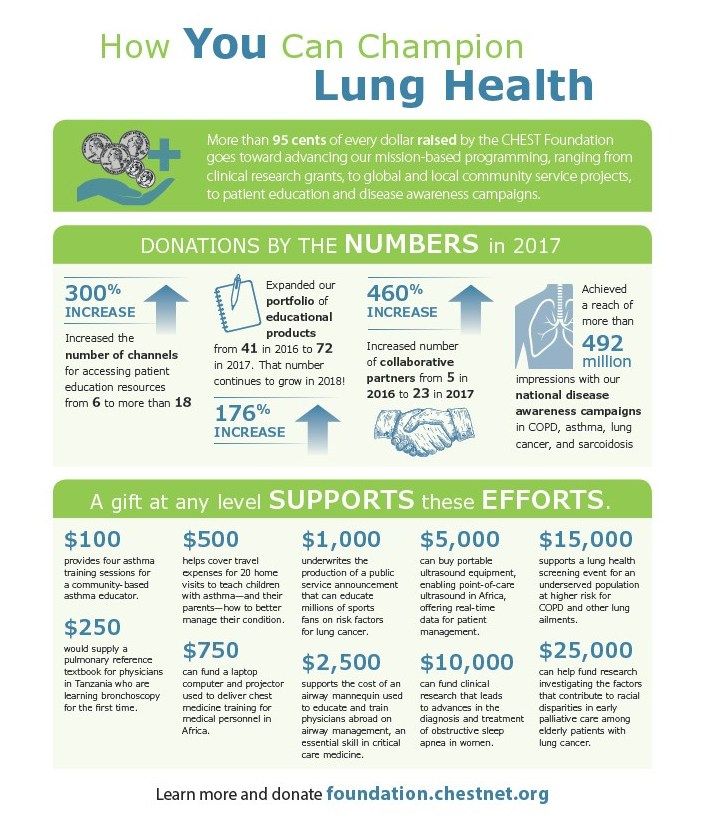

How You Can Champion Lung Health

More than 95 cents of every dollar raised by the CHEST Foundation goes toward advancing our mission-based programming, ranging from clinical research grants, to global and local community service projects, to patient education and disease awareness campaigns.

DONATIONS BY THE NUMBERS in 2017

300% INCREASE

176% INCREASE

Expanded our portfolio of educational products from 41 in 2016 to 72 in 2017. That number continues to grow in 2018!

460% INCREASE

Increased number of collaborative partners from 5 in 2016 to 23 in 2017.

Achieved a reach of more than 492 million impressions with our national disease awareness campaigns in COPD, asthma, lung cancer, and sarcoidosis

A gift at any level SUPPORTS these EFFORTS.

$100

provides four asthma training sessions for a community-based asthma educator.

$250

would supply a pulmonary referencer textbook for physicians in Tanzania who are learning bronchoscopy for the first time.

$500

helps cover travel expenses for 20 home visits to teach children with asthma—and their parents—how to better manage their condition.

$750

can fund a laptop computer and projector used to deliver chest medicine training for medical personnel in Africa.

$1,000

underwrites the production of a public service announcement that can educate millions of sports fans on risk factors for lung cancer.

$2,500

supports the cost of an airway mannequin used to educate and train physicians abroad on airway management, an essential skill in critical care medicine.

$5,000

can buy portable ultrasound equipment, enabling point-of-care ultrasound in Africa, offering real-time data for patient management.

$10,000

can fund clinical research that leads to advances in the diagnosis and treatment of obstructive sleep apnea in women.

$15,000

supports a lung health screening event for an underserved population at higher risk for COPD and other lung ailments.

$25,000

can help fund research investigating the factors that contribute to racial disparities in early palliative care among elderly patients with lung cancer.

Learn more and donate foundation.chestnet.org.

More than 95 cents of every dollar raised by the CHEST Foundation goes toward advancing our mission-based programming, ranging from clinical research grants, to global and local community service projects, to patient education and disease awareness campaigns.

DONATIONS BY THE NUMBERS in 2017

300% INCREASE

176% INCREASE

Expanded our portfolio of educational products from 41 in 2016 to 72 in 2017. That number continues to grow in 2018!

460% INCREASE

Increased number of collaborative partners from 5 in 2016 to 23 in 2017.

Achieved a reach of more than 492 million impressions with our national disease awareness campaigns in COPD, asthma, lung cancer, and sarcoidosis

A gift at any level SUPPORTS these EFFORTS.

$100

provides four asthma training sessions for a community-based asthma educator.

$250

would supply a pulmonary referencer textbook for physicians in Tanzania who are learning bronchoscopy for the first time.

$500

helps cover travel expenses for 20 home visits to teach children with asthma—and their parents—how to better manage their condition.

$750

can fund a laptop computer and projector used to deliver chest medicine training for medical personnel in Africa.

$1,000

underwrites the production of a public service announcement that can educate millions of sports fans on risk factors for lung cancer.

$2,500

supports the cost of an airway mannequin used to educate and train physicians abroad on airway management, an essential skill in critical care medicine.

$5,000

can buy portable ultrasound equipment, enabling point-of-care ultrasound in Africa, offering real-time data for patient management.

$10,000

can fund clinical research that leads to advances in the diagnosis and treatment of obstructive sleep apnea in women.

$15,000

supports a lung health screening event for an underserved population at higher risk for COPD and other lung ailments.

$25,000

can help fund research investigating the factors that contribute to racial disparities in early palliative care among elderly patients with lung cancer.

Learn more and donate foundation.chestnet.org.

More than 95 cents of every dollar raised by the CHEST Foundation goes toward advancing our mission-based programming, ranging from clinical research grants, to global and local community service projects, to patient education and disease awareness campaigns.

DONATIONS BY THE NUMBERS in 2017

300% INCREASE

176% INCREASE

Expanded our portfolio of educational products from 41 in 2016 to 72 in 2017. That number continues to grow in 2018!

460% INCREASE

Increased number of collaborative partners from 5 in 2016 to 23 in 2017.

Achieved a reach of more than 492 million impressions with our national disease awareness campaigns in COPD, asthma, lung cancer, and sarcoidosis

A gift at any level SUPPORTS these EFFORTS.

$100

provides four asthma training sessions for a community-based asthma educator.

$250

would supply a pulmonary referencer textbook for physicians in Tanzania who are learning bronchoscopy for the first time.

$500

helps cover travel expenses for 20 home visits to teach children with asthma—and their parents—how to better manage their condition.

$750

can fund a laptop computer and projector used to deliver chest medicine training for medical personnel in Africa.

$1,000

underwrites the production of a public service announcement that can educate millions of sports fans on risk factors for lung cancer.

$2,500

supports the cost of an airway mannequin used to educate and train physicians abroad on airway management, an essential skill in critical care medicine.

$5,000

can buy portable ultrasound equipment, enabling point-of-care ultrasound in Africa, offering real-time data for patient management.

$10,000

can fund clinical research that leads to advances in the diagnosis and treatment of obstructive sleep apnea in women.

$15,000

supports a lung health screening event for an underserved population at higher risk for COPD and other lung ailments.

$25,000

can help fund research investigating the factors that contribute to racial disparities in early palliative care among elderly patients with lung cancer.

Learn more and donate foundation.chestnet.org.

In Memoriam

W. Gerald Rainer, MD, FCCP, died November 14, 2017, one day after his 90th birthday Dr. Rainer was President of the American College of Chest Physicians in 1982-1983. He practiced thoracic and cardiovascular surgery for 50 years with St. Joseph Hospital in Denver as his professional home. He was a respected leader, researcher, and educator, helping and mentoring countless residents, fellows, and many other health-care professionals. Dr. Rainer was a distinguished clinical professor of surgery at the University of Colorado School of Medicine and served on many University boards and committees. He published prolifically in many respected surgical journals and was able to masterfully blend his private practice with strong academic involvement. As President of the American College of Chest Physicians and many other respected medical and surgical organizations, he was also actively involved in international professional societies. CHEST extends its condolences to Dr. Rainer’s wife of 67 years, Lois, and to his family and friends.