User login

AJO Awards Molly C. Meadows, MD, Second-Place Resident Writer's Award

2017 AJO Resident Writer's Awards

Second-Place Award

An Original Study

Effects of Platelet-Rich Plasma and Indomethacin on Biomechanics of Rotator Cuff Repair

Molly C. Meadows, MD, David M. Levy, MD, Christopher M. Ferry, MS, Thomas R. Gardner, MCE, Takeshi Teratani, MD, and Christopher S. Ahmad, MD

Dr. Meadows is currently in her chief year of orthopedic surgery residency training at Rush University Medical Center. Prior to residency, she completed undergraduate education at Brown University and medical school at Columbia University. Dr. Meadows is beginning a sports medicine fellowship at Stanford University in August 2018, and she plans to pursue a pediatric orthopedic fellowship thereafter.

Her research interests include osteochondritis dissecans lesions, patellofemoral disorders, and other sports injuries in the skeletally immature population.

Read the full version of Dr. Meadows' original study.

2017 AJO Resident Writer's Awards

Second-Place Award

An Original Study

Effects of Platelet-Rich Plasma and Indomethacin on Biomechanics of Rotator Cuff Repair

Molly C. Meadows, MD, David M. Levy, MD, Christopher M. Ferry, MS, Thomas R. Gardner, MCE, Takeshi Teratani, MD, and Christopher S. Ahmad, MD

Dr. Meadows is currently in her chief year of orthopedic surgery residency training at Rush University Medical Center. Prior to residency, she completed undergraduate education at Brown University and medical school at Columbia University. Dr. Meadows is beginning a sports medicine fellowship at Stanford University in August 2018, and she plans to pursue a pediatric orthopedic fellowship thereafter.

Her research interests include osteochondritis dissecans lesions, patellofemoral disorders, and other sports injuries in the skeletally immature population.

Read the full version of Dr. Meadows' original study.

2017 AJO Resident Writer's Awards

Second-Place Award

An Original Study

Effects of Platelet-Rich Plasma and Indomethacin on Biomechanics of Rotator Cuff Repair

Molly C. Meadows, MD, David M. Levy, MD, Christopher M. Ferry, MS, Thomas R. Gardner, MCE, Takeshi Teratani, MD, and Christopher S. Ahmad, MD

Dr. Meadows is currently in her chief year of orthopedic surgery residency training at Rush University Medical Center. Prior to residency, she completed undergraduate education at Brown University and medical school at Columbia University. Dr. Meadows is beginning a sports medicine fellowship at Stanford University in August 2018, and she plans to pursue a pediatric orthopedic fellowship thereafter.

Her research interests include osteochondritis dissecans lesions, patellofemoral disorders, and other sports injuries in the skeletally immature population.

Read the full version of Dr. Meadows' original study.

AJO Awards Joseph T. Patterson, MD, Third-Place Resident Writer's Award

2017 AJO Resident Writer's Awards

Third-Place Award

An Original Study

Does Preoperative Pneumonia Affect Complications of Geriatric Hip Fracture Surgery?

Joseph T. Patterson, MD, Daniel D. Bohl, MD, MPH, Bryce A. Basques, MD, Alexander H. Arzeno, MD, and Jonathan Grauer, MD

Dr. Patterson is completing his orthopedic surgery residency at the University of California San Francisco, and will continue training with a fellowship in orthopedic trauma at Harborview Medical Center. Prior to residency, he completed undergraduate education at the University of California Los Angeles and medical school at Yale University.

His research interests include geriatric hip fracture care, interdisciplinary trauma care performance improvement, and outcome assessment in orthopedic trauma.

Read the full version of Dr. Patterson's original study.

2017 AJO Resident Writer's Awards

Third-Place Award

An Original Study

Does Preoperative Pneumonia Affect Complications of Geriatric Hip Fracture Surgery?

Joseph T. Patterson, MD, Daniel D. Bohl, MD, MPH, Bryce A. Basques, MD, Alexander H. Arzeno, MD, and Jonathan Grauer, MD

Dr. Patterson is completing his orthopedic surgery residency at the University of California San Francisco, and will continue training with a fellowship in orthopedic trauma at Harborview Medical Center. Prior to residency, he completed undergraduate education at the University of California Los Angeles and medical school at Yale University.

His research interests include geriatric hip fracture care, interdisciplinary trauma care performance improvement, and outcome assessment in orthopedic trauma.

Read the full version of Dr. Patterson's original study.

2017 AJO Resident Writer's Awards

Third-Place Award

An Original Study

Does Preoperative Pneumonia Affect Complications of Geriatric Hip Fracture Surgery?

Joseph T. Patterson, MD, Daniel D. Bohl, MD, MPH, Bryce A. Basques, MD, Alexander H. Arzeno, MD, and Jonathan Grauer, MD

Dr. Patterson is completing his orthopedic surgery residency at the University of California San Francisco, and will continue training with a fellowship in orthopedic trauma at Harborview Medical Center. Prior to residency, he completed undergraduate education at the University of California Los Angeles and medical school at Yale University.

His research interests include geriatric hip fracture care, interdisciplinary trauma care performance improvement, and outcome assessment in orthopedic trauma.

Read the full version of Dr. Patterson's original study.

Ketamine formulation study is ‘groundbreaking’

It is remarkable to consider that we now have more than 30 medications approved by the Food and Drug Administration as monotherapy or augmentation for the treatment of major depressive disorder. And yet, we know very little about how these medications perform for patients at high risk for suicide.

Historically, suicidal patients have been excluded from phase 3 antidepressant trials, which provide the basis for regulatory approval. Even studies in treatment-resistant depression (TRD) have tended to exclude patients with the highest risk of suicide. Further, the FDA does not mandate that a new antidepressant medication demonstrate any benefit for suicidal ideation.

Focus on high-risk patients

The recent report by Canuso et al.3 in the American Journal of Psychiatry is a groundbreaking study: Previous placebo-controlled trials of intravenous ketamine in depressed patients with clinically significant suicidal ideation have used only one-time dose administrations4,5,6.

This phase 2, proof-of-concept trial randomized 68 adults with MDD at 11 U.S. sites, which were primarily academic medical centers. In contrast to previous ketamine studies, which recruited patients via advertisement or clinician referral, patients were identified and screened in an emergency department or an inpatient psychiatric unit. Participants had to voluntarily agree to hospitalization for 5 days following randomization, with the remainder of the study conducted on an outpatient basis. Intranasal esketamine (84 mg) or placebo was administered twice per week over 4 weeks, in addition to standard-of-care antidepressant treatment. The primary outcome was the change in the Montgomery Åsberg Depression Rating Scale (MADRS) score from baseline to 4 hours after first dose of study medication.

For the primary MADRS outcome, esketamine statistically separated from placebo at 4 hours and 24 hours, with moderate effect sizes (0.61 to 0.65). There were no significant differences at the end of the double-blind period at day 25 and at posttreatment follow-up at day 81. For the suicidal thoughts item of the MADRS, esketamine’s efficacy was greater than placebo at 4 hours, but not at 24 hours or at day 25. Clinician global judgment of suicide risk was not statistically different between groups at any time point, although the esketamine group had numerically greater improvements at 4 hours and 24 hours. There were no group differences in self-report measures (Beck Scale for Suicidal Ideation or Beck Hopelessness Scale) at any time point.

Regarding safety and tolerability, adverse events led to early termination for 5 patients in the esketamine group, compared with one in the placebo group. The most common adverse events were nausea, dizziness, dissociation, unpleasant taste, and headache, which were more frequent in the esketamine group. Transient elevations in blood pressure and dissociative symptoms generally peaked at 40 minutes after dosing and returned to baseline by 2 hours.

Putting findings in perspective

Several aspects of the trial are noteworthy. First, enrolled patients were markedly depressed, and half required additional suicide precautions in addition to hospitalization. Three patients (all in the placebo group) made suicide attempts during the follow-up period, further evidence that these patients were extremely high risk. Second, the sample was significantly more racially diverse (38% black or African American) than most previous ketamine studies. Third, psychiatric hospitalization plus the initiation of standard antidepressant medication resulted in substantial improvements for many patients randomized to intranasal placebo spray. Inflated short-term placebo responses are commonly seen even in severely depressed patients, making signal detection especially challenging for new drugs. Finally, it is difficult to compare the results of this study with the few placebo-controlled trials of intravenous ketamine for patients with MDD and significant suicidal ideation, because of differences in outcomes measures, patient populations, doses, and route of administration. This study used the Suicide Ideation and Behavior Assessment Tool, a computerized, modular instrument with patient-reported and clinician-reported assessments, which was developed specifically to measure rapid changes in suicidality and awaits further validation in ongoing studies.

Limitations of this study include the absence of reported plasma esketamine levels. Is it possible that higher doses of esketamine, or a different dosing schedule, would have had resulted in greater efficacy? The 84-mg dose used in this trial recently was found to be safe and effective in patients with TRD2, and was reported to have similar plasma levels as IV esketamine 0.2 mg/kg2. This dose, in turn, corresponds to a racemic ketamine dose of approximately 0.31 mg/kg1. Future studies will need to examine the antisuicidal and antidepressant effects of the most commonly used racemic ketamine dose (0.5 mg/kg), compared with 84 mg intranasal esketamine. The twice per week dosing schedule was supported empirically from a previous study of intravenous ketamine showing that twice weekly infusions were equally effective to thrice weekly administrations7. It is unknown, however, whether even less-frequent administrations (such as once weekly) would have been more effective than twice-weekly over the 4-week, double-blind period. Finally, the authors raise the possibility of functional unblinding, which always is a concern in ketamine studies. Although the placebo solution contained a bittering agent to simulate the taste of esketamine intranasal solution, the integrity of the blind was not reported.

Conclusion

Overall, this study is a promising start. In my view, the risk to benefit ratio for this approach is acceptable, given the morbidity and mortality associated with suicidal depression. The fact that esketamine nasal spray would be administered only under the observation of a clinician in a medical setting, and not be dispensed for at-home use, is reassuring and would mitigate the potential for abuse. In the meantime, our field awaits the results of larger phase 3 studies for patients with MDD at imminent risk for suicide.

Dr. Mathew is affiliated with the Michael E. Debakey VA Medical Center, and the Menninger Department of Psychiatry and Behavioral Sciences at the Baylor College of Medicine in Houston. Over the last 12 months, he has served as a paid consultant to Alkermes and Fortress Biotech. He also has served as an investigator on clinical trials sponsored by Janssen Research and Development, the manufacturer of intranasal esketamine, and as an investigator on a trial sponsored by NeuroRx.

References

1. Biol Psychiatry. 2016 Sep 15;80(6):424-31.

2. JAMA Psychiatry. 2018 Feb 1;75(2):139-48.

3. Am J Psychiatry. 2018 Apr 16. doi: 10.1176/appi.ajp.2018.17060720.

4. Am J Psychiatry. 2018 Apr 1;175(4]):327-35.

5. Psychol Med. 2015 Dec;45(16):3571-80.

6. Am J Psychiatry. 2018 Feb 1;175(2):150-8.

7. Am J Psychiatry. 2016 Aug 1;173(8):816-26.

It is remarkable to consider that we now have more than 30 medications approved by the Food and Drug Administration as monotherapy or augmentation for the treatment of major depressive disorder. And yet, we know very little about how these medications perform for patients at high risk for suicide.

Historically, suicidal patients have been excluded from phase 3 antidepressant trials, which provide the basis for regulatory approval. Even studies in treatment-resistant depression (TRD) have tended to exclude patients with the highest risk of suicide. Further, the FDA does not mandate that a new antidepressant medication demonstrate any benefit for suicidal ideation.

Focus on high-risk patients

The recent report by Canuso et al.3 in the American Journal of Psychiatry is a groundbreaking study: Previous placebo-controlled trials of intravenous ketamine in depressed patients with clinically significant suicidal ideation have used only one-time dose administrations4,5,6.

This phase 2, proof-of-concept trial randomized 68 adults with MDD at 11 U.S. sites, which were primarily academic medical centers. In contrast to previous ketamine studies, which recruited patients via advertisement or clinician referral, patients were identified and screened in an emergency department or an inpatient psychiatric unit. Participants had to voluntarily agree to hospitalization for 5 days following randomization, with the remainder of the study conducted on an outpatient basis. Intranasal esketamine (84 mg) or placebo was administered twice per week over 4 weeks, in addition to standard-of-care antidepressant treatment. The primary outcome was the change in the Montgomery Åsberg Depression Rating Scale (MADRS) score from baseline to 4 hours after first dose of study medication.

For the primary MADRS outcome, esketamine statistically separated from placebo at 4 hours and 24 hours, with moderate effect sizes (0.61 to 0.65). There were no significant differences at the end of the double-blind period at day 25 and at posttreatment follow-up at day 81. For the suicidal thoughts item of the MADRS, esketamine’s efficacy was greater than placebo at 4 hours, but not at 24 hours or at day 25. Clinician global judgment of suicide risk was not statistically different between groups at any time point, although the esketamine group had numerically greater improvements at 4 hours and 24 hours. There were no group differences in self-report measures (Beck Scale for Suicidal Ideation or Beck Hopelessness Scale) at any time point.

Regarding safety and tolerability, adverse events led to early termination for 5 patients in the esketamine group, compared with one in the placebo group. The most common adverse events were nausea, dizziness, dissociation, unpleasant taste, and headache, which were more frequent in the esketamine group. Transient elevations in blood pressure and dissociative symptoms generally peaked at 40 minutes after dosing and returned to baseline by 2 hours.

Putting findings in perspective

Several aspects of the trial are noteworthy. First, enrolled patients were markedly depressed, and half required additional suicide precautions in addition to hospitalization. Three patients (all in the placebo group) made suicide attempts during the follow-up period, further evidence that these patients were extremely high risk. Second, the sample was significantly more racially diverse (38% black or African American) than most previous ketamine studies. Third, psychiatric hospitalization plus the initiation of standard antidepressant medication resulted in substantial improvements for many patients randomized to intranasal placebo spray. Inflated short-term placebo responses are commonly seen even in severely depressed patients, making signal detection especially challenging for new drugs. Finally, it is difficult to compare the results of this study with the few placebo-controlled trials of intravenous ketamine for patients with MDD and significant suicidal ideation, because of differences in outcomes measures, patient populations, doses, and route of administration. This study used the Suicide Ideation and Behavior Assessment Tool, a computerized, modular instrument with patient-reported and clinician-reported assessments, which was developed specifically to measure rapid changes in suicidality and awaits further validation in ongoing studies.

Limitations of this study include the absence of reported plasma esketamine levels. Is it possible that higher doses of esketamine, or a different dosing schedule, would have had resulted in greater efficacy? The 84-mg dose used in this trial recently was found to be safe and effective in patients with TRD2, and was reported to have similar plasma levels as IV esketamine 0.2 mg/kg2. This dose, in turn, corresponds to a racemic ketamine dose of approximately 0.31 mg/kg1. Future studies will need to examine the antisuicidal and antidepressant effects of the most commonly used racemic ketamine dose (0.5 mg/kg), compared with 84 mg intranasal esketamine. The twice per week dosing schedule was supported empirically from a previous study of intravenous ketamine showing that twice weekly infusions were equally effective to thrice weekly administrations7. It is unknown, however, whether even less-frequent administrations (such as once weekly) would have been more effective than twice-weekly over the 4-week, double-blind period. Finally, the authors raise the possibility of functional unblinding, which always is a concern in ketamine studies. Although the placebo solution contained a bittering agent to simulate the taste of esketamine intranasal solution, the integrity of the blind was not reported.

Conclusion

Overall, this study is a promising start. In my view, the risk to benefit ratio for this approach is acceptable, given the morbidity and mortality associated with suicidal depression. The fact that esketamine nasal spray would be administered only under the observation of a clinician in a medical setting, and not be dispensed for at-home use, is reassuring and would mitigate the potential for abuse. In the meantime, our field awaits the results of larger phase 3 studies for patients with MDD at imminent risk for suicide.

Dr. Mathew is affiliated with the Michael E. Debakey VA Medical Center, and the Menninger Department of Psychiatry and Behavioral Sciences at the Baylor College of Medicine in Houston. Over the last 12 months, he has served as a paid consultant to Alkermes and Fortress Biotech. He also has served as an investigator on clinical trials sponsored by Janssen Research and Development, the manufacturer of intranasal esketamine, and as an investigator on a trial sponsored by NeuroRx.

References

1. Biol Psychiatry. 2016 Sep 15;80(6):424-31.

2. JAMA Psychiatry. 2018 Feb 1;75(2):139-48.

3. Am J Psychiatry. 2018 Apr 16. doi: 10.1176/appi.ajp.2018.17060720.

4. Am J Psychiatry. 2018 Apr 1;175(4]):327-35.

5. Psychol Med. 2015 Dec;45(16):3571-80.

6. Am J Psychiatry. 2018 Feb 1;175(2):150-8.

7. Am J Psychiatry. 2016 Aug 1;173(8):816-26.

It is remarkable to consider that we now have more than 30 medications approved by the Food and Drug Administration as monotherapy or augmentation for the treatment of major depressive disorder. And yet, we know very little about how these medications perform for patients at high risk for suicide.

Historically, suicidal patients have been excluded from phase 3 antidepressant trials, which provide the basis for regulatory approval. Even studies in treatment-resistant depression (TRD) have tended to exclude patients with the highest risk of suicide. Further, the FDA does not mandate that a new antidepressant medication demonstrate any benefit for suicidal ideation.

Focus on high-risk patients

The recent report by Canuso et al.3 in the American Journal of Psychiatry is a groundbreaking study: Previous placebo-controlled trials of intravenous ketamine in depressed patients with clinically significant suicidal ideation have used only one-time dose administrations4,5,6.

This phase 2, proof-of-concept trial randomized 68 adults with MDD at 11 U.S. sites, which were primarily academic medical centers. In contrast to previous ketamine studies, which recruited patients via advertisement or clinician referral, patients were identified and screened in an emergency department or an inpatient psychiatric unit. Participants had to voluntarily agree to hospitalization for 5 days following randomization, with the remainder of the study conducted on an outpatient basis. Intranasal esketamine (84 mg) or placebo was administered twice per week over 4 weeks, in addition to standard-of-care antidepressant treatment. The primary outcome was the change in the Montgomery Åsberg Depression Rating Scale (MADRS) score from baseline to 4 hours after first dose of study medication.

For the primary MADRS outcome, esketamine statistically separated from placebo at 4 hours and 24 hours, with moderate effect sizes (0.61 to 0.65). There were no significant differences at the end of the double-blind period at day 25 and at posttreatment follow-up at day 81. For the suicidal thoughts item of the MADRS, esketamine’s efficacy was greater than placebo at 4 hours, but not at 24 hours or at day 25. Clinician global judgment of suicide risk was not statistically different between groups at any time point, although the esketamine group had numerically greater improvements at 4 hours and 24 hours. There were no group differences in self-report measures (Beck Scale for Suicidal Ideation or Beck Hopelessness Scale) at any time point.

Regarding safety and tolerability, adverse events led to early termination for 5 patients in the esketamine group, compared with one in the placebo group. The most common adverse events were nausea, dizziness, dissociation, unpleasant taste, and headache, which were more frequent in the esketamine group. Transient elevations in blood pressure and dissociative symptoms generally peaked at 40 minutes after dosing and returned to baseline by 2 hours.

Putting findings in perspective

Several aspects of the trial are noteworthy. First, enrolled patients were markedly depressed, and half required additional suicide precautions in addition to hospitalization. Three patients (all in the placebo group) made suicide attempts during the follow-up period, further evidence that these patients were extremely high risk. Second, the sample was significantly more racially diverse (38% black or African American) than most previous ketamine studies. Third, psychiatric hospitalization plus the initiation of standard antidepressant medication resulted in substantial improvements for many patients randomized to intranasal placebo spray. Inflated short-term placebo responses are commonly seen even in severely depressed patients, making signal detection especially challenging for new drugs. Finally, it is difficult to compare the results of this study with the few placebo-controlled trials of intravenous ketamine for patients with MDD and significant suicidal ideation, because of differences in outcomes measures, patient populations, doses, and route of administration. This study used the Suicide Ideation and Behavior Assessment Tool, a computerized, modular instrument with patient-reported and clinician-reported assessments, which was developed specifically to measure rapid changes in suicidality and awaits further validation in ongoing studies.

Limitations of this study include the absence of reported plasma esketamine levels. Is it possible that higher doses of esketamine, or a different dosing schedule, would have had resulted in greater efficacy? The 84-mg dose used in this trial recently was found to be safe and effective in patients with TRD2, and was reported to have similar plasma levels as IV esketamine 0.2 mg/kg2. This dose, in turn, corresponds to a racemic ketamine dose of approximately 0.31 mg/kg1. Future studies will need to examine the antisuicidal and antidepressant effects of the most commonly used racemic ketamine dose (0.5 mg/kg), compared with 84 mg intranasal esketamine. The twice per week dosing schedule was supported empirically from a previous study of intravenous ketamine showing that twice weekly infusions were equally effective to thrice weekly administrations7. It is unknown, however, whether even less-frequent administrations (such as once weekly) would have been more effective than twice-weekly over the 4-week, double-blind period. Finally, the authors raise the possibility of functional unblinding, which always is a concern in ketamine studies. Although the placebo solution contained a bittering agent to simulate the taste of esketamine intranasal solution, the integrity of the blind was not reported.

Conclusion

Overall, this study is a promising start. In my view, the risk to benefit ratio for this approach is acceptable, given the morbidity and mortality associated with suicidal depression. The fact that esketamine nasal spray would be administered only under the observation of a clinician in a medical setting, and not be dispensed for at-home use, is reassuring and would mitigate the potential for abuse. In the meantime, our field awaits the results of larger phase 3 studies for patients with MDD at imminent risk for suicide.

Dr. Mathew is affiliated with the Michael E. Debakey VA Medical Center, and the Menninger Department of Psychiatry and Behavioral Sciences at the Baylor College of Medicine in Houston. Over the last 12 months, he has served as a paid consultant to Alkermes and Fortress Biotech. He also has served as an investigator on clinical trials sponsored by Janssen Research and Development, the manufacturer of intranasal esketamine, and as an investigator on a trial sponsored by NeuroRx.

References

1. Biol Psychiatry. 2016 Sep 15;80(6):424-31.

2. JAMA Psychiatry. 2018 Feb 1;75(2):139-48.

3. Am J Psychiatry. 2018 Apr 16. doi: 10.1176/appi.ajp.2018.17060720.

4. Am J Psychiatry. 2018 Apr 1;175(4]):327-35.

5. Psychol Med. 2015 Dec;45(16):3571-80.

6. Am J Psychiatry. 2018 Feb 1;175(2):150-8.

7. Am J Psychiatry. 2016 Aug 1;173(8):816-26.

Therapeutic endoscopy expands reach to deep GI lesions

BOSTON –

“The main difficulty with these procedures is closure,” Mouen A. Khashab, MD, said at the AGA Tech Summit, which was sponsored by the AGA Center for GI Innovation and Technology. “Sometimes you can create a large defect that you’re not sure you can close. You must have experience with large defect closure.”

In experienced hands, the endoscopic approaches spare adjacent large organs, have a complete resection rate approaching 95%, and an acceptable rate of adverse events. They can provide excellent surgical specimens that are more than adequate for histologic studies, although some cannot provide any information on margins, said Dr. Khashab, director of therapeutic endoscopy at Johns Hopkins University, Baltimore. “When doing a full-thickness endoscopic resection, you can’t comment on the margins. You’re not getting any normal tissue around the tumor, and this can create an issue with some patients.”

Dr. Khashab briefly described three endoscopic resection techniques that are suitable for the following deep GI tumors:

- Submucosal tunneling endoscopic resection. STER is most suitable for smaller tumors (4 cm or less). Tumors of this size are easily removed en bloc via the endoscopic tunnel. Larger tumors can also be resected this way, but need to be removed piecemeal after dissection off the muscle layer. This is an acceptable alternative in leiomyomas but not in gastrointestinal stromal tumors. “For this technique, you introduce the scope into the submucosal layer and create a space with tunneling,” Dr. Khashab said. “We then expose the tumor, dissect it off the wall of the muscularis propria, and pull it out of the tunnel.” A 2017 meta-analysis examined outcomes in 28 studies with data on 1,085 lesions. The pooled complete resection and en bloc resection rates were 97.5% and 94.6%, respectively. Common complications associated with STER were air leakage symptoms (15%) and perforation (5.6%). “The perforation rate is reasonable, I think. A lot of these complications can be managed intraoperatively with clipping or suturing,” Dr. Khashab noted.

- Endoscopic submucosal dissection: ESD is now being used to resect tumors that originate from the muscularis propria. “This is something I didn’t used to think was even possible,” Dr. Khashab said. “But we are seeing some literature on this now. A lot of these tumors originate from the superficial MP [muscularis propria], so we can dissect off the muscle without needing a full-thickness resection.” He presented findings from a large study comprising 143 patients with submucosal gastric or esophageal tumors that arose from the muscularis propria at the esophagogastric junction. These were large lesions, a mean 17.6 cm, but they ranged up to 50 cm. The en bloc resection rate was 94%. There were six perforations (4%), along with four pneumoperitoneum and two pneumothorax, which resolved without further surgery. There were no local recurrences or metastases when the 2012 study appeared, with a mean follow-up of 2 years.

- Endoscopic full-thickness resection: EFTR “is a technically demanding procedure, and we frequently have to work on these tumors in retroflexion,” Dr. Khashab said. He referred to a 2011 paper, which described the EFTR technique used in 26 patients with gastric cancers. The tumors (mean size, 2.8 cm) were located in the gastric corpus and in the gastric fundus. Although the procedures were lengthy, ranging from 60 to 145 minutes, they were highly successful, with a 100% complete resection rate. Nevertheless, there was also a 100% perforation rate, although all these were closed intraoperatively. There was no postoperative gastric bleeding, peritonitis sign, or abdominal abscess. No lesion residual or recurrence was found during the 6-24 month follow-up period.

Dr. Khashab is a consultant and medical advisory board member for Boston Scientific and Olympus.

BOSTON –

“The main difficulty with these procedures is closure,” Mouen A. Khashab, MD, said at the AGA Tech Summit, which was sponsored by the AGA Center for GI Innovation and Technology. “Sometimes you can create a large defect that you’re not sure you can close. You must have experience with large defect closure.”

In experienced hands, the endoscopic approaches spare adjacent large organs, have a complete resection rate approaching 95%, and an acceptable rate of adverse events. They can provide excellent surgical specimens that are more than adequate for histologic studies, although some cannot provide any information on margins, said Dr. Khashab, director of therapeutic endoscopy at Johns Hopkins University, Baltimore. “When doing a full-thickness endoscopic resection, you can’t comment on the margins. You’re not getting any normal tissue around the tumor, and this can create an issue with some patients.”

Dr. Khashab briefly described three endoscopic resection techniques that are suitable for the following deep GI tumors:

- Submucosal tunneling endoscopic resection. STER is most suitable for smaller tumors (4 cm or less). Tumors of this size are easily removed en bloc via the endoscopic tunnel. Larger tumors can also be resected this way, but need to be removed piecemeal after dissection off the muscle layer. This is an acceptable alternative in leiomyomas but not in gastrointestinal stromal tumors. “For this technique, you introduce the scope into the submucosal layer and create a space with tunneling,” Dr. Khashab said. “We then expose the tumor, dissect it off the wall of the muscularis propria, and pull it out of the tunnel.” A 2017 meta-analysis examined outcomes in 28 studies with data on 1,085 lesions. The pooled complete resection and en bloc resection rates were 97.5% and 94.6%, respectively. Common complications associated with STER were air leakage symptoms (15%) and perforation (5.6%). “The perforation rate is reasonable, I think. A lot of these complications can be managed intraoperatively with clipping or suturing,” Dr. Khashab noted.

- Endoscopic submucosal dissection: ESD is now being used to resect tumors that originate from the muscularis propria. “This is something I didn’t used to think was even possible,” Dr. Khashab said. “But we are seeing some literature on this now. A lot of these tumors originate from the superficial MP [muscularis propria], so we can dissect off the muscle without needing a full-thickness resection.” He presented findings from a large study comprising 143 patients with submucosal gastric or esophageal tumors that arose from the muscularis propria at the esophagogastric junction. These were large lesions, a mean 17.6 cm, but they ranged up to 50 cm. The en bloc resection rate was 94%. There were six perforations (4%), along with four pneumoperitoneum and two pneumothorax, which resolved without further surgery. There were no local recurrences or metastases when the 2012 study appeared, with a mean follow-up of 2 years.

- Endoscopic full-thickness resection: EFTR “is a technically demanding procedure, and we frequently have to work on these tumors in retroflexion,” Dr. Khashab said. He referred to a 2011 paper, which described the EFTR technique used in 26 patients with gastric cancers. The tumors (mean size, 2.8 cm) were located in the gastric corpus and in the gastric fundus. Although the procedures were lengthy, ranging from 60 to 145 minutes, they were highly successful, with a 100% complete resection rate. Nevertheless, there was also a 100% perforation rate, although all these were closed intraoperatively. There was no postoperative gastric bleeding, peritonitis sign, or abdominal abscess. No lesion residual or recurrence was found during the 6-24 month follow-up period.

Dr. Khashab is a consultant and medical advisory board member for Boston Scientific and Olympus.

BOSTON –

“The main difficulty with these procedures is closure,” Mouen A. Khashab, MD, said at the AGA Tech Summit, which was sponsored by the AGA Center for GI Innovation and Technology. “Sometimes you can create a large defect that you’re not sure you can close. You must have experience with large defect closure.”

In experienced hands, the endoscopic approaches spare adjacent large organs, have a complete resection rate approaching 95%, and an acceptable rate of adverse events. They can provide excellent surgical specimens that are more than adequate for histologic studies, although some cannot provide any information on margins, said Dr. Khashab, director of therapeutic endoscopy at Johns Hopkins University, Baltimore. “When doing a full-thickness endoscopic resection, you can’t comment on the margins. You’re not getting any normal tissue around the tumor, and this can create an issue with some patients.”

Dr. Khashab briefly described three endoscopic resection techniques that are suitable for the following deep GI tumors:

- Submucosal tunneling endoscopic resection. STER is most suitable for smaller tumors (4 cm or less). Tumors of this size are easily removed en bloc via the endoscopic tunnel. Larger tumors can also be resected this way, but need to be removed piecemeal after dissection off the muscle layer. This is an acceptable alternative in leiomyomas but not in gastrointestinal stromal tumors. “For this technique, you introduce the scope into the submucosal layer and create a space with tunneling,” Dr. Khashab said. “We then expose the tumor, dissect it off the wall of the muscularis propria, and pull it out of the tunnel.” A 2017 meta-analysis examined outcomes in 28 studies with data on 1,085 lesions. The pooled complete resection and en bloc resection rates were 97.5% and 94.6%, respectively. Common complications associated with STER were air leakage symptoms (15%) and perforation (5.6%). “The perforation rate is reasonable, I think. A lot of these complications can be managed intraoperatively with clipping or suturing,” Dr. Khashab noted.

- Endoscopic submucosal dissection: ESD is now being used to resect tumors that originate from the muscularis propria. “This is something I didn’t used to think was even possible,” Dr. Khashab said. “But we are seeing some literature on this now. A lot of these tumors originate from the superficial MP [muscularis propria], so we can dissect off the muscle without needing a full-thickness resection.” He presented findings from a large study comprising 143 patients with submucosal gastric or esophageal tumors that arose from the muscularis propria at the esophagogastric junction. These were large lesions, a mean 17.6 cm, but they ranged up to 50 cm. The en bloc resection rate was 94%. There were six perforations (4%), along with four pneumoperitoneum and two pneumothorax, which resolved without further surgery. There were no local recurrences or metastases when the 2012 study appeared, with a mean follow-up of 2 years.

- Endoscopic full-thickness resection: EFTR “is a technically demanding procedure, and we frequently have to work on these tumors in retroflexion,” Dr. Khashab said. He referred to a 2011 paper, which described the EFTR technique used in 26 patients with gastric cancers. The tumors (mean size, 2.8 cm) were located in the gastric corpus and in the gastric fundus. Although the procedures were lengthy, ranging from 60 to 145 minutes, they were highly successful, with a 100% complete resection rate. Nevertheless, there was also a 100% perforation rate, although all these were closed intraoperatively. There was no postoperative gastric bleeding, peritonitis sign, or abdominal abscess. No lesion residual or recurrence was found during the 6-24 month follow-up period.

Dr. Khashab is a consultant and medical advisory board member for Boston Scientific and Olympus.

EXPERT ANALYSIS FROM THE 2018 AGA TECH SUMMIT

AJO Awards Joseph T. O'Neil, MD, First-Place Resident Writer's Award

2017 AJO Resident Writer's Awards

First-Place Award

An Original Study

Prospective Evaluation of Opioid Consumption After Distal Radius Fracture Repair Surgery

Joseph T. O’Neil, MD, Mark L. Wang, MD, PhD, Nayoung Kim, BS, Mitchell Maltenfort, PhD, and Asif M. Ilyas, MD

Dr. O'Neil completed his orthopedic surgery residency training at Thomas Jefferson University Hospital in Philadelphia, Pennsylvania. Prior to residency, he completed undergraduate education at the University of Notre Dame and medical school at Thomas Jefferson University. He was born and raised in the Philadelphia area.

Dr. O'Neil is currently an orthopedic foot and ankle surgery fellow at Union Memorial Hospital in Baltimore, Maryland.

His research interests include total ankle arthroplasty; the diagnosis, treatment, and prevention of periprosthetic joint infection in the ankle; as well as helping to combat the opioid epidemic in the United States by better understanding patterns of prescribing and use following common orthopedic surgical procedures.

Read the full version of Dr. O'Neil's original study.

2017 AJO Resident Writer's Awards

First-Place Award

An Original Study

Prospective Evaluation of Opioid Consumption After Distal Radius Fracture Repair Surgery

Joseph T. O’Neil, MD, Mark L. Wang, MD, PhD, Nayoung Kim, BS, Mitchell Maltenfort, PhD, and Asif M. Ilyas, MD

Dr. O'Neil completed his orthopedic surgery residency training at Thomas Jefferson University Hospital in Philadelphia, Pennsylvania. Prior to residency, he completed undergraduate education at the University of Notre Dame and medical school at Thomas Jefferson University. He was born and raised in the Philadelphia area.

Dr. O'Neil is currently an orthopedic foot and ankle surgery fellow at Union Memorial Hospital in Baltimore, Maryland.

His research interests include total ankle arthroplasty; the diagnosis, treatment, and prevention of periprosthetic joint infection in the ankle; as well as helping to combat the opioid epidemic in the United States by better understanding patterns of prescribing and use following common orthopedic surgical procedures.

Read the full version of Dr. O'Neil's original study.

2017 AJO Resident Writer's Awards

First-Place Award

An Original Study

Prospective Evaluation of Opioid Consumption After Distal Radius Fracture Repair Surgery

Joseph T. O’Neil, MD, Mark L. Wang, MD, PhD, Nayoung Kim, BS, Mitchell Maltenfort, PhD, and Asif M. Ilyas, MD

Dr. O'Neil completed his orthopedic surgery residency training at Thomas Jefferson University Hospital in Philadelphia, Pennsylvania. Prior to residency, he completed undergraduate education at the University of Notre Dame and medical school at Thomas Jefferson University. He was born and raised in the Philadelphia area.

Dr. O'Neil is currently an orthopedic foot and ankle surgery fellow at Union Memorial Hospital in Baltimore, Maryland.

His research interests include total ankle arthroplasty; the diagnosis, treatment, and prevention of periprosthetic joint infection in the ankle; as well as helping to combat the opioid epidemic in the United States by better understanding patterns of prescribing and use following common orthopedic surgical procedures.

Read the full version of Dr. O'Neil's original study.

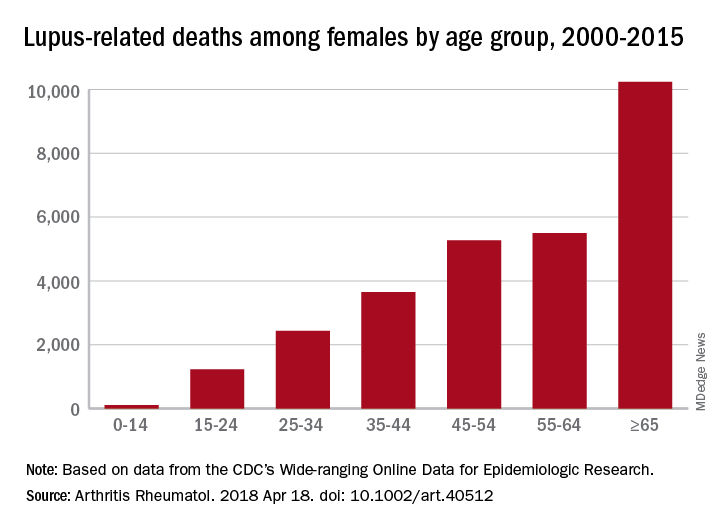

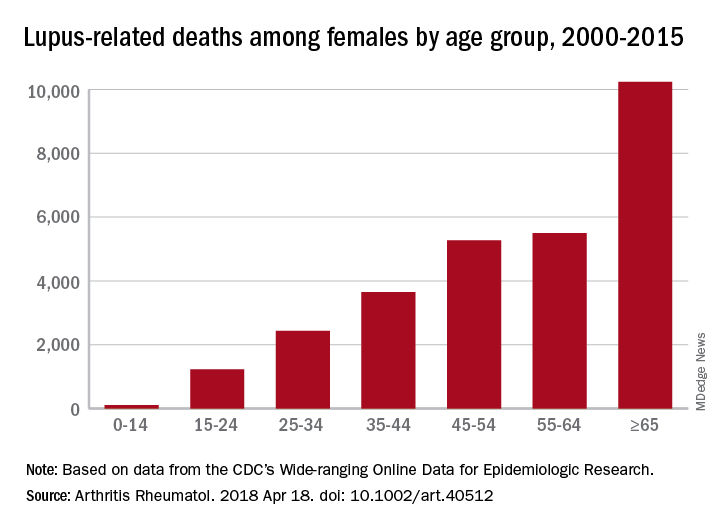

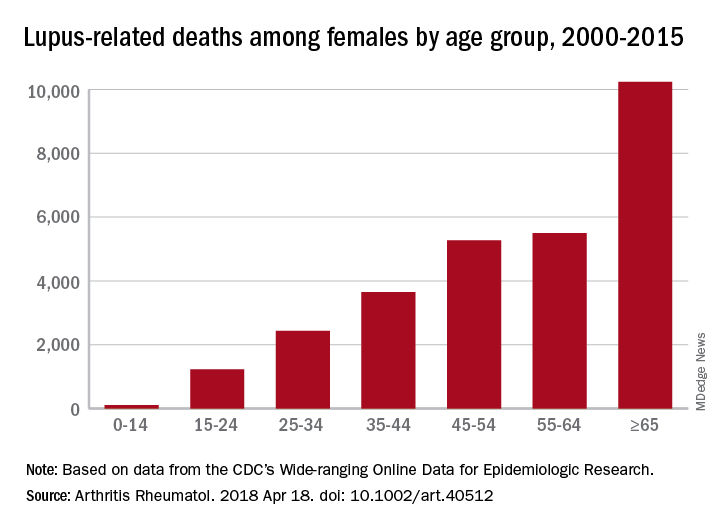

Lupus is quietly killing young women

according to researchers at the University of California, Los Angeles.

SLE was either the underlying or a contributing cause of death for 28,411 females from 2000 to 2015, when it was one of the 20 most common causes of death among females aged 5-64 years in the United States, based on an analysis of the Centers for Disease Control and Prevention’s Wide-ranging Online Data for Epidemiologic Research multiple cause-of-death database, the researchers said.

SLE was the 10th-leading cause of death in the 15- to 24-year age group (1,226 deaths), the 14th-leading cause among women aged 25-34 (2,431 deaths) and 35-44 (3,646 deaths), and the 16th-leading cause in those aged 45-54 (5,271 deaths), Eric Y. Yen, MD, and Ram R. Singh, MD, reported in Arthritis & Rheumatology.

The numbers for SLE could be higher, though, since it is underreported on death certificates – by as much as 40% in one study – and other causes may be overreported. “Patients with SLE die prematurely of complications such as cardiovascular events, infections, renal failure, and respiratory diseases [and] these proximate causes of death may be perceived to be unrelated to SLE, when in fact the disease or the medications used for it predispose to them,” the investigators said.

Dr. Yen was supported by the National Institutes of Health and UCLA Children’s Discovery and Innovation Institute. Dr. Singh was supported by the NIH, the Lupus Foundation of America, and the Rheumatology Research Foundation.

SOURCE: Yen EY and Singh RR. Arthritis Rheumatol. 2018 Apr 18. doi: 10.1002/art.40512.

according to researchers at the University of California, Los Angeles.

SLE was either the underlying or a contributing cause of death for 28,411 females from 2000 to 2015, when it was one of the 20 most common causes of death among females aged 5-64 years in the United States, based on an analysis of the Centers for Disease Control and Prevention’s Wide-ranging Online Data for Epidemiologic Research multiple cause-of-death database, the researchers said.

SLE was the 10th-leading cause of death in the 15- to 24-year age group (1,226 deaths), the 14th-leading cause among women aged 25-34 (2,431 deaths) and 35-44 (3,646 deaths), and the 16th-leading cause in those aged 45-54 (5,271 deaths), Eric Y. Yen, MD, and Ram R. Singh, MD, reported in Arthritis & Rheumatology.

The numbers for SLE could be higher, though, since it is underreported on death certificates – by as much as 40% in one study – and other causes may be overreported. “Patients with SLE die prematurely of complications such as cardiovascular events, infections, renal failure, and respiratory diseases [and] these proximate causes of death may be perceived to be unrelated to SLE, when in fact the disease or the medications used for it predispose to them,” the investigators said.

Dr. Yen was supported by the National Institutes of Health and UCLA Children’s Discovery and Innovation Institute. Dr. Singh was supported by the NIH, the Lupus Foundation of America, and the Rheumatology Research Foundation.

SOURCE: Yen EY and Singh RR. Arthritis Rheumatol. 2018 Apr 18. doi: 10.1002/art.40512.

according to researchers at the University of California, Los Angeles.

SLE was either the underlying or a contributing cause of death for 28,411 females from 2000 to 2015, when it was one of the 20 most common causes of death among females aged 5-64 years in the United States, based on an analysis of the Centers for Disease Control and Prevention’s Wide-ranging Online Data for Epidemiologic Research multiple cause-of-death database, the researchers said.

SLE was the 10th-leading cause of death in the 15- to 24-year age group (1,226 deaths), the 14th-leading cause among women aged 25-34 (2,431 deaths) and 35-44 (3,646 deaths), and the 16th-leading cause in those aged 45-54 (5,271 deaths), Eric Y. Yen, MD, and Ram R. Singh, MD, reported in Arthritis & Rheumatology.

The numbers for SLE could be higher, though, since it is underreported on death certificates – by as much as 40% in one study – and other causes may be overreported. “Patients with SLE die prematurely of complications such as cardiovascular events, infections, renal failure, and respiratory diseases [and] these proximate causes of death may be perceived to be unrelated to SLE, when in fact the disease or the medications used for it predispose to them,” the investigators said.

Dr. Yen was supported by the National Institutes of Health and UCLA Children’s Discovery and Innovation Institute. Dr. Singh was supported by the NIH, the Lupus Foundation of America, and the Rheumatology Research Foundation.

SOURCE: Yen EY and Singh RR. Arthritis Rheumatol. 2018 Apr 18. doi: 10.1002/art.40512.

FROM ARTHRITIS & RHEUMATOLOGY

2018 Resident Writer’s Award Information

The 2018 Resident Writer’s Award competition is sponsored by Johnson & Johnson. Orthopedic residents are invited to submit original studies, review papers, or case reports for publication. Papers published in 2018 will be judged by The American Journal of Orthopedics Editorial Board. Honoraria will be presented to the winners at the 2019 AAOS annual meeting.

- $1,500 for the First-Place Award

- $1,000 for the Second-Place Award

- $500 for the Third-Place Award

To quality for consideration, papers must have the resident as the first-listed author and must be accepted through the journal’s standard blinded-review process. Papers submitted in 2018 but not published until 2019 will automatically qualify for the 2019 competition. Manuscripts should be prepared according to our Information for the Authors and submitted via our online submission system, Editorial Manager®, at www.editorialmanager.com/AmJOrthop.

Read more about this year's RWA winners.

Supported by Johnson & Johnson

The 2018 Resident Writer’s Award competition is sponsored by Johnson & Johnson. Orthopedic residents are invited to submit original studies, review papers, or case reports for publication. Papers published in 2018 will be judged by The American Journal of Orthopedics Editorial Board. Honoraria will be presented to the winners at the 2019 AAOS annual meeting.

- $1,500 for the First-Place Award

- $1,000 for the Second-Place Award

- $500 for the Third-Place Award

To quality for consideration, papers must have the resident as the first-listed author and must be accepted through the journal’s standard blinded-review process. Papers submitted in 2018 but not published until 2019 will automatically qualify for the 2019 competition. Manuscripts should be prepared according to our Information for the Authors and submitted via our online submission system, Editorial Manager®, at www.editorialmanager.com/AmJOrthop.

Read more about this year's RWA winners.

Supported by Johnson & Johnson

The 2018 Resident Writer’s Award competition is sponsored by Johnson & Johnson. Orthopedic residents are invited to submit original studies, review papers, or case reports for publication. Papers published in 2018 will be judged by The American Journal of Orthopedics Editorial Board. Honoraria will be presented to the winners at the 2019 AAOS annual meeting.

- $1,500 for the First-Place Award

- $1,000 for the Second-Place Award

- $500 for the Third-Place Award

To quality for consideration, papers must have the resident as the first-listed author and must be accepted through the journal’s standard blinded-review process. Papers submitted in 2018 but not published until 2019 will automatically qualify for the 2019 competition. Manuscripts should be prepared according to our Information for the Authors and submitted via our online submission system, Editorial Manager®, at www.editorialmanager.com/AmJOrthop.

Read more about this year's RWA winners.

Supported by Johnson & Johnson

VIDEOS: High-priced drugs, out-of-pocket costs raise challenges for neurologists

LOS ANGELES – Neurologists can play an important role in helping patients gain access to high-cost, breakthrough drugs, while at the same time guiding patients to lower-cost options whenever possible, speakers said at the annual meeting of the American Academy of Neurology.

The use of the Orphan Drug approval pathway established in 1983 has gained a great deal of steam for rare neurologic diseases in recent years with the approval of a number of drugs, such as nusinersen (Spinraza) for spinal muscular atrophy, eteplirsen (Exondys 51) for Duchenne muscular dystrophy, and edaravone (Radicava) for amyotrophic lateral sclerosis, said Nicholas Johnson, MD, a pediatric neuromuscular disease specialist at the University of Utah, Salt Lake City.

But given that only 2% of U.S. physicians are neurologists, yet 18% of rare diseases are neurologic and 11% of drugs in development overall are for neurologic diseases, there are a great deal of challenges arising for neurologists in getting access to these new high-priced drugs for their patients, said Dr. Johnson, who leads the AAN’s Neurology Drug Pricing Task Force and is also chair of the AAN Government Relations Committee.

These challenges range from increased administrative burden on staff, getting insurance approval, finding administration sites, and the ability to perform special patient assessments, he said in an interview.

Dr. Nicholas Johnson’s interview:

The video associated with this article is no longer available on this site. Please view all of our videos on the MDedge YouTube channel

Dr. Brian Callaghan’s interview:

The video associated with this article is no longer available on this site. Please view all of our videos on the MDedge YouTube channel

Many high-priced drugs commonly prescribed for chronic neurologic conditions and diagnostic tests also have high out-of-pocket costs for patients, but it is remarkably hard even for well-informed experts to find the actual costs that patients will pay out of pocket for such drugs and tests, according to Brian Callaghan, MD, a neuromuscular disease specialist at the University of Michigan, Ann Arbor.

Neurologist can seek to find more affordable alternatives to drugs when the out-of-pocket expenses are too great, said Dr. Callaghan, who also serves on the Neurology Drug Pricing Task Force. It may be advisable to put certain drugs lower on a list of potential treatment options than others for a chronic condition such as epilepsy because of their out-of-pocket costs, but it can be frustratingly hard to determine these costs in advance.

The University of Michigan Health System is unique in having drug cost data provided as part of information presented to physicians in electronic health records, but this is not the case in most other clinics. Until doctors can regularly access patient-specific drug and diagnostic testing out-of-pocket costs through EHRs, finding the best affordable medications for patients will remain a costly and time-consuming process, he said in an interview.

LOS ANGELES – Neurologists can play an important role in helping patients gain access to high-cost, breakthrough drugs, while at the same time guiding patients to lower-cost options whenever possible, speakers said at the annual meeting of the American Academy of Neurology.

The use of the Orphan Drug approval pathway established in 1983 has gained a great deal of steam for rare neurologic diseases in recent years with the approval of a number of drugs, such as nusinersen (Spinraza) for spinal muscular atrophy, eteplirsen (Exondys 51) for Duchenne muscular dystrophy, and edaravone (Radicava) for amyotrophic lateral sclerosis, said Nicholas Johnson, MD, a pediatric neuromuscular disease specialist at the University of Utah, Salt Lake City.

But given that only 2% of U.S. physicians are neurologists, yet 18% of rare diseases are neurologic and 11% of drugs in development overall are for neurologic diseases, there are a great deal of challenges arising for neurologists in getting access to these new high-priced drugs for their patients, said Dr. Johnson, who leads the AAN’s Neurology Drug Pricing Task Force and is also chair of the AAN Government Relations Committee.

These challenges range from increased administrative burden on staff, getting insurance approval, finding administration sites, and the ability to perform special patient assessments, he said in an interview.

Dr. Nicholas Johnson’s interview:

The video associated with this article is no longer available on this site. Please view all of our videos on the MDedge YouTube channel

Dr. Brian Callaghan’s interview:

The video associated with this article is no longer available on this site. Please view all of our videos on the MDedge YouTube channel

Many high-priced drugs commonly prescribed for chronic neurologic conditions and diagnostic tests also have high out-of-pocket costs for patients, but it is remarkably hard even for well-informed experts to find the actual costs that patients will pay out of pocket for such drugs and tests, according to Brian Callaghan, MD, a neuromuscular disease specialist at the University of Michigan, Ann Arbor.

Neurologist can seek to find more affordable alternatives to drugs when the out-of-pocket expenses are too great, said Dr. Callaghan, who also serves on the Neurology Drug Pricing Task Force. It may be advisable to put certain drugs lower on a list of potential treatment options than others for a chronic condition such as epilepsy because of their out-of-pocket costs, but it can be frustratingly hard to determine these costs in advance.

The University of Michigan Health System is unique in having drug cost data provided as part of information presented to physicians in electronic health records, but this is not the case in most other clinics. Until doctors can regularly access patient-specific drug and diagnostic testing out-of-pocket costs through EHRs, finding the best affordable medications for patients will remain a costly and time-consuming process, he said in an interview.

LOS ANGELES – Neurologists can play an important role in helping patients gain access to high-cost, breakthrough drugs, while at the same time guiding patients to lower-cost options whenever possible, speakers said at the annual meeting of the American Academy of Neurology.

The use of the Orphan Drug approval pathway established in 1983 has gained a great deal of steam for rare neurologic diseases in recent years with the approval of a number of drugs, such as nusinersen (Spinraza) for spinal muscular atrophy, eteplirsen (Exondys 51) for Duchenne muscular dystrophy, and edaravone (Radicava) for amyotrophic lateral sclerosis, said Nicholas Johnson, MD, a pediatric neuromuscular disease specialist at the University of Utah, Salt Lake City.

But given that only 2% of U.S. physicians are neurologists, yet 18% of rare diseases are neurologic and 11% of drugs in development overall are for neurologic diseases, there are a great deal of challenges arising for neurologists in getting access to these new high-priced drugs for their patients, said Dr. Johnson, who leads the AAN’s Neurology Drug Pricing Task Force and is also chair of the AAN Government Relations Committee.

These challenges range from increased administrative burden on staff, getting insurance approval, finding administration sites, and the ability to perform special patient assessments, he said in an interview.

Dr. Nicholas Johnson’s interview:

The video associated with this article is no longer available on this site. Please view all of our videos on the MDedge YouTube channel

Dr. Brian Callaghan’s interview:

The video associated with this article is no longer available on this site. Please view all of our videos on the MDedge YouTube channel

Many high-priced drugs commonly prescribed for chronic neurologic conditions and diagnostic tests also have high out-of-pocket costs for patients, but it is remarkably hard even for well-informed experts to find the actual costs that patients will pay out of pocket for such drugs and tests, according to Brian Callaghan, MD, a neuromuscular disease specialist at the University of Michigan, Ann Arbor.

Neurologist can seek to find more affordable alternatives to drugs when the out-of-pocket expenses are too great, said Dr. Callaghan, who also serves on the Neurology Drug Pricing Task Force. It may be advisable to put certain drugs lower on a list of potential treatment options than others for a chronic condition such as epilepsy because of their out-of-pocket costs, but it can be frustratingly hard to determine these costs in advance.

The University of Michigan Health System is unique in having drug cost data provided as part of information presented to physicians in electronic health records, but this is not the case in most other clinics. Until doctors can regularly access patient-specific drug and diagnostic testing out-of-pocket costs through EHRs, finding the best affordable medications for patients will remain a costly and time-consuming process, he said in an interview.

REPORTING FROM AAN 2018

Speakers at FDA advisory meetings have hidden conflicts

Nearly a quarter of public speakers at meetings of a Food and Drug Administration advisory committee had conflicts of interest, about 20% of which are undisclosed, a study finds.

Matthew S. McCoy, PhD., of the University of Pennsylvania, Philadelphia, and his colleagues analyzed the public speakers at 15 meetings of the FDA Anesthetic and Analgesic Drug Products Advisory Committee from September 2009 to April 2017 related to the approval of drug products. (The committee advises the FDA on anesthesiology and pain management drug products.)

Investigators evaluated meeting transcripts to learn whether speakers reported chronic pain; had received the drug under review; reported an organization affiliation; reported a conflict of interest; and expressed support, opposition or were neutral with respect to drug approval. Of the 112 speakers studied, about 20% disclosed a conflict of interest, 5% had an undisclosed financial association with the sponsor that existed prior to the meeting and 13% had an undisclosed financial association of indeterminate date,the researchers reported in JAMA Internal Medicine on April 23.

Of those 112 speakers, 20 reported having experienced chronic pain and 11 reported receiving the drug under review. Overall, about 70% of speakers (76 out of 112) supported drug approval, the study found. Speakers who disclosed a conflict of interest were significantly more likely to support drug approval. When financial associations of indeterminate date were classified as conflicts of interest, speakers with a conflict were more than eight times as likely to support drug approval.

Dr. McCoy and his colleagues noted that the findings raise concerns about pro–sponsor bias among speakers at advisory committee meetings. They propose that the FDA should require – rather than only encourage – speakers to disclose conflicts of interest at all of the agency’s advisory committee meetings.

SOURCE: McCoy MS et al. JAMA Intern Med. 2018 Apr 23. doi:10.1001/jamainternmed.2018.1324

The influence of pharmaceutical companies permeates the open public hearing, even though these firms already have highlighted their products during the sponsor presentation.

Currently, pharmaceutical and medical device companies publicly report specified types of payments to physicians through the federal Open Payments program. In my view, these companies also should be required to publicly report payments to professional associations, patient groups, and other nongovernmental organizations.

Dr. McCoy and his colleagues recommend that the Food and Drug Administration require – not merely encourage – public speakers to disclose their conflicts of interest. This is a recommendation with which I disagree. Advisory committee members fill out forms related to conflicts of interest under penalty of perjury. In contrast, speakers at the open public hearing often sign up to speak on the day of the advisory committee meeting, and no documentation is provided, giving FDA staff no opportunity to corroborate any disclosures (or lack thereof). The chair of the FDA advisory committee simply encourages public speakers to disclose any conflicts. It does not seem consistent with the spirit of inclusiveness that should characterize the open public hearing to exclude a speaker who does not disclose whether he or she has conflicts. Nonetheless, the chair should directly query any speaker who fails to disclose whether he or she has conflicts and the subsequent testimony be should be considered in the light of any refusal to disclose.

Peter Lurie, MD, is president of the Center for Science in the Public Interest, Washington. Dr Lurie reports that he worked at the FDA during 2009-2017, including as associate commissioner for public health strategy and analysis from 2014 to 2017. His comments were made in an editorial accompanying Dr. McCoy’s study (JAMA Intern Med. doi:10.1001/jamainternmed.2018.1324).

The influence of pharmaceutical companies permeates the open public hearing, even though these firms already have highlighted their products during the sponsor presentation.

Currently, pharmaceutical and medical device companies publicly report specified types of payments to physicians through the federal Open Payments program. In my view, these companies also should be required to publicly report payments to professional associations, patient groups, and other nongovernmental organizations.

Dr. McCoy and his colleagues recommend that the Food and Drug Administration require – not merely encourage – public speakers to disclose their conflicts of interest. This is a recommendation with which I disagree. Advisory committee members fill out forms related to conflicts of interest under penalty of perjury. In contrast, speakers at the open public hearing often sign up to speak on the day of the advisory committee meeting, and no documentation is provided, giving FDA staff no opportunity to corroborate any disclosures (or lack thereof). The chair of the FDA advisory committee simply encourages public speakers to disclose any conflicts. It does not seem consistent with the spirit of inclusiveness that should characterize the open public hearing to exclude a speaker who does not disclose whether he or she has conflicts. Nonetheless, the chair should directly query any speaker who fails to disclose whether he or she has conflicts and the subsequent testimony be should be considered in the light of any refusal to disclose.

Peter Lurie, MD, is president of the Center for Science in the Public Interest, Washington. Dr Lurie reports that he worked at the FDA during 2009-2017, including as associate commissioner for public health strategy and analysis from 2014 to 2017. His comments were made in an editorial accompanying Dr. McCoy’s study (JAMA Intern Med. doi:10.1001/jamainternmed.2018.1324).

The influence of pharmaceutical companies permeates the open public hearing, even though these firms already have highlighted their products during the sponsor presentation.

Currently, pharmaceutical and medical device companies publicly report specified types of payments to physicians through the federal Open Payments program. In my view, these companies also should be required to publicly report payments to professional associations, patient groups, and other nongovernmental organizations.

Dr. McCoy and his colleagues recommend that the Food and Drug Administration require – not merely encourage – public speakers to disclose their conflicts of interest. This is a recommendation with which I disagree. Advisory committee members fill out forms related to conflicts of interest under penalty of perjury. In contrast, speakers at the open public hearing often sign up to speak on the day of the advisory committee meeting, and no documentation is provided, giving FDA staff no opportunity to corroborate any disclosures (or lack thereof). The chair of the FDA advisory committee simply encourages public speakers to disclose any conflicts. It does not seem consistent with the spirit of inclusiveness that should characterize the open public hearing to exclude a speaker who does not disclose whether he or she has conflicts. Nonetheless, the chair should directly query any speaker who fails to disclose whether he or she has conflicts and the subsequent testimony be should be considered in the light of any refusal to disclose.

Peter Lurie, MD, is president of the Center for Science in the Public Interest, Washington. Dr Lurie reports that he worked at the FDA during 2009-2017, including as associate commissioner for public health strategy and analysis from 2014 to 2017. His comments were made in an editorial accompanying Dr. McCoy’s study (JAMA Intern Med. doi:10.1001/jamainternmed.2018.1324).

Nearly a quarter of public speakers at meetings of a Food and Drug Administration advisory committee had conflicts of interest, about 20% of which are undisclosed, a study finds.

Matthew S. McCoy, PhD., of the University of Pennsylvania, Philadelphia, and his colleagues analyzed the public speakers at 15 meetings of the FDA Anesthetic and Analgesic Drug Products Advisory Committee from September 2009 to April 2017 related to the approval of drug products. (The committee advises the FDA on anesthesiology and pain management drug products.)

Investigators evaluated meeting transcripts to learn whether speakers reported chronic pain; had received the drug under review; reported an organization affiliation; reported a conflict of interest; and expressed support, opposition or were neutral with respect to drug approval. Of the 112 speakers studied, about 20% disclosed a conflict of interest, 5% had an undisclosed financial association with the sponsor that existed prior to the meeting and 13% had an undisclosed financial association of indeterminate date,the researchers reported in JAMA Internal Medicine on April 23.

Of those 112 speakers, 20 reported having experienced chronic pain and 11 reported receiving the drug under review. Overall, about 70% of speakers (76 out of 112) supported drug approval, the study found. Speakers who disclosed a conflict of interest were significantly more likely to support drug approval. When financial associations of indeterminate date were classified as conflicts of interest, speakers with a conflict were more than eight times as likely to support drug approval.

Dr. McCoy and his colleagues noted that the findings raise concerns about pro–sponsor bias among speakers at advisory committee meetings. They propose that the FDA should require – rather than only encourage – speakers to disclose conflicts of interest at all of the agency’s advisory committee meetings.

SOURCE: McCoy MS et al. JAMA Intern Med. 2018 Apr 23. doi:10.1001/jamainternmed.2018.1324

Nearly a quarter of public speakers at meetings of a Food and Drug Administration advisory committee had conflicts of interest, about 20% of which are undisclosed, a study finds.

Matthew S. McCoy, PhD., of the University of Pennsylvania, Philadelphia, and his colleagues analyzed the public speakers at 15 meetings of the FDA Anesthetic and Analgesic Drug Products Advisory Committee from September 2009 to April 2017 related to the approval of drug products. (The committee advises the FDA on anesthesiology and pain management drug products.)

Investigators evaluated meeting transcripts to learn whether speakers reported chronic pain; had received the drug under review; reported an organization affiliation; reported a conflict of interest; and expressed support, opposition or were neutral with respect to drug approval. Of the 112 speakers studied, about 20% disclosed a conflict of interest, 5% had an undisclosed financial association with the sponsor that existed prior to the meeting and 13% had an undisclosed financial association of indeterminate date,the researchers reported in JAMA Internal Medicine on April 23.

Of those 112 speakers, 20 reported having experienced chronic pain and 11 reported receiving the drug under review. Overall, about 70% of speakers (76 out of 112) supported drug approval, the study found. Speakers who disclosed a conflict of interest were significantly more likely to support drug approval. When financial associations of indeterminate date were classified as conflicts of interest, speakers with a conflict were more than eight times as likely to support drug approval.

Dr. McCoy and his colleagues noted that the findings raise concerns about pro–sponsor bias among speakers at advisory committee meetings. They propose that the FDA should require – rather than only encourage – speakers to disclose conflicts of interest at all of the agency’s advisory committee meetings.

SOURCE: McCoy MS et al. JAMA Intern Med. 2018 Apr 23. doi:10.1001/jamainternmed.2018.1324

Key clinical point: A significant portion of public speakers at certain FDA advisory committee meetings have conflicts of interest.

Major finding: Of 112 speakers, about 25% had a conflict of interest, of which about 20% were undisclosed.

Study details: A study of public speakers at 15 Anesthetic and Analgesic Drug Products Advisory Committee (AADPAC) meetings from September 2009 through April 2017 related to the approval of drug products.

Disclosures: Dr. McCoy reports that his spouse is employed by a cancer patient advocacy organization. Dr Litman reports that he is a member of AADPAC. No other disclosures were reported.

Source: McCoy et al. JAMA Intern Med. 2018 Apr 23. doi:10.1001/jamainternmed.2018.1324.

Self-harm

Nonsuicidal self-injury (NSSI) has become more prevalent in youth over recent years and has many inherent risks. In the Diagnostic and Statistical Manual, Fifth Edition (DSM-5), NSSI is a diagnosis suggested for further study, and criteria include engaging in self-injury for 5 or more days without suicidal intent as well as self-injury associated with at least 1 of the following: obtaining relief from negative thoughts or feelings, resolving interpersonal challenges, inducing positive feelings. It is associated with interpersonal difficulties or negative thoughts/feelings. The behavior causes significant impairment in functioning and is not better explained by another condition.1

Estimates of lifetime prevalence in community-based samples of youth range from 15% to 20%. Individuals often start during early adolescence. It can pose many risks including infection, permanent scarring or disfigurement, decreased self-esteem, interpersonal conflict, severe injury, or death. Reasons for engaging in self-harm can vary and include attempts to regulate negative affect, to manage feelings of emptiness/numbness, regain a sense of control over body, feelings, etc., or to provide a consequence for perceived faults. Youth often may start to engage in self-harm covertly, and it may first become apparent in emergency or primary care settings. However, upon discovery, the response given also may affect future behavior.

Efforts also have been underway to distinguish between youth who engage in self-harm with and without suicidal ideation. Girls are more likely than are boys to report NSSI, although male NSSI may present differently. In addition to cutting or more stereotypical self-injury, they may punch walls or engage in fights or other risky behaviors as a proxy for self-harm. Risk factors for boys with regard to suicide attempts include hopelessness and history of sexual abuse. Maladaptive eating patterns and hopelessness were the two most significant factors for girls.4

With regard to issues of confidentiality, it will be important to carefully gauge level of safety and to clearly communicate with the patient (and family) limits of confidentiality. This may result in working within shades of gray to help maintain the therapeutic relationship and the patient’s comfort in being able to disclose potentially sensitive information.

Families can struggle with how to manage this, and it can generate fear as well as other strong emotions.

Tips for parents and guardians

- Validate the underlying emotions while not validating the behavior. Self-injury is a coping strategy. Focus on the driving forces for the actions rather than the actions themselves.

- Approach your child from a nonjudgmental stance.

- Recognize that change may not happen overnight, and that there may be periods of regression.

- Acknowledge successes when they occur.

- Make yourself available for open communication. Open-ended questions may facilitate more dialogue.

- Take care of yourself as well. Ensure you use your supports and are engaging in healthy self-care.

- Take the behavior seriously. While this behavior is relatively common, do not assume it is “just a phase.”

- While remaining supportive, it is important to maintain a parental role and to keep expectations rather than “walking on eggshells.”

- Involve the child in identifying what can be of support.

- Become aware of local crisis resources in your community. National resources include Call 1-800-273-TALK for the national suicide hotline or Text 741741 to connect with a crisis counselor.

Things to avoid

- Avoid taking a punitive stance. While the behavior can be provocative, most likely the primary purpose is not for attention.

- Avoid engaging in power struggles.

- Avoid creating increased isolation for the child. This can be a delicate balance with regard to peer groups, but encouraging healthy social interactions and activities is a way to help build resilience.

- Avoid taking the behavior personally.5

In working with youth who engage in self-harm, it is important to work within a team, which may include family, primary care, mental health support, school, and potentially other community supports. Treatment evidence is relatively limited, but there is some evidence to support use of cognitive behavioral therapy, dialectical behavior therapy, and mentalization-based therapy. Regardless, work will likely be long term and at times intensive in addressing the problems leading to self-harm behavior.6

Dr. Strange is an assistant professor in the department of psychiatry at the University of Vermont Medical Center and University of Vermont Robert Larner College of Medicine, both in Burlington. She works with children and adolescents. She has no relevant financial disclosures.

References

1. Diagnostic and Statistical Manual of Mental Disorders, Fifth Edition (Arlington, Va.: American Psychiatric Association Publishing, 2013)

2. J Adolesc. 2014 Dec;37(8):1335-44.

3. Behav Ther. 2017 May; 48(3):366-79.

4. Acad Pediatr. 2012 May-Jun;12(3):205-13.

5. “Information for parents: What you need to know about self-injury.” The Fact Sheet Series, Cornell Research Program on Self-Injury and Recovery. 2009.

6. Clin Pediatr. 2016 Sep 13;55(11):1012-9.

Nonsuicidal self-injury (NSSI) has become more prevalent in youth over recent years and has many inherent risks. In the Diagnostic and Statistical Manual, Fifth Edition (DSM-5), NSSI is a diagnosis suggested for further study, and criteria include engaging in self-injury for 5 or more days without suicidal intent as well as self-injury associated with at least 1 of the following: obtaining relief from negative thoughts or feelings, resolving interpersonal challenges, inducing positive feelings. It is associated with interpersonal difficulties or negative thoughts/feelings. The behavior causes significant impairment in functioning and is not better explained by another condition.1

Estimates of lifetime prevalence in community-based samples of youth range from 15% to 20%. Individuals often start during early adolescence. It can pose many risks including infection, permanent scarring or disfigurement, decreased self-esteem, interpersonal conflict, severe injury, or death. Reasons for engaging in self-harm can vary and include attempts to regulate negative affect, to manage feelings of emptiness/numbness, regain a sense of control over body, feelings, etc., or to provide a consequence for perceived faults. Youth often may start to engage in self-harm covertly, and it may first become apparent in emergency or primary care settings. However, upon discovery, the response given also may affect future behavior.