User login

Dialysis decision in elderly needs to factor in comorbidities

SAN DIEGO – The wider picture of the patient’s health and prognosis, not just chronologic age, should enter into the clinical decision to initiate dialysis, according to Bjorg Thorsteinsdottir, MD, a palliative care physician at the Mayo Clinic in Rochester, Minn.

“People perceive they have no choice [but treatment], and we perceive we have to do things to them until everything is lost, then we expect them to do a 180 [degree turn],” she said in a presentation at the meeting sponsored by the American Society of Nephrology.

“A 90-year-old fit individual, with minimal comorbidity living independently, would absolutely be a good candidate for dialysis, while a 75-year-old patient with bad peripheral vascular disease and dementia, living in a nursing home, would be unlikely to live longer on dialysis than off dialysis,” she said. “We need to weigh the risks and benefits for each individual patient against their goals and values. We need to be honest about the lack of benefit for certain subgroups of patients and the heavy treatment burdens of dialysis. Age, comorbidity, and frailty all factor into these deliberations and prognosis.”

More than 107,000 people over age 75 in the United States received dialysis in 2015, according to statistics gathered by the National Kidney Foundation. Yet the survival advantage of dialysis is more limited in elderly patients with multiple comorbidities, Dr. Thorsteinsdottir said. “It becomes important to think about the harms of treatment.”

A 2016 study from the Netherlands found no survival advantage to dialysis, compared with conservative management among kidney failure patients aged 80 and older. The survival advantage was limited with dialysis in patients aged 70 and older who also had multiple comorbidities. (Clin J Am Soc Nephrol. 2016 Apr;11(4):633-40)

In an interview, Dr. Thorsteinsdottir acknowledged that “determining who is unlikely to benefit from dialysis is complicated.” However, she said, “we know that the following comorbidities are the worst: dementia and peripheral vascular disease.”

“No one that I know of currently has an age cutoff for dialysis,” Dr. Thorsteinsdottir said in the interview, “and I do not believe the U.S. is ready for any kind of explicit limit setting by the government on dialysis treatment.”

“We must respond to legitimate concerns raised by recent studies that suggest that strong moral imperatives – to treat anyone we can treat – have created a situation where we are not pausing and asking hard questions about whether the patient in front of us is likely to benefit from dialysis,” she said in the interview. “Patients sense this and do not feel that they are given any alternatives to dialysis treatment. This needs to change.”

Dr. Thorsteinsdottir reported no relevant financial disclosures.

SAN DIEGO – The wider picture of the patient’s health and prognosis, not just chronologic age, should enter into the clinical decision to initiate dialysis, according to Bjorg Thorsteinsdottir, MD, a palliative care physician at the Mayo Clinic in Rochester, Minn.

“People perceive they have no choice [but treatment], and we perceive we have to do things to them until everything is lost, then we expect them to do a 180 [degree turn],” she said in a presentation at the meeting sponsored by the American Society of Nephrology.

“A 90-year-old fit individual, with minimal comorbidity living independently, would absolutely be a good candidate for dialysis, while a 75-year-old patient with bad peripheral vascular disease and dementia, living in a nursing home, would be unlikely to live longer on dialysis than off dialysis,” she said. “We need to weigh the risks and benefits for each individual patient against their goals and values. We need to be honest about the lack of benefit for certain subgroups of patients and the heavy treatment burdens of dialysis. Age, comorbidity, and frailty all factor into these deliberations and prognosis.”

More than 107,000 people over age 75 in the United States received dialysis in 2015, according to statistics gathered by the National Kidney Foundation. Yet the survival advantage of dialysis is more limited in elderly patients with multiple comorbidities, Dr. Thorsteinsdottir said. “It becomes important to think about the harms of treatment.”

A 2016 study from the Netherlands found no survival advantage to dialysis, compared with conservative management among kidney failure patients aged 80 and older. The survival advantage was limited with dialysis in patients aged 70 and older who also had multiple comorbidities. (Clin J Am Soc Nephrol. 2016 Apr;11(4):633-40)

In an interview, Dr. Thorsteinsdottir acknowledged that “determining who is unlikely to benefit from dialysis is complicated.” However, she said, “we know that the following comorbidities are the worst: dementia and peripheral vascular disease.”

“No one that I know of currently has an age cutoff for dialysis,” Dr. Thorsteinsdottir said in the interview, “and I do not believe the U.S. is ready for any kind of explicit limit setting by the government on dialysis treatment.”

“We must respond to legitimate concerns raised by recent studies that suggest that strong moral imperatives – to treat anyone we can treat – have created a situation where we are not pausing and asking hard questions about whether the patient in front of us is likely to benefit from dialysis,” she said in the interview. “Patients sense this and do not feel that they are given any alternatives to dialysis treatment. This needs to change.”

Dr. Thorsteinsdottir reported no relevant financial disclosures.

SAN DIEGO – The wider picture of the patient’s health and prognosis, not just chronologic age, should enter into the clinical decision to initiate dialysis, according to Bjorg Thorsteinsdottir, MD, a palliative care physician at the Mayo Clinic in Rochester, Minn.

“People perceive they have no choice [but treatment], and we perceive we have to do things to them until everything is lost, then we expect them to do a 180 [degree turn],” she said in a presentation at the meeting sponsored by the American Society of Nephrology.

“A 90-year-old fit individual, with minimal comorbidity living independently, would absolutely be a good candidate for dialysis, while a 75-year-old patient with bad peripheral vascular disease and dementia, living in a nursing home, would be unlikely to live longer on dialysis than off dialysis,” she said. “We need to weigh the risks and benefits for each individual patient against their goals and values. We need to be honest about the lack of benefit for certain subgroups of patients and the heavy treatment burdens of dialysis. Age, comorbidity, and frailty all factor into these deliberations and prognosis.”

More than 107,000 people over age 75 in the United States received dialysis in 2015, according to statistics gathered by the National Kidney Foundation. Yet the survival advantage of dialysis is more limited in elderly patients with multiple comorbidities, Dr. Thorsteinsdottir said. “It becomes important to think about the harms of treatment.”

A 2016 study from the Netherlands found no survival advantage to dialysis, compared with conservative management among kidney failure patients aged 80 and older. The survival advantage was limited with dialysis in patients aged 70 and older who also had multiple comorbidities. (Clin J Am Soc Nephrol. 2016 Apr;11(4):633-40)

In an interview, Dr. Thorsteinsdottir acknowledged that “determining who is unlikely to benefit from dialysis is complicated.” However, she said, “we know that the following comorbidities are the worst: dementia and peripheral vascular disease.”

“No one that I know of currently has an age cutoff for dialysis,” Dr. Thorsteinsdottir said in the interview, “and I do not believe the U.S. is ready for any kind of explicit limit setting by the government on dialysis treatment.”

“We must respond to legitimate concerns raised by recent studies that suggest that strong moral imperatives – to treat anyone we can treat – have created a situation where we are not pausing and asking hard questions about whether the patient in front of us is likely to benefit from dialysis,” she said in the interview. “Patients sense this and do not feel that they are given any alternatives to dialysis treatment. This needs to change.”

Dr. Thorsteinsdottir reported no relevant financial disclosures.

REPORTING FROM KIDNEY WEEK 2018

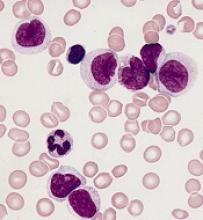

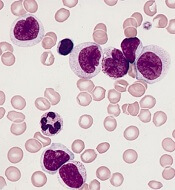

Prognostic features could improve ALL outcomes

CHICAGO – New recognition of the prognostic value of cytogenetic factors, minimal residual disease activity, and Philadelphia chromosome–like signature could improve the treatment and outcomes of acute lymphoblastic leukemia (ALL), according to Anjali Advani, MD.

CD20

About 80% of ALL is B-cell ALL and the majority of patients have pre–B-cell ALL, Dr. Advani, a hematologist and director of the inpatient leukemia program at the Cleveland Clinic, said at the American Society of Hematology Meeting on Hematologic Malignancies.

“And on the B lymphoblast, many antigens are expressed, including CD19, CD20, and CD52,” she said.

Rituximab, a drug often used for the treatment of lymphoma, is a chimeric monoclonal antibody against the protein CD20, which is expressed in 41% of ALL patients.

“Interestingly, CD20 expression in ALL has been associated with an adverse prognostic impact, which suggests that targeting this may potentially improve outcomes in these patients,” Dr. Advani said.

In fact, a recent randomized study by Sébastien Maury, MD, of the University of Paris-Est, and his colleagues, demonstrated that adding 16-18 doses of rituximab to a Berlin-Frankfurt-Münster (BFM)–based chemotherapy in Philadelphia chromosome (Ph)–negative patients aged 18-59 years with CD20-positive pre–B-cell ALL improved 2-year event-free survival from 52% to 65%. The data are consistent with those from prior studies, including a German study that showed a higher degree of minimal residual disease (MRD) negativity in patients treated with rituximab, she noted (N Engl J Med 2016;375:1044-53).

While the study by Dr. Maury and his colleagues didn’t look at MRD, that may offer an explanation for the improved event-free survival in their study, Dr. Advani suggested.

MRD

Minimal residual disease has become a standard part of practice in ALL, but pediatric ALL led the way in using early MRD measurement for risk-stratifying therapy, and it has taken a bit longer for it to be incorporated in the adult disease realm, Dr. Advani said.

Either flow cytometry or polymerase chain reaction (PCR) amplification can be used to measure MRD, she noted.

In one of the larger studies done in adults, researchers used PCR to look at MRD at two time points and stratified patients into three risk groups, including low, intermediate, and high risk (Blood. 2006 Feb 1;107:1116-23).

Measuring MRD at those two time points “clearly separated the prognosis of patients not only in terms of disease-free survival but [in] overall survival,” she said. “So that’s why, for ALL, this has really become very important.”

In the United States, where flow-based cytometry is used more, it is necessary to find a properly equipped laboratory that can provide reliable results, she added. “For example, at our center we actually send our MRD to Fred Hutchinson [Cancer Center in Seattle].”

Johns Hopkins [Baltimore] also has such a lab, and both can arrange to accept send-outs, she said.

The other “really exciting thing” in regard to MRD in ALL is the recent approval of blinatumomab for MRD-positive ALL, she said.

In a study of 113 evaluable patients who were treated with the monoclonal antibody, 78% achieved complete molecular response (Blood. 2018 Apr 5;131:1522-31).

“And probably most importantly, when they looked at those patients who responded to blinatumomab in terms of MRD, these patients had, again, not only improved relapse-free survival but also increased overall survival,” she said. “I think this really explains why in ALL, we are measuring MRD and how it can really impact these patients.”

One of the remaining questions that will be important to address going forward is whether patients with MRD-positive ALL should continue to be considered for transplant; some of these studies have shown “very, very good outcomes” in patients who have not been transplanted, she noted.

Ph-like signature

Another important new prognostic feature in ALL is the presence of the Ph-like signature, a gene expression signature that was initially described in children with poor-risk ALL, and which looks a lot like Ph-positive disease.

“When they delved in further, they identified that this signature actually correlated with multiple different [kinase] fusions ... and it turns out that 20%-25% of young adults have this signature,” she said.

Since event-free survival in young adults with ALL is usually in the 65%-70% range, most of the remaining 30%-35% likely have this signature, she explained.

The kinase fusions associated with the Ph-like signature retain intact tyrosine kinase domains, and the spectrum of the fusions changes across age groups.

Treatments targeting some of these – for example, dasatinib for patients with a Ph-like dasatinib-sensitive kinase mutation – are being investigated.

Additionally, the Children’s Oncology Group has developed a clinically adaptable screening assay to identify the signature, she noted.

“So I would say that, and there is probably some difference in opinion, it is now becoming fairly standard that at diagnosis in an adult with ALL we’re sending the Ph-like signature,” she said. “And again, usually you’re going to have to send this out, and at our center we send it out to [Nationwide Children’s Hospital] in Columbus [Ohio].”

As important as it is to identify the Ph-like signature, given its association with poor prognosis, a number of questions remain, including whether transplant improves outcomes.

“The hope is it probably does, and that’s something that’s being evaluated in studies,” she said, noting that clinical studies are also specifically targeting these patients.

“So these patients should probably be enrolled on a clinical trial, because their outcome is clearly inferior,” she said.

Dr. Advani reported consultancy for Pfizer; research funding from Genzyme, Novartis, Pfizer, and Sigma Tau; and honoraria from Genzyme, Pfizer, and Sigma Tau. She is also on the speakers bureau for Sigma Tau.

CHICAGO – New recognition of the prognostic value of cytogenetic factors, minimal residual disease activity, and Philadelphia chromosome–like signature could improve the treatment and outcomes of acute lymphoblastic leukemia (ALL), according to Anjali Advani, MD.

CD20

About 80% of ALL is B-cell ALL and the majority of patients have pre–B-cell ALL, Dr. Advani, a hematologist and director of the inpatient leukemia program at the Cleveland Clinic, said at the American Society of Hematology Meeting on Hematologic Malignancies.

“And on the B lymphoblast, many antigens are expressed, including CD19, CD20, and CD52,” she said.

Rituximab, a drug often used for the treatment of lymphoma, is a chimeric monoclonal antibody against the protein CD20, which is expressed in 41% of ALL patients.

“Interestingly, CD20 expression in ALL has been associated with an adverse prognostic impact, which suggests that targeting this may potentially improve outcomes in these patients,” Dr. Advani said.

In fact, a recent randomized study by Sébastien Maury, MD, of the University of Paris-Est, and his colleagues, demonstrated that adding 16-18 doses of rituximab to a Berlin-Frankfurt-Münster (BFM)–based chemotherapy in Philadelphia chromosome (Ph)–negative patients aged 18-59 years with CD20-positive pre–B-cell ALL improved 2-year event-free survival from 52% to 65%. The data are consistent with those from prior studies, including a German study that showed a higher degree of minimal residual disease (MRD) negativity in patients treated with rituximab, she noted (N Engl J Med 2016;375:1044-53).

While the study by Dr. Maury and his colleagues didn’t look at MRD, that may offer an explanation for the improved event-free survival in their study, Dr. Advani suggested.

MRD

Minimal residual disease has become a standard part of practice in ALL, but pediatric ALL led the way in using early MRD measurement for risk-stratifying therapy, and it has taken a bit longer for it to be incorporated in the adult disease realm, Dr. Advani said.

Either flow cytometry or polymerase chain reaction (PCR) amplification can be used to measure MRD, she noted.

In one of the larger studies done in adults, researchers used PCR to look at MRD at two time points and stratified patients into three risk groups, including low, intermediate, and high risk (Blood. 2006 Feb 1;107:1116-23).

Measuring MRD at those two time points “clearly separated the prognosis of patients not only in terms of disease-free survival but [in] overall survival,” she said. “So that’s why, for ALL, this has really become very important.”

In the United States, where flow-based cytometry is used more, it is necessary to find a properly equipped laboratory that can provide reliable results, she added. “For example, at our center we actually send our MRD to Fred Hutchinson [Cancer Center in Seattle].”

Johns Hopkins [Baltimore] also has such a lab, and both can arrange to accept send-outs, she said.

The other “really exciting thing” in regard to MRD in ALL is the recent approval of blinatumomab for MRD-positive ALL, she said.

In a study of 113 evaluable patients who were treated with the monoclonal antibody, 78% achieved complete molecular response (Blood. 2018 Apr 5;131:1522-31).

“And probably most importantly, when they looked at those patients who responded to blinatumomab in terms of MRD, these patients had, again, not only improved relapse-free survival but also increased overall survival,” she said. “I think this really explains why in ALL, we are measuring MRD and how it can really impact these patients.”

One of the remaining questions that will be important to address going forward is whether patients with MRD-positive ALL should continue to be considered for transplant; some of these studies have shown “very, very good outcomes” in patients who have not been transplanted, she noted.

Ph-like signature

Another important new prognostic feature in ALL is the presence of the Ph-like signature, a gene expression signature that was initially described in children with poor-risk ALL, and which looks a lot like Ph-positive disease.

“When they delved in further, they identified that this signature actually correlated with multiple different [kinase] fusions ... and it turns out that 20%-25% of young adults have this signature,” she said.

Since event-free survival in young adults with ALL is usually in the 65%-70% range, most of the remaining 30%-35% likely have this signature, she explained.

The kinase fusions associated with the Ph-like signature retain intact tyrosine kinase domains, and the spectrum of the fusions changes across age groups.

Treatments targeting some of these – for example, dasatinib for patients with a Ph-like dasatinib-sensitive kinase mutation – are being investigated.

Additionally, the Children’s Oncology Group has developed a clinically adaptable screening assay to identify the signature, she noted.

“So I would say that, and there is probably some difference in opinion, it is now becoming fairly standard that at diagnosis in an adult with ALL we’re sending the Ph-like signature,” she said. “And again, usually you’re going to have to send this out, and at our center we send it out to [Nationwide Children’s Hospital] in Columbus [Ohio].”

As important as it is to identify the Ph-like signature, given its association with poor prognosis, a number of questions remain, including whether transplant improves outcomes.

“The hope is it probably does, and that’s something that’s being evaluated in studies,” she said, noting that clinical studies are also specifically targeting these patients.

“So these patients should probably be enrolled on a clinical trial, because their outcome is clearly inferior,” she said.

Dr. Advani reported consultancy for Pfizer; research funding from Genzyme, Novartis, Pfizer, and Sigma Tau; and honoraria from Genzyme, Pfizer, and Sigma Tau. She is also on the speakers bureau for Sigma Tau.

CHICAGO – New recognition of the prognostic value of cytogenetic factors, minimal residual disease activity, and Philadelphia chromosome–like signature could improve the treatment and outcomes of acute lymphoblastic leukemia (ALL), according to Anjali Advani, MD.

CD20

About 80% of ALL is B-cell ALL and the majority of patients have pre–B-cell ALL, Dr. Advani, a hematologist and director of the inpatient leukemia program at the Cleveland Clinic, said at the American Society of Hematology Meeting on Hematologic Malignancies.

“And on the B lymphoblast, many antigens are expressed, including CD19, CD20, and CD52,” she said.

Rituximab, a drug often used for the treatment of lymphoma, is a chimeric monoclonal antibody against the protein CD20, which is expressed in 41% of ALL patients.

“Interestingly, CD20 expression in ALL has been associated with an adverse prognostic impact, which suggests that targeting this may potentially improve outcomes in these patients,” Dr. Advani said.

In fact, a recent randomized study by Sébastien Maury, MD, of the University of Paris-Est, and his colleagues, demonstrated that adding 16-18 doses of rituximab to a Berlin-Frankfurt-Münster (BFM)–based chemotherapy in Philadelphia chromosome (Ph)–negative patients aged 18-59 years with CD20-positive pre–B-cell ALL improved 2-year event-free survival from 52% to 65%. The data are consistent with those from prior studies, including a German study that showed a higher degree of minimal residual disease (MRD) negativity in patients treated with rituximab, she noted (N Engl J Med 2016;375:1044-53).

While the study by Dr. Maury and his colleagues didn’t look at MRD, that may offer an explanation for the improved event-free survival in their study, Dr. Advani suggested.

MRD

Minimal residual disease has become a standard part of practice in ALL, but pediatric ALL led the way in using early MRD measurement for risk-stratifying therapy, and it has taken a bit longer for it to be incorporated in the adult disease realm, Dr. Advani said.

Either flow cytometry or polymerase chain reaction (PCR) amplification can be used to measure MRD, she noted.

In one of the larger studies done in adults, researchers used PCR to look at MRD at two time points and stratified patients into three risk groups, including low, intermediate, and high risk (Blood. 2006 Feb 1;107:1116-23).

Measuring MRD at those two time points “clearly separated the prognosis of patients not only in terms of disease-free survival but [in] overall survival,” she said. “So that’s why, for ALL, this has really become very important.”

In the United States, where flow-based cytometry is used more, it is necessary to find a properly equipped laboratory that can provide reliable results, she added. “For example, at our center we actually send our MRD to Fred Hutchinson [Cancer Center in Seattle].”

Johns Hopkins [Baltimore] also has such a lab, and both can arrange to accept send-outs, she said.

The other “really exciting thing” in regard to MRD in ALL is the recent approval of blinatumomab for MRD-positive ALL, she said.

In a study of 113 evaluable patients who were treated with the monoclonal antibody, 78% achieved complete molecular response (Blood. 2018 Apr 5;131:1522-31).

“And probably most importantly, when they looked at those patients who responded to blinatumomab in terms of MRD, these patients had, again, not only improved relapse-free survival but also increased overall survival,” she said. “I think this really explains why in ALL, we are measuring MRD and how it can really impact these patients.”

One of the remaining questions that will be important to address going forward is whether patients with MRD-positive ALL should continue to be considered for transplant; some of these studies have shown “very, very good outcomes” in patients who have not been transplanted, she noted.

Ph-like signature

Another important new prognostic feature in ALL is the presence of the Ph-like signature, a gene expression signature that was initially described in children with poor-risk ALL, and which looks a lot like Ph-positive disease.

“When they delved in further, they identified that this signature actually correlated with multiple different [kinase] fusions ... and it turns out that 20%-25% of young adults have this signature,” she said.

Since event-free survival in young adults with ALL is usually in the 65%-70% range, most of the remaining 30%-35% likely have this signature, she explained.

The kinase fusions associated with the Ph-like signature retain intact tyrosine kinase domains, and the spectrum of the fusions changes across age groups.

Treatments targeting some of these – for example, dasatinib for patients with a Ph-like dasatinib-sensitive kinase mutation – are being investigated.

Additionally, the Children’s Oncology Group has developed a clinically adaptable screening assay to identify the signature, she noted.

“So I would say that, and there is probably some difference in opinion, it is now becoming fairly standard that at diagnosis in an adult with ALL we’re sending the Ph-like signature,” she said. “And again, usually you’re going to have to send this out, and at our center we send it out to [Nationwide Children’s Hospital] in Columbus [Ohio].”

As important as it is to identify the Ph-like signature, given its association with poor prognosis, a number of questions remain, including whether transplant improves outcomes.

“The hope is it probably does, and that’s something that’s being evaluated in studies,” she said, noting that clinical studies are also specifically targeting these patients.

“So these patients should probably be enrolled on a clinical trial, because their outcome is clearly inferior,” she said.

Dr. Advani reported consultancy for Pfizer; research funding from Genzyme, Novartis, Pfizer, and Sigma Tau; and honoraria from Genzyme, Pfizer, and Sigma Tau. She is also on the speakers bureau for Sigma Tau.

EXPERT ANALYSIS FROM MHM 2018

Heart failure and sacubiril/valsartan

Also today, concussion or TBI early in life is linked to suicide risk later, revised cholesterol guidelines veer toward personalized risk assessment, and sibling violence is the most common form of family violence.

Amazon Alexa

Apple Podcasts

Spotify

Also today, concussion or TBI early in life is linked to suicide risk later, revised cholesterol guidelines veer toward personalized risk assessment, and sibling violence is the most common form of family violence.

Amazon Alexa

Apple Podcasts

Spotify

Also today, concussion or TBI early in life is linked to suicide risk later, revised cholesterol guidelines veer toward personalized risk assessment, and sibling violence is the most common form of family violence.

Amazon Alexa

Apple Podcasts

Spotify

Study supports PBM program for HSCT recipients

BOSTON—A blood management program for patients undergoing hematopoietic stem cell transplant (HSCT) can reduce inappropriate transfusions and costs without compromising patient outcomes, a new study suggests.

Researchers retrospectively compared outcomes before and after implementation of a patient blood management (PBM) program at a single institution.

After the program was implemented, the number of transfusions and the units transfused declined without affecting patient mortality, intensive care unit (ICU) admission rates, or other transfusion-related complications.

In addition, the program saved the hospital more than $600,000 over a year.

Nilesh Jambhekar, MD, of the Mayo Clinic in Rochester, Minnesota, reported these results in a presentation at AABB 2018 (abstract PBM3-ST4-22*).

Study design

Dr. Jambhekar and his colleagues looked at blood product use both before and after the Mayo Clinic started a PBM program that included emphasis on AABB best practice guidelines and electronic clinical-decision support for transfusion orders.

The researchers evaluated the frequency and proportion of red blood cell (RBC) and platelet transfusions, total transfusion quantities, transfusions that occurred outside of the clinical guidelines, and the activity-based costs of transfusions.

Dr. Jambhekar acknowledged that the study relied on rigid hemoglobin and platelet thresholds when considering transfusions conducted outside of the guidelines, defined as RBCs administered for hemoglobin values greater than 7 g/dL and platelet transfusions for platelet counts greater than 10 x 109/L.

He noted, however, that the researchers conducted sensitivity analyses to account for exceptions such as patients with coronary disease or neutropenic fever.

The patient-centered outcomes the researchers evaluated included mortality, hospital and ICU admission rates, transfusion reactions, cerebrovascular and coronary ischemic events, and infections.

The study included data on 360 adults older than 18 who underwent HSCT in 2013, before the PBM program was implemented, and 368 transplanted in 2015, after implementation.

In each cohort, patients were followed out to 90 days after transplant.

Results

The total number of platelet units transfused dropped from 1,660 pre-PBM program to 1,417 post-PBM implementation. The total number of RBC units dropped from 1,158 to 826.

The researchers also saw changes in the proportions of inappropriate (outside guidelines) transfusions between the two time periods.

In 2013, 94.2% of RBC transfusions occurred outside the guidelines, compared with 35.4% in 2015 (P<0.0001). Similarly, the proportion of inappropriate platelet transfusions declined from 73.4% to 48.7% over the same time period (P<0.0001).

In addition, all-cause mortality at 3 months was significantly lower after the PBM program was introduced. The 3-month mortality rate was 30.7% for the 2013 cohort and 20.2% for the 2015 cohort (P=0.001).

Dr. Jambhekar noted that, in a multivariable analysis accounting for baseline differences between the groups, mortality for patients treated before the PBM program remained significantly higher, with an odds ratio of 1.85 (P=0.0008).

Neither hospital nor ICU admission within 30 days differed significantly between the groups, and there were no significant between-group differences in hospital or ICU lengths of stay.

Likewise, there were no significant between-group differences in myocardial infarctions, cerebrovascular events, sepsis, and febrile or allergic transfusion reactions.

Dr. Jambhekar noted that this study was retrospective in design and therefore could not fully account for potential confounders. It’s also unclear whether the results could be generalized for adoption by other institutions.

“In general, PBM implementation is probably helpful in reducing both platelet and PRBC [packed red blood cell] utilization, but it’s not an easy thing to do,” Dr. Jambhekar said.

“It requires institutional buy-in and key players to make it happen. Ongoing PBM-related activities like surveillance, education, and clinical decision feedback are critical to maintaining success that we’ve had.”

This study was internally funded. Dr. Jambhekar reported having nothing to disclose.

*Data presented differ from the abstract.

BOSTON—A blood management program for patients undergoing hematopoietic stem cell transplant (HSCT) can reduce inappropriate transfusions and costs without compromising patient outcomes, a new study suggests.

Researchers retrospectively compared outcomes before and after implementation of a patient blood management (PBM) program at a single institution.

After the program was implemented, the number of transfusions and the units transfused declined without affecting patient mortality, intensive care unit (ICU) admission rates, or other transfusion-related complications.

In addition, the program saved the hospital more than $600,000 over a year.

Nilesh Jambhekar, MD, of the Mayo Clinic in Rochester, Minnesota, reported these results in a presentation at AABB 2018 (abstract PBM3-ST4-22*).

Study design

Dr. Jambhekar and his colleagues looked at blood product use both before and after the Mayo Clinic started a PBM program that included emphasis on AABB best practice guidelines and electronic clinical-decision support for transfusion orders.

The researchers evaluated the frequency and proportion of red blood cell (RBC) and platelet transfusions, total transfusion quantities, transfusions that occurred outside of the clinical guidelines, and the activity-based costs of transfusions.

Dr. Jambhekar acknowledged that the study relied on rigid hemoglobin and platelet thresholds when considering transfusions conducted outside of the guidelines, defined as RBCs administered for hemoglobin values greater than 7 g/dL and platelet transfusions for platelet counts greater than 10 x 109/L.

He noted, however, that the researchers conducted sensitivity analyses to account for exceptions such as patients with coronary disease or neutropenic fever.

The patient-centered outcomes the researchers evaluated included mortality, hospital and ICU admission rates, transfusion reactions, cerebrovascular and coronary ischemic events, and infections.

The study included data on 360 adults older than 18 who underwent HSCT in 2013, before the PBM program was implemented, and 368 transplanted in 2015, after implementation.

In each cohort, patients were followed out to 90 days after transplant.

Results

The total number of platelet units transfused dropped from 1,660 pre-PBM program to 1,417 post-PBM implementation. The total number of RBC units dropped from 1,158 to 826.

The researchers also saw changes in the proportions of inappropriate (outside guidelines) transfusions between the two time periods.

In 2013, 94.2% of RBC transfusions occurred outside the guidelines, compared with 35.4% in 2015 (P<0.0001). Similarly, the proportion of inappropriate platelet transfusions declined from 73.4% to 48.7% over the same time period (P<0.0001).

In addition, all-cause mortality at 3 months was significantly lower after the PBM program was introduced. The 3-month mortality rate was 30.7% for the 2013 cohort and 20.2% for the 2015 cohort (P=0.001).

Dr. Jambhekar noted that, in a multivariable analysis accounting for baseline differences between the groups, mortality for patients treated before the PBM program remained significantly higher, with an odds ratio of 1.85 (P=0.0008).

Neither hospital nor ICU admission within 30 days differed significantly between the groups, and there were no significant between-group differences in hospital or ICU lengths of stay.

Likewise, there were no significant between-group differences in myocardial infarctions, cerebrovascular events, sepsis, and febrile or allergic transfusion reactions.

Dr. Jambhekar noted that this study was retrospective in design and therefore could not fully account for potential confounders. It’s also unclear whether the results could be generalized for adoption by other institutions.

“In general, PBM implementation is probably helpful in reducing both platelet and PRBC [packed red blood cell] utilization, but it’s not an easy thing to do,” Dr. Jambhekar said.

“It requires institutional buy-in and key players to make it happen. Ongoing PBM-related activities like surveillance, education, and clinical decision feedback are critical to maintaining success that we’ve had.”

This study was internally funded. Dr. Jambhekar reported having nothing to disclose.

*Data presented differ from the abstract.

BOSTON—A blood management program for patients undergoing hematopoietic stem cell transplant (HSCT) can reduce inappropriate transfusions and costs without compromising patient outcomes, a new study suggests.

Researchers retrospectively compared outcomes before and after implementation of a patient blood management (PBM) program at a single institution.

After the program was implemented, the number of transfusions and the units transfused declined without affecting patient mortality, intensive care unit (ICU) admission rates, or other transfusion-related complications.

In addition, the program saved the hospital more than $600,000 over a year.

Nilesh Jambhekar, MD, of the Mayo Clinic in Rochester, Minnesota, reported these results in a presentation at AABB 2018 (abstract PBM3-ST4-22*).

Study design

Dr. Jambhekar and his colleagues looked at blood product use both before and after the Mayo Clinic started a PBM program that included emphasis on AABB best practice guidelines and electronic clinical-decision support for transfusion orders.

The researchers evaluated the frequency and proportion of red blood cell (RBC) and platelet transfusions, total transfusion quantities, transfusions that occurred outside of the clinical guidelines, and the activity-based costs of transfusions.

Dr. Jambhekar acknowledged that the study relied on rigid hemoglobin and platelet thresholds when considering transfusions conducted outside of the guidelines, defined as RBCs administered for hemoglobin values greater than 7 g/dL and platelet transfusions for platelet counts greater than 10 x 109/L.

He noted, however, that the researchers conducted sensitivity analyses to account for exceptions such as patients with coronary disease or neutropenic fever.

The patient-centered outcomes the researchers evaluated included mortality, hospital and ICU admission rates, transfusion reactions, cerebrovascular and coronary ischemic events, and infections.

The study included data on 360 adults older than 18 who underwent HSCT in 2013, before the PBM program was implemented, and 368 transplanted in 2015, after implementation.

In each cohort, patients were followed out to 90 days after transplant.

Results

The total number of platelet units transfused dropped from 1,660 pre-PBM program to 1,417 post-PBM implementation. The total number of RBC units dropped from 1,158 to 826.

The researchers also saw changes in the proportions of inappropriate (outside guidelines) transfusions between the two time periods.

In 2013, 94.2% of RBC transfusions occurred outside the guidelines, compared with 35.4% in 2015 (P<0.0001). Similarly, the proportion of inappropriate platelet transfusions declined from 73.4% to 48.7% over the same time period (P<0.0001).

In addition, all-cause mortality at 3 months was significantly lower after the PBM program was introduced. The 3-month mortality rate was 30.7% for the 2013 cohort and 20.2% for the 2015 cohort (P=0.001).

Dr. Jambhekar noted that, in a multivariable analysis accounting for baseline differences between the groups, mortality for patients treated before the PBM program remained significantly higher, with an odds ratio of 1.85 (P=0.0008).

Neither hospital nor ICU admission within 30 days differed significantly between the groups, and there were no significant between-group differences in hospital or ICU lengths of stay.

Likewise, there were no significant between-group differences in myocardial infarctions, cerebrovascular events, sepsis, and febrile or allergic transfusion reactions.

Dr. Jambhekar noted that this study was retrospective in design and therefore could not fully account for potential confounders. It’s also unclear whether the results could be generalized for adoption by other institutions.

“In general, PBM implementation is probably helpful in reducing both platelet and PRBC [packed red blood cell] utilization, but it’s not an easy thing to do,” Dr. Jambhekar said.

“It requires institutional buy-in and key players to make it happen. Ongoing PBM-related activities like surveillance, education, and clinical decision feedback are critical to maintaining success that we’ve had.”

This study was internally funded. Dr. Jambhekar reported having nothing to disclose.

*Data presented differ from the abstract.

AP-1 plays key role in various AML subtypes, team says

The AP-1 transcription factor family is of “major importance” in acute myeloid leukemia (AML), according to researchers.

The team said they identified transcription factor networks specific to AML subtypes, which showed that leukemic growth is dependent upon certain transcription factors, and “the global activation of signaling pathways parallels a growth dependence on AP-1 activity in multiple types of AML.”

Constanze Bonifer, PhD, of the University of Birmingham in the U.K., and her colleagues conducted this research and detailed their findings in Nature Genetics.

The researchers noted that previous work revealed the existence of gene regulatory networks in different types of AML classified by gene expression and DNA methylation patterns.

“Our work now defines these networks in detail and shows that leukemic drivers determine the regulatory phenotype by establishing and maintaining specific gene regulatory and signaling networks that are distinct from those in normal cells,” Dr. Bonifer and her colleagues wrote.

The researchers combined data obtained via several analytic techniques to construct transcription factor networks in normal CD34+ cells and cells from AML patients with defined mutations, including RUNX1 mutations, t(8;21) translocations, mutations of both alleles of the CEBPA gene, and FLT3-ITD with or without NPM1 mutation.

The AP-1 family network was of “high regulatory relevance” for all AML subtypes evaluated, the team reported.

Follow-up in vitro and in vivo studies confirmed the importance of AP-1 for different AML subtypes.

In the in vitro study, the researchers transduced AML cells with a doxycycline-inducible version of a dominant-negative (dn) FOS protein.

“AP-1 is a heterodimer formed by members of the FOS, JUN, ATF, CREB, and JDP families of transcription factors,” the researchers wrote. “[T]hus, it is challenging to target by defined RNA interference approaches.”

Results of the in vitro study showed that induction of dnFOS, mediated by doxycycline, inhibited proliferation of t(8;21)+ Kasumi-1 cells and FLT3-ITD-expressing MV4-11 cells.

Induction of dnFOS also inhibited the colony-forming ability of primary CD34+ FLT3-ITD cells but not CD34+ hematopoietic stem and progenitor cells.

To evaluate the relevance of AP-1 for leukemia propagation in vivo, the researchers transplanted either of two cell lines—Kasumi-1 or MV4-11—expressing inducible dnFOS in immunodeficient mice.

With Kasumi-1, granulosarcomas developed in six of seven untreated control mice and two mice treated with doxycycline, neither of which expressed the inducible protein.

With MV4-11, doxycycline inhibited leukemia development, and untreated mice rapidly developed tumors.

The researchers declared no competing interests related to this work, which was funded by Bloodwise, Cancer Research UK, a Kay Kendall Clinical Training Fellowship, and an MRC/Leuka Clinical Training Fellowship.

The AP-1 transcription factor family is of “major importance” in acute myeloid leukemia (AML), according to researchers.

The team said they identified transcription factor networks specific to AML subtypes, which showed that leukemic growth is dependent upon certain transcription factors, and “the global activation of signaling pathways parallels a growth dependence on AP-1 activity in multiple types of AML.”

Constanze Bonifer, PhD, of the University of Birmingham in the U.K., and her colleagues conducted this research and detailed their findings in Nature Genetics.

The researchers noted that previous work revealed the existence of gene regulatory networks in different types of AML classified by gene expression and DNA methylation patterns.

“Our work now defines these networks in detail and shows that leukemic drivers determine the regulatory phenotype by establishing and maintaining specific gene regulatory and signaling networks that are distinct from those in normal cells,” Dr. Bonifer and her colleagues wrote.

The researchers combined data obtained via several analytic techniques to construct transcription factor networks in normal CD34+ cells and cells from AML patients with defined mutations, including RUNX1 mutations, t(8;21) translocations, mutations of both alleles of the CEBPA gene, and FLT3-ITD with or without NPM1 mutation.

The AP-1 family network was of “high regulatory relevance” for all AML subtypes evaluated, the team reported.

Follow-up in vitro and in vivo studies confirmed the importance of AP-1 for different AML subtypes.

In the in vitro study, the researchers transduced AML cells with a doxycycline-inducible version of a dominant-negative (dn) FOS protein.

“AP-1 is a heterodimer formed by members of the FOS, JUN, ATF, CREB, and JDP families of transcription factors,” the researchers wrote. “[T]hus, it is challenging to target by defined RNA interference approaches.”

Results of the in vitro study showed that induction of dnFOS, mediated by doxycycline, inhibited proliferation of t(8;21)+ Kasumi-1 cells and FLT3-ITD-expressing MV4-11 cells.

Induction of dnFOS also inhibited the colony-forming ability of primary CD34+ FLT3-ITD cells but not CD34+ hematopoietic stem and progenitor cells.

To evaluate the relevance of AP-1 for leukemia propagation in vivo, the researchers transplanted either of two cell lines—Kasumi-1 or MV4-11—expressing inducible dnFOS in immunodeficient mice.

With Kasumi-1, granulosarcomas developed in six of seven untreated control mice and two mice treated with doxycycline, neither of which expressed the inducible protein.

With MV4-11, doxycycline inhibited leukemia development, and untreated mice rapidly developed tumors.

The researchers declared no competing interests related to this work, which was funded by Bloodwise, Cancer Research UK, a Kay Kendall Clinical Training Fellowship, and an MRC/Leuka Clinical Training Fellowship.

The AP-1 transcription factor family is of “major importance” in acute myeloid leukemia (AML), according to researchers.

The team said they identified transcription factor networks specific to AML subtypes, which showed that leukemic growth is dependent upon certain transcription factors, and “the global activation of signaling pathways parallels a growth dependence on AP-1 activity in multiple types of AML.”

Constanze Bonifer, PhD, of the University of Birmingham in the U.K., and her colleagues conducted this research and detailed their findings in Nature Genetics.

The researchers noted that previous work revealed the existence of gene regulatory networks in different types of AML classified by gene expression and DNA methylation patterns.

“Our work now defines these networks in detail and shows that leukemic drivers determine the regulatory phenotype by establishing and maintaining specific gene regulatory and signaling networks that are distinct from those in normal cells,” Dr. Bonifer and her colleagues wrote.

The researchers combined data obtained via several analytic techniques to construct transcription factor networks in normal CD34+ cells and cells from AML patients with defined mutations, including RUNX1 mutations, t(8;21) translocations, mutations of both alleles of the CEBPA gene, and FLT3-ITD with or without NPM1 mutation.

The AP-1 family network was of “high regulatory relevance” for all AML subtypes evaluated, the team reported.

Follow-up in vitro and in vivo studies confirmed the importance of AP-1 for different AML subtypes.

In the in vitro study, the researchers transduced AML cells with a doxycycline-inducible version of a dominant-negative (dn) FOS protein.

“AP-1 is a heterodimer formed by members of the FOS, JUN, ATF, CREB, and JDP families of transcription factors,” the researchers wrote. “[T]hus, it is challenging to target by defined RNA interference approaches.”

Results of the in vitro study showed that induction of dnFOS, mediated by doxycycline, inhibited proliferation of t(8;21)+ Kasumi-1 cells and FLT3-ITD-expressing MV4-11 cells.

Induction of dnFOS also inhibited the colony-forming ability of primary CD34+ FLT3-ITD cells but not CD34+ hematopoietic stem and progenitor cells.

To evaluate the relevance of AP-1 for leukemia propagation in vivo, the researchers transplanted either of two cell lines—Kasumi-1 or MV4-11—expressing inducible dnFOS in immunodeficient mice.

With Kasumi-1, granulosarcomas developed in six of seven untreated control mice and two mice treated with doxycycline, neither of which expressed the inducible protein.

With MV4-11, doxycycline inhibited leukemia development, and untreated mice rapidly developed tumors.

The researchers declared no competing interests related to this work, which was funded by Bloodwise, Cancer Research UK, a Kay Kendall Clinical Training Fellowship, and an MRC/Leuka Clinical Training Fellowship.

FDA clears instrument for blood typing, screening

The U.S. Food and Drug Administration has granted marketing clearance for the immunohematology instrument NEO Iris™.

NEO Iris is a fully automated blood bank instrument designed for the mid- to high-volume laboratory, according to Immucor, Inc., the company marketing the device.

Immucor says NEO Iris provides the highest type and screen throughput on the market—up to 60 types and screens per hour.

NEO Iris performs ABO/Rh D typing, weak D testing, donor confirmation, cytomegalovirus screening, immunoglobulin G direct antiglobulin testing and crossmatching, and antibody identification and screening.

The workflow management tool on Neo Iris has STAT priority and allows operators to run tests in any order at any time, according to Immucor.

The company says NEO Iris can hold up to 224 samples, and “modules can pipette, incubate, centrifuge, and read simultaneously.”

NEO Iris integrates with Immucor’s data management software, ImmuLINK®, to aggregate test results and produce reports with complete testing history.

Another feature of NEO Iris is blud_directSM, which provides technical support from an Immucor representative that can be accessed on the NEO Iris monitor.

For more information on the instrument, see the NEO Iris page on the Immucor website.

The U.S. Food and Drug Administration has granted marketing clearance for the immunohematology instrument NEO Iris™.

NEO Iris is a fully automated blood bank instrument designed for the mid- to high-volume laboratory, according to Immucor, Inc., the company marketing the device.

Immucor says NEO Iris provides the highest type and screen throughput on the market—up to 60 types and screens per hour.

NEO Iris performs ABO/Rh D typing, weak D testing, donor confirmation, cytomegalovirus screening, immunoglobulin G direct antiglobulin testing and crossmatching, and antibody identification and screening.

The workflow management tool on Neo Iris has STAT priority and allows operators to run tests in any order at any time, according to Immucor.

The company says NEO Iris can hold up to 224 samples, and “modules can pipette, incubate, centrifuge, and read simultaneously.”

NEO Iris integrates with Immucor’s data management software, ImmuLINK®, to aggregate test results and produce reports with complete testing history.

Another feature of NEO Iris is blud_directSM, which provides technical support from an Immucor representative that can be accessed on the NEO Iris monitor.

For more information on the instrument, see the NEO Iris page on the Immucor website.

The U.S. Food and Drug Administration has granted marketing clearance for the immunohematology instrument NEO Iris™.

NEO Iris is a fully automated blood bank instrument designed for the mid- to high-volume laboratory, according to Immucor, Inc., the company marketing the device.

Immucor says NEO Iris provides the highest type and screen throughput on the market—up to 60 types and screens per hour.

NEO Iris performs ABO/Rh D typing, weak D testing, donor confirmation, cytomegalovirus screening, immunoglobulin G direct antiglobulin testing and crossmatching, and antibody identification and screening.

The workflow management tool on Neo Iris has STAT priority and allows operators to run tests in any order at any time, according to Immucor.

The company says NEO Iris can hold up to 224 samples, and “modules can pipette, incubate, centrifuge, and read simultaneously.”

NEO Iris integrates with Immucor’s data management software, ImmuLINK®, to aggregate test results and produce reports with complete testing history.

Another feature of NEO Iris is blud_directSM, which provides technical support from an Immucor representative that can be accessed on the NEO Iris monitor.

For more information on the instrument, see the NEO Iris page on the Immucor website.

Chronic liver disease independently linked to increased risk of falls

SAN FRANCISCO – .

“We have previously known that having cirrhosis, for example, is associated with the risk of falling, but we didn’t have any data from a nationally representative sample,” lead study author Maria Camila Pérez-Matos, MD, said in an interview at the annual meeting of the American Association for the Study of Liver Diseases. “What surprised us is that just by having chronic liver disease – any subtype – you’re more likely to fall, and also to have a fracture after you have fallen.”

In an effort to define the association between CLD and fall history and its related injuries, Dr. Pérez-Matos of the division of gastroenterology and hepatology at Beth Israel Deaconess Medical Center, Boston, and her associates examined data from 5,363 subjects aged 60 years and older in the Third National Health and Nutrition Examination Survey, which represents the noninstitutionalized civilian population in the United States. Their outcomes of interest were one or more falls occurring in the previous 12 months and fall-related injuries. The main exposure was definitive CLD, defined by chronic viral hepatitis (hepatitis C RNA or hepatitis B surface antigen), nonalcoholic steatohepatitis (NASH; hepatosteatosis by ultrasound with abnormal transaminases), and alcohol-related liver disease (females consuming more than 7 drinks/week and males consuming 14 drinks/week among, plus having abnormal transaminases). Suspected CLD was defined as having abnormal alanine aminotransferase levels (males greater than 30 IU/L, females greater than 19 IU/L), aspartate aminotransferase levels above 33 IU/L, or alkaline phosphatase levels above 100 IU/L. The researchers used univariate and multivariate logistic regression to examine associations.

The average age of subjects was 70 years, and 59% were female. Of the 5,363 subjects, 340 had definitive CLD. Of these, 234 (69%) had NASH, 85 (25%) had viral hepatitis, and 21 (6%) had alcoholic liver disease. Subjects with definitive CLD were more likely to be female and have diabetes mellitus, a higher body mass index, and physical/functional impairment. Dr. Pérez-Matos and her colleagues found that definitive CLD was associated with a 52% increase in the odds of having a history of falls (odds ratio, 1.52; P = .01). The association remained significant after controlling for age, sex, smoking, race, physical or functional impairment, impaired vision, polypharmacy, and body mass index. The degree of excess falling risk posed by CLD was similar to that of having impaired vision (OR, 1.48; P less than .001).

Of the CLD subtypes, subjects with viral hepatitis had the strongest association with a history of falls (OR, 2.2; P = .001). In addition, definitive CLD was found to have significant association with any physical impairment, even after adjusting for relevant covariates (OR, 1.63; P = .001).

Finally, multivariate logistic regression revealed that both suspected and definitive CLD were associated with injurious falls (OR, 1.40 and OR of 1.67, respectively). “Everyone is interested in preventing falls because of its public health impact, and predictors of falls are relatively limited,” said Elliott B. Tapper, MD, the study’s principal investigator, who is with the division of gastroenterology and hepatology at the University of Michigan, Ann Arbor. “Because chronic liver disease is increasingly common, our data is speaking to a hitherto unknown risk factor: one which if you apply it to other data sets might help figure out why more people are falling. The lesson is, there’s something about chronic liver disease; it’s a sign. If it’s fatty liver disease, it’s a sign that diabetes has taken its toll on the body – its nerves and muscles. There’s something about what’s going on in that person that’s worse than it is for other people. We don’t know cause or effect, but the association is strong and deserves further study, particularly when it comes to determining [which patients] in our clinics are at higher risk and making sure they’re doing physical therapy to prevent falls in the future.”

Dr. Tapper disclosed that she has a career development award from the National Institutes of Health. Dr. Pérez-Matos reported having no monetary conflicts.

Source: Hepatol. 2018;68[S1], Abstract 756.

SAN FRANCISCO – .

“We have previously known that having cirrhosis, for example, is associated with the risk of falling, but we didn’t have any data from a nationally representative sample,” lead study author Maria Camila Pérez-Matos, MD, said in an interview at the annual meeting of the American Association for the Study of Liver Diseases. “What surprised us is that just by having chronic liver disease – any subtype – you’re more likely to fall, and also to have a fracture after you have fallen.”

In an effort to define the association between CLD and fall history and its related injuries, Dr. Pérez-Matos of the division of gastroenterology and hepatology at Beth Israel Deaconess Medical Center, Boston, and her associates examined data from 5,363 subjects aged 60 years and older in the Third National Health and Nutrition Examination Survey, which represents the noninstitutionalized civilian population in the United States. Their outcomes of interest were one or more falls occurring in the previous 12 months and fall-related injuries. The main exposure was definitive CLD, defined by chronic viral hepatitis (hepatitis C RNA or hepatitis B surface antigen), nonalcoholic steatohepatitis (NASH; hepatosteatosis by ultrasound with abnormal transaminases), and alcohol-related liver disease (females consuming more than 7 drinks/week and males consuming 14 drinks/week among, plus having abnormal transaminases). Suspected CLD was defined as having abnormal alanine aminotransferase levels (males greater than 30 IU/L, females greater than 19 IU/L), aspartate aminotransferase levels above 33 IU/L, or alkaline phosphatase levels above 100 IU/L. The researchers used univariate and multivariate logistic regression to examine associations.

The average age of subjects was 70 years, and 59% were female. Of the 5,363 subjects, 340 had definitive CLD. Of these, 234 (69%) had NASH, 85 (25%) had viral hepatitis, and 21 (6%) had alcoholic liver disease. Subjects with definitive CLD were more likely to be female and have diabetes mellitus, a higher body mass index, and physical/functional impairment. Dr. Pérez-Matos and her colleagues found that definitive CLD was associated with a 52% increase in the odds of having a history of falls (odds ratio, 1.52; P = .01). The association remained significant after controlling for age, sex, smoking, race, physical or functional impairment, impaired vision, polypharmacy, and body mass index. The degree of excess falling risk posed by CLD was similar to that of having impaired vision (OR, 1.48; P less than .001).

Of the CLD subtypes, subjects with viral hepatitis had the strongest association with a history of falls (OR, 2.2; P = .001). In addition, definitive CLD was found to have significant association with any physical impairment, even after adjusting for relevant covariates (OR, 1.63; P = .001).

Finally, multivariate logistic regression revealed that both suspected and definitive CLD were associated with injurious falls (OR, 1.40 and OR of 1.67, respectively). “Everyone is interested in preventing falls because of its public health impact, and predictors of falls are relatively limited,” said Elliott B. Tapper, MD, the study’s principal investigator, who is with the division of gastroenterology and hepatology at the University of Michigan, Ann Arbor. “Because chronic liver disease is increasingly common, our data is speaking to a hitherto unknown risk factor: one which if you apply it to other data sets might help figure out why more people are falling. The lesson is, there’s something about chronic liver disease; it’s a sign. If it’s fatty liver disease, it’s a sign that diabetes has taken its toll on the body – its nerves and muscles. There’s something about what’s going on in that person that’s worse than it is for other people. We don’t know cause or effect, but the association is strong and deserves further study, particularly when it comes to determining [which patients] in our clinics are at higher risk and making sure they’re doing physical therapy to prevent falls in the future.”

Dr. Tapper disclosed that she has a career development award from the National Institutes of Health. Dr. Pérez-Matos reported having no monetary conflicts.

Source: Hepatol. 2018;68[S1], Abstract 756.

SAN FRANCISCO – .

“We have previously known that having cirrhosis, for example, is associated with the risk of falling, but we didn’t have any data from a nationally representative sample,” lead study author Maria Camila Pérez-Matos, MD, said in an interview at the annual meeting of the American Association for the Study of Liver Diseases. “What surprised us is that just by having chronic liver disease – any subtype – you’re more likely to fall, and also to have a fracture after you have fallen.”

In an effort to define the association between CLD and fall history and its related injuries, Dr. Pérez-Matos of the division of gastroenterology and hepatology at Beth Israel Deaconess Medical Center, Boston, and her associates examined data from 5,363 subjects aged 60 years and older in the Third National Health and Nutrition Examination Survey, which represents the noninstitutionalized civilian population in the United States. Their outcomes of interest were one or more falls occurring in the previous 12 months and fall-related injuries. The main exposure was definitive CLD, defined by chronic viral hepatitis (hepatitis C RNA or hepatitis B surface antigen), nonalcoholic steatohepatitis (NASH; hepatosteatosis by ultrasound with abnormal transaminases), and alcohol-related liver disease (females consuming more than 7 drinks/week and males consuming 14 drinks/week among, plus having abnormal transaminases). Suspected CLD was defined as having abnormal alanine aminotransferase levels (males greater than 30 IU/L, females greater than 19 IU/L), aspartate aminotransferase levels above 33 IU/L, or alkaline phosphatase levels above 100 IU/L. The researchers used univariate and multivariate logistic regression to examine associations.

The average age of subjects was 70 years, and 59% were female. Of the 5,363 subjects, 340 had definitive CLD. Of these, 234 (69%) had NASH, 85 (25%) had viral hepatitis, and 21 (6%) had alcoholic liver disease. Subjects with definitive CLD were more likely to be female and have diabetes mellitus, a higher body mass index, and physical/functional impairment. Dr. Pérez-Matos and her colleagues found that definitive CLD was associated with a 52% increase in the odds of having a history of falls (odds ratio, 1.52; P = .01). The association remained significant after controlling for age, sex, smoking, race, physical or functional impairment, impaired vision, polypharmacy, and body mass index. The degree of excess falling risk posed by CLD was similar to that of having impaired vision (OR, 1.48; P less than .001).

Of the CLD subtypes, subjects with viral hepatitis had the strongest association with a history of falls (OR, 2.2; P = .001). In addition, definitive CLD was found to have significant association with any physical impairment, even after adjusting for relevant covariates (OR, 1.63; P = .001).

Finally, multivariate logistic regression revealed that both suspected and definitive CLD were associated with injurious falls (OR, 1.40 and OR of 1.67, respectively). “Everyone is interested in preventing falls because of its public health impact, and predictors of falls are relatively limited,” said Elliott B. Tapper, MD, the study’s principal investigator, who is with the division of gastroenterology and hepatology at the University of Michigan, Ann Arbor. “Because chronic liver disease is increasingly common, our data is speaking to a hitherto unknown risk factor: one which if you apply it to other data sets might help figure out why more people are falling. The lesson is, there’s something about chronic liver disease; it’s a sign. If it’s fatty liver disease, it’s a sign that diabetes has taken its toll on the body – its nerves and muscles. There’s something about what’s going on in that person that’s worse than it is for other people. We don’t know cause or effect, but the association is strong and deserves further study, particularly when it comes to determining [which patients] in our clinics are at higher risk and making sure they’re doing physical therapy to prevent falls in the future.”

Dr. Tapper disclosed that she has a career development award from the National Institutes of Health. Dr. Pérez-Matos reported having no monetary conflicts.

Source: Hepatol. 2018;68[S1], Abstract 756.

REPORTING FROM THE LIVER MEETING 2018

Key clinical point: Attention to falls is warranted in chronic liver disease (CLD) patients at all stages of disease.

Major finding: Having definitive CLD was associated with a 52% increase in the odds of having a history of falls (OR 1.52; P = .01).

Study details: A cross-sectional analysis of 5,363 subjects in the Third National Health and Nutrition Examination Survey.

Disclosures: Dr. Tapper disclosed that he has a career development award from the National Institutes of Health. Dr. Perez-Matos reported having no monetary conflicts.

Source: Hepatol. 2018;68[S1]:Abstract 756.

PBM saves blood, costs in HSCT unit

BOSTON – Implementing a patient blood management (PBM) program for patients undergoing hematopoietic stem cell transplantation (HSCT) resulted in significant reductions in blood product use with an attendant reduction in costs, but without negative effects on patient-centered outcomes, investigators reported.

Since the PBM program began, the number of transfusions and the units transfused declined without affecting mortality, ICU admission rates, or other transfusion-related complications. The program saved the hospital more than $600,000 over 1 year, reported Nilesh Jambhekar, MD, an anesthesiology resident at the Mayo Clinic in Rochester, Minn.

“In general, PBM implementation is probably helpful in reducing both platelet and [packed red blood cell] utilization, but it’s not an easy thing to do. It requires institutional buy-in and key players to make it happen,” he said at AABB 2018, the annual meeting of the group formerly known as the American Association of Blood Banks.

“Ongoing PBM-related activities [such as] surveillance, education, and clinical decision feedback are critical to maintaining success that we’ve had,” he added.

The investigators looked at blood-product use both before and after the Mayo Clinic started a PBM program that included emphasis on AABB best practice guidelines and electronic clinical decision support for transfusion orders.

They analyzed the frequency and proportion of red blood cell (RBC) and platelet transfusions, total transfusion quantities, transfusions that occurred outside of the clinical guidelines, and the activity-based costs of transfusions.

Dr. Jambhekar acknowledged that the study relied on rigid hemoglobin and platelet thresholds when considering transfusions conducted outside of the guidelines, which they defined as RBCs administered for hemoglobin values greater than 7 g/dL and platelet transfusions for platelets counts greater than 10 x 109/L. He noted, however, that they conducted sensitivity analyses to account for exceptions, such as patients with coronary disease or neutropenic fever.

The patient-centered outcomes they evaluated included mortality, hospital and ICU admission rates, transfusion reactions, cerebrovascular and coronary ischemic events, and infections.

The study included data on 360 adults who underwent HSCT in 2013, before the PBM program was implemented, and 368 transplanted in 2015, after implementation. In each cohort, patients were followed out to 90 days after transplant.

The investigators found that the total number of units transfused dropped from 1,660 units of platelets and 1,158 U of RBCs before implementation, to 1,417 U and 826 U, respectively, after PBM implementation.

Significantly, in addition to an overall reduction in units transfused, the investigators saw substantial changes in the proportions of inappropriate (outside guidelines) transfusions of red blood cells between the two time periods, with 94.2% of RBC transfusions occurring outside the guidelines in 2013, compared with 35.4% in 2015 (P less than .0001). Similarly, the proportion of inappropriate platelet transfusions declined from 73.4% to 48.7% over the same time period (P less than .0001).

Also of note was the fact that all-cause mortality at 3 months was significantly lower after the PBM program was introduced. The 3-month mortality rate for the 2013 cohort was 30.7%, compared with 20.2% for the 2015 cohort (P = .001). Neither hospital or ICU admission with 30 days or hospital or ICU lengths of stay differed significantly between the groups.

Dr. Jambhekar noted that in a multivariable analysis accounting for baseline differences between the groups as a possible explanation for the higher mortality in the 2013 cohort, mortality for patients treated before the PBM program remained significantly higher, with an odds ratio of 1.85 (P = .0008).

There were also no significant differences in either MIs, cerebrovascular events, sepsis, and febrile or allergic transfusion reactions.

He noted that in addition to the rigid thresholds used, the study was retrospective in design – and therefore could not fully account for potential confounders – and that it is unclear whether the results could be generalized for adoption by other institutions.

The study was internally funded. Dr. Jambhekar reported having no financial disclosures.

SOURCE: Jambhekar N et al. AABB 2018, Abstract PBM3-ST4-22.

BOSTON – Implementing a patient blood management (PBM) program for patients undergoing hematopoietic stem cell transplantation (HSCT) resulted in significant reductions in blood product use with an attendant reduction in costs, but without negative effects on patient-centered outcomes, investigators reported.

Since the PBM program began, the number of transfusions and the units transfused declined without affecting mortality, ICU admission rates, or other transfusion-related complications. The program saved the hospital more than $600,000 over 1 year, reported Nilesh Jambhekar, MD, an anesthesiology resident at the Mayo Clinic in Rochester, Minn.

“In general, PBM implementation is probably helpful in reducing both platelet and [packed red blood cell] utilization, but it’s not an easy thing to do. It requires institutional buy-in and key players to make it happen,” he said at AABB 2018, the annual meeting of the group formerly known as the American Association of Blood Banks.

“Ongoing PBM-related activities [such as] surveillance, education, and clinical decision feedback are critical to maintaining success that we’ve had,” he added.

The investigators looked at blood-product use both before and after the Mayo Clinic started a PBM program that included emphasis on AABB best practice guidelines and electronic clinical decision support for transfusion orders.

They analyzed the frequency and proportion of red blood cell (RBC) and platelet transfusions, total transfusion quantities, transfusions that occurred outside of the clinical guidelines, and the activity-based costs of transfusions.

Dr. Jambhekar acknowledged that the study relied on rigid hemoglobin and platelet thresholds when considering transfusions conducted outside of the guidelines, which they defined as RBCs administered for hemoglobin values greater than 7 g/dL and platelet transfusions for platelets counts greater than 10 x 109/L. He noted, however, that they conducted sensitivity analyses to account for exceptions, such as patients with coronary disease or neutropenic fever.

The patient-centered outcomes they evaluated included mortality, hospital and ICU admission rates, transfusion reactions, cerebrovascular and coronary ischemic events, and infections.

The study included data on 360 adults who underwent HSCT in 2013, before the PBM program was implemented, and 368 transplanted in 2015, after implementation. In each cohort, patients were followed out to 90 days after transplant.

The investigators found that the total number of units transfused dropped from 1,660 units of platelets and 1,158 U of RBCs before implementation, to 1,417 U and 826 U, respectively, after PBM implementation.

Significantly, in addition to an overall reduction in units transfused, the investigators saw substantial changes in the proportions of inappropriate (outside guidelines) transfusions of red blood cells between the two time periods, with 94.2% of RBC transfusions occurring outside the guidelines in 2013, compared with 35.4% in 2015 (P less than .0001). Similarly, the proportion of inappropriate platelet transfusions declined from 73.4% to 48.7% over the same time period (P less than .0001).

Also of note was the fact that all-cause mortality at 3 months was significantly lower after the PBM program was introduced. The 3-month mortality rate for the 2013 cohort was 30.7%, compared with 20.2% for the 2015 cohort (P = .001). Neither hospital or ICU admission with 30 days or hospital or ICU lengths of stay differed significantly between the groups.

Dr. Jambhekar noted that in a multivariable analysis accounting for baseline differences between the groups as a possible explanation for the higher mortality in the 2013 cohort, mortality for patients treated before the PBM program remained significantly higher, with an odds ratio of 1.85 (P = .0008).

There were also no significant differences in either MIs, cerebrovascular events, sepsis, and febrile or allergic transfusion reactions.

He noted that in addition to the rigid thresholds used, the study was retrospective in design – and therefore could not fully account for potential confounders – and that it is unclear whether the results could be generalized for adoption by other institutions.

The study was internally funded. Dr. Jambhekar reported having no financial disclosures.

SOURCE: Jambhekar N et al. AABB 2018, Abstract PBM3-ST4-22.

BOSTON – Implementing a patient blood management (PBM) program for patients undergoing hematopoietic stem cell transplantation (HSCT) resulted in significant reductions in blood product use with an attendant reduction in costs, but without negative effects on patient-centered outcomes, investigators reported.

Since the PBM program began, the number of transfusions and the units transfused declined without affecting mortality, ICU admission rates, or other transfusion-related complications. The program saved the hospital more than $600,000 over 1 year, reported Nilesh Jambhekar, MD, an anesthesiology resident at the Mayo Clinic in Rochester, Minn.

“In general, PBM implementation is probably helpful in reducing both platelet and [packed red blood cell] utilization, but it’s not an easy thing to do. It requires institutional buy-in and key players to make it happen,” he said at AABB 2018, the annual meeting of the group formerly known as the American Association of Blood Banks.

“Ongoing PBM-related activities [such as] surveillance, education, and clinical decision feedback are critical to maintaining success that we’ve had,” he added.

The investigators looked at blood-product use both before and after the Mayo Clinic started a PBM program that included emphasis on AABB best practice guidelines and electronic clinical decision support for transfusion orders.

They analyzed the frequency and proportion of red blood cell (RBC) and platelet transfusions, total transfusion quantities, transfusions that occurred outside of the clinical guidelines, and the activity-based costs of transfusions.

Dr. Jambhekar acknowledged that the study relied on rigid hemoglobin and platelet thresholds when considering transfusions conducted outside of the guidelines, which they defined as RBCs administered for hemoglobin values greater than 7 g/dL and platelet transfusions for platelets counts greater than 10 x 109/L. He noted, however, that they conducted sensitivity analyses to account for exceptions, such as patients with coronary disease or neutropenic fever.

The patient-centered outcomes they evaluated included mortality, hospital and ICU admission rates, transfusion reactions, cerebrovascular and coronary ischemic events, and infections.

The study included data on 360 adults who underwent HSCT in 2013, before the PBM program was implemented, and 368 transplanted in 2015, after implementation. In each cohort, patients were followed out to 90 days after transplant.

The investigators found that the total number of units transfused dropped from 1,660 units of platelets and 1,158 U of RBCs before implementation, to 1,417 U and 826 U, respectively, after PBM implementation.