User login

Why did you choose to become a hospitalist? (VIDEO)

HM19 attendees explain why they became hospitalists.

HM19 attendees explain why they became hospitalists.

HM19 attendees explain why they became hospitalists.

SHM honors extraordinary leader, editor

When Andrew Auerbach, MD, MPH, SFHM, started as a hospitalist, his specialty didn’t have a name. His title was simply “medical director.” Now, 2 decades later, he is a professor of medicine at the University of California, San Francisco, and one of the most experienced and influential hospitalists in the field.

SHM will honor Dr. Auerbach and celebrate his achievements today at HM19, at the Awards plenary following the Chapter Awards of Excellence ceremony that begins at 8:30 a.m. SHM president Nasim Afsar, MD, SFHM, will present him with a plaque and review his contributions to the growth of the Journal of Hospital Medicine.

Throughout his career, even going back to the days he helped to found the Society of Hospital Medicine, Dr. Auerbach has played a crucial role in defining how a hospitalist works and thinks. Over the last 7 years, he led the Journal of Hospital Medicine through an extraordinary period of growth that has secured its reputation as a crucial resource for hospitalists and beyond.

“Andy Auerbach transformed the Journal of Hospital Medicine from the status of a ‘start-up’ Version 1.0 to a polished, efficient machine – Version 2.0. His efforts garnered the national respect that JHM deserves,” said Mark Williams, MD, MHM, University of Kentucky HealthCare hospital medicine division chief and tenured professor of medicine. Dr. Williams served as editor in chief of the journal immediately prior to Dr. Auerbach. “I hope Andy will be known as the editor who transformed an acceptable journal into a stellar example of what a medical journal can become.”

Samir S. Shah, MD, MSCE, MHM, who has replaced Dr. Auerbach as editor in chief of the journal, also has praise for his predecessor. “Andy has really invested in advancing scholarship in hospital medicine and ensuring that great work is broadly disseminated,” said Dr. Shah, chief of hospital medicine at Cincinnati Children’s Hospital Medical Center.

Dr. Auerbach said his interest in inpatient and perioperative care sparked his focus on hospital medicine. “My initial research was foundational for the field. I wanted to understand, refine, and improve our role: Do hospitalists improve care and outcomes? Do they affect patient perceptions of their doctors?”

At the time, hospital medicine felt like a 1990s dot-com startup, he recalled, but one that was destined to last. “It was clear that hospital medicine was going to take off, but the academic pursuits were taking longer to get going. We were starting from zero.”

Enter the Journal of Hospital Medicine. The publication received about 200 submissions a year when Dr. Auerbach took over as editor in chief. Now, it receives more than 800.

The higher number of submissions allows editors to be more selective about the papers that are published. At the same time, the growth in the journal’s profile and influence has allowed it to evolve into a more wide-ranging publication, Dr. Auerbach said.

“Geriatricians and nephrologists are sending us papers,” he said. “They believe our work is important, and they understand that we’re publishing research about topics such as acute kidney injury, delirium, inpatient safety issues, and transfer of care.”

According to Dr. Williams, his successor has played a crucial role in the journal’s success. “Andy improved the response rate of JHM, dramatically shortening the time for reviews while maintaining and even improving the quality of reviews,” he said. “This single act profoundly impacted author satisfaction and drove the increased number of article submissions.”

Dr. Auerbach also revolutionized the journal’s approach to technology. “Under his leadership, the journal pioneered the use of social media to engage readers in ways that were fundamentally different from established processes at the time,” said new editor in chief Dr. Shah. “For example, the journal has created roles for social media editors, and it routinely publishes visual abstracts to provide readers with a quick overview of journal research. We also hold regular dialogues with readers via our #JHMChat Twitter journal club to engage them in discussing the latest research published in JHM.”

Dr. Shah also noted that Dr. Auerbach boosted hospital medicine and the journal in other ways during his tenure. “He encouraged the team of editors to engage with our authors in meaningful and substantive ways. That meant encouraging thoughtful feedback and also reaching out to authors directly to provide additional guidance as they revised their manuscript and, oftentimes, as they prepared to submit their manuscript elsewhere,” he said.

In addition, Dr. Shah said that his colleague “also created the JHM editorial fellowship as a way to help develop the pipeline for academic leadership. This fellowship provides chief residents, academic hospital medicine fellows, and junior faculty an opportunity to learn about medical publishing, hone their skills in evaluating research and writing, and network with leaders in the field.”

For his part, Dr. Auerbach hopes his legacy at the journal will include an expansion, perhaps within a year or 2. “I’d love to see the journal come out twice a month,” he said. “There’s enough potential science out there, and I think it could be in that position soon.”

Awards of Excellence

Tuesday, 8:30 – 9:10 a.m.

Potomac ABCD

When Andrew Auerbach, MD, MPH, SFHM, started as a hospitalist, his specialty didn’t have a name. His title was simply “medical director.” Now, 2 decades later, he is a professor of medicine at the University of California, San Francisco, and one of the most experienced and influential hospitalists in the field.

SHM will honor Dr. Auerbach and celebrate his achievements today at HM19, at the Awards plenary following the Chapter Awards of Excellence ceremony that begins at 8:30 a.m. SHM president Nasim Afsar, MD, SFHM, will present him with a plaque and review his contributions to the growth of the Journal of Hospital Medicine.

Throughout his career, even going back to the days he helped to found the Society of Hospital Medicine, Dr. Auerbach has played a crucial role in defining how a hospitalist works and thinks. Over the last 7 years, he led the Journal of Hospital Medicine through an extraordinary period of growth that has secured its reputation as a crucial resource for hospitalists and beyond.

“Andy Auerbach transformed the Journal of Hospital Medicine from the status of a ‘start-up’ Version 1.0 to a polished, efficient machine – Version 2.0. His efforts garnered the national respect that JHM deserves,” said Mark Williams, MD, MHM, University of Kentucky HealthCare hospital medicine division chief and tenured professor of medicine. Dr. Williams served as editor in chief of the journal immediately prior to Dr. Auerbach. “I hope Andy will be known as the editor who transformed an acceptable journal into a stellar example of what a medical journal can become.”

Samir S. Shah, MD, MSCE, MHM, who has replaced Dr. Auerbach as editor in chief of the journal, also has praise for his predecessor. “Andy has really invested in advancing scholarship in hospital medicine and ensuring that great work is broadly disseminated,” said Dr. Shah, chief of hospital medicine at Cincinnati Children’s Hospital Medical Center.

Dr. Auerbach said his interest in inpatient and perioperative care sparked his focus on hospital medicine. “My initial research was foundational for the field. I wanted to understand, refine, and improve our role: Do hospitalists improve care and outcomes? Do they affect patient perceptions of their doctors?”

At the time, hospital medicine felt like a 1990s dot-com startup, he recalled, but one that was destined to last. “It was clear that hospital medicine was going to take off, but the academic pursuits were taking longer to get going. We were starting from zero.”

Enter the Journal of Hospital Medicine. The publication received about 200 submissions a year when Dr. Auerbach took over as editor in chief. Now, it receives more than 800.

The higher number of submissions allows editors to be more selective about the papers that are published. At the same time, the growth in the journal’s profile and influence has allowed it to evolve into a more wide-ranging publication, Dr. Auerbach said.

“Geriatricians and nephrologists are sending us papers,” he said. “They believe our work is important, and they understand that we’re publishing research about topics such as acute kidney injury, delirium, inpatient safety issues, and transfer of care.”

According to Dr. Williams, his successor has played a crucial role in the journal’s success. “Andy improved the response rate of JHM, dramatically shortening the time for reviews while maintaining and even improving the quality of reviews,” he said. “This single act profoundly impacted author satisfaction and drove the increased number of article submissions.”

Dr. Auerbach also revolutionized the journal’s approach to technology. “Under his leadership, the journal pioneered the use of social media to engage readers in ways that were fundamentally different from established processes at the time,” said new editor in chief Dr. Shah. “For example, the journal has created roles for social media editors, and it routinely publishes visual abstracts to provide readers with a quick overview of journal research. We also hold regular dialogues with readers via our #JHMChat Twitter journal club to engage them in discussing the latest research published in JHM.”

Dr. Shah also noted that Dr. Auerbach boosted hospital medicine and the journal in other ways during his tenure. “He encouraged the team of editors to engage with our authors in meaningful and substantive ways. That meant encouraging thoughtful feedback and also reaching out to authors directly to provide additional guidance as they revised their manuscript and, oftentimes, as they prepared to submit their manuscript elsewhere,” he said.

In addition, Dr. Shah said that his colleague “also created the JHM editorial fellowship as a way to help develop the pipeline for academic leadership. This fellowship provides chief residents, academic hospital medicine fellows, and junior faculty an opportunity to learn about medical publishing, hone their skills in evaluating research and writing, and network with leaders in the field.”

For his part, Dr. Auerbach hopes his legacy at the journal will include an expansion, perhaps within a year or 2. “I’d love to see the journal come out twice a month,” he said. “There’s enough potential science out there, and I think it could be in that position soon.”

Awards of Excellence

Tuesday, 8:30 – 9:10 a.m.

Potomac ABCD

When Andrew Auerbach, MD, MPH, SFHM, started as a hospitalist, his specialty didn’t have a name. His title was simply “medical director.” Now, 2 decades later, he is a professor of medicine at the University of California, San Francisco, and one of the most experienced and influential hospitalists in the field.

SHM will honor Dr. Auerbach and celebrate his achievements today at HM19, at the Awards plenary following the Chapter Awards of Excellence ceremony that begins at 8:30 a.m. SHM president Nasim Afsar, MD, SFHM, will present him with a plaque and review his contributions to the growth of the Journal of Hospital Medicine.

Throughout his career, even going back to the days he helped to found the Society of Hospital Medicine, Dr. Auerbach has played a crucial role in defining how a hospitalist works and thinks. Over the last 7 years, he led the Journal of Hospital Medicine through an extraordinary period of growth that has secured its reputation as a crucial resource for hospitalists and beyond.

“Andy Auerbach transformed the Journal of Hospital Medicine from the status of a ‘start-up’ Version 1.0 to a polished, efficient machine – Version 2.0. His efforts garnered the national respect that JHM deserves,” said Mark Williams, MD, MHM, University of Kentucky HealthCare hospital medicine division chief and tenured professor of medicine. Dr. Williams served as editor in chief of the journal immediately prior to Dr. Auerbach. “I hope Andy will be known as the editor who transformed an acceptable journal into a stellar example of what a medical journal can become.”

Samir S. Shah, MD, MSCE, MHM, who has replaced Dr. Auerbach as editor in chief of the journal, also has praise for his predecessor. “Andy has really invested in advancing scholarship in hospital medicine and ensuring that great work is broadly disseminated,” said Dr. Shah, chief of hospital medicine at Cincinnati Children’s Hospital Medical Center.

Dr. Auerbach said his interest in inpatient and perioperative care sparked his focus on hospital medicine. “My initial research was foundational for the field. I wanted to understand, refine, and improve our role: Do hospitalists improve care and outcomes? Do they affect patient perceptions of their doctors?”

At the time, hospital medicine felt like a 1990s dot-com startup, he recalled, but one that was destined to last. “It was clear that hospital medicine was going to take off, but the academic pursuits were taking longer to get going. We were starting from zero.”

Enter the Journal of Hospital Medicine. The publication received about 200 submissions a year when Dr. Auerbach took over as editor in chief. Now, it receives more than 800.

The higher number of submissions allows editors to be more selective about the papers that are published. At the same time, the growth in the journal’s profile and influence has allowed it to evolve into a more wide-ranging publication, Dr. Auerbach said.

“Geriatricians and nephrologists are sending us papers,” he said. “They believe our work is important, and they understand that we’re publishing research about topics such as acute kidney injury, delirium, inpatient safety issues, and transfer of care.”

According to Dr. Williams, his successor has played a crucial role in the journal’s success. “Andy improved the response rate of JHM, dramatically shortening the time for reviews while maintaining and even improving the quality of reviews,” he said. “This single act profoundly impacted author satisfaction and drove the increased number of article submissions.”

Dr. Auerbach also revolutionized the journal’s approach to technology. “Under his leadership, the journal pioneered the use of social media to engage readers in ways that were fundamentally different from established processes at the time,” said new editor in chief Dr. Shah. “For example, the journal has created roles for social media editors, and it routinely publishes visual abstracts to provide readers with a quick overview of journal research. We also hold regular dialogues with readers via our #JHMChat Twitter journal club to engage them in discussing the latest research published in JHM.”

Dr. Shah also noted that Dr. Auerbach boosted hospital medicine and the journal in other ways during his tenure. “He encouraged the team of editors to engage with our authors in meaningful and substantive ways. That meant encouraging thoughtful feedback and also reaching out to authors directly to provide additional guidance as they revised their manuscript and, oftentimes, as they prepared to submit their manuscript elsewhere,” he said.

In addition, Dr. Shah said that his colleague “also created the JHM editorial fellowship as a way to help develop the pipeline for academic leadership. This fellowship provides chief residents, academic hospital medicine fellows, and junior faculty an opportunity to learn about medical publishing, hone their skills in evaluating research and writing, and network with leaders in the field.”

For his part, Dr. Auerbach hopes his legacy at the journal will include an expansion, perhaps within a year or 2. “I’d love to see the journal come out twice a month,” he said. “There’s enough potential science out there, and I think it could be in that position soon.”

Awards of Excellence

Tuesday, 8:30 – 9:10 a.m.

Potomac ABCD

Cancer researchers take home AACR honors

The American Association for Cancer Research (AACR) has granted 14 awards and lectureships to cancer researchers and plans to recognize the recipients at the annual meeting of the American Association for Cancer Research.

Emil J. Freireich, MD, of the University of Texas MD Anderson Cancer Center in Houston, has won the 16th AACR Award for Lifetime Achievement in Cancer Research. AACR cited Dr. Freireich’s contributions related to leukocyte and allogeneic platelet transfusions, engraftment of peripheral blood stem cells, and combination chemotherapy approaches in the treatment of childhood leukemia. Dr. Freireich will receive the award during the opening ceremony of the meeting on March 31.

Another award to be given at the opening ceremony is the 13th Margaret Foti Award for Leadership and Extraordinary Achievements in Cancer Research. Raymond N. DuBois, MD, PhD, of the Medical University of South Carolina in Charleston, will receive the award for his contributions to the “early detection, interception, and prevention of colorectal cancer.” This includes elucidating the role of prostaglandins and cyclooxygenase in colon cancer tumorigenesis and pioneering the use of nonsteroidal anti-inflammatory mediators for cancer prevention. Dr. DuBois will deliver the lecture “Inflammation and Inflammatory Mediators as Potential Targets for Cancer Prevention or Interception” on April 1.

Jennifer R. Grandis, MD, of the University of California, San Francisco, has won the 22nd AACR–Women in Cancer Research Charlotte Friend Memorial Lectureship. Dr. Grandis characterized the role of EGFR, STAT3, and other signaling pathways in head and neck squamous cell carcinoma and used the findings to uncover new treatment options. Dr. Grandis will deliver her award lecture, “Leveraging Biologic Insights to Prevent and Treat Head and Neck Cancer,” on March 30.

John M. Carethers, MD, of the University of Michigan in Ann Arbor, has won the 14th AACR–Minorities in Cancer Research Jane Cooke Wright Memorial Lectureship. Dr. Carethers is being recognized for his work related to DNA mismatch repair, tumor resistance, inflammation, and health disparities in colorectal cancer patients. He will deliver his award lecture, “A Role for Inflammation-Induced DNA Mismatch Repair Deficits in Racial Outcomes from Advanced Colorectal Cancer,” on March 31.

Susan L. Cohn, MD, of the University of Chicago, has won the 24th AACR–Joseph H. Burchenal Memorial Award for Outstanding Achievement in Clinical Cancer Research (supported by Bristol-Myers Squibb). She won this award for her work in refining pediatric cancer risk-group classification, making discoveries that changed treatment strategies, and creating computational frameworks that enabled data collection and sharing. Dr. Cohn will deliver her award lecture, “Advancing Treatment Through Collaboration: A Pediatric Oncology Paradigm,” on April 2.

Another awardee to be recognized at the meeting is Alberto Mantovani, MD, a professor at Humanitas University in Milan, Italy, who won the 22nd Pezcoller Foundation–AACR International Award for Extraordinary Achievement in Cancer Research (supported by the Pezcoller Foundation).

Jeffrey A. Bluestone, PhD, of the University of California, San Francisco, won the 15th AACR–Irving Weinstein Foundation Distinguished Lecture (supported by the Irving Weinstein Foundation).

Andrew T. Chan, MD, of Massachusetts General Hospital in Boston, won the Third AACR–Waun Ki Hong Award for Outstanding Achievement in Translational and Clinical Cancer Research.

Elaine Fuchs, PhD, of Rockefeller University in New York, won the 59th AACR G.H.A. Clowes Memorial Award (supported by Lilly Oncology).

Charles L. Sawyers, MD, of the Memorial Sloan Kettering Cancer Center in New York, won the 13th AACR Princess Takamatsu Memorial Lectureship (supported by the Princess Takamatsu Cancer Research Fund).

Michael E. Jung, PhD, of the University of California, Los Angeles, won the 13th AACR Award for Outstanding Achievement in Chemistry in Cancer Research.

Cornelis J.M. Melief, MD, PhD, of Leiden (the Netherlands) University Medical Center and ISA Pharmaceuticals, also in Leiden, won the Seventh AACR–Cancer Research Institute Lloyd J. Old Award in Cancer Immunology (supported by the Cancer Research Institute).

Edward L. Giovannucci, MD, of the Harvard T.H. Chan School of Public Health in Boston, won the 28th AACR-American Cancer Society Award for Research Excellence in Cancer Epidemiology and Prevention (supported by the American Cancer Society).

Melissa M. Hudson, MD, and 15 other researchers from St. Jude Children’s Research Hospital in Memphis won the 13th AACR Team Science Award (supported by Lilly Oncology).

Movers in Medicine highlights career moves and personal achievements by hematologists and oncologists. Did you switch jobs, take on a new role, climb a mountain? Tell us all about it at [email protected], and you could be featured in Movers in Medicine.

The American Association for Cancer Research (AACR) has granted 14 awards and lectureships to cancer researchers and plans to recognize the recipients at the annual meeting of the American Association for Cancer Research.

Emil J. Freireich, MD, of the University of Texas MD Anderson Cancer Center in Houston, has won the 16th AACR Award for Lifetime Achievement in Cancer Research. AACR cited Dr. Freireich’s contributions related to leukocyte and allogeneic platelet transfusions, engraftment of peripheral blood stem cells, and combination chemotherapy approaches in the treatment of childhood leukemia. Dr. Freireich will receive the award during the opening ceremony of the meeting on March 31.

Another award to be given at the opening ceremony is the 13th Margaret Foti Award for Leadership and Extraordinary Achievements in Cancer Research. Raymond N. DuBois, MD, PhD, of the Medical University of South Carolina in Charleston, will receive the award for his contributions to the “early detection, interception, and prevention of colorectal cancer.” This includes elucidating the role of prostaglandins and cyclooxygenase in colon cancer tumorigenesis and pioneering the use of nonsteroidal anti-inflammatory mediators for cancer prevention. Dr. DuBois will deliver the lecture “Inflammation and Inflammatory Mediators as Potential Targets for Cancer Prevention or Interception” on April 1.

Jennifer R. Grandis, MD, of the University of California, San Francisco, has won the 22nd AACR–Women in Cancer Research Charlotte Friend Memorial Lectureship. Dr. Grandis characterized the role of EGFR, STAT3, and other signaling pathways in head and neck squamous cell carcinoma and used the findings to uncover new treatment options. Dr. Grandis will deliver her award lecture, “Leveraging Biologic Insights to Prevent and Treat Head and Neck Cancer,” on March 30.

John M. Carethers, MD, of the University of Michigan in Ann Arbor, has won the 14th AACR–Minorities in Cancer Research Jane Cooke Wright Memorial Lectureship. Dr. Carethers is being recognized for his work related to DNA mismatch repair, tumor resistance, inflammation, and health disparities in colorectal cancer patients. He will deliver his award lecture, “A Role for Inflammation-Induced DNA Mismatch Repair Deficits in Racial Outcomes from Advanced Colorectal Cancer,” on March 31.

Susan L. Cohn, MD, of the University of Chicago, has won the 24th AACR–Joseph H. Burchenal Memorial Award for Outstanding Achievement in Clinical Cancer Research (supported by Bristol-Myers Squibb). She won this award for her work in refining pediatric cancer risk-group classification, making discoveries that changed treatment strategies, and creating computational frameworks that enabled data collection and sharing. Dr. Cohn will deliver her award lecture, “Advancing Treatment Through Collaboration: A Pediatric Oncology Paradigm,” on April 2.

Another awardee to be recognized at the meeting is Alberto Mantovani, MD, a professor at Humanitas University in Milan, Italy, who won the 22nd Pezcoller Foundation–AACR International Award for Extraordinary Achievement in Cancer Research (supported by the Pezcoller Foundation).

Jeffrey A. Bluestone, PhD, of the University of California, San Francisco, won the 15th AACR–Irving Weinstein Foundation Distinguished Lecture (supported by the Irving Weinstein Foundation).

Andrew T. Chan, MD, of Massachusetts General Hospital in Boston, won the Third AACR–Waun Ki Hong Award for Outstanding Achievement in Translational and Clinical Cancer Research.

Elaine Fuchs, PhD, of Rockefeller University in New York, won the 59th AACR G.H.A. Clowes Memorial Award (supported by Lilly Oncology).

Charles L. Sawyers, MD, of the Memorial Sloan Kettering Cancer Center in New York, won the 13th AACR Princess Takamatsu Memorial Lectureship (supported by the Princess Takamatsu Cancer Research Fund).

Michael E. Jung, PhD, of the University of California, Los Angeles, won the 13th AACR Award for Outstanding Achievement in Chemistry in Cancer Research.

Cornelis J.M. Melief, MD, PhD, of Leiden (the Netherlands) University Medical Center and ISA Pharmaceuticals, also in Leiden, won the Seventh AACR–Cancer Research Institute Lloyd J. Old Award in Cancer Immunology (supported by the Cancer Research Institute).

Edward L. Giovannucci, MD, of the Harvard T.H. Chan School of Public Health in Boston, won the 28th AACR-American Cancer Society Award for Research Excellence in Cancer Epidemiology and Prevention (supported by the American Cancer Society).

Melissa M. Hudson, MD, and 15 other researchers from St. Jude Children’s Research Hospital in Memphis won the 13th AACR Team Science Award (supported by Lilly Oncology).

Movers in Medicine highlights career moves and personal achievements by hematologists and oncologists. Did you switch jobs, take on a new role, climb a mountain? Tell us all about it at [email protected], and you could be featured in Movers in Medicine.

The American Association for Cancer Research (AACR) has granted 14 awards and lectureships to cancer researchers and plans to recognize the recipients at the annual meeting of the American Association for Cancer Research.

Emil J. Freireich, MD, of the University of Texas MD Anderson Cancer Center in Houston, has won the 16th AACR Award for Lifetime Achievement in Cancer Research. AACR cited Dr. Freireich’s contributions related to leukocyte and allogeneic platelet transfusions, engraftment of peripheral blood stem cells, and combination chemotherapy approaches in the treatment of childhood leukemia. Dr. Freireich will receive the award during the opening ceremony of the meeting on March 31.

Another award to be given at the opening ceremony is the 13th Margaret Foti Award for Leadership and Extraordinary Achievements in Cancer Research. Raymond N. DuBois, MD, PhD, of the Medical University of South Carolina in Charleston, will receive the award for his contributions to the “early detection, interception, and prevention of colorectal cancer.” This includes elucidating the role of prostaglandins and cyclooxygenase in colon cancer tumorigenesis and pioneering the use of nonsteroidal anti-inflammatory mediators for cancer prevention. Dr. DuBois will deliver the lecture “Inflammation and Inflammatory Mediators as Potential Targets for Cancer Prevention or Interception” on April 1.

Jennifer R. Grandis, MD, of the University of California, San Francisco, has won the 22nd AACR–Women in Cancer Research Charlotte Friend Memorial Lectureship. Dr. Grandis characterized the role of EGFR, STAT3, and other signaling pathways in head and neck squamous cell carcinoma and used the findings to uncover new treatment options. Dr. Grandis will deliver her award lecture, “Leveraging Biologic Insights to Prevent and Treat Head and Neck Cancer,” on March 30.

John M. Carethers, MD, of the University of Michigan in Ann Arbor, has won the 14th AACR–Minorities in Cancer Research Jane Cooke Wright Memorial Lectureship. Dr. Carethers is being recognized for his work related to DNA mismatch repair, tumor resistance, inflammation, and health disparities in colorectal cancer patients. He will deliver his award lecture, “A Role for Inflammation-Induced DNA Mismatch Repair Deficits in Racial Outcomes from Advanced Colorectal Cancer,” on March 31.

Susan L. Cohn, MD, of the University of Chicago, has won the 24th AACR–Joseph H. Burchenal Memorial Award for Outstanding Achievement in Clinical Cancer Research (supported by Bristol-Myers Squibb). She won this award for her work in refining pediatric cancer risk-group classification, making discoveries that changed treatment strategies, and creating computational frameworks that enabled data collection and sharing. Dr. Cohn will deliver her award lecture, “Advancing Treatment Through Collaboration: A Pediatric Oncology Paradigm,” on April 2.

Another awardee to be recognized at the meeting is Alberto Mantovani, MD, a professor at Humanitas University in Milan, Italy, who won the 22nd Pezcoller Foundation–AACR International Award for Extraordinary Achievement in Cancer Research (supported by the Pezcoller Foundation).

Jeffrey A. Bluestone, PhD, of the University of California, San Francisco, won the 15th AACR–Irving Weinstein Foundation Distinguished Lecture (supported by the Irving Weinstein Foundation).

Andrew T. Chan, MD, of Massachusetts General Hospital in Boston, won the Third AACR–Waun Ki Hong Award for Outstanding Achievement in Translational and Clinical Cancer Research.

Elaine Fuchs, PhD, of Rockefeller University in New York, won the 59th AACR G.H.A. Clowes Memorial Award (supported by Lilly Oncology).

Charles L. Sawyers, MD, of the Memorial Sloan Kettering Cancer Center in New York, won the 13th AACR Princess Takamatsu Memorial Lectureship (supported by the Princess Takamatsu Cancer Research Fund).

Michael E. Jung, PhD, of the University of California, Los Angeles, won the 13th AACR Award for Outstanding Achievement in Chemistry in Cancer Research.

Cornelis J.M. Melief, MD, PhD, of Leiden (the Netherlands) University Medical Center and ISA Pharmaceuticals, also in Leiden, won the Seventh AACR–Cancer Research Institute Lloyd J. Old Award in Cancer Immunology (supported by the Cancer Research Institute).

Edward L. Giovannucci, MD, of the Harvard T.H. Chan School of Public Health in Boston, won the 28th AACR-American Cancer Society Award for Research Excellence in Cancer Epidemiology and Prevention (supported by the American Cancer Society).

Melissa M. Hudson, MD, and 15 other researchers from St. Jude Children’s Research Hospital in Memphis won the 13th AACR Team Science Award (supported by Lilly Oncology).

Movers in Medicine highlights career moves and personal achievements by hematologists and oncologists. Did you switch jobs, take on a new role, climb a mountain? Tell us all about it at [email protected], and you could be featured in Movers in Medicine.

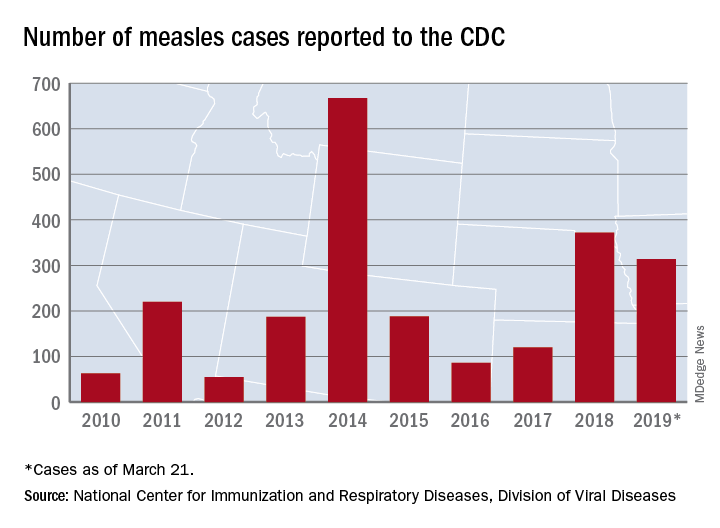

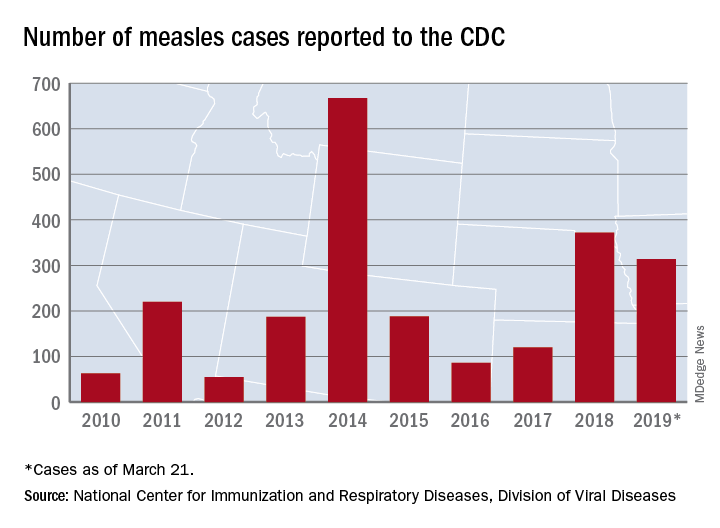

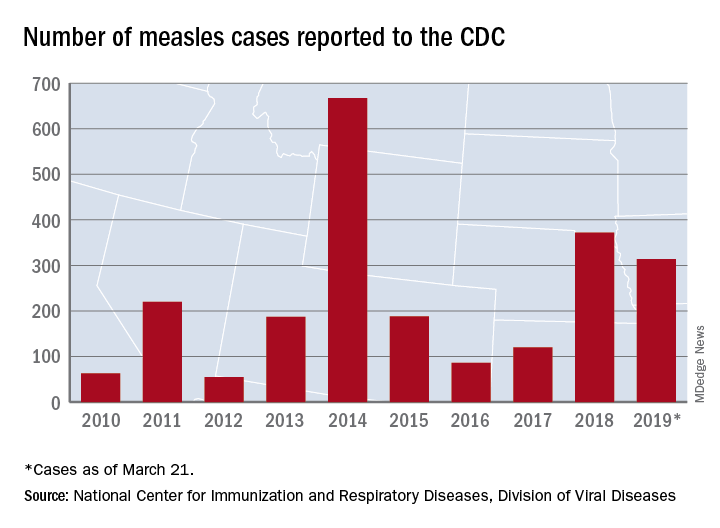

United States now over 300 measles cases for the year

Despite those 46 new cases, the number of states with reported cases remains at 15, the CDC reported March 25.

For the fifth consecutive week the busiest outbreak was in Brooklyn, N.Y., which added 23 new cases. New York’s Rockland County, which is just north of New York City and has 46 confirmed cases for the year, is home to another of the six current outbreaks in the country, with the other four located in Washington (74 total cases for the state), Texas (14 cases), California (7 cases), and Illinois (6 cases). Other states with cases are Arizona, Colorado, Connecticut, Georgia, Kentucky, Missouri, New Hampshire, New Jersey, and Oregon, reported the CDC.

This year’s case total through less than 3 months is nearing the 372 that occurred in 2018, which was the second-worst year for measles in the last decade, but is still well off the 10-year high of 667 reported in 2014, the CDC said.

Despite those 46 new cases, the number of states with reported cases remains at 15, the CDC reported March 25.

For the fifth consecutive week the busiest outbreak was in Brooklyn, N.Y., which added 23 new cases. New York’s Rockland County, which is just north of New York City and has 46 confirmed cases for the year, is home to another of the six current outbreaks in the country, with the other four located in Washington (74 total cases for the state), Texas (14 cases), California (7 cases), and Illinois (6 cases). Other states with cases are Arizona, Colorado, Connecticut, Georgia, Kentucky, Missouri, New Hampshire, New Jersey, and Oregon, reported the CDC.

This year’s case total through less than 3 months is nearing the 372 that occurred in 2018, which was the second-worst year for measles in the last decade, but is still well off the 10-year high of 667 reported in 2014, the CDC said.

Despite those 46 new cases, the number of states with reported cases remains at 15, the CDC reported March 25.

For the fifth consecutive week the busiest outbreak was in Brooklyn, N.Y., which added 23 new cases. New York’s Rockland County, which is just north of New York City and has 46 confirmed cases for the year, is home to another of the six current outbreaks in the country, with the other four located in Washington (74 total cases for the state), Texas (14 cases), California (7 cases), and Illinois (6 cases). Other states with cases are Arizona, Colorado, Connecticut, Georgia, Kentucky, Missouri, New Hampshire, New Jersey, and Oregon, reported the CDC.

This year’s case total through less than 3 months is nearing the 372 that occurred in 2018, which was the second-worst year for measles in the last decade, but is still well off the 10-year high of 667 reported in 2014, the CDC said.

Hospitalists ‘in exactly the right place’ to move to value-based care

Hospitalists are uniquely positioned to be agents of change in the health care system, helping to care for very sick patients as well as identifying cost-efficient ways to keep them well, Marc Harrison, MD, said in his keynote presentation Monday at HM19.

“You’re in exactly the right place in exactly the right specialty to make an enormous difference for our country, which desperately needs an upgrade in terms of how we approach keeping people well and then taking care of people when they’re sick in a superefficient as well as independent fashion,” said Dr. Harrison, president and CEO of Intermountain Healthcare, based in Salt Lake City.

Hospital medicine, hospitalists, and the health care system in general are at an inflection point, and leading change in a positive fashion is the “single core competency” attendees need as health care transitions toward value-based care. This means moving away from a volume-based care model and asking hard questions about whether providers are doing the right things for patients, said Dr. Harrison at the Annual Conference of the Society of Hospital Medicine.

“It’s going to require humility, risk taking, and a desire to truly serve others if we’re going to change the paradigm for health care delivery,” he added.

About one-third of health care that providers deliver is redundant, which costs the United States approximately $1 trillion per year, Dr. Harrison noted. “There’s plenty of money in American health care; it’s just being misspent.”

Health care is “expensive, not consumer centric, and provides uneven quality,” which has spurred businesses like Google and Amazon to try to change the model. Other trends in the health care industry are an increased focus on mergers and acquisitions, negative growth outlook for hospitals, rural hospitals facing closure, and consumers increasingly demanding transparency in health care in such places as their hospital bills.

“We need to be on our front foot” to adapt to these changes, said Dr. Harrison, which means changing how money is spent in U.S. health care. There is currently a disparity: 10% of a person’s health care comes from hospitals and clinics, while 90% of money spent in U.S. health care is on that 10%. Spending should instead be inverted, focused on population-based health initiatives such as addressing social issues surrounding housing, transportation, and food security.

A crisis is coming for providers and health systems that continue to focus on volume-based care models instead of making hard changes to value-based care, said Dr. Harrison. Population-based health initiatives that should be implemented include lowering the cost of care by shifting to outpatient care models and lowering insurance premiums.

“It doesn’t matter how technically good you are if people can’t afford what you’re doing,” he said.

Population-based health initiatives also should be modernized to care upstream, fixing the cause of health problems rather than treating the symptoms. The goal, said Dr. Harrison, is a system with end-to-end seamless care, in which providers understand patients’ goals, are intentional about keeping their patients well, and can “ensure seamless handoff” to other caregivers.

Dr. Harrison said the future of hospital medicine is providing acute care in this way, and attendees are uniquely suited to take care of patients not only in the hospital but also at home, as well as providing consultations with colleagues in care environments without hospitalist programs.

“You’re in the absolute perfect place to keep patients in the least-restrictive, least-expensive environment where they can receive superb care,” he said.

Hospitalists are uniquely positioned to be agents of change in the health care system, helping to care for very sick patients as well as identifying cost-efficient ways to keep them well, Marc Harrison, MD, said in his keynote presentation Monday at HM19.

“You’re in exactly the right place in exactly the right specialty to make an enormous difference for our country, which desperately needs an upgrade in terms of how we approach keeping people well and then taking care of people when they’re sick in a superefficient as well as independent fashion,” said Dr. Harrison, president and CEO of Intermountain Healthcare, based in Salt Lake City.

Hospital medicine, hospitalists, and the health care system in general are at an inflection point, and leading change in a positive fashion is the “single core competency” attendees need as health care transitions toward value-based care. This means moving away from a volume-based care model and asking hard questions about whether providers are doing the right things for patients, said Dr. Harrison at the Annual Conference of the Society of Hospital Medicine.

“It’s going to require humility, risk taking, and a desire to truly serve others if we’re going to change the paradigm for health care delivery,” he added.

About one-third of health care that providers deliver is redundant, which costs the United States approximately $1 trillion per year, Dr. Harrison noted. “There’s plenty of money in American health care; it’s just being misspent.”

Health care is “expensive, not consumer centric, and provides uneven quality,” which has spurred businesses like Google and Amazon to try to change the model. Other trends in the health care industry are an increased focus on mergers and acquisitions, negative growth outlook for hospitals, rural hospitals facing closure, and consumers increasingly demanding transparency in health care in such places as their hospital bills.

“We need to be on our front foot” to adapt to these changes, said Dr. Harrison, which means changing how money is spent in U.S. health care. There is currently a disparity: 10% of a person’s health care comes from hospitals and clinics, while 90% of money spent in U.S. health care is on that 10%. Spending should instead be inverted, focused on population-based health initiatives such as addressing social issues surrounding housing, transportation, and food security.

A crisis is coming for providers and health systems that continue to focus on volume-based care models instead of making hard changes to value-based care, said Dr. Harrison. Population-based health initiatives that should be implemented include lowering the cost of care by shifting to outpatient care models and lowering insurance premiums.

“It doesn’t matter how technically good you are if people can’t afford what you’re doing,” he said.

Population-based health initiatives also should be modernized to care upstream, fixing the cause of health problems rather than treating the symptoms. The goal, said Dr. Harrison, is a system with end-to-end seamless care, in which providers understand patients’ goals, are intentional about keeping their patients well, and can “ensure seamless handoff” to other caregivers.

Dr. Harrison said the future of hospital medicine is providing acute care in this way, and attendees are uniquely suited to take care of patients not only in the hospital but also at home, as well as providing consultations with colleagues in care environments without hospitalist programs.

“You’re in the absolute perfect place to keep patients in the least-restrictive, least-expensive environment where they can receive superb care,” he said.

Hospitalists are uniquely positioned to be agents of change in the health care system, helping to care for very sick patients as well as identifying cost-efficient ways to keep them well, Marc Harrison, MD, said in his keynote presentation Monday at HM19.

“You’re in exactly the right place in exactly the right specialty to make an enormous difference for our country, which desperately needs an upgrade in terms of how we approach keeping people well and then taking care of people when they’re sick in a superefficient as well as independent fashion,” said Dr. Harrison, president and CEO of Intermountain Healthcare, based in Salt Lake City.

Hospital medicine, hospitalists, and the health care system in general are at an inflection point, and leading change in a positive fashion is the “single core competency” attendees need as health care transitions toward value-based care. This means moving away from a volume-based care model and asking hard questions about whether providers are doing the right things for patients, said Dr. Harrison at the Annual Conference of the Society of Hospital Medicine.

“It’s going to require humility, risk taking, and a desire to truly serve others if we’re going to change the paradigm for health care delivery,” he added.

About one-third of health care that providers deliver is redundant, which costs the United States approximately $1 trillion per year, Dr. Harrison noted. “There’s plenty of money in American health care; it’s just being misspent.”

Health care is “expensive, not consumer centric, and provides uneven quality,” which has spurred businesses like Google and Amazon to try to change the model. Other trends in the health care industry are an increased focus on mergers and acquisitions, negative growth outlook for hospitals, rural hospitals facing closure, and consumers increasingly demanding transparency in health care in such places as their hospital bills.

“We need to be on our front foot” to adapt to these changes, said Dr. Harrison, which means changing how money is spent in U.S. health care. There is currently a disparity: 10% of a person’s health care comes from hospitals and clinics, while 90% of money spent in U.S. health care is on that 10%. Spending should instead be inverted, focused on population-based health initiatives such as addressing social issues surrounding housing, transportation, and food security.

A crisis is coming for providers and health systems that continue to focus on volume-based care models instead of making hard changes to value-based care, said Dr. Harrison. Population-based health initiatives that should be implemented include lowering the cost of care by shifting to outpatient care models and lowering insurance premiums.

“It doesn’t matter how technically good you are if people can’t afford what you’re doing,” he said.

Population-based health initiatives also should be modernized to care upstream, fixing the cause of health problems rather than treating the symptoms. The goal, said Dr. Harrison, is a system with end-to-end seamless care, in which providers understand patients’ goals, are intentional about keeping their patients well, and can “ensure seamless handoff” to other caregivers.

Dr. Harrison said the future of hospital medicine is providing acute care in this way, and attendees are uniquely suited to take care of patients not only in the hospital but also at home, as well as providing consultations with colleagues in care environments without hospitalist programs.

“You’re in the absolute perfect place to keep patients in the least-restrictive, least-expensive environment where they can receive superb care,” he said.

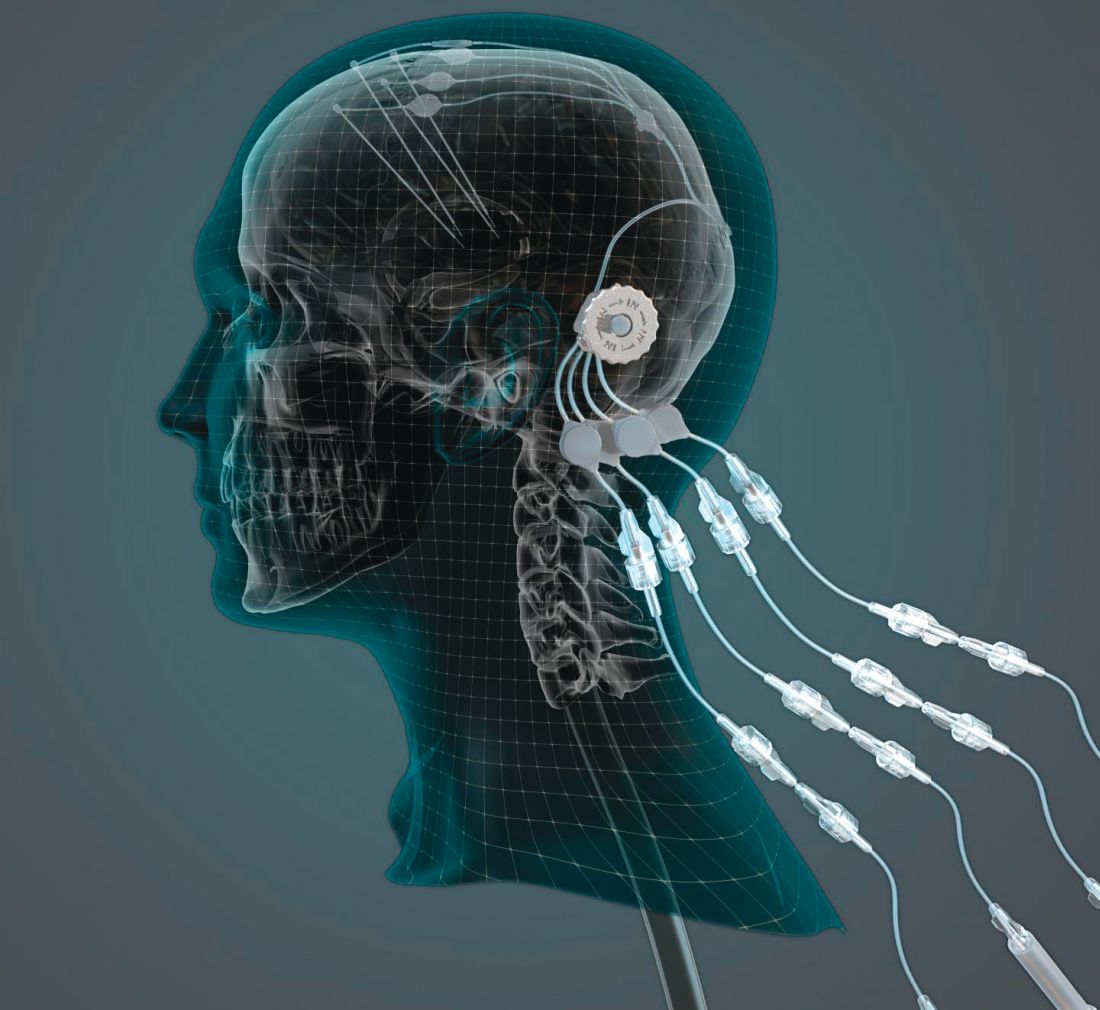

Can intraputamenal infusions of GDNF treat Parkinson’s disease?

researchers reported. The investigational therapy, delivered through a skull-mounted port, was well tolerated in a 40-week, randomized, controlled trial and a 40-week, open-label extension.

Neither study met its primary endpoint, but post hoc analyses suggest possible clinical benefits. In addition, PET imaging after the 40-week, randomized trial found significantly increased 18F-DOPA uptake in patients who received GDNF. The randomized trial was published in the March 2019 issue of Brain; data from the open-label extension were published online ahead of print Feb. 26, 2019, in the Journal of Parkinson’s Disease.

“The spatial and relative magnitude of the improvement in the brain scans is beyond anything seen previously in trials of surgically delivered growth-factor treatments for Parkinson’s [disease],” said principal investigator Alan L. Whone, MBChB, PhD, of the University of Bristol (England) and North Bristol National Health Service Trust. “This represents some of the most compelling evidence yet that we may have a means to possibly reawaken and restore the dopamine brain cells that are gradually destroyed in Parkinson’s [disease].”

Nevertheless, the trial did not confirm clinical benefits. The hypothesis that growth factors can benefit patients with Parkinson’s disease may be incorrect, the researchers acknowledged. It also is possible that the hypothesis is valid and that a trial with a higher GDNF dose, longer treatment duration, patients with an earlier disease stage, or different outcome measures would yield positive results. GDNF warrants further study, they wrote.

The findings could have implications for other neurologic disorders as well.

“This trial has shown that we can safely and repeatedly infuse drugs directly into patients’ brains over months or years. This is a significant breakthrough in our ability to treat neurologic conditions ... because most drugs that might work cannot cross from the bloodstream into the brain,” said Steven Gill, MB, MS. Mr. Gill, of the North Bristol NHS Trust and the U.K.-based engineering firm Renishaw, designed the convection-enhanced delivery system used in the studies.

A neurotrophic protein

GDNF has neurorestorative and neuroprotective effects in animal models of Parkinson’s disease. In open-label studies, continuous, low-rate intraputamenal administration of GDNF has shown signs of potential efficacy, but a placebo-controlled trial did not replicate clinical benefits. In the present studies, the researchers assessed intermittent GDNF administration using convection-enhanced delivery, which can achieve wider and more even distribution of GDNF, compared with the previous approach.

The researchers conducted a single-center, randomized, double-blind, placebo-controlled trial to study this novel administration approach. Patients were aged 35-75 years, had motor symptoms for at least 5 years, and had moderate disease severity in the off state (that is, Hoehn and Yahr stage 2-3 and Unified Parkinson’s Disease Rating Scale motor score–part III [UPDRS-III] of 25-45).

In a pilot stage of the trial, six patients were randomized 2:1 to receive GDNF (120 mcg per putamen) or placebo. In the primary stage, another 35 patients were randomized 1:1 to GDNF or placebo. The primary outcome was the percentage change from baseline to week 40 in the off-state UPDRS-III among patients from the primary stage of the trial. Further analyses included all 41 patients from the pilot and primary stages.

Patients in the primary analysis had a mean age of 56.4 years and mean disease duration of 10.9 years. About half were female.

Results on primary and secondary clinical endpoints did not significantly differ between the groups. Average off state UPDRS motor score decreased by 17.3 in the active treatment group, compared with 11.8 in the placebo group.

A post hoc analysis, however, found that nine patients (43%) in the active-treatment group had a large, clinically important motor improvement of 10 or more points in the off state, whereas no placebo patients did. These “10-point responders in the GDNF group are a potential focus of interest; however, as this is a post hoc finding we would not wish to overinterpret its meaning,” Dr. Whone and his colleagues wrote. Among patients who received GDNF, PET imaging demonstrated significantly increased 18F-DOPA uptake throughout the putamen, ranging from a 25% increase in the left anterior putamen to a 100% increase in both posterior putamena, whereas patients who received placebo did not have significantly increased uptake.

No drug-related serious adverse events were reported. “The majority of device-related adverse events were port site associated, most commonly local hypertrophic scarring or infections, amenable to antibiotics,” the investigators wrote. “The frequency of these declined during the trial as surgical and device handling experience improved.”

Open-label extension

By week 80, when all participants had received GDNF, both groups showed moderate to large improvement in symptoms, compared with baseline. From baseline to week 80, percentage change in UPDRS motor score in the off state did not significantly differ between patients who received GDNF for 80 weeks and patients who received placebo followed by GDNF (26.7% vs. 27.6%). Secondary endpoints also did not differ between the groups. Treatment compliance was 97.8%; no patients discontinued the study.

The trials were funded by Parkinson’s UK with support from the Cure Parkinson’s Trust and in association with the North Bristol NHS Trust. GDNF and additional resources and funding were provided by MedGenesis Therapeutix, which owns the license for GDNF and received funding from the Michael J. Fox Foundation for Parkinson’s Research. Renishaw manufactured the convection-enhanced delivery device on behalf of North Bristol NHS Trust. The Gatsby Foundation provided a 3T MRI scanner. Some study authors are employed by and have shares or share options with MedGenesis Therapeutix. Other authors are employees of Renishaw. Dr. Gill is Renishaw’s medical director and may have a future royalty share from the drug delivery system that he invented.

SOURCES: Whone AL et al. Brain. 2019 Feb 26. doi: 10.1093/brain/awz023; Whone AL et al. J Parkinsons Dis. 2019 Feb 26. doi: 10.3233/JPD-191576.

researchers reported. The investigational therapy, delivered through a skull-mounted port, was well tolerated in a 40-week, randomized, controlled trial and a 40-week, open-label extension.

Neither study met its primary endpoint, but post hoc analyses suggest possible clinical benefits. In addition, PET imaging after the 40-week, randomized trial found significantly increased 18F-DOPA uptake in patients who received GDNF. The randomized trial was published in the March 2019 issue of Brain; data from the open-label extension were published online ahead of print Feb. 26, 2019, in the Journal of Parkinson’s Disease.

“The spatial and relative magnitude of the improvement in the brain scans is beyond anything seen previously in trials of surgically delivered growth-factor treatments for Parkinson’s [disease],” said principal investigator Alan L. Whone, MBChB, PhD, of the University of Bristol (England) and North Bristol National Health Service Trust. “This represents some of the most compelling evidence yet that we may have a means to possibly reawaken and restore the dopamine brain cells that are gradually destroyed in Parkinson’s [disease].”

Nevertheless, the trial did not confirm clinical benefits. The hypothesis that growth factors can benefit patients with Parkinson’s disease may be incorrect, the researchers acknowledged. It also is possible that the hypothesis is valid and that a trial with a higher GDNF dose, longer treatment duration, patients with an earlier disease stage, or different outcome measures would yield positive results. GDNF warrants further study, they wrote.

The findings could have implications for other neurologic disorders as well.

“This trial has shown that we can safely and repeatedly infuse drugs directly into patients’ brains over months or years. This is a significant breakthrough in our ability to treat neurologic conditions ... because most drugs that might work cannot cross from the bloodstream into the brain,” said Steven Gill, MB, MS. Mr. Gill, of the North Bristol NHS Trust and the U.K.-based engineering firm Renishaw, designed the convection-enhanced delivery system used in the studies.

A neurotrophic protein

GDNF has neurorestorative and neuroprotective effects in animal models of Parkinson’s disease. In open-label studies, continuous, low-rate intraputamenal administration of GDNF has shown signs of potential efficacy, but a placebo-controlled trial did not replicate clinical benefits. In the present studies, the researchers assessed intermittent GDNF administration using convection-enhanced delivery, which can achieve wider and more even distribution of GDNF, compared with the previous approach.

The researchers conducted a single-center, randomized, double-blind, placebo-controlled trial to study this novel administration approach. Patients were aged 35-75 years, had motor symptoms for at least 5 years, and had moderate disease severity in the off state (that is, Hoehn and Yahr stage 2-3 and Unified Parkinson’s Disease Rating Scale motor score–part III [UPDRS-III] of 25-45).

In a pilot stage of the trial, six patients were randomized 2:1 to receive GDNF (120 mcg per putamen) or placebo. In the primary stage, another 35 patients were randomized 1:1 to GDNF or placebo. The primary outcome was the percentage change from baseline to week 40 in the off-state UPDRS-III among patients from the primary stage of the trial. Further analyses included all 41 patients from the pilot and primary stages.

Patients in the primary analysis had a mean age of 56.4 years and mean disease duration of 10.9 years. About half were female.

Results on primary and secondary clinical endpoints did not significantly differ between the groups. Average off state UPDRS motor score decreased by 17.3 in the active treatment group, compared with 11.8 in the placebo group.

A post hoc analysis, however, found that nine patients (43%) in the active-treatment group had a large, clinically important motor improvement of 10 or more points in the off state, whereas no placebo patients did. These “10-point responders in the GDNF group are a potential focus of interest; however, as this is a post hoc finding we would not wish to overinterpret its meaning,” Dr. Whone and his colleagues wrote. Among patients who received GDNF, PET imaging demonstrated significantly increased 18F-DOPA uptake throughout the putamen, ranging from a 25% increase in the left anterior putamen to a 100% increase in both posterior putamena, whereas patients who received placebo did not have significantly increased uptake.

No drug-related serious adverse events were reported. “The majority of device-related adverse events were port site associated, most commonly local hypertrophic scarring or infections, amenable to antibiotics,” the investigators wrote. “The frequency of these declined during the trial as surgical and device handling experience improved.”

Open-label extension

By week 80, when all participants had received GDNF, both groups showed moderate to large improvement in symptoms, compared with baseline. From baseline to week 80, percentage change in UPDRS motor score in the off state did not significantly differ between patients who received GDNF for 80 weeks and patients who received placebo followed by GDNF (26.7% vs. 27.6%). Secondary endpoints also did not differ between the groups. Treatment compliance was 97.8%; no patients discontinued the study.

The trials were funded by Parkinson’s UK with support from the Cure Parkinson’s Trust and in association with the North Bristol NHS Trust. GDNF and additional resources and funding were provided by MedGenesis Therapeutix, which owns the license for GDNF and received funding from the Michael J. Fox Foundation for Parkinson’s Research. Renishaw manufactured the convection-enhanced delivery device on behalf of North Bristol NHS Trust. The Gatsby Foundation provided a 3T MRI scanner. Some study authors are employed by and have shares or share options with MedGenesis Therapeutix. Other authors are employees of Renishaw. Dr. Gill is Renishaw’s medical director and may have a future royalty share from the drug delivery system that he invented.

SOURCES: Whone AL et al. Brain. 2019 Feb 26. doi: 10.1093/brain/awz023; Whone AL et al. J Parkinsons Dis. 2019 Feb 26. doi: 10.3233/JPD-191576.

researchers reported. The investigational therapy, delivered through a skull-mounted port, was well tolerated in a 40-week, randomized, controlled trial and a 40-week, open-label extension.

Neither study met its primary endpoint, but post hoc analyses suggest possible clinical benefits. In addition, PET imaging after the 40-week, randomized trial found significantly increased 18F-DOPA uptake in patients who received GDNF. The randomized trial was published in the March 2019 issue of Brain; data from the open-label extension were published online ahead of print Feb. 26, 2019, in the Journal of Parkinson’s Disease.

“The spatial and relative magnitude of the improvement in the brain scans is beyond anything seen previously in trials of surgically delivered growth-factor treatments for Parkinson’s [disease],” said principal investigator Alan L. Whone, MBChB, PhD, of the University of Bristol (England) and North Bristol National Health Service Trust. “This represents some of the most compelling evidence yet that we may have a means to possibly reawaken and restore the dopamine brain cells that are gradually destroyed in Parkinson’s [disease].”

Nevertheless, the trial did not confirm clinical benefits. The hypothesis that growth factors can benefit patients with Parkinson’s disease may be incorrect, the researchers acknowledged. It also is possible that the hypothesis is valid and that a trial with a higher GDNF dose, longer treatment duration, patients with an earlier disease stage, or different outcome measures would yield positive results. GDNF warrants further study, they wrote.

The findings could have implications for other neurologic disorders as well.

“This trial has shown that we can safely and repeatedly infuse drugs directly into patients’ brains over months or years. This is a significant breakthrough in our ability to treat neurologic conditions ... because most drugs that might work cannot cross from the bloodstream into the brain,” said Steven Gill, MB, MS. Mr. Gill, of the North Bristol NHS Trust and the U.K.-based engineering firm Renishaw, designed the convection-enhanced delivery system used in the studies.

A neurotrophic protein

GDNF has neurorestorative and neuroprotective effects in animal models of Parkinson’s disease. In open-label studies, continuous, low-rate intraputamenal administration of GDNF has shown signs of potential efficacy, but a placebo-controlled trial did not replicate clinical benefits. In the present studies, the researchers assessed intermittent GDNF administration using convection-enhanced delivery, which can achieve wider and more even distribution of GDNF, compared with the previous approach.

The researchers conducted a single-center, randomized, double-blind, placebo-controlled trial to study this novel administration approach. Patients were aged 35-75 years, had motor symptoms for at least 5 years, and had moderate disease severity in the off state (that is, Hoehn and Yahr stage 2-3 and Unified Parkinson’s Disease Rating Scale motor score–part III [UPDRS-III] of 25-45).

In a pilot stage of the trial, six patients were randomized 2:1 to receive GDNF (120 mcg per putamen) or placebo. In the primary stage, another 35 patients were randomized 1:1 to GDNF or placebo. The primary outcome was the percentage change from baseline to week 40 in the off-state UPDRS-III among patients from the primary stage of the trial. Further analyses included all 41 patients from the pilot and primary stages.

Patients in the primary analysis had a mean age of 56.4 years and mean disease duration of 10.9 years. About half were female.

Results on primary and secondary clinical endpoints did not significantly differ between the groups. Average off state UPDRS motor score decreased by 17.3 in the active treatment group, compared with 11.8 in the placebo group.

A post hoc analysis, however, found that nine patients (43%) in the active-treatment group had a large, clinically important motor improvement of 10 or more points in the off state, whereas no placebo patients did. These “10-point responders in the GDNF group are a potential focus of interest; however, as this is a post hoc finding we would not wish to overinterpret its meaning,” Dr. Whone and his colleagues wrote. Among patients who received GDNF, PET imaging demonstrated significantly increased 18F-DOPA uptake throughout the putamen, ranging from a 25% increase in the left anterior putamen to a 100% increase in both posterior putamena, whereas patients who received placebo did not have significantly increased uptake.

No drug-related serious adverse events were reported. “The majority of device-related adverse events were port site associated, most commonly local hypertrophic scarring or infections, amenable to antibiotics,” the investigators wrote. “The frequency of these declined during the trial as surgical and device handling experience improved.”

Open-label extension

By week 80, when all participants had received GDNF, both groups showed moderate to large improvement in symptoms, compared with baseline. From baseline to week 80, percentage change in UPDRS motor score in the off state did not significantly differ between patients who received GDNF for 80 weeks and patients who received placebo followed by GDNF (26.7% vs. 27.6%). Secondary endpoints also did not differ between the groups. Treatment compliance was 97.8%; no patients discontinued the study.

The trials were funded by Parkinson’s UK with support from the Cure Parkinson’s Trust and in association with the North Bristol NHS Trust. GDNF and additional resources and funding were provided by MedGenesis Therapeutix, which owns the license for GDNF and received funding from the Michael J. Fox Foundation for Parkinson’s Research. Renishaw manufactured the convection-enhanced delivery device on behalf of North Bristol NHS Trust. The Gatsby Foundation provided a 3T MRI scanner. Some study authors are employed by and have shares or share options with MedGenesis Therapeutix. Other authors are employees of Renishaw. Dr. Gill is Renishaw’s medical director and may have a future royalty share from the drug delivery system that he invented.

SOURCES: Whone AL et al. Brain. 2019 Feb 26. doi: 10.1093/brain/awz023; Whone AL et al. J Parkinsons Dis. 2019 Feb 26. doi: 10.3233/JPD-191576.

Hospital medicine grows globally

Hospital medicine is growing in popularity in some foreign countries, speakers said during Monday afternoon’s session, “International Hospital Medicine in the United Arab Emirates, Brazil and Holland.” The presenters discussed some of the history of hospital medicine in each of those countries as well as some current challenges.

Hospital medicine in the Netherlands started in about 2012, said Marjolein de Boom, MD, a hospitalist at Haaglanden Medical Centre. The country has its own 3-year training program for hospitalists, who first started to work in hospitals in the country in 2015. “It’s a relatively new and young specialty,” said Dr. de Boom, with 39 hospitalists in the country working in 8 of the 80 Dutch hospitals. Another 25 or so hospitalists are in training, “so it’s a growing profession,” she said. A Dutch chapter of SHM has been in place since 2017.

Hospitals in the Netherlands permit physicians to serve as hospitalists in different specialties depending on their needs. For example, Dr. de Boom works in the oncology department, as well as the surgical and trauma surgery units. One challenge has been to get more physicians interested in the hospitalist program because it’s newer and not as well-known, she said.

Hospital medicine in the United Arab Emirates also is a newer concept. The American model of hospital medicine was first introduced to the region in 2014 by the Cleveland Clinic in Abu Dhabi, said Mahmoud Al-Hawamdeh, MD, MBA, SFHM, FACP, chair of hospital medicine at the medical center. “Before that, inpatient hospital care was done by traditional family and internal medicine physicians, general practitioners, and residents,” he said.

There are 43 hospitalists at Cleveland Clinic, Abu Dhabi, said Dr. Al-Hawamdeh. They cover about 50%-60% of inpatient services, as well as handle admissions for vascular surgery, ophthalmology, and some general services; they also comanage postcardiac surgery care, he said. “It has been a tremendous success to implement hospital medicine in the care for the inpatient with improved quality metrics, reduced length of stay, and improved patient satisfaction.”

However, there are some challenges, such as educating patients and families about the role of hospitalists, cultural barriers, and the lack of a postdischarge follow-up network and institutions such as skilled nursing facilities. Dr. Al-Hawamdeh worked with physicians from Johns Hopkins Aramco Healthcare and Hamad Medical Corporation to establish an SHM Middle East chapter in 2016.

In Brazil, hospital medicine started to take hold in 2004, said Guilherme Barcellos, MD, SFHM. At that time, just a few doctors were true hospitalists. Dr. Barcellos helped create two hospitalist societies in the country. Hospitalists balancing multiple jobs is still very common, but decreasing, he said, while hospital employment and medical group participation is increasing.

“It was a high-pressure environment, crying out for efficiency, that drove forward Brazilian hospital medicine,” Dr. Barcellos said, “together with new reimbursement models, surgical redesigns, primary care recognition and structure.”

Some challenges remain in Brazil as well, he said. Fancy private hospitals announce they have hospitalists when they may not. In addition, the role of generalists and subspecialists, and the role of certifications, is not always clear. But hospitalists are gaining a foothold, participating in a Choosing Wisely initiative in the country and organizing several conferences.

Hospital medicine is growing in popularity in some foreign countries, speakers said during Monday afternoon’s session, “International Hospital Medicine in the United Arab Emirates, Brazil and Holland.” The presenters discussed some of the history of hospital medicine in each of those countries as well as some current challenges.

Hospital medicine in the Netherlands started in about 2012, said Marjolein de Boom, MD, a hospitalist at Haaglanden Medical Centre. The country has its own 3-year training program for hospitalists, who first started to work in hospitals in the country in 2015. “It’s a relatively new and young specialty,” said Dr. de Boom, with 39 hospitalists in the country working in 8 of the 80 Dutch hospitals. Another 25 or so hospitalists are in training, “so it’s a growing profession,” she said. A Dutch chapter of SHM has been in place since 2017.

Hospitals in the Netherlands permit physicians to serve as hospitalists in different specialties depending on their needs. For example, Dr. de Boom works in the oncology department, as well as the surgical and trauma surgery units. One challenge has been to get more physicians interested in the hospitalist program because it’s newer and not as well-known, she said.

Hospital medicine in the United Arab Emirates also is a newer concept. The American model of hospital medicine was first introduced to the region in 2014 by the Cleveland Clinic in Abu Dhabi, said Mahmoud Al-Hawamdeh, MD, MBA, SFHM, FACP, chair of hospital medicine at the medical center. “Before that, inpatient hospital care was done by traditional family and internal medicine physicians, general practitioners, and residents,” he said.

There are 43 hospitalists at Cleveland Clinic, Abu Dhabi, said Dr. Al-Hawamdeh. They cover about 50%-60% of inpatient services, as well as handle admissions for vascular surgery, ophthalmology, and some general services; they also comanage postcardiac surgery care, he said. “It has been a tremendous success to implement hospital medicine in the care for the inpatient with improved quality metrics, reduced length of stay, and improved patient satisfaction.”

However, there are some challenges, such as educating patients and families about the role of hospitalists, cultural barriers, and the lack of a postdischarge follow-up network and institutions such as skilled nursing facilities. Dr. Al-Hawamdeh worked with physicians from Johns Hopkins Aramco Healthcare and Hamad Medical Corporation to establish an SHM Middle East chapter in 2016.

In Brazil, hospital medicine started to take hold in 2004, said Guilherme Barcellos, MD, SFHM. At that time, just a few doctors were true hospitalists. Dr. Barcellos helped create two hospitalist societies in the country. Hospitalists balancing multiple jobs is still very common, but decreasing, he said, while hospital employment and medical group participation is increasing.

“It was a high-pressure environment, crying out for efficiency, that drove forward Brazilian hospital medicine,” Dr. Barcellos said, “together with new reimbursement models, surgical redesigns, primary care recognition and structure.”

Some challenges remain in Brazil as well, he said. Fancy private hospitals announce they have hospitalists when they may not. In addition, the role of generalists and subspecialists, and the role of certifications, is not always clear. But hospitalists are gaining a foothold, participating in a Choosing Wisely initiative in the country and organizing several conferences.

Hospital medicine is growing in popularity in some foreign countries, speakers said during Monday afternoon’s session, “International Hospital Medicine in the United Arab Emirates, Brazil and Holland.” The presenters discussed some of the history of hospital medicine in each of those countries as well as some current challenges.

Hospital medicine in the Netherlands started in about 2012, said Marjolein de Boom, MD, a hospitalist at Haaglanden Medical Centre. The country has its own 3-year training program for hospitalists, who first started to work in hospitals in the country in 2015. “It’s a relatively new and young specialty,” said Dr. de Boom, with 39 hospitalists in the country working in 8 of the 80 Dutch hospitals. Another 25 or so hospitalists are in training, “so it’s a growing profession,” she said. A Dutch chapter of SHM has been in place since 2017.

Hospitals in the Netherlands permit physicians to serve as hospitalists in different specialties depending on their needs. For example, Dr. de Boom works in the oncology department, as well as the surgical and trauma surgery units. One challenge has been to get more physicians interested in the hospitalist program because it’s newer and not as well-known, she said.

Hospital medicine in the United Arab Emirates also is a newer concept. The American model of hospital medicine was first introduced to the region in 2014 by the Cleveland Clinic in Abu Dhabi, said Mahmoud Al-Hawamdeh, MD, MBA, SFHM, FACP, chair of hospital medicine at the medical center. “Before that, inpatient hospital care was done by traditional family and internal medicine physicians, general practitioners, and residents,” he said.

There are 43 hospitalists at Cleveland Clinic, Abu Dhabi, said Dr. Al-Hawamdeh. They cover about 50%-60% of inpatient services, as well as handle admissions for vascular surgery, ophthalmology, and some general services; they also comanage postcardiac surgery care, he said. “It has been a tremendous success to implement hospital medicine in the care for the inpatient with improved quality metrics, reduced length of stay, and improved patient satisfaction.”

However, there are some challenges, such as educating patients and families about the role of hospitalists, cultural barriers, and the lack of a postdischarge follow-up network and institutions such as skilled nursing facilities. Dr. Al-Hawamdeh worked with physicians from Johns Hopkins Aramco Healthcare and Hamad Medical Corporation to establish an SHM Middle East chapter in 2016.

In Brazil, hospital medicine started to take hold in 2004, said Guilherme Barcellos, MD, SFHM. At that time, just a few doctors were true hospitalists. Dr. Barcellos helped create two hospitalist societies in the country. Hospitalists balancing multiple jobs is still very common, but decreasing, he said, while hospital employment and medical group participation is increasing.

“It was a high-pressure environment, crying out for efficiency, that drove forward Brazilian hospital medicine,” Dr. Barcellos said, “together with new reimbursement models, surgical redesigns, primary care recognition and structure.”

Some challenges remain in Brazil as well, he said. Fancy private hospitals announce they have hospitalists when they may not. In addition, the role of generalists and subspecialists, and the role of certifications, is not always clear. But hospitalists are gaining a foothold, participating in a Choosing Wisely initiative in the country and organizing several conferences.

In transgender care, questions are the answer

New York OBGYN Zoe I. Rodriguez, MD, a pioneer in the care of transgender people, has witnessed a remarkable evolution in medicine.

Years ago, providers knew little to nothing about the unique needs of transgender patients. Now, Dr. Rodriguez said, “there’s tremendous interest in being able to competently treat and address transgender individuals.”

But increased awareness has come with a dose of worry. Providers are often afraid they’ll say or do the wrong thing.

Dr. Rodriguez, who is an assistant professor at the Icahn School of Medicine at Mount Sinai, New York, will help hospitalists gain confidence in treating transgender patients at an HM19 session on Tuesday. “I hope to eliminate this element of fear,” she said. “It’s just really about treating people with respect and dignity and having the knowledge to care for them appropriately.”

The United States is home to an estimated 1.4 million transgender people, and every one has a preferred name and preferred pronouns. It’s crucial for physicians to understand name and pronoun preferences and use them, Dr. Rodriguez said.

At her practice, an intake form asks patients how they wish to be addressed. “I know this information by the time I walk into the exam room,” she said.

For hospitalists, she said, getting this information beforehand may not be possible. In that case, she said, ask questions of the patient and don’t be afraid to get it wrong.

“Mistakes happen all the time,” Dr. Rodriguez said. “People will correct you if you misgender them or call them other than their preferred name. As long as the mistakes are not willful, apologize and move on.”

It’s also important to understand the special needs that transgender patients may – or may not – have. For example, not every transgender patient takes hormones. Even if a patient does, the hormones may not affect as many body processes as you might assume, Dr. Rodriguez said.

Also, not every transgender person has had surgery. However, it can be helpful to understand what surgery entails. “If they get their surgery done in Thailand, a popular destination, and they need treatment in Topeka for an issue related to their surgery, it would be good for the hospitalist to understand what’s done during the surgery.”

In her session, Dr. Rodriguez will also talk about creating an LGBT-friendly environment. “These patients are already feeling very vulnerable and marginalized within these vast health systems,” she said. “It makes a big difference to know that someone is there and gets it.”

Dr. Rodriguez also plans to emphasize the importance of staying aware and up to date about transgender issues. “It’s a continuum,” she said. “There will be more evolution as people come up with new terminologies and words to describe their gender expression and identity. It will be crucially important for physicians to be aware and respectful.”

What Hospitalists Need to Know About Caring for Transgender Patients

Tuesday, 3:50 - 4:30 p.m.

Maryland A/1-3

New York OBGYN Zoe I. Rodriguez, MD, a pioneer in the care of transgender people, has witnessed a remarkable evolution in medicine.

Years ago, providers knew little to nothing about the unique needs of transgender patients. Now, Dr. Rodriguez said, “there’s tremendous interest in being able to competently treat and address transgender individuals.”

But increased awareness has come with a dose of worry. Providers are often afraid they’ll say or do the wrong thing.

Dr. Rodriguez, who is an assistant professor at the Icahn School of Medicine at Mount Sinai, New York, will help hospitalists gain confidence in treating transgender patients at an HM19 session on Tuesday. “I hope to eliminate this element of fear,” she said. “It’s just really about treating people with respect and dignity and having the knowledge to care for them appropriately.”