User login

CF patients live longer in Canada than in U.S.

People with cystic fibrosis (CF) survive an average of 10 years longer if they live in Canada than if they live in the United States, according to a report published online March 14 in Annals of Internal Medicine.

Differences between the two nations’ health care systems, including access to insurance, “may, in part, explain the Canadian survival advantage,” said Anne L. Stephenson, MD, PhD, of St. Michael’s Hospital, Toronto, and her associates.

Overall there were 9,654 U.S. deaths and 1,288 Canadian deaths during the study period, for nearly identical overall mortality between the two countries (21.2% and 21.7%, respectively). However, the median survival was 10 years longer in Canada (50.9 years) than in the United States (40.6 years), a gap that persisted across numerous analyses that adjusted for patient characteristics and clinical factors, including CF severity.

One particular difference between the two study populations was found to be key: Canada has single-payer universal health insurance, while the United States does not. When U.S. patients were categorized according to their insurance status, Canadians had a 44% lower risk of death than did U.S. patients receiving continuous Medicaid or Medicare (95% confidence interval, 0.45-0.71; P less than .001), a 36% lower risk than for U.S. patients receiving intermittent Medicaid or Medicare (95% CI, 0.51-0.80; P = .002), and a 77% lower risk of death than U.S. patients with no or unknown health insurance (95% CI, 0.14-0.37; P less than .001), the investigators said (Ann. Intern. Med. 2017 Mar 14. doi: 10.7326/M16-0858). In contrast, there was no survival advantage for Canadian patients when compared with U.S. patients who had private health insurance. This “[raises] the question of whether a disparity exists in access to therapeutic approaches or health care delivery,” the researchers noted.

This study was supported by the U.S. Cystic Fibrosis Foundation, Cystic Fibrosis Canada, the National Institutes of Health, and the U.S. Food and Drug Administration. Dr. Stephenson reported grants from the Cystic Fibrosis Foundation and fees from Cystic Fibrosis Canada. Several of the study’s other authors reported receiving fees from various sources and one of those authors reported serving on the boards of pharmaceutical companies.

Stephenson et al. confirmed that there is a “marked” [survival] “advantage” for CF patients in Canada, compared with those in the United States.

A key finding of this study was the survival difference between the two countries disappeared when U.S. patients insured by Medicaid or Medicare and those with no health insurance were excluded from the analysis. The fundamental differences between the two nations’ health care systems seem to be driving this disparity in survival.

Median predicted survival for all Canadians is higher than that of U.S. citizens, and this difference has increased over the last 2 decades.

Patrick A. Flume, MD, is at the Medical University of South Carolina in Charleston. Donald R. VanDevanter, PhD, is at Case Western Reserve University in Cleveland. They both reported ties to the Cystic Fibrosis Foundation. Dr. Flume and Dr. VanDevanter made these remarks in an editorial accompanying Dr. Stephenson’s report (Ann. Intern. Med. 2017 Mar 14. doi: 10.7326/M17-0564).

Stephenson et al. confirmed that there is a “marked” [survival] “advantage” for CF patients in Canada, compared with those in the United States.

A key finding of this study was the survival difference between the two countries disappeared when U.S. patients insured by Medicaid or Medicare and those with no health insurance were excluded from the analysis. The fundamental differences between the two nations’ health care systems seem to be driving this disparity in survival.

Median predicted survival for all Canadians is higher than that of U.S. citizens, and this difference has increased over the last 2 decades.

Patrick A. Flume, MD, is at the Medical University of South Carolina in Charleston. Donald R. VanDevanter, PhD, is at Case Western Reserve University in Cleveland. They both reported ties to the Cystic Fibrosis Foundation. Dr. Flume and Dr. VanDevanter made these remarks in an editorial accompanying Dr. Stephenson’s report (Ann. Intern. Med. 2017 Mar 14. doi: 10.7326/M17-0564).

Stephenson et al. confirmed that there is a “marked” [survival] “advantage” for CF patients in Canada, compared with those in the United States.

A key finding of this study was the survival difference between the two countries disappeared when U.S. patients insured by Medicaid or Medicare and those with no health insurance were excluded from the analysis. The fundamental differences between the two nations’ health care systems seem to be driving this disparity in survival.

Median predicted survival for all Canadians is higher than that of U.S. citizens, and this difference has increased over the last 2 decades.

Patrick A. Flume, MD, is at the Medical University of South Carolina in Charleston. Donald R. VanDevanter, PhD, is at Case Western Reserve University in Cleveland. They both reported ties to the Cystic Fibrosis Foundation. Dr. Flume and Dr. VanDevanter made these remarks in an editorial accompanying Dr. Stephenson’s report (Ann. Intern. Med. 2017 Mar 14. doi: 10.7326/M17-0564).

People with cystic fibrosis (CF) survive an average of 10 years longer if they live in Canada than if they live in the United States, according to a report published online March 14 in Annals of Internal Medicine.

Differences between the two nations’ health care systems, including access to insurance, “may, in part, explain the Canadian survival advantage,” said Anne L. Stephenson, MD, PhD, of St. Michael’s Hospital, Toronto, and her associates.

Overall there were 9,654 U.S. deaths and 1,288 Canadian deaths during the study period, for nearly identical overall mortality between the two countries (21.2% and 21.7%, respectively). However, the median survival was 10 years longer in Canada (50.9 years) than in the United States (40.6 years), a gap that persisted across numerous analyses that adjusted for patient characteristics and clinical factors, including CF severity.

One particular difference between the two study populations was found to be key: Canada has single-payer universal health insurance, while the United States does not. When U.S. patients were categorized according to their insurance status, Canadians had a 44% lower risk of death than did U.S. patients receiving continuous Medicaid or Medicare (95% confidence interval, 0.45-0.71; P less than .001), a 36% lower risk than for U.S. patients receiving intermittent Medicaid or Medicare (95% CI, 0.51-0.80; P = .002), and a 77% lower risk of death than U.S. patients with no or unknown health insurance (95% CI, 0.14-0.37; P less than .001), the investigators said (Ann. Intern. Med. 2017 Mar 14. doi: 10.7326/M16-0858). In contrast, there was no survival advantage for Canadian patients when compared with U.S. patients who had private health insurance. This “[raises] the question of whether a disparity exists in access to therapeutic approaches or health care delivery,” the researchers noted.

This study was supported by the U.S. Cystic Fibrosis Foundation, Cystic Fibrosis Canada, the National Institutes of Health, and the U.S. Food and Drug Administration. Dr. Stephenson reported grants from the Cystic Fibrosis Foundation and fees from Cystic Fibrosis Canada. Several of the study’s other authors reported receiving fees from various sources and one of those authors reported serving on the boards of pharmaceutical companies.

People with cystic fibrosis (CF) survive an average of 10 years longer if they live in Canada than if they live in the United States, according to a report published online March 14 in Annals of Internal Medicine.

Differences between the two nations’ health care systems, including access to insurance, “may, in part, explain the Canadian survival advantage,” said Anne L. Stephenson, MD, PhD, of St. Michael’s Hospital, Toronto, and her associates.

Overall there were 9,654 U.S. deaths and 1,288 Canadian deaths during the study period, for nearly identical overall mortality between the two countries (21.2% and 21.7%, respectively). However, the median survival was 10 years longer in Canada (50.9 years) than in the United States (40.6 years), a gap that persisted across numerous analyses that adjusted for patient characteristics and clinical factors, including CF severity.

One particular difference between the two study populations was found to be key: Canada has single-payer universal health insurance, while the United States does not. When U.S. patients were categorized according to their insurance status, Canadians had a 44% lower risk of death than did U.S. patients receiving continuous Medicaid or Medicare (95% confidence interval, 0.45-0.71; P less than .001), a 36% lower risk than for U.S. patients receiving intermittent Medicaid or Medicare (95% CI, 0.51-0.80; P = .002), and a 77% lower risk of death than U.S. patients with no or unknown health insurance (95% CI, 0.14-0.37; P less than .001), the investigators said (Ann. Intern. Med. 2017 Mar 14. doi: 10.7326/M16-0858). In contrast, there was no survival advantage for Canadian patients when compared with U.S. patients who had private health insurance. This “[raises] the question of whether a disparity exists in access to therapeutic approaches or health care delivery,” the researchers noted.

This study was supported by the U.S. Cystic Fibrosis Foundation, Cystic Fibrosis Canada, the National Institutes of Health, and the U.S. Food and Drug Administration. Dr. Stephenson reported grants from the Cystic Fibrosis Foundation and fees from Cystic Fibrosis Canada. Several of the study’s other authors reported receiving fees from various sources and one of those authors reported serving on the boards of pharmaceutical companies.

FROM ANNALS OF INTERNAL MEDICINE

Key clinical point: People with cystic fibrosis survive an average of 10 years longer if they live in Canada than if they live in the United States.

Major finding: Canadians with CF had a 44% lower risk of death than U.S. patients receiving Medicaid or Medicare and a striking 77% lower risk of death than U.S. patients with no health insurance, but the same risk as U.S. patients with private insurance.

Data source: A population-based cohort study involving 45,448 patients in a U.S. registry and 5,941 in a Canadian registry in 1990-2013.

Disclosures: This study was supported by the U.S. Cystic Fibrosis Foundation, Cystic Fibrosis Canada, the National Institutes of Health, and the Food and Drug Administration. The authors’ financial disclosures are available at www.acponline.org

No rise in CV events seen with tocilizumab

Patients with refractory rheumatoid arthritis who switched to tocilizumab showed no increased cardiovascular risk when compared with those who switched to a tumor necrosis factor inhibitor in a large cohort study.

Rheumatoid arthritis is known to approximately double the risk of cardiovascular morbidity and mortality partly because of its associated chronic systemic inflammation. Several small trials and observational studies have reported that tocilizumab, an interleukin-6 receptor antagonist typically used as a second-line treatment for RA, elevates serum lipids, including LDL cholesterol. These serum lipid elevations caused by tocilizumab have brought concerns that the drug may further heighten CV risk in people with RA, said Seoyoung C. Kim, MD, ScD, of the division of pharmacoepidemiology and pharmacoeconomics and the division of rheumatology, immunology, and allergy at Brigham and Women’s Hospital, Boston, and her associates.

To minimize the effect of confounding by the severity of RA and the baseline CV risk, the researchers adjusted the data to account for more than 90 variables related to CV events and to RA severity.

The primary outcome — a composite of MI and stroke — occurred in 125 patients, 36 taking tocilizumab and 89 taking TNF inhibitors. The rate of this composite outcome was 0.52 per 100 person-years with tocilizumab and 0.59 per 100 person-years for TNF inhibitors, a nonsignificant difference, Dr. Kim and her associates reported (Arthritis Rheumatol. 2017 Feb 28. doi: 10.1002/art.40084).

There also were no significant differences between the two study groups in secondary endpoints, including rates of coronary revascularization, acute coronary syndrome, heart failure, and all-cause mortality. In addition, all subgroup analyses confirmed that tocilizumab did not raise CV risk, regardless of patient age (younger than or older than 60 years), the presence of cardiovascular disease at baseline, the presence of diabetes, the use of methotrexate, the use of oral steroids, or the use of statins.

These “reassuring” findings show that even though tocilizumab appears to raise LDL levels, “such increases do not appear to be associated with an increased risk of clinical CV events,” the investigators said.

The results confirm those reported at the 2016 American College of Rheumatology annual meeting for the 5-year, randomized, postmarketing ENTRACTE trial in which the lipid changes induced by tocilizumab did not translate into an increased risk of heart attack or stroke in RA patients.

This cohort study was sponsored by Genentech, which markets tocilizumab (Actemra). Dr. Kim reported ties to Genentech, Lilly, Pfizer, Bristol-Myers Squibb, and AstraZeneca. Her associates reported ties to Genentech, Lilly, Pfizer, AstraZeneca, Amgen, Corrona, Whiscon, Aetion, and Boehringer Ingelheim. Three of the seven authors were employees of Genentech.

Patients with refractory rheumatoid arthritis who switched to tocilizumab showed no increased cardiovascular risk when compared with those who switched to a tumor necrosis factor inhibitor in a large cohort study.

Rheumatoid arthritis is known to approximately double the risk of cardiovascular morbidity and mortality partly because of its associated chronic systemic inflammation. Several small trials and observational studies have reported that tocilizumab, an interleukin-6 receptor antagonist typically used as a second-line treatment for RA, elevates serum lipids, including LDL cholesterol. These serum lipid elevations caused by tocilizumab have brought concerns that the drug may further heighten CV risk in people with RA, said Seoyoung C. Kim, MD, ScD, of the division of pharmacoepidemiology and pharmacoeconomics and the division of rheumatology, immunology, and allergy at Brigham and Women’s Hospital, Boston, and her associates.

To minimize the effect of confounding by the severity of RA and the baseline CV risk, the researchers adjusted the data to account for more than 90 variables related to CV events and to RA severity.

The primary outcome — a composite of MI and stroke — occurred in 125 patients, 36 taking tocilizumab and 89 taking TNF inhibitors. The rate of this composite outcome was 0.52 per 100 person-years with tocilizumab and 0.59 per 100 person-years for TNF inhibitors, a nonsignificant difference, Dr. Kim and her associates reported (Arthritis Rheumatol. 2017 Feb 28. doi: 10.1002/art.40084).

There also were no significant differences between the two study groups in secondary endpoints, including rates of coronary revascularization, acute coronary syndrome, heart failure, and all-cause mortality. In addition, all subgroup analyses confirmed that tocilizumab did not raise CV risk, regardless of patient age (younger than or older than 60 years), the presence of cardiovascular disease at baseline, the presence of diabetes, the use of methotrexate, the use of oral steroids, or the use of statins.

These “reassuring” findings show that even though tocilizumab appears to raise LDL levels, “such increases do not appear to be associated with an increased risk of clinical CV events,” the investigators said.

The results confirm those reported at the 2016 American College of Rheumatology annual meeting for the 5-year, randomized, postmarketing ENTRACTE trial in which the lipid changes induced by tocilizumab did not translate into an increased risk of heart attack or stroke in RA patients.

This cohort study was sponsored by Genentech, which markets tocilizumab (Actemra). Dr. Kim reported ties to Genentech, Lilly, Pfizer, Bristol-Myers Squibb, and AstraZeneca. Her associates reported ties to Genentech, Lilly, Pfizer, AstraZeneca, Amgen, Corrona, Whiscon, Aetion, and Boehringer Ingelheim. Three of the seven authors were employees of Genentech.

Patients with refractory rheumatoid arthritis who switched to tocilizumab showed no increased cardiovascular risk when compared with those who switched to a tumor necrosis factor inhibitor in a large cohort study.

Rheumatoid arthritis is known to approximately double the risk of cardiovascular morbidity and mortality partly because of its associated chronic systemic inflammation. Several small trials and observational studies have reported that tocilizumab, an interleukin-6 receptor antagonist typically used as a second-line treatment for RA, elevates serum lipids, including LDL cholesterol. These serum lipid elevations caused by tocilizumab have brought concerns that the drug may further heighten CV risk in people with RA, said Seoyoung C. Kim, MD, ScD, of the division of pharmacoepidemiology and pharmacoeconomics and the division of rheumatology, immunology, and allergy at Brigham and Women’s Hospital, Boston, and her associates.

To minimize the effect of confounding by the severity of RA and the baseline CV risk, the researchers adjusted the data to account for more than 90 variables related to CV events and to RA severity.

The primary outcome — a composite of MI and stroke — occurred in 125 patients, 36 taking tocilizumab and 89 taking TNF inhibitors. The rate of this composite outcome was 0.52 per 100 person-years with tocilizumab and 0.59 per 100 person-years for TNF inhibitors, a nonsignificant difference, Dr. Kim and her associates reported (Arthritis Rheumatol. 2017 Feb 28. doi: 10.1002/art.40084).

There also were no significant differences between the two study groups in secondary endpoints, including rates of coronary revascularization, acute coronary syndrome, heart failure, and all-cause mortality. In addition, all subgroup analyses confirmed that tocilizumab did not raise CV risk, regardless of patient age (younger than or older than 60 years), the presence of cardiovascular disease at baseline, the presence of diabetes, the use of methotrexate, the use of oral steroids, or the use of statins.

These “reassuring” findings show that even though tocilizumab appears to raise LDL levels, “such increases do not appear to be associated with an increased risk of clinical CV events,” the investigators said.

The results confirm those reported at the 2016 American College of Rheumatology annual meeting for the 5-year, randomized, postmarketing ENTRACTE trial in which the lipid changes induced by tocilizumab did not translate into an increased risk of heart attack or stroke in RA patients.

This cohort study was sponsored by Genentech, which markets tocilizumab (Actemra). Dr. Kim reported ties to Genentech, Lilly, Pfizer, Bristol-Myers Squibb, and AstraZeneca. Her associates reported ties to Genentech, Lilly, Pfizer, AstraZeneca, Amgen, Corrona, Whiscon, Aetion, and Boehringer Ingelheim. Three of the seven authors were employees of Genentech.

FROM ARTHRITIS & RHEUMATOLOGY

Key clinical point: Patients with refractory RA who switch to tocilizumab show no increased cardiovascular risk, compared with those who switch to a TNF inhibitor.

Major finding: The primary outcome – the rate of a composite of MI and stroke – was 0.52 per 100 person-years with tocilizumab and 0.59 per 100 person-years for TNF inhibitors, a nonsignificant difference.

Data source: A cohort study involving 28,028 adults with RA enrolled in three large health care claims databases from all 50 states who were followed for a median of 1 year.

Disclosures: This study was sponsored by Genentech, which markets tocilizumab (Actemra). Dr. Kim reported ties to Genentech, Lilly, Pfizer, Bristol-Myers Squibb, and AstraZeneca. Her associates reported ties to Genentech, Lilly, Pfizer, AstraZeneca, Amgen, Corrona, Whiscon, Aetion, and Boehringer Ingelheim. Three of the seven authors were employees of Genentech.

Norovirus reporting tool yields real-time outbreak data

NoroSTAT, the Centers for Disease Control and Prevention’s new program with which states can report norovirus outbreaks, yields more timely and more complete epidemiologic and laboratory data, which allows a faster and better-informed public health response to such outbreaks, according to a report published in the Morbidity and Mortality Weekly Report.

The CDC launched NoroSTAT (Norovirus Sentinel Testing and Tracking) in 2012 to permit the health departments in selected states to report specific epidemiologic and laboratory data regarding norovirus outbreaks more rapidly than usual – within 7 business days, said Minesh P. Shah, MD, of the Epidemic Intelligence Service and the division of viral diseases, CDC, Atlanta, and his associates.

NoroSTAT significantly reduced the median interval in reporting epidemiologic data concerning norovirus from 22 days to 2 days and significantly reduced the median interval in reporting relevant laboratory data from 21 days to 3 days. The percentage of reports submitted within 7 business days increased from 26% to 95% among the states participating in NoroSTAT, while remaining low – only 12%-13% – in nonparticipating states. The number of complete reports also increased substantially, from 87% to 99.9%, among the participating states.

These improvements likely result from NoroSTAT’s stringent reporting requirements and from the program’s ability “to enhance communication between epidemiologists and laboratorians in both state health departments and at CDC,” Dr. Shah and his associates said (MMWR Morbidity and Mortality Weekly Report. 2017 Feb 24;66:185-9).

NoroSTAT represents a key advancement in norovirus outbreak surveillance and has proved valuable in early identification and better characterization of outbreaks. It was expanded to include nine states in August 2016, the investigators added.

NoroSTAT, the Centers for Disease Control and Prevention’s new program with which states can report norovirus outbreaks, yields more timely and more complete epidemiologic and laboratory data, which allows a faster and better-informed public health response to such outbreaks, according to a report published in the Morbidity and Mortality Weekly Report.

The CDC launched NoroSTAT (Norovirus Sentinel Testing and Tracking) in 2012 to permit the health departments in selected states to report specific epidemiologic and laboratory data regarding norovirus outbreaks more rapidly than usual – within 7 business days, said Minesh P. Shah, MD, of the Epidemic Intelligence Service and the division of viral diseases, CDC, Atlanta, and his associates.

NoroSTAT significantly reduced the median interval in reporting epidemiologic data concerning norovirus from 22 days to 2 days and significantly reduced the median interval in reporting relevant laboratory data from 21 days to 3 days. The percentage of reports submitted within 7 business days increased from 26% to 95% among the states participating in NoroSTAT, while remaining low – only 12%-13% – in nonparticipating states. The number of complete reports also increased substantially, from 87% to 99.9%, among the participating states.

These improvements likely result from NoroSTAT’s stringent reporting requirements and from the program’s ability “to enhance communication between epidemiologists and laboratorians in both state health departments and at CDC,” Dr. Shah and his associates said (MMWR Morbidity and Mortality Weekly Report. 2017 Feb 24;66:185-9).

NoroSTAT represents a key advancement in norovirus outbreak surveillance and has proved valuable in early identification and better characterization of outbreaks. It was expanded to include nine states in August 2016, the investigators added.

NoroSTAT, the Centers for Disease Control and Prevention’s new program with which states can report norovirus outbreaks, yields more timely and more complete epidemiologic and laboratory data, which allows a faster and better-informed public health response to such outbreaks, according to a report published in the Morbidity and Mortality Weekly Report.

The CDC launched NoroSTAT (Norovirus Sentinel Testing and Tracking) in 2012 to permit the health departments in selected states to report specific epidemiologic and laboratory data regarding norovirus outbreaks more rapidly than usual – within 7 business days, said Minesh P. Shah, MD, of the Epidemic Intelligence Service and the division of viral diseases, CDC, Atlanta, and his associates.

NoroSTAT significantly reduced the median interval in reporting epidemiologic data concerning norovirus from 22 days to 2 days and significantly reduced the median interval in reporting relevant laboratory data from 21 days to 3 days. The percentage of reports submitted within 7 business days increased from 26% to 95% among the states participating in NoroSTAT, while remaining low – only 12%-13% – in nonparticipating states. The number of complete reports also increased substantially, from 87% to 99.9%, among the participating states.

These improvements likely result from NoroSTAT’s stringent reporting requirements and from the program’s ability “to enhance communication between epidemiologists and laboratorians in both state health departments and at CDC,” Dr. Shah and his associates said (MMWR Morbidity and Mortality Weekly Report. 2017 Feb 24;66:185-9).

NoroSTAT represents a key advancement in norovirus outbreak surveillance and has proved valuable in early identification and better characterization of outbreaks. It was expanded to include nine states in August 2016, the investigators added.

Key clinical point: NoroSTAT, the CDC’s new program with which states can report norovirus outbreaks, yields more timely and complete epidemiologic data.

Major finding: NoroSTAT significantly reduced the median interval in reporting epidemiologic data concerning norovirus from 22 days to 2 days and significantly reduced the median interval in reporting relevant laboratory data from 21 days to 3 days.

Data source: A comparison of epidemiologic and laboratory data reported by all 50 states for the 3 years before and the 3 years after NoroSTAT was implemented in 5 states.

Disclosures: This study was sponsored by the Centers for Disease Control and Prevention. No financial disclosures were provided.

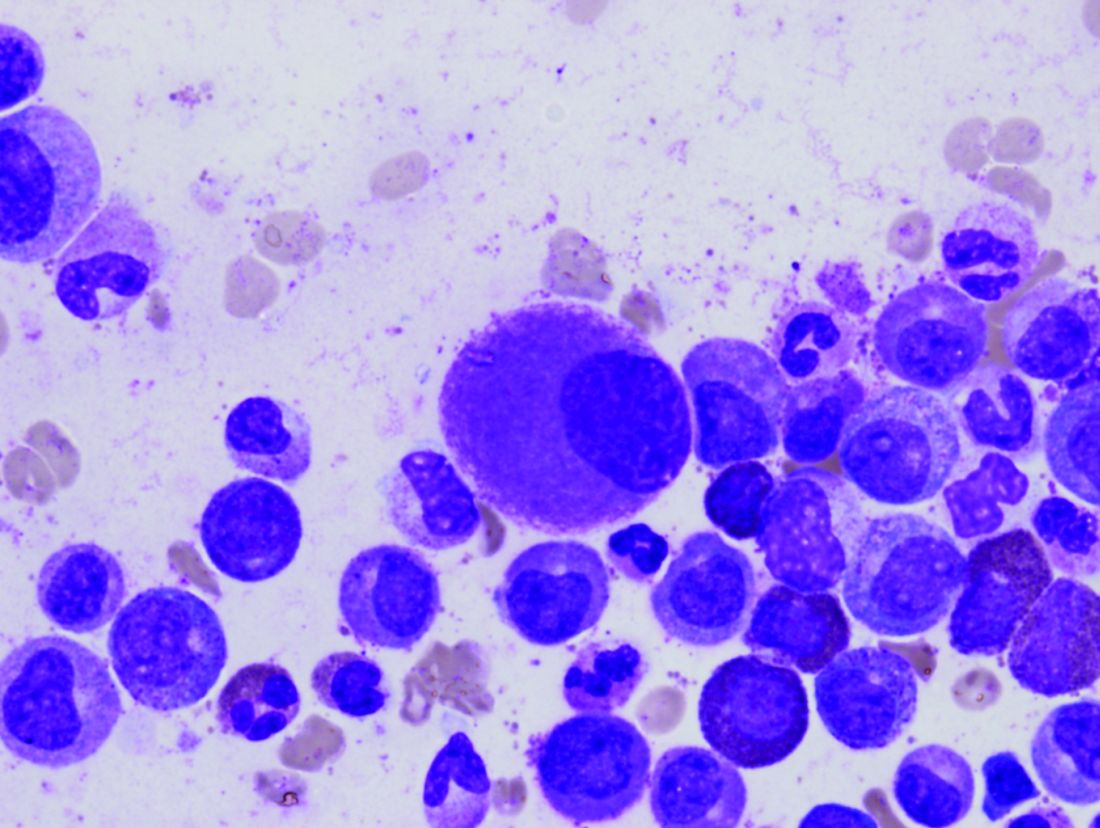

In CML, imatinib benefits persist at 10 years

In patients with chronic myeloid leukemia (CML), the safety and benefits of imatinib persisted over the course of 10 years in a follow-up study reported online March 9 in the New England Journal of Medicine.

The initial results of the phase III International Randomized Study of Interferon and ST1571 (IRIS) trial “fundamentally changed CML treatment and led to marked improvements in prognosis for patients” when imatinib was shown more effective than interferon plus cytarabine among newly diagnosed patients in the chronic phase of the disease, said Andreas Hochhaus, MD, of the Klinik für Innere Medizin II, Universitätsklinikum Jena (Germany), and his associates. The researchers are now reporting the final follow-up results after a median of 10.9 years for the 553 patients who had been randomly assigned to receive daily oral imatinib in the IRIS trial.

The high rate of crossover to the imatinib group among IRIS study participants precluded a direct comparison of overall survival between the two study groups at 10 years. However, the estimated overall 10-year survival in the imatinib group alone was 83.3%. “A total of 260 patients (47.0%) were alive and still receiving study treatment at 10 years, 96 patients (17.4%) were alive and not receiving study treatment, 86 known deaths (15.6% of patients) had occurred, and 11 patients (20.1%) had unknown survival status,” Dr. Hochhaus and his associates reported (N Engl J Med. 2017 Mar 9. doi: 10.1056/NEJMoa1609324).

Approximately 9% of those in the imatinib group had a serious adverse event during follow-up, including 4 patients (0.7%) in whom the event was considered to be related to the drug. Most occurred during the first year of treatment and declined over time. No new safety signals were observed after the 5-year follow-up.

“These results highlight the safety and efficacy of imatinib therapy, with a clear improvement over the outcomes that were expected n patients who received a diagnosis of CML before the introduction of tyrosine kinase inhibitor therapy, when interferon alfa and hematopoietic stem-cell transplantation were the standard therapies,” the investigators said.

Second-generation tyrosine kinase inhibitors have been developed since the IRIS trial was begun, and “it remains to be seen whether they will have similarly favorable long-term safety. Given the long-term safety and efficacy results with imatinib and the increasing availability of generic imatinib, comparative analyses evaluating the available tyrosine kinase inhibitors for first-line therapy are likely to be forthcoming,” they noted.

In patients with chronic myeloid leukemia (CML), the safety and benefits of imatinib persisted over the course of 10 years in a follow-up study reported online March 9 in the New England Journal of Medicine.

The initial results of the phase III International Randomized Study of Interferon and ST1571 (IRIS) trial “fundamentally changed CML treatment and led to marked improvements in prognosis for patients” when imatinib was shown more effective than interferon plus cytarabine among newly diagnosed patients in the chronic phase of the disease, said Andreas Hochhaus, MD, of the Klinik für Innere Medizin II, Universitätsklinikum Jena (Germany), and his associates. The researchers are now reporting the final follow-up results after a median of 10.9 years for the 553 patients who had been randomly assigned to receive daily oral imatinib in the IRIS trial.

The high rate of crossover to the imatinib group among IRIS study participants precluded a direct comparison of overall survival between the two study groups at 10 years. However, the estimated overall 10-year survival in the imatinib group alone was 83.3%. “A total of 260 patients (47.0%) were alive and still receiving study treatment at 10 years, 96 patients (17.4%) were alive and not receiving study treatment, 86 known deaths (15.6% of patients) had occurred, and 11 patients (20.1%) had unknown survival status,” Dr. Hochhaus and his associates reported (N Engl J Med. 2017 Mar 9. doi: 10.1056/NEJMoa1609324).

Approximately 9% of those in the imatinib group had a serious adverse event during follow-up, including 4 patients (0.7%) in whom the event was considered to be related to the drug. Most occurred during the first year of treatment and declined over time. No new safety signals were observed after the 5-year follow-up.

“These results highlight the safety and efficacy of imatinib therapy, with a clear improvement over the outcomes that were expected n patients who received a diagnosis of CML before the introduction of tyrosine kinase inhibitor therapy, when interferon alfa and hematopoietic stem-cell transplantation were the standard therapies,” the investigators said.

Second-generation tyrosine kinase inhibitors have been developed since the IRIS trial was begun, and “it remains to be seen whether they will have similarly favorable long-term safety. Given the long-term safety and efficacy results with imatinib and the increasing availability of generic imatinib, comparative analyses evaluating the available tyrosine kinase inhibitors for first-line therapy are likely to be forthcoming,” they noted.

In patients with chronic myeloid leukemia (CML), the safety and benefits of imatinib persisted over the course of 10 years in a follow-up study reported online March 9 in the New England Journal of Medicine.

The initial results of the phase III International Randomized Study of Interferon and ST1571 (IRIS) trial “fundamentally changed CML treatment and led to marked improvements in prognosis for patients” when imatinib was shown more effective than interferon plus cytarabine among newly diagnosed patients in the chronic phase of the disease, said Andreas Hochhaus, MD, of the Klinik für Innere Medizin II, Universitätsklinikum Jena (Germany), and his associates. The researchers are now reporting the final follow-up results after a median of 10.9 years for the 553 patients who had been randomly assigned to receive daily oral imatinib in the IRIS trial.

The high rate of crossover to the imatinib group among IRIS study participants precluded a direct comparison of overall survival between the two study groups at 10 years. However, the estimated overall 10-year survival in the imatinib group alone was 83.3%. “A total of 260 patients (47.0%) were alive and still receiving study treatment at 10 years, 96 patients (17.4%) were alive and not receiving study treatment, 86 known deaths (15.6% of patients) had occurred, and 11 patients (20.1%) had unknown survival status,” Dr. Hochhaus and his associates reported (N Engl J Med. 2017 Mar 9. doi: 10.1056/NEJMoa1609324).

Approximately 9% of those in the imatinib group had a serious adverse event during follow-up, including 4 patients (0.7%) in whom the event was considered to be related to the drug. Most occurred during the first year of treatment and declined over time. No new safety signals were observed after the 5-year follow-up.

“These results highlight the safety and efficacy of imatinib therapy, with a clear improvement over the outcomes that were expected n patients who received a diagnosis of CML before the introduction of tyrosine kinase inhibitor therapy, when interferon alfa and hematopoietic stem-cell transplantation were the standard therapies,” the investigators said.

Second-generation tyrosine kinase inhibitors have been developed since the IRIS trial was begun, and “it remains to be seen whether they will have similarly favorable long-term safety. Given the long-term safety and efficacy results with imatinib and the increasing availability of generic imatinib, comparative analyses evaluating the available tyrosine kinase inhibitors for first-line therapy are likely to be forthcoming,” they noted.

FROM THE NEW ENGLAND JOURNAL OF MEDICINE

Key clinical point: Imatinib’s benefits in chronic myeloid leukemia and its safety profile persisted over the long term in a 10-year follow-up study.

Major finding: The estimated overall 10-year survival was 83.3%, and no new safety signals were observed after 5-year follow-up.

Data source: Extended follow-up of an open-label international randomized trial involving 553 adults with CML.

Disclosures: This study was funded by Novartis. Dr. Hochhaus reported ties to Novartis and other drug companies.

Nemolizumab improves pruritus in atopic dermatitis

Monthly subcutaneous injections of nemolizumab, a humanized monoclonal antibody that inhibits interleukin-31 signaling, significantly improved pruritus associated with atopic dermatitis (AD) in a small, 3-month phase II trial. The results were published online March 2 in the New England Journal of Medicine.

“Although this trial has limitations, most notably the small number of patients and short duration, it provides evidence supporting the role of interleukin-31 in the pathobiologic mechanism of atopic dermatitis,” said Thomas Ruzicka, MD, of the department of dermatology and allergology, Ludwig Maximilian University, Munich, and his associates.

Pruritus aggravates atopic dermatitis and has been linked to loss of sleep, depression, aggressiveness, body disfiguration, and suicidal thoughts. Existing treatments, including emollients, topical glucocorticoids, calcineurin inhibitors, and oral antihistamines, have limited efficacy and can cause adverse effects when used long term, the investigators noted.

They assessed nemolizumab in a manufacturer-funded multiple-dose trial involving 264 adults in the United States, Europe, and Japan who had refractory moderate to severe atopic dermatitis, inadequately controlled with topical treatments. Study participants were randomly assigned in a double blind fashion to receive 12 weeks of 0.1 mg/kg nemolizumab (53 patients), 0.5 mg/kg nemolizumab (54 patients), 2.0 mg/kg nemolizumab (52 patients), or placebo (53 control subjects) every 4 weeks. Another 52 participants were given 2.0 mg/kg nemolizumab every 8 weeks in an exploratory analysis. All the study participants were permitted to use emollients and localized treatments, and some were permitted by the investigators to use a potent topical glucocorticoid as rescue therapy after week 4.

A total of 216 patients (82%) completed the trial.

The primary efficacy endpoint was the percentage improvement at week 12 in scores on a pruritus visual analogue scale, which patients recorded electronically every day. These scores improved significantly in a dose-dependent manner for active treatment, compared with placebo. Pruritus declined by 43.7% with the 0.1 mg/kg dose (P =.002), 59.8% with the 0.5 mg/kg dose (P less than .001), and 63.1% with the 2.0 mg/kg dose (P less than .001), compared with 20.9% with placebo.

Nemolizumab also bested placebo in several secondary endpoints including scores on a verbal rating of pruritus, the Eczema Area and Severity Index, and the static Investigator’s Global Assessment, the investigators said (N Engl J Med 2017;376:826-35. doi: 10.1056/NEJMoa1606490).

The study population was too small to allow the investigators to draw conclusions regarding adverse events, even before a relatively high number of participants dropped out. However, patients who received active treatment had a higher rate of dermatitis exacerbations and peripheral edema than did those who received placebo.

The group given 0.5 mg/kg nemolizumab every month showed the greatest treatment benefit and the best benefit-to-risk profile, Dr. Ruzicka and his associates said.

This trial was funded by Chugai Pharmaceutical, which also participated in the study design, data collection and analysis, and preparation of the manuscript. Dr. Ruzicka reported receiving research grants and personal fees from Chugai and honoraria from Astellas; his associates reported ties to numerous industry sources.

In addition to the benefits cited by Ruzicka et al., nemolizumab appeared to work quickly, reducing pruritus by nearly 30% within the first week, compared with a slight placebo effect.

Data from larger and longer-term studies, as well as pediatric trials, are needed to fully understand how nemolizumab and other new agents should be incorporated into the management of AD.

It will be important to assess how quickly disease flares occur when these agents are stopped, and whether the concomitant use of other treatments may enhance their effectiveness or induce longer remissions.

Lynda C. Schneider, MD, is in the division of immunology at Boston Children’s Hospital. She disclosed having received grant support from Astellas, personal fees from Anacor Pharmaceuticals, and other support from the National Eczema Association outside the submitted work. Dr. Schneider made these remarks in an editorial accompanying the study (N Engl J Med. 2017 March 2. doi:10.1056/NEJMe1616072).

In addition to the benefits cited by Ruzicka et al., nemolizumab appeared to work quickly, reducing pruritus by nearly 30% within the first week, compared with a slight placebo effect.

Data from larger and longer-term studies, as well as pediatric trials, are needed to fully understand how nemolizumab and other new agents should be incorporated into the management of AD.

It will be important to assess how quickly disease flares occur when these agents are stopped, and whether the concomitant use of other treatments may enhance their effectiveness or induce longer remissions.

Lynda C. Schneider, MD, is in the division of immunology at Boston Children’s Hospital. She disclosed having received grant support from Astellas, personal fees from Anacor Pharmaceuticals, and other support from the National Eczema Association outside the submitted work. Dr. Schneider made these remarks in an editorial accompanying the study (N Engl J Med. 2017 March 2. doi:10.1056/NEJMe1616072).

In addition to the benefits cited by Ruzicka et al., nemolizumab appeared to work quickly, reducing pruritus by nearly 30% within the first week, compared with a slight placebo effect.

Data from larger and longer-term studies, as well as pediatric trials, are needed to fully understand how nemolizumab and other new agents should be incorporated into the management of AD.

It will be important to assess how quickly disease flares occur when these agents are stopped, and whether the concomitant use of other treatments may enhance their effectiveness or induce longer remissions.

Lynda C. Schneider, MD, is in the division of immunology at Boston Children’s Hospital. She disclosed having received grant support from Astellas, personal fees from Anacor Pharmaceuticals, and other support from the National Eczema Association outside the submitted work. Dr. Schneider made these remarks in an editorial accompanying the study (N Engl J Med. 2017 March 2. doi:10.1056/NEJMe1616072).

Monthly subcutaneous injections of nemolizumab, a humanized monoclonal antibody that inhibits interleukin-31 signaling, significantly improved pruritus associated with atopic dermatitis (AD) in a small, 3-month phase II trial. The results were published online March 2 in the New England Journal of Medicine.

“Although this trial has limitations, most notably the small number of patients and short duration, it provides evidence supporting the role of interleukin-31 in the pathobiologic mechanism of atopic dermatitis,” said Thomas Ruzicka, MD, of the department of dermatology and allergology, Ludwig Maximilian University, Munich, and his associates.

Pruritus aggravates atopic dermatitis and has been linked to loss of sleep, depression, aggressiveness, body disfiguration, and suicidal thoughts. Existing treatments, including emollients, topical glucocorticoids, calcineurin inhibitors, and oral antihistamines, have limited efficacy and can cause adverse effects when used long term, the investigators noted.

They assessed nemolizumab in a manufacturer-funded multiple-dose trial involving 264 adults in the United States, Europe, and Japan who had refractory moderate to severe atopic dermatitis, inadequately controlled with topical treatments. Study participants were randomly assigned in a double blind fashion to receive 12 weeks of 0.1 mg/kg nemolizumab (53 patients), 0.5 mg/kg nemolizumab (54 patients), 2.0 mg/kg nemolizumab (52 patients), or placebo (53 control subjects) every 4 weeks. Another 52 participants were given 2.0 mg/kg nemolizumab every 8 weeks in an exploratory analysis. All the study participants were permitted to use emollients and localized treatments, and some were permitted by the investigators to use a potent topical glucocorticoid as rescue therapy after week 4.

A total of 216 patients (82%) completed the trial.

The primary efficacy endpoint was the percentage improvement at week 12 in scores on a pruritus visual analogue scale, which patients recorded electronically every day. These scores improved significantly in a dose-dependent manner for active treatment, compared with placebo. Pruritus declined by 43.7% with the 0.1 mg/kg dose (P =.002), 59.8% with the 0.5 mg/kg dose (P less than .001), and 63.1% with the 2.0 mg/kg dose (P less than .001), compared with 20.9% with placebo.

Nemolizumab also bested placebo in several secondary endpoints including scores on a verbal rating of pruritus, the Eczema Area and Severity Index, and the static Investigator’s Global Assessment, the investigators said (N Engl J Med 2017;376:826-35. doi: 10.1056/NEJMoa1606490).

The study population was too small to allow the investigators to draw conclusions regarding adverse events, even before a relatively high number of participants dropped out. However, patients who received active treatment had a higher rate of dermatitis exacerbations and peripheral edema than did those who received placebo.

The group given 0.5 mg/kg nemolizumab every month showed the greatest treatment benefit and the best benefit-to-risk profile, Dr. Ruzicka and his associates said.

This trial was funded by Chugai Pharmaceutical, which also participated in the study design, data collection and analysis, and preparation of the manuscript. Dr. Ruzicka reported receiving research grants and personal fees from Chugai and honoraria from Astellas; his associates reported ties to numerous industry sources.

Monthly subcutaneous injections of nemolizumab, a humanized monoclonal antibody that inhibits interleukin-31 signaling, significantly improved pruritus associated with atopic dermatitis (AD) in a small, 3-month phase II trial. The results were published online March 2 in the New England Journal of Medicine.

“Although this trial has limitations, most notably the small number of patients and short duration, it provides evidence supporting the role of interleukin-31 in the pathobiologic mechanism of atopic dermatitis,” said Thomas Ruzicka, MD, of the department of dermatology and allergology, Ludwig Maximilian University, Munich, and his associates.

Pruritus aggravates atopic dermatitis and has been linked to loss of sleep, depression, aggressiveness, body disfiguration, and suicidal thoughts. Existing treatments, including emollients, topical glucocorticoids, calcineurin inhibitors, and oral antihistamines, have limited efficacy and can cause adverse effects when used long term, the investigators noted.

They assessed nemolizumab in a manufacturer-funded multiple-dose trial involving 264 adults in the United States, Europe, and Japan who had refractory moderate to severe atopic dermatitis, inadequately controlled with topical treatments. Study participants were randomly assigned in a double blind fashion to receive 12 weeks of 0.1 mg/kg nemolizumab (53 patients), 0.5 mg/kg nemolizumab (54 patients), 2.0 mg/kg nemolizumab (52 patients), or placebo (53 control subjects) every 4 weeks. Another 52 participants were given 2.0 mg/kg nemolizumab every 8 weeks in an exploratory analysis. All the study participants were permitted to use emollients and localized treatments, and some were permitted by the investigators to use a potent topical glucocorticoid as rescue therapy after week 4.

A total of 216 patients (82%) completed the trial.

The primary efficacy endpoint was the percentage improvement at week 12 in scores on a pruritus visual analogue scale, which patients recorded electronically every day. These scores improved significantly in a dose-dependent manner for active treatment, compared with placebo. Pruritus declined by 43.7% with the 0.1 mg/kg dose (P =.002), 59.8% with the 0.5 mg/kg dose (P less than .001), and 63.1% with the 2.0 mg/kg dose (P less than .001), compared with 20.9% with placebo.

Nemolizumab also bested placebo in several secondary endpoints including scores on a verbal rating of pruritus, the Eczema Area and Severity Index, and the static Investigator’s Global Assessment, the investigators said (N Engl J Med 2017;376:826-35. doi: 10.1056/NEJMoa1606490).

The study population was too small to allow the investigators to draw conclusions regarding adverse events, even before a relatively high number of participants dropped out. However, patients who received active treatment had a higher rate of dermatitis exacerbations and peripheral edema than did those who received placebo.

The group given 0.5 mg/kg nemolizumab every month showed the greatest treatment benefit and the best benefit-to-risk profile, Dr. Ruzicka and his associates said.

This trial was funded by Chugai Pharmaceutical, which also participated in the study design, data collection and analysis, and preparation of the manuscript. Dr. Ruzicka reported receiving research grants and personal fees from Chugai and honoraria from Astellas; his associates reported ties to numerous industry sources.

FROM THE NEW ENGLAND JOURNAL OF MEDICINE

Key clinical point: Monthly nemolizumab injections significantly improved pruritus in adults with moderate to severe atopic dermatitis.

Major finding: Pruritus declined by 43.7% with the 0.1-mg/kg dose, 59.8% with the 0.5-mg/kg dose, and 63.1% with the 2.0-mg/kg dose, compared with 20.9% with placebo.

Data source: A manufacturer-funded international randomized double-blind placebo-controlled phase II trial of 216 adults with moderate to severe AD treated for 12 weeks.

Disclosures: This trial was funded by Chugai Pharmaceutical, which also participated in the study design, data collection and analysis, and preparation of the manuscript. Dr. Ruzicka reported receiving research grants and personal fees from Chugai and honoraria from Astellas; his associates reported ties to numerous industry sources.

Blinatumomab superior to chemotherapy for refractory ALL

Blinatumomab proved superior to standard chemotherapy for relapsed or refractory acute lymphoblastic leukemia (ALL), based on results of an international phase III trial reported online March 2 in the New England Journal of Medicine.

The trial was halted early when an interim analysis revealed the clear benefit with blinatumomab, Hagop Kantarjian, MD, chair of the department of leukemia, University of Texas MD Anderson Cancer Center, Houston, and his associates wrote.

The manufacturer-sponsored open-label study included 376 adults with Ph-negative B-cell precursor ALL that was either refractory to primary induction therapy or to salvage with intensive combination chemotherapy, first relapse with the first remission lasting less than 12 months, second or greater relapse, or relapse at any time after allogeneic stem cell transplantation.

Study participants were randomly assigned to receive either blinatumomab (267 patients) or the investigator’s choice of one of four protocol-defined regimens of standard chemotherapy (109 patients) and were followed at 101 medical centers in 21 countries for a median of 11 months.

For each 6-week cycle of blinatumomab therapy, patients received treatment for 4 weeks (9 mcg blinatumomab per day during week 1 of induction cycle one and 28 mcg/day thereafter, by continuous infusion) and then no treatment for 2 weeks.

Maintenance treatment with blinatumomab was given as a 4-week continuous infusion every 12 weeks.

At the interim analysis – when 75% of the total number of planned deaths for the final analysis had occurred – the monitoring committee recommended that the trial be stopped early because of the benefit observed with blinatumomab therapy. Median overall survival was significantly longer with blinatumomab (7.7 months) than with chemotherapy (4 months), with a hazard ratio for death of 0.71. The estimated survival at 6 months was 54% with blinatumomab and 39% with chemotherapy.

Remission rates also favored blinatumomab: Rates of complete remission with full hematologic recovery were 34% vs. 16% and rates of complete remission with full, partial, or incomplete hematologic recovery were 44% vs. 25%.

In addition, the median duration of remission was 7.3 months with blinatumomab and 4.6 months with chemotherapy. And 6-month estimates of event-free survival were 31% vs. 12%. These survival and remission benefits were consistent across all subgroups of patients and persisted in several sensitivity analyses, the investigators said (N Engl J Med. 2017 Mar 2. doi: 10.1056/nejmOA1609783).

A total of 24% of the patients in the blinatumomab group and 24% of the patients in the chemotherapy group underwent allogeneic stem cell transplantation, with comparable outcomes and death rates.

Serious adverse events occurred in 62% of patients receiving blinatumomab and in 45% of those receiving chemotherapy, including fatal adverse events in 19% and 17%, respectively. The fatal events were considered to be related to treatment in 3% of the blinatumomab group and in 7% of the chemotherapy group. Rates of treatment discontinuation from an adverse event were 12% and 8%, respectively.

Patient-reported health status and quality of life improved with blinatumomab but worsened with chemotherapy.

“Given the previous exposure of these patients to myelosuppressive and immunosuppressive treatments, the activity of an immune-based therapy such as blinatumomab, which depends on functioning T cells for its activity, provides encouragement that responses may be further enhanced and made durable with additional immune activation strategies,” Dr. Kantarjian and his associates noted.

Dr. Kantarjian reported receiving research support from Amgen, Pfizer, Bristol-Myers Squibb, Novartis, and ARIAD; his associates reported ties to numerous industry sources.

Blinatumomab proved superior to standard chemotherapy for relapsed or refractory acute lymphoblastic leukemia (ALL), based on results of an international phase III trial reported online March 2 in the New England Journal of Medicine.

The trial was halted early when an interim analysis revealed the clear benefit with blinatumomab, Hagop Kantarjian, MD, chair of the department of leukemia, University of Texas MD Anderson Cancer Center, Houston, and his associates wrote.

The manufacturer-sponsored open-label study included 376 adults with Ph-negative B-cell precursor ALL that was either refractory to primary induction therapy or to salvage with intensive combination chemotherapy, first relapse with the first remission lasting less than 12 months, second or greater relapse, or relapse at any time after allogeneic stem cell transplantation.

Study participants were randomly assigned to receive either blinatumomab (267 patients) or the investigator’s choice of one of four protocol-defined regimens of standard chemotherapy (109 patients) and were followed at 101 medical centers in 21 countries for a median of 11 months.

For each 6-week cycle of blinatumomab therapy, patients received treatment for 4 weeks (9 mcg blinatumomab per day during week 1 of induction cycle one and 28 mcg/day thereafter, by continuous infusion) and then no treatment for 2 weeks.

Maintenance treatment with blinatumomab was given as a 4-week continuous infusion every 12 weeks.

At the interim analysis – when 75% of the total number of planned deaths for the final analysis had occurred – the monitoring committee recommended that the trial be stopped early because of the benefit observed with blinatumomab therapy. Median overall survival was significantly longer with blinatumomab (7.7 months) than with chemotherapy (4 months), with a hazard ratio for death of 0.71. The estimated survival at 6 months was 54% with blinatumomab and 39% with chemotherapy.

Remission rates also favored blinatumomab: Rates of complete remission with full hematologic recovery were 34% vs. 16% and rates of complete remission with full, partial, or incomplete hematologic recovery were 44% vs. 25%.

In addition, the median duration of remission was 7.3 months with blinatumomab and 4.6 months with chemotherapy. And 6-month estimates of event-free survival were 31% vs. 12%. These survival and remission benefits were consistent across all subgroups of patients and persisted in several sensitivity analyses, the investigators said (N Engl J Med. 2017 Mar 2. doi: 10.1056/nejmOA1609783).

A total of 24% of the patients in the blinatumomab group and 24% of the patients in the chemotherapy group underwent allogeneic stem cell transplantation, with comparable outcomes and death rates.

Serious adverse events occurred in 62% of patients receiving blinatumomab and in 45% of those receiving chemotherapy, including fatal adverse events in 19% and 17%, respectively. The fatal events were considered to be related to treatment in 3% of the blinatumomab group and in 7% of the chemotherapy group. Rates of treatment discontinuation from an adverse event were 12% and 8%, respectively.

Patient-reported health status and quality of life improved with blinatumomab but worsened with chemotherapy.

“Given the previous exposure of these patients to myelosuppressive and immunosuppressive treatments, the activity of an immune-based therapy such as blinatumomab, which depends on functioning T cells for its activity, provides encouragement that responses may be further enhanced and made durable with additional immune activation strategies,” Dr. Kantarjian and his associates noted.

Dr. Kantarjian reported receiving research support from Amgen, Pfizer, Bristol-Myers Squibb, Novartis, and ARIAD; his associates reported ties to numerous industry sources.

Blinatumomab proved superior to standard chemotherapy for relapsed or refractory acute lymphoblastic leukemia (ALL), based on results of an international phase III trial reported online March 2 in the New England Journal of Medicine.

The trial was halted early when an interim analysis revealed the clear benefit with blinatumomab, Hagop Kantarjian, MD, chair of the department of leukemia, University of Texas MD Anderson Cancer Center, Houston, and his associates wrote.

The manufacturer-sponsored open-label study included 376 adults with Ph-negative B-cell precursor ALL that was either refractory to primary induction therapy or to salvage with intensive combination chemotherapy, first relapse with the first remission lasting less than 12 months, second or greater relapse, or relapse at any time after allogeneic stem cell transplantation.

Study participants were randomly assigned to receive either blinatumomab (267 patients) or the investigator’s choice of one of four protocol-defined regimens of standard chemotherapy (109 patients) and were followed at 101 medical centers in 21 countries for a median of 11 months.

For each 6-week cycle of blinatumomab therapy, patients received treatment for 4 weeks (9 mcg blinatumomab per day during week 1 of induction cycle one and 28 mcg/day thereafter, by continuous infusion) and then no treatment for 2 weeks.

Maintenance treatment with blinatumomab was given as a 4-week continuous infusion every 12 weeks.

At the interim analysis – when 75% of the total number of planned deaths for the final analysis had occurred – the monitoring committee recommended that the trial be stopped early because of the benefit observed with blinatumomab therapy. Median overall survival was significantly longer with blinatumomab (7.7 months) than with chemotherapy (4 months), with a hazard ratio for death of 0.71. The estimated survival at 6 months was 54% with blinatumomab and 39% with chemotherapy.

Remission rates also favored blinatumomab: Rates of complete remission with full hematologic recovery were 34% vs. 16% and rates of complete remission with full, partial, or incomplete hematologic recovery were 44% vs. 25%.

In addition, the median duration of remission was 7.3 months with blinatumomab and 4.6 months with chemotherapy. And 6-month estimates of event-free survival were 31% vs. 12%. These survival and remission benefits were consistent across all subgroups of patients and persisted in several sensitivity analyses, the investigators said (N Engl J Med. 2017 Mar 2. doi: 10.1056/nejmOA1609783).

A total of 24% of the patients in the blinatumomab group and 24% of the patients in the chemotherapy group underwent allogeneic stem cell transplantation, with comparable outcomes and death rates.

Serious adverse events occurred in 62% of patients receiving blinatumomab and in 45% of those receiving chemotherapy, including fatal adverse events in 19% and 17%, respectively. The fatal events were considered to be related to treatment in 3% of the blinatumomab group and in 7% of the chemotherapy group. Rates of treatment discontinuation from an adverse event were 12% and 8%, respectively.

Patient-reported health status and quality of life improved with blinatumomab but worsened with chemotherapy.

“Given the previous exposure of these patients to myelosuppressive and immunosuppressive treatments, the activity of an immune-based therapy such as blinatumomab, which depends on functioning T cells for its activity, provides encouragement that responses may be further enhanced and made durable with additional immune activation strategies,” Dr. Kantarjian and his associates noted.

Dr. Kantarjian reported receiving research support from Amgen, Pfizer, Bristol-Myers Squibb, Novartis, and ARIAD; his associates reported ties to numerous industry sources.

FROM THE NEW ENGLAND JOURNAL OF MEDICINE

Key clinical point: Blinatumomab proved superior to standard chemotherapy for relapsed or refractory ALL in a phase III trial.

Major finding: Median overall survival with blinatumomab (7.7 months) was significantly longer than with chemotherapy (4.0 months), and rates of complete remission with full hematologic recovery were 34% vs 16%, respectively.

Data source: A manufacturer-sponsored international randomized open-label phase-3 trial involving 376 adults followed for 11 months.

Disclosures: This trial was funded by Amgen, which also participated in the study design, data analysis, and report writing. Dr. Kantarjian reported receiving research support from Amgen, Pfizer, Bristol-Myers Squibb, Novartis, and ARIAD; his associates reported ties to numerous industry sources.

Sirukumab found effective, safe for highly refractory RA

The investigational interleukin-6 inhibitor sirukumab proved effective and safe for rheumatoid arthritis patients who failed to respond to or were intolerant of multiple previous therapies in a phase III trial reported online in The Lancet.

These patients are “exceptionally treatment-resistant” and have “substantial clinical needs with the lowest chance of treatment success,” said first author Daniel Aletaha, MD, of the division of rheumatology at the Medical University of Vienna, and his associates.

Two different doses of sirukumab provided rapid and sustained improvements in rheumatoid arthritis (RA) signs and symptoms, physical function, and health status. These were accompanied by improvements in physical and mental well-being, a result that is particularly relevant for RA patients, who have a high prevalence of mood disorders. It has been estimated that 39% of RA patients have moderate to severe depressive symptoms, the investigators noted.

They assessed sirukumab in the manufacturer-funded trial involving 878 adults treated and followed at 183 centers in 20 countries across North America, Europe, Latin America, and Asia. These patients included 59% who had failed to respond to or had been intolerant of two or more biologic disease-modifying antirheumatic drugs (39% to two or more tumor necrosis factor inhibitors) and numerous other RA treatments.

The patients in the study, called SIRROUND-T, were randomly assigned to receive 50 mg sirukumab every 4 weeks (292 patients in the low-dose group), 100 mg sirukumab every 2 weeks (292 in the high-dose group), or matching placebo (294 patients in the control group) via subcutaneous injections for 52 weeks. Patients receiving placebo whose condition worsened were allowed an “early escape,” a blinded switch to either dose of the active drug for the remainder of the trial. At week 24, patients remaining in the placebo groups also were randomly assigned in a blinded manner to switch to active treatment (Lancet. 2017 Feb 15. doi: 10.1016/S0140-6736[17]30401-4). All patients were allowed to continue using any concomitant disease-modifying antirheumatic drugs (DMARDs). At baseline, only 80% were using a conventional synthetic DMARD, and about 25% of patients in each group were not taking methotrexate.

The primary efficacy endpoint was the proportion of patients achieving an ACR20 response (a 20% or greater improvement in swollen joint count and tender joint count plus a 20% or greater improvement in at least three factors: pain, patient-assessed disease activity, physician-assessed disease activity, patient-assessed physical function, and C-reactive protein levels) at week 16. Sirukumab achieved higher levels of response in both the low-dose (40% of patients) and high-dose (45%) groups than did placebo (24%).

In addition, significantly higher proportions of patients receiving active treatment achieved the secondary endpoints of ACR20, ACR50, and ACR70 at week 24. Greater improvements, including remission, also were noted with sirukumab than with placebo according to the Simplified Disease Activity Index, ACR/EULAR criteria, and the Health Assessment Questionnaire–Disability Index. And significantly greater improvements also were seen in the Functional Assessment of Chronic Illness Therapy–Fatigue score, the 36-Item Short Form Health Survey, the Work Limitations Questionnaire, the EuroQol-5 Dimension questionnaire, and the EuroQol Health State Visual Analogue Scale.

In both active-treatment groups, all of these improvements were noted as early as within 2 weeks of starting treatment and persisted through follow-ups at week 24 and week 52.

Rates of adverse events, serious adverse events, and adverse events leading to treatment discontinuation were similar across the three study groups. Infections (primarily pneumonia) and infestations were the most frequent adverse events leading to discontinuation. No cases of tuberculosis or serious opportunistic infections were reported. Both doses of sirukumab induced declines in neutrophil, platelet, and white blood cell counts, as well as increases in alanine aminotransferase, aspartate aminotransferase, bilirubin, cholesterol, and triglycerides. Nine patients had hypersensitivity reactions, including one classified as serious dermatitis; all of these patients were taking the high dose of sirukumab. One of the five patient deaths that occurred, a fatal case of pneumonia, was considered possibly related to sirukumab.

This trial was funded by Janssen and GlaxoSmithKline, which also participated in the study design, data collection and analysis, and writing the results. Dr. Aletaha reported serving as a consultant for or receiving research support from AbbVie, Pfizer, Grünenthal, Merck, Medac, UCB, Mitsubishi/Tanabe, Janssen, and Roche. His associates reported ties to numerous industry sources.

It would be useful to compare sirukumab’s efficacy against that of two other inhibitors of the interleukin-6 pathway, tocilizumab (Actemra) and sarilumab.

Roy Fleischmann, MD, is with the University of Texas Southwestern Medical Center and Metroplex Clinical Research Center, both in Dallas. He reported receiving research grants and consulting fees from Genentech-Roche, Sanofi-Aventis, and GlaxoSmithKline. Dr. Fleischmann made these remarks in editorial accompanying Dr. Aletaha and colleagues’ report ( Lancet. 2017 Feb 15. doi: 10.1016/S0140-6736[17]30405-1 ).

It would be useful to compare sirukumab’s efficacy against that of two other inhibitors of the interleukin-6 pathway, tocilizumab (Actemra) and sarilumab.

Roy Fleischmann, MD, is with the University of Texas Southwestern Medical Center and Metroplex Clinical Research Center, both in Dallas. He reported receiving research grants and consulting fees from Genentech-Roche, Sanofi-Aventis, and GlaxoSmithKline. Dr. Fleischmann made these remarks in editorial accompanying Dr. Aletaha and colleagues’ report ( Lancet. 2017 Feb 15. doi: 10.1016/S0140-6736[17]30405-1 ).

It would be useful to compare sirukumab’s efficacy against that of two other inhibitors of the interleukin-6 pathway, tocilizumab (Actemra) and sarilumab.

Roy Fleischmann, MD, is with the University of Texas Southwestern Medical Center and Metroplex Clinical Research Center, both in Dallas. He reported receiving research grants and consulting fees from Genentech-Roche, Sanofi-Aventis, and GlaxoSmithKline. Dr. Fleischmann made these remarks in editorial accompanying Dr. Aletaha and colleagues’ report ( Lancet. 2017 Feb 15. doi: 10.1016/S0140-6736[17]30405-1 ).

The investigational interleukin-6 inhibitor sirukumab proved effective and safe for rheumatoid arthritis patients who failed to respond to or were intolerant of multiple previous therapies in a phase III trial reported online in The Lancet.

These patients are “exceptionally treatment-resistant” and have “substantial clinical needs with the lowest chance of treatment success,” said first author Daniel Aletaha, MD, of the division of rheumatology at the Medical University of Vienna, and his associates.

Two different doses of sirukumab provided rapid and sustained improvements in rheumatoid arthritis (RA) signs and symptoms, physical function, and health status. These were accompanied by improvements in physical and mental well-being, a result that is particularly relevant for RA patients, who have a high prevalence of mood disorders. It has been estimated that 39% of RA patients have moderate to severe depressive symptoms, the investigators noted.

They assessed sirukumab in the manufacturer-funded trial involving 878 adults treated and followed at 183 centers in 20 countries across North America, Europe, Latin America, and Asia. These patients included 59% who had failed to respond to or had been intolerant of two or more biologic disease-modifying antirheumatic drugs (39% to two or more tumor necrosis factor inhibitors) and numerous other RA treatments.

The patients in the study, called SIRROUND-T, were randomly assigned to receive 50 mg sirukumab every 4 weeks (292 patients in the low-dose group), 100 mg sirukumab every 2 weeks (292 in the high-dose group), or matching placebo (294 patients in the control group) via subcutaneous injections for 52 weeks. Patients receiving placebo whose condition worsened were allowed an “early escape,” a blinded switch to either dose of the active drug for the remainder of the trial. At week 24, patients remaining in the placebo groups also were randomly assigned in a blinded manner to switch to active treatment (Lancet. 2017 Feb 15. doi: 10.1016/S0140-6736[17]30401-4). All patients were allowed to continue using any concomitant disease-modifying antirheumatic drugs (DMARDs). At baseline, only 80% were using a conventional synthetic DMARD, and about 25% of patients in each group were not taking methotrexate.

The primary efficacy endpoint was the proportion of patients achieving an ACR20 response (a 20% or greater improvement in swollen joint count and tender joint count plus a 20% or greater improvement in at least three factors: pain, patient-assessed disease activity, physician-assessed disease activity, patient-assessed physical function, and C-reactive protein levels) at week 16. Sirukumab achieved higher levels of response in both the low-dose (40% of patients) and high-dose (45%) groups than did placebo (24%).

In addition, significantly higher proportions of patients receiving active treatment achieved the secondary endpoints of ACR20, ACR50, and ACR70 at week 24. Greater improvements, including remission, also were noted with sirukumab than with placebo according to the Simplified Disease Activity Index, ACR/EULAR criteria, and the Health Assessment Questionnaire–Disability Index. And significantly greater improvements also were seen in the Functional Assessment of Chronic Illness Therapy–Fatigue score, the 36-Item Short Form Health Survey, the Work Limitations Questionnaire, the EuroQol-5 Dimension questionnaire, and the EuroQol Health State Visual Analogue Scale.

In both active-treatment groups, all of these improvements were noted as early as within 2 weeks of starting treatment and persisted through follow-ups at week 24 and week 52.

Rates of adverse events, serious adverse events, and adverse events leading to treatment discontinuation were similar across the three study groups. Infections (primarily pneumonia) and infestations were the most frequent adverse events leading to discontinuation. No cases of tuberculosis or serious opportunistic infections were reported. Both doses of sirukumab induced declines in neutrophil, platelet, and white blood cell counts, as well as increases in alanine aminotransferase, aspartate aminotransferase, bilirubin, cholesterol, and triglycerides. Nine patients had hypersensitivity reactions, including one classified as serious dermatitis; all of these patients were taking the high dose of sirukumab. One of the five patient deaths that occurred, a fatal case of pneumonia, was considered possibly related to sirukumab.

This trial was funded by Janssen and GlaxoSmithKline, which also participated in the study design, data collection and analysis, and writing the results. Dr. Aletaha reported serving as a consultant for or receiving research support from AbbVie, Pfizer, Grünenthal, Merck, Medac, UCB, Mitsubishi/Tanabe, Janssen, and Roche. His associates reported ties to numerous industry sources.

The investigational interleukin-6 inhibitor sirukumab proved effective and safe for rheumatoid arthritis patients who failed to respond to or were intolerant of multiple previous therapies in a phase III trial reported online in The Lancet.

These patients are “exceptionally treatment-resistant” and have “substantial clinical needs with the lowest chance of treatment success,” said first author Daniel Aletaha, MD, of the division of rheumatology at the Medical University of Vienna, and his associates.

Two different doses of sirukumab provided rapid and sustained improvements in rheumatoid arthritis (RA) signs and symptoms, physical function, and health status. These were accompanied by improvements in physical and mental well-being, a result that is particularly relevant for RA patients, who have a high prevalence of mood disorders. It has been estimated that 39% of RA patients have moderate to severe depressive symptoms, the investigators noted.

They assessed sirukumab in the manufacturer-funded trial involving 878 adults treated and followed at 183 centers in 20 countries across North America, Europe, Latin America, and Asia. These patients included 59% who had failed to respond to or had been intolerant of two or more biologic disease-modifying antirheumatic drugs (39% to two or more tumor necrosis factor inhibitors) and numerous other RA treatments.

The patients in the study, called SIRROUND-T, were randomly assigned to receive 50 mg sirukumab every 4 weeks (292 patients in the low-dose group), 100 mg sirukumab every 2 weeks (292 in the high-dose group), or matching placebo (294 patients in the control group) via subcutaneous injections for 52 weeks. Patients receiving placebo whose condition worsened were allowed an “early escape,” a blinded switch to either dose of the active drug for the remainder of the trial. At week 24, patients remaining in the placebo groups also were randomly assigned in a blinded manner to switch to active treatment (Lancet. 2017 Feb 15. doi: 10.1016/S0140-6736[17]30401-4). All patients were allowed to continue using any concomitant disease-modifying antirheumatic drugs (DMARDs). At baseline, only 80% were using a conventional synthetic DMARD, and about 25% of patients in each group were not taking methotrexate.

The primary efficacy endpoint was the proportion of patients achieving an ACR20 response (a 20% or greater improvement in swollen joint count and tender joint count plus a 20% or greater improvement in at least three factors: pain, patient-assessed disease activity, physician-assessed disease activity, patient-assessed physical function, and C-reactive protein levels) at week 16. Sirukumab achieved higher levels of response in both the low-dose (40% of patients) and high-dose (45%) groups than did placebo (24%).

In addition, significantly higher proportions of patients receiving active treatment achieved the secondary endpoints of ACR20, ACR50, and ACR70 at week 24. Greater improvements, including remission, also were noted with sirukumab than with placebo according to the Simplified Disease Activity Index, ACR/EULAR criteria, and the Health Assessment Questionnaire–Disability Index. And significantly greater improvements also were seen in the Functional Assessment of Chronic Illness Therapy–Fatigue score, the 36-Item Short Form Health Survey, the Work Limitations Questionnaire, the EuroQol-5 Dimension questionnaire, and the EuroQol Health State Visual Analogue Scale.

In both active-treatment groups, all of these improvements were noted as early as within 2 weeks of starting treatment and persisted through follow-ups at week 24 and week 52.

Rates of adverse events, serious adverse events, and adverse events leading to treatment discontinuation were similar across the three study groups. Infections (primarily pneumonia) and infestations were the most frequent adverse events leading to discontinuation. No cases of tuberculosis or serious opportunistic infections were reported. Both doses of sirukumab induced declines in neutrophil, platelet, and white blood cell counts, as well as increases in alanine aminotransferase, aspartate aminotransferase, bilirubin, cholesterol, and triglycerides. Nine patients had hypersensitivity reactions, including one classified as serious dermatitis; all of these patients were taking the high dose of sirukumab. One of the five patient deaths that occurred, a fatal case of pneumonia, was considered possibly related to sirukumab.

This trial was funded by Janssen and GlaxoSmithKline, which also participated in the study design, data collection and analysis, and writing the results. Dr. Aletaha reported serving as a consultant for or receiving research support from AbbVie, Pfizer, Grünenthal, Merck, Medac, UCB, Mitsubishi/Tanabe, Janssen, and Roche. His associates reported ties to numerous industry sources.

FROM THE LANCET

Key clinical point: Sirukumab proved effective and safe for RA patients who failed to respond to or were intolerant of multiple previous therapies.

Key numerical finding: The primary efficacy endpoint – the proportion of patients achieving an ACR20 response at week 16 – was 40% for low-dose and 45% for high-dose sirukumab, compared with 24% for placebo.

Data source: A manufacturer-sponsored, international, randomized, double-blind, placebo-controlled, phase III trial involving 878 adults with refractory RA.