User login

Assessment of IV Edaravone Use in the Management of Amyotrophic Lateral Sclerosis

Amyotrophic lateral sclerosis (ALS) is an incurable neurodegenerative disorder that results in progressive deterioration of motor neurons in the ventral horn of the spinal cord, which results in loss of voluntary muscle movements.1 Eventually, typical daily tasks become difficult to perform, and as the disease progresses, the ability to eat and breathe is impaired.2 Reports from 2015 show the annual incidence of ALS is 5 cases per 100,000 people, with the total number of cases reported at more than 16,000 in the United States.3 In clinical practice, disease progression is routinely assessed by the Revised Amyotrophic Lateral Sclerosis Functional Rating Scale (ALSFRS-R). Typical decline is 1 point per month.4

Unfortunately, at this time, ALS care focuses on symptom management, including prevention of weight loss; implementation of communication strategies; and management of pain, constipation, excess secretions, cramping, and breathing. Despite copious research into treatment options, few exist. Riluzole is an oral medication administered twice daily and has been on the market since 1995.5-7 Efficacy was demonstrated in a study showing statistically significant survival at 12 months compared with controls (74% vs 58%, respectively; P = .014).6 Since its approval, riluzole has become part of standard-of-care ALS management.

In 2017, the US Food and Drug Administration (FDA) approved edaravone, an IV medication that was found to slow the progression of ALS in some patients.8-12 Oxidative stress caused by free radicals is hypothesized to increase the progression of ALS by motor neuron degradation.13 Edaravone works as a free radical and peroxynitrite scavenger and has been shown to eliminate lipid peroxides and hydroxyl radicals known to damage endothelial and neuronal cells.12

Given the mechanism of action of edaravone, it seemed to be a promising option to slow the progression of ALS. A 2019 systematic review analyzed 3 randomized studies with 367 patients and found a statistically significant difference in change in ALSFRS-R scores between patients treated with edaravone for 24 weeks compared with patients treated with the placebo (mean difference, 1.63; 95% CI, 0.26-3.00; P = .02).12 Secondary endpoints evaluated included percent forced vital capacity (%FVC), grip strength, and pinch strength: All showing no significant difference when comparing IV edaravone with placebo.

A 2022 postmarketing study of 324 patients with ALS evaluated the safety and efficacy of long-term edaravone treatment. IV edaravone therapy for > 24 weeks was well tolerated, although it was not associated with any disease-modifying benefit when comparing ALSFRS-R scores with patients not receiving edaravone over a median 13.9 months (ALSFRS-R points/month, -0.91 vs -0.85; P = .37).13 A third ALS treatment medication, sodium phenylbutyrate/taurursodiol was approved in 2022 but not available during our study period and not included here.14,15

Studies have shown an increased incidence of ALS in the veteran population. Veterans serving in the Gulf War were nearly twice as likely to develop ALS as those not serving in the Gulf.16 However, existing literature regarding the effectiveness of edaravone does not specifically examine the effect on this unique population. The objective of this study was to assess the effect of IV edaravone on ALS progression in veterans compared with veterans who received standard of care.

Methods

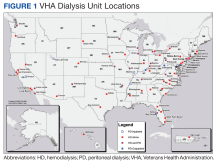

This study was conducted at a large, academic US Department of Veterans Affairs (VA) medical center. Patients with ALS are followed by a multidisciplinary clinic composed of a neurologist, pulmonologist, clinical pharmacist, social worker, speech therapist, physical therapist, occupational therapist, dietician, clinical psychologist, wheelchair clinic representative, and benefits representative. Patients are typically seen for a half-day appointment about every 3 months. During these visits, a comprehensive review of disease progression is performed. This review entails completion of the ALSFRS-R, physical examination, and pulmonary function testing. Speech intelligibility stage (SIS) is assessed by a speech therapist as well. SIS is scored from 1 (no detectable speech disorder) to 5 (no functional speech). All patients followed in this multidisciplinary ALS clinic receive standard-of-care treatment. This includes the discussion of treatment options that if appropriate are provided to help manage a wide range of complications associated with this disease (eg, pain, cramping, constipation, excessive secretions, weight loss, dysphagia). As a part of these personal discussions, treatment with riluzole is also offered as a standard-of-care pharmacologic option.

Study Design

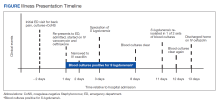

This retrospective case-control study was conducted using electronic health record data to compare ALS progression in patients on IV edaravone therapy with standard of care. The Indiana University/Purdue University, Indianapolis Institutional Review Board and the VA Research and Development Committee approved the study. The control cohort received the standard of care. Patients in the case cohort received standard of care and edaravone 60 mg infusions daily for an initial cycle of 14 days on treatment, followed by 14 days off. All subsequent cycles were 10 of 14 days on treatment followed by 14 days off. The initial 2 doses were administered in the outpatient infusion clinic to monitor for a hypersensitivity reaction. Patients then had a peripherally inserted central catheter line placed and received doses on days 3 through 14 at home. A port was placed for subsequent cycles, which were also completed at home. Appropriateness of edaravone therapy was assessed by the neurologist at each follow-up appointment. Therapy was then discontinued if warranted based on disease progression or patient preference.

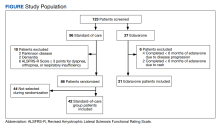

Study Population

Patients included were aged 18 to 75 years with diagnosed ALS. Patients with complications that might influence evaluation of medication efficacy (eg, Parkinson disease, schizophrenia, significant dementia, other major medical morbidity) were excluded. Patients were also excluded if they were on continuous bilevel positive airway pressure and/or had a total score of ≤ 3 points on ALSFRS-R items for dyspnea, orthopnea, or respiratory insufficiency. Due to our small sample size, patients were excluded if treatment was < 6 months, which is the gold standard of therapy duration established by clinical trials.9,11,12

The standard-of-care cohort included patients enrolled in the multidisciplinary clinic September 1, 2014 to August 31, 2017. These patients were compared in a 2:1 ratio with patients who received IV edaravone. The edaravone cohort included patients who initiated treatment with IV edaravone between September 1, 2017, and August 31, 2020. This date range prior to the approval of edaravone was chosen to compare patients at similar stages of disease progression and to have the largest sample size possible.

Data Collection

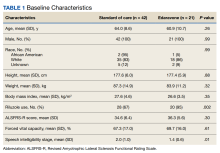

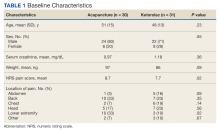

Data were obtained for eligible patients using the VA Computerized Patient Record System. Demographic data gathered for each patient included age, sex, weight, height, body mass index (BMI), race, and riluzole use.

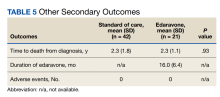

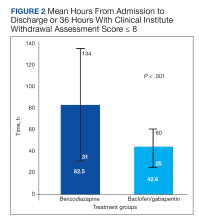

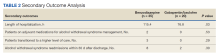

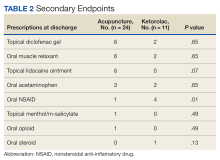

The primary endpoint was the change in ALSFRS-R score after 6 months of IV edaravone compared with standard-of-care ALS management. Secondary outcomes included change in ALSFRS-R scores 3, 12, 18, and 24 months after therapy initiation, change in %FVC and SIS 3, 6, 12, 18, and 24 months after therapy initiation, duration of edaravone completed (months), time to death (months), and adverse events.

Statistical Analysis

Comparisons between the edaravone and control groups for differences in patient characteristics were made using χ2 and 2-sample t tests for categorical and continuous variables, respectively. Comparisons between the 2 groups for differences in study outcomes (ALSFRS-R scores, %FVC, SIS) at each time point were evaluated using 2-sample t tests. Adverse events and adverse drug reactions were compared between groups using χ2 tests. Statistical significance was set at 0.05.

We estimated that a sample size of 21 subjects in the edaravone (case) group and 42 in the standard-of-care (control) group would be needed to achieve 80% power to detect a difference of 6.5 between the 2 groups for the change in ALSFRS-R scores. This 80% power was calculated based on a 2-sample t test, and assuming a 2-sided 5% significance level and a within-group SD of 8.5.9 Statistical analysis was conducted using Microsoft Excel.

Results

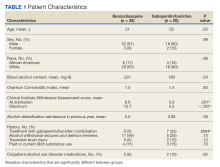

Of the 96 patients, 10 met exclusion criteria. From the remaining 86, 42 were randomly selected for the standard-of-care group. A total of 27 patients seen in multidisciplinary ALS clinic between September 1, 2017, and August 31, 2020, received at least 1 dose of IV edaravone. Of the 27 edaravone patients, 6 were excluded for not completing a total of 6 months of edaravone. Two of the 6 excluded developed a rash, which resolved within 1 week after discontinuing edaravone. The other 4 discontinued edaravone before 6 months because of disease progression.

Baseline Characteristics

Efficacy

Discussion

This 24-month, case-control retrospective study assessed efficacy and safety of IV edaravone for the management of ALS. Although the landmark edaravone study showed slowed progression of ALS at 6 and 12 months, the effectiveness of edaravone outside the clinical trial setting has been less compelling.9-11,13 A later study showed no difference in change in ALSFRS-R score at 6 months compared with that of the placebo group.7 In our study, no statistically significant difference was found for change in ALSFRS-R scores at 6 months.

Our study was unique given we evaluated a veteran population. The link between the military and ALS is largely unknown, although studies have shown increased incidence of ALS in people with a military history compared with that of the general population.16-18 Our study was also unique because it was single-centered in design and allowed for outcome assessments, including ALSFRS-R scores, SIS, and %FVC measurements, to all be conducted by the same practitioner to limit variability. Unfortunately, our sample size resulted in a cohort that was underpowered at 12, 18, and 24 months. In addition, there was a lack of data on chart review for SIS and %FVC measurements at 24 months. As ALS progresses toward end stage, SIS and %FVC measurements can become difficult and burdensome on the patient to obtain, and the ALS multidisciplinary team may decide not to gather these data points as ALS progresses. As a result, change in SIS and %FVC measurements were unable to be reported due to lack of gathering this information at the 24-month mark in the edaravone group. Due to the cost and administration burden associated with edaravone, it is important that assessment of disease progression is performed regularly to assess benefit and appropriateness of continued therapy. The oral formulation of edaravone was approved in 2022, shortly after the completion of data collection for this study.19,20 Although our study did not analyze oral edaravone, the administration burden of treatment would be reduced with the oral formulation, and we hypothesize there will be increased patient interest in ALS management with oral vs IV edaravone. Evaluation of long-term treatment for efficacy and safety beyond 24 months has not been evaluated. Future studies should continue to evaluate edaravone use in a larger veteran population.

Limitations

One limitation for our study alluded to earlier in the discussion was sample size. Although this study met power at the 6-month mark, it was limited by the number of patients who received more than 6 months of edaravone (n = 21). As a result, statistical analyses between treatment groups were underpowered at 12, 18, and 24 months. Our study had 80% power to detect a difference of 6.5 between the groups for the change in ALSFRS-R scores. Previous studies detected a statistically significant difference in ALSFRS-R scores, with a difference in ALSFRS-R scores of 2.49 between groups.8 Future studies should evaluate a larger sample size of patients who are prescribed edaravone.

Another limitation was that the edaravone and standard-of-care group data were gathered from different time periods. Two different time frames were selected to increase sample size by gathering data over a longer period and to account for patients who may have qualified for IV edaravone but could not receive it as it was not yet available on the market. There were no known changes to the standard of care between the time periods that would affect results. As noted previously, the standard-of-care group had fewer patients taking riluzole compared with the edaravone group, which may have confounded our results. We concluded patients opting for edaravone were more likely to trial riluzole, taken by mouth twice daily, before starting edaravone, a once-daily IV infusion.

Conclusions

No difference in the rate of ALS progression was noted between patients who received IV edaravone vs standard of care at 6 months. In addition, no difference was noted in other objective measures of disease progression, including %FVC, SIS, and time to death. As a result, the decision to initiate and continue edaravone therapy should be made on an individualized basis according to a prescriber’s clinical judgment and a patient’s goals. Edaravone therapy should be discontinued when disease progression occurs or when medication administration becomes a burden.

Acknowledgments

This material is the result of work supported with resources and the use of facilities at Veteran Health Indiana.

1. Kiernan MC, Vucic S, Cheah BC, et al. Amyotrophic lateral sclerosis. Lancet. 2011;377(9769):942-955. doi:10.1016/S0140-6736(10)61156-7

2. Rowland LP, Shneider NA. Amyotrophic lateral sclerosis. N Engl J Med. 2001;344(22):1688-1700. doi:0.1056/NEJM200105313442207

3. Mehta P, Kaye W, Raymond J, et al. Prevalence of amyotrophic lateral sclerosis–United States, 2015. MMWR Morb Mortal Wkly Rep. 2018;67(46):1285-1289. doi:10.15585/mmwr.mm6746a1

4. Castrillo-Viguera C, Grasso DL, Simpson E, Shefner J, Cudkowicz ME. Clinical significance in the change of decline in ALSFRS-R. Amyotroph Lateral Scler. 2010;11(1-2):178-180. doi:10.3109/17482960903093710

5. Rilutek. Package insert. Covis Pharmaceuticals; 1995.

6. Bensimon G, Lacomblez L, Meininger V. A controlled trial of riluzole in amyotrophic lateral sclerosis. ALS/Riluzole Study Group. N Engl J Med. 1994;330(9):585-591. doi:10.1056/NEJM199403033300901

7. Lacomblez L, Bensimon G, Leigh PN, Guillet P, Meininger V. Dose-ranging study of riluzole in amyotrophic lateral sclerosis. Amyotrophic Lateral Sclerosis/Riluzole Study Group II. Lancet. 1996;347(9013):1425-1431. doi:10.1016/s0140-6736(96)91680-3

8. Radicava. Package insert. MT Pharma America Inc; 2017.

9. Abe K, Itoyama Y, Sobue G, et al. Confirmatory double-blind, parallel-group, placebo-controlled study of efficacy and safety of edaravone (MCI-186) in amyotrophic lateral sclerosis patients. Amyotroph Lateral Scler Frontotemporal Degener. 2014;15(7-8):610-617. doi:10.3109/21678421.2014.959024

10. Writing Group; Edaravone (MCI-186) ALS 19 Study Group. Safety and efficacy of edaravone in well defined patients with amyotrophic lateral sclerosis: a randomised, double-blind, placebo-controlled trial. Lancet Neurol. 2017;16(7):505-512. doi:10.1016/S1474-4422(17)30115-1

11. Writing Group; Edaravone (MCI-186) ALS 19 Study Group. Exploratory double-blind, parallel-group, placebo-controlled study of edaravone (MCI-186) in amyotrophic lateral sclerosis (Japan ALS severity classification: Grade 3, requiring assistance for eating, excretion or ambulation). Amyotroph Lateral Scler Frontotemporal Degener. 2017;18(suppl 1):40-48. doi:10.1080/21678421.2017.1361441

12. Luo L, Song Z, Li X, et al. Efficacy and safety of edaravone in treatment of amyotrophic lateral sclerosis–a systematic review and meta-analysis. Neurol Sci. 2019;40(2):235-241. doi:10.1007/s10072-018-3653-2

13. Witzel S, Maier A, Steinbach R, et al; German Motor Neuron Disease Network (MND-NET). Safety and effectiveness of long-term intravenous administration of edaravone for treatment of patients with amyotrophic lateral sclerosis. JAMA Neurol. 2022;79(2):121-130. doi:10.1001/jamaneurol.2021.4893

14. Paganoni S, Macklin EA, Hendrix S, et al. Trial of sodium phenylbutyrate-taurursodiol for amyotrophic lateral sclerosis. N Engl J Med. 2020;383(10):919-930. doi:10.1056/NEJMoa1916945

15. Relyvrio. Package insert. Amylyx Pharmaceuticals Inc; 2022.

16. McKay KA, Smith KA, Smertinaite L, Fang F, Ingre C, Taube F. Military service and related risk factors for amyotrophic lateral sclerosis. Acta Neurol Scand. 2021;143(1):39-50. doi:10.1111/ane.13345

17. Watanabe K, Tanaka M, Yuki S, Hirai M, Yamamoto Y. How is edaravone effective against acute ischemic stroke and amyotrophic lateral sclerosis? J Clin Biochem Nutr. 2018;62(1):20-38. doi:10.3164/jcbn.17-62

18. Horner RD, Kamins KG, Feussner JR, et al. Occurrence of amyotrophic lateral sclerosis among Gulf War veterans. Neurology. 2003;61(6):742-749. doi:10.1212/01.wnl.0000069922.32557.ca

19. Radicava ORS. Package insert. Mitsubishi Tanabe Pharma America Inc; 2022.

20. Shimizu H, Nishimura Y, Shiide Y, et al. Bioequivalence study of oral suspension and intravenous formulation of edaravone in healthy adult subjects. Clin Pharmacol Drug Dev. 2021;10(10):1188-1197. doi:10.1002/cpdd.952

Amyotrophic lateral sclerosis (ALS) is an incurable neurodegenerative disorder that results in progressive deterioration of motor neurons in the ventral horn of the spinal cord, which results in loss of voluntary muscle movements.1 Eventually, typical daily tasks become difficult to perform, and as the disease progresses, the ability to eat and breathe is impaired.2 Reports from 2015 show the annual incidence of ALS is 5 cases per 100,000 people, with the total number of cases reported at more than 16,000 in the United States.3 In clinical practice, disease progression is routinely assessed by the Revised Amyotrophic Lateral Sclerosis Functional Rating Scale (ALSFRS-R). Typical decline is 1 point per month.4

Unfortunately, at this time, ALS care focuses on symptom management, including prevention of weight loss; implementation of communication strategies; and management of pain, constipation, excess secretions, cramping, and breathing. Despite copious research into treatment options, few exist. Riluzole is an oral medication administered twice daily and has been on the market since 1995.5-7 Efficacy was demonstrated in a study showing statistically significant survival at 12 months compared with controls (74% vs 58%, respectively; P = .014).6 Since its approval, riluzole has become part of standard-of-care ALS management.

In 2017, the US Food and Drug Administration (FDA) approved edaravone, an IV medication that was found to slow the progression of ALS in some patients.8-12 Oxidative stress caused by free radicals is hypothesized to increase the progression of ALS by motor neuron degradation.13 Edaravone works as a free radical and peroxynitrite scavenger and has been shown to eliminate lipid peroxides and hydroxyl radicals known to damage endothelial and neuronal cells.12

Given the mechanism of action of edaravone, it seemed to be a promising option to slow the progression of ALS. A 2019 systematic review analyzed 3 randomized studies with 367 patients and found a statistically significant difference in change in ALSFRS-R scores between patients treated with edaravone for 24 weeks compared with patients treated with the placebo (mean difference, 1.63; 95% CI, 0.26-3.00; P = .02).12 Secondary endpoints evaluated included percent forced vital capacity (%FVC), grip strength, and pinch strength: All showing no significant difference when comparing IV edaravone with placebo.

A 2022 postmarketing study of 324 patients with ALS evaluated the safety and efficacy of long-term edaravone treatment. IV edaravone therapy for > 24 weeks was well tolerated, although it was not associated with any disease-modifying benefit when comparing ALSFRS-R scores with patients not receiving edaravone over a median 13.9 months (ALSFRS-R points/month, -0.91 vs -0.85; P = .37).13 A third ALS treatment medication, sodium phenylbutyrate/taurursodiol was approved in 2022 but not available during our study period and not included here.14,15

Studies have shown an increased incidence of ALS in the veteran population. Veterans serving in the Gulf War were nearly twice as likely to develop ALS as those not serving in the Gulf.16 However, existing literature regarding the effectiveness of edaravone does not specifically examine the effect on this unique population. The objective of this study was to assess the effect of IV edaravone on ALS progression in veterans compared with veterans who received standard of care.

Methods

This study was conducted at a large, academic US Department of Veterans Affairs (VA) medical center. Patients with ALS are followed by a multidisciplinary clinic composed of a neurologist, pulmonologist, clinical pharmacist, social worker, speech therapist, physical therapist, occupational therapist, dietician, clinical psychologist, wheelchair clinic representative, and benefits representative. Patients are typically seen for a half-day appointment about every 3 months. During these visits, a comprehensive review of disease progression is performed. This review entails completion of the ALSFRS-R, physical examination, and pulmonary function testing. Speech intelligibility stage (SIS) is assessed by a speech therapist as well. SIS is scored from 1 (no detectable speech disorder) to 5 (no functional speech). All patients followed in this multidisciplinary ALS clinic receive standard-of-care treatment. This includes the discussion of treatment options that if appropriate are provided to help manage a wide range of complications associated with this disease (eg, pain, cramping, constipation, excessive secretions, weight loss, dysphagia). As a part of these personal discussions, treatment with riluzole is also offered as a standard-of-care pharmacologic option.

Study Design

This retrospective case-control study was conducted using electronic health record data to compare ALS progression in patients on IV edaravone therapy with standard of care. The Indiana University/Purdue University, Indianapolis Institutional Review Board and the VA Research and Development Committee approved the study. The control cohort received the standard of care. Patients in the case cohort received standard of care and edaravone 60 mg infusions daily for an initial cycle of 14 days on treatment, followed by 14 days off. All subsequent cycles were 10 of 14 days on treatment followed by 14 days off. The initial 2 doses were administered in the outpatient infusion clinic to monitor for a hypersensitivity reaction. Patients then had a peripherally inserted central catheter line placed and received doses on days 3 through 14 at home. A port was placed for subsequent cycles, which were also completed at home. Appropriateness of edaravone therapy was assessed by the neurologist at each follow-up appointment. Therapy was then discontinued if warranted based on disease progression or patient preference.

Study Population

Patients included were aged 18 to 75 years with diagnosed ALS. Patients with complications that might influence evaluation of medication efficacy (eg, Parkinson disease, schizophrenia, significant dementia, other major medical morbidity) were excluded. Patients were also excluded if they were on continuous bilevel positive airway pressure and/or had a total score of ≤ 3 points on ALSFRS-R items for dyspnea, orthopnea, or respiratory insufficiency. Due to our small sample size, patients were excluded if treatment was < 6 months, which is the gold standard of therapy duration established by clinical trials.9,11,12

The standard-of-care cohort included patients enrolled in the multidisciplinary clinic September 1, 2014 to August 31, 2017. These patients were compared in a 2:1 ratio with patients who received IV edaravone. The edaravone cohort included patients who initiated treatment with IV edaravone between September 1, 2017, and August 31, 2020. This date range prior to the approval of edaravone was chosen to compare patients at similar stages of disease progression and to have the largest sample size possible.

Data Collection

Data were obtained for eligible patients using the VA Computerized Patient Record System. Demographic data gathered for each patient included age, sex, weight, height, body mass index (BMI), race, and riluzole use.

The primary endpoint was the change in ALSFRS-R score after 6 months of IV edaravone compared with standard-of-care ALS management. Secondary outcomes included change in ALSFRS-R scores 3, 12, 18, and 24 months after therapy initiation, change in %FVC and SIS 3, 6, 12, 18, and 24 months after therapy initiation, duration of edaravone completed (months), time to death (months), and adverse events.

Statistical Analysis

Comparisons between the edaravone and control groups for differences in patient characteristics were made using χ2 and 2-sample t tests for categorical and continuous variables, respectively. Comparisons between the 2 groups for differences in study outcomes (ALSFRS-R scores, %FVC, SIS) at each time point were evaluated using 2-sample t tests. Adverse events and adverse drug reactions were compared between groups using χ2 tests. Statistical significance was set at 0.05.

We estimated that a sample size of 21 subjects in the edaravone (case) group and 42 in the standard-of-care (control) group would be needed to achieve 80% power to detect a difference of 6.5 between the 2 groups for the change in ALSFRS-R scores. This 80% power was calculated based on a 2-sample t test, and assuming a 2-sided 5% significance level and a within-group SD of 8.5.9 Statistical analysis was conducted using Microsoft Excel.

Results

Of the 96 patients, 10 met exclusion criteria. From the remaining 86, 42 were randomly selected for the standard-of-care group. A total of 27 patients seen in multidisciplinary ALS clinic between September 1, 2017, and August 31, 2020, received at least 1 dose of IV edaravone. Of the 27 edaravone patients, 6 were excluded for not completing a total of 6 months of edaravone. Two of the 6 excluded developed a rash, which resolved within 1 week after discontinuing edaravone. The other 4 discontinued edaravone before 6 months because of disease progression.

Baseline Characteristics

Efficacy

Discussion

This 24-month, case-control retrospective study assessed efficacy and safety of IV edaravone for the management of ALS. Although the landmark edaravone study showed slowed progression of ALS at 6 and 12 months, the effectiveness of edaravone outside the clinical trial setting has been less compelling.9-11,13 A later study showed no difference in change in ALSFRS-R score at 6 months compared with that of the placebo group.7 In our study, no statistically significant difference was found for change in ALSFRS-R scores at 6 months.

Our study was unique given we evaluated a veteran population. The link between the military and ALS is largely unknown, although studies have shown increased incidence of ALS in people with a military history compared with that of the general population.16-18 Our study was also unique because it was single-centered in design and allowed for outcome assessments, including ALSFRS-R scores, SIS, and %FVC measurements, to all be conducted by the same practitioner to limit variability. Unfortunately, our sample size resulted in a cohort that was underpowered at 12, 18, and 24 months. In addition, there was a lack of data on chart review for SIS and %FVC measurements at 24 months. As ALS progresses toward end stage, SIS and %FVC measurements can become difficult and burdensome on the patient to obtain, and the ALS multidisciplinary team may decide not to gather these data points as ALS progresses. As a result, change in SIS and %FVC measurements were unable to be reported due to lack of gathering this information at the 24-month mark in the edaravone group. Due to the cost and administration burden associated with edaravone, it is important that assessment of disease progression is performed regularly to assess benefit and appropriateness of continued therapy. The oral formulation of edaravone was approved in 2022, shortly after the completion of data collection for this study.19,20 Although our study did not analyze oral edaravone, the administration burden of treatment would be reduced with the oral formulation, and we hypothesize there will be increased patient interest in ALS management with oral vs IV edaravone. Evaluation of long-term treatment for efficacy and safety beyond 24 months has not been evaluated. Future studies should continue to evaluate edaravone use in a larger veteran population.

Limitations

One limitation for our study alluded to earlier in the discussion was sample size. Although this study met power at the 6-month mark, it was limited by the number of patients who received more than 6 months of edaravone (n = 21). As a result, statistical analyses between treatment groups were underpowered at 12, 18, and 24 months. Our study had 80% power to detect a difference of 6.5 between the groups for the change in ALSFRS-R scores. Previous studies detected a statistically significant difference in ALSFRS-R scores, with a difference in ALSFRS-R scores of 2.49 between groups.8 Future studies should evaluate a larger sample size of patients who are prescribed edaravone.

Another limitation was that the edaravone and standard-of-care group data were gathered from different time periods. Two different time frames were selected to increase sample size by gathering data over a longer period and to account for patients who may have qualified for IV edaravone but could not receive it as it was not yet available on the market. There were no known changes to the standard of care between the time periods that would affect results. As noted previously, the standard-of-care group had fewer patients taking riluzole compared with the edaravone group, which may have confounded our results. We concluded patients opting for edaravone were more likely to trial riluzole, taken by mouth twice daily, before starting edaravone, a once-daily IV infusion.

Conclusions

No difference in the rate of ALS progression was noted between patients who received IV edaravone vs standard of care at 6 months. In addition, no difference was noted in other objective measures of disease progression, including %FVC, SIS, and time to death. As a result, the decision to initiate and continue edaravone therapy should be made on an individualized basis according to a prescriber’s clinical judgment and a patient’s goals. Edaravone therapy should be discontinued when disease progression occurs or when medication administration becomes a burden.

Acknowledgments

This material is the result of work supported with resources and the use of facilities at Veteran Health Indiana.

Amyotrophic lateral sclerosis (ALS) is an incurable neurodegenerative disorder that results in progressive deterioration of motor neurons in the ventral horn of the spinal cord, which results in loss of voluntary muscle movements.1 Eventually, typical daily tasks become difficult to perform, and as the disease progresses, the ability to eat and breathe is impaired.2 Reports from 2015 show the annual incidence of ALS is 5 cases per 100,000 people, with the total number of cases reported at more than 16,000 in the United States.3 In clinical practice, disease progression is routinely assessed by the Revised Amyotrophic Lateral Sclerosis Functional Rating Scale (ALSFRS-R). Typical decline is 1 point per month.4

Unfortunately, at this time, ALS care focuses on symptom management, including prevention of weight loss; implementation of communication strategies; and management of pain, constipation, excess secretions, cramping, and breathing. Despite copious research into treatment options, few exist. Riluzole is an oral medication administered twice daily and has been on the market since 1995.5-7 Efficacy was demonstrated in a study showing statistically significant survival at 12 months compared with controls (74% vs 58%, respectively; P = .014).6 Since its approval, riluzole has become part of standard-of-care ALS management.

In 2017, the US Food and Drug Administration (FDA) approved edaravone, an IV medication that was found to slow the progression of ALS in some patients.8-12 Oxidative stress caused by free radicals is hypothesized to increase the progression of ALS by motor neuron degradation.13 Edaravone works as a free radical and peroxynitrite scavenger and has been shown to eliminate lipid peroxides and hydroxyl radicals known to damage endothelial and neuronal cells.12

Given the mechanism of action of edaravone, it seemed to be a promising option to slow the progression of ALS. A 2019 systematic review analyzed 3 randomized studies with 367 patients and found a statistically significant difference in change in ALSFRS-R scores between patients treated with edaravone for 24 weeks compared with patients treated with the placebo (mean difference, 1.63; 95% CI, 0.26-3.00; P = .02).12 Secondary endpoints evaluated included percent forced vital capacity (%FVC), grip strength, and pinch strength: All showing no significant difference when comparing IV edaravone with placebo.

A 2022 postmarketing study of 324 patients with ALS evaluated the safety and efficacy of long-term edaravone treatment. IV edaravone therapy for > 24 weeks was well tolerated, although it was not associated with any disease-modifying benefit when comparing ALSFRS-R scores with patients not receiving edaravone over a median 13.9 months (ALSFRS-R points/month, -0.91 vs -0.85; P = .37).13 A third ALS treatment medication, sodium phenylbutyrate/taurursodiol was approved in 2022 but not available during our study period and not included here.14,15

Studies have shown an increased incidence of ALS in the veteran population. Veterans serving in the Gulf War were nearly twice as likely to develop ALS as those not serving in the Gulf.16 However, existing literature regarding the effectiveness of edaravone does not specifically examine the effect on this unique population. The objective of this study was to assess the effect of IV edaravone on ALS progression in veterans compared with veterans who received standard of care.

Methods

This study was conducted at a large, academic US Department of Veterans Affairs (VA) medical center. Patients with ALS are followed by a multidisciplinary clinic composed of a neurologist, pulmonologist, clinical pharmacist, social worker, speech therapist, physical therapist, occupational therapist, dietician, clinical psychologist, wheelchair clinic representative, and benefits representative. Patients are typically seen for a half-day appointment about every 3 months. During these visits, a comprehensive review of disease progression is performed. This review entails completion of the ALSFRS-R, physical examination, and pulmonary function testing. Speech intelligibility stage (SIS) is assessed by a speech therapist as well. SIS is scored from 1 (no detectable speech disorder) to 5 (no functional speech). All patients followed in this multidisciplinary ALS clinic receive standard-of-care treatment. This includes the discussion of treatment options that if appropriate are provided to help manage a wide range of complications associated with this disease (eg, pain, cramping, constipation, excessive secretions, weight loss, dysphagia). As a part of these personal discussions, treatment with riluzole is also offered as a standard-of-care pharmacologic option.

Study Design

This retrospective case-control study was conducted using electronic health record data to compare ALS progression in patients on IV edaravone therapy with standard of care. The Indiana University/Purdue University, Indianapolis Institutional Review Board and the VA Research and Development Committee approved the study. The control cohort received the standard of care. Patients in the case cohort received standard of care and edaravone 60 mg infusions daily for an initial cycle of 14 days on treatment, followed by 14 days off. All subsequent cycles were 10 of 14 days on treatment followed by 14 days off. The initial 2 doses were administered in the outpatient infusion clinic to monitor for a hypersensitivity reaction. Patients then had a peripherally inserted central catheter line placed and received doses on days 3 through 14 at home. A port was placed for subsequent cycles, which were also completed at home. Appropriateness of edaravone therapy was assessed by the neurologist at each follow-up appointment. Therapy was then discontinued if warranted based on disease progression or patient preference.

Study Population

Patients included were aged 18 to 75 years with diagnosed ALS. Patients with complications that might influence evaluation of medication efficacy (eg, Parkinson disease, schizophrenia, significant dementia, other major medical morbidity) were excluded. Patients were also excluded if they were on continuous bilevel positive airway pressure and/or had a total score of ≤ 3 points on ALSFRS-R items for dyspnea, orthopnea, or respiratory insufficiency. Due to our small sample size, patients were excluded if treatment was < 6 months, which is the gold standard of therapy duration established by clinical trials.9,11,12

The standard-of-care cohort included patients enrolled in the multidisciplinary clinic September 1, 2014 to August 31, 2017. These patients were compared in a 2:1 ratio with patients who received IV edaravone. The edaravone cohort included patients who initiated treatment with IV edaravone between September 1, 2017, and August 31, 2020. This date range prior to the approval of edaravone was chosen to compare patients at similar stages of disease progression and to have the largest sample size possible.

Data Collection

Data were obtained for eligible patients using the VA Computerized Patient Record System. Demographic data gathered for each patient included age, sex, weight, height, body mass index (BMI), race, and riluzole use.

The primary endpoint was the change in ALSFRS-R score after 6 months of IV edaravone compared with standard-of-care ALS management. Secondary outcomes included change in ALSFRS-R scores 3, 12, 18, and 24 months after therapy initiation, change in %FVC and SIS 3, 6, 12, 18, and 24 months after therapy initiation, duration of edaravone completed (months), time to death (months), and adverse events.

Statistical Analysis

Comparisons between the edaravone and control groups for differences in patient characteristics were made using χ2 and 2-sample t tests for categorical and continuous variables, respectively. Comparisons between the 2 groups for differences in study outcomes (ALSFRS-R scores, %FVC, SIS) at each time point were evaluated using 2-sample t tests. Adverse events and adverse drug reactions were compared between groups using χ2 tests. Statistical significance was set at 0.05.

We estimated that a sample size of 21 subjects in the edaravone (case) group and 42 in the standard-of-care (control) group would be needed to achieve 80% power to detect a difference of 6.5 between the 2 groups for the change in ALSFRS-R scores. This 80% power was calculated based on a 2-sample t test, and assuming a 2-sided 5% significance level and a within-group SD of 8.5.9 Statistical analysis was conducted using Microsoft Excel.

Results

Of the 96 patients, 10 met exclusion criteria. From the remaining 86, 42 were randomly selected for the standard-of-care group. A total of 27 patients seen in multidisciplinary ALS clinic between September 1, 2017, and August 31, 2020, received at least 1 dose of IV edaravone. Of the 27 edaravone patients, 6 were excluded for not completing a total of 6 months of edaravone. Two of the 6 excluded developed a rash, which resolved within 1 week after discontinuing edaravone. The other 4 discontinued edaravone before 6 months because of disease progression.

Baseline Characteristics

Efficacy

Discussion

This 24-month, case-control retrospective study assessed efficacy and safety of IV edaravone for the management of ALS. Although the landmark edaravone study showed slowed progression of ALS at 6 and 12 months, the effectiveness of edaravone outside the clinical trial setting has been less compelling.9-11,13 A later study showed no difference in change in ALSFRS-R score at 6 months compared with that of the placebo group.7 In our study, no statistically significant difference was found for change in ALSFRS-R scores at 6 months.

Our study was unique given we evaluated a veteran population. The link between the military and ALS is largely unknown, although studies have shown increased incidence of ALS in people with a military history compared with that of the general population.16-18 Our study was also unique because it was single-centered in design and allowed for outcome assessments, including ALSFRS-R scores, SIS, and %FVC measurements, to all be conducted by the same practitioner to limit variability. Unfortunately, our sample size resulted in a cohort that was underpowered at 12, 18, and 24 months. In addition, there was a lack of data on chart review for SIS and %FVC measurements at 24 months. As ALS progresses toward end stage, SIS and %FVC measurements can become difficult and burdensome on the patient to obtain, and the ALS multidisciplinary team may decide not to gather these data points as ALS progresses. As a result, change in SIS and %FVC measurements were unable to be reported due to lack of gathering this information at the 24-month mark in the edaravone group. Due to the cost and administration burden associated with edaravone, it is important that assessment of disease progression is performed regularly to assess benefit and appropriateness of continued therapy. The oral formulation of edaravone was approved in 2022, shortly after the completion of data collection for this study.19,20 Although our study did not analyze oral edaravone, the administration burden of treatment would be reduced with the oral formulation, and we hypothesize there will be increased patient interest in ALS management with oral vs IV edaravone. Evaluation of long-term treatment for efficacy and safety beyond 24 months has not been evaluated. Future studies should continue to evaluate edaravone use in a larger veteran population.

Limitations

One limitation for our study alluded to earlier in the discussion was sample size. Although this study met power at the 6-month mark, it was limited by the number of patients who received more than 6 months of edaravone (n = 21). As a result, statistical analyses between treatment groups were underpowered at 12, 18, and 24 months. Our study had 80% power to detect a difference of 6.5 between the groups for the change in ALSFRS-R scores. Previous studies detected a statistically significant difference in ALSFRS-R scores, with a difference in ALSFRS-R scores of 2.49 between groups.8 Future studies should evaluate a larger sample size of patients who are prescribed edaravone.

Another limitation was that the edaravone and standard-of-care group data were gathered from different time periods. Two different time frames were selected to increase sample size by gathering data over a longer period and to account for patients who may have qualified for IV edaravone but could not receive it as it was not yet available on the market. There were no known changes to the standard of care between the time periods that would affect results. As noted previously, the standard-of-care group had fewer patients taking riluzole compared with the edaravone group, which may have confounded our results. We concluded patients opting for edaravone were more likely to trial riluzole, taken by mouth twice daily, before starting edaravone, a once-daily IV infusion.

Conclusions

No difference in the rate of ALS progression was noted between patients who received IV edaravone vs standard of care at 6 months. In addition, no difference was noted in other objective measures of disease progression, including %FVC, SIS, and time to death. As a result, the decision to initiate and continue edaravone therapy should be made on an individualized basis according to a prescriber’s clinical judgment and a patient’s goals. Edaravone therapy should be discontinued when disease progression occurs or when medication administration becomes a burden.

Acknowledgments

This material is the result of work supported with resources and the use of facilities at Veteran Health Indiana.

1. Kiernan MC, Vucic S, Cheah BC, et al. Amyotrophic lateral sclerosis. Lancet. 2011;377(9769):942-955. doi:10.1016/S0140-6736(10)61156-7

2. Rowland LP, Shneider NA. Amyotrophic lateral sclerosis. N Engl J Med. 2001;344(22):1688-1700. doi:0.1056/NEJM200105313442207

3. Mehta P, Kaye W, Raymond J, et al. Prevalence of amyotrophic lateral sclerosis–United States, 2015. MMWR Morb Mortal Wkly Rep. 2018;67(46):1285-1289. doi:10.15585/mmwr.mm6746a1

4. Castrillo-Viguera C, Grasso DL, Simpson E, Shefner J, Cudkowicz ME. Clinical significance in the change of decline in ALSFRS-R. Amyotroph Lateral Scler. 2010;11(1-2):178-180. doi:10.3109/17482960903093710

5. Rilutek. Package insert. Covis Pharmaceuticals; 1995.

6. Bensimon G, Lacomblez L, Meininger V. A controlled trial of riluzole in amyotrophic lateral sclerosis. ALS/Riluzole Study Group. N Engl J Med. 1994;330(9):585-591. doi:10.1056/NEJM199403033300901

7. Lacomblez L, Bensimon G, Leigh PN, Guillet P, Meininger V. Dose-ranging study of riluzole in amyotrophic lateral sclerosis. Amyotrophic Lateral Sclerosis/Riluzole Study Group II. Lancet. 1996;347(9013):1425-1431. doi:10.1016/s0140-6736(96)91680-3

8. Radicava. Package insert. MT Pharma America Inc; 2017.

9. Abe K, Itoyama Y, Sobue G, et al. Confirmatory double-blind, parallel-group, placebo-controlled study of efficacy and safety of edaravone (MCI-186) in amyotrophic lateral sclerosis patients. Amyotroph Lateral Scler Frontotemporal Degener. 2014;15(7-8):610-617. doi:10.3109/21678421.2014.959024

10. Writing Group; Edaravone (MCI-186) ALS 19 Study Group. Safety and efficacy of edaravone in well defined patients with amyotrophic lateral sclerosis: a randomised, double-blind, placebo-controlled trial. Lancet Neurol. 2017;16(7):505-512. doi:10.1016/S1474-4422(17)30115-1

11. Writing Group; Edaravone (MCI-186) ALS 19 Study Group. Exploratory double-blind, parallel-group, placebo-controlled study of edaravone (MCI-186) in amyotrophic lateral sclerosis (Japan ALS severity classification: Grade 3, requiring assistance for eating, excretion or ambulation). Amyotroph Lateral Scler Frontotemporal Degener. 2017;18(suppl 1):40-48. doi:10.1080/21678421.2017.1361441

12. Luo L, Song Z, Li X, et al. Efficacy and safety of edaravone in treatment of amyotrophic lateral sclerosis–a systematic review and meta-analysis. Neurol Sci. 2019;40(2):235-241. doi:10.1007/s10072-018-3653-2

13. Witzel S, Maier A, Steinbach R, et al; German Motor Neuron Disease Network (MND-NET). Safety and effectiveness of long-term intravenous administration of edaravone for treatment of patients with amyotrophic lateral sclerosis. JAMA Neurol. 2022;79(2):121-130. doi:10.1001/jamaneurol.2021.4893

14. Paganoni S, Macklin EA, Hendrix S, et al. Trial of sodium phenylbutyrate-taurursodiol for amyotrophic lateral sclerosis. N Engl J Med. 2020;383(10):919-930. doi:10.1056/NEJMoa1916945

15. Relyvrio. Package insert. Amylyx Pharmaceuticals Inc; 2022.

16. McKay KA, Smith KA, Smertinaite L, Fang F, Ingre C, Taube F. Military service and related risk factors for amyotrophic lateral sclerosis. Acta Neurol Scand. 2021;143(1):39-50. doi:10.1111/ane.13345

17. Watanabe K, Tanaka M, Yuki S, Hirai M, Yamamoto Y. How is edaravone effective against acute ischemic stroke and amyotrophic lateral sclerosis? J Clin Biochem Nutr. 2018;62(1):20-38. doi:10.3164/jcbn.17-62

18. Horner RD, Kamins KG, Feussner JR, et al. Occurrence of amyotrophic lateral sclerosis among Gulf War veterans. Neurology. 2003;61(6):742-749. doi:10.1212/01.wnl.0000069922.32557.ca

19. Radicava ORS. Package insert. Mitsubishi Tanabe Pharma America Inc; 2022.

20. Shimizu H, Nishimura Y, Shiide Y, et al. Bioequivalence study of oral suspension and intravenous formulation of edaravone in healthy adult subjects. Clin Pharmacol Drug Dev. 2021;10(10):1188-1197. doi:10.1002/cpdd.952

1. Kiernan MC, Vucic S, Cheah BC, et al. Amyotrophic lateral sclerosis. Lancet. 2011;377(9769):942-955. doi:10.1016/S0140-6736(10)61156-7

2. Rowland LP, Shneider NA. Amyotrophic lateral sclerosis. N Engl J Med. 2001;344(22):1688-1700. doi:0.1056/NEJM200105313442207

3. Mehta P, Kaye W, Raymond J, et al. Prevalence of amyotrophic lateral sclerosis–United States, 2015. MMWR Morb Mortal Wkly Rep. 2018;67(46):1285-1289. doi:10.15585/mmwr.mm6746a1

4. Castrillo-Viguera C, Grasso DL, Simpson E, Shefner J, Cudkowicz ME. Clinical significance in the change of decline in ALSFRS-R. Amyotroph Lateral Scler. 2010;11(1-2):178-180. doi:10.3109/17482960903093710

5. Rilutek. Package insert. Covis Pharmaceuticals; 1995.

6. Bensimon G, Lacomblez L, Meininger V. A controlled trial of riluzole in amyotrophic lateral sclerosis. ALS/Riluzole Study Group. N Engl J Med. 1994;330(9):585-591. doi:10.1056/NEJM199403033300901

7. Lacomblez L, Bensimon G, Leigh PN, Guillet P, Meininger V. Dose-ranging study of riluzole in amyotrophic lateral sclerosis. Amyotrophic Lateral Sclerosis/Riluzole Study Group II. Lancet. 1996;347(9013):1425-1431. doi:10.1016/s0140-6736(96)91680-3

8. Radicava. Package insert. MT Pharma America Inc; 2017.

9. Abe K, Itoyama Y, Sobue G, et al. Confirmatory double-blind, parallel-group, placebo-controlled study of efficacy and safety of edaravone (MCI-186) in amyotrophic lateral sclerosis patients. Amyotroph Lateral Scler Frontotemporal Degener. 2014;15(7-8):610-617. doi:10.3109/21678421.2014.959024

10. Writing Group; Edaravone (MCI-186) ALS 19 Study Group. Safety and efficacy of edaravone in well defined patients with amyotrophic lateral sclerosis: a randomised, double-blind, placebo-controlled trial. Lancet Neurol. 2017;16(7):505-512. doi:10.1016/S1474-4422(17)30115-1

11. Writing Group; Edaravone (MCI-186) ALS 19 Study Group. Exploratory double-blind, parallel-group, placebo-controlled study of edaravone (MCI-186) in amyotrophic lateral sclerosis (Japan ALS severity classification: Grade 3, requiring assistance for eating, excretion or ambulation). Amyotroph Lateral Scler Frontotemporal Degener. 2017;18(suppl 1):40-48. doi:10.1080/21678421.2017.1361441

12. Luo L, Song Z, Li X, et al. Efficacy and safety of edaravone in treatment of amyotrophic lateral sclerosis–a systematic review and meta-analysis. Neurol Sci. 2019;40(2):235-241. doi:10.1007/s10072-018-3653-2

13. Witzel S, Maier A, Steinbach R, et al; German Motor Neuron Disease Network (MND-NET). Safety and effectiveness of long-term intravenous administration of edaravone for treatment of patients with amyotrophic lateral sclerosis. JAMA Neurol. 2022;79(2):121-130. doi:10.1001/jamaneurol.2021.4893

14. Paganoni S, Macklin EA, Hendrix S, et al. Trial of sodium phenylbutyrate-taurursodiol for amyotrophic lateral sclerosis. N Engl J Med. 2020;383(10):919-930. doi:10.1056/NEJMoa1916945

15. Relyvrio. Package insert. Amylyx Pharmaceuticals Inc; 2022.

16. McKay KA, Smith KA, Smertinaite L, Fang F, Ingre C, Taube F. Military service and related risk factors for amyotrophic lateral sclerosis. Acta Neurol Scand. 2021;143(1):39-50. doi:10.1111/ane.13345

17. Watanabe K, Tanaka M, Yuki S, Hirai M, Yamamoto Y. How is edaravone effective against acute ischemic stroke and amyotrophic lateral sclerosis? J Clin Biochem Nutr. 2018;62(1):20-38. doi:10.3164/jcbn.17-62

18. Horner RD, Kamins KG, Feussner JR, et al. Occurrence of amyotrophic lateral sclerosis among Gulf War veterans. Neurology. 2003;61(6):742-749. doi:10.1212/01.wnl.0000069922.32557.ca

19. Radicava ORS. Package insert. Mitsubishi Tanabe Pharma America Inc; 2022.

20. Shimizu H, Nishimura Y, Shiide Y, et al. Bioequivalence study of oral suspension and intravenous formulation of edaravone in healthy adult subjects. Clin Pharmacol Drug Dev. 2021;10(10):1188-1197. doi:10.1002/cpdd.952

Acute Painful Horner Syndrome as the First Presenting Sign of Carotid Artery Dissection

Horner syndrome is a rare condition that has no sex or race predilection and is characterized by the clinical triad of a miosis, anhidrosis, and small, unilateral ptosis. The prompt diagnosis and determination of the etiology of Horner syndrome are of utmost importance, as the condition can result from many life-threatening systemic complications. Horner syndrome is often asymptomatic but can have distinct, easily identified characteristics seen with an ophthalmic examination. This report describes a patient who presented with Horner syndrome resulting from an internal carotid artery dissection.

Case Presentation

A 61-year-old woman presented with periorbital pain with onset 3 days prior. The patient described the pain as 7 of 10 that had been worsening and was localized around and behind the right eye. She reported new-onset headaches on the right side over the past week with associated intermittent vision blurriness in the right eye. She had a history of mobility issues and had fallen backward about 1 week before, hitting the back of her head on the floor without direct trauma to the eye. She was symptomatic for light sensitivity, syncope, and dizziness, with reports of a recent history of transient ischemic attacks (TIAs) of unknown etiology, which had occurred in the months preceding her examination. She reported no jaw claudication, scalp tenderness, and neck or shoulder pain. She was unaware of any changes in her perspiration pattern on the right side of her face but mentioned that she had noticed her right upper eyelid drooping while looking in the mirror.

This patient had a routine eye examination 2 months before, which was remarkable for stable, nonfoveal involving adult-onset vitelliform dystrophy in the left eye and nuclear sclerotic cataracts and mild refractive error in both eyes. No iris heterochromia was noted, and her pupils were equal, round, and reactive to light. Her history was remarkable for chest pain, obesity, bipolar disorder, vertigo, transient cerebral ischemia, hypertension, hypercholesterolemia, alcohol use disorder, cocaine use disorder, and asthma. A carotid ultrasound had been performed 1 month before the onset of symptoms due to her history of TIAs, which showed no hemodynamically significant stenosis (> 50% stenosis) of either carotid artery. Her medications included oxybutynin chloride, amlodipine, acetaminophen, sertraline hydrochloride, lidocaine, albuterol, risperidone, hydroxyzine hydrochloride, lisinopril, omeprazole, once-daily baby aspirin, atorvastatin, and calcium.

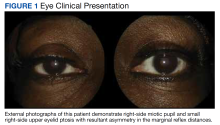

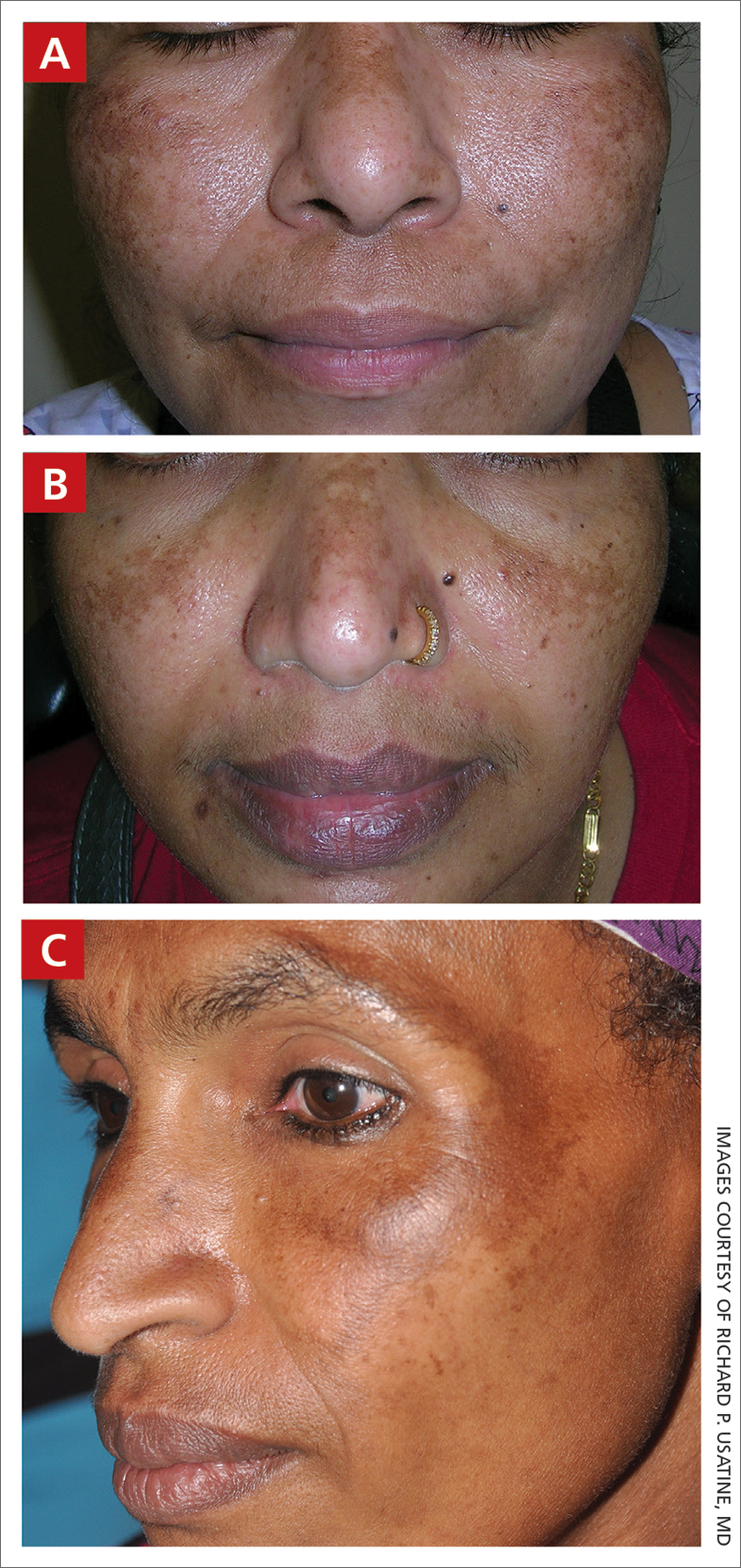

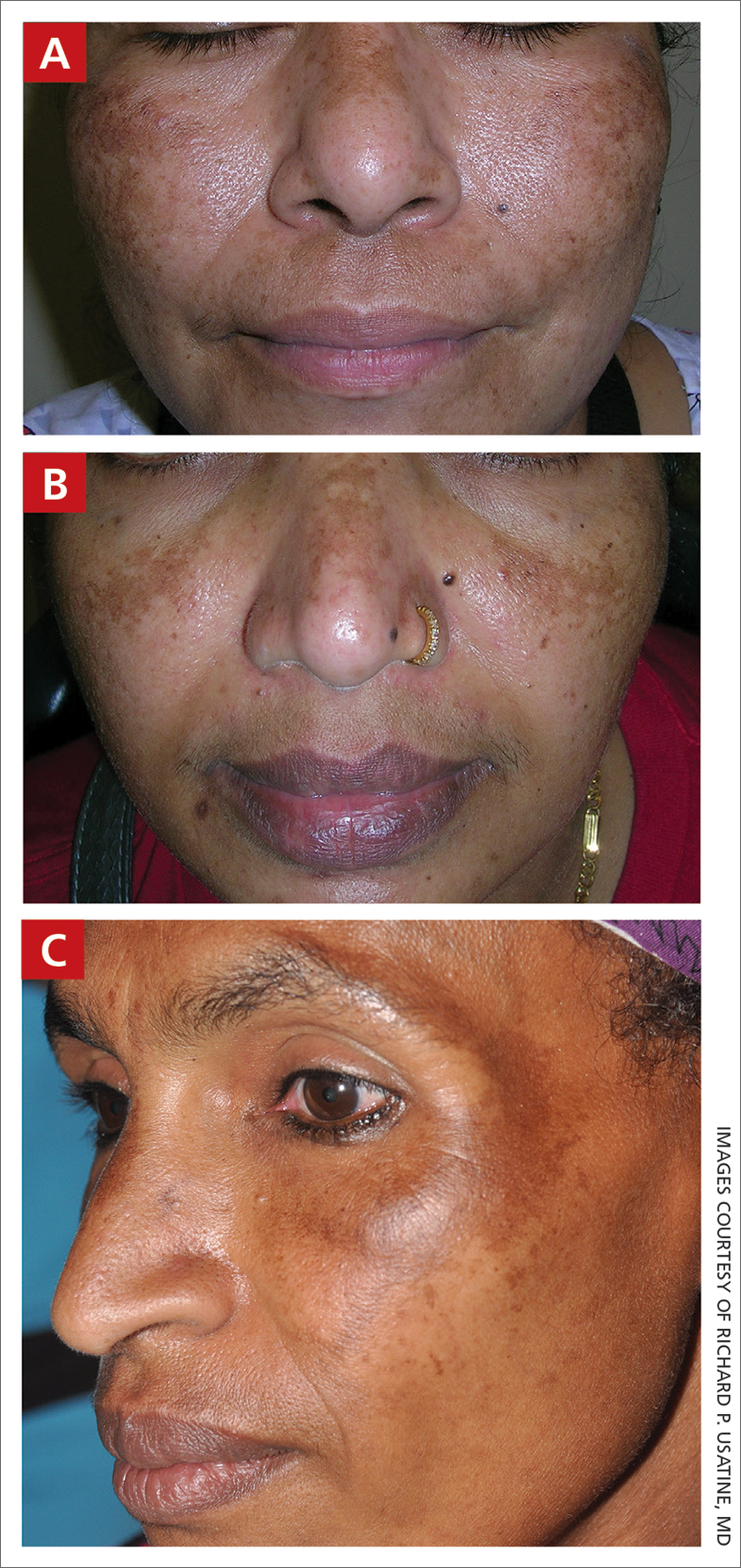

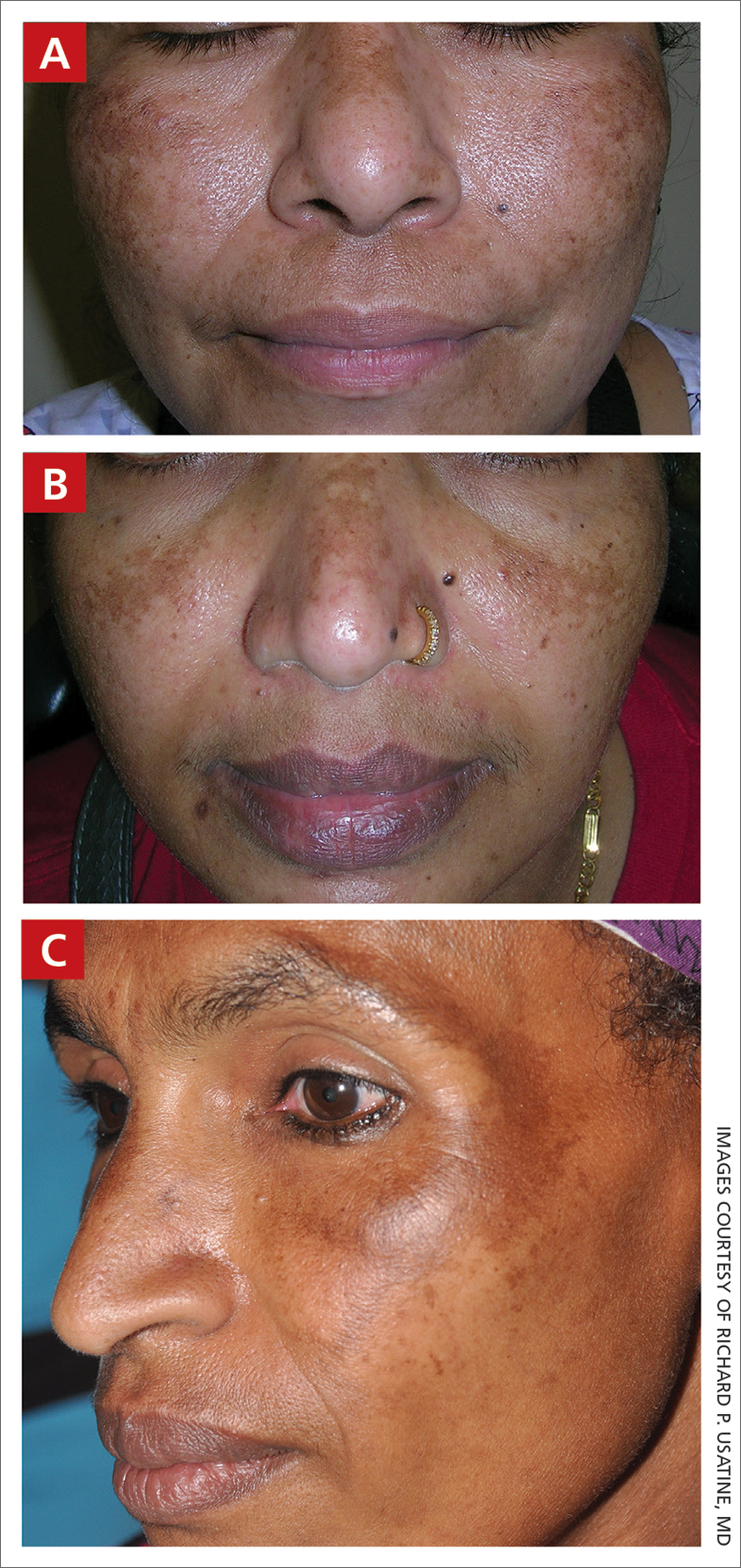

At the time of presentation, an ophthalmic examination revealed no decrease in visual acuity with a best-corrected visual acuity of 20/20 in the right and left eyes. The patient’s pupil sizes were unequal, with a smaller, more miotic right pupil with a greater difference between the pupil sizes in dim illumination (Figure 1).

As the patient had pathologic miosis, conditions causing pathologic mydriasis, such as Adie tonic pupil and cranial nerve III palsy, were ruled out. The presence of an acute, slight ptosis with pathologic miosis and pain in the ipsilateral eye with no reports of exposure to miotic pharmaceutical agents and no history of trauma to the globe or orbit eliminated other differentials, leading to a diagnosis of right-sided Horner syndrome. Due to concerns of acute onset periorbital and retrobulbar pain, she was referred to the emergency department with recommendations for computed tomography angiography (CTA), magnetic resonance imaging (MRI), and magnetic resonance angiogram (MRA) of the head and neck to rule out a carotid artery dissection.

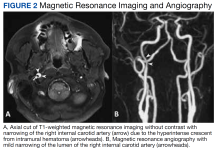

CTA revealed a focal linear filling defect in the right midinternal carotid artery, likely related to an internal carotid artery vascular flap. There was no evidence of proximal intracranial occlusive disease. MRI revealed a linear area of high-intensity signal projecting over the mid and distal right internal carotid artery lumen (Figure 2A).

Imaging suggested an internal carotid artery dissection, and the patient was admitted to the hospital for observation for 4 days. During this time, the patient was instructed to continue taking 81mg aspirin daily and to begin taking 75 mg clopidogrel bisulfate daily to prevent a cerebrovascular accident. Once stability was established, the patient was discharged with instructions to follow up with neurology and neuro-ophthalmology.

Discussion

Anisocoria is defined as a difference in pupil sizes between the eyes.1 This difference can be physiologic with no underlying pathology as an etiology of the condition. If underlying pathology causes anisocoria, it can result in dysfunction with mydriasis, leading to a more miotic pupil, or it can result from issues with miosis, leading to a more mydriatic pupil.1

To determine whether anisocoria is physiologic or pathologic, one must assess the patient’s pupil sizes in dim and bright illumination. If the difference in the pupil size is the same in both room illuminations (ie, the anisocoria is 2 mm in both bright and dim illumination, pupillary constriction and dilation are functioning normally), then the patient has physiologic anisocoria.1 If anisocoria is different in bright and dim illumination (ie, the anisocoria is 1 mm in bright and 3 mm in dim settings or 3 mm in bright and 1 mm in dim settings), the condition is related to pathology. To determine the underlying pathology of anisocoria in cases that are not physiologic, it is important to first determine whether the anisocoria is related to miotic or mydriatic dysfunction.1

If the anisocoria is greater in dim illumination, this suggests mydriatic dysfunction and could be a result of damage to the sympathetic pupillary pathway.1 The smaller or more miotic pupil in this instance is the pathologic pupil. If the anisocoria is greater in bright illumination, this suggests miotic dysfunction and could be a result of damage to the parasympathetic pathway.1 The larger or more mydriatic pupil in this instance is the pathologic pupil. Congenital abnormalities, such as iris colobomas, aniridia, and ectopic pupils, can result in a wide range of pupil sizes and shapes, including miotic or mydriatic pupils.1

Pathologic Mydriasis

Pathologic mydriatic pupils can result from dysfunction in the parasympathetic nervous system, which results in a pupil that is not sufficiently able to dilate with the removal of a light stimulus. Mydriatic pupils can be related to Adie tonic pupil, Argyll-Robertson pupil, third nerve palsy, trauma, surgeries, or pharmacologic mydriasis.2 The conditions that cause mydriasis can be readily differentiated from one another based on clinical examination.

Adie tonic pupil results from damage to the ciliary ganglion.2 While pupillary constriction in response to light will be absent or sluggish in an Adie pupil, the patient will have an intact but sluggish accommodative pupillary response; therefore, the pupil will still constrict with accommodation and convergence to focus on near objects, although slowly. This is known as light-near dissociation.2

Argyll-Robertson pupils are caused by damage to the Edinger-Westphal nucleus in the rostral midbrain.3 Lesions to this area of the brain are typically associated with neurosyphilis but also can be a result of Lyme disease, multiple sclerosis, encephalitis, neurosarcoidosis, herpes zoster, diabetes mellitus, and chronic alcohol misuse.3 Argyll Robertson pupils can appear very similar to a tonic pupil in that this condition will also have a dilated pupil and light-near dissociation.3 These pupils will differ in that they also tend to have an irregular shape (dyscoria), and the pupils will constrict briskly when focusing on near objects and dilate briskly when focusing on distant objects, not sluggishly, as in Adie tonic pupil.3

Mydriasis due to a third nerve palsy will present with ptosis and extraocular muscle dysfunction (including deficits to the superior rectus, medial rectus, inferior oblique, and inferior rectus), with the classic presentation of a completed palsy with the eye positioned “down and out” or the patient’s inability to look medially and superiorly with the affected eye.2

As in cases of pathologic mydriasis, a thorough and in-depth history can help determine traumatic, surgical and pharmacologic etiologies of a mydriatic pupil. It should be determined whether the patient has had any previous trauma or surgeries to the eye or has been in contact with any of the following: acetylcholine receptor antagonists (atropine, scopolamine, homatropine, cyclopentolate, and tropicamide), motion sickness patches (scopolamine), nasal vasoconstrictors, glycopyrrolate deodorants, and/or various plants (Jimson weed or plants belonging to the digitalis family, such as foxglove).2

Pathologic Miosis

Pathologic miotic pupils can result from dysfunction in the sympathetic nervous system and can be related to blunt or penetrating trauma to the orbit, Horner syndrome, and pharmacologic miosis.2 Horner syndrome will be accompanied by a slight ptosis and sometimes anhidrosis on the ipsilateral side of the face. To differentiate between traumatic and pharmacologic miosis, a detailed history should be obtained, paying close attention to injuries to the eyes or head and/or possible exposure to chemical or pharmaceutical agents, including prostaglandins, pilocarpine, organophosphates, and opiates.2

Horner Syndrome

Horner syndrome is a neurologic condition that results from damage to the oculosympathetic pathway.4 The oculosympathetic pathway is a 3-neuron pathway that begins in the hypothalamus and follows a circuitous route to ultimately innervate the facial sweat glands, the smooth muscles of the blood vessels in the orbit and face, the iris dilator muscle, and the Müller muscles of the superior and inferior eyelids.1,5 Therefore, this pathway’s functions include vasoconstriction of facial blood vessels, facial diaphoresis (sweating), pupillary dilation, and maintaining an open position of the eyelids.1

Oculosympathetic pathway anatomy. To understand the findings associated with Horner syndrome, it is necessary to understand the anatomy of this 3-neuron pathway.5 First-order neurons, or central neurons, arise in the posterolateral aspect of the hypothalamus, where they then descend through the midbrain, pons, medulla, and cervical spinal cord via the intermediolateral gray column.6 The fibers then synapse in the ciliospinal center of Budge at the level of cervical vertebra C8 to thoracic vertebra T2, which give rise to the preganglionic, or second-order neurons.6

Second-order neurons begin at the ciliospinal center of Budge and exit the spinal cord via the central roots, most at the level of thoracic vertebra T1, with the remainder leaving at the levels of cervical vertebra C8 and thoracic vertebra T2.7 After exiting the spinal cord, the second-order neurons loop around the subclavian artery, where they then ascend close to the apex of the lung to synapse with the cell bodies of the third-order neurons at the superior cervical ganglion near cervical vertebrae C2 and C3.7

After arising at the superior cervical ganglion, third-order neurons diverge to follow 2 different courses.7 A portion of the neurons travels along the external carotid artery to ultimately innervate the facial sweat glands, while the other portion of the neurons combines with the carotid plexus and travels within the walls of the internal carotid artery and through the cavernous sinus.7 The fibers then briefly join the abducens nerve before anastomosing with the ophthalmic division of the trigeminal nerve.7 After coursing through the superior orbital fissure, the fibers innervate the iris dilator and Müller muscles via the long ciliary nerves.7

Symptoms and signs. Patients with Horner syndrome can present with a variety of symptoms and signs. Patients may be largely asymptomatic or they may complain of a droopy eyelid and blurry vision. The full Horner syndrome triad consists of ipsilateral miosis, anhidrosis of the face, and mild ptosis of the upper eyelid with reverse ptosis of the lower eyelid.8 The difference in pupil size is greatest 4 to 5 seconds after switching from bright to dim room illumination due to dilation lag in the miotic pupil from poor innervation.1

Although the classical triad of ptosis, miosis, and anhidrosis is emphasized in the literature, the full triad may not always be present.4 This variation is due to the anatomy of the oculosympathetic pathway with branches of the nerve system separating at the superior cervical ganglion and following different pathways along the internal and external carotid arteries, resulting in anhidrosis only in Horner syndrome caused by lesions to the first- or second-order neurons.4,5 Because of this deviation of the nerve fibers in the pathway, the presence of miosis and a slight ptosis in the absence of anhidrosis should still strongly suggest Horner syndrome.

In addition to the classic triad, Horner syndrome can present with other ophthalmic findings, including conjunctival injection, changes in accommodation, and a small decrease in intraocular pressure usually by no more than 1 to 2 mm Hg.4 Congenital Horner syndrome is unique in that it can result in iris heterochromia, with the lighter eye being the affected eye.4

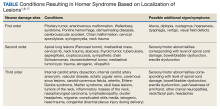

Due to the long and circuitous nature of the oculosympathetic pathway, damage can occur due to a wide variety of conditions (Table) and can present with many neurologic findings.7

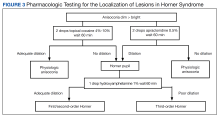

Localization of lesions. In Horner syndrome, 13% of lesions were present at first-order neurons, 44% at second-order neurons, and 43% at third-order neurons.7 While all these lesions have similar clinical presentations that can be difficult to differentiate, localization of the lesion within the oculosympathetic pathway is important to determine the underlying cause. This determination can be readily achieved in office with pharmacologic pupil testing (Figure 3).

Management. All acute Horner syndrome presentations should be referred for same-day evaluation to rule out potentially life-threatening conditions, such as a cerebrovascular accident, carotid artery dissection or aneurysm, and giant cell arteritis.10 The urgent evaluation should include CTA and MRI/MRA of the head and neck.5 If giant cell arteritis is suspected, it is also recommended to obtain urgent bloodwork, which should include complete blood count with differential, erythrocyte sedimentation rate, and C-reactive protein.5 Carotid angiography and CT of the chest also are indicated if the aforementioned tests are noncontributory, but these are less urgent and can be deferred for evaluation within 1 to 2 days after the initial diagnosis.10

In this patient’s case, an immediate neurologic evaluation was appropriate due to the acute and painful nature of her presentation. Ultimately, her Horner syndrome was determined to result from an internal carotid artery dissection. As indicated by Schievink, all acute Horner syndrome cases should be considered a result of a carotid artery dissection until proven otherwise, despite the presence or absence of any other signs or symptoms.11 This consideration is not only because of the potentially life-threatening sequelae associated with carotid dissections, but also because dissections have been shown to be the most common cause of ischemic strokes in young and middle-aged patients, accounting for 10% to 25% of all ischemic strokes.4,11

Carotid Artery Dissection

An artery dissection is typically the result of a tear of the

There are many causes of carotid artery dissections, such as structural defects of the arterial wall, fibromuscular dysplasia, cystic medial necrosis, and connective tissue disorders, including Ehlers-Danlos syndrome type IV, Marfan syndrome, autosomal dominant polycystic kidney disease, and osteogenesis imperfecta type I.13 Many environmental factors also can induce a carotid artery dissection, such as a history of anesthesia use, resuscitation with classic cardiopulmonary resuscitation techniques, head or neck trauma, chiropractic manipulation of the neck, and hyperextension or rotation of the neck, which can occur in activities such as yoga, painting a ceiling, coughing, vomiting, or sneezing.11

Patients with an internal carotid artery dissection typically present with pain on one side of the neck, face, or head, which can be accompanied by a partial Horner syndrome that results from damage to the oculosympathetic neurons traveling with the carotid plexus in the internal carotid artery wall.9,10 Unilateral facial or orbital pain has been noted to be present in half of patients and is typically accompanied by an ipsilateral headache.9 These symptoms are typically followed by cerebral or retinal ischemia within hours or days of onset and other ophthalmic conditions that can cause blindness, such as ischemic optic neuropathy or retinal artery occlusions, although these are rare.9

Due to the potential complications that can arise, carotid artery dissections require prompt treatment with antithrombotic therapy for 3 to 6 months to prevent carotid artery occlusion, which can result in a hemispheric cerebrovascular accident or TIAs.15 The options for antithrombotic therapy include anticoagulants, such as warfarin, and antiplatelets, such as aspirin. Studies have found similar rates of recurrent ischemic strokes in treatment with anticoagulants compared with antiplatelets, so both are reasonable therapeutic options.15,16 Following a carotid artery dissection diagnosis, patients should be evaluated by neurology to minimize other cardiovascular risk factors and prevent other complications.

Conclusions

Due to the potential life-threatening complications that can arise from conditions resulting in Horner syndrome, it is imperative that clinicians have a thorough understanding of the condition and its appropriate treatment and management modalities. Understanding the need for immediate testing to determine the underlying etiology of Horner syndrome can help prevent a decrease in a patient’s vision or quality of life, and in some cases, prevent death.

Acknowledgments

The author recognizes and thanks Kyle Stuard for his invaluable assistance in the editing of this manuscript

1. Yanoff M, Duker J. Ophthalmology. 5th ed. Elsevier; 2019.

2. Payne WN, Blair K, Barrett MJ. Anisocoria. StatPearls Publishing; 2022. Accessed February 1, 2023. https://www.ncbi.nlm.nih.gov/books/NBK470384

3. Lee A, Bindiganavile SH, Fan J, Al-Zubidi N, Bhatti MT. Argyll Robertson pupils. Accessed February 1, 2023. https://eyewiki.aao.org/Argyll_Robertson_Pupils

4. Kedar S, Prakalapakorn G, Yen M, et al. Horner syndrome. American Academy of Optometry. 2021. Accessed February 1, 2023. https://eyewiki.aao.org/Horner_Syndrome

5. Daroff R, Bradley W, Jankovic J. Bradley and Daroff’s Neurology in Clinical Practice. 8th ed. Elsevier; 2022.

6. Kanagalingam S, Miller NR. Horner syndrome: clinical perspectives. Eye Brain. 2015;7:35-46. doi:10.2147/EB.S63633

7. Lykstad J, Reddy V, Hanna A. Neuroanatomy, Pupillary Dilation Pathway. StatPearls Publishing; 2022. Updated August 11, 2021. Accessed February 1, 2023. https://www.ncbi.nlm.nih.gov/books/NBK535421

8. Friedman N, Kaiser P, Pineda R. The Massachusetts Eye and Ear Infirmary Illustrated Manual of Ophthalmology. 5th ed. Elsevier; 2020.

9. Silbert PL, Mokri B, Schievink WI. Headache and neck pain in spontaneous internal carotid and vertebral artery dissections. Neurology. 1995;45(8):1517-1522. doi:10.1212/wnl.45.8.1517

10. Gervasio K, Peck T. The Will’s Eye Manual. 8th ed. Walters Kluwer; 2022.

11. Schievink WI. Spontaneous dissection of the carotid and vertebral arteries. N Engl J Med. 2001;344(12):898-906. doi:10.1056/NEJM200103223441206

12. Hart RG, Easton JD. Dissections of cervical and cerebral arteries. Neurol Clin. 1983;1(1):155-182.

13. Goodfriend SD, Tadi P, Koury R. Carotid Artery Dissection. StatPearls Publishing; 2022. Updated December 24, 2021. Accessed February 1, 2023. https://www.ncbi.nlm.nih.gov/books/NBK430835

14. Blum CA, Yaghi S. Cervical artery dissection: a review of the epidemiology, pathophysiology, treatment, and outcome. Arch Neurosci. 2015;2(4):e26670. doi:10.5812/archneurosci.26670

15. Furie KL, Kasner SE, Adams RJ, et al. Guidelines for the prevention of stroke in patients with stroke or transient ischemic attack: a guideline for healthcare professionals from the American Heart Association/American Stroke Association. Stroke. 2011;42(1):227-276. doi:10.1161/STR.0b013e3181f7d043

16. Mohr JP, Thompson JL, Lazar RM, et al; Warfarin-Aspirin Recurrent Stroke Study Group. A comparison of warfarin and aspirin for the prevention of recurrent ischemic stroke. N Engl J Med. 2001;345(20):1444-1451. doi:10.1056/NEJMoa011258

17. Davagnanam I, Fraser CL, Miszkiel K, Daniel CS, Plant GT. Adult Horner’s syndrome: a combined clinical, pharmacological, and imaging algorithm. Eye (Lond). 2013;27(3):291-298. doi:10.1038/eye.2012.281

Horner syndrome is a rare condition that has no sex or race predilection and is characterized by the clinical triad of a miosis, anhidrosis, and small, unilateral ptosis. The prompt diagnosis and determination of the etiology of Horner syndrome are of utmost importance, as the condition can result from many life-threatening systemic complications. Horner syndrome is often asymptomatic but can have distinct, easily identified characteristics seen with an ophthalmic examination. This report describes a patient who presented with Horner syndrome resulting from an internal carotid artery dissection.

Case Presentation

A 61-year-old woman presented with periorbital pain with onset 3 days prior. The patient described the pain as 7 of 10 that had been worsening and was localized around and behind the right eye. She reported new-onset headaches on the right side over the past week with associated intermittent vision blurriness in the right eye. She had a history of mobility issues and had fallen backward about 1 week before, hitting the back of her head on the floor without direct trauma to the eye. She was symptomatic for light sensitivity, syncope, and dizziness, with reports of a recent history of transient ischemic attacks (TIAs) of unknown etiology, which had occurred in the months preceding her examination. She reported no jaw claudication, scalp tenderness, and neck or shoulder pain. She was unaware of any changes in her perspiration pattern on the right side of her face but mentioned that she had noticed her right upper eyelid drooping while looking in the mirror.

This patient had a routine eye examination 2 months before, which was remarkable for stable, nonfoveal involving adult-onset vitelliform dystrophy in the left eye and nuclear sclerotic cataracts and mild refractive error in both eyes. No iris heterochromia was noted, and her pupils were equal, round, and reactive to light. Her history was remarkable for chest pain, obesity, bipolar disorder, vertigo, transient cerebral ischemia, hypertension, hypercholesterolemia, alcohol use disorder, cocaine use disorder, and asthma. A carotid ultrasound had been performed 1 month before the onset of symptoms due to her history of TIAs, which showed no hemodynamically significant stenosis (> 50% stenosis) of either carotid artery. Her medications included oxybutynin chloride, amlodipine, acetaminophen, sertraline hydrochloride, lidocaine, albuterol, risperidone, hydroxyzine hydrochloride, lisinopril, omeprazole, once-daily baby aspirin, atorvastatin, and calcium.

At the time of presentation, an ophthalmic examination revealed no decrease in visual acuity with a best-corrected visual acuity of 20/20 in the right and left eyes. The patient’s pupil sizes were unequal, with a smaller, more miotic right pupil with a greater difference between the pupil sizes in dim illumination (Figure 1).

As the patient had pathologic miosis, conditions causing pathologic mydriasis, such as Adie tonic pupil and cranial nerve III palsy, were ruled out. The presence of an acute, slight ptosis with pathologic miosis and pain in the ipsilateral eye with no reports of exposure to miotic pharmaceutical agents and no history of trauma to the globe or orbit eliminated other differentials, leading to a diagnosis of right-sided Horner syndrome. Due to concerns of acute onset periorbital and retrobulbar pain, she was referred to the emergency department with recommendations for computed tomography angiography (CTA), magnetic resonance imaging (MRI), and magnetic resonance angiogram (MRA) of the head and neck to rule out a carotid artery dissection.

CTA revealed a focal linear filling defect in the right midinternal carotid artery, likely related to an internal carotid artery vascular flap. There was no evidence of proximal intracranial occlusive disease. MRI revealed a linear area of high-intensity signal projecting over the mid and distal right internal carotid artery lumen (Figure 2A).

Imaging suggested an internal carotid artery dissection, and the patient was admitted to the hospital for observation for 4 days. During this time, the patient was instructed to continue taking 81mg aspirin daily and to begin taking 75 mg clopidogrel bisulfate daily to prevent a cerebrovascular accident. Once stability was established, the patient was discharged with instructions to follow up with neurology and neuro-ophthalmology.

Discussion

Anisocoria is defined as a difference in pupil sizes between the eyes.1 This difference can be physiologic with no underlying pathology as an etiology of the condition. If underlying pathology causes anisocoria, it can result in dysfunction with mydriasis, leading to a more miotic pupil, or it can result from issues with miosis, leading to a more mydriatic pupil.1