User login

Changing Paradigms in Short Stay Total Joint Arthroplasty

Reflections on My VA Experience and Why I See the Proverbial Glass as Half Full

Veterans Health Administration (VA) hospitals have received notoriety due to episodes of misdiagnosis, poor management, and negligent care described in many recent reports and news articles.1-3 While veterans are appropriately the primary focus of these investigative reports, physicians are also challenged in this setting, as they often meet resistance when advocating for patients and attempting to improve a flawed system.2 Although my residency training includes 6 months at a VA hospital mired in controversy, the hospital has played a critical role in my training.3

Despite my many frustrations with the VA and the daily stresses incurred because of barriers impeding the timing and quality of care, I have several reasons to see the glass as “half full” when reflecting on my experiences as an orthopedic surgery resident at a VA medical center. This editorial will focus on the most important of these reasons—the special opportunity and pride associated with caring for veterans and these patients’ extremely appreciative nature.

The VA is one of the largest integrated health care systems in the United States, offering both inpatient and outpatient care to eligible veterans. Although eligibility has historically been based on military service–related medical conditions, disability, and financial need, reforms from 1996 to 2002 expanded enrollment to veteran populations previously deemed ineligible for VA care.4,5 Despite this, studies suggest that some uninsured veterans do not seek VA care, even when eligible for VA coverage. This troubling notion is further complicated by research suggesting that veterans who use the VA for all of their health care are more likely to be from poor, less-educated, and minority populations, and are more likely to report fair or poor health and seek more disability days.6

Such disheartening realities can mask the most important attributes of VA patients, which pertain to their selfless commitment to our country. Orthopedic surgery residents must appreciate these attributes as well as the tremendous need for musculoskeletal care in this setting, as musculoskeletal conditions are some of the most common reasons for patient visits at the VA.7 Although combat-related high-energy blast injuries and the reconstructive procedures used to treat them have received a lot of attention, it is the more common musculoskeletal disorders that are most responsible for the tremendous burden of musculoskeletal disease in the VA. In a study by Dominick and colleagues,8 veterans had significantly greater odds of reporting doctor-diagnosed arthritis compared with nonveterans. Furthermore, veterans are also more vulnerable to overuse injuries, a finding attributed to the intense physical activity associated with military training and service.9

The busy orthopedic surgery clinic at my VA hospital is a fulfilling experience and a reminder of the large demand for musculoskeletal care. However, it is the patient population that makes it most gratifying. Most of the veterans seeking care are appreciative, regularly expressing their gratitude. They view me and the other residents as their physicians, not simply as doctors in training, like so many other non-VA patients do. Despite the fact that VA patients sometimes have to wait several hours to be seen in clinic and several months for surgery, I have never been subjected to their inevitable disdain or frustration. This is true in even the most trying and infuriating times, such as when an operation is cancelled on the day of surgery for reasons that many surgeons in non-VA hospitals would consider trivial. And even when witness to my visible irritation with the VA system, the veterans remain respectful and understanding; if they ever share similar feelings, they most certainly never voice them to me.

I cannot refute the notion that the VA must change and that the veterans deserve an improved health care system. However, this editorial is not written as a call to action. Instead, I hope it helps to humanize the patients of the VA, serving as a reminder to residents and other providers that the VA is a unique and extraordinary opportunity to give back and say thank you to veterans.

This editorial is dedicated to CPT David Huskie, USAR (Ret.), a veteran of Operation Desert Storm and orthopedic nurse at my VA hospital. It was he who first reminded me, and the other orthopedic residents, of the importance of our time at the VA. The Figure depicts the letter he gives to orthopedic residents at our program, along with a pewter coin, after their first VA rotation.

1. Pearson M. The VA’s troubled history. Cable News Network (CNN) website. http://www.cnn.com/2014/05/23/politics/va-scandals-timeline. Updated May 30, 2014. Accessed August 28, 2015.

2. Scherz H. Doctors’ war stories from VA hospitals. The Wall Street Journal website. http://www.wsj.com/articles/hal-scherz-doctors-war-stories-from-va-hospitals-1401233147. Published May 27, 2014. Accessed August 28, 2015.

3. Riviello V. Nurse exposes VA hospital: stolen drugs, tortured veterans. New York Post website. http://nypost.com/2014/07/12/nurse-exposes-va-hospital-stolen-drugs-tortured-veterans. Published July 12, 2014. Accessed August 28, 2015.

4. Enrollment—provision of hospital and outpatient care to veterans—VA. Proposed rule. Fed Regist. 1998;63(132):37299-37307.

5. US Department of Veterans Affairs, Veterans Health Administration, Office of Assistant Deputy Under Secretary for Health for Policy and Planning. 2003 Survey of Veteran Enrollees’ Health and Reliance Upon VA With Selected Comparisons to the 1999 and 2002 Surveys. US Department of Veterans Affairs website. www.va.gov/healthpolicyplanning/Docs/SOE2003_Report.pdf. Published December 2004. Accessed August 28, 2015.

6. Nelson KM, Starkebaum GA, Reiber GE. Veterans using and uninsured veterans not using Veterans Affairs (VA) health care. Public Health Rep. 2007;122(1):93-100.

7. Wasserman GM, Martin BL, Hyams KC, Merrill BR, Oaks HG, McAdoo HA. A survey of outpatient visits in a United States Army forward unit during Operation Desert Shield. Mil Med. 1997;162(6):374-379.

8. Dominick KL, Golightly YM, Jackson GL. Arthritis prevalence and symptoms among US non-veterans, veterans, and veterans receiving Department of Veterans Affairs Healthcare. J Rheumatol. 2006;33(2):348-354.

9. West SG. Rheumatic disorders during Operation Desert Storm. Arthritis Rheum. 1993;36(10):1487-1488.

Veterans Health Administration (VA) hospitals have received notoriety due to episodes of misdiagnosis, poor management, and negligent care described in many recent reports and news articles.1-3 While veterans are appropriately the primary focus of these investigative reports, physicians are also challenged in this setting, as they often meet resistance when advocating for patients and attempting to improve a flawed system.2 Although my residency training includes 6 months at a VA hospital mired in controversy, the hospital has played a critical role in my training.3

Despite my many frustrations with the VA and the daily stresses incurred because of barriers impeding the timing and quality of care, I have several reasons to see the glass as “half full” when reflecting on my experiences as an orthopedic surgery resident at a VA medical center. This editorial will focus on the most important of these reasons—the special opportunity and pride associated with caring for veterans and these patients’ extremely appreciative nature.

The VA is one of the largest integrated health care systems in the United States, offering both inpatient and outpatient care to eligible veterans. Although eligibility has historically been based on military service–related medical conditions, disability, and financial need, reforms from 1996 to 2002 expanded enrollment to veteran populations previously deemed ineligible for VA care.4,5 Despite this, studies suggest that some uninsured veterans do not seek VA care, even when eligible for VA coverage. This troubling notion is further complicated by research suggesting that veterans who use the VA for all of their health care are more likely to be from poor, less-educated, and minority populations, and are more likely to report fair or poor health and seek more disability days.6

Such disheartening realities can mask the most important attributes of VA patients, which pertain to their selfless commitment to our country. Orthopedic surgery residents must appreciate these attributes as well as the tremendous need for musculoskeletal care in this setting, as musculoskeletal conditions are some of the most common reasons for patient visits at the VA.7 Although combat-related high-energy blast injuries and the reconstructive procedures used to treat them have received a lot of attention, it is the more common musculoskeletal disorders that are most responsible for the tremendous burden of musculoskeletal disease in the VA. In a study by Dominick and colleagues,8 veterans had significantly greater odds of reporting doctor-diagnosed arthritis compared with nonveterans. Furthermore, veterans are also more vulnerable to overuse injuries, a finding attributed to the intense physical activity associated with military training and service.9

The busy orthopedic surgery clinic at my VA hospital is a fulfilling experience and a reminder of the large demand for musculoskeletal care. However, it is the patient population that makes it most gratifying. Most of the veterans seeking care are appreciative, regularly expressing their gratitude. They view me and the other residents as their physicians, not simply as doctors in training, like so many other non-VA patients do. Despite the fact that VA patients sometimes have to wait several hours to be seen in clinic and several months for surgery, I have never been subjected to their inevitable disdain or frustration. This is true in even the most trying and infuriating times, such as when an operation is cancelled on the day of surgery for reasons that many surgeons in non-VA hospitals would consider trivial. And even when witness to my visible irritation with the VA system, the veterans remain respectful and understanding; if they ever share similar feelings, they most certainly never voice them to me.

I cannot refute the notion that the VA must change and that the veterans deserve an improved health care system. However, this editorial is not written as a call to action. Instead, I hope it helps to humanize the patients of the VA, serving as a reminder to residents and other providers that the VA is a unique and extraordinary opportunity to give back and say thank you to veterans.

This editorial is dedicated to CPT David Huskie, USAR (Ret.), a veteran of Operation Desert Storm and orthopedic nurse at my VA hospital. It was he who first reminded me, and the other orthopedic residents, of the importance of our time at the VA. The Figure depicts the letter he gives to orthopedic residents at our program, along with a pewter coin, after their first VA rotation.

Veterans Health Administration (VA) hospitals have received notoriety due to episodes of misdiagnosis, poor management, and negligent care described in many recent reports and news articles.1-3 While veterans are appropriately the primary focus of these investigative reports, physicians are also challenged in this setting, as they often meet resistance when advocating for patients and attempting to improve a flawed system.2 Although my residency training includes 6 months at a VA hospital mired in controversy, the hospital has played a critical role in my training.3

Despite my many frustrations with the VA and the daily stresses incurred because of barriers impeding the timing and quality of care, I have several reasons to see the glass as “half full” when reflecting on my experiences as an orthopedic surgery resident at a VA medical center. This editorial will focus on the most important of these reasons—the special opportunity and pride associated with caring for veterans and these patients’ extremely appreciative nature.

The VA is one of the largest integrated health care systems in the United States, offering both inpatient and outpatient care to eligible veterans. Although eligibility has historically been based on military service–related medical conditions, disability, and financial need, reforms from 1996 to 2002 expanded enrollment to veteran populations previously deemed ineligible for VA care.4,5 Despite this, studies suggest that some uninsured veterans do not seek VA care, even when eligible for VA coverage. This troubling notion is further complicated by research suggesting that veterans who use the VA for all of their health care are more likely to be from poor, less-educated, and minority populations, and are more likely to report fair or poor health and seek more disability days.6

Such disheartening realities can mask the most important attributes of VA patients, which pertain to their selfless commitment to our country. Orthopedic surgery residents must appreciate these attributes as well as the tremendous need for musculoskeletal care in this setting, as musculoskeletal conditions are some of the most common reasons for patient visits at the VA.7 Although combat-related high-energy blast injuries and the reconstructive procedures used to treat them have received a lot of attention, it is the more common musculoskeletal disorders that are most responsible for the tremendous burden of musculoskeletal disease in the VA. In a study by Dominick and colleagues,8 veterans had significantly greater odds of reporting doctor-diagnosed arthritis compared with nonveterans. Furthermore, veterans are also more vulnerable to overuse injuries, a finding attributed to the intense physical activity associated with military training and service.9

The busy orthopedic surgery clinic at my VA hospital is a fulfilling experience and a reminder of the large demand for musculoskeletal care. However, it is the patient population that makes it most gratifying. Most of the veterans seeking care are appreciative, regularly expressing their gratitude. They view me and the other residents as their physicians, not simply as doctors in training, like so many other non-VA patients do. Despite the fact that VA patients sometimes have to wait several hours to be seen in clinic and several months for surgery, I have never been subjected to their inevitable disdain or frustration. This is true in even the most trying and infuriating times, such as when an operation is cancelled on the day of surgery for reasons that many surgeons in non-VA hospitals would consider trivial. And even when witness to my visible irritation with the VA system, the veterans remain respectful and understanding; if they ever share similar feelings, they most certainly never voice them to me.

I cannot refute the notion that the VA must change and that the veterans deserve an improved health care system. However, this editorial is not written as a call to action. Instead, I hope it helps to humanize the patients of the VA, serving as a reminder to residents and other providers that the VA is a unique and extraordinary opportunity to give back and say thank you to veterans.

This editorial is dedicated to CPT David Huskie, USAR (Ret.), a veteran of Operation Desert Storm and orthopedic nurse at my VA hospital. It was he who first reminded me, and the other orthopedic residents, of the importance of our time at the VA. The Figure depicts the letter he gives to orthopedic residents at our program, along with a pewter coin, after their first VA rotation.

1. Pearson M. The VA’s troubled history. Cable News Network (CNN) website. http://www.cnn.com/2014/05/23/politics/va-scandals-timeline. Updated May 30, 2014. Accessed August 28, 2015.

2. Scherz H. Doctors’ war stories from VA hospitals. The Wall Street Journal website. http://www.wsj.com/articles/hal-scherz-doctors-war-stories-from-va-hospitals-1401233147. Published May 27, 2014. Accessed August 28, 2015.

3. Riviello V. Nurse exposes VA hospital: stolen drugs, tortured veterans. New York Post website. http://nypost.com/2014/07/12/nurse-exposes-va-hospital-stolen-drugs-tortured-veterans. Published July 12, 2014. Accessed August 28, 2015.

4. Enrollment—provision of hospital and outpatient care to veterans—VA. Proposed rule. Fed Regist. 1998;63(132):37299-37307.

5. US Department of Veterans Affairs, Veterans Health Administration, Office of Assistant Deputy Under Secretary for Health for Policy and Planning. 2003 Survey of Veteran Enrollees’ Health and Reliance Upon VA With Selected Comparisons to the 1999 and 2002 Surveys. US Department of Veterans Affairs website. www.va.gov/healthpolicyplanning/Docs/SOE2003_Report.pdf. Published December 2004. Accessed August 28, 2015.

6. Nelson KM, Starkebaum GA, Reiber GE. Veterans using and uninsured veterans not using Veterans Affairs (VA) health care. Public Health Rep. 2007;122(1):93-100.

7. Wasserman GM, Martin BL, Hyams KC, Merrill BR, Oaks HG, McAdoo HA. A survey of outpatient visits in a United States Army forward unit during Operation Desert Shield. Mil Med. 1997;162(6):374-379.

8. Dominick KL, Golightly YM, Jackson GL. Arthritis prevalence and symptoms among US non-veterans, veterans, and veterans receiving Department of Veterans Affairs Healthcare. J Rheumatol. 2006;33(2):348-354.

9. West SG. Rheumatic disorders during Operation Desert Storm. Arthritis Rheum. 1993;36(10):1487-1488.

1. Pearson M. The VA’s troubled history. Cable News Network (CNN) website. http://www.cnn.com/2014/05/23/politics/va-scandals-timeline. Updated May 30, 2014. Accessed August 28, 2015.

2. Scherz H. Doctors’ war stories from VA hospitals. The Wall Street Journal website. http://www.wsj.com/articles/hal-scherz-doctors-war-stories-from-va-hospitals-1401233147. Published May 27, 2014. Accessed August 28, 2015.

3. Riviello V. Nurse exposes VA hospital: stolen drugs, tortured veterans. New York Post website. http://nypost.com/2014/07/12/nurse-exposes-va-hospital-stolen-drugs-tortured-veterans. Published July 12, 2014. Accessed August 28, 2015.

4. Enrollment—provision of hospital and outpatient care to veterans—VA. Proposed rule. Fed Regist. 1998;63(132):37299-37307.

5. US Department of Veterans Affairs, Veterans Health Administration, Office of Assistant Deputy Under Secretary for Health for Policy and Planning. 2003 Survey of Veteran Enrollees’ Health and Reliance Upon VA With Selected Comparisons to the 1999 and 2002 Surveys. US Department of Veterans Affairs website. www.va.gov/healthpolicyplanning/Docs/SOE2003_Report.pdf. Published December 2004. Accessed August 28, 2015.

6. Nelson KM, Starkebaum GA, Reiber GE. Veterans using and uninsured veterans not using Veterans Affairs (VA) health care. Public Health Rep. 2007;122(1):93-100.

7. Wasserman GM, Martin BL, Hyams KC, Merrill BR, Oaks HG, McAdoo HA. A survey of outpatient visits in a United States Army forward unit during Operation Desert Shield. Mil Med. 1997;162(6):374-379.

8. Dominick KL, Golightly YM, Jackson GL. Arthritis prevalence and symptoms among US non-veterans, veterans, and veterans receiving Department of Veterans Affairs Healthcare. J Rheumatol. 2006;33(2):348-354.

9. West SG. Rheumatic disorders during Operation Desert Storm. Arthritis Rheum. 1993;36(10):1487-1488.

Is Skin Tenting Secondary to Displaced Clavicle Fracture More Than a Theoretical Risk? A Report of 2 Adolescent Cases

Fractures of the clavicle, which account for 2.6% of all fractures, are displaced in 70% of cases and are mid-diaphyseal in 80% of cases.1-3 Historically, both displaced and nondisplaced fractures were treated nonoperatively with excellent outcomes reported in the majority of patients.1-3 Traditionally, the indications for surgical fixation of a clavicular fracture include open fractures, which occur infrequently, accounting for only 3.2% of clavicle fractures.4 Other indications include floating shoulder girdle or scapulothoracic dissociation, neurovascular injury, and skin “tenting” by the fracture fragments.3,5 Recently, both meta-analyses and randomized clinical trials have reported reduced malunion rates and improved patient outcomes with open reduction and internal fixation (ORIF).6-9 Consequently, operative fixation could be considered in patients with 100% displacement or greater than 1.5 cm shortening.6-9 Open reduction and internal fixation of the clavicle has been demonstrated to have excellent outcomes in pediatric populations as well.10

The clavicle is subcutaneous for much of its length and, thus, displaced clavicular fractures often result in a visible deformity with a stretch of the soft-tissue envelope over the fracture. While this has been suggested as an operative indication, several recent sources indicate that this concern may only be theoretical. According to the fourth edition of Skeletal Trauma, “It is often stated that open reduction and internal fixation should be considered if the skin is threatened by pressure from a prominent clavicle fracture fragment; however, it is extremely rare of the skin to be perforated from within.”5 The most recent Journal of Bone and Joint Surgery Current Concepts Review on the subject stated that “open fractures or soft-tissue tenting sufficient to produce skin necrosis is uncommon.”3 To the best of our knowledge, there is no reported case of a displaced midshaft clavicle fracture with secondary skin necrosis and conversion into an open fracture, validating the conclusion that this complication may be only theoretical. Given that surgical fixation carries a risk of complications including wound complications, infection, nonunion, malunion, and damage to the nearby neurovascular structures and pleural apices,11 some surgeons may be uncertain how to proceed in cases at risk for disturbance of the soft tissues.

We report 2 adolescent cases of displaced, comminuted clavicle fractures in which the skin was initially intact. Both were managed nonoperatively and both secondarily presented with open lesions at the fracture site requiring urgent irrigation and débridement (I&D) and ORIF. The patients and their guardians provided written informed consent for print and electronic publication of these case reports.

Case Reports

Case 1

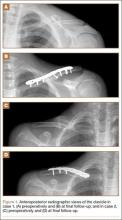

A 15-year-old boy with no significant medical or surgical history flipped over the handlebars of his bicycle the day prior to presentation and sustained a clavicle fracture on his left nondominant upper extremity. This was an isolated injury. On examination, his skin was intact with an area of tender mild osseous protuberance at the midclavicle with associated surrounding edema. He was neurovascularly intact. Radiographs showed a displaced fracture of the midshaft of the clavicle with 20% shortening with a vertically angulated piece of comminution (Figure 1A). After a discussion of the treatment options with the family, the decision was made to pursue nonoperative treatment with sling immobilization as needed and restriction from gym and sports.

Two and a half weeks later, the patient presented at follow-up with significant reduction but persistence of his pain and a new complaint of drainage from the area of the fracture. On examination, he was found to have a puncture wound of the skin with exposed clavicle protruding through the wound with a 1-cm circumferential area of erythema without purulence present or expressible. The patient denied reinjury and endorsed compliance with sling immobilization. He was taken for urgent I&D and ORIF. After excision of the eschar surrounding the open lesion and full I&D of the soft tissues, the protruding spike was partially excised and the fracture site was débrided. The fracture was reduced and fixated with a lag screw and neutralization plate technique using an anatomically contoured locking clavicle plate (Synthes). Vancomycin powder was sprinkled into the wound at the completion of the procedure to reduce the chance of infection.12

Postoperatively, the patient was prescribed oral clindamycin but was subsequently switched to oral cephalexin because of mild signs of an allergic reaction, for a total course of antibiotics of 1 week. The patient was immobilized in a sling for comfort for the first 9 weeks postoperatively until radiographic union occurred. The patient’s wound healed uneventfully and with acceptable cosmesis. He was released to full activities at 10 weeks postoperatively. At final follow-up 6 months after surgery, the patient had returned to all of his regular activities without pain, and with full range of motion and no demonstrable deficits with radiographic union (Figure 1B).

Case 2

An 11-year-old boy with no significant medical or surgical history fell onto his right dominant upper extremity while doing a jump on his dirt bike 1 week prior to presentation, sustaining a clavicle fracture. This was an isolated injury. He was seen and evaluated by an outside orthopedist who noted that the soft-tissue envelope was intact and the patient was neurovascularly intact. Radiographs showed a displaced fracture of the midshaft of the clavicle with 15% shortening and with a vertically angulated piece of comminution (Figure 1C). Nonoperative treatment with a figure-of-8 brace was recommended. The patient’s discomfort completely resolved.

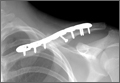

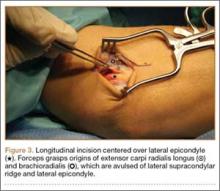

One week later, when he presented to the outside orthopedist for follow-up, the development of a wound overlying the fracture site was noted, and the patient was started on oral trimethoprim/sulfamethoxazole and referred to our office for treatment (Figure 1D). The patient denied reinjury and endorsed compliance with brace immobilization. On examination, the patient was afebrile and was noted to have a puncture wound at the fracture site with a protruding spike of bone and surrounding erythema but without present or expressible discharge (Figure 2). The patient was taken urgently for I&D and ORIF, using a similar technique to case 1, except that no lag screw was employed.

Postoperatively, the patient did well with no complications; he was prescribed oral cephalexin for 1 week. The patient was immobilized in a sling for the first 5 weeks after surgery until radiographic union had occurred, after which the sling was discontinued. The patient’s wound healed uneventfully and with acceptable cosmesis. The patient was released from activity restrictions at 6 weeks postoperatively. At final follow-up 5 weeks after surgery, the patient had full painless range of motion, no tenderness at the fracture site, no signs of infection on examination, and radiographic union (Figure 1D).

Discussion

Optimal treatment of displaced clavicle fractures is controversial. While nonoperative treatment has been recommended,1-3 especially in skeletally immature populations with a capacity for remodeling,7-9 2 recent randomized clinical trials have demonstrated improved patient outcomes with ORIF.6,8,9 Traditionally, ORIF was recommended with tenting of the skin because of concern for an impending open fracture. However, recent review materials have implied that this complication may only be theoretical.3,5 Indeed, in 2 randomized trials, sufficient displacement to cause concern for impending violation of the skin envelope was not listed as an exclusion criteria.8,9 We report 2 cases of displaced comminuted clavicle fractures that were initially managed nonoperatively but developed open lesions at the fracture site. This complication, while rare, is possible, and surgeons must consider it as a possibility when assessing patients with displaced clavicle fractures. To the best of the authors’ knowledge, no guidelines exist to direct antibiotic choice and duration in secondarily open fractures.

These 2 cases have several features in common that may serve as risk factors for impending violation of the skin envelope. Both fractures had a vertically angulated segmental piece of comminution with a sharp spike. This feature has been identified as a potential risk factor for subsequent development of an open fracture in a case report of fragment excision without reduction or fixation to allow rapid return to play in a professional jockey.13 Both patients in these cases presented with high-velocity mechanisms of injury and significant displacement, both of which may serve as risk factors. In the only similar case the authors could identify, Strauss and colleagues14 described a distal clavicle fracture with significant displacement and with secondary ulceration of the skin complicated by infection presenting with purulent discharge, cultured positive for methicillin-sensitive Staphylococcus aureus, requiring management with an external fixator and 6 weeks of intravenous antibiotics. Because both cases presented here occurred in healthy adolescent patients who were taken urgently for I&D and ORIF as soon as the wound was discovered, deep infection was avoided in these cases. Finally, in 1 case, a figure-of-8 brace was employed, which may also have placed pressure on the skin overlying the fracture and may have predisposed this patient to this complication.

Conclusion

In displaced midshaft clavicle fractures, tenting of the skin sufficient to cause subsequent violation of the soft-tissue envelope is possible and is more than a theoretical risk. At-risk patients, ie, those with a vertically angulated sharp fragment of comminution, should be counseled appropriately and observed closely or considered for primary ORIF.

1. Neer CS 2nd. Nonunion of the clavicle. J Am Med Assoc. 1960;172:1006-1011.

2. Robinson CM. Fractures of the clavicle in the adult. Epidemiology and classification. J Bone Joint Surg Br. 1998;80(3):476-484.

3. Khan LA, Bradnock TJ, Scott C, Robinson CM. Fractures of the clavicle. J Bone Joint Surg Am. 2009;91(2):447-460.

4. Gottschalk HP, Dumont G, Khanani S, Browne RH, Starr AJ. Open clavicle fractures: patterns of trauma and associated injuries. J Orthop Trauma. 2012;26(2):107-109.

5. Ring D, Jupiter JB. Injuries to the shoulder girdle. In: Browner BD, Jupiter JB, eds. Skeletal Trauma. 4th ed. New York, NY: Elsevier; 2009:1755–1778.

6. McKee RC, Whelan DB, Schemitsch EH, McKee MD. Operative versus nonoperative care of displaced midshaft clavicular fractures: a meta-analysis of randomized clinical trials. J Bone Joint Surg Am. 2012;94(8):675-684.

7. Zlowodzki M, Zelle BA, Cole PA, Jeray K, McKee MD; Evidence-Based Orthopaedic Trauma Working Group. Treatment of acute midshaft clavicle fractures: systematic review of 2144 fractures: on behalf of the Evidence-Based Orthopaedic Trauma Working Group. J Orthop Trauma. 2005;19(7):504-507.

8. Robinson CM, Goudie EB, Murray IR, et al. Open reduction and plate fixation versus nonoperative treatment for displaced midshaft clavicular fractures: a multicenter, randomized, controlled trial. J Bone Joint Surg Am. 2013;95(17):1576-1584.

9. Canadian Orthopaedic Trauma Society. Nonoperative treatment compared with plate fixation of displaced midshaft clavicular fractures. A multicenter, randomized clinical trial. J Bone Joint Surg Am. 2007;89(1):1-10.

10. Mehlman CT, Yihua G, Bochang C, Zhigang W. Operative treatment of completely displaced clavicle shaft fractures in children. J Pediatr Orthop. 2009;29(8):851-855.

11. Gross CE, Chalmers PN, Ellman M, Fernandez JJ, Verma NN. Acute brachial plexopathy after clavicular open reduction and internal fixation. J Shoulder Elbow Surg. 2013;22(5):e6-e9.

12. Pahys JM, Pahys JR, Cho SK, et al. Methods to decrease postoperative infections following posterior cervical spine surgery. J Bone Joint Surg Am. 2013;95(6):549-554.

13. Mandalia V, Shivshanker V, Foy MA. Excision of a bony spike without fixation of the fractured clavicle in a jockey. Clin Orthop Relat Res. 2003;(409):275-277.

14. Strauss EJ, Kaplan KM, Paksima N, Bosco JA. Treatment of an open infected type IIB distal clavicle fracture: case report and review of the literature. Bull NYU Hosp Jt Dis. 2008;66(2):129-133.

Fractures of the clavicle, which account for 2.6% of all fractures, are displaced in 70% of cases and are mid-diaphyseal in 80% of cases.1-3 Historically, both displaced and nondisplaced fractures were treated nonoperatively with excellent outcomes reported in the majority of patients.1-3 Traditionally, the indications for surgical fixation of a clavicular fracture include open fractures, which occur infrequently, accounting for only 3.2% of clavicle fractures.4 Other indications include floating shoulder girdle or scapulothoracic dissociation, neurovascular injury, and skin “tenting” by the fracture fragments.3,5 Recently, both meta-analyses and randomized clinical trials have reported reduced malunion rates and improved patient outcomes with open reduction and internal fixation (ORIF).6-9 Consequently, operative fixation could be considered in patients with 100% displacement or greater than 1.5 cm shortening.6-9 Open reduction and internal fixation of the clavicle has been demonstrated to have excellent outcomes in pediatric populations as well.10

The clavicle is subcutaneous for much of its length and, thus, displaced clavicular fractures often result in a visible deformity with a stretch of the soft-tissue envelope over the fracture. While this has been suggested as an operative indication, several recent sources indicate that this concern may only be theoretical. According to the fourth edition of Skeletal Trauma, “It is often stated that open reduction and internal fixation should be considered if the skin is threatened by pressure from a prominent clavicle fracture fragment; however, it is extremely rare of the skin to be perforated from within.”5 The most recent Journal of Bone and Joint Surgery Current Concepts Review on the subject stated that “open fractures or soft-tissue tenting sufficient to produce skin necrosis is uncommon.”3 To the best of our knowledge, there is no reported case of a displaced midshaft clavicle fracture with secondary skin necrosis and conversion into an open fracture, validating the conclusion that this complication may be only theoretical. Given that surgical fixation carries a risk of complications including wound complications, infection, nonunion, malunion, and damage to the nearby neurovascular structures and pleural apices,11 some surgeons may be uncertain how to proceed in cases at risk for disturbance of the soft tissues.

We report 2 adolescent cases of displaced, comminuted clavicle fractures in which the skin was initially intact. Both were managed nonoperatively and both secondarily presented with open lesions at the fracture site requiring urgent irrigation and débridement (I&D) and ORIF. The patients and their guardians provided written informed consent for print and electronic publication of these case reports.

Case Reports

Case 1

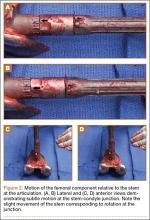

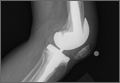

A 15-year-old boy with no significant medical or surgical history flipped over the handlebars of his bicycle the day prior to presentation and sustained a clavicle fracture on his left nondominant upper extremity. This was an isolated injury. On examination, his skin was intact with an area of tender mild osseous protuberance at the midclavicle with associated surrounding edema. He was neurovascularly intact. Radiographs showed a displaced fracture of the midshaft of the clavicle with 20% shortening with a vertically angulated piece of comminution (Figure 1A). After a discussion of the treatment options with the family, the decision was made to pursue nonoperative treatment with sling immobilization as needed and restriction from gym and sports.

Two and a half weeks later, the patient presented at follow-up with significant reduction but persistence of his pain and a new complaint of drainage from the area of the fracture. On examination, he was found to have a puncture wound of the skin with exposed clavicle protruding through the wound with a 1-cm circumferential area of erythema without purulence present or expressible. The patient denied reinjury and endorsed compliance with sling immobilization. He was taken for urgent I&D and ORIF. After excision of the eschar surrounding the open lesion and full I&D of the soft tissues, the protruding spike was partially excised and the fracture site was débrided. The fracture was reduced and fixated with a lag screw and neutralization plate technique using an anatomically contoured locking clavicle plate (Synthes). Vancomycin powder was sprinkled into the wound at the completion of the procedure to reduce the chance of infection.12

Postoperatively, the patient was prescribed oral clindamycin but was subsequently switched to oral cephalexin because of mild signs of an allergic reaction, for a total course of antibiotics of 1 week. The patient was immobilized in a sling for comfort for the first 9 weeks postoperatively until radiographic union occurred. The patient’s wound healed uneventfully and with acceptable cosmesis. He was released to full activities at 10 weeks postoperatively. At final follow-up 6 months after surgery, the patient had returned to all of his regular activities without pain, and with full range of motion and no demonstrable deficits with radiographic union (Figure 1B).

Case 2

An 11-year-old boy with no significant medical or surgical history fell onto his right dominant upper extremity while doing a jump on his dirt bike 1 week prior to presentation, sustaining a clavicle fracture. This was an isolated injury. He was seen and evaluated by an outside orthopedist who noted that the soft-tissue envelope was intact and the patient was neurovascularly intact. Radiographs showed a displaced fracture of the midshaft of the clavicle with 15% shortening and with a vertically angulated piece of comminution (Figure 1C). Nonoperative treatment with a figure-of-8 brace was recommended. The patient’s discomfort completely resolved.

One week later, when he presented to the outside orthopedist for follow-up, the development of a wound overlying the fracture site was noted, and the patient was started on oral trimethoprim/sulfamethoxazole and referred to our office for treatment (Figure 1D). The patient denied reinjury and endorsed compliance with brace immobilization. On examination, the patient was afebrile and was noted to have a puncture wound at the fracture site with a protruding spike of bone and surrounding erythema but without present or expressible discharge (Figure 2). The patient was taken urgently for I&D and ORIF, using a similar technique to case 1, except that no lag screw was employed.

Postoperatively, the patient did well with no complications; he was prescribed oral cephalexin for 1 week. The patient was immobilized in a sling for the first 5 weeks after surgery until radiographic union had occurred, after which the sling was discontinued. The patient’s wound healed uneventfully and with acceptable cosmesis. The patient was released from activity restrictions at 6 weeks postoperatively. At final follow-up 5 weeks after surgery, the patient had full painless range of motion, no tenderness at the fracture site, no signs of infection on examination, and radiographic union (Figure 1D).

Discussion

Optimal treatment of displaced clavicle fractures is controversial. While nonoperative treatment has been recommended,1-3 especially in skeletally immature populations with a capacity for remodeling,7-9 2 recent randomized clinical trials have demonstrated improved patient outcomes with ORIF.6,8,9 Traditionally, ORIF was recommended with tenting of the skin because of concern for an impending open fracture. However, recent review materials have implied that this complication may only be theoretical.3,5 Indeed, in 2 randomized trials, sufficient displacement to cause concern for impending violation of the skin envelope was not listed as an exclusion criteria.8,9 We report 2 cases of displaced comminuted clavicle fractures that were initially managed nonoperatively but developed open lesions at the fracture site. This complication, while rare, is possible, and surgeons must consider it as a possibility when assessing patients with displaced clavicle fractures. To the best of the authors’ knowledge, no guidelines exist to direct antibiotic choice and duration in secondarily open fractures.

These 2 cases have several features in common that may serve as risk factors for impending violation of the skin envelope. Both fractures had a vertically angulated segmental piece of comminution with a sharp spike. This feature has been identified as a potential risk factor for subsequent development of an open fracture in a case report of fragment excision without reduction or fixation to allow rapid return to play in a professional jockey.13 Both patients in these cases presented with high-velocity mechanisms of injury and significant displacement, both of which may serve as risk factors. In the only similar case the authors could identify, Strauss and colleagues14 described a distal clavicle fracture with significant displacement and with secondary ulceration of the skin complicated by infection presenting with purulent discharge, cultured positive for methicillin-sensitive Staphylococcus aureus, requiring management with an external fixator and 6 weeks of intravenous antibiotics. Because both cases presented here occurred in healthy adolescent patients who were taken urgently for I&D and ORIF as soon as the wound was discovered, deep infection was avoided in these cases. Finally, in 1 case, a figure-of-8 brace was employed, which may also have placed pressure on the skin overlying the fracture and may have predisposed this patient to this complication.

Conclusion

In displaced midshaft clavicle fractures, tenting of the skin sufficient to cause subsequent violation of the soft-tissue envelope is possible and is more than a theoretical risk. At-risk patients, ie, those with a vertically angulated sharp fragment of comminution, should be counseled appropriately and observed closely or considered for primary ORIF.

Fractures of the clavicle, which account for 2.6% of all fractures, are displaced in 70% of cases and are mid-diaphyseal in 80% of cases.1-3 Historically, both displaced and nondisplaced fractures were treated nonoperatively with excellent outcomes reported in the majority of patients.1-3 Traditionally, the indications for surgical fixation of a clavicular fracture include open fractures, which occur infrequently, accounting for only 3.2% of clavicle fractures.4 Other indications include floating shoulder girdle or scapulothoracic dissociation, neurovascular injury, and skin “tenting” by the fracture fragments.3,5 Recently, both meta-analyses and randomized clinical trials have reported reduced malunion rates and improved patient outcomes with open reduction and internal fixation (ORIF).6-9 Consequently, operative fixation could be considered in patients with 100% displacement or greater than 1.5 cm shortening.6-9 Open reduction and internal fixation of the clavicle has been demonstrated to have excellent outcomes in pediatric populations as well.10

The clavicle is subcutaneous for much of its length and, thus, displaced clavicular fractures often result in a visible deformity with a stretch of the soft-tissue envelope over the fracture. While this has been suggested as an operative indication, several recent sources indicate that this concern may only be theoretical. According to the fourth edition of Skeletal Trauma, “It is often stated that open reduction and internal fixation should be considered if the skin is threatened by pressure from a prominent clavicle fracture fragment; however, it is extremely rare of the skin to be perforated from within.”5 The most recent Journal of Bone and Joint Surgery Current Concepts Review on the subject stated that “open fractures or soft-tissue tenting sufficient to produce skin necrosis is uncommon.”3 To the best of our knowledge, there is no reported case of a displaced midshaft clavicle fracture with secondary skin necrosis and conversion into an open fracture, validating the conclusion that this complication may be only theoretical. Given that surgical fixation carries a risk of complications including wound complications, infection, nonunion, malunion, and damage to the nearby neurovascular structures and pleural apices,11 some surgeons may be uncertain how to proceed in cases at risk for disturbance of the soft tissues.

We report 2 adolescent cases of displaced, comminuted clavicle fractures in which the skin was initially intact. Both were managed nonoperatively and both secondarily presented with open lesions at the fracture site requiring urgent irrigation and débridement (I&D) and ORIF. The patients and their guardians provided written informed consent for print and electronic publication of these case reports.

Case Reports

Case 1

A 15-year-old boy with no significant medical or surgical history flipped over the handlebars of his bicycle the day prior to presentation and sustained a clavicle fracture on his left nondominant upper extremity. This was an isolated injury. On examination, his skin was intact with an area of tender mild osseous protuberance at the midclavicle with associated surrounding edema. He was neurovascularly intact. Radiographs showed a displaced fracture of the midshaft of the clavicle with 20% shortening with a vertically angulated piece of comminution (Figure 1A). After a discussion of the treatment options with the family, the decision was made to pursue nonoperative treatment with sling immobilization as needed and restriction from gym and sports.

Two and a half weeks later, the patient presented at follow-up with significant reduction but persistence of his pain and a new complaint of drainage from the area of the fracture. On examination, he was found to have a puncture wound of the skin with exposed clavicle protruding through the wound with a 1-cm circumferential area of erythema without purulence present or expressible. The patient denied reinjury and endorsed compliance with sling immobilization. He was taken for urgent I&D and ORIF. After excision of the eschar surrounding the open lesion and full I&D of the soft tissues, the protruding spike was partially excised and the fracture site was débrided. The fracture was reduced and fixated with a lag screw and neutralization plate technique using an anatomically contoured locking clavicle plate (Synthes). Vancomycin powder was sprinkled into the wound at the completion of the procedure to reduce the chance of infection.12

Postoperatively, the patient was prescribed oral clindamycin but was subsequently switched to oral cephalexin because of mild signs of an allergic reaction, for a total course of antibiotics of 1 week. The patient was immobilized in a sling for comfort for the first 9 weeks postoperatively until radiographic union occurred. The patient’s wound healed uneventfully and with acceptable cosmesis. He was released to full activities at 10 weeks postoperatively. At final follow-up 6 months after surgery, the patient had returned to all of his regular activities without pain, and with full range of motion and no demonstrable deficits with radiographic union (Figure 1B).

Case 2

An 11-year-old boy with no significant medical or surgical history fell onto his right dominant upper extremity while doing a jump on his dirt bike 1 week prior to presentation, sustaining a clavicle fracture. This was an isolated injury. He was seen and evaluated by an outside orthopedist who noted that the soft-tissue envelope was intact and the patient was neurovascularly intact. Radiographs showed a displaced fracture of the midshaft of the clavicle with 15% shortening and with a vertically angulated piece of comminution (Figure 1C). Nonoperative treatment with a figure-of-8 brace was recommended. The patient’s discomfort completely resolved.

One week later, when he presented to the outside orthopedist for follow-up, the development of a wound overlying the fracture site was noted, and the patient was started on oral trimethoprim/sulfamethoxazole and referred to our office for treatment (Figure 1D). The patient denied reinjury and endorsed compliance with brace immobilization. On examination, the patient was afebrile and was noted to have a puncture wound at the fracture site with a protruding spike of bone and surrounding erythema but without present or expressible discharge (Figure 2). The patient was taken urgently for I&D and ORIF, using a similar technique to case 1, except that no lag screw was employed.

Postoperatively, the patient did well with no complications; he was prescribed oral cephalexin for 1 week. The patient was immobilized in a sling for the first 5 weeks after surgery until radiographic union had occurred, after which the sling was discontinued. The patient’s wound healed uneventfully and with acceptable cosmesis. The patient was released from activity restrictions at 6 weeks postoperatively. At final follow-up 5 weeks after surgery, the patient had full painless range of motion, no tenderness at the fracture site, no signs of infection on examination, and radiographic union (Figure 1D).

Discussion

Optimal treatment of displaced clavicle fractures is controversial. While nonoperative treatment has been recommended,1-3 especially in skeletally immature populations with a capacity for remodeling,7-9 2 recent randomized clinical trials have demonstrated improved patient outcomes with ORIF.6,8,9 Traditionally, ORIF was recommended with tenting of the skin because of concern for an impending open fracture. However, recent review materials have implied that this complication may only be theoretical.3,5 Indeed, in 2 randomized trials, sufficient displacement to cause concern for impending violation of the skin envelope was not listed as an exclusion criteria.8,9 We report 2 cases of displaced comminuted clavicle fractures that were initially managed nonoperatively but developed open lesions at the fracture site. This complication, while rare, is possible, and surgeons must consider it as a possibility when assessing patients with displaced clavicle fractures. To the best of the authors’ knowledge, no guidelines exist to direct antibiotic choice and duration in secondarily open fractures.

These 2 cases have several features in common that may serve as risk factors for impending violation of the skin envelope. Both fractures had a vertically angulated segmental piece of comminution with a sharp spike. This feature has been identified as a potential risk factor for subsequent development of an open fracture in a case report of fragment excision without reduction or fixation to allow rapid return to play in a professional jockey.13 Both patients in these cases presented with high-velocity mechanisms of injury and significant displacement, both of which may serve as risk factors. In the only similar case the authors could identify, Strauss and colleagues14 described a distal clavicle fracture with significant displacement and with secondary ulceration of the skin complicated by infection presenting with purulent discharge, cultured positive for methicillin-sensitive Staphylococcus aureus, requiring management with an external fixator and 6 weeks of intravenous antibiotics. Because both cases presented here occurred in healthy adolescent patients who were taken urgently for I&D and ORIF as soon as the wound was discovered, deep infection was avoided in these cases. Finally, in 1 case, a figure-of-8 brace was employed, which may also have placed pressure on the skin overlying the fracture and may have predisposed this patient to this complication.

Conclusion

In displaced midshaft clavicle fractures, tenting of the skin sufficient to cause subsequent violation of the soft-tissue envelope is possible and is more than a theoretical risk. At-risk patients, ie, those with a vertically angulated sharp fragment of comminution, should be counseled appropriately and observed closely or considered for primary ORIF.

1. Neer CS 2nd. Nonunion of the clavicle. J Am Med Assoc. 1960;172:1006-1011.

2. Robinson CM. Fractures of the clavicle in the adult. Epidemiology and classification. J Bone Joint Surg Br. 1998;80(3):476-484.

3. Khan LA, Bradnock TJ, Scott C, Robinson CM. Fractures of the clavicle. J Bone Joint Surg Am. 2009;91(2):447-460.

4. Gottschalk HP, Dumont G, Khanani S, Browne RH, Starr AJ. Open clavicle fractures: patterns of trauma and associated injuries. J Orthop Trauma. 2012;26(2):107-109.

5. Ring D, Jupiter JB. Injuries to the shoulder girdle. In: Browner BD, Jupiter JB, eds. Skeletal Trauma. 4th ed. New York, NY: Elsevier; 2009:1755–1778.

6. McKee RC, Whelan DB, Schemitsch EH, McKee MD. Operative versus nonoperative care of displaced midshaft clavicular fractures: a meta-analysis of randomized clinical trials. J Bone Joint Surg Am. 2012;94(8):675-684.

7. Zlowodzki M, Zelle BA, Cole PA, Jeray K, McKee MD; Evidence-Based Orthopaedic Trauma Working Group. Treatment of acute midshaft clavicle fractures: systematic review of 2144 fractures: on behalf of the Evidence-Based Orthopaedic Trauma Working Group. J Orthop Trauma. 2005;19(7):504-507.

8. Robinson CM, Goudie EB, Murray IR, et al. Open reduction and plate fixation versus nonoperative treatment for displaced midshaft clavicular fractures: a multicenter, randomized, controlled trial. J Bone Joint Surg Am. 2013;95(17):1576-1584.

9. Canadian Orthopaedic Trauma Society. Nonoperative treatment compared with plate fixation of displaced midshaft clavicular fractures. A multicenter, randomized clinical trial. J Bone Joint Surg Am. 2007;89(1):1-10.

10. Mehlman CT, Yihua G, Bochang C, Zhigang W. Operative treatment of completely displaced clavicle shaft fractures in children. J Pediatr Orthop. 2009;29(8):851-855.

11. Gross CE, Chalmers PN, Ellman M, Fernandez JJ, Verma NN. Acute brachial plexopathy after clavicular open reduction and internal fixation. J Shoulder Elbow Surg. 2013;22(5):e6-e9.

12. Pahys JM, Pahys JR, Cho SK, et al. Methods to decrease postoperative infections following posterior cervical spine surgery. J Bone Joint Surg Am. 2013;95(6):549-554.

13. Mandalia V, Shivshanker V, Foy MA. Excision of a bony spike without fixation of the fractured clavicle in a jockey. Clin Orthop Relat Res. 2003;(409):275-277.

14. Strauss EJ, Kaplan KM, Paksima N, Bosco JA. Treatment of an open infected type IIB distal clavicle fracture: case report and review of the literature. Bull NYU Hosp Jt Dis. 2008;66(2):129-133.

1. Neer CS 2nd. Nonunion of the clavicle. J Am Med Assoc. 1960;172:1006-1011.

2. Robinson CM. Fractures of the clavicle in the adult. Epidemiology and classification. J Bone Joint Surg Br. 1998;80(3):476-484.

3. Khan LA, Bradnock TJ, Scott C, Robinson CM. Fractures of the clavicle. J Bone Joint Surg Am. 2009;91(2):447-460.

4. Gottschalk HP, Dumont G, Khanani S, Browne RH, Starr AJ. Open clavicle fractures: patterns of trauma and associated injuries. J Orthop Trauma. 2012;26(2):107-109.

5. Ring D, Jupiter JB. Injuries to the shoulder girdle. In: Browner BD, Jupiter JB, eds. Skeletal Trauma. 4th ed. New York, NY: Elsevier; 2009:1755–1778.

6. McKee RC, Whelan DB, Schemitsch EH, McKee MD. Operative versus nonoperative care of displaced midshaft clavicular fractures: a meta-analysis of randomized clinical trials. J Bone Joint Surg Am. 2012;94(8):675-684.

7. Zlowodzki M, Zelle BA, Cole PA, Jeray K, McKee MD; Evidence-Based Orthopaedic Trauma Working Group. Treatment of acute midshaft clavicle fractures: systematic review of 2144 fractures: on behalf of the Evidence-Based Orthopaedic Trauma Working Group. J Orthop Trauma. 2005;19(7):504-507.

8. Robinson CM, Goudie EB, Murray IR, et al. Open reduction and plate fixation versus nonoperative treatment for displaced midshaft clavicular fractures: a multicenter, randomized, controlled trial. J Bone Joint Surg Am. 2013;95(17):1576-1584.

9. Canadian Orthopaedic Trauma Society. Nonoperative treatment compared with plate fixation of displaced midshaft clavicular fractures. A multicenter, randomized clinical trial. J Bone Joint Surg Am. 2007;89(1):1-10.

10. Mehlman CT, Yihua G, Bochang C, Zhigang W. Operative treatment of completely displaced clavicle shaft fractures in children. J Pediatr Orthop. 2009;29(8):851-855.

11. Gross CE, Chalmers PN, Ellman M, Fernandez JJ, Verma NN. Acute brachial plexopathy after clavicular open reduction and internal fixation. J Shoulder Elbow Surg. 2013;22(5):e6-e9.

12. Pahys JM, Pahys JR, Cho SK, et al. Methods to decrease postoperative infections following posterior cervical spine surgery. J Bone Joint Surg Am. 2013;95(6):549-554.

13. Mandalia V, Shivshanker V, Foy MA. Excision of a bony spike without fixation of the fractured clavicle in a jockey. Clin Orthop Relat Res. 2003;(409):275-277.

14. Strauss EJ, Kaplan KM, Paksima N, Bosco JA. Treatment of an open infected type IIB distal clavicle fracture: case report and review of the literature. Bull NYU Hosp Jt Dis. 2008;66(2):129-133.

Osteofibrous Dysplasia–like Adamantinoma of the Tibia in a 15-Year-Old Girl

Adamantinomas are rare primary malignant bone tumors (less than 1% of all bone tumors) that arise most commonly in the tibia.1 There is a predilection for adult men aged 20 to 50 years, with rare occurrences in children. These tumors are malignant, highly invasive, and have significant metastatic potential.2 A rarely seen, benign variant, known as osteofibrous dysplasia–like adamantinoma, is described in the literature, with fewer than 135 cases reported.3-5 This variant predominantly has benign characteristics of an osteofibrous dysplasia lesion but has the potential to transform into an adamantinoma.6 Osteofibrous dysplasia–like adamantinoma has been observed to regress with age and is also referred to as a regressing adamantinoma or differentiated adamantinoma.7

We report an uncommon case of an osteofibrous dysplasia–like adamantinoma of the tibia in a 15-year-old girl. We decided to observe the tumor with regular 3- to 6-month follow-ups. Osteofibrous dysplasia–like adamantinoma in our patient has remained stable for 2 years and has an excellent prognosis.8 We report this case for its rarity, its short-term stability, and significant treatment implications due to its potential to regress or develop into a malignant form. The patient and the patient’s guardian provided written informed consent for print and electronic publication of this case report.

Case Report

A healthy 15-year-old girl was referred to our institution for evaluation of anterior left knee pain. She had sustained a fall while playing basketball 3 months earlier and had been having left knee pain since that time. She did not have any swelling, catching, or locking in her left knee. She denied any recent fever, chills, night sweats, weight loss, nausea, vomiting, or diarrhea. On physical examination, her gait was normal and no swelling, erythema, or tenderness was noticed around the left knee.

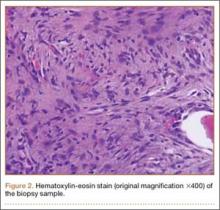

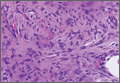

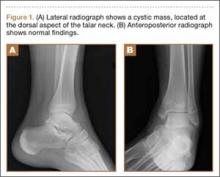

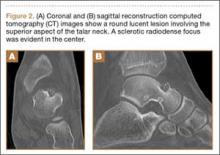

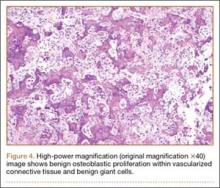

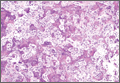

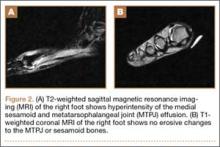

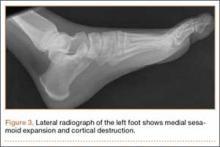

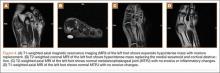

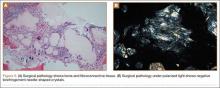

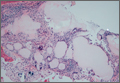

Plain radiographs revealed a heterogeneous lesion with sclerosis and thickening of the anteromedial cortex of the proximal left tibia (Figures 1A, 1B). A computed tomography (CT) scan of the abdomen, pelvis, and chest showed no osseous abnormalities. A whole-body bone scan showed activity in the anterior aspect of the left proximal tibia. No other areas of activity were noted. Magnetic resonance imaging of the left leg showed an elongated, multiloculated, enhancing mass arising from the anterolateral cortex and extending from the tibial tuberosity to the mid-diaphysis of the left tibia. Histologic examination of the CT-guided core needle biopsy specimen showed that the lesion was composed of dense fibrocollagenous tissue separating irregular bony trabeculae with osteoblastic and osteoclastic activity. There was no evidence of any atypical cells, necrosis, or significant mitotic activity. No epithelial cells were identified on hematoxylin-eosin (H&E) stain (Figure 2). However, immunohistochemical staining was positive for focal cytokeratin-positive epithelial cells (Figure 3). The lesion was diagnosed as an osteofibrous dysplasia–like adamantinoma on the basis of the radiographic and histologic findings. We elected nonoperative intervention given the benign nature of the lesion and its potential to regress. Given the possibility of sampling error and potential for progression, the patient was followed regularly at 3- to 6-month intervals over a 2-year period without disease progression.

Discussion

Osteofibrous dysplasia, osteofibrous dysplasia–like adamantinoma, and adamantinoma are rare fibro-osseous lesions that largely involve the midshaft of the tibia. Osteofibrous dysplasia accounts for 0.2% of primary bone tumors, whereas adamantinoma accounts for 0.1% to 0.5% of malignant bone tumors.9 Osteofibrous dysplasia is a benign lesion composed primarily of fibro-osseous tissue. Adamantinoma, however, is a slow-growing, low-grade, malignant biphasic tumor with intermingled epithelial and fibro-osseous components. It is an aggressive tumor that is locally invasive and can metastasize.2 Osteofibrous dysplasia–like adamantinoma (also known as differentiated or regressing adamantinoma) is a benign lesion like osteofibrous dysplasia but has features of both osteofibrous dysplasia and adamantinoma. Osteofibrous dysplasia–like adamantinoma may progress and become a malignant adamantinoma.6,10

The radiologic features of the 3 lesions are quite similar. It is not possible to distinguish between osteofibrous dysplasia and osteofibrous dysplasia–like adamantinoma based on imaging alone.9 Adamantinoma, being highly invasive, can be distinguished from osteofibrous dysplasia and osteofibrous dysplasia–like adamantinoma according to the extent of involvement of the medullary cavity seen on magnetic resonance imaging.9 Complete involvement of the medullary cavity is almost always seen in an adamantinoma. Involvement of the medullary cavity is minimal or absent in osteofibrous dysplasia and osteofibrous dysplasia–like adamantinoma lesions.

Tissue confirmation through biopsy is crucial for accurate diagnosis. A biopsy should always be performed on any suspicious lesion,3,6 and the fibro-osseous lesion should be treated as an adamantinoma if findings are equivocal. A biopsy also distinctly distinguishes these lesions from benign fibrous cortical defects, which have a similar radiographic appearance. While open biopsy is the gold standard, minimally invasive techniques such as core needle biopsy and fine needle biopsy are increasingly used.6 Because of the higher risk of misdiagnosis with minimally invasive techniques, radiographic confirmation is highly recommended.5

Histologically, both osteofibrous dysplasia and osteofibrous dysplasia–like adamantinoma do not stain for cytokeratin on H&E stain. However, they can be differentiated based on immunohistochemical staining for cytokeratin. Osteofibrous dysplasia lesions exhibit diffuse staining whereas osteofibrous dysplasia–like adamantinoma lesions show focal staining of small nests of epithelial cells. Adamantinoma, in comparison, exhibits a biphasic pattern on H&E stain, representing areas of epithelial and osteofibrous cells. Immunohistochemical staining for cytokeratin of an adamantinoma reveals large nests of epithelial cells.

The association between osteofibrous dysplasia, osteofibrous dysplasia–like adamantinoma, and adamantinoma is not clearly established. However, it is widely believed that these 3 lesions represent a spectrum of the same disease and are linearly related in disease progression, with osteofibrous dysplasia at the benign end of the spectrum, osteofibrous dysplasia–like adamantinoma the intermediate form, and adamantinoma at the malignant end of the spectrum.11

Hazelbag and colleagues6 and Springfield and colleagues10 point out that osteofibrous dysplasia and osteofibrous dysplasia–like adamantinoma could be precursor lesions of adamantinoma. We found several studies in the literature that support and document progression from osteofibrous dysplasia and osteofibrous dysplasia–like adamantinoma to an adamantinoma.4,6,10,12 Other studies, however, showed no progression from either a benign osteofibrous dysplasia or an osteofibrous dysplasia–like adamantinoma lesion to a malignant adamantinoma. Park and colleagues13 described 41 cases of osteofibrous dysplasia that did not progress to adamantinoma. Kuruvilla and Steiner8 described 5 cases of osteofibrous dysplasia–like adamantinoma that showed no progression to adamantinoma. Additionally, our case has not progressed and has remained radiographically stable over a 2-year follow-up. Czerniak and colleagues7 and Ueda and colleagues14 postulated, based on histologic and immunohistochemical studies, that osteofibrous dysplasia–like adamantinoma might be a regressing form of an adamantinoma that is undergoing reparative processes that could result in complete elimination of all tumor cells.

In general, any lesion with absent to low malignant potential could be managed nonoperatively with periodic observation and without the need for surgical intervention. Thus, identification of a stable or nonprogressing osteofibrous dysplasia–like adamantinoma lesion has significant treatment implications. Campanacci and Laus15 at the Rizzoli Institute in Milan, through long term follow-up of their patients with osteofibrous dysplasia, found that most lesions had a tendency to regress spontaneously by puberty. They recommended that nonextensive osteofibrous dysplasia lesions should be observed, and surgery should be delayed until puberty. Gleason and colleagues16 also recommended nonoperative management of osteofibrous dysplasia lesions, with surgery used only for large, deforming, and highly invasive lesions. We recommend a similar treatment approach for osteofibrous dysplasia–like adamantinoma lesions.

Adamantinomas, however, are usually symptomatic, are highly invasive, have a high recurrence rate, and can metastasize.9 In these patients, a wide en bloc resection or amputation should be performed as soon as possible.11 Our case highlights that osteofibrous dysplasia–like adamantinoma lesions can occur in children and can remain stable, especially over the short term. Such lesions can be observed without surgical intervention.

1. Kanakaraddi SV, Nagaraj G, Ravinath TM. Adamantinoma of the tibia with late skeletal metastasis: an unusual presentation. J Bone Joint Surg Br. 2007;89(3):388-389.

2. Van Geel AN, Hazelbag HM, Slingerland R, Vermeulen MI. Disseminating adamantinoma of the tibia. Sarcoma. 1997;1(2):109-111.

3. Povysil C, Kohout A, Urban K, Horak M. Differentiated adamantinoma of the fibula: a rhabdoid variant. Skeletal Radiol. 2004;33(8):488-492.

4. Hatori M, Watanabe M, Hosaka M, Sasano H, Narita M, Kokubun S. A classic adamantinoma arising from osteofibrous dysplasia-like adamantinoma in the lower leg: a case report and review of the literature. Tohoku J Exp Med. 2006;209(1):53-59.

5. Khanna M, Delaney D, Tirabosco R, Saifuddin A. Osteofibrous dysplasia, osteofibrous dysplasia-like adamantinoma and adamantinoma: correlation of radiological imaging features with surgical histology and assessment of the use of radiology in contributing to needle biopsy diagnosis. Skeletal Radiol. 2008;37(12):1077-1084.

6. Hazelbag HM, Taminiau AH, Fleuren GJ, Hogendoorn PC. Adamantinoma of the long bones. A clinicopathological study of thirty-two patients with emphasis on histological subtype, precursor lesion, and biological behavior. J Bone Joint Surg Am. 1994;76(10):1482-1499.

7. Czerniak B, Rojas-Corona RR, Dorfman HD. Morphologic diversity of long bone adamantinoma. The concept of differentiated (regressing) adamantinoma and its relationship to osteofibrous dysplasia. Cancer. 1989;64(11):2319-2334.

8. Kuruvilla G, Steiner GC. Osteofibrous dysplasia-like adamantinoma of bone: a report of five cases with immunohistochemical and ultrastructural studies. Hum Pathol. 1998;29(8):809-814.

9. Bethapudi S, Ritchie DA, Macduff E, Straiton J. Imaging in osteofibrous dysplasia, osteofibrous dysplasia-like adamantinoma, and classic adamantinoma. Clin Radiol. 2014;69(2):200-208.

10. Springfield DS, Rosenberg AE, Mankin HJ, Mindell ER. Relationship between osteofibrous dysplasia and adamantinoma. Clin Orthop Relat Res. 1994;(309):234-244.

11. Most MJ, Sim FH, Inwards CY. Osteofibrous dysplasia and adamantinoma. J Am Acad Orthop Surg. 2010;18(6):358-366.

12. Lee RS, Weitzel S, Eastwood DM, et al. Osteofibrous dysplasia of the tibia. Is there a need for a radical surgical approach? J Bone Joint Surg Br. 2006;88(5):658-664.

13. Park YK, Unni KK, McLeod RA, Pritchard DJ. Osteofibrous dysplasia: clinicopathologic study of 80 cases. Hum Pathol. 1993;24(12):1339-1347.

14. Ueda Y, Roessner A, Bosse A, Edel G, Bocker W, Wuisman P. Juvenile intracortical adamantinoma of the tibia with predominant osteofibrous dysplasia-like features. Pathol Res Pract. 1991;187(8):1039-1043; discussion 1043-1034.

15. Campanacci M, Laus M. Osteofibrous dysplasia of the tibia and fibula. J Bone Joint Surg Am. 1981;63(3):367-375.

16. Gleason BC, Liegl-Atzwanger B, Kozakewich HP, et al. Osteofibrous dysplasia and adamantinoma in children and adolescents: a clinicopathologic reappraisal. Am J Surg Pathol. 2008;32(3):363-376.

Adamantinomas are rare primary malignant bone tumors (less than 1% of all bone tumors) that arise most commonly in the tibia.1 There is a predilection for adult men aged 20 to 50 years, with rare occurrences in children. These tumors are malignant, highly invasive, and have significant metastatic potential.2 A rarely seen, benign variant, known as osteofibrous dysplasia–like adamantinoma, is described in the literature, with fewer than 135 cases reported.3-5 This variant predominantly has benign characteristics of an osteofibrous dysplasia lesion but has the potential to transform into an adamantinoma.6 Osteofibrous dysplasia–like adamantinoma has been observed to regress with age and is also referred to as a regressing adamantinoma or differentiated adamantinoma.7

We report an uncommon case of an osteofibrous dysplasia–like adamantinoma of the tibia in a 15-year-old girl. We decided to observe the tumor with regular 3- to 6-month follow-ups. Osteofibrous dysplasia–like adamantinoma in our patient has remained stable for 2 years and has an excellent prognosis.8 We report this case for its rarity, its short-term stability, and significant treatment implications due to its potential to regress or develop into a malignant form. The patient and the patient’s guardian provided written informed consent for print and electronic publication of this case report.

Case Report

A healthy 15-year-old girl was referred to our institution for evaluation of anterior left knee pain. She had sustained a fall while playing basketball 3 months earlier and had been having left knee pain since that time. She did not have any swelling, catching, or locking in her left knee. She denied any recent fever, chills, night sweats, weight loss, nausea, vomiting, or diarrhea. On physical examination, her gait was normal and no swelling, erythema, or tenderness was noticed around the left knee.

Plain radiographs revealed a heterogeneous lesion with sclerosis and thickening of the anteromedial cortex of the proximal left tibia (Figures 1A, 1B). A computed tomography (CT) scan of the abdomen, pelvis, and chest showed no osseous abnormalities. A whole-body bone scan showed activity in the anterior aspect of the left proximal tibia. No other areas of activity were noted. Magnetic resonance imaging of the left leg showed an elongated, multiloculated, enhancing mass arising from the anterolateral cortex and extending from the tibial tuberosity to the mid-diaphysis of the left tibia. Histologic examination of the CT-guided core needle biopsy specimen showed that the lesion was composed of dense fibrocollagenous tissue separating irregular bony trabeculae with osteoblastic and osteoclastic activity. There was no evidence of any atypical cells, necrosis, or significant mitotic activity. No epithelial cells were identified on hematoxylin-eosin (H&E) stain (Figure 2). However, immunohistochemical staining was positive for focal cytokeratin-positive epithelial cells (Figure 3). The lesion was diagnosed as an osteofibrous dysplasia–like adamantinoma on the basis of the radiographic and histologic findings. We elected nonoperative intervention given the benign nature of the lesion and its potential to regress. Given the possibility of sampling error and potential for progression, the patient was followed regularly at 3- to 6-month intervals over a 2-year period without disease progression.

Discussion

Osteofibrous dysplasia, osteofibrous dysplasia–like adamantinoma, and adamantinoma are rare fibro-osseous lesions that largely involve the midshaft of the tibia. Osteofibrous dysplasia accounts for 0.2% of primary bone tumors, whereas adamantinoma accounts for 0.1% to 0.5% of malignant bone tumors.9 Osteofibrous dysplasia is a benign lesion composed primarily of fibro-osseous tissue. Adamantinoma, however, is a slow-growing, low-grade, malignant biphasic tumor with intermingled epithelial and fibro-osseous components. It is an aggressive tumor that is locally invasive and can metastasize.2 Osteofibrous dysplasia–like adamantinoma (also known as differentiated or regressing adamantinoma) is a benign lesion like osteofibrous dysplasia but has features of both osteofibrous dysplasia and adamantinoma. Osteofibrous dysplasia–like adamantinoma may progress and become a malignant adamantinoma.6,10

The radiologic features of the 3 lesions are quite similar. It is not possible to distinguish between osteofibrous dysplasia and osteofibrous dysplasia–like adamantinoma based on imaging alone.9 Adamantinoma, being highly invasive, can be distinguished from osteofibrous dysplasia and osteofibrous dysplasia–like adamantinoma according to the extent of involvement of the medullary cavity seen on magnetic resonance imaging.9 Complete involvement of the medullary cavity is almost always seen in an adamantinoma. Involvement of the medullary cavity is minimal or absent in osteofibrous dysplasia and osteofibrous dysplasia–like adamantinoma lesions.

Tissue confirmation through biopsy is crucial for accurate diagnosis. A biopsy should always be performed on any suspicious lesion,3,6 and the fibro-osseous lesion should be treated as an adamantinoma if findings are equivocal. A biopsy also distinctly distinguishes these lesions from benign fibrous cortical defects, which have a similar radiographic appearance. While open biopsy is the gold standard, minimally invasive techniques such as core needle biopsy and fine needle biopsy are increasingly used.6 Because of the higher risk of misdiagnosis with minimally invasive techniques, radiographic confirmation is highly recommended.5

Histologically, both osteofibrous dysplasia and osteofibrous dysplasia–like adamantinoma do not stain for cytokeratin on H&E stain. However, they can be differentiated based on immunohistochemical staining for cytokeratin. Osteofibrous dysplasia lesions exhibit diffuse staining whereas osteofibrous dysplasia–like adamantinoma lesions show focal staining of small nests of epithelial cells. Adamantinoma, in comparison, exhibits a biphasic pattern on H&E stain, representing areas of epithelial and osteofibrous cells. Immunohistochemical staining for cytokeratin of an adamantinoma reveals large nests of epithelial cells.

The association between osteofibrous dysplasia, osteofibrous dysplasia–like adamantinoma, and adamantinoma is not clearly established. However, it is widely believed that these 3 lesions represent a spectrum of the same disease and are linearly related in disease progression, with osteofibrous dysplasia at the benign end of the spectrum, osteofibrous dysplasia–like adamantinoma the intermediate form, and adamantinoma at the malignant end of the spectrum.11

Hazelbag and colleagues6 and Springfield and colleagues10 point out that osteofibrous dysplasia and osteofibrous dysplasia–like adamantinoma could be precursor lesions of adamantinoma. We found several studies in the literature that support and document progression from osteofibrous dysplasia and osteofibrous dysplasia–like adamantinoma to an adamantinoma.4,6,10,12 Other studies, however, showed no progression from either a benign osteofibrous dysplasia or an osteofibrous dysplasia–like adamantinoma lesion to a malignant adamantinoma. Park and colleagues13 described 41 cases of osteofibrous dysplasia that did not progress to adamantinoma. Kuruvilla and Steiner8 described 5 cases of osteofibrous dysplasia–like adamantinoma that showed no progression to adamantinoma. Additionally, our case has not progressed and has remained radiographically stable over a 2-year follow-up. Czerniak and colleagues7 and Ueda and colleagues14 postulated, based on histologic and immunohistochemical studies, that osteofibrous dysplasia–like adamantinoma might be a regressing form of an adamantinoma that is undergoing reparative processes that could result in complete elimination of all tumor cells.

In general, any lesion with absent to low malignant potential could be managed nonoperatively with periodic observation and without the need for surgical intervention. Thus, identification of a stable or nonprogressing osteofibrous dysplasia–like adamantinoma lesion has significant treatment implications. Campanacci and Laus15 at the Rizzoli Institute in Milan, through long term follow-up of their patients with osteofibrous dysplasia, found that most lesions had a tendency to regress spontaneously by puberty. They recommended that nonextensive osteofibrous dysplasia lesions should be observed, and surgery should be delayed until puberty. Gleason and colleagues16 also recommended nonoperative management of osteofibrous dysplasia lesions, with surgery used only for large, deforming, and highly invasive lesions. We recommend a similar treatment approach for osteofibrous dysplasia–like adamantinoma lesions.

Adamantinomas, however, are usually symptomatic, are highly invasive, have a high recurrence rate, and can metastasize.9 In these patients, a wide en bloc resection or amputation should be performed as soon as possible.11 Our case highlights that osteofibrous dysplasia–like adamantinoma lesions can occur in children and can remain stable, especially over the short term. Such lesions can be observed without surgical intervention.