User login

FDA approves implantable device for central sleep apnea

The U.S. Food and Drug Administration on Oct. 6 approved an implantable device for the treatment of moderate to severe central sleep apnea.

The remedē System consists of a battery pack and small, thin wires placed under the skin in the upper chest area. The wires are inserted into the blood vessels in the chest to stimulate the phrenic nerve. The system monitors respiratory signals and, when it stimulates the nerve, the diaphragm moves to restore normal breathing.

The agency’s approval comes on the basis of study results showing that the system reduced the apnea–hypopnea index scores by 50% or more in 51% of patients studied. Control patients in the study saw an 11% reduction in their score.

Adverse events reported in the study included concomitant device interaction, implant site infection, and swelling and local tissue damage or pocket erosion. The remedē System is contraindicated for patients with active infection or who are known to require an MRI.

The U.S. Food and Drug Administration on Oct. 6 approved an implantable device for the treatment of moderate to severe central sleep apnea.

The remedē System consists of a battery pack and small, thin wires placed under the skin in the upper chest area. The wires are inserted into the blood vessels in the chest to stimulate the phrenic nerve. The system monitors respiratory signals and, when it stimulates the nerve, the diaphragm moves to restore normal breathing.

The agency’s approval comes on the basis of study results showing that the system reduced the apnea–hypopnea index scores by 50% or more in 51% of patients studied. Control patients in the study saw an 11% reduction in their score.

Adverse events reported in the study included concomitant device interaction, implant site infection, and swelling and local tissue damage or pocket erosion. The remedē System is contraindicated for patients with active infection or who are known to require an MRI.

The U.S. Food and Drug Administration on Oct. 6 approved an implantable device for the treatment of moderate to severe central sleep apnea.

The remedē System consists of a battery pack and small, thin wires placed under the skin in the upper chest area. The wires are inserted into the blood vessels in the chest to stimulate the phrenic nerve. The system monitors respiratory signals and, when it stimulates the nerve, the diaphragm moves to restore normal breathing.

The agency’s approval comes on the basis of study results showing that the system reduced the apnea–hypopnea index scores by 50% or more in 51% of patients studied. Control patients in the study saw an 11% reduction in their score.

Adverse events reported in the study included concomitant device interaction, implant site infection, and swelling and local tissue damage or pocket erosion. The remedē System is contraindicated for patients with active infection or who are known to require an MRI.

Spotting Sepsis Sooner

More than 1.5 million Americans develop sepsis each year, and at least 250,000 die of it. “Detecting sepsis early and starting immediate treatment is often the difference between life and death. It starts with preventing the infections that lead to sepsis,” said CDC Director Brenda Fitzgerald, MD, introducing the CDC’s Get Ahead of Sepsis campaign, which launched in August. “We created Get Ahead of Sepsis to give people the resources they need to help stop this medical emergency in its tracks.”

The campaign is an educational initiative for both the public and health care professionals in hospitals, home care, long-term care, and urgent care. For many patients, the CDC says, sepsis develops from an infection that begins outside the hospital. Health care professionals are not only in prime positions to monitor for signs and symptoms of sepsis in the health care setting—they can also help educate patients about things they can do to prevent sepsis. For instance, people with chronic conditions can take good care to avoid infections that could lead to sepsis.

The campaign website, www.cdc.gov/sepsis, provides fact sheets, infographics, brochures, and other materials to help spread the word.

More than 1.5 million Americans develop sepsis each year, and at least 250,000 die of it. “Detecting sepsis early and starting immediate treatment is often the difference between life and death. It starts with preventing the infections that lead to sepsis,” said CDC Director Brenda Fitzgerald, MD, introducing the CDC’s Get Ahead of Sepsis campaign, which launched in August. “We created Get Ahead of Sepsis to give people the resources they need to help stop this medical emergency in its tracks.”

The campaign is an educational initiative for both the public and health care professionals in hospitals, home care, long-term care, and urgent care. For many patients, the CDC says, sepsis develops from an infection that begins outside the hospital. Health care professionals are not only in prime positions to monitor for signs and symptoms of sepsis in the health care setting—they can also help educate patients about things they can do to prevent sepsis. For instance, people with chronic conditions can take good care to avoid infections that could lead to sepsis.

The campaign website, www.cdc.gov/sepsis, provides fact sheets, infographics, brochures, and other materials to help spread the word.

More than 1.5 million Americans develop sepsis each year, and at least 250,000 die of it. “Detecting sepsis early and starting immediate treatment is often the difference between life and death. It starts with preventing the infections that lead to sepsis,” said CDC Director Brenda Fitzgerald, MD, introducing the CDC’s Get Ahead of Sepsis campaign, which launched in August. “We created Get Ahead of Sepsis to give people the resources they need to help stop this medical emergency in its tracks.”

The campaign is an educational initiative for both the public and health care professionals in hospitals, home care, long-term care, and urgent care. For many patients, the CDC says, sepsis develops from an infection that begins outside the hospital. Health care professionals are not only in prime positions to monitor for signs and symptoms of sepsis in the health care setting—they can also help educate patients about things they can do to prevent sepsis. For instance, people with chronic conditions can take good care to avoid infections that could lead to sepsis.

The campaign website, www.cdc.gov/sepsis, provides fact sheets, infographics, brochures, and other materials to help spread the word.

Nutrition Index Helps Identify High-Risk Elderly Heart Patients

A “wealth of evidence” suggests that nutrition and immunologic status on admission is closely associated with the outcome of patients with cardiovascular disease—especially high-risk elderly patients, say researchers from Chinese People’s Liberation Army General Hospital, Beijing. The researchers note that malnutrition is an independent factor influencing post myocardial infarction complications and mortality in geriatric patients with coronary artery disease (CAD). According to their study of 336 hospitalized patients with hypertension, the Controlling Nutritional Status (CONUT) score can help predict who is at highest risk.

Nutrition indexes are widely used. CONUT scores, which are calculated based on serum albumin concentration, total peripheral lymphocyte count, and total cholesterol concentration, have been found useful in a variety of areas, including cancer. The Geriatric Nutritional Risk Index (GNRI), although a relatively new index for nutrition assessment in the elderly, is the most-used tool to evaluate patients with chronic kidney disease, the researchers say. Both indexes are “widely applied” in evaluation of patients with tumors who are also undergoing dialysis. Some studies also have reported on GNRI as a prognostic factor in cardiovascular diseases.

The researchers conducted their study to assess the effect of nutrition status on survival in patients aged ≥ 80 years, with hypertension, measuring outcomes at 90 days postadmission. All patients had a history of CAD, 167 had type 2 diabetes, and 124 had anemia. Of the enrolled patients, 192 were admitted for respiratory tract infection, with a significantly high proportion of poor nutrition status. Five patients scored > 9 on the CONUT scale. A score of ≥ 5 indicated moderate to severe malnutrition.

During the 90-day follow-up, 27 patients died. No differences in systolic blood pressure were found. The surviving patients, however, showed increased body mass index, hemoglobin, and albumin levels, as well as lower diastolic blood pressure and fasting blood glucose. Surviving patients had improved GRNI scores and reduced CONUT scores, both of which indicated improved nutrition status. Respiratory tract infection, CONUT, and albumin were independent predictors of all-cause mortality.

However, only CONUT accurately predicted all-cause mortality among patients with hypertension during the 90-day follow-up. A CONUT score above 3.0 at admission predicted all-cause mortality with a sensitivity of 77.8% and specificity of 64.7%.

Source:

Sun X, Luo L, Zhao X, Ye P. BMJ Open. 2017;7(9):e015649.

doi: 10.1136/bmjopen-2016-015649.

A “wealth of evidence” suggests that nutrition and immunologic status on admission is closely associated with the outcome of patients with cardiovascular disease—especially high-risk elderly patients, say researchers from Chinese People’s Liberation Army General Hospital, Beijing. The researchers note that malnutrition is an independent factor influencing post myocardial infarction complications and mortality in geriatric patients with coronary artery disease (CAD). According to their study of 336 hospitalized patients with hypertension, the Controlling Nutritional Status (CONUT) score can help predict who is at highest risk.

Nutrition indexes are widely used. CONUT scores, which are calculated based on serum albumin concentration, total peripheral lymphocyte count, and total cholesterol concentration, have been found useful in a variety of areas, including cancer. The Geriatric Nutritional Risk Index (GNRI), although a relatively new index for nutrition assessment in the elderly, is the most-used tool to evaluate patients with chronic kidney disease, the researchers say. Both indexes are “widely applied” in evaluation of patients with tumors who are also undergoing dialysis. Some studies also have reported on GNRI as a prognostic factor in cardiovascular diseases.

The researchers conducted their study to assess the effect of nutrition status on survival in patients aged ≥ 80 years, with hypertension, measuring outcomes at 90 days postadmission. All patients had a history of CAD, 167 had type 2 diabetes, and 124 had anemia. Of the enrolled patients, 192 were admitted for respiratory tract infection, with a significantly high proportion of poor nutrition status. Five patients scored > 9 on the CONUT scale. A score of ≥ 5 indicated moderate to severe malnutrition.

During the 90-day follow-up, 27 patients died. No differences in systolic blood pressure were found. The surviving patients, however, showed increased body mass index, hemoglobin, and albumin levels, as well as lower diastolic blood pressure and fasting blood glucose. Surviving patients had improved GRNI scores and reduced CONUT scores, both of which indicated improved nutrition status. Respiratory tract infection, CONUT, and albumin were independent predictors of all-cause mortality.

However, only CONUT accurately predicted all-cause mortality among patients with hypertension during the 90-day follow-up. A CONUT score above 3.0 at admission predicted all-cause mortality with a sensitivity of 77.8% and specificity of 64.7%.

Source:

Sun X, Luo L, Zhao X, Ye P. BMJ Open. 2017;7(9):e015649.

doi: 10.1136/bmjopen-2016-015649.

A “wealth of evidence” suggests that nutrition and immunologic status on admission is closely associated with the outcome of patients with cardiovascular disease—especially high-risk elderly patients, say researchers from Chinese People’s Liberation Army General Hospital, Beijing. The researchers note that malnutrition is an independent factor influencing post myocardial infarction complications and mortality in geriatric patients with coronary artery disease (CAD). According to their study of 336 hospitalized patients with hypertension, the Controlling Nutritional Status (CONUT) score can help predict who is at highest risk.

Nutrition indexes are widely used. CONUT scores, which are calculated based on serum albumin concentration, total peripheral lymphocyte count, and total cholesterol concentration, have been found useful in a variety of areas, including cancer. The Geriatric Nutritional Risk Index (GNRI), although a relatively new index for nutrition assessment in the elderly, is the most-used tool to evaluate patients with chronic kidney disease, the researchers say. Both indexes are “widely applied” in evaluation of patients with tumors who are also undergoing dialysis. Some studies also have reported on GNRI as a prognostic factor in cardiovascular diseases.

The researchers conducted their study to assess the effect of nutrition status on survival in patients aged ≥ 80 years, with hypertension, measuring outcomes at 90 days postadmission. All patients had a history of CAD, 167 had type 2 diabetes, and 124 had anemia. Of the enrolled patients, 192 were admitted for respiratory tract infection, with a significantly high proportion of poor nutrition status. Five patients scored > 9 on the CONUT scale. A score of ≥ 5 indicated moderate to severe malnutrition.

During the 90-day follow-up, 27 patients died. No differences in systolic blood pressure were found. The surviving patients, however, showed increased body mass index, hemoglobin, and albumin levels, as well as lower diastolic blood pressure and fasting blood glucose. Surviving patients had improved GRNI scores and reduced CONUT scores, both of which indicated improved nutrition status. Respiratory tract infection, CONUT, and albumin were independent predictors of all-cause mortality.

However, only CONUT accurately predicted all-cause mortality among patients with hypertension during the 90-day follow-up. A CONUT score above 3.0 at admission predicted all-cause mortality with a sensitivity of 77.8% and specificity of 64.7%.

Source:

Sun X, Luo L, Zhao X, Ye P. BMJ Open. 2017;7(9):e015649.

doi: 10.1136/bmjopen-2016-015649.

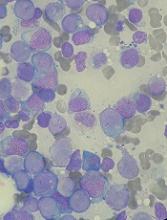

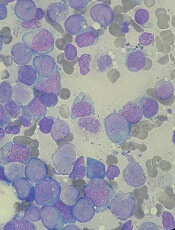

Team identifies genetic drivers of DLBCL

Research published in Cell has revealed 150 genetic drivers of diffuse large B-cell lymphoma (DLBCL).

Among these drivers are 27 genes newly implicated in DLBCL, 35 functional oncogenes, and 9 genes that can be targeted with existing drugs.

Researchers used these findings to create a prognostic model that, they say, outperformed existing risk predictors in DLBCL.

“This work provides a comprehensive road map in terms of research and clinical priorities,” said study author Sandeep Dave, MD, of Duke University in Durham, North Carolina.

“We have very good data now to pursue new and existing therapies that might target the genetic mutations we identified. Additionally, this data could also be used to develop genetic markers that steer patients to therapies that would be most effective.”

Dr Dave and his colleagues began this research by analyzing tumor samples from 1001 patients who had been diagnosed with DLBCL over the past decade and were treated at 12 institutions around the world. There were 313 patients with ABC DLBCL, 331 with GCB DLBCL, and the rest were unclassified DLBCLs.

Using whole-exome sequencing, the researchers pinpointed 150 driver genes that were recurrently mutated in the DLBCL patients. This included 27 genes that, the researchers believe, had never before been implicated in DLBCL.

The team also found that ABC and GCB DLBCLs “shared the vast majority of driver genes at statistically indistinguishable frequencies.”

However, there were 20 genes that were differentially mutated between the 2 groups. For instance, EZH2, SGK1, GNA13, SOCS1, STAT6, and TNFRSF14 were more frequently mutated in GCB DLBCLs. And ETV6, MYD88, PIM1, and TBL1XR1 were more frequently mutated in ABC DLBCLs.

Essential genes

To identify genes essential to the development and maintenance of DLBCL, the researchers used CRISPR. The team knocked out genes in 6 cell lines—3 ABC DLBCLs (LY3, TMD8, and HBL1), 2 GCB DLBCLs (SUDHL4 and Pfeiffer), and 1 Burkitt lymphoma (BJAB) that phenotypically resembles GCB DLBCL.

This revealed 1956 essential genes. Knocking out these genes resulted in a significant decrease in cell fitness in at least 1 cell line.

The work also revealed 35 driver genes that, when knocked out, resulted in decreased viability of DLBCL cells, which classified them as functional oncogenes.

The researchers found that knockout of EBF1, IRF4, CARD11, MYD88, and IKBKB was selectively lethal in ABC DLBCL. And knockout of ZBTB7A, XPO1, TGFBR2, and PTPN6 was selectively lethal in GCB DLBCL.

In addition, the team noted that 9 of the driver genes are direct targets of drugs that are already approved or under investigation in clinical trials—MTOR, BCL2, SF3B1, SYK, PIM2, PIK3R1, XPO1, MCL1, and BTK.

Patient outcomes

The researchers also looked at the driver genes in the context of patient outcomes. The team found that mutations in MYC, CD79B, and ZFAT were strongly associated with poorer survival, while mutations in NF1 and SGK1 were associated with more favorable survival.

Mutations in KLHL14, BTG1, PAX5, and CDKN2A were associated with significantly poorer survival in ABC DLBCL, while mutations in CREBBP were associated with favorable survival in ABC DLBCL.

In GCB DLBCL, mutations in NFKBIA and NCOR1 were associated with poorer prognosis, while mutations in EZH2, MYD88, and ARID5B were associated with better prognosis.

Finally, the researchers developed a prognostic model based on combinations of genetic markers (the 150 driver genes) and gene expression markers (cell of origin, MYC, and BCL2).

The team found their prognostic model could predict survival more effectively than the International Prognostic Index, cell of origin alone, and MYC and BCL2 expression alone or together. ![]()

Research published in Cell has revealed 150 genetic drivers of diffuse large B-cell lymphoma (DLBCL).

Among these drivers are 27 genes newly implicated in DLBCL, 35 functional oncogenes, and 9 genes that can be targeted with existing drugs.

Researchers used these findings to create a prognostic model that, they say, outperformed existing risk predictors in DLBCL.

“This work provides a comprehensive road map in terms of research and clinical priorities,” said study author Sandeep Dave, MD, of Duke University in Durham, North Carolina.

“We have very good data now to pursue new and existing therapies that might target the genetic mutations we identified. Additionally, this data could also be used to develop genetic markers that steer patients to therapies that would be most effective.”

Dr Dave and his colleagues began this research by analyzing tumor samples from 1001 patients who had been diagnosed with DLBCL over the past decade and were treated at 12 institutions around the world. There were 313 patients with ABC DLBCL, 331 with GCB DLBCL, and the rest were unclassified DLBCLs.

Using whole-exome sequencing, the researchers pinpointed 150 driver genes that were recurrently mutated in the DLBCL patients. This included 27 genes that, the researchers believe, had never before been implicated in DLBCL.

The team also found that ABC and GCB DLBCLs “shared the vast majority of driver genes at statistically indistinguishable frequencies.”

However, there were 20 genes that were differentially mutated between the 2 groups. For instance, EZH2, SGK1, GNA13, SOCS1, STAT6, and TNFRSF14 were more frequently mutated in GCB DLBCLs. And ETV6, MYD88, PIM1, and TBL1XR1 were more frequently mutated in ABC DLBCLs.

Essential genes

To identify genes essential to the development and maintenance of DLBCL, the researchers used CRISPR. The team knocked out genes in 6 cell lines—3 ABC DLBCLs (LY3, TMD8, and HBL1), 2 GCB DLBCLs (SUDHL4 and Pfeiffer), and 1 Burkitt lymphoma (BJAB) that phenotypically resembles GCB DLBCL.

This revealed 1956 essential genes. Knocking out these genes resulted in a significant decrease in cell fitness in at least 1 cell line.

The work also revealed 35 driver genes that, when knocked out, resulted in decreased viability of DLBCL cells, which classified them as functional oncogenes.

The researchers found that knockout of EBF1, IRF4, CARD11, MYD88, and IKBKB was selectively lethal in ABC DLBCL. And knockout of ZBTB7A, XPO1, TGFBR2, and PTPN6 was selectively lethal in GCB DLBCL.

In addition, the team noted that 9 of the driver genes are direct targets of drugs that are already approved or under investigation in clinical trials—MTOR, BCL2, SF3B1, SYK, PIM2, PIK3R1, XPO1, MCL1, and BTK.

Patient outcomes

The researchers also looked at the driver genes in the context of patient outcomes. The team found that mutations in MYC, CD79B, and ZFAT were strongly associated with poorer survival, while mutations in NF1 and SGK1 were associated with more favorable survival.

Mutations in KLHL14, BTG1, PAX5, and CDKN2A were associated with significantly poorer survival in ABC DLBCL, while mutations in CREBBP were associated with favorable survival in ABC DLBCL.

In GCB DLBCL, mutations in NFKBIA and NCOR1 were associated with poorer prognosis, while mutations in EZH2, MYD88, and ARID5B were associated with better prognosis.

Finally, the researchers developed a prognostic model based on combinations of genetic markers (the 150 driver genes) and gene expression markers (cell of origin, MYC, and BCL2).

The team found their prognostic model could predict survival more effectively than the International Prognostic Index, cell of origin alone, and MYC and BCL2 expression alone or together. ![]()

Research published in Cell has revealed 150 genetic drivers of diffuse large B-cell lymphoma (DLBCL).

Among these drivers are 27 genes newly implicated in DLBCL, 35 functional oncogenes, and 9 genes that can be targeted with existing drugs.

Researchers used these findings to create a prognostic model that, they say, outperformed existing risk predictors in DLBCL.

“This work provides a comprehensive road map in terms of research and clinical priorities,” said study author Sandeep Dave, MD, of Duke University in Durham, North Carolina.

“We have very good data now to pursue new and existing therapies that might target the genetic mutations we identified. Additionally, this data could also be used to develop genetic markers that steer patients to therapies that would be most effective.”

Dr Dave and his colleagues began this research by analyzing tumor samples from 1001 patients who had been diagnosed with DLBCL over the past decade and were treated at 12 institutions around the world. There were 313 patients with ABC DLBCL, 331 with GCB DLBCL, and the rest were unclassified DLBCLs.

Using whole-exome sequencing, the researchers pinpointed 150 driver genes that were recurrently mutated in the DLBCL patients. This included 27 genes that, the researchers believe, had never before been implicated in DLBCL.

The team also found that ABC and GCB DLBCLs “shared the vast majority of driver genes at statistically indistinguishable frequencies.”

However, there were 20 genes that were differentially mutated between the 2 groups. For instance, EZH2, SGK1, GNA13, SOCS1, STAT6, and TNFRSF14 were more frequently mutated in GCB DLBCLs. And ETV6, MYD88, PIM1, and TBL1XR1 were more frequently mutated in ABC DLBCLs.

Essential genes

To identify genes essential to the development and maintenance of DLBCL, the researchers used CRISPR. The team knocked out genes in 6 cell lines—3 ABC DLBCLs (LY3, TMD8, and HBL1), 2 GCB DLBCLs (SUDHL4 and Pfeiffer), and 1 Burkitt lymphoma (BJAB) that phenotypically resembles GCB DLBCL.

This revealed 1956 essential genes. Knocking out these genes resulted in a significant decrease in cell fitness in at least 1 cell line.

The work also revealed 35 driver genes that, when knocked out, resulted in decreased viability of DLBCL cells, which classified them as functional oncogenes.

The researchers found that knockout of EBF1, IRF4, CARD11, MYD88, and IKBKB was selectively lethal in ABC DLBCL. And knockout of ZBTB7A, XPO1, TGFBR2, and PTPN6 was selectively lethal in GCB DLBCL.

In addition, the team noted that 9 of the driver genes are direct targets of drugs that are already approved or under investigation in clinical trials—MTOR, BCL2, SF3B1, SYK, PIM2, PIK3R1, XPO1, MCL1, and BTK.

Patient outcomes

The researchers also looked at the driver genes in the context of patient outcomes. The team found that mutations in MYC, CD79B, and ZFAT were strongly associated with poorer survival, while mutations in NF1 and SGK1 were associated with more favorable survival.

Mutations in KLHL14, BTG1, PAX5, and CDKN2A were associated with significantly poorer survival in ABC DLBCL, while mutations in CREBBP were associated with favorable survival in ABC DLBCL.

In GCB DLBCL, mutations in NFKBIA and NCOR1 were associated with poorer prognosis, while mutations in EZH2, MYD88, and ARID5B were associated with better prognosis.

Finally, the researchers developed a prognostic model based on combinations of genetic markers (the 150 driver genes) and gene expression markers (cell of origin, MYC, and BCL2).

The team found their prognostic model could predict survival more effectively than the International Prognostic Index, cell of origin alone, and MYC and BCL2 expression alone or together. ![]()

FDA grants drug orphan designation for AML

The US Food and Drug Administration (FDA) has granted orphan drug designation to PCM-075 for the treatment of patients with acute myeloid leukemia (AML).

PCM-075 is an oral adenosine triphosphate competitive inhibitor of the serine/threonine Polo-like kinase 1 (PLK1) enzyme, which appears to be overexpressed in several hematologic and solid tumor malignancies.

PCM-075 is being developed by Trovagene, Inc.

The company is initiating a phase 1b/2 trial of PCM-075 in combination with standard care (low-dose cytarabine or decitabine) in patients with AML (NCT03303339).

Trovagene has already completed a phase 1 dose-escalation study of PCM-075 in patients with advanced metastatic solid tumor malignancies. This study was recently published in Investigational New Drugs.

According to Trovagene, preclinical studies have shown that PCM-075 synergizes with more than 10 drugs used to treat hematologic and solid tumor malignancies, including FLT3 and HDAC inhibitors, taxanes, and cytotoxins.

Trovagene believes the combination of PCM-075 with other compounds has the potential for improved clinical efficacy in AML, non-Hodgkin lymphoma, castration-resistant prostate cancer, triple-negative breast cancer, and adrenocortical carcinoma.

About orphan designation

The FDA grants orphan designation to products intended to treat, diagnose, or prevent diseases/disorders that affect fewer than 200,000 people in the US.

The designation provides incentives for sponsors to develop products for rare diseases. This may include tax credits toward the cost of clinical trials, prescription drug user fee waivers, and 7 years of market exclusivity if the product is approved. ![]()

The US Food and Drug Administration (FDA) has granted orphan drug designation to PCM-075 for the treatment of patients with acute myeloid leukemia (AML).

PCM-075 is an oral adenosine triphosphate competitive inhibitor of the serine/threonine Polo-like kinase 1 (PLK1) enzyme, which appears to be overexpressed in several hematologic and solid tumor malignancies.

PCM-075 is being developed by Trovagene, Inc.

The company is initiating a phase 1b/2 trial of PCM-075 in combination with standard care (low-dose cytarabine or decitabine) in patients with AML (NCT03303339).

Trovagene has already completed a phase 1 dose-escalation study of PCM-075 in patients with advanced metastatic solid tumor malignancies. This study was recently published in Investigational New Drugs.

According to Trovagene, preclinical studies have shown that PCM-075 synergizes with more than 10 drugs used to treat hematologic and solid tumor malignancies, including FLT3 and HDAC inhibitors, taxanes, and cytotoxins.

Trovagene believes the combination of PCM-075 with other compounds has the potential for improved clinical efficacy in AML, non-Hodgkin lymphoma, castration-resistant prostate cancer, triple-negative breast cancer, and adrenocortical carcinoma.

About orphan designation

The FDA grants orphan designation to products intended to treat, diagnose, or prevent diseases/disorders that affect fewer than 200,000 people in the US.

The designation provides incentives for sponsors to develop products for rare diseases. This may include tax credits toward the cost of clinical trials, prescription drug user fee waivers, and 7 years of market exclusivity if the product is approved. ![]()

The US Food and Drug Administration (FDA) has granted orphan drug designation to PCM-075 for the treatment of patients with acute myeloid leukemia (AML).

PCM-075 is an oral adenosine triphosphate competitive inhibitor of the serine/threonine Polo-like kinase 1 (PLK1) enzyme, which appears to be overexpressed in several hematologic and solid tumor malignancies.

PCM-075 is being developed by Trovagene, Inc.

The company is initiating a phase 1b/2 trial of PCM-075 in combination with standard care (low-dose cytarabine or decitabine) in patients with AML (NCT03303339).

Trovagene has already completed a phase 1 dose-escalation study of PCM-075 in patients with advanced metastatic solid tumor malignancies. This study was recently published in Investigational New Drugs.

According to Trovagene, preclinical studies have shown that PCM-075 synergizes with more than 10 drugs used to treat hematologic and solid tumor malignancies, including FLT3 and HDAC inhibitors, taxanes, and cytotoxins.

Trovagene believes the combination of PCM-075 with other compounds has the potential for improved clinical efficacy in AML, non-Hodgkin lymphoma, castration-resistant prostate cancer, triple-negative breast cancer, and adrenocortical carcinoma.

About orphan designation

The FDA grants orphan designation to products intended to treat, diagnose, or prevent diseases/disorders that affect fewer than 200,000 people in the US.

The designation provides incentives for sponsors to develop products for rare diseases. This may include tax credits toward the cost of clinical trials, prescription drug user fee waivers, and 7 years of market exclusivity if the product is approved. ![]()

Rivaroxaban trial stopped early due to futility

The companies developing rivaroxaban have announced an early end to the phase 3 NAVIGATE ESUS study.

In this trial, researchers were evaluating the efficacy and safety of rivaroxaban for the secondary prevention of stroke and systemic embolism in patients with a recent embolic stroke of undetermined source (ESUS).

The study was stopped early after it became evident that rivaroxaban was unlikely to provide a benefit over aspirin.

Results showed comparable efficacy between rivaroxaban and aspirin and an increase in bleeding in the rivaroxaban arm, although bleeding rates were considered low overall.

The NAVIGATE ESUS trial enrolled 7214 patients from 459 sites across 31 countries. Patients were randomized to receive either rivaroxaban at 15 mg once daily or aspirin at 100 mg once daily.

The primary efficacy endpoint was a composite of stroke and systemic embolism. The primary safety endpoint was major bleeding according to the criteria of the International Society on Thrombosis and Haemostasis.

A complete analysis of NAVIGATE ESUS data is expected to be presented in 2018.

About rivaroxaban

Rivaroxaban was discovered by Bayer, which is developing the drug with Janssen Research & Development, LLC.

Rivaroxaban is approved for use in more than 130 countries for a total of 7 indications:

- The prevention of stroke and systemic embolism in adults with non-valvular atrial fibrillation and 1 or more risk factors

- The treatment of pulmonary embolism (PE) in adults

- The treatment of deep vein thrombosis (DVT) in adults

- The prevention of recurrent PE and DVT in adults

- The prevention of venous thromboembolism (VTE) in adults undergoing elective hip replacement surgery

- The prevention of VTE in adults undergoing elective knee replacement surgery

- The prevention of atherothrombotic events (cardiovascular death, myocardial infarction, or stroke) after an acute coronary syndrome in adults with elevated cardiac biomarkers and no prior stroke or transient ischemic attack when co-administered with acetylsalicylic acid alone or with acetylsalicylic acid plus clopidogrel or ticlopidine.

The companies developing rivaroxaban have announced an early end to the phase 3 NAVIGATE ESUS study.

In this trial, researchers were evaluating the efficacy and safety of rivaroxaban for the secondary prevention of stroke and systemic embolism in patients with a recent embolic stroke of undetermined source (ESUS).

The study was stopped early after it became evident that rivaroxaban was unlikely to provide a benefit over aspirin.

Results showed comparable efficacy between rivaroxaban and aspirin and an increase in bleeding in the rivaroxaban arm, although bleeding rates were considered low overall.

The NAVIGATE ESUS trial enrolled 7214 patients from 459 sites across 31 countries. Patients were randomized to receive either rivaroxaban at 15 mg once daily or aspirin at 100 mg once daily.

The primary efficacy endpoint was a composite of stroke and systemic embolism. The primary safety endpoint was major bleeding according to the criteria of the International Society on Thrombosis and Haemostasis.

A complete analysis of NAVIGATE ESUS data is expected to be presented in 2018.

About rivaroxaban

Rivaroxaban was discovered by Bayer, which is developing the drug with Janssen Research & Development, LLC.

Rivaroxaban is approved for use in more than 130 countries for a total of 7 indications:

- The prevention of stroke and systemic embolism in adults with non-valvular atrial fibrillation and 1 or more risk factors

- The treatment of pulmonary embolism (PE) in adults

- The treatment of deep vein thrombosis (DVT) in adults

- The prevention of recurrent PE and DVT in adults

- The prevention of venous thromboembolism (VTE) in adults undergoing elective hip replacement surgery

- The prevention of VTE in adults undergoing elective knee replacement surgery

- The prevention of atherothrombotic events (cardiovascular death, myocardial infarction, or stroke) after an acute coronary syndrome in adults with elevated cardiac biomarkers and no prior stroke or transient ischemic attack when co-administered with acetylsalicylic acid alone or with acetylsalicylic acid plus clopidogrel or ticlopidine.

The companies developing rivaroxaban have announced an early end to the phase 3 NAVIGATE ESUS study.

In this trial, researchers were evaluating the efficacy and safety of rivaroxaban for the secondary prevention of stroke and systemic embolism in patients with a recent embolic stroke of undetermined source (ESUS).

The study was stopped early after it became evident that rivaroxaban was unlikely to provide a benefit over aspirin.

Results showed comparable efficacy between rivaroxaban and aspirin and an increase in bleeding in the rivaroxaban arm, although bleeding rates were considered low overall.

The NAVIGATE ESUS trial enrolled 7214 patients from 459 sites across 31 countries. Patients were randomized to receive either rivaroxaban at 15 mg once daily or aspirin at 100 mg once daily.

The primary efficacy endpoint was a composite of stroke and systemic embolism. The primary safety endpoint was major bleeding according to the criteria of the International Society on Thrombosis and Haemostasis.

A complete analysis of NAVIGATE ESUS data is expected to be presented in 2018.

About rivaroxaban

Rivaroxaban was discovered by Bayer, which is developing the drug with Janssen Research & Development, LLC.

Rivaroxaban is approved for use in more than 130 countries for a total of 7 indications:

- The prevention of stroke and systemic embolism in adults with non-valvular atrial fibrillation and 1 or more risk factors

- The treatment of pulmonary embolism (PE) in adults

- The treatment of deep vein thrombosis (DVT) in adults

- The prevention of recurrent PE and DVT in adults

- The prevention of venous thromboembolism (VTE) in adults undergoing elective hip replacement surgery

- The prevention of VTE in adults undergoing elective knee replacement surgery

- The prevention of atherothrombotic events (cardiovascular death, myocardial infarction, or stroke) after an acute coronary syndrome in adults with elevated cardiac biomarkers and no prior stroke or transient ischemic attack when co-administered with acetylsalicylic acid alone or with acetylsalicylic acid plus clopidogrel or ticlopidine.

How to Interpret Positive Troponin Tests in CKD

Q) Recently, when I have sent my patients with chronic kidney disease (CKD) to the emergency department (ED) for complaints of chest pain or shortness of breath, their troponin levels are high. I know CKD increases risk for cardiovascular disease, but I find it hard to believe that every CKD patient is having an MI. What gives?

Cardiovascular disease remains the most common cause of death in patients with CKD, accounting for 45% to 50% of all deaths. Therefore, accurate diagnosis of acute myocardial infarction (AMI) in this patient population is vital to assure prompt identification and treatment.1,2

Cardiac troponins are the gold standard for detecting myocardial injury in patients presenting to the ED with suggestive symptoms.1 But the chronic baseline elevation in serum troponin levels among patients with CKD often results in a false-positive reading, making the detection of AMI difficult.1

With the recent introduction of high-sensitivity troponin assays, as many as 97% of patients on hemodialysis exhibit elevated troponin levels; this is also true for patients with CKD, on a sliding scale (lower kidney function = higher baseline troponins).2 The use of high-sensitivity testing has increased substantially in the past 15 years, and it is expected to become the benchmark for troponin evaluation. While older troponin tests had a false-positive rate of 30% to 85% in patients with stage 5 CKD, the newer troponin tests display elevated troponins in almost 100% of these patients.1,2

Numerous studies have been conducted to determine the best way to interpret positive troponin tests in patients with CKD to ensure an accurate diagnosis of AMI.2 One study determined that a 20% increase in troponin levels was a more accurate determinant of AMI in patients with CKD than one isolated positive level.3 Another study demonstrated that serial troponin measurements conducted over time yielded higher diagnostic accuracy than one measurement above the 99th percentile.4

The American College of Cardiology Foundation task force found that monitoring changes in troponin concentration over time (3-6 h) is more accurate than a single elevated troponin when diagnosing AMI in symptomatic patients.3 Correlation between elevated troponin levels and clinical suspicion proved helpful in determining the significance of troponin results and the probability of AMI in patients with CKD.2

The significance and interpretation of elevated troponin levels in patients with CKD remains an important topic for further study, as cardiovascular disease continues to be the leading cause of mortality in patients with kidney dysfunction.1,2 More definitive studies need to be conducted on patients with CKD as high-sensitivity troponin assay testing becomes standard for diagnosing AMI.

So, the reason you see more positive troponin results in your CKD population is due to both the increased accuracy of the newer tests and the fact that CKD often causes a false-positive result. Monitoring your patients with serial troponins for at least three hours is essential to confirm or rule out an AMI. —MS-G

Marlene Shaw-Gallagher, MS, PA-C

University of Detroit Mercy, Michigan

Division of Nephrology, University of Michigan, Ann Arbor

1. Robitaille R, Lafrance JP, Leblanc M. Altered laboratory findings associated with end-stage renal disease. Semin Dial. 2006;19(5):373.

2. Howard CE, McCullough PA. Decoding acute myocardial infarction among patients on dialysis. J Am Soc Nephrol. 2017;28(5):1337-1339.

3. Newby LK, Jesse RL, Babb JD, et al. ACCF 2012 expert consensus document on practical clinical considerations in the interpretation of troponin elevations: a report of the American College of Cardiology Foundation task force on Clinical Expert Consensus Documents. J Am Coll Cardiol. 2012; 60(23):2427-2463.

4. Mahajan VS, Petr Jarolim P. How to interpret elevated cardiac troponin levels. Circulation. 2011;124:2350-2354.

Q) Recently, when I have sent my patients with chronic kidney disease (CKD) to the emergency department (ED) for complaints of chest pain or shortness of breath, their troponin levels are high. I know CKD increases risk for cardiovascular disease, but I find it hard to believe that every CKD patient is having an MI. What gives?

Cardiovascular disease remains the most common cause of death in patients with CKD, accounting for 45% to 50% of all deaths. Therefore, accurate diagnosis of acute myocardial infarction (AMI) in this patient population is vital to assure prompt identification and treatment.1,2

Cardiac troponins are the gold standard for detecting myocardial injury in patients presenting to the ED with suggestive symptoms.1 But the chronic baseline elevation in serum troponin levels among patients with CKD often results in a false-positive reading, making the detection of AMI difficult.1

With the recent introduction of high-sensitivity troponin assays, as many as 97% of patients on hemodialysis exhibit elevated troponin levels; this is also true for patients with CKD, on a sliding scale (lower kidney function = higher baseline troponins).2 The use of high-sensitivity testing has increased substantially in the past 15 years, and it is expected to become the benchmark for troponin evaluation. While older troponin tests had a false-positive rate of 30% to 85% in patients with stage 5 CKD, the newer troponin tests display elevated troponins in almost 100% of these patients.1,2

Numerous studies have been conducted to determine the best way to interpret positive troponin tests in patients with CKD to ensure an accurate diagnosis of AMI.2 One study determined that a 20% increase in troponin levels was a more accurate determinant of AMI in patients with CKD than one isolated positive level.3 Another study demonstrated that serial troponin measurements conducted over time yielded higher diagnostic accuracy than one measurement above the 99th percentile.4

The American College of Cardiology Foundation task force found that monitoring changes in troponin concentration over time (3-6 h) is more accurate than a single elevated troponin when diagnosing AMI in symptomatic patients.3 Correlation between elevated troponin levels and clinical suspicion proved helpful in determining the significance of troponin results and the probability of AMI in patients with CKD.2

The significance and interpretation of elevated troponin levels in patients with CKD remains an important topic for further study, as cardiovascular disease continues to be the leading cause of mortality in patients with kidney dysfunction.1,2 More definitive studies need to be conducted on patients with CKD as high-sensitivity troponin assay testing becomes standard for diagnosing AMI.

So, the reason you see more positive troponin results in your CKD population is due to both the increased accuracy of the newer tests and the fact that CKD often causes a false-positive result. Monitoring your patients with serial troponins for at least three hours is essential to confirm or rule out an AMI. —MS-G

Marlene Shaw-Gallagher, MS, PA-C

University of Detroit Mercy, Michigan

Division of Nephrology, University of Michigan, Ann Arbor

Q) Recently, when I have sent my patients with chronic kidney disease (CKD) to the emergency department (ED) for complaints of chest pain or shortness of breath, their troponin levels are high. I know CKD increases risk for cardiovascular disease, but I find it hard to believe that every CKD patient is having an MI. What gives?

Cardiovascular disease remains the most common cause of death in patients with CKD, accounting for 45% to 50% of all deaths. Therefore, accurate diagnosis of acute myocardial infarction (AMI) in this patient population is vital to assure prompt identification and treatment.1,2

Cardiac troponins are the gold standard for detecting myocardial injury in patients presenting to the ED with suggestive symptoms.1 But the chronic baseline elevation in serum troponin levels among patients with CKD often results in a false-positive reading, making the detection of AMI difficult.1

With the recent introduction of high-sensitivity troponin assays, as many as 97% of patients on hemodialysis exhibit elevated troponin levels; this is also true for patients with CKD, on a sliding scale (lower kidney function = higher baseline troponins).2 The use of high-sensitivity testing has increased substantially in the past 15 years, and it is expected to become the benchmark for troponin evaluation. While older troponin tests had a false-positive rate of 30% to 85% in patients with stage 5 CKD, the newer troponin tests display elevated troponins in almost 100% of these patients.1,2

Numerous studies have been conducted to determine the best way to interpret positive troponin tests in patients with CKD to ensure an accurate diagnosis of AMI.2 One study determined that a 20% increase in troponin levels was a more accurate determinant of AMI in patients with CKD than one isolated positive level.3 Another study demonstrated that serial troponin measurements conducted over time yielded higher diagnostic accuracy than one measurement above the 99th percentile.4

The American College of Cardiology Foundation task force found that monitoring changes in troponin concentration over time (3-6 h) is more accurate than a single elevated troponin when diagnosing AMI in symptomatic patients.3 Correlation between elevated troponin levels and clinical suspicion proved helpful in determining the significance of troponin results and the probability of AMI in patients with CKD.2

The significance and interpretation of elevated troponin levels in patients with CKD remains an important topic for further study, as cardiovascular disease continues to be the leading cause of mortality in patients with kidney dysfunction.1,2 More definitive studies need to be conducted on patients with CKD as high-sensitivity troponin assay testing becomes standard for diagnosing AMI.

So, the reason you see more positive troponin results in your CKD population is due to both the increased accuracy of the newer tests and the fact that CKD often causes a false-positive result. Monitoring your patients with serial troponins for at least three hours is essential to confirm or rule out an AMI. —MS-G

Marlene Shaw-Gallagher, MS, PA-C

University of Detroit Mercy, Michigan

Division of Nephrology, University of Michigan, Ann Arbor

1. Robitaille R, Lafrance JP, Leblanc M. Altered laboratory findings associated with end-stage renal disease. Semin Dial. 2006;19(5):373.

2. Howard CE, McCullough PA. Decoding acute myocardial infarction among patients on dialysis. J Am Soc Nephrol. 2017;28(5):1337-1339.

3. Newby LK, Jesse RL, Babb JD, et al. ACCF 2012 expert consensus document on practical clinical considerations in the interpretation of troponin elevations: a report of the American College of Cardiology Foundation task force on Clinical Expert Consensus Documents. J Am Coll Cardiol. 2012; 60(23):2427-2463.

4. Mahajan VS, Petr Jarolim P. How to interpret elevated cardiac troponin levels. Circulation. 2011;124:2350-2354.

1. Robitaille R, Lafrance JP, Leblanc M. Altered laboratory findings associated with end-stage renal disease. Semin Dial. 2006;19(5):373.

2. Howard CE, McCullough PA. Decoding acute myocardial infarction among patients on dialysis. J Am Soc Nephrol. 2017;28(5):1337-1339.

3. Newby LK, Jesse RL, Babb JD, et al. ACCF 2012 expert consensus document on practical clinical considerations in the interpretation of troponin elevations: a report of the American College of Cardiology Foundation task force on Clinical Expert Consensus Documents. J Am Coll Cardiol. 2012; 60(23):2427-2463.

4. Mahajan VS, Petr Jarolim P. How to interpret elevated cardiac troponin levels. Circulation. 2011;124:2350-2354.

U.S. measles incidence since 2001 is low but increasing

, especially in the very young.

So, the importance of maintaining high vaccine coverage remains essential, concluded the authors of an analysis of confirmed U.S. measles cases, because endemic measles was eliminated nationwide in 2000.

Incidence of measles was highest in infants aged 6-11 months, followed by toddlers aged 12-15 months. Measles rates fell with age, starting at 16 months, Nakia S. Clemmons and her associates of the division of viral diseases at the Centers for Disease Control and Prevention, Atlanta, said in a research letter in JAMA (2017 Oct 3;318[13]:1279-81).

Higher incidence per million population occurred over time, from 0.28 in 2001 to 0.56 in 2015. Imported cases decreased from 47% in 2001 to 15% in 2015. Vaccinated patients decreased from 30% of U.S. measles cases in 2001 to 20% in 2015.

“The concurrent increase in incidence and declines in the proportion of imported and vaccinated cases (signifying relative increases in U.S.-acquired and unvaccinated cases) may suggest increased susceptibility and transmission after introductions in certain subpopulations,” the investigators said.

“The declining incidence with age, the high proportion of unvaccinated cases, and the decline in the proportion of vaccinated cases despite rate increases suggest that failure to vaccinate, rather than failure of vaccine performance, may be the main driver of measles transmission, emphasizing the importance of maintaining high vaccine coverage,” they added.

, especially in the very young.

So, the importance of maintaining high vaccine coverage remains essential, concluded the authors of an analysis of confirmed U.S. measles cases, because endemic measles was eliminated nationwide in 2000.

Incidence of measles was highest in infants aged 6-11 months, followed by toddlers aged 12-15 months. Measles rates fell with age, starting at 16 months, Nakia S. Clemmons and her associates of the division of viral diseases at the Centers for Disease Control and Prevention, Atlanta, said in a research letter in JAMA (2017 Oct 3;318[13]:1279-81).

Higher incidence per million population occurred over time, from 0.28 in 2001 to 0.56 in 2015. Imported cases decreased from 47% in 2001 to 15% in 2015. Vaccinated patients decreased from 30% of U.S. measles cases in 2001 to 20% in 2015.

“The concurrent increase in incidence and declines in the proportion of imported and vaccinated cases (signifying relative increases in U.S.-acquired and unvaccinated cases) may suggest increased susceptibility and transmission after introductions in certain subpopulations,” the investigators said.

“The declining incidence with age, the high proportion of unvaccinated cases, and the decline in the proportion of vaccinated cases despite rate increases suggest that failure to vaccinate, rather than failure of vaccine performance, may be the main driver of measles transmission, emphasizing the importance of maintaining high vaccine coverage,” they added.

, especially in the very young.

So, the importance of maintaining high vaccine coverage remains essential, concluded the authors of an analysis of confirmed U.S. measles cases, because endemic measles was eliminated nationwide in 2000.

Incidence of measles was highest in infants aged 6-11 months, followed by toddlers aged 12-15 months. Measles rates fell with age, starting at 16 months, Nakia S. Clemmons and her associates of the division of viral diseases at the Centers for Disease Control and Prevention, Atlanta, said in a research letter in JAMA (2017 Oct 3;318[13]:1279-81).

Higher incidence per million population occurred over time, from 0.28 in 2001 to 0.56 in 2015. Imported cases decreased from 47% in 2001 to 15% in 2015. Vaccinated patients decreased from 30% of U.S. measles cases in 2001 to 20% in 2015.

“The concurrent increase in incidence and declines in the proportion of imported and vaccinated cases (signifying relative increases in U.S.-acquired and unvaccinated cases) may suggest increased susceptibility and transmission after introductions in certain subpopulations,” the investigators said.

“The declining incidence with age, the high proportion of unvaccinated cases, and the decline in the proportion of vaccinated cases despite rate increases suggest that failure to vaccinate, rather than failure of vaccine performance, may be the main driver of measles transmission, emphasizing the importance of maintaining high vaccine coverage,” they added.

FROM JAMA

Treatment of hemangioma with brand-name propranolol tied to fewer dosing errors

CHICAGO – Among physicians using generic propranolol to treat infantile hemangioma, 30% reported at least one patient experienced a miscalculation dosing error, a new survey showed. Among respondents who prescribed Hemangeol (Pierre Fabre Pharmaceuticals), 10% reported a similar error. The errors were made by either a provider or caregiver.

Confusion may have contributed to a second source of errors, said Elaine Siegfried, MD, professor of pediatrics and dermatology at St. Louis University in Missouri. Generic propranolol is supplied as a 20-mg/5-mL oral solution and a 40-mg/5-mL oral solution. “Any time you have more than one formulation, it’s a nidus for dispensing error by the pharmacy.”

“So if a doctor prescribes [the lower dose], which is what we always do for safety, and they get 40 [mg], the [patient] can become hypoglycemic, hypotensive, or bradycardic,” Dr. Siegfried said. “That’s not good.”

Dr. Siegfried and colleagues assessed survey responses from 223 physicians. The majority, 90%, reported prescribing generic propranolol to treat infantile hemangioma in the past. Sixty-percent reported also prescribing the brand name formulation approved by the Food and Drug Administration in 2014. Most of those who completed the survey, 70%, were pediatric dermatologists; general dermatologists, pediatric otolaryngologists, and other specialists also participated.

A total of 18% of physicians surveyed reported a dispensing error associated with use of generic propranolol. Dr. Siegfried said such errors are not possible with the branded formulation because it is available only in a single concentration, a 4.28 mg/mL oral solution. She added that one central specialty pharmacy dispenses Hemangeol, further reducing the likelihood of errors.

Addressing cost concerns

“When this [branded] drug became available, I wondered why everyone was not prescribing it,” Dr. Siegfried said.

“One of the pushbacks with this drug is that people didn’t want to prescribe it because they thought it was too expensive.” She acknowledged the higher cost, but added the manufacturer has a program to provide the agent free-of-charge to families without health insurance who cannot afford the medicine. She added, “People with private insurance do have higher copays, but insurance generally pays for most of it, depending on the plan.”

Dr. Siegfried also emphasized that the manufacturer invested considerable time and money to bring the agent and its specific pediatric indication to market, generating scientific data on its safety and efficacy along the way. In contrast, generic propranolol has been available in the United States for decades as a beta-blocker. The discovery that the agent also could effectively treat infantile hemangioma was serendipitous, not based on preclinical efficacy, safety, or dosing studies.

An additional benefit of the single-concentration branded formulation is pediatric clinicians might be more comfortable using this agent, Dr. Siegfried said. She described the package insert instructions as straightforward and easy to follow. Also, given a sometimes longer wait to see a pediatric dermatologist because of their shortage in certain parts of the country, having general pediatricians or family physicians gain proficiency in administering the medication could mean earlier treatment of hemangioma. “If you have to wait to get into a specialist, that can delay treatment. The caveat about hemangiomas is the earlier you treat them, the more effective the treatment is.”

“Using propranolol to treat hemangiomas is probably one of the biggest positive changes in my practice. It was very difficult to treat hemangiomas with steroids, vincristine, or other alternatives. Now treatment is ‘cookbook’ and well-tolerated. It’s amazing,” Dr. Siegfried said. “I only prescribe the branded propranolol because of the specialty pharmacy issue, because of the formulation issue, and because we have data that Pierre Fabre paid for,” she added.

Dr. Siegfried is a consultant for Pierre Fabre and served as a principle investigator on phase 3 research. The company did not sponsor the current study, but a coauthor and employee of Pierre Fabre assisted with the logistics of the survey.

CHICAGO – Among physicians using generic propranolol to treat infantile hemangioma, 30% reported at least one patient experienced a miscalculation dosing error, a new survey showed. Among respondents who prescribed Hemangeol (Pierre Fabre Pharmaceuticals), 10% reported a similar error. The errors were made by either a provider or caregiver.

Confusion may have contributed to a second source of errors, said Elaine Siegfried, MD, professor of pediatrics and dermatology at St. Louis University in Missouri. Generic propranolol is supplied as a 20-mg/5-mL oral solution and a 40-mg/5-mL oral solution. “Any time you have more than one formulation, it’s a nidus for dispensing error by the pharmacy.”

“So if a doctor prescribes [the lower dose], which is what we always do for safety, and they get 40 [mg], the [patient] can become hypoglycemic, hypotensive, or bradycardic,” Dr. Siegfried said. “That’s not good.”

Dr. Siegfried and colleagues assessed survey responses from 223 physicians. The majority, 90%, reported prescribing generic propranolol to treat infantile hemangioma in the past. Sixty-percent reported also prescribing the brand name formulation approved by the Food and Drug Administration in 2014. Most of those who completed the survey, 70%, were pediatric dermatologists; general dermatologists, pediatric otolaryngologists, and other specialists also participated.

A total of 18% of physicians surveyed reported a dispensing error associated with use of generic propranolol. Dr. Siegfried said such errors are not possible with the branded formulation because it is available only in a single concentration, a 4.28 mg/mL oral solution. She added that one central specialty pharmacy dispenses Hemangeol, further reducing the likelihood of errors.

Addressing cost concerns

“When this [branded] drug became available, I wondered why everyone was not prescribing it,” Dr. Siegfried said.

“One of the pushbacks with this drug is that people didn’t want to prescribe it because they thought it was too expensive.” She acknowledged the higher cost, but added the manufacturer has a program to provide the agent free-of-charge to families without health insurance who cannot afford the medicine. She added, “People with private insurance do have higher copays, but insurance generally pays for most of it, depending on the plan.”

Dr. Siegfried also emphasized that the manufacturer invested considerable time and money to bring the agent and its specific pediatric indication to market, generating scientific data on its safety and efficacy along the way. In contrast, generic propranolol has been available in the United States for decades as a beta-blocker. The discovery that the agent also could effectively treat infantile hemangioma was serendipitous, not based on preclinical efficacy, safety, or dosing studies.

An additional benefit of the single-concentration branded formulation is pediatric clinicians might be more comfortable using this agent, Dr. Siegfried said. She described the package insert instructions as straightforward and easy to follow. Also, given a sometimes longer wait to see a pediatric dermatologist because of their shortage in certain parts of the country, having general pediatricians or family physicians gain proficiency in administering the medication could mean earlier treatment of hemangioma. “If you have to wait to get into a specialist, that can delay treatment. The caveat about hemangiomas is the earlier you treat them, the more effective the treatment is.”

“Using propranolol to treat hemangiomas is probably one of the biggest positive changes in my practice. It was very difficult to treat hemangiomas with steroids, vincristine, or other alternatives. Now treatment is ‘cookbook’ and well-tolerated. It’s amazing,” Dr. Siegfried said. “I only prescribe the branded propranolol because of the specialty pharmacy issue, because of the formulation issue, and because we have data that Pierre Fabre paid for,” she added.

Dr. Siegfried is a consultant for Pierre Fabre and served as a principle investigator on phase 3 research. The company did not sponsor the current study, but a coauthor and employee of Pierre Fabre assisted with the logistics of the survey.

CHICAGO – Among physicians using generic propranolol to treat infantile hemangioma, 30% reported at least one patient experienced a miscalculation dosing error, a new survey showed. Among respondents who prescribed Hemangeol (Pierre Fabre Pharmaceuticals), 10% reported a similar error. The errors were made by either a provider or caregiver.

Confusion may have contributed to a second source of errors, said Elaine Siegfried, MD, professor of pediatrics and dermatology at St. Louis University in Missouri. Generic propranolol is supplied as a 20-mg/5-mL oral solution and a 40-mg/5-mL oral solution. “Any time you have more than one formulation, it’s a nidus for dispensing error by the pharmacy.”

“So if a doctor prescribes [the lower dose], which is what we always do for safety, and they get 40 [mg], the [patient] can become hypoglycemic, hypotensive, or bradycardic,” Dr. Siegfried said. “That’s not good.”

Dr. Siegfried and colleagues assessed survey responses from 223 physicians. The majority, 90%, reported prescribing generic propranolol to treat infantile hemangioma in the past. Sixty-percent reported also prescribing the brand name formulation approved by the Food and Drug Administration in 2014. Most of those who completed the survey, 70%, were pediatric dermatologists; general dermatologists, pediatric otolaryngologists, and other specialists also participated.

A total of 18% of physicians surveyed reported a dispensing error associated with use of generic propranolol. Dr. Siegfried said such errors are not possible with the branded formulation because it is available only in a single concentration, a 4.28 mg/mL oral solution. She added that one central specialty pharmacy dispenses Hemangeol, further reducing the likelihood of errors.

Addressing cost concerns

“When this [branded] drug became available, I wondered why everyone was not prescribing it,” Dr. Siegfried said.

“One of the pushbacks with this drug is that people didn’t want to prescribe it because they thought it was too expensive.” She acknowledged the higher cost, but added the manufacturer has a program to provide the agent free-of-charge to families without health insurance who cannot afford the medicine. She added, “People with private insurance do have higher copays, but insurance generally pays for most of it, depending on the plan.”

Dr. Siegfried also emphasized that the manufacturer invested considerable time and money to bring the agent and its specific pediatric indication to market, generating scientific data on its safety and efficacy along the way. In contrast, generic propranolol has been available in the United States for decades as a beta-blocker. The discovery that the agent also could effectively treat infantile hemangioma was serendipitous, not based on preclinical efficacy, safety, or dosing studies.

An additional benefit of the single-concentration branded formulation is pediatric clinicians might be more comfortable using this agent, Dr. Siegfried said. She described the package insert instructions as straightforward and easy to follow. Also, given a sometimes longer wait to see a pediatric dermatologist because of their shortage in certain parts of the country, having general pediatricians or family physicians gain proficiency in administering the medication could mean earlier treatment of hemangioma. “If you have to wait to get into a specialist, that can delay treatment. The caveat about hemangiomas is the earlier you treat them, the more effective the treatment is.”

“Using propranolol to treat hemangiomas is probably one of the biggest positive changes in my practice. It was very difficult to treat hemangiomas with steroids, vincristine, or other alternatives. Now treatment is ‘cookbook’ and well-tolerated. It’s amazing,” Dr. Siegfried said. “I only prescribe the branded propranolol because of the specialty pharmacy issue, because of the formulation issue, and because we have data that Pierre Fabre paid for,” she added.

Dr. Siegfried is a consultant for Pierre Fabre and served as a principle investigator on phase 3 research. The company did not sponsor the current study, but a coauthor and employee of Pierre Fabre assisted with the logistics of the survey.

AT AAP 2017

Key clinical point: Although more costly than generics, Hemangeol (Pierre Fabre) could reduce safety concerns for treating infantile hemangioma.

Major finding:

Data source: Based on survey responses from 223 physicians, 70% of whom were pediatric dermatologists.

Disclosures: Dr. Siegfried is a consultant for Pierre Fabre and served as a principle investigator on phase 3 research. The company did not sponsor the current study, but a coauthor and employee of Pierre Fabre assisted with the logistics of the survey.

Should we stop administering the influenza vaccine to pregnant women?

EXPERT COMMENTARY

Influenza can be a serious, even life-threatening infection, especially in pregnant women and their newborn infants.1 For that reason, the Centers for Disease Control and Prevention (CDC) and the American College of Obstetricians and Gynecologists (ACOG) strongly recommend that all pregnant women receive the inactivated influenza vaccine at the start of each flu season, regardless of trimester of exposure.

The most widely-used vaccine in the United States is the inactivated quadrivalent vaccine, which is intended for intramuscular administration in a single dose. The 2017-2018 version of this vaccine includes 2 influenza A antigens and 2 influenza B antigens. The first of the A antigens differs from last year's vaccine. The other 3 antigens are the same as in the 2016-2017 vaccine:

- A/Michigan/45/2015 (H1N1) pdm09-like virus

- A/Hong Kong/4801/2014 (H3N2)-like virus

- B/Brisbane/60/2008-like virus

- B/Phuket/3073/2013-like virus (B/Yamagota)

Several recent reports in large, diverse populations2-5 have demonstrated that the vaccine does not increase the risk of spontaneous abortion, stillbirth, preterm delivery, or congenital anomalies. Therefore, a recent report by Donahue and colleagues6 is surprising and is certainly worthy of our attention.

Related article:

Does influenza immunization during pregnancy confer flu protection to newborns?

Details of the study

Donahue and colleagues, experienced investigators, were tasked by the CDC with using information from the Vaccine Safety Datalink to specifically assess the safety of the influenza vaccine when administered early in pregnancy. They evaluated 485 women who had experienced a spontaneous abortion in 1 of 2 time periods: September 1, 2010 to April 28, 2011 and September 1, 2011 to April 28, 2012. These women were matched by last menstrual period with controls who subsequently had a liveborn infant or stillbirth at greater than 20 weeks of gestation. The exposure of interest was receipt of the monovalent H1N1 vaccine (H1N1pdm09), the inactivated trivalent vaccine (A/California/7/2009 H1N1 pdm09-like, A/Perth/16/2009 H3N2-like, and B/Brisbane/60/2008-like), or both in the 28 days immediately preceding the spontaneous abortion. The investigators also considered 2 other windows of exposure: 29 to 56 days and greater than 56 days. In addition, they controlled for the following potential confounding variables: maternal age, smoking history, presence of type 1 or 2 diabetes, prepregnancy BMI, and previous health care utilization.

Cases were significantly older than controls. They also were more likely to be African-American, to have had a history of greater than or equal to 2 spontaneous abortions, and to have smoked during pregnancy. The median gestational age at the time of spontaneous abortion was 7 weeks. Overall, the adjusted odds ratio (aOR) of spontaneous abortion within the 1- to 28-day window was 2.0 (95% confidence interval [CI], 1.1-3.6). There was not even a weak association in the other 2 windows of exposure. However, in women who received the pH1N1 vaccine in the previous flu season, the aOR was 7.7 (95% CI, 2.2-27.3). When women who had experienced 2 or more spontaneous abortions were excluded, the aOR remained significantly elevated at 6.5 (95% CI, 1.7-24.3). The aOR was 1.3 (95% CI, 0.7-2.7) in women who were not vaccinated with the pH1N1 vaccine in the previous flu season.

Donahue and colleagues6 offered several possible explanations for their observations. They noted that the pH1N1 vaccine seemed to cause at least mild increases in pro-inflammatory cytokines, particularly in pregnant compared with nonpregnant women. In addition, infection with the pH1N1 virus or vaccination with the pH1N1 vaccine induces an increase in T helper type-1 cells, which exert a pro-inflammatory effect. Excessive inflammation, in turn, may cause spontaneous abortion.

Related article:

5 ways to reduce infection risk during pregnancy

Study limitations

This study has several important limitations. First, the vaccine used in the investigation is not identical to the one used most commonly today. Second, although the number of women with spontaneous abortions is relatively large, the number who received the pH1N1-containing vaccine in consecutive years was relatively small. Third, this case-control study was able to estimate an odds ratio for the adverse outcome, but it could not prove causation, nor could it provide a precise estimate of absolute risk for a spontaneous miscarriage following the influenza vaccine. Finally, the authors, understandably,were unable to control for all the myriad factors that may increase the risk for spontaneous abortion.

With these limitations in mind, I certainly concur with the recommendations of the CDC and ACOG7 to continue our practice of routinely offering the influenza vaccine to virtually all pregnant women at the beginning of the flu season. For the vast majority of patients, the benefit of vaccination for both mother and baby outweighs the risk of an adverse effect. Traditionally, allergy to any of the vaccine components, principally egg protein and/or mercury, was considered a contraindication to vaccination. However, one trivalent vaccine preparation (Flublok, Protein Sciences Corporation) is now available that does not contain egg protein, and 3 trivalent preparations and at least 6 quadrivalent preparations are available that do not contain thimerosal (mercury). Therefore, allergy should rarely be a contraindication to vaccination.

In order to exercise an abundance of caution, I will not offer this vaccination in the first trimester to women who appear to be at increased risk for early pregnancy loss (women with spontaneous bleeding or a prior history of early loss). In these individuals, I will defer vaccination until the second trimester. I will also eagerly await the results of another CDC-sponsored investigation designed to evaluate the risks of spontaneous abortion in women who were vaccinated consecutively in the 2012–2013, 2013–2014, and 2014–2015 influenza seasons.6

-- Patrick Duff, MD

Share your thoughts! Send your Letter to the Editor to [email protected]. Please include your name and the city and state in which you practice.

- Louie JK, Acosta M, Jamieson DJ, Honein MA; California Pandemic (H1N1) Working Group. Severe 2009 H1N1 influenza in pregnant and postpartum women in California. N Engl J Med. 2010;362(1):27–35.

- Polyzos KA, Konstantelias AA, Pitsa CE, Falagas ME. Maternal influenza vaccination and risk for congenital malformations: a systematic review and meta-analysis. Obstet Gynecol. 2015;126(5):1075–1084.

- Kharbanda EO, Vazquez-Benitez G, Lipkind H, Naleway A, Lee G, Nordin JD; Vaccine Safety Datalink Team. Inactivated influenza vaccine during pregnancy and risks for adverse obstetric events. Obstet Gynecol. 2013;122(3):659–667.

- Sheffield JS, Greer LG, Rogers VL, et al. Effect of influenza vaccination in the first trimester of pregnancy. Obstet Gynecol. 2012;120(3):532–537.

- American College of Obstetricians and Gynecologists Committee on Obstetric Practice. ACOG Committee Opinion No. 468: influenza vaccination during pregnancy. Obstet Gynecol. 2010;116(4):1006–1007.

- Donahue JG, Kieke BA, King JP, et al. Association of spontaneous abortion with receipt of inactivated influenza vaccine containing H1N1pdm09 in 2010-11 and 2011-12. Vaccine. 2017;35(40):5314–5322.

- Flu vaccination and possible safety signal. CDC website. https://www.cdc.gov/flu/professionals/vaccination/vaccination-possible-safety-signal.html. Updated September 13, 2017. Accessed October 2, 2017.

EXPERT COMMENTARY

Influenza can be a serious, even life-threatening infection, especially in pregnant women and their newborn infants.1 For that reason, the Centers for Disease Control and Prevention (CDC) and the American College of Obstetricians and Gynecologists (ACOG) strongly recommend that all pregnant women receive the inactivated influenza vaccine at the start of each flu season, regardless of trimester of exposure.

The most widely-used vaccine in the United States is the inactivated quadrivalent vaccine, which is intended for intramuscular administration in a single dose. The 2017-2018 version of this vaccine includes 2 influenza A antigens and 2 influenza B antigens. The first of the A antigens differs from last year's vaccine. The other 3 antigens are the same as in the 2016-2017 vaccine:

- A/Michigan/45/2015 (H1N1) pdm09-like virus

- A/Hong Kong/4801/2014 (H3N2)-like virus

- B/Brisbane/60/2008-like virus

- B/Phuket/3073/2013-like virus (B/Yamagota)

Several recent reports in large, diverse populations2-5 have demonstrated that the vaccine does not increase the risk of spontaneous abortion, stillbirth, preterm delivery, or congenital anomalies. Therefore, a recent report by Donahue and colleagues6 is surprising and is certainly worthy of our attention.

Related article:

Does influenza immunization during pregnancy confer flu protection to newborns?

Details of the study

Donahue and colleagues, experienced investigators, were tasked by the CDC with using information from the Vaccine Safety Datalink to specifically assess the safety of the influenza vaccine when administered early in pregnancy. They evaluated 485 women who had experienced a spontaneous abortion in 1 of 2 time periods: September 1, 2010 to April 28, 2011 and September 1, 2011 to April 28, 2012. These women were matched by last menstrual period with controls who subsequently had a liveborn infant or stillbirth at greater than 20 weeks of gestation. The exposure of interest was receipt of the monovalent H1N1 vaccine (H1N1pdm09), the inactivated trivalent vaccine (A/California/7/2009 H1N1 pdm09-like, A/Perth/16/2009 H3N2-like, and B/Brisbane/60/2008-like), or both in the 28 days immediately preceding the spontaneous abortion. The investigators also considered 2 other windows of exposure: 29 to 56 days and greater than 56 days. In addition, they controlled for the following potential confounding variables: maternal age, smoking history, presence of type 1 or 2 diabetes, prepregnancy BMI, and previous health care utilization.

Cases were significantly older than controls. They also were more likely to be African-American, to have had a history of greater than or equal to 2 spontaneous abortions, and to have smoked during pregnancy. The median gestational age at the time of spontaneous abortion was 7 weeks. Overall, the adjusted odds ratio (aOR) of spontaneous abortion within the 1- to 28-day window was 2.0 (95% confidence interval [CI], 1.1-3.6). There was not even a weak association in the other 2 windows of exposure. However, in women who received the pH1N1 vaccine in the previous flu season, the aOR was 7.7 (95% CI, 2.2-27.3). When women who had experienced 2 or more spontaneous abortions were excluded, the aOR remained significantly elevated at 6.5 (95% CI, 1.7-24.3). The aOR was 1.3 (95% CI, 0.7-2.7) in women who were not vaccinated with the pH1N1 vaccine in the previous flu season.