User login

Product Update

LUTECH LT-300 HD FOR COLPOSCOPY

Background. In March 1924, the colposcope was introduced to evaluate the portio of the cervix by Hans Hinselmann in Germany after years of work with the famous lens manufacturer Leitz.1 Although its adoption as a standard tool for evaluating lower genital tract neoplasia was protracted, today it sits as a cornerstone technology in gynecology, and every ObGyn provider has been trained to perform colposcopic exams that include visualizing the cervix, vagina, and vulva as well as taking biopsies. In December 2000, after 75 years of glass lens technology, Welch-Allyn (Skaneateles Falls, New York) introduced the first video colposcope, shepherding the field into the 21st century with only limited traction. Now, Lutech is entering the fray hoping to further nudge traditionalists into the digital age.

Design/Functionality. The Lutech LT-300 HD works off of a Sony Exmor CMOS (complementary metaloxide semiconductor) camera with 2.13 megapixels to provide high-definition optical magnification of 1-30X illuminated by a circular cool LED array that offers 3000 lx of white light with an adjustable green filter to allow for contrast at working distances between 5.1 and 15.7 inches. The colposcope comes with either a vertical stand or a swing arm stand and has both HDMI and USB 3.0 video output so that the system can be attached to either a stand-alone monitor or a computer (not included). The colposcope also comes in a standard definition configuration (LT-300 SD), but I did not trial that model because the price difference did not seem to justify the potentially lower resolution.

In my experience with its use, the Lutech LT-300 HD was pretty excellent. Being a man and a doctor, I refused the online training session that comes free with the colposcope, assuming I could figure it out on my own. My assumption was mostly true, but there were definitely some tips and tricks that would have made my life easier had I not been so stiff-necked. That said, the biggest adjustment is getting used to looking at a screen and not having to look through eyepieces. The picture output is great and, as a patient (or student) teaching tool, it is phenomenal. Also, because it is digital, the image capture features allow for image importation into notes (although it is clunky and requires work arounds when using Epic).

Innovation. From an innovation point of view, I am not sure that Lutech re-invented fire since, in essence, the LT-300 HD is a modified CMOS video camera. But the company did do a nice job bringing together a lot of existing technologies into a highly functional product. I would love to see better integration with some of the larger electronic medical records (EMRs), but I suspect the barriers lie with the EMR companies rather than with Lutech, so I am giving them a pass on that front.

Summary. At its core, a colposcope is simply a tool with which to obtain a magnified view of the cervix, vagina, and/or vulva. Prior to advent and proliferation of CMOS camera technology, the most readily available means of accomplishing this was to employ glass lenses. But that was then, and this is now; CMOS technology is just better, cheaper, and more versatile. I no longer turn my head to look over my shoulder while backing up my car—I use the back-up camera. My Kodak instamatic has given way to my iPhone. And now, my incredibly heavy, unwieldy Leisegang colposcope has been replaced by a light-weight camera on a stand that I can easily move from room to room. I won’t lie, though,…it still seems weird to not look through eyepieces and work the focus knobs, but I am happy with the change. My patients can now see what I am looking at and better understand their diagnosis (if they want), and my notes are prettier. Onward march of progress.

Reference

1. Fusco E, Padula F, Mancini E, et al. History of colposcopy: a brief biography of Hinselmann. J Prenat Med. 2008;2:19-23.

Continue to: DTR MEDICAL CERVICAL ROTATING BIOPSY PUNCH...

DTR MEDICAL CERVICAL ROTATING BIOPSY PUNCH

The single-use DTR Medical Cervical Rotating Biopsy Punch from Innovia Medical (Swansea, United Kingdom) “works great” and “is reasonably cost-effective to replace reusables.”

Background. Integral to every colposcopic examination is the potential need to biopsy abnormal appearing tissues. To accomplish this latter task, numerous punch-style biopsy devices have been developed in a variety of jaw shapes and styles, crafted from materials ranging from stainless steel to titanium to ceramic, with the ultimate goal the same—get a piece of tissue from the cervix as easily as possible.

Design/Functionality. DTR Medical Cervical Rotating Biopsy Punch is a single-use sterile device that comes packaged as 10 per box. It features Kevorkian-style “stronger than Titanium” jaws that yield a 3.0 mm x 7.5 mm sample attached to a metal shaft that can rotate 360°. The shaft inserts into a lightweight plastic pistol-grip style handle. From tip to handle, the device measures 36.5 cm (14.125 in).

In my experience with its use, the DTR Medical Cervical Rotating Biopsy Punch performed flawlessly. Its relatively low-profile jaws allowed for unobstructed access to biopsy sites and the ability to rotate the jaws was a big plus. The “stronger than Titanium” jaws consistently yielded the exact biopsies I wanted, like a knife going through butter.

Innovation. From an innovation standpoint, the DTR Medical Cervical Rotating Biopsy Punch is more of an engineering “duh” than “wow,” but it works great so who cares that it’s not a fusion reactor. That said, the innovative part from Innovia Medical is their ability to make such a high-quality biopsy device and sell it at a price that makes it reasonably cost-effective to replace reusables.

Summary. Whether it is a Tischler, Kevorkian, or Burke tip, the real question before any gynecologist uses the cervical biopsy device she/he/they has in her/his/ their hand is, will it cut? Because all reusable surgical instruments are in fact reusable, those edges that are designed to cut invariably become dull with reuse. And, unless they are meticulously maintained and routinely sharpened (spoiler alert, they never are), providers are not infrequently chagrinned by the gnawing rather than cutting that these instruments deliver. Thinking back, I could not remember the last time I had made an incision with a surgical scalpel blade that had previously been used then sharpened and re-sterilized. Then I did remember…never. Reflecting on this, I wondered why I was doing this with my cervical biopsy devices. While I really do not like the environmental waste created by single-use devices, reusable instruments that require re-processing do have an environmental impact and a significant cost. Considering this, I do not think that environmental reasons are enough of a barrier to justify using dull biopsy tools if it can be done cost-effectively with a minimal carbon footprint. All-in-all, I like this product, and I plan to use it. ●

LUTECH LT-300 HD FOR COLPOSCOPY

Background. In March 1924, the colposcope was introduced to evaluate the portio of the cervix by Hans Hinselmann in Germany after years of work with the famous lens manufacturer Leitz.1 Although its adoption as a standard tool for evaluating lower genital tract neoplasia was protracted, today it sits as a cornerstone technology in gynecology, and every ObGyn provider has been trained to perform colposcopic exams that include visualizing the cervix, vagina, and vulva as well as taking biopsies. In December 2000, after 75 years of glass lens technology, Welch-Allyn (Skaneateles Falls, New York) introduced the first video colposcope, shepherding the field into the 21st century with only limited traction. Now, Lutech is entering the fray hoping to further nudge traditionalists into the digital age.

Design/Functionality. The Lutech LT-300 HD works off of a Sony Exmor CMOS (complementary metaloxide semiconductor) camera with 2.13 megapixels to provide high-definition optical magnification of 1-30X illuminated by a circular cool LED array that offers 3000 lx of white light with an adjustable green filter to allow for contrast at working distances between 5.1 and 15.7 inches. The colposcope comes with either a vertical stand or a swing arm stand and has both HDMI and USB 3.0 video output so that the system can be attached to either a stand-alone monitor or a computer (not included). The colposcope also comes in a standard definition configuration (LT-300 SD), but I did not trial that model because the price difference did not seem to justify the potentially lower resolution.

In my experience with its use, the Lutech LT-300 HD was pretty excellent. Being a man and a doctor, I refused the online training session that comes free with the colposcope, assuming I could figure it out on my own. My assumption was mostly true, but there were definitely some tips and tricks that would have made my life easier had I not been so stiff-necked. That said, the biggest adjustment is getting used to looking at a screen and not having to look through eyepieces. The picture output is great and, as a patient (or student) teaching tool, it is phenomenal. Also, because it is digital, the image capture features allow for image importation into notes (although it is clunky and requires work arounds when using Epic).

Innovation. From an innovation point of view, I am not sure that Lutech re-invented fire since, in essence, the LT-300 HD is a modified CMOS video camera. But the company did do a nice job bringing together a lot of existing technologies into a highly functional product. I would love to see better integration with some of the larger electronic medical records (EMRs), but I suspect the barriers lie with the EMR companies rather than with Lutech, so I am giving them a pass on that front.

Summary. At its core, a colposcope is simply a tool with which to obtain a magnified view of the cervix, vagina, and/or vulva. Prior to advent and proliferation of CMOS camera technology, the most readily available means of accomplishing this was to employ glass lenses. But that was then, and this is now; CMOS technology is just better, cheaper, and more versatile. I no longer turn my head to look over my shoulder while backing up my car—I use the back-up camera. My Kodak instamatic has given way to my iPhone. And now, my incredibly heavy, unwieldy Leisegang colposcope has been replaced by a light-weight camera on a stand that I can easily move from room to room. I won’t lie, though,…it still seems weird to not look through eyepieces and work the focus knobs, but I am happy with the change. My patients can now see what I am looking at and better understand their diagnosis (if they want), and my notes are prettier. Onward march of progress.

Reference

1. Fusco E, Padula F, Mancini E, et al. History of colposcopy: a brief biography of Hinselmann. J Prenat Med. 2008;2:19-23.

Continue to: DTR MEDICAL CERVICAL ROTATING BIOPSY PUNCH...

DTR MEDICAL CERVICAL ROTATING BIOPSY PUNCH

The single-use DTR Medical Cervical Rotating Biopsy Punch from Innovia Medical (Swansea, United Kingdom) “works great” and “is reasonably cost-effective to replace reusables.”

Background. Integral to every colposcopic examination is the potential need to biopsy abnormal appearing tissues. To accomplish this latter task, numerous punch-style biopsy devices have been developed in a variety of jaw shapes and styles, crafted from materials ranging from stainless steel to titanium to ceramic, with the ultimate goal the same—get a piece of tissue from the cervix as easily as possible.

Design/Functionality. DTR Medical Cervical Rotating Biopsy Punch is a single-use sterile device that comes packaged as 10 per box. It features Kevorkian-style “stronger than Titanium” jaws that yield a 3.0 mm x 7.5 mm sample attached to a metal shaft that can rotate 360°. The shaft inserts into a lightweight plastic pistol-grip style handle. From tip to handle, the device measures 36.5 cm (14.125 in).

In my experience with its use, the DTR Medical Cervical Rotating Biopsy Punch performed flawlessly. Its relatively low-profile jaws allowed for unobstructed access to biopsy sites and the ability to rotate the jaws was a big plus. The “stronger than Titanium” jaws consistently yielded the exact biopsies I wanted, like a knife going through butter.

Innovation. From an innovation standpoint, the DTR Medical Cervical Rotating Biopsy Punch is more of an engineering “duh” than “wow,” but it works great so who cares that it’s not a fusion reactor. That said, the innovative part from Innovia Medical is their ability to make such a high-quality biopsy device and sell it at a price that makes it reasonably cost-effective to replace reusables.

Summary. Whether it is a Tischler, Kevorkian, or Burke tip, the real question before any gynecologist uses the cervical biopsy device she/he/they has in her/his/ their hand is, will it cut? Because all reusable surgical instruments are in fact reusable, those edges that are designed to cut invariably become dull with reuse. And, unless they are meticulously maintained and routinely sharpened (spoiler alert, they never are), providers are not infrequently chagrinned by the gnawing rather than cutting that these instruments deliver. Thinking back, I could not remember the last time I had made an incision with a surgical scalpel blade that had previously been used then sharpened and re-sterilized. Then I did remember…never. Reflecting on this, I wondered why I was doing this with my cervical biopsy devices. While I really do not like the environmental waste created by single-use devices, reusable instruments that require re-processing do have an environmental impact and a significant cost. Considering this, I do not think that environmental reasons are enough of a barrier to justify using dull biopsy tools if it can be done cost-effectively with a minimal carbon footprint. All-in-all, I like this product, and I plan to use it. ●

LUTECH LT-300 HD FOR COLPOSCOPY

Background. In March 1924, the colposcope was introduced to evaluate the portio of the cervix by Hans Hinselmann in Germany after years of work with the famous lens manufacturer Leitz.1 Although its adoption as a standard tool for evaluating lower genital tract neoplasia was protracted, today it sits as a cornerstone technology in gynecology, and every ObGyn provider has been trained to perform colposcopic exams that include visualizing the cervix, vagina, and vulva as well as taking biopsies. In December 2000, after 75 years of glass lens technology, Welch-Allyn (Skaneateles Falls, New York) introduced the first video colposcope, shepherding the field into the 21st century with only limited traction. Now, Lutech is entering the fray hoping to further nudge traditionalists into the digital age.

Design/Functionality. The Lutech LT-300 HD works off of a Sony Exmor CMOS (complementary metaloxide semiconductor) camera with 2.13 megapixels to provide high-definition optical magnification of 1-30X illuminated by a circular cool LED array that offers 3000 lx of white light with an adjustable green filter to allow for contrast at working distances between 5.1 and 15.7 inches. The colposcope comes with either a vertical stand or a swing arm stand and has both HDMI and USB 3.0 video output so that the system can be attached to either a stand-alone monitor or a computer (not included). The colposcope also comes in a standard definition configuration (LT-300 SD), but I did not trial that model because the price difference did not seem to justify the potentially lower resolution.

In my experience with its use, the Lutech LT-300 HD was pretty excellent. Being a man and a doctor, I refused the online training session that comes free with the colposcope, assuming I could figure it out on my own. My assumption was mostly true, but there were definitely some tips and tricks that would have made my life easier had I not been so stiff-necked. That said, the biggest adjustment is getting used to looking at a screen and not having to look through eyepieces. The picture output is great and, as a patient (or student) teaching tool, it is phenomenal. Also, because it is digital, the image capture features allow for image importation into notes (although it is clunky and requires work arounds when using Epic).

Innovation. From an innovation point of view, I am not sure that Lutech re-invented fire since, in essence, the LT-300 HD is a modified CMOS video camera. But the company did do a nice job bringing together a lot of existing technologies into a highly functional product. I would love to see better integration with some of the larger electronic medical records (EMRs), but I suspect the barriers lie with the EMR companies rather than with Lutech, so I am giving them a pass on that front.

Summary. At its core, a colposcope is simply a tool with which to obtain a magnified view of the cervix, vagina, and/or vulva. Prior to advent and proliferation of CMOS camera technology, the most readily available means of accomplishing this was to employ glass lenses. But that was then, and this is now; CMOS technology is just better, cheaper, and more versatile. I no longer turn my head to look over my shoulder while backing up my car—I use the back-up camera. My Kodak instamatic has given way to my iPhone. And now, my incredibly heavy, unwieldy Leisegang colposcope has been replaced by a light-weight camera on a stand that I can easily move from room to room. I won’t lie, though,…it still seems weird to not look through eyepieces and work the focus knobs, but I am happy with the change. My patients can now see what I am looking at and better understand their diagnosis (if they want), and my notes are prettier. Onward march of progress.

Reference

1. Fusco E, Padula F, Mancini E, et al. History of colposcopy: a brief biography of Hinselmann. J Prenat Med. 2008;2:19-23.

Continue to: DTR MEDICAL CERVICAL ROTATING BIOPSY PUNCH...

DTR MEDICAL CERVICAL ROTATING BIOPSY PUNCH

The single-use DTR Medical Cervical Rotating Biopsy Punch from Innovia Medical (Swansea, United Kingdom) “works great” and “is reasonably cost-effective to replace reusables.”

Background. Integral to every colposcopic examination is the potential need to biopsy abnormal appearing tissues. To accomplish this latter task, numerous punch-style biopsy devices have been developed in a variety of jaw shapes and styles, crafted from materials ranging from stainless steel to titanium to ceramic, with the ultimate goal the same—get a piece of tissue from the cervix as easily as possible.

Design/Functionality. DTR Medical Cervical Rotating Biopsy Punch is a single-use sterile device that comes packaged as 10 per box. It features Kevorkian-style “stronger than Titanium” jaws that yield a 3.0 mm x 7.5 mm sample attached to a metal shaft that can rotate 360°. The shaft inserts into a lightweight plastic pistol-grip style handle. From tip to handle, the device measures 36.5 cm (14.125 in).

In my experience with its use, the DTR Medical Cervical Rotating Biopsy Punch performed flawlessly. Its relatively low-profile jaws allowed for unobstructed access to biopsy sites and the ability to rotate the jaws was a big plus. The “stronger than Titanium” jaws consistently yielded the exact biopsies I wanted, like a knife going through butter.

Innovation. From an innovation standpoint, the DTR Medical Cervical Rotating Biopsy Punch is more of an engineering “duh” than “wow,” but it works great so who cares that it’s not a fusion reactor. That said, the innovative part from Innovia Medical is their ability to make such a high-quality biopsy device and sell it at a price that makes it reasonably cost-effective to replace reusables.

Summary. Whether it is a Tischler, Kevorkian, or Burke tip, the real question before any gynecologist uses the cervical biopsy device she/he/they has in her/his/ their hand is, will it cut? Because all reusable surgical instruments are in fact reusable, those edges that are designed to cut invariably become dull with reuse. And, unless they are meticulously maintained and routinely sharpened (spoiler alert, they never are), providers are not infrequently chagrinned by the gnawing rather than cutting that these instruments deliver. Thinking back, I could not remember the last time I had made an incision with a surgical scalpel blade that had previously been used then sharpened and re-sterilized. Then I did remember…never. Reflecting on this, I wondered why I was doing this with my cervical biopsy devices. While I really do not like the environmental waste created by single-use devices, reusable instruments that require re-processing do have an environmental impact and a significant cost. Considering this, I do not think that environmental reasons are enough of a barrier to justify using dull biopsy tools if it can be done cost-effectively with a minimal carbon footprint. All-in-all, I like this product, and I plan to use it. ●

Norgestrel for nonprescription contraception: What you and your patients need to know

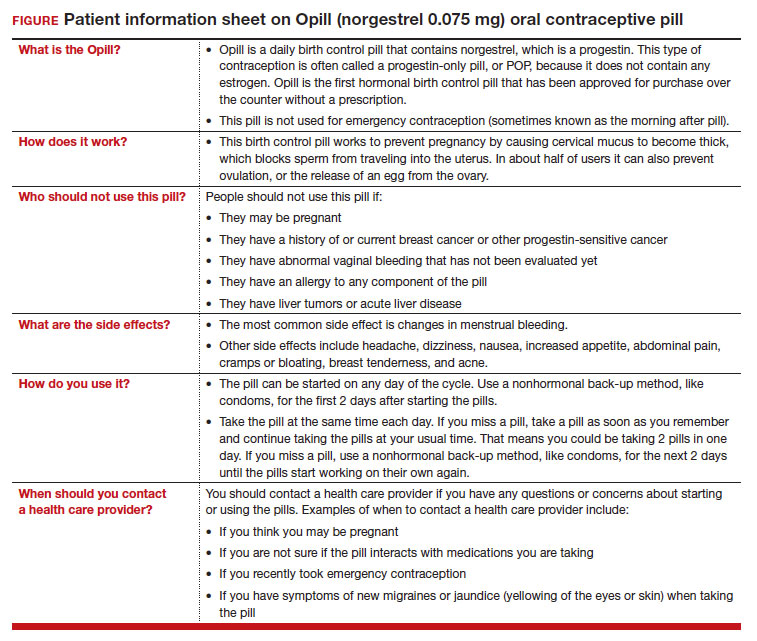

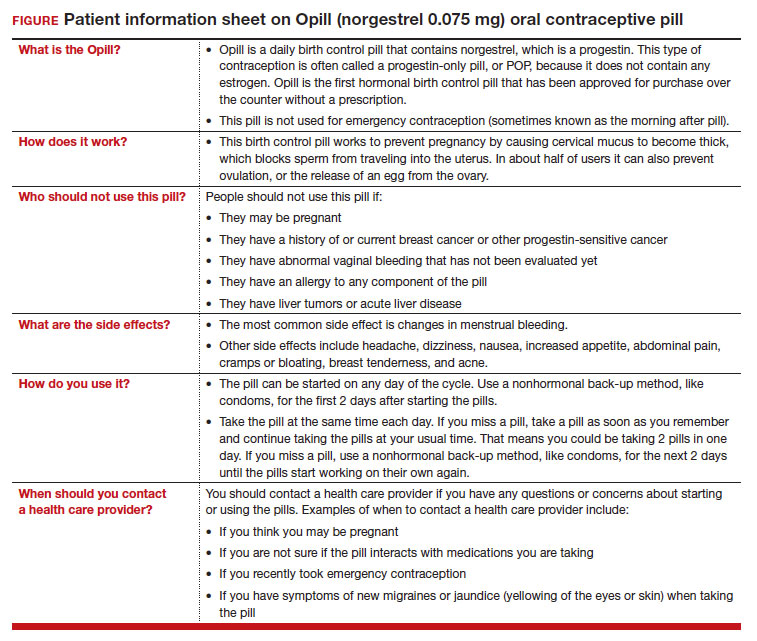

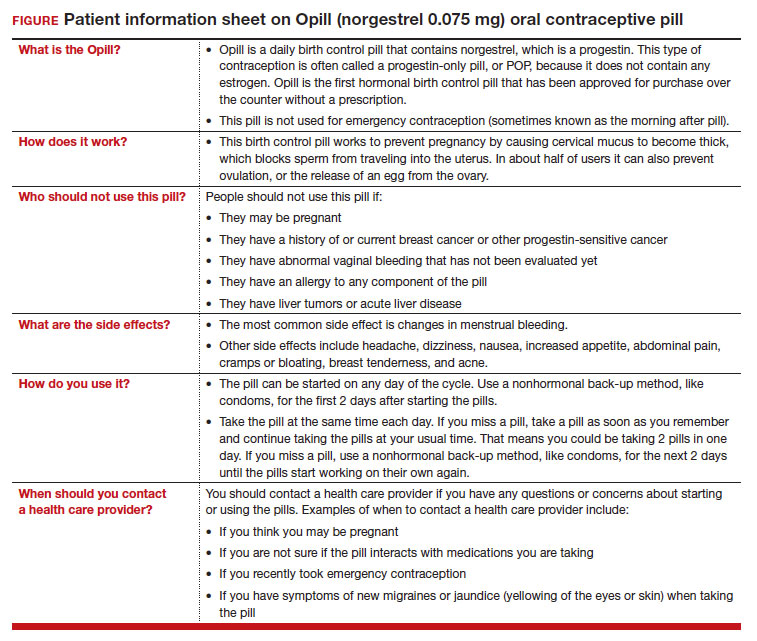

On July 13, 2023, the US Food and Drug Administration (FDA) approved norgestrel 0.075 mg (Opill, HRA Pharma, Paris, France) as the first nonprescription oral contraceptive pill (FIGURE). This progestin-only pill was originally FDA approved in 1973, with prescription required, and was available as Ovrette until 2005, when product distribution ceased for marketing reasons and not for safety or effectiveness concerns.1 In recent years, studies have been conducted to support converted approval from prescription to nonprescription to increase access to safe and effective contraception. Overall, norgestrel is more effective than other currently available nonprescription contraceptive options when used as directed, and widespread accessibility to this method has the potential to decrease the risk of unintended pregnancies. This product is expected to be available in drugstores, convenience stores, grocery stores, and online in 2024.

How it works

The indication for norgestrel 0.075 mg is pregnancy prevention in people with the capacity to become pregnant; this product is not intended for emergency contraception. Norgestrel is a racemic mixture of 2 isomers, of which only levonorgestrel is bioactive. The mechanism of action for contraception is primarily through cervical mucus thickening, which inhibits sperm movement through the cervix. About 50% of users also have an additional contraceptive effect of ovulation suppression.2

Instructions for use. In the package label, users are instructed to take the norgestrel 0.075 mg pill daily, preferably at the same time each day and no more than 3 hours from the time taken on the previous day. This method can be started on any day of the cycle, and backup contraception (a barrier method) should be used for the first 48 hours after starting the method if it has been more than 5 days since menstrual bleeding started.3 Product instructions indicate that, if users miss a dose, they should take the next dose as soon as possible. If a pill is taken 3 hours or more later than the usual time, they should take a pill immediately and then resume the next pill at the usual time. In addition, backup contraception is recommended for 48 hours.2

Based on the Centers for Disease Control and Prevention (CDC) Selected Practice Recommendations for Contraceptive Use, no examinations or tests are required prior to initiation of progestin-only pills for safe and effective use.3

Efficacy

The product label indicates that the pregnancy rate is approximately 2 per 100 women-years based on over 21,000 28-day exposure cycles from 8 US clinical studies.2 In a recent review by Glasier and colleagues, the authors identified 13 trials that assessed the efficacy of the norgestrel 0.075 mg pill, all published several decades ago.4 Given that breastfeeding can have contraceptive impact through ovulation inhibition, studies that included breastfeeding participants were evaluated separately. Six studies without breastfeeding participants included 3,184 women who provided more than 35,000 months of use. The overall failure rates ranged from 0 to 2.4 per hundred woman-years with typical use; an aggregate Pearl Index was calculated to be 2.2 based on the total numbers of pregnancies and cycles. The remaining 7 studies included individuals who were breastfeeding for at least part of their study participation. These studies included 5,445 women, and the 12-month life table cumulative pregnancy rates in this group ranged from 0.0% to 3.4%. This review noted that the available studies are limited by incomplete descriptions of study participant information and differences in reporting of failure rates; however, the overall data support the effectiveness of the norgestrel 0.075 mg pill for pregnancy prevention.

Continue to: Norgestrel’s mechanism of action on ovarian activity and cervical mucus...

Norgestrel’s mechanism of action on ovarian activity and cervical mucus

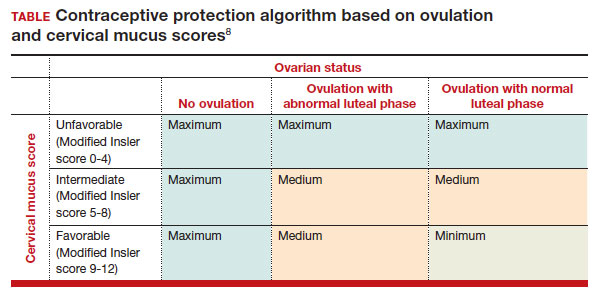

More recently, a prospective, multicenter randomized, crossover study was performed to better understand this pill’s impact on cervical mucus and ovulation during preparation for nonprescription approval. In this study, participants were evaluated with frequent transvaginal ultrasonography, cervical mucus, and blood assessments (including levels of follicular-stimulating hormone, luteinizing hormone, progesterone, and estradiol) for three 28-day cycles. Cervical mucus was scored on a modified Insler scale to indicate if the mucus was favorable (Insler score ≥9), intermediate (Insler score 5-8), or unfavorable to fertility (Insler score ≤4).5

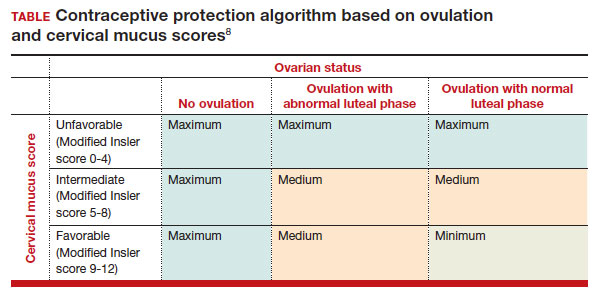

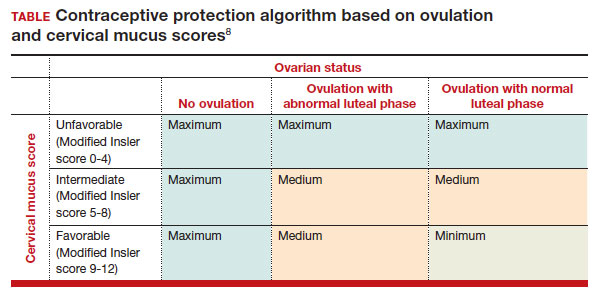

In the first cycle, participants were instructed to use the pills as prescribed (described as “correct use”). During this cycle, most participants (n = 34/51; 67%) did not ovulate, confirming that norgestrel 0.075 mg does impact ovulation.6 Most participants also had unfavorable cervical mucus (n = 39/51; 76%).6 Overall, 94% had full protection against pregnancy, either through lack of ovulation (n = 9), unfavorable mucus (n = 14), or both (n = 25). The remaining 3 participants ovulated and had intermediate mucus scores; ultimately, these participants were considered to have medium protection against pregnancy.7,8 (See the contraceptive protection algorithm [TABLE]).8

In the second and third cycles, the investigators evaluated ovulation and cervical mucus changes in the setting of either a delayed (by 6 hours) or missed dose midcycle.8 Of the 46 participants with evaluable data during the intervention cycles, 32 (70%) did not ovulate in each of the delayed- and missed-dose cycles. Most participants (n = 27; 59%) also demonstrated unfavorable mucus scores (modified Insler score ≤4) over the entire cycle despite delaying or missing a pill. There was no significant change to the cervical mucus score when comparing the scores on the days before, during, and after the delayed or missed pills (P = .26), nor when comparing between delayed pill use and missed pill use (P = .45). With the delayed pill intervention, 4 (9%) had reduced contraceptive protection (ie, medium protection) based on ovulation with intermediate mucus scores. With the missed pill intervention, 5 (11%) had reduced protection, of whom 3 had medium protection and 2 had minimum protection with ovulation and favorable mucus scores. Overall, this study shows that delaying or missing one pill may not impact contraceptive efficacy as much as previously thought given the strict 3-hour window for progestin-only pills. However, these findings are theoretical as information about pregnancy outcomes with delaying or missing pills are lacking.

Safety

Progestin-only methods are one of the safest options for contraception, with few contraindications to use; those listed include known or suspected pregnancy, known or suspected carcinoma of the breast or other progestinsensitive cancer, undiagnosed abnormal uterine bleeding, hypersensitivity to any component of the product, benign or malignant liver tumors, and acute liver disease.2

The CDC Medical Eligibility Criteria for Contraceptive Use guidelines offer guidance for progestin-only pills, indicating a category 3 (theoretical or proven risks usually outweigh the advantages) or category 4 (unacceptable health risk, method not to be used) for only a select number of additional conditions. These conditions include a history of malabsorptive bariatric surgery (category 3) and concurrent use of medications that induce hepatic enzyme activity (category 3)— such as phenytoin, carbamazepine, barbiturates, primidone, topiramate, oxcarbazepine, rifampin, and rifabutin.9 These conditions are included primarily due to concerns of decreased effectivenessof the contraception and not necessarily because of evidence of harm with use.

The prevalence of consumers with contraindications to progestin-only pills appears to be low. In a large database study, only 4.36% seeking preventive care and 2.29% seeking both preventive and contraceptive services had a contraindication to progestin-only pills.10 Therefore, candidates for norgestrel use include individuals who have commonly encountered conditions, including those who9:

- have recently given birth

- are breastfeeding

- have a history of venous thromboembolism

- smoke

- have cardiovascular disease, hypertension, migraines with aura, or longstanding diabetes.

Adverse effects

The most common adverse effects (AEs) related to norgestrel use are bleeding changes.2 In the initial clinical studies for FDA approval, about half of enrolled participants reported a change in bleeding; about 9% discontinued the contraceptive due to bleeding. Breakthrough bleeding and spotting were reported by 48.6% and 47.3% of participants, respectively. About 6.1% had amenorrhea in their first cycle; 28.7% of participants had amenorrhea overall. Other reported AEs were headache, dizziness, nausea, increased appetite, abdominal pain, cramps or bloating, breast tenderness, and acne.

- Brand name: Opill

- Class: Progestin-only contraception

- Indication: Pregnancy prevention

- Approval date: Initial approval in 1973, nonprescription approval on July 13, 2023

- Availability date: 2024

- Manufacturer: Perrigo Company, HRA Pharma, Paris, France

- Dosage forms: 0.075 mg tablet

Continue to: FDA approval required determining appropriate direct-to-patient classification...

FDA approval required determining appropriate direct-to-patient classification

As part of the process for obtaining nonprescription approval, studies needed to determine that patients can safely and effectively use norgestrel without talking to a health care provider first. As part of that process, label comprehension, self-selection, and actualuse studies were required to demonstrate that consumers can use the package information to determine their eligibility and take the medication appropriately.

The ACCESS study Research Q: Do patients appropriately determine if the contraceptive is right for them?

Study A: Yes, 99% of the time. In the Adherence with Continuous-dose Oral Contraceptive: Evaluation of Self-Selection and Use (ACCESS) pivotal study, which evaluated prescription to nonprescription approval, participants were asked to review the label and determine whether the product was appropriate for them to use based on their health history.11 Approximately 99% of participants (n = 1,234/1,246) were able to correctly self-select whether norgestrel was appropriate for their own use.12

Research Q: After beginning the contraceptive, do patients adhere to correct use?

Study A: Yes, more than 90% of the time (and that remained true for subpopulations).

In the next phase of the ACCESS study, eligible participants from the self-selection population who purchased norgestrel and reported using the product at least once in their e-diary over a 6-month study period comprised the “User Population.”12 The overall adherence to daily pill intake was 92.5% (95% confidence interval [CI], 92.3–92.6%) among the 883 participants who contributed more than 90,000 days of study participation, and adherence was similarly high in subpopulations of individuals with low health literacy (92.6%; 95% CI, 92.1–93.0), adolescents aged 12–14 years (91.8%; 95% CI, 91.0–92.5%), and adolescents aged 15–17 years (91.9%; 95% CI, 91.4%–92.3%).

Research Q: When a pill was missed, did patients use backup contraception?

Study A: Yes, 97% of the time.

When including whether participants followed label instructions for mitigating behaviors when the pill was missed (eg, take a pill as soon as they remember, use backup contraception for 2 days after restarting the pill), adherence was 97.1% (95% CI, 97.0–97.2%). Most participants missed a single day of taking pills, and the most common reported reason for missing pills was issues with resupply as participants needed to get new packs from their enrolled research site, which should be less of a barrier when these pills are available over the counter.

Clinical implications of expanded access

Opportunities to expand access to effective contraception have become more critical in the increasingly restrictive environment for abortion care in the post-Dobbs era, and the availability of norgestrel to patients without prescription can advance contraceptive equity. Patients encounter many barriers to accessing prescription contraception, such as lack of insurance; difficulty with scheduling an appointment or getting to a clinic; not having a regular clinician or clinic; or health care providers requiring a visit, exam, or test prior to prescribing contraception.13,14 For patients who face these challenges, an alternative option is to use a nonprescription contraceptive, such as barrier or fertility awareness–based methods, which are typically associated with higher failure rates. With the introduction of norgestrel as a nonprescription contraceptive product, people can have direct access to a more effective contraceptive option.

A follow-up study of participants who had participated in the ACCESS actual-use study demonstrated that most (83%) would be likely to use the nonprescription method if available in the future for many reasons, including convenience, ease of access, ability to save time and money, not needing to visit a clinic, and flexibility of accessing the pills while traveling or having someone else get their pills for them.14 Furthermore, a nonprescription method could be beneficial for people who have concerns about privacy, such as adolescents or individuals affected by contraception sabotage (an act that can intentionally limit or prohibit a person's contraception access or use, ie, damaging condoms or hiding a person’s contraception method). This expansion of access can ultimately lead to a decrease in unintended pregnancies. In a model using the ACCESS actual-use data, about 1,500 to 34,000 unintended pregnancies would be prevented per year based on varying model parameters, with all scenarios demonstrating a benefit to nonprescription access to norgestrel.15

After norgestrel is available, where will patients be able to seek more information?

Patients who have questions or concerns about starting or taking norgestrel should talk to their clinician or a pharmacist for additional information (FIGURE 2). Examples of situations when additional clinical evaluation or counseling are recommended include:

- when a person is taking any medications with possible drug-drug interactions

- if a person is starting norgestrel after taking an emergency contraceptive in the last 5 days

- if there is a concern about pregnancy

- when there are any questions about adverse effects while taking norgestrel.

Bottom line

The nonprescription approval of norgestrel, a progestin-only pill, has the potential to greatly expand patient access to a safe and effective contraceptive method and advance contraceptive equity. The availability of informational materials for consumers about potential issues that may arise (for instance, changes in bleeding) will be important for initiation and continuation of this method. As this product is not yet available for purchase, several unknown factors remain, such as the cost and ease of accessibility in stores or online, that will ultimately determine its public health impact on unintended pregnancies. ●

- US Food and Drug Administration. 82 FR 49380. Determination that Ovrette (norgestrel) tablet, 0.075 milligrams, was not withdrawn from sale for reasons of safety or effectiveness. October 25, 2017. Accessed December 5, 2023. https://www.federalregister.gov/d/2017-23125

- US Food and Drug Administration. Opill tablets (norgestrel tablets) package label. August 2017. Accessed December 5, 2023. https://www.accessdata.fda.gov/drugsatfda_docs/label /2017/017031s035s036lbl.pdf

- Curtis KM, Jatlaoui TC, Tepper NK, et al. US selected practice recommendations for contraceptive use, 2016. MMWR Recomm Rep. 2016;65(No. RR-4):1-66.

- Glasier A, Sober S, Gasloli R, et al. A review of the effectiveness of a progestogen-only pill containing norgestrel 75 µg/day. Contraception. 2022;105:1-6.

- Edelman A, Hemon A, Creinin M, et al. Assessing the pregnancy protective impact of scheduled nonadherence to a novel progestin-only pill: protocol for a prospective, multicenter, randomized, crossover study. JMIR Res Protoc. 2021;10:e292208.

- Glasier A, Edelman A, Creinin MD, et al. Mechanism of action of norgestrel 0.075 mg a progestogen-only pill. I. Effect on ovarian activity. Contraception. 2022;112:37-42.

- Han L, Creinin MD, Hemon A, et al. Mechanism of action of a 0.075 mg norgestrel progestogen-only pill 2. Effect on cervical mucus and theoretical risk of conception. Contraception. 2022;112:43-47.

- Glasier A, Edelman A, Creinin MD, et al. The effect of deliberate non-adherence to a norgestrel progestin-only pill: a randomized, crossover study. Contraception. 2023;117:1-6.

- Curtis KM, Tepper NK, Jatlaoui TC, et al. U.S. medical eligibility criteria for contraceptive use, 2016. MMWR Recomm Rep. 2016;65(No RR-3):1-104.

- Dutton C, Kim R, Janiak E. Prevalence of contraindications to progestin-only contraceptive pills in a multi-institution patient database. Contraception. 2021;103:367-370.

- Clinicaltrials.gov. Adherence with Continuous-dose Oral Contraceptive Evaluation of Self-Selection and Use (ACCESS). Accessed December 5, 2023. https://clinicaltrials.gov/study /NCT04112095

- HRA Pharma. Opill (norgestrel 0.075 mg tablets) for Rx-toOTC switch. Sponsor Briefing Documents. Joint Meeting of the Nonprescription Drugs Advisory Committee and the Obstetrics, Reproductive, and Urology Drugs Advisory Committee. Meeting dates: 9-10 May 2023. Accessed December 5, 2023. https://www.fda.gov/media/167893 /download

- American College of Obstetricians and Gynecologists. Committee Opinion No. 788: Over-the-counter access to hormonal contraception. Obstet Gynecol. 2019;134:e96-105.

- Grindlay K, Key K, Zuniga C, et al. Interest in continued use after participation in a study of over-the-counter progestin-only pills in the United States. Womens Health Rep. 2022;3:904-914.

- Guillard H, Laurora I, Sober S, et al. Modeling the potential benefit of an over-the-counter progestin-only pill in preventing unintended pregnancies in the U.S. Contraception. 2023;117:7-12.

On July 13, 2023, the US Food and Drug Administration (FDA) approved norgestrel 0.075 mg (Opill, HRA Pharma, Paris, France) as the first nonprescription oral contraceptive pill (FIGURE). This progestin-only pill was originally FDA approved in 1973, with prescription required, and was available as Ovrette until 2005, when product distribution ceased for marketing reasons and not for safety or effectiveness concerns.1 In recent years, studies have been conducted to support converted approval from prescription to nonprescription to increase access to safe and effective contraception. Overall, norgestrel is more effective than other currently available nonprescription contraceptive options when used as directed, and widespread accessibility to this method has the potential to decrease the risk of unintended pregnancies. This product is expected to be available in drugstores, convenience stores, grocery stores, and online in 2024.

How it works

The indication for norgestrel 0.075 mg is pregnancy prevention in people with the capacity to become pregnant; this product is not intended for emergency contraception. Norgestrel is a racemic mixture of 2 isomers, of which only levonorgestrel is bioactive. The mechanism of action for contraception is primarily through cervical mucus thickening, which inhibits sperm movement through the cervix. About 50% of users also have an additional contraceptive effect of ovulation suppression.2

Instructions for use. In the package label, users are instructed to take the norgestrel 0.075 mg pill daily, preferably at the same time each day and no more than 3 hours from the time taken on the previous day. This method can be started on any day of the cycle, and backup contraception (a barrier method) should be used for the first 48 hours after starting the method if it has been more than 5 days since menstrual bleeding started.3 Product instructions indicate that, if users miss a dose, they should take the next dose as soon as possible. If a pill is taken 3 hours or more later than the usual time, they should take a pill immediately and then resume the next pill at the usual time. In addition, backup contraception is recommended for 48 hours.2

Based on the Centers for Disease Control and Prevention (CDC) Selected Practice Recommendations for Contraceptive Use, no examinations or tests are required prior to initiation of progestin-only pills for safe and effective use.3

Efficacy

The product label indicates that the pregnancy rate is approximately 2 per 100 women-years based on over 21,000 28-day exposure cycles from 8 US clinical studies.2 In a recent review by Glasier and colleagues, the authors identified 13 trials that assessed the efficacy of the norgestrel 0.075 mg pill, all published several decades ago.4 Given that breastfeeding can have contraceptive impact through ovulation inhibition, studies that included breastfeeding participants were evaluated separately. Six studies without breastfeeding participants included 3,184 women who provided more than 35,000 months of use. The overall failure rates ranged from 0 to 2.4 per hundred woman-years with typical use; an aggregate Pearl Index was calculated to be 2.2 based on the total numbers of pregnancies and cycles. The remaining 7 studies included individuals who were breastfeeding for at least part of their study participation. These studies included 5,445 women, and the 12-month life table cumulative pregnancy rates in this group ranged from 0.0% to 3.4%. This review noted that the available studies are limited by incomplete descriptions of study participant information and differences in reporting of failure rates; however, the overall data support the effectiveness of the norgestrel 0.075 mg pill for pregnancy prevention.

Continue to: Norgestrel’s mechanism of action on ovarian activity and cervical mucus...

Norgestrel’s mechanism of action on ovarian activity and cervical mucus

More recently, a prospective, multicenter randomized, crossover study was performed to better understand this pill’s impact on cervical mucus and ovulation during preparation for nonprescription approval. In this study, participants were evaluated with frequent transvaginal ultrasonography, cervical mucus, and blood assessments (including levels of follicular-stimulating hormone, luteinizing hormone, progesterone, and estradiol) for three 28-day cycles. Cervical mucus was scored on a modified Insler scale to indicate if the mucus was favorable (Insler score ≥9), intermediate (Insler score 5-8), or unfavorable to fertility (Insler score ≤4).5

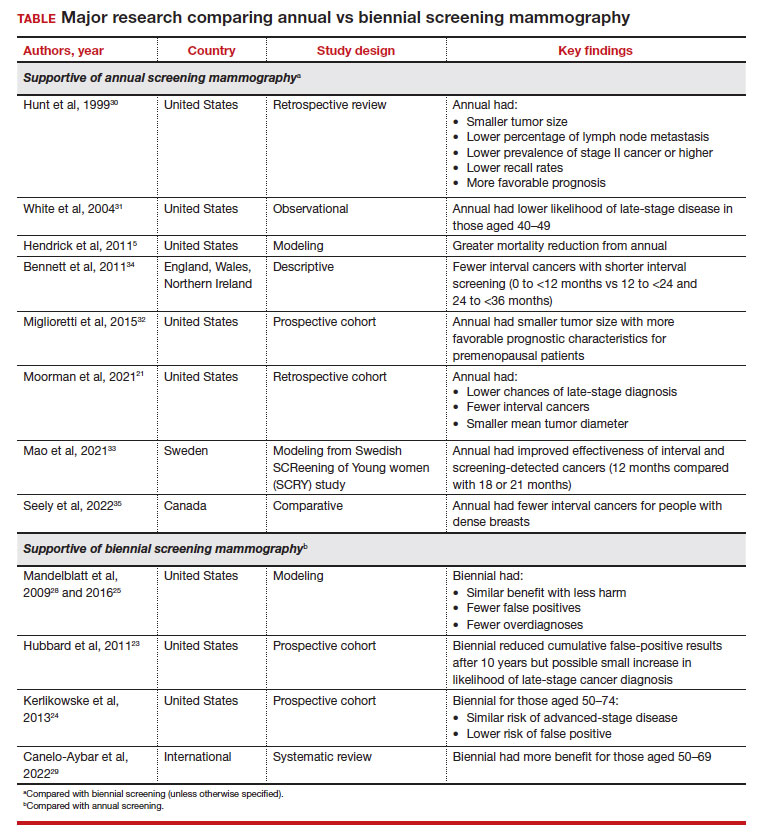

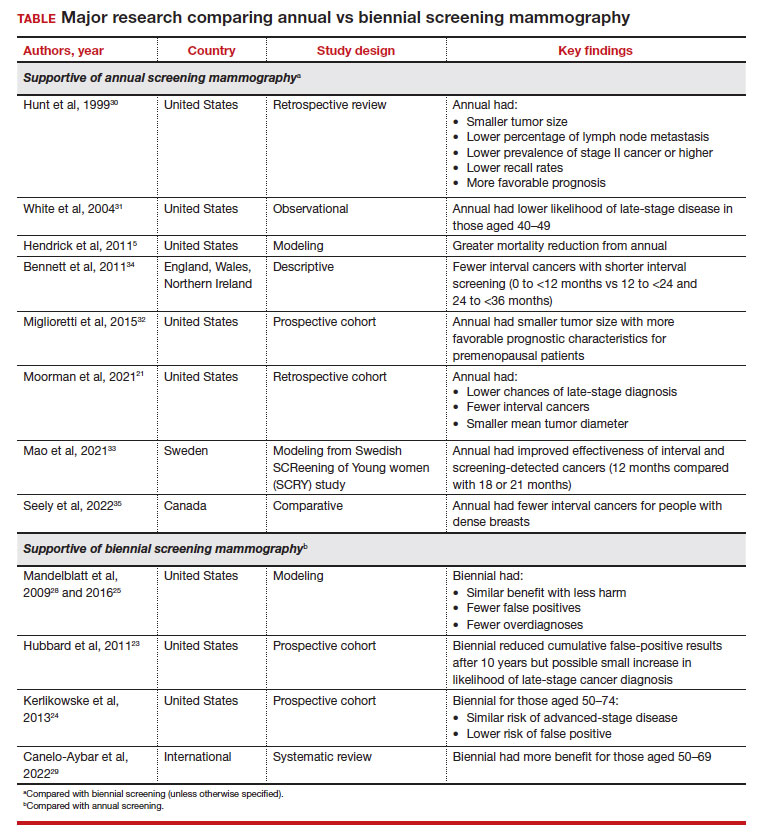

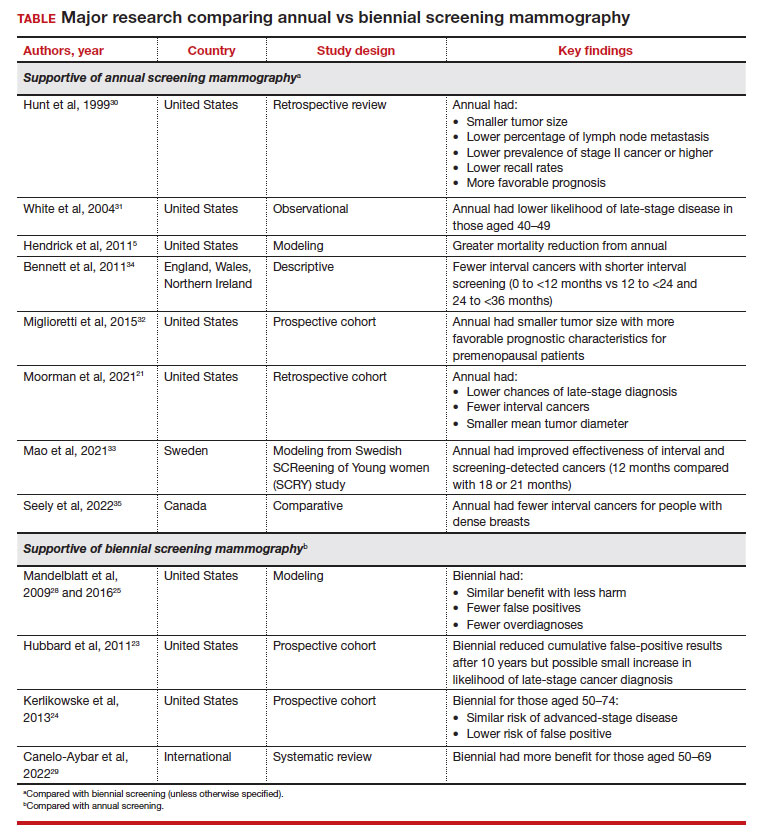

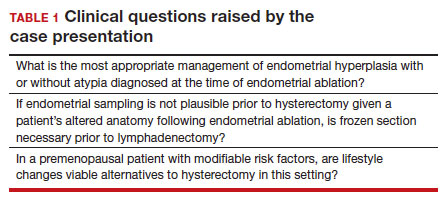

In the first cycle, participants were instructed to use the pills as prescribed (described as “correct use”). During this cycle, most participants (n = 34/51; 67%) did not ovulate, confirming that norgestrel 0.075 mg does impact ovulation.6 Most participants also had unfavorable cervical mucus (n = 39/51; 76%).6 Overall, 94% had full protection against pregnancy, either through lack of ovulation (n = 9), unfavorable mucus (n = 14), or both (n = 25). The remaining 3 participants ovulated and had intermediate mucus scores; ultimately, these participants were considered to have medium protection against pregnancy.7,8 (See the contraceptive protection algorithm [TABLE]).8

In the second and third cycles, the investigators evaluated ovulation and cervical mucus changes in the setting of either a delayed (by 6 hours) or missed dose midcycle.8 Of the 46 participants with evaluable data during the intervention cycles, 32 (70%) did not ovulate in each of the delayed- and missed-dose cycles. Most participants (n = 27; 59%) also demonstrated unfavorable mucus scores (modified Insler score ≤4) over the entire cycle despite delaying or missing a pill. There was no significant change to the cervical mucus score when comparing the scores on the days before, during, and after the delayed or missed pills (P = .26), nor when comparing between delayed pill use and missed pill use (P = .45). With the delayed pill intervention, 4 (9%) had reduced contraceptive protection (ie, medium protection) based on ovulation with intermediate mucus scores. With the missed pill intervention, 5 (11%) had reduced protection, of whom 3 had medium protection and 2 had minimum protection with ovulation and favorable mucus scores. Overall, this study shows that delaying or missing one pill may not impact contraceptive efficacy as much as previously thought given the strict 3-hour window for progestin-only pills. However, these findings are theoretical as information about pregnancy outcomes with delaying or missing pills are lacking.

Safety

Progestin-only methods are one of the safest options for contraception, with few contraindications to use; those listed include known or suspected pregnancy, known or suspected carcinoma of the breast or other progestinsensitive cancer, undiagnosed abnormal uterine bleeding, hypersensitivity to any component of the product, benign or malignant liver tumors, and acute liver disease.2

The CDC Medical Eligibility Criteria for Contraceptive Use guidelines offer guidance for progestin-only pills, indicating a category 3 (theoretical or proven risks usually outweigh the advantages) or category 4 (unacceptable health risk, method not to be used) for only a select number of additional conditions. These conditions include a history of malabsorptive bariatric surgery (category 3) and concurrent use of medications that induce hepatic enzyme activity (category 3)— such as phenytoin, carbamazepine, barbiturates, primidone, topiramate, oxcarbazepine, rifampin, and rifabutin.9 These conditions are included primarily due to concerns of decreased effectivenessof the contraception and not necessarily because of evidence of harm with use.

The prevalence of consumers with contraindications to progestin-only pills appears to be low. In a large database study, only 4.36% seeking preventive care and 2.29% seeking both preventive and contraceptive services had a contraindication to progestin-only pills.10 Therefore, candidates for norgestrel use include individuals who have commonly encountered conditions, including those who9:

- have recently given birth

- are breastfeeding

- have a history of venous thromboembolism

- smoke

- have cardiovascular disease, hypertension, migraines with aura, or longstanding diabetes.

Adverse effects

The most common adverse effects (AEs) related to norgestrel use are bleeding changes.2 In the initial clinical studies for FDA approval, about half of enrolled participants reported a change in bleeding; about 9% discontinued the contraceptive due to bleeding. Breakthrough bleeding and spotting were reported by 48.6% and 47.3% of participants, respectively. About 6.1% had amenorrhea in their first cycle; 28.7% of participants had amenorrhea overall. Other reported AEs were headache, dizziness, nausea, increased appetite, abdominal pain, cramps or bloating, breast tenderness, and acne.

- Brand name: Opill

- Class: Progestin-only contraception

- Indication: Pregnancy prevention

- Approval date: Initial approval in 1973, nonprescription approval on July 13, 2023

- Availability date: 2024

- Manufacturer: Perrigo Company, HRA Pharma, Paris, France

- Dosage forms: 0.075 mg tablet

Continue to: FDA approval required determining appropriate direct-to-patient classification...

FDA approval required determining appropriate direct-to-patient classification

As part of the process for obtaining nonprescription approval, studies needed to determine that patients can safely and effectively use norgestrel without talking to a health care provider first. As part of that process, label comprehension, self-selection, and actualuse studies were required to demonstrate that consumers can use the package information to determine their eligibility and take the medication appropriately.

The ACCESS study Research Q: Do patients appropriately determine if the contraceptive is right for them?

Study A: Yes, 99% of the time. In the Adherence with Continuous-dose Oral Contraceptive: Evaluation of Self-Selection and Use (ACCESS) pivotal study, which evaluated prescription to nonprescription approval, participants were asked to review the label and determine whether the product was appropriate for them to use based on their health history.11 Approximately 99% of participants (n = 1,234/1,246) were able to correctly self-select whether norgestrel was appropriate for their own use.12

Research Q: After beginning the contraceptive, do patients adhere to correct use?

Study A: Yes, more than 90% of the time (and that remained true for subpopulations).

In the next phase of the ACCESS study, eligible participants from the self-selection population who purchased norgestrel and reported using the product at least once in their e-diary over a 6-month study period comprised the “User Population.”12 The overall adherence to daily pill intake was 92.5% (95% confidence interval [CI], 92.3–92.6%) among the 883 participants who contributed more than 90,000 days of study participation, and adherence was similarly high in subpopulations of individuals with low health literacy (92.6%; 95% CI, 92.1–93.0), adolescents aged 12–14 years (91.8%; 95% CI, 91.0–92.5%), and adolescents aged 15–17 years (91.9%; 95% CI, 91.4%–92.3%).

Research Q: When a pill was missed, did patients use backup contraception?

Study A: Yes, 97% of the time.

When including whether participants followed label instructions for mitigating behaviors when the pill was missed (eg, take a pill as soon as they remember, use backup contraception for 2 days after restarting the pill), adherence was 97.1% (95% CI, 97.0–97.2%). Most participants missed a single day of taking pills, and the most common reported reason for missing pills was issues with resupply as participants needed to get new packs from their enrolled research site, which should be less of a barrier when these pills are available over the counter.

Clinical implications of expanded access

Opportunities to expand access to effective contraception have become more critical in the increasingly restrictive environment for abortion care in the post-Dobbs era, and the availability of norgestrel to patients without prescription can advance contraceptive equity. Patients encounter many barriers to accessing prescription contraception, such as lack of insurance; difficulty with scheduling an appointment or getting to a clinic; not having a regular clinician or clinic; or health care providers requiring a visit, exam, or test prior to prescribing contraception.13,14 For patients who face these challenges, an alternative option is to use a nonprescription contraceptive, such as barrier or fertility awareness–based methods, which are typically associated with higher failure rates. With the introduction of norgestrel as a nonprescription contraceptive product, people can have direct access to a more effective contraceptive option.

A follow-up study of participants who had participated in the ACCESS actual-use study demonstrated that most (83%) would be likely to use the nonprescription method if available in the future for many reasons, including convenience, ease of access, ability to save time and money, not needing to visit a clinic, and flexibility of accessing the pills while traveling or having someone else get their pills for them.14 Furthermore, a nonprescription method could be beneficial for people who have concerns about privacy, such as adolescents or individuals affected by contraception sabotage (an act that can intentionally limit or prohibit a person's contraception access or use, ie, damaging condoms or hiding a person’s contraception method). This expansion of access can ultimately lead to a decrease in unintended pregnancies. In a model using the ACCESS actual-use data, about 1,500 to 34,000 unintended pregnancies would be prevented per year based on varying model parameters, with all scenarios demonstrating a benefit to nonprescription access to norgestrel.15

After norgestrel is available, where will patients be able to seek more information?

Patients who have questions or concerns about starting or taking norgestrel should talk to their clinician or a pharmacist for additional information (FIGURE 2). Examples of situations when additional clinical evaluation or counseling are recommended include:

- when a person is taking any medications with possible drug-drug interactions

- if a person is starting norgestrel after taking an emergency contraceptive in the last 5 days

- if there is a concern about pregnancy

- when there are any questions about adverse effects while taking norgestrel.

Bottom line

The nonprescription approval of norgestrel, a progestin-only pill, has the potential to greatly expand patient access to a safe and effective contraceptive method and advance contraceptive equity. The availability of informational materials for consumers about potential issues that may arise (for instance, changes in bleeding) will be important for initiation and continuation of this method. As this product is not yet available for purchase, several unknown factors remain, such as the cost and ease of accessibility in stores or online, that will ultimately determine its public health impact on unintended pregnancies. ●

On July 13, 2023, the US Food and Drug Administration (FDA) approved norgestrel 0.075 mg (Opill, HRA Pharma, Paris, France) as the first nonprescription oral contraceptive pill (FIGURE). This progestin-only pill was originally FDA approved in 1973, with prescription required, and was available as Ovrette until 2005, when product distribution ceased for marketing reasons and not for safety or effectiveness concerns.1 In recent years, studies have been conducted to support converted approval from prescription to nonprescription to increase access to safe and effective contraception. Overall, norgestrel is more effective than other currently available nonprescription contraceptive options when used as directed, and widespread accessibility to this method has the potential to decrease the risk of unintended pregnancies. This product is expected to be available in drugstores, convenience stores, grocery stores, and online in 2024.

How it works

The indication for norgestrel 0.075 mg is pregnancy prevention in people with the capacity to become pregnant; this product is not intended for emergency contraception. Norgestrel is a racemic mixture of 2 isomers, of which only levonorgestrel is bioactive. The mechanism of action for contraception is primarily through cervical mucus thickening, which inhibits sperm movement through the cervix. About 50% of users also have an additional contraceptive effect of ovulation suppression.2

Instructions for use. In the package label, users are instructed to take the norgestrel 0.075 mg pill daily, preferably at the same time each day and no more than 3 hours from the time taken on the previous day. This method can be started on any day of the cycle, and backup contraception (a barrier method) should be used for the first 48 hours after starting the method if it has been more than 5 days since menstrual bleeding started.3 Product instructions indicate that, if users miss a dose, they should take the next dose as soon as possible. If a pill is taken 3 hours or more later than the usual time, they should take a pill immediately and then resume the next pill at the usual time. In addition, backup contraception is recommended for 48 hours.2

Based on the Centers for Disease Control and Prevention (CDC) Selected Practice Recommendations for Contraceptive Use, no examinations or tests are required prior to initiation of progestin-only pills for safe and effective use.3

Efficacy

The product label indicates that the pregnancy rate is approximately 2 per 100 women-years based on over 21,000 28-day exposure cycles from 8 US clinical studies.2 In a recent review by Glasier and colleagues, the authors identified 13 trials that assessed the efficacy of the norgestrel 0.075 mg pill, all published several decades ago.4 Given that breastfeeding can have contraceptive impact through ovulation inhibition, studies that included breastfeeding participants were evaluated separately. Six studies without breastfeeding participants included 3,184 women who provided more than 35,000 months of use. The overall failure rates ranged from 0 to 2.4 per hundred woman-years with typical use; an aggregate Pearl Index was calculated to be 2.2 based on the total numbers of pregnancies and cycles. The remaining 7 studies included individuals who were breastfeeding for at least part of their study participation. These studies included 5,445 women, and the 12-month life table cumulative pregnancy rates in this group ranged from 0.0% to 3.4%. This review noted that the available studies are limited by incomplete descriptions of study participant information and differences in reporting of failure rates; however, the overall data support the effectiveness of the norgestrel 0.075 mg pill for pregnancy prevention.

Continue to: Norgestrel’s mechanism of action on ovarian activity and cervical mucus...

Norgestrel’s mechanism of action on ovarian activity and cervical mucus

More recently, a prospective, multicenter randomized, crossover study was performed to better understand this pill’s impact on cervical mucus and ovulation during preparation for nonprescription approval. In this study, participants were evaluated with frequent transvaginal ultrasonography, cervical mucus, and blood assessments (including levels of follicular-stimulating hormone, luteinizing hormone, progesterone, and estradiol) for three 28-day cycles. Cervical mucus was scored on a modified Insler scale to indicate if the mucus was favorable (Insler score ≥9), intermediate (Insler score 5-8), or unfavorable to fertility (Insler score ≤4).5

In the first cycle, participants were instructed to use the pills as prescribed (described as “correct use”). During this cycle, most participants (n = 34/51; 67%) did not ovulate, confirming that norgestrel 0.075 mg does impact ovulation.6 Most participants also had unfavorable cervical mucus (n = 39/51; 76%).6 Overall, 94% had full protection against pregnancy, either through lack of ovulation (n = 9), unfavorable mucus (n = 14), or both (n = 25). The remaining 3 participants ovulated and had intermediate mucus scores; ultimately, these participants were considered to have medium protection against pregnancy.7,8 (See the contraceptive protection algorithm [TABLE]).8

In the second and third cycles, the investigators evaluated ovulation and cervical mucus changes in the setting of either a delayed (by 6 hours) or missed dose midcycle.8 Of the 46 participants with evaluable data during the intervention cycles, 32 (70%) did not ovulate in each of the delayed- and missed-dose cycles. Most participants (n = 27; 59%) also demonstrated unfavorable mucus scores (modified Insler score ≤4) over the entire cycle despite delaying or missing a pill. There was no significant change to the cervical mucus score when comparing the scores on the days before, during, and after the delayed or missed pills (P = .26), nor when comparing between delayed pill use and missed pill use (P = .45). With the delayed pill intervention, 4 (9%) had reduced contraceptive protection (ie, medium protection) based on ovulation with intermediate mucus scores. With the missed pill intervention, 5 (11%) had reduced protection, of whom 3 had medium protection and 2 had minimum protection with ovulation and favorable mucus scores. Overall, this study shows that delaying or missing one pill may not impact contraceptive efficacy as much as previously thought given the strict 3-hour window for progestin-only pills. However, these findings are theoretical as information about pregnancy outcomes with delaying or missing pills are lacking.

Safety

Progestin-only methods are one of the safest options for contraception, with few contraindications to use; those listed include known or suspected pregnancy, known or suspected carcinoma of the breast or other progestinsensitive cancer, undiagnosed abnormal uterine bleeding, hypersensitivity to any component of the product, benign or malignant liver tumors, and acute liver disease.2

The CDC Medical Eligibility Criteria for Contraceptive Use guidelines offer guidance for progestin-only pills, indicating a category 3 (theoretical or proven risks usually outweigh the advantages) or category 4 (unacceptable health risk, method not to be used) for only a select number of additional conditions. These conditions include a history of malabsorptive bariatric surgery (category 3) and concurrent use of medications that induce hepatic enzyme activity (category 3)— such as phenytoin, carbamazepine, barbiturates, primidone, topiramate, oxcarbazepine, rifampin, and rifabutin.9 These conditions are included primarily due to concerns of decreased effectivenessof the contraception and not necessarily because of evidence of harm with use.

The prevalence of consumers with contraindications to progestin-only pills appears to be low. In a large database study, only 4.36% seeking preventive care and 2.29% seeking both preventive and contraceptive services had a contraindication to progestin-only pills.10 Therefore, candidates for norgestrel use include individuals who have commonly encountered conditions, including those who9:

- have recently given birth

- are breastfeeding

- have a history of venous thromboembolism

- smoke

- have cardiovascular disease, hypertension, migraines with aura, or longstanding diabetes.

Adverse effects

The most common adverse effects (AEs) related to norgestrel use are bleeding changes.2 In the initial clinical studies for FDA approval, about half of enrolled participants reported a change in bleeding; about 9% discontinued the contraceptive due to bleeding. Breakthrough bleeding and spotting were reported by 48.6% and 47.3% of participants, respectively. About 6.1% had amenorrhea in their first cycle; 28.7% of participants had amenorrhea overall. Other reported AEs were headache, dizziness, nausea, increased appetite, abdominal pain, cramps or bloating, breast tenderness, and acne.

- Brand name: Opill

- Class: Progestin-only contraception

- Indication: Pregnancy prevention

- Approval date: Initial approval in 1973, nonprescription approval on July 13, 2023

- Availability date: 2024

- Manufacturer: Perrigo Company, HRA Pharma, Paris, France

- Dosage forms: 0.075 mg tablet

Continue to: FDA approval required determining appropriate direct-to-patient classification...

FDA approval required determining appropriate direct-to-patient classification

As part of the process for obtaining nonprescription approval, studies needed to determine that patients can safely and effectively use norgestrel without talking to a health care provider first. As part of that process, label comprehension, self-selection, and actualuse studies were required to demonstrate that consumers can use the package information to determine their eligibility and take the medication appropriately.

The ACCESS study Research Q: Do patients appropriately determine if the contraceptive is right for them?

Study A: Yes, 99% of the time. In the Adherence with Continuous-dose Oral Contraceptive: Evaluation of Self-Selection and Use (ACCESS) pivotal study, which evaluated prescription to nonprescription approval, participants were asked to review the label and determine whether the product was appropriate for them to use based on their health history.11 Approximately 99% of participants (n = 1,234/1,246) were able to correctly self-select whether norgestrel was appropriate for their own use.12

Research Q: After beginning the contraceptive, do patients adhere to correct use?

Study A: Yes, more than 90% of the time (and that remained true for subpopulations).

In the next phase of the ACCESS study, eligible participants from the self-selection population who purchased norgestrel and reported using the product at least once in their e-diary over a 6-month study period comprised the “User Population.”12 The overall adherence to daily pill intake was 92.5% (95% confidence interval [CI], 92.3–92.6%) among the 883 participants who contributed more than 90,000 days of study participation, and adherence was similarly high in subpopulations of individuals with low health literacy (92.6%; 95% CI, 92.1–93.0), adolescents aged 12–14 years (91.8%; 95% CI, 91.0–92.5%), and adolescents aged 15–17 years (91.9%; 95% CI, 91.4%–92.3%).

Research Q: When a pill was missed, did patients use backup contraception?

Study A: Yes, 97% of the time.

When including whether participants followed label instructions for mitigating behaviors when the pill was missed (eg, take a pill as soon as they remember, use backup contraception for 2 days after restarting the pill), adherence was 97.1% (95% CI, 97.0–97.2%). Most participants missed a single day of taking pills, and the most common reported reason for missing pills was issues with resupply as participants needed to get new packs from their enrolled research site, which should be less of a barrier when these pills are available over the counter.

Clinical implications of expanded access

Opportunities to expand access to effective contraception have become more critical in the increasingly restrictive environment for abortion care in the post-Dobbs era, and the availability of norgestrel to patients without prescription can advance contraceptive equity. Patients encounter many barriers to accessing prescription contraception, such as lack of insurance; difficulty with scheduling an appointment or getting to a clinic; not having a regular clinician or clinic; or health care providers requiring a visit, exam, or test prior to prescribing contraception.13,14 For patients who face these challenges, an alternative option is to use a nonprescription contraceptive, such as barrier or fertility awareness–based methods, which are typically associated with higher failure rates. With the introduction of norgestrel as a nonprescription contraceptive product, people can have direct access to a more effective contraceptive option.

A follow-up study of participants who had participated in the ACCESS actual-use study demonstrated that most (83%) would be likely to use the nonprescription method if available in the future for many reasons, including convenience, ease of access, ability to save time and money, not needing to visit a clinic, and flexibility of accessing the pills while traveling or having someone else get their pills for them.14 Furthermore, a nonprescription method could be beneficial for people who have concerns about privacy, such as adolescents or individuals affected by contraception sabotage (an act that can intentionally limit or prohibit a person's contraception access or use, ie, damaging condoms or hiding a person’s contraception method). This expansion of access can ultimately lead to a decrease in unintended pregnancies. In a model using the ACCESS actual-use data, about 1,500 to 34,000 unintended pregnancies would be prevented per year based on varying model parameters, with all scenarios demonstrating a benefit to nonprescription access to norgestrel.15

After norgestrel is available, where will patients be able to seek more information?

Patients who have questions or concerns about starting or taking norgestrel should talk to their clinician or a pharmacist for additional information (FIGURE 2). Examples of situations when additional clinical evaluation or counseling are recommended include:

- when a person is taking any medications with possible drug-drug interactions

- if a person is starting norgestrel after taking an emergency contraceptive in the last 5 days

- if there is a concern about pregnancy

- when there are any questions about adverse effects while taking norgestrel.

Bottom line

The nonprescription approval of norgestrel, a progestin-only pill, has the potential to greatly expand patient access to a safe and effective contraceptive method and advance contraceptive equity. The availability of informational materials for consumers about potential issues that may arise (for instance, changes in bleeding) will be important for initiation and continuation of this method. As this product is not yet available for purchase, several unknown factors remain, such as the cost and ease of accessibility in stores or online, that will ultimately determine its public health impact on unintended pregnancies. ●

- US Food and Drug Administration. 82 FR 49380. Determination that Ovrette (norgestrel) tablet, 0.075 milligrams, was not withdrawn from sale for reasons of safety or effectiveness. October 25, 2017. Accessed December 5, 2023. https://www.federalregister.gov/d/2017-23125

- US Food and Drug Administration. Opill tablets (norgestrel tablets) package label. August 2017. Accessed December 5, 2023. https://www.accessdata.fda.gov/drugsatfda_docs/label /2017/017031s035s036lbl.pdf

- Curtis KM, Jatlaoui TC, Tepper NK, et al. US selected practice recommendations for contraceptive use, 2016. MMWR Recomm Rep. 2016;65(No. RR-4):1-66.

- Glasier A, Sober S, Gasloli R, et al. A review of the effectiveness of a progestogen-only pill containing norgestrel 75 µg/day. Contraception. 2022;105:1-6.

- Edelman A, Hemon A, Creinin M, et al. Assessing the pregnancy protective impact of scheduled nonadherence to a novel progestin-only pill: protocol for a prospective, multicenter, randomized, crossover study. JMIR Res Protoc. 2021;10:e292208.

- Glasier A, Edelman A, Creinin MD, et al. Mechanism of action of norgestrel 0.075 mg a progestogen-only pill. I. Effect on ovarian activity. Contraception. 2022;112:37-42.

- Han L, Creinin MD, Hemon A, et al. Mechanism of action of a 0.075 mg norgestrel progestogen-only pill 2. Effect on cervical mucus and theoretical risk of conception. Contraception. 2022;112:43-47.

- Glasier A, Edelman A, Creinin MD, et al. The effect of deliberate non-adherence to a norgestrel progestin-only pill: a randomized, crossover study. Contraception. 2023;117:1-6.

- Curtis KM, Tepper NK, Jatlaoui TC, et al. U.S. medical eligibility criteria for contraceptive use, 2016. MMWR Recomm Rep. 2016;65(No RR-3):1-104.

- Dutton C, Kim R, Janiak E. Prevalence of contraindications to progestin-only contraceptive pills in a multi-institution patient database. Contraception. 2021;103:367-370.

- Clinicaltrials.gov. Adherence with Continuous-dose Oral Contraceptive Evaluation of Self-Selection and Use (ACCESS). Accessed December 5, 2023. https://clinicaltrials.gov/study /NCT04112095

- HRA Pharma. Opill (norgestrel 0.075 mg tablets) for Rx-toOTC switch. Sponsor Briefing Documents. Joint Meeting of the Nonprescription Drugs Advisory Committee and the Obstetrics, Reproductive, and Urology Drugs Advisory Committee. Meeting dates: 9-10 May 2023. Accessed December 5, 2023. https://www.fda.gov/media/167893 /download

- American College of Obstetricians and Gynecologists. Committee Opinion No. 788: Over-the-counter access to hormonal contraception. Obstet Gynecol. 2019;134:e96-105.

- Grindlay K, Key K, Zuniga C, et al. Interest in continued use after participation in a study of over-the-counter progestin-only pills in the United States. Womens Health Rep. 2022;3:904-914.

- Guillard H, Laurora I, Sober S, et al. Modeling the potential benefit of an over-the-counter progestin-only pill in preventing unintended pregnancies in the U.S. Contraception. 2023;117:7-12.

- US Food and Drug Administration. 82 FR 49380. Determination that Ovrette (norgestrel) tablet, 0.075 milligrams, was not withdrawn from sale for reasons of safety or effectiveness. October 25, 2017. Accessed December 5, 2023. https://www.federalregister.gov/d/2017-23125

- US Food and Drug Administration. Opill tablets (norgestrel tablets) package label. August 2017. Accessed December 5, 2023. https://www.accessdata.fda.gov/drugsatfda_docs/label /2017/017031s035s036lbl.pdf

- Curtis KM, Jatlaoui TC, Tepper NK, et al. US selected practice recommendations for contraceptive use, 2016. MMWR Recomm Rep. 2016;65(No. RR-4):1-66.

- Glasier A, Sober S, Gasloli R, et al. A review of the effectiveness of a progestogen-only pill containing norgestrel 75 µg/day. Contraception. 2022;105:1-6.

- Edelman A, Hemon A, Creinin M, et al. Assessing the pregnancy protective impact of scheduled nonadherence to a novel progestin-only pill: protocol for a prospective, multicenter, randomized, crossover study. JMIR Res Protoc. 2021;10:e292208.

- Glasier A, Edelman A, Creinin MD, et al. Mechanism of action of norgestrel 0.075 mg a progestogen-only pill. I. Effect on ovarian activity. Contraception. 2022;112:37-42.

- Han L, Creinin MD, Hemon A, et al. Mechanism of action of a 0.075 mg norgestrel progestogen-only pill 2. Effect on cervical mucus and theoretical risk of conception. Contraception. 2022;112:43-47.

- Glasier A, Edelman A, Creinin MD, et al. The effect of deliberate non-adherence to a norgestrel progestin-only pill: a randomized, crossover study. Contraception. 2023;117:1-6.

- Curtis KM, Tepper NK, Jatlaoui TC, et al. U.S. medical eligibility criteria for contraceptive use, 2016. MMWR Recomm Rep. 2016;65(No RR-3):1-104.

- Dutton C, Kim R, Janiak E. Prevalence of contraindications to progestin-only contraceptive pills in a multi-institution patient database. Contraception. 2021;103:367-370.

- Clinicaltrials.gov. Adherence with Continuous-dose Oral Contraceptive Evaluation of Self-Selection and Use (ACCESS). Accessed December 5, 2023. https://clinicaltrials.gov/study /NCT04112095

- HRA Pharma. Opill (norgestrel 0.075 mg tablets) for Rx-toOTC switch. Sponsor Briefing Documents. Joint Meeting of the Nonprescription Drugs Advisory Committee and the Obstetrics, Reproductive, and Urology Drugs Advisory Committee. Meeting dates: 9-10 May 2023. Accessed December 5, 2023. https://www.fda.gov/media/167893 /download

- American College of Obstetricians and Gynecologists. Committee Opinion No. 788: Over-the-counter access to hormonal contraception. Obstet Gynecol. 2019;134:e96-105.

- Grindlay K, Key K, Zuniga C, et al. Interest in continued use after participation in a study of over-the-counter progestin-only pills in the United States. Womens Health Rep. 2022;3:904-914.

- Guillard H, Laurora I, Sober S, et al. Modeling the potential benefit of an over-the-counter progestin-only pill in preventing unintended pregnancies in the U.S. Contraception. 2023;117:7-12.

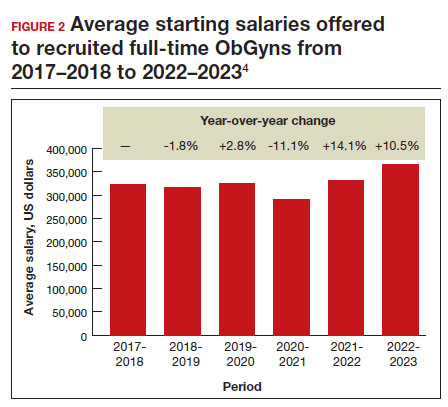

Recruiting ObGyns: Starting salary considerations

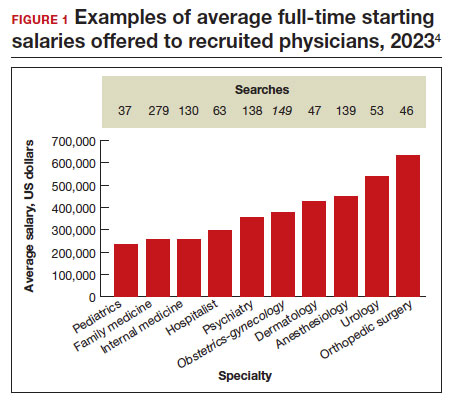

Evidence continues to show that the number of practicing ObGyns lags the growing and diverse US population of women.1 Furthermore, approximately 1 in every 3 ObGyns will move usually once or twice every 10 years.2 Knowing what to expect in being recruited requires a better understanding of your needs and capabilities and what they may be worth in real time. Some ObGyns elect to use a recruitment firm to begin their search to more objectively assess what is fair and equitable.

Understanding physician compensation involves many factors, such as patient composition, sources of reimbursement, impact of health care systems, and geography.3 Several sources report trends in annual physician compensation, most notably the American Medical Association, medical specialty organizations, and recruitment firms. Sources such as the Medical Group Management Association (MGMA), the American Medical Group Association (AMGA), and Medscape report total compensation.

Determining salaries for new positions

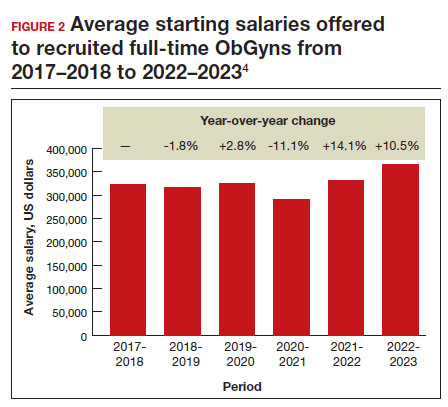

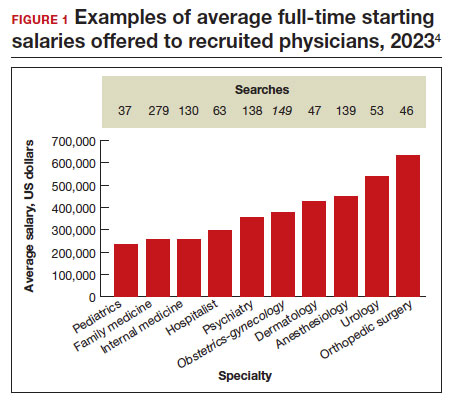

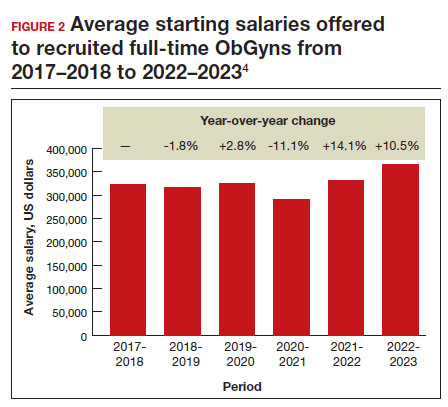

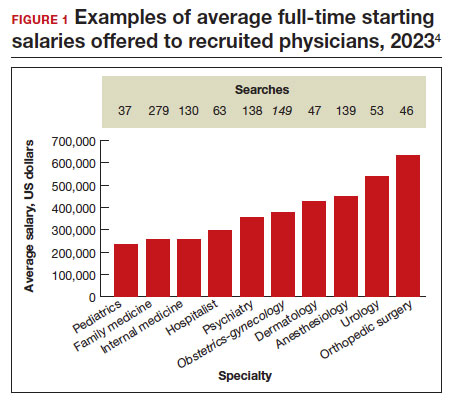

A standard and comprehensive benchmarking resource for salaries in new positions has been the annual review of physician and advanced practitioner recruiting incentives by AMN Healthcare (formerly Merritt Hawkins) Physician Solutions.4 This resource is used by hospitals, medical groups, academics, other health care systems, and others who track trends in physician supply, demand, and compensation. Their 2023 report considered starting salaries for more than 20 medical or surgical specialties.

Specialists’ revenue-generating potential is tracked by annual billings to commercial payers. The average annual billing by a full-time ObGyn ($3.8 million) is about the same as that of other specialties combined.5 As in the past, ObGyns are among the most consistently requested specialists in searches. In 2023, ObGyns were ranked the third most common physician specialists being recruited and tenth as the percentage of physicians per specialty (TABLE).4