User login

Elagolix is effective second-tier treatment for endometriosis-associated dysmenorrhea

PHILADELPHIA – Charles E. Miller, MD, said at the annual meeting of the American Society for Reproductive Medicine.

Although clinicians need prior authorization and evidence of treatment failure before prescribing Elagolix, the drug is a viable option as a second-tier treatment for patients with endometriosis-associated dysmenorrhea, said Dr. Miller, director of minimally invasive gynecologic surgery at Advocate Lutheran General Hospital in Park Ridge, Ill. “We have a drug that is very effective, that has a very low adverse event profile, and is tolerated by the vast majority of our patients.”

First-line options

NSAIDs are first-line treatment for endometriosis-related dysmenorrhea, with acetaminophen used in cases where NSAIDs are contraindicated or cause side effects such as gastrointestinal issues. Hormonal contraceptives also can be used as first-line treatment, divided into estrogen/progestin and progestin-only options that can be combined. Evidence from the literature has shown oral pills decrease pain, compared with placebo, but the decrease is not dose dependent, said Dr. Miller.

“We also know that if you use it continuously or prolonged, we find that there is going to be greater success with dysmenorrhea, and that ultimately you would use a higher-dose pill because of the greater risk of breakthrough when using a lesser dose in a continuous fashion,” he said. “Obviously if you’re not having menses, you’re not going to have dysmenorrhea.”

Other estrogen/progestin hormonal contraception such as the vaginal ring or transdermal patch also have been shown to decrease dysmenorrhea from endometriosis, with one study showing a reduction from 17% to 6% in moderate to severe dysmenorrhea in patients using the vaginal ring, compared with patients receiving oral contraceptives. In a separate randomized, controlled trial, “dysmenorrhea was more common in patch users, so it doesn’t appear that the patch is quite as effective in terms of reducing dysmenorrhea,” said Dr. Miller (JAMA. 2001 May 9. doi: 10.1001/jama.285.18.2347).

Compared with combination hormone therapy, there has been less research conducted on progestin-only hormone contraceptives on reducing dysmenorrhea from endometriosis. For example, there is little evidence for depot medroxyprogesterone acetate in reducing dysmenorrhea, but rather with it causing amenorrhea; one study showed a 50% amenorrhea rate at 1 year. “The disadvantage, however, in our infertile population is ultimately getting the menses back,” said Dr. Miller.

IUDs using levonorgestrel appear comparable with gonadotropin-releasing hormone (GnRH) agonists in reducing endometriosis-related pain; in one study, most women treated with either of these had visual analogue scores of less than 3 at 6 months of treatment. Between 68% and 75% of women with dysmenorrhea who receive an implantable contraceptive device with etonogestrel report decreased pain, and one meta-analysis reported 75% of women had “complete resolution of dysmenorrhea.” Concerning progestin-only pills, they can be used for endometriosis-related dysmenorrhea, but they are “problematic in that there’s a lot of breakthrough bleeding, and often times that is associated with pain,” said Dr. Miller.

Second-tier options

Injectable GnRH agonists are effective options as second-tier treatments for endometriosis-related dysmenorrhea, but patients are at risk of developing postmenopausal symptoms such as hot flashes, insomnia, spotting, and decreased libido. “One advantage to that is, over the years and particularly something that I’ve done with my endometriosis-related dysmenorrhea, is to utilize add-back with these patients,” said Dr. Miller, who noted that patients on 2.5 mg of norethindrone acetate and 0.5 mg of ethinyl estradiol“do very well” with that combination of add-back therapy.

Elagolix is the most recent second-tier treatment option for these patients, and was studied in the Elaris EM-I and Elaris EM-II trials in a once-daily dose of 150 mg and a twice-daily dose of 200 mg. In Elaris EM-1, 76% of patients in the 200-mg elagolix group had a clinical response, compared with 46% in the 150-mg group and 20% in the placebo group (N Engl J Med. 2017 Jul 6. doi: 10.1056/NEJMoa1700089). However, patients should not be on elagolix at 200 mg for more than 6 months, while patients receiving elagolix at 150 mg can stay on the treatment for up to 2 years.

Patients taking elagolix also showed postmenopausal symptoms, with 24% in the 150-mg group and 46% in the 200-mg group experiencing hot flashes, compared with 9% of patients in the placebo group. While 6% of patients in the 150-mg group and 10% in the 200-mg group discontinued because of adverse events, 1% and 3% of patients in the 150-mg and 200-mg group discontinued because of hot flashes or night sweats, respectively. “Symptoms are well tolerated, far different than in comparison with leuprolide acetate and GnRH agonists,” said Dr. Miller.

There also is a benefit to how patients recover from bone mineral density (BMD) changes after remaining on elagolix, Dr. Miller noted. In patients who received elagolix for 12 months at doses of 150 mg and 200 mg, there was an increase in lumbar spine BMD recovered 6 months after discontinuation, with patients in the 150-mg group experiencing a recovery close to baseline BMD levels. Among patients who discontinued treatment, there also was a quick resumption in menses for both groups: 87% of patients in the 150 mg group and 88% of patients in the 200-mg group who discontinued treatment after 6 months had resumed menses by 2 months after discontinuation, while 95% of patients in the 150-mg and 91% in the 200-mg group who discontinued after 12 months resumed menses by 2 months after discontinuation.

Dr. Miller reported relationships with AbbVie, Allergan, Blue Seas Med Spa, Espiner Medical, Gynesonics, Halt Medical, Hologic, Karl Storz, Medtronic, and Richard Wolf in the form of consultancies, grants, speakers’ bureau appointments, stock options, royalties, and ownership interests.

PHILADELPHIA – Charles E. Miller, MD, said at the annual meeting of the American Society for Reproductive Medicine.

Although clinicians need prior authorization and evidence of treatment failure before prescribing Elagolix, the drug is a viable option as a second-tier treatment for patients with endometriosis-associated dysmenorrhea, said Dr. Miller, director of minimally invasive gynecologic surgery at Advocate Lutheran General Hospital in Park Ridge, Ill. “We have a drug that is very effective, that has a very low adverse event profile, and is tolerated by the vast majority of our patients.”

First-line options

NSAIDs are first-line treatment for endometriosis-related dysmenorrhea, with acetaminophen used in cases where NSAIDs are contraindicated or cause side effects such as gastrointestinal issues. Hormonal contraceptives also can be used as first-line treatment, divided into estrogen/progestin and progestin-only options that can be combined. Evidence from the literature has shown oral pills decrease pain, compared with placebo, but the decrease is not dose dependent, said Dr. Miller.

“We also know that if you use it continuously or prolonged, we find that there is going to be greater success with dysmenorrhea, and that ultimately you would use a higher-dose pill because of the greater risk of breakthrough when using a lesser dose in a continuous fashion,” he said. “Obviously if you’re not having menses, you’re not going to have dysmenorrhea.”

Other estrogen/progestin hormonal contraception such as the vaginal ring or transdermal patch also have been shown to decrease dysmenorrhea from endometriosis, with one study showing a reduction from 17% to 6% in moderate to severe dysmenorrhea in patients using the vaginal ring, compared with patients receiving oral contraceptives. In a separate randomized, controlled trial, “dysmenorrhea was more common in patch users, so it doesn’t appear that the patch is quite as effective in terms of reducing dysmenorrhea,” said Dr. Miller (JAMA. 2001 May 9. doi: 10.1001/jama.285.18.2347).

Compared with combination hormone therapy, there has been less research conducted on progestin-only hormone contraceptives on reducing dysmenorrhea from endometriosis. For example, there is little evidence for depot medroxyprogesterone acetate in reducing dysmenorrhea, but rather with it causing amenorrhea; one study showed a 50% amenorrhea rate at 1 year. “The disadvantage, however, in our infertile population is ultimately getting the menses back,” said Dr. Miller.

IUDs using levonorgestrel appear comparable with gonadotropin-releasing hormone (GnRH) agonists in reducing endometriosis-related pain; in one study, most women treated with either of these had visual analogue scores of less than 3 at 6 months of treatment. Between 68% and 75% of women with dysmenorrhea who receive an implantable contraceptive device with etonogestrel report decreased pain, and one meta-analysis reported 75% of women had “complete resolution of dysmenorrhea.” Concerning progestin-only pills, they can be used for endometriosis-related dysmenorrhea, but they are “problematic in that there’s a lot of breakthrough bleeding, and often times that is associated with pain,” said Dr. Miller.

Second-tier options

Injectable GnRH agonists are effective options as second-tier treatments for endometriosis-related dysmenorrhea, but patients are at risk of developing postmenopausal symptoms such as hot flashes, insomnia, spotting, and decreased libido. “One advantage to that is, over the years and particularly something that I’ve done with my endometriosis-related dysmenorrhea, is to utilize add-back with these patients,” said Dr. Miller, who noted that patients on 2.5 mg of norethindrone acetate and 0.5 mg of ethinyl estradiol“do very well” with that combination of add-back therapy.

Elagolix is the most recent second-tier treatment option for these patients, and was studied in the Elaris EM-I and Elaris EM-II trials in a once-daily dose of 150 mg and a twice-daily dose of 200 mg. In Elaris EM-1, 76% of patients in the 200-mg elagolix group had a clinical response, compared with 46% in the 150-mg group and 20% in the placebo group (N Engl J Med. 2017 Jul 6. doi: 10.1056/NEJMoa1700089). However, patients should not be on elagolix at 200 mg for more than 6 months, while patients receiving elagolix at 150 mg can stay on the treatment for up to 2 years.

Patients taking elagolix also showed postmenopausal symptoms, with 24% in the 150-mg group and 46% in the 200-mg group experiencing hot flashes, compared with 9% of patients in the placebo group. While 6% of patients in the 150-mg group and 10% in the 200-mg group discontinued because of adverse events, 1% and 3% of patients in the 150-mg and 200-mg group discontinued because of hot flashes or night sweats, respectively. “Symptoms are well tolerated, far different than in comparison with leuprolide acetate and GnRH agonists,” said Dr. Miller.

There also is a benefit to how patients recover from bone mineral density (BMD) changes after remaining on elagolix, Dr. Miller noted. In patients who received elagolix for 12 months at doses of 150 mg and 200 mg, there was an increase in lumbar spine BMD recovered 6 months after discontinuation, with patients in the 150-mg group experiencing a recovery close to baseline BMD levels. Among patients who discontinued treatment, there also was a quick resumption in menses for both groups: 87% of patients in the 150 mg group and 88% of patients in the 200-mg group who discontinued treatment after 6 months had resumed menses by 2 months after discontinuation, while 95% of patients in the 150-mg and 91% in the 200-mg group who discontinued after 12 months resumed menses by 2 months after discontinuation.

Dr. Miller reported relationships with AbbVie, Allergan, Blue Seas Med Spa, Espiner Medical, Gynesonics, Halt Medical, Hologic, Karl Storz, Medtronic, and Richard Wolf in the form of consultancies, grants, speakers’ bureau appointments, stock options, royalties, and ownership interests.

PHILADELPHIA – Charles E. Miller, MD, said at the annual meeting of the American Society for Reproductive Medicine.

Although clinicians need prior authorization and evidence of treatment failure before prescribing Elagolix, the drug is a viable option as a second-tier treatment for patients with endometriosis-associated dysmenorrhea, said Dr. Miller, director of minimally invasive gynecologic surgery at Advocate Lutheran General Hospital in Park Ridge, Ill. “We have a drug that is very effective, that has a very low adverse event profile, and is tolerated by the vast majority of our patients.”

First-line options

NSAIDs are first-line treatment for endometriosis-related dysmenorrhea, with acetaminophen used in cases where NSAIDs are contraindicated or cause side effects such as gastrointestinal issues. Hormonal contraceptives also can be used as first-line treatment, divided into estrogen/progestin and progestin-only options that can be combined. Evidence from the literature has shown oral pills decrease pain, compared with placebo, but the decrease is not dose dependent, said Dr. Miller.

“We also know that if you use it continuously or prolonged, we find that there is going to be greater success with dysmenorrhea, and that ultimately you would use a higher-dose pill because of the greater risk of breakthrough when using a lesser dose in a continuous fashion,” he said. “Obviously if you’re not having menses, you’re not going to have dysmenorrhea.”

Other estrogen/progestin hormonal contraception such as the vaginal ring or transdermal patch also have been shown to decrease dysmenorrhea from endometriosis, with one study showing a reduction from 17% to 6% in moderate to severe dysmenorrhea in patients using the vaginal ring, compared with patients receiving oral contraceptives. In a separate randomized, controlled trial, “dysmenorrhea was more common in patch users, so it doesn’t appear that the patch is quite as effective in terms of reducing dysmenorrhea,” said Dr. Miller (JAMA. 2001 May 9. doi: 10.1001/jama.285.18.2347).

Compared with combination hormone therapy, there has been less research conducted on progestin-only hormone contraceptives on reducing dysmenorrhea from endometriosis. For example, there is little evidence for depot medroxyprogesterone acetate in reducing dysmenorrhea, but rather with it causing amenorrhea; one study showed a 50% amenorrhea rate at 1 year. “The disadvantage, however, in our infertile population is ultimately getting the menses back,” said Dr. Miller.

IUDs using levonorgestrel appear comparable with gonadotropin-releasing hormone (GnRH) agonists in reducing endometriosis-related pain; in one study, most women treated with either of these had visual analogue scores of less than 3 at 6 months of treatment. Between 68% and 75% of women with dysmenorrhea who receive an implantable contraceptive device with etonogestrel report decreased pain, and one meta-analysis reported 75% of women had “complete resolution of dysmenorrhea.” Concerning progestin-only pills, they can be used for endometriosis-related dysmenorrhea, but they are “problematic in that there’s a lot of breakthrough bleeding, and often times that is associated with pain,” said Dr. Miller.

Second-tier options

Injectable GnRH agonists are effective options as second-tier treatments for endometriosis-related dysmenorrhea, but patients are at risk of developing postmenopausal symptoms such as hot flashes, insomnia, spotting, and decreased libido. “One advantage to that is, over the years and particularly something that I’ve done with my endometriosis-related dysmenorrhea, is to utilize add-back with these patients,” said Dr. Miller, who noted that patients on 2.5 mg of norethindrone acetate and 0.5 mg of ethinyl estradiol“do very well” with that combination of add-back therapy.

Elagolix is the most recent second-tier treatment option for these patients, and was studied in the Elaris EM-I and Elaris EM-II trials in a once-daily dose of 150 mg and a twice-daily dose of 200 mg. In Elaris EM-1, 76% of patients in the 200-mg elagolix group had a clinical response, compared with 46% in the 150-mg group and 20% in the placebo group (N Engl J Med. 2017 Jul 6. doi: 10.1056/NEJMoa1700089). However, patients should not be on elagolix at 200 mg for more than 6 months, while patients receiving elagolix at 150 mg can stay on the treatment for up to 2 years.

Patients taking elagolix also showed postmenopausal symptoms, with 24% in the 150-mg group and 46% in the 200-mg group experiencing hot flashes, compared with 9% of patients in the placebo group. While 6% of patients in the 150-mg group and 10% in the 200-mg group discontinued because of adverse events, 1% and 3% of patients in the 150-mg and 200-mg group discontinued because of hot flashes or night sweats, respectively. “Symptoms are well tolerated, far different than in comparison with leuprolide acetate and GnRH agonists,” said Dr. Miller.

There also is a benefit to how patients recover from bone mineral density (BMD) changes after remaining on elagolix, Dr. Miller noted. In patients who received elagolix for 12 months at doses of 150 mg and 200 mg, there was an increase in lumbar spine BMD recovered 6 months after discontinuation, with patients in the 150-mg group experiencing a recovery close to baseline BMD levels. Among patients who discontinued treatment, there also was a quick resumption in menses for both groups: 87% of patients in the 150 mg group and 88% of patients in the 200-mg group who discontinued treatment after 6 months had resumed menses by 2 months after discontinuation, while 95% of patients in the 150-mg and 91% in the 200-mg group who discontinued after 12 months resumed menses by 2 months after discontinuation.

Dr. Miller reported relationships with AbbVie, Allergan, Blue Seas Med Spa, Espiner Medical, Gynesonics, Halt Medical, Hologic, Karl Storz, Medtronic, and Richard Wolf in the form of consultancies, grants, speakers’ bureau appointments, stock options, royalties, and ownership interests.

EXPERT ANALYSIS FROM ASRM 2019

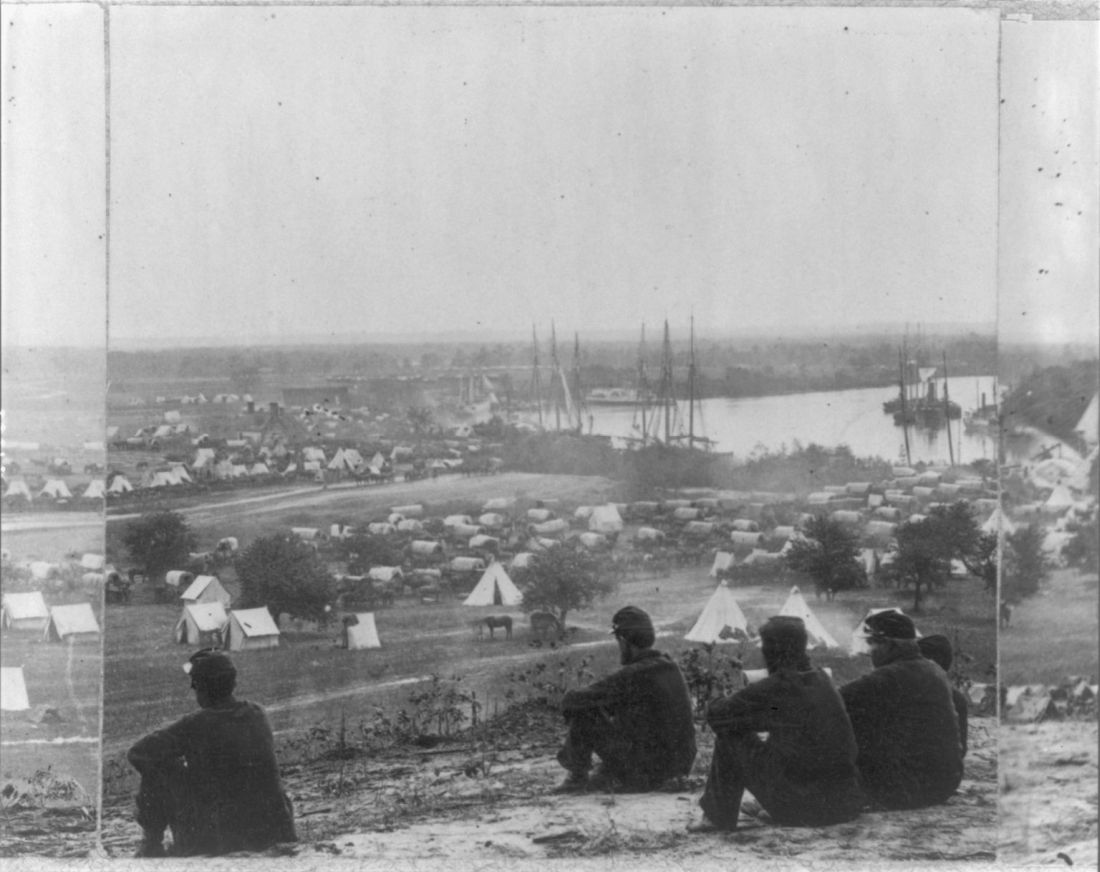

ID Blog: The waters of death

Diseases of the American Civil War, Part I

If cleanliness is next to godliness, then the average soldier in the American Civil War lived just down the street from hell. In a land at war, before the formal tenets of germ theory had spread beyond the confines of Louis Pasteur’s laboratory in France, the lack of basic hygiene, both cultural and situational, coupled to an almost complete lack of curative therapies created an appalling death toll. Waterborne diseases in particular spared neither general nor private, and neither doctor nor nurse.

“Of all the adversities that Union and Confederate soldiers confronted, none was more deadly or more prevalent than contaminated water,” according to Jeffrey S. Sartin, MD, in his survey of Civil War diseases.1

The Union Army records list 1,765,000 cases of diarrhea or dysentery with 45,000 deaths and 149,000 cases of typhoid fever with 35,000 deaths. Add to these the 1,316,000 cases of malaria (borne by mosquitoes breeding in the waters) with its 10,000 deaths, and it is easy to see how the battlefield itself took second place in service to the grim reaper. (Overall, there were roughly two deaths from disease for every one from wounds.)

The chief waterborne plague, infectious diarrhea – including bacterial, amoebic, and other parasites – as well as cholera and typhoid, was an all-year-long problem, and, with the typical wry humor of soldiers, these maladies were given popular names, including the “Tennessee trots” and the “Virginia quick-step.”

Unsanitary conditions in the camps were primarily to blame, and this problem of sanitation was obvious to many observers at the time.

Despite a lack of knowledge of germ theory, doctors were fully aware of the relationship of unsanitary conditions to disease, even if they ascribed the link to miasmas or particles of filth.

Hospitals, which were under more strict control than the regular army camps, were meticulous about the placement of latrines and about keeping high standards of cleanliness among the patients, including routine washing. However, this was insufficient for complete protection, because what passed for clean in the absence of the knowledge of bacterial contamination was often totally ineffective. As one Civil War surgeon stated: “We operated in old bloodstained and often pus-stained coats, we used undisinfected instruments from undisinfected plush-lined cases. If a sponge (if they had sponges) or instrument fell on the floor it was washed and squeezed in a basin of water and used as if it was clean.”2

Overall, efforts at what passed for sanitation remained a constant goal and constant struggle in the field.

After the First Battle of Bull Run, Women’s Central Association of Relief President Henry W. Bellows met with Secretary of War Simon Cameron to discuss the abysmal sanitary conditions witnessed by WCAR volunteers. This meeting led to the creation of what would become the U.S. Sanitary Commission, which was approved by President Abraham Lincoln on June 13, 1861.

The U.S. Sanitary Commission served as a means for funneling civilian assistance to the military, with volunteers providing assistance in the organization of military hospitals and camps and aiding in the transportation of the wounded. However, despite these efforts, the setup of army camps and the behavior of the soldiers were not often directed toward proper sanitation. “The principal causes of disease, however, in our camps were the same that we have always to deplore and find it so difficult to remedy, simply because citizens suddenly called to the field cannot comprehend that men in masses require the attention of their officers to enforce certain hygienic conditions without which health cannot be preserved.”3

Breaches of sanitation were common in the confines of the camps, despite regulations designed to protect the soldiers. According to one U.S. Army surgeon of the time: “Especially [needed] was policing of the latrines. The trench is generally too shallow, the daily covering ... with dirt is entirely neglected. Large numbers of the men will not use the sinks [latrines], ... but instead every clump of bushes, every fence border in the vicinity.” Another pointed out that, after the Battle of Seven Pines, “the only water was infiltrated with the decaying animal matter of the battlefield.” Commenting on the placement of latrines in one encampment, another surgeon described how “the sink [latrine] is the ground in the vicinity, which slopes down to the stream, from which all water from the camp is obtained.”4

Treatment for diarrhea and dysentery was varied. Opiates were one of the most common treatments for diarrhea, whether in an alcohol solution as laudanum or in pill form, with belladonna being used to treat intestinal cramps, according to Glenna R. Schroeder-Lein in her book “The Encyclopedia of Civil War Medicine.” However, useless or damaging treatments were also prescribed, including the use of calomel (a mercury compound), turpentine, castor oil, and quinine.5

Acute diarrhea and dysentery illnesses occurred in at least 641/1,000 troops per year in the Union army. And even though the death rate was comparatively low (20/1,000 cases), it frequently led to chronic diarrhea, which was responsible for 288 deaths per 1,000 cases, and was the third highest cause of medical discharge after gunshot wounds and tuberculosis, according to Ms. Schroeder-Lein.

Although the American Civil War was the last major conflict before the spread of the knowledge of germ theory, the struggle to prevent the spread of waterborne diseases under wartime conditions remains ongoing. Hygiene is difficult under conditions of abject poverty and especially under conditions of armed conflict, and until the era of curative antibiotics there was no recourse.

Antibiotics are not the final solution for antibiotic resistance in intestinal disease pathogens, as outlined in a recent CDC report, is an increasing problem.6 For example, nontyphoidal Salmonella causes an estimated 1.35 million infections, 26,500 hospitalizations, and 420 deaths each year in the United States, with 16% of strains being resistant to at least one essential antibiotic. On a global scale, according to the World Health Organization, poor sanitation causes up to 432,000 diarrheal deaths annually and is linked to the transmission of other diseases like cholera, dysentery, typhoid, hepatitis A, and polio.7

With regard to actual epidemics, the world is only a hygienic crisis away from a major outbreak of dysentery (the last occurring between 1969 and 1972, when 20,000 people in Central America died), according to researchers who have detected antibiotic resistance in all but 1% of circulating Shigella dysenteriae strains surveyed since the 1990s. “This bacterium is still in circulation, and could be responsible for future epidemics if conditions should prove favorable – such as a large gathering of people without access to drinking water or treatment of human waste,” wrote François-Xavier Weill of the Pasteur Institute’s Enteric Bacterial Pathogens Unit.8

References

1. Sartin JS. Clin Infec Dis. 1993;16:580-4. (Correction published in 2002).

2. Civil War Battlefield Surgery. eHistory. The Ohio State University.

3. “Myths About Antiseptics and Camp Life – George Wunderlich,” published online Oct. 11, 2011. http://civilwarscholars.com/2011/10/myths-about-antiseptics-and-camp-life-george-wunderlich/

4. Dorwart BB. “Death is in the Breeze: Disease during the American Civil War” (The National Museum of the American Civil War Press, 2009).

5. Glenna R, Schroeder-Lein GR. “The Encyclopedia of Civil War Medicine” (New York: M. E. Sharpe, 2008).

6. “Antibiotic resistance threats in the United States 2019” Centers for Disease Control and Prevention.

7. “New report exposes horror of working conditions for millions of sanitation workers in the developing world,” World Health Organization. 2019 Nov 14.

8. Grant B. Origins of Dysentery. The Scientist. Published online March 22, 2016.

Mark Lesney is the managing editor of MDedge.com/IDPractioner. He has a PhD in plant virology and a PhD in the history of science, with a focus on the history of biotechnology and medicine. He has served as an adjunct assistant professor of the department of biochemistry and molecular & cellular biology at Georgetown University, Washington.

Diseases of the American Civil War, Part I

Diseases of the American Civil War, Part I

If cleanliness is next to godliness, then the average soldier in the American Civil War lived just down the street from hell. In a land at war, before the formal tenets of germ theory had spread beyond the confines of Louis Pasteur’s laboratory in France, the lack of basic hygiene, both cultural and situational, coupled to an almost complete lack of curative therapies created an appalling death toll. Waterborne diseases in particular spared neither general nor private, and neither doctor nor nurse.

“Of all the adversities that Union and Confederate soldiers confronted, none was more deadly or more prevalent than contaminated water,” according to Jeffrey S. Sartin, MD, in his survey of Civil War diseases.1

The Union Army records list 1,765,000 cases of diarrhea or dysentery with 45,000 deaths and 149,000 cases of typhoid fever with 35,000 deaths. Add to these the 1,316,000 cases of malaria (borne by mosquitoes breeding in the waters) with its 10,000 deaths, and it is easy to see how the battlefield itself took second place in service to the grim reaper. (Overall, there were roughly two deaths from disease for every one from wounds.)

The chief waterborne plague, infectious diarrhea – including bacterial, amoebic, and other parasites – as well as cholera and typhoid, was an all-year-long problem, and, with the typical wry humor of soldiers, these maladies were given popular names, including the “Tennessee trots” and the “Virginia quick-step.”

Unsanitary conditions in the camps were primarily to blame, and this problem of sanitation was obvious to many observers at the time.

Despite a lack of knowledge of germ theory, doctors were fully aware of the relationship of unsanitary conditions to disease, even if they ascribed the link to miasmas or particles of filth.

Hospitals, which were under more strict control than the regular army camps, were meticulous about the placement of latrines and about keeping high standards of cleanliness among the patients, including routine washing. However, this was insufficient for complete protection, because what passed for clean in the absence of the knowledge of bacterial contamination was often totally ineffective. As one Civil War surgeon stated: “We operated in old bloodstained and often pus-stained coats, we used undisinfected instruments from undisinfected plush-lined cases. If a sponge (if they had sponges) or instrument fell on the floor it was washed and squeezed in a basin of water and used as if it was clean.”2

Overall, efforts at what passed for sanitation remained a constant goal and constant struggle in the field.

After the First Battle of Bull Run, Women’s Central Association of Relief President Henry W. Bellows met with Secretary of War Simon Cameron to discuss the abysmal sanitary conditions witnessed by WCAR volunteers. This meeting led to the creation of what would become the U.S. Sanitary Commission, which was approved by President Abraham Lincoln on June 13, 1861.

The U.S. Sanitary Commission served as a means for funneling civilian assistance to the military, with volunteers providing assistance in the organization of military hospitals and camps and aiding in the transportation of the wounded. However, despite these efforts, the setup of army camps and the behavior of the soldiers were not often directed toward proper sanitation. “The principal causes of disease, however, in our camps were the same that we have always to deplore and find it so difficult to remedy, simply because citizens suddenly called to the field cannot comprehend that men in masses require the attention of their officers to enforce certain hygienic conditions without which health cannot be preserved.”3

Breaches of sanitation were common in the confines of the camps, despite regulations designed to protect the soldiers. According to one U.S. Army surgeon of the time: “Especially [needed] was policing of the latrines. The trench is generally too shallow, the daily covering ... with dirt is entirely neglected. Large numbers of the men will not use the sinks [latrines], ... but instead every clump of bushes, every fence border in the vicinity.” Another pointed out that, after the Battle of Seven Pines, “the only water was infiltrated with the decaying animal matter of the battlefield.” Commenting on the placement of latrines in one encampment, another surgeon described how “the sink [latrine] is the ground in the vicinity, which slopes down to the stream, from which all water from the camp is obtained.”4

Treatment for diarrhea and dysentery was varied. Opiates were one of the most common treatments for diarrhea, whether in an alcohol solution as laudanum or in pill form, with belladonna being used to treat intestinal cramps, according to Glenna R. Schroeder-Lein in her book “The Encyclopedia of Civil War Medicine.” However, useless or damaging treatments were also prescribed, including the use of calomel (a mercury compound), turpentine, castor oil, and quinine.5

Acute diarrhea and dysentery illnesses occurred in at least 641/1,000 troops per year in the Union army. And even though the death rate was comparatively low (20/1,000 cases), it frequently led to chronic diarrhea, which was responsible for 288 deaths per 1,000 cases, and was the third highest cause of medical discharge after gunshot wounds and tuberculosis, according to Ms. Schroeder-Lein.

Although the American Civil War was the last major conflict before the spread of the knowledge of germ theory, the struggle to prevent the spread of waterborne diseases under wartime conditions remains ongoing. Hygiene is difficult under conditions of abject poverty and especially under conditions of armed conflict, and until the era of curative antibiotics there was no recourse.

Antibiotics are not the final solution for antibiotic resistance in intestinal disease pathogens, as outlined in a recent CDC report, is an increasing problem.6 For example, nontyphoidal Salmonella causes an estimated 1.35 million infections, 26,500 hospitalizations, and 420 deaths each year in the United States, with 16% of strains being resistant to at least one essential antibiotic. On a global scale, according to the World Health Organization, poor sanitation causes up to 432,000 diarrheal deaths annually and is linked to the transmission of other diseases like cholera, dysentery, typhoid, hepatitis A, and polio.7

With regard to actual epidemics, the world is only a hygienic crisis away from a major outbreak of dysentery (the last occurring between 1969 and 1972, when 20,000 people in Central America died), according to researchers who have detected antibiotic resistance in all but 1% of circulating Shigella dysenteriae strains surveyed since the 1990s. “This bacterium is still in circulation, and could be responsible for future epidemics if conditions should prove favorable – such as a large gathering of people without access to drinking water or treatment of human waste,” wrote François-Xavier Weill of the Pasteur Institute’s Enteric Bacterial Pathogens Unit.8

References

1. Sartin JS. Clin Infec Dis. 1993;16:580-4. (Correction published in 2002).

2. Civil War Battlefield Surgery. eHistory. The Ohio State University.

3. “Myths About Antiseptics and Camp Life – George Wunderlich,” published online Oct. 11, 2011. http://civilwarscholars.com/2011/10/myths-about-antiseptics-and-camp-life-george-wunderlich/

4. Dorwart BB. “Death is in the Breeze: Disease during the American Civil War” (The National Museum of the American Civil War Press, 2009).

5. Glenna R, Schroeder-Lein GR. “The Encyclopedia of Civil War Medicine” (New York: M. E. Sharpe, 2008).

6. “Antibiotic resistance threats in the United States 2019” Centers for Disease Control and Prevention.

7. “New report exposes horror of working conditions for millions of sanitation workers in the developing world,” World Health Organization. 2019 Nov 14.

8. Grant B. Origins of Dysentery. The Scientist. Published online March 22, 2016.

Mark Lesney is the managing editor of MDedge.com/IDPractioner. He has a PhD in plant virology and a PhD in the history of science, with a focus on the history of biotechnology and medicine. He has served as an adjunct assistant professor of the department of biochemistry and molecular & cellular biology at Georgetown University, Washington.

If cleanliness is next to godliness, then the average soldier in the American Civil War lived just down the street from hell. In a land at war, before the formal tenets of germ theory had spread beyond the confines of Louis Pasteur’s laboratory in France, the lack of basic hygiene, both cultural and situational, coupled to an almost complete lack of curative therapies created an appalling death toll. Waterborne diseases in particular spared neither general nor private, and neither doctor nor nurse.

“Of all the adversities that Union and Confederate soldiers confronted, none was more deadly or more prevalent than contaminated water,” according to Jeffrey S. Sartin, MD, in his survey of Civil War diseases.1

The Union Army records list 1,765,000 cases of diarrhea or dysentery with 45,000 deaths and 149,000 cases of typhoid fever with 35,000 deaths. Add to these the 1,316,000 cases of malaria (borne by mosquitoes breeding in the waters) with its 10,000 deaths, and it is easy to see how the battlefield itself took second place in service to the grim reaper. (Overall, there were roughly two deaths from disease for every one from wounds.)

The chief waterborne plague, infectious diarrhea – including bacterial, amoebic, and other parasites – as well as cholera and typhoid, was an all-year-long problem, and, with the typical wry humor of soldiers, these maladies were given popular names, including the “Tennessee trots” and the “Virginia quick-step.”

Unsanitary conditions in the camps were primarily to blame, and this problem of sanitation was obvious to many observers at the time.

Despite a lack of knowledge of germ theory, doctors were fully aware of the relationship of unsanitary conditions to disease, even if they ascribed the link to miasmas or particles of filth.

Hospitals, which were under more strict control than the regular army camps, were meticulous about the placement of latrines and about keeping high standards of cleanliness among the patients, including routine washing. However, this was insufficient for complete protection, because what passed for clean in the absence of the knowledge of bacterial contamination was often totally ineffective. As one Civil War surgeon stated: “We operated in old bloodstained and often pus-stained coats, we used undisinfected instruments from undisinfected plush-lined cases. If a sponge (if they had sponges) or instrument fell on the floor it was washed and squeezed in a basin of water and used as if it was clean.”2

Overall, efforts at what passed for sanitation remained a constant goal and constant struggle in the field.

After the First Battle of Bull Run, Women’s Central Association of Relief President Henry W. Bellows met with Secretary of War Simon Cameron to discuss the abysmal sanitary conditions witnessed by WCAR volunteers. This meeting led to the creation of what would become the U.S. Sanitary Commission, which was approved by President Abraham Lincoln on June 13, 1861.

The U.S. Sanitary Commission served as a means for funneling civilian assistance to the military, with volunteers providing assistance in the organization of military hospitals and camps and aiding in the transportation of the wounded. However, despite these efforts, the setup of army camps and the behavior of the soldiers were not often directed toward proper sanitation. “The principal causes of disease, however, in our camps were the same that we have always to deplore and find it so difficult to remedy, simply because citizens suddenly called to the field cannot comprehend that men in masses require the attention of their officers to enforce certain hygienic conditions without which health cannot be preserved.”3

Breaches of sanitation were common in the confines of the camps, despite regulations designed to protect the soldiers. According to one U.S. Army surgeon of the time: “Especially [needed] was policing of the latrines. The trench is generally too shallow, the daily covering ... with dirt is entirely neglected. Large numbers of the men will not use the sinks [latrines], ... but instead every clump of bushes, every fence border in the vicinity.” Another pointed out that, after the Battle of Seven Pines, “the only water was infiltrated with the decaying animal matter of the battlefield.” Commenting on the placement of latrines in one encampment, another surgeon described how “the sink [latrine] is the ground in the vicinity, which slopes down to the stream, from which all water from the camp is obtained.”4

Treatment for diarrhea and dysentery was varied. Opiates were one of the most common treatments for diarrhea, whether in an alcohol solution as laudanum or in pill form, with belladonna being used to treat intestinal cramps, according to Glenna R. Schroeder-Lein in her book “The Encyclopedia of Civil War Medicine.” However, useless or damaging treatments were also prescribed, including the use of calomel (a mercury compound), turpentine, castor oil, and quinine.5

Acute diarrhea and dysentery illnesses occurred in at least 641/1,000 troops per year in the Union army. And even though the death rate was comparatively low (20/1,000 cases), it frequently led to chronic diarrhea, which was responsible for 288 deaths per 1,000 cases, and was the third highest cause of medical discharge after gunshot wounds and tuberculosis, according to Ms. Schroeder-Lein.

Although the American Civil War was the last major conflict before the spread of the knowledge of germ theory, the struggle to prevent the spread of waterborne diseases under wartime conditions remains ongoing. Hygiene is difficult under conditions of abject poverty and especially under conditions of armed conflict, and until the era of curative antibiotics there was no recourse.

Antibiotics are not the final solution for antibiotic resistance in intestinal disease pathogens, as outlined in a recent CDC report, is an increasing problem.6 For example, nontyphoidal Salmonella causes an estimated 1.35 million infections, 26,500 hospitalizations, and 420 deaths each year in the United States, with 16% of strains being resistant to at least one essential antibiotic. On a global scale, according to the World Health Organization, poor sanitation causes up to 432,000 diarrheal deaths annually and is linked to the transmission of other diseases like cholera, dysentery, typhoid, hepatitis A, and polio.7

With regard to actual epidemics, the world is only a hygienic crisis away from a major outbreak of dysentery (the last occurring between 1969 and 1972, when 20,000 people in Central America died), according to researchers who have detected antibiotic resistance in all but 1% of circulating Shigella dysenteriae strains surveyed since the 1990s. “This bacterium is still in circulation, and could be responsible for future epidemics if conditions should prove favorable – such as a large gathering of people without access to drinking water or treatment of human waste,” wrote François-Xavier Weill of the Pasteur Institute’s Enteric Bacterial Pathogens Unit.8

References

1. Sartin JS. Clin Infec Dis. 1993;16:580-4. (Correction published in 2002).

2. Civil War Battlefield Surgery. eHistory. The Ohio State University.

3. “Myths About Antiseptics and Camp Life – George Wunderlich,” published online Oct. 11, 2011. http://civilwarscholars.com/2011/10/myths-about-antiseptics-and-camp-life-george-wunderlich/

4. Dorwart BB. “Death is in the Breeze: Disease during the American Civil War” (The National Museum of the American Civil War Press, 2009).

5. Glenna R, Schroeder-Lein GR. “The Encyclopedia of Civil War Medicine” (New York: M. E. Sharpe, 2008).

6. “Antibiotic resistance threats in the United States 2019” Centers for Disease Control and Prevention.

7. “New report exposes horror of working conditions for millions of sanitation workers in the developing world,” World Health Organization. 2019 Nov 14.

8. Grant B. Origins of Dysentery. The Scientist. Published online March 22, 2016.

Mark Lesney is the managing editor of MDedge.com/IDPractioner. He has a PhD in plant virology and a PhD in the history of science, with a focus on the history of biotechnology and medicine. He has served as an adjunct assistant professor of the department of biochemistry and molecular & cellular biology at Georgetown University, Washington.

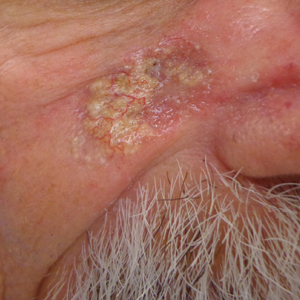

SPIRIT-H2H results confirm superiority of ixekizumab over adalimumab for PsA

ATLANTA – Ixekizumab (Taltz) provided significantly greater improvement in joint and skin symptoms, compared with adalimumab (Humira), in biologic-naive patients with active psoriatic arthritis (PsA), according to final 52-week safety and efficacy results from the randomized SPIRIT-H2H study.

The high-affinity monoclonal antibody against interleukin-17A also performed at least as well as the tumor necrosis factor (TNF)–inhibitor adalimumab across multiple PsA domains and regardless of methotrexate use, Josef Smolen, MD, reported during a late-breaking abstract session at the annual meeting of the American College of Rheumatology.

Multiple biologic disease-modifying antirheumatic drugs (bDMARDs) are available for the treatment of PsA, but few studies have directly compared their efficacy and safety, said Dr. Smolen of the Medical University of Vienna. He noted that the SPIRIT-H2H study aimed to compare ixekizumab and adalimumab and also to address “one of the most clinically relevant questions for clinicians,” which relates to the efficacy of bDMARDs with and without concomitant methotrexate.

Ixekizumab is approved for adults with active PsA and moderate to severe plaque psoriasis, but TNF inhibitors like adalimumab have long been considered the gold standard for PsA treatment, he explained.

Of 283 patients with PsA randomized to receive ixekizumab and 283 randomized to receive adalimumab, 87% and 84%, respectively, completed week 52 of the head-to-head, open-label study comparing the bDMARDs. Treatment with ixekizumab achieved the primary endpoint of simultaneous improvement of 50% on ACR response criteria (ACR50) and 100% on the Psoriasis Area and Severity Index (PASI100) in 39% of patients, which was significantly higher than the rate of 26% with adalimumab, Dr. Smolen said.

Ixekizumab also performed at least as well as adalimumab for the secondary outcome measures of ACR50 response (50% in both groups) and PASI100 response (64% vs. 41%), as well as for all other outcomes measures, including multiple musculoskeletal PsA domains, he said.

“Remarkably ... at 1 year, more than one-third of the patients achieved an ACR70 in both groups, and half of the patients achieved an ACR50,” he added, noting that the ACR100 responses were in line with previous investigations.

Stratification by methotrexate use showed that the simultaneous ACR50 and PASI100 response rates were improved with ixekizumab versus adalimumab both in users and nonusers of methotrexate (39% vs. 30% and 40% vs. 20%, respectively). This finding highlights the ongoing debate about whether TNF inhibitors should or should not be used with methotrexate for PsA.

“This study was not adequately powered to say that, but there is some indication, and I think that this is food for thought for future further analysis because the data in the literature are discrepant in this respect,” Dr. Smolen said.

In non-methotrexate users in SPIRIT-H2H, the ACR20 responses were 53% with ixekinumab vs. 40% with adalimumab, ACR50 responses were 72% vs. 60%, and ACR70 responses were 41% vs. 27%, respectively, he said noting that the difference for ACR70 was statistically significant, and that the ACR70 response with ixekinumab was about the same as the ACR50 for adalimumab.

As for ACR20, ACR50, and ACR70 responses in methotrexate users, “the lines criss-crossed” early on, he said, but all were “slightly superior” with adalimumab than with ixekizumab at 52 weeks (75% vs. 68%, 56% vs. 48%, and 39% vs. 32%, respectively).

Study participants had a mean age of 48 years and had active PsA with at least 3/66 tender joints, at least 3/68 swollen joints, at least 3% psoriasis body surface area involvement, no prior treatment with bDMARDs, and prior inadequate response to one or more conventional synthetic DMARDs. Treatment was dosed according to drug labeling through 52 weeks.

The safety profiles of both agents were consistent with previous reports; treatment-emergent adverse events occurred in 73.9% of ixekizumab and 68.6% of adalimumab patients, and serious adverse events occurred in 4.2% and 12.4%, respectively.

“On the other hand, ixekizumab had more injection site reactions: 11% vs. close to 4%,” he said, noting that 4.2% of the ixekizumab patients and 7.4% of the adalimumab patients discontinued treatment because of adverse events. No deaths occurred in either group.

As reported previously in Annals of the Rheumatic Diseases, ixekizumab was superior to adalimumab for simultaneous achievement of ACR50 and PASI100 at 24 weeks, and these final 52-week results confirm those results, he said.

The study was funded by Eli Lilly, which markets ixekizumab. Dr. Smolen reported research grants and/or honoraria from Eli Lilly and AbbVie, which markets adalimumab, as well as many other pharmaceutical companies.

SOURCE: Smolen J et al. Arthritis Rheumatol. 2019;71(suppl 10), Abstract L20.

ATLANTA – Ixekizumab (Taltz) provided significantly greater improvement in joint and skin symptoms, compared with adalimumab (Humira), in biologic-naive patients with active psoriatic arthritis (PsA), according to final 52-week safety and efficacy results from the randomized SPIRIT-H2H study.

The high-affinity monoclonal antibody against interleukin-17A also performed at least as well as the tumor necrosis factor (TNF)–inhibitor adalimumab across multiple PsA domains and regardless of methotrexate use, Josef Smolen, MD, reported during a late-breaking abstract session at the annual meeting of the American College of Rheumatology.

Multiple biologic disease-modifying antirheumatic drugs (bDMARDs) are available for the treatment of PsA, but few studies have directly compared their efficacy and safety, said Dr. Smolen of the Medical University of Vienna. He noted that the SPIRIT-H2H study aimed to compare ixekizumab and adalimumab and also to address “one of the most clinically relevant questions for clinicians,” which relates to the efficacy of bDMARDs with and without concomitant methotrexate.

Ixekizumab is approved for adults with active PsA and moderate to severe plaque psoriasis, but TNF inhibitors like adalimumab have long been considered the gold standard for PsA treatment, he explained.

Of 283 patients with PsA randomized to receive ixekizumab and 283 randomized to receive adalimumab, 87% and 84%, respectively, completed week 52 of the head-to-head, open-label study comparing the bDMARDs. Treatment with ixekizumab achieved the primary endpoint of simultaneous improvement of 50% on ACR response criteria (ACR50) and 100% on the Psoriasis Area and Severity Index (PASI100) in 39% of patients, which was significantly higher than the rate of 26% with adalimumab, Dr. Smolen said.

Ixekizumab also performed at least as well as adalimumab for the secondary outcome measures of ACR50 response (50% in both groups) and PASI100 response (64% vs. 41%), as well as for all other outcomes measures, including multiple musculoskeletal PsA domains, he said.

“Remarkably ... at 1 year, more than one-third of the patients achieved an ACR70 in both groups, and half of the patients achieved an ACR50,” he added, noting that the ACR100 responses were in line with previous investigations.

Stratification by methotrexate use showed that the simultaneous ACR50 and PASI100 response rates were improved with ixekizumab versus adalimumab both in users and nonusers of methotrexate (39% vs. 30% and 40% vs. 20%, respectively). This finding highlights the ongoing debate about whether TNF inhibitors should or should not be used with methotrexate for PsA.

“This study was not adequately powered to say that, but there is some indication, and I think that this is food for thought for future further analysis because the data in the literature are discrepant in this respect,” Dr. Smolen said.

In non-methotrexate users in SPIRIT-H2H, the ACR20 responses were 53% with ixekinumab vs. 40% with adalimumab, ACR50 responses were 72% vs. 60%, and ACR70 responses were 41% vs. 27%, respectively, he said noting that the difference for ACR70 was statistically significant, and that the ACR70 response with ixekinumab was about the same as the ACR50 for adalimumab.

As for ACR20, ACR50, and ACR70 responses in methotrexate users, “the lines criss-crossed” early on, he said, but all were “slightly superior” with adalimumab than with ixekizumab at 52 weeks (75% vs. 68%, 56% vs. 48%, and 39% vs. 32%, respectively).

Study participants had a mean age of 48 years and had active PsA with at least 3/66 tender joints, at least 3/68 swollen joints, at least 3% psoriasis body surface area involvement, no prior treatment with bDMARDs, and prior inadequate response to one or more conventional synthetic DMARDs. Treatment was dosed according to drug labeling through 52 weeks.

The safety profiles of both agents were consistent with previous reports; treatment-emergent adverse events occurred in 73.9% of ixekizumab and 68.6% of adalimumab patients, and serious adverse events occurred in 4.2% and 12.4%, respectively.

“On the other hand, ixekizumab had more injection site reactions: 11% vs. close to 4%,” he said, noting that 4.2% of the ixekizumab patients and 7.4% of the adalimumab patients discontinued treatment because of adverse events. No deaths occurred in either group.

As reported previously in Annals of the Rheumatic Diseases, ixekizumab was superior to adalimumab for simultaneous achievement of ACR50 and PASI100 at 24 weeks, and these final 52-week results confirm those results, he said.

The study was funded by Eli Lilly, which markets ixekizumab. Dr. Smolen reported research grants and/or honoraria from Eli Lilly and AbbVie, which markets adalimumab, as well as many other pharmaceutical companies.

SOURCE: Smolen J et al. Arthritis Rheumatol. 2019;71(suppl 10), Abstract L20.

ATLANTA – Ixekizumab (Taltz) provided significantly greater improvement in joint and skin symptoms, compared with adalimumab (Humira), in biologic-naive patients with active psoriatic arthritis (PsA), according to final 52-week safety and efficacy results from the randomized SPIRIT-H2H study.

The high-affinity monoclonal antibody against interleukin-17A also performed at least as well as the tumor necrosis factor (TNF)–inhibitor adalimumab across multiple PsA domains and regardless of methotrexate use, Josef Smolen, MD, reported during a late-breaking abstract session at the annual meeting of the American College of Rheumatology.

Multiple biologic disease-modifying antirheumatic drugs (bDMARDs) are available for the treatment of PsA, but few studies have directly compared their efficacy and safety, said Dr. Smolen of the Medical University of Vienna. He noted that the SPIRIT-H2H study aimed to compare ixekizumab and adalimumab and also to address “one of the most clinically relevant questions for clinicians,” which relates to the efficacy of bDMARDs with and without concomitant methotrexate.

Ixekizumab is approved for adults with active PsA and moderate to severe plaque psoriasis, but TNF inhibitors like adalimumab have long been considered the gold standard for PsA treatment, he explained.

Of 283 patients with PsA randomized to receive ixekizumab and 283 randomized to receive adalimumab, 87% and 84%, respectively, completed week 52 of the head-to-head, open-label study comparing the bDMARDs. Treatment with ixekizumab achieved the primary endpoint of simultaneous improvement of 50% on ACR response criteria (ACR50) and 100% on the Psoriasis Area and Severity Index (PASI100) in 39% of patients, which was significantly higher than the rate of 26% with adalimumab, Dr. Smolen said.

Ixekizumab also performed at least as well as adalimumab for the secondary outcome measures of ACR50 response (50% in both groups) and PASI100 response (64% vs. 41%), as well as for all other outcomes measures, including multiple musculoskeletal PsA domains, he said.

“Remarkably ... at 1 year, more than one-third of the patients achieved an ACR70 in both groups, and half of the patients achieved an ACR50,” he added, noting that the ACR100 responses were in line with previous investigations.

Stratification by methotrexate use showed that the simultaneous ACR50 and PASI100 response rates were improved with ixekizumab versus adalimumab both in users and nonusers of methotrexate (39% vs. 30% and 40% vs. 20%, respectively). This finding highlights the ongoing debate about whether TNF inhibitors should or should not be used with methotrexate for PsA.

“This study was not adequately powered to say that, but there is some indication, and I think that this is food for thought for future further analysis because the data in the literature are discrepant in this respect,” Dr. Smolen said.

In non-methotrexate users in SPIRIT-H2H, the ACR20 responses were 53% with ixekinumab vs. 40% with adalimumab, ACR50 responses were 72% vs. 60%, and ACR70 responses were 41% vs. 27%, respectively, he said noting that the difference for ACR70 was statistically significant, and that the ACR70 response with ixekinumab was about the same as the ACR50 for adalimumab.

As for ACR20, ACR50, and ACR70 responses in methotrexate users, “the lines criss-crossed” early on, he said, but all were “slightly superior” with adalimumab than with ixekizumab at 52 weeks (75% vs. 68%, 56% vs. 48%, and 39% vs. 32%, respectively).

Study participants had a mean age of 48 years and had active PsA with at least 3/66 tender joints, at least 3/68 swollen joints, at least 3% psoriasis body surface area involvement, no prior treatment with bDMARDs, and prior inadequate response to one or more conventional synthetic DMARDs. Treatment was dosed according to drug labeling through 52 weeks.

The safety profiles of both agents were consistent with previous reports; treatment-emergent adverse events occurred in 73.9% of ixekizumab and 68.6% of adalimumab patients, and serious adverse events occurred in 4.2% and 12.4%, respectively.

“On the other hand, ixekizumab had more injection site reactions: 11% vs. close to 4%,” he said, noting that 4.2% of the ixekizumab patients and 7.4% of the adalimumab patients discontinued treatment because of adverse events. No deaths occurred in either group.

As reported previously in Annals of the Rheumatic Diseases, ixekizumab was superior to adalimumab for simultaneous achievement of ACR50 and PASI100 at 24 weeks, and these final 52-week results confirm those results, he said.

The study was funded by Eli Lilly, which markets ixekizumab. Dr. Smolen reported research grants and/or honoraria from Eli Lilly and AbbVie, which markets adalimumab, as well as many other pharmaceutical companies.

SOURCE: Smolen J et al. Arthritis Rheumatol. 2019;71(suppl 10), Abstract L20.

REPORTING FROM ACR 2019

Chronic pain more common in women with ADHD or ASD

Women with ADHD, autism spectrum disorder (ASD), or both report higher rates of chronic pain, which should be accounted for in the treatment received, new research shows.

In some cases, treating the ADHD might lower the pain, reported Karin Asztély of the Sahlgrenska Academy Institute of Neuroscience, Göteborg, Sweden, and associates. The study was published in the Journal of Pain Research.

The research included 77 Swedish women with ADHD, ASD, or both from a larger prospective longitudinal study. From 2015 to 2018, when the women were aged 19-37 years, they were contacted by mail and phone, and interviewed about symptoms of pain. This included chronic widespread or regional symptoms of pain; widespread pain was pain that lasted more than 3 months and was described both above and below the waist, on both sides of the body, and in the axial skeleton. Any pain that lasted more than 3 months but did not meet those other requirements was listed as chronic regional pain.

Chronic pain of any kind was reported by 59 participants (76.6%). Chronic widespread pain was reported by 25 participants (32.5%), and chronic regional pain was reported by 34 (44.2%), both of which were higher than those seen in a cross-sectional survey, which showed prevalences of 11.9% and 23.9% of Swedish participants, respectively (J Rheumatol. 2001 Jun;28[6]:1369-77).

Among the limitations of the latest study is the small sample size and the absence of healthy controls; however, the researchers thought this was compensated for by the comparisons with previous research.

“ and possible unrecognized ASD and/or ADHD in women with chronic pain,” they concluded.

The investigators reported no conflicts of interest.

SOURCE: Asztély et al. J Pain Res. 2019 Oct 18;12:2925-32.

Women with ADHD, autism spectrum disorder (ASD), or both report higher rates of chronic pain, which should be accounted for in the treatment received, new research shows.

In some cases, treating the ADHD might lower the pain, reported Karin Asztély of the Sahlgrenska Academy Institute of Neuroscience, Göteborg, Sweden, and associates. The study was published in the Journal of Pain Research.

The research included 77 Swedish women with ADHD, ASD, or both from a larger prospective longitudinal study. From 2015 to 2018, when the women were aged 19-37 years, they were contacted by mail and phone, and interviewed about symptoms of pain. This included chronic widespread or regional symptoms of pain; widespread pain was pain that lasted more than 3 months and was described both above and below the waist, on both sides of the body, and in the axial skeleton. Any pain that lasted more than 3 months but did not meet those other requirements was listed as chronic regional pain.

Chronic pain of any kind was reported by 59 participants (76.6%). Chronic widespread pain was reported by 25 participants (32.5%), and chronic regional pain was reported by 34 (44.2%), both of which were higher than those seen in a cross-sectional survey, which showed prevalences of 11.9% and 23.9% of Swedish participants, respectively (J Rheumatol. 2001 Jun;28[6]:1369-77).

Among the limitations of the latest study is the small sample size and the absence of healthy controls; however, the researchers thought this was compensated for by the comparisons with previous research.

“ and possible unrecognized ASD and/or ADHD in women with chronic pain,” they concluded.

The investigators reported no conflicts of interest.

SOURCE: Asztély et al. J Pain Res. 2019 Oct 18;12:2925-32.

Women with ADHD, autism spectrum disorder (ASD), or both report higher rates of chronic pain, which should be accounted for in the treatment received, new research shows.

In some cases, treating the ADHD might lower the pain, reported Karin Asztély of the Sahlgrenska Academy Institute of Neuroscience, Göteborg, Sweden, and associates. The study was published in the Journal of Pain Research.

The research included 77 Swedish women with ADHD, ASD, or both from a larger prospective longitudinal study. From 2015 to 2018, when the women were aged 19-37 years, they were contacted by mail and phone, and interviewed about symptoms of pain. This included chronic widespread or regional symptoms of pain; widespread pain was pain that lasted more than 3 months and was described both above and below the waist, on both sides of the body, and in the axial skeleton. Any pain that lasted more than 3 months but did not meet those other requirements was listed as chronic regional pain.

Chronic pain of any kind was reported by 59 participants (76.6%). Chronic widespread pain was reported by 25 participants (32.5%), and chronic regional pain was reported by 34 (44.2%), both of which were higher than those seen in a cross-sectional survey, which showed prevalences of 11.9% and 23.9% of Swedish participants, respectively (J Rheumatol. 2001 Jun;28[6]:1369-77).

Among the limitations of the latest study is the small sample size and the absence of healthy controls; however, the researchers thought this was compensated for by the comparisons with previous research.

“ and possible unrecognized ASD and/or ADHD in women with chronic pain,” they concluded.

The investigators reported no conflicts of interest.

SOURCE: Asztély et al. J Pain Res. 2019 Oct 18;12:2925-32.

FROM THE JOURNAL OF PAIN RESEARCH

Geriatric IBD hospitalization carries steep inpatient mortality

SAN ANTONIO – Jeffrey Schwartz, MD, reported at the annual meeting of the American College of Gastroenterology.

The magnitude of the age-related increased risk highlighted in this large national study was strikingly larger than the differential inpatient mortality between geriatric and nongeriatric patients hospitalized for conditions other than inflammatory bowel disease (IBD). It’s a finding that reveals a major unmet need for improved systems of care for elderly hospitalized IBD patients, according to Dr. Schwartz, an internal medicine resident at Beth Israel Deaconess Medical Center, Boston.

“Given the high prevalence of IBD patients that require inpatient admission, as well as the rapidly aging nature of the U.S. population, it’s our hope that this study will provide some insight to drive efforts to improve standardized guideline-directed therapy and propose interventions to help close what I think is a very important gap in clinical care,” he said.

It’s well established that a second peak of IBD diagnoses occurs in 50- to 70-year-olds. At present, roughly 30% of all individuals carrying the diagnosis of IBD are over age 65, and with the graying of the baby-boomer population, this proportion is climbing.

Dr. Schwartz presented a study of the National Inpatient Sample for 2016, which is a representative sample comprising 20% of all U.S. hospital discharges for that year, the most recent year for which the data are available. The study population included all 71,040 patients hospitalized for acute management of Crohn’s disease or its immediate complications, of whom 10,095 were aged over age 75 years, as well as the 35,950 patients hospitalized for ulcerative colitis, 8,285 of whom were over 75.

Inpatient mortality occurred in 1.5% of the geriatric admissions, compared with 0.2% of nongeriatric admissions for Crohn’s disease. Similarly, the inpatient mortality rate in geriatric patients with ulcerative colitis was 1.0% versus 0.1% in patients under age 75 hospitalized for ulcerative colitis.

There are lots of reasons why the management of geriatric patients with IBD is particularly challenging, Dr. Schwartz noted. They have a higher burden of comorbid conditions, worse nutritional status, and increased risks of infection and cancer. In a regression analysis that attempted to control for such confounders using the Elixhauser mortality index, the nongeriatric Crohn’s disease patients were an adjusted 75% less likely to die in the hospital than those who were older. Nongeriatric ulcerative colitis patients were 81% less likely to die than geriatric patients with the disease. In contrast, nongeriatric patients admitted for reasons other than IBD had only an adjusted 50% lower risk of inpatient mortality than those who were older than 75.

Of note, in this analysis adjusted for confounders, there was no difference between geriatric and nongeriatric IBD patients in terms of resource utilization as reflected in average length of stay and hospital charges, Dr. Schwartz continued.

Asked if he could shed light on any specific complications that drove the age-related disparity in inpatient mortality in the IBD population, the physician replied that he and his coinvestigators were thwarted in their effort to do so because the inpatient mortality of 1.0%-1.5% was so low that further breakdown as to causes of death would have been statistically unreliable. It might be possible to do so successfully by combining several years of National Inpatient Sample data. That being said, it’s reasonable to hypothesize that cardiovascular complications are an important contributor, he added.

Dr. Schwartz reported having no financial conflicts regarding his study, conducted free of commercial support.

SAN ANTONIO – Jeffrey Schwartz, MD, reported at the annual meeting of the American College of Gastroenterology.

The magnitude of the age-related increased risk highlighted in this large national study was strikingly larger than the differential inpatient mortality between geriatric and nongeriatric patients hospitalized for conditions other than inflammatory bowel disease (IBD). It’s a finding that reveals a major unmet need for improved systems of care for elderly hospitalized IBD patients, according to Dr. Schwartz, an internal medicine resident at Beth Israel Deaconess Medical Center, Boston.

“Given the high prevalence of IBD patients that require inpatient admission, as well as the rapidly aging nature of the U.S. population, it’s our hope that this study will provide some insight to drive efforts to improve standardized guideline-directed therapy and propose interventions to help close what I think is a very important gap in clinical care,” he said.

It’s well established that a second peak of IBD diagnoses occurs in 50- to 70-year-olds. At present, roughly 30% of all individuals carrying the diagnosis of IBD are over age 65, and with the graying of the baby-boomer population, this proportion is climbing.

Dr. Schwartz presented a study of the National Inpatient Sample for 2016, which is a representative sample comprising 20% of all U.S. hospital discharges for that year, the most recent year for which the data are available. The study population included all 71,040 patients hospitalized for acute management of Crohn’s disease or its immediate complications, of whom 10,095 were aged over age 75 years, as well as the 35,950 patients hospitalized for ulcerative colitis, 8,285 of whom were over 75.

Inpatient mortality occurred in 1.5% of the geriatric admissions, compared with 0.2% of nongeriatric admissions for Crohn’s disease. Similarly, the inpatient mortality rate in geriatric patients with ulcerative colitis was 1.0% versus 0.1% in patients under age 75 hospitalized for ulcerative colitis.

There are lots of reasons why the management of geriatric patients with IBD is particularly challenging, Dr. Schwartz noted. They have a higher burden of comorbid conditions, worse nutritional status, and increased risks of infection and cancer. In a regression analysis that attempted to control for such confounders using the Elixhauser mortality index, the nongeriatric Crohn’s disease patients were an adjusted 75% less likely to die in the hospital than those who were older. Nongeriatric ulcerative colitis patients were 81% less likely to die than geriatric patients with the disease. In contrast, nongeriatric patients admitted for reasons other than IBD had only an adjusted 50% lower risk of inpatient mortality than those who were older than 75.

Of note, in this analysis adjusted for confounders, there was no difference between geriatric and nongeriatric IBD patients in terms of resource utilization as reflected in average length of stay and hospital charges, Dr. Schwartz continued.

Asked if he could shed light on any specific complications that drove the age-related disparity in inpatient mortality in the IBD population, the physician replied that he and his coinvestigators were thwarted in their effort to do so because the inpatient mortality of 1.0%-1.5% was so low that further breakdown as to causes of death would have been statistically unreliable. It might be possible to do so successfully by combining several years of National Inpatient Sample data. That being said, it’s reasonable to hypothesize that cardiovascular complications are an important contributor, he added.

Dr. Schwartz reported having no financial conflicts regarding his study, conducted free of commercial support.

SAN ANTONIO – Jeffrey Schwartz, MD, reported at the annual meeting of the American College of Gastroenterology.

The magnitude of the age-related increased risk highlighted in this large national study was strikingly larger than the differential inpatient mortality between geriatric and nongeriatric patients hospitalized for conditions other than inflammatory bowel disease (IBD). It’s a finding that reveals a major unmet need for improved systems of care for elderly hospitalized IBD patients, according to Dr. Schwartz, an internal medicine resident at Beth Israel Deaconess Medical Center, Boston.

“Given the high prevalence of IBD patients that require inpatient admission, as well as the rapidly aging nature of the U.S. population, it’s our hope that this study will provide some insight to drive efforts to improve standardized guideline-directed therapy and propose interventions to help close what I think is a very important gap in clinical care,” he said.

It’s well established that a second peak of IBD diagnoses occurs in 50- to 70-year-olds. At present, roughly 30% of all individuals carrying the diagnosis of IBD are over age 65, and with the graying of the baby-boomer population, this proportion is climbing.

Dr. Schwartz presented a study of the National Inpatient Sample for 2016, which is a representative sample comprising 20% of all U.S. hospital discharges for that year, the most recent year for which the data are available. The study population included all 71,040 patients hospitalized for acute management of Crohn’s disease or its immediate complications, of whom 10,095 were aged over age 75 years, as well as the 35,950 patients hospitalized for ulcerative colitis, 8,285 of whom were over 75.

Inpatient mortality occurred in 1.5% of the geriatric admissions, compared with 0.2% of nongeriatric admissions for Crohn’s disease. Similarly, the inpatient mortality rate in geriatric patients with ulcerative colitis was 1.0% versus 0.1% in patients under age 75 hospitalized for ulcerative colitis.

There are lots of reasons why the management of geriatric patients with IBD is particularly challenging, Dr. Schwartz noted. They have a higher burden of comorbid conditions, worse nutritional status, and increased risks of infection and cancer. In a regression analysis that attempted to control for such confounders using the Elixhauser mortality index, the nongeriatric Crohn’s disease patients were an adjusted 75% less likely to die in the hospital than those who were older. Nongeriatric ulcerative colitis patients were 81% less likely to die than geriatric patients with the disease. In contrast, nongeriatric patients admitted for reasons other than IBD had only an adjusted 50% lower risk of inpatient mortality than those who were older than 75.

Of note, in this analysis adjusted for confounders, there was no difference between geriatric and nongeriatric IBD patients in terms of resource utilization as reflected in average length of stay and hospital charges, Dr. Schwartz continued.

Asked if he could shed light on any specific complications that drove the age-related disparity in inpatient mortality in the IBD population, the physician replied that he and his coinvestigators were thwarted in their effort to do so because the inpatient mortality of 1.0%-1.5% was so low that further breakdown as to causes of death would have been statistically unreliable. It might be possible to do so successfully by combining several years of National Inpatient Sample data. That being said, it’s reasonable to hypothesize that cardiovascular complications are an important contributor, he added.

Dr. Schwartz reported having no financial conflicts regarding his study, conducted free of commercial support.

REPORTING FROM ACG 2019

Key clinical point: A major unmet need exists for better guideline-directed management of geriatric patients hospitalized for inflammatory bowel disease.

Major finding: The inpatient mortality rate among patients aged over age 75 years hospitalized for management of inflammatory bowel disease is four to five times higher than in those who are younger.

Study details: This was a retrospective analysis of all 106,990 hospital admissions for management of inflammatory bowel disease included in the 2016 National Inpatient Sample.

Disclosures: The presenter reported having no financial conflicts regarding his study, conducted free of commercial support.

Source: Schwartz J. ACG 2019, Abstract 42.

Two national analyses confirm safety of 9vHPV vaccine

most of which cannot be definitively tied to the vaccine, according to two large studies published simultaneously in Pediatrics.

“The body of evidence on the safety of 9vHPV now includes prelicensure clinical trial data on 15,000 study subjects, reassuring results from postlicensure near real-time sequential monitoring by the Centers for Disease Control and Prevention’s Vaccine Safety Datalink, on approximately 839 000 doses administered, and our review of VAERS [Vaccine Adverse Event Reporting System] reports over a 3-year period, during which time approximately 28 million doses were distributed in the United States,” Tom T. Shimabukuro, MD, and colleagues reported in Pediatrics.

James G. Donahue, PhD, and colleagues, authors of the Vaccine Safety Datalink study published in the same issue, concluded much the same thing.

The new numbers bolster extant safety data on the vaccine, which was approved in 2015, wrote Dr. Donahue, an epidemiologist at the Marshfield (Wis.) Clinic Research Institute, and coauthors. “With this large observational study, we contribute reassuring postlicensure data that will help bolster the safety profile of 9vHPV. Although we detected several unexpected potential safety signals, none were confirmed after further evaluation.”

The Vaccine Safety Datalink study of 838,991 doses looked for safety signals in a prespecified group of potential events, including anaphylaxis, appendicitis, Guillain-Barré syndrome, chronic inflammatory demyelinating polyneuropathy, pancreatitis, seizures, stroke, and venous thromboembolism.

Dr. Donahue and coauthors used real-time vaccination data and time-matched historical controls to evaluate any changes in expected disease rates, compared with those occurring in vaccine recipients.

Most doses in the study (76%) were given to children aged 9-17 years, with 48% going to girls. The remaining 24% of doses were given to persons aged 18-26 years, with 64% going to women.

The analysis found potential safety signals in allergic reactions (43 cases), appendicitis (30 cases), pancreatitis (8 cases), and syncope (67). None of these were confirmed after further investigation.

“The safety profile of 9vHPV is favorable and comparable to that of its predecessor, 4vHPV,” Dr. Donahue and associates concluded.

The VAERS analysis was similarly reassuring. It examined all reported adverse events, not predetermined events.

Among 28 million doses, there were 7,244 adverse event reports – a rate of about 1 event per 7 million doses. Of these, 97% were nonserious, wrote Dr. Shimabukuro, deputy director of the CDC’s Immunization Safety Office, and colleagues.