User login

Bringing you the latest news, research and reviews, exclusive interviews, podcasts, quizzes, and more.

div[contains(@class, 'read-next-article')]

div[contains(@class, 'nav-primary')]

nav[contains(@class, 'nav-primary')]

section[contains(@class, 'footer-nav-section-wrapper')]

nav[contains(@class, 'nav-ce-stack nav-ce-stack__large-screen')]

header[@id='header']

div[contains(@class, 'header__large-screen')]

div[contains(@class, 'read-next-article')]

div[contains(@class, 'main-prefix')]

div[contains(@class, 'nav-primary')]

nav[contains(@class, 'nav-primary')]

section[contains(@class, 'footer-nav-section-wrapper')]

footer[@id='footer']

section[contains(@class, 'nav-hidden')]

div[contains(@class, 'ce-card-content')]

nav[contains(@class, 'nav-ce-stack')]

div[contains(@class, 'view-medstat-quiz-listing-panes')]

div[contains(@class, 'pane-article-sidebar-latest-news')]

Ultraprocessed Foods May Be an Independent Risk Factor for Poor Brain Health

, new research suggests.

Observations from a large cohort of adults followed for more than 10 years suggested that eating more ultraprocessed foods (UPFs) may increase the risk for cognitive decline and stroke, while eating more unprocessed or minimally processed foods may lower the risk.

“The first key takeaway is that the type of food that we eat matters for brain health, but it’s equally important to think about how it’s made and handled when thinking about brain health,” said study investigator W. Taylor Kimberly, MD, PhD, with Massachusetts General Hospital in Boston.

“The second is that it’s not just all a bad news story because while increased consumption of ultra-processed foods is associated with a higher risk of cognitive impairment and stroke, unprocessed foods appear to be protective,” Dr. Kimberly added.

The study was published online on May 22 in Neurology.

Food Processing Matters

UPFs are highly manipulated, low in protein and fiber, and packed with added ingredients, including sugar, fat, and salt. Examples of UPFs are soft drinks, chips, chocolate, candy, ice cream, sweetened breakfast cereals, packaged soups, chicken nuggets, hot dogs, and fries.

Unprocessed or minimally processed foods include meats such as simple cuts of beef, pork, and chicken, and vegetables and fruits.

Research has shown associations between high UPF consumption and increased risk for metabolic and neurologic disorders.

As reported previously, in the ELSA-Brasil study, higher intake of UPFs was significantly associated with a faster rate of decline in executive and global cognitive function.

Yet, it’s unclear whether the extent of food processing contributes to the risk of adverse neurologic outcomes independent of dietary patterns.

Dr. Kimberly and colleagues examined the association of food processing levels with the risk for cognitive impairment and stroke in the long-running REGARDS study, a large prospective US cohort of Black and White adults aged 45 years and older.

Food processing levels were defined by the NOVA food classification system, which ranges from unprocessed or minimally processed foods (NOVA1) to UPFs (NOVA4). Dietary patterns were characterized based on food frequency questionnaires.

In the cognitive impairment cohort, 768 of 14,175 adults without evidence of impairment at baseline who underwent follow-up testing developed cognitive impairment.

Diet an Opportunity to Protect Brain Health

In multivariable Cox proportional hazards models adjusting for age, sex, high blood pressure, and other factors, a 10% increase in relative intake of UPFs was associated with a 16% higher risk for cognitive impairment (hazard ratio [HR], 1.16). Conversely, a higher intake of unprocessed or minimally processed foods correlated with a 12% lower risk for cognitive impairment (HR, 0.88).

In the stroke cohort, 1108 of 20,243 adults without a history of stroke had a stroke during the follow-up.

In multivariable Cox models, greater intake of UPFs was associated with an 8% increased risk for stroke (HR, 1.08), while greater intake of unprocessed or minimally processed foods correlated with a 9% lower risk for stroke (HR, 0.91).

The effect of UPFs on stroke risk was greater among Black than among White adults (UPF-by-race interaction HR, 1.15).

The associations between UPFs and both cognitive impairment and stroke were independent of adherence to the Mediterranean diet, the Dietary Approaches to Stop Hypertension (DASH) diet, and the Mediterranean-DASH Intervention for Neurodegenerative Delay diet.

These results “highlight the possibility that we have the capacity to maintain our brain health and prevent poor brain health outcomes by focusing on unprocessed foods in the long term,” Dr. Kimberly said.

He cautioned that this was “an observational study and not an interventional study, so we can’t say with certainty that substituting ultra-processed foods with unprocessed foods will definitively improve brain health,” Dr. Kimberly said. “That’s a clinical trial question that has not been done but our results certainly are provocative.”

Consider UPFs in National Guidelines?

The coauthors of an accompanying editorial said the “robust” results from Kimberly and colleagues highlight the “significant role of food processing levels and their relationship with adverse neurologic outcomes, independent of conventional dietary patterns.”

Peipei Gao, MS, with Harvard T.H. Chan School of Public Health, and Zhendong Mei, PhD, with Harvard Medical School, both in Boston, noted that the mechanisms underlying the impact of UPFs on adverse neurologic outcomes “can be attributed not only to their nutritional profiles,” including poor nutrient composition and high glycemic load, “but also to the presence of additives including emulsifiers, colorants, sweeteners, and nitrates/nitrites, which have been associated with disruptions in the gut microbial ecosystem and inflammation.

“Understanding how food processing levels are associated with human health offers a fresh take on the saying ‘you are what you eat,’ ” the editorialists wrote.

This new study, they noted, adds to the evidence by highlighting the link between UPFs and brain health, independent of traditional dietary patterns and “raises questions about whether considerations of UPFs should be included in dietary guidelines, as well as national and global public health policies for improving brain health.”

The editorialists called for large prospective population studies and randomized controlled trials to better understand the link between UPF consumption and brain health. “In addition, mechanistic studies are warranted to identify specific foods, detrimental processes, and additives that play a role in UPFs and their association with neurologic disorders,” they concluded.

Funding for the study was provided by the National Institute of Neurological Disorders and Stroke, the National Institute on Aging, National Institutes of Health, and Department of Health and Human Services. The authors and editorial writers had no relevant disclosures.

A version of this article appeared on Medscape.com.

, new research suggests.

Observations from a large cohort of adults followed for more than 10 years suggested that eating more ultraprocessed foods (UPFs) may increase the risk for cognitive decline and stroke, while eating more unprocessed or minimally processed foods may lower the risk.

“The first key takeaway is that the type of food that we eat matters for brain health, but it’s equally important to think about how it’s made and handled when thinking about brain health,” said study investigator W. Taylor Kimberly, MD, PhD, with Massachusetts General Hospital in Boston.

“The second is that it’s not just all a bad news story because while increased consumption of ultra-processed foods is associated with a higher risk of cognitive impairment and stroke, unprocessed foods appear to be protective,” Dr. Kimberly added.

The study was published online on May 22 in Neurology.

Food Processing Matters

UPFs are highly manipulated, low in protein and fiber, and packed with added ingredients, including sugar, fat, and salt. Examples of UPFs are soft drinks, chips, chocolate, candy, ice cream, sweetened breakfast cereals, packaged soups, chicken nuggets, hot dogs, and fries.

Unprocessed or minimally processed foods include meats such as simple cuts of beef, pork, and chicken, and vegetables and fruits.

Research has shown associations between high UPF consumption and increased risk for metabolic and neurologic disorders.

As reported previously, in the ELSA-Brasil study, higher intake of UPFs was significantly associated with a faster rate of decline in executive and global cognitive function.

Yet, it’s unclear whether the extent of food processing contributes to the risk of adverse neurologic outcomes independent of dietary patterns.

Dr. Kimberly and colleagues examined the association of food processing levels with the risk for cognitive impairment and stroke in the long-running REGARDS study, a large prospective US cohort of Black and White adults aged 45 years and older.

Food processing levels were defined by the NOVA food classification system, which ranges from unprocessed or minimally processed foods (NOVA1) to UPFs (NOVA4). Dietary patterns were characterized based on food frequency questionnaires.

In the cognitive impairment cohort, 768 of 14,175 adults without evidence of impairment at baseline who underwent follow-up testing developed cognitive impairment.

Diet an Opportunity to Protect Brain Health

In multivariable Cox proportional hazards models adjusting for age, sex, high blood pressure, and other factors, a 10% increase in relative intake of UPFs was associated with a 16% higher risk for cognitive impairment (hazard ratio [HR], 1.16). Conversely, a higher intake of unprocessed or minimally processed foods correlated with a 12% lower risk for cognitive impairment (HR, 0.88).

In the stroke cohort, 1108 of 20,243 adults without a history of stroke had a stroke during the follow-up.

In multivariable Cox models, greater intake of UPFs was associated with an 8% increased risk for stroke (HR, 1.08), while greater intake of unprocessed or minimally processed foods correlated with a 9% lower risk for stroke (HR, 0.91).

The effect of UPFs on stroke risk was greater among Black than among White adults (UPF-by-race interaction HR, 1.15).

The associations between UPFs and both cognitive impairment and stroke were independent of adherence to the Mediterranean diet, the Dietary Approaches to Stop Hypertension (DASH) diet, and the Mediterranean-DASH Intervention for Neurodegenerative Delay diet.

These results “highlight the possibility that we have the capacity to maintain our brain health and prevent poor brain health outcomes by focusing on unprocessed foods in the long term,” Dr. Kimberly said.

He cautioned that this was “an observational study and not an interventional study, so we can’t say with certainty that substituting ultra-processed foods with unprocessed foods will definitively improve brain health,” Dr. Kimberly said. “That’s a clinical trial question that has not been done but our results certainly are provocative.”

Consider UPFs in National Guidelines?

The coauthors of an accompanying editorial said the “robust” results from Kimberly and colleagues highlight the “significant role of food processing levels and their relationship with adverse neurologic outcomes, independent of conventional dietary patterns.”

Peipei Gao, MS, with Harvard T.H. Chan School of Public Health, and Zhendong Mei, PhD, with Harvard Medical School, both in Boston, noted that the mechanisms underlying the impact of UPFs on adverse neurologic outcomes “can be attributed not only to their nutritional profiles,” including poor nutrient composition and high glycemic load, “but also to the presence of additives including emulsifiers, colorants, sweeteners, and nitrates/nitrites, which have been associated with disruptions in the gut microbial ecosystem and inflammation.

“Understanding how food processing levels are associated with human health offers a fresh take on the saying ‘you are what you eat,’ ” the editorialists wrote.

This new study, they noted, adds to the evidence by highlighting the link between UPFs and brain health, independent of traditional dietary patterns and “raises questions about whether considerations of UPFs should be included in dietary guidelines, as well as national and global public health policies for improving brain health.”

The editorialists called for large prospective population studies and randomized controlled trials to better understand the link between UPF consumption and brain health. “In addition, mechanistic studies are warranted to identify specific foods, detrimental processes, and additives that play a role in UPFs and their association with neurologic disorders,” they concluded.

Funding for the study was provided by the National Institute of Neurological Disorders and Stroke, the National Institute on Aging, National Institutes of Health, and Department of Health and Human Services. The authors and editorial writers had no relevant disclosures.

A version of this article appeared on Medscape.com.

, new research suggests.

Observations from a large cohort of adults followed for more than 10 years suggested that eating more ultraprocessed foods (UPFs) may increase the risk for cognitive decline and stroke, while eating more unprocessed or minimally processed foods may lower the risk.

“The first key takeaway is that the type of food that we eat matters for brain health, but it’s equally important to think about how it’s made and handled when thinking about brain health,” said study investigator W. Taylor Kimberly, MD, PhD, with Massachusetts General Hospital in Boston.

“The second is that it’s not just all a bad news story because while increased consumption of ultra-processed foods is associated with a higher risk of cognitive impairment and stroke, unprocessed foods appear to be protective,” Dr. Kimberly added.

The study was published online on May 22 in Neurology.

Food Processing Matters

UPFs are highly manipulated, low in protein and fiber, and packed with added ingredients, including sugar, fat, and salt. Examples of UPFs are soft drinks, chips, chocolate, candy, ice cream, sweetened breakfast cereals, packaged soups, chicken nuggets, hot dogs, and fries.

Unprocessed or minimally processed foods include meats such as simple cuts of beef, pork, and chicken, and vegetables and fruits.

Research has shown associations between high UPF consumption and increased risk for metabolic and neurologic disorders.

As reported previously, in the ELSA-Brasil study, higher intake of UPFs was significantly associated with a faster rate of decline in executive and global cognitive function.

Yet, it’s unclear whether the extent of food processing contributes to the risk of adverse neurologic outcomes independent of dietary patterns.

Dr. Kimberly and colleagues examined the association of food processing levels with the risk for cognitive impairment and stroke in the long-running REGARDS study, a large prospective US cohort of Black and White adults aged 45 years and older.

Food processing levels were defined by the NOVA food classification system, which ranges from unprocessed or minimally processed foods (NOVA1) to UPFs (NOVA4). Dietary patterns were characterized based on food frequency questionnaires.

In the cognitive impairment cohort, 768 of 14,175 adults without evidence of impairment at baseline who underwent follow-up testing developed cognitive impairment.

Diet an Opportunity to Protect Brain Health

In multivariable Cox proportional hazards models adjusting for age, sex, high blood pressure, and other factors, a 10% increase in relative intake of UPFs was associated with a 16% higher risk for cognitive impairment (hazard ratio [HR], 1.16). Conversely, a higher intake of unprocessed or minimally processed foods correlated with a 12% lower risk for cognitive impairment (HR, 0.88).

In the stroke cohort, 1108 of 20,243 adults without a history of stroke had a stroke during the follow-up.

In multivariable Cox models, greater intake of UPFs was associated with an 8% increased risk for stroke (HR, 1.08), while greater intake of unprocessed or minimally processed foods correlated with a 9% lower risk for stroke (HR, 0.91).

The effect of UPFs on stroke risk was greater among Black than among White adults (UPF-by-race interaction HR, 1.15).

The associations between UPFs and both cognitive impairment and stroke were independent of adherence to the Mediterranean diet, the Dietary Approaches to Stop Hypertension (DASH) diet, and the Mediterranean-DASH Intervention for Neurodegenerative Delay diet.

These results “highlight the possibility that we have the capacity to maintain our brain health and prevent poor brain health outcomes by focusing on unprocessed foods in the long term,” Dr. Kimberly said.

He cautioned that this was “an observational study and not an interventional study, so we can’t say with certainty that substituting ultra-processed foods with unprocessed foods will definitively improve brain health,” Dr. Kimberly said. “That’s a clinical trial question that has not been done but our results certainly are provocative.”

Consider UPFs in National Guidelines?

The coauthors of an accompanying editorial said the “robust” results from Kimberly and colleagues highlight the “significant role of food processing levels and their relationship with adverse neurologic outcomes, independent of conventional dietary patterns.”

Peipei Gao, MS, with Harvard T.H. Chan School of Public Health, and Zhendong Mei, PhD, with Harvard Medical School, both in Boston, noted that the mechanisms underlying the impact of UPFs on adverse neurologic outcomes “can be attributed not only to their nutritional profiles,” including poor nutrient composition and high glycemic load, “but also to the presence of additives including emulsifiers, colorants, sweeteners, and nitrates/nitrites, which have been associated with disruptions in the gut microbial ecosystem and inflammation.

“Understanding how food processing levels are associated with human health offers a fresh take on the saying ‘you are what you eat,’ ” the editorialists wrote.

This new study, they noted, adds to the evidence by highlighting the link between UPFs and brain health, independent of traditional dietary patterns and “raises questions about whether considerations of UPFs should be included in dietary guidelines, as well as national and global public health policies for improving brain health.”

The editorialists called for large prospective population studies and randomized controlled trials to better understand the link between UPF consumption and brain health. “In addition, mechanistic studies are warranted to identify specific foods, detrimental processes, and additives that play a role in UPFs and their association with neurologic disorders,” they concluded.

Funding for the study was provided by the National Institute of Neurological Disorders and Stroke, the National Institute on Aging, National Institutes of Health, and Department of Health and Human Services. The authors and editorial writers had no relevant disclosures.

A version of this article appeared on Medscape.com.

FROM NEUROLOGY

Will the Federal Non-Compete Ban Take Effect?

(with very limited exceptions). The final rule will not go into effect until 120 days after its publication in the Federal Register, which took place on May 7, and numerous legal challenges appear to be on the horizon.

The principal components of the rule are as follows:

- After the effective date, most non-compete agreements (which prevent departing employees from signing with a new employer for a defined period within a specific geographic area) are banned nationwide.

- The rule exempts certain “senior executives,” ie individuals who earn more than $151,164 annually and serve in policy-making positions.

- There is another major exception for non-competes connected with a sale of a business.

- While not explicitly stated, the rule arguably exempts non-profits, tax-exempt hospitals, and other tax-exempt entities.

- Employers must provide verbal and written notice to employees regarding existing agreements, which would be voided under the rule.

The final rule is the latest skirmish in an ongoing, years-long debate. Twelve states have already put non-compete bans in place, according to a recent paper, and they may serve as a harbinger of things to come should the federal ban go into effect. Each state rule varies in its specifics as states respond to local market conditions. While some states ban all non-compete agreements outright, others limit them based on variables, such as income and employment circumstances. Of course, should the federal ban take effect, it will supersede whatever rules the individual states have in place.

In drafting the rule, the FTC reasoned that non-compete clauses constitute restraint of trade, and eliminating them could potentially increase worker earnings as well as lower health care costs by billions of dollars. In its statements on the proposed ban, the FTC claimed that it could lower health spending across the board by almost $150 billion per year and return $300 million to workers each year in earnings. The agency cited a large body of research that non-competes make it harder for workers to move between jobs and can raise prices for goods and services, while suppressing wages for workers and inhibiting the creation of new businesses.

Most physicians affected by non-compete agreements heavily favor the new rule, because it would give them more control over their careers and expand their practice and income opportunities. It would allow them to get a new job with a competing organization, bucking a long-standing trend that hospitals and health care systems have heavily relied on to keep staff in place.

The rule would, however, keep in place “non-solicitation” rules that many health care organizations have put in place. That means that if a physician leaves an employer, he or she cannot reach out to former patients and colleagues to bring them along or invite them to join him or her at the new employment venue.

Within that clause, however, the FTC has specified that if such non-solicitation agreement has the “equivalent effect” of a non-compete, the agency would deem it such. That means, even if that rule stands, it could be contested and may be interpreted as violating the non-compete provision. So, there is value in reading all the fine print should the rule move forward.

Physicians in independent practices who employ physician assistants and nurse practitioners have expressed concerns that their expensively trained employees might be tempted to accept a nearby, higher-paying position. The “non-solicitation” clause would theoretically prevent them from taking patients and co-workers with them — unless it were successfully contested. Many questions remain.

Further complicating the non-compete ban issue is how it might impact nonprofit institutions. Most hospitals structured as nonprofits would theoretically be exempt from the rule, although it is not specifically stated in the rule itself, because the FTC Act gives the Commission jurisdiction over for-profit companies only. This would obviously create an unfair advantage for nonprofits, who could continue writing non-compete clauses with impunity.

All of these questions may be moot, of course, because a number of powerful entities with deep pockets have lined up in opposition to the rule. Some of them have even questioned the FTC’s authority to pass the rule at all, on the grounds that Section 5 of the FTC Act does not give it the authority to police labor markets. A lawsuit has already been filed by the US Chamber of Commerce. Other large groups in opposition are the American Medical Group Association, the American Hospital Association, and numerous large hospital and healthcare networks.

Only time will tell whether this issue will be regulated on a national level or remain the purview of each individual state.

Dr. Eastern practices dermatology and dermatologic surgery in Belleville, N.J. He is the author of numerous articles and textbook chapters, and is a longtime monthly columnist for Dermatology News. Write to him at [email protected].

(with very limited exceptions). The final rule will not go into effect until 120 days after its publication in the Federal Register, which took place on May 7, and numerous legal challenges appear to be on the horizon.

The principal components of the rule are as follows:

- After the effective date, most non-compete agreements (which prevent departing employees from signing with a new employer for a defined period within a specific geographic area) are banned nationwide.

- The rule exempts certain “senior executives,” ie individuals who earn more than $151,164 annually and serve in policy-making positions.

- There is another major exception for non-competes connected with a sale of a business.

- While not explicitly stated, the rule arguably exempts non-profits, tax-exempt hospitals, and other tax-exempt entities.

- Employers must provide verbal and written notice to employees regarding existing agreements, which would be voided under the rule.

The final rule is the latest skirmish in an ongoing, years-long debate. Twelve states have already put non-compete bans in place, according to a recent paper, and they may serve as a harbinger of things to come should the federal ban go into effect. Each state rule varies in its specifics as states respond to local market conditions. While some states ban all non-compete agreements outright, others limit them based on variables, such as income and employment circumstances. Of course, should the federal ban take effect, it will supersede whatever rules the individual states have in place.

In drafting the rule, the FTC reasoned that non-compete clauses constitute restraint of trade, and eliminating them could potentially increase worker earnings as well as lower health care costs by billions of dollars. In its statements on the proposed ban, the FTC claimed that it could lower health spending across the board by almost $150 billion per year and return $300 million to workers each year in earnings. The agency cited a large body of research that non-competes make it harder for workers to move between jobs and can raise prices for goods and services, while suppressing wages for workers and inhibiting the creation of new businesses.

Most physicians affected by non-compete agreements heavily favor the new rule, because it would give them more control over their careers and expand their practice and income opportunities. It would allow them to get a new job with a competing organization, bucking a long-standing trend that hospitals and health care systems have heavily relied on to keep staff in place.

The rule would, however, keep in place “non-solicitation” rules that many health care organizations have put in place. That means that if a physician leaves an employer, he or she cannot reach out to former patients and colleagues to bring them along or invite them to join him or her at the new employment venue.

Within that clause, however, the FTC has specified that if such non-solicitation agreement has the “equivalent effect” of a non-compete, the agency would deem it such. That means, even if that rule stands, it could be contested and may be interpreted as violating the non-compete provision. So, there is value in reading all the fine print should the rule move forward.

Physicians in independent practices who employ physician assistants and nurse practitioners have expressed concerns that their expensively trained employees might be tempted to accept a nearby, higher-paying position. The “non-solicitation” clause would theoretically prevent them from taking patients and co-workers with them — unless it were successfully contested. Many questions remain.

Further complicating the non-compete ban issue is how it might impact nonprofit institutions. Most hospitals structured as nonprofits would theoretically be exempt from the rule, although it is not specifically stated in the rule itself, because the FTC Act gives the Commission jurisdiction over for-profit companies only. This would obviously create an unfair advantage for nonprofits, who could continue writing non-compete clauses with impunity.

All of these questions may be moot, of course, because a number of powerful entities with deep pockets have lined up in opposition to the rule. Some of them have even questioned the FTC’s authority to pass the rule at all, on the grounds that Section 5 of the FTC Act does not give it the authority to police labor markets. A lawsuit has already been filed by the US Chamber of Commerce. Other large groups in opposition are the American Medical Group Association, the American Hospital Association, and numerous large hospital and healthcare networks.

Only time will tell whether this issue will be regulated on a national level or remain the purview of each individual state.

Dr. Eastern practices dermatology and dermatologic surgery in Belleville, N.J. He is the author of numerous articles and textbook chapters, and is a longtime monthly columnist for Dermatology News. Write to him at [email protected].

(with very limited exceptions). The final rule will not go into effect until 120 days after its publication in the Federal Register, which took place on May 7, and numerous legal challenges appear to be on the horizon.

The principal components of the rule are as follows:

- After the effective date, most non-compete agreements (which prevent departing employees from signing with a new employer for a defined period within a specific geographic area) are banned nationwide.

- The rule exempts certain “senior executives,” ie individuals who earn more than $151,164 annually and serve in policy-making positions.

- There is another major exception for non-competes connected with a sale of a business.

- While not explicitly stated, the rule arguably exempts non-profits, tax-exempt hospitals, and other tax-exempt entities.

- Employers must provide verbal and written notice to employees regarding existing agreements, which would be voided under the rule.

The final rule is the latest skirmish in an ongoing, years-long debate. Twelve states have already put non-compete bans in place, according to a recent paper, and they may serve as a harbinger of things to come should the federal ban go into effect. Each state rule varies in its specifics as states respond to local market conditions. While some states ban all non-compete agreements outright, others limit them based on variables, such as income and employment circumstances. Of course, should the federal ban take effect, it will supersede whatever rules the individual states have in place.

In drafting the rule, the FTC reasoned that non-compete clauses constitute restraint of trade, and eliminating them could potentially increase worker earnings as well as lower health care costs by billions of dollars. In its statements on the proposed ban, the FTC claimed that it could lower health spending across the board by almost $150 billion per year and return $300 million to workers each year in earnings. The agency cited a large body of research that non-competes make it harder for workers to move between jobs and can raise prices for goods and services, while suppressing wages for workers and inhibiting the creation of new businesses.

Most physicians affected by non-compete agreements heavily favor the new rule, because it would give them more control over their careers and expand their practice and income opportunities. It would allow them to get a new job with a competing organization, bucking a long-standing trend that hospitals and health care systems have heavily relied on to keep staff in place.

The rule would, however, keep in place “non-solicitation” rules that many health care organizations have put in place. That means that if a physician leaves an employer, he or she cannot reach out to former patients and colleagues to bring them along or invite them to join him or her at the new employment venue.

Within that clause, however, the FTC has specified that if such non-solicitation agreement has the “equivalent effect” of a non-compete, the agency would deem it such. That means, even if that rule stands, it could be contested and may be interpreted as violating the non-compete provision. So, there is value in reading all the fine print should the rule move forward.

Physicians in independent practices who employ physician assistants and nurse practitioners have expressed concerns that their expensively trained employees might be tempted to accept a nearby, higher-paying position. The “non-solicitation” clause would theoretically prevent them from taking patients and co-workers with them — unless it were successfully contested. Many questions remain.

Further complicating the non-compete ban issue is how it might impact nonprofit institutions. Most hospitals structured as nonprofits would theoretically be exempt from the rule, although it is not specifically stated in the rule itself, because the FTC Act gives the Commission jurisdiction over for-profit companies only. This would obviously create an unfair advantage for nonprofits, who could continue writing non-compete clauses with impunity.

All of these questions may be moot, of course, because a number of powerful entities with deep pockets have lined up in opposition to the rule. Some of them have even questioned the FTC’s authority to pass the rule at all, on the grounds that Section 5 of the FTC Act does not give it the authority to police labor markets. A lawsuit has already been filed by the US Chamber of Commerce. Other large groups in opposition are the American Medical Group Association, the American Hospital Association, and numerous large hospital and healthcare networks.

Only time will tell whether this issue will be regulated on a national level or remain the purview of each individual state.

Dr. Eastern practices dermatology and dermatologic surgery in Belleville, N.J. He is the author of numerous articles and textbook chapters, and is a longtime monthly columnist for Dermatology News. Write to him at [email protected].

Fluoride, Water, and Kids’ Brains: It’s Complicated

This transcript has been edited for clarity.

I recently looked back at my folder full of these medical study commentaries, this weekly video series we call Impact Factor, and realized that I’ve been doing this for a long time. More than 400 articles, believe it or not.

I’ve learned a lot in that time — about medicine, of course — but also about how people react to certain topics. If you’ve been with me this whole time, or even for just a chunk of it, you’ll know that I tend to take a measured approach to most topics. No one study is ever truly definitive, after all. But regardless of how even-keeled I may be, there are some topics that I just know in advance are going to be a bit divisive: studies about gun control; studies about vitamin D; and, of course, studies about fluoride.

Shall We Shake This Hornet’s Nest?

The fluoridation of the US water system began in 1945 with the goal of reducing cavities in the population. The CDC named water fluoridation one of the 10 great public health achievements of the 20th century, along with such inarguable achievements as the recognition of tobacco as a health hazard.

But fluoridation has never been without its detractors. One problem is that the spectrum of beliefs about the potential harm of fluoridation is huge. On one end, you have science-based concerns such as the recognition that excessive fluoride intake can cause fluorosis and stain tooth enamel. I’ll note that the EPA regulates fluoride levels — there is a fair amount of naturally occurring fluoride in water tables around the world — to prevent this. And, of course, on the other end of the spectrum, you have beliefs that are essentially conspiracy theories: “They” add fluoride to the water supply to control us.

The challenge for me is that when one “side” of a scientific debate includes the crazy theories, it can be hard to discuss that whole spectrum, since there are those who will see evidence of any adverse fluoride effect as confirmation that the conspiracy theory is true.

I can’t help this. So I’ll just say this up front: I am about to tell you about a study that shows some potential risk from fluoride exposure. I will tell you up front that there are some significant caveats to the study that call the results into question. And I will tell you up front that no one is controlling your mind, or my mind, with fluoride; they do it with social media.

Let’s Dive Into These Shark-Infested, Fluoridated Waters

We’re talking about the study, “Maternal Urinary Fluoride and Child Neurobehavior at Age 36 Months,” which appears in JAMA Network Open.

It’s a study of 229 mother-child pairs from the Los Angeles area. The moms had their urinary fluoride level measured once before 30 weeks of gestation. A neurobehavioral battery called the Preschool Child Behavior Checklist was administered to the children at age 36 months.

The main thing you’ll hear about this study — in headlines, Facebook posts, and manifestos locked in drawers somewhere — is the primary result: A 0.68-mg/L increase in urinary fluoride in the mothers, about 25 percentile points, was associated with a doubling of the risk for neurobehavioral problems in their kids when they were 3 years old.

Yikes.

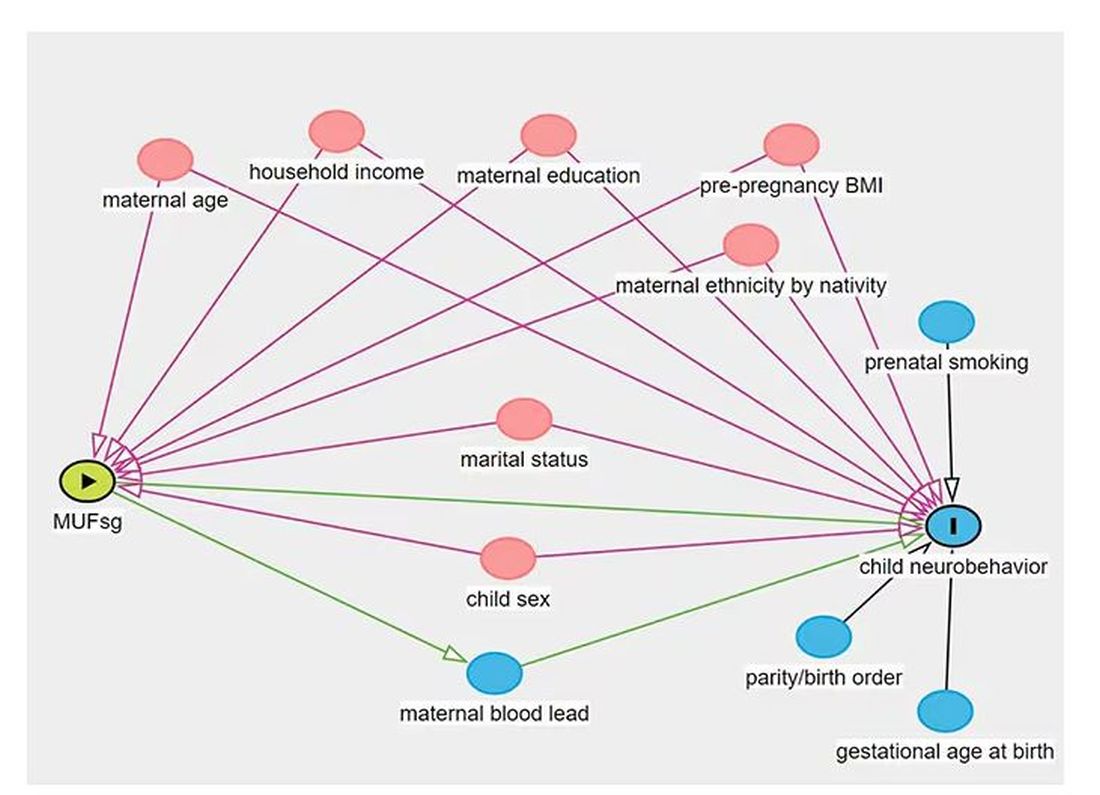

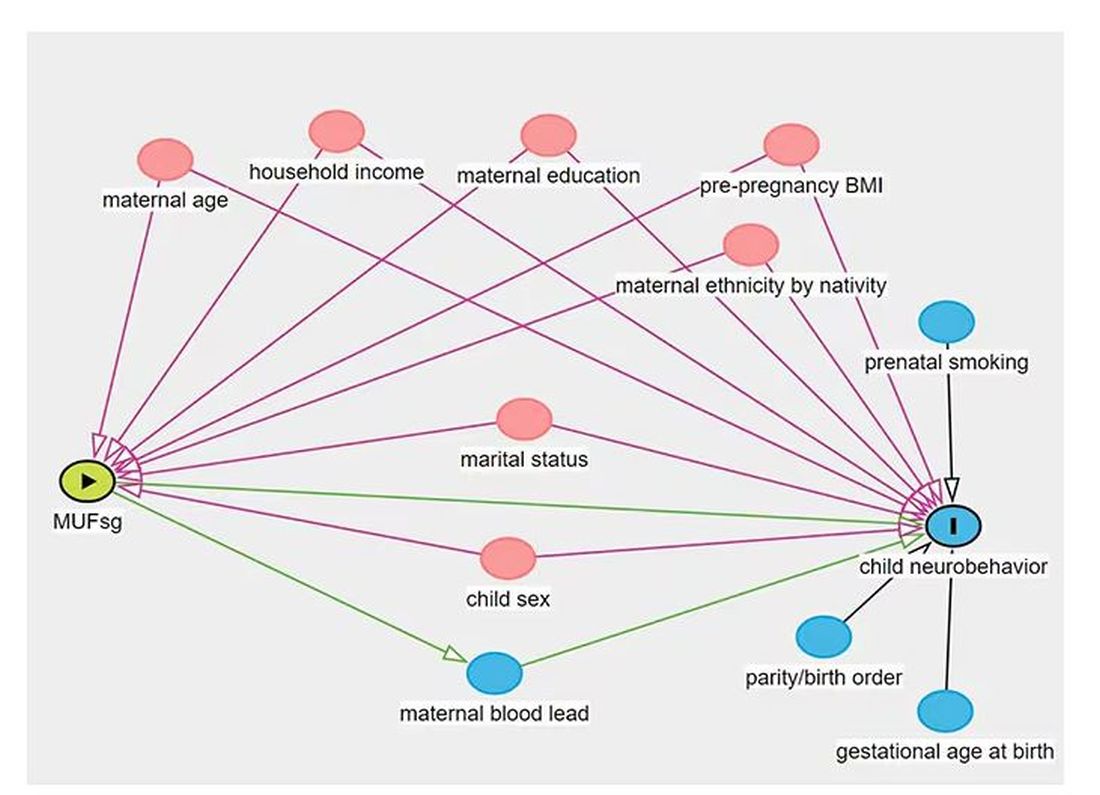

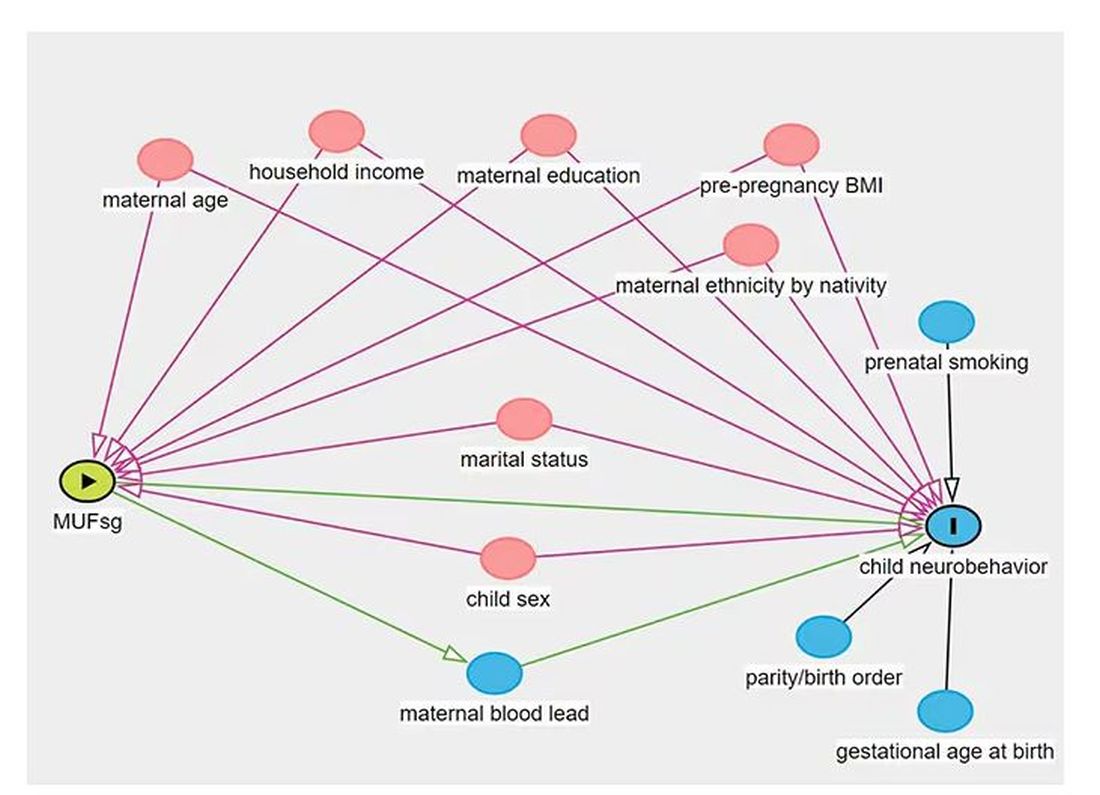

But this is not a randomized trial. Researchers didn’t randomly assign some women to have high fluoride intake and some women to have low fluoride intake. They knew that other factors that might lead to neurobehavioral problems could also lead to higher fluoride intake. They represent these factors in what’s known as a directed acyclic graph, as seen here, and account for them statistically using a regression equation.

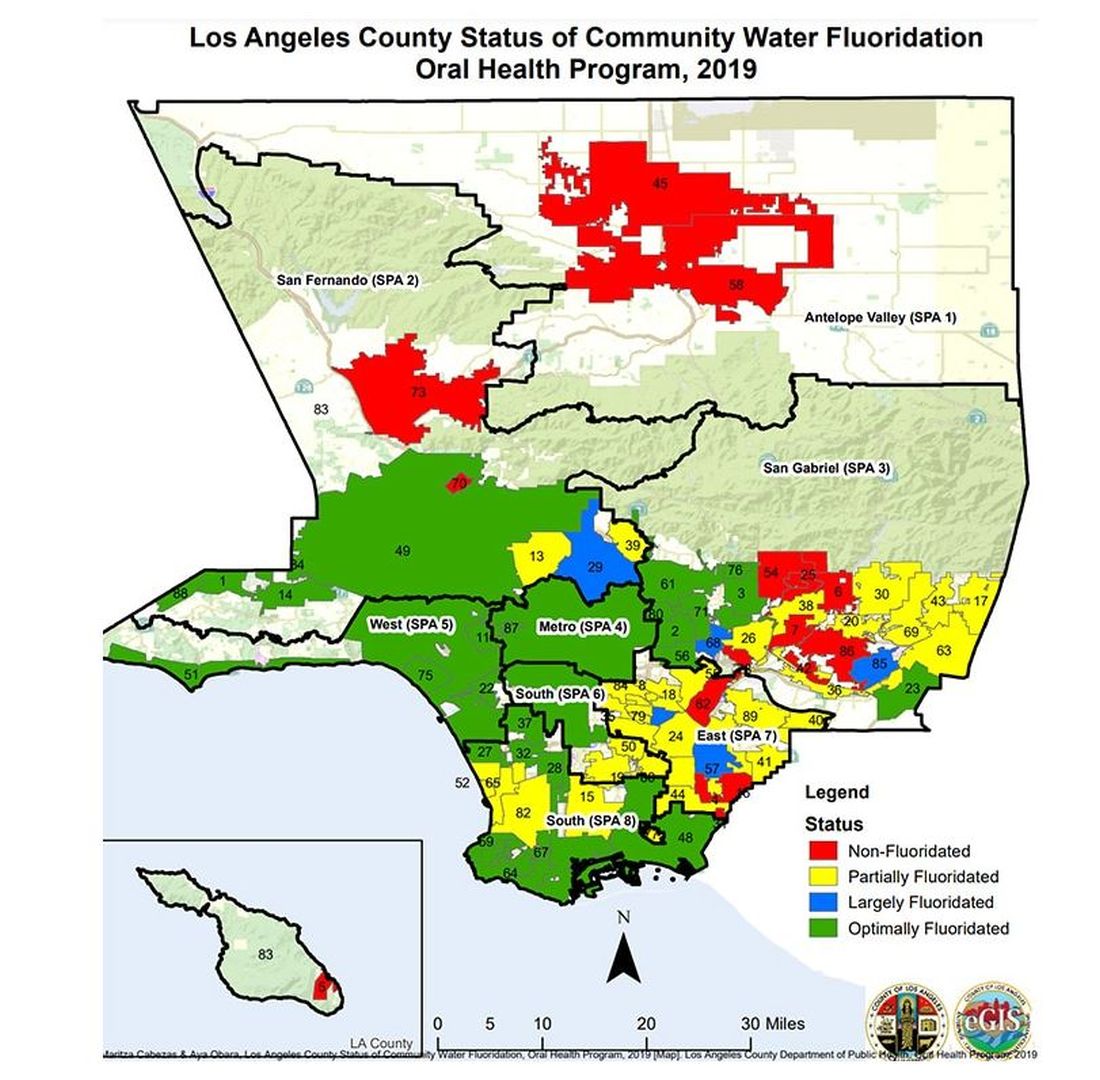

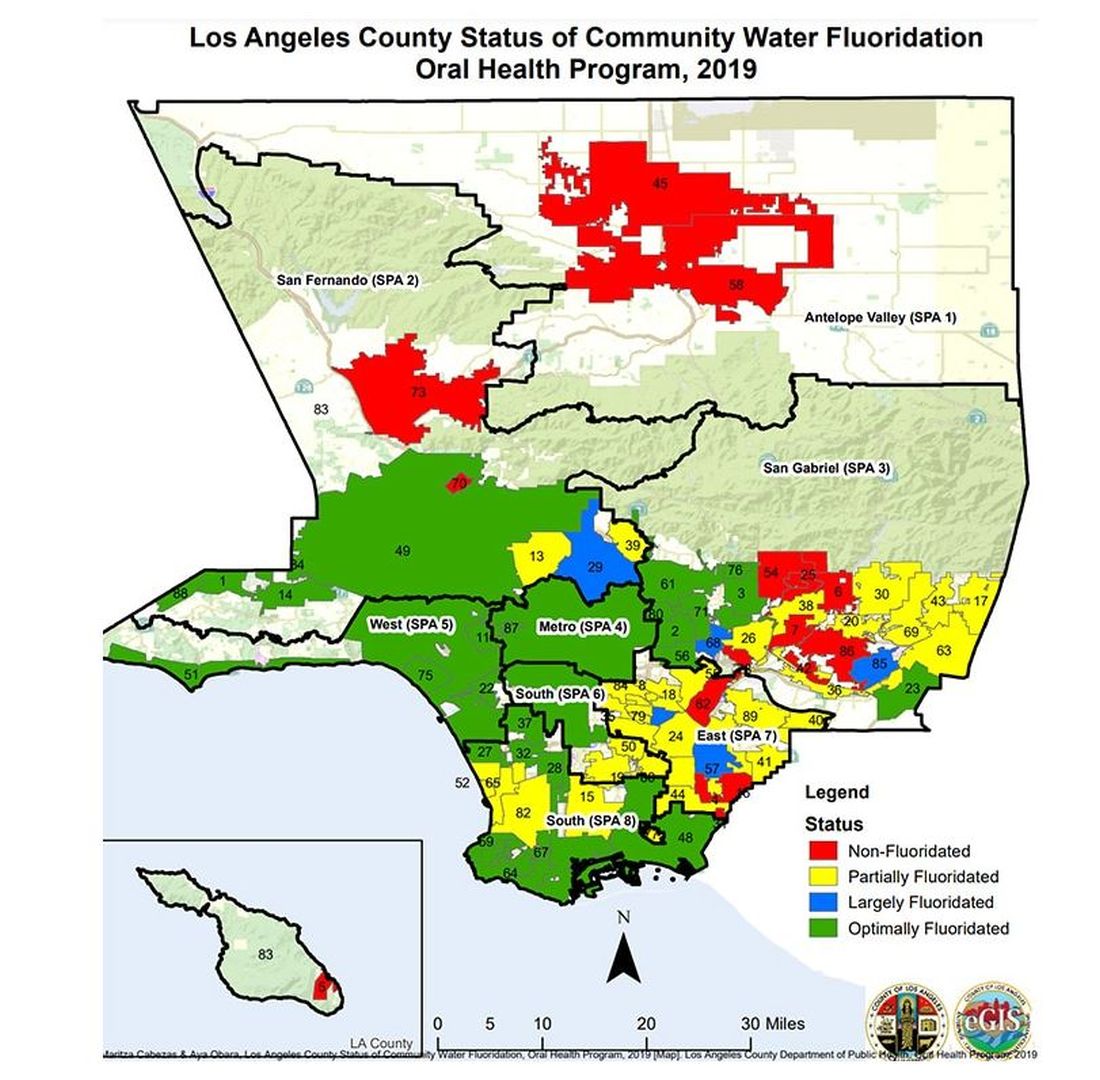

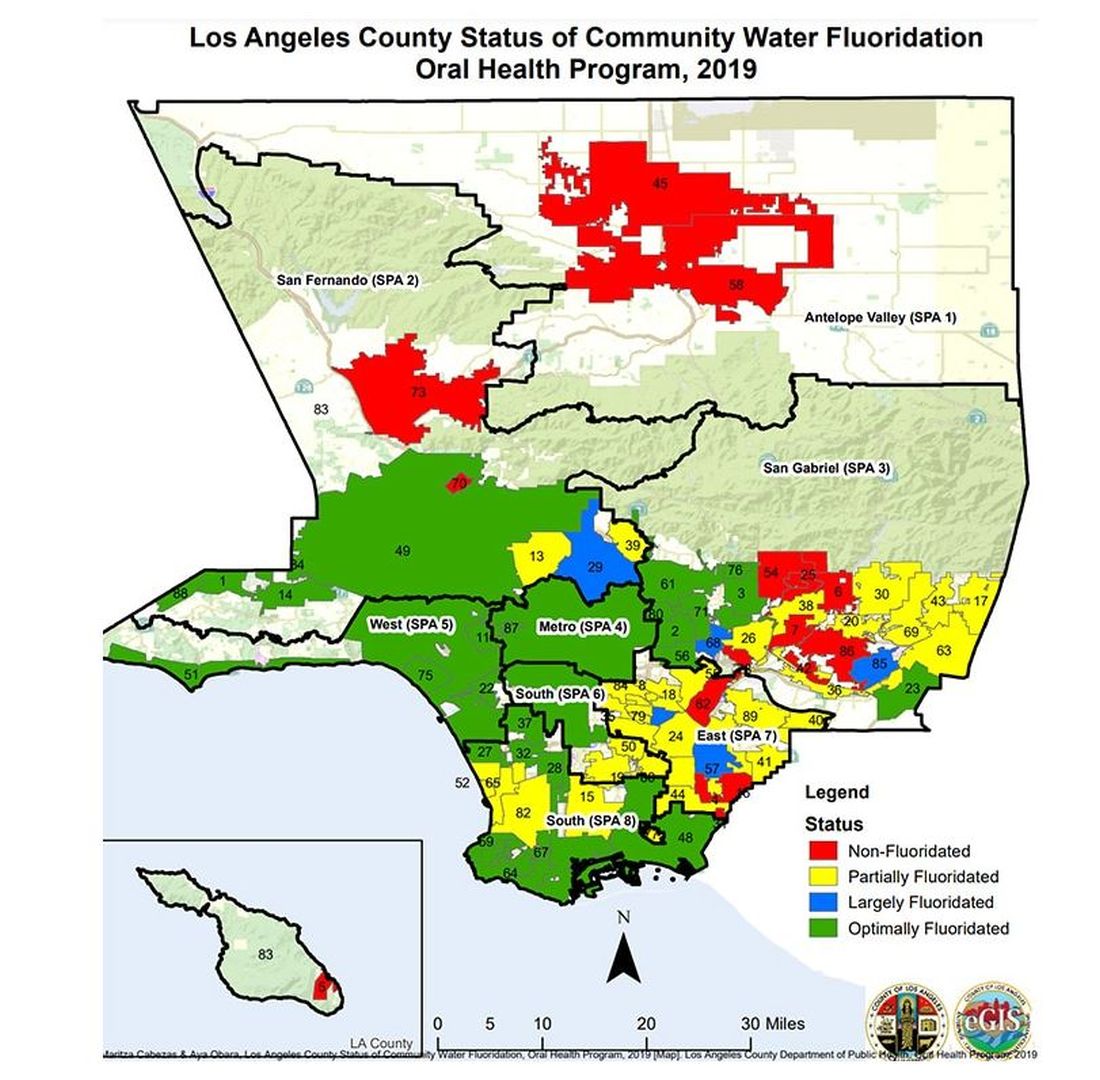

Not represented here are neighborhood characteristics. Los Angeles does not have uniformly fluoridated water, and neurobehavioral problems in kids are strongly linked to stressors in their environments. Fluoride level could be an innocent bystander.

I’m really just describing the classic issue of correlation versus causation here, the bane of all observational research and — let’s be honest — a bit of a crutch that allows us to disregard the results of studies we don’t like, provided the study wasn’t a randomized trial.

But I have a deeper issue with this study than the old “failure to adjust for relevant confounders” thing, as important as that is.

The exposure of interest in this study is maternal urinary fluoride, as measured in a spot sample. It’s not often that I get to go deep on nephrology in this space, but let’s think about that for a second. Let’s assume for a moment that fluoride is toxic to the developing fetal brain, the main concern raised by the results of the study. How would that work? Presumably, mom would be ingesting fluoride from various sources (like the water supply), and that fluoride would get into her blood, and from her blood across the placenta to the baby’s blood, and into the baby’s brain.

Is Urinary Fluoride a Good Measure of Blood Fluoride?

It’s not great. Empirically, we have data that tell us that levels of urine fluoride are not all that similar to levels of serum fluoride. In 2014, a study investigated the correlation between urine and serum fluoride in a cohort of 60 schoolchildren and found a correlation coefficient of around 0.5.

Why isn’t urine fluoride a great proxy for serum fluoride? The most obvious reason is the urine concentration. Human urine concentration can range from about 50 mmol to 1200 mmol (a 24-fold difference) depending on hydration status. Over the course of 24 hours, for example, the amount of fluoride you put out in your urine may be fairly stable in relation to intake, but for a spot urine sample it would be wildly variable. The authors know this, of course, and so they divide the measured urine fluoride by the specific gravity of the urine to give a sort of “dilution adjusted” value. That’s what is actually used in this study. But specific gravity is, itself, an imperfect measure of how dilute the urine is.

This is something that comes up a lot in urinary biomarker research and it’s not that hard to get around. The best thing would be to just measure blood levels of fluoride. The second best option is 24-hour fluoride excretion. After that, the next best thing would be to adjust the spot concentration by other markers of urinary dilution — creatinine or osmolality — as sensitivity analyses. Any of these approaches would lend credence to the results of the study.

Urinary fluoride excretion is pH dependent. The more acidic the urine, the less fluoride is excreted. Many things — including, importantly, diet — affect urine pH. And it is not a stretch to think that diet may also affect the developing fetus. Neither urine pH nor dietary habits were accounted for in this study.

So, here we are. We have an observational study suggesting a harm that may be associated with fluoride. There may be a causal link here, in which case we need further studies to weigh the harm against the more well-established public health benefit. Or, this is all correlation — an illusion created by the limitations of observational data, and the unique challenges of estimating intake from a single urine sample. In other words, this study has something for everyone, fluoride boosters and skeptics alike. Let the arguments begin. But, if possible, leave me out of it.

Dr. Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Conn. He has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

This transcript has been edited for clarity.

I recently looked back at my folder full of these medical study commentaries, this weekly video series we call Impact Factor, and realized that I’ve been doing this for a long time. More than 400 articles, believe it or not.

I’ve learned a lot in that time — about medicine, of course — but also about how people react to certain topics. If you’ve been with me this whole time, or even for just a chunk of it, you’ll know that I tend to take a measured approach to most topics. No one study is ever truly definitive, after all. But regardless of how even-keeled I may be, there are some topics that I just know in advance are going to be a bit divisive: studies about gun control; studies about vitamin D; and, of course, studies about fluoride.

Shall We Shake This Hornet’s Nest?

The fluoridation of the US water system began in 1945 with the goal of reducing cavities in the population. The CDC named water fluoridation one of the 10 great public health achievements of the 20th century, along with such inarguable achievements as the recognition of tobacco as a health hazard.

But fluoridation has never been without its detractors. One problem is that the spectrum of beliefs about the potential harm of fluoridation is huge. On one end, you have science-based concerns such as the recognition that excessive fluoride intake can cause fluorosis and stain tooth enamel. I’ll note that the EPA regulates fluoride levels — there is a fair amount of naturally occurring fluoride in water tables around the world — to prevent this. And, of course, on the other end of the spectrum, you have beliefs that are essentially conspiracy theories: “They” add fluoride to the water supply to control us.

The challenge for me is that when one “side” of a scientific debate includes the crazy theories, it can be hard to discuss that whole spectrum, since there are those who will see evidence of any adverse fluoride effect as confirmation that the conspiracy theory is true.

I can’t help this. So I’ll just say this up front: I am about to tell you about a study that shows some potential risk from fluoride exposure. I will tell you up front that there are some significant caveats to the study that call the results into question. And I will tell you up front that no one is controlling your mind, or my mind, with fluoride; they do it with social media.

Let’s Dive Into These Shark-Infested, Fluoridated Waters

We’re talking about the study, “Maternal Urinary Fluoride and Child Neurobehavior at Age 36 Months,” which appears in JAMA Network Open.

It’s a study of 229 mother-child pairs from the Los Angeles area. The moms had their urinary fluoride level measured once before 30 weeks of gestation. A neurobehavioral battery called the Preschool Child Behavior Checklist was administered to the children at age 36 months.

The main thing you’ll hear about this study — in headlines, Facebook posts, and manifestos locked in drawers somewhere — is the primary result: A 0.68-mg/L increase in urinary fluoride in the mothers, about 25 percentile points, was associated with a doubling of the risk for neurobehavioral problems in their kids when they were 3 years old.

Yikes.

But this is not a randomized trial. Researchers didn’t randomly assign some women to have high fluoride intake and some women to have low fluoride intake. They knew that other factors that might lead to neurobehavioral problems could also lead to higher fluoride intake. They represent these factors in what’s known as a directed acyclic graph, as seen here, and account for them statistically using a regression equation.

Not represented here are neighborhood characteristics. Los Angeles does not have uniformly fluoridated water, and neurobehavioral problems in kids are strongly linked to stressors in their environments. Fluoride level could be an innocent bystander.

I’m really just describing the classic issue of correlation versus causation here, the bane of all observational research and — let’s be honest — a bit of a crutch that allows us to disregard the results of studies we don’t like, provided the study wasn’t a randomized trial.

But I have a deeper issue with this study than the old “failure to adjust for relevant confounders” thing, as important as that is.

The exposure of interest in this study is maternal urinary fluoride, as measured in a spot sample. It’s not often that I get to go deep on nephrology in this space, but let’s think about that for a second. Let’s assume for a moment that fluoride is toxic to the developing fetal brain, the main concern raised by the results of the study. How would that work? Presumably, mom would be ingesting fluoride from various sources (like the water supply), and that fluoride would get into her blood, and from her blood across the placenta to the baby’s blood, and into the baby’s brain.

Is Urinary Fluoride a Good Measure of Blood Fluoride?

It’s not great. Empirically, we have data that tell us that levels of urine fluoride are not all that similar to levels of serum fluoride. In 2014, a study investigated the correlation between urine and serum fluoride in a cohort of 60 schoolchildren and found a correlation coefficient of around 0.5.

Why isn’t urine fluoride a great proxy for serum fluoride? The most obvious reason is the urine concentration. Human urine concentration can range from about 50 mmol to 1200 mmol (a 24-fold difference) depending on hydration status. Over the course of 24 hours, for example, the amount of fluoride you put out in your urine may be fairly stable in relation to intake, but for a spot urine sample it would be wildly variable. The authors know this, of course, and so they divide the measured urine fluoride by the specific gravity of the urine to give a sort of “dilution adjusted” value. That’s what is actually used in this study. But specific gravity is, itself, an imperfect measure of how dilute the urine is.

This is something that comes up a lot in urinary biomarker research and it’s not that hard to get around. The best thing would be to just measure blood levels of fluoride. The second best option is 24-hour fluoride excretion. After that, the next best thing would be to adjust the spot concentration by other markers of urinary dilution — creatinine or osmolality — as sensitivity analyses. Any of these approaches would lend credence to the results of the study.

Urinary fluoride excretion is pH dependent. The more acidic the urine, the less fluoride is excreted. Many things — including, importantly, diet — affect urine pH. And it is not a stretch to think that diet may also affect the developing fetus. Neither urine pH nor dietary habits were accounted for in this study.

So, here we are. We have an observational study suggesting a harm that may be associated with fluoride. There may be a causal link here, in which case we need further studies to weigh the harm against the more well-established public health benefit. Or, this is all correlation — an illusion created by the limitations of observational data, and the unique challenges of estimating intake from a single urine sample. In other words, this study has something for everyone, fluoride boosters and skeptics alike. Let the arguments begin. But, if possible, leave me out of it.

Dr. Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Conn. He has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

This transcript has been edited for clarity.

I recently looked back at my folder full of these medical study commentaries, this weekly video series we call Impact Factor, and realized that I’ve been doing this for a long time. More than 400 articles, believe it or not.

I’ve learned a lot in that time — about medicine, of course — but also about how people react to certain topics. If you’ve been with me this whole time, or even for just a chunk of it, you’ll know that I tend to take a measured approach to most topics. No one study is ever truly definitive, after all. But regardless of how even-keeled I may be, there are some topics that I just know in advance are going to be a bit divisive: studies about gun control; studies about vitamin D; and, of course, studies about fluoride.

Shall We Shake This Hornet’s Nest?

The fluoridation of the US water system began in 1945 with the goal of reducing cavities in the population. The CDC named water fluoridation one of the 10 great public health achievements of the 20th century, along with such inarguable achievements as the recognition of tobacco as a health hazard.

But fluoridation has never been without its detractors. One problem is that the spectrum of beliefs about the potential harm of fluoridation is huge. On one end, you have science-based concerns such as the recognition that excessive fluoride intake can cause fluorosis and stain tooth enamel. I’ll note that the EPA regulates fluoride levels — there is a fair amount of naturally occurring fluoride in water tables around the world — to prevent this. And, of course, on the other end of the spectrum, you have beliefs that are essentially conspiracy theories: “They” add fluoride to the water supply to control us.

The challenge for me is that when one “side” of a scientific debate includes the crazy theories, it can be hard to discuss that whole spectrum, since there are those who will see evidence of any adverse fluoride effect as confirmation that the conspiracy theory is true.

I can’t help this. So I’ll just say this up front: I am about to tell you about a study that shows some potential risk from fluoride exposure. I will tell you up front that there are some significant caveats to the study that call the results into question. And I will tell you up front that no one is controlling your mind, or my mind, with fluoride; they do it with social media.

Let’s Dive Into These Shark-Infested, Fluoridated Waters

We’re talking about the study, “Maternal Urinary Fluoride and Child Neurobehavior at Age 36 Months,” which appears in JAMA Network Open.

It’s a study of 229 mother-child pairs from the Los Angeles area. The moms had their urinary fluoride level measured once before 30 weeks of gestation. A neurobehavioral battery called the Preschool Child Behavior Checklist was administered to the children at age 36 months.

The main thing you’ll hear about this study — in headlines, Facebook posts, and manifestos locked in drawers somewhere — is the primary result: A 0.68-mg/L increase in urinary fluoride in the mothers, about 25 percentile points, was associated with a doubling of the risk for neurobehavioral problems in their kids when they were 3 years old.

Yikes.

But this is not a randomized trial. Researchers didn’t randomly assign some women to have high fluoride intake and some women to have low fluoride intake. They knew that other factors that might lead to neurobehavioral problems could also lead to higher fluoride intake. They represent these factors in what’s known as a directed acyclic graph, as seen here, and account for them statistically using a regression equation.

Not represented here are neighborhood characteristics. Los Angeles does not have uniformly fluoridated water, and neurobehavioral problems in kids are strongly linked to stressors in their environments. Fluoride level could be an innocent bystander.

I’m really just describing the classic issue of correlation versus causation here, the bane of all observational research and — let’s be honest — a bit of a crutch that allows us to disregard the results of studies we don’t like, provided the study wasn’t a randomized trial.

But I have a deeper issue with this study than the old “failure to adjust for relevant confounders” thing, as important as that is.

The exposure of interest in this study is maternal urinary fluoride, as measured in a spot sample. It’s not often that I get to go deep on nephrology in this space, but let’s think about that for a second. Let’s assume for a moment that fluoride is toxic to the developing fetal brain, the main concern raised by the results of the study. How would that work? Presumably, mom would be ingesting fluoride from various sources (like the water supply), and that fluoride would get into her blood, and from her blood across the placenta to the baby’s blood, and into the baby’s brain.

Is Urinary Fluoride a Good Measure of Blood Fluoride?

It’s not great. Empirically, we have data that tell us that levels of urine fluoride are not all that similar to levels of serum fluoride. In 2014, a study investigated the correlation between urine and serum fluoride in a cohort of 60 schoolchildren and found a correlation coefficient of around 0.5.

Why isn’t urine fluoride a great proxy for serum fluoride? The most obvious reason is the urine concentration. Human urine concentration can range from about 50 mmol to 1200 mmol (a 24-fold difference) depending on hydration status. Over the course of 24 hours, for example, the amount of fluoride you put out in your urine may be fairly stable in relation to intake, but for a spot urine sample it would be wildly variable. The authors know this, of course, and so they divide the measured urine fluoride by the specific gravity of the urine to give a sort of “dilution adjusted” value. That’s what is actually used in this study. But specific gravity is, itself, an imperfect measure of how dilute the urine is.

This is something that comes up a lot in urinary biomarker research and it’s not that hard to get around. The best thing would be to just measure blood levels of fluoride. The second best option is 24-hour fluoride excretion. After that, the next best thing would be to adjust the spot concentration by other markers of urinary dilution — creatinine or osmolality — as sensitivity analyses. Any of these approaches would lend credence to the results of the study.

Urinary fluoride excretion is pH dependent. The more acidic the urine, the less fluoride is excreted. Many things — including, importantly, diet — affect urine pH. And it is not a stretch to think that diet may also affect the developing fetus. Neither urine pH nor dietary habits were accounted for in this study.

So, here we are. We have an observational study suggesting a harm that may be associated with fluoride. There may be a causal link here, in which case we need further studies to weigh the harm against the more well-established public health benefit. Or, this is all correlation — an illusion created by the limitations of observational data, and the unique challenges of estimating intake from a single urine sample. In other words, this study has something for everyone, fluoride boosters and skeptics alike. Let the arguments begin. But, if possible, leave me out of it.

Dr. Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Conn. He has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

Does More Systemic Treatment for Advanced Cancer Improve Survival?

This conclusion of a new study published online May 16 in JAMA Oncology may help reassure oncologists that giving systemic anticancer therapy (SACT) at the most advanced stages of cancer will not improve the patient’s life, the authors wrote. It also may encourage them to instead focus more on honest communication with patients about their choices, Maureen E. Canavan, PhD, at the Cancer and Outcomes, Public Policy and Effectiveness Research (COPPER) Center at the Yale School of Medicine in New Haven, Connecticut, and colleagues, wrote in their paper.

How Was the Study Conducted?

Researchers used Flatiron Health, a nationwide electronic health records database of academic and community practices throughout the United State. They identified 78,446 adults with advanced or metastatic stages of one of six common cancers (breast, colorectal, urothelial, non–small cell lung cancer [NSCLC], pancreatic and renal cell carcinoma) who were treated at healthcare practices from 2015 to 2019. They then stratified practices into quintiles based on how often the practices treated patients with any systemic therapy, including chemotherapy and immunotherapy, in their last 14 days of life. They compared whether patients in practices with greater use of systemic treatment at very advanced stages had longer overall survival.

What Were the Main Findings?

“We saw that there were absolutely no survival differences between the practices that used more systemic therapy for very advanced cancer than the practices that use less,” said senior author Kerin Adelson, MD, chief quality and value officer at MD Anderson Cancer Center in Houston, Texas. In some cancers, those in the lowest quintile (those with the lowest rates of systemic end-of-life care) lived fewer years compared with those in the highest quintiles. In other cancers, those in the lowest quintiles lived more years than those in the highest quintiles.

“What’s important is that none of those differences, after you control for other factors, was statistically significant,” Dr. Adelson said. “That was the same in every cancer type we looked at.”

An example is seen in advanced urothelial cancer. Those in the first quintile (lowest rates of systemic care at end of life) had an SACT rate range of 4.0-9.1. The SACT rate range in the highest quintile was 19.8-42.6. But the median overall survival (OS) rate for those in the lowest quintile was 12.7 months, not statistically different from the median OS in the highest quintile (11 months.)

How Does This Study Add to the Literature?

The American Society of Clinical Oncology (ASCO) and the National Quality Forum (NQF) developed a cancer quality metric to reduce SACT at the end of life. The NQF 0210 is a ratio of patients who get systemic treatment within 14 days of death over all patients who die of cancer. The quality metric has been widely adopted and used in value-based care reporting.

But the metric has been criticized because it focuses only on people who died and not people who lived longer because they benefited from the systemic therapy, the authors wrote.

Dr. Canavan’s team focused on all patients treated in the practice, not just those who died, Dr. Adelson said. This may put that criticism to rest, Dr. Adelson said.

“I personally believed the ASCO and NQF metric was appropriate and the criticisms were off base,” said Otis Brawley, MD, associate director of community outreach and engagement at the Sidney Kimmel Comprehensive Cancer Center at Johns Hopkins University School of Medicine in Baltimore. “Canavan’s study is evidence suggesting the metrics were appropriate.”

This study included not just chemotherapy, as some other studies have, but targeted therapies and immunotherapies as well. Dr. Adelson said some think that the newer drugs might change the prognosis at end of life. But this study shows “even those drugs are not helping patients to survive with very advanced cancer,” she said.

Could This Change Practice?

The authors noted that end-of life SACT has been linked with more acute care use, delays in conversations about care goals, late enrollment in hospice, higher costs, and potentially shorter and poorer quality life.

Dr. Adelson said she’s hoping that the knowledge that there’s no survival benefit for use of SACT for patients with advanced solid tumors who are nearing the end of life will lead instead to more conversations about prognosis with patients and transitions to palliative care.

“Palliative care has actually been shown to improve quality of life and, in some studies, even survival,” she said.

“I doubt it will change practice, but it should,” Dr. Brawley said. “The study suggests that doctors and patients have too much hope for chemotherapy as patients’ disease progresses. In the US especially, there is a tendency to believe we have better therapies than we truly do and we have difficulty accepting that the patient is dying. Many patients get third- and fourth-line chemotherapy that is highly likely to increase suffering without realistic hope of prolonging life and especially no hope of prolonging life with good quality.”

Dr. Adelson disclosed ties with AbbVie, Quantum Health, Gilead, ParetoHealth, and Carrum Health. Various coauthors disclosed ties with Roche, AbbVie, Johnson & Johnson, Genentech, the National Comprehensive Cancer Network, and AstraZeneca. The study was funded by Flatiron Health, an independent member of the Roche group. Dr. Brawley reports no relevant financial disclosures.

This conclusion of a new study published online May 16 in JAMA Oncology may help reassure oncologists that giving systemic anticancer therapy (SACT) at the most advanced stages of cancer will not improve the patient’s life, the authors wrote. It also may encourage them to instead focus more on honest communication with patients about their choices, Maureen E. Canavan, PhD, at the Cancer and Outcomes, Public Policy and Effectiveness Research (COPPER) Center at the Yale School of Medicine in New Haven, Connecticut, and colleagues, wrote in their paper.

How Was the Study Conducted?

Researchers used Flatiron Health, a nationwide electronic health records database of academic and community practices throughout the United State. They identified 78,446 adults with advanced or metastatic stages of one of six common cancers (breast, colorectal, urothelial, non–small cell lung cancer [NSCLC], pancreatic and renal cell carcinoma) who were treated at healthcare practices from 2015 to 2019. They then stratified practices into quintiles based on how often the practices treated patients with any systemic therapy, including chemotherapy and immunotherapy, in their last 14 days of life. They compared whether patients in practices with greater use of systemic treatment at very advanced stages had longer overall survival.

What Were the Main Findings?

“We saw that there were absolutely no survival differences between the practices that used more systemic therapy for very advanced cancer than the practices that use less,” said senior author Kerin Adelson, MD, chief quality and value officer at MD Anderson Cancer Center in Houston, Texas. In some cancers, those in the lowest quintile (those with the lowest rates of systemic end-of-life care) lived fewer years compared with those in the highest quintiles. In other cancers, those in the lowest quintiles lived more years than those in the highest quintiles.

“What’s important is that none of those differences, after you control for other factors, was statistically significant,” Dr. Adelson said. “That was the same in every cancer type we looked at.”

An example is seen in advanced urothelial cancer. Those in the first quintile (lowest rates of systemic care at end of life) had an SACT rate range of 4.0-9.1. The SACT rate range in the highest quintile was 19.8-42.6. But the median overall survival (OS) rate for those in the lowest quintile was 12.7 months, not statistically different from the median OS in the highest quintile (11 months.)

How Does This Study Add to the Literature?

The American Society of Clinical Oncology (ASCO) and the National Quality Forum (NQF) developed a cancer quality metric to reduce SACT at the end of life. The NQF 0210 is a ratio of patients who get systemic treatment within 14 days of death over all patients who die of cancer. The quality metric has been widely adopted and used in value-based care reporting.

But the metric has been criticized because it focuses only on people who died and not people who lived longer because they benefited from the systemic therapy, the authors wrote.

Dr. Canavan’s team focused on all patients treated in the practice, not just those who died, Dr. Adelson said. This may put that criticism to rest, Dr. Adelson said.

“I personally believed the ASCO and NQF metric was appropriate and the criticisms were off base,” said Otis Brawley, MD, associate director of community outreach and engagement at the Sidney Kimmel Comprehensive Cancer Center at Johns Hopkins University School of Medicine in Baltimore. “Canavan’s study is evidence suggesting the metrics were appropriate.”

This study included not just chemotherapy, as some other studies have, but targeted therapies and immunotherapies as well. Dr. Adelson said some think that the newer drugs might change the prognosis at end of life. But this study shows “even those drugs are not helping patients to survive with very advanced cancer,” she said.

Could This Change Practice?

The authors noted that end-of life SACT has been linked with more acute care use, delays in conversations about care goals, late enrollment in hospice, higher costs, and potentially shorter and poorer quality life.

Dr. Adelson said she’s hoping that the knowledge that there’s no survival benefit for use of SACT for patients with advanced solid tumors who are nearing the end of life will lead instead to more conversations about prognosis with patients and transitions to palliative care.

“Palliative care has actually been shown to improve quality of life and, in some studies, even survival,” she said.

“I doubt it will change practice, but it should,” Dr. Brawley said. “The study suggests that doctors and patients have too much hope for chemotherapy as patients’ disease progresses. In the US especially, there is a tendency to believe we have better therapies than we truly do and we have difficulty accepting that the patient is dying. Many patients get third- and fourth-line chemotherapy that is highly likely to increase suffering without realistic hope of prolonging life and especially no hope of prolonging life with good quality.”

Dr. Adelson disclosed ties with AbbVie, Quantum Health, Gilead, ParetoHealth, and Carrum Health. Various coauthors disclosed ties with Roche, AbbVie, Johnson & Johnson, Genentech, the National Comprehensive Cancer Network, and AstraZeneca. The study was funded by Flatiron Health, an independent member of the Roche group. Dr. Brawley reports no relevant financial disclosures.

This conclusion of a new study published online May 16 in JAMA Oncology may help reassure oncologists that giving systemic anticancer therapy (SACT) at the most advanced stages of cancer will not improve the patient’s life, the authors wrote. It also may encourage them to instead focus more on honest communication with patients about their choices, Maureen E. Canavan, PhD, at the Cancer and Outcomes, Public Policy and Effectiveness Research (COPPER) Center at the Yale School of Medicine in New Haven, Connecticut, and colleagues, wrote in their paper.

How Was the Study Conducted?

Researchers used Flatiron Health, a nationwide electronic health records database of academic and community practices throughout the United State. They identified 78,446 adults with advanced or metastatic stages of one of six common cancers (breast, colorectal, urothelial, non–small cell lung cancer [NSCLC], pancreatic and renal cell carcinoma) who were treated at healthcare practices from 2015 to 2019. They then stratified practices into quintiles based on how often the practices treated patients with any systemic therapy, including chemotherapy and immunotherapy, in their last 14 days of life. They compared whether patients in practices with greater use of systemic treatment at very advanced stages had longer overall survival.

What Were the Main Findings?

“We saw that there were absolutely no survival differences between the practices that used more systemic therapy for very advanced cancer than the practices that use less,” said senior author Kerin Adelson, MD, chief quality and value officer at MD Anderson Cancer Center in Houston, Texas. In some cancers, those in the lowest quintile (those with the lowest rates of systemic end-of-life care) lived fewer years compared with those in the highest quintiles. In other cancers, those in the lowest quintiles lived more years than those in the highest quintiles.

“What’s important is that none of those differences, after you control for other factors, was statistically significant,” Dr. Adelson said. “That was the same in every cancer type we looked at.”

An example is seen in advanced urothelial cancer. Those in the first quintile (lowest rates of systemic care at end of life) had an SACT rate range of 4.0-9.1. The SACT rate range in the highest quintile was 19.8-42.6. But the median overall survival (OS) rate for those in the lowest quintile was 12.7 months, not statistically different from the median OS in the highest quintile (11 months.)

How Does This Study Add to the Literature?

The American Society of Clinical Oncology (ASCO) and the National Quality Forum (NQF) developed a cancer quality metric to reduce SACT at the end of life. The NQF 0210 is a ratio of patients who get systemic treatment within 14 days of death over all patients who die of cancer. The quality metric has been widely adopted and used in value-based care reporting.

But the metric has been criticized because it focuses only on people who died and not people who lived longer because they benefited from the systemic therapy, the authors wrote.

Dr. Canavan’s team focused on all patients treated in the practice, not just those who died, Dr. Adelson said. This may put that criticism to rest, Dr. Adelson said.

“I personally believed the ASCO and NQF metric was appropriate and the criticisms were off base,” said Otis Brawley, MD, associate director of community outreach and engagement at the Sidney Kimmel Comprehensive Cancer Center at Johns Hopkins University School of Medicine in Baltimore. “Canavan’s study is evidence suggesting the metrics were appropriate.”

This study included not just chemotherapy, as some other studies have, but targeted therapies and immunotherapies as well. Dr. Adelson said some think that the newer drugs might change the prognosis at end of life. But this study shows “even those drugs are not helping patients to survive with very advanced cancer,” she said.

Could This Change Practice?

The authors noted that end-of life SACT has been linked with more acute care use, delays in conversations about care goals, late enrollment in hospice, higher costs, and potentially shorter and poorer quality life.

Dr. Adelson said she’s hoping that the knowledge that there’s no survival benefit for use of SACT for patients with advanced solid tumors who are nearing the end of life will lead instead to more conversations about prognosis with patients and transitions to palliative care.

“Palliative care has actually been shown to improve quality of life and, in some studies, even survival,” she said.

“I doubt it will change practice, but it should,” Dr. Brawley said. “The study suggests that doctors and patients have too much hope for chemotherapy as patients’ disease progresses. In the US especially, there is a tendency to believe we have better therapies than we truly do and we have difficulty accepting that the patient is dying. Many patients get third- and fourth-line chemotherapy that is highly likely to increase suffering without realistic hope of prolonging life and especially no hope of prolonging life with good quality.”

Dr. Adelson disclosed ties with AbbVie, Quantum Health, Gilead, ParetoHealth, and Carrum Health. Various coauthors disclosed ties with Roche, AbbVie, Johnson & Johnson, Genentech, the National Comprehensive Cancer Network, and AstraZeneca. The study was funded by Flatiron Health, an independent member of the Roche group. Dr. Brawley reports no relevant financial disclosures.

FROM JAMA ONCOLOGY

Urine Tests Could Be ‘Enormous Step’ in Diagnosing Cancer

Emerging science suggests that the body’s “liquid gold” could be particularly useful for liquid biopsies, offering a convenient, pain-free, and cost-effective way to spot otherwise hard-to-detect cancers.

“The search for cancer biomarkers that can be detected in urine could provide an enormous step forward to decrease cancer patient mortality,” said Kenneth R. Shroyer, MD, PhD, a pathologist at Stony Brook University, Stony Brook, New York, who studies cancer biomarkers.

Physicians have long known that urine can reveal a lot about our health — that’s why urinalysis has been part of medicine for 6000 years. Urine tests can detect diabetes, pregnancy, drug use, and urinary or kidney conditions.

But other conditions leave clues in urine, too, and cancer may be one of the most promising. “Urine testing could detect biomarkers of early-stage cancers, not only from local but also distant sites,” Dr. Shroyer said. It could also help flag recurrence in cancer survivors who have undergone treatment.

Granted, cancer biomarkers in urine are not nearly as widely studied as those in the blood, Dr. Shroyer noted. But a new wave of urine tests suggests research is gaining pace.

“The recent availability of high-throughput screening technologies has enabled researchers to investigate cancer from a top-down, comprehensive approach,” said Pak Kin Wong, PhD, professor of mechanical engineering, biomedical engineering, and surgery at The Pennsylvania State University. “We are starting to understand the rich information that can be obtained from urine.”

Urine is mostly water (about 95%) and urea, a metabolic byproduct that imparts that signature yellow color (about 2%). The other 3% is a mix of waste products, minerals, and other compounds the kidneys removed from the blood. Even in trace amounts, these substances say a lot.

Among them are “exfoliated cancer cells, cell-free DNA, hormones, and the urine microbiota — the collection of microbes in our urinary tract system,” Dr. Wong said.

“It is highly promising to be one of the major biological fluids used for screening, diagnosis, prognosis, and monitoring treatment efficiency in the era of precision medicine,” Dr. Wong said.

How Urine Testing Could Reveal Cancer

Still, as exciting as the prospect is, there’s a lot to consider in the hunt for cancer biomarkers in urine. These biomarkers must be able to pass through the renal nephrons (filtering units), remain stable in urine, and have high-level sensitivity, Dr. Shroyer said. They should also have high specificity for cancer vs benign conditions and be expressed at early stages, before the primary tumor has spread.

“At this stage, few circulating biomarkers have been found that are both sensitive and specific for early-stage disease,” said Dr. Shroyer.

But there are a few promising examples under investigation in humans:

Prostate cancer. Researchers at the University of Michigan have developed a urine test that detects high-grade prostate cancer more accurately than existing tests, including PHI, SelectMDx, 4Kscore, EPI, MPS, and IsoPSA.

The MyProstateScore 2.0 (MPS2) test, which looks for 18 genes associated with high-grade tumors, could reduce unnecessary biopsies in men with elevated prostate-specific antigen levels, according to a paper published in JAMA Oncology.

It makes sense. The prostate gland secretes fluid that becomes part of the semen, traces of which enter urine. After a digital rectal exam, even more prostate fluid enters the urine. If a patient has prostate cancer, genetic material from the cancer cells will infiltrate the urine.

In the MPS2 test, researchers used polymerase chain reaction (PCR) testing in urine. “The technology used for COVID PCR is essentially the same as the PCR used to detect transcripts associated with high-grade prostate cancer in urine,” said study author Arul Chinnaiyan, MD, PhD, director of the Michigan Center for Translational Pathology at the University of Michigan, Ann Arbor. “In the case of the MPS2 test, we are doing PCR on 18 genes simultaneously on urine samples.”