User login

Enterovirus in at-risk children associated with later celiac disease

“We found a significant association between exposure to enterovirus and subsequent risk of celiac disease,” wrote lead author Christian R. Kahrs of the University of Oslo and his coauthors, adding that “adenovirus was not associated with celiac disease.” The study was published in the BMJ.

From 2001 to 2007, 46,939 newborns in Norway were screened for the HLA-DQ2/DQ8 genotype, which is associated with an increased risk of celiac disease. The genotype was identified in 912 children, and blood and stool sample collection began at 3 months. Children who were still contributing blood samples by 2014-2016 were invited to be screened for celiac disease.

Of the 220 children screened, 25 were diagnosed with celiac disease. Enterovirus was detected in 370 (17%) of the 2,135 stool samples and was more frequent in children who developed celiac disease antibodies than in matched controls (adjusted odds ratio, 1.49; 95% confidence interval, 1.07-2.06; P = .02). There was a significant association between later development of celiac disease and the commonly identified enterovirus A (aOR, 1.62; 95% CI, 1.04-2.53; P = .03) and enterovirus B (aOR, 2.27; 95% CI, 1.33-3.88; P = .003). No adenovirus types were associated with development of celiac disease.

The authors acknowledged their study’s limitations, including the possibility that children might be diagnosed with celiac disease later than the study’s roughly 10-year follow-up and the limited number of children with the disease despite a large number of analyzed samples. They noted that, “given the limited number of cases, we call for corroboration in similar studies and preferably interventional studies to reach conclusions about causality.”

The study was funded by the Research Council of Norway, the Project for the Conceptual Development of Research Organization, and the Norwegian Coeliac Society. Two authors reported grant support from trusts and foundations in Norway and Switzerland; no conflicts of interest were reported.

SOURCE: Kahrs CR et al. BMJ. 2019 Feb 13. doi: 10.1136/bmj.l231.

“We found a significant association between exposure to enterovirus and subsequent risk of celiac disease,” wrote lead author Christian R. Kahrs of the University of Oslo and his coauthors, adding that “adenovirus was not associated with celiac disease.” The study was published in the BMJ.

From 2001 to 2007, 46,939 newborns in Norway were screened for the HLA-DQ2/DQ8 genotype, which is associated with an increased risk of celiac disease. The genotype was identified in 912 children, and blood and stool sample collection began at 3 months. Children who were still contributing blood samples by 2014-2016 were invited to be screened for celiac disease.

Of the 220 children screened, 25 were diagnosed with celiac disease. Enterovirus was detected in 370 (17%) of the 2,135 stool samples and was more frequent in children who developed celiac disease antibodies than in matched controls (adjusted odds ratio, 1.49; 95% confidence interval, 1.07-2.06; P = .02). There was a significant association between later development of celiac disease and the commonly identified enterovirus A (aOR, 1.62; 95% CI, 1.04-2.53; P = .03) and enterovirus B (aOR, 2.27; 95% CI, 1.33-3.88; P = .003). No adenovirus types were associated with development of celiac disease.

The authors acknowledged their study’s limitations, including the possibility that children might be diagnosed with celiac disease later than the study’s roughly 10-year follow-up and the limited number of children with the disease despite a large number of analyzed samples. They noted that, “given the limited number of cases, we call for corroboration in similar studies and preferably interventional studies to reach conclusions about causality.”

The study was funded by the Research Council of Norway, the Project for the Conceptual Development of Research Organization, and the Norwegian Coeliac Society. Two authors reported grant support from trusts and foundations in Norway and Switzerland; no conflicts of interest were reported.

SOURCE: Kahrs CR et al. BMJ. 2019 Feb 13. doi: 10.1136/bmj.l231.

“We found a significant association between exposure to enterovirus and subsequent risk of celiac disease,” wrote lead author Christian R. Kahrs of the University of Oslo and his coauthors, adding that “adenovirus was not associated with celiac disease.” The study was published in the BMJ.

From 2001 to 2007, 46,939 newborns in Norway were screened for the HLA-DQ2/DQ8 genotype, which is associated with an increased risk of celiac disease. The genotype was identified in 912 children, and blood and stool sample collection began at 3 months. Children who were still contributing blood samples by 2014-2016 were invited to be screened for celiac disease.

Of the 220 children screened, 25 were diagnosed with celiac disease. Enterovirus was detected in 370 (17%) of the 2,135 stool samples and was more frequent in children who developed celiac disease antibodies than in matched controls (adjusted odds ratio, 1.49; 95% confidence interval, 1.07-2.06; P = .02). There was a significant association between later development of celiac disease and the commonly identified enterovirus A (aOR, 1.62; 95% CI, 1.04-2.53; P = .03) and enterovirus B (aOR, 2.27; 95% CI, 1.33-3.88; P = .003). No adenovirus types were associated with development of celiac disease.

The authors acknowledged their study’s limitations, including the possibility that children might be diagnosed with celiac disease later than the study’s roughly 10-year follow-up and the limited number of children with the disease despite a large number of analyzed samples. They noted that, “given the limited number of cases, we call for corroboration in similar studies and preferably interventional studies to reach conclusions about causality.”

The study was funded by the Research Council of Norway, the Project for the Conceptual Development of Research Organization, and the Norwegian Coeliac Society. Two authors reported grant support from trusts and foundations in Norway and Switzerland; no conflicts of interest were reported.

SOURCE: Kahrs CR et al. BMJ. 2019 Feb 13. doi: 10.1136/bmj.l231.

FROM THE BMJ

Lower prices drive OTC insulin sales at Walmart

than the OTC insulin sold elsewhere, according to a national survey of pharmacy employees.

The survey was undertaken by Jennifer N. Goldstein, MD, of Christiana Care Health System in Newark, Del., and her colleagues and published online Feb. 18 in JAMA Internal Medicine.

As Walmart does not make its sales data public, Dr. Goldstein and her colleagues conducted a national telephone-based survey of Walmart and chain pharmacies in the 49 states where OTC insulin is available. They administered a five-item questionnaire that sought to determine the frequency of OTC insulin sales.

Of the 561 pharmacies that completed the questionnaire, 500 (89.1%) responded that they did sell OTC insulin; this included 284 of 292 Walmart pharmacies. Among those Walmart pharmacies, 247 (87%) said sales of OTC insulin occurred daily; 31 (10.9%) sold it weekly; and 3 (1.1%) sold it monthly.

The chains (CVS, Walgreens, Rite Aid) reported far less frequent sales of OTC insulin; 100 out of 216 (46.3%) pharmacies said they made sales only “a few times a year,” and none reported daily sales.

A majority of respondents (54.9%) also answered yes when asked if they believed patients purchased OTC insulin because they could not afford the copayment on prescription insulin; 70.1% of those positive responses came from Walmart pharmacies.

The coauthors acknowledged that their survey represents the impressions of pharmacy employees as opposed to actual sales data. The numbers, however, do “support an estimate of daily sales of more than 18,000 vials of over-the-counter insulin at Walmart pharmacies.” As for next steps, they noted that “further studies should explore clinical and safety outcomes related to the use of over-the-counter insulin.”

One author was supported by a grant from the National Institutes of Health. No conflicts of interest were reported.

SOURCE: Goldstein JN et al. JAMA Intern Med. 2019 Feb 18. doi: 10.1001/jamainternmed.2018.7279

than the OTC insulin sold elsewhere, according to a national survey of pharmacy employees.

The survey was undertaken by Jennifer N. Goldstein, MD, of Christiana Care Health System in Newark, Del., and her colleagues and published online Feb. 18 in JAMA Internal Medicine.

As Walmart does not make its sales data public, Dr. Goldstein and her colleagues conducted a national telephone-based survey of Walmart and chain pharmacies in the 49 states where OTC insulin is available. They administered a five-item questionnaire that sought to determine the frequency of OTC insulin sales.

Of the 561 pharmacies that completed the questionnaire, 500 (89.1%) responded that they did sell OTC insulin; this included 284 of 292 Walmart pharmacies. Among those Walmart pharmacies, 247 (87%) said sales of OTC insulin occurred daily; 31 (10.9%) sold it weekly; and 3 (1.1%) sold it monthly.

The chains (CVS, Walgreens, Rite Aid) reported far less frequent sales of OTC insulin; 100 out of 216 (46.3%) pharmacies said they made sales only “a few times a year,” and none reported daily sales.

A majority of respondents (54.9%) also answered yes when asked if they believed patients purchased OTC insulin because they could not afford the copayment on prescription insulin; 70.1% of those positive responses came from Walmart pharmacies.

The coauthors acknowledged that their survey represents the impressions of pharmacy employees as opposed to actual sales data. The numbers, however, do “support an estimate of daily sales of more than 18,000 vials of over-the-counter insulin at Walmart pharmacies.” As for next steps, they noted that “further studies should explore clinical and safety outcomes related to the use of over-the-counter insulin.”

One author was supported by a grant from the National Institutes of Health. No conflicts of interest were reported.

SOURCE: Goldstein JN et al. JAMA Intern Med. 2019 Feb 18. doi: 10.1001/jamainternmed.2018.7279

than the OTC insulin sold elsewhere, according to a national survey of pharmacy employees.

The survey was undertaken by Jennifer N. Goldstein, MD, of Christiana Care Health System in Newark, Del., and her colleagues and published online Feb. 18 in JAMA Internal Medicine.

As Walmart does not make its sales data public, Dr. Goldstein and her colleagues conducted a national telephone-based survey of Walmart and chain pharmacies in the 49 states where OTC insulin is available. They administered a five-item questionnaire that sought to determine the frequency of OTC insulin sales.

Of the 561 pharmacies that completed the questionnaire, 500 (89.1%) responded that they did sell OTC insulin; this included 284 of 292 Walmart pharmacies. Among those Walmart pharmacies, 247 (87%) said sales of OTC insulin occurred daily; 31 (10.9%) sold it weekly; and 3 (1.1%) sold it monthly.

The chains (CVS, Walgreens, Rite Aid) reported far less frequent sales of OTC insulin; 100 out of 216 (46.3%) pharmacies said they made sales only “a few times a year,” and none reported daily sales.

A majority of respondents (54.9%) also answered yes when asked if they believed patients purchased OTC insulin because they could not afford the copayment on prescription insulin; 70.1% of those positive responses came from Walmart pharmacies.

The coauthors acknowledged that their survey represents the impressions of pharmacy employees as opposed to actual sales data. The numbers, however, do “support an estimate of daily sales of more than 18,000 vials of over-the-counter insulin at Walmart pharmacies.” As for next steps, they noted that “further studies should explore clinical and safety outcomes related to the use of over-the-counter insulin.”

One author was supported by a grant from the National Institutes of Health. No conflicts of interest were reported.

SOURCE: Goldstein JN et al. JAMA Intern Med. 2019 Feb 18. doi: 10.1001/jamainternmed.2018.7279

FROM JAMA INTERNAL MEDICINE

Key clinical point: Patients who purchase over-the-counter insulin typically do so at Walmart, likely because they charge much less than chain pharmacies.

Major finding: 87% of Walmart pharmacies report daily sales of over-the-counter insulin, compared with 0% of chain pharmacies.

Study details: A national telephone-based survey of Walmart and chain pharmacies in the 49 states where over-the-counter insulin is sold.

Disclosures: One author was supported by a grant from the National Institutes of Health. No conflicts of interest were reported.

Source: Goldstein JN et al. JAMA Intern Med. 2019 Feb 18. doi: 10.1001/jamainternmed.2018.7279

Consider hysterectomy in patients with post-irradiated residual cervical cancer

according to a study of Bangladeshi patients with cervical cancer.

“Surgical intervention by either extrafascial or radical hysterectomy should be strongly considered for women with biopsy-confirmed residual disease after chemoradiation,” wrote lead authors Shahana Pervin, MBBS, and Farzana Islam Ruma, MBBS, of the National Institute of Cancer Research and Hospital and of the Railway General Hospital, respectively, in Dhaka, Bangladesh, and their associates. The study was published in the Journal of Global Oncology.

From 2009 to June 2013, this prospective longitudinal study collected data from 40 patients with biopsy-confirmed persistence of cervical cancer. The patients, who were being treated at one of two hospitals in Dhaka, Bangladesh, underwent either radical or extrafascial hysterectomy at least 12 weeks after initial radiation therapy.

At 5 years of follow-up, 36 (90%) had no evidence of disease. Of the 29 women who underwent extrafascial hysterectomy, 4 (14%) developed recurrent disease and 1 died. None of the 11 women who underwent radical hysterectomy had recurrences during the study period; that group, however, did suffer from “intraoperative, postoperative, and long-term complications.”

The investigators acknowledged the study’s several limitations, including a lack of standardized preoperative therapy, incomplete records of radiation dosing, and a limited number of patients. Along the same lines, another larger prospective trial to evaluate the two types of hysterectomy would “help guide what should be the standard of care for salvage therapy,” they wrote.

However, their findings emphasized the need for physicians in limited resource areas to have “a strong index of suspicion” when evaluating patients with locally advanced cervical cancer for residual disease. “Close clinical follow-up is crucial to identify these women in a timely manner,” the investigators added.

The Massachusetts General Hospital Gynecologic Oncology Global Health Fund supported the study. The authors reported no conflicts of interest.

SOURCE: Pervin S et al. J Glob Oncol. 2019 Feb 1. doi: 10.1200/JGO.18.00157.

according to a study of Bangladeshi patients with cervical cancer.

“Surgical intervention by either extrafascial or radical hysterectomy should be strongly considered for women with biopsy-confirmed residual disease after chemoradiation,” wrote lead authors Shahana Pervin, MBBS, and Farzana Islam Ruma, MBBS, of the National Institute of Cancer Research and Hospital and of the Railway General Hospital, respectively, in Dhaka, Bangladesh, and their associates. The study was published in the Journal of Global Oncology.

From 2009 to June 2013, this prospective longitudinal study collected data from 40 patients with biopsy-confirmed persistence of cervical cancer. The patients, who were being treated at one of two hospitals in Dhaka, Bangladesh, underwent either radical or extrafascial hysterectomy at least 12 weeks after initial radiation therapy.

At 5 years of follow-up, 36 (90%) had no evidence of disease. Of the 29 women who underwent extrafascial hysterectomy, 4 (14%) developed recurrent disease and 1 died. None of the 11 women who underwent radical hysterectomy had recurrences during the study period; that group, however, did suffer from “intraoperative, postoperative, and long-term complications.”

The investigators acknowledged the study’s several limitations, including a lack of standardized preoperative therapy, incomplete records of radiation dosing, and a limited number of patients. Along the same lines, another larger prospective trial to evaluate the two types of hysterectomy would “help guide what should be the standard of care for salvage therapy,” they wrote.

However, their findings emphasized the need for physicians in limited resource areas to have “a strong index of suspicion” when evaluating patients with locally advanced cervical cancer for residual disease. “Close clinical follow-up is crucial to identify these women in a timely manner,” the investigators added.

The Massachusetts General Hospital Gynecologic Oncology Global Health Fund supported the study. The authors reported no conflicts of interest.

SOURCE: Pervin S et al. J Glob Oncol. 2019 Feb 1. doi: 10.1200/JGO.18.00157.

according to a study of Bangladeshi patients with cervical cancer.

“Surgical intervention by either extrafascial or radical hysterectomy should be strongly considered for women with biopsy-confirmed residual disease after chemoradiation,” wrote lead authors Shahana Pervin, MBBS, and Farzana Islam Ruma, MBBS, of the National Institute of Cancer Research and Hospital and of the Railway General Hospital, respectively, in Dhaka, Bangladesh, and their associates. The study was published in the Journal of Global Oncology.

From 2009 to June 2013, this prospective longitudinal study collected data from 40 patients with biopsy-confirmed persistence of cervical cancer. The patients, who were being treated at one of two hospitals in Dhaka, Bangladesh, underwent either radical or extrafascial hysterectomy at least 12 weeks after initial radiation therapy.

At 5 years of follow-up, 36 (90%) had no evidence of disease. Of the 29 women who underwent extrafascial hysterectomy, 4 (14%) developed recurrent disease and 1 died. None of the 11 women who underwent radical hysterectomy had recurrences during the study period; that group, however, did suffer from “intraoperative, postoperative, and long-term complications.”

The investigators acknowledged the study’s several limitations, including a lack of standardized preoperative therapy, incomplete records of radiation dosing, and a limited number of patients. Along the same lines, another larger prospective trial to evaluate the two types of hysterectomy would “help guide what should be the standard of care for salvage therapy,” they wrote.

However, their findings emphasized the need for physicians in limited resource areas to have “a strong index of suspicion” when evaluating patients with locally advanced cervical cancer for residual disease. “Close clinical follow-up is crucial to identify these women in a timely manner,” the investigators added.

The Massachusetts General Hospital Gynecologic Oncology Global Health Fund supported the study. The authors reported no conflicts of interest.

SOURCE: Pervin S et al. J Glob Oncol. 2019 Feb 1. doi: 10.1200/JGO.18.00157.

FROM THE JOURNAL OF GLOBAL ONCOLOGY

Key clinical point: Hysterectomy should be strongly considered for women with biopsy-confirmed residual cervical cancer after radiation.

Major finding: At 5 years of follow-up, 90% of the patients who underwent hysterectomy had no evidence of disease.

Study details: A prospective longitudinal study of 40 patients with locally advanced cervical cancer who underwent hysterectomy after radiation at one of two hospitals in Dhaka, Bangladesh.

Disclosures: The Massachusetts General Hospital Gynecologic Oncology Global Health Fund supported the study. The authors reported no conflicts of interest.

Source: Pervin S et al. J Glob Oncol. 2019 Feb 1. doi: 10.1200/JGO.18.00157.

Consider timing and technique before hernia repair in patients with cirrhosis

but only when accompanied by careful selection of patients, operative timing, and technique, according to a literature review of studies on hernia management in cirrhosis.

“Hernia management in cirrhosis continues to be restricted by lack of high-quality evidence and heterogeneity in expert opinion,” wrote lead author Sara P. Myers, MD, of the University of Pittsburgh, and her coauthors, adding that “there is, however, convincing evidence to advocate for elective ventral, umbilical, or inguinal hernia repair in compensated cirrhosis.” The study was published in the Journal of Surgical Research.

After reviewing 51 articles – including 7 prospective observational studies, 26 retrospective observational studies, 2 randomized controlled trials, 15 review articles, and 1 case report – Dr. Myers and her colleagues organized their data into three categories: preoperative, intraoperative, or postoperative considerations.

From a preoperative standpoint, decompensated cirrhosis was recognized as a “harbinger of poor outcomes.” Signs of decompensated cirrhosis include ascites, variceal bleeding, spontaneous bacterial peritonitis, hepatic encephalopathy, and hepatorenal syndrome. A 2006 study found that patients with decompensated cirrhosis survive for a median of less than 2 years, while those with compensated cirrhosis has a median survival of more than 12 years.

Intraoperative considerations leaned on studies of cholecystectomy; the evidence suggested that patients with mild to moderate cirrhosis can undergo laparoscopic surgery, even showing decreased risk of mortality, pneumonia, sepsis, and reoperation as a result. And although most laparoscopic procedures involve an intraperitoneal onlay mesh repair and the “use of mesh for hernia repair in patients with cirrhosis has been debated,” a 2007 study showed that synthetic mesh is safe for elective herniorrhaphy.

Finally, they shared specific postoperative risks, including encephalopathy and ascites, along with the fact that cirrhosis itself “precipitates immune dysfunction and deficiency and promotes systemic inflammation.” At the same time, they highlighted a 2008 study in which a cohort of 32 patients with cirrhosis underwent elective Lichtenstein repair with no major complications and an overall improved quality of life.

The coauthors noted that, although many surgeons will avoid hernia repair in patients with severe liver disease because of the associations with high morbidity and mortality, dissent fades when an emergency means abstaining will lead to a prognosis worse than intervention. That said, when it comes to preemptive elective repair there are also “no clear guidelines with regard to severity of cirrhosis and thresholds that would preclude herniorrhaphy.” Regardless of the choices made, “recognizing and managing complications in cirrhotic patients who have undergone hernia repair is crucial,” they wrote.

The authors reported no conflicts of interest.

SOURCE: Myers SP et al. J Surg Res. 2018 Oct 23. doi: doi: 10.1016/j.jss.2018.09.052.

but only when accompanied by careful selection of patients, operative timing, and technique, according to a literature review of studies on hernia management in cirrhosis.

“Hernia management in cirrhosis continues to be restricted by lack of high-quality evidence and heterogeneity in expert opinion,” wrote lead author Sara P. Myers, MD, of the University of Pittsburgh, and her coauthors, adding that “there is, however, convincing evidence to advocate for elective ventral, umbilical, or inguinal hernia repair in compensated cirrhosis.” The study was published in the Journal of Surgical Research.

After reviewing 51 articles – including 7 prospective observational studies, 26 retrospective observational studies, 2 randomized controlled trials, 15 review articles, and 1 case report – Dr. Myers and her colleagues organized their data into three categories: preoperative, intraoperative, or postoperative considerations.

From a preoperative standpoint, decompensated cirrhosis was recognized as a “harbinger of poor outcomes.” Signs of decompensated cirrhosis include ascites, variceal bleeding, spontaneous bacterial peritonitis, hepatic encephalopathy, and hepatorenal syndrome. A 2006 study found that patients with decompensated cirrhosis survive for a median of less than 2 years, while those with compensated cirrhosis has a median survival of more than 12 years.

Intraoperative considerations leaned on studies of cholecystectomy; the evidence suggested that patients with mild to moderate cirrhosis can undergo laparoscopic surgery, even showing decreased risk of mortality, pneumonia, sepsis, and reoperation as a result. And although most laparoscopic procedures involve an intraperitoneal onlay mesh repair and the “use of mesh for hernia repair in patients with cirrhosis has been debated,” a 2007 study showed that synthetic mesh is safe for elective herniorrhaphy.

Finally, they shared specific postoperative risks, including encephalopathy and ascites, along with the fact that cirrhosis itself “precipitates immune dysfunction and deficiency and promotes systemic inflammation.” At the same time, they highlighted a 2008 study in which a cohort of 32 patients with cirrhosis underwent elective Lichtenstein repair with no major complications and an overall improved quality of life.

The coauthors noted that, although many surgeons will avoid hernia repair in patients with severe liver disease because of the associations with high morbidity and mortality, dissent fades when an emergency means abstaining will lead to a prognosis worse than intervention. That said, when it comes to preemptive elective repair there are also “no clear guidelines with regard to severity of cirrhosis and thresholds that would preclude herniorrhaphy.” Regardless of the choices made, “recognizing and managing complications in cirrhotic patients who have undergone hernia repair is crucial,” they wrote.

The authors reported no conflicts of interest.

SOURCE: Myers SP et al. J Surg Res. 2018 Oct 23. doi: doi: 10.1016/j.jss.2018.09.052.

but only when accompanied by careful selection of patients, operative timing, and technique, according to a literature review of studies on hernia management in cirrhosis.

“Hernia management in cirrhosis continues to be restricted by lack of high-quality evidence and heterogeneity in expert opinion,” wrote lead author Sara P. Myers, MD, of the University of Pittsburgh, and her coauthors, adding that “there is, however, convincing evidence to advocate for elective ventral, umbilical, or inguinal hernia repair in compensated cirrhosis.” The study was published in the Journal of Surgical Research.

After reviewing 51 articles – including 7 prospective observational studies, 26 retrospective observational studies, 2 randomized controlled trials, 15 review articles, and 1 case report – Dr. Myers and her colleagues organized their data into three categories: preoperative, intraoperative, or postoperative considerations.

From a preoperative standpoint, decompensated cirrhosis was recognized as a “harbinger of poor outcomes.” Signs of decompensated cirrhosis include ascites, variceal bleeding, spontaneous bacterial peritonitis, hepatic encephalopathy, and hepatorenal syndrome. A 2006 study found that patients with decompensated cirrhosis survive for a median of less than 2 years, while those with compensated cirrhosis has a median survival of more than 12 years.

Intraoperative considerations leaned on studies of cholecystectomy; the evidence suggested that patients with mild to moderate cirrhosis can undergo laparoscopic surgery, even showing decreased risk of mortality, pneumonia, sepsis, and reoperation as a result. And although most laparoscopic procedures involve an intraperitoneal onlay mesh repair and the “use of mesh for hernia repair in patients with cirrhosis has been debated,” a 2007 study showed that synthetic mesh is safe for elective herniorrhaphy.

Finally, they shared specific postoperative risks, including encephalopathy and ascites, along with the fact that cirrhosis itself “precipitates immune dysfunction and deficiency and promotes systemic inflammation.” At the same time, they highlighted a 2008 study in which a cohort of 32 patients with cirrhosis underwent elective Lichtenstein repair with no major complications and an overall improved quality of life.

The coauthors noted that, although many surgeons will avoid hernia repair in patients with severe liver disease because of the associations with high morbidity and mortality, dissent fades when an emergency means abstaining will lead to a prognosis worse than intervention. That said, when it comes to preemptive elective repair there are also “no clear guidelines with regard to severity of cirrhosis and thresholds that would preclude herniorrhaphy.” Regardless of the choices made, “recognizing and managing complications in cirrhotic patients who have undergone hernia repair is crucial,” they wrote.

The authors reported no conflicts of interest.

SOURCE: Myers SP et al. J Surg Res. 2018 Oct 23. doi: doi: 10.1016/j.jss.2018.09.052.

FROM THE JOURNAL OF SURGICAL RESEARCH

Key clinical point: With careful selection of patients, operative timing, and technique, herniorrhaphy may be appropriate in patients with cirrhosis.

Major finding: Patients with decompensated cirrhosis survived for a median of less than 2 years while those with compensated cirrhosis had a median survival of more than 12 years.

Study details: A literature review of 51 conference abstracts, review articles, randomized clinical trials, and observational studies.

Disclosures: The authors reported no conflicts of interest.

Source: Myers SP et al. J Surg Res. 2018 Oct 23. doi: 10.1016/j.jss.2018.09.052.

For CABG, multiple and single arterial grafts show no survival difference

No significant difference in rate of death was found in patients who underwent either bilateral or single internal thoracic artery grafting during coronary artery bypass grafting (CABG) surgery, according to a randomized trial of patients who were scheduled to undergo CABG.

“At 10 years, in intention-to-treat analyses, there were no significant between-group differences in all-cause mortality,” wrote lead author David P. Taggart, MD, PhD, of the University of Oxford (England), and his coauthors, adding that “the results of this trial are not consistent with data from previous, nonrandomized studies.” The study was published in the New England Journal of Medicine.

In the multicenter, unblinded Arterial Revascularization Trial (ART), 3,102 patients with multivessel coronary artery disease were divided into two groups: the bilateral-graft group (1,548 patients) and the single-graft group (1,554). They were assigned to receive bilateral internal thoracic artery grafts or a standard single left internal thoracic artery graft during CABG, respectively. However, 13.9% of the patients in the bilateral-graft group received only a single internal thoracic artery graft, while 21.8% of those in the single-graft group also received a radial artery graft.

At 10-year follow-up, 644 patients (20.8%) had died; 315 deaths (20.3%) occurred in the bilateral-graft group and 329 (21.2%) occurred in the single-graft group. A total of 385 patients (24.9%) suffered MI, stroke, or death in the bilateral-graft group, compared with 425 (27.3%) in the single-graft group (hazard ratio, 0.90; 95% confidence interval, 0.79-1.03).

The coauthors noted several reasons that the results of their trial may not have matched previous data, including conflicting evidence about vein graft failure’s clinical effect on survival and the aforementioned patients who were assigned to a specific group but received alternate grafting. In addition, they acknowledged that ART was an unblinded trial and “biases may be introduced in the treatment of patients, depending on their randomization assignment.”

The study was supported by grants from the British Heart Foundation, the U.K. Medical Research Council, and the National Institute of Health Research Efficacy and Mechanistic Evaluation Program. No relevant conflicts of interest were reported.

SOURCE: Taggart DP et al. N Engl J Med. 2019 Jan 31. doi: 10.1056/NEJMoa1808783.

Do the results of the Arterial Revascularization Trial undercut observational studies that elevated bilateral internal thoracic artery grafting? Not just yet, according to Stuart J. Head, MD, PhD, and Arie Pieter Kappetein, MD, PhD, of Erasmus Medical Center, the Netherlands.

They also recognized the study’s limitations in regard to groups receiving unassigned grafts and potential selection bias. Until another ongoing study on multiple arterial grafts is completed, the authors recommended that “CABG [coronary artery bypass grafting] with both internal thoracic arteries should not be abandoned. It should still be performed in patients with a low risk of sternal wound complications and a good long-term survival prognosis and by surgeons who are experienced in performing multiarterial CABG procedures.”

These comments are adapted from an accompanying editorial (N Engl J Med. 2019 Jan 31. doi: 10.1056/NEJMe1814681). No conflicts of interest were reported.

Do the results of the Arterial Revascularization Trial undercut observational studies that elevated bilateral internal thoracic artery grafting? Not just yet, according to Stuart J. Head, MD, PhD, and Arie Pieter Kappetein, MD, PhD, of Erasmus Medical Center, the Netherlands.

They also recognized the study’s limitations in regard to groups receiving unassigned grafts and potential selection bias. Until another ongoing study on multiple arterial grafts is completed, the authors recommended that “CABG [coronary artery bypass grafting] with both internal thoracic arteries should not be abandoned. It should still be performed in patients with a low risk of sternal wound complications and a good long-term survival prognosis and by surgeons who are experienced in performing multiarterial CABG procedures.”

These comments are adapted from an accompanying editorial (N Engl J Med. 2019 Jan 31. doi: 10.1056/NEJMe1814681). No conflicts of interest were reported.

Do the results of the Arterial Revascularization Trial undercut observational studies that elevated bilateral internal thoracic artery grafting? Not just yet, according to Stuart J. Head, MD, PhD, and Arie Pieter Kappetein, MD, PhD, of Erasmus Medical Center, the Netherlands.

They also recognized the study’s limitations in regard to groups receiving unassigned grafts and potential selection bias. Until another ongoing study on multiple arterial grafts is completed, the authors recommended that “CABG [coronary artery bypass grafting] with both internal thoracic arteries should not be abandoned. It should still be performed in patients with a low risk of sternal wound complications and a good long-term survival prognosis and by surgeons who are experienced in performing multiarterial CABG procedures.”

These comments are adapted from an accompanying editorial (N Engl J Med. 2019 Jan 31. doi: 10.1056/NEJMe1814681). No conflicts of interest were reported.

No significant difference in rate of death was found in patients who underwent either bilateral or single internal thoracic artery grafting during coronary artery bypass grafting (CABG) surgery, according to a randomized trial of patients who were scheduled to undergo CABG.

“At 10 years, in intention-to-treat analyses, there were no significant between-group differences in all-cause mortality,” wrote lead author David P. Taggart, MD, PhD, of the University of Oxford (England), and his coauthors, adding that “the results of this trial are not consistent with data from previous, nonrandomized studies.” The study was published in the New England Journal of Medicine.

In the multicenter, unblinded Arterial Revascularization Trial (ART), 3,102 patients with multivessel coronary artery disease were divided into two groups: the bilateral-graft group (1,548 patients) and the single-graft group (1,554). They were assigned to receive bilateral internal thoracic artery grafts or a standard single left internal thoracic artery graft during CABG, respectively. However, 13.9% of the patients in the bilateral-graft group received only a single internal thoracic artery graft, while 21.8% of those in the single-graft group also received a radial artery graft.

At 10-year follow-up, 644 patients (20.8%) had died; 315 deaths (20.3%) occurred in the bilateral-graft group and 329 (21.2%) occurred in the single-graft group. A total of 385 patients (24.9%) suffered MI, stroke, or death in the bilateral-graft group, compared with 425 (27.3%) in the single-graft group (hazard ratio, 0.90; 95% confidence interval, 0.79-1.03).

The coauthors noted several reasons that the results of their trial may not have matched previous data, including conflicting evidence about vein graft failure’s clinical effect on survival and the aforementioned patients who were assigned to a specific group but received alternate grafting. In addition, they acknowledged that ART was an unblinded trial and “biases may be introduced in the treatment of patients, depending on their randomization assignment.”

The study was supported by grants from the British Heart Foundation, the U.K. Medical Research Council, and the National Institute of Health Research Efficacy and Mechanistic Evaluation Program. No relevant conflicts of interest were reported.

SOURCE: Taggart DP et al. N Engl J Med. 2019 Jan 31. doi: 10.1056/NEJMoa1808783.

No significant difference in rate of death was found in patients who underwent either bilateral or single internal thoracic artery grafting during coronary artery bypass grafting (CABG) surgery, according to a randomized trial of patients who were scheduled to undergo CABG.

“At 10 years, in intention-to-treat analyses, there were no significant between-group differences in all-cause mortality,” wrote lead author David P. Taggart, MD, PhD, of the University of Oxford (England), and his coauthors, adding that “the results of this trial are not consistent with data from previous, nonrandomized studies.” The study was published in the New England Journal of Medicine.

In the multicenter, unblinded Arterial Revascularization Trial (ART), 3,102 patients with multivessel coronary artery disease were divided into two groups: the bilateral-graft group (1,548 patients) and the single-graft group (1,554). They were assigned to receive bilateral internal thoracic artery grafts or a standard single left internal thoracic artery graft during CABG, respectively. However, 13.9% of the patients in the bilateral-graft group received only a single internal thoracic artery graft, while 21.8% of those in the single-graft group also received a radial artery graft.

At 10-year follow-up, 644 patients (20.8%) had died; 315 deaths (20.3%) occurred in the bilateral-graft group and 329 (21.2%) occurred in the single-graft group. A total of 385 patients (24.9%) suffered MI, stroke, or death in the bilateral-graft group, compared with 425 (27.3%) in the single-graft group (hazard ratio, 0.90; 95% confidence interval, 0.79-1.03).

The coauthors noted several reasons that the results of their trial may not have matched previous data, including conflicting evidence about vein graft failure’s clinical effect on survival and the aforementioned patients who were assigned to a specific group but received alternate grafting. In addition, they acknowledged that ART was an unblinded trial and “biases may be introduced in the treatment of patients, depending on their randomization assignment.”

The study was supported by grants from the British Heart Foundation, the U.K. Medical Research Council, and the National Institute of Health Research Efficacy and Mechanistic Evaluation Program. No relevant conflicts of interest were reported.

SOURCE: Taggart DP et al. N Engl J Med. 2019 Jan 31. doi: 10.1056/NEJMoa1808783.

FROM THE NEW ENGLAND JOURNAL OF MEDICINE

Key clinical point:

Major finding: At 10-year follow-up, there were 315 deaths (20.3% of patients) in the bilateral-graft group and 329 deaths (21.2%) in the single-graft group.

Study details: A two-group, multicenter, randomized, unblinded trial of 3,102 patients who were scheduled to undergo coronary artery bypass grafting.

Disclosures: The study was supported by grants from the British Heart Foundation, the U.K. Medical Research Council, and the National Institute of Health Research Efficacy and Mechanistic Evaluation Program. No relevant conflicts of interest were reported.

Source: Taggart DP et al. N Engl J Med. 2019 Jan 31. doi: 10.1056/NEJMoa1808783.

New study determines factors that can send flu patients to the ICU

Numerous independent factors – including a history of obstructive/central sleep apnea syndrome (OSAS/CSAS) or myocardial infarction, along with a body mass index greater than 30 g/m2 – could be related to ICU admission and subsequent high mortality rates in influenza patients, according to an analysis of patients in the Netherlands who were treated during the influenza epidemic of 2015-2016.

Along with determining these factors, lead author M.C. Beumer, of Radboud University Medical Center, the Netherlands, and his coauthors found that “coinfections with bacterial, fungal, and viral pathogens developed more often in patients who were admitted to the ICU.” The study was published in the Journal of Critical Care.

The coauthors reviewed 199 influenza patients who were admitted to two medical centers in the Netherlands during October 2015–April 2016. Of those patients, 45 (23%) were admitted to the ICU, primarily because of respiratory failure, and their mortality rate was 17/45 (38%) versus an overall mortality rate of 18/199 (9%).

Compared with patients in the normal ward, patients admitted to the ICU more frequently had a history of OSAS/CSAS (11% vs. 3%; P = .03) and MI (20% vs. 6%; P = .007), along with a BMI higher than 30 g/m2 (30% vs. 15%; P = .04) and dyspnea as a symptom (77% vs. 48%,; P = .001). In addition, more ICU-admitted patients had influenza A rather than influenza B, compared with those not admitted (87% vs. 66%; P = .009).

Pulmonary coinfections – including bacterial, fungal, and viral pathogens – were also proportionally higher among the 45 ICU patients (56% vs. 20%; P less than .0001). The most common bacterial pathogens were Staphylococcus aureus (11%) and Streptococcus pneumoniae (7%) while Aspergillus fumigatus (18%) and Pneumocystis jirovecii (7%) topped the fungal pathogens.

Mr. Beumer and his colleagues noted potential limitations of their work, including the selection of patients from among the “most severely ill” contributing to an ICU admission rate that surpassed the 5%-10% described elsewhere. They also admitted that their study relied on a “relatively small sample size,” focusing on one seasonal influenza outbreak. However, “despite the limited validity,” they reiterated that “the identified factors may contribute to a complicated disease course and could represent a tool for early recognition of the influenza patients at risk for a complicated disease course.”

The authors reported no conflicts of interest.

SOURCE: Beumer MC et al. J Crit Care. 2019;50:59-65.

.

Numerous independent factors – including a history of obstructive/central sleep apnea syndrome (OSAS/CSAS) or myocardial infarction, along with a body mass index greater than 30 g/m2 – could be related to ICU admission and subsequent high mortality rates in influenza patients, according to an analysis of patients in the Netherlands who were treated during the influenza epidemic of 2015-2016.

Along with determining these factors, lead author M.C. Beumer, of Radboud University Medical Center, the Netherlands, and his coauthors found that “coinfections with bacterial, fungal, and viral pathogens developed more often in patients who were admitted to the ICU.” The study was published in the Journal of Critical Care.

The coauthors reviewed 199 influenza patients who were admitted to two medical centers in the Netherlands during October 2015–April 2016. Of those patients, 45 (23%) were admitted to the ICU, primarily because of respiratory failure, and their mortality rate was 17/45 (38%) versus an overall mortality rate of 18/199 (9%).

Compared with patients in the normal ward, patients admitted to the ICU more frequently had a history of OSAS/CSAS (11% vs. 3%; P = .03) and MI (20% vs. 6%; P = .007), along with a BMI higher than 30 g/m2 (30% vs. 15%; P = .04) and dyspnea as a symptom (77% vs. 48%,; P = .001). In addition, more ICU-admitted patients had influenza A rather than influenza B, compared with those not admitted (87% vs. 66%; P = .009).

Pulmonary coinfections – including bacterial, fungal, and viral pathogens – were also proportionally higher among the 45 ICU patients (56% vs. 20%; P less than .0001). The most common bacterial pathogens were Staphylococcus aureus (11%) and Streptococcus pneumoniae (7%) while Aspergillus fumigatus (18%) and Pneumocystis jirovecii (7%) topped the fungal pathogens.

Mr. Beumer and his colleagues noted potential limitations of their work, including the selection of patients from among the “most severely ill” contributing to an ICU admission rate that surpassed the 5%-10% described elsewhere. They also admitted that their study relied on a “relatively small sample size,” focusing on one seasonal influenza outbreak. However, “despite the limited validity,” they reiterated that “the identified factors may contribute to a complicated disease course and could represent a tool for early recognition of the influenza patients at risk for a complicated disease course.”

The authors reported no conflicts of interest.

SOURCE: Beumer MC et al. J Crit Care. 2019;50:59-65.

.

Numerous independent factors – including a history of obstructive/central sleep apnea syndrome (OSAS/CSAS) or myocardial infarction, along with a body mass index greater than 30 g/m2 – could be related to ICU admission and subsequent high mortality rates in influenza patients, according to an analysis of patients in the Netherlands who were treated during the influenza epidemic of 2015-2016.

Along with determining these factors, lead author M.C. Beumer, of Radboud University Medical Center, the Netherlands, and his coauthors found that “coinfections with bacterial, fungal, and viral pathogens developed more often in patients who were admitted to the ICU.” The study was published in the Journal of Critical Care.

The coauthors reviewed 199 influenza patients who were admitted to two medical centers in the Netherlands during October 2015–April 2016. Of those patients, 45 (23%) were admitted to the ICU, primarily because of respiratory failure, and their mortality rate was 17/45 (38%) versus an overall mortality rate of 18/199 (9%).

Compared with patients in the normal ward, patients admitted to the ICU more frequently had a history of OSAS/CSAS (11% vs. 3%; P = .03) and MI (20% vs. 6%; P = .007), along with a BMI higher than 30 g/m2 (30% vs. 15%; P = .04) and dyspnea as a symptom (77% vs. 48%,; P = .001). In addition, more ICU-admitted patients had influenza A rather than influenza B, compared with those not admitted (87% vs. 66%; P = .009).

Pulmonary coinfections – including bacterial, fungal, and viral pathogens – were also proportionally higher among the 45 ICU patients (56% vs. 20%; P less than .0001). The most common bacterial pathogens were Staphylococcus aureus (11%) and Streptococcus pneumoniae (7%) while Aspergillus fumigatus (18%) and Pneumocystis jirovecii (7%) topped the fungal pathogens.

Mr. Beumer and his colleagues noted potential limitations of their work, including the selection of patients from among the “most severely ill” contributing to an ICU admission rate that surpassed the 5%-10% described elsewhere. They also admitted that their study relied on a “relatively small sample size,” focusing on one seasonal influenza outbreak. However, “despite the limited validity,” they reiterated that “the identified factors may contribute to a complicated disease course and could represent a tool for early recognition of the influenza patients at risk for a complicated disease course.”

The authors reported no conflicts of interest.

SOURCE: Beumer MC et al. J Crit Care. 2019;50:59-65.

.

FROM THE JOURNAL OF CRITICAL CARE

Key clinical point:

Major finding: Flu patients in the ICU more frequently had a history of obstructive/central sleep apnea syndrome (11% vs. 3%; P = .03) and MI (20% vs. 6%; P = .007), compared with non-ICU flu patients.

Study details: A retrospective cohort study of 199 flu patients who were admitted to two academic hospitals in the Netherlands.

Disclosures: The authors reported no conflicts of interest.

Source: Beumer MC et al. J Crit Care. 2019; 50:59-65.

Study hints at lacosamide’s efficacy for small fiber neuropathy

As a treatment for small fiber neuropathy (SFN), lacosamide decreased pain and had a positive effect on sleep quality with minimal adverse events in patients with mutations in the gene SCN9A that encodes the voltage-gated sodium channel Nav1.7, according to a randomized, placebo-controlled, double-blind, crossover-design study published in Brain.

“This is the first study that investigated the efficacy of lacosamide [Vimpat] in patients with SFN,” wrote lead author Bianca T.A. de Greef, MD, of Maastricht University Medical Center, the Netherlands, and her coauthors. “Compared with placebo, lacosamide appeared to be safe to use and well tolerated in this cohort of patients.”

Lacosamide, which is approved in the United States to treat partial-onset seizures in people aged 4 years and older, has been shown to bind to and inhibit Nav1.7.

The investigators randomized 25 Dutch patients with Nav1.7-related SFN into the Lacosamide-Efficacy-’N’-Safety in SFN (LENSS) study to receive lacosamide followed by placebo, or vice versa. The patients were recruited between November 2014 and July 2016; 1 patient dropped out before treatment and another after the first treatment period, leaving 24 patients who received lacosamide and 23 patients who received placebo. They went through a 3-week titration period, an 8-week treatment period, a 2-week tapering period, and a washout period of at least 2 weeks, after which they switched to the other treatment arm and repeated the same schedule.

Through the daily pain intensity numerical rating scale and the daily sleep interference scale (DSIS), among other questionnaires, the investigators sought to determine if lacosamide reduced pain and thereby improved sleep quality. Lacosamide treatment led to a decrease in mean average pain by at least 1 point in 50.0% of patients, compared with 21.7% in the placebo group (odds ratio, 4.45; 95% confidence interval, 1.38-14.36; P = .0213). In addition, 25.0% of the lacosamide group reported at least a 2-point decrease in mean average pain versus 8.7% in the placebo group. There was also a notable difference in pain’s impact on sleep quality between the two, with the lacosamide period seeing a DSIS median value of 5.3, compared with 5.7 for the placebo period.

According to the patients’ global impression of change questionnaire, 33.3% felt better while using lacosamide versus 4.3% who felt better while using placebo (P = .0156). Six serious adverse events occurred during the study, though only two occurred during the lacosamide period. The most common adverse events for patients taking lacosamide included dizziness, headache, and nausea, all of which were comparable with adverse events in patients taking placebo.

Dr. de Greef and her colleagues noted the study’s potential limitations, including a carryover effect that could have confounded direct treatment effects (which they attempted to mitigate via a lengthier washout period) and a small cohort that was limited to very specific patients. However, the authors chose this particular cohort because “our aim was to demonstrate proof of-concept, which can be used for future studies involving larger groups of patients diagnosed with SFN.” They observed that their response rates were slightly lower than expected, but they noted that “lacosamide appears to be as effective as currently available neuropathic pain treatment.”

The study was funded by the Prinses Beatrix Spierfonds. Some of the authors reported receiving grants, personal fees, funding for research, and/or honoraria from foundations, pharmaceutical companies, life sciences companies, and the European Commission.

SOURCE: de Greef BTA et al. Brain. 2019 Jan 14. doi: 10.1093/brain/awy329.

As a treatment for small fiber neuropathy (SFN), lacosamide decreased pain and had a positive effect on sleep quality with minimal adverse events in patients with mutations in the gene SCN9A that encodes the voltage-gated sodium channel Nav1.7, according to a randomized, placebo-controlled, double-blind, crossover-design study published in Brain.

“This is the first study that investigated the efficacy of lacosamide [Vimpat] in patients with SFN,” wrote lead author Bianca T.A. de Greef, MD, of Maastricht University Medical Center, the Netherlands, and her coauthors. “Compared with placebo, lacosamide appeared to be safe to use and well tolerated in this cohort of patients.”

Lacosamide, which is approved in the United States to treat partial-onset seizures in people aged 4 years and older, has been shown to bind to and inhibit Nav1.7.

The investigators randomized 25 Dutch patients with Nav1.7-related SFN into the Lacosamide-Efficacy-’N’-Safety in SFN (LENSS) study to receive lacosamide followed by placebo, or vice versa. The patients were recruited between November 2014 and July 2016; 1 patient dropped out before treatment and another after the first treatment period, leaving 24 patients who received lacosamide and 23 patients who received placebo. They went through a 3-week titration period, an 8-week treatment period, a 2-week tapering period, and a washout period of at least 2 weeks, after which they switched to the other treatment arm and repeated the same schedule.

Through the daily pain intensity numerical rating scale and the daily sleep interference scale (DSIS), among other questionnaires, the investigators sought to determine if lacosamide reduced pain and thereby improved sleep quality. Lacosamide treatment led to a decrease in mean average pain by at least 1 point in 50.0% of patients, compared with 21.7% in the placebo group (odds ratio, 4.45; 95% confidence interval, 1.38-14.36; P = .0213). In addition, 25.0% of the lacosamide group reported at least a 2-point decrease in mean average pain versus 8.7% in the placebo group. There was also a notable difference in pain’s impact on sleep quality between the two, with the lacosamide period seeing a DSIS median value of 5.3, compared with 5.7 for the placebo period.

According to the patients’ global impression of change questionnaire, 33.3% felt better while using lacosamide versus 4.3% who felt better while using placebo (P = .0156). Six serious adverse events occurred during the study, though only two occurred during the lacosamide period. The most common adverse events for patients taking lacosamide included dizziness, headache, and nausea, all of which were comparable with adverse events in patients taking placebo.

Dr. de Greef and her colleagues noted the study’s potential limitations, including a carryover effect that could have confounded direct treatment effects (which they attempted to mitigate via a lengthier washout period) and a small cohort that was limited to very specific patients. However, the authors chose this particular cohort because “our aim was to demonstrate proof of-concept, which can be used for future studies involving larger groups of patients diagnosed with SFN.” They observed that their response rates were slightly lower than expected, but they noted that “lacosamide appears to be as effective as currently available neuropathic pain treatment.”

The study was funded by the Prinses Beatrix Spierfonds. Some of the authors reported receiving grants, personal fees, funding for research, and/or honoraria from foundations, pharmaceutical companies, life sciences companies, and the European Commission.

SOURCE: de Greef BTA et al. Brain. 2019 Jan 14. doi: 10.1093/brain/awy329.

As a treatment for small fiber neuropathy (SFN), lacosamide decreased pain and had a positive effect on sleep quality with minimal adverse events in patients with mutations in the gene SCN9A that encodes the voltage-gated sodium channel Nav1.7, according to a randomized, placebo-controlled, double-blind, crossover-design study published in Brain.

“This is the first study that investigated the efficacy of lacosamide [Vimpat] in patients with SFN,” wrote lead author Bianca T.A. de Greef, MD, of Maastricht University Medical Center, the Netherlands, and her coauthors. “Compared with placebo, lacosamide appeared to be safe to use and well tolerated in this cohort of patients.”

Lacosamide, which is approved in the United States to treat partial-onset seizures in people aged 4 years and older, has been shown to bind to and inhibit Nav1.7.

The investigators randomized 25 Dutch patients with Nav1.7-related SFN into the Lacosamide-Efficacy-’N’-Safety in SFN (LENSS) study to receive lacosamide followed by placebo, or vice versa. The patients were recruited between November 2014 and July 2016; 1 patient dropped out before treatment and another after the first treatment period, leaving 24 patients who received lacosamide and 23 patients who received placebo. They went through a 3-week titration period, an 8-week treatment period, a 2-week tapering period, and a washout period of at least 2 weeks, after which they switched to the other treatment arm and repeated the same schedule.

Through the daily pain intensity numerical rating scale and the daily sleep interference scale (DSIS), among other questionnaires, the investigators sought to determine if lacosamide reduced pain and thereby improved sleep quality. Lacosamide treatment led to a decrease in mean average pain by at least 1 point in 50.0% of patients, compared with 21.7% in the placebo group (odds ratio, 4.45; 95% confidence interval, 1.38-14.36; P = .0213). In addition, 25.0% of the lacosamide group reported at least a 2-point decrease in mean average pain versus 8.7% in the placebo group. There was also a notable difference in pain’s impact on sleep quality between the two, with the lacosamide period seeing a DSIS median value of 5.3, compared with 5.7 for the placebo period.

According to the patients’ global impression of change questionnaire, 33.3% felt better while using lacosamide versus 4.3% who felt better while using placebo (P = .0156). Six serious adverse events occurred during the study, though only two occurred during the lacosamide period. The most common adverse events for patients taking lacosamide included dizziness, headache, and nausea, all of which were comparable with adverse events in patients taking placebo.

Dr. de Greef and her colleagues noted the study’s potential limitations, including a carryover effect that could have confounded direct treatment effects (which they attempted to mitigate via a lengthier washout period) and a small cohort that was limited to very specific patients. However, the authors chose this particular cohort because “our aim was to demonstrate proof of-concept, which can be used for future studies involving larger groups of patients diagnosed with SFN.” They observed that their response rates were slightly lower than expected, but they noted that “lacosamide appears to be as effective as currently available neuropathic pain treatment.”

The study was funded by the Prinses Beatrix Spierfonds. Some of the authors reported receiving grants, personal fees, funding for research, and/or honoraria from foundations, pharmaceutical companies, life sciences companies, and the European Commission.

SOURCE: de Greef BTA et al. Brain. 2019 Jan 14. doi: 10.1093/brain/awy329.

FROM BRAIN

Key clinical point:

Major finding: In the lacosamide group, 50.0% of patients reported mean average pain decreasing by at least 1 point, compared with 21.7% in the placebo group (odds ratio, 4.45; 95% confidence interval, 1.38-14.36; P = .0213).

Study details: A randomized, placebo-controlled, double-blind, crossover-design study of 25 patients with Nav1.7-related small fiber neuropathy who received lacosamide followed by placebo, or vice versa.

Disclosures: The study was funded by the Prinses Beatrix Spierfonds. Some of the authors reported receiving grants, personal fees, funding for research, and/or honoraria from foundations, pharmaceutical companies, life sciences companies, and the European Commission.

Source: de Greef BTA et al. Brain. 2019 Jan 14. doi: 10.1093/brain/awy329.

Brazilian study finds oral tranexamic acid effective for facial melasma

of patients from a public dermatology clinic in Brazil.

“Our study was one of the few to compare the use of oral TA isolated against a control group using a placebo and to evaluate the results by four different methods,” wrote lead author Mariana Morais Tavares Colferai, MD, of the Universidade de Mogi das Cruzes (Brazil), and her coauthors. The study was published online in the Journal of Cosmetic Dermatology.TA is a plasmin inhibitor, first described as a treatment for melasma in 1979. It is approved in the United States for treating menorrhagia.

In the randomized, double-blind, controlled trial of 47 patients with facial melasma – 37 completed the study – participants were assigned to one of two groups: Group A received 250 mg of tranexamic acid twice daily (n = 20) while group B received a placebo twice daily (n = 17). All patients were advised to use sunscreen. Before treatment and after 12 weeks, the researchers evaluated patients with four methods: the Melasma Area Severity Index (MASI), photographic records, patient evaluation with questionnaire (MELASQoL), and colorimetry assessed via a colorimeter.

Per evaluation of all tests, melasma improved in 50% of patients in group A, compared with 5.9% of patients in group B (P less than .005). Group A saw improvements in the mean MASI score (20.9 at pretreatment vs. 10.8 after treatment, P less than .001), mean MELASQoL value (55.4 vs. 38.2, P less than .001), and colorimetry (55.0 vs. 56.1, with higher values indicating lighter pigmentation, P = .033).

The most common side effects among those who received TA were gastrointestinal effects, such as nausea and diarrhea (5%); changes in menstrual flow (10%); and headache (14%). No serious side effects were reported.

The authors acknowledged several potential limitations in their study, including a lack of intermediate evaluations between pretreatment and 12 weeks. They also noted that the photographs were dichotomously classified as either “yes for improvement” or “no for the lack of improvement or worsening,” which may “limit the accuracy of our results” for that particular method.

The tranexamic acid and placebo for the study was provided by U.SK Dermatology/Brazil. No conflicts of interest were reported.

SOURCE: Colferai MMT et al. J Cosmet Dermatol. 2018 Dec 9. doi: 10.1111/jocd.12830.

of patients from a public dermatology clinic in Brazil.

“Our study was one of the few to compare the use of oral TA isolated against a control group using a placebo and to evaluate the results by four different methods,” wrote lead author Mariana Morais Tavares Colferai, MD, of the Universidade de Mogi das Cruzes (Brazil), and her coauthors. The study was published online in the Journal of Cosmetic Dermatology.TA is a plasmin inhibitor, first described as a treatment for melasma in 1979. It is approved in the United States for treating menorrhagia.

In the randomized, double-blind, controlled trial of 47 patients with facial melasma – 37 completed the study – participants were assigned to one of two groups: Group A received 250 mg of tranexamic acid twice daily (n = 20) while group B received a placebo twice daily (n = 17). All patients were advised to use sunscreen. Before treatment and after 12 weeks, the researchers evaluated patients with four methods: the Melasma Area Severity Index (MASI), photographic records, patient evaluation with questionnaire (MELASQoL), and colorimetry assessed via a colorimeter.

Per evaluation of all tests, melasma improved in 50% of patients in group A, compared with 5.9% of patients in group B (P less than .005). Group A saw improvements in the mean MASI score (20.9 at pretreatment vs. 10.8 after treatment, P less than .001), mean MELASQoL value (55.4 vs. 38.2, P less than .001), and colorimetry (55.0 vs. 56.1, with higher values indicating lighter pigmentation, P = .033).

The most common side effects among those who received TA were gastrointestinal effects, such as nausea and diarrhea (5%); changes in menstrual flow (10%); and headache (14%). No serious side effects were reported.

The authors acknowledged several potential limitations in their study, including a lack of intermediate evaluations between pretreatment and 12 weeks. They also noted that the photographs were dichotomously classified as either “yes for improvement” or “no for the lack of improvement or worsening,” which may “limit the accuracy of our results” for that particular method.

The tranexamic acid and placebo for the study was provided by U.SK Dermatology/Brazil. No conflicts of interest were reported.

SOURCE: Colferai MMT et al. J Cosmet Dermatol. 2018 Dec 9. doi: 10.1111/jocd.12830.

of patients from a public dermatology clinic in Brazil.

“Our study was one of the few to compare the use of oral TA isolated against a control group using a placebo and to evaluate the results by four different methods,” wrote lead author Mariana Morais Tavares Colferai, MD, of the Universidade de Mogi das Cruzes (Brazil), and her coauthors. The study was published online in the Journal of Cosmetic Dermatology.TA is a plasmin inhibitor, first described as a treatment for melasma in 1979. It is approved in the United States for treating menorrhagia.

In the randomized, double-blind, controlled trial of 47 patients with facial melasma – 37 completed the study – participants were assigned to one of two groups: Group A received 250 mg of tranexamic acid twice daily (n = 20) while group B received a placebo twice daily (n = 17). All patients were advised to use sunscreen. Before treatment and after 12 weeks, the researchers evaluated patients with four methods: the Melasma Area Severity Index (MASI), photographic records, patient evaluation with questionnaire (MELASQoL), and colorimetry assessed via a colorimeter.

Per evaluation of all tests, melasma improved in 50% of patients in group A, compared with 5.9% of patients in group B (P less than .005). Group A saw improvements in the mean MASI score (20.9 at pretreatment vs. 10.8 after treatment, P less than .001), mean MELASQoL value (55.4 vs. 38.2, P less than .001), and colorimetry (55.0 vs. 56.1, with higher values indicating lighter pigmentation, P = .033).

The most common side effects among those who received TA were gastrointestinal effects, such as nausea and diarrhea (5%); changes in menstrual flow (10%); and headache (14%). No serious side effects were reported.

The authors acknowledged several potential limitations in their study, including a lack of intermediate evaluations between pretreatment and 12 weeks. They also noted that the photographs were dichotomously classified as either “yes for improvement” or “no for the lack of improvement or worsening,” which may “limit the accuracy of our results” for that particular method.

The tranexamic acid and placebo for the study was provided by U.SK Dermatology/Brazil. No conflicts of interest were reported.

SOURCE: Colferai MMT et al. J Cosmet Dermatol. 2018 Dec 9. doi: 10.1111/jocd.12830.

FROM THE JOURNAL OF COSMETIC DERMATOLOGY

Key clinical point: After 12 weeks and according to four methods of evaluation, oral tranexamic acid was effective in 50% of patients with melasma.

Major finding: Melasma improved in 50% of patients who received oral tranexamic acid, compared with 5.9% of patients who received placebo (P less than .005).

Study details: A randomized, double-blind, controlled trial of 37 patients who received oral tranexamic acid or placebo twice a day for 12 weeks.

Disclosures: The tranexamic acid and placebo were provided by U.SK Dermatology/Brazil. No conflicts of interest were reported.

Source: Colferai MMT et al. J Cosmet Dermatol. 2018 Dec 9. doi: 10.1111/jocd.12830.

Blood test shows potential as an early biomarker of methotrexate inefficacy

A newly developed gene expression classifier may be able to identify rheumatoid arthritis patients who are unlikely to benefit from methotrexate, according to an analysis of blood samples from patients who had just begun the drug.

“This study highlights the potential of early treatment biomarker monitoring in RA and raises important questions regarding acceptable levels of performance for complementary diagnostic testing,” wrote lead author Darren Plant, PhD, of the University of Manchester (England), and his coauthors. The study was published in Arthritis & Rheumatology.

The study analyzed 85 participants in the Rheumatoid Arthritis Medication Study, a U.K.-based longitudinal observational study of RA patients taking methotrexate for the first time, and classified them as either good responders (n = 42) or nonresponders (n = 43) after 6 months on the drug, based on European League Against Rheumatism response criteria. Dr. Plant and his colleagues then performed gene expression profiling on whole-blood samples from those participants, collected before treatment and after 4 weeks on methotrexate.

Ultimately, pathway analysis indicated an overrepresentation of type 1 interferon signaling pathway genes in nonresponsive patients at both pretreatment (P = 2.8 x 10-25) and 4 weeks (P = 4.9 x 10-28). As such, the coauthors “developed a gene expression classifier that could potentially provide an early response biomarker of methotrexate inefficacy” at 6 months based on the gene expression ratio between 4 weeks and pretreatment, which yielded an area under the receiver operating characteristic curve (ROC AUC) of 0.78. This was proven stable in cross validation and superior to models including clinical covariates (ROC AUC = 0.65).

The authors did not replicate the results in an independent cohort, but the authors noted that the “utility of a gene expression classifier of methotrexate nonresponse now requires validation, not only in independent samples but also using independent technology.”

The study was also potentially limited by the fact that the patients at baseline were atypical in having a long disease duration at a median of 6-9 years but relatively low disease activity, based on a mean 28-joint Disease Activity Score (DAS28) of 4.8 in good responders and 4.0 in nonresponders. The authors also acknowledged both the objective and subjective nature of the DAS28 and its components in approximating disease activity. The authors also did not include a negative predictive value in their analysis, which would have been useful in considering the value of the test.

The study was jointly funded by Pfizer, the Medical Research Council, and Arthritis Research UK. No conflicts of interest were reported.

SOURCE: Plant D et al. Arthritis Rheumatol. 2019 Jan 7. doi: 10.1002/art.40810.

A newly developed gene expression classifier may be able to identify rheumatoid arthritis patients who are unlikely to benefit from methotrexate, according to an analysis of blood samples from patients who had just begun the drug.

“This study highlights the potential of early treatment biomarker monitoring in RA and raises important questions regarding acceptable levels of performance for complementary diagnostic testing,” wrote lead author Darren Plant, PhD, of the University of Manchester (England), and his coauthors. The study was published in Arthritis & Rheumatology.

The study analyzed 85 participants in the Rheumatoid Arthritis Medication Study, a U.K.-based longitudinal observational study of RA patients taking methotrexate for the first time, and classified them as either good responders (n = 42) or nonresponders (n = 43) after 6 months on the drug, based on European League Against Rheumatism response criteria. Dr. Plant and his colleagues then performed gene expression profiling on whole-blood samples from those participants, collected before treatment and after 4 weeks on methotrexate.

Ultimately, pathway analysis indicated an overrepresentation of type 1 interferon signaling pathway genes in nonresponsive patients at both pretreatment (P = 2.8 x 10-25) and 4 weeks (P = 4.9 x 10-28). As such, the coauthors “developed a gene expression classifier that could potentially provide an early response biomarker of methotrexate inefficacy” at 6 months based on the gene expression ratio between 4 weeks and pretreatment, which yielded an area under the receiver operating characteristic curve (ROC AUC) of 0.78. This was proven stable in cross validation and superior to models including clinical covariates (ROC AUC = 0.65).

The authors did not replicate the results in an independent cohort, but the authors noted that the “utility of a gene expression classifier of methotrexate nonresponse now requires validation, not only in independent samples but also using independent technology.”

The study was also potentially limited by the fact that the patients at baseline were atypical in having a long disease duration at a median of 6-9 years but relatively low disease activity, based on a mean 28-joint Disease Activity Score (DAS28) of 4.8 in good responders and 4.0 in nonresponders. The authors also acknowledged both the objective and subjective nature of the DAS28 and its components in approximating disease activity. The authors also did not include a negative predictive value in their analysis, which would have been useful in considering the value of the test.

The study was jointly funded by Pfizer, the Medical Research Council, and Arthritis Research UK. No conflicts of interest were reported.

SOURCE: Plant D et al. Arthritis Rheumatol. 2019 Jan 7. doi: 10.1002/art.40810.

A newly developed gene expression classifier may be able to identify rheumatoid arthritis patients who are unlikely to benefit from methotrexate, according to an analysis of blood samples from patients who had just begun the drug.

“This study highlights the potential of early treatment biomarker monitoring in RA and raises important questions regarding acceptable levels of performance for complementary diagnostic testing,” wrote lead author Darren Plant, PhD, of the University of Manchester (England), and his coauthors. The study was published in Arthritis & Rheumatology.

The study analyzed 85 participants in the Rheumatoid Arthritis Medication Study, a U.K.-based longitudinal observational study of RA patients taking methotrexate for the first time, and classified them as either good responders (n = 42) or nonresponders (n = 43) after 6 months on the drug, based on European League Against Rheumatism response criteria. Dr. Plant and his colleagues then performed gene expression profiling on whole-blood samples from those participants, collected before treatment and after 4 weeks on methotrexate.

Ultimately, pathway analysis indicated an overrepresentation of type 1 interferon signaling pathway genes in nonresponsive patients at both pretreatment (P = 2.8 x 10-25) and 4 weeks (P = 4.9 x 10-28). As such, the coauthors “developed a gene expression classifier that could potentially provide an early response biomarker of methotrexate inefficacy” at 6 months based on the gene expression ratio between 4 weeks and pretreatment, which yielded an area under the receiver operating characteristic curve (ROC AUC) of 0.78. This was proven stable in cross validation and superior to models including clinical covariates (ROC AUC = 0.65).

The authors did not replicate the results in an independent cohort, but the authors noted that the “utility of a gene expression classifier of methotrexate nonresponse now requires validation, not only in independent samples but also using independent technology.”

The study was also potentially limited by the fact that the patients at baseline were atypical in having a long disease duration at a median of 6-9 years but relatively low disease activity, based on a mean 28-joint Disease Activity Score (DAS28) of 4.8 in good responders and 4.0 in nonresponders. The authors also acknowledged both the objective and subjective nature of the DAS28 and its components in approximating disease activity. The authors also did not include a negative predictive value in their analysis, which would have been useful in considering the value of the test.

The study was jointly funded by Pfizer, the Medical Research Council, and Arthritis Research UK. No conflicts of interest were reported.

SOURCE: Plant D et al. Arthritis Rheumatol. 2019 Jan 7. doi: 10.1002/art.40810.

FROM ARTHRITIS & RHEUMATOLOGY

Key clinical point:

Major finding: A blood test that uses a gene expression ratio between 4 weeks and pretreatment yielded a ROC AUC of 0.78 for predicting methotrexate inefficacy at 6 months.

Study details: An analysis of blood samples from 85 participants in a U.K.-based longitudinal observational study of RA patients starting methotrexate for the first time.

Disclosures: The study was jointly funded by Pfizer, the Medical Research Council, and Arthritis Research UK. No conflicts of interest were reported.

Source: Plant D et al. Arthritis Rheumatol. 2019 Jan 7. doi: 10.1002/art.40810.

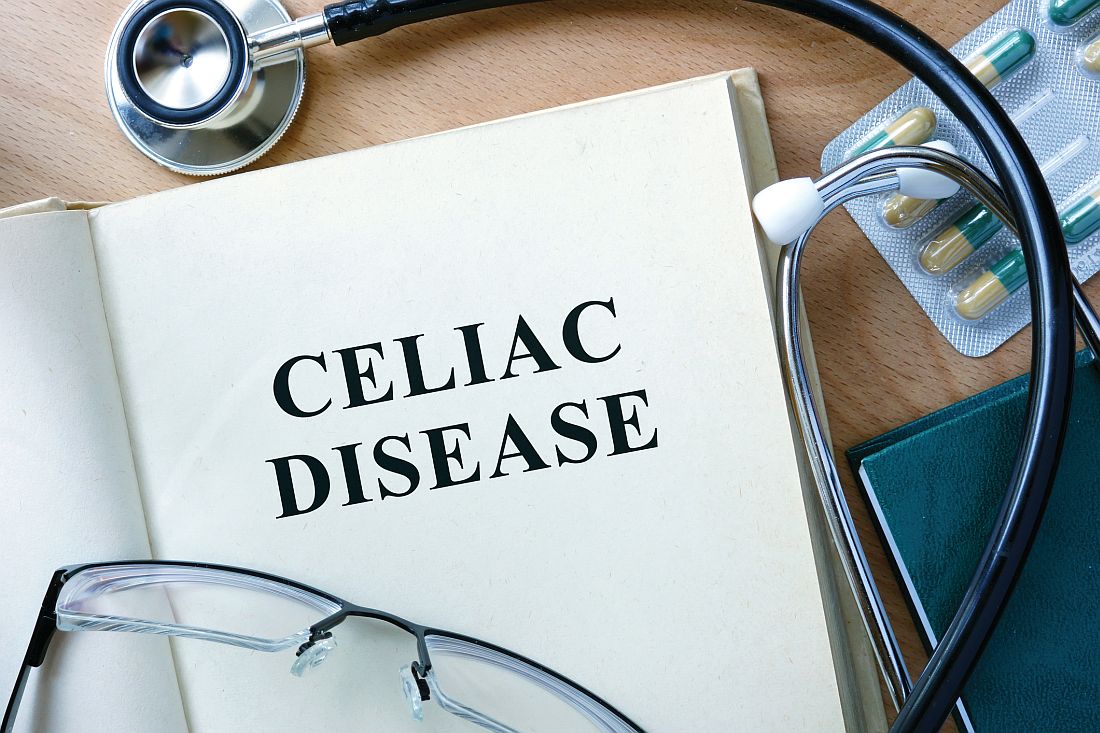

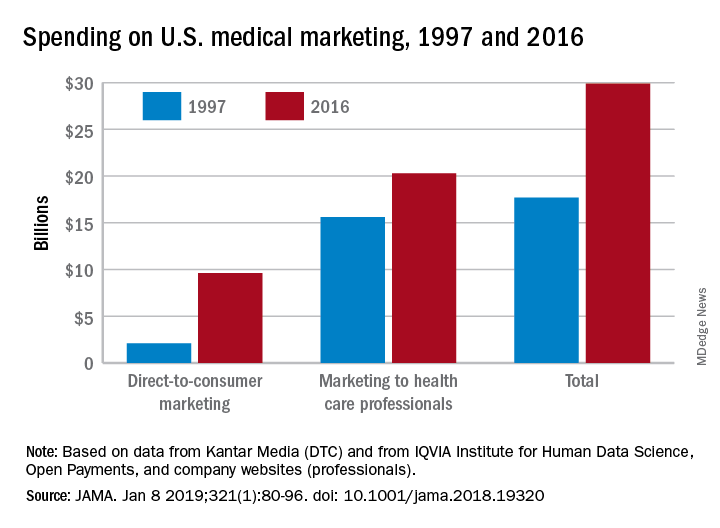

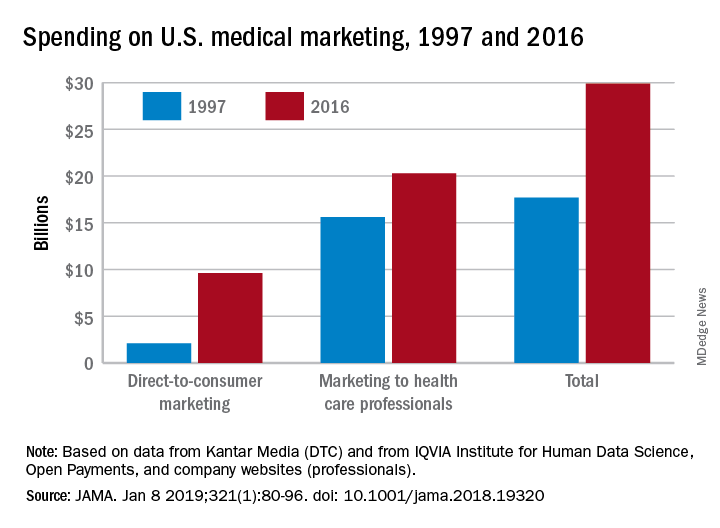

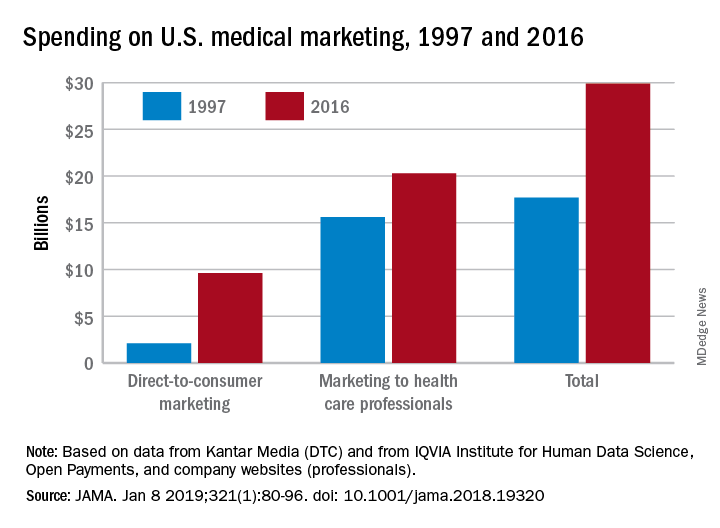

Spending on medical marketing increased by $12.2 billion over the last 2 decades

Total spending on medical marketing in the United States increased from $17.7 billion in 1997 to $29.9 billion in 2016, according to an analysis of direct-to-consumer (DTC) and professional marketing for prescription drugs, disease awareness campaigns, health services, and laboratory tests.