User login

Negative nasal swabs reliably predicted no MRSA infection

SAN DIEGO – , said Darunee Chotiprasitsakul, MD, of Johns Hopkins Medicine in Baltimore.

But physicians often prescribed vancomycin anyway, accumulating nearly 7,400 potentially avoidable treatment days over a 19-month period, she said during an oral presentation at an annual meeting on infectious diseases.

Current guidelines recommend empiric vancomycin to cover MRSA infection when ill patients have a history of MRSA colonization or recent hospitalization or exposure to antibiotics. Patients whose nasal screening swabs are negative for MRSA have been shown to be at low risk of subsequent infection, but guidelines don’t address how to use swab results to guide decisions about empiric vancomycin, Dr. Chotiprasitsakul said.

Therefore, she and her associates studied 11,882 adults without historical MRSA infection or colonization who received nasal swabs for routine surveillance in adult ICUs at Johns Hopkins. A total of 441 patients (4%) had positive swabs, while 96% tested negative.

Among patients with negative swabs, only 25 (0.22%) developed MRSA infection requiring treatment. Thus, the negative predictive value of a nasal swab for MRSA was 99%, making the probability of infection despite a negative swab “exceedingly low,” Dr. Chotiprasitsakul said.

But clinicians seemed not to use negative swab results to curtail vancomycin therapy, she found. Rates of empiric vancomycin use were 36% among patients with positive swabs and 39% among those with negative swabs. Over 19 months, ICU patients received 7,371 avoidable days of vancomycin, a median of 3 days per patient.

Matching patients by ICU and days at risk identified no significant predictors of MRSA infection, Dr. Chotiprasitsakul said. Johns Hopkins Medicine has robust infection control practices, high compliance with hand hygiene and contact precautions, and low rates of nosocomial MRSA transmission, she noted. The predictive value of a negative MRSA nasal swab could be lower at institutions where that isn’t the case, she said.

Johns Hopkins is working to curtail unnecessary use of vancomycin, said senior author Sara Cosgrove, MD, professor of medicine in infectious diseases and director of the department of antimicrobial stewardship. The team has added the findings to its guidelines for antibiotic use, which are available in an app for Johns Hopkins providers, she said in an interview.

The stewardship also highlights the data when discussing starting and stopping vancomycin in patients at very low risk for MRSA infections, she said. “In general, providers have responded favorably to acting upon this new information,” Dr. Cosgrove noted.

Johns Hopkins continues to track median days of vancomycin use per patient and per 1,000 days in its units. “[We] will assess if there is an impact on vancomycin use over the coming year,” said Dr. Cosgrove.

The investigators had no conflicts of interest. The event marked the combined annual meetings of the Infectious Diseases Society of America, the Society for Healthcare Epidemiology of America, the HIV Medicine Association, and the Pediatric Infectious Diseases Society.

SAN DIEGO – , said Darunee Chotiprasitsakul, MD, of Johns Hopkins Medicine in Baltimore.

But physicians often prescribed vancomycin anyway, accumulating nearly 7,400 potentially avoidable treatment days over a 19-month period, she said during an oral presentation at an annual meeting on infectious diseases.

Current guidelines recommend empiric vancomycin to cover MRSA infection when ill patients have a history of MRSA colonization or recent hospitalization or exposure to antibiotics. Patients whose nasal screening swabs are negative for MRSA have been shown to be at low risk of subsequent infection, but guidelines don’t address how to use swab results to guide decisions about empiric vancomycin, Dr. Chotiprasitsakul said.

Therefore, she and her associates studied 11,882 adults without historical MRSA infection or colonization who received nasal swabs for routine surveillance in adult ICUs at Johns Hopkins. A total of 441 patients (4%) had positive swabs, while 96% tested negative.

Among patients with negative swabs, only 25 (0.22%) developed MRSA infection requiring treatment. Thus, the negative predictive value of a nasal swab for MRSA was 99%, making the probability of infection despite a negative swab “exceedingly low,” Dr. Chotiprasitsakul said.

But clinicians seemed not to use negative swab results to curtail vancomycin therapy, she found. Rates of empiric vancomycin use were 36% among patients with positive swabs and 39% among those with negative swabs. Over 19 months, ICU patients received 7,371 avoidable days of vancomycin, a median of 3 days per patient.

Matching patients by ICU and days at risk identified no significant predictors of MRSA infection, Dr. Chotiprasitsakul said. Johns Hopkins Medicine has robust infection control practices, high compliance with hand hygiene and contact precautions, and low rates of nosocomial MRSA transmission, she noted. The predictive value of a negative MRSA nasal swab could be lower at institutions where that isn’t the case, she said.

Johns Hopkins is working to curtail unnecessary use of vancomycin, said senior author Sara Cosgrove, MD, professor of medicine in infectious diseases and director of the department of antimicrobial stewardship. The team has added the findings to its guidelines for antibiotic use, which are available in an app for Johns Hopkins providers, she said in an interview.

The stewardship also highlights the data when discussing starting and stopping vancomycin in patients at very low risk for MRSA infections, she said. “In general, providers have responded favorably to acting upon this new information,” Dr. Cosgrove noted.

Johns Hopkins continues to track median days of vancomycin use per patient and per 1,000 days in its units. “[We] will assess if there is an impact on vancomycin use over the coming year,” said Dr. Cosgrove.

The investigators had no conflicts of interest. The event marked the combined annual meetings of the Infectious Diseases Society of America, the Society for Healthcare Epidemiology of America, the HIV Medicine Association, and the Pediatric Infectious Diseases Society.

SAN DIEGO – , said Darunee Chotiprasitsakul, MD, of Johns Hopkins Medicine in Baltimore.

But physicians often prescribed vancomycin anyway, accumulating nearly 7,400 potentially avoidable treatment days over a 19-month period, she said during an oral presentation at an annual meeting on infectious diseases.

Current guidelines recommend empiric vancomycin to cover MRSA infection when ill patients have a history of MRSA colonization or recent hospitalization or exposure to antibiotics. Patients whose nasal screening swabs are negative for MRSA have been shown to be at low risk of subsequent infection, but guidelines don’t address how to use swab results to guide decisions about empiric vancomycin, Dr. Chotiprasitsakul said.

Therefore, she and her associates studied 11,882 adults without historical MRSA infection or colonization who received nasal swabs for routine surveillance in adult ICUs at Johns Hopkins. A total of 441 patients (4%) had positive swabs, while 96% tested negative.

Among patients with negative swabs, only 25 (0.22%) developed MRSA infection requiring treatment. Thus, the negative predictive value of a nasal swab for MRSA was 99%, making the probability of infection despite a negative swab “exceedingly low,” Dr. Chotiprasitsakul said.

But clinicians seemed not to use negative swab results to curtail vancomycin therapy, she found. Rates of empiric vancomycin use were 36% among patients with positive swabs and 39% among those with negative swabs. Over 19 months, ICU patients received 7,371 avoidable days of vancomycin, a median of 3 days per patient.

Matching patients by ICU and days at risk identified no significant predictors of MRSA infection, Dr. Chotiprasitsakul said. Johns Hopkins Medicine has robust infection control practices, high compliance with hand hygiene and contact precautions, and low rates of nosocomial MRSA transmission, she noted. The predictive value of a negative MRSA nasal swab could be lower at institutions where that isn’t the case, she said.

Johns Hopkins is working to curtail unnecessary use of vancomycin, said senior author Sara Cosgrove, MD, professor of medicine in infectious diseases and director of the department of antimicrobial stewardship. The team has added the findings to its guidelines for antibiotic use, which are available in an app for Johns Hopkins providers, she said in an interview.

The stewardship also highlights the data when discussing starting and stopping vancomycin in patients at very low risk for MRSA infections, she said. “In general, providers have responded favorably to acting upon this new information,” Dr. Cosgrove noted.

Johns Hopkins continues to track median days of vancomycin use per patient and per 1,000 days in its units. “[We] will assess if there is an impact on vancomycin use over the coming year,” said Dr. Cosgrove.

The investigators had no conflicts of interest. The event marked the combined annual meetings of the Infectious Diseases Society of America, the Society for Healthcare Epidemiology of America, the HIV Medicine Association, and the Pediatric Infectious Diseases Society.

AT IDWEEK 2017

Key clinical point: Only 0.2% of ICU patients with negative surveillance nasal swabs developed MRSA infections during the same hospitalization.

Major finding: The predictive value of a negative swab was 99%.

Data source: A study of 11,882 adults without historical MRSA infection or colonization who received nasal swabs for routine surveillance.

Disclosures: The investigators had no conflicts of interest.

Statins linked to lower death rates in COPD

Receiving a statin prescription within a year after diagnosis of chronic obstructive pulmonary disease was associated with a 21% decrease in the subsequent risk of all-cause mortality and a 45% drop in risk of pulmonary mortality, according to the results of a large retrospective administrative database study.

The findings belie those of the recent Simvastatin in the Prevention of COPD Exacerbation (STATCOPE) trial, in which daily simvastatin (40 mg) did not affect exacerbation rates or time to first exacerbation in high-risk COPD patients, wrote Larry D. Lynd, PhD, a professor at the at the University of British Columbia, Vancouver, and his associates. Their study was observational, but the association between statin use and decreased mortality “persisted across several measures of statin exposure,” they wrote. “Our findings, in conjunction with previously reported evidence, suggest that there may be a specific subtype of COPD patients that may benefit from statin use.” The study appears in the September issue of CHEST (2017;152;486-93).

To further explore the question, the researchers analyzed linked health databases from nearly 40,000 patients aged 50 years and older who had received at least three prescriptions for an anticholinergic or a short-acting beta agonist in 12 months some time between 1998 and 2007. The first prescription was considered the date of COPD “diagnosis.” The average age of the patients was 71 years; 55% were female.

A total of 7,775 patients (19.6%) who met this definition of incident COPD were prescribed a statin at least once during the subsequent year. These patients had a significantly reduced risk of subsequent all-cause mortality in univariate and multivariate analyses, with hazard ratios of 0.79 (95% confidence intervals, 0.68-0.91; P less than .002). Statins also showed a protective effect against pulmonary mortality, with univariate and multivariate hazard ratios of 0.52 (P = .01) and 0.55 (P = .03), respectively.

The protective effect of statins held up when the investigators narrowed the exposure period to 6 months after COPD diagnosis and when they expanded it to 18 months. Exposure to statins for 80% of the 1-year window after COPD diagnosis – a proxy for statin adherence – also led to a reduced risk of all-cause mortality, but the 95% confidence interval for the hazard ratio did not reach statistical significance (0.71- 1.01; P = .06).

The most common prescription was for atorvastatin (49%), usually for 90 days (23%), 100 days (20%), or 30 days (15%), the researchers said. While the “possibility of the ‘healthy user’ or the ‘healthy adherer’ cannot be ignored,” they adjusted for other prescriptions, comorbidities, and income level, which should have helped eliminate this effect, they added. However, they lacked data on smoking and lung function assessments, both of which are “important confounders and contributors to mortality,” they acknowledged.

Despite [its] limitations, the study results are intriguing and in line with findings from other retrospective cohorts, noted Or Kalchiem-Dekel, MD, and Robert M. Reed, MD, in an editorial published in CHEST (2017;152:456-7. doi: 10.1016/j.chest.2017.04.156).

How then can we reconcile the apparent benefits observed in retrospective studies with the lack of clinical effect seen in prospective trials, particularly the in the STATCOPE study? Could it be that both negative and positive studies are “correct”? Prospective studies have thus far not been adequately powered for mortality as an endpoint, said the editorialists, who are both at the pulmonary and critical care medicine division, University of Maryland, Baltimore.This most recent study reinforces the idea that statins may play a beneficial role in COPD, but it isn’t clear which patients to target for therapy. It is unlikely that the findings will reverse recent recommendations by the American College of Chest Physicians and Canadian Thoracic Society against the use of statins for the purpose of prevention of COPD exacerbations, but the suggestion of survival advantage related to statins certainly may breathe new life into an enthusiasm greatly tempered by STATCOPE, they said.

Canadian Institutes of Health Research supported the study. One coinvestigator disclosed consulting relationships with Teva, Pfizer, and Novartis; the others had no conflicts of interest. Neither editorialist had conflicts of interest.

Receiving a statin prescription within a year after diagnosis of chronic obstructive pulmonary disease was associated with a 21% decrease in the subsequent risk of all-cause mortality and a 45% drop in risk of pulmonary mortality, according to the results of a large retrospective administrative database study.

The findings belie those of the recent Simvastatin in the Prevention of COPD Exacerbation (STATCOPE) trial, in which daily simvastatin (40 mg) did not affect exacerbation rates or time to first exacerbation in high-risk COPD patients, wrote Larry D. Lynd, PhD, a professor at the at the University of British Columbia, Vancouver, and his associates. Their study was observational, but the association between statin use and decreased mortality “persisted across several measures of statin exposure,” they wrote. “Our findings, in conjunction with previously reported evidence, suggest that there may be a specific subtype of COPD patients that may benefit from statin use.” The study appears in the September issue of CHEST (2017;152;486-93).

To further explore the question, the researchers analyzed linked health databases from nearly 40,000 patients aged 50 years and older who had received at least three prescriptions for an anticholinergic or a short-acting beta agonist in 12 months some time between 1998 and 2007. The first prescription was considered the date of COPD “diagnosis.” The average age of the patients was 71 years; 55% were female.

A total of 7,775 patients (19.6%) who met this definition of incident COPD were prescribed a statin at least once during the subsequent year. These patients had a significantly reduced risk of subsequent all-cause mortality in univariate and multivariate analyses, with hazard ratios of 0.79 (95% confidence intervals, 0.68-0.91; P less than .002). Statins also showed a protective effect against pulmonary mortality, with univariate and multivariate hazard ratios of 0.52 (P = .01) and 0.55 (P = .03), respectively.

The protective effect of statins held up when the investigators narrowed the exposure period to 6 months after COPD diagnosis and when they expanded it to 18 months. Exposure to statins for 80% of the 1-year window after COPD diagnosis – a proxy for statin adherence – also led to a reduced risk of all-cause mortality, but the 95% confidence interval for the hazard ratio did not reach statistical significance (0.71- 1.01; P = .06).

The most common prescription was for atorvastatin (49%), usually for 90 days (23%), 100 days (20%), or 30 days (15%), the researchers said. While the “possibility of the ‘healthy user’ or the ‘healthy adherer’ cannot be ignored,” they adjusted for other prescriptions, comorbidities, and income level, which should have helped eliminate this effect, they added. However, they lacked data on smoking and lung function assessments, both of which are “important confounders and contributors to mortality,” they acknowledged.

Despite [its] limitations, the study results are intriguing and in line with findings from other retrospective cohorts, noted Or Kalchiem-Dekel, MD, and Robert M. Reed, MD, in an editorial published in CHEST (2017;152:456-7. doi: 10.1016/j.chest.2017.04.156).

How then can we reconcile the apparent benefits observed in retrospective studies with the lack of clinical effect seen in prospective trials, particularly the in the STATCOPE study? Could it be that both negative and positive studies are “correct”? Prospective studies have thus far not been adequately powered for mortality as an endpoint, said the editorialists, who are both at the pulmonary and critical care medicine division, University of Maryland, Baltimore.This most recent study reinforces the idea that statins may play a beneficial role in COPD, but it isn’t clear which patients to target for therapy. It is unlikely that the findings will reverse recent recommendations by the American College of Chest Physicians and Canadian Thoracic Society against the use of statins for the purpose of prevention of COPD exacerbations, but the suggestion of survival advantage related to statins certainly may breathe new life into an enthusiasm greatly tempered by STATCOPE, they said.

Canadian Institutes of Health Research supported the study. One coinvestigator disclosed consulting relationships with Teva, Pfizer, and Novartis; the others had no conflicts of interest. Neither editorialist had conflicts of interest.

Receiving a statin prescription within a year after diagnosis of chronic obstructive pulmonary disease was associated with a 21% decrease in the subsequent risk of all-cause mortality and a 45% drop in risk of pulmonary mortality, according to the results of a large retrospective administrative database study.

The findings belie those of the recent Simvastatin in the Prevention of COPD Exacerbation (STATCOPE) trial, in which daily simvastatin (40 mg) did not affect exacerbation rates or time to first exacerbation in high-risk COPD patients, wrote Larry D. Lynd, PhD, a professor at the at the University of British Columbia, Vancouver, and his associates. Their study was observational, but the association between statin use and decreased mortality “persisted across several measures of statin exposure,” they wrote. “Our findings, in conjunction with previously reported evidence, suggest that there may be a specific subtype of COPD patients that may benefit from statin use.” The study appears in the September issue of CHEST (2017;152;486-93).

To further explore the question, the researchers analyzed linked health databases from nearly 40,000 patients aged 50 years and older who had received at least three prescriptions for an anticholinergic or a short-acting beta agonist in 12 months some time between 1998 and 2007. The first prescription was considered the date of COPD “diagnosis.” The average age of the patients was 71 years; 55% were female.

A total of 7,775 patients (19.6%) who met this definition of incident COPD were prescribed a statin at least once during the subsequent year. These patients had a significantly reduced risk of subsequent all-cause mortality in univariate and multivariate analyses, with hazard ratios of 0.79 (95% confidence intervals, 0.68-0.91; P less than .002). Statins also showed a protective effect against pulmonary mortality, with univariate and multivariate hazard ratios of 0.52 (P = .01) and 0.55 (P = .03), respectively.

The protective effect of statins held up when the investigators narrowed the exposure period to 6 months after COPD diagnosis and when they expanded it to 18 months. Exposure to statins for 80% of the 1-year window after COPD diagnosis – a proxy for statin adherence – also led to a reduced risk of all-cause mortality, but the 95% confidence interval for the hazard ratio did not reach statistical significance (0.71- 1.01; P = .06).

The most common prescription was for atorvastatin (49%), usually for 90 days (23%), 100 days (20%), or 30 days (15%), the researchers said. While the “possibility of the ‘healthy user’ or the ‘healthy adherer’ cannot be ignored,” they adjusted for other prescriptions, comorbidities, and income level, which should have helped eliminate this effect, they added. However, they lacked data on smoking and lung function assessments, both of which are “important confounders and contributors to mortality,” they acknowledged.

Despite [its] limitations, the study results are intriguing and in line with findings from other retrospective cohorts, noted Or Kalchiem-Dekel, MD, and Robert M. Reed, MD, in an editorial published in CHEST (2017;152:456-7. doi: 10.1016/j.chest.2017.04.156).

How then can we reconcile the apparent benefits observed in retrospective studies with the lack of clinical effect seen in prospective trials, particularly the in the STATCOPE study? Could it be that both negative and positive studies are “correct”? Prospective studies have thus far not been adequately powered for mortality as an endpoint, said the editorialists, who are both at the pulmonary and critical care medicine division, University of Maryland, Baltimore.This most recent study reinforces the idea that statins may play a beneficial role in COPD, but it isn’t clear which patients to target for therapy. It is unlikely that the findings will reverse recent recommendations by the American College of Chest Physicians and Canadian Thoracic Society against the use of statins for the purpose of prevention of COPD exacerbations, but the suggestion of survival advantage related to statins certainly may breathe new life into an enthusiasm greatly tempered by STATCOPE, they said.

Canadian Institutes of Health Research supported the study. One coinvestigator disclosed consulting relationships with Teva, Pfizer, and Novartis; the others had no conflicts of interest. Neither editorialist had conflicts of interest.

FROM CHEST

Study supports routine rapid HCV testing for at-risk youth

Routine finger-stick testing for hepatitis C virus infection is the best screening strategy for 15- to 30-year-olds, provided that at least 6 in every 1,000 have injected drugs, according to the results of a modeling study.

“Currently, nearly all hepatitis C virus (HCV) transmission in the United States occurs among young persons who inject drugs,” wrote Sabrina A. Assoumou, MD, of Boston Medical Center and Boston University and her associates. “We show that routine testing provides the most clinical benefit and best value for money in an urban community health setting where HCV prevalence is high.”

Rapid routine testing consistently yielded more quality-adjusted life years (QALYs) at a lower cost than did the current practice of reflexive, risk-based venipuncture testing, the researchers said. They recommended that urban health centers either replace venipuncture diagnostics with routine finger-stick testing or that they ensure follow-up RNA testing when needed so they can link HCV-positive patients to treatment (Clin Infect Dis. 2017 Sep 9. doi: 10.1093/cid/cix798).

The Centers for Disease Control and Prevention has recommended risk-based HCV testing, but studies indicate that primary care providers often miss the chance to test and have trouble identifying high-risk patients, Dr. Assoumou and her associates said. The standard HCV test is a blood draw for antibody testing followed by confirmatory RNA testing, but a two-step process complicates follow-up.

To compare one-time HCV screening strategies in high-risk settings, the researchers created a decision analytic model using TreeAge Pro 2014 software and input data on prevalence, mortality, treatment costs, and efficacy from an extensive literature review.

Compared with targeted risk-based HCV testing, routine rapid testing performed by dedicated counselors yielded an incremental cost-effectiveness ratio of less than $100,000 per quality-adjusted life year unless the prevalence of injection drug use was less than 0.59%, the prevalence of HCV infection among injection drug users was less than 16%, the reinfection rate exceeded 26 cases per 100 person-years, or all venipuncture antibody tests were followed by confirmatory testing. Routine rapid testing identified 20% of HCV infections in the model, which is four times the rate under current practice. Rates of sustained virologic response were 18% with routine rapid testing and 2% with standard practice.

Routine rapid testing did not dramatically boost QALYs at a population level, the researchers acknowledged, but diagnosing and treating an injection drug user increased life span by an average of 2 years and saved $214,000 per patient in additional costs.

“Rapid testing always provided greater life expectancy than venipuncture testing at either a lower lifetime medical cost or a lower cost/QALY gained,” the investigators concluded. “Future studies are needed to define the programmatic effectiveness of HCV treatment among youth, and testing and treatment acceptability in this population.”

The National Institute on Drug Abuse and the National Institute of Allergy and Infectious Diseases provided funding. The researchers reported having no conflicts of interest.

Routine finger-stick testing for hepatitis C virus infection is the best screening strategy for 15- to 30-year-olds, provided that at least 6 in every 1,000 have injected drugs, according to the results of a modeling study.

“Currently, nearly all hepatitis C virus (HCV) transmission in the United States occurs among young persons who inject drugs,” wrote Sabrina A. Assoumou, MD, of Boston Medical Center and Boston University and her associates. “We show that routine testing provides the most clinical benefit and best value for money in an urban community health setting where HCV prevalence is high.”

Rapid routine testing consistently yielded more quality-adjusted life years (QALYs) at a lower cost than did the current practice of reflexive, risk-based venipuncture testing, the researchers said. They recommended that urban health centers either replace venipuncture diagnostics with routine finger-stick testing or that they ensure follow-up RNA testing when needed so they can link HCV-positive patients to treatment (Clin Infect Dis. 2017 Sep 9. doi: 10.1093/cid/cix798).

The Centers for Disease Control and Prevention has recommended risk-based HCV testing, but studies indicate that primary care providers often miss the chance to test and have trouble identifying high-risk patients, Dr. Assoumou and her associates said. The standard HCV test is a blood draw for antibody testing followed by confirmatory RNA testing, but a two-step process complicates follow-up.

To compare one-time HCV screening strategies in high-risk settings, the researchers created a decision analytic model using TreeAge Pro 2014 software and input data on prevalence, mortality, treatment costs, and efficacy from an extensive literature review.

Compared with targeted risk-based HCV testing, routine rapid testing performed by dedicated counselors yielded an incremental cost-effectiveness ratio of less than $100,000 per quality-adjusted life year unless the prevalence of injection drug use was less than 0.59%, the prevalence of HCV infection among injection drug users was less than 16%, the reinfection rate exceeded 26 cases per 100 person-years, or all venipuncture antibody tests were followed by confirmatory testing. Routine rapid testing identified 20% of HCV infections in the model, which is four times the rate under current practice. Rates of sustained virologic response were 18% with routine rapid testing and 2% with standard practice.

Routine rapid testing did not dramatically boost QALYs at a population level, the researchers acknowledged, but diagnosing and treating an injection drug user increased life span by an average of 2 years and saved $214,000 per patient in additional costs.

“Rapid testing always provided greater life expectancy than venipuncture testing at either a lower lifetime medical cost or a lower cost/QALY gained,” the investigators concluded. “Future studies are needed to define the programmatic effectiveness of HCV treatment among youth, and testing and treatment acceptability in this population.”

The National Institute on Drug Abuse and the National Institute of Allergy and Infectious Diseases provided funding. The researchers reported having no conflicts of interest.

Routine finger-stick testing for hepatitis C virus infection is the best screening strategy for 15- to 30-year-olds, provided that at least 6 in every 1,000 have injected drugs, according to the results of a modeling study.

“Currently, nearly all hepatitis C virus (HCV) transmission in the United States occurs among young persons who inject drugs,” wrote Sabrina A. Assoumou, MD, of Boston Medical Center and Boston University and her associates. “We show that routine testing provides the most clinical benefit and best value for money in an urban community health setting where HCV prevalence is high.”

Rapid routine testing consistently yielded more quality-adjusted life years (QALYs) at a lower cost than did the current practice of reflexive, risk-based venipuncture testing, the researchers said. They recommended that urban health centers either replace venipuncture diagnostics with routine finger-stick testing or that they ensure follow-up RNA testing when needed so they can link HCV-positive patients to treatment (Clin Infect Dis. 2017 Sep 9. doi: 10.1093/cid/cix798).

The Centers for Disease Control and Prevention has recommended risk-based HCV testing, but studies indicate that primary care providers often miss the chance to test and have trouble identifying high-risk patients, Dr. Assoumou and her associates said. The standard HCV test is a blood draw for antibody testing followed by confirmatory RNA testing, but a two-step process complicates follow-up.

To compare one-time HCV screening strategies in high-risk settings, the researchers created a decision analytic model using TreeAge Pro 2014 software and input data on prevalence, mortality, treatment costs, and efficacy from an extensive literature review.

Compared with targeted risk-based HCV testing, routine rapid testing performed by dedicated counselors yielded an incremental cost-effectiveness ratio of less than $100,000 per quality-adjusted life year unless the prevalence of injection drug use was less than 0.59%, the prevalence of HCV infection among injection drug users was less than 16%, the reinfection rate exceeded 26 cases per 100 person-years, or all venipuncture antibody tests were followed by confirmatory testing. Routine rapid testing identified 20% of HCV infections in the model, which is four times the rate under current practice. Rates of sustained virologic response were 18% with routine rapid testing and 2% with standard practice.

Routine rapid testing did not dramatically boost QALYs at a population level, the researchers acknowledged, but diagnosing and treating an injection drug user increased life span by an average of 2 years and saved $214,000 per patient in additional costs.

“Rapid testing always provided greater life expectancy than venipuncture testing at either a lower lifetime medical cost or a lower cost/QALY gained,” the investigators concluded. “Future studies are needed to define the programmatic effectiveness of HCV treatment among youth, and testing and treatment acceptability in this population.”

The National Institute on Drug Abuse and the National Institute of Allergy and Infectious Diseases provided funding. The researchers reported having no conflicts of interest.

FROM CLINICAL INFECTIOUS DISEASES

Key clinical point: Routine finger-stick testing was the most cost-effective way to screen urban adolescents and young adults for hepatitis C virus infection.

Major finding: The incremental cost-effectiveness ratio was less than $100,000 per quality-adjusted life year unless prevalence of injection drug use was less than 0.59%, less than 16% of injection drug users had HCV infection, the reinfection rate exceeded 26 cases per 100 person-years, or all venipuncture antibody tests were followed by confirmatory testing.

Data source: A decision analytic model created with TreeAge Pro 2014 and data from an extensive literature review.

Disclosures: The National Institute on Drug Abuse and the National Institute of Allergy and Infectious Diseases provided funding. The researchers reported having no conflicts of interest.

Household MRSA contamination predicted human recolonization

SAN DIEGO – Patients who were successfully treated for methicillin-resistant Staphylococcus aureus infections were about four times more likely to become recolonized if their homes contained MRSA, according to the results of a longitudinal household study.

“Many of these homes were contaminated with a classic community strain,” Meghan Frost Davis, DVM, PhD, MPH, said during an oral presentation at an annual meeting on infectious disease. “We need to think about interventions in the home environment to improve our ability to achieve successful decolonization.”

Importantly, Dr. Davis and her associates recently published another study in which biocidal disinfectants failed to eliminate MRSA from homes and appeared to increase the risk of multi-drug resistance (Appl Environ Microbiol. online 22 September 2017, doi: 10.1128/AEM.01369-17). Her team is testing MRSA isolates for resistance to disinfectants and hopes to have more information in about a year, she said. Until then, Dr. Davis suggests advising patients with MRSA to clean sheets and pillowcases frequently.

S. aureus can survive in the environment for long periods. In one case, a MRSA strain tied to an outbreak was cultured from a dry mop that had been locked in a closet for 79 months, Dr. Davis said. “This is concerning because the home is a place that receives bacteria from us,” she said. “A person who was originally colonized or infected with MRSA may clear naturally or through treatment, but the environment may become a reservoir for recolonization and infection.”

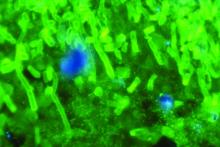

To better understand the role of this reservoir, she and her associates recruited 88 index outpatients with MRSA skin and soft tissue infections who were part of a randomized trial of MRSA decolonization strategies. At baseline and 3 months later, the researchers sampled multiple sites in each patient’s home and all household pets. Patients and household members also swabbed themselves in multiple body sites every 2 weeks for up to 3 months. Swabs were cultured in enrichment broth, and positive results were confirmed by PCR (Infect Control Hosp Epidemiol. 2016 Oct;37[10]:1226-33. doi: 10.1017/ice.2016.138. Epub 2016 Jul 28).

Even after accounting for potential confounders, household contamination with MRSA was associated with about a three- to five-fold increase in the odds of human colonization, which was statistically significant. Seventy percent of households had at least one pet, but only 10% had a pet colonized with MRSA. Having such a pet increased the risk of human carriage slightly, but not significantly. However, having more than one pet did predict human colonization, Dr. Davis said. Even if pets aren’t colonized, they still can carry MRSA on “the petting zone” – the top of the head and back, she explained. Thus, pets can serve as reservoirs for MRSA without being colonized.

In all, 53 index patients had at least two consecutive negative cultures and thus were considered decolonized. However, 43% of these individuals were subsequently re-colonized, and those whose homes contained MRSA at baseline were about 4.3 times more likely to become recolonized than those whose households cultured negative (hazard ratio, 4.3; 95% CI, 1.2-16; P less than .03).

A total of six patients were persistently colonized with MRSA, and 62% of contaminated homes tested positive for MRSA Staph protein A (spa) type t008, a common community-onset strain. Living in one of these households significantly increased the chances of persistent colonization (odds ratio, 12.7; 95% CI, 1.33-122; P less than .03).

Pets testing positive for MRSA always came from homes that also tested positive, so these factors couldn’t be disentangled, Dr. Davis said. Repository surfaces in homes – such as the top of a refrigerator – were just as likely to be contaminated with MRSA as high-touch surfaces. However, pillowcases often were most contaminated of all. “If I can give you one take-home message, when you treat people with MRSA, you may want to tell them to clean their sheets and pillowcases a lot.”

Dr. Davis and her associates had no disclosures.

SAN DIEGO – Patients who were successfully treated for methicillin-resistant Staphylococcus aureus infections were about four times more likely to become recolonized if their homes contained MRSA, according to the results of a longitudinal household study.

“Many of these homes were contaminated with a classic community strain,” Meghan Frost Davis, DVM, PhD, MPH, said during an oral presentation at an annual meeting on infectious disease. “We need to think about interventions in the home environment to improve our ability to achieve successful decolonization.”

Importantly, Dr. Davis and her associates recently published another study in which biocidal disinfectants failed to eliminate MRSA from homes and appeared to increase the risk of multi-drug resistance (Appl Environ Microbiol. online 22 September 2017, doi: 10.1128/AEM.01369-17). Her team is testing MRSA isolates for resistance to disinfectants and hopes to have more information in about a year, she said. Until then, Dr. Davis suggests advising patients with MRSA to clean sheets and pillowcases frequently.

S. aureus can survive in the environment for long periods. In one case, a MRSA strain tied to an outbreak was cultured from a dry mop that had been locked in a closet for 79 months, Dr. Davis said. “This is concerning because the home is a place that receives bacteria from us,” she said. “A person who was originally colonized or infected with MRSA may clear naturally or through treatment, but the environment may become a reservoir for recolonization and infection.”

To better understand the role of this reservoir, she and her associates recruited 88 index outpatients with MRSA skin and soft tissue infections who were part of a randomized trial of MRSA decolonization strategies. At baseline and 3 months later, the researchers sampled multiple sites in each patient’s home and all household pets. Patients and household members also swabbed themselves in multiple body sites every 2 weeks for up to 3 months. Swabs were cultured in enrichment broth, and positive results were confirmed by PCR (Infect Control Hosp Epidemiol. 2016 Oct;37[10]:1226-33. doi: 10.1017/ice.2016.138. Epub 2016 Jul 28).

Even after accounting for potential confounders, household contamination with MRSA was associated with about a three- to five-fold increase in the odds of human colonization, which was statistically significant. Seventy percent of households had at least one pet, but only 10% had a pet colonized with MRSA. Having such a pet increased the risk of human carriage slightly, but not significantly. However, having more than one pet did predict human colonization, Dr. Davis said. Even if pets aren’t colonized, they still can carry MRSA on “the petting zone” – the top of the head and back, she explained. Thus, pets can serve as reservoirs for MRSA without being colonized.

In all, 53 index patients had at least two consecutive negative cultures and thus were considered decolonized. However, 43% of these individuals were subsequently re-colonized, and those whose homes contained MRSA at baseline were about 4.3 times more likely to become recolonized than those whose households cultured negative (hazard ratio, 4.3; 95% CI, 1.2-16; P less than .03).

A total of six patients were persistently colonized with MRSA, and 62% of contaminated homes tested positive for MRSA Staph protein A (spa) type t008, a common community-onset strain. Living in one of these households significantly increased the chances of persistent colonization (odds ratio, 12.7; 95% CI, 1.33-122; P less than .03).

Pets testing positive for MRSA always came from homes that also tested positive, so these factors couldn’t be disentangled, Dr. Davis said. Repository surfaces in homes – such as the top of a refrigerator – were just as likely to be contaminated with MRSA as high-touch surfaces. However, pillowcases often were most contaminated of all. “If I can give you one take-home message, when you treat people with MRSA, you may want to tell them to clean their sheets and pillowcases a lot.”

Dr. Davis and her associates had no disclosures.

SAN DIEGO – Patients who were successfully treated for methicillin-resistant Staphylococcus aureus infections were about four times more likely to become recolonized if their homes contained MRSA, according to the results of a longitudinal household study.

“Many of these homes were contaminated with a classic community strain,” Meghan Frost Davis, DVM, PhD, MPH, said during an oral presentation at an annual meeting on infectious disease. “We need to think about interventions in the home environment to improve our ability to achieve successful decolonization.”

Importantly, Dr. Davis and her associates recently published another study in which biocidal disinfectants failed to eliminate MRSA from homes and appeared to increase the risk of multi-drug resistance (Appl Environ Microbiol. online 22 September 2017, doi: 10.1128/AEM.01369-17). Her team is testing MRSA isolates for resistance to disinfectants and hopes to have more information in about a year, she said. Until then, Dr. Davis suggests advising patients with MRSA to clean sheets and pillowcases frequently.

S. aureus can survive in the environment for long periods. In one case, a MRSA strain tied to an outbreak was cultured from a dry mop that had been locked in a closet for 79 months, Dr. Davis said. “This is concerning because the home is a place that receives bacteria from us,” she said. “A person who was originally colonized or infected with MRSA may clear naturally or through treatment, but the environment may become a reservoir for recolonization and infection.”

To better understand the role of this reservoir, she and her associates recruited 88 index outpatients with MRSA skin and soft tissue infections who were part of a randomized trial of MRSA decolonization strategies. At baseline and 3 months later, the researchers sampled multiple sites in each patient’s home and all household pets. Patients and household members also swabbed themselves in multiple body sites every 2 weeks for up to 3 months. Swabs were cultured in enrichment broth, and positive results were confirmed by PCR (Infect Control Hosp Epidemiol. 2016 Oct;37[10]:1226-33. doi: 10.1017/ice.2016.138. Epub 2016 Jul 28).

Even after accounting for potential confounders, household contamination with MRSA was associated with about a three- to five-fold increase in the odds of human colonization, which was statistically significant. Seventy percent of households had at least one pet, but only 10% had a pet colonized with MRSA. Having such a pet increased the risk of human carriage slightly, but not significantly. However, having more than one pet did predict human colonization, Dr. Davis said. Even if pets aren’t colonized, they still can carry MRSA on “the petting zone” – the top of the head and back, she explained. Thus, pets can serve as reservoirs for MRSA without being colonized.

In all, 53 index patients had at least two consecutive negative cultures and thus were considered decolonized. However, 43% of these individuals were subsequently re-colonized, and those whose homes contained MRSA at baseline were about 4.3 times more likely to become recolonized than those whose households cultured negative (hazard ratio, 4.3; 95% CI, 1.2-16; P less than .03).

A total of six patients were persistently colonized with MRSA, and 62% of contaminated homes tested positive for MRSA Staph protein A (spa) type t008, a common community-onset strain. Living in one of these households significantly increased the chances of persistent colonization (odds ratio, 12.7; 95% CI, 1.33-122; P less than .03).

Pets testing positive for MRSA always came from homes that also tested positive, so these factors couldn’t be disentangled, Dr. Davis said. Repository surfaces in homes – such as the top of a refrigerator – were just as likely to be contaminated with MRSA as high-touch surfaces. However, pillowcases often were most contaminated of all. “If I can give you one take-home message, when you treat people with MRSA, you may want to tell them to clean their sheets and pillowcases a lot.”

Dr. Davis and her associates had no disclosures.

IDWEEK 2017

Key clinical point: Patients who were successfully decolonized of MRSA were significantly more likely to become recolonized if their homes were contaminated with MRSA.

Major finding: The risk of recolonization was about four-fold higher if a home was contaminated at baseline (hazard ratio, 4.3; 95% CI, 1.2-16).

Data source: A nested household study that enrolled 88 index patients with MRSA skin and soft tissue infections.

Disclosures: Dr. Davis and her associates had no disclosures.

Focal cultures, PCR upped Kingella detection in pediatric hematogenous osteomyelitis

SAN DIEGO – Early focal cultures and strategic use of polymerase chain reaction (PCR) testing helped a hospital detect Kingella kingae seven times more often in a study of young children with acute non-complex hematogenous osteomyelitis, Rachel Quick, MSN, CNS, said at an annual meeting on infectious diseases.

Kingella kingae turned out to be the leading culprit in these cases, although the new approach also enhanced detection of Staphylococcus aureus and other bacteria, said Ms. Quick of Seton Healthcare Family in Austin, Texas. Children also transitioned to oral antibiotics a median of 22 days sooner and needed peripherally inserted central catheters (PICC) two-thirds less often after the guideline was implemented, she said during her oral presentation.

After implementing the guideline, Ms. Quick and her associates compared 25 children treated beforehand with 24 children treated afterward. Patients were 6 months to 5 years old, had physical signs and symptoms of acute hematogenous osteomyelitis or septic joint, and had been symptomatic for less than 14 days. The study was conducted between 2009 and 2016.

Kingella kingae was identified in one patient (4%) from the baseline cohort and in seven patients (29%) after the guideline was rolled out (P = .02), Ms. Quick said. Kingella was cultured from focal samples only, not from blood. Detection of methicillin-sensitive Staphylococcus aureus (MSSA) jumped from 8% to 17%, while cases with no detectable pathogen dropped from 80% to 46%. Lengths of stay and readmission rates did not change significantly.

Taken together, the findings show how early focal cultures and PCR can facilitate targeted therapy in acute pediatric bone and joint infections, prevent unnecessary antibiotic use, and expedite a targeted transition to oral antibiotics, said Ms. Quick. “We recognize that we have a small sample and that these are not complicated cases,” she said. “Our findings do not suggest it’s more important to look for Kingella than Staphylococcus aureus, but that Kingella should be up there in the ranks of what we’re looking for.”

The investigators reported having no conflicts of interest.

SAN DIEGO – Early focal cultures and strategic use of polymerase chain reaction (PCR) testing helped a hospital detect Kingella kingae seven times more often in a study of young children with acute non-complex hematogenous osteomyelitis, Rachel Quick, MSN, CNS, said at an annual meeting on infectious diseases.

Kingella kingae turned out to be the leading culprit in these cases, although the new approach also enhanced detection of Staphylococcus aureus and other bacteria, said Ms. Quick of Seton Healthcare Family in Austin, Texas. Children also transitioned to oral antibiotics a median of 22 days sooner and needed peripherally inserted central catheters (PICC) two-thirds less often after the guideline was implemented, she said during her oral presentation.

After implementing the guideline, Ms. Quick and her associates compared 25 children treated beforehand with 24 children treated afterward. Patients were 6 months to 5 years old, had physical signs and symptoms of acute hematogenous osteomyelitis or septic joint, and had been symptomatic for less than 14 days. The study was conducted between 2009 and 2016.

Kingella kingae was identified in one patient (4%) from the baseline cohort and in seven patients (29%) after the guideline was rolled out (P = .02), Ms. Quick said. Kingella was cultured from focal samples only, not from blood. Detection of methicillin-sensitive Staphylococcus aureus (MSSA) jumped from 8% to 17%, while cases with no detectable pathogen dropped from 80% to 46%. Lengths of stay and readmission rates did not change significantly.

Taken together, the findings show how early focal cultures and PCR can facilitate targeted therapy in acute pediatric bone and joint infections, prevent unnecessary antibiotic use, and expedite a targeted transition to oral antibiotics, said Ms. Quick. “We recognize that we have a small sample and that these are not complicated cases,” she said. “Our findings do not suggest it’s more important to look for Kingella than Staphylococcus aureus, but that Kingella should be up there in the ranks of what we’re looking for.”

The investigators reported having no conflicts of interest.

SAN DIEGO – Early focal cultures and strategic use of polymerase chain reaction (PCR) testing helped a hospital detect Kingella kingae seven times more often in a study of young children with acute non-complex hematogenous osteomyelitis, Rachel Quick, MSN, CNS, said at an annual meeting on infectious diseases.

Kingella kingae turned out to be the leading culprit in these cases, although the new approach also enhanced detection of Staphylococcus aureus and other bacteria, said Ms. Quick of Seton Healthcare Family in Austin, Texas. Children also transitioned to oral antibiotics a median of 22 days sooner and needed peripherally inserted central catheters (PICC) two-thirds less often after the guideline was implemented, she said during her oral presentation.

After implementing the guideline, Ms. Quick and her associates compared 25 children treated beforehand with 24 children treated afterward. Patients were 6 months to 5 years old, had physical signs and symptoms of acute hematogenous osteomyelitis or septic joint, and had been symptomatic for less than 14 days. The study was conducted between 2009 and 2016.

Kingella kingae was identified in one patient (4%) from the baseline cohort and in seven patients (29%) after the guideline was rolled out (P = .02), Ms. Quick said. Kingella was cultured from focal samples only, not from blood. Detection of methicillin-sensitive Staphylococcus aureus (MSSA) jumped from 8% to 17%, while cases with no detectable pathogen dropped from 80% to 46%. Lengths of stay and readmission rates did not change significantly.

Taken together, the findings show how early focal cultures and PCR can facilitate targeted therapy in acute pediatric bone and joint infections, prevent unnecessary antibiotic use, and expedite a targeted transition to oral antibiotics, said Ms. Quick. “We recognize that we have a small sample and that these are not complicated cases,” she said. “Our findings do not suggest it’s more important to look for Kingella than Staphylococcus aureus, but that Kingella should be up there in the ranks of what we’re looking for.”

The investigators reported having no conflicts of interest.

IDWEEK 2017

Key clinical point: Early focal cultures and strategic use of polymerase chain reaction (PCR) enhanced detection of Kingella kingae and other bacteria in young children with acute non-complex hematogenous osteomyelitis

Major finding: Detection of Kingella surged from 4% to 29%.

Data source: A retrospective cohort study of 49 children with non-complex acute hematogenous osteomyelitis or septic arthritis.

Disclosures: The investigators reported having no conflicts of interest.

Faster multiplex PCR improved management of pediatric acute respiratory illness

SAN DIEGO – Switching to a faster, more comprehensive multiplex PCR viral respiratory assay enabled a hospital to discharge young children with acute respiratory illnesses sooner, prescribe oseltamivir more often, and curtail the use of antibiotics and thoracic radiography, Rangaraj Selvarangan, PhD, reported at an annual meeting on infectious disease.

The study shows how rapid multiplex PCR testing can facilitate antimicrobial stewardship, said Dr. Selvarangan, who is a professor at the University of Missouri Kansas City School of Medicine and director of the microbiology laboratory at Children’s Mercy Kansas City. “Our antimicrobial stewardship programs monitors these test results daily, add notes, and make recommendations on antibiotic choices,” he said.

For the study, the researchers compared hospital records from December 2008 through May 2012, when Children’s Mercy hospital used the Luminex xTAG Respiratory Viral Panel, with records from August 2012 through June 2015, after the hospital had switched over to the Biofire FilmArray Respiratory Panel. FilmArray targets the same 17 viral pathogens as the Luminex panel, but also targets Bordetella pertussis, Chlamydia pneumoniae, and Mycoplasma pneumoniae, seasonal influenza A, parainfluenza type 4, and four coronaviruses.

The study included children aged up to 2 years who were not on immunosuppressive medications, in the NICU, or hospitalized for more than 7 days. For this population, the two panels yielded similar rates of positivity overall (about 60%) and for individual viruses, Dr. Selvarangan said. A total of 810 patients tested positive for at least one virus on the Luminex panel, and 2,096 patients tested positive on FilmArray. Results for FilmArray were available within a median of 4 hours, versus 29 hours for Luminex (P less than .001). The prevalence of empiric antibiotic therapy was 44% during the Luminex era and 28% after the hospital switched to FilmArray (P less than .001). Rates of antibiotic discontinuation rose from 16% with Luminex to 23% with FilmArray (P less than .01). Strikingly, oseltamivir prescriptions rose five-fold (from 17% to 85%; P less than .001) after the hospital began using FilmArray, which covers seasonal influenza. Finally, use of chest radiography fell significantly in both infants and older children after the hospital began using FilmArray instead of Luminex.

Clinicians might have become more comfortable interpreting molecular test results over time, which could have altered their clinical decision-making and thereby biased the study results, Dr. Selvarangan noted. Although at least four other single-center retrospective studies have linked multiplex PCR assays to better antibiotic stewardship, this study is one of the first to directly compare clinical outcomes between two assays, he added. More studies are needed to help guide choice of multiplex assays, whose cost remains a major barrier to widespread use, he concluded.

Dr. Selvarangan disclosed grant support from both Biofire Diagnostics and Luminex corporation, and an advisory relationship with BioFire.

SAN DIEGO – Switching to a faster, more comprehensive multiplex PCR viral respiratory assay enabled a hospital to discharge young children with acute respiratory illnesses sooner, prescribe oseltamivir more often, and curtail the use of antibiotics and thoracic radiography, Rangaraj Selvarangan, PhD, reported at an annual meeting on infectious disease.

The study shows how rapid multiplex PCR testing can facilitate antimicrobial stewardship, said Dr. Selvarangan, who is a professor at the University of Missouri Kansas City School of Medicine and director of the microbiology laboratory at Children’s Mercy Kansas City. “Our antimicrobial stewardship programs monitors these test results daily, add notes, and make recommendations on antibiotic choices,” he said.

For the study, the researchers compared hospital records from December 2008 through May 2012, when Children’s Mercy hospital used the Luminex xTAG Respiratory Viral Panel, with records from August 2012 through June 2015, after the hospital had switched over to the Biofire FilmArray Respiratory Panel. FilmArray targets the same 17 viral pathogens as the Luminex panel, but also targets Bordetella pertussis, Chlamydia pneumoniae, and Mycoplasma pneumoniae, seasonal influenza A, parainfluenza type 4, and four coronaviruses.

The study included children aged up to 2 years who were not on immunosuppressive medications, in the NICU, or hospitalized for more than 7 days. For this population, the two panels yielded similar rates of positivity overall (about 60%) and for individual viruses, Dr. Selvarangan said. A total of 810 patients tested positive for at least one virus on the Luminex panel, and 2,096 patients tested positive on FilmArray. Results for FilmArray were available within a median of 4 hours, versus 29 hours for Luminex (P less than .001). The prevalence of empiric antibiotic therapy was 44% during the Luminex era and 28% after the hospital switched to FilmArray (P less than .001). Rates of antibiotic discontinuation rose from 16% with Luminex to 23% with FilmArray (P less than .01). Strikingly, oseltamivir prescriptions rose five-fold (from 17% to 85%; P less than .001) after the hospital began using FilmArray, which covers seasonal influenza. Finally, use of chest radiography fell significantly in both infants and older children after the hospital began using FilmArray instead of Luminex.

Clinicians might have become more comfortable interpreting molecular test results over time, which could have altered their clinical decision-making and thereby biased the study results, Dr. Selvarangan noted. Although at least four other single-center retrospective studies have linked multiplex PCR assays to better antibiotic stewardship, this study is one of the first to directly compare clinical outcomes between two assays, he added. More studies are needed to help guide choice of multiplex assays, whose cost remains a major barrier to widespread use, he concluded.

Dr. Selvarangan disclosed grant support from both Biofire Diagnostics and Luminex corporation, and an advisory relationship with BioFire.

SAN DIEGO – Switching to a faster, more comprehensive multiplex PCR viral respiratory assay enabled a hospital to discharge young children with acute respiratory illnesses sooner, prescribe oseltamivir more often, and curtail the use of antibiotics and thoracic radiography, Rangaraj Selvarangan, PhD, reported at an annual meeting on infectious disease.

The study shows how rapid multiplex PCR testing can facilitate antimicrobial stewardship, said Dr. Selvarangan, who is a professor at the University of Missouri Kansas City School of Medicine and director of the microbiology laboratory at Children’s Mercy Kansas City. “Our antimicrobial stewardship programs monitors these test results daily, add notes, and make recommendations on antibiotic choices,” he said.

For the study, the researchers compared hospital records from December 2008 through May 2012, when Children’s Mercy hospital used the Luminex xTAG Respiratory Viral Panel, with records from August 2012 through June 2015, after the hospital had switched over to the Biofire FilmArray Respiratory Panel. FilmArray targets the same 17 viral pathogens as the Luminex panel, but also targets Bordetella pertussis, Chlamydia pneumoniae, and Mycoplasma pneumoniae, seasonal influenza A, parainfluenza type 4, and four coronaviruses.

The study included children aged up to 2 years who were not on immunosuppressive medications, in the NICU, or hospitalized for more than 7 days. For this population, the two panels yielded similar rates of positivity overall (about 60%) and for individual viruses, Dr. Selvarangan said. A total of 810 patients tested positive for at least one virus on the Luminex panel, and 2,096 patients tested positive on FilmArray. Results for FilmArray were available within a median of 4 hours, versus 29 hours for Luminex (P less than .001). The prevalence of empiric antibiotic therapy was 44% during the Luminex era and 28% after the hospital switched to FilmArray (P less than .001). Rates of antibiotic discontinuation rose from 16% with Luminex to 23% with FilmArray (P less than .01). Strikingly, oseltamivir prescriptions rose five-fold (from 17% to 85%; P less than .001) after the hospital began using FilmArray, which covers seasonal influenza. Finally, use of chest radiography fell significantly in both infants and older children after the hospital began using FilmArray instead of Luminex.

Clinicians might have become more comfortable interpreting molecular test results over time, which could have altered their clinical decision-making and thereby biased the study results, Dr. Selvarangan noted. Although at least four other single-center retrospective studies have linked multiplex PCR assays to better antibiotic stewardship, this study is one of the first to directly compare clinical outcomes between two assays, he added. More studies are needed to help guide choice of multiplex assays, whose cost remains a major barrier to widespread use, he concluded.

Dr. Selvarangan disclosed grant support from both Biofire Diagnostics and Luminex corporation, and an advisory relationship with BioFire.

AT IDWEEK 2017

Key clinical point: Faster multiplex PCR respiratory panel results helped guide management of young children hospitalized with acute respiratory illness.

Major finding: Empiric antibiotic therapy, thoracic radiography, and median length of stay all decreased.

Data source: A descriptive single-center retrospective study of 2,905 children up to 24 months old who were hospitalized with acute respiratory illness and tested positive for at least one virus on a multiplex PCR.

Disclosures: Dr. Selvarangan disclosed grant support from both Biofire Diagnostics and Luminex corporation, and an advisory relationship with BioFire.

PrEP is not main driver in STI epidemic, says expert

SAN DIEGO – Increased use of pre-exposure prophylaxis (PrEP) to prevent HIV transmission has accelerated but is not the main reason for surging rates of sexually transmitted infections in the United States, Kenneth Mayer, MD, said during an oral presentation at an annual scientific meeting on infectious diseases.

Public health officials are seeing unprecedented rises in STIs such as syphilis and gonorrhea in both HIV-negative and HIV-positive individuals, and these trends predate the advent of PrEP, said Dr. Mayer of the Fenway Institute and Harvard University in Boston, Mass. “An overall level of behavioral disinhibition is fueling this epidemic and is not necessarily associated with PrEP,” he said.

Several studies suggest that being on PrEP does not increase the likelihood of acquiring or transmitting an STI, Dr. Mayer emphasized. In the open-label PROUD trial of PrEP in men who have sex with men (MSM), “rates of STI were extremely high and remained so, but did not go up after PrEP was initiated,” he noted. Importantly, the incidence of HIV infection was only 1.6 per 100 person-years when MSM received PrEP immediately but was 9.4 cases per 100 person-years when MSM were randomly assigned to a 1-year wait list (rate ratio, 6.0). In the randomized ANRS IPERGAY trial, 70% of high-risk MSM prescribed PrEP reported engaging in condomless anal intercourse during their most recent sexual encounter, but that proportion remained stable over 24 subsequent months of follow-up.

Providers also should understand that oral PrEP is just as effective at preventing HIV transmission when patients have STIs, Dr. Mayer said. In five recent studies, PrEP was equally efficacious among MSM regardless of whether they had syphilis or other STIs, and bacterial vaginosis in women also did not decrease the efficacy of oral PrEP. “There is no evidence to indicate that the efficacy of PrEP is lower among persons with STIs,” Dr. Mayer said.

Finally, providers should consider screening high-risk individuals for STIs more frequently than every 6 months as recommended by the Centers for Disease Control and Prevention, said Dr. Mayer. “For men who have sex with men, who are sexually active, and are on PrEP, quarterly screening makes exceedingly good sense from a cost-effectiveness standpoint,” he said.

“Screening less frequently than quarterly means that these individuals are having STIs for a longer period of time. When they are sexually active, we have a better chance of interrupting the transmission chain if we detect closer to the time of infection.”

Dr. Mayer disclosed support from the National Institutes of Health and Gilead Sciences, which makes some of the medications used in PrEP regimens.

SAN DIEGO – Increased use of pre-exposure prophylaxis (PrEP) to prevent HIV transmission has accelerated but is not the main reason for surging rates of sexually transmitted infections in the United States, Kenneth Mayer, MD, said during an oral presentation at an annual scientific meeting on infectious diseases.

Public health officials are seeing unprecedented rises in STIs such as syphilis and gonorrhea in both HIV-negative and HIV-positive individuals, and these trends predate the advent of PrEP, said Dr. Mayer of the Fenway Institute and Harvard University in Boston, Mass. “An overall level of behavioral disinhibition is fueling this epidemic and is not necessarily associated with PrEP,” he said.

Several studies suggest that being on PrEP does not increase the likelihood of acquiring or transmitting an STI, Dr. Mayer emphasized. In the open-label PROUD trial of PrEP in men who have sex with men (MSM), “rates of STI were extremely high and remained so, but did not go up after PrEP was initiated,” he noted. Importantly, the incidence of HIV infection was only 1.6 per 100 person-years when MSM received PrEP immediately but was 9.4 cases per 100 person-years when MSM were randomly assigned to a 1-year wait list (rate ratio, 6.0). In the randomized ANRS IPERGAY trial, 70% of high-risk MSM prescribed PrEP reported engaging in condomless anal intercourse during their most recent sexual encounter, but that proportion remained stable over 24 subsequent months of follow-up.

Providers also should understand that oral PrEP is just as effective at preventing HIV transmission when patients have STIs, Dr. Mayer said. In five recent studies, PrEP was equally efficacious among MSM regardless of whether they had syphilis or other STIs, and bacterial vaginosis in women also did not decrease the efficacy of oral PrEP. “There is no evidence to indicate that the efficacy of PrEP is lower among persons with STIs,” Dr. Mayer said.

Finally, providers should consider screening high-risk individuals for STIs more frequently than every 6 months as recommended by the Centers for Disease Control and Prevention, said Dr. Mayer. “For men who have sex with men, who are sexually active, and are on PrEP, quarterly screening makes exceedingly good sense from a cost-effectiveness standpoint,” he said.

“Screening less frequently than quarterly means that these individuals are having STIs for a longer period of time. When they are sexually active, we have a better chance of interrupting the transmission chain if we detect closer to the time of infection.”

Dr. Mayer disclosed support from the National Institutes of Health and Gilead Sciences, which makes some of the medications used in PrEP regimens.

SAN DIEGO – Increased use of pre-exposure prophylaxis (PrEP) to prevent HIV transmission has accelerated but is not the main reason for surging rates of sexually transmitted infections in the United States, Kenneth Mayer, MD, said during an oral presentation at an annual scientific meeting on infectious diseases.

Public health officials are seeing unprecedented rises in STIs such as syphilis and gonorrhea in both HIV-negative and HIV-positive individuals, and these trends predate the advent of PrEP, said Dr. Mayer of the Fenway Institute and Harvard University in Boston, Mass. “An overall level of behavioral disinhibition is fueling this epidemic and is not necessarily associated with PrEP,” he said.

Several studies suggest that being on PrEP does not increase the likelihood of acquiring or transmitting an STI, Dr. Mayer emphasized. In the open-label PROUD trial of PrEP in men who have sex with men (MSM), “rates of STI were extremely high and remained so, but did not go up after PrEP was initiated,” he noted. Importantly, the incidence of HIV infection was only 1.6 per 100 person-years when MSM received PrEP immediately but was 9.4 cases per 100 person-years when MSM were randomly assigned to a 1-year wait list (rate ratio, 6.0). In the randomized ANRS IPERGAY trial, 70% of high-risk MSM prescribed PrEP reported engaging in condomless anal intercourse during their most recent sexual encounter, but that proportion remained stable over 24 subsequent months of follow-up.

Providers also should understand that oral PrEP is just as effective at preventing HIV transmission when patients have STIs, Dr. Mayer said. In five recent studies, PrEP was equally efficacious among MSM regardless of whether they had syphilis or other STIs, and bacterial vaginosis in women also did not decrease the efficacy of oral PrEP. “There is no evidence to indicate that the efficacy of PrEP is lower among persons with STIs,” Dr. Mayer said.

Finally, providers should consider screening high-risk individuals for STIs more frequently than every 6 months as recommended by the Centers for Disease Control and Prevention, said Dr. Mayer. “For men who have sex with men, who are sexually active, and are on PrEP, quarterly screening makes exceedingly good sense from a cost-effectiveness standpoint,” he said.

“Screening less frequently than quarterly means that these individuals are having STIs for a longer period of time. When they are sexually active, we have a better chance of interrupting the transmission chain if we detect closer to the time of infection.”

Dr. Mayer disclosed support from the National Institutes of Health and Gilead Sciences, which makes some of the medications used in PrEP regimens.

AT IDWEEK 2017

Anidulafungin effectively treated invasive pediatric candidiasis in open-label trial

SAN DIEGO – The intravenous echinocandin anidulafungin effectively treated invasive candidiasis in a single-arm, multicenter, open-label trial of 47 children aged 2-17 years.

The overall global response rate of 72% resembled that from the prior adult registry study (76%), Emmanuel Roilides, MD, PhD, and his associates reported in a poster presented at an annual scientific meeting on infectious diseases.

At 6-week follow-up, two patients (4%) had relapsed, both with Candida parapsilosis, which was more resistant to treatment with anidulafungin (Eraxis) than other Candida species, the researchers reported. Treating the children with 3.0 mg/kg anidulafungin on day 1, followed by 1.5 mg/kg every 24 hours, yielded similar pharmacokinetics as the 200/100 mg regimen used in adults. The most common treatment-emergent adverse effects included diarrhea (23%), vomiting (23%), and fever (19%), which also reflected findings in adults, the investigators said. Five patients (10%) developed at least one severe treatment-emergent adverse event, including neutropenia, gastrointestinal hemorrhage, increased hepatic transaminases, hyponatremia, and myalgia. The study (NCT00761267) is ongoing and continues to recruit patients in 11 states in the United States and nine other countries, with final top-line results expected in 2019.

Although rates of invasive candidiasis appear to be decreasing in children overall, the population at risk is expanding, experts have noted. Relevant guidelines from the Infectious Disease Society of America and the European Society of Clinical Microbiology and Infectious Diseases list amphotericin B, echinocandins, and azoles as treatment options, but these recommendations are extrapolated mainly from adult studies, noted Dr. Roilides, who is a pediatric infectious disease specialist at Aristotle University School of Health Sciences and Hippokration General Hospital in Thessaloniki, Greece.

To better characterize the safety and efficacy of anidulafungin in children, the researchers enrolled patients up to 17 years of age who had signs and symptoms of invasive candidiasis and Candida cultured from a normally sterile site. Patients received intravenous anidulafungin (3 mg/kg on day 1, followed by 1.5 mg/kg every 24 hours) for at least 10 days, after which they could switch to oral fluconazole. Treatment continued for at least 14 days after blood cultures were negative and signs and symptoms resolved.

At interim data cutoff in October 2016, patients were exposed to anidulafungin for a median of 11.5 days (range, 1-28 days). Among 47 patients who received at least one dose of anidulafungin, about two-thirds were male, about 70% were white, and the average age was 8 years (standard deviation, 4.7 years). Rates of global success – a combination of clinical and microbiological response – were 82% in patients up to 5 years old and 67% in older children. Children whose baseline neutrophil count was at least 500 per mm3 had a 78% global response rate versus 50% among those with more severe neutropenia. C. parapsilosis had higher minimum inhibitory concentrations than other Candida species, and in vitro susceptibility rates of 85% for C. parapsilosis versus 100% for other species.

All patients experienced at least one treatment-emergent adverse effect. In addition to diarrhea, vomiting, and pyrexia, adverse events affecting more than 10% of patients included epistaxis (17%), headache (15%), and abdominal pain (13%). Half of patients switched to oral fluconazole. Four patients stopped treatment because of vomiting, generalized pruritus, or increased transaminases. A total of 15% of patients died, although no deaths were considered treatment related. The patient who stopped treatment because of pruritus later died of septic shock secondary to invasive candidiasis, despite having started treatment with fluconazole and micafungin, the investigators reported at the combined annual meetings of the Infectious Diseases Society of America, the Society for Healthcare Epidemiology of America, the HIV Medicine Association, and the Pediatric Infectious Diseases Society.

Nearly all patients had bloodstream infections, and catheters also cultured positive in more than two-thirds of cases, the researchers said. Many patients had multiple risk factors for infection such as central venous catheters, broad-spectrum antibiotic therapy, total parenteral nutrition, and chemotherapy. Cultures were most often positive for Candida albicans (38%), followed by C. parapsilosis (26%) and C. tropicalis (13%).

Pfizer makes anidulafungin and sponsored the study. Dr. Roilides disclosed research grants and advisory relationships with Pfizer, Astellas, Gilead, and Merck.

SAN DIEGO – The intravenous echinocandin anidulafungin effectively treated invasive candidiasis in a single-arm, multicenter, open-label trial of 47 children aged 2-17 years.

The overall global response rate of 72% resembled that from the prior adult registry study (76%), Emmanuel Roilides, MD, PhD, and his associates reported in a poster presented at an annual scientific meeting on infectious diseases.

At 6-week follow-up, two patients (4%) had relapsed, both with Candida parapsilosis, which was more resistant to treatment with anidulafungin (Eraxis) than other Candida species, the researchers reported. Treating the children with 3.0 mg/kg anidulafungin on day 1, followed by 1.5 mg/kg every 24 hours, yielded similar pharmacokinetics as the 200/100 mg regimen used in adults. The most common treatment-emergent adverse effects included diarrhea (23%), vomiting (23%), and fever (19%), which also reflected findings in adults, the investigators said. Five patients (10%) developed at least one severe treatment-emergent adverse event, including neutropenia, gastrointestinal hemorrhage, increased hepatic transaminases, hyponatremia, and myalgia. The study (NCT00761267) is ongoing and continues to recruit patients in 11 states in the United States and nine other countries, with final top-line results expected in 2019.

Although rates of invasive candidiasis appear to be decreasing in children overall, the population at risk is expanding, experts have noted. Relevant guidelines from the Infectious Disease Society of America and the European Society of Clinical Microbiology and Infectious Diseases list amphotericin B, echinocandins, and azoles as treatment options, but these recommendations are extrapolated mainly from adult studies, noted Dr. Roilides, who is a pediatric infectious disease specialist at Aristotle University School of Health Sciences and Hippokration General Hospital in Thessaloniki, Greece.