User login

Geriatric-Centered Interdisciplinary Care Pathway Reduces Delirium in Hospitalized Older Adults With Traumatic Injury

Study 1 Overview (Park et al)

Objective: To examine whether implementation of a geriatric trauma clinical pathway is associated with reduced rates of delirium in older adults with traumatic injury.

Design: Retrospective case-control study of electronic health records.

Setting and participants: Eligible patients were persons aged 65 years or older who were admitted to the trauma service and did not undergo an operation. A Geriatric Trauma Care Pathway was developed by a multidisciplinary Stanford Quality Pathways team and formally launched on November 1, 2018. The clinical pathway was designed to incorporate geriatric best practices, which included order sets (eg, age-appropriate nonpharmacological interventions and pharmacological dosages), guidelines (eg, Institute for Healthcare Improvement Age-Friendly Health systems 4M framework), automated consultations (comprehensive geriatric assessment), and escalation pathways executed by a multidisciplinary team (eg, pain, bowel, and sleep regulation). The clinical pathway began with admission to the emergency department (ED) (ie, automatic trigger of geriatric trauma care admission order set), daily multidisciplinary team meetings during acute hospitalization, and a transitional care team consultation for postdischarge follow-up or home visit.

Main outcome measures: The primary outcome was delirium as determined by a positive Confusion Assessment Method (CAM) score or a diagnosis of delirium by the clinical team. The secondary outcome was hospital length of stay (LOS). Process measures for pathway compliance (eg, achieving adequate pain control, early mobilization, advance care planning) were assessed. Outcome measures were compared between patients who underwent the Geriatric Trauma Care Pathway intervention (postimplementation group) vs patients who were treated prior to pathway implementation (baseline pre-implementation group).

Main results: Of the 859 eligible patients, 712 were included in the analysis (442 [62.1%] in the baseline pre-implementation group and 270 [37.9%] in the postimplementation group); mean (SD) age was 81.4 (9.1) years, and 394 (55.3%) were women. The injury mechanism was similar between groups, with falls being the most common cause of injury (247 [55.9%] in the baseline group vs 162 [60.0%] in the postimplementation group; P = .43). Injuries as measured by Injury Severity Score (ISS) were minor or moderate in both groups (261 [59.0%] in baseline group vs 168 [62.2%] in postimplementation group; P = .87). The adjusted odds ratio (OR) for delirium in the postimplementation group was lower compared to the baseline pre-implementation group (OR, 0.54; 95% CI, 0.37-0.80; P < .001). Measures of advance care planning in the postimplementation group improved, including more frequent goals-of-care documentation (53.7% in postimplementation group vs 16.7% in baseline group; P < .001) and a shortened time to first goals-of-care discussion upon presenting to the ED (36 hours in postimplementation group vs 50 hours in baseline group; P = .03).

Conclusion: Implementation of a multidisciplinary geriatric trauma clinical pathway for older adults with traumatic injury at a single level I trauma center was associated with reduced rates of delirium.

Study 2 Overview (Bryant et al)

Objective: To determine whether an interdisciplinary care pathway for frail trauma patients can improve in-hospital mortality, complications, and 30-day readmissions.

Design: Retrospective cohort study of frail patients.

Setting and participants: Eligible patients were persons aged 65 years or older who were admitted to the trauma service and survived more than 24 hours; admitted to and discharged from the trauma unit; and determined to be pre-frail or frail by a geriatrician’s assessment. A Frailty Identification and Care Pathway designed to reduce delirium and complications in frail older trauma patients was developed by a multidisciplinary team and implemented in 2016. The standardized evidence-based interdisciplinary care pathway included utilization of order sets and interventions for delirium prevention, early ambulation, bowel and pain regimens, nutrition and physical therapy consults, medication management, care-goal setting, and geriatric assessments.

Main outcome measures: The main outcomes were delirium as determined by a positive CAM score, major complications as defined by the Trauma Quality Improvement Project, in-hospital mortality, and 30-day hospital readmission. Outcome measures were compared between patients who underwent Frailty Identification and Care Pathway intervention (postintervention group) vs patients who were treated prior to pathway implementation (pre-intervention group).

Main results: A total of 269 frail patients were included in the analysis (125 in pre-intervention group vs 144 in postintervention group). Patient demographic and admission characteristics were similar between the 2 groups: mean age was 83.5 (7.1) years, 60.6% were women, and median ISS was 10 (interquartile range [IQR], 9-14). The injury mechanism was similar between groups, with falls accounting for 92.8% and 86.1% of injuries in the pre-intervention and postintervention groups, respectively (P = .07). In univariate analysis, the Frailty Identification and Care Pathway intervention was associated with a significant reduction in delirium (12.5% vs 21.6%, P = .04) and 30-day hospital readmission (2.7% vs 9.6%, P = .01) compared to patients in the pre-intervention group. However, rates of major complications (28.5% vs 28.0%, P = 0.93) and in-hospital mortality (4.2% vs 7.2%, P = .28) were similar between the pre-intervention and postintervention groups. In multivariate logistic regression models adjusted for patient characteristics (age, sex, race, ISS), patients in the postintervention group had lower delirium (OR, 0.44; 95% CI, 0.22-0.88; P = .02) and 30-day hospital readmission (OR, 0.25; 95% CI, 0.07-0.84; P = .02) rates compared to those in the pre-intervention group.

Conclusion: Implementation of an interdisciplinary care protocol for frail geriatric trauma patients significantly decreased their risks for in-hospital delirium and 30-day hospital readmission.

Commentary

Traumatic injuries in older adults are associated with higher morbidity and mortality compared to younger patients, with falls and motor vehicle accidents accounting for a majority of these injuries. Astoundingly, up to one-third of this vulnerable population presenting to hospitals with an ISS greater than 15 may die during hospitalization.1 As a result, a large number of studies and clinical trials have focused on interventions that are designed to reduce fall risks, and hence reduce adverse consequences of traumatic injuries that may arise after falls.2 However, this emphasis on falls prevention has overshadowed a need to develop effective geriatric-centered clinical interventions that aim to improve outcomes in older adults who present to hospitals with traumatic injuries. Furthermore, frailty—a geriatric syndrome indicative of an increased state of vulnerability and predictive of adverse outcomes such as delirium—is highly prevalent in older patients with traumatic injury.3 Thus, there is an urgent need to develop novel, hospital-based, traumatic injury–targeting strategies that incorporate a thoughtful redesign of the care framework that includes evidence-based interventions for geriatric syndromes such as delirium and frailty.

The study reported by Park et al (Study 1) represents the latest effort to evaluate inpatient management strategies designed to improve outcomes in hospitalized older adults who have sustained traumatic injury. Through the implementation of a novel multidisciplinary Geriatric Trauma Care Pathway that incorporates geriatric best practices, this intervention was found to be associated with a 46% lower risk of in-hospital delirium. Because of the inclusion of all age-eligible patients across all strata of traumatic injuries, rather than preselecting for those at the highest risk for poor clinical outcomes, the benefits of this intervention extend to those with minor or moderate injury severity. Furthermore, the improvement in delirium (ie, the primary outcome) is particularly meaningful given that delirium is one of the most common hospital-associated complications that increase hospital LOS, discharge to an institution, and mortality in older adults. Finally, the study’s observed reduced time to a first goals-of-care discussion and increased frequency of goals-of-care documentation after intervention should not be overlooked. The improvements in these 2 process measures are highly significant given that advanced care planning, an intervention that helps to align patients’ values, goals, and treatments, is completed at substantially lower rates in older adults in the acute hospital setting.4

Similarly, in an earlier published study, Bryant and colleagues (Study 2) also show that a geriatric-focused interdisciplinary trauma care pathway is associated with delirium risk reduction in hospitalized older trauma patients. Much like Study 1, the Frailty Identification and Care Pathway utilized in Study 2 is an evidence-based interdisciplinary care pathway that includes the use of geriatric assessments, order sets, and geriatric best practices. Moreover, its exclusive inclusion of pre-frail and frail older patients (ie, those at higher risk for poor outcomes) with moderate injury severity (median ISS of 10 [IQR, 9-14]) suggests that this type of care pathway benefits hospitalized older trauma patients, who are particularly vulnerable to adverse complications such as delirium. Moreover, the successful utilization of the FRAIL questionnaire, a validated frailty screening tool, by surgical residents in the ED to initiate this care pathway demonstrates the feasibility of its use in expediting frailty screening in older patients in trauma care.

Application for Clinical Practice and System Implementation

Findings from the 2 studies discussed in this review indicate that implementation of interdisciplinary clinical care pathways predicated on evidence-based geriatric principles and best practices is a promising approach to reduce delirium in hospitalized older trauma patients. These studies have helped to lay the groundwork in outlining the roadmaps (eg, processes and infrastructures) needed to create such clinical pathways. These key elements include: (1) integration of a multidisciplinary committee (eg, representation from trauma, emergency, and geriatric medicine, nursing, physical and occupational therapy, pharmacy, social work) in pathway design and implementation; (2) adaption of evidence-based geriatric best practices (eg, the Institute for Healthcare Improvement Age-Friendly Health System 4M framework [medication, mentation, mobility, what matters]) to prioritize interventions and to design a pathway that incorporates these features; (3) incorporation of comprehensive geriatric assessment by interdisciplinary providers; (4) utilization of validated clinical instruments to assess physical and cognitive functions, frailty, delirium, and social determinants of health; (5) modification of electronic health record systems to encompass order sets that incorporate evidence-based, nonpharmacological and pharmacological interventions to manage symptoms (eg, delirium, pain, bowel movement, sleep, immobility, polypharmacy) essential to quality geriatric care; and (6) integration of patient and caregiver preferences via goals-of-care discussions and corresponding documentation and communication of these goals.

Additionally, these 2 studies imparted some strategies that may facilitate the implementation of interdisciplinary clinical care pathways in trauma care. Examples of such facilitators include: (1) collaboration with champions within each specialty to reinforce education and buy-in; (2) creation of automatically triggered order sets upon patient presentation to the ED that unites distinct features of clinical pathways; (3) adaption and reorganization of existing hospital infrastructures and resources to meet the needs of clinical pathways implementation (eg, utilizing information technology resources to develop electronic health record order sets; using quality department to develop clinical pathway guidelines and electronic outcome dashboards); and (4) development of individualized patient and caregiver education materials based on care needs (eg, principles of delirium prevention and preservation of mobility during hospitalization) to prepare and engage these stakeholders in patient care and recovery.

Practice Points

- A geriatric interdisciplinary care model can be effectively applied to the management of acute trauma in older patients.

- Interdisciplinary clinical pathways should incorporate geriatric best practices and guidelines and age-appropriate order sets to prioritize and integrate care.

—Fred Ko, MD, MS

1. Hashmi A, Ibrahim-Zada I, Rhee P, et al. Predictors of mortality in geriatric trauma patients: a systematic review and meta-analysis. J Trauma Acute Care Surg. 2014;76(3):894-901. doi:10.1097/TA.0b013e3182ab0763

2. Hopewell S, Adedire O, Copsey BJ, et al. Multifactorial and multiple component interventions for preventing falls in older people living in the community. Cochrane Database Syst Rev. 2018;7(7):CD012221. doi:10.1002/14651858.CD012221.pub2

3. Joseph B, Pandit V, Zangbar B, et al. Superiority of frailty over age in predicting outcomes among geriatric trauma patients: a prospective analysis. JAMA Surg. 2014;149(8):766-772. doi:10.1001/jamasurg.2014.296

4. Hopkins SA, Bentley A, Phillips V, Barclay S. Advance care plans and hospitalized frail older adults: a systematic review. BMJ Support Palliat Care. 2020;10(2):164-174. doi:10.1136/bmjspcare-2019-002093

Study 1 Overview (Park et al)

Objective: To examine whether implementation of a geriatric trauma clinical pathway is associated with reduced rates of delirium in older adults with traumatic injury.

Design: Retrospective case-control study of electronic health records.

Setting and participants: Eligible patients were persons aged 65 years or older who were admitted to the trauma service and did not undergo an operation. A Geriatric Trauma Care Pathway was developed by a multidisciplinary Stanford Quality Pathways team and formally launched on November 1, 2018. The clinical pathway was designed to incorporate geriatric best practices, which included order sets (eg, age-appropriate nonpharmacological interventions and pharmacological dosages), guidelines (eg, Institute for Healthcare Improvement Age-Friendly Health systems 4M framework), automated consultations (comprehensive geriatric assessment), and escalation pathways executed by a multidisciplinary team (eg, pain, bowel, and sleep regulation). The clinical pathway began with admission to the emergency department (ED) (ie, automatic trigger of geriatric trauma care admission order set), daily multidisciplinary team meetings during acute hospitalization, and a transitional care team consultation for postdischarge follow-up or home visit.

Main outcome measures: The primary outcome was delirium as determined by a positive Confusion Assessment Method (CAM) score or a diagnosis of delirium by the clinical team. The secondary outcome was hospital length of stay (LOS). Process measures for pathway compliance (eg, achieving adequate pain control, early mobilization, advance care planning) were assessed. Outcome measures were compared between patients who underwent the Geriatric Trauma Care Pathway intervention (postimplementation group) vs patients who were treated prior to pathway implementation (baseline pre-implementation group).

Main results: Of the 859 eligible patients, 712 were included in the analysis (442 [62.1%] in the baseline pre-implementation group and 270 [37.9%] in the postimplementation group); mean (SD) age was 81.4 (9.1) years, and 394 (55.3%) were women. The injury mechanism was similar between groups, with falls being the most common cause of injury (247 [55.9%] in the baseline group vs 162 [60.0%] in the postimplementation group; P = .43). Injuries as measured by Injury Severity Score (ISS) were minor or moderate in both groups (261 [59.0%] in baseline group vs 168 [62.2%] in postimplementation group; P = .87). The adjusted odds ratio (OR) for delirium in the postimplementation group was lower compared to the baseline pre-implementation group (OR, 0.54; 95% CI, 0.37-0.80; P < .001). Measures of advance care planning in the postimplementation group improved, including more frequent goals-of-care documentation (53.7% in postimplementation group vs 16.7% in baseline group; P < .001) and a shortened time to first goals-of-care discussion upon presenting to the ED (36 hours in postimplementation group vs 50 hours in baseline group; P = .03).

Conclusion: Implementation of a multidisciplinary geriatric trauma clinical pathway for older adults with traumatic injury at a single level I trauma center was associated with reduced rates of delirium.

Study 2 Overview (Bryant et al)

Objective: To determine whether an interdisciplinary care pathway for frail trauma patients can improve in-hospital mortality, complications, and 30-day readmissions.

Design: Retrospective cohort study of frail patients.

Setting and participants: Eligible patients were persons aged 65 years or older who were admitted to the trauma service and survived more than 24 hours; admitted to and discharged from the trauma unit; and determined to be pre-frail or frail by a geriatrician’s assessment. A Frailty Identification and Care Pathway designed to reduce delirium and complications in frail older trauma patients was developed by a multidisciplinary team and implemented in 2016. The standardized evidence-based interdisciplinary care pathway included utilization of order sets and interventions for delirium prevention, early ambulation, bowel and pain regimens, nutrition and physical therapy consults, medication management, care-goal setting, and geriatric assessments.

Main outcome measures: The main outcomes were delirium as determined by a positive CAM score, major complications as defined by the Trauma Quality Improvement Project, in-hospital mortality, and 30-day hospital readmission. Outcome measures were compared between patients who underwent Frailty Identification and Care Pathway intervention (postintervention group) vs patients who were treated prior to pathway implementation (pre-intervention group).

Main results: A total of 269 frail patients were included in the analysis (125 in pre-intervention group vs 144 in postintervention group). Patient demographic and admission characteristics were similar between the 2 groups: mean age was 83.5 (7.1) years, 60.6% were women, and median ISS was 10 (interquartile range [IQR], 9-14). The injury mechanism was similar between groups, with falls accounting for 92.8% and 86.1% of injuries in the pre-intervention and postintervention groups, respectively (P = .07). In univariate analysis, the Frailty Identification and Care Pathway intervention was associated with a significant reduction in delirium (12.5% vs 21.6%, P = .04) and 30-day hospital readmission (2.7% vs 9.6%, P = .01) compared to patients in the pre-intervention group. However, rates of major complications (28.5% vs 28.0%, P = 0.93) and in-hospital mortality (4.2% vs 7.2%, P = .28) were similar between the pre-intervention and postintervention groups. In multivariate logistic regression models adjusted for patient characteristics (age, sex, race, ISS), patients in the postintervention group had lower delirium (OR, 0.44; 95% CI, 0.22-0.88; P = .02) and 30-day hospital readmission (OR, 0.25; 95% CI, 0.07-0.84; P = .02) rates compared to those in the pre-intervention group.

Conclusion: Implementation of an interdisciplinary care protocol for frail geriatric trauma patients significantly decreased their risks for in-hospital delirium and 30-day hospital readmission.

Commentary

Traumatic injuries in older adults are associated with higher morbidity and mortality compared to younger patients, with falls and motor vehicle accidents accounting for a majority of these injuries. Astoundingly, up to one-third of this vulnerable population presenting to hospitals with an ISS greater than 15 may die during hospitalization.1 As a result, a large number of studies and clinical trials have focused on interventions that are designed to reduce fall risks, and hence reduce adverse consequences of traumatic injuries that may arise after falls.2 However, this emphasis on falls prevention has overshadowed a need to develop effective geriatric-centered clinical interventions that aim to improve outcomes in older adults who present to hospitals with traumatic injuries. Furthermore, frailty—a geriatric syndrome indicative of an increased state of vulnerability and predictive of adverse outcomes such as delirium—is highly prevalent in older patients with traumatic injury.3 Thus, there is an urgent need to develop novel, hospital-based, traumatic injury–targeting strategies that incorporate a thoughtful redesign of the care framework that includes evidence-based interventions for geriatric syndromes such as delirium and frailty.

The study reported by Park et al (Study 1) represents the latest effort to evaluate inpatient management strategies designed to improve outcomes in hospitalized older adults who have sustained traumatic injury. Through the implementation of a novel multidisciplinary Geriatric Trauma Care Pathway that incorporates geriatric best practices, this intervention was found to be associated with a 46% lower risk of in-hospital delirium. Because of the inclusion of all age-eligible patients across all strata of traumatic injuries, rather than preselecting for those at the highest risk for poor clinical outcomes, the benefits of this intervention extend to those with minor or moderate injury severity. Furthermore, the improvement in delirium (ie, the primary outcome) is particularly meaningful given that delirium is one of the most common hospital-associated complications that increase hospital LOS, discharge to an institution, and mortality in older adults. Finally, the study’s observed reduced time to a first goals-of-care discussion and increased frequency of goals-of-care documentation after intervention should not be overlooked. The improvements in these 2 process measures are highly significant given that advanced care planning, an intervention that helps to align patients’ values, goals, and treatments, is completed at substantially lower rates in older adults in the acute hospital setting.4

Similarly, in an earlier published study, Bryant and colleagues (Study 2) also show that a geriatric-focused interdisciplinary trauma care pathway is associated with delirium risk reduction in hospitalized older trauma patients. Much like Study 1, the Frailty Identification and Care Pathway utilized in Study 2 is an evidence-based interdisciplinary care pathway that includes the use of geriatric assessments, order sets, and geriatric best practices. Moreover, its exclusive inclusion of pre-frail and frail older patients (ie, those at higher risk for poor outcomes) with moderate injury severity (median ISS of 10 [IQR, 9-14]) suggests that this type of care pathway benefits hospitalized older trauma patients, who are particularly vulnerable to adverse complications such as delirium. Moreover, the successful utilization of the FRAIL questionnaire, a validated frailty screening tool, by surgical residents in the ED to initiate this care pathway demonstrates the feasibility of its use in expediting frailty screening in older patients in trauma care.

Application for Clinical Practice and System Implementation

Findings from the 2 studies discussed in this review indicate that implementation of interdisciplinary clinical care pathways predicated on evidence-based geriatric principles and best practices is a promising approach to reduce delirium in hospitalized older trauma patients. These studies have helped to lay the groundwork in outlining the roadmaps (eg, processes and infrastructures) needed to create such clinical pathways. These key elements include: (1) integration of a multidisciplinary committee (eg, representation from trauma, emergency, and geriatric medicine, nursing, physical and occupational therapy, pharmacy, social work) in pathway design and implementation; (2) adaption of evidence-based geriatric best practices (eg, the Institute for Healthcare Improvement Age-Friendly Health System 4M framework [medication, mentation, mobility, what matters]) to prioritize interventions and to design a pathway that incorporates these features; (3) incorporation of comprehensive geriatric assessment by interdisciplinary providers; (4) utilization of validated clinical instruments to assess physical and cognitive functions, frailty, delirium, and social determinants of health; (5) modification of electronic health record systems to encompass order sets that incorporate evidence-based, nonpharmacological and pharmacological interventions to manage symptoms (eg, delirium, pain, bowel movement, sleep, immobility, polypharmacy) essential to quality geriatric care; and (6) integration of patient and caregiver preferences via goals-of-care discussions and corresponding documentation and communication of these goals.

Additionally, these 2 studies imparted some strategies that may facilitate the implementation of interdisciplinary clinical care pathways in trauma care. Examples of such facilitators include: (1) collaboration with champions within each specialty to reinforce education and buy-in; (2) creation of automatically triggered order sets upon patient presentation to the ED that unites distinct features of clinical pathways; (3) adaption and reorganization of existing hospital infrastructures and resources to meet the needs of clinical pathways implementation (eg, utilizing information technology resources to develop electronic health record order sets; using quality department to develop clinical pathway guidelines and electronic outcome dashboards); and (4) development of individualized patient and caregiver education materials based on care needs (eg, principles of delirium prevention and preservation of mobility during hospitalization) to prepare and engage these stakeholders in patient care and recovery.

Practice Points

- A geriatric interdisciplinary care model can be effectively applied to the management of acute trauma in older patients.

- Interdisciplinary clinical pathways should incorporate geriatric best practices and guidelines and age-appropriate order sets to prioritize and integrate care.

—Fred Ko, MD, MS

Study 1 Overview (Park et al)

Objective: To examine whether implementation of a geriatric trauma clinical pathway is associated with reduced rates of delirium in older adults with traumatic injury.

Design: Retrospective case-control study of electronic health records.

Setting and participants: Eligible patients were persons aged 65 years or older who were admitted to the trauma service and did not undergo an operation. A Geriatric Trauma Care Pathway was developed by a multidisciplinary Stanford Quality Pathways team and formally launched on November 1, 2018. The clinical pathway was designed to incorporate geriatric best practices, which included order sets (eg, age-appropriate nonpharmacological interventions and pharmacological dosages), guidelines (eg, Institute for Healthcare Improvement Age-Friendly Health systems 4M framework), automated consultations (comprehensive geriatric assessment), and escalation pathways executed by a multidisciplinary team (eg, pain, bowel, and sleep regulation). The clinical pathway began with admission to the emergency department (ED) (ie, automatic trigger of geriatric trauma care admission order set), daily multidisciplinary team meetings during acute hospitalization, and a transitional care team consultation for postdischarge follow-up or home visit.

Main outcome measures: The primary outcome was delirium as determined by a positive Confusion Assessment Method (CAM) score or a diagnosis of delirium by the clinical team. The secondary outcome was hospital length of stay (LOS). Process measures for pathway compliance (eg, achieving adequate pain control, early mobilization, advance care planning) were assessed. Outcome measures were compared between patients who underwent the Geriatric Trauma Care Pathway intervention (postimplementation group) vs patients who were treated prior to pathway implementation (baseline pre-implementation group).

Main results: Of the 859 eligible patients, 712 were included in the analysis (442 [62.1%] in the baseline pre-implementation group and 270 [37.9%] in the postimplementation group); mean (SD) age was 81.4 (9.1) years, and 394 (55.3%) were women. The injury mechanism was similar between groups, with falls being the most common cause of injury (247 [55.9%] in the baseline group vs 162 [60.0%] in the postimplementation group; P = .43). Injuries as measured by Injury Severity Score (ISS) were minor or moderate in both groups (261 [59.0%] in baseline group vs 168 [62.2%] in postimplementation group; P = .87). The adjusted odds ratio (OR) for delirium in the postimplementation group was lower compared to the baseline pre-implementation group (OR, 0.54; 95% CI, 0.37-0.80; P < .001). Measures of advance care planning in the postimplementation group improved, including more frequent goals-of-care documentation (53.7% in postimplementation group vs 16.7% in baseline group; P < .001) and a shortened time to first goals-of-care discussion upon presenting to the ED (36 hours in postimplementation group vs 50 hours in baseline group; P = .03).

Conclusion: Implementation of a multidisciplinary geriatric trauma clinical pathway for older adults with traumatic injury at a single level I trauma center was associated with reduced rates of delirium.

Study 2 Overview (Bryant et al)

Objective: To determine whether an interdisciplinary care pathway for frail trauma patients can improve in-hospital mortality, complications, and 30-day readmissions.

Design: Retrospective cohort study of frail patients.

Setting and participants: Eligible patients were persons aged 65 years or older who were admitted to the trauma service and survived more than 24 hours; admitted to and discharged from the trauma unit; and determined to be pre-frail or frail by a geriatrician’s assessment. A Frailty Identification and Care Pathway designed to reduce delirium and complications in frail older trauma patients was developed by a multidisciplinary team and implemented in 2016. The standardized evidence-based interdisciplinary care pathway included utilization of order sets and interventions for delirium prevention, early ambulation, bowel and pain regimens, nutrition and physical therapy consults, medication management, care-goal setting, and geriatric assessments.

Main outcome measures: The main outcomes were delirium as determined by a positive CAM score, major complications as defined by the Trauma Quality Improvement Project, in-hospital mortality, and 30-day hospital readmission. Outcome measures were compared between patients who underwent Frailty Identification and Care Pathway intervention (postintervention group) vs patients who were treated prior to pathway implementation (pre-intervention group).

Main results: A total of 269 frail patients were included in the analysis (125 in pre-intervention group vs 144 in postintervention group). Patient demographic and admission characteristics were similar between the 2 groups: mean age was 83.5 (7.1) years, 60.6% were women, and median ISS was 10 (interquartile range [IQR], 9-14). The injury mechanism was similar between groups, with falls accounting for 92.8% and 86.1% of injuries in the pre-intervention and postintervention groups, respectively (P = .07). In univariate analysis, the Frailty Identification and Care Pathway intervention was associated with a significant reduction in delirium (12.5% vs 21.6%, P = .04) and 30-day hospital readmission (2.7% vs 9.6%, P = .01) compared to patients in the pre-intervention group. However, rates of major complications (28.5% vs 28.0%, P = 0.93) and in-hospital mortality (4.2% vs 7.2%, P = .28) were similar between the pre-intervention and postintervention groups. In multivariate logistic regression models adjusted for patient characteristics (age, sex, race, ISS), patients in the postintervention group had lower delirium (OR, 0.44; 95% CI, 0.22-0.88; P = .02) and 30-day hospital readmission (OR, 0.25; 95% CI, 0.07-0.84; P = .02) rates compared to those in the pre-intervention group.

Conclusion: Implementation of an interdisciplinary care protocol for frail geriatric trauma patients significantly decreased their risks for in-hospital delirium and 30-day hospital readmission.

Commentary

Traumatic injuries in older adults are associated with higher morbidity and mortality compared to younger patients, with falls and motor vehicle accidents accounting for a majority of these injuries. Astoundingly, up to one-third of this vulnerable population presenting to hospitals with an ISS greater than 15 may die during hospitalization.1 As a result, a large number of studies and clinical trials have focused on interventions that are designed to reduce fall risks, and hence reduce adverse consequences of traumatic injuries that may arise after falls.2 However, this emphasis on falls prevention has overshadowed a need to develop effective geriatric-centered clinical interventions that aim to improve outcomes in older adults who present to hospitals with traumatic injuries. Furthermore, frailty—a geriatric syndrome indicative of an increased state of vulnerability and predictive of adverse outcomes such as delirium—is highly prevalent in older patients with traumatic injury.3 Thus, there is an urgent need to develop novel, hospital-based, traumatic injury–targeting strategies that incorporate a thoughtful redesign of the care framework that includes evidence-based interventions for geriatric syndromes such as delirium and frailty.

The study reported by Park et al (Study 1) represents the latest effort to evaluate inpatient management strategies designed to improve outcomes in hospitalized older adults who have sustained traumatic injury. Through the implementation of a novel multidisciplinary Geriatric Trauma Care Pathway that incorporates geriatric best practices, this intervention was found to be associated with a 46% lower risk of in-hospital delirium. Because of the inclusion of all age-eligible patients across all strata of traumatic injuries, rather than preselecting for those at the highest risk for poor clinical outcomes, the benefits of this intervention extend to those with minor or moderate injury severity. Furthermore, the improvement in delirium (ie, the primary outcome) is particularly meaningful given that delirium is one of the most common hospital-associated complications that increase hospital LOS, discharge to an institution, and mortality in older adults. Finally, the study’s observed reduced time to a first goals-of-care discussion and increased frequency of goals-of-care documentation after intervention should not be overlooked. The improvements in these 2 process measures are highly significant given that advanced care planning, an intervention that helps to align patients’ values, goals, and treatments, is completed at substantially lower rates in older adults in the acute hospital setting.4

Similarly, in an earlier published study, Bryant and colleagues (Study 2) also show that a geriatric-focused interdisciplinary trauma care pathway is associated with delirium risk reduction in hospitalized older trauma patients. Much like Study 1, the Frailty Identification and Care Pathway utilized in Study 2 is an evidence-based interdisciplinary care pathway that includes the use of geriatric assessments, order sets, and geriatric best practices. Moreover, its exclusive inclusion of pre-frail and frail older patients (ie, those at higher risk for poor outcomes) with moderate injury severity (median ISS of 10 [IQR, 9-14]) suggests that this type of care pathway benefits hospitalized older trauma patients, who are particularly vulnerable to adverse complications such as delirium. Moreover, the successful utilization of the FRAIL questionnaire, a validated frailty screening tool, by surgical residents in the ED to initiate this care pathway demonstrates the feasibility of its use in expediting frailty screening in older patients in trauma care.

Application for Clinical Practice and System Implementation

Findings from the 2 studies discussed in this review indicate that implementation of interdisciplinary clinical care pathways predicated on evidence-based geriatric principles and best practices is a promising approach to reduce delirium in hospitalized older trauma patients. These studies have helped to lay the groundwork in outlining the roadmaps (eg, processes and infrastructures) needed to create such clinical pathways. These key elements include: (1) integration of a multidisciplinary committee (eg, representation from trauma, emergency, and geriatric medicine, nursing, physical and occupational therapy, pharmacy, social work) in pathway design and implementation; (2) adaption of evidence-based geriatric best practices (eg, the Institute for Healthcare Improvement Age-Friendly Health System 4M framework [medication, mentation, mobility, what matters]) to prioritize interventions and to design a pathway that incorporates these features; (3) incorporation of comprehensive geriatric assessment by interdisciplinary providers; (4) utilization of validated clinical instruments to assess physical and cognitive functions, frailty, delirium, and social determinants of health; (5) modification of electronic health record systems to encompass order sets that incorporate evidence-based, nonpharmacological and pharmacological interventions to manage symptoms (eg, delirium, pain, bowel movement, sleep, immobility, polypharmacy) essential to quality geriatric care; and (6) integration of patient and caregiver preferences via goals-of-care discussions and corresponding documentation and communication of these goals.

Additionally, these 2 studies imparted some strategies that may facilitate the implementation of interdisciplinary clinical care pathways in trauma care. Examples of such facilitators include: (1) collaboration with champions within each specialty to reinforce education and buy-in; (2) creation of automatically triggered order sets upon patient presentation to the ED that unites distinct features of clinical pathways; (3) adaption and reorganization of existing hospital infrastructures and resources to meet the needs of clinical pathways implementation (eg, utilizing information technology resources to develop electronic health record order sets; using quality department to develop clinical pathway guidelines and electronic outcome dashboards); and (4) development of individualized patient and caregiver education materials based on care needs (eg, principles of delirium prevention and preservation of mobility during hospitalization) to prepare and engage these stakeholders in patient care and recovery.

Practice Points

- A geriatric interdisciplinary care model can be effectively applied to the management of acute trauma in older patients.

- Interdisciplinary clinical pathways should incorporate geriatric best practices and guidelines and age-appropriate order sets to prioritize and integrate care.

—Fred Ko, MD, MS

1. Hashmi A, Ibrahim-Zada I, Rhee P, et al. Predictors of mortality in geriatric trauma patients: a systematic review and meta-analysis. J Trauma Acute Care Surg. 2014;76(3):894-901. doi:10.1097/TA.0b013e3182ab0763

2. Hopewell S, Adedire O, Copsey BJ, et al. Multifactorial and multiple component interventions for preventing falls in older people living in the community. Cochrane Database Syst Rev. 2018;7(7):CD012221. doi:10.1002/14651858.CD012221.pub2

3. Joseph B, Pandit V, Zangbar B, et al. Superiority of frailty over age in predicting outcomes among geriatric trauma patients: a prospective analysis. JAMA Surg. 2014;149(8):766-772. doi:10.1001/jamasurg.2014.296

4. Hopkins SA, Bentley A, Phillips V, Barclay S. Advance care plans and hospitalized frail older adults: a systematic review. BMJ Support Palliat Care. 2020;10(2):164-174. doi:10.1136/bmjspcare-2019-002093

1. Hashmi A, Ibrahim-Zada I, Rhee P, et al. Predictors of mortality in geriatric trauma patients: a systematic review and meta-analysis. J Trauma Acute Care Surg. 2014;76(3):894-901. doi:10.1097/TA.0b013e3182ab0763

2. Hopewell S, Adedire O, Copsey BJ, et al. Multifactorial and multiple component interventions for preventing falls in older people living in the community. Cochrane Database Syst Rev. 2018;7(7):CD012221. doi:10.1002/14651858.CD012221.pub2

3. Joseph B, Pandit V, Zangbar B, et al. Superiority of frailty over age in predicting outcomes among geriatric trauma patients: a prospective analysis. JAMA Surg. 2014;149(8):766-772. doi:10.1001/jamasurg.2014.296

4. Hopkins SA, Bentley A, Phillips V, Barclay S. Advance care plans and hospitalized frail older adults: a systematic review. BMJ Support Palliat Care. 2020;10(2):164-174. doi:10.1136/bmjspcare-2019-002093

Hospital-acquired pneumonia is killing patients, yet there is a simple way to stop it

Four years ago, when Dr. Karen Giuliano went to a Boston hospital for hip replacement surgery, she was given a pale-pink bucket of toiletries issued to patients in many hospitals. Inside were tissues, bar soap, deodorant, toothpaste, and, without a doubt, the worst toothbrush she’d ever seen.

“I couldn’t believe it. I got a toothbrush with no bristles,” she said. “It must have not gone through the bristle machine. It was just a stick.”

To most patients, a useless hospital toothbrush would be a mild inconvenience. But to Dr. Giuliano, a nursing professor at the University of Massachusetts, Amherst, it was a reminder of a pervasive “blind spot” in U.S. hospitals: the stunning consequences of unbrushed teeth.

Hospital patients not getting their teeth brushed, or not brushing their teeth themselves, is believed to be a leading cause of hundreds of thousands of cases of pneumonia a year in patients who have not been put on a ventilator. Pneumonia is among the most common infections that occur in health care facilities, and a majority of cases are nonventilator hospital-acquired pneumonia, or NVHAP, which kills up to 30% of those infected, Dr. Giuliano and other experts said.

But unlike many infections that strike within hospitals, the federal government doesn’t require hospitals to report cases of NVHAP. As a result, few hospitals understand the origin of the illness, track its occurrence, or actively work to prevent it, the experts said.

, according to a growing body of peer-reviewed research papers. Instead, many hospitals often skip teeth brushing to prioritize other tasks and provide only cheap, ineffective toothbrushes, often unaware of the consequences, said Dr. Dian Baker, a Sacramento (Calif.) State nursing professor who has spent more than a decade studying NVHAP.

“I’ll tell you that today the vast majority of the tens of thousands of nurses in hospitals have no idea that pneumonia comes from germs in the mouth,” Dr. Baker said.

Pneumonia occurs when germs trigger an infection in the lungs. Although NVHAP accounts for most of the cases that occur in hospitals, it historically has not received the same attention as pneumonia tied to ventilators, which is easier to identify and study because it occurs among a narrow subset of patients.

NVHAP, a risk for virtually all hospital patients, is often caused by bacteria from the mouth that gathers in the scummy biofilm on unbrushed teeth and is aspirated into the lungs. Patients face a higher risk if they lie flat or remain immobile for long periods, so NVHAP can also be prevented by elevating their heads and getting them out of bed more often.

According to the National Organization for NV-HAP Prevention, which was founded in 2020, this pneumonia infects about 1 in every 100 hospital patients and kills 15%-30% of them. For those who survive, the illness often extends their hospital stay by up to 15 days and makes it much more likely they will be readmitted within a month or transferred to an intensive care unit.

John McCleary, 83, of Millinocket, Maine, contracted a likely case of NVHAP in 2008 after he fractured his ankle in a fall and spent 12 days in rehabilitation at a hospital, said his daughter, Kathy Day, a retired nurse and advocate with the Patient Safety Action Network.

Mr. McCleary recovered from the fracture but not from pneumonia. Two days after he returned home, the infection in his lungs caused him to be rushed back to the hospital, where he went into sepsis and spent weeks in treatment before moving to an isolation unit in a nursing home.

He died weeks later, emaciated, largely deaf, unable to eat, and often “too weak to get water through a straw,” his daughter said. After contracting pneumonia, he never walked again.

“It was an astounding assault on his body, from him being here visiting me the week before his fall, to his death just a few months later,” Ms. Day said. “And the whole thing was avoidable.”

While experts describe NVHAP as a largely ignored threat, that appears to be changing.

Last year, a group of researchers – including Dr. Giuliano and Dr. Baker, plus officials from the Centers for Disease Control and Prevention, the Veterans Health Administration, and the Joint Commission – published a “call-to-action” research paper hoping to launch “a national health care conversation about NVHAP prevention.”

The Joint Commission, a nonprofit organization whose accreditation can make or break hospitals, is considering broadening the infection control standards to include more ailments, including NVHAP, said Sylvia Garcia-Houchins, its director of infection prevention and control.

Separately, ECRI, a nonprofit focused on health care safety, this year pinpointed NVHAP as one of its top patient safety concerns.

James Davis, an ECRI infection expert, said the prevalence of NVHAP, while already alarming, is likely “underestimated” and probably worsened as hospitals swelled with patients during the coronavirus pandemic.

“We only know what’s reported,” Mr. Davis said. “Could this be the tip of the iceberg? I would say, in my opinion, probably.”

To better measure the condition, some researchers call for a standardized surveillance definition of NVHAP, which could in time open the door for the federal government to mandate reporting of cases or incentivize prevention. With increasing urgency, researchers are pushing for hospitals not to wait for the federal government to act against NVHAP.

Dr. Baker said she has spoken with hundreds of hospitals about how to prevent NVHAP, but thousands more have yet to take up the cause.

“We are not asking for some big, $300,000 piece of equipment,” Dr. Baker said. “The two things that show the best evidence of preventing this harm are things that should be happening in standard care anyway – brushing teeth and getting patients mobilized.”

That evidence comes from a smattering of studies that show those two strategies can lead to sharp reductions in infection rates.

In California, a study at 21 Kaiser Permanente hospitals used a reprioritization of oral care and getting patients out of bed to reduce rates of hospital-acquired pneumonia by around 70%. At Sutter Medical Center in Sacramento, better oral care reduced NVHAP cases by a yearly average of 35%.

At Orlando Regional Medical Center in Florida, a medical unit and a surgical unit where patients received enhanced oral care reduced NVHAP rates by 85% and 56%, respectively, when compared with similar units that received normal care. A similar study is underway at two hospitals in Illinois.

And the most compelling results come from a veterans’ hospital in Salem, Va., where a 2016 oral care pilot program reduced rates of NVHAP by 92% – saving an estimated 13 lives in just 19 months. The program, the HAPPEN Initiative, has been expanded across the Veterans Health Administration, and experts say it could serve as a model for all U.S. hospitals.

Dr. Michelle Lucatorto, a nursing official who leads HAPPEN, said the program trains nurses to most effectively brush patients’ teeth and educates patients and families on the link between oral care and preventing NVHAP. While teeth brushing may not seem to require training, Dr. Lucatorto made comparisons to how the coronavirus revealed many Americans were doing a lackluster job of another routine hygienic practice: washing their hands.

“Sometimes we are searching for the most complicated intervention,” she said. “We are always looking for that new bypass surgery, or some new technical equipment. And sometimes I think we fail to look at the simple things we can do in our practice to save people’s lives.”

KHN (Kaiser Health News) is a national newsroom that produces in-depth journalism about health issues. Together with Policy Analysis and Polling, KHN is one of the three major operating programs at KFF (Kaiser Family Foundation). KFF is an endowed nonprofit organization providing information on health issues to the nation.

Four years ago, when Dr. Karen Giuliano went to a Boston hospital for hip replacement surgery, she was given a pale-pink bucket of toiletries issued to patients in many hospitals. Inside were tissues, bar soap, deodorant, toothpaste, and, without a doubt, the worst toothbrush she’d ever seen.

“I couldn’t believe it. I got a toothbrush with no bristles,” she said. “It must have not gone through the bristle machine. It was just a stick.”

To most patients, a useless hospital toothbrush would be a mild inconvenience. But to Dr. Giuliano, a nursing professor at the University of Massachusetts, Amherst, it was a reminder of a pervasive “blind spot” in U.S. hospitals: the stunning consequences of unbrushed teeth.

Hospital patients not getting their teeth brushed, or not brushing their teeth themselves, is believed to be a leading cause of hundreds of thousands of cases of pneumonia a year in patients who have not been put on a ventilator. Pneumonia is among the most common infections that occur in health care facilities, and a majority of cases are nonventilator hospital-acquired pneumonia, or NVHAP, which kills up to 30% of those infected, Dr. Giuliano and other experts said.

But unlike many infections that strike within hospitals, the federal government doesn’t require hospitals to report cases of NVHAP. As a result, few hospitals understand the origin of the illness, track its occurrence, or actively work to prevent it, the experts said.

, according to a growing body of peer-reviewed research papers. Instead, many hospitals often skip teeth brushing to prioritize other tasks and provide only cheap, ineffective toothbrushes, often unaware of the consequences, said Dr. Dian Baker, a Sacramento (Calif.) State nursing professor who has spent more than a decade studying NVHAP.

“I’ll tell you that today the vast majority of the tens of thousands of nurses in hospitals have no idea that pneumonia comes from germs in the mouth,” Dr. Baker said.

Pneumonia occurs when germs trigger an infection in the lungs. Although NVHAP accounts for most of the cases that occur in hospitals, it historically has not received the same attention as pneumonia tied to ventilators, which is easier to identify and study because it occurs among a narrow subset of patients.

NVHAP, a risk for virtually all hospital patients, is often caused by bacteria from the mouth that gathers in the scummy biofilm on unbrushed teeth and is aspirated into the lungs. Patients face a higher risk if they lie flat or remain immobile for long periods, so NVHAP can also be prevented by elevating their heads and getting them out of bed more often.

According to the National Organization for NV-HAP Prevention, which was founded in 2020, this pneumonia infects about 1 in every 100 hospital patients and kills 15%-30% of them. For those who survive, the illness often extends their hospital stay by up to 15 days and makes it much more likely they will be readmitted within a month or transferred to an intensive care unit.

John McCleary, 83, of Millinocket, Maine, contracted a likely case of NVHAP in 2008 after he fractured his ankle in a fall and spent 12 days in rehabilitation at a hospital, said his daughter, Kathy Day, a retired nurse and advocate with the Patient Safety Action Network.

Mr. McCleary recovered from the fracture but not from pneumonia. Two days after he returned home, the infection in his lungs caused him to be rushed back to the hospital, where he went into sepsis and spent weeks in treatment before moving to an isolation unit in a nursing home.

He died weeks later, emaciated, largely deaf, unable to eat, and often “too weak to get water through a straw,” his daughter said. After contracting pneumonia, he never walked again.

“It was an astounding assault on his body, from him being here visiting me the week before his fall, to his death just a few months later,” Ms. Day said. “And the whole thing was avoidable.”

While experts describe NVHAP as a largely ignored threat, that appears to be changing.

Last year, a group of researchers – including Dr. Giuliano and Dr. Baker, plus officials from the Centers for Disease Control and Prevention, the Veterans Health Administration, and the Joint Commission – published a “call-to-action” research paper hoping to launch “a national health care conversation about NVHAP prevention.”

The Joint Commission, a nonprofit organization whose accreditation can make or break hospitals, is considering broadening the infection control standards to include more ailments, including NVHAP, said Sylvia Garcia-Houchins, its director of infection prevention and control.

Separately, ECRI, a nonprofit focused on health care safety, this year pinpointed NVHAP as one of its top patient safety concerns.

James Davis, an ECRI infection expert, said the prevalence of NVHAP, while already alarming, is likely “underestimated” and probably worsened as hospitals swelled with patients during the coronavirus pandemic.

“We only know what’s reported,” Mr. Davis said. “Could this be the tip of the iceberg? I would say, in my opinion, probably.”

To better measure the condition, some researchers call for a standardized surveillance definition of NVHAP, which could in time open the door for the federal government to mandate reporting of cases or incentivize prevention. With increasing urgency, researchers are pushing for hospitals not to wait for the federal government to act against NVHAP.

Dr. Baker said she has spoken with hundreds of hospitals about how to prevent NVHAP, but thousands more have yet to take up the cause.

“We are not asking for some big, $300,000 piece of equipment,” Dr. Baker said. “The two things that show the best evidence of preventing this harm are things that should be happening in standard care anyway – brushing teeth and getting patients mobilized.”

That evidence comes from a smattering of studies that show those two strategies can lead to sharp reductions in infection rates.

In California, a study at 21 Kaiser Permanente hospitals used a reprioritization of oral care and getting patients out of bed to reduce rates of hospital-acquired pneumonia by around 70%. At Sutter Medical Center in Sacramento, better oral care reduced NVHAP cases by a yearly average of 35%.

At Orlando Regional Medical Center in Florida, a medical unit and a surgical unit where patients received enhanced oral care reduced NVHAP rates by 85% and 56%, respectively, when compared with similar units that received normal care. A similar study is underway at two hospitals in Illinois.

And the most compelling results come from a veterans’ hospital in Salem, Va., where a 2016 oral care pilot program reduced rates of NVHAP by 92% – saving an estimated 13 lives in just 19 months. The program, the HAPPEN Initiative, has been expanded across the Veterans Health Administration, and experts say it could serve as a model for all U.S. hospitals.

Dr. Michelle Lucatorto, a nursing official who leads HAPPEN, said the program trains nurses to most effectively brush patients’ teeth and educates patients and families on the link between oral care and preventing NVHAP. While teeth brushing may not seem to require training, Dr. Lucatorto made comparisons to how the coronavirus revealed many Americans were doing a lackluster job of another routine hygienic practice: washing their hands.

“Sometimes we are searching for the most complicated intervention,” she said. “We are always looking for that new bypass surgery, or some new technical equipment. And sometimes I think we fail to look at the simple things we can do in our practice to save people’s lives.”

KHN (Kaiser Health News) is a national newsroom that produces in-depth journalism about health issues. Together with Policy Analysis and Polling, KHN is one of the three major operating programs at KFF (Kaiser Family Foundation). KFF is an endowed nonprofit organization providing information on health issues to the nation.

Four years ago, when Dr. Karen Giuliano went to a Boston hospital for hip replacement surgery, she was given a pale-pink bucket of toiletries issued to patients in many hospitals. Inside were tissues, bar soap, deodorant, toothpaste, and, without a doubt, the worst toothbrush she’d ever seen.

“I couldn’t believe it. I got a toothbrush with no bristles,” she said. “It must have not gone through the bristle machine. It was just a stick.”

To most patients, a useless hospital toothbrush would be a mild inconvenience. But to Dr. Giuliano, a nursing professor at the University of Massachusetts, Amherst, it was a reminder of a pervasive “blind spot” in U.S. hospitals: the stunning consequences of unbrushed teeth.

Hospital patients not getting their teeth brushed, or not brushing their teeth themselves, is believed to be a leading cause of hundreds of thousands of cases of pneumonia a year in patients who have not been put on a ventilator. Pneumonia is among the most common infections that occur in health care facilities, and a majority of cases are nonventilator hospital-acquired pneumonia, or NVHAP, which kills up to 30% of those infected, Dr. Giuliano and other experts said.

But unlike many infections that strike within hospitals, the federal government doesn’t require hospitals to report cases of NVHAP. As a result, few hospitals understand the origin of the illness, track its occurrence, or actively work to prevent it, the experts said.

, according to a growing body of peer-reviewed research papers. Instead, many hospitals often skip teeth brushing to prioritize other tasks and provide only cheap, ineffective toothbrushes, often unaware of the consequences, said Dr. Dian Baker, a Sacramento (Calif.) State nursing professor who has spent more than a decade studying NVHAP.

“I’ll tell you that today the vast majority of the tens of thousands of nurses in hospitals have no idea that pneumonia comes from germs in the mouth,” Dr. Baker said.

Pneumonia occurs when germs trigger an infection in the lungs. Although NVHAP accounts for most of the cases that occur in hospitals, it historically has not received the same attention as pneumonia tied to ventilators, which is easier to identify and study because it occurs among a narrow subset of patients.

NVHAP, a risk for virtually all hospital patients, is often caused by bacteria from the mouth that gathers in the scummy biofilm on unbrushed teeth and is aspirated into the lungs. Patients face a higher risk if they lie flat or remain immobile for long periods, so NVHAP can also be prevented by elevating their heads and getting them out of bed more often.

According to the National Organization for NV-HAP Prevention, which was founded in 2020, this pneumonia infects about 1 in every 100 hospital patients and kills 15%-30% of them. For those who survive, the illness often extends their hospital stay by up to 15 days and makes it much more likely they will be readmitted within a month or transferred to an intensive care unit.

John McCleary, 83, of Millinocket, Maine, contracted a likely case of NVHAP in 2008 after he fractured his ankle in a fall and spent 12 days in rehabilitation at a hospital, said his daughter, Kathy Day, a retired nurse and advocate with the Patient Safety Action Network.

Mr. McCleary recovered from the fracture but not from pneumonia. Two days after he returned home, the infection in his lungs caused him to be rushed back to the hospital, where he went into sepsis and spent weeks in treatment before moving to an isolation unit in a nursing home.

He died weeks later, emaciated, largely deaf, unable to eat, and often “too weak to get water through a straw,” his daughter said. After contracting pneumonia, he never walked again.

“It was an astounding assault on his body, from him being here visiting me the week before his fall, to his death just a few months later,” Ms. Day said. “And the whole thing was avoidable.”

While experts describe NVHAP as a largely ignored threat, that appears to be changing.

Last year, a group of researchers – including Dr. Giuliano and Dr. Baker, plus officials from the Centers for Disease Control and Prevention, the Veterans Health Administration, and the Joint Commission – published a “call-to-action” research paper hoping to launch “a national health care conversation about NVHAP prevention.”

The Joint Commission, a nonprofit organization whose accreditation can make or break hospitals, is considering broadening the infection control standards to include more ailments, including NVHAP, said Sylvia Garcia-Houchins, its director of infection prevention and control.

Separately, ECRI, a nonprofit focused on health care safety, this year pinpointed NVHAP as one of its top patient safety concerns.

James Davis, an ECRI infection expert, said the prevalence of NVHAP, while already alarming, is likely “underestimated” and probably worsened as hospitals swelled with patients during the coronavirus pandemic.

“We only know what’s reported,” Mr. Davis said. “Could this be the tip of the iceberg? I would say, in my opinion, probably.”

To better measure the condition, some researchers call for a standardized surveillance definition of NVHAP, which could in time open the door for the federal government to mandate reporting of cases or incentivize prevention. With increasing urgency, researchers are pushing for hospitals not to wait for the federal government to act against NVHAP.

Dr. Baker said she has spoken with hundreds of hospitals about how to prevent NVHAP, but thousands more have yet to take up the cause.

“We are not asking for some big, $300,000 piece of equipment,” Dr. Baker said. “The two things that show the best evidence of preventing this harm are things that should be happening in standard care anyway – brushing teeth and getting patients mobilized.”

That evidence comes from a smattering of studies that show those two strategies can lead to sharp reductions in infection rates.

In California, a study at 21 Kaiser Permanente hospitals used a reprioritization of oral care and getting patients out of bed to reduce rates of hospital-acquired pneumonia by around 70%. At Sutter Medical Center in Sacramento, better oral care reduced NVHAP cases by a yearly average of 35%.

At Orlando Regional Medical Center in Florida, a medical unit and a surgical unit where patients received enhanced oral care reduced NVHAP rates by 85% and 56%, respectively, when compared with similar units that received normal care. A similar study is underway at two hospitals in Illinois.

And the most compelling results come from a veterans’ hospital in Salem, Va., where a 2016 oral care pilot program reduced rates of NVHAP by 92% – saving an estimated 13 lives in just 19 months. The program, the HAPPEN Initiative, has been expanded across the Veterans Health Administration, and experts say it could serve as a model for all U.S. hospitals.

Dr. Michelle Lucatorto, a nursing official who leads HAPPEN, said the program trains nurses to most effectively brush patients’ teeth and educates patients and families on the link between oral care and preventing NVHAP. While teeth brushing may not seem to require training, Dr. Lucatorto made comparisons to how the coronavirus revealed many Americans were doing a lackluster job of another routine hygienic practice: washing their hands.

“Sometimes we are searching for the most complicated intervention,” she said. “We are always looking for that new bypass surgery, or some new technical equipment. And sometimes I think we fail to look at the simple things we can do in our practice to save people’s lives.”

KHN (Kaiser Health News) is a national newsroom that produces in-depth journalism about health issues. Together with Policy Analysis and Polling, KHN is one of the three major operating programs at KFF (Kaiser Family Foundation). KFF is an endowed nonprofit organization providing information on health issues to the nation.

Effect of Pharmacist Interventions on Hospital Readmissions for Home-Based Primary Care Veterans

Following hospital discharge, patients are often in a vulnerable state due to new medical diagnoses, changes in medications, lack of understanding, and concerns for medical costs. In addition, the discharge process is complex and encompasses decisions regarding the postdischarge site of care, conveying patient instructions, and obtaining supplies and medications. There are several disciplines involved in the transitions of care process that are all essential for ensuring a successful transition and reducing the risk of hospital readmissions. Pharmacists play an integral role in the process.

When pharmacists are provided the opportunity to make therapeutic interventions, medication errors and hospital readmissions decrease and quality of life improves.1 Studies have shown that many older patients return home from the hospital with a limited understanding of their discharge instructions and oftentimes are unable to recall their discharge diagnoses and treatment plan, leaving opportunities for error when patients transition from one level of care to another.2,3 Additionally, high-quality transitional care is especially important for older adults with multiple comorbidities and complex therapeutic regimens as well as for their families and caregivers.4 To prevent hospital readmissions, pharmacists and other health care professionals (HCPs) should work diligently to prevent gaps in care as patients transition between settings. Common factors that lead to increased readmissions include premature discharge, inadequate follow-up, therapeutic errors, and medication-related problems. Furthermore, unintended hospital readmissions are common within the first 30 days following hospital discharge and lead to increased health care costs.2 For these reasons, many health care institutions have developed comprehensive models to improve the discharge process, decrease hospital readmissions, and reduce incidence of adverse events in general medical patients and high-risk populations.5

A study evaluating 693 hospital discharges found that 27.6% of patients were recommended for outpatient workups; however only 9% were actually completed.6 Due to lack of communication regarding discharge summaries, primary care practitioners (PCPs) were unaware of the need for outpatient workups; thus, these patients were lost to follow-up, and appropriate care was not received. Future studies should focus on interventions to improve the quality and dissemination of discharge information to PCPs.6 Fosnight and colleagues assessed a new transitions process focusing on the role of pharmacists. They evaluated medication reconciliations performed and discussed medication adherence barriers, medication recommendations, and time spent performing the interventions.7 After patients received a pharmacy intervention, Fosnight and colleagues reported that readmission rates decreased from 21.0% to 15.3% and mean length of stay decreased from 5.3 to 4.4 days. They also observed greater improvements in patients who received the full pharmacy intervention vs those receiving only parts of the intervention. This study concluded that adding a comprehensive pharmacy intervention to transitions of care resulted in an average of nearly 10 medication recommendations per patient, improved length of stay, and reduced readmission rates. After a review of similar studies, we concluded that a comprehensive discharge model is imperative to improve patient outcomes, along with HCP monitoring of the process to ensure appropriate follow-up.8

At Michael E. DeBakey Veteran Affairs Medical Center (MEDVAMC) in Houston, Texas, 30-day readmissions data were reviewed for veterans 6 months before and 12 months after enrollment in the Home-Based Primary Care (HBPC) service. HBPC is an in-home health care service provided to home-bound veterans with complex health care needs or when routine clinic-based care is not feasible. HBPC programs may differ among various US Department of Veterans Affairs (VA) medical centers. Currently, there are 9 HBPC teams at MEDVAMC and nearly 540 veterans are enrolled in the program. HBPC teams typically consist of PCPs, pharmacists, nurses, psychologists, occupational/physical therapists, social workers, medical support assistants, and dietitians.

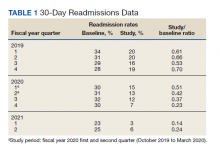

Readmissions data are reviewed quarterly by fiscal year (FY) (Table 1). In FY 2019 quarter (Q) 2, the readmission rate before HBPC enrollment was 31% and decreased to 20% after enrollment. In FY 2019 Q3, the readmission rate was 29% before enrollment and decreased to 16% afterward. In FY 2019 Q4, the readmission rate before HBPC enrollment was 28% and decreased to 19% afterward. Although the readmission rates appeared to be decreasing overall, improvements were needed to decrease these rates further and to ensure readmissions were not rising as there was a slight increase in Q4. After reviewing these data, the HBPC service implemented a streamlined hospital discharge process to lower readmission rates and improve patient outcomes.

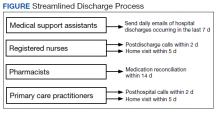

HBPC at MEDVAMC incorporates a team-based approach and the new streamlined discharge process implemented in 2019 highlights the role of each team member (Figure). Medical support assistants send daily emails of hospital discharges occurring in the last 7 days. Registered nurses are responsible for postdischarge calls within 2 days and home visits within 5 days. Pharmacists perform medication reconciliation within 14 days of discharge, review and/or educate on new medications, and change medications. The PCP is responsible for posthospital calls within 2 days and conducts a home visit within 5 days. Because HBPC programs vary among VA medical centers, the streamlined discharge process discussed may be applicable only to MEDVAMC. The primary objective of this quality improvement project was to identify specific pharmacist interventions to improve the HBPC discharge process and improve hospital readmission rates.

Methods

We conducted a Plan-Do-Study-Act quality improvement project. The first step was to conduct a review of veterans enrolled in HBPC at MEDVAMC.9 Patients included were enrolled in HBPC at MEDVAMC from October 2019 to March 2020 (FY 2020 Q1 and Q2). The Computerized Patient Record System was used to access the patients’ electronic health records. Patient information collected included race, age, sex, admission diagnosis, date of discharge, HBPC pharmacist name, PCP notification on the discharge summary, and 30-day readmission rates. Unplanned return to the hospital within 30 days, which was counted as a readmission, was defined as any admission for acute clinical events that required urgent hospital management.10

Next, we identified specific pharmacist interventions, including medication reconciliation completed by an HBPC pharmacist postdischarge; mean time to contact patients postdischarge; correct medications and supplies on discharge; incorrect dose; incorrect medication frequency or route of administration; therapeutic duplications; discontinuation of medications; additional drug therapy recommendations; laboratory test recommendations; maintenance medications not restarted or omitted; new medication education; and medication or formulation changes.

In the third step, we reviewed discharge summaries and clinical pharmacy notes to collect pharmacist intervention data. These data were analyzed to develop a standardized discharge process. Descriptive statistics were used to represent the results of the study.

Results

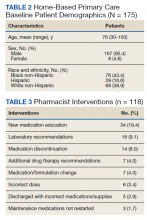

Medication reconciliation was completed postdischarge by an HBPC pharmacist in 118 of 175 study patients (67.4%). The mean age of patients was 76 years, about 95% were male (Table 2). There was a wide variety of admission diagnoses but sepsis, chronic obstructive pulmonary disease, and chronic kidney disease were most common. The PCP was notified on the discharge note for 68 (38.9%) patients. The mean time for HBPC pharmacists to contact patients postdischarge was about 3 days, which was much less than the 14 days allowed in the streamlined discharge process.

Pharmacists made the following interventions during medication reconciliation: New medication education was provided for 34 (19.4%) patients and was the largest intervention completed by HBPC pharmacists. Laboratory tests were recommended for 16 (9.1%) patients, medications were discontinued in 14 (8.0%) patients, and additional drug therapy recommendations were made for 7 (4.0%) patients. Medication or formulation changes were completed in 7 (4.0%) patients, incorrect doses were identified in 6 (3.4%) patients, 5 (2.9%) patients were not discharged with the correct medications or supplies, maintenance medications were not restarted in 3 (1.7%) patients, and there were no therapeutic duplications identified. In total, there were 92 (77.9%) patients with interventions compared with the 118 medication reconciliations completed (Table 3).

Process Improvement

As this was a new streamlined discharge process, it was important to assess the progress of the pharmacist role over time. We evaluated the number of medication reconciliations completed by quarter to determine whether more interventions were completed as the streamlined discharge process was being fully implemented. In FY 2020 Q1, medication reconciliation was completed by an HBPC pharmacist at a rate of 35%, and in FY 2020 Q2, at a rate of 65%.

In addition to assessing interventions completed by an HBPC pharmacist, we noted how many medication reconciliations were completed by an inpatient pharmacist as this may have impacted the results of this study. Of the 175 patients in this study, 49 (28%) received a medication reconciliation by an inpatient clinical pharmacy specialist before discharge. Last, when reviewing the readmissions data for the study period, it was evident that the streamlined discharge process was improving. In FY 2020 Q1, the readmissions rate prior to HBPC enrollment was 30% and decreased to 15% after and in FY 2020 Q2 was 31% before and decreased to 13% after HBPC enrollment. Before the study period in FY 2019 Q4, the readmissions rate after HBPC enrollment was 19%. Therefore, the readmissions rate decreased from 19% before the study period to 13% by the end of the study period.

Discussion

A comparison of the readmissions data from FYs 2019, 2020, and 2021 revealed that the newly implemented discharge process at MEDVAMC had been more effective.

There were 92 interventions made during the study period, which is about 78% of all medication reconciliations completed. Medication doses were changed based on patients’ renal function. Additional laboratory tests were recommended after discharge to ensure safety of therapy. Medications were discontinued if inappropriate or if patients were no longer on them to simplify their medication list and limit polypharmacy. New medication education was provided, including drug name, dose, route of administration, time of administration, frequency, indication, mechanism of action, adverse effect profile, monitoring parameters, and more. The HBPC pharmacists were able to make suitable interventions in a timely fashion as the average time to contact patients postdischarge was 3 days.

Areas for Improvement

The PCP was notified on the discharge note only in 68 (38.9%) patients. This could lead to gaps in care if other mechanisms are not in place to notify the PCP of the patient’s discharge. For this reason, it is imperative not only to implement a streamlined discharge process, but to review it and determine methods for continued improvement.9 The streamlined discharge process implemented by the HBPC team highlights when each team member should contact the patient postdischarge. However, it may be beneficial for each team member to have a list of vital information that should be communicated to the patient postdischarge and to other HCPs. For pharmacists, a standardized discharge note template may aid in the consistency of the medication reconciliation process postdischarge and may also increase interventions from pharmacists. For example, only some HBPC pharmacists inserted a new medication template in their discharge follow-up note. In addition, 23 (13.1%) patients were unreachable, and although a complete medication reconciliation was not feasible, a standardized note to review inpatient and outpatient medications along with the discharge plan may still serve as an asset for HCPs.