User login

The value of using ultrasound to rule out deep vein thrombosis in cases of cellulitis

The “Things We Do for No Reason” series reviews practices which have become common parts of hospital care but which may provide little value to our patients. Practices reviewed in the TWDFNR series do not represent “black and white” conclusions or clinical practice standards, but are meant as a starting place for research and active discussions among hospitalists and patients. We invite you to be part of that discussion. https://www.choosingwisely.org/

Because of overlapping clinical manifestations, clinicians often order ultrasound to rule out deep vein thrombosis (DVT) in cases of cellulitis. Ultrasound testing is performed for 16% to 73% of patients diagnosed with cellulitis. Although testing is common, the pooled incidence of DVT is low (3.1%). Few data elucidate which patients with cellulitis are more likely to have concurrent DVT and require further testing. The Wells clinical prediction rule with

CASE REPORT

A 50-year-old man presented to the emergency department with a 3-day-old cut on his anterior right shin. Associated redness, warmth, pain, and swelling had progressed. The patient had no history of prior DVT or pulmonary embolism (PE). His temperature was 38.5°C, and his white blood cell count of 18,000. On review of systems, he denied shortness of breath and chest pain. He was diagnosed with cellulitis and administered intravenous fluids and cefazolin. The clinician wondered whether to perform lower extremity ultrasound to rule out concurrent DVT.

WHY YOU MIGHT THINK ULTRASOUND IS HELPFUL IN RULING OUT DVT IN CELLULITIS

Lower extremity cellulitis, a common infection of the skin and subcutaneous tissues, is characterized by unilateral erythema, pain, warmth, and swelling. The infection usually follows a skin breach that allows bacteria to enter. DVT may present similarly, and symptoms can include mild leukocytosis and elevated temperature. Because of the clinical similarities, clinicians often order compression ultrasound of the extremity to rule out concurrent DVT in cellulitis. Further impetus for testing stems from fear of the potential complications of untreated DVT, including post-thrombotic syndrome, chronic venous insufficiency, and venous ulceration. A subsequent PE can be fatal, or can cause significant morbidity, including chronic VTE with associated pulmonary hypertension. An estimated quarter of all PEs present as sudden death.1

WHY ULTRASOUND IS NOT HELPFUL IN THIS SETTING

Studies have shown that ultrasound is ordered for 16% to 73% of patients with a cellulitis diagnosis.2,3 Although testing is commonly performed, a meta-analysis of 9 studies of cellulitis patients who underwent ultrasound testing for concurrent DVT revealed a low pooled incidence of total DVT (3.1%) and proximal DVT (2.1%).4 Maze et al.2 retrospectively reviewed 1515 cellulitis cases (identified by International Classification of Diseases, Ninth Revision codes) at a single center in New Zealand over 3 years. Of the 1515 patients, 240 (16%) had ultrasound performed, and only 3 (1.3%) were found to have DVT. Two of the 3 had active malignancy, and the third had injected battery acid into the area. In a 5-year retrospective cohort study at a Veterans Administration hospital in Connecticut, Gunderson and Chang3 reviewed the cases of 183 patients with cellulitis and found ultrasound testing commonly performed (73% of cases) to assess for DVT. Only 1 patient (<1%) was diagnosed with new DVT in the ipsilateral leg, and acute DVT was diagnosed in the contralateral leg of 2 other patients. Overall, these studies indicate the incidence of concurrent DVT in cellulitis is low, regardless of the frequency of ultrasound testing.

Although the cost of a single ultrasound test is not prohibitive, annual total costs hospital-wide and nationally are large. In the United States, the charge for a unilateral duplex ultrasound of the extremity ranges from $260 to $1300, and there is an additional charge for interpretation by a radiologist.5 In a retrospective study spanning 3.5 years and involving 2 community hospitals in Michigan, an estimated $290,000 was spent on ultrasound tests defined as unnecessary for patients with cellulitis.6 A limitation of the study was defining a test as unnecessary based on its result being negative.

DOES WELLS SCORE WITH D-DIMER HELP DEFINE A LOW-RISK POPULATION?

The Wells clinical prediction rule is commonly used to assess the pretest probability of DVT in patients presenting with unilateral leg symptoms. The Wells score is often combined with

WHEN MIGHT ULTRASOUND

Investigators have described possible DVT risk factors in patients with cellulitis, but definitive associations are lacking because of the insufficient number of patients studied.8,9 The most consistently identified DVT risk factor is history of previous thromboembolism. In a retrospective analysis of patients with cellulitis, Afzal et al.6 found that, of the 66.8% who underwent ultrasound testing, 5.5% were identified as having concurrent DVT. The authors performed univariate analyses of 15 potential risk factors, including active malignancy, oral contraceptive pill use, recent hospitalization, and surgery. A higher incidence of DVT was found for patients with history of VTE (odds ratio [OR], 5.7; 95% confidence interval [CI], 2.3-13.7), calf swelling (OR, 4.5; 95% CI, 1.3-15.8), CVA (OR, 3.5; 95% CI, 1.2-10.1), or hypertension (OR, 3.5; 95% CI, 0.98-12.2). Given the wide confidence intervals, paucity of studies, and lack of definitive data in the setting of cellulitis, clinicians may want to consider the risk factors established in larger trials in other settings, including known immobility (OR, <2); thrombophilia, CHF, and CVA with hemiparesis (OR, 2-9); and trauma and recent surgery (OR, >10).10

WHAT YOU SHOULD DO INSTEAD

As the incidence of concurrent VTE in patients with cellulitis is low, the essential step is to make a clear diagnosis of cellulitis based on its established signs and symptoms. A 2-center trial of 145 patients found that cellulitis was diagnosed accurately by general medicine and emergency medicine physicians 72% of the time, with evaluation by dermatologists and infectious disease specialists used as the gold standard. Only 5% of the misdiagnosed patients were diagnosed with DVT; stasis dermatitis was the most common alternative diagnosis. Taking a thorough history may elicit risk factors consistent with cellulitis, such as a recent injury with a break in the skin. On examination, cellulitis should be suspected for patients with fever and localized pain, redness, swelling, and warmth—the cardinal signs of dolor, rubor, tumor, and calor. An injury or entry site and leukocytosis also support the diagnosis of cellulitis. Distinct margins of erythema on the skin are highly suspicious for erysipelas.11 Other physical findings (eg, laceration, purulent drainage, lymphangitic spread, fluctuating mass) also are consistent with a diagnosis of cellulitis.

The patient’s history is also essential in determining whether any DVT risk factors are present. Past medical history of VTE or CVA, or recent history of surgery, immobility, or trauma, should alert the clinician to the possibility of DVT. Family history of VTE increases the likelihood of DVT. Acute shortness of breath or chest pain in the setting of concerning lower extremity findings for DVT should raise concern for DVT and concurrent PE.

If the classic features of cellulitis are present, empiric antibiotics should be initiated. Routine ultrasound testing for all patients with cellulitis is of low value. However, as the incidence of DVT in this population is not negligible, those with VTE risk factors should be targeted for testing. Studies in the setting of cellulitis provide little guidance regarding specific risk factors that can be used to determine who should undergo further testing. Given this limitation, we suggest that clinicians incorporate into their decision making the well-established VTE risk factors identified for large populations studied in other settings, such as the postoperative period. Specifically, clinicians should consider ultrasound testing for patients with cellulitis and prior history of VTE; immobility; thrombophilia, CHF, and CVA with hemiparesis; or trauma and recent surgery.10-12 Ultrasound should also be considered for patients with cellulitis that does not improve and for patients whose localized symptoms worsen despite use of antibiotics.

RECOMMENDATIONS

Do not routinely perform ultrasound to rule out concurrent DVT in cases of cellulitis.

Consider compression ultrasound if there is a history of VTE; immobility; thrombophilia, CHF, and CVA with hemiparesis; or trauma and recent surgery. Also consider it for patients who do not respond to antibiotics.

- In cases of cellulitis, avoid use of the Wells score alone or with

D -dimer testing, as it likely overestimates the DVT risk.

CONCLUSION

The current evidence shows that, for most patients with cellulitis, routine ultrasound testing for DVT is unnecessary. Ultrasound should be considered for patients with potent VTE risk factors. If symptoms do not improve, or if they worsen despite use of antibiotics, clinicians should be alert to potential anchoring bias and consider DVT. The Wells clinical prediction rule overestimates the incidence of DVT in cellulitis and has little value in this setting.

Disclosure

Nothing to report.

Do you think this is a low-value practice? Is this truly a “Thing We Do for No Reason”? Let us know what you do in your practice and propose ideas for other “Things We Do for No Reason” topics. Please join in the conversation online at Twitter (#TWDFNR)/Facebook and don’t forget to “Like It” on Facebook or retweet it on Twitter. We invite you to propose ideas for other “Things We Do for No Reason” topics by emailing [email protected].

1. Heit JA. The epidemiology of venous thromboembolism in the community: implications for prevention and management. J Thromb Thrombolysis. 2006;21(1):23-29. PubMed

2. Maze MJ, Pithie A, Dawes T, Chambers ST. An audit of venous duplex ultrasonography in patients with lower limb cellulitis. N Z Med J. 2011;124(1329):53-56. PubMed

3. Gunderson CG, Chang JJ. Overuse of compression ultrasound for patients with lower extremity cellulitis. Thromb Res. 2014;134(4):846-850. PubMed

4. Gunderson CG, Chang JJ. Risk of deep vein thrombosis in patients with cellulitis and erysipelas: a systematic review and meta-analysis. Thromb Res. 2013;132(3):336-340. PubMed

5. Extremity ultrasound (nonvascular) cost and procedure information. http://www.newchoicehealth.com/procedures/extremity-ultrasound-nonvascular. Accessed February 15, 2016.

6. Afzal MZ, Saleh MM, Razvi S, Hashmi H, Lampen R. Utility of lower extremity Doppler in patients with lower extremity cellulitis: a need to change the practice? South Med J. 2015;108(7):439-444. PubMed

7. Goodacre S, Sutton AJ, Sampson FC. Meta-analysis: the value of clinical assessment in the diagnosis of deep venous thrombosis. Ann Intern Med. 2005;143(2):129-139. PubMed

8. Maze MJ, Skea S, Pithie A, Metcalf S, Pearson JF, Chambers ST. Prevalence of concurrent deep vein thrombosis in patients with lower limb cellulitis: a prospective cohort study. BMC Infect Dis. 2013;13:141. PubMed

9. Bersier D, Bounameaux H. Cellulitis and deep vein thrombosis: a controversial association. J Thromb Haemost. 2003;1(4):867-868. PubMed

10. Anderson FA Jr, Spencer FA. Risk factors for venous thromboembolism. Circulation. 2003;107(23 suppl 1):I9-I16. PubMed

11. Rabuka CE, Azoulay LY, Kahn SR. Predictors of a positive duplex scan in patients with a clinical presentation compatible with deep vein thrombosis or cellulitis. Can J Infect Dis. 2003;14(4):210-214. PubMed

12. Samama MM. An epidemiologic study of risk factors for deep vein thrombosis in medical outpatients: the Sirius Study. Arch Intern Med. 2000;160(22):3415-3420. PubMed

The “Things We Do for No Reason” series reviews practices which have become common parts of hospital care but which may provide little value to our patients. Practices reviewed in the TWDFNR series do not represent “black and white” conclusions or clinical practice standards, but are meant as a starting place for research and active discussions among hospitalists and patients. We invite you to be part of that discussion. https://www.choosingwisely.org/

Because of overlapping clinical manifestations, clinicians often order ultrasound to rule out deep vein thrombosis (DVT) in cases of cellulitis. Ultrasound testing is performed for 16% to 73% of patients diagnosed with cellulitis. Although testing is common, the pooled incidence of DVT is low (3.1%). Few data elucidate which patients with cellulitis are more likely to have concurrent DVT and require further testing. The Wells clinical prediction rule with

CASE REPORT

A 50-year-old man presented to the emergency department with a 3-day-old cut on his anterior right shin. Associated redness, warmth, pain, and swelling had progressed. The patient had no history of prior DVT or pulmonary embolism (PE). His temperature was 38.5°C, and his white blood cell count of 18,000. On review of systems, he denied shortness of breath and chest pain. He was diagnosed with cellulitis and administered intravenous fluids and cefazolin. The clinician wondered whether to perform lower extremity ultrasound to rule out concurrent DVT.

WHY YOU MIGHT THINK ULTRASOUND IS HELPFUL IN RULING OUT DVT IN CELLULITIS

Lower extremity cellulitis, a common infection of the skin and subcutaneous tissues, is characterized by unilateral erythema, pain, warmth, and swelling. The infection usually follows a skin breach that allows bacteria to enter. DVT may present similarly, and symptoms can include mild leukocytosis and elevated temperature. Because of the clinical similarities, clinicians often order compression ultrasound of the extremity to rule out concurrent DVT in cellulitis. Further impetus for testing stems from fear of the potential complications of untreated DVT, including post-thrombotic syndrome, chronic venous insufficiency, and venous ulceration. A subsequent PE can be fatal, or can cause significant morbidity, including chronic VTE with associated pulmonary hypertension. An estimated quarter of all PEs present as sudden death.1

WHY ULTRASOUND IS NOT HELPFUL IN THIS SETTING

Studies have shown that ultrasound is ordered for 16% to 73% of patients with a cellulitis diagnosis.2,3 Although testing is commonly performed, a meta-analysis of 9 studies of cellulitis patients who underwent ultrasound testing for concurrent DVT revealed a low pooled incidence of total DVT (3.1%) and proximal DVT (2.1%).4 Maze et al.2 retrospectively reviewed 1515 cellulitis cases (identified by International Classification of Diseases, Ninth Revision codes) at a single center in New Zealand over 3 years. Of the 1515 patients, 240 (16%) had ultrasound performed, and only 3 (1.3%) were found to have DVT. Two of the 3 had active malignancy, and the third had injected battery acid into the area. In a 5-year retrospective cohort study at a Veterans Administration hospital in Connecticut, Gunderson and Chang3 reviewed the cases of 183 patients with cellulitis and found ultrasound testing commonly performed (73% of cases) to assess for DVT. Only 1 patient (<1%) was diagnosed with new DVT in the ipsilateral leg, and acute DVT was diagnosed in the contralateral leg of 2 other patients. Overall, these studies indicate the incidence of concurrent DVT in cellulitis is low, regardless of the frequency of ultrasound testing.

Although the cost of a single ultrasound test is not prohibitive, annual total costs hospital-wide and nationally are large. In the United States, the charge for a unilateral duplex ultrasound of the extremity ranges from $260 to $1300, and there is an additional charge for interpretation by a radiologist.5 In a retrospective study spanning 3.5 years and involving 2 community hospitals in Michigan, an estimated $290,000 was spent on ultrasound tests defined as unnecessary for patients with cellulitis.6 A limitation of the study was defining a test as unnecessary based on its result being negative.

DOES WELLS SCORE WITH D-DIMER HELP DEFINE A LOW-RISK POPULATION?

The Wells clinical prediction rule is commonly used to assess the pretest probability of DVT in patients presenting with unilateral leg symptoms. The Wells score is often combined with

WHEN MIGHT ULTRASOUND

Investigators have described possible DVT risk factors in patients with cellulitis, but definitive associations are lacking because of the insufficient number of patients studied.8,9 The most consistently identified DVT risk factor is history of previous thromboembolism. In a retrospective analysis of patients with cellulitis, Afzal et al.6 found that, of the 66.8% who underwent ultrasound testing, 5.5% were identified as having concurrent DVT. The authors performed univariate analyses of 15 potential risk factors, including active malignancy, oral contraceptive pill use, recent hospitalization, and surgery. A higher incidence of DVT was found for patients with history of VTE (odds ratio [OR], 5.7; 95% confidence interval [CI], 2.3-13.7), calf swelling (OR, 4.5; 95% CI, 1.3-15.8), CVA (OR, 3.5; 95% CI, 1.2-10.1), or hypertension (OR, 3.5; 95% CI, 0.98-12.2). Given the wide confidence intervals, paucity of studies, and lack of definitive data in the setting of cellulitis, clinicians may want to consider the risk factors established in larger trials in other settings, including known immobility (OR, <2); thrombophilia, CHF, and CVA with hemiparesis (OR, 2-9); and trauma and recent surgery (OR, >10).10

WHAT YOU SHOULD DO INSTEAD

As the incidence of concurrent VTE in patients with cellulitis is low, the essential step is to make a clear diagnosis of cellulitis based on its established signs and symptoms. A 2-center trial of 145 patients found that cellulitis was diagnosed accurately by general medicine and emergency medicine physicians 72% of the time, with evaluation by dermatologists and infectious disease specialists used as the gold standard. Only 5% of the misdiagnosed patients were diagnosed with DVT; stasis dermatitis was the most common alternative diagnosis. Taking a thorough history may elicit risk factors consistent with cellulitis, such as a recent injury with a break in the skin. On examination, cellulitis should be suspected for patients with fever and localized pain, redness, swelling, and warmth—the cardinal signs of dolor, rubor, tumor, and calor. An injury or entry site and leukocytosis also support the diagnosis of cellulitis. Distinct margins of erythema on the skin are highly suspicious for erysipelas.11 Other physical findings (eg, laceration, purulent drainage, lymphangitic spread, fluctuating mass) also are consistent with a diagnosis of cellulitis.

The patient’s history is also essential in determining whether any DVT risk factors are present. Past medical history of VTE or CVA, or recent history of surgery, immobility, or trauma, should alert the clinician to the possibility of DVT. Family history of VTE increases the likelihood of DVT. Acute shortness of breath or chest pain in the setting of concerning lower extremity findings for DVT should raise concern for DVT and concurrent PE.

If the classic features of cellulitis are present, empiric antibiotics should be initiated. Routine ultrasound testing for all patients with cellulitis is of low value. However, as the incidence of DVT in this population is not negligible, those with VTE risk factors should be targeted for testing. Studies in the setting of cellulitis provide little guidance regarding specific risk factors that can be used to determine who should undergo further testing. Given this limitation, we suggest that clinicians incorporate into their decision making the well-established VTE risk factors identified for large populations studied in other settings, such as the postoperative period. Specifically, clinicians should consider ultrasound testing for patients with cellulitis and prior history of VTE; immobility; thrombophilia, CHF, and CVA with hemiparesis; or trauma and recent surgery.10-12 Ultrasound should also be considered for patients with cellulitis that does not improve and for patients whose localized symptoms worsen despite use of antibiotics.

RECOMMENDATIONS

Do not routinely perform ultrasound to rule out concurrent DVT in cases of cellulitis.

Consider compression ultrasound if there is a history of VTE; immobility; thrombophilia, CHF, and CVA with hemiparesis; or trauma and recent surgery. Also consider it for patients who do not respond to antibiotics.

- In cases of cellulitis, avoid use of the Wells score alone or with

D -dimer testing, as it likely overestimates the DVT risk.

CONCLUSION

The current evidence shows that, for most patients with cellulitis, routine ultrasound testing for DVT is unnecessary. Ultrasound should be considered for patients with potent VTE risk factors. If symptoms do not improve, or if they worsen despite use of antibiotics, clinicians should be alert to potential anchoring bias and consider DVT. The Wells clinical prediction rule overestimates the incidence of DVT in cellulitis and has little value in this setting.

Disclosure

Nothing to report.

Do you think this is a low-value practice? Is this truly a “Thing We Do for No Reason”? Let us know what you do in your practice and propose ideas for other “Things We Do for No Reason” topics. Please join in the conversation online at Twitter (#TWDFNR)/Facebook and don’t forget to “Like It” on Facebook or retweet it on Twitter. We invite you to propose ideas for other “Things We Do for No Reason” topics by emailing [email protected].

The “Things We Do for No Reason” series reviews practices which have become common parts of hospital care but which may provide little value to our patients. Practices reviewed in the TWDFNR series do not represent “black and white” conclusions or clinical practice standards, but are meant as a starting place for research and active discussions among hospitalists and patients. We invite you to be part of that discussion. https://www.choosingwisely.org/

Because of overlapping clinical manifestations, clinicians often order ultrasound to rule out deep vein thrombosis (DVT) in cases of cellulitis. Ultrasound testing is performed for 16% to 73% of patients diagnosed with cellulitis. Although testing is common, the pooled incidence of DVT is low (3.1%). Few data elucidate which patients with cellulitis are more likely to have concurrent DVT and require further testing. The Wells clinical prediction rule with

CASE REPORT

A 50-year-old man presented to the emergency department with a 3-day-old cut on his anterior right shin. Associated redness, warmth, pain, and swelling had progressed. The patient had no history of prior DVT or pulmonary embolism (PE). His temperature was 38.5°C, and his white blood cell count of 18,000. On review of systems, he denied shortness of breath and chest pain. He was diagnosed with cellulitis and administered intravenous fluids and cefazolin. The clinician wondered whether to perform lower extremity ultrasound to rule out concurrent DVT.

WHY YOU MIGHT THINK ULTRASOUND IS HELPFUL IN RULING OUT DVT IN CELLULITIS

Lower extremity cellulitis, a common infection of the skin and subcutaneous tissues, is characterized by unilateral erythema, pain, warmth, and swelling. The infection usually follows a skin breach that allows bacteria to enter. DVT may present similarly, and symptoms can include mild leukocytosis and elevated temperature. Because of the clinical similarities, clinicians often order compression ultrasound of the extremity to rule out concurrent DVT in cellulitis. Further impetus for testing stems from fear of the potential complications of untreated DVT, including post-thrombotic syndrome, chronic venous insufficiency, and venous ulceration. A subsequent PE can be fatal, or can cause significant morbidity, including chronic VTE with associated pulmonary hypertension. An estimated quarter of all PEs present as sudden death.1

WHY ULTRASOUND IS NOT HELPFUL IN THIS SETTING

Studies have shown that ultrasound is ordered for 16% to 73% of patients with a cellulitis diagnosis.2,3 Although testing is commonly performed, a meta-analysis of 9 studies of cellulitis patients who underwent ultrasound testing for concurrent DVT revealed a low pooled incidence of total DVT (3.1%) and proximal DVT (2.1%).4 Maze et al.2 retrospectively reviewed 1515 cellulitis cases (identified by International Classification of Diseases, Ninth Revision codes) at a single center in New Zealand over 3 years. Of the 1515 patients, 240 (16%) had ultrasound performed, and only 3 (1.3%) were found to have DVT. Two of the 3 had active malignancy, and the third had injected battery acid into the area. In a 5-year retrospective cohort study at a Veterans Administration hospital in Connecticut, Gunderson and Chang3 reviewed the cases of 183 patients with cellulitis and found ultrasound testing commonly performed (73% of cases) to assess for DVT. Only 1 patient (<1%) was diagnosed with new DVT in the ipsilateral leg, and acute DVT was diagnosed in the contralateral leg of 2 other patients. Overall, these studies indicate the incidence of concurrent DVT in cellulitis is low, regardless of the frequency of ultrasound testing.

Although the cost of a single ultrasound test is not prohibitive, annual total costs hospital-wide and nationally are large. In the United States, the charge for a unilateral duplex ultrasound of the extremity ranges from $260 to $1300, and there is an additional charge for interpretation by a radiologist.5 In a retrospective study spanning 3.5 years and involving 2 community hospitals in Michigan, an estimated $290,000 was spent on ultrasound tests defined as unnecessary for patients with cellulitis.6 A limitation of the study was defining a test as unnecessary based on its result being negative.

DOES WELLS SCORE WITH D-DIMER HELP DEFINE A LOW-RISK POPULATION?

The Wells clinical prediction rule is commonly used to assess the pretest probability of DVT in patients presenting with unilateral leg symptoms. The Wells score is often combined with

WHEN MIGHT ULTRASOUND

Investigators have described possible DVT risk factors in patients with cellulitis, but definitive associations are lacking because of the insufficient number of patients studied.8,9 The most consistently identified DVT risk factor is history of previous thromboembolism. In a retrospective analysis of patients with cellulitis, Afzal et al.6 found that, of the 66.8% who underwent ultrasound testing, 5.5% were identified as having concurrent DVT. The authors performed univariate analyses of 15 potential risk factors, including active malignancy, oral contraceptive pill use, recent hospitalization, and surgery. A higher incidence of DVT was found for patients with history of VTE (odds ratio [OR], 5.7; 95% confidence interval [CI], 2.3-13.7), calf swelling (OR, 4.5; 95% CI, 1.3-15.8), CVA (OR, 3.5; 95% CI, 1.2-10.1), or hypertension (OR, 3.5; 95% CI, 0.98-12.2). Given the wide confidence intervals, paucity of studies, and lack of definitive data in the setting of cellulitis, clinicians may want to consider the risk factors established in larger trials in other settings, including known immobility (OR, <2); thrombophilia, CHF, and CVA with hemiparesis (OR, 2-9); and trauma and recent surgery (OR, >10).10

WHAT YOU SHOULD DO INSTEAD

As the incidence of concurrent VTE in patients with cellulitis is low, the essential step is to make a clear diagnosis of cellulitis based on its established signs and symptoms. A 2-center trial of 145 patients found that cellulitis was diagnosed accurately by general medicine and emergency medicine physicians 72% of the time, with evaluation by dermatologists and infectious disease specialists used as the gold standard. Only 5% of the misdiagnosed patients were diagnosed with DVT; stasis dermatitis was the most common alternative diagnosis. Taking a thorough history may elicit risk factors consistent with cellulitis, such as a recent injury with a break in the skin. On examination, cellulitis should be suspected for patients with fever and localized pain, redness, swelling, and warmth—the cardinal signs of dolor, rubor, tumor, and calor. An injury or entry site and leukocytosis also support the diagnosis of cellulitis. Distinct margins of erythema on the skin are highly suspicious for erysipelas.11 Other physical findings (eg, laceration, purulent drainage, lymphangitic spread, fluctuating mass) also are consistent with a diagnosis of cellulitis.

The patient’s history is also essential in determining whether any DVT risk factors are present. Past medical history of VTE or CVA, or recent history of surgery, immobility, or trauma, should alert the clinician to the possibility of DVT. Family history of VTE increases the likelihood of DVT. Acute shortness of breath or chest pain in the setting of concerning lower extremity findings for DVT should raise concern for DVT and concurrent PE.

If the classic features of cellulitis are present, empiric antibiotics should be initiated. Routine ultrasound testing for all patients with cellulitis is of low value. However, as the incidence of DVT in this population is not negligible, those with VTE risk factors should be targeted for testing. Studies in the setting of cellulitis provide little guidance regarding specific risk factors that can be used to determine who should undergo further testing. Given this limitation, we suggest that clinicians incorporate into their decision making the well-established VTE risk factors identified for large populations studied in other settings, such as the postoperative period. Specifically, clinicians should consider ultrasound testing for patients with cellulitis and prior history of VTE; immobility; thrombophilia, CHF, and CVA with hemiparesis; or trauma and recent surgery.10-12 Ultrasound should also be considered for patients with cellulitis that does not improve and for patients whose localized symptoms worsen despite use of antibiotics.

RECOMMENDATIONS

Do not routinely perform ultrasound to rule out concurrent DVT in cases of cellulitis.

Consider compression ultrasound if there is a history of VTE; immobility; thrombophilia, CHF, and CVA with hemiparesis; or trauma and recent surgery. Also consider it for patients who do not respond to antibiotics.

- In cases of cellulitis, avoid use of the Wells score alone or with

D -dimer testing, as it likely overestimates the DVT risk.

CONCLUSION

The current evidence shows that, for most patients with cellulitis, routine ultrasound testing for DVT is unnecessary. Ultrasound should be considered for patients with potent VTE risk factors. If symptoms do not improve, or if they worsen despite use of antibiotics, clinicians should be alert to potential anchoring bias and consider DVT. The Wells clinical prediction rule overestimates the incidence of DVT in cellulitis and has little value in this setting.

Disclosure

Nothing to report.

Do you think this is a low-value practice? Is this truly a “Thing We Do for No Reason”? Let us know what you do in your practice and propose ideas for other “Things We Do for No Reason” topics. Please join in the conversation online at Twitter (#TWDFNR)/Facebook and don’t forget to “Like It” on Facebook or retweet it on Twitter. We invite you to propose ideas for other “Things We Do for No Reason” topics by emailing [email protected].

1. Heit JA. The epidemiology of venous thromboembolism in the community: implications for prevention and management. J Thromb Thrombolysis. 2006;21(1):23-29. PubMed

2. Maze MJ, Pithie A, Dawes T, Chambers ST. An audit of venous duplex ultrasonography in patients with lower limb cellulitis. N Z Med J. 2011;124(1329):53-56. PubMed

3. Gunderson CG, Chang JJ. Overuse of compression ultrasound for patients with lower extremity cellulitis. Thromb Res. 2014;134(4):846-850. PubMed

4. Gunderson CG, Chang JJ. Risk of deep vein thrombosis in patients with cellulitis and erysipelas: a systematic review and meta-analysis. Thromb Res. 2013;132(3):336-340. PubMed

5. Extremity ultrasound (nonvascular) cost and procedure information. http://www.newchoicehealth.com/procedures/extremity-ultrasound-nonvascular. Accessed February 15, 2016.

6. Afzal MZ, Saleh MM, Razvi S, Hashmi H, Lampen R. Utility of lower extremity Doppler in patients with lower extremity cellulitis: a need to change the practice? South Med J. 2015;108(7):439-444. PubMed

7. Goodacre S, Sutton AJ, Sampson FC. Meta-analysis: the value of clinical assessment in the diagnosis of deep venous thrombosis. Ann Intern Med. 2005;143(2):129-139. PubMed

8. Maze MJ, Skea S, Pithie A, Metcalf S, Pearson JF, Chambers ST. Prevalence of concurrent deep vein thrombosis in patients with lower limb cellulitis: a prospective cohort study. BMC Infect Dis. 2013;13:141. PubMed

9. Bersier D, Bounameaux H. Cellulitis and deep vein thrombosis: a controversial association. J Thromb Haemost. 2003;1(4):867-868. PubMed

10. Anderson FA Jr, Spencer FA. Risk factors for venous thromboembolism. Circulation. 2003;107(23 suppl 1):I9-I16. PubMed

11. Rabuka CE, Azoulay LY, Kahn SR. Predictors of a positive duplex scan in patients with a clinical presentation compatible with deep vein thrombosis or cellulitis. Can J Infect Dis. 2003;14(4):210-214. PubMed

12. Samama MM. An epidemiologic study of risk factors for deep vein thrombosis in medical outpatients: the Sirius Study. Arch Intern Med. 2000;160(22):3415-3420. PubMed

1. Heit JA. The epidemiology of venous thromboembolism in the community: implications for prevention and management. J Thromb Thrombolysis. 2006;21(1):23-29. PubMed

2. Maze MJ, Pithie A, Dawes T, Chambers ST. An audit of venous duplex ultrasonography in patients with lower limb cellulitis. N Z Med J. 2011;124(1329):53-56. PubMed

3. Gunderson CG, Chang JJ. Overuse of compression ultrasound for patients with lower extremity cellulitis. Thromb Res. 2014;134(4):846-850. PubMed

4. Gunderson CG, Chang JJ. Risk of deep vein thrombosis in patients with cellulitis and erysipelas: a systematic review and meta-analysis. Thromb Res. 2013;132(3):336-340. PubMed

5. Extremity ultrasound (nonvascular) cost and procedure information. http://www.newchoicehealth.com/procedures/extremity-ultrasound-nonvascular. Accessed February 15, 2016.

6. Afzal MZ, Saleh MM, Razvi S, Hashmi H, Lampen R. Utility of lower extremity Doppler in patients with lower extremity cellulitis: a need to change the practice? South Med J. 2015;108(7):439-444. PubMed

7. Goodacre S, Sutton AJ, Sampson FC. Meta-analysis: the value of clinical assessment in the diagnosis of deep venous thrombosis. Ann Intern Med. 2005;143(2):129-139. PubMed

8. Maze MJ, Skea S, Pithie A, Metcalf S, Pearson JF, Chambers ST. Prevalence of concurrent deep vein thrombosis in patients with lower limb cellulitis: a prospective cohort study. BMC Infect Dis. 2013;13:141. PubMed

9. Bersier D, Bounameaux H. Cellulitis and deep vein thrombosis: a controversial association. J Thromb Haemost. 2003;1(4):867-868. PubMed

10. Anderson FA Jr, Spencer FA. Risk factors for venous thromboembolism. Circulation. 2003;107(23 suppl 1):I9-I16. PubMed

11. Rabuka CE, Azoulay LY, Kahn SR. Predictors of a positive duplex scan in patients with a clinical presentation compatible with deep vein thrombosis or cellulitis. Can J Infect Dis. 2003;14(4):210-214. PubMed

12. Samama MM. An epidemiologic study of risk factors for deep vein thrombosis in medical outpatients: the Sirius Study. Arch Intern Med. 2000;160(22):3415-3420. PubMed

© 2017 Society of Hospital Medicine

What are the chances?

The approach to clinical conundrums by an expert clinician is revealed through the presentation of an actual patient’s case in an approach typical of a morning report. Similarly to patient care, sequential pieces of information are provided to the clinician, who is unfamiliar with the case. The focus is on the thought processes of both the clinical team caring for the patient and the discussant. The bolded text represents the patient’s case. Each paragraph that follows represents the discussant’s thoughts.

Two weeks after undergoing a below-knee amputation (BKA) and 10 days after being discharged to a skilled nursing facility (SNF), an 87-year-old man returned to the emergency department (ED) for evaluation of somnolence and altered mental state. In the ED, he was disoriented and unable to provide a detailed history.

The differential diagnosis for acute confusion and altered consciousness is broad. Initial possibilities include toxic-metabolic abnormalities, medication side effects, and infections. Urinary tract infection, pneumonia, and surgical-site infection should be assessed for first, as they are common causes of postoperative altered mentation. Next to be considered are subclinical seizure, ischemic stroke, and infectious encephalitis or meningitis, along with hemorrhagic stroke and subdural hematoma.

During initial assessment, the clinician should ascertain baseline mental state, the timeline of the change in mental status, recent medication changes, history of substance abuse, and concern about any recent trauma, such as a fall. Performing the physical examination, the clinician should assess vital signs and then focus on identifying localizing neurologic deficits.

First steps in the work-up include a complete metabolic panel, complete blood cell count, urinalysis with culture, and a urine toxicology screen. If the patient has a “toxic” appearance, blood cultures should be obtained. An electrocardiogram should be used to screen for drug toxicity or evidence of cardiac ischemia. If laboratory test results do not reveal an obvious infectious or metabolic cause, a noncontrast computed tomography (CT) of the head should be obtained. In terms of early interventions, a low glucose level should be treated with thiamine and then glucose, and naloxone should be given if there is any suspicion of narcotic overdose.

More history was obtained from the patient’s records. The BKA was performed to address a nonhealing transmetatarsal amputation. Two months earlier, the transmetatarsal amputation had been performed as treatment for a diabetic forefoot ulcer with chronic osteomyelitis. The patient’s post-BKA course was uncomplicated. He was started on intravenous (IV) ertapenem on postoperative day 1, and on postoperative day 4 was discharged to the SNF to complete a 6-week course of antibiotics for osteomyelitis. Past medical history included paroxysmal atrial fibrillation, coronary artery disease, congestive heart failure (ejection fraction 40%), and type 2 diabetes mellitus. Medications given at the SNF were oxycodone, acetaminophen, cholecalciferol, melatonin, digoxin, ondansetron, furosemide, gabapentin, correctional insulin, tamsulosin, senna, docusate, warfarin, and metoprolol. While there, the patient’s family expressed concern about his diminishing “mental ability.” They reported he had been fully alert and oriented on arrival at the SNF, and living independently with his wife before the BKA. Then, a week before the ED presentation, he started becoming more somnolent and forgetful. The gabapentin and oxycodone dosages were reduced to minimize their sedative effects, but he showed no improvement. At the SNF, a somnolence work-up was not performed.

Several of the patient’s medications can contribute to altered mental state. Ertapenem can cause seizures as well as profound mental status changes, though these are more likely in the setting of poor renal function. The mental status changes were noticed about a week into the patient’s course of antibiotics, which suggests a possible temporal correlation with the initiation of ertapenem. An electroencephalogram is required to diagnose nonconvulsive seizure activity. Narcotic overdose should still be considered, despite the recent reduction in oxycodone dosage. Digoxin toxicity, though less likely when the dose is stable and there are no changes in renal function, can cause a confused state. Concurrent use of furosemide could potentiate the toxic effects of digoxin.

Non-medication-related concerns include hypoglycemia, hyperglycemia, and, given his history of atrial fibrillation, cardioembolic stroke. Although generalized confusion is not a common manifestation of stroke, a thalamic stroke can alter mental state but be easily missed if not specifically considered. Additional lab work-up should include a digoxin level and, since he is taking warfarin, a prothrombin time/international normalized ratio (PT/INR). If the initial laboratory studies and head CT do not explain the altered mental state, magnetic resonance imaging (MRI) of the brain should be performed to further assess for stroke.

On physical examination in the ED, the patient was resting comfortably with eyes closed, and arousing to voice. He obeyed commands and participated in the examination. His Glasgow Coma Scale score was 13; temperature, 36.8°C, heart rate, 80 beats per minute; respiratory rate, 16 breaths per minute; blood pressure, 90/57 mm Hg; and 100% peripheral capillary oxygen saturation while breathing ambient air. He appeared well developed. His heart rhythm was irregularly irregular, without murmurs, rubs, or gallops. Respiratory and abdominal examination findings were normal. The left BKA incision was well approximated, with no drainage, dehiscence, fluctuance, or erythema. On neurologic examination, the patient was intermittently oriented only to self. Pupils were equal, round, and reactive to light; extraocular movements were intact; face was symmetric; tongue was midline; sensation on face was equal bilaterally; and shoulder shrug was intact. Strength was 5/5 and symmetric in the elbow and hip and 5/5 in the right knee and ankle (not tested on left because of BKA). Deep tendon reflexes were 3+ and symmetrical at the biceps, brachioradialis, and triceps tendons and 3+ in the right patellar and Achilles tendons. Sensation was intact and symmetrical in the upper and lower extremities. The patient’s speech was slow and slurred, and his answers were unrelated to the questions being asked.

The patient’s mental state is best described as lethargic. As he is only intermittently oriented, he meets the criteria for delirium. He is not obtunded or comatose, and his pupils are at least reactive, not pinpoint, so narcotic overdose is less likely. Thalamic stroke remains in the differential diagnosis; despite the seemingly symmetrical sensation examination, hemisensory deficits cannot be definitively ruled out given the patient’s mental state. A rare entity such as carcinomatosis meningitis or another diffuse, infiltrative neoplastic process could be causing his condition. However, because focal deficits other than abnormal speech and diffuse hyperreflexia are absent, toxic, infectious, or metabolic causes are more likely than structural abnormalities. Still possible is a medication toxicity, such as ertapenem toxicity or, less likely, digoxin toxicity. In terms of infectious possibilities, urinary tract infection could certainly present in this fashion, especially if the patient had a somewhat low neurologic reserve at baseline, and hypotension could be secondary to sepsis. Encephalitis or meningitis remains in the differential diagnosis, though the patient appears nontoxic, and therefore a bacterial etiology is very unlikely.

The patient’s hyperreflexia may be an important clue. Although the strength of his reflexes at baseline is unknown, seizures can cause transiently increased reflexes as well as a confused, lethargic mental state. Reflexes can also be increased by a drug overdose that has caused serotonin syndrome. Of the patient’s medications, only ondansetron can cause this reaction. Hyperthyroidism can cause brisk reflexes and confusion, though more typically it causes agitated confusion. A thyroid-stimulating hormone level should be added to the initial laboratory panel.

A complete blood count revealed white blood cell count 11.86 K/uL with neutrophilic predominance and immature granulocytes, hemoglobin 11.5 g/dL, and platelet count 323 K/uL. Serum sodium was 141 mEq/L, potassium 4.2 mEq/L, chloride 103 mEq/L, bicarbonate 30 mEq/L, creatinine 1.14 mg/dL (prior baseline of 0.8-1.0 mg/dL), blood urea nitrogen 26 mg/dL, blood glucose 159 mg/dL, and calcium 9.1 mg/dL. His digoxin level was 1.3 ng/mL (reference range 0.5-1.9 mg/mL) and troponin was undetectable. INR was 2.7 and partial thromboplastin time (PTT) 60 seconds. Vitamin B12 level was 674 pg/mL (reference range >180). A urinalysis had 1+ hyaline casts and was negative for nitrites, leukocyte esterase, blood, and bacteria. An ECG revealed atrial fibrillation with a ventricular rate of 80 beats per minute. A chest radiograph showed clear lung fields. A CT of the head without IV contrast had no evidence of an acute intracranial abnormality. In the ED, 1 liter of IV normal saline was given and blood pressure improved to 127/72 mm Hg.

The head CT does not show intracranial bleeding, and, though it is reassuring that INR is in the therapeutic range, ischemic stroke must remain in the differential diagnosis. Sepsis is less likely given that the criteria for systemic inflammatory response syndrome are not met, and hypotension was rapidly corrected with administration of IV fluids. Urinary tract infection was ruled out with the negative urinalysis. Subclinical seizures remain possible, as does medication-related or other toxicity. A medication overdose, intentional or otherwise, should also be considered.

The patient was admitted to the hospital. On reassessment by the inpatient team, he was oriented only to self, frequently falling asleep, and not recalling earlier conversations when aroused. His speech remained slurred and difficult to understand. Neurologic examination findings were unchanged since the ED examination. On additional cerebellar examination, he had dysmetria with finger-to-nose testing bilaterally and dysdiadochokinesia (impaired rapid alternating movements) of the left hand.

His handedness is not mentioned; the dysdiadochokinesia of the left hand may reflect the patient’s being right-handed, or may signify a focal cerebellar lesion. The cerebellum is also implicated by the bilateral dysmetria. Persistent somnolence in the absence of CT findings suggests a metabolic or infectious process. Metabolic processes that can cause bilateral cerebellar ataxia and somnolence include overdose of a drug or medication. Use of alcohol or a medication such as phenytoin, valproic acid, or a benzodiazepine can cause the symptoms in this case, but was not reported by the family, and there was no documentation of it in the SNF records. Wernicke encephalopathy is rare and is not well supported by the patient’s presentation but should be considered, as it can be easily treated with thiamine. Meningoencephalitis affecting the cerebellum remains possible, but infection is less likely. Both electroencephalogram and brain MRI should be performed, with a specific interest in possible cerebellar lesions. If the MRI is unremarkable, a lumbar puncture should be performed to assess opening pressure and investigate for infectious etiologies.

MRI of the brain showed age-related volume loss and nonspecific white matter disease without acute changes. Lack of a clear explanation for the neurologic findings led to suspicion of a medication side effect. Ertapenem was stopped on admission because it has been reported to rarely cause altered mental status. IV moxifloxacin was started for the osteomyelitis. Over the next 2 days, symptoms began resolving; within 24 hours of ertapenem discontinuation, the patient was awake, alert, and talkative. On examination, he remained dysarthric but was no longer dysmetric. Within 48 hours, the dysarthria was completely resolved, and he was returned to the SNF to complete a course of IV moxifloxacin.

DISCUSSION

Among elderly patients presenting to the ED, altered mental status is a common complaint, accounting for 10% to 30% of visits.1 Medications are a common cause of altered mental status among the elderly and are responsible for 40% of delirium cases.1 The risk of adverse drug events (ADEs) rises with the number of medications prescribed.1-3 Among patients older than 60 years, the incidence of polypharmacy (defined as taking >5 prescription medications) increased from roughly 20% in 1999 to 40% in 2012.4,5 The most common ADEs in the ambulatory setting (25%) are central nervous system (CNS) symptoms, including dizziness, sleep disturbances, and mood changes.6 A medication effect should be suspected in any elderly patient presenting with altered mental state.

The present patient developed a constellation of neurologic symptoms after starting ertapenem, one of the carbapenem antibiotics, which is a class of medications that can cause CNS ADEs. Carbapenems are renally cleared, and adjustments must be made for acute or chronic changes in kidney function. Carbapenems are associated with increased risk of seizure; the incidence of seizure with ertapenem is 0.2%.7,8 Food and Drug Administration postmarketing reports have noted ertapenem can cause somnolence and dyskinesia,9 and several case reports have described ertapenem-associated CNS side effects, including psychosis and encephalopathy.10-13 Symptoms and examination findings can include confusion, disorientation, garbled speech, dysphagia, hallucinations, miosis, myoclonus, tremor, and agitation.10-13 Although reports of dysmetria and dysdiadochokinesia are lacking, suspicion of an ADE in this case was heightened by the timing of the exposure and the absence of alternative infectious, metabolic, and vascular explanations for bilateral cerebellar dysfunction.

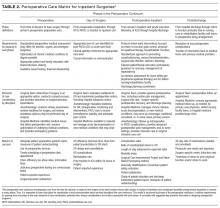

The Naranjo Adverse Drug Reaction (ADR) scale may help clinicians differentiate ADEs from other etiologies of symptoms. It uses 10 weighted questions (Table) to estimate the probability that an adverse clinical event is caused by a drug reaction.14 The present case was assigned 1 point for prior reports of neurologic ADEs associated with ertapenem, 2 for the temporal association, 1 for resolution after medication withdrawal, 2 for lack of alternative causes, and 1 for objective evidence of neurologic dysfunction—for a total of 7 points, indicating ertapenem was probably the cause of the patient’s neurologic symptoms. Of 4 prior cases in which carbapenem toxicity was suspected and the Naranjo scale was used, 3 found a probable relationship, and the fourth a highly probable one.10,12 Confusion, disorientation, hallucinations, tangential thoughts, and garbled speech were reported in the 3 probable cases of ADEs. In the highly probable case, tangential thoughts, garbled speech, and miosis were noted on examination, and these findings returned after re-exposure to ertapenem. Of note, these ADEs occurred in patients with normal and abnormal renal function, and in middle-aged and elderly patients.10,11,13

Most medications have a long list of low-frequency and rarely reported adverse effects. The present case reminds clinicians to consider rare adverse effects, or variants of previously reported adverse effects, in a patient with unexplained symptoms. To estimate the probability that a drug is causing harm to a patient, using a validated tool such as the Naranjo scale helps answer the question, What are the chances?

KEY TEACHING POINTS

Clinicians should include rare adverse effects of common medications in the differential diagnosis.

The Naranjo score is a validated tool that can be used to systematically assess the probability of an adverse drug effect at the bedside.

- The presentation of ertapenem-associated neurotoxicity may include features of bilateral cerebellar dysfunction.

Disclosure

Nothing to report.

1. Inouye SK, Fearing MA, Marcantonio ER. Delirium. In: Halter JB, Ouslander JG, Tinetti ME, Studenski S, High KP, Asthana S, eds. Hazzard’s Geriatric Medicine and Gerontology. 6th ed. New York, NY: McGraw-Hill; 2009.

2. Sarkar U, López A, Maselli JH, Gonzales R. Adverse drug events in U.S. adult ambulatory medical care. Health Serv Res. 2011;46(5):1517-1533. PubMed

3. Chrischilles E, Rubenstein L, Van Gilder R, Voelker M, Wright K, Wallace R. Risk factors for adverse drug events in older adults with mobility limitations in the community setting. J Am Geriatr Soc. 2007;55(1):29-34. PubMed

4. Kaufman DW, Kelly JP, Rosenberg L, Anderson TE, Mitchell AA. Recent patterns of medication use in the ambulatory adult population of the United States: the Slone survey. JAMA. 2002;287(3):337-344. PubMed

5. Kantor ED, Rehm CD, Haas JS, Chan AT, Giovannucci EL. Trends in prescription drug use among adults in the United States from 1999-2012. JAMA. 2015;314(17):1818-1831. PubMed

6. Thomsen LA, Winterstein AG, Søndergaard B, Haugbølle LS, Melander A. Systematic review of the incidence and characteristics of preventable adverse drug events in ambulatory care. Ann Pharmacother. 2007;41(9):1411-1426. PubMed

7. Zhanel GG, Wiebe R, Dilay L, et al. Comparative review of the carbapenems. Drugs. 2007;67(7):1027-1052. PubMed

8. Cannon JP, Lee TA, Clark NM, Setlak P, Grim SA. The risk of seizures among the carbapenems: a meta-analysis. J Antimicrob Chemother. 2014;69(8):2043-2055. PubMed

9. US Food and Drug Administration. Invanz (ertapenem) injection [safety information]. http://www.fda.gov/Safety/MedWatch/SafetyInformation/ucm196605.htm. Published July 2013. Accessed July 6, 2015.

10. Oo Y, Packham D, Yau W, Munckhof WJ. Ertapenem-associated psychosis and encephalopathy. Intern Med J. 2014;44(8):817-819. PubMed

11. Wen MJ, Sung CC, Chau T, Lin SH. Acute prolonged neurotoxicity associated with recommended doses of ertapenem in 2 patients with advanced renal failure. Clin Nephrol. 2013;80(6):474-478. PubMed

12. Duquaine S, Kitchell E, Tate T, Tannen RC, Wickremasinghe IM. Central nervous system toxicity associated with ertapenem use. Ann Pharmacother. 2011;45(1):e6. PubMed

13. Kong V, Beckert L, Awunor-Renner C. A case of beta lactam-induced visual hallucination. N Z Med J. 2009;122(1298):76-77. PubMed

14. Naranjo CA, Busto U, Sellers EM, et al. A method for estimating the probability of adverse drug reactions. Clin Pharmacol Ther. 1981;30(2):239-245. PubMed

The approach to clinical conundrums by an expert clinician is revealed through the presentation of an actual patient’s case in an approach typical of a morning report. Similarly to patient care, sequential pieces of information are provided to the clinician, who is unfamiliar with the case. The focus is on the thought processes of both the clinical team caring for the patient and the discussant. The bolded text represents the patient’s case. Each paragraph that follows represents the discussant’s thoughts.

Two weeks after undergoing a below-knee amputation (BKA) and 10 days after being discharged to a skilled nursing facility (SNF), an 87-year-old man returned to the emergency department (ED) for evaluation of somnolence and altered mental state. In the ED, he was disoriented and unable to provide a detailed history.

The differential diagnosis for acute confusion and altered consciousness is broad. Initial possibilities include toxic-metabolic abnormalities, medication side effects, and infections. Urinary tract infection, pneumonia, and surgical-site infection should be assessed for first, as they are common causes of postoperative altered mentation. Next to be considered are subclinical seizure, ischemic stroke, and infectious encephalitis or meningitis, along with hemorrhagic stroke and subdural hematoma.

During initial assessment, the clinician should ascertain baseline mental state, the timeline of the change in mental status, recent medication changes, history of substance abuse, and concern about any recent trauma, such as a fall. Performing the physical examination, the clinician should assess vital signs and then focus on identifying localizing neurologic deficits.

First steps in the work-up include a complete metabolic panel, complete blood cell count, urinalysis with culture, and a urine toxicology screen. If the patient has a “toxic” appearance, blood cultures should be obtained. An electrocardiogram should be used to screen for drug toxicity or evidence of cardiac ischemia. If laboratory test results do not reveal an obvious infectious or metabolic cause, a noncontrast computed tomography (CT) of the head should be obtained. In terms of early interventions, a low glucose level should be treated with thiamine and then glucose, and naloxone should be given if there is any suspicion of narcotic overdose.

More history was obtained from the patient’s records. The BKA was performed to address a nonhealing transmetatarsal amputation. Two months earlier, the transmetatarsal amputation had been performed as treatment for a diabetic forefoot ulcer with chronic osteomyelitis. The patient’s post-BKA course was uncomplicated. He was started on intravenous (IV) ertapenem on postoperative day 1, and on postoperative day 4 was discharged to the SNF to complete a 6-week course of antibiotics for osteomyelitis. Past medical history included paroxysmal atrial fibrillation, coronary artery disease, congestive heart failure (ejection fraction 40%), and type 2 diabetes mellitus. Medications given at the SNF were oxycodone, acetaminophen, cholecalciferol, melatonin, digoxin, ondansetron, furosemide, gabapentin, correctional insulin, tamsulosin, senna, docusate, warfarin, and metoprolol. While there, the patient’s family expressed concern about his diminishing “mental ability.” They reported he had been fully alert and oriented on arrival at the SNF, and living independently with his wife before the BKA. Then, a week before the ED presentation, he started becoming more somnolent and forgetful. The gabapentin and oxycodone dosages were reduced to minimize their sedative effects, but he showed no improvement. At the SNF, a somnolence work-up was not performed.

Several of the patient’s medications can contribute to altered mental state. Ertapenem can cause seizures as well as profound mental status changes, though these are more likely in the setting of poor renal function. The mental status changes were noticed about a week into the patient’s course of antibiotics, which suggests a possible temporal correlation with the initiation of ertapenem. An electroencephalogram is required to diagnose nonconvulsive seizure activity. Narcotic overdose should still be considered, despite the recent reduction in oxycodone dosage. Digoxin toxicity, though less likely when the dose is stable and there are no changes in renal function, can cause a confused state. Concurrent use of furosemide could potentiate the toxic effects of digoxin.

Non-medication-related concerns include hypoglycemia, hyperglycemia, and, given his history of atrial fibrillation, cardioembolic stroke. Although generalized confusion is not a common manifestation of stroke, a thalamic stroke can alter mental state but be easily missed if not specifically considered. Additional lab work-up should include a digoxin level and, since he is taking warfarin, a prothrombin time/international normalized ratio (PT/INR). If the initial laboratory studies and head CT do not explain the altered mental state, magnetic resonance imaging (MRI) of the brain should be performed to further assess for stroke.

On physical examination in the ED, the patient was resting comfortably with eyes closed, and arousing to voice. He obeyed commands and participated in the examination. His Glasgow Coma Scale score was 13; temperature, 36.8°C, heart rate, 80 beats per minute; respiratory rate, 16 breaths per minute; blood pressure, 90/57 mm Hg; and 100% peripheral capillary oxygen saturation while breathing ambient air. He appeared well developed. His heart rhythm was irregularly irregular, without murmurs, rubs, or gallops. Respiratory and abdominal examination findings were normal. The left BKA incision was well approximated, with no drainage, dehiscence, fluctuance, or erythema. On neurologic examination, the patient was intermittently oriented only to self. Pupils were equal, round, and reactive to light; extraocular movements were intact; face was symmetric; tongue was midline; sensation on face was equal bilaterally; and shoulder shrug was intact. Strength was 5/5 and symmetric in the elbow and hip and 5/5 in the right knee and ankle (not tested on left because of BKA). Deep tendon reflexes were 3+ and symmetrical at the biceps, brachioradialis, and triceps tendons and 3+ in the right patellar and Achilles tendons. Sensation was intact and symmetrical in the upper and lower extremities. The patient’s speech was slow and slurred, and his answers were unrelated to the questions being asked.

The patient’s mental state is best described as lethargic. As he is only intermittently oriented, he meets the criteria for delirium. He is not obtunded or comatose, and his pupils are at least reactive, not pinpoint, so narcotic overdose is less likely. Thalamic stroke remains in the differential diagnosis; despite the seemingly symmetrical sensation examination, hemisensory deficits cannot be definitively ruled out given the patient’s mental state. A rare entity such as carcinomatosis meningitis or another diffuse, infiltrative neoplastic process could be causing his condition. However, because focal deficits other than abnormal speech and diffuse hyperreflexia are absent, toxic, infectious, or metabolic causes are more likely than structural abnormalities. Still possible is a medication toxicity, such as ertapenem toxicity or, less likely, digoxin toxicity. In terms of infectious possibilities, urinary tract infection could certainly present in this fashion, especially if the patient had a somewhat low neurologic reserve at baseline, and hypotension could be secondary to sepsis. Encephalitis or meningitis remains in the differential diagnosis, though the patient appears nontoxic, and therefore a bacterial etiology is very unlikely.

The patient’s hyperreflexia may be an important clue. Although the strength of his reflexes at baseline is unknown, seizures can cause transiently increased reflexes as well as a confused, lethargic mental state. Reflexes can also be increased by a drug overdose that has caused serotonin syndrome. Of the patient’s medications, only ondansetron can cause this reaction. Hyperthyroidism can cause brisk reflexes and confusion, though more typically it causes agitated confusion. A thyroid-stimulating hormone level should be added to the initial laboratory panel.

A complete blood count revealed white blood cell count 11.86 K/uL with neutrophilic predominance and immature granulocytes, hemoglobin 11.5 g/dL, and platelet count 323 K/uL. Serum sodium was 141 mEq/L, potassium 4.2 mEq/L, chloride 103 mEq/L, bicarbonate 30 mEq/L, creatinine 1.14 mg/dL (prior baseline of 0.8-1.0 mg/dL), blood urea nitrogen 26 mg/dL, blood glucose 159 mg/dL, and calcium 9.1 mg/dL. His digoxin level was 1.3 ng/mL (reference range 0.5-1.9 mg/mL) and troponin was undetectable. INR was 2.7 and partial thromboplastin time (PTT) 60 seconds. Vitamin B12 level was 674 pg/mL (reference range >180). A urinalysis had 1+ hyaline casts and was negative for nitrites, leukocyte esterase, blood, and bacteria. An ECG revealed atrial fibrillation with a ventricular rate of 80 beats per minute. A chest radiograph showed clear lung fields. A CT of the head without IV contrast had no evidence of an acute intracranial abnormality. In the ED, 1 liter of IV normal saline was given and blood pressure improved to 127/72 mm Hg.

The head CT does not show intracranial bleeding, and, though it is reassuring that INR is in the therapeutic range, ischemic stroke must remain in the differential diagnosis. Sepsis is less likely given that the criteria for systemic inflammatory response syndrome are not met, and hypotension was rapidly corrected with administration of IV fluids. Urinary tract infection was ruled out with the negative urinalysis. Subclinical seizures remain possible, as does medication-related or other toxicity. A medication overdose, intentional or otherwise, should also be considered.

The patient was admitted to the hospital. On reassessment by the inpatient team, he was oriented only to self, frequently falling asleep, and not recalling earlier conversations when aroused. His speech remained slurred and difficult to understand. Neurologic examination findings were unchanged since the ED examination. On additional cerebellar examination, he had dysmetria with finger-to-nose testing bilaterally and dysdiadochokinesia (impaired rapid alternating movements) of the left hand.

His handedness is not mentioned; the dysdiadochokinesia of the left hand may reflect the patient’s being right-handed, or may signify a focal cerebellar lesion. The cerebellum is also implicated by the bilateral dysmetria. Persistent somnolence in the absence of CT findings suggests a metabolic or infectious process. Metabolic processes that can cause bilateral cerebellar ataxia and somnolence include overdose of a drug or medication. Use of alcohol or a medication such as phenytoin, valproic acid, or a benzodiazepine can cause the symptoms in this case, but was not reported by the family, and there was no documentation of it in the SNF records. Wernicke encephalopathy is rare and is not well supported by the patient’s presentation but should be considered, as it can be easily treated with thiamine. Meningoencephalitis affecting the cerebellum remains possible, but infection is less likely. Both electroencephalogram and brain MRI should be performed, with a specific interest in possible cerebellar lesions. If the MRI is unremarkable, a lumbar puncture should be performed to assess opening pressure and investigate for infectious etiologies.

MRI of the brain showed age-related volume loss and nonspecific white matter disease without acute changes. Lack of a clear explanation for the neurologic findings led to suspicion of a medication side effect. Ertapenem was stopped on admission because it has been reported to rarely cause altered mental status. IV moxifloxacin was started for the osteomyelitis. Over the next 2 days, symptoms began resolving; within 24 hours of ertapenem discontinuation, the patient was awake, alert, and talkative. On examination, he remained dysarthric but was no longer dysmetric. Within 48 hours, the dysarthria was completely resolved, and he was returned to the SNF to complete a course of IV moxifloxacin.

DISCUSSION

Among elderly patients presenting to the ED, altered mental status is a common complaint, accounting for 10% to 30% of visits.1 Medications are a common cause of altered mental status among the elderly and are responsible for 40% of delirium cases.1 The risk of adverse drug events (ADEs) rises with the number of medications prescribed.1-3 Among patients older than 60 years, the incidence of polypharmacy (defined as taking >5 prescription medications) increased from roughly 20% in 1999 to 40% in 2012.4,5 The most common ADEs in the ambulatory setting (25%) are central nervous system (CNS) symptoms, including dizziness, sleep disturbances, and mood changes.6 A medication effect should be suspected in any elderly patient presenting with altered mental state.

The present patient developed a constellation of neurologic symptoms after starting ertapenem, one of the carbapenem antibiotics, which is a class of medications that can cause CNS ADEs. Carbapenems are renally cleared, and adjustments must be made for acute or chronic changes in kidney function. Carbapenems are associated with increased risk of seizure; the incidence of seizure with ertapenem is 0.2%.7,8 Food and Drug Administration postmarketing reports have noted ertapenem can cause somnolence and dyskinesia,9 and several case reports have described ertapenem-associated CNS side effects, including psychosis and encephalopathy.10-13 Symptoms and examination findings can include confusion, disorientation, garbled speech, dysphagia, hallucinations, miosis, myoclonus, tremor, and agitation.10-13 Although reports of dysmetria and dysdiadochokinesia are lacking, suspicion of an ADE in this case was heightened by the timing of the exposure and the absence of alternative infectious, metabolic, and vascular explanations for bilateral cerebellar dysfunction.

The Naranjo Adverse Drug Reaction (ADR) scale may help clinicians differentiate ADEs from other etiologies of symptoms. It uses 10 weighted questions (Table) to estimate the probability that an adverse clinical event is caused by a drug reaction.14 The present case was assigned 1 point for prior reports of neurologic ADEs associated with ertapenem, 2 for the temporal association, 1 for resolution after medication withdrawal, 2 for lack of alternative causes, and 1 for objective evidence of neurologic dysfunction—for a total of 7 points, indicating ertapenem was probably the cause of the patient’s neurologic symptoms. Of 4 prior cases in which carbapenem toxicity was suspected and the Naranjo scale was used, 3 found a probable relationship, and the fourth a highly probable one.10,12 Confusion, disorientation, hallucinations, tangential thoughts, and garbled speech were reported in the 3 probable cases of ADEs. In the highly probable case, tangential thoughts, garbled speech, and miosis were noted on examination, and these findings returned after re-exposure to ertapenem. Of note, these ADEs occurred in patients with normal and abnormal renal function, and in middle-aged and elderly patients.10,11,13

Most medications have a long list of low-frequency and rarely reported adverse effects. The present case reminds clinicians to consider rare adverse effects, or variants of previously reported adverse effects, in a patient with unexplained symptoms. To estimate the probability that a drug is causing harm to a patient, using a validated tool such as the Naranjo scale helps answer the question, What are the chances?

KEY TEACHING POINTS

Clinicians should include rare adverse effects of common medications in the differential diagnosis.

The Naranjo score is a validated tool that can be used to systematically assess the probability of an adverse drug effect at the bedside.

- The presentation of ertapenem-associated neurotoxicity may include features of bilateral cerebellar dysfunction.

Disclosure

Nothing to report.

The approach to clinical conundrums by an expert clinician is revealed through the presentation of an actual patient’s case in an approach typical of a morning report. Similarly to patient care, sequential pieces of information are provided to the clinician, who is unfamiliar with the case. The focus is on the thought processes of both the clinical team caring for the patient and the discussant. The bolded text represents the patient’s case. Each paragraph that follows represents the discussant’s thoughts.

Two weeks after undergoing a below-knee amputation (BKA) and 10 days after being discharged to a skilled nursing facility (SNF), an 87-year-old man returned to the emergency department (ED) for evaluation of somnolence and altered mental state. In the ED, he was disoriented and unable to provide a detailed history.

The differential diagnosis for acute confusion and altered consciousness is broad. Initial possibilities include toxic-metabolic abnormalities, medication side effects, and infections. Urinary tract infection, pneumonia, and surgical-site infection should be assessed for first, as they are common causes of postoperative altered mentation. Next to be considered are subclinical seizure, ischemic stroke, and infectious encephalitis or meningitis, along with hemorrhagic stroke and subdural hematoma.

During initial assessment, the clinician should ascertain baseline mental state, the timeline of the change in mental status, recent medication changes, history of substance abuse, and concern about any recent trauma, such as a fall. Performing the physical examination, the clinician should assess vital signs and then focus on identifying localizing neurologic deficits.

First steps in the work-up include a complete metabolic panel, complete blood cell count, urinalysis with culture, and a urine toxicology screen. If the patient has a “toxic” appearance, blood cultures should be obtained. An electrocardiogram should be used to screen for drug toxicity or evidence of cardiac ischemia. If laboratory test results do not reveal an obvious infectious or metabolic cause, a noncontrast computed tomography (CT) of the head should be obtained. In terms of early interventions, a low glucose level should be treated with thiamine and then glucose, and naloxone should be given if there is any suspicion of narcotic overdose.

More history was obtained from the patient’s records. The BKA was performed to address a nonhealing transmetatarsal amputation. Two months earlier, the transmetatarsal amputation had been performed as treatment for a diabetic forefoot ulcer with chronic osteomyelitis. The patient’s post-BKA course was uncomplicated. He was started on intravenous (IV) ertapenem on postoperative day 1, and on postoperative day 4 was discharged to the SNF to complete a 6-week course of antibiotics for osteomyelitis. Past medical history included paroxysmal atrial fibrillation, coronary artery disease, congestive heart failure (ejection fraction 40%), and type 2 diabetes mellitus. Medications given at the SNF were oxycodone, acetaminophen, cholecalciferol, melatonin, digoxin, ondansetron, furosemide, gabapentin, correctional insulin, tamsulosin, senna, docusate, warfarin, and metoprolol. While there, the patient’s family expressed concern about his diminishing “mental ability.” They reported he had been fully alert and oriented on arrival at the SNF, and living independently with his wife before the BKA. Then, a week before the ED presentation, he started becoming more somnolent and forgetful. The gabapentin and oxycodone dosages were reduced to minimize their sedative effects, but he showed no improvement. At the SNF, a somnolence work-up was not performed.

Several of the patient’s medications can contribute to altered mental state. Ertapenem can cause seizures as well as profound mental status changes, though these are more likely in the setting of poor renal function. The mental status changes were noticed about a week into the patient’s course of antibiotics, which suggests a possible temporal correlation with the initiation of ertapenem. An electroencephalogram is required to diagnose nonconvulsive seizure activity. Narcotic overdose should still be considered, despite the recent reduction in oxycodone dosage. Digoxin toxicity, though less likely when the dose is stable and there are no changes in renal function, can cause a confused state. Concurrent use of furosemide could potentiate the toxic effects of digoxin.

Non-medication-related concerns include hypoglycemia, hyperglycemia, and, given his history of atrial fibrillation, cardioembolic stroke. Although generalized confusion is not a common manifestation of stroke, a thalamic stroke can alter mental state but be easily missed if not specifically considered. Additional lab work-up should include a digoxin level and, since he is taking warfarin, a prothrombin time/international normalized ratio (PT/INR). If the initial laboratory studies and head CT do not explain the altered mental state, magnetic resonance imaging (MRI) of the brain should be performed to further assess for stroke.

On physical examination in the ED, the patient was resting comfortably with eyes closed, and arousing to voice. He obeyed commands and participated in the examination. His Glasgow Coma Scale score was 13; temperature, 36.8°C, heart rate, 80 beats per minute; respiratory rate, 16 breaths per minute; blood pressure, 90/57 mm Hg; and 100% peripheral capillary oxygen saturation while breathing ambient air. He appeared well developed. His heart rhythm was irregularly irregular, without murmurs, rubs, or gallops. Respiratory and abdominal examination findings were normal. The left BKA incision was well approximated, with no drainage, dehiscence, fluctuance, or erythema. On neurologic examination, the patient was intermittently oriented only to self. Pupils were equal, round, and reactive to light; extraocular movements were intact; face was symmetric; tongue was midline; sensation on face was equal bilaterally; and shoulder shrug was intact. Strength was 5/5 and symmetric in the elbow and hip and 5/5 in the right knee and ankle (not tested on left because of BKA). Deep tendon reflexes were 3+ and symmetrical at the biceps, brachioradialis, and triceps tendons and 3+ in the right patellar and Achilles tendons. Sensation was intact and symmetrical in the upper and lower extremities. The patient’s speech was slow and slurred, and his answers were unrelated to the questions being asked.

The patient’s mental state is best described as lethargic. As he is only intermittently oriented, he meets the criteria for delirium. He is not obtunded or comatose, and his pupils are at least reactive, not pinpoint, so narcotic overdose is less likely. Thalamic stroke remains in the differential diagnosis; despite the seemingly symmetrical sensation examination, hemisensory deficits cannot be definitively ruled out given the patient’s mental state. A rare entity such as carcinomatosis meningitis or another diffuse, infiltrative neoplastic process could be causing his condition. However, because focal deficits other than abnormal speech and diffuse hyperreflexia are absent, toxic, infectious, or metabolic causes are more likely than structural abnormalities. Still possible is a medication toxicity, such as ertapenem toxicity or, less likely, digoxin toxicity. In terms of infectious possibilities, urinary tract infection could certainly present in this fashion, especially if the patient had a somewhat low neurologic reserve at baseline, and hypotension could be secondary to sepsis. Encephalitis or meningitis remains in the differential diagnosis, though the patient appears nontoxic, and therefore a bacterial etiology is very unlikely.