User login

Treatment of HCV in special populations

MAUI, HAWAII – Treatment of acute rather than chronic hepatitis C infection is well worth considering in selected circumstances, Norah Terrault, MD, asserted at the Gastroenterology Updates, IBD, Liver Disease meeting.

This is not at present guideline-recommended therapy. Current American Association for the Study of Liver Disease/Infectious Diseases Society of America guidance states that while there is emerging data to support treatment of acute hepatitis C, the evidence isn’t yet sufficiently robust to support a particular regimen or duration. The guidelines currently recommend waiting 6 months to see if the acute infection resolves spontaneously, as happens in a minority of cases, or becomes chronic, at which point it becomes guideline-directed treatment time. But Dr. Terrault believes persuasive evidence to back treatment of acute hepatitis C infection (HCV) is forthcoming, and she noted that the guidelines leave the door ajar by stating, “There are instances wherein a clinician may decide that the benefits of early treatment outweigh waiting for possible spontaneous clearance.”

Treatment of acute HCV

Dr. Terrault deems treatment of acute HCV warranted in circumstances in which there is significant danger of transmission from the acutely infected individual to others. For example, health care providers with a needlestick HCV infection, injecting drug users, and men with acute HCV/HIV coinfection. She also treats acute HCV in patients with underlying chronic liver disease.

“Clearly, I wouldn’t want those individuals to have any worsening of their liver function, so I would treat them acutely,” explained Dr. Terrault, professor of medicine and director of the Viral Hepatitis Center at the University of California, San Francisco.

She cited as particularly impressive the results of the SWIFT-C trial presented by Suzanna Naggie, MD, of Duke University, Durham, N.C., at the 2017 AASLD annual meeting. In this modest-size, National Institutes of Health–sponsored, multicenter study of HIV-infected men with acute HCV coinfection, the sustained viral response (SVR) rate with 8 weeks of ledipasvir/sofosbuvir (Harvoni) was 100%, regardless of their baseline HCV RNA level.

“I think this is remarkable. They cleared virus quite late and yet they went on to achieve HCV eradication. It highlights how little we really know about the treatment of individuals in this phase and that relying on HCV RNA levels may not tell the whole story. I think this is important data to suggest maybe when we treat acute hepatitis C we can use a shorter duration of treatment for that population. There are also other small studies testing 8 weeks of treatment in non–HIV-infected individuals with acute hepatitis C in which they also showed very high SVR rates,” the hepatologist said.

Copanelist Steven L. Flamm, MD, said that when he encounters a patient with acute HCV he, too, is prepared to offer treatment – he finds the available supporting evidence sufficiently compelling – but he often encounters a problem.

“Sometimes I’m blocked by insurance companies because this isn’t officially approved,” noted Dr. Flamm, professor of medicine and chief of the hepatology program at Northwestern University, Chicago.

“You’re right,” Dr. Terrault commented, “we have to make a pretty compelling argument to the insurer as to why we’re treating. But ‘treat to prevent transmission to others’ usually is successful in our hands.”

HCV in patients with end-stage renal disease

The product labeling for sofosbuvir (Sovaldi) says the drug’s safety and efficacy haven’t been established in patients with severe renal impairment or end-stage renal disease. However, a small multicenter study presented at the 2017 AASLD meeting demonstrated that 12 weeks of ledipasvir/sofosbuvir achieved a 100% SVR rate in patients with genotype 1 HCV and severe renal impairment, including some on dialysis, with no clinically meaningful change in estimated glomerular filtration rate or any signal of cardiac arrhythmia.

“The serum drug levels went up significantly, but reassuringly they saw no meaningful safety signals,” according to Dr. Terrault. “This, I think, is initial reassuring information that we were all very much waiting for.”

Still, as the AASLD/IDSA guidelines point out, ledipasvir/sofosbuvir is not a recommended option for HCV treatment in end-stage renal disease.

“In general, I think glecapravir/pibrentasvir [Mavyret] has become the go-to drug for patients who have renal dysfunction because it’s a pangenic regimen, it doesn’t require use of sofosbuvir, and there’s no dose adjustment. But I would say you could encounter situations where you might want to use sofosbuvir, and for me that situation is typically those direct-acting, antiviral-experienced patients who have failed other therapies and you really need to use sofosbuvir/velpatasvir/voxilaprevir [Vosevi] as your last or rescue therapy,” the hepatologist continued.

HCV in liver transplant recipients

“In the years before the direct-acting antivirals, treating transplant patients was always very challenging,” Dr. Terrault recalled. “They had very low response rates to therapy. That’s all gone away. Now we can say that liver transplant recipients who require treatment have response rates that are the same as in individuals who have not had a transplant. These patients are now being treated earlier and earlier after their transplant because you can do it safely.”

She pointed to a study presented at the 2017 AASLD meeting by Kosh Agarwal, MD, of Kings College London. It involved 79 adults with recurrent genotypes 1-4 HCV infection post–liver transplant who were treated with sofosbuvir/velpatasvir (Epclusa) for 12 weeks with a total SVR rate of 96%.

“The nice thing about sofosbuvir/velpatasvir is there are no drug-drug interactions with immunosuppressive drugs. Now it’s very easy to take care of these patients. The SVR rates are excellent,” Dr. Terrault observed.

The other combination that’s been studied specifically in liver transplant recipients, and in kidney transplant recipients as well, is glecapravir/pibrentasvir. In the MAGELLAN-2 study of 100 such patients with genotypes 1-6 HCV, the SVR rate was 99% with no drug-related adverse events leading to discontinuation.

Persons who inject drugs

The Centers for Disease Control and Prevention and the World Health Organization want HCV eradicated by 2030. If that’s going to happen, physicians will have to become more comfortable treating the disease in injectable drug users, a population with a high prevalence of HCV. Several studies have now shown that very high SVR rates can be achieved with direct-acting antiviral regimens as short as 8 weeks in these individuals, even if they are concurrently injecting drugs.

“There is increasing evidence that we should be doing more treatment in persons who inject drugs. Many of these individuals have very early disease and their response rates are excellent,” according to Dr. Terrault.

Moreover, their reinfection rates “are not outrageous,” she said: 1% or less in individuals who stopped injecting drugs decades prior to anti-HCV treatment, 5%-10% over the course of 3-5 years in those who continue injecting drugs after achieving SVR, and about 2% in those on methadone substitution therapy.

“These are very acceptable levels of reinfection if our goal is to move toward elimination of hepatitis C in this population,” she said.

She reported having no financial conflicts regarding her presentation.

MAUI, HAWAII – Treatment of acute rather than chronic hepatitis C infection is well worth considering in selected circumstances, Norah Terrault, MD, asserted at the Gastroenterology Updates, IBD, Liver Disease meeting.

This is not at present guideline-recommended therapy. Current American Association for the Study of Liver Disease/Infectious Diseases Society of America guidance states that while there is emerging data to support treatment of acute hepatitis C, the evidence isn’t yet sufficiently robust to support a particular regimen or duration. The guidelines currently recommend waiting 6 months to see if the acute infection resolves spontaneously, as happens in a minority of cases, or becomes chronic, at which point it becomes guideline-directed treatment time. But Dr. Terrault believes persuasive evidence to back treatment of acute hepatitis C infection (HCV) is forthcoming, and she noted that the guidelines leave the door ajar by stating, “There are instances wherein a clinician may decide that the benefits of early treatment outweigh waiting for possible spontaneous clearance.”

Treatment of acute HCV

Dr. Terrault deems treatment of acute HCV warranted in circumstances in which there is significant danger of transmission from the acutely infected individual to others. For example, health care providers with a needlestick HCV infection, injecting drug users, and men with acute HCV/HIV coinfection. She also treats acute HCV in patients with underlying chronic liver disease.

“Clearly, I wouldn’t want those individuals to have any worsening of their liver function, so I would treat them acutely,” explained Dr. Terrault, professor of medicine and director of the Viral Hepatitis Center at the University of California, San Francisco.

She cited as particularly impressive the results of the SWIFT-C trial presented by Suzanna Naggie, MD, of Duke University, Durham, N.C., at the 2017 AASLD annual meeting. In this modest-size, National Institutes of Health–sponsored, multicenter study of HIV-infected men with acute HCV coinfection, the sustained viral response (SVR) rate with 8 weeks of ledipasvir/sofosbuvir (Harvoni) was 100%, regardless of their baseline HCV RNA level.

“I think this is remarkable. They cleared virus quite late and yet they went on to achieve HCV eradication. It highlights how little we really know about the treatment of individuals in this phase and that relying on HCV RNA levels may not tell the whole story. I think this is important data to suggest maybe when we treat acute hepatitis C we can use a shorter duration of treatment for that population. There are also other small studies testing 8 weeks of treatment in non–HIV-infected individuals with acute hepatitis C in which they also showed very high SVR rates,” the hepatologist said.

Copanelist Steven L. Flamm, MD, said that when he encounters a patient with acute HCV he, too, is prepared to offer treatment – he finds the available supporting evidence sufficiently compelling – but he often encounters a problem.

“Sometimes I’m blocked by insurance companies because this isn’t officially approved,” noted Dr. Flamm, professor of medicine and chief of the hepatology program at Northwestern University, Chicago.

“You’re right,” Dr. Terrault commented, “we have to make a pretty compelling argument to the insurer as to why we’re treating. But ‘treat to prevent transmission to others’ usually is successful in our hands.”

HCV in patients with end-stage renal disease

The product labeling for sofosbuvir (Sovaldi) says the drug’s safety and efficacy haven’t been established in patients with severe renal impairment or end-stage renal disease. However, a small multicenter study presented at the 2017 AASLD meeting demonstrated that 12 weeks of ledipasvir/sofosbuvir achieved a 100% SVR rate in patients with genotype 1 HCV and severe renal impairment, including some on dialysis, with no clinically meaningful change in estimated glomerular filtration rate or any signal of cardiac arrhythmia.

“The serum drug levels went up significantly, but reassuringly they saw no meaningful safety signals,” according to Dr. Terrault. “This, I think, is initial reassuring information that we were all very much waiting for.”

Still, as the AASLD/IDSA guidelines point out, ledipasvir/sofosbuvir is not a recommended option for HCV treatment in end-stage renal disease.

“In general, I think glecapravir/pibrentasvir [Mavyret] has become the go-to drug for patients who have renal dysfunction because it’s a pangenic regimen, it doesn’t require use of sofosbuvir, and there’s no dose adjustment. But I would say you could encounter situations where you might want to use sofosbuvir, and for me that situation is typically those direct-acting, antiviral-experienced patients who have failed other therapies and you really need to use sofosbuvir/velpatasvir/voxilaprevir [Vosevi] as your last or rescue therapy,” the hepatologist continued.

HCV in liver transplant recipients

“In the years before the direct-acting antivirals, treating transplant patients was always very challenging,” Dr. Terrault recalled. “They had very low response rates to therapy. That’s all gone away. Now we can say that liver transplant recipients who require treatment have response rates that are the same as in individuals who have not had a transplant. These patients are now being treated earlier and earlier after their transplant because you can do it safely.”

She pointed to a study presented at the 2017 AASLD meeting by Kosh Agarwal, MD, of Kings College London. It involved 79 adults with recurrent genotypes 1-4 HCV infection post–liver transplant who were treated with sofosbuvir/velpatasvir (Epclusa) for 12 weeks with a total SVR rate of 96%.

“The nice thing about sofosbuvir/velpatasvir is there are no drug-drug interactions with immunosuppressive drugs. Now it’s very easy to take care of these patients. The SVR rates are excellent,” Dr. Terrault observed.

The other combination that’s been studied specifically in liver transplant recipients, and in kidney transplant recipients as well, is glecapravir/pibrentasvir. In the MAGELLAN-2 study of 100 such patients with genotypes 1-6 HCV, the SVR rate was 99% with no drug-related adverse events leading to discontinuation.

Persons who inject drugs

The Centers for Disease Control and Prevention and the World Health Organization want HCV eradicated by 2030. If that’s going to happen, physicians will have to become more comfortable treating the disease in injectable drug users, a population with a high prevalence of HCV. Several studies have now shown that very high SVR rates can be achieved with direct-acting antiviral regimens as short as 8 weeks in these individuals, even if they are concurrently injecting drugs.

“There is increasing evidence that we should be doing more treatment in persons who inject drugs. Many of these individuals have very early disease and their response rates are excellent,” according to Dr. Terrault.

Moreover, their reinfection rates “are not outrageous,” she said: 1% or less in individuals who stopped injecting drugs decades prior to anti-HCV treatment, 5%-10% over the course of 3-5 years in those who continue injecting drugs after achieving SVR, and about 2% in those on methadone substitution therapy.

“These are very acceptable levels of reinfection if our goal is to move toward elimination of hepatitis C in this population,” she said.

She reported having no financial conflicts regarding her presentation.

MAUI, HAWAII – Treatment of acute rather than chronic hepatitis C infection is well worth considering in selected circumstances, Norah Terrault, MD, asserted at the Gastroenterology Updates, IBD, Liver Disease meeting.

This is not at present guideline-recommended therapy. Current American Association for the Study of Liver Disease/Infectious Diseases Society of America guidance states that while there is emerging data to support treatment of acute hepatitis C, the evidence isn’t yet sufficiently robust to support a particular regimen or duration. The guidelines currently recommend waiting 6 months to see if the acute infection resolves spontaneously, as happens in a minority of cases, or becomes chronic, at which point it becomes guideline-directed treatment time. But Dr. Terrault believes persuasive evidence to back treatment of acute hepatitis C infection (HCV) is forthcoming, and she noted that the guidelines leave the door ajar by stating, “There are instances wherein a clinician may decide that the benefits of early treatment outweigh waiting for possible spontaneous clearance.”

Treatment of acute HCV

Dr. Terrault deems treatment of acute HCV warranted in circumstances in which there is significant danger of transmission from the acutely infected individual to others. For example, health care providers with a needlestick HCV infection, injecting drug users, and men with acute HCV/HIV coinfection. She also treats acute HCV in patients with underlying chronic liver disease.

“Clearly, I wouldn’t want those individuals to have any worsening of their liver function, so I would treat them acutely,” explained Dr. Terrault, professor of medicine and director of the Viral Hepatitis Center at the University of California, San Francisco.

She cited as particularly impressive the results of the SWIFT-C trial presented by Suzanna Naggie, MD, of Duke University, Durham, N.C., at the 2017 AASLD annual meeting. In this modest-size, National Institutes of Health–sponsored, multicenter study of HIV-infected men with acute HCV coinfection, the sustained viral response (SVR) rate with 8 weeks of ledipasvir/sofosbuvir (Harvoni) was 100%, regardless of their baseline HCV RNA level.

“I think this is remarkable. They cleared virus quite late and yet they went on to achieve HCV eradication. It highlights how little we really know about the treatment of individuals in this phase and that relying on HCV RNA levels may not tell the whole story. I think this is important data to suggest maybe when we treat acute hepatitis C we can use a shorter duration of treatment for that population. There are also other small studies testing 8 weeks of treatment in non–HIV-infected individuals with acute hepatitis C in which they also showed very high SVR rates,” the hepatologist said.

Copanelist Steven L. Flamm, MD, said that when he encounters a patient with acute HCV he, too, is prepared to offer treatment – he finds the available supporting evidence sufficiently compelling – but he often encounters a problem.

“Sometimes I’m blocked by insurance companies because this isn’t officially approved,” noted Dr. Flamm, professor of medicine and chief of the hepatology program at Northwestern University, Chicago.

“You’re right,” Dr. Terrault commented, “we have to make a pretty compelling argument to the insurer as to why we’re treating. But ‘treat to prevent transmission to others’ usually is successful in our hands.”

HCV in patients with end-stage renal disease

The product labeling for sofosbuvir (Sovaldi) says the drug’s safety and efficacy haven’t been established in patients with severe renal impairment or end-stage renal disease. However, a small multicenter study presented at the 2017 AASLD meeting demonstrated that 12 weeks of ledipasvir/sofosbuvir achieved a 100% SVR rate in patients with genotype 1 HCV and severe renal impairment, including some on dialysis, with no clinically meaningful change in estimated glomerular filtration rate or any signal of cardiac arrhythmia.

“The serum drug levels went up significantly, but reassuringly they saw no meaningful safety signals,” according to Dr. Terrault. “This, I think, is initial reassuring information that we were all very much waiting for.”

Still, as the AASLD/IDSA guidelines point out, ledipasvir/sofosbuvir is not a recommended option for HCV treatment in end-stage renal disease.

“In general, I think glecapravir/pibrentasvir [Mavyret] has become the go-to drug for patients who have renal dysfunction because it’s a pangenic regimen, it doesn’t require use of sofosbuvir, and there’s no dose adjustment. But I would say you could encounter situations where you might want to use sofosbuvir, and for me that situation is typically those direct-acting, antiviral-experienced patients who have failed other therapies and you really need to use sofosbuvir/velpatasvir/voxilaprevir [Vosevi] as your last or rescue therapy,” the hepatologist continued.

HCV in liver transplant recipients

“In the years before the direct-acting antivirals, treating transplant patients was always very challenging,” Dr. Terrault recalled. “They had very low response rates to therapy. That’s all gone away. Now we can say that liver transplant recipients who require treatment have response rates that are the same as in individuals who have not had a transplant. These patients are now being treated earlier and earlier after their transplant because you can do it safely.”

She pointed to a study presented at the 2017 AASLD meeting by Kosh Agarwal, MD, of Kings College London. It involved 79 adults with recurrent genotypes 1-4 HCV infection post–liver transplant who were treated with sofosbuvir/velpatasvir (Epclusa) for 12 weeks with a total SVR rate of 96%.

“The nice thing about sofosbuvir/velpatasvir is there are no drug-drug interactions with immunosuppressive drugs. Now it’s very easy to take care of these patients. The SVR rates are excellent,” Dr. Terrault observed.

The other combination that’s been studied specifically in liver transplant recipients, and in kidney transplant recipients as well, is glecapravir/pibrentasvir. In the MAGELLAN-2 study of 100 such patients with genotypes 1-6 HCV, the SVR rate was 99% with no drug-related adverse events leading to discontinuation.

Persons who inject drugs

The Centers for Disease Control and Prevention and the World Health Organization want HCV eradicated by 2030. If that’s going to happen, physicians will have to become more comfortable treating the disease in injectable drug users, a population with a high prevalence of HCV. Several studies have now shown that very high SVR rates can be achieved with direct-acting antiviral regimens as short as 8 weeks in these individuals, even if they are concurrently injecting drugs.

“There is increasing evidence that we should be doing more treatment in persons who inject drugs. Many of these individuals have very early disease and their response rates are excellent,” according to Dr. Terrault.

Moreover, their reinfection rates “are not outrageous,” she said: 1% or less in individuals who stopped injecting drugs decades prior to anti-HCV treatment, 5%-10% over the course of 3-5 years in those who continue injecting drugs after achieving SVR, and about 2% in those on methadone substitution therapy.

“These are very acceptable levels of reinfection if our goal is to move toward elimination of hepatitis C in this population,” she said.

She reported having no financial conflicts regarding her presentation.

EXPERT ANALYSIS FROM GUILD 2018

Inhaled nitrous oxide for labor analgesia: Pearls from clinical experience

Nitrous oxide, a colorless, odorless gas, has long been used for labor analgesia in many countries, including the United Kingdom, Canada, throughout Europe, Australia, and New Zealand. Recently, interest in its use in the United States has increased, since the US Food and Drug Administration (FDA) approval in 2012 of simple devices for administration of nitrous oxide in a variety of locations. Being able to offer an alternative technique, other than parenteral opioids, for women who may not wish to or who cannot have regional analgesia, and for women who have delivered and need analgesia for postdelivery repair, conveys significant benefits. Risks to its use are very low, although the quality of pain relief is inferior to that offered by regional analgesic techniques. Our experience with its use since 2014 at Brigham and Women’s Hospital in Boston, Massachusetts, corroborates that reported in the literature and leads us to continue offering inhaled nitrous oxide and advocating that others do as well.1–7 When using nitrous oxide in your labor and delivery unit, or if considering its use, keep the following points in mind.

A successful inhaled nitrous oxide program requires proper patient selection

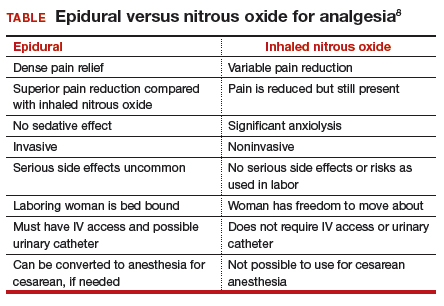

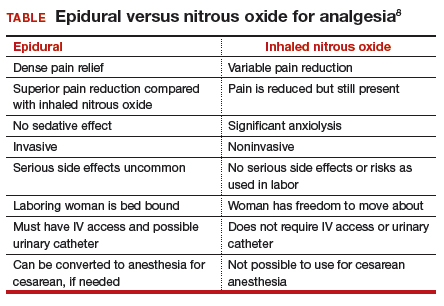

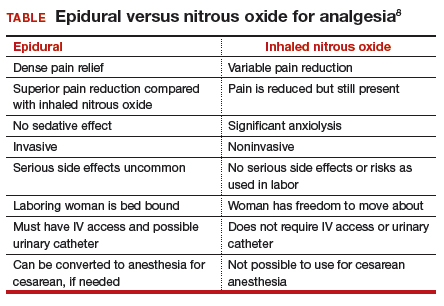

Inhaled nitrous oxide is not an epidural (TABLE).8 The pain relief is clearly inferior to that of an epidural. Inhaled nitrous oxide will not replace epidurals or even have any effect on the epidural rate at a particular institution.6 However, the use of inhaled nitrous oxide for labor analgesia has a long track record of safety (albeit with moderate efficacy for selected patients) for many years in many countries around the world. Inhaled nitrous oxide is a valuable addition to the options we can offer patients:

- who are poor responders to opioid medication or who have high opioid tolerance

- with certain disorders of coagulation

- with chronic pain or anxiety

- who for other reasons need to consider alternatives or adjuncts to neuraxial analgesia.

Although it is important to be realistic regarding the expectations of analgesia quality offered by this agent,7 compared with other agents we have tried, it has less adverse effects, is economically reasonable, and has no proven impact on neonatal outcomes.

No significant complications with inhaled nitrous oxide have been reported

Systematic reviews did not report any significant complications to either mother or newborn.1,2 Our personal experiences corroborate this, as no complications have been associated with its frequent use at Brigham and Women’s Hospital. Reported adverse effects are mild. The incidence of nausea is 13%, dizziness is 3% to 5%, and drowsiness is 4%; these rates are hard to detect over the baseline rates of those side effects associated with labor and delivery alone.1 Many other centers have now adopted the use of this agent, with several hundred locations now offering inhaled nitrous oxide for labor analgesia in the United States.

Practical use of inhaled nitrous oxide is relatively simple

Several vendors offer portable, user-friendly, cost-effective equipment that is appropriate for labor and delivery use. All devices are structured in demand-valve modality, meaning that the patient must initiate a breath in order to open a valve that allows gas to flow. Cessation of the inspiratory effort closes the valve, thus preventing the free flow of gas into the ambient atmosphere of the room. The devices generally include a tank with nitrous oxide as well as a source of oxygen. Most devices designed for labor and delivery provide a fixed mixture of 50% nitrous oxide and 50% oxygen, with fail-safe mechanisms to allow increased oxygen delivery in the event of failure or depletion of the nitrous supply. All modern, FDA–approved devices include effective scavenging systems, such that expired gases are vented outside (generally via room suction), which prevents occupational exposure to low levels of nitrous oxide.

Inhaled nitrous oxide for labor pain must be patient controlled

An essential feature of the use of inhaled nitrous oxide for labor analgesia is that it must be considered a patient-controlled system. Patients have an option to use either a mask or a mouthpiece, according to their preferences and comfort. The patient must hold the mask or mouthpiece herself; it is neither appropriate nor safe for anyone else, such as a nurse, family member, or labor support personnel, to assist with this task.

Some coordination with the nurse is essential for optimal timing of administration. Onset of a therapeutic level of pain relief is generally 30 to 60 seconds after inhalation has begun, with rapid resolution after cessation of the inhalation. The patient should thus initiate the inspiration of the gas at the earliest signs of onset of a contraction, so as to achieve maximal analgesia at the peak of the contraction. Waiting until the peak of the contraction to initiate inhalation of the nitrous oxide will not provide effective analgesia, yet will result in sedation after the contraction has ended.

Read about patient satisfaction with inhaled nitrous oxide.

No oversight by an anesthesiologist is required

The Centers for Medicare and Medicaid Services (CMS) produced a clarification statement for definitions of “anesthesia services” (42 CFR 482.52)9 that may be offered by a hospital, based on American Society of Anesthesiologists (ASA) definitions. CMS, consistent with ASA guidelines, does not define moderate or conscious sedation as “anesthesia,” thus direct oversight by an anesthesiologist is not required. Furthermore, the definition of “minimal sedation,” which is where 50% concentration delivery of inhaled nitrous oxide would be categorized, also does not meet this requirement by CMS.

Women who use inhaled nitrous oxide for labor pain typically are satisfied with its use

The use of analog pain scale measurements may not be appropriate in a setting where dissociation from pain might be the primary beneficial effect. Measurements of maternal satisfaction with their analgesic experience support this. The experiences at Vanderbilt University and Brigham and Women’s Hospital show that, while pain relief is limited, like reported in systematic reviews, maternal satisfaction scores for labor analgesia are not different among women who receive inhaled nitrous oxide analgesia, neuraxial analgesia, and those who transition from nitrous to neuraxial analgesia. In fact, published evidence supports extraordinarily high satisfaction in women who plan to use inhaled nitrous oxide, and actually successfully do so, despite only limited degrees of pain relief.10,11 Work to identify the characteristics of women who report success with inhaled nitrous oxide use needs to be performed so that patients can be better selected and informed when making analgesic choices.

Animal research on inhaled nitrous oxide may not translate well to human neonates

A very recent task force convened by the European Society of Anaesthesiology (ESA) addressed some of the potential concerns about inhaled nitrous oxide analgesia.12 Per their report:

“the potential teratogenic effect of N2O observed in experimental models cannot be extrapolated to humans. There is a lack of evidence for an association between N2O and reproductive toxicity. The incidence of health hazards and abortion was not shown to be higher in women exposed to, or spouses of men exposed to N2O than those who were not so exposed. Moreover, the incidence of congenital malformations was not higher among women who received N2O for anaesthesia during the first trimester of pregnancy nor during anaesthesia management for cervical cerclage, nor for surgery in the first two trimesters of pregnancy.”

There is a theoretical concern of an increase in neuronal apoptosis in neonates, demonstrated in laboratory animals in anesthetic concentrations, but the human relevance of this is not clear, since the data on animal developmental neurotoxicity is generally combined with data wherein potent inhalational anesthetic agents were also used, not nitrous oxide alone.13 The analgesic doses and time of exposure of inhaled nitrous oxide administered for labor analgesia are well below those required for these changes, as subanesthetic doses are associated with minimal changes, if any, in laboratory animals.

No labor analgesic is without the potential for fetal effects, and alternative labor analgesics such as systemic opioids in higher doses also may have potential adverse effects on the fetus, such as fetal heart rate effects or early tone, alertness, and breastfeeding difficulties. The low solubility and short half-life of inhaled nitrous oxide contribute to low absorption by tissues, thus contributing to the safety of this agent. Nitrous oxide via inhalation for sedation during elective cesarean has been reported to show no adverse effects on neonatal Apgar scores.14

Modern equipment keeps occupational exposure to nitrous oxide safe

One retrospective review of women exposed to high concentrations of inhaled nitrous oxide reported reduced fertility.15 However, the only effects on fertility were seen when nitrous was used without scavenging equipment, and in high concentrations. Moreover, that study examined dental offices, where nitrous was free flowing during procedures—quite a different setting than the intermittent inhalation, demand-valve modality as is used during labor—and when using appropriate modern, FDA-approved equipment, and scavenging devices. Per the recent ESA task force12:

“Members of the task force agreed that, despite theoretical concerns and laboratory data, there is no evidence indicating that the use of N2O in a clinically relevant setting would increase health risk in patients or providers exposed to this drug. With the ubiquitous availability of scavenging systems in the modern operating room, the health concern for medical staff has decreased dramatically. Properly operating scavenging systems reduce N2O concentrations by more than 70%, thereby efficiently keeping ambient N2O levels well below official limits.”

The ESA task force concludes: “An extensive amount of clinical evidence indicates that N2O can be used safely for procedural pain management, for labour pain, and for anxiolysis and sedation in dentistry.”12

Two important reminders

Inhaled nitrous oxide has been a central component of the labor pain relief menu in most of the rest of the world for decades, and the safety record is impeccable. This agent has now had extensive and growing experience in American maternity units. Remember 2 critical points: 1) patient selection is key, 2) analgesia is not like that provided by regional anesthetic techniques such as an epidural.

Share your thoughts! Send your Letter to the Editor to [email protected]. Please include your name and the city and state in which you practice.

- Likis FE, Andrews JC, Collins MR, et al. Nitrous oxide for the management of labor pain: a systematic review. Anesth Analg. 2014;118(1):153-167.

- Rosen MA. Nitrous oxide for relief of labor pain: a systematic review. Am J Obstet Gynecol. 2002;186(5 suppl nature):S110-S126.

- Angle P, Landy CK, Charles C. Phase 1 development of an index to measure the quality of neuraxial labour analgesia: exploring the perspectives of childbearing women. Can J Anaesth. 2010;57(5):468-478.

- Migliaccio L, Lawton R, Leeman L, Holbrook A. Initiating intrapartum nitrous oxide in an academic hospital: considerations and challenges. J Midwifery Womens Health. 2017;62(3):358-362.

- Markley JC, Rollins MD. Non-neuraxial labor analgesia: options. Clin Obstet Gynecol. 2017;60(2);350-364.

- Bobb LE, Farber MK, McGovern C, Camann W. Does nitrous oxide labor analgesia influence the pattern of neuraxial analgesia usage? An impact study at an academic medical center. J Clin Anesth. 2016;35:54-57.

- Sutton CD, Butwick AJ, Riley ET, Carvalho B. Nitrous oxide for labor analgesia: utilization and predictors of conversion to neuraxial analgesia. J Clin Anesth. 2017;40:40-45.

- Collins MR, Starr SA, Bishop JT, Baysinger CL. Nitrous oxide for labor analgesia: expanding analgesic options for women in the United States. Rev Obstet Gynecol. 2012;5(3-4):e126-e131.

- 42 CFR 482.52 - Condition of participation: Anesthesia services. US Government Publishing Office website. https://www.gpo.gov/fdsys/granule/CFR-2011-title42-vol5/CFR-2011-title42-vol5-sec482-52. Accessed April 16, 2018.

- Richardson MG, Lopez BM, Baysinger CL, Shotwell MS, Chestnut DH. Nitrous oxide during labor: maternal satisfaction does not depend exclusively on analgesic effectiveness. Anesth Analg. 2017;124(2):548-553.

- Camann W. Pain, pain relief, satisfaction, and excellence in obstetric anesthesia: a surprisingly complex relationship. Anesth Analg. 2017;124(2):383-385.

- European Society of Anaesthesiology Task Force on Use of Nitrous Oxide in Clinical Anaesthetic Practice. The current place of nitrous oxide in clinical practice: an expert opinion-based task force consensus statement of the European Society of Anaesthesiology. Eur J Anaesthesiol. 2015;32(8):517-520.

- Rappaport B, Mellon RD, Simone A, Woodcock J. Defining safe use of anesthesia in children. N Engl J Med. 2011;364(15):1387-1390.

- Vallejo MC, Phelps AL, Shepherd CJ, Kaul B, Mandell GL, Ramanathan S. Nitrous oxide anxiolysis for elective cesarean section. J Clin Anesth. 2005;17(7):543-548.

- Rowland AS, Baird DD, Weinberg CR, et al. Reduced fertility among women employed as dental assistants exposed to high levels of nitrous oxide. N Engl J Med. 1992;327(14):993-997.

Nitrous oxide, a colorless, odorless gas, has long been used for labor analgesia in many countries, including the United Kingdom, Canada, throughout Europe, Australia, and New Zealand. Recently, interest in its use in the United States has increased, since the US Food and Drug Administration (FDA) approval in 2012 of simple devices for administration of nitrous oxide in a variety of locations. Being able to offer an alternative technique, other than parenteral opioids, for women who may not wish to or who cannot have regional analgesia, and for women who have delivered and need analgesia for postdelivery repair, conveys significant benefits. Risks to its use are very low, although the quality of pain relief is inferior to that offered by regional analgesic techniques. Our experience with its use since 2014 at Brigham and Women’s Hospital in Boston, Massachusetts, corroborates that reported in the literature and leads us to continue offering inhaled nitrous oxide and advocating that others do as well.1–7 When using nitrous oxide in your labor and delivery unit, or if considering its use, keep the following points in mind.

A successful inhaled nitrous oxide program requires proper patient selection

Inhaled nitrous oxide is not an epidural (TABLE).8 The pain relief is clearly inferior to that of an epidural. Inhaled nitrous oxide will not replace epidurals or even have any effect on the epidural rate at a particular institution.6 However, the use of inhaled nitrous oxide for labor analgesia has a long track record of safety (albeit with moderate efficacy for selected patients) for many years in many countries around the world. Inhaled nitrous oxide is a valuable addition to the options we can offer patients:

- who are poor responders to opioid medication or who have high opioid tolerance

- with certain disorders of coagulation

- with chronic pain or anxiety

- who for other reasons need to consider alternatives or adjuncts to neuraxial analgesia.

Although it is important to be realistic regarding the expectations of analgesia quality offered by this agent,7 compared with other agents we have tried, it has less adverse effects, is economically reasonable, and has no proven impact on neonatal outcomes.

No significant complications with inhaled nitrous oxide have been reported

Systematic reviews did not report any significant complications to either mother or newborn.1,2 Our personal experiences corroborate this, as no complications have been associated with its frequent use at Brigham and Women’s Hospital. Reported adverse effects are mild. The incidence of nausea is 13%, dizziness is 3% to 5%, and drowsiness is 4%; these rates are hard to detect over the baseline rates of those side effects associated with labor and delivery alone.1 Many other centers have now adopted the use of this agent, with several hundred locations now offering inhaled nitrous oxide for labor analgesia in the United States.

Practical use of inhaled nitrous oxide is relatively simple

Several vendors offer portable, user-friendly, cost-effective equipment that is appropriate for labor and delivery use. All devices are structured in demand-valve modality, meaning that the patient must initiate a breath in order to open a valve that allows gas to flow. Cessation of the inspiratory effort closes the valve, thus preventing the free flow of gas into the ambient atmosphere of the room. The devices generally include a tank with nitrous oxide as well as a source of oxygen. Most devices designed for labor and delivery provide a fixed mixture of 50% nitrous oxide and 50% oxygen, with fail-safe mechanisms to allow increased oxygen delivery in the event of failure or depletion of the nitrous supply. All modern, FDA–approved devices include effective scavenging systems, such that expired gases are vented outside (generally via room suction), which prevents occupational exposure to low levels of nitrous oxide.

Inhaled nitrous oxide for labor pain must be patient controlled

An essential feature of the use of inhaled nitrous oxide for labor analgesia is that it must be considered a patient-controlled system. Patients have an option to use either a mask or a mouthpiece, according to their preferences and comfort. The patient must hold the mask or mouthpiece herself; it is neither appropriate nor safe for anyone else, such as a nurse, family member, or labor support personnel, to assist with this task.

Some coordination with the nurse is essential for optimal timing of administration. Onset of a therapeutic level of pain relief is generally 30 to 60 seconds after inhalation has begun, with rapid resolution after cessation of the inhalation. The patient should thus initiate the inspiration of the gas at the earliest signs of onset of a contraction, so as to achieve maximal analgesia at the peak of the contraction. Waiting until the peak of the contraction to initiate inhalation of the nitrous oxide will not provide effective analgesia, yet will result in sedation after the contraction has ended.

Read about patient satisfaction with inhaled nitrous oxide.

No oversight by an anesthesiologist is required

The Centers for Medicare and Medicaid Services (CMS) produced a clarification statement for definitions of “anesthesia services” (42 CFR 482.52)9 that may be offered by a hospital, based on American Society of Anesthesiologists (ASA) definitions. CMS, consistent with ASA guidelines, does not define moderate or conscious sedation as “anesthesia,” thus direct oversight by an anesthesiologist is not required. Furthermore, the definition of “minimal sedation,” which is where 50% concentration delivery of inhaled nitrous oxide would be categorized, also does not meet this requirement by CMS.

Women who use inhaled nitrous oxide for labor pain typically are satisfied with its use

The use of analog pain scale measurements may not be appropriate in a setting where dissociation from pain might be the primary beneficial effect. Measurements of maternal satisfaction with their analgesic experience support this. The experiences at Vanderbilt University and Brigham and Women’s Hospital show that, while pain relief is limited, like reported in systematic reviews, maternal satisfaction scores for labor analgesia are not different among women who receive inhaled nitrous oxide analgesia, neuraxial analgesia, and those who transition from nitrous to neuraxial analgesia. In fact, published evidence supports extraordinarily high satisfaction in women who plan to use inhaled nitrous oxide, and actually successfully do so, despite only limited degrees of pain relief.10,11 Work to identify the characteristics of women who report success with inhaled nitrous oxide use needs to be performed so that patients can be better selected and informed when making analgesic choices.

Animal research on inhaled nitrous oxide may not translate well to human neonates

A very recent task force convened by the European Society of Anaesthesiology (ESA) addressed some of the potential concerns about inhaled nitrous oxide analgesia.12 Per their report:

“the potential teratogenic effect of N2O observed in experimental models cannot be extrapolated to humans. There is a lack of evidence for an association between N2O and reproductive toxicity. The incidence of health hazards and abortion was not shown to be higher in women exposed to, or spouses of men exposed to N2O than those who were not so exposed. Moreover, the incidence of congenital malformations was not higher among women who received N2O for anaesthesia during the first trimester of pregnancy nor during anaesthesia management for cervical cerclage, nor for surgery in the first two trimesters of pregnancy.”

There is a theoretical concern of an increase in neuronal apoptosis in neonates, demonstrated in laboratory animals in anesthetic concentrations, but the human relevance of this is not clear, since the data on animal developmental neurotoxicity is generally combined with data wherein potent inhalational anesthetic agents were also used, not nitrous oxide alone.13 The analgesic doses and time of exposure of inhaled nitrous oxide administered for labor analgesia are well below those required for these changes, as subanesthetic doses are associated with minimal changes, if any, in laboratory animals.

No labor analgesic is without the potential for fetal effects, and alternative labor analgesics such as systemic opioids in higher doses also may have potential adverse effects on the fetus, such as fetal heart rate effects or early tone, alertness, and breastfeeding difficulties. The low solubility and short half-life of inhaled nitrous oxide contribute to low absorption by tissues, thus contributing to the safety of this agent. Nitrous oxide via inhalation for sedation during elective cesarean has been reported to show no adverse effects on neonatal Apgar scores.14

Modern equipment keeps occupational exposure to nitrous oxide safe

One retrospective review of women exposed to high concentrations of inhaled nitrous oxide reported reduced fertility.15 However, the only effects on fertility were seen when nitrous was used without scavenging equipment, and in high concentrations. Moreover, that study examined dental offices, where nitrous was free flowing during procedures—quite a different setting than the intermittent inhalation, demand-valve modality as is used during labor—and when using appropriate modern, FDA-approved equipment, and scavenging devices. Per the recent ESA task force12:

“Members of the task force agreed that, despite theoretical concerns and laboratory data, there is no evidence indicating that the use of N2O in a clinically relevant setting would increase health risk in patients or providers exposed to this drug. With the ubiquitous availability of scavenging systems in the modern operating room, the health concern for medical staff has decreased dramatically. Properly operating scavenging systems reduce N2O concentrations by more than 70%, thereby efficiently keeping ambient N2O levels well below official limits.”

The ESA task force concludes: “An extensive amount of clinical evidence indicates that N2O can be used safely for procedural pain management, for labour pain, and for anxiolysis and sedation in dentistry.”12

Two important reminders

Inhaled nitrous oxide has been a central component of the labor pain relief menu in most of the rest of the world for decades, and the safety record is impeccable. This agent has now had extensive and growing experience in American maternity units. Remember 2 critical points: 1) patient selection is key, 2) analgesia is not like that provided by regional anesthetic techniques such as an epidural.

Share your thoughts! Send your Letter to the Editor to [email protected]. Please include your name and the city and state in which you practice.

Nitrous oxide, a colorless, odorless gas, has long been used for labor analgesia in many countries, including the United Kingdom, Canada, throughout Europe, Australia, and New Zealand. Recently, interest in its use in the United States has increased, since the US Food and Drug Administration (FDA) approval in 2012 of simple devices for administration of nitrous oxide in a variety of locations. Being able to offer an alternative technique, other than parenteral opioids, for women who may not wish to or who cannot have regional analgesia, and for women who have delivered and need analgesia for postdelivery repair, conveys significant benefits. Risks to its use are very low, although the quality of pain relief is inferior to that offered by regional analgesic techniques. Our experience with its use since 2014 at Brigham and Women’s Hospital in Boston, Massachusetts, corroborates that reported in the literature and leads us to continue offering inhaled nitrous oxide and advocating that others do as well.1–7 When using nitrous oxide in your labor and delivery unit, or if considering its use, keep the following points in mind.

A successful inhaled nitrous oxide program requires proper patient selection

Inhaled nitrous oxide is not an epidural (TABLE).8 The pain relief is clearly inferior to that of an epidural. Inhaled nitrous oxide will not replace epidurals or even have any effect on the epidural rate at a particular institution.6 However, the use of inhaled nitrous oxide for labor analgesia has a long track record of safety (albeit with moderate efficacy for selected patients) for many years in many countries around the world. Inhaled nitrous oxide is a valuable addition to the options we can offer patients:

- who are poor responders to opioid medication or who have high opioid tolerance

- with certain disorders of coagulation

- with chronic pain or anxiety

- who for other reasons need to consider alternatives or adjuncts to neuraxial analgesia.

Although it is important to be realistic regarding the expectations of analgesia quality offered by this agent,7 compared with other agents we have tried, it has less adverse effects, is economically reasonable, and has no proven impact on neonatal outcomes.

No significant complications with inhaled nitrous oxide have been reported

Systematic reviews did not report any significant complications to either mother or newborn.1,2 Our personal experiences corroborate this, as no complications have been associated with its frequent use at Brigham and Women’s Hospital. Reported adverse effects are mild. The incidence of nausea is 13%, dizziness is 3% to 5%, and drowsiness is 4%; these rates are hard to detect over the baseline rates of those side effects associated with labor and delivery alone.1 Many other centers have now adopted the use of this agent, with several hundred locations now offering inhaled nitrous oxide for labor analgesia in the United States.

Practical use of inhaled nitrous oxide is relatively simple

Several vendors offer portable, user-friendly, cost-effective equipment that is appropriate for labor and delivery use. All devices are structured in demand-valve modality, meaning that the patient must initiate a breath in order to open a valve that allows gas to flow. Cessation of the inspiratory effort closes the valve, thus preventing the free flow of gas into the ambient atmosphere of the room. The devices generally include a tank with nitrous oxide as well as a source of oxygen. Most devices designed for labor and delivery provide a fixed mixture of 50% nitrous oxide and 50% oxygen, with fail-safe mechanisms to allow increased oxygen delivery in the event of failure or depletion of the nitrous supply. All modern, FDA–approved devices include effective scavenging systems, such that expired gases are vented outside (generally via room suction), which prevents occupational exposure to low levels of nitrous oxide.

Inhaled nitrous oxide for labor pain must be patient controlled

An essential feature of the use of inhaled nitrous oxide for labor analgesia is that it must be considered a patient-controlled system. Patients have an option to use either a mask or a mouthpiece, according to their preferences and comfort. The patient must hold the mask or mouthpiece herself; it is neither appropriate nor safe for anyone else, such as a nurse, family member, or labor support personnel, to assist with this task.

Some coordination with the nurse is essential for optimal timing of administration. Onset of a therapeutic level of pain relief is generally 30 to 60 seconds after inhalation has begun, with rapid resolution after cessation of the inhalation. The patient should thus initiate the inspiration of the gas at the earliest signs of onset of a contraction, so as to achieve maximal analgesia at the peak of the contraction. Waiting until the peak of the contraction to initiate inhalation of the nitrous oxide will not provide effective analgesia, yet will result in sedation after the contraction has ended.

Read about patient satisfaction with inhaled nitrous oxide.

No oversight by an anesthesiologist is required

The Centers for Medicare and Medicaid Services (CMS) produced a clarification statement for definitions of “anesthesia services” (42 CFR 482.52)9 that may be offered by a hospital, based on American Society of Anesthesiologists (ASA) definitions. CMS, consistent with ASA guidelines, does not define moderate or conscious sedation as “anesthesia,” thus direct oversight by an anesthesiologist is not required. Furthermore, the definition of “minimal sedation,” which is where 50% concentration delivery of inhaled nitrous oxide would be categorized, also does not meet this requirement by CMS.

Women who use inhaled nitrous oxide for labor pain typically are satisfied with its use

The use of analog pain scale measurements may not be appropriate in a setting where dissociation from pain might be the primary beneficial effect. Measurements of maternal satisfaction with their analgesic experience support this. The experiences at Vanderbilt University and Brigham and Women’s Hospital show that, while pain relief is limited, like reported in systematic reviews, maternal satisfaction scores for labor analgesia are not different among women who receive inhaled nitrous oxide analgesia, neuraxial analgesia, and those who transition from nitrous to neuraxial analgesia. In fact, published evidence supports extraordinarily high satisfaction in women who plan to use inhaled nitrous oxide, and actually successfully do so, despite only limited degrees of pain relief.10,11 Work to identify the characteristics of women who report success with inhaled nitrous oxide use needs to be performed so that patients can be better selected and informed when making analgesic choices.

Animal research on inhaled nitrous oxide may not translate well to human neonates

A very recent task force convened by the European Society of Anaesthesiology (ESA) addressed some of the potential concerns about inhaled nitrous oxide analgesia.12 Per their report:

“the potential teratogenic effect of N2O observed in experimental models cannot be extrapolated to humans. There is a lack of evidence for an association between N2O and reproductive toxicity. The incidence of health hazards and abortion was not shown to be higher in women exposed to, or spouses of men exposed to N2O than those who were not so exposed. Moreover, the incidence of congenital malformations was not higher among women who received N2O for anaesthesia during the first trimester of pregnancy nor during anaesthesia management for cervical cerclage, nor for surgery in the first two trimesters of pregnancy.”

There is a theoretical concern of an increase in neuronal apoptosis in neonates, demonstrated in laboratory animals in anesthetic concentrations, but the human relevance of this is not clear, since the data on animal developmental neurotoxicity is generally combined with data wherein potent inhalational anesthetic agents were also used, not nitrous oxide alone.13 The analgesic doses and time of exposure of inhaled nitrous oxide administered for labor analgesia are well below those required for these changes, as subanesthetic doses are associated with minimal changes, if any, in laboratory animals.

No labor analgesic is without the potential for fetal effects, and alternative labor analgesics such as systemic opioids in higher doses also may have potential adverse effects on the fetus, such as fetal heart rate effects or early tone, alertness, and breastfeeding difficulties. The low solubility and short half-life of inhaled nitrous oxide contribute to low absorption by tissues, thus contributing to the safety of this agent. Nitrous oxide via inhalation for sedation during elective cesarean has been reported to show no adverse effects on neonatal Apgar scores.14

Modern equipment keeps occupational exposure to nitrous oxide safe

One retrospective review of women exposed to high concentrations of inhaled nitrous oxide reported reduced fertility.15 However, the only effects on fertility were seen when nitrous was used without scavenging equipment, and in high concentrations. Moreover, that study examined dental offices, where nitrous was free flowing during procedures—quite a different setting than the intermittent inhalation, demand-valve modality as is used during labor—and when using appropriate modern, FDA-approved equipment, and scavenging devices. Per the recent ESA task force12:

“Members of the task force agreed that, despite theoretical concerns and laboratory data, there is no evidence indicating that the use of N2O in a clinically relevant setting would increase health risk in patients or providers exposed to this drug. With the ubiquitous availability of scavenging systems in the modern operating room, the health concern for medical staff has decreased dramatically. Properly operating scavenging systems reduce N2O concentrations by more than 70%, thereby efficiently keeping ambient N2O levels well below official limits.”

The ESA task force concludes: “An extensive amount of clinical evidence indicates that N2O can be used safely for procedural pain management, for labour pain, and for anxiolysis and sedation in dentistry.”12

Two important reminders

Inhaled nitrous oxide has been a central component of the labor pain relief menu in most of the rest of the world for decades, and the safety record is impeccable. This agent has now had extensive and growing experience in American maternity units. Remember 2 critical points: 1) patient selection is key, 2) analgesia is not like that provided by regional anesthetic techniques such as an epidural.

Share your thoughts! Send your Letter to the Editor to [email protected]. Please include your name and the city and state in which you practice.

- Likis FE, Andrews JC, Collins MR, et al. Nitrous oxide for the management of labor pain: a systematic review. Anesth Analg. 2014;118(1):153-167.

- Rosen MA. Nitrous oxide for relief of labor pain: a systematic review. Am J Obstet Gynecol. 2002;186(5 suppl nature):S110-S126.

- Angle P, Landy CK, Charles C. Phase 1 development of an index to measure the quality of neuraxial labour analgesia: exploring the perspectives of childbearing women. Can J Anaesth. 2010;57(5):468-478.

- Migliaccio L, Lawton R, Leeman L, Holbrook A. Initiating intrapartum nitrous oxide in an academic hospital: considerations and challenges. J Midwifery Womens Health. 2017;62(3):358-362.

- Markley JC, Rollins MD. Non-neuraxial labor analgesia: options. Clin Obstet Gynecol. 2017;60(2);350-364.

- Bobb LE, Farber MK, McGovern C, Camann W. Does nitrous oxide labor analgesia influence the pattern of neuraxial analgesia usage? An impact study at an academic medical center. J Clin Anesth. 2016;35:54-57.

- Sutton CD, Butwick AJ, Riley ET, Carvalho B. Nitrous oxide for labor analgesia: utilization and predictors of conversion to neuraxial analgesia. J Clin Anesth. 2017;40:40-45.

- Collins MR, Starr SA, Bishop JT, Baysinger CL. Nitrous oxide for labor analgesia: expanding analgesic options for women in the United States. Rev Obstet Gynecol. 2012;5(3-4):e126-e131.

- 42 CFR 482.52 - Condition of participation: Anesthesia services. US Government Publishing Office website. https://www.gpo.gov/fdsys/granule/CFR-2011-title42-vol5/CFR-2011-title42-vol5-sec482-52. Accessed April 16, 2018.

- Richardson MG, Lopez BM, Baysinger CL, Shotwell MS, Chestnut DH. Nitrous oxide during labor: maternal satisfaction does not depend exclusively on analgesic effectiveness. Anesth Analg. 2017;124(2):548-553.

- Camann W. Pain, pain relief, satisfaction, and excellence in obstetric anesthesia: a surprisingly complex relationship. Anesth Analg. 2017;124(2):383-385.

- European Society of Anaesthesiology Task Force on Use of Nitrous Oxide in Clinical Anaesthetic Practice. The current place of nitrous oxide in clinical practice: an expert opinion-based task force consensus statement of the European Society of Anaesthesiology. Eur J Anaesthesiol. 2015;32(8):517-520.

- Rappaport B, Mellon RD, Simone A, Woodcock J. Defining safe use of anesthesia in children. N Engl J Med. 2011;364(15):1387-1390.

- Vallejo MC, Phelps AL, Shepherd CJ, Kaul B, Mandell GL, Ramanathan S. Nitrous oxide anxiolysis for elective cesarean section. J Clin Anesth. 2005;17(7):543-548.

- Rowland AS, Baird DD, Weinberg CR, et al. Reduced fertility among women employed as dental assistants exposed to high levels of nitrous oxide. N Engl J Med. 1992;327(14):993-997.

- Likis FE, Andrews JC, Collins MR, et al. Nitrous oxide for the management of labor pain: a systematic review. Anesth Analg. 2014;118(1):153-167.

- Rosen MA. Nitrous oxide for relief of labor pain: a systematic review. Am J Obstet Gynecol. 2002;186(5 suppl nature):S110-S126.

- Angle P, Landy CK, Charles C. Phase 1 development of an index to measure the quality of neuraxial labour analgesia: exploring the perspectives of childbearing women. Can J Anaesth. 2010;57(5):468-478.

- Migliaccio L, Lawton R, Leeman L, Holbrook A. Initiating intrapartum nitrous oxide in an academic hospital: considerations and challenges. J Midwifery Womens Health. 2017;62(3):358-362.

- Markley JC, Rollins MD. Non-neuraxial labor analgesia: options. Clin Obstet Gynecol. 2017;60(2);350-364.

- Bobb LE, Farber MK, McGovern C, Camann W. Does nitrous oxide labor analgesia influence the pattern of neuraxial analgesia usage? An impact study at an academic medical center. J Clin Anesth. 2016;35:54-57.

- Sutton CD, Butwick AJ, Riley ET, Carvalho B. Nitrous oxide for labor analgesia: utilization and predictors of conversion to neuraxial analgesia. J Clin Anesth. 2017;40:40-45.

- Collins MR, Starr SA, Bishop JT, Baysinger CL. Nitrous oxide for labor analgesia: expanding analgesic options for women in the United States. Rev Obstet Gynecol. 2012;5(3-4):e126-e131.

- 42 CFR 482.52 - Condition of participation: Anesthesia services. US Government Publishing Office website. https://www.gpo.gov/fdsys/granule/CFR-2011-title42-vol5/CFR-2011-title42-vol5-sec482-52. Accessed April 16, 2018.

- Richardson MG, Lopez BM, Baysinger CL, Shotwell MS, Chestnut DH. Nitrous oxide during labor: maternal satisfaction does not depend exclusively on analgesic effectiveness. Anesth Analg. 2017;124(2):548-553.

- Camann W. Pain, pain relief, satisfaction, and excellence in obstetric anesthesia: a surprisingly complex relationship. Anesth Analg. 2017;124(2):383-385.

- European Society of Anaesthesiology Task Force on Use of Nitrous Oxide in Clinical Anaesthetic Practice. The current place of nitrous oxide in clinical practice: an expert opinion-based task force consensus statement of the European Society of Anaesthesiology. Eur J Anaesthesiol. 2015;32(8):517-520.

- Rappaport B, Mellon RD, Simone A, Woodcock J. Defining safe use of anesthesia in children. N Engl J Med. 2011;364(15):1387-1390.

- Vallejo MC, Phelps AL, Shepherd CJ, Kaul B, Mandell GL, Ramanathan S. Nitrous oxide anxiolysis for elective cesarean section. J Clin Anesth. 2005;17(7):543-548.

- Rowland AS, Baird DD, Weinberg CR, et al. Reduced fertility among women employed as dental assistants exposed to high levels of nitrous oxide. N Engl J Med. 1992;327(14):993-997.

What’s Eating You? Ixodes Tick and Related Diseases, Part 3: Coinfection and Tick-Bite Prevention

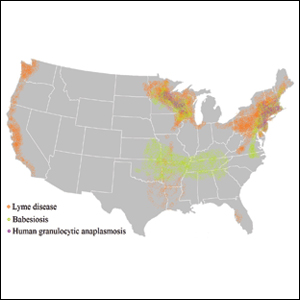

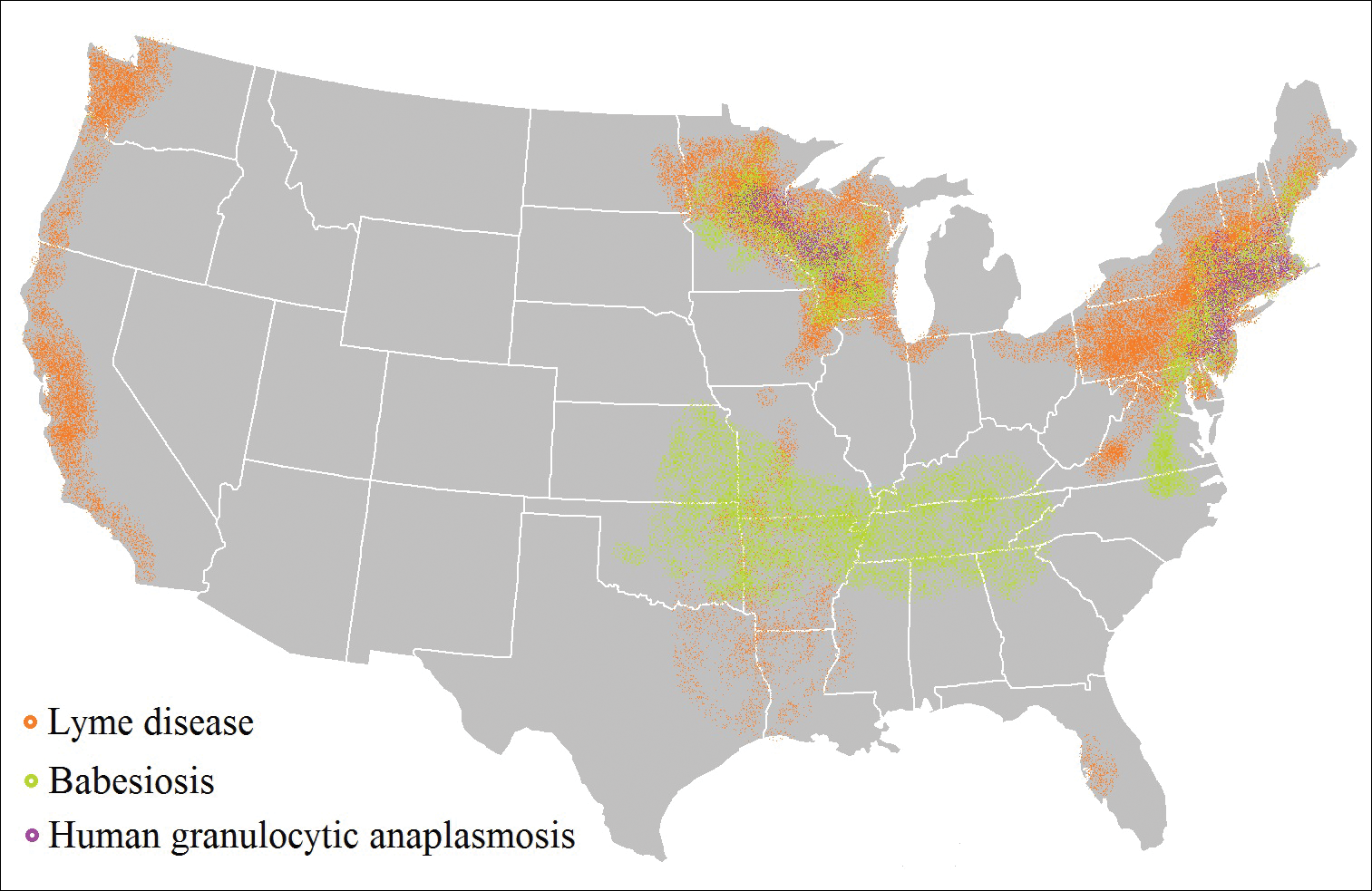

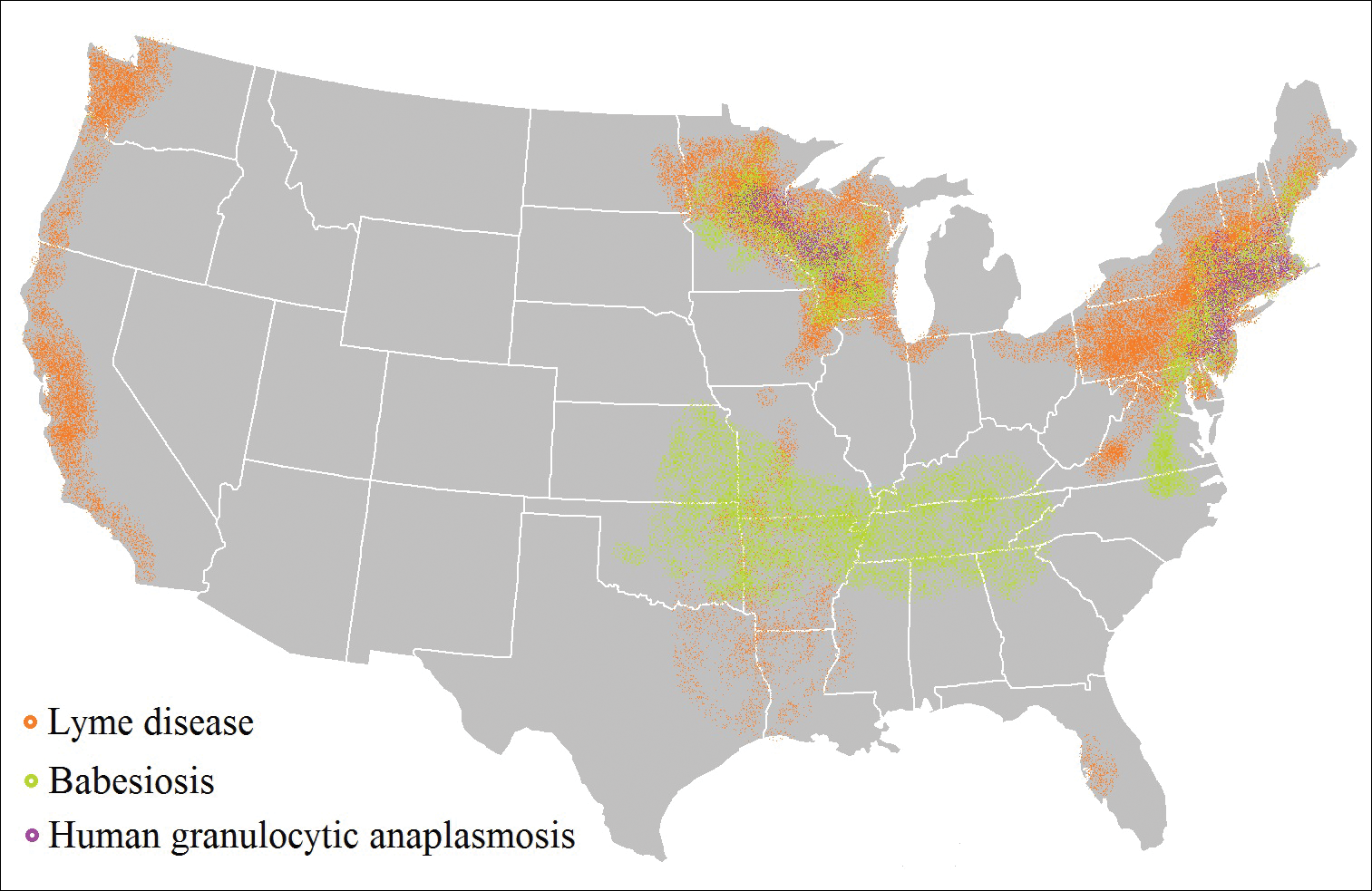

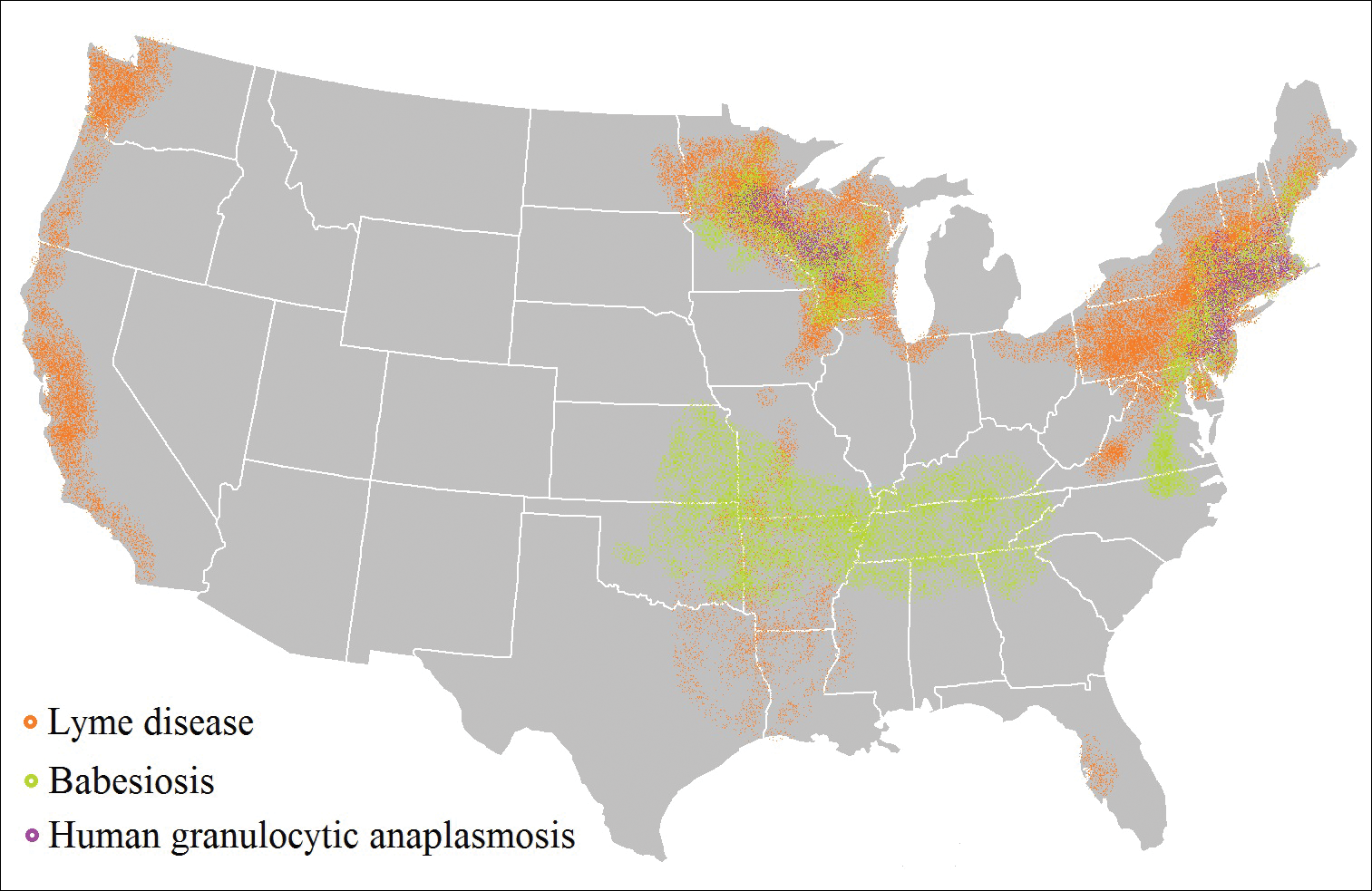

Tick-borne diseases are increasing in prevalence, likely due to climate change in combination with human movement into tick habitats.1-3 The Ixodes genus of hard ticks is a common vector for the transmission of pathogenic viruses, bacteria, parasites, and toxins. Among these, Lyme disease, which is caused by Borrelia burgdorferi, is the most prevalent, followed by babesiosis and human granulocytic anaplasmosis (HGA), respectively.4 In Europe, tick-borne encephalitis is commonly encountered. More recently identified diseases transmitted by Ixodes ticks include Powassan virus and Borrelia miyamotoi infection; however, these diseases are less frequently encountered than other tick-borne diseases.5,6

As tick-borne diseases become more prevalent, the likelihood of coinfection with more than one Ixodes-transmitted pathogen is increasing.7 Therefore, it is important for physicians who practice in endemic areas to be aware of the possibility of coinfection, which can alter clinical presentation, disease severity, and treatment response in tick-borne diseases. Additionally, public education on tick-bite prevention and prompt tick removal is necessary to combat the rising prevalence of these diseases.

Coinfection

Risk of coinfection with more than one tick-borne disease is contingent on the geographic distribution of the tick species as well as the particular pathogen’s prevalence within reservoir hosts in a given area (Figure). Most coinfections occur with B. burgdorferi and an additional pathogen, usually Anaplasma phagocytophilum (which causes human granulocytic anaplasmosis [HGA]) or Babesia microti (which causes babesiosis). In Europe, coinfection with tick-borne encephalitis virus may occur. There is limited evidence of human coinfection with B miyamotoi or Powassan virus, as isolated infection with either of these pathogens is rare.

In patients with Lyme disease, as many as 35% may have concurrent babesiosis, and as many as 12% may have concurrent HGA in endemic areas (eg, northeast and northern central United States).7-9 Concurrent HGA and babesiosis in the absence of Lyme disease also has been documented.7-9 Coinfection generally increases the diversity of presenting symptoms, often obscuring the primary diagnosis. In addition, these patients may have more severe and prolonged illness.8,10,11

In endemic areas, coinfection with B burgdorferi and an additional pathogen should be suspected if a patient presents with typical symptoms of early Lyme disease, especially erythema migrans, along with (1) combination of fever, chills, and headache; (2) prolonged viral-like illness, particularly 48 hours after appropriate antibiotic treatment; and (3) unexplained blood dyscrasia.7,11,12 When a patient presents with erythema migrans, it is unnecessary to test for HGA, as treatment of Lyme disease with doxycycline also is adequate for treating HGA; however, if systemic symptoms persist despite treatment, testing for babesiosis and other tick-borne illnesses should be considered, as babesiosis requires treatment with atovaquone plus azithromycin or clindamycin plus quinine.13

A complete blood count and peripheral blood smear can aid in the diagnosis of coinfection. The complete blood count may reveal leukopenia, anemia, or thrombocytopenia associated with HGA or babesiosis. The peripheral blood smear can reveal inclusions of intra-erythrocytic ring forms and tetrads (the “Maltese cross” appearance) in babesiosis and intragranulocytic morulae in HGA.12 The most sensitive diagnostic tests for tick-borne diseases are organism-specific IgM and IgG serology for Lyme disease, babesiosis, and HGA and polymerase chain reaction for babesiosis and HGA.7

Prevention Strategies

The most effective means of controlling tick-borne disease is avoiding tick bites altogether. One method is to avoid spending time in high-risk areas that may be infested with ticks, particularly low-lying brush, where ticks are likely to hide.14 For individuals traveling in environments with a high risk of tick exposure, behavioral methods of avoidance are indicated, including wearing long pants and a shirt with long sleeves, tucking the shirt into the pants, and wearing closed-toe shoes. Wearing light-colored clothing may aid in tick identification and prompt removal prior to attachment. Permethrin-impregnated clothing has been proven to decrease the likelihood of tick bites in adults working outdoors.15-17

Topical repellents also play a role in the prevention of tick-borne diseases. The most effective and safe synthetic repellents are N,N-diethyl-meta-toluamide (DEET); picaridin; p-menthane-3,8-diol; and insect repellent 3535 (IR3535)(ethyl butylacetylaminopropionate).16-19 Plant-based repellents also are available, but their efficacy is strongly influenced by the surrounding environment (eg, temperature, humidity, organic matter).20-22 Individuals also may be exposed to ticks following contact with domesticated animals and pets.23,24 Tick prevention in pets with the use of ectoparasiticides should be directed by a qualified veterinarian.25

Tick Removal

Following a bite, the tick should be removed promptly to avoid transmission of pathogens. Numerous commercial and in-home methods of tick removal are available, but not all are equally effective. Detachment techniques include removal with a card or commercially available radiofrequency device, lassoing, or freezing.26,27 However, the most effective method is simple removal with tweezers. The tick should be grasped close to the skin surface and pulled upward with an even pressure. Commercially available tick-removal devices have not been shown to produce better outcomes than removal of the tick with tweezers.28

Conclusion

When patients do not respond to therapy for presumed tick-borne infection, the diagnosis should be reconsidered. One important consideration is coinfection with a second organism. Prompt identification and removal of ticks can prevent disease transmission.

- McMichael C, Barnett J, McMichael AJ. An ill wind? climate change, migration, and health. Environ Health Perspect. 2012;120:646-654.

- Ostfeld RS, Brunner JL. Climate change and Ixodes tick-borne diseases of humans. Philos Trans R Soc Lond B Biol Sci. 2015;370:20140051.

- Ogden NH, Bigras-Poulin M, O’Callaghan CJ, et al. Vector seasonality, host infection dynamics and fitness of pathogens transmitted by the tick Ixodes scapularis. Parasitology. 2007;134(pt 2):209-227.

- Tickborne diseases of the United States. Centers for Disease Control and Prevention website. http://www.cdc.gov/ticks/diseases/index.html. Updated July 25, 2017. Accessed April 10, 2018.

- Hinten SR, Beckett GA, Gensheimer KF, et al. Increased recognition of Powassan encephalitis in the United States, 1999-2005. Vector Borne Zoonotic Dis. 2008;8:733-740.

- Platonov AE, Karan LS, Kolyasnikova NM, et al. Humans infected with relapsing fever spirochete Borrelia miyamotoi, Russia. Emerg Infect Dis. 2011;17:1816-1823.

- Krause PJ, McKay K, Thompson CA, et al; Deer-Associated Infection Study Group. Disease-specific diagnosis of coinfecting tickborne zoonoses: babesiosis, human granulocytic ehrlichiosis, and Lyme disease. Clin Infect Dis. 2002;34:1184-1191.

- Krause PJ, Telford SR 3rd, Spielman A, et al. Concurrent Lyme disease and babesiosis. evidence for increased severity and duration of illness. JAMA. 1996;275:1657-1660.

- Belongia EA, Reed KD, Mitchell PD, et al. Clinical and epidemiological features of early Lyme disease and human granulocytic ehrlichiosis in Wisconsin. Clin Infect Dis. 1999;29:1472-1477.

- Sweeny CJ, Ghassemi M, Agger WA, et al. Coinfection with Babesia microti and Borrelia burgdorferi in a western Wisconsin resident. Mayo Clin Proc.1998;73:338-341.

- Nadelman RB, Horowitz HW, Hsieh TC, et al. Simultaneous human granulocytic ehrlichiosis and Lyme borreliosis. N Engl J Med. 1997;337:27-30.

- Wormser GP, Dattwyler RJ, Shapiro ED, et al. The clinical assessment, treatment, and prevention of Lyme disease, human granulocytic anaplasmosis, and babesiosis: clinical practice guidelines by the Infectious Diseases Society of America. Clin Infect Dis. 2006;43:1089-1134.

- Swanson SJ, Neitzel D, Reed DK, et al. Coinfections acquired from Ixodes ticks. Clin Microbiol Rev. 2006;19:708-727.

- Hayes EB, Piesman J. How can we prevent Lyme disease? N Engl J Med. 2003;348:2424-2430.

- Vaughn MF, Funkhouser SW, Lin FC, et al. Long-lasting permethrin impregnated uniforms: a randomized-controlled trial for tick bite prevention. Am J Prev Med. 2014;46:473-480.

- Miller NJ, Rainone EE, Dyer MC, et al. Tick bite protection with permethrin-treated summer-weight clothing. J Med Entomol. 2011;48:327-333.

- Richards SL, Balanay JAG, Harris JW. Effectiveness of permethrin-treated clothing to prevent tick exposure in foresters in the central Appalachian region of the USA. Int J Environ Health Res. 2015;25:453-462.

- Pages F, Dautel H, Duvallet G, et al. Tick repellents for human use: prevention of tick bites and tick-borne diseases. Vector Borne Zoonotic Dis. 2014;14:85-93.

- Büchel K, Bendin J, Gharbi A, et al. Repellent efficacy of DEET, icaridin, and EBAAP against Ixodes ricinus and Ixodes scapularis nymphs (Acari, Ixodidae). Ticks Tick Borne Dis. 2015;6:494-498.

- Schwantes U, Dautel H, Jung G. Prevention of infectious tick-borne diseases in humans: comparative studies of the repellency of different dodecanoic acid-formulations against Ixodes ricinus ticks (Acari: Ixodidae). Parasit Vectors. 2008;8:1-8.

- Bissinger BW, Apperson CS, Sonenshine DE, et al. Efficacy of the new repellent BioUD against three species of ixodid ticks. Exp Appl Acarol. 2009;48:239-250.

- Feaster JE, Scialdone MA, Todd RG, et al. Dihydronepetalactones deter feeding activity by mosquitoes, stable flies, and deer ticks. J Med Entomol. 2009;46:832-840.

- Jennett AL, Smith FD, Wall R. Tick infestation risk for dogs in a peri-urban park. Parasit Vectors. 2013;6:358.

- Rand PW, Smith RP Jr, Lacombe EH. Canine seroprevalence and the distribution of Ixodes dammini in an area of emerging Lyme disease. Am J Public Health. 1991;81:1331-1334.

- Baneth G, Bourdeau P, Bourdoiseau G, et al; CVBD World Forum. Vector-borne diseases—constant challenge for practicing veterinarians: recommendations from the CVBD World Forum. Parasit Vectors. 2012;5:55.

- Akin Belli A, Dervis E, Kar S, et al. Revisiting detachment techniques in human-biting ticks. J Am Acad Dermatol. 2016;75:393-397.

- Ashique KT, Kaliyadan F. Radiofrequency device for tick removal. J Am Acad Dermatol. 2015;72:155-156.

- Due C, Fox W, Medlock JM, et al. Tick bite prevention and tick removal. BMJ. 2013;347:f7123.

Tick-borne diseases are increasing in prevalence, likely due to climate change in combination with human movement into tick habitats.1-3 The Ixodes genus of hard ticks is a common vector for the transmission of pathogenic viruses, bacteria, parasites, and toxins. Among these, Lyme disease, which is caused by Borrelia burgdorferi, is the most prevalent, followed by babesiosis and human granulocytic anaplasmosis (HGA), respectively.4 In Europe, tick-borne encephalitis is commonly encountered. More recently identified diseases transmitted by Ixodes ticks include Powassan virus and Borrelia miyamotoi infection; however, these diseases are less frequently encountered than other tick-borne diseases.5,6

As tick-borne diseases become more prevalent, the likelihood of coinfection with more than one Ixodes-transmitted pathogen is increasing.7 Therefore, it is important for physicians who practice in endemic areas to be aware of the possibility of coinfection, which can alter clinical presentation, disease severity, and treatment response in tick-borne diseases. Additionally, public education on tick-bite prevention and prompt tick removal is necessary to combat the rising prevalence of these diseases.

Coinfection

Risk of coinfection with more than one tick-borne disease is contingent on the geographic distribution of the tick species as well as the particular pathogen’s prevalence within reservoir hosts in a given area (Figure). Most coinfections occur with B. burgdorferi and an additional pathogen, usually Anaplasma phagocytophilum (which causes human granulocytic anaplasmosis [HGA]) or Babesia microti (which causes babesiosis). In Europe, coinfection with tick-borne encephalitis virus may occur. There is limited evidence of human coinfection with B miyamotoi or Powassan virus, as isolated infection with either of these pathogens is rare.

In patients with Lyme disease, as many as 35% may have concurrent babesiosis, and as many as 12% may have concurrent HGA in endemic areas (eg, northeast and northern central United States).7-9 Concurrent HGA and babesiosis in the absence of Lyme disease also has been documented.7-9 Coinfection generally increases the diversity of presenting symptoms, often obscuring the primary diagnosis. In addition, these patients may have more severe and prolonged illness.8,10,11

In endemic areas, coinfection with B burgdorferi and an additional pathogen should be suspected if a patient presents with typical symptoms of early Lyme disease, especially erythema migrans, along with (1) combination of fever, chills, and headache; (2) prolonged viral-like illness, particularly 48 hours after appropriate antibiotic treatment; and (3) unexplained blood dyscrasia.7,11,12 When a patient presents with erythema migrans, it is unnecessary to test for HGA, as treatment of Lyme disease with doxycycline also is adequate for treating HGA; however, if systemic symptoms persist despite treatment, testing for babesiosis and other tick-borne illnesses should be considered, as babesiosis requires treatment with atovaquone plus azithromycin or clindamycin plus quinine.13

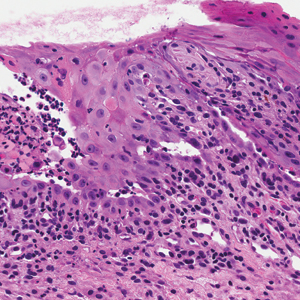

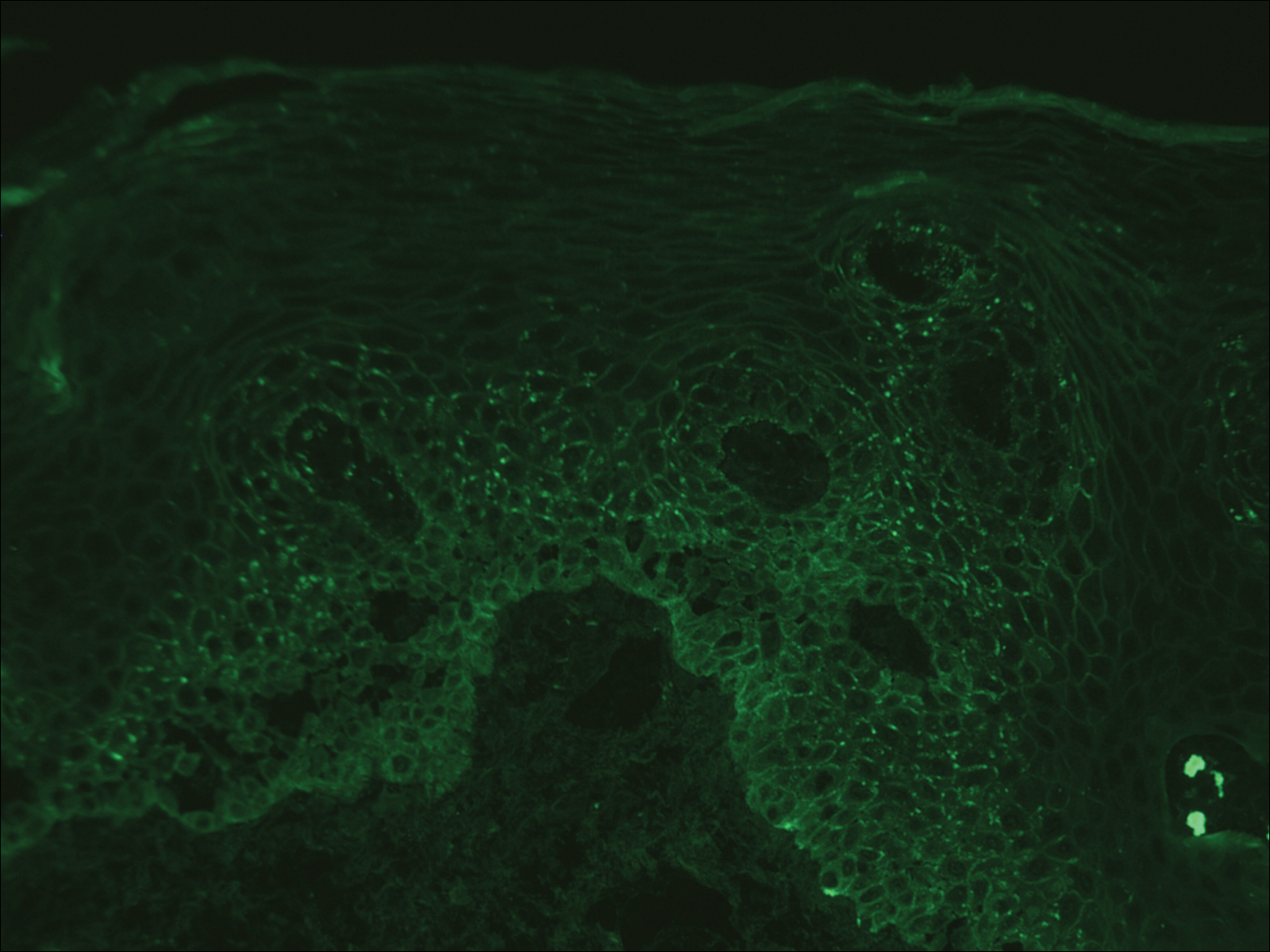

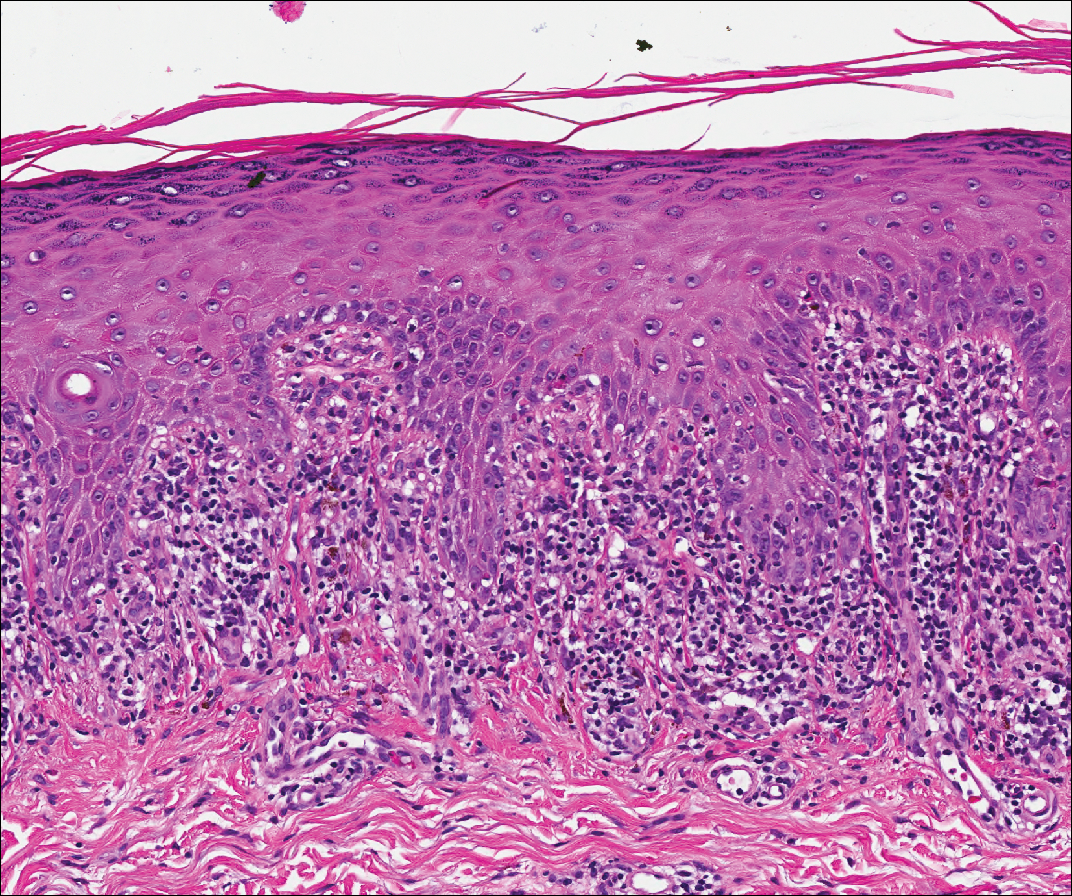

A complete blood count and peripheral blood smear can aid in the diagnosis of coinfection. The complete blood count may reveal leukopenia, anemia, or thrombocytopenia associated with HGA or babesiosis. The peripheral blood smear can reveal inclusions of intra-erythrocytic ring forms and tetrads (the “Maltese cross” appearance) in babesiosis and intragranulocytic morulae in HGA.12 The most sensitive diagnostic tests for tick-borne diseases are organism-specific IgM and IgG serology for Lyme disease, babesiosis, and HGA and polymerase chain reaction for babesiosis and HGA.7

Prevention Strategies