User login

Richard Franki is the associate editor who writes and creates graphs. He started with the company in 1987, when it was known as the International Medical News Group. In his years as a journalist, Richard has worked for Cap Cities/ABC, Disney, Harcourt, Elsevier, Quadrant, Frontline, and Internet Brands. In the 1990s, he was a contributor to the ill-fated Indications column, predecessor of Livin' on the MDedge.

Flu increases activity but not its severity

The CDC’s latest report shows that 6.8% of outpatients visiting health care providers had influenza-like illness during the week ending Feb. 8. That’s up from the previous week’s 6.6%, but that rise of 0.2 percentage points is smaller than the 0.6-point rises that occurred each of the 2 weeks before, and that could mean that activity is slowing.

That slowing, however, is not noticeable from this week’s map, which puts 41 states (there were 35 last week) and Puerto Rico in the red at the highest level of activity on the CDC’s 1-10 scale and another three states in the “high” range with levels of 8 or 9, the CDC’s influenza division reported.

That leaves Nevada and Oregon at level 7; Alaska, Florida, and the District of Columbia at level 5; Idaho at level 3, and Delaware with insufficient data (it was at level 5 last week), the CDC said.

The 2019-2020 season’s high activity, fortunately, has not translated into high severity, as overall hospitalization and mortality rates continue to remain at fairly typical levels. Hospitalization rates are elevated among children and young adults, however, and pediatric deaths are now up to 92, the CDC said, which is high for this point in the season.

The CDC’s latest report shows that 6.8% of outpatients visiting health care providers had influenza-like illness during the week ending Feb. 8. That’s up from the previous week’s 6.6%, but that rise of 0.2 percentage points is smaller than the 0.6-point rises that occurred each of the 2 weeks before, and that could mean that activity is slowing.

That slowing, however, is not noticeable from this week’s map, which puts 41 states (there were 35 last week) and Puerto Rico in the red at the highest level of activity on the CDC’s 1-10 scale and another three states in the “high” range with levels of 8 or 9, the CDC’s influenza division reported.

That leaves Nevada and Oregon at level 7; Alaska, Florida, and the District of Columbia at level 5; Idaho at level 3, and Delaware with insufficient data (it was at level 5 last week), the CDC said.

The 2019-2020 season’s high activity, fortunately, has not translated into high severity, as overall hospitalization and mortality rates continue to remain at fairly typical levels. Hospitalization rates are elevated among children and young adults, however, and pediatric deaths are now up to 92, the CDC said, which is high for this point in the season.

The CDC’s latest report shows that 6.8% of outpatients visiting health care providers had influenza-like illness during the week ending Feb. 8. That’s up from the previous week’s 6.6%, but that rise of 0.2 percentage points is smaller than the 0.6-point rises that occurred each of the 2 weeks before, and that could mean that activity is slowing.

That slowing, however, is not noticeable from this week’s map, which puts 41 states (there were 35 last week) and Puerto Rico in the red at the highest level of activity on the CDC’s 1-10 scale and another three states in the “high” range with levels of 8 or 9, the CDC’s influenza division reported.

That leaves Nevada and Oregon at level 7; Alaska, Florida, and the District of Columbia at level 5; Idaho at level 3, and Delaware with insufficient data (it was at level 5 last week), the CDC said.

The 2019-2020 season’s high activity, fortunately, has not translated into high severity, as overall hospitalization and mortality rates continue to remain at fairly typical levels. Hospitalization rates are elevated among children and young adults, however, and pediatric deaths are now up to 92, the CDC said, which is high for this point in the season.

The dementia height advantage, and ‘human textile’

Soylent stitches are people!

Ask anyone in advertising and they’ll tell you that branding is everything. Now, there may be a bit of self-promotion involved there. But you can’t deny that naming your product appropriately is important, and we’re going to say with some degree of confidence that “human textile” is not the greatest name in the world.

Now, we don’t want to question the team of French researchers too much. After all, according to their research published in Acta Biomaterialia, they’ve come up with quite the nifty and potentially lifesaving innovation: stitches made from human skin.

By taking sheets of human skin cells (eww) and cutting them into strips, the researchers were able to weave the strips into a sort of yarn, the advantages of which should be obvious. Patients can say goodbye to pesky issues of compatibility and adverse immune response when doctors and surgeons can stitch wounds, sew pouches, and create tubes and valves with yarn crafted from themselves.

We just can’t get past the name they chose. Human textile. The process of making them is gruesome enough already. No need to call to mind some horrific dystopian future in which cotton can no longer grow and we have to recycle humans (alive or dead, depending on how grim you’re feeling) in big industrial textile mills to craft clothing for ourselves.

It’s just too bad Charlton Heston is dead; he’d have made a great spokesperson.

Towering over dementia

Let us take a moment to pity the plight of the shorter brother. Always losing the battle of the boards in fraternal driveway basketball games. Never reaching the Pop-Tarts cruelly stashed high in the pantry by one’s taller, greedier sibling. Always being addressed during family dinners as “Frodo.”

And new research findings add to the burden borne by altitude-impaired brothers everywhere: Being the short one may boost your risk of dementia.

Danish researchers at the University of Copenhagen examined the potential role that height in young adulthood plays later in dementia risk. The planet’s taller-brother Danes (the world’s third-tallest nation) analyzed data from more than 666,000 Danish men, including more than 70,000 brothers.

They found that, for every 6 cm of height in those above average height, the risk of dementia dropped 10%. And that inverse relationship between height and dementia risk held even in the shared environments of families: Being the taller brother delivered relatively more dementia protection. Even being smarter, better educated, and savvier at playing point guard didn’t erase shorter brothers’ dementia/height disadvantage.

Before you take solace in a Coors stubby, littler brothers, let’s remember the advantages shorter siblings still enjoy: Never being called “Ichabod.” Walking tall in low-ceilinged parking garages. Fitting comfortably into 911s and F-18s alike. Draining threes from anywhere in the driveway.

Oh, and clearly being Mom’s favorite.

Swinging for longevity

They say that laughter is the best medicine, but we always assumed that it applied to the people doing the laughing.

That may not be the case, according to a report presented at the American Stroke Association International Stroke Conference in Dallas.

It may be even better to get laughed at, and Adnan Qureshi, MD, of the University of Missouri, Columbia, and associates have data from the Cardiovascular Health Study of adults aged 65 years and older to prove it.

It’s all about the golf. The 384 golfers among the almost 5,900 participants had a death rate of 15.1% over the 10-year follow-up.

As for the nongolfers – the ones who make fun of golfers’ clothes and say that golf is boring, who joke about riding around in carts and hanging around with old people, who laugh because the lowest score wins, who say it’s easy to hit a little white ball that’s not even moving, who think an albatross is just a bird ... um, we seem to have gotten a bit off topic here.

Anyway, the death rate for the nongolfers in the study was a significantly higher 24.6%. So, suck on that, nongolfers, because it looks like the golfers will be having the last laugh. In plaid pants.

Soylent stitches are people!

Ask anyone in advertising and they’ll tell you that branding is everything. Now, there may be a bit of self-promotion involved there. But you can’t deny that naming your product appropriately is important, and we’re going to say with some degree of confidence that “human textile” is not the greatest name in the world.

Now, we don’t want to question the team of French researchers too much. After all, according to their research published in Acta Biomaterialia, they’ve come up with quite the nifty and potentially lifesaving innovation: stitches made from human skin.

By taking sheets of human skin cells (eww) and cutting them into strips, the researchers were able to weave the strips into a sort of yarn, the advantages of which should be obvious. Patients can say goodbye to pesky issues of compatibility and adverse immune response when doctors and surgeons can stitch wounds, sew pouches, and create tubes and valves with yarn crafted from themselves.

We just can’t get past the name they chose. Human textile. The process of making them is gruesome enough already. No need to call to mind some horrific dystopian future in which cotton can no longer grow and we have to recycle humans (alive or dead, depending on how grim you’re feeling) in big industrial textile mills to craft clothing for ourselves.

It’s just too bad Charlton Heston is dead; he’d have made a great spokesperson.

Towering over dementia

Let us take a moment to pity the plight of the shorter brother. Always losing the battle of the boards in fraternal driveway basketball games. Never reaching the Pop-Tarts cruelly stashed high in the pantry by one’s taller, greedier sibling. Always being addressed during family dinners as “Frodo.”

And new research findings add to the burden borne by altitude-impaired brothers everywhere: Being the short one may boost your risk of dementia.

Danish researchers at the University of Copenhagen examined the potential role that height in young adulthood plays later in dementia risk. The planet’s taller-brother Danes (the world’s third-tallest nation) analyzed data from more than 666,000 Danish men, including more than 70,000 brothers.

They found that, for every 6 cm of height in those above average height, the risk of dementia dropped 10%. And that inverse relationship between height and dementia risk held even in the shared environments of families: Being the taller brother delivered relatively more dementia protection. Even being smarter, better educated, and savvier at playing point guard didn’t erase shorter brothers’ dementia/height disadvantage.

Before you take solace in a Coors stubby, littler brothers, let’s remember the advantages shorter siblings still enjoy: Never being called “Ichabod.” Walking tall in low-ceilinged parking garages. Fitting comfortably into 911s and F-18s alike. Draining threes from anywhere in the driveway.

Oh, and clearly being Mom’s favorite.

Swinging for longevity

They say that laughter is the best medicine, but we always assumed that it applied to the people doing the laughing.

That may not be the case, according to a report presented at the American Stroke Association International Stroke Conference in Dallas.

It may be even better to get laughed at, and Adnan Qureshi, MD, of the University of Missouri, Columbia, and associates have data from the Cardiovascular Health Study of adults aged 65 years and older to prove it.

It’s all about the golf. The 384 golfers among the almost 5,900 participants had a death rate of 15.1% over the 10-year follow-up.

As for the nongolfers – the ones who make fun of golfers’ clothes and say that golf is boring, who joke about riding around in carts and hanging around with old people, who laugh because the lowest score wins, who say it’s easy to hit a little white ball that’s not even moving, who think an albatross is just a bird ... um, we seem to have gotten a bit off topic here.

Anyway, the death rate for the nongolfers in the study was a significantly higher 24.6%. So, suck on that, nongolfers, because it looks like the golfers will be having the last laugh. In plaid pants.

Soylent stitches are people!

Ask anyone in advertising and they’ll tell you that branding is everything. Now, there may be a bit of self-promotion involved there. But you can’t deny that naming your product appropriately is important, and we’re going to say with some degree of confidence that “human textile” is not the greatest name in the world.

Now, we don’t want to question the team of French researchers too much. After all, according to their research published in Acta Biomaterialia, they’ve come up with quite the nifty and potentially lifesaving innovation: stitches made from human skin.

By taking sheets of human skin cells (eww) and cutting them into strips, the researchers were able to weave the strips into a sort of yarn, the advantages of which should be obvious. Patients can say goodbye to pesky issues of compatibility and adverse immune response when doctors and surgeons can stitch wounds, sew pouches, and create tubes and valves with yarn crafted from themselves.

We just can’t get past the name they chose. Human textile. The process of making them is gruesome enough already. No need to call to mind some horrific dystopian future in which cotton can no longer grow and we have to recycle humans (alive or dead, depending on how grim you’re feeling) in big industrial textile mills to craft clothing for ourselves.

It’s just too bad Charlton Heston is dead; he’d have made a great spokesperson.

Towering over dementia

Let us take a moment to pity the plight of the shorter brother. Always losing the battle of the boards in fraternal driveway basketball games. Never reaching the Pop-Tarts cruelly stashed high in the pantry by one’s taller, greedier sibling. Always being addressed during family dinners as “Frodo.”

And new research findings add to the burden borne by altitude-impaired brothers everywhere: Being the short one may boost your risk of dementia.

Danish researchers at the University of Copenhagen examined the potential role that height in young adulthood plays later in dementia risk. The planet’s taller-brother Danes (the world’s third-tallest nation) analyzed data from more than 666,000 Danish men, including more than 70,000 brothers.

They found that, for every 6 cm of height in those above average height, the risk of dementia dropped 10%. And that inverse relationship between height and dementia risk held even in the shared environments of families: Being the taller brother delivered relatively more dementia protection. Even being smarter, better educated, and savvier at playing point guard didn’t erase shorter brothers’ dementia/height disadvantage.

Before you take solace in a Coors stubby, littler brothers, let’s remember the advantages shorter siblings still enjoy: Never being called “Ichabod.” Walking tall in low-ceilinged parking garages. Fitting comfortably into 911s and F-18s alike. Draining threes from anywhere in the driveway.

Oh, and clearly being Mom’s favorite.

Swinging for longevity

They say that laughter is the best medicine, but we always assumed that it applied to the people doing the laughing.

That may not be the case, according to a report presented at the American Stroke Association International Stroke Conference in Dallas.

It may be even better to get laughed at, and Adnan Qureshi, MD, of the University of Missouri, Columbia, and associates have data from the Cardiovascular Health Study of adults aged 65 years and older to prove it.

It’s all about the golf. The 384 golfers among the almost 5,900 participants had a death rate of 15.1% over the 10-year follow-up.

As for the nongolfers – the ones who make fun of golfers’ clothes and say that golf is boring, who joke about riding around in carts and hanging around with old people, who laugh because the lowest score wins, who say it’s easy to hit a little white ball that’s not even moving, who think an albatross is just a bird ... um, we seem to have gotten a bit off topic here.

Anyway, the death rate for the nongolfers in the study was a significantly higher 24.6%. So, suck on that, nongolfers, because it looks like the golfers will be having the last laugh. In plaid pants.

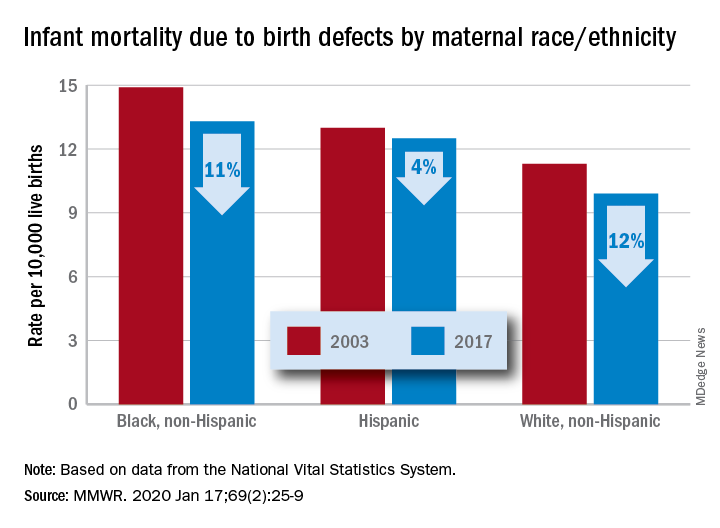

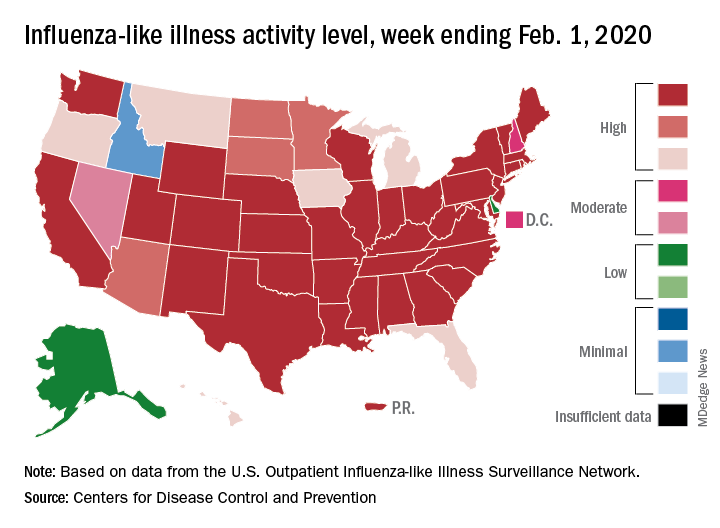

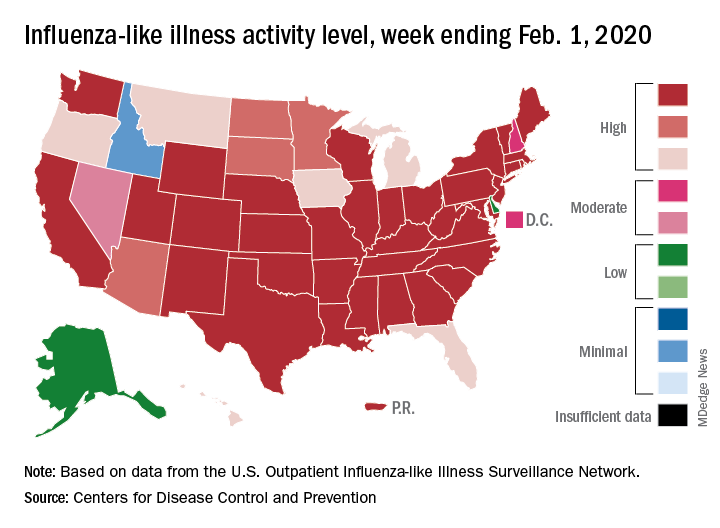

Flu activity increases for third straight week

For the second time during the 2019-2020 flu season, activity measures have climbed into noteworthy territory.

The proportion of outpatient visits for influenza-like illness (ILI) reached its highest December level, 7.1%, since 2003 and then dropped for 2 weeks. Three weeks of increases since then, however, have the outpatient-visit rate at 6.7% for the week ending Feb. 1, 2020, the Centers for Disease Control and Prevention reported. The baseline rate for the United States is 2.4%.

That rate of 6.7% is already above the highest rates recorded in eight of the last nine flu seasons, and another increase could mean a second, separate trip above 7.0% in the 2019-2020 season – something that has not occurred since national tracking began in 1997, CDC data show.

Those same data also show that,

Another important measure on the rise, the proportion of respiratory specimens testing positive for influenza, reached a new high for the season, 29.8%, during the week of Feb. 1, the CDC’s influenza division said.

Tests at clinical laboratories also show that predominance is continuing to switch from type B (45.6%) to type A (54.4%), the influenza division noted. Overall predominance for the season, however, continues to favor type B, 59.3% to 40.7%.

The percentage of deaths caused by pneumonia and influenza, which passed the threshold for epidemic of 7.2% back in early January, has been trending downward for the last 3 weeks and was 7.1% as of Feb. 1, according to the influenza division.

ILI-related deaths among children continue to remain high, with a total count of 78 for the season after another 10 deaths were reported during the week ending Feb. 1, the CDC reported. Comparable numbers for the last three seasons are 44 (2018-2019), 97 (2017-2018), and 35 (2016-2017).

The CDC estimates put the total number of ILIs at around 22 million for the season so far, leading to 210,000 hospitalizations. The agency said that it expects to release estimates of vaccine effectiveness later this month.

For the second time during the 2019-2020 flu season, activity measures have climbed into noteworthy territory.

The proportion of outpatient visits for influenza-like illness (ILI) reached its highest December level, 7.1%, since 2003 and then dropped for 2 weeks. Three weeks of increases since then, however, have the outpatient-visit rate at 6.7% for the week ending Feb. 1, 2020, the Centers for Disease Control and Prevention reported. The baseline rate for the United States is 2.4%.

That rate of 6.7% is already above the highest rates recorded in eight of the last nine flu seasons, and another increase could mean a second, separate trip above 7.0% in the 2019-2020 season – something that has not occurred since national tracking began in 1997, CDC data show.

Those same data also show that,

Another important measure on the rise, the proportion of respiratory specimens testing positive for influenza, reached a new high for the season, 29.8%, during the week of Feb. 1, the CDC’s influenza division said.

Tests at clinical laboratories also show that predominance is continuing to switch from type B (45.6%) to type A (54.4%), the influenza division noted. Overall predominance for the season, however, continues to favor type B, 59.3% to 40.7%.

The percentage of deaths caused by pneumonia and influenza, which passed the threshold for epidemic of 7.2% back in early January, has been trending downward for the last 3 weeks and was 7.1% as of Feb. 1, according to the influenza division.

ILI-related deaths among children continue to remain high, with a total count of 78 for the season after another 10 deaths were reported during the week ending Feb. 1, the CDC reported. Comparable numbers for the last three seasons are 44 (2018-2019), 97 (2017-2018), and 35 (2016-2017).

The CDC estimates put the total number of ILIs at around 22 million for the season so far, leading to 210,000 hospitalizations. The agency said that it expects to release estimates of vaccine effectiveness later this month.

For the second time during the 2019-2020 flu season, activity measures have climbed into noteworthy territory.

The proportion of outpatient visits for influenza-like illness (ILI) reached its highest December level, 7.1%, since 2003 and then dropped for 2 weeks. Three weeks of increases since then, however, have the outpatient-visit rate at 6.7% for the week ending Feb. 1, 2020, the Centers for Disease Control and Prevention reported. The baseline rate for the United States is 2.4%.

That rate of 6.7% is already above the highest rates recorded in eight of the last nine flu seasons, and another increase could mean a second, separate trip above 7.0% in the 2019-2020 season – something that has not occurred since national tracking began in 1997, CDC data show.

Those same data also show that,

Another important measure on the rise, the proportion of respiratory specimens testing positive for influenza, reached a new high for the season, 29.8%, during the week of Feb. 1, the CDC’s influenza division said.

Tests at clinical laboratories also show that predominance is continuing to switch from type B (45.6%) to type A (54.4%), the influenza division noted. Overall predominance for the season, however, continues to favor type B, 59.3% to 40.7%.

The percentage of deaths caused by pneumonia and influenza, which passed the threshold for epidemic of 7.2% back in early January, has been trending downward for the last 3 weeks and was 7.1% as of Feb. 1, according to the influenza division.

ILI-related deaths among children continue to remain high, with a total count of 78 for the season after another 10 deaths were reported during the week ending Feb. 1, the CDC reported. Comparable numbers for the last three seasons are 44 (2018-2019), 97 (2017-2018), and 35 (2016-2017).

The CDC estimates put the total number of ILIs at around 22 million for the season so far, leading to 210,000 hospitalizations. The agency said that it expects to release estimates of vaccine effectiveness later this month.

Funding failures: Tobacco prevention and cessation

When it comes to state funding for tobacco prevention and cessation, the American Lung Association grades on a curve. It did not help.

Each state’s annual funding for tobacco prevention and cessation was calculated and then compared with the Centers for Disease Control and Prevention’s recommended spending level. That percentage became the grade, with any level of funding at 80% or more of the CDC’s recommendation getting an A and anything below 50% getting an F, the ALA explained.

The three A’s went to Alaska – which spent $10.14 million, or 99.4% of the CDC-recommended $10.2 million – California (96.0%), and Maine (83.5%). The lowest levels of spending came from Georgia, which spend just 2.8% of the CDC’s recommendation of $106 million, and Missouri, which spent 3.0%, the ALA reported.

States’ grades were generally better in the four other areas of tobacco-control policy: There were 24 A’s and 9 F’s for smoke-free air laws, 1 A and 35 F’s for tobacco excise taxes, 3 A’s and 17 F’s for access to cessation treatment, and 10 A’s and 30 F’s for laws to raise the tobacco sales age to 21 years, the ALA said in the report.

Despite an overall grade of F, the federal government managed to earn some praise in that last area: “In what could only be described as unimaginable even 2 years ago, in December 2019, Congress passed bipartisan legislation to raise the minimum age of sale for tobacco products to 21,” the ALA said.

The federal government was strongly criticized on the subject of e-cigarettes. “The Trump Administration failed to prioritize public health over the tobacco industry with its Jan. 2, 2020, announcement that will leave thousands of flavored e-cigarettes on the market,” the ALA said, while concluding that the rising use of e-cigarettes in recent years “is a real-world demonstration of the failure of the U.S. Food and Drug Administration to properly oversee all tobacco products. … This failure places the lung health and lives of Americans at risk.”

When it comes to state funding for tobacco prevention and cessation, the American Lung Association grades on a curve. It did not help.

Each state’s annual funding for tobacco prevention and cessation was calculated and then compared with the Centers for Disease Control and Prevention’s recommended spending level. That percentage became the grade, with any level of funding at 80% or more of the CDC’s recommendation getting an A and anything below 50% getting an F, the ALA explained.

The three A’s went to Alaska – which spent $10.14 million, or 99.4% of the CDC-recommended $10.2 million – California (96.0%), and Maine (83.5%). The lowest levels of spending came from Georgia, which spend just 2.8% of the CDC’s recommendation of $106 million, and Missouri, which spent 3.0%, the ALA reported.

States’ grades were generally better in the four other areas of tobacco-control policy: There were 24 A’s and 9 F’s for smoke-free air laws, 1 A and 35 F’s for tobacco excise taxes, 3 A’s and 17 F’s for access to cessation treatment, and 10 A’s and 30 F’s for laws to raise the tobacco sales age to 21 years, the ALA said in the report.

Despite an overall grade of F, the federal government managed to earn some praise in that last area: “In what could only be described as unimaginable even 2 years ago, in December 2019, Congress passed bipartisan legislation to raise the minimum age of sale for tobacco products to 21,” the ALA said.

The federal government was strongly criticized on the subject of e-cigarettes. “The Trump Administration failed to prioritize public health over the tobacco industry with its Jan. 2, 2020, announcement that will leave thousands of flavored e-cigarettes on the market,” the ALA said, while concluding that the rising use of e-cigarettes in recent years “is a real-world demonstration of the failure of the U.S. Food and Drug Administration to properly oversee all tobacco products. … This failure places the lung health and lives of Americans at risk.”

When it comes to state funding for tobacco prevention and cessation, the American Lung Association grades on a curve. It did not help.

Each state’s annual funding for tobacco prevention and cessation was calculated and then compared with the Centers for Disease Control and Prevention’s recommended spending level. That percentage became the grade, with any level of funding at 80% or more of the CDC’s recommendation getting an A and anything below 50% getting an F, the ALA explained.

The three A’s went to Alaska – which spent $10.14 million, or 99.4% of the CDC-recommended $10.2 million – California (96.0%), and Maine (83.5%). The lowest levels of spending came from Georgia, which spend just 2.8% of the CDC’s recommendation of $106 million, and Missouri, which spent 3.0%, the ALA reported.

States’ grades were generally better in the four other areas of tobacco-control policy: There were 24 A’s and 9 F’s for smoke-free air laws, 1 A and 35 F’s for tobacco excise taxes, 3 A’s and 17 F’s for access to cessation treatment, and 10 A’s and 30 F’s for laws to raise the tobacco sales age to 21 years, the ALA said in the report.

Despite an overall grade of F, the federal government managed to earn some praise in that last area: “In what could only be described as unimaginable even 2 years ago, in December 2019, Congress passed bipartisan legislation to raise the minimum age of sale for tobacco products to 21,” the ALA said.

The federal government was strongly criticized on the subject of e-cigarettes. “The Trump Administration failed to prioritize public health over the tobacco industry with its Jan. 2, 2020, announcement that will leave thousands of flavored e-cigarettes on the market,” the ALA said, while concluding that the rising use of e-cigarettes in recent years “is a real-world demonstration of the failure of the U.S. Food and Drug Administration to properly oversee all tobacco products. … This failure places the lung health and lives of Americans at risk.”

The Zzzzzuper Bowl, and 4D needles

HDL 35, LDL 220, hike!

Super Bowl Sunday is, for all intents and purposes, an American national holiday. And if there’s one thing we Americans love to do on our national holidays, it’s eat. And eat. Oh, and also eat.

According to research from LetsGetChecked, about 70% of Americans who watch the Super Bowl overindulge on game day. Actually, the term “overindulge” may not be entirely adequate: On Super Bowl Sunday, the average football fan ate nearly 11,000 calories and 180 g of saturated fat. That’s more than four times the recommended daily calorie intake, and seven times the recommended saturated fat intake.

Naturally, the chief medical officer for LetsGetChecked termed this level of food consumption as potentially dangerous if it becomes a regular occurrence and asked that people “question if they need to be eating quite so much.” Yeah, we think he’s being a party pooper, too.

So, just what did Joe Schmoe eat this past Sunday that has the experts all worried?

LetsGetChecked thoughtfully asked, and the list is something to be proud of: wings, pizza, fries, burgers, hot dogs, ribs, nachos, sausage, ice cream, chocolate, cake. The average fan ate all these, and more. Our personal favorite: the 2.3 portions of salad. Wouldn’t want to be too unhealthy now. Gotta have that salad to balance everything else out.

Strangely, the survey didn’t seem to ask about the presumably prodigious quantities of alcohol the average Super Bowl fan consumed. So, if anything, that 11,000 calories is an underestimation. And it really doesn’t get more American than that.

Zzzzzuper Bowl

Hardly, according to the buzzzzzz-kills [Ed. note: Why so many Zs? Author note: Wait for it ...] at the American Academy of Sleep Medicine. In a report with the sleep-inducing title “AASM Sleep Prioritization Survey Monday after the Super Bowl,” the academy pulls the sheets back on America’s somnolent post–Super Bowl secret: We’re sleep deprived.

More than one-third of the 2,003 adults alert enough to answer the AASM survey said they were more tired than usual the day after the Super Bowl. And 12% of respondents admitted that they were “extremely tired.”

Millennials were the generation most likely to meet Monday morning in an extreme stupor, followed by the few Gen X’ers who could even be bothered to cynically answer such an utterly pointless collection of survey questions. Baby boomers had already gone to bed before the academy could poll them.

AASM noted that Cleveland fans were stumped by the survey’s questions about the Super Bowl, given that the Browns are always well rested on the Monday morning after the game.

The gift that keeps on grabbing

Rutgers, you had us at “morph into new shapes.”

We read a lot of press releases here at LOTME world headquarters, but when we saw New Jersey’s state university announcing that a new 4D-printed microneedle array could “morph into new shapes,” we were hooked, so to speak.

Right now, though, you’re probably wondering what 4D printing is. We wondered that, too. It’s like 3D printing, but “with smart materials that are programmed to change shape after printing. Time is the fourth dimension that allows materials to morph into new shapes,” as senior investigator Howon Lee, PhD, and associates explained it.

Microneedles are becoming increasing popular as a replacement for hypodermics, but their “weak adhesion to tissues is a major challenge for controlled drug delivery over the long run,” the investigators noted. To try and solve the adhesion problem, they turned to – that’s right, you guessed it – insects and parasites.

When you think about it, it does make sense. What’s better at holding onto tissue than the barbed stinger of a honeybee or the microhooks of a tapeworm?

The microneedle array that Dr. Lee and his team have come up has backward-facing barbs that interlock with tissue when it is inserted, which improves adhesion. It was those barbs that required the whole 4D-printing approach, they explained in Advanced Functional Materials.

That’s sounds great, you’re probably thinking now – but we need to show you the money, right? Okay.

During testing on chicken muscle tissue, adhesion with the new microneedle was “18 times stronger than with a barbless microneedle,” they reported.

The 4D microneedle’s next stop? Its own commercial during next year’s Super Bowl, according to its new agent.

HDL 35, LDL 220, hike!

Super Bowl Sunday is, for all intents and purposes, an American national holiday. And if there’s one thing we Americans love to do on our national holidays, it’s eat. And eat. Oh, and also eat.

According to research from LetsGetChecked, about 70% of Americans who watch the Super Bowl overindulge on game day. Actually, the term “overindulge” may not be entirely adequate: On Super Bowl Sunday, the average football fan ate nearly 11,000 calories and 180 g of saturated fat. That’s more than four times the recommended daily calorie intake, and seven times the recommended saturated fat intake.

Naturally, the chief medical officer for LetsGetChecked termed this level of food consumption as potentially dangerous if it becomes a regular occurrence and asked that people “question if they need to be eating quite so much.” Yeah, we think he’s being a party pooper, too.

So, just what did Joe Schmoe eat this past Sunday that has the experts all worried?

LetsGetChecked thoughtfully asked, and the list is something to be proud of: wings, pizza, fries, burgers, hot dogs, ribs, nachos, sausage, ice cream, chocolate, cake. The average fan ate all these, and more. Our personal favorite: the 2.3 portions of salad. Wouldn’t want to be too unhealthy now. Gotta have that salad to balance everything else out.

Strangely, the survey didn’t seem to ask about the presumably prodigious quantities of alcohol the average Super Bowl fan consumed. So, if anything, that 11,000 calories is an underestimation. And it really doesn’t get more American than that.

Zzzzzuper Bowl

Hardly, according to the buzzzzzz-kills [Ed. note: Why so many Zs? Author note: Wait for it ...] at the American Academy of Sleep Medicine. In a report with the sleep-inducing title “AASM Sleep Prioritization Survey Monday after the Super Bowl,” the academy pulls the sheets back on America’s somnolent post–Super Bowl secret: We’re sleep deprived.

More than one-third of the 2,003 adults alert enough to answer the AASM survey said they were more tired than usual the day after the Super Bowl. And 12% of respondents admitted that they were “extremely tired.”

Millennials were the generation most likely to meet Monday morning in an extreme stupor, followed by the few Gen X’ers who could even be bothered to cynically answer such an utterly pointless collection of survey questions. Baby boomers had already gone to bed before the academy could poll them.

AASM noted that Cleveland fans were stumped by the survey’s questions about the Super Bowl, given that the Browns are always well rested on the Monday morning after the game.

The gift that keeps on grabbing

Rutgers, you had us at “morph into new shapes.”

We read a lot of press releases here at LOTME world headquarters, but when we saw New Jersey’s state university announcing that a new 4D-printed microneedle array could “morph into new shapes,” we were hooked, so to speak.

Right now, though, you’re probably wondering what 4D printing is. We wondered that, too. It’s like 3D printing, but “with smart materials that are programmed to change shape after printing. Time is the fourth dimension that allows materials to morph into new shapes,” as senior investigator Howon Lee, PhD, and associates explained it.

Microneedles are becoming increasing popular as a replacement for hypodermics, but their “weak adhesion to tissues is a major challenge for controlled drug delivery over the long run,” the investigators noted. To try and solve the adhesion problem, they turned to – that’s right, you guessed it – insects and parasites.

When you think about it, it does make sense. What’s better at holding onto tissue than the barbed stinger of a honeybee or the microhooks of a tapeworm?

The microneedle array that Dr. Lee and his team have come up has backward-facing barbs that interlock with tissue when it is inserted, which improves adhesion. It was those barbs that required the whole 4D-printing approach, they explained in Advanced Functional Materials.

That’s sounds great, you’re probably thinking now – but we need to show you the money, right? Okay.

During testing on chicken muscle tissue, adhesion with the new microneedle was “18 times stronger than with a barbless microneedle,” they reported.

The 4D microneedle’s next stop? Its own commercial during next year’s Super Bowl, according to its new agent.

HDL 35, LDL 220, hike!

Super Bowl Sunday is, for all intents and purposes, an American national holiday. And if there’s one thing we Americans love to do on our national holidays, it’s eat. And eat. Oh, and also eat.

According to research from LetsGetChecked, about 70% of Americans who watch the Super Bowl overindulge on game day. Actually, the term “overindulge” may not be entirely adequate: On Super Bowl Sunday, the average football fan ate nearly 11,000 calories and 180 g of saturated fat. That’s more than four times the recommended daily calorie intake, and seven times the recommended saturated fat intake.

Naturally, the chief medical officer for LetsGetChecked termed this level of food consumption as potentially dangerous if it becomes a regular occurrence and asked that people “question if they need to be eating quite so much.” Yeah, we think he’s being a party pooper, too.

So, just what did Joe Schmoe eat this past Sunday that has the experts all worried?

LetsGetChecked thoughtfully asked, and the list is something to be proud of: wings, pizza, fries, burgers, hot dogs, ribs, nachos, sausage, ice cream, chocolate, cake. The average fan ate all these, and more. Our personal favorite: the 2.3 portions of salad. Wouldn’t want to be too unhealthy now. Gotta have that salad to balance everything else out.

Strangely, the survey didn’t seem to ask about the presumably prodigious quantities of alcohol the average Super Bowl fan consumed. So, if anything, that 11,000 calories is an underestimation. And it really doesn’t get more American than that.

Zzzzzuper Bowl

Hardly, according to the buzzzzzz-kills [Ed. note: Why so many Zs? Author note: Wait for it ...] at the American Academy of Sleep Medicine. In a report with the sleep-inducing title “AASM Sleep Prioritization Survey Monday after the Super Bowl,” the academy pulls the sheets back on America’s somnolent post–Super Bowl secret: We’re sleep deprived.

More than one-third of the 2,003 adults alert enough to answer the AASM survey said they were more tired than usual the day after the Super Bowl. And 12% of respondents admitted that they were “extremely tired.”

Millennials were the generation most likely to meet Monday morning in an extreme stupor, followed by the few Gen X’ers who could even be bothered to cynically answer such an utterly pointless collection of survey questions. Baby boomers had already gone to bed before the academy could poll them.

AASM noted that Cleveland fans were stumped by the survey’s questions about the Super Bowl, given that the Browns are always well rested on the Monday morning after the game.

The gift that keeps on grabbing

Rutgers, you had us at “morph into new shapes.”

We read a lot of press releases here at LOTME world headquarters, but when we saw New Jersey’s state university announcing that a new 4D-printed microneedle array could “morph into new shapes,” we were hooked, so to speak.

Right now, though, you’re probably wondering what 4D printing is. We wondered that, too. It’s like 3D printing, but “with smart materials that are programmed to change shape after printing. Time is the fourth dimension that allows materials to morph into new shapes,” as senior investigator Howon Lee, PhD, and associates explained it.

Microneedles are becoming increasing popular as a replacement for hypodermics, but their “weak adhesion to tissues is a major challenge for controlled drug delivery over the long run,” the investigators noted. To try and solve the adhesion problem, they turned to – that’s right, you guessed it – insects and parasites.

When you think about it, it does make sense. What’s better at holding onto tissue than the barbed stinger of a honeybee or the microhooks of a tapeworm?

The microneedle array that Dr. Lee and his team have come up has backward-facing barbs that interlock with tissue when it is inserted, which improves adhesion. It was those barbs that required the whole 4D-printing approach, they explained in Advanced Functional Materials.

That’s sounds great, you’re probably thinking now – but we need to show you the money, right? Okay.

During testing on chicken muscle tissue, adhesion with the new microneedle was “18 times stronger than with a barbless microneedle,” they reported.

The 4D microneedle’s next stop? Its own commercial during next year’s Super Bowl, according to its new agent.

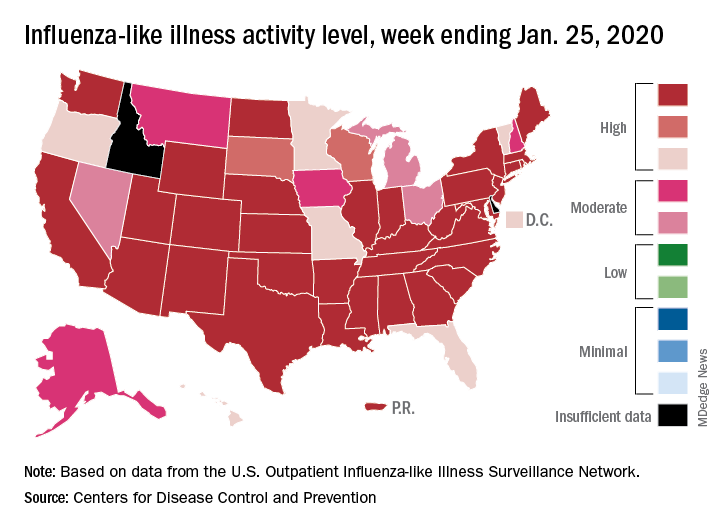

Don’t forget about the flu: 2019-2010 season is not over

Nationally, an estimated 5.7% of all outpatients visiting health care providers had influenza-like illness (ILI) for the week ending Jan. 25, which was up from 5.1% the previous week but still lower than the current seasonal high of 7.1% recorded during the week of Dec. 22-28, the CDC’s influenza division reported.

Another key indicator of influenza activity, the percentage of respiratory specimens testing positive, also remains high as it rose from 25.7% the week before to 27.7% for the week ending Jan. 25, the influenza division said. That is the highest rate of the 2019-2020 season so far, surpassing the 26.8% reached during Dec. 22-28.

Another new seasonal high involves the number of states, 33 plus Puerto Rico, at the highest level of ILI activity on the CDC’s 1-10 scale for the latest reporting week, topping the 32 jurisdictions from the last full week of December. Another eight states and the District of Columbia were in the “high” range with activity levels of 8 and 9, and no state with available data was lower than level 6, the CDC data show.

Going along with the recent 2-week increase in activity is a large increase in the number of ILI-related pediatric deaths, which rose from 39 on Jan. 11 to the current count of 68, the CDC said. At the same point last year, there had been 36 pediatric deaths.

Other indicators of ILI severity, however, “are not high at this point in the season,” the influenza division noted. “Overall, hospitalization rates remain similar to what has been seen at this time during recent seasons, but rates among children and young adults are higher at this time than in recent seasons.” Overall pneumonia and influenza mortality is also low, the CDC added.

Nationally, an estimated 5.7% of all outpatients visiting health care providers had influenza-like illness (ILI) for the week ending Jan. 25, which was up from 5.1% the previous week but still lower than the current seasonal high of 7.1% recorded during the week of Dec. 22-28, the CDC’s influenza division reported.

Another key indicator of influenza activity, the percentage of respiratory specimens testing positive, also remains high as it rose from 25.7% the week before to 27.7% for the week ending Jan. 25, the influenza division said. That is the highest rate of the 2019-2020 season so far, surpassing the 26.8% reached during Dec. 22-28.

Another new seasonal high involves the number of states, 33 plus Puerto Rico, at the highest level of ILI activity on the CDC’s 1-10 scale for the latest reporting week, topping the 32 jurisdictions from the last full week of December. Another eight states and the District of Columbia were in the “high” range with activity levels of 8 and 9, and no state with available data was lower than level 6, the CDC data show.

Going along with the recent 2-week increase in activity is a large increase in the number of ILI-related pediatric deaths, which rose from 39 on Jan. 11 to the current count of 68, the CDC said. At the same point last year, there had been 36 pediatric deaths.

Other indicators of ILI severity, however, “are not high at this point in the season,” the influenza division noted. “Overall, hospitalization rates remain similar to what has been seen at this time during recent seasons, but rates among children and young adults are higher at this time than in recent seasons.” Overall pneumonia and influenza mortality is also low, the CDC added.

Nationally, an estimated 5.7% of all outpatients visiting health care providers had influenza-like illness (ILI) for the week ending Jan. 25, which was up from 5.1% the previous week but still lower than the current seasonal high of 7.1% recorded during the week of Dec. 22-28, the CDC’s influenza division reported.

Another key indicator of influenza activity, the percentage of respiratory specimens testing positive, also remains high as it rose from 25.7% the week before to 27.7% for the week ending Jan. 25, the influenza division said. That is the highest rate of the 2019-2020 season so far, surpassing the 26.8% reached during Dec. 22-28.

Another new seasonal high involves the number of states, 33 plus Puerto Rico, at the highest level of ILI activity on the CDC’s 1-10 scale for the latest reporting week, topping the 32 jurisdictions from the last full week of December. Another eight states and the District of Columbia were in the “high” range with activity levels of 8 and 9, and no state with available data was lower than level 6, the CDC data show.

Going along with the recent 2-week increase in activity is a large increase in the number of ILI-related pediatric deaths, which rose from 39 on Jan. 11 to the current count of 68, the CDC said. At the same point last year, there had been 36 pediatric deaths.

Other indicators of ILI severity, however, “are not high at this point in the season,” the influenza division noted. “Overall, hospitalization rates remain similar to what has been seen at this time during recent seasons, but rates among children and young adults are higher at this time than in recent seasons.” Overall pneumonia and influenza mortality is also low, the CDC added.

CDC: Opioid prescribing and use rates down since 2010

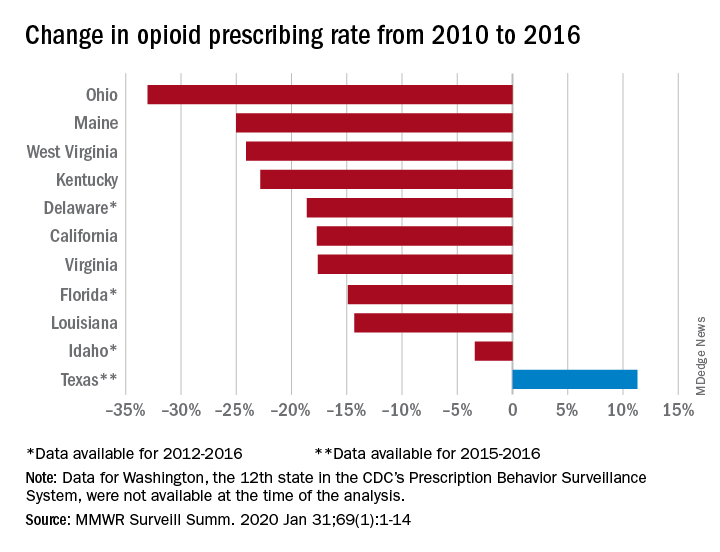

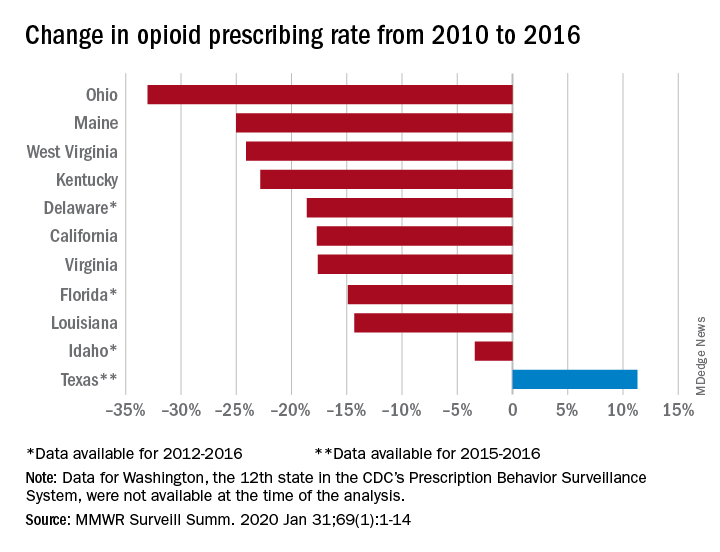

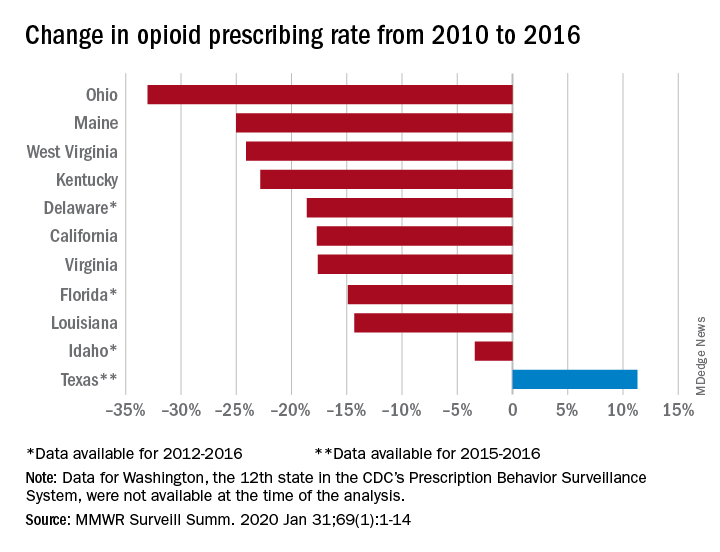

Trends in opioid prescribing and use from 2010 to 2016 offer some encouragement, but opioid-attributable deaths continued to increase over that period, according to the Centers for Disease Control and Prevention.

Prescribing rates dropped during that period, as did daily opioid dosage rates and the percentage of patients with high daily opioid dosages, Gail K. Strickler, PhD, of the Institute for Behavioral Health at Brandeis University in Waltham, Mass., and associates wrote in MMWR Surveillance Summaries.

Their analysis involved 11 of the 12 states (Washington was unable to provide data for the analysis) participating in the CDC’s Prescription Behavior Surveillance System, which uses data from the states’ prescription drug monitoring programs. The 11 states represented about 38% of the U.S. population in 2016.

The opioid prescribing rate fell in 10 of those 11 states, with declines varying from 3.4% in Idaho to 33.0% in Ohio. Prescribing went up in Texas by 11.3%, but the state only had data available for 2015 and 2016. Three other states – Delaware, Florida, and Idaho – were limited to data from 2012 to 2016, the investigators noted.

As for the other measures, all states showed declines for the mean daily opioid dosage. Texas had the smallest drop at 2.9% and Florida saw the largest, at 27.4%. All states also had reductions in the percentage of patients with high daily opioid dosage, with decreases varying from 5.7% in Idaho to 43.9% in Louisiana, Dr. Strickler and associates reported. A high daily dosage was defined as at least 90 morphine milligram equivalents for all class II-V opioid drugs.

“Despite these favorable trends ... opioid overdose deaths attributable to the most commonly prescribed opioids, the natural and semisynthetics (e.g., morphine and oxycodone), increased during 2010-2016,” they said.

It is possible that a change in mortality is lagging “behind changes in prescribing behaviors” or that “the trend in deaths related to these types of opioids has been driven by factors other than prescription opioid misuse rates, such as increasing mortality from heroin, which is frequently classified as morphine or found concomitantly with morphine postmortem, and a spike in deaths involving illicitly manufactured fentanyl combined with heroin and prescribed opioids since 2013,” the investigators suggested.

SOURCE: Strickler GK et al. MMWR Surveill Summ. 2020 Jan 31;69(1):1-14.

Trends in opioid prescribing and use from 2010 to 2016 offer some encouragement, but opioid-attributable deaths continued to increase over that period, according to the Centers for Disease Control and Prevention.

Prescribing rates dropped during that period, as did daily opioid dosage rates and the percentage of patients with high daily opioid dosages, Gail K. Strickler, PhD, of the Institute for Behavioral Health at Brandeis University in Waltham, Mass., and associates wrote in MMWR Surveillance Summaries.

Their analysis involved 11 of the 12 states (Washington was unable to provide data for the analysis) participating in the CDC’s Prescription Behavior Surveillance System, which uses data from the states’ prescription drug monitoring programs. The 11 states represented about 38% of the U.S. population in 2016.

The opioid prescribing rate fell in 10 of those 11 states, with declines varying from 3.4% in Idaho to 33.0% in Ohio. Prescribing went up in Texas by 11.3%, but the state only had data available for 2015 and 2016. Three other states – Delaware, Florida, and Idaho – were limited to data from 2012 to 2016, the investigators noted.

As for the other measures, all states showed declines for the mean daily opioid dosage. Texas had the smallest drop at 2.9% and Florida saw the largest, at 27.4%. All states also had reductions in the percentage of patients with high daily opioid dosage, with decreases varying from 5.7% in Idaho to 43.9% in Louisiana, Dr. Strickler and associates reported. A high daily dosage was defined as at least 90 morphine milligram equivalents for all class II-V opioid drugs.

“Despite these favorable trends ... opioid overdose deaths attributable to the most commonly prescribed opioids, the natural and semisynthetics (e.g., morphine and oxycodone), increased during 2010-2016,” they said.

It is possible that a change in mortality is lagging “behind changes in prescribing behaviors” or that “the trend in deaths related to these types of opioids has been driven by factors other than prescription opioid misuse rates, such as increasing mortality from heroin, which is frequently classified as morphine or found concomitantly with morphine postmortem, and a spike in deaths involving illicitly manufactured fentanyl combined with heroin and prescribed opioids since 2013,” the investigators suggested.

SOURCE: Strickler GK et al. MMWR Surveill Summ. 2020 Jan 31;69(1):1-14.

Trends in opioid prescribing and use from 2010 to 2016 offer some encouragement, but opioid-attributable deaths continued to increase over that period, according to the Centers for Disease Control and Prevention.

Prescribing rates dropped during that period, as did daily opioid dosage rates and the percentage of patients with high daily opioid dosages, Gail K. Strickler, PhD, of the Institute for Behavioral Health at Brandeis University in Waltham, Mass., and associates wrote in MMWR Surveillance Summaries.

Their analysis involved 11 of the 12 states (Washington was unable to provide data for the analysis) participating in the CDC’s Prescription Behavior Surveillance System, which uses data from the states’ prescription drug monitoring programs. The 11 states represented about 38% of the U.S. population in 2016.

The opioid prescribing rate fell in 10 of those 11 states, with declines varying from 3.4% in Idaho to 33.0% in Ohio. Prescribing went up in Texas by 11.3%, but the state only had data available for 2015 and 2016. Three other states – Delaware, Florida, and Idaho – were limited to data from 2012 to 2016, the investigators noted.

As for the other measures, all states showed declines for the mean daily opioid dosage. Texas had the smallest drop at 2.9% and Florida saw the largest, at 27.4%. All states also had reductions in the percentage of patients with high daily opioid dosage, with decreases varying from 5.7% in Idaho to 43.9% in Louisiana, Dr. Strickler and associates reported. A high daily dosage was defined as at least 90 morphine milligram equivalents for all class II-V opioid drugs.

“Despite these favorable trends ... opioid overdose deaths attributable to the most commonly prescribed opioids, the natural and semisynthetics (e.g., morphine and oxycodone), increased during 2010-2016,” they said.

It is possible that a change in mortality is lagging “behind changes in prescribing behaviors” or that “the trend in deaths related to these types of opioids has been driven by factors other than prescription opioid misuse rates, such as increasing mortality from heroin, which is frequently classified as morphine or found concomitantly with morphine postmortem, and a spike in deaths involving illicitly manufactured fentanyl combined with heroin and prescribed opioids since 2013,” the investigators suggested.

SOURCE: Strickler GK et al. MMWR Surveill Summ. 2020 Jan 31;69(1):1-14.

FROM MMWR SURVEILLANCE SUMMARIES

The scents-less life and the speaking mummy

If I only had a nose

Deaf and blind people get all the attention. Special schools, Braille, sign language, even a pinball-focused rock opera. And it is easy to see why: Those senses are kind of important when it comes to navigating the world. But what if you have to live without one of the less-cool senses? What if the nose doesn’t know?

According to research published in Clinical Otolaryngology, up to 5% of the world’s population has some sort of smell disorder, preventing them from either smelling correctly or smelling anything at all. And the effects of this on everyday life are drastic.

In a survey of 71 people with smell disorders, the researchers found that patients experience a smorgasbord of negative effects – ranging from poor hazard perception and poor sense of personal hygiene, to an inability to enjoy food and an inability to link smell to happy memories. The whiff of gingerbread on Christmas morning, the smoke of a bonfire on a summer evening – the smell-deprived miss out on them all. The negative emotions those people experience read like a recipe for your very own homemade Sith lord: sadness, regret, isolation, anxiety, anger, frustration. A path to the dark side, losing your scent is.

Speaking of fictional bad guys, this nasal-based research really could have benefited one Lord Volde ... fine, You-Know-Who. Just look at that face. That’s a man who can’t smell. You can’t tell us he wouldn’t have turned out better if only Dorothy had picked him up on the yellow brick road instead of some dumb scarecrow.

The sound of hieroglyphics

The Rosetta Stone revealed the meaning of Egyptian hieroglyphics and unlocked the ancient language of the Pharaohs for modern humans. But that mute stele said nothing about what those who uttered that ancient tongue sounded like.

Researchers at London’s Royal Holloway College may now know the answer. At least, a monosyllabic one.

The answer comes (indirectly) from Egyptian priest Nesyamun, a former resident of Thebes who worked at the temple of Karnak, but who now calls the Leeds City Museum home. Or, to be precise, Nesyamun’s 3,000-year-old mummified remains live on in Leeds. Nesyamun’s religious duties during his Karnak career likely required a smooth singing style and an accomplished speaking voice.

In a paper published in Scientific Reports, the British scientists say they’ve now heard the sound of the Egyptian priest’s long-silenced liturgical voice.

Working from CT scans of Nesyamun’s relatively well-preserved vocal-tract soft tissue, the scientists used a 3D-printed vocal tract and an electronic larynx to synthesize the actual sound of his voice.

And the result? Did the crooning priest of Karnak utter a Boris Karloffian curse upon those who had disturbed his millennia-long slumber? Did he deliver a rousing hymn of praise to Egypt’s ruler during the turbulent 1070s bce, Ramses XI?

In fact, what emerged from Nesyamun’s synthesized throat was ... “eh.” Maybe “a,” as in “bad.”

Given the state of the priest’s tongue (shrunken) and his soft palate (missing), the researchers say those monosyllabic sounds are the best Nesyamun can muster in his present state. Other experts say actual words from the ancients are likely impossible.

Perhaps one day, science will indeed be able to synthesize whole words or sentences from other well-preserved residents of the distant past. May we all live to hear an unyielding Ramses II himself chew the scenery like his Hollywood doppelganger, Yul Brynner: “So let it be written! So let it be done!”

To beard or not to beard

People are funny, and men, who happen to be people, are no exception.

Men, you see, have these things called beards, and there are definitely more men running around with facial hair these days. A lot of women go through a lot of trouble to get rid of a lot of their hair. But men, well, we grow extra hair. Why?

That’s what Honest Amish, a maker of beard-care products, wanted to know. They commissioned OnePoll to conduct a survey of 2,000 Americans, both men and women, to learn all kinds of things about beards.

So what did they find? Facial hair confidence, that’s what. Three-quarters of men said that a beard made them feel more confident than did a bare face, and 73% said that facial hair makes a man more attractive. That number was a bit lower among women, 63% of whom said that facial hair made a man more attractive.

That doesn’t seem very funny, does it? We’re getting there.

Male respondents also were asked what they would do to get the perfect beard: 40% would be willing to spend a night in jail or give up coffee for a year, and 38% would stand in line at the DMV for an entire day. Somewhat less popular responses included giving up sex for a year (22%) – seems like a waste of all that new-found confidence – and shaving their heads (18%).

And that, we don’t mind saying, is a hair-raising conclusion.

If I only had a nose

Deaf and blind people get all the attention. Special schools, Braille, sign language, even a pinball-focused rock opera. And it is easy to see why: Those senses are kind of important when it comes to navigating the world. But what if you have to live without one of the less-cool senses? What if the nose doesn’t know?

According to research published in Clinical Otolaryngology, up to 5% of the world’s population has some sort of smell disorder, preventing them from either smelling correctly or smelling anything at all. And the effects of this on everyday life are drastic.

In a survey of 71 people with smell disorders, the researchers found that patients experience a smorgasbord of negative effects – ranging from poor hazard perception and poor sense of personal hygiene, to an inability to enjoy food and an inability to link smell to happy memories. The whiff of gingerbread on Christmas morning, the smoke of a bonfire on a summer evening – the smell-deprived miss out on them all. The negative emotions those people experience read like a recipe for your very own homemade Sith lord: sadness, regret, isolation, anxiety, anger, frustration. A path to the dark side, losing your scent is.

Speaking of fictional bad guys, this nasal-based research really could have benefited one Lord Volde ... fine, You-Know-Who. Just look at that face. That’s a man who can’t smell. You can’t tell us he wouldn’t have turned out better if only Dorothy had picked him up on the yellow brick road instead of some dumb scarecrow.

The sound of hieroglyphics

The Rosetta Stone revealed the meaning of Egyptian hieroglyphics and unlocked the ancient language of the Pharaohs for modern humans. But that mute stele said nothing about what those who uttered that ancient tongue sounded like.

Researchers at London’s Royal Holloway College may now know the answer. At least, a monosyllabic one.

The answer comes (indirectly) from Egyptian priest Nesyamun, a former resident of Thebes who worked at the temple of Karnak, but who now calls the Leeds City Museum home. Or, to be precise, Nesyamun’s 3,000-year-old mummified remains live on in Leeds. Nesyamun’s religious duties during his Karnak career likely required a smooth singing style and an accomplished speaking voice.

In a paper published in Scientific Reports, the British scientists say they’ve now heard the sound of the Egyptian priest’s long-silenced liturgical voice.

Working from CT scans of Nesyamun’s relatively well-preserved vocal-tract soft tissue, the scientists used a 3D-printed vocal tract and an electronic larynx to synthesize the actual sound of his voice.

And the result? Did the crooning priest of Karnak utter a Boris Karloffian curse upon those who had disturbed his millennia-long slumber? Did he deliver a rousing hymn of praise to Egypt’s ruler during the turbulent 1070s bce, Ramses XI?

In fact, what emerged from Nesyamun’s synthesized throat was ... “eh.” Maybe “a,” as in “bad.”

Given the state of the priest’s tongue (shrunken) and his soft palate (missing), the researchers say those monosyllabic sounds are the best Nesyamun can muster in his present state. Other experts say actual words from the ancients are likely impossible.

Perhaps one day, science will indeed be able to synthesize whole words or sentences from other well-preserved residents of the distant past. May we all live to hear an unyielding Ramses II himself chew the scenery like his Hollywood doppelganger, Yul Brynner: “So let it be written! So let it be done!”

To beard or not to beard

People are funny, and men, who happen to be people, are no exception.

Men, you see, have these things called beards, and there are definitely more men running around with facial hair these days. A lot of women go through a lot of trouble to get rid of a lot of their hair. But men, well, we grow extra hair. Why?

That’s what Honest Amish, a maker of beard-care products, wanted to know. They commissioned OnePoll to conduct a survey of 2,000 Americans, both men and women, to learn all kinds of things about beards.

So what did they find? Facial hair confidence, that’s what. Three-quarters of men said that a beard made them feel more confident than did a bare face, and 73% said that facial hair makes a man more attractive. That number was a bit lower among women, 63% of whom said that facial hair made a man more attractive.

That doesn’t seem very funny, does it? We’re getting there.

Male respondents also were asked what they would do to get the perfect beard: 40% would be willing to spend a night in jail or give up coffee for a year, and 38% would stand in line at the DMV for an entire day. Somewhat less popular responses included giving up sex for a year (22%) – seems like a waste of all that new-found confidence – and shaving their heads (18%).

And that, we don’t mind saying, is a hair-raising conclusion.

If I only had a nose

Deaf and blind people get all the attention. Special schools, Braille, sign language, even a pinball-focused rock opera. And it is easy to see why: Those senses are kind of important when it comes to navigating the world. But what if you have to live without one of the less-cool senses? What if the nose doesn’t know?

According to research published in Clinical Otolaryngology, up to 5% of the world’s population has some sort of smell disorder, preventing them from either smelling correctly or smelling anything at all. And the effects of this on everyday life are drastic.

In a survey of 71 people with smell disorders, the researchers found that patients experience a smorgasbord of negative effects – ranging from poor hazard perception and poor sense of personal hygiene, to an inability to enjoy food and an inability to link smell to happy memories. The whiff of gingerbread on Christmas morning, the smoke of a bonfire on a summer evening – the smell-deprived miss out on them all. The negative emotions those people experience read like a recipe for your very own homemade Sith lord: sadness, regret, isolation, anxiety, anger, frustration. A path to the dark side, losing your scent is.

Speaking of fictional bad guys, this nasal-based research really could have benefited one Lord Volde ... fine, You-Know-Who. Just look at that face. That’s a man who can’t smell. You can’t tell us he wouldn’t have turned out better if only Dorothy had picked him up on the yellow brick road instead of some dumb scarecrow.

The sound of hieroglyphics

The Rosetta Stone revealed the meaning of Egyptian hieroglyphics and unlocked the ancient language of the Pharaohs for modern humans. But that mute stele said nothing about what those who uttered that ancient tongue sounded like.

Researchers at London’s Royal Holloway College may now know the answer. At least, a monosyllabic one.

The answer comes (indirectly) from Egyptian priest Nesyamun, a former resident of Thebes who worked at the temple of Karnak, but who now calls the Leeds City Museum home. Or, to be precise, Nesyamun’s 3,000-year-old mummified remains live on in Leeds. Nesyamun’s religious duties during his Karnak career likely required a smooth singing style and an accomplished speaking voice.

In a paper published in Scientific Reports, the British scientists say they’ve now heard the sound of the Egyptian priest’s long-silenced liturgical voice.

Working from CT scans of Nesyamun’s relatively well-preserved vocal-tract soft tissue, the scientists used a 3D-printed vocal tract and an electronic larynx to synthesize the actual sound of his voice.

And the result? Did the crooning priest of Karnak utter a Boris Karloffian curse upon those who had disturbed his millennia-long slumber? Did he deliver a rousing hymn of praise to Egypt’s ruler during the turbulent 1070s bce, Ramses XI?

In fact, what emerged from Nesyamun’s synthesized throat was ... “eh.” Maybe “a,” as in “bad.”

Given the state of the priest’s tongue (shrunken) and his soft palate (missing), the researchers say those monosyllabic sounds are the best Nesyamun can muster in his present state. Other experts say actual words from the ancients are likely impossible.

Perhaps one day, science will indeed be able to synthesize whole words or sentences from other well-preserved residents of the distant past. May we all live to hear an unyielding Ramses II himself chew the scenery like his Hollywood doppelganger, Yul Brynner: “So let it be written! So let it be done!”

To beard or not to beard

People are funny, and men, who happen to be people, are no exception.

Men, you see, have these things called beards, and there are definitely more men running around with facial hair these days. A lot of women go through a lot of trouble to get rid of a lot of their hair. But men, well, we grow extra hair. Why?

That’s what Honest Amish, a maker of beard-care products, wanted to know. They commissioned OnePoll to conduct a survey of 2,000 Americans, both men and women, to learn all kinds of things about beards.

So what did they find? Facial hair confidence, that’s what. Three-quarters of men said that a beard made them feel more confident than did a bare face, and 73% said that facial hair makes a man more attractive. That number was a bit lower among women, 63% of whom said that facial hair made a man more attractive.

That doesn’t seem very funny, does it? We’re getting there.

Male respondents also were asked what they would do to get the perfect beard: 40% would be willing to spend a night in jail or give up coffee for a year, and 38% would stand in line at the DMV for an entire day. Somewhat less popular responses included giving up sex for a year (22%) – seems like a waste of all that new-found confidence – and shaving their heads (18%).

And that, we don’t mind saying, is a hair-raising conclusion.

Zika virus: Birth defects rose fourfold in U.S. hardest-hit areas

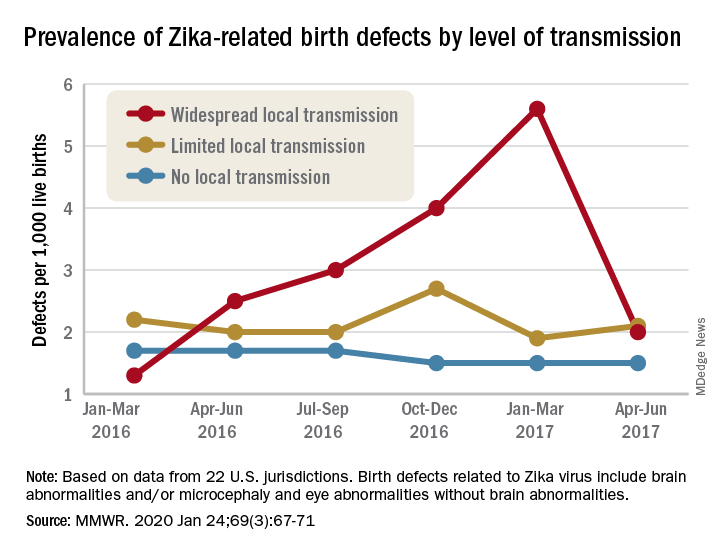

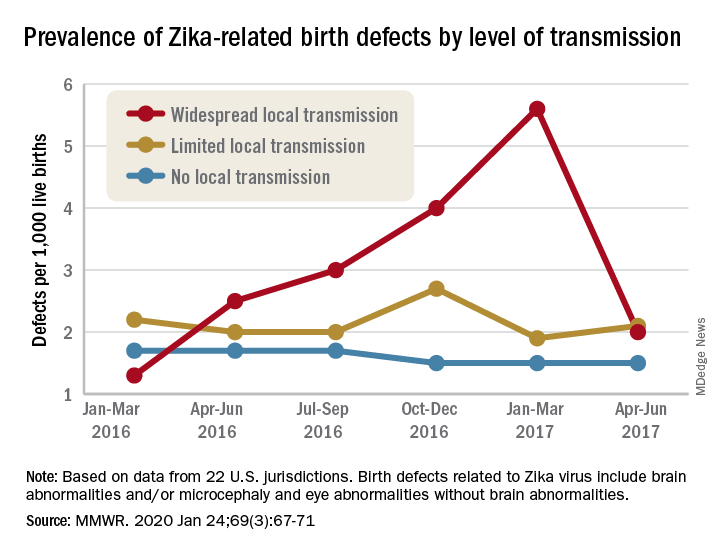

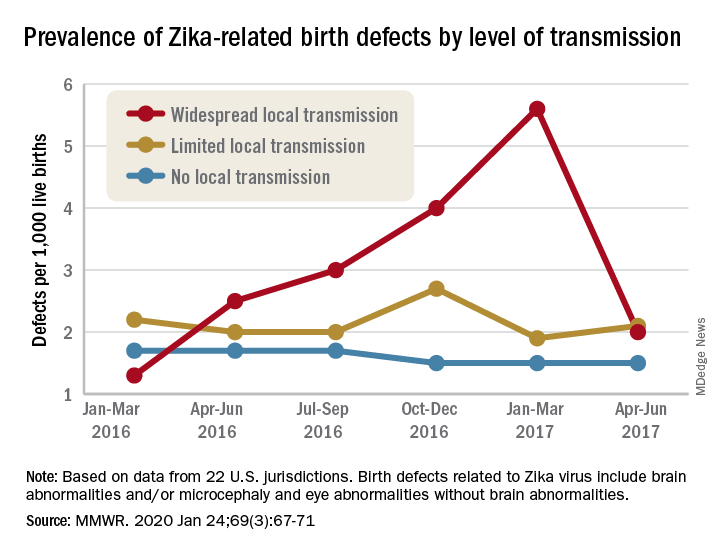

according to the Centers for Disease Control and Prevention.

That spike in the prevalence of brain abnormalities and/or microcephaly or eye abnormalities without brain abnormalities came during January through March 2017, about 6 months after the Zika outbreak’s reported peak in the jurisdictions with widespread local transmission, Puerto Rico and the U.S. Virgin Islands, wrote Ashley N. Smoots, MPH, of the CDC’s National Center on Birth Defects and Developmental Disabilities and associates in the Morbidity and Mortality Weekly Report.

In those two territories, the prevalence of birth defects potentially related to Zika virus infection was 5.6 per 1,000 live births during January through March 2017, compared with 1.3 per 1,000 in January through March 2016, they reported.

In the southern areas of Florida and Texas, where there was limited local Zika transmission, the highest prevalence of birth defects, 2.7 per 1,000, occurred during October through December 2016, and was only slightly greater than the baseline rate of 2.2 per 1,000 in January through March 2016, the investigators reported.

Among the other 19 jurisdictions (including Illinois, Louisiana, New Jersey, South Carolina, and Virginia) involved in the analysis, the rate of Zika virus–related birth defects never reached any higher than the 1.7 per 1,000 recorded at the start of the study period in January through March 2016, they said.

“Population-based birth defects surveillance is critical for identifying infants and fetuses with birth defects potentially related to Zika virus regardless of whether Zika virus testing was conducted, especially given the high prevalence of asymptomatic disease. These data can be used to inform follow-up care and services as well as strengthen surveillance,” the investigators wrote.

SOURCE: Smoots AN et al. MMWR. 2020 Jan 24;69(3):67-71.

according to the Centers for Disease Control and Prevention.

That spike in the prevalence of brain abnormalities and/or microcephaly or eye abnormalities without brain abnormalities came during January through March 2017, about 6 months after the Zika outbreak’s reported peak in the jurisdictions with widespread local transmission, Puerto Rico and the U.S. Virgin Islands, wrote Ashley N. Smoots, MPH, of the CDC’s National Center on Birth Defects and Developmental Disabilities and associates in the Morbidity and Mortality Weekly Report.

In those two territories, the prevalence of birth defects potentially related to Zika virus infection was 5.6 per 1,000 live births during January through March 2017, compared with 1.3 per 1,000 in January through March 2016, they reported.

In the southern areas of Florida and Texas, where there was limited local Zika transmission, the highest prevalence of birth defects, 2.7 per 1,000, occurred during October through December 2016, and was only slightly greater than the baseline rate of 2.2 per 1,000 in January through March 2016, the investigators reported.

Among the other 19 jurisdictions (including Illinois, Louisiana, New Jersey, South Carolina, and Virginia) involved in the analysis, the rate of Zika virus–related birth defects never reached any higher than the 1.7 per 1,000 recorded at the start of the study period in January through March 2016, they said.

“Population-based birth defects surveillance is critical for identifying infants and fetuses with birth defects potentially related to Zika virus regardless of whether Zika virus testing was conducted, especially given the high prevalence of asymptomatic disease. These data can be used to inform follow-up care and services as well as strengthen surveillance,” the investigators wrote.

SOURCE: Smoots AN et al. MMWR. 2020 Jan 24;69(3):67-71.

according to the Centers for Disease Control and Prevention.

That spike in the prevalence of brain abnormalities and/or microcephaly or eye abnormalities without brain abnormalities came during January through March 2017, about 6 months after the Zika outbreak’s reported peak in the jurisdictions with widespread local transmission, Puerto Rico and the U.S. Virgin Islands, wrote Ashley N. Smoots, MPH, of the CDC’s National Center on Birth Defects and Developmental Disabilities and associates in the Morbidity and Mortality Weekly Report.

In those two territories, the prevalence of birth defects potentially related to Zika virus infection was 5.6 per 1,000 live births during January through March 2017, compared with 1.3 per 1,000 in January through March 2016, they reported.

In the southern areas of Florida and Texas, where there was limited local Zika transmission, the highest prevalence of birth defects, 2.7 per 1,000, occurred during October through December 2016, and was only slightly greater than the baseline rate of 2.2 per 1,000 in January through March 2016, the investigators reported.

Among the other 19 jurisdictions (including Illinois, Louisiana, New Jersey, South Carolina, and Virginia) involved in the analysis, the rate of Zika virus–related birth defects never reached any higher than the 1.7 per 1,000 recorded at the start of the study period in January through March 2016, they said.

“Population-based birth defects surveillance is critical for identifying infants and fetuses with birth defects potentially related to Zika virus regardless of whether Zika virus testing was conducted, especially given the high prevalence of asymptomatic disease. These data can be used to inform follow-up care and services as well as strengthen surveillance,” the investigators wrote.

SOURCE: Smoots AN et al. MMWR. 2020 Jan 24;69(3):67-71.

FROM MMWR

Infant deaths from birth defects decline, but some disparities widen

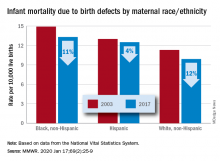

according to the Centers for Disease Control and Prevention.

The total rate of IMBD dropped from 12.2 cases per 10,000 live births in 2003 to 11 cases per 10,000 in 2017, with decreases occurring “across the categories of maternal race/ethnicity, infant sex, and infant age at death,” Lynn M. Almli, PhD, of the CDC’s National Center on Birth Defects and Developmental Disabilities and associates wrote in the Morbidity and Mortality Weekly Report.

Rates were down for infants of white non-Hispanic, black non-Hispanic, and Hispanic mothers, but disparities among races/ethnicities persisted or even increased. The IMBD rate for infants born to Hispanic mothers, which was 15% higher than that of infants born to white mothers in 2003, was 26% higher by 2017. The difference between infants born to black mothers and those born to whites rose from 32% in 2003 to 34% in 2017, the investigators reported.

The disparities were even greater among subgroups of infants categorized by gestational age. From 2003 to 2017, IMBD rates dropped by 20% for infants in the youngest group (20-27 weeks), 25% for infants in the oldest group (41-44 weeks), and 29% among those born at 39-40 weeks, they said.

For moderate- and late-preterm infants, however, IMBD rates went up: Infants born at 32-33 weeks and 34-36 weeks each had an increase of 17% over the study period, Dr. Almli and associates noted, based on data from the National Vital Statistics System.

“The observed differences in IMBD rates by race/ethnicity might be influenced by access to and utilization of health care before and during pregnancy, prenatal screening, losses of pregnancies with fetal anomalies, and insurance type,” they wrote, and trends by gestational age “could be influenced by the quantity and quality of care for infants born before 30 weeks’ gestation, compared with that of those born closer to term.”

Birth defects occur in approximately 3% of all births in the United States but accounted for 20% of infant deaths during 2003-2017, the investigators wrote, suggesting that “the results from this analysis can inform future research into areas where efforts to reduce IMBD rates are needed.”

SOURCE: Almli LM et al. MMWR. 2020 Jan 17;69(2):25-9.

according to the Centers for Disease Control and Prevention.

The total rate of IMBD dropped from 12.2 cases per 10,000 live births in 2003 to 11 cases per 10,000 in 2017, with decreases occurring “across the categories of maternal race/ethnicity, infant sex, and infant age at death,” Lynn M. Almli, PhD, of the CDC’s National Center on Birth Defects and Developmental Disabilities and associates wrote in the Morbidity and Mortality Weekly Report.

Rates were down for infants of white non-Hispanic, black non-Hispanic, and Hispanic mothers, but disparities among races/ethnicities persisted or even increased. The IMBD rate for infants born to Hispanic mothers, which was 15% higher than that of infants born to white mothers in 2003, was 26% higher by 2017. The difference between infants born to black mothers and those born to whites rose from 32% in 2003 to 34% in 2017, the investigators reported.