User login

Alzheimer’s: Biomarkers, not cognition, will now define disorder

A new definition of Alzheimer’s disease based solely on biomarkers has the potential to strengthen clinical trials and change the way physicians talk to patients.

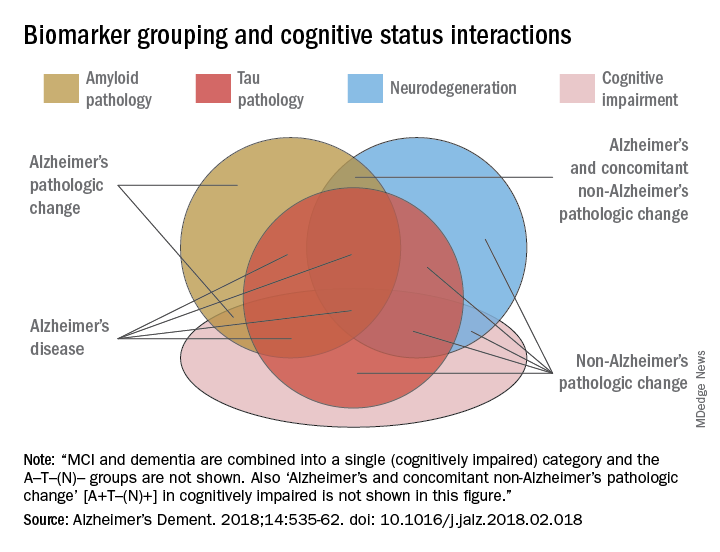

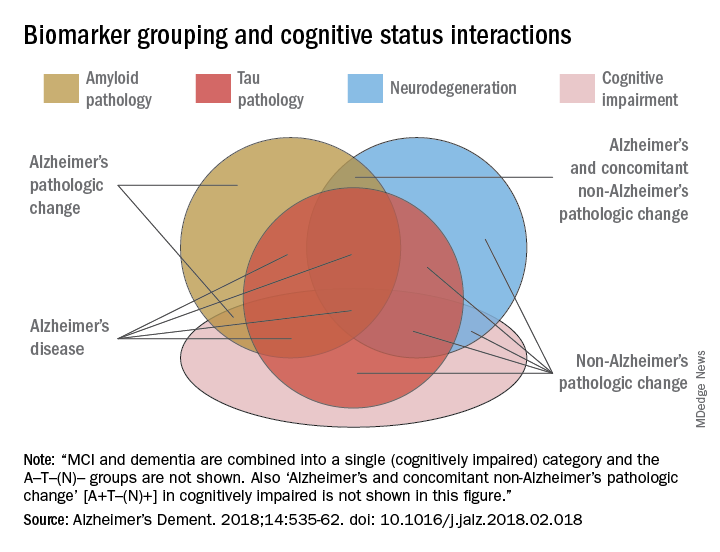

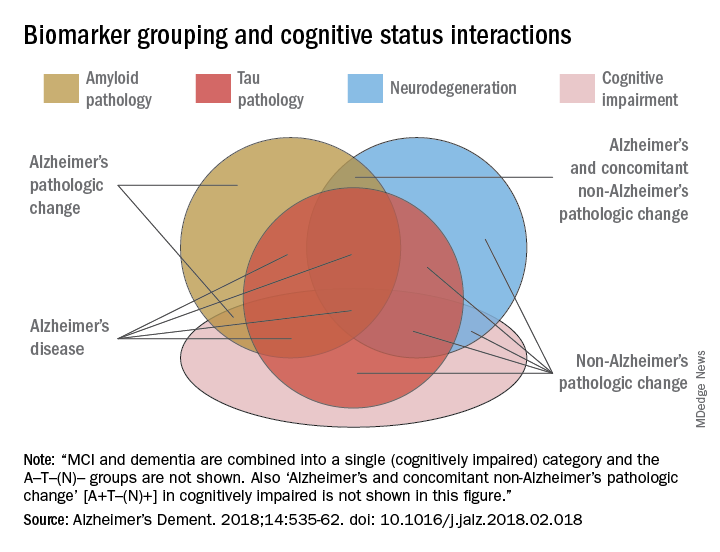

AB is the key to this classification paradigm – any patient with it (A+) is on the Alzheimer’s continuum. But only those with both amyloid and tau in the brain (A+T+) receive the “Alzheimer’s disease” classification. A third biomarker, neurodegeneration, may be either present or absent for an Alzheimer’s disease profile (N+ or N-). Cognitive staging adds important details, but remains secondary to the biomarker classification.

Jointly created by National Institute on Aging and the Alzheimer’s Association, the system – dubbed the NIA-AA Research Framework – represents a new, common language that researchers around the world may now use to generate and test Alzheimer’s hypotheses, and to optimize both epidemiologic studies and interventional trials. It will be especially important as Alzheimer’s prevention trials seek to target patients who are cognitively normal, yet harbor the neuropathological hallmarks of the disease.

This recasting adds Alzheimer’s to the list of biomarker-defined disorders, including hypertension, diabetes, and hyperlipidemia. It is a timely and necessary reframing, said Clifford Jack, MD, chair of the 20-member committee that created the paradigm. It appears in the April 10 issue of Alzheimer’s & Dementia.

“This is a fundamental change in the definition of Alzheimer’s disease,” Dr. Jack said in an interview. “We are advocating the disease be defined by its neuropathology [of plaques and tangles], which is specific to Alzheimer’s, and no longer by clinical symptoms which are not specific for any disease.”

One of the primary intents is to refine AD research cohorts, allowing pure stratification of patients who actually have the intended therapeutic targets of amyloid beta or tau. Without biomarker screening, up to 30% of subjects who enroll in AD drug trials don’t have the target pathologies – a situation researchers say contributes to the long string of failed Alzheimer’s drug studies.

For now, the system is intended only for research settings said Dr. Jack, an Alzheimer’s investigator at the Mayo Clinic, Rochester, Minn. But as biomarker testing comes of age and new less-expensive markers are discovered, the paradigm will likely be incorporated into clinical practice. The process can begin even now with a simple change in the way doctors talk to patients about Alzheimer’s, he said in an interview.

“We advocate people stop using the terms ‘probable or possible AD.’ A better term is ‘Alzheimer’s clinical syndrome.’ Without biomarkers, the clinical syndrome is the only thing you can know. What you can’t know is whether they do or don’t have Alzheimer’s disease. When I’m asked by physicians, ‘What do I tell my patients now?’ my very direct answer is ‘Tell them the truth.’ And the truth is that they have Alzheimer’s clinical syndrome and may or may not have Alzheimer’s disease.”

A reflection of evolving science

The research framework reflects advances in Alzheimer’s science that have occurred since the NIA last updated it AD diagnostic criteria in 2011. Those criteria divided the disease continuum into three phases largely based on cognitive symptoms, but were the first to recognize a presymptomatic AD phase.

- Preclinical: Brain changes, including amyloid buildup and other nerve cell changes already may be in progress but significant clinical symptoms are not yet evident.

- Mild cognitive impairment (MCI): A stage marked by symptoms of memory and/or other thinking problems that are greater than normal for a person’s age and education but that do not interfere with his or her independence. MCI may or may not progress to Alzheimer’s dementia.

- Alzheimer’s dementia: The final stage of the disease in which the symptoms of Alzheimer’s, such as memory loss, word-finding difficulties, and visual/spatial problems, are significant enough to impair a person’s ability to function independently.

The next 6 years brought striking advances in understanding the biology and pathology of AD, as well as technical advances in biomarker measurements. It became possible not only to measure AB and tau in cerebrospinal fluid but also to see these proteins in living brains with specialized PET ligands. It also became obvious that about a third of subjects in any given AD study didn’t have the disease-defining brain plaques and tangles – the therapeutic targets of all the largest drug studies to date. And while it’s clear that none of the interventions that have been through trials have exerted a significant benefit yet, “Treating people for a disease they don’t have can’t possibly help the results,” Dr. Jack said.

These research observations and revolutionary biomarker advances have reshaped the way researchers think about AD. To maximize research potential and to create a global classification standard that would unify studies as well, NIA and the Alzheimer’s Association convened several meetings to redefine Alzheimer’s disease biologically, by pathologic brain changes as measured by biomarkers. In this paradigm, cognitive dysfunction steps aside as the primary classification driver, becoming a symptom of AD rather than its definition.

“The way AD has historically been defined is by clinical symptoms: a progressive amnestic dementia was Alzheimer’s, and if there was no progressive amnestic dementia, it wasn’t,” Dr. Jack said. “Well, it turns out that both of those statements are wrong. About 30% of people with progressive amnestic dementia have other things causing it.”

It makes much more sense, he said, to define the disease based on its unique neuropathologic signature: amyloid beta plaques and tau neurofibrillary tangles in the brain.

The three-part key: A/T(N)

The NIA-AA research framework yields eight biomarker profiles with different combinations of amyloid (A), tau (T), and neuropathologic damage (N).

“Different measures have different roles,” Dr. Jack and his colleagues wrote in Alzheimer’s & Dementia. “Amyloid beta biomarkers determine whether or not an individual is in the Alzheimer’s continuum. Pathologic tau biomarkers determine if someone who is in the Alzheimer’s continuum has AD, because both amyloid beta and tau are required for a neuropathologic diagnosis of the disease. Neurodegenerative/neuronal injury biomarkers and cognitive symptoms, neither of which is specific for AD, are used only to stage severity not to define the presence of the Alzheimer’s continuum.”

The “N” category is not as cut and dried at the other biomarkers, the paper noted.

“Biomarkers in the (N) group are indicators of neurodegeneration or neuronal injury resulting from many causes; they are not specific for neurodegeneration due to AD. In any individual, the proportion of observed neurodegeneration/injury that can be attributed to AD versus other possible comorbid conditions (most of which have no extant biomarker) is unknown.”

The biomarker profiles are:

- A-T-(N): Normal AD biomarkers

- A+T-(N): Alzheimer’s pathologic change; Alzheimer’s continuum

- A+T+(N): Alzheimer’s disease; Alzheimer’s continuum

- A+T-(N)+: Alzheimer’s with suspected non Alzheimer’s pathologic change; Alzheimer’s continuum

- A-T+(N)-: Non-AD pathologic change

- A-T-(N)+: Non-AD pathologic change

- A-T+(N)+: Non-AD pathologic change

“This latter biomarker profile implies evidence of one or more neuropathologic processes other than AD and has been labeled ‘suspected non-Alzheimer’s pathophysiology, or SNAP,” according to the paper.

Cognitive staging further refines each person’s status. There are two clinical staging schemes in the framework. One is the familiar syndromal staging system of cognitively unimpaired, MCI, and dementia, which can be subdivided into mild, moderate, and severe. This can be applied to anyone with a biomarker profile.

The second, a six-stage numerical clinical staging scheme, will apply only to those who are amyloid-positive and on the Alzheimer’s continuum. Stages run from 1 (unimpaired) to 6 (severe dementia). The numeric staging does not concentrate solely on cognition but also takes into account neurobehavioral and functional symptoms. It includes a transitional stage during which measures may be within population norms but have declined relative to the individual’s past performance.

The numeric staging scheme is intended to mesh with FDA guidance for clinical trials outcomes, the committee noted.

“A useful application envisioned for this numeric cognitive staging scheme is interventional trials. Indeed, the NIA-AA numeric staging scheme is intentionally very similar to the categorical system for staging AD outlined in recent FDA guidance for industry pertaining to developing drugs for treatment of early AD … it was our belief that harmonizing this aspect of the framework with FDA guidance would enhance cross fertilization between observational and interventional studies, which in turn would facilitate conduct of interventional clinical trials early in the disease process.”

The entire system yields a shorthand biomarker profile entirely unique to each subject. For example an A+T-(N)+ MCI profile suggests that both Alzheimer’s and non-Alzheimer’s pathologic change may be contributing to the cognitive impairment. A cognitive staging number could also be added.

This biomarker profile introduces the option of completely avoiding traditional AD nomenclature, the committee noted.

“Some investigators may prefer to not use the biomarker category terminology but instead simply report biomarker profile, i.e., A+T+(N)+ instead of ‘Alzheimer’s disease.’ An alternative is to combine the biomarker profile with a descriptive term – for example, ‘A+T+(N)+ with dementia’ instead of ‘Alzheimer’s disease with dementia’.”

Again, Dr. Jack cautioned, the paradigm is not intended for clinical use – at least not now. It relies entirely on biomarkers obtained by methods that are either invasive (lumbar puncture), unavailable outside research settings (tau scans), or very expensive when privately obtained (amyloid scans). Until this situation changes, the biomarker profile paradigm has little clinical impact.

IDEAS on the horizon

Change may be coming, however. The Alzheimer’s Association-sponsored Imaging Dementia–Evidence for Amyloid Scanning (IDEAS) study is assessing the clinical usefulness of amyloid PET scans and their impact on patient outcomes. The goal is to accumulate enough data to prove that amyloid scans are a cost-effective addition to the management of dementia patients. If federal payers agree and decide to cover amyloid scans, advocates hope that private insurers might follow suit.

An interim analysis of 4,000 scans, presented at the 2017 Alzheimer’s Association International Conference, was quite positive. Scan results changed patient management in 68% of cases, including refining dementia diagnoses, adding, stopping, or switching medications, and altering patient counseling.

IDEAS uses an FDA-approved amyloid imaging agent. But although several are under investigation, there are no approved tau PET ligands. However, other less-invasive and less-costly options may soon be developed, the committee noted. The search continues for a validated blood-based biomarker, including neurofilament light protein, plasma amyloid beta, and plasma tau.

“In the future, less-invasive/less-expensive blood-based biomarker tests - along with genetics, clinical, and demographic information - will likely play an important screening role in selecting individuals for more-expensive/more-invasive biomarker testing. This has been the history in other biologically defined diseases such as cardiovascular disease,” Dr. Jack and his colleagues noted in the paper.

In any case, however, without an effective treatment, much of the information conveyed by the biomarker profile paradigm remains, literally, academic, Dr. Jack said.

“If [the biomarker profile] were easy to determine and inexpensive, I imagine a lot of people would ask for it. Certainly many people would want to know, especially if they have a cognitive problem. People who have a family history, who may have Alzheimer’s pathology without the symptoms, might want to know. But the reality is that, until there’s a treatment that alters the course of this disease, finding out that you actually have Alzheimer’s is not going to enable you to change anything.”

The editors of Alzheimer’s & Dementia are seeking comment on the research framework. Letters and commentary can be submitted through June and will be considered for publication in an e-book, to be published sometime this summer, according to an accompanying editorial (https://doi.org/10/1016/j.jalz.2018.03.003).

Alzheimer’s & Dementia is the official journal of the Alzheimer’s Association. Dr. Jack has served on scientific advisory boards for Elan/Janssen AI, Bristol-Meyers Squibb, Eli Lilly, GE Healthcare, Siemens, and Eisai; received research support from Baxter International, Allon Therapeutics; and holds stock in Johnson & Johnson. Disclosures for other committee members can be found here.

SOURCE: Jack CR et al. Alzheimer’s Dement. 2018;14:535-62. doi: 10.1016/j.jalz.2018.02.018.

The biologically defined amyloid beta–tau–neuronal damage (ATN) framework is a logical and modern approach to Alzheimer’s disease (AD) diagnosis. It is hard to argue that more data are bad. Having such data on every patient would certainly be a luxury, but, with a few notable exceptions, the context in which this will most frequently occur is within the context of clinical trials.

While having this information does provide a biological basis for diagnosis, it does not account for non-AD contributions to the patient’s symptoms, which are found in more than half of all AD patients at autopsy; these non-AD pathologies also can influence clinical trial outcomes.

It also seems a bit ironic that the only meaningful manifestation of AD is now essentially left out of the diagnostic framework or relegated to nothing more than an adjective. Yet having a head full of amyloid means little if a person does not express symptoms (and vice versa), and we know that all people do not progress in the same way.

In the future, genomic and exposomic profiles may provide an even-more-nuanced picture, but further work is needed before that becomes a clinical reality. For now, the ATN biomarker framework represents the state of the art, though not an end.

Richard J. Caselli, MD, is professor of neurology at the Mayo Clinic Arizona in Scottsdale. He is also associate director and clinical core director of the Arizona Alzheimer’s Disease Center. He has no relevant disclosures.

The biologically defined amyloid beta–tau–neuronal damage (ATN) framework is a logical and modern approach to Alzheimer’s disease (AD) diagnosis. It is hard to argue that more data are bad. Having such data on every patient would certainly be a luxury, but, with a few notable exceptions, the context in which this will most frequently occur is within the context of clinical trials.

While having this information does provide a biological basis for diagnosis, it does not account for non-AD contributions to the patient’s symptoms, which are found in more than half of all AD patients at autopsy; these non-AD pathologies also can influence clinical trial outcomes.

It also seems a bit ironic that the only meaningful manifestation of AD is now essentially left out of the diagnostic framework or relegated to nothing more than an adjective. Yet having a head full of amyloid means little if a person does not express symptoms (and vice versa), and we know that all people do not progress in the same way.

In the future, genomic and exposomic profiles may provide an even-more-nuanced picture, but further work is needed before that becomes a clinical reality. For now, the ATN biomarker framework represents the state of the art, though not an end.

Richard J. Caselli, MD, is professor of neurology at the Mayo Clinic Arizona in Scottsdale. He is also associate director and clinical core director of the Arizona Alzheimer’s Disease Center. He has no relevant disclosures.

The biologically defined amyloid beta–tau–neuronal damage (ATN) framework is a logical and modern approach to Alzheimer’s disease (AD) diagnosis. It is hard to argue that more data are bad. Having such data on every patient would certainly be a luxury, but, with a few notable exceptions, the context in which this will most frequently occur is within the context of clinical trials.

While having this information does provide a biological basis for diagnosis, it does not account for non-AD contributions to the patient’s symptoms, which are found in more than half of all AD patients at autopsy; these non-AD pathologies also can influence clinical trial outcomes.

It also seems a bit ironic that the only meaningful manifestation of AD is now essentially left out of the diagnostic framework or relegated to nothing more than an adjective. Yet having a head full of amyloid means little if a person does not express symptoms (and vice versa), and we know that all people do not progress in the same way.

In the future, genomic and exposomic profiles may provide an even-more-nuanced picture, but further work is needed before that becomes a clinical reality. For now, the ATN biomarker framework represents the state of the art, though not an end.

Richard J. Caselli, MD, is professor of neurology at the Mayo Clinic Arizona in Scottsdale. He is also associate director and clinical core director of the Arizona Alzheimer’s Disease Center. He has no relevant disclosures.

A new definition of Alzheimer’s disease based solely on biomarkers has the potential to strengthen clinical trials and change the way physicians talk to patients.

AB is the key to this classification paradigm – any patient with it (A+) is on the Alzheimer’s continuum. But only those with both amyloid and tau in the brain (A+T+) receive the “Alzheimer’s disease” classification. A third biomarker, neurodegeneration, may be either present or absent for an Alzheimer’s disease profile (N+ or N-). Cognitive staging adds important details, but remains secondary to the biomarker classification.

Jointly created by National Institute on Aging and the Alzheimer’s Association, the system – dubbed the NIA-AA Research Framework – represents a new, common language that researchers around the world may now use to generate and test Alzheimer’s hypotheses, and to optimize both epidemiologic studies and interventional trials. It will be especially important as Alzheimer’s prevention trials seek to target patients who are cognitively normal, yet harbor the neuropathological hallmarks of the disease.

This recasting adds Alzheimer’s to the list of biomarker-defined disorders, including hypertension, diabetes, and hyperlipidemia. It is a timely and necessary reframing, said Clifford Jack, MD, chair of the 20-member committee that created the paradigm. It appears in the April 10 issue of Alzheimer’s & Dementia.

“This is a fundamental change in the definition of Alzheimer’s disease,” Dr. Jack said in an interview. “We are advocating the disease be defined by its neuropathology [of plaques and tangles], which is specific to Alzheimer’s, and no longer by clinical symptoms which are not specific for any disease.”

One of the primary intents is to refine AD research cohorts, allowing pure stratification of patients who actually have the intended therapeutic targets of amyloid beta or tau. Without biomarker screening, up to 30% of subjects who enroll in AD drug trials don’t have the target pathologies – a situation researchers say contributes to the long string of failed Alzheimer’s drug studies.

For now, the system is intended only for research settings said Dr. Jack, an Alzheimer’s investigator at the Mayo Clinic, Rochester, Minn. But as biomarker testing comes of age and new less-expensive markers are discovered, the paradigm will likely be incorporated into clinical practice. The process can begin even now with a simple change in the way doctors talk to patients about Alzheimer’s, he said in an interview.

“We advocate people stop using the terms ‘probable or possible AD.’ A better term is ‘Alzheimer’s clinical syndrome.’ Without biomarkers, the clinical syndrome is the only thing you can know. What you can’t know is whether they do or don’t have Alzheimer’s disease. When I’m asked by physicians, ‘What do I tell my patients now?’ my very direct answer is ‘Tell them the truth.’ And the truth is that they have Alzheimer’s clinical syndrome and may or may not have Alzheimer’s disease.”

A reflection of evolving science

The research framework reflects advances in Alzheimer’s science that have occurred since the NIA last updated it AD diagnostic criteria in 2011. Those criteria divided the disease continuum into three phases largely based on cognitive symptoms, but were the first to recognize a presymptomatic AD phase.

- Preclinical: Brain changes, including amyloid buildup and other nerve cell changes already may be in progress but significant clinical symptoms are not yet evident.

- Mild cognitive impairment (MCI): A stage marked by symptoms of memory and/or other thinking problems that are greater than normal for a person’s age and education but that do not interfere with his or her independence. MCI may or may not progress to Alzheimer’s dementia.

- Alzheimer’s dementia: The final stage of the disease in which the symptoms of Alzheimer’s, such as memory loss, word-finding difficulties, and visual/spatial problems, are significant enough to impair a person’s ability to function independently.

The next 6 years brought striking advances in understanding the biology and pathology of AD, as well as technical advances in biomarker measurements. It became possible not only to measure AB and tau in cerebrospinal fluid but also to see these proteins in living brains with specialized PET ligands. It also became obvious that about a third of subjects in any given AD study didn’t have the disease-defining brain plaques and tangles – the therapeutic targets of all the largest drug studies to date. And while it’s clear that none of the interventions that have been through trials have exerted a significant benefit yet, “Treating people for a disease they don’t have can’t possibly help the results,” Dr. Jack said.

These research observations and revolutionary biomarker advances have reshaped the way researchers think about AD. To maximize research potential and to create a global classification standard that would unify studies as well, NIA and the Alzheimer’s Association convened several meetings to redefine Alzheimer’s disease biologically, by pathologic brain changes as measured by biomarkers. In this paradigm, cognitive dysfunction steps aside as the primary classification driver, becoming a symptom of AD rather than its definition.

“The way AD has historically been defined is by clinical symptoms: a progressive amnestic dementia was Alzheimer’s, and if there was no progressive amnestic dementia, it wasn’t,” Dr. Jack said. “Well, it turns out that both of those statements are wrong. About 30% of people with progressive amnestic dementia have other things causing it.”

It makes much more sense, he said, to define the disease based on its unique neuropathologic signature: amyloid beta plaques and tau neurofibrillary tangles in the brain.

The three-part key: A/T(N)

The NIA-AA research framework yields eight biomarker profiles with different combinations of amyloid (A), tau (T), and neuropathologic damage (N).

“Different measures have different roles,” Dr. Jack and his colleagues wrote in Alzheimer’s & Dementia. “Amyloid beta biomarkers determine whether or not an individual is in the Alzheimer’s continuum. Pathologic tau biomarkers determine if someone who is in the Alzheimer’s continuum has AD, because both amyloid beta and tau are required for a neuropathologic diagnosis of the disease. Neurodegenerative/neuronal injury biomarkers and cognitive symptoms, neither of which is specific for AD, are used only to stage severity not to define the presence of the Alzheimer’s continuum.”

The “N” category is not as cut and dried at the other biomarkers, the paper noted.

“Biomarkers in the (N) group are indicators of neurodegeneration or neuronal injury resulting from many causes; they are not specific for neurodegeneration due to AD. In any individual, the proportion of observed neurodegeneration/injury that can be attributed to AD versus other possible comorbid conditions (most of which have no extant biomarker) is unknown.”

The biomarker profiles are:

- A-T-(N): Normal AD biomarkers

- A+T-(N): Alzheimer’s pathologic change; Alzheimer’s continuum

- A+T+(N): Alzheimer’s disease; Alzheimer’s continuum

- A+T-(N)+: Alzheimer’s with suspected non Alzheimer’s pathologic change; Alzheimer’s continuum

- A-T+(N)-: Non-AD pathologic change

- A-T-(N)+: Non-AD pathologic change

- A-T+(N)+: Non-AD pathologic change

“This latter biomarker profile implies evidence of one or more neuropathologic processes other than AD and has been labeled ‘suspected non-Alzheimer’s pathophysiology, or SNAP,” according to the paper.

Cognitive staging further refines each person’s status. There are two clinical staging schemes in the framework. One is the familiar syndromal staging system of cognitively unimpaired, MCI, and dementia, which can be subdivided into mild, moderate, and severe. This can be applied to anyone with a biomarker profile.

The second, a six-stage numerical clinical staging scheme, will apply only to those who are amyloid-positive and on the Alzheimer’s continuum. Stages run from 1 (unimpaired) to 6 (severe dementia). The numeric staging does not concentrate solely on cognition but also takes into account neurobehavioral and functional symptoms. It includes a transitional stage during which measures may be within population norms but have declined relative to the individual’s past performance.

The numeric staging scheme is intended to mesh with FDA guidance for clinical trials outcomes, the committee noted.

“A useful application envisioned for this numeric cognitive staging scheme is interventional trials. Indeed, the NIA-AA numeric staging scheme is intentionally very similar to the categorical system for staging AD outlined in recent FDA guidance for industry pertaining to developing drugs for treatment of early AD … it was our belief that harmonizing this aspect of the framework with FDA guidance would enhance cross fertilization between observational and interventional studies, which in turn would facilitate conduct of interventional clinical trials early in the disease process.”

The entire system yields a shorthand biomarker profile entirely unique to each subject. For example an A+T-(N)+ MCI profile suggests that both Alzheimer’s and non-Alzheimer’s pathologic change may be contributing to the cognitive impairment. A cognitive staging number could also be added.

This biomarker profile introduces the option of completely avoiding traditional AD nomenclature, the committee noted.

“Some investigators may prefer to not use the biomarker category terminology but instead simply report biomarker profile, i.e., A+T+(N)+ instead of ‘Alzheimer’s disease.’ An alternative is to combine the biomarker profile with a descriptive term – for example, ‘A+T+(N)+ with dementia’ instead of ‘Alzheimer’s disease with dementia’.”

Again, Dr. Jack cautioned, the paradigm is not intended for clinical use – at least not now. It relies entirely on biomarkers obtained by methods that are either invasive (lumbar puncture), unavailable outside research settings (tau scans), or very expensive when privately obtained (amyloid scans). Until this situation changes, the biomarker profile paradigm has little clinical impact.

IDEAS on the horizon

Change may be coming, however. The Alzheimer’s Association-sponsored Imaging Dementia–Evidence for Amyloid Scanning (IDEAS) study is assessing the clinical usefulness of amyloid PET scans and their impact on patient outcomes. The goal is to accumulate enough data to prove that amyloid scans are a cost-effective addition to the management of dementia patients. If federal payers agree and decide to cover amyloid scans, advocates hope that private insurers might follow suit.

An interim analysis of 4,000 scans, presented at the 2017 Alzheimer’s Association International Conference, was quite positive. Scan results changed patient management in 68% of cases, including refining dementia diagnoses, adding, stopping, or switching medications, and altering patient counseling.

IDEAS uses an FDA-approved amyloid imaging agent. But although several are under investigation, there are no approved tau PET ligands. However, other less-invasive and less-costly options may soon be developed, the committee noted. The search continues for a validated blood-based biomarker, including neurofilament light protein, plasma amyloid beta, and plasma tau.

“In the future, less-invasive/less-expensive blood-based biomarker tests - along with genetics, clinical, and demographic information - will likely play an important screening role in selecting individuals for more-expensive/more-invasive biomarker testing. This has been the history in other biologically defined diseases such as cardiovascular disease,” Dr. Jack and his colleagues noted in the paper.

In any case, however, without an effective treatment, much of the information conveyed by the biomarker profile paradigm remains, literally, academic, Dr. Jack said.

“If [the biomarker profile] were easy to determine and inexpensive, I imagine a lot of people would ask for it. Certainly many people would want to know, especially if they have a cognitive problem. People who have a family history, who may have Alzheimer’s pathology without the symptoms, might want to know. But the reality is that, until there’s a treatment that alters the course of this disease, finding out that you actually have Alzheimer’s is not going to enable you to change anything.”

The editors of Alzheimer’s & Dementia are seeking comment on the research framework. Letters and commentary can be submitted through June and will be considered for publication in an e-book, to be published sometime this summer, according to an accompanying editorial (https://doi.org/10/1016/j.jalz.2018.03.003).

Alzheimer’s & Dementia is the official journal of the Alzheimer’s Association. Dr. Jack has served on scientific advisory boards for Elan/Janssen AI, Bristol-Meyers Squibb, Eli Lilly, GE Healthcare, Siemens, and Eisai; received research support from Baxter International, Allon Therapeutics; and holds stock in Johnson & Johnson. Disclosures for other committee members can be found here.

SOURCE: Jack CR et al. Alzheimer’s Dement. 2018;14:535-62. doi: 10.1016/j.jalz.2018.02.018.

A new definition of Alzheimer’s disease based solely on biomarkers has the potential to strengthen clinical trials and change the way physicians talk to patients.

AB is the key to this classification paradigm – any patient with it (A+) is on the Alzheimer’s continuum. But only those with both amyloid and tau in the brain (A+T+) receive the “Alzheimer’s disease” classification. A third biomarker, neurodegeneration, may be either present or absent for an Alzheimer’s disease profile (N+ or N-). Cognitive staging adds important details, but remains secondary to the biomarker classification.

Jointly created by National Institute on Aging and the Alzheimer’s Association, the system – dubbed the NIA-AA Research Framework – represents a new, common language that researchers around the world may now use to generate and test Alzheimer’s hypotheses, and to optimize both epidemiologic studies and interventional trials. It will be especially important as Alzheimer’s prevention trials seek to target patients who are cognitively normal, yet harbor the neuropathological hallmarks of the disease.

This recasting adds Alzheimer’s to the list of biomarker-defined disorders, including hypertension, diabetes, and hyperlipidemia. It is a timely and necessary reframing, said Clifford Jack, MD, chair of the 20-member committee that created the paradigm. It appears in the April 10 issue of Alzheimer’s & Dementia.

“This is a fundamental change in the definition of Alzheimer’s disease,” Dr. Jack said in an interview. “We are advocating the disease be defined by its neuropathology [of plaques and tangles], which is specific to Alzheimer’s, and no longer by clinical symptoms which are not specific for any disease.”

One of the primary intents is to refine AD research cohorts, allowing pure stratification of patients who actually have the intended therapeutic targets of amyloid beta or tau. Without biomarker screening, up to 30% of subjects who enroll in AD drug trials don’t have the target pathologies – a situation researchers say contributes to the long string of failed Alzheimer’s drug studies.

For now, the system is intended only for research settings said Dr. Jack, an Alzheimer’s investigator at the Mayo Clinic, Rochester, Minn. But as biomarker testing comes of age and new less-expensive markers are discovered, the paradigm will likely be incorporated into clinical practice. The process can begin even now with a simple change in the way doctors talk to patients about Alzheimer’s, he said in an interview.

“We advocate people stop using the terms ‘probable or possible AD.’ A better term is ‘Alzheimer’s clinical syndrome.’ Without biomarkers, the clinical syndrome is the only thing you can know. What you can’t know is whether they do or don’t have Alzheimer’s disease. When I’m asked by physicians, ‘What do I tell my patients now?’ my very direct answer is ‘Tell them the truth.’ And the truth is that they have Alzheimer’s clinical syndrome and may or may not have Alzheimer’s disease.”

A reflection of evolving science

The research framework reflects advances in Alzheimer’s science that have occurred since the NIA last updated it AD diagnostic criteria in 2011. Those criteria divided the disease continuum into three phases largely based on cognitive symptoms, but were the first to recognize a presymptomatic AD phase.

- Preclinical: Brain changes, including amyloid buildup and other nerve cell changes already may be in progress but significant clinical symptoms are not yet evident.

- Mild cognitive impairment (MCI): A stage marked by symptoms of memory and/or other thinking problems that are greater than normal for a person’s age and education but that do not interfere with his or her independence. MCI may or may not progress to Alzheimer’s dementia.

- Alzheimer’s dementia: The final stage of the disease in which the symptoms of Alzheimer’s, such as memory loss, word-finding difficulties, and visual/spatial problems, are significant enough to impair a person’s ability to function independently.

The next 6 years brought striking advances in understanding the biology and pathology of AD, as well as technical advances in biomarker measurements. It became possible not only to measure AB and tau in cerebrospinal fluid but also to see these proteins in living brains with specialized PET ligands. It also became obvious that about a third of subjects in any given AD study didn’t have the disease-defining brain plaques and tangles – the therapeutic targets of all the largest drug studies to date. And while it’s clear that none of the interventions that have been through trials have exerted a significant benefit yet, “Treating people for a disease they don’t have can’t possibly help the results,” Dr. Jack said.

These research observations and revolutionary biomarker advances have reshaped the way researchers think about AD. To maximize research potential and to create a global classification standard that would unify studies as well, NIA and the Alzheimer’s Association convened several meetings to redefine Alzheimer’s disease biologically, by pathologic brain changes as measured by biomarkers. In this paradigm, cognitive dysfunction steps aside as the primary classification driver, becoming a symptom of AD rather than its definition.

“The way AD has historically been defined is by clinical symptoms: a progressive amnestic dementia was Alzheimer’s, and if there was no progressive amnestic dementia, it wasn’t,” Dr. Jack said. “Well, it turns out that both of those statements are wrong. About 30% of people with progressive amnestic dementia have other things causing it.”

It makes much more sense, he said, to define the disease based on its unique neuropathologic signature: amyloid beta plaques and tau neurofibrillary tangles in the brain.

The three-part key: A/T(N)

The NIA-AA research framework yields eight biomarker profiles with different combinations of amyloid (A), tau (T), and neuropathologic damage (N).

“Different measures have different roles,” Dr. Jack and his colleagues wrote in Alzheimer’s & Dementia. “Amyloid beta biomarkers determine whether or not an individual is in the Alzheimer’s continuum. Pathologic tau biomarkers determine if someone who is in the Alzheimer’s continuum has AD, because both amyloid beta and tau are required for a neuropathologic diagnosis of the disease. Neurodegenerative/neuronal injury biomarkers and cognitive symptoms, neither of which is specific for AD, are used only to stage severity not to define the presence of the Alzheimer’s continuum.”

The “N” category is not as cut and dried at the other biomarkers, the paper noted.

“Biomarkers in the (N) group are indicators of neurodegeneration or neuronal injury resulting from many causes; they are not specific for neurodegeneration due to AD. In any individual, the proportion of observed neurodegeneration/injury that can be attributed to AD versus other possible comorbid conditions (most of which have no extant biomarker) is unknown.”

The biomarker profiles are:

- A-T-(N): Normal AD biomarkers

- A+T-(N): Alzheimer’s pathologic change; Alzheimer’s continuum

- A+T+(N): Alzheimer’s disease; Alzheimer’s continuum

- A+T-(N)+: Alzheimer’s with suspected non Alzheimer’s pathologic change; Alzheimer’s continuum

- A-T+(N)-: Non-AD pathologic change

- A-T-(N)+: Non-AD pathologic change

- A-T+(N)+: Non-AD pathologic change

“This latter biomarker profile implies evidence of one or more neuropathologic processes other than AD and has been labeled ‘suspected non-Alzheimer’s pathophysiology, or SNAP,” according to the paper.

Cognitive staging further refines each person’s status. There are two clinical staging schemes in the framework. One is the familiar syndromal staging system of cognitively unimpaired, MCI, and dementia, which can be subdivided into mild, moderate, and severe. This can be applied to anyone with a biomarker profile.

The second, a six-stage numerical clinical staging scheme, will apply only to those who are amyloid-positive and on the Alzheimer’s continuum. Stages run from 1 (unimpaired) to 6 (severe dementia). The numeric staging does not concentrate solely on cognition but also takes into account neurobehavioral and functional symptoms. It includes a transitional stage during which measures may be within population norms but have declined relative to the individual’s past performance.

The numeric staging scheme is intended to mesh with FDA guidance for clinical trials outcomes, the committee noted.

“A useful application envisioned for this numeric cognitive staging scheme is interventional trials. Indeed, the NIA-AA numeric staging scheme is intentionally very similar to the categorical system for staging AD outlined in recent FDA guidance for industry pertaining to developing drugs for treatment of early AD … it was our belief that harmonizing this aspect of the framework with FDA guidance would enhance cross fertilization between observational and interventional studies, which in turn would facilitate conduct of interventional clinical trials early in the disease process.”

The entire system yields a shorthand biomarker profile entirely unique to each subject. For example an A+T-(N)+ MCI profile suggests that both Alzheimer’s and non-Alzheimer’s pathologic change may be contributing to the cognitive impairment. A cognitive staging number could also be added.

This biomarker profile introduces the option of completely avoiding traditional AD nomenclature, the committee noted.

“Some investigators may prefer to not use the biomarker category terminology but instead simply report biomarker profile, i.e., A+T+(N)+ instead of ‘Alzheimer’s disease.’ An alternative is to combine the biomarker profile with a descriptive term – for example, ‘A+T+(N)+ with dementia’ instead of ‘Alzheimer’s disease with dementia’.”

Again, Dr. Jack cautioned, the paradigm is not intended for clinical use – at least not now. It relies entirely on biomarkers obtained by methods that are either invasive (lumbar puncture), unavailable outside research settings (tau scans), or very expensive when privately obtained (amyloid scans). Until this situation changes, the biomarker profile paradigm has little clinical impact.

IDEAS on the horizon

Change may be coming, however. The Alzheimer’s Association-sponsored Imaging Dementia–Evidence for Amyloid Scanning (IDEAS) study is assessing the clinical usefulness of amyloid PET scans and their impact on patient outcomes. The goal is to accumulate enough data to prove that amyloid scans are a cost-effective addition to the management of dementia patients. If federal payers agree and decide to cover amyloid scans, advocates hope that private insurers might follow suit.

An interim analysis of 4,000 scans, presented at the 2017 Alzheimer’s Association International Conference, was quite positive. Scan results changed patient management in 68% of cases, including refining dementia diagnoses, adding, stopping, or switching medications, and altering patient counseling.

IDEAS uses an FDA-approved amyloid imaging agent. But although several are under investigation, there are no approved tau PET ligands. However, other less-invasive and less-costly options may soon be developed, the committee noted. The search continues for a validated blood-based biomarker, including neurofilament light protein, plasma amyloid beta, and plasma tau.

“In the future, less-invasive/less-expensive blood-based biomarker tests - along with genetics, clinical, and demographic information - will likely play an important screening role in selecting individuals for more-expensive/more-invasive biomarker testing. This has been the history in other biologically defined diseases such as cardiovascular disease,” Dr. Jack and his colleagues noted in the paper.

In any case, however, without an effective treatment, much of the information conveyed by the biomarker profile paradigm remains, literally, academic, Dr. Jack said.

“If [the biomarker profile] were easy to determine and inexpensive, I imagine a lot of people would ask for it. Certainly many people would want to know, especially if they have a cognitive problem. People who have a family history, who may have Alzheimer’s pathology without the symptoms, might want to know. But the reality is that, until there’s a treatment that alters the course of this disease, finding out that you actually have Alzheimer’s is not going to enable you to change anything.”

The editors of Alzheimer’s & Dementia are seeking comment on the research framework. Letters and commentary can be submitted through June and will be considered for publication in an e-book, to be published sometime this summer, according to an accompanying editorial (https://doi.org/10/1016/j.jalz.2018.03.003).

Alzheimer’s & Dementia is the official journal of the Alzheimer’s Association. Dr. Jack has served on scientific advisory boards for Elan/Janssen AI, Bristol-Meyers Squibb, Eli Lilly, GE Healthcare, Siemens, and Eisai; received research support from Baxter International, Allon Therapeutics; and holds stock in Johnson & Johnson. Disclosures for other committee members can be found here.

SOURCE: Jack CR et al. Alzheimer’s Dement. 2018;14:535-62. doi: 10.1016/j.jalz.2018.02.018.

FROM ALZHEIMER’S & DEMENTIA

Simvastatin, atorvastatin cut mortality risk for sepsis patients

a large health care database review has determined.

Among almost 53,000 sepsis patients, those who had been taking simvastatin were 28% less likely to die within 30 days of a sepsis admission than were patients not taking a statin. Atorvastatin conferred a similar significant survival benefit, reducing the risk of death by 22%, Chien-Chang Lee, MD and his colleagues wrote in the April issue of the journal CHEST®.

The drugs also exert a direct antimicrobial effect, he asserted.

“Of note, simvastatin was shown by several reports to have the most potent antibacterial activity,” targeting both methicillin-resistant and -sensitive Staphylococcus aureus, as well as gram negative and positive bacteria.

Dr. Lee and his colleagues extracted mortality and statin prescription data from the Taiwan National Health Insurance Database from 2000-2011. They looked at 30- and 90-day mortality in 52,737 patients who developed sepsis; the statins of interest were atorvastatin, simvastatin, and rosuvastatin. Patients had to have been taking the medication for at least 30 days before sepsis onset to be included, and patients taking more than one statin were excluded from the analysis.

Patients were a mean of 69 years old. About half had a lower respiratory infection. The remainder had infections within the abdomen, the biliary or urinary tract, skin, or orthopedic infections. There were no significant differences in comorbidities or in other medications taken among the three statin groups or the nonusers.

Of the entire cohort, 17% died by 30 days and nearly 23% by 90 days. Compared with those who had never received a statin, the statin users were 12% less likely to die by 30 days (hazard ratio, 0.88). Mortality at 90 days was also decreased, when compared with nonusers (HR, 0.93).

Simvastatin demonstrated the greatest benefit, with a 28% decreased risk of 30-day mortality (HR, 0.72). Atorvastatin followed, with a 22% risk reduction (HR, 0.78). Rosuvastatin exerted a nonsignificant 13% benefit.

The authors then examined 90-day mortality risks for the patients with a propensity matching score using a subgroup comprising 536 simvastatin users, 536 atorvastatin users, and 536 rosuvastatin users. Simvastatin was associated with a 23% reduction in 30-day mortality risk (HR, 0.77) and atorvastatin with a 21% reduction (HR, 0.79), when compared with rosuvastatin.

Statins’ antimicrobial properties are probably partially caused by their inactivation of the 3-hydroxy-3-methylglutaryl-coenzyme A (HMG-CoA) reductase pathway, Dr. Lee and his colleagues noted. In addition to being vital for cholesterol synthesis, this pathway “also contributes to the production of isoprenoids and lipid compounds that are essential for cell signaling and structure in the pathogen. Secondly, the chemical property of different types of statins may affect their targeting to bacteria. The lipophilic properties of simvastatin or atorvastatin may allow better binding to bacteria cell walls than the hydrophilic properties of rosuvastatin.”

The study was funded by the Taiwan National Science Foundation and Taiwan National Ministry of Science and Technology. Dr. Lee had no financial conflicts.

The statin-sepsis mortality link will probably never be definitively proven, but the study by Lee and colleagues gives us the best data so far on this intriguing connection, Steven Q. Simpson, MD and Joel D. Mermis, MD wrote in an accompanying editorial.

“It is unlikely that prospective randomized trials of statins for prevention of sepsis mortality will ever be undertaken, owing to the sheer number of patients that would require randomization in order to have adequate numbers who actually develop sepsis,” the colleagues wrote. “We believe that the next best thing to randomization and a prospective trial is exactly what the authors have done – identify a cohort, track them through time, even if nonconcurrently, and match cases to controls by propensity matching on important clinical characteristics.”

Nevertheless, the two said, “This brings us to one aspect of the study that leaves open a window for some doubt.”

Lee et al. extracted their data from a large national insurance claims database. These systems “are commonly believed to overestimate sepsis incidence,” Dr. Simpson and Dr. Mermis wrote. A 2009 U.S. study bore this out, they said. “That study showed that in the U.S in 2014, there were approximately 1.7 million cases of sepsis in a population of 330 million, for an annual incidence rate of five sepsis cases per 1,000 patient-years.”

However, a “quick calculation” of the Taiwan data suggests that the annual sepsis caseload is about 5,200 per year in a population of 23 million at risk – an annual incidence of only 0.2 cases per 1,000 patient-years.

“This represents an order of magnitude difference in sepsis incidence between the U.S. and Taiwan, providing some issues to ponder. Does Taiwan indeed have a lower incidence of sepsis by that much? If so, is the lower incidence related to genetics, environment, health care access, or other factors?

“Although Lee et al. have provided us with data of the highest quality that we can likely hope for, the book may not be quite closed, yet.”

Dr. Mermis and Dr. Simpson are pulmonologists at the University of Kansas, Kansas City. They made their comments in an editorial published in the April issue of CHEST® (Mermis JD and Simpson SQ. CHEST. 2018 April. doi: 10.1016/j.chest.2017.12.004.)

The statin-sepsis mortality link will probably never be definitively proven, but the study by Lee and colleagues gives us the best data so far on this intriguing connection, Steven Q. Simpson, MD and Joel D. Mermis, MD wrote in an accompanying editorial.

“It is unlikely that prospective randomized trials of statins for prevention of sepsis mortality will ever be undertaken, owing to the sheer number of patients that would require randomization in order to have adequate numbers who actually develop sepsis,” the colleagues wrote. “We believe that the next best thing to randomization and a prospective trial is exactly what the authors have done – identify a cohort, track them through time, even if nonconcurrently, and match cases to controls by propensity matching on important clinical characteristics.”

Nevertheless, the two said, “This brings us to one aspect of the study that leaves open a window for some doubt.”

Lee et al. extracted their data from a large national insurance claims database. These systems “are commonly believed to overestimate sepsis incidence,” Dr. Simpson and Dr. Mermis wrote. A 2009 U.S. study bore this out, they said. “That study showed that in the U.S in 2014, there were approximately 1.7 million cases of sepsis in a population of 330 million, for an annual incidence rate of five sepsis cases per 1,000 patient-years.”

However, a “quick calculation” of the Taiwan data suggests that the annual sepsis caseload is about 5,200 per year in a population of 23 million at risk – an annual incidence of only 0.2 cases per 1,000 patient-years.

“This represents an order of magnitude difference in sepsis incidence between the U.S. and Taiwan, providing some issues to ponder. Does Taiwan indeed have a lower incidence of sepsis by that much? If so, is the lower incidence related to genetics, environment, health care access, or other factors?

“Although Lee et al. have provided us with data of the highest quality that we can likely hope for, the book may not be quite closed, yet.”

Dr. Mermis and Dr. Simpson are pulmonologists at the University of Kansas, Kansas City. They made their comments in an editorial published in the April issue of CHEST® (Mermis JD and Simpson SQ. CHEST. 2018 April. doi: 10.1016/j.chest.2017.12.004.)

The statin-sepsis mortality link will probably never be definitively proven, but the study by Lee and colleagues gives us the best data so far on this intriguing connection, Steven Q. Simpson, MD and Joel D. Mermis, MD wrote in an accompanying editorial.

“It is unlikely that prospective randomized trials of statins for prevention of sepsis mortality will ever be undertaken, owing to the sheer number of patients that would require randomization in order to have adequate numbers who actually develop sepsis,” the colleagues wrote. “We believe that the next best thing to randomization and a prospective trial is exactly what the authors have done – identify a cohort, track them through time, even if nonconcurrently, and match cases to controls by propensity matching on important clinical characteristics.”

Nevertheless, the two said, “This brings us to one aspect of the study that leaves open a window for some doubt.”

Lee et al. extracted their data from a large national insurance claims database. These systems “are commonly believed to overestimate sepsis incidence,” Dr. Simpson and Dr. Mermis wrote. A 2009 U.S. study bore this out, they said. “That study showed that in the U.S in 2014, there were approximately 1.7 million cases of sepsis in a population of 330 million, for an annual incidence rate of five sepsis cases per 1,000 patient-years.”

However, a “quick calculation” of the Taiwan data suggests that the annual sepsis caseload is about 5,200 per year in a population of 23 million at risk – an annual incidence of only 0.2 cases per 1,000 patient-years.

“This represents an order of magnitude difference in sepsis incidence between the U.S. and Taiwan, providing some issues to ponder. Does Taiwan indeed have a lower incidence of sepsis by that much? If so, is the lower incidence related to genetics, environment, health care access, or other factors?

“Although Lee et al. have provided us with data of the highest quality that we can likely hope for, the book may not be quite closed, yet.”

Dr. Mermis and Dr. Simpson are pulmonologists at the University of Kansas, Kansas City. They made their comments in an editorial published in the April issue of CHEST® (Mermis JD and Simpson SQ. CHEST. 2018 April. doi: 10.1016/j.chest.2017.12.004.)

a large health care database review has determined.

Among almost 53,000 sepsis patients, those who had been taking simvastatin were 28% less likely to die within 30 days of a sepsis admission than were patients not taking a statin. Atorvastatin conferred a similar significant survival benefit, reducing the risk of death by 22%, Chien-Chang Lee, MD and his colleagues wrote in the April issue of the journal CHEST®.

The drugs also exert a direct antimicrobial effect, he asserted.

“Of note, simvastatin was shown by several reports to have the most potent antibacterial activity,” targeting both methicillin-resistant and -sensitive Staphylococcus aureus, as well as gram negative and positive bacteria.

Dr. Lee and his colleagues extracted mortality and statin prescription data from the Taiwan National Health Insurance Database from 2000-2011. They looked at 30- and 90-day mortality in 52,737 patients who developed sepsis; the statins of interest were atorvastatin, simvastatin, and rosuvastatin. Patients had to have been taking the medication for at least 30 days before sepsis onset to be included, and patients taking more than one statin were excluded from the analysis.

Patients were a mean of 69 years old. About half had a lower respiratory infection. The remainder had infections within the abdomen, the biliary or urinary tract, skin, or orthopedic infections. There were no significant differences in comorbidities or in other medications taken among the three statin groups or the nonusers.

Of the entire cohort, 17% died by 30 days and nearly 23% by 90 days. Compared with those who had never received a statin, the statin users were 12% less likely to die by 30 days (hazard ratio, 0.88). Mortality at 90 days was also decreased, when compared with nonusers (HR, 0.93).

Simvastatin demonstrated the greatest benefit, with a 28% decreased risk of 30-day mortality (HR, 0.72). Atorvastatin followed, with a 22% risk reduction (HR, 0.78). Rosuvastatin exerted a nonsignificant 13% benefit.

The authors then examined 90-day mortality risks for the patients with a propensity matching score using a subgroup comprising 536 simvastatin users, 536 atorvastatin users, and 536 rosuvastatin users. Simvastatin was associated with a 23% reduction in 30-day mortality risk (HR, 0.77) and atorvastatin with a 21% reduction (HR, 0.79), when compared with rosuvastatin.

Statins’ antimicrobial properties are probably partially caused by their inactivation of the 3-hydroxy-3-methylglutaryl-coenzyme A (HMG-CoA) reductase pathway, Dr. Lee and his colleagues noted. In addition to being vital for cholesterol synthesis, this pathway “also contributes to the production of isoprenoids and lipid compounds that are essential for cell signaling and structure in the pathogen. Secondly, the chemical property of different types of statins may affect their targeting to bacteria. The lipophilic properties of simvastatin or atorvastatin may allow better binding to bacteria cell walls than the hydrophilic properties of rosuvastatin.”

The study was funded by the Taiwan National Science Foundation and Taiwan National Ministry of Science and Technology. Dr. Lee had no financial conflicts.

a large health care database review has determined.

Among almost 53,000 sepsis patients, those who had been taking simvastatin were 28% less likely to die within 30 days of a sepsis admission than were patients not taking a statin. Atorvastatin conferred a similar significant survival benefit, reducing the risk of death by 22%, Chien-Chang Lee, MD and his colleagues wrote in the April issue of the journal CHEST®.

The drugs also exert a direct antimicrobial effect, he asserted.

“Of note, simvastatin was shown by several reports to have the most potent antibacterial activity,” targeting both methicillin-resistant and -sensitive Staphylococcus aureus, as well as gram negative and positive bacteria.

Dr. Lee and his colleagues extracted mortality and statin prescription data from the Taiwan National Health Insurance Database from 2000-2011. They looked at 30- and 90-day mortality in 52,737 patients who developed sepsis; the statins of interest were atorvastatin, simvastatin, and rosuvastatin. Patients had to have been taking the medication for at least 30 days before sepsis onset to be included, and patients taking more than one statin were excluded from the analysis.

Patients were a mean of 69 years old. About half had a lower respiratory infection. The remainder had infections within the abdomen, the biliary or urinary tract, skin, or orthopedic infections. There were no significant differences in comorbidities or in other medications taken among the three statin groups or the nonusers.

Of the entire cohort, 17% died by 30 days and nearly 23% by 90 days. Compared with those who had never received a statin, the statin users were 12% less likely to die by 30 days (hazard ratio, 0.88). Mortality at 90 days was also decreased, when compared with nonusers (HR, 0.93).

Simvastatin demonstrated the greatest benefit, with a 28% decreased risk of 30-day mortality (HR, 0.72). Atorvastatin followed, with a 22% risk reduction (HR, 0.78). Rosuvastatin exerted a nonsignificant 13% benefit.

The authors then examined 90-day mortality risks for the patients with a propensity matching score using a subgroup comprising 536 simvastatin users, 536 atorvastatin users, and 536 rosuvastatin users. Simvastatin was associated with a 23% reduction in 30-day mortality risk (HR, 0.77) and atorvastatin with a 21% reduction (HR, 0.79), when compared with rosuvastatin.

Statins’ antimicrobial properties are probably partially caused by their inactivation of the 3-hydroxy-3-methylglutaryl-coenzyme A (HMG-CoA) reductase pathway, Dr. Lee and his colleagues noted. In addition to being vital for cholesterol synthesis, this pathway “also contributes to the production of isoprenoids and lipid compounds that are essential for cell signaling and structure in the pathogen. Secondly, the chemical property of different types of statins may affect their targeting to bacteria. The lipophilic properties of simvastatin or atorvastatin may allow better binding to bacteria cell walls than the hydrophilic properties of rosuvastatin.”

The study was funded by the Taiwan National Science Foundation and Taiwan National Ministry of Science and Technology. Dr. Lee had no financial conflicts.

FROM CHEST

Key clinical point: Simvastatin and atorvastatin were associated with decreased mortality risk among sepsis patients.

Major finding: Compared with those not taking the drugs, those taking simvastatin were 28% less likely to die by 30 days, and those taking atorvastatin were 22% less likely.

Study details: The database study comprised almost 54,000 sepsis cases over 11 years.

Disclosures: The study was funded by the Taiwan National Science Foundation and Taiwan National Ministry of Science and Technology. Dr. Lee had no financial conflicts.

Source: Lee C-C et al. CHEST. 2018 April;153(4):769-70.

AbbVie, Samsung Bioepis settle suits with delayed U.S. entry for adalimumab biosimilar

A new adalimumab biosimilar will become available in the European Union later this year, but a court settlement will keep Samsung Bioepis’ competitor off U.S. shelves until 2023.

Under the settlement, AbbVie, which manufactures adalimumab (Humira), will grant Bioepis and its partner, Biogen, a nonexclusive license to the intellectual property relating to the antibody. Bioepis’ version, dubbed SB5 (Imraldi), will enter global markets in a staggered fashion, according to an AbbVie press statement. In most countries in the European Union, the license period will begin on Oct. 16, 2018. In the United States, Samsung Bioepis’ license period will begin on June 30, 2023, according to the Abbvie statement.

Biogen and Bioepis hailed the settlement as a victory, but Imraldi won’t be the first Humira biosimilar to break into the U.S. market. Last September, AbbVie settled a similar suit with Amgen, granting patent licenses for the global use and sale of its anti–tumor necrosis factor–alpha antibody, Amgevita/Amjevita. Amgen expects to launch Amgevita in Europe on Oct. 16, 2018, and Amjevita in the United States on Jan. 31, 2023. Samsung Bioepis’ U.S. license date will not be accelerated upon Amgen’s entry.

Ian Henshaw, Biogen’s global head of biosimilars, said the deal further strengthens the company’s European biosimilars reach.

“Biogen is a leader in the emerging field of biosimilars through Samsung Bioepis, our joint venture with Samsung BioLogics,” Mr. Henshaw said in a press statement. “Biogen already markets two biosimilars in Europe and the planned introduction of Imraldi on Oct. 16 could potentially expand patient choice by offering physicians more options to meet the needs of patients while delivering significant savings to healthcare systems.”

AbbVie focused on the settlement as a global recognition of its leadership role in developing the anti-TNF-alpha antibody.

“The Samsung Bioepis settlement reflects the strength and breadth of AbbVie’s intellectual property,” Laura Schumacher, the company’s general counsel, said in the Abbvie statement. “We continue to believe biosimilars will play an important role in our healthcare system, but we also believe it is important to protect our investment in innovation. This agreement accomplishes both objectives.”

Samsung Bioepis will pay royalties to AbbVie for licensing its adalimumab patents once its biosimilar product is launched. As is the case with the prior Amgen resolution, AbbVie will not make any payments to Samsung Bioepis. “All litigation pending between the parties, as well as all litigation with Samsung Bioepis’ European partner, Biogen, will be dismissed. The precise terms of the agreements are confidential,” the Abbvie statement said.

The settlement brings to a closing a flurry of lawsuits Samsung Bioepis filed against AbbVie in 2017.

A new adalimumab biosimilar will become available in the European Union later this year, but a court settlement will keep Samsung Bioepis’ competitor off U.S. shelves until 2023.

Under the settlement, AbbVie, which manufactures adalimumab (Humira), will grant Bioepis and its partner, Biogen, a nonexclusive license to the intellectual property relating to the antibody. Bioepis’ version, dubbed SB5 (Imraldi), will enter global markets in a staggered fashion, according to an AbbVie press statement. In most countries in the European Union, the license period will begin on Oct. 16, 2018. In the United States, Samsung Bioepis’ license period will begin on June 30, 2023, according to the Abbvie statement.

Biogen and Bioepis hailed the settlement as a victory, but Imraldi won’t be the first Humira biosimilar to break into the U.S. market. Last September, AbbVie settled a similar suit with Amgen, granting patent licenses for the global use and sale of its anti–tumor necrosis factor–alpha antibody, Amgevita/Amjevita. Amgen expects to launch Amgevita in Europe on Oct. 16, 2018, and Amjevita in the United States on Jan. 31, 2023. Samsung Bioepis’ U.S. license date will not be accelerated upon Amgen’s entry.

Ian Henshaw, Biogen’s global head of biosimilars, said the deal further strengthens the company’s European biosimilars reach.

“Biogen is a leader in the emerging field of biosimilars through Samsung Bioepis, our joint venture with Samsung BioLogics,” Mr. Henshaw said in a press statement. “Biogen already markets two biosimilars in Europe and the planned introduction of Imraldi on Oct. 16 could potentially expand patient choice by offering physicians more options to meet the needs of patients while delivering significant savings to healthcare systems.”

AbbVie focused on the settlement as a global recognition of its leadership role in developing the anti-TNF-alpha antibody.

“The Samsung Bioepis settlement reflects the strength and breadth of AbbVie’s intellectual property,” Laura Schumacher, the company’s general counsel, said in the Abbvie statement. “We continue to believe biosimilars will play an important role in our healthcare system, but we also believe it is important to protect our investment in innovation. This agreement accomplishes both objectives.”

Samsung Bioepis will pay royalties to AbbVie for licensing its adalimumab patents once its biosimilar product is launched. As is the case with the prior Amgen resolution, AbbVie will not make any payments to Samsung Bioepis. “All litigation pending between the parties, as well as all litigation with Samsung Bioepis’ European partner, Biogen, will be dismissed. The precise terms of the agreements are confidential,” the Abbvie statement said.

The settlement brings to a closing a flurry of lawsuits Samsung Bioepis filed against AbbVie in 2017.

A new adalimumab biosimilar will become available in the European Union later this year, but a court settlement will keep Samsung Bioepis’ competitor off U.S. shelves until 2023.

Under the settlement, AbbVie, which manufactures adalimumab (Humira), will grant Bioepis and its partner, Biogen, a nonexclusive license to the intellectual property relating to the antibody. Bioepis’ version, dubbed SB5 (Imraldi), will enter global markets in a staggered fashion, according to an AbbVie press statement. In most countries in the European Union, the license period will begin on Oct. 16, 2018. In the United States, Samsung Bioepis’ license period will begin on June 30, 2023, according to the Abbvie statement.

Biogen and Bioepis hailed the settlement as a victory, but Imraldi won’t be the first Humira biosimilar to break into the U.S. market. Last September, AbbVie settled a similar suit with Amgen, granting patent licenses for the global use and sale of its anti–tumor necrosis factor–alpha antibody, Amgevita/Amjevita. Amgen expects to launch Amgevita in Europe on Oct. 16, 2018, and Amjevita in the United States on Jan. 31, 2023. Samsung Bioepis’ U.S. license date will not be accelerated upon Amgen’s entry.

Ian Henshaw, Biogen’s global head of biosimilars, said the deal further strengthens the company’s European biosimilars reach.

“Biogen is a leader in the emerging field of biosimilars through Samsung Bioepis, our joint venture with Samsung BioLogics,” Mr. Henshaw said in a press statement. “Biogen already markets two biosimilars in Europe and the planned introduction of Imraldi on Oct. 16 could potentially expand patient choice by offering physicians more options to meet the needs of patients while delivering significant savings to healthcare systems.”

AbbVie focused on the settlement as a global recognition of its leadership role in developing the anti-TNF-alpha antibody.

“The Samsung Bioepis settlement reflects the strength and breadth of AbbVie’s intellectual property,” Laura Schumacher, the company’s general counsel, said in the Abbvie statement. “We continue to believe biosimilars will play an important role in our healthcare system, but we also believe it is important to protect our investment in innovation. This agreement accomplishes both objectives.”

Samsung Bioepis will pay royalties to AbbVie for licensing its adalimumab patents once its biosimilar product is launched. As is the case with the prior Amgen resolution, AbbVie will not make any payments to Samsung Bioepis. “All litigation pending between the parties, as well as all litigation with Samsung Bioepis’ European partner, Biogen, will be dismissed. The precise terms of the agreements are confidential,” the Abbvie statement said.

The settlement brings to a closing a flurry of lawsuits Samsung Bioepis filed against AbbVie in 2017.

Federal budget grants $1.8 billion to Alzheimer’s and dementia research

Congress has appropriated an additional $414 million for research into Alzheimer’s disease and other dementias – the full increase requested by the National Institutes of Health for fiscal year 2018. The boost brings the total AD funding available this year to $1.8 billion.

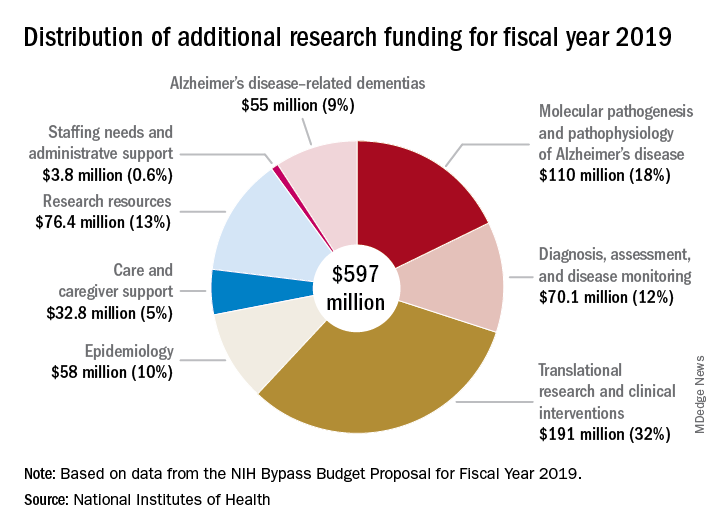

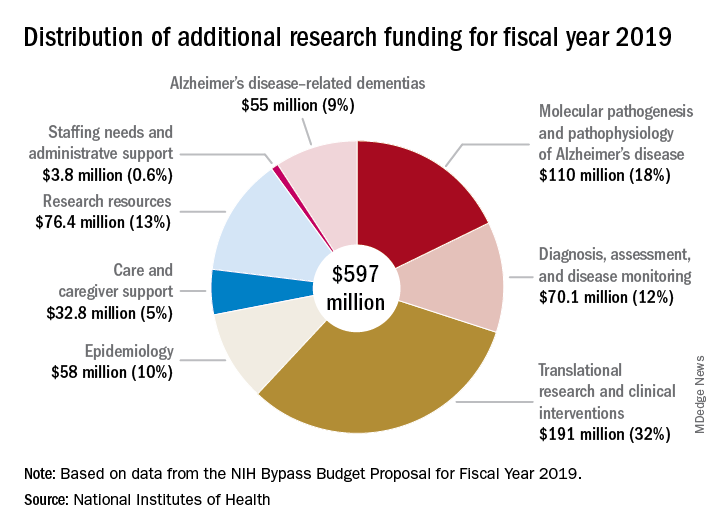

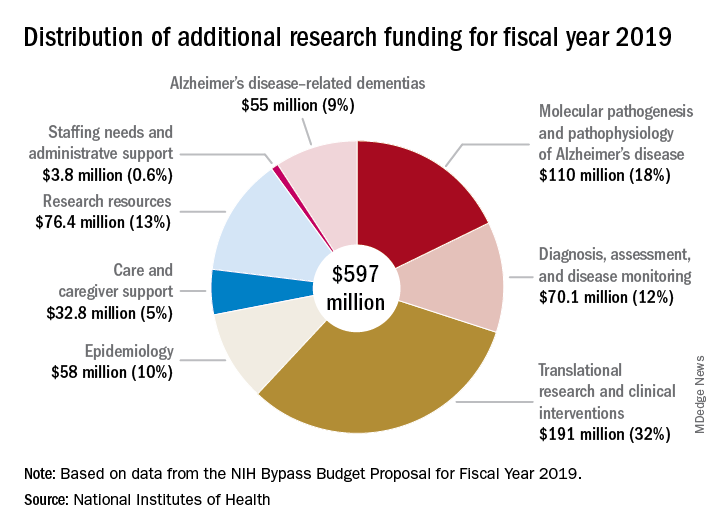

Bolstered by the mandate of the National Plan to Address Alzheimer’s Disease, to prevent or treat AD by 2025, the NIH is aiming higher still. Its draft FY2019 AD appropriation asks for another $597 million; if passed, nearly $2.4 billion could be available for AD research as soon as next October.

“For the third consecutive fiscal year, Congress has approved the Alzheimer’s Association’s appeal for a historic funding increase for Alzheimer’s and dementia research at the NIH,” Alzheimer’s Association president Harry Johns said in a press statement. “This decision demonstrates that Congress is deeply committed to providing the Alzheimer’s and dementia science community with the resources needed to move research forward.”

Several members of Congress championed the AD funding request, including Roy Blunt (R-Mo.), Patty Murray (D-Wash.), Nita Lowey (D-N.Y.), and Tom Cole (R-Okla.).

The forward momentum is on pace to continue into FY2019, according to Robert Egge, chief public policy officer and executive vice president of governmental affairs for the association. The NIH Bypass Budget, an estimate of how much additional funding is necessary to reach the 2025 goal, contains an additional $597 million appropriation for AD and other dementias.

“This is what we need to fund scientific projects that are meritorious and ready to go,” Mr. Egge said in an interview. “Congress has the assurance that this request is scientifically driven and that NIH is already thinking about how the money will be used.”

While a record-setting amount in the AD research world, this year’s $1.8 billion appropriation is a fraction of what other costly diseases receive. By comparison, the budget granted the National Cancer Institute $5.7 billion for its research programs.

“Compared to what the cost of the disease brings to Americans in terms of Medicare, Medicaid, and out of pocket expenses, it’s not that much,” Mr. Egge said. “But we have the opportunity to use this money to change these huge numbers that we’re facing.”

A 2013 report by the Rand Corporation found that, although Alzheimer’s affects fewer people than cancer or heart disease, the cost of treating and caring for those patients far outstrips spending in either of those other categories. The conclusions were perhaps even more startling, considering that it looked only at costs related solely to Alzheimer’s, not the cost of treating comorbid illnesses.

Long-term care was a key driver of the total cost in 2013, and remains the bulk of expenses today, Mr. Egge said. Transitions – going from home to nursing home to hospital – are terribly expensive, he noted. And although the Rand report didn’t include it, managing comorbid illnesses in Alzheimer’s is an enormous money drain. “Diabetes is just one example. It costs 80% more to manage diabetes in a patient with AD than in one without AD.”

The Facts and Figures report notes that the average 2017 per-person payout for Medicare beneficiaries was more than three times higher in AD patients than in those without the disease ($48,028 vs. $13,705). These are the kinds of numbers it takes to put partisan bickering on hold and grapple with tough decisions, Mr. Egge said.

“The fiscal argument is one thing that really impressed Congress. They do know how worried Americans are about this disease and how tough it is on families, but the growing fiscal impact has really focused them on addressing it.”

Congress has appropriated an additional $414 million for research into Alzheimer’s disease and other dementias – the full increase requested by the National Institutes of Health for fiscal year 2018. The boost brings the total AD funding available this year to $1.8 billion.

Bolstered by the mandate of the National Plan to Address Alzheimer’s Disease, to prevent or treat AD by 2025, the NIH is aiming higher still. Its draft FY2019 AD appropriation asks for another $597 million; if passed, nearly $2.4 billion could be available for AD research as soon as next October.

“For the third consecutive fiscal year, Congress has approved the Alzheimer’s Association’s appeal for a historic funding increase for Alzheimer’s and dementia research at the NIH,” Alzheimer’s Association president Harry Johns said in a press statement. “This decision demonstrates that Congress is deeply committed to providing the Alzheimer’s and dementia science community with the resources needed to move research forward.”

Several members of Congress championed the AD funding request, including Roy Blunt (R-Mo.), Patty Murray (D-Wash.), Nita Lowey (D-N.Y.), and Tom Cole (R-Okla.).

The forward momentum is on pace to continue into FY2019, according to Robert Egge, chief public policy officer and executive vice president of governmental affairs for the association. The NIH Bypass Budget, an estimate of how much additional funding is necessary to reach the 2025 goal, contains an additional $597 million appropriation for AD and other dementias.

“This is what we need to fund scientific projects that are meritorious and ready to go,” Mr. Egge said in an interview. “Congress has the assurance that this request is scientifically driven and that NIH is already thinking about how the money will be used.”

While a record-setting amount in the AD research world, this year’s $1.8 billion appropriation is a fraction of what other costly diseases receive. By comparison, the budget granted the National Cancer Institute $5.7 billion for its research programs.

“Compared to what the cost of the disease brings to Americans in terms of Medicare, Medicaid, and out of pocket expenses, it’s not that much,” Mr. Egge said. “But we have the opportunity to use this money to change these huge numbers that we’re facing.”

A 2013 report by the Rand Corporation found that, although Alzheimer’s affects fewer people than cancer or heart disease, the cost of treating and caring for those patients far outstrips spending in either of those other categories. The conclusions were perhaps even more startling, considering that it looked only at costs related solely to Alzheimer’s, not the cost of treating comorbid illnesses.

Long-term care was a key driver of the total cost in 2013, and remains the bulk of expenses today, Mr. Egge said. Transitions – going from home to nursing home to hospital – are terribly expensive, he noted. And although the Rand report didn’t include it, managing comorbid illnesses in Alzheimer’s is an enormous money drain. “Diabetes is just one example. It costs 80% more to manage diabetes in a patient with AD than in one without AD.”

The Facts and Figures report notes that the average 2017 per-person payout for Medicare beneficiaries was more than three times higher in AD patients than in those without the disease ($48,028 vs. $13,705). These are the kinds of numbers it takes to put partisan bickering on hold and grapple with tough decisions, Mr. Egge said.

“The fiscal argument is one thing that really impressed Congress. They do know how worried Americans are about this disease and how tough it is on families, but the growing fiscal impact has really focused them on addressing it.”

Congress has appropriated an additional $414 million for research into Alzheimer’s disease and other dementias – the full increase requested by the National Institutes of Health for fiscal year 2018. The boost brings the total AD funding available this year to $1.8 billion.

Bolstered by the mandate of the National Plan to Address Alzheimer’s Disease, to prevent or treat AD by 2025, the NIH is aiming higher still. Its draft FY2019 AD appropriation asks for another $597 million; if passed, nearly $2.4 billion could be available for AD research as soon as next October.