User login

CDC: Forty percent of cancers linked to overweight or obesity

Being overweight or obese significantly increased the risk of developing at least 13 types of cancer, according to a report by the Centers for Disease Control and Prevention.

Now that a larger proportion of the American population is overweight or obese, the rates of obesity-related cancers have increased. Between 2005 and 2014, the rate of obesity-related cancers, excluding colorectal cancer, increased by 7%. Over the same period, non–obesity-related cancers declined, according to C. Brooke Steele, DO, of the CDC’s Division of Cancer Prevention and Control, and her associates (MMWR Morb Mortal Wkly Rep. 2017 Oct 3;66[39]:1052-8).

They found that 631,604 people were diagnosed with an overweight- or obesity-related cancer, 40% of nearly 1.6 million of all cancer diagnoses in 2014. The effect was more pronounced in older people (age at least 50 years), compared with younger people, with two-thirds of cases occurring in the 50- to 74-year-old age range.

Women were much more likely to have overweight- and obesity-related cancers, with higher incidence rates (218.1 per 100,000 population) than those of men (115.0 per 100,000). A contributing factor for this difference between men and women was female-specific cancers such as endometrial, ovarian, and postmenopausal breast cancers, which accounted for 42% (268,091) of overweight- and obesity-related cancers.

Researchers found that, between 2005 and 2014, the overall incidence of overweight- and obesity-related cancers (including colorectal cancer) decreased by 2%, colorectal cancer decreased by 23%, and cancers unrelated to body weight decreased by 13%. A contributing factor to the decrease in colorectal cancer was most likely cancer screening tests, which can detect and lead to the removal of precancerous polyps.

“A majority of American adults weigh more than recommended – and being overweight or obese puts people at higher risk for a number of cancers – so these findings are a cause for concern,” CDC Director Brenda Fitzgerald, MD, said in a statement. “By getting to and keeping a healthy weight, we all can play a role in cancer prevention.”

The researchers had no conflicts of interest to report.

Being overweight or obese significantly increased the risk of developing at least 13 types of cancer, according to a report by the Centers for Disease Control and Prevention.

Now that a larger proportion of the American population is overweight or obese, the rates of obesity-related cancers have increased. Between 2005 and 2014, the rate of obesity-related cancers, excluding colorectal cancer, increased by 7%. Over the same period, non–obesity-related cancers declined, according to C. Brooke Steele, DO, of the CDC’s Division of Cancer Prevention and Control, and her associates (MMWR Morb Mortal Wkly Rep. 2017 Oct 3;66[39]:1052-8).

They found that 631,604 people were diagnosed with an overweight- or obesity-related cancer, 40% of nearly 1.6 million of all cancer diagnoses in 2014. The effect was more pronounced in older people (age at least 50 years), compared with younger people, with two-thirds of cases occurring in the 50- to 74-year-old age range.

Women were much more likely to have overweight- and obesity-related cancers, with higher incidence rates (218.1 per 100,000 population) than those of men (115.0 per 100,000). A contributing factor for this difference between men and women was female-specific cancers such as endometrial, ovarian, and postmenopausal breast cancers, which accounted for 42% (268,091) of overweight- and obesity-related cancers.

Researchers found that, between 2005 and 2014, the overall incidence of overweight- and obesity-related cancers (including colorectal cancer) decreased by 2%, colorectal cancer decreased by 23%, and cancers unrelated to body weight decreased by 13%. A contributing factor to the decrease in colorectal cancer was most likely cancer screening tests, which can detect and lead to the removal of precancerous polyps.

“A majority of American adults weigh more than recommended – and being overweight or obese puts people at higher risk for a number of cancers – so these findings are a cause for concern,” CDC Director Brenda Fitzgerald, MD, said in a statement. “By getting to and keeping a healthy weight, we all can play a role in cancer prevention.”

The researchers had no conflicts of interest to report.

Being overweight or obese significantly increased the risk of developing at least 13 types of cancer, according to a report by the Centers for Disease Control and Prevention.

Now that a larger proportion of the American population is overweight or obese, the rates of obesity-related cancers have increased. Between 2005 and 2014, the rate of obesity-related cancers, excluding colorectal cancer, increased by 7%. Over the same period, non–obesity-related cancers declined, according to C. Brooke Steele, DO, of the CDC’s Division of Cancer Prevention and Control, and her associates (MMWR Morb Mortal Wkly Rep. 2017 Oct 3;66[39]:1052-8).

They found that 631,604 people were diagnosed with an overweight- or obesity-related cancer, 40% of nearly 1.6 million of all cancer diagnoses in 2014. The effect was more pronounced in older people (age at least 50 years), compared with younger people, with two-thirds of cases occurring in the 50- to 74-year-old age range.

Women were much more likely to have overweight- and obesity-related cancers, with higher incidence rates (218.1 per 100,000 population) than those of men (115.0 per 100,000). A contributing factor for this difference between men and women was female-specific cancers such as endometrial, ovarian, and postmenopausal breast cancers, which accounted for 42% (268,091) of overweight- and obesity-related cancers.

Researchers found that, between 2005 and 2014, the overall incidence of overweight- and obesity-related cancers (including colorectal cancer) decreased by 2%, colorectal cancer decreased by 23%, and cancers unrelated to body weight decreased by 13%. A contributing factor to the decrease in colorectal cancer was most likely cancer screening tests, which can detect and lead to the removal of precancerous polyps.

“A majority of American adults weigh more than recommended – and being overweight or obese puts people at higher risk for a number of cancers – so these findings are a cause for concern,” CDC Director Brenda Fitzgerald, MD, said in a statement. “By getting to and keeping a healthy weight, we all can play a role in cancer prevention.”

The researchers had no conflicts of interest to report.

FROM MORBIDITY AND MORTALITY WEEKLY REPORT

Key clinical point:

Major finding: In the United States, more than 631,000 patients received cancer diagnoses related to overweight or obesity, representing 40% of nearly 1.6 million cancer diagnoses in 2014.

Data source: An analysis of United States Cancer Statistics data from 2005 to 2014.

Disclosures: The researchers had no conflicts of interest to report.

FDA approves third indication for onabotulinumtoxinA

The Food and Drug Administration has approved onabotulinumtoxinA, marketed as Botox Cosmetic by Allergan, for a third indication: the temporary improvement in the appearance of “moderate to severe forehead lines associated with frontalis muscle activity” in adults, according to the manufacturer.

The company announced the latest approval in a press release on October 3.

The Food and Drug Administration has approved onabotulinumtoxinA, marketed as Botox Cosmetic by Allergan, for a third indication: the temporary improvement in the appearance of “moderate to severe forehead lines associated with frontalis muscle activity” in adults, according to the manufacturer.

The company announced the latest approval in a press release on October 3.

The Food and Drug Administration has approved onabotulinumtoxinA, marketed as Botox Cosmetic by Allergan, for a third indication: the temporary improvement in the appearance of “moderate to severe forehead lines associated with frontalis muscle activity” in adults, according to the manufacturer.

The company announced the latest approval in a press release on October 3.

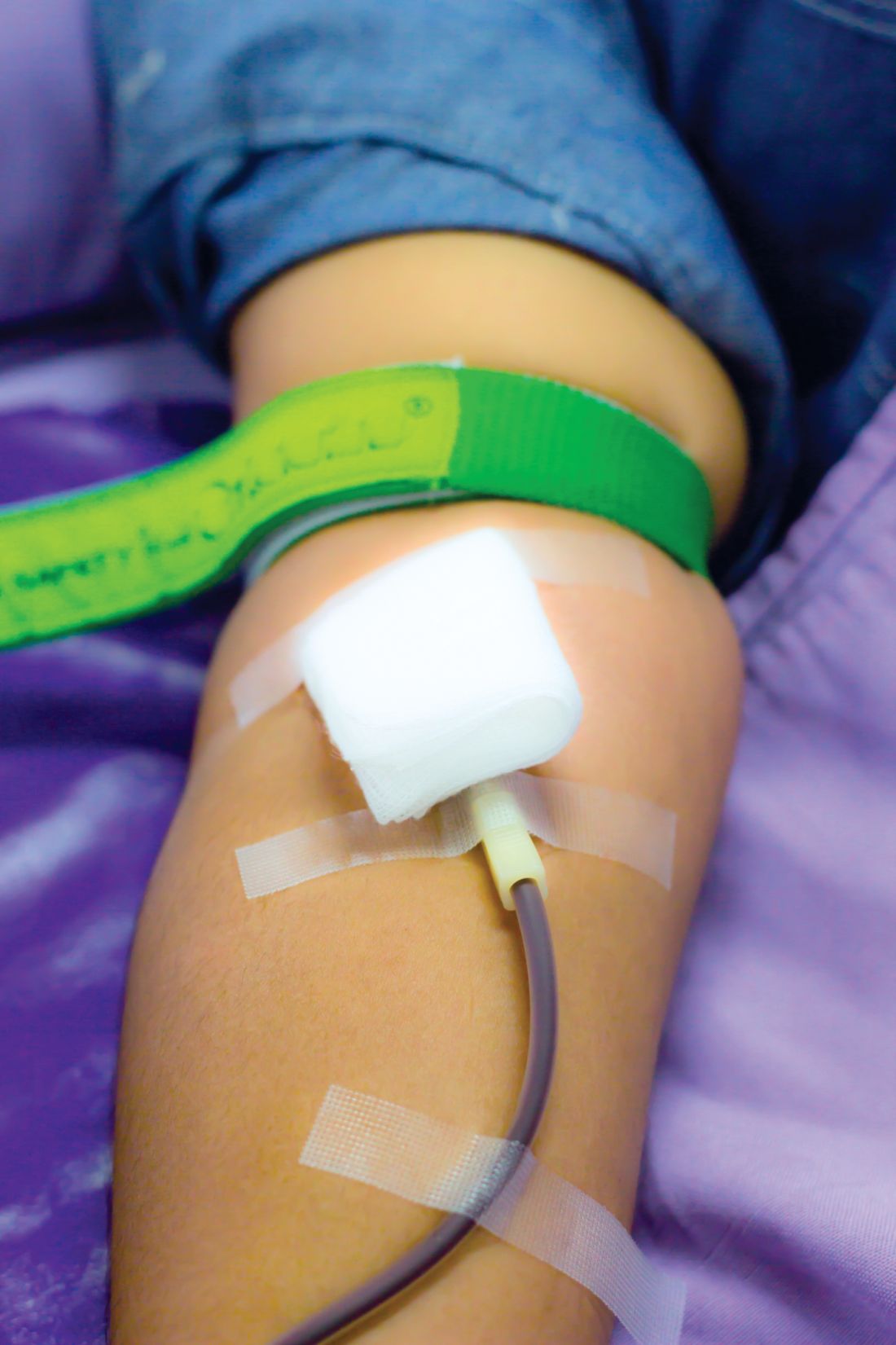

Blood donation intervals of 8 weeks safe for most donors

Reducing the interval between blood donations from every 12 weeks to every 8 weeks increases blood units collected with little ill effects on donor quality of life other than symptoms related to iron deficiency, based on results reported by the INTERVAL Trial Group.

In the United States, men and women can donate blood every 8 weeks; the Food and Drug Administration and the AABB (formerly the American Association of Blood Banks) have considered lengthening the 8-week minimum inter-donation interval to reduce the risk of iron deficiency. The current practice in the United Kingdom is to allow men to donate every 12 weeks and women every 16 weeks, the authors noted.

The INTERVAL study examined hemoglobin levels, ferritin levels, and self-reported symptoms as well as the number of blood donations over a 2-year period among 22,466 men randomly assigned to either 8-, 10-, or 12-week blood donation intervals and 22,797 women randomly assigned to donation intervals of 12, 14, and 16 weeks.

Men in the 8-week interval group donated significantly more blood on a per-donor basis, contributing 1.69 and 7.9 units more than the men in the 10- and 12-week interval groups, respectively. Similarly, women in the 12-week interval group contributed approximately 0.84 and 0.46 units more than did women in the 14- and 16-week interval groups.

Hemoglobin and ferritin concentrations stayed relatively stable throughout the study in men and women in all donation interval groups. The baseline hemoglobin and ferritin averages were 149.7 g/L and 44.9 mcg/L in men, and 133.9 g/L and 24.6 mcg/L in women, respectively. Ultimately, mean hemoglobin level decreased by 1%-2%. Ferritin level decreased by 15%-30%, indicating that it is a more accurate marker of iron depletion, the study authors wrote. The report was published online in The Lancet.

The researchers also analyzed self-reported symptoms associated with blood donations derived from donor follow-up surveys sent at 6-, 12-, and 18-month intervals. At the end of the 2-year study, donors underwent a series of cognitive function tests and answered a physical activity questionnaire. Increased donation rates correlated with increased symptoms of iron deficiency such as tiredness, restless legs, and dizziness. This effect was reported more strongly in men.

About 10% of participants were allowed to donate despite having baseline hemoglobin concentrations below the minimum regulatory threshold, said the authors, who said that the screening method used in the United Kingdom to test eligibility to donate needs to be reviewed.

“In response to these findings, we have started the COMPARE study (ISRCTN90871183) to provide a systematic, within-person comparison of the relative merits of different haemoglobin screening methods,” they wrote

The study was funded by National Health Service Blood and Transplant and the National Institute for Health Research Blood and Transplant Research Unit in Donor Health and Genomics (NIHR BTRU-2014-10024).

Donating blood serves an important function in medicine, but the practices associated with blood donations do not consider the effect it has on the donors. The amount of blood that most donors give is around 500 mL, or about 10% of total blood volume. The ratio of iron to blood is 1:1, meaning that for each milligram of blood drawn, one milligram of iron is lost. Considering that women only have 250 mg of iron reserves and men have 1,000 mg of reserves, many frequent donors are at risk of developing iron deficiency.

This study found that the total units of blood collected increased with shorter inter-donation intervals, while secondary safety outcomes of hemoglobin and ferritin levels remained similar between short and long inter-donation interval groups. While overall health did not decline, short inter-donation donors reported symptoms consistent with iron deficiency, including dizziness, tiredness, and restless legs. Researchers found that “about 25% of men and women at the most frequent inter-donation interval had iron deficiency and a third had at least one deferral for low hemoglobin.”

Iron deficiency can be mitigated by iron supplements or by lengthening the time between donations, but this does not fully correct the issue. Some blood centers have already introduced ferritin screening and lengthened the inter-donation interval for donors found to have low ferritin concentrations. Given the advances in automated laboratory testing, information technology, and the high compliance of blood donors, individualized approaches for prevention of iron deficiency could be feasible.

Alan E. Mast, MD , is the medical director for the Blood Center of Wisconsin, and an associate professor of pathology and cell biology at the Medical College of Wisconsin, Milwaukee. Edward L. Murphy, MD , is resident professor of laboratory medicine at University of California, San Francisco, and an affiliate of the Blood Systems Research Institute. They made their comments in an editorial that accompanied the study.

Donating blood serves an important function in medicine, but the practices associated with blood donations do not consider the effect it has on the donors. The amount of blood that most donors give is around 500 mL, or about 10% of total blood volume. The ratio of iron to blood is 1:1, meaning that for each milligram of blood drawn, one milligram of iron is lost. Considering that women only have 250 mg of iron reserves and men have 1,000 mg of reserves, many frequent donors are at risk of developing iron deficiency.

This study found that the total units of blood collected increased with shorter inter-donation intervals, while secondary safety outcomes of hemoglobin and ferritin levels remained similar between short and long inter-donation interval groups. While overall health did not decline, short inter-donation donors reported symptoms consistent with iron deficiency, including dizziness, tiredness, and restless legs. Researchers found that “about 25% of men and women at the most frequent inter-donation interval had iron deficiency and a third had at least one deferral for low hemoglobin.”

Iron deficiency can be mitigated by iron supplements or by lengthening the time between donations, but this does not fully correct the issue. Some blood centers have already introduced ferritin screening and lengthened the inter-donation interval for donors found to have low ferritin concentrations. Given the advances in automated laboratory testing, information technology, and the high compliance of blood donors, individualized approaches for prevention of iron deficiency could be feasible.

Alan E. Mast, MD , is the medical director for the Blood Center of Wisconsin, and an associate professor of pathology and cell biology at the Medical College of Wisconsin, Milwaukee. Edward L. Murphy, MD , is resident professor of laboratory medicine at University of California, San Francisco, and an affiliate of the Blood Systems Research Institute. They made their comments in an editorial that accompanied the study.

Donating blood serves an important function in medicine, but the practices associated with blood donations do not consider the effect it has on the donors. The amount of blood that most donors give is around 500 mL, or about 10% of total blood volume. The ratio of iron to blood is 1:1, meaning that for each milligram of blood drawn, one milligram of iron is lost. Considering that women only have 250 mg of iron reserves and men have 1,000 mg of reserves, many frequent donors are at risk of developing iron deficiency.

This study found that the total units of blood collected increased with shorter inter-donation intervals, while secondary safety outcomes of hemoglobin and ferritin levels remained similar between short and long inter-donation interval groups. While overall health did not decline, short inter-donation donors reported symptoms consistent with iron deficiency, including dizziness, tiredness, and restless legs. Researchers found that “about 25% of men and women at the most frequent inter-donation interval had iron deficiency and a third had at least one deferral for low hemoglobin.”

Iron deficiency can be mitigated by iron supplements or by lengthening the time between donations, but this does not fully correct the issue. Some blood centers have already introduced ferritin screening and lengthened the inter-donation interval for donors found to have low ferritin concentrations. Given the advances in automated laboratory testing, information technology, and the high compliance of blood donors, individualized approaches for prevention of iron deficiency could be feasible.

Alan E. Mast, MD , is the medical director for the Blood Center of Wisconsin, and an associate professor of pathology and cell biology at the Medical College of Wisconsin, Milwaukee. Edward L. Murphy, MD , is resident professor of laboratory medicine at University of California, San Francisco, and an affiliate of the Blood Systems Research Institute. They made their comments in an editorial that accompanied the study.

Reducing the interval between blood donations from every 12 weeks to every 8 weeks increases blood units collected with little ill effects on donor quality of life other than symptoms related to iron deficiency, based on results reported by the INTERVAL Trial Group.

In the United States, men and women can donate blood every 8 weeks; the Food and Drug Administration and the AABB (formerly the American Association of Blood Banks) have considered lengthening the 8-week minimum inter-donation interval to reduce the risk of iron deficiency. The current practice in the United Kingdom is to allow men to donate every 12 weeks and women every 16 weeks, the authors noted.

The INTERVAL study examined hemoglobin levels, ferritin levels, and self-reported symptoms as well as the number of blood donations over a 2-year period among 22,466 men randomly assigned to either 8-, 10-, or 12-week blood donation intervals and 22,797 women randomly assigned to donation intervals of 12, 14, and 16 weeks.

Men in the 8-week interval group donated significantly more blood on a per-donor basis, contributing 1.69 and 7.9 units more than the men in the 10- and 12-week interval groups, respectively. Similarly, women in the 12-week interval group contributed approximately 0.84 and 0.46 units more than did women in the 14- and 16-week interval groups.

Hemoglobin and ferritin concentrations stayed relatively stable throughout the study in men and women in all donation interval groups. The baseline hemoglobin and ferritin averages were 149.7 g/L and 44.9 mcg/L in men, and 133.9 g/L and 24.6 mcg/L in women, respectively. Ultimately, mean hemoglobin level decreased by 1%-2%. Ferritin level decreased by 15%-30%, indicating that it is a more accurate marker of iron depletion, the study authors wrote. The report was published online in The Lancet.

The researchers also analyzed self-reported symptoms associated with blood donations derived from donor follow-up surveys sent at 6-, 12-, and 18-month intervals. At the end of the 2-year study, donors underwent a series of cognitive function tests and answered a physical activity questionnaire. Increased donation rates correlated with increased symptoms of iron deficiency such as tiredness, restless legs, and dizziness. This effect was reported more strongly in men.

About 10% of participants were allowed to donate despite having baseline hemoglobin concentrations below the minimum regulatory threshold, said the authors, who said that the screening method used in the United Kingdom to test eligibility to donate needs to be reviewed.

“In response to these findings, we have started the COMPARE study (ISRCTN90871183) to provide a systematic, within-person comparison of the relative merits of different haemoglobin screening methods,” they wrote

The study was funded by National Health Service Blood and Transplant and the National Institute for Health Research Blood and Transplant Research Unit in Donor Health and Genomics (NIHR BTRU-2014-10024).

Reducing the interval between blood donations from every 12 weeks to every 8 weeks increases blood units collected with little ill effects on donor quality of life other than symptoms related to iron deficiency, based on results reported by the INTERVAL Trial Group.

In the United States, men and women can donate blood every 8 weeks; the Food and Drug Administration and the AABB (formerly the American Association of Blood Banks) have considered lengthening the 8-week minimum inter-donation interval to reduce the risk of iron deficiency. The current practice in the United Kingdom is to allow men to donate every 12 weeks and women every 16 weeks, the authors noted.

The INTERVAL study examined hemoglobin levels, ferritin levels, and self-reported symptoms as well as the number of blood donations over a 2-year period among 22,466 men randomly assigned to either 8-, 10-, or 12-week blood donation intervals and 22,797 women randomly assigned to donation intervals of 12, 14, and 16 weeks.

Men in the 8-week interval group donated significantly more blood on a per-donor basis, contributing 1.69 and 7.9 units more than the men in the 10- and 12-week interval groups, respectively. Similarly, women in the 12-week interval group contributed approximately 0.84 and 0.46 units more than did women in the 14- and 16-week interval groups.

Hemoglobin and ferritin concentrations stayed relatively stable throughout the study in men and women in all donation interval groups. The baseline hemoglobin and ferritin averages were 149.7 g/L and 44.9 mcg/L in men, and 133.9 g/L and 24.6 mcg/L in women, respectively. Ultimately, mean hemoglobin level decreased by 1%-2%. Ferritin level decreased by 15%-30%, indicating that it is a more accurate marker of iron depletion, the study authors wrote. The report was published online in The Lancet.

The researchers also analyzed self-reported symptoms associated with blood donations derived from donor follow-up surveys sent at 6-, 12-, and 18-month intervals. At the end of the 2-year study, donors underwent a series of cognitive function tests and answered a physical activity questionnaire. Increased donation rates correlated with increased symptoms of iron deficiency such as tiredness, restless legs, and dizziness. This effect was reported more strongly in men.

About 10% of participants were allowed to donate despite having baseline hemoglobin concentrations below the minimum regulatory threshold, said the authors, who said that the screening method used in the United Kingdom to test eligibility to donate needs to be reviewed.

“In response to these findings, we have started the COMPARE study (ISRCTN90871183) to provide a systematic, within-person comparison of the relative merits of different haemoglobin screening methods,” they wrote

The study was funded by National Health Service Blood and Transplant and the National Institute for Health Research Blood and Transplant Research Unit in Donor Health and Genomics (NIHR BTRU-2014-10024).

FROM THE LANCET

Key clinical point:

Major finding: Reducing inter-donation intervals increases blood collections by 33% in men and 24% in women.

Data source: Parallel group, pragmatic, randomized trial of 22,466 men and 22,797 women from the 25 donor centers of National Health Service Blood and Transplant.

Disclosures: The study was funded by National Health Service Blood and Transplant and the National Institute for Health Research Blood and Transplant Research Unit in Donor Health and Genomics.

FDA approves higher dose brigatinib tablet for advanced ALK+ NSCLC

The Food and Drug Administration has approved 180 mg brigatinib (Alunbrig) tablets for treatment of anaplastic lymphoma kinase–positive (ALK+) metastatic non–small cell lung cancer (NSCLC), expanding on previously available 30-mg tablets.*

“With the approval of a 180-mg tablet, Alunbrig has become the only ALK inhibitor available as a one tablet per day dose that can be taken with or without food,” Ryan Cohlhepp, PharmD, vice president, U.S. Commercial, at Takeda Oncology said in a press release.

Approval of the regimen was based on objective response in the ongoing, two-arm, open-label, multicenter phase 2 ALTA trial, which enrolled 222 patients with metastatic or locally advanced ALK+ NSCLC who had progressed on crizotinib. Patients were randomized to brigatinib orally either 90 mg once daily or 180 mg once daily following a 7-day lead-in at 90 mg once daily. Of those in the 180-mg arm, 53% had an objective response, compared with 48% in the 90-mg arm.

Adverse reactions occurred in 40% of the patients in the 180-mg arm, compared with 38% in the 90-mg arm. The most common serious adverse reactions were pneumonia and interstitial lung disease/pneumonitis. Fatal adverse reactions occurred in 3.7% of the patients, caused by pneumonia (two patients), sudden death, dyspnea, respiratory failure, pulmonary embolism, bacterial meningitis and urosepsis (one patient each).

The ALTA trial is ongoing, and updated results will be presented at the World Conference on Lung Cancer in Yokohama, Japan, on Oct. 15-18, the company said in the press release.

*Correction, 10/4/17: An earlier version of this article misstated the previously available tablet sizes.

The Food and Drug Administration has approved 180 mg brigatinib (Alunbrig) tablets for treatment of anaplastic lymphoma kinase–positive (ALK+) metastatic non–small cell lung cancer (NSCLC), expanding on previously available 30-mg tablets.*

“With the approval of a 180-mg tablet, Alunbrig has become the only ALK inhibitor available as a one tablet per day dose that can be taken with or without food,” Ryan Cohlhepp, PharmD, vice president, U.S. Commercial, at Takeda Oncology said in a press release.

Approval of the regimen was based on objective response in the ongoing, two-arm, open-label, multicenter phase 2 ALTA trial, which enrolled 222 patients with metastatic or locally advanced ALK+ NSCLC who had progressed on crizotinib. Patients were randomized to brigatinib orally either 90 mg once daily or 180 mg once daily following a 7-day lead-in at 90 mg once daily. Of those in the 180-mg arm, 53% had an objective response, compared with 48% in the 90-mg arm.

Adverse reactions occurred in 40% of the patients in the 180-mg arm, compared with 38% in the 90-mg arm. The most common serious adverse reactions were pneumonia and interstitial lung disease/pneumonitis. Fatal adverse reactions occurred in 3.7% of the patients, caused by pneumonia (two patients), sudden death, dyspnea, respiratory failure, pulmonary embolism, bacterial meningitis and urosepsis (one patient each).

The ALTA trial is ongoing, and updated results will be presented at the World Conference on Lung Cancer in Yokohama, Japan, on Oct. 15-18, the company said in the press release.

*Correction, 10/4/17: An earlier version of this article misstated the previously available tablet sizes.

The Food and Drug Administration has approved 180 mg brigatinib (Alunbrig) tablets for treatment of anaplastic lymphoma kinase–positive (ALK+) metastatic non–small cell lung cancer (NSCLC), expanding on previously available 30-mg tablets.*

“With the approval of a 180-mg tablet, Alunbrig has become the only ALK inhibitor available as a one tablet per day dose that can be taken with or without food,” Ryan Cohlhepp, PharmD, vice president, U.S. Commercial, at Takeda Oncology said in a press release.

Approval of the regimen was based on objective response in the ongoing, two-arm, open-label, multicenter phase 2 ALTA trial, which enrolled 222 patients with metastatic or locally advanced ALK+ NSCLC who had progressed on crizotinib. Patients were randomized to brigatinib orally either 90 mg once daily or 180 mg once daily following a 7-day lead-in at 90 mg once daily. Of those in the 180-mg arm, 53% had an objective response, compared with 48% in the 90-mg arm.

Adverse reactions occurred in 40% of the patients in the 180-mg arm, compared with 38% in the 90-mg arm. The most common serious adverse reactions were pneumonia and interstitial lung disease/pneumonitis. Fatal adverse reactions occurred in 3.7% of the patients, caused by pneumonia (two patients), sudden death, dyspnea, respiratory failure, pulmonary embolism, bacterial meningitis and urosepsis (one patient each).

The ALTA trial is ongoing, and updated results will be presented at the World Conference on Lung Cancer in Yokohama, Japan, on Oct. 15-18, the company said in the press release.

*Correction, 10/4/17: An earlier version of this article misstated the previously available tablet sizes.

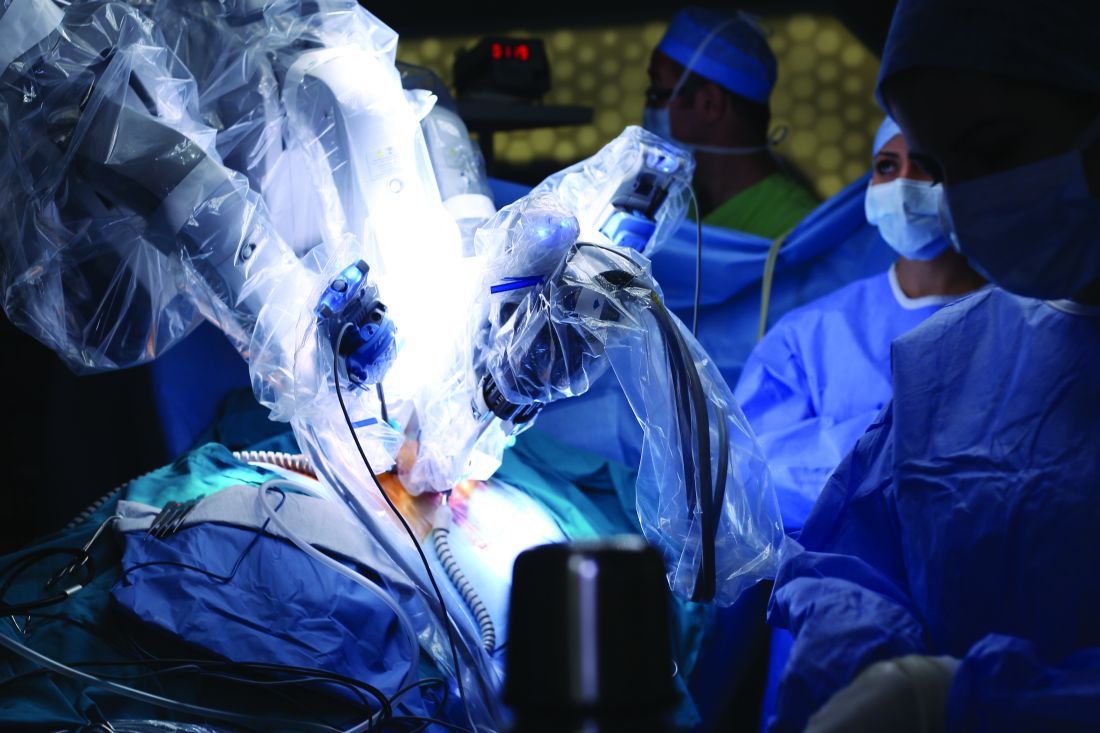

Robotic surgery technologies have unique learning curves for trainees

Training surgeons to use robotic technology involves learning curves, and a study has found that robotic technologies have unique learning curve profiles that have implications for the time and number of procedures needed to achieve competence.

Giorgio Mazzon, MD, of the Institute of Urology at University College Hospital, London, and his colleagues reviewed the literature on training surgeons in the use of a variety of technologies for urological procedures. They analyzed learning curves for virtual reality robotic simulators, robot-assisted laparoscopic radical prostatectomy (RALP), robot-assisted radical cystectomy (RARC), and robot-assisted partial nephrectomy (RAPN) (Curr Urol Rep. 2017 Sep 23;18:89).

RARC learning curves are more rapid than RALP, but this may be due to the fact that most surgeons practice RALP before learning RARC. It is estimated that it takes 21 procedures for operating time to plateau and 30 patients for proper lymph node yield and positive surgical margins of less than 5% to occur (Eur Urol. 2010 Aug;58[2]:197-202).Safety and competence in RAPN is usually defined by operating times, warm ischemic time, positive surgical margin, and complication rates. It has been reported that RAPN can be safely performed with completion of 25-30 cases (Eur Urol. 2010 Jul;58[1]:127-32).The results of the review “should inform trainers and trainees on what outcomes are expected at a given stage of training,” according to the investigators.

They reported no relevant financial disclosures.

Training surgeons to use robotic technology involves learning curves, and a study has found that robotic technologies have unique learning curve profiles that have implications for the time and number of procedures needed to achieve competence.

Giorgio Mazzon, MD, of the Institute of Urology at University College Hospital, London, and his colleagues reviewed the literature on training surgeons in the use of a variety of technologies for urological procedures. They analyzed learning curves for virtual reality robotic simulators, robot-assisted laparoscopic radical prostatectomy (RALP), robot-assisted radical cystectomy (RARC), and robot-assisted partial nephrectomy (RAPN) (Curr Urol Rep. 2017 Sep 23;18:89).

RARC learning curves are more rapid than RALP, but this may be due to the fact that most surgeons practice RALP before learning RARC. It is estimated that it takes 21 procedures for operating time to plateau and 30 patients for proper lymph node yield and positive surgical margins of less than 5% to occur (Eur Urol. 2010 Aug;58[2]:197-202).Safety and competence in RAPN is usually defined by operating times, warm ischemic time, positive surgical margin, and complication rates. It has been reported that RAPN can be safely performed with completion of 25-30 cases (Eur Urol. 2010 Jul;58[1]:127-32).The results of the review “should inform trainers and trainees on what outcomes are expected at a given stage of training,” according to the investigators.

They reported no relevant financial disclosures.

Training surgeons to use robotic technology involves learning curves, and a study has found that robotic technologies have unique learning curve profiles that have implications for the time and number of procedures needed to achieve competence.

Giorgio Mazzon, MD, of the Institute of Urology at University College Hospital, London, and his colleagues reviewed the literature on training surgeons in the use of a variety of technologies for urological procedures. They analyzed learning curves for virtual reality robotic simulators, robot-assisted laparoscopic radical prostatectomy (RALP), robot-assisted radical cystectomy (RARC), and robot-assisted partial nephrectomy (RAPN) (Curr Urol Rep. 2017 Sep 23;18:89).

RARC learning curves are more rapid than RALP, but this may be due to the fact that most surgeons practice RALP before learning RARC. It is estimated that it takes 21 procedures for operating time to plateau and 30 patients for proper lymph node yield and positive surgical margins of less than 5% to occur (Eur Urol. 2010 Aug;58[2]:197-202).Safety and competence in RAPN is usually defined by operating times, warm ischemic time, positive surgical margin, and complication rates. It has been reported that RAPN can be safely performed with completion of 25-30 cases (Eur Urol. 2010 Jul;58[1]:127-32).The results of the review “should inform trainers and trainees on what outcomes are expected at a given stage of training,” according to the investigators.

They reported no relevant financial disclosures.

FROM CURRENT UROLOGY REPORTS

Key clinical point:

Major finding: For virtual reality training programs, recommendations for achieving safety and competence is between 4 and 12 hours of training in a simulator.

Data source: Review of studies of learning curves in robotic urological surgery.

Disclosures: The researchers reported no financial disclosures.

Deep brain stimulation shows promise in treating Tourette syndrome

Deep brain stimulation (DBS) showed promise in improving tic severity in patients with Tourette syndrome in a prospective, open-label registry study, but the treatment was associated with adverse events in a substantial number of patients.

“The first-year results of this multinational electronic collaboration strengthen the notion that DBS could be a potential surgical treatment for select patients with Tourette syndrome,” Daniel Martinez-Ramirez, MD, of the University of Florida, Gainesville, and his colleagues wrote in their study published online Jan. 16 in JAMA Neurology. “Practitioners should be aware of the high number of stimulation-related adverse events and that these are likely reversible.”

The research team studied data from 185 patients with bilateral Tourette syndrome in 10 countries who had undergone DBS implantation between Jan. 1, 2012, and Dec. 31, 2016, and found that DBS significantly improved the Yale Global Tic Severity Scale scores of patients in the study for both motor and phonic tics. Mean motor tic scores improved 38.2% at 6 months, compared with baseline, and 38.5% at 12 months. A similar effect was observed for phonic tic scores, with improvements of 44.2% and 42.7% at 6 and 12 months, compared with baseline, respectively. While there was slight variation between the 6- and 12-month follow-ups for both phonic and motor tic scores, these changes were not statistically significant.

Despite the improvement of Yale Global Tic Severity Scale scores, adverse events were prevalent among patients in the study. Of 158 patients, 56 (35.4%) reported a total of 160 adverse events during the first follow-up year. Of those events reported, 48 (30.8%) were stimulation related, while 6 (3.8%) were surgically related, and 2 (1.3%) were related to issues with the device. The most common adverse events were dysarthria, reported by 10 patients (6.3%), and paresthesias, reported by 13 patients (8.2%). These events were not permanent and did not involve any major complications.

There are a number of limitations when using data from a multinational registry and database, according to the authors. Using information from different sites may affect results because surgical and treatment techniques may differ by location. Additionally, the lack of standardized inclusion criteria for the registry may have affected the results.

Despite the study’s limitations and the adverse events were observed, DBS still appeared effective in improving motor and phonic tics in Tourette syndrome patients. With these results, Dr. Martinez-Ramirez and his associates recommended what needs to be done to further research into DBS.

“Larger numbers of patients will need to receive DBS implants across multiple targets, and comparison of center-to-center outcomes could help refine the therapy,” they wrote. “Publishing multiyear outcomes to a public website (https://tourettedeepbrainstimulationregistry.ese.ufhealth.org) will improve access to information, improve data sharing, and, we hope, contribute to improvement in outcomes.”

Two investigators reported receiving grants from various pharmaceutical companies, government agencies, and foundations. Both also served as consultants to major pharmaceutical companies. All other researchers had no relevant financial conflicts to disclose.

SOURCE: Martinez-Ramirez D et al. JAMA Neurol. 2018 Jan 16. doi: 10.1001/jamaneurol.2017.4317.

Deep brain stimulation (DBS) showed promise in improving tic severity in patients with Tourette syndrome in a prospective, open-label registry study, but the treatment was associated with adverse events in a substantial number of patients.

“The first-year results of this multinational electronic collaboration strengthen the notion that DBS could be a potential surgical treatment for select patients with Tourette syndrome,” Daniel Martinez-Ramirez, MD, of the University of Florida, Gainesville, and his colleagues wrote in their study published online Jan. 16 in JAMA Neurology. “Practitioners should be aware of the high number of stimulation-related adverse events and that these are likely reversible.”

The research team studied data from 185 patients with bilateral Tourette syndrome in 10 countries who had undergone DBS implantation between Jan. 1, 2012, and Dec. 31, 2016, and found that DBS significantly improved the Yale Global Tic Severity Scale scores of patients in the study for both motor and phonic tics. Mean motor tic scores improved 38.2% at 6 months, compared with baseline, and 38.5% at 12 months. A similar effect was observed for phonic tic scores, with improvements of 44.2% and 42.7% at 6 and 12 months, compared with baseline, respectively. While there was slight variation between the 6- and 12-month follow-ups for both phonic and motor tic scores, these changes were not statistically significant.

Despite the improvement of Yale Global Tic Severity Scale scores, adverse events were prevalent among patients in the study. Of 158 patients, 56 (35.4%) reported a total of 160 adverse events during the first follow-up year. Of those events reported, 48 (30.8%) were stimulation related, while 6 (3.8%) were surgically related, and 2 (1.3%) were related to issues with the device. The most common adverse events were dysarthria, reported by 10 patients (6.3%), and paresthesias, reported by 13 patients (8.2%). These events were not permanent and did not involve any major complications.

There are a number of limitations when using data from a multinational registry and database, according to the authors. Using information from different sites may affect results because surgical and treatment techniques may differ by location. Additionally, the lack of standardized inclusion criteria for the registry may have affected the results.

Despite the study’s limitations and the adverse events were observed, DBS still appeared effective in improving motor and phonic tics in Tourette syndrome patients. With these results, Dr. Martinez-Ramirez and his associates recommended what needs to be done to further research into DBS.

“Larger numbers of patients will need to receive DBS implants across multiple targets, and comparison of center-to-center outcomes could help refine the therapy,” they wrote. “Publishing multiyear outcomes to a public website (https://tourettedeepbrainstimulationregistry.ese.ufhealth.org) will improve access to information, improve data sharing, and, we hope, contribute to improvement in outcomes.”

Two investigators reported receiving grants from various pharmaceutical companies, government agencies, and foundations. Both also served as consultants to major pharmaceutical companies. All other researchers had no relevant financial conflicts to disclose.

SOURCE: Martinez-Ramirez D et al. JAMA Neurol. 2018 Jan 16. doi: 10.1001/jamaneurol.2017.4317.

Deep brain stimulation (DBS) showed promise in improving tic severity in patients with Tourette syndrome in a prospective, open-label registry study, but the treatment was associated with adverse events in a substantial number of patients.

“The first-year results of this multinational electronic collaboration strengthen the notion that DBS could be a potential surgical treatment for select patients with Tourette syndrome,” Daniel Martinez-Ramirez, MD, of the University of Florida, Gainesville, and his colleagues wrote in their study published online Jan. 16 in JAMA Neurology. “Practitioners should be aware of the high number of stimulation-related adverse events and that these are likely reversible.”

The research team studied data from 185 patients with bilateral Tourette syndrome in 10 countries who had undergone DBS implantation between Jan. 1, 2012, and Dec. 31, 2016, and found that DBS significantly improved the Yale Global Tic Severity Scale scores of patients in the study for both motor and phonic tics. Mean motor tic scores improved 38.2% at 6 months, compared with baseline, and 38.5% at 12 months. A similar effect was observed for phonic tic scores, with improvements of 44.2% and 42.7% at 6 and 12 months, compared with baseline, respectively. While there was slight variation between the 6- and 12-month follow-ups for both phonic and motor tic scores, these changes were not statistically significant.

Despite the improvement of Yale Global Tic Severity Scale scores, adverse events were prevalent among patients in the study. Of 158 patients, 56 (35.4%) reported a total of 160 adverse events during the first follow-up year. Of those events reported, 48 (30.8%) were stimulation related, while 6 (3.8%) were surgically related, and 2 (1.3%) were related to issues with the device. The most common adverse events were dysarthria, reported by 10 patients (6.3%), and paresthesias, reported by 13 patients (8.2%). These events were not permanent and did not involve any major complications.

There are a number of limitations when using data from a multinational registry and database, according to the authors. Using information from different sites may affect results because surgical and treatment techniques may differ by location. Additionally, the lack of standardized inclusion criteria for the registry may have affected the results.

Despite the study’s limitations and the adverse events were observed, DBS still appeared effective in improving motor and phonic tics in Tourette syndrome patients. With these results, Dr. Martinez-Ramirez and his associates recommended what needs to be done to further research into DBS.

“Larger numbers of patients will need to receive DBS implants across multiple targets, and comparison of center-to-center outcomes could help refine the therapy,” they wrote. “Publishing multiyear outcomes to a public website (https://tourettedeepbrainstimulationregistry.ese.ufhealth.org) will improve access to information, improve data sharing, and, we hope, contribute to improvement in outcomes.”

Two investigators reported receiving grants from various pharmaceutical companies, government agencies, and foundations. Both also served as consultants to major pharmaceutical companies. All other researchers had no relevant financial conflicts to disclose.

SOURCE: Martinez-Ramirez D et al. JAMA Neurol. 2018 Jan 16. doi: 10.1001/jamaneurol.2017.4317.

FROM JAMA NEUROLOGY

Key clinical point: Deep brain stimulation improved motor and phonic tics in Tourette syndrome patients but may come with a substantial number of reversible adverse events.

Major finding: At a 6-month follow-up, motor and phonic Yale Global Tic Severity Scale scores improved 38.2% and 44.2%, respectively.

Study details: Analysis of 185 patients with medically refractory Tourette syndrome who underwent deep brain stimulation implantation between Jan. 1, 2012, and Dec. 31, 2016, at 31 sites in 10 countries.

Disclosures: Two investigators reported receiving grants from various pharmaceutical companies, government agencies, and foundations. Both also served as consultants to major pharmaceutical companies. All other researchers reported no relevant financial disclosures.

Source: Martinez-Ramirez D et al. JAMA Neurol. 2018 Jan 16. doi: 10.1001/jamaneurol.2017.4317.

Swedish study finds low risk of developing psoriasis in bariatric surgery patients

Obese patients who undergo bariatric surgery have a lower risk of later developing psoriasis, according to results of nonrandomized, longitudinal intervention trial.

Cristina Maglio, MD, of the University of Gothenburg, Sweden, and her associates found that over a 26-year follow-up period, the adjusted hazard ratio (HR) of developing psoriasis was 0.65 (95% confidence interval [CI], 0.47-0.89; P = .008) for patients who underwent bariatric surgery, compared with those who received conventional, nonsurgical obesity treatments. Psoriasis developed in 3.6% of 1,991 patients in the surgery group during follow-up and in 5.1% of 2,018 control patients during follow-up.

Conversely, the difference in the risk of developing psoriatic arthritis (PsA), experienced by up to one-third of patients with psoriasis, was not statistically significant (HR, 0.77; 95% CI, 0.43-1.37; P = .287). PsA developed in 1% of subjects from the surgery group and 1.3% from the control group.

To understand how surgery affected the development of psoriasis or psoriatic arthritis, the researchers conducted a trial with a control group and surgery group. In the control group, 2,018 patients received standard obesity treatments that included recommendations on eating behavior, food selection, and physical activity. The 1,991 patients in the surgery group underwent gastric banding (375), vertical banded gastroplasty (1,354), or gastric bypass (262). At the start of the study, patients were evaluated for baseline measurements, then again at 6 months. After the 6-month mark, patients were reevaluated at 1, 2, 3, 4, 6, 8, 10, 15, and 20 years, respectively. All study participants, regardless of trial group, were examined and presented patient health questionnaires at each follow-up. The endpoint for this study was the first diagnosis of either psoriasis or PsA. Body mass index decreased significantly in the surgery group, compared with virtually no change in the control group.

Vertical banded gastroplasty was found to significantly lower the incidence of psoriasis, compared with usual treatment. But using gastric banding as a reference, vertical banded gastroplasty (HR, 0.80; 95% CI, 0.46-1.39; P = .418) and gastric bypass (HR, 0.71; 95% CI, 0.29-1.71; P = 0.439) were found to have similar effects on the prevention of psoriasis.

The researchers also identified several risk factors that significantly increased the risk of developing psoriasis. Smoking (HR, 1.75; 95% CI, 1.26-2.42; P = .001), a known risk factor in the development of psoriasis, and the length of time a patient had been obese (HR, 1.28; 95% CI, 1.05-1.55; P = .014) were found to be independently associated with an increased risk of psoriasis.

As part of their risk analysis, Dr. Maglio and her colleagues analyzed the interactions of baseline risk factors such as BMI and obesity duration with the bariatric surgery. This analysis found no significant interactions between baseline risk factors and bariatric surgery. It did reveal that patients who were older at baseline evaluation had slightly better responses to bariatric surgery with lower incidences of psoriasis, compared with younger patients, but the differences were not statistically significant.

“The preventive role of bariatric surgery on the risk of psoriasis has been recently highlighted by a retrospective Danish study (JAMA Surg. 2017 Apr 1;152[4]:344-9),” noted Dr. Maglio and her colleagues. “However, we lent strength to the previous results by confirming this association in a large prospective intervention trial designed to examine the effect of bariatric surgery on obesity-related comorbidities in comparison with usual obesity care.

This study was funded in part by the National Institutes of Diabetes and Digestive and Kidney Diseases, the Swedish Rheumatism association, the Swedish Research Council, the University of Gothenburg, and the Swedish federal government. Dr. Anna Rudin reported that part of her salary at Sahlgrenska University is supported by a grant from AstraZeneca. Dr. Lena M.S. Carlsson has received lecture fees from AstraZeneca, Johnson & Johnson, and Merck Sharp and Dohme. Dr. Maglio and Dr. Markku Peltonen had no relevant financial disclosures.

SOURCE: Maglio et al. Obesity. 2017. Dec; 25[12]:2068-73.

Obese patients who undergo bariatric surgery have a lower risk of later developing psoriasis, according to results of nonrandomized, longitudinal intervention trial.

Cristina Maglio, MD, of the University of Gothenburg, Sweden, and her associates found that over a 26-year follow-up period, the adjusted hazard ratio (HR) of developing psoriasis was 0.65 (95% confidence interval [CI], 0.47-0.89; P = .008) for patients who underwent bariatric surgery, compared with those who received conventional, nonsurgical obesity treatments. Psoriasis developed in 3.6% of 1,991 patients in the surgery group during follow-up and in 5.1% of 2,018 control patients during follow-up.

Conversely, the difference in the risk of developing psoriatic arthritis (PsA), experienced by up to one-third of patients with psoriasis, was not statistically significant (HR, 0.77; 95% CI, 0.43-1.37; P = .287). PsA developed in 1% of subjects from the surgery group and 1.3% from the control group.

To understand how surgery affected the development of psoriasis or psoriatic arthritis, the researchers conducted a trial with a control group and surgery group. In the control group, 2,018 patients received standard obesity treatments that included recommendations on eating behavior, food selection, and physical activity. The 1,991 patients in the surgery group underwent gastric banding (375), vertical banded gastroplasty (1,354), or gastric bypass (262). At the start of the study, patients were evaluated for baseline measurements, then again at 6 months. After the 6-month mark, patients were reevaluated at 1, 2, 3, 4, 6, 8, 10, 15, and 20 years, respectively. All study participants, regardless of trial group, were examined and presented patient health questionnaires at each follow-up. The endpoint for this study was the first diagnosis of either psoriasis or PsA. Body mass index decreased significantly in the surgery group, compared with virtually no change in the control group.

Vertical banded gastroplasty was found to significantly lower the incidence of psoriasis, compared with usual treatment. But using gastric banding as a reference, vertical banded gastroplasty (HR, 0.80; 95% CI, 0.46-1.39; P = .418) and gastric bypass (HR, 0.71; 95% CI, 0.29-1.71; P = 0.439) were found to have similar effects on the prevention of psoriasis.

The researchers also identified several risk factors that significantly increased the risk of developing psoriasis. Smoking (HR, 1.75; 95% CI, 1.26-2.42; P = .001), a known risk factor in the development of psoriasis, and the length of time a patient had been obese (HR, 1.28; 95% CI, 1.05-1.55; P = .014) were found to be independently associated with an increased risk of psoriasis.

As part of their risk analysis, Dr. Maglio and her colleagues analyzed the interactions of baseline risk factors such as BMI and obesity duration with the bariatric surgery. This analysis found no significant interactions between baseline risk factors and bariatric surgery. It did reveal that patients who were older at baseline evaluation had slightly better responses to bariatric surgery with lower incidences of psoriasis, compared with younger patients, but the differences were not statistically significant.

“The preventive role of bariatric surgery on the risk of psoriasis has been recently highlighted by a retrospective Danish study (JAMA Surg. 2017 Apr 1;152[4]:344-9),” noted Dr. Maglio and her colleagues. “However, we lent strength to the previous results by confirming this association in a large prospective intervention trial designed to examine the effect of bariatric surgery on obesity-related comorbidities in comparison with usual obesity care.

This study was funded in part by the National Institutes of Diabetes and Digestive and Kidney Diseases, the Swedish Rheumatism association, the Swedish Research Council, the University of Gothenburg, and the Swedish federal government. Dr. Anna Rudin reported that part of her salary at Sahlgrenska University is supported by a grant from AstraZeneca. Dr. Lena M.S. Carlsson has received lecture fees from AstraZeneca, Johnson & Johnson, and Merck Sharp and Dohme. Dr. Maglio and Dr. Markku Peltonen had no relevant financial disclosures.

SOURCE: Maglio et al. Obesity. 2017. Dec; 25[12]:2068-73.

Obese patients who undergo bariatric surgery have a lower risk of later developing psoriasis, according to results of nonrandomized, longitudinal intervention trial.

Cristina Maglio, MD, of the University of Gothenburg, Sweden, and her associates found that over a 26-year follow-up period, the adjusted hazard ratio (HR) of developing psoriasis was 0.65 (95% confidence interval [CI], 0.47-0.89; P = .008) for patients who underwent bariatric surgery, compared with those who received conventional, nonsurgical obesity treatments. Psoriasis developed in 3.6% of 1,991 patients in the surgery group during follow-up and in 5.1% of 2,018 control patients during follow-up.

Conversely, the difference in the risk of developing psoriatic arthritis (PsA), experienced by up to one-third of patients with psoriasis, was not statistically significant (HR, 0.77; 95% CI, 0.43-1.37; P = .287). PsA developed in 1% of subjects from the surgery group and 1.3% from the control group.

To understand how surgery affected the development of psoriasis or psoriatic arthritis, the researchers conducted a trial with a control group and surgery group. In the control group, 2,018 patients received standard obesity treatments that included recommendations on eating behavior, food selection, and physical activity. The 1,991 patients in the surgery group underwent gastric banding (375), vertical banded gastroplasty (1,354), or gastric bypass (262). At the start of the study, patients were evaluated for baseline measurements, then again at 6 months. After the 6-month mark, patients were reevaluated at 1, 2, 3, 4, 6, 8, 10, 15, and 20 years, respectively. All study participants, regardless of trial group, were examined and presented patient health questionnaires at each follow-up. The endpoint for this study was the first diagnosis of either psoriasis or PsA. Body mass index decreased significantly in the surgery group, compared with virtually no change in the control group.

Vertical banded gastroplasty was found to significantly lower the incidence of psoriasis, compared with usual treatment. But using gastric banding as a reference, vertical banded gastroplasty (HR, 0.80; 95% CI, 0.46-1.39; P = .418) and gastric bypass (HR, 0.71; 95% CI, 0.29-1.71; P = 0.439) were found to have similar effects on the prevention of psoriasis.

The researchers also identified several risk factors that significantly increased the risk of developing psoriasis. Smoking (HR, 1.75; 95% CI, 1.26-2.42; P = .001), a known risk factor in the development of psoriasis, and the length of time a patient had been obese (HR, 1.28; 95% CI, 1.05-1.55; P = .014) were found to be independently associated with an increased risk of psoriasis.

As part of their risk analysis, Dr. Maglio and her colleagues analyzed the interactions of baseline risk factors such as BMI and obesity duration with the bariatric surgery. This analysis found no significant interactions between baseline risk factors and bariatric surgery. It did reveal that patients who were older at baseline evaluation had slightly better responses to bariatric surgery with lower incidences of psoriasis, compared with younger patients, but the differences were not statistically significant.

“The preventive role of bariatric surgery on the risk of psoriasis has been recently highlighted by a retrospective Danish study (JAMA Surg. 2017 Apr 1;152[4]:344-9),” noted Dr. Maglio and her colleagues. “However, we lent strength to the previous results by confirming this association in a large prospective intervention trial designed to examine the effect of bariatric surgery on obesity-related comorbidities in comparison with usual obesity care.

This study was funded in part by the National Institutes of Diabetes and Digestive and Kidney Diseases, the Swedish Rheumatism association, the Swedish Research Council, the University of Gothenburg, and the Swedish federal government. Dr. Anna Rudin reported that part of her salary at Sahlgrenska University is supported by a grant from AstraZeneca. Dr. Lena M.S. Carlsson has received lecture fees from AstraZeneca, Johnson & Johnson, and Merck Sharp and Dohme. Dr. Maglio and Dr. Markku Peltonen had no relevant financial disclosures.

SOURCE: Maglio et al. Obesity. 2017. Dec; 25[12]:2068-73.

FROM OBESITY

Key clinical point:

Major finding: Obese patients who underwent bariatric surgery had a lower incidence of psoriasis over a 26-year period (HR, 0.65; 95% CI: 0.47-0.89; P = .008), compared with usual care.

Study details: Swedish Obese Subjects study, a longitudinal, nonrandomized intervention trial comprising 1,991 surgery group patients and 2,018 control patients.

Disclosures: This study was funded in part by the National Institutes of Diabetes and Digestive and Kidney Diseases, the Swedish Rheumatism association, the Swedish Research Council, the University of Gothenburg, and the Swedish federal government. Dr. Anna Rudin reported that part of her salary at Sahlgrenska University is supported by a grant from AstraZeneca. Dr. Lena M.S. Carlsson has received lecture fees from AstraZeneca, Johnson & Johnson, and Merck Sharp and Dohme. Dr. Maglio and Dr. Markku Peltonen had no relevant financial disclosures.

Source: Maglio et al. Obesity. 2017. Dec; 25[12]:2068-2073.

Robot-assisted prostatectomy providing better outcomes

Robot-assisted radical prostatectomy shows better early postoperative outcomes than does laparoscopic radical prostatectomy, but the differences between the two surgical approaches disappeared by the 6-month follow-up.

Dr. Hiroyuki Koike and his colleagues at Wakayama (Japan) Medical University Hospital conducted a study of two groups of patients treated for localized prostate cancer. One group of 229 patients underwent laparoscopic radical prostatectomy (LRP) between July 2007 and July 2013. The other group of 115 patients had robot-assisted radical prostatectomy (RARP) between December 2012 and August 2014 (J Robot Surg. 2017;11[3]:325-31).

The patients were given health-related quality of life self-assessment surveys prior to surgery and at 3, 6, and 12 months post surgery. In addition, a generic questionnaire, the eight-item Short-Form Health Survey, was used to assess a physical component summary (PCS) and a mental component summary (MCS). The Expanded Prostate Cancer Index of Prostate, which covers four domains – urinary, sexual, bowel, and hormonal – was used as a disease-specific measure, and the response rates for both LRP and RARP at each follow-up interval were over 80%.

“The RARP group showed significantly better scores in urinary summary and all urinary subscales at postoperative 3-month follow-up. However, these differences disappeared at postoperative 6 and 12-month follow-up,” the investigators wrote. For the urinary summary score, LRP significantly underperformed, compared with RARP, with scores of 63.3 vs. 75.8, respectively, after 3 months. In addition, the bowel function score was superior for RARP, compared with LRP, at 96.9 vs. 92.9, respectively. Sexual function results were similar, with RARP and LRP scores of 2.8 vs. 0.

The general measures of the PCS and MCS also favored RARP. At the 3-month follow-up, PCS (51.3 vs. 48.1) and MCS (50 vs. 47.8) scores were higher for RARP, compared with LRP.

“It is unclear why our superiority of urinary function in RARP was observed only in early period. However, we can speculate several reasons for better urinary function in RARP group. First, we were able to treat the apex area more delicately with RARP. Second, some of the new techniques which we employed after the introduction of RARP could influence the urinary continence recovery,” the investigators wrote.

The authors had no relevant financial disclosures.

Robot-assisted radical prostatectomy shows better early postoperative outcomes than does laparoscopic radical prostatectomy, but the differences between the two surgical approaches disappeared by the 6-month follow-up.

Dr. Hiroyuki Koike and his colleagues at Wakayama (Japan) Medical University Hospital conducted a study of two groups of patients treated for localized prostate cancer. One group of 229 patients underwent laparoscopic radical prostatectomy (LRP) between July 2007 and July 2013. The other group of 115 patients had robot-assisted radical prostatectomy (RARP) between December 2012 and August 2014 (J Robot Surg. 2017;11[3]:325-31).

The patients were given health-related quality of life self-assessment surveys prior to surgery and at 3, 6, and 12 months post surgery. In addition, a generic questionnaire, the eight-item Short-Form Health Survey, was used to assess a physical component summary (PCS) and a mental component summary (MCS). The Expanded Prostate Cancer Index of Prostate, which covers four domains – urinary, sexual, bowel, and hormonal – was used as a disease-specific measure, and the response rates for both LRP and RARP at each follow-up interval were over 80%.

“The RARP group showed significantly better scores in urinary summary and all urinary subscales at postoperative 3-month follow-up. However, these differences disappeared at postoperative 6 and 12-month follow-up,” the investigators wrote. For the urinary summary score, LRP significantly underperformed, compared with RARP, with scores of 63.3 vs. 75.8, respectively, after 3 months. In addition, the bowel function score was superior for RARP, compared with LRP, at 96.9 vs. 92.9, respectively. Sexual function results were similar, with RARP and LRP scores of 2.8 vs. 0.

The general measures of the PCS and MCS also favored RARP. At the 3-month follow-up, PCS (51.3 vs. 48.1) and MCS (50 vs. 47.8) scores were higher for RARP, compared with LRP.

“It is unclear why our superiority of urinary function in RARP was observed only in early period. However, we can speculate several reasons for better urinary function in RARP group. First, we were able to treat the apex area more delicately with RARP. Second, some of the new techniques which we employed after the introduction of RARP could influence the urinary continence recovery,” the investigators wrote.

The authors had no relevant financial disclosures.

Robot-assisted radical prostatectomy shows better early postoperative outcomes than does laparoscopic radical prostatectomy, but the differences between the two surgical approaches disappeared by the 6-month follow-up.

Dr. Hiroyuki Koike and his colleagues at Wakayama (Japan) Medical University Hospital conducted a study of two groups of patients treated for localized prostate cancer. One group of 229 patients underwent laparoscopic radical prostatectomy (LRP) between July 2007 and July 2013. The other group of 115 patients had robot-assisted radical prostatectomy (RARP) between December 2012 and August 2014 (J Robot Surg. 2017;11[3]:325-31).

The patients were given health-related quality of life self-assessment surveys prior to surgery and at 3, 6, and 12 months post surgery. In addition, a generic questionnaire, the eight-item Short-Form Health Survey, was used to assess a physical component summary (PCS) and a mental component summary (MCS). The Expanded Prostate Cancer Index of Prostate, which covers four domains – urinary, sexual, bowel, and hormonal – was used as a disease-specific measure, and the response rates for both LRP and RARP at each follow-up interval were over 80%.

“The RARP group showed significantly better scores in urinary summary and all urinary subscales at postoperative 3-month follow-up. However, these differences disappeared at postoperative 6 and 12-month follow-up,” the investigators wrote. For the urinary summary score, LRP significantly underperformed, compared with RARP, with scores of 63.3 vs. 75.8, respectively, after 3 months. In addition, the bowel function score was superior for RARP, compared with LRP, at 96.9 vs. 92.9, respectively. Sexual function results were similar, with RARP and LRP scores of 2.8 vs. 0.

The general measures of the PCS and MCS also favored RARP. At the 3-month follow-up, PCS (51.3 vs. 48.1) and MCS (50 vs. 47.8) scores were higher for RARP, compared with LRP.

“It is unclear why our superiority of urinary function in RARP was observed only in early period. However, we can speculate several reasons for better urinary function in RARP group. First, we were able to treat the apex area more delicately with RARP. Second, some of the new techniques which we employed after the introduction of RARP could influence the urinary continence recovery,” the investigators wrote.

The authors had no relevant financial disclosures.

FROM JOURNAL OF ROBOTIC SURGERY

Key clinical point:

Major finding: Quality-of-life score for robotic-assisted radical prostatectomy was higher in all urinary categories after 3 months.

Data source: Postop survey results from patients with localized prostate cancer who underwent laparoscopic radical prostatectomy (n = 229) or robot-assisted radical prostatectomy (n = 115).

Disclosures: The investigators had no financial disclosures to report.

Biotin interference a concern in hormonal assays

Biotin supplementation showed signs of interference with biotinylated assays in a crossover trial.

The proposed benefits of biotin (vitamin B7), including stimulating hair growth and assisting in the treatment of various forms of diabetes, have made it a popular supplement. Supplementation generally leads to artificially high levels of biotin, which was shown to cause inaccurate results in biotinylated assays, according to Danni Li, PhD, of the advanced research and diagnostic laboratory at the University of Minnesota, Minneapolis, and her colleagues.

They analyzed the results of both biotinylated and nonbiotinylated assays of six patients – two women and four men – who ingested 10 mg/day of biotin supplement for 7 days. Two blood samples were obtained in the course of the study: one prior to starting biotin supplementation as a baseline and one a week ofter biotin supplementation had ended. The assays tested the presence of nine hormones and two nonhormones using multiple diagnostic systems to run the assays. In total, 37 immunoassays were conducted on each sample (JAMA. 2017;318[12]:1150-60. doi: 10.1001/jama.2017.13705).

Two immunoassay testing techniques were used in the diagnostic assays. The sandwich technique was used in testing TSH, parathyroid hormone (PTH), prolactin, N-terminal pro-brain natriuretic peptide (NT-proBNP), PSA, and ferritin and competitive technique was used in testing total T4, total T3, free T4, free T3, and 25-OHD. In assays utilizing competitive techniques, false highs were reported in three Roche cobas e602 machines and one Siemens Vista Dimension 1500 machine.

Assays utilizing the sandwich technique experienced false decreases in TSH, PTH, and NT-proBNP when compared with baseline measurements in Roche cobas e602 and OCD Vitros 5600 machines. A predominance of the falsely low results were present in the assays conducted by the OCD Vitros machine, with significant changes from baseline measurements. TSH experienced a 94% decrease of 1.67 mIU/L, PTH experienced a 61% decrease of 25.8 pg/mL, and NT-proBNP falsely decreased by more than 13.9 pg/mL. In Roche cobas e602 assays, TSH levels were falsely low and measurements decreased by 0.72 mIU/L (37%) when compared with baseline measurements.

Biotin did not interfere in all biotinylated assays and was only observed in 9 of the 23 (39%) of the assays conducted. Specifically, 4 of 15 (27%) sandwich immunoassays were falsely decreased while five of eight (63%) competitive binding assays were falsely increased.

“Among the 23 biotinylated assays studied, biotin interference was of greatest clinical significance in the OCD Vitros TSH assay, where falsely decreased TSH concentrations (to less than 0.15 mU/L) could have resulted in misdiagnosis of thyrotoxicosis in otherwise euthyroid individuals,” according to Dr. Li and her associates, “Likewise, falsely decreased OCD Vitros NT-proBNP, to lower than assay detection limits, could possibly result in failure to identify congestive heart failure.”

One investigator received funding and nonfinanical support from Siemens Healthcare Diagnostics, one reported receiving financial support from Abbott Laboratories, and another reported receiving personal fees from Roche and Abbott Laboratories. Dr. Li and the other researchers had no relevant financial disclosures.

Biotin supplementation showed signs of interference with biotinylated assays in a crossover trial.

The proposed benefits of biotin (vitamin B7), including stimulating hair growth and assisting in the treatment of various forms of diabetes, have made it a popular supplement. Supplementation generally leads to artificially high levels of biotin, which was shown to cause inaccurate results in biotinylated assays, according to Danni Li, PhD, of the advanced research and diagnostic laboratory at the University of Minnesota, Minneapolis, and her colleagues.

They analyzed the results of both biotinylated and nonbiotinylated assays of six patients – two women and four men – who ingested 10 mg/day of biotin supplement for 7 days. Two blood samples were obtained in the course of the study: one prior to starting biotin supplementation as a baseline and one a week ofter biotin supplementation had ended. The assays tested the presence of nine hormones and two nonhormones using multiple diagnostic systems to run the assays. In total, 37 immunoassays were conducted on each sample (JAMA. 2017;318[12]:1150-60. doi: 10.1001/jama.2017.13705).

Two immunoassay testing techniques were used in the diagnostic assays. The sandwich technique was used in testing TSH, parathyroid hormone (PTH), prolactin, N-terminal pro-brain natriuretic peptide (NT-proBNP), PSA, and ferritin and competitive technique was used in testing total T4, total T3, free T4, free T3, and 25-OHD. In assays utilizing competitive techniques, false highs were reported in three Roche cobas e602 machines and one Siemens Vista Dimension 1500 machine.

Assays utilizing the sandwich technique experienced false decreases in TSH, PTH, and NT-proBNP when compared with baseline measurements in Roche cobas e602 and OCD Vitros 5600 machines. A predominance of the falsely low results were present in the assays conducted by the OCD Vitros machine, with significant changes from baseline measurements. TSH experienced a 94% decrease of 1.67 mIU/L, PTH experienced a 61% decrease of 25.8 pg/mL, and NT-proBNP falsely decreased by more than 13.9 pg/mL. In Roche cobas e602 assays, TSH levels were falsely low and measurements decreased by 0.72 mIU/L (37%) when compared with baseline measurements.

Biotin did not interfere in all biotinylated assays and was only observed in 9 of the 23 (39%) of the assays conducted. Specifically, 4 of 15 (27%) sandwich immunoassays were falsely decreased while five of eight (63%) competitive binding assays were falsely increased.

“Among the 23 biotinylated assays studied, biotin interference was of greatest clinical significance in the OCD Vitros TSH assay, where falsely decreased TSH concentrations (to less than 0.15 mU/L) could have resulted in misdiagnosis of thyrotoxicosis in otherwise euthyroid individuals,” according to Dr. Li and her associates, “Likewise, falsely decreased OCD Vitros NT-proBNP, to lower than assay detection limits, could possibly result in failure to identify congestive heart failure.”

One investigator received funding and nonfinanical support from Siemens Healthcare Diagnostics, one reported receiving financial support from Abbott Laboratories, and another reported receiving personal fees from Roche and Abbott Laboratories. Dr. Li and the other researchers had no relevant financial disclosures.

Biotin supplementation showed signs of interference with biotinylated assays in a crossover trial.

The proposed benefits of biotin (vitamin B7), including stimulating hair growth and assisting in the treatment of various forms of diabetes, have made it a popular supplement. Supplementation generally leads to artificially high levels of biotin, which was shown to cause inaccurate results in biotinylated assays, according to Danni Li, PhD, of the advanced research and diagnostic laboratory at the University of Minnesota, Minneapolis, and her colleagues.

They analyzed the results of both biotinylated and nonbiotinylated assays of six patients – two women and four men – who ingested 10 mg/day of biotin supplement for 7 days. Two blood samples were obtained in the course of the study: one prior to starting biotin supplementation as a baseline and one a week ofter biotin supplementation had ended. The assays tested the presence of nine hormones and two nonhormones using multiple diagnostic systems to run the assays. In total, 37 immunoassays were conducted on each sample (JAMA. 2017;318[12]:1150-60. doi: 10.1001/jama.2017.13705).

Two immunoassay testing techniques were used in the diagnostic assays. The sandwich technique was used in testing TSH, parathyroid hormone (PTH), prolactin, N-terminal pro-brain natriuretic peptide (NT-proBNP), PSA, and ferritin and competitive technique was used in testing total T4, total T3, free T4, free T3, and 25-OHD. In assays utilizing competitive techniques, false highs were reported in three Roche cobas e602 machines and one Siemens Vista Dimension 1500 machine.

Assays utilizing the sandwich technique experienced false decreases in TSH, PTH, and NT-proBNP when compared with baseline measurements in Roche cobas e602 and OCD Vitros 5600 machines. A predominance of the falsely low results were present in the assays conducted by the OCD Vitros machine, with significant changes from baseline measurements. TSH experienced a 94% decrease of 1.67 mIU/L, PTH experienced a 61% decrease of 25.8 pg/mL, and NT-proBNP falsely decreased by more than 13.9 pg/mL. In Roche cobas e602 assays, TSH levels were falsely low and measurements decreased by 0.72 mIU/L (37%) when compared with baseline measurements.

Biotin did not interfere in all biotinylated assays and was only observed in 9 of the 23 (39%) of the assays conducted. Specifically, 4 of 15 (27%) sandwich immunoassays were falsely decreased while five of eight (63%) competitive binding assays were falsely increased.

“Among the 23 biotinylated assays studied, biotin interference was of greatest clinical significance in the OCD Vitros TSH assay, where falsely decreased TSH concentrations (to less than 0.15 mU/L) could have resulted in misdiagnosis of thyrotoxicosis in otherwise euthyroid individuals,” according to Dr. Li and her associates, “Likewise, falsely decreased OCD Vitros NT-proBNP, to lower than assay detection limits, could possibly result in failure to identify congestive heart failure.”

One investigator received funding and nonfinanical support from Siemens Healthcare Diagnostics, one reported receiving financial support from Abbott Laboratories, and another reported receiving personal fees from Roche and Abbott Laboratories. Dr. Li and the other researchers had no relevant financial disclosures.

FROM JAMA

Key clinical point:

Major finding: 9 of 23 biotinylated (39%) showed signs of interference from biotin ingestion.

Data source: Nonrandomized crossover trial of six participants at an academic medical center.

Disclosures: One investigator received funding and nonfinanical support from Siemens Healthcare Diagnostics, one reported receiving financial support from Abbott Laboratories, and another reported receiving personal fees from Roche and Abbott Laboratories. Dr. Li and the other researchers had no relevant financial disclosures.

Diagnostic laparoscopy pinpoints postop abdominal pain in bariatric patients

The etiology of chronic pain after bariatric surgery can be difficult to pinpoint, but diagnostic laparoscopy can detect causes in about half of patients, findings from a small study have shown.

In an investigation conducted by Mohammed Alsulaimy, MD, a surgeon at the Bariatric and Metabolic Institute at the Cleveland Clinic, and his colleagues, 35 patients underwent diagnostic laparoscopy (DL) to identify the causes of their chronic abdominal pain after bariatric surgery. Patients included in the study had a history of abdominal pain lasting longer than 30 days after their bariatric procedure, a negative CT scan of their abdomen and pelvis, a gallstone-negative abdominal ultrasound, and an upper GI endoscopy with no abnormalities. Researchers collected patient data including age, gender, body, weight, and body mass index, type of previous bariatric procedure, and time between surgery and onset of pain.

The results of DL were either positive (presence detected of pathology or injury) or negative (no disease or injury detected).