User login

Why Are Prion Diseases on the Rise?

This transcript has been edited for clarity.

In 1986, in Britain, cattle started dying.

The condition, quickly nicknamed “mad cow disease,” was clearly infectious, but the particular pathogen was difficult to identify. By 1993, 120,000 cattle in Britain were identified as being infected. As yet, no human cases had occurred and the UK government insisted that cattle were a dead-end host for the pathogen. By the mid-1990s, however, multiple human cases, attributable to ingestion of meat and organs from infected cattle, were discovered. In humans, variant Creutzfeldt-Jakob disease (CJD) was a media sensation — a nearly uniformly fatal, untreatable condition with a rapid onset of dementia, mobility issues characterized by jerky movements, and autopsy reports finding that the brain itself had turned into a spongy mess.

The United States banned UK beef imports in 1996 and only lifted the ban in 2020.

The disease was made all the more mysterious because the pathogen involved was not a bacterium, parasite, or virus, but a protein — or a proteinaceous infectious particle, shortened to “prion.”

Prions are misfolded proteins that aggregate in cells — in this case, in nerve cells. But what makes prions different from other misfolded proteins is that the misfolded protein catalyzes the conversion of its non-misfolded counterpart into the misfolded configuration. It creates a chain reaction, leading to rapid accumulation of misfolded proteins and cell death.

And, like a time bomb, we all have prion protein inside us. In its normally folded state, the function of prion protein remains unclear — knockout mice do okay without it — but it is also highly conserved across mammalian species, so it probably does something worthwhile, perhaps protecting nerve fibers.

Far more common than humans contracting mad cow disease is the condition known as sporadic CJD, responsible for 85% of all cases of prion-induced brain disease. The cause of sporadic CJD is unknown.

But one thing is known: Cases are increasing.

I don’t want you to freak out; we are not in the midst of a CJD epidemic. But it’s been a while since I’ve seen people discussing the condition — which remains as horrible as it was in the 1990s — and a new research letter appearing in JAMA Neurology brought it back to the top of my mind.

Researchers, led by Matthew Crane at Hopkins, used the CDC’s WONDER cause-of-death database, which pulls diagnoses from death certificates. Normally, I’m not a fan of using death certificates for cause-of-death analyses, but in this case I’ll give it a pass. Assuming that the diagnosis of CJD is made, it would be really unlikely for it not to appear on a death certificate.

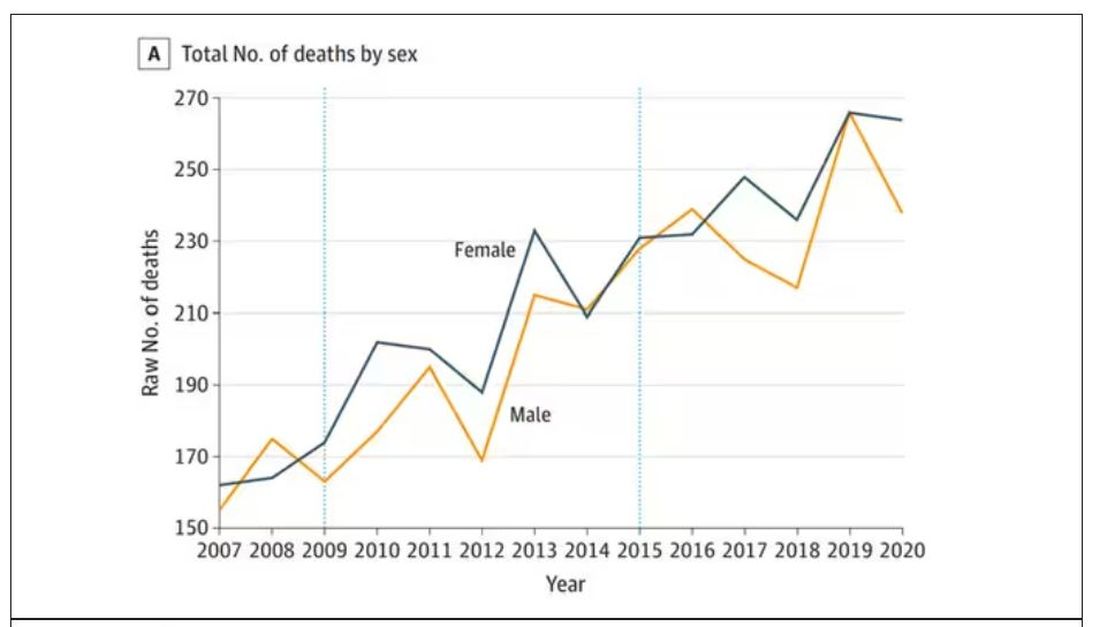

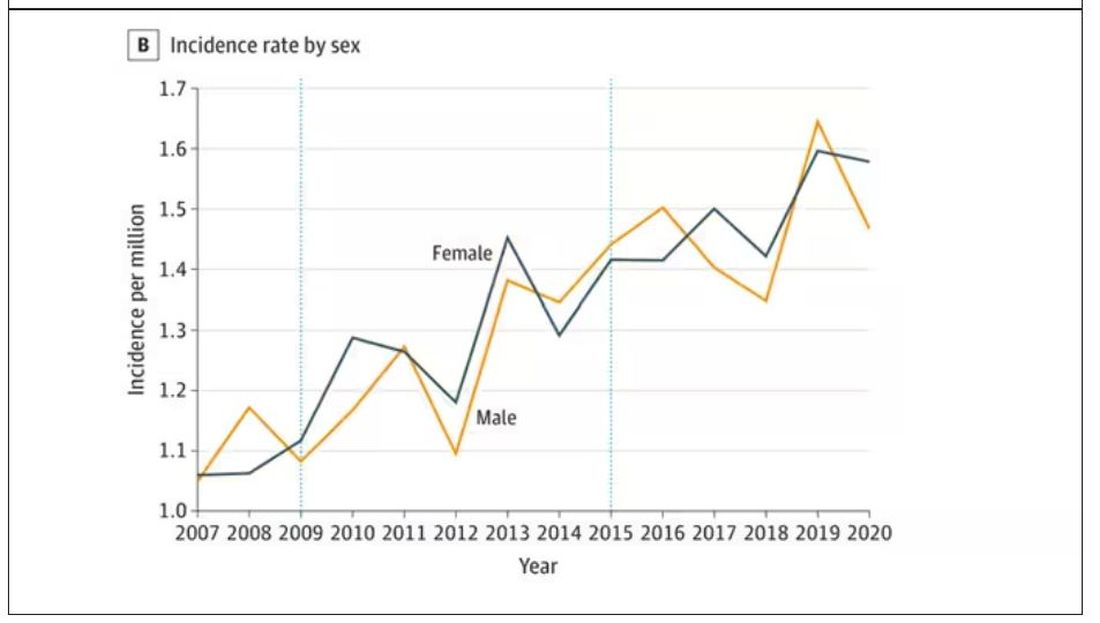

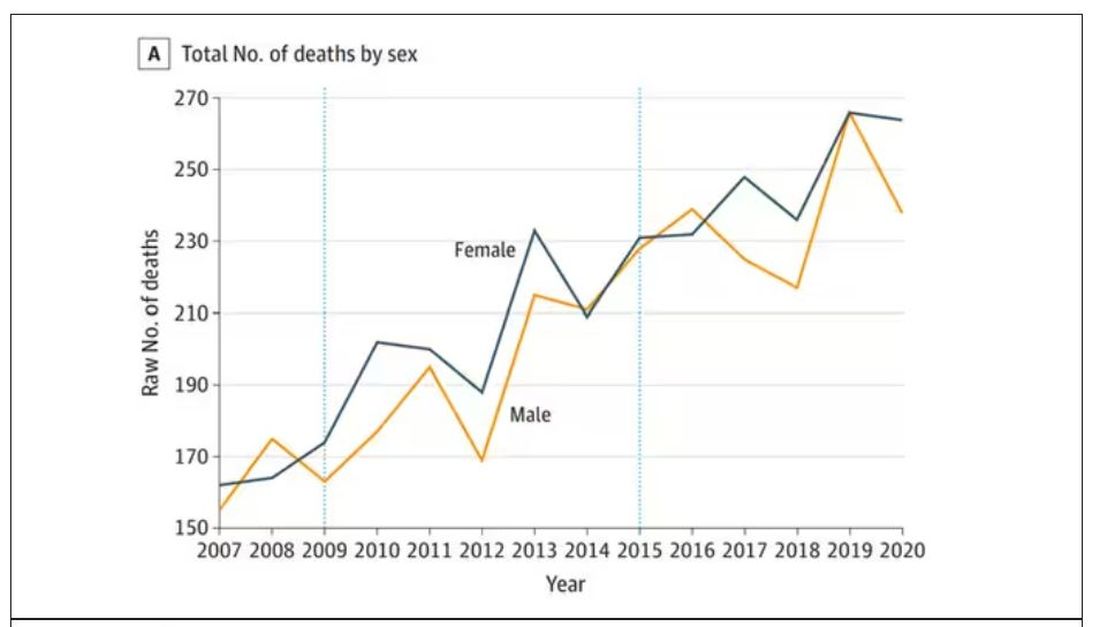

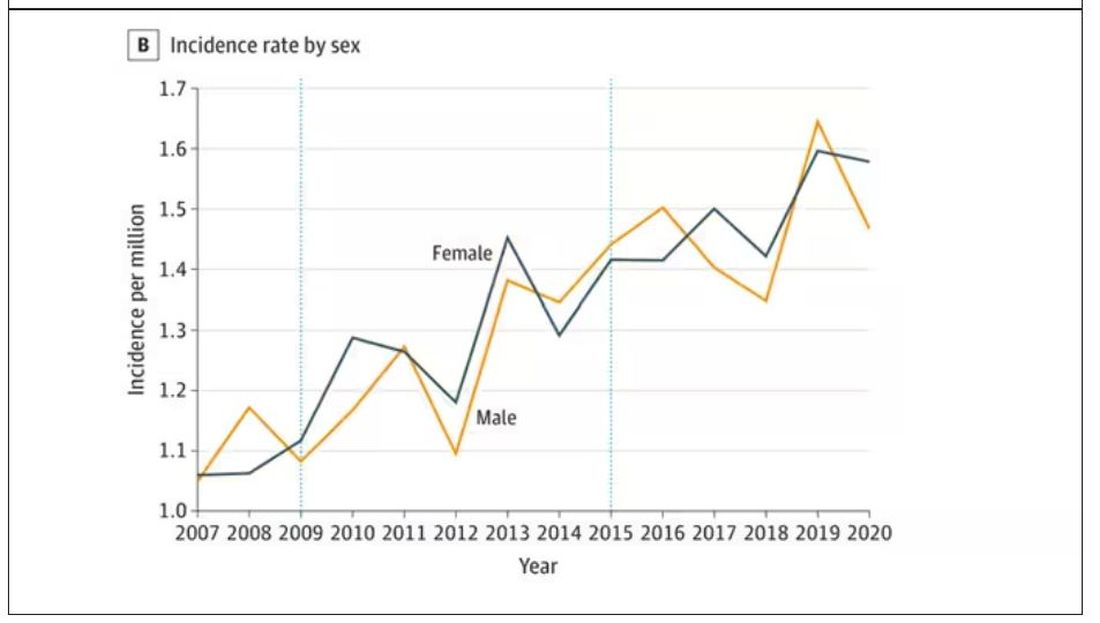

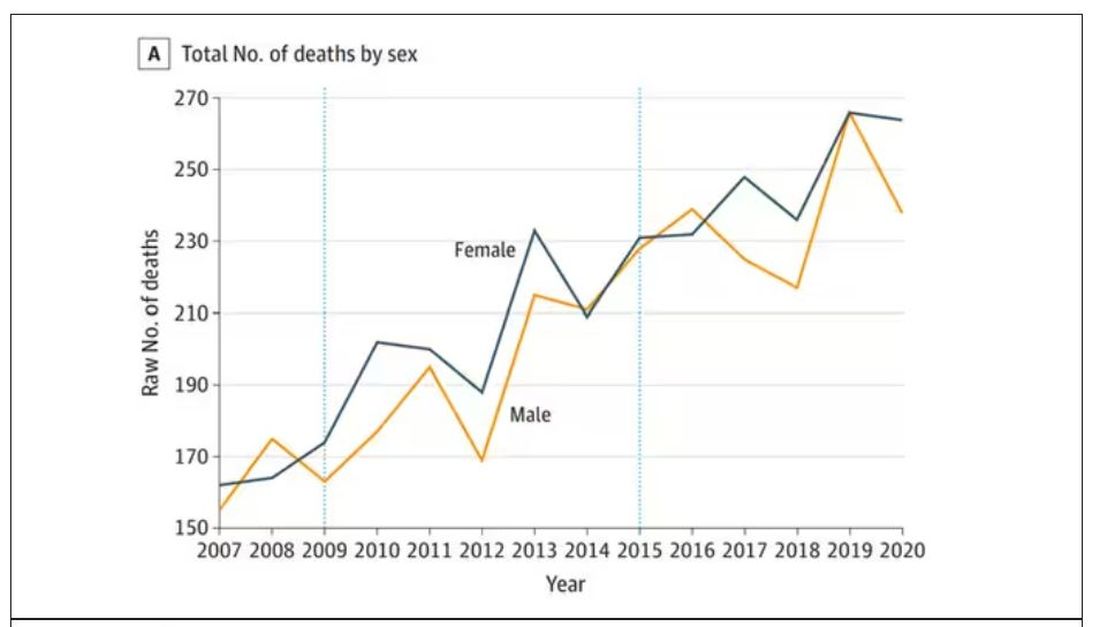

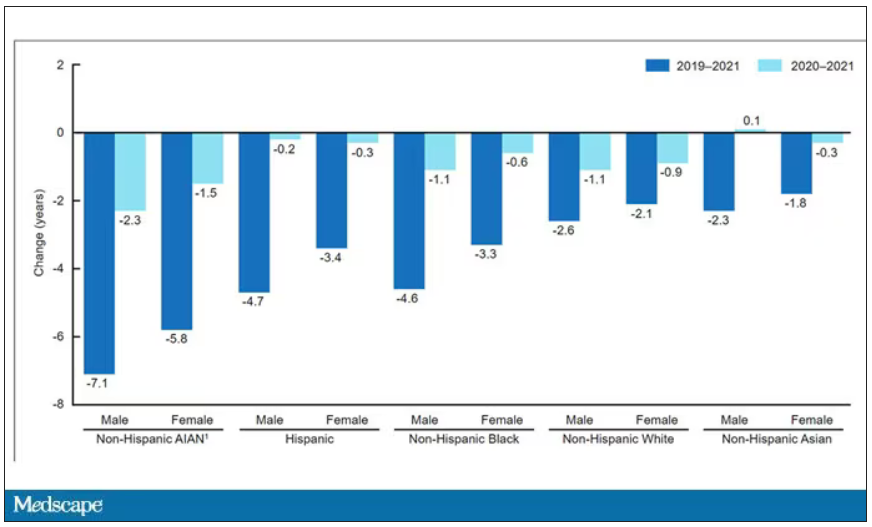

The main findings are seen here.

Note that we can’t tell whether these are sporadic CJD cases or variant CJD cases or even familial CJD cases; however, unless there has been a dramatic change in epidemiology, the vast majority of these will be sporadic.

The question is, why are there more cases?

Whenever this type of question comes up with any disease, there are basically three possibilities:

First, there may be an increase in the susceptible, or at-risk, population. In this case, we know that older people are at higher risk of developing sporadic CJD, and over time, the population has aged. To be fair, the authors adjusted for this and still saw an increase, though it was attenuated.

Second, we might be better at diagnosing the condition. A lot has happened since the mid-1990s, when the diagnosis was based more or less on symptoms. The advent of more sophisticated MRI protocols as well as a new diagnostic test called “real-time quaking-induced conversion testing” may mean we are just better at detecting people with this disease.

Third (and most concerning), a new exposure has occurred. What that exposure might be, where it might come from, is anyone’s guess. It’s hard to do broad-scale epidemiology on very rare diseases.

But given these findings, it seems that a bit more surveillance for this rare but devastating condition is well merited.

F. Perry Wilson, MD, MSCE, is an associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator. His science communication work can be found in the Huffington Post, on NPR, and here on Medscape. He tweets @fperrywilson and his new book, How Medicine Works and When It Doesn’t, is available now.

F. Perry Wilson, MD, MSCE, has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

This transcript has been edited for clarity.

In 1986, in Britain, cattle started dying.

The condition, quickly nicknamed “mad cow disease,” was clearly infectious, but the particular pathogen was difficult to identify. By 1993, 120,000 cattle in Britain were identified as being infected. As yet, no human cases had occurred and the UK government insisted that cattle were a dead-end host for the pathogen. By the mid-1990s, however, multiple human cases, attributable to ingestion of meat and organs from infected cattle, were discovered. In humans, variant Creutzfeldt-Jakob disease (CJD) was a media sensation — a nearly uniformly fatal, untreatable condition with a rapid onset of dementia, mobility issues characterized by jerky movements, and autopsy reports finding that the brain itself had turned into a spongy mess.

The United States banned UK beef imports in 1996 and only lifted the ban in 2020.

The disease was made all the more mysterious because the pathogen involved was not a bacterium, parasite, or virus, but a protein — or a proteinaceous infectious particle, shortened to “prion.”

Prions are misfolded proteins that aggregate in cells — in this case, in nerve cells. But what makes prions different from other misfolded proteins is that the misfolded protein catalyzes the conversion of its non-misfolded counterpart into the misfolded configuration. It creates a chain reaction, leading to rapid accumulation of misfolded proteins and cell death.

And, like a time bomb, we all have prion protein inside us. In its normally folded state, the function of prion protein remains unclear — knockout mice do okay without it — but it is also highly conserved across mammalian species, so it probably does something worthwhile, perhaps protecting nerve fibers.

Far more common than humans contracting mad cow disease is the condition known as sporadic CJD, responsible for 85% of all cases of prion-induced brain disease. The cause of sporadic CJD is unknown.

But one thing is known: Cases are increasing.

I don’t want you to freak out; we are not in the midst of a CJD epidemic. But it’s been a while since I’ve seen people discussing the condition — which remains as horrible as it was in the 1990s — and a new research letter appearing in JAMA Neurology brought it back to the top of my mind.

Researchers, led by Matthew Crane at Hopkins, used the CDC’s WONDER cause-of-death database, which pulls diagnoses from death certificates. Normally, I’m not a fan of using death certificates for cause-of-death analyses, but in this case I’ll give it a pass. Assuming that the diagnosis of CJD is made, it would be really unlikely for it not to appear on a death certificate.

The main findings are seen here.

Note that we can’t tell whether these are sporadic CJD cases or variant CJD cases or even familial CJD cases; however, unless there has been a dramatic change in epidemiology, the vast majority of these will be sporadic.

The question is, why are there more cases?

Whenever this type of question comes up with any disease, there are basically three possibilities:

First, there may be an increase in the susceptible, or at-risk, population. In this case, we know that older people are at higher risk of developing sporadic CJD, and over time, the population has aged. To be fair, the authors adjusted for this and still saw an increase, though it was attenuated.

Second, we might be better at diagnosing the condition. A lot has happened since the mid-1990s, when the diagnosis was based more or less on symptoms. The advent of more sophisticated MRI protocols as well as a new diagnostic test called “real-time quaking-induced conversion testing” may mean we are just better at detecting people with this disease.

Third (and most concerning), a new exposure has occurred. What that exposure might be, where it might come from, is anyone’s guess. It’s hard to do broad-scale epidemiology on very rare diseases.

But given these findings, it seems that a bit more surveillance for this rare but devastating condition is well merited.

F. Perry Wilson, MD, MSCE, is an associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator. His science communication work can be found in the Huffington Post, on NPR, and here on Medscape. He tweets @fperrywilson and his new book, How Medicine Works and When It Doesn’t, is available now.

F. Perry Wilson, MD, MSCE, has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

This transcript has been edited for clarity.

In 1986, in Britain, cattle started dying.

The condition, quickly nicknamed “mad cow disease,” was clearly infectious, but the particular pathogen was difficult to identify. By 1993, 120,000 cattle in Britain were identified as being infected. As yet, no human cases had occurred and the UK government insisted that cattle were a dead-end host for the pathogen. By the mid-1990s, however, multiple human cases, attributable to ingestion of meat and organs from infected cattle, were discovered. In humans, variant Creutzfeldt-Jakob disease (CJD) was a media sensation — a nearly uniformly fatal, untreatable condition with a rapid onset of dementia, mobility issues characterized by jerky movements, and autopsy reports finding that the brain itself had turned into a spongy mess.

The United States banned UK beef imports in 1996 and only lifted the ban in 2020.

The disease was made all the more mysterious because the pathogen involved was not a bacterium, parasite, or virus, but a protein — or a proteinaceous infectious particle, shortened to “prion.”

Prions are misfolded proteins that aggregate in cells — in this case, in nerve cells. But what makes prions different from other misfolded proteins is that the misfolded protein catalyzes the conversion of its non-misfolded counterpart into the misfolded configuration. It creates a chain reaction, leading to rapid accumulation of misfolded proteins and cell death.

And, like a time bomb, we all have prion protein inside us. In its normally folded state, the function of prion protein remains unclear — knockout mice do okay without it — but it is also highly conserved across mammalian species, so it probably does something worthwhile, perhaps protecting nerve fibers.

Far more common than humans contracting mad cow disease is the condition known as sporadic CJD, responsible for 85% of all cases of prion-induced brain disease. The cause of sporadic CJD is unknown.

But one thing is known: Cases are increasing.

I don’t want you to freak out; we are not in the midst of a CJD epidemic. But it’s been a while since I’ve seen people discussing the condition — which remains as horrible as it was in the 1990s — and a new research letter appearing in JAMA Neurology brought it back to the top of my mind.

Researchers, led by Matthew Crane at Hopkins, used the CDC’s WONDER cause-of-death database, which pulls diagnoses from death certificates. Normally, I’m not a fan of using death certificates for cause-of-death analyses, but in this case I’ll give it a pass. Assuming that the diagnosis of CJD is made, it would be really unlikely for it not to appear on a death certificate.

The main findings are seen here.

Note that we can’t tell whether these are sporadic CJD cases or variant CJD cases or even familial CJD cases; however, unless there has been a dramatic change in epidemiology, the vast majority of these will be sporadic.

The question is, why are there more cases?

Whenever this type of question comes up with any disease, there are basically three possibilities:

First, there may be an increase in the susceptible, or at-risk, population. In this case, we know that older people are at higher risk of developing sporadic CJD, and over time, the population has aged. To be fair, the authors adjusted for this and still saw an increase, though it was attenuated.

Second, we might be better at diagnosing the condition. A lot has happened since the mid-1990s, when the diagnosis was based more or less on symptoms. The advent of more sophisticated MRI protocols as well as a new diagnostic test called “real-time quaking-induced conversion testing” may mean we are just better at detecting people with this disease.

Third (and most concerning), a new exposure has occurred. What that exposure might be, where it might come from, is anyone’s guess. It’s hard to do broad-scale epidemiology on very rare diseases.

But given these findings, it seems that a bit more surveillance for this rare but devastating condition is well merited.

F. Perry Wilson, MD, MSCE, is an associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator. His science communication work can be found in the Huffington Post, on NPR, and here on Medscape. He tweets @fperrywilson and his new book, How Medicine Works and When It Doesn’t, is available now.

F. Perry Wilson, MD, MSCE, has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

Are you sure your patient is alive?

This transcript has been edited for clarity.

Much of my research focuses on what is known as clinical decision support — prompts and messages to providers to help them make good decisions for their patients. I know that these things can be annoying, which is exactly why I study them — to figure out which ones actually help.

When I got started on this about 10 years ago, we were learning a lot about how best to message providers about their patients. My team had developed a simple alert for acute kidney injury (AKI). We knew that providers often missed the diagnosis, so maybe letting them know would improve patient outcomes.

As we tested the alert, we got feedback, and I have kept an email from an ICU doctor from those early days. It read:

Dear Dr. Wilson: Thank you for the automated alert informing me that my patient had AKI. Regrettably, the alert fired about an hour after the patient had died. I feel that the information is less than actionable at this time.

Our early system had neglected to add a conditional flag ensuring that the patient was still alive at the time it sent the alert message. A small oversight, but one that had very large implications. Future studies would show that “false positive” alerts like this seriously degrade physician confidence in the system. And why wouldn’t they?

Not knowing the vital status of a patient can have major consequences.

Health systems send messages to their patients all the time: reminders of appointments, reminders for preventive care, reminders for vaccinations, and so on.

But what if the patient being reminded has died? It’s a waste of resources, of course, but more than that, it can be painful for their families and reflects poorly on the health care system. Of all the people who should know whether someone is alive or dead, shouldn’t their doctor be at the top of the list?

A new study in JAMA Internal Medicine quantifies this very phenomenon.

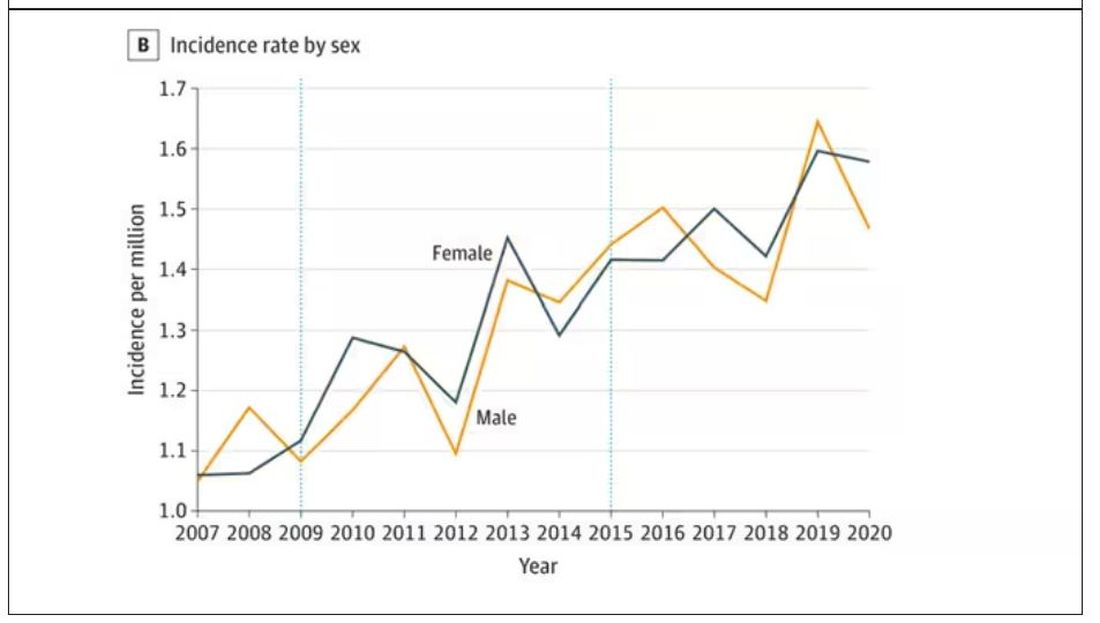

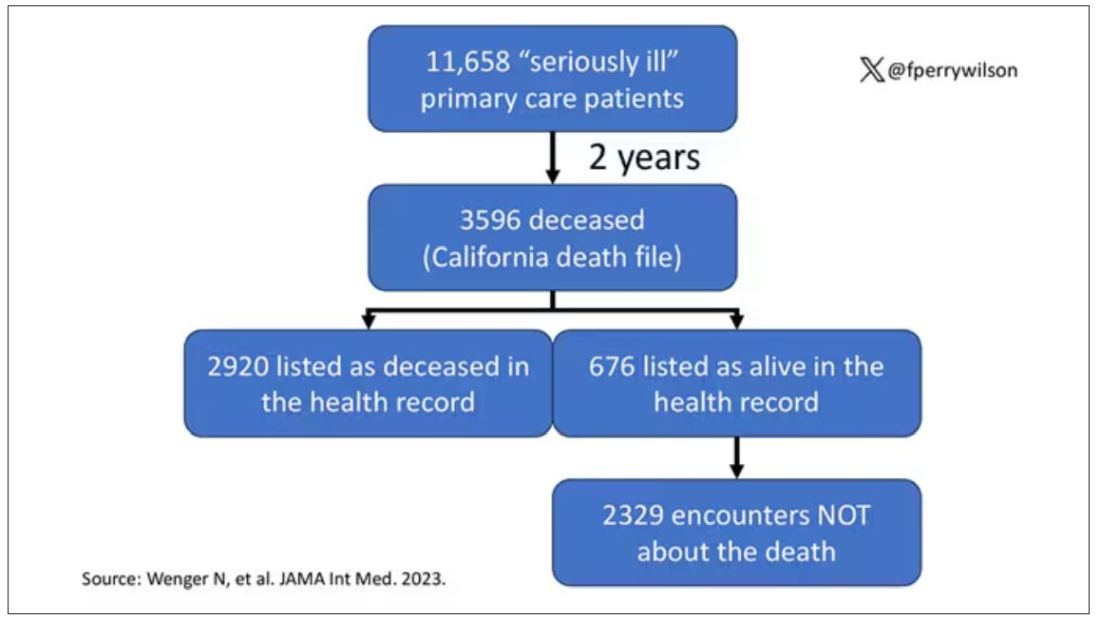

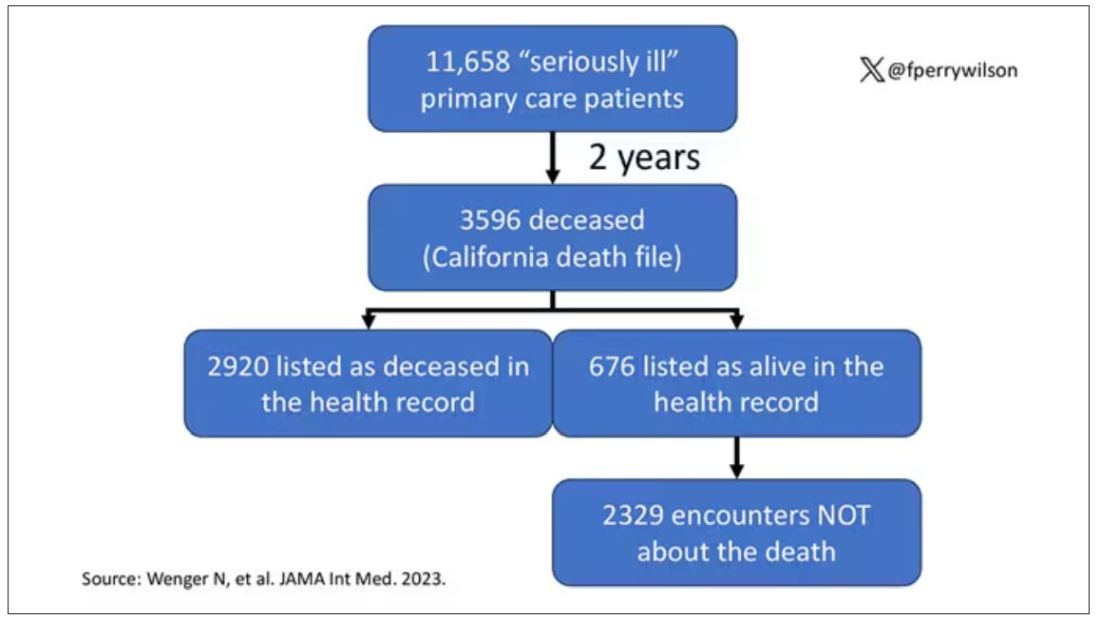

Researchers examined 11,658 primary care patients in their health system who met the criteria of being “seriously ill” and followed them for 2 years. During that period of time, 25% were recorded as deceased in the electronic health record. But 30.8% had died. That left 676 patients who had died, but were not known to have died, left in the system.

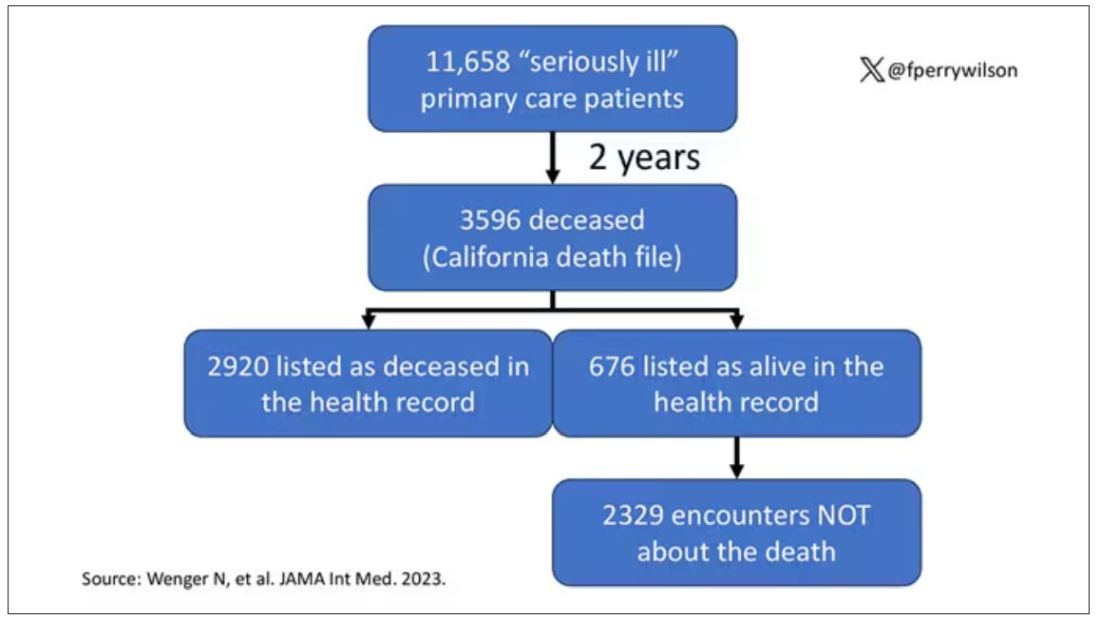

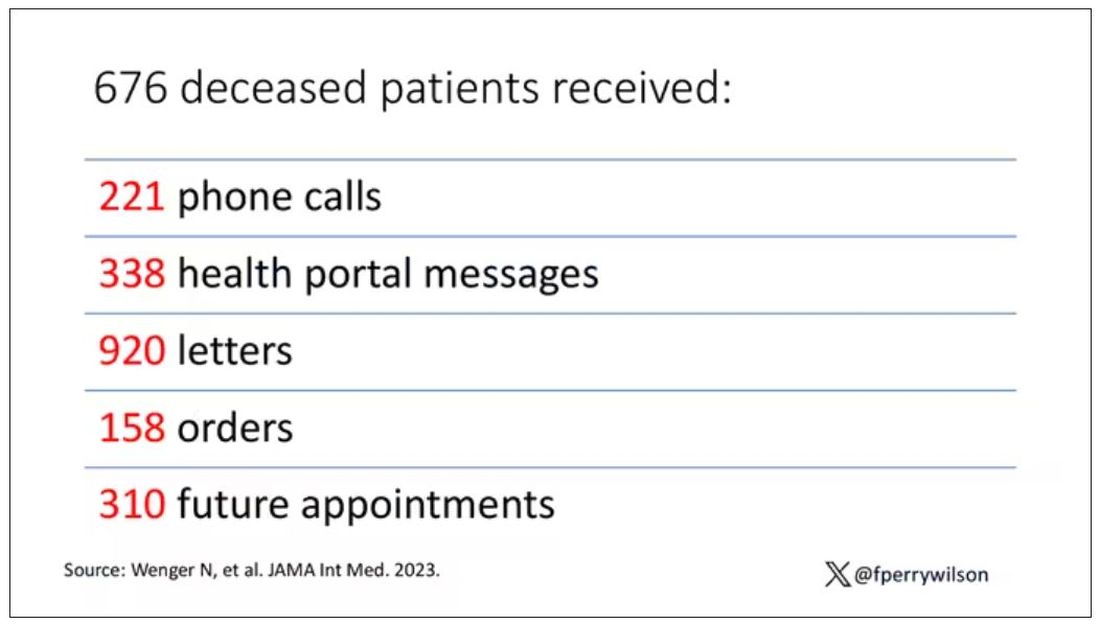

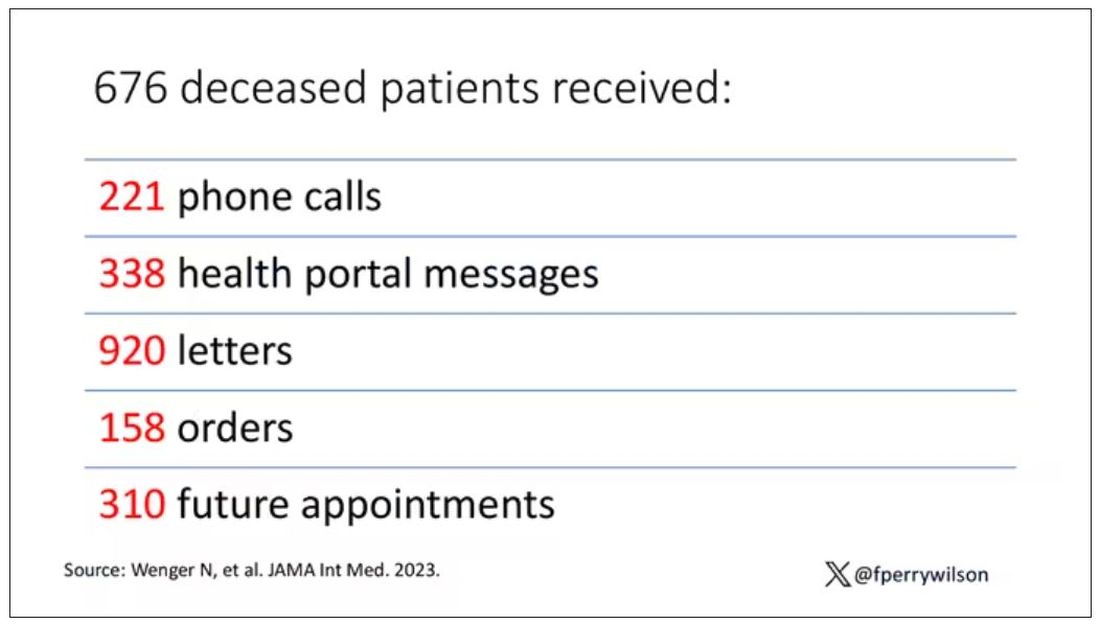

And those 676 were not left to rest in peace. They received 221 telephone and 338 health portal messages not related to death, and 920 letters reminding them about unmet primary care metrics like flu shots and cancer screening. Orders were entered into the health record for things like vaccines and routine screenings for 158 patients, and 310 future appointments — destined to be no-shows — were still on the books. One can only imagine the frustration of families checking their mail and finding yet another letter reminding their deceased loved one to get a mammogram.

How did the researchers figure out who had died? It turns out it’s not that hard. California keeps a record of all deaths in the state; they simply had to search it. Like all state death records, they tend to lag a bit so it’s not clinically terribly useful, but it works. California and most other states also have a very accurate and up-to-date death file which can only be used by law enforcement to investigate criminal activity and fraud; health care is left in the lurch.

Nationwide, there is the real-time fact of death service, supported by the National Association for Public Health Statistics and Information Systems. This allows employers to verify, in real time, whether the person applying for a job is alive. Healthcare systems are not allowed to use it.

Let’s also remember that very few people die in this country without some health care agency knowing about it and recording it. But sharing of medical information is so poor in the United States that your patient could die in a hospital one city away from you and you might not find out until you’re calling them to see why they missed a scheduled follow-up appointment.

These events — the embarrassing lack of knowledge about the very vital status of our patients — highlight a huge problem with health care in our country. The fragmented health care system is terrible at data sharing, in part because of poor protocols, in part because of unfounded concerns about patient privacy, and in part because of a tendency to hoard data that might be valuable in the future. It has to stop. We need to know how our patients are doing even when they are not sitting in front of us. When it comes to life and death, the knowledge is out there; we just can’t access it. Seems like a pretty easy fix.

Dr. Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Connecticut. He has disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com .

This transcript has been edited for clarity.

Much of my research focuses on what is known as clinical decision support — prompts and messages to providers to help them make good decisions for their patients. I know that these things can be annoying, which is exactly why I study them — to figure out which ones actually help.

When I got started on this about 10 years ago, we were learning a lot about how best to message providers about their patients. My team had developed a simple alert for acute kidney injury (AKI). We knew that providers often missed the diagnosis, so maybe letting them know would improve patient outcomes.

As we tested the alert, we got feedback, and I have kept an email from an ICU doctor from those early days. It read:

Dear Dr. Wilson: Thank you for the automated alert informing me that my patient had AKI. Regrettably, the alert fired about an hour after the patient had died. I feel that the information is less than actionable at this time.

Our early system had neglected to add a conditional flag ensuring that the patient was still alive at the time it sent the alert message. A small oversight, but one that had very large implications. Future studies would show that “false positive” alerts like this seriously degrade physician confidence in the system. And why wouldn’t they?

Not knowing the vital status of a patient can have major consequences.

Health systems send messages to their patients all the time: reminders of appointments, reminders for preventive care, reminders for vaccinations, and so on.

But what if the patient being reminded has died? It’s a waste of resources, of course, but more than that, it can be painful for their families and reflects poorly on the health care system. Of all the people who should know whether someone is alive or dead, shouldn’t their doctor be at the top of the list?

A new study in JAMA Internal Medicine quantifies this very phenomenon.

Researchers examined 11,658 primary care patients in their health system who met the criteria of being “seriously ill” and followed them for 2 years. During that period of time, 25% were recorded as deceased in the electronic health record. But 30.8% had died. That left 676 patients who had died, but were not known to have died, left in the system.

And those 676 were not left to rest in peace. They received 221 telephone and 338 health portal messages not related to death, and 920 letters reminding them about unmet primary care metrics like flu shots and cancer screening. Orders were entered into the health record for things like vaccines and routine screenings for 158 patients, and 310 future appointments — destined to be no-shows — were still on the books. One can only imagine the frustration of families checking their mail and finding yet another letter reminding their deceased loved one to get a mammogram.

How did the researchers figure out who had died? It turns out it’s not that hard. California keeps a record of all deaths in the state; they simply had to search it. Like all state death records, they tend to lag a bit so it’s not clinically terribly useful, but it works. California and most other states also have a very accurate and up-to-date death file which can only be used by law enforcement to investigate criminal activity and fraud; health care is left in the lurch.

Nationwide, there is the real-time fact of death service, supported by the National Association for Public Health Statistics and Information Systems. This allows employers to verify, in real time, whether the person applying for a job is alive. Healthcare systems are not allowed to use it.

Let’s also remember that very few people die in this country without some health care agency knowing about it and recording it. But sharing of medical information is so poor in the United States that your patient could die in a hospital one city away from you and you might not find out until you’re calling them to see why they missed a scheduled follow-up appointment.

These events — the embarrassing lack of knowledge about the very vital status of our patients — highlight a huge problem with health care in our country. The fragmented health care system is terrible at data sharing, in part because of poor protocols, in part because of unfounded concerns about patient privacy, and in part because of a tendency to hoard data that might be valuable in the future. It has to stop. We need to know how our patients are doing even when they are not sitting in front of us. When it comes to life and death, the knowledge is out there; we just can’t access it. Seems like a pretty easy fix.

Dr. Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Connecticut. He has disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com .

This transcript has been edited for clarity.

Much of my research focuses on what is known as clinical decision support — prompts and messages to providers to help them make good decisions for their patients. I know that these things can be annoying, which is exactly why I study them — to figure out which ones actually help.

When I got started on this about 10 years ago, we were learning a lot about how best to message providers about their patients. My team had developed a simple alert for acute kidney injury (AKI). We knew that providers often missed the diagnosis, so maybe letting them know would improve patient outcomes.

As we tested the alert, we got feedback, and I have kept an email from an ICU doctor from those early days. It read:

Dear Dr. Wilson: Thank you for the automated alert informing me that my patient had AKI. Regrettably, the alert fired about an hour after the patient had died. I feel that the information is less than actionable at this time.

Our early system had neglected to add a conditional flag ensuring that the patient was still alive at the time it sent the alert message. A small oversight, but one that had very large implications. Future studies would show that “false positive” alerts like this seriously degrade physician confidence in the system. And why wouldn’t they?

Not knowing the vital status of a patient can have major consequences.

Health systems send messages to their patients all the time: reminders of appointments, reminders for preventive care, reminders for vaccinations, and so on.

But what if the patient being reminded has died? It’s a waste of resources, of course, but more than that, it can be painful for their families and reflects poorly on the health care system. Of all the people who should know whether someone is alive or dead, shouldn’t their doctor be at the top of the list?

A new study in JAMA Internal Medicine quantifies this very phenomenon.

Researchers examined 11,658 primary care patients in their health system who met the criteria of being “seriously ill” and followed them for 2 years. During that period of time, 25% were recorded as deceased in the electronic health record. But 30.8% had died. That left 676 patients who had died, but were not known to have died, left in the system.

And those 676 were not left to rest in peace. They received 221 telephone and 338 health portal messages not related to death, and 920 letters reminding them about unmet primary care metrics like flu shots and cancer screening. Orders were entered into the health record for things like vaccines and routine screenings for 158 patients, and 310 future appointments — destined to be no-shows — were still on the books. One can only imagine the frustration of families checking their mail and finding yet another letter reminding their deceased loved one to get a mammogram.

How did the researchers figure out who had died? It turns out it’s not that hard. California keeps a record of all deaths in the state; they simply had to search it. Like all state death records, they tend to lag a bit so it’s not clinically terribly useful, but it works. California and most other states also have a very accurate and up-to-date death file which can only be used by law enforcement to investigate criminal activity and fraud; health care is left in the lurch.

Nationwide, there is the real-time fact of death service, supported by the National Association for Public Health Statistics and Information Systems. This allows employers to verify, in real time, whether the person applying for a job is alive. Healthcare systems are not allowed to use it.

Let’s also remember that very few people die in this country without some health care agency knowing about it and recording it. But sharing of medical information is so poor in the United States that your patient could die in a hospital one city away from you and you might not find out until you’re calling them to see why they missed a scheduled follow-up appointment.

These events — the embarrassing lack of knowledge about the very vital status of our patients — highlight a huge problem with health care in our country. The fragmented health care system is terrible at data sharing, in part because of poor protocols, in part because of unfounded concerns about patient privacy, and in part because of a tendency to hoard data that might be valuable in the future. It has to stop. We need to know how our patients are doing even when they are not sitting in front of us. When it comes to life and death, the knowledge is out there; we just can’t access it. Seems like a pretty easy fix.

Dr. Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Connecticut. He has disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com .

Is air filtration the best public health intervention against respiratory viruses?

This transcript has been edited for clarity.

When it comes to the public health fight against respiratory viruses – COVID, flu, RSV, and so on – it has always struck me as strange how staunchly basically any intervention is opposed. Masking was, of course, the prototypical entrenched warfare of opposing ideologies, with advocates pointing to studies suggesting the efficacy of masking to prevent transmission and advocating for broad masking recommendations, and detractors citing studies that suggested masks were ineffective and characterizing masking policies as fascist overreach. I’ll admit that I was always perplexed by this a bit, as that particular intervention seemed so benign – a bit annoying, I guess, but not crazy.

I have come to appreciate what I call status quo bias, which is the tendency to reject any policy, advice, or intervention that would force you, as an individual, to change your usual behavior. We just don’t like to do that. It has made me think that the most successful public health interventions might be the ones that take the individual out of the loop. And air quality control seems an ideal fit here. Here is a potential intervention where you, the individual, have to do precisely nothing. The status quo is preserved. We just, you know, have cleaner indoor air.

But even the suggestion of air treatment systems as a bulwark against respiratory virus transmission has been met with not just skepticism but cynicism, and perhaps even defeatism. It seems that there are those out there who think there really is nothing we can do. Sickness is interpreted in a Calvinistic framework: You become ill because it is your pre-destiny. But maybe air treatment could actually work. It seems like it might, if a new paper from PLOS One is to be believed.

What we’re talking about is a study titled “Bipolar Ionization Rapidly Inactivates Real-World, Airborne Concentrations of Infective Respiratory Viruses” – a highly controlled, laboratory-based analysis of a bipolar ionization system which seems to rapidly reduce viral counts in the air.

The proposed mechanism of action is pretty simple. The ionization system – which, don’t worry, has been shown not to produce ozone – spits out positively and negatively charged particles, which float around the test chamber, designed to look like a pretty standard room that you might find in an office or a school.

Virus is then injected into the chamber through an aerosolization machine, to achieve concentrations on the order of what you might get standing within 6 feet or so of someone actively infected with COVID while they are breathing and talking.

The idea is that those ions stick to the virus particles, similar to how a balloon sticks to the wall after you rub it on your hair, and that tends to cause them to clump together and settle on surfaces more rapidly, and thus get farther away from their ports of entry to the human system: nose, mouth, and eyes. But the ions may also interfere with viruses’ ability to bind to cellular receptors, even in the air.

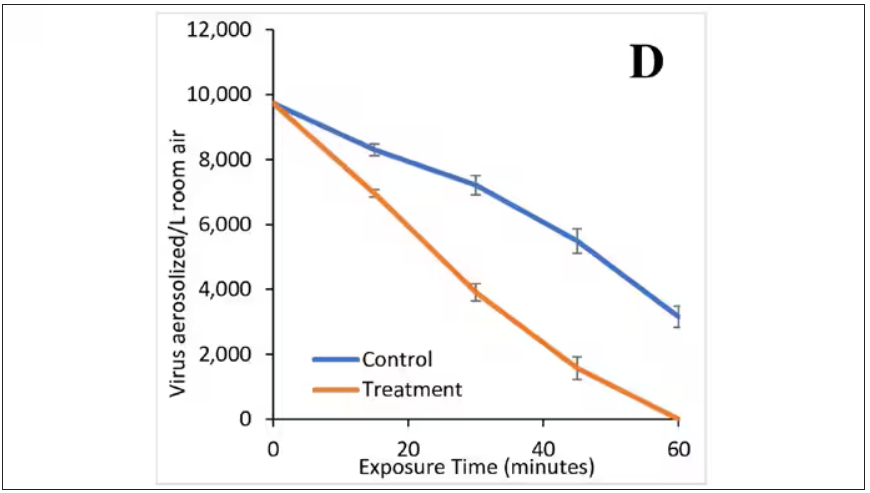

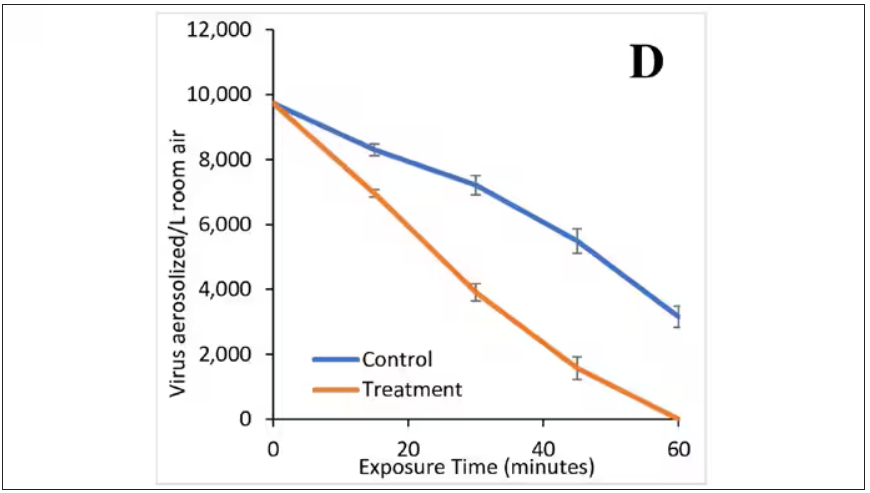

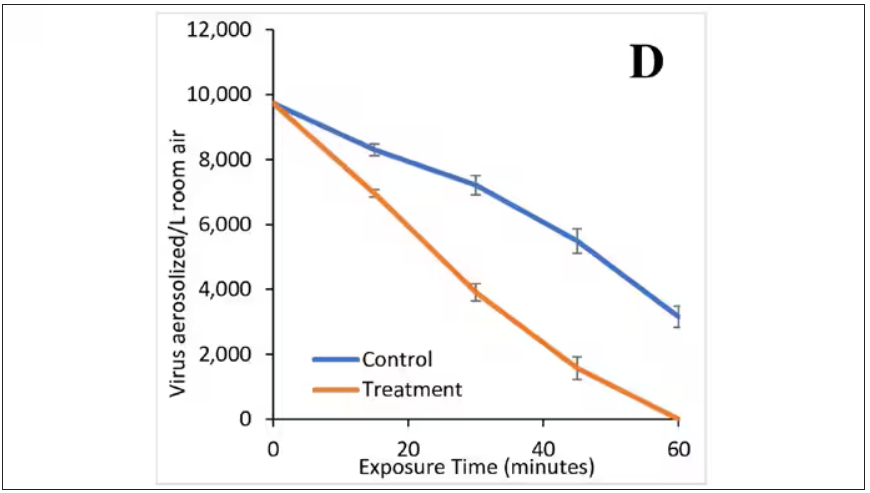

To quantify viral infectivity, the researchers used a biological system. Basically, you take air samples and expose a petri dish of cells to them and see how many cells die. Fewer cells dying, less infective. Under control conditions, you can see that virus infectivity does decrease over time. Time zero here is the end of a SARS-CoV-2 aerosolization.

This may simply reflect the fact that virus particles settle out of the air. But As you can see, within about an hour, you have almost no infective virus detectable. That’s fairly impressive.

Now, I’m not saying that this is a panacea, but it is certainly worth considering the use of technologies like these if we are going to revamp the infrastructure of our offices and schools. And, of course, it would be nice to see this tested in a rigorous clinical trial with actual infected people, not cells, as the outcome. But I continue to be encouraged by interventions like this which, to be honest, ask very little of us as individuals. Maybe it’s time we accept the things, or people, that we cannot change.

F. Perry Wilson, MD, MSCE, is an associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator. He reported no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

This transcript has been edited for clarity.

When it comes to the public health fight against respiratory viruses – COVID, flu, RSV, and so on – it has always struck me as strange how staunchly basically any intervention is opposed. Masking was, of course, the prototypical entrenched warfare of opposing ideologies, with advocates pointing to studies suggesting the efficacy of masking to prevent transmission and advocating for broad masking recommendations, and detractors citing studies that suggested masks were ineffective and characterizing masking policies as fascist overreach. I’ll admit that I was always perplexed by this a bit, as that particular intervention seemed so benign – a bit annoying, I guess, but not crazy.

I have come to appreciate what I call status quo bias, which is the tendency to reject any policy, advice, or intervention that would force you, as an individual, to change your usual behavior. We just don’t like to do that. It has made me think that the most successful public health interventions might be the ones that take the individual out of the loop. And air quality control seems an ideal fit here. Here is a potential intervention where you, the individual, have to do precisely nothing. The status quo is preserved. We just, you know, have cleaner indoor air.

But even the suggestion of air treatment systems as a bulwark against respiratory virus transmission has been met with not just skepticism but cynicism, and perhaps even defeatism. It seems that there are those out there who think there really is nothing we can do. Sickness is interpreted in a Calvinistic framework: You become ill because it is your pre-destiny. But maybe air treatment could actually work. It seems like it might, if a new paper from PLOS One is to be believed.

What we’re talking about is a study titled “Bipolar Ionization Rapidly Inactivates Real-World, Airborne Concentrations of Infective Respiratory Viruses” – a highly controlled, laboratory-based analysis of a bipolar ionization system which seems to rapidly reduce viral counts in the air.

The proposed mechanism of action is pretty simple. The ionization system – which, don’t worry, has been shown not to produce ozone – spits out positively and negatively charged particles, which float around the test chamber, designed to look like a pretty standard room that you might find in an office or a school.

Virus is then injected into the chamber through an aerosolization machine, to achieve concentrations on the order of what you might get standing within 6 feet or so of someone actively infected with COVID while they are breathing and talking.

The idea is that those ions stick to the virus particles, similar to how a balloon sticks to the wall after you rub it on your hair, and that tends to cause them to clump together and settle on surfaces more rapidly, and thus get farther away from their ports of entry to the human system: nose, mouth, and eyes. But the ions may also interfere with viruses’ ability to bind to cellular receptors, even in the air.

To quantify viral infectivity, the researchers used a biological system. Basically, you take air samples and expose a petri dish of cells to them and see how many cells die. Fewer cells dying, less infective. Under control conditions, you can see that virus infectivity does decrease over time. Time zero here is the end of a SARS-CoV-2 aerosolization.

This may simply reflect the fact that virus particles settle out of the air. But As you can see, within about an hour, you have almost no infective virus detectable. That’s fairly impressive.

Now, I’m not saying that this is a panacea, but it is certainly worth considering the use of technologies like these if we are going to revamp the infrastructure of our offices and schools. And, of course, it would be nice to see this tested in a rigorous clinical trial with actual infected people, not cells, as the outcome. But I continue to be encouraged by interventions like this which, to be honest, ask very little of us as individuals. Maybe it’s time we accept the things, or people, that we cannot change.

F. Perry Wilson, MD, MSCE, is an associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator. He reported no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

This transcript has been edited for clarity.

When it comes to the public health fight against respiratory viruses – COVID, flu, RSV, and so on – it has always struck me as strange how staunchly basically any intervention is opposed. Masking was, of course, the prototypical entrenched warfare of opposing ideologies, with advocates pointing to studies suggesting the efficacy of masking to prevent transmission and advocating for broad masking recommendations, and detractors citing studies that suggested masks were ineffective and characterizing masking policies as fascist overreach. I’ll admit that I was always perplexed by this a bit, as that particular intervention seemed so benign – a bit annoying, I guess, but not crazy.

I have come to appreciate what I call status quo bias, which is the tendency to reject any policy, advice, or intervention that would force you, as an individual, to change your usual behavior. We just don’t like to do that. It has made me think that the most successful public health interventions might be the ones that take the individual out of the loop. And air quality control seems an ideal fit here. Here is a potential intervention where you, the individual, have to do precisely nothing. The status quo is preserved. We just, you know, have cleaner indoor air.

But even the suggestion of air treatment systems as a bulwark against respiratory virus transmission has been met with not just skepticism but cynicism, and perhaps even defeatism. It seems that there are those out there who think there really is nothing we can do. Sickness is interpreted in a Calvinistic framework: You become ill because it is your pre-destiny. But maybe air treatment could actually work. It seems like it might, if a new paper from PLOS One is to be believed.

What we’re talking about is a study titled “Bipolar Ionization Rapidly Inactivates Real-World, Airborne Concentrations of Infective Respiratory Viruses” – a highly controlled, laboratory-based analysis of a bipolar ionization system which seems to rapidly reduce viral counts in the air.

The proposed mechanism of action is pretty simple. The ionization system – which, don’t worry, has been shown not to produce ozone – spits out positively and negatively charged particles, which float around the test chamber, designed to look like a pretty standard room that you might find in an office or a school.

Virus is then injected into the chamber through an aerosolization machine, to achieve concentrations on the order of what you might get standing within 6 feet or so of someone actively infected with COVID while they are breathing and talking.

The idea is that those ions stick to the virus particles, similar to how a balloon sticks to the wall after you rub it on your hair, and that tends to cause them to clump together and settle on surfaces more rapidly, and thus get farther away from their ports of entry to the human system: nose, mouth, and eyes. But the ions may also interfere with viruses’ ability to bind to cellular receptors, even in the air.

To quantify viral infectivity, the researchers used a biological system. Basically, you take air samples and expose a petri dish of cells to them and see how many cells die. Fewer cells dying, less infective. Under control conditions, you can see that virus infectivity does decrease over time. Time zero here is the end of a SARS-CoV-2 aerosolization.

This may simply reflect the fact that virus particles settle out of the air. But As you can see, within about an hour, you have almost no infective virus detectable. That’s fairly impressive.

Now, I’m not saying that this is a panacea, but it is certainly worth considering the use of technologies like these if we are going to revamp the infrastructure of our offices and schools. And, of course, it would be nice to see this tested in a rigorous clinical trial with actual infected people, not cells, as the outcome. But I continue to be encouraged by interventions like this which, to be honest, ask very little of us as individuals. Maybe it’s time we accept the things, or people, that we cannot change.

F. Perry Wilson, MD, MSCE, is an associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator. He reported no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

Headache after drinking red wine? This could be why

This transcript has been edited for clarity.

Robert Louis Stevenson famously said, “Wine is bottled poetry.” And I think it works quite well. I’ve had wines that are simple, elegant, and unpretentious like Emily Dickinson, and passionate and mysterious like Pablo Neruda. And I’ve had wines that are more analogous to the limerick you might read scrawled on a rest-stop bathroom wall. Those ones give me headaches.

– and apparently it’s not just the alcohol.

Headaches are common, and headaches after drinking alcohol are particularly common. An interesting epidemiologic phenomenon, not yet adequately explained, is why red wine is associated with more headache than other forms of alcohol. There have been many studies fingering many suspects, from sulfites to tannins to various phenolic compounds, but none have really provided a concrete explanation for what might be going on.

A new hypothesis came to the fore on Nov. 20 in the journal Scientific Reports:

To understand the idea, first a reminder of what happens when you drink alcohol, physiologically.

Alcohol is metabolized by the enzyme alcohol dehydrogenase in the gut and then in the liver. That turns it into acetaldehyde, a toxic metabolite. In most of us, aldehyde dehydrogenase (ALDH) quickly metabolizes acetaldehyde to the inert acetate, which can be safely excreted.

I say “most of us” because some populations, particularly those with East Asian ancestry, have a mutation in the ALDH gene which can lead to accumulation of toxic acetaldehyde with alcohol consumption – leading to facial flushing, nausea, and headache.

We can also inhibit the enzyme medically. That’s what the drug disulfiram, also known as Antabuse, does. It doesn’t prevent you from wanting to drink; it makes the consequences of drinking incredibly aversive.

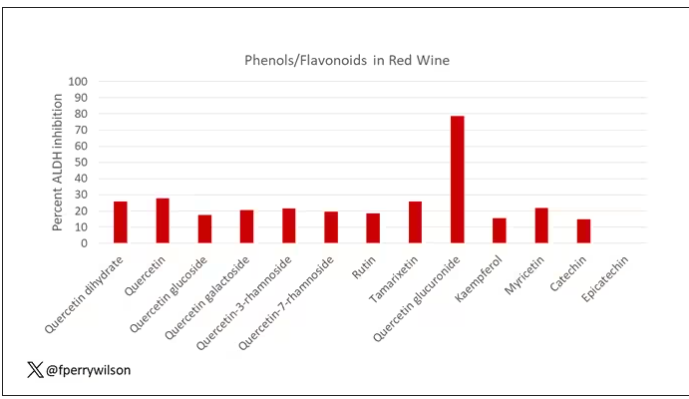

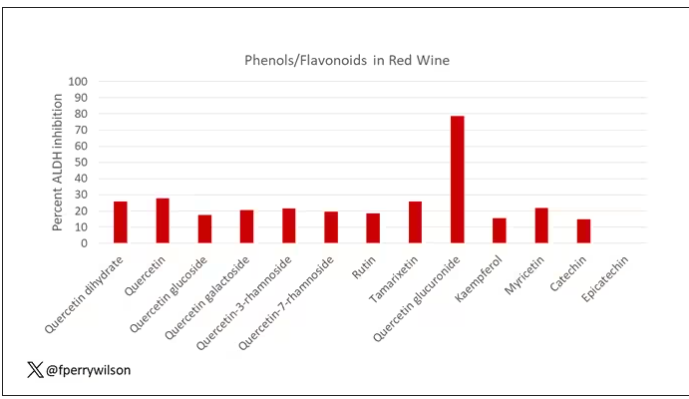

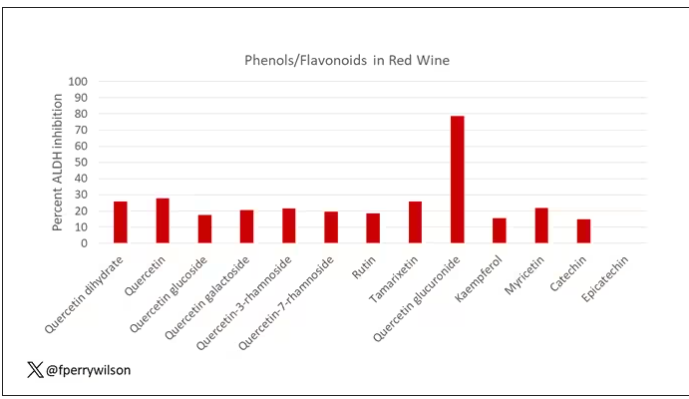

The researchers focused in on the aldehyde dehydrogenase enzyme and conducted a screening study. Are there any compounds in red wine that naturally inhibit ALDH?

The results pointed squarely at quercetin, and particularly its metabolite quercetin glucuronide, which, at 20 micromolar concentrations, inhibited about 80% of ALDH activity.

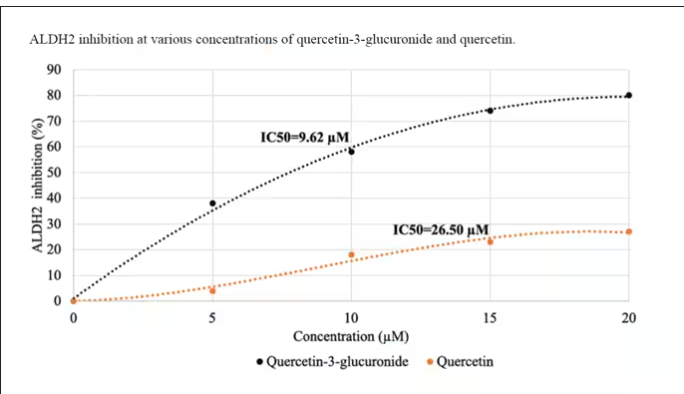

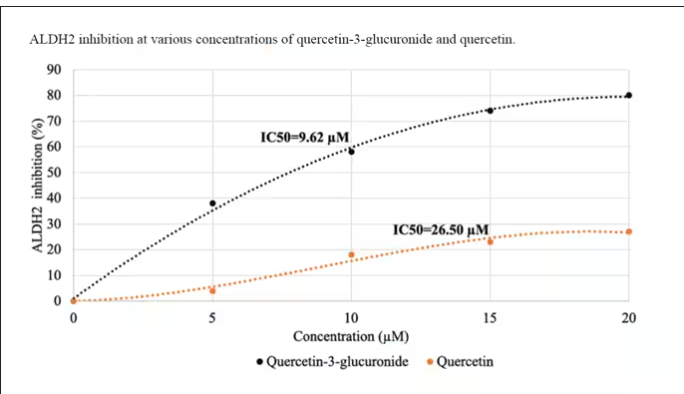

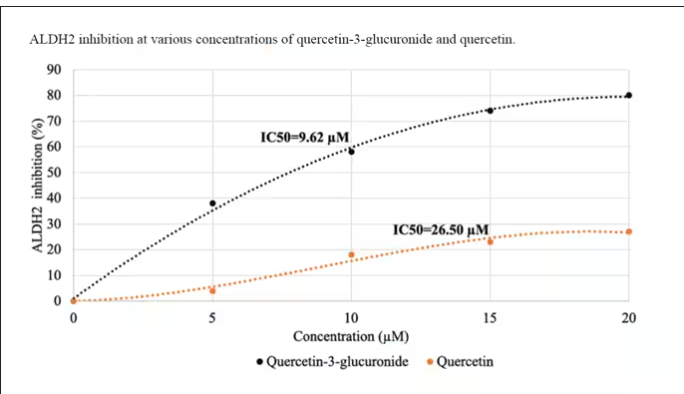

Quercetin is a flavonoid – a compound that gives color to a variety of vegetables and fruits, including grapes. In a test tube, it is an antioxidant, which is enough evidence to spawn a small quercetin-as-supplement industry, but there is no convincing evidence that it is medically useful. The authors then examined the concentration of quercetin glucuronide to achieve various inhibitions of ALDH, as you can see in this graph here.

By about 10 micromolar, we see a decent amount of inhibition. Disulfiram is about 10 times more potent than that, but then again, you don’t drink three glasses of disulfiram with Thanksgiving dinner.

This is where this study stops. But it obviously tells us very little about what might be happening in the human body. For that, we need to ask the question: Can we get our quercetin levels to 10 micromolar? Is that remotely achievable?

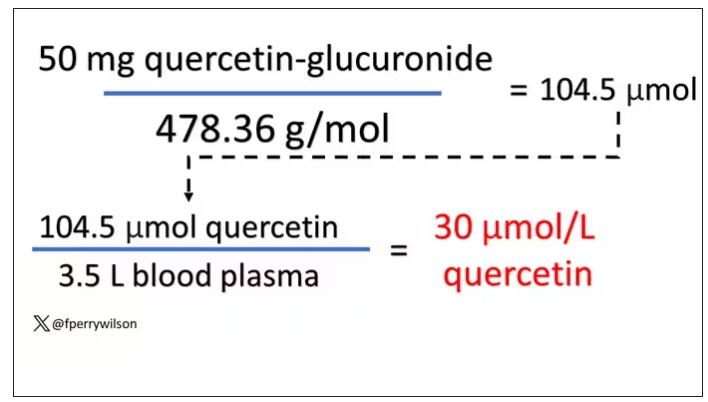

Let’s start with how much quercetin there is in red wine. Like all things wine, it varies, but this study examining Australian wines found mean concentrations of 11 mg/L. The highest value I saw was close to 50 mg/L.

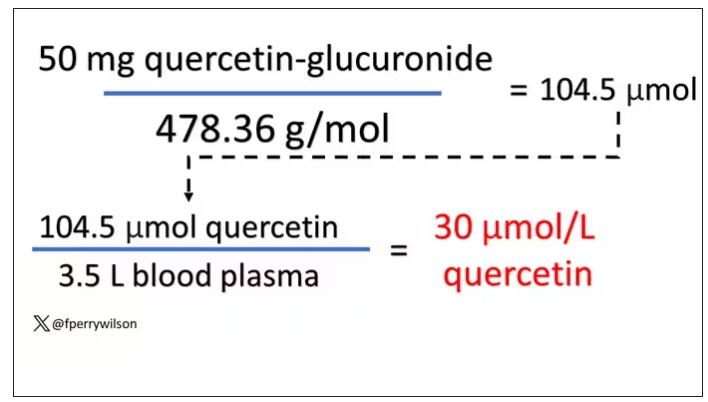

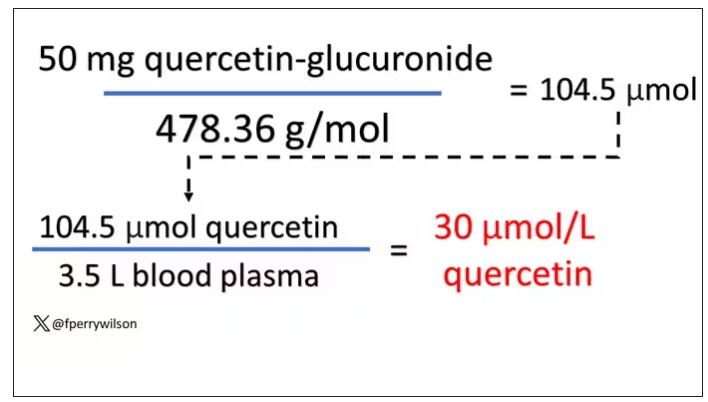

So let’s do some math. To make the numbers easy, let’s say you drank a liter of Australian wine, taking in 50 mg of quercetin glucuronide.

How much of that gets into your bloodstream? Some studies suggest a bioavailability of less than 1%, which basically means none and should probably put the quercetin hypothesis to bed. But there is some variation here too; it seems to depend on the form of quercetin you ingest.

Let’s say all 50 mg gets into your bloodstream. What blood concentration would that lead to? Well, I’ll keep the stoichiometry in the graphics and just say that if we assume that the volume of distribution of the compound is restricted to plasma alone, then you could achieve similar concentrations to what was done in petri dishes during this study.

Of course, if quercetin is really the culprit behind red wine headache, I have some questions: Why aren’t the Amazon reviews of quercetin supplements chock full of warnings not to take them with alcohol? And other foods have way higher quercetin concentration than wine, but you don’t hear people warning not to take your red onions with alcohol, or your capers, or lingonberries.

There’s some more work to be done here – most importantly, some human studies. Let’s give people wine with different amounts of quercetin and see what happens. Sign me up. Seriously.

As for Thanksgiving, it’s worth noting that cranberries have a lot of quercetin in them. So between the cranberry sauce, the Beaujolais, and your uncle ranting about the contrails again, the probability of headache is pretty darn high. Stay safe out there, and Happy Thanksgiving.

Dr. Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Conn. He has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

This transcript has been edited for clarity.

Robert Louis Stevenson famously said, “Wine is bottled poetry.” And I think it works quite well. I’ve had wines that are simple, elegant, and unpretentious like Emily Dickinson, and passionate and mysterious like Pablo Neruda. And I’ve had wines that are more analogous to the limerick you might read scrawled on a rest-stop bathroom wall. Those ones give me headaches.

– and apparently it’s not just the alcohol.

Headaches are common, and headaches after drinking alcohol are particularly common. An interesting epidemiologic phenomenon, not yet adequately explained, is why red wine is associated with more headache than other forms of alcohol. There have been many studies fingering many suspects, from sulfites to tannins to various phenolic compounds, but none have really provided a concrete explanation for what might be going on.

A new hypothesis came to the fore on Nov. 20 in the journal Scientific Reports:

To understand the idea, first a reminder of what happens when you drink alcohol, physiologically.

Alcohol is metabolized by the enzyme alcohol dehydrogenase in the gut and then in the liver. That turns it into acetaldehyde, a toxic metabolite. In most of us, aldehyde dehydrogenase (ALDH) quickly metabolizes acetaldehyde to the inert acetate, which can be safely excreted.

I say “most of us” because some populations, particularly those with East Asian ancestry, have a mutation in the ALDH gene which can lead to accumulation of toxic acetaldehyde with alcohol consumption – leading to facial flushing, nausea, and headache.

We can also inhibit the enzyme medically. That’s what the drug disulfiram, also known as Antabuse, does. It doesn’t prevent you from wanting to drink; it makes the consequences of drinking incredibly aversive.

The researchers focused in on the aldehyde dehydrogenase enzyme and conducted a screening study. Are there any compounds in red wine that naturally inhibit ALDH?

The results pointed squarely at quercetin, and particularly its metabolite quercetin glucuronide, which, at 20 micromolar concentrations, inhibited about 80% of ALDH activity.

Quercetin is a flavonoid – a compound that gives color to a variety of vegetables and fruits, including grapes. In a test tube, it is an antioxidant, which is enough evidence to spawn a small quercetin-as-supplement industry, but there is no convincing evidence that it is medically useful. The authors then examined the concentration of quercetin glucuronide to achieve various inhibitions of ALDH, as you can see in this graph here.

By about 10 micromolar, we see a decent amount of inhibition. Disulfiram is about 10 times more potent than that, but then again, you don’t drink three glasses of disulfiram with Thanksgiving dinner.

This is where this study stops. But it obviously tells us very little about what might be happening in the human body. For that, we need to ask the question: Can we get our quercetin levels to 10 micromolar? Is that remotely achievable?

Let’s start with how much quercetin there is in red wine. Like all things wine, it varies, but this study examining Australian wines found mean concentrations of 11 mg/L. The highest value I saw was close to 50 mg/L.

So let’s do some math. To make the numbers easy, let’s say you drank a liter of Australian wine, taking in 50 mg of quercetin glucuronide.

How much of that gets into your bloodstream? Some studies suggest a bioavailability of less than 1%, which basically means none and should probably put the quercetin hypothesis to bed. But there is some variation here too; it seems to depend on the form of quercetin you ingest.

Let’s say all 50 mg gets into your bloodstream. What blood concentration would that lead to? Well, I’ll keep the stoichiometry in the graphics and just say that if we assume that the volume of distribution of the compound is restricted to plasma alone, then you could achieve similar concentrations to what was done in petri dishes during this study.

Of course, if quercetin is really the culprit behind red wine headache, I have some questions: Why aren’t the Amazon reviews of quercetin supplements chock full of warnings not to take them with alcohol? And other foods have way higher quercetin concentration than wine, but you don’t hear people warning not to take your red onions with alcohol, or your capers, or lingonberries.

There’s some more work to be done here – most importantly, some human studies. Let’s give people wine with different amounts of quercetin and see what happens. Sign me up. Seriously.

As for Thanksgiving, it’s worth noting that cranberries have a lot of quercetin in them. So between the cranberry sauce, the Beaujolais, and your uncle ranting about the contrails again, the probability of headache is pretty darn high. Stay safe out there, and Happy Thanksgiving.

Dr. Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Conn. He has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

This transcript has been edited for clarity.

Robert Louis Stevenson famously said, “Wine is bottled poetry.” And I think it works quite well. I’ve had wines that are simple, elegant, and unpretentious like Emily Dickinson, and passionate and mysterious like Pablo Neruda. And I’ve had wines that are more analogous to the limerick you might read scrawled on a rest-stop bathroom wall. Those ones give me headaches.

– and apparently it’s not just the alcohol.

Headaches are common, and headaches after drinking alcohol are particularly common. An interesting epidemiologic phenomenon, not yet adequately explained, is why red wine is associated with more headache than other forms of alcohol. There have been many studies fingering many suspects, from sulfites to tannins to various phenolic compounds, but none have really provided a concrete explanation for what might be going on.

A new hypothesis came to the fore on Nov. 20 in the journal Scientific Reports:

To understand the idea, first a reminder of what happens when you drink alcohol, physiologically.

Alcohol is metabolized by the enzyme alcohol dehydrogenase in the gut and then in the liver. That turns it into acetaldehyde, a toxic metabolite. In most of us, aldehyde dehydrogenase (ALDH) quickly metabolizes acetaldehyde to the inert acetate, which can be safely excreted.

I say “most of us” because some populations, particularly those with East Asian ancestry, have a mutation in the ALDH gene which can lead to accumulation of toxic acetaldehyde with alcohol consumption – leading to facial flushing, nausea, and headache.

We can also inhibit the enzyme medically. That’s what the drug disulfiram, also known as Antabuse, does. It doesn’t prevent you from wanting to drink; it makes the consequences of drinking incredibly aversive.

The researchers focused in on the aldehyde dehydrogenase enzyme and conducted a screening study. Are there any compounds in red wine that naturally inhibit ALDH?

The results pointed squarely at quercetin, and particularly its metabolite quercetin glucuronide, which, at 20 micromolar concentrations, inhibited about 80% of ALDH activity.

Quercetin is a flavonoid – a compound that gives color to a variety of vegetables and fruits, including grapes. In a test tube, it is an antioxidant, which is enough evidence to spawn a small quercetin-as-supplement industry, but there is no convincing evidence that it is medically useful. The authors then examined the concentration of quercetin glucuronide to achieve various inhibitions of ALDH, as you can see in this graph here.

By about 10 micromolar, we see a decent amount of inhibition. Disulfiram is about 10 times more potent than that, but then again, you don’t drink three glasses of disulfiram with Thanksgiving dinner.

This is where this study stops. But it obviously tells us very little about what might be happening in the human body. For that, we need to ask the question: Can we get our quercetin levels to 10 micromolar? Is that remotely achievable?

Let’s start with how much quercetin there is in red wine. Like all things wine, it varies, but this study examining Australian wines found mean concentrations of 11 mg/L. The highest value I saw was close to 50 mg/L.

So let’s do some math. To make the numbers easy, let’s say you drank a liter of Australian wine, taking in 50 mg of quercetin glucuronide.

How much of that gets into your bloodstream? Some studies suggest a bioavailability of less than 1%, which basically means none and should probably put the quercetin hypothesis to bed. But there is some variation here too; it seems to depend on the form of quercetin you ingest.

Let’s say all 50 mg gets into your bloodstream. What blood concentration would that lead to? Well, I’ll keep the stoichiometry in the graphics and just say that if we assume that the volume of distribution of the compound is restricted to plasma alone, then you could achieve similar concentrations to what was done in petri dishes during this study.

Of course, if quercetin is really the culprit behind red wine headache, I have some questions: Why aren’t the Amazon reviews of quercetin supplements chock full of warnings not to take them with alcohol? And other foods have way higher quercetin concentration than wine, but you don’t hear people warning not to take your red onions with alcohol, or your capers, or lingonberries.

There’s some more work to be done here – most importantly, some human studies. Let’s give people wine with different amounts of quercetin and see what happens. Sign me up. Seriously.

As for Thanksgiving, it’s worth noting that cranberries have a lot of quercetin in them. So between the cranberry sauce, the Beaujolais, and your uncle ranting about the contrails again, the probability of headache is pretty darn high. Stay safe out there, and Happy Thanksgiving.

Dr. Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Conn. He has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

The future of medicine is RNA

Welcome to Impact Factor, your weekly dose of commentary on a new medical study. I’m Dr F. Perry Wilson of the Yale School of Medicine.

Every once in a while, medicine changes in a fundamental way, and we may not realize it while it’s happening. I wasn’t around in 1928 when Fleming discovered penicillin; or in 1953 when Watson, Crick, and Franklin characterized the double-helical structure of DNA.

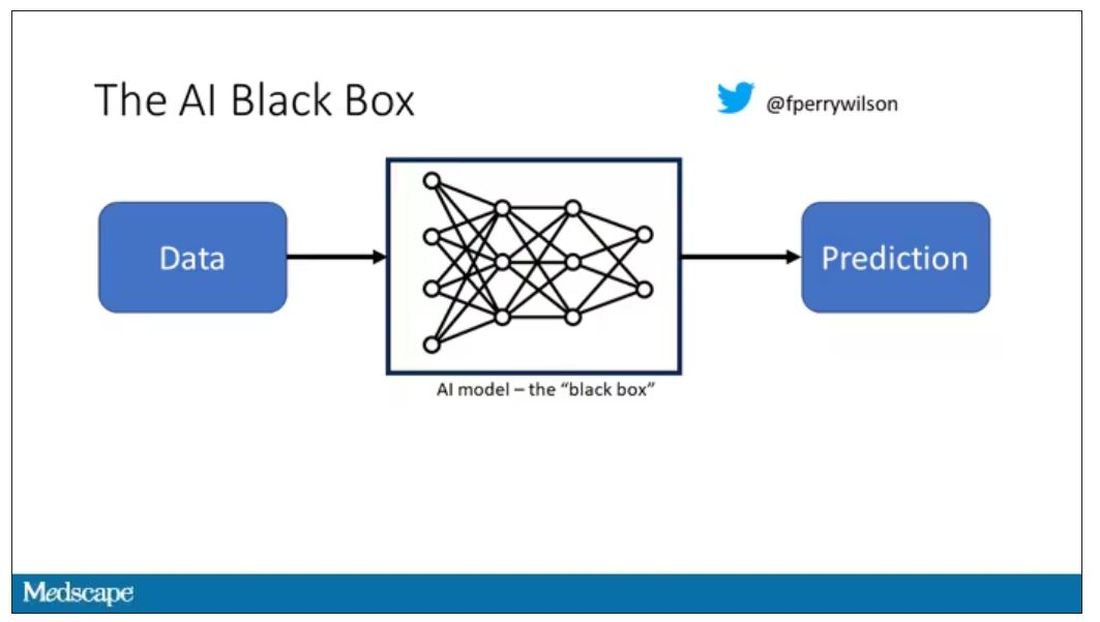

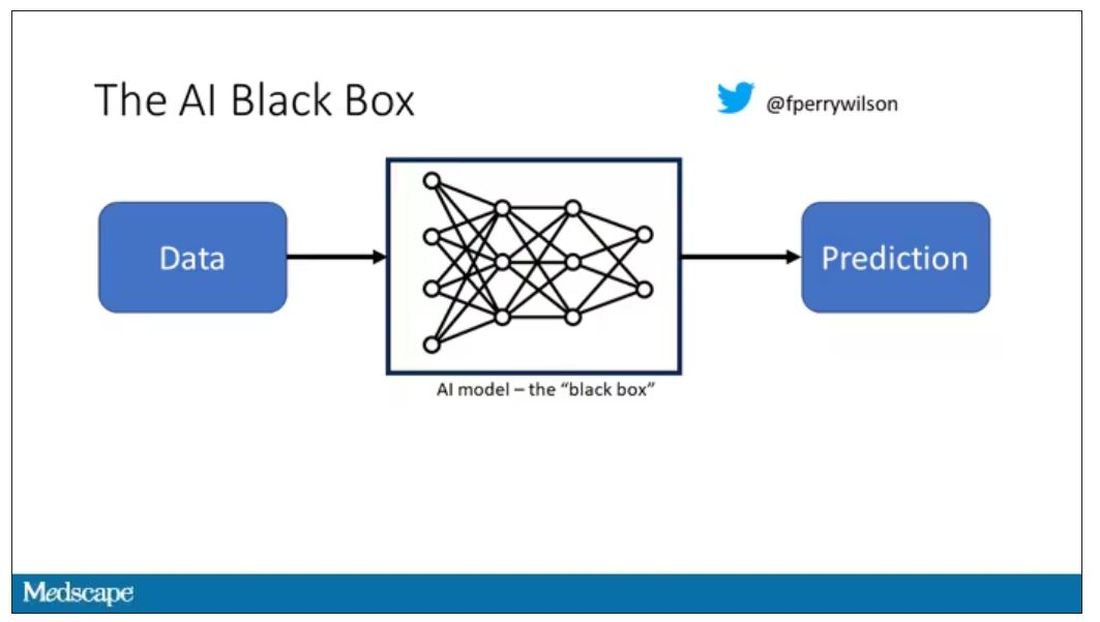

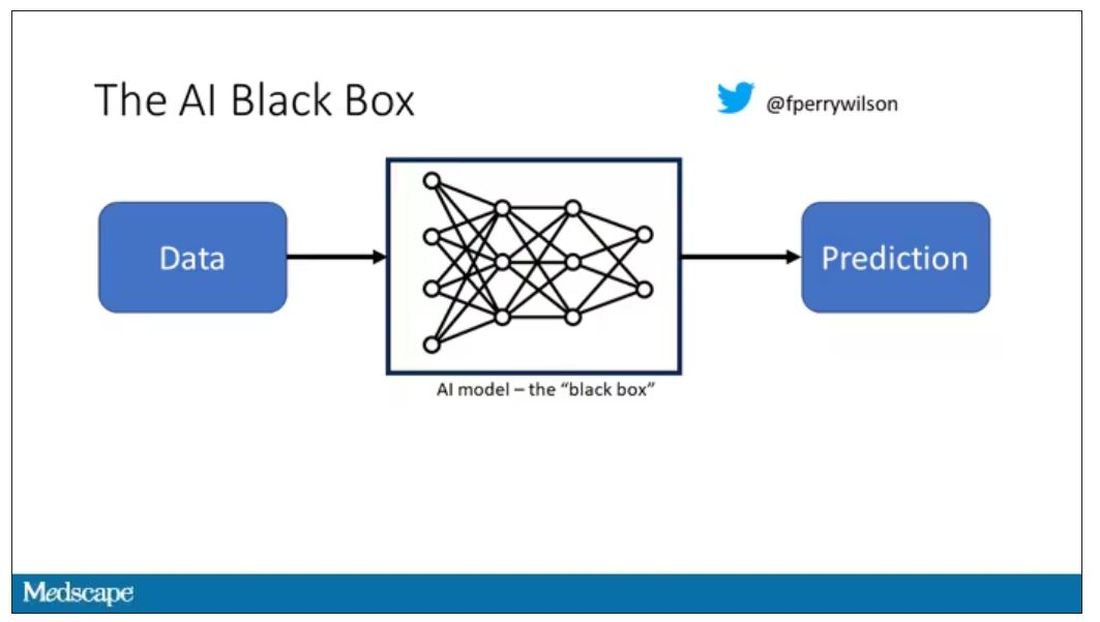

But looking at medicine today, there are essentially two places where I think we will see, in retrospect, that we were at a fundamental turning point. One is artificial intelligence, which gets so much attention and hype that I will simply say yes, this will change things, stay tuned.

The other is a bit more obscure, but I suspect it may be just as impactful. That other thing is

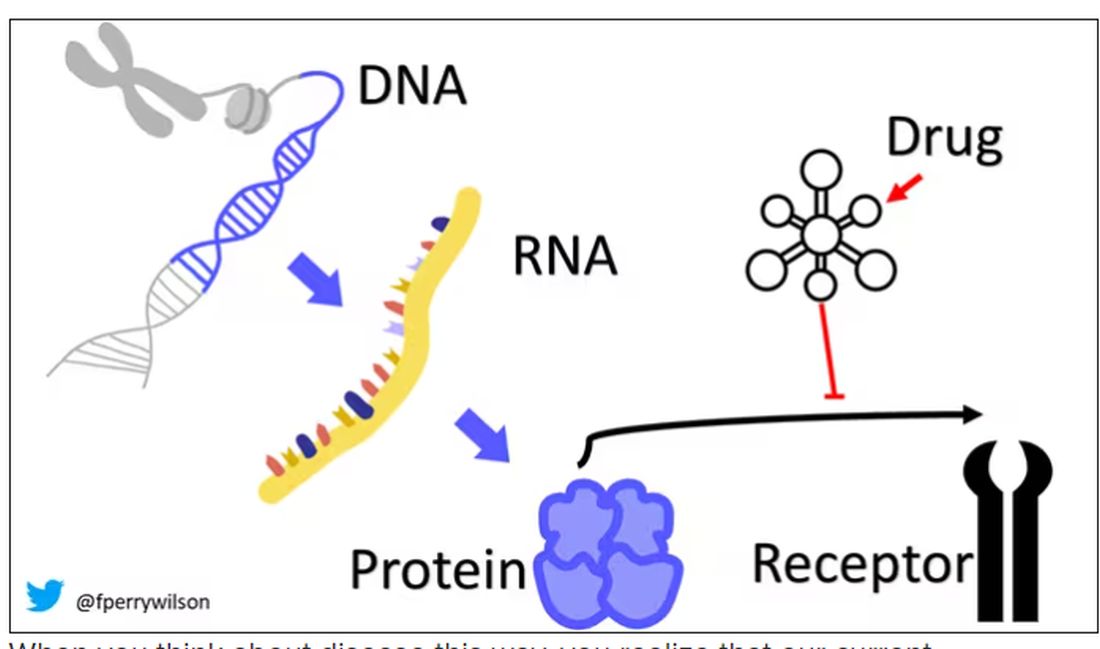

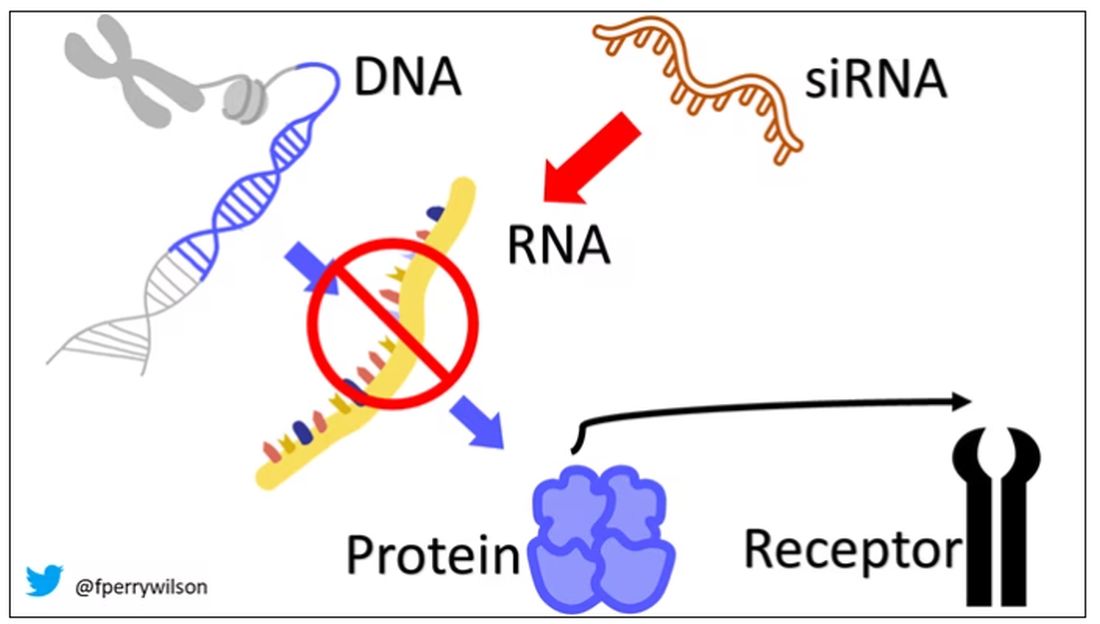

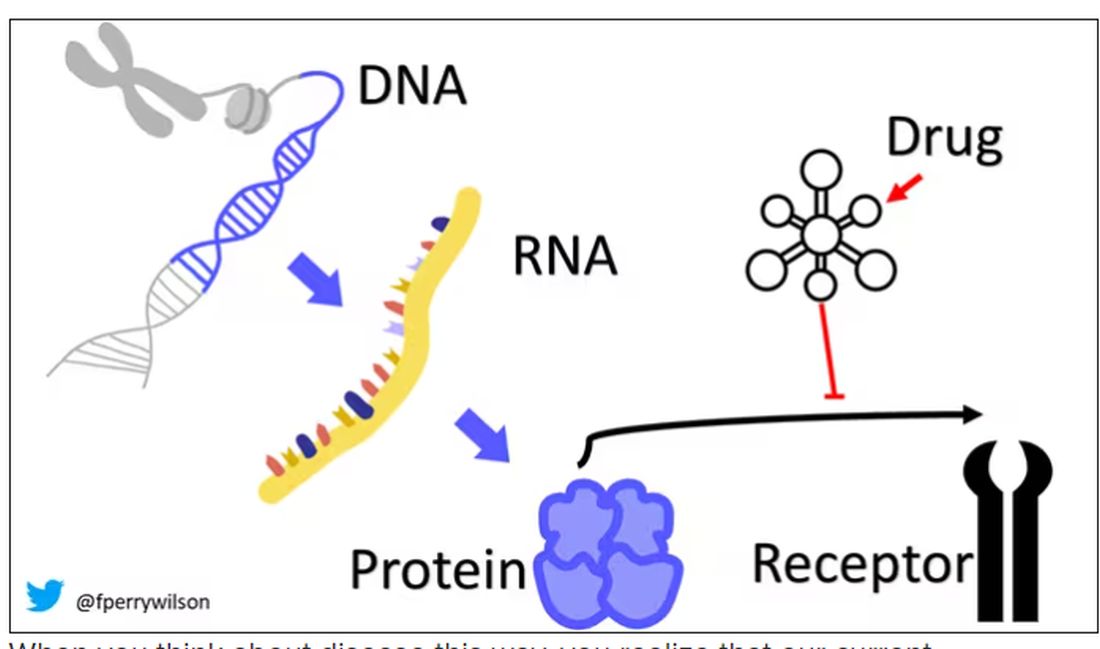

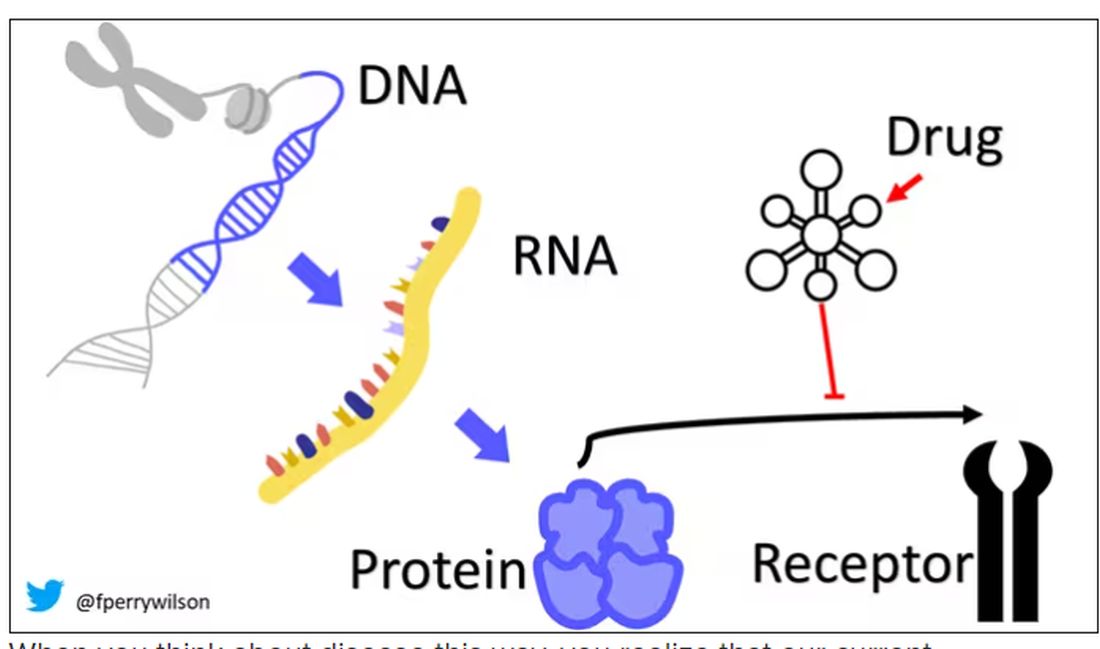

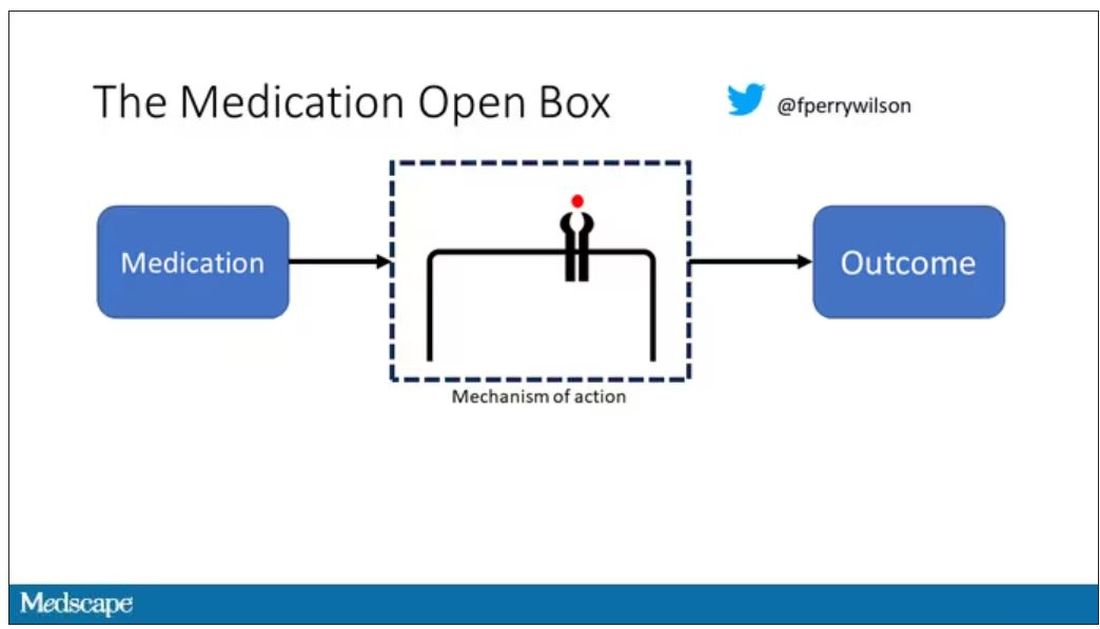

I want to start with the idea that many diseases are, fundamentally, a problem of proteins. In some cases, like hypercholesterolemia, the body produces too much protein; in others, like hemophilia, too little.

When you think about disease this way, you realize that our current medications take effect late in the disease game. We have these molecules that try to block a protein from its receptor, prevent a protein from cleaving another protein, or increase the rate that a protein is broken down. It’s all distal to the fundamental problem: the production of the bad protein in the first place.

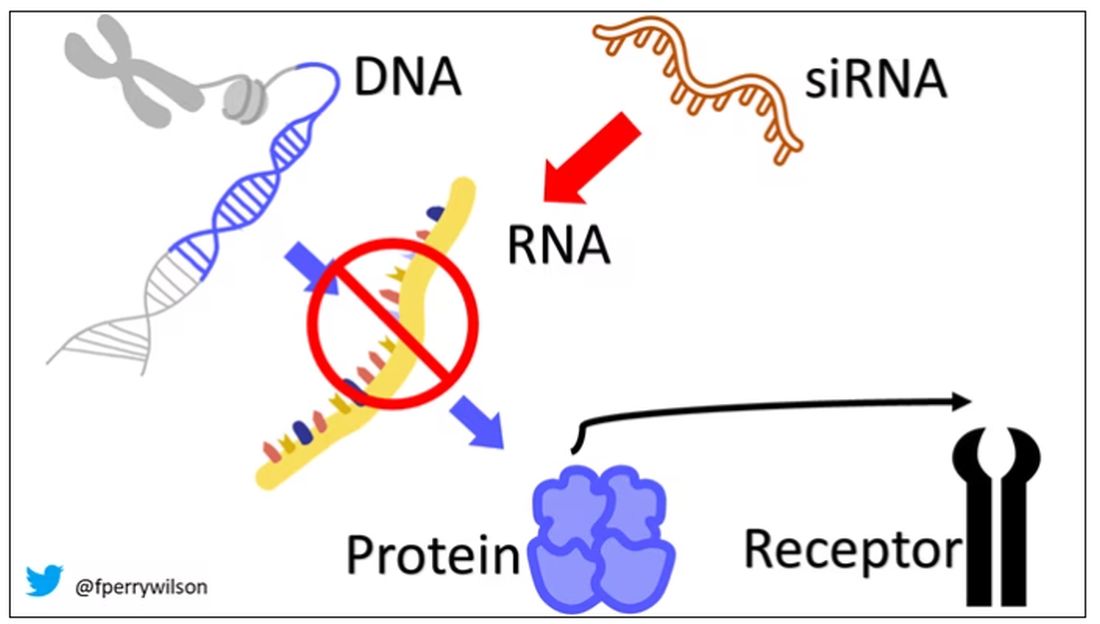

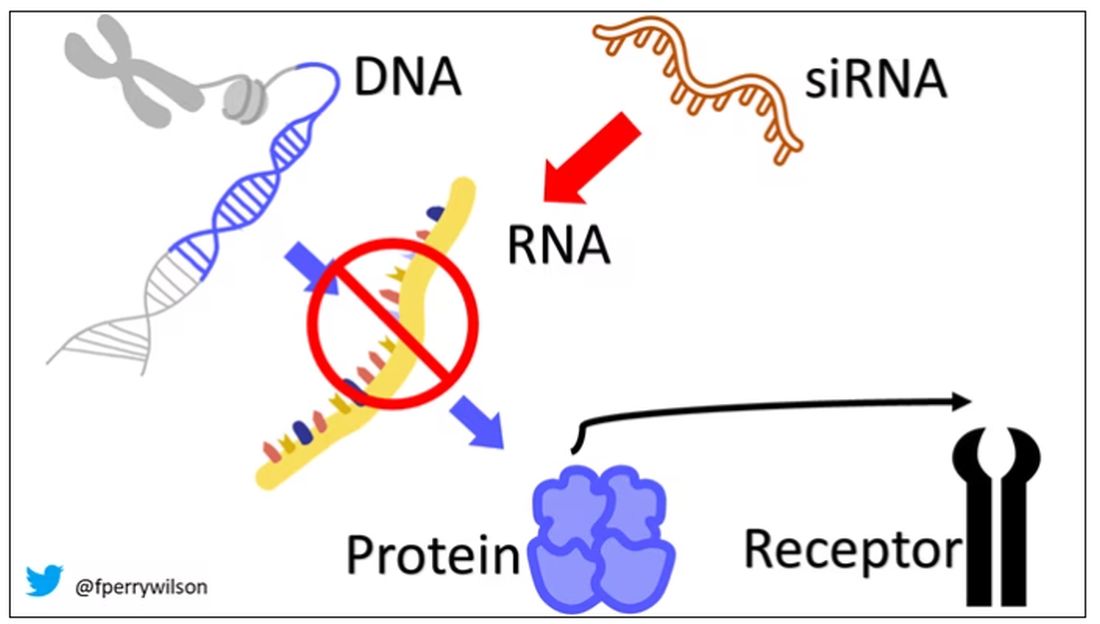

Enter small inhibitory RNAs, or siRNAs for short, discovered in 1998 by Andrew Fire and Craig Mello at UMass Worcester. The two won the Nobel prize in medicine just 8 years later; that’s a really short time, highlighting just how important this discovery was. In contrast, Karikó and Weissman won the Nobel for mRNA vaccines this year, after inventing them 18 years ago.

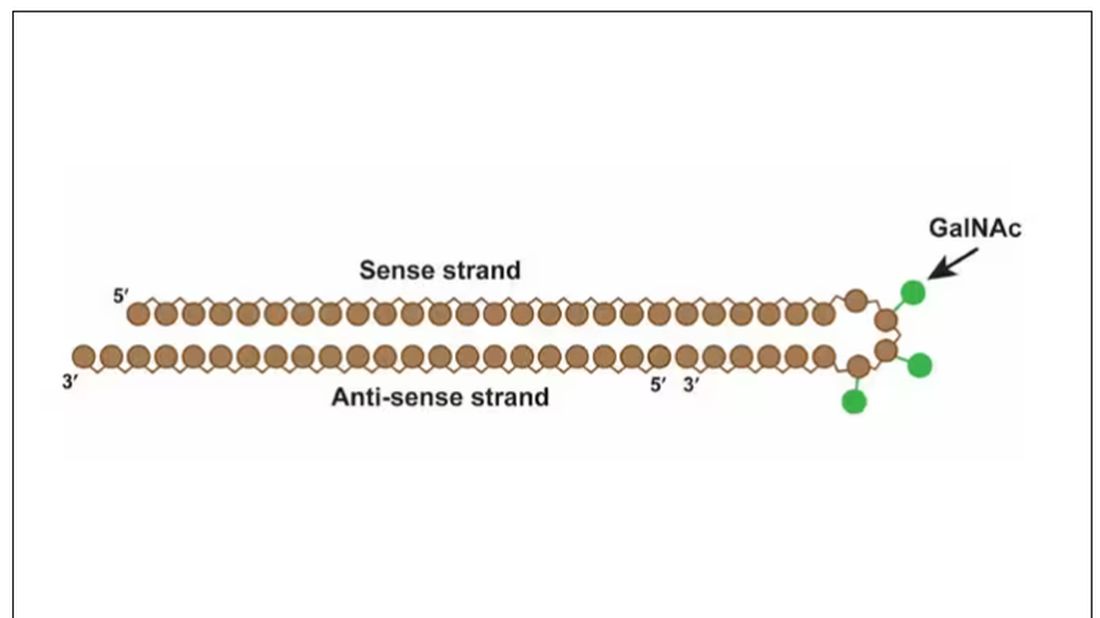

siRNAs are the body’s way of targeting proteins for destruction before they are ever created. About 20 base pairs long, siRNAs seek out a complementary target mRNA, attach to it, and call in a group of proteins to destroy it. With the target mRNA gone, no protein can be created.

You see where this is going, right? How does high cholesterol kill you? Proteins. How does Staphylococcus aureus kill you? Proteins. Even viruses can’t replicate if their RNA is prevented from being turned into proteins.

So, how do we use siRNAs? A new paper appearing in JAMA describes a fairly impressive use case.

The background here is that higher levels of lipoprotein(a), an LDL-like protein, are associated with cardiovascular disease, heart attack, and stroke. But unfortunately, statins really don’t have any effect on lipoprotein(a) levels. Neither does diet. Your lipoprotein(a) level seems to be more or less hard-coded genetically.

So, what if we stop the genetic machinery from working? Enter lepodisiran, a drug from Eli Lilly. Unlike so many other medications, which are usually found in nature, purified, and synthesized, lepodisiran was created from scratch. It’s not hard. Thanks to the Human Genome Project, we know the genetic code for lipoprotein(a), so inventing an siRNA to target it specifically is trivial. That’s one of the key features of siRNA – you don’t have to find a chemical that binds strongly to some protein receptor, and worry about the off-target effects and all that nonsense. You just pick a protein you want to suppress and you suppress it.

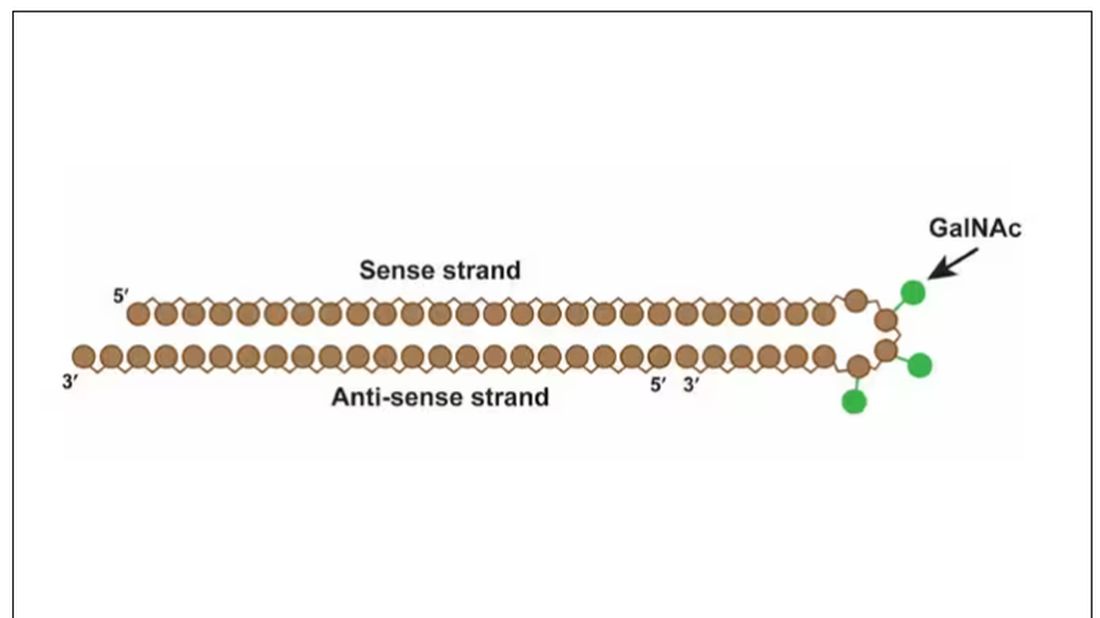

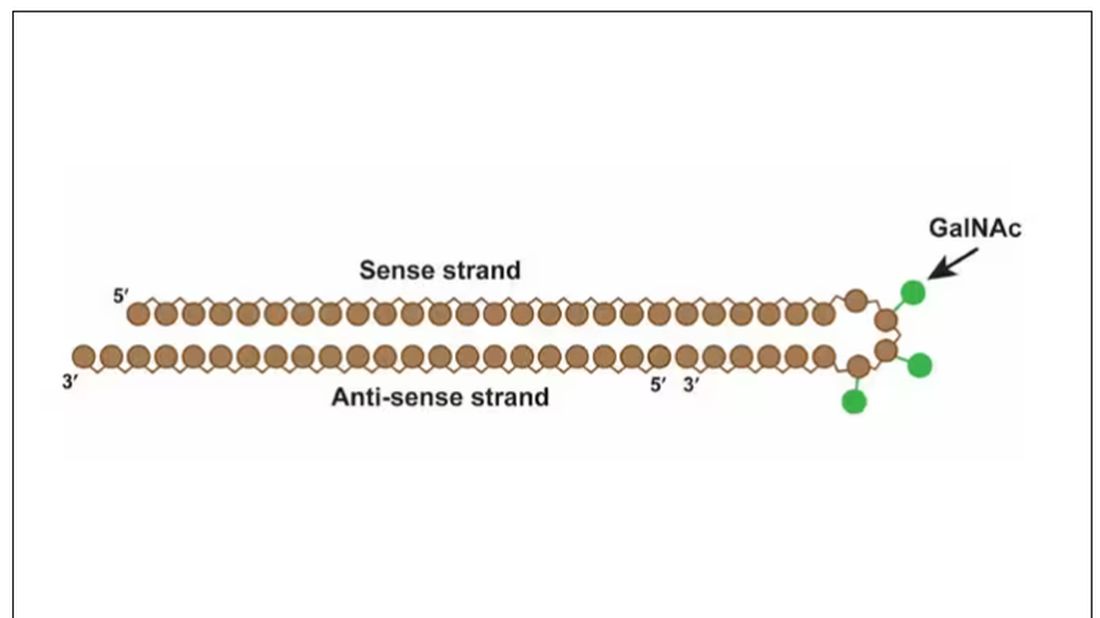

Okay, it’s not that simple. siRNA is broken down very quickly by the body, so it needs to be targeted to the organ of interest – in this case, the liver, since that is where lipoprotein(a) is synthesized. Lepodisiran is targeted to the liver by this special targeting label here.

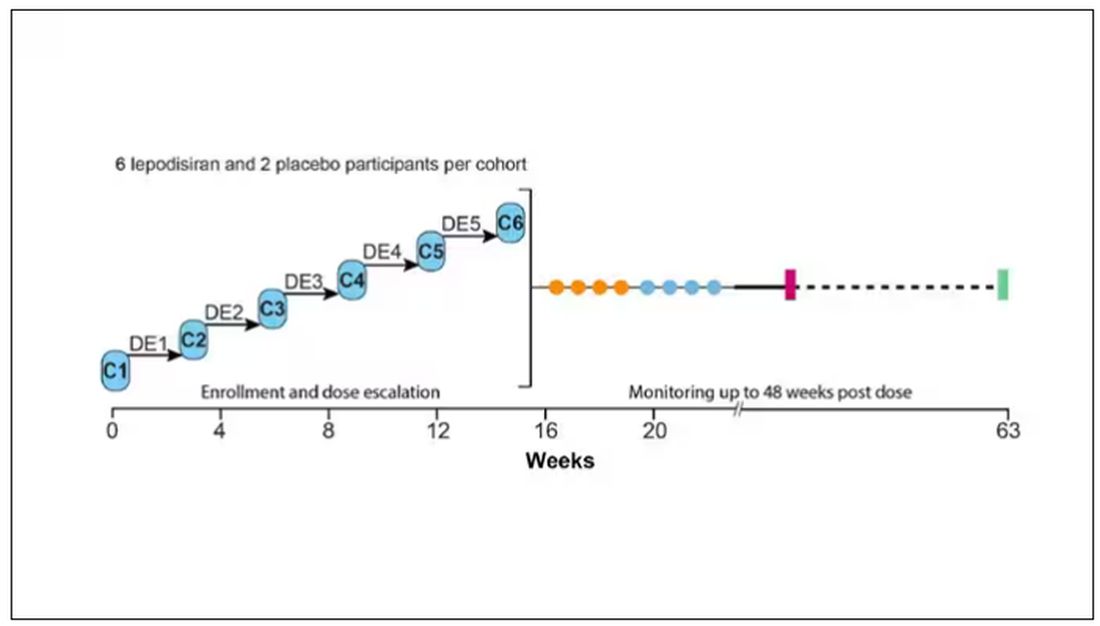

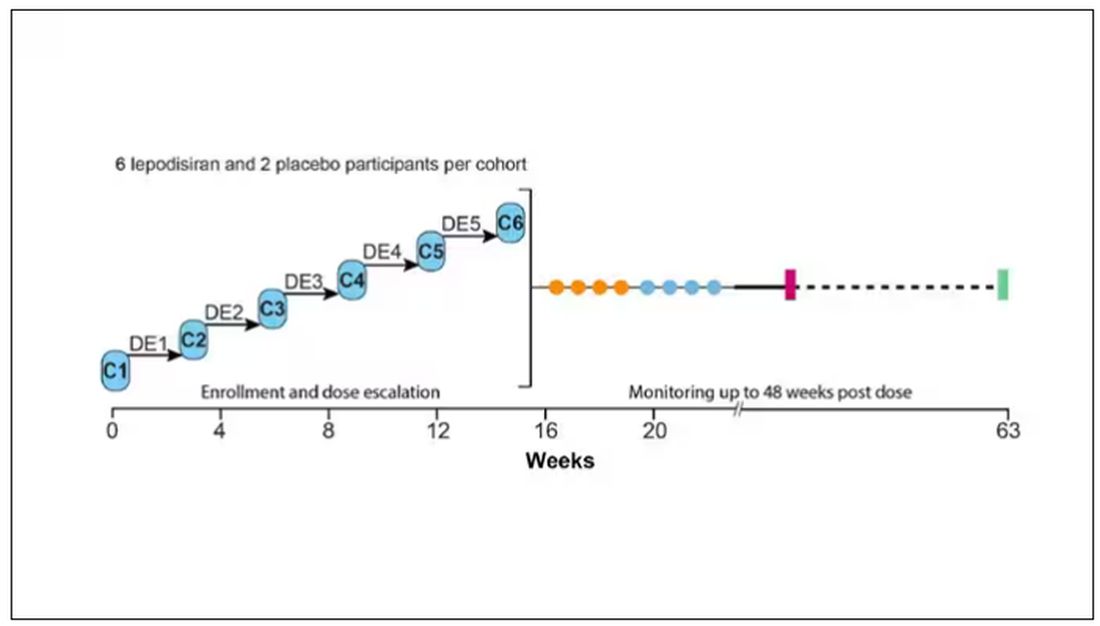

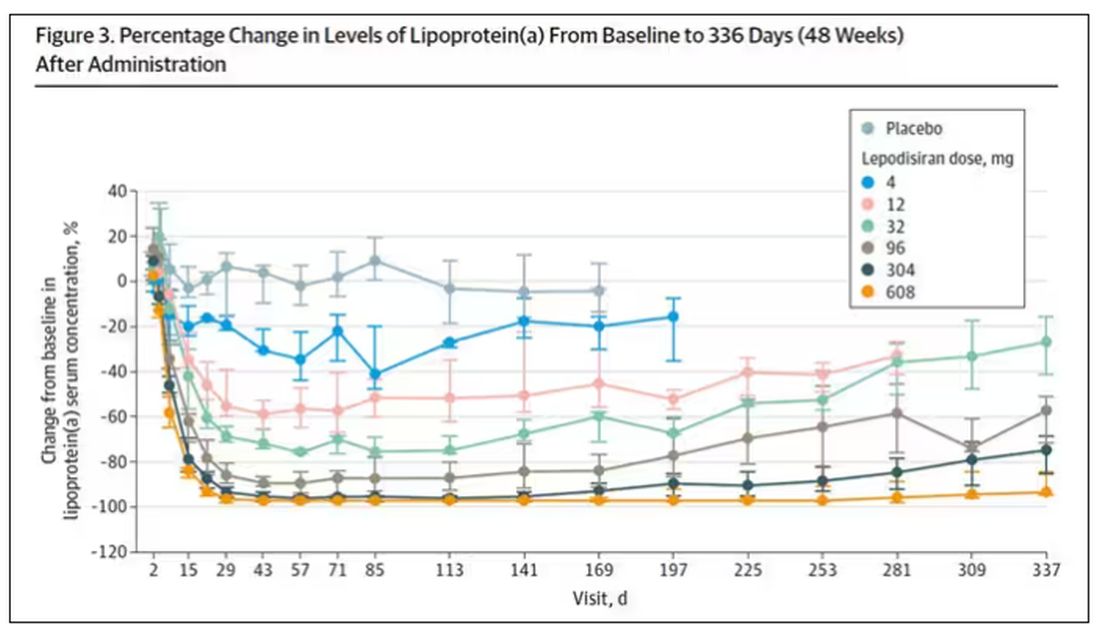

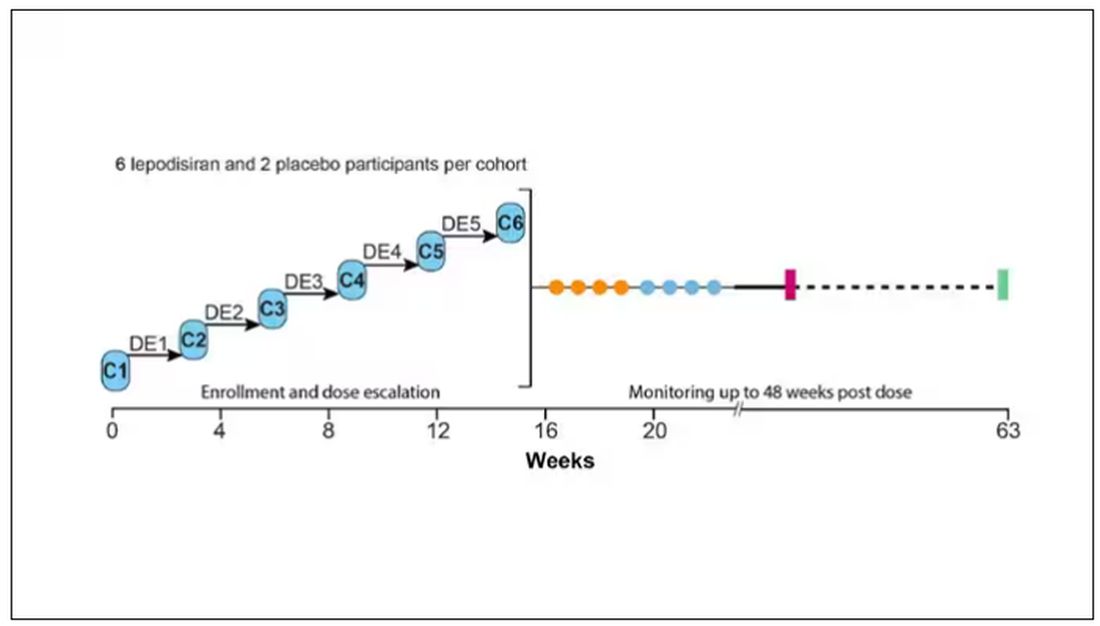

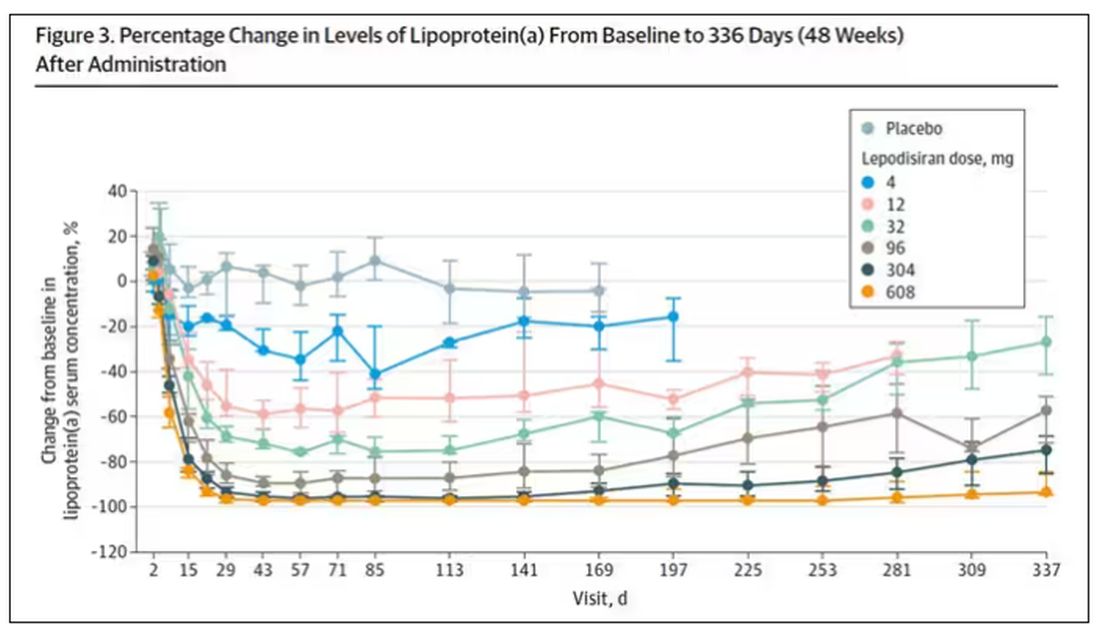

The report is a standard dose-escalation trial. Six patients, all with elevated lipoprotein(a) levels, were started with a 4-mg dose (two additional individuals got placebo). They were intensely monitored, spending 3 days in a research unit for multiple blood draws followed by weekly, and then biweekly outpatient visits. Once they had done well, the next group of six people received a higher dose (two more got placebo), and the process was repeated – six times total – until the highest dose, 608 mg, was reached.

This is an injection, of course; siRNA wouldn’t withstand the harshness of the digestive system. And it’s only one injection. You can see from the blood concentration curves that within about 48 hours, circulating lepodisiran was not detectable.

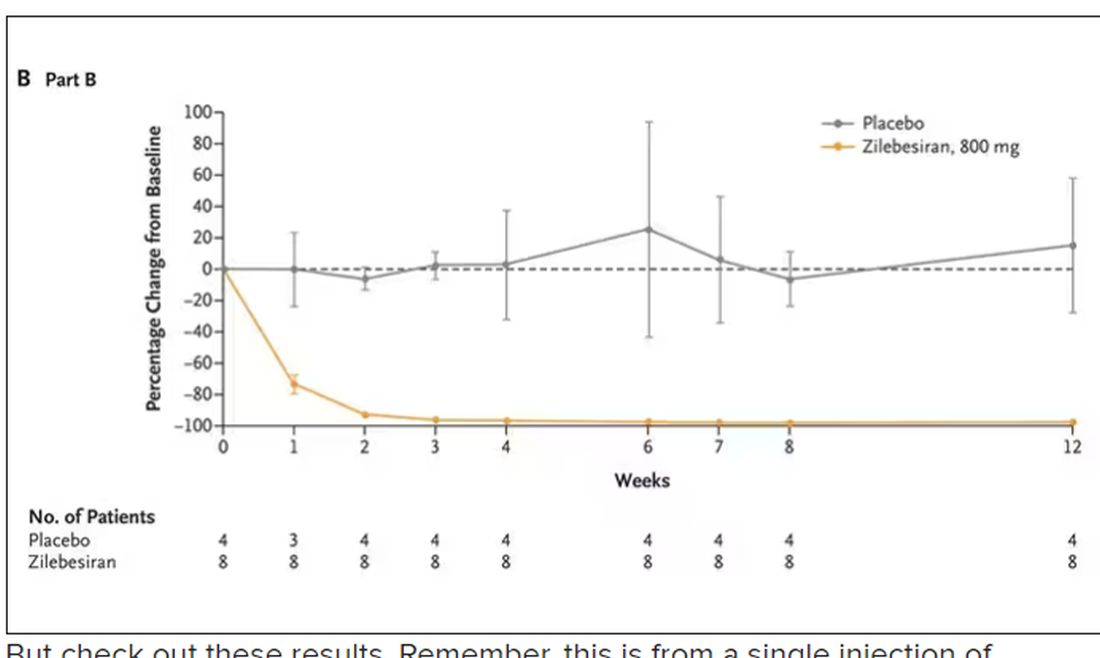

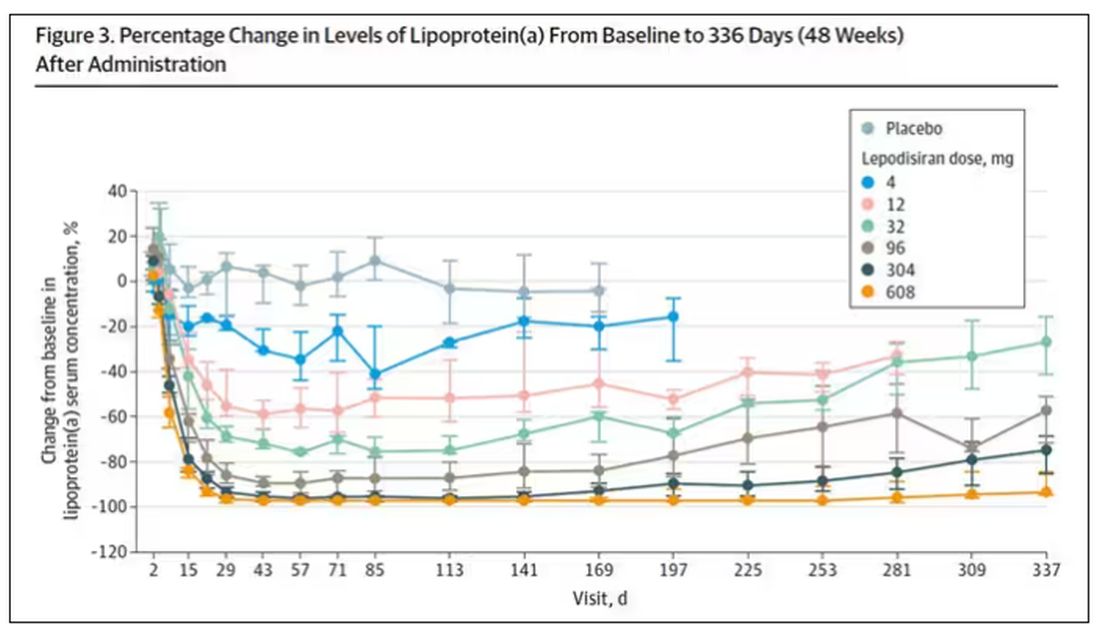

But check out these results. Remember, this is from a single injection of lepodisiran.

Lipoprotein(a) levels start to drop within a week of administration, and they stay down. In the higher-dose groups, levels are nearly undetectable a year after that injection.

It was this graph that made me sit back and think that there might be something new under the sun. A single injection that can suppress protein synthesis for an entire year? If it really works, it changes the game.

Of course, this study wasn’t powered to look at important outcomes like heart attacks and strokes. It was primarily designed to assess safety, and the drug was pretty well tolerated, with similar rates of adverse events in the drug and placebo groups.

As crazy as it sounds, the real concern here might be that this drug is too good; is it safe to drop your lipoprotein(a) levels to zero for a year? I don’t know. But lower doses don’t have quite as strong an effect.

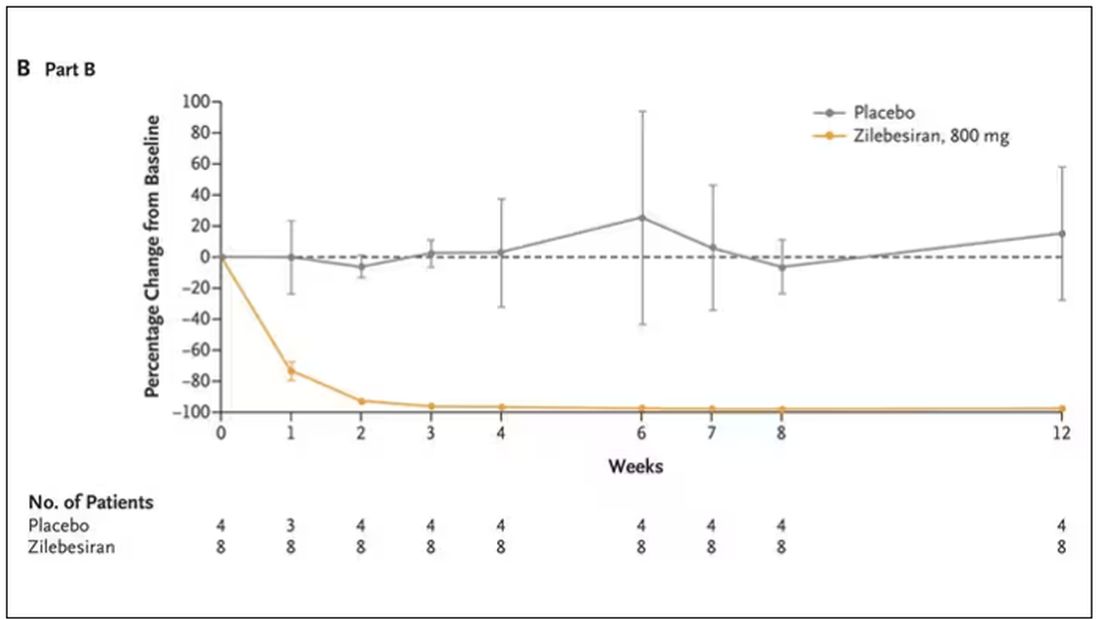

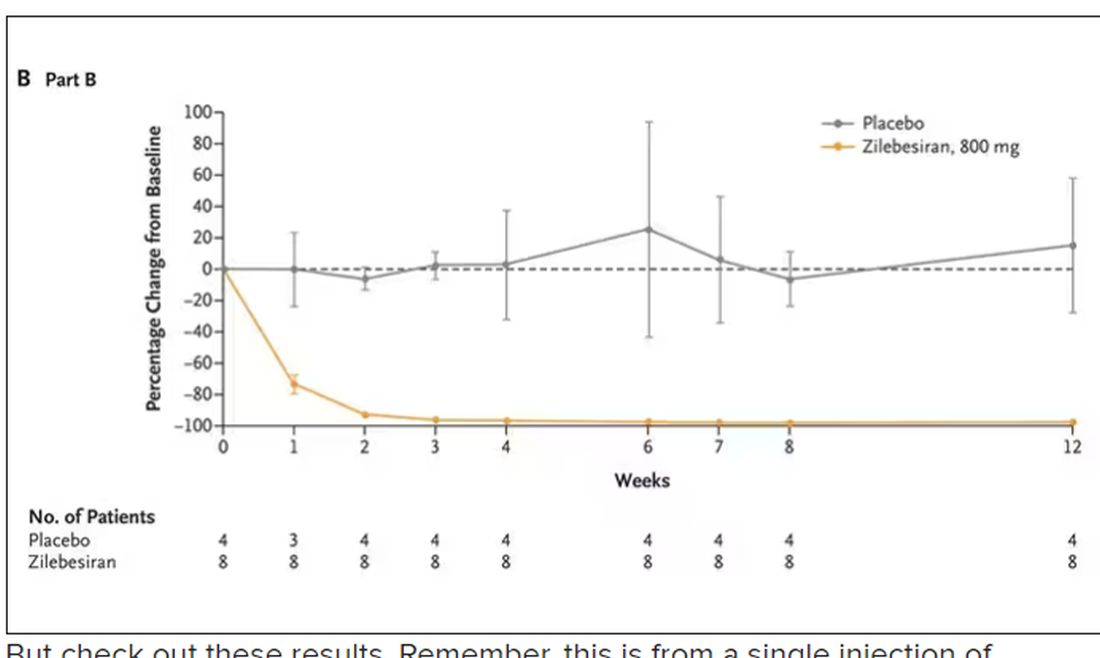

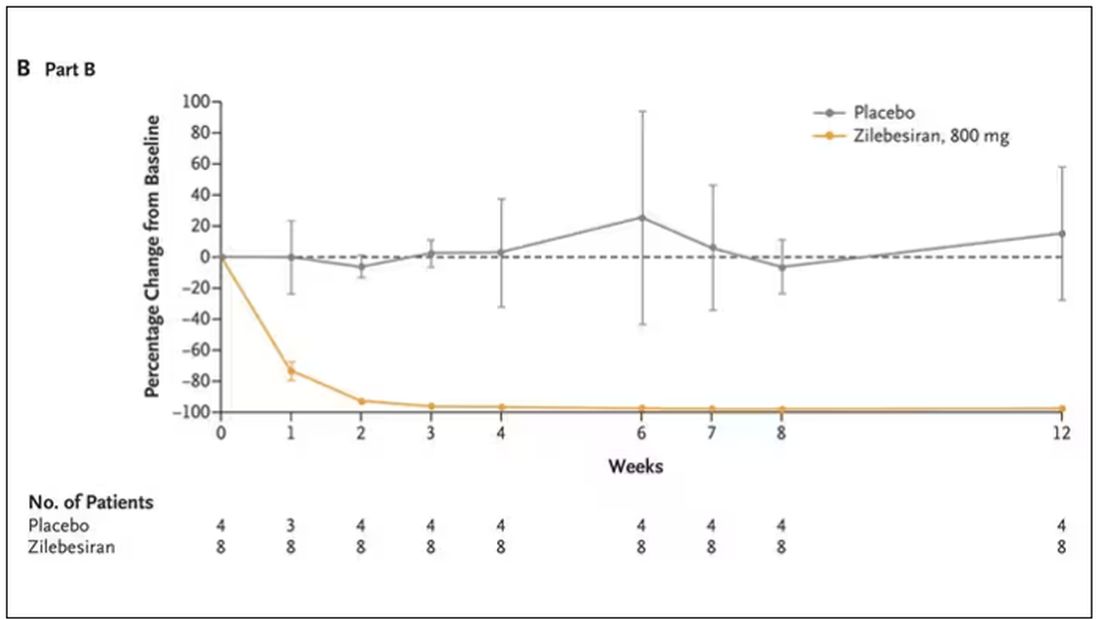

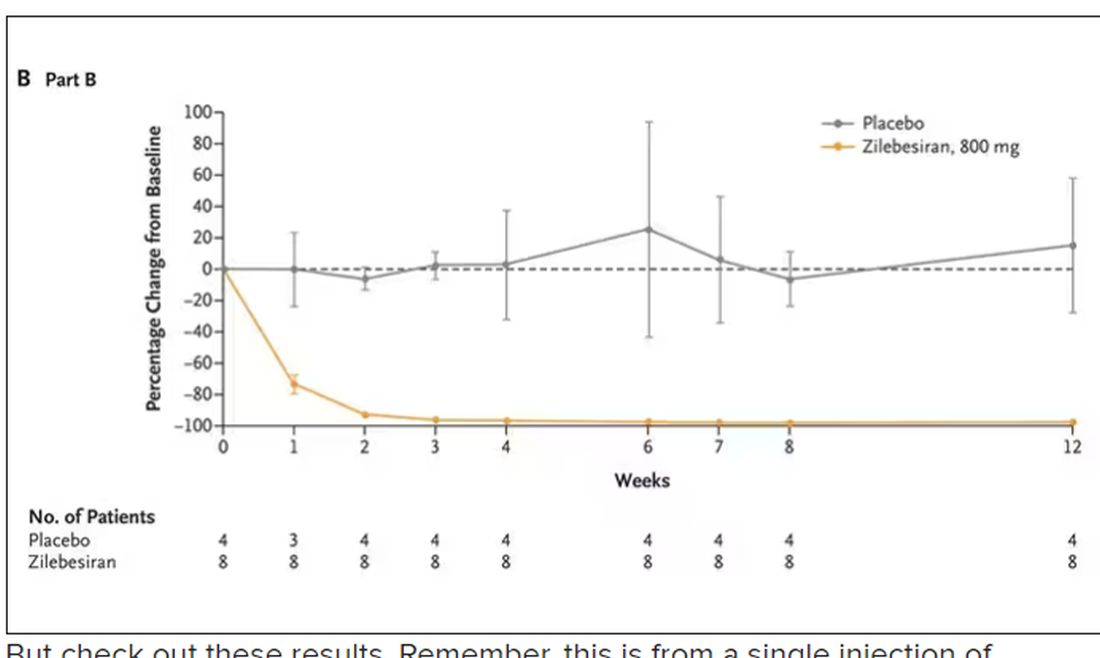

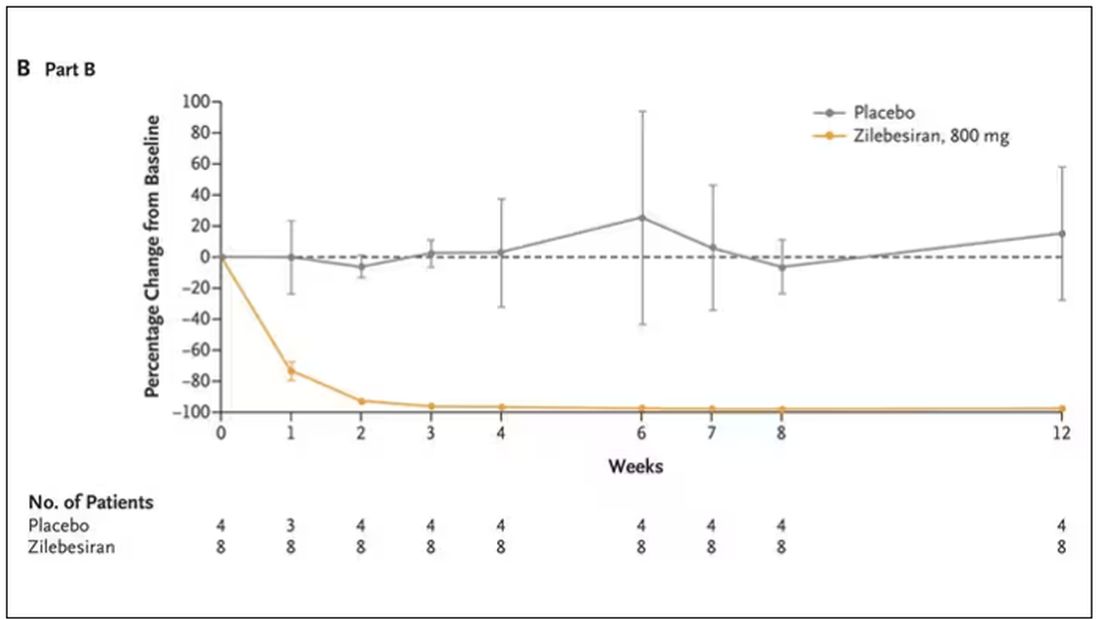

Trust me, these drugs are going to change things. They already are. In July, The New England Journal of Medicine published a study of zilebesiran, an siRNA that inhibits the production of angiotensinogen, to control blood pressure. Similar story: One injection led to a basically complete suppression of angiotensinogen and a sustained decrease in blood pressure.

I’m not exaggerating when I say that there may come a time when you go to your doctor once a year, get your RNA shots, and don’t have to take any other medication from that point on. And that time may be, like, 5 years from now. It’s wild.

Seems to me that that rapid Nobel Prize was very well deserved.

Dr. F. Perry Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Conn. He has disclosed no relevant financial relationships. This transcript has been edited for clarity.

A version of this article appeared on Medscape.com.

Welcome to Impact Factor, your weekly dose of commentary on a new medical study. I’m Dr F. Perry Wilson of the Yale School of Medicine.

Every once in a while, medicine changes in a fundamental way, and we may not realize it while it’s happening. I wasn’t around in 1928 when Fleming discovered penicillin; or in 1953 when Watson, Crick, and Franklin characterized the double-helical structure of DNA.

But looking at medicine today, there are essentially two places where I think we will see, in retrospect, that we were at a fundamental turning point. One is artificial intelligence, which gets so much attention and hype that I will simply say yes, this will change things, stay tuned.

The other is a bit more obscure, but I suspect it may be just as impactful. That other thing is

I want to start with the idea that many diseases are, fundamentally, a problem of proteins. In some cases, like hypercholesterolemia, the body produces too much protein; in others, like hemophilia, too little.

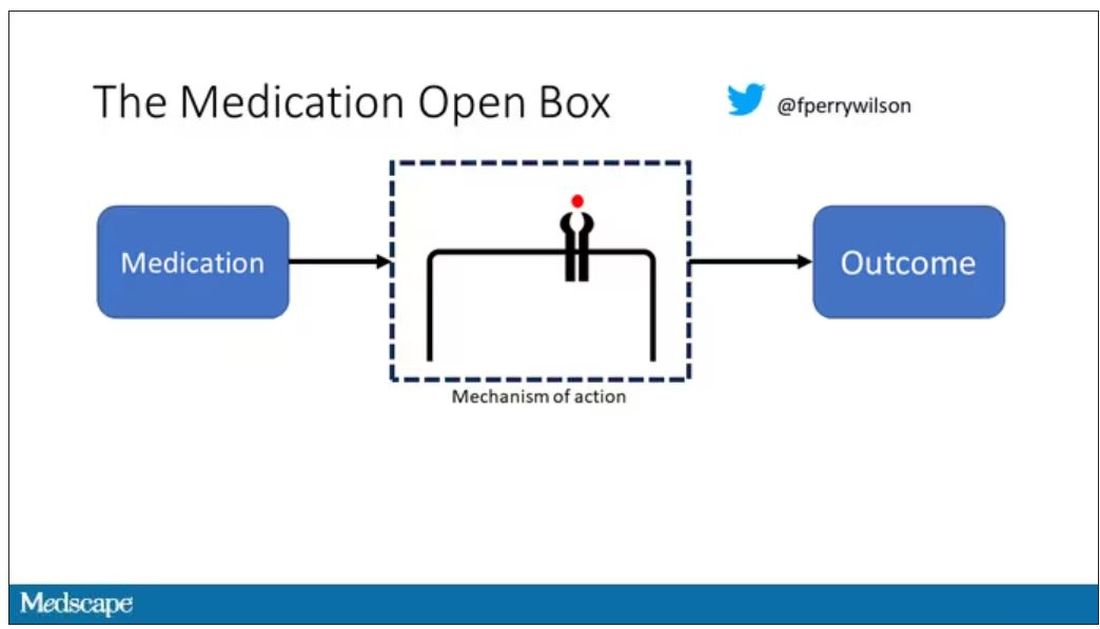

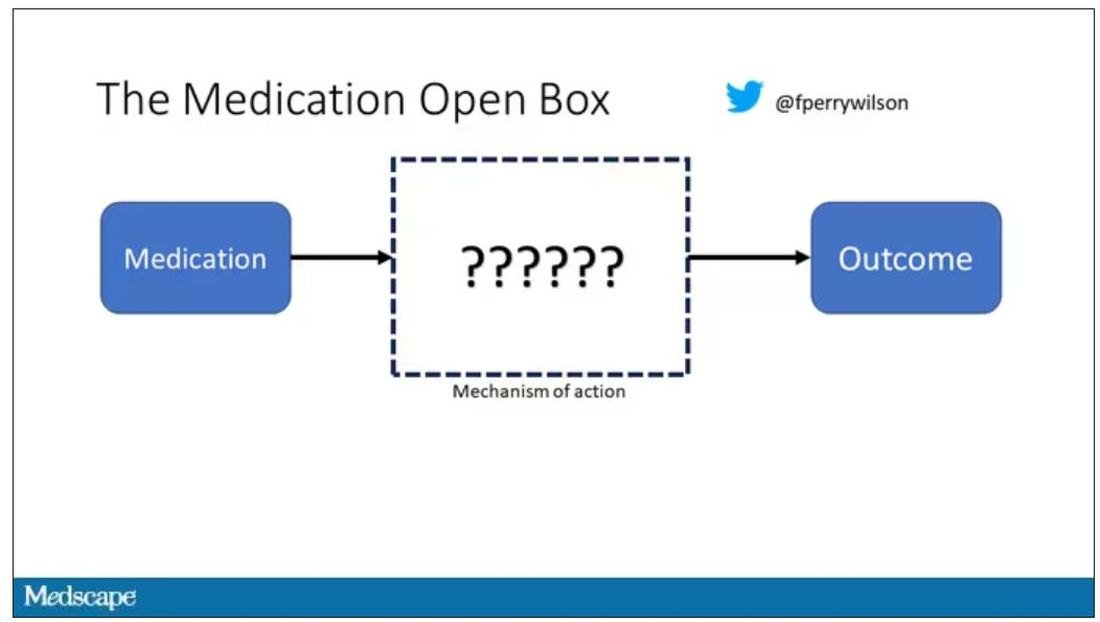

When you think about disease this way, you realize that our current medications take effect late in the disease game. We have these molecules that try to block a protein from its receptor, prevent a protein from cleaving another protein, or increase the rate that a protein is broken down. It’s all distal to the fundamental problem: the production of the bad protein in the first place.

Enter small inhibitory RNAs, or siRNAs for short, discovered in 1998 by Andrew Fire and Craig Mello at UMass Worcester. The two won the Nobel prize in medicine just 8 years later; that’s a really short time, highlighting just how important this discovery was. In contrast, Karikó and Weissman won the Nobel for mRNA vaccines this year, after inventing them 18 years ago.

siRNAs are the body’s way of targeting proteins for destruction before they are ever created. About 20 base pairs long, siRNAs seek out a complementary target mRNA, attach to it, and call in a group of proteins to destroy it. With the target mRNA gone, no protein can be created.

You see where this is going, right? How does high cholesterol kill you? Proteins. How does Staphylococcus aureus kill you? Proteins. Even viruses can’t replicate if their RNA is prevented from being turned into proteins.

So, how do we use siRNAs? A new paper appearing in JAMA describes a fairly impressive use case.

The background here is that higher levels of lipoprotein(a), an LDL-like protein, are associated with cardiovascular disease, heart attack, and stroke. But unfortunately, statins really don’t have any effect on lipoprotein(a) levels. Neither does diet. Your lipoprotein(a) level seems to be more or less hard-coded genetically.

So, what if we stop the genetic machinery from working? Enter lepodisiran, a drug from Eli Lilly. Unlike so many other medications, which are usually found in nature, purified, and synthesized, lepodisiran was created from scratch. It’s not hard. Thanks to the Human Genome Project, we know the genetic code for lipoprotein(a), so inventing an siRNA to target it specifically is trivial. That’s one of the key features of siRNA – you don’t have to find a chemical that binds strongly to some protein receptor, and worry about the off-target effects and all that nonsense. You just pick a protein you want to suppress and you suppress it.

Okay, it’s not that simple. siRNA is broken down very quickly by the body, so it needs to be targeted to the organ of interest – in this case, the liver, since that is where lipoprotein(a) is synthesized. Lepodisiran is targeted to the liver by this special targeting label here.

The report is a standard dose-escalation trial. Six patients, all with elevated lipoprotein(a) levels, were started with a 4-mg dose (two additional individuals got placebo). They were intensely monitored, spending 3 days in a research unit for multiple blood draws followed by weekly, and then biweekly outpatient visits. Once they had done well, the next group of six people received a higher dose (two more got placebo), and the process was repeated – six times total – until the highest dose, 608 mg, was reached.

This is an injection, of course; siRNA wouldn’t withstand the harshness of the digestive system. And it’s only one injection. You can see from the blood concentration curves that within about 48 hours, circulating lepodisiran was not detectable.

But check out these results. Remember, this is from a single injection of lepodisiran.

Lipoprotein(a) levels start to drop within a week of administration, and they stay down. In the higher-dose groups, levels are nearly undetectable a year after that injection.

It was this graph that made me sit back and think that there might be something new under the sun. A single injection that can suppress protein synthesis for an entire year? If it really works, it changes the game.

Of course, this study wasn’t powered to look at important outcomes like heart attacks and strokes. It was primarily designed to assess safety, and the drug was pretty well tolerated, with similar rates of adverse events in the drug and placebo groups.

As crazy as it sounds, the real concern here might be that this drug is too good; is it safe to drop your lipoprotein(a) levels to zero for a year? I don’t know. But lower doses don’t have quite as strong an effect.

Trust me, these drugs are going to change things. They already are. In July, The New England Journal of Medicine published a study of zilebesiran, an siRNA that inhibits the production of angiotensinogen, to control blood pressure. Similar story: One injection led to a basically complete suppression of angiotensinogen and a sustained decrease in blood pressure.

I’m not exaggerating when I say that there may come a time when you go to your doctor once a year, get your RNA shots, and don’t have to take any other medication from that point on. And that time may be, like, 5 years from now. It’s wild.

Seems to me that that rapid Nobel Prize was very well deserved.

Dr. F. Perry Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Conn. He has disclosed no relevant financial relationships. This transcript has been edited for clarity.

A version of this article appeared on Medscape.com.

Welcome to Impact Factor, your weekly dose of commentary on a new medical study. I’m Dr F. Perry Wilson of the Yale School of Medicine.

Every once in a while, medicine changes in a fundamental way, and we may not realize it while it’s happening. I wasn’t around in 1928 when Fleming discovered penicillin; or in 1953 when Watson, Crick, and Franklin characterized the double-helical structure of DNA.

But looking at medicine today, there are essentially two places where I think we will see, in retrospect, that we were at a fundamental turning point. One is artificial intelligence, which gets so much attention and hype that I will simply say yes, this will change things, stay tuned.

The other is a bit more obscure, but I suspect it may be just as impactful. That other thing is

I want to start with the idea that many diseases are, fundamentally, a problem of proteins. In some cases, like hypercholesterolemia, the body produces too much protein; in others, like hemophilia, too little.

When you think about disease this way, you realize that our current medications take effect late in the disease game. We have these molecules that try to block a protein from its receptor, prevent a protein from cleaving another protein, or increase the rate that a protein is broken down. It’s all distal to the fundamental problem: the production of the bad protein in the first place.

Enter small inhibitory RNAs, or siRNAs for short, discovered in 1998 by Andrew Fire and Craig Mello at UMass Worcester. The two won the Nobel prize in medicine just 8 years later; that’s a really short time, highlighting just how important this discovery was. In contrast, Karikó and Weissman won the Nobel for mRNA vaccines this year, after inventing them 18 years ago.

siRNAs are the body’s way of targeting proteins for destruction before they are ever created. About 20 base pairs long, siRNAs seek out a complementary target mRNA, attach to it, and call in a group of proteins to destroy it. With the target mRNA gone, no protein can be created.

You see where this is going, right? How does high cholesterol kill you? Proteins. How does Staphylococcus aureus kill you? Proteins. Even viruses can’t replicate if their RNA is prevented from being turned into proteins.

So, how do we use siRNAs? A new paper appearing in JAMA describes a fairly impressive use case.

The background here is that higher levels of lipoprotein(a), an LDL-like protein, are associated with cardiovascular disease, heart attack, and stroke. But unfortunately, statins really don’t have any effect on lipoprotein(a) levels. Neither does diet. Your lipoprotein(a) level seems to be more or less hard-coded genetically.

So, what if we stop the genetic machinery from working? Enter lepodisiran, a drug from Eli Lilly. Unlike so many other medications, which are usually found in nature, purified, and synthesized, lepodisiran was created from scratch. It’s not hard. Thanks to the Human Genome Project, we know the genetic code for lipoprotein(a), so inventing an siRNA to target it specifically is trivial. That’s one of the key features of siRNA – you don’t have to find a chemical that binds strongly to some protein receptor, and worry about the off-target effects and all that nonsense. You just pick a protein you want to suppress and you suppress it.

Okay, it’s not that simple. siRNA is broken down very quickly by the body, so it needs to be targeted to the organ of interest – in this case, the liver, since that is where lipoprotein(a) is synthesized. Lepodisiran is targeted to the liver by this special targeting label here.

The report is a standard dose-escalation trial. Six patients, all with elevated lipoprotein(a) levels, were started with a 4-mg dose (two additional individuals got placebo). They were intensely monitored, spending 3 days in a research unit for multiple blood draws followed by weekly, and then biweekly outpatient visits. Once they had done well, the next group of six people received a higher dose (two more got placebo), and the process was repeated – six times total – until the highest dose, 608 mg, was reached.

This is an injection, of course; siRNA wouldn’t withstand the harshness of the digestive system. And it’s only one injection. You can see from the blood concentration curves that within about 48 hours, circulating lepodisiran was not detectable.

But check out these results. Remember, this is from a single injection of lepodisiran.

Lipoprotein(a) levels start to drop within a week of administration, and they stay down. In the higher-dose groups, levels are nearly undetectable a year after that injection.

It was this graph that made me sit back and think that there might be something new under the sun. A single injection that can suppress protein synthesis for an entire year? If it really works, it changes the game.

Of course, this study wasn’t powered to look at important outcomes like heart attacks and strokes. It was primarily designed to assess safety, and the drug was pretty well tolerated, with similar rates of adverse events in the drug and placebo groups.

As crazy as it sounds, the real concern here might be that this drug is too good; is it safe to drop your lipoprotein(a) levels to zero for a year? I don’t know. But lower doses don’t have quite as strong an effect.

Trust me, these drugs are going to change things. They already are. In July, The New England Journal of Medicine published a study of zilebesiran, an siRNA that inhibits the production of angiotensinogen, to control blood pressure. Similar story: One injection led to a basically complete suppression of angiotensinogen and a sustained decrease in blood pressure.

I’m not exaggerating when I say that there may come a time when you go to your doctor once a year, get your RNA shots, and don’t have to take any other medication from that point on. And that time may be, like, 5 years from now. It’s wild.

Seems to me that that rapid Nobel Prize was very well deserved.

Dr. F. Perry Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Conn. He has disclosed no relevant financial relationships. This transcript has been edited for clarity.

A version of this article appeared on Medscape.com.

Even one night in the ED raises risk for death

This transcript has been edited for clarity.

As a consulting nephrologist, I go all over the hospital. Medicine floors, surgical floors, the ICU – I’ve even done consults in the operating room. And more and more, I do consults in the emergency department.

The reason I am doing more consults in the ED is not because the ED docs are getting gun shy with creatinine increases; it’s because patients are staying for extended periods in the ED despite being formally admitted to the hospital. It’s a phenomenon known as boarding, because there are simply not enough beds. You know the scene if you have ever been to a busy hospital: The ED is full to breaking, with patients on stretchers in hallways. It can often feel more like a warzone than a place for healing.

This is a huge problem.

The Joint Commission specifies that admitted patients should spend no more than 4 hours in the ED waiting for a bed in the hospital.

That is, based on what I’ve seen, hugely ambitious. But I should point out that I work in a hospital that runs near capacity all the time, and studies – from some of my Yale colleagues, actually – have shown that once hospital capacity exceeds 85%, boarding rates skyrocket.

I want to discuss some of the causes of extended boarding and some solutions. But before that, I should prove to you that this really matters, and for that we are going to dig in to a new study which suggests that ED boarding kills.

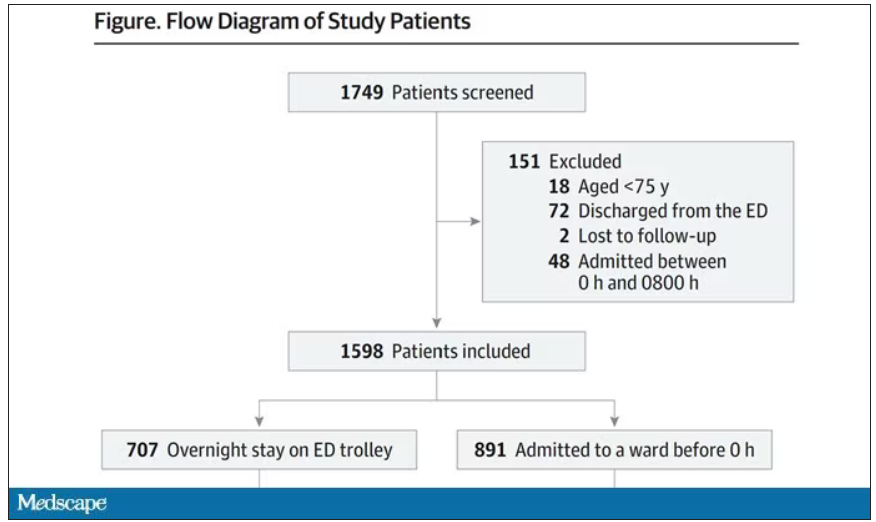

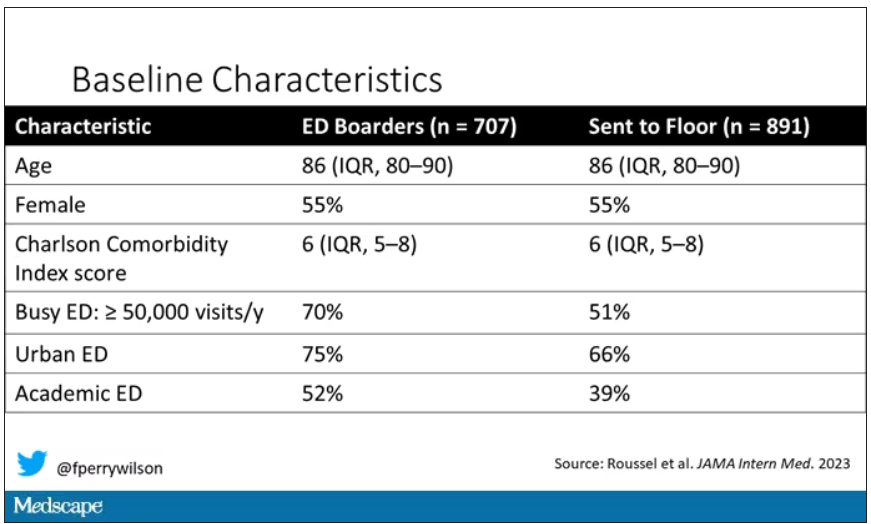

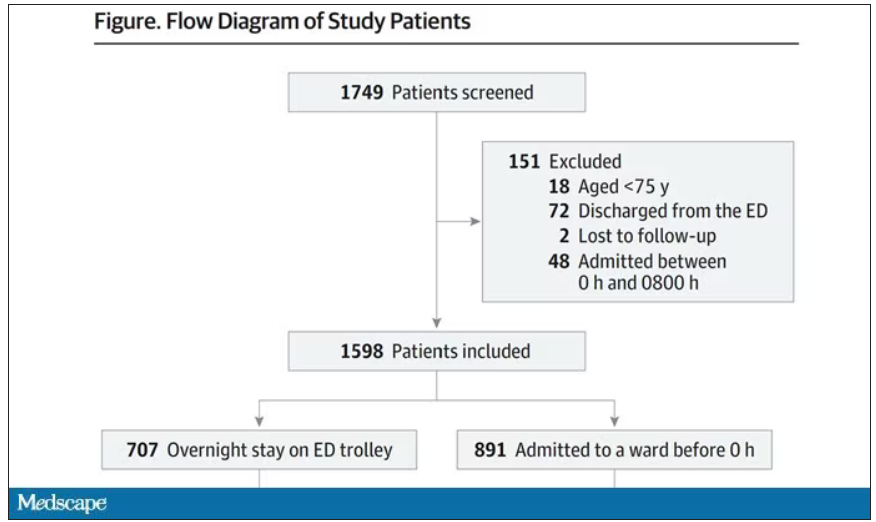

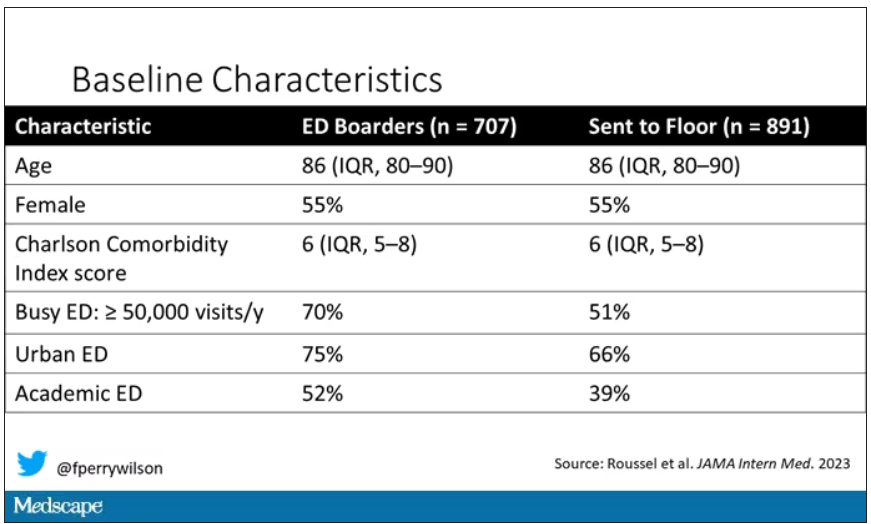

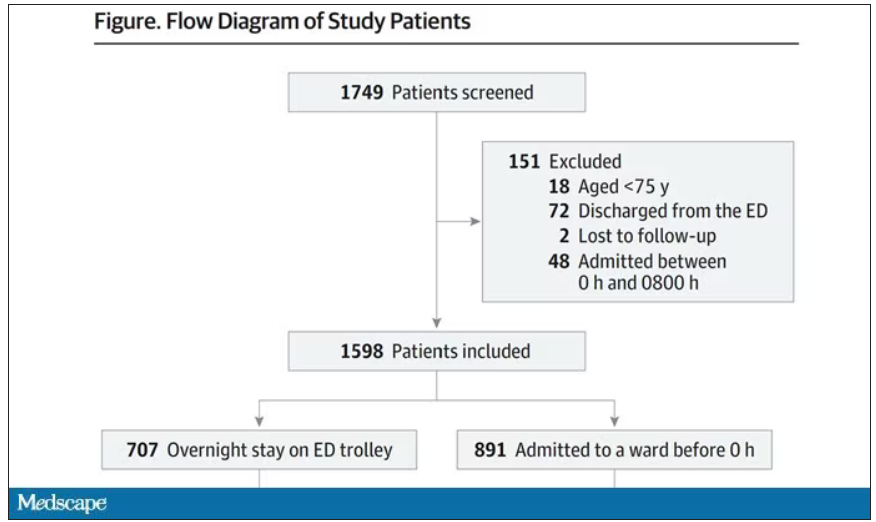

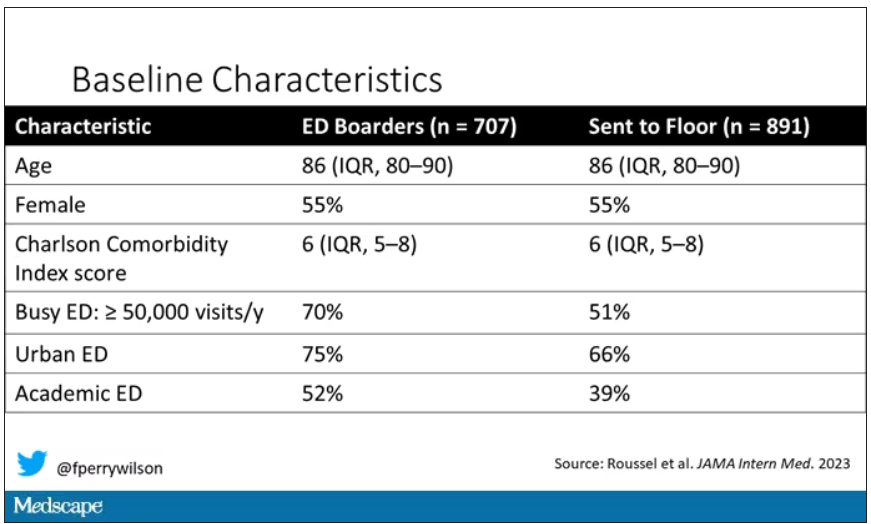

To put some hard numbers to the boarding problem, we turn to this paper out of France, appearing in JAMA Internal Medicine.

This is a unique study design. Basically, on a single day – Dec. 12, 2022 – researchers fanned out across France to 97 EDs and started counting patients. The study focused on those older than age 75 who were admitted to a hospital ward from the ED. The researchers then defined two groups: those who were sent up to the hospital floor before midnight, and those who spent at least from midnight until 8 AM in the ED (basically, people forced to sleep in the ED for a night). The middle-ground people who were sent up between midnight and 8 AM were excluded.