User login

Possible mortality risk seen with tramadol in osteoarthritis

Tramadol appears to be associated with higher mortality risk among older patients with osteoarthritis when compared against common NSAIDs, according to findings from a study published online March 12 in JAMA.

The findings from the retrospective cohort study are worth noting despite their susceptibility to confounding by indication because “tramadol is a weak opioid agonist and has been considered a potential alternative to NSAIDs and traditional opioids because of its assumed relatively lower risk of serious cardiovascular and gastrointestinal adverse effects than NSAIDs, as well as a lower risk of addiction and respiratory depression compared with other opioids,” wrote Chao Zeng, MD, PhD, of Xiangya Hospital of Central South University, Changsha, China, and his coauthors.

The investigators analyzed data from a combined total of 88,902 individuals aged 50 years and older with knee, hip, or hand osteoarthritis who were seen during 2000-2015 and had visits recorded in the United Kingdom’s The Health Improvement Network (THIN) electronic medical records database. Participants were matched on sociodemographic and lifestyle factors, as well as osteoarthritis duration, comorbidities, other prescriptions, and health care utilization prior to the index date of the study.

Over 1 year of follow-up, researchers saw a 71% higher risk of all-cause mortality in patients taking tramadol than that in seen in those taking naproxen, 88% higher than in those taking diclofenac, 70% higher than in those taking celecoxib, and about twice as high as in patients taking etoricoxib.

However, there was no significant difference in risk of all-cause mortality between tramadol and codeine, the researchers found.

The authors suggested that tramadol may have adverse effects on the neurologic system by inhibiting central serotonin and norepinephrine uptake, which could potentially lead to serotonin syndrome. They also speculated that it could increase the risk of postoperative delirium, cause fatal poisoning or respiratory depression if taken in conjunction with alcohol or other drugs, or increase the risk of hypoglycemia, hyponatremia, fractures, or falls.

The numbers of deaths from cardiovascular, gastrointestinal, infection, cancer, and respiratory diseases were all higher in the tramadol group, compared with patients taking NSAIDs, but the differences were not statistically significant because of the relatively small number of deaths, the authors said.

Overall, 44,451 patients were taking tramadol, 12,397 were taking naproxen, 6,512 were taking diclofenac, 5,674 were taking celecoxib, 2,946 were taking etoricoxib, and 16,922 were taking codeine.

Patients in the tramadol cohort were generally older, with higher body mass index, a longer duration of osteoarthritis, and had a higher prevalence of comorbidities, higher health care utilization, and more prescriptions of other medications.

The authors noted that, while the patients from each medication cohort were matched on propensity score, the results were still susceptible to confounding by indication and should be interpreted with caution.

The study was supported by grants from the National Institute of Arthritis and Musculoskeletal and Skin Diseases and the National Natural Science Foundation of China. One author declared funding from the National Institute on Drug Abuse during the conduct of the study and grants from Optum Labs outside the study. No other conflicts of interest were declared.

SOURCE: Zeng C et al. JAMA. 2019;321:969-82.

Tramadol appears to be associated with higher mortality risk among older patients with osteoarthritis when compared against common NSAIDs, according to findings from a study published online March 12 in JAMA.

The findings from the retrospective cohort study are worth noting despite their susceptibility to confounding by indication because “tramadol is a weak opioid agonist and has been considered a potential alternative to NSAIDs and traditional opioids because of its assumed relatively lower risk of serious cardiovascular and gastrointestinal adverse effects than NSAIDs, as well as a lower risk of addiction and respiratory depression compared with other opioids,” wrote Chao Zeng, MD, PhD, of Xiangya Hospital of Central South University, Changsha, China, and his coauthors.

The investigators analyzed data from a combined total of 88,902 individuals aged 50 years and older with knee, hip, or hand osteoarthritis who were seen during 2000-2015 and had visits recorded in the United Kingdom’s The Health Improvement Network (THIN) electronic medical records database. Participants were matched on sociodemographic and lifestyle factors, as well as osteoarthritis duration, comorbidities, other prescriptions, and health care utilization prior to the index date of the study.

Over 1 year of follow-up, researchers saw a 71% higher risk of all-cause mortality in patients taking tramadol than that in seen in those taking naproxen, 88% higher than in those taking diclofenac, 70% higher than in those taking celecoxib, and about twice as high as in patients taking etoricoxib.

However, there was no significant difference in risk of all-cause mortality between tramadol and codeine, the researchers found.

The authors suggested that tramadol may have adverse effects on the neurologic system by inhibiting central serotonin and norepinephrine uptake, which could potentially lead to serotonin syndrome. They also speculated that it could increase the risk of postoperative delirium, cause fatal poisoning or respiratory depression if taken in conjunction with alcohol or other drugs, or increase the risk of hypoglycemia, hyponatremia, fractures, or falls.

The numbers of deaths from cardiovascular, gastrointestinal, infection, cancer, and respiratory diseases were all higher in the tramadol group, compared with patients taking NSAIDs, but the differences were not statistically significant because of the relatively small number of deaths, the authors said.

Overall, 44,451 patients were taking tramadol, 12,397 were taking naproxen, 6,512 were taking diclofenac, 5,674 were taking celecoxib, 2,946 were taking etoricoxib, and 16,922 were taking codeine.

Patients in the tramadol cohort were generally older, with higher body mass index, a longer duration of osteoarthritis, and had a higher prevalence of comorbidities, higher health care utilization, and more prescriptions of other medications.

The authors noted that, while the patients from each medication cohort were matched on propensity score, the results were still susceptible to confounding by indication and should be interpreted with caution.

The study was supported by grants from the National Institute of Arthritis and Musculoskeletal and Skin Diseases and the National Natural Science Foundation of China. One author declared funding from the National Institute on Drug Abuse during the conduct of the study and grants from Optum Labs outside the study. No other conflicts of interest were declared.

SOURCE: Zeng C et al. JAMA. 2019;321:969-82.

Tramadol appears to be associated with higher mortality risk among older patients with osteoarthritis when compared against common NSAIDs, according to findings from a study published online March 12 in JAMA.

The findings from the retrospective cohort study are worth noting despite their susceptibility to confounding by indication because “tramadol is a weak opioid agonist and has been considered a potential alternative to NSAIDs and traditional opioids because of its assumed relatively lower risk of serious cardiovascular and gastrointestinal adverse effects than NSAIDs, as well as a lower risk of addiction and respiratory depression compared with other opioids,” wrote Chao Zeng, MD, PhD, of Xiangya Hospital of Central South University, Changsha, China, and his coauthors.

The investigators analyzed data from a combined total of 88,902 individuals aged 50 years and older with knee, hip, or hand osteoarthritis who were seen during 2000-2015 and had visits recorded in the United Kingdom’s The Health Improvement Network (THIN) electronic medical records database. Participants were matched on sociodemographic and lifestyle factors, as well as osteoarthritis duration, comorbidities, other prescriptions, and health care utilization prior to the index date of the study.

Over 1 year of follow-up, researchers saw a 71% higher risk of all-cause mortality in patients taking tramadol than that in seen in those taking naproxen, 88% higher than in those taking diclofenac, 70% higher than in those taking celecoxib, and about twice as high as in patients taking etoricoxib.

However, there was no significant difference in risk of all-cause mortality between tramadol and codeine, the researchers found.

The authors suggested that tramadol may have adverse effects on the neurologic system by inhibiting central serotonin and norepinephrine uptake, which could potentially lead to serotonin syndrome. They also speculated that it could increase the risk of postoperative delirium, cause fatal poisoning or respiratory depression if taken in conjunction with alcohol or other drugs, or increase the risk of hypoglycemia, hyponatremia, fractures, or falls.

The numbers of deaths from cardiovascular, gastrointestinal, infection, cancer, and respiratory diseases were all higher in the tramadol group, compared with patients taking NSAIDs, but the differences were not statistically significant because of the relatively small number of deaths, the authors said.

Overall, 44,451 patients were taking tramadol, 12,397 were taking naproxen, 6,512 were taking diclofenac, 5,674 were taking celecoxib, 2,946 were taking etoricoxib, and 16,922 were taking codeine.

Patients in the tramadol cohort were generally older, with higher body mass index, a longer duration of osteoarthritis, and had a higher prevalence of comorbidities, higher health care utilization, and more prescriptions of other medications.

The authors noted that, while the patients from each medication cohort were matched on propensity score, the results were still susceptible to confounding by indication and should be interpreted with caution.

The study was supported by grants from the National Institute of Arthritis and Musculoskeletal and Skin Diseases and the National Natural Science Foundation of China. One author declared funding from the National Institute on Drug Abuse during the conduct of the study and grants from Optum Labs outside the study. No other conflicts of interest were declared.

SOURCE: Zeng C et al. JAMA. 2019;321:969-82.

FROM JAMA

Coagulation pathway may play role in IBD

Writing in Science Translational Medicine, researchers presented the findings of a transcriptome analysis of 1,800 intestinal biopsies from individuals with IBD across 14 different cohorts.

Their analysis revealed that the coagulation gene pathway is altered in a number of patients with active IBD and, in particular, among patients whose disease does not respond to anti–tumor necrosis factor (anti-TNF) therapy.

“Clinical studies have established that patients with IBD are at substantially increased risk for thrombotic events and those with active disease have abnormal blood coagulation parameters, but the function and mechanism remain unclear,” wrote Gerard E. Kaiko, PhD, from the University of Newcastle, Australia, in Callaghan and coauthors.

The analysis highlighted a particular component of the coagulation pathway – SERPINE1, which codes for the protein plasminogen activator inhibitor–1 (PAI-1) – whose expression was increased in colon biopsies taken from actively inflamed areas of disease, compared with biopsies of uninflamed areas, biopsies from patients in remission, or in biopsies from individuals without IBD.

The increased expression of SERPINE1/PAI-1 was mostly within epithelial cells, which the authors said supported the hypothesis that the gene is a key player in the inflammation/epithelium interface in the disease.

Researchers also found that SERPINE1 expression correlated with disease severity, and it was consistently higher in patients who had failed to respond to anti-TNF therapy. They suggested that SERPINE1/PAI-1 activity could potentially address an unmet clinical need for objective measures of disease activity and function as a way to predict response to biologic therapy.

“Although biologic therapies with anti-TNF are now a mainstay for IBD therapy, up to 40% of patients are nonresponsive, and patients lose responsiveness over time,” they wrote. “Furthermore, because more therapeutic options become available in IBD, a predictive biomarker is needed for personalized treatment.”

The authors further explored the role of SERPINE1/PAI-1 in an experimental mouse model of IBD. They found that colonic expression of the gene was around sixfold higher in mice with chemically induced colonic injury and inflammation, compared with untreated controls.

Researchers noted that PAI-1’s function is to bind and inhibit the activity of tissue plasminogen activator, which is a protein involved in the breakdown of blood clots and is coded by the gene PLAT.

They screened for which cytokine pathways might regulate PAI-1, PLAT, and tissue plasminogen activator, and they found that, while none increased SERPINE1 expression, interleukin-17A did appear to increase the expression of PLAT, which raises the possibility that IL-17A could counteract the effects of PAI-1.

The study also found that, in the colon biopsies from individuals with active disease, there was an imbalance in the ratio of PAI-1 to tissue plasminogen activator such that these biopsies showed lower levels of active tissue plasminogen activator.

“Therefore, the potentially protective mechanism of elevation of tPA [tissue plasminogen activator] does not occur properly in patients with IBD,” they wrote.

The next step was to see whether inhibiting the activity of SERPINE1 had any effect. In a mouse model of chemically induced colitis, the authors saw that treatment with a SERPINE1 inhibitor was associated with reduced weight change, mucosal damage, and reduced signs of inflammation, compared with untreated mice.

The study was supported by the Crohn’s & Colitis Foundation. Three authors were supported by grants from the National Health & Medical Research Council, one by the Cancer Institute NSW, one by an Alpha Omega Alpha – Carolyn L. Kuckein Student Research Fellowship, and two by the National Institutes of Health. Four authors have a patent pending related to PAI-1. Two authors declared advisory board positions with pharmaceutical companies, including the manufacturer of a product used in the study. Three authors are employees of Janssen R&D.

SOURCE: Kaiko GE et al. Sci. Transl. Med. 2019. doi: 10.1126/scitranslmed.aat0852.

Writing in Science Translational Medicine, researchers presented the findings of a transcriptome analysis of 1,800 intestinal biopsies from individuals with IBD across 14 different cohorts.

Their analysis revealed that the coagulation gene pathway is altered in a number of patients with active IBD and, in particular, among patients whose disease does not respond to anti–tumor necrosis factor (anti-TNF) therapy.

“Clinical studies have established that patients with IBD are at substantially increased risk for thrombotic events and those with active disease have abnormal blood coagulation parameters, but the function and mechanism remain unclear,” wrote Gerard E. Kaiko, PhD, from the University of Newcastle, Australia, in Callaghan and coauthors.

The analysis highlighted a particular component of the coagulation pathway – SERPINE1, which codes for the protein plasminogen activator inhibitor–1 (PAI-1) – whose expression was increased in colon biopsies taken from actively inflamed areas of disease, compared with biopsies of uninflamed areas, biopsies from patients in remission, or in biopsies from individuals without IBD.

The increased expression of SERPINE1/PAI-1 was mostly within epithelial cells, which the authors said supported the hypothesis that the gene is a key player in the inflammation/epithelium interface in the disease.

Researchers also found that SERPINE1 expression correlated with disease severity, and it was consistently higher in patients who had failed to respond to anti-TNF therapy. They suggested that SERPINE1/PAI-1 activity could potentially address an unmet clinical need for objective measures of disease activity and function as a way to predict response to biologic therapy.

“Although biologic therapies with anti-TNF are now a mainstay for IBD therapy, up to 40% of patients are nonresponsive, and patients lose responsiveness over time,” they wrote. “Furthermore, because more therapeutic options become available in IBD, a predictive biomarker is needed for personalized treatment.”

The authors further explored the role of SERPINE1/PAI-1 in an experimental mouse model of IBD. They found that colonic expression of the gene was around sixfold higher in mice with chemically induced colonic injury and inflammation, compared with untreated controls.

Researchers noted that PAI-1’s function is to bind and inhibit the activity of tissue plasminogen activator, which is a protein involved in the breakdown of blood clots and is coded by the gene PLAT.

They screened for which cytokine pathways might regulate PAI-1, PLAT, and tissue plasminogen activator, and they found that, while none increased SERPINE1 expression, interleukin-17A did appear to increase the expression of PLAT, which raises the possibility that IL-17A could counteract the effects of PAI-1.

The study also found that, in the colon biopsies from individuals with active disease, there was an imbalance in the ratio of PAI-1 to tissue plasminogen activator such that these biopsies showed lower levels of active tissue plasminogen activator.

“Therefore, the potentially protective mechanism of elevation of tPA [tissue plasminogen activator] does not occur properly in patients with IBD,” they wrote.

The next step was to see whether inhibiting the activity of SERPINE1 had any effect. In a mouse model of chemically induced colitis, the authors saw that treatment with a SERPINE1 inhibitor was associated with reduced weight change, mucosal damage, and reduced signs of inflammation, compared with untreated mice.

The study was supported by the Crohn’s & Colitis Foundation. Three authors were supported by grants from the National Health & Medical Research Council, one by the Cancer Institute NSW, one by an Alpha Omega Alpha – Carolyn L. Kuckein Student Research Fellowship, and two by the National Institutes of Health. Four authors have a patent pending related to PAI-1. Two authors declared advisory board positions with pharmaceutical companies, including the manufacturer of a product used in the study. Three authors are employees of Janssen R&D.

SOURCE: Kaiko GE et al. Sci. Transl. Med. 2019. doi: 10.1126/scitranslmed.aat0852.

Writing in Science Translational Medicine, researchers presented the findings of a transcriptome analysis of 1,800 intestinal biopsies from individuals with IBD across 14 different cohorts.

Their analysis revealed that the coagulation gene pathway is altered in a number of patients with active IBD and, in particular, among patients whose disease does not respond to anti–tumor necrosis factor (anti-TNF) therapy.

“Clinical studies have established that patients with IBD are at substantially increased risk for thrombotic events and those with active disease have abnormal blood coagulation parameters, but the function and mechanism remain unclear,” wrote Gerard E. Kaiko, PhD, from the University of Newcastle, Australia, in Callaghan and coauthors.

The analysis highlighted a particular component of the coagulation pathway – SERPINE1, which codes for the protein plasminogen activator inhibitor–1 (PAI-1) – whose expression was increased in colon biopsies taken from actively inflamed areas of disease, compared with biopsies of uninflamed areas, biopsies from patients in remission, or in biopsies from individuals without IBD.

The increased expression of SERPINE1/PAI-1 was mostly within epithelial cells, which the authors said supported the hypothesis that the gene is a key player in the inflammation/epithelium interface in the disease.

Researchers also found that SERPINE1 expression correlated with disease severity, and it was consistently higher in patients who had failed to respond to anti-TNF therapy. They suggested that SERPINE1/PAI-1 activity could potentially address an unmet clinical need for objective measures of disease activity and function as a way to predict response to biologic therapy.

“Although biologic therapies with anti-TNF are now a mainstay for IBD therapy, up to 40% of patients are nonresponsive, and patients lose responsiveness over time,” they wrote. “Furthermore, because more therapeutic options become available in IBD, a predictive biomarker is needed for personalized treatment.”

The authors further explored the role of SERPINE1/PAI-1 in an experimental mouse model of IBD. They found that colonic expression of the gene was around sixfold higher in mice with chemically induced colonic injury and inflammation, compared with untreated controls.

Researchers noted that PAI-1’s function is to bind and inhibit the activity of tissue plasminogen activator, which is a protein involved in the breakdown of blood clots and is coded by the gene PLAT.

They screened for which cytokine pathways might regulate PAI-1, PLAT, and tissue plasminogen activator, and they found that, while none increased SERPINE1 expression, interleukin-17A did appear to increase the expression of PLAT, which raises the possibility that IL-17A could counteract the effects of PAI-1.

The study also found that, in the colon biopsies from individuals with active disease, there was an imbalance in the ratio of PAI-1 to tissue plasminogen activator such that these biopsies showed lower levels of active tissue plasminogen activator.

“Therefore, the potentially protective mechanism of elevation of tPA [tissue plasminogen activator] does not occur properly in patients with IBD,” they wrote.

The next step was to see whether inhibiting the activity of SERPINE1 had any effect. In a mouse model of chemically induced colitis, the authors saw that treatment with a SERPINE1 inhibitor was associated with reduced weight change, mucosal damage, and reduced signs of inflammation, compared with untreated mice.

The study was supported by the Crohn’s & Colitis Foundation. Three authors were supported by grants from the National Health & Medical Research Council, one by the Cancer Institute NSW, one by an Alpha Omega Alpha – Carolyn L. Kuckein Student Research Fellowship, and two by the National Institutes of Health. Four authors have a patent pending related to PAI-1. Two authors declared advisory board positions with pharmaceutical companies, including the manufacturer of a product used in the study. Three authors are employees of Janssen R&D.

SOURCE: Kaiko GE et al. Sci. Transl. Med. 2019. doi: 10.1126/scitranslmed.aat0852.

FROM SCIENCE TRANSLATIONAL MEDICINE

Fluorouracil beats other actinic keratosis treatments in head-to-head trial

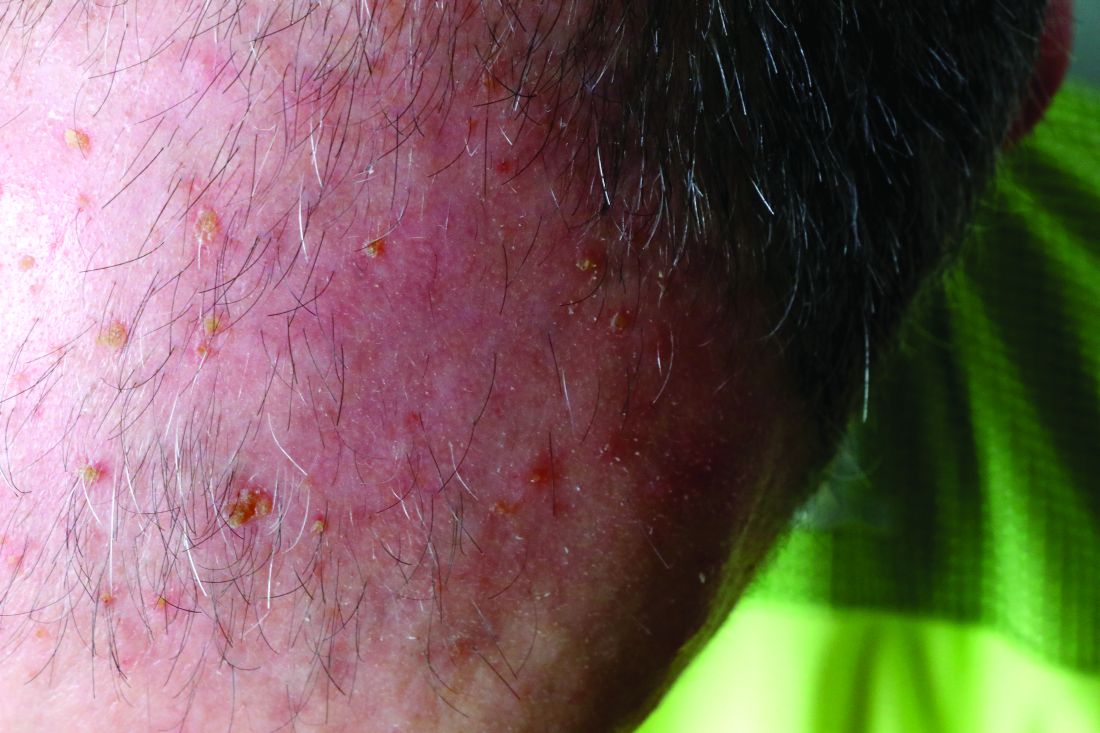

A head-to-head comparison of four commonly used field-directed treatments for actinic keratosis (AK) found that 5% fluorouracil cream was the most effective at reducing the size of lesions.

In a study published in the March 7 issue of the New England Journal of Medicine, researchers reported the outcomes of a multicenter, single-blind trial in 602 patients with five or more AK lesions in one continuous area on the head measuring 25-100 cm2. Patients were randomized to treatment with either 5% fluorouracil cream, 5% imiquimod cream, methyl aminolevulinate photodynamic therapy (MAL-PDT), or 0.015% ingenol mebutate gel.

Overall, 74.7% of patients who received fluorouracil cream achieved treatment success – defined as at least a 75% reduction in lesion size at 12 months after the end of treatment – compared with 53.9% of patients treated with imiquimod, 37.7% of those treated with MAL-PDT, and 28.9% of those treated with ingenol mebutate. The differences between fluorouracil and the other treatments was significant.

Maud H.E. Jansen, MD, and Janneke P.H.M. Kessels, MD, of the department of dermatology at Maastricht (the Netherlands) University Medical Center and their coauthors pointed out that, while there was plenty of literature about different AK treatments, there were few head-to-head comparisons and many studies were underpowered or had different outcome measures.

Even when the analysis was restricted to patients with grade I or II lesions, fluorouracil remained the most effective treatment, with 75.3% of patients achieving treatment success, compared with 52.6% with imiquimod, 38.7% with MAL-PDT, and 30.2% with ingenol mebutate.

There were not enough patients with more severe grade III lesions to enable a separate analysis of their outcomes; 49 (7.9%) of patients in the study had at least one grade III lesion.

The authors noted that many previous studies had excluded patients with grade III lesions. “Exclusion of patients with grade III lesions was associated with slightly higher rates of success in the fluorouracil, MAL-PDT, and ingenol mebutate groups than the rates in the unrestricted analysis,” they wrote. The inclusion of patients with grade III AK lesions in this trial made it “more representative of patients seen in daily practice,” they added.

Treatment failure – less than 75% clearance of actinic keratosis at 3 months after the final treatment – was seen after one treatment cycle in 14.8% of patients treated with fluorouracil, 37.2% of patients on imiquimod, 34.6% of patients given photodynamic therapy, and 47.8% of patients on ingenol mebutate therapy.

All these patients were offered a second treatment cycle, but those treated with imiquimod, PDT, and ingenol mebutate were less likely to undergo a second treatment.

The authors suggested that the higher proportion of patients in the fluorouracil group who were willing to undergo a second round of therapy suggests they may have experienced less discomfort and inconvenience with the therapy to begin with, compared with those treated with the other regimens.

Full adherence to treatment was more common in the ingenol mebutate (98.7% of patients) and MAL-PDT (96.8%) groups, compared with the fluorouracil (88.7%) and imiquimod (88.2%) groups. However, patients in the fluorouracil group reported greater levels of patient satisfaction and improvements in health-related quality of life than did patients in the other treatment arms of the study.

No serious adverse events were reported with any of the treatments, and no patients stopped treatment because of adverse events. However, reports of moderate or severe crusts were highest among patients treated with imiquimod, and moderate to severe vesicles or bullae were highest among those treated with ingenol mebutate. Severe pain and severe burning sensation were significantly more common among those treated with MAL-PDT.

While the study had some limitations, the results “could affect treatment choices in both dermatology and primary care,” the authors wrote, pointing out how common AKs are in practice, accounting for 5 million dermatology visits in the United States every year. When considering treatment costs, “fluorouracil is also the most attractive option,” they added. “It is expected that a substantial cost reduction could be achieved with more uniformity in care and the choice for effective therapy.”

The study was supported by the Netherlands Organization for Health Research and Development. Five of the 11 authors declared conference costs, advisory board fees, or trial supplies from private industry, including from manufacturers of some of the products in the study. The remaining authors had no disclosures.

SOURCE: Jansen M et al. N Engl J Med. 2019;380:935-46.

A head-to-head comparison of four commonly used field-directed treatments for actinic keratosis (AK) found that 5% fluorouracil cream was the most effective at reducing the size of lesions.

In a study published in the March 7 issue of the New England Journal of Medicine, researchers reported the outcomes of a multicenter, single-blind trial in 602 patients with five or more AK lesions in one continuous area on the head measuring 25-100 cm2. Patients were randomized to treatment with either 5% fluorouracil cream, 5% imiquimod cream, methyl aminolevulinate photodynamic therapy (MAL-PDT), or 0.015% ingenol mebutate gel.

Overall, 74.7% of patients who received fluorouracil cream achieved treatment success – defined as at least a 75% reduction in lesion size at 12 months after the end of treatment – compared with 53.9% of patients treated with imiquimod, 37.7% of those treated with MAL-PDT, and 28.9% of those treated with ingenol mebutate. The differences between fluorouracil and the other treatments was significant.

Maud H.E. Jansen, MD, and Janneke P.H.M. Kessels, MD, of the department of dermatology at Maastricht (the Netherlands) University Medical Center and their coauthors pointed out that, while there was plenty of literature about different AK treatments, there were few head-to-head comparisons and many studies were underpowered or had different outcome measures.

Even when the analysis was restricted to patients with grade I or II lesions, fluorouracil remained the most effective treatment, with 75.3% of patients achieving treatment success, compared with 52.6% with imiquimod, 38.7% with MAL-PDT, and 30.2% with ingenol mebutate.

There were not enough patients with more severe grade III lesions to enable a separate analysis of their outcomes; 49 (7.9%) of patients in the study had at least one grade III lesion.

The authors noted that many previous studies had excluded patients with grade III lesions. “Exclusion of patients with grade III lesions was associated with slightly higher rates of success in the fluorouracil, MAL-PDT, and ingenol mebutate groups than the rates in the unrestricted analysis,” they wrote. The inclusion of patients with grade III AK lesions in this trial made it “more representative of patients seen in daily practice,” they added.

Treatment failure – less than 75% clearance of actinic keratosis at 3 months after the final treatment – was seen after one treatment cycle in 14.8% of patients treated with fluorouracil, 37.2% of patients on imiquimod, 34.6% of patients given photodynamic therapy, and 47.8% of patients on ingenol mebutate therapy.

All these patients were offered a second treatment cycle, but those treated with imiquimod, PDT, and ingenol mebutate were less likely to undergo a second treatment.

The authors suggested that the higher proportion of patients in the fluorouracil group who were willing to undergo a second round of therapy suggests they may have experienced less discomfort and inconvenience with the therapy to begin with, compared with those treated with the other regimens.

Full adherence to treatment was more common in the ingenol mebutate (98.7% of patients) and MAL-PDT (96.8%) groups, compared with the fluorouracil (88.7%) and imiquimod (88.2%) groups. However, patients in the fluorouracil group reported greater levels of patient satisfaction and improvements in health-related quality of life than did patients in the other treatment arms of the study.

No serious adverse events were reported with any of the treatments, and no patients stopped treatment because of adverse events. However, reports of moderate or severe crusts were highest among patients treated with imiquimod, and moderate to severe vesicles or bullae were highest among those treated with ingenol mebutate. Severe pain and severe burning sensation were significantly more common among those treated with MAL-PDT.

While the study had some limitations, the results “could affect treatment choices in both dermatology and primary care,” the authors wrote, pointing out how common AKs are in practice, accounting for 5 million dermatology visits in the United States every year. When considering treatment costs, “fluorouracil is also the most attractive option,” they added. “It is expected that a substantial cost reduction could be achieved with more uniformity in care and the choice for effective therapy.”

The study was supported by the Netherlands Organization for Health Research and Development. Five of the 11 authors declared conference costs, advisory board fees, or trial supplies from private industry, including from manufacturers of some of the products in the study. The remaining authors had no disclosures.

SOURCE: Jansen M et al. N Engl J Med. 2019;380:935-46.

A head-to-head comparison of four commonly used field-directed treatments for actinic keratosis (AK) found that 5% fluorouracil cream was the most effective at reducing the size of lesions.

In a study published in the March 7 issue of the New England Journal of Medicine, researchers reported the outcomes of a multicenter, single-blind trial in 602 patients with five or more AK lesions in one continuous area on the head measuring 25-100 cm2. Patients were randomized to treatment with either 5% fluorouracil cream, 5% imiquimod cream, methyl aminolevulinate photodynamic therapy (MAL-PDT), or 0.015% ingenol mebutate gel.

Overall, 74.7% of patients who received fluorouracil cream achieved treatment success – defined as at least a 75% reduction in lesion size at 12 months after the end of treatment – compared with 53.9% of patients treated with imiquimod, 37.7% of those treated with MAL-PDT, and 28.9% of those treated with ingenol mebutate. The differences between fluorouracil and the other treatments was significant.

Maud H.E. Jansen, MD, and Janneke P.H.M. Kessels, MD, of the department of dermatology at Maastricht (the Netherlands) University Medical Center and their coauthors pointed out that, while there was plenty of literature about different AK treatments, there were few head-to-head comparisons and many studies were underpowered or had different outcome measures.

Even when the analysis was restricted to patients with grade I or II lesions, fluorouracil remained the most effective treatment, with 75.3% of patients achieving treatment success, compared with 52.6% with imiquimod, 38.7% with MAL-PDT, and 30.2% with ingenol mebutate.

There were not enough patients with more severe grade III lesions to enable a separate analysis of their outcomes; 49 (7.9%) of patients in the study had at least one grade III lesion.

The authors noted that many previous studies had excluded patients with grade III lesions. “Exclusion of patients with grade III lesions was associated with slightly higher rates of success in the fluorouracil, MAL-PDT, and ingenol mebutate groups than the rates in the unrestricted analysis,” they wrote. The inclusion of patients with grade III AK lesions in this trial made it “more representative of patients seen in daily practice,” they added.

Treatment failure – less than 75% clearance of actinic keratosis at 3 months after the final treatment – was seen after one treatment cycle in 14.8% of patients treated with fluorouracil, 37.2% of patients on imiquimod, 34.6% of patients given photodynamic therapy, and 47.8% of patients on ingenol mebutate therapy.

All these patients were offered a second treatment cycle, but those treated with imiquimod, PDT, and ingenol mebutate were less likely to undergo a second treatment.

The authors suggested that the higher proportion of patients in the fluorouracil group who were willing to undergo a second round of therapy suggests they may have experienced less discomfort and inconvenience with the therapy to begin with, compared with those treated with the other regimens.

Full adherence to treatment was more common in the ingenol mebutate (98.7% of patients) and MAL-PDT (96.8%) groups, compared with the fluorouracil (88.7%) and imiquimod (88.2%) groups. However, patients in the fluorouracil group reported greater levels of patient satisfaction and improvements in health-related quality of life than did patients in the other treatment arms of the study.

No serious adverse events were reported with any of the treatments, and no patients stopped treatment because of adverse events. However, reports of moderate or severe crusts were highest among patients treated with imiquimod, and moderate to severe vesicles or bullae were highest among those treated with ingenol mebutate. Severe pain and severe burning sensation were significantly more common among those treated with MAL-PDT.

While the study had some limitations, the results “could affect treatment choices in both dermatology and primary care,” the authors wrote, pointing out how common AKs are in practice, accounting for 5 million dermatology visits in the United States every year. When considering treatment costs, “fluorouracil is also the most attractive option,” they added. “It is expected that a substantial cost reduction could be achieved with more uniformity in care and the choice for effective therapy.”

The study was supported by the Netherlands Organization for Health Research and Development. Five of the 11 authors declared conference costs, advisory board fees, or trial supplies from private industry, including from manufacturers of some of the products in the study. The remaining authors had no disclosures.

SOURCE: Jansen M et al. N Engl J Med. 2019;380:935-46.

FROM THE NEW ENGLAND JOURNAL OF MEDICINE

Behavioral intervention improves physical activity in patients with diabetes

A behavioral intervention that involves regular counseling sessions could help patients with type 2 diabetes increase their levels of physical activity and decrease their amount of sedentary time, according to findings from a prospective, randomized trial of 300 physically inactive patients with type 2 diabetes.

“The primary strength of this study is the application of an intervention targeting both physical activity and sedentary time across all settings (e.g., leisure, transportation, household, and occupation), based on theoretical grounds and using several behavior-change techniques,” wrote Stefano Balducci, MD, of Sapienza University in Rome and his colleagues. The findings were published in JAMA.

Half the participants were randomized to an intervention that involved one individual theoretical counseling session with a diabetologist and eight biweekly theoretical and practical counseling sessions with an exercise specialist each year for 3 years. The other half received standard care in the form of recommendations from their general physician about increasing physical activity and decreasing sedentary time. Both groups also received the same general treatment regimen according to guidelines.

The findings showed significant increases in volume of physical activity, light-intensity physical activity, and moderate to vigorous physical activity in the intervention group during the first 4 months of the trial. Those increases also were greater than the increases seen in the usual care group. Patients in the intervention group also showed greater decreases in sedentary time, compared with those in the control group during the same time.

After 4 months, the increases in physical activity in the intervention group plateaued but remained stable until 2 years. After that, the levels of activity declined but still remained significantly higher than at baseline. The level of sedentary time also increased after 2 years but was still lower than at baseline.

By the end of the study, the intervention group accumulated 13.8 metabolic equivalent hours/week of physical activity volume, compared with 10.5 hours in the control group; 18.9 minutes/day of moderate to vigorous intensity physical activity, compared with 12.5 minutes in the control group; and 4.6 hours/day of light-intensity physical activity, compared with 3.8 hours in the control group. In regard to sedentary time, the intervention group accumulated 10.9 hours/day, compared with 11.7 hours in the control group. All differences were statistically significant.

“The present findings support the need for interventions targeting all domains of behavior to obtain substantial lifestyle changes, not limited to moderate- to vigorous-intensity physical activity, which has little effect on sedentary time,” Dr. Balducci and his coauthors wrote. “This concept is consistent with a 2018 report showing that physical activity, sedentary time, and cardiorespiratory fitness are all important for cardiometabolic health.”

For the secondary outcomes of cardiorespiratory fitness and lower-body strength, the authors saw significant improvements in the intervention group, whereas the control group showed a worsening in those outcomes. The intervention group also showed significant improvements in fasting plasma glucose level, systolic blood pressure, total coronary heart disease 10-year risk score, and fatal coronary heart disease 10-year risk score. They also had significantly greater improvements than did the control group in total stroke risk score, hemoglobin A1c, fasting plasma glucose levels, and coronary heart disease risk.

In all, there were 41 adverse events in the intervention group, compared with 59 in the control group, outside of the sessions. During the sessions, participants in the intervention group experienced mild hypoglycemia (8 episodes), tachycardia/arrhythmia (3), and musculoskeletal injury or discomfort (19).

One of the limitations highlighted by the authors was that the benefits of their strategy could vary in other cohorts because of differences in climatic, socioeconomic, or cultural settings.

The study was supported by the Metabolic Fitness Association. Three authors declared grants and personal fees from pharmaceutical companies, and one author was an employee of Technogym. No other conflicts of interest were declared.

SOURCE: Balducci S et al. JAMA. 2019;321:880-90.

A behavioral intervention that involves regular counseling sessions could help patients with type 2 diabetes increase their levels of physical activity and decrease their amount of sedentary time, according to findings from a prospective, randomized trial of 300 physically inactive patients with type 2 diabetes.

“The primary strength of this study is the application of an intervention targeting both physical activity and sedentary time across all settings (e.g., leisure, transportation, household, and occupation), based on theoretical grounds and using several behavior-change techniques,” wrote Stefano Balducci, MD, of Sapienza University in Rome and his colleagues. The findings were published in JAMA.

Half the participants were randomized to an intervention that involved one individual theoretical counseling session with a diabetologist and eight biweekly theoretical and practical counseling sessions with an exercise specialist each year for 3 years. The other half received standard care in the form of recommendations from their general physician about increasing physical activity and decreasing sedentary time. Both groups also received the same general treatment regimen according to guidelines.

The findings showed significant increases in volume of physical activity, light-intensity physical activity, and moderate to vigorous physical activity in the intervention group during the first 4 months of the trial. Those increases also were greater than the increases seen in the usual care group. Patients in the intervention group also showed greater decreases in sedentary time, compared with those in the control group during the same time.

After 4 months, the increases in physical activity in the intervention group plateaued but remained stable until 2 years. After that, the levels of activity declined but still remained significantly higher than at baseline. The level of sedentary time also increased after 2 years but was still lower than at baseline.

By the end of the study, the intervention group accumulated 13.8 metabolic equivalent hours/week of physical activity volume, compared with 10.5 hours in the control group; 18.9 minutes/day of moderate to vigorous intensity physical activity, compared with 12.5 minutes in the control group; and 4.6 hours/day of light-intensity physical activity, compared with 3.8 hours in the control group. In regard to sedentary time, the intervention group accumulated 10.9 hours/day, compared with 11.7 hours in the control group. All differences were statistically significant.

“The present findings support the need for interventions targeting all domains of behavior to obtain substantial lifestyle changes, not limited to moderate- to vigorous-intensity physical activity, which has little effect on sedentary time,” Dr. Balducci and his coauthors wrote. “This concept is consistent with a 2018 report showing that physical activity, sedentary time, and cardiorespiratory fitness are all important for cardiometabolic health.”

For the secondary outcomes of cardiorespiratory fitness and lower-body strength, the authors saw significant improvements in the intervention group, whereas the control group showed a worsening in those outcomes. The intervention group also showed significant improvements in fasting plasma glucose level, systolic blood pressure, total coronary heart disease 10-year risk score, and fatal coronary heart disease 10-year risk score. They also had significantly greater improvements than did the control group in total stroke risk score, hemoglobin A1c, fasting plasma glucose levels, and coronary heart disease risk.

In all, there were 41 adverse events in the intervention group, compared with 59 in the control group, outside of the sessions. During the sessions, participants in the intervention group experienced mild hypoglycemia (8 episodes), tachycardia/arrhythmia (3), and musculoskeletal injury or discomfort (19).

One of the limitations highlighted by the authors was that the benefits of their strategy could vary in other cohorts because of differences in climatic, socioeconomic, or cultural settings.

The study was supported by the Metabolic Fitness Association. Three authors declared grants and personal fees from pharmaceutical companies, and one author was an employee of Technogym. No other conflicts of interest were declared.

SOURCE: Balducci S et al. JAMA. 2019;321:880-90.

A behavioral intervention that involves regular counseling sessions could help patients with type 2 diabetes increase their levels of physical activity and decrease their amount of sedentary time, according to findings from a prospective, randomized trial of 300 physically inactive patients with type 2 diabetes.

“The primary strength of this study is the application of an intervention targeting both physical activity and sedentary time across all settings (e.g., leisure, transportation, household, and occupation), based on theoretical grounds and using several behavior-change techniques,” wrote Stefano Balducci, MD, of Sapienza University in Rome and his colleagues. The findings were published in JAMA.

Half the participants were randomized to an intervention that involved one individual theoretical counseling session with a diabetologist and eight biweekly theoretical and practical counseling sessions with an exercise specialist each year for 3 years. The other half received standard care in the form of recommendations from their general physician about increasing physical activity and decreasing sedentary time. Both groups also received the same general treatment regimen according to guidelines.

The findings showed significant increases in volume of physical activity, light-intensity physical activity, and moderate to vigorous physical activity in the intervention group during the first 4 months of the trial. Those increases also were greater than the increases seen in the usual care group. Patients in the intervention group also showed greater decreases in sedentary time, compared with those in the control group during the same time.

After 4 months, the increases in physical activity in the intervention group plateaued but remained stable until 2 years. After that, the levels of activity declined but still remained significantly higher than at baseline. The level of sedentary time also increased after 2 years but was still lower than at baseline.

By the end of the study, the intervention group accumulated 13.8 metabolic equivalent hours/week of physical activity volume, compared with 10.5 hours in the control group; 18.9 minutes/day of moderate to vigorous intensity physical activity, compared with 12.5 minutes in the control group; and 4.6 hours/day of light-intensity physical activity, compared with 3.8 hours in the control group. In regard to sedentary time, the intervention group accumulated 10.9 hours/day, compared with 11.7 hours in the control group. All differences were statistically significant.

“The present findings support the need for interventions targeting all domains of behavior to obtain substantial lifestyle changes, not limited to moderate- to vigorous-intensity physical activity, which has little effect on sedentary time,” Dr. Balducci and his coauthors wrote. “This concept is consistent with a 2018 report showing that physical activity, sedentary time, and cardiorespiratory fitness are all important for cardiometabolic health.”

For the secondary outcomes of cardiorespiratory fitness and lower-body strength, the authors saw significant improvements in the intervention group, whereas the control group showed a worsening in those outcomes. The intervention group also showed significant improvements in fasting plasma glucose level, systolic blood pressure, total coronary heart disease 10-year risk score, and fatal coronary heart disease 10-year risk score. They also had significantly greater improvements than did the control group in total stroke risk score, hemoglobin A1c, fasting plasma glucose levels, and coronary heart disease risk.

In all, there were 41 adverse events in the intervention group, compared with 59 in the control group, outside of the sessions. During the sessions, participants in the intervention group experienced mild hypoglycemia (8 episodes), tachycardia/arrhythmia (3), and musculoskeletal injury or discomfort (19).

One of the limitations highlighted by the authors was that the benefits of their strategy could vary in other cohorts because of differences in climatic, socioeconomic, or cultural settings.

The study was supported by the Metabolic Fitness Association. Three authors declared grants and personal fees from pharmaceutical companies, and one author was an employee of Technogym. No other conflicts of interest were declared.

SOURCE: Balducci S et al. JAMA. 2019;321:880-90.

FROM JAMA

Intervention raises pediatric patient awareness of teratogenic rheumatology medications

While many medications in rheumatology are known or suspected to be teratogenic, there are no standards on educating rheumatology patients about this risk or on screening for pregnancy, wrote Ashley M. Cooper, MD, and her colleagues at Children’s Mercy Kansas City, Mo. Their report is in the April issue of Pediatrics.

“This is problematic in pediatric rheumatology because nearly half of adolescents report a history of sexual intercourse, but only 57% report condom use and 27% another form of contraception,” they wrote, pointing out that the United States also has one of the highest rates of teen pregnancy among industrialized nations; 80% are unplanned.

The intervention consisted of a number of approaches. Posters listing teratogenic medications were put in exam rooms, physicians received education about the Food and Drug Administration–mandated mycophenolate Risk Evaluation and Mitigation Strategy (REMS), and scripts were developed for staff to inform patients of teratogenic risks with medications, as well as prompts for referral to birth control clinics.

Researchers also implemented a standardized EHR template to allow clinical staff to review medication-specific teaching points and document the discussion with the patient. There also was a previsit planning process to review everything before the clinic day began, and identify patients for education or pregnancy screening.

After 8 months of the intervention – during which 1,366 eligible patient encounters occurred in rheumatology patients aged 10 years and older– researchers saw a significant increase in those encounters where teratogen education was recorded, from 25% before the intervention to 80% at 23 months after it. Pregnancy screening also increased among eligible patients, from 20% preintervention to 83% at 23 months after the first intervention.

Three patients became pregnant during the intervention period; all of the pregnancies were picked up by the screening process. In all cases, the teratogenic medication was immediately stopped; one patient underwent elective termination, one delivered a healthy term infant, and one patient was lost to follow-up. Two of the three had received education during the previous year.

“The strategies used in this project have implications that reach beyond rheumatology because teratogenic medications are commonly prescribed by other subspecialties,” the authors wrote.

Dr. Cooper and her associates noted that an original previsit checklist was later abandoned because it was viewed as being too much of a time imposition on staff at the beginning of a busy clinic day.

“The new process had higher staff buy-in because it was used to address multiple quality improvement projects and hospital requirements, some linked to provider remuneration,” they wrote.

No funding or conflicts of interest were declared.

SOURCE: Cooper AM et al. Pediatrics 2019; 143(4):e20180803. doi: 10.1542/peds.2018-0803.

While many medications in rheumatology are known or suspected to be teratogenic, there are no standards on educating rheumatology patients about this risk or on screening for pregnancy, wrote Ashley M. Cooper, MD, and her colleagues at Children’s Mercy Kansas City, Mo. Their report is in the April issue of Pediatrics.

“This is problematic in pediatric rheumatology because nearly half of adolescents report a history of sexual intercourse, but only 57% report condom use and 27% another form of contraception,” they wrote, pointing out that the United States also has one of the highest rates of teen pregnancy among industrialized nations; 80% are unplanned.

The intervention consisted of a number of approaches. Posters listing teratogenic medications were put in exam rooms, physicians received education about the Food and Drug Administration–mandated mycophenolate Risk Evaluation and Mitigation Strategy (REMS), and scripts were developed for staff to inform patients of teratogenic risks with medications, as well as prompts for referral to birth control clinics.

Researchers also implemented a standardized EHR template to allow clinical staff to review medication-specific teaching points and document the discussion with the patient. There also was a previsit planning process to review everything before the clinic day began, and identify patients for education or pregnancy screening.

After 8 months of the intervention – during which 1,366 eligible patient encounters occurred in rheumatology patients aged 10 years and older– researchers saw a significant increase in those encounters where teratogen education was recorded, from 25% before the intervention to 80% at 23 months after it. Pregnancy screening also increased among eligible patients, from 20% preintervention to 83% at 23 months after the first intervention.

Three patients became pregnant during the intervention period; all of the pregnancies were picked up by the screening process. In all cases, the teratogenic medication was immediately stopped; one patient underwent elective termination, one delivered a healthy term infant, and one patient was lost to follow-up. Two of the three had received education during the previous year.

“The strategies used in this project have implications that reach beyond rheumatology because teratogenic medications are commonly prescribed by other subspecialties,” the authors wrote.

Dr. Cooper and her associates noted that an original previsit checklist was later abandoned because it was viewed as being too much of a time imposition on staff at the beginning of a busy clinic day.

“The new process had higher staff buy-in because it was used to address multiple quality improvement projects and hospital requirements, some linked to provider remuneration,” they wrote.

No funding or conflicts of interest were declared.

SOURCE: Cooper AM et al. Pediatrics 2019; 143(4):e20180803. doi: 10.1542/peds.2018-0803.

While many medications in rheumatology are known or suspected to be teratogenic, there are no standards on educating rheumatology patients about this risk or on screening for pregnancy, wrote Ashley M. Cooper, MD, and her colleagues at Children’s Mercy Kansas City, Mo. Their report is in the April issue of Pediatrics.

“This is problematic in pediatric rheumatology because nearly half of adolescents report a history of sexual intercourse, but only 57% report condom use and 27% another form of contraception,” they wrote, pointing out that the United States also has one of the highest rates of teen pregnancy among industrialized nations; 80% are unplanned.

The intervention consisted of a number of approaches. Posters listing teratogenic medications were put in exam rooms, physicians received education about the Food and Drug Administration–mandated mycophenolate Risk Evaluation and Mitigation Strategy (REMS), and scripts were developed for staff to inform patients of teratogenic risks with medications, as well as prompts for referral to birth control clinics.

Researchers also implemented a standardized EHR template to allow clinical staff to review medication-specific teaching points and document the discussion with the patient. There also was a previsit planning process to review everything before the clinic day began, and identify patients for education or pregnancy screening.

After 8 months of the intervention – during which 1,366 eligible patient encounters occurred in rheumatology patients aged 10 years and older– researchers saw a significant increase in those encounters where teratogen education was recorded, from 25% before the intervention to 80% at 23 months after it. Pregnancy screening also increased among eligible patients, from 20% preintervention to 83% at 23 months after the first intervention.

Three patients became pregnant during the intervention period; all of the pregnancies were picked up by the screening process. In all cases, the teratogenic medication was immediately stopped; one patient underwent elective termination, one delivered a healthy term infant, and one patient was lost to follow-up. Two of the three had received education during the previous year.

“The strategies used in this project have implications that reach beyond rheumatology because teratogenic medications are commonly prescribed by other subspecialties,” the authors wrote.

Dr. Cooper and her associates noted that an original previsit checklist was later abandoned because it was viewed as being too much of a time imposition on staff at the beginning of a busy clinic day.

“The new process had higher staff buy-in because it was used to address multiple quality improvement projects and hospital requirements, some linked to provider remuneration,” they wrote.

No funding or conflicts of interest were declared.

SOURCE: Cooper AM et al. Pediatrics 2019; 143(4):e20180803. doi: 10.1542/peds.2018-0803.

FROM PEDIATRICS

Key clinical point: Intervention improves awareness of teratogenic rheumatology medications among pediatric patients, as well as pregnancy screening.

Major finding: Teratogen education increased from 25% before the intervention to 80% at 23 months after it. Pregnancy screening also increased among eligible patients, from 20% preintervention to 83% at 23 months after the first intervention.

Study details: Single-center trial of 1,366 patient encounters.

Disclosures: No funding or conflicts of interest were declared.

Source: Cooper AM et al. Pediatrics 2019;143(4):e20180803. doi: 10.1542/peds.2018-0803.

Better epilepsy outcomes with stereoelectroencephalography versus subdural electrode

Robotic stereoelectroencephalography for the localization of seizures in epilepsy is associated with less procedural morbidity, such as infection and hemorrhage, and better outcomes, compared with subdural electrode implantation.

A paper published in JAMA Neurology detailed the results of a retrospective cohort study of the outcomes of 239 patients with medically intractable epilepsy, 121 of whom underwent stereoelectroencephalography (SEEG) and 139 underwent subdural electrode (SDE) implantation.

The authors noted a significant difference between the two groups in complication rates. There were seven symptomatic hemorrhagic sequelae and three infections related to SDE implantation, and in one of these cases the patient suffered long-term neurologic consequences.

In contrast, there were no symptomatic complications in the SEEG cohort, although two patients were found to have small asymptomatic subdural hematomas that were spotted on CT scans.

Patients in the implantation group also received significantly more narcotics and had a much higher rate of perioperative blood transfusions (13.7% vs. 0.8%, P less than .001), compared with those in the SEEG group.

The study also looked at epilepsy outcomes in the two groups. Significantly more of the patients who had subdural electrodes underwent resection or ablative surgery with laser interstitial thermal therapy, compared with the patients who had SEEG (91.4% vs. 74.4%, P less than .001).

“Thus, the SEEG and SDE cohorts were significantly different in the proportions of cases that were lesional, suggesting that these modalities were used to evaluate somewhat different populations, although the same group of physicians at the same center managed and referred these cases,” wrote Nitin Tandon, MD, from the University of Texas, Houston, and his coauthors. “However, this shift in the patient pool would be expected to bias outcomes against SEEG, because these patients generally have less favorable outcomes.”

Yet the authors saw that a significantly greater proportion of the SEEG patients were free of disabling seizures, compared with the SDE implant group at 6 months (83.9% vs. 66.1%) and 1 year (76% vs. 54.6%) after resection.

To account for the difference between the two groups in the proportion of cases that were lesional, the authors conducted a subgroup analysis of patients with abnormalities on imaging. Again, they saw that a significantly greater proportion of the SEEG patients achieved good outcomes at 6 months and 1 year, compared with the electrode group.

While subdural electrodes have long been the standard approach for delineating epileptogenic zones, the authors wrote that SEEG offers improved coverage and precise targeting of deeper structures. “In addition, the ability of the SEEG method to map distributed epileptic networks involved in epileptic activity has been hypothesized to be responsible for improved outcomes in patients with epilepsy that is difficult to localize.”

They also commented that, in their institution, they saw much greater patient tolerance for SEEG and slightly better outcomes in those patients who underwent resection or ablation.

One author reported a position with a company specializing in outpatient clinical neurophysiological testing services. No other conflicts of interest were reported.

SOURCE: Tandon N et al. JAMA Neurol. 2019 Mar 4. doi: 10.1001/jamaneurol.2019.0098.

Robotic stereoelectroencephalography for the localization of seizures in epilepsy is associated with less procedural morbidity, such as infection and hemorrhage, and better outcomes, compared with subdural electrode implantation.

A paper published in JAMA Neurology detailed the results of a retrospective cohort study of the outcomes of 239 patients with medically intractable epilepsy, 121 of whom underwent stereoelectroencephalography (SEEG) and 139 underwent subdural electrode (SDE) implantation.

The authors noted a significant difference between the two groups in complication rates. There were seven symptomatic hemorrhagic sequelae and three infections related to SDE implantation, and in one of these cases the patient suffered long-term neurologic consequences.

In contrast, there were no symptomatic complications in the SEEG cohort, although two patients were found to have small asymptomatic subdural hematomas that were spotted on CT scans.

Patients in the implantation group also received significantly more narcotics and had a much higher rate of perioperative blood transfusions (13.7% vs. 0.8%, P less than .001), compared with those in the SEEG group.

The study also looked at epilepsy outcomes in the two groups. Significantly more of the patients who had subdural electrodes underwent resection or ablative surgery with laser interstitial thermal therapy, compared with the patients who had SEEG (91.4% vs. 74.4%, P less than .001).

“Thus, the SEEG and SDE cohorts were significantly different in the proportions of cases that were lesional, suggesting that these modalities were used to evaluate somewhat different populations, although the same group of physicians at the same center managed and referred these cases,” wrote Nitin Tandon, MD, from the University of Texas, Houston, and his coauthors. “However, this shift in the patient pool would be expected to bias outcomes against SEEG, because these patients generally have less favorable outcomes.”

Yet the authors saw that a significantly greater proportion of the SEEG patients were free of disabling seizures, compared with the SDE implant group at 6 months (83.9% vs. 66.1%) and 1 year (76% vs. 54.6%) after resection.

To account for the difference between the two groups in the proportion of cases that were lesional, the authors conducted a subgroup analysis of patients with abnormalities on imaging. Again, they saw that a significantly greater proportion of the SEEG patients achieved good outcomes at 6 months and 1 year, compared with the electrode group.

While subdural electrodes have long been the standard approach for delineating epileptogenic zones, the authors wrote that SEEG offers improved coverage and precise targeting of deeper structures. “In addition, the ability of the SEEG method to map distributed epileptic networks involved in epileptic activity has been hypothesized to be responsible for improved outcomes in patients with epilepsy that is difficult to localize.”

They also commented that, in their institution, they saw much greater patient tolerance for SEEG and slightly better outcomes in those patients who underwent resection or ablation.

One author reported a position with a company specializing in outpatient clinical neurophysiological testing services. No other conflicts of interest were reported.

SOURCE: Tandon N et al. JAMA Neurol. 2019 Mar 4. doi: 10.1001/jamaneurol.2019.0098.

Robotic stereoelectroencephalography for the localization of seizures in epilepsy is associated with less procedural morbidity, such as infection and hemorrhage, and better outcomes, compared with subdural electrode implantation.

A paper published in JAMA Neurology detailed the results of a retrospective cohort study of the outcomes of 239 patients with medically intractable epilepsy, 121 of whom underwent stereoelectroencephalography (SEEG) and 139 underwent subdural electrode (SDE) implantation.

The authors noted a significant difference between the two groups in complication rates. There were seven symptomatic hemorrhagic sequelae and three infections related to SDE implantation, and in one of these cases the patient suffered long-term neurologic consequences.

In contrast, there were no symptomatic complications in the SEEG cohort, although two patients were found to have small asymptomatic subdural hematomas that were spotted on CT scans.

Patients in the implantation group also received significantly more narcotics and had a much higher rate of perioperative blood transfusions (13.7% vs. 0.8%, P less than .001), compared with those in the SEEG group.

The study also looked at epilepsy outcomes in the two groups. Significantly more of the patients who had subdural electrodes underwent resection or ablative surgery with laser interstitial thermal therapy, compared with the patients who had SEEG (91.4% vs. 74.4%, P less than .001).

“Thus, the SEEG and SDE cohorts were significantly different in the proportions of cases that were lesional, suggesting that these modalities were used to evaluate somewhat different populations, although the same group of physicians at the same center managed and referred these cases,” wrote Nitin Tandon, MD, from the University of Texas, Houston, and his coauthors. “However, this shift in the patient pool would be expected to bias outcomes against SEEG, because these patients generally have less favorable outcomes.”

Yet the authors saw that a significantly greater proportion of the SEEG patients were free of disabling seizures, compared with the SDE implant group at 6 months (83.9% vs. 66.1%) and 1 year (76% vs. 54.6%) after resection.

To account for the difference between the two groups in the proportion of cases that were lesional, the authors conducted a subgroup analysis of patients with abnormalities on imaging. Again, they saw that a significantly greater proportion of the SEEG patients achieved good outcomes at 6 months and 1 year, compared with the electrode group.

While subdural electrodes have long been the standard approach for delineating epileptogenic zones, the authors wrote that SEEG offers improved coverage and precise targeting of deeper structures. “In addition, the ability of the SEEG method to map distributed epileptic networks involved in epileptic activity has been hypothesized to be responsible for improved outcomes in patients with epilepsy that is difficult to localize.”

They also commented that, in their institution, they saw much greater patient tolerance for SEEG and slightly better outcomes in those patients who underwent resection or ablation.

One author reported a position with a company specializing in outpatient clinical neurophysiological testing services. No other conflicts of interest were reported.

SOURCE: Tandon N et al. JAMA Neurol. 2019 Mar 4. doi: 10.1001/jamaneurol.2019.0098.

FROM JAMA NEUROLOGY

Key clinical point: Stereoelectroencephalography in epilepsy associated with fewer complications than subdural electrode implantation

Major finding: Subdural electrode implantation is associated with significantly more hemorrhagic complications and infections than stereoelectroencephalography.

Study details: A retrospective cohort study in 239 patients with medically intractable epilepsy.

Disclosures: One author reported a position with a company specializing in outpatient clinical neurophysiological testing services. No other conflicts of interest were reported.

Source: Tandon N et al. JAMA Neurol. 2019 Mar 4. doi: 10.1001/jamaneurol.2019.0098.

No survival benefit from systematic lymphadenectomy in ovarian cancer

Lymphadenectomy in women with advanced ovarian cancer and normal lymph nodes does not appear to improve overall or progression-free survival, according to a randomized trial of 647 women with newly-diagnosed advanced ovarian cancer who were undergoing macroscopically complete resection.

The women were randomized during the resection to either undergo systematic pelvic and para-aortic lymphadenectomy or no lymphadenectomy. The study excluded women with obvious node involvement.

The median overall survival rates were similar between the two groups; 65.5 months in the lymphadenectomy group and 69.2 months in the no-lymphadenectomy group (HR 1.06, P = .65). There was also no significant difference between the two groups in median progression-free survival, which was 25.5 months in both.

While overall quality of life was similar between the two groups, there were some significant points of difference. Patients who underwent lymphadenectomy experienced significantly longer surgical times, and greater median blood loss, which in turn led to a higher rate of blood transfusions and higher rate of postoperative admission to intensive care.

The 60-day mortality rates were also significantly higher among the lymphadenectomy group – 3.1% vs. 0.9% (P = .049) – as was the rate of repeat laparotomies for complications (12.4% vs. 6.5%, P = .01), mainly due to bowel leakage or fistula.

While systematic pelvic and para-aortic lymphadenectomy is often used in patients with advanced ovarian cancer, there is limited evidence in its favor from randomized clinical trials, wrote Philipp Harter, MD, of the department of gynecology and gynecologic oncology at Kliniken Essen-Mitte, Germany, and his coauthors. The report is in the New England Journal of Medicine

“In this trial, patients with advanced ovarian cancer who underwent macroscopically complete resection did not benefit from systematic lymphadenectomy,” the authors wrote. “In contrast, lymphadenectomy resulted in treatment burden and harm to patients.”

The research group also tried to account for the level of surgical experience in each of the 52 centers involved in the study, and found no difference in treatment outcomes between high-recruiting centers and low-recruiting centers. All the centers also had to demonstrate their proficiency with the lymphadenectomy procedure before participating in the study.

“Accordingly, the quality of surgery and the numbers of resected lymph nodes were higher than in previous gynecologic oncologic clinical trials analyzing this issue,” they wrote.

The study was supported by the Deutsche Forschungsgemeinschaft and the Austrian Science Fund. Six authors declared a range of fees and support from the pharmaceutical industry.

SOURCE: Harter P et al. N Engl J Med. 2019 Feb 27 doi: 10.1056/NEJMoa1808424.

Pelvic and aortic lymph nodes can often contain microscopic ovarian cancer metastases even when they appear normal, so there has been some debate as to whether these should be systematically removed during primary surgery to eliminate this potential sanctuary for cancer cells.

While a number of previous studies have suggested a survival benefit, there were concerns about potential confounders that may have influenced those findings. This study avoids many of the criticisms leveled at previous trials; for example, by ensuring surgical center quality, by excluding women with obvious node involvement, and by conducting the lymphadenectomy only after complete macroscopic resection.

The findings are consistent with the notion that the most frequent cause of ovarian cancer-related illness and death is the inability to control intra-abdominal disease.

Dr. Eric L. Eisenhauer is from Massachusetts General Hospital in Boston and Dr. Dennis S. Chi is from Memorial Sloan Kettering Cancer Center in New York. These comments are adapted from their accompanying editorial (N Engl J Med. 2019 Feb 27. doi: 10.1056/NEJMe1900044). Both authors declared financial and other support, including advisory board positions, from private industry.

Pelvic and aortic lymph nodes can often contain microscopic ovarian cancer metastases even when they appear normal, so there has been some debate as to whether these should be systematically removed during primary surgery to eliminate this potential sanctuary for cancer cells.

While a number of previous studies have suggested a survival benefit, there were concerns about potential confounders that may have influenced those findings. This study avoids many of the criticisms leveled at previous trials; for example, by ensuring surgical center quality, by excluding women with obvious node involvement, and by conducting the lymphadenectomy only after complete macroscopic resection.

The findings are consistent with the notion that the most frequent cause of ovarian cancer-related illness and death is the inability to control intra-abdominal disease.

Dr. Eric L. Eisenhauer is from Massachusetts General Hospital in Boston and Dr. Dennis S. Chi is from Memorial Sloan Kettering Cancer Center in New York. These comments are adapted from their accompanying editorial (N Engl J Med. 2019 Feb 27. doi: 10.1056/NEJMe1900044). Both authors declared financial and other support, including advisory board positions, from private industry.

Pelvic and aortic lymph nodes can often contain microscopic ovarian cancer metastases even when they appear normal, so there has been some debate as to whether these should be systematically removed during primary surgery to eliminate this potential sanctuary for cancer cells.

While a number of previous studies have suggested a survival benefit, there were concerns about potential confounders that may have influenced those findings. This study avoids many of the criticisms leveled at previous trials; for example, by ensuring surgical center quality, by excluding women with obvious node involvement, and by conducting the lymphadenectomy only after complete macroscopic resection.

The findings are consistent with the notion that the most frequent cause of ovarian cancer-related illness and death is the inability to control intra-abdominal disease.

Dr. Eric L. Eisenhauer is from Massachusetts General Hospital in Boston and Dr. Dennis S. Chi is from Memorial Sloan Kettering Cancer Center in New York. These comments are adapted from their accompanying editorial (N Engl J Med. 2019 Feb 27. doi: 10.1056/NEJMe1900044). Both authors declared financial and other support, including advisory board positions, from private industry.

Lymphadenectomy in women with advanced ovarian cancer and normal lymph nodes does not appear to improve overall or progression-free survival, according to a randomized trial of 647 women with newly-diagnosed advanced ovarian cancer who were undergoing macroscopically complete resection.