User login

Laser treatment cut the fat on flank and abs

KISSIMMEE, FLA. – Single noninvasive treatments with a prototype 1,060-nm diode laser led to statistically significant, visually discernible reductions of adipose tissue covering the abdomen and flanks in two prospective multicenter studies.

“The treatment is well tolerated, with only mild and transient side effects,” said Dr. Bruce Katz of Mount Sinai School of Medicine in New York, who presented the results of the flank study at the annual meeting of the American Society for Laser Medicine and Surgery.

Most patients were “satisfied” or “very satisfied” with the results, added Dr. Sean Doherty, a plastic surgeon in group practice in Boston, who presented the results of the abdominal study.

Noninvasive fat reduction procedures rose 43% in the past year, said Dr. Doherty. The 1,060-nm diode laser damages adipocytes in targeted areas, eventually resulting in apoptosis, he added. Sessions in both studies lasted 25 minutes, with irradiance at 0.9-1.4 W/cm2 and treatment areas of 48-144 cm2 for the flanks and 192-288 cm2 for the abdomen, the investigators said.

The abdominal study included 34 patients, and the flank study included 42 patients. Investigators treated only one side for each patient in the flank study, reserving the other as a “sham” or control, Dr. Katz said. A blinded review of photographs taken before and 12 weeks after the procedures served as the primary endpoint in both studies. Assessors correctly identified an average of 95% of “before” photographs of the abdomen and 90.3% of those of the flank, Dr. Doherty and Dr. Katz reported.

The secondary endpoint was change in thickness of the adipose layer at 12 weeks post procedure, as measured by ultrasound. The average reduction was 3.1 mm for the abdomen (standard deviation, 1.7 mm) and 2.6 mm for the flank (standard deviation not reported; P < .001 for both), the researchers said. In the abdominal study, 85% of patients said they were “satisfied” or “extremely satisfied” with the results, while 6% were “slightly satisfied,” Dr. Doherty said. In the flank study, 86% of patients said they were “satisfied” or “extremely satisfied,” while 10% were “slightly satisfied.”

Mean pain scores were 3.7 out of 10 for the abdominal procedure and 4.0 out of 10 for the flank procedure. The most common side effect was mild to moderate tenderness that usually subsided within about 2 weeks of treatment. Other side effects included localized firmness, edema, and ecchymosis. No patients had serious adverse effects, the researchers said.

Most of the patients were approximately 45-49 years of age. Those in the abdominal study had an average body mass index of 25.5 kg/m2, while those in the flank study averaged 26.4 kg/m2, the researchers reported. Most of the patients in both studies were women (94% of abdominal patients and 86% of flank patients).

The average weight gain at 12 weeks for the abdominal study was 0.1 pounds (range, –5.2 to 5.6 pounds), while patients in the flank study gained an average of 0.8 pounds (range, –0.7 to 13 pounds), the researchers noted. Six patients in the flank study gained more than 5 pounds, and one gained more than 37 pounds and was therefore excluded from the efficacy analysis, Dr. Katz noted.

The researchers did not report funding sources for the studies. They disclosed advisory and financial relationships with Allergan, Merz Pharmaceuticals, Cynosure, Valeant, Syneron, and several other pharmaceutical companies.

KISSIMMEE, FLA. – Single noninvasive treatments with a prototype 1,060-nm diode laser led to statistically significant, visually discernible reductions of adipose tissue covering the abdomen and flanks in two prospective multicenter studies.

“The treatment is well tolerated, with only mild and transient side effects,” said Dr. Bruce Katz of Mount Sinai School of Medicine in New York, who presented the results of the flank study at the annual meeting of the American Society for Laser Medicine and Surgery.

Most patients were “satisfied” or “very satisfied” with the results, added Dr. Sean Doherty, a plastic surgeon in group practice in Boston, who presented the results of the abdominal study.

Noninvasive fat reduction procedures rose 43% in the past year, said Dr. Doherty. The 1,060-nm diode laser damages adipocytes in targeted areas, eventually resulting in apoptosis, he added. Sessions in both studies lasted 25 minutes, with irradiance at 0.9-1.4 W/cm2 and treatment areas of 48-144 cm2 for the flanks and 192-288 cm2 for the abdomen, the investigators said.

The abdominal study included 34 patients, and the flank study included 42 patients. Investigators treated only one side for each patient in the flank study, reserving the other as a “sham” or control, Dr. Katz said. A blinded review of photographs taken before and 12 weeks after the procedures served as the primary endpoint in both studies. Assessors correctly identified an average of 95% of “before” photographs of the abdomen and 90.3% of those of the flank, Dr. Doherty and Dr. Katz reported.

The secondary endpoint was change in thickness of the adipose layer at 12 weeks post procedure, as measured by ultrasound. The average reduction was 3.1 mm for the abdomen (standard deviation, 1.7 mm) and 2.6 mm for the flank (standard deviation not reported; P < .001 for both), the researchers said. In the abdominal study, 85% of patients said they were “satisfied” or “extremely satisfied” with the results, while 6% were “slightly satisfied,” Dr. Doherty said. In the flank study, 86% of patients said they were “satisfied” or “extremely satisfied,” while 10% were “slightly satisfied.”

Mean pain scores were 3.7 out of 10 for the abdominal procedure and 4.0 out of 10 for the flank procedure. The most common side effect was mild to moderate tenderness that usually subsided within about 2 weeks of treatment. Other side effects included localized firmness, edema, and ecchymosis. No patients had serious adverse effects, the researchers said.

Most of the patients were approximately 45-49 years of age. Those in the abdominal study had an average body mass index of 25.5 kg/m2, while those in the flank study averaged 26.4 kg/m2, the researchers reported. Most of the patients in both studies were women (94% of abdominal patients and 86% of flank patients).

The average weight gain at 12 weeks for the abdominal study was 0.1 pounds (range, –5.2 to 5.6 pounds), while patients in the flank study gained an average of 0.8 pounds (range, –0.7 to 13 pounds), the researchers noted. Six patients in the flank study gained more than 5 pounds, and one gained more than 37 pounds and was therefore excluded from the efficacy analysis, Dr. Katz noted.

The researchers did not report funding sources for the studies. They disclosed advisory and financial relationships with Allergan, Merz Pharmaceuticals, Cynosure, Valeant, Syneron, and several other pharmaceutical companies.

KISSIMMEE, FLA. – Single noninvasive treatments with a prototype 1,060-nm diode laser led to statistically significant, visually discernible reductions of adipose tissue covering the abdomen and flanks in two prospective multicenter studies.

“The treatment is well tolerated, with only mild and transient side effects,” said Dr. Bruce Katz of Mount Sinai School of Medicine in New York, who presented the results of the flank study at the annual meeting of the American Society for Laser Medicine and Surgery.

Most patients were “satisfied” or “very satisfied” with the results, added Dr. Sean Doherty, a plastic surgeon in group practice in Boston, who presented the results of the abdominal study.

Noninvasive fat reduction procedures rose 43% in the past year, said Dr. Doherty. The 1,060-nm diode laser damages adipocytes in targeted areas, eventually resulting in apoptosis, he added. Sessions in both studies lasted 25 minutes, with irradiance at 0.9-1.4 W/cm2 and treatment areas of 48-144 cm2 for the flanks and 192-288 cm2 for the abdomen, the investigators said.

The abdominal study included 34 patients, and the flank study included 42 patients. Investigators treated only one side for each patient in the flank study, reserving the other as a “sham” or control, Dr. Katz said. A blinded review of photographs taken before and 12 weeks after the procedures served as the primary endpoint in both studies. Assessors correctly identified an average of 95% of “before” photographs of the abdomen and 90.3% of those of the flank, Dr. Doherty and Dr. Katz reported.

The secondary endpoint was change in thickness of the adipose layer at 12 weeks post procedure, as measured by ultrasound. The average reduction was 3.1 mm for the abdomen (standard deviation, 1.7 mm) and 2.6 mm for the flank (standard deviation not reported; P < .001 for both), the researchers said. In the abdominal study, 85% of patients said they were “satisfied” or “extremely satisfied” with the results, while 6% were “slightly satisfied,” Dr. Doherty said. In the flank study, 86% of patients said they were “satisfied” or “extremely satisfied,” while 10% were “slightly satisfied.”

Mean pain scores were 3.7 out of 10 for the abdominal procedure and 4.0 out of 10 for the flank procedure. The most common side effect was mild to moderate tenderness that usually subsided within about 2 weeks of treatment. Other side effects included localized firmness, edema, and ecchymosis. No patients had serious adverse effects, the researchers said.

Most of the patients were approximately 45-49 years of age. Those in the abdominal study had an average body mass index of 25.5 kg/m2, while those in the flank study averaged 26.4 kg/m2, the researchers reported. Most of the patients in both studies were women (94% of abdominal patients and 86% of flank patients).

The average weight gain at 12 weeks for the abdominal study was 0.1 pounds (range, –5.2 to 5.6 pounds), while patients in the flank study gained an average of 0.8 pounds (range, –0.7 to 13 pounds), the researchers noted. Six patients in the flank study gained more than 5 pounds, and one gained more than 37 pounds and was therefore excluded from the efficacy analysis, Dr. Katz noted.

The researchers did not report funding sources for the studies. They disclosed advisory and financial relationships with Allergan, Merz Pharmaceuticals, Cynosure, Valeant, Syneron, and several other pharmaceutical companies.

AT LASER 2015

Key clinical point: One 25-minute noninvasive treatment with a 1,060-nm laser significantly reduced abdominal and flank fat.

Major finding: At 12 weeks, blinded assessors correctly chose 95% and 90.3% of “before” (compared with “after”) photographs of the abdomen and flank, respectively.

Data source: Two prospective multicenter studies of 34 patients who underwent abdominal treatment and 42 patients who underwent flank treatment.

Disclosures: Dr. Katz and Dr. Doherty did not report funding sources for the studies. They disclosed advisory and financial relationships with Allergan, Merz Pharmaceuticals, Cynosure, Valeant, Syneron, and several other pharmaceutical companies.

Copeptin levels predicted diabetes insipidus after pituitary surgery

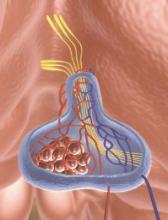

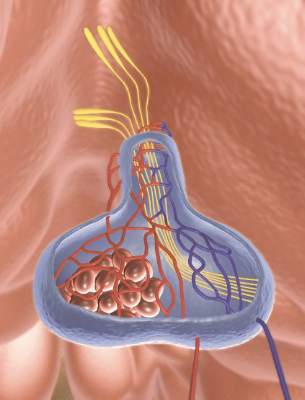

Copeptin levels of less than 2.5 pmol/L reliably identified diabetes insipidus after pituitary surgery, while levels of more than 30 pmol/L ruled out the condition, investigators reported online in the Journal of Clinical Endocrinology and Metabolism.

“Copeptin represents a new, early, and reliable single marker for postoperative diabetes insipidus in the post–pituitary surgery setting, where no such marker currently is known,” said Dr. Bettina Winzeler at University Hospital of Basel (Switzerland) and her associates. Postoperative measures of copeptin levels might help clinicians to differentiate patients who need closer postsurgical inpatient observation from those who can safely be discharged.

About 16%-34% of patients who undergo surgery in the sellar region develop central diabetes insipidus, which, without prompt rehydration, can cause severe hypernatremia, Dr. Winzeler and her associates noted. Risk factors for postoperative diabetes insipidus are not useful markers; however, copeptin, a surrogate of arginine vasopressin, is stable and can be measured quickly and reliably.

The researchers hypothesized that patients with postoperative diabetes insipidus would not have the markedly elevated copeptin levels that surgical stress normally triggers when posterior pituitary function is adequate. To test that theory, they measured daily postoperative copeptin levels for 205 consecutive patients who underwent surgeries for sellar lesions or tumors near the hypothalamus or pituitary gland (J. Clin. Endocrinol. Metab. 2015 Apr. 29 [doi:10.1210/jc.2014-4527]).

One day after surgery, 22 (81.5%) of the 27 patients whose copeptin levels were less than 2.5 pmol/L had diabetes insipidus (positive predictive value, 81%; specificity, 97%), said the researchers. In contrast, one of 40 (2.5%) patients with copeptin levels greater than 30 pmol/L had diabetes insipidus (negative predictive value 95%; sensitivity, 94%). Patients with diabetes insipidus also had significantly lower median and interquartile (25th-75th percentile) copeptin levels, compared with other patients (median for patients with diabetes insipidus, 2.9 pmol/L; interquartile range, 1.9-7.9 pmol/L; median for other patients, 10.8 pmol/L; IQR, 5.2-30.4 pmol/L; P less than .001). In the multivariate analysis, low postoperative copeptin levels remained associated with diabetes insipidus after adjusting for age, gender, body-mass index, tumor size and type of surgery, history of surgery or radiotherapy, cerebrospinal fluid leakage, and serum sodium concentration, the investigators reported (odds ratio, 1.62; 95% confidence interval, 1.25-2.10; P less than .001).

The study did not use standardized diagnostic criteria for diabetes insipidus, and postoperative blood samples were obtained as part of daily care and not at fixed time points, Dr. Winzeler and her associates noted. “Absence of standardized sampling for copeptin measurement at a defined early time point presumably decreased copeptin accuracy in this study, rendering our findings conservative,” they cautioned.

The Swiss National Foundation partially funded the research. Three coauthors reported having received speaker honoraria from Thermo Scientific Biomarkers, which develops and makes the copeptin assay.

Copeptin levels of less than 2.5 pmol/L reliably identified diabetes insipidus after pituitary surgery, while levels of more than 30 pmol/L ruled out the condition, investigators reported online in the Journal of Clinical Endocrinology and Metabolism.

“Copeptin represents a new, early, and reliable single marker for postoperative diabetes insipidus in the post–pituitary surgery setting, where no such marker currently is known,” said Dr. Bettina Winzeler at University Hospital of Basel (Switzerland) and her associates. Postoperative measures of copeptin levels might help clinicians to differentiate patients who need closer postsurgical inpatient observation from those who can safely be discharged.

About 16%-34% of patients who undergo surgery in the sellar region develop central diabetes insipidus, which, without prompt rehydration, can cause severe hypernatremia, Dr. Winzeler and her associates noted. Risk factors for postoperative diabetes insipidus are not useful markers; however, copeptin, a surrogate of arginine vasopressin, is stable and can be measured quickly and reliably.

The researchers hypothesized that patients with postoperative diabetes insipidus would not have the markedly elevated copeptin levels that surgical stress normally triggers when posterior pituitary function is adequate. To test that theory, they measured daily postoperative copeptin levels for 205 consecutive patients who underwent surgeries for sellar lesions or tumors near the hypothalamus or pituitary gland (J. Clin. Endocrinol. Metab. 2015 Apr. 29 [doi:10.1210/jc.2014-4527]).

One day after surgery, 22 (81.5%) of the 27 patients whose copeptin levels were less than 2.5 pmol/L had diabetes insipidus (positive predictive value, 81%; specificity, 97%), said the researchers. In contrast, one of 40 (2.5%) patients with copeptin levels greater than 30 pmol/L had diabetes insipidus (negative predictive value 95%; sensitivity, 94%). Patients with diabetes insipidus also had significantly lower median and interquartile (25th-75th percentile) copeptin levels, compared with other patients (median for patients with diabetes insipidus, 2.9 pmol/L; interquartile range, 1.9-7.9 pmol/L; median for other patients, 10.8 pmol/L; IQR, 5.2-30.4 pmol/L; P less than .001). In the multivariate analysis, low postoperative copeptin levels remained associated with diabetes insipidus after adjusting for age, gender, body-mass index, tumor size and type of surgery, history of surgery or radiotherapy, cerebrospinal fluid leakage, and serum sodium concentration, the investigators reported (odds ratio, 1.62; 95% confidence interval, 1.25-2.10; P less than .001).

The study did not use standardized diagnostic criteria for diabetes insipidus, and postoperative blood samples were obtained as part of daily care and not at fixed time points, Dr. Winzeler and her associates noted. “Absence of standardized sampling for copeptin measurement at a defined early time point presumably decreased copeptin accuracy in this study, rendering our findings conservative,” they cautioned.

The Swiss National Foundation partially funded the research. Three coauthors reported having received speaker honoraria from Thermo Scientific Biomarkers, which develops and makes the copeptin assay.

Copeptin levels of less than 2.5 pmol/L reliably identified diabetes insipidus after pituitary surgery, while levels of more than 30 pmol/L ruled out the condition, investigators reported online in the Journal of Clinical Endocrinology and Metabolism.

“Copeptin represents a new, early, and reliable single marker for postoperative diabetes insipidus in the post–pituitary surgery setting, where no such marker currently is known,” said Dr. Bettina Winzeler at University Hospital of Basel (Switzerland) and her associates. Postoperative measures of copeptin levels might help clinicians to differentiate patients who need closer postsurgical inpatient observation from those who can safely be discharged.

About 16%-34% of patients who undergo surgery in the sellar region develop central diabetes insipidus, which, without prompt rehydration, can cause severe hypernatremia, Dr. Winzeler and her associates noted. Risk factors for postoperative diabetes insipidus are not useful markers; however, copeptin, a surrogate of arginine vasopressin, is stable and can be measured quickly and reliably.

The researchers hypothesized that patients with postoperative diabetes insipidus would not have the markedly elevated copeptin levels that surgical stress normally triggers when posterior pituitary function is adequate. To test that theory, they measured daily postoperative copeptin levels for 205 consecutive patients who underwent surgeries for sellar lesions or tumors near the hypothalamus or pituitary gland (J. Clin. Endocrinol. Metab. 2015 Apr. 29 [doi:10.1210/jc.2014-4527]).

One day after surgery, 22 (81.5%) of the 27 patients whose copeptin levels were less than 2.5 pmol/L had diabetes insipidus (positive predictive value, 81%; specificity, 97%), said the researchers. In contrast, one of 40 (2.5%) patients with copeptin levels greater than 30 pmol/L had diabetes insipidus (negative predictive value 95%; sensitivity, 94%). Patients with diabetes insipidus also had significantly lower median and interquartile (25th-75th percentile) copeptin levels, compared with other patients (median for patients with diabetes insipidus, 2.9 pmol/L; interquartile range, 1.9-7.9 pmol/L; median for other patients, 10.8 pmol/L; IQR, 5.2-30.4 pmol/L; P less than .001). In the multivariate analysis, low postoperative copeptin levels remained associated with diabetes insipidus after adjusting for age, gender, body-mass index, tumor size and type of surgery, history of surgery or radiotherapy, cerebrospinal fluid leakage, and serum sodium concentration, the investigators reported (odds ratio, 1.62; 95% confidence interval, 1.25-2.10; P less than .001).

The study did not use standardized diagnostic criteria for diabetes insipidus, and postoperative blood samples were obtained as part of daily care and not at fixed time points, Dr. Winzeler and her associates noted. “Absence of standardized sampling for copeptin measurement at a defined early time point presumably decreased copeptin accuracy in this study, rendering our findings conservative,” they cautioned.

The Swiss National Foundation partially funded the research. Three coauthors reported having received speaker honoraria from Thermo Scientific Biomarkers, which develops and makes the copeptin assay.

Key clinical point: Low copeptin levels reliably predicted diabetes insipidus after pituitary surgery.

Major finding: A day after surgery, 81.5% of patients with copeptin levels less than 2.5 pmol/L had diabetes insipidus, compared with 2.5% of patients with levels greater than 30 pmol/L.

Data source: Multicenter, prospective, observational cohort study of 205 consecutive pituitary surgery patients.

Disclosures: The Swiss National Foundation partially funded the research. Three coauthors reported having received speaker honoraria from Thermo Scientific Biomarkers, which develops and makes the copeptin assay.

Albuterol costs soared after CFC inhaler ban

Privately insured patients with asthma faced an 81% rise in out-of-pocket costs for albuterol and used slightly less of the medication after the Food and Drug Administration banned chlorofluorocarbon-based inhalers, researchers reported online in JAMA Internal Medicine.

But the ban did not appear to affect rates of hospitalization or emergency department or outpatient visits for asthma, said Dr. Anupam Jena of Massachusetts General Hospital in Boston and his associates. “The impact of the FDA policy on individuals without insurance who faced greater increases in out-of-pocket costs warrants further exploration,” the researchers emphasized.

Concerns about ozone depletion led the FDA in 2005 to announce a ban on CFC inhalers that became effective at the end of 2008. Patients were left with pricier branded hydrofluoroalkane albuterol inhalers, Dr. Jena and his associates noted (JAMA Intern. Med. 2015 May 11 [doi:10.1001/jamainternmed.2015.1665]).

To investigate the economic and clinical effects of this shift, the researchers analyzed private insurance data from 2004 to 2010 on 109,428 adults and 37,281 children with asthma.

The average out-of-pocket cost of an albuterol prescription rose from $13.60 (95% confidence interval, $13.40-$13.70) in 2004 to $25.00 (95% CI, $24.80-$25.20) in 2008, just after the ban went into effect, the researchers reported. By 2010, the average cost of a prescription had dropped to $21.00 (95% CI, $20.80-$21.20). “Steep declines in use of generic CFC inhalers occurred after the fourth quarter of 2006 and were almost fully offset by increases in use of hydrofluoroalkane inhalers,” added the researchers. Furthermore, every $10 increase in out-of-pocket albuterol prescription costs was tied to about a 0.92 percentage point decline in use of the inhalers (95% CI, −1.39 to −0.44; P < .001) in adults, and a 0.54 percentage point in children (95% CI, −0.84 to −0.24; P = .001), they said. Usage did not vary significantly between adults and children or among patients with persistent or nonpersistent asthma, they added.

The National Institutes of Health, National Institute on Aging, and University of Minnesota funded the study. The investigators declared no relevant conflicts of interest.

The 2008 FDA ban on albuterol inhalers containing chlorofluorocarbons was questioned at the time because the CFCs emitted from inhalers have an insignificant effect on ozone and because of the anticipated costs of transitioning to hydrofluoroalkane inhalers for patients with respiratory disease.

Whether banning chlorofluorocarbon inhalers will lead to any improvement in the environment is unclear. It is clear that the ban has increased health care costs and improved the bottom line of pharmaceutical companies that are making hydrofluoroalkane-based inhalers. Although albuterol inhalers have been in use for more than 30 years, pharmaceutical companies have used the chlorofluorocarbon ban as an opportunity to raise the price on inhalers from approximately $13 for a generic formulation to more than $50 today. … In this unique situation, it would have made more sense to not ban chlorofluorocarbon inhalers until hydrofluoroalkane inhalers were available in generic formulations. This would have balanced the best interests of society and the best interests of individuals with respiratory disease, allowing the FDA to protect the environment without making inhalers expensive and unaffordable for many.

Joseph Ross, M.D., M.H.S. is at the Yale University School of Medicine in New Haven, Conn., and disclosed FDA research funding related to medical device surveillance and clinical trial data sharing. Rita Redberg, M.D., M.Sc., is at the University of California, San Francisco, and made no relevant disclosures. These comments are based on their accompanying editorial (JAMA Intern. Med. 2015 May 11 [doi:10.1001/jamainternmed.2015.1696]).

The 2008 FDA ban on albuterol inhalers containing chlorofluorocarbons was questioned at the time because the CFCs emitted from inhalers have an insignificant effect on ozone and because of the anticipated costs of transitioning to hydrofluoroalkane inhalers for patients with respiratory disease.

Whether banning chlorofluorocarbon inhalers will lead to any improvement in the environment is unclear. It is clear that the ban has increased health care costs and improved the bottom line of pharmaceutical companies that are making hydrofluoroalkane-based inhalers. Although albuterol inhalers have been in use for more than 30 years, pharmaceutical companies have used the chlorofluorocarbon ban as an opportunity to raise the price on inhalers from approximately $13 for a generic formulation to more than $50 today. … In this unique situation, it would have made more sense to not ban chlorofluorocarbon inhalers until hydrofluoroalkane inhalers were available in generic formulations. This would have balanced the best interests of society and the best interests of individuals with respiratory disease, allowing the FDA to protect the environment without making inhalers expensive and unaffordable for many.

Joseph Ross, M.D., M.H.S. is at the Yale University School of Medicine in New Haven, Conn., and disclosed FDA research funding related to medical device surveillance and clinical trial data sharing. Rita Redberg, M.D., M.Sc., is at the University of California, San Francisco, and made no relevant disclosures. These comments are based on their accompanying editorial (JAMA Intern. Med. 2015 May 11 [doi:10.1001/jamainternmed.2015.1696]).

The 2008 FDA ban on albuterol inhalers containing chlorofluorocarbons was questioned at the time because the CFCs emitted from inhalers have an insignificant effect on ozone and because of the anticipated costs of transitioning to hydrofluoroalkane inhalers for patients with respiratory disease.

Whether banning chlorofluorocarbon inhalers will lead to any improvement in the environment is unclear. It is clear that the ban has increased health care costs and improved the bottom line of pharmaceutical companies that are making hydrofluoroalkane-based inhalers. Although albuterol inhalers have been in use for more than 30 years, pharmaceutical companies have used the chlorofluorocarbon ban as an opportunity to raise the price on inhalers from approximately $13 for a generic formulation to more than $50 today. … In this unique situation, it would have made more sense to not ban chlorofluorocarbon inhalers until hydrofluoroalkane inhalers were available in generic formulations. This would have balanced the best interests of society and the best interests of individuals with respiratory disease, allowing the FDA to protect the environment without making inhalers expensive and unaffordable for many.

Joseph Ross, M.D., M.H.S. is at the Yale University School of Medicine in New Haven, Conn., and disclosed FDA research funding related to medical device surveillance and clinical trial data sharing. Rita Redberg, M.D., M.Sc., is at the University of California, San Francisco, and made no relevant disclosures. These comments are based on their accompanying editorial (JAMA Intern. Med. 2015 May 11 [doi:10.1001/jamainternmed.2015.1696]).

Privately insured patients with asthma faced an 81% rise in out-of-pocket costs for albuterol and used slightly less of the medication after the Food and Drug Administration banned chlorofluorocarbon-based inhalers, researchers reported online in JAMA Internal Medicine.

But the ban did not appear to affect rates of hospitalization or emergency department or outpatient visits for asthma, said Dr. Anupam Jena of Massachusetts General Hospital in Boston and his associates. “The impact of the FDA policy on individuals without insurance who faced greater increases in out-of-pocket costs warrants further exploration,” the researchers emphasized.

Concerns about ozone depletion led the FDA in 2005 to announce a ban on CFC inhalers that became effective at the end of 2008. Patients were left with pricier branded hydrofluoroalkane albuterol inhalers, Dr. Jena and his associates noted (JAMA Intern. Med. 2015 May 11 [doi:10.1001/jamainternmed.2015.1665]).

To investigate the economic and clinical effects of this shift, the researchers analyzed private insurance data from 2004 to 2010 on 109,428 adults and 37,281 children with asthma.

The average out-of-pocket cost of an albuterol prescription rose from $13.60 (95% confidence interval, $13.40-$13.70) in 2004 to $25.00 (95% CI, $24.80-$25.20) in 2008, just after the ban went into effect, the researchers reported. By 2010, the average cost of a prescription had dropped to $21.00 (95% CI, $20.80-$21.20). “Steep declines in use of generic CFC inhalers occurred after the fourth quarter of 2006 and were almost fully offset by increases in use of hydrofluoroalkane inhalers,” added the researchers. Furthermore, every $10 increase in out-of-pocket albuterol prescription costs was tied to about a 0.92 percentage point decline in use of the inhalers (95% CI, −1.39 to −0.44; P < .001) in adults, and a 0.54 percentage point in children (95% CI, −0.84 to −0.24; P = .001), they said. Usage did not vary significantly between adults and children or among patients with persistent or nonpersistent asthma, they added.

The National Institutes of Health, National Institute on Aging, and University of Minnesota funded the study. The investigators declared no relevant conflicts of interest.

Privately insured patients with asthma faced an 81% rise in out-of-pocket costs for albuterol and used slightly less of the medication after the Food and Drug Administration banned chlorofluorocarbon-based inhalers, researchers reported online in JAMA Internal Medicine.

But the ban did not appear to affect rates of hospitalization or emergency department or outpatient visits for asthma, said Dr. Anupam Jena of Massachusetts General Hospital in Boston and his associates. “The impact of the FDA policy on individuals without insurance who faced greater increases in out-of-pocket costs warrants further exploration,” the researchers emphasized.

Concerns about ozone depletion led the FDA in 2005 to announce a ban on CFC inhalers that became effective at the end of 2008. Patients were left with pricier branded hydrofluoroalkane albuterol inhalers, Dr. Jena and his associates noted (JAMA Intern. Med. 2015 May 11 [doi:10.1001/jamainternmed.2015.1665]).

To investigate the economic and clinical effects of this shift, the researchers analyzed private insurance data from 2004 to 2010 on 109,428 adults and 37,281 children with asthma.

The average out-of-pocket cost of an albuterol prescription rose from $13.60 (95% confidence interval, $13.40-$13.70) in 2004 to $25.00 (95% CI, $24.80-$25.20) in 2008, just after the ban went into effect, the researchers reported. By 2010, the average cost of a prescription had dropped to $21.00 (95% CI, $20.80-$21.20). “Steep declines in use of generic CFC inhalers occurred after the fourth quarter of 2006 and were almost fully offset by increases in use of hydrofluoroalkane inhalers,” added the researchers. Furthermore, every $10 increase in out-of-pocket albuterol prescription costs was tied to about a 0.92 percentage point decline in use of the inhalers (95% CI, −1.39 to −0.44; P < .001) in adults, and a 0.54 percentage point in children (95% CI, −0.84 to −0.24; P = .001), they said. Usage did not vary significantly between adults and children or among patients with persistent or nonpersistent asthma, they added.

The National Institutes of Health, National Institute on Aging, and University of Minnesota funded the study. The investigators declared no relevant conflicts of interest.

FROM JAMA INTERNAL MEDICINE

Key clinical point: The FDA ban on chlorofluorocarbon-based albuterol inhalers sharply increased out-of-pocket costs and slightly decreased inhaler usage.

Major finding: Average out-of-pocket cost of albuterol inhalers rose by 50% after the ban was passed.

Data source: Analysis of private insurance data from 109,428 adults and 37,281 children with asthma from 2004 through 2010.

Disclosures: The National Institutes of Health, National Institute on Aging, and University of Minnesota funded the study. The investigators declared no relevant conflicts of interest.

HCV Spike in Four Appalachian States Tied to Drug Abuse

Acute hepatitis C virus infections more than tripled among young people in Kentucky, Tennessee, Virginia, and West Virginia between 2006 and 2012, investigators reported online May 8 in Morbidity and Mortality Weekly Report.

“The increase in acute HCV infections in central Appalachia is highly correlated with the region’s epidemic of prescription opioid abuse and facilitated by an upsurge in the number of persons who inject drugs,” said Dr. Jon Zibbell at the Centers for Disease Control and Prevention and his associates.

Nationally, acute HCV infections have risen most steeply in states east of the Mississippi. To further explore the trend, the researchers examined HCV case data from the National Notifiable Disease Surveillance System, and data on 217,789 admissions to substance abuse treatment centers related to opioid or injection drug abuse (MMWR 2015;64:453-8).

Confirmed HCV cases among individuals aged 30 years and younger rose by 364% in the four Appalachian states during 2006-2012, the investigators found. “The increasing incidence among nonurban residents was at least double that of urban residents each year,” they said. Among patients with known risk factors for HCV infection, 73% reported injection drug use.

During the same time, treatment admissions for opioid dependency among individuals aged 12-29 years rose by 21% in the four states, and self-reported injection drug use rose by more than 12%, the researchers said. “Evidence-based strategies as well as integrated-service provision are urgently needed in drug treatment programs to ensure patients are tested for HCV, and persons found to be HCV infected are linked to care and receive appropriate treatment,” they concluded. “These efforts will require further collaboration among federal partners and state and local health departments to better address the syndemic of opioid abuse and HCV infection.”

The investigators declared no funding sources or financial conflicts of interest.

Acute hepatitis C virus infections more than tripled among young people in Kentucky, Tennessee, Virginia, and West Virginia between 2006 and 2012, investigators reported online May 8 in Morbidity and Mortality Weekly Report.

“The increase in acute HCV infections in central Appalachia is highly correlated with the region’s epidemic of prescription opioid abuse and facilitated by an upsurge in the number of persons who inject drugs,” said Dr. Jon Zibbell at the Centers for Disease Control and Prevention and his associates.

Nationally, acute HCV infections have risen most steeply in states east of the Mississippi. To further explore the trend, the researchers examined HCV case data from the National Notifiable Disease Surveillance System, and data on 217,789 admissions to substance abuse treatment centers related to opioid or injection drug abuse (MMWR 2015;64:453-8).

Confirmed HCV cases among individuals aged 30 years and younger rose by 364% in the four Appalachian states during 2006-2012, the investigators found. “The increasing incidence among nonurban residents was at least double that of urban residents each year,” they said. Among patients with known risk factors for HCV infection, 73% reported injection drug use.

During the same time, treatment admissions for opioid dependency among individuals aged 12-29 years rose by 21% in the four states, and self-reported injection drug use rose by more than 12%, the researchers said. “Evidence-based strategies as well as integrated-service provision are urgently needed in drug treatment programs to ensure patients are tested for HCV, and persons found to be HCV infected are linked to care and receive appropriate treatment,” they concluded. “These efforts will require further collaboration among federal partners and state and local health departments to better address the syndemic of opioid abuse and HCV infection.”

The investigators declared no funding sources or financial conflicts of interest.

Acute hepatitis C virus infections more than tripled among young people in Kentucky, Tennessee, Virginia, and West Virginia between 2006 and 2012, investigators reported online May 8 in Morbidity and Mortality Weekly Report.

“The increase in acute HCV infections in central Appalachia is highly correlated with the region’s epidemic of prescription opioid abuse and facilitated by an upsurge in the number of persons who inject drugs,” said Dr. Jon Zibbell at the Centers for Disease Control and Prevention and his associates.

Nationally, acute HCV infections have risen most steeply in states east of the Mississippi. To further explore the trend, the researchers examined HCV case data from the National Notifiable Disease Surveillance System, and data on 217,789 admissions to substance abuse treatment centers related to opioid or injection drug abuse (MMWR 2015;64:453-8).

Confirmed HCV cases among individuals aged 30 years and younger rose by 364% in the four Appalachian states during 2006-2012, the investigators found. “The increasing incidence among nonurban residents was at least double that of urban residents each year,” they said. Among patients with known risk factors for HCV infection, 73% reported injection drug use.

During the same time, treatment admissions for opioid dependency among individuals aged 12-29 years rose by 21% in the four states, and self-reported injection drug use rose by more than 12%, the researchers said. “Evidence-based strategies as well as integrated-service provision are urgently needed in drug treatment programs to ensure patients are tested for HCV, and persons found to be HCV infected are linked to care and receive appropriate treatment,” they concluded. “These efforts will require further collaboration among federal partners and state and local health departments to better address the syndemic of opioid abuse and HCV infection.”

The investigators declared no funding sources or financial conflicts of interest.

HCV spike in four Appalachian states tied to drug abuse

Acute hepatitis C virus infections more than tripled among young people in Kentucky, Tennessee, Virginia, and West Virginia between 2006 and 2012, investigators reported online May 8 in Morbidity and Mortality Weekly Report.

“The increase in acute HCV infections in central Appalachia is highly correlated with the region’s epidemic of prescription opioid abuse and facilitated by an upsurge in the number of persons who inject drugs,” said Dr. Jon Zibbell at the Centers for Disease Control and Prevention and his associates.

Nationally, acute HCV infections have risen most steeply in states east of the Mississippi. To further explore the trend, the researchers examined HCV case data from the National Notifiable Disease Surveillance System, and data on 217,789 admissions to substance abuse treatment centers related to opioid or injection drug abuse (MMWR 2015;64:453-8).

Confirmed HCV cases among individuals aged 30 years and younger rose by 364% in the four Appalachian states during 2006-2012, the investigators found. “The increasing incidence among nonurban residents was at least double that of urban residents each year,” they said. Among patients with known risk factors for HCV infection, 73% reported injection drug use.

During the same time, treatment admissions for opioid dependency among individuals aged 12-29 years rose by 21% in the four states, and self-reported injection drug use rose by more than 12%, the researchers said. “Evidence-based strategies as well as integrated-service provision are urgently needed in drug treatment programs to ensure patients are tested for HCV, and persons found to be HCV infected are linked to care and receive appropriate treatment,” they concluded. “These efforts will require further collaboration among federal partners and state and local health departments to better address the syndemic of opioid abuse and HCV infection.”

The investigators declared no funding sources or financial conflicts of interest.

Acute hepatitis C virus infections more than tripled among young people in Kentucky, Tennessee, Virginia, and West Virginia between 2006 and 2012, investigators reported online May 8 in Morbidity and Mortality Weekly Report.

“The increase in acute HCV infections in central Appalachia is highly correlated with the region’s epidemic of prescription opioid abuse and facilitated by an upsurge in the number of persons who inject drugs,” said Dr. Jon Zibbell at the Centers for Disease Control and Prevention and his associates.

Nationally, acute HCV infections have risen most steeply in states east of the Mississippi. To further explore the trend, the researchers examined HCV case data from the National Notifiable Disease Surveillance System, and data on 217,789 admissions to substance abuse treatment centers related to opioid or injection drug abuse (MMWR 2015;64:453-8).

Confirmed HCV cases among individuals aged 30 years and younger rose by 364% in the four Appalachian states during 2006-2012, the investigators found. “The increasing incidence among nonurban residents was at least double that of urban residents each year,” they said. Among patients with known risk factors for HCV infection, 73% reported injection drug use.

During the same time, treatment admissions for opioid dependency among individuals aged 12-29 years rose by 21% in the four states, and self-reported injection drug use rose by more than 12%, the researchers said. “Evidence-based strategies as well as integrated-service provision are urgently needed in drug treatment programs to ensure patients are tested for HCV, and persons found to be HCV infected are linked to care and receive appropriate treatment,” they concluded. “These efforts will require further collaboration among federal partners and state and local health departments to better address the syndemic of opioid abuse and HCV infection.”

The investigators declared no funding sources or financial conflicts of interest.

Acute hepatitis C virus infections more than tripled among young people in Kentucky, Tennessee, Virginia, and West Virginia between 2006 and 2012, investigators reported online May 8 in Morbidity and Mortality Weekly Report.

“The increase in acute HCV infections in central Appalachia is highly correlated with the region’s epidemic of prescription opioid abuse and facilitated by an upsurge in the number of persons who inject drugs,” said Dr. Jon Zibbell at the Centers for Disease Control and Prevention and his associates.

Nationally, acute HCV infections have risen most steeply in states east of the Mississippi. To further explore the trend, the researchers examined HCV case data from the National Notifiable Disease Surveillance System, and data on 217,789 admissions to substance abuse treatment centers related to opioid or injection drug abuse (MMWR 2015;64:453-8).

Confirmed HCV cases among individuals aged 30 years and younger rose by 364% in the four Appalachian states during 2006-2012, the investigators found. “The increasing incidence among nonurban residents was at least double that of urban residents each year,” they said. Among patients with known risk factors for HCV infection, 73% reported injection drug use.

During the same time, treatment admissions for opioid dependency among individuals aged 12-29 years rose by 21% in the four states, and self-reported injection drug use rose by more than 12%, the researchers said. “Evidence-based strategies as well as integrated-service provision are urgently needed in drug treatment programs to ensure patients are tested for HCV, and persons found to be HCV infected are linked to care and receive appropriate treatment,” they concluded. “These efforts will require further collaboration among federal partners and state and local health departments to better address the syndemic of opioid abuse and HCV infection.”

The investigators declared no funding sources or financial conflicts of interest.

Key clinical point: Hepatitis C virus infections more than tripled among young people in Kentucky, Tennessee, Virginia, and West Virginia, and were strongly tied to rises in opioid and injection drug abuse.

Major finding: From 2006 to 2012, the number of acute HCV infections increased by 364% among individuals aged 30 years or less.

Data source: Analysis of HCV case data from the National Notifiable Disease Surveillance System and of substance abuse admissions data from the Treatment Episode Data Set.

Disclosures: The investigators reported no funding sources or financial conflicts of interest.

Reserve thrombophilia testing for select subgroups

Clinicians should avoid routinely screening venous thromboembolism patients for thrombophilias, and should instead weigh the risks of recurrent thrombosis against the chances of bleeding with prolonged anticoagulation, according to a review article published in the April issue of the Journal of Vascular Surgery: Venous and Lymphatic Disorders.

“These laboratory tests are costly, and surprisingly, there is little evidence showing that testing leads to improved clinical outcomes,” said Dr. Elna Masuda at Straub Clinic and Hospital, Honolulu, and her associates. “Until data from well-designed, controlled trials are available comparing different durations of anticoagulation with specific thrombophilic states, treatment should be based on clinical risk factors and less on laboratory abnormalities.”

More than half of patients with an initial venous thromboembolism (VTE) episode had a positive thrombophilia screen in one study (Ann. Intern. Med. 2001;135:367-73), the reviewers noted. Testing, however, usually does not affect clinical management or prevent VTE recurrence, and it can cost more than $3,000 per patient, they said.

For these reasons, the American Society of Hematology, the National Institute for Health Care and Excellence, and the Society for Vascular Medicine discourage screening after an initial VTE episode if patients have a known cause or transient risk factor for thrombosis.

Testing also is unlikely to benefit patients with first-time unprovoked (or idiopathic) VTE, patients with a permanent risk factor for thrombosis such as cancer, or patients with arterial thrombosis or thrombosis of the retina or upper arm veins, Dr. Masuda and her associates said. And because recurrent VTE generally merits long-term anticoagulation, affected patients only should be screened if they are considering stopping treatment and test results could inform that decision, they added (J. Vasc. Surg. Venous Lymphat. Disord. 2015;3:228-35).

Some subgroups of patients, however, could benefit from targeted thrombophilia testing. The reviewers recommended antiphospholipid antibody testing if patients have a history of several unexplained pregnancy losses or another reason to suspect antiphospholipid syndrome. Patients with heparin resistance should be tested for antithrombin deficiency, and patients with warfarin necrosis or neonatal purpura fulminans should be tested for protein C and S deficiencies, they added.

Clinicians also should consider screening women with a personal or family history of VTE if they are pregnant and are considering anticoagulation or are considering oral contraceptives or hormone replacement therapy, Dr. Masuda and her associates said.

Screening such patients remains controversial, but it could help guide anticoagulation therapy before and after delivery or might help patients decide whether or not to take exogenous hormones. “In the subgroup of those pregnant or planning pregnancy, history of prior VTE and strong family history of thrombosis are two factors that appear to play a clinically important role in identifying those who may benefit from screening,” they concluded.

Patients who want to pursue testing need to understand that management is mainly based on clinical risk and that test results usually will not change treatment recommendations, the reviewers also emphasized. “If testing will change management, it may be appropriate to proceed,” they added. “If long-term anticoagulation is preferred on the basis of positive test results, the risk of bleeding should be considered.”

The researchers reported no funding sources. Dr. Masuda reported having served on the speakers bureau for Janssen Pharmaceuticals.

Clinical utility of thrombophilia testing is determined by the cost-benefit ratio to each patient. The extent of testing can be quite variable with the cost varying widely. Testing can range from factor V Leiden and homocystine levels to lupus anticoagulant and an isolated factor, or it can include panels of both fibrinolytic and thrombotic pathways as well as genetic testing. Duration of therapy and risk of recurrence can be influenced by the results. The real cost of underestimating the risk of recurrence is the sequela of recurrent thrombosis, such as the increased risk of valvular damage or obstruction, pulmonary embolism, and the development of the postthrombotic syndrome.

Even patients who have a provoked thrombus have been shown to have an increased incidence of thrombophilia. A positive test result can impact the patient’s treatment or potentially prevent events in families who have an unrecognized thrombophilic issue. Those outcomes matter to the patient and the family. In the past we ligated the saphenofemoral junction for patients with an isolated superficial vein thrombosis encroaching on the junction only to find out that many of these patients have an underlying undiagnosed thrombophilia, which had progressed to deep vein thrombosis.

Knowledge helps select appropriate therapies and potentially prevents life-threatening complications to the patient and their family members. Many people who have an underlying thrombophilia have a minor change in their baseline that then starts a cascade to promote a thrombotic event. Knowledge is power and testing to help identify risk is clinically warranted.

Treatments are based on risk factor assessment, which includes laboratory analysis, residual thrombus, and clinical risk. Understanding the fibrinolytic balance may further explain why some patients recanalize completely while other patients never recanalize and have a significant amount of residual thrombus.

Once a thrombophilia has been identified, family members can be tested for a specific defect, potentially avoiding any thrombotic events and preventing miscarriages in those of reproductive years. Further testing and identification of subgroups is needed to clearly define those who would benefit most. This will only be accomplished with further testing. Research can identify additional defects that will help treating physicians to further understand the thrombotic and fibrinolytic pathways. Management decisions need to be based on evidence. Some of these factors were unknown 20 years ago.

Dr. Joann Lohr is an associate program director at the Good Samaritan Hospital vascular surgery residency program and director of the John J. Cranley Vascular Laboratory at Good Samaritan Hospital, both in Cincinnati. She had no relevant financial disclosures.

Clinical utility of thrombophilia testing is determined by the cost-benefit ratio to each patient. The extent of testing can be quite variable with the cost varying widely. Testing can range from factor V Leiden and homocystine levels to lupus anticoagulant and an isolated factor, or it can include panels of both fibrinolytic and thrombotic pathways as well as genetic testing. Duration of therapy and risk of recurrence can be influenced by the results. The real cost of underestimating the risk of recurrence is the sequela of recurrent thrombosis, such as the increased risk of valvular damage or obstruction, pulmonary embolism, and the development of the postthrombotic syndrome.

Even patients who have a provoked thrombus have been shown to have an increased incidence of thrombophilia. A positive test result can impact the patient’s treatment or potentially prevent events in families who have an unrecognized thrombophilic issue. Those outcomes matter to the patient and the family. In the past we ligated the saphenofemoral junction for patients with an isolated superficial vein thrombosis encroaching on the junction only to find out that many of these patients have an underlying undiagnosed thrombophilia, which had progressed to deep vein thrombosis.

Knowledge helps select appropriate therapies and potentially prevents life-threatening complications to the patient and their family members. Many people who have an underlying thrombophilia have a minor change in their baseline that then starts a cascade to promote a thrombotic event. Knowledge is power and testing to help identify risk is clinically warranted.

Treatments are based on risk factor assessment, which includes laboratory analysis, residual thrombus, and clinical risk. Understanding the fibrinolytic balance may further explain why some patients recanalize completely while other patients never recanalize and have a significant amount of residual thrombus.

Once a thrombophilia has been identified, family members can be tested for a specific defect, potentially avoiding any thrombotic events and preventing miscarriages in those of reproductive years. Further testing and identification of subgroups is needed to clearly define those who would benefit most. This will only be accomplished with further testing. Research can identify additional defects that will help treating physicians to further understand the thrombotic and fibrinolytic pathways. Management decisions need to be based on evidence. Some of these factors were unknown 20 years ago.

Dr. Joann Lohr is an associate program director at the Good Samaritan Hospital vascular surgery residency program and director of the John J. Cranley Vascular Laboratory at Good Samaritan Hospital, both in Cincinnati. She had no relevant financial disclosures.

Clinical utility of thrombophilia testing is determined by the cost-benefit ratio to each patient. The extent of testing can be quite variable with the cost varying widely. Testing can range from factor V Leiden and homocystine levels to lupus anticoagulant and an isolated factor, or it can include panels of both fibrinolytic and thrombotic pathways as well as genetic testing. Duration of therapy and risk of recurrence can be influenced by the results. The real cost of underestimating the risk of recurrence is the sequela of recurrent thrombosis, such as the increased risk of valvular damage or obstruction, pulmonary embolism, and the development of the postthrombotic syndrome.

Even patients who have a provoked thrombus have been shown to have an increased incidence of thrombophilia. A positive test result can impact the patient’s treatment or potentially prevent events in families who have an unrecognized thrombophilic issue. Those outcomes matter to the patient and the family. In the past we ligated the saphenofemoral junction for patients with an isolated superficial vein thrombosis encroaching on the junction only to find out that many of these patients have an underlying undiagnosed thrombophilia, which had progressed to deep vein thrombosis.

Knowledge helps select appropriate therapies and potentially prevents life-threatening complications to the patient and their family members. Many people who have an underlying thrombophilia have a minor change in their baseline that then starts a cascade to promote a thrombotic event. Knowledge is power and testing to help identify risk is clinically warranted.

Treatments are based on risk factor assessment, which includes laboratory analysis, residual thrombus, and clinical risk. Understanding the fibrinolytic balance may further explain why some patients recanalize completely while other patients never recanalize and have a significant amount of residual thrombus.

Once a thrombophilia has been identified, family members can be tested for a specific defect, potentially avoiding any thrombotic events and preventing miscarriages in those of reproductive years. Further testing and identification of subgroups is needed to clearly define those who would benefit most. This will only be accomplished with further testing. Research can identify additional defects that will help treating physicians to further understand the thrombotic and fibrinolytic pathways. Management decisions need to be based on evidence. Some of these factors were unknown 20 years ago.

Dr. Joann Lohr is an associate program director at the Good Samaritan Hospital vascular surgery residency program and director of the John J. Cranley Vascular Laboratory at Good Samaritan Hospital, both in Cincinnati. She had no relevant financial disclosures.

Clinicians should avoid routinely screening venous thromboembolism patients for thrombophilias, and should instead weigh the risks of recurrent thrombosis against the chances of bleeding with prolonged anticoagulation, according to a review article published in the April issue of the Journal of Vascular Surgery: Venous and Lymphatic Disorders.

“These laboratory tests are costly, and surprisingly, there is little evidence showing that testing leads to improved clinical outcomes,” said Dr. Elna Masuda at Straub Clinic and Hospital, Honolulu, and her associates. “Until data from well-designed, controlled trials are available comparing different durations of anticoagulation with specific thrombophilic states, treatment should be based on clinical risk factors and less on laboratory abnormalities.”

More than half of patients with an initial venous thromboembolism (VTE) episode had a positive thrombophilia screen in one study (Ann. Intern. Med. 2001;135:367-73), the reviewers noted. Testing, however, usually does not affect clinical management or prevent VTE recurrence, and it can cost more than $3,000 per patient, they said.

For these reasons, the American Society of Hematology, the National Institute for Health Care and Excellence, and the Society for Vascular Medicine discourage screening after an initial VTE episode if patients have a known cause or transient risk factor for thrombosis.

Testing also is unlikely to benefit patients with first-time unprovoked (or idiopathic) VTE, patients with a permanent risk factor for thrombosis such as cancer, or patients with arterial thrombosis or thrombosis of the retina or upper arm veins, Dr. Masuda and her associates said. And because recurrent VTE generally merits long-term anticoagulation, affected patients only should be screened if they are considering stopping treatment and test results could inform that decision, they added (J. Vasc. Surg. Venous Lymphat. Disord. 2015;3:228-35).

Some subgroups of patients, however, could benefit from targeted thrombophilia testing. The reviewers recommended antiphospholipid antibody testing if patients have a history of several unexplained pregnancy losses or another reason to suspect antiphospholipid syndrome. Patients with heparin resistance should be tested for antithrombin deficiency, and patients with warfarin necrosis or neonatal purpura fulminans should be tested for protein C and S deficiencies, they added.

Clinicians also should consider screening women with a personal or family history of VTE if they are pregnant and are considering anticoagulation or are considering oral contraceptives or hormone replacement therapy, Dr. Masuda and her associates said.

Screening such patients remains controversial, but it could help guide anticoagulation therapy before and after delivery or might help patients decide whether or not to take exogenous hormones. “In the subgroup of those pregnant or planning pregnancy, history of prior VTE and strong family history of thrombosis are two factors that appear to play a clinically important role in identifying those who may benefit from screening,” they concluded.

Patients who want to pursue testing need to understand that management is mainly based on clinical risk and that test results usually will not change treatment recommendations, the reviewers also emphasized. “If testing will change management, it may be appropriate to proceed,” they added. “If long-term anticoagulation is preferred on the basis of positive test results, the risk of bleeding should be considered.”

The researchers reported no funding sources. Dr. Masuda reported having served on the speakers bureau for Janssen Pharmaceuticals.

Clinicians should avoid routinely screening venous thromboembolism patients for thrombophilias, and should instead weigh the risks of recurrent thrombosis against the chances of bleeding with prolonged anticoagulation, according to a review article published in the April issue of the Journal of Vascular Surgery: Venous and Lymphatic Disorders.

“These laboratory tests are costly, and surprisingly, there is little evidence showing that testing leads to improved clinical outcomes,” said Dr. Elna Masuda at Straub Clinic and Hospital, Honolulu, and her associates. “Until data from well-designed, controlled trials are available comparing different durations of anticoagulation with specific thrombophilic states, treatment should be based on clinical risk factors and less on laboratory abnormalities.”

More than half of patients with an initial venous thromboembolism (VTE) episode had a positive thrombophilia screen in one study (Ann. Intern. Med. 2001;135:367-73), the reviewers noted. Testing, however, usually does not affect clinical management or prevent VTE recurrence, and it can cost more than $3,000 per patient, they said.

For these reasons, the American Society of Hematology, the National Institute for Health Care and Excellence, and the Society for Vascular Medicine discourage screening after an initial VTE episode if patients have a known cause or transient risk factor for thrombosis.

Testing also is unlikely to benefit patients with first-time unprovoked (or idiopathic) VTE, patients with a permanent risk factor for thrombosis such as cancer, or patients with arterial thrombosis or thrombosis of the retina or upper arm veins, Dr. Masuda and her associates said. And because recurrent VTE generally merits long-term anticoagulation, affected patients only should be screened if they are considering stopping treatment and test results could inform that decision, they added (J. Vasc. Surg. Venous Lymphat. Disord. 2015;3:228-35).

Some subgroups of patients, however, could benefit from targeted thrombophilia testing. The reviewers recommended antiphospholipid antibody testing if patients have a history of several unexplained pregnancy losses or another reason to suspect antiphospholipid syndrome. Patients with heparin resistance should be tested for antithrombin deficiency, and patients with warfarin necrosis or neonatal purpura fulminans should be tested for protein C and S deficiencies, they added.

Clinicians also should consider screening women with a personal or family history of VTE if they are pregnant and are considering anticoagulation or are considering oral contraceptives or hormone replacement therapy, Dr. Masuda and her associates said.

Screening such patients remains controversial, but it could help guide anticoagulation therapy before and after delivery or might help patients decide whether or not to take exogenous hormones. “In the subgroup of those pregnant or planning pregnancy, history of prior VTE and strong family history of thrombosis are two factors that appear to play a clinically important role in identifying those who may benefit from screening,” they concluded.

Patients who want to pursue testing need to understand that management is mainly based on clinical risk and that test results usually will not change treatment recommendations, the reviewers also emphasized. “If testing will change management, it may be appropriate to proceed,” they added. “If long-term anticoagulation is preferred on the basis of positive test results, the risk of bleeding should be considered.”

The researchers reported no funding sources. Dr. Masuda reported having served on the speakers bureau for Janssen Pharmaceuticals.

FROM THE JOURNAL OF VASCULAR SURGERY: VENOUS AND LYMPHATIC DISORDERS

Key clinical point: Clinicians should reserve thrombophilia testing for select subgroups of patients with venous thromboembolism.

Major finding: Consider select thrombophilia testing for patients with suspected antiphospholipid syndrome, heparin resistance, warfarin necrosis, neonatal purpura fulminans, or mesenteric or cerebral deep venous thrombosis. Also consider screening women with a personal or family history of thrombosis who are or want to become pregnant or are considering oral contraceptives or hormone replacement therapy.

Data source: Review of 40 original research and review articles.

Disclosures: The researchers reported no funding sources. Dr. Masuda reported having served on the speakers bureau for Janssen Pharmaceuticals.

Capsule colonoscopy improved, but limitations persist

When compared with conventional colonoscopy, capsule colonoscopy had a sensitivity of 88% and a specificity of 82% for detecting adenomas of at least 6 mm in asymptomatic subjects, a multicenter prospective study showed.

But the capsule detected only 29% of subjects who had sessile serrated polyps of at least 6 mm, and required more extensive bowel preparation than did conventional colonoscopy, Dr. Douglas K. Rex at Indiana University Hospital in Indianapolis and his associates reported in the May issue of Gastroenterology (2015 [doi:10.1053/j.gastro.2015.01.025]). “Given these considerations ... colonoscopy remains the gold standard for the detection of colorectal polyps,” said the researchers. “The capsule is a good test for the detection of patients with conventional adenomas 6 mm or larger in size and appears to be an appropriate imaging choice for patients who cannot undergo colonoscopy or had incomplete colonoscopy.”

Capsule endoscopy is useful for small-bowel imaging, but adapting the technology for colorectal studies has been difficult. A first-generation capsule detected only 74% of advanced adenomas in one prospective multicenter trial, the researchers noted. Since then, the PillCam COLON 2 capsule has been updated with motion detection, variable frame speed, and a wider angle view, they said. In smaller studies, it detected up to 89% of subjects with polyps of at least 6 mm, but its specificity was as low as 64%.

To further investigate the technology, researchers at 16 centers in the United States and Israel compared the second-generation capsule with conventional colonoscopy in an average-risk screening population of 884 asymptomatic subjects. Endoscopists were blinded as to technique, but performed unblinded follow-up colonoscopies in subjects who were positive on capsule but negative on conventional colonoscopy.

The capsule’s sensitivity was 81% (95% confidence interval, 77%-84%) and its specificity was 93% (91%-95%) for detecting subjects who had polyps of at least 6 mm, the researchers reported. Sensitivity was 80% (74%-86%) and specificity was 97% (96%-98%) for detecting subjects with polyps of at least 10 mm. For conventional adenomas of at least 6 mm, sensitivity was 88% (82%-93%) and specificity was 82% (80%-83%), and for adenomas of 10 mm or larger, sensitivity was 92% (82%-97%) and specificity was 95% (94%-95%).

“Lesions in the serrated class were not detected well by the capsule in this study, compared with conventional adenomas,” the researchers reported. Prior studies of the capsule did not look for serrated lesions, and in the current study sensitivities were only 29% and 33% for 6-mm and 10-mm lesions, respectively.

The software used to measure polyps during capsule colonoscopy had a 40% error range when tested on balls of known size, the researchers said. Therefore, there is a strict location-matching rule within the colon, but a liberal size rule that only required measurements to fall within 50% of one another to be considered a match. “Any set of matching rules for polyps detected by the capsule and colonoscopy might operate to increase or decrease the calculated sensitivity of the capsule incorrectly,” they added. Also, the adenoma detection rate for conventional colonoscopy was only 39%, and some polyps that were clearly seen on capsule were not visualized on regular colonoscopy. “In these cases, a polyp that should be a true positive for the capsule was counted as false,” they added. “Both colonoscopy and capsule are inferior for localization, compared with CT colonography. Inaccurate localization by one or both tests in this study could have reduced the sensitivity of the capsule.”

The investigators also had to exclude 77 patients because of inadequate cleansing and short transit times related to using sodium phosphate as a boost, they noted. The Food and Drug Administration label for the capsule is expected to reflect those limitations, they added.

Given Imaging funded the study and paid consulting or other fees to Dr. Rex and six coauthors. The other authors reported having no relevant conflicts of interest.

In the United States, colonoscopy is the primary screening test for colorectal cancer. However, because of issues with colonoscopy uptake, costs, and the small but finite risk of complications, the concept of a relatively noninvasive structural examination of the colon that can detect colorectal neoplasia is appealing to both patients and physicians.

Although capsule colonoscopy has emerged as a potential noninvasive tool for examining the entire colon, there are limited data on its accuracy for detecting conventional adenomas or sessile serrated polyps, particularly in an average-risk screening population.

In the May issue of Gastroenterology, Dr. Rex and colleagues report their results from a large, multicenter, prospective study evaluating the new second-generation capsule colonoscopy (PillCam COLON 2, Given Imaging) for detecting colorectal neoplasia in an average-risk screening population. Using optical colonoscopy as the reference standard, the capsule colonoscopy performed well for detecting conventional adenomas 6 mm or larger with a sensitivity and specificity of 88% and 82%, respectively.

In addition, the sensitivity and specificity of capsule colonoscopy for conventional adenomas 10 mm or larger were 92% and 95%, respectively. However, despite the high performance characteristics for detection of conventional adenomas, the capsule colonoscopy had limited accuracy for detecting sessile serrated polyps 10 mm or larger, with a sensitivity of 33%. Furthermore, nearly 10% of the enrolled subjects were excluded from the analysis due to poor bowel preparation and rapid transit time.

These issues aside, the Rex study provides important information about alternative screening modalities for detection of colorectal neoplasia, particularly for gastroenterologists who may be hesitant or unwilling to perform an optical colonoscopy in high-risk patients with significant co-morbidities or in patients who had an incomplete colonoscopy.

Dr. Jeffrey Lee, MAS is assistant clinical professor of medicine, division of gastroenterology, University of California, San Francisco. He has no conflicts of interest.

In the United States, colonoscopy is the primary screening test for colorectal cancer. However, because of issues with colonoscopy uptake, costs, and the small but finite risk of complications, the concept of a relatively noninvasive structural examination of the colon that can detect colorectal neoplasia is appealing to both patients and physicians.

Although capsule colonoscopy has emerged as a potential noninvasive tool for examining the entire colon, there are limited data on its accuracy for detecting conventional adenomas or sessile serrated polyps, particularly in an average-risk screening population.

In the May issue of Gastroenterology, Dr. Rex and colleagues report their results from a large, multicenter, prospective study evaluating the new second-generation capsule colonoscopy (PillCam COLON 2, Given Imaging) for detecting colorectal neoplasia in an average-risk screening population. Using optical colonoscopy as the reference standard, the capsule colonoscopy performed well for detecting conventional adenomas 6 mm or larger with a sensitivity and specificity of 88% and 82%, respectively.

In addition, the sensitivity and specificity of capsule colonoscopy for conventional adenomas 10 mm or larger were 92% and 95%, respectively. However, despite the high performance characteristics for detection of conventional adenomas, the capsule colonoscopy had limited accuracy for detecting sessile serrated polyps 10 mm or larger, with a sensitivity of 33%. Furthermore, nearly 10% of the enrolled subjects were excluded from the analysis due to poor bowel preparation and rapid transit time.

These issues aside, the Rex study provides important information about alternative screening modalities for detection of colorectal neoplasia, particularly for gastroenterologists who may be hesitant or unwilling to perform an optical colonoscopy in high-risk patients with significant co-morbidities or in patients who had an incomplete colonoscopy.

Dr. Jeffrey Lee, MAS is assistant clinical professor of medicine, division of gastroenterology, University of California, San Francisco. He has no conflicts of interest.

In the United States, colonoscopy is the primary screening test for colorectal cancer. However, because of issues with colonoscopy uptake, costs, and the small but finite risk of complications, the concept of a relatively noninvasive structural examination of the colon that can detect colorectal neoplasia is appealing to both patients and physicians.

Although capsule colonoscopy has emerged as a potential noninvasive tool for examining the entire colon, there are limited data on its accuracy for detecting conventional adenomas or sessile serrated polyps, particularly in an average-risk screening population.

In the May issue of Gastroenterology, Dr. Rex and colleagues report their results from a large, multicenter, prospective study evaluating the new second-generation capsule colonoscopy (PillCam COLON 2, Given Imaging) for detecting colorectal neoplasia in an average-risk screening population. Using optical colonoscopy as the reference standard, the capsule colonoscopy performed well for detecting conventional adenomas 6 mm or larger with a sensitivity and specificity of 88% and 82%, respectively.

In addition, the sensitivity and specificity of capsule colonoscopy for conventional adenomas 10 mm or larger were 92% and 95%, respectively. However, despite the high performance characteristics for detection of conventional adenomas, the capsule colonoscopy had limited accuracy for detecting sessile serrated polyps 10 mm or larger, with a sensitivity of 33%. Furthermore, nearly 10% of the enrolled subjects were excluded from the analysis due to poor bowel preparation and rapid transit time.

These issues aside, the Rex study provides important information about alternative screening modalities for detection of colorectal neoplasia, particularly for gastroenterologists who may be hesitant or unwilling to perform an optical colonoscopy in high-risk patients with significant co-morbidities or in patients who had an incomplete colonoscopy.

Dr. Jeffrey Lee, MAS is assistant clinical professor of medicine, division of gastroenterology, University of California, San Francisco. He has no conflicts of interest.

When compared with conventional colonoscopy, capsule colonoscopy had a sensitivity of 88% and a specificity of 82% for detecting adenomas of at least 6 mm in asymptomatic subjects, a multicenter prospective study showed.

But the capsule detected only 29% of subjects who had sessile serrated polyps of at least 6 mm, and required more extensive bowel preparation than did conventional colonoscopy, Dr. Douglas K. Rex at Indiana University Hospital in Indianapolis and his associates reported in the May issue of Gastroenterology (2015 [doi:10.1053/j.gastro.2015.01.025]). “Given these considerations ... colonoscopy remains the gold standard for the detection of colorectal polyps,” said the researchers. “The capsule is a good test for the detection of patients with conventional adenomas 6 mm or larger in size and appears to be an appropriate imaging choice for patients who cannot undergo colonoscopy or had incomplete colonoscopy.”