User login

Glucosuria Is Not Always Due to Diabetes

Familial renal glucosuria is an uncommon, rarely documented condition wherein the absence of other renal or endocrine conditions and with a normal serum glucose level, glucosuria persists due to an isolated defect in the nephron’s proximal tubule. Seemingly, in these patients, the body’s physiologic function mimics that of sodiumglucose cotransporter-2 (SGLT2)-inhibiting medications with the glucose cotransporter being selectively targeted for promoting renal excretion of glucose. This has implications for the patient’s prospective development of hyperglycemic diseases, urinary tract infections (UTIs), and potentially even cardiovascular disease. Though it is a generally asymptomatic condition, it is one that seasoned clinicians should investigate given the future impacts and considerations required for their patients.

Case Presentation

Mr. A was a 28-year-old male with no medical history nor prescription medication use who presented to the nephrology clinic at Eglin Air Force Base, Florida, in June 2019 for a workup of asymptomatic glucosuria. The condition was discovered on a routine urinalysis in October 2015 at the initial presentation at Eglin Air Force Base, when the patient was being evaluated by his primary care physician for acute, benign headache with fever and chills. Urinalysis testing was performed in October 2015 and resulted in a urine glucose of 500 mg/dL (2+). He was directed to the emergency department for further evaluation, reciprocating the results.

On further laboratory testing in October 2015, his blood glucose was normal at 75 mg/dL; hemoglobin A1c was 5.5%. On repeat urinalysis 2 weeks later, his urinary glucose was found to be 500 mg/dL (2+). Each time, the elevated urinary glucose was the only abnormal finding: There was no concurrent hematuria, proteinuria, or ketonuria. The patient reported he had no associated symptoms, including nausea, vomiting, abdominal pain, dysuria, polyuria, and increased thirst. He was not taking any prescription medications, including SGLT2 inhibitors. His presenting headache and fever resolved with supportive care and was considered unrelated to his additional workup.

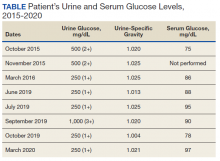

A diagnostic evaluation ensued from 2015 to 2020, including follow-up urinalyses, metabolic panels, complete blood counts, urine protein electrophoresis (UPEP), urine creatinine, urine electrolytes, 25-OH vitamin D level, κ/λ light chain panel, and serum protein electrophoresis (SPEP). The results of all diagnostic workup throughout the entirety of his evaluation were found to be normal. In 2020, his 25-OH vitamin D level was borderline low at 29.4 ng/mL. His κ/λ ratio was normal at 1.65, and his serum albumin protein electrophoresis was 4.74 g/dL, marginally elevated, but his SPEP and UPEP were normal, as were urine protein levels, total gamma globulin, and no monoclonal gamma spike noted on pathology review. Serum uric acid, and urine phosphorous were both normal. His serum creatinine and electrolytes were all within normal limits. Over the 5 years of intermittent monitoring, the maximum amount of glucosuria was 1,000 mg/dL (3+) and the minimum was 250 mg/dL (1+). There was a gap of monitoring from March 2016 until June 2019 due to the patient receiving care from offsite health care providers without shared documentation of specific laboratory values, but notes documenting persistent glucosuria (Table).

Analysis

Building the initial differential diagnosis for this patient began with confirming that he had isolated glucosuria, and not glucosuria secondary to elevated serum glucose. Additionally, conditions related to generalized proximal tubule dysfunction, acute or chronic impaired renal function, and neoplasms, including multiple myeloma (MM), were eliminated because this patient did not have the other specific findings associated with these conditions.

Proximal tubulopathies, including proximal renal tubular acidosis (type 2) and Fanconi syndrome, was initially a leading diagnosis in this patient. Isolated proximal renal tubular acidosis (RTA) (type 2) is uncommon and pathophysiologically involves reduced proximal tubular reabsorption of bicarbonate, resulting in low serum bicarbonate and metabolic acidosis. Patients with isolated proximal RTA (type 2) typically present in infancy with failure to thrive, tachypnea, recurrent vomiting, and feeding difficulties. These symptoms do not meet our patient’s clinical presentation. Fanconi syndrome involves a specific disruption in the proximal tubular apical sodium uptake mechanism affecting the transmembrane sodium gradient and the sodium-potassium- ATPase pump. Fanconi syndrome, therefore, would not only present with glucosuria, but also classically with proteinuria, hypophosphatemia, hypokalemia, and a hyperchloremic metabolic acidosis.

Chronic or acute renal disease may present with glucosuria, but one would expect additional findings including elevated serum creatinine, elevated urinary creatinine, 25-OH vitamin D deficiency, or anemia of chronic disease. Other potential diagnoses included MM and similar neoplasms. MM also would present with glucosuria with proteinuria, an elevated κ/λ light chain ratio, and an elevated SPEP and concern for bone lytic lesions, which were not present. A related disorder, monoclonal gammopathy of renal significance (MGRS), akin to monoclonal gammopathy of unknown significance (MGUS), presents with proteinuria with evidence of renal injury. While this patient had a marginally elevated κ/λ light chain ratio, the remainder of his SPEP and UPEP were normal, and evaluation by a hematologist/ oncologist and pathology review of laboratory findings confirmed no additional evidence for MM, including no monoclonal γ spike. With no evidence of renal injury with a normal serum creatinine and glomerular filtration rate, MGRS was eliminated from the differential as it did not meet the International Myeloma Working Group diagnostic criteria.1 The elevated κ/λ ratio with normal renal function is attributed to polyclonal immunoglobulin elevation, which may occur more commonly with uncomplicated acute viral illnesses.

Diagnosis

The differential homed in on a targeted defect in the proximal tubular SGLT2 gene as the final diagnosis causing isolated glucosuria. Familial renal glucosuria (FRG), a condition caused by a mutation in the SLC5A2 gene that codes for the SGLT2 has been identified in the literature as causing cases with nearly identical presentations to this patient.2,3 This condition is often found in otherwise healthy, asymptomatic patients in whom isolated glucosuria was identified on routine urinalysis testing.

Due to isolated case reports sharing this finding and the asymptomatic nature of the condition, specific data pertaining to its prevalence are not available. Case studies of other affected individuals have not noted adverse effects (AEs), such as UTIs or hypotension specifically.2,3 The patient was referred for genetic testing for this gene mutation; however, he was unable to obtain the test due to lack of insurance coverage. Mr. A has no other family members that have been evaluated for or identified as having this condition. Despite the name, FRG has an unknown inheritance pattern and is attributed to a variety of missense mutations in the SLC5A2 gene.4,5

Discussion

The SGLT2 gene believed to be mutated in this patient has recently become wellknown. The inhibition of the SGLT2 transport protein has become an important tool in the management of type 2 diabetes mellitus (T2DM) independent of the insulin pathway. The SGLT2 in the proximal convoluted tubule of the kidney reabsorbs the majority, 98%, of the renal glucose for reabsorption, and the remaining glucose is reabsorbed by the SGLT2 gene in the more distal portion of the proximal tubule in healthy individuals.4,6 The normal renal threshold for glucose reabsorption in a patient with a normal glomerular filtration rate is equivalent to a serum glucose concentration of 180 mg/dL, even higher in patients with T2DM due to upregulation of the SGLT2 inhibitors. SGLT2 inhibitors, such as canagliflozin, dapagliflozin, and empagliflozin, selectively inhibit this cotransporter, reducing the threshold from 40 to 120 mg/dL, thereby significantly increasing the renal excretion of glucose.4 The patient’s mutation in question and clinical presentation aligned with a naturally occurring mimicry of this drug’s mechanism of action (Figure).

Arguably, one of the more significant benefits to using this new class of oral antihyperglycemics, aside from the noninferior glycemic control compared with that of other first-line agents, is the added metabolic benefit. To date, SGLT2 inhibitors have been found to decrease blood pressure in all studies of the medications and promote moderate weight loss.7 SGLT2 inhibitors have not only demonstrated significant cardiovascular (CV) benefits, linked with the aforementioned metabolic benefits, but also have reduced hospitalizations for heart failure in patients with T2DM and those without.7 The EMPA-REG OUTCOME trial showed a 38% relative risk reduction in CV events in empagliflozin vs placebo.4,8 However, it is unknown whether patients with the SLC5A2 mutation also benefit from these CV benefits akin to the SGLT2 inhibiting medications, and it is and worthy of studying via longterm follow-up with patients similar to this.

This SLC5A2 mutation causing FRG selectively inhibiting SGLT2 function effectively causes this patient’s natural physiology to mimic that of these new oral antihyperglycemic medications. Patients with FRG should be counseled regarding this condition and the implications it has on their overall health. At this time, there is no formal recommendation for short-term or longterm management of patients with FRG; observation and routine preventive care monitoring based on US Preventive Services Task Force screening recommendations apply to this population in line with the general population.

This condition is not known to be associated with hypotension or hypoglycemia, and to some extent, it can be theorized that patients with this condition may have inherent protection of development of hyperglycemia. 4 Akin to patients on SGLT2 inhibitors, these patients may be at an increased risk of UTIs and genital infections, including mycotic infections due to glycemic-related imbalance in the normal flora of the urinary tract.9 Other serious AEs of SGLT2 inhibitors, such as diabetic ketoacidosis, osteoporosis and related fractures, and acute pancreatitis, should be shared with FRG patients, though they are unlikely to be at increased risk for this condition in the setting of normal serum glucose and electrolyte levels. Notably, the osteoporosis risk is small, and specific other risk factors pertinent to individual patient’s medical history, and canagliflozin exclusively. If a patient with FRG develops T2DM after diagnosis, it is imperative that they inform physicians of their condition, because SGLT2-inhibiting drugs will be ineffective in this subset of patients, necessitating increased clinical judgment in selecting an appropriate antihyperglycemic agent in this population.

Conclusions

FRG is an uncommon diagnosis of exclusion that presents with isolated glucosuria in the setting of normal serum glucose. The patient generally presents asymptomatically with a urinalysis completed for other reasons, and the patient may or may not have a family history of similar findings. The condition is of particular interest given that its SGLT2 mutation mimics the effect of SGLT2 inhibitors used for T2DM. More monitoring of patients with this condition will be required for documentation regarding long-term implications, including development of further renal disease, T2DM, or CV disease.

1. Rajkumar SV, Dimopoulos MA, Palumbo A, et al. International Myeloma Working Group updated criteria for the diagnosis of multiple myeloma. Lancet Oncol. 2014;15(12). doi:10.1016/s1470-2045(14)70442-5

2. Calado J, Sznajer Y, Metzger D, et al. Twenty-one additional cases of familial renal glucosuria: absence of genetic heterogeneity, high prevalence of private mutations and further evidence of volume depletion. Nephrol Dial Transplant. 2008;23(12):3874-3879. doi.org/10.1093/ndt/gfn386

3. Kim KM, Kwon SK, Kim HY. A case of isolated glycosuria mediated by an SLC5A2 gene mutation and characterized by postprandial heavy glycosuria without salt wasting. Electrolyte Blood Press. 2016;14(2):35-37. doi:10.5049/EBP.2016.14.2.35

4. Hsia DS, Grove O, Cefalu WT. An update on sodiumglucose co-transporter-2 inhibitors for the treatment of diabetes mellitus. Curr Opin Endocrinol Diabetes Obes. 2017;24(1):73-79. doi:10.1097/MED.0000000000000311

5. Kleta R. Renal glucosuria due to SGLT2 mutations. Mol Genet Metab. 2004;82(1):56-58. doi:10.1016/j.ymgme.2004.01.018

6. Neumiller JJ. Empagliflozin: a new sodium-glucose co-transporter 2 (SGLT2) inhibitor for the treatment of type 2 diabetes. Drugs Context. 2014;3:212262. doi:10.7573/dic.212262

7. Raz I, Cernea S, Cahn A. SGLT2 inhibitors for primary prevention of cardiovascular events. J Diabetes. 2020;12(1):5- 7. doi:10.1111/1753-0407.13004

8. Zinman B, Wanner C, Lachin JM, et al. Empagliflozin, cardiovascular outcomes, and mortality in type 2 diabetes. N Engl J Med. 2015;373(22):2117-2128. doi:10.1056/nejmoa1504720

9. Mcgill JB, Subramanian S. Safety of sodium-glucose cotransporter 2 inhibitors. Am J Cardiol. 2019;124(suppl 1):S45-S52. doi:10.1016/j.amjcard.2019.10.029

Familial renal glucosuria is an uncommon, rarely documented condition wherein the absence of other renal or endocrine conditions and with a normal serum glucose level, glucosuria persists due to an isolated defect in the nephron’s proximal tubule. Seemingly, in these patients, the body’s physiologic function mimics that of sodiumglucose cotransporter-2 (SGLT2)-inhibiting medications with the glucose cotransporter being selectively targeted for promoting renal excretion of glucose. This has implications for the patient’s prospective development of hyperglycemic diseases, urinary tract infections (UTIs), and potentially even cardiovascular disease. Though it is a generally asymptomatic condition, it is one that seasoned clinicians should investigate given the future impacts and considerations required for their patients.

Case Presentation

Mr. A was a 28-year-old male with no medical history nor prescription medication use who presented to the nephrology clinic at Eglin Air Force Base, Florida, in June 2019 for a workup of asymptomatic glucosuria. The condition was discovered on a routine urinalysis in October 2015 at the initial presentation at Eglin Air Force Base, when the patient was being evaluated by his primary care physician for acute, benign headache with fever and chills. Urinalysis testing was performed in October 2015 and resulted in a urine glucose of 500 mg/dL (2+). He was directed to the emergency department for further evaluation, reciprocating the results.

On further laboratory testing in October 2015, his blood glucose was normal at 75 mg/dL; hemoglobin A1c was 5.5%. On repeat urinalysis 2 weeks later, his urinary glucose was found to be 500 mg/dL (2+). Each time, the elevated urinary glucose was the only abnormal finding: There was no concurrent hematuria, proteinuria, or ketonuria. The patient reported he had no associated symptoms, including nausea, vomiting, abdominal pain, dysuria, polyuria, and increased thirst. He was not taking any prescription medications, including SGLT2 inhibitors. His presenting headache and fever resolved with supportive care and was considered unrelated to his additional workup.

A diagnostic evaluation ensued from 2015 to 2020, including follow-up urinalyses, metabolic panels, complete blood counts, urine protein electrophoresis (UPEP), urine creatinine, urine electrolytes, 25-OH vitamin D level, κ/λ light chain panel, and serum protein electrophoresis (SPEP). The results of all diagnostic workup throughout the entirety of his evaluation were found to be normal. In 2020, his 25-OH vitamin D level was borderline low at 29.4 ng/mL. His κ/λ ratio was normal at 1.65, and his serum albumin protein electrophoresis was 4.74 g/dL, marginally elevated, but his SPEP and UPEP were normal, as were urine protein levels, total gamma globulin, and no monoclonal gamma spike noted on pathology review. Serum uric acid, and urine phosphorous were both normal. His serum creatinine and electrolytes were all within normal limits. Over the 5 years of intermittent monitoring, the maximum amount of glucosuria was 1,000 mg/dL (3+) and the minimum was 250 mg/dL (1+). There was a gap of monitoring from March 2016 until June 2019 due to the patient receiving care from offsite health care providers without shared documentation of specific laboratory values, but notes documenting persistent glucosuria (Table).

Analysis

Building the initial differential diagnosis for this patient began with confirming that he had isolated glucosuria, and not glucosuria secondary to elevated serum glucose. Additionally, conditions related to generalized proximal tubule dysfunction, acute or chronic impaired renal function, and neoplasms, including multiple myeloma (MM), were eliminated because this patient did not have the other specific findings associated with these conditions.

Proximal tubulopathies, including proximal renal tubular acidosis (type 2) and Fanconi syndrome, was initially a leading diagnosis in this patient. Isolated proximal renal tubular acidosis (RTA) (type 2) is uncommon and pathophysiologically involves reduced proximal tubular reabsorption of bicarbonate, resulting in low serum bicarbonate and metabolic acidosis. Patients with isolated proximal RTA (type 2) typically present in infancy with failure to thrive, tachypnea, recurrent vomiting, and feeding difficulties. These symptoms do not meet our patient’s clinical presentation. Fanconi syndrome involves a specific disruption in the proximal tubular apical sodium uptake mechanism affecting the transmembrane sodium gradient and the sodium-potassium- ATPase pump. Fanconi syndrome, therefore, would not only present with glucosuria, but also classically with proteinuria, hypophosphatemia, hypokalemia, and a hyperchloremic metabolic acidosis.

Chronic or acute renal disease may present with glucosuria, but one would expect additional findings including elevated serum creatinine, elevated urinary creatinine, 25-OH vitamin D deficiency, or anemia of chronic disease. Other potential diagnoses included MM and similar neoplasms. MM also would present with glucosuria with proteinuria, an elevated κ/λ light chain ratio, and an elevated SPEP and concern for bone lytic lesions, which were not present. A related disorder, monoclonal gammopathy of renal significance (MGRS), akin to monoclonal gammopathy of unknown significance (MGUS), presents with proteinuria with evidence of renal injury. While this patient had a marginally elevated κ/λ light chain ratio, the remainder of his SPEP and UPEP were normal, and evaluation by a hematologist/ oncologist and pathology review of laboratory findings confirmed no additional evidence for MM, including no monoclonal γ spike. With no evidence of renal injury with a normal serum creatinine and glomerular filtration rate, MGRS was eliminated from the differential as it did not meet the International Myeloma Working Group diagnostic criteria.1 The elevated κ/λ ratio with normal renal function is attributed to polyclonal immunoglobulin elevation, which may occur more commonly with uncomplicated acute viral illnesses.

Diagnosis

The differential homed in on a targeted defect in the proximal tubular SGLT2 gene as the final diagnosis causing isolated glucosuria. Familial renal glucosuria (FRG), a condition caused by a mutation in the SLC5A2 gene that codes for the SGLT2 has been identified in the literature as causing cases with nearly identical presentations to this patient.2,3 This condition is often found in otherwise healthy, asymptomatic patients in whom isolated glucosuria was identified on routine urinalysis testing.

Due to isolated case reports sharing this finding and the asymptomatic nature of the condition, specific data pertaining to its prevalence are not available. Case studies of other affected individuals have not noted adverse effects (AEs), such as UTIs or hypotension specifically.2,3 The patient was referred for genetic testing for this gene mutation; however, he was unable to obtain the test due to lack of insurance coverage. Mr. A has no other family members that have been evaluated for or identified as having this condition. Despite the name, FRG has an unknown inheritance pattern and is attributed to a variety of missense mutations in the SLC5A2 gene.4,5

Discussion

The SGLT2 gene believed to be mutated in this patient has recently become wellknown. The inhibition of the SGLT2 transport protein has become an important tool in the management of type 2 diabetes mellitus (T2DM) independent of the insulin pathway. The SGLT2 in the proximal convoluted tubule of the kidney reabsorbs the majority, 98%, of the renal glucose for reabsorption, and the remaining glucose is reabsorbed by the SGLT2 gene in the more distal portion of the proximal tubule in healthy individuals.4,6 The normal renal threshold for glucose reabsorption in a patient with a normal glomerular filtration rate is equivalent to a serum glucose concentration of 180 mg/dL, even higher in patients with T2DM due to upregulation of the SGLT2 inhibitors. SGLT2 inhibitors, such as canagliflozin, dapagliflozin, and empagliflozin, selectively inhibit this cotransporter, reducing the threshold from 40 to 120 mg/dL, thereby significantly increasing the renal excretion of glucose.4 The patient’s mutation in question and clinical presentation aligned with a naturally occurring mimicry of this drug’s mechanism of action (Figure).

Arguably, one of the more significant benefits to using this new class of oral antihyperglycemics, aside from the noninferior glycemic control compared with that of other first-line agents, is the added metabolic benefit. To date, SGLT2 inhibitors have been found to decrease blood pressure in all studies of the medications and promote moderate weight loss.7 SGLT2 inhibitors have not only demonstrated significant cardiovascular (CV) benefits, linked with the aforementioned metabolic benefits, but also have reduced hospitalizations for heart failure in patients with T2DM and those without.7 The EMPA-REG OUTCOME trial showed a 38% relative risk reduction in CV events in empagliflozin vs placebo.4,8 However, it is unknown whether patients with the SLC5A2 mutation also benefit from these CV benefits akin to the SGLT2 inhibiting medications, and it is and worthy of studying via longterm follow-up with patients similar to this.

This SLC5A2 mutation causing FRG selectively inhibiting SGLT2 function effectively causes this patient’s natural physiology to mimic that of these new oral antihyperglycemic medications. Patients with FRG should be counseled regarding this condition and the implications it has on their overall health. At this time, there is no formal recommendation for short-term or longterm management of patients with FRG; observation and routine preventive care monitoring based on US Preventive Services Task Force screening recommendations apply to this population in line with the general population.

This condition is not known to be associated with hypotension or hypoglycemia, and to some extent, it can be theorized that patients with this condition may have inherent protection of development of hyperglycemia. 4 Akin to patients on SGLT2 inhibitors, these patients may be at an increased risk of UTIs and genital infections, including mycotic infections due to glycemic-related imbalance in the normal flora of the urinary tract.9 Other serious AEs of SGLT2 inhibitors, such as diabetic ketoacidosis, osteoporosis and related fractures, and acute pancreatitis, should be shared with FRG patients, though they are unlikely to be at increased risk for this condition in the setting of normal serum glucose and electrolyte levels. Notably, the osteoporosis risk is small, and specific other risk factors pertinent to individual patient’s medical history, and canagliflozin exclusively. If a patient with FRG develops T2DM after diagnosis, it is imperative that they inform physicians of their condition, because SGLT2-inhibiting drugs will be ineffective in this subset of patients, necessitating increased clinical judgment in selecting an appropriate antihyperglycemic agent in this population.

Conclusions

FRG is an uncommon diagnosis of exclusion that presents with isolated glucosuria in the setting of normal serum glucose. The patient generally presents asymptomatically with a urinalysis completed for other reasons, and the patient may or may not have a family history of similar findings. The condition is of particular interest given that its SGLT2 mutation mimics the effect of SGLT2 inhibitors used for T2DM. More monitoring of patients with this condition will be required for documentation regarding long-term implications, including development of further renal disease, T2DM, or CV disease.

Familial renal glucosuria is an uncommon, rarely documented condition wherein the absence of other renal or endocrine conditions and with a normal serum glucose level, glucosuria persists due to an isolated defect in the nephron’s proximal tubule. Seemingly, in these patients, the body’s physiologic function mimics that of sodiumglucose cotransporter-2 (SGLT2)-inhibiting medications with the glucose cotransporter being selectively targeted for promoting renal excretion of glucose. This has implications for the patient’s prospective development of hyperglycemic diseases, urinary tract infections (UTIs), and potentially even cardiovascular disease. Though it is a generally asymptomatic condition, it is one that seasoned clinicians should investigate given the future impacts and considerations required for their patients.

Case Presentation

Mr. A was a 28-year-old male with no medical history nor prescription medication use who presented to the nephrology clinic at Eglin Air Force Base, Florida, in June 2019 for a workup of asymptomatic glucosuria. The condition was discovered on a routine urinalysis in October 2015 at the initial presentation at Eglin Air Force Base, when the patient was being evaluated by his primary care physician for acute, benign headache with fever and chills. Urinalysis testing was performed in October 2015 and resulted in a urine glucose of 500 mg/dL (2+). He was directed to the emergency department for further evaluation, reciprocating the results.

On further laboratory testing in October 2015, his blood glucose was normal at 75 mg/dL; hemoglobin A1c was 5.5%. On repeat urinalysis 2 weeks later, his urinary glucose was found to be 500 mg/dL (2+). Each time, the elevated urinary glucose was the only abnormal finding: There was no concurrent hematuria, proteinuria, or ketonuria. The patient reported he had no associated symptoms, including nausea, vomiting, abdominal pain, dysuria, polyuria, and increased thirst. He was not taking any prescription medications, including SGLT2 inhibitors. His presenting headache and fever resolved with supportive care and was considered unrelated to his additional workup.

A diagnostic evaluation ensued from 2015 to 2020, including follow-up urinalyses, metabolic panels, complete blood counts, urine protein electrophoresis (UPEP), urine creatinine, urine electrolytes, 25-OH vitamin D level, κ/λ light chain panel, and serum protein electrophoresis (SPEP). The results of all diagnostic workup throughout the entirety of his evaluation were found to be normal. In 2020, his 25-OH vitamin D level was borderline low at 29.4 ng/mL. His κ/λ ratio was normal at 1.65, and his serum albumin protein electrophoresis was 4.74 g/dL, marginally elevated, but his SPEP and UPEP were normal, as were urine protein levels, total gamma globulin, and no monoclonal gamma spike noted on pathology review. Serum uric acid, and urine phosphorous were both normal. His serum creatinine and electrolytes were all within normal limits. Over the 5 years of intermittent monitoring, the maximum amount of glucosuria was 1,000 mg/dL (3+) and the minimum was 250 mg/dL (1+). There was a gap of monitoring from March 2016 until June 2019 due to the patient receiving care from offsite health care providers without shared documentation of specific laboratory values, but notes documenting persistent glucosuria (Table).

Analysis

Building the initial differential diagnosis for this patient began with confirming that he had isolated glucosuria, and not glucosuria secondary to elevated serum glucose. Additionally, conditions related to generalized proximal tubule dysfunction, acute or chronic impaired renal function, and neoplasms, including multiple myeloma (MM), were eliminated because this patient did not have the other specific findings associated with these conditions.

Proximal tubulopathies, including proximal renal tubular acidosis (type 2) and Fanconi syndrome, was initially a leading diagnosis in this patient. Isolated proximal renal tubular acidosis (RTA) (type 2) is uncommon and pathophysiologically involves reduced proximal tubular reabsorption of bicarbonate, resulting in low serum bicarbonate and metabolic acidosis. Patients with isolated proximal RTA (type 2) typically present in infancy with failure to thrive, tachypnea, recurrent vomiting, and feeding difficulties. These symptoms do not meet our patient’s clinical presentation. Fanconi syndrome involves a specific disruption in the proximal tubular apical sodium uptake mechanism affecting the transmembrane sodium gradient and the sodium-potassium- ATPase pump. Fanconi syndrome, therefore, would not only present with glucosuria, but also classically with proteinuria, hypophosphatemia, hypokalemia, and a hyperchloremic metabolic acidosis.

Chronic or acute renal disease may present with glucosuria, but one would expect additional findings including elevated serum creatinine, elevated urinary creatinine, 25-OH vitamin D deficiency, or anemia of chronic disease. Other potential diagnoses included MM and similar neoplasms. MM also would present with glucosuria with proteinuria, an elevated κ/λ light chain ratio, and an elevated SPEP and concern for bone lytic lesions, which were not present. A related disorder, monoclonal gammopathy of renal significance (MGRS), akin to monoclonal gammopathy of unknown significance (MGUS), presents with proteinuria with evidence of renal injury. While this patient had a marginally elevated κ/λ light chain ratio, the remainder of his SPEP and UPEP were normal, and evaluation by a hematologist/ oncologist and pathology review of laboratory findings confirmed no additional evidence for MM, including no monoclonal γ spike. With no evidence of renal injury with a normal serum creatinine and glomerular filtration rate, MGRS was eliminated from the differential as it did not meet the International Myeloma Working Group diagnostic criteria.1 The elevated κ/λ ratio with normal renal function is attributed to polyclonal immunoglobulin elevation, which may occur more commonly with uncomplicated acute viral illnesses.

Diagnosis

The differential homed in on a targeted defect in the proximal tubular SGLT2 gene as the final diagnosis causing isolated glucosuria. Familial renal glucosuria (FRG), a condition caused by a mutation in the SLC5A2 gene that codes for the SGLT2 has been identified in the literature as causing cases with nearly identical presentations to this patient.2,3 This condition is often found in otherwise healthy, asymptomatic patients in whom isolated glucosuria was identified on routine urinalysis testing.

Due to isolated case reports sharing this finding and the asymptomatic nature of the condition, specific data pertaining to its prevalence are not available. Case studies of other affected individuals have not noted adverse effects (AEs), such as UTIs or hypotension specifically.2,3 The patient was referred for genetic testing for this gene mutation; however, he was unable to obtain the test due to lack of insurance coverage. Mr. A has no other family members that have been evaluated for or identified as having this condition. Despite the name, FRG has an unknown inheritance pattern and is attributed to a variety of missense mutations in the SLC5A2 gene.4,5

Discussion

The SGLT2 gene believed to be mutated in this patient has recently become wellknown. The inhibition of the SGLT2 transport protein has become an important tool in the management of type 2 diabetes mellitus (T2DM) independent of the insulin pathway. The SGLT2 in the proximal convoluted tubule of the kidney reabsorbs the majority, 98%, of the renal glucose for reabsorption, and the remaining glucose is reabsorbed by the SGLT2 gene in the more distal portion of the proximal tubule in healthy individuals.4,6 The normal renal threshold for glucose reabsorption in a patient with a normal glomerular filtration rate is equivalent to a serum glucose concentration of 180 mg/dL, even higher in patients with T2DM due to upregulation of the SGLT2 inhibitors. SGLT2 inhibitors, such as canagliflozin, dapagliflozin, and empagliflozin, selectively inhibit this cotransporter, reducing the threshold from 40 to 120 mg/dL, thereby significantly increasing the renal excretion of glucose.4 The patient’s mutation in question and clinical presentation aligned with a naturally occurring mimicry of this drug’s mechanism of action (Figure).

Arguably, one of the more significant benefits to using this new class of oral antihyperglycemics, aside from the noninferior glycemic control compared with that of other first-line agents, is the added metabolic benefit. To date, SGLT2 inhibitors have been found to decrease blood pressure in all studies of the medications and promote moderate weight loss.7 SGLT2 inhibitors have not only demonstrated significant cardiovascular (CV) benefits, linked with the aforementioned metabolic benefits, but also have reduced hospitalizations for heart failure in patients with T2DM and those without.7 The EMPA-REG OUTCOME trial showed a 38% relative risk reduction in CV events in empagliflozin vs placebo.4,8 However, it is unknown whether patients with the SLC5A2 mutation also benefit from these CV benefits akin to the SGLT2 inhibiting medications, and it is and worthy of studying via longterm follow-up with patients similar to this.

This SLC5A2 mutation causing FRG selectively inhibiting SGLT2 function effectively causes this patient’s natural physiology to mimic that of these new oral antihyperglycemic medications. Patients with FRG should be counseled regarding this condition and the implications it has on their overall health. At this time, there is no formal recommendation for short-term or longterm management of patients with FRG; observation and routine preventive care monitoring based on US Preventive Services Task Force screening recommendations apply to this population in line with the general population.

This condition is not known to be associated with hypotension or hypoglycemia, and to some extent, it can be theorized that patients with this condition may have inherent protection of development of hyperglycemia. 4 Akin to patients on SGLT2 inhibitors, these patients may be at an increased risk of UTIs and genital infections, including mycotic infections due to glycemic-related imbalance in the normal flora of the urinary tract.9 Other serious AEs of SGLT2 inhibitors, such as diabetic ketoacidosis, osteoporosis and related fractures, and acute pancreatitis, should be shared with FRG patients, though they are unlikely to be at increased risk for this condition in the setting of normal serum glucose and electrolyte levels. Notably, the osteoporosis risk is small, and specific other risk factors pertinent to individual patient’s medical history, and canagliflozin exclusively. If a patient with FRG develops T2DM after diagnosis, it is imperative that they inform physicians of their condition, because SGLT2-inhibiting drugs will be ineffective in this subset of patients, necessitating increased clinical judgment in selecting an appropriate antihyperglycemic agent in this population.

Conclusions

FRG is an uncommon diagnosis of exclusion that presents with isolated glucosuria in the setting of normal serum glucose. The patient generally presents asymptomatically with a urinalysis completed for other reasons, and the patient may or may not have a family history of similar findings. The condition is of particular interest given that its SGLT2 mutation mimics the effect of SGLT2 inhibitors used for T2DM. More monitoring of patients with this condition will be required for documentation regarding long-term implications, including development of further renal disease, T2DM, or CV disease.

1. Rajkumar SV, Dimopoulos MA, Palumbo A, et al. International Myeloma Working Group updated criteria for the diagnosis of multiple myeloma. Lancet Oncol. 2014;15(12). doi:10.1016/s1470-2045(14)70442-5

2. Calado J, Sznajer Y, Metzger D, et al. Twenty-one additional cases of familial renal glucosuria: absence of genetic heterogeneity, high prevalence of private mutations and further evidence of volume depletion. Nephrol Dial Transplant. 2008;23(12):3874-3879. doi.org/10.1093/ndt/gfn386

3. Kim KM, Kwon SK, Kim HY. A case of isolated glycosuria mediated by an SLC5A2 gene mutation and characterized by postprandial heavy glycosuria without salt wasting. Electrolyte Blood Press. 2016;14(2):35-37. doi:10.5049/EBP.2016.14.2.35

4. Hsia DS, Grove O, Cefalu WT. An update on sodiumglucose co-transporter-2 inhibitors for the treatment of diabetes mellitus. Curr Opin Endocrinol Diabetes Obes. 2017;24(1):73-79. doi:10.1097/MED.0000000000000311

5. Kleta R. Renal glucosuria due to SGLT2 mutations. Mol Genet Metab. 2004;82(1):56-58. doi:10.1016/j.ymgme.2004.01.018

6. Neumiller JJ. Empagliflozin: a new sodium-glucose co-transporter 2 (SGLT2) inhibitor for the treatment of type 2 diabetes. Drugs Context. 2014;3:212262. doi:10.7573/dic.212262

7. Raz I, Cernea S, Cahn A. SGLT2 inhibitors for primary prevention of cardiovascular events. J Diabetes. 2020;12(1):5- 7. doi:10.1111/1753-0407.13004

8. Zinman B, Wanner C, Lachin JM, et al. Empagliflozin, cardiovascular outcomes, and mortality in type 2 diabetes. N Engl J Med. 2015;373(22):2117-2128. doi:10.1056/nejmoa1504720

9. Mcgill JB, Subramanian S. Safety of sodium-glucose cotransporter 2 inhibitors. Am J Cardiol. 2019;124(suppl 1):S45-S52. doi:10.1016/j.amjcard.2019.10.029

1. Rajkumar SV, Dimopoulos MA, Palumbo A, et al. International Myeloma Working Group updated criteria for the diagnosis of multiple myeloma. Lancet Oncol. 2014;15(12). doi:10.1016/s1470-2045(14)70442-5

2. Calado J, Sznajer Y, Metzger D, et al. Twenty-one additional cases of familial renal glucosuria: absence of genetic heterogeneity, high prevalence of private mutations and further evidence of volume depletion. Nephrol Dial Transplant. 2008;23(12):3874-3879. doi.org/10.1093/ndt/gfn386

3. Kim KM, Kwon SK, Kim HY. A case of isolated glycosuria mediated by an SLC5A2 gene mutation and characterized by postprandial heavy glycosuria without salt wasting. Electrolyte Blood Press. 2016;14(2):35-37. doi:10.5049/EBP.2016.14.2.35

4. Hsia DS, Grove O, Cefalu WT. An update on sodiumglucose co-transporter-2 inhibitors for the treatment of diabetes mellitus. Curr Opin Endocrinol Diabetes Obes. 2017;24(1):73-79. doi:10.1097/MED.0000000000000311

5. Kleta R. Renal glucosuria due to SGLT2 mutations. Mol Genet Metab. 2004;82(1):56-58. doi:10.1016/j.ymgme.2004.01.018

6. Neumiller JJ. Empagliflozin: a new sodium-glucose co-transporter 2 (SGLT2) inhibitor for the treatment of type 2 diabetes. Drugs Context. 2014;3:212262. doi:10.7573/dic.212262

7. Raz I, Cernea S, Cahn A. SGLT2 inhibitors for primary prevention of cardiovascular events. J Diabetes. 2020;12(1):5- 7. doi:10.1111/1753-0407.13004

8. Zinman B, Wanner C, Lachin JM, et al. Empagliflozin, cardiovascular outcomes, and mortality in type 2 diabetes. N Engl J Med. 2015;373(22):2117-2128. doi:10.1056/nejmoa1504720

9. Mcgill JB, Subramanian S. Safety of sodium-glucose cotransporter 2 inhibitors. Am J Cardiol. 2019;124(suppl 1):S45-S52. doi:10.1016/j.amjcard.2019.10.029

Management of Do Not Resuscitate Orders Before Invasive Procedures

In January 2017, the US Department of Veterans Affairs (VA), led by the National Center of Ethics in Health Care, created the Life-Sustaining Treatment Decisions Initiative (LSTDI). The VA gradually implemented the LSTDI in its facilities nationwide. In a format similar to the standardized form of portable medical orders, provider orders for life-sustaining treatments (POLST), the initiative promotes discussions with veterans and encourages but does not require health care professionals (HCPs) to complete a template for documentation (life-sustaining treatment [LST] note) of a patient’s preferences.1 The HCP enters a code status into the electronic health record (EHR), creating a portable and durable note and order.

With a new durable code status, the HCPs performing these procedures (eg, colonoscopies, coronary catheterization, or percutaneous biopsies) need to acknowledge and can potentially rescind a do not resuscitate (DNR) order. Although the risk of cardiac arrest or intubation is low, all invasive procedures carry these risks to some degree.2,3 Some HCPs advocate the automatic discontinuation of DNR orders before any procedure, but multiple professional societies recommend that patients be included in these discussions to honor their wishes.4-7 Although no procedures at the VA require the suspension of a DNR status, it is important to establish which life-sustaining measures are acceptable to patients.

As part of the informed consent process, proceduralists (HCPs who perform a procedure) should discuss the option of temporary suspension of DNR in the periprocedural period and document the outcome of this discussion (eg, rescinded DNR, acknowledgment of continued DNR status). These discussions need to be documented clearly to ensure accurate communication with other HCPs, particularly those caring for the patient postprocedure. Without the documentation, the risk that the patient’s wishes will not be honored is high.8 Code status is usually addressed before intubation of general anesthesia; however, nonsurgical procedures have a lower likelihood of DNR acknowledgment.

This study aimed to examine and improve the rate of acknowledgment of DNR status before nonsurgical procedures. We hypothesized that the rate of DNR acknowledgment before nonsurgical invasive procedures is low; and the rate can be raised with an intervention designed to educate proceduralists and improve and simplify this documentation.9

Methods

This was a single center, before/after quasi-experimental study. The study was considered clinical operations and institutional review board approval was unnecessary.

A retrospective chart review was performed of patients who underwent an inpatient or outpatient, nonsurgical invasive procedure at the Minneapolis VA Medical Center in Minnesota. The preintervention period was defined as the first 6 months after implementation of the LSTDI between May 8, 2018 and October 31, 2018. The intervention was presented in December 2018 and January 2019. The postintervention period was from February 1, 2019 to April 30, 2019.

Patients who underwent a nonsurgical invasive procedure were reviewed in 3 procedural areas. These areas were chosen based on high patient volumes and the need for rapid patient turnover, including gastroenterology, cardiology, and interventional radiology. An invasive procedure was defined as any procedure requiring patient consent. Those patients who had a completed LST note and who had a DNR order were recorded.

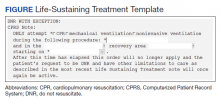

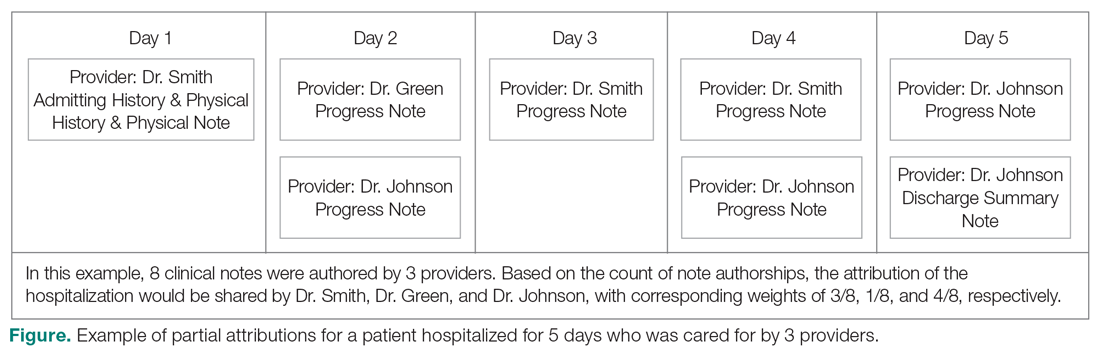

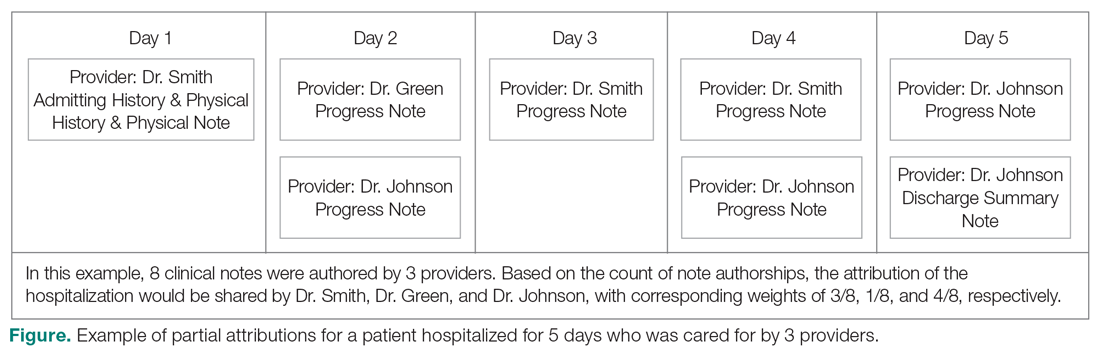

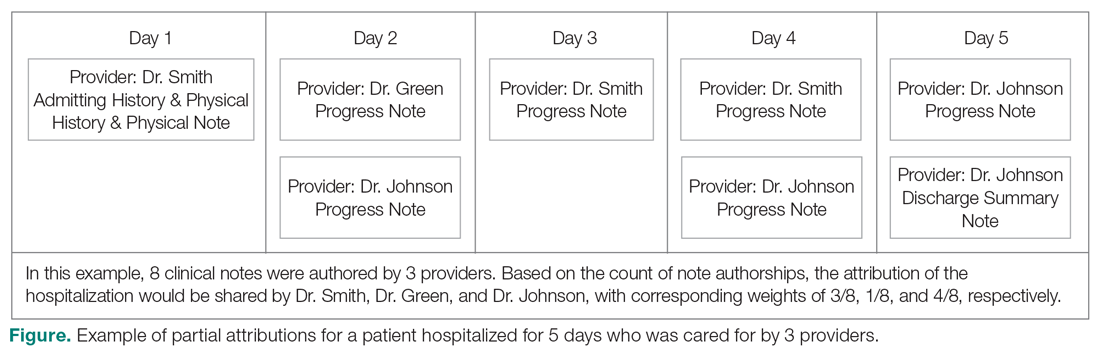

The intervention was composed of 2 elements: (1) an addendum to the LST note, which temporarily suspended resuscitation orders (Figure). We developed the addendum based on templates and orders in use before LSTDI implementation. Physicians from the procedural areas reviewed the addendum and provided feedback and the facility chief-of-staff provided approval. Part 2 was an educational presentation to proceduralists in each procedural area. The presentation included a brief introduction to the LSTDI, where to find a life-sustaining treatment note, code status, the importance of addressing code status, and a description of the addendum. The proceduralists were advised to use the addendum only after discussion with the patient and obtaining verbal consent for DNR suspension. If the patient elected to remain DNR, proceduralists were encouraged to document the conversation acknowledging the DNR.

Outcomes

The primary outcome of the study was proceduralist acknowledgment of DNR status before nonsurgical invasive procedures. DNR status was considered acknowledged if the proceduralist provided any type of documentation.

Statistical Analysis

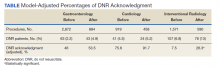

Model predicted percentages of DNR acknowledgment are reported from a logistic regression model with both procedural area, time (before vs after) and the interaction between these 2 variables in the model. The simple main effects comparing before vs after within the procedural area based on post hoc contrasts of the interaction term also are shown.

Results

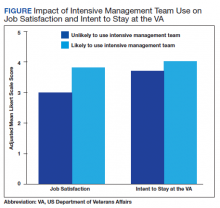

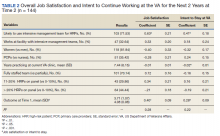

During the first 6 months following LSTDI implementation (the preintervention phase), 5,362 invasive procedures were performed in gastroenterology, interventional radiology, and cardiology. A total of 211 procedures were performed on patients who had a prior LST note indicating DNR. Of those, 68 (32.2%) had documentation acknowledging their DNR status. The educational presentation was given to each of the 3 departments with about 75% faculty attendance in each department. After the intervention, 1,932 invasive procedures were performed, identifying 143 LST notes with a DNR status. Sixty-five (45.5%) had documentation of a discussion regarding their DNR status.

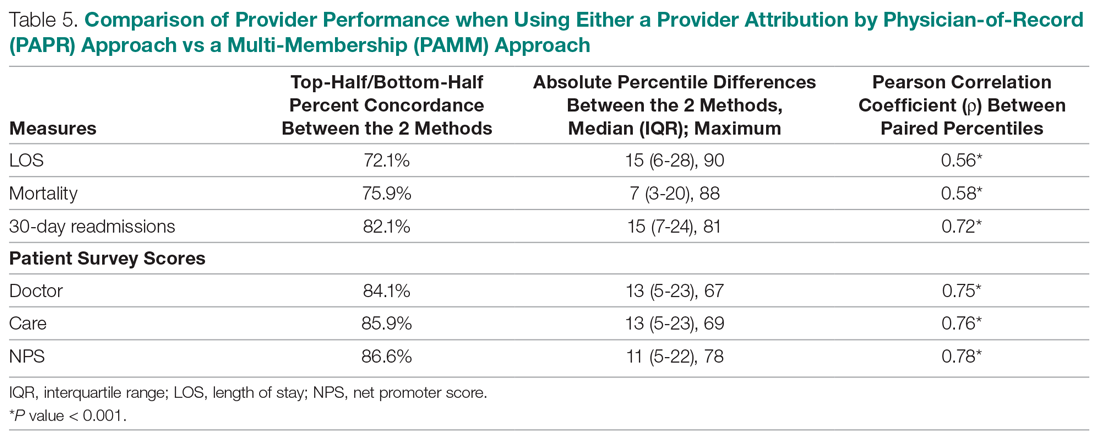

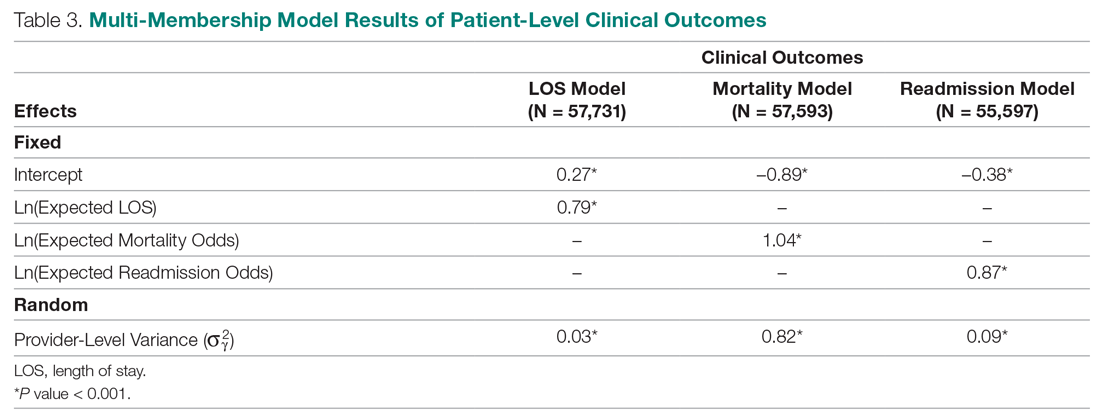

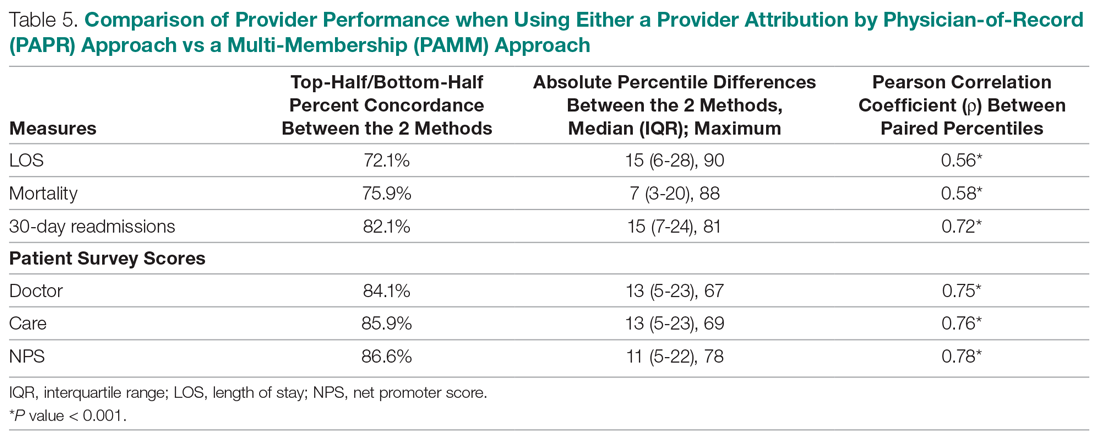

The interaction between procedural areas and time (before, after) was examined. Of the 3 procedural areas, only interventional radiology had significant differences before vs after, 7.5% vs 26.3%, respectively (P = .01). Model-adjusted percentages before vs after for cardiology were 75.6% vs 91.7% (P = .12) and for gastroenterology were 46% vs 53.5% (P = .40) (Table). When all 3 procedural areas were combined, there was a significant improvement in the overall percentage of DNR acknowledgment postintervention from 38.6% to 61.1.% (P = .01).

Discussion

With the LSTDI, DNR orders remain in place and are valid in the inpatient and outpatient setting until reversed by the patient. This creates new challenges for proceduralists. Before our intervention, only about one-third of proceduralists’ recognized DNR status before procedures. This low rate of preprocedural DNR acknowledgments is not unique to the VA. A pilot study assessing rate of documentation of code status discussions in patients undergoing venting gastrostomy tube for malignant bowel obstruction showed documentation in only 22% of cases before the procedure.10 Another simulation-based study of anesthesiologist showed only 57% of subjects addressed resuscitation before starting the procedure.11

Despite the low initial rates of DNR acknowledgment, our intervention successfully improved these rates, although with variation between procedural areas. Prior studies looking at improving adherence to guidelines have shown the benefit of physician education.12,13 Improving code status acknowledgment before an invasive procedure not only involves increasing awareness of a preexisting code status, but also developing a system to incorporate the documentation process efficiently into the procedural workflow and ensuring that providers are aware of the appropriate process. Although the largest improvement was in interventional radiology, many patients postintervention still did not have their DNR orders acknowledged. Confusion is created when the patient is cared for by a different HCP or when the resuscitation team is called during a cardiac arrest. Cardiopulmonary resuscitation may be started or withheld incorrectly if the patient’s most recent wishes for resuscitation are unclear.14

Outside of using education to raise awareness, other improvements could utilize informatics solutions, such as developing an alert on opening a patient chart if a DNR status exists (such as a pop-up screen) or adding code status as an item to a preprocedural checklist. Similar to our study, previous studies also have found that a systematic approach with guidelines and templates improved rates of documentation of code status and DNR decisions.15,16 A large proportion of the LST notes and procedures done on patients with a DNR in our study occurred in the inpatient setting without any involvement of the primary care provider in the discussion. Having an automated way to alert the primary care provider that a new LST note has been completed may be helpful in guiding future care. Future work could identify additional systematic methods to increase acknowledgment of DNR.

Limitations

Our single-center results may not be generalizable. Although the interaction between procedural area and time was tested, it is possible that improvement in DNR acknowledgment was attributable to secular trends and not the intervention. Other limitations included the decreased generalizability of a VA health care initiative and its unique electronic health record, incomplete attendance rates at our educational sessions, and a lack of patient-centered outcomes.

Conclusions

A templated addendum combined with targeted staff education improved the percentage of DNR acknowledgments before nonsurgical invasive procedures, an important step in establishing patient preferences for life-sustaining treatment in procedures with potential complications. Further research is needed to assess whether these improvements also lead to improved patient-centered outcomes.

Acknowledgments

The authors would like to acknowledge the invaluable help of Dr. Kathryn Rice and Dr. Anne Melzer for their guidance in the manuscript revision process

1. Physician Orders for Life-Sustaining Treatment Paradigm. Honoring the wishes of those with serious illness and frailty. Accessed January 11, 2021.

2. Arepally A, Oechsle D, Kirkwood S, Savader S. Safety of conscious sedation in interventional radiology. Cardiovasc Intervent Radiol. 2001;24(3):185-190. doi:10.1007/s002700002549

3. Arrowsmith J, Gertsman B, Fleischer D, Benjamin S. Results from the American Society for Gastrointestinal Endoscopy/U.S. Food and Drug Administration collaborative study on complication rates and drug use during gastrointestinal endoscopy. Gastrointest Endosc. 1991;37(4):421-427. doi:10.1016/s0016-5107(91)70773-6

4. Burkle C, Swetz K, Armstrong M, Keegan M. Patient and doctor attitudes and beliefs concerning perioperative do not resuscitate orders: anesthesiologists’ growing compliance with patient autonomy and self-determination guidelines. BMC Anesthesiol. 2013;13:2. doi:10.1186/1471-2253-13-2

5. American College of Surgeons. Statement on advance directives by patients: “do not resuscitate” in the operative room. Published January 3, 2014. Accessed January 11, 2021. https://bulletin.facs.org/2014/01/statement-on-advance-directives-by-patients-do-not-resuscitate-in-the-operating-room

6. Association of periOperative Registered Nurses. AORN position statement on perioperative care of patients with do-not-resuscitate or allow-natural death orders. Reaffirmed February 2020. Accessed June 16, 2020. https://www.aorn.org/guidelines/clinical-resources/position-statements

7. Bastron DR. Ethical guidelines for the anesthesia care of patients with do-not-resuscitate orders or other directives that limit treatment. Published 1996. Accessed January 11, 2021. https://pubs.asahq.org/anesthesiology/article/85/5/1190/35862/Ethical-Concerns-in-Anesthetic-Care-for-Patients

8. Baxter L, Hancox J, King B, Powell A, Tolley T. Stop! Patients receiving CPR despite valid DNACPR documentation. Eur J Pall Car. 2018;23(3):125-127.

9. Agency for Healthcare Research and Quality. Practice facilitation handbook, module 10: academic detailing as a quality improvement tool. Last reviewed May 2013. Accessed January 11, 2021. 2021. https://www.ahrq.gov/ncepcr/tools/pf-handbook/mod10.html

10. Urman R, Lilley E, Changala M, Lindvall C, Hepner D, Bader A. A pilot study to evaluate compliance with guidelines for preprocedural reconsideration of code status limitations. J Palliat Med. 2018;21(8):1152-1156. doi:10.1089/jpm.2017.0601

11. Waisel D, Simon R, Truog R, Baboolal H, Raemer D. Anesthesiologist management of perioperative do-not-resuscitate orders: a simulation-based experiment. Simul Healthc. 2009;4(2):70-76. doi:10.1097/SIH.0b013e31819e137b

12. Lozano P, Finkelstein J, Carey V, et al. A multisite randomized trial of the effects of physician education and organizational change in chronic-asthma care. Arch Pediatr Adolesc Med. 2004;158(9):875-883. doi:10.1001/archpedi.158.9.875

13. Brunström M, Ng N, Dahlström J, et al. Association of physician education and feedback on hypertension management with patient blood pressure and hypertension control. JAMA Netw Open. 2020;3(1):e1918625. doi:10.1001/jamanetworkopen.2019.18625

14. Wong J, Duane P, Ingraham N. A case series of patients who were do not resuscitate but underwent cardiopulmonary resuscitation. Resuscitation. 2020;146:145-146. doi:10.1016/j.resuscitation.2019.11.020

15. Mittelberger J, Lo B, Martin D, Uhlmann R. Impact of a procedure-specific do not resuscitate order form on documentation of do not resuscitate orders. Arch Intern Med. 1993;153(2):228-232.

16. Neubauer M, Taniguchi C, Hoverman J. Improving incidence of code status documentation through process and discipline. J Oncol Pract. 2015;11(2):e263-266. doi:10.1200/JOP.2014.001438

In January 2017, the US Department of Veterans Affairs (VA), led by the National Center of Ethics in Health Care, created the Life-Sustaining Treatment Decisions Initiative (LSTDI). The VA gradually implemented the LSTDI in its facilities nationwide. In a format similar to the standardized form of portable medical orders, provider orders for life-sustaining treatments (POLST), the initiative promotes discussions with veterans and encourages but does not require health care professionals (HCPs) to complete a template for documentation (life-sustaining treatment [LST] note) of a patient’s preferences.1 The HCP enters a code status into the electronic health record (EHR), creating a portable and durable note and order.

With a new durable code status, the HCPs performing these procedures (eg, colonoscopies, coronary catheterization, or percutaneous biopsies) need to acknowledge and can potentially rescind a do not resuscitate (DNR) order. Although the risk of cardiac arrest or intubation is low, all invasive procedures carry these risks to some degree.2,3 Some HCPs advocate the automatic discontinuation of DNR orders before any procedure, but multiple professional societies recommend that patients be included in these discussions to honor their wishes.4-7 Although no procedures at the VA require the suspension of a DNR status, it is important to establish which life-sustaining measures are acceptable to patients.

As part of the informed consent process, proceduralists (HCPs who perform a procedure) should discuss the option of temporary suspension of DNR in the periprocedural period and document the outcome of this discussion (eg, rescinded DNR, acknowledgment of continued DNR status). These discussions need to be documented clearly to ensure accurate communication with other HCPs, particularly those caring for the patient postprocedure. Without the documentation, the risk that the patient’s wishes will not be honored is high.8 Code status is usually addressed before intubation of general anesthesia; however, nonsurgical procedures have a lower likelihood of DNR acknowledgment.

This study aimed to examine and improve the rate of acknowledgment of DNR status before nonsurgical procedures. We hypothesized that the rate of DNR acknowledgment before nonsurgical invasive procedures is low; and the rate can be raised with an intervention designed to educate proceduralists and improve and simplify this documentation.9

Methods

This was a single center, before/after quasi-experimental study. The study was considered clinical operations and institutional review board approval was unnecessary.

A retrospective chart review was performed of patients who underwent an inpatient or outpatient, nonsurgical invasive procedure at the Minneapolis VA Medical Center in Minnesota. The preintervention period was defined as the first 6 months after implementation of the LSTDI between May 8, 2018 and October 31, 2018. The intervention was presented in December 2018 and January 2019. The postintervention period was from February 1, 2019 to April 30, 2019.

Patients who underwent a nonsurgical invasive procedure were reviewed in 3 procedural areas. These areas were chosen based on high patient volumes and the need for rapid patient turnover, including gastroenterology, cardiology, and interventional radiology. An invasive procedure was defined as any procedure requiring patient consent. Those patients who had a completed LST note and who had a DNR order were recorded.

The intervention was composed of 2 elements: (1) an addendum to the LST note, which temporarily suspended resuscitation orders (Figure). We developed the addendum based on templates and orders in use before LSTDI implementation. Physicians from the procedural areas reviewed the addendum and provided feedback and the facility chief-of-staff provided approval. Part 2 was an educational presentation to proceduralists in each procedural area. The presentation included a brief introduction to the LSTDI, where to find a life-sustaining treatment note, code status, the importance of addressing code status, and a description of the addendum. The proceduralists were advised to use the addendum only after discussion with the patient and obtaining verbal consent for DNR suspension. If the patient elected to remain DNR, proceduralists were encouraged to document the conversation acknowledging the DNR.

Outcomes

The primary outcome of the study was proceduralist acknowledgment of DNR status before nonsurgical invasive procedures. DNR status was considered acknowledged if the proceduralist provided any type of documentation.

Statistical Analysis

Model predicted percentages of DNR acknowledgment are reported from a logistic regression model with both procedural area, time (before vs after) and the interaction between these 2 variables in the model. The simple main effects comparing before vs after within the procedural area based on post hoc contrasts of the interaction term also are shown.

Results

During the first 6 months following LSTDI implementation (the preintervention phase), 5,362 invasive procedures were performed in gastroenterology, interventional radiology, and cardiology. A total of 211 procedures were performed on patients who had a prior LST note indicating DNR. Of those, 68 (32.2%) had documentation acknowledging their DNR status. The educational presentation was given to each of the 3 departments with about 75% faculty attendance in each department. After the intervention, 1,932 invasive procedures were performed, identifying 143 LST notes with a DNR status. Sixty-five (45.5%) had documentation of a discussion regarding their DNR status.

The interaction between procedural areas and time (before, after) was examined. Of the 3 procedural areas, only interventional radiology had significant differences before vs after, 7.5% vs 26.3%, respectively (P = .01). Model-adjusted percentages before vs after for cardiology were 75.6% vs 91.7% (P = .12) and for gastroenterology were 46% vs 53.5% (P = .40) (Table). When all 3 procedural areas were combined, there was a significant improvement in the overall percentage of DNR acknowledgment postintervention from 38.6% to 61.1.% (P = .01).

Discussion

With the LSTDI, DNR orders remain in place and are valid in the inpatient and outpatient setting until reversed by the patient. This creates new challenges for proceduralists. Before our intervention, only about one-third of proceduralists’ recognized DNR status before procedures. This low rate of preprocedural DNR acknowledgments is not unique to the VA. A pilot study assessing rate of documentation of code status discussions in patients undergoing venting gastrostomy tube for malignant bowel obstruction showed documentation in only 22% of cases before the procedure.10 Another simulation-based study of anesthesiologist showed only 57% of subjects addressed resuscitation before starting the procedure.11

Despite the low initial rates of DNR acknowledgment, our intervention successfully improved these rates, although with variation between procedural areas. Prior studies looking at improving adherence to guidelines have shown the benefit of physician education.12,13 Improving code status acknowledgment before an invasive procedure not only involves increasing awareness of a preexisting code status, but also developing a system to incorporate the documentation process efficiently into the procedural workflow and ensuring that providers are aware of the appropriate process. Although the largest improvement was in interventional radiology, many patients postintervention still did not have their DNR orders acknowledged. Confusion is created when the patient is cared for by a different HCP or when the resuscitation team is called during a cardiac arrest. Cardiopulmonary resuscitation may be started or withheld incorrectly if the patient’s most recent wishes for resuscitation are unclear.14

Outside of using education to raise awareness, other improvements could utilize informatics solutions, such as developing an alert on opening a patient chart if a DNR status exists (such as a pop-up screen) or adding code status as an item to a preprocedural checklist. Similar to our study, previous studies also have found that a systematic approach with guidelines and templates improved rates of documentation of code status and DNR decisions.15,16 A large proportion of the LST notes and procedures done on patients with a DNR in our study occurred in the inpatient setting without any involvement of the primary care provider in the discussion. Having an automated way to alert the primary care provider that a new LST note has been completed may be helpful in guiding future care. Future work could identify additional systematic methods to increase acknowledgment of DNR.

Limitations

Our single-center results may not be generalizable. Although the interaction between procedural area and time was tested, it is possible that improvement in DNR acknowledgment was attributable to secular trends and not the intervention. Other limitations included the decreased generalizability of a VA health care initiative and its unique electronic health record, incomplete attendance rates at our educational sessions, and a lack of patient-centered outcomes.

Conclusions

A templated addendum combined with targeted staff education improved the percentage of DNR acknowledgments before nonsurgical invasive procedures, an important step in establishing patient preferences for life-sustaining treatment in procedures with potential complications. Further research is needed to assess whether these improvements also lead to improved patient-centered outcomes.

Acknowledgments

The authors would like to acknowledge the invaluable help of Dr. Kathryn Rice and Dr. Anne Melzer for their guidance in the manuscript revision process

In January 2017, the US Department of Veterans Affairs (VA), led by the National Center of Ethics in Health Care, created the Life-Sustaining Treatment Decisions Initiative (LSTDI). The VA gradually implemented the LSTDI in its facilities nationwide. In a format similar to the standardized form of portable medical orders, provider orders for life-sustaining treatments (POLST), the initiative promotes discussions with veterans and encourages but does not require health care professionals (HCPs) to complete a template for documentation (life-sustaining treatment [LST] note) of a patient’s preferences.1 The HCP enters a code status into the electronic health record (EHR), creating a portable and durable note and order.

With a new durable code status, the HCPs performing these procedures (eg, colonoscopies, coronary catheterization, or percutaneous biopsies) need to acknowledge and can potentially rescind a do not resuscitate (DNR) order. Although the risk of cardiac arrest or intubation is low, all invasive procedures carry these risks to some degree.2,3 Some HCPs advocate the automatic discontinuation of DNR orders before any procedure, but multiple professional societies recommend that patients be included in these discussions to honor their wishes.4-7 Although no procedures at the VA require the suspension of a DNR status, it is important to establish which life-sustaining measures are acceptable to patients.

As part of the informed consent process, proceduralists (HCPs who perform a procedure) should discuss the option of temporary suspension of DNR in the periprocedural period and document the outcome of this discussion (eg, rescinded DNR, acknowledgment of continued DNR status). These discussions need to be documented clearly to ensure accurate communication with other HCPs, particularly those caring for the patient postprocedure. Without the documentation, the risk that the patient’s wishes will not be honored is high.8 Code status is usually addressed before intubation of general anesthesia; however, nonsurgical procedures have a lower likelihood of DNR acknowledgment.

This study aimed to examine and improve the rate of acknowledgment of DNR status before nonsurgical procedures. We hypothesized that the rate of DNR acknowledgment before nonsurgical invasive procedures is low; and the rate can be raised with an intervention designed to educate proceduralists and improve and simplify this documentation.9

Methods

This was a single center, before/after quasi-experimental study. The study was considered clinical operations and institutional review board approval was unnecessary.

A retrospective chart review was performed of patients who underwent an inpatient or outpatient, nonsurgical invasive procedure at the Minneapolis VA Medical Center in Minnesota. The preintervention period was defined as the first 6 months after implementation of the LSTDI between May 8, 2018 and October 31, 2018. The intervention was presented in December 2018 and January 2019. The postintervention period was from February 1, 2019 to April 30, 2019.

Patients who underwent a nonsurgical invasive procedure were reviewed in 3 procedural areas. These areas were chosen based on high patient volumes and the need for rapid patient turnover, including gastroenterology, cardiology, and interventional radiology. An invasive procedure was defined as any procedure requiring patient consent. Those patients who had a completed LST note and who had a DNR order were recorded.

The intervention was composed of 2 elements: (1) an addendum to the LST note, which temporarily suspended resuscitation orders (Figure). We developed the addendum based on templates and orders in use before LSTDI implementation. Physicians from the procedural areas reviewed the addendum and provided feedback and the facility chief-of-staff provided approval. Part 2 was an educational presentation to proceduralists in each procedural area. The presentation included a brief introduction to the LSTDI, where to find a life-sustaining treatment note, code status, the importance of addressing code status, and a description of the addendum. The proceduralists were advised to use the addendum only after discussion with the patient and obtaining verbal consent for DNR suspension. If the patient elected to remain DNR, proceduralists were encouraged to document the conversation acknowledging the DNR.

Outcomes

The primary outcome of the study was proceduralist acknowledgment of DNR status before nonsurgical invasive procedures. DNR status was considered acknowledged if the proceduralist provided any type of documentation.

Statistical Analysis

Model predicted percentages of DNR acknowledgment are reported from a logistic regression model with both procedural area, time (before vs after) and the interaction between these 2 variables in the model. The simple main effects comparing before vs after within the procedural area based on post hoc contrasts of the interaction term also are shown.

Results

During the first 6 months following LSTDI implementation (the preintervention phase), 5,362 invasive procedures were performed in gastroenterology, interventional radiology, and cardiology. A total of 211 procedures were performed on patients who had a prior LST note indicating DNR. Of those, 68 (32.2%) had documentation acknowledging their DNR status. The educational presentation was given to each of the 3 departments with about 75% faculty attendance in each department. After the intervention, 1,932 invasive procedures were performed, identifying 143 LST notes with a DNR status. Sixty-five (45.5%) had documentation of a discussion regarding their DNR status.

The interaction between procedural areas and time (before, after) was examined. Of the 3 procedural areas, only interventional radiology had significant differences before vs after, 7.5% vs 26.3%, respectively (P = .01). Model-adjusted percentages before vs after for cardiology were 75.6% vs 91.7% (P = .12) and for gastroenterology were 46% vs 53.5% (P = .40) (Table). When all 3 procedural areas were combined, there was a significant improvement in the overall percentage of DNR acknowledgment postintervention from 38.6% to 61.1.% (P = .01).

Discussion

With the LSTDI, DNR orders remain in place and are valid in the inpatient and outpatient setting until reversed by the patient. This creates new challenges for proceduralists. Before our intervention, only about one-third of proceduralists’ recognized DNR status before procedures. This low rate of preprocedural DNR acknowledgments is not unique to the VA. A pilot study assessing rate of documentation of code status discussions in patients undergoing venting gastrostomy tube for malignant bowel obstruction showed documentation in only 22% of cases before the procedure.10 Another simulation-based study of anesthesiologist showed only 57% of subjects addressed resuscitation before starting the procedure.11

Despite the low initial rates of DNR acknowledgment, our intervention successfully improved these rates, although with variation between procedural areas. Prior studies looking at improving adherence to guidelines have shown the benefit of physician education.12,13 Improving code status acknowledgment before an invasive procedure not only involves increasing awareness of a preexisting code status, but also developing a system to incorporate the documentation process efficiently into the procedural workflow and ensuring that providers are aware of the appropriate process. Although the largest improvement was in interventional radiology, many patients postintervention still did not have their DNR orders acknowledged. Confusion is created when the patient is cared for by a different HCP or when the resuscitation team is called during a cardiac arrest. Cardiopulmonary resuscitation may be started or withheld incorrectly if the patient’s most recent wishes for resuscitation are unclear.14

Outside of using education to raise awareness, other improvements could utilize informatics solutions, such as developing an alert on opening a patient chart if a DNR status exists (such as a pop-up screen) or adding code status as an item to a preprocedural checklist. Similar to our study, previous studies also have found that a systematic approach with guidelines and templates improved rates of documentation of code status and DNR decisions.15,16 A large proportion of the LST notes and procedures done on patients with a DNR in our study occurred in the inpatient setting without any involvement of the primary care provider in the discussion. Having an automated way to alert the primary care provider that a new LST note has been completed may be helpful in guiding future care. Future work could identify additional systematic methods to increase acknowledgment of DNR.

Limitations

Our single-center results may not be generalizable. Although the interaction between procedural area and time was tested, it is possible that improvement in DNR acknowledgment was attributable to secular trends and not the intervention. Other limitations included the decreased generalizability of a VA health care initiative and its unique electronic health record, incomplete attendance rates at our educational sessions, and a lack of patient-centered outcomes.

Conclusions

A templated addendum combined with targeted staff education improved the percentage of DNR acknowledgments before nonsurgical invasive procedures, an important step in establishing patient preferences for life-sustaining treatment in procedures with potential complications. Further research is needed to assess whether these improvements also lead to improved patient-centered outcomes.

Acknowledgments

The authors would like to acknowledge the invaluable help of Dr. Kathryn Rice and Dr. Anne Melzer for their guidance in the manuscript revision process

1. Physician Orders for Life-Sustaining Treatment Paradigm. Honoring the wishes of those with serious illness and frailty. Accessed January 11, 2021.

2. Arepally A, Oechsle D, Kirkwood S, Savader S. Safety of conscious sedation in interventional radiology. Cardiovasc Intervent Radiol. 2001;24(3):185-190. doi:10.1007/s002700002549

3. Arrowsmith J, Gertsman B, Fleischer D, Benjamin S. Results from the American Society for Gastrointestinal Endoscopy/U.S. Food and Drug Administration collaborative study on complication rates and drug use during gastrointestinal endoscopy. Gastrointest Endosc. 1991;37(4):421-427. doi:10.1016/s0016-5107(91)70773-6

4. Burkle C, Swetz K, Armstrong M, Keegan M. Patient and doctor attitudes and beliefs concerning perioperative do not resuscitate orders: anesthesiologists’ growing compliance with patient autonomy and self-determination guidelines. BMC Anesthesiol. 2013;13:2. doi:10.1186/1471-2253-13-2

5. American College of Surgeons. Statement on advance directives by patients: “do not resuscitate” in the operative room. Published January 3, 2014. Accessed January 11, 2021. https://bulletin.facs.org/2014/01/statement-on-advance-directives-by-patients-do-not-resuscitate-in-the-operating-room

6. Association of periOperative Registered Nurses. AORN position statement on perioperative care of patients with do-not-resuscitate or allow-natural death orders. Reaffirmed February 2020. Accessed June 16, 2020. https://www.aorn.org/guidelines/clinical-resources/position-statements

7. Bastron DR. Ethical guidelines for the anesthesia care of patients with do-not-resuscitate orders or other directives that limit treatment. Published 1996. Accessed January 11, 2021. https://pubs.asahq.org/anesthesiology/article/85/5/1190/35862/Ethical-Concerns-in-Anesthetic-Care-for-Patients

8. Baxter L, Hancox J, King B, Powell A, Tolley T. Stop! Patients receiving CPR despite valid DNACPR documentation. Eur J Pall Car. 2018;23(3):125-127.

9. Agency for Healthcare Research and Quality. Practice facilitation handbook, module 10: academic detailing as a quality improvement tool. Last reviewed May 2013. Accessed January 11, 2021. 2021. https://www.ahrq.gov/ncepcr/tools/pf-handbook/mod10.html

10. Urman R, Lilley E, Changala M, Lindvall C, Hepner D, Bader A. A pilot study to evaluate compliance with guidelines for preprocedural reconsideration of code status limitations. J Palliat Med. 2018;21(8):1152-1156. doi:10.1089/jpm.2017.0601

11. Waisel D, Simon R, Truog R, Baboolal H, Raemer D. Anesthesiologist management of perioperative do-not-resuscitate orders: a simulation-based experiment. Simul Healthc. 2009;4(2):70-76. doi:10.1097/SIH.0b013e31819e137b

12. Lozano P, Finkelstein J, Carey V, et al. A multisite randomized trial of the effects of physician education and organizational change in chronic-asthma care. Arch Pediatr Adolesc Med. 2004;158(9):875-883. doi:10.1001/archpedi.158.9.875

13. Brunström M, Ng N, Dahlström J, et al. Association of physician education and feedback on hypertension management with patient blood pressure and hypertension control. JAMA Netw Open. 2020;3(1):e1918625. doi:10.1001/jamanetworkopen.2019.18625

14. Wong J, Duane P, Ingraham N. A case series of patients who were do not resuscitate but underwent cardiopulmonary resuscitation. Resuscitation. 2020;146:145-146. doi:10.1016/j.resuscitation.2019.11.020

15. Mittelberger J, Lo B, Martin D, Uhlmann R. Impact of a procedure-specific do not resuscitate order form on documentation of do not resuscitate orders. Arch Intern Med. 1993;153(2):228-232.

16. Neubauer M, Taniguchi C, Hoverman J. Improving incidence of code status documentation through process and discipline. J Oncol Pract. 2015;11(2):e263-266. doi:10.1200/JOP.2014.001438

1. Physician Orders for Life-Sustaining Treatment Paradigm. Honoring the wishes of those with serious illness and frailty. Accessed January 11, 2021.

2. Arepally A, Oechsle D, Kirkwood S, Savader S. Safety of conscious sedation in interventional radiology. Cardiovasc Intervent Radiol. 2001;24(3):185-190. doi:10.1007/s002700002549

3. Arrowsmith J, Gertsman B, Fleischer D, Benjamin S. Results from the American Society for Gastrointestinal Endoscopy/U.S. Food and Drug Administration collaborative study on complication rates and drug use during gastrointestinal endoscopy. Gastrointest Endosc. 1991;37(4):421-427. doi:10.1016/s0016-5107(91)70773-6

4. Burkle C, Swetz K, Armstrong M, Keegan M. Patient and doctor attitudes and beliefs concerning perioperative do not resuscitate orders: anesthesiologists’ growing compliance with patient autonomy and self-determination guidelines. BMC Anesthesiol. 2013;13:2. doi:10.1186/1471-2253-13-2