User login

Risk of Intestinal Necrosis With Sodium Polystyrene Sulfonate: A Systematic Review and Meta-analysis

Sodium polystyrene sulfonate (SPS) was first approved in the United States in 1958 and is a commonly prescribed medication for hyperkalemia.1 SPS works by exchanging potassium for sodium in the colonic lumen, thereby promoting potassium loss in the stool. However, reports of severe gastrointestinal side effects, particularly intestinal necrosis, have been persistent since the 1970s,2 leading some authors to recommend against the use of SPS.3,4 In 2009, the US Food and Drug Administration (FDA) warned against concomitant sorbitol administration, which was implicated in some studies.4,5 The concern about gastrointestinal side effects has also led to the development and FDA approval of two new cation-exchange resins for treatment of hyperkalemia.6 A prior systematic review of the literature found 30 separate case reports or case series including a total of 58 patients who were treated with SPS and developed severe gastrointestinal side effects.7 Because the included studies were all case reports or case series and therefore did not include comparison groups, it could not be determined whether SPS had a causal role in gastrointestinal side effects, and the authors could only conclude that there was a “possible” association. In contrast to case reports, several large cohort studies have been published more recently and report the risk of severe gastrointestinal adverse events associated with SPS compared with controls.8-10 While some studies found an increased risk, others have not. Given this uncertainty, we undertook a systematic review of studies that report the incidence of severe gastrointestinal side effects with SPS compared with controls.

METHODS

Data Sources and Search Strategy

A systematic search of the literature was conducted by a medical librarian using the Cochrane Library, Embase, Medline, Google Scholar, PubMed, Scopus, and Web of Science Core Collection databases to find relevant articles published from database inception to October 4, 2020. The search was peer reviewed by a second medical librarian using Peer Review of Electronic Search Strategies (PRESS).11 Databases were searched using a combination of controlled vocabulary and free-text terms for “SPS” and “bowel necrosis.” Details of the full search strategy are listed in Appendix A. References from all databases were imported into an EndNote X9 library, duplicates removed, and then uploaded into Coviden

Data Extraction and Quality Assessment

We used a standardized form to extract data, which included author, year, country, study design, setting, number of patients, SPS formulation, dosing, exposure, sorbitol content, outcomes of intestinal necrosis and the composite severe gastrointestinal adverse events, and the duration of time from SPS exposure to outcome occurrence. Two reviewers (JLH and AER) independently assessed the methodological quality of included studies using the Risk of Bias in Non-randomized Studies of Interventions (ROBINS-I) tool for observational studies13 and the Revised Cochrane risk of bias (RoB 2) tool for randomized controlled trials (RCTs).14 Additionally, two reviewers (JLH and CGG) graded overall strength of evidence based on the Grading of Recommendations Assessment, Development and Evaluation (GRADE) system.15 Disagreement was resolved by consensus.

Data Synthesis and Analysis

The proportion of patients with intestinal necrosis was compared using random effects meta-analysis using the restricted maximum likelihood method.16 For the two studies that reported hazard ratios (HRs), meta-analysis was performed after log transformation of the HRs and CIs. One study that performed survival analysis presented data for both the duration of the study (up to 11 years) and up to 1 year after exposure.9 We used the data up to 1 year after exposure because we believed later events were more likely to be due to chance than exposure to SPS. For studies with zero events, we used the treat ment-arm continuity correction, which has been reported to be preferable to the standard fixed-correction factor.17 We also performed two sensitivity analyses, including omitting the studies with zero events and performing meta-analysis using risk difference. The prevalence of intestinal ischemia was pooled using the DerSimonian and Laird18 random effects model with Freeman-Tukey19 double arcsine transformation. Heterogeneity was estimated using the I² statistic. I² values of 25%, 50%, and 75% were considered low, moderate, and high heterogeneity, respectively.20 Meta-regression and tests for small-study effects were not performed because of the small number of included studies.21 In addition to random effects meta-analysis, we calculated the 90% predicted interval for future studies for the pooled effect of intestinal ischemia.22 Statistical analysis was performed using meta and metaprop commands in Stata/IC, version 16.1 (StataCorp).

RESULTS

Selected Studies

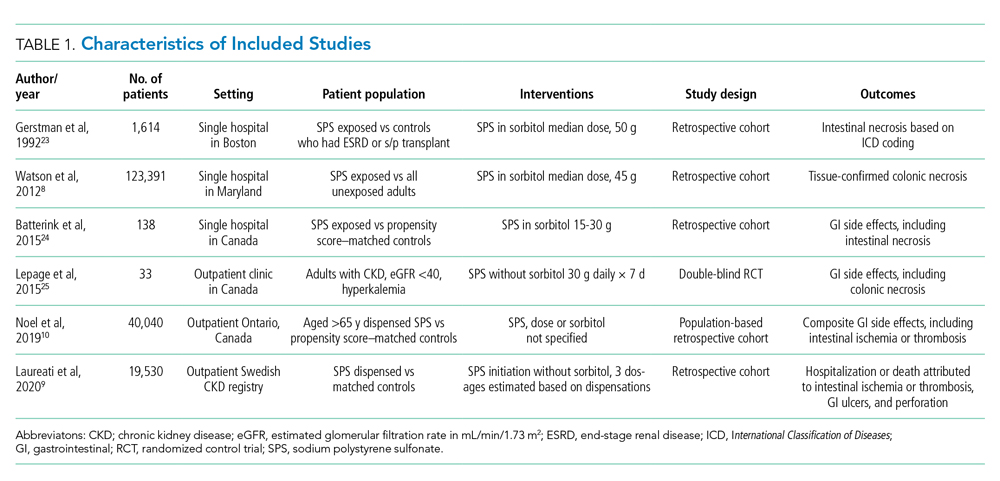

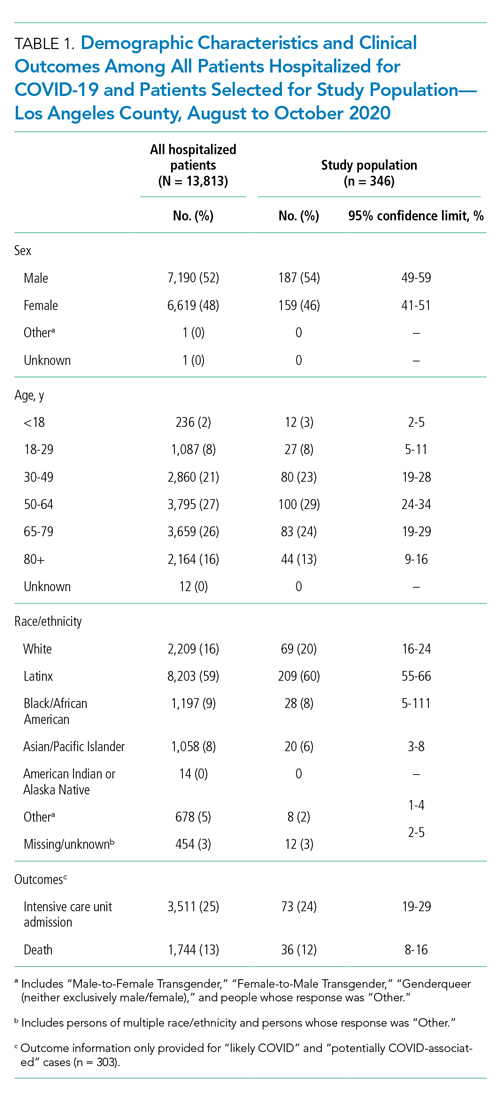

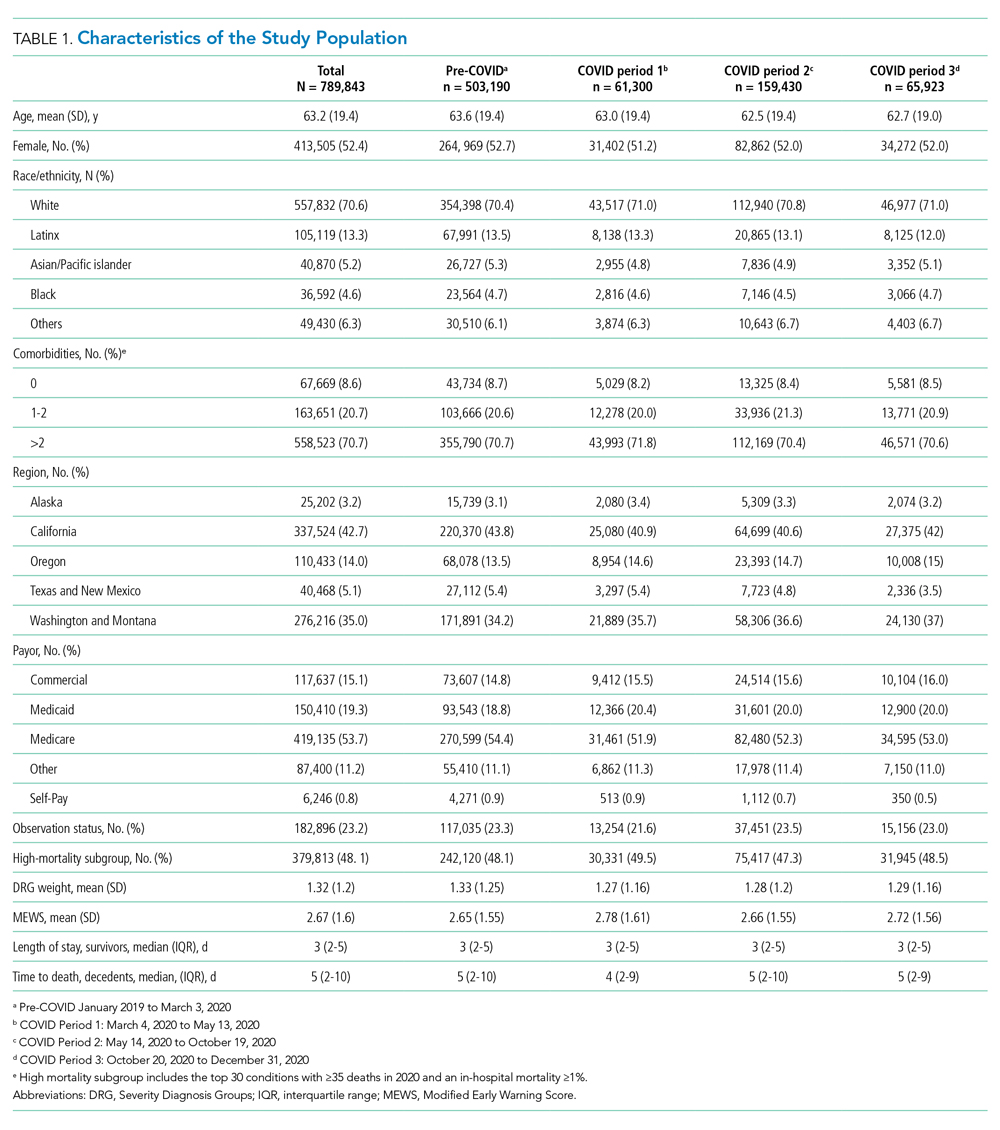

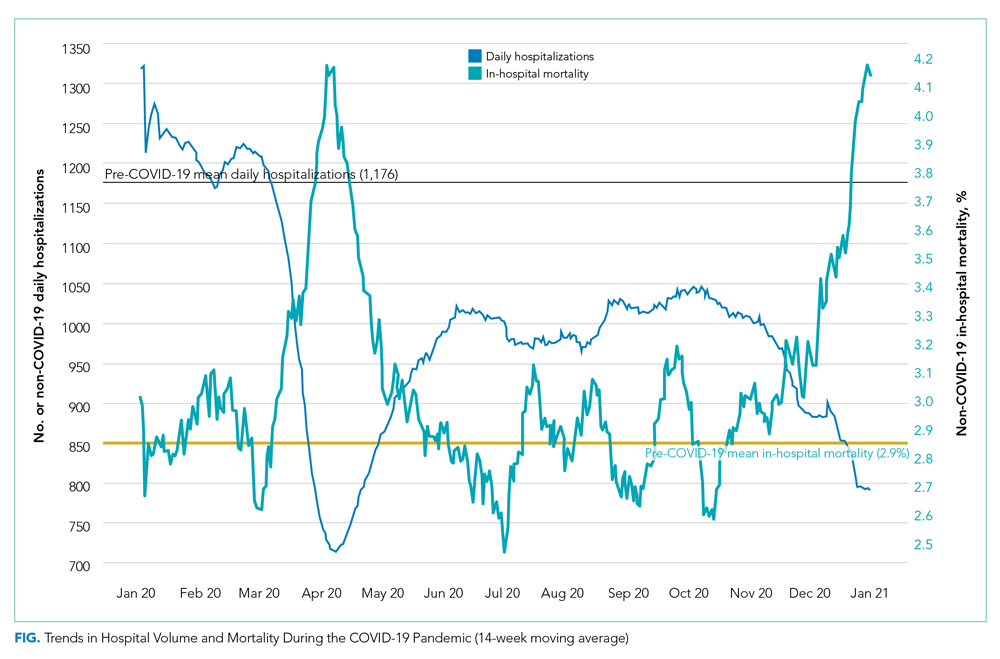

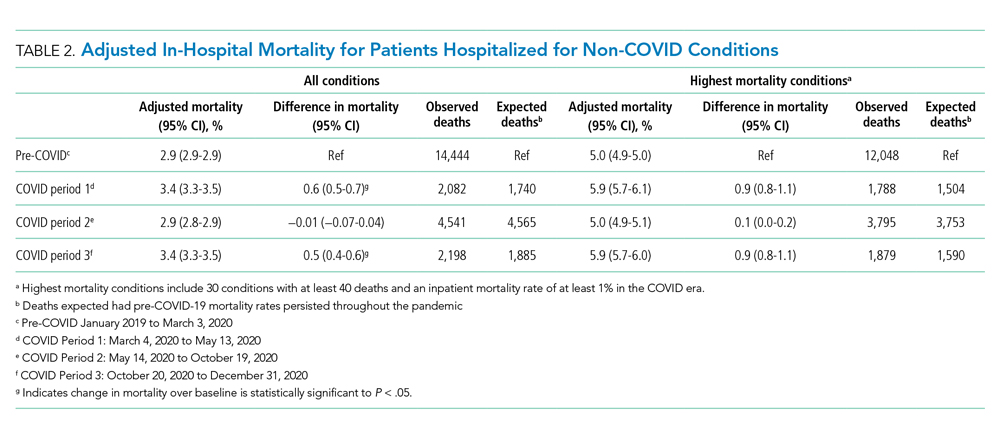

The electronic search yielded 806 unique articles, of which 791 were excluded based on title and abstract, leaving 15 articles for full-text review (Appendix B). Appendix C describes the nine studies that were excluded, including the reason for exclusion. Table 1 describes the characteristics of the six studies that met study inclusion criteria. Studies were published between 1992 and 2020. Three studies were from Canada,10,24,25 two from the United States,8,23 and one from Sweden.9 Three studies occurred in an outpatient setting,9,10,25 and three were described as inpatient studies.8,23,24 SPS preparations included sorbitol in three studies,8,23,24 were not specified in one study,10 and were not included in two studies.9,25 SPS dosing varied widely, with median doses of 15 to 30 g in three studies,9,24,25 45 to 50 g in two studies,8,23 and unspecified in one study.10 Duration of exposure typically ranged from 1 to 7 days but was not consistently described. For example, two of the studies did not report duration of exposure,8,10 and a third study reported a single dispensation of 450 g in 41% of patients, with the remaining 59% averaging three dispensations within the first year.9 Sample size ranged from 33 to 123,391 patients. Most patients were male, and mean ages ranged from 44 to 78 years. Two studies limited participation to those with chronic kidney disease (CKD) with glomerular filtration rate (GFR) <4024 or CKD stage 4 or 5 or dialysis.9 Two studies specifically limited participation to patients with potassium levels of 5.0 to 5.9 mmol/L.24,25 All six studies reported outcomes for intestinal necrosis, and four reported composite outcomes for major adverse gastrointestinal events.9,10,24,25

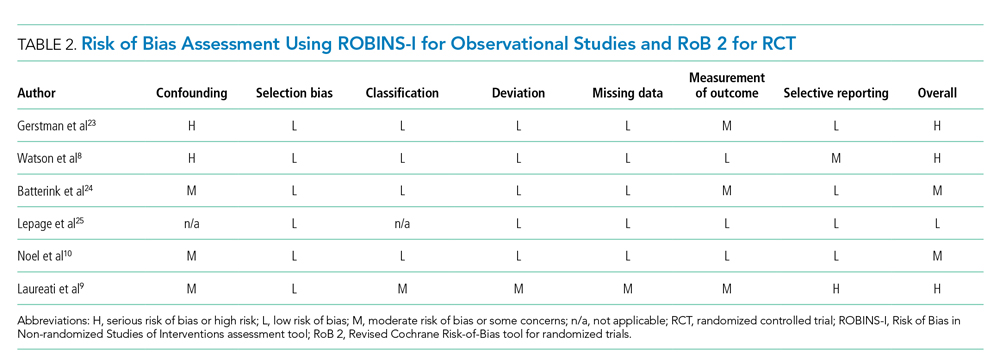

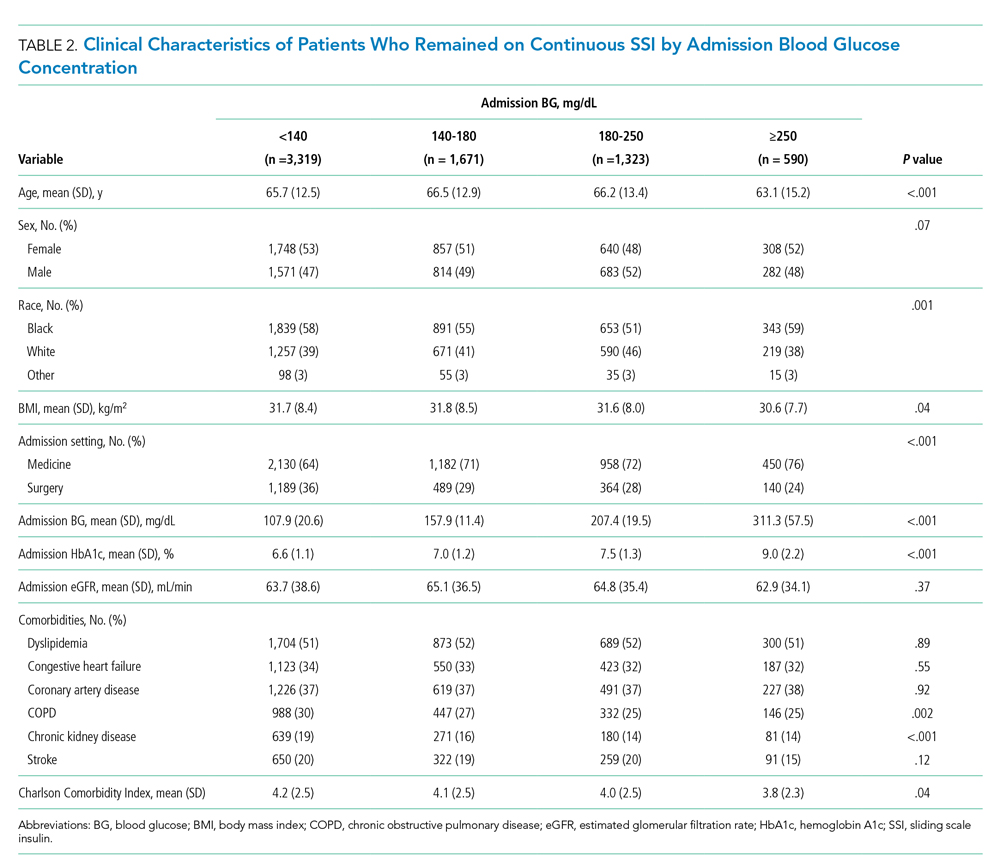

Table 2 describes the assessment of risk of bias using the ROBINS-I tool for the five retrospective observational studies and the RoB 2 tool for the one RCT.13,14 Three studies were rated as having serious risk of bias, with the remainder having a moderate risk of bias or some concerns. Two studies were judged as having a serious risk of bias because of potential confounding.8,23 To be judged low or moderate risk, studies needed to measure and control for potential risk factors for intestinal ischemia, such as age, diabetes, vascular disease, and heart failure.26,27 One study also had serious risk of bias for selective reporting because the published abstract of the study used a different analysis and had contradictory results from the published study.9,28 An additional area of risk of bias that did not fit into the ROBINS-I tool is that the two studies that used survival analysis chose durations for the outcome that were longer than would be expected for adverse events from SPS to be evident. One study chose 30 days and the other up to a maximum of 11 years from the time of exposure.9,10

Quantitative Outcomes

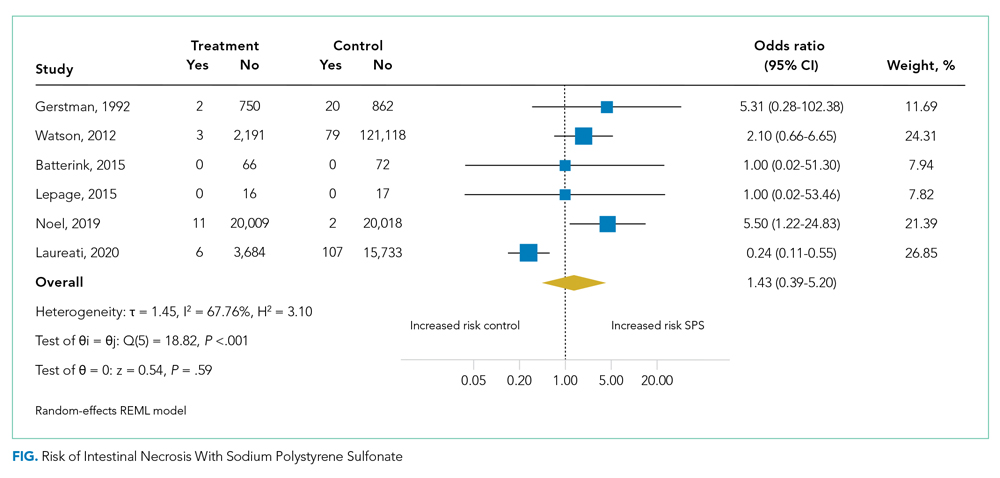

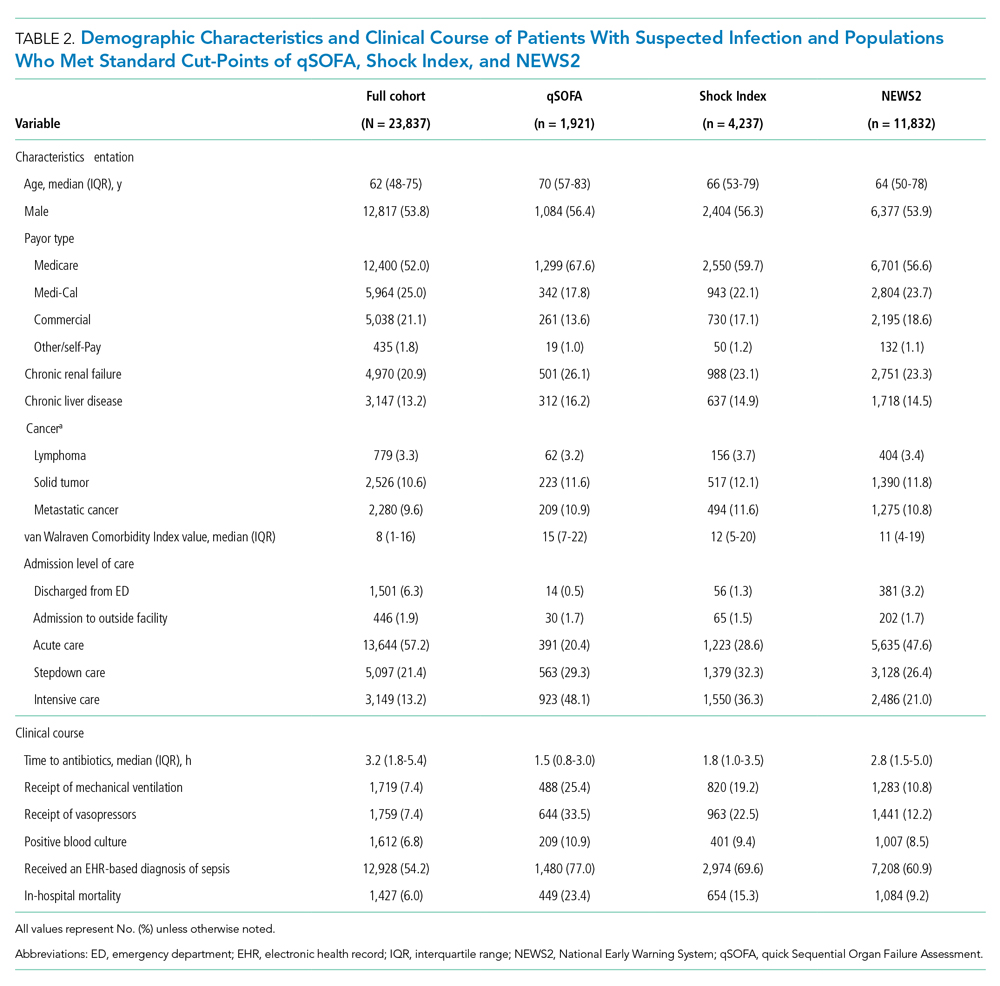

Six studies including 26,716 patients treated with SPS and controls reported the proportion of patients who developed intestinal necrosis. The Figure shows the individual study and pooled results for intestinal necrosis. The prevalence of intestinal ischemia in patients treated with SPS was 0.1% (95% CI, 0.03%-0.17%). The pooled odds ratio (OR) of intestinal necrosis was 1.43 (95% CI, 0.39-5.20). The 90% predicted interval for future studies was 0.08 to 26.6. Two studies reported rates of intestinal necrosis using survival analysis. The pooled HR from these studies was 2.00 (95% CI, 0.45-8.78). Two studies performed survival analysis for a composite outcome of severe gastrointestinal adverse events. The pooled HR for these two studies was 1.46 (95% CI, 1.01-2.11).

For the meta-analysis of intestinal necrosis, we found moderate-high statistical significance (Q = 18.82; P < .01; I² = 67.8%). Sensitivity analysis removing each study did not affect heterogeneity, with the exception of removing the study by Laureati et al,9 which resolved the heterogeneity (Q = 1.7, P = .8, I² = 0%). The pooled effect for intestinal necrosis also became statistically significant after removing Laureati et al (OR, 2.87; 95% CI, 1.24-6.63).9 We also performed two subgroup analyses, including studies that involved the concomitant use of sorbitol8,23,24 compared with studies that did not9,25 and subgroup analysis removing studies with zero events. Studies that included sorbitol found higher rates of intestinal necrosis (OR, 2.26; 95% CI, 0.80-6.38; I² = 0%) compared with studies that did not include sorbitol (OR, 0.25; 95% CI, 0.11-0.57; I² = 0%; test of group difference, P < .01). Removing the three studies with zero events resulted in a similar overall effect (OR, 1.30; 95% CI, 0.21-8.19). Finally, a meta-analysis using risk difference instead of ORs found a non–statistically significant difference in rate of intestinal necrosis favoring the control group (risk difference, −0.00033; 95% CI, −0.0022 to 0.0015; I² = 84.6%).

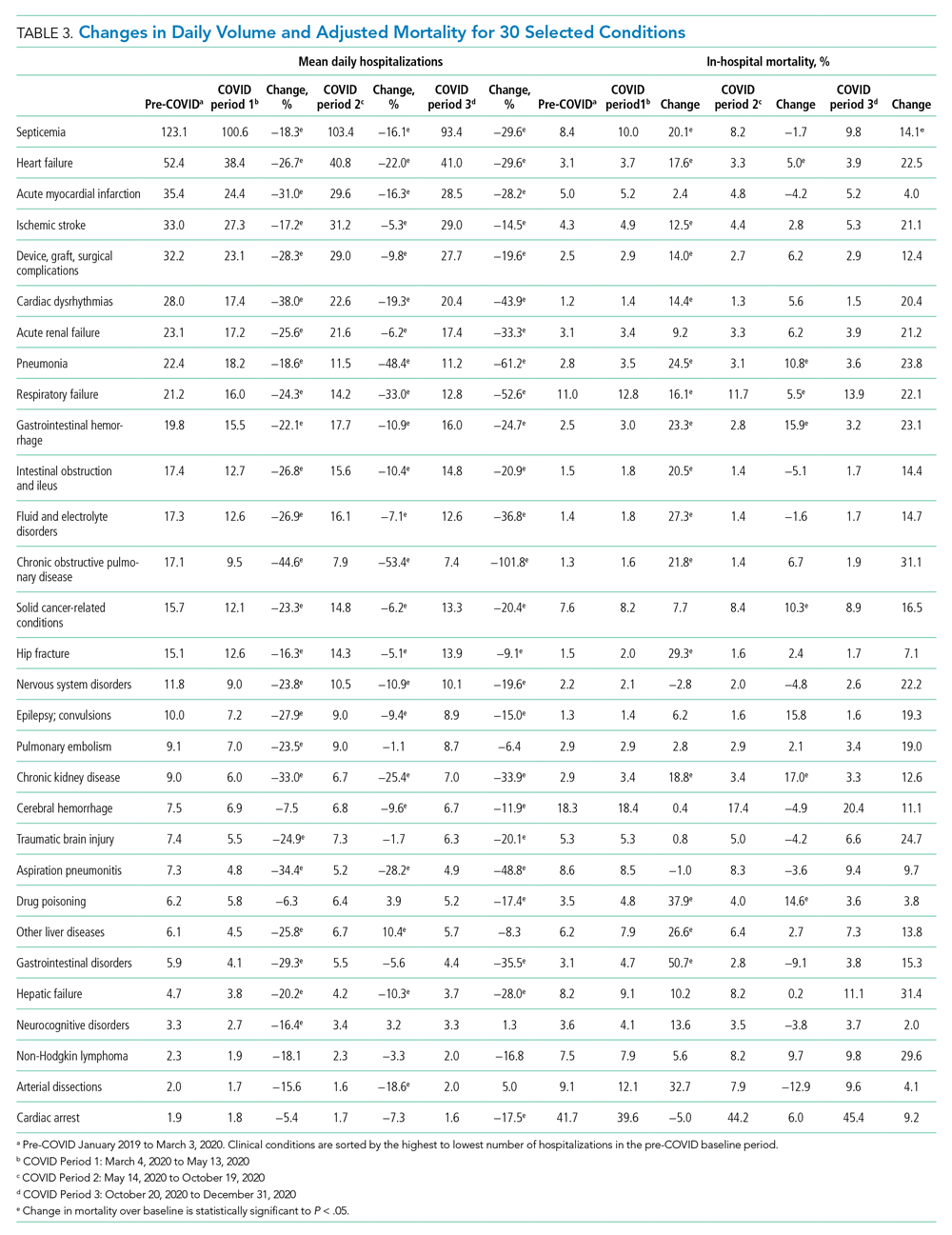

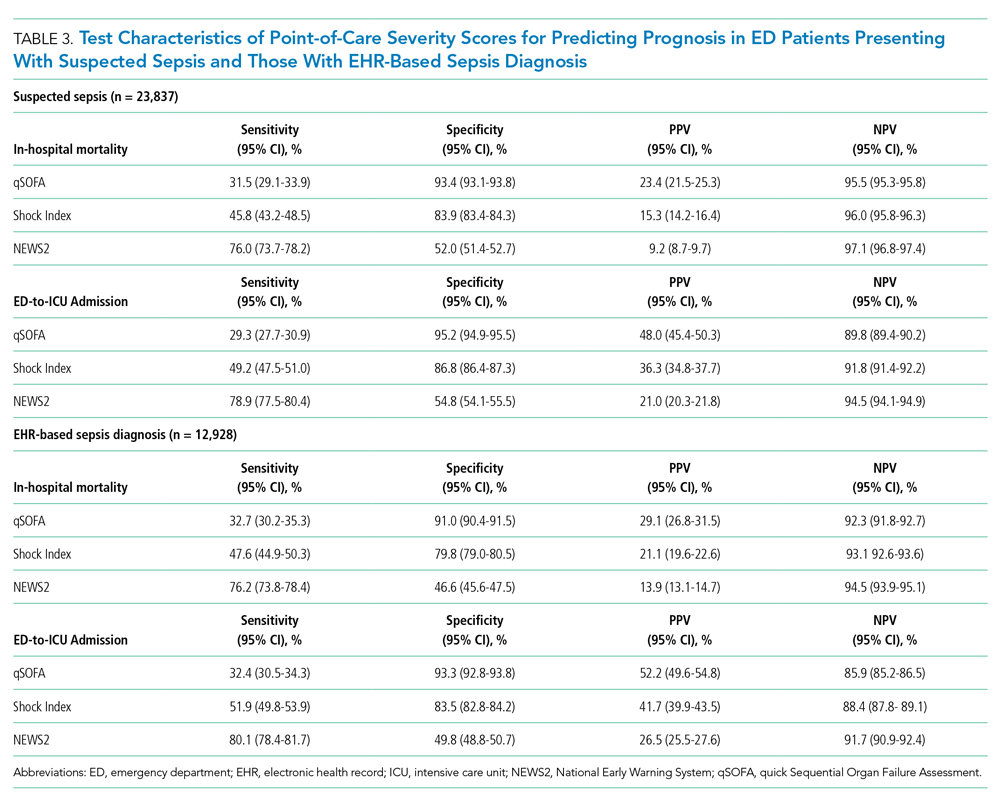

Table 3 summarizes our review findings and presents overall strength of evidence. Overall strength of evidence was found to be very low. Per GRADE criteria,15,29 strength of evidence for observational studies starts at low and may then be modified by the presence of bias, inconsistency, indirectness, imprecision, effect size, and direction of confounding. In the case of the three meta-analyses in the present study, risk of bias was serious for more than half of the study weights. Strength of evidence was also downrated for imprecision because of the low number of events and resultant wide CIs.

DISCUSSION

In total, we found six studies that reported rates of intestinal necrosis or severe gastrointestinal adverse events with SPS use compared with controls. The pooled rate of intestinal necrosis was not significantly higher for patients exposed to SPS when analyzed either as the proportion of patients with events or as HRs. The pooled rate for a composite outcome of severe gastrointestinal side effects was significantly higher (HR, 1.46; 95% CI, 1.01-2.11). The overall strength of evidence for the association of SPS with either intestinal necrosis or the composite outcome was found to be very low because of risk of bias and imprecision.

In some ways, our results emphasize the difficulty of showing a causal link between a medication and a possible rare adverse event. The first included study to assess the risk of intestinal necrosis after exposure to SPS compared with controls found only two events in the SPS group and no events in the control arm.23 Two additional studies that we found were small and did not report any events in either arm.24,25 The first large study to assess the risk of intestinal ischemia included more than 2,000 patients treated with SPS and more than 100,000 controls but found no difference in risk.8 The next large study did find increased risk of both intestinal necrosis (incidence rate, 6.82 per 1,000 person-years compared with 1.22 per 1,000 person-years for controls) and a composite outcome (incidence rate, 22.97 per 1,000 person-years compared with 11.01 per 1000 person-years for controls), but in the time to event analysis included events up to 30 days after treatment with SPS.10 A prior review of case reports of SPS and intestinal necrosis found a median of 2 days between SPS treatment and symptom onset.7 It is unlikely the authors would have had sufficient events to meaningfully compare rates if they limited the analysis to events within 7 days of SPS treatment, but events after a week of exposure are unlikely to be due to SPS. The final study to assess the association of SPS with intestinal necrosis actually found higher rates of intestinal necrosis in the control group when analyzed as proportions with events but reported a higher rate of a composite outcome of severe gastrointestinal adverse events that included nine separate International Classification of Diseases codes occurring up to 11 years after SPS exposure.9 This study was limited by evidence of selective reporting and was funded by the manufacturers of an alternative cation-exchange medication.

Based on our review of the literature, it is unclear if SPS does cause intestinal ischemia. The pooled results for intestinal ischemia analyzed as a proportion with events or with survival analysis did not find a statistically significantly increased risk. Because most o

A cost analysis of SPS vs potential alternatives such as patiromer for patients on chronic RAAS-I with a history of hyperkalemia or CKD published by Little et al26 concluded that SPS remained the cost-effective option when colonic necrosis incidence is 19.9% or less, and our systematic review reveals an incidence of 0.1% (95% CI, 0.03-0.17%). The incremental cost-effectiveness ratio was an astronomical $26,088,369 per quality-adjusted life-year gained, per Little’s analysis.

Limitations of our review are the heterogeneity of studies, which varied regarding inpatient or outpatient setting, formulations such as dosing, frequency, whether sorbitol was used, and interval from exposure to outcome measurement, which ranged from 7 days to 1 year. On sensitivity analysis, statistical heterogeneity was resolved by removing the study by Laureati et al.9 This study was notably different from the others because it included events occurring up to 1 year after exposure to SPS, which may have resulted in any true effect being diluted by later events unrelated to SPS. We did not exclude this study post hoc because this would result in bias; however, because the overall result becomes statistically significant without this study, our overall conclusion should be interpreted with caution.30 It is possible that future well-conducted studies may still find an effect of SPS on intestinal necrosis. Similarly, the finding that studies with SPS coformulated with sorbitol had statistically significantly increased risk of intestinal necrosis compared with studies without sorbitol should be interpreted with caution because the study by Laureati et al9 was included in the studies without sorbitol.

CONCLUSIONS

Based on our r

This work was presented at the Society of General Internal Medicine and Society of Hospital Medicine 2021 annual conferences.

1. Labriola L, Jadoul M. Sodium polystyrene sulfonate: still news after 60 years on the market. Nephrol Dial Transplant. 2020;35(9):1455-1458. https://doi.org/10.1093/ndt/gfaa004

2. Arvanitakis C, Malek G, Uehling D, Morrissey JF. Colonic complications after renal transplantation. Gastroenterology. 1973;64(4):533-538.

3. Parks M, Grady D. Sodium polystyrene sulfonate for hyperkalemia. JAMA Intern Med. 2019;179(8):1023-1024. https://doi.org/10.1001/jamainternmed.2019.1291

4. Sterns RH, Rojas M, Bernstein P, Chennupati S. Ion-exchange resins for the treatment of hyperkalemia: are they safe and effective? J Am Soc Nephrol. 2010;21(5):733-735. https://doi.org/10.1681/ASN.2010010079

5. Lillemoe KD, Romolo JL, Hamilton SR, Pennington LR, Burdick JF, Williams GM. Intestinal necrosis due to sodium polystyrene (Kayexalate) in sorbitol enemas: clinical and experimental support for the hypothesis. Surgery. 1987;101(3):267-272.

6. Sterns RH, Grieff M, Bernstein PL. Treatment of hyperkalemia: something old, something new. Kidney Int. 2016;89(3):546-554. https://doi.org/10.1016/j.kint.2015.11.018

7. Harel Z, Harel S, Shah PS, Wald R, Perl J, Bell CM. Gastrointestinal adverse events with sodium polystyrene sulfonate (Kayexalate) use: a systematic review. Am J Med. 2013;126(3):264.e269-24. https://doi.org/10.1016/j.amjmed.2012.08.016

8. Watson MA, Baker TP, Nguyen A, et al. Association of prescription of oral sodium polystyrene sulfonate with sorbitol in an inpatient setting with colonic necrosis: a retrospective cohort study. Am J Kidney Dis. 2012;60(3):409-416. https://doi.org/10.1053/j.ajkd.2012.04.023

9. Laureati P, Xu Y, Trevisan M, et al. Initiation of sodium polystyrene sulphonate and the risk of gastrointestinal adverse events in advanced chronic kidney disease: a nationwide study. Nephrol Dial Transplant. 2020;35(9):1518-1526. https://doi.org/10.1093/ndt/gfz150

10. Noel JA, Bota SE, Petrcich W, et al. Risk of hospitalization for serious adverse gastrointestinal events associated with sodium polystyrene sulfonate use in patients of advanced age. JAMA Intern Med. 2019;179(8):1025-1033. https://doi.org/10.1001/jamainternmed.2019.0631

11. McGowan J, Sampson M, Salzwedel DM, Cogo E, Foerster V, Lefebvre C. PRESS Peer Review of Electronic Search Strategies: 2015 guideline statement. J Clin Epidemiol. 2016;75:40-46. https://doi.org/10.1016/j.jclinepi.2016.01.021

12. Liberati A, Altman DG, Tetzlaff J, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. Ann Intern Med. 2009;151(4):W65-94. https://doi.org/10.7326/0003-4819-151-4-200908180-00136

13. Sterne JA, Hernán MA, Reeves BC, et al. ROBINS-I: a tool for assessing risk of bias in non-randomised studies of interventions. BMJ. 2016;355:i4919. https://doi.org/10.1136/bmj.i4919

14. Sterne JAC, Savovic J, Page MJ, et al. RoB 2: a revised tool for assessing risk of bias in randomised trials. BMJ. 2019;366:l4898. https://doi.org/10.1136/bmj.l4898

15. Guyatt G, Oxman AD, Akl EA, et al. GRADE guidelines: 1. Introduction-GRADE evidence profiles and summary of findings tables. J Clin Epidemiol. 2011;64(4):383-394. https://doi.org/10.1016/j.jclinepi.2010.04.026

16. Raudenbush SW. Analyzing effect sizes: random-effects models. In: Cooper H, Hedges LV, Valentine JC, eds. The Handbook of Research Synthesis and Meta-Analysis. 2nd ed. Russel Sage Foundation; 2009:295-316.

17. Sweeting MJ, Sutton AJ, Lambert PC. What to add to nothing? Use and avoidance of continuity corrections in meta-analysis of sparse data. Stat Med. 2004;23(9):1351-1375. https://doi.org/10.1002/sim.1761

18. DerSimonian R, Laird N. Meta-analysis in clinical trials. Control Clin Trials. 1986;7(3):177-188. https://doi.org/10.1016/0197-2456(86)90046-2

19. Freeman MF, Tukey JW. Transformations related to the angular and the square root. Ann Math Statist. 1950;21(4):607-611. https://doi.org/10.1214/aoms/1177729756

20. Higgins JPT, Thompson SG, Deeks JJ, Altman DG. Measuring inconsistency in meta-analyses. BMJ. 2003;327(7414):557-560. https://doi.org/10.1136/bmj.327.7414.557

21. Higgins JPT, Chandler TJ, Cumptson M, Li T, Page MJ, Welch VA, eds. Cochrane Handbook for Systematic Reviews of Interventions version 6.1 (updated September 2020). Cochrane, 2020. www.training.cochrane.org/handbook

22. Higgins JPT, Thompson SG, Spiegelhalter DJ. A re-evaluation of random-effects meta-analysis. J R Stat Soc Ser A Stat Soc. Jan 2009;172(1):137-159. https://doi.org/10.1111/j.1467-985X.2008.00552.x

23. Gerstman BB, Kirkman R, Platt R. Intestinal necrosis associated with postoperative orally administered sodium polystyrene sulfonate in sorbitol. Am J Kidney Dis. 1992;20(2):159-161. https://doi.org/10.1016/s0272-6386(12)80544-0

24. Batterink J, Lin J, Au-Yeung SHM, Cessford T. Effectiveness of sodium polystyrene sulfonate for short-term treatment of hyperkalemia. Can J Hosp Pharm. 2015;68(4):296-303. https://doi.org/10.4212/cjhp.v68i4.1469

25. Lepage L, Dufour AC, Doiron J, et al. Randomized clinical trial of sodium polystyrene sulfonate for the treatment of mild hyperkalemia in CKD. Clin J Am Soc Nephrol. 2015;10(12):2136-2142. https://doi.org/10.2215/CJN.03640415

26. Little DJ, Nee R, Abbott KC, Watson MA, Yuan CM. Cost-utility analysis of sodium polystyrene sulfonate vs. potential alternatives for chronic hyperkalemia. Clin Nephrol. 2014;81(4):259-268. https://doi.org/10.5414/cn108103

27. Cubiella Fernández J, Núñez Calvo L, González Vázquez E, et al. Risk factors associated with the development of ischemic colitis. World J Gastroenterol. 2010;16(36):4564-4569. https://doi.org/10.3748/wjg.v16.i36.4564

28. Laureati P, Evans M, Trevisan M, et al. Sodium polystyrene sulfonate, practice patterns and associated adverse event risk; a nationwide analysis from the Swedish Renal Register [abstract]. Nephroly Dial Transplant. 2019;34(suppl 1):i94. https://doi.org/10.1093/ndt/gfz106.FP151

29. Santesso N, Carrasco-Labra A, Langendam M, et al. Improving GRADE evidence tables part 3: detailed guidance for explanatory footnotes supports creating and understanding GRADE certainty in the evidence judgments. J Clin Epidemiol. 2016;74:28-39. https://doi.org/10.1016/j.jclinepi.2015.12.006

30. Deeks JJ HJ, Altman DG. Analysing data and undertaking meta-analyses. In: Cochrane Handbook for Systematic Reviews of Interventions version 6.1 (updated September 2020). Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA, eds. Cochrane, 2020. www.training.cochrane.org/handbook

Sodium polystyrene sulfonate (SPS) was first approved in the United States in 1958 and is a commonly prescribed medication for hyperkalemia.1 SPS works by exchanging potassium for sodium in the colonic lumen, thereby promoting potassium loss in the stool. However, reports of severe gastrointestinal side effects, particularly intestinal necrosis, have been persistent since the 1970s,2 leading some authors to recommend against the use of SPS.3,4 In 2009, the US Food and Drug Administration (FDA) warned against concomitant sorbitol administration, which was implicated in some studies.4,5 The concern about gastrointestinal side effects has also led to the development and FDA approval of two new cation-exchange resins for treatment of hyperkalemia.6 A prior systematic review of the literature found 30 separate case reports or case series including a total of 58 patients who were treated with SPS and developed severe gastrointestinal side effects.7 Because the included studies were all case reports or case series and therefore did not include comparison groups, it could not be determined whether SPS had a causal role in gastrointestinal side effects, and the authors could only conclude that there was a “possible” association. In contrast to case reports, several large cohort studies have been published more recently and report the risk of severe gastrointestinal adverse events associated with SPS compared with controls.8-10 While some studies found an increased risk, others have not. Given this uncertainty, we undertook a systematic review of studies that report the incidence of severe gastrointestinal side effects with SPS compared with controls.

METHODS

Data Sources and Search Strategy

A systematic search of the literature was conducted by a medical librarian using the Cochrane Library, Embase, Medline, Google Scholar, PubMed, Scopus, and Web of Science Core Collection databases to find relevant articles published from database inception to October 4, 2020. The search was peer reviewed by a second medical librarian using Peer Review of Electronic Search Strategies (PRESS).11 Databases were searched using a combination of controlled vocabulary and free-text terms for “SPS” and “bowel necrosis.” Details of the full search strategy are listed in Appendix A. References from all databases were imported into an EndNote X9 library, duplicates removed, and then uploaded into Coviden

Data Extraction and Quality Assessment

We used a standardized form to extract data, which included author, year, country, study design, setting, number of patients, SPS formulation, dosing, exposure, sorbitol content, outcomes of intestinal necrosis and the composite severe gastrointestinal adverse events, and the duration of time from SPS exposure to outcome occurrence. Two reviewers (JLH and AER) independently assessed the methodological quality of included studies using the Risk of Bias in Non-randomized Studies of Interventions (ROBINS-I) tool for observational studies13 and the Revised Cochrane risk of bias (RoB 2) tool for randomized controlled trials (RCTs).14 Additionally, two reviewers (JLH and CGG) graded overall strength of evidence based on the Grading of Recommendations Assessment, Development and Evaluation (GRADE) system.15 Disagreement was resolved by consensus.

Data Synthesis and Analysis

The proportion of patients with intestinal necrosis was compared using random effects meta-analysis using the restricted maximum likelihood method.16 For the two studies that reported hazard ratios (HRs), meta-analysis was performed after log transformation of the HRs and CIs. One study that performed survival analysis presented data for both the duration of the study (up to 11 years) and up to 1 year after exposure.9 We used the data up to 1 year after exposure because we believed later events were more likely to be due to chance than exposure to SPS. For studies with zero events, we used the treat ment-arm continuity correction, which has been reported to be preferable to the standard fixed-correction factor.17 We also performed two sensitivity analyses, including omitting the studies with zero events and performing meta-analysis using risk difference. The prevalence of intestinal ischemia was pooled using the DerSimonian and Laird18 random effects model with Freeman-Tukey19 double arcsine transformation. Heterogeneity was estimated using the I² statistic. I² values of 25%, 50%, and 75% were considered low, moderate, and high heterogeneity, respectively.20 Meta-regression and tests for small-study effects were not performed because of the small number of included studies.21 In addition to random effects meta-analysis, we calculated the 90% predicted interval for future studies for the pooled effect of intestinal ischemia.22 Statistical analysis was performed using meta and metaprop commands in Stata/IC, version 16.1 (StataCorp).

RESULTS

Selected Studies

The electronic search yielded 806 unique articles, of which 791 were excluded based on title and abstract, leaving 15 articles for full-text review (Appendix B). Appendix C describes the nine studies that were excluded, including the reason for exclusion. Table 1 describes the characteristics of the six studies that met study inclusion criteria. Studies were published between 1992 and 2020. Three studies were from Canada,10,24,25 two from the United States,8,23 and one from Sweden.9 Three studies occurred in an outpatient setting,9,10,25 and three were described as inpatient studies.8,23,24 SPS preparations included sorbitol in three studies,8,23,24 were not specified in one study,10 and were not included in two studies.9,25 SPS dosing varied widely, with median doses of 15 to 30 g in three studies,9,24,25 45 to 50 g in two studies,8,23 and unspecified in one study.10 Duration of exposure typically ranged from 1 to 7 days but was not consistently described. For example, two of the studies did not report duration of exposure,8,10 and a third study reported a single dispensation of 450 g in 41% of patients, with the remaining 59% averaging three dispensations within the first year.9 Sample size ranged from 33 to 123,391 patients. Most patients were male, and mean ages ranged from 44 to 78 years. Two studies limited participation to those with chronic kidney disease (CKD) with glomerular filtration rate (GFR) <4024 or CKD stage 4 or 5 or dialysis.9 Two studies specifically limited participation to patients with potassium levels of 5.0 to 5.9 mmol/L.24,25 All six studies reported outcomes for intestinal necrosis, and four reported composite outcomes for major adverse gastrointestinal events.9,10,24,25

Table 2 describes the assessment of risk of bias using the ROBINS-I tool for the five retrospective observational studies and the RoB 2 tool for the one RCT.13,14 Three studies were rated as having serious risk of bias, with the remainder having a moderate risk of bias or some concerns. Two studies were judged as having a serious risk of bias because of potential confounding.8,23 To be judged low or moderate risk, studies needed to measure and control for potential risk factors for intestinal ischemia, such as age, diabetes, vascular disease, and heart failure.26,27 One study also had serious risk of bias for selective reporting because the published abstract of the study used a different analysis and had contradictory results from the published study.9,28 An additional area of risk of bias that did not fit into the ROBINS-I tool is that the two studies that used survival analysis chose durations for the outcome that were longer than would be expected for adverse events from SPS to be evident. One study chose 30 days and the other up to a maximum of 11 years from the time of exposure.9,10

Quantitative Outcomes

Six studies including 26,716 patients treated with SPS and controls reported the proportion of patients who developed intestinal necrosis. The Figure shows the individual study and pooled results for intestinal necrosis. The prevalence of intestinal ischemia in patients treated with SPS was 0.1% (95% CI, 0.03%-0.17%). The pooled odds ratio (OR) of intestinal necrosis was 1.43 (95% CI, 0.39-5.20). The 90% predicted interval for future studies was 0.08 to 26.6. Two studies reported rates of intestinal necrosis using survival analysis. The pooled HR from these studies was 2.00 (95% CI, 0.45-8.78). Two studies performed survival analysis for a composite outcome of severe gastrointestinal adverse events. The pooled HR for these two studies was 1.46 (95% CI, 1.01-2.11).

For the meta-analysis of intestinal necrosis, we found moderate-high statistical significance (Q = 18.82; P < .01; I² = 67.8%). Sensitivity analysis removing each study did not affect heterogeneity, with the exception of removing the study by Laureati et al,9 which resolved the heterogeneity (Q = 1.7, P = .8, I² = 0%). The pooled effect for intestinal necrosis also became statistically significant after removing Laureati et al (OR, 2.87; 95% CI, 1.24-6.63).9 We also performed two subgroup analyses, including studies that involved the concomitant use of sorbitol8,23,24 compared with studies that did not9,25 and subgroup analysis removing studies with zero events. Studies that included sorbitol found higher rates of intestinal necrosis (OR, 2.26; 95% CI, 0.80-6.38; I² = 0%) compared with studies that did not include sorbitol (OR, 0.25; 95% CI, 0.11-0.57; I² = 0%; test of group difference, P < .01). Removing the three studies with zero events resulted in a similar overall effect (OR, 1.30; 95% CI, 0.21-8.19). Finally, a meta-analysis using risk difference instead of ORs found a non–statistically significant difference in rate of intestinal necrosis favoring the control group (risk difference, −0.00033; 95% CI, −0.0022 to 0.0015; I² = 84.6%).

Table 3 summarizes our review findings and presents overall strength of evidence. Overall strength of evidence was found to be very low. Per GRADE criteria,15,29 strength of evidence for observational studies starts at low and may then be modified by the presence of bias, inconsistency, indirectness, imprecision, effect size, and direction of confounding. In the case of the three meta-analyses in the present study, risk of bias was serious for more than half of the study weights. Strength of evidence was also downrated for imprecision because of the low number of events and resultant wide CIs.

DISCUSSION

In total, we found six studies that reported rates of intestinal necrosis or severe gastrointestinal adverse events with SPS use compared with controls. The pooled rate of intestinal necrosis was not significantly higher for patients exposed to SPS when analyzed either as the proportion of patients with events or as HRs. The pooled rate for a composite outcome of severe gastrointestinal side effects was significantly higher (HR, 1.46; 95% CI, 1.01-2.11). The overall strength of evidence for the association of SPS with either intestinal necrosis or the composite outcome was found to be very low because of risk of bias and imprecision.

In some ways, our results emphasize the difficulty of showing a causal link between a medication and a possible rare adverse event. The first included study to assess the risk of intestinal necrosis after exposure to SPS compared with controls found only two events in the SPS group and no events in the control arm.23 Two additional studies that we found were small and did not report any events in either arm.24,25 The first large study to assess the risk of intestinal ischemia included more than 2,000 patients treated with SPS and more than 100,000 controls but found no difference in risk.8 The next large study did find increased risk of both intestinal necrosis (incidence rate, 6.82 per 1,000 person-years compared with 1.22 per 1,000 person-years for controls) and a composite outcome (incidence rate, 22.97 per 1,000 person-years compared with 11.01 per 1000 person-years for controls), but in the time to event analysis included events up to 30 days after treatment with SPS.10 A prior review of case reports of SPS and intestinal necrosis found a median of 2 days between SPS treatment and symptom onset.7 It is unlikely the authors would have had sufficient events to meaningfully compare rates if they limited the analysis to events within 7 days of SPS treatment, but events after a week of exposure are unlikely to be due to SPS. The final study to assess the association of SPS with intestinal necrosis actually found higher rates of intestinal necrosis in the control group when analyzed as proportions with events but reported a higher rate of a composite outcome of severe gastrointestinal adverse events that included nine separate International Classification of Diseases codes occurring up to 11 years after SPS exposure.9 This study was limited by evidence of selective reporting and was funded by the manufacturers of an alternative cation-exchange medication.

Based on our review of the literature, it is unclear if SPS does cause intestinal ischemia. The pooled results for intestinal ischemia analyzed as a proportion with events or with survival analysis did not find a statistically significantly increased risk. Because most o

A cost analysis of SPS vs potential alternatives such as patiromer for patients on chronic RAAS-I with a history of hyperkalemia or CKD published by Little et al26 concluded that SPS remained the cost-effective option when colonic necrosis incidence is 19.9% or less, and our systematic review reveals an incidence of 0.1% (95% CI, 0.03-0.17%). The incremental cost-effectiveness ratio was an astronomical $26,088,369 per quality-adjusted life-year gained, per Little’s analysis.

Limitations of our review are the heterogeneity of studies, which varied regarding inpatient or outpatient setting, formulations such as dosing, frequency, whether sorbitol was used, and interval from exposure to outcome measurement, which ranged from 7 days to 1 year. On sensitivity analysis, statistical heterogeneity was resolved by removing the study by Laureati et al.9 This study was notably different from the others because it included events occurring up to 1 year after exposure to SPS, which may have resulted in any true effect being diluted by later events unrelated to SPS. We did not exclude this study post hoc because this would result in bias; however, because the overall result becomes statistically significant without this study, our overall conclusion should be interpreted with caution.30 It is possible that future well-conducted studies may still find an effect of SPS on intestinal necrosis. Similarly, the finding that studies with SPS coformulated with sorbitol had statistically significantly increased risk of intestinal necrosis compared with studies without sorbitol should be interpreted with caution because the study by Laureati et al9 was included in the studies without sorbitol.

CONCLUSIONS

Based on our r

This work was presented at the Society of General Internal Medicine and Society of Hospital Medicine 2021 annual conferences.

Sodium polystyrene sulfonate (SPS) was first approved in the United States in 1958 and is a commonly prescribed medication for hyperkalemia.1 SPS works by exchanging potassium for sodium in the colonic lumen, thereby promoting potassium loss in the stool. However, reports of severe gastrointestinal side effects, particularly intestinal necrosis, have been persistent since the 1970s,2 leading some authors to recommend against the use of SPS.3,4 In 2009, the US Food and Drug Administration (FDA) warned against concomitant sorbitol administration, which was implicated in some studies.4,5 The concern about gastrointestinal side effects has also led to the development and FDA approval of two new cation-exchange resins for treatment of hyperkalemia.6 A prior systematic review of the literature found 30 separate case reports or case series including a total of 58 patients who were treated with SPS and developed severe gastrointestinal side effects.7 Because the included studies were all case reports or case series and therefore did not include comparison groups, it could not be determined whether SPS had a causal role in gastrointestinal side effects, and the authors could only conclude that there was a “possible” association. In contrast to case reports, several large cohort studies have been published more recently and report the risk of severe gastrointestinal adverse events associated with SPS compared with controls.8-10 While some studies found an increased risk, others have not. Given this uncertainty, we undertook a systematic review of studies that report the incidence of severe gastrointestinal side effects with SPS compared with controls.

METHODS

Data Sources and Search Strategy

A systematic search of the literature was conducted by a medical librarian using the Cochrane Library, Embase, Medline, Google Scholar, PubMed, Scopus, and Web of Science Core Collection databases to find relevant articles published from database inception to October 4, 2020. The search was peer reviewed by a second medical librarian using Peer Review of Electronic Search Strategies (PRESS).11 Databases were searched using a combination of controlled vocabulary and free-text terms for “SPS” and “bowel necrosis.” Details of the full search strategy are listed in Appendix A. References from all databases were imported into an EndNote X9 library, duplicates removed, and then uploaded into Coviden

Data Extraction and Quality Assessment

We used a standardized form to extract data, which included author, year, country, study design, setting, number of patients, SPS formulation, dosing, exposure, sorbitol content, outcomes of intestinal necrosis and the composite severe gastrointestinal adverse events, and the duration of time from SPS exposure to outcome occurrence. Two reviewers (JLH and AER) independently assessed the methodological quality of included studies using the Risk of Bias in Non-randomized Studies of Interventions (ROBINS-I) tool for observational studies13 and the Revised Cochrane risk of bias (RoB 2) tool for randomized controlled trials (RCTs).14 Additionally, two reviewers (JLH and CGG) graded overall strength of evidence based on the Grading of Recommendations Assessment, Development and Evaluation (GRADE) system.15 Disagreement was resolved by consensus.

Data Synthesis and Analysis

The proportion of patients with intestinal necrosis was compared using random effects meta-analysis using the restricted maximum likelihood method.16 For the two studies that reported hazard ratios (HRs), meta-analysis was performed after log transformation of the HRs and CIs. One study that performed survival analysis presented data for both the duration of the study (up to 11 years) and up to 1 year after exposure.9 We used the data up to 1 year after exposure because we believed later events were more likely to be due to chance than exposure to SPS. For studies with zero events, we used the treat ment-arm continuity correction, which has been reported to be preferable to the standard fixed-correction factor.17 We also performed two sensitivity analyses, including omitting the studies with zero events and performing meta-analysis using risk difference. The prevalence of intestinal ischemia was pooled using the DerSimonian and Laird18 random effects model with Freeman-Tukey19 double arcsine transformation. Heterogeneity was estimated using the I² statistic. I² values of 25%, 50%, and 75% were considered low, moderate, and high heterogeneity, respectively.20 Meta-regression and tests for small-study effects were not performed because of the small number of included studies.21 In addition to random effects meta-analysis, we calculated the 90% predicted interval for future studies for the pooled effect of intestinal ischemia.22 Statistical analysis was performed using meta and metaprop commands in Stata/IC, version 16.1 (StataCorp).

RESULTS

Selected Studies

The electronic search yielded 806 unique articles, of which 791 were excluded based on title and abstract, leaving 15 articles for full-text review (Appendix B). Appendix C describes the nine studies that were excluded, including the reason for exclusion. Table 1 describes the characteristics of the six studies that met study inclusion criteria. Studies were published between 1992 and 2020. Three studies were from Canada,10,24,25 two from the United States,8,23 and one from Sweden.9 Three studies occurred in an outpatient setting,9,10,25 and three were described as inpatient studies.8,23,24 SPS preparations included sorbitol in three studies,8,23,24 were not specified in one study,10 and were not included in two studies.9,25 SPS dosing varied widely, with median doses of 15 to 30 g in three studies,9,24,25 45 to 50 g in two studies,8,23 and unspecified in one study.10 Duration of exposure typically ranged from 1 to 7 days but was not consistently described. For example, two of the studies did not report duration of exposure,8,10 and a third study reported a single dispensation of 450 g in 41% of patients, with the remaining 59% averaging three dispensations within the first year.9 Sample size ranged from 33 to 123,391 patients. Most patients were male, and mean ages ranged from 44 to 78 years. Two studies limited participation to those with chronic kidney disease (CKD) with glomerular filtration rate (GFR) <4024 or CKD stage 4 or 5 or dialysis.9 Two studies specifically limited participation to patients with potassium levels of 5.0 to 5.9 mmol/L.24,25 All six studies reported outcomes for intestinal necrosis, and four reported composite outcomes for major adverse gastrointestinal events.9,10,24,25

Table 2 describes the assessment of risk of bias using the ROBINS-I tool for the five retrospective observational studies and the RoB 2 tool for the one RCT.13,14 Three studies were rated as having serious risk of bias, with the remainder having a moderate risk of bias or some concerns. Two studies were judged as having a serious risk of bias because of potential confounding.8,23 To be judged low or moderate risk, studies needed to measure and control for potential risk factors for intestinal ischemia, such as age, diabetes, vascular disease, and heart failure.26,27 One study also had serious risk of bias for selective reporting because the published abstract of the study used a different analysis and had contradictory results from the published study.9,28 An additional area of risk of bias that did not fit into the ROBINS-I tool is that the two studies that used survival analysis chose durations for the outcome that were longer than would be expected for adverse events from SPS to be evident. One study chose 30 days and the other up to a maximum of 11 years from the time of exposure.9,10

Quantitative Outcomes

Six studies including 26,716 patients treated with SPS and controls reported the proportion of patients who developed intestinal necrosis. The Figure shows the individual study and pooled results for intestinal necrosis. The prevalence of intestinal ischemia in patients treated with SPS was 0.1% (95% CI, 0.03%-0.17%). The pooled odds ratio (OR) of intestinal necrosis was 1.43 (95% CI, 0.39-5.20). The 90% predicted interval for future studies was 0.08 to 26.6. Two studies reported rates of intestinal necrosis using survival analysis. The pooled HR from these studies was 2.00 (95% CI, 0.45-8.78). Two studies performed survival analysis for a composite outcome of severe gastrointestinal adverse events. The pooled HR for these two studies was 1.46 (95% CI, 1.01-2.11).

For the meta-analysis of intestinal necrosis, we found moderate-high statistical significance (Q = 18.82; P < .01; I² = 67.8%). Sensitivity analysis removing each study did not affect heterogeneity, with the exception of removing the study by Laureati et al,9 which resolved the heterogeneity (Q = 1.7, P = .8, I² = 0%). The pooled effect for intestinal necrosis also became statistically significant after removing Laureati et al (OR, 2.87; 95% CI, 1.24-6.63).9 We also performed two subgroup analyses, including studies that involved the concomitant use of sorbitol8,23,24 compared with studies that did not9,25 and subgroup analysis removing studies with zero events. Studies that included sorbitol found higher rates of intestinal necrosis (OR, 2.26; 95% CI, 0.80-6.38; I² = 0%) compared with studies that did not include sorbitol (OR, 0.25; 95% CI, 0.11-0.57; I² = 0%; test of group difference, P < .01). Removing the three studies with zero events resulted in a similar overall effect (OR, 1.30; 95% CI, 0.21-8.19). Finally, a meta-analysis using risk difference instead of ORs found a non–statistically significant difference in rate of intestinal necrosis favoring the control group (risk difference, −0.00033; 95% CI, −0.0022 to 0.0015; I² = 84.6%).

Table 3 summarizes our review findings and presents overall strength of evidence. Overall strength of evidence was found to be very low. Per GRADE criteria,15,29 strength of evidence for observational studies starts at low and may then be modified by the presence of bias, inconsistency, indirectness, imprecision, effect size, and direction of confounding. In the case of the three meta-analyses in the present study, risk of bias was serious for more than half of the study weights. Strength of evidence was also downrated for imprecision because of the low number of events and resultant wide CIs.

DISCUSSION

In total, we found six studies that reported rates of intestinal necrosis or severe gastrointestinal adverse events with SPS use compared with controls. The pooled rate of intestinal necrosis was not significantly higher for patients exposed to SPS when analyzed either as the proportion of patients with events or as HRs. The pooled rate for a composite outcome of severe gastrointestinal side effects was significantly higher (HR, 1.46; 95% CI, 1.01-2.11). The overall strength of evidence for the association of SPS with either intestinal necrosis or the composite outcome was found to be very low because of risk of bias and imprecision.

In some ways, our results emphasize the difficulty of showing a causal link between a medication and a possible rare adverse event. The first included study to assess the risk of intestinal necrosis after exposure to SPS compared with controls found only two events in the SPS group and no events in the control arm.23 Two additional studies that we found were small and did not report any events in either arm.24,25 The first large study to assess the risk of intestinal ischemia included more than 2,000 patients treated with SPS and more than 100,000 controls but found no difference in risk.8 The next large study did find increased risk of both intestinal necrosis (incidence rate, 6.82 per 1,000 person-years compared with 1.22 per 1,000 person-years for controls) and a composite outcome (incidence rate, 22.97 per 1,000 person-years compared with 11.01 per 1000 person-years for controls), but in the time to event analysis included events up to 30 days after treatment with SPS.10 A prior review of case reports of SPS and intestinal necrosis found a median of 2 days between SPS treatment and symptom onset.7 It is unlikely the authors would have had sufficient events to meaningfully compare rates if they limited the analysis to events within 7 days of SPS treatment, but events after a week of exposure are unlikely to be due to SPS. The final study to assess the association of SPS with intestinal necrosis actually found higher rates of intestinal necrosis in the control group when analyzed as proportions with events but reported a higher rate of a composite outcome of severe gastrointestinal adverse events that included nine separate International Classification of Diseases codes occurring up to 11 years after SPS exposure.9 This study was limited by evidence of selective reporting and was funded by the manufacturers of an alternative cation-exchange medication.

Based on our review of the literature, it is unclear if SPS does cause intestinal ischemia. The pooled results for intestinal ischemia analyzed as a proportion with events or with survival analysis did not find a statistically significantly increased risk. Because most o

A cost analysis of SPS vs potential alternatives such as patiromer for patients on chronic RAAS-I with a history of hyperkalemia or CKD published by Little et al26 concluded that SPS remained the cost-effective option when colonic necrosis incidence is 19.9% or less, and our systematic review reveals an incidence of 0.1% (95% CI, 0.03-0.17%). The incremental cost-effectiveness ratio was an astronomical $26,088,369 per quality-adjusted life-year gained, per Little’s analysis.

Limitations of our review are the heterogeneity of studies, which varied regarding inpatient or outpatient setting, formulations such as dosing, frequency, whether sorbitol was used, and interval from exposure to outcome measurement, which ranged from 7 days to 1 year. On sensitivity analysis, statistical heterogeneity was resolved by removing the study by Laureati et al.9 This study was notably different from the others because it included events occurring up to 1 year after exposure to SPS, which may have resulted in any true effect being diluted by later events unrelated to SPS. We did not exclude this study post hoc because this would result in bias; however, because the overall result becomes statistically significant without this study, our overall conclusion should be interpreted with caution.30 It is possible that future well-conducted studies may still find an effect of SPS on intestinal necrosis. Similarly, the finding that studies with SPS coformulated with sorbitol had statistically significantly increased risk of intestinal necrosis compared with studies without sorbitol should be interpreted with caution because the study by Laureati et al9 was included in the studies without sorbitol.

CONCLUSIONS

Based on our r

This work was presented at the Society of General Internal Medicine and Society of Hospital Medicine 2021 annual conferences.

1. Labriola L, Jadoul M. Sodium polystyrene sulfonate: still news after 60 years on the market. Nephrol Dial Transplant. 2020;35(9):1455-1458. https://doi.org/10.1093/ndt/gfaa004

2. Arvanitakis C, Malek G, Uehling D, Morrissey JF. Colonic complications after renal transplantation. Gastroenterology. 1973;64(4):533-538.

3. Parks M, Grady D. Sodium polystyrene sulfonate for hyperkalemia. JAMA Intern Med. 2019;179(8):1023-1024. https://doi.org/10.1001/jamainternmed.2019.1291

4. Sterns RH, Rojas M, Bernstein P, Chennupati S. Ion-exchange resins for the treatment of hyperkalemia: are they safe and effective? J Am Soc Nephrol. 2010;21(5):733-735. https://doi.org/10.1681/ASN.2010010079

5. Lillemoe KD, Romolo JL, Hamilton SR, Pennington LR, Burdick JF, Williams GM. Intestinal necrosis due to sodium polystyrene (Kayexalate) in sorbitol enemas: clinical and experimental support for the hypothesis. Surgery. 1987;101(3):267-272.

6. Sterns RH, Grieff M, Bernstein PL. Treatment of hyperkalemia: something old, something new. Kidney Int. 2016;89(3):546-554. https://doi.org/10.1016/j.kint.2015.11.018

7. Harel Z, Harel S, Shah PS, Wald R, Perl J, Bell CM. Gastrointestinal adverse events with sodium polystyrene sulfonate (Kayexalate) use: a systematic review. Am J Med. 2013;126(3):264.e269-24. https://doi.org/10.1016/j.amjmed.2012.08.016

8. Watson MA, Baker TP, Nguyen A, et al. Association of prescription of oral sodium polystyrene sulfonate with sorbitol in an inpatient setting with colonic necrosis: a retrospective cohort study. Am J Kidney Dis. 2012;60(3):409-416. https://doi.org/10.1053/j.ajkd.2012.04.023

9. Laureati P, Xu Y, Trevisan M, et al. Initiation of sodium polystyrene sulphonate and the risk of gastrointestinal adverse events in advanced chronic kidney disease: a nationwide study. Nephrol Dial Transplant. 2020;35(9):1518-1526. https://doi.org/10.1093/ndt/gfz150

10. Noel JA, Bota SE, Petrcich W, et al. Risk of hospitalization for serious adverse gastrointestinal events associated with sodium polystyrene sulfonate use in patients of advanced age. JAMA Intern Med. 2019;179(8):1025-1033. https://doi.org/10.1001/jamainternmed.2019.0631

11. McGowan J, Sampson M, Salzwedel DM, Cogo E, Foerster V, Lefebvre C. PRESS Peer Review of Electronic Search Strategies: 2015 guideline statement. J Clin Epidemiol. 2016;75:40-46. https://doi.org/10.1016/j.jclinepi.2016.01.021

12. Liberati A, Altman DG, Tetzlaff J, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. Ann Intern Med. 2009;151(4):W65-94. https://doi.org/10.7326/0003-4819-151-4-200908180-00136

13. Sterne JA, Hernán MA, Reeves BC, et al. ROBINS-I: a tool for assessing risk of bias in non-randomised studies of interventions. BMJ. 2016;355:i4919. https://doi.org/10.1136/bmj.i4919

14. Sterne JAC, Savovic J, Page MJ, et al. RoB 2: a revised tool for assessing risk of bias in randomised trials. BMJ. 2019;366:l4898. https://doi.org/10.1136/bmj.l4898

15. Guyatt G, Oxman AD, Akl EA, et al. GRADE guidelines: 1. Introduction-GRADE evidence profiles and summary of findings tables. J Clin Epidemiol. 2011;64(4):383-394. https://doi.org/10.1016/j.jclinepi.2010.04.026

16. Raudenbush SW. Analyzing effect sizes: random-effects models. In: Cooper H, Hedges LV, Valentine JC, eds. The Handbook of Research Synthesis and Meta-Analysis. 2nd ed. Russel Sage Foundation; 2009:295-316.

17. Sweeting MJ, Sutton AJ, Lambert PC. What to add to nothing? Use and avoidance of continuity corrections in meta-analysis of sparse data. Stat Med. 2004;23(9):1351-1375. https://doi.org/10.1002/sim.1761

18. DerSimonian R, Laird N. Meta-analysis in clinical trials. Control Clin Trials. 1986;7(3):177-188. https://doi.org/10.1016/0197-2456(86)90046-2

19. Freeman MF, Tukey JW. Transformations related to the angular and the square root. Ann Math Statist. 1950;21(4):607-611. https://doi.org/10.1214/aoms/1177729756

20. Higgins JPT, Thompson SG, Deeks JJ, Altman DG. Measuring inconsistency in meta-analyses. BMJ. 2003;327(7414):557-560. https://doi.org/10.1136/bmj.327.7414.557

21. Higgins JPT, Chandler TJ, Cumptson M, Li T, Page MJ, Welch VA, eds. Cochrane Handbook for Systematic Reviews of Interventions version 6.1 (updated September 2020). Cochrane, 2020. www.training.cochrane.org/handbook

22. Higgins JPT, Thompson SG, Spiegelhalter DJ. A re-evaluation of random-effects meta-analysis. J R Stat Soc Ser A Stat Soc. Jan 2009;172(1):137-159. https://doi.org/10.1111/j.1467-985X.2008.00552.x

23. Gerstman BB, Kirkman R, Platt R. Intestinal necrosis associated with postoperative orally administered sodium polystyrene sulfonate in sorbitol. Am J Kidney Dis. 1992;20(2):159-161. https://doi.org/10.1016/s0272-6386(12)80544-0

24. Batterink J, Lin J, Au-Yeung SHM, Cessford T. Effectiveness of sodium polystyrene sulfonate for short-term treatment of hyperkalemia. Can J Hosp Pharm. 2015;68(4):296-303. https://doi.org/10.4212/cjhp.v68i4.1469

25. Lepage L, Dufour AC, Doiron J, et al. Randomized clinical trial of sodium polystyrene sulfonate for the treatment of mild hyperkalemia in CKD. Clin J Am Soc Nephrol. 2015;10(12):2136-2142. https://doi.org/10.2215/CJN.03640415

26. Little DJ, Nee R, Abbott KC, Watson MA, Yuan CM. Cost-utility analysis of sodium polystyrene sulfonate vs. potential alternatives for chronic hyperkalemia. Clin Nephrol. 2014;81(4):259-268. https://doi.org/10.5414/cn108103

27. Cubiella Fernández J, Núñez Calvo L, González Vázquez E, et al. Risk factors associated with the development of ischemic colitis. World J Gastroenterol. 2010;16(36):4564-4569. https://doi.org/10.3748/wjg.v16.i36.4564

28. Laureati P, Evans M, Trevisan M, et al. Sodium polystyrene sulfonate, practice patterns and associated adverse event risk; a nationwide analysis from the Swedish Renal Register [abstract]. Nephroly Dial Transplant. 2019;34(suppl 1):i94. https://doi.org/10.1093/ndt/gfz106.FP151

29. Santesso N, Carrasco-Labra A, Langendam M, et al. Improving GRADE evidence tables part 3: detailed guidance for explanatory footnotes supports creating and understanding GRADE certainty in the evidence judgments. J Clin Epidemiol. 2016;74:28-39. https://doi.org/10.1016/j.jclinepi.2015.12.006

30. Deeks JJ HJ, Altman DG. Analysing data and undertaking meta-analyses. In: Cochrane Handbook for Systematic Reviews of Interventions version 6.1 (updated September 2020). Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA, eds. Cochrane, 2020. www.training.cochrane.org/handbook

1. Labriola L, Jadoul M. Sodium polystyrene sulfonate: still news after 60 years on the market. Nephrol Dial Transplant. 2020;35(9):1455-1458. https://doi.org/10.1093/ndt/gfaa004

2. Arvanitakis C, Malek G, Uehling D, Morrissey JF. Colonic complications after renal transplantation. Gastroenterology. 1973;64(4):533-538.

3. Parks M, Grady D. Sodium polystyrene sulfonate for hyperkalemia. JAMA Intern Med. 2019;179(8):1023-1024. https://doi.org/10.1001/jamainternmed.2019.1291

4. Sterns RH, Rojas M, Bernstein P, Chennupati S. Ion-exchange resins for the treatment of hyperkalemia: are they safe and effective? J Am Soc Nephrol. 2010;21(5):733-735. https://doi.org/10.1681/ASN.2010010079

5. Lillemoe KD, Romolo JL, Hamilton SR, Pennington LR, Burdick JF, Williams GM. Intestinal necrosis due to sodium polystyrene (Kayexalate) in sorbitol enemas: clinical and experimental support for the hypothesis. Surgery. 1987;101(3):267-272.

6. Sterns RH, Grieff M, Bernstein PL. Treatment of hyperkalemia: something old, something new. Kidney Int. 2016;89(3):546-554. https://doi.org/10.1016/j.kint.2015.11.018

7. Harel Z, Harel S, Shah PS, Wald R, Perl J, Bell CM. Gastrointestinal adverse events with sodium polystyrene sulfonate (Kayexalate) use: a systematic review. Am J Med. 2013;126(3):264.e269-24. https://doi.org/10.1016/j.amjmed.2012.08.016

8. Watson MA, Baker TP, Nguyen A, et al. Association of prescription of oral sodium polystyrene sulfonate with sorbitol in an inpatient setting with colonic necrosis: a retrospective cohort study. Am J Kidney Dis. 2012;60(3):409-416. https://doi.org/10.1053/j.ajkd.2012.04.023

9. Laureati P, Xu Y, Trevisan M, et al. Initiation of sodium polystyrene sulphonate and the risk of gastrointestinal adverse events in advanced chronic kidney disease: a nationwide study. Nephrol Dial Transplant. 2020;35(9):1518-1526. https://doi.org/10.1093/ndt/gfz150

10. Noel JA, Bota SE, Petrcich W, et al. Risk of hospitalization for serious adverse gastrointestinal events associated with sodium polystyrene sulfonate use in patients of advanced age. JAMA Intern Med. 2019;179(8):1025-1033. https://doi.org/10.1001/jamainternmed.2019.0631

11. McGowan J, Sampson M, Salzwedel DM, Cogo E, Foerster V, Lefebvre C. PRESS Peer Review of Electronic Search Strategies: 2015 guideline statement. J Clin Epidemiol. 2016;75:40-46. https://doi.org/10.1016/j.jclinepi.2016.01.021

12. Liberati A, Altman DG, Tetzlaff J, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. Ann Intern Med. 2009;151(4):W65-94. https://doi.org/10.7326/0003-4819-151-4-200908180-00136

13. Sterne JA, Hernán MA, Reeves BC, et al. ROBINS-I: a tool for assessing risk of bias in non-randomised studies of interventions. BMJ. 2016;355:i4919. https://doi.org/10.1136/bmj.i4919

14. Sterne JAC, Savovic J, Page MJ, et al. RoB 2: a revised tool for assessing risk of bias in randomised trials. BMJ. 2019;366:l4898. https://doi.org/10.1136/bmj.l4898

15. Guyatt G, Oxman AD, Akl EA, et al. GRADE guidelines: 1. Introduction-GRADE evidence profiles and summary of findings tables. J Clin Epidemiol. 2011;64(4):383-394. https://doi.org/10.1016/j.jclinepi.2010.04.026

16. Raudenbush SW. Analyzing effect sizes: random-effects models. In: Cooper H, Hedges LV, Valentine JC, eds. The Handbook of Research Synthesis and Meta-Analysis. 2nd ed. Russel Sage Foundation; 2009:295-316.

17. Sweeting MJ, Sutton AJ, Lambert PC. What to add to nothing? Use and avoidance of continuity corrections in meta-analysis of sparse data. Stat Med. 2004;23(9):1351-1375. https://doi.org/10.1002/sim.1761

18. DerSimonian R, Laird N. Meta-analysis in clinical trials. Control Clin Trials. 1986;7(3):177-188. https://doi.org/10.1016/0197-2456(86)90046-2

19. Freeman MF, Tukey JW. Transformations related to the angular and the square root. Ann Math Statist. 1950;21(4):607-611. https://doi.org/10.1214/aoms/1177729756

20. Higgins JPT, Thompson SG, Deeks JJ, Altman DG. Measuring inconsistency in meta-analyses. BMJ. 2003;327(7414):557-560. https://doi.org/10.1136/bmj.327.7414.557

21. Higgins JPT, Chandler TJ, Cumptson M, Li T, Page MJ, Welch VA, eds. Cochrane Handbook for Systematic Reviews of Interventions version 6.1 (updated September 2020). Cochrane, 2020. www.training.cochrane.org/handbook

22. Higgins JPT, Thompson SG, Spiegelhalter DJ. A re-evaluation of random-effects meta-analysis. J R Stat Soc Ser A Stat Soc. Jan 2009;172(1):137-159. https://doi.org/10.1111/j.1467-985X.2008.00552.x

23. Gerstman BB, Kirkman R, Platt R. Intestinal necrosis associated with postoperative orally administered sodium polystyrene sulfonate in sorbitol. Am J Kidney Dis. 1992;20(2):159-161. https://doi.org/10.1016/s0272-6386(12)80544-0

24. Batterink J, Lin J, Au-Yeung SHM, Cessford T. Effectiveness of sodium polystyrene sulfonate for short-term treatment of hyperkalemia. Can J Hosp Pharm. 2015;68(4):296-303. https://doi.org/10.4212/cjhp.v68i4.1469

25. Lepage L, Dufour AC, Doiron J, et al. Randomized clinical trial of sodium polystyrene sulfonate for the treatment of mild hyperkalemia in CKD. Clin J Am Soc Nephrol. 2015;10(12):2136-2142. https://doi.org/10.2215/CJN.03640415

26. Little DJ, Nee R, Abbott KC, Watson MA, Yuan CM. Cost-utility analysis of sodium polystyrene sulfonate vs. potential alternatives for chronic hyperkalemia. Clin Nephrol. 2014;81(4):259-268. https://doi.org/10.5414/cn108103

27. Cubiella Fernández J, Núñez Calvo L, González Vázquez E, et al. Risk factors associated with the development of ischemic colitis. World J Gastroenterol. 2010;16(36):4564-4569. https://doi.org/10.3748/wjg.v16.i36.4564

28. Laureati P, Evans M, Trevisan M, et al. Sodium polystyrene sulfonate, practice patterns and associated adverse event risk; a nationwide analysis from the Swedish Renal Register [abstract]. Nephroly Dial Transplant. 2019;34(suppl 1):i94. https://doi.org/10.1093/ndt/gfz106.FP151

29. Santesso N, Carrasco-Labra A, Langendam M, et al. Improving GRADE evidence tables part 3: detailed guidance for explanatory footnotes supports creating and understanding GRADE certainty in the evidence judgments. J Clin Epidemiol. 2016;74:28-39. https://doi.org/10.1016/j.jclinepi.2015.12.006

30. Deeks JJ HJ, Altman DG. Analysing data and undertaking meta-analyses. In: Cochrane Handbook for Systematic Reviews of Interventions version 6.1 (updated September 2020). Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA, eds. Cochrane, 2020. www.training.cochrane.org/handbook

© 2021 Society of Hospital Medicine

Clinical Progress Note: E-cigarette, or Vaping, Product Use-Associated Lung Injury

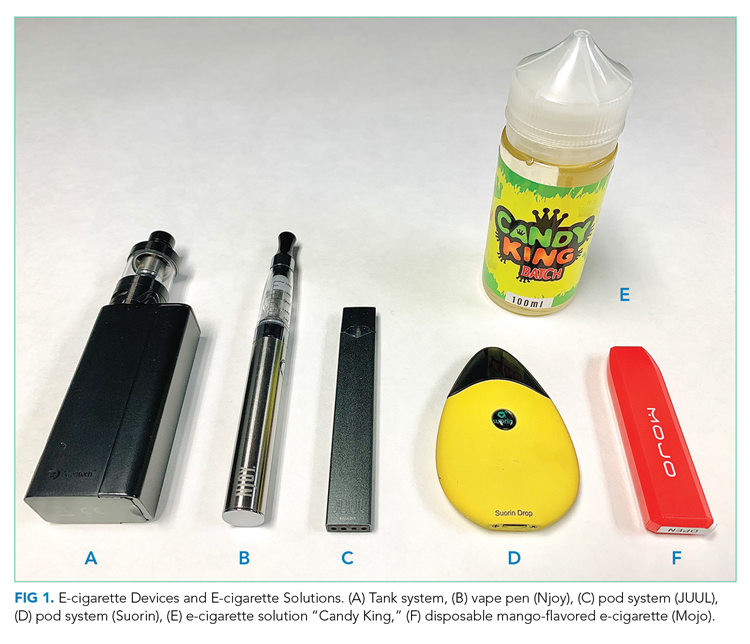

E-cigarettes are handheld devices that are used to aerosolize a liquid that commonly contains nicotine, flavorings, and polyethylene glycol and/or vegetable glycerin. These products vary widely in design and style (Figure 1); from the disposable “cigalikes” to vape pens, mods, tanks, and pod systems such as JUUL, there has been a dramatic increase in the recognition, use, sale, and variety of products.1 In addition to the known risks of e-cigarette use, with youth nicotine addiction and progression to cigarette smoking, there is evidence of a wide range of health concerns, including pulmonary and cardiovascular effects, immune dysfunction, and carcinogenesis.1 The emergence of patients with severe lung injury in the summer of 2019 highlighted the harmful health effects specific to these tobacco products.2 Ultimately named EVALI (e-cigarette, or vaping, product use-associated lung injury), there have been 2,807 hospitalized patients with 68 deaths reported to the Centers for Disease Control and Prevention (CDC).2,3 This clinical progress note reviews the epidemiology and clinical course of EVALI and strategies to distinguish the disease from other illnesses. This is particularly timely with the emergence of and surges in COVID-19 cases.4

SEARCH STRATEGY

As the first reports of patients with e-cigarette–associated lung injury were made in the summer of 2019, and the CDC defined EVALI in the fall of 2019, a PubMed search was performed for studies published from June 2019 to June 2020, using the search terms “EVALI” or “e-cigarette–associated lung injury.” In addition, the authors reviewed the CDC and US Food and Drug Administration (FDA) website and presentations on EVALI available in the public domain. Articles discussing COVID-19 and EVALI that the authors became aware of were also included. This update is intended for hospitalists as well as researchers and public health advocates.

DEFINING EVALI

Standard diagnostic criteria do not yet exist, and EVALI remains a diagnosis of exclusion. For epidemiologic (and not diagnostic) purposes, however, the CDC developed the following definitions.3 A confirmed EVALI case must include all of the following criteria:

- Vaping or dabbing within 90 days prior to symptoms. Vaping refers to using e-cigarettes, while dabbing denotes inhaling concentrated tetrahydrocannabinol (THC) products, also known as wax, shatter, or oil

- Pulmonary infiltrates on chest X-ray (CXR) or ground-glass opacities on computed tomography (CT) scan

- Absence of pulmonary infection (including negative respiratory viral panel and influenza testing)

- Negative respiratory infectious disease testing, as clinically indicated

- No evidence in the medical record to suggest an alternative diagnosis

The criteria for a probable EVALI case are similar, except that an infection may be identified but thought not to be the sole cause of lung injury, or the minimum criteria to rule out infection may not be met.

EPIDEMIOLOGY AND DEMOGRAPHICS

Although cases have been reported in all 50 states, the District of Columbia, and two US territories, geographic heterogeneity has been observed.3 Hospital admissions for EVALI reported to the CDC peaked in mid-September 2019 and declined through February 2020.3,8 Although the CDC is no longer reporting weekly numbers, cases continue to be reported in the literature, and current numbers are unclear.4,9,10 The decrease in cases since the peak is thought to be due to increased public awareness of the dangers associated with vaping (particularly with THC-containing products), law enforcement actions, and removal of vitamin E acetate from products.3,8

Risk factors associated with EVALI include younger age, male sex, and use of THC products.5,6 The median age of hospitalized patients diagnosed with EVALI is 24 years, with patients ranging from 13 to 85 years old.3 Overall, 66% of all EVALI patients were male, 82% reported use of a THC-containing product, and 57% reported use of a nicotine-containing product. Approximately 14% of patients reported exclusive nicotine use.3

Nearly half (44%) of hospitalized EVALI patients reported to the CDC required intensive care.7 Of the 68 fatal cases reported to the CDC, the patients were older, with a median age of 51 years (range, 15-75 years), and had increased rates of preexisting conditions, including obesity, asthma, cardiac disease, chronic obstructive pulmonary disease, and mental health disorders.7

HISTORICAL FEATURES

Patients with EVALI may initially present with a variety of respiratory, gastrointestinal, and constitutional symptoms (including fever, muscle aches, and fatigue).11 For this reason, clinicians should universally ask about vaping or dabbing as part of an exposure history, taking care to ensure confidentiality, especially in the adolescent or youth population.12 If the patient reports use, details, including the types of devices, how they were obtained and used, the ingredients in the e-cigarette solution (e-liquid), and the presence of additives or flavorings, should all be noted.3,5,9,12 This history may not be volunteered by the patient, which could result in a delay in diagnosing EVALI.9,12 Although the CDC uses vaping within 90 days in the criteria for diagnosis,3 the likelihood of EVALI decreases with increased time from last use; longer than 1 month is unlikely to be related.11

PHYSICAL EXAM AND LABORATORY STUDIES

Physical assessment of a patient with EVALI may be notable for fever, tachypnea, hypoxemia, or tachycardia; rales may be present, but the exam is often otherwise unrevealing.5,11,12Lab studies may show a mild leukocytosis with neutrophilic predominance and elevated inflammatory markers, including erythrocyte sedimentation rate and C-reactive protein. Procalcitonin may be normal or mildly increased, and, rarely, impaired renal function, hyponatremia, and mild transaminitis may also be present.5,7 As EVALI remains a diagnosis of exclusion, an infectious workup must be completed, which should include evaluation of respiratory viruses and influenza, as well as SARS-CoV-2 testing.11,12

IMAGING AND ADVANCED DIAGNOSTICS

CXR may show bilateral consolidative opacities.11 If the CXR is normal but EVALI is suspected, a CT scan can be considered for diagnostic purposes. Ground-glass opacities are often present on CT imaging (Figure 2), occasionally with subpleural sparing, although this finding is also nonspecific. Less frequently, pneumomediastinum, pleural effusion, or pneumothorax may occur.6,11

Finally, bronchoscopy may be used to exclude other diagnoses if less invasive measures are not conclusive; pulmonary lipid-laden macrophages are associated with EVALI but are nonspecific.5 Cytology and/or biopsy can be used to eliminate other diagnoses but cannot confirm a diagnosis of EVALI.5

DIFFERENTIAL DIAGNOSIS

Hospitalists care for many patients with respiratory symptoms, particularly in the midst of the COVID-19 pandemic and influenza season. Common infectious etiologies that may present similarly include COVID-19, community-acquired pneumonia, influenza, and other viral respiratory illnesses. Hospitalists may rely on microbiologic testing to rule out these causes. If there is a history of vaping and dabbing and this testing is negative, EVALI must be considered more strongly. Recent case studies report that patients with EVALI have been presumed to have COVID-19, despite negative SARS-CoV-2 testing, resulting in delayed diagnosis.4,9 Two small case series suggest that leukocytosis, subpleural sparing on CT scan, vitamin E acetate or macrophages in bronchoalveolar lavage (BAL) fluid, and quick improvement with steroids may suggest a diagnosis of EVALI, as opposed to COVID-19.4,10

Consultation with pulmonary, infectious disease, and toxicology specialists may be of benefit when the diagnosis remains unclear, and specific patient characteristics should guide additional evaluation. Less common diagnoses may need to be considered depending on specific patient factors. For example, patients in certain geographical areas may need testing for endemic fungi, adolescents with recurrent respiratory illnesses may benefit from evaluation for structural lung disease or immunodeficiencies, and patients with impaired immune function need evaluation for Pneumocystis jiroveci infection.5 Diagnostic and treatment algorithms have been developed by the CDC; Kalininskiy et al11 have also proposed a clinical algorithm.12,13

TREATMENT AND CLINICAL COURSE

Empiric treatment for typical infectious pathogens is often provided until evaluation is complete.11,12 Although no randomized clinical trials exist, the CDC and other treatment algorithms recommend supportive care and abstinence from vaping.11-13 Although there are limited data regarding dose and duration, case reports have noted clinical improvement with corticosteroids.6,11-13 Use of steroids can be considered in consultation with a pulmonologist based on the clinical picture, including severity of illness, coexisting infections, and comorbidities.6,11-13 Overall, the clinical course for hospitalized patients with EVALI is variable, but the majority improve with supportive therapy.11,12

Substance use and mental health screening should be performed during hospitalization, as appropriate social support and tobacco use treatment are essential components of care.13 The FDA and CDC recommend universal abstention from all THC-containing products, particularly from informal sources. These agencies also recommend that all nonsmoking adults, including youth and women who are pregnant, abstain from the use of any e-cigarette products.3 Resources for patients who are tobacco users include the nationally available quit line, 1-800-QUIT-NOW, and Smokefree.gov. Similarly, follow-up with a primary care provider within 48 hours of discharge, as well as a visit with a pulmonologist within 4 weeks, is recommended by the CDC per the discharge readiness checklist, with the goal of improving management through earlier follow-up.13 Hospitalists should report confirmed or presumed cases to their local or state health department. Correct medical coding should also be used with diagnosis to better track and care for patients with EVALI; as of April 1, 2020, the World Health Organization established a new International Classification of Diseases, 10th Revision (ICD-10) code, U07.0, for vaping-related injury.14

FUTURE RESEARCH

As EVALI has only recently been described, further research on prevention, etiology, pathophysiology, treatment, and outcomes is needed Although the precise pathophysiology of EVALI remains unknown, vitamin E acetate, a diluent used in some THC-containing e-cigarette solutions, was detected in the BAL of 48 of 51 patients with EVALI (94%) in one study.15 However, available evidence is not sufficient to rule out other toxins found in e-cigarette solution.3 Longitudinal studies should be done to follow patients with EVALI with an emphasis on sustained tobacco use treatment, as the long-term effects of e-cigarette use remain unknown. Furthermore, although corticosteroids are often used, there have been no clinical trials on their efficacy, dose, or duration. Finally, since the CDC is no longer reporting cases, continued epidemiologic studies are necessary.

CONCLUSIONS AND IMPLICATIONS FOR CLINICAL CARE

EVALI, first reported in August 2019, is associated with vaping and e-cigarette use and may present with respiratory, gastrointestinal, and constitutional symptoms similar to COVID-19. Healthcare teams should universally screen patients for tobacco, vaping, and e-cigarette use. The majority of patients with EVALI improve with supportive care and abstinence from vaping and e-cigarettes. Tobacco cessation treatment, which includes access to pharmacotherapy and counseling, is critical for patients with EVALI. Additional treatment may include steroids in consultation with subspecialists. The pathophysiology and long-term effects of EVALI remain unclear. Hospitalists should continue to report cases to their local or state health department and use the ICD-10 code for EVALI.

1. Walley SC, Wilson KM, Winickoff JP, Groner J. A public health crisis: electronic cigarettes, vape, and JUUL. Pediatrics. 2019;143(6):e20182741. https://doi.org/10.1542/peds.2018-2741

2. Davidson K, Brancato A, Heetderks P, et al. Outbreak of electronic-cigarette-associated acute lipoid pneumonia—North Carolina, July-August 2019. MMWR Morb Mortal Wkly Rep. 2019;68(36):784-786. https://doi.org/10.15585/mmwr.mm6836e1

3. Centers for Disease Control and Prevention. Outbreak of lung injury associated with the use of e-cigarette, or vaping, products. Updated February 25, 2020. Accessed June 5, 2020.https://www.cdc.gov/tobacco/basic_information/e-cigarettes/severe-lung-disease.html

4. Callahan SJ, Harris D, Collingridge DS, et al. Diagnosing EVALI in the time of COVID-19. Chest. 2020;158(5):2034-2037. https://doi.org/10.1016/j.chest.2020.06.029

5. Aberegg SK, Maddock SD, Blagev DP, Callahan SJ. Diagnosis of EVALI: general approach and the role of bronchoscopy. Chest. 2020;158(2):820-827. https://doi.org/10.1016/j.chest.2020.02.018

6. Layden JE, Ghinai I, Pray I, et al. Pulmonary illness related to e-cigarette use in Illinois and Wisconsin —final report. N Engl J Med. 2020;382(10):903-916. https://doi.org/10.1056/NEJMoa1911614

7. Werner AK, Koumans EH, Chatham-Stephens K, et al. Hospitalizations and deaths associated with EVALI. N Engl J Med. 2020;382(17):1589-1598. https://doi.org/10.1056/NEJMoa1915314