User login

Pediatric Hospitalist Workload and Sustainability in University-Based Programs: Results from a National Interview-Based Survey

Pediatric hospital medicine (PHM) has grown tremendously since Wachter first described the specialty in 1996.1 Evidence of this growth is seen most markedly at the annual Pediatric Hospitalist Meeting, which has experienced an increase in attendance from 700 in 2013 to over 1,200 in 20172. Although the exact number of pediatric hospitalists in the United States is unknown, the American Academy of Pediatrics Section on Hospital Medicine (AAP SOHM) estimates that approximately 3,000-5,000 pediatric hospitalists currently practice in the country (personal communication).

As PHM programs have grown, variability has been reported in the roles, responsibilities, and workload among practitioners. Gosdin et al.3 reported large ranges and standard deviations in workload among full-time equivalents (FTEs) in academic PHM programs. However, this study’s ability to account for important nuances in program description was limited given that its data were obtained from an online survey.

Program variability, particularly regarding clinical hours and overall clinical burden (eg, in-house hours, census caps, and weekend coverage), is concerning given the well-reported increase in physician burn-out.4,5 Benchmarking data regarding the overall workload of pediatric hospitalists can offer nationally recognized guidance to assist program leaders in building successful programs. With this goal in mind, we sought to obtain data on university-based PHM programs to describe the current average workload for a 1.0 clinical FTE pediatric hospitalist and to assess the perceptions of program directors regarding the sustainability of the current workload.

METHODS

Study Design and Population

To obtain data with sufficient detail to compare programs, the authors, all of whom are practicing pediatric hospitalists at university-based programs, conducted structured interviews of PHM leaders in the United States. Given the absence of a single database for all PHM programs in the United States, the clinical division/program leaders of university-based programs were invited to participate through a post (with 2 reminders) to the AAP SOHM Listserv for PHM Division Leaders in May of 2017. To encourage participation, respondents were promised a summary of aggregate data. The study was exempted by the IRB of the University of Chicago.

Interview Content and Administration

The authors designed an 18-question structured interview regarding the current state of staffing in university-based PHM programs, with a focus on current descriptions of FTE, patient volume, and workload. Utilizing prior surveys3 as a basis, the authors iteratively determined the questions essential to understanding the programs’ current staffing models and ideal models. Considering the diversity of program models, interviews allowed for the clarification of questions and answers. A question regarding employment models was included to determine whether hospitalists were university-employed, hospital-employed, or a hybrid of the 2 modes of employment. The interview was also designed to establish a common language for work metrics (hours per year) for comparative purposes and to assess the perceived sustainability of the workload. Questions were provided in advance to provide respondents with sufficient time to collect data, thus increasing the accuracy of estimates. Respondents were asked, “Do you or your hospitalists have concerns about the sustainability of the model?” Sustainability was intentionally undefined to prevent limiting respondent perspective. For clarification, however, a follow-up comment that included examples was provided: “Faculty departures, reduction in total effort, and/or significant burn out.” The authors piloted the interview protocol by interviewing the division leaders of their own programs, and revisions were made based on feedback on feasibility and clarity. Finally, the AAP SOHM Subcommittee on Division Leaders provided feedback, which was incorporated.

Each author then interviewed 10-12 leaders (or designee) during May and June of 2017. Answers were recorded in REDCAP, an online survey and database tool that contains largely numeric data fields and has 1 field for narrative comments.

Data Analysis

Descriptive statistics were used to summarize interview responses, including median values with interquartile range. Data were compared between programs with models that were self-identified as either sustainable or unsustainable, with P-values in categorical variables from χ2-test or Fischer’s exact test and in continuous variables from Wilcoxon rank-sum test.

Spearman correlation coefficient was used to evaluate the association between average protected time (defined as the percent of funded time for nonclinical roles) and percentage working full-time clinical effort. It was also used to evaluate hours per year per 1.0 FTE and total weekends per year per 1.0 FTE and perceived sustainability. Linear regression was used to determine whether associations differed between groups identifying as sustainable versus unsustainable.

RESULTS

Participation and Program Characteristics

Administration

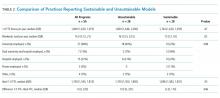

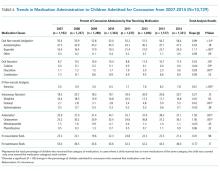

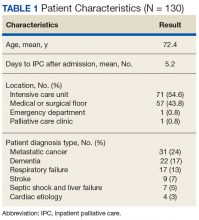

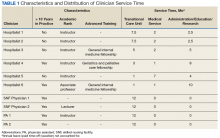

A wide variation was reported in the clinical time expected of a 1.0 FTE hospitalist. Clinical time for 1.0 FTE was defined as the amount of clinical service a full-time hospitalist is expected to complete in 12 months (Table 1). The median hours worked per year were 1800 (Interquartile range [IQR] 1620,1975; mean 1796). The median number of weekends worked per year was 15.0 (IQR 12.5, 21; mean 16.8). Only 30% of pediatric hospitalists were full-time clinicians, whereas the rest had protected time for nonclinical duties. The average amount of protected time was 20% per full-time hospitalist.

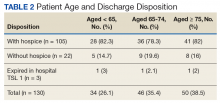

Sustainability and Ideal FTE

Half of the division leaders reported that they or their hospitalists have concerns about the sustainability of the current workload. Programs perceived as sustainable required significantly fewer weekends per year (13 vs. 16, P < .02; Table 2) than those perceived as unsustainable. University-employed programs were more likely to be perceived as unsustainable (64% unsustainable vs. 32% unsustainable, P < .048), whereas programs with other employment models were more likely to be perceived as sustainable (Table 2).

DISCUSSION

This study updates what has been previously reported about the structure and characteristics of university-based pediatric hospitalist programs.3 It also deepens our understanding of a relatively new field and the evolution of clinical coverage models. This evolution has been impacted by decreased resident work hours, increased patient complexity and acuity,6 and a broadened focus on care coordination and communication,7 while attempting to build and sustain a high-quality workforce.

This study is the first to use an interview-based method to determine the current PHM workload and to focus exclusively on university-based programs. Compared with the study by Gosdin et al,3 our study, which utilized interviews instead of surveys, was able to clarify questions and obtain workload data with a common language of hours per year. This approach allowed interviewees to incorporate subtleties, such as clinical vs. total FTE, in their responses. Our study found a slightly narrower range of clinical hours per year and extended the understanding of nonclinical duties by finding that university-based hospitalists have an average of 20% protected time from clinical duties.

In this study, we also explored the perceived sustainability of current clinical models and the ideal clinical model in hours per year. Half of respondents felt their current model was unsustainable. This result suggested that the field must continue to mitigate attrition and burnout.

Interestingly, the total number of clinical hours did not significantly differ in programs perceived to be unsustainable. Instead, a higher number of weekends worked and university employment were associated with lack of sustainability. We hypothesize that weekends have a disproportionate impact on work-life balance as compared with total hours, and that employment by a university may be a proxy for the increased academic and teaching demands of hospitalists without protected time. Future studies may better elucidate these findings and inform programmatic efforts to address sustainability.

Given that PHM is a relatively young field, considering the evolution of our clinical work model within the context of pediatric emergency medicine (PEM), a field that faces similar challenges in overnight and weekend staffing requirements, may be helpful. Gorelick et al.8 reported that total clinical work hours in PEM (combined academic and nonacademic programs) has decreased from 35.3 hours per week in 1998 to 26.7 in 2013. Extrapolating these numbers to an annual position with 5 weeks PTO/CME, the average PEM attending physician works 1254 clinical hours. These numbers demonstrate a marked difference compared with the average 1800 clinical work hours for PHM found in our study.

Although total hours trend lower in PEM, the authors noted continued challenges in sustainability with an estimated half of all PEM respondents indicating a plan to reduce hours or leave the field in the next 5 years and endorsing symptoms of burnout.6 These findings from PEM may motivate PHM leaders to be more aggressive in adjusting work models toward sustainability in the future.

Our study has several limitations. We utilized a convenience sampling approach that requires the voluntary participation of division directors. Although we had robust interest from respondents representing all major geographic areas, the respondent pool might conceivably over-represent those most interested in understanding and/or changing PHM clinical models. Overall, our sample size was smaller than that achieved by a survey approach. Nevertheless, this limitation was offset by controlling respondent type and clarifying questions, thus improving the quality of our obtained data.

CONCLUSION

This interview-based study of PHM directors describes the current state of clinical work models for university-based hospitalists. University-based PHM programs have similar mean and median total clinical hours per year. However, these hours are higher than those considered ideal by PHM directors, and many are concerned about the sustainability of current work models. Notably, programs that are university-employed or have higher weekends worked per year are more likely to be perceived as unsustainable. Future studies should explore differences between programs with sustainable work models and those with high levels of attrition and burnout.

Disclosures

The authors have no other conflicts to report.

Funding

A grant from the American Academy of Pediatrics Section on Hospital Medicine funded this study through the Subcommittee on Division and Program Leaders.

1. Wachter RM, Goldman L. The emerging role of “hospitalists” in the American health care system. N Engl J Med. 1996;335(7):514-517. DOI: 10.1056/NEJM199608153350713 PubMed

2. Chang W. Record Attendance, Key Issues Highlight Pediatric Hospital Medicine’s 10th Anniversary.

3. Gosdin C, Simmons J, Yau C, Sucharew H, Carlson D, Paciorkowski N. Survey of academic pediatric hospitalist programs in the US: organizational, administrative, and financial factors. J Hosp Med. 2013;8(6):285-291. DOI: 10.1002/jhm.2020. PubMed

4. Hinami K, Whelan CT, Wolosin RJ, Miller JA, Wetterneck TB. Worklife and satisfaction of hospitalists: toward flourishing careers. J Gen Intern Med. 2011;27(1):28-36. DOI: 10.1007/s11606-011-1780-z. PubMed

5. Hinami K, Whelan CT, Miller JA, Wolosin RJ, Wetterneck TB. Job characteristics, satisfaction, and burnout across hospitalist practice models. J Hosp Med. 2012;7(5):402-410. DOI: 10.1002/jhm.1907. PubMed

6. Barrett DJ, McGuinness GA, Cunha CA, et al. Pediatric hospital medicine: a proposed new subspecialty. Pediatrics. 2017;139(3):1-9. DOI: 10.1542/peds.2016-1823. PubMed

7. Cawley P, Deitelzweig S, Flores L, et al. The key principles and characteristics of an effective hospital medicine group: an assessment guide for hospitals and hospitalists. J Hosp Med. 2014;9(2):123-128. DOI: 10.1002/jhm.2119. PubMed

8. Gorelick MH, Schremmer R, Ruch-Ross H, Radabaugh C, Selbst S. Current workforce characteristics and burnout in pediatric emergency medicine. Acad Emerg Med. 2016;23(1):48-54. DOI: 10.1111/acem.12845. PubMed

Pediatric hospital medicine (PHM) has grown tremendously since Wachter first described the specialty in 1996.1 Evidence of this growth is seen most markedly at the annual Pediatric Hospitalist Meeting, which has experienced an increase in attendance from 700 in 2013 to over 1,200 in 20172. Although the exact number of pediatric hospitalists in the United States is unknown, the American Academy of Pediatrics Section on Hospital Medicine (AAP SOHM) estimates that approximately 3,000-5,000 pediatric hospitalists currently practice in the country (personal communication).

As PHM programs have grown, variability has been reported in the roles, responsibilities, and workload among practitioners. Gosdin et al.3 reported large ranges and standard deviations in workload among full-time equivalents (FTEs) in academic PHM programs. However, this study’s ability to account for important nuances in program description was limited given that its data were obtained from an online survey.

Program variability, particularly regarding clinical hours and overall clinical burden (eg, in-house hours, census caps, and weekend coverage), is concerning given the well-reported increase in physician burn-out.4,5 Benchmarking data regarding the overall workload of pediatric hospitalists can offer nationally recognized guidance to assist program leaders in building successful programs. With this goal in mind, we sought to obtain data on university-based PHM programs to describe the current average workload for a 1.0 clinical FTE pediatric hospitalist and to assess the perceptions of program directors regarding the sustainability of the current workload.

METHODS

Study Design and Population

To obtain data with sufficient detail to compare programs, the authors, all of whom are practicing pediatric hospitalists at university-based programs, conducted structured interviews of PHM leaders in the United States. Given the absence of a single database for all PHM programs in the United States, the clinical division/program leaders of university-based programs were invited to participate through a post (with 2 reminders) to the AAP SOHM Listserv for PHM Division Leaders in May of 2017. To encourage participation, respondents were promised a summary of aggregate data. The study was exempted by the IRB of the University of Chicago.

Interview Content and Administration

The authors designed an 18-question structured interview regarding the current state of staffing in university-based PHM programs, with a focus on current descriptions of FTE, patient volume, and workload. Utilizing prior surveys3 as a basis, the authors iteratively determined the questions essential to understanding the programs’ current staffing models and ideal models. Considering the diversity of program models, interviews allowed for the clarification of questions and answers. A question regarding employment models was included to determine whether hospitalists were university-employed, hospital-employed, or a hybrid of the 2 modes of employment. The interview was also designed to establish a common language for work metrics (hours per year) for comparative purposes and to assess the perceived sustainability of the workload. Questions were provided in advance to provide respondents with sufficient time to collect data, thus increasing the accuracy of estimates. Respondents were asked, “Do you or your hospitalists have concerns about the sustainability of the model?” Sustainability was intentionally undefined to prevent limiting respondent perspective. For clarification, however, a follow-up comment that included examples was provided: “Faculty departures, reduction in total effort, and/or significant burn out.” The authors piloted the interview protocol by interviewing the division leaders of their own programs, and revisions were made based on feedback on feasibility and clarity. Finally, the AAP SOHM Subcommittee on Division Leaders provided feedback, which was incorporated.

Each author then interviewed 10-12 leaders (or designee) during May and June of 2017. Answers were recorded in REDCAP, an online survey and database tool that contains largely numeric data fields and has 1 field for narrative comments.

Data Analysis

Descriptive statistics were used to summarize interview responses, including median values with interquartile range. Data were compared between programs with models that were self-identified as either sustainable or unsustainable, with P-values in categorical variables from χ2-test or Fischer’s exact test and in continuous variables from Wilcoxon rank-sum test.

Spearman correlation coefficient was used to evaluate the association between average protected time (defined as the percent of funded time for nonclinical roles) and percentage working full-time clinical effort. It was also used to evaluate hours per year per 1.0 FTE and total weekends per year per 1.0 FTE and perceived sustainability. Linear regression was used to determine whether associations differed between groups identifying as sustainable versus unsustainable.

RESULTS

Participation and Program Characteristics

Administration

A wide variation was reported in the clinical time expected of a 1.0 FTE hospitalist. Clinical time for 1.0 FTE was defined as the amount of clinical service a full-time hospitalist is expected to complete in 12 months (Table 1). The median hours worked per year were 1800 (Interquartile range [IQR] 1620,1975; mean 1796). The median number of weekends worked per year was 15.0 (IQR 12.5, 21; mean 16.8). Only 30% of pediatric hospitalists were full-time clinicians, whereas the rest had protected time for nonclinical duties. The average amount of protected time was 20% per full-time hospitalist.

Sustainability and Ideal FTE

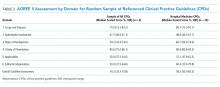

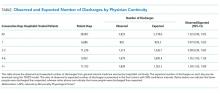

Half of the division leaders reported that they or their hospitalists have concerns about the sustainability of the current workload. Programs perceived as sustainable required significantly fewer weekends per year (13 vs. 16, P < .02; Table 2) than those perceived as unsustainable. University-employed programs were more likely to be perceived as unsustainable (64% unsustainable vs. 32% unsustainable, P < .048), whereas programs with other employment models were more likely to be perceived as sustainable (Table 2).

DISCUSSION

This study updates what has been previously reported about the structure and characteristics of university-based pediatric hospitalist programs.3 It also deepens our understanding of a relatively new field and the evolution of clinical coverage models. This evolution has been impacted by decreased resident work hours, increased patient complexity and acuity,6 and a broadened focus on care coordination and communication,7 while attempting to build and sustain a high-quality workforce.

This study is the first to use an interview-based method to determine the current PHM workload and to focus exclusively on university-based programs. Compared with the study by Gosdin et al,3 our study, which utilized interviews instead of surveys, was able to clarify questions and obtain workload data with a common language of hours per year. This approach allowed interviewees to incorporate subtleties, such as clinical vs. total FTE, in their responses. Our study found a slightly narrower range of clinical hours per year and extended the understanding of nonclinical duties by finding that university-based hospitalists have an average of 20% protected time from clinical duties.

In this study, we also explored the perceived sustainability of current clinical models and the ideal clinical model in hours per year. Half of respondents felt their current model was unsustainable. This result suggested that the field must continue to mitigate attrition and burnout.

Interestingly, the total number of clinical hours did not significantly differ in programs perceived to be unsustainable. Instead, a higher number of weekends worked and university employment were associated with lack of sustainability. We hypothesize that weekends have a disproportionate impact on work-life balance as compared with total hours, and that employment by a university may be a proxy for the increased academic and teaching demands of hospitalists without protected time. Future studies may better elucidate these findings and inform programmatic efforts to address sustainability.

Given that PHM is a relatively young field, considering the evolution of our clinical work model within the context of pediatric emergency medicine (PEM), a field that faces similar challenges in overnight and weekend staffing requirements, may be helpful. Gorelick et al.8 reported that total clinical work hours in PEM (combined academic and nonacademic programs) has decreased from 35.3 hours per week in 1998 to 26.7 in 2013. Extrapolating these numbers to an annual position with 5 weeks PTO/CME, the average PEM attending physician works 1254 clinical hours. These numbers demonstrate a marked difference compared with the average 1800 clinical work hours for PHM found in our study.

Although total hours trend lower in PEM, the authors noted continued challenges in sustainability with an estimated half of all PEM respondents indicating a plan to reduce hours or leave the field in the next 5 years and endorsing symptoms of burnout.6 These findings from PEM may motivate PHM leaders to be more aggressive in adjusting work models toward sustainability in the future.

Our study has several limitations. We utilized a convenience sampling approach that requires the voluntary participation of division directors. Although we had robust interest from respondents representing all major geographic areas, the respondent pool might conceivably over-represent those most interested in understanding and/or changing PHM clinical models. Overall, our sample size was smaller than that achieved by a survey approach. Nevertheless, this limitation was offset by controlling respondent type and clarifying questions, thus improving the quality of our obtained data.

CONCLUSION

This interview-based study of PHM directors describes the current state of clinical work models for university-based hospitalists. University-based PHM programs have similar mean and median total clinical hours per year. However, these hours are higher than those considered ideal by PHM directors, and many are concerned about the sustainability of current work models. Notably, programs that are university-employed or have higher weekends worked per year are more likely to be perceived as unsustainable. Future studies should explore differences between programs with sustainable work models and those with high levels of attrition and burnout.

Disclosures

The authors have no other conflicts to report.

Funding

A grant from the American Academy of Pediatrics Section on Hospital Medicine funded this study through the Subcommittee on Division and Program Leaders.

Pediatric hospital medicine (PHM) has grown tremendously since Wachter first described the specialty in 1996.1 Evidence of this growth is seen most markedly at the annual Pediatric Hospitalist Meeting, which has experienced an increase in attendance from 700 in 2013 to over 1,200 in 20172. Although the exact number of pediatric hospitalists in the United States is unknown, the American Academy of Pediatrics Section on Hospital Medicine (AAP SOHM) estimates that approximately 3,000-5,000 pediatric hospitalists currently practice in the country (personal communication).

As PHM programs have grown, variability has been reported in the roles, responsibilities, and workload among practitioners. Gosdin et al.3 reported large ranges and standard deviations in workload among full-time equivalents (FTEs) in academic PHM programs. However, this study’s ability to account for important nuances in program description was limited given that its data were obtained from an online survey.

Program variability, particularly regarding clinical hours and overall clinical burden (eg, in-house hours, census caps, and weekend coverage), is concerning given the well-reported increase in physician burn-out.4,5 Benchmarking data regarding the overall workload of pediatric hospitalists can offer nationally recognized guidance to assist program leaders in building successful programs. With this goal in mind, we sought to obtain data on university-based PHM programs to describe the current average workload for a 1.0 clinical FTE pediatric hospitalist and to assess the perceptions of program directors regarding the sustainability of the current workload.

METHODS

Study Design and Population

To obtain data with sufficient detail to compare programs, the authors, all of whom are practicing pediatric hospitalists at university-based programs, conducted structured interviews of PHM leaders in the United States. Given the absence of a single database for all PHM programs in the United States, the clinical division/program leaders of university-based programs were invited to participate through a post (with 2 reminders) to the AAP SOHM Listserv for PHM Division Leaders in May of 2017. To encourage participation, respondents were promised a summary of aggregate data. The study was exempted by the IRB of the University of Chicago.

Interview Content and Administration

The authors designed an 18-question structured interview regarding the current state of staffing in university-based PHM programs, with a focus on current descriptions of FTE, patient volume, and workload. Utilizing prior surveys3 as a basis, the authors iteratively determined the questions essential to understanding the programs’ current staffing models and ideal models. Considering the diversity of program models, interviews allowed for the clarification of questions and answers. A question regarding employment models was included to determine whether hospitalists were university-employed, hospital-employed, or a hybrid of the 2 modes of employment. The interview was also designed to establish a common language for work metrics (hours per year) for comparative purposes and to assess the perceived sustainability of the workload. Questions were provided in advance to provide respondents with sufficient time to collect data, thus increasing the accuracy of estimates. Respondents were asked, “Do you or your hospitalists have concerns about the sustainability of the model?” Sustainability was intentionally undefined to prevent limiting respondent perspective. For clarification, however, a follow-up comment that included examples was provided: “Faculty departures, reduction in total effort, and/or significant burn out.” The authors piloted the interview protocol by interviewing the division leaders of their own programs, and revisions were made based on feedback on feasibility and clarity. Finally, the AAP SOHM Subcommittee on Division Leaders provided feedback, which was incorporated.

Each author then interviewed 10-12 leaders (or designee) during May and June of 2017. Answers were recorded in REDCAP, an online survey and database tool that contains largely numeric data fields and has 1 field for narrative comments.

Data Analysis

Descriptive statistics were used to summarize interview responses, including median values with interquartile range. Data were compared between programs with models that were self-identified as either sustainable or unsustainable, with P-values in categorical variables from χ2-test or Fischer’s exact test and in continuous variables from Wilcoxon rank-sum test.

Spearman correlation coefficient was used to evaluate the association between average protected time (defined as the percent of funded time for nonclinical roles) and percentage working full-time clinical effort. It was also used to evaluate hours per year per 1.0 FTE and total weekends per year per 1.0 FTE and perceived sustainability. Linear regression was used to determine whether associations differed between groups identifying as sustainable versus unsustainable.

RESULTS

Participation and Program Characteristics

Administration

A wide variation was reported in the clinical time expected of a 1.0 FTE hospitalist. Clinical time for 1.0 FTE was defined as the amount of clinical service a full-time hospitalist is expected to complete in 12 months (Table 1). The median hours worked per year were 1800 (Interquartile range [IQR] 1620,1975; mean 1796). The median number of weekends worked per year was 15.0 (IQR 12.5, 21; mean 16.8). Only 30% of pediatric hospitalists were full-time clinicians, whereas the rest had protected time for nonclinical duties. The average amount of protected time was 20% per full-time hospitalist.

Sustainability and Ideal FTE

Half of the division leaders reported that they or their hospitalists have concerns about the sustainability of the current workload. Programs perceived as sustainable required significantly fewer weekends per year (13 vs. 16, P < .02; Table 2) than those perceived as unsustainable. University-employed programs were more likely to be perceived as unsustainable (64% unsustainable vs. 32% unsustainable, P < .048), whereas programs with other employment models were more likely to be perceived as sustainable (Table 2).

DISCUSSION

This study updates what has been previously reported about the structure and characteristics of university-based pediatric hospitalist programs.3 It also deepens our understanding of a relatively new field and the evolution of clinical coverage models. This evolution has been impacted by decreased resident work hours, increased patient complexity and acuity,6 and a broadened focus on care coordination and communication,7 while attempting to build and sustain a high-quality workforce.

This study is the first to use an interview-based method to determine the current PHM workload and to focus exclusively on university-based programs. Compared with the study by Gosdin et al,3 our study, which utilized interviews instead of surveys, was able to clarify questions and obtain workload data with a common language of hours per year. This approach allowed interviewees to incorporate subtleties, such as clinical vs. total FTE, in their responses. Our study found a slightly narrower range of clinical hours per year and extended the understanding of nonclinical duties by finding that university-based hospitalists have an average of 20% protected time from clinical duties.

In this study, we also explored the perceived sustainability of current clinical models and the ideal clinical model in hours per year. Half of respondents felt their current model was unsustainable. This result suggested that the field must continue to mitigate attrition and burnout.

Interestingly, the total number of clinical hours did not significantly differ in programs perceived to be unsustainable. Instead, a higher number of weekends worked and university employment were associated with lack of sustainability. We hypothesize that weekends have a disproportionate impact on work-life balance as compared with total hours, and that employment by a university may be a proxy for the increased academic and teaching demands of hospitalists without protected time. Future studies may better elucidate these findings and inform programmatic efforts to address sustainability.

Given that PHM is a relatively young field, considering the evolution of our clinical work model within the context of pediatric emergency medicine (PEM), a field that faces similar challenges in overnight and weekend staffing requirements, may be helpful. Gorelick et al.8 reported that total clinical work hours in PEM (combined academic and nonacademic programs) has decreased from 35.3 hours per week in 1998 to 26.7 in 2013. Extrapolating these numbers to an annual position with 5 weeks PTO/CME, the average PEM attending physician works 1254 clinical hours. These numbers demonstrate a marked difference compared with the average 1800 clinical work hours for PHM found in our study.

Although total hours trend lower in PEM, the authors noted continued challenges in sustainability with an estimated half of all PEM respondents indicating a plan to reduce hours or leave the field in the next 5 years and endorsing symptoms of burnout.6 These findings from PEM may motivate PHM leaders to be more aggressive in adjusting work models toward sustainability in the future.

Our study has several limitations. We utilized a convenience sampling approach that requires the voluntary participation of division directors. Although we had robust interest from respondents representing all major geographic areas, the respondent pool might conceivably over-represent those most interested in understanding and/or changing PHM clinical models. Overall, our sample size was smaller than that achieved by a survey approach. Nevertheless, this limitation was offset by controlling respondent type and clarifying questions, thus improving the quality of our obtained data.

CONCLUSION

This interview-based study of PHM directors describes the current state of clinical work models for university-based hospitalists. University-based PHM programs have similar mean and median total clinical hours per year. However, these hours are higher than those considered ideal by PHM directors, and many are concerned about the sustainability of current work models. Notably, programs that are university-employed or have higher weekends worked per year are more likely to be perceived as unsustainable. Future studies should explore differences between programs with sustainable work models and those with high levels of attrition and burnout.

Disclosures

The authors have no other conflicts to report.

Funding

A grant from the American Academy of Pediatrics Section on Hospital Medicine funded this study through the Subcommittee on Division and Program Leaders.

1. Wachter RM, Goldman L. The emerging role of “hospitalists” in the American health care system. N Engl J Med. 1996;335(7):514-517. DOI: 10.1056/NEJM199608153350713 PubMed

2. Chang W. Record Attendance, Key Issues Highlight Pediatric Hospital Medicine’s 10th Anniversary.

3. Gosdin C, Simmons J, Yau C, Sucharew H, Carlson D, Paciorkowski N. Survey of academic pediatric hospitalist programs in the US: organizational, administrative, and financial factors. J Hosp Med. 2013;8(6):285-291. DOI: 10.1002/jhm.2020. PubMed

4. Hinami K, Whelan CT, Wolosin RJ, Miller JA, Wetterneck TB. Worklife and satisfaction of hospitalists: toward flourishing careers. J Gen Intern Med. 2011;27(1):28-36. DOI: 10.1007/s11606-011-1780-z. PubMed

5. Hinami K, Whelan CT, Miller JA, Wolosin RJ, Wetterneck TB. Job characteristics, satisfaction, and burnout across hospitalist practice models. J Hosp Med. 2012;7(5):402-410. DOI: 10.1002/jhm.1907. PubMed

6. Barrett DJ, McGuinness GA, Cunha CA, et al. Pediatric hospital medicine: a proposed new subspecialty. Pediatrics. 2017;139(3):1-9. DOI: 10.1542/peds.2016-1823. PubMed

7. Cawley P, Deitelzweig S, Flores L, et al. The key principles and characteristics of an effective hospital medicine group: an assessment guide for hospitals and hospitalists. J Hosp Med. 2014;9(2):123-128. DOI: 10.1002/jhm.2119. PubMed

8. Gorelick MH, Schremmer R, Ruch-Ross H, Radabaugh C, Selbst S. Current workforce characteristics and burnout in pediatric emergency medicine. Acad Emerg Med. 2016;23(1):48-54. DOI: 10.1111/acem.12845. PubMed

1. Wachter RM, Goldman L. The emerging role of “hospitalists” in the American health care system. N Engl J Med. 1996;335(7):514-517. DOI: 10.1056/NEJM199608153350713 PubMed

2. Chang W. Record Attendance, Key Issues Highlight Pediatric Hospital Medicine’s 10th Anniversary.

3. Gosdin C, Simmons J, Yau C, Sucharew H, Carlson D, Paciorkowski N. Survey of academic pediatric hospitalist programs in the US: organizational, administrative, and financial factors. J Hosp Med. 2013;8(6):285-291. DOI: 10.1002/jhm.2020. PubMed

4. Hinami K, Whelan CT, Wolosin RJ, Miller JA, Wetterneck TB. Worklife and satisfaction of hospitalists: toward flourishing careers. J Gen Intern Med. 2011;27(1):28-36. DOI: 10.1007/s11606-011-1780-z. PubMed

5. Hinami K, Whelan CT, Miller JA, Wolosin RJ, Wetterneck TB. Job characteristics, satisfaction, and burnout across hospitalist practice models. J Hosp Med. 2012;7(5):402-410. DOI: 10.1002/jhm.1907. PubMed

6. Barrett DJ, McGuinness GA, Cunha CA, et al. Pediatric hospital medicine: a proposed new subspecialty. Pediatrics. 2017;139(3):1-9. DOI: 10.1542/peds.2016-1823. PubMed

7. Cawley P, Deitelzweig S, Flores L, et al. The key principles and characteristics of an effective hospital medicine group: an assessment guide for hospitals and hospitalists. J Hosp Med. 2014;9(2):123-128. DOI: 10.1002/jhm.2119. PubMed

8. Gorelick MH, Schremmer R, Ruch-Ross H, Radabaugh C, Selbst S. Current workforce characteristics and burnout in pediatric emergency medicine. Acad Emerg Med. 2016;23(1):48-54. DOI: 10.1111/acem.12845. PubMed

© 2018 Society of Hospital Medicine

Cardiac Troponins in Low-Risk Pulmonary Embolism Patients: A Systematic Review and Meta-Analysis

Hospital stays for pulmonary embolism (PE) represent a significant cost burden to the United States healthcare system.1 The mean total hospitalization costs for treating a patient with PE ranges widely from $8,764 to $37,006, with an average reported length of stay between 4 and 5 days.2,3 This cost range is attributed to many factors, including type of PE, therapy-induced bleeding risk requiring close monitoring, comorbidities, and social determinants of health. Given that patients with low-risk PE represent the majority of the cases, changes in approaches to care for this population can significantly impact the overall healthcare costs for PE. The European Society of Cardiology (ESC) guidelines incorporate well-validated risk scores, known as the pulmonary embolism severity index (PESI) and the simplified PESI (sPESI) score, and diagnostic test recommendations, including troponin test, echocardiography, and computed tomography, to evaluate patients with PE at varying risk for mortality.4 In these guidelines, the risk stratification algorithm for patients with a low PESI score or a sPESI score of zero does not include checking for the presence of troponin. In reality, practicing hospitalists frequently find that patients receiving a workup in the emergency department for suspected PE undergo troponin test. The ESC guidelines categorize patients with a low-risk score on PESI/sPESI, who subsequently have a positive troponin status, as intermediate low-risk and suggest consideration of hospitalization. The guidelines recommend patients with positive cardiac biomarkers to undergo assessment of right ventricular function through echocardiogram or computed tomography analysis. Moreover, the guidelines support early discharge or ambulatory treatment for low-risk patients who have a negative troponin status.4

The American College of Chest Physicians (ACCP) guidelines on venous thromboembolism (VTE) recommend that cardiac biomarkers should not be measured routinely in all patients with PE and that positive troponin status should discourage physicians from pursuing ambulatory treatment.5 Therefore, ambiguity lies within both guidelines with regard to how hospitalists should interpret a positive troponin status in patients with low risk, which in turn may lead to unnecessary hospitalizations and further imaging. This systematic review and meta-analysis aims to provide clarity, both about gaps in literature and about how practicing hospitalists should interpret troponins in patients with low-risk PE.

METHODS

Data Sources and Searches

This systematic review and meta-analysis was performed in accordance with the established methods and Preferred Reporting Items for Systematic Review and Meta-Analysis (PRISMA) guidelines. We searched MEDLINE, SCOPUS, and Cochrane Controlled Trial Registry databases for studies published from inception to December 2016 by using the following key words: pulmonary embolism AND PESI OR “pulmonary embolism severity index.” Only articles written in English language were included. The full articles of potentially eligible studies were reviewed, and articles published only in abstract form were excluded.

Study Selection

Two investigators independently assessed the abstract of each article, and the full article was assessed if it fulfilled the following criteria: (1) the publication must be original; (2) inclusion of objectively diagnosed, hemodynamically stable patients (normotensive patients) with acute PE in the inpatient or outpatient setting; (3) inclusion of patients>19 years old; (4) use of the PESI or sPESI model to stratify patients into a low-risk group irrespective of any evidence of right ventricular dysfunction; and (5) testing of cardiac troponin levels (TnI-troponin I, TnT-troponin T, or hs-TnI/TnT-high sensitivity troponin I/T) in patients. Study design, sample size, duration of follow-up, type of troponin used, definition of hemodynamic stability, and specific type of outcome measured (endpoint) did not affect the study eligibility.

Data Extraction and Risk of Bias Assessment

Statistical Analysis

Data were summarized by using 30-day all-cause mortality only because it is the most consistent endpoint reported by all of the included studies. For each study, 30-day all-cause mortality was analyzed across the 2 troponin groups, and the results were summarized in terms of positive predictive value (PPV), negative predictive value (NPV), positive likelihood ratio (PLR), negative likelihood ratio (NLR), and odds ratio (OR). To quantify the uncertainty in the LRs and ORs, we calculated 95% confidence intervals (CI).

Overall measures of PPV, NPV, PLR, and NLR were calculated on the pooled collection of data from the studies. LRs are one of the best measures of diagnostic accuracy; therefore, we defined the degree of probability of disease based on simple estimations that were reported by McGee.6 These estimations are independent of pretest probability and include the following: PLR 5.0 increases the probability of the outcome by about 30%, whereas NLR 0.20 decreases the probability of the outcome by 30%. To identify reasonable performance, we defined a PLR > 5 as an increase in moderate to high probability and a NLR < 0.20 as a decrease in moderate to high probability.6

The overall association between 30-day all-cause mortality and troponin classification among patients with low-risk PE was assessed using a mixed effects logistic regression model. The model included a random intercept to account for the correlation among the measurements for patients within a study. The exponentiated regression coefficient for troponin classification is the OR for 30-day all-cause mortality, comparing troponin-positive patients to troponin-negative patients. OR is reported with a 95% CI and a P value. A continuity correction (correction = 0.5) was applied to zero cells. Heterogeneity was measured using Cochran Q statistic and Higgins I2 statistic.

RESULTS

Search Results

Figure 1 represents the PRISMA flow diagram for literature search and selection process to identify eligible studies for inclusion.

Study Characteristics

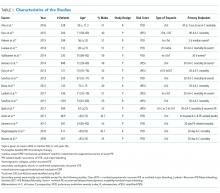

The abstracts of 117 articles were initially identified using the search strategy described above. Of these, 18 articles were deemed appropriate for review based on the criteria outlined in “Study Selection.” The full-text articles of the selected studies were obtained. Upon further evaluation, we identified 16 articles (Figure 1) eligible for the systematic review. Two studies were excluded because they did not provide the number of study participants that met the primary endpoints. The included studies were published from 2009–2016 (Table 1). For patients with low-risk PE, the number of patients with right ventricle dysfunction was either difficult to determine or not reported in all the studies.

Regarding study design, 11 studies were described as prospective cohorts and the remaining 5 studies were identified as retrospective (Table 1). Seven studies stratified participants’ risk of mortality by using sPESI, and 8 studies employed the PESI score. A total of 6952 participants diagnosed with PE were obtained, and 2662 (38%) were recognized as being low-risk based on either the PESI or sPESI. The sample sizes of the individual studies ranged from 121 to 1,291. The studies used either hs-cTnT, hs-cTnI, cTnT, cTnI, or a combination of hs-cTnT and cTnI or cTnT for troponin assay. Most studies used a pre-defined cut-off value to determine positive or negative troponin status.

Thirteen studies reported 30-day event rate as one of the primary endpoints. The 3 other studies included 90-day all-cause mortality, and 2 of them included in-hospital events. Secondary event rates were only reported in 4 studies and consisted of nonfatal PE, nonfatal major bleeding, and PE-related mortality.

Our systematic review revealed that 5 of the 16 studies used either hemodynamic decompensation, cardiopulmonary resuscitation, mechanical ventilation, or a combination of any of these parameters as part of their primary or secondary endpoint. However, none of the studies specified the number of patients that reached any of these endpoints. Furthermore, 10 of the 16 studies did not specify 30-day PE-related mortality outcomes. The most common endpoint was 30-day all-cause mortality, and only 7 studies reported outcomes with positive or negative troponin status.

Outcome Data of All Studies

A total of 2662 participants were categorized as being low risk based on the PESI or sPESI risk score. The pooled rate of PE-related mortality (specified and inferred) was 5 (0.46%) from 6 studies (1,093 patients), in which only 2 studies specified PE-related mortality as the primary endpoint (Vanni [2011]19 and Jimenez [2011]20). The pooled rate of 30-day all-cause mortality was 24 (1.3%) from 12 studies (1882 patients). In 14 studies (2163 patients), the rates of recurrence of PE and major bleeding were 3 (0.14%) and 6 (0.28%), respectively.

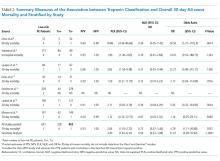

Outcomes of Studies with Corresponding Troponin+ and Troponin –

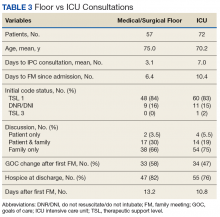

Seven studies used positive or negative troponin status as endpoint to assess low-risk participants (Table 2). However, only 5 studies were included in the final meta-analysis because some data were missing in the Sanchez14 study and the Oszu8 study’s mortality endpoint was more than 30 days. The risk of bias within the studies was evaluated, and for most studies, the quality was of moderate degree (Supplementary Table 1). Table 2 shows the results for the overall pooled data stratified by study. In the pooled data, 463 (67%) patients tested negative for troponin and 228 (33%) tested positive. The overall mortality (from sensitivity analysis) including in-hospital, 30-day, and 90-day mortalities was 1.2%. The NPVs for all individual studies and the overall NPV are 1 or approximately 1. The overall PPVs and by study were low, ranging from 0 to 0.60. The PLRs and NLRs were not estimated for an outcome within an individual study if none of the patients experienced the outcome. When outcomes were only observed among troponin-negative patients, such as in the study of Moores (2009)22 who used 30-day all-cause mortality, the PLR had a value of zero. When outcomes were only observed among troponin-positive patients, as for 30-day all-cause mortality in the Hakemi(2015)9, Lauque (2014)10, and Lankeit(2011)16 studies, the NLR had a value of zero. For zero cells, a continuity correction of 0.5 was applied. The pooled likelihood ratios (LRs) for all-cause mortality were positive LR 2.04 (95% CI, 1.53 to 2.72) and negative LR 0.72 (95% CI, 0.37 to 1.40). The OR for all-cause mortality was 4.79 (95% CI 1.11 to 20.68, P = .0357).

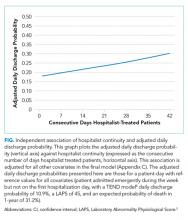

A forest plot was created to visualize the PLR from each study included in the main analysis (Figure 2).

A sensitivity analysis among troponin-positive patients was conducted using 90-day all-cause mortality outcome from the study of Ozsu8 (2015) and the 2 all-cause mortality outcomes from the study of Sanchez14 (2013). The pooled estimates from the 30-day all-cause mortality differed slightly from those previously reported. The PLR increased to 3.40 (95% CI 1.81 to 6.37), and the NLR decreased to 0.59 (95% CI 0.33 to 1.08).

DISCUSSION

In this meta-analysis of 5 studies, which included 691 patients with low-risk PESI or sPESI scores, those tested positive for troponin had nearly a fivefold increased risk of 30-day all-cause mortality compared with patients who tested negative. However, the clinical significance of this association is unclear given that the CI is quite wide and mortality could be associated with PE versus other causes. Similar results were reported by other meta-analyses that consisted of patients with normotensive PE.23-25 To our knowledge, the present meta-analysis is the first to report outcomes in patients with low-risk PE stratified by the presence of cardiac troponin.

A published paper on simplifying the clinical interpretation of LRs state that a positive LR of greater than 5 and a negative LR of less than 0.20 provide dependable evidence regarding reasonable prognostic performance.6 In our analysis, the positive LR was less than 5 and the negative LR’s CI included one. These results suggest a small statistical probability that a patient with a low PESI/sPESI score and a positive troponin status would benefit from inpatient monitoring; simultaneously, a negative troponin does not necessarily translate to safe outpatient therapy, based on our statistical analysis. Previous studies also reported nonextreme positive LRs.23,24 We therefore conclude that low-risk PE patients with positive troponins may be eligible for safe ambulatory treatment or early discharge. However, the number of outcomes of interest (mortality) occurred in only 6 patients among the 228 patients who had positive troponin status. The majority of deaths were reported by Hakemi et al.9 in their retrospective cohort study; as such, drawing conclusions is difficult. Furthermore, the low 30-day all-cause mortality rate of 2.6% in the positive troponin group may have been affected by close monitoring of the patients, who commonly received hemodynamic and oxygen support. Based on these factors, our conclusion is relatively weak, and we cannot recommend a change in practice compared to existing guidelines. In general, additional prospective research is needed to determine whether patients with low-risk PE tested positive for troponin can receive care safely outside the hospital or, rather, require hospitalization similar to patients with intermediate-high risk PE.

We identified a number of other limitations in our analysis. First, aside from the relatively small number of pertinent studies in the literature, most of the studies are of low-moderate quality. Second, the troponin classification in various studies was not conducted using the same assay, and the cut-off value determining positive versus negative results in each case may have differed. These differences may have created some ambiguity or misclassification when the data were pooled together. Third, although the mixed effects logistic regression model controls for some of the variations among patients enrolled in different studies, significant differences exist in terms of patient characteristics or the protocol for follow-up care. This aspect was unaccounted for in this analysis. Lastly, pooled outcome events could not be retrieved from all of the included studies, which would have resulted in a misrepresentation of the true outcomes.

The ESC guidelines suggest avoiding cardiac biomarker testing in patients with low-risk PE because this practice does not have therapeutic implications. Moreover, ESC and ACCP guidelines both state that a positive cardiac biomarker should discourage treatment out of the hospital. The ACCP guidelines further encourage testing of cardiac biomarkers and/or evaluating right ventricular function via echocardiography when uncertainty exists regarding whether patients may require close in-hospital monitoring or not. Although no resounding evidence suggests that troponins have therapeutic implications in patients with low-risk PE, the current guidelines and our meta-analysis cannot offer an overwhelmingly convincing recommendation about whether or not patients with low-risk PE and positive cardiac biomarkers are best treated in the ambulatory or inpatient setting. Such patients may benefit from monitoring in an observation unit (eg, less than 24 or 48 hours), rather than requiring a full admission to the hospital. Nevertheless, our analysis shows that making this determination will require prospective studies that will utilize cardiac troponin status in predicting PE-related events, such as arrhythmia, acute respiratory failure, and hemodynamic decompensation, rather than all-cause mortality.

Until further studies, hospitalists should integrate the use of cardiac troponin and other clinical data, including those available from patient history, physical exam, and other laboratory testing, in determining whether or not to admit, observe, or discharge patients with low-risk PE. As the current guidelines recommend, we support consideration of right ventricular function assessment, via echocardiogram or computed tomography, in patients with positive cardiac troponins even when their PESI/sPESI score is low.

ACKNOWLEDGMENTS

The authors would like to thank Megan Therese Smith, PhD and Lishi Zhang, MS for their contribution in providing a comprehensive statistical analysis of this meta-analysis.

Disclosures

The authors declare no conflicts of interest in the work under consideration for publication. Abdullah Mahayni and Mukti Patel, MD also declared no conflicts of interest with regard to the relevant financial activities outside the submitted work. Omar Darwish, DO and Alpesh Amin, MD also declared no relevant financial activities outside the submitted work; they are speakers for Bristol Myer Squibb and Pfizer regarding the anticoagulant, Apixaban, for treatment of venous thromboembolism and atrial fibrillation.

1. Grosse SD, Nelson RE, Nyarko KA, Richardson LC, Raskob GE. The economic burden of incident venous thromboembolism in the United States: A review of estimated attributable healthcare costs. Thromb Res. 2016;137:3-10 PubMed

2. Fanikos J, Rao A, Seger AC, Carter D, Piazza G, Goldhaber SZ. Hospital Costs of Acute Pulmonary Embolism. Am J Med. 2013;126(2):127-132. PubMed

3. LaMori JC, Shoheiber O, Mody SH, Bookart BK. Inpatient Resource Use and Cost Burden of Deep Vein Thrombosis and Pulmonary Embolism in the United States. Clin Ther. 2015;37(1):62-70. PubMed

4. Konstantinides S, Torbicki A, Agnelli G, Danchin N, Fitzmaurice D, Galié N, et al. 2014 ESC Guidelines on the diagnosis and management of acute pulmonary embolism. The Task Force for the Diagnosis and Management of Acute Pulmonary Embolism of the European Society of Cardiology (ESC). Eur Heart J. 2014;35(43):3033-3080. PubMed

5. Kearon C, Akl EA, Ornelas J, Blaivas A, Jimenez D, Bounameaux H, et al. Antithrombotic Therapy for VTE Disease: CHEST Guideline and Expert Panel Report. Chest. 2016;149(2):315-352. PubMed

6. McGee S. Simplifying Likelihood Ratios. J Gen Intern Med. 2002;17(8):647-650. PubMed

7. Ahn S, Lee Y, Kim WY, Lim KS, Lee J. Prognostic Value of Treatment Setting in Patients With Cancer Having Pulmonary Embolism: Comparison With the Pulmonary Embolism Severity Index. Clin Appl Thromb Hemost. 2016;23(6):615-621. PubMed

8. Ozsu S, Bektas H, Abul Y, Ozlu T, Örem A. Value of Cardiac Troponin and sPESI in Treatment of Pulmonary Thromboembolism at Outpatient Setting. Lung. 2015;193(4):559-565. PubMed

9. Hakemi EU, Alyousef T, Dang G, Hakmei J, Doukky R. The prognostic value of undetectable highly sensitive cardiac troponin I in patients with acute pulmonary embolism. Chest. 2015;147(3):685-694. PubMed

10. Lauque D, Maupas-Schwalm F, Bounes V, et al. Predictive Value of the Heart‐type Fatty Acid–binding Protein and the Pulmonary Embolism Severity Index in Patients With Acute Pulmonary Embolism in the Emergency Department. Acad Emerg Med. 2014;21(10):1143-1150. PubMed

11. Vuilleumier N, Limacher A, Méan M, Choffat J, Lescuyer P, Bounameaux H, et al. Cardiac biomarkers and clinical scores for risk stratification in elderly patients with non‐high‐risk pulmonary embolism. J Intern Med. 2014;277(6):707-716. PubMed

12. Jiménez D, Kopecna D, Tapson V, et al. Derivation and validation of multimarker prognostication for normotensive patients with acute symptomatic pulmonary embolism. Am J Respir Crit Care Med. 2014;189(6):718-726. PubMed

13. Ozsu S, Abul Y, Orem A, et al. Predictive value of troponins and simplified pulmonary embolism severity index in patients with normotensive pulmonary embolism. Multidiscip Respir Med. 2013;8(1):34. PubMed

14. Sanchez O, Trinquart L, Planquette B, et al. Echocardiography and pulmonary embolism severity index have independent prognostic roles in pulmonary embolism. Eur Respir J. 2013;42(3):681-688. PubMed

15. Barra SN, Paiva L, Providéncia R, Fernandes A, Nascimento J, Marques AL. LR–PED Rule: Low Risk Pulmonary Embolism Decision Rule–A new decision score for low risk Pulmonary Embolism. Thromb Res. 2012;130(3):327-333. PubMed

16. Lankeit M, Jiménez D, Kostrubiec M, et al. Predictive Value of the High-Sensitivity Troponin T Assay and the Simplified Pulmonary Embolism Severity Index in Hemodynamically Stable Patients With Acute Pulmonary Embolism A Prospective Validation Study. Circulation. 2011;124(24):2716-2724. PubMed

17. Sánchez D, De Miguel J, Sam A, et al. The effects of cause of death classification on prognostic assessment of patients with pulmonary embolism. J Thromb Haemost. 2011;9(11):2201-2207. PubMed

18. Spirk D, Aujesky D, Husmann M, et al. Cardiac troponin testing and the simplified Pulmonary Embolism Severity Index. J Thromb Haemost. 2011;105(05):978-984. PubMed

19. Vanni S, Nazerian P, Pepe G, et al. Comparison of two prognostic models for acute pulmonary embolism: clinical vs. right ventricular dysfunction‐guided approach. J Thromb Haemos. 2011;9(10):1916-1923. PubMed

20. Jiménez D, Aujesky D, Moores L, et al. Combinations of prognostic tools for identification of high-risk normotensive patients with acute symptomatic pulmonary embolism. Thorax. 2011;66(1):75-81. PubMed

21. Singanayagam A, Scally C, Al-Khairalla MZ, et al. Are biomarkers additive to pulmonary embolism severity index for severity assessment in normotensive patients with acute pulmonary embolism? QJM. 2010;104(2):125-131. PubMed

22. Moores L, Aujesky D, Jimenez D, et al. Pulmonary Embolism Severity Index and troponin testing for the selection of low‐risk patients with acute symptomatic pulmonary embolism. J Thromb Haemost. 2009;8(3):517-522. PubMed

23. Bajaj A, Rathor P, Sehgal V, et al. Prognostic Value of Biomarkers in Acute Non-massive Pulmonary Embolism; A Sysemative Review and Meta-Analysis. Lung. 2015;193(5):639-651. PubMed

24. Jiménez D Uresandi F, Otero R, et al. Troponin-based risk stratification of patients with acute nonmassive pulmonary embolism; a systematic review and metaanalysis. Chest. 2009;136(4):974-982. PubMed

25. Becattini C, Vedovati MC, Agnelli G. Prognostic Value of Troponins in Acute Pulmonary Embolism: A Meta-Analysis. Circulation. 2007;116(4):427-433. PubMed

Hospital stays for pulmonary embolism (PE) represent a significant cost burden to the United States healthcare system.1 The mean total hospitalization costs for treating a patient with PE ranges widely from $8,764 to $37,006, with an average reported length of stay between 4 and 5 days.2,3 This cost range is attributed to many factors, including type of PE, therapy-induced bleeding risk requiring close monitoring, comorbidities, and social determinants of health. Given that patients with low-risk PE represent the majority of the cases, changes in approaches to care for this population can significantly impact the overall healthcare costs for PE. The European Society of Cardiology (ESC) guidelines incorporate well-validated risk scores, known as the pulmonary embolism severity index (PESI) and the simplified PESI (sPESI) score, and diagnostic test recommendations, including troponin test, echocardiography, and computed tomography, to evaluate patients with PE at varying risk for mortality.4 In these guidelines, the risk stratification algorithm for patients with a low PESI score or a sPESI score of zero does not include checking for the presence of troponin. In reality, practicing hospitalists frequently find that patients receiving a workup in the emergency department for suspected PE undergo troponin test. The ESC guidelines categorize patients with a low-risk score on PESI/sPESI, who subsequently have a positive troponin status, as intermediate low-risk and suggest consideration of hospitalization. The guidelines recommend patients with positive cardiac biomarkers to undergo assessment of right ventricular function through echocardiogram or computed tomography analysis. Moreover, the guidelines support early discharge or ambulatory treatment for low-risk patients who have a negative troponin status.4

The American College of Chest Physicians (ACCP) guidelines on venous thromboembolism (VTE) recommend that cardiac biomarkers should not be measured routinely in all patients with PE and that positive troponin status should discourage physicians from pursuing ambulatory treatment.5 Therefore, ambiguity lies within both guidelines with regard to how hospitalists should interpret a positive troponin status in patients with low risk, which in turn may lead to unnecessary hospitalizations and further imaging. This systematic review and meta-analysis aims to provide clarity, both about gaps in literature and about how practicing hospitalists should interpret troponins in patients with low-risk PE.

METHODS

Data Sources and Searches

This systematic review and meta-analysis was performed in accordance with the established methods and Preferred Reporting Items for Systematic Review and Meta-Analysis (PRISMA) guidelines. We searched MEDLINE, SCOPUS, and Cochrane Controlled Trial Registry databases for studies published from inception to December 2016 by using the following key words: pulmonary embolism AND PESI OR “pulmonary embolism severity index.” Only articles written in English language were included. The full articles of potentially eligible studies were reviewed, and articles published only in abstract form were excluded.

Study Selection

Two investigators independently assessed the abstract of each article, and the full article was assessed if it fulfilled the following criteria: (1) the publication must be original; (2) inclusion of objectively diagnosed, hemodynamically stable patients (normotensive patients) with acute PE in the inpatient or outpatient setting; (3) inclusion of patients>19 years old; (4) use of the PESI or sPESI model to stratify patients into a low-risk group irrespective of any evidence of right ventricular dysfunction; and (5) testing of cardiac troponin levels (TnI-troponin I, TnT-troponin T, or hs-TnI/TnT-high sensitivity troponin I/T) in patients. Study design, sample size, duration of follow-up, type of troponin used, definition of hemodynamic stability, and specific type of outcome measured (endpoint) did not affect the study eligibility.

Data Extraction and Risk of Bias Assessment

Statistical Analysis

Data were summarized by using 30-day all-cause mortality only because it is the most consistent endpoint reported by all of the included studies. For each study, 30-day all-cause mortality was analyzed across the 2 troponin groups, and the results were summarized in terms of positive predictive value (PPV), negative predictive value (NPV), positive likelihood ratio (PLR), negative likelihood ratio (NLR), and odds ratio (OR). To quantify the uncertainty in the LRs and ORs, we calculated 95% confidence intervals (CI).

Overall measures of PPV, NPV, PLR, and NLR were calculated on the pooled collection of data from the studies. LRs are one of the best measures of diagnostic accuracy; therefore, we defined the degree of probability of disease based on simple estimations that were reported by McGee.6 These estimations are independent of pretest probability and include the following: PLR 5.0 increases the probability of the outcome by about 30%, whereas NLR 0.20 decreases the probability of the outcome by 30%. To identify reasonable performance, we defined a PLR > 5 as an increase in moderate to high probability and a NLR < 0.20 as a decrease in moderate to high probability.6

The overall association between 30-day all-cause mortality and troponin classification among patients with low-risk PE was assessed using a mixed effects logistic regression model. The model included a random intercept to account for the correlation among the measurements for patients within a study. The exponentiated regression coefficient for troponin classification is the OR for 30-day all-cause mortality, comparing troponin-positive patients to troponin-negative patients. OR is reported with a 95% CI and a P value. A continuity correction (correction = 0.5) was applied to zero cells. Heterogeneity was measured using Cochran Q statistic and Higgins I2 statistic.

RESULTS

Search Results

Figure 1 represents the PRISMA flow diagram for literature search and selection process to identify eligible studies for inclusion.

Study Characteristics

The abstracts of 117 articles were initially identified using the search strategy described above. Of these, 18 articles were deemed appropriate for review based on the criteria outlined in “Study Selection.” The full-text articles of the selected studies were obtained. Upon further evaluation, we identified 16 articles (Figure 1) eligible for the systematic review. Two studies were excluded because they did not provide the number of study participants that met the primary endpoints. The included studies were published from 2009–2016 (Table 1). For patients with low-risk PE, the number of patients with right ventricle dysfunction was either difficult to determine or not reported in all the studies.

Regarding study design, 11 studies were described as prospective cohorts and the remaining 5 studies were identified as retrospective (Table 1). Seven studies stratified participants’ risk of mortality by using sPESI, and 8 studies employed the PESI score. A total of 6952 participants diagnosed with PE were obtained, and 2662 (38%) were recognized as being low-risk based on either the PESI or sPESI. The sample sizes of the individual studies ranged from 121 to 1,291. The studies used either hs-cTnT, hs-cTnI, cTnT, cTnI, or a combination of hs-cTnT and cTnI or cTnT for troponin assay. Most studies used a pre-defined cut-off value to determine positive or negative troponin status.

Thirteen studies reported 30-day event rate as one of the primary endpoints. The 3 other studies included 90-day all-cause mortality, and 2 of them included in-hospital events. Secondary event rates were only reported in 4 studies and consisted of nonfatal PE, nonfatal major bleeding, and PE-related mortality.

Our systematic review revealed that 5 of the 16 studies used either hemodynamic decompensation, cardiopulmonary resuscitation, mechanical ventilation, or a combination of any of these parameters as part of their primary or secondary endpoint. However, none of the studies specified the number of patients that reached any of these endpoints. Furthermore, 10 of the 16 studies did not specify 30-day PE-related mortality outcomes. The most common endpoint was 30-day all-cause mortality, and only 7 studies reported outcomes with positive or negative troponin status.

Outcome Data of All Studies

A total of 2662 participants were categorized as being low risk based on the PESI or sPESI risk score. The pooled rate of PE-related mortality (specified and inferred) was 5 (0.46%) from 6 studies (1,093 patients), in which only 2 studies specified PE-related mortality as the primary endpoint (Vanni [2011]19 and Jimenez [2011]20). The pooled rate of 30-day all-cause mortality was 24 (1.3%) from 12 studies (1882 patients). In 14 studies (2163 patients), the rates of recurrence of PE and major bleeding were 3 (0.14%) and 6 (0.28%), respectively.

Outcomes of Studies with Corresponding Troponin+ and Troponin –

Seven studies used positive or negative troponin status as endpoint to assess low-risk participants (Table 2). However, only 5 studies were included in the final meta-analysis because some data were missing in the Sanchez14 study and the Oszu8 study’s mortality endpoint was more than 30 days. The risk of bias within the studies was evaluated, and for most studies, the quality was of moderate degree (Supplementary Table 1). Table 2 shows the results for the overall pooled data stratified by study. In the pooled data, 463 (67%) patients tested negative for troponin and 228 (33%) tested positive. The overall mortality (from sensitivity analysis) including in-hospital, 30-day, and 90-day mortalities was 1.2%. The NPVs for all individual studies and the overall NPV are 1 or approximately 1. The overall PPVs and by study were low, ranging from 0 to 0.60. The PLRs and NLRs were not estimated for an outcome within an individual study if none of the patients experienced the outcome. When outcomes were only observed among troponin-negative patients, such as in the study of Moores (2009)22 who used 30-day all-cause mortality, the PLR had a value of zero. When outcomes were only observed among troponin-positive patients, as for 30-day all-cause mortality in the Hakemi(2015)9, Lauque (2014)10, and Lankeit(2011)16 studies, the NLR had a value of zero. For zero cells, a continuity correction of 0.5 was applied. The pooled likelihood ratios (LRs) for all-cause mortality were positive LR 2.04 (95% CI, 1.53 to 2.72) and negative LR 0.72 (95% CI, 0.37 to 1.40). The OR for all-cause mortality was 4.79 (95% CI 1.11 to 20.68, P = .0357).

A forest plot was created to visualize the PLR from each study included in the main analysis (Figure 2).

A sensitivity analysis among troponin-positive patients was conducted using 90-day all-cause mortality outcome from the study of Ozsu8 (2015) and the 2 all-cause mortality outcomes from the study of Sanchez14 (2013). The pooled estimates from the 30-day all-cause mortality differed slightly from those previously reported. The PLR increased to 3.40 (95% CI 1.81 to 6.37), and the NLR decreased to 0.59 (95% CI 0.33 to 1.08).

DISCUSSION

In this meta-analysis of 5 studies, which included 691 patients with low-risk PESI or sPESI scores, those tested positive for troponin had nearly a fivefold increased risk of 30-day all-cause mortality compared with patients who tested negative. However, the clinical significance of this association is unclear given that the CI is quite wide and mortality could be associated with PE versus other causes. Similar results were reported by other meta-analyses that consisted of patients with normotensive PE.23-25 To our knowledge, the present meta-analysis is the first to report outcomes in patients with low-risk PE stratified by the presence of cardiac troponin.

A published paper on simplifying the clinical interpretation of LRs state that a positive LR of greater than 5 and a negative LR of less than 0.20 provide dependable evidence regarding reasonable prognostic performance.6 In our analysis, the positive LR was less than 5 and the negative LR’s CI included one. These results suggest a small statistical probability that a patient with a low PESI/sPESI score and a positive troponin status would benefit from inpatient monitoring; simultaneously, a negative troponin does not necessarily translate to safe outpatient therapy, based on our statistical analysis. Previous studies also reported nonextreme positive LRs.23,24 We therefore conclude that low-risk PE patients with positive troponins may be eligible for safe ambulatory treatment or early discharge. However, the number of outcomes of interest (mortality) occurred in only 6 patients among the 228 patients who had positive troponin status. The majority of deaths were reported by Hakemi et al.9 in their retrospective cohort study; as such, drawing conclusions is difficult. Furthermore, the low 30-day all-cause mortality rate of 2.6% in the positive troponin group may have been affected by close monitoring of the patients, who commonly received hemodynamic and oxygen support. Based on these factors, our conclusion is relatively weak, and we cannot recommend a change in practice compared to existing guidelines. In general, additional prospective research is needed to determine whether patients with low-risk PE tested positive for troponin can receive care safely outside the hospital or, rather, require hospitalization similar to patients with intermediate-high risk PE.

We identified a number of other limitations in our analysis. First, aside from the relatively small number of pertinent studies in the literature, most of the studies are of low-moderate quality. Second, the troponin classification in various studies was not conducted using the same assay, and the cut-off value determining positive versus negative results in each case may have differed. These differences may have created some ambiguity or misclassification when the data were pooled together. Third, although the mixed effects logistic regression model controls for some of the variations among patients enrolled in different studies, significant differences exist in terms of patient characteristics or the protocol for follow-up care. This aspect was unaccounted for in this analysis. Lastly, pooled outcome events could not be retrieved from all of the included studies, which would have resulted in a misrepresentation of the true outcomes.

The ESC guidelines suggest avoiding cardiac biomarker testing in patients with low-risk PE because this practice does not have therapeutic implications. Moreover, ESC and ACCP guidelines both state that a positive cardiac biomarker should discourage treatment out of the hospital. The ACCP guidelines further encourage testing of cardiac biomarkers and/or evaluating right ventricular function via echocardiography when uncertainty exists regarding whether patients may require close in-hospital monitoring or not. Although no resounding evidence suggests that troponins have therapeutic implications in patients with low-risk PE, the current guidelines and our meta-analysis cannot offer an overwhelmingly convincing recommendation about whether or not patients with low-risk PE and positive cardiac biomarkers are best treated in the ambulatory or inpatient setting. Such patients may benefit from monitoring in an observation unit (eg, less than 24 or 48 hours), rather than requiring a full admission to the hospital. Nevertheless, our analysis shows that making this determination will require prospective studies that will utilize cardiac troponin status in predicting PE-related events, such as arrhythmia, acute respiratory failure, and hemodynamic decompensation, rather than all-cause mortality.

Until further studies, hospitalists should integrate the use of cardiac troponin and other clinical data, including those available from patient history, physical exam, and other laboratory testing, in determining whether or not to admit, observe, or discharge patients with low-risk PE. As the current guidelines recommend, we support consideration of right ventricular function assessment, via echocardiogram or computed tomography, in patients with positive cardiac troponins even when their PESI/sPESI score is low.

ACKNOWLEDGMENTS

The authors would like to thank Megan Therese Smith, PhD and Lishi Zhang, MS for their contribution in providing a comprehensive statistical analysis of this meta-analysis.

Disclosures

The authors declare no conflicts of interest in the work under consideration for publication. Abdullah Mahayni and Mukti Patel, MD also declared no conflicts of interest with regard to the relevant financial activities outside the submitted work. Omar Darwish, DO and Alpesh Amin, MD also declared no relevant financial activities outside the submitted work; they are speakers for Bristol Myer Squibb and Pfizer regarding the anticoagulant, Apixaban, for treatment of venous thromboembolism and atrial fibrillation.