User login

Hearing loss strongly tied to increased dementia risk

, new national data show. Investigators also found that even mild hearing loss was associated with increased dementia risk, although it was not statistically significant, and that hearing aid use was tied to a 32% decrease in dementia prevalence.

“Every 10-decibel increase in hearing loss was associated with 16% greater prevalence of dementia, such that prevalence of dementia in older adults with moderate or greater hearing loss was 61% higher than prevalence in those with normal hearing,” said lead investigator Alison Huang, PhD, senior research associate in epidemiology at Johns Hopkins Bloomberg School of Public Health and core faculty in the Cochlear Center for Hearing and Public Health, Baltimore.

The findings were published online in JAMA.

Dose dependent effect

For their study, researchers analyzed data on 2,413 community-dwelling participants in the National Health and Aging Trends Study, a nationally representative, continuous panel study of U.S. Medicare beneficiaries aged 65 and older.

Data from the study was collected during in-home interviews, setting it apart from previous work that relied on data collected in a clinical setting, Dr. Huang said.

“This study was able to capture more vulnerable populations, such as the oldest old and older adults with disabilities, typically excluded from prior epidemiologic studies of the hearing loss–dementia association that use clinic-based data collection, which only captures people who have the ability and means to get to clinics,” Dr. Huang said.

Weighted hearing loss prevalence was 36.7% for mild and 29.8% for moderate to severe hearing loss, and weighted prevalence of dementia was 10.3%.

Those with moderate to severe hearing loss were 61% more likely to have dementia than were those with normal hearing (prevalence ratio, 1.61; 95% confidence interval [CI], 1.09-2.38).

Dementia prevalence increased with increasing severity of hearing loss: Normal hearing: 6.19% (95% CI, 4.31-8.80); mild hearing loss: 8.93% (95% CI, 6.99-11.34); moderate to severe hearing loss: 16.52% (95% CI, 13.81-19.64). But only moderate to severe hearing loss showed a statistically significant association with dementia (P = .02).

Dementia prevalence increased 16% per 10-decibel increase in hearing loss (prevalence ratio 1.16; P < .001).

Among the 853 individuals in the study with moderate to severe hearing loss, those who used hearing aids (n = 414) had a 32% lower risk of dementia compared with those who didn’t use assisted devices (prevalence ratio, 0.68; 95% CI, 0.47-1.00). Similar data were published in JAMA Neurology, suggesting that hearing aids reduce dementia risk.

“With this study, we were able to refine our understanding of the strength of the hearing loss–dementia association in a study more representative of older adults in the United States,” said Dr. Huang.

Robust association

Commenting on the findings, Justin S. Golub, MD, associate professor in the department of otolaryngology–head and neck surgery at Columbia University, New York, said the study supports earlier research and suggests a “robust” association between hearing loss and dementia.

“The particular advantage of this study was that it was high quality and nationally representative,” Dr. Golub said. “It is also among a smaller set of studies that have shown hearing aid use to be associated with lower risk of dementia.”

Although not statistically significant, researchers did find increasing prevalence of dementia among people with only mild hearing loss, and clinicians should take note, said Dr. Golub, who was not involved with this study.

“We would expect the relationship between mild hearing loss and dementia to be weaker than severe hearing loss and dementia and, as a result, it might take more participants to show an association among the mild group,” Dr. Golub said.

“Even though this particular study did not specifically find a relationship between mild hearing loss and dementia, I would still recommend people to start treating their hearing loss when it is early,” Dr. Golub added.

The study was funded by the National Institute on Aging. Dr. Golub reports no relevant financial relationships. Full disclosures for study authors are included in the original article.

A version of this article first appeared on Medscape.com.

, new national data show. Investigators also found that even mild hearing loss was associated with increased dementia risk, although it was not statistically significant, and that hearing aid use was tied to a 32% decrease in dementia prevalence.

“Every 10-decibel increase in hearing loss was associated with 16% greater prevalence of dementia, such that prevalence of dementia in older adults with moderate or greater hearing loss was 61% higher than prevalence in those with normal hearing,” said lead investigator Alison Huang, PhD, senior research associate in epidemiology at Johns Hopkins Bloomberg School of Public Health and core faculty in the Cochlear Center for Hearing and Public Health, Baltimore.

The findings were published online in JAMA.

Dose dependent effect

For their study, researchers analyzed data on 2,413 community-dwelling participants in the National Health and Aging Trends Study, a nationally representative, continuous panel study of U.S. Medicare beneficiaries aged 65 and older.

Data from the study was collected during in-home interviews, setting it apart from previous work that relied on data collected in a clinical setting, Dr. Huang said.

“This study was able to capture more vulnerable populations, such as the oldest old and older adults with disabilities, typically excluded from prior epidemiologic studies of the hearing loss–dementia association that use clinic-based data collection, which only captures people who have the ability and means to get to clinics,” Dr. Huang said.

Weighted hearing loss prevalence was 36.7% for mild and 29.8% for moderate to severe hearing loss, and weighted prevalence of dementia was 10.3%.

Those with moderate to severe hearing loss were 61% more likely to have dementia than were those with normal hearing (prevalence ratio, 1.61; 95% confidence interval [CI], 1.09-2.38).

Dementia prevalence increased with increasing severity of hearing loss: Normal hearing: 6.19% (95% CI, 4.31-8.80); mild hearing loss: 8.93% (95% CI, 6.99-11.34); moderate to severe hearing loss: 16.52% (95% CI, 13.81-19.64). But only moderate to severe hearing loss showed a statistically significant association with dementia (P = .02).

Dementia prevalence increased 16% per 10-decibel increase in hearing loss (prevalence ratio 1.16; P < .001).

Among the 853 individuals in the study with moderate to severe hearing loss, those who used hearing aids (n = 414) had a 32% lower risk of dementia compared with those who didn’t use assisted devices (prevalence ratio, 0.68; 95% CI, 0.47-1.00). Similar data were published in JAMA Neurology, suggesting that hearing aids reduce dementia risk.

“With this study, we were able to refine our understanding of the strength of the hearing loss–dementia association in a study more representative of older adults in the United States,” said Dr. Huang.

Robust association

Commenting on the findings, Justin S. Golub, MD, associate professor in the department of otolaryngology–head and neck surgery at Columbia University, New York, said the study supports earlier research and suggests a “robust” association between hearing loss and dementia.

“The particular advantage of this study was that it was high quality and nationally representative,” Dr. Golub said. “It is also among a smaller set of studies that have shown hearing aid use to be associated with lower risk of dementia.”

Although not statistically significant, researchers did find increasing prevalence of dementia among people with only mild hearing loss, and clinicians should take note, said Dr. Golub, who was not involved with this study.

“We would expect the relationship between mild hearing loss and dementia to be weaker than severe hearing loss and dementia and, as a result, it might take more participants to show an association among the mild group,” Dr. Golub said.

“Even though this particular study did not specifically find a relationship between mild hearing loss and dementia, I would still recommend people to start treating their hearing loss when it is early,” Dr. Golub added.

The study was funded by the National Institute on Aging. Dr. Golub reports no relevant financial relationships. Full disclosures for study authors are included in the original article.

A version of this article first appeared on Medscape.com.

, new national data show. Investigators also found that even mild hearing loss was associated with increased dementia risk, although it was not statistically significant, and that hearing aid use was tied to a 32% decrease in dementia prevalence.

“Every 10-decibel increase in hearing loss was associated with 16% greater prevalence of dementia, such that prevalence of dementia in older adults with moderate or greater hearing loss was 61% higher than prevalence in those with normal hearing,” said lead investigator Alison Huang, PhD, senior research associate in epidemiology at Johns Hopkins Bloomberg School of Public Health and core faculty in the Cochlear Center for Hearing and Public Health, Baltimore.

The findings were published online in JAMA.

Dose dependent effect

For their study, researchers analyzed data on 2,413 community-dwelling participants in the National Health and Aging Trends Study, a nationally representative, continuous panel study of U.S. Medicare beneficiaries aged 65 and older.

Data from the study was collected during in-home interviews, setting it apart from previous work that relied on data collected in a clinical setting, Dr. Huang said.

“This study was able to capture more vulnerable populations, such as the oldest old and older adults with disabilities, typically excluded from prior epidemiologic studies of the hearing loss–dementia association that use clinic-based data collection, which only captures people who have the ability and means to get to clinics,” Dr. Huang said.

Weighted hearing loss prevalence was 36.7% for mild and 29.8% for moderate to severe hearing loss, and weighted prevalence of dementia was 10.3%.

Those with moderate to severe hearing loss were 61% more likely to have dementia than were those with normal hearing (prevalence ratio, 1.61; 95% confidence interval [CI], 1.09-2.38).

Dementia prevalence increased with increasing severity of hearing loss: Normal hearing: 6.19% (95% CI, 4.31-8.80); mild hearing loss: 8.93% (95% CI, 6.99-11.34); moderate to severe hearing loss: 16.52% (95% CI, 13.81-19.64). But only moderate to severe hearing loss showed a statistically significant association with dementia (P = .02).

Dementia prevalence increased 16% per 10-decibel increase in hearing loss (prevalence ratio 1.16; P < .001).

Among the 853 individuals in the study with moderate to severe hearing loss, those who used hearing aids (n = 414) had a 32% lower risk of dementia compared with those who didn’t use assisted devices (prevalence ratio, 0.68; 95% CI, 0.47-1.00). Similar data were published in JAMA Neurology, suggesting that hearing aids reduce dementia risk.

“With this study, we were able to refine our understanding of the strength of the hearing loss–dementia association in a study more representative of older adults in the United States,” said Dr. Huang.

Robust association

Commenting on the findings, Justin S. Golub, MD, associate professor in the department of otolaryngology–head and neck surgery at Columbia University, New York, said the study supports earlier research and suggests a “robust” association between hearing loss and dementia.

“The particular advantage of this study was that it was high quality and nationally representative,” Dr. Golub said. “It is also among a smaller set of studies that have shown hearing aid use to be associated with lower risk of dementia.”

Although not statistically significant, researchers did find increasing prevalence of dementia among people with only mild hearing loss, and clinicians should take note, said Dr. Golub, who was not involved with this study.

“We would expect the relationship between mild hearing loss and dementia to be weaker than severe hearing loss and dementia and, as a result, it might take more participants to show an association among the mild group,” Dr. Golub said.

“Even though this particular study did not specifically find a relationship between mild hearing loss and dementia, I would still recommend people to start treating their hearing loss when it is early,” Dr. Golub added.

The study was funded by the National Institute on Aging. Dr. Golub reports no relevant financial relationships. Full disclosures for study authors are included in the original article.

A version of this article first appeared on Medscape.com.

Early retirement and the terrible, horrible, no good, very bad cognitive decline

The ‘scheme’ in the name should have been a clue

Retirement. The shiny reward to a lifetime’s worth of working and saving. We’re all literally working to get there, some of us more to get there early, but current research reveals that early retirement isn’t the relaxing finish line we dream about, cognitively speaking.

Researchers at Binghamton (N.Y.) University set out to examine just how retirement plans affect cognitive performance. They started off with China’s New Rural Pension Scheme (scheme probably has a less negative connotation in Chinese), a plan that financially aids the growing rural retirement-age population in the country. Then they looked at data from the Chinese Health and Retirement Longitudinal Survey, which tests cognition with a focus on episodic memory and parts of intact mental status.

What they found was the opposite of what you would expect out of retirees with nothing but time on their hands.

The pension program, which had been in place for almost a decade, led to delayed recall, especially among women, supporting “the mental retirement hypothesis that decreased mental activity results in worsening cognitive skills,” the investigators said in a written statement.

There also was a drop in social engagement, with lower rates of volunteering and social interaction than people who didn’t receive the pension. Some behaviors, like regular alcohol consumption, did improve over the previous year, as did total health in general, but “the adverse effects of early retirement on mental and social engagement significantly outweigh the program’s protective effect on various health behaviors,” Plamen Nikolov, PhD, said about his research.

So if you’re looking to retire early, don’t skimp on the crosswords and the bingo nights. Stay busy in a good way. Your brain will thank you.

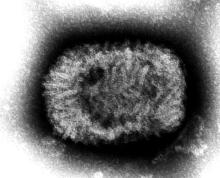

Indiana Jones and the First Smallpox Ancestor

Smallpox was, not that long ago, one of the most devastating diseases known to humanity, killing 300 million people in the 20th century alone. Eradicating it has to be one of medicine’s crowning achievements. Now it can only be found in museums, which is where it belongs.

Here’s the thing with smallpox though: For all it did to us, we know frustratingly little about where it came from. Until very recently, the best available genetic evidence placed its emergence in the 17th century, which clashes with historical data. You know what that means, right? It’s time to dig out the fedora and whip, cue the music, and dig into a recently published study spanning continents in search of the mythical smallpox origin story.

We pick up in 2020, when genetic evidence definitively showed smallpox in a Viking burial site, moving the disease’s emergence a thousand years earlier. Which is all well and good, but there’s solid visual evidence that Egyptian pharaohs were dying of smallpox, as their bodies show the signature scarring. Historians were pretty sure smallpox went back about 4,000 years, but there was no genetic material to prove it.

Since there aren’t any 4,000-year-old smallpox germs laying around, the researchers chose to attack the problem another way – by burning down a Venetian catacomb, er, conducting a analysis of historical smallpox genetics to find the virus’s origin. By analyzing the genomes of various strains at different periods of time, they were able to determine that the variola virus had a definitive common ancestor. Some of the genetic components in the Viking-age sample, for example, persisted until the 18th century.

Armed with this information, the scientists determined that the first smallpox ancestor emerged about 3,800 years ago. That’s very close to the historians’ estimate for the disease’s emergence. Proof at last of smallpox’s truly ancient origin. One might even say the researchers chose wisely.

The only hall of fame that really matters

LOTME loves the holiday season – the food, the gifts, the radio stations that play nothing but Christmas music – but for us the most wonderful time of the year comes just a bit later. No, it’s not our annual Golden Globes slap bet. Nope, not even the “excitement” of the College Football Playoff National Championship. It’s time for the National Inventors Hall of Fame to announce its latest inductees, and we could hardly sleep last night after putting cookies out for Thomas Edison. Fasten your seatbelts!

- Robert G. Bryant is a NASA chemist who developed Langley Research Center-Soluble Imide (yes, that’s the actual name) a polymer used as an insulation material for leads in implantable cardiac resynchronization therapy devices.

- Rory Cooper is a biomedical engineer who was paralyzed in a bicycle accident. His work has improved manual and electric wheelchairs and advanced the health, mobility, and social inclusion of people with disabilities and older adults. He is also the first NIHF inductee named Rory.

- Katalin Karikó, a biochemist, and Drew Weissman, an immunologist, “discovered how to enable messenger ribonucleic acid (mRNA) to enter cells without triggering the body’s immune system,” NIHF said, and that laid the foundation for the mRNA COVID-19 vaccines developed by Pfizer-BioNTech and Moderna. That, of course, led to the antivax movement, which has provided so much LOTME fodder over the years.

- Angela Hartley Brodie was a biochemist who discovered and developed a class of drugs called aromatase inhibitors, which can stop the production of hormones that fuel cancer cell growth and are used to treat breast cancer in 500,000 women worldwide each year.

We can’t mention all of the inductees for 2023 (our editor made that very clear), but we would like to offer a special shout-out to brothers Cyril (the first Cyril in the NIHF, by the way) and Louis Keller, who invented the world’s first compact loader, which eventually became the Bobcat skid-steer loader. Not really medical, you’re probably thinking, but we’re sure that someone, somewhere, at some time, used one to build a hospital, landscape a hospital, or clean up after the demolition of a hospital.

The ‘scheme’ in the name should have been a clue

Retirement. The shiny reward to a lifetime’s worth of working and saving. We’re all literally working to get there, some of us more to get there early, but current research reveals that early retirement isn’t the relaxing finish line we dream about, cognitively speaking.

Researchers at Binghamton (N.Y.) University set out to examine just how retirement plans affect cognitive performance. They started off with China’s New Rural Pension Scheme (scheme probably has a less negative connotation in Chinese), a plan that financially aids the growing rural retirement-age population in the country. Then they looked at data from the Chinese Health and Retirement Longitudinal Survey, which tests cognition with a focus on episodic memory and parts of intact mental status.

What they found was the opposite of what you would expect out of retirees with nothing but time on their hands.

The pension program, which had been in place for almost a decade, led to delayed recall, especially among women, supporting “the mental retirement hypothesis that decreased mental activity results in worsening cognitive skills,” the investigators said in a written statement.

There also was a drop in social engagement, with lower rates of volunteering and social interaction than people who didn’t receive the pension. Some behaviors, like regular alcohol consumption, did improve over the previous year, as did total health in general, but “the adverse effects of early retirement on mental and social engagement significantly outweigh the program’s protective effect on various health behaviors,” Plamen Nikolov, PhD, said about his research.

So if you’re looking to retire early, don’t skimp on the crosswords and the bingo nights. Stay busy in a good way. Your brain will thank you.

Indiana Jones and the First Smallpox Ancestor

Smallpox was, not that long ago, one of the most devastating diseases known to humanity, killing 300 million people in the 20th century alone. Eradicating it has to be one of medicine’s crowning achievements. Now it can only be found in museums, which is where it belongs.

Here’s the thing with smallpox though: For all it did to us, we know frustratingly little about where it came from. Until very recently, the best available genetic evidence placed its emergence in the 17th century, which clashes with historical data. You know what that means, right? It’s time to dig out the fedora and whip, cue the music, and dig into a recently published study spanning continents in search of the mythical smallpox origin story.

We pick up in 2020, when genetic evidence definitively showed smallpox in a Viking burial site, moving the disease’s emergence a thousand years earlier. Which is all well and good, but there’s solid visual evidence that Egyptian pharaohs were dying of smallpox, as their bodies show the signature scarring. Historians were pretty sure smallpox went back about 4,000 years, but there was no genetic material to prove it.

Since there aren’t any 4,000-year-old smallpox germs laying around, the researchers chose to attack the problem another way – by burning down a Venetian catacomb, er, conducting a analysis of historical smallpox genetics to find the virus’s origin. By analyzing the genomes of various strains at different periods of time, they were able to determine that the variola virus had a definitive common ancestor. Some of the genetic components in the Viking-age sample, for example, persisted until the 18th century.

Armed with this information, the scientists determined that the first smallpox ancestor emerged about 3,800 years ago. That’s very close to the historians’ estimate for the disease’s emergence. Proof at last of smallpox’s truly ancient origin. One might even say the researchers chose wisely.

The only hall of fame that really matters

LOTME loves the holiday season – the food, the gifts, the radio stations that play nothing but Christmas music – but for us the most wonderful time of the year comes just a bit later. No, it’s not our annual Golden Globes slap bet. Nope, not even the “excitement” of the College Football Playoff National Championship. It’s time for the National Inventors Hall of Fame to announce its latest inductees, and we could hardly sleep last night after putting cookies out for Thomas Edison. Fasten your seatbelts!

- Robert G. Bryant is a NASA chemist who developed Langley Research Center-Soluble Imide (yes, that’s the actual name) a polymer used as an insulation material for leads in implantable cardiac resynchronization therapy devices.

- Rory Cooper is a biomedical engineer who was paralyzed in a bicycle accident. His work has improved manual and electric wheelchairs and advanced the health, mobility, and social inclusion of people with disabilities and older adults. He is also the first NIHF inductee named Rory.

- Katalin Karikó, a biochemist, and Drew Weissman, an immunologist, “discovered how to enable messenger ribonucleic acid (mRNA) to enter cells without triggering the body’s immune system,” NIHF said, and that laid the foundation for the mRNA COVID-19 vaccines developed by Pfizer-BioNTech and Moderna. That, of course, led to the antivax movement, which has provided so much LOTME fodder over the years.

- Angela Hartley Brodie was a biochemist who discovered and developed a class of drugs called aromatase inhibitors, which can stop the production of hormones that fuel cancer cell growth and are used to treat breast cancer in 500,000 women worldwide each year.

We can’t mention all of the inductees for 2023 (our editor made that very clear), but we would like to offer a special shout-out to brothers Cyril (the first Cyril in the NIHF, by the way) and Louis Keller, who invented the world’s first compact loader, which eventually became the Bobcat skid-steer loader. Not really medical, you’re probably thinking, but we’re sure that someone, somewhere, at some time, used one to build a hospital, landscape a hospital, or clean up after the demolition of a hospital.

The ‘scheme’ in the name should have been a clue

Retirement. The shiny reward to a lifetime’s worth of working and saving. We’re all literally working to get there, some of us more to get there early, but current research reveals that early retirement isn’t the relaxing finish line we dream about, cognitively speaking.

Researchers at Binghamton (N.Y.) University set out to examine just how retirement plans affect cognitive performance. They started off with China’s New Rural Pension Scheme (scheme probably has a less negative connotation in Chinese), a plan that financially aids the growing rural retirement-age population in the country. Then they looked at data from the Chinese Health and Retirement Longitudinal Survey, which tests cognition with a focus on episodic memory and parts of intact mental status.

What they found was the opposite of what you would expect out of retirees with nothing but time on their hands.

The pension program, which had been in place for almost a decade, led to delayed recall, especially among women, supporting “the mental retirement hypothesis that decreased mental activity results in worsening cognitive skills,” the investigators said in a written statement.

There also was a drop in social engagement, with lower rates of volunteering and social interaction than people who didn’t receive the pension. Some behaviors, like regular alcohol consumption, did improve over the previous year, as did total health in general, but “the adverse effects of early retirement on mental and social engagement significantly outweigh the program’s protective effect on various health behaviors,” Plamen Nikolov, PhD, said about his research.

So if you’re looking to retire early, don’t skimp on the crosswords and the bingo nights. Stay busy in a good way. Your brain will thank you.

Indiana Jones and the First Smallpox Ancestor

Smallpox was, not that long ago, one of the most devastating diseases known to humanity, killing 300 million people in the 20th century alone. Eradicating it has to be one of medicine’s crowning achievements. Now it can only be found in museums, which is where it belongs.

Here’s the thing with smallpox though: For all it did to us, we know frustratingly little about where it came from. Until very recently, the best available genetic evidence placed its emergence in the 17th century, which clashes with historical data. You know what that means, right? It’s time to dig out the fedora and whip, cue the music, and dig into a recently published study spanning continents in search of the mythical smallpox origin story.

We pick up in 2020, when genetic evidence definitively showed smallpox in a Viking burial site, moving the disease’s emergence a thousand years earlier. Which is all well and good, but there’s solid visual evidence that Egyptian pharaohs were dying of smallpox, as their bodies show the signature scarring. Historians were pretty sure smallpox went back about 4,000 years, but there was no genetic material to prove it.

Since there aren’t any 4,000-year-old smallpox germs laying around, the researchers chose to attack the problem another way – by burning down a Venetian catacomb, er, conducting a analysis of historical smallpox genetics to find the virus’s origin. By analyzing the genomes of various strains at different periods of time, they were able to determine that the variola virus had a definitive common ancestor. Some of the genetic components in the Viking-age sample, for example, persisted until the 18th century.

Armed with this information, the scientists determined that the first smallpox ancestor emerged about 3,800 years ago. That’s very close to the historians’ estimate for the disease’s emergence. Proof at last of smallpox’s truly ancient origin. One might even say the researchers chose wisely.

The only hall of fame that really matters

LOTME loves the holiday season – the food, the gifts, the radio stations that play nothing but Christmas music – but for us the most wonderful time of the year comes just a bit later. No, it’s not our annual Golden Globes slap bet. Nope, not even the “excitement” of the College Football Playoff National Championship. It’s time for the National Inventors Hall of Fame to announce its latest inductees, and we could hardly sleep last night after putting cookies out for Thomas Edison. Fasten your seatbelts!

- Robert G. Bryant is a NASA chemist who developed Langley Research Center-Soluble Imide (yes, that’s the actual name) a polymer used as an insulation material for leads in implantable cardiac resynchronization therapy devices.

- Rory Cooper is a biomedical engineer who was paralyzed in a bicycle accident. His work has improved manual and electric wheelchairs and advanced the health, mobility, and social inclusion of people with disabilities and older adults. He is also the first NIHF inductee named Rory.

- Katalin Karikó, a biochemist, and Drew Weissman, an immunologist, “discovered how to enable messenger ribonucleic acid (mRNA) to enter cells without triggering the body’s immune system,” NIHF said, and that laid the foundation for the mRNA COVID-19 vaccines developed by Pfizer-BioNTech and Moderna. That, of course, led to the antivax movement, which has provided so much LOTME fodder over the years.

- Angela Hartley Brodie was a biochemist who discovered and developed a class of drugs called aromatase inhibitors, which can stop the production of hormones that fuel cancer cell growth and are used to treat breast cancer in 500,000 women worldwide each year.

We can’t mention all of the inductees for 2023 (our editor made that very clear), but we would like to offer a special shout-out to brothers Cyril (the first Cyril in the NIHF, by the way) and Louis Keller, who invented the world’s first compact loader, which eventually became the Bobcat skid-steer loader. Not really medical, you’re probably thinking, but we’re sure that someone, somewhere, at some time, used one to build a hospital, landscape a hospital, or clean up after the demolition of a hospital.

Frail ADHF patients benefit more from early rehab

Patients with acute decompensated heart failure who were frail at baseline improved more with targeted, early physical rehabilitation than those who were prefrail, a new analysis of the REHAB-HF study suggests.

“The robust response to the intervention by frail patients exceeded our expectations,” Gordon R. Reeves, MD, PT, of Novant Health Heart and Vascular Institute, Charlotte, N.C., told this news organization. “The effect size from improvement in physical function among frail patients was very large, with at least four times the minimal meaningful improvement, based on the Short Physical Performance Battery (SPPB).”

Furthermore, the interaction between baseline frailty status and treatment in REHAB-HF was such that a 2.6-fold larger improvement in SPPB was seen among frail versus prefrail patients.

However, Dr. Reeves noted, “We need to further evaluate safety and efficacy as it relates to adverse clinical events. Specifically, we observed a numerically higher number of deaths with the REHAB-HF intervention, which warrants further investigation before the intervention is implemented in clinical practice.”

The study was published online in JAMA Cardiology.

Interpret with caution

Dr. Reeves and colleagues conducted a prespecified secondary analysis of the previously published Therapy in Older Acute Heart Failure Patients (REHAB-HF) trial, a multicenter, randomized controlled trial that showed that a 3-month early, transitional, tailored, multidomain physical rehabilitation intervention improved physical function and quality of life (QoL), compared with usual care. The secondary analysis aimed to evaluate whether baseline frailty altered the benefits of the intervention or was associated with risk of adverse outcomes.

According to Dr. Reeves, REHAB-HF differs from more traditional cardiac rehab programs in several ways.

- The intervention targets patients with acute HF, including HF with preserved ejection fraction (HFpEF). Medicare policy limits standard cardiac rehabilitation in HF to long-term patients with HF with reduced ejection fraction (HFrEF) only who have been stabilized for 6 weeks or longer after a recent hospitalization.

- It addresses multiple physical function domains, including balance, mobility, functional strength, and endurance. Standard cardiac rehab is primarily focused on endurance training, which can result in injuries and falls if deficits in balance, mobility, and strength are not addressed first.

- It is delivered one to one rather than in a group setting and primarily by physical therapists who are experts in the rehabilitation of medically complex patients.

- It is transitional, beginning in the hospital, then moving to the outpatient setting, then to home and includes a home assessment.

For the analysis, the Fried phenotype model was used to assess baseline frailty across five domains: unintentional weight loss during the past year; self-reported exhaustion; grip strength; slowness, as assessed by gait speed; and low physical activity, as assessed by the Short Form-12 Physical Composite Score.

At the baseline visit, patients were categorized as frail if they met three or more of these criteria. They were categorized as prefrail if they met one or two criteria and as nonfrail if they met none of the criteria. Because of the small number of nonfrail participants, the analysis included only frail and prefrail participants.

The analysis included 337 participants (mean age, 72; 54%, women; 50%, Black). At baseline, 57% were frail, and 43% were prefrail.

A significant interaction was seen between baseline frailty and the intervention for the primary trial endpoint of overall SPPB score, with a 2.6-fold larger improvement in SPPB among frail (2.1) versus prefrail (0.8) patients.

Trends favored a larger intervention effect size, with significant improvement among frail versus prefrail participants for 6-minute walk distance, QoL, and the geriatric depression score.

“However, we must interpret these findings with caution,” the authors write. “The REHAB-HF trial was not adequately powered to determine the effect of the intervention on clinical events.” This plus the number of deaths “underscore the need for additional research, including prospective clinical trials, investigating the effect of physical function interventions on clinical events among frail patients with HF.”

To address this need, the researchers recently launched a larger clinical trial, called REHAB-HFpEF, which is powered to assess the impact of the intervention on clinical events, according to Dr. Reeves. “As the name implies,” he said, “this trial is focused on older patients recently hospitalized with HFpEF, who, [compared with HFrEF] also showed a more robust response to the intervention, with worse physical function and very high prevalence of frailty near the time of hospital discharge.”

‘Never too old or sick to benefit’

Jonathan H. Whiteson, MD, vice chair of clinical operations and medical director of cardiac and pulmonary rehabilitation at NYU Langone Health’s Rusk Rehabilitation, said, “We have seen in clinical practice and in other (non–heart failure) clinical areas that frail older patients do improve proportionally more than younger and less frail patients with rehabilitation programs. Encouragingly, this very much supports the practice that patients are never too old or too sick to benefit from an individualized multidisciplinary rehabilitation program.”

However, he noted, “patients had to be independent with basic activities of daily living to be included in the study,” so many frail, elderly patients with heart failure who are not independent were not included in the study. It also wasn’t clear whether patients who received postacute care at a rehab facility before going home were included in the trial.

Furthermore, he said, outcomes over 1 to 5 years are needed to understand the long-term impact of the intervention.

On the other hand, he added, the fact that about half of participants were Black and were women is a “tremendous strength.”

“Repeating this study in population groups at high risk for frailty with different diagnoses, such as chronic lung diseases, interstitial lung diseases, chronic kidney disease, and rheumatologic disorders will further support the value of rehabilitation in improving patient health, function, quality of life, and reducing rehospitalizations and health care costs,” Dr. Whiteson concluded.

The study was supported by grants from the National Key R&D program. The authors have disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Patients with acute decompensated heart failure who were frail at baseline improved more with targeted, early physical rehabilitation than those who were prefrail, a new analysis of the REHAB-HF study suggests.

“The robust response to the intervention by frail patients exceeded our expectations,” Gordon R. Reeves, MD, PT, of Novant Health Heart and Vascular Institute, Charlotte, N.C., told this news organization. “The effect size from improvement in physical function among frail patients was very large, with at least four times the minimal meaningful improvement, based on the Short Physical Performance Battery (SPPB).”

Furthermore, the interaction between baseline frailty status and treatment in REHAB-HF was such that a 2.6-fold larger improvement in SPPB was seen among frail versus prefrail patients.

However, Dr. Reeves noted, “We need to further evaluate safety and efficacy as it relates to adverse clinical events. Specifically, we observed a numerically higher number of deaths with the REHAB-HF intervention, which warrants further investigation before the intervention is implemented in clinical practice.”

The study was published online in JAMA Cardiology.

Interpret with caution

Dr. Reeves and colleagues conducted a prespecified secondary analysis of the previously published Therapy in Older Acute Heart Failure Patients (REHAB-HF) trial, a multicenter, randomized controlled trial that showed that a 3-month early, transitional, tailored, multidomain physical rehabilitation intervention improved physical function and quality of life (QoL), compared with usual care. The secondary analysis aimed to evaluate whether baseline frailty altered the benefits of the intervention or was associated with risk of adverse outcomes.

According to Dr. Reeves, REHAB-HF differs from more traditional cardiac rehab programs in several ways.

- The intervention targets patients with acute HF, including HF with preserved ejection fraction (HFpEF). Medicare policy limits standard cardiac rehabilitation in HF to long-term patients with HF with reduced ejection fraction (HFrEF) only who have been stabilized for 6 weeks or longer after a recent hospitalization.

- It addresses multiple physical function domains, including balance, mobility, functional strength, and endurance. Standard cardiac rehab is primarily focused on endurance training, which can result in injuries and falls if deficits in balance, mobility, and strength are not addressed first.

- It is delivered one to one rather than in a group setting and primarily by physical therapists who are experts in the rehabilitation of medically complex patients.

- It is transitional, beginning in the hospital, then moving to the outpatient setting, then to home and includes a home assessment.

For the analysis, the Fried phenotype model was used to assess baseline frailty across five domains: unintentional weight loss during the past year; self-reported exhaustion; grip strength; slowness, as assessed by gait speed; and low physical activity, as assessed by the Short Form-12 Physical Composite Score.

At the baseline visit, patients were categorized as frail if they met three or more of these criteria. They were categorized as prefrail if they met one or two criteria and as nonfrail if they met none of the criteria. Because of the small number of nonfrail participants, the analysis included only frail and prefrail participants.

The analysis included 337 participants (mean age, 72; 54%, women; 50%, Black). At baseline, 57% were frail, and 43% were prefrail.

A significant interaction was seen between baseline frailty and the intervention for the primary trial endpoint of overall SPPB score, with a 2.6-fold larger improvement in SPPB among frail (2.1) versus prefrail (0.8) patients.

Trends favored a larger intervention effect size, with significant improvement among frail versus prefrail participants for 6-minute walk distance, QoL, and the geriatric depression score.

“However, we must interpret these findings with caution,” the authors write. “The REHAB-HF trial was not adequately powered to determine the effect of the intervention on clinical events.” This plus the number of deaths “underscore the need for additional research, including prospective clinical trials, investigating the effect of physical function interventions on clinical events among frail patients with HF.”

To address this need, the researchers recently launched a larger clinical trial, called REHAB-HFpEF, which is powered to assess the impact of the intervention on clinical events, according to Dr. Reeves. “As the name implies,” he said, “this trial is focused on older patients recently hospitalized with HFpEF, who, [compared with HFrEF] also showed a more robust response to the intervention, with worse physical function and very high prevalence of frailty near the time of hospital discharge.”

‘Never too old or sick to benefit’

Jonathan H. Whiteson, MD, vice chair of clinical operations and medical director of cardiac and pulmonary rehabilitation at NYU Langone Health’s Rusk Rehabilitation, said, “We have seen in clinical practice and in other (non–heart failure) clinical areas that frail older patients do improve proportionally more than younger and less frail patients with rehabilitation programs. Encouragingly, this very much supports the practice that patients are never too old or too sick to benefit from an individualized multidisciplinary rehabilitation program.”

However, he noted, “patients had to be independent with basic activities of daily living to be included in the study,” so many frail, elderly patients with heart failure who are not independent were not included in the study. It also wasn’t clear whether patients who received postacute care at a rehab facility before going home were included in the trial.

Furthermore, he said, outcomes over 1 to 5 years are needed to understand the long-term impact of the intervention.

On the other hand, he added, the fact that about half of participants were Black and were women is a “tremendous strength.”

“Repeating this study in population groups at high risk for frailty with different diagnoses, such as chronic lung diseases, interstitial lung diseases, chronic kidney disease, and rheumatologic disorders will further support the value of rehabilitation in improving patient health, function, quality of life, and reducing rehospitalizations and health care costs,” Dr. Whiteson concluded.

The study was supported by grants from the National Key R&D program. The authors have disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Patients with acute decompensated heart failure who were frail at baseline improved more with targeted, early physical rehabilitation than those who were prefrail, a new analysis of the REHAB-HF study suggests.

“The robust response to the intervention by frail patients exceeded our expectations,” Gordon R. Reeves, MD, PT, of Novant Health Heart and Vascular Institute, Charlotte, N.C., told this news organization. “The effect size from improvement in physical function among frail patients was very large, with at least four times the minimal meaningful improvement, based on the Short Physical Performance Battery (SPPB).”

Furthermore, the interaction between baseline frailty status and treatment in REHAB-HF was such that a 2.6-fold larger improvement in SPPB was seen among frail versus prefrail patients.

However, Dr. Reeves noted, “We need to further evaluate safety and efficacy as it relates to adverse clinical events. Specifically, we observed a numerically higher number of deaths with the REHAB-HF intervention, which warrants further investigation before the intervention is implemented in clinical practice.”

The study was published online in JAMA Cardiology.

Interpret with caution

Dr. Reeves and colleagues conducted a prespecified secondary analysis of the previously published Therapy in Older Acute Heart Failure Patients (REHAB-HF) trial, a multicenter, randomized controlled trial that showed that a 3-month early, transitional, tailored, multidomain physical rehabilitation intervention improved physical function and quality of life (QoL), compared with usual care. The secondary analysis aimed to evaluate whether baseline frailty altered the benefits of the intervention or was associated with risk of adverse outcomes.

According to Dr. Reeves, REHAB-HF differs from more traditional cardiac rehab programs in several ways.

- The intervention targets patients with acute HF, including HF with preserved ejection fraction (HFpEF). Medicare policy limits standard cardiac rehabilitation in HF to long-term patients with HF with reduced ejection fraction (HFrEF) only who have been stabilized for 6 weeks or longer after a recent hospitalization.

- It addresses multiple physical function domains, including balance, mobility, functional strength, and endurance. Standard cardiac rehab is primarily focused on endurance training, which can result in injuries and falls if deficits in balance, mobility, and strength are not addressed first.

- It is delivered one to one rather than in a group setting and primarily by physical therapists who are experts in the rehabilitation of medically complex patients.

- It is transitional, beginning in the hospital, then moving to the outpatient setting, then to home and includes a home assessment.

For the analysis, the Fried phenotype model was used to assess baseline frailty across five domains: unintentional weight loss during the past year; self-reported exhaustion; grip strength; slowness, as assessed by gait speed; and low physical activity, as assessed by the Short Form-12 Physical Composite Score.

At the baseline visit, patients were categorized as frail if they met three or more of these criteria. They were categorized as prefrail if they met one or two criteria and as nonfrail if they met none of the criteria. Because of the small number of nonfrail participants, the analysis included only frail and prefrail participants.

The analysis included 337 participants (mean age, 72; 54%, women; 50%, Black). At baseline, 57% were frail, and 43% were prefrail.

A significant interaction was seen between baseline frailty and the intervention for the primary trial endpoint of overall SPPB score, with a 2.6-fold larger improvement in SPPB among frail (2.1) versus prefrail (0.8) patients.

Trends favored a larger intervention effect size, with significant improvement among frail versus prefrail participants for 6-minute walk distance, QoL, and the geriatric depression score.

“However, we must interpret these findings with caution,” the authors write. “The REHAB-HF trial was not adequately powered to determine the effect of the intervention on clinical events.” This plus the number of deaths “underscore the need for additional research, including prospective clinical trials, investigating the effect of physical function interventions on clinical events among frail patients with HF.”

To address this need, the researchers recently launched a larger clinical trial, called REHAB-HFpEF, which is powered to assess the impact of the intervention on clinical events, according to Dr. Reeves. “As the name implies,” he said, “this trial is focused on older patients recently hospitalized with HFpEF, who, [compared with HFrEF] also showed a more robust response to the intervention, with worse physical function and very high prevalence of frailty near the time of hospital discharge.”

‘Never too old or sick to benefit’

Jonathan H. Whiteson, MD, vice chair of clinical operations and medical director of cardiac and pulmonary rehabilitation at NYU Langone Health’s Rusk Rehabilitation, said, “We have seen in clinical practice and in other (non–heart failure) clinical areas that frail older patients do improve proportionally more than younger and less frail patients with rehabilitation programs. Encouragingly, this very much supports the practice that patients are never too old or too sick to benefit from an individualized multidisciplinary rehabilitation program.”

However, he noted, “patients had to be independent with basic activities of daily living to be included in the study,” so many frail, elderly patients with heart failure who are not independent were not included in the study. It also wasn’t clear whether patients who received postacute care at a rehab facility before going home were included in the trial.

Furthermore, he said, outcomes over 1 to 5 years are needed to understand the long-term impact of the intervention.

On the other hand, he added, the fact that about half of participants were Black and were women is a “tremendous strength.”

“Repeating this study in population groups at high risk for frailty with different diagnoses, such as chronic lung diseases, interstitial lung diseases, chronic kidney disease, and rheumatologic disorders will further support the value of rehabilitation in improving patient health, function, quality of life, and reducing rehospitalizations and health care costs,” Dr. Whiteson concluded.

The study was supported by grants from the National Key R&D program. The authors have disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Some BP meds tied to significantly lower risk for dementia, Alzheimer’s

Antihypertensive medications that stimulate rather than inhibit type 2 and 4 angiotensin II receptors can lower the rate of dementia among new users of these medications, new research suggests.

Results from a cohort study of more than 57,000 older Medicare beneficiaries showed that the initiation of antihypertensives that stimulate the receptors was linked to a 16% lower risk for incident Alzheimer’s disease and related dementia (ADRD) and an 18% lower risk for vascular dementia compared with those that inhibit the receptors.

“Achieving appropriate blood pressure control is essential for maximizing brain health, and this promising research suggests certain antihypertensives could yield brain benefit compared to others,” lead study author Zachary A. Marcum, PharmD, PhD, associate professor, University of Washington School of Pharmacy, Seattle, told this news organization.

The findings were published online in JAMA Network Open.

Medicare beneficiaries

Previous observational studies showed that antihypertensive medications that stimulate type 2 and 4 angiotensin II receptors, in comparison with those that don’t, were associated with lower rates of dementia. However, those studies included individuals with prevalent hypertension and were relatively small.

The new retrospective cohort study included a random sample of 57,773 Medicare beneficiaries aged at least 65 years with new-onset hypertension. The mean age of participants was 73.8 years, 62.9% were women, and 86.9% were White.

Over the course of the study, some participants filled at least one prescription for a stimulating angiotensin II receptor type 2 and 4, such as angiotensin II receptor type 1 blockers, dihydropyridine calcium channel blockers, and thiazide diuretics.

Others participants filled a prescription for an inhibiting type 2 and 4 angiotensin II receptors, including angiotensin-converting enzyme (ACE) inhibitors, beta-blockers, and nondihydropyridine calcium channel blockers.

“All these medications lower blood pressure, but they do it in different ways,” said Dr. Marcum.

The researchers were interested in the varying activity of these drugs at the type 2 and 4 angiotensin II receptors.

For each 30-day interval, they categorized beneficiaries into four groups: a stimulating medication group (n = 4,879) consisting of individuals mostly taking stimulating antihypertensives; an inhibiting medication group (n = 10,303) that mostly included individuals prescribed this type of antihypertensive; a mixed group (n = 2,179) that included a combination of the first two classifications; and a nonuser group (n = 40,413) of individuals who were not using either type of drug.

The primary outcome was time to first occurrence of ADRD. The secondary outcome was time to first occurrence of vascular dementia.

Researchers controlled for cardiovascular risk factors and sociodemographic characteristics, such as age, sex, race/ethnicity, and receipt of low-income subsidy.

Unanswered questions

After adjustments, results showed that initiation of an antihypertensive medication regimen that exclusively stimulates, rather than inhibits, type 2 and 4 angiotensin II receptors was associated with a 16% lower risk for incident ADRD over a follow-up of just under 7 years (hazard ratio, 0.84; 95% confidence interval, 0.79-0.90; P < .001).

The mixed regimen was also associated with statistically significant (P = .001) reduced odds of ADRD compared with the inhibiting medications.

As for vascular dementia, use of stimulating vs. inhibiting medications was associated with an 18% lower risk (HR, 0.82; 95% CI, 0.69-0.96; P = .02).

Again, use of the mixed regimen was associated with reduced risk of vascular dementia compared with the inhibiting medications (P = .03).

A variety of potential mechanisms might explain the superiority of stimulating agents when it comes to dementia risk, said Dr. Marcum. These could include, for example, increased blood flow to the brain and reduced amyloid.

“But more mechanistic work is needed as well as evaluation of dose responses, because that’s not something we looked at in this study,” Dr. Marcum said. “There are still a lot of unanswered questions.”

Stimulators instead of inhibitors?

The results of the current analysis come on the heels of some previous work showing the benefits of lowering blood pressure. For example, the Systolic Blood Pressure Intervention Trial (SPRINT) showed that targeting a systolic blood pressure below 120 mm Hg significantly reduces risk for heart disease, stroke, and death from these diseases.

But in contrast to previous research, the current study included only beneficiaries with incident hypertension and new use of antihypertensive medications, and it adjusted for time-varying confounding.

Prescribing stimulating instead of inhibiting treatments could make a difference at the population level, Dr. Marcum noted.

“If we could shift the prescribing a little bit from inhibiting to stimulating, that could possibly reduce dementia risk,” he said.

However, “we’re not suggesting [that all patients] have their regimen switched,” he added.

That’s because inhibiting medications still have an important place in the antihypertensive treatment armamentarium, Dr. Marcum noted. As an example, beta-blockers are used post heart attack.

As well, factors such as cost and side effects should be taken into consideration when prescribing an antihypertensive drug.

The new results could be used to set up a comparison in a future randomized controlled trial that would provide the strongest evidence for estimating causal effects of treatments, said Dr. Marcum.

‘More convincing’

Carlos G. Santos-Gallego, MD, Icahn School of Medicine at Mount Sinai, New York, said the study is “more convincing” than previous related research, as it has a larger sample size and a longer follow-up.

“And the exquisite statistical analysis gives more robustness, more solidity, to the hypothesis that drugs that stimulate type 2 and 4 angiotensin II receptors might be protective for dementia,” said Dr. Santos-Gallego, who was not involved with the research.

However, he noted that the retrospective study had some limitations, including the underdiagnosis of dementia. “The diagnosis of dementia is, honestly, very poorly done in the clinical setting,” he said.

As well, the study could be subject to “confounding by indication,” Dr. Santos-Gallego said. “There could be a third variable, another confounding factor, that’s responsible both for the dementia and for the prescription of these drugs,” he added.

For example, he noted that comorbidities such as atrial fibrillation, myocardial infarction, and heart failure might increase the risk of dementia.

He agreed with the investigators that a randomized clinical trial would address these limitations. “All comorbidities would be equally shared” in the randomized groups, and all participants would be given “a specific test for dementia at the same time,” Dr. Santos-Gallego said.

Still, he noted that the new results are in keeping with hypertension guidelines that recommend stimulating drugs.

“This trial definitely shows that the current hypertension guidelines are good treatment for our patients, not only to control blood pressure and not only to prevent infarction to prevent stroke but also to prevent dementia,” said Dr. Santos-Gallego.

Also commenting for this news organization, Heather Snyder, PhD, vice president of medical and scientific relations at the Alzheimer’s Association, said the new data provide “clarity” on why previous research had differing results on the effect of antihypertensives on cognition.

Among the caveats of this new analysis is that “it’s unclear if the demographics in this study are fully representative of Medicare beneficiaries,” said Dr. Snyder.

She, too, said a clinical trial is important “to understand if there is a preventative and/or treatment potential in the medications that stimulate type 2 and 4 angiotensin II receptors.”

The study received funding from the National Institute on Aging. Dr. Marcum and Dr. Santos-Gallego have reported no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Antihypertensive medications that stimulate rather than inhibit type 2 and 4 angiotensin II receptors can lower the rate of dementia among new users of these medications, new research suggests.

Results from a cohort study of more than 57,000 older Medicare beneficiaries showed that the initiation of antihypertensives that stimulate the receptors was linked to a 16% lower risk for incident Alzheimer’s disease and related dementia (ADRD) and an 18% lower risk for vascular dementia compared with those that inhibit the receptors.

“Achieving appropriate blood pressure control is essential for maximizing brain health, and this promising research suggests certain antihypertensives could yield brain benefit compared to others,” lead study author Zachary A. Marcum, PharmD, PhD, associate professor, University of Washington School of Pharmacy, Seattle, told this news organization.

The findings were published online in JAMA Network Open.

Medicare beneficiaries

Previous observational studies showed that antihypertensive medications that stimulate type 2 and 4 angiotensin II receptors, in comparison with those that don’t, were associated with lower rates of dementia. However, those studies included individuals with prevalent hypertension and were relatively small.

The new retrospective cohort study included a random sample of 57,773 Medicare beneficiaries aged at least 65 years with new-onset hypertension. The mean age of participants was 73.8 years, 62.9% were women, and 86.9% were White.

Over the course of the study, some participants filled at least one prescription for a stimulating angiotensin II receptor type 2 and 4, such as angiotensin II receptor type 1 blockers, dihydropyridine calcium channel blockers, and thiazide diuretics.

Others participants filled a prescription for an inhibiting type 2 and 4 angiotensin II receptors, including angiotensin-converting enzyme (ACE) inhibitors, beta-blockers, and nondihydropyridine calcium channel blockers.

“All these medications lower blood pressure, but they do it in different ways,” said Dr. Marcum.

The researchers were interested in the varying activity of these drugs at the type 2 and 4 angiotensin II receptors.

For each 30-day interval, they categorized beneficiaries into four groups: a stimulating medication group (n = 4,879) consisting of individuals mostly taking stimulating antihypertensives; an inhibiting medication group (n = 10,303) that mostly included individuals prescribed this type of antihypertensive; a mixed group (n = 2,179) that included a combination of the first two classifications; and a nonuser group (n = 40,413) of individuals who were not using either type of drug.

The primary outcome was time to first occurrence of ADRD. The secondary outcome was time to first occurrence of vascular dementia.

Researchers controlled for cardiovascular risk factors and sociodemographic characteristics, such as age, sex, race/ethnicity, and receipt of low-income subsidy.

Unanswered questions

After adjustments, results showed that initiation of an antihypertensive medication regimen that exclusively stimulates, rather than inhibits, type 2 and 4 angiotensin II receptors was associated with a 16% lower risk for incident ADRD over a follow-up of just under 7 years (hazard ratio, 0.84; 95% confidence interval, 0.79-0.90; P < .001).

The mixed regimen was also associated with statistically significant (P = .001) reduced odds of ADRD compared with the inhibiting medications.

As for vascular dementia, use of stimulating vs. inhibiting medications was associated with an 18% lower risk (HR, 0.82; 95% CI, 0.69-0.96; P = .02).

Again, use of the mixed regimen was associated with reduced risk of vascular dementia compared with the inhibiting medications (P = .03).

A variety of potential mechanisms might explain the superiority of stimulating agents when it comes to dementia risk, said Dr. Marcum. These could include, for example, increased blood flow to the brain and reduced amyloid.

“But more mechanistic work is needed as well as evaluation of dose responses, because that’s not something we looked at in this study,” Dr. Marcum said. “There are still a lot of unanswered questions.”

Stimulators instead of inhibitors?

The results of the current analysis come on the heels of some previous work showing the benefits of lowering blood pressure. For example, the Systolic Blood Pressure Intervention Trial (SPRINT) showed that targeting a systolic blood pressure below 120 mm Hg significantly reduces risk for heart disease, stroke, and death from these diseases.

But in contrast to previous research, the current study included only beneficiaries with incident hypertension and new use of antihypertensive medications, and it adjusted for time-varying confounding.

Prescribing stimulating instead of inhibiting treatments could make a difference at the population level, Dr. Marcum noted.

“If we could shift the prescribing a little bit from inhibiting to stimulating, that could possibly reduce dementia risk,” he said.

However, “we’re not suggesting [that all patients] have their regimen switched,” he added.

That’s because inhibiting medications still have an important place in the antihypertensive treatment armamentarium, Dr. Marcum noted. As an example, beta-blockers are used post heart attack.

As well, factors such as cost and side effects should be taken into consideration when prescribing an antihypertensive drug.

The new results could be used to set up a comparison in a future randomized controlled trial that would provide the strongest evidence for estimating causal effects of treatments, said Dr. Marcum.

‘More convincing’

Carlos G. Santos-Gallego, MD, Icahn School of Medicine at Mount Sinai, New York, said the study is “more convincing” than previous related research, as it has a larger sample size and a longer follow-up.

“And the exquisite statistical analysis gives more robustness, more solidity, to the hypothesis that drugs that stimulate type 2 and 4 angiotensin II receptors might be protective for dementia,” said Dr. Santos-Gallego, who was not involved with the research.

However, he noted that the retrospective study had some limitations, including the underdiagnosis of dementia. “The diagnosis of dementia is, honestly, very poorly done in the clinical setting,” he said.

As well, the study could be subject to “confounding by indication,” Dr. Santos-Gallego said. “There could be a third variable, another confounding factor, that’s responsible both for the dementia and for the prescription of these drugs,” he added.

For example, he noted that comorbidities such as atrial fibrillation, myocardial infarction, and heart failure might increase the risk of dementia.

He agreed with the investigators that a randomized clinical trial would address these limitations. “All comorbidities would be equally shared” in the randomized groups, and all participants would be given “a specific test for dementia at the same time,” Dr. Santos-Gallego said.

Still, he noted that the new results are in keeping with hypertension guidelines that recommend stimulating drugs.

“This trial definitely shows that the current hypertension guidelines are good treatment for our patients, not only to control blood pressure and not only to prevent infarction to prevent stroke but also to prevent dementia,” said Dr. Santos-Gallego.

Also commenting for this news organization, Heather Snyder, PhD, vice president of medical and scientific relations at the Alzheimer’s Association, said the new data provide “clarity” on why previous research had differing results on the effect of antihypertensives on cognition.

Among the caveats of this new analysis is that “it’s unclear if the demographics in this study are fully representative of Medicare beneficiaries,” said Dr. Snyder.

She, too, said a clinical trial is important “to understand if there is a preventative and/or treatment potential in the medications that stimulate type 2 and 4 angiotensin II receptors.”

The study received funding from the National Institute on Aging. Dr. Marcum and Dr. Santos-Gallego have reported no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Antihypertensive medications that stimulate rather than inhibit type 2 and 4 angiotensin II receptors can lower the rate of dementia among new users of these medications, new research suggests.

Results from a cohort study of more than 57,000 older Medicare beneficiaries showed that the initiation of antihypertensives that stimulate the receptors was linked to a 16% lower risk for incident Alzheimer’s disease and related dementia (ADRD) and an 18% lower risk for vascular dementia compared with those that inhibit the receptors.

“Achieving appropriate blood pressure control is essential for maximizing brain health, and this promising research suggests certain antihypertensives could yield brain benefit compared to others,” lead study author Zachary A. Marcum, PharmD, PhD, associate professor, University of Washington School of Pharmacy, Seattle, told this news organization.

The findings were published online in JAMA Network Open.

Medicare beneficiaries

Previous observational studies showed that antihypertensive medications that stimulate type 2 and 4 angiotensin II receptors, in comparison with those that don’t, were associated with lower rates of dementia. However, those studies included individuals with prevalent hypertension and were relatively small.

The new retrospective cohort study included a random sample of 57,773 Medicare beneficiaries aged at least 65 years with new-onset hypertension. The mean age of participants was 73.8 years, 62.9% were women, and 86.9% were White.

Over the course of the study, some participants filled at least one prescription for a stimulating angiotensin II receptor type 2 and 4, such as angiotensin II receptor type 1 blockers, dihydropyridine calcium channel blockers, and thiazide diuretics.

Others participants filled a prescription for an inhibiting type 2 and 4 angiotensin II receptors, including angiotensin-converting enzyme (ACE) inhibitors, beta-blockers, and nondihydropyridine calcium channel blockers.

“All these medications lower blood pressure, but they do it in different ways,” said Dr. Marcum.

The researchers were interested in the varying activity of these drugs at the type 2 and 4 angiotensin II receptors.

For each 30-day interval, they categorized beneficiaries into four groups: a stimulating medication group (n = 4,879) consisting of individuals mostly taking stimulating antihypertensives; an inhibiting medication group (n = 10,303) that mostly included individuals prescribed this type of antihypertensive; a mixed group (n = 2,179) that included a combination of the first two classifications; and a nonuser group (n = 40,413) of individuals who were not using either type of drug.

The primary outcome was time to first occurrence of ADRD. The secondary outcome was time to first occurrence of vascular dementia.

Researchers controlled for cardiovascular risk factors and sociodemographic characteristics, such as age, sex, race/ethnicity, and receipt of low-income subsidy.

Unanswered questions

After adjustments, results showed that initiation of an antihypertensive medication regimen that exclusively stimulates, rather than inhibits, type 2 and 4 angiotensin II receptors was associated with a 16% lower risk for incident ADRD over a follow-up of just under 7 years (hazard ratio, 0.84; 95% confidence interval, 0.79-0.90; P < .001).

The mixed regimen was also associated with statistically significant (P = .001) reduced odds of ADRD compared with the inhibiting medications.

As for vascular dementia, use of stimulating vs. inhibiting medications was associated with an 18% lower risk (HR, 0.82; 95% CI, 0.69-0.96; P = .02).

Again, use of the mixed regimen was associated with reduced risk of vascular dementia compared with the inhibiting medications (P = .03).

A variety of potential mechanisms might explain the superiority of stimulating agents when it comes to dementia risk, said Dr. Marcum. These could include, for example, increased blood flow to the brain and reduced amyloid.

“But more mechanistic work is needed as well as evaluation of dose responses, because that’s not something we looked at in this study,” Dr. Marcum said. “There are still a lot of unanswered questions.”

Stimulators instead of inhibitors?

The results of the current analysis come on the heels of some previous work showing the benefits of lowering blood pressure. For example, the Systolic Blood Pressure Intervention Trial (SPRINT) showed that targeting a systolic blood pressure below 120 mm Hg significantly reduces risk for heart disease, stroke, and death from these diseases.

But in contrast to previous research, the current study included only beneficiaries with incident hypertension and new use of antihypertensive medications, and it adjusted for time-varying confounding.

Prescribing stimulating instead of inhibiting treatments could make a difference at the population level, Dr. Marcum noted.

“If we could shift the prescribing a little bit from inhibiting to stimulating, that could possibly reduce dementia risk,” he said.

However, “we’re not suggesting [that all patients] have their regimen switched,” he added.

That’s because inhibiting medications still have an important place in the antihypertensive treatment armamentarium, Dr. Marcum noted. As an example, beta-blockers are used post heart attack.

As well, factors such as cost and side effects should be taken into consideration when prescribing an antihypertensive drug.

The new results could be used to set up a comparison in a future randomized controlled trial that would provide the strongest evidence for estimating causal effects of treatments, said Dr. Marcum.

‘More convincing’

Carlos G. Santos-Gallego, MD, Icahn School of Medicine at Mount Sinai, New York, said the study is “more convincing” than previous related research, as it has a larger sample size and a longer follow-up.

“And the exquisite statistical analysis gives more robustness, more solidity, to the hypothesis that drugs that stimulate type 2 and 4 angiotensin II receptors might be protective for dementia,” said Dr. Santos-Gallego, who was not involved with the research.

However, he noted that the retrospective study had some limitations, including the underdiagnosis of dementia. “The diagnosis of dementia is, honestly, very poorly done in the clinical setting,” he said.

As well, the study could be subject to “confounding by indication,” Dr. Santos-Gallego said. “There could be a third variable, another confounding factor, that’s responsible both for the dementia and for the prescription of these drugs,” he added.

For example, he noted that comorbidities such as atrial fibrillation, myocardial infarction, and heart failure might increase the risk of dementia.

He agreed with the investigators that a randomized clinical trial would address these limitations. “All comorbidities would be equally shared” in the randomized groups, and all participants would be given “a specific test for dementia at the same time,” Dr. Santos-Gallego said.

Still, he noted that the new results are in keeping with hypertension guidelines that recommend stimulating drugs.

“This trial definitely shows that the current hypertension guidelines are good treatment for our patients, not only to control blood pressure and not only to prevent infarction to prevent stroke but also to prevent dementia,” said Dr. Santos-Gallego.

Also commenting for this news organization, Heather Snyder, PhD, vice president of medical and scientific relations at the Alzheimer’s Association, said the new data provide “clarity” on why previous research had differing results on the effect of antihypertensives on cognition.

Among the caveats of this new analysis is that “it’s unclear if the demographics in this study are fully representative of Medicare beneficiaries,” said Dr. Snyder.

She, too, said a clinical trial is important “to understand if there is a preventative and/or treatment potential in the medications that stimulate type 2 and 4 angiotensin II receptors.”

The study received funding from the National Institute on Aging. Dr. Marcum and Dr. Santos-Gallego have reported no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Hearing loss strongly tied to increased dementia risk

Investigators also found that even mild hearing loss was associated with increased dementia risk, although it was not statistically significant, and that hearing aid use was tied to a 32% decrease in dementia prevalence.

“Every 10-decibel increase in hearing loss was associated with 16% greater prevalence of dementia, such that prevalence of dementia in older adults with moderate or greater hearing loss was 61% higher than prevalence in those with normal hearing,” lead investigator Alison Huang, PhD, senior research associate in epidemiology at Johns Hopkins Bloomberg School of Public Health and core faculty in the Cochlear Center for Hearing and Public Health, Baltimore, Md., told this news organization.

The findings were published online in JAMA.

Dose-dependent effect

For the study, researchers analyzed data on 2,413 community-dwelling participants in the National Health and Aging Trends Study, a nationally representative, continuous panel study of U.S. Medicare beneficiaries aged 65 and older.

Data from the study were collected during in-home interviews, setting it apart from previous work that relied on data collected in a clinical setting, Dr. Huang said.

“This study was able to capture more vulnerable populations, such as the oldest old and older adults with disabilities, typically excluded from prior epidemiologic studies of the hearing loss–dementia association that use clinic-based data collection, which only captures people who have the ability and means to get to clinics,” Dr. Huang said.

Weighted hearing loss prevalence was 36.7% for mild and 29.8% for moderate to severe hearing loss, and weighted prevalence of dementia was 10.3%.

Those with moderate to severe hearing loss were 61% more likely to have dementia than those with normal hearing (prevalence ratio, 1.61; 95% confidence interval, 1.09-2.38).