User login

Reorganizing a Hospital Ward

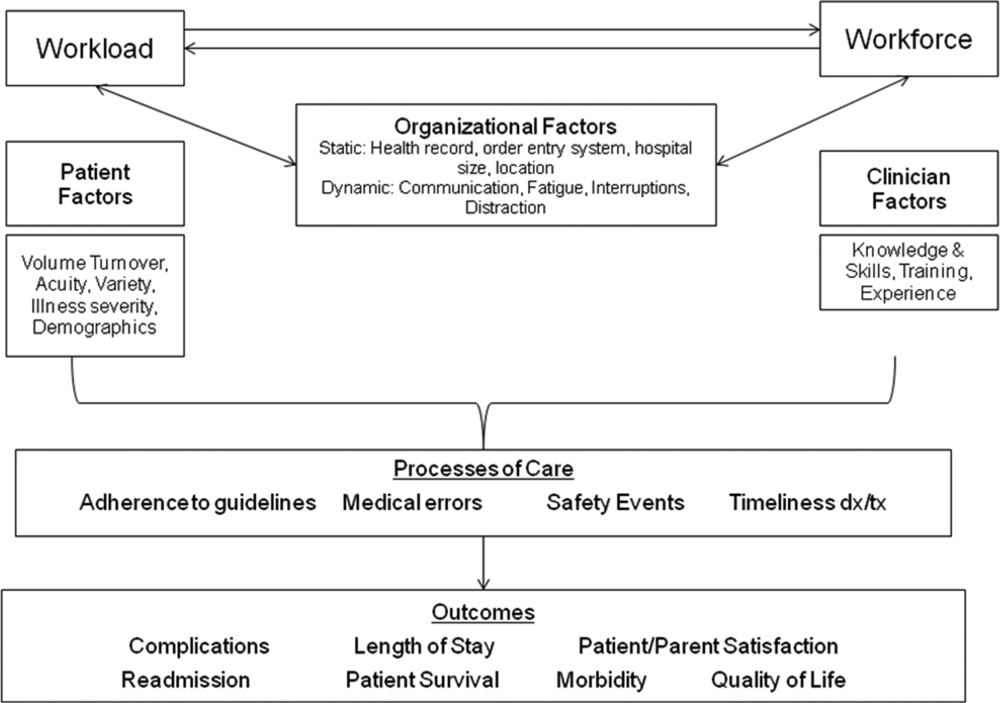

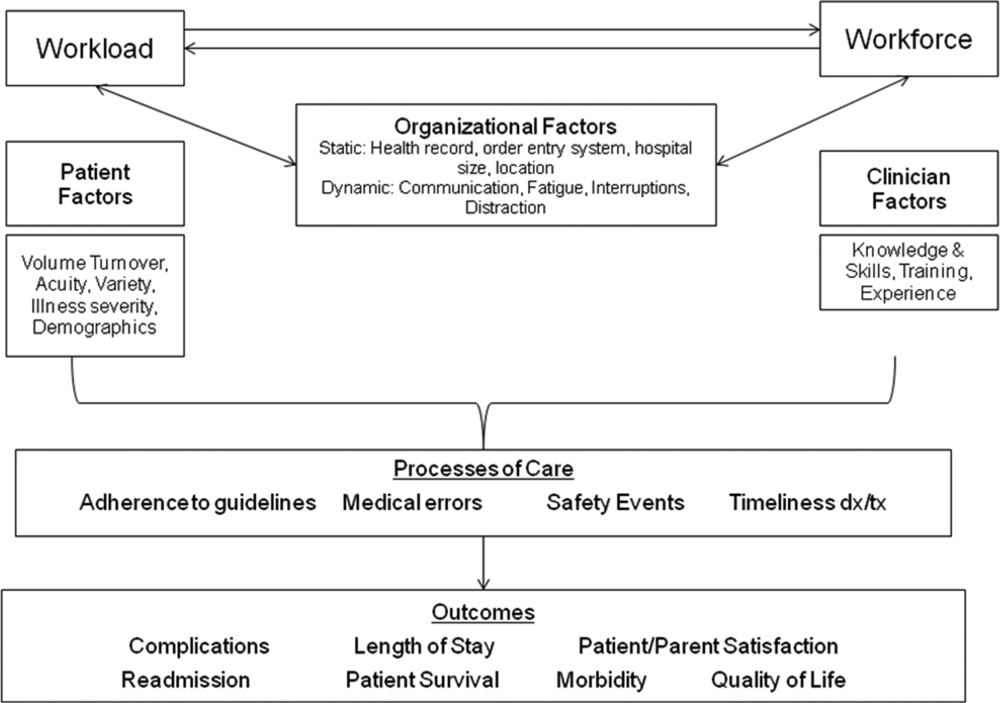

In 2001, the Institute of Medicine called for a major redesign of the US healthcare system, describing the chasm between the quality of care Americans receive and the quality of healthcare they deserve.[1] The healthcare community recognizes its ongoing quality and value gaps, but progress has been limited by outdated care models, fragmented organizational structures, and insufficient advances in system design.[2] Many healthcare organizations are searching for new care delivery models capable of producing greater value.

A major constraint in hospitals is the persistence of underperforming frontline clinical care teams.[3] Physicians typically travel from 1 unit or patient to the next in unpredictable patterns, resulting in missed opportunities to share perspectives and coordinate care with nurses, discharge planning personnel, pharmacists, therapists, and patients. This geographic fragmentation almost certainly contributes to interprofessional silos and hierarchies, nonspecific care plans, and failure to initiate or intensify therapy when indicated.[4] Modern hospital units could benefit from having a standard care model that synchronizes frontline professionals into teams routinely coordinating and progressing a shared plan of care.

EFFECTIVE CLINICAL MICROSYSTEMS REFLECTED IN THE DESIGN OF THE ACCOUNTABLE CARE UNIT

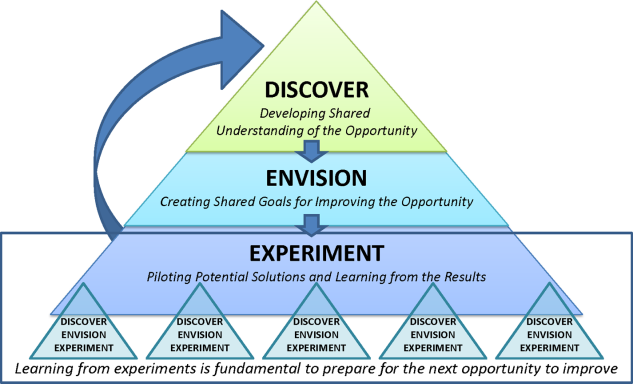

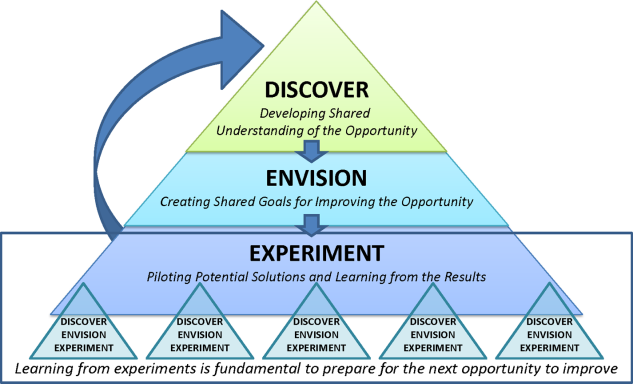

High‐value healthcare organizations deliberately design clinical microsystems.[5] An effective clinical microsystem combines several traits: (1) a small group of people who work together in a defined setting on a regular basis to provide care, (2) linked care processes and a shared information environment that includes individuals who receive that care, (3) performance outcomes, and (4) set service and care aims.[6] For the accountable care unit (ACU) to reflect the traits of an effective clinical microsystem, we designed it with analogous features: (1) unit‐based teams, (2) structured interdisciplinary bedside rounds (SIBR), (3) unit‐level performance reporting, and (4) unit‐level nurse and physician coleadership. We launched the ACU on September 1, 2010 in a high‐acuity 24‐bed medical unit at Emory University Hospital, a 579‐bed tertiary academic medical center. Herein we provide a brief report of our experience implementing and refining the ACU over a 4‐year period to help others gauge feasibility and sustainability.

FEATURES OF AN ACU

Unit‐Based Teams

Design

Geographic alignment fosters mutual respect, cohesiveness, communication, timeliness, and face‐to‐face problem solving,[7, 8] and has been linked to improved patient satisfaction, decreased length of stay, and reductions in morbidity and mortality.[9, 10, 11] At our hospital, though, patients newly admitted or transferred to the hospital medicine service traditionally had been distributed to physician teams without regard to geography, typically based on physician call schedules or traditions of balancing patient volumes across colleagues. These traditional practices geographically dispersed our teams. Physicians would be forced regularly to travel to 5 to 8 different units each day to see 10 to 18 patients. Nurses might perceive this as a parade of different physician teams coming and going off the unit at unpredictable times. To temporally and spatially align physicians with unit‐based staff, specific physician teams were assigned to the ACU.

Implementation

The first step in implementing unit‐based teams was to identify the smallest number of physician teams that could be assigned to the ACU. Two internal medicine resident teams are assigned to care for all medical patients in the unit. Each resident team consists of 1 hospital medicine attending physician, 1 internal medicine resident, 3 interns (2 covering the day shift and 1 overnight every other night), and up to 2 medical students. The 2 teams alternate a 24‐hour call cycle where the on‐call team admits every patient arriving to the unit. For patients arriving to the unit from 6 pm to 7 am, the on‐call overnight intern admits the patients and hands over care to the team in the morning. The on‐call team becomes aware of an incoming patient once the patient has been assigned a bed in the home unit. Several patients per day may arrive on the unit as transfers from a medical or surgical intensive care unit, but most patients arrive as emergency room or direct admissions. On any given day it is acceptable and typical for a team to have several patients off the ACU. No specific changes were made to nurse staffing, with the unit continuing to have 1 nurse unit manager, 1 charge nurse per shift, and a nurse‐to‐patient ratio of 1 to 4.

Results

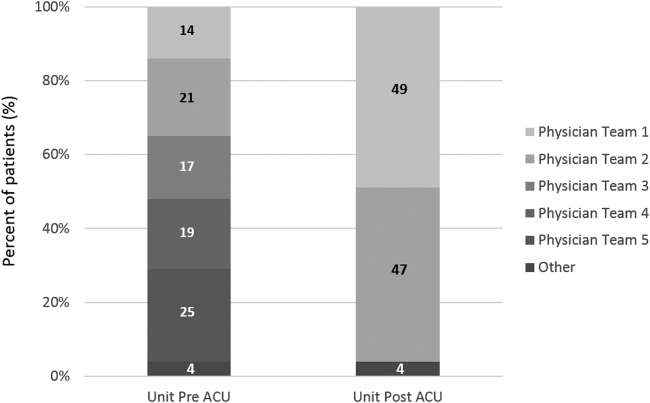

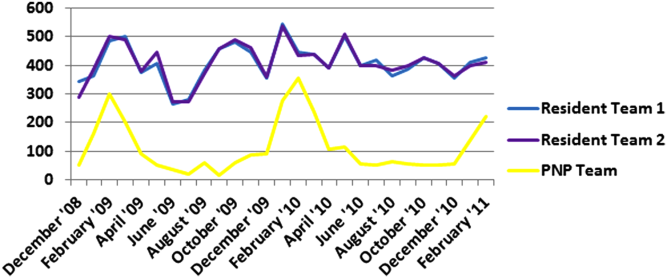

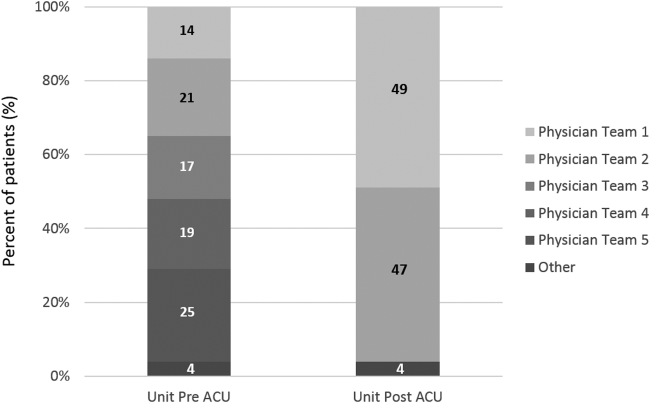

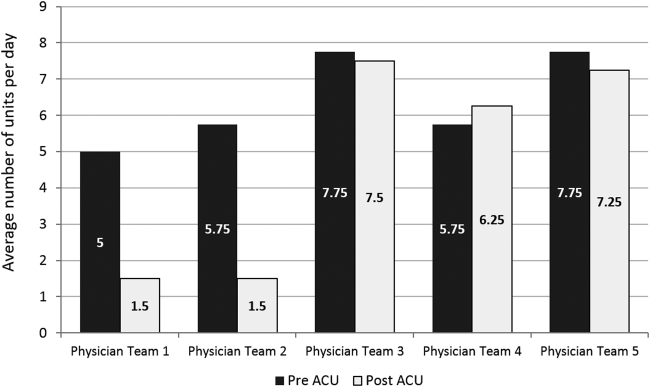

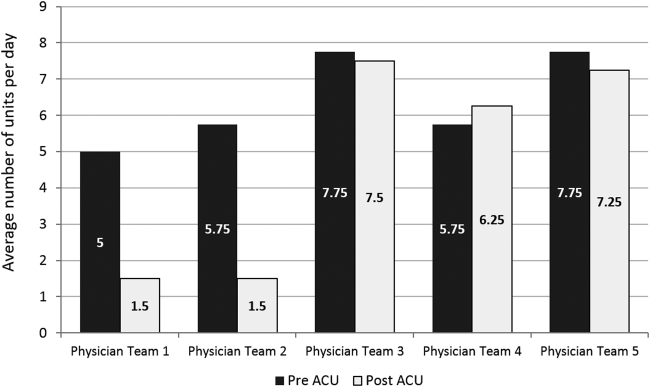

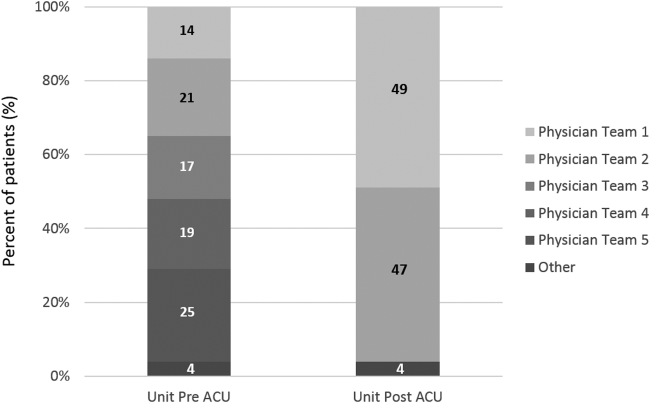

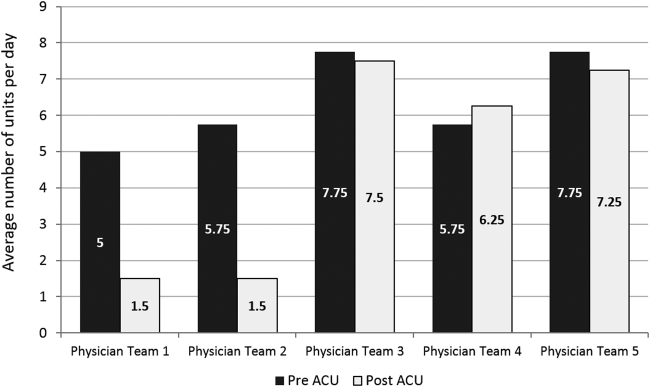

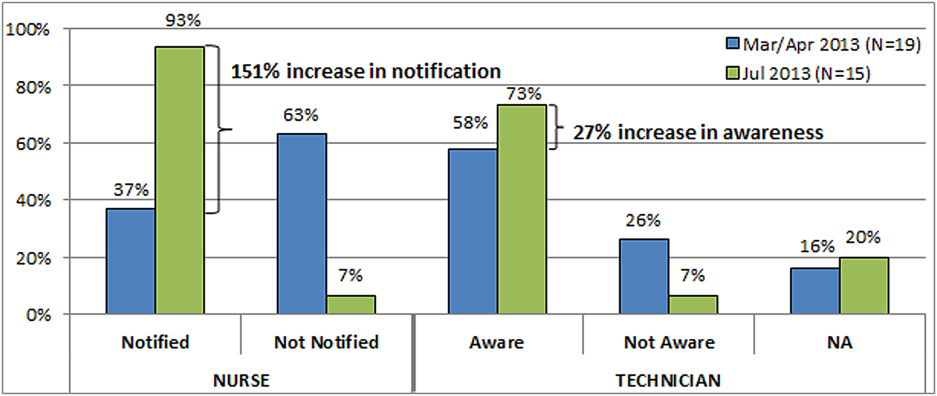

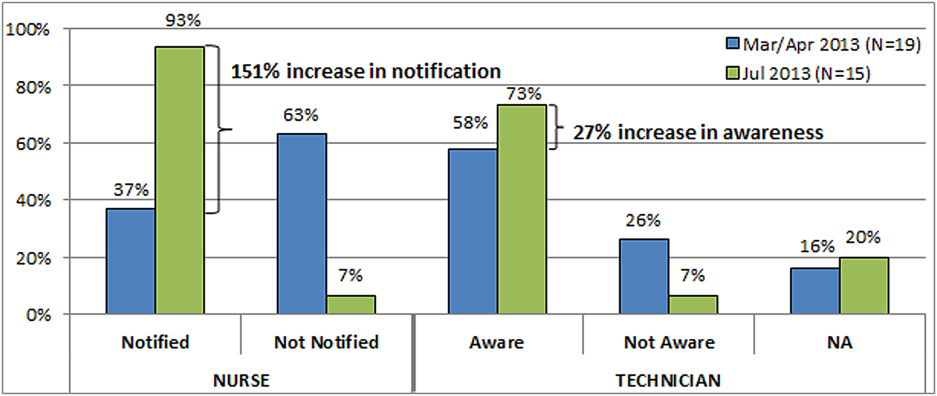

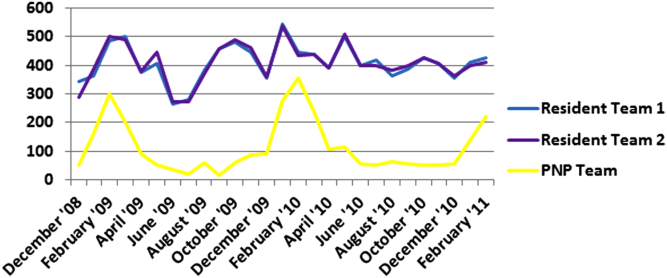

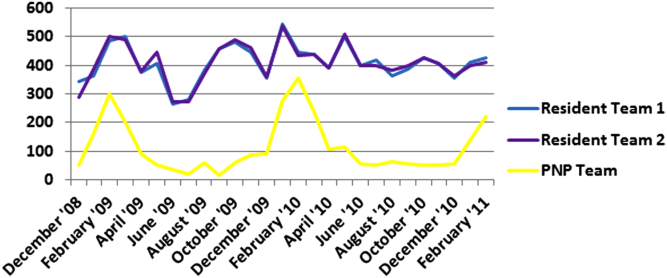

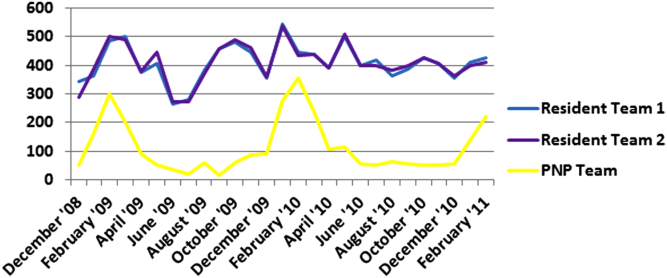

Geographic patient assignment has been successful (Figure 1). Prior to implementing the ACU, more than 5 different hospital medicine physician teams cared for patients on the unit, with no single team caring for more than 25% of them. In the ACU, all medical patients are assigned to 1 of the 2 unit‐based physician teams (physician teams 1 and 2), which regularly represents more than 95% of all patients on the unit. Over the 4 years, these 2 ACU teams have had an average of 12.9 total patient encounters per day (compared to 11.8 in the year before the ACU when these teams were not unit based). The 2 unit‐based teams have over 90% of their patients on the ACU daily. In contrast, 3 attending‐only hospital medicine teams (physician teams 3, 4, and 5) are still dispersed over 6 to 8 units every day (Figure 2), primarily due to high hospital occupancy and a relative scarcity of units eligible to become dedicated hospital medicine units.

Effects of the Change

Through unit‐based teams, the ACU achieves the first trait of an effective clinical microsystem. Although an evaluation of the cultural gains are beyond the scope of this article, the logistical advantages are self‐evident; having the fewest necessary physician teams overseeing care for nearly all patients in 1 unit and where those physician teams simultaneously have nearly all of their patients on that 1 unit, makes it possible to schedule interdisciplinary teamwork activities, such as SIBR, not otherwise feasible.

Structured Interdisciplinary Bedside Rounds

Design

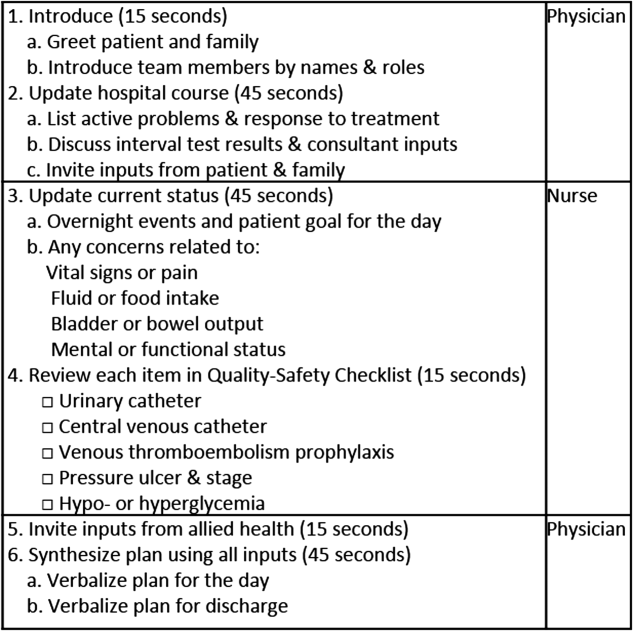

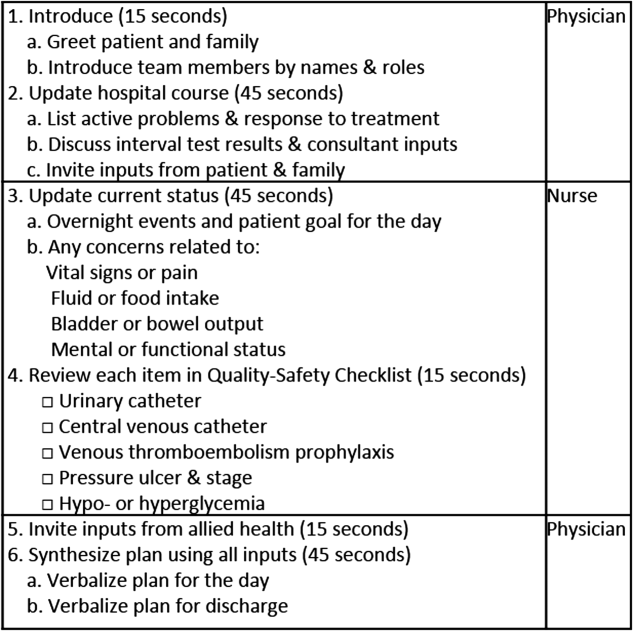

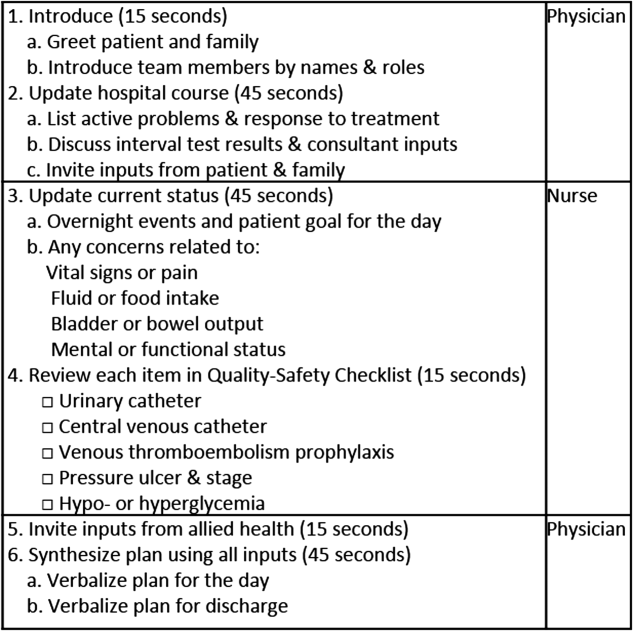

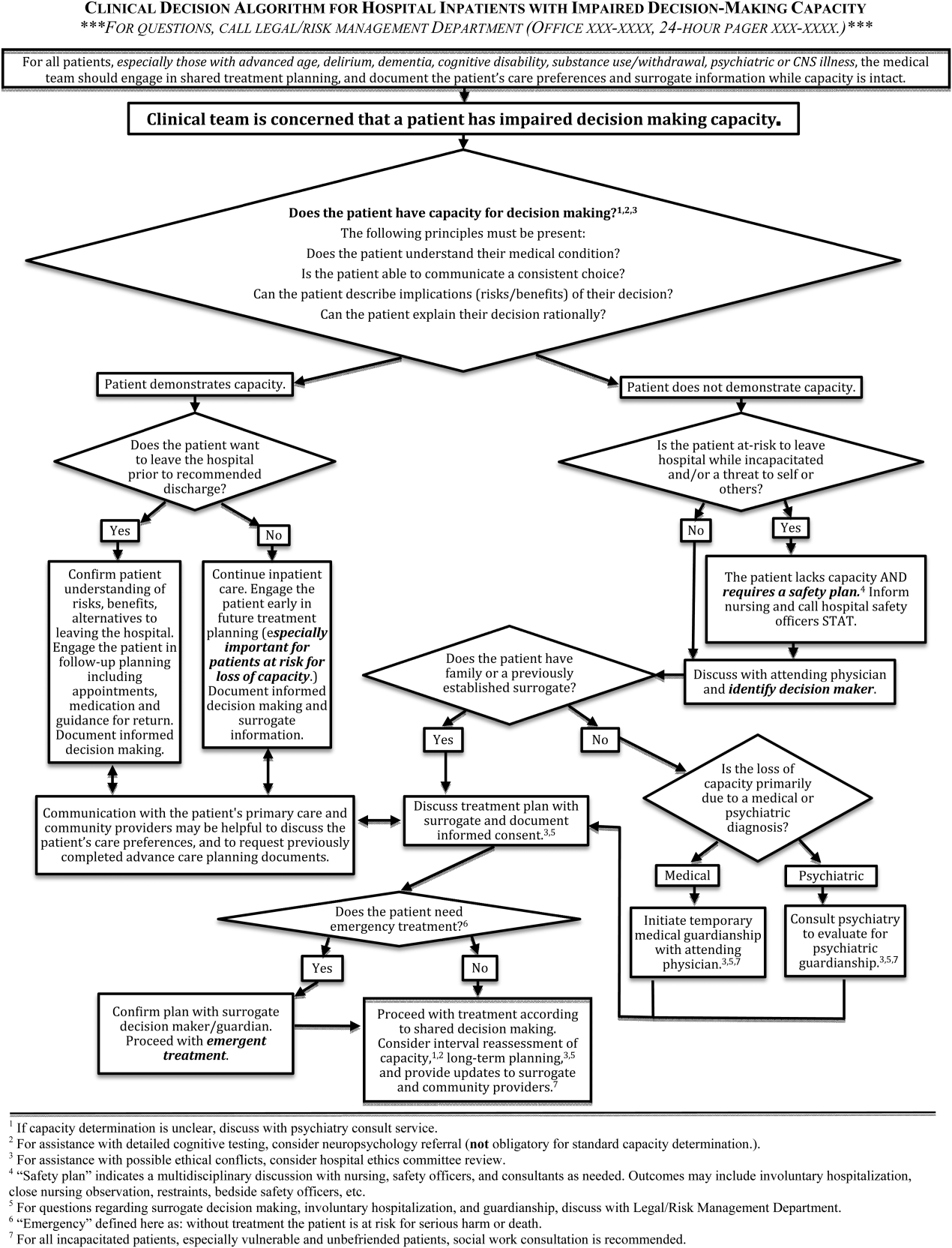

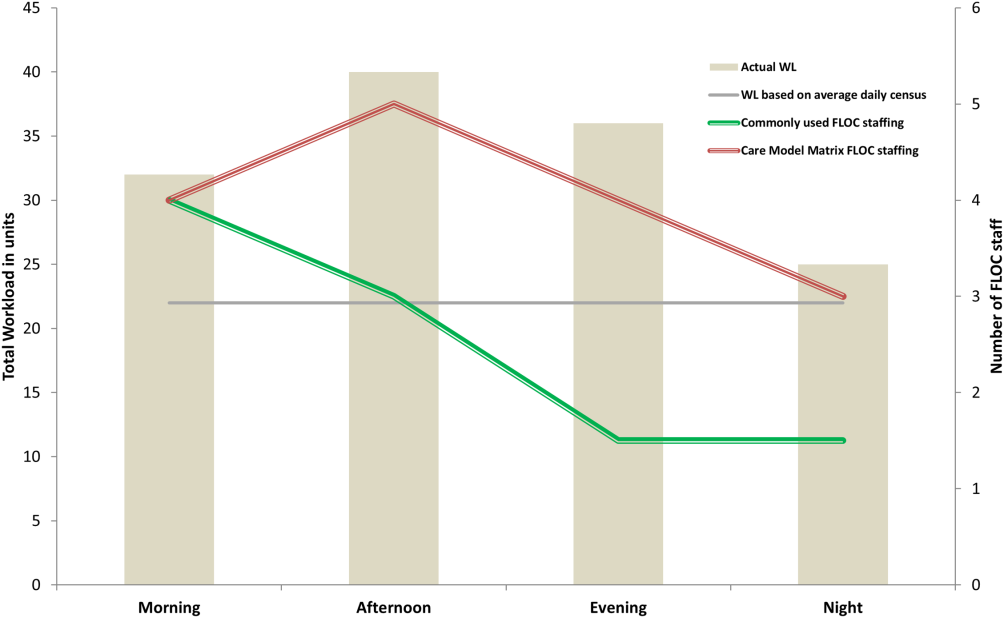

To reflect the second trait of an effective clinical microsystem, a hospital unit should routinely combine best practices for communication, including daily goals sheets,[12] safety checklists,[13] and multidisciplinary rounds.[14, 15] ACU design achieves this through SIBR, a patient‐ and family‐centered, team‐based approach to rounds that brings the nurse, physician, and available allied health professionals to the patient's bedside every day to exchange perspectives using a standard format to cross‐check information with the patient, family, and one another, and articulate a clear plan for the day. Before the SIBR hour starts, physicians and nurses have already performed independent patient assessments through usual activities such as handover, chart review, patient interviews, and physical examinations. Participants in SIBR are expected to give or receive inputs according to the standard SIBR communication protocol (Figure 3), review a quality‐safety checklist together, and ensure the plan of care is verbalized. Including the patient and family allows all parties to hear and be heard, cross‐check information for accuracy, and hold each person accountable for contributions.[16, 17]

Implementation

Each ACU staff member receives orientation to the SIBR communication protocol and is expected to be prepared and punctual for the midmorning start times. The charge nurse serves as the SIBR rounds manager, ensuring physicians waste no time searching for the next nurse and each team's eligible patients are seen in the SIBR hour. For each patient, SIBR begins when the nurse and physician are both present at the bedside. The intern begins SIBR by introducing team members before reviewing the patient's active problem list, response to treatment, and interval test results or consultant inputs. The nurse then relays the patient's goal for the day, overnight events, nursing concerns, and reviews the quality‐safety checklist. The intern then invites allied health professionals to share inputs that might impact medical decision making or discharge planning, before synthesizing all inputs into a shared plan for the day.

Throughout SIBR, the patient and family are encouraged to ask questions or correct misinformation. Although newcomers to SIBR often imagine that inviting patient inputs will disrupt efficiency, we have found teams readily learn to manage this risk, for instance discerning the core question among multiple seemingly disparate ones, or volunteering to return after the SIBR hour to explore a complex issue.

Results

Since the launch of the ACU on September 1, 2010, SIBR has been embedded as a routine on the unit with both physician teams and the nursing staff conducting it every day. Patients not considered eligible for SIBR are those whom the entire physician team has not yet evaluated, typically patients who arrived to the unit overnight. For patients who opt out due to personal preference, or for patients away from the unit for a procedure or a test, SIBR occurs without the patient so the rest of the team can still exchange inputs and formulate a plan of care. A visitor to the unit sees SIBR start punctually at 9 am and 10 am for successive teams, with each completing SIBR on eligible patients in under 60 minutes.

Effects of the Change

The second trait of an effective clinical microsystem is achieved through SIBR's routine forum for staff to share information with each other and the patient. By practicing SIBR every workday, staff are presented with multiple routine opportunities to experience an environment reflective of high‐performing frontline units.[18] We found that SIBR resembled other competencies, with a bell curve of performance. For this reason, by the start of the third year we added a SIBR certification program, a SIBR skills training program where permanent and rotating staff are evaluated through an in vivo observed structured clinical exam, typically with a charge nurse or physician as preceptor. When a nurse, medical student, intern, or resident demonstrates an ability to perform a series of specific high performance SIBR behaviors in 5 of 6 consecutive patients, they can achieve SIBR certification. In the first 2 years of this voluntary certification program, all daytime nursing staff and rotating interns have achieved this demonstration of interdisciplinary teamwork competence.

Unit‐Level Performance Reporting

Design

Hospital outcomes are determined on the clinical frontline. To be effective at managing unit outcomes, performance reports must be made available to unit leadership and staff.[5, 16] However, many hospitals still report performance at the level of the facility or service line. This limits the relevance of reports for the people who directly determine outcomes.

Implementation

For the first year, a data analyst was available to prepare and distribute unit‐level performance reports to unit leaders quarterly, including rates of in‐hospital mortality, blood stream infections, patient satisfaction, length of stay, and 30‐day readmissions. Preparation of these reports was labor intensive, requiring the analyst to acquire raw data from multiple data sources and to build the reports manually.

Results

In an analysis comparing outcomes for every patient spending at least 1 night on the unit in the year before and year after implementation, we observed reductions in in‐hospital mortality and length of stay. Unadjusted in‐hospital mortality decreased from 2.3% to 1.1% (P=0.004), with no change in referrals to hospice (5.4% to 4.5%) (P=0.176), and length‐of‐stay decreased from 5.0 to 4.5 days (P=0.001).[19] A complete report of these findings, including an analysis of concurrent control groups is beyond the scope of this article, but here we highlight an effect we observed on ACU leadership and staff from the reduction in in‐hospital mortality.

Effects of the Change

Noting the apparent mortality reduction, ACU leadership encouraged permanent staff and rotating trainees to consider an unexpected death as a never event. Although perhaps self‐evident, before the ACU we had never been organized to reflect on that concept or to use routines to do something about it. The unit considered an unexpected death one where the patient was not actively receiving comfort measures. At the monthly meet and greet, where ACU leadership bring the permanent staff and new rotating trainees together to introduce themselves by first name, the coleaders proposed that unexpected deaths in the month ahead could represent failures to recognize or respond to deterioration, to consider an alternative or under‐treated process, to transfer the patient to a higher level of care, or to deliver more timely and appropriate end‐of‐life care. It is our impression that this introspection was extraordinarily meaningful and would not have occurred without unit‐based teams, unit‐level performance data, and ACU leadership learning to utilize this rhetoric.

Unit‐Level Nurse and Physician Coleadership

Design

Effective leadership is a major driver of successful clinical microsystems.[20] The ACU is designed to be co‐led by a nurse unit manager and physician medical director. The leadership pair was charged simply with developing patient‐centered teams and ensuring the staff felt connected to the values of the organization and accountable to each other and the outcomes of the unit.

Implementation

Nursing leadership and hospital executives influenced the selection of the physician medical director, which was a way for them to demonstrate support for the care model. Over the first 4 years, the physician medical director position has been afforded a 10% to 20% reduction in clinical duties to fulfill the charge. The leadership pair sets expectations for the ACU's code of conduct, standard operating procedures (eg, SIBR), and best‐practice protocols.

Results

The leadership pair tries explicitly to role model the behaviors enumerated in the ACU's relational covenant, itself the product of a facilitated exercise they commissioned in the first year in which the entire staff drafted and signed a document listing behaviors they wished to see from each other (see Supporting Information, Appendix 1, in the online version of this article). The physician medical director, along with charge nurses, coach staff and trainees wishing to achieve SIBR certification. Over the 4 years, the pair has introduced best‐practice protocols for glycemic control, venous thromboembolism prophylaxis, removal of idle venous and bladder catheters, and bedside goals‐of‐care conversations.

Effects of the Change

Where there had previously been no explicit code of conduct, standard operating procedures such as SIBR, or focused efforts to optimize unit outcomes, the coleadership pair fills a management gap. These coleaders play an essential role in building momentum for the structure and processes of the ACU. The leadership pair has also become a primary resource for intraorganizational spread of the ACU model to medical and surgical wards, as well as geriatric, long‐term acute, and intensive care units.

CHALLENGES

Challenges with implementing the ACU fell into 3 primary categories: (1) performing change management required for a successful launch, (2) solving logistics of maintaining unit‐based physician teams, and (3) training physicians and nurses to perform SIBR at a high level.

For change management, the leadership pair was able to explain the rationale of the model to all staff in sufficient detail to launch the ACU. To build momentum for ACU routines and relationships, the physician leader and the nurse unit manager were both present on the unit daily for the first 100 days. As ACU operations became routine and competencies formed among clinicians, the amount of time spent by these leaders was de‐escalated.

Creating and maintaining unit‐based physician teams required shared understanding and coordination between on‐call hospital medicine physicians and the bed control office so that new admissions or transfers could be consistently assigned to unit‐based teams without adversely affecting patient flow. We found this challenge to be manageable once stakeholders accepted the rationale for the care mode and figured out how to support it.

The challenge of building high‐performance SIBR across the unit, including competence of rotating trainees new to the model, requires individualized assessment and feedback necessary for SIBR certification. We addressed this challenge by creating a SIBR train‐the‐trainer programa list of observable high‐performance SIBR behaviors coupled with a short course about giving effective feedback to learnersand found that once the ACU had several nurse and physician SIBR trainers in the staffing mix every day, the required amount of SIBR coaching expertise was available when needed.

CONCLUSION

Improving value and reliability in hospital care may require new models of care. The ACU is a hospital care model specifically designed to organize physicians, nurses, and allied health professionals into high‐functioning, unit‐based teams. It converges standard workflow, patient‐centered communication, quality‐safety checklists, best‐practice protocols, performance measurement, and progressive leadership. Our experience with the ACU suggests that hospital units can be reorganized as effective clinical microsystems where consistent unit professionals can share time and space, a sense of purpose, code of conduct, shared mental model for teamwork, an interprofessional management structure, and an important level of accountability to each other and their patients.

Disclosures: Jason Stein, MD: grant support from the US Health & Resources Services Administration to support organizational implementation of the care model described; recipient of consulting fees and royalties for licensed intellectual property to support implementation of the care model described; founder and president of nonprofit Centripital, provider of consulting services to hospital systems implementing the care model described. The terms of this arrangement have been reviewed and approved by Emory University in accordance with its conflict of interest policies. Liam Chadwick, PhD, and Diaz Clark, MS, RN: recipients of consulting fees through Centripital to support implementation of the care model described. Bryan W. Castle, MBA, RN: grant support from the US Health & Resources Services Administration to support organizational implementation of the care model described; recipient of consulting fees through Centripital to support implementation of the care model described. The authors report no other conflicts of interest.

- Institute of Medicine. Committee on Quality of Health Care in America. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: National Academies Press; 2001.

- , , . The triple aim: care, health, and cost. Health Aff (Millwood). 2008;27(3):759–769.

- . The end of the beginning: patient safety five years after “to err is human”. Health Aff (Millwood). 2004;Suppl Web Exclusives:W4‐534–545.

- , , , et al. Clinical inertia. Ann Intern Med. 2001;135(9):825–834.

- . The four habits of high‐value health care organizations. N Engl J Med. 2011;365(22):2045–2047.

- , , , . Using a Malcolm Baldrige framework to understand high‐performing clinical microsystems. Qual Saf Health Care. 2007;16(5):334–341.

- , , , . Relational coordination among nurses and other providers: impact on the quality of patient care. J Nurs Manag. 2010;18(8):926–937.

- , , , et al. Unit‐based care teams and the frequency and quality of physician‐nurse communications. Arch Pediatr Adolesc Med. 2011;165(5):424–428.

- , , , et al. Reducing cardiac arrests in the acute admissions unit: a quality improvement journey. BMJ Qual Saf. 2013;22(12):1025–1031.

- , , , et al. Evolving practice of hospital medicine and its impact on hospital throughput and efficiencies. J Hosp Med. 2012;7(8):649–654.

- , . Improvement projects led by unit‐based teams of nurse, physician, and quality leaders reduce infections, lower costs, improve patient satisfaction, and nurse‐physician communication. AHRQ Health Care Innovations Exchange. Available at: https://innovations.ahrq.gov/profiles/improvement‐projects‐led‐unit‐based‐teams‐nurse‐physician‐and‐quality‐leaders‐reduce. Accessed May 4, 2014.

- , , , , . The daily goals communication sheet: a simple and novel tool for improved communication and care. Jt Comm J Qual Patient Saf. 2008;34(10):608–613, 561.

- , , , et al. Implementation of a mandatory checklist of protocols and objectives improves compliance with a wide range of evidence‐based intensive care unit practices. Crit Care Med. 2009;37(10):2775–2781.

- , , , et al. Structured interdisciplinary rounds in a medical teaching unit: improving patient safety. Arch Intern Med. 2011;171(7):678–684.

- , , , , , . Improving teamwork: impact of structured interdisciplinary rounds on a hospitalist unit. J Hosp Med. 2011;6(2):88–93.

- , , . Integrating patient safety into the clinical microsystem. Qual Saf Health Care. 2004;13(suppl 2):ii34–ii38.

- , , , . Collaborative‐cross checking to enhance resilience. Cogn Tech Work. 2007;9:155–162.

- , , , et al. Microsystems in health care: Part 1. Learning from high‐performing front‐line clinical units. Jt Comm J Qual Improv. 2002;28(9):472–493.

- , , . Mortality reduction associated with structure process, and management redesign of a hospital medicine unit. J Hosp Med. 2012;7(suppl 2):115.

- , , , et al. Microsystems in health care: part 5. How leaders are leading. Jt Comm J Qual Saf. 2003;29(6):297–308.

In 2001, the Institute of Medicine called for a major redesign of the US healthcare system, describing the chasm between the quality of care Americans receive and the quality of healthcare they deserve.[1] The healthcare community recognizes its ongoing quality and value gaps, but progress has been limited by outdated care models, fragmented organizational structures, and insufficient advances in system design.[2] Many healthcare organizations are searching for new care delivery models capable of producing greater value.

A major constraint in hospitals is the persistence of underperforming frontline clinical care teams.[3] Physicians typically travel from 1 unit or patient to the next in unpredictable patterns, resulting in missed opportunities to share perspectives and coordinate care with nurses, discharge planning personnel, pharmacists, therapists, and patients. This geographic fragmentation almost certainly contributes to interprofessional silos and hierarchies, nonspecific care plans, and failure to initiate or intensify therapy when indicated.[4] Modern hospital units could benefit from having a standard care model that synchronizes frontline professionals into teams routinely coordinating and progressing a shared plan of care.

EFFECTIVE CLINICAL MICROSYSTEMS REFLECTED IN THE DESIGN OF THE ACCOUNTABLE CARE UNIT

High‐value healthcare organizations deliberately design clinical microsystems.[5] An effective clinical microsystem combines several traits: (1) a small group of people who work together in a defined setting on a regular basis to provide care, (2) linked care processes and a shared information environment that includes individuals who receive that care, (3) performance outcomes, and (4) set service and care aims.[6] For the accountable care unit (ACU) to reflect the traits of an effective clinical microsystem, we designed it with analogous features: (1) unit‐based teams, (2) structured interdisciplinary bedside rounds (SIBR), (3) unit‐level performance reporting, and (4) unit‐level nurse and physician coleadership. We launched the ACU on September 1, 2010 in a high‐acuity 24‐bed medical unit at Emory University Hospital, a 579‐bed tertiary academic medical center. Herein we provide a brief report of our experience implementing and refining the ACU over a 4‐year period to help others gauge feasibility and sustainability.

FEATURES OF AN ACU

Unit‐Based Teams

Design

Geographic alignment fosters mutual respect, cohesiveness, communication, timeliness, and face‐to‐face problem solving,[7, 8] and has been linked to improved patient satisfaction, decreased length of stay, and reductions in morbidity and mortality.[9, 10, 11] At our hospital, though, patients newly admitted or transferred to the hospital medicine service traditionally had been distributed to physician teams without regard to geography, typically based on physician call schedules or traditions of balancing patient volumes across colleagues. These traditional practices geographically dispersed our teams. Physicians would be forced regularly to travel to 5 to 8 different units each day to see 10 to 18 patients. Nurses might perceive this as a parade of different physician teams coming and going off the unit at unpredictable times. To temporally and spatially align physicians with unit‐based staff, specific physician teams were assigned to the ACU.

Implementation

The first step in implementing unit‐based teams was to identify the smallest number of physician teams that could be assigned to the ACU. Two internal medicine resident teams are assigned to care for all medical patients in the unit. Each resident team consists of 1 hospital medicine attending physician, 1 internal medicine resident, 3 interns (2 covering the day shift and 1 overnight every other night), and up to 2 medical students. The 2 teams alternate a 24‐hour call cycle where the on‐call team admits every patient arriving to the unit. For patients arriving to the unit from 6 pm to 7 am, the on‐call overnight intern admits the patients and hands over care to the team in the morning. The on‐call team becomes aware of an incoming patient once the patient has been assigned a bed in the home unit. Several patients per day may arrive on the unit as transfers from a medical or surgical intensive care unit, but most patients arrive as emergency room or direct admissions. On any given day it is acceptable and typical for a team to have several patients off the ACU. No specific changes were made to nurse staffing, with the unit continuing to have 1 nurse unit manager, 1 charge nurse per shift, and a nurse‐to‐patient ratio of 1 to 4.

Results

Geographic patient assignment has been successful (Figure 1). Prior to implementing the ACU, more than 5 different hospital medicine physician teams cared for patients on the unit, with no single team caring for more than 25% of them. In the ACU, all medical patients are assigned to 1 of the 2 unit‐based physician teams (physician teams 1 and 2), which regularly represents more than 95% of all patients on the unit. Over the 4 years, these 2 ACU teams have had an average of 12.9 total patient encounters per day (compared to 11.8 in the year before the ACU when these teams were not unit based). The 2 unit‐based teams have over 90% of their patients on the ACU daily. In contrast, 3 attending‐only hospital medicine teams (physician teams 3, 4, and 5) are still dispersed over 6 to 8 units every day (Figure 2), primarily due to high hospital occupancy and a relative scarcity of units eligible to become dedicated hospital medicine units.

Effects of the Change

Through unit‐based teams, the ACU achieves the first trait of an effective clinical microsystem. Although an evaluation of the cultural gains are beyond the scope of this article, the logistical advantages are self‐evident; having the fewest necessary physician teams overseeing care for nearly all patients in 1 unit and where those physician teams simultaneously have nearly all of their patients on that 1 unit, makes it possible to schedule interdisciplinary teamwork activities, such as SIBR, not otherwise feasible.

Structured Interdisciplinary Bedside Rounds

Design

To reflect the second trait of an effective clinical microsystem, a hospital unit should routinely combine best practices for communication, including daily goals sheets,[12] safety checklists,[13] and multidisciplinary rounds.[14, 15] ACU design achieves this through SIBR, a patient‐ and family‐centered, team‐based approach to rounds that brings the nurse, physician, and available allied health professionals to the patient's bedside every day to exchange perspectives using a standard format to cross‐check information with the patient, family, and one another, and articulate a clear plan for the day. Before the SIBR hour starts, physicians and nurses have already performed independent patient assessments through usual activities such as handover, chart review, patient interviews, and physical examinations. Participants in SIBR are expected to give or receive inputs according to the standard SIBR communication protocol (Figure 3), review a quality‐safety checklist together, and ensure the plan of care is verbalized. Including the patient and family allows all parties to hear and be heard, cross‐check information for accuracy, and hold each person accountable for contributions.[16, 17]

Implementation

Each ACU staff member receives orientation to the SIBR communication protocol and is expected to be prepared and punctual for the midmorning start times. The charge nurse serves as the SIBR rounds manager, ensuring physicians waste no time searching for the next nurse and each team's eligible patients are seen in the SIBR hour. For each patient, SIBR begins when the nurse and physician are both present at the bedside. The intern begins SIBR by introducing team members before reviewing the patient's active problem list, response to treatment, and interval test results or consultant inputs. The nurse then relays the patient's goal for the day, overnight events, nursing concerns, and reviews the quality‐safety checklist. The intern then invites allied health professionals to share inputs that might impact medical decision making or discharge planning, before synthesizing all inputs into a shared plan for the day.

Throughout SIBR, the patient and family are encouraged to ask questions or correct misinformation. Although newcomers to SIBR often imagine that inviting patient inputs will disrupt efficiency, we have found teams readily learn to manage this risk, for instance discerning the core question among multiple seemingly disparate ones, or volunteering to return after the SIBR hour to explore a complex issue.

Results

Since the launch of the ACU on September 1, 2010, SIBR has been embedded as a routine on the unit with both physician teams and the nursing staff conducting it every day. Patients not considered eligible for SIBR are those whom the entire physician team has not yet evaluated, typically patients who arrived to the unit overnight. For patients who opt out due to personal preference, or for patients away from the unit for a procedure or a test, SIBR occurs without the patient so the rest of the team can still exchange inputs and formulate a plan of care. A visitor to the unit sees SIBR start punctually at 9 am and 10 am for successive teams, with each completing SIBR on eligible patients in under 60 minutes.

Effects of the Change

The second trait of an effective clinical microsystem is achieved through SIBR's routine forum for staff to share information with each other and the patient. By practicing SIBR every workday, staff are presented with multiple routine opportunities to experience an environment reflective of high‐performing frontline units.[18] We found that SIBR resembled other competencies, with a bell curve of performance. For this reason, by the start of the third year we added a SIBR certification program, a SIBR skills training program where permanent and rotating staff are evaluated through an in vivo observed structured clinical exam, typically with a charge nurse or physician as preceptor. When a nurse, medical student, intern, or resident demonstrates an ability to perform a series of specific high performance SIBR behaviors in 5 of 6 consecutive patients, they can achieve SIBR certification. In the first 2 years of this voluntary certification program, all daytime nursing staff and rotating interns have achieved this demonstration of interdisciplinary teamwork competence.

Unit‐Level Performance Reporting

Design

Hospital outcomes are determined on the clinical frontline. To be effective at managing unit outcomes, performance reports must be made available to unit leadership and staff.[5, 16] However, many hospitals still report performance at the level of the facility or service line. This limits the relevance of reports for the people who directly determine outcomes.

Implementation

For the first year, a data analyst was available to prepare and distribute unit‐level performance reports to unit leaders quarterly, including rates of in‐hospital mortality, blood stream infections, patient satisfaction, length of stay, and 30‐day readmissions. Preparation of these reports was labor intensive, requiring the analyst to acquire raw data from multiple data sources and to build the reports manually.

Results

In an analysis comparing outcomes for every patient spending at least 1 night on the unit in the year before and year after implementation, we observed reductions in in‐hospital mortality and length of stay. Unadjusted in‐hospital mortality decreased from 2.3% to 1.1% (P=0.004), with no change in referrals to hospice (5.4% to 4.5%) (P=0.176), and length‐of‐stay decreased from 5.0 to 4.5 days (P=0.001).[19] A complete report of these findings, including an analysis of concurrent control groups is beyond the scope of this article, but here we highlight an effect we observed on ACU leadership and staff from the reduction in in‐hospital mortality.

Effects of the Change

Noting the apparent mortality reduction, ACU leadership encouraged permanent staff and rotating trainees to consider an unexpected death as a never event. Although perhaps self‐evident, before the ACU we had never been organized to reflect on that concept or to use routines to do something about it. The unit considered an unexpected death one where the patient was not actively receiving comfort measures. At the monthly meet and greet, where ACU leadership bring the permanent staff and new rotating trainees together to introduce themselves by first name, the coleaders proposed that unexpected deaths in the month ahead could represent failures to recognize or respond to deterioration, to consider an alternative or under‐treated process, to transfer the patient to a higher level of care, or to deliver more timely and appropriate end‐of‐life care. It is our impression that this introspection was extraordinarily meaningful and would not have occurred without unit‐based teams, unit‐level performance data, and ACU leadership learning to utilize this rhetoric.

Unit‐Level Nurse and Physician Coleadership

Design

Effective leadership is a major driver of successful clinical microsystems.[20] The ACU is designed to be co‐led by a nurse unit manager and physician medical director. The leadership pair was charged simply with developing patient‐centered teams and ensuring the staff felt connected to the values of the organization and accountable to each other and the outcomes of the unit.

Implementation

Nursing leadership and hospital executives influenced the selection of the physician medical director, which was a way for them to demonstrate support for the care model. Over the first 4 years, the physician medical director position has been afforded a 10% to 20% reduction in clinical duties to fulfill the charge. The leadership pair sets expectations for the ACU's code of conduct, standard operating procedures (eg, SIBR), and best‐practice protocols.

Results

The leadership pair tries explicitly to role model the behaviors enumerated in the ACU's relational covenant, itself the product of a facilitated exercise they commissioned in the first year in which the entire staff drafted and signed a document listing behaviors they wished to see from each other (see Supporting Information, Appendix 1, in the online version of this article). The physician medical director, along with charge nurses, coach staff and trainees wishing to achieve SIBR certification. Over the 4 years, the pair has introduced best‐practice protocols for glycemic control, venous thromboembolism prophylaxis, removal of idle venous and bladder catheters, and bedside goals‐of‐care conversations.

Effects of the Change

Where there had previously been no explicit code of conduct, standard operating procedures such as SIBR, or focused efforts to optimize unit outcomes, the coleadership pair fills a management gap. These coleaders play an essential role in building momentum for the structure and processes of the ACU. The leadership pair has also become a primary resource for intraorganizational spread of the ACU model to medical and surgical wards, as well as geriatric, long‐term acute, and intensive care units.

CHALLENGES

Challenges with implementing the ACU fell into 3 primary categories: (1) performing change management required for a successful launch, (2) solving logistics of maintaining unit‐based physician teams, and (3) training physicians and nurses to perform SIBR at a high level.

For change management, the leadership pair was able to explain the rationale of the model to all staff in sufficient detail to launch the ACU. To build momentum for ACU routines and relationships, the physician leader and the nurse unit manager were both present on the unit daily for the first 100 days. As ACU operations became routine and competencies formed among clinicians, the amount of time spent by these leaders was de‐escalated.

Creating and maintaining unit‐based physician teams required shared understanding and coordination between on‐call hospital medicine physicians and the bed control office so that new admissions or transfers could be consistently assigned to unit‐based teams without adversely affecting patient flow. We found this challenge to be manageable once stakeholders accepted the rationale for the care mode and figured out how to support it.

The challenge of building high‐performance SIBR across the unit, including competence of rotating trainees new to the model, requires individualized assessment and feedback necessary for SIBR certification. We addressed this challenge by creating a SIBR train‐the‐trainer programa list of observable high‐performance SIBR behaviors coupled with a short course about giving effective feedback to learnersand found that once the ACU had several nurse and physician SIBR trainers in the staffing mix every day, the required amount of SIBR coaching expertise was available when needed.

CONCLUSION

Improving value and reliability in hospital care may require new models of care. The ACU is a hospital care model specifically designed to organize physicians, nurses, and allied health professionals into high‐functioning, unit‐based teams. It converges standard workflow, patient‐centered communication, quality‐safety checklists, best‐practice protocols, performance measurement, and progressive leadership. Our experience with the ACU suggests that hospital units can be reorganized as effective clinical microsystems where consistent unit professionals can share time and space, a sense of purpose, code of conduct, shared mental model for teamwork, an interprofessional management structure, and an important level of accountability to each other and their patients.

Disclosures: Jason Stein, MD: grant support from the US Health & Resources Services Administration to support organizational implementation of the care model described; recipient of consulting fees and royalties for licensed intellectual property to support implementation of the care model described; founder and president of nonprofit Centripital, provider of consulting services to hospital systems implementing the care model described. The terms of this arrangement have been reviewed and approved by Emory University in accordance with its conflict of interest policies. Liam Chadwick, PhD, and Diaz Clark, MS, RN: recipients of consulting fees through Centripital to support implementation of the care model described. Bryan W. Castle, MBA, RN: grant support from the US Health & Resources Services Administration to support organizational implementation of the care model described; recipient of consulting fees through Centripital to support implementation of the care model described. The authors report no other conflicts of interest.

In 2001, the Institute of Medicine called for a major redesign of the US healthcare system, describing the chasm between the quality of care Americans receive and the quality of healthcare they deserve.[1] The healthcare community recognizes its ongoing quality and value gaps, but progress has been limited by outdated care models, fragmented organizational structures, and insufficient advances in system design.[2] Many healthcare organizations are searching for new care delivery models capable of producing greater value.

A major constraint in hospitals is the persistence of underperforming frontline clinical care teams.[3] Physicians typically travel from 1 unit or patient to the next in unpredictable patterns, resulting in missed opportunities to share perspectives and coordinate care with nurses, discharge planning personnel, pharmacists, therapists, and patients. This geographic fragmentation almost certainly contributes to interprofessional silos and hierarchies, nonspecific care plans, and failure to initiate or intensify therapy when indicated.[4] Modern hospital units could benefit from having a standard care model that synchronizes frontline professionals into teams routinely coordinating and progressing a shared plan of care.

EFFECTIVE CLINICAL MICROSYSTEMS REFLECTED IN THE DESIGN OF THE ACCOUNTABLE CARE UNIT

High‐value healthcare organizations deliberately design clinical microsystems.[5] An effective clinical microsystem combines several traits: (1) a small group of people who work together in a defined setting on a regular basis to provide care, (2) linked care processes and a shared information environment that includes individuals who receive that care, (3) performance outcomes, and (4) set service and care aims.[6] For the accountable care unit (ACU) to reflect the traits of an effective clinical microsystem, we designed it with analogous features: (1) unit‐based teams, (2) structured interdisciplinary bedside rounds (SIBR), (3) unit‐level performance reporting, and (4) unit‐level nurse and physician coleadership. We launched the ACU on September 1, 2010 in a high‐acuity 24‐bed medical unit at Emory University Hospital, a 579‐bed tertiary academic medical center. Herein we provide a brief report of our experience implementing and refining the ACU over a 4‐year period to help others gauge feasibility and sustainability.

FEATURES OF AN ACU

Unit‐Based Teams

Design

Geographic alignment fosters mutual respect, cohesiveness, communication, timeliness, and face‐to‐face problem solving,[7, 8] and has been linked to improved patient satisfaction, decreased length of stay, and reductions in morbidity and mortality.[9, 10, 11] At our hospital, though, patients newly admitted or transferred to the hospital medicine service traditionally had been distributed to physician teams without regard to geography, typically based on physician call schedules or traditions of balancing patient volumes across colleagues. These traditional practices geographically dispersed our teams. Physicians would be forced regularly to travel to 5 to 8 different units each day to see 10 to 18 patients. Nurses might perceive this as a parade of different physician teams coming and going off the unit at unpredictable times. To temporally and spatially align physicians with unit‐based staff, specific physician teams were assigned to the ACU.

Implementation

The first step in implementing unit‐based teams was to identify the smallest number of physician teams that could be assigned to the ACU. Two internal medicine resident teams are assigned to care for all medical patients in the unit. Each resident team consists of 1 hospital medicine attending physician, 1 internal medicine resident, 3 interns (2 covering the day shift and 1 overnight every other night), and up to 2 medical students. The 2 teams alternate a 24‐hour call cycle where the on‐call team admits every patient arriving to the unit. For patients arriving to the unit from 6 pm to 7 am, the on‐call overnight intern admits the patients and hands over care to the team in the morning. The on‐call team becomes aware of an incoming patient once the patient has been assigned a bed in the home unit. Several patients per day may arrive on the unit as transfers from a medical or surgical intensive care unit, but most patients arrive as emergency room or direct admissions. On any given day it is acceptable and typical for a team to have several patients off the ACU. No specific changes were made to nurse staffing, with the unit continuing to have 1 nurse unit manager, 1 charge nurse per shift, and a nurse‐to‐patient ratio of 1 to 4.

Results

Geographic patient assignment has been successful (Figure 1). Prior to implementing the ACU, more than 5 different hospital medicine physician teams cared for patients on the unit, with no single team caring for more than 25% of them. In the ACU, all medical patients are assigned to 1 of the 2 unit‐based physician teams (physician teams 1 and 2), which regularly represents more than 95% of all patients on the unit. Over the 4 years, these 2 ACU teams have had an average of 12.9 total patient encounters per day (compared to 11.8 in the year before the ACU when these teams were not unit based). The 2 unit‐based teams have over 90% of their patients on the ACU daily. In contrast, 3 attending‐only hospital medicine teams (physician teams 3, 4, and 5) are still dispersed over 6 to 8 units every day (Figure 2), primarily due to high hospital occupancy and a relative scarcity of units eligible to become dedicated hospital medicine units.

Effects of the Change

Through unit‐based teams, the ACU achieves the first trait of an effective clinical microsystem. Although an evaluation of the cultural gains are beyond the scope of this article, the logistical advantages are self‐evident; having the fewest necessary physician teams overseeing care for nearly all patients in 1 unit and where those physician teams simultaneously have nearly all of their patients on that 1 unit, makes it possible to schedule interdisciplinary teamwork activities, such as SIBR, not otherwise feasible.

Structured Interdisciplinary Bedside Rounds

Design

To reflect the second trait of an effective clinical microsystem, a hospital unit should routinely combine best practices for communication, including daily goals sheets,[12] safety checklists,[13] and multidisciplinary rounds.[14, 15] ACU design achieves this through SIBR, a patient‐ and family‐centered, team‐based approach to rounds that brings the nurse, physician, and available allied health professionals to the patient's bedside every day to exchange perspectives using a standard format to cross‐check information with the patient, family, and one another, and articulate a clear plan for the day. Before the SIBR hour starts, physicians and nurses have already performed independent patient assessments through usual activities such as handover, chart review, patient interviews, and physical examinations. Participants in SIBR are expected to give or receive inputs according to the standard SIBR communication protocol (Figure 3), review a quality‐safety checklist together, and ensure the plan of care is verbalized. Including the patient and family allows all parties to hear and be heard, cross‐check information for accuracy, and hold each person accountable for contributions.[16, 17]

Implementation

Each ACU staff member receives orientation to the SIBR communication protocol and is expected to be prepared and punctual for the midmorning start times. The charge nurse serves as the SIBR rounds manager, ensuring physicians waste no time searching for the next nurse and each team's eligible patients are seen in the SIBR hour. For each patient, SIBR begins when the nurse and physician are both present at the bedside. The intern begins SIBR by introducing team members before reviewing the patient's active problem list, response to treatment, and interval test results or consultant inputs. The nurse then relays the patient's goal for the day, overnight events, nursing concerns, and reviews the quality‐safety checklist. The intern then invites allied health professionals to share inputs that might impact medical decision making or discharge planning, before synthesizing all inputs into a shared plan for the day.

Throughout SIBR, the patient and family are encouraged to ask questions or correct misinformation. Although newcomers to SIBR often imagine that inviting patient inputs will disrupt efficiency, we have found teams readily learn to manage this risk, for instance discerning the core question among multiple seemingly disparate ones, or volunteering to return after the SIBR hour to explore a complex issue.

Results

Since the launch of the ACU on September 1, 2010, SIBR has been embedded as a routine on the unit with both physician teams and the nursing staff conducting it every day. Patients not considered eligible for SIBR are those whom the entire physician team has not yet evaluated, typically patients who arrived to the unit overnight. For patients who opt out due to personal preference, or for patients away from the unit for a procedure or a test, SIBR occurs without the patient so the rest of the team can still exchange inputs and formulate a plan of care. A visitor to the unit sees SIBR start punctually at 9 am and 10 am for successive teams, with each completing SIBR on eligible patients in under 60 minutes.

Effects of the Change

The second trait of an effective clinical microsystem is achieved through SIBR's routine forum for staff to share information with each other and the patient. By practicing SIBR every workday, staff are presented with multiple routine opportunities to experience an environment reflective of high‐performing frontline units.[18] We found that SIBR resembled other competencies, with a bell curve of performance. For this reason, by the start of the third year we added a SIBR certification program, a SIBR skills training program where permanent and rotating staff are evaluated through an in vivo observed structured clinical exam, typically with a charge nurse or physician as preceptor. When a nurse, medical student, intern, or resident demonstrates an ability to perform a series of specific high performance SIBR behaviors in 5 of 6 consecutive patients, they can achieve SIBR certification. In the first 2 years of this voluntary certification program, all daytime nursing staff and rotating interns have achieved this demonstration of interdisciplinary teamwork competence.

Unit‐Level Performance Reporting

Design

Hospital outcomes are determined on the clinical frontline. To be effective at managing unit outcomes, performance reports must be made available to unit leadership and staff.[5, 16] However, many hospitals still report performance at the level of the facility or service line. This limits the relevance of reports for the people who directly determine outcomes.

Implementation

For the first year, a data analyst was available to prepare and distribute unit‐level performance reports to unit leaders quarterly, including rates of in‐hospital mortality, blood stream infections, patient satisfaction, length of stay, and 30‐day readmissions. Preparation of these reports was labor intensive, requiring the analyst to acquire raw data from multiple data sources and to build the reports manually.

Results

In an analysis comparing outcomes for every patient spending at least 1 night on the unit in the year before and year after implementation, we observed reductions in in‐hospital mortality and length of stay. Unadjusted in‐hospital mortality decreased from 2.3% to 1.1% (P=0.004), with no change in referrals to hospice (5.4% to 4.5%) (P=0.176), and length‐of‐stay decreased from 5.0 to 4.5 days (P=0.001).[19] A complete report of these findings, including an analysis of concurrent control groups is beyond the scope of this article, but here we highlight an effect we observed on ACU leadership and staff from the reduction in in‐hospital mortality.

Effects of the Change

Noting the apparent mortality reduction, ACU leadership encouraged permanent staff and rotating trainees to consider an unexpected death as a never event. Although perhaps self‐evident, before the ACU we had never been organized to reflect on that concept or to use routines to do something about it. The unit considered an unexpected death one where the patient was not actively receiving comfort measures. At the monthly meet and greet, where ACU leadership bring the permanent staff and new rotating trainees together to introduce themselves by first name, the coleaders proposed that unexpected deaths in the month ahead could represent failures to recognize or respond to deterioration, to consider an alternative or under‐treated process, to transfer the patient to a higher level of care, or to deliver more timely and appropriate end‐of‐life care. It is our impression that this introspection was extraordinarily meaningful and would not have occurred without unit‐based teams, unit‐level performance data, and ACU leadership learning to utilize this rhetoric.

Unit‐Level Nurse and Physician Coleadership

Design

Effective leadership is a major driver of successful clinical microsystems.[20] The ACU is designed to be co‐led by a nurse unit manager and physician medical director. The leadership pair was charged simply with developing patient‐centered teams and ensuring the staff felt connected to the values of the organization and accountable to each other and the outcomes of the unit.

Implementation

Nursing leadership and hospital executives influenced the selection of the physician medical director, which was a way for them to demonstrate support for the care model. Over the first 4 years, the physician medical director position has been afforded a 10% to 20% reduction in clinical duties to fulfill the charge. The leadership pair sets expectations for the ACU's code of conduct, standard operating procedures (eg, SIBR), and best‐practice protocols.

Results

The leadership pair tries explicitly to role model the behaviors enumerated in the ACU's relational covenant, itself the product of a facilitated exercise they commissioned in the first year in which the entire staff drafted and signed a document listing behaviors they wished to see from each other (see Supporting Information, Appendix 1, in the online version of this article). The physician medical director, along with charge nurses, coach staff and trainees wishing to achieve SIBR certification. Over the 4 years, the pair has introduced best‐practice protocols for glycemic control, venous thromboembolism prophylaxis, removal of idle venous and bladder catheters, and bedside goals‐of‐care conversations.

Effects of the Change

Where there had previously been no explicit code of conduct, standard operating procedures such as SIBR, or focused efforts to optimize unit outcomes, the coleadership pair fills a management gap. These coleaders play an essential role in building momentum for the structure and processes of the ACU. The leadership pair has also become a primary resource for intraorganizational spread of the ACU model to medical and surgical wards, as well as geriatric, long‐term acute, and intensive care units.

CHALLENGES

Challenges with implementing the ACU fell into 3 primary categories: (1) performing change management required for a successful launch, (2) solving logistics of maintaining unit‐based physician teams, and (3) training physicians and nurses to perform SIBR at a high level.

For change management, the leadership pair was able to explain the rationale of the model to all staff in sufficient detail to launch the ACU. To build momentum for ACU routines and relationships, the physician leader and the nurse unit manager were both present on the unit daily for the first 100 days. As ACU operations became routine and competencies formed among clinicians, the amount of time spent by these leaders was de‐escalated.

Creating and maintaining unit‐based physician teams required shared understanding and coordination between on‐call hospital medicine physicians and the bed control office so that new admissions or transfers could be consistently assigned to unit‐based teams without adversely affecting patient flow. We found this challenge to be manageable once stakeholders accepted the rationale for the care mode and figured out how to support it.

The challenge of building high‐performance SIBR across the unit, including competence of rotating trainees new to the model, requires individualized assessment and feedback necessary for SIBR certification. We addressed this challenge by creating a SIBR train‐the‐trainer programa list of observable high‐performance SIBR behaviors coupled with a short course about giving effective feedback to learnersand found that once the ACU had several nurse and physician SIBR trainers in the staffing mix every day, the required amount of SIBR coaching expertise was available when needed.

CONCLUSION

Improving value and reliability in hospital care may require new models of care. The ACU is a hospital care model specifically designed to organize physicians, nurses, and allied health professionals into high‐functioning, unit‐based teams. It converges standard workflow, patient‐centered communication, quality‐safety checklists, best‐practice protocols, performance measurement, and progressive leadership. Our experience with the ACU suggests that hospital units can be reorganized as effective clinical microsystems where consistent unit professionals can share time and space, a sense of purpose, code of conduct, shared mental model for teamwork, an interprofessional management structure, and an important level of accountability to each other and their patients.

Disclosures: Jason Stein, MD: grant support from the US Health & Resources Services Administration to support organizational implementation of the care model described; recipient of consulting fees and royalties for licensed intellectual property to support implementation of the care model described; founder and president of nonprofit Centripital, provider of consulting services to hospital systems implementing the care model described. The terms of this arrangement have been reviewed and approved by Emory University in accordance with its conflict of interest policies. Liam Chadwick, PhD, and Diaz Clark, MS, RN: recipients of consulting fees through Centripital to support implementation of the care model described. Bryan W. Castle, MBA, RN: grant support from the US Health & Resources Services Administration to support organizational implementation of the care model described; recipient of consulting fees through Centripital to support implementation of the care model described. The authors report no other conflicts of interest.

- Institute of Medicine. Committee on Quality of Health Care in America. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: National Academies Press; 2001.

- , , . The triple aim: care, health, and cost. Health Aff (Millwood). 2008;27(3):759–769.

- . The end of the beginning: patient safety five years after “to err is human”. Health Aff (Millwood). 2004;Suppl Web Exclusives:W4‐534–545.

- , , , et al. Clinical inertia. Ann Intern Med. 2001;135(9):825–834.

- . The four habits of high‐value health care organizations. N Engl J Med. 2011;365(22):2045–2047.

- , , , . Using a Malcolm Baldrige framework to understand high‐performing clinical microsystems. Qual Saf Health Care. 2007;16(5):334–341.

- , , , . Relational coordination among nurses and other providers: impact on the quality of patient care. J Nurs Manag. 2010;18(8):926–937.

- , , , et al. Unit‐based care teams and the frequency and quality of physician‐nurse communications. Arch Pediatr Adolesc Med. 2011;165(5):424–428.

- , , , et al. Reducing cardiac arrests in the acute admissions unit: a quality improvement journey. BMJ Qual Saf. 2013;22(12):1025–1031.

- , , , et al. Evolving practice of hospital medicine and its impact on hospital throughput and efficiencies. J Hosp Med. 2012;7(8):649–654.

- , . Improvement projects led by unit‐based teams of nurse, physician, and quality leaders reduce infections, lower costs, improve patient satisfaction, and nurse‐physician communication. AHRQ Health Care Innovations Exchange. Available at: https://innovations.ahrq.gov/profiles/improvement‐projects‐led‐unit‐based‐teams‐nurse‐physician‐and‐quality‐leaders‐reduce. Accessed May 4, 2014.

- , , , , . The daily goals communication sheet: a simple and novel tool for improved communication and care. Jt Comm J Qual Patient Saf. 2008;34(10):608–613, 561.

- , , , et al. Implementation of a mandatory checklist of protocols and objectives improves compliance with a wide range of evidence‐based intensive care unit practices. Crit Care Med. 2009;37(10):2775–2781.

- , , , et al. Structured interdisciplinary rounds in a medical teaching unit: improving patient safety. Arch Intern Med. 2011;171(7):678–684.

- , , , , , . Improving teamwork: impact of structured interdisciplinary rounds on a hospitalist unit. J Hosp Med. 2011;6(2):88–93.

- , , . Integrating patient safety into the clinical microsystem. Qual Saf Health Care. 2004;13(suppl 2):ii34–ii38.

- , , , . Collaborative‐cross checking to enhance resilience. Cogn Tech Work. 2007;9:155–162.

- , , , et al. Microsystems in health care: Part 1. Learning from high‐performing front‐line clinical units. Jt Comm J Qual Improv. 2002;28(9):472–493.

- , , . Mortality reduction associated with structure process, and management redesign of a hospital medicine unit. J Hosp Med. 2012;7(suppl 2):115.

- , , , et al. Microsystems in health care: part 5. How leaders are leading. Jt Comm J Qual Saf. 2003;29(6):297–308.

- Institute of Medicine. Committee on Quality of Health Care in America. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: National Academies Press; 2001.

- , , . The triple aim: care, health, and cost. Health Aff (Millwood). 2008;27(3):759–769.

- . The end of the beginning: patient safety five years after “to err is human”. Health Aff (Millwood). 2004;Suppl Web Exclusives:W4‐534–545.

- , , , et al. Clinical inertia. Ann Intern Med. 2001;135(9):825–834.

- . The four habits of high‐value health care organizations. N Engl J Med. 2011;365(22):2045–2047.

- , , , . Using a Malcolm Baldrige framework to understand high‐performing clinical microsystems. Qual Saf Health Care. 2007;16(5):334–341.

- , , , . Relational coordination among nurses and other providers: impact on the quality of patient care. J Nurs Manag. 2010;18(8):926–937.

- , , , et al. Unit‐based care teams and the frequency and quality of physician‐nurse communications. Arch Pediatr Adolesc Med. 2011;165(5):424–428.

- , , , et al. Reducing cardiac arrests in the acute admissions unit: a quality improvement journey. BMJ Qual Saf. 2013;22(12):1025–1031.

- , , , et al. Evolving practice of hospital medicine and its impact on hospital throughput and efficiencies. J Hosp Med. 2012;7(8):649–654.

- , . Improvement projects led by unit‐based teams of nurse, physician, and quality leaders reduce infections, lower costs, improve patient satisfaction, and nurse‐physician communication. AHRQ Health Care Innovations Exchange. Available at: https://innovations.ahrq.gov/profiles/improvement‐projects‐led‐unit‐based‐teams‐nurse‐physician‐and‐quality‐leaders‐reduce. Accessed May 4, 2014.

- , , , , . The daily goals communication sheet: a simple and novel tool for improved communication and care. Jt Comm J Qual Patient Saf. 2008;34(10):608–613, 561.

- , , , et al. Implementation of a mandatory checklist of protocols and objectives improves compliance with a wide range of evidence‐based intensive care unit practices. Crit Care Med. 2009;37(10):2775–2781.

- , , , et al. Structured interdisciplinary rounds in a medical teaching unit: improving patient safety. Arch Intern Med. 2011;171(7):678–684.

- , , , , , . Improving teamwork: impact of structured interdisciplinary rounds on a hospitalist unit. J Hosp Med. 2011;6(2):88–93.

- , , . Integrating patient safety into the clinical microsystem. Qual Saf Health Care. 2004;13(suppl 2):ii34–ii38.

- , , , . Collaborative‐cross checking to enhance resilience. Cogn Tech Work. 2007;9:155–162.

- , , , et al. Microsystems in health care: Part 1. Learning from high‐performing front‐line clinical units. Jt Comm J Qual Improv. 2002;28(9):472–493.

- , , . Mortality reduction associated with structure process, and management redesign of a hospital medicine unit. J Hosp Med. 2012;7(suppl 2):115.

- , , , et al. Microsystems in health care: part 5. How leaders are leading. Jt Comm J Qual Saf. 2003;29(6):297–308.

Hospital High‐Value Care Program

With a United States medical system that spends as much as $750 billion each year on care that does not result in improved health outcomes,[1] many policy initiatives, including the Centers for Medicare and Medicaid Services' Value‐Based Purchasing program, seek to realign hospitals' financial incentives from a focus on production to one on value (quality divided by cost).[2, 3] Professional organizations have now deemed resource stewardship an ethical responsibility for professionalism,[4, 5] and campaigns such as the American Board of Internal Medicine (ABIM) Foundation's Choosing Wisely effort and the American College of Physicians' High‐Value Care platform are calling on frontline clinicians to address unnecessary and wasteful services.[6, 7]

Despite these pressures and initiatives, most physicians lack the knowledge and tools necessary to prioritize the delivery of their own healthcare services according to value.[8, 9, 10] Hospital medicine physicians are unaware of the costs associated with the interventions they order,[10] and the majority of medical training programs lack curricula focused on healthcare costs,[11] creating a large gap between physicians' perceived, desired, and actual knowledge related to costs.[12] Novel frameworks and frontline physician engagement are required if clinicians are to improve the value of the care they deliver.

We describe 1 of our first steps at the University of California, San Francisco (UCSF) to promote high‐value care (HVC) delivery: the creation of a HVC program led by clinicians and administrators focused on identifying and addressing wasteful practices within our hospitalist group. The program aims to (1) use financial and clinical data to identify areas with clear evidence of waste in the hospital, (2) promote evidence‐based interventions that improve both quality of care and value, and (3) pair interventions with evidence‐based cost awareness education to drive culture change. Our experience and inaugural projects provide a model of the key features, inherent challenges, and lessons learned, which may help inform similar efforts.

METHODS

In March 2012, we launched an HVC program within our Division of Hospital Medicine at UCSF Medical Center, a 600‐bed academic medical center in an urban setting. During the 2013 academic year, our division included 45 physicians. The medicine service, comprised of 8 teaching medical ward teams (1 attending, 1 resident, 2 interns, and variable number of medical students), and 1 nonteaching medical ward team (1 attending), admitted 4700 patients that year.

Organizational Framework

The HVC program is co‐led by a UCSF hospitalist (C.M.) and the administrator of the Division of Hospital Medicine (M.N.). Team members include hospitalists, hospital medicine fellows, resident physicians, pharmacists, project coordinators, and other administrators. The team meets in person for 1 hour every month. Project teams and ad hoc subcommittee groups often convene between meetings.

Our HVC program was placed within the infrastructure, and under the leadership, of our already established quality improvement (QI) program at UCSF. Our Division of Hospital Medicine Director of Quality and Safety (M.M.) thus oversees the QI, patient safety, patient experience, and high‐value care efforts.

The HVC program funding is largely in personnel costs. The physician leader (15% effort) is funded by the Division of Hospital Medicine, whereas the administrator is cofunded by both the division and by the medical center (largely through her roles as both division administrator and service line director). An administrative assistant within the division is also assigned to help with administrative tasks. Some additional data gathering and project support comes from existing medical center QI infrastructure, the decision support services unit, and through UCSF's new Center for Healthcare Value. Other ancillary costs for our projects have included publicity, data analytics, and information technology infrastructure. We estimate that the costs of this program are approximately $50,000 to $75,000 annually.

Framework for Identifying Target Projects

Robust Analysis of Costs

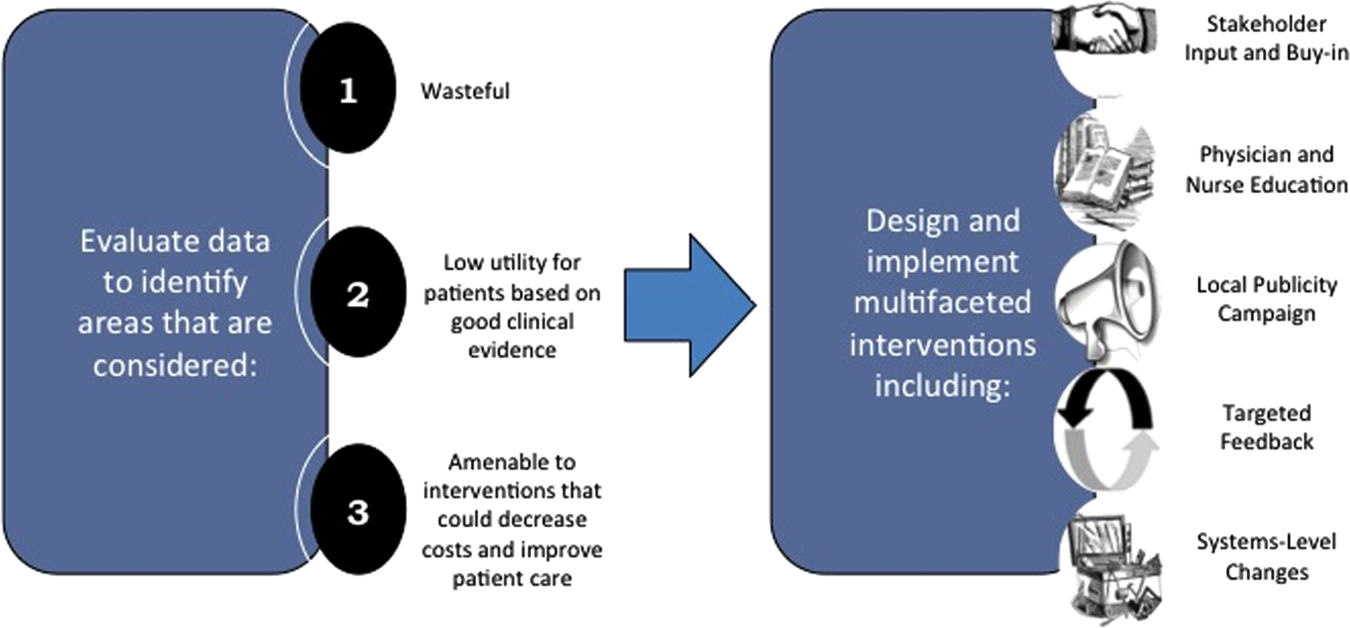

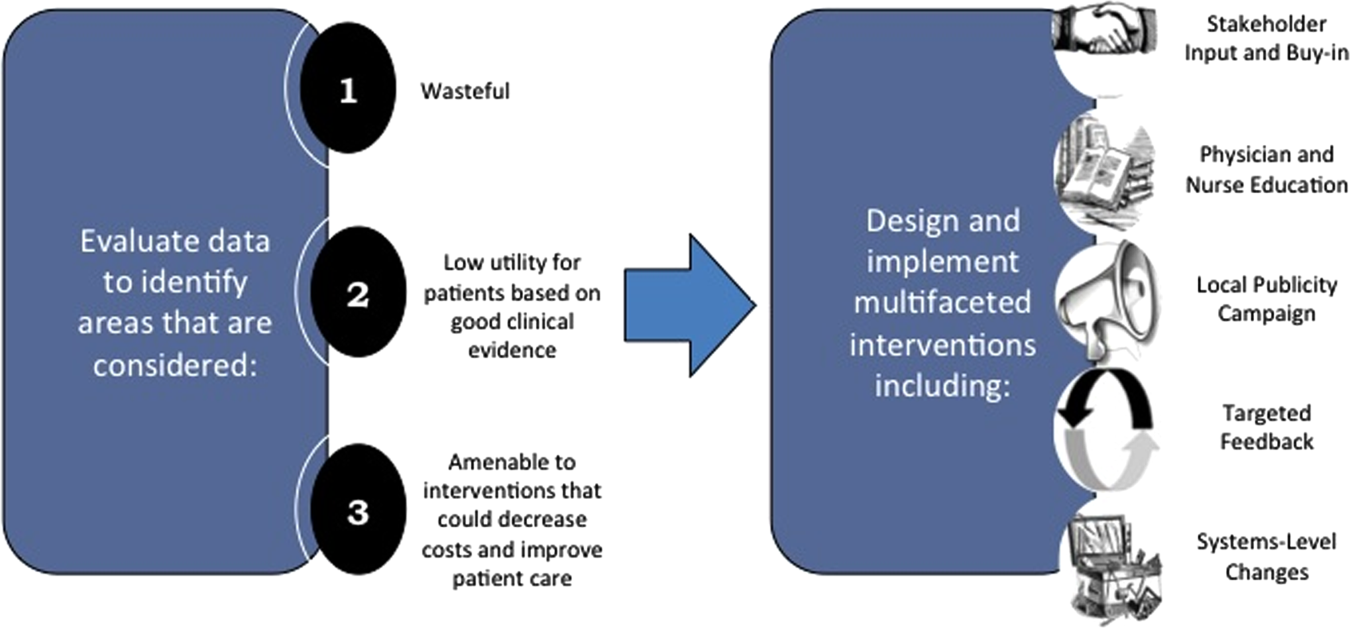

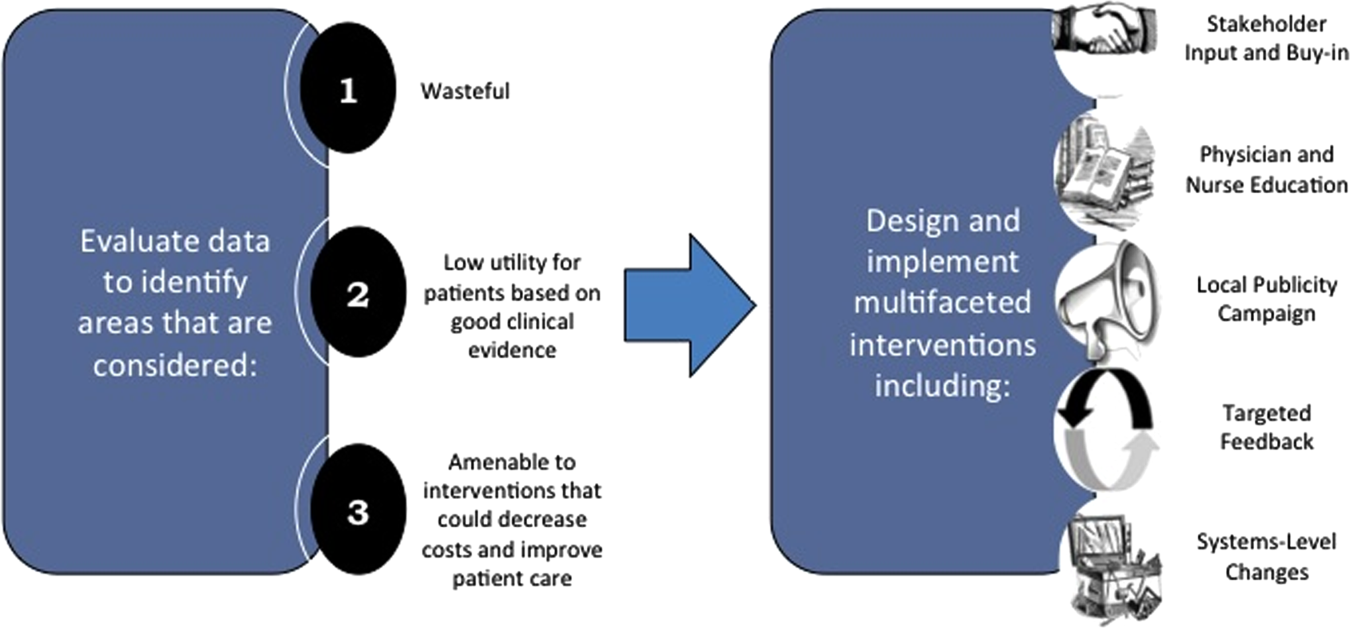

We created a framework for identifying, designing, and promoting projects specifically aimed at improving healthcare value (Figure 1). Financial data were used to identify areas with clear evidence of waste in the hospital, areas of high cost with no benefit in health outcomes. We focused particularly on obtaining cost and billing data for our medical service, which provided important insight into potential targets for improvements in value. For example, in 2011, the Division of Hospital Medicine spent more than $1 million annually in direct costs for the administration of nebulized bronchodilator therapies (nebs) to nonintensive care unit patients on the medical service.[13] These high costs, exposed by billing data, were believed to represent potential unnecessary testing and/or procedures. Not every area of high cost was deemed a target for intervention. For example, the use of recombinant factor VIII appeared a necessary expenditure (over $1 million per year) for our patients with hemophilia. Although our efforts focused on reducing waste, it is worth noting that healthcare value can also be increased by improving the delivery of high‐value services.

Recognized Benefits in Quality of Care

The program also evaluated the impact of cost reduction efforts on the quality of care, based on a high standard of current evidence. Though value can be improved by interventions that decrease costs while being quality neutral, our group chose to focus first on projects that would simultaneously improve quality while decreasing costs. We felt that this win‐win strategy would help obtain buy‐in from clinicians weary of prior cost‐cutting programs. For example, we pursued interventions aimed at reducing inappropriate gastric stress ulcer prophylaxis, which had the potential to both cut costs and minimize risks of hospital‐acquired pneumonia and Clostridium difficile infections.[14, 15] All proposed HVC targets were vetted through a review of the literature and published guidelines. In general, our initial projects had to be strongly supported by evidence, with high‐quality studies, preferably meta‐analyses or systematic reviews, that displayed the safety of our recommended changes. We reviewed the literature with experts. For example, we met with faculty pulmonologists to discuss the evidence supporting the use of inhalers instead of nebulizers in adults with obstructive pulmonary disease. The goals of our projects were chosen by our HVC committee, based on an analysis of our baseline data and the perceived potential effects of our proposed interventions.

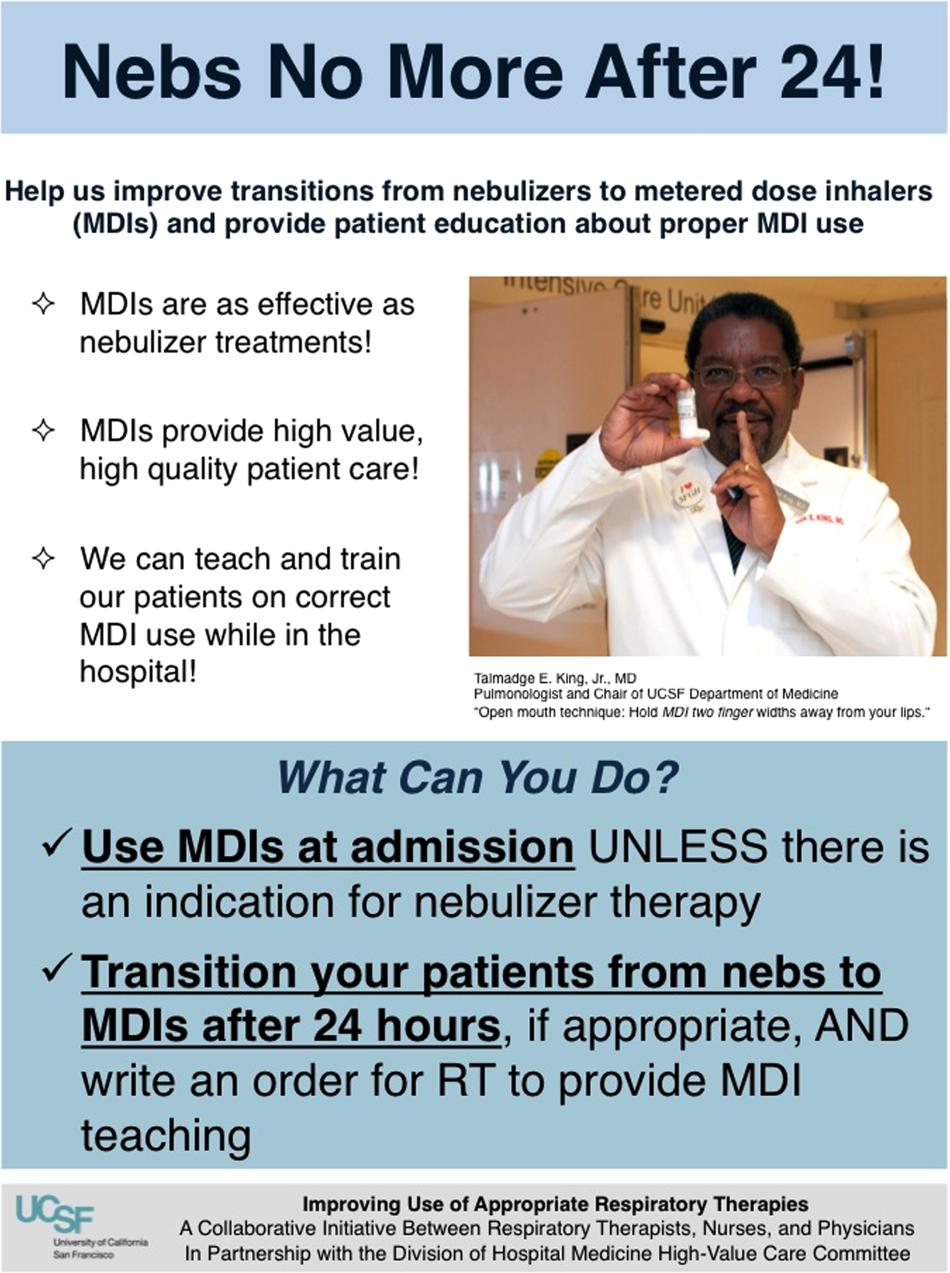

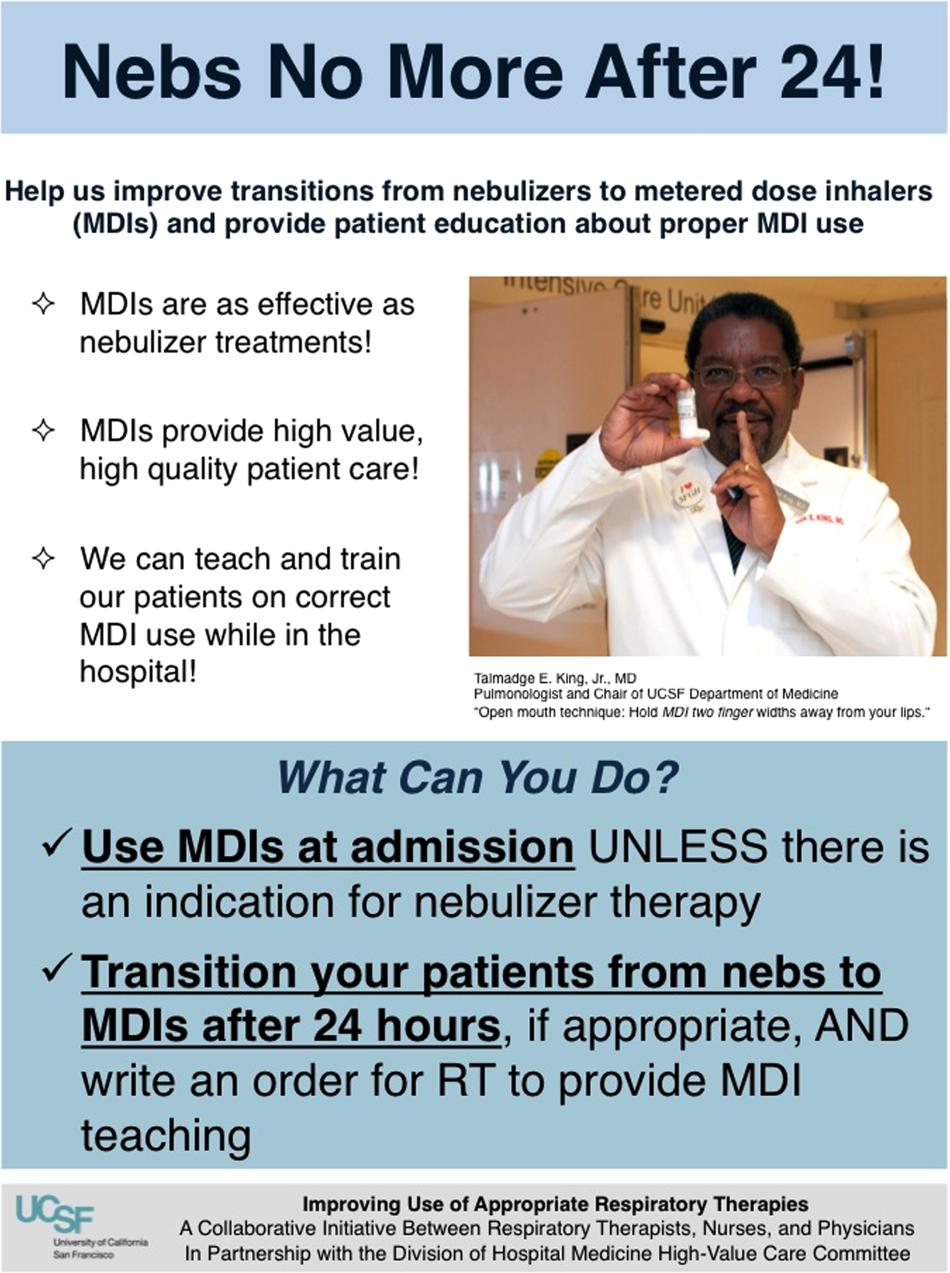

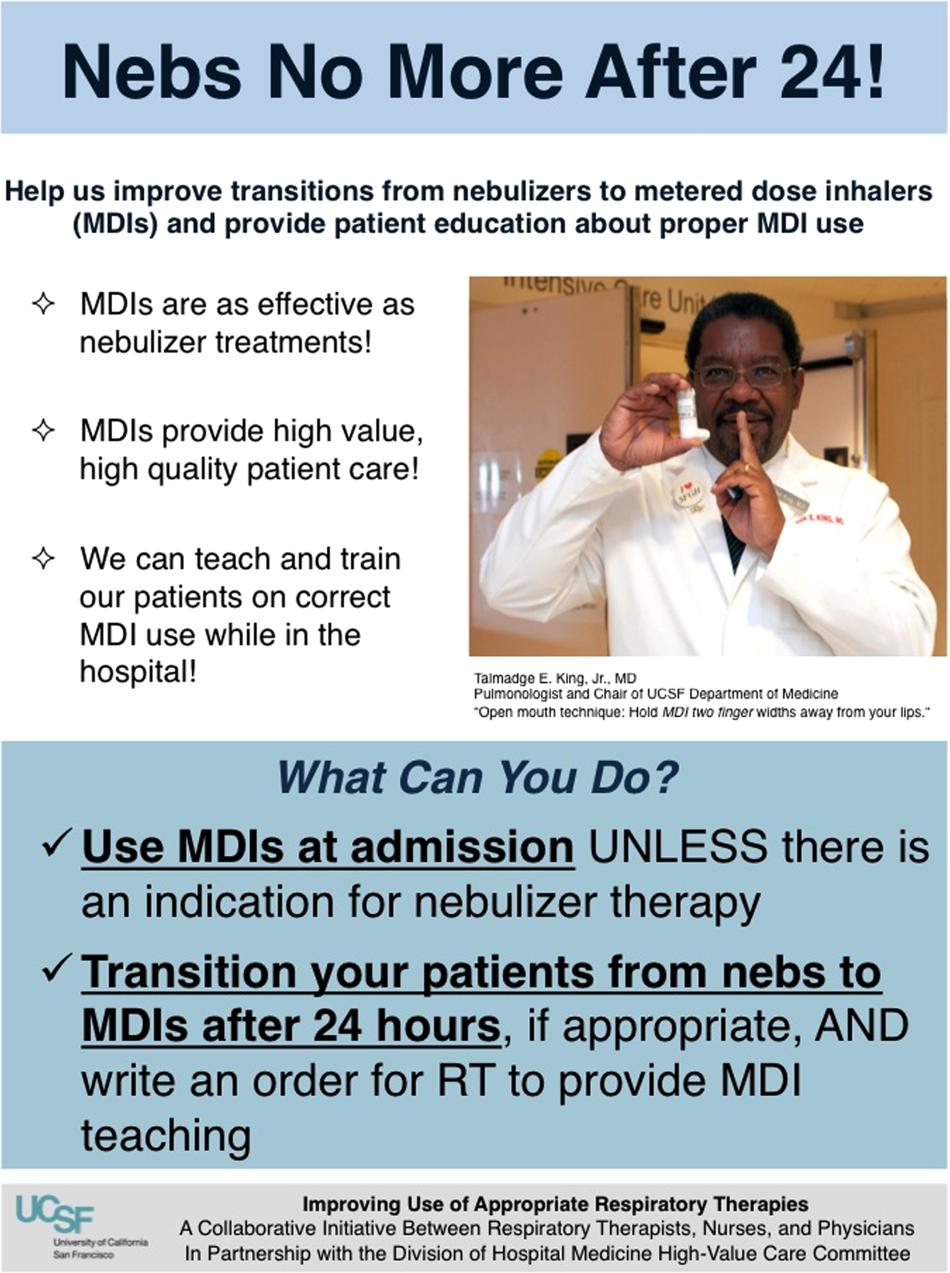

Educational Intervention

Last, we paired interventions with evidence‐based cost awareness education to drive culture change. At UCSF we have an ongoing longitudinal cost‐awareness curriculum for residents, which has previously been described.[16] We took advantage of this educational forum to address gaps in clinician knowledge related to the targeted areas. When launching the initiative to decrease unnecessary inpatient nebulizer usage and improve transitions to inhalers, we utilized the chronic obstructive pulmonary disease case in the cost‐awareness series. Doing so allowed us to both review the evidence behind the effectiveness of inhalers, and introduce our Nebs No More After 24 campaign, which sought to transition adult inpatients with obstructive pulmonary symptoms from nebs to inhalers within 24 hours of admission.[13]

Intervention Strategy

Our general approach has been to design and implement multifaceted interventions, adapted from previous QI literature (Figure 1).[17] Given the importance of frontline clinician engagement to successful project implementation,[18, 19, 20] our interventions are physician‐driven and are vetted by a large group of clinicians prior to launch. The HVC program also explicitly seeks stakeholder input, perspective, and buy‐in prior to implementation. For example, we involved respiratory therapists (RTs) in the design of the Nebs No More After 24 project, thus ensuring that the interventions fit within their workflow and align with their care‐delivery goals.

Local publicity campaigns provide education and reminders for clinicians. Posters, such as the Nebs No More After 24 poster (Figure 2), were hung in physician, nursing, and RT work areas. Pens featuring the catchphrase Nebs No More After 24 were distributed to clinicians.

In addition to presentations to residents through the UCSF cost awareness curriculum, educational presentations were also delivered to attending physicians and to other allied members of the healthcare team (eg, nurses, RTs) during regularly scheduled staff meetings.

The metrics for each of the projects were regularly monitored, and targeted feedback was provided to clinicians. For the Nebs No More After 24 campaign, data for the number of nebs delivered on the target floor were provided to resident physicians during the cost awareness conference each month, and the data were presented to attending hospitalists in the monthly QI newsletter. This academic year, transfusion and telemetry data are presented via the same strategy.

Stakeholder recruitment, education, and promotional campaigns are important to program launches, but to sustain projects over the long‐term, system changes may be necessary. We have pursued changes in the computerized provider order entry (CPOE) system, such as removing nebs from the admission order set or putting a default duration for certain telemetry orders. Systems‐level interventions, although more difficult to achieve, play an important role in creating enduring changes when paired with educational interventions.

RESULTS

During our first 2 years we have initiated ongoing projects directed at 6 major targets (Table 1). Our flagship project, Nebs No More After 24, resulted in a decrease of nebulizer rates by more than 50% on a high‐acuity medical floor, as previously published.[13] We created a financial model that primarily accounted for RT time and pharmaceutical costs, and estimated a savings of approximately $250,000 annually on this single medical ward (see Supporting Information, Table 1, in the online version of this article).[13]

| High‐Value Care Projects | Relevant Baseline Data | Goals of Project | Strategies |

|---|---|---|---|

| |||

| Nebs No More After 24: Improving appropriate use of respiratory services | The medicine service spent $1 million in direct costs on approximately 25,000 nebs for non‐ICU inpatients. | Reduce unnecessary nebs >15% over 9 months. | Removed nebs from admit order set. |

| Improve transitions from nebs to MDIs. | Enlisted RTs and RNs to help with MDI teaching for patients. | ||

| Improve patient self‐administration of MDIs. | Implemented an educational program for medicine physicians. | ||

| Created local publicity: posters, flyers, and pens. | |||

| Provided data feedback to providers. | |||

| Next step: Introduce a CPOE‐linked intervention. | |||

| Improving use of stress ulcer prophylaxis | 77% of ICU patients on acid suppressive therapy; 31% of these patients did not meet criteria for appropriate prophylaxis. | Reduce overuse and inappropriate use of SUP. | A team of pharmacists, nurses, and physicians developed targeted and evidence‐based UCSF guidelines on use of SUP. |

| Developed and implemented a pharmacist‐led intervention to reduce inappropriate SUP in the ICUs that included the following: | |||

| Reminders on admission and discharge from ICU | |||

| Education and awareness initiative for prescribers | |||

| ICU and service champions | |||

| Culture change | |||

| Next step: Incorporate indications in CPOE and work with ICU to incorporate appropriate GI prophylaxis as part of the standard ICU care bundle. | |||

| Blood utilization stewardship | 30% of transfusions on the hospital medicine service are provided to patients with a hemoglobin >8 g/dL. | Decrease units of blood transfused for a hemoglobin >8.0 g/dL by 25%. | Launched an educational campaign for attending and resident physicians. |

| Monthly feedback to residents and attending physicians. | |||

| Next step: Introduce a decision support system in the CPOE for blood transfusion orders in patients with most recent hemoglobin level >8. | |||

| Improving telemetry utilization | 44% of monitored inpatients on the medical service (with length of stay >48 hours) remain on telemetry until discharge. | Decrease by 15% the number of patients (with length of stay >48 hours) who remain on telemetry until discharge. | Implemented an educational campaign for nursing groups and the medicine and cardiology housestaff. |

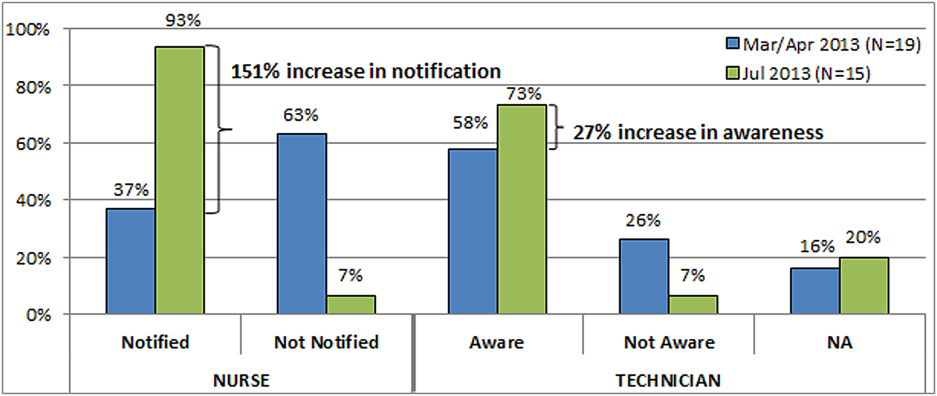

| Launched a messaging campaign consisting of posters and pocket cards on appropriate telemetry use. | |||