User login

Large-scale implementation of the I-PASS handover system

Title: Large-scale implementation of the I-PASS handover system at an academic medical center

Clinical Question: Is a system-wide I-PASS handover system able to be effectively implemented?

Background: Handovers (also referred to as “handoffs”) in patient care are ubiquitous and are increasing, especially in academic medicine. Errors in handovers are associated with poor patient outcomes. I-PASS (Illness Severity, Patient Summary, Action List, Situational Awareness, Synthesis by Receiver) is a handover system that is thought to improve efficiency and accuracy of handovers, however generalized roll-out within a large academic hospital remains daunting.

Setting: Academic medical center.

Synopsis: The authors recount a 3-year system-wide I-PASS implementation at their 999-bed major academic medical center. Effectiveness was measured through surveys and direct observations. Postimplementation surveys demonstrated a generally positive response to the implementation and training processes. Direct observation over 8 months was used to assess adoption and adherence to the handover method, and results showed improvement across all aspects of the I-PASS model, although the synthesis component of the handover consistently scored lowest. The authors noted that this is an ongoing project and plan future studies to evaluate effect on quality and safety measures.

Bottom Line: Implementing a system-wide handover change process is achievable, but will need to be incorporated into organizational culture to ensure continued use.

Citation: Shahian DM, McEachern K, Rossi L, et al. Large-scale implementation of the I-PASS handover system at an academic medical center. BMJ Qual Saf. 2017; doi: 10.1136/bmjqs-2016006195.

Dr. Rankin is a hospitalist and director of the family medicine residency inpatient service at the University of New Mexico.

Title: Large-scale implementation of the I-PASS handover system at an academic medical center

Clinical Question: Is a system-wide I-PASS handover system able to be effectively implemented?

Background: Handovers (also referred to as “handoffs”) in patient care are ubiquitous and are increasing, especially in academic medicine. Errors in handovers are associated with poor patient outcomes. I-PASS (Illness Severity, Patient Summary, Action List, Situational Awareness, Synthesis by Receiver) is a handover system that is thought to improve efficiency and accuracy of handovers, however generalized roll-out within a large academic hospital remains daunting.

Setting: Academic medical center.

Synopsis: The authors recount a 3-year system-wide I-PASS implementation at their 999-bed major academic medical center. Effectiveness was measured through surveys and direct observations. Postimplementation surveys demonstrated a generally positive response to the implementation and training processes. Direct observation over 8 months was used to assess adoption and adherence to the handover method, and results showed improvement across all aspects of the I-PASS model, although the synthesis component of the handover consistently scored lowest. The authors noted that this is an ongoing project and plan future studies to evaluate effect on quality and safety measures.

Bottom Line: Implementing a system-wide handover change process is achievable, but will need to be incorporated into organizational culture to ensure continued use.

Citation: Shahian DM, McEachern K, Rossi L, et al. Large-scale implementation of the I-PASS handover system at an academic medical center. BMJ Qual Saf. 2017; doi: 10.1136/bmjqs-2016006195.

Dr. Rankin is a hospitalist and director of the family medicine residency inpatient service at the University of New Mexico.

Title: Large-scale implementation of the I-PASS handover system at an academic medical center

Clinical Question: Is a system-wide I-PASS handover system able to be effectively implemented?

Background: Handovers (also referred to as “handoffs”) in patient care are ubiquitous and are increasing, especially in academic medicine. Errors in handovers are associated with poor patient outcomes. I-PASS (Illness Severity, Patient Summary, Action List, Situational Awareness, Synthesis by Receiver) is a handover system that is thought to improve efficiency and accuracy of handovers, however generalized roll-out within a large academic hospital remains daunting.

Setting: Academic medical center.

Synopsis: The authors recount a 3-year system-wide I-PASS implementation at their 999-bed major academic medical center. Effectiveness was measured through surveys and direct observations. Postimplementation surveys demonstrated a generally positive response to the implementation and training processes. Direct observation over 8 months was used to assess adoption and adherence to the handover method, and results showed improvement across all aspects of the I-PASS model, although the synthesis component of the handover consistently scored lowest. The authors noted that this is an ongoing project and plan future studies to evaluate effect on quality and safety measures.

Bottom Line: Implementing a system-wide handover change process is achievable, but will need to be incorporated into organizational culture to ensure continued use.

Citation: Shahian DM, McEachern K, Rossi L, et al. Large-scale implementation of the I-PASS handover system at an academic medical center. BMJ Qual Saf. 2017; doi: 10.1136/bmjqs-2016006195.

Dr. Rankin is a hospitalist and director of the family medicine residency inpatient service at the University of New Mexico.

CCDSSs to prevent VTE

Title: Use of computerized clinical decision support systems decreases venous thromboembolic events in surgical patients

Clinical Question: Do computerized clinical decision support systems (CCDSSs) decrease the risk of venous thromboembolism (VTE) in surgical patients?

Background: VTE remains the leading preventable cause of death in the hospital. Despite multiple tools that are available to stratify risk of VTE, they are not used uniformly or are used incorrectly. It is unclear whether CCDSSs help prevent VTE compared to standard care.

Study Design: Retrospective systematic review and meta-analysis.

Setting: 188 studies initially screened, 11 studies were included.

Synopsis: Multiple studies relevant to the topic were reviewed; only studies that used an electronic medical record (EMR)–based tool to augment the rate of appropriate prophylaxis of VTE were included. Primary outcomes assessed were rate of appropriate prophylaxis for VTE and rate of VTE events. A total of 156,366 patients were analyzed, of which 104,241 (66%) received intervention with CCDSSs and 52,125 (33%) received standard care (physician judgment and discretion). The use of CCDSSs was associated with a significant increase in the rate of appropriate ordering of prophylaxis for VTE (odds ratio, 2.35; 95% confidence interval, 1.78-3.10; P less than .001) and a significant decrease in the risk of VTE events (risk ratio, 0.78; 95% CI, 0.72-0.85; P less than .001). The major limitation of this study is that it did not evaluate the number of adverse events as a result of VTE prophylaxis, such as bleeding, which may have been significantly increased in the CCDSS group.

Bottom Line: The use of CCDSSs increases the proportion of surgical patients who are prescribed adequate prophylaxis for VTE and correlates with a reduction in VTE events.

Citation: Borab ZM, Lanni MA, Tecce MG, Pannucci CJ, Fischer JP. Use of computerized clinical decision support systems to prevent venous thromboembolism in surgical patients: A systematic review and meta-analysis. JAMA Surg. 2017; doi: 10.1001/jamasurg.2017.0131.

Dr. Mayasy is assistant professor in the department of hospital medicine at the University of New Mexico.

Title: Use of computerized clinical decision support systems decreases venous thromboembolic events in surgical patients

Clinical Question: Do computerized clinical decision support systems (CCDSSs) decrease the risk of venous thromboembolism (VTE) in surgical patients?

Background: VTE remains the leading preventable cause of death in the hospital. Despite multiple tools that are available to stratify risk of VTE, they are not used uniformly or are used incorrectly. It is unclear whether CCDSSs help prevent VTE compared to standard care.

Study Design: Retrospective systematic review and meta-analysis.

Setting: 188 studies initially screened, 11 studies were included.

Synopsis: Multiple studies relevant to the topic were reviewed; only studies that used an electronic medical record (EMR)–based tool to augment the rate of appropriate prophylaxis of VTE were included. Primary outcomes assessed were rate of appropriate prophylaxis for VTE and rate of VTE events. A total of 156,366 patients were analyzed, of which 104,241 (66%) received intervention with CCDSSs and 52,125 (33%) received standard care (physician judgment and discretion). The use of CCDSSs was associated with a significant increase in the rate of appropriate ordering of prophylaxis for VTE (odds ratio, 2.35; 95% confidence interval, 1.78-3.10; P less than .001) and a significant decrease in the risk of VTE events (risk ratio, 0.78; 95% CI, 0.72-0.85; P less than .001). The major limitation of this study is that it did not evaluate the number of adverse events as a result of VTE prophylaxis, such as bleeding, which may have been significantly increased in the CCDSS group.

Bottom Line: The use of CCDSSs increases the proportion of surgical patients who are prescribed adequate prophylaxis for VTE and correlates with a reduction in VTE events.

Citation: Borab ZM, Lanni MA, Tecce MG, Pannucci CJ, Fischer JP. Use of computerized clinical decision support systems to prevent venous thromboembolism in surgical patients: A systematic review and meta-analysis. JAMA Surg. 2017; doi: 10.1001/jamasurg.2017.0131.

Dr. Mayasy is assistant professor in the department of hospital medicine at the University of New Mexico.

Title: Use of computerized clinical decision support systems decreases venous thromboembolic events in surgical patients

Clinical Question: Do computerized clinical decision support systems (CCDSSs) decrease the risk of venous thromboembolism (VTE) in surgical patients?

Background: VTE remains the leading preventable cause of death in the hospital. Despite multiple tools that are available to stratify risk of VTE, they are not used uniformly or are used incorrectly. It is unclear whether CCDSSs help prevent VTE compared to standard care.

Study Design: Retrospective systematic review and meta-analysis.

Setting: 188 studies initially screened, 11 studies were included.

Synopsis: Multiple studies relevant to the topic were reviewed; only studies that used an electronic medical record (EMR)–based tool to augment the rate of appropriate prophylaxis of VTE were included. Primary outcomes assessed were rate of appropriate prophylaxis for VTE and rate of VTE events. A total of 156,366 patients were analyzed, of which 104,241 (66%) received intervention with CCDSSs and 52,125 (33%) received standard care (physician judgment and discretion). The use of CCDSSs was associated with a significant increase in the rate of appropriate ordering of prophylaxis for VTE (odds ratio, 2.35; 95% confidence interval, 1.78-3.10; P less than .001) and a significant decrease in the risk of VTE events (risk ratio, 0.78; 95% CI, 0.72-0.85; P less than .001). The major limitation of this study is that it did not evaluate the number of adverse events as a result of VTE prophylaxis, such as bleeding, which may have been significantly increased in the CCDSS group.

Bottom Line: The use of CCDSSs increases the proportion of surgical patients who are prescribed adequate prophylaxis for VTE and correlates with a reduction in VTE events.

Citation: Borab ZM, Lanni MA, Tecce MG, Pannucci CJ, Fischer JP. Use of computerized clinical decision support systems to prevent venous thromboembolism in surgical patients: A systematic review and meta-analysis. JAMA Surg. 2017; doi: 10.1001/jamasurg.2017.0131.

Dr. Mayasy is assistant professor in the department of hospital medicine at the University of New Mexico.

Rivaroxaban or aspirin for extended treatment of VTE

TITLE: Low-dose or full-dose rivaroxaban is superior to aspirin for long-term anticoagulation

CLINICAL QUESTION: Is full or lower intensity rivaroxaban better for extended treatment of venous thromboembolism (VTE) as compared to aspirin?

STUDY DESIGN: Multicenter randomized double-blinded phase III trial.

SETTING: 230 Medical centers worldwide in 20 countries.

SYNOPSIS: 3,365 patients with a history of VTE who had undergone 6-12 months of initial anticoagulation therapy and in whom continuation of therapy was thought to be beneficial were enrolled. Patients were randomly assigned to daily high-dose rivaroxaban (20 mg) or daily low-dose rivaroxaban (10 mg) or aspirin (100 mg). After a median of 351 days, symptomatic recurrent VTE or unexplained death occurred in 17 of the 1,107 patients (1.5%) who were assigned to the high-dose group, in 13 of 1,127 patients (1.2%) who were assigned to the low-dose group, and in 50 of 1,131 patients (4.4%) who were assigned to aspirin. Bleeding rates were not significantly different between the three groups (2%-3%). The major limitation of this study is the short duration of follow-up and the lack of power to demonstrate noninferiority of the low-dose as compared to the high-dose regimen for rivaroxaban.

BOTTOM LINE: In patients with a history of VTE, in whom prolonged anticoagulation could be beneficial, low or high-dose rivaroxaban is superior to aspirin in preventing recurrent VTE without increasing bleeding risk.

CITATION: Weitz JI, Lensing MH, Prins R, et al. Rivaroxaban or aspirin for extended treatment of venous thromboembolism. N Engl J Med. 2017; 376:1211-22.

Dr. Mayasy is assistant professor in the department of hospital medicine at the University of New Mexico.

TITLE: Low-dose or full-dose rivaroxaban is superior to aspirin for long-term anticoagulation

CLINICAL QUESTION: Is full or lower intensity rivaroxaban better for extended treatment of venous thromboembolism (VTE) as compared to aspirin?

STUDY DESIGN: Multicenter randomized double-blinded phase III trial.

SETTING: 230 Medical centers worldwide in 20 countries.

SYNOPSIS: 3,365 patients with a history of VTE who had undergone 6-12 months of initial anticoagulation therapy and in whom continuation of therapy was thought to be beneficial were enrolled. Patients were randomly assigned to daily high-dose rivaroxaban (20 mg) or daily low-dose rivaroxaban (10 mg) or aspirin (100 mg). After a median of 351 days, symptomatic recurrent VTE or unexplained death occurred in 17 of the 1,107 patients (1.5%) who were assigned to the high-dose group, in 13 of 1,127 patients (1.2%) who were assigned to the low-dose group, and in 50 of 1,131 patients (4.4%) who were assigned to aspirin. Bleeding rates were not significantly different between the three groups (2%-3%). The major limitation of this study is the short duration of follow-up and the lack of power to demonstrate noninferiority of the low-dose as compared to the high-dose regimen for rivaroxaban.

BOTTOM LINE: In patients with a history of VTE, in whom prolonged anticoagulation could be beneficial, low or high-dose rivaroxaban is superior to aspirin in preventing recurrent VTE without increasing bleeding risk.

CITATION: Weitz JI, Lensing MH, Prins R, et al. Rivaroxaban or aspirin for extended treatment of venous thromboembolism. N Engl J Med. 2017; 376:1211-22.

Dr. Mayasy is assistant professor in the department of hospital medicine at the University of New Mexico.

TITLE: Low-dose or full-dose rivaroxaban is superior to aspirin for long-term anticoagulation

CLINICAL QUESTION: Is full or lower intensity rivaroxaban better for extended treatment of venous thromboembolism (VTE) as compared to aspirin?

STUDY DESIGN: Multicenter randomized double-blinded phase III trial.

SETTING: 230 Medical centers worldwide in 20 countries.

SYNOPSIS: 3,365 patients with a history of VTE who had undergone 6-12 months of initial anticoagulation therapy and in whom continuation of therapy was thought to be beneficial were enrolled. Patients were randomly assigned to daily high-dose rivaroxaban (20 mg) or daily low-dose rivaroxaban (10 mg) or aspirin (100 mg). After a median of 351 days, symptomatic recurrent VTE or unexplained death occurred in 17 of the 1,107 patients (1.5%) who were assigned to the high-dose group, in 13 of 1,127 patients (1.2%) who were assigned to the low-dose group, and in 50 of 1,131 patients (4.4%) who were assigned to aspirin. Bleeding rates were not significantly different between the three groups (2%-3%). The major limitation of this study is the short duration of follow-up and the lack of power to demonstrate noninferiority of the low-dose as compared to the high-dose regimen for rivaroxaban.

BOTTOM LINE: In patients with a history of VTE, in whom prolonged anticoagulation could be beneficial, low or high-dose rivaroxaban is superior to aspirin in preventing recurrent VTE without increasing bleeding risk.

CITATION: Weitz JI, Lensing MH, Prins R, et al. Rivaroxaban or aspirin for extended treatment of venous thromboembolism. N Engl J Med. 2017; 376:1211-22.

Dr. Mayasy is assistant professor in the department of hospital medicine at the University of New Mexico.

Corticosteroid therapy in Kawasaki disease

Clinical Question

What is the efficacy of corticosteroid therapy in Kawasaki disease?

Background

First described in 1967 in Japan, Kawasaki disease (KD), or mucocutaneous lymph node syndrome, is an acute systemic vasculitis of unclear etiology which primarily affects infants and children. Of significant clinical concern, 30%-50% of untreated patients develop acute coronary artery dilatation, and about one fourth progress to serious coronary artery abnormalities (CAA) such as aneurysm and ectasia.1,2 These patients have a higher risk of long-term complications, such as coronary artery thrombus, myocardial infarction, and sudden death.3 KD is typically treated with a combination of intravenous immunoglobulin (IVIG) and aspirin, which reduces the risk of CAA.1 However, more than 20% of cases are resistant to this conventional therapy and have higher risk of CAA than nonresistant patients.4 Corticosteroids have been suggested as therapy in KD, as the anti-inflammatory effect is useful for many other vasculitides, but studies to date have had conflicting results. This study was performed to comprehensively evaluate the effect of corticosteroids in KD as initial or rescue therapy (after failure to respond to IVIG).

Study design

Systematic review and meta-analysis.

Synopsis

The population of interest was children diagnosed with KD. The intervention of interest was treatment with adjunctive corticosteroids either as initial or rescue therapy. Comparisons were made between the corticosteroids group and the conventional therapy (IVIG) group. Outcome measurements included the incidence of CAA (primary outcome), duration until defervescence, and adverse events. IVIG resistance was defined as persistent or recurrent fever lasting (or relapsed within) 24-48 hours after the initial IVIG treatment.

A total of 681 articles were initially retrieved, and after exclusions, 16 comparative studies were enrolled for meta-analysis. In these studies, a total of 2,746 cases were involved, with 861 in the corticosteroid group and 1,885 in the IVIG group. Ten studies used corticosteroids as initial treatment (comparing this plus IVIG versus IVIG therapy alone), and six used steroids as rescue treatment after initial IVIG failure (comparing corticosteroids with additional IVIG therapy). Four studies enrolled patients with KD who were predicted to have high risk of IVIG resistance, based on published scoring systems. All patients in the studies received oral aspirin.

Overall, this meta-analysis found that adding corticosteroid therapy was associated with a relative risk reduction of 58% in CAA (odds ratio, 0.424; 95% confidence interval, 0.270-0.665; P less than .001). Further analysis showed that the longer the duration of illness prior to corticosteroids, the less of a treatment effect was noticed. The studies using steroids as initial adjunctive therapy had duration of illness 4.7 days prior to treatment, and showed an advantage, compared with IVIG alone, while studies using steroids as rescue therapy had a longer duration of illness prior to steroid therapy (7.2 days), and did not show significant benefit, compared with additional IVIG. In analyzing patients predicted to be at high risk of IVIG resistance at baseline, addition of corticosteroids with IVIG as initial therapy showed a significantly lower risk of CAA development (relative risk reduction of 76%) versus IVIG alone (OR, 0.240; 95% CI, 0.123-0.467; P less than .001). As a secondary outcome, the use of adjunctive corticosteroid therapy was associated with a quicker resolution of fever, compared with IVIG alone (0.66 days vs. 2.18 days). There was no significant difference in adverse events between the two groups.

Although this is the most comprehensive study of corticosteroids in KD, there are some limitations. High-risk patients were found to receive the greatest benefit, but the predictive ability of published scoring systems have not been optimized or generalized to all populations. Most of the studies included in this review were conducted in Japan, so it is uncertain if the results are applicable to other regions. Also, the selection of corticosteroids and treatment regimens were not consistent between studies.

Bottom line

This study suggests that corticosteroids combined with IVIG as initial therapy for KD showed a more protective effect against CAA, compared with conventional IVIG therapy, and the efficacy was more pronounced in the high-risk patient group.

Citation

Chen S, Dong Y, Kiuchi MG, et al. Coronary Artery Complication in Kawasaki Disease and the Importance of Early Intervention: A Systematic Review and Meta-analysis. JAMA Pediatr. 2016;170(12):1156-1163.

References

1. Newburger JW, Takahashi M, Gerber MA, et al. Committee on Rheumatic Fever, Endocarditis and Kawasaki Disease. Council on Cardiovascular Disease in the Young; American Heart Association; American Academy of Pediatrics: diagnosis, treatment, and long-term management of Kawasaki disease. Circulation. 2004;110:2747-71.

2. Daniels LB, Tjajadi MS, Walford HH, et al. Prevalence of Kawasaki disease in young adults with suspected myocardial ischemia. Circulation. 2012;125(20):2447-53.

3. Gordon JB, Kahn AM, Burns JC. When children with Kawasaki disease grow up: myocardial and vascular complications in adulthood. J Am Coll Cardiol. 2009;54(21):1911-20.

4. Tremoulet AH, Best BM, Song S, et al. Resistance to intravenous immunoglobulin in children with Kawasaki disease. J Pediatr. 2008;153(1):117-21.

Dr. Galloway is a pediatric hospitalist at Sanford Children’s Hospital in Sioux Falls, S.D., assistant professor of pediatrics at the University of South Dakota Sanford School of Medicine, and vice chief of the division of hospital pediatrics at USD SSOM and Sanford Children’s Hospital.

Clinical Question

What is the efficacy of corticosteroid therapy in Kawasaki disease?

Background

First described in 1967 in Japan, Kawasaki disease (KD), or mucocutaneous lymph node syndrome, is an acute systemic vasculitis of unclear etiology which primarily affects infants and children. Of significant clinical concern, 30%-50% of untreated patients develop acute coronary artery dilatation, and about one fourth progress to serious coronary artery abnormalities (CAA) such as aneurysm and ectasia.1,2 These patients have a higher risk of long-term complications, such as coronary artery thrombus, myocardial infarction, and sudden death.3 KD is typically treated with a combination of intravenous immunoglobulin (IVIG) and aspirin, which reduces the risk of CAA.1 However, more than 20% of cases are resistant to this conventional therapy and have higher risk of CAA than nonresistant patients.4 Corticosteroids have been suggested as therapy in KD, as the anti-inflammatory effect is useful for many other vasculitides, but studies to date have had conflicting results. This study was performed to comprehensively evaluate the effect of corticosteroids in KD as initial or rescue therapy (after failure to respond to IVIG).

Study design

Systematic review and meta-analysis.

Synopsis

The population of interest was children diagnosed with KD. The intervention of interest was treatment with adjunctive corticosteroids either as initial or rescue therapy. Comparisons were made between the corticosteroids group and the conventional therapy (IVIG) group. Outcome measurements included the incidence of CAA (primary outcome), duration until defervescence, and adverse events. IVIG resistance was defined as persistent or recurrent fever lasting (or relapsed within) 24-48 hours after the initial IVIG treatment.

A total of 681 articles were initially retrieved, and after exclusions, 16 comparative studies were enrolled for meta-analysis. In these studies, a total of 2,746 cases were involved, with 861 in the corticosteroid group and 1,885 in the IVIG group. Ten studies used corticosteroids as initial treatment (comparing this plus IVIG versus IVIG therapy alone), and six used steroids as rescue treatment after initial IVIG failure (comparing corticosteroids with additional IVIG therapy). Four studies enrolled patients with KD who were predicted to have high risk of IVIG resistance, based on published scoring systems. All patients in the studies received oral aspirin.

Overall, this meta-analysis found that adding corticosteroid therapy was associated with a relative risk reduction of 58% in CAA (odds ratio, 0.424; 95% confidence interval, 0.270-0.665; P less than .001). Further analysis showed that the longer the duration of illness prior to corticosteroids, the less of a treatment effect was noticed. The studies using steroids as initial adjunctive therapy had duration of illness 4.7 days prior to treatment, and showed an advantage, compared with IVIG alone, while studies using steroids as rescue therapy had a longer duration of illness prior to steroid therapy (7.2 days), and did not show significant benefit, compared with additional IVIG. In analyzing patients predicted to be at high risk of IVIG resistance at baseline, addition of corticosteroids with IVIG as initial therapy showed a significantly lower risk of CAA development (relative risk reduction of 76%) versus IVIG alone (OR, 0.240; 95% CI, 0.123-0.467; P less than .001). As a secondary outcome, the use of adjunctive corticosteroid therapy was associated with a quicker resolution of fever, compared with IVIG alone (0.66 days vs. 2.18 days). There was no significant difference in adverse events between the two groups.

Although this is the most comprehensive study of corticosteroids in KD, there are some limitations. High-risk patients were found to receive the greatest benefit, but the predictive ability of published scoring systems have not been optimized or generalized to all populations. Most of the studies included in this review were conducted in Japan, so it is uncertain if the results are applicable to other regions. Also, the selection of corticosteroids and treatment regimens were not consistent between studies.

Bottom line

This study suggests that corticosteroids combined with IVIG as initial therapy for KD showed a more protective effect against CAA, compared with conventional IVIG therapy, and the efficacy was more pronounced in the high-risk patient group.

Citation

Chen S, Dong Y, Kiuchi MG, et al. Coronary Artery Complication in Kawasaki Disease and the Importance of Early Intervention: A Systematic Review and Meta-analysis. JAMA Pediatr. 2016;170(12):1156-1163.

References

1. Newburger JW, Takahashi M, Gerber MA, et al. Committee on Rheumatic Fever, Endocarditis and Kawasaki Disease. Council on Cardiovascular Disease in the Young; American Heart Association; American Academy of Pediatrics: diagnosis, treatment, and long-term management of Kawasaki disease. Circulation. 2004;110:2747-71.

2. Daniels LB, Tjajadi MS, Walford HH, et al. Prevalence of Kawasaki disease in young adults with suspected myocardial ischemia. Circulation. 2012;125(20):2447-53.

3. Gordon JB, Kahn AM, Burns JC. When children with Kawasaki disease grow up: myocardial and vascular complications in adulthood. J Am Coll Cardiol. 2009;54(21):1911-20.

4. Tremoulet AH, Best BM, Song S, et al. Resistance to intravenous immunoglobulin in children with Kawasaki disease. J Pediatr. 2008;153(1):117-21.

Dr. Galloway is a pediatric hospitalist at Sanford Children’s Hospital in Sioux Falls, S.D., assistant professor of pediatrics at the University of South Dakota Sanford School of Medicine, and vice chief of the division of hospital pediatrics at USD SSOM and Sanford Children’s Hospital.

Clinical Question

What is the efficacy of corticosteroid therapy in Kawasaki disease?

Background

First described in 1967 in Japan, Kawasaki disease (KD), or mucocutaneous lymph node syndrome, is an acute systemic vasculitis of unclear etiology which primarily affects infants and children. Of significant clinical concern, 30%-50% of untreated patients develop acute coronary artery dilatation, and about one fourth progress to serious coronary artery abnormalities (CAA) such as aneurysm and ectasia.1,2 These patients have a higher risk of long-term complications, such as coronary artery thrombus, myocardial infarction, and sudden death.3 KD is typically treated with a combination of intravenous immunoglobulin (IVIG) and aspirin, which reduces the risk of CAA.1 However, more than 20% of cases are resistant to this conventional therapy and have higher risk of CAA than nonresistant patients.4 Corticosteroids have been suggested as therapy in KD, as the anti-inflammatory effect is useful for many other vasculitides, but studies to date have had conflicting results. This study was performed to comprehensively evaluate the effect of corticosteroids in KD as initial or rescue therapy (after failure to respond to IVIG).

Study design

Systematic review and meta-analysis.

Synopsis

The population of interest was children diagnosed with KD. The intervention of interest was treatment with adjunctive corticosteroids either as initial or rescue therapy. Comparisons were made between the corticosteroids group and the conventional therapy (IVIG) group. Outcome measurements included the incidence of CAA (primary outcome), duration until defervescence, and adverse events. IVIG resistance was defined as persistent or recurrent fever lasting (or relapsed within) 24-48 hours after the initial IVIG treatment.

A total of 681 articles were initially retrieved, and after exclusions, 16 comparative studies were enrolled for meta-analysis. In these studies, a total of 2,746 cases were involved, with 861 in the corticosteroid group and 1,885 in the IVIG group. Ten studies used corticosteroids as initial treatment (comparing this plus IVIG versus IVIG therapy alone), and six used steroids as rescue treatment after initial IVIG failure (comparing corticosteroids with additional IVIG therapy). Four studies enrolled patients with KD who were predicted to have high risk of IVIG resistance, based on published scoring systems. All patients in the studies received oral aspirin.

Overall, this meta-analysis found that adding corticosteroid therapy was associated with a relative risk reduction of 58% in CAA (odds ratio, 0.424; 95% confidence interval, 0.270-0.665; P less than .001). Further analysis showed that the longer the duration of illness prior to corticosteroids, the less of a treatment effect was noticed. The studies using steroids as initial adjunctive therapy had duration of illness 4.7 days prior to treatment, and showed an advantage, compared with IVIG alone, while studies using steroids as rescue therapy had a longer duration of illness prior to steroid therapy (7.2 days), and did not show significant benefit, compared with additional IVIG. In analyzing patients predicted to be at high risk of IVIG resistance at baseline, addition of corticosteroids with IVIG as initial therapy showed a significantly lower risk of CAA development (relative risk reduction of 76%) versus IVIG alone (OR, 0.240; 95% CI, 0.123-0.467; P less than .001). As a secondary outcome, the use of adjunctive corticosteroid therapy was associated with a quicker resolution of fever, compared with IVIG alone (0.66 days vs. 2.18 days). There was no significant difference in adverse events between the two groups.

Although this is the most comprehensive study of corticosteroids in KD, there are some limitations. High-risk patients were found to receive the greatest benefit, but the predictive ability of published scoring systems have not been optimized or generalized to all populations. Most of the studies included in this review were conducted in Japan, so it is uncertain if the results are applicable to other regions. Also, the selection of corticosteroids and treatment regimens were not consistent between studies.

Bottom line

This study suggests that corticosteroids combined with IVIG as initial therapy for KD showed a more protective effect against CAA, compared with conventional IVIG therapy, and the efficacy was more pronounced in the high-risk patient group.

Citation

Chen S, Dong Y, Kiuchi MG, et al. Coronary Artery Complication in Kawasaki Disease and the Importance of Early Intervention: A Systematic Review and Meta-analysis. JAMA Pediatr. 2016;170(12):1156-1163.

References

1. Newburger JW, Takahashi M, Gerber MA, et al. Committee on Rheumatic Fever, Endocarditis and Kawasaki Disease. Council on Cardiovascular Disease in the Young; American Heart Association; American Academy of Pediatrics: diagnosis, treatment, and long-term management of Kawasaki disease. Circulation. 2004;110:2747-71.

2. Daniels LB, Tjajadi MS, Walford HH, et al. Prevalence of Kawasaki disease in young adults with suspected myocardial ischemia. Circulation. 2012;125(20):2447-53.

3. Gordon JB, Kahn AM, Burns JC. When children with Kawasaki disease grow up: myocardial and vascular complications in adulthood. J Am Coll Cardiol. 2009;54(21):1911-20.

4. Tremoulet AH, Best BM, Song S, et al. Resistance to intravenous immunoglobulin in children with Kawasaki disease. J Pediatr. 2008;153(1):117-21.

Dr. Galloway is a pediatric hospitalist at Sanford Children’s Hospital in Sioux Falls, S.D., assistant professor of pediatrics at the University of South Dakota Sanford School of Medicine, and vice chief of the division of hospital pediatrics at USD SSOM and Sanford Children’s Hospital.

Hospitalizations may speed up cognitive decline in older adults

LONDON – Older adult patients who already had cognitive decline when they were admitted to a hospital often left with a significantly accelerated rate of decline, according to findings from a large longitudinal cohort study.

The study found up to a 62% acceleration of prehospital cognitive decline after any hospitalization. Urgent or emergency hospitalizations exacted the biggest toll on cognitive health, Bryan James, PhD, said at the Alzheimer’s Association International Conference.

Cognitive decline after hospitalization in older patients is a common occurrence but still poorly understood, he said. “Some data suggest this could actually be seen as a public health crisis since 40% of all hospitalized patients in the U.S. are older than 65, and the risk of past-hospitalization cognitive impairment rises with age.

“Given the risk to cognitive health, older patients, families, and physicians require information on when to admit to the hospital,” Dr. James said. “We wondered if those who decline rapidly after the hospital admission were already declining before. Our second question was whether elective hospital admissions are associated with the same negative cognitive outcomes as nonelective (emergent or urgent) admissions.”

To examine this, Dr. James and his colleagues used patient data from the Rush Memory and Aging Project, which provides each participant with an annual cognitive assessment consisting of 19 neuropsychological tests. They linked these data to each patient’s Medicare claims record, allowing them to assess cognitive function both before and after the index hospitalization.

The cohort comprised 930 patients who were followed for a mean of 5 years. Hospitalized patients were older (81 vs. 79 years). Most patients in both groups had at least one medical condition, such as hypertension, heart disease, diabetes, cancer, thyroid disease, head injury, or stroke. Cognition was already impaired in many of the hospitalized patients; 62% had mild cognitive impairment (MCI) and 35% had dementia. Among the nonhospitalized subjects, 49% had MCI and 24% had dementia.

Of the cohort, 66% experienced a hospitalization during follow-up. Most hospitalizations (57%) were either for urgent or emergency problems. The rest were elective admissions. The main outcome was change in global cognition – an averaged z-score of all 19 tests of working memory, episodic memory, semantic memory, visuospatial processing, and perceptual speed.

Elective admissions were mostly planned surgeries (94%), and unplanned surgeries occurred in 64% of the nonelective admissions. Most of the elective admissions (81%) involved anesthesia, compared with 32% of the nonelective admissions. About 40% of each group required a stay in the intensive care unit. Around 11% in each group had a critical illness – a stroke, hemorrhage, or brain trauma in about 6% of each group.

A multivariate analysis looked at the change in cognition during two time points: 2 years before the index hospitalization and up to 8 years after it. As could be expected of aged subjects in a memory study cohort, most patients experienced a decline in cognition over the study period. However, nonhospitalized patients continued on a smooth linear slope of decline. Hospitalized patients experienced a significant 62% increased rate of decline, even after controlling for age, education, comorbidities, depression, Activities of Daily Living disability, and physical activity.

Visuospatial processing was the only domain not significantly affected by a hospital admission. All of the memory domains, as well as perceptual speed, declined significantly faster after hospitalization than before.

The second analysis examined which type of admission was most dangerous for cognitive health. This controlled for even more potential confounding factors, including length of stay, surgery and anesthesia, Charlson comorbidity index, critical illness, brain injury, and number of hospitalizations during the follow-up period.

Urgent and emergency admissions drove virtually all of the increase in decline, Dr. James said, with a 60% increase in the rate of decline, compared with the prehospitalization rate. Patients who had elective admissions showed no variance from their baseline rate of decline, and, in fact, followed the same slope as nonhospitalized patients. Again, change was seen in the global score and in all the memory domains and perceptual speed. Only visuospatial processing was unaffected.

“It’s unclear why the urgent and emergent admissions drove this finding, even after we controlled for illness and injury severity and other factors,” Dr. James said. “Obviously, we need more research in this area.”

He had no financial disclosures.

[email protected]

On Twitter @alz_gal

LONDON – Older adult patients who already had cognitive decline when they were admitted to a hospital often left with a significantly accelerated rate of decline, according to findings from a large longitudinal cohort study.

The study found up to a 62% acceleration of prehospital cognitive decline after any hospitalization. Urgent or emergency hospitalizations exacted the biggest toll on cognitive health, Bryan James, PhD, said at the Alzheimer’s Association International Conference.

Cognitive decline after hospitalization in older patients is a common occurrence but still poorly understood, he said. “Some data suggest this could actually be seen as a public health crisis since 40% of all hospitalized patients in the U.S. are older than 65, and the risk of past-hospitalization cognitive impairment rises with age.

“Given the risk to cognitive health, older patients, families, and physicians require information on when to admit to the hospital,” Dr. James said. “We wondered if those who decline rapidly after the hospital admission were already declining before. Our second question was whether elective hospital admissions are associated with the same negative cognitive outcomes as nonelective (emergent or urgent) admissions.”

To examine this, Dr. James and his colleagues used patient data from the Rush Memory and Aging Project, which provides each participant with an annual cognitive assessment consisting of 19 neuropsychological tests. They linked these data to each patient’s Medicare claims record, allowing them to assess cognitive function both before and after the index hospitalization.

The cohort comprised 930 patients who were followed for a mean of 5 years. Hospitalized patients were older (81 vs. 79 years). Most patients in both groups had at least one medical condition, such as hypertension, heart disease, diabetes, cancer, thyroid disease, head injury, or stroke. Cognition was already impaired in many of the hospitalized patients; 62% had mild cognitive impairment (MCI) and 35% had dementia. Among the nonhospitalized subjects, 49% had MCI and 24% had dementia.

Of the cohort, 66% experienced a hospitalization during follow-up. Most hospitalizations (57%) were either for urgent or emergency problems. The rest were elective admissions. The main outcome was change in global cognition – an averaged z-score of all 19 tests of working memory, episodic memory, semantic memory, visuospatial processing, and perceptual speed.

Elective admissions were mostly planned surgeries (94%), and unplanned surgeries occurred in 64% of the nonelective admissions. Most of the elective admissions (81%) involved anesthesia, compared with 32% of the nonelective admissions. About 40% of each group required a stay in the intensive care unit. Around 11% in each group had a critical illness – a stroke, hemorrhage, or brain trauma in about 6% of each group.

A multivariate analysis looked at the change in cognition during two time points: 2 years before the index hospitalization and up to 8 years after it. As could be expected of aged subjects in a memory study cohort, most patients experienced a decline in cognition over the study period. However, nonhospitalized patients continued on a smooth linear slope of decline. Hospitalized patients experienced a significant 62% increased rate of decline, even after controlling for age, education, comorbidities, depression, Activities of Daily Living disability, and physical activity.

Visuospatial processing was the only domain not significantly affected by a hospital admission. All of the memory domains, as well as perceptual speed, declined significantly faster after hospitalization than before.

The second analysis examined which type of admission was most dangerous for cognitive health. This controlled for even more potential confounding factors, including length of stay, surgery and anesthesia, Charlson comorbidity index, critical illness, brain injury, and number of hospitalizations during the follow-up period.

Urgent and emergency admissions drove virtually all of the increase in decline, Dr. James said, with a 60% increase in the rate of decline, compared with the prehospitalization rate. Patients who had elective admissions showed no variance from their baseline rate of decline, and, in fact, followed the same slope as nonhospitalized patients. Again, change was seen in the global score and in all the memory domains and perceptual speed. Only visuospatial processing was unaffected.

“It’s unclear why the urgent and emergent admissions drove this finding, even after we controlled for illness and injury severity and other factors,” Dr. James said. “Obviously, we need more research in this area.”

He had no financial disclosures.

[email protected]

On Twitter @alz_gal

LONDON – Older adult patients who already had cognitive decline when they were admitted to a hospital often left with a significantly accelerated rate of decline, according to findings from a large longitudinal cohort study.

The study found up to a 62% acceleration of prehospital cognitive decline after any hospitalization. Urgent or emergency hospitalizations exacted the biggest toll on cognitive health, Bryan James, PhD, said at the Alzheimer’s Association International Conference.

Cognitive decline after hospitalization in older patients is a common occurrence but still poorly understood, he said. “Some data suggest this could actually be seen as a public health crisis since 40% of all hospitalized patients in the U.S. are older than 65, and the risk of past-hospitalization cognitive impairment rises with age.

“Given the risk to cognitive health, older patients, families, and physicians require information on when to admit to the hospital,” Dr. James said. “We wondered if those who decline rapidly after the hospital admission were already declining before. Our second question was whether elective hospital admissions are associated with the same negative cognitive outcomes as nonelective (emergent or urgent) admissions.”

To examine this, Dr. James and his colleagues used patient data from the Rush Memory and Aging Project, which provides each participant with an annual cognitive assessment consisting of 19 neuropsychological tests. They linked these data to each patient’s Medicare claims record, allowing them to assess cognitive function both before and after the index hospitalization.

The cohort comprised 930 patients who were followed for a mean of 5 years. Hospitalized patients were older (81 vs. 79 years). Most patients in both groups had at least one medical condition, such as hypertension, heart disease, diabetes, cancer, thyroid disease, head injury, or stroke. Cognition was already impaired in many of the hospitalized patients; 62% had mild cognitive impairment (MCI) and 35% had dementia. Among the nonhospitalized subjects, 49% had MCI and 24% had dementia.

Of the cohort, 66% experienced a hospitalization during follow-up. Most hospitalizations (57%) were either for urgent or emergency problems. The rest were elective admissions. The main outcome was change in global cognition – an averaged z-score of all 19 tests of working memory, episodic memory, semantic memory, visuospatial processing, and perceptual speed.

Elective admissions were mostly planned surgeries (94%), and unplanned surgeries occurred in 64% of the nonelective admissions. Most of the elective admissions (81%) involved anesthesia, compared with 32% of the nonelective admissions. About 40% of each group required a stay in the intensive care unit. Around 11% in each group had a critical illness – a stroke, hemorrhage, or brain trauma in about 6% of each group.

A multivariate analysis looked at the change in cognition during two time points: 2 years before the index hospitalization and up to 8 years after it. As could be expected of aged subjects in a memory study cohort, most patients experienced a decline in cognition over the study period. However, nonhospitalized patients continued on a smooth linear slope of decline. Hospitalized patients experienced a significant 62% increased rate of decline, even after controlling for age, education, comorbidities, depression, Activities of Daily Living disability, and physical activity.

Visuospatial processing was the only domain not significantly affected by a hospital admission. All of the memory domains, as well as perceptual speed, declined significantly faster after hospitalization than before.

The second analysis examined which type of admission was most dangerous for cognitive health. This controlled for even more potential confounding factors, including length of stay, surgery and anesthesia, Charlson comorbidity index, critical illness, brain injury, and number of hospitalizations during the follow-up period.

Urgent and emergency admissions drove virtually all of the increase in decline, Dr. James said, with a 60% increase in the rate of decline, compared with the prehospitalization rate. Patients who had elective admissions showed no variance from their baseline rate of decline, and, in fact, followed the same slope as nonhospitalized patients. Again, change was seen in the global score and in all the memory domains and perceptual speed. Only visuospatial processing was unaffected.

“It’s unclear why the urgent and emergent admissions drove this finding, even after we controlled for illness and injury severity and other factors,” Dr. James said. “Obviously, we need more research in this area.”

He had no financial disclosures.

[email protected]

On Twitter @alz_gal

AT AAIC 2017

Key clinical point:

Major finding: Urgent or emergency admissions accelerated the rate of cognitive decline by 60%, compared with the prehospitalization rate. Elective admissions did not change the rate of cognitive decline.

Data source: The 930 patients were drawn from the Rush Memory and Aging Project.

Disclosures: The presenter had no financial disclosures.

New tool predicts antimicrobial resistance in sepsis

Use of a clinical decision tree predicted antibiotic resistance in sepsis patients infected with gram-negative bacteria, based on data from 1,618 patients.

Increasing rates of bacterial resistance have “contributed to the unwarranted empiric administration of broad-spectrum antibiotics, further promoting resistance emergence across microbial species,” said M. Cristina Vazquez Guillamet, MD, of the University of New Mexico, Albuquerque, and her colleagues (Clin Infect Dis. cix612. 2017 Jul 10. doi: 10.1093/cid/cix612).

The researchers identified adults with sepsis or septic shock caused by bloodstream infections who were treated at a single center between 2008 and 2015. They developed clinical decision trees using the CHAID algorithm (Chi squared Automatic Interaction Detection) to analyze risk factors for resistance associated with three antibiotics: piperacillin-tazobactam (PT), cefepime (CE), and meropenem (ME).

Overall, resistance rates to PT, CE, and ME were 29%, 22%, and 9%, respectively, and 6.6% of the isolates were resistant to all three antibiotics.

Factors associated with increased resistance risk included residence in a nursing home, transfer from an outside hospital, and prior antibiotics use. Resistance to ME was associated with infection with Pseudomonas or Acinetobacter spp, the researchers noted, and resistance to PT was associated with central nervous system and central venous catheter infections.

Clinical decision trees were able to separate patients at low risk for resistance to PT and CE, as well as those with a risk greater than 30% of resistance to PT, CE, or ME. “We also found good overall agreement between the accuracies of the [multivariable logistic regression] models and the decision tree analyses for predicting antibiotic resistance,” the researchers said.

The findings were limited by several factors, including the use of data from a single center and incomplete reporting of previous antibiotic exposure, the researchers noted. However, the results “provide a framework for how empiric antibiotics can be tailored according to decision tree patient clusters,” they said.

Combining user-friendly clinical decision trees and multivariable logistic regression models may offer the best opportunities for hospitals to derive local models to help with antimicrobial prescription.

The researchers had no financial conflicts to disclose.

Use of a clinical decision tree predicted antibiotic resistance in sepsis patients infected with gram-negative bacteria, based on data from 1,618 patients.

Increasing rates of bacterial resistance have “contributed to the unwarranted empiric administration of broad-spectrum antibiotics, further promoting resistance emergence across microbial species,” said M. Cristina Vazquez Guillamet, MD, of the University of New Mexico, Albuquerque, and her colleagues (Clin Infect Dis. cix612. 2017 Jul 10. doi: 10.1093/cid/cix612).

The researchers identified adults with sepsis or septic shock caused by bloodstream infections who were treated at a single center between 2008 and 2015. They developed clinical decision trees using the CHAID algorithm (Chi squared Automatic Interaction Detection) to analyze risk factors for resistance associated with three antibiotics: piperacillin-tazobactam (PT), cefepime (CE), and meropenem (ME).

Overall, resistance rates to PT, CE, and ME were 29%, 22%, and 9%, respectively, and 6.6% of the isolates were resistant to all three antibiotics.

Factors associated with increased resistance risk included residence in a nursing home, transfer from an outside hospital, and prior antibiotics use. Resistance to ME was associated with infection with Pseudomonas or Acinetobacter spp, the researchers noted, and resistance to PT was associated with central nervous system and central venous catheter infections.

Clinical decision trees were able to separate patients at low risk for resistance to PT and CE, as well as those with a risk greater than 30% of resistance to PT, CE, or ME. “We also found good overall agreement between the accuracies of the [multivariable logistic regression] models and the decision tree analyses for predicting antibiotic resistance,” the researchers said.

The findings were limited by several factors, including the use of data from a single center and incomplete reporting of previous antibiotic exposure, the researchers noted. However, the results “provide a framework for how empiric antibiotics can be tailored according to decision tree patient clusters,” they said.

Combining user-friendly clinical decision trees and multivariable logistic regression models may offer the best opportunities for hospitals to derive local models to help with antimicrobial prescription.

The researchers had no financial conflicts to disclose.

Use of a clinical decision tree predicted antibiotic resistance in sepsis patients infected with gram-negative bacteria, based on data from 1,618 patients.

Increasing rates of bacterial resistance have “contributed to the unwarranted empiric administration of broad-spectrum antibiotics, further promoting resistance emergence across microbial species,” said M. Cristina Vazquez Guillamet, MD, of the University of New Mexico, Albuquerque, and her colleagues (Clin Infect Dis. cix612. 2017 Jul 10. doi: 10.1093/cid/cix612).

The researchers identified adults with sepsis or septic shock caused by bloodstream infections who were treated at a single center between 2008 and 2015. They developed clinical decision trees using the CHAID algorithm (Chi squared Automatic Interaction Detection) to analyze risk factors for resistance associated with three antibiotics: piperacillin-tazobactam (PT), cefepime (CE), and meropenem (ME).

Overall, resistance rates to PT, CE, and ME were 29%, 22%, and 9%, respectively, and 6.6% of the isolates were resistant to all three antibiotics.

Factors associated with increased resistance risk included residence in a nursing home, transfer from an outside hospital, and prior antibiotics use. Resistance to ME was associated with infection with Pseudomonas or Acinetobacter spp, the researchers noted, and resistance to PT was associated with central nervous system and central venous catheter infections.

Clinical decision trees were able to separate patients at low risk for resistance to PT and CE, as well as those with a risk greater than 30% of resistance to PT, CE, or ME. “We also found good overall agreement between the accuracies of the [multivariable logistic regression] models and the decision tree analyses for predicting antibiotic resistance,” the researchers said.

The findings were limited by several factors, including the use of data from a single center and incomplete reporting of previous antibiotic exposure, the researchers noted. However, the results “provide a framework for how empiric antibiotics can be tailored according to decision tree patient clusters,” they said.

Combining user-friendly clinical decision trees and multivariable logistic regression models may offer the best opportunities for hospitals to derive local models to help with antimicrobial prescription.

The researchers had no financial conflicts to disclose.

FROM CLINICAL INFECTIOUS DISEASES

Key clinical point:

Major finding: The model found prevalence rates for resistance to piperacillin-tazobactam, cefepime, and meropenem of 28.6%, 21.8%, and 8.5%, respectively.

Data source: A review of 1,618 adults with sepsis.

Disclosures: The researchers had no financial conflicts to disclose.

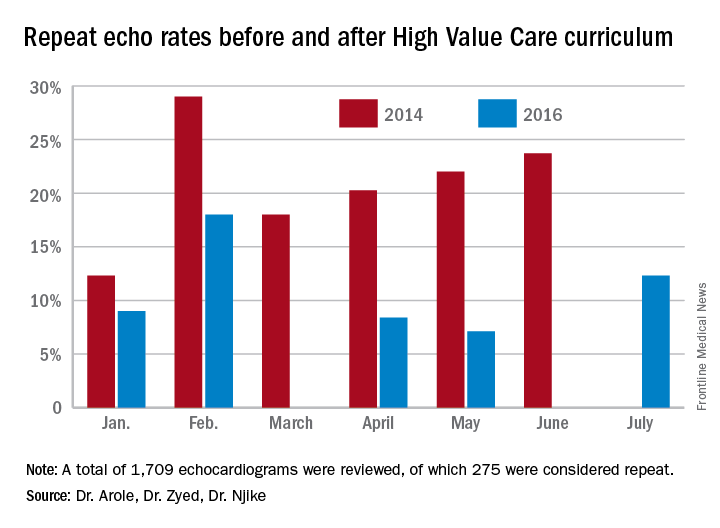

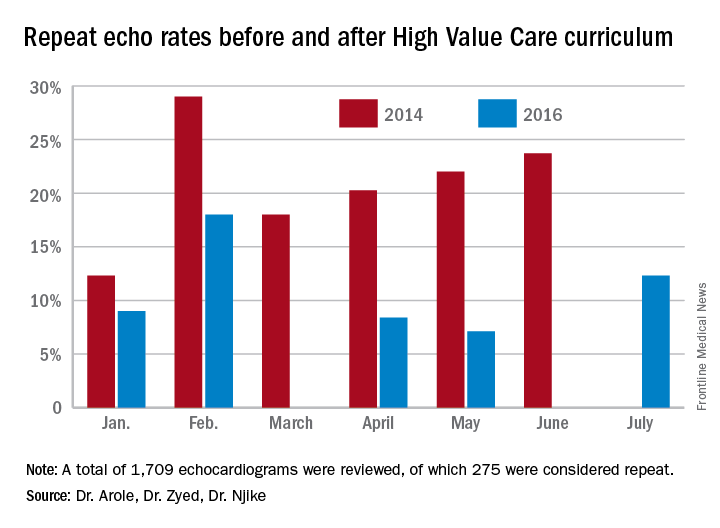

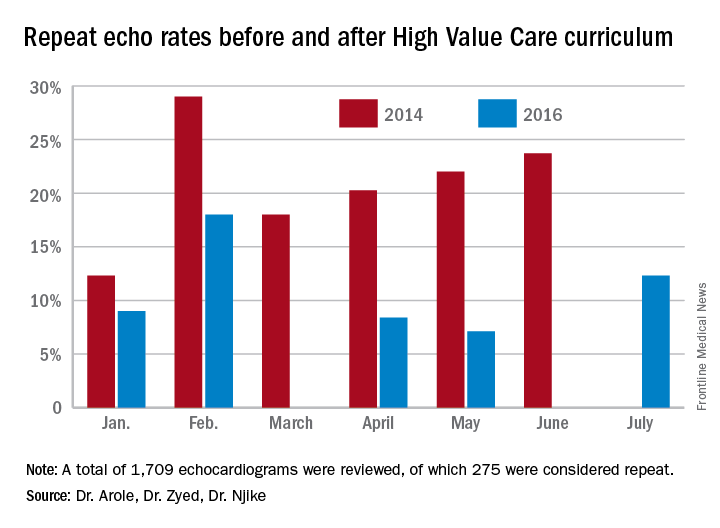

High Value Care curriculum reduced echocardiogram ordering

Study Title

The impact of a High Value Care curriculum on rate of repeat of trans-thoracic echocardiogram ordering among medical residents

Background

There is little data to confirm the impact of a High Value Care curriculum on echocardiogram ordering practices in a residency training program. We sought to evaluate the rate of performance of repeat transthoracic echocardiograms (TTE) before and after implementation of a High Vale Care curriculum.

Methods

A High Value Care curriculum was developed for the medical residents at Griffin Hospital, a community hospital, in 2015. The curriculum included a series of lectures aimed at promoting cost-conscious care while maintaining high quality. It also involved house staff in different quality improvement (QI) projects aimed at promoting high value care.

A group of residents decided to work on an initiative to reduce repeat echocardiograms. Repeat echocardiograms were defined as those performed within 6 months of a previous echocardiogram on the same patient. Only results in our EHR reflecting in-patient echocardiograms were utilized.

We retrospectively examined the rates of repeat echocardiograms performed in a 6 month period in 2014 before the High Vale Care curriculum was initiated. We assessed data from a 5 month period in 2016 to determine the rate of repeat electrocardiograms ordered at our institution.

Results

A total of 1,709 echocardiograms were reviewed in both time periods. Of these, 275 were considered repeat. At baseline, or before the implementation of a High Value Care curriculum, we examined 908 echocardiograms that were ordered, of which 21% were repeats.

After the implementation of a High Vale Care curriculum, 801 echocardiograms were ordered. Only 11% of these were repeats. This corresponds to a 52% reduction in the rate of repeated ordering of echocardiograms.

Discussion

The significant improvement in the rate of repeat echocardiograms was noted without any initiative directed specifically at echocardiogram ordering practices. During the planning phases of the proposed QI initiative to reduce repeat echocardiograms, house staff noted that their colleagues were already being more selective in their echocardiogram ordering practices because of the impact of the-cost conscious care lectures they had attended, as well as hospital-wide attention on the first resident-driven QI initiative that was aimed at reducing repetitive daily labs.

As part of the reducing repetitive labs QI, house staff had to provide clear rationale for why they were ordering daily labs. The received regular updates about their lab ordering practices and direct feedback if they consistently did not provide clear rationale for ordering daily labs.

Conclusion

Our study clearly showed a greater than 50% reduction in the ordering of repeat echocardiograms because of a High Value Care curriculum in our residency training program.

The improvement was brought on by increased awareness by house staff regarding provision of high quality care while being cognizant of the costs involved. The reduction in repeat echocardiograms resulted in more efficient use of a limited hospital resource.

Dr. Arole is chief of hospital medicine at Griffin Hospital, Derby, Conn. Dr. Zyed is in the department of internal medicine at Griffin Hospital. Dr. Njike is with the Yale University Prevention Research Center at Griffin Hospital.

Study Title

The impact of a High Value Care curriculum on rate of repeat of trans-thoracic echocardiogram ordering among medical residents

Background

There is little data to confirm the impact of a High Value Care curriculum on echocardiogram ordering practices in a residency training program. We sought to evaluate the rate of performance of repeat transthoracic echocardiograms (TTE) before and after implementation of a High Vale Care curriculum.

Methods

A High Value Care curriculum was developed for the medical residents at Griffin Hospital, a community hospital, in 2015. The curriculum included a series of lectures aimed at promoting cost-conscious care while maintaining high quality. It also involved house staff in different quality improvement (QI) projects aimed at promoting high value care.

A group of residents decided to work on an initiative to reduce repeat echocardiograms. Repeat echocardiograms were defined as those performed within 6 months of a previous echocardiogram on the same patient. Only results in our EHR reflecting in-patient echocardiograms were utilized.

We retrospectively examined the rates of repeat echocardiograms performed in a 6 month period in 2014 before the High Vale Care curriculum was initiated. We assessed data from a 5 month period in 2016 to determine the rate of repeat electrocardiograms ordered at our institution.

Results

A total of 1,709 echocardiograms were reviewed in both time periods. Of these, 275 were considered repeat. At baseline, or before the implementation of a High Value Care curriculum, we examined 908 echocardiograms that were ordered, of which 21% were repeats.

After the implementation of a High Vale Care curriculum, 801 echocardiograms were ordered. Only 11% of these were repeats. This corresponds to a 52% reduction in the rate of repeated ordering of echocardiograms.

Discussion

The significant improvement in the rate of repeat echocardiograms was noted without any initiative directed specifically at echocardiogram ordering practices. During the planning phases of the proposed QI initiative to reduce repeat echocardiograms, house staff noted that their colleagues were already being more selective in their echocardiogram ordering practices because of the impact of the-cost conscious care lectures they had attended, as well as hospital-wide attention on the first resident-driven QI initiative that was aimed at reducing repetitive daily labs.

As part of the reducing repetitive labs QI, house staff had to provide clear rationale for why they were ordering daily labs. The received regular updates about their lab ordering practices and direct feedback if they consistently did not provide clear rationale for ordering daily labs.

Conclusion

Our study clearly showed a greater than 50% reduction in the ordering of repeat echocardiograms because of a High Value Care curriculum in our residency training program.

The improvement was brought on by increased awareness by house staff regarding provision of high quality care while being cognizant of the costs involved. The reduction in repeat echocardiograms resulted in more efficient use of a limited hospital resource.

Dr. Arole is chief of hospital medicine at Griffin Hospital, Derby, Conn. Dr. Zyed is in the department of internal medicine at Griffin Hospital. Dr. Njike is with the Yale University Prevention Research Center at Griffin Hospital.

Study Title

The impact of a High Value Care curriculum on rate of repeat of trans-thoracic echocardiogram ordering among medical residents

Background

There is little data to confirm the impact of a High Value Care curriculum on echocardiogram ordering practices in a residency training program. We sought to evaluate the rate of performance of repeat transthoracic echocardiograms (TTE) before and after implementation of a High Vale Care curriculum.

Methods

A High Value Care curriculum was developed for the medical residents at Griffin Hospital, a community hospital, in 2015. The curriculum included a series of lectures aimed at promoting cost-conscious care while maintaining high quality. It also involved house staff in different quality improvement (QI) projects aimed at promoting high value care.

A group of residents decided to work on an initiative to reduce repeat echocardiograms. Repeat echocardiograms were defined as those performed within 6 months of a previous echocardiogram on the same patient. Only results in our EHR reflecting in-patient echocardiograms were utilized.

We retrospectively examined the rates of repeat echocardiograms performed in a 6 month period in 2014 before the High Vale Care curriculum was initiated. We assessed data from a 5 month period in 2016 to determine the rate of repeat electrocardiograms ordered at our institution.

Results

A total of 1,709 echocardiograms were reviewed in both time periods. Of these, 275 were considered repeat. At baseline, or before the implementation of a High Value Care curriculum, we examined 908 echocardiograms that were ordered, of which 21% were repeats.

After the implementation of a High Vale Care curriculum, 801 echocardiograms were ordered. Only 11% of these were repeats. This corresponds to a 52% reduction in the rate of repeated ordering of echocardiograms.

Discussion

The significant improvement in the rate of repeat echocardiograms was noted without any initiative directed specifically at echocardiogram ordering practices. During the planning phases of the proposed QI initiative to reduce repeat echocardiograms, house staff noted that their colleagues were already being more selective in their echocardiogram ordering practices because of the impact of the-cost conscious care lectures they had attended, as well as hospital-wide attention on the first resident-driven QI initiative that was aimed at reducing repetitive daily labs.

As part of the reducing repetitive labs QI, house staff had to provide clear rationale for why they were ordering daily labs. The received regular updates about their lab ordering practices and direct feedback if they consistently did not provide clear rationale for ordering daily labs.

Conclusion

Our study clearly showed a greater than 50% reduction in the ordering of repeat echocardiograms because of a High Value Care curriculum in our residency training program.

The improvement was brought on by increased awareness by house staff regarding provision of high quality care while being cognizant of the costs involved. The reduction in repeat echocardiograms resulted in more efficient use of a limited hospital resource.

Dr. Arole is chief of hospital medicine at Griffin Hospital, Derby, Conn. Dr. Zyed is in the department of internal medicine at Griffin Hospital. Dr. Njike is with the Yale University Prevention Research Center at Griffin Hospital.

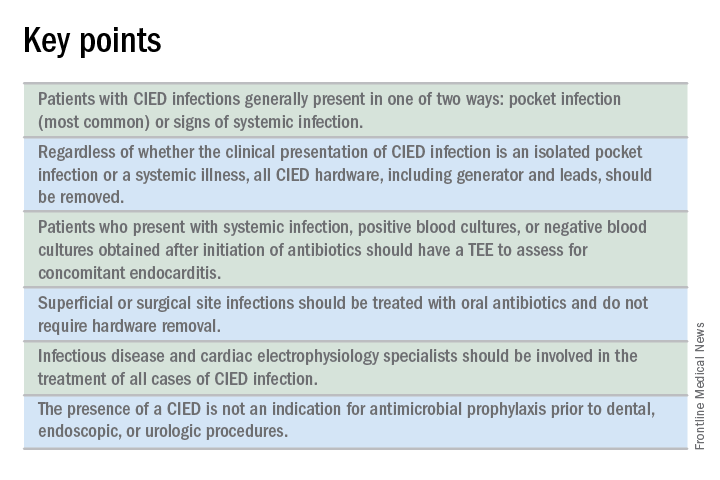

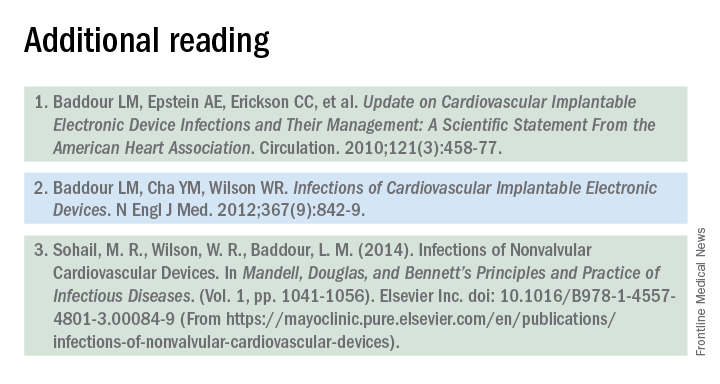

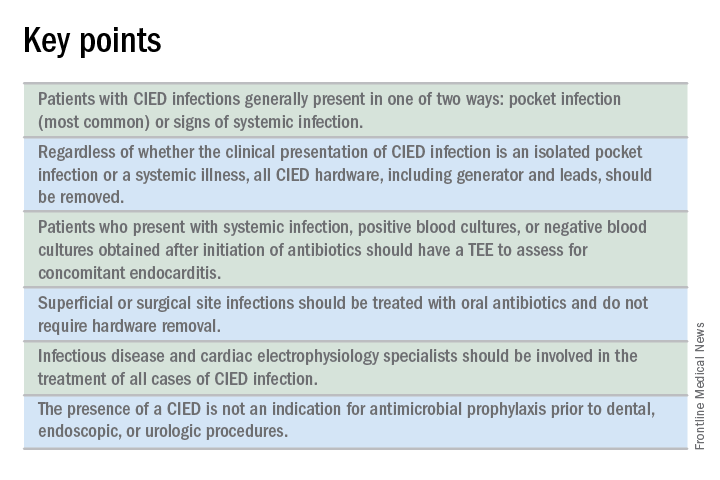

What is the best approach for managing CIED infections?

The case

A 72-year-old man with ischemic cardiomyopathy and left-ventricular ejection fraction of 15% had an cardioverter-defibrillator implanted five years ago for primary prevention of sudden cardiac death. He was brought to the emergency department by his daughter who noticed erythema and swelling over the generator pocket site. His temperature is 100.1° F. Vital signs are otherwise normal and stable.

What are the best initial and definitive management strategies for this patient?

When should a cardiac implanted electronic device (CIED) infection be suspected?

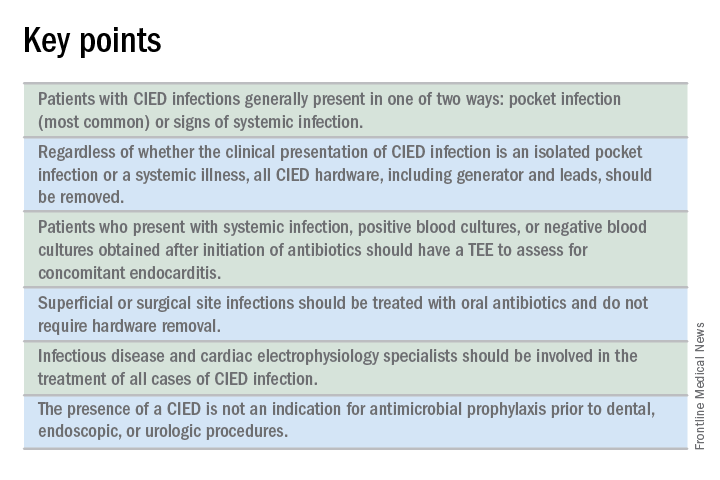

CIED infections generally present in one of two ways: as a local generator pocket infection or as a systemic illness.1 Around 70% of CIED infections present with findings related to the generator pocket site. Findings in such cases include pain, swelling, erythema, induration, and ulceration. Systemic findings range from vague constitutional symptoms to overt sepsis. It is important to note that systemic signs of infection are only present in the minority of cases. Their absence does not rule out a CIED infection.1,2 As such, hospitalists must maintain a high index of suspicion in order to avoid missing the diagnosis.

Unfortunately, it can be difficult to distinguish between a CIED infection and less severe postimplantation complications such as surgical site infections, superficial pocket site infections, and noninfected hematoma.2

What are the risk factors for CIED infections?

The risk factors for CIED infection fit into three broad categories: procedure, patient, and pathogen.

Procedural factors that increase risk of infection include lack of periprocedural antimicrobial prophylaxis, inexperienced physician operator, multiple revision procedures, hematoma formation at the pocket site, and increased amount of hardware.1

Patient factors center on medical comorbidities, including congestive heart failure, diabetes mellitus, chronic kidney disease, immunosuppression, and ongoing oral anticoagulation.1

The specific pathogen also plays a role in infection risk. In one cohort study of 1,524 patients with CIEDs, patients with Staphylococcus aureus bacteremia ended up having confirmed or suspected CIED infections in 54.6% of cases, compared with just 12.0% of patients who had bacteremia with gram-negative bacilli.3

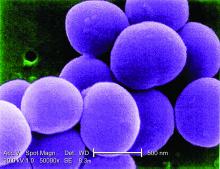

Which bacteria cause most CIED infections?

The vast majority of CIED infections are caused by gram-positive bacteria.1,4 An Italian study of 1,204 patients with CIED infection reported that pathogens isolated from extracted leads were gram-positive bacteria in 92.5% of infections.4 Staph species are the most common pathogens. Coagulase-negative staphylococcus and Staphylococcus aureus accounted for 69% and 13.8% of all isolates, respectively. Of note, 33% of CoNS isolates and 13% of S. aureus isolates were resistant to oxacillin in that study.4

Which initial diagnostic tests should be performed?

Patients should have two sets of blood cultures drawn from two separate sites, prior to administration of antibiotics.1 Current guidelines recommend against aspiration of a suspected infected pocket site because the sensitivity for identifying the causal pathogen is low while the risk of introducing infection to the generator pocket is substantial.1 In the event of CIED removal, pocket tissue and lead tips should be sent for gram stain and culture.1

Do all patients require a transesophageal echocardiogram?

Guidelines recommend a transesophageal echocardiogram (TEE) if suspicion for CIED infection is high based on positive blood cultures, antibiotic initiation prior to culture collection, ongoing systemic illness, or other suggestive signs.1,2 Positive transthoracic echocardiogram findings (for example, a valve vegetation) do not obviate the need for TEE because of the possibility of other relevant complications (including endocarditis and perivalvular extension) for which TEE has a greater sensitivity.1

What is the approach to antimicrobial therapy?

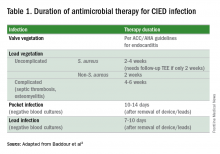

Since most infections involve staphylococcus species, initial empiric antimicrobial therapy should cover both oxacillin-sensitive and oxacillin-resistant strains. Thus, intravenous vancomycin is an appropriate initial choice.1 Culture and sensitivity results should then guide specific therapy decisions.1 Table 1 provides a summary of strategies for antimicrobial selection and duration.

Should all patients undergo complete device removal?

All patients with CIED infection require complete device removal, even if the infection is suspected to be confined to the generator pocket and blood cultures remain negative.2 Patients with superficial or surgical site infections without CIED infection do not require complete device removal. Rather, those cases can be managed with a 7- to 10-day course of oral antimicrobials.1

After device removal, what is the appropriate timing for installing a new device?

The decision to implant a replacement device is often made with input from infectious disease and cardiac electrophysiology experts. The decision must consider both infection and device-related concerns. Importantly, repeat blood cultures must be negative, and any infection in the pocket site should be controlled prior to installing a new device.2

Should all patients with a CIED receive antimicrobial prophylaxis prior to invasive dental, urologic, or endoscopic procedures?

No. Merely having a CIED is not considered an indication for antimicrobial prophylaxis.2

Back to the case

Two sets of blood cultures are drawn, and vancomycin is started as empiric therapy. The culture results show CoNS species, and antimicrobial resistance testing shows oxacillin-resistance.

TEE shows a vegetation on one of the leads but no vegetation on any of the heart valves. Cardiac electrophysiology is consulted and performs a percutaneous extraction of the entire device (generator and leads). Starting on the day of extraction, the patient undergoes two weeks of intravenous antimicrobial therapy with vancomycin. The patient, his family, and the electrophysiology team discuss the patient’s wishes, indications, and potential burdens related to device replacement.

He ultimately decides to have a replacement device installed. An infectious disease specialist is consulted to help define appropriate timing of the new device installation procedure.

Bottom line

The patient clearly had a CIED infection which required TEE, extraction of his CIED, and prolonged antimicrobial therapy.

Dr. Davisson is a primary care physician at Community Health Network in Indianapolis, Ind. Dr. Lockwood is a hospitalist at the Lexington VA Medical Center. Dr. Sweigart is a hospitalist at the University of Kentucky, Lexington, and the Lexington VA Medical Center.

References

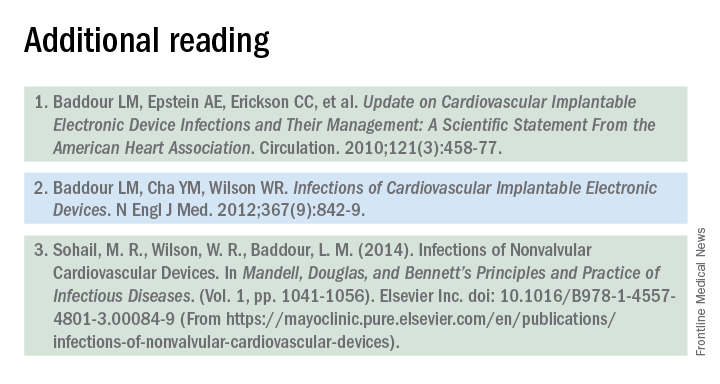

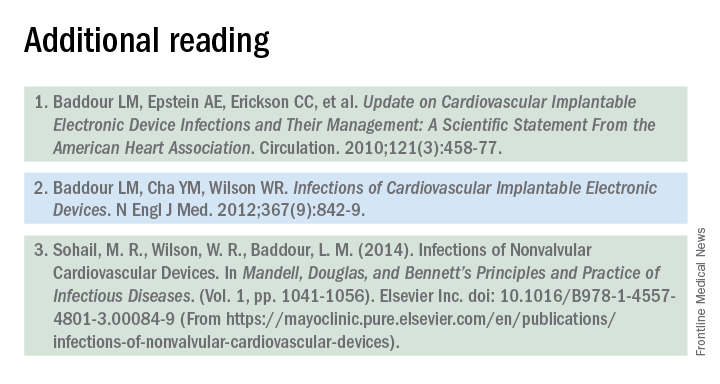

1. Baddour LM, Epstein AE, Erickson CC, et al. Update on Cardiovascular Implantable Electronic Device Infections and Their Management: A Scientific Statement From the American Heart Association. Circulation. 2010;121(3):458-77.

2. Baddour LM, Cha YM, Wilson WR. Infections of Cardiovascular Implantable Electronic Devices. N Engl J Med. 2012;367(9):842-9.

3. Uslan DZ, Sohail MR, St. Sauver JL, et al. Permanent Pacemaker and Implantable Cardioverter Defibrillator Infection: A Population-Based Study. Arch Intern Med. 2007;167(7):669-75.

4. Bongiorni MG, Tascini C, Tagliaferri E, et al. Microbiology of Cardiac Implantable Electronic Device Infections. Europace. 2012;14(9):1334-9.

The case

A 72-year-old man with ischemic cardiomyopathy and left-ventricular ejection fraction of 15% had an cardioverter-defibrillator implanted five years ago for primary prevention of sudden cardiac death. He was brought to the emergency department by his daughter who noticed erythema and swelling over the generator pocket site. His temperature is 100.1° F. Vital signs are otherwise normal and stable.

What are the best initial and definitive management strategies for this patient?

When should a cardiac implanted electronic device (CIED) infection be suspected?

CIED infections generally present in one of two ways: as a local generator pocket infection or as a systemic illness.1 Around 70% of CIED infections present with findings related to the generator pocket site. Findings in such cases include pain, swelling, erythema, induration, and ulceration. Systemic findings range from vague constitutional symptoms to overt sepsis. It is important to note that systemic signs of infection are only present in the minority of cases. Their absence does not rule out a CIED infection.1,2 As such, hospitalists must maintain a high index of suspicion in order to avoid missing the diagnosis.

Unfortunately, it can be difficult to distinguish between a CIED infection and less severe postimplantation complications such as surgical site infections, superficial pocket site infections, and noninfected hematoma.2

What are the risk factors for CIED infections?

The risk factors for CIED infection fit into three broad categories: procedure, patient, and pathogen.

Procedural factors that increase risk of infection include lack of periprocedural antimicrobial prophylaxis, inexperienced physician operator, multiple revision procedures, hematoma formation at the pocket site, and increased amount of hardware.1

Patient factors center on medical comorbidities, including congestive heart failure, diabetes mellitus, chronic kidney disease, immunosuppression, and ongoing oral anticoagulation.1

The specific pathogen also plays a role in infection risk. In one cohort study of 1,524 patients with CIEDs, patients with Staphylococcus aureus bacteremia ended up having confirmed or suspected CIED infections in 54.6% of cases, compared with just 12.0% of patients who had bacteremia with gram-negative bacilli.3

Which bacteria cause most CIED infections?

The vast majority of CIED infections are caused by gram-positive bacteria.1,4 An Italian study of 1,204 patients with CIED infection reported that pathogens isolated from extracted leads were gram-positive bacteria in 92.5% of infections.4 Staph species are the most common pathogens. Coagulase-negative staphylococcus and Staphylococcus aureus accounted for 69% and 13.8% of all isolates, respectively. Of note, 33% of CoNS isolates and 13% of S. aureus isolates were resistant to oxacillin in that study.4

Which initial diagnostic tests should be performed?