User login

Diluted Apple Juice Versus Electrolyte Solution in Gastroenteritis

Clinical Question: Is diluted apple juice inferior to apple-flavored electrolyte oral rehydration solution in children with mild dehydration due to acute gastroenteritis?

Background: In the setting of acute gastroenteritis, teaching has classically been that the simple sugars in juice and sports drinks can worsen diarrhea and that they could cause hyponatremia since they are not isotonic. Due to this, the American Academy of Pediatrics recommends an electrolyte oral rehydration solution for children with dehydration and acute gastroenteritis. These solutions are more expensive and less palatable than juices. The authors sought to determine if diluted apple juice versus electrolyte oral rehydration fluid decreased the need for IV fluids, hospitalization, return visits, prolonged symptoms, or ongoing dehydration in mildly dehydrated children with acute gastroenteritis.

Study Design: Randomized single-blind non-inferiority prospective trial.

Setting: Single large tertiary-care pediatric emergency room.

Synopsis: Over five years, 3,668 patients were identified. Inclusion criteria were age >6 months or 8 kg of weight; Clinical Dehydration Scale score

Patients were challenged with small aliquots of these solutions and given ondansetron if they vomited. Upon discharge, they were sent home with 2 L of their solution. In the control arm, families were instructed to use this solution to make up for any ongoing losses. In the experimental arm, families were instructed to provide whatever fluids they would prefer. Follow-up was via phone, mail, and in-person reassessments. Patients were considered to have failed treatment if they required hospitalization, IV fluids, or a repeat unscheduled visit to a physician or experienced diarrhea lasting more than seven days or worsening dehydration on follow-up.

In the experimental arm, 16.7% of patients failed treatment (95% CI, 12.8%–21.2%) compared to 25% in the control arm (95% CI, 20.4%–30.1%; P < 0.001 for non-inferiority, P = .006 for superiority). The experimental arm also required IV fluids (2.5% versus 9%) significantly less often, though without a significantly decreased rate of hospitalization. These differences were present primarily in children >24 months old. No difference in the frequency of diarrheal stools was found, and no episodes of significant hyponatremia occurred.

Bottom Line: Giving children with mild dehydration due to acute gastroenteritis diluted apple juice and preferred fluids rather than the currently recommended electrolyte oral rehydration solution leads to decreased treatment failures and decreased need for IV fluids. There was no evidence of worsened diarrhea or significant hyponatremia.

Citation: Freedman SB, Willan AR, Boutis K, Schuh S. Effect of dilute apple juice and preferred fluids vs electrolyte maintenance solution on treatment failure among children with mild gastroenteritis: a randomized clinical trial. JAMA. 2016;315(18):1966-1974. doi:10.1001/jama.2016.5352.

Dr. Stubblefield is a pediatric hospitalist at Nemours/Alfred I. Dupont Hospital for Children in Wilmington, Del., and assistant professor of pediatrics at Thomas Jefferson Medical College in Philadelphia.

Clinical Question: Is diluted apple juice inferior to apple-flavored electrolyte oral rehydration solution in children with mild dehydration due to acute gastroenteritis?

Background: In the setting of acute gastroenteritis, teaching has classically been that the simple sugars in juice and sports drinks can worsen diarrhea and that they could cause hyponatremia since they are not isotonic. Due to this, the American Academy of Pediatrics recommends an electrolyte oral rehydration solution for children with dehydration and acute gastroenteritis. These solutions are more expensive and less palatable than juices. The authors sought to determine if diluted apple juice versus electrolyte oral rehydration fluid decreased the need for IV fluids, hospitalization, return visits, prolonged symptoms, or ongoing dehydration in mildly dehydrated children with acute gastroenteritis.

Study Design: Randomized single-blind non-inferiority prospective trial.

Setting: Single large tertiary-care pediatric emergency room.

Synopsis: Over five years, 3,668 patients were identified. Inclusion criteria were age >6 months or 8 kg of weight; Clinical Dehydration Scale score

Patients were challenged with small aliquots of these solutions and given ondansetron if they vomited. Upon discharge, they were sent home with 2 L of their solution. In the control arm, families were instructed to use this solution to make up for any ongoing losses. In the experimental arm, families were instructed to provide whatever fluids they would prefer. Follow-up was via phone, mail, and in-person reassessments. Patients were considered to have failed treatment if they required hospitalization, IV fluids, or a repeat unscheduled visit to a physician or experienced diarrhea lasting more than seven days or worsening dehydration on follow-up.

In the experimental arm, 16.7% of patients failed treatment (95% CI, 12.8%–21.2%) compared to 25% in the control arm (95% CI, 20.4%–30.1%; P < 0.001 for non-inferiority, P = .006 for superiority). The experimental arm also required IV fluids (2.5% versus 9%) significantly less often, though without a significantly decreased rate of hospitalization. These differences were present primarily in children >24 months old. No difference in the frequency of diarrheal stools was found, and no episodes of significant hyponatremia occurred.

Bottom Line: Giving children with mild dehydration due to acute gastroenteritis diluted apple juice and preferred fluids rather than the currently recommended electrolyte oral rehydration solution leads to decreased treatment failures and decreased need for IV fluids. There was no evidence of worsened diarrhea or significant hyponatremia.

Citation: Freedman SB, Willan AR, Boutis K, Schuh S. Effect of dilute apple juice and preferred fluids vs electrolyte maintenance solution on treatment failure among children with mild gastroenteritis: a randomized clinical trial. JAMA. 2016;315(18):1966-1974. doi:10.1001/jama.2016.5352.

Dr. Stubblefield is a pediatric hospitalist at Nemours/Alfred I. Dupont Hospital for Children in Wilmington, Del., and assistant professor of pediatrics at Thomas Jefferson Medical College in Philadelphia.

Clinical Question: Is diluted apple juice inferior to apple-flavored electrolyte oral rehydration solution in children with mild dehydration due to acute gastroenteritis?

Background: In the setting of acute gastroenteritis, teaching has classically been that the simple sugars in juice and sports drinks can worsen diarrhea and that they could cause hyponatremia since they are not isotonic. Due to this, the American Academy of Pediatrics recommends an electrolyte oral rehydration solution for children with dehydration and acute gastroenteritis. These solutions are more expensive and less palatable than juices. The authors sought to determine if diluted apple juice versus electrolyte oral rehydration fluid decreased the need for IV fluids, hospitalization, return visits, prolonged symptoms, or ongoing dehydration in mildly dehydrated children with acute gastroenteritis.

Study Design: Randomized single-blind non-inferiority prospective trial.

Setting: Single large tertiary-care pediatric emergency room.

Synopsis: Over five years, 3,668 patients were identified. Inclusion criteria were age >6 months or 8 kg of weight; Clinical Dehydration Scale score

Patients were challenged with small aliquots of these solutions and given ondansetron if they vomited. Upon discharge, they were sent home with 2 L of their solution. In the control arm, families were instructed to use this solution to make up for any ongoing losses. In the experimental arm, families were instructed to provide whatever fluids they would prefer. Follow-up was via phone, mail, and in-person reassessments. Patients were considered to have failed treatment if they required hospitalization, IV fluids, or a repeat unscheduled visit to a physician or experienced diarrhea lasting more than seven days or worsening dehydration on follow-up.

In the experimental arm, 16.7% of patients failed treatment (95% CI, 12.8%–21.2%) compared to 25% in the control arm (95% CI, 20.4%–30.1%; P < 0.001 for non-inferiority, P = .006 for superiority). The experimental arm also required IV fluids (2.5% versus 9%) significantly less often, though without a significantly decreased rate of hospitalization. These differences were present primarily in children >24 months old. No difference in the frequency of diarrheal stools was found, and no episodes of significant hyponatremia occurred.

Bottom Line: Giving children with mild dehydration due to acute gastroenteritis diluted apple juice and preferred fluids rather than the currently recommended electrolyte oral rehydration solution leads to decreased treatment failures and decreased need for IV fluids. There was no evidence of worsened diarrhea or significant hyponatremia.

Citation: Freedman SB, Willan AR, Boutis K, Schuh S. Effect of dilute apple juice and preferred fluids vs electrolyte maintenance solution on treatment failure among children with mild gastroenteritis: a randomized clinical trial. JAMA. 2016;315(18):1966-1974. doi:10.1001/jama.2016.5352.

Dr. Stubblefield is a pediatric hospitalist at Nemours/Alfred I. Dupont Hospital for Children in Wilmington, Del., and assistant professor of pediatrics at Thomas Jefferson Medical College in Philadelphia.

Underlay mesh for hernia repair yields better postop pain outcomes

Chronic pain that typically follows primary inguinal hernia repair can be significantly reduced by adopting procedures that use underlay mesh rather than overlay mesh, according to a new study published in the Journal of Surgical Research.

“Although chronic pain and discomfort is still one of the greatest problems after inguinal hernia repair due to the fact that it interferes with patients’ quality of life, there are very little data available from previous studies concerning presentation, diagnosis, and modes of treatment of this issue. In particular, the data in the literature concerning the cause of chronic pain are very limited,” wrote the authors, led by Hideyuki Takata, MD, of Nippon Medical School, Tokyo.

Dr. Takata and his coinvestigators looked at patients who underwent a mesh repair operation for primary inguinal hernia at a single institution – Nippon Medical School – between May 2011 and May 2014. All patients were aged 40 years or older, and the overwhelming majority were male. A total of 334 patients were identified, with 378 lesions among them; all patients’ operations were performed via the Lichtenstein (onlay mesh only), Ultrapro Plug (onlay and plug mesh), modified Kugel Patch (onlay and underlay mesh), or laparoscopic transabdominal preperitoneal (underlay mesh only, TAPP) surgical routes.

Forty-four patients had bilateral lesions, 152 had lesions on the right, and 138 on the left; 76 patients received Lichtenstein operations, 85 received Ultrapro Plug, 156 received modified Kugel Patch, and 61 received TAPP. (J Surg Res 2016 Aug 11. doi: 10.1016/j.jss.2016.08.027).

Patients received questionnaires at 2-3 weeks, 3 months, and 6 months after the operation to determine their pain and discomfort levels. Responses for all 378 lesions (100%) were received at the first follow-up, with questionnaires received for 229 lesions (60.5%) at the 3-month follow-up and 249 lesions (65.9%) at the 6-month follow-up. Of those who responded at the 6-month follow-up, 46 received Lichtenstein, 59 received Ultrapro Plug, 101 received modified Kugel Patch, and 61 received TAPP.

No patients reported moderate or severe pain while at rest. Mild pain was reported by 11 (4.4%) of all respondents; 0 of those who received Lichtenstein, 4 (6.8%) of those who received Ultrapro Plug, 7 (6.9%) of those who received modified Kugel Patch, and 0 of those who received TAPP (P less than .01).

Pain with movement was reported in 35 (14.1%) of respondents: 6 (13.0%) of those who received Lichtenstein, 7 (11.9%) of those who received Ultrapro Plug, 20 (19.8%) of those who received modified Kugel Patch, and 2 (4.7%) of those who received TAPP (P less than .05). One respondent reported experiencing moderate pain with movement, and that individual received Ultrapro Plug (1.7%). No patients reported experiencing severe pain with movement.

“We conclude that the sensory nerves in the inguinal region should be kept away from the mesh to prevent the development of chronic pain and discomfort,” the investigators concluded. “Further study is required to determine the mechanism involved in the generation of chronic pain and discomfort to improve the patient’s quality of life after primary inguinal hernia repair.”

No funding source was disclosed for this study. Dr. Takata and his coauthors did not report any relevant financial disclosures.

Chronic pain that typically follows primary inguinal hernia repair can be significantly reduced by adopting procedures that use underlay mesh rather than overlay mesh, according to a new study published in the Journal of Surgical Research.

“Although chronic pain and discomfort is still one of the greatest problems after inguinal hernia repair due to the fact that it interferes with patients’ quality of life, there are very little data available from previous studies concerning presentation, diagnosis, and modes of treatment of this issue. In particular, the data in the literature concerning the cause of chronic pain are very limited,” wrote the authors, led by Hideyuki Takata, MD, of Nippon Medical School, Tokyo.

Dr. Takata and his coinvestigators looked at patients who underwent a mesh repair operation for primary inguinal hernia at a single institution – Nippon Medical School – between May 2011 and May 2014. All patients were aged 40 years or older, and the overwhelming majority were male. A total of 334 patients were identified, with 378 lesions among them; all patients’ operations were performed via the Lichtenstein (onlay mesh only), Ultrapro Plug (onlay and plug mesh), modified Kugel Patch (onlay and underlay mesh), or laparoscopic transabdominal preperitoneal (underlay mesh only, TAPP) surgical routes.

Forty-four patients had bilateral lesions, 152 had lesions on the right, and 138 on the left; 76 patients received Lichtenstein operations, 85 received Ultrapro Plug, 156 received modified Kugel Patch, and 61 received TAPP. (J Surg Res 2016 Aug 11. doi: 10.1016/j.jss.2016.08.027).

Patients received questionnaires at 2-3 weeks, 3 months, and 6 months after the operation to determine their pain and discomfort levels. Responses for all 378 lesions (100%) were received at the first follow-up, with questionnaires received for 229 lesions (60.5%) at the 3-month follow-up and 249 lesions (65.9%) at the 6-month follow-up. Of those who responded at the 6-month follow-up, 46 received Lichtenstein, 59 received Ultrapro Plug, 101 received modified Kugel Patch, and 61 received TAPP.

No patients reported moderate or severe pain while at rest. Mild pain was reported by 11 (4.4%) of all respondents; 0 of those who received Lichtenstein, 4 (6.8%) of those who received Ultrapro Plug, 7 (6.9%) of those who received modified Kugel Patch, and 0 of those who received TAPP (P less than .01).

Pain with movement was reported in 35 (14.1%) of respondents: 6 (13.0%) of those who received Lichtenstein, 7 (11.9%) of those who received Ultrapro Plug, 20 (19.8%) of those who received modified Kugel Patch, and 2 (4.7%) of those who received TAPP (P less than .05). One respondent reported experiencing moderate pain with movement, and that individual received Ultrapro Plug (1.7%). No patients reported experiencing severe pain with movement.

“We conclude that the sensory nerves in the inguinal region should be kept away from the mesh to prevent the development of chronic pain and discomfort,” the investigators concluded. “Further study is required to determine the mechanism involved in the generation of chronic pain and discomfort to improve the patient’s quality of life after primary inguinal hernia repair.”

No funding source was disclosed for this study. Dr. Takata and his coauthors did not report any relevant financial disclosures.

Chronic pain that typically follows primary inguinal hernia repair can be significantly reduced by adopting procedures that use underlay mesh rather than overlay mesh, according to a new study published in the Journal of Surgical Research.

“Although chronic pain and discomfort is still one of the greatest problems after inguinal hernia repair due to the fact that it interferes with patients’ quality of life, there are very little data available from previous studies concerning presentation, diagnosis, and modes of treatment of this issue. In particular, the data in the literature concerning the cause of chronic pain are very limited,” wrote the authors, led by Hideyuki Takata, MD, of Nippon Medical School, Tokyo.

Dr. Takata and his coinvestigators looked at patients who underwent a mesh repair operation for primary inguinal hernia at a single institution – Nippon Medical School – between May 2011 and May 2014. All patients were aged 40 years or older, and the overwhelming majority were male. A total of 334 patients were identified, with 378 lesions among them; all patients’ operations were performed via the Lichtenstein (onlay mesh only), Ultrapro Plug (onlay and plug mesh), modified Kugel Patch (onlay and underlay mesh), or laparoscopic transabdominal preperitoneal (underlay mesh only, TAPP) surgical routes.

Forty-four patients had bilateral lesions, 152 had lesions on the right, and 138 on the left; 76 patients received Lichtenstein operations, 85 received Ultrapro Plug, 156 received modified Kugel Patch, and 61 received TAPP. (J Surg Res 2016 Aug 11. doi: 10.1016/j.jss.2016.08.027).

Patients received questionnaires at 2-3 weeks, 3 months, and 6 months after the operation to determine their pain and discomfort levels. Responses for all 378 lesions (100%) were received at the first follow-up, with questionnaires received for 229 lesions (60.5%) at the 3-month follow-up and 249 lesions (65.9%) at the 6-month follow-up. Of those who responded at the 6-month follow-up, 46 received Lichtenstein, 59 received Ultrapro Plug, 101 received modified Kugel Patch, and 61 received TAPP.

No patients reported moderate or severe pain while at rest. Mild pain was reported by 11 (4.4%) of all respondents; 0 of those who received Lichtenstein, 4 (6.8%) of those who received Ultrapro Plug, 7 (6.9%) of those who received modified Kugel Patch, and 0 of those who received TAPP (P less than .01).

Pain with movement was reported in 35 (14.1%) of respondents: 6 (13.0%) of those who received Lichtenstein, 7 (11.9%) of those who received Ultrapro Plug, 20 (19.8%) of those who received modified Kugel Patch, and 2 (4.7%) of those who received TAPP (P less than .05). One respondent reported experiencing moderate pain with movement, and that individual received Ultrapro Plug (1.7%). No patients reported experiencing severe pain with movement.

“We conclude that the sensory nerves in the inguinal region should be kept away from the mesh to prevent the development of chronic pain and discomfort,” the investigators concluded. “Further study is required to determine the mechanism involved in the generation of chronic pain and discomfort to improve the patient’s quality of life after primary inguinal hernia repair.”

No funding source was disclosed for this study. Dr. Takata and his coauthors did not report any relevant financial disclosures.

Key clinical point:

Major finding: Mild pain at rest and with movement were both significantly lower in patients who received TAPP than in those who received one of three other surgical procedures.

Data source: Retrospective analysis of 334 primary inguinal hernia patients with 378 lesions undergoing TAPP or Lichtenstein procedures.

Disclosures: The authors did not report any relevant financial disclosures.

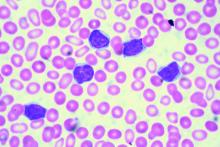

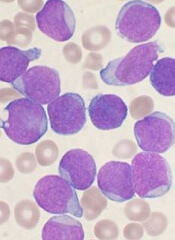

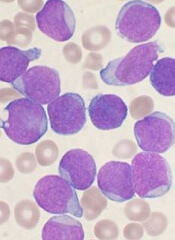

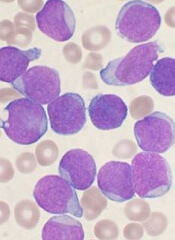

Minimal residual disease status predicts 10-year survival in CLL

Patients who have chronic lymphocytic leukemia and achieve minimal residual disease negativity have a high probability of long-term progression-free and overall survival, irrespective of the type of therapy they receive, reported Marwan Kwok, MD, of Queen Elizabeth Hospital Birmingham (England) and colleagues.

Minimal residual disease (MRD) negativity, defined as less than 1 chronic lymphocytic leukemic (CLL) cell detectable per 10,000 leukocytes, has been shown to independently predict clinical outcome in the front line setting, but the long-term prognostic value of MRD status in other therapeutic settings remains unclear. “Our results demonstrate the long-term benefit of achieving MRD negativity regardless of the therapeutic setting and treatment modality, and support its use as a prognostic marker for long-term PFS (progression-free survival) and as a potential therapeutic goal in CLL,” the authors wrote (Blood. 2016 Oct 3. doi: 10.1182/blood-2016-05-714162).

The researchers retrospectively analyzed, with up to 18 years of follow-up, all 133 CLL patients at St. James’s University Hospital in Leeds, England, who achieved at least a partial response with various therapies between 1996 and 2007 and who received a bone marrow MRD assessment at the end of treatment, according to the international harmonized approach.

MRD negativity correlated with progression-free and overall survival, and the association was independent of the type and line of treatment, as well as adverse cytogenetic findings, the investigators said.

For those who achieved MRD negativity in front-line treatment, the 10-year progression-free survival was 65%; survival in patients who did not achieve MRD negativity was 10%. Overall survival at 10 years was 70% for those who achieved MRD negativity and 30% for MRD-positive patients.

The authors had no relevant financial disclosures.

Patients who have chronic lymphocytic leukemia and achieve minimal residual disease negativity have a high probability of long-term progression-free and overall survival, irrespective of the type of therapy they receive, reported Marwan Kwok, MD, of Queen Elizabeth Hospital Birmingham (England) and colleagues.

Minimal residual disease (MRD) negativity, defined as less than 1 chronic lymphocytic leukemic (CLL) cell detectable per 10,000 leukocytes, has been shown to independently predict clinical outcome in the front line setting, but the long-term prognostic value of MRD status in other therapeutic settings remains unclear. “Our results demonstrate the long-term benefit of achieving MRD negativity regardless of the therapeutic setting and treatment modality, and support its use as a prognostic marker for long-term PFS (progression-free survival) and as a potential therapeutic goal in CLL,” the authors wrote (Blood. 2016 Oct 3. doi: 10.1182/blood-2016-05-714162).

The researchers retrospectively analyzed, with up to 18 years of follow-up, all 133 CLL patients at St. James’s University Hospital in Leeds, England, who achieved at least a partial response with various therapies between 1996 and 2007 and who received a bone marrow MRD assessment at the end of treatment, according to the international harmonized approach.

MRD negativity correlated with progression-free and overall survival, and the association was independent of the type and line of treatment, as well as adverse cytogenetic findings, the investigators said.

For those who achieved MRD negativity in front-line treatment, the 10-year progression-free survival was 65%; survival in patients who did not achieve MRD negativity was 10%. Overall survival at 10 years was 70% for those who achieved MRD negativity and 30% for MRD-positive patients.

The authors had no relevant financial disclosures.

Patients who have chronic lymphocytic leukemia and achieve minimal residual disease negativity have a high probability of long-term progression-free and overall survival, irrespective of the type of therapy they receive, reported Marwan Kwok, MD, of Queen Elizabeth Hospital Birmingham (England) and colleagues.

Minimal residual disease (MRD) negativity, defined as less than 1 chronic lymphocytic leukemic (CLL) cell detectable per 10,000 leukocytes, has been shown to independently predict clinical outcome in the front line setting, but the long-term prognostic value of MRD status in other therapeutic settings remains unclear. “Our results demonstrate the long-term benefit of achieving MRD negativity regardless of the therapeutic setting and treatment modality, and support its use as a prognostic marker for long-term PFS (progression-free survival) and as a potential therapeutic goal in CLL,” the authors wrote (Blood. 2016 Oct 3. doi: 10.1182/blood-2016-05-714162).

The researchers retrospectively analyzed, with up to 18 years of follow-up, all 133 CLL patients at St. James’s University Hospital in Leeds, England, who achieved at least a partial response with various therapies between 1996 and 2007 and who received a bone marrow MRD assessment at the end of treatment, according to the international harmonized approach.

MRD negativity correlated with progression-free and overall survival, and the association was independent of the type and line of treatment, as well as adverse cytogenetic findings, the investigators said.

For those who achieved MRD negativity in front-line treatment, the 10-year progression-free survival was 65%; survival in patients who did not achieve MRD negativity was 10%. Overall survival at 10 years was 70% for those who achieved MRD negativity and 30% for MRD-positive patients.

The authors had no relevant financial disclosures.

FROM BLOOD

Key clinical point:

Major finding: Overall survival at 10 years was 70% for those who achieved MRD negativity and 30% for those who did not.

Data source: Retrospective, single-center study of 133 patients with chronic lymphocytic leukemia.

Disclosures: The authors had no relevant financial disclosures.

CD49d trumps novel recurrent mutations for predicting overall survival in CLL

CD49d was a strong predictor of overall survival in a cohort of 778 unselected patients with chronic lymphocytic leukemia, reported Michele Dal Bo, MD, of Centro di Riferimento Oncologico, in Aviano, Italy, and colleagues.

High CD49d expression was an independent predictor of poor overall survival in a multivariate Cox analysis (hazard ratio = 1.88, P less than .0001) and in each category of a risk stratification model. Among other biological prognosticators, CD49d was among the top predictors of overall survival (variable importance = 0.0410) along with immunoglobulin heavy chain variable (IGHV) gene mutational status and TP53 abnormalities. “In this context, TP53 disruption and NOTCH1 mutations retained prognostic relevance, in keeping with their roles in CLL cell immuno-chemoresistance,” the authors wrote.

CD49d was a strong predictor of overall survival in a cohort of 778 unselected patients with chronic lymphocytic leukemia, reported Michele Dal Bo, MD, of Centro di Riferimento Oncologico, in Aviano, Italy, and colleagues.

High CD49d expression was an independent predictor of poor overall survival in a multivariate Cox analysis (hazard ratio = 1.88, P less than .0001) and in each category of a risk stratification model. Among other biological prognosticators, CD49d was among the top predictors of overall survival (variable importance = 0.0410) along with immunoglobulin heavy chain variable (IGHV) gene mutational status and TP53 abnormalities. “In this context, TP53 disruption and NOTCH1 mutations retained prognostic relevance, in keeping with their roles in CLL cell immuno-chemoresistance,” the authors wrote.

CD49d was a strong predictor of overall survival in a cohort of 778 unselected patients with chronic lymphocytic leukemia, reported Michele Dal Bo, MD, of Centro di Riferimento Oncologico, in Aviano, Italy, and colleagues.

High CD49d expression was an independent predictor of poor overall survival in a multivariate Cox analysis (hazard ratio = 1.88, P less than .0001) and in each category of a risk stratification model. Among other biological prognosticators, CD49d was among the top predictors of overall survival (variable importance = 0.0410) along with immunoglobulin heavy chain variable (IGHV) gene mutational status and TP53 abnormalities. “In this context, TP53 disruption and NOTCH1 mutations retained prognostic relevance, in keeping with their roles in CLL cell immuno-chemoresistance,” the authors wrote.

Almost half of terminal cancer patient hospitalizations deemed avoidable

Nearly half of all intensive care unit hospitalizations among terminal oncology patients in a retrospective case review were identified as potentially avoidable.

The findings suggest a need for strategies to prospectively identify patients at risk for ICU admission and to formulate interventions to improve end-of-life care, wrote Bobby Daly, MD, and colleagues at the University of Chicago. The report was published in the Journal of Oncology Practice.

Of 72 terminal oncology patients who received care in a 600-bed academic medical center’s ambulatory oncology practice and died in an ICU between July 1, 2012, and June 30, 2013, within a week of transfer, 72% were men, 71% had solid tumor malignancies, and 51% had poor performance status (score of 2 or greater). The majority had multiple encounters with the health care system, but only 25% had a documented advance directive, the investigators found.

During a median ICU length of stay of 4 days, 82% of patients had a central line, 81% were intubated, 44% received a feeding tube, 39% received cardiopulmonary resuscitation, 22% began hemodialysis, and 8% received chemotherapy, while 6% had an inpatient palliative care consult, the researchers noted.

Notably, 47% of the ICU hospitalizations were determined to be potentially avoidable by at least two of three reviewers – an oncologist, an intensivist, and a hospitalist – and agreement between the reviewers was fair (kappa statistic, 0.24). Factors independently associated with avoidable hospitalizations on multivariable analysis were worse performance status prior to admission (median score, 2 vs. 1), worse Charlson comorbidity score (median, 8.5 vs. 7.0), number of hospitalizations in the previous 12 months (median, 2 vs. 1), and fewer days since the last outpatient oncology clinic visit (median 21 vs. 41 days). Having chemotherapy as the most recent treatment and cancer symptoms as the reason for hospitalization were also associated with potentially avoidable hospitalization (J Oncol Pract. 2016 Sep. doi: 10.1200/jop.2016.012823).

The findings are important because part of the reason for the increasing cost of cancer care in the United States, which is projected to increase by 27% over 2010 costs to $158 billion by 2020, is the increasingly aggressive care provided at the end of life, the investigators noted.

“Critically ill patients with cancer constitute a large percentage of ICU admissions, 25% of Medicare cancer beneficiaries receive ICU care in the last month of life, and 8% of patients with cancer die there,” they wrote.

Further, high-intensity end-of-life care has been shown in prior studies to improve neither survival nor quality of life for cancer patients.

In fact, the National Quality Forum “endorses ICU admissions in the last 30 days of life as a marker of poor-quality cancer care,” and other groups consider the proportion of patients with advanced cancer dying in the ICU as a quality-of-care metric, they said.

The current study was designed to explore the characteristics of oncology patients who expire in the ICU and the potential avoidability of their deaths there, and although the findings are limited by the single-center retrospective design and use of “subjective majority-driven medical record review,” they “serve to highlight terminal ICU hospitalization as an area of focus to improve the quality and value of cancer care,” the researchers wrote.

The findings also underscore the need for improved advance care planning, they added.

“Beyond the issues of cost and resource scarcity, these ICU deaths often create a traumatic experience for patients and families,” they wrote.

“Understanding these hospitalizations will contribute to the design of interventions aimed at avoiding unnecessary aggressive end-of-life care.”

Dr. Daly reported a leadership role with Quadrant Holdings and financial relationships with Quadrant Holdings, CVS Health, Johnson & Johnson, McKesson, and Walgreens Boots Alliance. Detailed disclosures for all authors are available with the full text of the article at jop.ascopubs.org.

Nearly half of all intensive care unit hospitalizations among terminal oncology patients in a retrospective case review were identified as potentially avoidable.

The findings suggest a need for strategies to prospectively identify patients at risk for ICU admission and to formulate interventions to improve end-of-life care, wrote Bobby Daly, MD, and colleagues at the University of Chicago. The report was published in the Journal of Oncology Practice.

Of 72 terminal oncology patients who received care in a 600-bed academic medical center’s ambulatory oncology practice and died in an ICU between July 1, 2012, and June 30, 2013, within a week of transfer, 72% were men, 71% had solid tumor malignancies, and 51% had poor performance status (score of 2 or greater). The majority had multiple encounters with the health care system, but only 25% had a documented advance directive, the investigators found.

During a median ICU length of stay of 4 days, 82% of patients had a central line, 81% were intubated, 44% received a feeding tube, 39% received cardiopulmonary resuscitation, 22% began hemodialysis, and 8% received chemotherapy, while 6% had an inpatient palliative care consult, the researchers noted.

Notably, 47% of the ICU hospitalizations were determined to be potentially avoidable by at least two of three reviewers – an oncologist, an intensivist, and a hospitalist – and agreement between the reviewers was fair (kappa statistic, 0.24). Factors independently associated with avoidable hospitalizations on multivariable analysis were worse performance status prior to admission (median score, 2 vs. 1), worse Charlson comorbidity score (median, 8.5 vs. 7.0), number of hospitalizations in the previous 12 months (median, 2 vs. 1), and fewer days since the last outpatient oncology clinic visit (median 21 vs. 41 days). Having chemotherapy as the most recent treatment and cancer symptoms as the reason for hospitalization were also associated with potentially avoidable hospitalization (J Oncol Pract. 2016 Sep. doi: 10.1200/jop.2016.012823).

The findings are important because part of the reason for the increasing cost of cancer care in the United States, which is projected to increase by 27% over 2010 costs to $158 billion by 2020, is the increasingly aggressive care provided at the end of life, the investigators noted.

“Critically ill patients with cancer constitute a large percentage of ICU admissions, 25% of Medicare cancer beneficiaries receive ICU care in the last month of life, and 8% of patients with cancer die there,” they wrote.

Further, high-intensity end-of-life care has been shown in prior studies to improve neither survival nor quality of life for cancer patients.

In fact, the National Quality Forum “endorses ICU admissions in the last 30 days of life as a marker of poor-quality cancer care,” and other groups consider the proportion of patients with advanced cancer dying in the ICU as a quality-of-care metric, they said.

The current study was designed to explore the characteristics of oncology patients who expire in the ICU and the potential avoidability of their deaths there, and although the findings are limited by the single-center retrospective design and use of “subjective majority-driven medical record review,” they “serve to highlight terminal ICU hospitalization as an area of focus to improve the quality and value of cancer care,” the researchers wrote.

The findings also underscore the need for improved advance care planning, they added.

“Beyond the issues of cost and resource scarcity, these ICU deaths often create a traumatic experience for patients and families,” they wrote.

“Understanding these hospitalizations will contribute to the design of interventions aimed at avoiding unnecessary aggressive end-of-life care.”

Dr. Daly reported a leadership role with Quadrant Holdings and financial relationships with Quadrant Holdings, CVS Health, Johnson & Johnson, McKesson, and Walgreens Boots Alliance. Detailed disclosures for all authors are available with the full text of the article at jop.ascopubs.org.

Nearly half of all intensive care unit hospitalizations among terminal oncology patients in a retrospective case review were identified as potentially avoidable.

The findings suggest a need for strategies to prospectively identify patients at risk for ICU admission and to formulate interventions to improve end-of-life care, wrote Bobby Daly, MD, and colleagues at the University of Chicago. The report was published in the Journal of Oncology Practice.

Of 72 terminal oncology patients who received care in a 600-bed academic medical center’s ambulatory oncology practice and died in an ICU between July 1, 2012, and June 30, 2013, within a week of transfer, 72% were men, 71% had solid tumor malignancies, and 51% had poor performance status (score of 2 or greater). The majority had multiple encounters with the health care system, but only 25% had a documented advance directive, the investigators found.

During a median ICU length of stay of 4 days, 82% of patients had a central line, 81% were intubated, 44% received a feeding tube, 39% received cardiopulmonary resuscitation, 22% began hemodialysis, and 8% received chemotherapy, while 6% had an inpatient palliative care consult, the researchers noted.

Notably, 47% of the ICU hospitalizations were determined to be potentially avoidable by at least two of three reviewers – an oncologist, an intensivist, and a hospitalist – and agreement between the reviewers was fair (kappa statistic, 0.24). Factors independently associated with avoidable hospitalizations on multivariable analysis were worse performance status prior to admission (median score, 2 vs. 1), worse Charlson comorbidity score (median, 8.5 vs. 7.0), number of hospitalizations in the previous 12 months (median, 2 vs. 1), and fewer days since the last outpatient oncology clinic visit (median 21 vs. 41 days). Having chemotherapy as the most recent treatment and cancer symptoms as the reason for hospitalization were also associated with potentially avoidable hospitalization (J Oncol Pract. 2016 Sep. doi: 10.1200/jop.2016.012823).

The findings are important because part of the reason for the increasing cost of cancer care in the United States, which is projected to increase by 27% over 2010 costs to $158 billion by 2020, is the increasingly aggressive care provided at the end of life, the investigators noted.

“Critically ill patients with cancer constitute a large percentage of ICU admissions, 25% of Medicare cancer beneficiaries receive ICU care in the last month of life, and 8% of patients with cancer die there,” they wrote.

Further, high-intensity end-of-life care has been shown in prior studies to improve neither survival nor quality of life for cancer patients.

In fact, the National Quality Forum “endorses ICU admissions in the last 30 days of life as a marker of poor-quality cancer care,” and other groups consider the proportion of patients with advanced cancer dying in the ICU as a quality-of-care metric, they said.

The current study was designed to explore the characteristics of oncology patients who expire in the ICU and the potential avoidability of their deaths there, and although the findings are limited by the single-center retrospective design and use of “subjective majority-driven medical record review,” they “serve to highlight terminal ICU hospitalization as an area of focus to improve the quality and value of cancer care,” the researchers wrote.

The findings also underscore the need for improved advance care planning, they added.

“Beyond the issues of cost and resource scarcity, these ICU deaths often create a traumatic experience for patients and families,” they wrote.

“Understanding these hospitalizations will contribute to the design of interventions aimed at avoiding unnecessary aggressive end-of-life care.”

Dr. Daly reported a leadership role with Quadrant Holdings and financial relationships with Quadrant Holdings, CVS Health, Johnson & Johnson, McKesson, and Walgreens Boots Alliance. Detailed disclosures for all authors are available with the full text of the article at jop.ascopubs.org.

Key clinical point:

Major finding: Almost half (47%) of the ICU hospitalizations were determined by a majority of reviewers to be potentially avoidable.

Data source: A retrospective review of 72 cases.

Disclosures: Dr. Daly reported a leadership role with Quadrant Holdings and financial relationships with Quadrant Holdings, CVS Health, Johnson & Johnson, McKesson, and Walgreens Boots Alliance. Detailed disclosures for all authors are available with the full text of the article at jop.ascopubs.org.

New Standard Announced for Antimicrobial Stewardship

Decreasing antimicrobial resistance and improving the correct use of antimicrobials is a national priority. According to CDC estimates, at least 2 million illnesses and 23,000 deaths annually are caused by antibiotic-resistant bacteria in the United States alone.

“Antimicrobial resistance is a serious global healthcare issue,” says Kelly Podgorny, DNP, MS, CPHQ, RN, project director at The Joint Commission. “If you review the scientific literature, it will indicate that we’re in crisis mode right now because of this.”

That’s why The Joint Commission recently announced a new Medication Management (MM) standard for hospitals, critical-access hospitals, and nursing care centers. This standard addresses antimicrobial stewardship and becomes effective January 1, 2017.

The Joint Commission is one of many organizations implementing plans to support the national action plan on this issue developed by the White House and signed by President Barack Obama. The purpose of The Joint Commission’s antimicrobial stewardship standard is to improve quality and patient safety and also to support, through its accreditation process, imperatives and actions at a national level.

The Joint Commission’s standard includes medications beyond just antibiotics by addressing antimicrobial stewardship. Clifford Chen, MD and Steven Eagle, MD

“Most of the organizations are focusing on antibiotics,” Podgorny says. “We broadened our perspective. The World Health Organization states that antimicrobial resistance threatens the effective prevention and treatment of an ever-increasing range of infections caused by bacteria, which would be antibiotics, but also includes parasites, viruses, and fungi.”

She emphasizes that hospitals need to have an effective antimicrobial stewardship program supported by hospital leadership. In fact, in The Joint Commission’s standard, the first element of performance requires leadership to establish antimicrobial stewardship as an organizational priority.

For hospitalists, antimicrobial stewardship should be a major issue in their daily work lives.

“The CDC states that studies indicate that 30–50% percent of antibiotics, and we’re just talking about antibiotics here, prescribed in hospitals are unnecessary or inappropriate,” Podgorny says.

References

- Centers for Disease Control and Prevention. Antibiotic Resistance Threats in the United States, 2012.

2. The Joint Commission. New Antimicrobial Stewardship Standard. Accessed September 25, 2016.

Quick Byte

Improving the Bundled Payment Model

Researchers took national Medicare fee-for-service claims for the period 2011–2012 and evaluated how 30- and 90-day episode-based spending related to patient satisfaction and surgical mortality. Results showed patients who had major surgery at high-quality hospitals cost Medicare less than patients at low-quality hospitals. Post-acute care accounted for 59.5% of the difference in 30-day episode spending. Researchers concluded that efforts to increase value with bundled payment should pay attention to improving the care at low-quality hospitals and reducing unnecessary post-acute care.

Reference

- Tsai TC, Greaves F, Zheng J, Orav EJ, Zinner MJ, Jha AK. Better patient care at high-quality hospitals may save Medicare money and bolster episode-based payment models. Health Aff (Millwood). 2016;35(9):1681-1689.

Decreasing antimicrobial resistance and improving the correct use of antimicrobials is a national priority. According to CDC estimates, at least 2 million illnesses and 23,000 deaths annually are caused by antibiotic-resistant bacteria in the United States alone.

“Antimicrobial resistance is a serious global healthcare issue,” says Kelly Podgorny, DNP, MS, CPHQ, RN, project director at The Joint Commission. “If you review the scientific literature, it will indicate that we’re in crisis mode right now because of this.”

That’s why The Joint Commission recently announced a new Medication Management (MM) standard for hospitals, critical-access hospitals, and nursing care centers. This standard addresses antimicrobial stewardship and becomes effective January 1, 2017.

The Joint Commission is one of many organizations implementing plans to support the national action plan on this issue developed by the White House and signed by President Barack Obama. The purpose of The Joint Commission’s antimicrobial stewardship standard is to improve quality and patient safety and also to support, through its accreditation process, imperatives and actions at a national level.

The Joint Commission’s standard includes medications beyond just antibiotics by addressing antimicrobial stewardship. Clifford Chen, MD and Steven Eagle, MD

“Most of the organizations are focusing on antibiotics,” Podgorny says. “We broadened our perspective. The World Health Organization states that antimicrobial resistance threatens the effective prevention and treatment of an ever-increasing range of infections caused by bacteria, which would be antibiotics, but also includes parasites, viruses, and fungi.”

She emphasizes that hospitals need to have an effective antimicrobial stewardship program supported by hospital leadership. In fact, in The Joint Commission’s standard, the first element of performance requires leadership to establish antimicrobial stewardship as an organizational priority.

For hospitalists, antimicrobial stewardship should be a major issue in their daily work lives.

“The CDC states that studies indicate that 30–50% percent of antibiotics, and we’re just talking about antibiotics here, prescribed in hospitals are unnecessary or inappropriate,” Podgorny says.

References

- Centers for Disease Control and Prevention. Antibiotic Resistance Threats in the United States, 2012.

2. The Joint Commission. New Antimicrobial Stewardship Standard. Accessed September 25, 2016.

Quick Byte

Improving the Bundled Payment Model

Researchers took national Medicare fee-for-service claims for the period 2011–2012 and evaluated how 30- and 90-day episode-based spending related to patient satisfaction and surgical mortality. Results showed patients who had major surgery at high-quality hospitals cost Medicare less than patients at low-quality hospitals. Post-acute care accounted for 59.5% of the difference in 30-day episode spending. Researchers concluded that efforts to increase value with bundled payment should pay attention to improving the care at low-quality hospitals and reducing unnecessary post-acute care.

Reference

- Tsai TC, Greaves F, Zheng J, Orav EJ, Zinner MJ, Jha AK. Better patient care at high-quality hospitals may save Medicare money and bolster episode-based payment models. Health Aff (Millwood). 2016;35(9):1681-1689.

Decreasing antimicrobial resistance and improving the correct use of antimicrobials is a national priority. According to CDC estimates, at least 2 million illnesses and 23,000 deaths annually are caused by antibiotic-resistant bacteria in the United States alone.

“Antimicrobial resistance is a serious global healthcare issue,” says Kelly Podgorny, DNP, MS, CPHQ, RN, project director at The Joint Commission. “If you review the scientific literature, it will indicate that we’re in crisis mode right now because of this.”

That’s why The Joint Commission recently announced a new Medication Management (MM) standard for hospitals, critical-access hospitals, and nursing care centers. This standard addresses antimicrobial stewardship and becomes effective January 1, 2017.

The Joint Commission is one of many organizations implementing plans to support the national action plan on this issue developed by the White House and signed by President Barack Obama. The purpose of The Joint Commission’s antimicrobial stewardship standard is to improve quality and patient safety and also to support, through its accreditation process, imperatives and actions at a national level.

The Joint Commission’s standard includes medications beyond just antibiotics by addressing antimicrobial stewardship. Clifford Chen, MD and Steven Eagle, MD

“Most of the organizations are focusing on antibiotics,” Podgorny says. “We broadened our perspective. The World Health Organization states that antimicrobial resistance threatens the effective prevention and treatment of an ever-increasing range of infections caused by bacteria, which would be antibiotics, but also includes parasites, viruses, and fungi.”

She emphasizes that hospitals need to have an effective antimicrobial stewardship program supported by hospital leadership. In fact, in The Joint Commission’s standard, the first element of performance requires leadership to establish antimicrobial stewardship as an organizational priority.

For hospitalists, antimicrobial stewardship should be a major issue in their daily work lives.

“The CDC states that studies indicate that 30–50% percent of antibiotics, and we’re just talking about antibiotics here, prescribed in hospitals are unnecessary or inappropriate,” Podgorny says.

References

- Centers for Disease Control and Prevention. Antibiotic Resistance Threats in the United States, 2012.

2. The Joint Commission. New Antimicrobial Stewardship Standard. Accessed September 25, 2016.

Quick Byte

Improving the Bundled Payment Model

Researchers took national Medicare fee-for-service claims for the period 2011–2012 and evaluated how 30- and 90-day episode-based spending related to patient satisfaction and surgical mortality. Results showed patients who had major surgery at high-quality hospitals cost Medicare less than patients at low-quality hospitals. Post-acute care accounted for 59.5% of the difference in 30-day episode spending. Researchers concluded that efforts to increase value with bundled payment should pay attention to improving the care at low-quality hospitals and reducing unnecessary post-acute care.

Reference

- Tsai TC, Greaves F, Zheng J, Orav EJ, Zinner MJ, Jha AK. Better patient care at high-quality hospitals may save Medicare money and bolster episode-based payment models. Health Aff (Millwood). 2016;35(9):1681-1689.

Device could aid treatment decisions in ALL, other cancers

A new device might help physicians choose the optimal treatment for cancer patients, according to research published in Nature Biotechnology.

The device was designed to predict responses to treatment by measuring individual cell growth after drug exposure.

Researchers found they could predict whether a particular drug would kill leukemia or glioblastoma cells, based on how the drug affected the cells’ mass.

“We’ve developed a functional assay that can measure drug response of individual cells while maintaining viability for downstream analysis such as sequencing,” said study author Scott Manalis, PhD, of the Massachusetts Institute of Technology in Cambridge.

He and his colleagues were inspired to develop their assay, in part, by a test that has been used for decades to choose antibiotics to treat bacterial infections. The antibiotic susceptibility test involves simply taking bacteria from a patient, exposing them to a range of antibiotics, and observing whether the bacteria grow or die.

To translate that approach to cancer, the researchers needed a way to rapidly measure cell responses to drugs, and to do it with a limited number of cells.

For the past several years, Dr Manalis’s lab has been developing a device known as a suspended microchannel resonator (SMR).

According to the researchers, the SMR can measure cell masses 10 to 100 times more accurately than any other technique. This allows for the precise calculation of growth rates of single cells over short periods of time.

For this study, Dr Manalis and his colleagues used the SMR to determine whether drug susceptibility could be predicted by measuring cancer cell growth rates following drug exposure.

The team analyzed cells from patients with different subtypes of glioblastoma and B-cell acute lymphocytic leukemia (ALL) that have previously been shown to be either sensitive or resistant to specific therapies—MDM2 inhibitors for glioblastoma and BCR-ABL inhibitors for ALL.

After exposing the cancer cells to the drugs, the researchers waited about 15 hours, then measured the cell’s growth rates. Each cell was measured several times over a period of 15 to 20 minutes, providing enough data for the team to calculate the mass accumulation rate.

They found that cells known to be susceptible to a given therapy changed the way they accumulate mass, whereas resistant cells continued to grow as if unaffected.

“We’re able to show that cells we know are sensitive to therapy respond by dramatically reducing their growth rate relative to cells that are resistant,” said study author Mark Stevens, of the Dana-Farber Cancer Institute in Boston, Massachusetts.

“And because the cells are still alive, we have the opportunity to study the same cells following our measurement.”

The researchers said a major advantage of this technique is that it can be done with very small numbers of cells. In the experiments with ALL cells, the team showed they could get accurate results with a droplet of blood containing about 1000 ALL cells.

Another advantage is the speed at which small changes in cell mass can be measured, said Anthony Letai, MD, PhD, of the Dana-Farber Cancer Institute.

“This system is well suited to making rapid measurements,” said Dr Letai, who was not involved in this study. “I look forward to seeing them apply this to many more cancers and many more drugs.”

The researchers are now using their technique to test cells’ susceptibility to drugs, then isolate the cells and sequence the RNA found in them, revealing which genes are turned on.

“Now that we have a way to identify cells that are not responding to a given therapy, we are excited about isolating these cells and analyzing them to understand mechanisms of resistance,” Dr Manalis said.

In another recent paper published in Nature Biotechnology, the researchers described a higher throughput version of the SMR device that can do in 1 day the same number of measurements that took several months with the device used in this study.

This is an important step toward making the approach suitable for clinical samples, Dr Manalis said. ![]()

A new device might help physicians choose the optimal treatment for cancer patients, according to research published in Nature Biotechnology.

The device was designed to predict responses to treatment by measuring individual cell growth after drug exposure.

Researchers found they could predict whether a particular drug would kill leukemia or glioblastoma cells, based on how the drug affected the cells’ mass.

“We’ve developed a functional assay that can measure drug response of individual cells while maintaining viability for downstream analysis such as sequencing,” said study author Scott Manalis, PhD, of the Massachusetts Institute of Technology in Cambridge.

He and his colleagues were inspired to develop their assay, in part, by a test that has been used for decades to choose antibiotics to treat bacterial infections. The antibiotic susceptibility test involves simply taking bacteria from a patient, exposing them to a range of antibiotics, and observing whether the bacteria grow or die.

To translate that approach to cancer, the researchers needed a way to rapidly measure cell responses to drugs, and to do it with a limited number of cells.

For the past several years, Dr Manalis’s lab has been developing a device known as a suspended microchannel resonator (SMR).

According to the researchers, the SMR can measure cell masses 10 to 100 times more accurately than any other technique. This allows for the precise calculation of growth rates of single cells over short periods of time.

For this study, Dr Manalis and his colleagues used the SMR to determine whether drug susceptibility could be predicted by measuring cancer cell growth rates following drug exposure.

The team analyzed cells from patients with different subtypes of glioblastoma and B-cell acute lymphocytic leukemia (ALL) that have previously been shown to be either sensitive or resistant to specific therapies—MDM2 inhibitors for glioblastoma and BCR-ABL inhibitors for ALL.

After exposing the cancer cells to the drugs, the researchers waited about 15 hours, then measured the cell’s growth rates. Each cell was measured several times over a period of 15 to 20 minutes, providing enough data for the team to calculate the mass accumulation rate.

They found that cells known to be susceptible to a given therapy changed the way they accumulate mass, whereas resistant cells continued to grow as if unaffected.

“We’re able to show that cells we know are sensitive to therapy respond by dramatically reducing their growth rate relative to cells that are resistant,” said study author Mark Stevens, of the Dana-Farber Cancer Institute in Boston, Massachusetts.

“And because the cells are still alive, we have the opportunity to study the same cells following our measurement.”

The researchers said a major advantage of this technique is that it can be done with very small numbers of cells. In the experiments with ALL cells, the team showed they could get accurate results with a droplet of blood containing about 1000 ALL cells.

Another advantage is the speed at which small changes in cell mass can be measured, said Anthony Letai, MD, PhD, of the Dana-Farber Cancer Institute.

“This system is well suited to making rapid measurements,” said Dr Letai, who was not involved in this study. “I look forward to seeing them apply this to many more cancers and many more drugs.”

The researchers are now using their technique to test cells’ susceptibility to drugs, then isolate the cells and sequence the RNA found in them, revealing which genes are turned on.

“Now that we have a way to identify cells that are not responding to a given therapy, we are excited about isolating these cells and analyzing them to understand mechanisms of resistance,” Dr Manalis said.

In another recent paper published in Nature Biotechnology, the researchers described a higher throughput version of the SMR device that can do in 1 day the same number of measurements that took several months with the device used in this study.

This is an important step toward making the approach suitable for clinical samples, Dr Manalis said. ![]()

A new device might help physicians choose the optimal treatment for cancer patients, according to research published in Nature Biotechnology.

The device was designed to predict responses to treatment by measuring individual cell growth after drug exposure.

Researchers found they could predict whether a particular drug would kill leukemia or glioblastoma cells, based on how the drug affected the cells’ mass.

“We’ve developed a functional assay that can measure drug response of individual cells while maintaining viability for downstream analysis such as sequencing,” said study author Scott Manalis, PhD, of the Massachusetts Institute of Technology in Cambridge.

He and his colleagues were inspired to develop their assay, in part, by a test that has been used for decades to choose antibiotics to treat bacterial infections. The antibiotic susceptibility test involves simply taking bacteria from a patient, exposing them to a range of antibiotics, and observing whether the bacteria grow or die.

To translate that approach to cancer, the researchers needed a way to rapidly measure cell responses to drugs, and to do it with a limited number of cells.

For the past several years, Dr Manalis’s lab has been developing a device known as a suspended microchannel resonator (SMR).

According to the researchers, the SMR can measure cell masses 10 to 100 times more accurately than any other technique. This allows for the precise calculation of growth rates of single cells over short periods of time.

For this study, Dr Manalis and his colleagues used the SMR to determine whether drug susceptibility could be predicted by measuring cancer cell growth rates following drug exposure.

The team analyzed cells from patients with different subtypes of glioblastoma and B-cell acute lymphocytic leukemia (ALL) that have previously been shown to be either sensitive or resistant to specific therapies—MDM2 inhibitors for glioblastoma and BCR-ABL inhibitors for ALL.

After exposing the cancer cells to the drugs, the researchers waited about 15 hours, then measured the cell’s growth rates. Each cell was measured several times over a period of 15 to 20 minutes, providing enough data for the team to calculate the mass accumulation rate.

They found that cells known to be susceptible to a given therapy changed the way they accumulate mass, whereas resistant cells continued to grow as if unaffected.

“We’re able to show that cells we know are sensitive to therapy respond by dramatically reducing their growth rate relative to cells that are resistant,” said study author Mark Stevens, of the Dana-Farber Cancer Institute in Boston, Massachusetts.

“And because the cells are still alive, we have the opportunity to study the same cells following our measurement.”

The researchers said a major advantage of this technique is that it can be done with very small numbers of cells. In the experiments with ALL cells, the team showed they could get accurate results with a droplet of blood containing about 1000 ALL cells.

Another advantage is the speed at which small changes in cell mass can be measured, said Anthony Letai, MD, PhD, of the Dana-Farber Cancer Institute.

“This system is well suited to making rapid measurements,” said Dr Letai, who was not involved in this study. “I look forward to seeing them apply this to many more cancers and many more drugs.”

The researchers are now using their technique to test cells’ susceptibility to drugs, then isolate the cells and sequence the RNA found in them, revealing which genes are turned on.

“Now that we have a way to identify cells that are not responding to a given therapy, we are excited about isolating these cells and analyzing them to understand mechanisms of resistance,” Dr Manalis said.

In another recent paper published in Nature Biotechnology, the researchers described a higher throughput version of the SMR device that can do in 1 day the same number of measurements that took several months with the device used in this study.

This is an important step toward making the approach suitable for clinical samples, Dr Manalis said. ![]()

Holographic imaging and deep learning diagnose malaria

Image by Peter H. Seeberger

Scientists say they have devised a technique that can be used to diagnose malaria quickly and with clinically relevant accuracy.

The technique involves using computer deep learning and light-based, holographic scans to spot malaria-infected cells from an untouched blood sample without any help from a human.

The scientists believe this could form the basis of a fast, reliable malaria test that could be given by most anyone, anywhere in the field.

The team described the method in PLOS ONE.

“With this technique, the path is there to be able to process thousands of cells per minute,” said study author Adam Wax, PhD, of Duke University in Durham, North Carolina.

“That’s a huge improvement to the 40 minutes it currently takes a field technician to stain, prepare, and read a slide to personally look for infection.”

The new technique is based on a technology called quantitative phase spectroscopy. As a laser sweeps through the visible spectrum of light, sensors capture how each discrete light frequency interacts with a sample of blood.

The resulting data captures a holographic image that provides a wide array of information that can indicate a malaria infection.

“We identified 23 parameters that are statistically significant for spotting malaria,” said study author Han Sang Park, a doctoral student in Dr Wax’s lab.

For example, as the disease progresses, red blood cells decrease in volume, lose hemoglobin, and deform as the parasite within grows larger. This affects features such as cell volume, perimeter, shape, and center of mass.

“However, none of the parameters were reliable more than 90% of the time on their own,” Park said. “So we decided to use them all.”

“To be adopted, any new diagnostic device has to be just as reliable as a trained field worker with a microscope,” Dr Wax said. “Otherwise, even with a 90% success rate, you’d still miss more than 20 million [malaria] cases a year.”

To get a more accurate reading, Dr Wax and his colleagues turned to deep learning—a method by which computers teach themselves how to distinguish between different objects.

Feeding data on healthy and diseased cells into a computer enabled the deep learning program to determine which sets of measurements at which thresholds most clearly distinguished healthy cells from diseased cells.

When the scientists put the resulting algorithm to the test with hundreds of cells, the algorithm was able to correctly spot malaria more than 95% of the time—a number the team believes will increase as more cells are used to train the program.

The team noted that, because the technique breaks data-rich holograms down to just 23 numbers, tests can be easily transmitted in bulk. They said this is important for locations that often do not have reliable, fast internet connections.

Dr Wax and his colleagues are now looking to develop the technology into a diagnostic device through a startup company called M2 Photonics Innovations. They hope to show that a device based on this technology would be accurate and cost-efficient enough to be useful in the field.

Dr Wax has also received funding to begin exploring the use of the technique for spotting cancerous cells in blood samples. ![]()

Image by Peter H. Seeberger

Scientists say they have devised a technique that can be used to diagnose malaria quickly and with clinically relevant accuracy.

The technique involves using computer deep learning and light-based, holographic scans to spot malaria-infected cells from an untouched blood sample without any help from a human.

The scientists believe this could form the basis of a fast, reliable malaria test that could be given by most anyone, anywhere in the field.

The team described the method in PLOS ONE.

“With this technique, the path is there to be able to process thousands of cells per minute,” said study author Adam Wax, PhD, of Duke University in Durham, North Carolina.

“That’s a huge improvement to the 40 minutes it currently takes a field technician to stain, prepare, and read a slide to personally look for infection.”

The new technique is based on a technology called quantitative phase spectroscopy. As a laser sweeps through the visible spectrum of light, sensors capture how each discrete light frequency interacts with a sample of blood.

The resulting data captures a holographic image that provides a wide array of information that can indicate a malaria infection.

“We identified 23 parameters that are statistically significant for spotting malaria,” said study author Han Sang Park, a doctoral student in Dr Wax’s lab.

For example, as the disease progresses, red blood cells decrease in volume, lose hemoglobin, and deform as the parasite within grows larger. This affects features such as cell volume, perimeter, shape, and center of mass.

“However, none of the parameters were reliable more than 90% of the time on their own,” Park said. “So we decided to use them all.”

“To be adopted, any new diagnostic device has to be just as reliable as a trained field worker with a microscope,” Dr Wax said. “Otherwise, even with a 90% success rate, you’d still miss more than 20 million [malaria] cases a year.”

To get a more accurate reading, Dr Wax and his colleagues turned to deep learning—a method by which computers teach themselves how to distinguish between different objects.

Feeding data on healthy and diseased cells into a computer enabled the deep learning program to determine which sets of measurements at which thresholds most clearly distinguished healthy cells from diseased cells.

When the scientists put the resulting algorithm to the test with hundreds of cells, the algorithm was able to correctly spot malaria more than 95% of the time—a number the team believes will increase as more cells are used to train the program.

The team noted that, because the technique breaks data-rich holograms down to just 23 numbers, tests can be easily transmitted in bulk. They said this is important for locations that often do not have reliable, fast internet connections.

Dr Wax and his colleagues are now looking to develop the technology into a diagnostic device through a startup company called M2 Photonics Innovations. They hope to show that a device based on this technology would be accurate and cost-efficient enough to be useful in the field.

Dr Wax has also received funding to begin exploring the use of the technique for spotting cancerous cells in blood samples. ![]()

Image by Peter H. Seeberger

Scientists say they have devised a technique that can be used to diagnose malaria quickly and with clinically relevant accuracy.

The technique involves using computer deep learning and light-based, holographic scans to spot malaria-infected cells from an untouched blood sample without any help from a human.

The scientists believe this could form the basis of a fast, reliable malaria test that could be given by most anyone, anywhere in the field.

The team described the method in PLOS ONE.

“With this technique, the path is there to be able to process thousands of cells per minute,” said study author Adam Wax, PhD, of Duke University in Durham, North Carolina.

“That’s a huge improvement to the 40 minutes it currently takes a field technician to stain, prepare, and read a slide to personally look for infection.”

The new technique is based on a technology called quantitative phase spectroscopy. As a laser sweeps through the visible spectrum of light, sensors capture how each discrete light frequency interacts with a sample of blood.

The resulting data captures a holographic image that provides a wide array of information that can indicate a malaria infection.

“We identified 23 parameters that are statistically significant for spotting malaria,” said study author Han Sang Park, a doctoral student in Dr Wax’s lab.

For example, as the disease progresses, red blood cells decrease in volume, lose hemoglobin, and deform as the parasite within grows larger. This affects features such as cell volume, perimeter, shape, and center of mass.

“However, none of the parameters were reliable more than 90% of the time on their own,” Park said. “So we decided to use them all.”

“To be adopted, any new diagnostic device has to be just as reliable as a trained field worker with a microscope,” Dr Wax said. “Otherwise, even with a 90% success rate, you’d still miss more than 20 million [malaria] cases a year.”

To get a more accurate reading, Dr Wax and his colleagues turned to deep learning—a method by which computers teach themselves how to distinguish between different objects.

Feeding data on healthy and diseased cells into a computer enabled the deep learning program to determine which sets of measurements at which thresholds most clearly distinguished healthy cells from diseased cells.

When the scientists put the resulting algorithm to the test with hundreds of cells, the algorithm was able to correctly spot malaria more than 95% of the time—a number the team believes will increase as more cells are used to train the program.

The team noted that, because the technique breaks data-rich holograms down to just 23 numbers, tests can be easily transmitted in bulk. They said this is important for locations that often do not have reliable, fast internet connections.

Dr Wax and his colleagues are now looking to develop the technology into a diagnostic device through a startup company called M2 Photonics Innovations. They hope to show that a device based on this technology would be accurate and cost-efficient enough to be useful in the field.

Dr Wax has also received funding to begin exploring the use of the technique for spotting cancerous cells in blood samples. ![]()

‘Fresher’ RBCs no safer than standard RBCs, AABB says

Photo courtesy of UAB Hospital

AABB has released new guidelines on when to perform red blood cell (RBC) transfusions and the optimal duration of RBC storage.

The guidelines state that a restrictive transfusion threshold—waiting to transfuse until a patient’s hemoglobin level is 7-8 g/dL—is safe in most clinical settings.

And, for most patients, “fresh” RBCs—stored for less than 10 days—are no safer than standard-issue RBCs—stored for up to 42 days.

“One of the biggest controversies concerning transfusion therapy is whether older blood is harmful compared to fresher blood,” said guideline author Aaron Tobian, MD, PhD, of the Johns Hopkins University School of Medicine in Baltimore, Maryland.

“Now, we have information that can accurately inform guidelines about red blood cell storage duration. If data suggest no harm from the use of standard-issue blood and fresher blood would only constrain the use of a limited resource, continuing with standard practice of using older blood is appropriate. The newly released guidelines now clearly inform the community.”

The guidelines were published in JAMA alongside a related editorial.

The recommendations in the guidelines are based on an analysis of randomized clinical trials in which researchers evaluated hemoglobin thresholds for RBC transfusion (trials conducted from 1950 through May 2016) and RBC storage duration (trials conducted from 1948 through May 2016).

For transfusion thresholds, there were 31 trials including 12,587 subjects. The results of these trials suggested that restrictive transfusion thresholds (transfusing when the hemoglobin level is 7-8 g/dL) were not associated with higher rates of adverse clinical outcomes when compared to liberal thresholds (transfusing when the hemoglobin level is 9-10 g/dL).

For RBC storage duration, there were 13 trials including 5515 subjects. The results suggested that transfusing fresher blood did not improve clinical outcomes.

Transfusion threshold

The guideline authors said it is good practice, when making transfusion decisions, to consider the patient’s hemoglobin level, the overall clinical context, patient preference, and alternative therapies.

However, in general, a hemoglobin level of 7 g/dL should serve as the threshold for transfusing adult patients who are hemodynamically stable, even if they are in critical care. This is a strong recommendation based on moderate-quality evidence.

“While the recommended threshold of 7 g/dL is consistent with previous AABB guidelines, the strength of the new recommendation reflects the quality and quantity of the new data, much of which was generated since 2012,” said guideline author Jeffrey Carson, MD, of Robert Wood Johnson University Hospital in New Brunswick, New Jersey.

“Clinically, these results show that no harm will come from waiting to transfuse a patient until the hemoglobin level reaches a lower point. The restrictive approach is associated with reductions in blood use, blood conservation, and lower expenses.”

The guidelines also state that, for patients with pre-existing cardiovascular disease and those undergoing cardiac or orthopedic surgery, the threshold should be 8 g/dL. This is a strong recommendation based on moderate-quality evidence.

Neither of the aforementioned recommendations apply to patients with acute coronary syndrome, severe thrombocytopenia, or chronic transfusion-dependent anemia.

Dr Carson said additional trials are needed to determine whether these patients benefit from transfusion at higher hemoglobin levels.

“We are about to embark on a large, international clinical trial supported by the NIH [National Institutes of Health] that will provide the evidence needed to determine the best course of action for patients who have had a heart attack,” he said.

Dr Carson and his colleagues also noted that, although the recommendations are based on the available evidence, the hemoglobin transfusion thresholds assessed may not be optimal. And the use of hemoglobin transfusion thresholds may be an imperfect surrogate for oxygen delivery.

Storage duration